2022-10-10 19:46:41 +08:00

#!/usr/bin/env python3

[Tools] A new, more versatile benchmark output compare tool (#474)

* [Tools] A new, more versatile benchmark output compare tool

Sometimes, there is more than one implementation of some functionality.

And the obvious use-case is to benchmark them, which is better?

Currently, there is no easy way to compare the benchmarking results

in that case:

The obvious solution is to have multiple binaries, each one

containing/running one implementation. And each binary must use

exactly the same benchmark family name, which is super bad,

because now the binary name should contain all the info about

benchmark family...

What if i tell you that is not the solution?

What if we could avoid producing one binary per benchmark family,

with the same family name used in each binary,

but instead could keep all the related families in one binary,

with their proper names, AND still be able to compare them?

There are three modes of operation:

1. Just compare two benchmarks, what `compare_bench.py` did:

```

$ ../tools/compare.py benchmarks ./a.out ./a.out

RUNNING: ./a.out --benchmark_out=/tmp/tmprBT5nW

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:44

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19101577 211.669MB/s

BM_memcpy/64 76 ns 76 ns 9412571 800.199MB/s

BM_memcpy/512 84 ns 84 ns 8249070 5.64771GB/s

BM_memcpy/1024 116 ns 116 ns 6181763 8.19505GB/s

BM_memcpy/8192 643 ns 643 ns 1062855 11.8636GB/s

BM_copy/8 222 ns 222 ns 3137987 34.3772MB/s

BM_copy/64 1608 ns 1608 ns 432758 37.9501MB/s

BM_copy/512 12589 ns 12589 ns 54806 38.7867MB/s

BM_copy/1024 25169 ns 25169 ns 27713 38.8003MB/s

BM_copy/8192 201165 ns 201112 ns 3486 38.8466MB/s

RUNNING: ./a.out --benchmark_out=/tmp/tmpt1wwG_

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:53

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19397903 211.255MB/s

BM_memcpy/64 73 ns 73 ns 9691174 839.635MB/s

BM_memcpy/512 85 ns 85 ns 8312329 5.60101GB/s

BM_memcpy/1024 118 ns 118 ns 6438774 8.11608GB/s

BM_memcpy/8192 656 ns 656 ns 1068644 11.6277GB/s

BM_copy/8 223 ns 223 ns 3146977 34.2338MB/s

BM_copy/64 1611 ns 1611 ns 435340 37.8751MB/s

BM_copy/512 12622 ns 12622 ns 54818 38.6844MB/s

BM_copy/1024 25257 ns 25239 ns 27779 38.6927MB/s

BM_copy/8192 205013 ns 205010 ns 3479 38.108MB/s

Comparing ./a.out to ./a.out

Benchmark Time CPU Time Old Time New CPU Old CPU New

------------------------------------------------------------------------------------------------------

BM_memcpy/8 +0.0020 +0.0020 36 36 36 36

BM_memcpy/64 -0.0468 -0.0470 76 73 76 73

BM_memcpy/512 +0.0081 +0.0083 84 85 84 85

BM_memcpy/1024 +0.0098 +0.0097 116 118 116 118

BM_memcpy/8192 +0.0200 +0.0203 643 656 643 656

BM_copy/8 +0.0046 +0.0042 222 223 222 223

BM_copy/64 +0.0020 +0.0020 1608 1611 1608 1611

BM_copy/512 +0.0027 +0.0026 12589 12622 12589 12622

BM_copy/1024 +0.0035 +0.0028 25169 25257 25169 25239

BM_copy/8192 +0.0191 +0.0194 201165 205013 201112 205010

```

2. Compare two different filters of one benchmark:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py filters ./a.out BM_memcpy BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmpBWKk0k

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:28

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 17891491 211.215MB/s

BM_memcpy/64 74 ns 74 ns 9400999 825.646MB/s

BM_memcpy/512 87 ns 87 ns 8027453 5.46126GB/s

BM_memcpy/1024 111 ns 111 ns 6116853 8.5648GB/s

BM_memcpy/8192 657 ns 656 ns 1064679 11.6247GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpAvWcOM

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:33

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 227 ns 227 ns 3038700 33.6264MB/s

BM_copy/64 1640 ns 1640 ns 426893 37.2154MB/s

BM_copy/512 12804 ns 12801 ns 55417 38.1444MB/s

BM_copy/1024 25409 ns 25407 ns 27516 38.4365MB/s

BM_copy/8192 202986 ns 202990 ns 3454 38.4871MB/s

Comparing BM_memcpy to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.2829 +5.2812 36 227 36 227

[BM_memcpy vs. BM_copy]/64 +21.1719 +21.1856 74 1640 74 1640

[BM_memcpy vs. BM_copy]/512 +145.6487 +145.6097 87 12804 87 12801

[BM_memcpy vs. BM_copy]/1024 +227.1860 +227.1776 111 25409 111 25407

[BM_memcpy vs. BM_copy]/8192 +308.1664 +308.2898 657 202986 656 202990

```

3. Compare filter one from benchmark one to filter two from benchmark two:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py benchmarksfiltered ./a.out BM_memcpy ./a.out BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmp_FvbYg

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:27

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 37 ns 37 ns 18953482 204.118MB/s

BM_memcpy/64 74 ns 74 ns 9206578 828.245MB/s

BM_memcpy/512 91 ns 91 ns 8086195 5.25476GB/s

BM_memcpy/1024 120 ns 120 ns 5804513 7.95662GB/s

BM_memcpy/8192 664 ns 664 ns 1028363 11.4948GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpDfL5iE

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:32

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 230 ns 230 ns 2985909 33.1161MB/s

BM_copy/64 1654 ns 1653 ns 419408 36.9137MB/s

BM_copy/512 13122 ns 13120 ns 53403 37.2156MB/s

BM_copy/1024 26679 ns 26666 ns 26575 36.6218MB/s

BM_copy/8192 215068 ns 215053 ns 3221 36.3283MB/s

Comparing BM_memcpy (from ./a.out) to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.1649 +5.1637 37 230 37 230

[BM_memcpy vs. BM_copy]/64 +21.4352 +21.4374 74 1654 74 1653

[BM_memcpy vs. BM_copy]/512 +143.6022 +143.5865 91 13122 91 13120

[BM_memcpy vs. BM_copy]/1024 +221.5903 +221.4790 120 26679 120 26666

[BM_memcpy vs. BM_copy]/8192 +322.9059 +323.0096 664 215068 664 215053

```

* [Docs] Document tools/compare.py

* [docs] Document how the change is calculated

2017-11-08 05:35:25 +08:00

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

# type: ignore

[Tools] A new, more versatile benchmark output compare tool (#474)

* [Tools] A new, more versatile benchmark output compare tool

Sometimes, there is more than one implementation of some functionality.

And the obvious use-case is to benchmark them, which is better?

Currently, there is no easy way to compare the benchmarking results

in that case:

The obvious solution is to have multiple binaries, each one

containing/running one implementation. And each binary must use

exactly the same benchmark family name, which is super bad,

because now the binary name should contain all the info about

benchmark family...

What if i tell you that is not the solution?

What if we could avoid producing one binary per benchmark family,

with the same family name used in each binary,

but instead could keep all the related families in one binary,

with their proper names, AND still be able to compare them?

There are three modes of operation:

1. Just compare two benchmarks, what `compare_bench.py` did:

```

$ ../tools/compare.py benchmarks ./a.out ./a.out

RUNNING: ./a.out --benchmark_out=/tmp/tmprBT5nW

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:44

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19101577 211.669MB/s

BM_memcpy/64 76 ns 76 ns 9412571 800.199MB/s

BM_memcpy/512 84 ns 84 ns 8249070 5.64771GB/s

BM_memcpy/1024 116 ns 116 ns 6181763 8.19505GB/s

BM_memcpy/8192 643 ns 643 ns 1062855 11.8636GB/s

BM_copy/8 222 ns 222 ns 3137987 34.3772MB/s

BM_copy/64 1608 ns 1608 ns 432758 37.9501MB/s

BM_copy/512 12589 ns 12589 ns 54806 38.7867MB/s

BM_copy/1024 25169 ns 25169 ns 27713 38.8003MB/s

BM_copy/8192 201165 ns 201112 ns 3486 38.8466MB/s

RUNNING: ./a.out --benchmark_out=/tmp/tmpt1wwG_

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:53

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19397903 211.255MB/s

BM_memcpy/64 73 ns 73 ns 9691174 839.635MB/s

BM_memcpy/512 85 ns 85 ns 8312329 5.60101GB/s

BM_memcpy/1024 118 ns 118 ns 6438774 8.11608GB/s

BM_memcpy/8192 656 ns 656 ns 1068644 11.6277GB/s

BM_copy/8 223 ns 223 ns 3146977 34.2338MB/s

BM_copy/64 1611 ns 1611 ns 435340 37.8751MB/s

BM_copy/512 12622 ns 12622 ns 54818 38.6844MB/s

BM_copy/1024 25257 ns 25239 ns 27779 38.6927MB/s

BM_copy/8192 205013 ns 205010 ns 3479 38.108MB/s

Comparing ./a.out to ./a.out

Benchmark Time CPU Time Old Time New CPU Old CPU New

------------------------------------------------------------------------------------------------------

BM_memcpy/8 +0.0020 +0.0020 36 36 36 36

BM_memcpy/64 -0.0468 -0.0470 76 73 76 73

BM_memcpy/512 +0.0081 +0.0083 84 85 84 85

BM_memcpy/1024 +0.0098 +0.0097 116 118 116 118

BM_memcpy/8192 +0.0200 +0.0203 643 656 643 656

BM_copy/8 +0.0046 +0.0042 222 223 222 223

BM_copy/64 +0.0020 +0.0020 1608 1611 1608 1611

BM_copy/512 +0.0027 +0.0026 12589 12622 12589 12622

BM_copy/1024 +0.0035 +0.0028 25169 25257 25169 25239

BM_copy/8192 +0.0191 +0.0194 201165 205013 201112 205010

```

2. Compare two different filters of one benchmark:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py filters ./a.out BM_memcpy BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmpBWKk0k

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:28

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 17891491 211.215MB/s

BM_memcpy/64 74 ns 74 ns 9400999 825.646MB/s

BM_memcpy/512 87 ns 87 ns 8027453 5.46126GB/s

BM_memcpy/1024 111 ns 111 ns 6116853 8.5648GB/s

BM_memcpy/8192 657 ns 656 ns 1064679 11.6247GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpAvWcOM

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:33

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 227 ns 227 ns 3038700 33.6264MB/s

BM_copy/64 1640 ns 1640 ns 426893 37.2154MB/s

BM_copy/512 12804 ns 12801 ns 55417 38.1444MB/s

BM_copy/1024 25409 ns 25407 ns 27516 38.4365MB/s

BM_copy/8192 202986 ns 202990 ns 3454 38.4871MB/s

Comparing BM_memcpy to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.2829 +5.2812 36 227 36 227

[BM_memcpy vs. BM_copy]/64 +21.1719 +21.1856 74 1640 74 1640

[BM_memcpy vs. BM_copy]/512 +145.6487 +145.6097 87 12804 87 12801

[BM_memcpy vs. BM_copy]/1024 +227.1860 +227.1776 111 25409 111 25407

[BM_memcpy vs. BM_copy]/8192 +308.1664 +308.2898 657 202986 656 202990

```

3. Compare filter one from benchmark one to filter two from benchmark two:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py benchmarksfiltered ./a.out BM_memcpy ./a.out BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmp_FvbYg

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:27

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 37 ns 37 ns 18953482 204.118MB/s

BM_memcpy/64 74 ns 74 ns 9206578 828.245MB/s

BM_memcpy/512 91 ns 91 ns 8086195 5.25476GB/s

BM_memcpy/1024 120 ns 120 ns 5804513 7.95662GB/s

BM_memcpy/8192 664 ns 664 ns 1028363 11.4948GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpDfL5iE

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:32

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 230 ns 230 ns 2985909 33.1161MB/s

BM_copy/64 1654 ns 1653 ns 419408 36.9137MB/s

BM_copy/512 13122 ns 13120 ns 53403 37.2156MB/s

BM_copy/1024 26679 ns 26666 ns 26575 36.6218MB/s

BM_copy/8192 215068 ns 215053 ns 3221 36.3283MB/s

Comparing BM_memcpy (from ./a.out) to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.1649 +5.1637 37 230 37 230

[BM_memcpy vs. BM_copy]/64 +21.4352 +21.4374 74 1654 74 1653

[BM_memcpy vs. BM_copy]/512 +143.6022 +143.5865 91 13122 91 13120

[BM_memcpy vs. BM_copy]/1024 +221.5903 +221.4790 120 26679 120 26666

[BM_memcpy vs. BM_copy]/8192 +322.9059 +323.0096 664 215068 664 215053

```

* [Docs] Document tools/compare.py

* [docs] Document how the change is calculated

2017-11-08 05:35:25 +08:00

"""

compare . py - versatile benchmark output compare tool

"""

import argparse

2020-09-21 18:25:28 +08:00

import json

2023-02-07 00:57:07 +08:00

import os

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

import sys

import unittest

from argparse import ArgumentParser

[Tools] A new, more versatile benchmark output compare tool (#474)

* [Tools] A new, more versatile benchmark output compare tool

Sometimes, there is more than one implementation of some functionality.

And the obvious use-case is to benchmark them, which is better?

Currently, there is no easy way to compare the benchmarking results

in that case:

The obvious solution is to have multiple binaries, each one

containing/running one implementation. And each binary must use

exactly the same benchmark family name, which is super bad,

because now the binary name should contain all the info about

benchmark family...

What if i tell you that is not the solution?

What if we could avoid producing one binary per benchmark family,

with the same family name used in each binary,

but instead could keep all the related families in one binary,

with their proper names, AND still be able to compare them?

There are three modes of operation:

1. Just compare two benchmarks, what `compare_bench.py` did:

```

$ ../tools/compare.py benchmarks ./a.out ./a.out

RUNNING: ./a.out --benchmark_out=/tmp/tmprBT5nW

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:44

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19101577 211.669MB/s

BM_memcpy/64 76 ns 76 ns 9412571 800.199MB/s

BM_memcpy/512 84 ns 84 ns 8249070 5.64771GB/s

BM_memcpy/1024 116 ns 116 ns 6181763 8.19505GB/s

BM_memcpy/8192 643 ns 643 ns 1062855 11.8636GB/s

BM_copy/8 222 ns 222 ns 3137987 34.3772MB/s

BM_copy/64 1608 ns 1608 ns 432758 37.9501MB/s

BM_copy/512 12589 ns 12589 ns 54806 38.7867MB/s

BM_copy/1024 25169 ns 25169 ns 27713 38.8003MB/s

BM_copy/8192 201165 ns 201112 ns 3486 38.8466MB/s

RUNNING: ./a.out --benchmark_out=/tmp/tmpt1wwG_

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:53

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19397903 211.255MB/s

BM_memcpy/64 73 ns 73 ns 9691174 839.635MB/s

BM_memcpy/512 85 ns 85 ns 8312329 5.60101GB/s

BM_memcpy/1024 118 ns 118 ns 6438774 8.11608GB/s

BM_memcpy/8192 656 ns 656 ns 1068644 11.6277GB/s

BM_copy/8 223 ns 223 ns 3146977 34.2338MB/s

BM_copy/64 1611 ns 1611 ns 435340 37.8751MB/s

BM_copy/512 12622 ns 12622 ns 54818 38.6844MB/s

BM_copy/1024 25257 ns 25239 ns 27779 38.6927MB/s

BM_copy/8192 205013 ns 205010 ns 3479 38.108MB/s

Comparing ./a.out to ./a.out

Benchmark Time CPU Time Old Time New CPU Old CPU New

------------------------------------------------------------------------------------------------------

BM_memcpy/8 +0.0020 +0.0020 36 36 36 36

BM_memcpy/64 -0.0468 -0.0470 76 73 76 73

BM_memcpy/512 +0.0081 +0.0083 84 85 84 85

BM_memcpy/1024 +0.0098 +0.0097 116 118 116 118

BM_memcpy/8192 +0.0200 +0.0203 643 656 643 656

BM_copy/8 +0.0046 +0.0042 222 223 222 223

BM_copy/64 +0.0020 +0.0020 1608 1611 1608 1611

BM_copy/512 +0.0027 +0.0026 12589 12622 12589 12622

BM_copy/1024 +0.0035 +0.0028 25169 25257 25169 25239

BM_copy/8192 +0.0191 +0.0194 201165 205013 201112 205010

```

2. Compare two different filters of one benchmark:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py filters ./a.out BM_memcpy BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmpBWKk0k

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:28

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 17891491 211.215MB/s

BM_memcpy/64 74 ns 74 ns 9400999 825.646MB/s

BM_memcpy/512 87 ns 87 ns 8027453 5.46126GB/s

BM_memcpy/1024 111 ns 111 ns 6116853 8.5648GB/s

BM_memcpy/8192 657 ns 656 ns 1064679 11.6247GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpAvWcOM

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:33

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 227 ns 227 ns 3038700 33.6264MB/s

BM_copy/64 1640 ns 1640 ns 426893 37.2154MB/s

BM_copy/512 12804 ns 12801 ns 55417 38.1444MB/s

BM_copy/1024 25409 ns 25407 ns 27516 38.4365MB/s

BM_copy/8192 202986 ns 202990 ns 3454 38.4871MB/s

Comparing BM_memcpy to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.2829 +5.2812 36 227 36 227

[BM_memcpy vs. BM_copy]/64 +21.1719 +21.1856 74 1640 74 1640

[BM_memcpy vs. BM_copy]/512 +145.6487 +145.6097 87 12804 87 12801

[BM_memcpy vs. BM_copy]/1024 +227.1860 +227.1776 111 25409 111 25407

[BM_memcpy vs. BM_copy]/8192 +308.1664 +308.2898 657 202986 656 202990

```

3. Compare filter one from benchmark one to filter two from benchmark two:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py benchmarksfiltered ./a.out BM_memcpy ./a.out BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmp_FvbYg

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:27

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 37 ns 37 ns 18953482 204.118MB/s

BM_memcpy/64 74 ns 74 ns 9206578 828.245MB/s

BM_memcpy/512 91 ns 91 ns 8086195 5.25476GB/s

BM_memcpy/1024 120 ns 120 ns 5804513 7.95662GB/s

BM_memcpy/8192 664 ns 664 ns 1028363 11.4948GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpDfL5iE

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:32

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 230 ns 230 ns 2985909 33.1161MB/s

BM_copy/64 1654 ns 1653 ns 419408 36.9137MB/s

BM_copy/512 13122 ns 13120 ns 53403 37.2156MB/s

BM_copy/1024 26679 ns 26666 ns 26575 36.6218MB/s

BM_copy/8192 215068 ns 215053 ns 3221 36.3283MB/s

Comparing BM_memcpy (from ./a.out) to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.1649 +5.1637 37 230 37 230

[BM_memcpy vs. BM_copy]/64 +21.4352 +21.4374 74 1654 74 1653

[BM_memcpy vs. BM_copy]/512 +143.6022 +143.5865 91 13122 91 13120

[BM_memcpy vs. BM_copy]/1024 +221.5903 +221.4790 120 26679 120 26666

[BM_memcpy vs. BM_copy]/8192 +322.9059 +323.0096 664 215068 664 215053

```

* [Docs] Document tools/compare.py

* [docs] Document how the change is calculated

2017-11-08 05:35:25 +08:00

import gbench

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

from gbench import report , util

[Tools] A new, more versatile benchmark output compare tool (#474)

* [Tools] A new, more versatile benchmark output compare tool

Sometimes, there is more than one implementation of some functionality.

And the obvious use-case is to benchmark them, which is better?

Currently, there is no easy way to compare the benchmarking results

in that case:

The obvious solution is to have multiple binaries, each one

containing/running one implementation. And each binary must use

exactly the same benchmark family name, which is super bad,

because now the binary name should contain all the info about

benchmark family...

What if i tell you that is not the solution?

What if we could avoid producing one binary per benchmark family,

with the same family name used in each binary,

but instead could keep all the related families in one binary,

with their proper names, AND still be able to compare them?

There are three modes of operation:

1. Just compare two benchmarks, what `compare_bench.py` did:

```

$ ../tools/compare.py benchmarks ./a.out ./a.out

RUNNING: ./a.out --benchmark_out=/tmp/tmprBT5nW

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:44

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19101577 211.669MB/s

BM_memcpy/64 76 ns 76 ns 9412571 800.199MB/s

BM_memcpy/512 84 ns 84 ns 8249070 5.64771GB/s

BM_memcpy/1024 116 ns 116 ns 6181763 8.19505GB/s

BM_memcpy/8192 643 ns 643 ns 1062855 11.8636GB/s

BM_copy/8 222 ns 222 ns 3137987 34.3772MB/s

BM_copy/64 1608 ns 1608 ns 432758 37.9501MB/s

BM_copy/512 12589 ns 12589 ns 54806 38.7867MB/s

BM_copy/1024 25169 ns 25169 ns 27713 38.8003MB/s

BM_copy/8192 201165 ns 201112 ns 3486 38.8466MB/s

RUNNING: ./a.out --benchmark_out=/tmp/tmpt1wwG_

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:53

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19397903 211.255MB/s

BM_memcpy/64 73 ns 73 ns 9691174 839.635MB/s

BM_memcpy/512 85 ns 85 ns 8312329 5.60101GB/s

BM_memcpy/1024 118 ns 118 ns 6438774 8.11608GB/s

BM_memcpy/8192 656 ns 656 ns 1068644 11.6277GB/s

BM_copy/8 223 ns 223 ns 3146977 34.2338MB/s

BM_copy/64 1611 ns 1611 ns 435340 37.8751MB/s

BM_copy/512 12622 ns 12622 ns 54818 38.6844MB/s

BM_copy/1024 25257 ns 25239 ns 27779 38.6927MB/s

BM_copy/8192 205013 ns 205010 ns 3479 38.108MB/s

Comparing ./a.out to ./a.out

Benchmark Time CPU Time Old Time New CPU Old CPU New

------------------------------------------------------------------------------------------------------

BM_memcpy/8 +0.0020 +0.0020 36 36 36 36

BM_memcpy/64 -0.0468 -0.0470 76 73 76 73

BM_memcpy/512 +0.0081 +0.0083 84 85 84 85

BM_memcpy/1024 +0.0098 +0.0097 116 118 116 118

BM_memcpy/8192 +0.0200 +0.0203 643 656 643 656

BM_copy/8 +0.0046 +0.0042 222 223 222 223

BM_copy/64 +0.0020 +0.0020 1608 1611 1608 1611

BM_copy/512 +0.0027 +0.0026 12589 12622 12589 12622

BM_copy/1024 +0.0035 +0.0028 25169 25257 25169 25239

BM_copy/8192 +0.0191 +0.0194 201165 205013 201112 205010

```

2. Compare two different filters of one benchmark:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py filters ./a.out BM_memcpy BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmpBWKk0k

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:28

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 17891491 211.215MB/s

BM_memcpy/64 74 ns 74 ns 9400999 825.646MB/s

BM_memcpy/512 87 ns 87 ns 8027453 5.46126GB/s

BM_memcpy/1024 111 ns 111 ns 6116853 8.5648GB/s

BM_memcpy/8192 657 ns 656 ns 1064679 11.6247GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpAvWcOM

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:33

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 227 ns 227 ns 3038700 33.6264MB/s

BM_copy/64 1640 ns 1640 ns 426893 37.2154MB/s

BM_copy/512 12804 ns 12801 ns 55417 38.1444MB/s

BM_copy/1024 25409 ns 25407 ns 27516 38.4365MB/s

BM_copy/8192 202986 ns 202990 ns 3454 38.4871MB/s

Comparing BM_memcpy to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.2829 +5.2812 36 227 36 227

[BM_memcpy vs. BM_copy]/64 +21.1719 +21.1856 74 1640 74 1640

[BM_memcpy vs. BM_copy]/512 +145.6487 +145.6097 87 12804 87 12801

[BM_memcpy vs. BM_copy]/1024 +227.1860 +227.1776 111 25409 111 25407

[BM_memcpy vs. BM_copy]/8192 +308.1664 +308.2898 657 202986 656 202990

```

3. Compare filter one from benchmark one to filter two from benchmark two:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py benchmarksfiltered ./a.out BM_memcpy ./a.out BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmp_FvbYg

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:27

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 37 ns 37 ns 18953482 204.118MB/s

BM_memcpy/64 74 ns 74 ns 9206578 828.245MB/s

BM_memcpy/512 91 ns 91 ns 8086195 5.25476GB/s

BM_memcpy/1024 120 ns 120 ns 5804513 7.95662GB/s

BM_memcpy/8192 664 ns 664 ns 1028363 11.4948GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpDfL5iE

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:32

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 230 ns 230 ns 2985909 33.1161MB/s

BM_copy/64 1654 ns 1653 ns 419408 36.9137MB/s

BM_copy/512 13122 ns 13120 ns 53403 37.2156MB/s

BM_copy/1024 26679 ns 26666 ns 26575 36.6218MB/s

BM_copy/8192 215068 ns 215053 ns 3221 36.3283MB/s

Comparing BM_memcpy (from ./a.out) to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.1649 +5.1637 37 230 37 230

[BM_memcpy vs. BM_copy]/64 +21.4352 +21.4374 74 1654 74 1653

[BM_memcpy vs. BM_copy]/512 +143.6022 +143.5865 91 13122 91 13120

[BM_memcpy vs. BM_copy]/1024 +221.5903 +221.4790 120 26679 120 26666

[BM_memcpy vs. BM_copy]/8192 +322.9059 +323.0096 664 215068 664 215053

```

* [Docs] Document tools/compare.py

* [docs] Document how the change is calculated

2017-11-08 05:35:25 +08:00

def check_inputs ( in1 , in2 , flags ) :

"""

Perform checking on the user provided inputs and diagnose any abnormalities

"""

2023-02-07 00:57:07 +08:00

in1_kind , in1_err = util . classify_input_file ( in1 )

in2_kind , in2_err = util . classify_input_file ( in2 )

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

output_file = util . find_benchmark_flag ( " --benchmark_out= " , flags )

output_type = util . find_benchmark_flag ( " --benchmark_out_format= " , flags )

if (

in1_kind == util . IT_Executable

and in2_kind == util . IT_Executable

and output_file

) :

print (

(

" WARNING: ' --benchmark_out= %s ' will be passed to both "

" benchmarks causing it to be overwritten "

)

% output_file

)

2023-02-07 00:57:07 +08:00

if in1_kind == util . IT_JSON and in2_kind == util . IT_JSON :

# When both sides are JSON the only supported flag is

# --benchmark_filter=

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

for flag in util . remove_benchmark_flags ( " --benchmark_filter= " , flags ) :

print (

" WARNING: passing %s has no effect since both "

" inputs are JSON " % flag

)

if output_type is not None and output_type != " json " :

print (

(

" ERROR: passing ' --benchmark_out_format= %s ' to ' compare.py` "

" is not supported. "

)

% output_type

)

[Tools] A new, more versatile benchmark output compare tool (#474)

* [Tools] A new, more versatile benchmark output compare tool

Sometimes, there is more than one implementation of some functionality.

And the obvious use-case is to benchmark them, which is better?

Currently, there is no easy way to compare the benchmarking results

in that case:

The obvious solution is to have multiple binaries, each one

containing/running one implementation. And each binary must use

exactly the same benchmark family name, which is super bad,

because now the binary name should contain all the info about

benchmark family...

What if i tell you that is not the solution?

What if we could avoid producing one binary per benchmark family,

with the same family name used in each binary,

but instead could keep all the related families in one binary,

with their proper names, AND still be able to compare them?

There are three modes of operation:

1. Just compare two benchmarks, what `compare_bench.py` did:

```

$ ../tools/compare.py benchmarks ./a.out ./a.out

RUNNING: ./a.out --benchmark_out=/tmp/tmprBT5nW

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:44

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19101577 211.669MB/s

BM_memcpy/64 76 ns 76 ns 9412571 800.199MB/s

BM_memcpy/512 84 ns 84 ns 8249070 5.64771GB/s

BM_memcpy/1024 116 ns 116 ns 6181763 8.19505GB/s

BM_memcpy/8192 643 ns 643 ns 1062855 11.8636GB/s

BM_copy/8 222 ns 222 ns 3137987 34.3772MB/s

BM_copy/64 1608 ns 1608 ns 432758 37.9501MB/s

BM_copy/512 12589 ns 12589 ns 54806 38.7867MB/s

BM_copy/1024 25169 ns 25169 ns 27713 38.8003MB/s

BM_copy/8192 201165 ns 201112 ns 3486 38.8466MB/s

RUNNING: ./a.out --benchmark_out=/tmp/tmpt1wwG_

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:16:53

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 19397903 211.255MB/s

BM_memcpy/64 73 ns 73 ns 9691174 839.635MB/s

BM_memcpy/512 85 ns 85 ns 8312329 5.60101GB/s

BM_memcpy/1024 118 ns 118 ns 6438774 8.11608GB/s

BM_memcpy/8192 656 ns 656 ns 1068644 11.6277GB/s

BM_copy/8 223 ns 223 ns 3146977 34.2338MB/s

BM_copy/64 1611 ns 1611 ns 435340 37.8751MB/s

BM_copy/512 12622 ns 12622 ns 54818 38.6844MB/s

BM_copy/1024 25257 ns 25239 ns 27779 38.6927MB/s

BM_copy/8192 205013 ns 205010 ns 3479 38.108MB/s

Comparing ./a.out to ./a.out

Benchmark Time CPU Time Old Time New CPU Old CPU New

------------------------------------------------------------------------------------------------------

BM_memcpy/8 +0.0020 +0.0020 36 36 36 36

BM_memcpy/64 -0.0468 -0.0470 76 73 76 73

BM_memcpy/512 +0.0081 +0.0083 84 85 84 85

BM_memcpy/1024 +0.0098 +0.0097 116 118 116 118

BM_memcpy/8192 +0.0200 +0.0203 643 656 643 656

BM_copy/8 +0.0046 +0.0042 222 223 222 223

BM_copy/64 +0.0020 +0.0020 1608 1611 1608 1611

BM_copy/512 +0.0027 +0.0026 12589 12622 12589 12622

BM_copy/1024 +0.0035 +0.0028 25169 25257 25169 25239

BM_copy/8192 +0.0191 +0.0194 201165 205013 201112 205010

```

2. Compare two different filters of one benchmark:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py filters ./a.out BM_memcpy BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmpBWKk0k

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:28

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 36 ns 36 ns 17891491 211.215MB/s

BM_memcpy/64 74 ns 74 ns 9400999 825.646MB/s

BM_memcpy/512 87 ns 87 ns 8027453 5.46126GB/s

BM_memcpy/1024 111 ns 111 ns 6116853 8.5648GB/s

BM_memcpy/8192 657 ns 656 ns 1064679 11.6247GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpAvWcOM

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:37:33

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 227 ns 227 ns 3038700 33.6264MB/s

BM_copy/64 1640 ns 1640 ns 426893 37.2154MB/s

BM_copy/512 12804 ns 12801 ns 55417 38.1444MB/s

BM_copy/1024 25409 ns 25407 ns 27516 38.4365MB/s

BM_copy/8192 202986 ns 202990 ns 3454 38.4871MB/s

Comparing BM_memcpy to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.2829 +5.2812 36 227 36 227

[BM_memcpy vs. BM_copy]/64 +21.1719 +21.1856 74 1640 74 1640

[BM_memcpy vs. BM_copy]/512 +145.6487 +145.6097 87 12804 87 12801

[BM_memcpy vs. BM_copy]/1024 +227.1860 +227.1776 111 25409 111 25407

[BM_memcpy vs. BM_copy]/8192 +308.1664 +308.2898 657 202986 656 202990

```

3. Compare filter one from benchmark one to filter two from benchmark two:

(for simplicity, the benchmark is executed twice)

```

$ ../tools/compare.py benchmarksfiltered ./a.out BM_memcpy ./a.out BM_copy

RUNNING: ./a.out --benchmark_filter=BM_memcpy --benchmark_out=/tmp/tmp_FvbYg

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:27

------------------------------------------------------

Benchmark Time CPU Iterations

------------------------------------------------------

BM_memcpy/8 37 ns 37 ns 18953482 204.118MB/s

BM_memcpy/64 74 ns 74 ns 9206578 828.245MB/s

BM_memcpy/512 91 ns 91 ns 8086195 5.25476GB/s

BM_memcpy/1024 120 ns 120 ns 5804513 7.95662GB/s

BM_memcpy/8192 664 ns 664 ns 1028363 11.4948GB/s

RUNNING: ./a.out --benchmark_filter=BM_copy --benchmark_out=/tmp/tmpDfL5iE

Run on (8 X 4000 MHz CPU s)

2017-11-07 21:38:32

----------------------------------------------------

Benchmark Time CPU Iterations

----------------------------------------------------

BM_copy/8 230 ns 230 ns 2985909 33.1161MB/s

BM_copy/64 1654 ns 1653 ns 419408 36.9137MB/s

BM_copy/512 13122 ns 13120 ns 53403 37.2156MB/s

BM_copy/1024 26679 ns 26666 ns 26575 36.6218MB/s

BM_copy/8192 215068 ns 215053 ns 3221 36.3283MB/s

Comparing BM_memcpy (from ./a.out) to BM_copy (from ./a.out)

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------

[BM_memcpy vs. BM_copy]/8 +5.1649 +5.1637 37 230 37 230

[BM_memcpy vs. BM_copy]/64 +21.4352 +21.4374 74 1654 74 1653

[BM_memcpy vs. BM_copy]/512 +143.6022 +143.5865 91 13122 91 13120

[BM_memcpy vs. BM_copy]/1024 +221.5903 +221.4790 120 26679 120 26666

[BM_memcpy vs. BM_copy]/8192 +322.9059 +323.0096 664 215068 664 215053

```

* [Docs] Document tools/compare.py

* [docs] Document how the change is calculated

2017-11-08 05:35:25 +08:00

sys . exit ( 1 )

def create_parser ( ) :

parser = ArgumentParser (

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

description = " versatile benchmark output compare tool "

)

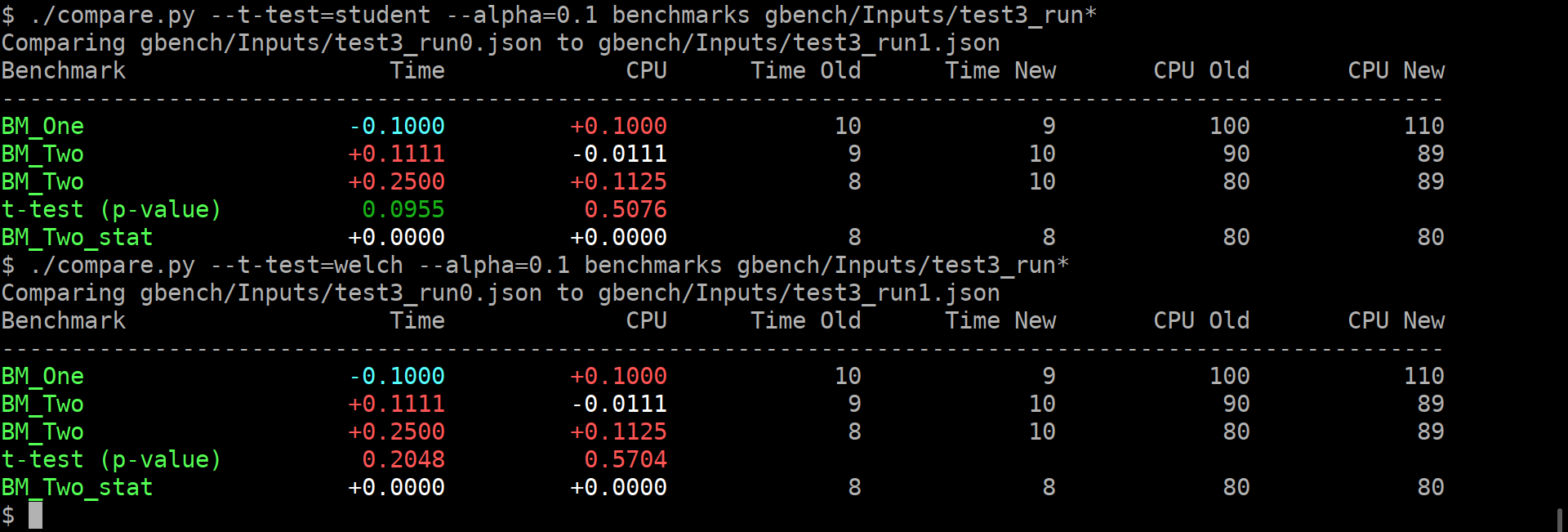

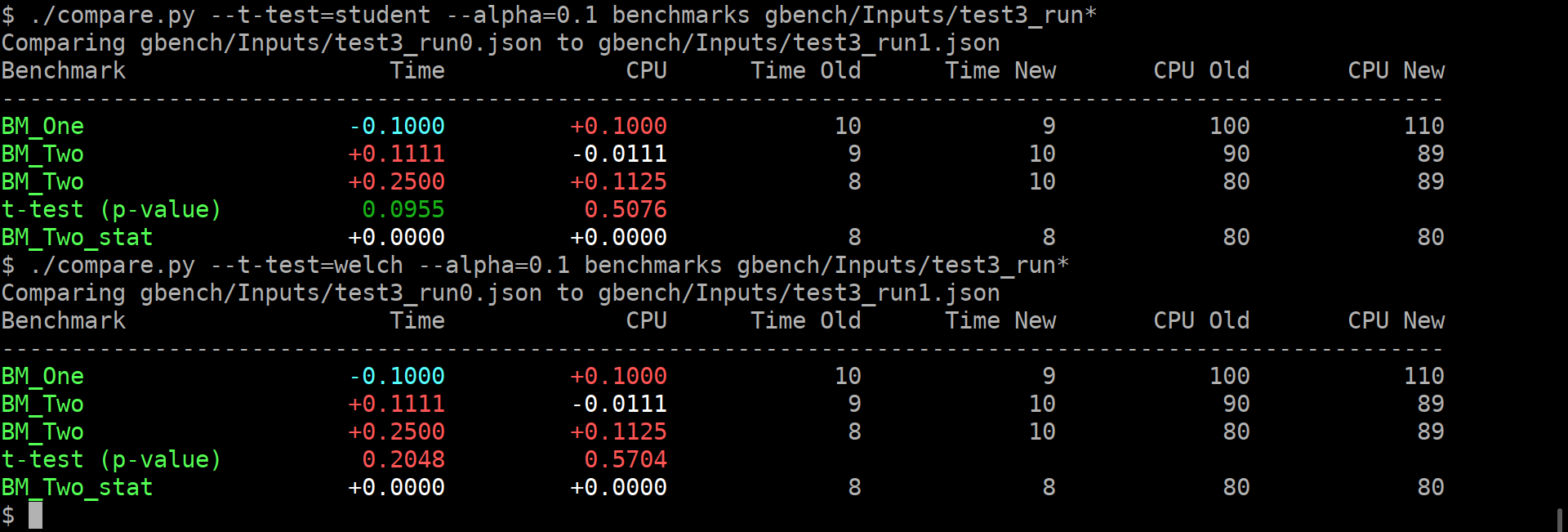

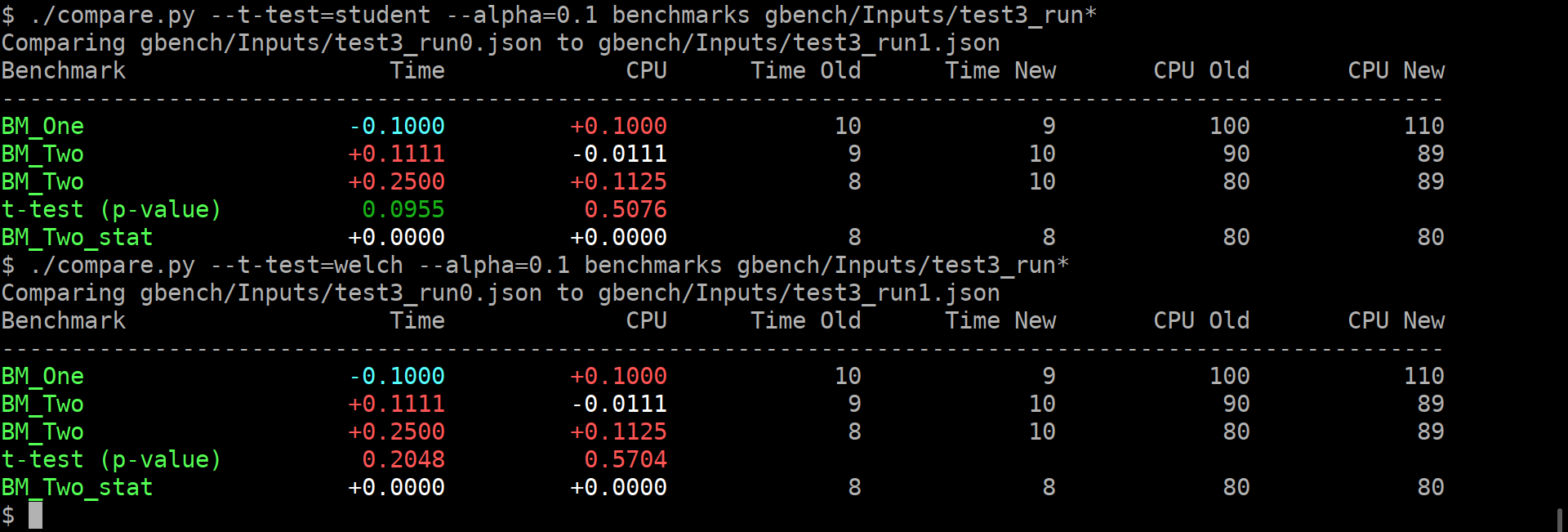

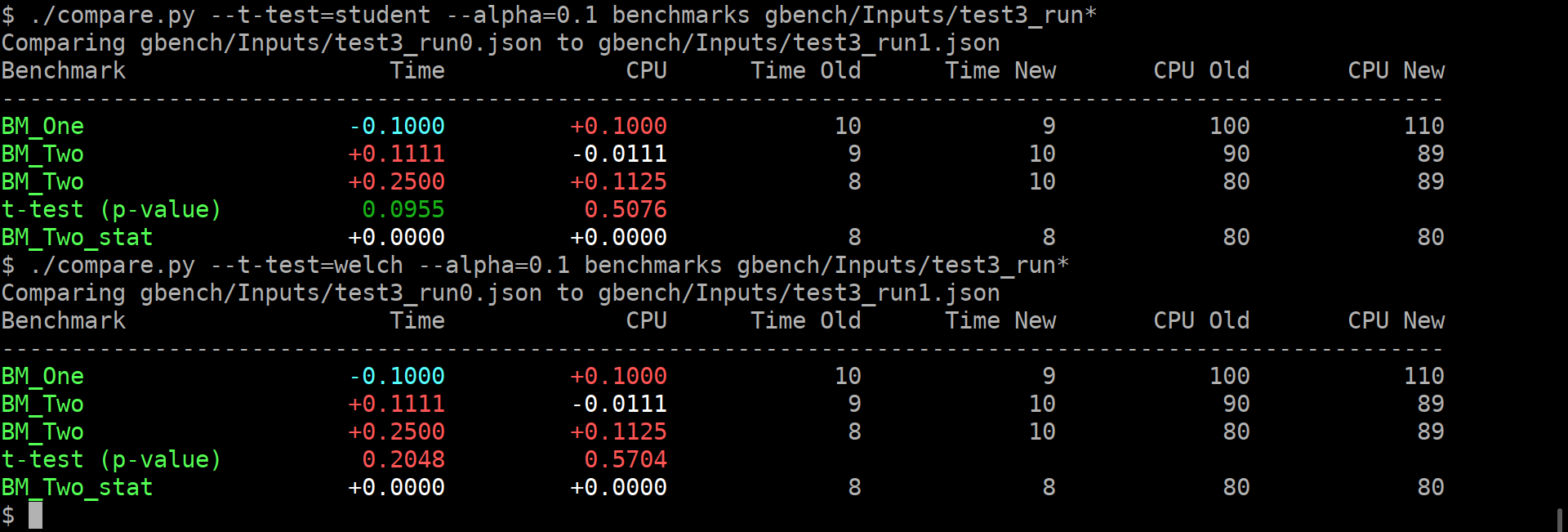

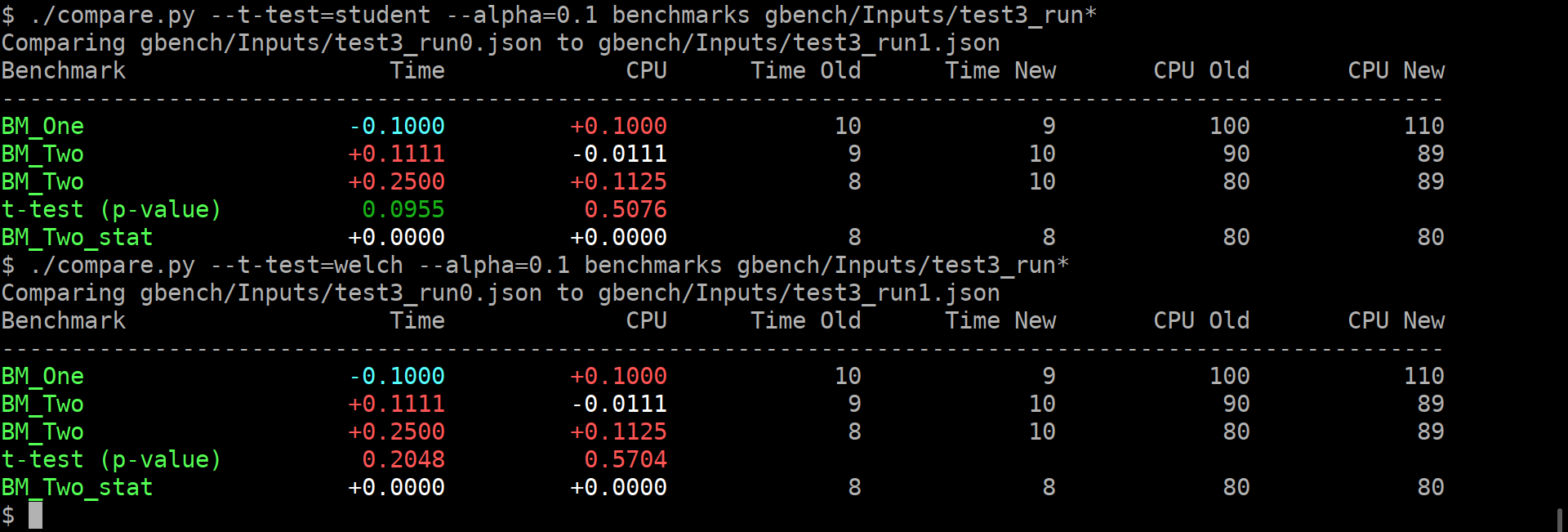

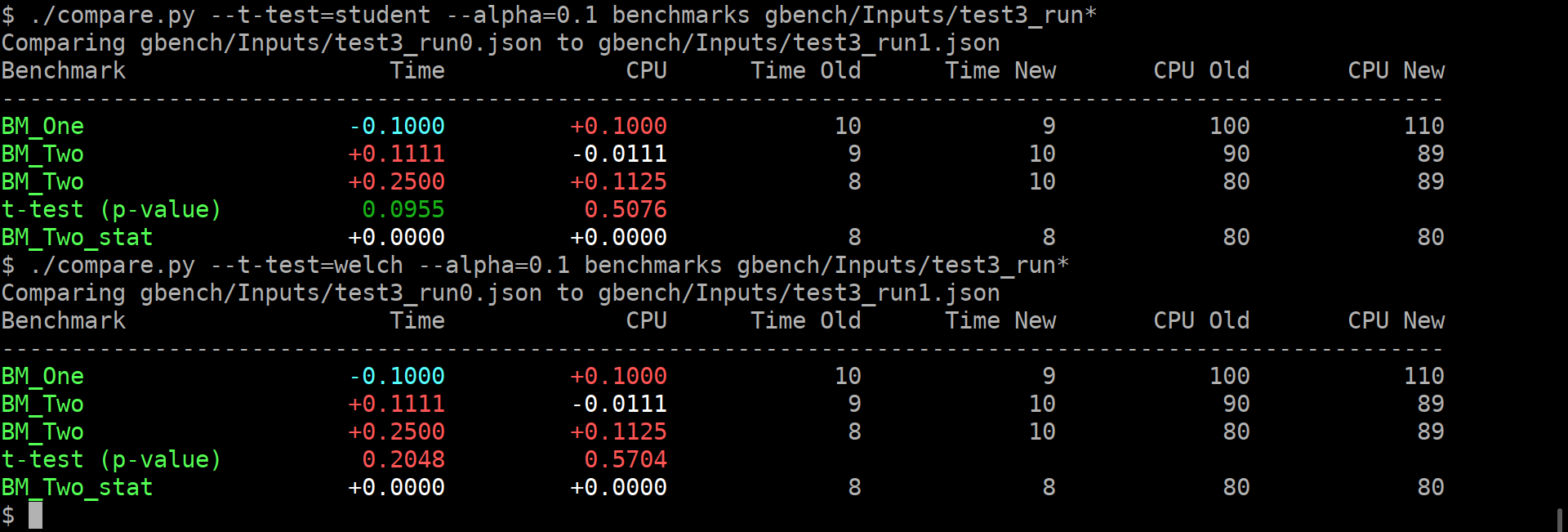

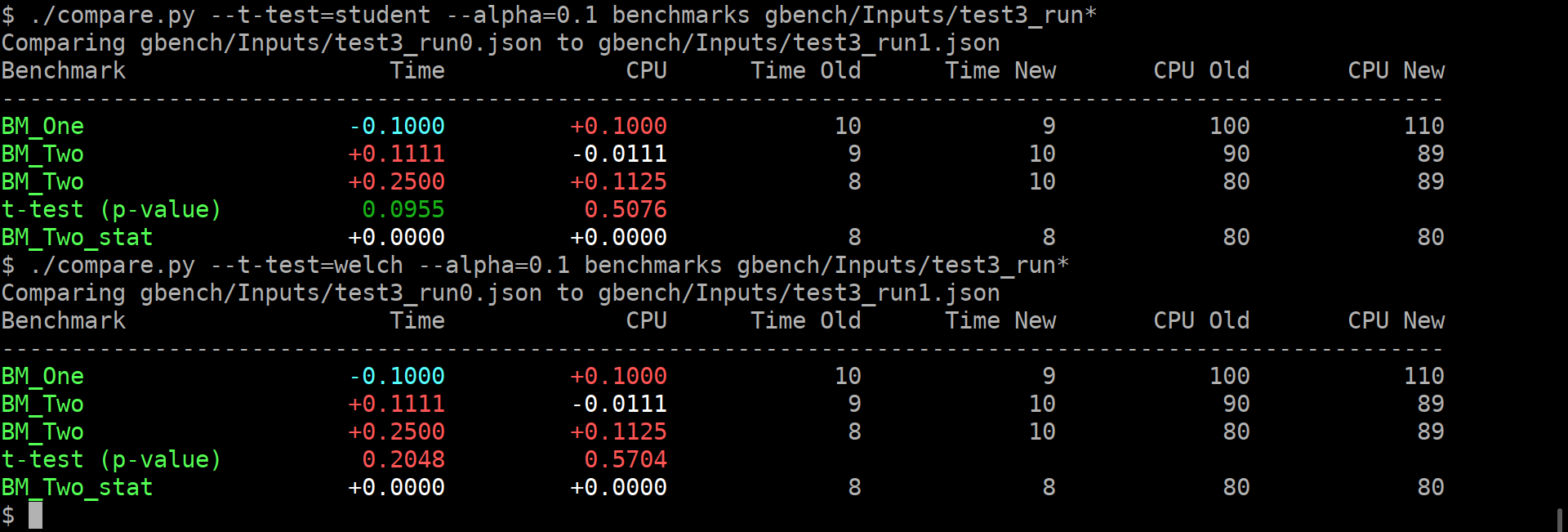

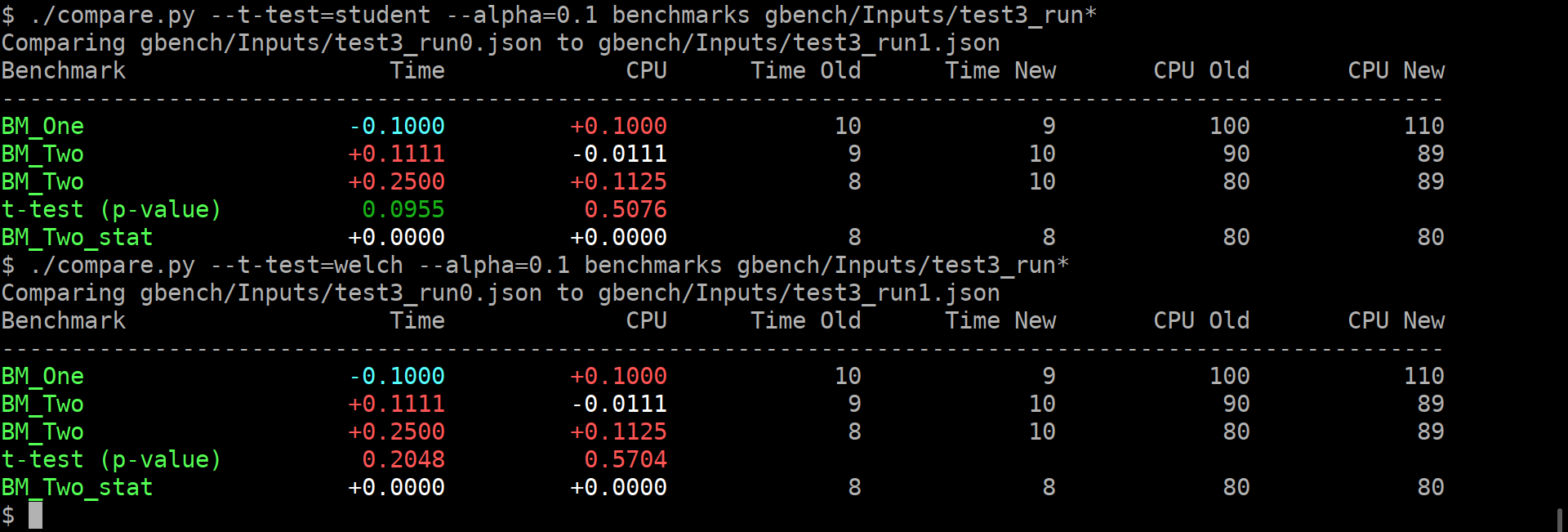

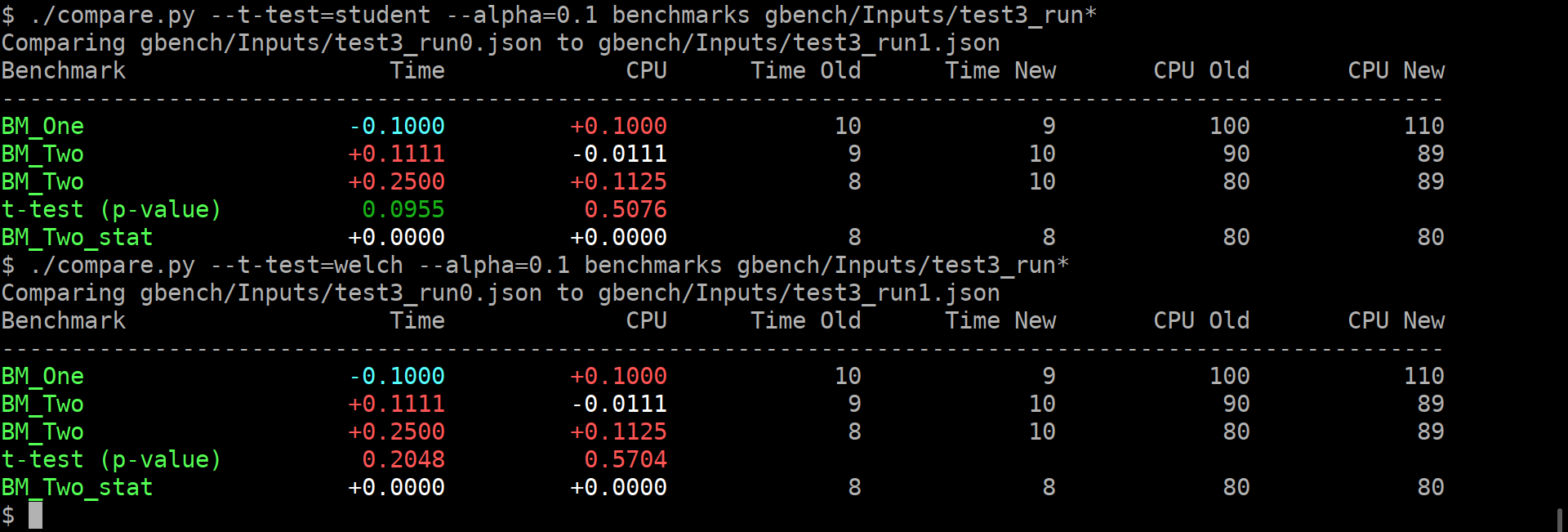

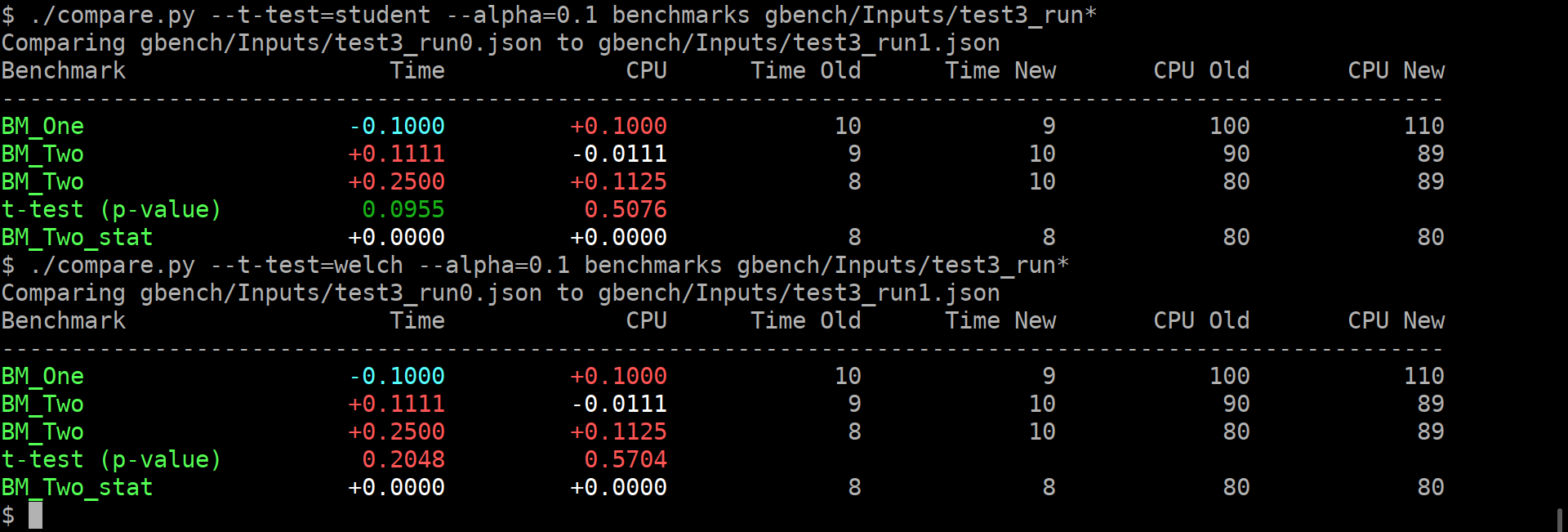

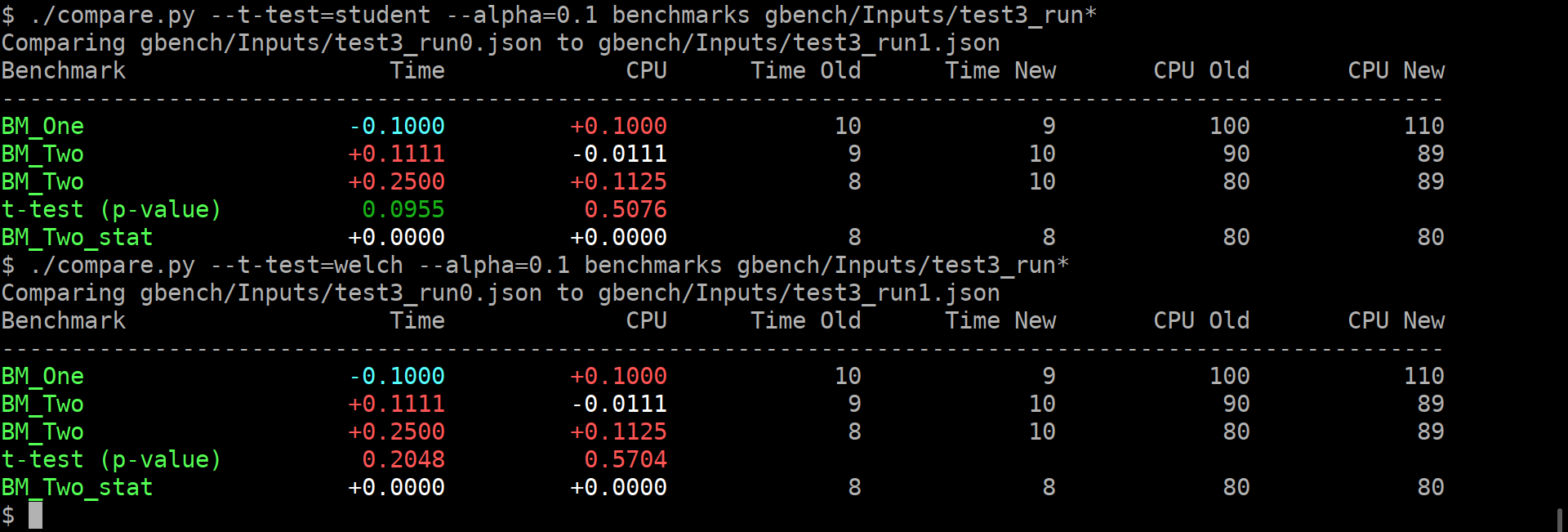

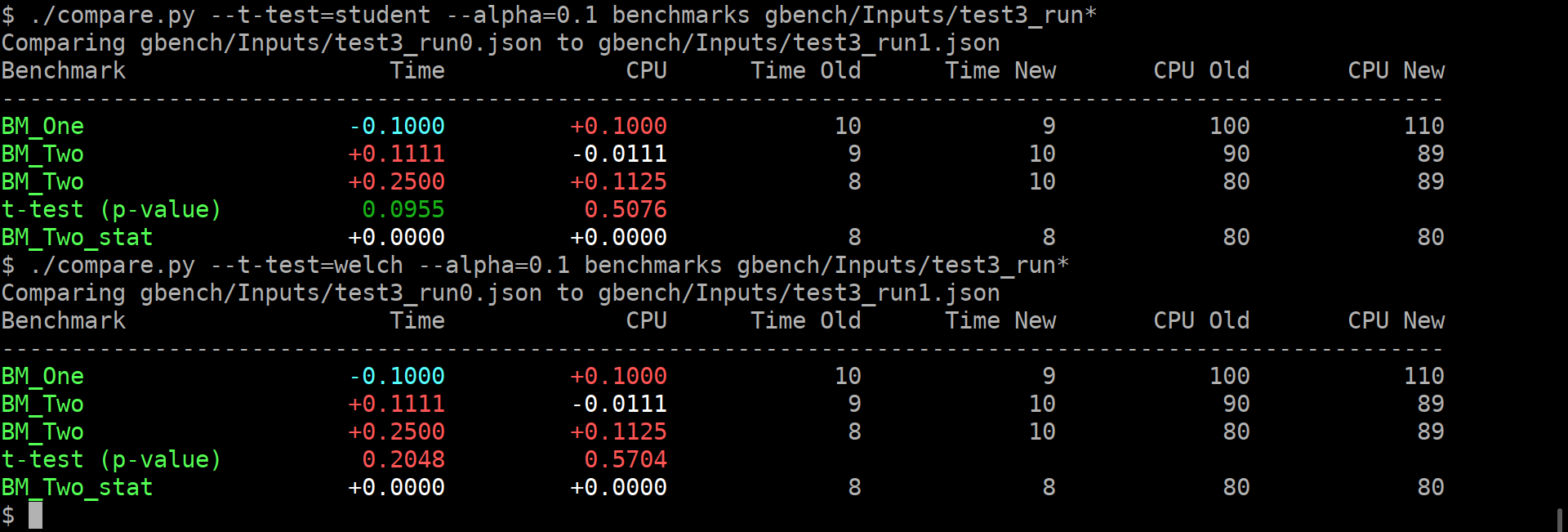

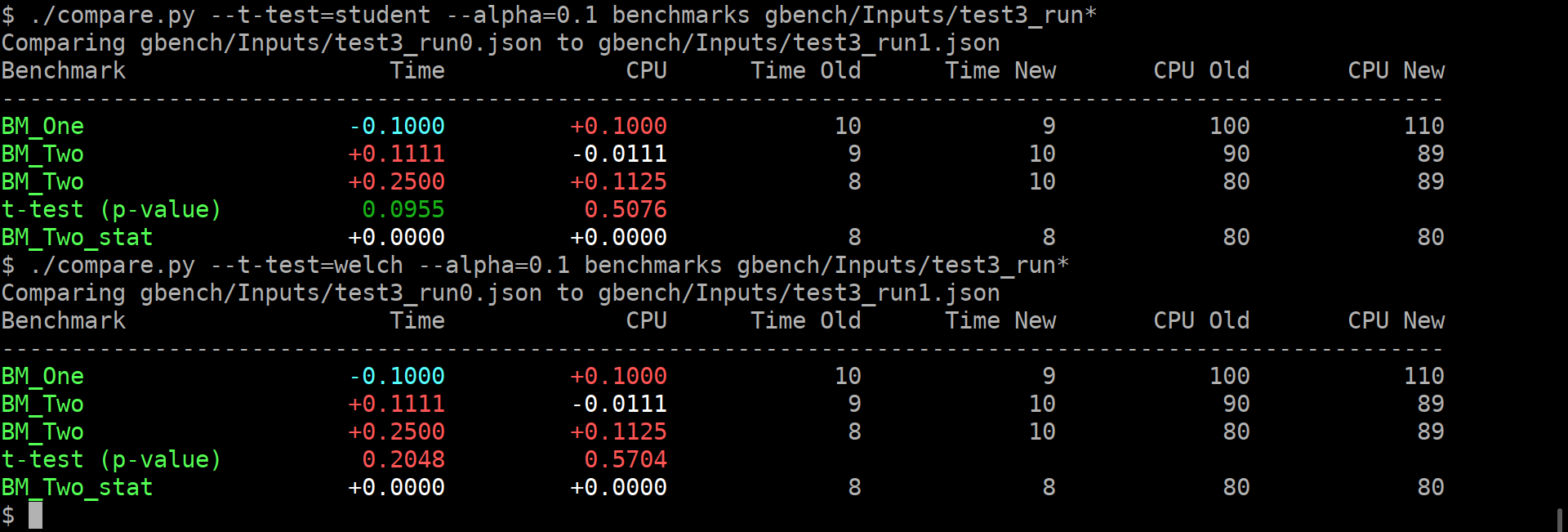

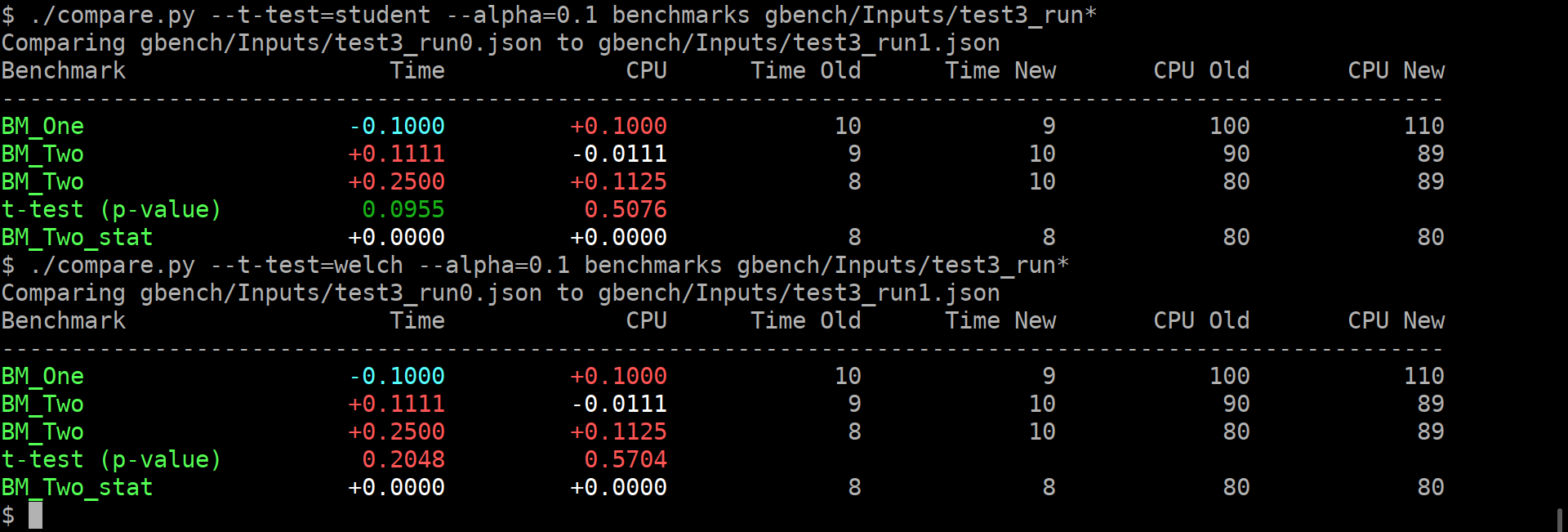

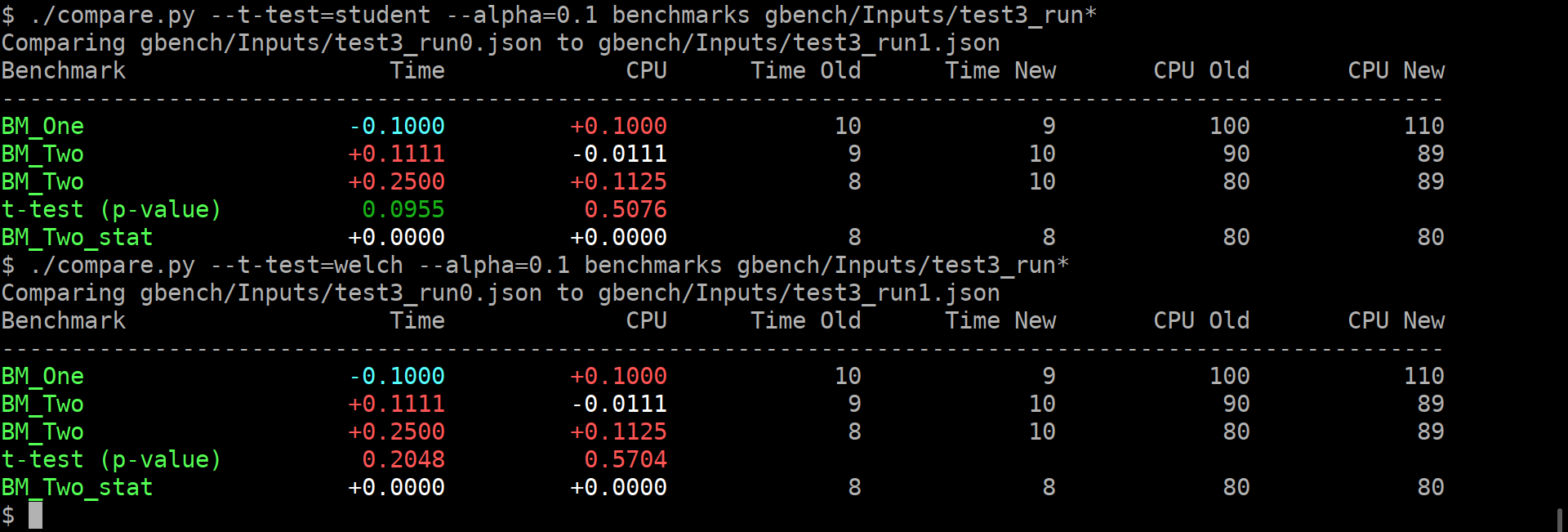

Benchmarking is hard. Making sense of the benchmarking results is even harder. (#593)

The first problem you have to solve yourself. The second one can be aided.

The benchmark library can compute some statistics over the repetitions,

which helps with grasping the results somewhat.

But that is only for the one set of results. It does not really help to compare

the two benchmark results, which is the interesting bit. Thankfully, there are

these bundled `tools/compare.py` and `tools/compare_bench.py` scripts.

They can provide a diff between two benchmarking results. Yay!

Except not really, it's just a diff, while it is very informative and better than

nothing, it does not really help answer The Question - am i just looking at the noise?

It's like not having these per-benchmark statistics...

Roughly, we can formulate the question as:

> Are these two benchmarks the same?

> Did my change actually change anything, or is the difference below the noise level?

Well, this really sounds like a [null hypothesis](https://en.wikipedia.org/wiki/Null_hypothesis), does it not?

So maybe we can use statistics here, and solve all our problems?

lol, no, it won't solve all the problems. But maybe it will act as a tool,

to better understand the output, just like the usual statistics on the repetitions...

I'm making an assumption here that most of the people care about the change

of average value, not the standard deviation. Thus i believe we can use T-Test,

be it either [Student's t-test](https://en.wikipedia.org/wiki/Student%27s_t-test), or [Welch's t-test](https://en.wikipedia.org/wiki/Welch%27s_t-test).

**EDIT**: however, after @dominichamon review, it was decided that it is better

to use more robust [Mann–Whitney U test](https://en.wikipedia.org/wiki/Mann–Whitney_U_test)

I'm using [scipy.stats.mannwhitneyu](https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.mannwhitneyu.html#scipy.stats.mannwhitneyu).

There are two new user-facing knobs:

```

$ ./compare.py --help

usage: compare.py [-h] [-u] [--alpha UTEST_ALPHA]

{benchmarks,filters,benchmarksfiltered} ...

versatile benchmark output compare tool

<...>

optional arguments:

-h, --help show this help message and exit

-u, --utest Do a two-tailed Mann-Whitney U test with the null

hypothesis that it is equally likely that a randomly

selected value from one sample will be less than or

greater than a randomly selected value from a second

sample. WARNING: requires **LARGE** (9 or more)

number of repetitions to be meaningful!

--alpha UTEST_ALPHA significance level alpha. if the calculated p-value is

below this value, then the result is said to be

statistically significant and the null hypothesis is

rejected. (default: 0.0500)

```

Example output:

As you can guess, the alpha does affect anything but the coloring of the computed p-values.

If it is green, then the change in the average values is statistically-significant.

I'm detecting the repetitions by matching name. This way, no changes to the json are _needed_.

Caveats:

* This won't work if the json is not in the same order as outputted by the benchmark,

or if the parsing does not retain the ordering.

* This won't work if after the grouped repetitions there isn't at least one row with

different name (e.g. statistic). Since there isn't a knob to disable printing of statistics

(only the other way around), i'm not too worried about this.

* **The results will be wrong if the repetition count is different between the two benchmarks being compared.**

* Even though i have added (hopefully full) test coverage, the code of these python tools is staring

to look a bit jumbled.

* So far i have added this only to the `tools/compare.py`.

Should i add it to `tools/compare_bench.py` too?

Or should we deduplicate them (by removing the latter one)?

2018-05-29 18:13:28 +08:00

[Tooling] 'display aggregates only' support. (#674)

Works as you'd expect, both for the benchmark binaries, and

the jsons (naturally, since the tests need to work):

```

$ ~/src/googlebenchmark/tools/compare.py benchmarks ~/rawspeed/build-{0,1}/src/utilities/rsbench/rsbench --benchmark_repetitions=3 *

RUNNING: /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_out=/tmp/tmpYoui5H

2018-09-12 20:23:47

Running /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time 447 ms 447 ms 2 CPUTime,s=0.447302 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=117.738M Pixels/WallTime=117.738M Raws/CPUTime=2.23562 Raws/WallTime=2.23564 WallTime,s=0.4473

2K4A9927.CR2/threads:8/real_time 444 ms 444 ms 2 CPUTime,s=0.444228 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=118.552M Pixels/WallTime=118.553M Raws/CPUTime=2.2511 Raws/WallTime=2.25111 WallTime,s=0.444226

2K4A9927.CR2/threads:8/real_time 447 ms 447 ms 2 CPUTime,s=0.446983 CPUTime/WallTime=0.999999 Pixels=52.6643M Pixels/CPUTime=117.822M Pixels/WallTime=117.822M Raws/CPUTime=2.23722 Raws/WallTime=2.23722 WallTime,s=0.446984

2K4A9927.CR2/threads:8/real_time_mean 446 ms 446 ms 2 CPUTime,s=0.446171 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.037M Pixels/WallTime=118.038M Raws/CPUTime=2.24131 Raws/WallTime=2.24132 WallTime,s=0.44617

2K4A9927.CR2/threads:8/real_time_median 447 ms 447 ms 2 CPUTime,s=0.446983 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=117.822M Pixels/WallTime=117.822M Raws/CPUTime=2.23722 Raws/WallTime=2.23722 WallTime,s=0.446984

2K4A9927.CR2/threads:8/real_time_stddev 2 ms 2 ms 2 CPUTime,s=1.69052m CPUTime/WallTime=3.53737u Pixels=0 Pixels/CPUTime=448.178k Pixels/WallTime=448.336k Raws/CPUTime=8.51008m Raws/WallTime=8.51308m WallTime,s=1.6911m

2K4A9928.CR2/threads:8/real_time 563 ms 563 ms 1 CPUTime,s=0.562511 CPUTime/WallTime=0.999824 Pixels=27.9936M Pixels/CPUTime=49.7654M Pixels/WallTime=49.7567M Raws/CPUTime=1.77774 Raws/WallTime=1.77743 WallTime,s=0.56261

2K4A9928.CR2/threads:8/real_time 561 ms 561 ms 1 CPUTime,s=0.561328 CPUTime/WallTime=0.999917 Pixels=27.9936M Pixels/CPUTime=49.8703M Pixels/WallTime=49.8662M Raws/CPUTime=1.78149 Raws/WallTime=1.78134 WallTime,s=0.561375

2K4A9928.CR2/threads:8/real_time 570 ms 570 ms 1 CPUTime,s=0.570423 CPUTime/WallTime=0.999876 Pixels=27.9936M Pixels/CPUTime=49.0752M Pixels/WallTime=49.0691M Raws/CPUTime=1.75308 Raws/WallTime=1.75287 WallTime,s=0.570493

2K4A9928.CR2/threads:8/real_time_mean 565 ms 565 ms 1 CPUTime,s=0.564754 CPUTime/WallTime=0.999872 Pixels=27.9936M Pixels/CPUTime=49.5703M Pixels/WallTime=49.564M Raws/CPUTime=1.77077 Raws/WallTime=1.77055 WallTime,s=0.564826

2K4A9928.CR2/threads:8/real_time_median 563 ms 563 ms 1 CPUTime,s=0.562511 CPUTime/WallTime=0.999876 Pixels=27.9936M Pixels/CPUTime=49.7654M Pixels/WallTime=49.7567M Raws/CPUTime=1.77774 Raws/WallTime=1.77743 WallTime,s=0.56261

2K4A9928.CR2/threads:8/real_time_stddev 5 ms 5 ms 1 CPUTime,s=4.945m CPUTime/WallTime=46.3459u Pixels=0 Pixels/CPUTime=431.997k Pixels/WallTime=432.061k Raws/CPUTime=0.015432 Raws/WallTime=0.0154343 WallTime,s=4.94686m

2K4A9929.CR2/threads:8/real_time 306 ms 306 ms 2 CPUTime,s=0.306476 CPUTime/WallTime=0.999961 Pixels=12.4416M Pixels/CPUTime=40.5957M Pixels/WallTime=40.5941M Raws/CPUTime=3.2629 Raws/WallTime=3.26277 WallTime,s=0.306488

2K4A9929.CR2/threads:8/real_time 310 ms 310 ms 2 CPUTime,s=0.309978 CPUTime/WallTime=0.999939 Pixels=12.4416M Pixels/CPUTime=40.1371M Pixels/WallTime=40.1347M Raws/CPUTime=3.22604 Raws/WallTime=3.22584 WallTime,s=0.309996

2K4A9929.CR2/threads:8/real_time 309 ms 309 ms 2 CPUTime,s=0.30943 CPUTime/WallTime=0.999987 Pixels=12.4416M Pixels/CPUTime=40.2081M Pixels/WallTime=40.2076M Raws/CPUTime=3.23175 Raws/WallTime=3.23171 WallTime,s=0.309434

2K4A9929.CR2/threads:8/real_time_mean 309 ms 309 ms 2 CPUTime,s=0.308628 CPUTime/WallTime=0.999962 Pixels=12.4416M Pixels/CPUTime=40.3136M Pixels/WallTime=40.3121M Raws/CPUTime=3.24023 Raws/WallTime=3.24011 WallTime,s=0.308639

2K4A9929.CR2/threads:8/real_time_median 309 ms 309 ms 2 CPUTime,s=0.30943 CPUTime/WallTime=0.999961 Pixels=12.4416M Pixels/CPUTime=40.2081M Pixels/WallTime=40.2076M Raws/CPUTime=3.23175 Raws/WallTime=3.23171 WallTime,s=0.309434

2K4A9929.CR2/threads:8/real_time_stddev 2 ms 2 ms 2 CPUTime,s=1.88354m CPUTime/WallTime=23.7788u Pixels=0 Pixels/CPUTime=246.821k Pixels/WallTime=246.914k Raws/CPUTime=0.0198384 Raws/WallTime=0.0198458 WallTime,s=1.88442m

RUNNING: /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_out=/tmp/tmpRShmmf

2018-09-12 20:23:55

Running /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time 446 ms 446 ms 2 CPUTime,s=0.445589 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.19M Pixels/WallTime=118.19M Raws/CPUTime=2.24422 Raws/WallTime=2.24422 WallTime,s=0.445589

2K4A9927.CR2/threads:8/real_time 446 ms 446 ms 2 CPUTime,s=0.446008 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=118.079M Pixels/WallTime=118.08M Raws/CPUTime=2.24211 Raws/WallTime=2.24213 WallTime,s=0.446005

2K4A9927.CR2/threads:8/real_time 448 ms 448 ms 2 CPUTime,s=0.447763 CPUTime/WallTime=0.999994 Pixels=52.6643M Pixels/CPUTime=117.616M Pixels/WallTime=117.616M Raws/CPUTime=2.23332 Raws/WallTime=2.23331 WallTime,s=0.447766

2K4A9927.CR2/threads:8/real_time_mean 446 ms 446 ms 2 CPUTime,s=0.446453 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=117.962M Pixels/WallTime=117.962M Raws/CPUTime=2.23988 Raws/WallTime=2.23989 WallTime,s=0.446453

2K4A9927.CR2/threads:8/real_time_median 446 ms 446 ms 2 CPUTime,s=0.446008 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.079M Pixels/WallTime=118.08M Raws/CPUTime=2.24211 Raws/WallTime=2.24213 WallTime,s=0.446005

2K4A9927.CR2/threads:8/real_time_stddev 1 ms 1 ms 2 CPUTime,s=1.15367m CPUTime/WallTime=6.48501u Pixels=0 Pixels/CPUTime=304.437k Pixels/WallTime=305.025k Raws/CPUTime=5.7807m Raws/WallTime=5.79188m WallTime,s=1.15591m

2K4A9928.CR2/threads:8/real_time 561 ms 561 ms 1 CPUTime,s=0.560856 CPUTime/WallTime=0.999996 Pixels=27.9936M Pixels/CPUTime=49.9123M Pixels/WallTime=49.9121M Raws/CPUTime=1.78299 Raws/WallTime=1.78298 WallTime,s=0.560858

2K4A9928.CR2/threads:8/real_time 560 ms 560 ms 1 CPUTime,s=0.560023 CPUTime/WallTime=1.00001 Pixels=27.9936M Pixels/CPUTime=49.9865M Pixels/WallTime=49.9872M Raws/CPUTime=1.78564 Raws/WallTime=1.78567 WallTime,s=0.560015

2K4A9928.CR2/threads:8/real_time 562 ms 562 ms 1 CPUTime,s=0.562337 CPUTime/WallTime=0.999994 Pixels=27.9936M Pixels/CPUTime=49.7808M Pixels/WallTime=49.7805M Raws/CPUTime=1.77829 Raws/WallTime=1.77828 WallTime,s=0.56234

2K4A9928.CR2/threads:8/real_time_mean 561 ms 561 ms 1 CPUTime,s=0.561072 CPUTime/WallTime=1 Pixels=27.9936M Pixels/CPUTime=49.8932M Pixels/WallTime=49.8933M Raws/CPUTime=1.78231 Raws/WallTime=1.78231 WallTime,s=0.561071

2K4A9928.CR2/threads:8/real_time_median 561 ms 561 ms 1 CPUTime,s=0.560856 CPUTime/WallTime=0.999996 Pixels=27.9936M Pixels/CPUTime=49.9123M Pixels/WallTime=49.9121M Raws/CPUTime=1.78299 Raws/WallTime=1.78298 WallTime,s=0.560858

2K4A9928.CR2/threads:8/real_time_stddev 1 ms 1 ms 1 CPUTime,s=1.17202m CPUTime/WallTime=10.7929u Pixels=0 Pixels/CPUTime=104.164k Pixels/WallTime=104.612k Raws/CPUTime=3.721m Raws/WallTime=3.73701m WallTime,s=1.17706m

2K4A9929.CR2/threads:8/real_time 305 ms 305 ms 2 CPUTime,s=0.305436 CPUTime/WallTime=0.999926 Pixels=12.4416M Pixels/CPUTime=40.7339M Pixels/WallTime=40.7309M Raws/CPUTime=3.27401 Raws/WallTime=3.27376 WallTime,s=0.305459

2K4A9929.CR2/threads:8/real_time 306 ms 306 ms 2 CPUTime,s=0.30576 CPUTime/WallTime=0.999999 Pixels=12.4416M Pixels/CPUTime=40.6908M Pixels/WallTime=40.6908M Raws/CPUTime=3.27054 Raws/WallTime=3.27054 WallTime,s=0.30576

2K4A9929.CR2/threads:8/real_time 307 ms 307 ms 2 CPUTime,s=0.30724 CPUTime/WallTime=0.999991 Pixels=12.4416M Pixels/CPUTime=40.4947M Pixels/WallTime=40.4944M Raws/CPUTime=3.25478 Raws/WallTime=3.25475 WallTime,s=0.307243

2K4A9929.CR2/threads:8/real_time_mean 306 ms 306 ms 2 CPUTime,s=0.306145 CPUTime/WallTime=0.999972 Pixels=12.4416M Pixels/CPUTime=40.6398M Pixels/WallTime=40.6387M Raws/CPUTime=3.26645 Raws/WallTime=3.26635 WallTime,s=0.306154

2K4A9929.CR2/threads:8/real_time_median 306 ms 306 ms 2 CPUTime,s=0.30576 CPUTime/WallTime=0.999991 Pixels=12.4416M Pixels/CPUTime=40.6908M Pixels/WallTime=40.6908M Raws/CPUTime=3.27054 Raws/WallTime=3.27054 WallTime,s=0.30576

2K4A9929.CR2/threads:8/real_time_stddev 1 ms 1 ms 2 CPUTime,s=961.851u CPUTime/WallTime=40.2708u Pixels=0 Pixels/CPUTime=127.481k Pixels/WallTime=126.577k Raws/CPUTime=0.0102463 Raws/WallTime=0.0101737 WallTime,s=955.105u

Comparing /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench to /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time -0.0038 -0.0038 447 446 447 446

2K4A9927.CR2/threads:8/real_time +0.0031 +0.0031 444 446 444 446

2K4A9927.CR2/threads:8/real_time -0.0031 -0.0031 447 446 447 446

2K4A9927.CR2/threads:8/real_time_pvalue 0.6428 0.6428 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9927.CR2/threads:8/real_time_mean +0.0006 +0.0006 446 446 446 446

2K4A9927.CR2/threads:8/real_time_median -0.0022 -0.0022 447 446 447 446

2K4A9927.CR2/threads:8/real_time_stddev -0.3161 -0.3175 2 1 2 1

2K4A9928.CR2/threads:8/real_time -0.0031 -0.0029 563 561 563 561

2K4A9928.CR2/threads:8/real_time -0.0009 -0.0008 561 561 561 561

2K4A9928.CR2/threads:8/real_time -0.0169 -0.0168 570 561 570 561

2K4A9928.CR2/threads:8/real_time_pvalue 0.0636 0.0636 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9928.CR2/threads:8/real_time_mean -0.0066 -0.0065 565 561 565 561

2K4A9928.CR2/threads:8/real_time_median -0.0031 -0.0029 563 561 563 561

2K4A9928.CR2/threads:8/real_time_stddev -0.7620 -0.7630 5 1 5 1

2K4A9929.CR2/threads:8/real_time -0.0034 -0.0034 306 305 306 305

2K4A9929.CR2/threads:8/real_time -0.0146 -0.0146 310 305 310 305

2K4A9929.CR2/threads:8/real_time -0.0128 -0.0129 309 305 309 305

2K4A9929.CR2/threads:8/real_time_pvalue 0.0636 0.0636 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9929.CR2/threads:8/real_time_mean -0.0081 -0.0080 309 306 309 306

2K4A9929.CR2/threads:8/real_time_median -0.0119 -0.0119 309 306 309 306

2K4A9929.CR2/threads:8/real_time_stddev -0.4931 -0.4894 2 1 2 1

$ ~/src/googlebenchmark/tools/compare.py -a benchmarks ~/rawspeed/build-{0,1}/src/utilities/rsbench/rsbench --benchmark_repetitions=3 *

RUNNING: /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_display_aggregates_only=true --benchmark_out=/tmp/tmpjrD2I0

2018-09-12 20:24:11

Running /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time_mean 446 ms 446 ms 2 CPUTime,s=0.446077 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.061M Pixels/WallTime=118.062M Raws/CPUTime=2.24177 Raws/WallTime=2.24178 WallTime,s=0.446076

2K4A9927.CR2/threads:8/real_time_median 446 ms 446 ms 2 CPUTime,s=0.445676 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.167M Pixels/WallTime=118.168M Raws/CPUTime=2.24378 Raws/WallTime=2.2438 WallTime,s=0.445673

2K4A9927.CR2/threads:8/real_time_stddev 1 ms 1 ms 2 CPUTime,s=820.275u CPUTime/WallTime=3.51513u Pixels=0 Pixels/CPUTime=216.876k Pixels/WallTime=217.229k Raws/CPUTime=4.11809m Raws/WallTime=4.12478m WallTime,s=821.607u

2K4A9928.CR2/threads:8/real_time_mean 562 ms 562 ms 1 CPUTime,s=0.561843 CPUTime/WallTime=0.999983 Pixels=27.9936M Pixels/CPUTime=49.8247M Pixels/WallTime=49.8238M Raws/CPUTime=1.77986 Raws/WallTime=1.77983 WallTime,s=0.561852

2K4A9928.CR2/threads:8/real_time_median 562 ms 562 ms 1 CPUTime,s=0.561745 CPUTime/WallTime=0.999995 Pixels=27.9936M Pixels/CPUTime=49.8333M Pixels/WallTime=49.8307M Raws/CPUTime=1.78017 Raws/WallTime=1.78008 WallTime,s=0.561774

2K4A9928.CR2/threads:8/real_time_stddev 1 ms 1 ms 1 CPUTime,s=788.58u CPUTime/WallTime=30.2578u Pixels=0 Pixels/CPUTime=69.914k Pixels/WallTime=69.4978k Raws/CPUTime=2.4975m Raws/WallTime=2.48263m WallTime,s=783.873u

2K4A9929.CR2/threads:8/real_time_mean 306 ms 306 ms 2 CPUTime,s=0.305718 CPUTime/WallTime=1.00001 Pixels=12.4416M Pixels/CPUTime=40.6964M Pixels/WallTime=40.6967M Raws/CPUTime=3.271 Raws/WallTime=3.27102 WallTime,s=0.305716

2K4A9929.CR2/threads:8/real_time_median 306 ms 306 ms 2 CPUTime,s=0.305737 CPUTime/WallTime=1 Pixels=12.4416M Pixels/CPUTime=40.6939M Pixels/WallTime=40.6945M Raws/CPUTime=3.27079 Raws/WallTime=3.27084 WallTime,s=0.305732

2K4A9929.CR2/threads:8/real_time_stddev 1 ms 1 ms 2 CPUTime,s=584.969u CPUTime/WallTime=7.87539u Pixels=0 Pixels/CPUTime=77.8769k Pixels/WallTime=77.9118k Raws/CPUTime=6.2594m Raws/WallTime=6.2622m WallTime,s=585.232u

RUNNING: /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_display_aggregates_only=true --benchmark_out=/tmp/tmpN9Xhk2

2018-09-12 20:24:18

Running /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time_mean 446 ms 446 ms 2 CPUTime,s=0.445771 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=118.142M Pixels/WallTime=118.143M Raws/CPUTime=2.2433 Raws/WallTime=2.24332 WallTime,s=0.445769

2K4A9927.CR2/threads:8/real_time_median 446 ms 446 ms 2 CPUTime,s=0.445719 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=118.156M Pixels/WallTime=118.156M Raws/CPUTime=2.24356 Raws/WallTime=2.24358 WallTime,s=0.445717

2K4A9927.CR2/threads:8/real_time_stddev 0 ms 0 ms 2 CPUTime,s=184.23u CPUTime/WallTime=1.69441u Pixels=0 Pixels/CPUTime=48.8185k Pixels/WallTime=49.0086k Raws/CPUTime=926.975u Raws/WallTime=930.585u WallTime,s=184.946u

2K4A9928.CR2/threads:8/real_time_mean 562 ms 562 ms 1 CPUTime,s=0.562071 CPUTime/WallTime=0.999969 Pixels=27.9936M Pixels/CPUTime=49.8045M Pixels/WallTime=49.803M Raws/CPUTime=1.77914 Raws/WallTime=1.77908 WallTime,s=0.562088

2K4A9928.CR2/threads:8/real_time_median 562 ms 562 ms 1 CPUTime,s=0.56237 CPUTime/WallTime=0.999986 Pixels=27.9936M Pixels/CPUTime=49.7779M Pixels/WallTime=49.7772M Raws/CPUTime=1.77819 Raws/WallTime=1.77816 WallTime,s=0.562378

2K4A9928.CR2/threads:8/real_time_stddev 1 ms 1 ms 1 CPUTime,s=967.38u CPUTime/WallTime=35.0024u Pixels=0 Pixels/CPUTime=85.7806k Pixels/WallTime=84.0545k Raws/CPUTime=3.06429m Raws/WallTime=3.00263m WallTime,s=947.993u

2K4A9929.CR2/threads:8/real_time_mean 307 ms 307 ms 2 CPUTime,s=0.306511 CPUTime/WallTime=0.999995 Pixels=12.4416M Pixels/CPUTime=40.5924M Pixels/WallTime=40.5922M Raws/CPUTime=3.26264 Raws/WallTime=3.26262 WallTime,s=0.306513

2K4A9929.CR2/threads:8/real_time_median 306 ms 306 ms 2 CPUTime,s=0.306169 CPUTime/WallTime=0.999999 Pixels=12.4416M Pixels/CPUTime=40.6364M Pixels/WallTime=40.637M Raws/CPUTime=3.26618 Raws/WallTime=3.26622 WallTime,s=0.306164

2K4A9929.CR2/threads:8/real_time_stddev 2 ms 2 ms 2 CPUTime,s=2.25763m CPUTime/WallTime=21.4306u Pixels=0 Pixels/CPUTime=298.503k Pixels/WallTime=299.126k Raws/CPUTime=0.0239924 Raws/WallTime=0.0240424 WallTime,s=2.26242m

Comparing /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench to /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time_pvalue 0.6428 0.6428 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9927.CR2/threads:8/real_time_mean -0.0007 -0.0007 446 446 446 446

2K4A9927.CR2/threads:8/real_time_median +0.0001 +0.0001 446 446 446 446

2K4A9927.CR2/threads:8/real_time_stddev -0.7746 -0.7749 1 0 1 0

2K4A9928.CR2/threads:8/real_time_pvalue 0.0636 0.0636 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9928.CR2/threads:8/real_time_mean +0.0004 +0.0004 562 562 562 562

2K4A9928.CR2/threads:8/real_time_median +0.0011 +0.0011 562 562 562 562

2K4A9928.CR2/threads:8/real_time_stddev +0.2091 +0.2270 1 1 1 1

2K4A9929.CR2/threads:8/real_time_pvalue 0.0636 0.0636 U Test, Repetitions: 3. WARNING: Results unreliable! 9+ repetitions recommended.

2K4A9929.CR2/threads:8/real_time_mean +0.0026 +0.0026 306 307 306 307

2K4A9929.CR2/threads:8/real_time_median +0.0014 +0.0014 306 306 306 306

2K4A9929.CR2/threads:8/real_time_stddev +2.8652 +2.8585 1 2 1 2

```

2018-09-17 16:59:39 +08:00

parser . add_argument (

Add pre-commit config and GitHub Actions job (#1688)

* Add pre-commit config and GitHub Actions job

Contains the following hooks:

* buildifier - for formatting and linting Bazel files.

* mypy, ruff, isort, black - for Python typechecking, import hygiene,

static analysis, and formatting.

The pylint CI job was changed to be a pre-commit CI job, where pre-commit

is bootstrapped via Python.

Pylint is currently no longer part of the

code checks, but can be re-added if requested. The reason to drop was

that it does not play nicely with pre-commit, and lots of its

functionality and responsibilities are actually covered in ruff.

* Add dev extra to pyproject.toml for development installs

* Clarify that pre-commit contains only Python and Bazel hooks

* Add one-line docstrings to Bazel modules

* Apply buildifier pre-commit fixes to Bazel files

* Apply pre-commit fixes to Python files

* Supply --profile=black to isort to prevent conflicts

* Fix nanobind build file formatting

* Add tooling configs to `pyproject.toml`

In particular, set line length 80 for all Python files.

* Reformat all Python files to line length 80, fix return type annotations

Also ignores the `tools/compare.py` and `tools/gbench/report.py` files

for mypy, since they emit a barrage of errors which we can deal with

later. The errors are mostly related to dynamic classmethod definition.

2023-10-30 23:35:37 +08:00

" -a " ,

" --display_aggregates_only " ,

dest = " display_aggregates_only " ,

[Tooling] 'display aggregates only' support. (#674)

Works as you'd expect, both for the benchmark binaries, and

the jsons (naturally, since the tests need to work):

```

$ ~/src/googlebenchmark/tools/compare.py benchmarks ~/rawspeed/build-{0,1}/src/utilities/rsbench/rsbench --benchmark_repetitions=3 *

RUNNING: /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_out=/tmp/tmpYoui5H

2018-09-12 20:23:47

Running /home/lebedevri/rawspeed/build-0/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------

2K4A9927.CR2/threads:8/real_time 447 ms 447 ms 2 CPUTime,s=0.447302 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=117.738M Pixels/WallTime=117.738M Raws/CPUTime=2.23562 Raws/WallTime=2.23564 WallTime,s=0.4473

2K4A9927.CR2/threads:8/real_time 444 ms 444 ms 2 CPUTime,s=0.444228 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=118.552M Pixels/WallTime=118.553M Raws/CPUTime=2.2511 Raws/WallTime=2.25111 WallTime,s=0.444226

2K4A9927.CR2/threads:8/real_time 447 ms 447 ms 2 CPUTime,s=0.446983 CPUTime/WallTime=0.999999 Pixels=52.6643M Pixels/CPUTime=117.822M Pixels/WallTime=117.822M Raws/CPUTime=2.23722 Raws/WallTime=2.23722 WallTime,s=0.446984

2K4A9927.CR2/threads:8/real_time_mean 446 ms 446 ms 2 CPUTime,s=0.446171 CPUTime/WallTime=1 Pixels=52.6643M Pixels/CPUTime=118.037M Pixels/WallTime=118.038M Raws/CPUTime=2.24131 Raws/WallTime=2.24132 WallTime,s=0.44617

2K4A9927.CR2/threads:8/real_time_median 447 ms 447 ms 2 CPUTime,s=0.446983 CPUTime/WallTime=1.00001 Pixels=52.6643M Pixels/CPUTime=117.822M Pixels/WallTime=117.822M Raws/CPUTime=2.23722 Raws/WallTime=2.23722 WallTime,s=0.446984

2K4A9927.CR2/threads:8/real_time_stddev 2 ms 2 ms 2 CPUTime,s=1.69052m CPUTime/WallTime=3.53737u Pixels=0 Pixels/CPUTime=448.178k Pixels/WallTime=448.336k Raws/CPUTime=8.51008m Raws/WallTime=8.51308m WallTime,s=1.6911m

2K4A9928.CR2/threads:8/real_time 563 ms 563 ms 1 CPUTime,s=0.562511 CPUTime/WallTime=0.999824 Pixels=27.9936M Pixels/CPUTime=49.7654M Pixels/WallTime=49.7567M Raws/CPUTime=1.77774 Raws/WallTime=1.77743 WallTime,s=0.56261

2K4A9928.CR2/threads:8/real_time 561 ms 561 ms 1 CPUTime,s=0.561328 CPUTime/WallTime=0.999917 Pixels=27.9936M Pixels/CPUTime=49.8703M Pixels/WallTime=49.8662M Raws/CPUTime=1.78149 Raws/WallTime=1.78134 WallTime,s=0.561375

2K4A9928.CR2/threads:8/real_time 570 ms 570 ms 1 CPUTime,s=0.570423 CPUTime/WallTime=0.999876 Pixels=27.9936M Pixels/CPUTime=49.0752M Pixels/WallTime=49.0691M Raws/CPUTime=1.75308 Raws/WallTime=1.75287 WallTime,s=0.570493

2K4A9928.CR2/threads:8/real_time_mean 565 ms 565 ms 1 CPUTime,s=0.564754 CPUTime/WallTime=0.999872 Pixels=27.9936M Pixels/CPUTime=49.5703M Pixels/WallTime=49.564M Raws/CPUTime=1.77077 Raws/WallTime=1.77055 WallTime,s=0.564826

2K4A9928.CR2/threads:8/real_time_median 563 ms 563 ms 1 CPUTime,s=0.562511 CPUTime/WallTime=0.999876 Pixels=27.9936M Pixels/CPUTime=49.7654M Pixels/WallTime=49.7567M Raws/CPUTime=1.77774 Raws/WallTime=1.77743 WallTime,s=0.56261

2K4A9928.CR2/threads:8/real_time_stddev 5 ms 5 ms 1 CPUTime,s=4.945m CPUTime/WallTime=46.3459u Pixels=0 Pixels/CPUTime=431.997k Pixels/WallTime=432.061k Raws/CPUTime=0.015432 Raws/WallTime=0.0154343 WallTime,s=4.94686m

2K4A9929.CR2/threads:8/real_time 306 ms 306 ms 2 CPUTime,s=0.306476 CPUTime/WallTime=0.999961 Pixels=12.4416M Pixels/CPUTime=40.5957M Pixels/WallTime=40.5941M Raws/CPUTime=3.2629 Raws/WallTime=3.26277 WallTime,s=0.306488

2K4A9929.CR2/threads:8/real_time 310 ms 310 ms 2 CPUTime,s=0.309978 CPUTime/WallTime=0.999939 Pixels=12.4416M Pixels/CPUTime=40.1371M Pixels/WallTime=40.1347M Raws/CPUTime=3.22604 Raws/WallTime=3.22584 WallTime,s=0.309996

2K4A9929.CR2/threads:8/real_time 309 ms 309 ms 2 CPUTime,s=0.30943 CPUTime/WallTime=0.999987 Pixels=12.4416M Pixels/CPUTime=40.2081M Pixels/WallTime=40.2076M Raws/CPUTime=3.23175 Raws/WallTime=3.23171 WallTime,s=0.309434

2K4A9929.CR2/threads:8/real_time_mean 309 ms 309 ms 2 CPUTime,s=0.308628 CPUTime/WallTime=0.999962 Pixels=12.4416M Pixels/CPUTime=40.3136M Pixels/WallTime=40.3121M Raws/CPUTime=3.24023 Raws/WallTime=3.24011 WallTime,s=0.308639

2K4A9929.CR2/threads:8/real_time_median 309 ms 309 ms 2 CPUTime,s=0.30943 CPUTime/WallTime=0.999961 Pixels=12.4416M Pixels/CPUTime=40.2081M Pixels/WallTime=40.2076M Raws/CPUTime=3.23175 Raws/WallTime=3.23171 WallTime,s=0.309434

2K4A9929.CR2/threads:8/real_time_stddev 2 ms 2 ms 2 CPUTime,s=1.88354m CPUTime/WallTime=23.7788u Pixels=0 Pixels/CPUTime=246.821k Pixels/WallTime=246.914k Raws/CPUTime=0.0198384 Raws/WallTime=0.0198458 WallTime,s=1.88442m

RUNNING: /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench --benchmark_repetitions=3 2K4A9927.CR2 2K4A9928.CR2 2K4A9929.CR2 --benchmark_out=/tmp/tmpRShmmf

2018-09-12 20:23:55

Running /home/lebedevri/rawspeed/build-1/src/utilities/rsbench/rsbench

Run on (8 X 4000 MHz CPU s)

CPU Caches:

L1 Data 16K (x8)

L1 Instruction 64K (x4)

L2 Unified 2048K (x4)

L3 Unified 8192K (x1)

-----------------------------------------------------------------------------------------------

Benchmark Time CPU Iterations UserCounters...

-----------------------------------------------------------------------------------------------