25 KiB

Codecademy vs. The BBC Micro

In the late 1970s, the computer, which for decades had been a mysterious, hulking machine that only did the bidding of corporate overlords, suddenly became something the average person could buy and take home. An enthusiastic minority saw how great this was and rushed to get a computer of their own. For many more people, the arrival of the microcomputer triggered helpless anxiety about the future. An ad from a magazine at the time promised that a home computer would “give your child an unfair advantage in school.” It showed a boy in a smart blazer and tie eagerly raising his hand to answer a question, while behind him his dim-witted classmates look on sullenly. The ad and others like it implied that the world was changing quickly and, if you did not immediately learn how to use one of these intimidating new devices, you and your family would be left behind.

In the UK, this anxiety metastasized into concern at the highest levels of government about the competitiveness of the nation. The 1970s had been, on the whole, an underwhelming decade for Great Britain. Both inflation and unemployment had been high. Meanwhile, a series of strikes put London through blackout after blackout. A government report from 1979 fretted that a failure to keep up with trends in computing technology would “add another factor to our poor industrial performance.”1 The country already seemed to be behind in the computing arena—all the great computer companies were American, while integrated circuits were being assembled in Japan and Taiwan.

In an audacious move, the BBC, a public service broadcaster funded by the government, decided that it would solve Britain’s national competitiveness problems by helping Britons everywhere overcome their aversion to computers. It launched the Computer Literacy Project, a multi-pronged educational effort that involved several TV series, a few books, a network of support groups, and a specially built microcomputer known as the BBC Micro. The project was so successful that, by 1983, an editor for BYTE Magazine wrote, “compared to the US, proportionally more of Britain’s population is interested in microcomputers.”2 The editor marveled that there were more people at the Fifth Personal Computer World Show in the UK than had been to that year’s West Coast Computer Faire. Over a sixth of Great Britain watched an episode in the first series produced for the Computer Literacy Project and 1.5 million BBC Micros were ultimately sold.3

An archive containing every TV series produced and all the materials published for the Computer Literacy Project was put on the web last year. I’ve had a huge amount of fun watching the TV series and trying to imagine what it would have been like to learn about computing in the early 1980s. But what’s turned out to be more interesting is how computing was taught. Today, we still worry about technology leaving people behind. Wealthy tech entrepreneurs and governments spend lots of money trying to teach kids “to code.” We have websites like Codecademy that make use of new technologies to teach coding interactively. One would assume that this approach is more effective than a goofy ’80s TV series. But is it?

The Computer Literacy Project

The microcomputer revolution began in 1975 with the release of the Altair 8800. Only two years later, the Apple II, TRS-80, and Commodore PET had all been released. Sales of the new computers exploded. In 1978, the BBC explored the dramatic societal changes these new machines were sure to bring in a documentary called “Now the Chips Are Down.”

The documentary was alarming. Within the first five minutes, the narrator explains that microelectronics will “totally revolutionize our way of life.” As eerie synthesizer music plays, and green pulses of electricity dance around a magnified microprocessor on screen, the narrator argues that the new chips are why “Japan is abandoning its ship building, and why our children will grow up without jobs to go to.” The documentary goes on to explore how robots are being used to automate car assembly and how the European watch industry has lost out to digital watch manufacturers in the United States. It castigates the British government for not doing more to prepare the country for a future of mass unemployment.

The documentary was supposedly shown to the British Cabinet.4 Several government agencies, including the Department of Industry and the Manpower Services Commission, became interested in trying to raise awareness about computers among the British public. The Manpower Services Commission provided funds for a team from the BBC’s education division to travel to Japan, the United States, and other countries on a fact-finding trip. This research team produced a report that cataloged the ways in which microelectronics would indeed mean major changes for industrial manufacturing, labor relations, and office work. In late 1979, it was decided that the BBC should make a ten-part TV series that would help regular Britons “learn how to use and control computers and not feel dominated by them.”5 The project eventually became a multimedia endeavor similar to the Adult Literacy Project, an earlier BBC undertaking involving both a TV series and supplemental courses that helped two million people improve their reading.

The producers behind the Computer Literacy Project were keen for the TV series to feature “hands-on” examples that viewers could try on their own if they had a microcomputer at home. These examples would have to be in BASIC, since that was the language (really the entire shell) used on almost all microcomputers. But the producers faced a thorny problem: Microcomputer manufacturers all had their own dialects of BASIC, so no matter which dialect they picked, they would inevitably alienate some large fraction of their audience. The only real solution was to create a new BASIC—BBC BASIC—and a microcomputer to go along with it. Members of the British public would be able to buy the new microcomputer and follow along without worrying about differences in software or hardware.

The TV producers and presenters at the BBC were not capable of building a microcomputer on their own. So they put together a specification for the computer they had in mind and invited British microcomputer companies to propose a new machine that met the requirements. The specification called for a relatively powerful computer because the BBC producers felt that the machine should be able to run real, useful applications. Technical consultants for the Computer Literacy Project also suggested that, if it had to be a BASIC dialect that was going to be taught to the entire nation, then it had better be a good one. (They may not have phrased it exactly that way, but I bet that’s what they were thinking.) BBC BASIC would make up for some of BASIC’s usual shortcomings by allowing for recursion and local variables.6

The BBC eventually decided that a Cambridge-based company called Acorn Computers would make the BBC Micro. In choosing Acorn, the BBC passed over a proposal from Clive Sinclair, who ran a company called Sinclair Research. Sinclair Research had brought mass-market microcomputing to the UK in 1980 with the Sinclair ZX80. Sinclair’s new computer, the ZX81, was cheap but not powerful enough for the BBC’s purposes. Acorn’s new prototype computer, known internally as the Proton, would be more expensive but more powerful and expandable. The BBC was impressed. The Proton was never marketed or sold as the Proton because it was instead released in December 1981 as the BBC Micro, also affectionately called “The Beeb.” You could get a 16k version for £235 and a 32k version for £335.

In 1980, Acorn was an underdog in the British computing industry. But the BBC Micro helped establish the company’s legacy. Today, the world’s most popular microprocessor instruction set is the ARM architecture. “ARM” now stands for “Advanced RISC Machine,” but originally it stood for “Acorn RISC Machine.” ARM Holdings, the company behind the architecture, was spun out from Acorn in 1990.

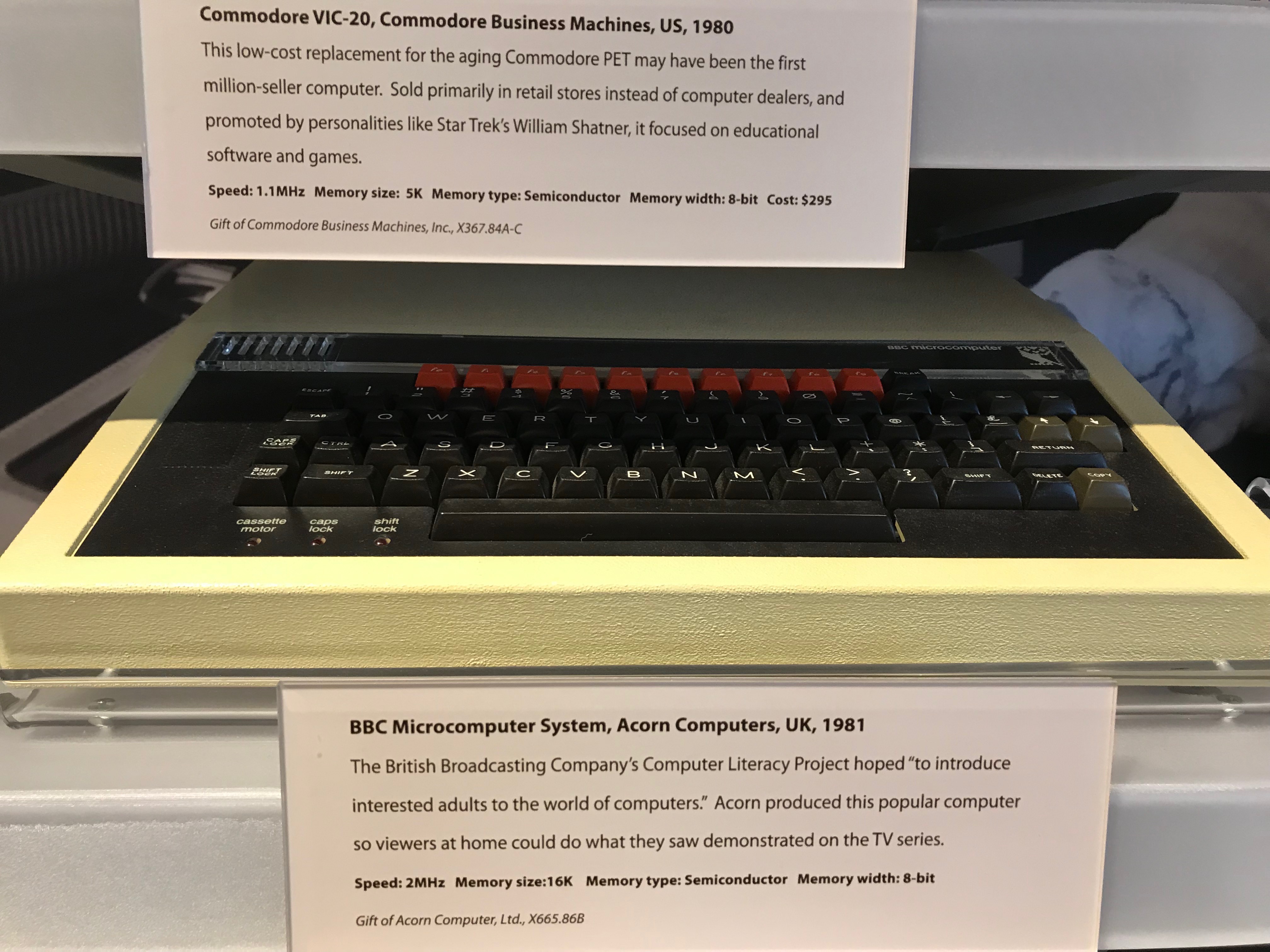

A bad picture of a BBC Micro, taken by me at the Computer History Museum

in Mountain View, California.

A bad picture of a BBC Micro, taken by me at the Computer History Museum

in Mountain View, California.

The Computer Programme

A dozen different TV series were eventually produced as part of the Computer Literacy Project, but the first of them was a ten-part series known as The Computer Programme. The series was broadcast over ten weeks at the beginning of 1982. A million people watched each week-night broadcast of the show; a quarter million watched the reruns on Sunday and Monday afternoon.

The show was hosted by two presenters, Chris Serle and Ian McNaught-Davis. Serle plays the neophyte while McNaught-Davis, who had professional experience programming mainframe computers, plays the expert. This was an inspired setup. It made for awkward transitions—Serle often goes directly from a conversation with McNaught-Davis to a bit of walk-and-talk narration delivered to the camera, and you can’t help but wonder whether McNaught-Davis is still standing there out of frame or what. But it meant that Serle could voice the concerns that the audience would surely have. He can look intimidated by a screenful of BASIC and can ask questions like, “What do all these dollar signs mean?” At several points during the show, Serle and McNaught-Davis sit down in front of a computer and essentially pair program, with McNaught-Davis providing hints here and there while Serle tries to figure it out. It would have been much less relatable if the show had been presented by a single, all-knowing narrator.

The show also made an effort to demonstrate the many practical applications of computing in the lives of regular people. By the early 1980s, the home computer had already begun to be associated with young boys and video games. The producers behind The Computer Programme sought to avoid interviewing “impressively competent youngsters,” as that was likely “to increase the anxieties of older viewers,” a demographic that the show was trying to attract to computing.7 In the first episode of the series, Gill Nevill, the show’s “on location” reporter, interviews a woman that has bought a Commodore PET to help manage her sweet shop. The woman (her name is Phyllis) looks to be 60-something years old, yet she has no trouble using the computer to do her accounting and has even started using her PET to do computer work for other businesses, which sounds like the beginning of a promising freelance career. Phyllis says that she wouldn’t mind if the computer work grew to replace her sweet shop business since she enjoys the computer work more. This interview could instead have been an interview with a teenager about how he had modified Breakout to be faster and more challenging. But that would have been encouraging to almost nobody. On the other hand, if Phyllis, of all people, can use a computer, then surely you can too.

While the show features lots of BASIC programming, what it really wants to teach its audience is how computing works in general. The show explains these general principles with analogies. In the second episode, there is an extended discussion of the Jacquard loom, which accomplishes two things. First, it illustrates that computers are not based only on magical technology invented yesterday—some of the foundational principles of computing go back two hundred years and are about as simple as the idea that you can punch holes in card to control a weaving machine. Second, the interlacing of warp and weft threads is used to demonstrate how a binary choice (does the weft thread go above or below the warp thread?) is enough, when repeated over and over, to produce enormous variation. This segues, of course, into a discussion of how information can be stored using binary digits.

Later in the show there is a section about a steam organ that plays music encoded in a long, segmented roll of punched card. This time the analogy is used to explain subroutines in BASIC. Serle and McNaught-Davis lay out the whole roll of punched card on the floor in the studio, then point out the segments where it looks like a refrain is being repeated. McNaught-Davis explains that a subroutine is what you would get if you cut out those repeated segments of card and somehow added an instruction to go back to the original segment that played the refrain for the first time. This is a brilliant explanation and probably one that stuck around in people’s minds for a long time afterward.

I’ve picked out only a few examples, but I think in general the show excels at demystifying computers by explaining the principles that computers rely on to function. The show could instead have focused on teaching BASIC, but it did not. This, it turns out, was very much a conscious choice. In a retrospective written in 1983, John Radcliffe, the executive producer of the Computer Literacy Project, wrote the following:

If computers were going to be as important as we believed, some genuine understanding of this new subject would be important for everyone, almost as important perhaps as the capacity to read and write. Early ideas, both here and in America, had concentrated on programming as the main route to computer literacy. However, as our thinking progressed, although we recognized the value of “hands-on” experience on personal micros, we began to place less emphasis on programming and more on wider understanding, on relating micros to larger machines, encouraging people to gain experience with a range of applications programs and high-level languages, and relating these to experience in the real world of industry and commerce…. Our belief was that once people had grasped these principles, at their simplest, they would be able to move further forward into the subject.

Later, Radcliffe writes, in a similar vein:

There had been much debate about the main explanatory thrust of the series. One school of thought had argued that it was particularly important for the programmes to give advice on the practical details of learning to use a micro. But we had concluded that if the series was to have any sustained educational value, it had to be a way into the real world of computing, through an explanation of computing principles. This would need to be achieved by a combination of studio demonstration on micros, explanation of principles by analogy, and illustration on film of real-life examples of practical applications. Not only micros, but mini computers and mainframes would be shown.

I love this, particularly the part about mini-computers and mainframes. The producers behind The Computer Programme aimed to help Britons get situated: Where had computing been, and where was it going? What can computers do now, and what might they do in the future? Learning some BASIC was part of answering those questions, but knowing BASIC alone was not seen as enough to make someone computer literate.

Computer Literacy Today

If you google “learn to code,” the first result you see is a link to Codecademy’s website. If there is a modern equivalent to the Computer Literacy Project, something with the same reach and similar aims, then it is Codecademy.

“Learn to code” is Codecademy’s tagline. I don’t think I’m the first person to point this out—in fact, I probably read this somewhere and I’m now ripping it off—but there’s something revealing about using the word “code” instead of “program.” It suggests that the important thing you are learning is how to decode the code, how to look at a screen’s worth of Python and not have your eyes glaze over. I can understand why to the average person this seems like the main hurdle to becoming a professional programmer. Professional programmers spend all day looking at computer monitors covered in gobbledygook, so, if I want to become a professional programmer, I better make sure I can decipher the gobbledygook. But dealing with syntax is not the most challenging part of being a programmer, and it quickly becomes almost irrelevant in the face of much bigger obstacles. Also, armed only with knowledge of a programming language’s syntax, you may be able to read code but you won’t be able to write code to solve a novel problem.

I recently went through Codecademy’s “Code Foundations” course, which is the course that the site recommends you take if you are interested in programming (as opposed to web development or data science) and have never done any programming before. There are a few lessons in there about the history of computer science, but they are perfunctory and poorly researched. (Thank heavens for this noble internet vigilante, who pointed out a particularly egregious error.) The main focus of the course is teaching you about the common structural elements of programming languages: variables, functions, control flow, loops. In other words, the course focuses on what you would need to know to start seeing patterns in the gobbledygook.

To be fair to Codecademy, they offer other courses that look meatier. But even courses such as their “Computer Science Path” course focus almost exclusively on programming and concepts that can be represented in programs. One might argue that this is the whole point—Codecademy’s main feature is that it gives you little interactive programming lessons with automated feedback. There also just isn’t enough room to cover more because there is only so much you can stuff into somebody’s brain in a little automated lesson. But the producers at the BBC tasked with kicking off the Computer Literacy Project also had this problem; they recognized that they were limited by their medium and that “the amount of learning that would take place as a result of the television programmes themselves would be limited.”8 With similar constraints on the volume of information they could convey, they chose to emphasize general principles over learning BASIC. Couldn’t Codecademy replace a lesson or two with an interactive visualization of a Jacquard loom weaving together warp and weft threads?

I’m banging the drum for “general principles” loudly now, so let me just explain what I think they are and why they are important. There’s a book by J. Clark Scott about computers called But How Do It Know? The title comes from the anecdote that opens the book. A salesman is explaining to a group of people that a thermos can keep hot food hot and cold food cold. A member of the audience, astounded by this new invention, asks, “But how do it know?” The joke of course is that the thermos is not perceiving the temperature of the food and then making a decision—the thermos is just constructed so that cold food inevitably stays cold and hot food inevitably stays hot. People anthropomorphize computers in the same way, believing that computers are digital brains that somehow “choose” to do one thing or another based on the code they are fed. But learning a few things about how computers work, even at a rudimentary level, takes the homunculus out of the machine. That’s why the Jacquard loom is such a good go-to illustration. It may at first seem like an incredible device. It reads punch cards and somehow “knows” to weave the right pattern! The reality is mundane: Each row of holes corresponds to a thread, and where there is a hole in that row the corresponding thread gets lifted. Understanding this may not help you do anything new with computers, but it will give you the confidence that you are not dealing with something magical. We should impart this sense of confidence to beginners as soon as we can.

Alas, it’s possible that the real problem is that nobody wants to learn about the Jacquard loom. Judging by how Codecademy emphasizes the professional applications of what it teaches, many people probably start using Codecademy because they believe it will help them “level up” their careers. They believe, not unreasonably, that the primary challenge will be understanding the gobbledygook, so they want to “learn to code.” And they want to do it as quickly as possible, in the hour or two they have each night between dinner and collapsing into bed. Codecademy, which after all is a business, gives these people what they are looking for—not some roundabout explanation involving a machine invented in the 18th century.

The Computer Literacy Project, on the other hand, is what a bunch of producers and civil servants at the BBC thought would be the best way to educate the nation about computing. I admit that it is a bit elitist to suggest we should laud this group of people for teaching the masses what they were incapable of seeking out on their own. But I can’t help but think they got it right. Lots of people first learned about computing using a BBC Micro, and many of these people went on to become successful software developers or game designers. As I’ve written before, I suspect learning about computing at a time when computers were relatively simple was a huge advantage. But perhaps another advantage these people had is shows like The Computer Programme, which strove to teach not just programming but also how and why computers can run programs at all. After watching The Computer Programme, you may not understand all the gobbledygook on a computer screen, but you don’t really need to because you know that, whatever the “code” looks like, the computer is always doing the same basic thing. After a course or two on Codecademy, you understand some flavors of gobbledygook, but to you a computer is just a magical machine that somehow turns gobbledygook into running software. That isn’t computer literacy.

If you enjoyed this post, more like it come out every four weeks! Follow @TwoBitHistory on Twitter or subscribe to the RSS feed to make sure you know when a new post is out.

Previously on TwoBitHistory…

FINALLY some new damn content, amirite?

Wanted to write an article about how Simula bought us object-oriented programming. It did that, but early Simula also flirted with a different vision for how OOP would work. Wrote about that instead!https://t.co/AYIWRRceI6

— TwoBitHistory (@TwoBitHistory) February 1, 2019

-

Robert Albury and David Allen, Microelectronics, report (1979). ↩︎

-

Gregg Williams, “Microcomputing, British Style”, Byte Magazine, 40, January 1983, accessed on March 31, 2019, https://archive.org/stream/byte-magazine-1983-01/1983_01_BYTE_08-01_Looking_Ahead#page/n41/mode/2up. ↩︎

-

John Radcliffe, “Toward Computer Literacy,” Computer Literacy Project Achive, 42, accessed March 31, 2019, https://computer-literacy-project.pilots.bbcconnectedstudio.co.uk/media/Towards Computer Literacy.pdf. ↩︎

-

David Allen, “About the Computer Literacy Project,” Computer Literacy Project Archive, accessed March 31, 2019, https://computer-literacy-project.pilots.bbcconnectedstudio.co.uk/history. ↩︎

-

ibid. ↩︎

-

Williams, 51. ↩︎

-

Radcliffe, 11. ↩︎

-

Radcliffe, 5. ↩︎

via: https://twobithistory.org/2019/03/31/bbc-micro.html

作者:Two-Bit History 选题:lujun9972 译者:译者ID 校对:校对者ID