11 KiB

Jupyter and data science in Fedora

In the past, kings and leaders used oracles and magicians to help them predict the future — or at least get some good advice due to their supposed power to perceive hidden information. Nowadays, we live in a society obsessed with quantifying everything. So we have data scientists to do this job.

Data scientists use statistical models, numerical techniques and advanced algorithms that didn’t come from statistical disciplines, along with the data that exist on databases, to find, to infer, to predict data that doesn’t exist yet. Sometimes this data is about the future. That is why we do a lot of predictive analytics and prescriptive analytics.

Here are some questions to which data scientists help find answers:

- Who are the students with high propensity to abandon the class? For each one, what are the reasons for leaving?

- Which house has a price above or below the fair price? What is the fair price for a certain house?

- What are the hidden groups that my clients classify themselves?

- Which future problems this premature child will develop?

- How many calls will I get in my call center tomorrow 11:43 AM?

- My bank should or should not lend money to this customer?

Note how the answer to all these question is not sitting in any database waiting to be queried. These are all data that still doesn’t exist and has to be calculated. That is part of the job we data scientists do.

Throughout this article you’ll learn how to prepare a Fedora system as a Data Scientist’s development environment and also a production system. Most of the basic software is RPM-packaged, but the most advanced parts can only be installed, nowadays, with Python’s pip tool.

Jupyter — the IDE

Most modern data scientists use Python. And an important part of their work is EDA (exploratory data analysis). EDA is a manual and interactive process that retrieves data, explores its features, searches for correlations, and uses plotted graphics to visualize and understand how data is shaped and prototypes predictive models.

Jupyter is a web application perfect for this task. Jupyter works with Notebooks, documents that mix rich text including beautifully rendered math formulas (thanks to mathjax), blocks of code and code output, including graphics.

Notebook files have extension .ipynb, which means Interactive Python Notebook.

Setting up and running Jupyter

First, install essential packages for Jupyter (using sudo):

$ sudo dnf install python3-notebook mathjax sscg

You might want to install additional and optional Python modules commonly used by data scientists:

$ sudo dnf install python3-seaborn python3-lxml python3-basemap python3-scikit-image python3-scikit-learn python3-sympy python3-dask+dataframe python3-nltk

Set a password to log into Notebook web interface and avoid those long tokens. Run the following command anywhere on your terminal:

$ mkdir -p $HOME/.jupyter

$ jupyter notebook password

Now, type a password for yourself. This will create the file $HOME/.jupyter/jupyter_notebook_config.json with your encrypted password.

Next, prepare for SSLby generating a self-signed HTTPS certificate for Jupyter’s web server:

$ cd $HOME/.jupyter; sscg

Finish configuring Jupyter by editing your $HOME/.jupyter/jupyter_notebook_config.json file. Make it look like this:

{

"NotebookApp": {

"password": "sha1:abf58...87b",

"ip": "*",

"allow_origin": "*",

"allow_remote_access": true,

"open_browser": false,

"websocket_compression_options": {},

"certfile": "/home/aviram/.jupyter/service.pem",

"keyfile": "/home/aviram/.jupyter/service-key.pem",

"notebook_dir": "/home/aviram/Notebooks"

}

}

The parts in red must be changed to match your folders. Parts in blue were already there after you created your password. Parts in green are the crypto-related files generated by sscg.

Create a folder for your notebook files, as configured in the notebook_dir setting above:

$ mkdir $HOME/Notebooks

Now you are all set. Just run Jupyter Notebook from anywhere on your system by typing:

$ jupyter notebook

Or add this line to your $HOME/.bashrc file to create a shortcut command called jn:

alias jn='jupyter notebook'

After running the command jn, access https://your-fedora-host.com:8888 from any browser on the network to see the Jupyter user interface. You’ll need to use the password you set up earlier. Start typing some Python code and markup text. This is how it looks:

In addition to the IPython environment, you’ll also get a web-based Unix terminal provided by terminado. Some people might find this useful, while others find this insecure. You can disable this feature in the config file.

JupyterLab — the next generation of Jupyter

JupyterLab is the next generation of Jupyter, with a better interface and more control over your workspace. It’s currently not RPM-packaged for Fedora at the time of writing, but you can use pip to get it installed easily:

$ pip3 install jupyterlab --user

$ jupyter serverextension enable --py jupyterlab

Then run your regular jupiter notebook command or jn alias. JupyterLab will be accessible from http://your-linux-host.com:8888/**lab**.

Tools used by data scientists

In this section you can get to know some of these tools, and how to install them. Unless noted otherwise, the module is already packaged for Fedora and was installed as prerequisites for previous components.

Numpy

Numpy is an advanced and C-optimized math library designed to work with large in-memory datasets. It provides advanced multidimensional matrix support and operations, including math functions as log(), exp(), trigonometry etc.

Pandas

In this author’s opinion, Python is THE platform for data science mostly because of Pandas. Built on top of numpy, Pandas makes easy the work of preparing and displaying data. You can think of it as a no-UI spreadsheet, but ready to work with much larger datasets. Pandas helps with data retrieval from a SQL database, CSV or other types of files, columns and rows manipulation, data filtering and, to some extent, data visualization with matplotlib.

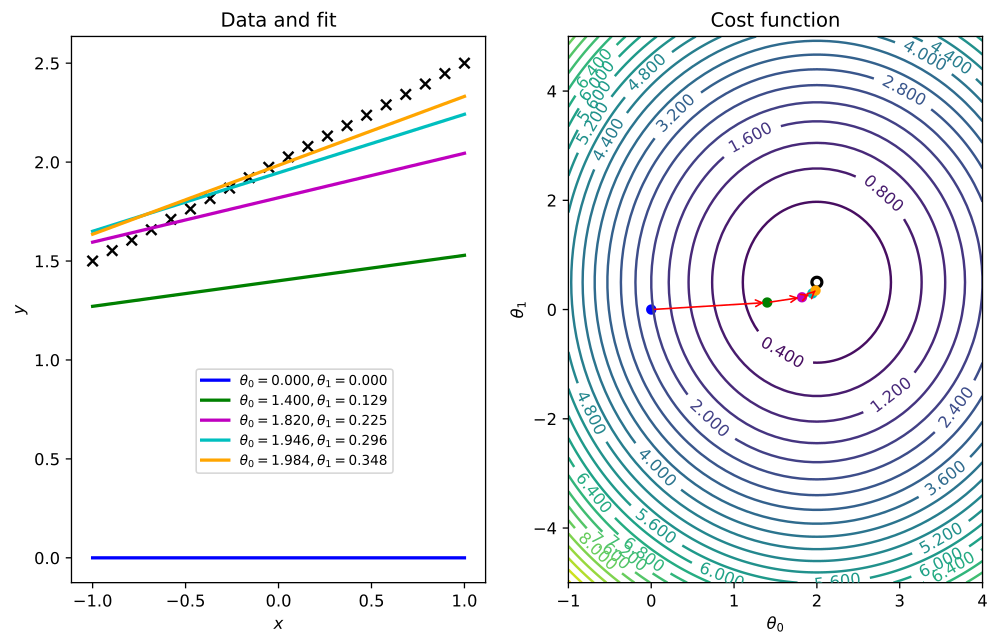

Matplotlib

Matplotlib is a library to plot 2D and 3D data. It has great support for notations in graphics, labels and overlays

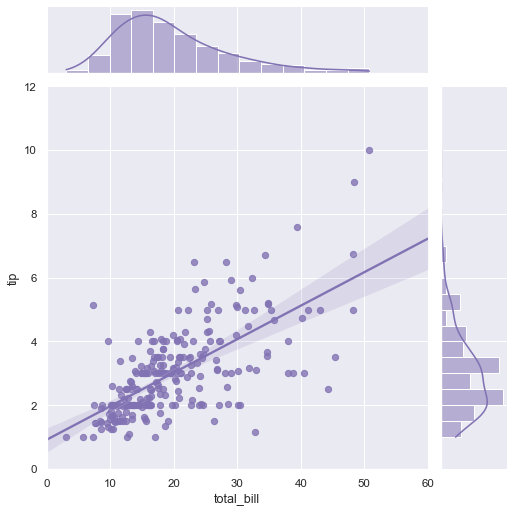

Seaborn

Built on top of matplotlib, Seaborn’s graphics are optimized for a more statistical comprehension of data. It automatically displays regression lines or Gauss curve approximations of plotted data.

StatsModels

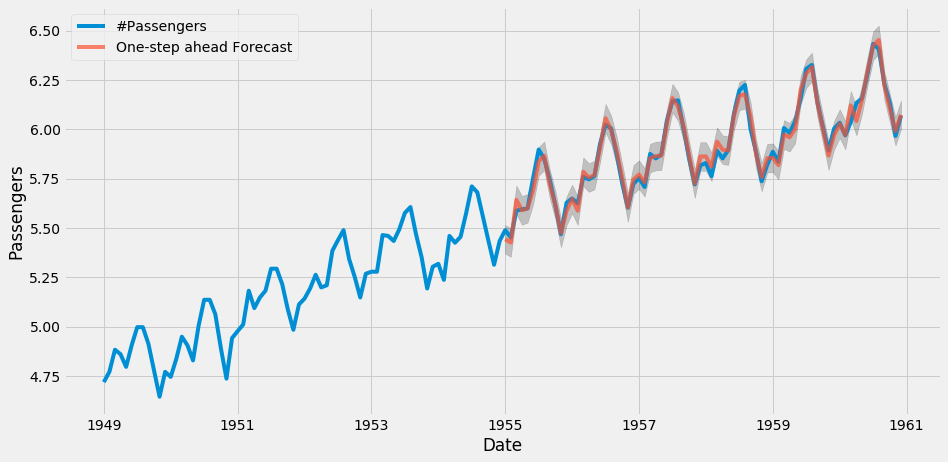

StatsModels provides algorithms for statistical and econometrics data analysis such as linear and logistic regressions. Statsmodel is also home for the classical family of time series algorithms known as ARIMA.

Scikit-learn

The central piece of the machine-learning ecosystem, scikit provides predictor algorithms for regression (Elasticnet, Gradient Boosting, Random Forest etc) and classification and clustering (K-means, DBSCAN etc). It features a very well designed API. Scikit also has classes for advanced data manipulation, dataset split into train and test parts, dimensionality reduction and data pipeline preparation.

XGBoost

XGBoost is the most advanced regressor and classifier used nowadays. It’s not part of scikit-learn, but it adheres to scikit’s API. XGBoost is not packaged for Fedora and should be installed with pip. XGBoost can be accelerated with your nVidia GPU, but not through its pip package. You can get this if you compile it yourself against CUDA. Get it with:

$ pip3 install xgboost --user

Imbalanced Learn

imbalanced-learn provides ways for under-sampling and over-sampling data. It is useful in fraud detection scenarios where known fraud data is very small when compared to non-fraud data. In these cases data augmentation is needed for the known fraud data, to make it more relevant to train predictors. Install it with pip:

$ pip3 install imblearn --user

NLTK

The Natural Language toolkit, or NLTK, helps you work with human language data for the purpose of building chatbots (just to cite an example).

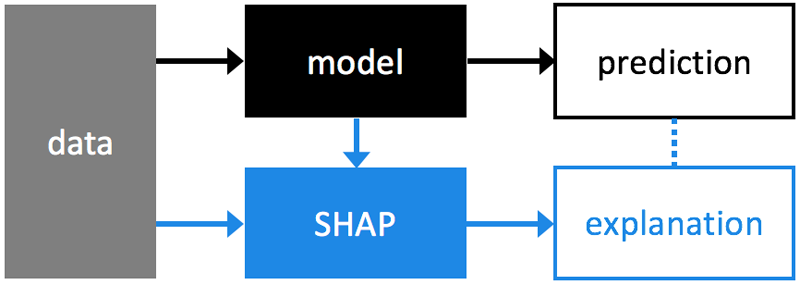

SHAP

Machine learning algorithms are very good on predicting, but aren’t good at explaining why they made a prediction. SHAP solves that, by analyzing trained models.

Install it with pip:

$ pip3 install shap --user

Keras

Keras is a library for deep learning and neural networks. Install it with pip:

$ sudo dnf install python3-h5py

$ pip3 install keras --user

TensorFlow

TensorFlow is a popular neural networks builder. Install it with pip:

$ pip3 install tensorflow --user

Photo courtesy of FolsomNatural on Flickr (CC BY-SA 2.0).

via: https://fedoramagazine.org/jupyter-and-data-science-in-fedora/

作者:Avi Alkalay 选题:lujun9972 译者:译者ID 校对:校对者ID