sources/tech/20210205.0 ⭐️⭐️ Why simplicity is critical to delivering sturdy applications.md

12 KiB

Why simplicity is critical to delivering sturdy applications

In the previous articles in this series, I explained why tackling coding problems all at once, as if they were hordes of zombies, is a mistake. I'm using a helpful acronym explaining why it's better to approach problems incrementally. ZOMBIES stands for:

Z – ZeroO – OneM – Many (or more complex)B – Boundary behaviorsI – Interface definitionE – Exercise exceptional behaviorS – Simple scenarios, simple solutions

More Great Content

- Free online course: RHEL technical overview

- Learn Advanced Linux Commands

- Download Cheat Sheets

- Find an Open Source Alternative

- Read Top Linux Content

- Check out open source resources

In the first four articles in this series, I demonstrated the first five ZOMBIES principles. The first article implemented Zero, which provides the simplest possible path through your code. The second article performed tests with One and Many samples, the third article looked at Boundaries and Interfaces, and the fourth examined Exceptional behavior. In this article, I'll take a look at the final letter in the acronym: S, which stands for "simple scenarios, simple solutions."

Simple scenarios, simple solutions in action

If you go back and examine all the steps taken to implement the shopping API in this series, you'll see a purposeful decision to always stick to the simplest possible scenarios. In the process, you end up with the simplest possible solutions.

There you have it: ZOMBIES help you deliver sturdy, elegant solutions by adhering to simplicity.

Victory?

It might seem you're done here, and a less conscientious engineer would very likely declare victory. But enlightened engineers always probe a bit deeper.

One exercise I always recommend is mutation testing. Before you wrap up this exercise and go on to fight new battles, it is wise to give your solution a good shakeout with mutation testing. And besides, you have to admit that mutation fits well in a battle against zombies.

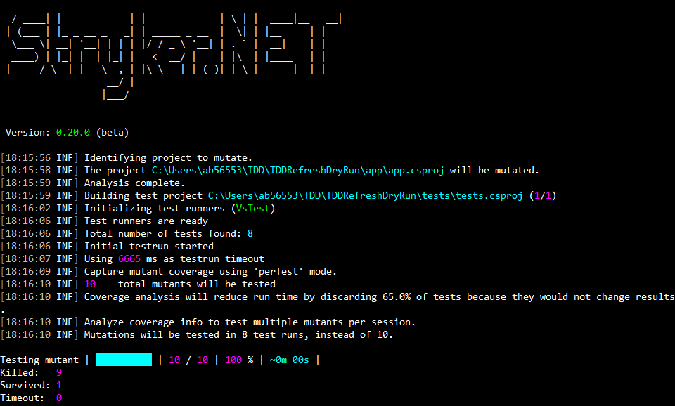

Use the open source Stryker.NET to run mutation tests.

It looks like you have one surviving mutant! That's not a good sign.

What does this mean? Just when you thought you had a rock-solid, sturdy solution, Stryker.NET is telling you that not everything is rosy in your neck of the woods.

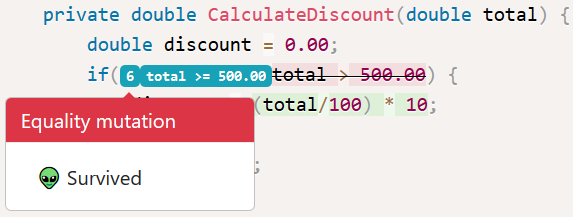

Take a look at the pesky mutant who survived:

The mutation testing tool took the statement:

if(total > 500.00) {

and mutated it to:

if(total >= 500.00) {

Then it ran the tests and realized that none of the tests complained about the change. If there is a change in processing logic and none of the tests complain about the change, that means you have a surviving mutant.

Why mutation matters

Why is a surviving mutant a sign of trouble? It's because the processing logic you craft governs the behavior of your system. If the processing logic changes, the behavior should change, too. And if the behavior changes, the expectations encoded in the tests should be violated. If these expectations are not violated, that means that the expectations are not precise enough. You have a loophole in your processing logic.

To fix this, you need to "kill" the surviving mutant. How do you do that? Typically, the fact that a mutant survived means at least one expectation is missing.

Look through your code to see what expectation, if any, is not there:

- You clearly defined the expectation that a newly created basket has zero items (and, by implication, has a $0 grand total).

- You also defined the expectation that adding one item will result in the basket having one item, and if the item price is $10, the grand total will be $10.

- Furthermore, you defined the expectation that adding two items to the basket, one item priced at $10 and the other at $20, results in a grand total of $30.

- You also declared expectations regarding the removal of items from the basket.

- Finally, you defined the expectation that any order total greater than $500 results in a price discount. The business policy rule dictates that in such a case, the discount is 10% of the order's total price.

What is missing? According to the mutation testing report, you never defined an expectation regarding what business policy rule applies when the order total is exactly $500. You defined what happens if the order total is greater than the $500 threshold and what happens when the order total is less than $500.

Define this edge-case expectation:

[Fact]

public void Add2ItemsTotal500GrandTotal500() {

var expectedGrandTotal = 500.00;

var actualGrandTotal = 450;

Assert.Equal(expectedGrandTotal, actualGrandTotal);

}

The first stab fakes the expectation to make it fail. You now have nine microtests; eight succeed, and the ninth test fails:

[xUnit.net 00:00:00.57] tests.UnitTest1.Add2ItemsTotal500GrandTotal500 [FAIL]

X tests.UnitTest1.Add2ItemsTotal500GrandTotal500 [2ms]

Error Message:

Assert.Equal() Failure

Expected: 500

Actual: 450

[...]

Test Run Failed.

Total tests: 9

Passed: 8

Failed: 1

Total time: 1.5920 Seconds

Replace hard-coded values with an expectation of a confirmation example:

[Fact]

public void Add2ItemsTotal500GrandTotal500() {

var expectedGrandTotal = 500.00;

Hashtable item1 = new Hashtable();

item1.Add("0001", 400.00);

shoppingAPI.AddItem(item1);

Hashtable item2 = new Hashtable();

item2.Add("0002", 100.00);

shoppingAPI.AddItem(item2);

var actualGrandTotal = shoppingAPI.CalculateGrandTotal(); }

You added two items, one priced at $400, the other at $100, totaling $500. After calculating the grand total, you expect that it will be $500.

Run the system. All nine tests pass!

Total tests: 9

Passed: 9

Failed: 0

Total time: 1.0440 Seconds

Now for the moment of truth. Will this new expectation remove all mutants? Run the mutation testing and check the results:

Success! All 10 mutants were killed. Great job; you can now ship this API with confidence.

Epilogue

If there is one takeaway from this exercise, it's the emerging concept of skillful procrastination. It's an essential concept, knowing that many of us tend to rush mindlessly into envisioning the solution even before our customers have finished describing their problem.

Positive procrastination

Procrastination doesn't come easily to software engineers. We're eager to get our hands dirty with the code. We know by heart numerous design patterns, anti-patterns, principles, and ready-made solutions. We're itching to put them into executable code, and we lean toward doing it in large batches. So it is indeed a virtue to hold our horses and carefully consider each and every step we make.

This exercise proves how ZOMBIES help you take many deliberate small steps toward solutions. It's one thing to be aware of and to agree with the Yagni principle, but in the "heat of the battle," those deep considerations often fly out the window, and you end up throwing in everything and the kitchen sink. And that produces bloated, tightly coupled systems.

Iteration and incrementation

Another essential takeaway from this exercise is the realization that the only way to keep a system working at all times is by adopting an iterative approach. You developed the shopping API by applying some rework, which is to say, you proceeded with coding by making changes to code that you already changed. This rework is unavoidable when iterating on a solution.

One of the problems many teams experience is confusion related to iteration and increments. These two concepts are fundamentally different.

An incremental approach is based on the idea that you hold a crisp set of requirements (or a blueprint) in your hand, and you go and build the solution by working incrementally. Basically, you build it piece-by-piece, and when all pieces have been assembled, you put them together, and voila! The solution is ready to be shipped!

In contrast, in an iterative approach, you are less certain that you know all that needs to be known to deliver the expected value to the paying customer. Because of that realization, you proceed gingerly. You're wary of breaking the system that already works (i.e., the system in a steady-state). If you disturb that balance, you always try to disturb it in the least intrusive, least invasive manner. You focus on taking the smallest imaginable batches, then quickly wrapping up your work on each batch. You prefer to have the system back to the steady-state in a matter of minutes, sometimes even seconds.

That's why an iterative approach so often adheres to "fake it 'til you make it." You hard-code many expectations so that you can verify that a tiny change does not disable the system from running. You then make the changes necessary to replace the hard-coded value with real processing.

As a rule of thumb, in an iterative approach, you aim to craft an expectation (a microtest) in such a way that it precipitates only one improvement to the code. You go one improvement by one improvement, and with each improvement, you exercise the system to make sure it is in a working state. As you proceed in that fashion, you eventually hit the stage where all the expectations have been met, and the code has been refactored in such a way that it leaves no surviving mutants.

Once you get to that state, you can be fairly confident that you can ship the solution.

Many thanks to inimitable Kent Beck, Ron Jeffries, and GeePaw Hill for being a constant inspiration on my journey to software engineering apprenticeship.

And may your journey be filled with ZOMBIES.

via: https://opensource.com/article/21/2/simplicity

作者:Alex Bunardzic 选题:lkxed 译者:译者ID 校对:校对者ID