mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

ff7a7f10e1

76

published/20171218 Internet Chemotherapy.md

Normal file

76

published/20171218 Internet Chemotherapy.md

Normal file

@ -0,0 +1,76 @@

|

||||

互联网化疗

|

||||

======

|

||||

|

||||

> LCTT 译注:本文作者 janit0r 被认为是 BrickerBot 病毒的作者。此病毒会攻击物联网上安全性不足的设备并使其断开和其他网络设备的连接。janit0r 宣称他使用这个病毒的目的是保护互联网的安全,避免这些设备被入侵者用于入侵网络上的其他设备。janit0r 称此项目为“互联网化疗”。janit0r 决定在 2017 年 12 月终止这个项目,并在网络上发表了这篇文章。

|

||||

|

||||

> —— 12/10 2017

|

||||

|

||||

### --[ 1 互联网化疗

|

||||

|

||||

<ruby>互联网化疗<rt>Internet Chemotherapy</rt></ruby>是在 2016 年 11 月 到 2017 年 12 月之间的一个为期 13 个月的项目。它曾被称为 “BrickerBot”、“错误的固件升级”、“勒索软件”、“大规模网络瘫痪”,甚至 “前所未有的恐怖行为”。最后一个有点伤人了,费尔南德斯(LCTT 译注:委内瑞拉电信公司 CANTV 的光纤网络曾在 2017 年 8 月受到病毒攻击,公司董事长曼努埃尔·费尔南德斯称这次攻击为[“前所未有的恐怖行为”][1]),但我想我大概不能让所有人都满意吧。

|

||||

|

||||

你可以从 http://91.215.104.140/mod_plaintext.py 下载我的代码模块,它可以基于 http 和 telnet 发送恶意请求(LCTT 译注:这个链接已经失效,不过在 [Github][2] 上有备份)。因为平台的限制,模块里是代码混淆过的单线程 Python 代码,但<ruby>载荷<rt>payload</rd></ruby>(LCTT 译注:payload,指实质的攻击/利用代码)依然是明文,任何合格的程序员应该都能看得懂。看看这里面有多少载荷、0-day 漏洞和入侵技巧,花点时间让自己接受现实。然后想象一下,如果我是一个黑客,致力于创造出强大的 DDoS 生成器来勒索那些最大的互联网服务提供商(ISP)和公司的话,互联网在 2017 年会受到怎样的打击。我完全可以让他们全部陷入混乱,并同时对整个互联网造成巨大的伤害。

|

||||

|

||||

我的 ssh 爬虫太危险了,不能发布出来。它包含很多层面的自动化,可以只利用一个被入侵的路由器就能够在设计有缺陷的 ISP 的网络上平行移动并加以入侵。正是因为我可以征用数以万计的 ISP 的路由器,而这些路由器让我知晓网络上发生的事情并给我提供源源不断的节点用来进行入侵行动,我才得以进行我的反物联网僵尸网络项目。我于 2015 年开始了我的非破坏性的 ISP 的网络清理项目,于是当 Mirai 病毒入侵时我已经做好了准备来做出回应。主动破坏其他人的设备仍然是一个困难的决定,但无比危险的 CVE-2016-10372 漏洞让我别无选择。从那时起我就决定一不做二不休。

|

||||

|

||||

(LCTT 译注:上一段中提到的 Mirai 病毒首次出现在 2016 年 8 月。它可以远程入侵运行 Linux 系统的网络设备并利用这些设备构建僵尸网络。本文作者 janit0r 宣称当 Mirai 入侵时他利用自己的 BrickerBot 病毒强制将数以万计的设备从网络上断开,从而减少 Mirai 病毒可以利用的设备数量。)

|

||||

|

||||

我在此警告你们,我所做的只是权宜之计,它并不足以在未来继续拯救互联网。坏人们正变得更加聪明,潜在存在漏洞的设备数量在持续增加,发生大规模的、能使网络瘫痪的事件只是时间问题。如果你愿意相信,我曾经在一个持续 13 个月的项目中使上千万有漏洞的设备变得无法使用,那么不过分地说,如此严重的事件本已经在 2017 年发生了。

|

||||

|

||||

__你们应该意识到,只需要再有一两个严重的物联网漏洞,我们的网络就会严重瘫痪。__ 考虑到我们的社会现在是多么依赖数字网络,而计算机安全应急响应组(CERT)、ISP 们和政府们又是多么地忽视这种问题的严重性,这种事件造成的伤害是无法估计的。ISP 在持续地部署暴露了控制端口的设备,而且即使像 Shodan 这样的服务可以轻而易举地发现这些问题,国家 CERT 还是似乎并不在意。而很多国家甚至都没有自己的 CERT 。世界上许多最大的 ISP 都没有雇佣任何熟知计算机安全问题的人,而是在出现问题的时候依赖于外来的专家来解决。我曾见识过大型 ISP 在我的僵尸网络的调节之下连续多个月持续受损,但他们还是不能完全解决漏洞(几个好的例子是 BSNL、Telkom ZA、PLDT、某些时候的 PT Telkom,以及南半球大部分的大型 ISP )。只要看看 Telkom ZA 解决他们的 Aztech 调制解调器问题的速度有多慢,你就会开始理解现状有多么令人绝望。在 99% 的情况下,要解决这个问题只需要 ISP 部署合理的访问控制列表,并把部署在用户端的设备(CPE)单独分段就行,但是几个月过去之后他们的技术人员依然没有弄明白。如果 ISP 在经历了几周到几个月的针对他们设备的蓄意攻击之后仍然无法解决问题,我们又怎么能期望他们会注意到并解决 Mirai 在他们网络上造成的问题呢?世界上许多最大的 ISP 对这些事情无知得令人发指,而这毫无疑问是最大的危险,但奇怪的是,这应该也是最容易解决的问题。

|

||||

|

||||

我已经尽自己的责任试着去让互联网多坚持一段时间,但我已经尽力了。接下来要交给你们了。即使很小的行动也是非常重要的。你们能做的事情有:

|

||||

|

||||

* 使用像 Shodan 之类的服务来检查你的 ISP 的安全性,并驱使他们去解决他们网络上开放的 telnet、http、httpd、ssh 和 tr069 等端口。如果需要的话,可以把这篇文章给他们看。从来不存在什么好的理由来让这些端口可以从外界访问。暴露控制端口是业余人士的错误。如果有足够的客户抱怨,他们也许真的会采取行动!

|

||||

* 用你的钱包投票!拒绝购买或使用任何“智能”产品,除非制造商保证这个产品能够而且将会收到及时的安全更新。在把你辛苦赚的钱交给提供商之前,先去查看他们的安全记录。为了更好的安全性,可以多花一些钱。

|

||||

* 游说你本地的政治家和政府官员,让他们改进法案来规范物联网设备,包括路由器、IP 照相机和各种“智能”设备。不论私有还是公有的公司目前都没有足够的动机去在短期内解决该问题。这件事情和汽车或者通用电器的安全标准一样重要。

|

||||

* 考虑给像 GDI 基金会或者 Shadowserver 基金会这种缺少支持的白帽黑客组织贡献你的时间或者其他资源。这些组织和人能产生巨大的影响,并且他们可以很好地发挥你的能力来帮助互联网。

|

||||

* 最后,虽然希望不大,但可以考虑通过设立法律先例来让物联网设备成为一种“<ruby>诱惑性危险品<rt>attractive nuisance</rt></ruby>”(LCTT 译注:attractive nuisance 是美国法律中的一个原则,意思是如果儿童在私人领地上因为某些对儿童有吸引力的危险物品而受伤,领地的主人需要负责,无论受伤的儿童是否是合法进入领地)。如果一个房主可以因为小偷或者侵入者受伤而被追责,我不清楚为什么设备的主人(或者 ISP 和设备制造商)不应该因为他们的危险的设备被远程入侵所造成的伤害而被追责。连带责任原则应该适用于对设备应用层的入侵。如果任何有钱的大型 ISP 不愿意为设立这样的先例而出钱(他们也许的确不会,因为他们害怕这样的先例会反过来让自己吃亏),我们甚至可以在这里还有在欧洲为这个行动而进行众筹。 ISP 们:把你们在用来应对 DDoS 的带宽上省下的可观的成本当做我为这个目标的间接投资,也当做它的好处的证明吧。

|

||||

|

||||

### --[ 2 时间线

|

||||

|

||||

下面是这个项目中一些值得纪念的事件:

|

||||

|

||||

* 2016 年 11 月底的德国电信 Mirai 事故。我匆忙写出的最初的 TR069/64 请求只执行了 `route del default`,不过这已经足够引起 ISP 去注意这个问题,而它引发的新闻头条警告了全球的其他 ISP 来注意这个迫近的危机。

|

||||

* 大约 1 月 11 日 到 12 日,一些位于华盛顿特区的开放了 6789 控制端口的硬盘录像机被 Mirai 入侵并瘫痪,这上了很多头条新闻。我要给 Vemulapalli 点赞,她居然认为 Mirai 加上 `/dev/urandom` 一定是“非常复杂的勒索软件”(LCTT 译注:Archana Vemulapalli 当时是华盛顿市政府的 CTO)。欧洲的那两个可怜人又怎么样了呢?

|

||||

* 2017 年 1 月底发生了第一起真正的大规模 ISP 下线事件。Rogers Canada 的提供商 Hitron 非常粗心地推送了一个在 2323 端口上监听的无验证的 root shell(这可能是一个他们忘记关闭的 debug 接口)。这个惊天的失误很快被 Mirai 的僵尸网络所发现,造成大量设备瘫痪。

|

||||

* 在 2017 年 2 月,我注意到 Mirai 在这一年里的第一次扩张,Netcore/Netis 以及 Broadcom 的基于 CLI (命令行接口)的调制解调器都遭受了攻击。BCM CLI 后来成为了 Mirai 在 2017 年的主要战场,黑客们和我自己都在这一年的余下时间里花大量时间寻找无数 ISP 和设备制造商设置的默认密码。前面代码中的“broadcom” 载荷也许看上去有点奇怪,但它们是统计角度上最可能禁用那些大量的有问题的 BCM CLI 固件的序列。

|

||||

* 在 2017 年 3 月,我大幅提升了我的僵尸网络的节点数量并开始加入更多的网络载荷。这是为了应对包括 Imeij、Amnesia 和 Persirai 在内的僵尸网络的威胁。大规模地禁用这些被入侵的设备也带来了新的一些问题。比如在 Avtech 和 Wificam 设备所泄露的登录信息当中,有一些用户名和密码非常像是用于机场和其他重要设施的,而英国政府官员大概在 2017 年 4 月 1 日关于针对机场和核设施的“实际存在的网络威胁”做出过警告。哎呀。

|

||||

* 这种更加激进的扫描还引起了民间安全研究者的注意,安全公司 Radware 在 2017 年 4 月 6 日发表了一篇关于我的项目的文章。这个公司把它叫做“BrickerBot”。显然,如果我要继续增加我的物联网防御措施的规模,我必须想出更好的网络映射与检测方法来应对蜜罐或者其他有风险的目标。

|

||||

* 2017 年 4 月 11 日左右的时候发生了一件非常不寻常的事情。一开始这看上去和许多其他的 ISP 下线事件相似,一个叫 Sierra Tel 的半本地 ISP 在一些 Zyxel 设备上使用了默认的 telnet 用户名密码 supervisor/zyad1234。一个 Mirai 运行器发现了这些有漏洞的设备,我的僵尸网络紧随其后,2017 年精彩绝伦的 BCM CLI 战争又开启了新的一场战斗。这场战斗并没有持续很久。它本来会和 2017 年的其他数百起 ISP 下线事件一样,如果不是在尘埃落定之后发生的那件非常不寻常的事情的话。令人惊奇的是,这家 ISP 并没有试着把这次网络中断掩盖成某种网络故障、电力超额或错误的固件升级。他们完全没有对客户说谎。相反,他们很快发表了新闻公告,说他们的调制解调器有漏洞,这让他们的客户们得以评估自己可能遭受的风险。这家全世界最诚实的 ISP 为他们值得赞扬的公开行为而收获了什么呢?悲哀的是,它得到的只是批评和不好的名声。这依然是我记忆中最令人沮丧的“为什么我们得不到好东西”的例子,这很有可能也是为什么 99% 的安全错误都被掩盖而真正的受害者被蒙在鼓里的最主要原因。太多时候,“有责任心的信息公开”会直接变成“粉饰太平”的委婉说法。

|

||||

* 在 2017 年 4 月 14 日,国土安全部关于“BrickerBot 对物联网的威胁”做出了警告,我自己的政府把我作为一个网络威胁这件事让我觉得他们很不公平而且目光短浅。跟我相比,对美国人民威胁最大的难道不应该是那些部署缺乏安全性的网络设备的提供商和贩卖不成熟的安全方案的物联网设备制造商吗?如果没有我,数以百万计的人们可能还在用被入侵的设备和网络来处理银行业务和其他需要保密的交易。如果国土安全部里有人读到这篇文章,我强烈建议你重新考虑一下保护国家和公民究竟是什么意思。

|

||||

* 在 2017 年 4 月底,我花了一些时间改进我的 TR069/64 攻击方法,然后在 2017 年 5 月初,一个叫 Wordfence 的公司(现在叫 Defiant)报道称一个曾给 Wordpress 网站造成威胁的基于入侵 TR069 的僵尸网络很明显地衰减了。值得注意的是,同一个僵尸网络在几星期后使用了一个不同的入侵方式暂时回归了(不过这最终也同样被化解了)。

|

||||

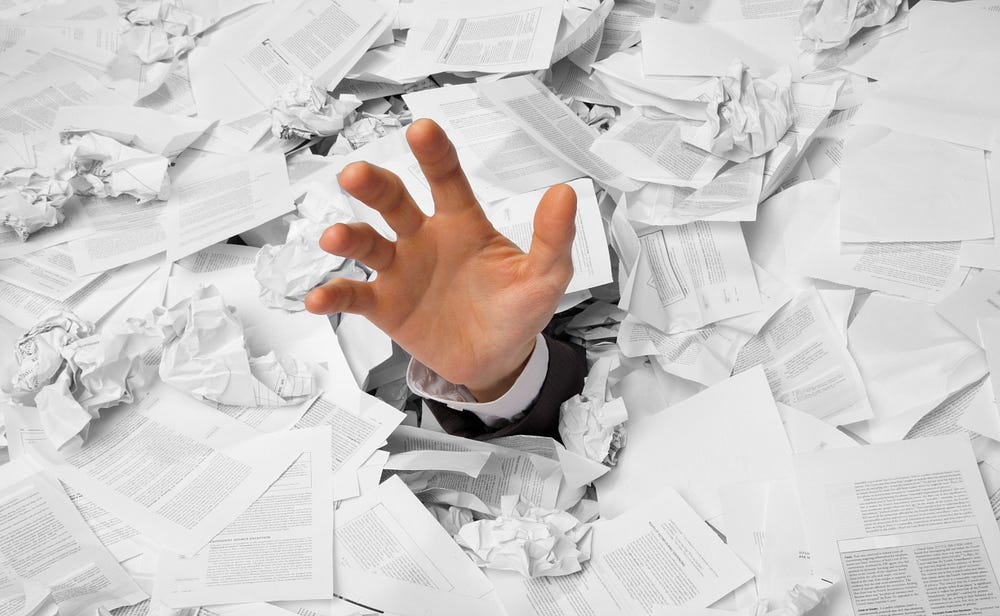

* 在 2017 年 5 月,主机托管公司 Akamai 在它的 2017 年第一季度互联网现状报告中写道,相比于 2016 年第一季度,大型(超过 100 Gbps)DDoS 攻击数减少了 89%,而总体 DDoS 攻击数减少了 30%。鉴于大型 DDoS 攻击是 Mirai 的主要手段,我觉得这给这些月来我在物联网领域的辛苦劳动提供了实际的支持。

|

||||

* 在夏天我持续地改进我的入侵技术军火库,然后在 7 月底我针对亚太互联网络信息中心(APNIC)的 ISP 进行了一些测试。测试结果非常令人吃惊。造成的影响之一是数十万的 BSNL 和 MTNL 调制解调器被禁用,而这次中断事故在印度成为了头条新闻。考虑到当时在印度和中国之间持续升级的地缘政治压力,我觉得这个事故有很大的风险会被归咎于中国所为,于是我很罕见地决定公开承认是我所做。Catalin,我很抱歉你在报道这条新闻之后突然被迫放的“两天的假期”。

|

||||

* 在处理过亚太互联网络信息中心(APNIC)和非洲互联网络信息中心(AfriNIC)的之后,在 2017 年 8 月 9 日我又针对拉丁美洲与加勒比地区互联网络信息中心(LACNIC)进行了大规模的清理,给这个大洲的许多提供商造成了问题。在数百万的 Movilnet 的手机用户失去连接之后,这次攻击在委内瑞拉被大幅报道。虽然我个人反对政府监管互联网,委内瑞拉的这次情况值得注意。许多拉美与加勒比地区的提供商与网络曾在我的僵尸网络的持续调节之下连续数个月逐渐衰弱,但委内瑞拉的提供商很快加强了他们的网络防护并确保了他们的网络设施的安全。我认为这是由于委内瑞拉相比于该地区的其他国家来说进行了更具侵入性的深度包检测。值得思考一下。

|

||||

* F5 实验室在 2017 年 8 月发布了一个题为“狩猎物联网:僵尸物联网的崛起”的报告,研究者们在其中对近期 telnet 活动的平静表达了困惑。研究者们猜测这种活动的减少也许证实了一个或多个非常大型的网络武器正在成型(我想这大概确实是真的)。这篇报告是在我印象中对我的项目的规模最准确的评估,但神奇的是,这些研究者们什么都推断不出来,尽管他们把所有相关的线索都集中到了一页纸上。

|

||||

* 2017 年 8 月,Akamai 的 2017 年第二季度互联网现状报告宣布这是三年以来首个该提供商没有发现任何大规模(超过 100 Gbps)攻击的季度,而且 DDoS 攻击总数相比 2017 年第一季度减少了 28%。这看上去给我的清理工作提供了更多的支持。这个出奇的好消息被主流媒体所完全忽视了,这些媒体有着“流血的才是好新闻”的心态,即使是在信息安全领域。这是我们为什么不能得到好东西的又一个原因。

|

||||

* 在 CVE-2017-7921 和 7923 于 2017 年 9 月公布之后,我决定更密切地关注海康威视公司的设备,然后我惊恐地发现有一个黑客们还没有发现的方法可以用来入侵有漏洞的固件。于是我在 9 月中旬开启了一个全球范围的清理行动。超过一百万台硬盘录像机和摄像机(主要是海康威视和大华出品)在三周内被禁用,然后包括 IPVM.com 在内的媒体为这些攻击写了多篇报道。大华和海康威视在新闻公告中提到或暗示了这些攻击。大量的设备总算得到了固件升级。看到这次清理活动造成的困惑,我决定给这些闭路电视制造商写一篇[简短的总结][3](请原谅在这个粘贴板网站上的不和谐的语言)。这令人震惊的有漏洞而且在关键的安全补丁发布之后仍然在线的设备数量应该能够唤醒所有人,让他们知道现今的物联网补丁升级过程有多么无力。

|

||||

* 2017 年 9 月 28 日左右,Verisign 发表了报告称 2017 年第二季度的 DDoS 攻击数相比第一季度减少了 55%,而且攻击峰值大幅减少了 81%。

|

||||

* 2017 年 11 月 23 日,CDN 供应商 Cloudflare 报道称“近几个月来,Cloudflare 看到试图用垃圾流量挤满我们的网络的简单进攻尝试有了大幅减少”。Cloudflare 推测这可能和他们的政策变化有一定关系,但这些减少也和物联网清理行动有着明显的重合。

|

||||

* 2017 年 11 月底,Akamai 的 2017 年第三季度互联网现状报告称 DDoS 攻击数较前一季度小幅增加了 8%。虽然这相比 2016 年的第三季度已经减少了很多,但这次小幅上涨提醒我们危险仍然存在。

|

||||

* 作为潜在危险的更进一步的提醒,一个叫做“Satori”的新的 Mirai 变种于 2017 年 11 月至 12 月开始冒头。这个僵尸网络仅仅通过一个 0-day 漏洞而达成的增长速度非常值得注意。这起事件凸显了互联网的危险现状,以及我们为什么距离大规模的事故只差一两起物联网入侵。当下一次威胁发生而且没人阻止的时候,会发生什么?Sinkholing 和其他的白帽或“合法”的缓解措施在 2018 年不会有效,就像它们在 2016 年也不曾有效一样。也许未来各国政府可以合作创建一个国际范围的反黑客特别部队来应对特别严重的会影响互联网存续的威胁,但我并不抱太大期望。

|

||||

* 在年末出现了一些危言耸听的新闻报道,有关被一个被称作“Reaper”和“IoTroop”的新的僵尸网络。我知道你们中有些人最终会去嘲笑那些把它的规模估算为一两百万的人,但你们应该理解这些网络安全研究者们对网络上发生的事情以及不由他们掌控的硬件的事情都是一知半解。实际来说,研究者们不可能知道或甚至猜测到大部分有漏洞的设备在僵尸网络出现时已经被禁用了。给“Reaper”一两个新的未经处理的 0-day 漏洞的话,它就会变得和我们最担心的事情一样可怕。

|

||||

|

||||

### --[ 3 临别赠言

|

||||

|

||||

我很抱歉把你们留在这种境况当中,但我的人身安全受到的威胁已经不允许我再继续下去。我树了很多敌人。如果你想要帮忙,请看前面列举的该做的事情。祝你好运。

|

||||

|

||||

也会有人批评我,说我不负责任,但这完全找错了重点。真正的重点是如果一个像我一样没有黑客背景的人可以做到我所做到的事情,那么一个比我厉害的人可以在 2017 年对互联网做比这要可怕太多的事情。我并不是问题本身,我也不是来遵循任何人制定的规则的。我只是报信的人。你越早意识到这点越好。

|

||||

|

||||

-Dr Cyborkian 又名 janit0r,“病入膏肓”的设备的调节者。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:https://ghostbin.com/paste/q2vq2

|

||||

|

||||

作者:janit0r

|

||||

译者:[yixunx](https://github.com/yixunx)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,

|

||||

[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.telecompaper.com/news/venezuelan-operators-hit-by-unprecedented-cyberattack--1208384

|

||||

[2]:https://github.com/JeremyNGalloway/mod_plaintext.py

|

||||

[3]:http://depastedihrn3jtw.onion.link/show.php?md5=62d1d87f67a8bf485d43a05ec32b1e6f

|

||||

133

sources/talk/20180201 Custom Embedded Linux Distributions.md

Normal file

133

sources/talk/20180201 Custom Embedded Linux Distributions.md

Normal file

@ -0,0 +1,133 @@

|

||||

Custom Embedded Linux Distributions

|

||||

======

|

||||

### Why Go Custom?

|

||||

|

||||

In the past, many embedded projects used off-the-shelf distributions and stripped them down to bare essentials for a number of reasons. First, removing unused packages reduced storage requirements. Embedded systems are typically shy of large amounts of storage at boot time, and the storage available, in non-volatile memory, can require copying large amounts of the OS to memory to run. Second, removing unused packages reduced possible attack vectors. There is no sense hanging on to potentially vulnerable packages if you don't need them. Finally, removing unused packages reduced distribution management overhead. Having dependencies between packages means keeping them in sync if any one package requires an update from the upstream distribution. That can be a validation nightmare.

|

||||

|

||||

Yet, starting with an existing distribution and removing packages isn't as easy as it sounds. Removing one package might break dependencies held by a variety of other packages, and dependencies can change in the upstream distribution management. Additionally, some packages simply cannot be removed without great pain due to their integrated nature within the boot or runtime process. All of this takes control of the platform outside the project and can lead to unexpected delays in development.

|

||||

|

||||

A popular alternative is to build a custom distribution using build tools available from an upstream distribution provider. Both Gentoo and Debian provide options for this type of bottom-up build. The most popular of these is probably the Debian debootstrap utility. It retrieves prebuilt core components and allows users to cherry-pick the packages of interest in building their platforms. But, debootstrap originally was only for x86 platforms. Although there are ARM (and possibly other) options now, debootstrap and Gentoo's catalyst still take dependency management away from the local project.

|

||||

|

||||

Some people will argue that letting someone else manage the platform software (like Android) is much easier than doing it yourself. But, those distributions are general-purpose, and when you're sitting on a lightweight, resource-limited IoT device, you may think twice about any any advantage that is taken out of your hands.

|

||||

|

||||

### System Bring-Up Primer

|

||||

|

||||

A custom Linux distribution requires a number of software components. The first is the toolchain. A toolchain is a collection of tools for compiling software, including (but not limited to) a compiler, linker, binary manipulation tools and standard C library. Toolchains are built specifically for a target hardware device. A toolchain built on an x86 system that is intended for use with a Raspberry Pi is called a cross-toolchain. When working with small embedded devices with limited memory and storage, it's always best to use a cross-toolchain. Note that even applications written for a specific purpose in a scripted language like JavaScript will need to run on a software platform that needs to be compiled with a cross-toolchain.

|

||||

|

||||

|

||||

|

||||

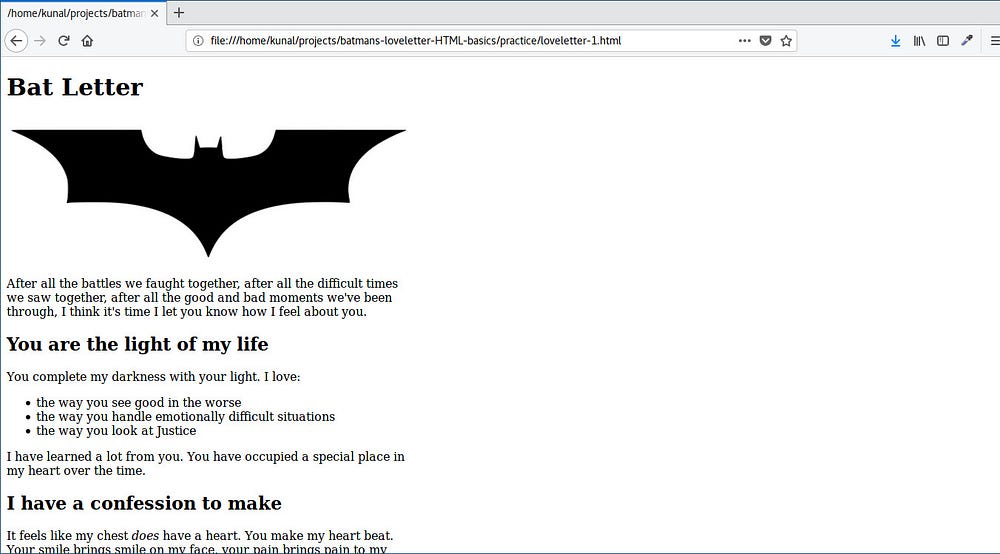

Figure 1\. Compile Dependencies and Boot Order

|

||||

|

||||

The cross-toolchain is used to build software components for the target hardware. The first component needed is a bootloader. When power is applied to a board, the processor (depending on design) attempts to jump to a specific memory location to start running software. That memory location is where a bootloader is stored. Hardware can have a built-in bootloader that can be run directly from its storage location or it may be copied into memory first before it is run. There also can be multiple bootloaders. A first-stage bootloader would reside on the hardware in NAND or NOR flash, for example. Its sole purpose would be to set up the hardware so a second-stage bootloader, such as one stored on an SD card, can be loaded and run.

|

||||

|

||||

Bootloaders have enough knowledge to get the hardware to the point where it can load Linux into memory and jump to it, effectively handing control over to Linux. Linux is an operating system. This means that, by design, it doesn't actually do anything other than monitor the hardware and provide services to higher layer software—aka applications. The [Linux kernel][1] often is accompanied by a variety of firmware blobs. These are software objects that have been precompiled, often containing proprietary IP (intellectual property) for devices used with the hardware platform. When building a custom distribution, it may be necessary to acquire any firmware blobs not provided by the Linux kernel source tree before beginning compilation of the kernel.

|

||||

|

||||

Applications are stored in the root filesystem. The root filesystem is constructed by compiling and collecting a variety of software libraries, tools, scripts and configuration files. Collectively, these all provide the services, such as network configuration and USB device mounting, required by applications the project will run.

|

||||

|

||||

In summary, a complete system build requires the following components:

|

||||

|

||||

1. A cross-toolchain.

|

||||

|

||||

2. One or more bootloaders.

|

||||

|

||||

3. The Linux kernel and associated firmware blobs.

|

||||

|

||||

4. A root filesystem populated with libraries, tools and utilities.

|

||||

|

||||

5. Custom applications.

|

||||

|

||||

### Start with the Right Tools

|

||||

|

||||

The components of the cross-toolchain can be built manually, but it's a complex process. Fortunately, tools exist that make this process easier. The best of them is probably [Crosstool-NG][2]. This project utilizes the same kconfig menu system used by the Linux kernel to configure the bits and pieces of the toolchain. The key to using this tool is finding the correct configuration items for the target platform. This typically includes the following items:

|

||||

|

||||

1. The target architecture, such as ARM or x86.

|

||||

|

||||

2. Endianness: little (typically Intel) or big (typically ARM or others).

|

||||

|

||||

3. CPU type as it's known to the compiler, such as GCC's use of either -mcpu or --with-cpu.

|

||||

|

||||

4. The floating point type supported, if any, by the CPU, such as GCC's use of either -mfpu or --with-fpu.

|

||||

|

||||

5. Specific version information for the binutils package, the C library and the C compiler.

|

||||

|

||||

|

||||

|

||||

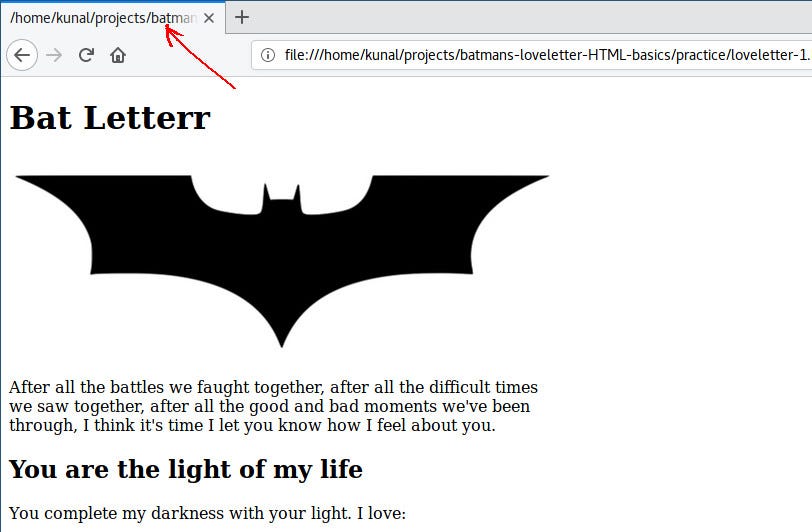

Figure 2. Crosstool-NG Configuration Menu

|

||||

|

||||

The first four are typically available from the processor maker's documentation. It can be hard to find these for relatively new processors, but for the Raspberry Pi or BeagleBoards (and their offspring and off-shoots), you can find the information online at places like the [Embedded Linux Wiki][3].

|

||||

|

||||

The versions of the binutils, C library and C compiler are what will separate the toolchain from any others that might be provided from third parties. First, there are multiple providers of each of these things. Linaro provides bleeding-edge versions for newer processor types, while working to merge support into upstream projects like the GNU C Library. Although you can use a variety of providers, you may want to stick to the stock GNU toolchain or the Linaro versions of the same.

|

||||

|

||||

Another important selection in Crosstool-NG is the version of the Linux kernel. This selection gets headers for use with various toolchain components, but it is does not have to be the same as the Linux kernel you will boot on the target hardware. It's important to choose a kernel that is not newer than the target hardware's kernel. When possible, pick a long-term support kernel that is older than the kernel that will be used on the target hardware.

|

||||

|

||||

For most developers new to custom distribution builds, the toolchain build is the most complex process. Fortunately, binary toolchains are available for many target hardware platforms. If building a custom toolchain becomes problematic, search online at places like the [Embedded Linux Wiki][4] for links to prebuilt toolchains.

|

||||

|

||||

### Booting Options

|

||||

|

||||

The next component to focus on after the toolchain is the bootloader. A bootloader sets up hardware so it can be used by ever more complex software. A first-stage bootloader is often provided by the target platform maker, burned into on-hardware storage like an EEPROM or NOR flash. The first-stage bootloader will make it possible to boot from, for example, an SD card. The Raspberry Pi has such a bootloader, which makes creating a custom bootloader unnecessary.

|

||||

|

||||

Despite that, many projects add a secondary bootloader to perform a variety of tasks. One such task could be to provide a splash animation without using the Linux kernel or userspace tools like plymouth. A more common secondary bootloader task is to make network-based boot or PCI-connected disks available. In those cases, a tertiary bootloader, such as GRUB, may be necessary to get the system running.

|

||||

|

||||

Most important, bootloaders load the Linux kernel and start it running. If the first-stage bootloader doesn't provide a mechanism for passing kernel arguments at boot time, a second-stage bootloader may be necessary.

|

||||

|

||||

A number of open-source bootloaders are available. The [U-Boot project][5] often is used for ARM platforms like the Raspberry Pi. CoreBoot typically is used for x86 platform like the Chromebook. Bootloaders can be very specific to target hardware. The choice of bootloader will depend on overall project requirements and target hardware (search for lists of open-source bootloaders be online).

|

||||

|

||||

### Now Bring the Penguin

|

||||

|

||||

The bootloader will load the Linux kernel into memory and start it running. Linux is like an extended bootloader: it continues hardware setup and prepares to load higher-level software. The core of the kernel will set up and prepare memory for sharing between applications and hardware, prepare task management to allow multiple applications to run at the same time, initialize hardware components that were not configured by the bootloader or were configured incompletely and begin interfaces for human interaction. The kernel may not be configured to do this on its own, however. It may include an embedded lightweight filesystem, known as the initramfs or initrd, that can be created separately from the kernel to assist in hardware setup.

|

||||

|

||||

Another thing the kernel handles is downloading binary blobs, known generically as firmware, to hardware devices. Firmware is pre-compiled object files in formats specific to a particular device that is used to initialize hardware in places that the bootloader and kernel cannot access. Many such firmware objects are available from the Linux kernel source repositories, but many others are available only from specific hardware vendors. Examples of devices that often provide their own firmware include digital TV tuners or WiFi network cards.

|

||||

|

||||

Firmware may be loaded from the initramfs or may be loaded after the kernel starts the init process from the root filesystem. However, creating the kernel often will be the process where obtaining firmware will occur when creating a custom Linux distribution.

|

||||

|

||||

### Lightweight Core Platforms

|

||||

|

||||

The last thing the Linux kernel does is to attempt to run a specific program called the init process. This can be named init or linuxrc or the name of the program can be passed to the kernel by the bootloader. The init process is stored in a file system that the kernel can access. In the case of the initramfs, the file system is stored in memory (either by the kernel itself or by the bootloader placing it there). But the initramfs is not typically complete enough to run more complex applications. So another file system, known as the root file system, is required.

|

||||

|

||||

|

||||

|

||||

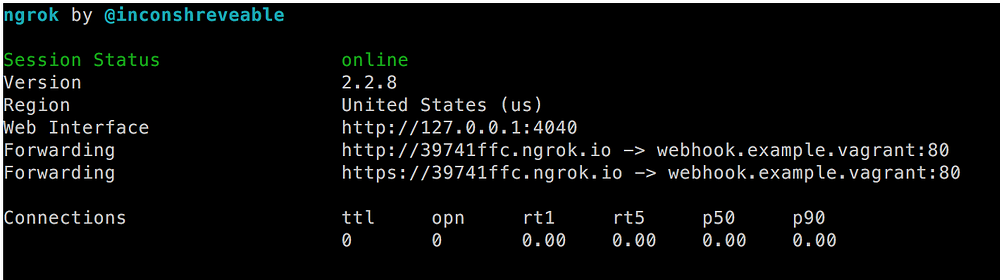

Figure 3\. Buildroot Configuration Menu

|

||||

|

||||

The initramfs filesystem can be built using the Linux kernel itself, but more commonly, it is created using a project called [BusyBox][6]. BusyBox combines a collection of GNU utilities, such as grep or awk, into a single binary in order to reduce the size of the filesystem itself. BusyBox often is used to jump-start the root filesystem's creation.

|

||||

|

||||

But, BusyBox is purposely lightweight. It isn't intended to provide every tool that a target platform will need, and even those it does provide can be feature-reduced. BusyBox has a sister project known as [Buildroot][7], which can be used to get a complete root filesystem, providing a variety of libraries, utilities and scripting languages. Like Crosstool-NG and the Linux kernel, both BusyBox and Buildroot allow custom configuration using the kconfig menu system. More important, the Buildroot system handles dependencies automatically, so selection of a given utility will guarantee that any software it requires also will be built and installed in the root filesystem.

|

||||

|

||||

Buildroot can generate a root filesystem archive in a variety of formats. However, it is important to note that the filesystem only is archived. Individual utilities and libraries are not packaged in either Debian or RPM formats. Using Buildroot will generate a root filesystem image, but its contents are not managed packages. Despite this, Buildroot does provide support for both the opkg and rpm package managers. This means custom applications that will be installed on the root filesystem can be package-managed, even if the root filesystem itself is not.

|

||||

|

||||

### Cross-Compiling and Scripting

|

||||

|

||||

One of Buildroot's features is the ability to generate a staging tree. This directory contains libraries and utilities that can be used to cross-compile other applications. With a staging tree and the cross toolchain, it becomes possible to compile additional applications outside Buildroot on the host system instead of on the target platform. Using rpm or opkg, those applications then can be installed to the root filesystem on the target at runtime using package management software.

|

||||

|

||||

Most custom systems are built around the idea of building applications with scripting languages. If scripting is required on the target platform, a variety of choices are available from Buildroot, including Python, PHP, Lua and JavaScript via Node.js. Support also exists for applications requiring encryption using OpenSSL.

|

||||

|

||||

### What's Next

|

||||

|

||||

The Linux kernel and bootloaders are compiled like most applications. Their build systems are designed to build a specific bit of software. Crosstool-NG and Buildroot are metabuilds. A metabuild is a wrapper build system around a collection of software, each with their own build systems. Alternatives to these include [Yocto][8] and [OpenEmbedded][9]. The benefit of Buildroot is the ease with which it can be wrapped by an even higher-level metabuild to automate customized Linux distribution builds. Doing this opens the option of pointing Buildroot to project-specific cache repositories. Using cache repositories can speed development and offers snapshot builds without worrying about changes to upstream repositories.

|

||||

|

||||

An example implementation of a higher-level build system is [PiBox][10]. PiBox is a metabuild wrapped around all of the tools discussed in this article. Its purpose is to add a common GNU Make target construction around all the tools in order to produce a core platform on which additional software can be built and distributed. The PiBox Media Center and kiosk projects are implementations of application-layer software installed on top of the core platform to produce a purpose-built platform. The [Iron Man project][11] is intended to extend these applications for home automation, integrated with voice control and IoT management.

|

||||

|

||||

But PiBox is nothing without these core software tools and could never run without an in-depth understanding of a complete custom distribution build process. And, PiBox could not exist without the long-term dedication of the teams of developers for these projects who have made custom-distribution-building a task for the masses.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxjournal.com/content/custom-embedded-linux-distributions

|

||||

|

||||

作者:[Michael J.Hammel][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxjournal.com/user/1000879

|

||||

[1]:https://www.kernel.org

|

||||

[2]:http://crosstool-ng.github.io

|

||||

[3]:https://elinux.org/Main_Page

|

||||

[4]:https://elinux.org/Main_Page

|

||||

[5]:https://www.denx.de/wiki/U-Boot

|

||||

[6]:https://busybox.net

|

||||

[7]:https://buildroot.org

|

||||

[8]:https://www.yoctoproject.org

|

||||

[9]:https://www.openembedded.org/wiki/Main_Page

|

||||

[10]:https://www.piboxproject.com

|

||||

[11]:http://redmine.graphics-muse.org/projects/ironman/wiki/Getting_Started

|

||||

@ -0,0 +1,161 @@

|

||||

Learn your tools: Navigating your Git History

|

||||

============================================================

|

||||

|

||||

Starting a greenfield application everyday is nearly impossible, especially in your daily job. In fact, most of us are facing (somewhat) legacy codebases on a daily basis, and regaining the context of why some feature, or line of code exists in the codebase is very important. This is where `git`, the distributed version control system, is invaluable. Let’s dive in and see how we can use our `git` history and easily navigate through it.

|

||||

|

||||

### Git history

|

||||

|

||||

First and foremost, what is `git` history? As the name says, it is the commit history of a `git` repo. It contains a bunch of commit messages, with their authors’ name, the commit hash and the date of the commit. The easiest way to see the history of a `git`repo, is the `git log` command.

|

||||

|

||||

Sidenote: For the purpose of this post, we will use Ruby on Rails’ repo, the `master`branch. The reason behind this is because Rails has a very good `git` history, with nice commit messages, references and explanations behind every change. Given the size of the codebase, the age and the number of maintainers, it’s certainly one of the best repositories that I have seen. Of course, I am not saying there are no other repositories built with good `git` practices, but this is one that has caught my eye.

|

||||

|

||||

So back to Rails’ repo. If you run `git log` in the Rails’ repo, you will see something like this:

|

||||

|

||||

```

|

||||

commit 66ebbc4952f6cfb37d719f63036441ef98149418Author: Arthur Neves <foo@bar.com>Date: Fri Jun 3 17:17:38 2016 -0400 Dont re-define class SQLite3Adapter on test We were declaring in a few tests, which depending of the order load will cause an error, as the super class could change. see https://github.com/rails/rails/commit/ac1c4e141b20c1067af2c2703db6e1b463b985da#commitcomment-17731383commit 755f6bf3d3d568bc0af2c636be2f6df16c651eb1Merge: 4e85538 f7b850eAuthor: Eileen M. Uchitelle <foo@bar.com>Date: Fri Jun 3 10:21:49 2016 -0400 Merge pull request #25263 from abhishekjain16/doc_accessor_thread [skip ci] Fix grammarcommit f7b850ec9f6036802339e965c8ce74494f731b4aAuthor: Abhishek Jain <foo@bar.com>Date: Fri Jun 3 16:49:21 2016 +0530 [skip ci] Fix grammarcommit 4e85538dddf47877cacc65cea6c050e349af0405Merge: 082a515 cf2158cAuthor: Vijay Dev <foo@bar.com>Date: Fri Jun 3 14:00:47 2016 +0000 Merge branch 'master' of github.com:rails/docrails Conflicts: guides/source/action_cable_overview.mdcommit 082a5158251c6578714132e5c4f71bd39f462d71Merge: 4bd11d4 3bd30d9Author: Yves Senn <foo@bar.com>Date: Fri Jun 3 11:30:19 2016 +0200 Merge pull request #25243 from sukesan1984/add_i18n_validation_test Add i18n_validation_testcommit 4bd11d46de892676830bca51d3040f29200abbfaMerge: 99d8d45 e98caf8Author: Arthur Nogueira Neves <foo@bar.com>Date: Thu Jun 2 22:55:52 2016 -0400 Merge pull request #25258 from alexcameron89/master [skip ci] Make header bullets consistent in engines.mdcommit e98caf81fef54746126d31076c6d346c48ae8e1bAuthor: Alex Kitchens <foo@bar.com>Date: Thu Jun 2 21:26:53 2016 -0500 [skip ci] Make header bullets consistent in engines.md

|

||||

```

|

||||

|

||||

As you can see, the `git log` shows the commit hash, the author and his email and the date of when the commit was created. Of course, `git` being super customisable, it allows you to customise the output format of the `git log` command. Let’s say, we want to just see the first line of the commit message, we could run `git log --oneline`, which will produce a more compact log:

|

||||

|

||||

```

|

||||

66ebbc4 Dont re-define class SQLite3Adapter on test755f6bf Merge pull request #25263 from abhishekjain16/doc_accessor_threadf7b850e [skip ci] Fix grammar4e85538 Merge branch 'master' of github.com:rails/docrails082a515 Merge pull request #25243 from sukesan1984/add_i18n_validation_test4bd11d4 Merge pull request #25258 from alexcameron89/mastere98caf8 [skip ci] Make header bullets consistent in engines.md99d8d45 Merge pull request #25254 from kamipo/fix_debug_helper_test818397c Merge pull request #25240 from matthewd/reloadable-channels2c5a8ba Don't blank pad day of the month when formatting dates14ff8e7 Fix debug helper test

|

||||

```

|

||||

|

||||

To see all of the `git log` options, I recommend checking out manpage of `git log`, available in your terminal via `man git-log` or `git help log`. A tip: if `git log` is a bit scarse or complicated to use, or maybe you are just bored, I recommend checking out various `git` GUIs and command line tools. In the past I’ve used [GitX][1] which was very good, but since the command line feels like home to me, after trying [tig][2] I’ve never looked back.

|

||||

|

||||

### Finding Nemo

|

||||

|

||||

So now, since we know the bare minimum of the `git log` command, let’s see how we can explore the history more effectively in our everyday work.

|

||||

|

||||

Let’s say, hypothetically, we are suspecting an unexpected behaviour in the`String#classify` method and we want to find how and where it has been implemented.

|

||||

|

||||

One of the first commands that you can use, to see where the method is defined, is `git grep`. Simply said, this command prints out lines that match a certain pattern. Now, to find the definition of the method, it’s pretty simple - we can grep for `def classify` and see what we get:

|

||||

|

||||

```

|

||||

➜ git grep 'def classify'activesupport/lib/active_support/core_ext/string/inflections.rb: def classifyactivesupport/lib/active_support/inflector/methods.rb: def classify(table_name)tools/profile: def classify

|

||||

```

|

||||

|

||||

Now, although we can already see where our method is created, we are not sure on which line it is. If we add the `-n` flag to our `git grep` command, `git` will provide the line numbers of the match:

|

||||

|

||||

```

|

||||

➜ git grep -n 'def classify'activesupport/lib/active_support/core_ext/string/inflections.rb:205: def classifyactivesupport/lib/active_support/inflector/methods.rb:186: def classify(table_name)tools/profile:112: def classify

|

||||

```

|

||||

|

||||

Much better, right? Having the context in mind, we can easily figure out that the method that we are looking for lives in `activesupport/lib/active_support/core_ext/string/inflections.rb`, on line 205\. The `classify` method, in all of it’s glory looks like this:

|

||||

|

||||

```

|

||||

# Creates a class name from a plural table name like Rails does for table names to models.# Note that this returns a string and not a class. (To convert to an actual class# follow +classify+ with +constantize+.)## 'ham_and_eggs'.classify # => "HamAndEgg"# 'posts'.classify # => "Post"def classify ActiveSupport::Inflector.classify(self)end

|

||||

```

|

||||

|

||||

Although the method we found is the one we usually call on `String`s, it invokes another method on the `ActiveSupport::Inflector`, with the same name. Having our `git grep` result available, we can easily navigate there, since we can see the second line of the result being`activesupport/lib/active_support/inflector/methods.rb` on line 186\. The method that we are are looking for is:

|

||||

|

||||

```

|

||||

# Creates a class name from a plural table name like Rails does for table# names to models. Note that this returns a string and not a Class (To# convert to an actual class follow +classify+ with #constantize).## classify('ham_and_eggs') # => "HamAndEgg"# classify('posts') # => "Post"## Singular names are not handled correctly:## classify('calculus') # => "Calculus"def classify(table_name) # strip out any leading schema name camelize(singularize(table_name.to_s.sub(/.*\./, ''.freeze)))end

|

||||

```

|

||||

|

||||

Boom! Given the size of Rails, finding this should not take us more than 30 seconds with the help of `git grep`.

|

||||

|

||||

### So, what changed last?

|

||||

|

||||

Now, since we have the method available, we need to figure out what were the changes that this file has gone through. The since we know the correct file name and line number, we can use `git blame`. This command shows what revision and author last modified each line of a file. Let’s see what were the latest changes made to this file:

|

||||

|

||||

```

|

||||

git blame activesupport/lib/active_support/inflector/methods.rb

|

||||

```

|

||||

|

||||

Whoa! Although we get the last change of every line in the file, we are more interested in the specific method (lines 176 to 189). Let’s add a flag to the `git blame` command, that will show the blame of just those lines. Also, we will add the `-s` (suppress) option to the command, to skip the author names and the timestamp of the revision (commit) that changed the line:

|

||||

|

||||

```

|

||||

git blame -L 176,189 -s activesupport/lib/active_support/inflector/methods.rb9fe8e19a 176) # Creates a class name from a plural table name like Rails does for table5ea3f284 177) # names to models. Note that this returns a string and not a Class (To9fe8e19a 178) # convert to an actual class follow +classify+ with #constantize).51cd6bb8 179) #6d077205 180) # classify('ham_and_eggs') # => "HamAndEgg"9fe8e19a 181) # classify('posts') # => "Post"51cd6bb8 182) #51cd6bb8 183) # Singular names are not handled correctly:5ea3f284 184) #66d6e7be 185) # classify('calculus') # => "Calculus"51cd6bb8 186) def classify(table_name)51cd6bb8 187) # strip out any leading schema name5bb1d4d2 188) camelize(singularize(table_name.to_s.sub(/.*\./, ''.freeze)))51cd6bb8 189) end

|

||||

```

|

||||

|

||||

The output of the `git blame` command now shows all of the file lines and their respective revisions. Now, to see a specific revision, or in other words, what each of those revisions changed, we can use the `git show` command. When supplied a revision hash (like `66d6e7be`) as an argument, it will show you the full revision, with the author name, timestamp and the whole revision in it’s glory. Let’s see what actually changed at the latest revision that changed line 188:

|

||||

|

||||

```

|

||||

git show 5bb1d4d2

|

||||

```

|

||||

|

||||

Whoa! Did you test that? If you didn’t, it’s an awesome [commit][3] by [Schneems][4] that made a very interesting performance optimization by using frozen strings, which makes sense in our current context. But, since we are on this hypothetical debugging session, this doesn’t tell much about our current problem. So, how can we see what changes has our method under investigation gone through?

|

||||

|

||||

### Searching the logs

|

||||

|

||||

Now, we are back to the `git` log. The question is, how can we see all the revisions that the `classify` method went under?

|

||||

|

||||

The `git log` command is quite powerful, because it has a rich list of options to apply to it. We can try to see what the `git` log has stored for this file, using the `-p`options, which means show me the patch for this entry in the `git` log:

|

||||

|

||||

```

|

||||

git log -p activesupport/lib/active_support/inflector/methods.rb

|

||||

```

|

||||

|

||||

This will show us a big list of revisions, for every revision of this file. But, just like before, we are interested in the specific file lines. Let’s modify the command a bit, to show us what we need:

|

||||

|

||||

```

|

||||

git log -L 176,189:activesupport/lib/active_support/inflector/methods.rb

|

||||

```

|

||||

|

||||

The `git log` command accepts the `-L` option, which takes the lines range and the filename as arguments. The format might be a bit weird for you, but it translates to:

|

||||

|

||||

```

|

||||

git log -L <start-line>,<end-line>:<path-to-file>

|

||||

```

|

||||

|

||||

When we run this command, we can see the list of revisions for these lines, which will lead us to the first revision that created the method:

|

||||

|

||||

```

|

||||

commit 51xd6bb829c418c5fbf75de1dfbb177233b1b154Author: Foo Bar <foo@bar.com>Date: Tue Jun 7 19:05:09 2011 -0700 Refactordiff --git a/activesupport/lib/active_support/inflector/methods.rb b/activesupport/lib/active_support/inflector/methods.rb--- a/activesupport/lib/active_support/inflector/methods.rb+++ b/activesupport/lib/active_support/inflector/methods.rb@@ -58,0 +135,14 @@+ # Create a class name from a plural table name like Rails does for table names to models.+ # Note that this returns a string and not a Class. (To convert to an actual class+ # follow +classify+ with +constantize+.)+ #+ # Examples:+ # "egg_and_hams".classify # => "EggAndHam"+ # "posts".classify # => "Post"+ #+ # Singular names are not handled correctly:+ # "business".classify # => "Busines"+ def classify(table_name)+ # strip out any leading schema name+ camelize(singularize(table_name.to_s.sub(/.*\./, '')))+ end

|

||||

```

|

||||

|

||||

Now, look at that - it’s a commit from 2011\. Practically, `git` allows us to travel back in time. This is a very good example of why a proper commit message is paramount to regain context, because from the commit message we cannot really regain context of how this method came to be. But, on the flip side, you should **never ever** get frustrated about it, because you are looking at someone that basically gives away his time and energy for free, doing open source work.

|

||||

|

||||

Coming back from that tangent, we are not sure how the initial implementation of the `classify` method came to be, given that the first commit is just a refactor. Now, if you are thinking something within the lines of “but maybe, just maybe, the method was not on the line range 176 to 189, and we should look more broadly in the file”, you are very correct. The revision that we saw said “Refactor” in it’s commit message, which means that the method was actually there, but after that refactor it started to exist on that line range.

|

||||

|

||||

So, how can we confirm this? Well, believe it or not, `git` comes to the rescue again. The `git log` command accepts the `-S` option, which looks for the code change (additions or deletions) for the specified string as an argument to the command. This means that, if we call `git log -S classify`, we can see all of the commits that changed a line that contains that string.

|

||||

|

||||

If you call this command in the Rails repo, you will first see `git` slowing down a bit. But, when you realise that `git` actually parses all of the revisions in the repo to match the string, it’s actually super fast. Again, the power of `git` at your fingertips. So, to find the first version of the `classify` method, we can run:

|

||||

|

||||

```

|

||||

git log -S 'def classify'

|

||||

```

|

||||

|

||||

This will return all of the revisions where this method has been introduced or changed. If you were following along, the last commit in the log that you will see is:

|

||||

|

||||

```

|

||||

commit db045dbbf60b53dbe013ef25554fd013baf88134Author: David Heinemeier Hansson <foo@bar.com>Date: Wed Nov 24 01:04:44 2004 +0000 Initial git-svn-id: http://svn-commit.rubyonrails.org/rails/trunk@4 5ecf4fe2-1ee6-0310-87b1-e25e094e27de

|

||||

```

|

||||

|

||||

How cool is that? It’s the initial commit to Rails, made on a `svn` repo by DHH! This means that `classify` has been around since the beginning of (Rails) time. Now, to see the commit with all of it’s changes, we can run:

|

||||

|

||||

```

|

||||

git show db045dbbf60b53dbe013ef25554fd013baf88134

|

||||

```

|

||||

|

||||

Great, we got to the bottom of it. Now, by using the output from `git log -S 'def classify'` you can track the changes that have happened to this method, combined with the power of the `git log -L` command.

|

||||

|

||||

### Until next time

|

||||

|

||||

Sure, we didn’t really fix any bugs, because we were trying some `git` commands and following along the evolution of the `classify` method. But, nevertheless, `git` is a very powerful tool that we all must learn to use and to embrace. I hope this article gave you a little bit more knowledge of how useful `git` is.

|

||||

|

||||

What are your favourite (or, most effective) ways of navigating through the `git`history?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Backend engineer, interested in Ruby, Go, microservices, building resilient architectures and solving challenges at scale. I coach at Rails Girls in Amsterdam, maintain a list of small gems and often contribute to Open Source.

|

||||

This is where I write about software development, programming languages and everything else that interests me.

|

||||

|

||||

------

|

||||

|

||||

via: https://ieftimov.com/learn-your-tools-navigating-git-history

|

||||

|

||||

作者:[Ilija Eftimov ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://ieftimov.com/

|

||||

[1]:http://gitx.frim.nl/

|

||||

[2]:https://github.com/jonas/tig

|

||||

[3]:https://github.com/rails/rails/commit/5bb1d4d288d019e276335465d0389fd2f5246bfd

|

||||

[4]:https://twitter.com/schneems

|

||||

139

sources/tech/20170428 Ultimate guide to securing SSH sessions.md

Normal file

139

sources/tech/20170428 Ultimate guide to securing SSH sessions.md

Normal file

@ -0,0 +1,139 @@

|

||||

Ultimate guide to securing SSH sessions

|

||||

======

|

||||

Hi Linux-fanatics, in this tutorial we will be discussing some ways with which we make our ssh server more secure. OpenSSH is currently used by default to work on servers as physical access to servers is very limited. We use ssh to copy/backup files/folders, to remotely execute commands etc. But these ssh connections might not be as secure as we believee & we must make some changes to our default settings to make them more secure.

|

||||

|

||||

Here are steps needed to secure our ssh sessions,

|

||||

|

||||

### Use complex username & password

|

||||

|

||||

This is first of the problem that needs to be addressed, I have known users who have '12345' as their password. It seems they are inviting hackers to get themselves hacked. You should always have a complex password.

|

||||

|

||||

It should have at-least 8 characters with numbers & alphabets, lower case & upper case letter, and also special characters. A good example would be " ** ** _vXdrf23#$wd_**** " , it is not a word so dictionary attack will be useless & has uppercase, lowercase characters, numbers & special characters.

|

||||

|

||||

### Limit user logins

|

||||

|

||||

Not all the users are required to have access to ssh in an organization, so we should make changes to our configuration file to limit user logins. Let's say only Bob & Susan are authorized have access to ssh, so open your configuration file

|

||||

|

||||

```

|

||||

$ vi /etc/ssh/sshd_config

|

||||

```

|

||||

|

||||

& add the allowed users to the bottom of the file

|

||||

|

||||

```

|

||||

AllowUsers bob susan

|

||||

```

|

||||

|

||||

Save the file & restart the service. Now only Bob & Susan will have access to ssh , others won't be able to access ssh.

|

||||

|

||||

### Configure Idle logout time

|

||||

|

||||

|

||||

Once logged into ssh sessions, there is default time before sessions logs out on it own. By default idle logout time is 60 minutes, which according to me is way to much. Consider this, you logged into a session , executed some commands & then went out to get a cup of coffee but you forgot to log-out of the ssh. Just think what could be done in the 60 seconds, let alone in 60 minutes.

|

||||

|

||||

So, its wise to reduce idle log-out time to something around 5 minutes & it can be done in config file only. Open '/etc/ssh/sshd_config' & change the values

|

||||

|

||||

```

|

||||

ClientAliveInterval 300

|

||||

ClientAliveCountMax 0

|

||||

```

|

||||

|

||||

Its in seconds, so configure them accordingly.

|

||||

|

||||

### Disable root logins

|

||||

|

||||

As we know root have access to anything & everything on the server, so we must disable root access through ssh session. Even if it is needed to complete a task that only root can do, we can escalate the privileges of a normal user.

|

||||

|

||||

To disable root access, open your configuration file & change the following parameter

|

||||

|

||||

```

|

||||

PermitRootLogin no

|

||||

ClientAliveCountMax 0

|

||||

```

|

||||

|

||||

This will disable root access to ssh sessions.

|

||||

|

||||

### Enable Protocol 2

|

||||

|

||||

SSH protocol 1 had man in the middle attack issues & other security issues as well, all these issues were addressed in Protocol 2. So protocol 1 must not be used at any cost. To change the protocol , open your sshd_config file & change the following parameter

|

||||

|

||||

```

|

||||

Protocol 2

|

||||

```

|

||||

|

||||

### Enable a warning screen

|

||||

|

||||

It would be a good idea to enable a warning screen stating a warning about misuse of ssh, just before a user logs into the session. To create a warning screen, create a file named **" warning"** in **/etc/** folder (or any other folder) & write something like "We monitor all our sessions on continuously. Don't misuse your access or else you will be prosecuted" or whatever you wish to warn. You can also consult legal team about this warning to make it more official.

|

||||

|

||||

After this file is create, open sshd_config file & enter the following parameter into the file

|

||||

|

||||

```

|

||||

Banner /etc/issue

|

||||

```

|

||||

|

||||

now you warning message will be displayed each time someone tries to access the session.

|

||||

|

||||

### Use non-standard ssh port

|

||||

|

||||

By default, ssh uses port 22 & all the brute force scripts are written for port 22 only. So to make your sessions even more secure, use a non-standard port like 15000. But make sure before selecting a port that its not being used by some other service.

|

||||

|

||||

To change port, open sshd_config & change the following parameter

|

||||

|

||||

```

|

||||

Port 15000

|

||||

```

|

||||

|

||||

Save & restart the service and you can access the ssh only with this new port. To start a session with custom port use the following command

|

||||

|

||||

```

|

||||

$ ssh -p 15000 {server IP}

|

||||

```

|

||||

|

||||

** Note:-** If using firewall, open the port on your firewall & we must also change the SELinux settings if using a custom port for ssh. Run the following command to update the SELinux label

|

||||

|

||||

```

|

||||

$ semanage port -a -t ssh_port_t -p tcp 15000

|

||||

```

|

||||

|

||||

### Limit IP access

|

||||

|

||||

If you have an environment where your server is accessed by only limited number of IP addresses, you can also allow access to those IP addresses only. Open sshd_config file & enter the following with your custom port

|

||||

|

||||

```

|

||||

Port 15000

|

||||

ListenAddress 192.168.1.100

|

||||

ListenAddress 192.168.1.115

|

||||

```

|

||||

|

||||

Now ssh session will only be available to these mentioned IPs with the custom port 15000.

|

||||

|

||||

### Disable empty passwords

|

||||

|

||||

As mentioned already that you should only use complex username & passwords, so using an empty password for remote login is a complete no-no. To disable empty passwords, open sshd_config file & edit the following parameter

|

||||

|

||||

```

|

||||

PermitEmptyPasswords no

|

||||

```

|

||||

|

||||

### Use public/private key based authentication

|

||||

|

||||

Using Public/Private key based authentication has its advantages i.e. you no longer need to enter the password when entering into a session (unless you are using a passphrase to decrypt the key) & no one can have access to your server until & unless they have the right authentication key. Process to setup public/private key based authentication is discussed in [**this tutorial here**][1].

|

||||

|

||||

So, this completes our tutorial on securing your ssh server. If having any doubts or issues, please leave a message in the comment box below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/ultimate-guide-to-securing-ssh-sessions/

|

||||

|

||||

作者:[SHUSAIN][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:http://linuxtechlab.com/configure-ssh-server-publicprivate-key/

|

||||

[2]:https://www.facebook.com/techlablinux/

|

||||

[3]:https://twitter.com/LinuxTechLab

|

||||

[4]:https://plus.google.com/+linuxtechlab

|

||||

[5]:http://linuxtechlab.com/contact-us-2/

|

||||

@ -1,125 +0,0 @@

|

||||

How To Fully Update And Upgrade Offline Debian-based Systems

|

||||

======

|

||||

|

||||

|

||||

A while ago we have shown you how to install softwares in any[ **offline Ubuntu**][1] system and any [**offline Arch Linux**][2] system. Today, we will see how to fully update and upgrade offline Debian-based systems. Unlike the previous methods, we do not update/upgrade a single package, but the whole system. This method can be helpful where you don't have an active Internet connection or slow Internet speed.

|

||||

|

||||

### Fully Update And Upgrade Offline Debian-based Systems

|

||||

|

||||

Let us say, you have a system (Windows or Linux) with high-speed Internet connection at work and a Debian or any Debian derived systems with no internet connection or very slow Internet connection(like dial-up) at home. You want to upgrade your offline home system. What would you do? Buy a high speed Internet connection? Not necessary! You still can update or upgrade your offline system with Internet. This is where **Apt-Offline** comes in help.

|

||||

|

||||

As the name says, apt-offline is an Offline APT Package Manager for APT based systems like Debian and Debian derived distributions such as Ubuntu, Linux Mint. Using apt-offline, we can fully update/upgrade our Debian box without the need of connecting it to the Internet. It is cross-platform tool written in the Python Programming Language and has both CLI and graphical interfaces.

|

||||

|

||||

#### Requirements

|

||||

|

||||

* An Internet connected system (Windows or Linux). We call it online system for the sake of easy understanding throughout this guide.

|

||||

* An Offline system (Debian and Debian derived system). We call it offline system.

|

||||

* USB drive or External Hard drive with sufficient space to carry all updated packages.

|

||||

|

||||

|

||||

|

||||

#### Installation

|

||||

|

||||

Apt-Offline is available in the default repositories of Debian and derivatives. If your Online system is running with Debian, Ubuntu, Linux Mint, and other DEB based systems, you can install Apt-Offline using command:

|

||||

```

|

||||

sudo apt-get install apt-offline

|

||||

```

|

||||

|

||||

If your Online runs with any other distro than Debian, git clone Apt-Offline repository:

|

||||

```

|

||||

git clone https://github.com/rickysarraf/apt-offline.git

|

||||

```

|

||||

|

||||

Go the directory and run it from there.

|

||||

```

|

||||

cd apt-offline/

|

||||

```

|

||||

```

|

||||

sudo ./apt-offline

|

||||

```

|

||||

|

||||

#### Steps to do in Offline system (Non-Internet connected system)

|

||||

|

||||

Go to your offline system and create a directory where you want to store the signature file:

|

||||

```

|

||||

mkdir ~/tmp

|

||||

```

|

||||

```

|

||||

cd ~/tmp/

|

||||

```

|

||||

|

||||

You can use any directory of your choice. Then, run the following command to generate the signature file:

|

||||

```

|

||||

sudo apt-offline set apt-offline.sig

|

||||

```

|

||||

|

||||

Sample output would be:

|

||||

```

|

||||

Generating database of files that are needed for an update.

|

||||

|

||||

Generating database of file that are needed for operation upgrade

|

||||

```

|

||||

|

||||

By default, apt-offline will generate database of files that are needed to be update and upgrade. You can use **--` update`** or `**--upgrade** options to create database for either one of these.`

|

||||

|

||||

Copy the entire **tmp** folder in an USB drive or external drive and go to your online system (Internet-enabled system).

|

||||

|

||||

#### Steps to do in Online system

|

||||

|

||||

Plug in your USB drive and go to the temp directory:

|

||||

```

|

||||

cd tmp/

|

||||

```

|

||||

|

||||

Then, run the following command:

|

||||

```

|

||||

sudo apt-offline get apt-offline.sig --threads 5 --bundle apt-offline-bundle.zip

|

||||

```

|

||||

|

||||

Here, "-threads 5" represents the number of APT repositories. You can increase the number if you want to download packages from more repositories. And, "-bundle apt-offline-bundle.zip" option represents all packages will be bundled in a single archive file called **apt-offline-bundle.zip**. This archive file will be saved in your current working directory.

|

||||

|

||||

The above command will download data based on the signature file generated earlier in the offline system.

|

||||

|

||||

[![][3]][4]

|

||||

|

||||

This will take several minutes depending upon the Internet connection speed. Please note that apt-offline is cross platform, so you can use it to download packages on any OS.

|

||||

|

||||

Once completed, copy the **tmp** folder to USB or External drive and return back to the offline system. Make sure your USB device has enough free space to keep all downloaded files, because all packages are available in the tmp folder now.

|

||||

|

||||

#### Steps to do in offline system

|

||||

|

||||

Plug in the device in your offline system and go to the **tmp** directory where you have downloaded all packages earlier.

|

||||

```

|

||||

cd tmp

|

||||

```

|

||||

|

||||

Then, run the following command to install all download packages.

|

||||

```

|

||||

sudo apt-offline install apt-offline-bundle.zip

|

||||

```

|

||||

|

||||

This will update the APT database, so APT will find all required packages in the APT cache.

|

||||

|

||||

**Note:** If both online and offline systems are in the same local network, you can transfer the **tmp** folder to the offline system using "scp" or any other file transfer applications. If both systems are in different places, copy the folder using USB devices.

|

||||

|

||||

And, that's all for now folks. I hope this guide will useful for you. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/fully-update-upgrade-offline-debian-based-systems/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/install-softwares-offline-ubuntu-16-04/

|

||||

[2]:https://www.ostechnix.com/install-packages-offline-arch-linux/

|

||||

[3]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/11/apt-offline.png ()

|

||||

@ -1,48 +0,0 @@

|

||||

A tour of containerd 1.0

|

||||

======

|

||||

![containerd][1]

|

||||

|

||||

We have done a few talks in the past on different features of containerd, how it was designed, and some of the problems that we have fixed along the way. Containerd is used by Docker, Kubernetes CRI, and a few other projects but this is a post for people who may not know what containerd actually does within these platforms. I would like to do more posts on the feature set and design of containerd in the future but for now, we will start with the basics.

|

||||

|

||||

I think the container ecosystem can be confusing at times. Especially with the terminology that we use. Whats this? A runtime. And this? A runtime… containerd (pronounced " _container-dee "_) as the name implies, not contain nerd as some would like to troll me with, is a container daemon. It was originally built as an integration point for OCI runtimes like runc but over the past six months it has added a lot of functionality to bring it up to par with the needs of modern container platforms like Docker and orchestration systems like Kubernetes.

|

||||

|

||||

So what do you actually get using containerd? You get push and pull functionality as well as image management. You get container lifecycle APIs to create, execute, and manage containers and their tasks. An entire API dedicated to snapshot management and an openly governed project to depend on. Basically everything that you need to build a container platform without having to deal with the underlying OS details. I think the most important part of containerd is having a versioned and stable API that will have bug fixes and security patches backported.

|

||||

|

||||

![containerd][2]

|

||||

|

||||

Since there is no such thing as Linux containers in the kernel, containers are various kernel features tied together, when you are building a large platform or distributed system you want an abstraction layer between your management code and the syscalls and duct tape of features to run a container. That is where containerd lives. It provides a client a layer of stable types that platforms can build on top of without ever having to drop down to the kernel level. It's so much nicer to work with Container, Task, and Snapshot types than it is to manage calls to clone() or mount(). Balanced with the flexibility to directly interact with the runtime or host-machine, these objects avoid the sacrifice of capabilities that typically come with higher-level abstractions. The result is that easy tasks are simple to complete and hard tasks are possible.

|

||||

|

||||

![containerd][3]Containerd was designed to be used by Docker and Kubernetes as well as any other container system that wants to abstract away syscalls or OS specific functionality to run containers on Linux, Windows, Solaris, or other Operating Systems. With these users in mind, we wanted to make sure that containerd has only what they need and nothing that they don't. Realistically this is impossible but at least that is what we try for. While networking is out of scope for containerd, what it doesn't do lets higher level systems have full control. The reason for this is, when you are building a distributed system, networking is a very central aspect. With SDN and service discovery today, networking is way more platform specific than abstracting away netlink calls on linux. Most of the new overlay networks are route based and require routing tables to be updated each time a new container is created or deleted. Service discovery, DNS, etc all have to be notified of these changes as well. It would be a large chunk of code to be able to support all the different network interfaces, hooks, and integration points to support this if we added networking to containerd. What we did instead is opted for a robust events system inside containerd so that multiple consumers can subscribe to the events that they care about. We also expose a [Task API ][4]that lets users create a running task, have the ability to add interfaces to the network namespace of the container, and then start the container's process without the need for complex hooks in various points of a container's lifecycle.

|

||||

|

||||

Another area that has been added to containerd over the past few months is a complete storage and distribution system that supports both OCI and Docker image formats. You have a complete content addressed storage system across the containerd API that works not only for images but also metadata, checkpoints, and arbitrary data attached to containers.

|

||||

|

||||

We also took the time to [rethink how "graphdrivers" work][5]. These are the overlay or block level filesystems that allow images to have layers and you to perform efficient builds. Graphdrivers were initially written by Solomon and I when we added support for devicemapper. Docker only supported AUFS at the time so we modeled the graphdrivers after the overlay filesystem. However, making a block level filesystem such as devicemapper/lvm act like an overlay filesystem proved to be much harder to do in the long run. The interfaces had to expand over time to support different features than what we originally thought would be needed. With containerd, we took a different approach, make overlay filesystems act like a snapshotter instead of vice versa. This was much easier to do as overlay filesystems provide much more flexibility than snapshotting filesystems like BTRFS, ZFS, and devicemapper as they don't have a strict parent/child relationship. This helped us build out [a smaller interface for the snapshotters][6] while still fulfilling the requirements needed from things [like a builder][7] as well as reduce the amount of code needed, making it much easier to maintain in the long run.

|

||||

|

||||

![][8]

|

||||

|

||||

You can find more details about the architecture of containerd in [Stephen Day's Dec 7th 2017 KubeCon SIG Node presentation][9].

|

||||

|

||||

In addition to the technical and design changes in the 1.0 codebase, we also switched the containerd [governance model from the long standing BDFL to a Technical Steering Committee][10] giving the community an independent third party resource to rely on.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.docker.com/2017/12/containerd-ga-features-2/

|

||||

|

||||

作者:[Michael Crosby][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blog.docker.com/author/michael/

|

||||

[1]:https://i0.wp.com/blog.docker.com/wp-content/uploads/950cf948-7c08-4df6-afd9-cc9bc417cabe-6.jpg?resize=400%2C120&ssl=1

|

||||

[2]:https://i1.wp.com/blog.docker.com/wp-content/uploads/4a7666e4-ebdb-4a40-b61a-26ac7c3f663e-4.jpg?resize=906%2C470&ssl=1 (containerd)

|

||||

[3]:https://i1.wp.com/blog.docker.com/wp-content/uploads/2a73a4d8-cd40-4187-851f-6104ae3c12ba-1.jpg?resize=1140%2C680&ssl=1

|

||||

[4]:https://github.com/containerd/containerd/blob/master/api/services/tasks/v1/tasks.proto

|

||||

[5]:https://blog.mobyproject.org/where-are-containerds-graph-drivers-145fc9b7255

|

||||

[6]:https://github.com/containerd/containerd/blob/master/api/services/snapshots/v1/snapshots.proto

|

||||

[7]:https://blog.mobyproject.org/introducing-buildkit-17e056cc5317

|

||||

[8]:https://i1.wp.com/blog.docker.com/wp-content/uploads/d0fb5eb9-c561-415d-8d57-e74442a879a2-1.jpg?resize=1140%2C556&ssl=1

|

||||

[9]:https://speakerdeck.com/stevvooe/whats-happening-with-containerd-and-the-cri

|

||||

[10]:https://github.com/containerd/containerd/pull/1748

|

||||

@ -1,197 +0,0 @@

|

||||

How to Create a Docker Image

|

||||

======

|

||||

|

||||

|

||||

|

||||

In the previous [article][1], we learned about how to get started with Docker on Linux, macOS, and Windows. In this article, we will get a basic understanding of creating Docker images. There are prebuilt images available on DockerHub that you can use for your own project, and you can publish your own image there.

|

||||

|

||||

We are going to use prebuilt images to get the base Linux subsystem, as it's a lot of work to build one from scratch. You can get Alpine (the official distro used by Docker Editions), Ubuntu, BusyBox, or scratch. In this example, I will use Ubuntu.

|

||||

|

||||

Before we start building our images, let's "containerize" them! By this I just mean creating directories for all of your Docker images so that you can maintain different projects and stages isolated from each other.

|

||||

```

|

||||

$ mkdir dockerprojects

|

||||

|

||||

cd dockerprojects

|

||||

|

||||

```

|

||||

|

||||

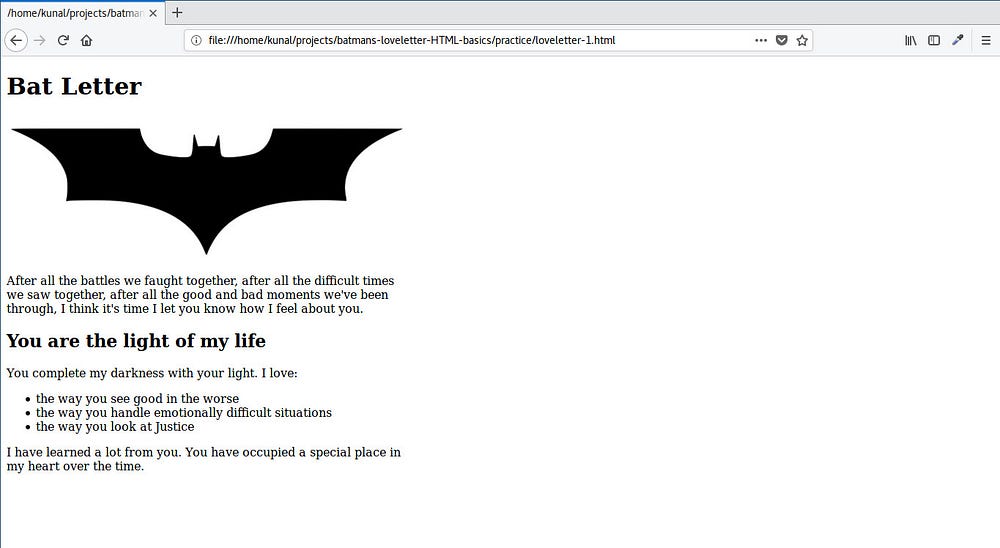

Now create a Dockerfile inside the dockerprojects directory using your favorite text editor; I prefer nano, which is also easy for new users.

|

||||

```

|

||||

$ nano Dockerfile

|

||||

|

||||

```

|

||||

|

||||

And add this line:

|

||||

```

|

||||

FROM Ubuntu

|

||||

|

||||

```

|

||||

|

||||

![m7_f7No0pmZr2iQmEOH5_ID6MDG2oEnODpQZkUL7][2]

|

||||

|

||||

Save it with Ctrl+Exit then Y.

|

||||

|

||||

Now create your new image and provide it with a name (run these commands within the same directory):

|

||||

```

|

||||

$ docker build -t dockp .

|

||||

|

||||

```

|

||||

|

||||

(Note the dot at the end of the command.) This should build successfully, so you'll see:

|

||||

```

|

||||

Sending build context to Docker daemon 2.048kB

|

||||

|

||||

Step 1/1 : FROM ubuntu

|

||||

|

||||

---> 2a4cca5ac898

|

||||

|

||||

Successfully built 2a4cca5ac898