mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-16 22:42:21 +08:00

commit

feb2cda84f

@ -0,0 +1,77 @@

|

||||

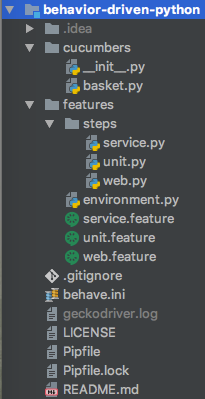

如何在 Ubuntu 上安装 Cinnamon 桌面环境

|

||||

======

|

||||

|

||||

> 这篇教程将会为你展示如何在 Ubuntu 上安装 Cinnamon 桌面环境。

|

||||

|

||||

[Cinnamon][1] 是 [Linux Mint][2] 的默认桌面环境。不同于 Ubuntu 的 Unity 桌面环境,Cinnamon 是一个更加传统而优雅的桌面环境,其带有底部面板和应用菜单。由于 Cinnamon 桌面以及它类 Windows 的用户界面,许多桌面用户[相较于 Ubuntu 更喜欢 Linux Mint][3]。

|

||||

|

||||

现在你无需[安装 Linux Mint][4] 就能够体验到 Cinnamon了。在这篇教程,我将会展示给你如何在 Ubuntu 18.04,16.04 和 14.04 上安装 Cinnamon。

|

||||

|

||||

在 Ubuntu 上安装 Cinnamon 之前,有一些事情需要你注意。有时候,安装的额外桌面环境可能会与你当前的桌面环境有冲突。可能导致会话、应用程序或功能等的崩溃。这就是为什么你需要在做这个决定时谨慎一点的原因。

|

||||

|

||||

### 如何在 Ubuntu 上安装 Cinnamon 桌面环境

|

||||

|

||||

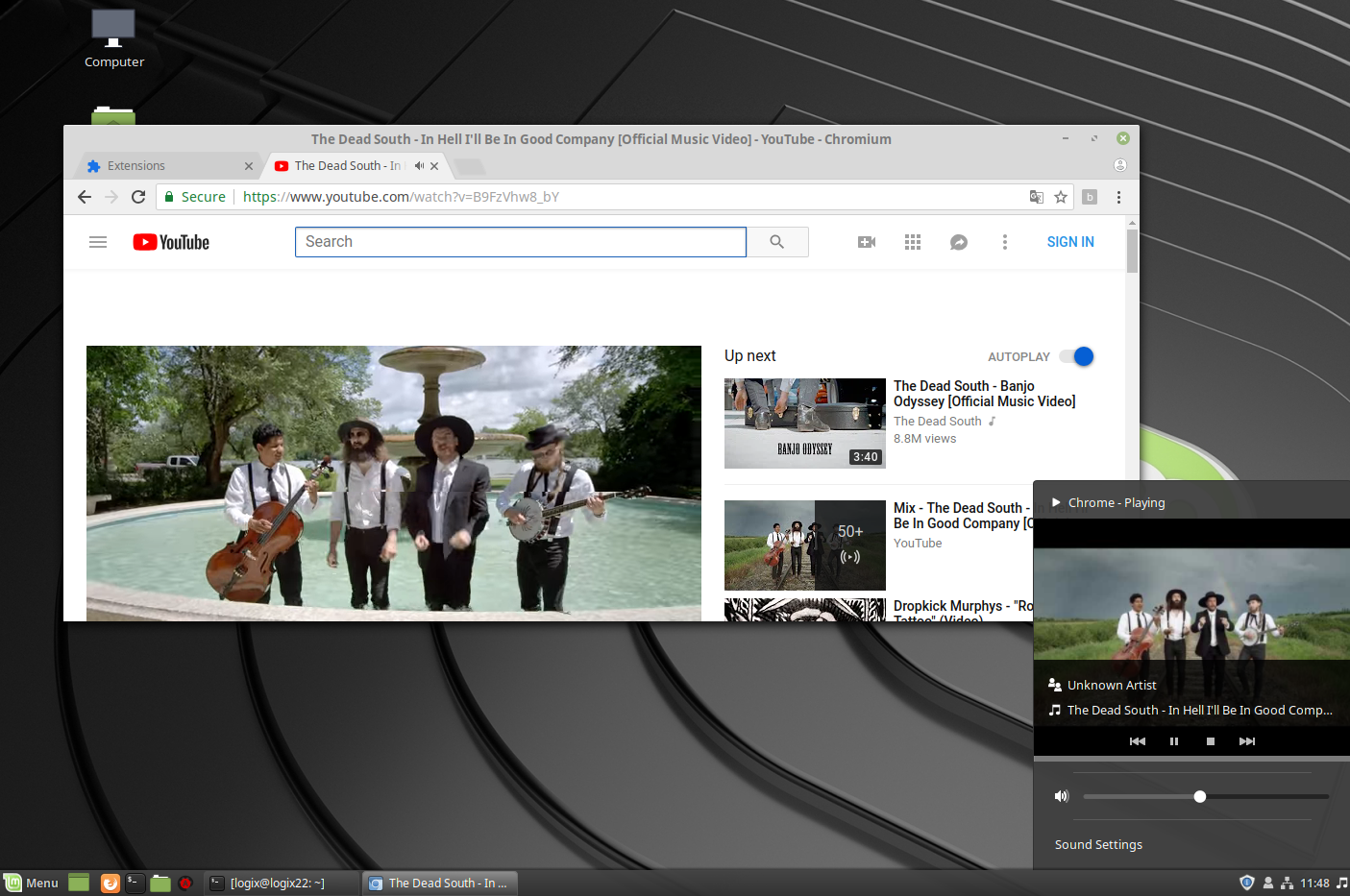

![如何在 Ubuntu 上安装 Cinnamon 桌面环境][5]

|

||||

|

||||

过去有 Cinnamon 团队为 Ubuntu 提供的一系列的官方 PPA,但现在都已经失效了。不过不用担心,还有一个非官方的 PPA,而且它运行的很完美。这个 PPA 里包含了最新的 Cinnamon 版本。

|

||||

|

||||

```

|

||||

sudo add-apt-repository

|

||||

ppa:embrosyn/cinnamon

|

||||

sudo apt update && sudo apt install cinnamon

|

||||

```

|

||||

|

||||

下载的大小大概是 150 MB(如果我没记错的话)。这其中提供的 Nemo(Cinnamon 的文件管理器,基于 Nautilus)和 Cinnamon 控制中心。这些东西提供了一个更加接近于 Linux Mint 的感觉。

|

||||

|

||||

### 在 Ubuntu 上使用 Cinnamon 桌面环境

|

||||

|

||||

Cinnamon 安装完成后,退出当前会话,在登录界面,点击用户名旁边的 Ubuntu 符号:

|

||||

|

||||

|

||||

|

||||

之后,它将会显示所有系统可用的桌面环境。选择 Cinnamon。

|

||||

|

||||

|

||||

|

||||

现在你应该已经登录到有着 Cinnamon 桌面环境的 Ubuntu 中了。你还可以通过同样的方式再回到 Unity 桌面。这里有一张以 Cinnamon 做为桌面环境的 Ubuntu 桌面截图。

|

||||

|

||||

|

||||

|

||||

看起来是不是像极了 Linux Mint。此外,我并没有发现任何有关 Cinnamon 和 Unity 的兼容性问题。在 Unity 和 Cinnamon 来回切换,它们也依旧工作的很完美。

|

||||

|

||||

#### 从 Ubuntu 卸载 Cinnamon

|

||||

|

||||

如果你想卸载 Cinnamon,可以使用 PPA Purge 来完成。首先安装 PPA Purge:

|

||||

|

||||

```

|

||||

sudo apt-get install ppa-purge

|

||||

```

|

||||

|

||||

安装完成之后,使用下面的命令去移除该 PPA:

|

||||

|

||||

```

|

||||

sudo ppa-purge ppa:embrosyn/cinnamon

|

||||

```

|

||||

|

||||

更多的信息,我建议你去阅读 [如何从 Linux 移除 PPA][6] 这篇文章。

|

||||

|

||||

我希望这篇文章能够帮助你在 Ubuntu 上安装 Cinnamon。也可以分享一下你使用 Cinnamon 的经验。

|

||||

|

||||

------

|

||||

|

||||

via: https://itsfoss.com/install-cinnamon-on-ubuntu/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[dianbanjiu](https://github.com/dianbanjiu)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: http://cinnamon.linuxmint.com/

|

||||

[2]: http://www.linuxmint.com/

|

||||

[3]: https://itsfoss.com/linux-mint-vs-ubuntu/

|

||||

[4]: https://itsfoss.com/guide-install-linux-mint-16-dual-boot-windows/

|

||||

[5]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/install-cinnamon-ubuntu.png

|

||||

[6]: https://itsfoss.com/how-to-remove-or-delete-ppas-quick-tip/

|

||||

132

published/20171124 How do groups work on Linux.md

Normal file

132

published/20171124 How do groups work on Linux.md

Normal file

@ -0,0 +1,132 @@

|

||||

“用户组”在 Linux 上到底是怎么工作的?

|

||||

========

|

||||

|

||||

嗨!就在上周,我还自认为对 Linux 上的用户和组的工作机制了如指掌。我认为它们的关系是这样的:

|

||||

|

||||

1. 每个进程都属于一个用户(比如用户 `julia`)

|

||||

2. 当这个进程试图读取一个被某个组所拥有的文件时, Linux 会

|

||||

a. 先检查用户`julia` 是否有权限访问文件。(LCTT 译注:此处应该是指检查文件的所有者是否就是 `julia`)

|

||||

b. 检查 `julia` 属于哪些组,并进一步检查在这些组里是否有某个组拥有这个文件或者有权限访问这个文件。

|

||||

3. 如果上述 a、b 任一为真(或者“其它”位设为有权限访问),那么这个进程就有权限访问这个文件。

|

||||

|

||||

比如说,如果一个进程被用户 `julia` 拥有并且 `julia` 在`awesome` 组,那么这个进程就能访问下面这个文件。

|

||||

|

||||

```

|

||||

r--r--r-- 1 root awesome 6872 Sep 24 11:09 file.txt

|

||||

```

|

||||

|

||||

然而上述的机制我并没有考虑得非常清楚,如果你硬要我阐述清楚,我会说进程可能会在**运行时**去检查 `/etc/group` 文件里是否有某些组拥有当前的用户。

|

||||

|

||||

### 然而这并不是 Linux 里“组”的工作机制

|

||||

|

||||

我在上个星期的工作中发现了一件有趣的事,事实证明我前面的理解错了,我对组的工作机制的描述并不准确。特别是 Linux **并不会**在进程每次试图访问一个文件时就去检查这个进程的用户属于哪些组。

|

||||

|

||||

我在读了《[Linux 编程接口][1]》这本书的第九章(“进程资格”)后才恍然大悟(这本书真是太棒了),这才是组真正的工作方式!我意识到之前我并没有真正理解用户和组是怎么工作的,我信心满满的尝试了下面的内容并且验证到底发生了什么,事实证明现在我的理解才是对的。

|

||||

|

||||

### 用户和组权限检查是怎么完成的

|

||||

|

||||

现在这些关键的知识在我看来非常简单! 这本书的第九章上来就告诉我如下事实:用户和组 ID 是**进程的属性**,它们是:

|

||||

|

||||

* 真实用户 ID 和组 ID;

|

||||

* 有效用户 ID 和组 ID;

|

||||

* 保存的 set-user-ID 和保存的 set-group-ID;

|

||||

* 文件系统用户 ID 和组 ID(特定于 Linux);

|

||||

* 补充的组 ID;

|

||||

|

||||

这说明 Linux **实际上**检查一个进程能否访问一个文件所做的组检查是这样的:

|

||||

|

||||

* 检查一个进程的组 ID 和补充组 ID(这些 ID 就在进程的属性里,**并不是**实时在 `/etc/group` 里查找这些 ID)

|

||||

* 检查要访问的文件的访问属性里的组设置

|

||||

* 确定进程对文件是否有权限访问(LCTT 译注:即文件的组是否是以上的组之一)

|

||||

|

||||

通常当访问控制的时候使用的是**有效**用户/组 ID,而不是**真实**用户/组 ID。技术上来说当访问一个文件时使用的是**文件系统**的 ID,它们通常和有效用户/组 ID 一样。(LCTT 译注:这句话针对 Linux 而言。)

|

||||

|

||||

### 将一个用户加入一个组并不会将一个已存在的进程(的用户)加入那个组

|

||||

|

||||

下面是一个有趣的例子:如果我创建了一个新的组:`panda` 组并且将我自己(`bork`)加入到这个组,然后运行 `groups` 来检查我是否在这个组里:结果是我(`bork`)竟然不在这个组?!

|

||||

|

||||

```

|

||||

bork@kiwi~> sudo addgroup panda

|

||||

Adding group `panda' (GID 1001) ...

|

||||

Done.

|

||||

bork@kiwi~> sudo adduser bork panda

|

||||

Adding user `bork' to group `panda' ...

|

||||

Adding user bork to group panda

|

||||

Done.

|

||||

bork@kiwi~> groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd

|

||||

|

||||

```

|

||||

|

||||

`panda` 并不在上面的组里!为了再次确定我们的发现,让我们建一个文件,这个文件被 `panda` 组拥有,看看我能否访问它。

|

||||

|

||||

```

|

||||

$ touch panda-file.txt

|

||||

$ sudo chown root:panda panda-file.txt

|

||||

$ sudo chmod 660 panda-file.txt

|

||||

$ cat panda-file.txt

|

||||

cat: panda-file.txt: Permission denied

|

||||

```

|

||||

|

||||

好吧,确定了,我(`bork`)无法访问 `panda-file.txt`。这一点都不让人吃惊,我的命令解释器并没有将 `panda` 组作为补充组 ID,运行 `adduser bork panda` 并不会改变这一点。

|

||||

|

||||

### 那进程一开始是怎么得到用户的组的呢?

|

||||

|

||||

这真是个非常令人困惑的问题,对吗?如果进程会将组的信息预置到进程的属性里面,进程在初始化的时候怎么取到组的呢?很明显你无法给你自己指定更多的组(否则就会和 Linux 访问控制的初衷相违背了……)

|

||||

|

||||

有一点还是很清楚的:一个新的进程是怎么从我的命令行解释器(`/bash/fish`)里被**执行**而得到它的组的。(新的)进程将拥有我的用户 ID(`bork`),并且进程属性里还有很多组 ID。从我的命令解释器里执行的所有进程是从这个命令解释器里 `fork()` 而来的,所以这个新进程得到了和命令解释器同样的组。

|

||||

|

||||

因此一定存在一个“第一个”进程来把你的组设置到进程属性里,而所有由此进程而衍生的进程将都设置这些组。而那个“第一个”进程就是你的<ruby>登录程序<rt>login shell</rt></ruby>,在我的笔记本电脑上,它是由 `login` 程序(`/bin/login`)实例化而来。登录程序以 root 身份运行,然后调用了一个 C 的库函数 —— `initgroups` 来设置你的进程的组(具体来说是通过读取 `/etc/group` 文件),因为登录程序是以 root 运行的,所以它能设置你的进程的组。

|

||||

|

||||

### 让我们再登录一次

|

||||

|

||||

好了!假如说我们正处于一个登录程序中,而我又想刷新我的进程的组设置,从我们前面所学到的进程是怎么初始化组 ID 的,我应该可以通过再次运行登录程序来刷新我的进程组并启动一个新的登录命令!

|

||||

|

||||

让我们试试下边的方法:

|

||||

|

||||

```

|

||||

$ sudo login bork

|

||||

$ groups

|

||||

bork adm cdrom sudo dip plugdev lpadmin sambashare docker lxd panda

|

||||

$ cat panda-file.txt # it works! I can access the file owned by `panda` now!

|

||||

```

|

||||

|

||||

当然,成功了!现在由登录程序衍生的程序的用户是组 `panda` 的一部分了!太棒了!这并不会影响我其他的已经在运行的登录程序(及其子进程),如果我真的希望“所有的”进程都能对 `panda` 组有访问权限。我必须完全的重启我的登录会话,这意味着我必须退出我的窗口管理器然后再重新登录。(LCTT 译注:即更新进程树的树根进程,这里是窗口管理器进程。)

|

||||

|

||||

### newgrp 命令

|

||||

|

||||

在 Twitter 上有人告诉我如果只是想启动一个刷新了组信息的命令解释器的话,你可以使用 `newgrp`(LCTT 译注:不启动新的命令解释器),如下:

|

||||

|

||||

```

|

||||

sudo addgroup panda

|

||||

sudo adduser bork panda

|

||||

newgrp panda # starts a new shell, and you don't have to be root to run it!

|

||||

```

|

||||

|

||||

你也可以用 `sg panda bash` 来完成同样的效果,这个命令能启动一个`bash` 登录程序,而这个程序就有 `panda` 组。

|

||||

|

||||

### seduid 将设置有效用户 ID

|

||||

|

||||

其实我一直对一个进程如何以 `setuid root` 的权限来运行意味着什么有点似是而非。现在我知道了,事实上所发生的是:`setuid` 设置了

|

||||

“有效用户 ID”! 如果我(`julia`)运行了一个 `setuid root` 的进程( 比如 `passwd`),那么进程的**真实**用户 ID 将为 `julia`,而**有效**用户 ID 将被设置为 `root`。

|

||||

|

||||

`passwd` 需要以 root 权限来运行,但是它能看到进程的真实用户 ID 是 `julia` ,是 `julia` 启动了这个进程,`passwd` 会阻止这个进程修改除了 `julia` 之外的用户密码。

|

||||

|

||||

### 就是这些了!

|

||||

|

||||

在《[Linux 编程接口][1]》这本书里有很多 Linux 上一些功能的罕见使用方法以及 Linux 上所有的事物到底是怎么运行的详细解释,这里我就不一一展开了。那本书棒极了,我上面所说的都在该书的第九章,这章在 1300 页的书里只占了 17 页。

|

||||

|

||||

我最爱这本书的一点是我只用读 17 页关于用户和组是怎么工作的内容,而这区区 17 页就能做到内容完备、详实有用。我不用读完所有的 1300 页书就能得到有用的东西,太棒了!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2017/11/20/groups/

|

||||

|

||||

作者:[Julia Evans][a]

|

||||

译者:[DavidChen](https://github.com/DavidChenLiang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/

|

||||

[1]:http://man7.org/tlpi/

|

||||

@ -1,65 +1,58 @@

|

||||

你没听说过的 Go 语言惊人优点

|

||||

============================================================

|

||||

=========

|

||||

|

||||

|

||||

|

||||

来自 [https://github.com/ashleymcnamara/gophers][1] 的图稿

|

||||

*来自 [https://github.com/ashleymcnamara/gophers][1] 的图稿*

|

||||

|

||||

在这篇文章中,我将讨论为什么你需要尝试一下 Go,以及应该从哪里学起。

|

||||

在这篇文章中,我将讨论为什么你需要尝试一下 Go 语言,以及应该从哪里学起。

|

||||

|

||||

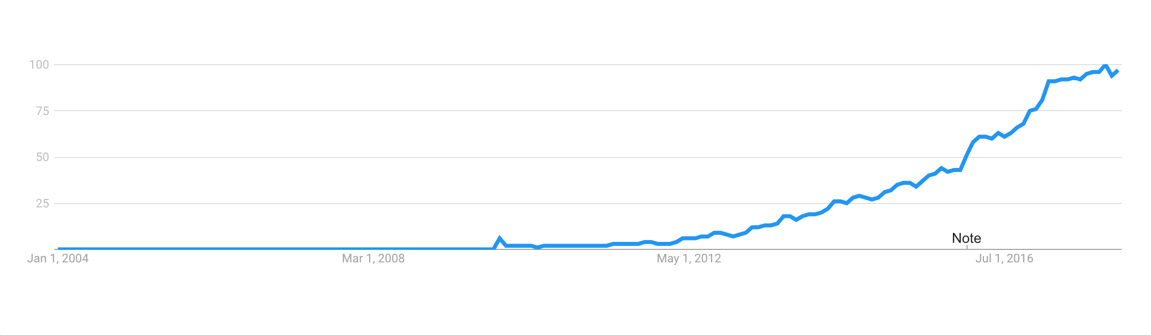

Golang 是可能是最近几年里你经常听人说起的编程语言。尽管它在 2009 年已经发布,但它最近才开始流行起来。

|

||||

Go 语言是可能是最近几年里你经常听人说起的编程语言。尽管它在 2009 年已经发布了,但它最近才开始流行起来。

|

||||

|

||||

|

||||

|

||||

根据 Google 趋势,Golang 语言非常流行。

|

||||

*根据 Google 趋势,Go 语言非常流行。*

|

||||

|

||||

这篇文章不会讨论一些你经常看到的 Golang 的主要特性。

|

||||

这篇文章不会讨论一些你经常看到的 Go 语言的主要特性。

|

||||

|

||||

相反,我想向您介绍一些相当小众但仍然很重要的功能。在您决定尝试Go后,您才会知道这些功能。

|

||||

相反,我想向您介绍一些相当小众但仍然很重要的功能。只有在您决定尝试 Go 语言后,您才会知道这些功能。

|

||||

|

||||

这些都是表面上没有体现出来的惊人特性,但它们可以为您节省数周或数月的工作量。而且这些特性还可以使软件开发更加愉快。

|

||||

|

||||

阅读本文不需要任何语言经验,所以不比担心 Golang 对你来说是新的事物。如果你想了解更多,可以看看我在底部列出的一些额外的链接,。

|

||||

阅读本文不需要任何语言经验,所以不必担心你还不了解 Go 语言。如果你想了解更多,可以看看我在底部列出的一些额外的链接。

|

||||

|

||||

我们将讨论以下主题:

|

||||

|

||||

* GoDoc

|

||||

|

||||

* 静态代码分析

|

||||

|

||||

* 内置的测试和分析框架

|

||||

|

||||

* 竞争条件检测

|

||||

|

||||

* 学习曲线

|

||||

|

||||

* 反射(Reflection)

|

||||

|

||||

* Opinionatedness(专制独裁的 Go)

|

||||

|

||||

* 反射

|

||||

* Opinionatedness

|

||||

* 文化

|

||||

|

||||

请注意,这个列表不遵循任何特定顺序来讨论。

|

||||

|

||||

### GoDoc

|

||||

|

||||

Golang 非常重视代码中的文档,简洁也是如此。

|

||||

Go 语言非常重视代码中的文档,所以也很简洁。

|

||||

|

||||

[GoDoc][4] 是一个静态代码分析工具,可以直接从代码中创建漂亮的文档页面。GoDoc 的一个显着特点是它不使用任何其他的语言,如 JavaDoc,PHPDoc 或 JSDoc 来注释代码中的结构,只需要用英语。

|

||||

[GoDoc][4] 是一个静态代码分析工具,可以直接从代码中创建漂亮的文档页面。GoDoc 的一个显著特点是它不使用任何其他的语言,如 JavaDoc、PHPDoc 或 JSDoc 来注释代码中的结构,只需要用英语。

|

||||

|

||||

它使用从代码中获取的尽可能多的信息来概述、构造和格式化文档。它有多而全的功能,比如:交叉引用,代码示例以及一个指向版本控制系统仓库的链接。

|

||||

它使用从代码中获取的尽可能多的信息来概述、构造和格式化文档。它有多而全的功能,比如:交叉引用、代码示例,并直接链接到你的版本控制系统仓库。

|

||||

|

||||

而你需要做的只有添加一些好的,像 `// MyFunc transforms Foo into Bar` 这样子的注释,而这些注释也会反映在的文档中。你甚至可以添加一些通过网络接口或者在本地可以实际运行的 [代码示例][5]。

|

||||

而你需要做的只有添加一些像 `// MyFunc transforms Foo into Bar` 这样子的老牌注释,而这些注释也会反映在的文档中。你甚至可以添加一些通过网络界面或者在本地可以实际运行的 [代码示例][5]。

|

||||

|

||||

GoDoc 是 Go 的唯一文档引擎,供整个社区使用。这意味着用 Go 编写的每个库或应用程序都具有相同的文档格式。从长远来看,它可以帮你在浏览这些文档时节省大量时间。

|

||||

GoDoc 是 Go 的唯一文档引擎,整个社区都在使用。这意味着用 Go 编写的每个库或应用程序都具有相同的文档格式。从长远来看,它可以帮你在浏览这些文档时节省大量时间。

|

||||

|

||||

例如,这是我最近一个小项目的 GoDoc 页面:[pullkee — GoDoc][6]。

|

||||

|

||||

### 静态代码分析

|

||||

|

||||

Go 严重依赖于静态代码分析。例子包括 godoc 文档,gofmt 代码格式化,golint 代码风格统一,等等。

|

||||

Go 严重依赖于静态代码分析。例如用于文档的 [godoc][7],用于代码格式化的 [gofmt][8],用于代码风格的 [golint][9],等等。

|

||||

|

||||

其中有很多甚至全部包含在类似 [gometalinter][10] 的项目中,这些将它们全部组合成一个实用程序。

|

||||

它们是如此之多,甚至有一个总揽了它们的项目 [gometalinter][10] ,将它们组合成了单一的实用程序。

|

||||

|

||||

这些工具通常作为独立的命令行应用程序实现,并可轻松与任何编码环境集成。

|

||||

|

||||

@ -67,21 +60,21 @@ Go 严重依赖于静态代码分析。例子包括 godoc 文档,gofmt 代码

|

||||

|

||||

创建自己的分析器非常简单,因为 Go 有专门的内置包来解析和加工 Go 源码。

|

||||

|

||||

你可以从这个链接中了解到更多相关内容: [GothamGo Kickoff Meetup: Go Static Analysis Tools by Alan Donovan][11].

|

||||

你可以从这个链接中了解到更多相关内容: [GothamGo Kickoff Meetup: Alan Donovan 的 Go 静态分析工具][11]。

|

||||

|

||||

### 内置的测试和分析框架

|

||||

|

||||

您是否曾尝试为一个从头开始的 Javascript 项目选择测试框架?如果是这样,你可能会明白经历这种分析瘫痪的斗争。您可能也意识到您没有使用其中 80% 的框架。

|

||||

您是否曾尝试为一个从头开始的 JavaScript 项目选择测试框架?如果是这样,你或许会理解经历这种<ruby>过度分析<rt>analysis paralysis</rt></ruby>的痛苦。您可能也意识到您没有使用其中 80% 的框架。

|

||||

|

||||

一旦您需要进行一些可靠的分析,问题就会重复出现。

|

||||

|

||||

Go 附带内置测试工具,旨在简化和提高效率。它为您提供了最简单的 API,并做出最小的假设。您可以将它用于不同类型的测试,分析,甚至可以提供可执行代码示例。

|

||||

Go 附带内置测试工具,旨在简化和提高效率。它为您提供了最简单的 API,并做出最小的假设。您可以将它用于不同类型的测试、分析,甚至可以提供可执行代码示例。

|

||||

|

||||

它可以开箱即用地生成持续集成友好的输出,而且它的用法很简单,只需运行 `go test`。当然,它还支持高级功能,如并行运行测试,跳过标记代码,以及其他更多功能。

|

||||

它可以开箱即用地生成便于持续集成的输出,而且它的用法很简单,只需运行 `go test`。当然,它还支持高级功能,如并行运行测试,跳过标记代码,以及其他更多功能。

|

||||

|

||||

### 竞争条件检测

|

||||

|

||||

您可能已经了解了 Goroutines,它们在 Go 中用于实现并发代码执行。如果你未曾了解过,[这里][12]有一个非常简短的解释。

|

||||

您可能已经听说了 Goroutine,它们在 Go 中用于实现并发代码执行。如果你未曾了解过,[这里][12]有一个非常简短的解释。

|

||||

|

||||

无论具体技术如何,复杂应用中的并发编程都不容易,部分原因在于竞争条件的可能性。

|

||||

|

||||

@ -93,13 +86,13 @@ Go 附带内置测试工具,旨在简化和提高效率。它为您提供了

|

||||

|

||||

### 学习曲线

|

||||

|

||||

您可以在一个晚上学习所有 Go 的语言功能。我是认真的。当然,还有标准库,以及不同,更具体领域的最佳实践。但是两个小时就足以让你自信地编写一个简单的 HTTP 服务器或命令行应用程序。

|

||||

您可以在一个晚上学习**所有**的 Go 语言功能。我是认真的。当然,还有标准库,以及不同的,更具体领域的最佳实践。但是两个小时就足以让你自信地编写一个简单的 HTTP 服务器或命令行应用程序。

|

||||

|

||||

Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进行了介绍:[The Go Programming Language Blog][15]。

|

||||

Go 语言拥有[出色的文档][14],大部分高级主题已经在他们的博客上进行了介绍:[Go 编程语言博客][15]。

|

||||

|

||||

比起 Java(以及 Java 家族的语言),Javascript,Ruby,Python 甚至 PHP,你可以更轻松地把 Go 语言带到你的团队中。由于环境易于设置,您的团队在完成第一个生产代码之前需要进行的投资要小得多。

|

||||

比起 Java(以及 Java 家族的语言)、Javascript、Ruby、Python 甚至 PHP,你可以更轻松地把 Go 语言带到你的团队中。由于环境易于设置,您的团队在完成第一个生产代码之前需要进行的投资要小得多。

|

||||

|

||||

### 反射(Reflection)

|

||||

### 反射

|

||||

|

||||

代码反射本质上是一种隐藏在编译器下并访问有关语言结构的各种元信息的能力,例如变量或函数。

|

||||

|

||||

@ -107,19 +100,19 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

此外,Go [没有实现一个名为泛型的概念][16],这使得以抽象方式处理多种类型更具挑战性。然而,由于泛型带来的复杂程度,许多人认为不实现泛型对语言实际上是有益的。我完全同意。

|

||||

|

||||

根据 Go 的理念(这是一个单独的主题),您应该努力不要过度设计您的解决方案。这也适用于动态类型编程。尽可能坚持使用静态类型,并在确切知道要处理的类型时使用接口(interfaces)。接口在 Go 中非常强大且无处不在。

|

||||

根据 Go 的理念(这是一个单独的主题),您应该努力不要过度设计您的解决方案。这也适用于动态类型编程。尽可能坚持使用静态类型,并在确切知道要处理的类型时使用<ruby>接口<rt>interface</rt></ruby>。接口在 Go 中非常强大且无处不在。

|

||||

|

||||

但是,仍然存在一些情况,你无法知道你处理的数据类型。一个很好的例子是 JSON。您可以在应用程序中来回转换所有类型的数据。字符串,缓冲区,各种数字,嵌套结构等。

|

||||

但是,仍然存在一些情况,你无法知道你处理的数据类型。一个很好的例子是 JSON。您可以在应用程序中来回转换所有类型的数据。字符串、缓冲区、各种数字、嵌套结构等。

|

||||

|

||||

为了解决这个问题,您需要一个工具来检查运行时的数据并根据其类型和结构采取不同行为。反射(Reflect)可以帮到你。Go 拥有一流的反射包,使您的代码能够像 Javascript 这样的语言一样动态。

|

||||

为了解决这个问题,您需要一个工具来检查运行时的数据并根据其类型和结构采取不同行为。<ruby>反射<rt>Reflect</rt></ruby>可以帮到你。Go 拥有一流的反射包,使您的代码能够像 Javascript 这样的语言一样动态。

|

||||

|

||||

一个重要的警告是知道你使用它所带来的代价 - 并且只有知道在没有更简单的方法时才使用它。

|

||||

一个重要的警告是知道你使用它所带来的代价 —— 并且只有知道在没有更简单的方法时才使用它。

|

||||

|

||||

你可以在这里阅读更多相关信息: [反射的法则 — Go 博客][18].

|

||||

|

||||

您还可以在此处阅读 JSON 包源码中的一些实际代码: [src/encoding/json/encode.go — Source Code][19]

|

||||

|

||||

### Opinionatedness

|

||||

### Opinionatedness(专制独裁的 Go)

|

||||

|

||||

顺便问一下,有这样一个单词吗?

|

||||

|

||||

@ -127,9 +120,9 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

这有时候基本上让我卡住了。我需要花时间思考这些事情而不是编写代码并满足用户。

|

||||

|

||||

首先,我应该注意到我完全可以得到这些惯例的来源,它总是来源于你或者你的团队。无论如何,即使是一群经验丰富的 Javascript 开发人员也可以轻松地发现自己拥有完全不同的工具和范例的大部分经验,以实现相同的结果。

|

||||

首先,我应该注意到我完全知道这些惯例的来源,它总是来源于你或者你的团队。无论如何,即使是一群经验丰富的 Javascript 开发人员也很容易发现他们在实现相同的结果时,而大部分的经验却是在完全不同的工具和范例上。

|

||||

|

||||

这导致整个团队中分析的瘫痪,并且使得个体之间更难以相互协作。

|

||||

这导致整个团队中出现过度分析,并且使得个体之间更难以相互协作。

|

||||

|

||||

嗯,Go 是不同的。即使您对如何构建和维护代码有很多强烈的意见,例如:如何命名,要遵循哪些结构模式,如何更好地实现并发。但你只有一个每个人都遵循的风格指南。你只有一个内置在基本工具链中的测试框架。

|

||||

|

||||

@ -141,11 +134,11 @@ Golang 拥有[出色的文档][14],大部分高级主题已经在博客上进

|

||||

|

||||

人们说,每当你学习一门新的口语时,你也会沉浸在说这种语言的人的某些文化中。因此,您学习的语言越多,您可能会有更多的变化。

|

||||

|

||||

编程语言也是如此。无论您将来如何应用新的编程语言,它总能给的带来新的编程视角或某些特别的技术。

|

||||

编程语言也是如此。无论您将来如何应用新的编程语言,它总能给你带来新的编程视角或某些特别的技术。

|

||||

|

||||

无论是函数式编程,模式匹配(pattern matching)还是原型继承(prototypal inheritance)。一旦你学会了它们,你就可以随身携带这些编程思想,这扩展了你作为软件开发人员所拥有的问题解决工具集。它们也改变了你阅读高质量代码的方式。

|

||||

无论是函数式编程,<ruby>模式匹配<rt>pattern matching</rt></ruby>还是<ruby>原型继承<rt>prototypal inheritance</rt></ruby>。一旦你学会了它们,你就可以随身携带这些编程思想,这扩展了你作为软件开发人员所拥有的问题解决工具集。它们也改变了你阅读高质量代码的方式。

|

||||

|

||||

而 Go 在方面有一项了不起的财富。Go 文化的主要支柱是保持简单,脚踏实地的代码,而不会产生许多冗余的抽象概念,并将可维护性放在首位。大部分时间花费在代码的编写工作上,而不是在修补工具和环境或者选择不同的实现方式上,这也是 Go文化的一部分。

|

||||

而 Go 在这方面有一项了不起的财富。Go 文化的主要支柱是保持简单,脚踏实地的代码,而不会产生许多冗余的抽象概念,并将可维护性放在首位。大部分时间花费在代码的编写工作上,而不是在修补工具和环境或者选择不同的实现方式上,这也是 Go 文化的一部分。

|

||||

|

||||

Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

@ -161,12 +154,11 @@ Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

这不是 Go 的所有惊人的优点的完整列表,只是一些被人低估的特性。

|

||||

|

||||

请尝试一下从 [Go 之旅(A Tour of Go)][20]来开始学习 Go,这将是一个令人惊叹的开始。

|

||||

请尝试一下从 [Go 之旅][20] 来开始学习 Go,这将是一个令人惊叹的开始。

|

||||

|

||||

如果您想了解有关 Go 的优点的更多信息,可以查看以下链接:

|

||||

|

||||

* [你为什么要学习 Go? - Keval Patel][2]

|

||||

|

||||

* [告别Node.js - TJ Holowaychuk][3]

|

||||

|

||||

并在评论中分享您的阅读感悟!

|

||||

@ -175,30 +167,16 @@ Go 文化也可以总结为:“应当只用一种方法去做一件事”。

|

||||

|

||||

不断为您的工作寻找最好的工具!

|

||||

|

||||

* * *

|

||||

|

||||

If you like this article, please consider following me for more, and clicking on those funny green little hands right below this text for sharing. 👏👏👏

|

||||

|

||||

Check out my [Github][21] and follow me on [Twitter][22]!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Software Engineer and Traveler. Coding for fun. Javascript enthusiast. Tinkering with Golang. A lot into SOA and Docker. Architect at Velvica.

|

||||

|

||||

------------

|

||||

|

||||

|

||||

-------------------------------------------------------

|

||||

via: https://medium.freecodecamp.org/here-are-some-amazing-advantages-of-go-that-you-dont-hear-much-about-1af99de3b23a

|

||||

|

||||

作者:[Kirill Rogovoy][a]

|

||||

译者:[译者ID](https://github.com/imquanquan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[imquanquan](https://github.com/imquanquan)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[a]:https://twitter.com/krogovoy

|

||||

[1]:https://github.com/ashleymcnamara/gophers

|

||||

[2]:https://medium.com/@kevalpatel2106/why-should-you-learn-go-f607681fad65

|

||||

[3]:https://medium.com/@tjholowaychuk/farewell-node-js-4ba9e7f3e52b

|

||||

181

published/20180516 Manipulating Directories in Linux.md

Normal file

181

published/20180516 Manipulating Directories in Linux.md

Normal file

@ -0,0 +1,181 @@

|

||||

在 Linux 上操作目录

|

||||

======

|

||||

|

||||

|

||||

|

||||

> 让我们继续学习一下 Linux 文件系统的树形结构,并展示一下如何在其中创建你的目录。

|

||||

|

||||

如果你不熟悉本系列(以及 Linux),[请查看我们的第一部分][1]。在那篇文章中,我们贯穿了 Linux 文件系统的树状结构(或者更确切地说是<ruby>文件层次结构标准<rt>File Hierarchy Standard</rt></ruby>,FHS)。我建议你仔细阅读,确保你理解自己能安全的做哪些操作。因为这一次,我将向你展示目录操作的魅力。

|

||||

|

||||

### 新建目录

|

||||

|

||||

在破坏之前,先让我们来创建。首先,打开一个终端窗口并使用命令 `mkdir` 创建一个新目录,如下所示:

|

||||

|

||||

```

|

||||

mkdir <directoryname>

|

||||

```

|

||||

如果你只输入了目录名称,该目录将显示在您当前所在目录中。如果你刚刚打开一个终端,你当前位置为你的家目录。在这个例子中,我们展示了将要创建的目录与你当前所处位置的关系:

|

||||

|

||||

```

|

||||

$ pwd # 告知你当前所在位置(参见第一部分)

|

||||

/home/<username>

|

||||

$ mkdir newdirectory # 创建 /home/<username>/newdirectory

|

||||

```

|

||||

|

||||

(注:你不用输入 `#` 后面的文本。`#` 后面的文本为注释内容,用于解释发生了什么。它会被 shell 忽略,不会被执行)。

|

||||

|

||||

你可以在当前位置中已经存在的某个目录下创建新的目录,方法是在命令行中指定它:

|

||||

|

||||

```

|

||||

mkdir Documents/Letters

|

||||

```

|

||||

|

||||

这将在 `Documents` 目录中创建 `Letters` 目录。

|

||||

|

||||

你还可以在路径中使用 `..` 在当前目录的上一级目录中创建目录。假设你进入刚刚创建的 `Documents/Letters/` 目录,并且想要创建`Documents/Memos/` 目录。你可以这样做:

|

||||

|

||||

```

|

||||

cd Documents/Letters # 进入到你刚刚创建的 Letters/ 目录

|

||||

mkdir ../Memos

|

||||

```

|

||||

|

||||

同样,以上所有内容都是相对于你当前的位置做的。这就是使用了相对路径。

|

||||

|

||||

你还可以使用目录的绝对路径:这意味着告诉 `mkdir` 命令将目录放在和根目录(`/`)有关的位置:

|

||||

|

||||

```

|

||||

mkdir /home/<username>/Documents/Letters

|

||||

```

|

||||

|

||||

在上面的命令中将 `<username>` 更改为你的用户名,这相当于从你的主目录执行 `mkdir Documents/Letters`,通过使用绝对路径你可以在目录树中的任何位置完成这项工作。

|

||||

|

||||

无论你使用相对路径还是绝对路径,只要命令成功执行,`mkdir` 将静默的创建新目录,而没有任何明显的反馈。只有当遇到某种问题时,`mkdir`才会在你敲下回车键后打印一些反馈。

|

||||

|

||||

与大多数其他命令行工具一样,`mkdir` 提供了几个有趣的选项。 `-p` 选项特别有用,因为它允许你嵌套创建目录,即使目录不存在也可以。例如,要在 `Documents/` 中创建一个目录存放写给妈妈的信,你可以这样做:

|

||||

|

||||

```

|

||||

mkdir -p Documents/Letters/Family/Mom

|

||||

```

|

||||

|

||||

`mkdir` 会创建 `Mom/` 之上的整个目录分支,并且也会创建 `Mom/` 目录,无论其上的目录在你敲入该命令时是否已经存在。

|

||||

|

||||

你也可以用空格来分隔目录名,来同时创建几个目录:

|

||||

|

||||

```

|

||||

mkdir Letters Memos Reports

|

||||

```

|

||||

|

||||

这将在当前目录下创建目录 `Letters`、`Memos` 和 `Reports`。

|

||||

|

||||

### 目录名中可怕的空格

|

||||

|

||||

……这带来了目录名称中关于空格的棘手问题。你能在目录名中使用空格吗?是的你可以。那么建议你使用空格吗?不,绝对不建议。空格使一切变得更加复杂,并且可能是危险的操作。

|

||||

|

||||

假设您要创建一个名为 `letters mom/` 的目录。如果你不知道如何更好处理,你可能会输入:

|

||||

|

||||

```

|

||||

mkdir letters mom

|

||||

```

|

||||

|

||||

但这是错误的!错误的!错误的!正如我们在上面介绍的,这将创建两个目录 `letters/` 和 `mom/`,而不是一个目录 `letters mom/`。

|

||||

|

||||

得承认这是一个小麻烦:你所要做的就是删除这两个目录并重新开始,这没什么大不了。

|

||||

|

||||

可是等等!删除目录可是个危险的操作。想象一下,你使用图形工具[Dolphin][2] 或 [Nautilus][3] 创建了目录 `letters mom/`。如果你突然决定从终端删除目录 `letters mom`,并且您在同一目录下有另一个名为 `letters` 的目录,并且该目录中包含重要的文档,结果你为了删除错误的目录尝试了以下操作:

|

||||

|

||||

```

|

||||

rmdir letters mom

|

||||

```

|

||||

|

||||

你将会有删除目录 letters 的风险。这里说“风险”,是因为幸运的是`rmdir` 这条用于删除目录的指令,有一个内置的安全措施,如果你试图删除一个非空目录时,它会发出警告。

|

||||

|

||||

但是,下面这个:

|

||||

|

||||

```

|

||||

rm -Rf letters mom

|

||||

```

|

||||

|

||||

(注:这是删除目录及其内容的一种非常标准的方式)将完全删除 `letters/` 目录,甚至永远不会告诉你刚刚发生了什么。)

|

||||

|

||||

`rm` 命令用于删除文件和目录。当你将它与选项 `-R`(递归删除)和 `-f`(强制删除)一起使用时,它会深入到目录及其子目录中,删除它们包含的所有文件,然后删除子目录本身,然后它将删除所有顶层目录中的文件,再然后是删除目录本身。

|

||||

|

||||

`rm -Rf` 是你必须非常小心处理的命令。

|

||||

|

||||

我的建议是,你可以使用下划线来代替空格,但如果你仍然坚持使用空格,有两种方法可以使它们起作用。您可以使用单引号或双引号,如下所示:

|

||||

|

||||

```

|

||||

mkdir 'letters mom'

|

||||

mkdir "letters dad"

|

||||

```

|

||||

|

||||

或者,你可以转义空格。有些字符对 shell 有特殊意义。正如你所见,空格用于在命令行上分隔选项和参数。 “分离选项和参数”属于“特殊含义”范畴。当你想让 shell 忽略一个字符的特殊含义时,你需要转义,你可以在它前面放一个反斜杠(`\`)如:

|

||||

|

||||

```

|

||||

mkdir letters\ mom

|

||||

mkdir letter\ dad

|

||||

```

|

||||

|

||||

还有其他特殊字符需要转义,如撇号或单引号(`'`),双引号(`“`)和&符号(`&`):

|

||||

|

||||

```

|

||||

mkdir mom\ \&\ dad\'s\ letters

|

||||

```

|

||||

|

||||

我知道你在想什么:如果反斜杠有一个特殊的含义(即告诉 shell 它必须转义下一个字符),这也使它成为一个特殊的字符。然后,你将如何转义转义字符(`\`)?

|

||||

|

||||

事实证明,你转义任何其他特殊字符都是同样的方式:

|

||||

|

||||

```

|

||||

mkdir special\\characters

|

||||

```

|

||||

|

||||

这将生成一个名为 `special\characters/` 的目录。

|

||||

|

||||

感觉困惑?当然。这就是为什么你应该避免在目录名中使用特殊字符,包括空格。

|

||||

|

||||

以防误操作你可以参考下面这个记录特殊字符的列表。(LCTT 译注:此处原文链接丢失。)

|

||||

|

||||

### 总结

|

||||

|

||||

* 使用 `mkdir <directory name>` 创建新目录。

|

||||

* 使用 `rmdir <directory name>` 删除目录(仅在目录为空时才有效)。

|

||||

* 使用 `rm -Rf <directory name>` 来完全删除目录及其内容 —— 请务必谨慎使用。

|

||||

* 使用相对路径创建相对于当前目录的目录: `mkdir newdir`。

|

||||

* 使用绝对路径创建相对于根目录(`/`)的目录: `mkdir /home/<username>/newdir`。

|

||||

* 使用 `..` 在当前目录的上级目录中创建目录: `mkdir ../newdir`。

|

||||

* 你可以通过在命令行上使用空格分隔目录名来创建多个目录: `mkdir onedir twodir threedir`。

|

||||

* 同时创建多个目录时,你可以混合使用相对路径和绝对路径: `mkdir onedir twodir /home/<username>/threedir`。

|

||||

* 在目录名称中使用空格和特殊字符真的会让你很头疼,你最好不要那样做。

|

||||

|

||||

有关更多信息,您可以查看 `mkdir`、`rmdir` 和 `rm` 的手册:

|

||||

|

||||

```

|

||||

man mkdir

|

||||

man rmdir

|

||||

man rm

|

||||

```

|

||||

|

||||

要退出手册页,请按键盘 `q` 键。

|

||||

|

||||

### 下次预告

|

||||

|

||||

在下一部分中,你将学习如何创建、修改和删除文件,以及你需要了解的有关权限和特权的所有信息!

|

||||

|

||||

通过 Linux 基金会和 edX 免费提供的[“Introduction to Linux”][4]课程了解有关Linux的更多信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/2018/5/manipulating-directories-linux

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[way-ww](https://github.com/way-ww)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/bro66

|

||||

[1]:https://linux.cn/article-9798-1.html

|

||||

[2]:https://userbase.kde.org/Dolphin

|

||||

[3]:https://projects-old.gnome.org/nautilus/screenshots.html

|

||||

[4]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,80 +1,84 @@

|

||||

这 7 个 Python 库让你写出更易维护的代码

|

||||

让 Python 代码更易维护的七种武器

|

||||

======

|

||||

|

||||

> 检查你的代码的质量,通过这些外部库使其更易维护。

|

||||

|

||||

|

||||

|

||||

> 可读性很重要。

|

||||

> — [Python 之禅(The Zen of Python)][1], Tim Peters

|

||||

> — <ruby>[Python 之禅][1]<rt>The Zen of Python</rt></ruby>,Tim Peters

|

||||

|

||||

尽管很多项目一开始的时候就有可读性和编码标准的要求,但随着项目进入“维护模式”,这些要求都会变得虎头蛇尾。然而,在代码库中保持一致的代码风格和测试标准能够显著减轻维护的压力,也能确保新的开发者能够快速了解项目的情况,同时能更好地保持应用程序的运行良好。

|

||||

随着软件项目进入“维护模式”,对可读性和编码标准的要求很容易落空(甚至从一开始就没有建立过那些标准)。然而,在代码库中保持一致的代码风格和测试标准能够显著减轻维护的压力,也能确保新的开发者能够快速了解项目的情况,同时能更好地全程保持应用程序的质量。

|

||||

|

||||

使用外部库来检查代码的质量不失为保护项目未来可维护性的一个好方法。以下会推荐一些我们最喜爱的[检查代码][2](包括检查 PEP 8 和其它代码风格错误)的库,用它们来强制保持代码风格一致,并确保在项目成熟时有一个可接受的测试覆盖率。

|

||||

|

||||

### 检查你的代码风格

|

||||

|

||||

使用外部库来检查代码运行情况不失为保护项目未来可维护性的一个好方法。以下会推荐一些我们最喜爱的[检查代码][2](包括检查 PEP 8 和其它代码风格错误)的库,用它们来强制保持代码风格一致,并确保在项目成熟时有一个可接受的测试覆盖率。

|

||||

[PEP 8][3] 是 Python 代码风格规范,它规定了类似行长度、缩进、多行表达式、变量命名约定等内容。尽管你的团队自身可能也会有稍微不同于 PEP 8 的代码风格规范,但任何代码风格规范的目标都是在代码库中强制实施一致的标准,使代码的可读性更强、更易于维护。下面三个库就可以用来帮助你美化代码。

|

||||

|

||||

[PEP 8][3]是Python代码风格规范,规定了行长度,缩进,多行表达式、变量命名约定等内容。尽管你的团队自身可能也会有不同于 PEP 8 的代码风格规范,但任何代码风格规范的目标都是在代码库中强制实施一致的标准,使代码的可读性更强、更易于维护。下面三个库就可以用来帮助你美化代码。

|

||||

#### 1、 Pylint

|

||||

|

||||

#### 1\. Pylint

|

||||

[Pylint][4] 是一个检查违反 PEP 8 规范和常见错误的库。它在一些流行的[编辑器和 IDE][5] 中都有集成,也可以单独从命令行运行。

|

||||

|

||||

[Pylint][4] 是一个检查违反 PEP 8 规范和常见错误的库。它在一些流行的编辑器和 IDE 中都有集成,也可以单独从命令行运行。

|

||||

|

||||

执行 `pip install pylint`安装 Pylint 。然后运行 `pylint [options] path/to/dir` 或者 `pylint [options] path/to/module.py` 就可以在命令行中使用 Pylint,它会向控制台输出代码中违反规范和出现错误的地方。

|

||||

执行 `pip install pylint` 安装 Pylint 。然后运行 `pylint [options] path/to/dir` 或者 `pylint [options] path/to/module.py` 就可以在命令行中使用 Pylint,它会向控制台输出代码中违反规范和出现错误的地方。

|

||||

|

||||

你还可以使用 `pylintrc` [配置文件][6]来自定义 Pylint 对哪些代码错误进行检查。

|

||||

|

||||

#### 2\. Flake8

|

||||

#### 2、 Flake8

|

||||

|

||||

对 [Flake8][7] 的描述是“将 PEP 8、Pyflakes(类似 Pylint)、McCabe(代码复杂性检查器)、第三方插件整合到一起,以检查 Python 代码风格和质量的一个 Python 工具”。

|

||||

[Flake8][7] 是“将 PEP 8、Pyflakes(类似 Pylint)、McCabe(代码复杂性检查器)和第三方插件整合到一起,以检查 Python 代码风格和质量的一个 Python 工具”。

|

||||

|

||||

执行 `pip install flake8` 安装 flake8 ,然后执行 `flake8 [options] path/to/dir` 或者 `flake8 [options] path/to/module.py` 可以查看报出的错误和警告。

|

||||

|

||||

和 Pylint 类似,Flake8 允许通过[配置文件][8]来自定义检查的内容。它有非常清晰的文档,包括一些有用的[提交钩子][9],可以将自动检查代码纳入到开发工作流程之中。

|

||||

|

||||

Flake8 也允许集成到一些流行的编辑器和 IDE 当中,但在文档中并没有详细说明。要将 Flake8 集成到喜欢的编辑器或 IDE 中,可以搜索插件(例如 [Sublime Text 的 Flake8 插件][10])。

|

||||

Flake8 也可以集成到一些流行的编辑器和 IDE 当中,但在文档中并没有详细说明。要将 Flake8 集成到喜欢的编辑器或 IDE 中,可以搜索插件(例如 [Sublime Text 的 Flake8 插件][10])。

|

||||

|

||||

#### 3\. Isort

|

||||

#### 3、 Isort

|

||||

|

||||

[Isort][11] 这个库能将你在项目中导入的库按字母顺序,并将其[正确划分为不同部分][12](例如标准库、第三方库,自建的库等)。这样提高了代码的可读性,并且可以在导入的库较多的时候轻松找到各个库。

|

||||

[Isort][11] 这个库能将你在项目中导入的库按字母顺序排序,并将其[正确划分为不同部分][12](例如标准库、第三方库、自建的库等)。这样提高了代码的可读性,并且可以在导入的库较多的时候轻松找到各个库。

|

||||

|

||||

执行 `pip install isort` 安装 isort,然后执行 `isort path/to/module.py` 就可以运行了。文档中还提供了更多的配置项,例如通过配置 `.isort.cfg` 文件来决定 isort 如何处理一个库的多行导入。

|

||||

执行 `pip install isort` 安装 isort,然后执行 `isort path/to/module.py` 就可以运行了。[文档][13]中还提供了更多的配置项,例如通过[配置][14] `.isort.cfg` 文件来决定 isort 如何处理一个库的多行导入。

|

||||

|

||||

和 Flake8、Pylint 一样,isort 也提供了将其与流行的[编辑器和 IDE][15] 集成的插件。

|

||||

|

||||

### 共享代码风格

|

||||

### 分享你的代码风格

|

||||

|

||||

每次文件发生变动之后都用命令行手动检查代码是一件痛苦的事,你可能也不太喜欢通过运行 IDE 中某个插件来实现这个功能。同样地,你的同事可能会用不同的代码检查方式,也许他们的编辑器中也没有安装插件,甚至自己可能也不会严格检查代码和按照警告来更正代码。总之,你共享的代码库将会逐渐地变得混乱且难以阅读。

|

||||

每次文件发生变动之后都用命令行手动检查代码是一件痛苦的事,你可能也不太喜欢通过运行 IDE 中某个插件来实现这个功能。同样地,你的同事可能会用不同的代码检查方式,也许他们的编辑器中也没有那种插件,甚至你自己可能也不会严格检查代码和按照警告来更正代码。总之,你分享出来的代码库将会逐渐地变得混乱且难以阅读。

|

||||

|

||||

一个很好的解决方案是使用一个库,自动将代码按照 PEP 8 规范进行格式化。我们推荐的三个库都有不同的自定义级别来控制如何格式化代码。其中有一些设置较为特殊,例如 Pylint 和 Flake8 ,你需要先行测试,看看是否有你无法忍受蛋有不能修改的默认配置。

|

||||

一个很好的解决方案是使用一个库,自动将代码按照 PEP 8 规范进行格式化。我们推荐的三个库都有不同的自定义级别来控制如何格式化代码。其中有一些设置较为特殊,例如 Pylint 和 Flake8 ,你需要先行测试,看看是否有你无法忍受但又不能修改的默认配置。

|

||||

|

||||

#### 4\. Autopep8

|

||||

#### 4、 Autopep8

|

||||

|

||||

[Autopep8][16] 可以自动格式化指定的模块中的代码,包括重新缩进行,修复缩进,删除多余的空格,并重构常见的比较错误(例如布尔值和 `None` 值)。你可以查看文档中完整的[更正列表][17]。

|

||||

[Autopep8][16] 可以自动格式化指定的模块中的代码,包括重新缩进行、修复缩进、删除多余的空格,并重构常见的比较错误(例如布尔值和 `None` 值)。你可以查看文档中完整的[更正列表][17]。

|

||||

|

||||

运行 `pip install --upgrade autopep8` 安装 autopep8。然后执行 `autopep8 --in-place --aggressive --aggressive <filename>` 就可以重新格式化你的代码。`aggressive` 标记的数量表示 auotopep8 在代码风格控制上有多少控制权。在这里可以详细了解 [aggressive][18] 选项。

|

||||

运行 `pip install --upgrade autopep8` 安装 Autopep8。然后执行 `autopep8 --in-place --aggressive --aggressive <filename>` 就可以重新格式化你的代码。`aggressive` 选项的数量表示 Auotopep8 在代码风格控制上有多少控制权。在这里可以详细了解 [aggressive][18] 选项。

|

||||

|

||||

#### 5\. Yapf

|

||||

#### 5、 Yapf

|

||||

|

||||

[Yapf][19] 是另一种有自己的[配置项][20]列表的重新格式化代码的工具。它与 autopep8 的不同之处在于它不仅会指出代码中违反 PEP 8 规范的地方,还会对没有违反 PEP 8 但代码风格不一致的地方重新格式化,旨在令代码的可读性更强。

|

||||

[Yapf][19] 是另一种有自己的[配置项][20]列表的重新格式化代码的工具。它与 Autopep8 的不同之处在于它不仅会指出代码中违反 PEP 8 规范的地方,还会对没有违反 PEP 8 但代码风格不一致的地方重新格式化,旨在令代码的可读性更强。

|

||||

|

||||

执行`pip install yapf` 安装 Yapf,然后执行 `yapf [options] path/to/dir` 或 `yapf [options] path/to/module.py` 可以对代码重新格式化。

|

||||

执行 `pip install yapf` 安装 Yapf,然后执行 `yapf [options] path/to/dir` 或 `yapf [options] path/to/module.py` 可以对代码重新格式化。[定制选项][20]的完整列表在这里。

|

||||

|

||||

#### 6\. Black

|

||||

#### 6、 Black

|

||||

|

||||

[Black][21] 在代码检查工具当中算是比较新的一个。它与 autopep8 和 Yapf 类似,但限制较多,没有太多的自定义选项。这样的好处是你不需要去决定使用怎么样的代码风格,让 black 来给你做决定就好。你可以在这里查阅 black 的[自定义选项][22]以及[如何在配置文件中对其进行设置][23]。

|

||||

[Black][21] 在代码检查工具当中算是比较新的一个。它与 Autopep8 和 Yapf 类似,但限制较多,没有太多的自定义选项。这样的好处是你不需要去决定使用怎么样的代码风格,让 Black 来给你做决定就好。你可以在这里查阅 Black [有限的自定义选项][22]以及[如何在配置文件中对其进行设置][23]。

|

||||

|

||||

Black 依赖于 Python 3.6+,但它可以格式化用 Python 2 编写的代码。执行 `pip install black` 安装 black,然后执行 `black path/to/dir` 或 `black path/to/module.py` 就可以使用 black 优化你的代码。

|

||||

Black 依赖于 Python 3.6+,但它可以格式化用 Python 2 编写的代码。执行 `pip install black` 安装 Black,然后执行 `black path/to/dir` 或 `black path/to/module.py` 就可以使用 Black 优化你的代码。

|

||||

|

||||

### 检查你的测试覆盖率

|

||||

|

||||

如果你正在进行测试工作,你需要确保提交到代码库的新代码都已经测试通过,并且不会降低测试覆盖率。虽然测试覆盖率不是衡量测试有效性和充分性的唯一指标,但它是确保项目遵循基本测试标准的一种方法。对于计算测试覆盖率,我们推荐使用 Coverage 这个库。

|

||||

如果你正在进行编写测试,你需要确保提交到代码库的新代码都已经测试通过,并且不会降低测试覆盖率。虽然测试覆盖率不是衡量测试有效性和充分性的唯一指标,但它是确保项目遵循基本测试标准的一种方法。对于计算测试覆盖率,我们推荐使用 Coverage 这个库。

|

||||

|

||||

#### 7\. Coverage

|

||||

#### 7、 Coverage

|

||||

|

||||

[Coverage][24] 有数种显示测试覆盖率的方式,包括将结果输出到控制台或 HTML 页面,并指出哪些具体哪些地方没有被覆盖到。你可以通过配置文件自定义 Coverage 检查的内容,让你更方便使用。

|

||||

[Coverage][24] 有数种显示测试覆盖率的方式,包括将结果输出到控制台或 HTML 页面,并指出哪些具体哪些地方没有被覆盖到。你可以通过[配置文件][25]自定义 Coverage 检查的内容,让你更方便使用。

|

||||

|

||||

执行 `pip install coverage` 安装 Converage 。然后执行 `coverage [path/to/module.py] [args]` 可以运行程序并查看输出结果。如果要查看哪些代码行没有被覆盖,执行 `coverage report -m` 即可。

|

||||

|

||||

持续集成(Continuous integration, CI)是在合并和部署代码之前自动检查代码风格错误和测试覆盖率最小值的过程。很多免费或付费的工具都可以用于执行这项工作,具体的过程不在本文中赘述,但 CI 过程是令代码更易读和更易维护的重要步骤,关于这一部分可以参考 [Travis CI][26] 和 [Jenkins][27]。

|

||||

### 持续集成工具

|

||||

|

||||

<ruby>持续集成<rt>Continuous integration</rt></ruby>(CI)是在合并和部署代码之前自动检查代码风格错误和测试覆盖率最小值的过程。很多免费或付费的工具都可以用于执行这项工作,具体的过程不在本文中赘述,但 CI 过程是令代码更易读和更易维护的重要步骤,关于这一部分可以参考 [Travis CI][26] 和 [Jenkins][27]。

|

||||

|

||||

以上这些只是用于检查 Python 代码的各种工具中的其中几个。如果你有其它喜爱的工具,欢迎在评论中分享。

|

||||

|

||||

@ -85,7 +89,7 @@ via: https://opensource.com/article/18/7/7-python-libraries-more-maintainable-co

|

||||

作者:[Jeff Triplett][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -16,12 +16,8 @@ Linux DNS 查询剖析(第四部分)

|

||||

|

||||

在第四部分中,我将介绍容器如何完成 DNS 查询。你想的没错,也不是那么简单。

|

||||

|

||||

* * *

|

||||

|

||||

### 1) Docker 和 DNS

|

||||

|

||||

============================================================

|

||||

|

||||

在 [Linux DNS 查询剖析(第三部分)][3] 中,我们介绍了 `dnsmasq`,其工作方式如下:将 DNS 查询指向到 localhost 地址 `127.0.0.1`,同时启动一个进程监听 `53` 端口并处理查询请求。

|

||||

|

||||

在按上述方式配置 DNS 的主机上,如果运行了一个 Docker 容器,容器内的 `/etc/resolv.conf` 文件会是怎样的呢?

|

||||

@ -72,29 +68,29 @@ google.com. 112 IN A 172.217.23.14

|

||||

|

||||

在这个问题上,Docker 的解决方案是忽略所有可能的复杂情况,即无论主机中使用什么 DNS 服务器,容器内都使用 Google 的 DNS 服务器 `8.8.8.8` 和 `8.8.4.4` 完成 DNS 查询。

|

||||

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 _<ruby>编排引擎<rt>orchestrators</rt></ruby>_,情况又有些不同。

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 <ruby>编排引擎<rt>orchestrators</rt></ruby>,情况又有些不同。

|

||||

|

||||

### 2) Kubernetes 和 DNS

|

||||

|

||||

在 Kubernetes 中,最小部署单元是 `pod`;`pod` 是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

在 Kubernetes 中,最小部署单元是 pod;它是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

|

||||

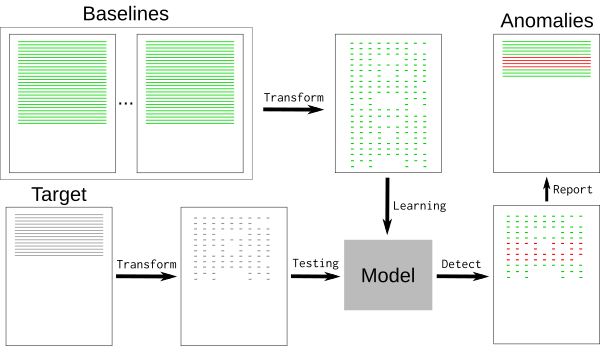

Kubernetes 面临的一个额外的挑战是,将 Kubernetes 服务请求(例如,`myservice.kubernetes.io`)通过对应的<ruby>解析器<rt>resolver</rt></ruby>,转发到具体服务地址对应的<ruby>内网地址<rt>private network</rt></ruby>。这里提到的服务地址被称为归属于“<ruby>集群域<rt>cluster domain</rt></ruby>”。集群域可由管理员配置,根据配置可以是 `cluster.local` 或 `myorg.badger` 等。

|

||||

|

||||

在 Kubernetes 中,你可以为 `pod` 指定如下四种 `pod` 内 DNS 查询的方式。

|

||||

在 Kubernetes 中,你可以为 pod 指定如下四种 pod 内 DNS 查询的方式。

|

||||

|

||||

* Default

|

||||

**Default**

|

||||

|

||||

在这种(名称容易让人误解)的方式中,`pod` 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

在这种(名称容易让人误解)的方式中,pod 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

|

||||

如果你希望覆盖 `/etc/resolv.conf` 中的条目,你可以添加到 `kubelet` 的配置中。

|

||||

|

||||

* ClusterFirst

|

||||

**ClusterFirst**

|

||||

|

||||

在 `ClusterFirst` 方式中,遇到 DNS 查询请求会做有选择的转发。根据配置的不同,有以下两种方式:

|

||||

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 `pod` 所在的主机。

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 pod 所在的主机。

|

||||

|

||||

第二种方式相对新一些,你可以在内部 DNS 中配置选择性转发。

|

||||

|

||||

@ -115,27 +111,27 @@ data:

|

||||

|

||||

在 `stubDomains` 条目中,可以为特定域名指定特定的 DNS 服务器;而 `upstreamNameservers` 条目则给出,待查询域名不是集群域子域情况下用到的 DNS 服务器。

|

||||

|

||||

这是通过在一个 `pod` 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

这是通过在一个 pod 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

|

||||

|

||||

|

||||

剩下两种选项都比较小众:

|

||||

|

||||

* ClusterFirstWithHostNet

|

||||

**ClusterFirstWithHostNet**

|

||||

|

||||

适用于 `pod` 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 `pod` 对应主机相同的网络。

|

||||

适用于 pod 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 pod 对应主机相同的网络。

|

||||

|

||||

* None

|

||||

**None**

|

||||

|

||||

`None` 意味着不改变 DNS,但强制要求你在 `pod` <ruby>规范文件<rt>specification</rt></ruby>的 `dnsConfig` 条目中指定 DNS 配置。

|

||||

|

||||

### CoreDNS 即将到来

|

||||

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代 Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

|

||||

如果想了解更多,参考[这里][5]。

|

||||

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 `3.6`,可能有些过时。

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 3.6,可能有些过时。

|

||||

|

||||

### 第四部分总结

|

||||

|

||||

@ -152,14 +148,14 @@ via: https://zwischenzugs.com/2018/08/06/anatomy-of-a-linux-dns-lookup-part-iv/

|

||||

|

||||

作者:[zwischenzugs][a]

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://zwischenzugs.com/

|

||||

[1]:https://zwischenzugs.com/2018/06/08/anatomy-of-a-linux-dns-lookup-part-i/

|

||||

[2]:https://zwischenzugs.com/2018/06/18/anatomy-of-a-linux-dns-lookup-part-ii/

|

||||

[3]:https://zwischenzugs.com/2018/07/06/anatomy-of-a-linux-dns-lookup-part-iii/

|

||||

[1]:https://linux.cn/article-9943-1.html

|

||||

[2]:https://linux.cn/article-9949-1.html

|

||||

[3]:https://linux.cn/article-9972-1.html

|

||||

[4]:https://kubernetes.io/docs/tasks/administer-cluster/dns-custom-nameservers/#impacts-on-pods

|

||||

[5]:https://coredns.io/

|

||||

[6]:https://zwischenzugs.com/2017/10/21/openshift-3-6-dns-in-pictures/

|

||||

@ -0,0 +1,118 @@

|

||||

怎样解决 Ubuntu 中的 “sub process usr bin dpkg returned an error code 1” 错误

|

||||

======

|

||||

|

||||

> 如果你在 Ubuntu Linux 上安装软件时遇到 “sub process usr bin dpkg returned an error code 1”,请按照以下步骤进行修复。

|

||||

|

||||

Ubuntu 和其他基于 Debian 的发行版中的一个常见问题是已经损坏的包。你尝试更新系统或安装新软件包时会遇到类似 “Sub-process /usr/bin/dpkg returned an error code” 的错误。

|

||||

|

||||

这就是前几天发生在我身上的事。我试图在 Ubuntu 中安装一个电台程序时,它给我了这个错误:

|

||||

|

||||

```

|

||||

Unpacking python-gst-1.0 (1.6.2-1build1) ...

|

||||

Selecting previously unselected package radiotray.

|

||||

Preparing to unpack .../radiotray_0.7.3-5ubuntu1_all.deb ...

|

||||

Unpacking radiotray (0.7.3-5ubuntu1) ...

|

||||

Processing triggers for man-db (2.7.5-1) ...

|

||||

Processing triggers for desktop-file-utils (0.22-1ubuntu5.2) ...

|

||||

Processing triggers for bamfdaemon (0.5.3~bzr0+16.04.20180209-0ubuntu1) ...

|

||||

Rebuilding /usr/share/applications/bamf-2.index...

|

||||

Processing triggers for gnome-menus (3.13.3-6ubuntu3.1) ...

|

||||

Processing triggers for mime-support (3.59ubuntu1) ...

|

||||

Setting up polar-bookshelf (1.0.0-beta56) ...

|

||||

ln: failed to create symbolic link '/usr/local/bin/polar-bookshelf': No such file or directory

|

||||

dpkg: error processing package polar-bookshelf (--configure):

|

||||

subprocess installed post-installation script returned error exit status 1

|

||||

Setting up python-appindicator (12.10.1+16.04.20170215-0ubuntu1) ...

|

||||

Setting up python-gst-1.0 (1.6.2-1build1) ...

|

||||

Setting up radiotray (0.7.3-5ubuntu1) ...

|

||||

Errors were encountered while processing:

|

||||

polar-bookshelf

|

||||

E: Sub-process /usr/bin/dpkg returned an error code (1)

|

||||

|

||||

```

|

||||

|

||||

这里最后三行非常重要。

|

||||

|

||||

```

|

||||

Errors were encountered while processing:

|

||||

polar-bookshelf

|

||||

E: Sub-process /usr/bin/dpkg returned an error code (1)

|

||||

```

|

||||

|

||||

它告诉我 polar-bookshelf 包引发了问题。这可能对你如何修复这个错误至关重要。

|

||||

|

||||

### 修复 Sub-process /usr/bin/dpkg returned an error code (1)

|

||||

|

||||

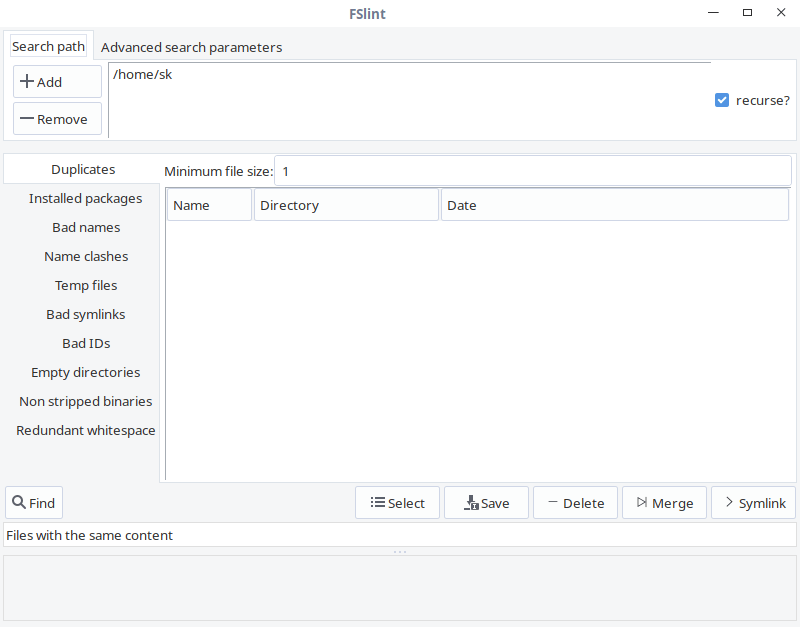

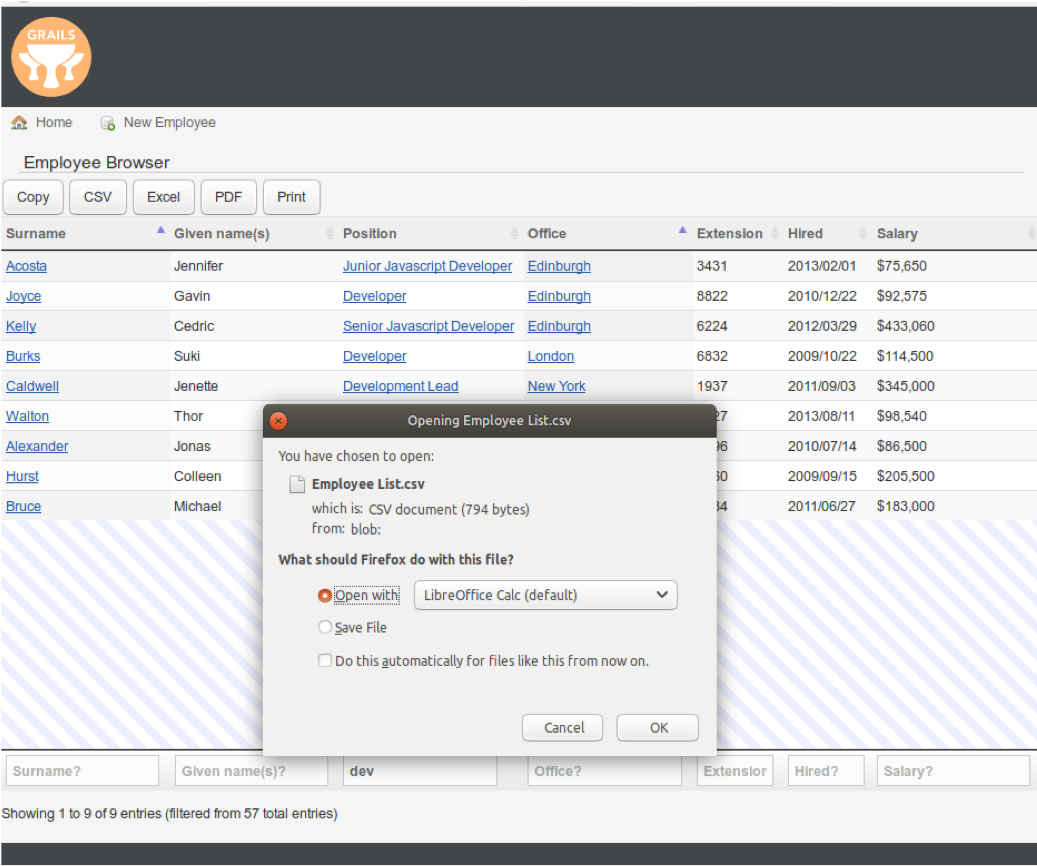

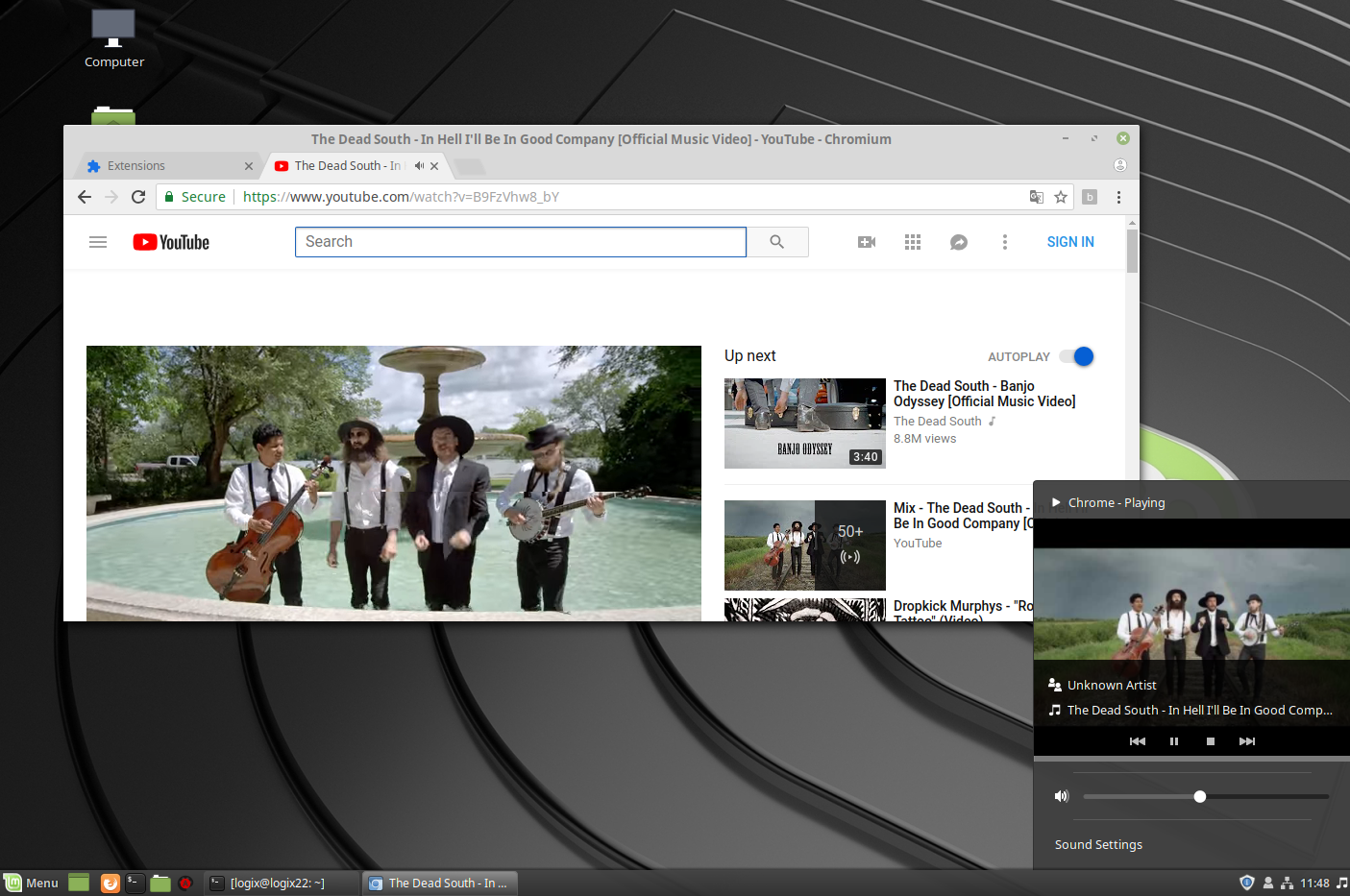

![Fix update errors in Ubuntu Linux][1]

|

||||

|

||||

让我们尝试修复这个损坏的错误包。我将展示几种你可以逐一尝试的方法。最初的那些易于使用,几乎不用动脑子。

|

||||

|

||||

在试了这里讨论的每一种方法之后,你应该尝试运行 `sudo apt update`,接着尝试安装新的包或升级。

|

||||

|

||||

#### 方法 1:重新配包数据库

|

||||

|

||||

你可以尝试的第一种方法是重新配置包数据库。数据库可能在安装包时损坏了。重新配置通常可以解决问题。

|

||||

|

||||

```

|

||||

sudo dpkg --configure -a

|

||||

```

|

||||

|

||||

#### 方法 2:强制安装

|

||||

|

||||

如果是之前包安装过程被中断,你可以尝试强制安装。

|

||||

|

||||

```

|

||||

sudo apt-get install -f

|

||||

```

|

||||

|

||||

#### 方法3:尝试删除有问题的包

|

||||

|

||||

如果这不是你的问题,你可以尝试手动删除包。但不要对 Linux 内核包(以 linux- 开头)执行此操作。

|

||||

|

||||

```

|

||||

sudo apt remove

|

||||

```

|

||||

|

||||

#### 方法 4:删除有问题的包中的信息文件

|

||||

|

||||

这应该是你最后的选择。你可以尝试从 `/var/lib/dpkg/info` 中删除与相关软件包关联的文件。

|

||||

|

||||

**你需要了解一些基本的 Linux 命令来了解发生了什么以及如何对应你的问题**

|

||||

|

||||

就我而言,我在 polar-bookshelf 中遇到问题。所以我查找了与之关联的文件:

|

||||

|

||||

```

|

||||

ls -l /var/lib/dpkg/info | grep -i polar-bookshelf

|

||||

-rw-r--r-- 1 root root 2324811 Aug 14 19:29 polar-bookshelf.list

|

||||

-rw-r--r-- 1 root root 2822824 Aug 10 04:28 polar-bookshelf.md5sums

|

||||

-rwxr-xr-x 1 root root 113 Aug 10 04:28 polar-bookshelf.postinst

|

||||

-rwxr-xr-x 1 root root 84 Aug 10 04:28 polar-bookshelf.postrm

|

||||

```

|

||||

|

||||

现在我需要做的就是删除这些文件:

|

||||

|

||||

```

|

||||

sudo mv /var/lib/dpkg/info/polar-bookshelf.* /tmp

|

||||

```

|

||||

|

||||

使用 `sudo apt update`,接着你应该就能像往常一样安装软件了。

|

||||

|

||||

#### 哪种方法适合你(如果有效)?

|

||||

|

||||

我希望这篇快速文章可以帮助你修复 “E: Sub-process /usr/bin/dpkg returned an error code (1)” 的错误。

|

||||

|

||||

如果它对你有用,是那种方法?你是否设法使用其他方法修复此错误?如果是,请分享一下以帮助其他人解决此问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/dpkg-returned-an-error-code-1/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/fix-common-update-errors-ubuntu.jpeg

|

||||

@ -0,0 +1,161 @@

|

||||

Bat:一种具有语法高亮和 Git 集成的 Cat 类命令

|

||||

======

|

||||

|

||||

|

||||

|

||||

在类 UNIX 系统中,我们使用 `cat` 命令去打印和连接文件。使用 `cat` 命令,我们能将文件目录打印到到标准输出,合成几个文件为一个目标文件,还有追加几个文件到目标文件中。今天,我偶然发现一个具有相似作用的命令叫做 “Bat” ,它是 `cat` 命令的一个克隆版,具有一些例如语法高亮、 Git 集成和自动分页等非常酷的特性。在这个简略指南中,我们将讲述如何在 Linux 中安装和使用 `bat` 命令。

|

||||

|

||||

### 安装

|

||||

|

||||

Bat 可以在 Arch Linux 的默认软件源中获取。 所以你可以使用 `pacman` 命令在任何基于 arch 的系统上来安装它。

|

||||

|

||||

```

|

||||

$ sudo pacman -S bat

|

||||

```

|

||||

|

||||

在 Debian、Ubuntu、Linux Mint 等系统中,从其[发布页面][1]下载 **.deb** 文件,然后用下面的命令来安装。

|

||||

|

||||

```

|

||||

$ sudo apt install gdebi

|

||||

$ sudo gdebi bat_0.5.0_amd64.deb

|

||||

```

|

||||

|

||||

对于其他系统,你也许需要从软件源编译并安装。确保你已经安装了 Rust 1.26 或者更高版本。

|

||||

|

||||

然后运行以下命令来安装 Bat:

|

||||

|

||||

```

|

||||

$ cargo install bat

|

||||

```

|

||||

|

||||

或者,你可以从 [Linuxbrew][2] 软件包管理中来安装它。

|

||||

|

||||

```

|

||||

$ brew install bat

|

||||

```

|

||||

|

||||

### bat 命令的使用

|

||||

|

||||

`bat` 命令的使用与 `cat` 命令的使用非常相似。

|

||||

|

||||

使用 `bat` 命令创建一个新的文件:

|

||||

|

||||

```

|

||||

$ bat > file.txt

|

||||

```

|

||||

|

||||

使用 `bat` 命令来查看文件内容,只需要:

|

||||

|

||||

```

|

||||

$ bat file.txt

|

||||

```

|

||||

|

||||

你能同时查看多个文件:

|

||||

|

||||

```

|

||||

$ bat file1.txt file2.txt

|

||||

```

|

||||

|

||||

将多个文件的内容合并至一个单独文件中:

|

||||

|

||||

```

|

||||

$ bat file1.txt file2.txt file3.txt > document.txt

|

||||

```

|

||||

|

||||

就像我之前提到的那样,除了浏览和编辑文件以外,`bat` 命令有一些非常酷的特性。

|

||||

|

||||

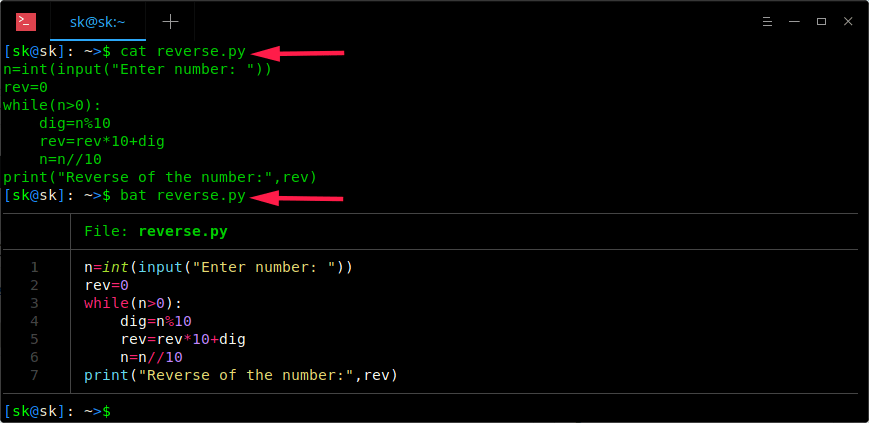

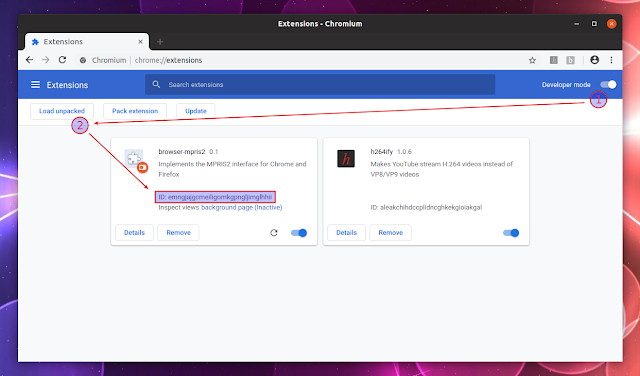

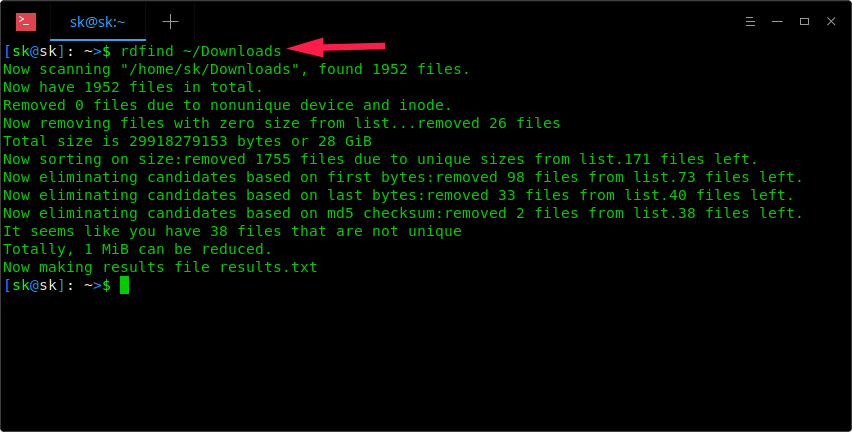

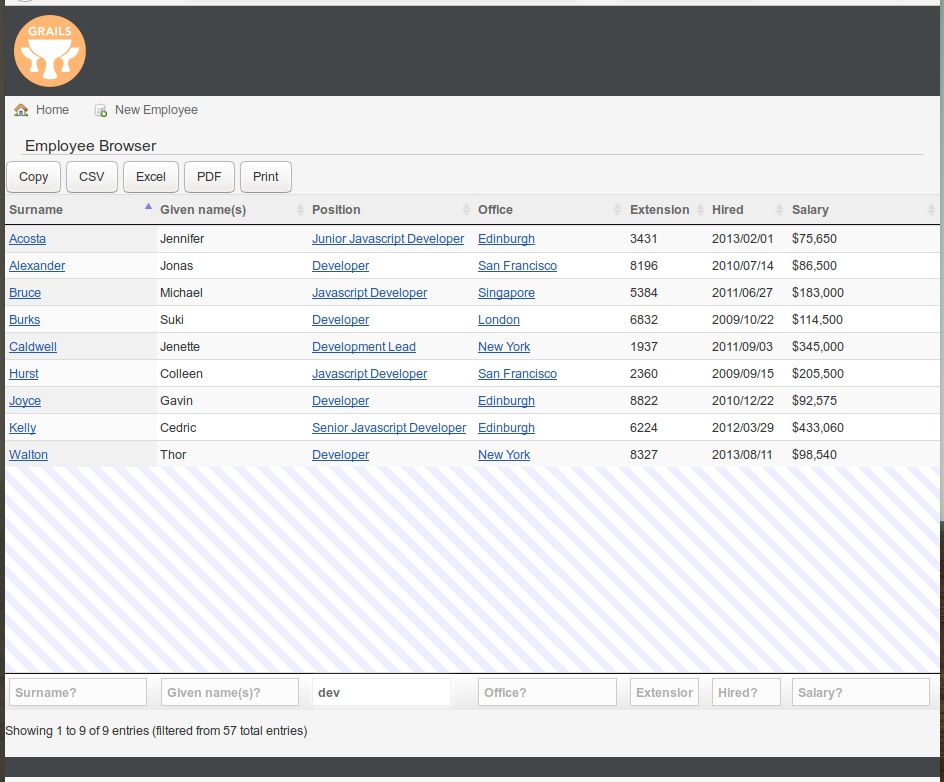

`bat` 命令支持大多数编程和标记语言的<ruby>语法高亮<rt>syntax highlighting</rt></ruby>。比如,下面这个例子。我将使用 `cat` 和 `bat` 命令来展示 `reverse.py` 的内容。

|

||||

|

||||

|

||||

|

||||

你注意到区别了吗? `cat` 命令以纯文本格式显示文件的内容,而 `bat` 命令显示了语法高亮和整齐的文本对齐格式。更好了不是吗?

|

||||

|

||||

如果你只想显示行号(而没有表格)使用 `-n` 标记。

|

||||

|

||||

```

|

||||

$ bat -n reverse.py

|

||||

```

|

||||

|

||||

|

||||

|

||||

另一个 `bat` 命令中值得注意的特性是它支持<ruby>自动分页<rt>automatic paging</rt></ruby>。 它的意思是当文件的输出对于屏幕来说太大的时候,`bat` 命令自动将自己的输出内容传输到 `less` 命令中,所以你可以一页一页的查看输出内容。

|

||||

|

||||

让我给你看一个例子,使用 `cat` 命令查看跨多个页面的文件的内容时,提示符会快速跳至文件的最后一页,你看不到内容的开头和中间部分。

|

||||

|

||||

看一下下面的输出:

|

||||

|

||||

|

||||

|

||||

正如你所看到的,`cat` 命令显示了文章的最后一页。

|

||||

|

||||

所以你也许需要去将使用 `cat` 命令的输出传输到 `less` 命令中去从开头一页一页的查看内容。

|

||||

|

||||

```

|

||||

$ cat reverse.py | less

|

||||

```

|

||||

|

||||

现在你可以使用回车键去一页一页的查看输出。然而当你使用 `bat` 命令时这些都是不必要的。`bat` 命令将自动传输跨越多个页面的文件的输出。

|

||||

|

||||

```

|

||||

$ bat reverse.py

|

||||

```

|

||||

|

||||

|

||||

|

||||

现在按下回车键去往下一页。

|

||||

|

||||

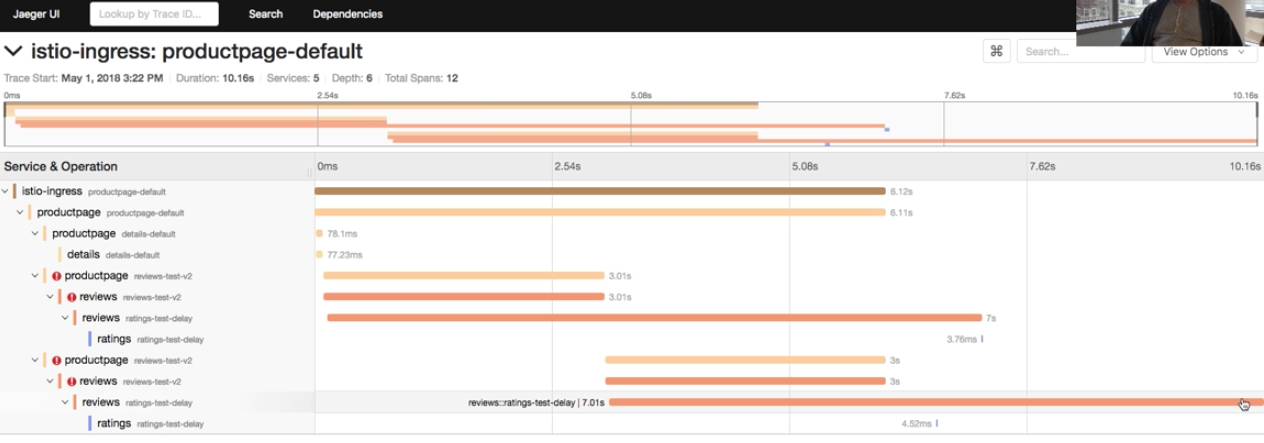

`bat` 命令也支持 <ruby>Git 集成<rt>**GIT integration**</rt></ruby>,这样您就可以轻松查看/编辑 Git 存储库中的文件。 它与 Git 连接可以显示关于索引的修改。(看左栏)

|

||||

|

||||

|

||||

|

||||

### 定制 Bat

|

||||

|

||||

如果你不喜欢默认主题,你也可以修改它。Bat 同样有修改它的选项。

|

||||

|

||||

若要显示可用主题,只需运行:

|

||||

|

||||

```

|

||||

$ bat --list-themes

|

||||

1337

|

||||

DarkNeon

|

||||

Default

|

||||

GitHub

|

||||

Monokai Extended

|

||||

Monokai Extended Bright

|

||||

Monokai Extended Light

|

||||

Monokai Extended Origin

|

||||

TwoDark

|

||||

```

|

||||

|

||||

|

||||

要使用其他主题,例如 TwoDark,请运行:

|

||||

|

||||

```

|

||||

$ bat --theme=TwoDark file.txt

|

||||

```

|

||||

|

||||

如果你想永久改变主题,在你的 shells 启动文件中加入 `export BAT_THEME="TwoDark"`。

|

||||

|

||||

`bat` 还可以选择修改输出的外观。使用 `--style` 选项来修改输出外观。仅显示 Git 的更改和行号但不显示网格和文件头,请使用 `--style=numbers,changes`。

|

||||

|

||||

更多详细信息,请参阅 Bat 项目的 GitHub 库(链接在文末)。

|

||||

|

||||

最好,这就是目前的全部内容了。希望这篇文章会帮到你。更多精彩文章即将到来,敬请关注!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/bat-a-cat-clone-with-syntax-highlighting-and-git-integration/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[z52527](https://github.com/z52527)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://github.com/sharkdp/bat/releases

|

||||

[2]:https://www.ostechnix.com/linuxbrew-common-package-manager-linux-mac-os-x/

|

||||

@ -1,8 +1,9 @@

|

||||

如何使用 Steam Play 在 Linux 上玩仅限 Windows 的游戏

|

||||

======

|

||||

Steam 的新实验功能允许你在 Linux 上玩仅限 Windows 的游戏。以下是如何在 Steam 中使用此功能。

|

||||

|

||||

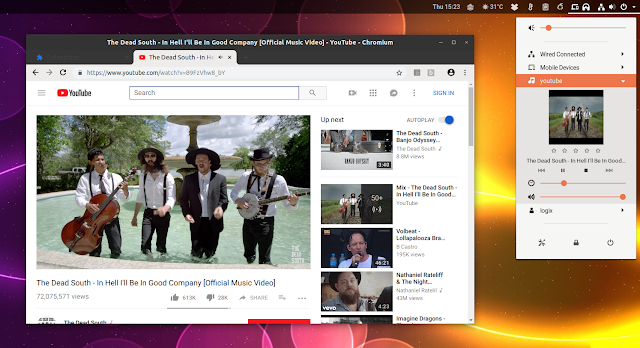

你已经听说过这个消息。游戏发行平台[ Steam 正在实现一个 WINE 分支来允许你玩仅在 Windows 上的游戏][1]。对于 Linux 用户来说,这绝对是一个好消息,因为我们抱怨 Linux 的游戏数量不足。

|

||||

> Steam 的新实验功能允许你在 Linux 上玩仅限 Windows 的游戏。以下是如何在 Steam 中使用此功能。

|

||||

|

||||

你已经听说过这个消息。游戏发行平台 [Steam 正在复刻一个 WINE 分支来允许你玩仅限于 Windows 上的游戏][1]。对于 Linux 用户来说,这绝对是一个好消息,因为我们总抱怨 Linux 的游戏数量不足。

|

||||

|

||||

这个新功能仍处于测试阶段,但你现在可以在 Linux 上试用它并在 Linux 上玩仅限 Windows 的游戏。让我们看看如何做到这一点。

|

||||

|

||||

@ -14,20 +15,19 @@ Steam 的新实验功能允许你在 Linux 上玩仅限 Windows 的游戏。以

|

||||

|

||||

安装了 Steam 并且你已登录到 Steam 帐户,就可以了解如何在 Steam Linux 客户端中启用 Windows 游戏。

|

||||

|

||||

|

||||

#### 步骤 1:进入帐户设置

|

||||

|

||||

运行 Steam 客户端。在左上角,单击 Steam,然后单击 Settings。

|

||||

运行 Steam 客户端。在左上角,单击 “Steam”,然后单击 “Settings”。

|

||||

|

||||

![Enable steam play beta on Linux][4]

|

||||

|

||||

#### 步骤 2:选择加入测试计划

|

||||

|

||||

在“设置”中,从左侧窗口中选择“帐户”,然后单击 “Beta participation” 下的 “CHANGE” 按钮。

|

||||

在“Settings”中,从左侧窗口中选择“Account”,然后单击 “Beta participation” 下的 “CHANGE” 按钮。

|

||||

|

||||

![Enable beta feature in Steam Linux][5]

|

||||

|

||||

你应该在此处选择 Steam Beta Update。

|

||||

你应该在此处选择 “Steam Beta Update”。

|

||||

|

||||

![Enable beta feature in Steam Linux][6]

|

||||

|

||||

@ -37,32 +37,29 @@ Steam 的新实验功能允许你在 Linux 上玩仅限 Windows 的游戏。以

|

||||

|

||||

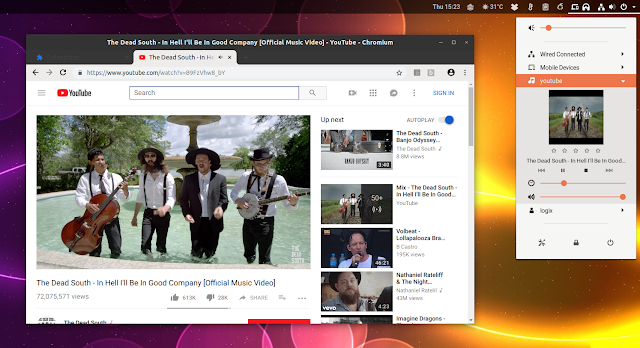

下载好 Steam 新的测试版更新后,它将重新启动。到这里就差不多了。

|

||||

|

||||

再次进入“设置”。你现在可以在左侧窗口看到新的 Steam Play 选项。单击它并选中复选框:

|

||||

再次进入“Settings”。你现在可以在左侧窗口看到新的 “Steam Play” 选项。单击它并选中复选框:

|

||||

|

||||

* Enable Steam Play for supported titles (你可以玩列入白名单的 Windows 游戏)

|

||||

* Enable Steam Play for all titles (你可以尝试玩所有仅限 Windows 的游戏)

|

||||

|

||||

|

||||

|

||||

![Play Windows games on Linux using Steam Play][7]

|

||||

|

||||

我不记得 Steam 是否会再次重启,但我想这是微不足道的。你现在应该可以在 Linux 上看到安装仅限 Windows 的游戏的选项了。

|

||||

我不记得 Steam 是否会再次重启,但我想这无所谓。你现在应该可以在 Linux 上看到安装仅限 Windows 的游戏的选项了。

|

||||

|

||||

比如,我的 Steam 库中有 Age of Empires,正常情况下这个在 Linux 中没有。但我在 Steam Play 测试版启用所有 Windows 游戏后,现在我可以选择在 Linux 上安装 Age of Empires 了。

|

||||

比如,我的 Steam 库中有《Age of Empires》,正常情况下这个在 Linux 中没有。但我在 Steam Play 测试版启用所有 Windows 游戏后,现在我可以选择在 Linux 上安装《Age of Empires》了。

|

||||

|

||||

![Install Windows-only games on Linux using Steam][8]

|

||||

现在可以在 Linux 上安装仅限 Windows 的游戏

|

||||

|

||||

*现在可以在 Linux 上安装仅限 Windows 的游戏*

|

||||

|

||||

### 有关 Steam Play 测试版功能的信息

|

||||

|

||||

在 Linux 上使用 Steam Play 测试版玩仅限 Windows 的游戏有一些事情你需要知道并且牢记。

|

||||

|

||||

* If you have games downloaded on Windows via Steam, you can save some download data by [sharing Steam game files between Linux and Windows][12].

|

||||

* 目前,[只有 27 个 Steam Play 中的 Windows 游戏被列入白名单][9]。这些白名单游戏在 Linux 上无缝运行。

|

||||

* 你可以使用 Steam Play 测试版尝试任何 Windows 游戏,但它可能无法一直运行。有些游戏有时会崩溃,而某些游戏可能根本无法运行。

|

||||

* 目前,[只有 27 个 Steam Play 中的 Windows 游戏被列入白名单][9]。这些白名单游戏可以在 Linux 上无缝运行。

|

||||

* 你可以使用 Steam Play 测试版尝试任何 Windows 游戏,但它可能不是总能运行。有些游戏有时会崩溃,而某些游戏可能根本无法运行。

|

||||

* 在测试版中,你无法 Steam 商店中看到适用于 Linux 的 Windows 限定游戏。你必须自己尝试游戏或参考[这个社区维护的列表][10]以查看该 Windows 游戏的兼容性状态。你也可以通过填写[此表][11]来为列表做出贡献。

|

||||

* 如果你通过 Steam 在 Windows 上下载游戏,那么可以通过[在 Linux 和 Windows 之间共享 Steam 游戏文件][12]来保存一些下载数据。

|

||||

|

||||

* 如果你在 Windows 中通过 Steam 下载了游戏,你可以[在 Linux 和 Windows 之间共享 Steam 游戏文件][12]来节省下载的数据。

|

||||

|

||||

我希望本教程能帮助你在 Linux 上运行仅限 Windows 的游戏。你期待在 Linux 上玩哪些游戏?

|

||||

|

||||

@ -73,12 +70,12 @@ via: https://itsfoss.com/steam-play/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]:https://itsfoss.com/steam-play-proton/

|

||||

[1]:https://linux.cn/article-10054-1.html

|

||||

[2]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/play-windows-games-on-linux-featured.jpeg

|

||||

[3]:https://itsfoss.com/install-steam-ubuntu-linux/

|

||||

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/enable-steam-play-beta.jpeg

|

||||

@ -89,4 +86,4 @@ via: https://itsfoss.com/steam-play/

|

||||

[9]:https://steamcommunity.com/games/221410

|

||||

[10]:https://docs.google.com/spreadsheets/d/1DcZZQ4HL_Ol969UbXJmFG8TzOHNnHoj8Q1f8DIFe8-8/htmlview?sle=true#

|

||||

[11]:https://docs.google.com/forms/d/e/1FAIpQLSeefaYQduMST_lg0IsYxZko8tHLKe2vtVZLFaPNycyhY4bidQ/viewform

|

||||

[12]:https://itsfoss.com/share-steam-files-linux-windows/

|

||||

[12]:https://linux.cn/article-8027-1.html

|

||||

@ -1,14 +1,15 @@

|

||||

让你提高效率的 Linux 技巧

|

||||

======

|

||||

想要在 Linux 命令行工作中提高效率,你需要使用一些技巧。

|

||||

|

||||

> 想要在 Linux 命令行工作中提高效率,你需要使用一些技巧。

|

||||

|

||||

|

||||

|

||||

巧妙的 Linux 命令行技巧能让你节省时间、避免出错,还能让你记住和复用各种复杂的命令,专注在需要做的事情本身,而不是做事的方式。以下介绍一些好用的命令行技巧。

|

||||

巧妙的 Linux 命令行技巧能让你节省时间、避免出错,还能让你记住和复用各种复杂的命令,专注在需要做的事情本身,而不是你要怎么做。以下介绍一些好用的命令行技巧。

|

||||

|

||||

### 命令编辑

|

||||

|

||||

如果要对一个已输入的命令进行修改,可以使用 ^a(ctrl + a)或 ^e(ctrl + e)将光标快速移动到命令的开头或命令的末尾。

|

||||

如果要对一个已输入的命令进行修改,可以使用 `^a`(`ctrl + a`)或 `^e`(`ctrl + e`)将光标快速移动到命令的开头或命令的末尾。

|

||||

|

||||

还可以使用 `^` 字符实现对上一个命令的文本替换并重新执行命令,例如 `^before^after^` 相当于把上一个命令中的 `before` 替换为 `after` 然后重新执行一次。

|

||||

|

||||

@ -59,11 +60,11 @@ alias show_dimensions='xdpyinfo | grep '\''dimensions:'\'''

|

||||

|

||||

### 冻结、解冻终端界面

|

||||

|

||||

^s(ctrl + s)将通过执行流量控制命令 XOFF 来停止终端输出内容,这会对 PuTTY 会话和桌面终端窗口产生影响。如果误输入了这个命令,可以使用 ^q(ctrl + q)让终端重新响应。所以只需要记住^q 这个组合键就可以了,毕竟这种情况并不多见。

|

||||

`^s`(`ctrl + s`)将通过执行流量控制命令 XOFF 来停止终端输出内容,这会对 PuTTY 会话和桌面终端窗口产生影响。如果误输入了这个命令,可以使用 `^q`(`ctrl + q`)让终端重新响应。所以只需要记住 `^q` 这个组合键就可以了,毕竟这种情况并不多见。

|

||||

|

||||

### 复用命令

|

||||

|

||||

Linux 提供了很多让用户复用命令的方法,其核心是通过历史缓冲区收集执行过的命令。复用命令的最简单方法是输入 `!` 然后接最近使用过的命令的开头字母;当然也可以按键盘上的向上箭头,直到看到要复用的命令,然后按 Enter 键。还可以先使用 `history` 显示命令历史,然后输入 `!` 后面再接命令历史记录中需要复用的命令旁边的数字。

|

||||

Linux 提供了很多让用户复用命令的方法,其核心是通过历史缓冲区收集执行过的命令。复用命令的最简单方法是输入 `!` 然后接最近使用过的命令的开头字母;当然也可以按键盘上的向上箭头,直到看到要复用的命令,然后按回车键。还可以先使用 `history` 显示命令历史,然后输入 `!` 后面再接命令历史记录中需要复用的命令旁边的数字。

|

||||

|

||||

```

|

||||

!! <== 复用上一条命令

|

||||

@ -129,7 +130,7 @@ $ rm -i <== 请求确认

|

||||

$ unalias rm

|

||||

```

|

||||

|

||||

如果已经将 `rm -i` 默认设置为 `rm` 的别名,但你希望在删除文件之前不必进行确认,则可以将 `unalias` 命令放在一个启动文件(例如 ~/.bashrc)中。

|

||||

如果已经将 `rm -i` 默认设置为 `rm` 的别名,但你希望在删除文件之前不必进行确认,则可以将 `unalias` 命令放在一个启动文件(例如 `~/.bashrc`)中。

|

||||

|

||||

### 使用 sudo

|

||||

|

||||

@ -151,8 +152,6 @@ md () { mkdir -p "$@" && cd "$1"; }

|

||||

|

||||

使用 Linux 命令行是在 Linux 系统上工作最有效也最有趣的方法,但配合命令行技巧和巧妙的别名可以让你获得更好的体验。

|

||||

|

||||

加入 [Facebook][1] 和 [LinkedIn][2] 上的 Network World 社区可以和我们一起讨论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3305811/linux/linux-tricks-that-even-you-can-love.html

|

||||

@ -160,7 +159,7 @@ via: https://www.networkworld.com/article/3305811/linux/linux-tricks-that-even-y

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,7 +3,7 @@

|

||||

|

||||

|

||||

|

||||

**APT**, 是 **A** dvanced **P** ackage **T** ool 的缩写,是基于 Debian 的系统的默认包管理器。我们可以使用 APT 安装、更新、升级和删除应用程序。最近,我一直遇到一个奇怪的错误。每当我尝试更新我的 Ubuntu 16.04 时,我都会收到此错误 - **“0% [Connecting to in.archive.ubuntu.com (2001:67c:1560:8001::14)]”** ,同时更新流程会卡住很长时间。我的网络连接没问题,我可以 ping 通所有网站,包括 Ubuntu 官方网站。在搜索了一番谷歌后,我意识到 Ubuntu 镜像有时无法通过 IPv6 访问。在我强制将 APT 包管理器在更新系统时使用 IPv4 代替 IPv6 访问 Ubuntu 镜像后,此问题得以解决。如果你遇到过此错误,可以按照以下说明解决。

|

||||

**APT**, 是 **A** dvanced **P** ackage **T** ool 的缩写,是基于 Debian 的系统的默认包管理器。我们可以使用 APT 安装、更新、升级和删除应用程序。最近,我一直遇到一个奇怪的错误。每当我尝试更新我的 Ubuntu 16.04 时,我都会收到此错误 - **“0% [Connecting to in.archive.ubuntu.com (2001:67c:1560:8001::14)]”** ,同时更新流程会卡住很长时间。我的网络连接没问题,我可以 ping 通所有网站,包括 Ubuntu 官方网站。在搜索了一番谷歌后,我意识到 Ubuntu 镜像站点有时无法通过 IPv6 访问。在我强制将 APT 包管理器在更新系统时使用 IPv4 代替 IPv6 访问 Ubuntu 镜像站点后,此问题得以解决。如果你遇到过此错误,可以按照以下说明解决。

|

||||

|

||||

### 强制 APT 包管理器在 Ubuntu 16.04 中使用 IPv4

|

||||

|

||||

@ -11,13 +11,12 @@

|

||||

|

||||

```

|

||||

$ sudo apt-get -o Acquire::ForceIPv4=true update

|

||||

|

||||

$ sudo apt-get -o Acquire::ForceIPv4=true upgrade

|

||||

```

|

||||

|

||||

瞧!这次更新很快就完成了。

|

||||

|

||||

你还可以使用以下命令在 **/etc/apt/apt.conf.d/99force-ipv4** 中添加以下行,以便将来对所有 **apt-get** 事务保持持久性:

|

||||

你还可以使用以下命令在 `/etc/apt/apt.conf.d/99force-ipv4` 中添加以下行,以便将来对所有 `apt-get` 事务保持持久性:

|

||||

|

||||

```

|

||||

$ echo 'Acquire::ForceIPv4 "true";' | sudo tee /etc/apt/apt.conf.d/99force-ipv4

|

||||

@ -25,7 +24,7 @@ $ echo 'Acquire::ForceIPv4 "true";' | sudo tee /etc/apt/apt.conf.d/99force-ipv4

|

||||

|

||||

**免责声明:**

|

||||

|

||||

我不知道最近是否有人遇到这个问题,但我今天在我的 Ubuntu 16.04 LTS 虚拟机中遇到了至少四五次这样的错误,我按照上面的说法解决了这个问题。我不确定这是推荐的解决方案。请浏览 Ubuntu 论坛来确保此方法合法。由于我只是一个 VM,我只将它用于测试和学习目的,我不介意这种方法的真实性。请自行承担使用风险。

|

||||

我不知道最近是否有人遇到这个问题,但我今天在我的 Ubuntu 16.04 LTS 虚拟机中遇到了至少四、五次这样的错误,我按照上面的说法解决了这个问题。我不确定这是推荐的解决方案。请浏览 Ubuntu 论坛来确保此方法合法。由于我只是一个 VM,我只将它用于测试和学习目的,我不介意这种方法的真实性。请自行承担使用风险。

|

||||

|

||||

希望这有帮助。还有更多的好东西。敬请关注!

|

||||

|

||||

@ -40,7 +39,7 @@ via: https://www.ostechnix.com/how-to-force-apt-package-manager-to-use-ipv4-in-u

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,3 @@

|

||||

translating by leowang

|

||||

Moving to Linux from dated Windows machines

|

||||

======

|

||||

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

translating by ynmlml

|

||||

|

||||

Write Dumb Code

|

||||

======

|

||||

The best way you can contribute to an open source project is to remove lines of code from it. We should endeavor to write code that a novice programmer can easily understand without explanation or that a maintainer can understand without significant time investment.

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Translating by FelixYFZ 20 questions DevOps job candidates should be prepared to answer

|

||||

20 questions DevOps job candidates should be prepared to answer Translating by FelixYFZ

|

||||

======

|

||||

|

||||

|

||||

|

||||

@ -1,4 +1,3 @@

|

||||

(translating by runningwater)

|

||||

Microservices Explained

|

||||

======

|

||||

|

||||

|

||||

@ -1,4 +1,3 @@

|

||||

translating by aiwhj

|

||||

3 tips for organizing your open source project's workflow on GitHub

|

||||

======

|

||||

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

Translating by jessie-pang

|

||||

|

||||

Why moving all your workloads to the cloud is a bad idea

|

||||

======

|

||||

|

||||

|

||||

@ -1,80 +0,0 @@

|

||||

How to Install Cinnamon Desktop on Ubuntu

|

||||

======

|

||||

**This tutorial shows you how to install Cinnamon desktop environment on Ubuntu.**

|

||||

|

||||

[Cinnamon][1] is the default desktop environment of [Linux Mint][2]. Unlike Unity desktop environment in Ubuntu, Cinnamon is more traditional but elegant looking desktop environment with the bottom panel and app menu etc. Many Windows migrants [prefer Linux Mint over Ubuntu][3] because of Cinnamon desktop and its Windows-resembling user interface.

|

||||

|

||||

Now, you don’t need to [install Linux Mint][4] just for trying Cinnamon. In this tutorial, I’ll show you **how to install Cinnamon in Ubuntu 18.04, 16.04 and 14.04**.

|

||||

|

||||

You should note something before you install Cinnamon desktop on Ubuntu. Sometimes, installing additional desktop environments leads to conflict between the desktop environments. This may result in a broken session, broken applications and features etc. This is why you should be careful in making this choice.

|

||||

|

||||

### How to Install Cinnamon on Ubuntu

|

||||

|

||||

![How to install cinnamon desktop on Ubuntu Linux][5]

|

||||

|

||||