mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

fd9c0aff4e

@ -1,59 +1,59 @@

|

|||||||

用户报告:Steam Machines 与 SteamOS 发布一周年记

|

回顾 Steam Machines 与 SteamOS

|

||||||

====

|

====

|

||||||

|

|

||||||

去年今日,在非常符合 Valve 风格的跳票之后大众迎来了 [Steam Machine 的发布][2]。即使是在 Linux 桌面环境对于游戏的支持大步进步的今天,Steam Machines 作为一个平台依然没有飞跃,而 SteamOS 似乎也止步不前。这些由 Valve 发起的项目究竟怎么了?这些项目为何被发起,又是如何失败的?一些改进又是否曾有机会挽救这些项目的成败?

|

去年今日(LCTT 译注:本文发表于 2016 年),在非常符合 Valve 风格的跳票之后,大众迎来了 [Steam Machines 的发布][2]。即使是在 Linux 桌面环境对于游戏的支持大步进步的今天,Steam Machines 作为一个平台依然没有飞跃,而 SteamOS 似乎也止步不前。这些由 Valve 发起的项目究竟怎么了?这些项目为何被发起,又是如何失败的?一些改进又是否曾有机会挽救这些项目的成败?

|

||||||

|

|

||||||

**行业环境**

|

### 行业环境

|

||||||

|

|

||||||

在 2012 年 Windows 8 发布的时候,微软像 iOS 与 Android 那样,为 Windows 集成了一个应用商店。在微软试图推广对触摸体验友好的界面时,为了更好的提供 “Metro” UI 语言指导下的沉浸式触摸体验,他们同时推出了一系列叫做 “WinRT” 的 API。然而为了能够使用这套 API,应用开发者们必须把应用程序通过 Windows 应用商城发布,并且正如其他应用商城那样,微软从中抽成30%。对于 Valve 的 CEO,Gabe Newell (G胖) 而言,这种限制发布平台和抽成行为是让人无法接受的,而且他前瞻地看到了微软利用行业龙头地位来推广 Windows 商店和 Metro 应用对于 Valve 潜在的危险,正如当年微软用 IE 浏览器击垮 Netscape 浏览器一样。

|

在 2012 年 Windows 8 发布的时候,微软像 iOS 与 Android 那样,为 Windows 集成了一个应用商店。在微软试图推广对触摸体验友好的界面时,为了更好的提供 “Metro” UI 语言指导下的沉浸式触摸体验,他们同时推出了一系列叫做 “WinRT” 的 API。然而为了能够使用这套 API,应用开发者们必须把应用程序通过 Windows 应用商城发布,并且正如其它应用商城那样,微软从中抽成 30%。对于 Valve 的 CEO,Gabe Newell (G 胖) 而言,这种限制发布平台和抽成行为是让人无法接受的,而且他前瞻地看到了微软利用行业龙头地位来推广 Windows 商店和 Metro 应用对于 Valve 潜在的危险,正如当年微软用 IE 浏览器击垮 Netscape 浏览器一样。

|

||||||

|

|

||||||

对于 Valve 来说,运行 Windows 的 PC 的优势在于任何人都可以不受操作系统和硬件方的限制运行各种软件。当像 Windows 这样的专有平台对像 Steam 这样的第三方软件限制越来越严格时,应用开发者们自然会想要寻找一个对任何人都更开放和自由的替代品,他们很自然的会想到 Linux 。Linux 本质上只是一套内核,但你可以轻易地使用 GNU 组件,Gnnome 等软件在这套内核上开发出一个操作系统,比如 Ubuntu 就是这么来的。推行 Ubuntu 或者其他 Linux 发行版自然可以为 Valve 提供一个无拘无束的平台,以防止微软或者苹果变成 Valve 作为第三方平台之路上的的敌人,但 Linux 甚至给了 Valve 一个创造新的操作系统平台的机会。

|

对于 Valve 来说,运行 Windows 的 PC 的优势在于任何人都可以不受操作系统和硬件方的限制运行各种软件。当像 Windows 这样的专有平台对像 Steam 这样的第三方软件限制越来越严格时,应用开发者们自然会想要寻找一个对任何人都更开放和自由的替代品,他们很自然的会想到 Linux 。Linux 本质上只是一套内核,但你可以轻易地使用 GNU 组件、Gnome 等软件在这套内核上开发出一个操作系统,比如 Ubuntu 就是这么来的。推行 Ubuntu 或者其他 Linux 发行版自然可以为 Valve 提供一个无拘无束的平台,以防止微软或者苹果变成 Valve 作为第三方平台之路上的的敌人,但 Linux 甚至给了 Valve 一个创造新的操作系统平台的机会。

|

||||||

|

|

||||||

**概念化**

|

### 概念化

|

||||||

|

|

||||||

如果我们把 Steam Machines 叫做主机的话,Valve 当时似乎认定了主机平台是一个机会。为了迎合用户对于电视主机平台用户界面的审美期待,同时也为了让玩家更好地从稍远的距离上在电视上玩游戏,Valve 为 Steam 推出了 Big Picture 模式。Steam Machines 的核心要点是开放性;比方说所有的软件都被设计成可以脱离 Windows 工作,又比如说 Steam Machines 手柄的 CAD 图纸也被公布出来以便支持玩家二次创作。

|

如果我们把 Steam Machines 叫做主机的话,Valve 当时似乎认定了主机平台是一个机会。为了迎合用户对于电视主机平台用户界面的审美期待,同时也为了让玩家更好地从稍远的距离上在电视上玩游戏,Valve 为 Steam 推出了 Big Picture 模式。Steam Machines 的核心要点是开放性;比方说所有的软件都被设计成可以脱离 Windows 工作,又比如说 Steam Machines 手柄的 CAD 图纸也被公布出来以便支持玩家二次创作。

|

||||||

|

|

||||||

原初计划中,Valve 打算设计一款官方的 Steam Machine 作为旗舰机型。但最终,这些机型只在 2013 年的时候作为原型机给与了部分测试者用于测试。Valve 后来也允许像戴尔这样的 OEM 厂商们制造 Steam Machines,并且也赋予了他们定制价格和配置规格的权利。有一家叫做 “Xi3” 的公司展示了他们设计的 Steam Machine 小型机型,那款机型小到可以放在手掌上,这一新闻创造了围绕 Steam Machines 的更多热烈讨论。最终,Valve 决定不自己设计知道 Steam Machines,而全权交给 OEM 合作厂商们。

|

原初计划中,Valve 打算设计一款官方的 Steam Machine 作为旗舰机型。但最终,这些机型只在 2013 年的时候作为原型机给与了部分测试者用于测试。Valve 后来也允许像戴尔这样的 OEM 厂商们制造 Steam Machines,并且也赋予了他们制定价格和配置规格的权利。有一家叫做 “Xi3” 的公司展示了他们设计的 Steam Machine 小型机型,那款机型小到可以放在手掌上,这一新闻创造了围绕 Steam Machines 的更多热烈讨论。最终,Valve 决定不自己设计制造 Steam Machines,而全权交给 OEM 合作厂商们。

|

||||||

|

|

||||||

这一过程中还有很多天马行空的创意被列入考量,比如在手柄上加入生物识别技术,眼球追踪以及动作控制等。在这些最初的想法里,陀螺仪被加入了 Steam Controller 手柄,HTC Vive 的手柄也有各种动作追踪仪器;这些想法可能最初都来源于 Steam 手柄的设计过程中。手柄最初还有些更激进的设计,比如在中心放置一块可定制化并且会随着游戏内容变化的触摸屏。但最后的最后,发布会上的手柄偏向保守了许多,但也有诸如双触摸板和内置软件等黑科技。Valve 也考虑过制作面向笔记本类型硬件的 Steam Machines 和 SteamOS。这个企划最终没有任何成果,但也许 “Smach Z” 手持游戏机会是发展的方向之一。

|

这一过程中还有很多天马行空的创意被列入考量,比如在手柄上加入生物识别技术、眼球追踪以及动作控制等。在这些最初的想法里,陀螺仪被加入了 Steam Controller 手柄,HTC Vive 的手柄也有各种动作追踪仪器;这些想法可能最初都来源于 Steam 手柄的设计过程中。手柄最初还有些更激进的设计,比如在中心放置一块可定制化并且会随着游戏内容变化的触摸屏。但最后的最后,发布会上的手柄偏向保守了许多,但也有诸如双触摸板和内置软件等黑科技。Valve 也考虑过制作面向笔记本类型硬件的 Steam Machines 和 SteamOS。这个企划最终没有任何成果,但也许 “Smach Z” 手持游戏机会是发展的方向之一。

|

||||||

|

|

||||||

在 [2013年九月][3],Valve 对外界宣布了 Steam Machines 和 SteamOS, 并且预告会在 2014 年中发布。前述的 300 台原型机在当年 12 月被分发给了测试者们,随后次年 1 月,2000 台原型机又被分发给了开发者们。SteamOS 也在那段时间被分发给有 Linux 经验的测试者们试用。根据当时的测试反馈,Valve 最终决定把产品发布延期到 2015 年 11 月。

|

在 [2013 年九月][3],Valve 对外界宣布了 Steam Machines 和 SteamOS, 并且预告会在 2014 年中发布。前述的 300 台原型机在当年 12 月分发给了测试者们,随后次年 1 月,又分发给了开发者们 2000 台原型机。SteamOS 也在那段时间分发给有 Linux 经验的测试者们试用。根据当时的测试反馈,Valve 最终决定把产品发布延期到 2015 年 11 月。

|

||||||

|

|

||||||

SteamOS 的延期跳票给合作伙伴带来了问题;戴尔的 Steam Machine 由于早发售了一年结果不得不改为搭配了额外软件甚至运行着 Windows 操作系统的 Alienware Alpha。

|

SteamOS 的延期跳票给合作伙伴带来了问题;戴尔的 Steam Machine 由于早发售了一年,结果不得不改为搭配了额外软件、甚至运行着 Windows 操作系统的 Alienware Alpha。

|

||||||

|

|

||||||

**正式发布**

|

### 正式发布

|

||||||

|

|

||||||

在最终的正式发布会上,Valve 和 OEM 合作商们发布了 Steam Machines,同时 Valve 还推出了 Steam Controller 手柄和 Steam Link 串流游戏设备。Valve 也在线下零售行业比如 GameStop 里开辟了货架空间。在发布会前,有几家 OEM 合作商退出了与 Valve 的合作;比如 Origin PC 和 Falcon Northwest 这两家高端精品主机设计商。他们宣称 Steam 生态的性能问题和一些限制迫使他们决定弃用 SteamOS。

|

在最终的正式发布会上,Valve 和 OEM 合作商们发布了 Steam Machines,同时 Valve 还推出了 Steam Controller 手柄和 Steam Link 串流游戏设备。Valve 也在线下零售行业比如 GameStop 里开辟了货架空间。在发布会前,有几家 OEM 合作商退出了与 Valve 的合作;比如 Origin PC 和 Falcon Northwest 这两家高端精品主机设计商。他们宣称 Steam 生态的性能问题和一些限制迫使他们决定弃用 SteamOS。

|

||||||

|

|

||||||

Steam Machines 在发布后收到了褒贬不一的评价。另一方面 Steam Link 则普遍受到好评,很多人表示愿意在客厅电视旁为他们已有的 PC 系统购买 Steam Link, 而不是购置一台全新的 Steam Machine。Steam Controller 手柄则受到其丰富功能伴随而来的陡峭学习曲线影响,评价一败涂地。然而针对 Steam Machines 的批评则是最猛烈的。诸如 LinusTechTips 这样的评测团体 (译者:YouTube硬件界老大,个人也经常看他们节目) 注意到了主机的明显的不足,其中甚至不乏性能为题。很多厂商的 Machines 都被批评为性价比极低,特别是经过和玩家们自己组装的同配置机器或者电视主机做对比之后。SteamOS 而被批评为兼容性有问题,Bugs 太多,以及性能不及 Windows。在所有 Machines 里,戴尔的 Alienware Alpha 被评价为最有意思的一款,主要是由于品牌价值和机型外观极小的缘故。

|

Steam Machines 在发布后收到了褒贬不一的评价。另一方面 Steam Link 则普遍受到好评,很多人表示愿意在客厅电视旁为他们已有的 PC 系统购买 Steam Link, 而不是购置一台全新的 Steam Machine。Steam Controller 手柄则受到其丰富功能伴随而来的陡峭学习曲线影响,评价一败涂地。然而针对 Steam Machines 的批评则是最猛烈的。诸如 LinusTechTips 这样的评测团体 (LCTT 译注:YouTube 硬件界老大,个人也经常看他们节目)注意到了主机的明显的不足,其中甚至不乏性能为题。很多厂商的 Machines 都被批评为性价比极低,特别是经过和玩家们自己组装的同配置机器或者电视主机做对比之后。SteamOS 而被批评为兼容性有问题,Bug 太多,以及性能不及 Windows。在所有 Machines 里,戴尔的 Alienware Alpha 被评价为最有意思的一款,主要是由于品牌价值和机型外观极小的缘故。

|

||||||

|

|

||||||

通过把 Debian Linux 操作系统作为开发基础,Valve 得以为 SteamOS 平台找到很多原本就存在与 Steam 平台上的 Linux 兼容游戏来作为“首发游戏”。所以起初大家认为在“首发游戏”上 Steam Machines 对比其他新发布的主机优势明显。然而,很多宣称会在新平台上发布的游戏要么跳票要么被中断了。Rocket League 和 Mad Max 在宣布支持新平台整整一年后才真正发布,而 巫师3 和蝙蝠侠:阿克汉姆骑士 甚至从来没有发布在新平台上。就 巫师3 的情况而言,他们的开发者 CD Projekt Red 拒绝承认他们曾经说过要支持新平台;然而他们的游戏曾在宣布支持 Linux 和 SteamOS 的游戏列表里赫然醒目。雪上加霜的是,很多 AAA 级的大作甚至没被宣布移植,虽然最近这种情况稍有所好转了。

|

通过把 Debian Linux 操作系统作为开发基础,Valve 得以为 SteamOS 平台找到很多原本就存在与 Steam 平台上的 Linux 兼容游戏来作为“首发游戏”。所以起初大家认为在“首发游戏”上 Steam Machines 对比其他新发布的主机优势明显。然而,很多宣称会在新平台上发布的游戏要么跳票要么被中断了。Rocket League 和 Mad Max 在宣布支持新平台整整一年后才真正发布,而《巫师 3》和《蝙蝠侠:阿克汉姆骑士》甚至从来没有发布在新平台上。就《巫师 3》的情况而言,他们的开发者 CD Projekt Red 拒绝承认他们曾经说过要支持新平台;然而他们的游戏曾在宣布支持 Linux 和 SteamOS 的游戏列表里赫然醒目。雪上加霜的是,很多 AAA 级的大作甚至没宣布移植,虽然最近这种情况稍有所好转了。

|

||||||

|

|

||||||

**被忽视的**

|

### 被忽视的

|

||||||

|

|

||||||

在 Stame Machines 发售后,Valve 的开发者们很快转移到了其他项目的工作中去了。在当时,VR 项目最为被内部所重视,6 月份的时候大约有 1/3 的员工都在相关项目上工作。Valve 把 VR 视为亟待开发的一片领域,而他们的 Steam 则应该作为分发 VR 内容的生态环境。通过与 HTC 合作生产,Valve 设计并制造出了他们自己的 VR 头戴和手柄,并计划在将来更新换代。然而与此同时,Linux 和 Steam Machines 都渐渐淡出了视野。SteamVR 甚至直到最近才刚刚支持 Linux (其实还没对普通消费者开放使用,只在 SteamDevDays 上展示过对 Linux 的支持),而这一点则让我们怀疑 Valve 在 Stame Machines 和 Linux 的开发上是否下定了足够的决心。

|

在 Stame Machines 发售后,Valve 的开发者们很快转移到了其他项目的工作中去了。在当时,VR 项目最为内部所重视,6 月份的时候大约有 1/3 的员工都在相关项目上工作。Valve 把 VR 视为亟待开发的一片领域,而他们的 Steam 则应该作为分发 VR 内容的生态环境。通过与 HTC 合作生产,Valve 设计并制造出了他们自己的 VR 头戴和手柄,并计划在将来更新换代。然而与此同时,Linux 和 Steam Machines 都渐渐淡出了视野。SteamVR 甚至直到最近才刚刚支持 Linux (其实还没对普通消费者开放使用,只在 SteamDevDays 上展示过对 Linux 的支持),而这一点则让我们怀疑 Valve 在 Stame Machines 和 Linux 的开发上是否下定了足够的决心。

|

||||||

|

|

||||||

SteamOS 自发布以来几乎止步不前。SteamOS 2.0 作为上一个大版本号更新,几乎只是同步了 Debian 上游的变化,而且还需要用户重新安装整个系统,而之后的小补丁也只是在做些上游更新的配合。当 Valve 在其他事关性能和用户体验的项目,例如 Mesa,上进步匪浅的时候,针对 Steam Machines 的相关项目则少有顾及。

|

SteamOS 自发布以来几乎止步不前。SteamOS 2.0 作为上一个大版本号更新,几乎只是同步了 Debian 上游的变化,而且还需要用户重新安装整个系统,而之后的小补丁也只是在做些上游更新的配合。当 Valve 在其他事关性能和用户体验的项目(例如 Mesa)上进步匪浅的时候,针对 Steam Machines 的相关项目则少有顾及。

|

||||||

|

|

||||||

很多原本应有的功能都从未完成。Steam 的内置功能,例如聊天和直播,都依然处于较弱的状态,而且这种落后会影响所有平台上的 Steam 用户体验。更具体来说,Steam 没有像其他主流主机平台一样把诸如 Netflix,Twitch 和 Spotify 之类的服务集成到客户端里,而通过 Steam 内置的浏览器使用这些服务则体验极差,甚至无法使用;而如果要使用第三方软件则需要开启 Terminal,而且很多软件甚至无法支持控制手柄 —— 无论从哪方面讲这样的用户界面体验都糟糕透顶。

|

很多原本应有的功能都从未完成。Steam 的内置功能,例如聊天和直播,都依然处于较弱的状态,而且这种落后会影响所有平台上的 Steam 用户体验。更具体来说,Steam 没有像其他主流主机平台一样把诸如 Netflix、Twitch 和 Spotify 之类的服务集成到客户端里,而通过 Steam 内置的浏览器使用这些服务则体验极差,甚至无法使用;而如果要使用第三方软件则需要开启 Terminal,而且很多软件甚至无法支持控制手柄 —— 无论从哪方面讲这样的用户界面体验都糟糕透顶。

|

||||||

|

|

||||||

Valve 同时也几乎没有花任何力气去推广他们的新平台而选择把一切都交由 OEM 厂商们去做。然而,几乎所有 OEM 合作商们要么是高端主机定制商,要么是电脑生产商,要么是廉价电脑公司(译者:简而言之没有一家有大型宣传渠道)。在所有 OEM 中,只有戴尔是 PC 市场的大碗,也只有他们真正给 Steam Machines 做了广告宣传。

|

Valve 同时也几乎没有花任何力气去推广他们的新平台,而选择把一切都交由 OEM 厂商们去做。然而,几乎所有 OEM 合作商们要么是高端主机定制商,要么是电脑生产商,要么是廉价电脑公司(LCTT 译注:简而言之没有一家有大型宣传渠道)。在所有 OEM 中,只有戴尔是 PC 市场的大碗,也只有他们真正给 Steam Machines 做了广告宣传。

|

||||||

|

|

||||||

最终销量也不尽人意。截至 2016 年 6 月,7 个月间 Steam Controller 手柄的销量在包括捆绑销售的情况下仅销售 500,000 件。这让 Steam Machines 的零售情况差到只能被归类到十万俱乐部的最底部。对比已经存在的巨大 PC 和主机游戏平台,可以说销量极低。

|

最终销量也不尽人意。截至 2016 年 6 月,7 个月间 Steam Controller 手柄的销量在包括捆绑销售的情况下仅销售 500,000 件。这让 Steam Machines 的零售情况差到只能被归类到十万俱乐部的最底部。对比已经存在的巨大 PC 和主机游戏平台,可以说销量极低。

|

||||||

|

|

||||||

**事后诸葛亮**

|

### 事后诸葛亮

|

||||||

|

|

||||||

既然知道了 Steam Machines 的历史,我们又能否总结出失败的原因以及可能存在的翻身改进呢?

|

既然知道了 Steam Machines 的历史,我们又能否总结出失败的原因以及可能存在的翻身改进呢?

|

||||||

|

|

||||||

_视野与目标_

|

#### 视野与目标

|

||||||

|

|

||||||

Steam Machines 从来没搞清楚他们在市场里的定位究竟是什么,也从来没说清楚他们具体有何优势。从 PC 市场的角度来说,自己搭建台式机已经非常普及并且往往让电脑的可以匹配玩家自己的目标,同时升级性也非常好。从主机平台的角度来说,Steam Machines 又被主机本身的相对廉价所打败,虽然算上游戏可能稍微便宜一些,但主机上的用户体验也直白很多。

|

Steam Machines 从来没搞清楚他们在市场里的定位究竟是什么,也从来没说清楚他们具体有何优势。从 PC 市场的角度来说,自己搭建台式机已经非常普及,并且往往可以让电脑可以匹配玩家自己的目标,同时升级性也非常好。从主机平台的角度来说,Steam Machines 又被主机本身的相对廉价所打败,虽然算上游戏可能稍微便宜一些,但主机上的用户体验也直白很多。

|

||||||

|

|

||||||

PC 用户会把多功能性看得很重,他们不仅能用电脑打游戏,也一样能办公和做各种各样的事情。即使 Steam Machines 也是跑着的 SteamOS 操作系统的自由的 Linux 电脑,但操作系统和市场宣传加固了 PC 玩家们对 Steam Machines 是不可定制硬件,低价的更接近主机的印象。即使这些 PC 用户能接受在客厅里购置一台 Steam Machines,他们也有 Steam Link 可以选择,而且很多更小型机比如 NUC 和 Mini-ITX 主板定制机可以让他们搭建更适合放在客厅里的电脑。SteamOS 软件也允许把这些硬件转变为 Steam Machines,但寻求灵活性和兼容性的用户通常都会使用一般 Linux 发行版或者 Windows。二矿最近的 Windows 和 Linux 桌面环境都让维护一般用户的操作系统变得自动化和简单了。

|

PC 用户会把多功能性看得很重,他们不仅能用电脑打游戏,也一样能办公和做各种各样的事情。即使 Steam Machines 也是跑着的 SteamOS 操作系统的自由的 Linux 电脑,但操作系统和市场宣传加深了 PC 玩家们对 Steam Machines 是不可定制的硬件、低价的、更接近主机的印象。即使这些 PC 用户能接受在客厅里购置一台 Steam Machines,他们也有 Steam Link 可以选择,而且很多更小型机比如 NUC 和 Mini-ITX 主板定制机可以让他们搭建更适合放在客厅里的电脑。SteamOS 软件也允许把这些硬件转变为 Steam Machines,但寻求灵活性和兼容性的用户通常都会使用一般 Linux 发行版或者 Windows。何况最近的 Windows 和 Linux 桌面环境都让维护一般用户的操作系统变得自动化和简单了。

|

||||||

|

|

||||||

电视主机用户们则把易用性放在第一。虽然近年来主机的功能也逐渐扩展,比如可以播放视频或者串流,但总体而言用户还是把即插即用即玩,不用担心兼容性和性能问题和低门槛放在第一。主机的使用寿命也往往较常,一般在 4-7 年左右,而统一固定的硬件也让游戏开发者们能针对其更好的优化和调试软件。现在刚刚新起的中代升级,例如天蝎和 PS 4 Pro 则可能会打破这样统一的游戏体验,但无论如何厂商还是会要求开发者们需要保证游戏在原机型上的体验。为了提高用户粘性,主机也会有自己的社交系统和独占游戏。而主机上的游戏也有实体版,以便将来重用或者二手转卖,这对零售商和用户都是好事儿。Steam Machines 则完全没有这方面的保证;即使长的像一台客厅主机,他们却有 PC 高昂的价格和复杂的硬件情况。

|

电视主机用户们则把易用性放在第一。虽然近年来主机的功能也逐渐扩展,比如可以播放视频或者串流,但总体而言用户还是把即插即用即玩、不用担心兼容性和性能问题和低门槛放在第一。主机的使用寿命也往往较长,一般在 4-7 年左右,而统一固定的硬件也让游戏开发者们能针对其更好的优化和调试软件。现在刚刚兴起的中生代升级,例如天蝎和 PS 4 Pro 则可能会打破这样统一的游戏体验,但无论如何厂商还是会要求开发者们需要保证游戏在原机型上的体验。为了提高用户粘性,主机也会有自己的社交系统和独占游戏。而主机上的游戏也有实体版,以便将来重用或者二手转卖,这对零售商和用户都是好事儿。Steam Machines 则完全没有这方面的保证;即使长的像一台客厅主机,他们却有 PC 高昂的价格和复杂的硬件情况。

|

||||||

|

|

||||||

_妥协_

|

#### 妥协

|

||||||

|

|

||||||

综上所述,Steam Machines 可以说是“集大成者”,吸取了两边的缺点,又没有自己明确的定位。更糟糕的是 Steam Machines 还展现了 PC 和主机都没有的毛病,比如没有 AAA 大作,又没有 Netflix 这样的客户端。抛开这些不说,Valve 在提高他们产品这件事上几乎没有出力,甚至没有尝试着解决 PC 和主机两头定位矛盾这一点。

|

综上所述,Steam Machines 可以说是“集大成者”,吸取了两边的缺点,又没有自己明确的定位。更糟糕的是 Steam Machines 还展现了 PC 和主机都没有的毛病,比如没有 AAA 大作,又没有 Netflix 这样的客户端。抛开这些不说,Valve 在提高他们产品这件事上几乎没有出力,甚至没有尝试着解决 PC 和主机两头定位矛盾这一点。

|

||||||

|

|

||||||

@ -61,35 +61,35 @@ _妥协_

|

|||||||

|

|

||||||

而最复杂的是 Steam Machines 多变的硬件情况,这使得用户不仅要考虑价格还要考虑配置,还要考虑这个价格下和别的系统(PC 和主机)比起来划算与否。更关键的是,Valve 无论如何也应该做出某种自动硬件检测机制,这样玩家才能知道是否能玩某个游戏,而且这个测试既得简单明了,又要能支持 Steam 上几乎所有游戏。同时,Valve 还要操心未来游戏对配置需求的变化,比如2016 年的 "A" 等主机三年后该给什么评分呢?

|

而最复杂的是 Steam Machines 多变的硬件情况,这使得用户不仅要考虑价格还要考虑配置,还要考虑这个价格下和别的系统(PC 和主机)比起来划算与否。更关键的是,Valve 无论如何也应该做出某种自动硬件检测机制,这样玩家才能知道是否能玩某个游戏,而且这个测试既得简单明了,又要能支持 Steam 上几乎所有游戏。同时,Valve 还要操心未来游戏对配置需求的变化,比如2016 年的 "A" 等主机三年后该给什么评分呢?

|

||||||

|

|

||||||

_Valve, 个人努力与公司结构_

|

#### Valve, 个人努力与公司结构

|

||||||

|

|

||||||

尽管 Valve 在 Steam 上创造了辉煌,但其公司的内部结构可能对于开发一个像 Steam Machines 一样的平台是有害的。他们几乎没有领导的自由办公结构,以及所有人都可以自由移动到想要工作的项目组里决定了他们具有极大的创新,研发,甚至开发能力。据说 Valve 只愿意招他们眼中的的 "顶尖人才",通过极其严格的筛选标准,并通过让他们在自己认为“有意义”的项目里工作以保持热情。然而这种思路很可能是错误的;拉帮结派总是存在,而 G胖 的话或许比公司手册上写的还管用,而又有人时不时会由于特殊原因被雇佣或解雇。

|

尽管 Valve 在 Steam 上创造了辉煌,但其公司的内部结构可能对于开发一个像 Steam Machines 一样的平台是有害的。他们几乎没有领导的自由办公结构,以及所有人都可以自由移动到想要工作的项目组里决定了他们具有极大的创新,研发,甚至开发能力。据说 Valve 只愿意招他们眼中的的 “顶尖人才”,通过极其严格的筛选标准,并通过让他们在自己认为“有意义”的项目里工作以保持热情。然而这种思路很可能是错误的;拉帮结派总是存在,而 G胖的话或许比公司手册上写的还管用,而又有人时不时会由于特殊原因被雇佣或解雇。

|

||||||

|

|

||||||

正因为如此,很多虽不闪闪发光甚至维护起来有些无聊但又需要大量时间的项目很容易枯萎。Valve 的客服已是被人诟病已久的毛病,玩家经常觉得被无视了,而 Valve 则经常不到万不得已法律要求的情况下绝不行动:例如自动退款系统,就是在澳大利亚和欧盟法律的要求下才被加入的;更有目前还没结案的华盛顿州 CS:GO 物品在线赌博网站一案。

|

正因为如此,很多虽不闪闪发光甚至维护起来有些无聊但又需要大量时间的项目很容易枯萎。Valve 的客服已是被人诟病已久的毛病,玩家经常觉得被无视了,而 Valve 则经常不到万不得已、法律要求的情况下绝不行动:例如自动退款系统,就是在澳大利亚和欧盟法律的要求下才被加入的;更有目前还没结案的华盛顿州 CS:GO 物品在线赌博网站一案。

|

||||||

|

|

||||||

各种因素最后也反映在 Steam Machines 这一项目上。Valve 方面的跳票迫使一些合作方做出了尴尬的决定,比如戴尔提前一年发布了 Alienware Alpha 外观的 Steam Machine 就在一年后的正式发布时显得硬件状况落后了。跳票很可能也导致了游戏数量上的问题。开发者和硬件合作商的对跳票和最终毫无轰动的发布也不明朗。Valve 的 VR 平台干脆直接不支持 Linux,而直到最近,SteamVR 都风风火火迭代了好几次之后,SteamOS 和 Linux 依然不支持 VR。

|

各种因素最后也反映在 Steam Machines 这一项目上。Valve 方面的跳票迫使一些合作方做出了尴尬的决定,比如戴尔提前一年发布了 Alienware Alpha 外观的 Steam Machine 就在一年后的正式发布时显得硬件状况落后了。跳票很可能也导致了游戏数量上的问题。开发者和硬件合作商的对跳票和最终毫无轰动的发布也不明朗。Valve 的 VR 平台干脆直接不支持 Linux,而直到最近,SteamVR 都风风火火迭代了好几次之后,SteamOS 和 Linux 依然不支持 VR。

|

||||||

|

|

||||||

_“长线钓鱼”_

|

#### “长线钓鱼”

|

||||||

|

|

||||||

尽管 Valve 方面对未来的规划毫无透露,有些人依然认为 Valve 在 Steam Machine 和 SteamOS 上是放长线钓大鱼。他们论点是 Steam 本身也是这样的项目 —— 一开始作为游戏补丁平台出现,到现在无敌的游戏零售和玩家社交网络。虽然 Valve 的独占游戏比如 Half-Life 2 和 CS 也帮助了 Steam 平台的传播。但现今我们完全无法看到 Valve 像当初对 Steam 那样上心 Steam Machines。同时现在 Steam Machines 也面临着 Steam 从没碰到过的激烈竞争。而这些竞争里自然也包含 Valve 自己的那些把 Windows 作为平台的 Steam 客户端。

|

尽管 Valve 方面对未来的规划毫无透露,有些人依然认为 Valve 在 Steam Machine 和 SteamOS 上是放长线钓大鱼。他们论点是 Steam 本身也是这样的项目 —— 一开始作为游戏补丁平台出现,到现在无敌的游戏零售和玩家社交网络。虽然 Valve 的独占游戏比如《半条命 2》和 《CS》 也帮助了 Steam 平台的传播。但现今我们完全无法看到 Valve 像当初对 Steam 那样上心 Steam Machines。同时现在 Steam Machines 也面临着 Steam 从没碰到过的激烈竞争。而这些竞争里自然也包含 Valve 自己的那些把 Windows 作为平台的 Steam 客户端。

|

||||||

|

|

||||||

_真正目的_

|

#### 真正目的

|

||||||

|

|

||||||

介于投入在 Steam Machines 上的努力如此之少,有些人怀疑整个产品平台是不是仅仅作为某种博弈的筹码才被开发出来。原初 Steam Machines 就发家于担心微软和苹果通过自己的应用市场垄断游戏的反制手段当中,Valve 寄希望于 Steam Machines 可以在不备之时脱离那些操作系统的支持而运行,同时也是提醒开发者们,也许有一日整个 Steam 平台会独立出来。而当微软和苹果等方面的风口没有继续收紧的情况下,Valve 自然就放慢了开发进度。然而我不这样认为;Valve 其实已经花了不少精力与硬件商和游戏开发者们共同推行这件事,不可能仅仅是为了吓吓他人就终止项目。你可以把这件事想成,微软和 Valve 都在吓唬对方 —— 微软推出了突然收紧的 Windows 8 而 Valve 则展示了一下可以独立门户的能力。

|

鉴于投入在 Steam Machines 上的努力如此之少,有些人怀疑整个产品平台是不是仅仅作为某种博弈的筹码才被开发出来。原初 Steam Machines 就发家于担心微软和苹果通过自己的应用市场垄断游戏的反制手段当中,Valve 寄希望于 Steam Machines 可以在不备之时脱离那些操作系统的支持而运行,同时也是提醒开发者们,也许有一日整个 Steam 平台会独立出来。而当微软和苹果等方面的风口没有继续收紧的情况下,Valve 自然就放慢了开发进度。然而我不这样认为;Valve 其实已经花了不少精力与硬件商和游戏开发者们共同推行这件事,不可能仅仅是为了吓吓他人就终止项目。你可以把这件事想成,微软和 Valve 都在吓唬对方 —— 微软推出了突然收紧的 Windows 8 ,而 Valve 则展示了一下可以独立门户的能力。

|

||||||

|

|

||||||

但即使如此,谁能保证开发者不会愿意跟着微软的封闭环境跑了呢?万一微软最后能提供更好的待遇和用户群体呢?更何况,微软现在正大力推行 Xbox 和 Windows 的交叉和整合,甚至 Xbox 独占游戏也出现在 Windows 上,这一切都没有损害 Windows 原本的平台性定位 —— 谁还能说微软方面不是 Steam 的直接竞争对手呢?

|

但即使如此,谁能保证开发者不会愿意跟着微软的封闭环境跑了呢?万一微软最后能提供更好的待遇和用户群体呢?更何况,微软现在正大力推行 Xbox 和 Windows 的交叉和整合,甚至 Xbox 独占游戏也出现在 Windows 上,这一切都没有损害 Windows 原本的平台性定位 —— 谁还能说微软方面不是 Steam 的直接竞争对手呢?

|

||||||

|

|

||||||

还会有人说这一切一切都是为了推进 Linux 生态环境尽快接纳 PC 游戏,而 Steam Machines 只是想为此大力推一把。但如果是这样,那这个目的实在是性价比极低,因为本愿意支持 Linux 的自然会开发,而 Steam Machines 这一出甚至会让开发者对平台期待额落空从而伤害到他们。

|

还会有人说这一切一切都是为了推进 Linux 生态环境尽快接纳 PC 游戏,而 Steam Machines 只是想为此大力推一把。但如果是这样,那这个目的实在是性价比极低,因为本愿意支持 Linux 的自然会开发,而 Steam Machines 这一出甚至会让开发者对平台期待额落空从而伤害到他们。

|

||||||

|

|

||||||

**大家眼中 Valve 曾经的机会**

|

### 大家眼中 Valve 曾经的机会

|

||||||

|

|

||||||

我认为 Steam Machines 的创意还是很有趣的,而也有一个与之匹配的市场,但就结果而言 Valve 投入的创意和努力还不够多,而定位模糊也伤害了这个产品。我认为 Steam Machines 的优势在于能砍掉 PC 游戏传统的复杂性,比如硬件问题,整机寿命和维护等;但又能拥有游戏便宜,可以打 Mod 等好处,而且也可以做各种定制化以满足用户需求。但他们必须要让产品的核心内容:价格,市场营销,机型产品线还有软件的质量有所保证才行。

|

我认为 Steam Machines 的创意还是很有趣的,而也有一个与之匹配的市场,但就结果而言 Valve 投入的创意和努力还不够多,而定位模糊也伤害了这个产品。我认为 Steam Machines 的优势在于能砍掉 PC 游戏传统的复杂性,比如硬件问题、整机寿命和维护等;但又能拥有游戏便宜,可以打 Mod 等好处,而且也可以做各种定制化以满足用户需求。但他们必须要让产品的核心内容:价格、市场营销、机型产品线还有软件的质量有所保证才行。

|

||||||

|

|

||||||

我认为 Steam Machines 可以做出一点妥协,比如硬件升级性(尽管这一点还是有可能被保留下来的 —— 但也要极为小心整个过程对用户体验的影响)和产品选择性,来减少摩擦成本。PC 一直会是一个并列的选项。想给用户产品可选性带来的只有一个困境,成吨的质量低下的 Steam Machines 根本不能解决。Valve 得自己造一台旗舰机型来指明 Steam Machines 的方向。毫无疑问,Alienware 的产品是最接近理想目标的,但他说到底也不是 Valve 的官方之作。Valve 内部不乏优秀的工业设计人才,如果他们愿意投入足够多的重视,我认为结果也许会值得他们努力。而像戴尔和 HTC 这样的公司则可以用他们丰富的经验帮 Valve 制造成品。直接钦定 Steam Machines 的硬件周期,并且在期间只推出 1-2 台机型也有助于帮助解决问题,更不用说他们还可以依次和开发商们确立性能的基准线。我不知道 OEM 合作商们该怎么办;如果 Valve 专注于自己的几台设备里,OEM 们很可能会变得多余甚至拖平台后腿。

|

我认为 Steam Machines 可以做出一点妥协,比如硬件升级性(尽管这一点还是有可能被保留下来的 —— 但也要极为小心整个过程对用户体验的影响)和产品选择性,来减少摩擦成本。PC 一直会是一个并列的选项。想给用户产品可选性带来的只有一个困境,成吨的质量低下的 Steam Machines 根本不能解决。Valve 得自己造一台旗舰机型来指明 Steam Machines 的方向。毫无疑问,Alienware 的产品是最接近理想目标的,但它说到底也不是 Valve 的官方之作。Valve 内部不乏优秀的工业设计人才,如果他们愿意投入足够多的重视,我认为结果也许会值得他们努力。而像戴尔和 HTC 这样的公司则可以用他们丰富的经验帮 Valve 制造成品。直接钦定 Steam Machines 的硬件周期,并且在期间只推出 1-2 台机型也有助于帮助解决问题,更不用说他们还可以依次和开发商们确立性能的基准线。我不知道 OEM 合作商们该怎么办;如果 Valve 专注于自己的几台设备里,OEM 们很可能会变得多余甚至拖平台后腿。

|

||||||

|

|

||||||

我觉得修复软件问题是最关键的。很多问题在严重拖着 Steam Machines 的后退,比如缺少主机上遍地都是,又能轻易安装在 PC 上的的 Netflix 和 Twitch,即使做好了客厅体验问题依然是严重的败笔。即使 Valve 已经在逐步购买电影的版权以便在 Steam 上发售,我觉得用户还是会倾向于去使用已经在市场上建立口碑的一些串流服务。这些问题需要被严肃地对待,因为玩家日益倾向于把主机作为家庭影院系统的一部分。同时,修复 Steam 客户端和平台的问题也很重要,和更多第三方服务商合作增加内容应该会是个好主意。性能问题和 Linux 下的显卡问题也很严重,不过好在他们最近在慢慢进步。移植游戏也是个问题。类似 Feral Interactive 或者 Aspyr Media 这样的游戏移植商可以帮助扩展 Steam 的商店游戏数量,但联系开发者和出版社可能会有问题,而且这两家移植商经常在移植的容器上搞自己的花样。Valve 已经在帮助游戏工作室自己移植游戏了,比如 Rocket League,不过这种情况很少见,而且就算 Valve 去帮忙了,也是非常符合 Valve 风格的拖拉。而 AAA 大作这一块内容也绝不应该被忽略 —— 近来这方面的情况已经有极大好转了,虽然 Linux 平台的支持好了很多,但在玩家数量不够以及 Valve 为 Steam Machines 提供的开发帮助甚少的情况下,Bethesda 这样的开发商依然不愿意移植游戏;同时,也有像 Denuvo 一样缺乏数字版权管理的公司难以向 Steam Machines 移植游戏。

|

我觉得修复软件问题是最关键的。很多问题在严重拖着 Steam Machines 的后腿,比如缺少主机上遍地都是、又能轻易安装在 PC 上的的 Netflix 和 Twitch,那么即使做好了客厅体验问题依然是严重的败笔。即使 Valve 已经在逐步购买电影的版权以便在 Steam 上发售,我觉得用户还是会倾向于去使用已经在市场上建立口碑的一些流媒体服务。这些问题需要被严肃地对待,因为玩家日益倾向于把主机作为家庭影院系统的一部分。同时,修复 Steam 客户端和平台的问题也很重要,和更多第三方服务商合作增加内容应该会是个好主意。性能问题和 Linux 下的显卡问题也很严重,不过好在他们最近在慢慢进步。移植游戏也是个问题。类似 Feral Interactive 或者 Aspyr Media 这样的游戏移植商可以帮助扩展 Steam 的商店游戏数量,但联系开发者和出版社可能会有问题,而且这两家移植商经常在移植的容器上搞自己的花样。Valve 已经在帮助游戏工作室自己移植游戏了,比如 Rocket League,不过这种情况很少见,而且就算 Valve 去帮忙了,也是非常符合 Valve 拖拉的风格。而 AAA 大作这一块内容也绝不应该被忽略 —— 近来这方面的情况已经有极大好转了,虽然 Linux 平台的支持好了很多,但在玩家数量不够以及 Valve 为 Steam Machines 提供的开发帮助甚少的情况下,Bethesda 这样的开发商依然不愿意移植游戏;同时,也有像 Denuvo 一样缺乏数字版权管理的公司难以向 Steam Machines 移植游戏。

|

||||||

|

|

||||||

在我看来 Valve 需要在除了软件和硬件的地方也多花些功夫。如果他们只有一个机型的话,他们可以很方便的在硬件生产上贴点钱。这样 Steam Machines 的价格就能跻身主机的行列,而且还能比自己组装 PC 要便宜。针对正确的市场群体做营销也很关键,即便我们还不知道目标玩家应该是谁(我个人会对这样的 Steam Machines 感兴趣,而且我有一整堆已经在 Steam 上以相当便宜的价格买好的游戏)。最后,我觉得零售商们其实不会对 Valve 的计划很感冒,毕竟他们要靠卖和倒卖实体游戏赚钱。

|

在我看来 Valve 需要在除了软件和硬件的地方也多花些功夫。如果他们只有一个机型的话,他们可以很方便的在硬件生产上贴点钱。这样 Steam Machines 的价格就能跻身主机的行列,而且还能比自己组装 PC 要便宜。针对正确的市场群体做营销也很关键,即便我们还不知道目标玩家应该是谁(我个人会对这样的 Steam Machines 感兴趣,而且我有一整堆已经在 Steam 上以相当便宜的价格买下的游戏)。最后,我觉得零售商们其实不会对 Valve 的计划很感冒,毕竟他们要靠卖和倒卖实体游戏赚钱。

|

||||||

|

|

||||||

就算 Valve 在产品和平台上采纳过这些改进,我也不知道怎样才能激活 Steam Machines 的全市场潜力。总的来说,Valve 不仅得学习自己的经验教训,还应该参考曾经有过类似尝试的厂商们,比如尝试依靠开放平台的 3DO 和 Pippin;又或者那些从台式机体验的竞争力退赛的那些公司,其实 Valve 如今的情况和他们也有几分相似。亦或者他们也可以观察一下任天堂 Switch —— 毕竟任天堂也在尝试跨界的创新。

|

就算 Valve 在产品和平台上采纳过这些改进,我也不知道怎样才能激活 Steam Machines 的全市场潜力。总的来说,Valve 不仅得学习自己的经验教训,还应该参考曾经有过类似尝试的厂商们,比如尝试依靠开放平台的 3DO 和 Pippin;又或者那些从台式机体验的竞争力退赛的那些公司,其实 Valve 如今的情况和他们也有几分相似。亦或者他们也可以观察一下任天堂 Switch —— 毕竟任天堂也在尝试跨界的创新。

|

||||||

|

|

||||||

@ -103,7 +103,7 @@ via: https://www.gamingonlinux.com/articles/user-editorial-steam-machines-steamo

|

|||||||

|

|

||||||

作者:[calvin][a]

|

作者:[calvin][a]

|

||||||

译者:[Moelf](https://github.com/Moelf)

|

译者:[Moelf](https://github.com/Moelf)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,77 +1,59 @@

|

|||||||

JavaScript 函数式编程介绍

|

JavaScript 函数式编程介绍

|

||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

### 探索函数式编程和通过它让你的程序更具有可读性和易于调试

|

> 探索函数式编程,通过它让你的程序更具有可读性和易于调试

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

>Image credits : Steve Jurvetson via [Flickr][80] (CC-BY-2.0)

|

>Image credits : Steve Jurvetson via [Flickr][80] (CC-BY-2.0)

|

||||||

|

|

||||||

当 Brendan Eich 在 1995 年创造了 JavaScript,他打算[将 Scheme 移植到浏览器里][81] 。Scheme 作为 Lisp 的方言,是一种函数式编程语言。而当 Eich 被告知新的语言应该是一种可以与 Java 相比的脚本语言后,他最终确立了一种拥有 C 风格语法的语言(也和 Java 一样),但将函数视作一等公民。Java 直到版本 8 才从技术上将函数视为一等公民,虽然你可以用匿名类来模拟它。这个特性允许 JavaScript 通过函数式范式编程。

|

当 Brendan Eich 在 1995 年创造 JavaScript 时,他原本打算[将 Scheme 移植到浏览器里][81] 。Scheme 作为 Lisp 的方言,是一种函数式编程语言。而当 Eich 被告知新的语言应该是一种可以与 Java 相比的脚本语言后,他最终确立了一种拥有 C 风格语法的语言(也和 Java 一样),但将函数视作一等公民。而 Java 直到版本 8 才从技术上将函数视为一等公民,虽然你可以用匿名类来模拟它。这个特性允许 JavaScript 通过函数式范式编程。

|

||||||

|

|

||||||

JavaScript 是一个多范式语言,允许你自由地混合和使用面向对象式,过程式和函数式的范式。最近,函数式编程越来越火热。在诸如 [Angular][82] 和 [React ][83]这样的框架中,通过使用不可变数据结构实际上可以提高性能。不可变是函数式编程的核心原则,纯函数和它使得编写和调试程序变得更加容易。使用函数来代替程序的循环可以提高程序的可读性并使它更加优雅。总之,函数式编程拥有很多优点。

|

JavaScript 是一个多范式语言,允许你自由地混合和使用面向对象式、过程式和函数式的编程范式。最近,函数式编程越来越火热。在诸如 [Angular][82] 和 [React][83] 这样的框架中,通过使用不可变数据结构可以切实提高性能。不可变是函数式编程的核心原则,它以及纯函数使得编写和调试程序变得更加容易。使用函数来代替程序的循环可以提高程序的可读性并使它更加优雅。总之,函数式编程拥有很多优点。

|

||||||

|

|

||||||

### 什么不是函数式编程

|

### 什么不是函数式编程

|

||||||

|

|

||||||

在讨论什么是函数式编程前,让我们先排除那些不属于函数式编程的东西。实际上它们是你需要丢弃的语言组件(再见,老朋友):

|

在讨论什么是函数式编程前,让我们先排除那些不属于函数式编程的东西。实际上它们是你需要丢弃的语言组件(再见,老朋友):

|

||||||

|

|

||||||

|

* 循环:

|

||||||

|

* `while`

|

||||||

* 循环

|

* `do...while`

|

||||||

* **while**

|

* `for`

|

||||||

|

* `for...of`

|

||||||

* **do...while**

|

* `for...in`

|

||||||

|

* 用 `var` 或者 `let` 来声明变量

|

||||||

* **for**

|

|

||||||

|

|

||||||

* **for...of**

|

|

||||||

|

|

||||||

* **for...in**

|

|

||||||

* 用 **var** 或者 **let** 来声明变量

|

|

||||||

* 没有返回值的函数

|

* 没有返回值的函数

|

||||||

* 改变对象的属性 (比如: **o.x = 5;**)

|

* 改变对象的属性 (比如: `o.x = 5;`)

|

||||||

* 改变 Array 本身的方法

|

* 改变数组本身的方法:

|

||||||

* **copyWithin**

|

* `copyWithin`

|

||||||

|

* `fill`

|

||||||

* **fill**

|

* `pop`

|

||||||

|

* `push`

|

||||||

* **pop**

|

* `reverse`

|

||||||

|

* `shift`

|

||||||

* **push**

|

* `sort`

|

||||||

|

* `splice`

|

||||||

* **reverse**

|

* `unshift`

|

||||||

|

* 改变映射本身的方法:

|

||||||

* **shift**

|

* `clear`

|

||||||

|

* `delete`

|

||||||

* **sort**

|

* `set`

|

||||||

|

* 改变集合本身的方法:

|

||||||

* **splice**

|

* `add`

|

||||||

|

* `clear`

|

||||||

* **unshift**

|

* `delete`

|

||||||

* 改变 Map 本身的方法

|

|

||||||

* **clear**

|

|

||||||

|

|

||||||

* **delete**

|

|

||||||

|

|

||||||

* **set**

|

|

||||||

* 改变 Set 本身的方法

|

|

||||||

* **add**

|

|

||||||

|

|

||||||

* **clear**

|

|

||||||

|

|

||||||

* **delete**

|

|

||||||

|

|

||||||

脱离这些特性应该如何编写程序呢?这是我们将在后面探索的问题。

|

脱离这些特性应该如何编写程序呢?这是我们将在后面探索的问题。

|

||||||

|

|

||||||

### 纯函数

|

### 纯函数

|

||||||

|

|

||||||

你的程序中包含函数不一定意味着你正在进行函数式编程。函数式范式将纯函数和非纯函数区分开。鼓励你编写纯函数。纯函数必须满足下面的两个属性:

|

你的程序中包含函数不一定意味着你正在进行函数式编程。函数式范式将<ruby>纯函数<rt>pure function</rt></ruby>和<ruby>非纯函数<rt>impure function</rt></ruby>区分开。鼓励你编写纯函数。纯函数必须满足下面的两个属性:

|

||||||

|

|

||||||

* **引用透明:**函数在传入相同的参数后永远返回相同的返回值。这意味着该函数不依赖于任何可变状态。

|

* 引用透明:函数在传入相同的参数后永远返回相同的返回值。这意味着该函数不依赖于任何可变状态。

|

||||||

* **Side-effect free无函数副作用:**函数不能导致任何副作用。副作用可能包括 I/O(比如 向终端或者日志文件写入),改变一个不可变的对象,对变量重新赋值等等。

|

* 无副作用:函数不能导致任何副作用。副作用可能包括 I/O(比如向终端或者日志文件写入),改变一个不可变的对象,对变量重新赋值等等。

|

||||||

|

|

||||||

我们来看一些例子。首先,**multiply** 就是一个纯函数的例子,它在传入相同的参数后永远返回相同的返回值,并且不会导致副作用。

|

|

||||||

|

|

||||||

|

我们来看一些例子。首先,`multiply` 就是一个纯函数的例子,它在传入相同的参数后永远返回相同的返回值,并且不会导致副作用。

|

||||||

|

|

||||||

```

|

```

|

||||||

function multiply(a, b) {

|

function multiply(a, b) {

|

||||||

@ -79,9 +61,7 @@ function multiply(a, b) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][5][pure-function-example.js][6] hosted with ❤ by [GitHub][7]

|

下面是非纯函数的例子。`canRide` 函数依赖捕获的 `heightRequirement` 变量。被捕获的变量不一定导致一个函数是非纯函数,除非它是一个可变的变量(或者可以被重新赋值)。这种情况下使用 `let` 来声明这个变量,意味着可以对它重新赋值。`multiply` 函数是非纯函数,因为它会导致在 console 上输出。

|

||||||

|

|

||||||

下面是非纯函数的例子。**canRide** 函数依赖捕获的 **heightRequirement** 变量。被捕获的变量不一定导致一个函数是非纯函数,除非它是一个可变的变量(或者可以被重新赋值)。这种情况下使用 **let** 来声明这个变量,意味着可以对它重新赋值。**multiply** 函数是非纯函数,因为它会导致在 console 上输出。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

let heightRequirement = 46;

|

let heightRequirement = 46;

|

||||||

@ -98,36 +78,27 @@ function multiply(a, b) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

[view raw][8][impure-functions.js][9] hosted with ❤ by [GitHub][10]

|

|

||||||

|

|

||||||

下面的列表包含着 JavaScript 内置的非纯函数。你可以指出它们不满足两个属性中的哪个吗?

|

下面的列表包含着 JavaScript 内置的非纯函数。你可以指出它们不满足两个属性中的哪个吗?

|

||||||

|

|

||||||

* **console.log**

|

* `console.log`

|

||||||

|

* `element.addEventListener`

|

||||||

|

* `Math.random`

|

||||||

|

* `Date.now`

|

||||||

|

* `$.ajax` (这里 `$` 代表你使用的 Ajax 库)

|

||||||

|

|

||||||

* **element.addEventListener**

|

理想的程序中所有的函数都是纯函数,但是从上面的函数列表可以看出,任何有意义的程序都将包含非纯函数。大多时候我们需要进行 AJAX 调用,检查当前日期或者获取一个随机数。一个好的经验法则是遵循 80/20 规则:函数中有 80% 应该是纯函数,剩下的 20% 的必要性将不可避免地是非纯函数。

|

||||||

|

|

||||||

* **Math.random**

|

|

||||||

|

|

||||||

* **Date.now**

|

|

||||||

|

|

||||||

* **$.ajax** (**$** 代表你使用的 Ajax 库)

|

|

||||||

|

|

||||||

理想的程序中所有的函数都是纯函数,但是从上面的函数列表可以看出,任何有意义的程序都将包含非纯函数。大多时候我们需要进行 AJAX 调用,检查当前日期或者获取一个随机数。一个好的经验法则是遵循80/20规则:函数中有80%应该是纯函数,剩下的20%的必要性将不可避免地是非纯函数。

|

|

||||||

|

|

||||||

使用纯函数有几个优点:

|

使用纯函数有几个优点:

|

||||||

|

|

||||||

* 它们很容易导出和调试,因为它们不依赖于可变的状态。

|

* 它们很容易导出和调试,因为它们不依赖于可变的状态。

|

||||||

|

|

||||||

* 返回值可以被缓存或者“记忆”来避免以后重复计算。

|

* 返回值可以被缓存或者“记忆”来避免以后重复计算。

|

||||||

|

|

||||||

* 它们很容易测试,因为没有需要模拟(mock)的依赖(比如日志,AJAX,数据库等等)。

|

* 它们很容易测试,因为没有需要模拟(mock)的依赖(比如日志,AJAX,数据库等等)。

|

||||||

|

|

||||||

你编写或者使用的函数返回空(换句话说它没有返回值),那代表它是非纯函数。

|

你编写或者使用的函数返回空(换句话说它没有返回值),那代表它是非纯函数。

|

||||||

|

|

||||||

### 不变性

|

### 不变性

|

||||||

|

|

||||||

让我们回到捕获变量的概念上。来看看 **canRide** 函数。我们认为它是一个非纯函数,因为 **heightRequirement** 变量可以被重新赋值。下面是一个构造出来的例子来说明如何用不可预测的值来对它重新赋值。

|

让我们回到捕获变量的概念上。来看看 `canRide` 函数。我们认为它是一个非纯函数,因为 `heightRequirement` 变量可以被重新赋值。下面是一个构造出来的例子来说明如何用不可预测的值来对它重新赋值。

|

||||||

|

|

||||||

```

|

```

|

||||||

let heightRequirement = 46;

|

let heightRequirement = 46;

|

||||||

@ -146,10 +117,7 @@ const mySonsHeight = 47;

|

|||||||

setInterval(() => console.log(canRide(mySonsHeight)), 500);

|

setInterval(() => console.log(canRide(mySonsHeight)), 500);

|

||||||

```

|

```

|

||||||

|

|

||||||

|

我要再次强调被捕获的变量不一定会使函数成为非纯函数。我们可以通过只是简单地改变 `heightRequirement` 的声明方式来使 `canRide` 函数成为纯函数。

|

||||||

[view raw][11][mutable-state.js][12] hosted with ❤ by [GitHub][13]

|

|

||||||

|

|

||||||

我要再次强调被捕获的变量不一定会使函数成为非纯函数。我们可以通过只是简单地改变 **heightRequirement** 的声明方式来使 **canRide** 函数成为纯函数。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

const heightRequirement = 46;

|

const heightRequirement = 46;

|

||||||

@ -159,10 +127,7 @@ function canRide(height) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

通过用 `const` 来声明变量意味着它不能被再次赋值。如果尝试对它重新赋值,运行时引擎将抛出错误;那么,如果用对象来代替数字来存储所有的“常量”怎么样?

|

||||||

[view raw][14][immutable-state.js][15] hosted with ❤ by [GitHub][16]

|

|

||||||

|

|

||||||

通过用 **const** 来声明变量意味着它不能被再次赋值。如果尝试对它重新赋值,运行时引擎将抛出错误;那么,如果用对象来代替数字来存储所有的“常量”怎么样?

|

|

||||||

|

|

||||||

```

|

```

|

||||||

const constants = {

|

const constants = {

|

||||||

@ -175,58 +140,53 @@ function canRide(height) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

我们用了 `const` ,所以这个变量不能被重新赋值,但是还有一个问题:这个对象可以被改变。下面的代码展示了,为了真正使其不可变,你不仅需要防止它被重新赋值,你也需要不可变的数据结构。JavaScript 语言提供了 `Object.freeze` 方法来阻止对象被改变。

|

||||||

[view raw][17][captured-mutable-object.js][18] hosted with ❤ by [GitHub][19]

|

|

||||||

|

|

||||||

我们用了 **const** ,所以这个变量不能被重新赋值,但是还有一个问题:这个对象可以被改变。下面的代码展示了,为了真正使其不可变,你不仅需要防止它被重新赋值,你也需要不可变的数据结构。JavaScript 语言提供了 **Object.freeze** 方法来阻止对象被改变。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

'use strict';

|

'use strict';

|

||||||

|

|

||||||

// CASE 1: The object is mutable and the variable can be reassigned.对象的属性是可变的,并且变量可以被再次赋值。

|

// CASE 1: 对象的属性是可变的,并且变量可以被再次赋值。

|

||||||

let o1 = { foo: 'bar' };

|

let o1 = { foo: 'bar' };

|

||||||

|

|

||||||

// Mutate the object改变对象的属性

|

// 改变对象的属性

|

||||||

o1.foo = 'something different';

|

o1.foo = 'something different';

|

||||||

|

|

||||||

// Reassign the variable对变量再次赋值

|

// 对变量再次赋值

|

||||||

o1 = { message: "I'm a completely new object" };

|

o1 = { message: "I'm a completely new object" };

|

||||||

|

|

||||||

|

|

||||||

// CASE 2: The object is still mutable but the variable cannot be reassigned.对象的属性还是可变的,但是变量不能被再次赋值。

|

// CASE 2: 对象的属性还是可变的,但是变量不能被再次赋值。

|

||||||

const o2 = { foo: 'baz' };

|

const o2 = { foo: 'baz' };

|

||||||

|

|

||||||

// Can still mutate the object

|

// 仍然能改变对象

|

||||||

o2.foo = 'Something different, yet again';

|

o2.foo = 'Something different, yet again';

|

||||||

|

|

||||||

// Cannot reassign the variable不能对变量再次赋值

|

// 不能对变量再次赋值

|

||||||

// o2 = { message: 'I will cause an error if you uncomment me' }; // Error!

|

// o2 = { message: 'I will cause an error if you uncomment me' }; // Error!

|

||||||

|

|

||||||

|

|

||||||

// CASE 3: The object is immutable but the variable can be reassigned.对象的属性是不可变的,但是变量可以被再次赋值。

|

// CASE 3: 对象的属性是不可变的,但是变量可以被再次赋值。

|

||||||

let o3 = Object.freeze({ foo: "Can't mutate me" });

|

let o3 = Object.freeze({ foo: "Can't mutate me" });

|

||||||

|

|

||||||

// Cannot mutate the object不能改变对象的属性

|

// 不能改变对象的属性

|

||||||

// o3.foo = 'Come on, uncomment me. I dare ya!'; // Error!

|

// o3.foo = 'Come on, uncomment me. I dare ya!'; // Error!

|

||||||

|

|

||||||

// Can still reassign the variable还是可以对变量再次赋值

|

// 还是可以对变量再次赋值

|

||||||

o3 = { message: "I'm some other object, and I'm even mutable -- so take that!" };

|

o3 = { message: "I'm some other object, and I'm even mutable -- so take that!" };

|

||||||

|

|

||||||

|

|

||||||

// CASE 4: The object is immutable and the variable cannot be reassigned. This is what we want!!!!!!!!对象的属性是不可变的,并且变量不能被再次赋值。这是我们想要的!!!!!!!!

|

// CASE 4: 对象的属性是不可变的,并且变量不能被再次赋值。这是我们想要的!!!!!!!!

|

||||||

const o4 = Object.freeze({ foo: 'never going to change me' });

|

const o4 = Object.freeze({ foo: 'never going to change me' });

|

||||||

|

|

||||||

// Cannot mutate the object不能改变对象的属性

|

// 不能改变对象的属性

|

||||||

// o4.foo = 'talk to the hand' // Error!

|

// o4.foo = 'talk to the hand' // Error!

|

||||||

|

|

||||||

// Cannot reassign the variable不能对变量再次赋值

|

// 不能对变量再次赋值

|

||||||

// o4 = { message: "ain't gonna happen, sorry" }; // Error

|

// o4 = { message: "ain't gonna happen, sorry" }; // Error

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

[view raw][20][immutability-vs-reassignment.js][21] hosted with ❤ by [GitHub][22]

|

不变性适用于所有的数据结构,包括数组、映射和集合。它意味着不能调用例如 `Array.prototype.push` 等会导致本身改变的方法,因为它会改变已经存在的数组。可以通过创建一个含有原来元素和新加元素的新数组,而不是将新元素加入一个已经存在的数组。其实所有会导致数组本身被修改的方法都可以通过一个返回修改好的新数组的函数代替。

|

||||||

|

|

||||||

不变性适用于所有的数据结构,包括数组,映射和集合。它意味着不能调用例如 **Array.prototype.push** 等会导致本身改变的方法,因为它会改变已经存在的数组。可以通过创建一个含有原来元素和新加元素的新数组,代替将新元素加入一个已经存在的数组。其实所有会导致数组本身被修改的方法都可以通过一个返回修改好的新数组的函数代替。**这里一段mutator的翻译大概有些啰嗦。**

|

|

||||||

|

|

||||||

```

|

```

|

||||||

'use strict';

|

'use strict';

|

||||||

@ -251,16 +211,13 @@ const f = R.sort(myCompareFunction, a); // R = Ramda

|

|||||||

// Instead of: a.reverse();

|

// Instead of: a.reverse();

|

||||||

const g = R.reverse(a); // R = Ramda

|

const g = R.reverse(a); // R = Ramda

|

||||||

|

|

||||||

// Exercise for the reader留给读者的练习:

|

// 留给读者的练习:

|

||||||

// copyWithin

|

// copyWithin

|

||||||

// fill

|

// fill

|

||||||

// splice

|

// splice

|

||||||

```

|

```

|

||||||

|

|

||||||

|

[映射][84] 和 [集合][85] 也很相似。可以通过返回一个新的修改好的映射或者集合来代替使用会修改其本身的函数。

|

||||||

[view raw][23][array-mutator-method-replacement.js][24] hosted with ❤ by [GitHub][25]

|

|

||||||

|

|

||||||

[**Map**][84] 和 [**Set**][85] 也很相似。可以通过返回一个新的修改好的 Map 或者 Set 来代替使用会修改其本身的函数。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

const map = new Map([

|

const map = new Map([

|

||||||

@ -279,9 +236,6 @@ const map3 = new Map([...map].filter(([key]) => key !== 1));

|

|||||||

const map4 = new Map();

|

const map4 = new Map();

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

[view raw][26][map-mutator-method-replacement.js][27] hosted with ❤ by [GitHub][28]

|

|

||||||

|

|

||||||

```

|

```

|

||||||

const set = new Set(['A', 'B', 'C']);

|

const set = new Set(['A', 'B', 'C']);

|

||||||

|

|

||||||

@ -295,19 +249,15 @@ const set3 = new Set([...set].filter(key => key !== 'B'));

|

|||||||

const set4 = new Set();

|

const set4 = new Set();

|

||||||

```

|

```

|

||||||

|

|

||||||

|

我想提一句如果你在使用 TypeScript(我非常喜欢 TypeScript),你可以用 `Readonly<T>`、`ReadonlyArray<T>`、`ReadonlyMap<K, V>` 和 `ReadonlySet<T>` 接口来在编译期检查你是否尝试更改这些对象,有则抛出编译错误。如果在对一个对象字面量或者数组调用 `Object.freeze`,编译器会自动推断它是只读的。由于映射和集合在其内部表达,所以在这些数据结构上调用 `Object.freeze` 不起作用。但是你可以轻松地告诉编译器它们是只读的变量。

|

||||||

|

|

||||||

[view raw][29][set-mutator-method-replacement.js][30] hosted with ❤ by [GitHub][31]

|

|

||||||

|

|

||||||

我想提一句如果你在使用 TypeScript(我非常喜欢TypeScript),你可以用 **Readonly<T>**, **ReadonlyArray<T>**, **ReadonlyMap<K, V>**, 和 **ReadonlySet<T>** 接口来在编译期检查你是否尝试更改这些对象,有则抛出编译错误。如果在对一个对象字面量或者数组调用 **Object.freeze**,编译器会自动推断它是只读的。由于映射和集合在其内部表达,所以在这些数据结构上调用 **Object.freeze** 不起作用。但是你可以轻松地告诉编译器它们是只读的变量。

|

|

||||||

|

|

||||||

### [typescript-readonly.png][32]

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

TypeScript read-only interfaces

|

*TypeScript 只读接口*

|

||||||

|

|

||||||

好,所以我们可以通过创建新的对象来代替修改原来的对象,但是这样不会导致性能损失吗?当然会。确保在你自己的应用中做了性能测试。如果你需要提高性能,可以考虑使用 [Immutable.js][86]。Immutable.js 用[持久的数据结构][91] 实现了[链表][87],,[堆栈][88], [映射][89],[集合][90]和其他数据结构。使用了和比如 Clojure 和 Scala 的函数式语言中相同的技术。

|

好,所以我们可以通过创建新的对象来代替修改原来的对象,但是这样不会导致性能损失吗?当然会。确保在你自己的应用中做了性能测试。如果你需要提高性能,可以考虑使用 [Immutable.js][86]。Immutable.js 用[持久的数据结构][91] 实现了[链表][87]、[堆栈][88]、[映射][89]、[集合][90]和其他数据结构。使用了如同 Clojure 和 Scala 这样的函数式语言中相同的技术。

|

||||||

|

|

||||||

```

|

```

|

||||||

// Use in place of `[]`.

|

// Use in place of `[]`.

|

||||||

@ -338,17 +288,14 @@ console.log([...set1]); // [1, 2, 3, 4]

|

|||||||

console.log([...set2]); // [1, 2, 3, 4, 5]

|

console.log([...set2]); // [1, 2, 3, 4, 5]

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][33][immutable-js-demo.js][34] hosted with ❤ by [GitHub][35]

|

### 函数组合

|

||||||

|

|

||||||

### 函数组合(不确定是这个叫法

|

记不记得在中学时我们学过一些像 `(f ∘ g)(x)` 的东西?你那时可能想,“我什么时候会用到这些?”,好了,现在就用到了。你准备好了吗?`f ∘ g`读作 “函数 f 和函数 g 组合”。对它的理解有两种等价的方式,如等式所示: `(f ∘ g)(x) = f(g(x))`。你可以认为 `f ∘ g` 是一个单独的函数,或者视作将调用函数 `g` 的结果作为参数传给函数 `f`。注意这些函数是从右向左依次调用的,先执行 `g`,接下来执行 `f`。

|

||||||

|

|

||||||

好,你准备好听解答了吗?**f ∘ g **读作 **“函数 f 和函数 g 组合”(不确定 没搜到中文资料**。它的定义是 **(f ∘ g)(x) = f(g(x))**,你也可以认为 **f ∘ g** 是一个单独的函数,它将调用函数 **g** 的结果作为参数传给函数 **f**。注意这些函数是从右向左以此调用的,先执行 **g**,接下来执行 **f**。

|

|

||||||

|

|

||||||

关于函数组合的几个要点:

|

关于函数组合的几个要点:

|

||||||

|

|

||||||

1. 我们可以组合任意数量的函数(不仅限于 2 个)。

|

1. 我们可以组合任意数量的函数(不仅限于 2 个)。

|

||||||

|

2. 组合函数的一个方式是简单地把一个函数的输出作为下一个函数的输入(比如 `f(g(x))`)。

|

||||||

2. 组合函数的一个方式是简单地把一个函数的输出作为下一个函数的输入。(比如 **f(g(x))**))

|

|

||||||

|

|

||||||

```

|

```

|

||||||

// h(x) = x + 1

|

// h(x) = x + 1

|

||||||

@ -374,9 +321,7 @@ const y = f(g(h(1)));

|

|||||||

console.log(y); // '4'

|

console.log(y); // '4'

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][36][function-composition-basic.js][37] hosted with ❤ by [GitHub][38]

|

[Ramda][92] 和 [lodash][93] 之类的库提供了更优雅的方式来组合函数。我们可以在更多的在数学意义上处理函数组合,而不是简单地将一个函数的返回值传递给下一个函数。我们可以创建一个由这些函数组成的单一复合函数(就是 `(f ∘ g)(x)`)。

|

||||||

|

|

||||||

[Ramda][92] 和 [lodash][93] 之类的库提供了更优雅的方式来组合函数。我们可以在更多的在数学意义上处理函数组合,而不是简单地将一个函数的返回值传递给下一个函数。我们可以创建一个由这些函数组成的单一复合函数(就是,**(f ∘ g)(x)**)。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

// h(x) = x + 1

|

// h(x) = x + 1

|

||||||

@ -406,17 +351,15 @@ const y = composite(1);

|

|||||||

console.log(y); // '4'

|

console.log(y); // '4'

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][39][function-composition-elegant.js][40] hosted with ❤ by [GitHub][41]

|

好了,我们可以在 JavaScript 中组合函数了。接下来呢?好,如果你已经入门了函数式编程,理想中你的程序将只有函数的组合。代码里没有循环(`for`, `for...of`, `for...in`, `while`, `do`),基本没有。你可能觉得那是不可能的。并不是这样。我们下面的两个话题是:递归和高阶函数。

|

||||||

|

|

||||||

我们可以在 JavaScript 中组合函数。接下来呢?好,如果你已经入门了函数式编程,理想中你的程序将只有函数的组合。代码里没有循环(**for**, **for...of**, **for...in**, **while**, **do**),基本没有。你说但是那不可能。并不是这样。我们下面的两个话题是:递归和高阶函数。

|

|

||||||

|

|

||||||

### 递归

|

### 递归

|

||||||

|

|

||||||

假设你想实现一个计算数字的阶乘的函数。 让我们回顾一下数学中阶乘的定义:

|

假设你想实现一个计算数字的阶乘的函数。 让我们回顾一下数学中阶乘的定义:

|

||||||

|

|

||||||

**n! = n * (n-1) * (n-2) * ... * 1**.

|

`n! = n * (n-1) * (n-2) * ... * 1`.

|

||||||

|

|

||||||

**n!** 是从 **n** 到 **1** 的所有整数的乘积。我们可以编写一个循环轻松地计算出结果。

|

`n!` 是从 `n` 到 `1` 的所有整数的乘积。我们可以编写一个循环轻松地计算出结果。

|

||||||

|

|

||||||

```

|

```

|

||||||

function iterativeFactorial(n) {

|

function iterativeFactorial(n) {

|

||||||

@ -428,16 +371,13 @@ function iterativeFactorial(n) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

注意 `product` 和 `i` 都在循环中被反复重新赋值。这是解决这个问题的标准过程式方法。如何用函数式的方法解决这个问题呢?我们需要消除循环,确保没有变量被重新赋值。递归是函数式程序员的最有力的工具之一。递归需要我们将整体问题分解为类似整体问题的子问题。

|

||||||

|

|

||||||

[view raw][42][iterative-factorial.js][43] hosted with ❤ by [GitHub][44]

|

计算阶乘是一个很好的例子,为了计算 `n!` 我们需要将 n 乘以所有比它小的正整数。它的意思就相当于:

|

||||||

|

|

||||||

注意 **product** 和 **i** 都在循环中被反复重新赋值。这是解决这个问题的标准过程式方法。如何用函数式的方法解决这个问题呢?我们需要消除循环,确保没有变量被重新赋值。递归是函数式程序员的最有力的工具之一。递归需要我们将整体问题分解为类似整体问题的子问题。

|

`n! = n * (n-1)!`

|

||||||

|

|

||||||

计算阶乘是一个很好的例子,为了计算 **n!** 我们需要将 n 乘以所有比它小的正整数。它的意思就相当于:

|

啊哈!我们发现了一个解决 `(n-1)!` 的子问题,它类似于整个问题 `n!`。还有一个需要注意的地方就是基础条件。基础条件告诉我们何时停止递归。 如果我们没有基础条件,那么递归将永远持续。 实际上,如果有太多的递归调用,程序会抛出一个堆栈溢出错误。啊哈!

|

||||||

|

|

||||||

**n! = n * (n-1)!**

|

|

||||||

|

|

||||||

A-ha!我们发现了一个类似于整个问题 **n!** 的子问题来解决 **(n-1)!**。还有一个需要注意的地方就是基础条件。基础条件告诉我们何时停止递归。 如果我们没有基础条件,那么递归将永远持续。 实际上,如果有太多的递归调用,程序会抛出一个堆栈溢出错误。A-HA!

|

|

||||||

|

|

||||||

```

|

```

|

||||||

function recursiveFactorial(n) {

|

function recursiveFactorial(n) {

|

||||||

@ -449,28 +389,21 @@ function recursiveFactorial(n) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

然后我们来计算 `recursiveFactorial(20000)` 因为……,为什么不呢?当我们这样做的时候,我们得到了这个结果:

|

||||||

[view raw][45][recursive-factorial.js][46] hosted with ❤ by [GitHub][47]

|

|

||||||

|

|

||||||

然后我们来计算 **recursiveFactorial(20000)** 因为...,为什么不呢?当我们这样做的时候,我们得到了这个结果:

|

|

||||||

|

|

||||||

### [stack-overflow.png][48]

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

堆栈溢出错误

|

*堆栈溢出错误*

|

||||||

|

|

||||||

这里发生了什么?我们得到一个堆栈溢出错误!这不是无穷的递归导致的。我们已经处理了基础条件(**n === 0** 的情况)。那是因为浏览器的堆栈大小是有限的,而我们的代码使用了越过了这个大小的堆栈。每次对 **recursiveFactorial** 的调用导致了新的帧被压入堆栈中,就像一个盒子压在另一个盒子上。每当 **recursiveFactorial** 被调用,一个新的盒子被放在最上面。下图展示了在计算 **recursiveFactorial(3)** 时堆栈的样子。注意在真实的堆栈中,堆栈顶部的帧将存储在执行完成后应该返回的内存地址,但是我选择用变量 **r** 来表示返回值,因为 JavaScript 开发者一般不需要考虑内存地址。

|

这里发生了什么?我们得到一个堆栈溢出错误!这不是无穷的递归导致的。我们已经处理了基础条件(`n === 0` 的情况)。那是因为浏览器的堆栈大小是有限的,而我们的代码使用了越过了这个大小的堆栈。每次对 `recursiveFactorial` 的调用导致了新的帧被压入堆栈中,就像一个盒子压在另一个盒子上。每当 `recursiveFactorial` 被调用,一个新的盒子被放在最上面。下图展示了在计算 `recursiveFactorial(3)` 时堆栈的样子。注意在真实的堆栈中,堆栈顶部的帧将存储在执行完成后应该返回的内存地址,但是我选择用变量 `r` 来表示返回值,因为 JavaScript 开发者一般不需要考虑内存地址。

|

||||||

|

|

||||||

### [stack-frames.png][49]

|

|

||||||

|

|

||||||

")

|

")

|

||||||

|

|

||||||

递归计算 3! 的堆栈(三次乘法)

|

*递归计算 3! 的堆栈(三次乘法)*

|

||||||

|

|

||||||

你可能会想象当计算 **n = 20000** 时堆栈会更高。我们可以做些什么优化它吗?当然可以。作为 **ES2015** (又名 **ES6**) 标准的一部分,有一个优化用来解决这个问题。它被称作_尾调用优化_。它使得浏览器删除或者忽略堆栈帧,当递归函数做的最后一件事是调用自己并返回结果的时候。实际上,这个优化对于相互递归函数也是有效的,但是为了简单起见,我们还是来看单递归函数。

|

你可能会想象当计算 `n = 20000` 时堆栈会更高。我们可以做些什么优化它吗?当然可以。作为 ES2015 (又名 ES6) 标准的一部分,有一个优化用来解决这个问题。它被称作<ruby>尾调用优化<rt>proper tail calls optimization</rt></ruby>(PTC)。当递归函数做的最后一件事是调用自己并返回结果的时候,它使得浏览器删除或者忽略堆栈帧。实际上,这个优化对于相互递归函数也是有效的,但是为了简单起见,我们还是来看单一递归函数。

|

||||||

|

|

||||||

你可能会注意到,在递归函数调用之后,还要进行一次额外的计算(**n * r**)。那意味着浏览器不能通过 PTC 来优化递归;然而,我们可以通过重写函数使最后一步变成递归调用以便优化。一个窍门是将中间结果(在这里是**乘积**)作为参数传递给函数。

|

你可能会注意到,在递归函数调用之后,还要进行一次额外的计算(`n * r`)。那意味着浏览器不能通过 PTC 来优化递归;然而,我们可以通过重写函数使最后一步变成递归调用以便优化。一个窍门是将中间结果(在这里是 `product`)作为参数传递给函数。

|

||||||

|

|

||||||

```

|

```

|

||||||

'use strict';

|

'use strict';

|

||||||

@ -484,30 +417,23 @@ function factorial(n, product = 1) {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

让我们来看看优化后的计算 `factorial(3)` 时的堆栈。如下图所示,堆栈不会增长到超过两层。原因是我们把必要的信息都传到了递归函数中(比如 `product`)。所以,在 `product` 被更新后,浏览器可以丢弃掉堆栈中原先的帧。你可以在图中看到每次最上面的帧下沉变成了底部的帧,原先底部的帧被丢弃,因为不再需要它了。

|

||||||

[view raw][50][factorial-tail-recursion.js][51] hosted with ❤ by [GitHub][52]

|

|

||||||

|

|

||||||

让我们来看看优化后的计算 **factorial(3)** 时的堆栈。如下图所示,堆栈不会增长到超过两层。原因是我们把必要的信息都传到了递归函数中(比如**乘积**)。所以,在**乘积**被更新后,浏览器可以丢弃掉堆栈中原先的帧。你可以在图中看到每次最上面的帧下沉变成了底部的帧,原先底部的帧被丢弃,因为不再需要它了。

|

|

||||||

|

|

||||||

### [optimized-stack-frames.png][53]

|

|

||||||

|

|

||||||

using PTC")

|

using PTC")

|

||||||

|

|

||||||

递归计算 3! 的堆栈(三次乘法)使用 PTC

|

*递归计算 3! 的堆栈(三次乘法)使用 PTC*

|

||||||

|

|

||||||

现在选一个浏览器运行吧,假设你在使用 Safari,你会得到**无穷大**(它是比在 JavaScript 中能表达的最大值更大的数)。但是我们没有得到堆栈溢出错误,那很不错!现在在其他的浏览器中呢怎么样呢?Safari 可能现在乃至将来是实现 PTC 的唯一一个浏览器。看看下面的兼容性表格:

|

现在选一个浏览器运行吧,假设你在使用 Safari,你会得到 `Infinity`(它是比在 JavaScript 中能表达的最大值更大的数)。但是我们没有得到堆栈溢出错误,那很不错!现在在其他的浏览器中呢怎么样呢?Safari 可能现在乃至将来是实现 PTC 的唯一一个浏览器。看看下面的兼容性表格:

|

||||||

|

|

||||||

### [ptc-compatibility.png][54]

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

PTC 兼容性

|

*PTC 兼容性*

|

||||||

|

|

||||||

其他浏览器提出了一种被称作[语法级尾调用][95](STC)的竞争标准。"Syntactic"意味着你需要用新的语法来标识你想要执行尾递归优化的函数。,即使浏览器还没有广泛支持,但是把你的递归函数写成支持尾递归优化的样子还是一个好主意。

|

其他浏览器提出了一种被称作<ruby>[语法级尾调用][95]<rt>syntactic tail calls</rt></ruby>(STC)的竞争标准。“语法级”意味着你需要用新的语法来标识你想要执行尾递归优化的函数。即使浏览器还没有广泛支持,但是把你的递归函数写成支持尾递归优化的样子还是一个好主意。

|

||||||

|

|

||||||

### 高阶函数

|

### 高阶函数

|

||||||

|

|

||||||

我们已经知道 JavaScript 将函数视作一等公民,可以把函数像其他值一样传递。所以,把一个函数传给另一个函数也很常见。我们也可以让函数返回一个函数。就是它!我们有高阶函数。你可能已经很熟悉几个在 **Array.prototype** 中的高阶函数。比如 **filter**,**map**,和 **reduce** 就在其中。对高阶函数的一种理解是:它是接受(一般会调用)一个回调函数参数的函数。让我们来看看一些内置的高阶函数的例子:

|

我们已经知道 JavaScript 将函数视作一等公民,可以把函数像其他值一样传递。所以,把一个函数传给另一个函数也很常见。我们也可以让函数返回一个函数。就是它!我们有高阶函数。你可能已经很熟悉几个在 `Array.prototype` 中的高阶函数。比如 `filter`、`map` 和 `reduce` 就在其中。对高阶函数的一种理解是:它是接受(一般会调用)一个回调函数参数的函数。让我们来看看一些内置的高阶函数的例子:

|

||||||

|

|

||||||

```

|

```

|

||||||

const vehicles = [

|

const vehicles = [

|

||||||

@ -531,10 +457,7 @@ const averageSUVPrice = vehicles

|

|||||||

console.log(averageSUVPrice); // 33399

|

console.log(averageSUVPrice); // 33399

|

||||||

```

|

```

|

||||||

|

|

||||||

|

注意我们在一个数组对象上调用其方法,这是面向对象编程的特性。如果我们想要更函数式一些,我们可以用 Rmmda 或者 lodash/fp 提供的函数。注意如果我们使用 `R.compose` 的话,需要倒转函数的顺序,因为它从右向左依次调用函数(从底向上);然而,如果我们想从左向右调用函数就像上面的例子,我们可以用 `R.pipe`。下面两个例子用了 Rmmda。注意 Rmmda 有一个 `mean` 函数用来代替 `reduce` 。

|

||||||

[view raw][55][built-in-higher-order-functions.js][56] hosted with ❤ by [GitHub][57]

|

|

||||||

|

|

||||||

注意我们在一个数组对象上调用其方法,这是面向对象编程的特性。如果我们想要更函数式一些,我们可以用 Rmmda 或者 lodash/fp 提供的函数。注意如果我们使用 **R.compose** 的话,需要倒转函数的顺序,因为它从右向左依次调用函数(从底向上);然而,如果我们想从左向右调用函数就像上面的例子,我们可以用 **R.pipe**。下面两个例子用了 Rmmda。注意 Rmmda 有一个 **mean** 函数用来代替 **reduce** 。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

const vehicles = [

|

const vehicles = [

|

||||||

@ -569,14 +492,11 @@ const averageSUVPrice2 = R.compose(

|

|||||||

console.log(averageSUVPrice2); // 33399

|

console.log(averageSUVPrice2); // 33399

|

||||||

```

|

```

|

||||||

|

|

||||||

|

使用函数式方法的优点是清楚地分开了数据(`vehicles`)和逻辑(函数 `filter`,`map` 和 `reduce`)。面向对象的代码相比之下把数据和函数用以方法的对象的形式混合在了一起。

|

||||||

[view raw][58][composing-higher-order-functions.js][59] hosted with ❤ by [GitHub][60]

|

|

||||||

|

|

||||||

使用函数式方法的优点是清楚地分开了数据(**车辆**)和逻辑(函数 **filter**,**map** 和 **reduce**)面向对象的代码相比之下把数据和函数用以方法的对象的形式混合在了一起。

|

|

||||||

|

|

||||||

### 柯里化

|

### 柯里化

|

||||||

|

|

||||||

不规范地说,柯里化是把一个接受 **n** 个参数的函数变成 **n** 个每个接受单个参数的函数的过程。函数的 **arity** 是它接受参数的个数。接受一个参数的函数是 **unary**,两个的是 **binary**,三个的是 **ternary**,**n**个的是 **n-ary**。那么,我们可以把柯里化定义成将一个 **n-ary** 函数转换成 **n** 个 unary 函数的过程。让我们通过简单的例子开始,一个计算两个向量点积的函数。回忆一下线性代数,两个向量 **[a, b, c]** 和 **[x, y, z]** 的点积是 **ax + by + cz**。

|

不规范地说,<ruby>柯里化<rt>currying</rt></ruby>是把一个接受 `n` 个参数的函数变成 `n` 个每个接受单个参数的函数的过程。函数的 `arity` 是它接受参数的个数。接受一个参数的函数是 `unary`,两个的是 `binary`,三个的是 `ternary`,`n` 个的是 `n-ary`。那么,我们可以把柯里化定义成将一个 `n-ary` 函数转换成 `n` 个 `unary` 函数的过程。让我们通过简单的例子开始,一个计算两个向量点积的函数。回忆一下线性代数,两个向量 `[a, b, c]` 和 `[x, y, z]` 的点积是 `ax + by + cz`。

|

||||||

|

|

||||||

```

|

```

|

||||||

function dot(vector1, vector2) {

|

function dot(vector1, vector2) {

|

||||||

@ -589,10 +509,7 @@ const v2 = [4, -2, -1];

|

|||||||

console.log(dot(v1, v2)); // 1(4) + 3(-2) + (-5)(-1) = 4 - 6 + 5 = 3

|

console.log(dot(v1, v2)); // 1(4) + 3(-2) + (-5)(-1) = 4 - 6 + 5 = 3

|

||||||

```

|

```

|

||||||

|

|

||||||

|

`dot` 函数是 binary,因为它接受两个参数;然而我们可以将它手动转换成两个 unary 函数,就像下面的例子。注意 `curriedDot` 是一个 unary 函数,它接受一个向量并返回另一个接受第二个向量的 unary 函数。

|

||||||

[view raw][61][dot-product.js][62] hosted with ❤ by [GitHub][63]

|

|

||||||

|

|

||||||

**dot** 函数是 binary 因为它接受两个参数;然而我们可以将它手动转换成两个 unary 函数,就像下面的例子。注意 **curriedDot** 是一个 unary 函数,它接受一个向量并返回另一个接受第二个向量的 unary 函数。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

function curriedDot(vector1) {

|

function curriedDot(vector1) {

|

||||||

@ -609,9 +526,6 @@ console.log(sumElements([1, 3, -5])); // -1

|

|||||||

console.log(sumElements([4, -2, -1])); // 1

|

console.log(sumElements([4, -2, -1])); // 1

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

[view raw][64][manual-currying.js][65] hosted with ❤ by [GitHub][66]

|

|

||||||

|

|

||||||

很幸运,我们不需要把每一个函数都手动转换成柯里化以后的形式。[Ramda][96] 和 [lodash][97] 等库可以为我们做这些工作。实际上,它们是柯里化的混合形式。你既可以每次传递一个参数,也可以像原来一样一次传递所有参数。

|

很幸运,我们不需要把每一个函数都手动转换成柯里化以后的形式。[Ramda][96] 和 [lodash][97] 等库可以为我们做这些工作。实际上,它们是柯里化的混合形式。你既可以每次传递一个参数,也可以像原来一样一次传递所有参数。

|

||||||

|

|

||||||

```

|

```

|

||||||

@ -634,8 +548,6 @@ console.log(sumElements(v2)); // 1

|

|||||||

console.log(curriedDot(v1, v2)); // 3

|

console.log(curriedDot(v1, v2)); // 3

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][67][fancy-currying.js][68] hosted with ❤ by [GitHub][69]

|

|

||||||

|

|

||||||

Ramda 和 lodash 都允许你“跳过”一些变量之后再指定它们。它们使用置位符来做这些工作。因为点积的计算可以交换两项。传入向量的顺序不影响结果。让我们换一个例子来阐述如何使用一个置位符。Ramda 使用双下划线作为其置位符。

|

Ramda 和 lodash 都允许你“跳过”一些变量之后再指定它们。它们使用置位符来做这些工作。因为点积的计算可以交换两项。传入向量的顺序不影响结果。让我们换一个例子来阐述如何使用一个置位符。Ramda 使用双下划线作为其置位符。

|

||||||

|

|

||||||

```

|

```

|

||||||

@ -657,11 +569,9 @@ console.log(result);

|

|||||||

// 3: French Hens

|

// 3: French Hens

|

||||||

```

|

```

|

||||||

|

|

||||||

[view raw][70][currying-placeholder.js][71] hosted with ❤ by [GitHub][72]

|

在我们结束探讨柯里化之前最后的议题是<ruby>偏函数应用<rt>partial application</rt></ruby>。偏函数应用和柯里化经常同时出场,尽管它们实际上是不同的概念。一个柯里化的函数还是柯里化的函数,即使没有给它任何参数。偏函数应用,另一方面是仅仅给一个函数传递部分参数而不是所有参数。柯里化是偏函数应用常用的方法之一,但是不是唯一的。

|

||||||

|

|

||||||

在我们结束探讨柯里化之前最后的议题是偏函数应用。偏函数应用和柯里化经常同时出场,尽管它们实际上是不同的概念。一个柯里化的函数还是柯里化的函数,即使没有给它任何参数。偏函数应用,另一方面是仅仅给一个函数传递部分而不是所有参数。柯里化是偏函数应用常用的方法之一,但是不是唯一的。

|

JavaScript 拥有一个内置机制可以不依靠柯里化来做偏函数应用。那就是 [function.prototype.bind][98] 方法。这个方法的一个特殊之处在于,它要求你将 `this` 作为第一个参数传入。 如果你不进行面向对象编程,那么你可以通过传入 `null` 来忽略 `this`。

|

||||||

|

|

||||||

JavaScript 拥有一个内置机制可以不依靠柯里化来偏函数应用。那就是 [**function.prototype.bind**][98] 方法。这个方法的一个特殊之处在于,它要求你将 **this** 作为第一个参数传入。 如果你不进行面向对象编程,那么你可以通过传入 **null** 来忽略 **this**。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

1function giveMe3(item1, item2, item3) {

|

1function giveMe3(item1, item2, item3) {

|

||||||

@ -682,30 +592,25 @@ console.log(result);

|

|||||||

// 3: scissors

|

// 3: scissors

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

[view raw][73][partial-application-using-bind.js][74] hosted with ❤ by [GitHub][75]

|

|

||||||

|

|

||||||

### 总结

|

### 总结

|

||||||

|

|

||||||

我希望你享受探索 JavaScript 中函数式编程的过程。对一些人来说,它可能是一个全新的编程范式,但我希望你能尝试它。你会发现你的程序更易于阅读和调试。不变性还将允许你优化 Angular 和 React 的性能。

|

我希望你享受探索 JavaScript 中函数式编程的过程。对一些人来说,它可能是一个全新的编程范式,但我希望你能尝试它。你会发现你的程序更易于阅读和调试。不变性还将允许你优化 Angular 和 React 的性能。

|

||||||

|

|

||||||

_这篇文章基于 Matt 在 OpenWest 的演讲 [JavaScript the Good-er Parts][77]. [OpenWest][78] ~~将~~在 6/12-15 ,2017 在 Salt Lake City, Utah 举行。_

|

_这篇文章基于 Matt 在 OpenWest 的演讲 [JavaScript the Good-er Parts][77]. [OpenWest][78] ~~将~~在 6/12-15 ,2017 在 Salt Lake City, Utah 举行。_

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

作者简介:

|

作者简介:

|

||||||

|

|

||||||

Matt Banz - Matt graduated from the University of Utah with a degree in mathematics in May of 2008. One month later he landed a job as a web developer, and he's been loving it ever since! In 2013, he earned a Master of Computer Science degree from North Carolina State University. He has taught courses in web development for LDS Business College and the Davis School District Community Education program. He is currently a Senior Front-End Developer for Motorola Solutions.

|

|

||||||

|

|

||||||

Matt Banz - Matt 于 2008 年五月在犹他大学获得了数学学位毕业。一个月后他得到了一份 web 开发者的工作,他从那时起就爱上了它!在 2013 年,他在北卡罗莱纳州立大学获得了计算机科学硕士学位。他在 LDS 商学院和戴维斯学区社区教育计划教授 Web 课程。他现在是就职于 Motorola Solutions 公司的高级前端开发者。

|

Matt Banz - Matt 于 2008 年五月在犹他大学获得了数学学位毕业。一个月后他得到了一份 web 开发者的工作,他从那时起就爱上了它!在 2013 年,他在北卡罗莱纳州立大学获得了计算机科学硕士学位。他在 LDS 商学院和戴维斯学区社区教育计划教授 Web 课程。他现在是就职于 Motorola Solutions 公司的高级前端开发者。

|

||||||

|

|

||||||

--------------

|

--------------

|

||||||

|

|

||||||

via: https://opensource.com/article/17/6/functional-javascript

|

via: https://opensource.com/article/17/6/functional-javascript

|

||||||

|

|

||||||

作者:[Matt Banz ][a]

|

作者:[Matt Banz][a]

|

||||||

译者:[trnhoe](https://github.com/trnhoe)

|

译者:[trnhoe](https://github.com/trnhoe)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,4 +1,4 @@

|

|||||||

[并发服务器: 第一节 —— 简介][18]

|

并发服务器(一):简介

|

||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

这是关于并发网络服务器编程的第一篇教程。我计划测试几个主流的、可以同时处理多个客户端请求的服务器并发模型,基于可扩展性和易实现性对这些模型进行评判。所有的服务器都会监听套接字连接,并且实现一些简单的协议用于与客户端进行通讯。

|

这是关于并发网络服务器编程的第一篇教程。我计划测试几个主流的、可以同时处理多个客户端请求的服务器并发模型,基于可扩展性和易实现性对这些模型进行评判。所有的服务器都会监听套接字连接,并且实现一些简单的协议用于与客户端进行通讯。

|

||||||

@ -6,34 +6,32 @@

|

|||||||

该系列的所有文章:

|

该系列的所有文章:

|

||||||

|

|

||||||

* [第一节 - 简介][7]

|

* [第一节 - 简介][7]

|

||||||

|

|

||||||

* [第二节 - 线程][8]

|

* [第二节 - 线程][8]

|

||||||

|

|

||||||

* [第三节 - 事件驱动][9]

|

* [第三节 - 事件驱动][9]

|

||||||

|

|

||||||

### 协议

|

### 协议

|

||||||

|

|

||||||

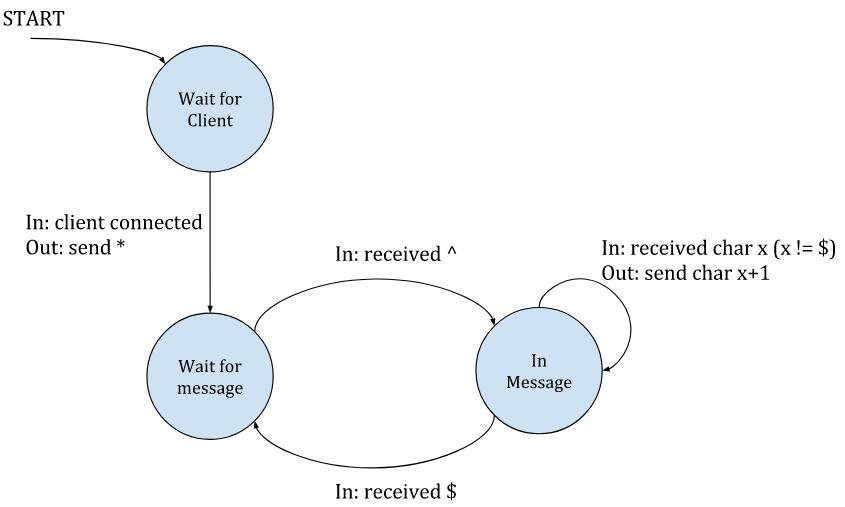

该系列教程所用的协议都非常简单,但足以展示并发服务器设计的许多有趣层面。而且这个协议是 _有状态的_ —— 服务器根据客户端发送的数据改变内部状态,然后根据内部状态产生相应的行为。并非所有的协议都是有状态的 —— 实际上,基于 HTTP 的许多协议是无状态的,但是有状态的协议对于保证重要会议的可靠很常见。

|

该系列教程所用的协议都非常简单,但足以展示并发服务器设计的许多有趣层面。而且这个协议是 _有状态的_ —— 服务器根据客户端发送的数据改变内部状态,然后根据内部状态产生相应的行为。并非所有的协议都是有状态的 —— 实际上,基于 HTTP 的许多协议是无状态的,但是有状态的协议也是很常见,值得认真讨论。

|

||||||

|

|

||||||

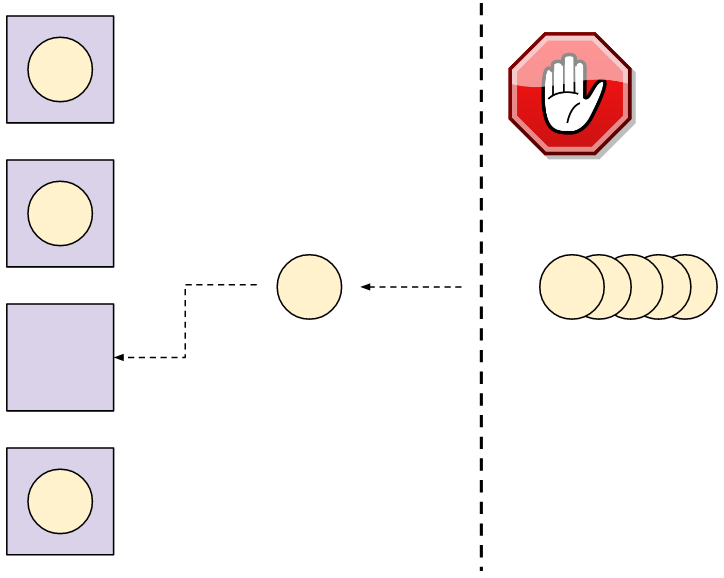

在服务器端看来,这个协议的视图是这样的:

|

在服务器端看来,这个协议的视图是这样的:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

总之:服务器等待新客户端的连接;当一个客户端连接的时候,服务器会向该客户端发送一个 `*` 字符,进入“等待消息”的状态。在该状态下,服务器会忽略客户端发送的所有字符,除非它看到了一个 `^` 字符,这表示一个新消息的开始。这个时候服务器就会转变为“正在通信”的状态,这时它会向客户端回送数据,把收到的所有字符的每个字节加 1 回送给客户端 [ [1][10] ]。当客户端发送了 `$`字符,服务器就会退回到等待新消息的状态。`^` 和 `$` 字符仅仅用于分隔消息 —— 它们不会被服务器回送。

|

总之:服务器等待新客户端的连接;当一个客户端连接的时候,服务器会向该客户端发送一个 `*` 字符,进入“等待消息”的状态。在该状态下,服务器会忽略客户端发送的所有字符,除非它看到了一个 `^` 字符,这表示一个新消息的开始。这个时候服务器就会转变为“正在通信”的状态,这时它会向客户端回送数据,把收到的所有字符的每个字节加 1 回送给客户端^注1 。当客户端发送了 `$` 字符,服务器就会退回到等待新消息的状态。`^` 和 `$` 字符仅仅用于分隔消息 —— 它们不会被服务器回送。

|

||||||

|

|

||||||

每个状态之后都有个隐藏的箭头指向 “等待客户端” 状态,用来防止客户端断开连接。因此,客户端要表示“我已经结束”的方法很简单,关掉它那一端的连接就好。

|

每个状态之后都有个隐藏的箭头指向 “等待客户端” 状态,用来客户端断开连接。因此,客户端要表示“我已经结束”的方法很简单,关掉它那一端的连接就好。

|

||||||

|

|

||||||

显然,这个协议是真实协议的简化版,真实使用的协议一般包含复杂的报文头,转义字符序列(例如让消息体中可以出现 `$` 符号),额外的状态变化。但是我们这个协议足以完成期望。

|

显然,这个协议是真实协议的简化版,真实使用的协议一般包含复杂的报文头、转义字符序列(例如让消息体中可以出现 `$` 符号),额外的状态变化。但是我们这个协议足以完成期望。

|

||||||

|

|

||||||

另一点:这个系列是引导性的,并假设客户端都工作的很好(虽然可能运行很慢);因此没有设置超时,也没有设置特殊的规则来确保服务器不会因为客户端的恶意行为(或是故障)而出现阻塞,导致不能正常结束。

|

另一点:这个系列是介绍性的,并假设客户端都工作的很好(虽然可能运行很慢);因此没有设置超时,也没有设置特殊的规则来确保服务器不会因为客户端的恶意行为(或是故障)而出现阻塞,导致不能正常结束。

|

||||||

|

|

||||||

### 有序服务器

|

### 顺序服务器

|

||||||

|

|

||||||

这个系列中我们的第一个服务端程序是一个简单的“有序”服务器,用 C 进行编写,除了标准的 POSIX 中用于套接字的内容以外没有使用其它库。服务器程序是有序的,因为它一次只能处理一个客户端的请求;当有客户端连接时,像之前所说的那样,服务器会进入到状态机中,并且不再监听套接字接受新的客户端连接,直到当前的客户端结束连接。显然这不是并发的,而且即便在很少的负载下也不能服务多个客户端,但它对于我们的讨论很有用,因为我们需要的是一个易于理解的基础。

|

这个系列中我们的第一个服务端程序是一个简单的“顺序”服务器,用 C 进行编写,除了标准的 POSIX 中用于套接字的内容以外没有使用其它库。服务器程序是顺序,因为它一次只能处理一个客户端的请求;当有客户端连接时,像之前所说的那样,服务器会进入到状态机中,并且不再监听套接字接受新的客户端连接,直到当前的客户端结束连接。显然这不是并发的,而且即便在很少的负载下也不能服务多个客户端,但它对于我们的讨论很有用,因为我们需要的是一个易于理解的基础。

|

||||||

|

|

||||||

这个服务器的完整代码在 [这里][11];接下来,我会着重于高亮的部分。`main` 函数里面的外层循环用于监听套接字,以便接受新客户端的连接。一旦有客户端进行连接,就会调用 `serve_connection`,这个函数中的代码会一直运行,直到客户端断开连接。

|

这个服务器的完整代码在[这里][11];接下来,我会着重于一些重点的部分。`main` 函数里面的外层循环用于监听套接字,以便接受新客户端的连接。一旦有客户端进行连接,就会调用 `serve_connection`,这个函数中的代码会一直运行,直到客户端断开连接。

|

||||||

|

|

||||||

有序服务器在循环里调用 `accept` 用来监听套接字,并接受新连接:

|

顺序服务器在循环里调用 `accept` 用来监听套接字,并接受新连接:

|

||||||

|

|

||||||

```

|

```

|

||||||

while (1) {

|

while (1) {

|

||||||

@ -105,25 +103,23 @@ void serve_connection(int sockfd) {

|

|||||||

|

|

||||||

它完全是按照状态机协议进行编写的。每次循环的时候,服务器尝试接收客户端的数据。收到 0 字节意味着客户端断开连接,然后循环就会退出。否则,会逐字节检查接收缓存,每一个字节都可能会触发一个状态。

|

它完全是按照状态机协议进行编写的。每次循环的时候,服务器尝试接收客户端的数据。收到 0 字节意味着客户端断开连接,然后循环就会退出。否则,会逐字节检查接收缓存,每一个字节都可能会触发一个状态。

|

||||||

|

|

||||||

`recv` 函数返回接收到的字节数与客户端发送消息的数量完全无关(`^...$` 闭合序列的字节)。因此,在保持状态的循环中,遍历整个缓冲区很重要。而且,每一个接收到的缓冲中可能包含多条信息,但也有可能开始了一个新消息,却没有显式的结束字符;而这个结束字符可能在下一个缓冲中才能收到,这就是处理状态在循环迭代中进行维护的原因。

|

`recv` 函数返回接收到的字节数与客户端发送消息的数量完全无关(`^...$` 闭合序列的字节)。因此,在保持状态的循环中遍历整个缓冲区很重要。而且,每一个接收到的缓冲中可能包含多条信息,但也有可能开始了一个新消息,却没有显式的结束字符;而这个结束字符可能在下一个缓冲中才能收到,这就是处理状态在循环迭代中进行维护的原因。

|

||||||

|

|

||||||

例如,试想主循环中的 `recv` 函数在某次连接中返回了三个非空的缓冲:

|

例如,试想主循环中的 `recv` 函数在某次连接中返回了三个非空的缓冲:

|

||||||

|

|

||||||

1. `^abc$de^abte$f`

|

1. `^abc$de^abte$f`

|

||||||

|

|

||||||

2. `xyz^123`

|

2. `xyz^123`

|

||||||

|

|

||||||

3. `25$^ab$abab`

|

3. `25$^ab$abab`

|

||||||

|

|

||||||

服务端返回的是哪些数据?追踪代码对于理解状态转变很有用。(答案见 [ [2][12] ])

|

服务端返回的是哪些数据?追踪代码对于理解状态转变很有用。(答案见^注[2] )

|

||||||

|

|

||||||

### 多个并发客户端

|

### 多个并发客户端

|

||||||

|

|

||||||

如果多个客户端在同一时刻向有序服务器发起连接会发生什么事情?

|

如果多个客户端在同一时刻向顺序服务器发起连接会发生什么事情?

|

||||||

|

|

||||||

服务器端的代码(以及它的名字 `有序的服务器`)已经说的很清楚了,一次只能处理 _一个_ 客户端的请求。只要服务器在 `serve_connection` 函数中忙于处理客户端的请求,就不会接受别的客户端的连接。只有当前的客户端断开了连接,`serve_connection` 才会返回,然后最外层的循环才能继续执行接受其他客户端的连接。

|

服务器端的代码(以及它的名字 “顺序服务器”)已经说的很清楚了,一次只能处理 _一个_ 客户端的请求。只要服务器在 `serve_connection` 函数中忙于处理客户端的请求,就不会接受别的客户端的连接。只有当前的客户端断开了连接,`serve_connection` 才会返回,然后最外层的循环才能继续执行接受其他客户端的连接。

|

||||||

|

|

||||||

为了演示这个行为,[该系列教程的示例代码][13] 包含了一个 Python 脚本,用于模拟几个想要同时连接服务器的客户端。每一个客户端发送类似之前那样的三个数据缓冲 [ [3][14] ],不过每次发送数据之间会有一定延迟。

|

为了演示这个行为,[该系列教程的示例代码][13] 包含了一个 Python 脚本,用于模拟几个想要同时连接服务器的客户端。每一个客户端发送类似之前那样的三个数据缓冲 ^注3 ,不过每次发送数据之间会有一定延迟。

|

||||||

|

|

||||||

客户端脚本在不同的线程中并发地模拟客户端行为。这是我们的序列化服务器与客户端交互的信息记录:

|

客户端脚本在不同的线程中并发地模拟客户端行为。这是我们的序列化服务器与客户端交互的信息记录:

|

||||||

|

|

||||||

@ -158,30 +154,26 @@ INFO:2017-09-16 14:14:24,176:conn0 received b'36bc1111'

|

|||||||

INFO:2017-09-16 14:14:24,376:conn0 disconnecting

|

INFO:2017-09-16 14:14:24,376:conn0 disconnecting

|

||||||

```

|

```

|

||||||

|

|

||||||

这里要注意连接名:`conn1` 是第一个连接到服务器的,先跟服务器交互了一段时间。接下来的连接 `conn2` —— 在第一个断开连接后,连接到了服务器,然后第三个连接也是一样。就像日志显示的那样,每一个连接让服务器变得繁忙,保持大约 2.2 秒的时间(这实际上是人为地在客户端代码中加入的延迟),在这段时间里别的客户端都不能连接。

|

这里要注意连接名:`conn1` 是第一个连接到服务器的,先跟服务器交互了一段时间。接下来的连接 `conn2` —— 在第一个断开连接后,连接到了服务器,然后第三个连接也是一样。就像日志显示的那样,每一个连接让服务器变得繁忙,持续了大约 2.2 秒的时间(这实际上是人为地在客户端代码中加入的延迟),在这段时间里别的客户端都不能连接。

|

||||||

|

|

||||||

显然,这不是一个可扩展的策略。这个例子中,客户端中加入了延迟,让服务器不能处理别的交互动作。一个智能服务器应该能处理一堆客户端的请求,而这个原始的服务器在结束连接之前一直繁忙(我们将会在之后的章节中看到如何实现智能的服务器)。尽管服务端有延迟,但这不会过度占用CPU;例如,从数据库中查找信息(时间基本上是花在连接到数据库服务器上,或者是花在硬盘中的本地数据库)。

|

显然,这不是一个可扩展的策略。这个例子中,客户端中加入了延迟,让服务器不能处理别的交互动作。一个智能服务器应该能处理一堆客户端的请求,而这个原始的服务器在结束连接之前一直繁忙(我们将会在之后的章节中看到如何实现智能的服务器)。尽管服务端有延迟,但这不会过度占用 CPU;例如,从数据库中查找信息(时间基本上是花在连接到数据库服务器上,或者是花在硬盘中的本地数据库)。

|

||||||

|

|

||||||

### 总结及期望

|

### 总结及期望

|

||||||

|

|

||||||

这个示例服务器达成了两个预期目标:

|

这个示例服务器达成了两个预期目标:

|

||||||

|

|

||||||

1. 首先是介绍了问题范畴和贯彻该系列文章的套接字编程基础。

|

1. 首先是介绍了问题范畴和贯彻该系列文章的套接字编程基础。

|

||||||

|

2. 对于并发服务器编程的抛砖引玉 —— 就像之前的部分所说,顺序服务器还不能在非常轻微的负载下进行扩展,而且没有高效的利用资源。

|

||||||

|

|

||||||

2. 对于并发服务器编程的抛砖引玉 —— 就像之前的部分所说,有序服务器还不能在几个轻微的负载下进行扩展,而且没有高效的利用资源。

|

在看下一篇文章前,确保你已经理解了这里所讲的服务器/客户端协议,还有顺序服务器的代码。我之前介绍过了这个简单的协议;例如 [串行通信分帧][15] 和 [用协程来替代状态机][16]。要学习套接字网络编程的基础,[Beej 的教程][17] 用来入门很不错,但是要深入理解我推荐你还是看本书。

|

||||||

|

|

||||||

在看下一篇文章前,确保你已经理解了这里所讲的服务器/客户端协议,还有有序服务器的代码。我之前介绍过了这个简单的协议;例如 [串行通信分帧][15] 和 [协同运行,作为状态机的替代][16]。要学习套接字网络编程的基础,[Beej's 教程][17] 用来入门很不错,但是要深入理解我推荐你还是看本书。

|

|

||||||

|

|

||||||

如果有什么不清楚的,请在评论区下进行评论或者向我发送邮件。深入理解并发服务器!

|

如果有什么不清楚的,请在评论区下进行评论或者向我发送邮件。深入理解并发服务器!

|

||||||

|

|

||||||

***

|

***

|

||||||

|

|

||||||

|

- 注1:状态转变中的 In/Out 记号是指 [Mealy machine][2]。

|

||||||

[ [1][1] ] 状态转变中的 In/Out 记号是指 [Mealy machine][2]。

|

- 注2:回应的是 `bcdbcuf23436bc`。

|

||||||

|

- 注3:这里在结尾处有一点小区别,加了字符串 `0000` —— 服务器回应这个序列,告诉客户端让其断开连接;这是一个简单的握手协议,确保客户端有足够的时间接收到服务器发送的所有回复。

|

||||||

[ [2][3] ] 回应的是 `bcdbcuf23436bc`。

|

|

||||||

|

|

||||||

[ [3][4] ] 这里在结尾处有一点小区别,加了字符串 `0000` —— 服务器回应这个序列,告诉客户端让其断开连接;这是一个简单的握手协议,确保客户端有足够的时间接收到服务器发送的所有回复。

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

@ -189,7 +181,7 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

|||||||

|

|

||||||

作者:[Eli Bendersky][a]

|

作者:[Eli Bendersky][a]

|

||||||

译者:[GitFuture](https://github.com/GitFuture)

|

译者:[GitFuture](https://github.com/GitFuture)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

294

published/20171004 Concurrent Servers Part 2 - Threads.md

Normal file

294

published/20171004 Concurrent Servers Part 2 - Threads.md

Normal file

@ -0,0 +1,294 @@

|

|||||||

|

并发服务器(二):线程

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

这是并发网络服务器系列的第二节。[第一节][20] 提出了服务端实现的协议,还有简单的顺序服务器的代码,是这整个系列的基础。

|

||||||

|

|

||||||

|

这一节里,我们来看看怎么用多线程来实现并发,用 C 实现一个最简单的多线程服务器,和用 Python 实现的线程池。

|

||||||

|

|

||||||

|

该系列的所有文章:

|

||||||

|

|

||||||

|

* [第一节 - 简介][8]

|

||||||

|

* [第二节 - 线程][9]

|

||||||

|

* [第三节 - 事件驱动][10]

|

||||||

|

|

||||||

|

### 多线程的方法设计并发服务器

|

||||||

|

|

||||||

|

说起第一节里的顺序服务器的性能,最显而易见的,是在服务器处理客户端连接时,计算机的很多资源都被浪费掉了。尽管假定客户端快速发送完消息,不做任何等待,仍然需要考虑网络通信的开销;网络要比现在的 CPU 慢上百万倍还不止,因此 CPU 运行服务器时会等待接收套接字的流量,而大量的时间都花在完全不必要的等待中。

|

||||||

|

|

||||||

|

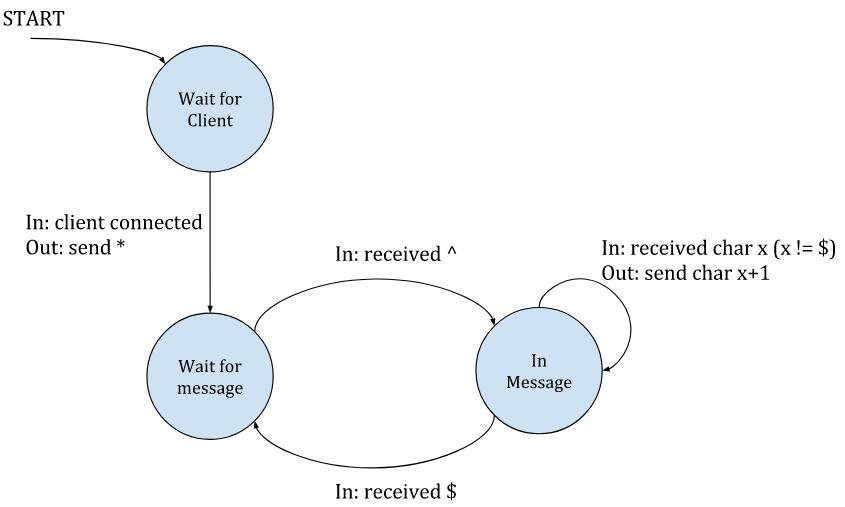

这里是一份示意图,表明顺序时客户端的运行过程:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

这个图片上有 3 个客户端程序。棱形表示客户端的“到达时间”(即客户端尝试连接服务器的时间)。黑色线条表示“等待时间”(客户端等待服务器真正接受连接所用的时间),有色矩形表示“处理时间”(服务器和客户端使用协议进行交互所用的时间)。有色矩形的末端表示客户端断开连接。

|

||||||

|

|

||||||

|

上图中,绿色和橘色的客户端尽管紧跟在蓝色客户端之后到达服务器,也要等到服务器处理完蓝色客户端的请求。这时绿色客户端得到响应,橘色的还要等待一段时间。

|

||||||

|

|

||||||

|

多线程服务器会开启多个控制线程,让操作系统管理 CPU 的并发(使用多个 CPU 核心)。当客户端连接的时候,创建一个线程与之交互,而在主线程中,服务器能够接受其他的客户端连接。下图是该模式的时间轴:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 每个客户端一个线程,在 C 语言里要用 pthread

|

||||||

|

|

||||||

|

这篇文章的 [第一个示例代码][11] 是一个简单的 “每个客户端一个线程” 的服务器,用 C 语言编写,使用了 [phtreads API][12] 用于实现多线程。这里是主循环代码:

|

||||||

|

|

||||||

|

```

|

||||||

|

while (1) {

|

||||||

|

struct sockaddr_in peer_addr;

|

||||||

|

socklen_t peer_addr_len = sizeof(peer_addr);

|

||||||

|

|

||||||

|

int newsockfd =

|

||||||

|

accept(sockfd, (struct sockaddr*)&peer_addr, &peer_addr_len);

|

||||||

|

|

||||||

|

if (newsockfd < 0) {

|

||||||

|

perror_die("ERROR on accept");

|

||||||

|

}

|

||||||

|

|

||||||

|

report_peer_connected(&peer_addr, peer_addr_len);

|

||||||

|

pthread_t the_thread;

|

||||||

|

|

||||||

|

thread_config_t* config = (thread_config_t*)malloc(sizeof(*config));

|

||||||

|

if (!config) {

|

||||||

|

die("OOM");

|

||||||

|

}

|

||||||

|

config->sockfd = newsockfd;

|

||||||

|

pthread_create(&the_thread, NULL, server_thread, config);

|

||||||

|

|

||||||

|

// 回收线程 —— 在线程结束的时候,它占用的资源会被回收

|

||||||

|

// 因为主线程在一直运行,所以它比服务线程存活更久。

|

||||||

|

pthread_detach(the_thread);

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

这是 `server_thread` 函数:

|

||||||

|

|

||||||

|

```

|

||||||

|

void* server_thread(void* arg) {

|

||||||

|