mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

fd4af063f7

122

20171202 docker - Use multi-stage builds.md

Normal file

122

20171202 docker - Use multi-stage builds.md

Normal file

@ -0,0 +1,122 @@

|

||||

Docker:使用多阶段构建镜像

|

||||

============================================================

|

||||

|

||||

多阶段构建是 Docker 17.05 及更高版本提供的新功能。这对致力于优化 Dockerfile 的人来说,使得 Dockerfile 易于阅读和维护。

|

||||

|

||||

> 致谢: 特别感谢 [Alex Ellis][1] 授权使用他的关于 Docker 多阶段构建的博客文章 [Builder pattern vs. Multi-stage builds in Docker][2] 作为以下示例的基础。

|

||||

|

||||

### 在多阶段构建之前

|

||||

|

||||

关于构建镜像最具挑战性的事情之一是保持镜像体积小巧。 Dockerfile 中的每条指令都会在镜像中增加一层,并且在移动到下一层之前,需要记住清除不需要的构件。要编写一个非常高效的 Dockerfile,你通常需要使用 shell 技巧和其它方式来尽可能地减少层数,并确保每一层都具有上一层所需的构件,而其它任何东西都不需要。

|

||||

|

||||

实际上最常见的是,有一个 Dockerfile 用于开发(其中包含构建应用程序所需的所有内容),而另一个裁剪过的用于生产环境,它只包含您的应用程序以及运行它所需的内容。这被称为“构建器模式”。但是维护两个 Dockerfile 并不理想。

|

||||

|

||||

下面分别是一个 `Dockerfile.build` 和遵循上面的构建器模式的 `Dockerfile` 的例子:

|

||||

|

||||

`Dockerfile.build`:

|

||||

|

||||

```

|

||||

FROM golang:1.7.3

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN go get -d -v golang.org/x/net/html \

|

||||

&& CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

```

|

||||

|

||||

注意这个例子还使用 Bash 的 `&&` 运算符人为地将两个 `RUN` 命令压缩在一起,以避免在镜像中创建额外的层。这很容易失败,难以维护。例如,插入另一个命令时,很容易忘记继续使用 `\` 字符。

|

||||

|

||||

`Dockerfile`:

|

||||

|

||||

```

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

`build.sh`:

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

echo Building alexellis2/href-counter:build

|

||||

|

||||

docker build --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy \

|

||||

-t alexellis2/href-counter:build . -f Dockerfile.build

|

||||

|

||||

docker create --name extract alexellis2/href-counter:build

|

||||

docker cp extract:/go/src/github.com/alexellis/href-counter/app ./app

|

||||

docker rm -f extract

|

||||

|

||||

echo Building alexellis2/href-counter:latest

|

||||

|

||||

docker build --no-cache -t alexellis2/href-counter:latest .

|

||||

rm ./app

|

||||

```

|

||||

|

||||

当您运行 `build.sh` 脚本时,它会构建第一个镜像,从中创建一个容器,以便将该构件复制出来,然后构建第二个镜像。 这两个镜像会占用您的系统的空间,而你仍然会一个 `app` 构件存放在你的本地磁盘上。

|

||||

|

||||

多阶段构建大大简化了这种情况!

|

||||

|

||||

### 使用多阶段构建

|

||||

|

||||

在多阶段构建中,您需要在 Dockerfile 中多次使用 `FROM` 声明。每次 `FROM` 指令可以使用不同的基础镜像,并且每次 `FROM` 指令都会开始新阶段的构建。您可以选择将构件从一个阶段复制到另一个阶段,在最终镜像中,不会留下您不需要的所有内容。为了演示这是如何工作的,让我们调整前一节中的 Dockerfile 以使用多阶段构建。

|

||||

|

||||

`Dockerfile`:

|

||||

|

||||

```

|

||||

FROM golang:1.7.3

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY --from=0 /go/src/github.com/alexellis/href-counter/app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

您只需要单一个 Dockerfile。 不需要另外的构建脚本。只需运行 `docker build` 即可。

|

||||

|

||||

```

|

||||

$ docker build -t alexellis2/href-counter:latest .

|

||||

```

|

||||

|

||||

最终的结果是和以前体积一样小的生产镜像,复杂性显著降低。您不需要创建任何中间镜像,也不需要将任何构件提取到本地系统。

|

||||

|

||||

它是如何工作的呢?第二条 `FROM` 指令以 `alpine:latest` 镜像作为基础开始新的建造阶段。`COPY --from=0` 这一行将刚才前一个阶段产生的构件复制到这个新阶段。Go SDK 和任何中间构件都被留在那里,而不会保存到最终的镜像中。

|

||||

|

||||

### 命名您的构建阶段

|

||||

|

||||

默认情况下,这些阶段没有命名,您可以通过它们的整数来引用它们,从第一个 `FROM` 指令的 0 开始。但是,你可以通过在 `FROM` 指令中使用 `as <NAME>` 来为阶段命名。以下示例通过命名阶段并在 `COPY` 指令中使用名称来改进前一个示例。这意味着,即使您的 `Dockerfile` 中的指令稍后重新排序,`COPY` 也不会出问题。

|

||||

|

||||

```

|

||||

FROM golang:1.7.3 as builder

|

||||

WORKDIR /go/src/github.com/alexellis/href-counter/

|

||||

RUN go get -d -v golang.org/x/net/html

|

||||

COPY app.go .

|

||||

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

|

||||

|

||||

FROM alpine:latest

|

||||

RUN apk --no-cache add ca-certificates

|

||||

WORKDIR /root/

|

||||

COPY --from=builder /go/src/github.com/alexellis/href-counter/app .

|

||||

CMD ["./app"]

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://docs.docker.com/engine/userguide/eng-image/multistage-build/

|

||||

|

||||

作者:[docker][a]

|

||||

译者:[iron0x](https://github.com/iron0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://docs.docker.com/engine/userguide/eng-image/multistage-build/

|

||||

[1]:https://twitter.com/alexellisuk

|

||||

[2]:http://blog.alexellis.io/mutli-stage-docker-builds/

|

||||

179

published/19951001 Writing man Pages Using groff.md

Normal file

179

published/19951001 Writing man Pages Using groff.md

Normal file

@ -0,0 +1,179 @@

|

||||

使用 groff 编写 man 手册页

|

||||

===================

|

||||

|

||||

`groff` 是大多数 Unix 系统上所提供的流行的文本格式化工具 nroff/troff 的 GNU 版本。它一般用于编写手册页,即命令、编程接口等的在线文档。在本文中,我们将给你展示如何使用 `groff` 编写你自己的 man 手册页。

|

||||

|

||||

在 Unix 系统上最初有两个文本处理系统:troff 和 nroff,它们是由贝尔实验室为初始的 Unix 所开发的(事实上,开发 Unix 系统的部分原因就是为了支持这样的一个文本处理系统)。这个文本处理器的第一个版本被称作 roff(意为 “runoff”——径流);稍后出现了 troff,在那时用于为特定的<ruby>排字机<rt>Typesetter</rt></ruby>生成输出。nroff 是更晚一些的版本,它成为了各种 Unix 系统的标准文本处理器。groff 是 nroff 和 troff 的 GNU 实现,用在 Linux 系统上。它包括了几个扩展功能和一些打印设备的驱动程序。

|

||||

|

||||

`groff` 能够生成文档、文章和书籍,很多时候它就像是其它的文本格式化系统(如 TeX)的血管一样。然而,`groff`(以及原来的 nroff)有一个固有的功能是 TeX 及其变体所缺乏的:生成普通 ASCII 输出。其它的系统在生成打印的文档方面做得很好,而 `groff` 却能够生成可以在线浏览的普通 ASCII(甚至可以在最简单的打印机上直接以普通文本打印)。如果要生成在线浏览的文档以及打印的表单,`groff` 也许是你所需要的(虽然也有替代品,如 Texinfo、Lametex 等等)。

|

||||

|

||||

`groff` 还有一个好处是它比 TeX 小很多;它所需要的支持文件和可执行程序甚至比最小化的 TeX 版本都少。

|

||||

|

||||

`groff` 一个特定的用途是用于格式化 Unix 的 man 手册页。如果你是一个 Unix 程序员,你肯定需要编写和生成各种 man 手册页。在本文中,我们将通过编写一个简短的 man 手册页来介绍 `groff` 的使用。

|

||||

|

||||

像 TeX 一样,`groff` 使用特定的文本格式化语言来描述如何处理文本。这种语言比 TeX 之类的系统更加神秘一些,但是更加简洁。此外,`groff` 在基本的格式化器之上提供了几个宏软件包;这些宏软件包是为一些特定类型的文档所定制的。举个例子, mgs 宏对于写作文章或论文很适合,而 man 宏可用于 man 手册页。

|

||||

|

||||

### 编写 man 手册页

|

||||

|

||||

用 `groff` 编写 man 手册页十分简单。要让你的 man 手册页看起来和其它的一样,你需要从源头上遵循几个惯例,如下所示。在这个例子中,我们将为一个虚构的命令 `coffee` 编写 man 手册页,它用于以各种方式控制你的联网咖啡机。

|

||||

|

||||

使用任意文本编辑器,输入如下代码,并保存为 `coffee.man`。不要输入每行的行号,它们仅用于本文中的说明。

|

||||

|

||||

```

|

||||

.TH COFFEE 1 "23 March 94"

|

||||

.SH NAME

|

||||

coffee \- Control remote coffee machine

|

||||

.SH SYNOPSIS

|

||||

\fBcoffee\fP [ -h | -b ] [ -t \fItype\fP ]

|

||||

\fIamount\fP

|

||||

.SH DESCRIPTION

|

||||

\fBcoffee\fP queues a request to the remote

|

||||

coffee machine at the device \fB/dev/cf0\fR.

|

||||

The required \fIamount\fP argument specifies

|

||||

the number of cups, generally between 0 and

|

||||

12 on ISO standard coffee machines.

|

||||

.SS Options

|

||||

.TP

|

||||

\fB-h\fP

|

||||

Brew hot coffee. Cold is the default.

|

||||

.TP

|

||||

\fB-b\fP

|

||||

Burn coffee. Especially useful when executing

|

||||

\fBcoffee\fP on behalf of your boss.

|

||||

.TP

|

||||

\fB-t \fItype\fR

|

||||

Specify the type of coffee to brew, where

|

||||

\fItype\fP is one of \fBcolumbian\fP,

|

||||

\fBregular\fP, or \fBdecaf\fP.

|

||||

.SH FILES

|

||||

.TP

|

||||

\fC/dev/cf0\fR

|

||||

The remote coffee machine device

|

||||

.SH "SEE ALSO"

|

||||

milk(5), sugar(5)

|

||||

.SH BUGS

|

||||

May require human intervention if coffee

|

||||

supply is exhausted.

|

||||

```

|

||||

|

||||

*清单 1:示例 man 手册页源文件*

|

||||

|

||||

不要让这些晦涩的代码吓坏了你。字符串序列 `\fB`、`\fI` 和 `\fR` 分别用来改变字体为粗体、斜体和正体(罗马字体)。`\fP` 设置字体为前一个选择的字体。

|

||||

|

||||

其它的 `groff` <ruby>请求<rt>request</rt></ruby>以点(`.`)开头出现在行首。第 1 行中,我们看到的 `.TH` 请求用于设置该 man 手册页的标题为 `COFFEE`、man 的部分为 `1`、以及该 man 手册页的最新版本的日期。(说明,man 手册的第 1 部分用于用户命令、第 2 部分用于系统调用等等。使用 `man man` 命令了解各个部分)。

|

||||

|

||||

在第 2 行,`.SH` 请求用于标记一个<ruby>节<rt>section</rt></ruby>的开始,并给该节名称为 `NAME`。注意,大部分的 Unix man 手册页依次使用 `NAME`、 `SYNOPSIS`、`DESCRIPTION`、`FILES`、`SEE ALSO`、`NOTES`、`AUTHOR` 和 `BUGS` 等节,个别情况下也需要一些额外的可选节。这只是编写 man 手册页的惯例,并不强制所有软件都如此。

|

||||

|

||||

第 3 行给出命令的名称,并在一个横线(`-`)后给出简短描述。在 `NAME` 节使用这个格式以便你的 man 手册页可以加到 whatis 数据库中——它可以用于 `man -k` 或 `apropos` 命令。

|

||||

|

||||

第 4-6 行我们给出了 `coffee` 命令格式的大纲。注意,斜体 `\fI...\fP` 用于表示命令行的参数,可选参数用方括号扩起来。

|

||||

|

||||

第 7-12 行给出了该命令的摘要介绍。粗体通常用于表示程序或文件的名称。

|

||||

|

||||

在 13 行,使用 `.SS` 开始了一个名为 `Options` 的子节。

|

||||

|

||||

接着第 14-25 行是选项列表,会使用参数列表样式表示。参数列表中的每一项以 `.TP` 请求来标记;`.TP` 后的行是参数,再之后是该项的文本。例如,第 14-16 行:

|

||||

|

||||

```

|

||||

.TP

|

||||

\fB-h\P

|

||||

Brew hot coffee. Cold is the default.

|

||||

```

|

||||

|

||||

将会显示如下:

|

||||

|

||||

```

|

||||

-h Brew hot coffee. Cold is the default.

|

||||

```

|

||||

|

||||

第 26-29 行创建该 man 手册页的 `FILES` 节,它用于描述该命令可能使用的文件。可以使用 `.TP` 请求来表示文件列表。

|

||||

|

||||

第 30-31 行,给出了 `SEE ALSO` 节,它提供了其它可以参考的 man 手册页。注意,第 30 行的 `.SH` 请求中 `"SEE ALSO"` 使用括号扩起来,这是因为 `.SH` 使用第一个空格来分隔该节的标题。任何超过一个单词的标题都需要使用引号扩起来成为一个单一参数。

|

||||

|

||||

最后,第 32-34 行,是 `BUGS` 节。

|

||||

|

||||

### 格式化和安装 man 手册页

|

||||

|

||||

为了在你的屏幕上查看这个手册页格式化的样式,你可以使用如下命令:

|

||||

|

||||

|

||||

```

|

||||

$ groff -Tascii -man coffee.man | more

|

||||

```

|

||||

|

||||

`-Tascii` 选项告诉 `groff` 生成普通 ASCII 输出;`-man` 告诉 `groff` 使用 man 手册页宏集合。如果一切正常,这个 man 手册页显示应该如下。

|

||||

|

||||

```

|

||||

COFFEE(1) COFFEE(1)

|

||||

NAME

|

||||

coffee - Control remote coffee machine

|

||||

SYNOPSIS

|

||||

coffee [ -h | -b ] [ -t type ] amount

|

||||

DESCRIPTION

|

||||

coffee queues a request to the remote coffee machine at

|

||||

the device /dev/cf0\. The required amount argument speci-

|

||||

fies the number of cups, generally between 0 and 12 on ISO

|

||||

standard coffee machines.

|

||||

Options

|

||||

-h Brew hot coffee. Cold is the default.

|

||||

-b Burn coffee. Especially useful when executing cof-

|

||||

fee on behalf of your boss.

|

||||

-t type

|

||||

Specify the type of coffee to brew, where type is

|

||||

one of columbian, regular, or decaf.

|

||||

FILES

|

||||

/dev/cf0

|

||||

The remote coffee machine device

|

||||

SEE ALSO

|

||||

milk(5), sugar(5)

|

||||

BUGS

|

||||

May require human intervention if coffee supply is

|

||||

exhausted.

|

||||

```

|

||||

|

||||

*格式化的 man 手册页*

|

||||

|

||||

如之前提到过的,`groff` 能够生成其它类型的输出。使用 `-Tps` 选项替代 `-Tascii` 将会生成 PostScript 输出,你可以将其保存为文件,用 GhostView 查看,或用一个 PostScript 打印机打印出来。`-Tdvi` 会生成设备无关的 .dvi 输出,类似于 TeX 的输出。

|

||||

|

||||

如果你希望让别人在你的系统上也可以查看这个 man 手册页,你需要安装这个 groff 源文件到其它用户的 `%MANPATH` 目录里面。标准的 man 手册页放在 `/usr/man`。第一部分的 man 手册页应该放在 `/usr/man/man1` 下,因此,使用命令:

|

||||

|

||||

```

|

||||

$ cp coffee.man /usr/man/man1/coffee.1

|

||||

```

|

||||

|

||||

这将安装该 man 手册页到 `/usr/man` 中供所有人使用(注意使用 `.1` 扩展名而不是 `.man`)。当接下来执行 `man coffee` 命令时,该 man 手册页会被自动重新格式化,并且可查看的文本会被保存到 `/usr/man/cat1/coffee.1.Z` 中。

|

||||

|

||||

如果你不能直接复制 man 手册页的源文件到 `/usr/man`(比如说你不是系统管理员),你可创建你自己的 man 手册页目录树,并将其加入到你的 `%MANPATH`。`%MANPATH` 环境变量的格式同 `%PATH` 一样,举个例子,要添加目录 `/home/mdw/man` 到 `%MANPATH` ,只需要:

|

||||

|

||||

```

|

||||

$ export MANPATH=/home/mdw/man:$MANPATH

|

||||

```

|

||||

|

||||

`groff` 和 man 手册页宏还有许多其它的选项和格式化命令。找到它们的最好办法是查看 `/usr/lib/groff` 中的文件; `tmac` 目录包含了宏文件,自身通常会包含其所提供的命令的文档。要让 `groff` 使用特定的宏集合,只需要使用 `-m macro` (或 `-macro`) 选项。例如,要使用 mgs 宏,使用命令:

|

||||

|

||||

```

|

||||

groff -Tascii -mgs files...

|

||||

```

|

||||

|

||||

`groff` 的 man 手册页对这个选项描述了更多细节。

|

||||

|

||||

不幸的是,随同 `groff` 提供的宏集合没有完善的文档。第 7 部分的 man 手册页提供了一些,例如,`man 7 groff_mm` 会给你 mm 宏集合的信息。然而,该文档通常只覆盖了在 `groff` 实现中不同和新功能,而假设你已经了解过原来的 nroff/troff 宏集合(称作 DWB:the Documentor's Work Bench)。最佳的信息来源或许是一本覆盖了那些经典宏集合细节的书。要了解更多的编写 man 手册页的信息,你可以看看 man 手册页源文件(`/usr/man` 中),并通过它们来比较源文件的输出。

|

||||

|

||||

这篇文章是《Running Linux》 中的一章,由 Matt Welsh 和 Lar Kaufman 著,奥莱理出版(ISBN 1-56592-100-3)。在本书中,还包括了 Linux 下使用的各种文本格式化系统的教程。这期的《Linux Journal》中的内容及《Running Linux》应该可以给你提供在 Linux 上使用各种文本工具的良好开端。

|

||||

|

||||

### 祝好,撰写快乐!

|

||||

|

||||

Matt Welsh ([mdw@cs.cornell.edu][1])是康奈尔大学的一名学生和系统程序员,在机器人和视觉实验室从事于时时机器视觉研究。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxjournal.com/article/1158

|

||||

|

||||

作者:[Matt Welsh][a]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxjournal.com/user/800006

|

||||

[1]:mailto:mdw@cs.cornell.edu

|

||||

@ -0,0 +1,219 @@

|

||||

在红帽企业版 Linux 中将系统服务容器化(一)

|

||||

====================

|

||||

|

||||

在 2017 年红帽峰会上,有几个人问我“我们通常用完整的虚拟机来隔离如 DNS 和 DHCP 等网络服务,那我们可以用容器来取而代之吗?”答案是可以的,下面是在当前红帽企业版 Linux 7 系统上创建一个系统容器的例子。

|

||||

|

||||

### 我们的目的

|

||||

|

||||

**创建一个可以独立于任何其它系统服务而更新的网络服务,并且可以从主机端容易地管理和更新。**

|

||||

|

||||

让我们来探究一下在容器中建立一个运行在 systemd 之下的 BIND 服务器。在这一部分,我们将了解到如何建立自己的容器以及管理 BIND 配置和数据文件。

|

||||

|

||||

在本系列的第二部分,我们将看到如何整合主机中的 systemd 和容器中的 systemd。我们将探究如何管理容器中的服务,并且使它作为一种主机中的服务。

|

||||

|

||||

### 创建 BIND 容器

|

||||

|

||||

为了使 systemd 在一个容器中轻松运行,我们首先需要在主机中增加两个包:`oci-register-machine` 和 `oci-systemd-hook`。`oci-systemd-hook` 这个钩子允许我们在一个容器中运行 systemd,而不需要使用特权容器或者手工配置 tmpfs 和 cgroups。`oci-register-machine` 这个钩子允许我们使用 systemd 工具如 `systemctl` 和 `machinectl` 来跟踪容器。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# yum install oci-register-machine oci-systemd-hook

|

||||

```

|

||||

|

||||

回到创建我们的 BIND 容器上。[红帽企业版 Linux 7 基础镜像][6]包含了 systemd 作为其初始化系统。我们可以如我们在典型的系统中做的那样安装并激活 BIND。你可以从 [git 仓库中下载这份 Dockerfile][8]。

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# vi Dockerfile

|

||||

|

||||

# Dockerfile for BIND

|

||||

FROM registry.access.redhat.com/rhel7/rhel

|

||||

ENV container docker

|

||||

RUN yum -y install bind && \

|

||||

yum clean all && \

|

||||

systemctl enable named

|

||||

STOPSIGNAL SIGRTMIN+3

|

||||

EXPOSE 53

|

||||

EXPOSE 53/udp

|

||||

CMD [ "/sbin/init" ]

|

||||

```

|

||||

|

||||

因为我们以 PID 1 来启动一个初始化系统,当我们告诉容器停止时,需要改变 docker CLI 发送的信号。从 `kill` 系统调用手册中 (`man 2 kill`):

|

||||

|

||||

> 唯一可以发送给 PID 1 进程(即 init 进程)的信号,是那些初始化系统明确安装了<ruby>信号处理器<rt>signal handler</rt></ruby>的信号。这是为了避免系统被意外破坏。

|

||||

|

||||

对于 systemd 信号处理器,`SIGRTMIN+3` 是对应于 `systemd start halt.target` 的信号。我们也需要为 BIND 暴露 TCP 和 UDP 端口号,因为这两种协议可能都要使用。

|

||||

|

||||

### 管理数据

|

||||

|

||||

有了一个可以工作的 BIND 服务,我们还需要一种管理配置文件和区域文件的方法。目前这些都放在容器里面,所以我们任何时候都可以进入容器去更新配置或者改变一个区域文件。从管理的角度来说,这并不是很理想。当要更新 BIND 时,我们将需要重建这个容器,所以镜像中的改变将会丢失。任何时候我们需要更新一个文件或者重启服务时,都需要进入这个容器,而这增加了步骤和时间。

|

||||

|

||||

相反的,我们将从这个容器中提取出配置文件和数据文件,把它们拷贝到主机上,然后在运行的时候挂载它们。用这种方式我们可以很容易地重启或者重建容器,而不会丢失所做出的更改。我们也可以使用容器外的编辑器来更改配置和区域文件。因为这个容器的数据看起来像“该系统所提供服务的特定站点数据”,让我们遵循 Linux <ruby>文件系统层次标准<rt>File System Hierarchy</rt></ruby>,并在当前主机上创建 `/srv/named` 目录来保持管理权分离。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# mkdir -p /srv/named/etc

|

||||

|

||||

[root@rhel7-host ~]# mkdir -p /srv/named/var/named

|

||||

```

|

||||

|

||||

*提示:如果你正在迁移一个已有的配置文件,你可以跳过下面的步骤并且将它直接拷贝到 `/srv/named` 目录下。你也许仍然要用一个临时容器来检查一下分配给这个容器的 GID。*

|

||||

|

||||

让我们建立并运行一个临时容器来检查 BIND。在将 init 进程以 PID 1 运行时,我们不能交互地运行这个容器来获取一个 shell。我们会在容器启动后执行 shell,并且使用 `rpm` 命令来检查重要文件。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker build -t named .

|

||||

|

||||

[root@rhel7-host ~]# docker exec -it $( docker run -d named ) /bin/bash

|

||||

|

||||

[root@0e77ce00405e /]# rpm -ql bind

|

||||

```

|

||||

|

||||

对于这个例子来说,我们将需要 `/etc/named.conf` 和 `/var/named/` 目录下的任何文件。我们可以使用 `machinectl` 命令来提取它们。如果注册了一个以上的容器,我们可以在任一机器上使用 `machinectl status` 命令来查看运行的是什么。一旦有了这些配置,我们就可以终止这个临时容器了。

|

||||

|

||||

*如果你喜欢,资源库中也有一个[样例 `named.conf` 和针对 `example.com` 的区域文件][8]。*

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# machinectl list

|

||||

|

||||

MACHINE CLASS SERVICE

|

||||

8824c90294d5a36d396c8ab35167937f container docker

|

||||

|

||||

[root@rhel7-host ~]# machinectl copy-from 8824c90294d5a36d396c8ab35167937f /etc/named.conf /srv/named/etc/named.conf

|

||||

|

||||

[root@rhel7-host ~]# machinectl copy-from 8824c90294d5a36d396c8ab35167937f /var/named /srv/named/var/named

|

||||

|

||||

[root@rhel7-host ~]# docker stop infallible_wescoff

|

||||

```

|

||||

|

||||

### 最终的创建

|

||||

|

||||

为了创建和运行最终的容器,添加卷选项以挂载:

|

||||

|

||||

- 将文件 `/srv/named/etc/named.conf` 映射为 `/etc/named.conf`

|

||||

- 将目录 `/srv/named/var/named` 映射为 `/var/named`

|

||||

|

||||

因为这是我们最终的容器,我们将提供一个有意义的名字,以供我们以后引用。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker run -d -p 53:53 -p 53:53/udp -v /srv/named/etc/named.conf:/etc/named.conf:Z -v /srv/named/var/named:/var/named:Z --name named-container named

|

||||

```

|

||||

|

||||

在最终容器运行时,我们可以更改本机配置来改变这个容器中 BIND 的行为。这个 BIND 服务器将需要在这个容器分配的任何 IP 上监听。请确保任何新文件的 GID 与来自这个容器中的其余的 BIND 文件相匹配。

|

||||

|

||||

```

|

||||

[root@rhel7-host bind]# cp named.conf /srv/named/etc/named.conf

|

||||

|

||||

[root@rhel7-host ~]# cp example.com.zone /srv/named/var/named/example.com.zone

|

||||

|

||||

[root@rhel7-host ~]# cp example.com.rr.zone /srv/named/var/named/example.com.rr.zone

|

||||

```

|

||||

|

||||

> 很好奇为什么我不需要在主机目录中改变 SELinux 上下文?^注1

|

||||

|

||||

我们将运行这个容器提供的 `rndc` 二进制文件重新加载配置。我们可以使用 `journald` 以同样的方式检查 BIND 日志。如果运行出现错误,你可以在主机中编辑该文件,并且重新加载配置。在主机中使用 `host` 或 `dig`,我们可以检查来自该容器化服务的 example.com 的响应。

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# docker exec -it named-container rndc reload

|

||||

server reload successful

|

||||

|

||||

[root@rhel7-host ~]# docker exec -it named-container journalctl -u named -n

|

||||

-- Logs begin at Fri 2017-05-12 19:15:18 UTC, end at Fri 2017-05-12 19:29:17 UTC. --

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: 9.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: A.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: B.E.F.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: automatic empty zone: 8.B.D.0.1.0.0.2.IP6.ARPA

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: reloading configuration succeeded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: reloading zones succeeded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: zone 1.0.10.in-addr.arpa/IN: loaded serial 2001062601

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: zone 1.0.10.in-addr.arpa/IN: sending notifies (serial 2001062601)

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: all zones loaded

|

||||

May 12 19:29:17 ac1752c314a7 named[27]: running

|

||||

|

||||

[root@rhel7-host bind]# host www.example.com localhost

|

||||

Using domain server:

|

||||

Name: localhost

|

||||

Address: ::1#53

|

||||

Aliases:

|

||||

www.example.com is an alias for server1.example.com.

|

||||

server1.example.com is an alias for mail

|

||||

```

|

||||

|

||||

> 你的区域文件没有更新吗?可能是因为你的编辑器,而不是序列号。^注2

|

||||

|

||||

### 终点线

|

||||

|

||||

我们已经达成了我们打算完成的目标,从容器中为 DNS 请求和区域文件提供服务。我们已经得到一个持久化的位置来管理更新和配置,并且更新后该配置不变。

|

||||

|

||||

在这个系列的第二部分,我们将看到怎样将一个容器看作为主机中的一个普通服务来运行。

|

||||

|

||||

---

|

||||

|

||||

[关注 RHEL 博客](http://redhatstackblog.wordpress.com/feed/),通过电子邮件来获得本系列第二部分和其它新文章的更新。

|

||||

|

||||

---

|

||||

|

||||

### 附加资源

|

||||

|

||||

- **所附带文件的 Github 仓库:** [https://github.com/nzwulfin/named-container](https://github.com/nzwulfin/named-container)

|

||||

- **注1:** **通过容器访问本地文件的 SELinux 上下文**

|

||||

|

||||

你可能已经注意到当我从容器向本地主机拷贝文件时,我没有运行 `chcon` 将主机中的文件类型改变为 `svirt_sandbox_file_t`。为什么它没有出错?将一个文件拷贝到 `/srv` 会将这个文件标记为类型 `var_t`。我 `setenforce 0` (关闭 SELinux)了吗?

|

||||

|

||||

当然没有,这将让 [Dan Walsh 大哭](https://stopdisablingselinux.com/)(LCTT 译注:RedHat 的 SELinux 团队负责人,倡议不要禁用 SELinux)。是的,`machinectl` 确实将文件标记类型设置为期望的那样,可以看一下:

|

||||

|

||||

启动一个容器之前:

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# ls -Z /srv/named/etc/named.conf

|

||||

-rw-r-----. unconfined_u:object_r:var_t:s0 /srv/named/etc/named.conf

|

||||

```

|

||||

|

||||

不过,运行中我使用了一个卷选项可以使 Dan Walsh 先生高兴起来,`:Z`。`-v /srv/named/etc/named.conf:/etc/named.conf:Z` 命令的这部分做了两件事情:首先它表示这需要使用一个私有卷的 SELiunx 标记来重新标记;其次它表明以读写挂载。

|

||||

|

||||

启动容器之后:

|

||||

|

||||

```

|

||||

[root@rhel7-host ~]# ls -Z /srv/named/etc/named.conf

|

||||

-rw-r-----. root 25 system_u:object_r:svirt_sandbox_file_t:s0:c821,c956 /srv/named/etc/named.conf

|

||||

```

|

||||

|

||||

- **注2:** **VIM 备份行为能改变 inode**

|

||||

|

||||

如果你在本地主机中使用 `vim` 来编辑配置文件,而你没有看到容器中的改变,你可能不经意的创建了容器感知不到的新文件。在编辑时,有三种 `vim` 设定影响备份副本:`backup`、`writebackup` 和 `backupcopy`。

|

||||

|

||||

我摘录了 RHEL 7 中的来自官方 VIM [backup_table][9] 中的默认配置。

|

||||

|

||||

```

|

||||

backup writebackup

|

||||

off on backup current file, deleted afterwards (default)

|

||||

```

|

||||

所以我们不创建残留下的 `~` 副本,而是创建备份。另外的设定是 `backupcopy`,`auto` 是默认的设置:

|

||||

|

||||

```

|

||||

"yes" make a copy of the file and overwrite the original one

|

||||

"no" rename the file and write a new one

|

||||

"auto" one of the previous, what works best

|

||||

```

|

||||

|

||||

这种组合设定意味着当你编辑一个文件时,除非 `vim` 有理由(请查看文档了解其逻辑),你将会得到一个包含你编辑内容的新文件,当你保存时它会重命名为原先的文件。这意味着这个文件获得了新的 inode。对于大多数情况,这不是问题,但是这里容器的<ruby>绑定挂载<rt>bind mount</rt></ruby>对 inode 的改变很敏感。为了解决这个问题,你需要改变 `backupcopy` 的行为。

|

||||

|

||||

不管是在 `vim` 会话中还是在你的 `.vimrc`中,请添加 `set backupcopy=yes`。这将确保原先的文件被清空并覆写,维持了 inode 不变并且将该改变传递到了容器中。

|

||||

|

||||

------------

|

||||

|

||||

via: http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/

|

||||

|

||||

作者:[Matt Micene][a]

|

||||

译者:[liuxinyu123](https://github.com/liuxinyu123)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/

|

||||

[1]:http://rhelblog.redhat.com/author/mmicenerht/

|

||||

[2]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#repo

|

||||

[3]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#sidebar_1

|

||||

[4]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#sidebar_2

|

||||

[5]:http://redhatstackblog.wordpress.com/feed/

|

||||

[6]:https://access.redhat.com/containers

|

||||

[7]:http://rhelblog.redhat.com/2017/07/19/containing-system-services-in-red-hat-enterprise-linux-part-1/#repo

|

||||

[8]:https://github.com/nzwulfin/named-container

|

||||

[9]:http://vimdoc.sourceforge.net/htmldoc/editing.html#backup-table

|

||||

@ -0,0 +1,75 @@

|

||||

在 Linux 启动或重启时执行命令与脚本

|

||||

======

|

||||

|

||||

有时可能会需要在重启时或者每次系统启动时运行某些命令或者脚本。我们要怎样做呢?本文中我们就对此进行讨论。 我们会用两种方法来描述如何在 CentOS/RHEL 以及 Ubuntu 系统上做到重启或者系统启动时执行命令和脚本。 两种方法都通过了测试。

|

||||

|

||||

### 方法 1 – 使用 rc.local

|

||||

|

||||

这种方法会利用 `/etc/` 中的 `rc.local` 文件来在启动时执行脚本与命令。我们在文件中加上一行来执行脚本,这样每次启动系统时,都会执行该脚本。

|

||||

|

||||

不过我们首先需要为 `/etc/rc.local` 添加执行权限,

|

||||

|

||||

```

|

||||

$ sudo chmod +x /etc/rc.local

|

||||

```

|

||||

|

||||

然后将要执行的脚本加入其中:

|

||||

|

||||

```

|

||||

$ sudo vi /etc/rc.local

|

||||

```

|

||||

|

||||

在文件最后加上:

|

||||

|

||||

```

|

||||

sh /root/script.sh &

|

||||

```

|

||||

|

||||

然后保存文件并退出。使用 `rc.local` 文件来执行命令也是一样的,但是一定要记得填写命令的完整路径。 想知道命令的完整路径可以运行:

|

||||

|

||||

```

|

||||

$ which command

|

||||

```

|

||||

|

||||

比如:

|

||||

|

||||

```

|

||||

$ which shutter

|

||||

/usr/bin/shutter

|

||||

```

|

||||

|

||||

如果是 CentOS,我们修改的是文件 `/etc/rc.d/rc.local` 而不是 `/etc/rc.local`。 不过我们也需要先为该文件添加可执行权限。

|

||||

|

||||

注意:- 启动时执行的脚本,请一定保证是以 `exit 0` 结尾的。

|

||||

|

||||

### 方法 2 – 使用 Crontab

|

||||

|

||||

该方法最简单了。我们创建一个 cron 任务,这个任务在系统启动后等待 90 秒,然后执行命令和脚本。

|

||||

|

||||

要创建 cron 任务,打开终端并执行

|

||||

|

||||

```

|

||||

$ crontab -e

|

||||

```

|

||||

|

||||

然后输入下行内容,

|

||||

|

||||

```

|

||||

@reboot ( sleep 90 ; sh \location\script.sh )

|

||||

```

|

||||

|

||||

这里 `\location\script.sh` 就是待执行脚本的地址。

|

||||

|

||||

我们的文章至此就完了。如有疑问,欢迎留言。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/executing-commands-scripts-at-reboot/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

72

published/20170922 How to disable USB storage on Linux.md

Normal file

72

published/20170922 How to disable USB storage on Linux.md

Normal file

@ -0,0 +1,72 @@

|

||||

Linux 上如何禁用 USB 存储

|

||||

======

|

||||

|

||||

为了保护数据不被泄漏,我们使用软件和硬件防火墙来限制外部未经授权的访问,但是数据泄露也可能发生在内部。 为了消除这种可能性,机构会限制和监测访问互联网,同时禁用 USB 存储设备。

|

||||

|

||||

在本教程中,我们将讨论三种不同的方法来禁用 Linux 机器上的 USB 存储设备。所有这三种方法都在 CentOS 6&7 机器上通过测试。那么让我们一一讨论这三种方法,

|

||||

|

||||

(另请阅读: [Ultimate guide to securing SSH sessions][1])

|

||||

|

||||

### 方法 1 – 伪安装

|

||||

|

||||

在本方法中,我们往配置文件中添加一行 `install usb-storage /bin/true`, 这会让安装 usb-storage 模块的操作实际上变成运行 `/bin/true`, 这也是为什么这种方法叫做`伪安装`的原因。 具体来说就是,在文件夹 `/etc/modprobe.d` 中创建并打开一个名为 `block_usb.conf` (也可能叫其他名字) ,

|

||||

|

||||

```

|

||||

$ sudo vim /etc/modprobe.d/block_usb.conf

|

||||

```

|

||||

|

||||

然后将下行内容添加进去:

|

||||

|

||||

```

|

||||

install usb-storage /bin/true

|

||||

```

|

||||

|

||||

最后保存文件并退出。

|

||||

|

||||

### 方法 2 – 删除 USB 驱动

|

||||

|

||||

这种方法要求我们将 USB 存储的驱动程序(`usb_storage.ko`)删掉或者移走,从而达到无法再访问 USB 存储设备的目的。 执行下面命令可以将驱动从它默认的位置移走:

|

||||

|

||||

```

|

||||

$ sudo mv /lib/modules/$(uname -r)/kernel/drivers/usb/storage/usb-storage.ko /home/user1

|

||||

```

|

||||

|

||||

现在在默认的位置上无法再找到驱动程序了,因此当 USB 存储器连接到系统上时也就无法加载到驱动程序了,从而导致磁盘不可用。 但是这个方法有一个小问题,那就是当系统内核更新的时候,`usb-storage` 模块会再次出现在它的默认位置。

|

||||

|

||||

### 方法 3 - 将 USB 存储器纳入黑名单

|

||||

|

||||

我们也可以通过 `/etc/modprobe.d/blacklist.conf` 文件将 usb-storage 纳入黑名单。这个文件在 RHEL/CentOS 6 是现成就有的,但在 7 上可能需要自己创建。 要将 USB 存储列入黑名单,请使用 vim 打开/创建上述文件:

|

||||

|

||||

```

|

||||

$ sudo vim /etc/modprobe.d/blacklist.conf

|

||||

```

|

||||

|

||||

并输入以下行将 USB 纳入黑名单:

|

||||

|

||||

```

|

||||

blacklist usb-storage

|

||||

```

|

||||

|

||||

保存文件并退出。`usb-storage` 就在就会被系统阻止加载,但这种方法有一个很大的缺点,即任何特权用户都可以通过执行以下命令来加载 `usb-storage` 模块,

|

||||

|

||||

```

|

||||

$ sudo modprobe usb-storage

|

||||

```

|

||||

|

||||

这个问题使得这个方法不是那么理想,但是对于非特权用户来说,这个方法效果很好。

|

||||

|

||||

在更改完成后重新启动系统,以使更改生效。请尝试用这些方法来禁用 USB 存储,如果您遇到任何问题或有什么疑问,请告知我们。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/disable-usb-storage-linux/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject)原创编译,[Linux 中国](https://linux.cn/)荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:http://linuxtechlab.com/ultimate-guide-to-securing-ssh-sessions/

|

||||

@ -1,9 +1,9 @@

|

||||

并发服务器(3) —— 事件驱动

|

||||

并发服务器(三):事件驱动

|

||||

============================================================

|

||||

|

||||

这是《并发服务器》系列的第三节。[第一节][26] 介绍了阻塞式编程,[第二节 —— 线程][27] 探讨了多线程,将其作为一种可行的方法来实现服务器并发编程。

|

||||

这是并发服务器系列的第三节。[第一节][26] 介绍了阻塞式编程,[第二节:线程][27] 探讨了多线程,将其作为一种可行的方法来实现服务器并发编程。

|

||||

|

||||

另一种常见的实现并发的方法叫做 _事件驱动编程_,也可以叫做 _异步_ 编程 [^注1][28]。这种方法变化万千,因此我们会从最基本的开始,使用一些基本的 APIs 而非从封装好的高级方法开始。本系列以后的文章会讲高层次抽象,还有各种混合的方法。

|

||||

另一种常见的实现并发的方法叫做 _事件驱动编程_,也可以叫做 _异步_ 编程 ^注1 。这种方法变化万千,因此我们会从最基本的开始,使用一些基本的 API 而非从封装好的高级方法开始。本系列以后的文章会讲高层次抽象,还有各种混合的方法。

|

||||

|

||||

本系列的所有文章:

|

||||

|

||||

@ -13,13 +13,13 @@

|

||||

|

||||

### 阻塞式 vs. 非阻塞式 I/O

|

||||

|

||||

要介绍这个标题,我们先讲讲阻塞和非阻塞 I/O 的区别。阻塞式 I/O 更好理解,因为这是我们使用 I/O 相关 API 时的“标准”方式。从套接字接收数据的时候,调用 `recv` 函数会发生 _阻塞_,直到它从端口上接收到了来自另一端套接字的数据。这恰恰是第一部分讲到的顺序服务器的问题。

|

||||

作为本篇的介绍,我们先讲讲阻塞和非阻塞 I/O 的区别。阻塞式 I/O 更好理解,因为这是我们使用 I/O 相关 API 时的“标准”方式。从套接字接收数据的时候,调用 `recv` 函数会发生 _阻塞_,直到它从端口上接收到了来自另一端套接字的数据。这恰恰是第一部分讲到的顺序服务器的问题。

|

||||

|

||||

因此阻塞式 I/O 存在着固有的性能问题。第二节里我们讲过一种解决方法,就是用多线程。哪怕一个线程的 I/O 阻塞了,别的线程仍然可以使用 CPU 资源。实际上,阻塞 I/O 通常在利用资源方面非常高效,因为线程就等待着 —— 操作系统将线程变成休眠状态,只有满足了线程需要的条件才会被唤醒。

|

||||

|

||||

_非阻塞式_ I/O 是另一种思路。把套接字设成非阻塞模式时,调用 `recv` 时(还有 `send`,但是我们现在只考虑接收),函数返回地会很快,哪怕没有数据要接收。这时,就会返回一个特殊的错误状态 ^[注2][15] 来通知调用者,此时没有数据传进来。调用者可以去做其他的事情,或者尝试再次调用 `recv` 函数。

|

||||

_非阻塞式_ I/O 是另一种思路。把套接字设成非阻塞模式时,调用 `recv` 时(还有 `send`,但是我们现在只考虑接收),函数返回的会很快,哪怕没有接收到数据。这时,就会返回一个特殊的错误状态 ^注2 来通知调用者,此时没有数据传进来。调用者可以去做其他的事情,或者尝试再次调用 `recv` 函数。

|

||||

|

||||

证明阻塞式和非阻塞式的 `recv` 区别的最好方式就是贴一段示例代码。这里有个监听套接字的小程序,一直在 `recv` 这里阻塞着;当 `recv` 返回了数据,程序就报告接收到了多少个字节 ^[注3][16]:

|

||||

示范阻塞式和非阻塞式的 `recv` 区别的最好方式就是贴一段示例代码。这里有个监听套接字的小程序,一直在 `recv` 这里阻塞着;当 `recv` 返回了数据,程序就报告接收到了多少个字节 ^注3 :

|

||||

|

||||

```

|

||||

int main(int argc, const char** argv) {

|

||||

@ -69,8 +69,7 @@ hello # wait for 2 seconds after typing this

|

||||

socket world

|

||||

^D # to end the connection>

|

||||

```

|

||||

|

||||

The listening program will print the following:

|

||||

|

||||

监听程序会输出以下内容:

|

||||

|

||||

```

|

||||

@ -144,7 +143,6 @@ int main(int argc, const char** argv) {

|

||||

这里与阻塞版本有些差异,值得注意:

|

||||

|

||||

1. `accept` 函数返回的 `newsocktfd` 套接字因调用了 `fcntl`, 被设置成非阻塞的模式。

|

||||

|

||||

2. 检查 `recv` 的返回状态时,我们对 `errno` 进行了检查,判断它是否被设置成表示没有可供接收的数据的状态。这时,我们仅仅是休眠了 200 毫秒然后进入到下一轮循环。

|

||||

|

||||

同样用 `nc` 进行测试,以下是非阻塞监听器的输出:

|

||||

@ -183,19 +181,19 @@ Peer disconnected; I'm done.

|

||||

|

||||

作为练习,给输出添加一个时间戳,确认调用 `recv` 得到结果之间花费的时间是比输入到 `nc` 中所用的多还是少(每一轮是 200 ms)。

|

||||

|

||||

这里就实现了使用非阻塞的 `recv` 让监听者检查套接字变为可能,并且在没有数据的时候重新获得控制权。换句话说,这就是 _polling(轮询)_ —— 主程序周期性的查询套接字以便读取数据。

|

||||

这里就实现了使用非阻塞的 `recv` 让监听者检查套接字变为可能,并且在没有数据的时候重新获得控制权。换句话说,用编程的语言说这就是 <ruby>轮询<rt>polling</rt></ruby> —— 主程序周期性的查询套接字以便读取数据。

|

||||

|

||||

对于顺序响应的问题,这似乎是个可行的方法。非阻塞的 `recv` 让同时与多个套接字通信变成可能,轮询这些套接字,仅当有新数据到来时才处理。就是这样,这种方式 _可以_ 用来写并发服务器;但实际上一般不这么做,因为轮询的方式很难扩展。

|

||||

|

||||

首先,我在代码中引入的 200 ms 延迟对于记录非常好(监听器在我输入 `nc` 之间只打印几行 “Calling recv...”,但实际上应该有上千行)。但它也增加了多达 200 ms 的服务器响应时间,这几乎是意料不到的。实际的程序中,延迟会低得多,休眠时间越短,进程占用的 CPU 资源就越多。有些时钟周期只是浪费在等待,这并不好,尤其是在移动设备上,这些设备的电量往往有限。

|

||||

首先,我在代码中引入的 200ms 延迟对于演示非常好(监听器在我输入 `nc` 之间只打印几行 “Calling recv...”,但实际上应该有上千行)。但它也增加了多达 200ms 的服务器响应时间,这无意是不必要的。实际的程序中,延迟会低得多,休眠时间越短,进程占用的 CPU 资源就越多。有些时钟周期只是浪费在等待,这并不好,尤其是在移动设备上,这些设备的电量往往有限。

|

||||

|

||||

但是当我们实际这样来使用多个套接字的时候,更严重的问题出现了。想像下监听器正在同时处理 1000 个 客户端。这意味着每一个循环迭代里面,它都得为 _这 1000 个套接字中的每一个_ 执行一遍非阻塞的 `recv`,找到其中准备好了数据的那一个。这非常低效,并且极大的限制了服务器能够并发处理的客户端数。这里有个准则:每次轮询之间等待的间隔越久,服务器响应性越差;而等待的时间越少,CPU 在无用的轮询上耗费的资源越多。

|

||||

但是当我们实际这样来使用多个套接字的时候,更严重的问题出现了。想像下监听器正在同时处理 1000 个客户端。这意味着每一个循环迭代里面,它都得为 _这 1000 个套接字中的每一个_ 执行一遍非阻塞的 `recv`,找到其中准备好了数据的那一个。这非常低效,并且极大的限制了服务器能够并发处理的客户端数。这里有个准则:每次轮询之间等待的间隔越久,服务器响应性越差;而等待的时间越少,CPU 在无用的轮询上耗费的资源越多。

|

||||

|

||||

讲真,所有的轮询都像是无用功。当然操作系统应该是知道哪个套接字是准备好了数据的,因此没必要逐个扫描。事实上,就是这样,接下来就会讲一些API,让我们可以更优雅地处理多个客户端。

|

||||

讲真,所有的轮询都像是无用功。当然操作系统应该是知道哪个套接字是准备好了数据的,因此没必要逐个扫描。事实上,就是这样,接下来就会讲一些 API,让我们可以更优雅地处理多个客户端。

|

||||

|

||||

### select

|

||||

|

||||

`select` 的系统调用是轻便的(POSIX),标准 Unix API 中常有的部分。它是为上一节最后一部分描述的问题而设计的 —— 允许一个线程可以监视许多文件描述符 ^[注4][17] 的变化,不用在轮询中执行不必要的代码。我并不打算在这里引入一个关于 `select` 的理解性的教程,有很多网站和书籍讲这个,但是在涉及到问题的相关内容时,我会介绍一下它的 API,然后再展示一个非常复杂的例子。

|

||||

`select` 的系统调用是可移植的(POSIX),是标准 Unix API 中常有的部分。它是为上一节最后一部分描述的问题而设计的 —— 允许一个线程可以监视许多文件描述符 ^注4 的变化,而不用在轮询中执行不必要的代码。我并不打算在这里引入一个关于 `select` 的全面教程,有很多网站和书籍讲这个,但是在涉及到问题的相关内容时,我会介绍一下它的 API,然后再展示一个非常复杂的例子。

|

||||

|

||||

`select` 允许 _多路 I/O_,监视多个文件描述符,查看其中任何一个的 I/O 是否可用。

|

||||

|

||||

@ -209,30 +207,25 @@ int select(int nfds, fd_set *readfds, fd_set *writefds,

|

||||

`select` 的调用过程如下:

|

||||

|

||||

1. 在调用之前,用户先要为所有不同种类的要监视的文件描述符创建 `fd_set` 实例。如果想要同时监视读取和写入事件,`readfds` 和 `writefds` 都要被创建并且引用。

|

||||

|

||||

2. 用户可以使用 `FD_SET` 来设置集合中想要监视的特殊描述符。例如,如果想要监视描述符 2、7 和 10 的读取事件,在 `readfds` 这里调用三次 `FD_SET`,分别设置 2、7 和 10。

|

||||

|

||||

3. `select` 被调用。

|

||||

|

||||

4. 当 `select` 返回时(现在先不管超时),就是说集合中有多少个文件描述符已经就绪了。它也修改 `readfds` 和 `writefds` 集合,来标记这些准备好的描述符。其它所有的描述符都会被清空。

|

||||

|

||||

5. 这时用户需要遍历 `readfds` 和 `writefds`,找到哪个描述符就绪了(使用 `FD_ISSET`)。

|

||||

|

||||

作为完整的例子,我在并发的服务器程序上使用 `select`,重新实现了我们之前的协议。[完整的代码在这里][18];接下来的是代码中的高亮,还有注释。警告:示例代码非常复杂,因此第一次看的时候,如果没有足够的时间,快速浏览也没有关系。

|

||||

作为完整的例子,我在并发的服务器程序上使用 `select`,重新实现了我们之前的协议。[完整的代码在这里][18];接下来的是代码中的重点部分及注释。警告:示例代码非常复杂,因此第一次看的时候,如果没有足够的时间,快速浏览也没有关系。

|

||||

|

||||

### 使用 select 的并发服务器

|

||||

|

||||

使用 I/O 的多发 API 诸如 `select` 会给我们服务器的设计带来一些限制;这不会马上显现出来,但这值得探讨,因为它们是理解事件驱动编程到底是什么的关键。

|

||||

|

||||

最重要的是,要记住这种方法本质上是单线程的 ^[注5][19]。服务器实际上在 _同一时刻只能做一件事_。因为我们想要同时处理多个客户端请求,我们需要换一种方式重构代码。

|

||||

最重要的是,要记住这种方法本质上是单线程的 ^注5 。服务器实际上在 _同一时刻只能做一件事_。因为我们想要同时处理多个客户端请求,我们需要换一种方式重构代码。

|

||||

|

||||

首先,让我们谈谈主循环。它看起来是什么样的呢?先让我们想象一下服务器有一堆任务,它应该监视哪些东西呢?两种类型的套接字活动:

|

||||

|

||||

1. 新客户端尝试连接。这些客户端应该被 `accept`。

|

||||

|

||||

2. 已连接的客户端发送数据。这个数据要用 [第一节][11] 中所讲到的协议进行传输,有可能会有一些数据要被回送给客户端。

|

||||

|

||||

尽管这两种活动在本质上有所区别,我们还是要把他们放在一个循环里,因为只能有一个主循环。循环会包含 `select` 的调用。这个 `select` 的调用会监视上述的两种活动。

|

||||

尽管这两种活动在本质上有所区别,我们还是要把它们放在一个循环里,因为只能有一个主循环。循环会包含 `select` 的调用。这个 `select` 的调用会监视上述的两种活动。

|

||||

|

||||

这里是部分代码,设置了文件描述符集合,并在主循环里转到被调用的 `select` 部分。

|

||||

|

||||

@ -264,9 +257,7 @@ while (1) {

|

||||

这里的一些要点:

|

||||

|

||||

1. 由于每次调用 `select` 都会重写传递给函数的集合,调用器就得维护一个 “master” 集合,在循环迭代中,保持对所监视的所有活跃的套接字的追踪。

|

||||

|

||||

2. 注意我们所关心的,最开始的唯一那个套接字是怎么变成 `listener_sockfd` 的,这就是最开始的套接字,服务器借此来接收新客户端的连接。

|

||||

|

||||

3. `select` 的返回值,是在作为参数传递的集合中,那些已经就绪的描述符的个数。`select` 修改这个集合,用来标记就绪的描述符。下一步是在这些描述符中进行迭代。

|

||||

|

||||

```

|

||||

@ -298,7 +289,7 @@ for (int fd = 0; fd <= fdset_max && nready > 0; fd++) {

|

||||

}

|

||||

```

|

||||

|

||||

这部分循环检查 _可读的_ 描述符。让我们跳过监听器套接字(要浏览所有内容,[看这个代码][20]) 然后看看当其中一个客户端准备好了之后会发生什么。出现了这种情况后,我们调用一个叫做 `on_peer_ready_recv` 的 _回调_ 函数,传入相应的文件描述符。这个调用意味着客户端连接到套接字上,发送某些数据,并且对套接字上 `recv` 的调用不会被阻塞 ^[注6][21]。这个回调函数返回结构体 `fd_status_t`。

|

||||

这部分循环检查 _可读的_ 描述符。让我们跳过监听器套接字(要浏览所有内容,[看这个代码][20]) 然后看看当其中一个客户端准备好了之后会发生什么。出现了这种情况后,我们调用一个叫做 `on_peer_ready_recv` 的 _回调_ 函数,传入相应的文件描述符。这个调用意味着客户端连接到套接字上,发送某些数据,并且对套接字上 `recv` 的调用不会被阻塞 ^注6 。这个回调函数返回结构体 `fd_status_t`。

|

||||

|

||||

```

|

||||

typedef struct {

|

||||

@ -307,7 +298,7 @@ typedef struct {

|

||||

} fd_status_t;

|

||||

```

|

||||

|

||||

这个结构体告诉主循环,是否应该监视套接字的读取事件,写入事件,或者两者都监视。上述代码展示了 `FD_SET` 和 `FD_CLR` 是怎么在合适的描述符集合中被调用的。对于主循环中某个准备好了写入数据的描述符,代码是类似的,除了它所调用的回调函数,这个回调函数叫做 `on_peer_ready_send`。

|

||||

这个结构体告诉主循环,是否应该监视套接字的读取事件、写入事件,或者两者都监视。上述代码展示了 `FD_SET` 和 `FD_CLR` 是怎么在合适的描述符集合中被调用的。对于主循环中某个准备好了写入数据的描述符,代码是类似的,除了它所调用的回调函数,这个回调函数叫做 `on_peer_ready_send`。

|

||||

|

||||

现在来花点时间看看这个回调:

|

||||

|

||||

@ -464,37 +455,36 @@ INFO:2017-09-26 05:29:18,070:conn0 disconnecting

|

||||

INFO:2017-09-26 05:29:18,070:conn2 disconnecting

|

||||

```

|

||||

|

||||

和线程的情况相似,客户端之间没有延迟,他们被同时处理。而且在 `select-server` 也没有用线程!主循环 _多路_ 处理所有的客户端,通过高效使用 `select` 轮询多个套接字。回想下 [第二节中][22] 顺序的 vs 多线程的客户端处理过程的图片。对于我们的 `select-server`,三个客户端的处理流程像这样:

|

||||

和线程的情况相似,客户端之间没有延迟,它们被同时处理。而且在 `select-server` 也没有用线程!主循环 _多路_ 处理所有的客户端,通过高效使用 `select` 轮询多个套接字。回想下 [第二节中][22] 顺序的 vs 多线程的客户端处理过程的图片。对于我们的 `select-server`,三个客户端的处理流程像这样:

|

||||

|

||||

|

||||

|

||||

所有的客户端在同一个线程中同时被处理,通过乘积,做一点这个客户端的任务,然后切换到另一个,再切换到下一个,最后切换回到最开始的那个客户端。注意,这里没有什么循环调度,客户端在它们发送数据的时候被客户端处理,这实际上是受客户端左右的。

|

||||

|

||||

### 同步,异步,事件驱动,回调

|

||||

### 同步、异步、事件驱动、回调

|

||||

|

||||

`select-server` 示例代码为讨论什么是异步编程,它和事件驱动及基于回调的编程有何联系,提供了一个良好的背景。因为这些词汇在并发服务器的(非常矛盾的)讨论中很常见。

|

||||

`select-server` 示例代码为讨论什么是异步编程、它和事件驱动及基于回调的编程有何联系,提供了一个良好的背景。因为这些词汇在并发服务器的(非常矛盾的)讨论中很常见。

|

||||

|

||||

让我们从一段 `select` 的手册页面中引用的一句好开始:

|

||||

让我们从一段 `select` 的手册页面中引用的一句话开始:

|

||||

|

||||

> select,pselect,FD_CLR,FD_ISSET,FD_SET,FD_ZERO - 同步 I/O 处理

|

||||

> select,pselect,FD\_CLR,FD\_ISSET,FD\_SET,FD\_ZERO - 同步 I/O 处理

|

||||

|

||||

因此 `select` 是 _同步_ 处理。但我刚刚演示了大量代码的例子,使用 `select` 作为 _异步_ 处理服务器的例子。有哪些东西?

|

||||

|

||||

答案是:这取决于你的观查角度。同步常用作阻塞处理,并且对 `select` 的调用实际上是阻塞的。和第 1、2 节中讲到的顺序的、多线程的服务器中对 `send` 和 `recv` 是一样的。因此说 `select` 是 _同步的_ API 是有道理的。可是,服务器的设计却可以是 _异步的_,或是 _基于回调的_,或是 _事件驱动的_,尽管其中有对 `select` 的使用。注意这里的 `on_peer_*` 函数是回调函数;它们永远不会阻塞,并且只有网络事件触发的时候才会被调用。它们可以获得部分数据,并能够在调用过程中保持稳定的状态。

|

||||

答案是:这取决于你的观察角度。同步常用作阻塞处理,并且对 `select` 的调用实际上是阻塞的。和第 1、2 节中讲到的顺序的、多线程的服务器中对 `send` 和 `recv` 是一样的。因此说 `select` 是 _同步的_ API 是有道理的。可是,服务器的设计却可以是 _异步的_,或是 _基于回调的_,或是 _事件驱动的_,尽管其中有对 `select` 的使用。注意这里的 `on_peer_*` 函数是回调函数;它们永远不会阻塞,并且只有网络事件触发的时候才会被调用。它们可以获得部分数据,并能够在调用过程中保持稳定的状态。

|

||||

|

||||

如果你曾经做过一些 GUI 编程,这些东西对你来说应该很亲切。有个 “事件循环”,常常完全隐藏在框架里,应用的 “业务逻辑” 建立在回调上,这些回调会在各种事件触发后被调用,用户点击鼠标,选择菜单,定时器到时间,数据到达套接字,等等。曾经最常见的编程模型是客户端的 JavaScript,这里面有一堆回调函数,它们在浏览网页时用户的行为被触发。

|

||||

如果你曾经做过一些 GUI 编程,这些东西对你来说应该很亲切。有个 “事件循环”,常常完全隐藏在框架里,应用的 “业务逻辑” 建立在回调上,这些回调会在各种事件触发后被调用,用户点击鼠标、选择菜单、定时器触发、数据到达套接字等等。曾经最常见的编程模型是客户端的 JavaScript,这里面有一堆回调函数,它们在浏览网页时用户的行为被触发。

|

||||

|

||||

### select 的局限

|

||||

|

||||

使用 `select` 作为第一个异步服务器的例子对于说明这个概念很有用,而且由于 `select` 是很常见,可移植的 API。但是它也有一些严重的缺陷,在监视的文件描述符非常大的时候就会出现。

|

||||

使用 `select` 作为第一个异步服务器的例子对于说明这个概念很有用,而且由于 `select` 是很常见、可移植的 API。但是它也有一些严重的缺陷,在监视的文件描述符非常大的时候就会出现。

|

||||

|

||||

1. 有限的文件描述符的集合大小。

|

||||

|

||||

2. 糟糕的性能。

|

||||

|

||||

从文件描述符的大小开始。`FD_SETSIZE` 是一个编译期常数,在如今的操作系统中,它的值通常是 1024。它被硬编码在 `glibc` 的头文件里,并且不容易修改。它把 `select` 能够监视的文件描述符的数量限制在 1024 以内。曾有些分支想要写出能够处理上万个并发访问的客户端请求的服务器,这个问题很有现实意义。有一些方法,但是不可移植,也很难用。

|

||||

从文件描述符的大小开始。`FD_SETSIZE` 是一个编译期常数,在如今的操作系统中,它的值通常是 1024。它被硬编码在 `glibc` 的头文件里,并且不容易修改。它把 `select` 能够监视的文件描述符的数量限制在 1024 以内。曾有些人想要写出能够处理上万个并发访问的客户端请求的服务器,所以这个问题很有现实意义。有一些方法,但是不可移植,也很难用。

|

||||

|

||||

糟糕的性能问题就好解决的多,但是依然非常严重。注意当 `select` 返回的时候,它向调用者提供的信息是 “就绪的” 描述符的个数,还有被修改过的描述符集合。描述符集映射着描述符 就绪/未就绪”,但是并没有提供什么有效的方法去遍历所有就绪的描述符。如果只有一个描述符是就绪的,最坏的情况是调用者需要遍历 _整个集合_ 来找到那个描述符。这在监视的描述符数量比较少的时候还行,但是如果数量变的很大的时候,这种方法弊端就凸显出了 ^[注7][23]。

|

||||

糟糕的性能问题就好解决的多,但是依然非常严重。注意当 `select` 返回的时候,它向调用者提供的信息是 “就绪的” 描述符的个数,还有被修改过的描述符集合。描述符集映射着描述符“就绪/未就绪”,但是并没有提供什么有效的方法去遍历所有就绪的描述符。如果只有一个描述符是就绪的,最坏的情况是调用者需要遍历 _整个集合_ 来找到那个描述符。这在监视的描述符数量比较少的时候还行,但是如果数量变的很大的时候,这种方法弊端就凸显出了 ^注7 。

|

||||

|

||||

由于这些原因,为了写出高性能的并发服务器, `select` 已经不怎么用了。每一个流行的操作系统有独特的不可移植的 API,允许用户写出非常高效的事件循环;像框架这样的高级结构还有高级语言通常在一个可移植的接口中包含这些 API。

|

||||

|

||||

@ -541,30 +531,23 @@ while (1) {

|

||||

}

|

||||

```

|

||||

|

||||

通过调用 `epoll_ctl` 来配置 `epoll`。这时,配置监听的套接字数量,也就是 `epoll` 监听的描述符的数量。然后分配一个缓冲区,把就绪的事件传给 `epoll` 以供修改。在主循环里对 `epoll_wait` 的调用是魅力所在。它阻塞着,直到某个描述符就绪了(或者超时),返回就绪的描述符数量。但这时,不少盲目地迭代所有监视的集合,我们知道 `epoll_write` 会修改传给它的 `events` 缓冲区,缓冲区中有就绪的事件,从 0 到 `nready-1`,因此我们只需迭代必要的次数。

|

||||

通过调用 `epoll_ctl` 来配置 `epoll`。这时,配置监听的套接字数量,也就是 `epoll` 监听的描述符的数量。然后分配一个缓冲区,把就绪的事件传给 `epoll` 以供修改。在主循环里对 `epoll_wait` 的调用是魅力所在。它阻塞着,直到某个描述符就绪了(或者超时),返回就绪的描述符数量。但这时,不要盲目地迭代所有监视的集合,我们知道 `epoll_write` 会修改传给它的 `events` 缓冲区,缓冲区中有就绪的事件,从 0 到 `nready-1`,因此我们只需迭代必要的次数。

|

||||

|

||||

要在 `select` 里面重新遍历,有明显的差异:如果在监视着 1000 个描述符,只有两个就绪, `epoll_waits` 返回的是 `nready=2`,然后修改 `events` 缓冲区最前面的两个元素,因此我们只需要“遍历”两个描述符。用 `select` 我们就需要遍历 1000 个描述符,找出哪个是就绪的。因此,在繁忙的服务器上,有许多活跃的套接字时 `epoll` 比 `select` 更加容易扩展。

|

||||

|

||||

剩下的代码很直观,因为我们已经很熟悉 `select 服务器` 了。实际上,`epoll 服务器` 中的所有“业务逻辑”和 `select 服务器` 是一样的,回调构成相同的代码。

|

||||

剩下的代码很直观,因为我们已经很熟悉 “select 服务器” 了。实际上,“epoll 服务器” 中的所有“业务逻辑”和 “select 服务器” 是一样的,回调构成相同的代码。

|

||||

|

||||

这种相似是通过将事件循环抽象分离到一个库/框架中。我将会详述这些内容,因为很多优秀的程序员曾经也是这样做的。相反,下一篇文章里我们会了解 `libuv`,一个最近出现的更加受欢迎的时间循环抽象层。像 `libuv` 这样的库让我们能够写出并发的异步服务器,并且不用考虑系统调用下繁琐的细节。

|

||||

这种相似是通过将事件循环抽象分离到一个库/框架中。我将会详述这些内容,因为很多优秀的程序员曾经也是这样做的。相反,下一篇文章里我们会了解 libuv,一个最近出现的更加受欢迎的时间循环抽象层。像 libuv 这样的库让我们能够写出并发的异步服务器,并且不用考虑系统调用下繁琐的细节。

|

||||

|

||||

* * *

|

||||

|

||||

|

||||

[注1][1] 我试着在两件事的实际差别中突显自己,一件是做一些网络浏览和阅读,但经常做得头疼。有很多不同的选项,从“他们是一样的东西”到“一个是另一个的子集”,再到“他们是完全不同的东西”。在面临这样主观的观点时,最好是完全放弃这个问题,专注特殊的例子和用例。

|

||||

|

||||

[注2][2] POSIX 表示这可以是 `EAGAIN`,也可以是 `EWOULDBLOCK`,可移植应用应该对这两个都进行检查。

|

||||

|

||||

[注3][3] 和这个系列所有的 C 示例类似,代码中用到了某些助手工具来设置监听套接字。这些工具的完整代码在这个 [仓库][4] 的 `utils` 模块里。

|

||||

|

||||

[注4][5] `select` 不是网络/套接字专用的函数,它可以监视任意的文件描述符,有可能是硬盘文件,管道,终端,套接字或者 Unix 系统中用到的任何文件描述符。这篇文章里,我们主要关注它在套接字方面的应用。

|

||||

|

||||

[注5][6] 有多种方式用多线程来实现事件驱动,我会把它放在稍后的文章中进行讨论。

|

||||

|

||||

[注6][7] 由于各种非实验因素,它 _仍然_ 可以阻塞,即使是在 `select` 说它就绪了之后。因此服务器上打开的所有套接字都被设置成非阻塞模式,如果对 `recv` 或 `send` 的调用返回了 `EAGAIN` 或者 `EWOULDBLOCK`,回调函数就装作没有事件发生。阅读示例代码的注释可以了解更多细节。

|

||||

|

||||

[注7][8] 注意这比该文章前面所讲的异步 polling 例子要稍好一点。polling 需要 _一直_ 发生,而 `select` 实际上会阻塞到有一个或多个套接字准备好读取/写入;`select` 会比一直询问浪费少得多的 CPU 时间。

|

||||

- 注1:我试着在做网络浏览和阅读这两件事的实际差别中突显自己,但经常做得头疼。有很多不同的选项,从“它们是一样的东西”到“一个是另一个的子集”,再到“它们是完全不同的东西”。在面临这样主观的观点时,最好是完全放弃这个问题,专注特殊的例子和用例。

|

||||

- 注2:POSIX 表示这可以是 `EAGAIN`,也可以是 `EWOULDBLOCK`,可移植应用应该对这两个都进行检查。

|

||||

- 注3:和这个系列所有的 C 示例类似,代码中用到了某些助手工具来设置监听套接字。这些工具的完整代码在这个 [仓库][4] 的 `utils` 模块里。

|

||||

- 注4:`select` 不是网络/套接字专用的函数,它可以监视任意的文件描述符,有可能是硬盘文件、管道、终端、套接字或者 Unix 系统中用到的任何文件描述符。这篇文章里,我们主要关注它在套接字方面的应用。

|

||||

- 注5:有多种方式用多线程来实现事件驱动,我会把它放在稍后的文章中进行讨论。

|

||||

- 注6:由于各种非实验因素,它 _仍然_ 可以阻塞,即使是在 `select` 说它就绪了之后。因此服务器上打开的所有套接字都被设置成非阻塞模式,如果对 `recv` 或 `send` 的调用返回了 `EAGAIN` 或者 `EWOULDBLOCK`,回调函数就装作没有事件发生。阅读示例代码的注释可以了解更多细节。

|

||||

- 注7:注意这比该文章前面所讲的异步轮询的例子要稍好一点。轮询需要 _一直_ 发生,而 `select` 实际上会阻塞到有一个或多个套接字准备好读取/写入;`select` 会比一直询问浪费少得多的 CPU 时间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -572,7 +555,7 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

|

||||

作者:[Eli Bendersky][a]

|

||||

译者:[GitFuture](https://github.com/GitFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -587,9 +570,9 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[8]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id9

|

||||

[9]:https://eli.thegreenplace.net/tag/concurrency

|

||||

[10]:https://eli.thegreenplace.net/tag/c-c

|

||||

[11]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[12]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[13]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[11]:https://linux.cn/article-8993-1.html

|

||||

[12]:https://linux.cn/article-8993-1.html

|

||||

[13]:https://linux.cn/article-9002-1.html

|

||||

[14]:http://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[15]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id11

|

||||

[16]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id12

|

||||

@ -598,10 +581,10 @@ via: https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[19]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id14

|

||||

[20]:https://github.com/eliben/code-for-blog/blob/master/2017/async-socket-server/select-server.c

|

||||

[21]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id15

|

||||

[22]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[22]:https://linux.cn/article-9002-1.html

|

||||

[23]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id16

|

||||

[24]:https://github.com/eliben/code-for-blog/blob/master/2017/async-socket-server/epoll-server.c

|

||||

[25]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/

|

||||

[26]:http://eli.thegreenplace.net/2017/concurrent-servers-part-1-introduction/

|

||||

[27]:http://eli.thegreenplace.net/2017/concurrent-servers-part-2-threads/

|

||||

[26]:https://linux.cn/article-8993-1.html

|

||||

[27]:https://linux.cn/article-9002-1.html

|

||||

[28]:https://eli.thegreenplace.net/2017/concurrent-servers-part-3-event-driven/#id10

|

||||

@ -0,0 +1,80 @@

|

||||

面向初学者的 Linux 网络硬件:软件思维

|

||||

===========================================================

|

||||

|

||||

|

||||

|

||||

> 没有路由和桥接,我们将会成为孤独的小岛,你将会在这个网络教程中学到更多知识。

|

||||

|

||||

[Commons Zero][3]Pixabay

|

||||

|

||||

上周,我们学习了本地网络硬件知识,本周,我们将学习网络互联技术和在移动网络中的一些很酷的黑客技术。

|

||||

|

||||

### 路由器

|

||||

|

||||

网络路由器就是计算机网络中的一切,因为路由器连接着网络,没有路由器,我们就会成为孤岛。图一展示了一个简单的有线本地网络和一个无线接入点,所有设备都接入到互联网上,本地局域网的计算机连接到一个连接着防火墙或者路由器的以太网交换机上,防火墙或者路由器连接到网络服务供应商(ISP)提供的电缆箱、调制调节器、卫星上行系统……好像一切都在计算中,就像是一个带着不停闪烁的的小灯的盒子。当你的网络数据包离开你的局域网,进入广阔的互联网,它们穿过一个又一个路由器直到到达自己的目的地。

|

||||

|

||||

|

||||

|

||||

*图一:一个简单的有线局域网和一个无线接入点。*

|

||||

|

||||

路由器可以是各种样式:一个只专注于路由的小巧特殊的小盒子,一个将会提供路由、防火墙、域名服务,以及 VPN 网关功能的大点的盒子,一台重新设计的台式电脑或者笔记本,一个树莓派计算机或者一个 Arduino,体积臃肿矮小的像 PC Engines 这样的单板计算机,除了苛刻的用途以外,普通的商品硬件都能良好的工作运行。高端的路由器使用特殊设计的硬件每秒能够传输最大量的数据包。它们有多路数据总线,多个中央处理器和极快的存储。(可以通过了解 Juniper 和思科的路由器来感受一下高端路由器书什么样子的,而且能看看里面是什么样的构造。)

|

||||

|

||||

接入你的局域网的无线接入点要么作为一个以太网网桥,要么作为一个路由器。桥接器扩展了这个网络,所以在这个桥接器上的任意一端口上的主机都连接在同一个网络中。一台路由器连接的是两个不同的网络。

|

||||

|

||||

### 网络拓扑

|

||||

|

||||

有多种设置你的局域网的方式,你可以把所有主机接入到一个单独的<ruby>平面网络<rt>flat network</rt></ruby>,也可以把它们划分为不同的子网。如果你的交换机支持 VLAN 的话,你也可以把它们分配到不同的 VLAN 中。

|

||||

|

||||

平面网络是最简单的网络,只需把每一台设备接入到同一个交换机上即可,如果一台交换上的端口不够使用,你可以将更多的交换机连接在一起。有些交换机有特殊的上行端口,有些是没有这种特殊限制的上行端口,你可以连接其中的任意端口,你可能需要使用交叉类型的以太网线,所以你要查阅你的交换机的说明文档来设置。

|

||||

|

||||

平面网络是最容易管理的,你不需要路由器也不需要计算子网,但它也有一些缺点。它们的伸缩性不好,所以当网络规模变得越来越大的时候就会被广播网络所阻塞。将你的局域网进行分段将会提升安全保障, 把局域网分成可管理的不同网段将有助于管理更大的网络。图二展示了一个分成两个子网的局域网络:内部的有线和无线主机,和一个托管公开服务的主机。包含面向公共的服务器的子网称作非军事区域 DMZ,(你有没有注意到那些都是主要在电脑上打字的男人们的术语?)因为它被阻挡了所有的内部网络的访问。

|

||||

|

||||

|

||||

|

||||

*图二:一个分成两个子网的简单局域网。*

|

||||

|

||||

即使像图二那样的小型网络也可以有不同的配置方法。你可以将防火墙和路由器放置在一台单独的设备上。你可以为你的非军事区域设置一个专用的网络连接,把它完全从你的内部网络隔离,这将引导我们进入下一个主题:一切基于软件。

|

||||

|

||||

### 软件思维

|

||||

|

||||

你可能已经注意到在这个简短的系列中我们所讨论的硬件,只有网络接口、交换机,和线缆是特殊用途的硬件。

|

||||

其它的都是通用的商用硬件,而且都是软件来定义它的用途。Linux 是一个真实的网络操作系统,它支持大量的网络操作:网关、虚拟专用网关、以太网桥、网页、邮箱以及文件等等服务器、负载均衡、代理、服务质量、多种认证、中继、故障转移……你可以在运行着 Linux 系统的标准硬件上运行你的整个网络。你甚至可以使用 Linux 交换应用(LISA)和VDE2 协议来模拟以太网交换机。

|

||||

|

||||

有一些用于小型硬件的特殊发行版,如 DD-WRT、OpenWRT,以及树莓派发行版,也不要忘记 BSD 们和它们的特殊衍生用途如 pfSense 防火墙/路由器,和 FreeNAS 网络存储服务器。

|

||||

|

||||

你知道有些人坚持认为硬件防火墙和软件防火墙有区别?其实是没有区别的,就像说硬件计算机和软件计算机一样。

|

||||

|

||||

### 端口聚合和以太网绑定

|

||||

|

||||

聚合和绑定,也称链路聚合,是把两条以太网通道绑定在一起成为一条通道。一些交换机支持端口聚合,就是把两个交换机端口绑定在一起,成为一个是它们原来带宽之和的一条新的连接。对于一台承载很多业务的服务器来说这是一个增加通道带宽的有效的方式。

|

||||

|

||||

你也可以在以太网口进行同样的配置,而且绑定汇聚的驱动是内置在 Linux 内核中的,所以不需要任何其他的专门的硬件。

|

||||

|

||||

### 随心所欲选择你的移动宽带

|

||||

|

||||

我期望移动宽带能够迅速增长来替代 DSL 和有线网络。我居住在一个有 25 万人口的靠近一个城市的地方,但是在城市以外,要想接入互联网就要靠运气了,即使那里有很大的用户上网需求。我居住的小角落离城镇有 20 分钟的距离,但对于网络服务供应商来说他们几乎不会考虑到为这个地方提供网络。 我唯一的选择就是移动宽带;这里没有拨号网络、卫星网络(即使它很糟糕)或者是 DSL、电缆、光纤,但却没有阻止网络供应商把那些我在这个区域从没看到过的 Xfinity 和其它高速网络服务的传单塞进我的邮箱。

|

||||

|

||||

我试用了 AT&T、Version 和 T-Mobile。Version 的信号覆盖范围最广,但是 Version 和 AT&T 是最昂贵的。

|

||||

我居住的地方在 T-Mobile 信号覆盖的边缘,但迄今为止他们给了最大的优惠,为了能够能够有效的使用,我必须购买一个 WeBoost 信号放大器和一台中兴的移动热点设备。当然你也可以使用一部手机作为热点,但是专用的热点设备有着最强的信号。如果你正在考虑购买一台信号放大器,最好的选择就是 WeBoost,因为他们的服务支持最棒,而且他们会尽最大努力去帮助你。在一个小小的 APP [SignalCheck Pro][8] 的协助下设置将会精准的增强你的网络信号,他们有一个功能较少的免费的版本,但你将一点都不会后悔去花两美元使用专业版。

|

||||

|

||||

那个小巧的中兴热点设备能够支持 15 台主机,而且还有拥有基本的防火墙功能。 但你如果你使用像 Linksys WRT54GL这样的设备,可以使用 Tomato、OpenWRT,或者 DD-WRT 来替代普通的固件,这样你就能完全控制你的防护墙规则、路由配置,以及任何其它你想要设置的服务。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/10/linux-networking-hardware-beginners-think-software

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/used-permission

|

||||

[3]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[4]:https://www.linux.com/files/images/fig-1png-7

|

||||

[5]:https://www.linux.com/files/images/fig-2png-4

|

||||

[6]:https://www.linux.com/files/images/soderskar-islandjpg

|

||||

[7]:https://www.linux.com/learn/intro-to-linux/2017/10/linux-networking-hardware-beginners-lan-hardware

|

||||

[8]:http://www.bluelinepc.com/signalcheck/

|

||||

@ -1,43 +1,50 @@

|

||||

translated by smartgrids

|

||||

Eclipse 如何助力 IoT 发展

|

||||

============================================================

|

||||

|

||||

### 开源组织的模块发开发方式非常适合物联网。

|

||||

|

||||

> 开源组织的模块化开发方式非常适合物联网。

|

||||

|

||||

|

||||

|

||||

图片来源: opensource.com

|

||||

|

||||

[Eclipse][3] 可能不是第一个去研究物联网的开源组织。但是,远在 IoT 家喻户晓之前,该基金会在 2001 年左右就开始支持开源软件发展商业化。九月 Eclipse 物联网日和 RedMonk 的 [ThingMonk 2017][4] 一块举行,着重强调了 Eclipse 在 [物联网发展][5] 中的重要作用。它现在已经包含了 28 个项目,覆盖了大部分物联网项目需求。会议过程中,我和负责 Eclipse 市场化运作的 [Ian Skerritt][6] 讨论了 Eclipse 的物联网项目以及如何拓展它。

|

||||

[Eclipse][3] 可能不是第一个去研究物联网的开源组织。但是,远在 IoT 家喻户晓之前,该基金会在 2001 年左右就开始支持开源软件发展商业化。

|

||||

|

||||

九月份的 Eclipse 物联网日和 RedMonk 的 [ThingMonk 2017][4] 一块举行,着重强调了 Eclipse 在 [物联网发展][5] 中的重要作用。它现在已经包含了 28 个项目,覆盖了大部分物联网项目需求。会议过程中,我和负责 Eclipse 市场化运作的 [Ian Skerritt][6] 讨论了 Eclipse 的物联网项目以及如何拓展它。

|

||||

|

||||

### 物联网的最新进展?

|

||||

|

||||

###物联网的最新进展?

|

||||

我问 Ian 物联网同传统工业自动化,也就是前几十年通过传感器和相应工具来实现工厂互联的方式有什么不同。 Ian 指出很多工厂是还没有互联的。

|

||||

另外,他说“ SCADA[监控和数据分析] 系统以及工厂底层技术都是私有、独立性的。我们很难去改变它,也很难去适配它们…… 现在,如果你想运行一套生产系统,你需要设计成百上千的单元。生产线想要的是满足用户需求,使制造过程更灵活,从而可以不断产出。” 这也就是物联网会带给制造业的一个很大的帮助。

|

||||

|

||||

另外,他说 “SCADA [<ruby>监控和数据分析<rt>supervisory control and data analysis</rt></ruby>] 系统以及工厂底层技术都是非常私有的、独立性的。我们很难去改变它,也很难去适配它们 …… 现在,如果你想运行一套生产系统,你需要设计成百上千的单元。生产线想要的是满足用户需求,使制造过程更灵活,从而可以不断产出。” 这也就是物联网会带给制造业的一个很大的帮助。

|

||||

|

||||

###Eclipse 物联网方面的研究

|

||||

Ian 对于 Eclipse 在物联网的研究是这样描述的:“满足任何物联网解决方案的核心基础技术” ,通过使用开源技术,“每个人都可以使用从而可以获得更好的适配性。” 他说,Eclipse 将物联网视为包括三层互联的软件栈。从更高的层面上看,这些软件栈(按照大家常见的说法)将物联网描述为跨越三个层面的网络。特定的观念可能认为含有更多的层面,但是他们一直符合这个三层模型的功能的:

|

||||

### Eclipse 物联网方面的研究

|

||||

|

||||

Ian 对于 Eclipse 在物联网的研究是这样描述的:“满足任何物联网解决方案的核心基础技术” ,通过使用开源技术,“每个人都可以使用,从而可以获得更好的适配性。” 他说,Eclipse 将物联网视为包括三层互联的软件栈。从更高的层面上看,这些软件栈(按照大家常见的说法)将物联网描述为跨越三个层面的网络。特定的实现方式可能含有更多的层,但是它们一般都可以映射到这个三层模型的功能上:

|

||||

|

||||

* 一种可以装载设备(例如设备、终端、微控制器、传感器)用软件的堆栈。

|

||||

* 将不同的传感器采集到的数据信息聚合起来并传输到网上的一类网关。这一层也可能会针对传感器数据检测做出实时反映。

|

||||

* 将不同的传感器采集到的数据信息聚合起来并传输到网上的一类网关。这一层也可能会针对传感器数据检测做出实时反应。

|

||||

* 物联网平台后端的一个软件栈。这个后端云存储数据并能根据采集的数据比如历史趋势、预测分析提供服务。

|

||||

|

||||

这三个软件栈在 Eclipse 的白皮书 “ [The Three Software Stacks Required for IoT Architectures][7] ”中有更详细的描述。

|

||||

这三个软件栈在 Eclipse 的白皮书 “[The Three Software Stacks Required for IoT Architectures][7] ”中有更详细的描述。

|

||||

|

||||

Ian 说在这些架构中开发一种解决方案时,“需要开发一些特殊的东西,但是很多底层的技术是可以借用的,像通信协议、网关服务。需要一种模块化的方式来满足不用的需求场合。” Eclipse 关于物联网方面的研究可以概括为:开发模块化开源组件从而可以被用于开发大量的特定性商业服务和解决方案。

|

||||

Ian 说在这些架构中开发一种解决方案时,“需要开发一些特殊的东西,但是很多底层的技术是可以借用的,像通信协议、网关服务。需要一种模块化的方式来满足不同的需求场合。” Eclipse 关于物联网方面的研究可以概括为:开发模块化开源组件,从而可以被用于开发大量的特定性商业服务和解决方案。

|

||||

|

||||

###Eclipse 的物联网项目

|

||||

### Eclipse 的物联网项目

|

||||

|

||||

在众多一杯应用的 Eclipse 物联网应用中, Ian 举了两个和 [MQTT][8] 有关联的突出应用,一个设备与设备互联(M2M)的物联网协议。 Ian 把它描述成“一个专为重视电源管理工作的油气传输线监控系统的信息发布/订阅协议。MQTT 已经是众多物联网广泛应用标准中很成功的一个。” [Eclipse Mosquitto][9] 是 MQTT 的代理,[Eclipse Paho][10] 是他的客户端。

|

||||

[Eclipse Kura][11] 是一个物联网网关,引用 Ian 的话,“它连接了很多不同的协议间的联系”包括蓝牙、Modbus、CANbus 和 OPC 统一架构协议,以及一直在不断添加的协议。一个优势就是,他说,取代了你自己写你自己的协议, Kura 提供了这个功能并将你通过卫星、网络或其他设备连接到网络。”另外它也提供了防火墙配置、网络延时以及其它功能。Ian 也指出“如果网络不通时,它会存储信息直到网络恢复。”

|

||||

在众多已被应用的 Eclipse 物联网应用中, Ian 举了两个和 [MQTT][8] 有关联的突出应用,一个设备与设备互联(M2M)的物联网协议。 Ian 把它描述成“一个专为重视电源管理工作的油气传输线监控系统的信息发布/订阅协议。MQTT 已经是众多物联网广泛应用标准中很成功的一个。” [Eclipse Mosquitto][9] 是 MQTT 的代理,[Eclipse Paho][10] 是他的客户端。

|

||||

|

||||

[Eclipse Kura][11] 是一个物联网网关,引用 Ian 的话,“它连接了很多不同的协议间的联系”,包括蓝牙、Modbus、CANbus 和 OPC 统一架构协议,以及一直在不断添加的各种协议。他说,一个优势就是,取代了你自己写你自己的协议, Kura 提供了这个功能并将你通过卫星、网络或其他设备连接到网络。”另外它也提供了防火墙配置、网络延时以及其它功能。Ian 也指出“如果网络不通时,它会存储信息直到网络恢复。”

|

||||

|

||||

最新的一个项目中,[Eclipse Kapua][12] 正尝试通过微服务来为物联网云平台提供不同的服务。比如,它集成了通信、汇聚、管理、存储和分析功能。Ian 说“它正在不断前进,虽然还没被完全开发出来,但是 Eurotech 和 RedHat 在这个项目上非常积极。”

|

||||

Ian 说 [Eclipse hawkBit][13] ,软件更新管理的软件,是一项“非常有趣的项目。从安全的角度说,如果你不能更新你的设备,你将会面临巨大的安全漏洞。”很多物联网安全事故都和无法更新的设备有关,他说,“ HawkBit 可以基本负责通过物联网系统来完成扩展性更新的后端管理。”

|

||||

|

||||

物联网设备软件升级的难度一直被看作是难度最高的安全挑战之一。物联网设备不是一直连接的,而且数目众多,再加上首先设备的更新程序很难完全正常。正因为这个原因,关于无赖女王软件升级的项目一直是被当作重要内容往前推进。

|

||||

Ian 说 [Eclipse hawkBit][13] ,一个软件更新管理的软件,是一项“非常有趣的项目。从安全的角度说,如果你不能更新你的设备,你将会面临巨大的安全漏洞。”很多物联网安全事故都和无法更新的设备有关,他说,“HawkBit 可以基本负责通过物联网系统来完成扩展性更新的后端管理。”

|

||||

|

||||

###为什么物联网这么适合 Eclipse

|

||||

物联网设备软件升级的难度一直被看作是难度最高的安全挑战之一。物联网设备不是一直连接的,而且数目众多,再加上首先设备的更新程序很难完全正常。正因为这个原因,关于 IoT 软件升级的项目一直是被当作重要内容往前推进。

|

||||

|

||||

在物联网发展趋势中的一个方面就是关于构建模块来解决商业问题,而不是宽约工业和公司的大物联网平台。 Eclipse 关于物联网的研究放在一系列模块栈、提供特定和大众化需求功能的项目,还有就是指定目标所需的可捆绑式中间件、网关和协议组件上。

|

||||

### 为什么物联网这么适合 Eclipse

|

||||

|

||||

在物联网发展趋势中的一个方面就是关于构建模块来解决商业问题,而不是跨越行业和公司的大物联网平台。 Eclipse 关于物联网的研究放在一系列模块栈、提供特定和大众化需求功能的项目上,还有就是指定目标所需的可捆绑式中间件、网关和协议组件上。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -46,15 +53,15 @@ Ian 说 [Eclipse hawkBit][13] ,软件更新管理的软件,是一项“非

|

||||

|

||||

作者简介:

|

||||

|

||||

Gordon Haff - Gordon Haff 是红帽公司的云营销员,经常在消费者和工业会议上讲话,并且帮助发展红帽全办公云解决方案。他是 计算机前言:云如何如何打开众多出版社未来之门 的作者。在红帽之前, Gordon 写了成百上千的研究报告,经常被引用到公众刊物上,像纽约时报关于 IT 的议题和产品建议等……

|

||||

Gordon Haff - Gordon Haff 是红帽公司的云专家,经常在消费者和行业会议上讲话,并且帮助发展红帽全面云化解决方案。他是《计算机前沿:云如何如何打开众多出版社未来之门》的作者。在红帽之前, Gordon 写了成百上千的研究报告,经常被引用到公众刊物上,像纽约时报关于 IT 的议题和产品建议等……

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

转自: https://opensource.com/article/17/10/eclipse-and-iot

|

||||

via: https://opensource.com/article/17/10/eclipse-and-iot

|

||||

|

||||

作者:[Gordon Haff ][a]

|

||||

作者:[Gordon Haff][a]

|

||||

译者:[smartgrids](https://github.com/smartgrids)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,135 @@

|

||||

如何使用 GPG 加解密文件

|

||||

=================

|

||||

|

||||

目标:使用 GPG 加密文件

|

||||

|

||||

发行版:适用于任何发行版

|

||||

|

||||

要求:安装了 GPG 的 Linux 或者拥有 root 权限来安装它。

|

||||

|

||||

难度:简单

|

||||

|

||||

约定:

|

||||

|

||||

* `#` - 需要使用 root 权限来执行指定命令,可以直接使用 root 用户来执行,也可以使用 `sudo` 命令

|

||||

* `$` - 可以使用普通用户来执行指定命令

|

||||

|

||||

### 介绍

|

||||

|

||||

加密非常重要。它对于保护敏感信息来说是必不可少的。你的私人文件应该要被加密,而 GPG 提供了很好的解决方案。

|

||||

|

||||

### 安装 GPG

|

||||

|

||||

GPG 的使用非常广泛。你在几乎每个发行版的仓库中都能找到它。如果你还没有安装它,那现在就来安装一下吧。

|

||||

|

||||

**Debian/Ubuntu**

|

||||

|

||||

```

|

||||

$ sudo apt install gnupg

|

||||

```

|

||||

|

||||

**Fedora**

|

||||

|

||||

```

|

||||

# dnf install gnupg2

|

||||

```

|

||||

|

||||

**Arch**

|

||||

|

||||

```

|

||||

# pacman -S gnupg

|

||||

```

|

||||

|

||||

**Gentoo**

|

||||

|

||||

```

|

||||

# emerge --ask app-crypt/gnupg

|

||||

```

|

||||

|

||||

### 创建密钥

|

||||

|

||||

你需要一个密钥对来加解密文件。如果你为 SSH 已经生成过了密钥对,那么你可以直接使用它。如果没有,GPG 包含工具来生成密钥对。

|

||||

|

||||

```

|

||||

$ gpg --full-generate-key

|

||||

```

|

||||

|

||||

GPG 有一个命令行程序可以帮你一步一步的生成密钥。它还有一个简单得多的工具,但是这个工具不能让你设置密钥类型,密钥的长度以及过期时间,因此不推荐使用这个工具。

|

||||

|

||||

GPG 首先会询问你密钥的类型。没什么特别的话选择默认值就好。

|

||||

|

||||

下一步需要设置密钥长度。`4096` 是一个不错的选择。

|

||||

|

||||

之后,可以设置过期的日期。 如果希望密钥永不过期则设置为 `0`。

|

||||

|

||||

然后,输入你的名称。

|

||||

|

||||

最后,输入电子邮件地址。

|

||||

|

||||

如果你需要的话,还能添加一个注释。

|

||||

|

||||

所有这些都完成后,GPG 会让你校验一下这些信息。

|

||||

|

||||

GPG 还会问你是否需要为密钥设置密码。这一步是可选的, 但是会增加保护的程度。若需要设置密码,则 GPG 会收集你的操作信息来增加密钥的健壮性。 所有这些都完成后, GPG 会显示密钥相关的信息。

|

||||

|

||||

### 加密的基本方法

|

||||

|

||||

现在你拥有了自己的密钥,加密文件非常简单。 使用下面的命令在 `/tmp` 目录中创建一个空白文本文件。

|

||||

|

||||

```

|

||||

$ touch /tmp/test.txt

|

||||

```

|

||||

|

||||

然后用 GPG 来加密它。这里 `-e` 标志告诉 GPG 你想要加密文件, `-r` 标志指定接收者。

|

||||

|

||||

```

|

||||

$ gpg -e -r "Your Name" /tmp/test.txt

|

||||

```

|

||||

|

||||

GPG 需要知道这个文件的接收者和发送者。由于这个文件给是你的,因此无需指定发送者,而接收者就是你自己。

|

||||

|

||||

### 解密的基本方法

|

||||

|

||||

你收到加密文件后,就需要对它进行解密。 你无需指定解密用的密钥。 这个信息被编码在文件中。 GPG 会尝试用其中的密钥进行解密。

|

||||

|

||||

```

|

||||

$ gpg -d /tmp/test.txt.gpg

|

||||

```

|

||||

|

||||

### 发送文件

|

||||

|

||||

假设你需要发送文件给别人。你需要有接收者的公钥。 具体怎么获得密钥由你自己决定。 你可以让他们直接把公钥发送给你, 也可以通过密钥服务器来获取。

|

||||

|

||||

收到对方公钥后,导入公钥到 GPG 中。

|

||||

|

||||

```

|

||||

$ gpg --import yourfriends.key

|

||||

```

|

||||

|

||||

这些公钥与你自己创建的密钥一样,自带了名称和电子邮件地址的信息。 记住,为了让别人能解密你的文件,别人也需要你的公钥。 因此导出公钥并将之发送出去。

|

||||

|

||||

```

|

||||

gpg --export -a "Your Name" > your.key

|

||||

```

|

||||

|

||||

现在可以开始加密要发送的文件了。它跟之前的步骤差不多, 只是需要指定你自己为发送人。

|

||||

|

||||

```

|

||||

$ gpg -e -u "Your Name" -r "Their Name" /tmp/test.txt

|

||||

```

|

||||

|

||||

### 结语

|

||||

|

||||

就这样了。GPG 还有一些高级选项, 不过你在 99% 的时间内都不会用到这些高级选项。 GPG 就是这么易于使用。你也可以使用创建的密钥对来发送和接受加密邮件,其步骤跟上面演示的差不多, 不过大多数的电子邮件客户端在拥有密钥的情况下会自动帮你做这个动作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/how-to-encrypt-and-decrypt-individual-files-with-gpg

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux 中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org

|

||||

@ -1,20 +1,19 @@

|

||||

归档仓库

|

||||

如何归档 GitHub 仓库

|

||||

====================

|

||||

|

||||

|

||||

因为仓库不再活跃开发或者你不想接受额外的贡献并不意味着你想要删除它。现在在 Github 上归档仓库让它变成只读。

|

||||

如果仓库不再活跃开发或者你不想接受额外的贡献,但这并不意味着你想要删除它。现在可以在 Github 上归档仓库让它变成只读。

|

||||

|

||||

[][1]

|

||||

|

||||

归档一个仓库让它对所有人只读(包括仓库拥有者)。这包括编辑仓库、问题、合并请求、标记、里程碑、维基、发布、提交、标签、分支、反馈和评论。没有人可以在一个归档的仓库上创建新的问题、合并请求或者评论,但是你仍可以 fork 仓库-允许归档的仓库在其他地方继续开发。

|

||||

归档一个仓库会让它对所有人只读(包括仓库拥有者)。这包括对仓库的编辑、<ruby>问题<rt>issue</rt></ruby>、<ruby>合并请求<rt>pull request</rt></ruby>(PR)、标记、里程碑、项目、维基、发布、提交、标签、分支、反馈和评论。谁都不可以在一个归档的仓库上创建新的问题、合并请求或者评论,但是你仍可以 fork 仓库——以允许归档的仓库在其它地方继续开发。

|

||||

|

||||

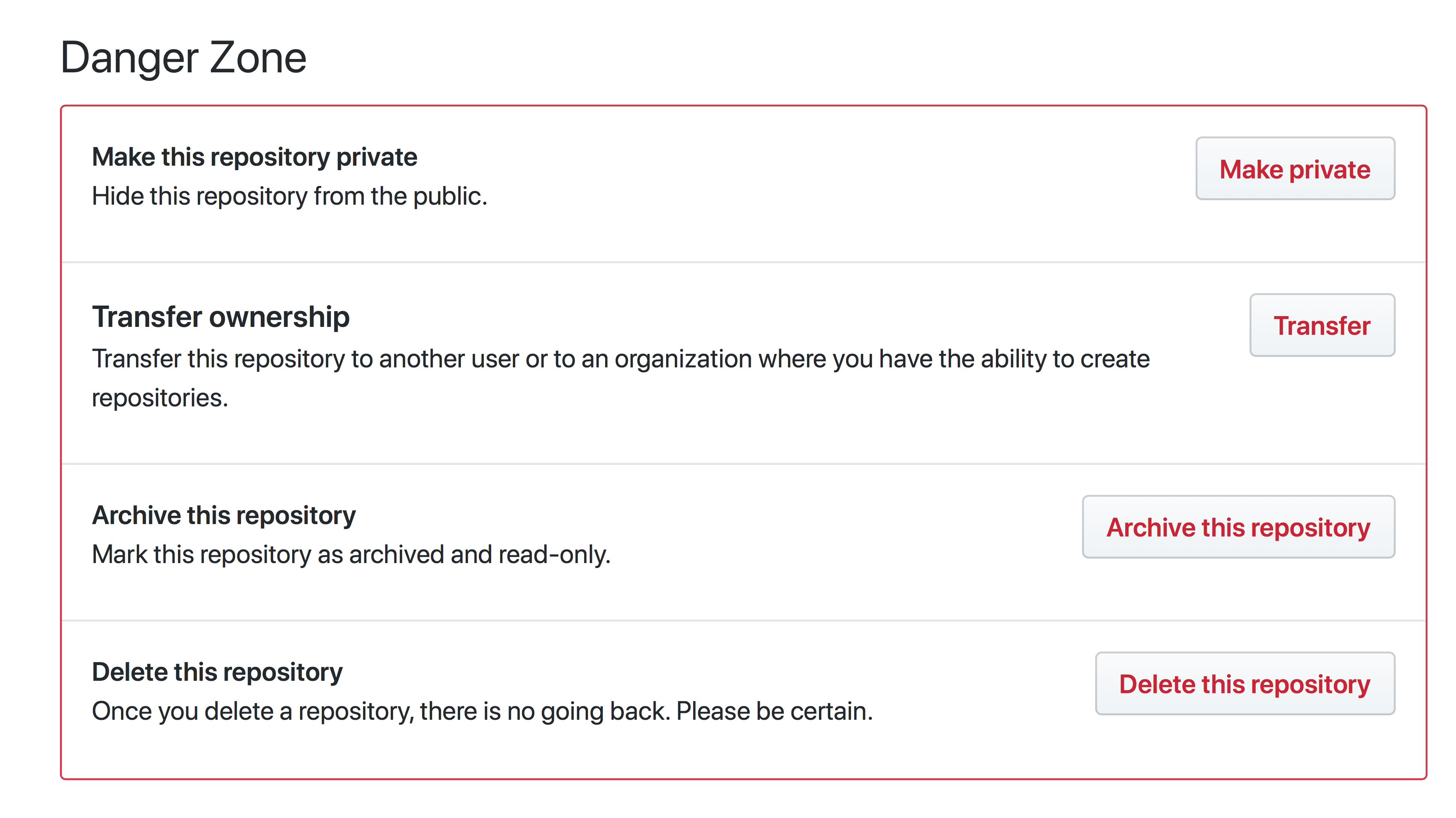

要归档一个仓库,进入仓库设置页面并点在这个仓库上点击归档。

|

||||

要归档一个仓库,进入仓库设置页面并点在这个仓库上点击“<ruby>归档该仓库<rt>Archive this repository</rt></ruby>”。

|

||||

|

||||

[][2]

|

||||

|

||||

在归档你的仓库前,确保你已经更改了它的设置并考虑关闭所有的开放问题和合并请求。你还应该更新你的 README 和描述来让它让访问者了解他不再能够贡献。

|

||||

在归档你的仓库前,确保你已经更改了它的设置并考虑关闭所有的开放问题和合并请求。你还应该更新你的 README 和描述来让它让访问者了解他不再能够对之贡献。

|

||||

|

||||

如果你改变了主意想要解除归档你的仓库,在相同的地方点击解除归档。请注意大多数归档仓库的设置是隐藏的,并且你需要解除归档来改变它们。

|

||||

如果你改变了主意想要解除归档你的仓库,在相同的地方点击“<ruby>解除归档该仓库<rt>Unarchive this repository</rt></ruby>”。请注意归档仓库的大多数设置是隐藏的,你需要解除归档才能改变它们。

|

||||

|

||||

[][3]

|

||||

|

||||

@ -24,9 +23,9 @@

|

||||

|

||||

via: https://github.com/blog/2460-archiving-repositories

|

||||

|

||||

作者:[MikeMcQuaid ][a]

|

||||

作者:[MikeMcQuaid][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||