mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-06 01:20:12 +08:00

commit

fc712fcf17

@ -1,26 +1,26 @@

|

||||

Lolcat – 一个在 Linux 终端中输出彩虹特效的命令行工具

|

||||

Lolcat :一个在 Linux 终端中输出彩虹特效的命令行工具

|

||||

================================================================================

|

||||

那些相信 Linux 命令行是单调无聊且没有任何乐趣的人们,你们错了,这里有一些有关 Linux 的文章,它们展示着 Linux 是如何的有趣和“淘气” 。

|

||||

|

||||

- [20 个有趣的 Linux 命令或在终端中 Linux 是有趣的][1]

|

||||

- [6 个有趣的好玩 Linux 命令(在终端中的乐趣)][2]

|

||||

- [在 Linux 终端中的乐趣 – 把玩文字和字符计数][3]

|

||||

- [Linux命令及Linux终端的20个趣事][1]

|

||||

- [终端中的乐趣:6个有趣的Linux命令行工具][2]

|

||||

- [Linux终端的乐趣之把玩字词计数][3]

|

||||

|

||||

在本文中,我将讨论一个名为“lolcat”的应用 – 它在终端中生成彩虹般的颜色。

|

||||

在本文中,我将讨论一个名为“lolcat”的小工具 – 它可以在终端中生成彩虹般的颜色。

|

||||

|

||||

|

||||

|

||||

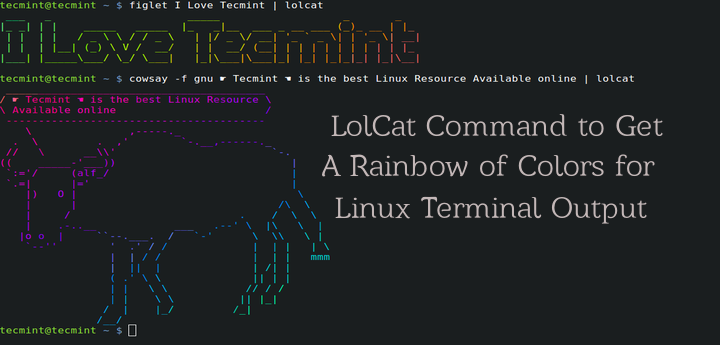

为终端生成彩虹般颜色的输出的 Lolcat 命令

|

||||

*为终端生成彩虹般颜色的输出的 Lolcat 命令*

|

||||

|

||||

#### 何为 lolcat ? ####

|

||||

|

||||

Lolcat 是一个针对 Linux,BSD 和 OSX 平台的应用,它类似于 [cat 命令][4],并为 `cat` 的输出添加彩虹般的色彩。 Lolcat 原本用于在 Linux 终端中为文本添加彩虹般的色彩。

|

||||

Lolcat 是一个针对 Linux,BSD 和 OSX 平台的工具,它类似于 [cat 命令][4],并为 `cat` 的输出添加彩虹般的色彩。 Lolcat 主要用于在 Linux 终端中为文本添加彩虹般的色彩。

|

||||

|

||||

### 在 Linux 中安装 Lolcat ###

|

||||

|

||||

**1. Lolcat 应用在许多 Linux 发行版本的软件仓库中都可获取到,但可获得的版本都有些陈旧,而你可以通过 git 仓库下载和安装最新版本的 lolcat。**

|

||||

**1. Lolcat 工具在许多 Linux 发行版的软件仓库中都可获取到,但可获得的版本都有些陈旧,而你可以通过 git 仓库下载和安装最新版本的 lolcat。**

|

||||

|

||||

由于 Lolcat 是一个 ruby gem 程序,所以在你的系统中安装有最新版本的 RUBY 是必须的。

|

||||

由于 Lolcat 是一个 ruby gem 程序,所以在你的系统中必须安装有最新版本的 RUBY。

|

||||

|

||||

# apt-get install ruby [在基于 APT 的系统中]

|

||||

# yum install ruby [在基于 Yum 的系统中]

|

||||

@ -53,7 +53,7 @@ Lolcat 是一个针对 Linux,BSD 和 OSX 平台的应用,它类似于 [cat

|

||||

|

||||

|

||||

|

||||

Lolcat 的帮助文档

|

||||

*Lolcat 的帮助文档*

|

||||

|

||||

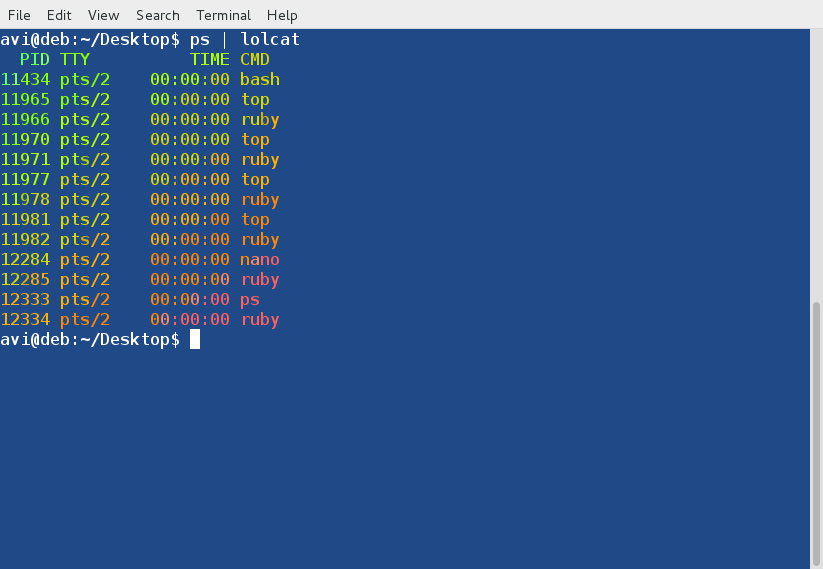

**4. 接着, 通过管道连接 lolcat 和其他命令,例如 ps, date 和 cal:**

|

||||

|

||||

@ -63,15 +63,15 @@ Lolcat 的帮助文档

|

||||

|

||||

|

||||

|

||||

ps 命令的输出

|

||||

*ps 命令的输出*

|

||||

|

||||

|

||||

|

||||

Date 的输出

|

||||

*Date 的输出*

|

||||

|

||||

|

||||

|

||||

Calendar 的输出

|

||||

*Calendar 的输出*

|

||||

|

||||

**5. 使用 lolcat 来展示一个脚本文件的代码:**

|

||||

|

||||

@ -79,18 +79,18 @@ Calendar 的输出

|

||||

|

||||

|

||||

|

||||

用 lolcat 来展示代码

|

||||

*用 lolcat 来展示代码*

|

||||

|

||||

**6. 通过管道连接 lolcat 和 figlet 命令。Figlet 是一个展示由常规的屏幕字符组成的巨大字符串的应用。我们可以通过管道将 figlet 的输出连接到 lolcat 中来给出如下的多彩输出:**

|

||||

**6. 通过管道连接 lolcat 和 figlet 命令。Figlet 是一个展示由常规的屏幕字符组成的巨大字符串的应用。我们可以通过管道将 figlet 的输出连接到 lolcat 中来展示出如下的多彩输出:**

|

||||

|

||||

# echo I ❤ Tecmint | lolcat

|

||||

# figlet I Love Tecmint | lolcat

|

||||

|

||||

|

||||

|

||||

多彩的文字

|

||||

*多彩的文字*

|

||||

|

||||

**注**: 毫无疑问 ❤ 是一个 unicode 字符并且为了安装 figlet,你需要像下面那样使用 yum 和 apt 来得到这个软件包:

|

||||

**注**: 注意, ❤ 是一个 unicode 字符。要安装 figlet,你需要像下面那样使用 yum 和 apt 来得到这个软件包:

|

||||

|

||||

# apt-get figlet

|

||||

# yum install figlet

|

||||

@ -102,7 +102,7 @@ Calendar 的输出

|

||||

|

||||

|

||||

|

||||

动的文本

|

||||

*动的文本*

|

||||

|

||||

这里选项 `-a` 指的是 Animation(动画), `-d` 指的是 duration(持续时间)。在上面的例子中,持续 500 次动画。

|

||||

|

||||

@ -112,7 +112,7 @@ Calendar 的输出

|

||||

|

||||

|

||||

|

||||

多彩地显示文件

|

||||

*多彩地显示文件*

|

||||

|

||||

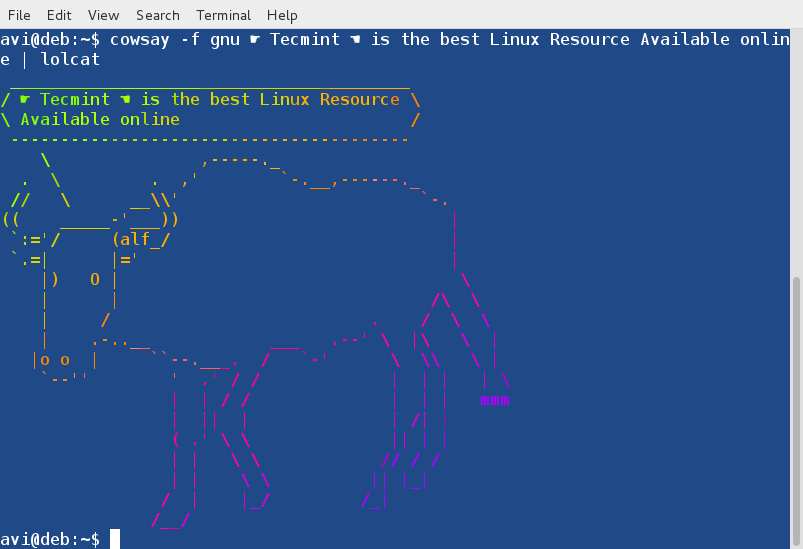

**9. 通过管道连接 lolcat 和 cowsay。cowsay 是一个可配置的正在思考或说话的奶牛,这个程序也支持其他的动物。**

|

||||

|

||||

@ -136,15 +136,15 @@ Calendar 的输出

|

||||

skeleton snowman sodomized-sheep stegosaurus stimpy suse three-eyes turkey

|

||||

turtle tux unipony unipony-smaller vader vader-koala www

|

||||

|

||||

通过管道连接 lolcat 和 cowsay 后的输出,并且使用了‘gnu’cowfile。

|

||||

通过管道连接 lolcat 和 cowsay 后的输出,并且使用了‘gnu’形象的 cowfile。

|

||||

|

||||

# cowsay -f gnu ☛ Tecmint ☚ is the best Linux Resource Available online | lolcat

|

||||

|

||||

|

||||

|

||||

使用 Lolcat 的 Cowsay

|

||||

*使用 Lolcat 的 Cowsay*

|

||||

|

||||

**注**: 你可以在管道中使用 lolcat 和其他任何命令来在终端中得到彩色的输出。

|

||||

**注**: 你可以在将 lolcat 和其他任何命令用管道连接起来在终端中得到彩色的输出。

|

||||

|

||||

**10. 你可以为最常用的命令创建别名来使得命令的输出呈现出彩虹般的色彩。你可以像下面那样为 ‘ls -l‘ 命令创建别名,这个命令输出一个目录中包含内容的列表。**

|

||||

|

||||

@ -153,23 +153,24 @@ Calendar 的输出

|

||||

|

||||

|

||||

|

||||

多彩的 Alias 命令

|

||||

*多彩的 Alias 命令*

|

||||

|

||||

你可以像上面建议的那样,为任何命令创建别名。为了使得别名永久生效,你必须添加相关的代码(上面的代码是 ls -l 的别名) 到 ~/.bashrc 文件中,并确保登出后再重新登录来使得更改生效。

|

||||

你可以像上面建议的那样,为任何命令创建别名。为了使得别名永久生效,你需要添加相关的代码(上面的代码是 ls -l 的别名) 到 ~/.bashrc 文件中,并登出后再重新登录来使得更改生效。

|

||||

|

||||

现在就是这些了。我想知道你是否曾经注意过 lolcat 这个工具?你是否喜欢这篇文章?欢迎在下面的评论环节中给出你的建议和反馈。喜欢并分享我们,帮助我们传播。

|

||||

|

||||

现在就是这些了。我想知道你是否曾经注意过 lolcat 这个应用?你是否喜欢这篇文章?欢迎在下面的评论环节中给出你的建议和反馈。喜欢并分享我们,帮助我们传播。

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/lolcat-command-to-output-rainbow-of-colors-in-linux-terminal/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/20-funny-commands-of-linux-or-linux-is-fun-in-terminal/

|

||||

[2]:http://www.tecmint.com/linux-funny-commands/

|

||||

[3]:http://www.tecmint.com/play-with-word-and-character-counts-in-linux/

|

||||

[1]:https://linux.cn/article-2831-1.html

|

||||

[2]:https://linux.cn/article-4128-1.html

|

||||

[3]:https://linux.cn/article-4088-1.html

|

||||

[4]:http://www.tecmint.com/13-basic-cat-command-examples-in-linux/

|

||||

@ -0,0 +1,93 @@

|

||||

LFS中文版手册发布:如何打造自己的 Linux 发行版

|

||||

================================================================================

|

||||

您是否想过打造您自己的 Linux 发行版?每个 Linux 用户在他们使用 Linux 的过程中都想过做一个他们自己的发行版,至少一次。我也不例外,作为一个 Linux 菜鸟,我也考虑过开发一个自己的 Linux 发行版。从头开发一个 Linux 发行版这件事情被称作 Linux From Scratch (LFS)。

|

||||

|

||||

在开始之前,我总结了一些有关 LFS 的内容,如下:

|

||||

|

||||

**1. 那些想要打造他们自己的 Linux 发行版的人应该了解打造一个 Linux 发行版(打造意味着从头开始)与配置一个已有的 Linux 发行版的不同**

|

||||

|

||||

如果您只是想调整下启动屏幕、定制登录页面以及拥有更好的外观和使用体验。您可以选择任何一个 Linux 发行版并且按照您的喜好进行个性化配置。此外,有许多配置工具可以帮助您。

|

||||

|

||||

如果您想打包所有必须的文件、引导加载器和内核,并选择什么该被包括进来,然后依靠自己编译这一切东西。那么您需要的就是 Linux From Scratch (LFS)。

|

||||

|

||||

**注意**:如果您只想要定制 Linux 系统的外表和体验,这个指南并不适合您。但如果您真的想打造一个 Linux 发行版,并且向了解怎么开始以及一些其他的信息,那么这个指南正是为您而写。

|

||||

|

||||

**2. 打造一个 Linux 发行版(LFS)的好处**

|

||||

|

||||

- 您将了解 Linux 系统的内部工作机制

|

||||

- 您将开发一个灵活的适应您需求的系统

|

||||

- 您开发的系统(LFS)将会非常紧凑,因为您对该包含/不该包含什么拥有绝对的掌控

|

||||

- 您开发的系统(LFS)在安全性上会更好

|

||||

|

||||

**3. 打造一个Linux发行版(LFS)的坏处**

|

||||

|

||||

打造一个 Linux 系统意味着将所有需要的东西放在一起并且编译之。这需要许多查阅、耐心和时间。而且您需要一个可用的 Linux 系统和足够的磁盘空间来打造 LFS。

|

||||

|

||||

**4. 有趣的是,Gentoo/GNU Linux 在某种意义上最接近于 LFS。Gentoo 和 LFS 都是完全从源码编译的定制的 Linux 系统**

|

||||

|

||||

**5. 您应该是一个有经验的Linux用户,对编译包、解决依赖有相当的了解,并且是个 shell 脚本的专家。**

|

||||

|

||||

了解一门编程语言(最好是 C 语言)将会使事情变得容易些。但哪怕您是一个新手,只要您是一个优秀的学习者,可以很快的掌握知识,您也可以开始。最重要的是不要在 LFS 过程中丢失您的热情。

|

||||

|

||||

如果您不够坚定,恐怕会在 LFS 进行到一半时放弃。

|

||||

|

||||

**6. 现在您需要一步一步的指导来打造一个 Linux 。LFS 手册是打造 LFS 的官方指南。我们的合作站点 tradepub 也为我们的读者制作了 LFS 的指南,这同样是免费的。 ###

|

||||

|

||||

您可以从下面的链接下载 Linux From Scratch 的电子书:

|

||||

|

||||

[][1]

|

||||

|

||||

下载: [Linux From Scratch][1]

|

||||

|

||||

**7. 当前 LFS 的版本是 7.7,分为 systemd 版本和非 systemd 版本**

|

||||

|

||||

LFS 的官方网站是: http://www.linuxfromscratch.org/

|

||||

|

||||

您可以在官网在线浏览 LFS 以及类似 BLFS 这样的相关项目的手册,也可以下载不同格式的版本。

|

||||

|

||||

- LFS (非 systemd 版本):

|

||||

- PDF 版本: http://www.linuxfromscratch.org/lfs/downloads/stable/LFS-BOOK-7.7.pdf

|

||||

- 单一 HTML 版本: http://www.linuxfromscratch.org/lfs/downloads/stable/LFS-BOOK-7.7-NOCHUNKS.html

|

||||

- 打包的多页 HTML 版本: http://www.linuxfromscratch.org/lfs/downloads/stable/LFS-BOOK-7.7.tar.bz2

|

||||

- LFS (systemd 版本):

|

||||

- PDF 版本: http://www.linuxfromscratch.org/lfs/downloads/7.7-systemd/LFS-BOOK-7.7-systemd.pdf

|

||||

- 单一 HTML 版本: http://www.linuxfromscratch.org/lfs/downloads/7.7-systemd/LFS-BOOK-7.7-systemd-NOCHUNKS.html

|

||||

- 打包的多页 HTML 版本: http://www.linuxfromscratch.org/lfs/downloads/7.7-systemd/LFS-BOOK-7.7-systemd.tar.bz2

|

||||

|

||||

**8. Linux 中国/LCTT 翻译了一份 LFS 手册(7.7,systemd 版本)**

|

||||

|

||||

经过 LCTT 成员的努力,我们终于完成了对 LFS 7.7 systemd 版本手册的翻译。

|

||||

|

||||

手册在线访问地址:https://linux.cn/lfs/LFS-BOOK-7.7-systemd/index.html 。

|

||||

|

||||

其它格式的版本稍后推出。

|

||||

|

||||

感谢参与翻译的成员: wxy, ictlyh, dongfengweixiao, zpl1025, H-mudcup, Yuking-net, kevinSJ 。

|

||||

|

||||

|

||||

### 关于:Linux From Scratch ###

|

||||

|

||||

这本手册是由 LFS 的项目领头人 Gerard Beekmans 创作的, Matthew Burgess 和 Bruse Dubbs 参与编辑,两人都是LFS 项目的联合领导人。这本书内容很广泛,有 338 页之多。

|

||||

|

||||

手册中内容包括:介绍 LFS、准备构建、构建 LFS、建立启动脚本、使 LFS 可以引导,以及附录。其中涵盖了您想知道的 LFS 项目中的所有东西。

|

||||

|

||||

这本手册还给出了编译一个包的预估时间。预估的时间以编译第一个包的时间作为参考。所有的东西都以易于理解的方式呈现,甚至对于新手来说也是这样。

|

||||

|

||||

如果您有充裕的时间并且真正对构建自己的 Linux 发行版感兴趣,那么您绝对不会错过下载这个电子书(免费下载)的机会。您需要做的,便是照着这本手册在一个工作的 Linux 系统(任何 Linux 发行版,足够的磁盘空间即可)中开始构建您自己的 Linux 系统,付出时间和热情。

|

||||

|

||||

如果 Linux 使您着迷,如果您想自己动手构建一个自己的 Linux 发行版,这便是现阶段您应该知道的全部了,其他的信息您可以参考上面链接的手册中的内容。

|

||||

|

||||

请让我了解您阅读/使用这本手册的经历,这本详尽的 LFS 指南的使用是否足够简单?如果您已经构建了一个 LFS 并且想给我们的读者一些建议,欢迎留言和反馈。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/create-custom-linux-distribution-from-scratch/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://tecmint.tradepub.com/free/w_linu01/prgm.cgi

|

||||

@ -0,0 +1,33 @@

|

||||

Linus Torvalds说那些对人工智能奇点深信不疑的人显然磕了药

|

||||

================================================================================

|

||||

*像往常一样, 他的评论不能只看字面意思*

|

||||

|

||||

|

||||

|

||||

**人工智能是一个非常热门的话题,许多高端人士,包括特斯拉的CEO埃隆·马斯克就曾表示有情感的人工智能技术即将到来,同时这一技术将发展到危险的门槛上。不过Linus Torvalds显然不这么认为,他认为那只是差劲的科幻小说。**

|

||||

|

||||

人工智能激发了人们的创造力已经不是什么新鲜的想法了,不过近段时间关于所谓的人工智能奇点的讨论,引起了诸如埃隆·马斯克和斯蒂芬·霍金表示关心,认为可能会创造出一个怪兽。不只是他们,论坛和评论部分充斥着杞人忧天者,他们不知道该相信谁,或是哪个提出建议的人更聪明。

|

||||

|

||||

事实证明Linux项目创始人Linus Torvalds在这件事上显然有完全不同的观点。他说事实上什么都不会发生,我们也更有理由相信他。人工智能意需要有人编写它的代码,Linus知道编写人工智能代码会遇到的阻力和障碍。他很有可能已经猜到了什么会被涉及到,并且明白为什么人工智能不会成为威胁。

|

||||

|

||||

### Linus Torvalds与人工智能 ###

|

||||

|

||||

Linus Torvalds在[slashdot.org][1]上回答了一些社区中的问题,他的所有观点都十分有趣。他曾对[游戏的未来和Valve][2]发表看法,就像这次关于人工智能一样。虽然他经常是关注一些关于内核和开源的问题,但是他在其他部分也有自己的见解。事实是作为一个问题,人工智能工程是一个他可以从程序员的角度讨论的问题。

|

||||

|

||||

“所以我期待更多有针对性的(和相当棒的)AI,而不是它有多像人。像语言识别、模式识别,这样的东西。我根本找不出在你洗碗的时候,洗碗机和你讨论Sartre(萨特,法国哲学家、小说家、剧作家)有什么危害。真的有‘奇点’这种事吗?是的,我认为那只是科幻小说,还不是好的那种。无休止的指数增长?我说,真的!这些人嗑了什么药了吧?” Linus在Slashdot写道。

|

||||

|

||||

选择相信埃隆·马斯克还是Linus是你的决定,但如果我卷入了这场赌局,我会把钱投给Linus。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/linus-torvalds-says-people-who-believe-in-an-ai-singularity-are-on-drugs-486373.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://classic.slashdot.org/story/15/06/30/0058243

|

||||

[2]:http://news.softpedia.com/news/linus-torvalds-said-valve-is-exploring-a-second-source-against-microsoft-486266.shtml

|

||||

@ -1,33 +0,0 @@

|

||||

Linus Torvalds Says People Who Believe in AI Singularity Are on Drugs

|

||||

================================================================================

|

||||

*As usual, his comments should not be taken literally*

|

||||

|

||||

|

||||

|

||||

**AI is a very hot topic right now, and many high profile people, including Elon Musk, the head of Tesla, have said that we're going to get sentient AIs soon and that it's going to be a dangerous threshold. It seems that Linus Torvalds doesn't feel the same way, and he thinks that it's just bad Sci-Fi.**

|

||||

|

||||

The idea of AIs turning on their human creators is not something new, but recently the so-called AI singularity has been discussed, and people like Elon Musk and Stephen Hawking expressed concerns about the possibility of creating a monster. And it's not just them, forums and comments sections are full of alarmist people who don't know what to believe or who take for granted the opinions of much smarter people.

|

||||

|

||||

As it turns out, Linus Torvalds, the creator of the Linux project, has a completely different opinion on this matter. In fact, he says that nothing like this will happen, and we have a much better reason to believe him. AI means that someone wrote its code, and Linus knows the power and the obstacles of writing an AI code. He's much likely to guess what's involved and to understands why an AI won't be a threat.

|

||||

|

||||

### Linus Torvalds and AIs ###

|

||||

|

||||

Linus Torvalds answered some questions from the community on [slashdot.org][1], and all his ideas were very interesting. He talked about the [future of gaming and Valve][2], but he also tackled stuff like AI. He's usually asked stuff about the kernel or open source, but he has opinions on other topics as well. As a matter a fact, the AI subject is something that he can actually talk about as a programmer.

|

||||

|

||||

"So I'd expect just more of (and much fancier) rather targeted AI, rather than anything human-like at all. Language recognition, pattern recognition, things like that. I just don't see the situation where you suddenly have some existential crisis because your dishwasher is starting to discuss Sartre with you. The whole 'Singularity' kind of event? Yeah, it's science fiction, and not very good Sci-Fi at that, in my opinion. Unending exponential growth? What drugs are those people on? I mean, really" wrote Linus on Slashdot.

|

||||

|

||||

It's your choice whether to believe Elon Musk or Linus, but if betting were involved, I would put my money on Linus.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/linus-torvalds-says-people-who-believe-in-an-ai-singularity-are-on-drugs-486373.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://classic.slashdot.org/story/15/06/30/0058243

|

||||

[2]:http://news.softpedia.com/news/linus-torvalds-said-valve-is-exploring-a-second-source-against-microsoft-486266.shtml

|

||||

@ -1,113 +0,0 @@

|

||||

Animated Wallpaper Adds Live Backgrounds To Linux Distros

|

||||

================================================================================

|

||||

**We know a lot of you love having a stylish Ubuntu desktop to show off.**

|

||||

|

||||

|

||||

|

||||

Live Wallpaper

|

||||

|

||||

And as Linux makes it so easy to create a stunning workspace with a minimal effort, that’s understandable!

|

||||

|

||||

Today, we’re highlighting — [re-highlighting][2] for those of you with long memories — a free, open-source tool that can add extra bling your OS screenshots and screencasts.

|

||||

|

||||

It’s called **Live Wallpaper** and (as you can probably guess) it will replace the standard static desktop background with an animated alternative powered by OpenGL.

|

||||

|

||||

And the best bit: it can be installed in Ubuntu very easily.

|

||||

|

||||

### Animated Wallpaper Themes ###

|

||||

|

||||

|

||||

|

||||

Live Wallpaper is not the only app of this type, but it is one of the the best.

|

||||

|

||||

It comes with a number of different themes out of the box.

|

||||

|

||||

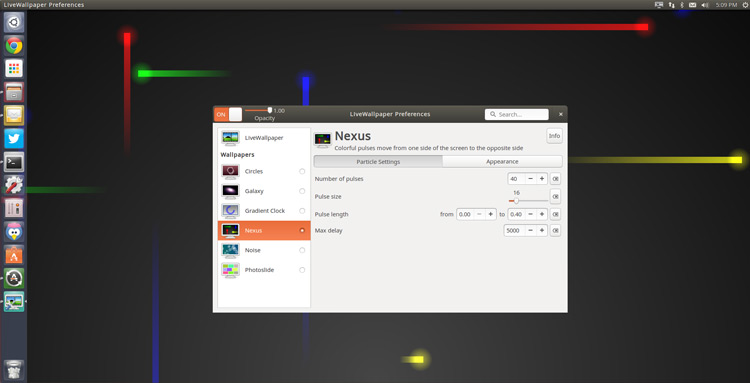

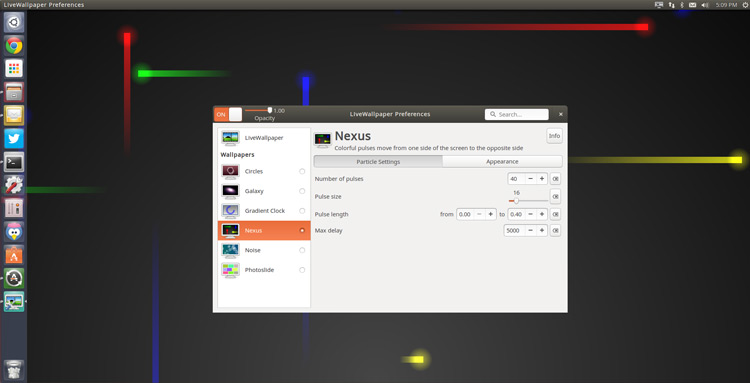

These range from the subtle (‘noise’) to frenetic (‘nexus’), and caters to everything in between. There’s even the obligatory clock wallpaper inspired by the welcome screen of the Ubuntu Phone:

|

||||

|

||||

- Circles — Clock inspired by Ubuntu Phone with ‘evolving circle’ aura

|

||||

- Galaxy — Spinning galaxy that can be resized/repositioned

|

||||

- Gradient Clock — A polar clock overlaid on basic gradient

|

||||

- Nexus — Brightly colored particles fire across screen

|

||||

- Noise — A bokeh design similar to the iOS dynamic wallpaper

|

||||

- Photoslide — Grid of photos from folder (default ~/Photos) animate in/out

|

||||

|

||||

Live Wallpaper is **fully open-source** so there’s nothing to stop imaginative artists with the know-how (and patience) from creating some slick themes of their own.

|

||||

|

||||

### Settings & Features ###

|

||||

|

||||

|

||||

|

||||

Each theme can be configured or customised in some way, though certain themes have more options than others.

|

||||

|

||||

For example, in Nexus (pictured above) you can change the number and colour of the the pulse particles, their size, and their frequency.

|

||||

|

||||

The preferences app also provides a set of **general options** that will apply to all themes. These include:

|

||||

|

||||

- Setting live wallpaper to run on log-in

|

||||

- Setting a custom background that the animation sits on

|

||||

- Adjusting the FPS (including option to show FPS on screen)

|

||||

- Specifying the multi-monitor behaviour

|

||||

|

||||

With so many options available it should be easy to create a background set up that suits you.

|

||||

|

||||

### Drawbacks ###

|

||||

|

||||

#### No Desktop Icons ####

|

||||

|

||||

You can’t add, open or edit files or folders on the desktop while Live Wallpaper is ‘On’.

|

||||

|

||||

The Preferences app does list an option that will, supposedly, let you do this. It may work on really older releases but in our testing, on Ubuntu 14.10, it does nothing.

|

||||

|

||||

One workaround that seems to work for some users of the app on Ubuntu is setting a .png image as the custom background. It doesn’t have to be a transparent .png, simply a .png.

|

||||

|

||||

#### Resource Usage ####

|

||||

|

||||

Animated wallpapers use more system resources than standard background images.

|

||||

|

||||

We’re not talking about 50% load at all times —at least not with this app in our testing— but those on low-power devices and laptops will want to use apps like this cautiously. Use a [system monitoring tool][2] to keep an eye on CPU and GPU load.

|

||||

|

||||

#### Quitting the app ####

|

||||

|

||||

The biggest “bug” for me is the absolute lack of “quit” option.

|

||||

|

||||

Sure, the animated wallpaper can be turned off from the Indicator Applet and the Preferences tool but quitting the app entirely, quitting the indicator applet? Nope. To do that I have to use the ‘pkill livewallpaper’ command in the Terminal.

|

||||

|

||||

### How to Install Live Wallpaper in Ubuntu 14.04 LTS + ###

|

||||

|

||||

|

||||

|

||||

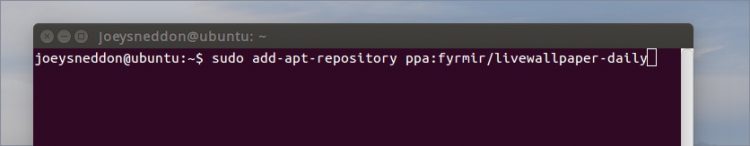

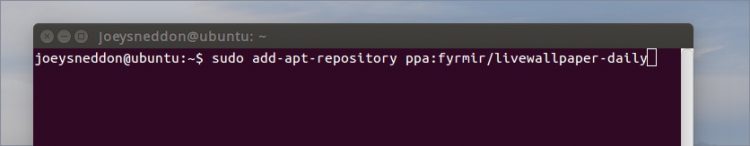

To install Live Wallpaper in Ubuntu 14.04 LTS and above you will first need to add the official PPA for the app to your Software Sources.

|

||||

|

||||

The quickest way to do this is using the Terminal:

|

||||

|

||||

sudo add-apt-repository ppa:fyrmir/livewallpaper-daily

|

||||

|

||||

sudo apt-get update && sudo apt-get install livewallpaper

|

||||

|

||||

You should also install the indicator applet, which lets you quickly and easily turn on/off the animated wallpaper and switch theme from the menu area, and the GUI settings tool so that you can configure each theme based on your tastes.

|

||||

|

||||

sudo apt-get install livewallpaper-config livewallpaper-indicator

|

||||

|

||||

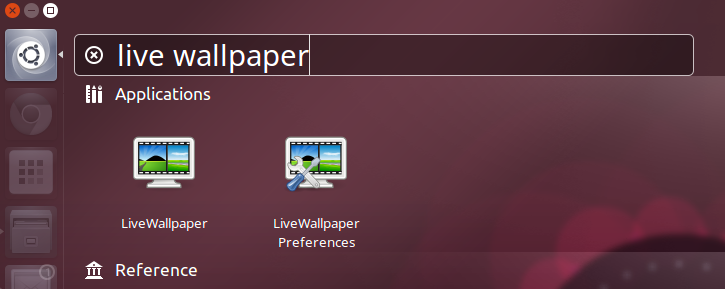

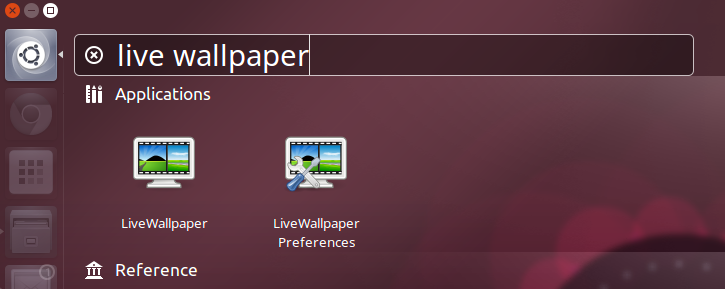

When everything has installed you will be able to launch the app and its preferences tool from the Unity Dash.

|

||||

|

||||

|

||||

|

||||

Annoyingly, the Indicator Applet won’t automatically open after you install it. It does add itself to the start up list, so a quick log out > log in will get it to show.

|

||||

|

||||

### Summary ###

|

||||

|

||||

If you fancy breathing life into a dull desktop, give it a spin — and let us know what you think of it and what animated wallpapers you’d love to see added!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/05/animated-wallpaper-adds-live-backgrounds-to-linux-distros

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www.omgubuntu.co.uk/2012/11/live-wallpaper-for-ubuntu

|

||||

[2]:http://www.omgubuntu.co.uk/2011/11/5-system-monitoring-tools-for-ubuntu

|

||||

@ -1,3 +1,5 @@

|

||||

FSSlc Translating

|

||||

|

||||

Backup with these DeDuplicating Encryption Tools

|

||||

================================================================================

|

||||

Data is growing both in volume and value. It is becoming increasingly important to be able to back up and restore this information quickly and reliably. As society has adapted to technology and learned how to depend on computers and mobile devices, there are few that can deal with the reality of losing important data. Of firms that suffer the loss of data, 30% fold within a year, 70% cease trading within five years. This highlights the value of data.

|

||||

@ -155,4 +157,4 @@ via: http://www.linuxlinks.com/article/20150628060000607/BackupTools.html

|

||||

[3]:http://obnam.org/

|

||||

[4]:http://duplicity.nongnu.org/

|

||||

[5]:http://zbackup.org/

|

||||

[6]:https://bup.github.io/

|

||||

[6]:https://bup.github.io/

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by ZTinoZ

|

||||

7 command line tools for monitoring your Linux system

|

||||

================================================================================

|

||||

**Here is a selection of basic command line tools that will make your exploration and optimization in Linux easier. **

|

||||

@ -76,4 +77,4 @@ via: http://www.networkworld.com/article/2937219/linux/7-command-line-tools-for-

|

||||

[7]:http://linuxcommand.org/man_pages/vmstat8.html

|

||||

[8]:http://linux.die.net/man/1/ps

|

||||

[9]:http://linux.die.net/man/1/pstree

|

||||

[10]:http://linux.die.net/man/1/iostat

|

||||

[10]:http://linux.die.net/man/1/iostat

|

||||

|

||||

@ -1,745 +0,0 @@

|

||||

translating...

|

||||

|

||||

ZMap Documentation

|

||||

================================================================================

|

||||

1. Getting Started with ZMap

|

||||

1. Scanning Best Practices

|

||||

1. Command Line Arguments

|

||||

1. Additional Information

|

||||

1. TCP SYN Probe Module

|

||||

1. ICMP Echo Probe Module

|

||||

1. UDP Probe Module

|

||||

1. Configuration Files

|

||||

1. Verbosity

|

||||

1. Results Output

|

||||

1. Blacklisting

|

||||

1. Rate Limiting and Sampling

|

||||

1. Sending Multiple Probes

|

||||

1. Extending ZMap

|

||||

1. Sample Applications

|

||||

1. Writing Probe and Output Modules

|

||||

|

||||

----------

|

||||

|

||||

### Getting Started with ZMap ###

|

||||

|

||||

ZMap is designed to perform comprehensive scans of the IPv4 address space or large portions of it. While ZMap is a powerful tool for researchers, please keep in mind that by running ZMap, you are potentially scanning the ENTIRE IPv4 address space at over 1.4 million packets per second. Before performing even small scans, we encourage users to contact their local network administrators and consult our list of scanning best practices.

|

||||

|

||||

By default, ZMap will perform a TCP SYN scan on the specified port at the maximum rate possible. A more conservative configuration that will scan 10,000 random addresses on port 80 at a maximum 10 Mbps can be run as follows:

|

||||

|

||||

$ zmap --bandwidth=10M --target-port=80 --max-targets=10000 --output-file=results.csv

|

||||

|

||||

Or more concisely specified as:

|

||||

|

||||

$ zmap -B 10M -p 80 -n 10000 -o results.csv

|

||||

|

||||

ZMap can also be used to scan specific subnets or CIDR blocks. For example, to scan only 10.0.0.0/8 and 192.168.0.0/16 on port 80, run:

|

||||

|

||||

zmap -p 80 -o results.csv 10.0.0.0/8 192.168.0.0/16

|

||||

|

||||

If the scan started successfully, ZMap will output status updates every one second similar to the following:

|

||||

|

||||

0% (1h51m left); send: 28777 562 Kp/s (560 Kp/s avg); recv: 1192 248 p/s (231 p/s avg); hits: 0.04%

|

||||

0% (1h51m left); send: 34320 554 Kp/s (559 Kp/s avg); recv: 1442 249 p/s (234 p/s avg); hits: 0.04%

|

||||

0% (1h50m left); send: 39676 535 Kp/s (555 Kp/s avg); recv: 1663 220 p/s (232 p/s avg); hits: 0.04%

|

||||

0% (1h50m left); send: 45372 570 Kp/s (557 Kp/s avg); recv: 1890 226 p/s (232 p/s avg); hits: 0.04%

|

||||

|

||||

These updates provide information about the current state of the scan and are of the following form: %-complete (est time remaining); packets-sent curr-send-rate (avg-send-rate); recv: packets-recv recv-rate (avg-recv-rate); hits: hit-rate

|

||||

|

||||

If you do not know the scan rate that your network can support, you may want to experiment with different scan rates or bandwidth limits to find the fastest rate that your network can support before you see decreased results.

|

||||

|

||||

By default, ZMap will output the list of distinct IP addresses that responded successfully (e.g. with a SYN ACK packet) similar to the following. There are several additional formats (e.g. JSON and Redis) for outputting results as well as options for producing programmatically parsable scan statistics. As wells, additional output fields can be specified and the results can be filtered using an output filter.

|

||||

|

||||

115.237.116.119

|

||||

23.9.117.80

|

||||

207.118.204.141

|

||||

217.120.143.111

|

||||

50.195.22.82

|

||||

|

||||

We strongly encourage you to use a blacklist file, to exclude both reserved/unallocated IP space (e.g. multicast, RFC1918), as well as networks that request to be excluded from your scans. By default, ZMap will utilize a simple blacklist file containing reserved and unallocated addresses located at `/etc/zmap/blacklist.conf`. If you find yourself specifying certain settings, such as your maximum bandwidth or blacklist file every time you run ZMap, you can specify these in `/etc/zmap/zmap.conf` or use a custom configuration file.

|

||||

|

||||

If you are attempting to troubleshoot scan related issues, there are several options to help debug. First, it is possible can perform a dry run scan in order to see the packets that would be sent over the network by adding the `--dryrun` flag. As well, it is possible to change the logging verbosity by setting the `--verbosity=n` flag.

|

||||

|

||||

----------

|

||||

|

||||

### Scanning Best Practices ###

|

||||

|

||||

We offer these suggestions for researchers conducting Internet-wide scans as guidelines for good Internet citizenship.

|

||||

|

||||

- Coordinate closely with local network administrators to reduce risks and handle inquiries

|

||||

- Verify that scans will not overwhelm the local network or upstream provider

|

||||

- Signal the benign nature of the scans in web pages and DNS entries of the source addresses

|

||||

- Clearly explain the purpose and scope of the scans in all communications

|

||||

- Provide a simple means of opting out and honor requests promptly

|

||||

- Conduct scans no larger or more frequent than is necessary for research objectives

|

||||

- Spread scan traffic over time or source addresses when feasible

|

||||

|

||||

It should go without saying that scan researchers should refrain from exploiting vulnerabilities or accessing protected resources, and should comply with any special legal requirements in their jurisdictions.

|

||||

|

||||

----------

|

||||

|

||||

### Command Line Arguments ###

|

||||

|

||||

#### Common Options ####

|

||||

|

||||

These options are the most common options when performing a simple scan. We note that some options are dependent on the probe module or output module used (e.g. target port is not used when performing an ICMP Echo Scan).

|

||||

|

||||

|

||||

**-p, --target-port=port**

|

||||

|

||||

TCP port number to scan (e.g. 443)

|

||||

|

||||

**-o, --output-file=name**

|

||||

|

||||

Write results to this file. Use - for stdout

|

||||

|

||||

**-b, --blacklist-file=path**

|

||||

|

||||

File of subnets to exclude, in CIDR notation (e.g. 192.168.0.0/16), one-per line. It is recommended you use this to exclude RFC 1918 addresses, multicast, IANA reserved space, and other IANA special-purpose addresses. An example blacklist file is provided in conf/blacklist.example for this purpose.

|

||||

|

||||

#### Scan Options ####

|

||||

|

||||

**-n, --max-targets=n**

|

||||

|

||||

Cap the number of targets to probe. This can either be a number (e.g. `-n 1000`) or a percentage (e.g. `-n 0.1%`) of the scannable address space (after excluding blacklist)

|

||||

|

||||

**-N, --max-results=n**

|

||||

|

||||

Exit after receiving this many results

|

||||

|

||||

**-t, --max-runtime=secs**

|

||||

|

||||

Cap the length of time for sending packets

|

||||

|

||||

**-r, --rate=pps**

|

||||

|

||||

Set the send rate in packets/sec

|

||||

|

||||

**-B, --bandwidth=bps**

|

||||

|

||||

Set the send rate in bits/second (supports suffixes G, M, and K (e.g. `-B 10M` for 10 mbps). This overrides the `--rate` flag.

|

||||

|

||||

**-c, --cooldown-time=secs**

|

||||

|

||||

How long to continue receiving after sending has completed (default=8)

|

||||

|

||||

**-e, --seed=n**

|

||||

|

||||

Seed used to select address permutation. Use this if you want to scan addresses in the same order for multiple ZMap runs.

|

||||

|

||||

**--shards=n**

|

||||

|

||||

Split the scan up into N shards/partitions among different instances of zmap (default=1). When sharding, `--seed` is required

|

||||

|

||||

**--shard=n**

|

||||

|

||||

Set which shard to scan (default=0). Shards are indexed in the range [0, N), where N is the total number of shards. When sharding `--seed` is required.

|

||||

|

||||

**-T, --sender-threads=n**

|

||||

|

||||

Threads used to send packets (default=1)

|

||||

|

||||

**-P, --probes=n**

|

||||

|

||||

Number of probes to send to each IP (default=1)

|

||||

|

||||

**-d, --dryrun**

|

||||

|

||||

Print out each packet to stdout instead of sending it (useful for debugging)

|

||||

|

||||

#### Network Options ####

|

||||

|

||||

**-s, --source-port=port|range**

|

||||

|

||||

Source port(s) to send packets from

|

||||

|

||||

**-S, --source-ip=ip|range**

|

||||

|

||||

Source address(es) to send packets from. Either single IP or range (e.g. 10.0.0.1-10.0.0.9)

|

||||

|

||||

**-G, --gateway-mac=addr**

|

||||

|

||||

Gateway MAC address to send packets to (in case auto-detection does not work)

|

||||

|

||||

**-i, --interface=name**

|

||||

|

||||

Network interface to use

|

||||

|

||||

#### Probe Options ####

|

||||

|

||||

ZMap allows users to specify and write their own probe modules for use with ZMap. Probe modules are responsible for generating probe packets to send, and processing responses from hosts.

|

||||

|

||||

**--list-probe-modules**

|

||||

|

||||

List available probe modules (e.g. tcp_synscan)

|

||||

|

||||

**-M, --probe-module=name**

|

||||

|

||||

Select probe module (default=tcp_synscan)

|

||||

|

||||

**--probe-args=args**

|

||||

|

||||

Arguments to pass to probe module

|

||||

|

||||

**--list-output-fields**

|

||||

|

||||

List the fields the selected probe module can send to the output module

|

||||

|

||||

#### Output Options ####

|

||||

|

||||

ZMap allows users to specify and write their own output modules for use with ZMap. Output modules are responsible for processing the fieldsets returned by the probe module, and outputing them to the user. Users can specify output fields, and write filters over the output fields.

|

||||

|

||||

**--list-output-modules**

|

||||

|

||||

List available output modules (e.g. tcp_synscan)

|

||||

|

||||

**-O, --output-module=name**

|

||||

|

||||

Select output module (default=csv)

|

||||

|

||||

**--output-args=args**

|

||||

|

||||

Arguments to pass to output module

|

||||

|

||||

**-f, --output-fields=fields**

|

||||

|

||||

Comma-separated list of fields to output

|

||||

|

||||

**--output-filter**

|

||||

|

||||

Specify an output filter over the fields defined by the probe module

|

||||

|

||||

#### Additional Options ####

|

||||

|

||||

**-C, --config=filename**

|

||||

|

||||

Read a configuration file, which can specify any other options.

|

||||

|

||||

**-q, --quiet**

|

||||

|

||||

Do not print status updates once per second

|

||||

|

||||

**-g, --summary**

|

||||

|

||||

Print configuration and summary of results at the end of the scan

|

||||

|

||||

**-v, --verbosity=n**

|

||||

|

||||

Level of log detail (0-5, default=3)

|

||||

|

||||

**-h, --help**

|

||||

|

||||

Print help and exit

|

||||

|

||||

**-V, --version**

|

||||

|

||||

Print version and exit

|

||||

|

||||

----------

|

||||

|

||||

### Additional Information ###

|

||||

|

||||

#### TCP SYN Scans ####

|

||||

|

||||

When performing a TCP SYN scan, ZMap requires a single target port and supports specifying a range of source ports from which the scan will originate.

|

||||

|

||||

**-p, --target-port=port**

|

||||

|

||||

TCP port number to scan (e.g. 443)

|

||||

|

||||

**-s, --source-port=port|range**

|

||||

|

||||

Source port(s) for scan packets (e.g. 40000-50000)

|

||||

|

||||

**Warning!** ZMap relies on the Linux kernel to respond to SYN/ACK packets with RST packets in order to close connections opened by the scanner. This occurs because ZMap sends packets at the Ethernet layer in order to reduce overhead otherwise incurred in the kernel from tracking open TCP connections and performing route lookups. As such, if you have a firewall rule that tracks established connections such as a netfilter rule similar to `-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT`, this will block SYN/ACK packets from reaching the kernel. This will not prevent ZMap from recording responses, but it will prevent RST packets from being sent back, ultimately using up a connection on the scanned host until your connection times out. We strongly recommend that you select a set of unused ports on your scanning host which can be allowed access in your firewall and specifying this port range when executing ZMap, with the `-s` flag (e.g. `-s '50000-60000'`).

|

||||

|

||||

#### ICMP Echo Request Scans ####

|

||||

|

||||

While ZMap performs TCP SYN scans by default, it also supports ICMP echo request scans in which an ICMP echo request packet is sent to each host and the type of ICMP response received in reply is denoted. An ICMP scan can be performed by selecting the icmp_echoscan scan module similar to the following:

|

||||

|

||||

$ zmap --probe-module=icmp_echoscan

|

||||

|

||||

#### UDP Datagram Scans ####

|

||||

|

||||

ZMap additionally supports UDP probes, where it will send out an arbitrary UDP datagram to each host, and receive either UDP or ICMP Unreachable responses. ZMap supports four different methods of setting the UDP payload through the --probe-args command-line option. These are 'text' for ASCII-printable payloads, 'hex' for hexadecimal payloads set on the command-line, 'file' for payloads contained in an external file, and 'template' for payloads that require dynamic field generation. In order to obtain the UDP response, make sure that you specify 'data' as one of the fields to report with the -f option.

|

||||

|

||||

The example below will send the two bytes 'ST', a PCAnwywhere 'status' request, to UDP port 5632.

|

||||

|

||||

$ zmap -M udp -p 5632 --probe-args=text:ST -N 100 -f saddr,data -o -

|

||||

|

||||

The example below will send the byte '0x02', a SQL Server 'client broadcast' request, to UDP port 1434.

|

||||

|

||||

$ zmap -M udp -p 1434 --probe-args=hex:02 -N 100 -f saddr,data -o -

|

||||

|

||||

The example below will send a NetBIOS status request to UDP port 137. This uses a payload file that is included with the ZMap distribution.

|

||||

|

||||

$ zmap -M udp -p 1434 --probe-args=file:netbios_137.pkt -N 100 -f saddr,data -o -

|

||||

|

||||

The example below will send a SIP 'OPTIONS' request to UDP port 5060. This uses a template file that is included with the ZMap distribution.

|

||||

|

||||

$ zmap -M udp -p 1434 --probe-args=file:sip_options.tpl -N 100 -f saddr,data -o -

|

||||

|

||||

UDP payload templates are still experimental. You may encounter crashes when more using more than one send thread (-T) and there is a significant decrease in performance compared to static payloads. A template is simply a payload file that contains one or more field specifiers enclosed in a ${} sequence. Some protocols, notably SIP, require the payload to reflect the source and destination of the packet. Other protocols, such as portmapper and DNS, contain fields that should be randomized per request or risk being dropped by multi-homed systems scanned by ZMap.

|

||||

|

||||

The payload template below will send a SIP OPTIONS request to every destination:

|

||||

|

||||

OPTIONS sip:${RAND_ALPHA=8}@${DADDR} SIP/2.0

|

||||

Via: SIP/2.0/UDP ${SADDR}:${SPORT};branch=${RAND_ALPHA=6}.${RAND_DIGIT=10};rport;alias

|

||||

From: sip:${RAND_ALPHA=8}@${SADDR}:${SPORT};tag=${RAND_DIGIT=8}

|

||||

To: sip:${RAND_ALPHA=8}@${DADDR}

|

||||

Call-ID: ${RAND_DIGIT=10}@${SADDR}

|

||||

CSeq: 1 OPTIONS

|

||||

Contact: sip:${RAND_ALPHA=8}@${SADDR}:${SPORT}

|

||||

Content-Length: 0

|

||||

Max-Forwards: 20

|

||||

User-Agent: ${RAND_ALPHA=8}

|

||||

Accept: text/plain

|

||||

|

||||

In the example above, note that line endings are \r\n and the end of this request must contain \r\n\r\n for most SIP implementations to correcly process it. A working example is included in the examples/udp-payloads directory of the ZMap source tree (sip_options.tpl).

|

||||

|

||||

The following template fields are currently implemented:

|

||||

|

||||

|

||||

- **SADDR**: Source IP address in dotted-quad format

|

||||

- **SADDR_N**: Source IP address in network byte order

|

||||

- **DADDR**: Destination IP address in dotted-quad format

|

||||

- **DADDR_N**: Destination IP address in network byte order

|

||||

- **SPORT**: Source port in ascii format

|

||||

- **SPORT_N**: Source port in network byte order

|

||||

- **DPORT**: Destination port in ascii format

|

||||

- **DPORT_N**: Destination port in network byte order

|

||||

- **RAND_BYTE**: Random bytes (0-255), length specified with =(length) parameter

|

||||

- **RAND_DIGIT**: Random digits from 0-9, length specified with =(length) parameter

|

||||

- **RAND_ALPHA**: Random mixed-case letters from A-Z, length specified with =(length) parameter

|

||||

- **RAND_ALPHANUM**: Random mixed-case letters from A-Z and digits from 0-9, length specified with =(length) parameter

|

||||

|

||||

### Configuration Files ###

|

||||

|

||||

ZMap supports configuration files instead of requiring all options to be specified on the command-line. A configuration can be created by specifying one long-name option and the value per line such as:

|

||||

|

||||

interface "eth1"

|

||||

source-ip 1.1.1.4-1.1.1.8

|

||||

gateway-mac b4:23:f9:28:fa:2d # upstream gateway

|

||||

cooldown-time 300 # seconds

|

||||

blacklist-file /etc/zmap/blacklist.conf

|

||||

output-file ~/zmap-output

|

||||

quiet

|

||||

summary

|

||||

|

||||

ZMap can then be run with a configuration file and specifying any additional necessary parameters:

|

||||

|

||||

$ zmap --config=~/.zmap.conf --target-port=443

|

||||

|

||||

### Verbosity ###

|

||||

|

||||

There are several types of on-screen output that ZMap produces. By default, ZMap will print out basic progress information similar to the following every 1 second. This can be disabled by setting the `--quiet` flag.

|

||||

|

||||

0:01 12%; send: 10000 done (15.1 Kp/s avg); recv: 144 143 p/s (141 p/s avg); hits: 1.44%

|

||||

|

||||

ZMap also prints out informational messages during scanner configuration such as the following, which can be controlled with the `--verbosity` argument.

|

||||

|

||||

Aug 11 16:16:12.813 [INFO] zmap: started

|

||||

Aug 11 16:16:12.817 [DEBUG] zmap: no interface provided. will use eth0

|

||||

Aug 11 16:17:03.971 [DEBUG] cyclic: primitive root: 3489180582

|

||||

Aug 11 16:17:03.971 [DEBUG] cyclic: starting point: 46588

|

||||

Aug 11 16:17:03.975 [DEBUG] blacklist: 3717595507 addresses allowed to be scanned

|

||||

Aug 11 16:17:03.975 [DEBUG] send: will send from 1 address on 28233 source ports

|

||||

Aug 11 16:17:03.975 [DEBUG] send: using bandwidth 10000000 bits/s, rate set to 14880 pkt/s

|

||||

Aug 11 16:17:03.985 [DEBUG] recv: thread started

|

||||

|

||||

ZMap also supports printing out a grep-able summary at the end of the scan, similar to below, which can be invoked with the `--summary` flag.

|

||||

|

||||

cnf target-port 443

|

||||

cnf source-port-range-begin 32768

|

||||

cnf source-port-range-end 61000

|

||||

cnf source-addr-range-begin 1.1.1.4

|

||||

cnf source-addr-range-end 1.1.1.8

|

||||

cnf maximum-packets 4294967295

|

||||

cnf maximum-runtime 0

|

||||

cnf permutation-seed 0

|

||||

cnf cooldown-period 300

|

||||

cnf send-interface eth1

|

||||

cnf rate 45000

|

||||

env nprocessors 16

|

||||

exc send-start-time Fri Jan 18 01:47:35 2013

|

||||

exc send-end-time Sat Jan 19 00:47:07 2013

|

||||

exc recv-start-time Fri Jan 18 01:47:35 2013

|

||||

exc recv-end-time Sat Jan 19 00:52:07 2013

|

||||

exc sent 3722335150

|

||||

exc blacklisted 572632145

|

||||

exc first-scanned 1318129262

|

||||

exc hit-rate 0.874102

|

||||

exc synack-received-unique 32537000

|

||||

exc synack-received-total 36689941

|

||||

exc synack-cooldown-received-unique 193

|

||||

exc synack-cooldown-received-total 1543

|

||||

exc rst-received-unique 141901021

|

||||

exc rst-received-total 166779002

|

||||

adv source-port-secret 37952

|

||||

adv permutation-gen 4215763218

|

||||

|

||||

### Results Output ###

|

||||

|

||||

ZMap can produce results in several formats through the use of **output modules**. By default, ZMap only supports **csv** output, however support for **redis** and **json** can be compiled in. The results sent to these output modules may be filtered using an **output filter**. The fields the output module writes are specified by the user. By default, ZMap will return results in csv format and if no output file is specified, ZMap will not produce specific results. It is also possible to write your own output module; see Writing Output Modules for information.

|

||||

|

||||

**-o, --output-file=p**

|

||||

|

||||

File to write output to

|

||||

|

||||

**-O, --output-module=p**

|

||||

|

||||

Invoke a custom output module

|

||||

|

||||

|

||||

**-f, --output-fields=p**

|

||||

|

||||

Comma-separated list of fields to output

|

||||

|

||||

**--output-filter=filter**

|

||||

|

||||

Specify an output filter over fields for a given probe

|

||||

|

||||

**--list-output-modules**

|

||||

|

||||

Lists available output modules

|

||||

|

||||

**--list-output-fields**

|

||||

|

||||

List available output fields for a given probe

|

||||

|

||||

#### Output Fields ####

|

||||

|

||||

ZMap has a variety of fields it can output beyond IP address. These fields can be viewed for a given probe module by running with the `--list-output-fields` flag.

|

||||

|

||||

$ zmap --probe-module="tcp_synscan" --list-output-fields

|

||||

saddr string: source IP address of response

|

||||

saddr-raw int: network order integer form of source IP address

|

||||

daddr string: destination IP address of response

|

||||

daddr-raw int: network order integer form of destination IP address

|

||||

ipid int: IP identification number of response

|

||||

ttl int: time-to-live of response packet

|

||||

sport int: TCP source port

|

||||

dport int: TCP destination port

|

||||

seqnum int: TCP sequence number

|

||||

acknum int: TCP acknowledgement number

|

||||

window int: TCP window

|

||||

classification string: packet classification

|

||||

success int: is response considered success

|

||||

repeat int: is response a repeat response from host

|

||||

cooldown int: Was response received during the cooldown period

|

||||

timestamp-str string: timestamp of when response arrived in ISO8601 format.

|

||||

timestamp-ts int: timestamp of when response arrived in seconds since Epoch

|

||||

timestamp-us int: microsecond part of timestamp (e.g. microseconds since 'timestamp-ts')

|

||||

|

||||

To select which fields to output, any combination of the output fields can be specified as a comma-separated list using the `--output-fields=fields` or `-f` flags. Example:

|

||||

|

||||

$ zmap -p 80 -f "response,saddr,daddr,sport,seq,ack,in_cooldown,is_repeat,timestamp" -o output.csv

|

||||

|

||||

#### Filtering Output ####

|

||||

|

||||

Results generated by a probe module can be filtered before being passed to the output module. Filters are defined over the output fields of a probe module. Filters are written in a simple filtering language, similar to SQL, and are passed to ZMap using the **--output-filter** option. Output filters are commonly used to filter out duplicate results, or to only pass only sucessful responses to the output module.

|

||||

|

||||

Filter expressions are of the form `<fieldname> <operation> <value>`. The type of `<value>` must be either a string or unsigned integer literal, and match the type of `<fieldname>`. The valid operations for integer comparisons are `= !=, <, >, <=, >=`. The operations for string comparisons are =, !=. The `--list-output-fields` flag will print what fields and types are available for the selected probe module, and then exit.

|

||||

|

||||

Compound filter expressions may be constructed by combining filter expressions using parenthesis to specify order of operations, the `&&` (logical AND) and `||` (logical OR) operators.

|

||||

|

||||

**Examples**

|

||||

|

||||

Write a filter for only successful, non-duplicate responses

|

||||

|

||||

--output-filter="success = 1 && repeat = 0"

|

||||

|

||||

Filter for packets that have classification RST and a TTL greater than 10, or for packets with classification SYNACK

|

||||

|

||||

--output-filter="(classification = rst && ttl > 10) || classification = synack"

|

||||

|

||||

#### CSV ####

|

||||

|

||||

The csv module will produce a comma-separated value file of the output fields requested. For example, the following command produces the following CSV in a file called `output.csv`.

|

||||

|

||||

$ zmap -p 80 -f "response,saddr,daddr,sport,seq,ack,in_cooldown,is_repeat,timestamp" -o output.csv

|

||||

|

||||

----------

|

||||

|

||||

response, saddr, daddr, sport, dport, seq, ack, in_cooldown, is_repeat, timestamp

|

||||

synack, 159.174.153.144, 10.0.0.9, 80, 40555, 3050964427, 3515084203, 0, 0,2013-08-15 18:55:47.681

|

||||

rst, 141.209.175.1, 10.0.0.9, 80, 40136, 0, 3272553764, 0, 0,2013-08-15 18:55:47.683

|

||||

rst, 72.36.213.231, 10.0.0.9, 80, 56642, 0, 2037447916, 0, 0,2013-08-15 18:55:47.691

|

||||

rst, 148.8.49.150, 10.0.0.9, 80, 41672, 0, 1135824975, 0, 0,2013-08-15 18:55:47.692

|

||||

rst, 50.165.166.206, 10.0.0.9, 80, 38858, 0, 535206863, 0, 0,2013-08-15 18:55:47.694

|

||||

rst, 65.55.203.135, 10.0.0.9, 80, 50008, 0, 4071709905, 0, 0,2013-08-15 18:55:47.700

|

||||

synack, 50.57.166.186, 10.0.0.9, 80, 60650, 2813653162, 993314545, 0, 0,2013-08-15 18:55:47.704

|

||||

synack, 152.75.208.114, 10.0.0.9, 80, 52498, 460383682, 4040786862, 0, 0,2013-08-15 18:55:47.707

|

||||

synack, 23.72.138.74, 10.0.0.9, 80, 33480, 810393698, 486476355, 0, 0,2013-08-15 18:55:47.710

|

||||

|

||||

#### Redis ####

|

||||

|

||||

The redis output module allows addresses to be added to a Redis queue instead of being saved to file which ultimately allows ZMap to be incorporated with post processing tools.

|

||||

|

||||

**Heads Up!** ZMap does not build with Redis support by default. If you are building ZMap from source, you can build with Redis support by running CMake with `-DWITH_REDIS=ON`.

|

||||

|

||||

### Blacklisting and Whitelisting ###

|

||||

|

||||

ZMap supports both blacklisting and whitelisting network prefixes. If ZMap is not provided with blacklist or whitelist parameters, ZMap will scan all IPv4 addresses (including local, reserved, and multicast addresses). If a blacklist file is specified, network prefixes in the blacklisted segments will not be scanned; if a whitelist file is provided, only network prefixes in the whitelist file will be scanned. A whitelist and blacklist file can be used in coordination; the blacklist has priority over the whitelist (e.g. if you have whitelisted 10.0.0.0/8 and blacklisted 10.1.0.0/16, then 10.1.0.0/16 will not be scanned). Whitelist and blacklist files can be specified on the command-line as follows:

|

||||

|

||||

**-b, --blacklist-file=path**

|

||||

|

||||

File of subnets to blacklist in CIDR notation, e.g. 192.168.0.0/16

|

||||

|

||||

**-w, --whitelist-file=path**

|

||||

|

||||

File of subnets to limit scan to in CIDR notation, e.g. 192.168.0.0/16

|

||||

|

||||

Blacklist files should be formatted with a single network prefix in CIDR notation per line. Comments are allowed using the `#` character. Example:

|

||||

|

||||

# From IANA IPv4 Special-Purpose Address Registry

|

||||

# http://www.iana.org/assignments/iana-ipv4-special-registry/iana-ipv4-special-registry.xhtml

|

||||

# Updated 2013-05-22

|

||||

|

||||

0.0.0.0/8 # RFC1122: "This host on this network"

|

||||

10.0.0.0/8 # RFC1918: Private-Use

|

||||

100.64.0.0/10 # RFC6598: Shared Address Space

|

||||

127.0.0.0/8 # RFC1122: Loopback

|

||||

169.254.0.0/16 # RFC3927: Link Local

|

||||

172.16.0.0/12 # RFC1918: Private-Use

|

||||

192.0.0.0/24 # RFC6890: IETF Protocol Assignments

|

||||

192.0.2.0/24 # RFC5737: Documentation (TEST-NET-1)

|

||||

192.88.99.0/24 # RFC3068: 6to4 Relay Anycast

|

||||

192.168.0.0/16 # RFC1918: Private-Use

|

||||

192.18.0.0/15 # RFC2544: Benchmarking

|

||||

198.51.100.0/24 # RFC5737: Documentation (TEST-NET-2)

|

||||

203.0.113.0/24 # RFC5737: Documentation (TEST-NET-3)

|

||||

240.0.0.0/4 # RFC1112: Reserved

|

||||

255.255.255.255/32 # RFC0919: Limited Broadcast

|

||||

|

||||

# From IANA Multicast Address Space Registry

|

||||

# http://www.iana.org/assignments/multicast-addresses/multicast-addresses.xhtml

|

||||

# Updated 2013-06-25

|

||||

|

||||

224.0.0.0/4 # RFC5771: Multicast/Reserved

|

||||

|

||||

If you are looking to scan only a random portion of the internet, checkout Sampling, instead of using whitelisting and blacklisting.

|

||||

|

||||

**Heads Up!** The default ZMap configuration uses the blacklist file at `/etc/zmap/blacklist.conf`, which contains locally scoped address space and reserved IP ranges. The default configuration can be changed by editing `/etc/zmap/zmap.conf`.

|

||||

|

||||

### Rate Limiting and Sampling ###

|

||||

|

||||

By default, ZMap will scan at the fastest rate that your network adaptor supports. In our experiences on commodity hardware, this is generally around 95-98% of the theoretical speed of gigabit Ethernet, which may be faster than your upstream provider can handle. ZMap will not automatically adjust its send-rate based on your upstream provider. You may need to manually adjust your send-rate to reduce packet drops and incorrect results.

|

||||

|

||||

**-r, --rate=pps**

|

||||

|

||||

Set maximum send rate in packets/sec

|

||||

|

||||

**-B, --bandwidth=bps**

|

||||

|

||||

Set send rate in bits/sec (supports suffixes G, M, and K). This overrides the --rate flag.

|

||||

|

||||

ZMap also allows random sampling of the IPv4 address space by specifying max-targets and/or max-runtime. Because hosts are scanned in a random permutation generated per scan instantiation, limiting a scan to n hosts will perform a random sampling of n hosts. Command-line options:

|

||||

|

||||

**-n, --max-targets=n**

|

||||

|

||||

Cap number of targets to probe

|

||||

|

||||

**-N, --max-results=n**

|

||||

|

||||

Cap number of results (exit after receiving this many positive results)

|

||||

|

||||

**-t, --max-runtime=s**

|

||||

|

||||

Cap length of time for sending packets (in seconds)

|

||||

|

||||

**-s, --seed=n**

|

||||

|

||||

Seed used to select address permutation. Specify the same seed in order to scan addresses in the same order for different ZMap runs.

|

||||

|

||||

For example, if you wanted to scan the same one million hosts on the Internet for multiple scans, you could set a predetermined seed and cap the number of scanned hosts similar to the following:

|

||||

|

||||

zmap -p 443 -s 3 -n 1000000 -o results

|

||||

|

||||

In order to determine which one million hosts were going to be scanned, you could run the scan in dry-run mode which will print out the packets that would be sent instead of performing the actual scan.

|

||||

|

||||

zmap -p 443 -s 3 -n 1000000 --dryrun | grep daddr

|

||||

| awk -F'daddr: ' '{print $2}' | sed 's/ |.*//;'

|

||||

|

||||

### Sending Multiple Packets ###

|

||||

|

||||

ZMap supports sending multiple probes to each host. Increasing this number both increases scan time and hosts reached. However, we find that the increase in scan time (~100% per additional probe) greatly outweighs the increase in hosts reached (~1% per additional probe).

|

||||

|

||||

**-P, --probes=n**

|

||||

|

||||

The number of unique probes to send to each IP (default=1)

|

||||

|

||||

----------

|

||||

|

||||

### Sample Applications ###

|

||||

|

||||

ZMap is designed for initiating contact with a large number of hosts and finding ones that respond positively. However, we realize that many users will want to perform follow-up processing, such as performing an application level handshake. For example, users who perform a TCP SYN scan on port 80 might want to perform a simple GET request and users who scan port 443 may be interested in completing a TLS handshake.

|

||||

|

||||

#### Banner Grab ####

|

||||

|

||||

We have included a sample application, banner-grab, with ZMap that enables users to receive messages from listening TCP servers. Banner-grab connects to the provided servers, optionally sends a message, and prints out the first message received from the server. This tool can be used to fetch banners such as HTTP server responses to specific commands, telnet login prompts, or SSH server strings.

|

||||

|

||||

This example finds 1000 servers listening on port 80, and sends a simple GET request to each, storing their base-64 encoded responses in http-banners.out

|

||||

|

||||

$ zmap -p 80 -N 1000 -B 10M -o - | ./banner-grab-tcp -p 80 -c 500 -d ./http-req > out

|

||||

|

||||

For more details on using `banner-grab`, see the README file in `examples/banner-grab`.

|

||||

|

||||

**Heads Up!** ZMap and banner-grab can have significant performance and accuracy impact on one another if run simultaneously (as in the example). Make sure not to let ZMap saturate banner-grab-tcp's concurrent connections, otherwise banner-grab will fall behind reading stdin, causing ZMap to block on writing stdout. We recommend using a slower scanning rate with ZMap, and increasing the concurrency of banner-grab-tcp to no more than 3000 (Note that > 1000 concurrent connections requires you to use `ulimit -SHn 100000` and `ulimit -HHn 100000` to increase the maximum file descriptors per process). These parameters will of course be dependent on your server performance, and hit-rate; we encourage developers to experiment with small samples before running a large scan.

|

||||

|

||||

#### Forge Socket ####

|

||||

|

||||

We have also included a form of banner-grab, called forge-socket, that reuses the SYN-ACK sent from the server for the connection that ultimately fetches the banner. In `banner-grab-tcp`, ZMap sends a SYN to each server, and listening servers respond with a SYN+ACK. The ZMap host's kernel receives this, and sends a RST, as no active connection is associated with that packet. The banner-grab program must then create a new TCP connection to the same server to fetch data from it.

|

||||

|

||||

In forge-socket, we utilize a kernel module by the same name, that allows us to create a connection with arbitrary TCP parameters. This enables us to suppress the kernel's RST packet, and instead create a socket that will reuse the SYN+ACK's parameters, and send and receive data through this socket as we would any normally connected socket.

|

||||

|

||||

To use forge-socket, you will need the forge-socket kernel module, available from [github][1]. You should git clone `git@github.com:ewust/forge_socket.git` in the ZMap root source directory, and then cd into the forge_socket directory, and run make. Install the kernel module with `insmod forge_socket.ko` as root.

|

||||

|

||||

You must also tell the kernel not to send RST packets. An easy way to disable RST packets system wide is to use **iptables**. `iptables -A OUTPUT -p tcp -m tcp --tcp-flgas RST,RST RST,RST -j DROP` as root will do this, though you may also add an optional --dport X to limit this to the port (X) you are scanning. To remove this after your scan completes, you can run `iptables -D OUTPUT -p tcp -m tcp --tcp-flags RST,RST RST,RST -j DROP` as root.

|

||||

|

||||

Now you should be able to build the forge-socket ZMap example program. To run it, you must use the **extended_file** ZMap output module:

|

||||

|

||||

$ zmap -p 80 -N 1000 -B 10M -O extended_file -o - | \

|

||||

./forge-socket -c 500 -d ./http-req > ./http-banners.out

|

||||

|

||||

See the README in `examples/forge-socket` for more details.

|

||||

|

||||

----------

|

||||

|

||||

### Writing Probe and Output Modules ###

|

||||

|

||||

ZMap can be extended to support different types of scanning through **probe modules** and additional types of results **output through** output modules. Registered probe and output modules can be listed through the command-line interface:

|

||||

|

||||

**--list-probe-modules**

|

||||

|

||||

Lists installed probe modules

|

||||

|

||||

**--list-output-modules**

|

||||

|

||||

Lists installed output modules

|

||||

|

||||

#### Output Modules ####

|

||||

|

||||

ZMap output and post-processing can be extended by implementing and registering **output modules** with the scanner. Output modules receive a callback for every received response packet. While the default provided modules provide simple output, these modules are also capable of performing additional post-processing (e.g. tracking duplicates or outputting numbers in terms of AS instead of IP address)

|

||||

|

||||

Output modules are created by defining a new output_module struct and registering it in [output_modules.c][2]:

|

||||

|

||||

typedef struct output_module {

|

||||

const char *name; // how is output module referenced in the CLI

|

||||

unsigned update_interval; // how often is update called in seconds

|

||||

|

||||

output_init_cb init; // called at scanner initialization

|

||||

output_update_cb start; // called at the beginning of scanner

|

||||

output_update_cb update; // called every update_interval seconds

|

||||

output_update_cb close; // called at scanner termination

|

||||

|

||||

output_packet_cb process_ip; // called when a response is received

|

||||

|

||||

const char *helptext; // Printed when --list-output-modules is called

|

||||

|

||||

} output_module_t;

|

||||

|

||||

Output modules must have a name, which is how they are referenced on the command-line and generally implement `success_ip` and oftentimes `other_ip` callback. The process_ip callback is called for every response packet that is received and passed through the output filter by the current **probe module**. The response may or may not be considered a success (e.g. it could be a TCP RST). These callbacks must define functions that match the `output_packet_cb` definition:

|

||||

|

||||

int (*output_packet_cb) (

|

||||

|

||||

ipaddr_n_t saddr, // IP address of scanned host in network-order

|

||||

ipaddr_n_t daddr, // destination IP address in network-order

|

||||

|

||||

const char* response_type, // send-module classification of packet

|

||||

|

||||

int is_repeat, // {0: first response from host, 1: subsequent responses}

|

||||

int in_cooldown, // {0: not in cooldown state, 1: scanner in cooldown state}

|

||||

|

||||

const u_char* packet, // pointer to struct iphdr of IP packet

|

||||

size_t packet_len // length of packet in bytes

|

||||

);

|

||||

|

||||

An output module can also register callbacks to be executed at scanner initialization (tasks such as opening an output file), start of the scan (tasks such as documenting blacklisted addresses), during regular intervals during the scan (tasks such as progress updates), and close (tasks such as closing any open file descriptors). These callbacks are provided with complete access to the scan configuration and current state:

|

||||

|

||||

int (*output_update_cb)(struct state_conf*, struct state_send*, struct state_recv*);

|

||||

|

||||

which are defined in [output_modules.h][3]. An example is available at [src/output_modules/module_csv.c][4].

|

||||

|

||||

#### Probe Modules ####

|

||||

|

||||