mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

commit

fc5a359aac

125

published/20180107 7 leadership rules for the DevOps age.md

Normal file

125

published/20180107 7 leadership rules for the DevOps age.md

Normal file

@ -0,0 +1,125 @@

|

||||

DevOps 时代的 7 个领导力准则

|

||||

======

|

||||

|

||||

> DevOps 是一种持续性的改变和提高:那么也准备改变你所珍视的领导力准则吧。

|

||||

|

||||

|

||||

|

||||

如果 [DevOps] 最终更多的是一种文化而非某种技术或者平台,那么请记住:它没有终点线。而是一种持续性的改变和提高——而且最高管理层并不及格。

|

||||

|

||||

然而,如果期望 DevOps 能够帮助获得更多的成果,领导者需要[修订他们的一些传统的方法][2]。让我们考虑 7 个在 DevOps 时代更有效的 IT 领导的想法。

|

||||

|

||||

### 1、 向失败说“是的”

|

||||

|

||||

“失败”这个词在 IT 领域中一直包含着非常具体的意义,而且通常是糟糕的意思:服务器失败、备份失败、硬盘驱动器失败——你的印象就是如此。

|

||||

|

||||

然而一个健康的 DevOps 文化取决于如何重新定义失败——IT 领导者应该在他们的字典里重新定义这个单词,使这个词的含义和“机会”对等起来。

|

||||

|

||||

“在 DevOps 之前,我们曾有一种惩罚失败者的文化,”[Datical][3] 的首席技术官兼联合创始人罗伯特·里夫斯说,“我们学到的仅仅是去避免错误。在 IT 领域避免错误的首要措施就是不要去改变任何东西:不要加速版本迭代的日程,不要迁移到云中,不要去做任何不同的事”

|

||||

|

||||

那是一个旧时代的剧本,里夫斯坦诚的说,它已经不起作用了,事实上,那种停滞实际上是失败。

|

||||

|

||||

“那些缓慢的发布周期并逃避云的公司被恐惧所麻痹——他们将会走向失败,”里夫斯说道。“IT 领导者必须拥抱失败,并把它当做成一个机遇。人们不仅仅从他们的过错中学习,也会从别人的错误中学习。开放和[安全心理][4]的文化促进学习和提高。”

|

||||

|

||||

**[相关文章:[为什么敏捷领导者谈论“失败”必须超越它本义][5]]**

|

||||

|

||||

### 2、 在管理层渗透开发运营的理念

|

||||

|

||||

尽管 DevOps 文化可以在各个方向有机的发展,那些正在从单体、孤立的 IT 实践中转变出来的公司,以及可能遭遇逆风的公司——需要高管层的全面支持。如果缺少了它,你就会传达模糊的信息,而且可能会鼓励那些宁愿被推着走的人,但这是我们一贯的做事方式。[改变文化是困难的][6];人们需要看到高管层完全投入进去并且知道改变已经实际发生了。

|

||||

|

||||

“高层管理必须全力支持 DevOps,才能成功的实现收益”,来自 [Rainforest QA][7] 的首席信息官德里克·蔡说道。

|

||||

|

||||

成为一个 DevOps 商店。德里克指出,涉及到公司的一切,从技术团队到工具到进程到规则和责任。

|

||||

|

||||

“没有高层管理的统一赞助支持,DevOps 的实施将很难成功,”德里克说道,“因此,在转变到 DevOps 之前在高层中保持一致是很重要的。”

|

||||

|

||||

### 3、 不要只是声明 “DevOps”——要明确它

|

||||

|

||||

即使 IT 公司也已经开始张开双臂拥抱 DevOps,也可能不是每个人都在同一个步调上。

|

||||

|

||||

**[参考我们的相关文章,[3 阐明了DevOps和首席技术官们必须在同一进程上][8]]**

|

||||

|

||||

造成这种脱节的一个根本原因是:人们对这个术语的有着不同的定义理解。

|

||||

|

||||

“DevOps 对不同的人可能意味着不同的含义,”德里克解释道,“对高管层和副总裁层来说,要执行明确的 DevOps 的目标,清楚地声明期望的成果,充分理解带来的成果将如何使公司的商业受益,并且能够衡量和报告成功的过程。”

|

||||

|

||||

事实上,在基线定义和远景之外,DevOps 要求正在进行频繁的交流,不是仅仅在小团队里,而是要贯穿到整个组织。IT 领导者必须将它设置为优先。

|

||||

|

||||

“不可避免的,将会有些阻碍,在商业中将会存在失败和破坏,”德里克说道,“领导者们需要清楚的将这个过程向公司的其他人阐述清楚,告诉他们他们作为这个过程的一份子能够期待的结果。”

|

||||

|

||||

### 4、 DevOps 对于商业和技术同样重要

|

||||

|

||||

IT 领导者们成功的将 DevOps 商店的这种文化和实践当做一项商业策略,以及构建和运营软件的方法。DevOps 是将 IT 从支持部门转向战略部门的推动力。

|

||||

|

||||

IT 领导者们必须转变他们的思想和方法,从成本和服务中心转变到驱动商业成果,而且 DevOps 的文化能够通过自动化和强大的协作加速这些成果,来自 [CYBRIC][9] 的首席技术官和联合创始人迈克·凯尔说道。

|

||||

|

||||

事实上,这是一个强烈的趋势,贯穿这些新“规则”,在 DevOps 时代走在前沿。

|

||||

|

||||

“促进创新并且鼓励团队成员去聪明的冒险是 DevOps 文化的一个关键部分,IT 领导者们需要在一个持续的基础上清楚的和他们交流”,凯尔说道。

|

||||

|

||||

“一个高效的 IT 领导者需要比以往任何时候都要积极的参与到业务中去,”来自 [West Monroe Partners][10] 的性能服务部门的主任埃文说道,“每年或季度回顾的日子一去不复返了——[你需要欢迎每两周一次的挤压整理][11],你需要有在年度水平上的思考战略能力,在冲刺阶段的互动能力,在商业期望满足时将会被给予一定的奖励。”

|

||||

|

||||

### 5、 改变妨碍 DevOps 目标的任何事情

|

||||

|

||||

虽然 DevOps 的老兵们普遍认为 DevOps 更多的是一种文化而不是技术,成功取决于通过正确的过程和工具激活文化。当你声称自己的部门是一个 DevOps 商店却拒绝对进程或技术做必要的改变,这就是你买了辆法拉利却使用了用了 20 年的引擎,每次转动钥匙都会冒烟。

|

||||

|

||||

展览 A: [自动化][12]。这是 DevOps 成功的重要并行策略。

|

||||

|

||||

“IT 领导者需要重点强调自动化,”卡伦德说,“这将是 DevOps 的前期投资,但是如果没有它,DevOps 将会很容易被低效吞噬,而且将会无法完整交付。”

|

||||

|

||||

自动化是基石,但改变不止于此。

|

||||

|

||||

“领导者们需要推动自动化、监控和持续交付过程。这意着对现有的实践、过程、团队架构以及规则的很多改变,” 德里克说。“领导者们需要改变一切会阻碍团队去实现完全自动化的因素。”

|

||||

|

||||

### 6、 重新思考团队架构和能力指标

|

||||

|

||||

当你想改变时……如果你桌面上的组织结构图和你过去大部分时候嵌入的名字都是一样的,那么你是时候该考虑改革了。

|

||||

|

||||

“在这个 DevOps 的新时代文化中,IT 执行者需要采取一个全新的方法来组织架构。”凯尔说,“消除组织的边界限制,它会阻碍团队间的合作,允许团队自我组织、敏捷管理。”

|

||||

|

||||

凯尔告诉我们在 DevOps 时代,这种反思也应该拓展应用到其他领域,包括你怎样衡量个人或者团队的成功,甚至是你和人们的互动。

|

||||

|

||||

“根据业务成果和总体的积极影响来衡量主动性,”凯尔建议。“最后,我认为管理中最重要的一个方面是:有同理心。”

|

||||

|

||||

注意很容易收集的到测量值不是 DevOps 真正的指标,[Red Hat] 的技术专家戈登·哈夫写到,“DevOps 应该把指标以某种形式和商业成果绑定在一起”,他指出,“你可能并不真正在乎开发者些了多少代码,是否有一台服务器在深夜硬件损坏,或者是你的测试是多么的全面。你甚至都不直接关注你的网站的响应情况或者是你更新的速度。但是你要注意的是这些指标可能和顾客放弃购物车去竞争对手那里有关,”参考他的文章,[DevOps 指标:你在测量什么?]

|

||||

|

||||

### 7、 丢弃传统的智慧

|

||||

|

||||

如果 DevOps 时代要求关于 IT 领导能力的新的思考方式,那么也就意味着一些旧的方法要被淘汰。但是是哪些呢?

|

||||

|

||||

“说实话,是全部”,凯尔说道,“要摆脱‘因为我们一直都是以这种方法做事的’的心态。过渡到 DevOps 文化是一种彻底的思维模式的转变,不是对瀑布式的过去和变革委员会的一些细微改变。”

|

||||

|

||||

事实上,IT 领导者们认识到真正的变革要求的不只是对旧方法的小小接触。它更多的是要求对之前的进程或者策略的一个重新启动。

|

||||

|

||||

West Monroe Partners 的卡伦德分享了一个阻碍 DevOps 的领导力的例子:未能拥抱 IT 混合模型和现代的基础架构比如说容器和微服务。

|

||||

|

||||

“我所看到的一个大的规则就是架构整合,或者认为在一个同质的环境下长期的维护会更便宜,”卡伦德说。

|

||||

|

||||

**领导者们,想要更多像这样的智慧吗?[注册我们的每周邮件新闻报道][15]。**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2018/1/7-leadership-rules-devops-age

|

||||

|

||||

作者:[Kevin Casey][a]

|

||||

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://enterprisersproject.com/user/kevin-casey

|

||||

[1]:https://enterprisersproject.com/tags/devops

|

||||

[2]:https://enterprisersproject.com/article/2017/7/devops-requires-dumping-old-it-leadership-ideas

|

||||

[3]:https://www.datical.com/

|

||||

[4]:https://rework.withgoogle.com/guides/understanding-team-effectiveness/steps/foster-psychological-safety/

|

||||

[5]:https://enterprisersproject.com/article/2017/10/why-agile-leaders-must-move-beyond-talking-about-failure?sc_cid=70160000000h0aXAAQ

|

||||

[6]:https://enterprisersproject.com/article/2017/10/how-beat-fear-and-loathing-it-change

|

||||

[7]:https://www.rainforestqa.com/

|

||||

[8]:https://enterprisersproject.com/article/2018/1/3-areas-where-devops-and-cios-must-get-same-page

|

||||

[9]:https://www.cybric.io/

|

||||

[10]:http://www.westmonroepartners.com/

|

||||

[11]:https://www.scrumalliance.org/community/articles/2017/february/product-backlog-grooming

|

||||

[12]:https://www.redhat.com/en/topics/automation?intcmp=701f2000000tjyaAAA

|

||||

[13]:https://www.redhat.com/en?intcmp=701f2000000tjyaAAA

|

||||

[14]:https://enterprisersproject.com/article/2017/7/devops-metrics-are-you-measuring-what-matters

|

||||

[15]:https://enterprisersproject.com/email-newsletter?intcmp=701f2000000tsjPAAQ

|

||||

@ -1,5 +1,5 @@

|

||||

如何使用 Android Things 和 TensorFlow 在物联网上应用机器学习

|

||||

============================================================

|

||||

=============================

|

||||

|

||||

|

||||

|

||||

@ -3,14 +3,11 @@

|

||||

|

||||

> 通过使用 `/etc/passwd` 文件,`getent` 命令,`compgen` 命令这三种方法查看系统中用户的信息

|

||||

|

||||

----------------

|

||||

大家都知道,Linux 系统中用户信息存放在 `/etc/passwd` 文件中。

|

||||

|

||||

这是一个包含每个用户基本信息的文本文件。

|

||||

这是一个包含每个用户基本信息的文本文件。当我们在系统中创建一个用户,新用户的详细信息就会被添加到这个文件中。

|

||||

|

||||

当我们在系统中创建一个用户,新用户的详细信息就会被添加到这个文件中。

|

||||

|

||||

`/etc/passwd` 文件将每个用户的基本信息记录为文件中的一行,一行中包含7个字段。

|

||||

`/etc/passwd` 文件将每个用户的基本信息记录为文件中的一行,一行中包含 7 个字段。

|

||||

|

||||

`/etc/passwd` 文件的一行代表一个单独的用户。该文件将用户的信息分为 3 个部分。

|

||||

|

||||

@ -18,11 +15,6 @@

|

||||

* 第 2 部分:系统定义的账号信息

|

||||

* 第 3 部分:真实用户的账户信息

|

||||

|

||||

** 建议阅读 : **

|

||||

**( # )** [ 如何在 Linux 上查看创建用户的日期 ][1]

|

||||

**( # )** [ 如何在 Linux 上查看 A 用户所属的群组 ][2]

|

||||

**( # )** [ 如何强制用户在下一次登录 Linux 系统时修改密码 ][3]

|

||||

|

||||

第一部分是 `root` 账户,这代表管理员账户,对系统的每个方面都有完全的权力。

|

||||

|

||||

第二部分是系统定义的群组和账户,这些群组和账号是正确安装和更新系统软件所必需的。

|

||||

@ -31,18 +23,24 @@

|

||||

|

||||

在创建新用户时,将修改以下 4 个文件。

|

||||

|

||||

* `/etc/passwd:` 用户账户的详细信息在此文件中更新。

|

||||

* `/etc/shadow:` 用户账户密码在此文件中更新。

|

||||

* `/etc/group:` 新用户群组的详细信息在此文件中更新。

|

||||

* `/etc/gshadow:` 新用户群组密码在此文件中更新。

|

||||

* `/etc/passwd`: 用户账户的详细信息在此文件中更新。

|

||||

* `/etc/shadow`: 用户账户密码在此文件中更新。

|

||||

* `/etc/group`: 新用户群组的详细信息在此文件中更新。

|

||||

* `/etc/gshadow`: 新用户群组密码在此文件中更新。

|

||||

|

||||

** 建议阅读 : **

|

||||

|

||||

- [ 如何在 Linux 上查看创建用户的日期 ][1]

|

||||

- [ 如何在 Linux 上查看 A 用户所属的群组 ][2]

|

||||

- [ 如何强制用户在下一次登录 Linux 系统时修改密码 ][3]

|

||||

|

||||

### 方法 1 :使用 `/etc/passwd` 文件

|

||||

|

||||

使用任何一个像 `cat`,`more`,`less` 等文件操作命令来打印 Linux 系统上创建的用户列表。

|

||||

使用任何一个像 `cat`、`more`、`less` 等文件操作命令来打印 Linux 系统上创建的用户列表。

|

||||

|

||||

`/etc/passwd` 是一个文本文件,其中包含了登录 Linux 系统所必需的每个用户的信息。它保存用户的有用信息,如用户名,密码,用户 ID,群组 ID,用户 ID 信息,用户的家目录和 Shell 。

|

||||

`/etc/passwd` 是一个文本文件,其中包含了登录 Linux 系统所必需的每个用户的信息。它保存用户的有用信息,如用户名、密码、用户 ID、群组 ID、用户 ID 信息、用户的家目录和 Shell 。

|

||||

|

||||

`/etc/passwd` 文件将每个用户的详细信息写为一行,其中包含七个字段,每个字段之间用冒号 “ :” 分隔:

|

||||

`/etc/passwd` 文件将每个用户的详细信息写为一行,其中包含七个字段,每个字段之间用冒号 `:` 分隔:

|

||||

|

||||

```

|

||||

# cat /etc/passwd

|

||||

@ -72,15 +70,15 @@ mageshm:x:506:507:2g Admin - Magesh M:/home/mageshm:/bin/bash

|

||||

```

|

||||

7 个字段的详细信息如下。

|

||||

|

||||

* **`Username ( magesh ):`** 已创建用户的用户名,字符长度 1 个到 12 个字符。

|

||||

* **`Password ( x ):`** 代表加密密码保存在 `/etc/shadow 文件中。

|

||||

* **`User ID ( UID-506 ):`** 代表用户 ID ,每个用户都要有一个唯一的 ID 。UID 号为 0 的是为 `root` 用户保留的,UID 号 1 到 99 是为系统用户保留的,UID 号100-999 是为系统账户和群组保留的。

|

||||

* **`Group ID ( GID-507 ):`** 代表组 ID ,每个群组都要有一个唯一的 GID ,保存在 `/etc/group` 文件中。

|

||||

* **`User ID Info ( 2g Admin - Magesh M):`** 代表命名字段,可以用来描述用户的信息。

|

||||

* **`Home Directory ( /home/mageshm ):`** 代表用户的家目录。

|

||||

* **`shell ( /bin/bash ):`** 代表用户使用的 shell种类。

|

||||

* **用户名** (`magesh`): 已创建用户的用户名,字符长度 1 个到 12 个字符。

|

||||

* **密码**(`x`):代表加密密码保存在 `/etc/shadow 文件中。

|

||||

* **用户 ID(`506`):代表用户的 ID 号,每个用户都要有一个唯一的 ID 。UID 号为 0 的是为 `root` 用户保留的,UID 号 1 到 99 是为系统用户保留的,UID 号 100-999 是为系统账户和群组保留的。

|

||||

* **群组 ID (`507`):代表群组的 ID 号,每个群组都要有一个唯一的 GID ,保存在 `/etc/group` 文件中。

|

||||

* **用户信息(`2g Admin - Magesh M`):代表描述字段,可以用来描述用户的信息(LCTT 译注:此处原文疑有误)。

|

||||

* **家目录(`/home/mageshm`):代表用户的家目录。

|

||||

* **Shell(`/bin/bash`):代表用户使用的 shell 类型。

|

||||

|

||||

你可以使用 **`awk`** 或 **`cut`** 命令仅打印出 Linux 系统中所有用户的用户名列表。显示的结果是相同的。

|

||||

你可以使用 `awk` 或 `cut` 命令仅打印出 Linux 系统中所有用户的用户名列表。显示的结果是相同的。

|

||||

|

||||

```

|

||||

# awk -F':' '{ print $1}' /etc/passwd

|

||||

@ -108,11 +106,10 @@ rpc

|

||||

2daygeek

|

||||

named

|

||||

mageshm

|

||||

|

||||

```

|

||||

### 方法 2 :使用 `getent` 命令

|

||||

|

||||

`getent` 命令显示 `Name Service Switch` 库支持的数据库中的条目。这些库的配置文件为 `/etc/nsswitch.conf`。

|

||||

`getent` 命令显示 Name Service Switch 库支持的数据库中的条目。这些库的配置文件为 `/etc/nsswitch.conf`。

|

||||

|

||||

`getent` 命令显示类似于 `/etc/passwd` 文件的用户详细信息,它将每个用户详细信息显示为包含七个字段的单行。

|

||||

|

||||

@ -140,49 +137,11 @@ rpc:x:32:32:Rpcbind Daemon:/var/cache/rpcbind:/sbin/nologin

|

||||

2daygeek:x:503:504::/home/2daygeek:/bin/bash

|

||||

named:x:25:25:Named:/var/named:/sbin/nologin

|

||||

mageshm:x:506:507:2g Admin - Magesh M:/home/mageshm:/bin/bash

|

||||

|

||||

```

|

||||

|

||||

7 个字段的详细信息如下。

|

||||

7 个字段的详细信息如上所述。(LCTT 译注:此处内容重复,删节)

|

||||

|

||||

* **`Username ( magesh ):`** 已创建用户的用户名,字符长度 1 个到 12 个字符。

|

||||

* **`Password ( x ):` 代表加密密码保存在 `/etc/shadow 文件中。

|

||||

* **`User ID ( UID-506 ):`** 代表用户 ID ,每个用户都要有一个唯一的 ID 。UID 号为 0 的是为 `root` 用户保留的,UID 号 1 到 99 是为系统用户保留的,UID 号100-999 是为系统账户和群组保留的。

|

||||

* **`Group ID ( GID-507 ):`** 代表组 ID ,每个群组都要有一个唯一的 GID ,保存在 `/etc/group` 文件中。

|

||||

* **`User ID Info ( 2g Admin - Magesh M):`** 代表命名字段,可以用来描述用户的信息。

|

||||

* **`Home Directory ( /home/mageshm ):`** 代表用户的家目录。

|

||||

* **`shell ( /bin/bash ):`** 代表用户使用的 shell种类。

|

||||

|

||||

你可以使用 **`awk`** 或 **`cut`** 命令仅打印出 Linux 系统中所有用户的用户名列表。显示的结果是相同的。

|

||||

|

||||

```

|

||||

# getent passwd | awk -F':' '{ print $1}'

|

||||

or

|

||||

# getent passwd | cut -d: -f1

|

||||

root

|

||||

bin

|

||||

daemon

|

||||

adm

|

||||

lp

|

||||

sync

|

||||

shutdown

|

||||

halt

|

||||

mail

|

||||

ftp

|

||||

postfix

|

||||

sshd

|

||||

tcpdump

|

||||

2gadmin

|

||||

apache

|

||||

zabbix

|

||||

mysql

|

||||

zend

|

||||

rpc

|

||||

2daygeek

|

||||

named

|

||||

mageshm

|

||||

|

||||

```

|

||||

你同样可以使用 `awk` 或 `cut` 命令仅打印出 Linux 系统中所有用户的用户名列表。显示的结果是相同的。

|

||||

|

||||

### 方法 3 :使用 `compgen` 命令

|

||||

|

||||

@ -212,7 +171,6 @@ rpc

|

||||

2daygeek

|

||||

named

|

||||

mageshm

|

||||

|

||||

```

|

||||

|

||||

------------------------

|

||||

@ -222,7 +180,7 @@ via: https://www.2daygeek.com/3-methods-to-list-all-the-users-in-linux-system/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[SunWave](https://github.com/SunWave)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,21 +1,23 @@

|

||||

使用 Handbrake 转换视频

|

||||

======

|

||||

|

||||

> 这个开源工具可以很简单地将老视频转换为新格式。

|

||||

|

||||

|

||||

|

||||

最近,当我的儿子让我数字化他的高中篮球比赛的一些旧 DVD 时,我立刻知道我会使用 [Handbrake][1]。它是一个开源软件包,可轻松将视频转换为可在 MacOS、Windows、Linux、iOS、Android 和其他平台上播放的格式所需的所有工具。

|

||||

|

||||

Handbrake 是开源的,并在[ GPLv2 许可证][2]下分发。它很容易在 MacOS、Windows 和 Linux 包括 [Fedora][3] 和 [Ubuntu][4] 上安装。在 Linux 中,安装后就可以从命令行使用 `$ handbrake` 或从图形用户界面中选择它。(在我的例子中,是 GNOME 3)

|

||||

最近,当我的儿子让我数字化他的高中篮球比赛的一些旧 DVD 时,我马上就想到了 [Handbrake][1]。它是一个开源软件包,可轻松将视频转换为可在 MacOS、Windows、Linux、iOS、Android 和其他平台上播放的格式所需的所有工具。

|

||||

|

||||

Handbrake 是开源的,并在 [GPLv2 许可证][2]下分发。它很容易在 MacOS、Windows 和 Linux 包括 [Fedora][3] 和 [Ubuntu][4] 上安装。在 Linux 中,安装后就可以从命令行使用 `$ handbrake` 或从图形用户界面中选择它。(我的情况是 GNOME 3)

|

||||

|

||||

|

||||

|

||||

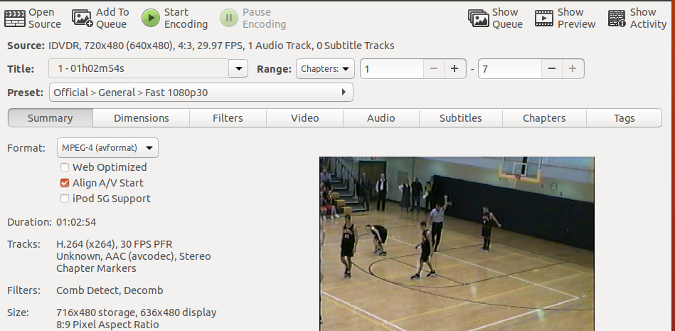

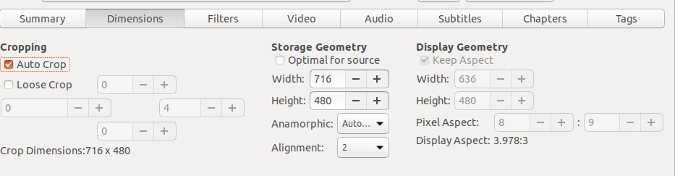

Handbrake 的菜单系统易于使用。单击 **Open Source** 选择要转换的视频源。对于我儿子的篮球视频,它是我的 Linux 笔记本中的 DVD 驱动器。将 DVD 插入驱动器后,软件会识别磁盘的内容。

|

||||

Handbrake 的菜单系统易于使用。单击 “Open Source” 选择要转换的视频源。对于我儿子的篮球视频,它是我的 Linux 笔记本中的 DVD 驱动器。将 DVD 插入驱动器后,软件会识别磁盘的内容。

|

||||

|

||||

|

||||

|

||||

正如你在上面截图中的 Source 旁边看到的那样,Handbrake 将其识别为 720x480 的 DVD,宽高比为 4:3,以每秒 29.97 帧的速度录制,有一个音轨。该软件还能预览视频。

|

||||

正如你在上面截图中的 “Source” 旁边看到的那样,Handbrake 将其识别为 720x480 的 DVD,宽高比为 4:3,以每秒 29.97 帧的速度录制,有一个音轨。该软件还能预览视频。

|

||||

|

||||

如果默认转换设置可以接受,只需按下 **Start Encoding** 按钮(一段时间后,根据处理器的速度),DVD 的内容将被转换并以默认格式 [M4V][5] 保存(可以改变)。

|

||||

如果默认转换设置可以接受,只需按下 “Start Encoding” 按钮(一段时间后,根据处理器的速度),DVD 的内容将被转换并以默认格式 [M4V][5] 保存(可以改变)。

|

||||

|

||||

如果你不喜欢文件名,很容易改变它。

|

||||

|

||||

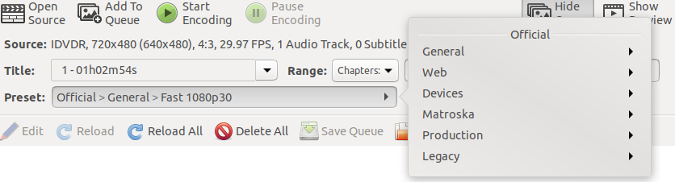

@ -25,7 +27,7 @@ Handbrake 有各种格式、大小和配置的输出选项。例如,它可以

|

||||

|

||||

|

||||

|

||||

你可以在 ”Dimensions“ 选项卡中更改视频输出大小。其他选项卡允许你应用过滤器、更改视频质量和编码、添加或修改音轨,包括字幕和修改章节。“Tags” 选项卡可让你识别输出视频文件中的作者、演员、导演、发布日期等。

|

||||

你可以在 “Dimensions” 选项卡中更改视频输出大小。其他选项卡允许你应用过滤器、更改视频质量和编码、添加或修改音轨,包括字幕和修改章节。“Tags” 选项卡可让你识别输出视频文件中的作者、演员、导演、发布日期等。

|

||||

|

||||

|

||||

|

||||

@ -44,7 +46,7 @@ via: https://opensource.com/article/18/7/handbrake

|

||||

作者:[Don Watkins][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating by ynmlml

|

||||

|

||||

Write Dumb Code

|

||||

======

|

||||

The best way you can contribute to an open source project is to remove lines of code from it. We should endeavor to write code that a novice programmer can easily understand without explanation or that a maintainer can understand without significant time investment.

|

||||

|

||||

110

sources/talk/20180731 How to be the lazy sysadmin.md

Normal file

110

sources/talk/20180731 How to be the lazy sysadmin.md

Normal file

@ -0,0 +1,110 @@

|

||||

How to be the lazy sysadmin

|

||||

======

|

||||

|

||||

|

||||

The job of a Linux SysAdmin is always complex and often fraught with various pitfalls and obstacles. Ranging from never having enough time to do everything, to having the Pointy-Haired Boss (PHB) staring over your shoulder while you try to work on the task that she or he just gave you, to having the most critical server in your care crash at the most inopportune time, problems and challenges abound. I have found that becoming the Lazy Sysadmin can help.

|

||||

|

||||

I discuss how to be a lazy SysAdmin in detail in my forthcoming book, [The Linux Philosophy for SysAdmins][1], (Apress), which is scheduled to be available in September. Parts of this article are taken from that book, especially Chapter 9, "Be a Lazy SysAdmin." Let's take a brief look at what it means to be a Lazy SysAdmin before we discuss how to do it.

|

||||

|

||||

### Real vs. fake productivity

|

||||

|

||||

#### Fake productivity

|

||||

|

||||

At one place I worked, the PHB believed in the management style called "management by walking around," the supposition being that anyone who wasn't typing something on their keyboard, or at least examining something on their display, was not being productive. This was a horrible place to work. It had high administrative walls between departments that created many, tiny silos, a heavy overburden of useless paperwork, and excruciatingly long wait times to obtain permission to do anything. For these and other reasons, it was impossible to do anything efficiently—if at all—so we were incredibly non-productive. To look busy, we all had our Look Busy Kits (LBKs), which were just short Bash scripts that showed some activity, or programs like `top`, `htop`, `iotop`, or any monitoring tool that constantly displayed some activity. The ethos of this place made it impossible to be truly productive, and I hated both the place and the fact that it was nearly impossible to accomplish anything worthwhile.

|

||||

|

||||

That horrible place was a nightmare for real SysAdmins. None of us was happy. It took four or five months to accomplish what took only a single morning in other places. We had little real work to do but spent a huge amount of time working to look busy. We had an unspoken contest going to create the best LBK, and that is where we spent most of our time. I only managed to last a few months at that job, but it seemed like a lifetime. If you looked only at the surface of that dungeon, you could say we were lazy because we accomplished almost zero real work.

|

||||

|

||||

This is an extreme example, and it is totally the opposite of what I mean when I say I am a Lazy SysAdmin and being a Lazy SysAdmin is a good thing.

|

||||

|

||||

#### Real productivity

|

||||

|

||||

I am fortunate to have worked for some true managers—they were people who understood that the productivity of a SysAdmin is not measured by how many hours per day are spent banging on a keyboard. After all, even a monkey can bang on a keyboard, but that is no indication of the value of the results.

|

||||

|

||||

As I say in my book:

|

||||

|

||||

> "I am a lazy SysAdmin and yet I am also a very productive SysAdmin. Those two seemingly contradictory statements are not mutually exclusive, rather they are complementary in a very positive way. …

|

||||

>

|

||||

> "A SysAdmin is most productive when thinking—thinking about how to solve existing problems and about how to avoid future problems; thinking about how to monitor Linux computers in order to find clues that anticipate and foreshadow those future problems; thinking about how to make their work more efficient; thinking about how to automate all of those tasks that need to be performed whether every day or once a year.

|

||||

>

|

||||

> "This contemplative aspect of the SysAdmin job is not well known or understood by those who are not SysAdmins—including many of those who manage the SysAdmins, the Pointy Haired Bosses. SysAdmins all approach the contemplative parts of their job in different ways. Some of the SysAdmins I have known found their best ideas at the beach, cycling, participating in marathons, or climbing rock walls. Others think best when sitting quietly or listening to music. Still others think best while reading fiction, studying unrelated disciplines, or even while learning more about Linux. The point is that we all stimulate our creativity in different ways, and many of those creativity boosters do not involve typing a single keystroke on a keyboard. Our true productivity may be completely invisible to those around the SysAdmin."

|

||||

|

||||

There are some simple secrets to being the Lazy SysAdmin—the SysAdmin who accomplishes everything that needs to be done and more, all the while keeping calm and collected while others are running around in a state of panic. Part of this is working efficiently, and part is about preventing problems in the first place.

|

||||

|

||||

### Ways to be the Lazy SysAdmin

|

||||

|

||||

#### Thinking

|

||||

|

||||

I believe the most important secret about being the Lazy SysAdmin is thinking. As in the excerpt above, great SysAdmins spend a significant amount of time thinking about things we can do to work more efficiently, locate anomalies before they become problems, and work smarter, all while considering how to accomplish all of those things and more.

|

||||

|

||||

For example, right now—in addition to writing this article—I am thinking about a project I intend to start as soon as the new parts arrive from Amazon and the local computer store. The motherboard on one of my less critical computers is going bad, and it has been crashing more frequently recently. But my very old and minimal server—the one that handles my email and external websites, as well as providing DHCP and DNS services for the rest of my network—isn't failing but has to deal with intermittent overloads due to external attacks of various types.

|

||||

|

||||

I started by thinking I would just replace the motherboard and its direct components—memory, CPU, and possibly the power supply—in the failing unit. But after thinking about it for a while, I decided I should put the new components into the server and move the old (but still serviceable) ones from the server into the failing system. This would work and take only an hour, or perhaps two, to remove the old components from the server and install the new ones. Then I could take my time replacing the components in the failing computer. Great. So I started generating a mental list of tasks to do to accomplish this.

|

||||

|

||||

However, as I worked the list, I realized that about the only components of the server I wouldn't replace were the case and the hard drive, and the two computers' cases are almost identical. After having this little revelation, I started thinking about replacing the failing computer's components with the new ones and making it my server. Then, after some testing, I would just need to remove the hard drive from my current server and install it in the case with all the new components, change a couple of network configuration items, change the hostname on the KVM switch port, and change the hostname labels on the case, and it should be good to go. This will produce far less server downtime and significantly less stress for me. Also, if something fails, I can simply move the hard drive back to the original server until I can fix the problem with the new one.

|

||||

|

||||

So now I have created a mental list of the tasks I need to do to accomplish this. And—I hope you were watching closely—my fingers never once touched the keyboard while I was working all of this out in my head. My new mental action plan is low risk and involves a much smaller amount of server downtime compared to my original plan.

|

||||

|

||||

When I worked for IBM, I used to see signs all over that said "THINK" in many languages. Thinking can save time and stress and is the main hallmark of a Lazy SysAdmin.

|

||||

|

||||

#### Doing preventative maintenance

|

||||

|

||||

In the mid-1970s, I was hired as a customer engineer at IBM, and my territory consisted of a fairly large number of [unit record machines][2]. That just means that they were heavily mechanical devices that processed punched cards—a few dated from the 1930s. Because these machines were primarily mechanical, their parts often wore out or became maladjusted. Part of my job was to fix them when they broke. The main part of my job—the most important part—was to prevent them from breaking in the first place. The preventative maintenance was intended to replace worn parts before they broke and to lubricate and adjust the moving components to ensure that they were working properly.

|

||||

|

||||

As I say in The Linux Philosophy for SysAdmins:

|

||||

|

||||

> "My managers at IBM understood that was only the tip of the iceberg; they—and I—knew my job was customer satisfaction. Although that usually meant fixing broken hardware, it also meant reducing the number of times the hardware broke. That was good for the customer because they were more productive when their machines were working. It was good for me because I received far fewer calls from those happier customers. I also got to sleep more due to the resultant fewer emergency off-hours callouts. I was being the Lazy [Customer Engineer]. By doing the extra work upfront, I had to do far less work in the long run.

|

||||

>

|

||||

> "This same tenet has become one of the functional tenets of the Linux Philosophy for SysAdmins. As SysAdmins, our time is best spent doing those tasks that minimize future workloads."

|

||||

|

||||

Looking for problems to fix in a Linux computer is the equivalent of project management. I review the system logs looking for hints of problems that might become critical later. If something appears to be a little amiss, or I notice my workstation or a server is not responding as it should, or if the logs show something unusual—all of these can be indicative of an underlying problem that has not generated symptoms obvious to users or the PHB.

|

||||

|

||||

I do frequent checks of the files in `/var/log/`, especially messages and security. One of my more common problems is the many script kiddies who try various types of attacks on my firewall system. And, no, I do not rely on the alleged firewall in the modem/router provided by my ISP. These logs contain a lot of information about the source of the attempted attack and can be very valuable. But it takes a lot of work to scan the logs on various hosts and put solutions into place. So I turn to automation.

|

||||

|

||||

#### Automating

|

||||

|

||||

I have found that a very large percentage of my work can be performed by some form of automation. One of the tenets of the Linux Philosophy for SysAdmins is "automate everything," and this includes boring, drudge tasks like scanning logfiles every day.

|

||||

|

||||

Programs like [Logwatch][3] can monitor your logfiles for anomalous entries and notify you when they occur. Logwatch usually runs as a cron job once a day and sends an email to root on the localhost. You can run Logwatch from the command line and view the results immediately on your display. Now I just need to look at the Logwatch email notification every day.

|

||||

|

||||

But the reality is just getting a notification is not enough, because we can't sit and watch for problems all the time. Sometimes an immediate response is required. Another program I like, one that does all of the work for me—see, this is the real Lazy Admin—is [Fail2Ban][4]. Fail2Ban scans designated logfiles for various types of hacking and intrusion attempts, and if it sees enough sustained activity of a specific type from a particular IP address, it adds an entry to the firewall that blocks any further hacking attempts from that IP address for a specified time. The defaults tend to be around 10 minutes, but I like to specify 12 or 24 hours for most types of attacks. Each type of hacking attack is configured separately, such as those trying to log in via SSH and those attacking a web server.

|

||||

|

||||

#### Writing scripts

|

||||

|

||||

Automation is one of the key components of the Philosophy. Everything that can be automated should be, and the rest should be automated as much as possible. So, I also write a lot of scripts to solve problems, which also means I write scripts to do most of my work for me.

|

||||

|

||||

My scripts save me huge amounts of time because they contain the commands to perform specific tasks, which significantly reduces the amount of typing I need to do. For example, I frequently restart my email server and my spam-fighting software (which needs restarted when configuration changes are made to SpamAssassin's `local.cf` file). Those services must be stopped and restarted in a specific order. So, I wrote a short script with a few commands and stored it in `/usr/local/bin`, where it is accessible. Now, instead of typing several commands and waiting for each to finish before typing the next one—not to mention remembering the correct sequence of commands and the proper syntax of each—I type in a three-character command and leave the rest to my script.

|

||||

|

||||

#### Reducing typing

|

||||

|

||||

Another way to be the Lazy SysAdmin is to reduce the amount of typing we need to do. Besides, my typing skills are really horrible (that is to say I have none—a few clumsy fingers at best). One possible cause for errors is my poor typing, so I try to keep typing to a minimum.

|

||||

|

||||

The vast majority of GNU and Linux core utilities have very short names. They are, however, names that have some meaning. Tools like `cd` for change directory, `ls` for list (the contents of a directory), and `dd` for disk dump are pretty obvious. Short names mean less typing and fewer opportunities for errors to creep in. I think the short names are usually easier to remember.

|

||||

|

||||

When I write shell scripts, I like to keep the names short but meaningful (to me at least) like `rsbu` for Rsync BackUp. In some cases, I like the names a bit longer, such as `doUpdates` to perform system updates. In the latter case, the longer name makes the script's purpose obvious. This saves time because it's easy to remember the script's name.

|

||||

|

||||

Other methods to reduce typing are command line aliases and command line recall and editing. Aliases are simply substitutions that are made by the Bash shell when you type a command. Type the `alias` command and look at the list of aliases that are configured by default. For example, when you enter the command `ls`, the entry `alias ls='ls –color=auto'` substitutes the longer command, so you only need to type two characters instead of 14 to get a listing with colors. You can also use the `alias` command to add your own aliases.

|

||||

|

||||

Command line recall allows you to use the keyboard's Up and Down arrow keys to scroll through your command history. If you need to use the same command again, you can just press the Enter key when you find the one you need. If you need to change the command once you have found it, you can use standard command line editing features to make the changes.

|

||||

|

||||

### Parting thoughts

|

||||

|

||||

It is actually quite a lot of work being the Lazy SysAdmin. But we work smart, rather than working hard. We spend time exploring the hosts we are responsible for and dealing with any little problems long before they become large problems. We spend a lot of time thinking about the best ways to resolve problems, and we think a lot about discovering new ways to work smarter at being the Lazy SysAdmin.

|

||||

|

||||

There are many other ways to be the Lazy SysAdmin besides the few described here. I'm sure you have some of your own; please share them with the rest of us in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/how-be-lazy-sysadmin

|

||||

|

||||

作者:[David Both][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/dboth

|

||||

[1]:https://www.apress.com/us/book/9781484237298

|

||||

[2]:https://en.wikipedia.org/wiki/Unit_record_equipment

|

||||

[3]:https://www.techrepublic.com/article/how-to-install-and-use-logwatch-on-linux/

|

||||

[4]:https://www.fail2ban.org/wiki/index.php/Main_Page

|

||||

@ -1,254 +0,0 @@

|

||||

Translating by qhwdw

|

||||

How to reset, revert, and return to previous states in Git

|

||||

======

|

||||

|

||||

|

||||

|

||||

One of the lesser understood (and appreciated) aspects of working with Git is how easy it is to get back to where you were before—that is, how easy it is to undo even major changes in a repository. In this article, we'll take a quick look at how to reset, revert, and completely return to previous states, all with the simplicity and elegance of individual Git commands.

|

||||

|

||||

### Reset

|

||||

|

||||

Let's start with the Git command `reset`. Practically, you can think of it as a "rollback"—it points your local environment back to a previous commit. By "local environment," we mean your local repository, staging area, and working directory.

|

||||

|

||||

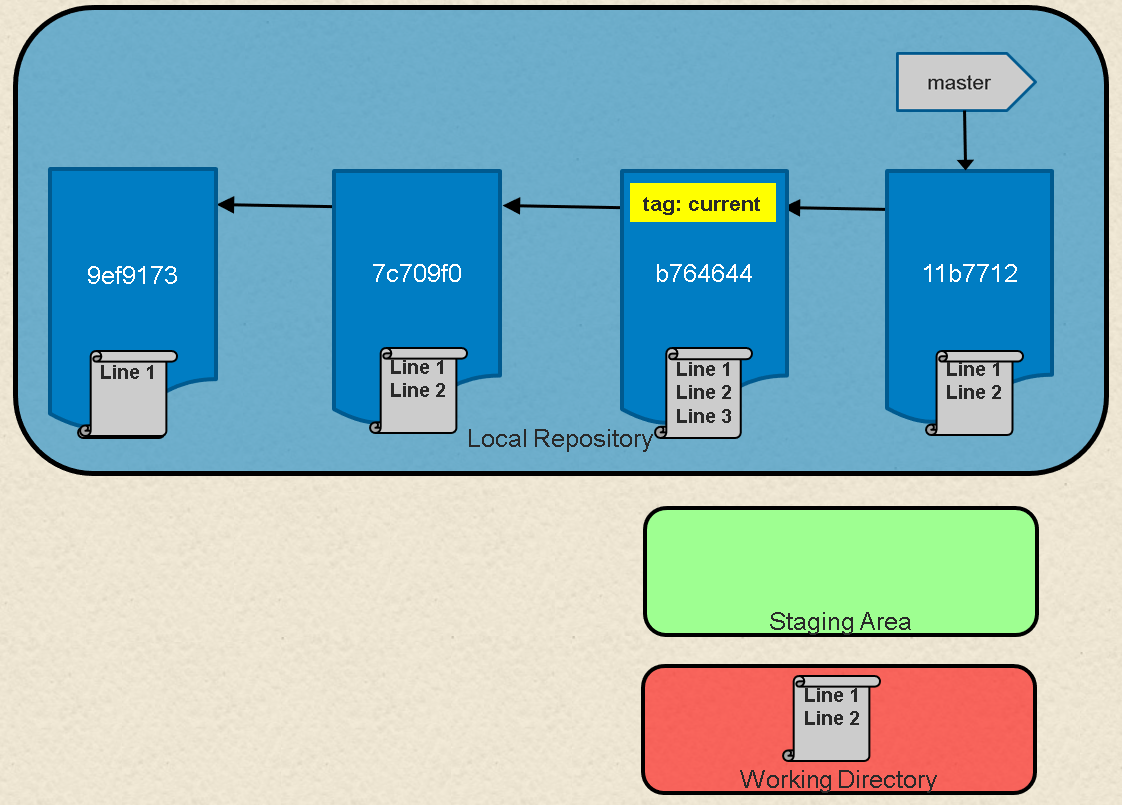

Take a look at Figure 1. Here we have a representation of a series of commits in Git. A branch in Git is simply a named, movable pointer to a specific commit. In this case, our branch master is a pointer to the latest commit in the chain.

|

||||

|

||||

![Local Git environment with repository, staging area, and working directory][2]

|

||||

|

||||

Fig. 1: Local Git environment with repository, staging area, and working directory

|

||||

|

||||

If we look at what's in our master branch now, we can see the chain of commits made so far.

|

||||

```

|

||||

$ git log --oneline

|

||||

b764644 File with three lines

|

||||

7c709f0 File with two lines

|

||||

9ef9173 File with one line

|

||||

```

|

||||

|

||||

`reset` command to do this for us. For example, if we want to reset master to point to the commit two back from the current commit, we could use either of the following methods:

|

||||

|

||||

What happens if we want to roll back to a previous commit. Simple—we can just move the branch pointer. Git supplies thecommand to do this for us. For example, if we want to reset master to point to the commit two back from the current commit, we could use either of the following methods:

|

||||

|

||||

`$ git reset 9ef9173` (using an absolute commit SHA1 value 9ef9173)

|

||||

|

||||

or

|

||||

|

||||

`$ git reset current~2` (using a relative value -2 before the "current" tag)

|

||||

|

||||

Figure 2 shows the results of this operation. After this, if we execute a `git log` command on the current branch (master), we'll see just the one commit.

|

||||

```

|

||||

$ git log --oneline

|

||||

|

||||

9ef9173 File with one line

|

||||

|

||||

```

|

||||

|

||||

![After reset][4]

|

||||

|

||||

Fig. 2: After `reset`

|

||||

|

||||

The `git reset` command also includes options to update the other parts of your local environment with the contents of the commit where you end up. These options include: `hard` to reset the commit being pointed to in the repository, populate the working directory with the contents of the commit, and reset the staging area; `soft` to only reset the pointer in the repository; and `mixed` (the default) to reset the pointer and the staging area.

|

||||

|

||||

Using these options can be useful in targeted circumstances such as `git reset --hard <commit sha1 | reference>``.` This overwrites any local changes you haven't committed. In effect, it resets (clears out) the staging area and overwrites content in the working directory with the content from the commit you reset to. Before you use the `hard` option, be sure that's what you really want to do, since the command overwrites any uncommitted changes.

|

||||

|

||||

### Revert

|

||||

|

||||

The net effect of the `git revert` command is similar to reset, but its approach is different. Where the `reset` command moves the branch pointer back in the chain (typically) to "undo" changes, the `revert` command adds a new commit at the end of the chain to "cancel" changes. The effect is most easily seen by looking at Figure 1 again. If we add a line to a file in each commit in the chain, one way to get back to the version with only two lines is to reset to that commit, i.e., `git reset HEAD~1`.

|

||||

|

||||

Another way to end up with the two-line version is to add a new commit that has the third line removed—effectively canceling out that change. This can be done with a `git revert` command, such as:

|

||||

```

|

||||

$ git revert HEAD

|

||||

|

||||

```

|

||||

|

||||

Because this adds a new commit, Git will prompt for the commit message:

|

||||

```

|

||||

Revert "File with three lines"

|

||||

|

||||

This reverts commit b764644bad524b804577684bf74e7bca3117f554.

|

||||

|

||||

# Please enter the commit message for your changes. Lines starting

|

||||

# with '#' will be ignored, and an empty message aborts the commit.

|

||||

# On branch master

|

||||

# Changes to be committed:

|

||||

# modified: file1.txt

|

||||

#

|

||||

```

|

||||

|

||||

Figure 3 (below) shows the result after the `revert` operation is completed.

|

||||

|

||||

If we do a `git log` now, we'll see a new commit that reflects the contents before the previous commit.

|

||||

```

|

||||

$ git log --oneline

|

||||

11b7712 Revert "File with three lines"

|

||||

b764644 File with three lines

|

||||

7c709f0 File with two lines

|

||||

9ef9173 File with one line

|

||||

```

|

||||

|

||||

Here are the current contents of the file in the working directory:

|

||||

```

|

||||

$ cat <filename>

|

||||

Line 1

|

||||

Line 2

|

||||

```

|

||||

|

||||

#### Revert or reset?

|

||||

|

||||

Why would you choose to do a `revert` over a `reset` operation? If you have already pushed your chain of commits to the remote repository (where others may have pulled your code and started working with it), a revert is a nicer way to cancel out changes for them. This is because the Git workflow works well for picking up additional commits at the end of a branch, but it can be challenging if a set of commits is no longer seen in the chain when someone resets the branch pointer back.

|

||||

|

||||

This brings us to one of the fundamental rules when working with Git in this manner: Making these kinds of changes in your local repository to code you haven't pushed yet is fine. But avoid making changes that rewrite history if the commits have already been pushed to the remote repository and others may be working with them.

|

||||

|

||||

In short, if you rollback, undo, or rewrite the history of a commit chain that others are working with, your colleagues may have a lot more work when they try to merge in changes based on the original chain they pulled. If you must make changes against code that has already been pushed and is being used by others, consider communicating before you make the changes and give people the chance to merge their changes first. Then they can pull a fresh copy after the infringing operation without needing to merge.

|

||||

|

||||

You may have noticed that the original chain of commits was still there after we did the reset. We moved the pointer and reset the code back to a previous commit, but it did not delete any commits. This means that, as long as we know the original commit we were pointing to, we can "restore" back to the previous point by simply resetting back to the original head of the branch:

|

||||

```

|

||||

git reset <sha1 of commit>

|

||||

|

||||

```

|

||||

|

||||

A similar thing happens in most other operations we do in Git when commits are replaced. New commits are created, and the appropriate pointer is moved to the new chain. But the old chain of commits still exists.

|

||||

|

||||

### Rebase

|

||||

|

||||

Now let's look at a branch rebase. Consider that we have two branches—master and feature—with the chain of commits shown in Figure 4 below. Master has the chain `C4->C2->C1->C0` and feature has the chain `C5->C3->C2->C1->C0`.

|

||||

|

||||

![Chain of commits for branches master and feature][6]

|

||||

|

||||

Fig. 4: Chain of commits for branches master and feature

|

||||

|

||||

If we look at the log of commits in the branches, they might look like the following. (The `C` designators for the commit messages are used to make this easier to understand.)

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

259bf36 C2

|

||||

f33ae68 C1

|

||||

5043e79 C0

|

||||

|

||||

$ git log --oneline feature

|

||||

79768b8 C5

|

||||

000f9ae C3

|

||||

259bf36 C2

|

||||

f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

I tell people to think of a rebase as a "merge with history" in Git. Essentially what Git does is take each different commit in one branch and attempt to "replay" the differences onto the other branch.

|

||||

|

||||

So, we can rebase a feature onto master to pick up `C4` (e.g., insert it into feature's chain). Using the basic Git commands, it might look like this:

|

||||

```

|

||||

$ git checkout feature

|

||||

$ git rebase master

|

||||

|

||||

First, rewinding head to replay your work on top of it...

|

||||

Applying: C3

|

||||

Applying: C5

|

||||

```

|

||||

|

||||

Afterward, our chain of commits would look like Figure 5.

|

||||

|

||||

![Chain of commits after the rebase command][8]

|

||||

|

||||

Fig. 5: Chain of commits after the `rebase` command

|

||||

|

||||

Again, looking at the log of commits, we can see the changes.

|

||||

```

|

||||

$ git log --oneline master

|

||||

6a92e7a C4

|

||||

259bf36 C2

|

||||

f33ae68 C1

|

||||

5043e79 C0

|

||||

|

||||

$ git log --oneline feature

|

||||

c4533a5 C5

|

||||

64f2047 C3

|

||||

6a92e7a C4

|

||||

259bf36 C2

|

||||

f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

Notice that we have `C3'` and `C5'`—new commits created as a result of making the changes from the originals "on top of" the existing chain in master. But also notice that the "original" `C3` and `C5` are still there—they just don't have a branch pointing to them anymore.

|

||||

|

||||

If we did this rebase, then decided we didn't like the results and wanted to undo it, it would be as simple as:

|

||||

```

|

||||

$ git reset 79768b8

|

||||

|

||||

```

|

||||

|

||||

With this simple change, our branch would now point back to the same set of commits as before the `rebase` operation—effectively undoing it (Figure 6).

|

||||

|

||||

![After undoing rebase][10]

|

||||

|

||||

Fig. 6: After undoing the `rebase` operation

|

||||

|

||||

What happens if you can't recall what commit a branch pointed to before an operation? Fortunately, Git again helps us out. For most operations that modify pointers in this way, Git remembers the original commit for you. In fact, it stores it in a special reference named `ORIG_HEAD `within the `.git` repository directory. That path is a file containing the most recent reference before it was modified. If we `cat` the file, we can see its contents.

|

||||

```

|

||||

$ cat .git/ORIG_HEAD

|

||||

79768b891f47ce06f13456a7e222536ee47ad2fe

|

||||

```

|

||||

|

||||

We could use the `reset` command, as before, to point back to the original chain. Then the log would show this:

|

||||

```

|

||||

$ git log --oneline feature

|

||||

79768b8 C5

|

||||

000f9ae C3

|

||||

259bf36 C2

|

||||

f33ae68 C1

|

||||

5043e79 C0

|

||||

```

|

||||

|

||||

Another place to get this information is in the reflog. The reflog is a play-by-play listing of switches or changes to references in your local repository. To see it, you can use the `git reflog` command:

|

||||

```

|

||||

$ git reflog

|

||||

79768b8 HEAD@{0}: reset: moving to 79768b

|

||||

c4533a5 HEAD@{1}: rebase finished: returning to refs/heads/feature

|

||||

c4533a5 HEAD@{2}: rebase: C5

|

||||

64f2047 HEAD@{3}: rebase: C3

|

||||

6a92e7a HEAD@{4}: rebase: checkout master

|

||||

79768b8 HEAD@{5}: checkout: moving from feature to feature

|

||||

79768b8 HEAD@{6}: commit: C5

|

||||

000f9ae HEAD@{7}: checkout: moving from master to feature

|

||||

6a92e7a HEAD@{8}: commit: C4

|

||||

259bf36 HEAD@{9}: checkout: moving from feature to master

|

||||

000f9ae HEAD@{10}: commit: C3

|

||||

259bf36 HEAD@{11}: checkout: moving from master to feature

|

||||

259bf36 HEAD@{12}: commit: C2

|

||||

f33ae68 HEAD@{13}: commit: C1

|

||||

5043e79 HEAD@{14}: commit (initial): C0

|

||||

```

|

||||

|

||||

You can then reset to any of the items in that list using the special relative naming format you see in the log:

|

||||

```

|

||||

$ git reset HEAD@{1}

|

||||

|

||||

```

|

||||

|

||||

Once you understand that Git keeps the original chain of commits around when operations "modify" the chain, making changes in Git becomes much less scary. This is one of Git's core strengths: being able to quickly and easily try things out and undo them if they don't work.

|

||||

|

||||

Brent Laster will present [Power Git: Rerere, Bisect, Subtrees, Filter Branch, Worktrees, Submodules, and More][11] at the 20th annual [OSCON][12] event, July 16-19 in Portland, Ore. For more tips and explanations about using Git at any level, checkout Brent's book "[Professional Git][13]," available on Amazon.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/6/git-reset-revert-rebase-commands

|

||||

|

||||

作者:[Brent Laster][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/bclaster

|

||||

[1]:/file/401126

|

||||

[2]:https://opensource.com/sites/default/files/uploads/gitcommands1_local-environment.png (Local Git environment with repository, staging area, and working directory)

|

||||

[3]:/file/401131

|

||||

[4]:https://opensource.com/sites/default/files/uploads/gitcommands2_reset.png (After reset)

|

||||

[5]:/file/401141

|

||||

[6]:https://opensource.com/sites/default/files/uploads/gitcommands4_commits-branches.png (Chain of commits for branches master and feature)

|

||||

[7]:/file/401146

|

||||

[8]:https://opensource.com/sites/default/files/uploads/gitcommands5_commits-rebase.png (Chain of commits after the rebase command)

|

||||

[9]:/file/401151

|

||||

[10]:https://opensource.com/sites/default/files/uploads/gitcommands6_rebase-undo.png (After undoing rebase)

|

||||

[11]:https://conferences.oreilly.com/oscon/oscon-or/public/schedule/detail/67142

|

||||

[12]:https://conferences.oreilly.com/oscon/oscon-or

|

||||

[13]:https://www.amazon.com/Professional-Git-Brent-Laster/dp/111928497X/ref=la_B01MTGIINQ_1_2?s=books&ie=UTF8&qid=1528826673&sr=1-2

|

||||

@ -1,128 +0,0 @@

|

||||

How to edit Adobe InDesign files with Scribus and Gedit

|

||||

======

|

||||

|

||||

|

||||

|

||||

To be a good graphic designer, you must be adept at using the profession's tools, which for most designers today are the ones in the proprietary Adobe Creative Suite.

|

||||

|

||||

However, there are times that open source tools will get you out of a jam. For example, imagine you're a commercial printer tasked with printing a file created in Adobe InDesign. You need to make a simple change (e.g., fixing a small typo) to the file, but you don't have immediate access to the Adobe suite. While these situations are admittedly rare, open source tools like desktop publishing software [Scribus][1] and text editor [Gedit][2] can save the day.

|

||||

|

||||

In this article, I'll show you how I edit Adobe InDesign files with Scribus and Gedit. Note that there are many open source graphic design solutions that can be used instead of or in conjunction with Adobe InDesign. For more on this subject, check out my articles: [Expensive tools aren't the only option for graphic design (and never were)][3] and [2 open][4][source][4][Adobe InDesign scripts][4].

|

||||

|

||||

When developing this solution, I read a few blogs on how to edit InDesign files with open source software but did not find what I was looking for. One suggestion I found was to create an EPS from InDesign and open it as an editable file in Scribus, but that did not work. Another suggestion was to create an IDML (an older InDesign file format) document from InDesign and open that in Scribus. That worked much better, so that's the workaround I used in the following examples.

|

||||

|

||||

### Editing a business card

|

||||

|

||||

Opening and editing my InDesign business card file in Scribus worked fairly well. The only issue I had was that the tracking (the space between letters) was a bit off and the upside-down "J" I used to create the lower-case "f" in "Jeff" was flipped. Otherwise, the styles and colors were all intact.

|

||||

|

||||

|

||||

![Business card in Adobe InDesign][6]

|

||||

|

||||

Business card designed in Adobe InDesign.

|

||||

|

||||

![InDesign IDML file opened in Scribus][8]

|

||||

|

||||

InDesign IDML file opened in Scribus.

|

||||

|

||||

### Deleting copy in a paginated book

|

||||

|

||||

The book conversion didn't go as well. The main body of the text was OK, but the table of contents and some of the drop caps and footers were messed up when I opened the InDesign file in Scribus. Still, it produced an editable document. One problem was some of my blockquotes defaulted to Arial font because a character style (apparently carried over from the original Word file) was on top of the paragraph style. This was simple to fix.

|

||||

|

||||

![Book layout in InDesign][10]

|

||||

|

||||

Book layout in InDesign.

|

||||

|

||||

![InDesign IDML file of book layout opened in Scribus][12]

|

||||

|

||||

InDesign IDML file of book layout opened in Scribus.

|

||||

|

||||

Trying to select and delete a page of text produced surprising results. I placed the cursor in the text and hit Command+A (the keyboard shortcut for "select all"). It looked like one page was highlighted. However, that wasn't really true.

|

||||

|

||||

![Selecting text in Scribus][14]

|

||||

|

||||

Selecting text in Scribus.

|

||||

|

||||

When I hit the Delete key, the entire text string (not just the highlighted page) disappeared.

|

||||

|

||||

![Both pages of text deleted in Scribus][16]

|

||||

|

||||

Both pages of text deleted in Scribus.

|

||||

|

||||

Then something even more interesting happened… I hit Command+Z to undo the deletion. When the text came back, the formatting was messed up.

|

||||

|

||||

![Undo delete restored the text, but with bad formatting.][18]

|

||||

|

||||

Command+Z (undo delete) restored the text, but the formatting was bad.

|

||||

|

||||

### Opening a design file in a text editor

|

||||

|

||||

If you open a Scribus file and an InDesign file in a standard text editor (e.g., TextEdit on a Mac), you will see that the Scribus file is very readable whereas the InDesign file is not.

|

||||

|

||||

You can use TextEdit to make changes to either type of file and save it, but the resulting file is useless. Here's the error I got when I tried re-opening the edited file in InDesign.

|

||||

|

||||

![InDesign error message][20]

|

||||

|

||||

InDesign error message.

|

||||

|

||||

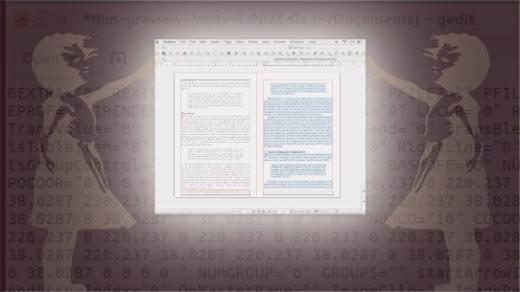

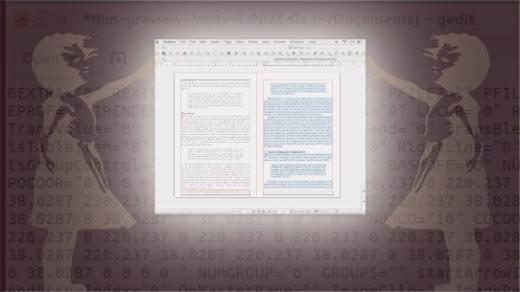

I got much better results when I used Gedit on my Linux Ubuntu machine to edit the Scribus file. I launched Gedit from the command line and voilà, the Scribus file opened, and the changes I made in Gedit were retained.

|

||||

|

||||

![Editing Scribus file in Gedit][22]

|

||||

|

||||

Editing a Scribus file in Gedit.

|

||||

|

||||

![Result of the Gedit edit in Scribus][24]

|

||||

|

||||

Result of the Gedit edit opened in Scribus.

|

||||

|

||||

This could be very useful to a printer that receives a call from a client about a small typo in a project. Instead of waiting to get a new file, the printer could open the Scribus file in Gedit, make the change, and be good to go.

|

||||

|

||||

### Dropping images into a file

|

||||

|

||||

I converted an InDesign doc to an IDML file so I could try dropping in some PDFs using Scribus. It seems Scribus doesn't do this as well as InDesign, as it failed. Instead, I converted my PDFs to JPGs and imported them into Scribus. That worked great. However, when I exported my document as a PDF, I found that the files size was rather large.

|

||||

|

||||

![Huge PDF file][26]

|

||||

|

||||

Exporting Scribus to PDF produced a huge file.

|

||||

|

||||

I'm not sure why this happened—I'll have to investigate it later.

|

||||

|

||||

Do you have any tips for using open source software to edit graphics files? If so, please share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/adobe-indesign-open-source-tools

|

||||

|

||||

作者:[Jeff Macharyas][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/rikki-endsley

|

||||

[1]:https://www.scribus.net/

|

||||

[2]:https://wiki.gnome.org/Apps/Gedit

|

||||

[3]:https://opensource.com/life/16/8/open-source-alternatives-graphic-design

|

||||

[4]:https://opensource.com/article/17/3/scripts-adobe-indesign

|

||||

[5]:/file/402516

|

||||

[6]:https://opensource.com/sites/default/files/uploads/1-business_card_designed_in_adobe_indesign_cc.png (Business card in Adobe InDesign)

|

||||

[7]:/file/402521

|

||||

[8]:https://opensource.com/sites/default/files/uploads/2-indesign_.idml_file_opened_in_scribus.png (InDesign IDML file opened in Scribus)

|

||||

[9]:/file/402531

|

||||

[10]:https://opensource.com/sites/default/files/uploads/3-book_layout_in_indesign.png (Book layout in InDesign)

|

||||

[11]:/file/402536

|

||||

[12]:https://opensource.com/sites/default/files/uploads/4-indesign_.idml_file_of_book_opened_in_scribus.png (InDesign IDML file of book layout opened in Scribus)

|

||||

[13]:/file/402541

|

||||

[14]:https://opensource.com/sites/default/files/uploads/5-command-a_in_the_scribus_file.png (Selecting text in Scribus)

|

||||

[15]:/file/402546

|

||||

[16]:https://opensource.com/sites/default/files/uploads/6-deleted_text_in_scribus.png (Both pages of text deleted in Scribus)

|

||||

[17]:/file/402551

|

||||

[18]:https://opensource.com/sites/default/files/uploads/7-command-z_in_scribus.png (Undo delete restored the text, but with bad formatting.)

|

||||

[19]:/file/402556

|

||||

[20]:https://opensource.com/sites/default/files/uploads/8-indesign_error_message.png (InDesign error message)

|

||||

[21]:/file/402561

|

||||

[22]:https://opensource.com/sites/default/files/uploads/9-scribus_edited_in_gedit_on_linux.png (Editing Scribus file in Gedit)

|

||||

[23]:/file/402566

|

||||

[24]:https://opensource.com/sites/default/files/uploads/10-scribus_opens_after_gedit_changes.png (Result of the Gedit edit in Scribus)

|

||||

[25]:/file/402571

|

||||

[26]:https://opensource.com/sites/default/files/uploads/11-large_pdf_size.png (Huge PDF file)

|

||||

@ -1,75 +0,0 @@

|

||||

[Moelf](https://github.com/Moelf) Translating

|

||||

Why is Arch Linux So Challenging and What are Its Pros & Cons?

|

||||

======

|

||||

|

||||

|

||||

|

||||

[Arch Linux][1] is among the most popular Linux distributions and it was first released in **2002** , being spear-headed by **Aaron Grifin**. Yes, it aims to provide simplicity, minimalism, and elegance to the OS user but its target audience is not the faint of hearts. Arch encourages community involvement and a user is expected to put in some effort to better comprehend how the system operates.

|

||||

|

||||

Many old-time Linux users know a good amount about **Arch Linux** but you probably don’t if you are new to it considering using it for your everyday computing tasks. I’m no authority on the distro myself but from my experience with it, here are the pros and cons you will experience while using it.

|

||||

|

||||

### 1\. Pro: Build Your Own Linux OS

|

||||

|

||||

Other popular Linux Operating Systems like **Fedora** and **Ubuntu** ship with computers, same as **Windows** and **MacOS**. **Arch** , on the other hand, allows you to develop your OS to your taste. If you are able to achieve this, you will end up with a system that will be able to do exactly as you wish.

|

||||

|

||||

#### Con: Installation is a Hectic Process

|

||||

|

||||

[Installing Arch Linux][2] is far from a walk in a park and since you will be fine-tuning the OS, it will take a while. You will need to have an understanding of various terminal commands and the components you will be working with since you are to pick them yourself. By now, you probably already know that this requires quite a bit of reading.

|

||||

|

||||

### 2\. Pro: No Bloatware and Unnecessary Services

|

||||

|

||||

Since **Arch** allows you to choose your own components, you no longer have to deal with a bunch of software you don’t want. In contrast, OSes like **Ubuntu** come with a huge number of pre-installed desktop and background apps which you may not need and might not be able to know that they exist in the first place, before going on to remove them.

|

||||

|

||||

To put simply, **Arch Linux** saves you post-installation time. **Pacman** , an awesome utility app, is the package manager Arch Linux uses by default. There is an alternative to **Pacman** , called [Pamac][3].

|

||||

|

||||

### 3\. Pro: No System Upgrades

|

||||

|

||||

**Arch Linux** uses the rolling release model and that is awesome. It means that you no longer have to worry about upgrading every now and then. Once you install Arch, say goodbye to upgrading to a new version as updates occur continuously. By default, you will always be using the latest version.

|

||||

|

||||

#### Con: Some Updates Can Break Your System

|

||||

|

||||

While updates flow in continuously, you have to consciously track what comes in. Nobody knows your software’s specific configuration and it’s not tested by anyone but you. So, if you are not careful, things on your machine could break.

|

||||

|

||||

### 4\. Pro: Arch is Community Based

|

||||

|

||||

Linux users generally have one thing in common: The need for independence. Although most Linux distros have less corporate ties, there are still a few you cannot ignore. For instance, a distro based on **Ubuntu** is influenced by whatever decisions Canonical makes.

|

||||

|

||||

If you are trying to become even more independent with the use of your computer, then **Arch Linux** is the way to go. Unlike most systems, Arch has no commercial influence and focuses on the community.

|

||||

|

||||

### 5\. Pro: Arch Wiki is Awesome

|

||||

|

||||

The [Arch Wiki][4] is a super library of everything you need to know about the installation and maintenance of every component in the Linux system. The great thing about this site is that even if you are using a different Linux distro from Arch, you would still find its information relevant. That’s simply because Arch uses the same components as many other Linux distros and its guides and fixes sometimes apply to all.

|

||||

|

||||

### 6\. Pro: Check Out the Arch User Repository

|

||||

|

||||

The [Arch User Repository (AUR)][5] is a huge collection of software packages from members of the community. If you are looking for a Linux program that is not yet available on Arch’s repositories, you can find it on the **AUR** for sure.

|

||||

|

||||

The **AUR** is maintained by users who compile and install packages from source. Users are also allowed to vote on packages which give them (the packages i.e.) higher rankings that make them more visible to potential users.

|

||||

|

||||

#### Ultimately: Is Arch Linux for You?

|

||||

|

||||

**Arch Linux** has way more **pros** than **cons** including the ones that aren’t on this list. The installation process is long and probably too technical for a non-Linux savvy user, but with enough time on your hands and the ability to maximize productivity using wiki guides and the like, you should be good to go.

|

||||

|

||||

**Arch Linux** is a great Linux distro – not in spite of its complexity, but because of it. And it appeals most to those who are ready to do what needs to be done – given that you will have to do your homework and exercise a good amount of patience.

|

||||

|

||||

By the time you build this Operating System from scratch, you would have learned many details about GNU/Linux and would never be ignorant of what’s going on with your PC again.

|

||||

|

||||

What are the **pros** and **cons** of using **Arch Linux** in your experience? And on the whole, why is using it so challenging? Drop your comments in the discussion section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.fossmint.com/why-is-arch-linux-so-challenging-what-are-pros-cons/

|

||||

|

||||

作者:[Martins D. Okoi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.fossmint.com/author/dillivine/

|

||||

[1]:https://www.archlinux.org/

|

||||

[2]:https://www.tecmint.com/arch-linux-installation-and-configuration-guide/

|

||||

[3]:https://www.fossmint.com/pamac-arch-linux-gui-package-manager/

|

||||

[4]:https://wiki.archlinux.org/

|

||||

[5]:https://wiki.archlinux.org/index.php/Arch_User_Repository

|

||||

362

sources/tech/20180719 Building tiny container images.md

Normal file

362

sources/tech/20180719 Building tiny container images.md

Normal file

@ -0,0 +1,362 @@

|

||||

Building tiny container images

|

||||

======

|

||||

|

||||

|

||||

|

||||

When [Docker][1] exploded onto the scene a few years ago, it brought containers and container images to the masses. Although Linux containers existed before then, Docker made it easy to get started with a user-friendly command-line interface and an easy-to-understand way to build images using the Dockerfile format. But while it may be easy to jump in, there are still some nuances and tricks to building container images that are usable, even powerful, but still small in size.

|

||||

|

||||

### First pass: Clean up after yourself

|

||||

|

||||

Some of these examples involve the same kind of cleanup you would use with a traditional server, but more rigorously followed. Smaller image sizes are critical for quickly moving images around, and storing multiple copies of unnecessary data on disk is a waste of resources. Consequently, these techniques should be used more regularly than on a server with lots of dedicated storage.

|

||||

|

||||

An example of this kind of cleanup is removing cached files from an image to recover space. Consider the difference in size between a base image with [Nginx][2] installed by `dnf` with and without the metadata and yum cache cleaned up:

|

||||

```

|

||||

# Dockerfile with cache

|

||||

|

||||

FROM fedora:28

|

||||

|

||||

LABEL maintainer Chris Collins <collins.christopher@gmail.com>

|

||||

|

||||

|

||||

|

||||

RUN dnf install -y nginx

|

||||

|

||||

|

||||

|

||||

-----

|

||||

|

||||

|

||||

|

||||

# Dockerfile w/o cache

|

||||

|

||||

FROM fedora:28

|

||||

|

||||

LABEL maintainer Chris Collins <collins.christopher@gmail.com>

|

||||

|

||||

|

||||

|

||||

RUN dnf install -y nginx \

|

||||

|

||||

&& dnf clean all \

|

||||

|

||||

&& rm -rf /var/cache/yum

|

||||

|

||||

|

||||

|

||||

-----

|

||||

|

||||

|

||||

|

||||

[chris@krang] $ docker build -t cache -f Dockerfile .

|

||||

|

||||

[chris@krang] $ docker images --format "{{.Repository}}: {{.Size}}"

|

||||

|

||||

| head -n 1

|

||||

|

||||

cache: 464 MB

|

||||

|

||||

|

||||

|