mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

Merge pull request #10546 from oska874/master

2 个选题 @lujun9972 选题信息也可以仿照此处,使用 特定的 `[b]` 来标识。

This commit is contained in:

commit

fc4b64cff7

@ -0,0 +1,637 @@

|

||||

# Compiling Lisp to JavaScript From Scratch in 350

|

||||

|

||||

In this article we will look at a from-scratch implementation of a compiler from a simple LISP-like calculator language to JavaScript. The complete source code can be found [here][7].

|

||||

|

||||

We will:

|

||||

|

||||

1. Define our language and write a simple program in it

|

||||

|

||||

2. Implement a simple parser combinator library

|

||||

|

||||

3. Implement a parser for our language

|

||||

|

||||

4. Implement a pretty printer for our language

|

||||

|

||||

5. Define a subset of JavaScript for our usage

|

||||

|

||||

6. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

7. Glue it all together

|

||||

|

||||

Let's start!

|

||||

|

||||

### 1\. Defining the language

|

||||

|

||||

The main attraction of lisps is that their syntax already represent a tree, this is why they are so easy to parse. We'll see that soon. But first let's define our language. Here's a BNF description of our language's syntax:

|

||||

|

||||

```

|

||||

program ::= expr

|

||||

expr ::= <integer> | <name> | ([<expr>])

|

||||

```

|

||||

|

||||

Basically, our language let's us define one expression at the top level which it will evaluate. An expression is composed of either an integer, for example `5`, a variable, for example `x`, or a list of expressions, for example `(add x 1)`.

|

||||

|

||||

An integer evaluate to itself, a variable evaluates to what it's bound in the current environment, and a list evaluates to a function call where the first argument is the function and the rest are the arguments to the function.

|

||||

|

||||

We have some built-in special forms in our language so we can do more interesting stuff:

|

||||

|

||||

* let expression let's us introduce new variables in the environment of the body of the let. The syntax is:

|

||||

|

||||

```

|

||||

let ::= (let ([<letarg>]) <body>)

|

||||

letargs ::= (<name> <expr>)

|

||||

body ::= <expr>

|

||||

```

|

||||

|

||||

* lambda expression: evaluates to an anonymous function definition. The syntax is:

|

||||

|

||||

```

|

||||

lambda ::= (lambda ([<name>]) <body>)

|

||||

```

|

||||

|

||||

We also have a few built in functions: `add`, `mul`, `sub`, `div` and `print`.

|

||||

|

||||

Let's see a quick example of a program written in our language:

|

||||

|

||||

```

|

||||

(let

|

||||

((compose

|

||||

(lambda (f g)

|

||||

(lambda (x) (f (g x)))))

|

||||

(square

|

||||

(lambda (x) (mul x x)))

|

||||

(add1

|

||||

(lambda (x) (add x 1))))

|

||||

(print ((compose square add1) 5)))

|

||||

```

|

||||

|

||||

This program defines 3 functions: `compose`, `square` and `add1`. And then prints the result of the computation:`((compose square add1) 5)`

|

||||

|

||||

I hope this is enough information about the language. Let's start implementing it!

|

||||

|

||||

We can define the language in Haskell like this:

|

||||

|

||||

```

|

||||

type Name = String

|

||||

|

||||

data Expr

|

||||

= ATOM Atom

|

||||

| LIST [Expr]

|

||||

deriving (Eq, Read, Show)

|

||||

|

||||

data Atom

|

||||

= Int Int

|

||||

| Symbol Name

|

||||

deriving (Eq, Read, Show)

|

||||

```

|

||||

|

||||

We can parse programs in the language we defined to an `Expr`. Also, we are giving the new data types `Eq`, `Read`and `Show` instances to aid in testing and debugging. You'll be able to use those in the REPL for example to verify all this actually works.

|

||||

|

||||

The reason we did not define `lambda`, `let` and the other built-in functions as part of the syntax is because we can get away with it in this case. These functions are just a more specific case of a `LIST`. So I decided to leave this to a later phase.

|

||||

|

||||

Usually, you would like to define these special cases in the abstract syntax - to improve error messages, to unable static analysis and optimizations and such, but we won't do that here so this is enough for us.

|

||||

|

||||

Another thing you would like to do usually is add some annotation to the syntax. For example the location: Which file did this `Expr` come from and which row and col in the file. You can use this in later stages to print the location of errors, even if they are not in the parser stage.

|

||||

|

||||

* _Exercise 1_ : Add a `Program` data type to include multiple `Expr` sequentially

|

||||

|

||||

* _Exercise 2_ : Add location annotation to the syntax tree.

|

||||

|

||||

### 2\. Implement a simple parser combinator library

|

||||

|

||||

First thing we are going to do is define an Embedded Domain Specific Language (or EDSL) which we will use to define our languages' parser. This is often referred to as parser combinator library. The reason we are doing it is strictly for learning purposes, Haskell has great parsing libraries and you should definitely use them when building real software, or even when just experimenting. One such library is [megaparsec][8].

|

||||

|

||||

First let's talk about the idea behind our parser library implementation. In it's essence, our parser is a function that takes some input, might consume some or all of the input, and returns the value it managed to parse and the rest of the input it didn't parse yet, or throws an error if it failed. Let's write that down.

|

||||

|

||||

```

|

||||

newtype Parser a

|

||||

= Parser (ParseString -> Either ParseError (a, ParseString))

|

||||

|

||||

data ParseString

|

||||

= ParseString Name (Int, Int) String

|

||||

|

||||

data ParseError

|

||||

= ParseError ParseString Error

|

||||

|

||||

type Error = String

|

||||

|

||||

```

|

||||

|

||||

Here we defined three main new types.

|

||||

|

||||

First, `Parser a`, is the parsing function we described before.

|

||||

|

||||

Second, `ParseString` is our input or state we carry along. It has three significant parts:

|

||||

|

||||

* `Name`: This is the name of the source

|

||||

|

||||

* `(Int, Int)`: This is the current location in the source

|

||||

|

||||

* `String`: This is the remaining string left to parse

|

||||

|

||||

Third, `ParseError` contains the current state of the parser and an error message.

|

||||

|

||||

Now we want our parser to be flexible, so we will define a few instances for common type classes for it. These instances will allow us to combine small parsers to make bigger parsers (hence the name 'parser combinators').

|

||||

|

||||

The first one is a `Functor` instance. We want a `Functor` instance because we want to be able to define a parser using another parser simply by applying a function on the parsed value. We will see an example of this when we define the parser for our language.

|

||||

|

||||

```

|

||||

instance Functor Parser where

|

||||

fmap f (Parser parser) =

|

||||

Parser (\str -> first f <$> parser str)

|

||||

```

|

||||

|

||||

The second instance is an `Applicative` instance. One common use case for this instance instance is to lift a pure function on multiple parsers.

|

||||

|

||||

```

|

||||

instance Applicative Parser where

|

||||

pure x = Parser (\str -> Right (x, str))

|

||||

(Parser p1) <*> (Parser p2) =

|

||||

Parser $

|

||||

\str -> do

|

||||

(f, rest) <- p1 str

|

||||

(x, rest') <- p2 rest

|

||||

pure (f x, rest')

|

||||

|

||||

```

|

||||

|

||||

(Note: _We will also implement a Monad instance so we can use do notation here._ )

|

||||

|

||||

The third instance is an `Alternative` instance. We want to be able to supply an alternative parser in case one fails.

|

||||

|

||||

```

|

||||

instance Alternative Parser where

|

||||

empty = Parser (`throwErr` "Failed consuming input")

|

||||

(Parser p1) <|> (Parser p2) =

|

||||

Parser $

|

||||

\pstr -> case p1 pstr of

|

||||

Right result -> Right result

|

||||

Left _ -> p2 pstr

|

||||

```

|

||||

|

||||

The forth instance is a `Monad` instance. So we'll be able to chain parsers.

|

||||

|

||||

```

|

||||

instance Monad Parser where

|

||||

(Parser p1) >>= f =

|

||||

Parser $

|

||||

\str -> case p1 str of

|

||||

Left err -> Left err

|

||||

Right (rs, rest) ->

|

||||

case f rs of

|

||||

Parser parser -> parser rest

|

||||

|

||||

```

|

||||

|

||||

Next, let's define a way to run a parser and a utility function for failure:

|

||||

|

||||

```

|

||||

|

||||

runParser :: String -> String -> Parser a -> Either ParseError (a, ParseString)

|

||||

runParser name str (Parser parser) = parser $ ParseString name (0,0) str

|

||||

|

||||

throwErr :: ParseString -> String -> Either ParseError a

|

||||

throwErr ps@(ParseString name (row,col) _) errMsg =

|

||||

Left $ ParseError ps $ unlines

|

||||

[ "*** " ++ name ++ ": " ++ errMsg

|

||||

, "* On row " ++ show row ++ ", column " ++ show col ++ "."

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

Now we'll start implementing the combinators which are the API and heart of the EDSL.

|

||||

|

||||

First, we'll define `oneOf`. `oneOf` will succeed if one of the characters in the list supplied to it is the next character of the input and will fail otherwise.

|

||||

|

||||

```

|

||||

oneOf :: [Char] -> Parser Char

|

||||

oneOf chars =

|

||||

Parser $ \case

|

||||

ps@(ParseString name (row, col) str) ->

|

||||

case str of

|

||||

[] -> throwErr ps "Cannot read character of empty string"

|

||||

(c:cs) ->

|

||||

if c `elem` chars

|

||||

then Right (c, ParseString name (row, col+1) cs)

|

||||

else throwErr ps $ unlines ["Unexpected character " ++ [c], "Expecting one of: " ++ show chars]

|

||||

```

|

||||

|

||||

`optional` will stop a parser from throwing an error. It will just return `Nothing` on failure.

|

||||

|

||||

```

|

||||

optional :: Parser a -> Parser (Maybe a)

|

||||

optional (Parser parser) =

|

||||

Parser $

|

||||

\pstr -> case parser pstr of

|

||||

Left _ -> Right (Nothing, pstr)

|

||||

Right (x, rest) -> Right (Just x, rest)

|

||||

```

|

||||

|

||||

`many` will try to run a parser repeatedly until it fails. When it does, it'll return a list of successful parses. `many1`will do the same, but will throw an error if it fails to parse at least once.

|

||||

|

||||

```

|

||||

many :: Parser a -> Parser [a]

|

||||

many parser = go []

|

||||

where go cs = (parser >>= \c -> go (c:cs)) <|> pure (reverse cs)

|

||||

|

||||

many1 :: Parser a -> Parser [a]

|

||||

many1 parser =

|

||||

(:) <$> parser <*> many parser

|

||||

|

||||

```

|

||||

|

||||

These next few parsers use the combinators we defined to make more specific parsers:

|

||||

|

||||

```

|

||||

char :: Char -> Parser Char

|

||||

char c = oneOf [c]

|

||||

|

||||

string :: String -> Parser String

|

||||

string = traverse char

|

||||

|

||||

space :: Parser Char

|

||||

space = oneOf " \n"

|

||||

|

||||

spaces :: Parser String

|

||||

spaces = many space

|

||||

|

||||

spaces1 :: Parser String

|

||||

spaces1 = many1 space

|

||||

|

||||

withSpaces :: Parser a -> Parser a

|

||||

withSpaces parser =

|

||||

spaces *> parser <* spaces

|

||||

|

||||

parens :: Parser a -> Parser a

|

||||

parens parser =

|

||||

(withSpaces $ char '(')

|

||||

*> withSpaces parser

|

||||

<* (spaces *> char ')')

|

||||

|

||||

sepBy :: Parser a -> Parser b -> Parser [b]

|

||||

sepBy sep parser = do

|

||||

frst <- optional parser

|

||||

rest <- many (sep *> parser)

|

||||

pure $ maybe rest (:rest) frst

|

||||

|

||||

```

|

||||

|

||||

Now we have everything we need to start defining a parser for our language.

|

||||

|

||||

* _Exercise_ : implement an EOF (end of file/input) parser combinator.

|

||||

|

||||

### 3\. Implementing a parser for our language

|

||||

|

||||

To define our parser, we'll use the top-bottom method.

|

||||

|

||||

```

|

||||

parseExpr :: Parser Expr

|

||||

parseExpr = fmap ATOM parseAtom <|> fmap LIST parseList

|

||||

|

||||

parseList :: Parser [Expr]

|

||||

parseList = parens $ sepBy spaces1 parseExpr

|

||||

|

||||

parseAtom :: Parser Atom

|

||||

parseAtom = parseSymbol <|> parseInt

|

||||

|

||||

parseSymbol :: Parser Atom

|

||||

parseSymbol = fmap Symbol parseName

|

||||

|

||||

```

|

||||

|

||||

Notice that these four function are a very high-level description of our language. This demonstrate why Haskell is so nice for parsing. Still, after defining the high-level parts, we still need to define the lower-level `parseName` and `parseInt`.

|

||||

|

||||

What characters can we use as names in our language? Let's decide to use lowercase letters, digits and underscores, where the first character must be a letter.

|

||||

|

||||

```

|

||||

parseName :: Parser Name

|

||||

parseName = do

|

||||

c <- oneOf ['a'..'z']

|

||||

cs <- many $ oneOf $ ['a'..'z'] ++ "0123456789" ++ "_"

|

||||

pure (c:cs)

|

||||

```

|

||||

|

||||

For integers, we want a sequence of digits optionally preceding by '-':

|

||||

|

||||

```

|

||||

parseInt :: Parser Atom

|

||||

parseInt = do

|

||||

sign <- optional $ char '-'

|

||||

num <- many1 $ oneOf "0123456789"

|

||||

let result = read $ maybe num (:num) sign of

|

||||

pure $ Int result

|

||||

```

|

||||

|

||||

Lastly, we'll define a function to run a parser and get back an `Expr` or an error message.

|

||||

|

||||

```

|

||||

runExprParser :: Name -> String -> Either String Expr

|

||||

runExprParser name str =

|

||||

case runParser name str (withSpaces parseExpr) of

|

||||

Left (ParseError _ errMsg) -> Left errMsg

|

||||

Right (result, _) -> Right result

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Write a parser for the `Program` type you defined in the first section

|

||||

|

||||

* _Exercise 2_ : Rewrite `parseName` in Applicative style

|

||||

|

||||

* _Exercise 3_ : Find a way to handle the overflow case in `parseInt` instead of using `read`.

|

||||

|

||||

### 4\. Implement a pretty printer for our language

|

||||

|

||||

One more thing we'd like to do is be able to print our programs as source code. This is useful for better error messages.

|

||||

|

||||

```

|

||||

printExpr :: Expr -> String

|

||||

printExpr = printExpr' False 0

|

||||

|

||||

printAtom :: Atom -> String

|

||||

printAtom = \case

|

||||

Symbol s -> s

|

||||

Int i -> show i

|

||||

|

||||

printExpr' :: Bool -> Int -> Expr -> String

|

||||

printExpr' doindent level = \case

|

||||

ATOM a -> indent (bool 0 level doindent) (printAtom a)

|

||||

LIST (e:es) ->

|

||||

indent (bool 0 level doindent) $

|

||||

concat

|

||||

[ "("

|

||||

, printExpr' False (level + 1) e

|

||||

, bool "\n" "" (null es)

|

||||

, intercalate "\n" $ map (printExpr' True (level + 1)) es

|

||||

, ")"

|

||||

]

|

||||

|

||||

indent :: Int -> String -> String

|

||||

indent tabs e = concat (replicate tabs " ") ++ e

|

||||

```

|

||||

|

||||

* _Exercise_ : Write a pretty printer for the `Program` type you defined in the first section

|

||||

|

||||

Okay, we wrote around 200 lines so far of what's typically called the front-end of the compiler. We have around 150 more lines to go and three more tasks: We need to define a subset of JS for our usage, define the translator from our language to that subset, and glue the whole thing together. Let's go!

|

||||

|

||||

### 5\. Define a subset of JavaScript for our usage

|

||||

|

||||

First, we'll define the subset of JavaScript we are going to use:

|

||||

|

||||

```

|

||||

data JSExpr

|

||||

= JSInt Int

|

||||

| JSSymbol Name

|

||||

| JSBinOp JSBinOp JSExpr JSExpr

|

||||

| JSLambda [Name] JSExpr

|

||||

| JSFunCall JSExpr [JSExpr]

|

||||

| JSReturn JSExpr

|

||||

deriving (Eq, Show, Read)

|

||||

|

||||

type JSBinOp = String

|

||||

```

|

||||

|

||||

This data type represent a JavaScript expression. We have two atoms - `JSInt` and `JSSymbol` to which we'll translate our languages' `Atom`, We have `JSBinOp` to represent a binary operation such as `+` or `*`, we have `JSLambda`for anonymous functions same as our `lambda expression`, We have `JSFunCall` which we'll use both for calling functions and introducing new names as in `let`, and we have `JSReturn` to return values from functions as that's required in JavaScript.

|

||||

|

||||

This `JSExpr` type is an **abstract representation** of a JavaScript expression. We will translate our own `Expr`which is an abstract representation of our languages' expression to `JSExpr` and from there to JavaScript. But in order to do that we need to take this `JSExpr` and produce JavaScript code from it. We'll do that by pattern matching on `JSExpr` recursively and emit JS code as a `String`. This is basically the same thing we did in `printExpr`. We'll also track the scoping of elements so we can indent the generated code in a nice way.

|

||||

|

||||

```

|

||||

printJSOp :: JSBinOp -> String

|

||||

printJSOp op = op

|

||||

|

||||

printJSExpr :: Bool -> Int -> JSExpr -> String

|

||||

printJSExpr doindent tabs = \case

|

||||

JSInt i -> show i

|

||||

JSSymbol name -> name

|

||||

JSLambda vars expr -> (if doindent then indent tabs else id) $ unlines

|

||||

["function(" ++ intercalate ", " vars ++ ") {"

|

||||

,indent (tabs+1) $ printJSExpr False (tabs+1) expr

|

||||

] ++ indent tabs "}"

|

||||

JSBinOp op e1 e2 -> "(" ++ printJSExpr False tabs e1 ++ " " ++ printJSOp op ++ " " ++ printJSExpr False tabs e2 ++ ")"

|

||||

JSFunCall f exprs -> "(" ++ printJSExpr False tabs f ++ ")(" ++ intercalate ", " (fmap (printJSExpr False tabs) exprs) ++ ")"

|

||||

JSReturn expr -> (if doindent then indent tabs else id) $ "return " ++ printJSExpr False tabs expr ++ ";"

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Add a `JSProgram` type that will hold multiple `JSExpr` and create a function `printJSExprProgram` to generate code for it.

|

||||

|

||||

* _Exercise 2_ : Add a new type of `JSExpr` - `JSIf`, and generate code for it.

|

||||

|

||||

### 6\. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

We are almost there. In this section we'll create a function to translate `Expr` to `JSExpr`.

|

||||

|

||||

The basic idea is simple, we'll translate `ATOM` to `JSSymbol` or `JSInt` and `LIST` to either a function call or a special case we'll translate later.

|

||||

|

||||

```

|

||||

type TransError = String

|

||||

|

||||

translateToJS :: Expr -> Either TransError JSExpr

|

||||

translateToJS = \case

|

||||

ATOM (Symbol s) -> pure $ JSSymbol s

|

||||

ATOM (Int i) -> pure $ JSInt i

|

||||

LIST xs -> translateList xs

|

||||

|

||||

translateList :: [Expr] -> Either TransError JSExpr

|

||||

translateList = \case

|

||||

[] -> Left "translating empty list"

|

||||

ATOM (Symbol s):xs

|

||||

| Just f <- lookup s builtins ->

|

||||

f xs

|

||||

f:xs ->

|

||||

JSFunCall <$> translateToJS f <*> traverse translateToJS xs

|

||||

|

||||

```

|

||||

|

||||

`builtins` is a list of special cases to translate, like `lambda` and `let`. Every case gets the list of arguments for it, verify that its syntactically valid and translates it to the equivalent `JSExpr`.

|

||||

|

||||

```

|

||||

type Builtin = [Expr] -> Either TransError JSExpr

|

||||

type Builtins = [(Name, Builtin)]

|

||||

|

||||

builtins :: Builtins

|

||||

builtins =

|

||||

[("lambda", transLambda)

|

||||

,("let", transLet)

|

||||

,("add", transBinOp "add" "+")

|

||||

,("mul", transBinOp "mul" "*")

|

||||

,("sub", transBinOp "sub" "-")

|

||||

,("div", transBinOp "div" "/")

|

||||

,("print", transPrint)

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

In our case, we treat built-in special forms as special and not first class, so will not be able to use them as first class functions and such.

|

||||

|

||||

We'll translate a Lambda to an anonymous function:

|

||||

|

||||

```

|

||||

transLambda :: [Expr] -> Either TransError JSExpr

|

||||

transLambda = \case

|

||||

[LIST vars, body] -> do

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSLambda vars' <$> (JSReturn <$> translateToJS body)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for lambda."

|

||||

,"expecting 2 arguments, the first is the list of vars and the second is the body of the lambda."

|

||||

,"In expression: " ++ show (LIST $ ATOM (Symbol "lambda") : vars)

|

||||

]

|

||||

|

||||

fromSymbol :: Expr -> Either String Name

|

||||

fromSymbol (ATOM (Symbol s)) = Right s

|

||||

fromSymbol e = Left $ "cannot bind value to non symbol type: " ++ show e

|

||||

|

||||

```

|

||||

|

||||

We'll translate let to a definition of a function with the relevant named arguments and call it with the values, Thus introducing the variables in that scope:

|

||||

|

||||

```

|

||||

transLet :: [Expr] -> Either TransError JSExpr

|

||||

transLet = \case

|

||||

[LIST binds, body] -> do

|

||||

(vars, vals) <- letParams binds

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSFunCall . JSLambda vars' <$> (JSReturn <$> translateToJS body) <*> traverse translateToJS vals

|

||||

where

|

||||

letParams :: [Expr] -> Either Error ([Expr],[Expr])

|

||||

letParams = \case

|

||||

[] -> pure ([],[])

|

||||

LIST [x,y] : rest -> ((x:) *** (y:)) <$> letParams rest

|

||||

x : _ -> Left ("Unexpected argument in let list in expression:\n" ++ printExpr x)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for let."

|

||||

,"expecting 2 arguments, the first is the list of var/val pairs and the second is the let body."

|

||||

,"In expression:\n" ++ printExpr (LIST $ ATOM (Symbol "let") : vars)

|

||||

]

|

||||

```

|

||||

|

||||

We'll translate an operation that can work on multiple arguments to a chain of binary operations. For example: `(add 1 2 3)` will become `1 + (2 + 3)`

|

||||

|

||||

```

|

||||

transBinOp :: Name -> Name -> [Expr] -> Either TransError JSExpr

|

||||

transBinOp f _ [] = Left $ "Syntax error: '" ++ f ++ "' expected at least 1 argument, got: 0"

|

||||

transBinOp _ _ [x] = translateToJS x

|

||||

transBinOp _ f list = foldl1 (JSBinOp f) <$> traverse translateToJS list

|

||||

```

|

||||

|

||||

And we'll translate a `print` as a call to `console.log`

|

||||

|

||||

```

|

||||

transPrint :: [Expr] -> Either TransError JSExpr

|

||||

transPrint [expr] = JSFunCall (JSSymbol "console.log") . (:[]) <$> translateToJS expr

|

||||

transPrint xs = Left $ "Syntax error. print expected 1 arguments, got: " ++ show (length xs)

|

||||

|

||||

```

|

||||

|

||||

Notice that we could have skipped verifying the syntax if we'd parse those as special cases of `Expr`.

|

||||

|

||||

* _Exercise 1_ : Translate `Program` to `JSProgram`

|

||||

|

||||

* _Exercise 2_ : add a special case for `if Expr Expr Expr` and translate it to the `JSIf` case you implemented in the last exercise

|

||||

|

||||

### 7\. Glue it all together

|

||||

|

||||

Finally, we are going to glue this all together. We'll:

|

||||

|

||||

1. Read a file

|

||||

|

||||

2. Parse it to `Expr`

|

||||

|

||||

3. Translate it to `JSExpr`

|

||||

|

||||

4. Emit JavaScript code to the standard output

|

||||

|

||||

We'll also enable a few flags for testing:

|

||||

|

||||

* `--e` will parse and print the abstract representation of the expression (`Expr`)

|

||||

|

||||

* `--pp` will parse and pretty print

|

||||

|

||||

* `--jse` will parse, translate and print the abstract representation of the resulting JS (`JSExpr`)

|

||||

|

||||

* `--ppc` will parse, pretty print and compile

|

||||

|

||||

```

|

||||

main :: IO ()

|

||||

main = getArgs >>= \case

|

||||

[file] ->

|

||||

printCompile =<< readFile file

|

||||

["--e",file] ->

|

||||

either putStrLn print . runExprParser "--e" =<< readFile file

|

||||

["--pp",file] ->

|

||||

either putStrLn (putStrLn . printExpr) . runExprParser "--pp" =<< readFile file

|

||||

["--jse",file] ->

|

||||

either print (either putStrLn print . translateToJS) . runExprParser "--jse" =<< readFile file

|

||||

["--ppc",file] ->

|

||||

either putStrLn (either putStrLn putStrLn) . fmap (compile . printExpr) . runExprParser "--ppc" =<< readFile file

|

||||

_ ->

|

||||

putStrLn $ unlines

|

||||

["Usage: runghc Main.hs [ --e, --pp, --jse, --ppc ] <filename>"

|

||||

,"--e print the Expr"

|

||||

,"--pp pretty print Expr"

|

||||

,"--jse print the JSExpr"

|

||||

,"--ppc pretty print Expr and then compile"

|

||||

]

|

||||

|

||||

printCompile :: String -> IO ()

|

||||

printCompile = either putStrLn putStrLn . compile

|

||||

|

||||

compile :: String -> Either Error String

|

||||

compile str = printJSExpr False 0 <$> (translateToJS =<< runExprParser "compile" str)

|

||||

|

||||

```

|

||||

|

||||

That's it. We have a compiler from our language to JS. Again, you can view the full source file [here][9].

|

||||

|

||||

Running our compiler with the example from the first section yields this JavaScript code:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp

|

||||

(function(compose, square, add1) {

|

||||

return (console.log)(((compose)(square, add1))(5));

|

||||

})(function(f, g) {

|

||||

return function(x) {

|

||||

return (f)((g)(x));

|

||||

};

|

||||

}, function(x) {

|

||||

return (x * x);

|

||||

}, function(x) {

|

||||

return (x + 1);

|

||||

})

|

||||

```

|

||||

|

||||

If you have node.js installed on your computer, you can run this code by running:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp | node -p

|

||||

36

|

||||

undefined

|

||||

```

|

||||

|

||||

* _Final exercise_ : instead of compiling an expression, compile a program of multiple expressions.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

|

||||

作者:[ Gil Mizrahi ][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://gilmi.me/home

|

||||

[b]:https://github.com/oska874

|

||||

[1]:https://gilmi.me/blog/authors/Gil

|

||||

[2]:https://gilmi.me/blog/tags/compilers

|

||||

[3]:https://gilmi.me/blog/tags/fp

|

||||

[4]:https://gilmi.me/blog/tags/haskell

|

||||

[5]:https://gilmi.me/blog/tags/lisp

|

||||

[6]:https://gilmi.me/blog/tags/parsing

|

||||

[7]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[8]:https://mrkkrp.github.io/megaparsec/

|

||||

[9]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[10]:https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

205

sources/tech/20180715 Why is Python so slow.md

Normal file

205

sources/tech/20180715 Why is Python so slow.md

Normal file

@ -0,0 +1,205 @@

|

||||

Why is Python so slow?

|

||||

============================================================

|

||||

|

||||

Python is booming in popularity. It is used in DevOps, Data Science, Web Development and Security.

|

||||

|

||||

It does not, however, win any medals for speed.

|

||||

|

||||

|

||||

|

||||

|

||||

> How does Java compare in terms of speed to C or C++ or C# or Python? The answer depends greatly on the type of application you’re running. No benchmark is perfect, but The Computer Language Benchmarks Game is [a good starting point][5].

|

||||

|

||||

I’ve been referring to the Computer Language Benchmarks Game for over a decade; compared with other languages like Java, C#, Go, JavaScript, C++, Python is [one of the slowest][6]. This includes [JIT][7] (C#, Java) and [AOT][8] (C, C++) compilers, as well as interpreted languages like JavaScript.

|

||||

|

||||

_NB: When I say “Python”, I’m talking about the reference implementation of the language, CPython. I will refer to other runtimes in this article._

|

||||

|

||||

> I want to answer this question: When Python completes a comparable application 2–10x slower than another language, _why is it slow_ and can’t we _make it faster_ ?

|

||||

|

||||

Here are the top theories:

|

||||

|

||||

* “ _It’s the GIL (Global Interpreter Lock)_ ”

|

||||

|

||||

* “ _It’s because its interpreted and not compiled_ ”

|

||||

|

||||

* “ _It’s because its a dynamically typed language_ ”

|

||||

|

||||

Which one of these reasons has the biggest impact on performance?

|

||||

|

||||

### “It’s the GIL”

|

||||

|

||||

Modern computers come with CPU’s that have multiple cores, and sometimes multiple processors. In order to utilise all this extra processing power, the Operating System defines a low-level structure called a thread, where a process (e.g. Chrome Browser) can spawn multiple threads and have instructions for the system inside. That way if one process is particularly CPU-intensive, that load can be shared across the cores and this effectively makes most applications complete tasks faster.

|

||||

|

||||

My Chrome Browser, as I’m writing this article, has 44 threads open. Keep in mind that the structure and API of threading are different between POSIX-based (e.g. Mac OS and Linux) and Windows OS. The operating system also handles the scheduling of threads.

|

||||

|

||||

IF you haven’t done multi-threaded programming before, a concept you’ll need to quickly become familiar with locks. Unlike a single-threaded process, you need to ensure that when changing variables in memory, multiple threads don’t try and access/change the same memory address at the same time.

|

||||

|

||||

When CPython creates variables, it allocates the memory and then counts how many references to that variable exist, this is a concept known as reference counting. If the number of references is 0, then it frees that piece of memory from the system. This is why creating a “temporary” variable within say, the scope of a for loop, doesn’t blow up the memory consumption of your application.

|

||||

|

||||

The challenge then becomes when variables are shared within multiple threads, how CPython locks the reference count. There is a “global interpreter lock” that carefully controls thread execution. The interpreter can only execute one operation at a time, regardless of how many threads it has.

|

||||

|

||||

#### What does this mean to the performance of Python application?

|

||||

|

||||

If you have a single-threaded, single interpreter application. It will make no difference to the speed. Removing the GIL would have no impact on the performance of your code.

|

||||

|

||||

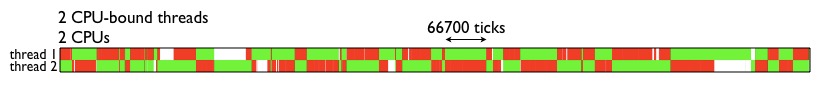

If you wanted to implement concurrency within a single interpreter (Python process) by using threading, and your threads were IO intensive (e.g. Network IO or Disk IO), you would see the consequences of GIL-contention.

|

||||

|

||||

|

||||

From David Beazley’s GIL visualised post [http://dabeaz.blogspot.com/2010/01/python-gil-visualized.html][1]

|

||||

|

||||

If you have a web-application (e.g. Django) and you’re using WSGI, then each request to your web-app is a separate Python interpreter, so there is only 1 lock _per_ request. Because the Python interpreter is slow to start, some WSGI implementations have a “Daemon Mode” [which keep Python process(es) on the go for you.][9]

|

||||

|

||||

#### What about other Python runtimes?

|

||||

|

||||

[PyPy has a GIL][10] and it is typically >3x faster than CPython.

|

||||

|

||||

[Jython does not have a GIL][11] because a Python thread in Jython is represented by a Java thread and benefits from the JVM memory-management system.

|

||||

|

||||

#### How does JavaScript do this?

|

||||

|

||||

Well, firstly all Javascript engines [use mark-and-sweep Garbage Collection][12]. As stated, the primary need for the GIL is CPython’s memory-management algorithm.

|

||||

|

||||

JavaScript does not have a GIL, but it’s also single-threaded so it doesn’t require one. JavaScript’s event-loop and Promise/Callback pattern are how asynchronous-programming is achieved in place of concurrency. Python has a similar thing with the asyncio event-loop.

|

||||

|

||||

### “It’s because its an interpreted language”

|

||||

|

||||

I hear this a lot and I find it a gross-simplification of the way CPython actually works. If at a terminal you wrote `python myscript.py` then CPython would start a long sequence of reading, lexing, parsing, compiling, interpreting and executing that code.

|

||||

|

||||

If you’re interested in how that process works, I’ve written about it before:

|

||||

|

||||

[Modifying the Python language in 6 minutes

|

||||

This week I raised my first pull-request to the CPython core project, which was declined :-( but as to not completely…hackernoon.com][13][][14]

|

||||

|

||||

An important point in that process is the creation of a `.pyc` file, at the compiler stage, the bytecode sequence is written to a file inside `__pycache__/`on Python 3 or in the same directory in Python 2\. This doesn’t just apply to your script, but all of the code you imported, including 3rd party modules.

|

||||

|

||||

So most of the time (unless you write code which you only ever run once?), Python is interpreting bytecode and executing it locally. Compare that with Java and C#.NET:

|

||||

|

||||

> Java compiles to an “Intermediate Language” and the Java Virtual Machine reads the bytecode and just-in-time compiles it to machine code. The .NET CIL is the same, the .NET Common-Language-Runtime, CLR, uses just-in-time compilation to machine code.

|

||||

|

||||

So, why is Python so much slower than both Java and C# in the benchmarks if they all use a virtual machine and some sort of Bytecode? Firstly, .NET and Java are JIT-Compiled.

|

||||

|

||||

JIT or Just-in-time compilation requires an intermediate language to allow the code to be split into chunks (or frames). Ahead of time (AOT) compilers are designed to ensure that the CPU can understand every line in the code before any interaction takes place.

|

||||

|

||||

The JIT itself does not make the execution any faster, because it is still executing the same bytecode sequences. However, JIT enables optimizations to be made at runtime. A good JIT optimizer will see which parts of the application are being executed a lot, call these “hot spots”. It will then make optimizations to those bits of code, by replacing them with more efficient versions.

|

||||

|

||||

This means that when your application does the same thing again and again, it can be significantly faster. Also, keep in mind that Java and C# are strongly-typed languages so the optimiser can make many more assumptions about the code.

|

||||

|

||||

PyPy has a JIT and as mentioned in the previous section, is significantly faster than CPython. This performance benchmark article goes into more detail —

|

||||

|

||||

[Which is the fastest version of Python?

|

||||

Of course, “it depends”, but what does it depend on and how can you assess which is the fastest version of Python for…hackernoon.com][15][][16]

|

||||

|

||||

#### So why doesn’t CPython use a JIT?

|

||||

|

||||

There are downsides to JITs: one of those is startup time. CPython startup time is already comparatively slow, PyPy is 2–3x slower to start than CPython. The Java Virtual Machine is notoriously slow to boot. The .NET CLR gets around this by starting at system-startup, but the developers of the CLR also develop the Operating System on which the CLR runs.

|

||||

|

||||

If you have a single Python process running for a long time, with code that can be optimized because it contains “hot spots”, then a JIT makes a lot of sense.

|

||||

|

||||

However, CPython is a general-purpose implementation. So if you were developing command-line applications using Python, having to wait for a JIT to start every time the CLI was called would be horribly slow.

|

||||

|

||||

CPython has to try and serve as many use cases as possible. There was the possibility of [plugging a JIT into CPython][17] but this project has largely stalled.

|

||||

|

||||

> If you want the benefits of a JIT and you have a workload that suits it, use PyPy.

|

||||

|

||||

### “It’s because its a dynamically typed language”

|

||||

|

||||

In a “Statically-Typed” language, you have to specify the type of a variable when it is declared. Those would include C, C++, Java, C#, Go.

|

||||

|

||||

In a dynamically-typed language, there are still the concept of types, but the type of a variable is dynamic.

|

||||

|

||||

```

|

||||

a = 1

|

||||

a = "foo"

|

||||

```

|

||||

|

||||

In this toy-example, Python creates a second variable with the same name and a type of `str` and deallocates the memory created for the first instance of `a`

|

||||

|

||||

Statically-typed languages aren’t designed as such to make your life hard, they are designed that way because of the way the CPU operates. If everything eventually needs to equate to a simple binary operation, you have to convert objects and types down to a low-level data structure.

|

||||

|

||||

Python does this for you, you just never see it, nor do you need to care.

|

||||

|

||||

Not having to declare the type isn’t what makes Python slow, the design of the Python language enables you to make almost anything dynamic. You can replace the methods on objects at runtime, you can monkey-patch low-level system calls to a value declared at runtime. Almost anything is possible.

|

||||

|

||||

It’s this design that makes it incredibly hard to optimise Python.

|

||||

|

||||

To illustrate my point, I’m going to use a syscall tracing tool that works in Mac OS called Dtrace. CPython distributions do not come with DTrace builtin, so you have to recompile CPython. I’m using 3.6.6 for my demo

|

||||

|

||||

```

|

||||

wget https://github.com/python/cpython/archive/v3.6.6.zip

|

||||

unzip v3.6.6.zip

|

||||

cd v3.6.6

|

||||

./configure --with-dtrace

|

||||

make

|

||||

```

|

||||

|

||||

Now `python.exe` will have Dtrace tracers throughout the code. [Paul Ross wrote an awesome Lightning Talk on Dtrace][19]. You can [download DTrace starter files][20] for Python to measure function calls, execution time, CPU time, syscalls, all sorts of fun. e.g.

|

||||

|

||||

`sudo dtrace -s toolkit/<tracer>.d -c ‘../cpython/python.exe script.py’`

|

||||

|

||||

The `py_callflow` tracer shows all the function calls in your application

|

||||

|

||||

|

||||

|

||||

|

||||

So, does Python’s dynamic typing make it slow?

|

||||

|

||||

* Comparing and converting types is costly, every time a variable is read, written to or referenced the type is checked

|

||||

|

||||

* It is hard to optimise a language that is so dynamic. The reason many alternatives to Python are so much faster is that they make compromises to flexibility in the name of performance

|

||||

|

||||

* Looking at [Cython][2], which combines C-Static Types and Python to optimise code where the types are known[ can provide ][3]an 84x performanceimprovement.

|

||||

|

||||

### Conclusion

|

||||

|

||||

> Python is primarily slow because of its dynamic nature and versatility. It can be used as a tool for all sorts of problems, where more optimised and faster alternatives are probably available.

|

||||

|

||||

There are, however, ways of optimising your Python applications by leveraging async, understanding the profiling tools, and consider using multiple-interpreters.

|

||||

|

||||

For applications where startup time is unimportant and the code would benefit a JIT, consider PyPy.

|

||||

|

||||

For parts of your code where performance is critical and you have more statically-typed variables, consider using [Cython][4].

|

||||

|

||||

#### Further reading

|

||||

|

||||

Jake VDP’s excellent article (although slightly dated) [https://jakevdp.github.io/blog/2014/05/09/why-python-is-slow/][21]

|

||||

|

||||

Dave Beazley’s talk on the GIL [http://www.dabeaz.com/python/GIL.pdf][22]

|

||||

|

||||

All about JIT compilers [https://hacks.mozilla.org/2017/02/a-crash-course-in-just-in-time-jit-compilers/][23]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/why-is-python-so-slow-e5074b6fe55b

|

||||

|

||||

作者:[Anthony Shaw][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@anthonypjshaw?source=post_header_lockup

|

||||

[b]:https://github.com/oska874

|

||||

[1]:http://dabeaz.blogspot.com/2010/01/python-gil-visualized.html

|

||||

[2]:http://cython.org/

|

||||

[3]:http://notes-on-cython.readthedocs.io/en/latest/std_dev.html

|

||||

[4]:http://cython.org/

|

||||

[5]:http://algs4.cs.princeton.edu/faq/

|

||||

[6]:https://benchmarksgame-team.pages.debian.net/benchmarksgame/faster/python.html

|

||||

[7]:https://en.wikipedia.org/wiki/Just-in-time_compilation

|

||||

[8]:https://en.wikipedia.org/wiki/Ahead-of-time_compilation

|

||||

[9]:https://www.slideshare.net/GrahamDumpleton/secrets-of-a-wsgi-master

|

||||

[10]:http://doc.pypy.org/en/latest/faq.html#does-pypy-have-a-gil-why

|

||||

[11]:http://www.jython.org/jythonbook/en/1.0/Concurrency.html#no-global-interpreter-lock

|

||||

[12]:https://developer.mozilla.org/en-US/docs/Web/JavaScript/Memory_Management

|

||||

[13]:https://hackernoon.com/modifying-the-python-language-in-7-minutes-b94b0a99ce14

|

||||

[14]:https://hackernoon.com/modifying-the-python-language-in-7-minutes-b94b0a99ce14

|

||||

[15]:https://hackernoon.com/which-is-the-fastest-version-of-python-2ae7c61a6b2b

|

||||

[16]:https://hackernoon.com/which-is-the-fastest-version-of-python-2ae7c61a6b2b

|

||||

[17]:https://www.slideshare.net/AnthonyShaw5/pyjion-a-jit-extension-system-for-cpython

|

||||

[18]:https://github.com/python/cpython/archive/v3.6.6.zip

|

||||

[19]:https://github.com/paulross/dtrace-py#the-lightning-talk

|

||||

[20]:https://github.com/paulross/dtrace-py/tree/master/toolkit

|

||||

[21]:https://jakevdp.github.io/blog/2014/05/09/why-python-is-slow/

|

||||

[22]:http://www.dabeaz.com/python/GIL.pdf

|

||||

[23]:https://hacks.mozilla.org/2017/02/a-crash-course-in-just-in-time-jit-compilers/

|

||||

Loading…

Reference in New Issue

Block a user