mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

johnhoow translating

This commit is contained in:

commit

fb74cc08d5

@ -1,12 +1,14 @@

|

||||

在逻辑卷管理中设置精简资源调配卷——第四部分

|

||||

在LVM中设置精简资源调配卷(第四部分)

|

||||

================================================================================

|

||||

逻辑卷管理有许多特性,比如像快照和精简资源调配。在先前(第三部分中),我们已经介绍了如何为逻辑卷创建快照。在本文中,我们将了解如何在LVM中设置精简资源调配。

|

||||

逻辑卷管理有许多特性,比如像快照和精简资源调配。在先前([第三部分][3]中),我们已经介绍了如何为逻辑卷创建快照。在本文中,我们将了解如何在LVM中设置精简资源调配。

|

||||

|

||||

|

||||

在LVM中设置精简资源调配

|

||||

|

||||

*在LVM中设置精简资源调配*

|

||||

|

||||

### 精简资源调配是什么? ###

|

||||

精简资源调配用于lvm以在精简池中创建虚拟磁盘。我们假定我服务器上有**15GB**的存储容量,而我已经有2个客户各自占去了5GB存储空间。你是第三个客户,你也请求5GB的存储空间。在以前,我们会提供整个5GB的空间(富卷)。然而,你可能只使用5GB中的2GB,其它3GB以后再去填满它。

|

||||

|

||||

精简资源调配用于LVM以在精简池中创建虚拟磁盘。我们假定我服务器上有**15GB**的存储容量,而我已经有2个客户各自占去了5GB存储空间。你是第三个客户,你也请求5GB的存储空间。在以前,我们会提供整个5GB的空间(富卷)。然而,你可能只使用5GB中的2GB,其它3GB以后再去填满它。

|

||||

|

||||

而在精简资源调配中我们所做的是,在其中一个大卷组中定义一个精简池,再在精简池中定义一个精简卷。这样,不管你写入什么文件,它都会保存进去,而你的存储空间看上去就是5GB。然而,这所有5GB空间不会全部铺满整个硬盘。对其它客户也进行同样的操作,就像我说的,那儿已经有两个客户,你是第三个客户。

|

||||

|

||||

@ -20,15 +22,13 @@

|

||||

|

||||

在精简资源调配中,如果我为你定义了5GB空间,它就不会在定义卷时就将整个磁盘空间全部分配,它会根据你的数据写入而增长,希望你看懂了!跟你一样,其它客户也不会使用全部卷,所以还是有机会为一个新客户分配5GB空间的,这称之为过度资源调配。

|

||||

|

||||

但是,必须对各个卷的增长情况进行监控,否则结局会是个灾难。在过度资源调配完成后,如果所有4个客户都极度地写入数据到磁盘,你将碰到问题了。因为这个动作会填满15GB的存储空间,甚至溢出,从而导致这些卷下线。

|

||||

但是,必须对各个卷的增长情况进行监控,否则结局会是个灾难。在过度资源调配完成后,如果所有4个客户都尽量写入数据到磁盘,你将碰到问题了。因为这个动作会填满15GB的存储空间,甚至溢出,从而导致这些卷下线。

|

||||

|

||||

### 需求 ###

|

||||

### 前置阅读 ###

|

||||

|

||||

注:此三篇文章如果发布后可换成发布后链接,原文在前几天更新中

|

||||

|

||||

- [使用LVM在Linux中创建逻辑卷——第一部分][1]

|

||||

- [在Linux中扩展/缩减LVM——第二部分][2]

|

||||

- [在LVM中创建/恢复逻辑卷快照——第三部分][3]

|

||||

- [在Linux中使用LVM构建灵活的磁盘存储(第一部分)][1]

|

||||

- [在Linux中扩展/缩减LVM(第二部分)][2]

|

||||

- [在 LVM中 录制逻辑卷快照并恢复(第三部分)][3]

|

||||

|

||||

#### 我的服务器设置 ####

|

||||

|

||||

@ -42,7 +42,8 @@

|

||||

# vgcreate -s 32M vg_thin /dev/sdb1

|

||||

|

||||

|

||||

列出卷组

|

||||

|

||||

*列出卷组*

|

||||

|

||||

接下来,在创建精简池和精简卷之前,检查逻辑卷有多少空间可用。

|

||||

|

||||

@ -50,7 +51,8 @@

|

||||

# lvs

|

||||

|

||||

|

||||

检查逻辑卷

|

||||

|

||||

*检查逻辑卷*

|

||||

|

||||

我们可以在上面的lvs命令输出中看到,只显示了一些默认逻辑用于文件系统和交换分区。

|

||||

|

||||

@ -62,18 +64,20 @@

|

||||

|

||||

- **-L** – 卷组大小

|

||||

- **–thinpool** – 创建精简池

|

||||

- **tp_tecmint_poolThin** - 精简池名称

|

||||

- **vg_thin** – 我们需要创建精简池的卷组名称

|

||||

- **tp\_tecmint\_poolThin** - 精简池名称

|

||||

- **vg\_thin** – 我们需要创建精简池的卷组名称

|

||||

|

||||

|

||||

创建精简池

|

||||

|

||||

*创建精简池*

|

||||

|

||||

使用‘lvdisplay’命令来查看详细信息。

|

||||

|

||||

# lvdisplay vg_thin/tp_tecmint_pool

|

||||

|

||||

|

||||

逻辑卷信息

|

||||

|

||||

*逻辑卷信息*

|

||||

|

||||

这里,我们还没有在该精简池中创建虚拟精简卷。在图片中,我们可以看到分配的精简池数据为**0.00%**。

|

||||

|

||||

@ -83,16 +87,17 @@

|

||||

|

||||

# lvcreate -V 5G --thin -n thin_vol_client1 vg_thin/tp_tecmint_pool

|

||||

|

||||

我已经在我的**vg_thin**卷组中的**tp_tecmint_pool**内创建了一个精简虚拟卷,取名为**thin_vol_client1**。现在,使用下面的命令来列出逻辑卷。

|

||||

我已经在我的**vg_thin**卷组中的**tp\_tecmint\_pool**内创建了一个精简虚拟卷,取名为**thin\_vol\_client1**。现在,使用下面的命令来列出逻辑卷。

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

列出逻辑卷

|

||||

|

||||

*列出逻辑卷*

|

||||

|

||||

刚才,我们已经在上面创建了精简卷,这就是为什么没有数据,显示为**0.00%M**。

|

||||

|

||||

好吧,让我为其它2个客户再创建2个精简卷。这里,你可以看到在精简池(**tp_tecmint_pool**)下有3个精简卷了。所以,从这一点上看,我们开始明白,我已经使用所有15GB的精简池。

|

||||

好吧,让我为其它2个客户再创建2个精简卷。这里,你可以看到在精简池(**tp\_tecmint\_pool**)下有3个精简卷了。所以,从这一点上看,我们开始明白,我已经使用所有15GB的精简池。

|

||||

|

||||

|

||||

|

||||

@ -107,14 +112,16 @@

|

||||

# ls -l /mnt/

|

||||

|

||||

|

||||

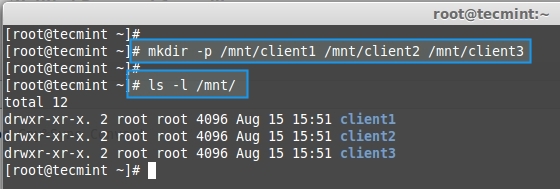

创建挂载点

|

||||

|

||||

*创建挂载点*

|

||||

|

||||

使用‘mkfs’命令为这些创建的精简卷创建文件系统。

|

||||

|

||||

# mkfs.ext4 /dev/vg_thin/thin_vol_client1 && mkfs.ext4 /dev/vg_thin/thin_vol_client2 && mkfs.ext4 /dev/vg_thin/thin_vol_client3

|

||||

|

||||

|

||||

创建文件系统

|

||||

|

||||

*创建文件系统*

|

||||

|

||||

使用‘mount’命令来挂载所有3个客户卷到创建的挂载点。

|

||||

|

||||

@ -125,12 +132,14 @@

|

||||

# df -h

|

||||

|

||||

|

||||

打印挂载点

|

||||

|

||||

*显示挂载点*

|

||||

|

||||

这里,我们可以看到所有3个客户卷已经挂载了,而每个客户卷只使用了3%的数据空间。那么,让我们从桌面添加一些文件到这3个挂载点,以填充一些空间。

|

||||

|

||||

|

||||

添加文件到卷

|

||||

|

||||

*添加文件到卷*

|

||||

|

||||

现在列出挂载点,并查看每个精简卷使用的空间,然后列出精简池来查看池中已使用的大小。

|

||||

|

||||

@ -138,10 +147,12 @@

|

||||

# lvdisplay vg_thin/tp_tecmint_pool

|

||||

|

||||

|

||||

检查挂载点大小

|

||||

|

||||

*检查挂载点大小*

|

||||

|

||||

|

||||

检查精简池大小

|

||||

|

||||

*检查精简池大小*

|

||||

|

||||

上面的命令显示了3个挂载点及其使用大小百分比。

|

||||

|

||||

@ -161,18 +172,20 @@

|

||||

# lvs

|

||||

|

||||

|

||||

创建精简存储

|

||||

|

||||

*创建精简存储*

|

||||

|

||||

在精简池中,我只有15GB大小的空间,但是我已经在精简池中创建了4个卷,其总量达到了20GB。如果4个客户都开始写入数据到他们的卷,并将空间填满,到那时我们将面对严峻的形势。如果不填满空间,那不会有问题。

|

||||

|

||||

现在,我已经创建在**thin_vol_client4**中创建了文件系统,然后挂载到了**/mnt/client4**下,并且拷贝了一些文件到里头。

|

||||

现在,我已经创建在**thin\_vol\_client4**中创建了文件系统,然后挂载到了**/mnt/client4**下,并且拷贝了一些文件到里头。

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

验证精简存储

|

||||

|

||||

我们可以在上面的图片中看到,新创建的client 4总计使用空间达到了**89.34%**,而精简池的已用空间达到了**59.19**。如果所有这些用户不在过度对卷写入,那么它就不会溢出,下线。要避免溢出,我们需要扩展精简池大小。

|

||||

*验证精简存储*

|

||||

|

||||

我们可以在上面的图片中看到,新创建的client 4总计使用空间达到了**89.34%**,而精简池的已用空间达到了**59.19**。如果所有这些用户不再过度对卷写入,那么它就不会溢出,下线。要避免溢出的话,我们需要扩展精简池大小。

|

||||

|

||||

**重要**:精简池只是一个逻辑卷,因此,如果我们需要对其进行扩展,我们可以使用和扩展逻辑卷一样的命令,但我们不能缩减精简池大小。

|

||||

|

||||

@ -183,16 +196,18 @@

|

||||

# lvextend -L +15G /dev/vg_thin/tp_tecmint_pool

|

||||

|

||||

|

||||

扩展精简存储

|

||||

|

||||

*扩展精简存储*

|

||||

|

||||

接下来,列出精简池大小。

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

验证精简存储

|

||||

|

||||

前面,我们的**tp_tecmint_pool**大小为15GB,而在对第四个精简卷进行过度资源配置后达到了20GB。现在,它扩展到了30GB,所以我们的过度资源配置又回归常态,而精简卷也不会溢出,下线了。通过这种方式,我们可以添加更多的精简卷到精简池中。

|

||||

*验证精简存储*

|

||||

|

||||

前面,我们的**tp_tecmint_pool**大小为15GB,而在对第四个精简卷进行过度资源配置后达到了20GB。现在,它扩展到了30GB,所以我们的过度资源配置又回归常态,而精简卷也不会溢出下线了。通过这种方式,我们可以添加更多的精简卷到精简池中。

|

||||

|

||||

在本文中,我们已经了解了怎样来使用一个大尺寸的卷组创建一个精简池,以及怎样通过过度资源配置在精简池中创建精简卷和扩着精简池。在下一篇文章中,我们将介绍怎样来移除逻辑卷。

|

||||

|

||||

@ -202,11 +217,11 @@ via: http://www.tecmint.com/setup-thin-provisioning-volumes-in-lvm/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/babinlonston/

|

||||

[1]:http://www.tecmint.com/create-lvm-storage-in-linux/

|

||||

[2]:http://www.tecmint.com/extend-and-reduce-lvms-in-linux/

|

||||

[3]:http://www.tecmint.com/take-snapshot-of-logical-volume-and-restore-in-lvm/

|

||||

[1]:http://linux.cn/article-3965-1.html

|

||||

[2]:http://linux.cn/article-3974-1.html

|

||||

[3]:http://linux.cn/article-4145-1.html

|

||||

@ -1,6 +1,6 @@

|

||||

如何在Debian上安装配置ownCloud

|

||||

================================================================================

|

||||

据其官方网站,ownCloud可以让你通过一个网络接口或者WebDAV访问你的文件。它还提供了一个平台,可以轻松地查看、编辑和同步您所有设备的通讯录、日历和书签。尽管ownCloud与广泛使用Dropbox非常相似,但主要区别在于ownCloud是免费的,开源的,从而可以自己的服务器上建立与Dropbox类似的云存储服务。使用ownCloud你可以完整地访问和控制您的私人数据而对存储空间没有限制(除了硬盘容量)或者连客户端的连接数量。

|

||||

据其官方网站,ownCloud可以让你通过一个Web界面或者WebDAV访问你的文件。它还提供了一个平台,可以轻松地查看、编辑和同步您所有设备的通讯录、日历和书签。尽管ownCloud与广泛使用Dropbox非常相似,但主要区别在于ownCloud是免费的,开源的,从而可以自己的服务器上建立与Dropbox类似的云存储服务。使用ownCloud你可以完整地访问和控制您的私人数据,而对存储空间(除了硬盘容量)或客户端的连接数量没有限制。

|

||||

|

||||

ownCloud提供了社区版(免费)和企业版(面向企业的有偿支持)。预编译的ownCloud社区版可以提供了CentOS、Debian、Fedora、openSUSE、,SLE和Ubuntu版本。本教程将演示如何在Debian Wheezy上安装和在配置ownCloud社区版。

|

||||

|

||||

@ -14,7 +14,7 @@ ownCloud提供了社区版(免费)和企业版(面向企业的有偿支持

|

||||

|

||||

|

||||

|

||||

在下一屏职工点击继续:

|

||||

在下一屏中点击继续:

|

||||

|

||||

|

||||

|

||||

@ -36,11 +36,11 @@ ownCloud提供了社区版(免费)和企业版(面向企业的有偿支持

|

||||

# aptitude update

|

||||

# aptitude install owncloud

|

||||

|

||||

打开你的浏览器并定位到你的ownCloud实例中,地址是http://<server-ip>/owncloud:

|

||||

打开你的浏览器并定位到你的ownCloud实例中,地址是 http://服务器 IP/owncloud:

|

||||

|

||||

|

||||

|

||||

注意ownCloud可能会包一个Apache配置错误的警告。使用下面的步骤来解决这个错误来摆脱这些错误信息。

|

||||

注意ownCloud可能会包一个Apache配置错误的警告。使用下面的步骤来解决这个错误来解决这些错误信息。

|

||||

|

||||

a) 编辑 the /etc/apache2/apache2.conf (设置 AllowOverride 为 All):

|

||||

|

||||

@ -70,7 +70,7 @@ d) 刷新浏览器,确认安全警告已经消失

|

||||

|

||||

### 设置数据库 ###

|

||||

|

||||

是时候为ownCloud设置数据库了。

|

||||

这时可以为ownCloud设置数据库了。

|

||||

|

||||

首先登录本地的MySQL/MariaDB数据库:

|

||||

|

||||

@ -83,7 +83,7 @@ d) 刷新浏览器,确认安全警告已经消失

|

||||

mysql> GRANT ALL PRIVILEGES ON owncloud_DB.* TO ‘owncloud-web’@'localhost';

|

||||

mysql> FLUSH PRIVILEGES;

|

||||

|

||||

通过http://<server-ip>/owncloud 进入ownCloud页面,并选择‘Storage & database’ 选项。输入所需的信息(MySQL/MariaDB用户名,密码,数据库和主机名),并点击完成按钮。

|

||||

通过http://服务器 IP/owncloud 进入ownCloud页面,并选择‘Storage & database’ 选项。输入所需的信息(MySQL/MariaDB用户名,密码,数据库和主机名),并点击完成按钮。

|

||||

|

||||

|

||||

|

||||

@ -101,7 +101,7 @@ d) 刷新浏览器,确认安全警告已经消失

|

||||

|

||||

|

||||

|

||||

编辑/etc/apache2/conf.d/owncloud.conf 启用HTTPS。对于余下的NC、R和L重写规则的意义,你可以参考[Apache 文档][2]:

|

||||

编辑/etc/apache2/conf.d/owncloud.conf 启用HTTPS。对于重写规则中的NC、R和L的意义,你可以参考[Apache 文档][2]:

|

||||

|

||||

Alias /owncloud /var/www/owncloud

|

||||

|

||||

@ -197,7 +197,7 @@ via: http://xmodulo.com/2014/08/install-configure-owncloud-debian.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,8 @@

|

||||

如何用Puppet和Augeas管理Linux配置

|

||||

================================================================================

|

||||

虽然[Puppet][1](注:此文原文原文中曾今做过,文件名:“20140808 How to install Puppet server and client on CentOS and RHEL.md”,如果翻译发布过,可修改此链接为发布地址)是一个非常独特的和有用的工具,在有些情况下你可以使用一点不同的方法。要修改的配置文件已经在几个不同的服务器上且它们在这时是互补相同的。Puppet实验室的人也意识到了这一点并集成了一个伟大的工具,称之为[Augeas][2],它是专为这种使用情况而设计的。

|

||||

虽然[Puppet][1]是一个真正独特的有用工具,但在有些情况下你可以使用一点不同的方法来用它。比如,你要修改几个服务器上已有的配置文件,而且它们彼此稍有不同。Puppet实验室的人也意识到了这一点,他们在 Puppet 中集成了一个叫做[Augeas][2]的伟大的工具,它是专为这种使用情况而设计的。

|

||||

|

||||

|

||||

Augeas可被认为填补了Puppet能力的缺陷,其中一个特定对象的资源类型(如主机资源来处理/etc/hosts中的条目)还不可用。在这个文档中,您将学习如何使用Augeas来减轻你管理配置文件的负担。

|

||||

Augeas可被认为填补了Puppet能力的空白,比如在其中一个指定对象的资源类型(例如用于维护/etc/hosts中的条目的主机资源)还不可用时。在这个文档中,您将学习如何使用Augeas来减轻你管理配置文件的负担。

|

||||

|

||||

### Augeas是什么? ###

|

||||

|

||||

@ -11,13 +10,13 @@ Augeas基本上就是一个配置编辑工具。它以他们原生的格式解

|

||||

|

||||

### 这篇教程要达成什么目的? ###

|

||||

|

||||

我们会安装并配置Augeas用于我们之前构建的Puppet服务器。我们会使用这个工具创建并测试几个不同的配置文件,并学习如何适当地使用它来管理我们的系统配置。

|

||||

我们会针对[我们之前构建的Puppet服务器][1]安装并配置Augeas。我们会使用这个工具创建并测试几个不同的配置文件,并学习如何适当地使用它来管理我们的系统配置。

|

||||

|

||||

### 先决条件 ###

|

||||

### 前置阅读 ###

|

||||

|

||||

我们需要一台工作的Puppet服务器和客户端。如果你还没有,请先按照我先前的教程来。

|

||||

我们需要一台工作的Puppet服务器和客户端。如果你还没有,请先按照我先前的[教程][1]来。

|

||||

|

||||

Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet用到的ruby封装的Augeas只在puppetlabs仓库中(或者[EPEL][4])中才有。如果你系统中还没有这个仓库,请使用下面的命令:

|

||||

Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet用到的Augeas的ruby封装只在puppetlabs仓库中(或者[EPEL][4])中才有。如果你系统中还没有这个仓库,请使用下面的命令:

|

||||

|

||||

在CentOS/RHEL 6.5上:

|

||||

|

||||

@ -31,7 +30,7 @@ Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet

|

||||

|

||||

# yum install rubyaugeas

|

||||

|

||||

或者如果你是从我的上一篇教程中继续的,使用puppet的方法安装这个包。在/etc/puppet/manifests/site.pp中修改你的custom_utils类,在packages这行中加入“rubyaugeas”。

|

||||

或者如果你是从我的[上一篇教程中继续][1]的,使用puppet的方法安装这个包。在/etc/puppet/manifests/site.pp中修改你的custom_utils类,在packages这行中加入“rubyaugeas”。

|

||||

|

||||

class custom_utils {

|

||||

package { ["nmap","telnet","vimenhanced","traceroute","rubyaugeas"]:

|

||||

@ -54,7 +53,7 @@ Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet

|

||||

|

||||

1. 给wheel组加上sudo权限。

|

||||

|

||||

这个例子会向你战士如何在你的GNU/Linux系统中为%wheel组加上sudo权限。

|

||||

这个例子会向你展示如何在你的GNU/Linux系统中为%wheel组加上sudo权限。

|

||||

|

||||

# 安装sudo包

|

||||

package { 'sudo':

|

||||

@ -73,7 +72,7 @@ Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet

|

||||

]

|

||||

}

|

||||

|

||||

现在来解释这些代码做了什么:**spec**定义了/etc/sudoers中的用户段,**[user]**定义了数组中给定的用户,所有的定义的斜杠( / ) 后用户的子部分。因此在典型的配置中这个可以这么表达:

|

||||

现在来解释这些代码做了什么:**spec**定义了/etc/sudoers中的用户段,**[user]**定义了数组中给定的用户,所有的定义放在该用户的斜杠( / ) 后那部分。因此在典型的配置中这个可以这么表达:

|

||||

|

||||

user host_group/host host_group/command host_group/command/runas_user

|

||||

|

||||

@ -83,7 +82,7 @@ Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet

|

||||

|

||||

2. 添加命令别称

|

||||

|

||||

下面这部分会向你展示如何定义命令别名,他可以在你的sudoer文件中使用。

|

||||

下面这部分会向你展示如何定义命令别名,它可以在你的sudoer文件中使用。

|

||||

|

||||

# 创建新的SERVICE别名,包含了一些基本的特权命令。

|

||||

augeas { 'sudo_cmdalias':

|

||||

@ -97,7 +96,7 @@ Augeas安装包可以在标准CentOS/RHEL仓库中找到。不幸的是,Puppet

|

||||

]

|

||||

}

|

||||

|

||||

sudo命令别名的语法很简单:**Cmnd_Alias**定义了命令别名字段,**[alias/name]**绑定所有给定的别名,/alias/name **SERVICES** 定义真实的别名以及alias/command 是所有命令的数组,每条命令是这个别名的一部分。

|

||||

sudo命令别名的语法很简单:**Cmnd_Alias**定义了命令别名字段,**[alias/name]**绑定所有给定的别名,/alias/name **SERVICES** 定义真实的别名,alias/command 是属于该别名的所有命令的数组。以上将被转换如下:

|

||||

|

||||

Cmnd_Alias SERVICES = /sbin/service , /sbin/chkconfig , /bin/hostname , /sbin/shutdown

|

||||

|

||||

@ -105,12 +104,12 @@ sudo命令别名的语法很简单:**Cmnd_Alias**定义了命令别名字段

|

||||

|

||||

#### 向一个组中加入用户 ####

|

||||

|

||||

要使用Augeas向组中添加用户,你有也许要添加一个新用户,无论是在gid字段后或者在最后一个用户后。我们在这个例子中使用组SVN。这可以通过下面的命令达成:

|

||||

要使用Augeas向组中添加用户,你也许要添加一个新用户,不管是排在 gid 字段还是最后的用户 uid 之后。我们在这个例子中使用SVN组。这可以通过下面的命令达成:

|

||||

|

||||

在Puppet中:

|

||||

|

||||

augeas { 'augeas_mod_group:

|

||||

context => '/files/etc/group', # The target file is /etc/group

|

||||

context => '/files/etc/group', #目标文件是 /etc/group

|

||||

changes => [

|

||||

"ins user after svn/*[self::gid or self::user][last()]",

|

||||

"set svn/user[last()] john",

|

||||

@ -123,14 +122,14 @@ sudo命令别名的语法很简单:**Cmnd_Alias**定义了命令别名字段

|

||||

|

||||

### 总结 ###

|

||||

|

||||

目前为止,你应该对如何在Puppet项目中使用Augeas有一个好想法了。随意地试一下,你肯定会经历官方的Augeas文档。这会帮助你了解如何在你的个人项目中正确地使用Augeas,并且它会想你展示你可以用它节省多少时间。

|

||||

目前为止,你应该对如何在Puppet项目中使用Augeas有点明白了。随意地试一下,你肯定需要浏览官方的Augeas文档。这会帮助你了解如何在你的个人项目中正确地使用Augeas,并且它会让你知道可以用它节省多少时间。

|

||||

|

||||

如有任何问题,欢迎在下面的评论中发布,我会尽力解答和向你建议。

|

||||

|

||||

### 有用的链接 ###

|

||||

|

||||

- [http://www.watzmann.net/categories/augeas.html][6]: contains a lot of tutorials focused on Augeas usage.

|

||||

- [http://projects.puppetlabs.com/projects/1/wiki/puppet_augeas][7]: Puppet wiki with a lot of practical examples.

|

||||

- [http://www.watzmann.net/categories/augeas.html][6]: 包含许多关于 Augeas 使用的教程。

|

||||

- [http://projects.puppetlabs.com/projects/1/wiki/puppet_augeas][7]: Puppet wiki 带有许多实例。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -138,12 +137,12 @@ via: http://xmodulo.com/2014/09/manage-configurations-linux-puppet-augeas.html

|

||||

|

||||

作者:[Jaroslav Štěpánek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/jaroslav

|

||||

[1]:http://xmodulo.com/2014/08/install-puppet-server-client-centos-rhel.html

|

||||

[1]:http://linux.cn/article-3959-1.html

|

||||

[2]:http://augeas.net/

|

||||

[3]:http://xmodulo.com/manage-configurations-linux-puppet-augeas.html

|

||||

[4]:http://xmodulo.com/2013/03/how-to-set-up-epel-repository-on-centos.html

|

||||

@ -1,24 +1,24 @@

|

||||

Ansible和Docker的作用和用法

|

||||

================================================================================

|

||||

在 [Docker][1] 和 [Ansible][2] 的技术社区内存在着很多好玩的东西,我希望在你阅读完这篇文章后也能获取到我们对它们的那种热爱。当然,你也会收获一些实践知识,那就是如何通过部署 Ansible 和 Docker 来为 Rails 应用搭建一个完整的服务器环境。

|

||||

在 [Docker][1] 和 [Ansible][2] 的技术社区内存在着很多好玩的东西,我希望在你阅读完这篇文章后也能像我们一样热爱它们。当然,你也会收获一些实践知识,那就是如何通过部署 Ansible 和 Docker 来为 Rails 应用搭建一个完整的服务器环境。

|

||||

|

||||

也许有人会问:你怎么不去用 Heroku?首先,我可以在任何供应商提供的主机上运行 Docker 和 Ansible;其次,相比于方便性,我更偏向于喜欢灵活性。我可以在这种组合中运行任何程序,而不仅仅是 web 应用。最后,我骨子里是一个工匠,我非常理解如何把零件拼凑在一起工作。Heroku 的基础模块是 Linux Container,而 Docker 表现出来的多功能性也是基于这种技术。事实上,Docker 的其中一个座右铭是:容器化是新虚拟化技术。

|

||||

也许有人会问:你怎么不去用 Heroku?首先,我可以在任何供应商提供的主机上运行 Docker 和 Ansible;其次,相比于方便性,我更偏向于喜欢灵活性。我可以在这种组合中运行任何程序,而不仅仅是 web 应用。最后,我骨子里是一个工匠,我非常了解如何把零件拼凑在一起工作。Heroku 的基础模块是 Linux Container,而 Docker 表现出来的多功能性也是基于这种技术。事实上,Docker 的其中一个座右铭是:容器化是新虚拟化技术。

|

||||

|

||||

### 为什么使用 Ansible? ###

|

||||

|

||||

我重度使用 Chef 已经有4年了(LCTT:Chef 是与 puppet 类似的配置管理工具),**基础设施即代码**的观念让我觉得非常无聊。我花费大量时间来管理代码,而不是管理基础设施本身。不论多小的改变,都需要相当大的努力来实现它。使用 [Ansible][3],你可以一手掌握拥有可描述性数据的基础架构,另一只手掌握不同组件之间的交互作用。这种更简单的操作模式让我把精力集中在如何将我的技术设施私有化,提高了我的工作效率。与 Unix 的模式一样,Ansible 提供大量功能简单的模块,我们可以组合这些模块,达到不同的工作要求。

|

||||

|

||||

除了 Python 和 SSH,Ansible 不再依赖其他软件,在它的远端主机上不需要部署代理,也不会留下任何运行痕迹。更厉害的是,它提供一套内建的、可扩展的模块库文件,通过它你可以控制所有:包管理器、云服务供应商、数据库等等等等。

|

||||

除了 Python 和 SSH,Ansible 不再依赖其他软件,在它的远端主机上不需要部署代理,也不会留下任何运行痕迹。更厉害的是,它提供一套内建的、可扩展的模块库文件,通过它你可以控制所有的一切:包管理器、云服务供应商、数据库等等等等。

|

||||

|

||||

### 为什么要使用 Docker? ###

|

||||

|

||||

[Docker][4] 的定位是:提供最可靠、最方便的方式来部署服务。这些服务可以是 mysqld,可以是 redis,可以是 Rails 应用。先聊聊 git,它的快照功能让它可以以最有效的方式发布代码,Docker 的处理方法与它类似。它保证应用可以无视主机环境,随心所欲地跑起来。

|

||||

[Docker][4] 的定位是:提供最可靠、最方便的方式来部署服务。这些服务可以是 mysqld,可以是 redis,可以是 Rails 应用。先聊聊 git 吧,它的快照功能让它可以以最有效的方式发布代码,Docker 的处理方法与它类似。它保证应用可以无视主机环境,随心所欲地跑起来。

|

||||

|

||||

一种最普遍的误解是人们总是把 Docker 容器看成是一个虚拟机,当然,我表示理解你们的误解。Docker 满足[单一功能原则][5],在一个容器里面只跑一个进程,所以一次修改只会影响一个进程,而这些进程可以被重用。这种模型参考了 Unix 的哲学思想,当前还处于试验阶段,并且正变得越来越稳定。

|

||||

|

||||

### 设置选项 ###

|

||||

|

||||

不需要离开终端,我就可以使用 Ansible 来生成以下实例:Amazon Web Services,Linode,Rackspace 以及 DigitalOcean。如果想要更详细的信息,我于1分25秒内在位于阿姆斯特丹的2号数据中心上创建了一个 2GB 的 DigitalOcean 虚拟机。另外的1分50秒用于系统配置,包括设置 Docker 和其他个人选项。当我完成这些基本设定后,就可以部署我的应用了。值得一提的是这个过程中我没有配置任何数据库或程序开发语言,Docker 已经帮我把应用所需要的事情都安排好了。

|

||||

不需要离开终端,我就可以使用 Ansible 来在这些云平台中生成实例:Amazon Web Services,Linode,Rackspace 以及 DigitalOcean。如果想要更详细的信息,我于1分25秒内在位于阿姆斯特丹的2号数据中心上创建了一个 2GB 的 DigitalOcean 虚拟机。另外的1分50秒用于系统配置,包括设置 Docker 和其他个人选项。当我完成这些基本设定后,就可以部署我的应用了。值得一提的是这个过程中我没有配置任何数据库或程序开发语言,Docker 已经帮我把应用所需要的事情都安排好了。

|

||||

|

||||

Ansible 通过 SSH 为远端主机发送命令。我保存在本地 ssh 代理上面的 SSH 密钥会通过 Ansible 提供的 SSH 会话分享到远端主机。当我把应用代码从远端 clone 下来,或者上传到远端时,我就不再需要提供 git 所需的证书了,我的 ssh 代理会帮我通过 git 主机的身份验证程序的。

|

||||

|

||||

@ -65,13 +65,13 @@ CMD 这个步骤是在新的 web 应用容器启动后执行的。在测试环

|

||||

|

||||

没有本地 Docker 镜像,从零开始部署一个中级规模的 Rails 应用大概需要100个 gems,进行100次整体测试,在使用2个核心实例和2GB内存的情况下,这些操作需要花费8分16秒。装上 Ruby、MySQL 和 Redis Docker 镜像后,部署应用花费了4分45秒。另外,如果从一个已存在的主应用镜像编译出一个新的 Docker 应用镜像出来,只需花费2分23秒。综上所述,部署一套新的 Rails 应用,解决其所有依赖关系(包括 MySQL 和 Redis),只需花我2分钟多一点的时间就够了。

|

||||

|

||||

需要指出的一点是,我的应用上运行着一套完全测试套件,跑完测试需要花费额外1分钟时间。尽管是无意的,Docker 可以变成一套简单的持续集成环境,当测试失败后,Docker 会把“test-only”这个容器保留下来,用于分析出错原因。我可以在1分钟之内和我的客户一起验证新代码,保证不同版本的应用之间是完全隔离的,同操作系统也是隔离的。传统虚拟机启动系统时需要花费好几分钟,Docker 容器只花几秒。另外,一旦一个 Dockedr 镜像编译出来,并且针对我的某个版本的应用的测试都被通过,我就可以把这个镜像提交到 Docker 私服 Registry 上,可以被其他 Docker 主机下载下来并启动一个新的 Docker 容器,而这不过需要几秒钟时间。

|

||||

需要指出的一点是,我的应用上运行着一套完全测试套件,跑完测试需要花费额外1分钟时间。尽管是无意的,Docker 可以变成一套简单的持续集成环境,当测试失败后,Docker 会把“test-only”这个容器保留下来,用于分析出错原因。我可以在1分钟之内和我的客户一起验证新代码,保证不同版本的应用之间是完全隔离的,同操作系统也是隔离的。传统虚拟机启动系统时需要花费好几分钟,Docker 容器只花几秒。另外,一旦一个 Dockedr 镜像编译出来,并且针对我的某个版本的应用的测试都被通过,我就可以把这个镜像提交到一个私有的 Docker Registry 上,可以被其他 Docker 主机下载下来并启动一个新的 Docker 容器,而这不过需要几秒钟时间。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

Ansible 让我重新看到管理基础设施的乐趣。Docker 让我有充分的信心能稳定处理应用部署过程中最重要的步骤——交付环节。双剑合璧,威力无穷。

|

||||

|

||||

从无到有搭建一个完整的 Rails 应用可以在12分钟内完成,这种速度放在任何场合都是令人印象深刻的。能获得一个免费的持续集成环境,可以查看不同版本的应用之间的区别,不会影响到同主机上已经在运行的应用,这些功能强大到难以置信,让我感到很兴奋。在文章的最后,我只希望你能感受到我的兴奋。

|

||||

从无到有搭建一个完整的 Rails 应用可以在12分钟内完成,这种速度放在任何场合都是令人印象深刻的。能获得一个免费的持续集成环境,可以查看不同版本的应用之间的区别,不会影响到同主机上已经在运行的应用,这些功能强大到难以置信,让我感到很兴奋。在文章的最后,我只希望你能感受到我的兴奋!

|

||||

|

||||

我在2014年1月伦敦 Docker 会议上讲过这个主题,[已经分享到 Speakerdeck][7]了。

|

||||

|

||||

@ -87,7 +87,7 @@ via: http://thechangelog.com/ansible-docker/

|

||||

|

||||

作者:[Gerhard Lazu][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -5,7 +5,7 @@

|

||||

|

||||

Git教程往往不会解决这个问题,因为它集中篇幅来教你Git命令和概念,并且不认为你会使用GitHub。[GitHub帮助教程](https://help.github.com/)一定程度上弥补了这一缺陷,但是它每篇文章的关注点都较为狭隘,而且没有提供关于"Git vs GitHub"问题的概念性概述。

|

||||

|

||||

**如果你是习惯于先理解概念,再着手代码的学习者**,而且你也是Git和GitHub的初学者,我建议你先理解清楚什么是fork,为什么?

|

||||

**如果你是习惯于先理解概念,再着手代码的学习者**,而且你也是Git和GitHub的初学者,我建议你先理解清楚什么是fork。为什么呢 ?

|

||||

|

||||

1. Fork是在GitHub起步最普遍的方式。

|

||||

2. Fork只需要很少的Git命令,但是起得作用却非常大。

|

||||

@ -53,15 +53,19 @@ Joe和其余贡献者已经对这个项目做了一些修改,而你将在他

|

||||

|

||||

### 结论

|

||||

|

||||

我希望这是一篇关于GitHub和Git [fork](https://help.github.com/articles/fork-a-repo)有用概述。现在,你已经理解了那些概念,你将会更容易地在实际中执行你的代码。GitHub关于fork和[同步](https://help.github.com/articles/syncing-a-fork)的文章将会给你大部分你需要的代码。

|

||||

我希望这是一篇关于GitHub和Git 的 [fork](https://help.github.com/articles/fork-a-repo)有用概述。现在,你已经理解了那些概念,你将会更容易地在实际中执行你的代码。GitHub关于fork和[同步](https://help.github.com/articles/syncing-a-fork)的文章将会给你大部分你需要的代码。

|

||||

|

||||

如果你是Git的初学者,而且你很喜欢这种学习方式,那么我极力推荐书籍[Pro Git](http://git-scm.com/book)的前两个章节,网上是可以免费查阅的。

|

||||

|

||||

如果你喜欢视频学习,我创建了一个[11部分的视频系列](http://www.dataschool.io/git-and-github-videos-for-beginners/)(总共36分钟),来向初学者介绍Git和GitHub。

|

||||

|

||||

---

|

||||

via: http://www.dataschool.io/simple-guide-to-forks-in-github-and-git/

|

||||

|

||||

作者:[Kevin Markham][a]

|

||||

译者:[su-kaiyao](https://github.com/su-kaiyao)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://disqus.com/home/user/justmarkham/

|

||||

54

published/20141023 6 Minesweeper Clones for Linux.md

Normal file

54

published/20141023 6 Minesweeper Clones for Linux.md

Normal file

@ -0,0 +1,54 @@

|

||||

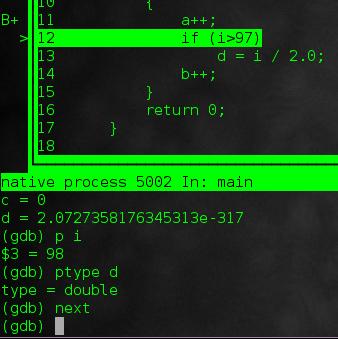

Linux下的6个扫雷游戏的翻版

|

||||

================================================================================

|

||||

Windows 下的扫雷游戏还没玩够么?那么来 Linux 下继续扫雷吧——这是一个雷的时代~~

|

||||

|

||||

### GNOME Mines ###

|

||||

|

||||

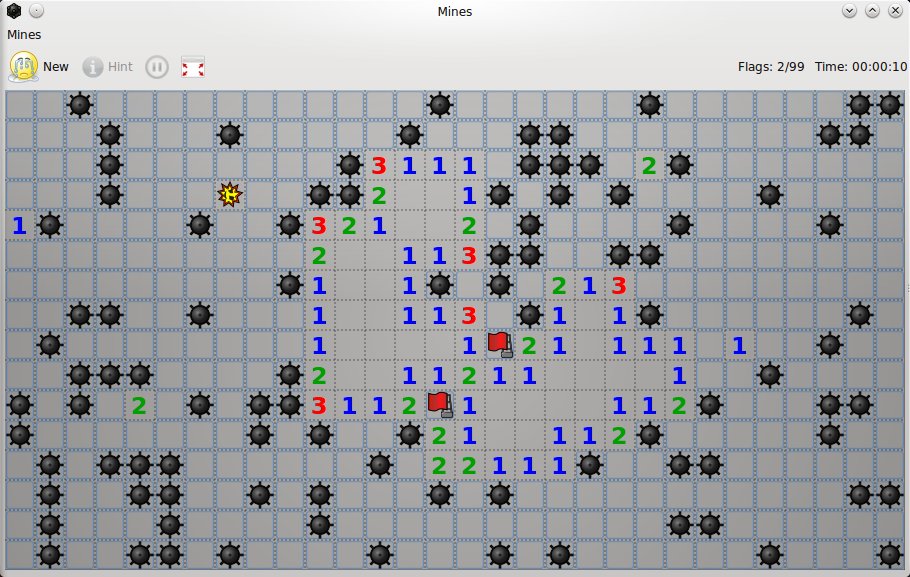

这是GNOME扫雷复制品,允许你从3个不同的预定义表大小(8×8, 16×16, 30×16)中选择其一,或者自定义行列的数量。它能以全屏模式运行,带有高分值、耗时和提示。游戏可以暂停和继续。

|

||||

|

||||

|

||||

|

||||

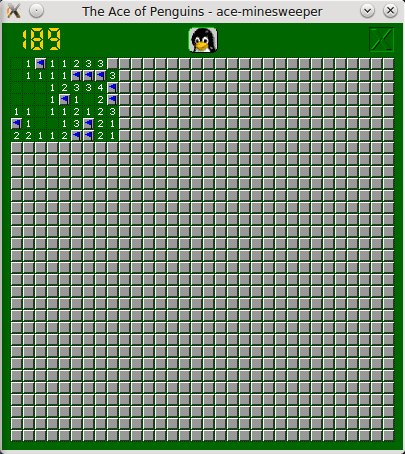

### ace-minesweeper ###

|

||||

|

||||

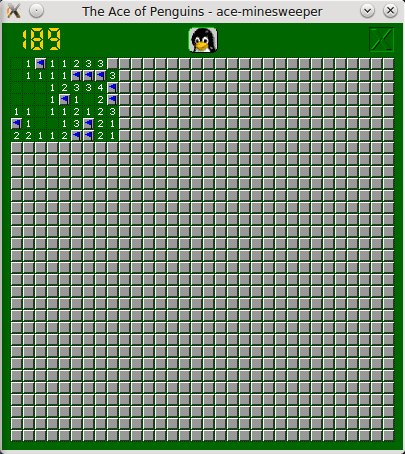

这是一个大的软件包中的游戏,此包中也包含有其它一些游戏,如ace-freecel,ace-solitaire或ace-spider。它有一个以小企鹅为特色的图形化界面,但好像不能调整表的大小。该包在Ubuntu中名为ace-of-penguins。

|

||||

|

||||

|

||||

|

||||

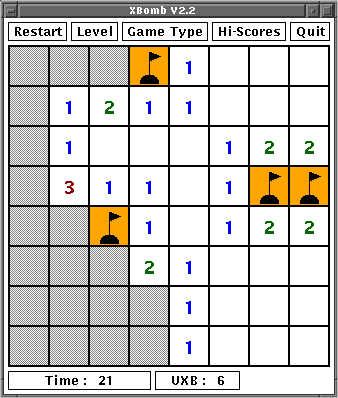

### XBomb ###

|

||||

|

||||

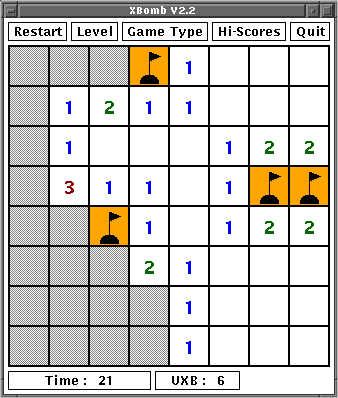

XBomb是针对X Windows系统扫雷游戏,它有三种不同的表尺寸和卡牌风格,包含有不同的外形:六角形、矩形(传统)或三角形。不幸的是,在Ubuntu 14.04中的版本会出现程序分段冲突,所以你可能需要安装另外一个版本。

|

||||

[首页][1]。

|

||||

|

||||

|

||||

|

||||

([图像来源][1])

|

||||

|

||||

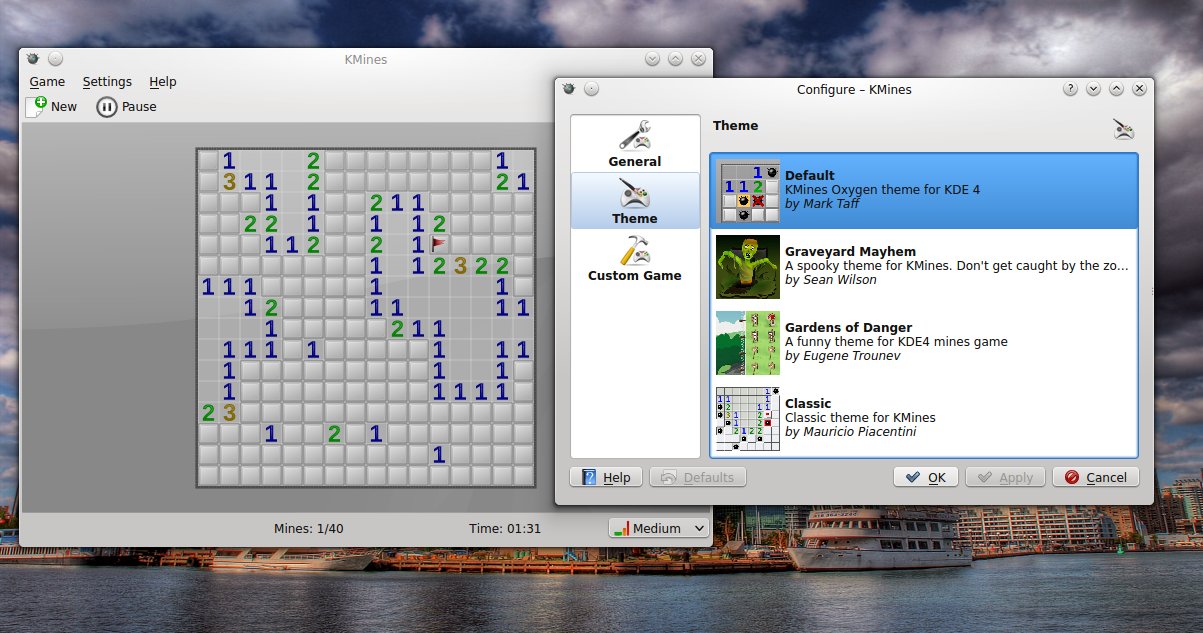

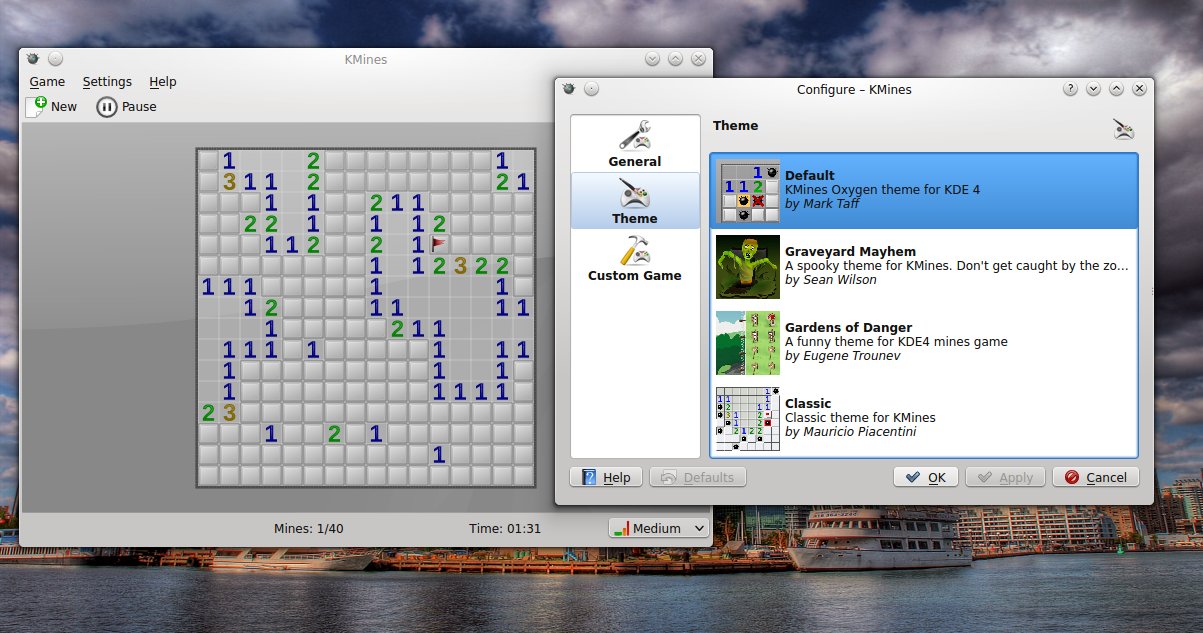

### KMines ###

|

||||

|

||||

KMines是一个KDE游戏,和GNOME Mines类似,有三个内建表尺寸(简易、中等、困单),也可以自定义,支持主题和高分。

|

||||

|

||||

|

||||

|

||||

### freesweep ###

|

||||

|

||||

Freesweep是一个针对终端的扫雷复制品,它可以配置表行列、炸弹比例、颜色,也有一个高分表。

|

||||

|

||||

|

||||

|

||||

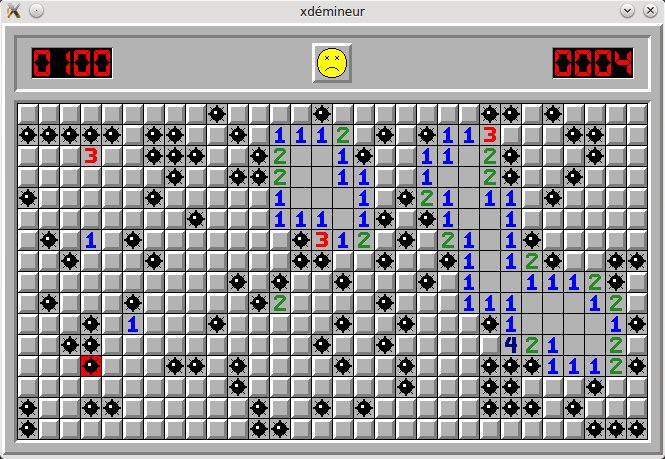

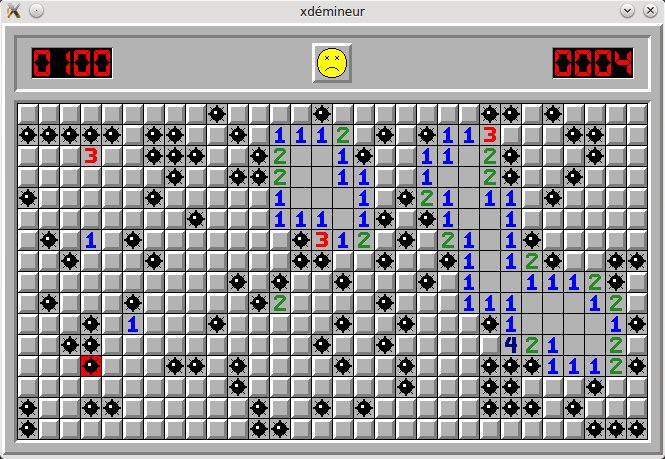

### xdemineur ###

|

||||

|

||||

另外一个针对X的图形化扫雷Xdemineur,和Ace-Minesweeper十分相像,带有一个预定义的表尺寸。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tuxarena.com/2014/10/6-minesweeper-clones-for-linux/

|

||||

|

||||

作者:Craciun Dan

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.gedanken.org.uk/software/xbomb/

|

||||

@ -1,31 +1,30 @@

|

||||

不得不说的磁盘镜像工具

|

||||

Linux 下易用的光盘镜像管理工具

|

||||

================================================================================

|

||||

磁盘镜像包括整个磁盘卷的文件或者是全部的存储设备的数据,比如说硬盘,光盘(DVD,CD,蓝光光碟),磁带机,USB闪存,软盘。一个完整的磁盘镜像应该像在原来的存储设备上一样完整、准确,包括数据和结构信息。

|

||||

磁盘镜像包括了整个磁盘卷的文件或者是全部的存储设备的数据,比如说硬盘,光盘(DVD,CD,蓝光光碟),磁带机,USB闪存,软盘。一个完整的磁盘镜像应该包含与原来的存储设备上一样完整、准确,包括数据和结构信息。

|

||||

|

||||

磁盘镜像文件格式可以是开放的标准,像ISO格式的光盘镜像,或者是专有的特别的软件应用程序。"ISO"这个名字来源于用CD存储的ISO 9660文件系统。但是,当用户转向Linux的时候,经常遇到这样的问题,需要把专有的的镜像格式转换为开放的格式。

|

||||

磁盘镜像文件格式可以是采用开放的标准,像ISO格式的光盘镜像,或者是专有的软件应用程序的特定格式。"ISO"这个名字来源于用CD存储的ISO 9660文件系统。但是,当用户转向Linux的时候,经常遇到这样的问题,需要把专有的的镜像格式转换为开放的格式。

|

||||

|

||||

磁盘镜像有很多不同的用处,像烧录光盘,系统备份,数据恢复,硬盘克隆,电子取证和提供操作系统(即LiveCD/DVDs)。

|

||||

|

||||

有很多不同非方法可以把ISO镜像挂载到Linux系统下。强大的mount 命令给我们提供了一个简单的解决方案。但是如果你需要很多工具来操作磁盘镜像,你可以试一试下面的这些完美的开源工具。

|

||||

有很多不同的方法可以把ISO镜像挂载到Linux系统下。强大的mount 命令给我们提供了一个简单的解决方案。但是如果你需要很多工具来操作磁盘镜像,你可以试一试下面的这些强大的开源工具。

|

||||

|

||||

很多工具还没有看到最新的版本,所以如果你正在寻找一个很好用的开源工具,你也可以加入,一起来为开源做出一点贡献。

|

||||

|

||||

----------

|

||||

### Furius ISO Mount

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Furius ISO Mount是一个简单易用的开源应用程序用来挂载镜像文件,它支持直接打开ISO,IMG,BIN,MDF和NRG格式的镜像而不用把他们烧录到磁盘。

|

||||

Furius ISO Mount是一个简单易用的开源应用程序,可以用来挂载镜像文件,它支持直接打开ISO,IMG,BIN,MDF和NRG格式的镜像而不用把他们烧录到磁盘。

|

||||

|

||||

特性:

|

||||

|

||||

- 支持自动挂载ISO, IMG, BIN, MDF and NRG镜像文件

|

||||

- 支持通过loop 挂载 UDF 镜像

|

||||

- 支持通过 loop 方式挂载 UDF 镜像

|

||||

- 自动在根目录创建挂载点

|

||||

- 自动解挂镜像文件

|

||||

- 自动删除挂载目录,并返回到主目录之前的状态

|

||||

- 自动存档最近10次挂载历史

|

||||

- 自动记录最近10次挂载历史

|

||||

- 支持挂载多个镜像文件

|

||||

- 支持烧录ISO文件及IMG文件到光盘

|

||||

- 支持MD5校验和SHA1校验

|

||||

@ -33,14 +32,14 @@ Furius ISO Mount是一个简单易用的开源应用程序用来挂载镜像文

|

||||

- 自动创建手动挂载和解挂的日志文件

|

||||

- 语言支持(目前支持保加利亚语,中文(简体),捷克语,荷兰语,法语,德语,匈牙利语,意大利语,希腊语,日语,波兰语,葡萄牙语,俄语,斯洛文尼亚语,西班牙语,瑞典语和土耳其语)

|

||||

|

||||

---

|

||||

- 项目网址: [launchpad.net/furiusisomount/][1]

|

||||

- 开发者: Dean Harris (Marcus Furius)

|

||||

- 许可: GNU GPL v3

|

||||

- 版本号: 0.11.3.1

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###fuseiso

|

||||

|

||||

|

||||

|

||||

@ -50,44 +49,46 @@ fuseiso 是用来挂载ISO文件系统的一个开源的安全模块。

|

||||

|

||||

特性:

|

||||

|

||||

- 支持读ISO,BIN和NRG镜像,包括ISO9660文件系统

|

||||

- 支持普通的ISO9660级别1和级别2

|

||||

- 支持读ISO,BIN和NRG镜像,包括ISO 9660文件系统

|

||||

- 支持普通的ISO 9660级别1和级别2

|

||||

- 支持一些常用的扩展,想Joliet,RockRidge和zisofs

|

||||

- 支持非标准的镜像,包括CloneCD's IMGs 、Alcohol 120%'s MDFs 因为他们的格式看起来恰好像BIN镜像一样

|

||||

|

||||

---

|

||||

|

||||

- 项目网址: [sourceforge.net/projects/fuseiso][2]

|

||||

- 开发者: Dmitry Morozhnikov

|

||||

- 许可: GNU GPL v2

|

||||

- 版本号: 20070708

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###iat

|

||||

|

||||

|

||||

|

||||

iat(Iso9660分析工具)是一个通用的开源工具,能够检测很多不同镜像格式文件的结构,包括BIN,MDF,PDI,CDI,NRG和B5I,并转化成ISO-9660格式.

|

||||

iat(Iso 9660分析工具)是一个通用的开源工具,能够检测很多不同镜像格式文件的结构,包括BIN,MDF,PDI,CDI,NRG和B5I,并转化成ISO 9660格式.

|

||||

|

||||

特性:

|

||||

|

||||

- 支持读(输入)NRG,MDF,PDI,CDI,BIN,CUE 和B5I镜像

|

||||

- 支持用cd 刻录机直接烧录光盘镜像

|

||||

- 支持读取(输入)NRG,MDF,PDI,CDI,BIN,CUE 和B5I镜像

|

||||

- 支持用 cd 刻录机直接烧录光盘镜像

|

||||

- 输出信息包括:进度条,块大小,ECC扇形分区(大小),头分区(大小),镜像偏移地址等等

|

||||

|

||||

---

|

||||

|

||||

- 项目网址: [sourceforge.net/projects/iat.berlios][3]

|

||||

- 开发者: Salvatore Santagati

|

||||

- 许可: GNU GPL v2

|

||||

- 版本号: 0.1.3

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###AcetoneISO

|

||||

|

||||

|

||||

|

||||

AcetoneISO 是一个功能丰富的开源图形化应用程序,用来挂载和管理CD/DVD镜像。

|

||||

|

||||

当你打开这个程序,你就会看到一个图形化的文件管理器用来挂载镜像文件,包括专有的镜像格式,也包括像ISO, BIN, NRG, MDF, IMG 等等,并且允许您执行一系列的动作。

|

||||

当你打开这个程序,你就会看到一个图形化的文件管理器用来挂载镜像文件,包括专有的镜像格式,也包括像ISO, BIN, NRG, MDF, IMG 等等,并且允许您执行一系列的操作。

|

||||

|

||||

AcetoneISO是用QT 4写的,也就是说,对于基于QT的桌面环境能很好的兼容,像KDE,LXQT或是Razor-qt。

|

||||

|

||||

@ -95,57 +96,60 @@ AcetoneISO是用QT 4写的,也就是说,对于基于QT的桌面环境能很

|

||||

|

||||

特性:

|

||||

|

||||

- 支持挂载大多数windowns 镜像,在一个简洁易用的界面

|

||||

- 支持所有镜像格式转换到ISO,或者是从中提取内容。

|

||||

- 支持加密,压缩,解压任何类型的镜像

|

||||

- 支持转换DVD成xvid avi,支持任何格式的转换成xvid avi

|

||||

- 支持从录像里提取声音

|

||||

- 支持从不同格式中提取镜像文件,包括bin mdf nrg img daa dmg cdi b5i bwi pdi

|

||||

- 支持用Kaffeine / VLC / SMplayer播放DVD镜像,可以从Amazon 自动下载。

|

||||

- 支持从文件夹或者是CD/DVD生成ISO镜像

|

||||

- 支持文件MD5校验,或者是生成一个MD5校验码

|

||||

- 支持计算镜像的ShaSums,以128,256和384位的速度

|

||||

- 支持挂载大多数windows 镜像,界面简洁易用

|

||||

- 可以将其所有支持镜像格式转换到ISO,或者是从中提取内容

|

||||

- 加密,压缩,解压任何类型的镜像

|

||||

- 转换DVD成xvid avi,支持将各种常规视频格式转换成xvid avi

|

||||

- 从视频里提取声音

|

||||

- 从不同格式中提取镜像中的文件,包括bin mdf nrg img daa dmg cdi b5i bwi pdi

|

||||

- 用Kaffeine / VLC / SMplayer播放DVD镜像,可以从Amazon 自动下载封面。

|

||||

- 从文件夹或者是CD/DVD生成ISO镜像

|

||||

- 可以做镜像的MD5校验,或者是生成镜像的MD5校验码

|

||||

- 计算镜像的ShaSums(128,256和384位)

|

||||

- 支持加密,解密一个镜像文件

|

||||

- 支持以M字节的速度,分开、合并镜像

|

||||

- 支持高比例压缩镜像成7z 格式

|

||||

- 支持翻录PSX CD成BIN格式,以便在ePSXe/pSX模拟器里运行

|

||||

- 支持修复CUE文件为BIN和IMG格式

|

||||

- 支持把MAC OS的DMG镜像转换成可挂载的镜像

|

||||

- 支持从指定的文件夹中挂载镜像

|

||||

- 支持创建数据库来管理一个大的镜像集合

|

||||

- 支持从CD/DVD 或者是ISO镜像中提取启动文件

|

||||

- 支持备份CD成BIN镜像

|

||||

- 支持简单快速的把DVD翻录成Xvid AVI

|

||||

- 支持简单快速的把常见的视频(avi, mpeg, mov, wmv, asf)转换成Xvid AVI

|

||||

- 支持简单快速的把FLV 换换成AVI 格式

|

||||

- 支持从YouTube和一些视频网站下载视频

|

||||

- 支持提取一个有密码的RAR存档

|

||||

- 支持转换任何的视频到索尼便携式PSP上

|

||||

- 按兆数分拆和合并镜像

|

||||

- 以高压缩比将镜像压缩成7z 格式

|

||||

- 翻录PSX CD成BIN格式,以便在ePSXe/pSX模拟器里运行

|

||||

- 为BIN和IMG格式恢复丢失的 CUE 文件

|

||||

- 把MAC OS的DMG镜像转换成可挂载的镜像

|

||||

- 从指定的文件夹中挂载镜像

|

||||

- 创建数据库来管理一个大的镜像集合

|

||||

- 从CD/DVD 或者是ISO镜像中提取启动文件

|

||||

- 备份CD成BIN镜像

|

||||

- 简单快速的把DVD翻录成Xvid AVI

|

||||

- 简单快速的把常见的视频(avi, mpeg, mov, wmv, asf)转换成Xvid AVI

|

||||

- 简单快速的把FLV 换换成AVI 格式

|

||||

- 从YouTube和一些视频网站下载视频

|

||||

- 提取一个有密码的RAR存档

|

||||

- 支持转换任何的视频到PSP上

|

||||

- 国际化的语言支持支持(英语,意大利语,波兰语,西班牙语,罗马尼亚语,匈牙利语,德语,捷克语和俄语)

|

||||

|

||||

---

|

||||

|

||||

- 项目网址: [sourceforge.net/projects/acetoneiso][4]

|

||||

- 开发者: Marco Di Antonio

|

||||

- 许可: GNU GPL v3

|

||||

- 版本号: 2.3

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###ISO Master

|

||||

|

||||

|

||||

|

||||

ISO Master是一个开源、易用的、图形化CD 镜像编辑器,适用于Linux 和BSD 。可以从ISO 里提取文件,给ISO 添加文件,创建一个可引导的ISO,这些都是在一个可视化的用户界面完成的。可以打开ISO,NRG 和一些MDF文件,但是只能保存成ISO 格式。

|

||||

ISO Master是一个开源、易用的、图形化CD 镜像编辑器,适用于Linux 和BSD 。可以从ISO 里提取文件,给ISO 里面添加文件,创建一个可引导的ISO,这些都是在一个可视化的用户界面完成的。可以打开ISO,NRG 和一些MDF文件,但是只能保存成ISO 格式。

|

||||

|

||||

ISO Master 是基于bkisofs 创建的,一个简单、稳定的阅读库,修改和编写ISO 镜像,支持Joliet, RockRidge 和EL Torito扩展,

|

||||

ISO Master 是基于bkisofs 创建的,这是一个简单、稳定的阅读,修改和编写ISO 镜像的软件库,支持Joliet, RockRidge 和EL Torito扩展,

|

||||

|

||||

特性:

|

||||

|

||||

- 支持读ISO 格式文件(ISO9660, Joliet, RockRidge 和 El Torito),大多数的NRG 格式文件和一些单向的MDF文件,但是,只能保存成ISO 格式

|

||||

- 支持读ISO 格式文件(ISO9660, Joliet, RockRidge 和 El Torito),大多数的NRG 格式文件和一些单轨道的MDF文件,但是,只能保存成ISO 格式

|

||||

- 创建和修改一个CD/DVD 格式文件

|

||||

- 支持CD 格式文件的添加或删除文件和目录

|

||||

- 支持创建可引导的CD/DVD

|

||||

- 国际化的支持

|

||||

|

||||

---

|

||||

|

||||

- 项目网址: [www.littlesvr.ca/isomaster/][5]

|

||||

- 开发者: Andrew Smith

|

||||

- 许可: GNU GPL v2

|

||||

@ -157,7 +161,7 @@ via: http://www.linuxlinks.com/article/20141025082352476/DiskImageTools.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

Linux 有问必答 -- 如何修复“hda-duplex not supported in this QEMU binary”(hda-duplex在此QEMU文件中不支持)

|

||||

Linux 有问必答:如何修复“hda-duplex not supported in this QEMU binary”

|

||||

================================================================================

|

||||

> **提问**: 当我尝试在虚拟机中安装一个新的Linux时,虚拟机不能启动且报了下面这个错误:“不支持的配置:hda-duplex在此QEMU文件中不支持。” 我该如何修复?

|

||||

> **提问**: 当我尝试在虚拟机中安装一个新的Linux时,虚拟机不能启动且报了下面这个错误:"unsupported configuration: hda-duplex not supported in this QEMU binary."(“不支持的配置:hda-duplex在此QEMU文件中不支持。”) 我该如何修复?

|

||||

|

||||

这个错误可能来自一个当默认声卡型号不能被识别时的一个qemu bug。

|

||||

|

||||

@ -20,7 +20,7 @@ Linux 有问必答 -- 如何修复“hda-duplex not supported in this QEMU binar

|

||||

|

||||

### 方案二: Virsh ###

|

||||

|

||||

如果你使用的是**virt-manager** 而不是**virt-manager**, 你可以编辑VM相应的配置文件。在<device>节点中查找**sound**节点,并按照下面的默认声卡型号改成**ac97**。

|

||||

如果你使用的是**virsh** 而不是**virt-manager**, 你可以编辑VM相应的配置文件。在<device>节点中查找**sound**节点,并按照下面的默认声卡型号改成**ac97**。

|

||||

|

||||

<devices>

|

||||

. . .

|

||||

@ -35,6 +35,6 @@ Linux 有问必答 -- 如何修复“hda-duplex not supported in this QEMU binar

|

||||

via: http://ask.xmodulo.com/hda-duplex-not-supported-in-this-qemu-binary.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,14 +1,15 @@

|

||||

修复了60个bug的LibreOffice 4.3.4正式发布,4.4版本开发工作有序进行中

|

||||

修复了60个bug的LibreOffice 4.3.4正式发布

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

**[上两周][1], 文档基金会带着它的开源LibreOffice生产力套件的又一个次版本回来了。**

|

||||

**[前一段时间][1], 文档基金会带着它的开源LibreOffice生产力套件的又一个小版本更新回来了。**

|

||||

|

||||

LibreOffice 4.3.4,新系列中的第四个次版本,是单独由修复好的bug构成的一个版本,不出乎意料地以点版本形式发行。

|

||||

LibreOffice 4.3.4,新系列中的第四个次版本,该版本只包含 BUG 修复,按计划发布了。

|

||||

|

||||

除了增加了即视感,基金会所说的在developers’ butterfly net上被揪出来并且修复的bug数量大概有:60个左右。

|

||||

可以看到的变化是,如基金会所说的在developers’ butterfly net上被揪出来并且修复的bug数量大概有:60个左右。

|

||||

|

||||

- 排序操作现在还是默认为旧的样式(Calc)

|

||||

- 排序操作现在还是默认为旧式风格(Calc)

|

||||

- 在预览后恢复焦点窗口(Impress)

|

||||

- 图表向导对话框不再是‘切除’式

|

||||

- 修复了记录改变时的字数统计问题 (Writer)

|

||||

@ -28,13 +29,13 @@ LibreOffice 4.3.4,新系列中的第四个次版本,是单独由修复好的

|

||||

|

||||

|

||||

|

||||

来自LibreOffice 4.4的信息栏

|

||||

*来自LibreOffice 4.4的信息栏*

|

||||

|

||||

LibreOffice 4.4应该给予大家多一点希望。

|

||||

LibreOffice 4.4应该会让大家更多期望。

|

||||

|

||||

[维基上讲述了][4]正在进行中的不间断大范围GUI调整,包括一个新的颜色选择器,重新设计的段落行距选择器和一个在凸显部位表示该文件是否为只读模式的信息栏。

|

||||

|

||||

虽然以上大规模的界面变动我知道一些桌面社区的抗议声不断,但是他们还是朝着正确的方向稳步前进。

|

||||

虽然我知道一些桌面社区对这些大规模的界面变动的抗议声不断,但是他们还是朝着正确的方向稳步前进。

|

||||

|

||||

要记住,在一些必要情况下,LibreOffice对于企业和机构来说是一款非常重要的软件。在外观和布局上有任何引人注目的修改都会引发一串连锁效应。

|

||||

|

||||

@ -46,7 +47,7 @@ via: http://www.omgubuntu.co.uk/2014/11/libreoffice-4-3-4-arrives-bundle-bug-fix

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

使用GDB命令行调试器调试C/C++程序

|

||||

============================================================

|

||||

没有调试器的情况下编写程序时最糟糕的状况是什么?编译时,跪着祈祷不要出错?用生命在运行可执行程序(blood offering不知道怎么翻译好...)?或者在每一行代码间添加printf("test")语句来定位错误点?如你所知,编写程序时不使用调试器的话是不利的。幸好,linux下调试还是很方便的。大多数人使用的IDE都集成了调试器,但linxu著名的调试器是命令行形式的C/C++调试器GDB。然而,与其他命令行工具一致,DGB需要一定的练习才能完全掌握。这里,我会告诉你GDB的基本情况及使用方法。

|

||||

没有调试器的情况下编写程序时最糟糕的状况是什么?编译时跪着祈祷不要出错?用血祭召唤恶魔帮你运行可执行程序?或者在每一行代码间添加printf("test")语句来定位错误点?如你所知,编写程序时不使用调试器的话是不方便的。幸好,linux下调试还是很方便的。大多数人使用的IDE都集成了调试器,但 linux 最著名的调试器是命令行形式的C/C++调试器GDB。然而,与其他命令行工具一致,DGB需要一定的练习才能完全掌握。这里,我会告诉你GDB的基本情况及使用方法。

|

||||

|

||||

###安装GDB###

|

||||

|

||||

@ -18,11 +18,11 @@ Fedora,CentOS 或 RHEL:

|

||||

|

||||

$sudo yum install gdb

|

||||

|

||||

如果在仓库中找不到的话,可以从官网中下载[official page][1]

|

||||

如果在仓库中找不到的话,可以从[官网中下载][1]。

|

||||

|

||||

###示例代码###

|

||||

|

||||

当学习GDB时,最好有一份代码,动手试验。下列代码是我编写的简单例子,它可以很好的体现GDB的特性。将它拷贝下来并且进行实验。这是最好的方法。

|

||||

当学习GDB时,最好有一份代码,动手试验。下列代码是我编写的简单例子,它可以很好的体现GDB的特性。将它拷贝下来并且进行实验——这是最好的方法。

|

||||

|

||||

#include <stdio.h>

|

||||

#include <stdlib.h>

|

||||

@ -48,21 +48,21 @@ Fedora,CentOS 或 RHEL:

|

||||

|

||||

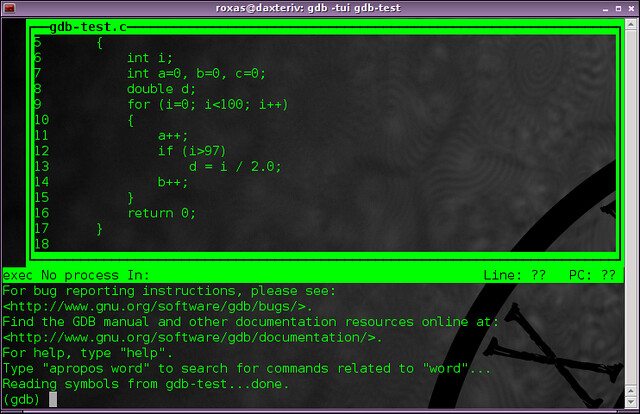

$ gdb -tui [executable's name]

|

||||

|

||||

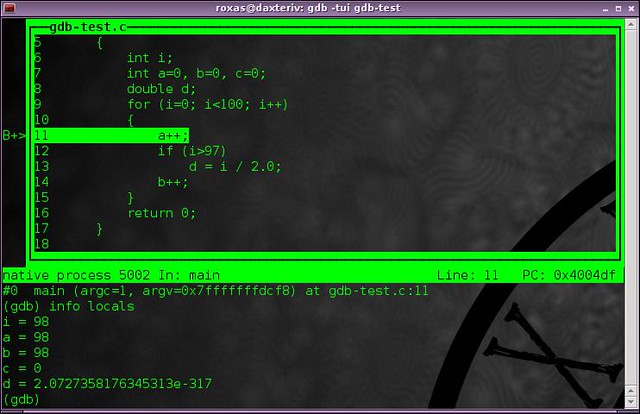

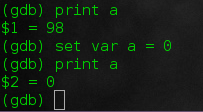

使用”-tui“选项可以将代码显示在一个窗口内(被称为”文本接口”),在这个窗口内可以使用光标来操控,同时在下面输入GDB shell命令。

|

||||

使用”-tui“选项可以将代码显示在一个漂亮的交互式窗口内(所以被称为“文本用户界面 TUI”),在这个窗口内可以使用光标来操控,同时在下面的GDB shell中输入命令。

|

||||

|

||||

|

||||

|

||||

现在我们可以在程序的任何地方设置断点。你可以通过下列命令来为当前源文件的某一行设置断点。

|

||||

|

||||

break [line number]

|

||||

break [行号]

|

||||

|

||||

或者为一个特定的函数设置断点:

|

||||

|

||||

break [function name]

|

||||

break [函数名]

|

||||

|

||||

甚至可以设置条件断点

|

||||

|

||||

break [line number] if [condition]

|

||||

break [行号] if [条件]

|

||||

|

||||

例如,在我们的示例代码中,可以设置如下:

|

||||

|

||||

@ -74,23 +74,25 @@ Fedora,CentOS 或 RHEL:

|

||||

|

||||

最后但也是很重要的是,我们可以设置一个“观察断点”,当这个被观察的变量发生变化时,程序会被停止。

|

||||

|

||||

watch [variable]

|

||||

watch [变量]

|

||||

|

||||

可以设置如下:

|

||||

这里我们可以设置如下:

|

||||

|

||||

watch d

|

||||

|

||||

当d的值发生变化时程序会停止运行(例如,当i>97为真时)。

|

||||

当设置后断点后,使用"run"命令开始运行程序,或按如下所示:

|

||||

|

||||

当设置断点后,使用"run"命令开始运行程序,或按如下所示:

|

||||

|

||||

r [程序的输入参数(如果有的话)]

|

||||

|

||||

gdb中,大多数的单词都可以简写为一个字母。

|

||||

gdb中,大多数的命令单词都可以简写为一个字母。

|

||||

|

||||

不出意外,程序会停留在11行。这里,我们可以做些有趣的事情。下列命令:

|

||||

|

||||

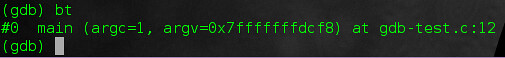

bt

|

||||

|

||||

回溯功能可以让我们知道程序如何到达这条语句的。

|

||||

回溯功能(backtrace)可以让我们知道程序如何到达这条语句的。

|

||||

|

||||

|

||||

|

||||

@ -98,16 +100,15 @@ gdb中,大多数的单词都可以简写为一个字母。

|

||||

|

||||

这条语句会显示所有的局部变量以及它们的值(你可以看到,我没有为d设置初始值,所以它现在的值是任意值)。

|

||||

|

||||

当然

|

||||

当然:

|

||||

|

||||

|

||||

|

||||

p [variable]

|

||||

p [变量]

|

||||

|

||||

这可以显示特定变量的值,但是还有更好的:

|

||||

|

||||

ptype [variable]

|

||||

这个命令可以显示特定变量的值,而更进一步:

|

||||

|

||||

ptype [变量]

|

||||

|

||||

可以显示变量的类型。所以这里可以确定d是double型。

|

||||

|

||||

@ -115,11 +116,11 @@ gdb中,大多数的单词都可以简写为一个字母。

|

||||

|

||||

既然已经到这一步了,我么不妨这么做:

|

||||

|

||||

set var [variable] = [new value]

|

||||

set var [变量] = [新的值]

|

||||

|

||||

这样会覆盖变量的值。不过需要注意,你不能创建一个新的变量或改变变量的类型。我们可以这样做:

|

||||

|

||||

set var a = 0

|

||||

set var a = 0

|

||||

|

||||

|

||||

|

||||

@ -127,17 +128,17 @@ gdb中,大多数的单词都可以简写为一个字母。

|

||||

|

||||

step

|

||||

|

||||

使用如上命令,运行到下一条语句,也可以进入到一个函数里面。或者使用:

|

||||

使用如上命令,运行到下一条语句,有可能进入到一个函数里面。或者使用:

|

||||

|

||||

next

|

||||

|

||||

这可以直接下一条语句,并且不进入子函数内部。

|

||||

这可以直接运行下一条语句,而不进入子函数内部。

|

||||

|

||||

|

||||

|

||||

结束测试后,删除断点:

|

||||

|

||||

delete [line number]

|

||||

delete [行号]

|

||||

|

||||

从当前断点继续运行程序:

|

||||

|

||||

@ -147,7 +148,7 @@ gdb中,大多数的单词都可以简写为一个字母。

|

||||

|

||||

quit

|

||||

|

||||

总结,有了GDB,编译时不用祈祷上帝了,运行时不用血祭(?)了,再也不用printf(“test“)了。当然,这里所讲的并不完整,而且GDB的功能远不止这些。所以我强烈建议你自己更加深入的学习它。我现在感兴趣的是将GDB整合到Vim中。同时,这里有一个[备忘录][2]记录了GDB所有的命令行,以供查阅。

|

||||

总之,有了GDB,编译时不用祈祷上帝了,运行时不用血祭了,再也不用printf(“test“)了。当然,这里所讲的并不完整,而且GDB的功能远远不止于此。所以我强烈建议你自己更加深入的学习它。我现在感兴趣的是将GDB整合到Vim中。同时,这里有一个[备忘录][2]记录了GDB所有的命令行,以供查阅。

|

||||

|

||||

你对GDB有什么看法?你会将它与图形调试器对比吗,它有什么优势呢?对于将GDB集成到Vim有什么看法呢?将你的想法写到评论里。

|

||||

|

||||

@ -157,7 +158,7 @@ via: http://xmodulo.com/gdb-command-line-debugger.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[SPccman](https://github.com/SPccman)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,7 @@

|

||||

如何将Ubuntu14.04安全的升级到14.10

|

||||

如何将 Ubuntu14.04 安全的升级到14.10

|

||||

================================================================================

|

||||

本文将讨论如何将Ubuntu14.04升级到14.10的beta版。Ubuntu14.10的最终beta版已经发布了

|

||||

|

||||

如果想从Ubuntu14.04/13.10/13.04/12.10/12.04或者更老的版本升级到14.10,只要遵循下面给出的步骤。注意,你不能直接从13.10升级到14.10。你应该想将13.10升级到14.04在从14.04升级到14.10。下面是详细步骤。

|

||||

如果想从Ubuntu14.04/13.10/13.04/12.10/12.04或者更老的版本升级到14.10,只要遵循下面给出的步骤。注意,你不能直接从13.10升级到14.10。你应该先将13.10升级到14.04在从14.04升级到14.10。下面是详细步骤。

|

||||

|

||||

下面的步骤不仅能用于14.10,也兼容于一些像Lubuntu14.10,Kubuntu14.10和Xubuntu14.10等的Ubuntu衍生版本

|

||||

|

||||

@ -90,15 +89,15 @@

|

||||

|

||||

sudo do-release-upgrade -d

|

||||

|

||||

直到屏幕提示你已完成

|

||||

直到屏幕提示你已完成。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/upgrade-ubuntu-14-04-trusty-ubuntu-14-10-utopic/

|

||||

|

||||

作者:SK

|

||||

译者:[译者ID](https://github.com/johnhoow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[johnhoow](https://github.com/johnhoow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,52 +0,0 @@

|

||||

6 Minesweeper Clones for Linux

|

||||

================================================================================

|

||||

### GNOME Mines ###

|

||||

|

||||

This is the GNOME Minesweeper clone, allowing you to choose from three different pre-defined table sizes (8×8, 16×16, 30×16) or a custom number of rows and columns. It can be ran in fullscreen mode, comes with highscores, elapsed time and hints. The game can be paused and resumed.

|

||||

|

||||

|

||||

|

||||

### ace-minesweeper ###

|

||||

|

||||

This is part of a package that contains some other games too, like ace-freecel, ace-solitaire or ace-spider. It has a graphical interface featuring Tux, but doesn’t seem to come with different table sizes. The package is called ace-of-penguins in Ubuntu.

|

||||

|

||||

|

||||

|

||||

### XBomb ###

|

||||

|

||||

XBomb is a mines game for the X Window System with three different table sizes and tiles which can take different shapes: hexagonal, rectangular (traditional) or triangular. Unfortunately the current version in Ubuntu 14.04 crashes with a segmentation fault, so you may need to install another version to make it work.

|

||||

[Homepage][1]

|

||||

|

||||

|

||||

|

||||

([Image credit][1])

|

||||

|

||||

### KMines ###

|

||||

|

||||

KMines is the a KDE game, and just like GNOME Mines, there are three built-in table sizes (easy, medium, hard) and custom, support for themes and highscores.

|

||||

|

||||

|

||||

|

||||

### freesweep ###

|

||||

|

||||

Freesweep is a Minesweeper clone for the terminal which allows you to configure settings such as table rows and columns, percentage of bombs, colors and also has a highscores table.

|

||||

|

||||

|

||||

|

||||

### xdemineur ###

|

||||

|

||||

Another graphical Minesweeper clone for X, Xdemineur is very much alike Ace-Minesweeper, with one predefined table size.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tuxarena.com/2014/10/6-minesweeper-clones-for-linux/

|

||||

|

||||

作者:Craciun Dan

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.gedanken.org.uk/software/xbomb/

|

||||

@ -1,47 +0,0 @@

|

||||

Qshutdown – An avanced shutdown tool

|

||||

================================================================================

|

||||

qshutdown is a Qt program to shutdown/reboot/suspend/hibernate the computer at a given time or after a certain number of minutes. It shows the time until the corresponding request is send to either the Gnome- or KDE-session-manager, to HAL or to DeviceKit and if none of these works the command ‘sudo shutdown -P now' is used. This program may be useful for people who want to work with the computer only for a certain time.

|

||||

|

||||

qshutdown will show it self 3 times as a warning if there are less than 70 seconds left. (if 1 Minute or local time +1 Minute was set it’ll appear only once.)

|

||||

|

||||

This program uses qdbus to send a shutdown/reboot/suspend/hibernate request to either the gnome- or kde-session-manager, to HAL or to DeviceKit and if none of these works, the command ’sudo shutdown’ will be used (note that when sending the request to HAL or DeviceKit, or the shutdown command is used, the Session will never be saved. If the shutdown command is used, the program will only be able to shutdown and reboot). So if nothing happens when the shutdown- or reboot-time is reached, it means that one lacks the rights for the shutdown command.

|

||||

|

||||

In this case one can do the following:

|

||||

|

||||

Post the following in a terminal: "EDITOR:nano sudo -E visudo" and add this line: "* ALL = NOPASSWD:/sbin/shutdown" whereas * replaces the username or %groupname.

|

||||

|

||||

Configurationfile qshutdown.conf

|

||||

|

||||

The maximum Number of countdown_minutes is 1440 (24 hours).The configurationfile (and logfile) is located at ~/.qshutdown

|

||||

|

||||

For admins:

|

||||

|

||||

With the option Lock_all in qshutdown.conf set to true the user won’t be able to change any settings. If you change the permissions of qshutdown.conf with "sudo chown root -R ~/.qshutdown" and "sudo chmod 744 ~/.qshutdown/qshutdown.conf", the user won’t be able to change anything in the configurationfile.

|

||||

|

||||

### Install Qshutdown in Ubuntu ###

|

||||

|

||||

Open the terminal and run the following command

|

||||

|

||||

sudo apt-get install qshutdown

|

||||

|

||||

### Screenshots ###

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.ubuntugeek.com/qshutdown-an-avanced-shutdown-tool.html

|

||||

|

||||

作者:[ruchi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.ubuntugeek.com/author/ubuntufix

|

||||

@ -1,4 +1,4 @@

|

||||

[translating by KayGuoWhu]

|

||||

[Translating by Stevearzh]

|

||||

When hackers grow old

|

||||

================================================================================

|

||||

Lately I’ve been wrestling with various members of an ancient and venerable open-source development group which I am not going to name, though people who regularly follow my adventures will probably guess which one it is by the time I’m done venting.

|

||||

|

||||

@ -0,0 +1,55 @@

|

||||

Four ways Linux is headed for no-downtime kernel patching

|

||||

================================================================================

|

||||

|

||||

Credit: Shutterstock

|

||||

|

||||

These technologies are competing to provide the best way to patch the Linux kernel without reboots or downtime

|

||||

|

||||

Nobody loves a reboot, especially not if it involves a late-breaking patch for a kernel-level issue that has to be applied stat.

|

||||

|

||||

To that end, three projects are in the works to provide a mechanism for upgrading the kernel in a running Linux instance without having to reboot anything.

|

||||

|

||||

### Ksplice ###

|

||||

|

||||

The first and original contender is Ksplice, courtesy of a company of the same name founded in 2008. The kernel being replaced does not have to be pre-modified; all it needs is a diff file listing the changes to be made to the kernel source. Ksplice, Inc. offered support for the (free) software as a paid service and supported most common Linux distributions used in production.

|

||||

|

||||

All that changed in 2011, when [Oracle purchased the company][1], rolled the feature into its own Linux distribution, and kept updates for the technology to itself. As a result, other intrepid kernel hackers have been looking for ways to pick up where Ksplice left off, without having to pay the associated Oracle tax.

|

||||

|

||||

### Kgraft ###

|

||||

|

||||

In February 2014, Suse provided the exact solution needed: [Kgraft][2], its kernel-update technology released under a mixed GPLv2/GPLv3 license and not kept close as a proprietary creation. It's since been [submitted][3] as a possible inclusion to the mainline Linux kernel, although Suse has rolled a version of the technology into [Suse Linux Enterprise Server 12][4].

|

||||

|

||||

Kgraft works roughly like Ksplice by using a set of diffs to figure out what parts of the kernel to replace. But unlike Ksplice, Kgraft doesn't need to stop the kernel entirely to replace it. Any running functions can be directed to their old or new kernel-level counterparts until the patching process is finished.

|

||||

|

||||

### Kpatch ###

|

||||

|

||||

Red Hat came up with its own no-reboot kernel-patch mechanism, too. Also introduced earlier this year -- right after Suse's work in that vein, no less -- [Kpatch][5] works in roughly the same manner as Kgraft.

|

||||

|

||||

The main difference, [as outlined][6] by Josh Poimboeuf of Red Hat, is that Kpatch doesn't redirect calls to old kernel functions. Rather, it waits until all function calls have stopped, then swaps in the new kernel. Red Hat's engineers consider this approach safer, with less code to maintain, albeit at the cost of more latency during the patch process.

|

||||

|

||||

Like Kgraft, Kpatch has been submitted for consideration as a possible kernel inclusion and can be used with Linux kernels other than Red Hat's. The bad news is that Kpatch isn't yet considered production-ready by Red Hat. It's included as part of Red Hat Enterprise Linux 7, but only in the form of a technology preview.

|

||||

|

||||

### ...or Kgraft + Kpatch? ###

|

||||

|

||||

A fourth solution [proposed by Red Hat developer Seth Jennings][7] early in November 2014 is a mix of both the Kgraft and Kpatch approaches, using patches built for either one of those solutions. This new approach, Jennings explained, "consists of a live patching 'core' that provides an interface for other 'patch' kernel modules to register patches with the core." This way, the patching process -- specifically, how to deal with any running kernel functions -- can be handled in a more orderly fashion.

|

||||

|

||||

The sheer newness of these proposals means it'll be a while before any of them are officially part of the Linux kernel, although Suse's chosen to move fast and made it a part of its latest enterprise offering. Let's see if Red Hat and Canonical choose to follow suit in the short run as well.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/2851028/linux/four-ways-linux-is-headed-for-no-downtime-kernel-patching.html

|

||||

|

||||

作者:[Serdar Yegulalp][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoworld.com/author/Serdar-Yegulalp/

|

||||

[1]:http://www.infoworld.com/article/2622437/open-source-software/oracle-buys-ksplice-for-linux--zero-downtime--tech.html

|

||||

[2]:http://www.infoworld.com/article/2610749/linux/suse-open-sources-live-updater-for-linux-kernel.html

|

||||

[3]:https://lwn.net/Articles/596854/

|

||||

[4]:http://www.infoworld.com/article/2838421/linux/suse-linux-enterprise-12-goes-light-on-docker-heavy-on-reliability.html

|

||||

[5]:https://github.com/dynup/kpatch

|

||||

[6]:https://lwn.net/Articles/597123/

|

||||

[7]:http://lkml.iu.edu/hypermail/linux/kernel/1411.0/04020.html

|

||||

@ -1,78 +0,0 @@

|

||||

alim0x translating

|

||||

|

||||

The history of Android

|

||||

================================================================================

|

||||

|

||||

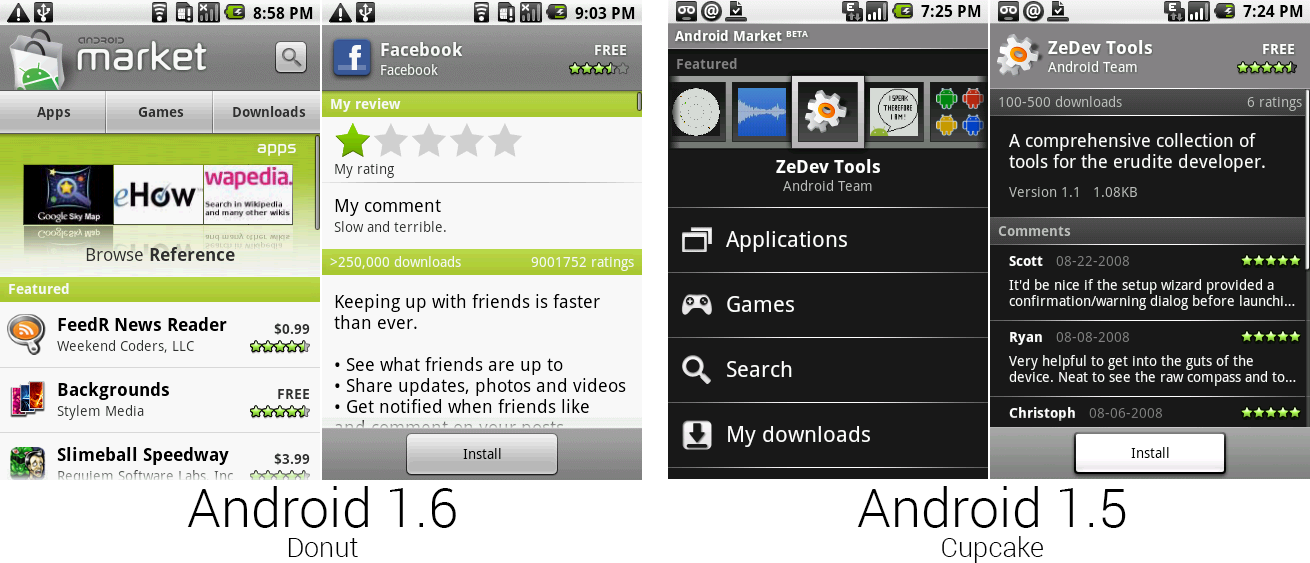

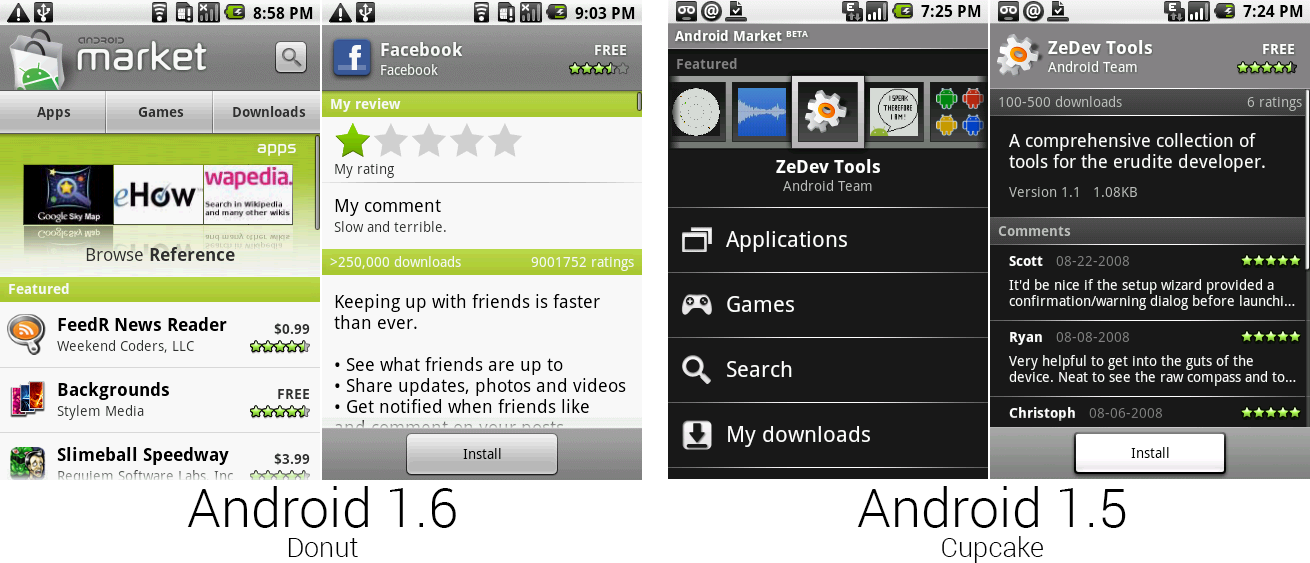

The new Android Market—less black, more white and green.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

### Android 1.6, Donut—CDMA support brings Android to any carrier ###

|

||||

|

||||

The fourth version of Android—1.6, Donut—launched in September 2009, five months after Cupcake hit the market. Despite the myriad of updates, Google was still adding basic functionality to Android. Donut brought support for different screen sizes, CDMA support, and a text-to-speech engine.

|

||||

|

||||

Android 1.6 is a great example of an update that, today, would have little reason to exist as a separate point update. The major improvements basically boiled down to new versions of the Android Market, camera, and YouTube. In the years since, apps like this have been broken out of the OS and can be updated by Google at any time. Before all this modularization work, though, even seemingly minor app updates like this required a full OS update.

|

||||

|

||||

The other big improvement—CDMA support—demonstrated that, despite the version number, Google was still busy getting basic functionality into Android.

|

||||

|

||||

The Android Market was christened as version "1.6" and got a complete overhaul. The original all-black design was tossed in favor of a white app with green highlights—the Android designers were clearly using the Android mascot for inspiration.

|

||||

|

||||

The new market was definitely a new style of app design for Google. The top fifth of the screen was dedicated to a banner logo announcing that this app is indeed the “Android Market." Below the banner were buttons for Apps, Games, and Downloads, and a search button was placed to the right of the banner. Below the navigation was a thumbnail display of featured apps, which could be swiped through. Below that were even more featured apps in a vertically scrolling list.

|

||||

|

||||

|

||||

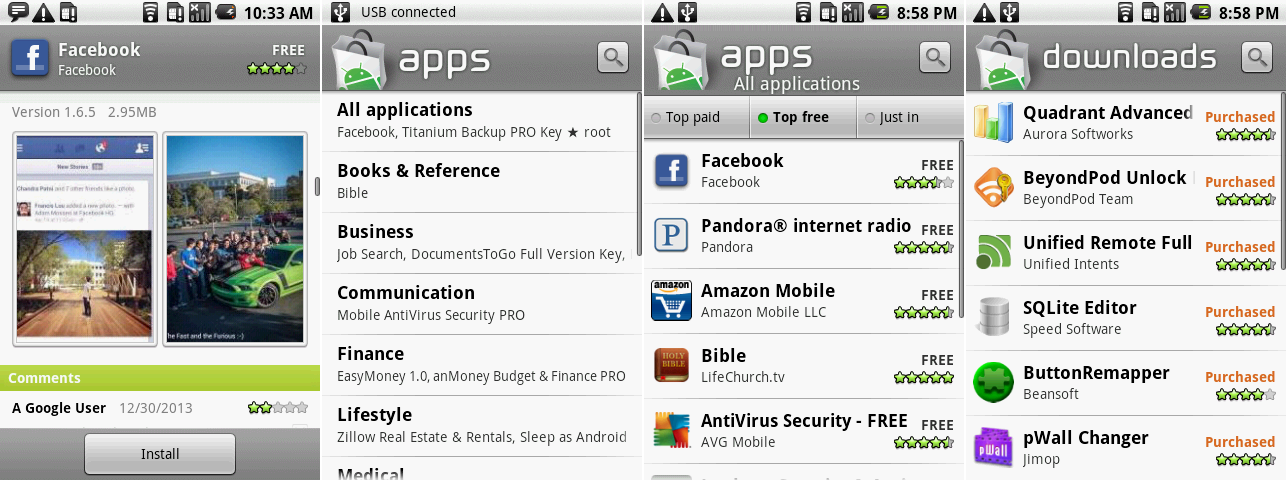

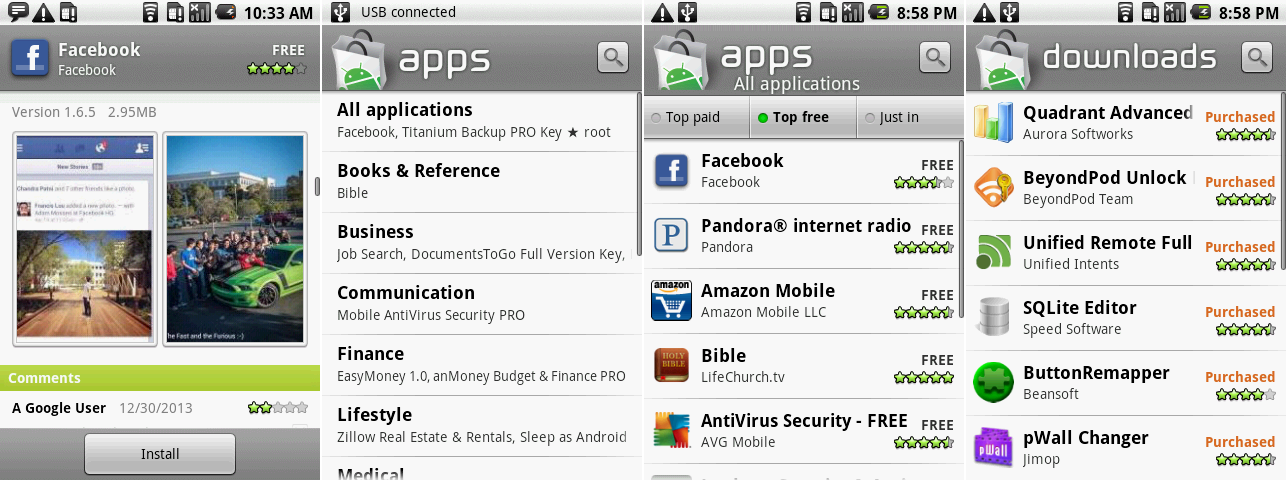

The new Market design, showing an app page with screenshots, the apps categories page, an app top list, and the downloads section.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

The biggest addition to the market was the inclusion of app screenshots. Android users could finally see what an app looked like before installing it—previously they only had a brief description and user reviews to go on. Your personal star review and comment was given top billing, followed by the description, and then finally the screenshots. Viewing the screenshots would often require a bit of scrolling—if you were looking for a well-designed app, it was a lot of work.

|

||||

|

||||

Tapping on App or Games would bring up a category list, which you can see in the second picture, above. After picking a category, more navigation was shown at the top of the screen, where users could see "Top paid," "Top free," or "Just in" apps within a category. While these sorta looked like buttons that would load a new screen, they were really just a clunky tabbed interface. To denote which "tab" was currently active, there were little green lights next to each button. The nicest part of this interface was that the list of apps would scroll infinitely—once you hit the bottom, more apps would load in. This made it easy to look through the list of apps, but opening any app and coming back would lose your spot in the list—you’d be kicked to the top. The downloads section would do something the new Google Play Store still can't do: simply display a list of your purchased apps.

|

||||

|

||||

While the new Market definitely looked better than the old market, cohesion across apps was getting worse and worse. It seemed like each app was made by a different group with no communication about how all Android apps should look.

|

||||

|

||||

|

||||

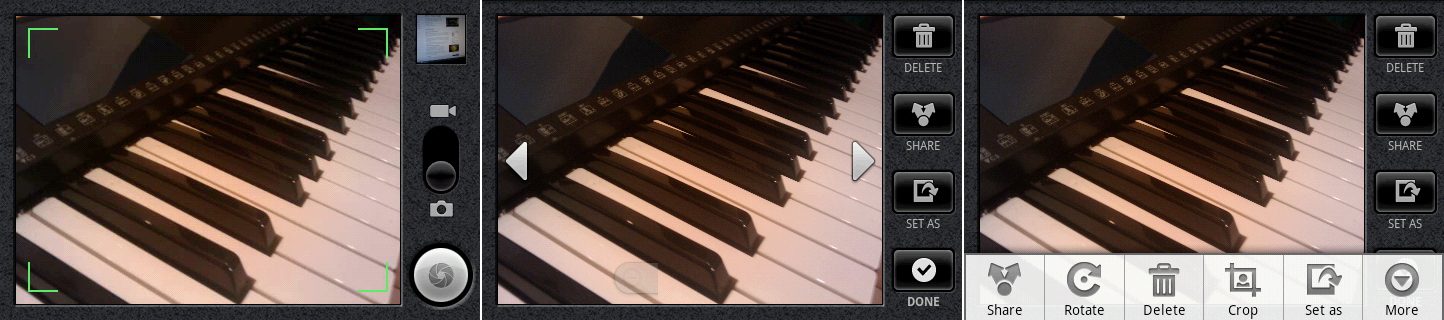

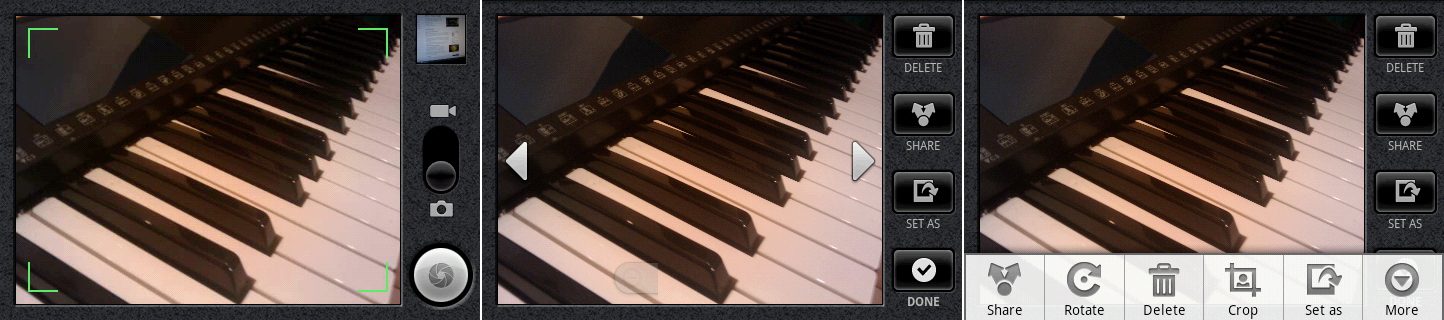

The Camera viewfinder, photo review screen, and menu.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

For instance, the camera app was changed from a full-screen, minimal design to a boxed viewfinder with controls on the side. With the new camera app, Google tried its hand at skeuomorphism, wrapping the whole app in a leather texture roughly replicating the exterior of a classic camera. Switching between the camera and camcorder was done with a literal switch, and below that was the on-screen shutter button.

|

||||

|

||||

Tapping on the previous picture thumbnail no longer launched the gallery, but a custom image viewer that was built in to the camera app. When viewing a picture the leather control area changed the camera controls to picture controls, where you could delete, share a picture, or set the picture as a wallpaper or contact image. There was still no swiping between pictures—that was still done with arrows on either side of the image.

|

||||

|

||||

This second picture shows one of the first examples of designers reducing dependence on the menu button, which the Android team slowly started to realize functioned terribly for discoverability. Many app designers (including those within Google) used the menu as a dumping ground for all sorts of controls and navigational elements. Most users didn't think to hit the menu button, though, and never saw the commands.

|

||||

|

||||

A common theme for future versions of Android would be moving things out of the menu and on to the main screen, making the whole OS more user-friendly. The menu button was completely killed in Android 4.0, and it's only supported in Android for legacy apps.

|

||||

|

||||

|

||||

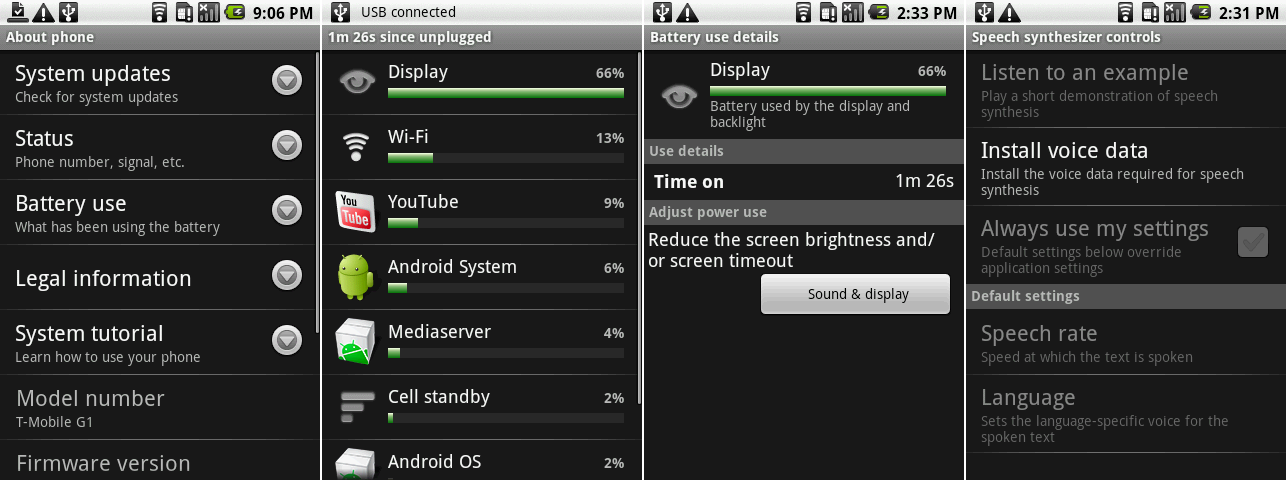

The battery and TTS settings.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

Donut was the first Android version to keep track of battery usage. Buried in the "About phone" menu was an option called "Battery use," which would display battery usage by app and hardware function as a percentage. Tapping on an item would bring up a separate page with relevant stats. Hardware items had buttons to jump directly to their settings, so for instance, you could change the display timeout if you felt the display battery usage was too high.

|

||||

|

||||

Android 1.6 was also the first version to support text-to-speech (TTS) engines, meaning the OS and apps would be able to talk back to you in a robot voice. The “Speech synthesizer controls" would allow you to set the language, choose the speech rate, and (critically) install the voice data from the Android market. Today, Google has its own TTS engine that ships with Android, but it seems Donut was hard coded to accept one specific TTS engine made by SVOX. But SVOX’s engine didn’t ship with Donut, so tapping on “install voice data" linked to an app in the Android Market. (In the years since Donut’s heyday, the app has been taken down. It seems Android 1.6 will never speak again.)

|

||||

|

||||

|

||||

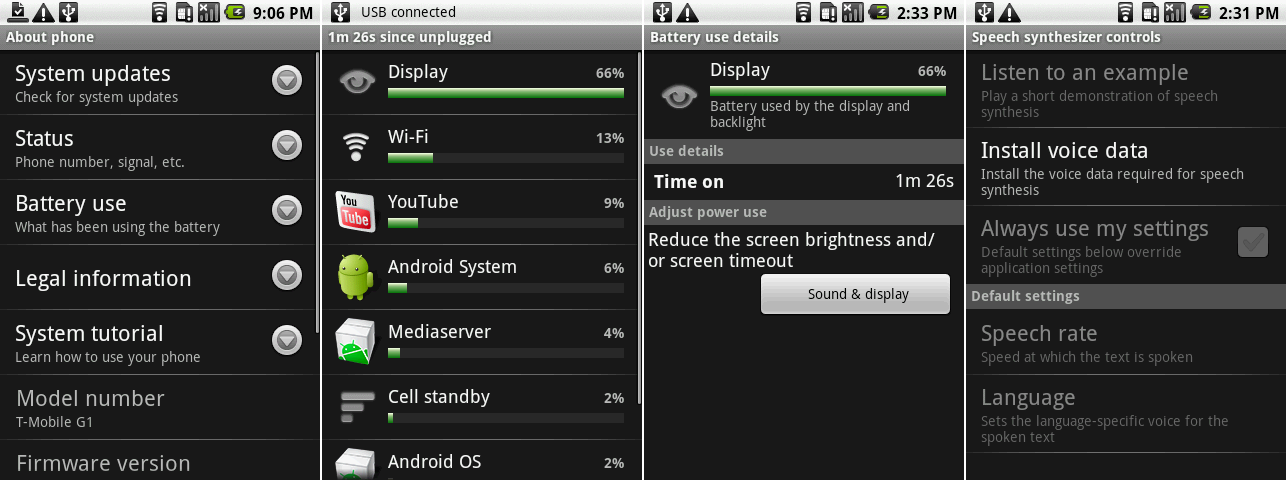

From left to right: new widgets, the search bar UI, the new notification clear button, and the new gallery controls.

|

||||

Photo by Ron Amadeo

|

||||

|

||||

There was more work on the widget front. Donut brought an entirely new widget called "Power control." This comprised on/off switches for common power-hungry features: Wi-FI, Bluetooth, GPS, Sync (to Google's servers), and brightness.

|

||||

|

||||

The search widget was redesigned to be much slimmer looking, and it had an embedded microphone button for voice search. It now had some actual UI to it and did find-as-you-type live searching, which searched not only the Internet, but your applications and history too.

|

||||

|

||||

The "Clear notifications" button has shrunk down considerably and lost the "notifications" text. In later Android versions it would be reduced to just a square button. The Gallery continues the trend of taking functionality out of the menu and putting it in front of the user—the individual picture view gained buttons for "Set as," "Share," and "Delete."

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

[Ron Amadeo][a] / Ron is the Reviews Editor at Ars Technica, where he specializes in Android OS and Google products. He is always on the hunt for a new gadget and loves to rip things apart to see how they work.

|

||||

|

||||

[@RonAmadeo][t]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://arstechnica.com/gadgets/2014/06/building-android-a-40000-word-history-of-googles-mobile-os/9/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://arstechnica.com/author/ronamadeo

|

||||

[t]:https://twitter.com/RonAmadeo

|

||||

@ -1,155 +0,0 @@

|

||||

翻译中 by coloka

|

||||

How to Debug CPU Regressions Using Flame Graphs

|

||||

================================================================================

|

||||