mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

fa711aad6b

@ -7,7 +7,7 @@

|

||||

|

||||

|

||||

|

||||

使用这种方式的明显好处就是你可以通过使用他们各自的官方应用访问你的各种云存储。目前,提供官方Linux客户端的服务提供商有[SpiderOak](1), [Dropbox](2), [Ubuntu One](3),[Copy](5)。[Ubuntu One](3)虽不出名但的确是[一个不错的云存储竞争着](4)。[Copy][5]则提供比Dropbox更多的空间,是[Dropbox的替代选择之一](6)。使用这些官方Linux客户端可以保持你的电脑与他们的服务器之间的通信,还可以让你进行属性设置,如选择性同步。

|

||||

使用这种方式的明显好处就是你可以通过使用他们各自的官方应用访问你的各种云存储。目前,提供官方Linux客户端的服务提供商有[SpiderOak][1], [Dropbox][2], [Ubuntu One][3],[Copy][5]。[Ubuntu One][3]虽不出名但的确是[一个不错的云存储竞争着][4]。[Copy][5]则提供比Dropbox更多的空间,是[Dropbox的替代选择之一][6]。使用这些官方Linux客户端可以保持你的电脑与他们的服务器之间的通信,还可以让你进行属性设置,如选择性同步。

|

||||

|

||||

对于普通桌面用户,使用官方客户端是最好的选择,因为官方客户端可以提供最多的功能和最好的兼容性。使用它们也很简单,只需要下载他们对应你的发行版的软件包,然后安装安装完后在运行一下就Ok了。安装客户端时,它一般会指导你完成这些简单的过程。

|

||||

|

||||

@ -25,9 +25,9 @@

|

||||

|

||||

当你运行最后一条命令后,脚本会提醒你这是你第一次运行这个脚本。它将告诉你去浏览一个Dropbox的特定网页以便访问你的账户。它还会告诉你所有你需要放入网站的信息,这是为了让Dropbox给你App Key和App Secret以及赋予这个脚本你给予的访问权限。现在脚本就拥有了访问你账户的合法授权了。

|

||||

|

||||

这些一旦完成,你就可以这个脚本执行各种任务了,例如上传、下载、删除、移动、复制、创建文件夹、查看文件、共享文件、查看文件信息和取消共享。对于全部的语法解释,你可以查看一下[这个页面](9)。

|

||||

这些一旦完成,你就可以这个脚本执行各种任务了,例如上传、下载、删除、移动、复制、创建文件夹、查看文件、共享文件、查看文件信息和取消共享。对于全部的语法解释,你可以查看一下[这个页面][9]。

|

||||

|

||||

###通过[Storage Made Easy](7)将SkyDrive带到Linux上

|

||||

###通过[Storage Made Easy][7]将SkyDrive带到Linux上

|

||||

|

||||

微软并没有提供SkyDrive的官方Linux客户端,这一点也不令人惊讶。但是你并不意味着你不能在Linux上访问SkyDrive,记住:SkyDrive的web版本是可用的。

|

||||

|

||||

@ -41,7 +41,7 @@

|

||||

|

||||

第一次启动时。它会要求你登录,还有询问你要把云存储挂载到什么地方。在你做完了这些后,你就可以浏览你选择的文件夹,你还可以访问你的Storage Made Easy空间以及你的SkyDrive空间了!这种方法对于那些想在Linux上使用SkyDrive的人来说非常好,对于想把他们的多个云存储服务整合到一个地方的人来说也很不错。这种方法的缺点是你无法使用他们各自官方客户端中可以使用的特殊功能。

|

||||

|

||||

因为现在在你的Linux桌面上也可以使用SkyDrive,接下来你可能需要阅读一下我写的[SkyDrive与Google Drive的比较](8)以便于知道究竟哪种更适合于你。

|

||||

因为现在在你的Linux桌面上也可以使用SkyDrive,接下来你可能需要阅读一下我写的[SkyDrive与Google Drive的比较][8]以便于知道究竟哪种更适合于你。

|

||||

|

||||

###结论

|

||||

|

||||

|

||||

125

published/20141223 Defending the Free Linux World.md

Normal file

125

published/20141223 Defending the Free Linux World.md

Normal file

@ -0,0 +1,125 @@

|

||||

守卫自由的 Linux 世界

|

||||

================================================================================

|

||||

|

||||

|

||||

**合作是开源的一部分。OIN 的 CEO Keith Bergelt 解释说,开放创新网络(Open Invention Network)模式允许众多企业和公司决定它们该在哪较量,在哪合作。随着开源的演变,“我们需要为合作创造渠道,否则我们将会有几百个团体把数十亿美元花费到同样的技术上。”**

|

||||

|

||||

[开放创新网络(Open Invention Network)][1],即 OIN,正在全球范围内开展让 Linux 远离专利诉讼的伤害的活动。它的努力得到了一千多个公司的热烈回应,它们的加入让这股力量成为了历史上最大的反专利管理组织。

|

||||

|

||||

开放创新网络以白帽子组织的身份创建于2005年,目的是保护 Linux 免受来自许可证方面的困扰。包括 Google、 IBM、 NEC、 Novell、 Philips、 [Red Hat][2] 和 Sony 这些成员的董事会给予了它可观的经济支持。世界范围内的多个组织通过签署自由 OIN 协议加入了这个社区。

|

||||

|

||||

创立开放创新网络的组织成员把它当作利用知识产权保护 Linux 的大胆尝试。它的商业模式非常的难以理解。它要求它的成员采用免版权许可证,并永远放弃由于 Linux 相关知识产权起诉其他成员的机会。

|

||||

|

||||

然而,从 Linux 收购风波——想想服务器和云平台——那时起,保护 Linux 知识产权的策略就变得越加的迫切。

|

||||

|

||||

在过去的几年里,Linux 的版图曾经历了一场变革。OIN 不必再向人们解释这个组织的定义,也不必再解释为什么 Linux 需要保护。据 OIN 的 CEO Keith Bergelt 说,现在 Linux 的重要性得到了全世界的关注。

|

||||

|

||||

“我们已经见到了一场人们了解到 OIN 如何让合作受益的文化变革,”他对 LinuxInsider 说。

|

||||

|

||||

### 如何运作 ###

|

||||

|

||||

开放创新网络使用专利权的方式创建了一个协作环境。这种方法有助于确保创新的延续。这已经使很多软件厂商、顾客、新型市场和投资者受益。

|

||||

|

||||

开放创新网络的专利证可以让任何公司、公共机构或个人免版权使用。这些权利的获得建立在签署者同意不会专为了维护专利而攻击 Linux 系统的基础上。

|

||||

|

||||

OIN 确保 Linux 的源代码保持开放的状态。这让编程人员、设备厂商、独立软件开发者和公共机构在投资和使用 Linux 时不用过多的担心知识产权的问题。这让对 Linux 进行重新打包、嵌入和使用的公司省了不少钱。

|

||||

|

||||

“随着版权许可证越来越广泛的使用,对 OIN 许可证的需求也变得更加的迫切。现在,人们正在寻找更加简单或更实用的解决方法”,Bergelt 说。

|

||||

|

||||

OIN 法律防御援助对成员是免费的。成员必须承诺不对 OIN 名单上的软件发起专利诉讼。为了保护他们的软件,他们也同意提供他们自己的专利。最终,这些保证将让几十万的交叉许可通过该网络相互连接起来,Bergelt 如此解释道。

|

||||

|

||||

### 填补法律漏洞 ###

|

||||

|

||||

“OIN 正在做的事情是非常必要的。它提供另一层 IP (知识产权)保护,”[休斯顿法律中心大学][3]的副教授 Greg R. Vetter 这样说道。

|

||||

|

||||

他回答 LinuxInsider 说,第二版 GPL 许可证被某些人认为提供了隐含的专利许可,但是律师们更喜欢明确的许可。

|

||||

|

||||

OIN 所提供的许可填补了这个空白。它还明确的覆盖了 Linux 内核。据 Vetter 说,明确的专利许可并不是 GPLv2 中的必要部分,但是这个部分被加入到了 GPLv3 中。(LCTT 译注:Linux 内核采用的是 GPLv2 的许可)

|

||||

|

||||

拿一个在 GPLv3 中写了10000行代码的代码编写者来说。随着时间推移,其他的代码编写者会贡献更多行的代码,也加入到了知识产权中。GPLv3 中的软件专利许可条款将基于所有参与的贡献者的专利,保护全部代码的使用,Vetter 如此说道。

|

||||

|

||||

### 并不完全一样 ###

|

||||

|

||||

专利权和许可证在法律结构上层层叠叠互相覆盖。弄清两者对开源软件的作用就像是穿越雷区。

|

||||

|

||||

Vetter 说“通常,许可证是授予建立在专利和版权法律上的额外权利的法律结构。许可证被认为是给予了人们做一些的可能会侵犯到其他人的知识产权权利的事的许可。”

|

||||

|

||||

Vetter 指出,很多自由开源许可证(例如 Mozilla 公共许可、GNU GPLv3 以及 Apache 软件许可)融合了某些互惠专利权的形式。Vetter 指出,像 BSD 和 MIT 这样旧的许可证不会提到专利。

|

||||

|

||||

一个软件的许可证让其他人可以在某种程度上使用这个编程人员创造的代码。版权对所属权的建立是自动的,只要某个人写或者画了某个原创的东西。然而,版权只覆盖了个别的表达方式和衍生的作品。他并没有涵盖代码的功能性或可用的想法。

|

||||

|

||||

专利涵盖了功能性。专利权还可以被许可。版权可能无法保护某人如何独立地开发对另一个人的代码的实现,但是专利填补了这个小瑕疵,Vetter 解释道。

|

||||

|

||||

### 寻找安全通道 ###

|

||||

|

||||

许可证和专利混合的法律性质可能会对开源开发者产生威胁。据 [Chaotic Moon Studios][4] 的创办者之一、 [IEEE][5] 计算机协会成员 William Hurley 说,对于某些人来说,即使是 GPL 也会成为威胁。

|

||||

|

||||

“在很久以前,开源是个完全不同的世界。被彼此间的尊重和把代码视为艺术而非资产的观点所驱动,那时的程序和代码比现在更加的开放。我相信很多为最好的愿景所做的努力几乎最后总是背负着意外的结果,”Hurley 这样告诉 LinuxInsider。

|

||||

|

||||

他暗示说,成员人数超越了1000人(的组织)可能会在知识产权保护重要性方面意见不一。这可能会继续搅混开源生态系统这滩浑水。

|

||||

|

||||

“最终,这些显现出了围绕着知识产权的常见的一些错误概念。拥有几千个开发者并不会减少风险——而是增加。给出了专利许可的开发者越多,它们看起来就越值钱,”Hurley 说。“它们看起来越值钱,有着类似专利的或者其他知识产权的人就越可能试图利用并从中榨取他们自己的经济利益。”

|

||||

|

||||

### 共享与竞争共存 ###

|

||||

|

||||

竞合策略是开源的一部分。OIN 模型让各个公司能够决定他们将在哪竞争以及在哪合作,Bergelt 解释道。

|

||||

|

||||

“开源演化中的许多改变已经把我们移到了另一个方向上。我们必须为合作创造渠道。否则我们将会有几百个团体把数十亿美元花费到同样的技术上,”他说。

|

||||

|

||||

手机产业的革新就是个很好的例子。各个公司放出了不同的标准。没有共享,没有合作,Bergelt 解释道。

|

||||

|

||||

他说:“这让我们在美国接触技术的能力落后了七到十年。我们接触设备的经验远远落后于世界其他地方的人。在我们用不上 CDMA (Code Division Multiple Access 码分多址访问通信技术)时对 GSM (Global System for Mobile Communications 全球移动通信系统) 还沾沾自喜。”

|

||||

|

||||

### 改变格局 ###

|

||||

|

||||

OIN 在去年经历了激增400个新许可的增长。这意味着着开源有了新趋势。

|

||||

|

||||

Bergelt 说:“市场到达了一个临界点,组织内的人们终于意识到直白地合作和竞争的需要。结果是两件事同时进行。这可能会变得复杂、费力。”

|

||||

|

||||

然而,这个由人们开始考虑合作和竞争的文化革新所驱动的转换过程是可以接受的。他解释说,这也是一个人们怎样拥抱开源的转变——尤其是在 Linux 这个开源社区的领导者项目。

|

||||

|

||||

还有一个迹象是,最具意义的新项目都没有在 GPLv3 许可下开发。

|

||||

|

||||

### 二个总比一个好 ###

|

||||

|

||||

“GPL 极为重要,但是事实是有一堆的许可模型正被使用着。在 Eclipse、Apache 和 Berkeley 许可中,专利问题的相对可解决性通常远远低于在 GPLv3 中的。”Bergelt 说。

|

||||

|

||||

GPLv3 对于解决专利问题是个自然的补充——但是 GPL 自身不足以独自解决围绕专利使用的潜在冲突。所以 OIN 的设计是以能够补充版权许可为目的的,他补充道。

|

||||

|

||||

然而,层层叠叠的专利和许可也许并没有带来多少好处。到最后,专利在几乎所有的案例中都被用于攻击目的——而不是防御目的,Bergelt 暗示说。

|

||||

|

||||

“如果你不准备对其他人采取法律行动,那么对于你的知识产权来说专利可能并不是最佳的法律保护方式”,他说。“我们现在生活在一个对软件——开放的和专有的——误会重重的世界里。这些软件还被错误而过时的专利系统所捆绑。我们每天在工业化和被扼杀的创新中挣扎”,他说。

|

||||

|

||||

### 法院是最后的手段###

|

||||

|

||||

想到 OIN 的出现抑制了诉讼的泛滥就感到十分欣慰,Bergelt 说,或者至少可以说 OIN 的出现扼制了特定的某些威胁。

|

||||

|

||||

“可以说我们让人们放下他们的武器。同时我们正在创建一种新的文化规范。一旦你入股这个模型中的非侵略专利,所产生的相关影响就是对合作的鼓励”,他说。

|

||||

|

||||

如果你愿意承诺合作,你的第一反应就会趋向于不急着起诉。相反的,你会想如何让我们允许你使用我们所拥有的东西并让它为你赚钱,而同时我们也能使用你所拥有的东西,Bergelt 解释道。

|

||||

|

||||

“OIN 是个多面的解决方式。它鼓励签署者创造双赢协议”,他说,“这让起诉成为最逼不得已的行为。那才是它的位置。”

|

||||

|

||||

### 底线###

|

||||

|

||||

Bergelt 坚信,OIN 的运作是为了阻止 Linux 受到专利伤害。在这个需要 Linux 的世界里没有诉讼的地方。

|

||||

|

||||

唯一临近的是与微软的移动之争,这关系到行业的发展前景(原文: The only thing that comes close are the mobile wars with Microsoft, which focus on elements high in the stack. 不太理解,请指正。)。那些来自法律的挑战可能是为了提高包括使用 Linux 产品的所属权的成本,Bergelt 说。

|

||||

|

||||

尽管如此“这些并不是有关 Linux 诉讼”,他说。“他们的重点并不在于 Linux 的核心。他们关注的是 Linux 系统里都有些什么。”

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxinsider.com/story/Defending-the-Free-Linux-World-81512.html

|

||||

|

||||

作者:Jack M. Germain

|

||||

译者:[H-mudcup](https://github.com/H-mudcup)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.openinventionnetwork.com/

|

||||

[2]:http://www.redhat.com/

|

||||

[3]:http://www.law.uh.edu/

|

||||

[4]:http://www.chaoticmoon.com/

|

||||

[5]:http://www.ieee.org/

|

||||

@ -0,0 +1,348 @@

|

||||

Shilpa Nair 分享的 RedHat Linux 包管理方面的面试经验

|

||||

========================================================================

|

||||

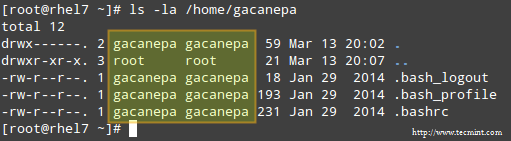

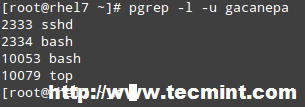

**Shilpa Nair 刚于2015年毕业。她之后去了一家位于 Noida,Delhi 的国家新闻电视台,应聘实习生的岗位。在她去年毕业季的时候,常逛 Tecmint 寻求作业上的帮助。从那时开始,她就常去 Tecmint。**

|

||||

|

||||

|

||||

|

||||

*有关 RPM 方面的 Linux 面试题*

|

||||

|

||||

所有的问题和回答都是 Shilpa Nair 根据回忆重写的。

|

||||

|

||||

> “大家好!我是来自 Delhi 的Shilpa Nair。我不久前才顺利毕业,正寻找一个实习的机会。在大学早期的时候,我就对 UNIX 十分喜爱,所以我也希望这个机会能适合我,满足我的兴趣。我被提问了很多问题,大部分都是关于 RedHat 包管理的基础问题。”

|

||||

|

||||

下面就是我被问到的问题,和对应的回答。我仅贴出了与 RedHat GNU/Linux 包管理相关的,也是主要被提问的。

|

||||

|

||||

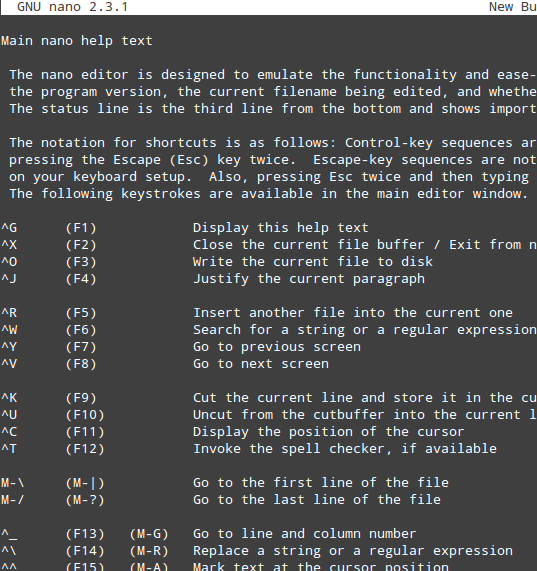

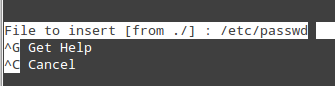

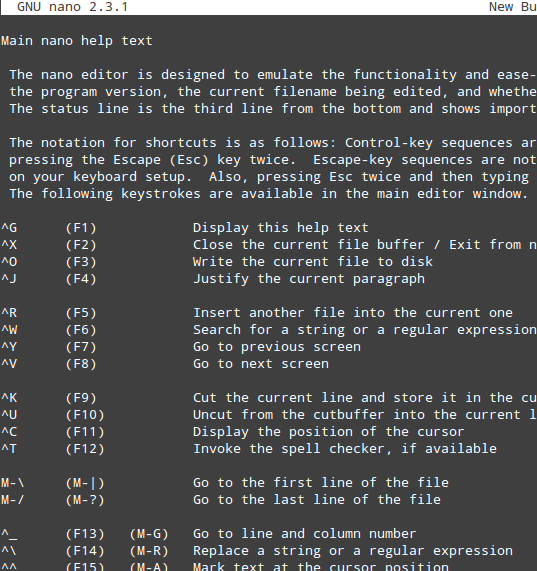

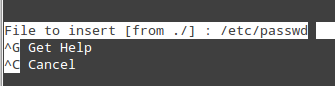

### 1,Linux 里如何查找一个包安装与否?假设你需要确认 ‘nano’ 有没有安装,你怎么做? ###

|

||||

|

||||

**回答**:为了确认 nano 软件包有没有安装,我们可以使用 rpm 命令,配合 -q 和 -a 选项来查询所有已安装的包

|

||||

|

||||

# rpm -qa nano

|

||||

或

|

||||

# rpm -qa | grep -i nano

|

||||

|

||||

nano-2.3.1-10.el7.x86_64

|

||||

|

||||

同时包的名字必须是完整的,不完整的包名会返回到提示符,不打印任何东西,就是说这包(包名字不全)未安装。下面的例子会更好理解些:

|

||||

|

||||

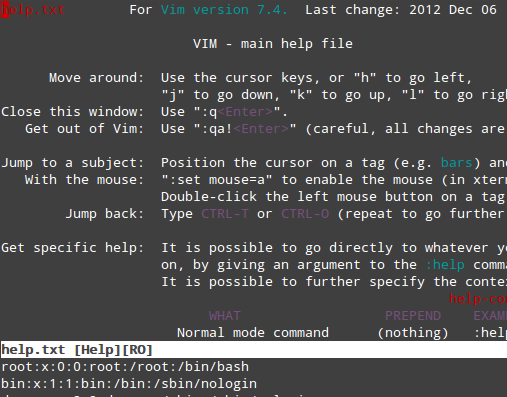

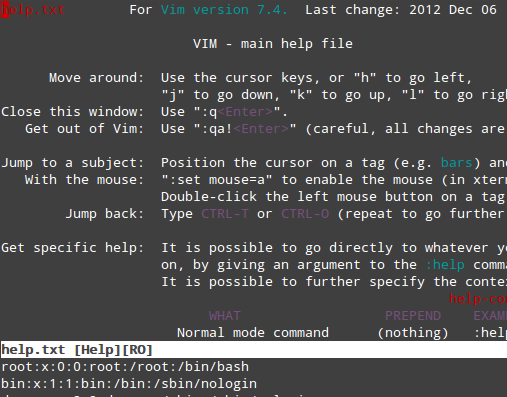

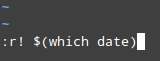

我们通常使用 vim 替代 vi 命令。当时如果我们查找安装包 vi/vim 的时候,我们就会看到标准输出上没有任何结果。

|

||||

|

||||

# vi

|

||||

# vim

|

||||

|

||||

尽管如此,我们仍然可以像上面一样运行 vi/vim 命令来清楚地知道包有没有安装。只是因为我们不知道它的完整包名才不能找到的。如果我们不确切知道完整的文件名,我们可以使用通配符:

|

||||

|

||||

# rpm -qa vim*

|

||||

|

||||

vim-minimal-7.4.160-1.el7.x86_64

|

||||

|

||||

通过这种方式,我们可以获得任何软件包的信息,安装与否。

|

||||

|

||||

### 2. 你如何使用 rpm 命令安装 XYZ 软件包? ###

|

||||

|

||||

**回答**:我们可以使用 rpm 命令安装任何的软件包(*.rpm),像下面这样,选项 -i(安装),-v(冗余或者显示额外的信息)和 -h(在安装过程中,打印#号显示进度)。

|

||||

|

||||

# rpm -ivh peazip-1.11-1.el6.rf.x86_64.rpm

|

||||

|

||||

Preparing... ################################# [100%]

|

||||

Updating / installing...

|

||||

1:peazip-1.11-1.el6.rf ################################# [100%]

|

||||

|

||||

如果要升级一个早期版本的包,应加上 -U 选项,选项 -v 和 -h 可以确保我们得到用 # 号表示的冗余输出,这增加了可读性。

|

||||

|

||||

### 3. 你已经安装了一个软件包(假设是 httpd),现在你想看看软件包创建并安装的所有文件和目录,你会怎么做? ###

|

||||

|

||||

**回答**:使用选项 -l(列出所有文件)和 -q(查询)列出 httpd 软件包安装的所有文件(Linux 哲学:所有的都是文件,包括目录)。

|

||||

|

||||

# rpm -ql httpd

|

||||

|

||||

/etc/httpd

|

||||

/etc/httpd/conf

|

||||

/etc/httpd/conf.d

|

||||

...

|

||||

|

||||

### 4. 假如你要移除一个软件包,叫 postfix。你会怎么做? ###

|

||||

|

||||

**回答**:首先我们需要知道什么包安装了 postfix。查找安装 postfix 的包名后,使用 -e(擦除/卸载软件包)和 -v(冗余输出)两个选项来实现。

|

||||

|

||||

# rpm -qa postfix*

|

||||

|

||||

postfix-2.10.1-6.el7.x86_64

|

||||

|

||||

然后移除 postfix,如下:

|

||||

|

||||

# rpm -ev postfix-2.10.1-6.el7.x86_64

|

||||

|

||||

Preparing packages...

|

||||

postfix-2:3.0.1-2.fc22.x86_64

|

||||

|

||||

### 5. 获得一个已安装包的具体信息,如版本,发行号,安装日期,大小,总结和一个简短的描述。 ###

|

||||

|

||||

**回答**:我们通过使用 rpm 的选项 -qi,后面接包名,可以获得关于一个已安装包的具体信息。

|

||||

|

||||

举个例子,为了获得 openssh 包的具体信息,我需要做的就是:

|

||||

|

||||

# rpm -qi openssh

|

||||

|

||||

[root@tecmint tecmint]# rpm -qi openssh

|

||||

Name : openssh

|

||||

Version : 6.8p1

|

||||

Release : 5.fc22

|

||||

Architecture: x86_64

|

||||

Install Date: Thursday 28 May 2015 12:34:50 PM IST

|

||||

Group : Applications/Internet

|

||||

Size : 1542057

|

||||

License : BSD

|

||||

....

|

||||

|

||||

### 6. 假如你不确定一个指定包的配置文件在哪,比如 httpd。你如何找到所有 httpd 提供的配置文件列表和位置。 ###

|

||||

|

||||

**回答**: 我们需要用选项 -c 接包名,这会列出所有配置文件的名字和他们的位置。

|

||||

|

||||

# rpm -qc httpd

|

||||

|

||||

/etc/httpd/conf.d/autoindex.conf

|

||||

/etc/httpd/conf.d/userdir.conf

|

||||

/etc/httpd/conf.d/welcome.conf

|

||||

/etc/httpd/conf.modules.d/00-base.conf

|

||||

/etc/httpd/conf/httpd.conf

|

||||

/etc/sysconfig/httpd

|

||||

|

||||

相似地,我们可以列出所有相关的文档文件,如下:

|

||||

|

||||

# rpm -qd httpd

|

||||

|

||||

/usr/share/doc/httpd/ABOUT_APACHE

|

||||

/usr/share/doc/httpd/CHANGES

|

||||

/usr/share/doc/httpd/LICENSE

|

||||

...

|

||||

|

||||

我们也可以列出所有相关的证书文件,如下:

|

||||

|

||||

# rpm -qL openssh

|

||||

|

||||

/usr/share/licenses/openssh/LICENCE

|

||||

|

||||

忘了说明上面的选项 -d 和 -L 分别表示 “文档” 和 “证书”,抱歉。

|

||||

|

||||

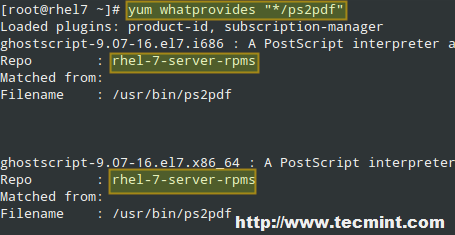

### 7. 你找到了一个配置文件,位于‘/usr/share/alsa/cards/AACI.conf’,现在你不确定该文件属于哪个包。你如何查找出包的名字? ###

|

||||

|

||||

**回答**:当一个包被安装后,相关的信息就存储在了数据库里。所以使用选项 -qf(-f 查询包拥有的文件)很容易追踪谁提供了上述的包。

|

||||

|

||||

# rpm -qf /usr/share/alsa/cards/AACI.conf

|

||||

alsa-lib-1.0.28-2.el7.x86_64

|

||||

|

||||

类似地,我们可以查找(谁提供的)关于任何子包,文档和证书文件的信息。

|

||||

|

||||

### 8. 你如何使用 rpm 查找最近安装的软件列表? ###

|

||||

|

||||

**回答**:如刚刚说的,每一样被安装的文件都记录在了数据库里。所以这并不难,通过查询 rpm 的数据库,找到最近安装软件的列表。

|

||||

|

||||

我们通过运行下面的命令,使用选项 -last(打印出最近安装的软件)达到目的。

|

||||

|

||||

# rpm -qa --last

|

||||

|

||||

上面的命令会打印出所有安装的软件,最近安装的软件在列表的顶部。

|

||||

|

||||

如果我们关心的是找出特定的包,我们可以使用 grep 命令从列表中匹配包(假设是 sqlite ),简单如下:

|

||||

|

||||

# rpm -qa --last | grep -i sqlite

|

||||

|

||||

sqlite-3.8.10.2-1.fc22.x86_64 Thursday 18 June 2015 05:05:43 PM IST

|

||||

|

||||

我们也可以获得10个最近安装的软件列表,简单如下:

|

||||

|

||||

# rpm -qa --last | head

|

||||

|

||||

我们可以重定义一下,输出想要的结果,简单如下:

|

||||

|

||||

# rpm -qa --last | head -n 2

|

||||

|

||||

上面的命令中,-n 代表数目,后面接一个常数值。该命令是打印2个最近安装的软件的列表。

|

||||

|

||||

### 9. 安装一个包之前,你如果要检查其依赖。你会怎么做? ###

|

||||

|

||||

**回答**:检查一个 rpm 包(XYZ.rpm)的依赖,我们可以使用选项 -q(查询包),-p(指定包名)和 -R(查询/列出该包依赖的包,嗯,就是依赖)。

|

||||

|

||||

# rpm -qpR gedit-3.16.1-1.fc22.i686.rpm

|

||||

|

||||

/bin/sh

|

||||

/usr/bin/env

|

||||

glib2(x86-32) >= 2.40.0

|

||||

gsettings-desktop-schemas

|

||||

gtk3(x86-32) >= 3.16

|

||||

gtksourceview3(x86-32) >= 3.16

|

||||

gvfs

|

||||

libX11.so.6

|

||||

...

|

||||

|

||||

### 10. rpm 是不是一个前端的包管理工具呢? ###

|

||||

|

||||

**回答**:**不是!**rpm 是一个后端管理工具,适用于基于 Linux 发行版的 RPM (此处指 Redhat Package Management)。

|

||||

|

||||

[YUM][1],全称 Yellowdog Updater Modified,是一个 RPM 的前端工具。YUM 命令自动完成所有工作,包括解决依赖和其他一切事务。

|

||||

|

||||

最近,[DNF][2](YUM命令升级版)在Fedora 22发行版中取代了 YUM。尽管 YUM 仍然可以在 RHEL 和 CentOS 平台使用,我们也可以安装 dnf,与 YUM 命令共存使用。据说 DNF 较于 YUM 有很多提高。

|

||||

|

||||

知道更多总是好的,保持自我更新。现在我们移步到前端部分来谈谈。

|

||||

|

||||

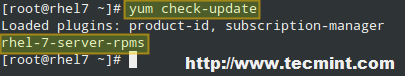

### 11. 你如何列出一个系统上面所有可用的仓库列表。 ###

|

||||

|

||||

**回答**:简单地使用下面的命令,我们就可以列出一个系统上所有可用的仓库列表。

|

||||

|

||||

# yum repolist

|

||||

或

|

||||

# dnf repolist

|

||||

|

||||

Last metadata expiration check performed 0:30:03 ago on Mon Jun 22 16:50:00 2015.

|

||||

repo id repo name status

|

||||

*fedora Fedora 22 - x86_64 44,762

|

||||

ozonos Repository for Ozon OS 61

|

||||

*updates Fedora 22 - x86_64 - Updates

|

||||

|

||||

上面的命令仅会列出可用的仓库。如果你需要列出所有的仓库,不管可用与否,可以这样做。

|

||||

|

||||

# yum repolist all

|

||||

或

|

||||

# dnf repolist all

|

||||

|

||||

Last metadata expiration check performed 0:29:45 ago on Mon Jun 22 16:50:00 2015.

|

||||

repo id repo name status

|

||||

*fedora Fedora 22 - x86_64 enabled: 44,762

|

||||

fedora-debuginfo Fedora 22 - x86_64 - Debug disabled

|

||||

fedora-source Fedora 22 - Source disabled

|

||||

ozonos Repository for Ozon OS enabled: 61

|

||||

*updates Fedora 22 - x86_64 - Updates enabled: 5,018

|

||||

updates-debuginfo Fedora 22 - x86_64 - Updates - Debug

|

||||

|

||||

### 12. 你如何列出一个系统上所有可用并且安装了的包? ###

|

||||

|

||||

**回答**:列出一个系统上所有可用的包,我们可以这样做:

|

||||

|

||||

# yum list available

|

||||

或

|

||||

# dnf list available

|

||||

|

||||

ast metadata expiration check performed 0:34:09 ago on Mon Jun 22 16:50:00 2015.

|

||||

Available Packages

|

||||

0ad.x86_64 0.0.18-1.fc22 fedora

|

||||

0ad-data.noarch 0.0.18-1.fc22 fedora

|

||||

0install.x86_64 2.6.1-2.fc21 fedora

|

||||

0xFFFF.x86_64 0.3.9-11.fc22 fedora

|

||||

2048-cli.x86_64 0.9-4.git20141214.723738c.fc22 fedora

|

||||

2048-cli-nocurses.x86_64 0.9-4.git20141214.723738c.fc22 fedora

|

||||

....

|

||||

|

||||

而列出一个系统上所有已安装的包,我们可以这样做。

|

||||

|

||||

# yum list installed

|

||||

或

|

||||

# dnf list installed

|

||||

|

||||

Last metadata expiration check performed 0:34:30 ago on Mon Jun 22 16:50:00 2015.

|

||||

Installed Packages

|

||||

GeoIP.x86_64 1.6.5-1.fc22 @System

|

||||

GeoIP-GeoLite-data.noarch 2015.05-1.fc22 @System

|

||||

NetworkManager.x86_64 1:1.0.2-1.fc22 @System

|

||||

NetworkManager-libnm.x86_64 1:1.0.2-1.fc22 @System

|

||||

aajohan-comfortaa-fonts.noarch 2.004-4.fc22 @System

|

||||

....

|

||||

|

||||

而要同时满足两个要求的时候,我们可以这样做。

|

||||

|

||||

# yum list

|

||||

或

|

||||

# dnf list

|

||||

|

||||

Last metadata expiration check performed 0:32:56 ago on Mon Jun 22 16:50:00 2015.

|

||||

Installed Packages

|

||||

GeoIP.x86_64 1.6.5-1.fc22 @System

|

||||

GeoIP-GeoLite-data.noarch 2015.05-1.fc22 @System

|

||||

NetworkManager.x86_64 1:1.0.2-1.fc22 @System

|

||||

NetworkManager-libnm.x86_64 1:1.0.2-1.fc22 @System

|

||||

aajohan-comfortaa-fonts.noarch 2.004-4.fc22 @System

|

||||

acl.x86_64 2.2.52-7.fc22 @System

|

||||

....

|

||||

|

||||

### 13. 你会怎么在一个系统上面使用 YUM 或 DNF 分别安装和升级一个包与一组包? ###

|

||||

|

||||

**回答**:安装一个包(假设是 nano),我们可以这样做,

|

||||

|

||||

# yum install nano

|

||||

|

||||

而安装一组包(假设是 Haskell),我们可以这样做,

|

||||

|

||||

# yum groupinstall 'haskell'

|

||||

|

||||

升级一个包(还是 nano),我们可以这样做,

|

||||

|

||||

# yum update nano

|

||||

|

||||

而为了升级一组包(还是 haskell),我们可以这样做,

|

||||

|

||||

# yum groupupdate 'haskell'

|

||||

|

||||

### 14. 你会如何同步一个系统上面的所有安装软件到稳定发行版? ###

|

||||

|

||||

**回答**:我们可以一个系统上(假设是 CentOS 或者 Fedora)的所有包到稳定发行版,如下,

|

||||

|

||||

# yum distro-sync [在 CentOS/ RHEL]

|

||||

或

|

||||

# dnf distro-sync [在 Fedora 20之后版本]

|

||||

|

||||

似乎来面试之前你做了相当不多的功课,很好!在进一步交谈前,我还想问一两个问题。

|

||||

|

||||

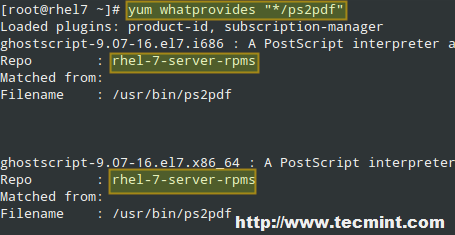

### 15. 你对 YUM 本地仓库熟悉吗?你尝试过建立一个本地 YUM 仓库吗?让我们简单看看你会怎么建立一个本地 YUM 仓库。 ###

|

||||

|

||||

**回答**:首先,感谢你的夸奖。回到问题,我必须承认我对本地 YUM 仓库十分熟悉,并且在我的本地主机上也部署过,作为测试用。

|

||||

|

||||

1、 为了建立本地 YUM 仓库,我们需要安装下面三个包:

|

||||

|

||||

# yum install deltarpm python-deltarpm createrepo

|

||||

|

||||

2、 新建一个目录(假设 /home/$USER/rpm),然后复制 RedHat/CentOS DVD 上的 RPM 包到这个文件夹下

|

||||

|

||||

# mkdir /home/$USER/rpm

|

||||

# cp /path/to/rpm/on/DVD/*.rpm /home/$USER/rpm

|

||||

|

||||

3、 新建基本的库头文件如下。

|

||||

|

||||

# createrepo -v /home/$USER/rpm

|

||||

|

||||

4、 在路径 /etc/yum.repo.d 下创建一个 .repo 文件(如 abc.repo):

|

||||

|

||||

cd /etc/yum.repos.d && cat << EOF abc.repo

|

||||

[local-installation]name=yum-local

|

||||

baseurl=file:///home/$USER/rpm

|

||||

enabled=1

|

||||

gpgcheck=0

|

||||

EOF

|

||||

|

||||

**重要**:用你的用户名替换掉 $USER。

|

||||

|

||||

以上就是创建一个本地 YUM 仓库所要做的全部工作。我们现在可以从这里安装软件了,相对快一些,安全一些,并且最重要的是不需要 Internet 连接。

|

||||

|

||||

好了!面试过程很愉快。我已经问完了。我会将你推荐给 HR。你是一个年轻且十分聪明的候选者,我们很愿意你加入进来。如果你有任何问题,你可以问我。

|

||||

|

||||

**我**:谢谢,这确实是一次愉快的面试,我感到今天非常幸运,可以搞定这次面试...

|

||||

|

||||

显然,不会在这里结束。我问了很多问题,比如他们正在做的项目。我会担任什么角色,负责什么,,,balabalabala

|

||||

|

||||

小伙伴们,这之后的 3 天会经过 HR 轮,到时候所有问题到时候也会被写成文档。希望我当时表现不错。感谢你们所有的祝福。

|

||||

|

||||

谢谢伙伴们和 Tecmint,花时间来编辑我的面试经历。我相信 Tecmint 好伙伴们做了很大的努力,必要要赞一个。当我们与他人分享我们的经历的时候,其他人从我们这里知道了更多,而我们自己则发现了自己的不足。

|

||||

|

||||

这增加了我们的信心。如果你最近也有任何类似的面试经历,别自己蔵着。分享出来!让我们所有人都知道。你可以使用如下的表单来与我们分享你的经历。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/linux-rpm-package-management-interview-questions/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[wi-cuckoo](https://github.com/wi-cuckoo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/20-linux-yum-yellowdog-updater-modified-commands-for-package-mangement/

|

||||

[2]:https://linux.cn/article-5718-1.html

|

||||

690

published/20150728 Process of the Linux kernel building.md

Normal file

690

published/20150728 Process of the Linux kernel building.md

Normal file

@ -0,0 +1,690 @@

|

||||

你知道 Linux 内核是如何构建的吗?

|

||||

================================================================================

|

||||

|

||||

###介绍

|

||||

|

||||

我不会告诉你怎么在自己的电脑上去构建、安装一个定制化的 Linux 内核,这样的[资料](https://encrypted.google.com/search?q=building+linux+kernel#q=building+linux+kernel+from+source+code)太多了,它们会对你有帮助。本文会告诉你当你在内核源码路径里敲下`make` 时会发生什么。

|

||||

|

||||

当我刚刚开始学习内核代码时,[Makefile](https://github.com/torvalds/linux/blob/master/Makefile) 是我打开的第一个文件,这个文件看起来真令人害怕 :)。那时候这个 [Makefile](https://en.wikipedia.org/wiki/Make_%28software%29) 还只包含了`1591` 行代码,当我开始写本文时,内核已经是[4.2.0的第三个候选版本](https://github.com/torvalds/linux/commit/52721d9d3334c1cb1f76219a161084094ec634dc) 了。

|

||||

|

||||

这个 makefile 是 Linux 内核代码的根 makefile ,内核构建就始于此处。是的,它的内容很多,但是如果你已经读过内核源代码,你就会发现每个包含代码的目录都有一个自己的 makefile。当然了,我们不会去描述每个代码文件是怎么编译链接的,所以我们将只会挑选一些通用的例子来说明问题。而你不会在这里找到构建内核的文档、如何整洁内核代码、[tags](https://en.wikipedia.org/wiki/Ctags) 的生成和[交叉编译](https://en.wikipedia.org/wiki/Cross_compiler) 相关的说明,等等。我们将从`make` 开始,使用标准的内核配置文件,到生成了内核镜像 [bzImage](https://en.wikipedia.org/wiki/Vmlinux#bzImage) 结束。

|

||||

|

||||

如果你已经很了解 [make](https://en.wikipedia.org/wiki/Make_%28software%29) 工具那是最好,但是我也会描述本文出现的相关代码。

|

||||

|

||||

让我们开始吧!

|

||||

|

||||

|

||||

###编译内核前的准备

|

||||

|

||||

在开始编译前要进行很多准备工作。最主要的就是找到并配置好配置文件,`make` 命令要使用到的参数都需要从这些配置文件获取。现在就让我们深入内核的根 `makefile` 吧

|

||||

|

||||

内核的根 `Makefile` 负责构建两个主要的文件:[vmlinux](https://en.wikipedia.org/wiki/Vmlinux) (内核镜像可执行文件)和模块文件。内核的 [Makefile](https://github.com/torvalds/linux/blob/master/Makefile) 从定义如下变量开始:

|

||||

|

||||

```Makefile

|

||||

VERSION = 4

|

||||

PATCHLEVEL = 2

|

||||

SUBLEVEL = 0

|

||||

EXTRAVERSION = -rc3

|

||||

NAME = Hurr durr I'ma sheep

|

||||

```

|

||||

|

||||

这些变量决定了当前内核的版本,并且被使用在很多不同的地方,比如同一个 `Makefile` 中的 `KERNELVERSION` :

|

||||

|

||||

```Makefile

|

||||

KERNELVERSION = $(VERSION)$(if $(PATCHLEVEL),.$(PATCHLEVEL)$(if $(SUBLEVEL),.$(SUBLEVEL)))$(EXTRAVERSION)

|

||||

```

|

||||

|

||||

接下来我们会看到很多`ifeq` 条件判断语句,它们负责检查传递给 `make` 的参数。内核的 `Makefile` 提供了一个特殊的编译选项 `make help` ,这个选项可以生成所有的可用目标和一些能传给 `make` 的有效的命令行参数。举个例子,`make V=1` 会在构建过程中输出详细的编译信息,第一个 `ifeq` 就是检查传递给 make 的 `V=n` 选项。

|

||||

|

||||

```Makefile

|

||||

ifeq ("$(origin V)", "command line")

|

||||

KBUILD_VERBOSE = $(V)

|

||||

endif

|

||||

ifndef KBUILD_VERBOSE

|

||||

KBUILD_VERBOSE = 0

|

||||

endif

|

||||

|

||||

ifeq ($(KBUILD_VERBOSE),1)

|

||||

quiet =

|

||||

Q =

|

||||

else

|

||||

quiet=quiet_

|

||||

Q = @

|

||||

endif

|

||||

|

||||

export quiet Q KBUILD_VERBOSE

|

||||

```

|

||||

如果 `V=n` 这个选项传给了 `make` ,系统就会给变量 `KBUILD_VERBOSE` 选项附上 `V` 的值,否则的话`KBUILD_VERBOSE` 就会为 `0`。然后系统会检查 `KBUILD_VERBOSE` 的值,以此来决定 `quiet` 和`Q` 的值。符号 `@` 控制命令的输出,如果它被放在一个命令之前,这条命令的输出将会是 `CC scripts/mod/empty.o`,而不是`Compiling .... scripts/mod/empty.o`(LCTT 译注:CC 在 makefile 中一般都是编译命令)。在这段最后,系统导出了所有的变量。

|

||||

|

||||

下一个 `ifeq` 语句检查的是传递给 `make` 的选项 `O=/dir`,这个选项允许在指定的目录 `dir` 输出所有的结果文件:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(KBUILD_SRC),)

|

||||

|

||||

ifeq ("$(origin O)", "command line")

|

||||

KBUILD_OUTPUT := $(O)

|

||||

endif

|

||||

|

||||

ifneq ($(KBUILD_OUTPUT),)

|

||||

saved-output := $(KBUILD_OUTPUT)

|

||||

KBUILD_OUTPUT := $(shell mkdir -p $(KBUILD_OUTPUT) && cd $(KBUILD_OUTPUT) \

|

||||

&& /bin/pwd)

|

||||

$(if $(KBUILD_OUTPUT),, \

|

||||

$(error failed to create output directory "$(saved-output)"))

|

||||

|

||||

sub-make: FORCE

|

||||

$(Q)$(MAKE) -C $(KBUILD_OUTPUT) KBUILD_SRC=$(CURDIR) \

|

||||

-f $(CURDIR)/Makefile $(filter-out _all sub-make,$(MAKECMDGOALS))

|

||||

|

||||

skip-makefile := 1

|

||||

endif # ifneq ($(KBUILD_OUTPUT),)

|

||||

endif # ifeq ($(KBUILD_SRC),)

|

||||

```

|

||||

|

||||

系统会检查变量 `KBUILD_SRC`,它代表内核代码的顶层目录,如果它是空的(第一次执行 makefile 时总是空的),我们会设置变量 `KBUILD_OUTPUT` 为传递给选项 `O` 的值(如果这个选项被传进来了)。下一步会检查变量 `KBUILD_OUTPUT` ,如果已经设置好,那么接下来会做以下几件事:

|

||||

|

||||

* 将变量 `KBUILD_OUTPUT` 的值保存到临时变量 `saved-output`;

|

||||

* 尝试创建给定的输出目录;

|

||||

* 检查创建的输出目录,如果失败了就打印错误;

|

||||

* 如果成功创建了输出目录,那么就在新目录重新执行 `make` 命令(参见选项`-C`)。

|

||||

|

||||

下一个 `ifeq` 语句会检查传递给 make 的选项 `C` 和 `M`:

|

||||

|

||||

```Makefile

|

||||

ifeq ("$(origin C)", "command line")

|

||||

KBUILD_CHECKSRC = $(C)

|

||||

endif

|

||||

ifndef KBUILD_CHECKSRC

|

||||

KBUILD_CHECKSRC = 0

|

||||

endif

|

||||

|

||||

ifeq ("$(origin M)", "command line")

|

||||

KBUILD_EXTMOD := $(M)

|

||||

endif

|

||||

```

|

||||

|

||||

第一个选项 `C` 会告诉 `makefile` 需要使用环境变量 `$CHECK` 提供的工具来检查全部 `c` 代码,默认情况下会使用[sparse](https://en.wikipedia.org/wiki/Sparse)。第二个选项 `M` 会用来编译外部模块(本文不做讨论)。

|

||||

|

||||

系统还会检查变量 `KBUILD_SRC`,如果 `KBUILD_SRC` 没有被设置,系统会设置变量 `srctree` 为`.`:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(KBUILD_SRC),)

|

||||

srctree := .

|

||||

endif

|

||||

|

||||

objtree := .

|

||||

src := $(srctree)

|

||||

obj := $(objtree)

|

||||

|

||||

export srctree objtree VPATH

|

||||

```

|

||||

|

||||

这将会告诉 `Makefile` 内核的源码树就在执行 `make` 命令的目录,然后要设置 `objtree` 和其他变量为这个目录,并且将这些变量导出。接着就是要获取 `SUBARCH` 的值,这个变量代表了当前的系统架构(LCTT 译注:一般都指CPU 架构):

|

||||

|

||||

```Makefile

|

||||

SUBARCH := $(shell uname -m | sed -e s/i.86/x86/ -e s/x86_64/x86/ \

|

||||

-e s/sun4u/sparc64/ \

|

||||

-e s/arm.*/arm/ -e s/sa110/arm/ \

|

||||

-e s/s390x/s390/ -e s/parisc64/parisc/ \

|

||||

-e s/ppc.*/powerpc/ -e s/mips.*/mips/ \

|

||||

-e s/sh[234].*/sh/ -e s/aarch64.*/arm64/ )

|

||||

```

|

||||

|

||||

如你所见,系统执行 [uname](https://en.wikipedia.org/wiki/Uname) 得到机器、操作系统和架构的信息。因为我们得到的是 `uname` 的输出,所以我们需要做一些处理再赋给变量 `SUBARCH` 。获得 `SUBARCH` 之后就要设置`SRCARCH` 和 `hfr-arch`,`SRCARCH` 提供了硬件架构相关代码的目录,`hfr-arch` 提供了相关头文件的目录:

|

||||

|

||||

```Makefile

|

||||

ifeq ($(ARCH),i386)

|

||||

SRCARCH := x86

|

||||

endif

|

||||

ifeq ($(ARCH),x86_64)

|

||||

SRCARCH := x86

|

||||

endif

|

||||

|

||||

hdr-arch := $(SRCARCH)

|

||||

```

|

||||

|

||||

注意:`ARCH` 是 `SUBARCH` 的别名。如果没有设置过代表内核配置文件路径的变量 `KCONFIG_CONFIG`,下一步系统会设置它,默认情况下就是 `.config` :

|

||||

|

||||

```Makefile

|

||||

KCONFIG_CONFIG ?= .config

|

||||

export KCONFIG_CONFIG

|

||||

```

|

||||

|

||||

以及编译内核过程中要用到的 [shell](https://en.wikipedia.org/wiki/Shell_%28computing%29)

|

||||

|

||||

```Makefile

|

||||

CONFIG_SHELL := $(shell if [ -x "$$BASH" ]; then echo $$BASH; \

|

||||

else if [ -x /bin/bash ]; then echo /bin/bash; \

|

||||

else echo sh; fi ; fi)

|

||||

```

|

||||

|

||||

接下来就要设置一组和编译内核的编译器相关的变量。我们会设置主机的 `C` 和 `C++` 的编译器及相关配置项:

|

||||

|

||||

```Makefile

|

||||

HOSTCC = gcc

|

||||

HOSTCXX = g++

|

||||

HOSTCFLAGS = -Wall -Wmissing-prototypes -Wstrict-prototypes -O2 -fomit-frame-pointer -std=gnu89

|

||||

HOSTCXXFLAGS = -O2

|

||||

```

|

||||

|

||||

接下来会去适配代表编译器的变量 `CC`,那为什么还要 `HOST*` 这些变量呢?这是因为 `CC` 是编译内核过程中要使用的目标架构的编译器,但是 `HOSTCC` 是要被用来编译一组 `host` 程序的(下面我们就会看到)。

|

||||

|

||||

然后我们就看到变量 `KBUILD_MODULES` 和 `KBUILD_BUILTIN` 的定义,这两个变量决定了我们要编译什么东西(内核、模块或者两者):

|

||||

|

||||

```Makefile

|

||||

KBUILD_MODULES :=

|

||||

KBUILD_BUILTIN := 1

|

||||

|

||||

ifeq ($(MAKECMDGOALS),modules)

|

||||

KBUILD_BUILTIN := $(if $(CONFIG_MODVERSIONS),1)

|

||||

endif

|

||||

```

|

||||

|

||||

在这我们可以看到这些变量的定义,并且,如果们仅仅传递了 `modules` 给 `make`,变量 `KBUILD_BUILTIN` 会依赖于内核配置选项 `CONFIG_MODVERSIONS`。

|

||||

|

||||

下一步操作是引入下面的文件:

|

||||

|

||||

```Makefile

|

||||

include scripts/Kbuild.include

|

||||

```

|

||||

|

||||

文件 [Kbuild](https://github.com/torvalds/linux/blob/master/Documentation/kbuild/kbuild.txt) 或者又叫做 `Kernel Build System` 是一个用来管理构建内核及其模块的特殊框架。`kbuild` 文件的语法与 makefile 一样。文件[scripts/Kbuild.include](https://github.com/torvalds/linux/blob/master/scripts/Kbuild.include) 为 `kbuild` 系统提供了一些常规的定义。因为我们包含了这个 `kbuild` 文件,我们可以看到和不同工具关联的这些变量的定义,这些工具会在内核和模块编译过程中被使用(比如链接器、编译器、来自 [binutils](http://www.gnu.org/software/binutils/) 的二进制工具包 ,等等):

|

||||

|

||||

```Makefile

|

||||

AS = $(CROSS_COMPILE)as

|

||||

LD = $(CROSS_COMPILE)ld

|

||||

CC = $(CROSS_COMPILE)gcc

|

||||

CPP = $(CC) -E

|

||||

AR = $(CROSS_COMPILE)ar

|

||||

NM = $(CROSS_COMPILE)nm

|

||||

STRIP = $(CROSS_COMPILE)strip

|

||||

OBJCOPY = $(CROSS_COMPILE)objcopy

|

||||

OBJDUMP = $(CROSS_COMPILE)objdump

|

||||

AWK = awk

|

||||

...

|

||||

...

|

||||

...

|

||||

```

|

||||

|

||||

在这些定义好的变量后面,我们又定义了两个变量:`USERINCLUDE` 和 `LINUXINCLUDE`。他们包含了头文件的路径(第一个是给用户用的,第二个是给内核用的):

|

||||

|

||||

```Makefile

|

||||

USERINCLUDE := \

|

||||

-I$(srctree)/arch/$(hdr-arch)/include/uapi \

|

||||

-Iarch/$(hdr-arch)/include/generated/uapi \

|

||||

-I$(srctree)/include/uapi \

|

||||

-Iinclude/generated/uapi \

|

||||

-include $(srctree)/include/linux/kconfig.h

|

||||

|

||||

LINUXINCLUDE := \

|

||||

-I$(srctree)/arch/$(hdr-arch)/include \

|

||||

...

|

||||

```

|

||||

|

||||

以及给 C 编译器的标准标志:

|

||||

|

||||

```Makefile

|

||||

KBUILD_CFLAGS := -Wall -Wundef -Wstrict-prototypes -Wno-trigraphs \

|

||||

-fno-strict-aliasing -fno-common \

|

||||

-Werror-implicit-function-declaration \

|

||||

-Wno-format-security \

|

||||

-std=gnu89

|

||||

```

|

||||

|

||||

这并不是最终确定的编译器标志,它们还可以在其他 makefile 里面更新(比如 `arch/` 里面的 kbuild)。变量定义完之后,全部会被导出供其他 makefile 使用。

|

||||

|

||||

下面的两个变量 `RCS_FIND_IGNORE` 和 `RCS_TAR_IGNORE` 包含了被版本控制系统忽略的文件:

|

||||

|

||||

```Makefile

|

||||

export RCS_FIND_IGNORE := \( -name SCCS -o -name BitKeeper -o -name .svn -o \

|

||||

-name CVS -o -name .pc -o -name .hg -o -name .git \) \

|

||||

-prune -o

|

||||

export RCS_TAR_IGNORE := --exclude SCCS --exclude BitKeeper --exclude .svn \

|

||||

--exclude CVS --exclude .pc --exclude .hg --exclude .git

|

||||

```

|

||||

|

||||

这就是全部了,我们已经完成了所有的准备工作,下一个点就是如果构建`vmlinux`。

|

||||

|

||||

###直面内核构建

|

||||

|

||||

现在我们已经完成了所有的准备工作,根 makefile(注:内核根目录下的 makefile)的下一步工作就是和编译内核相关的了。在这之前,我们不会在终端看到 `make` 命令输出的任何东西。但是现在编译的第一步开始了,这里我们需要从内核根 makefile 的 [598](https://github.com/torvalds/linux/blob/master/Makefile#L598) 行开始,这里可以看到目标`vmlinux`:

|

||||

|

||||

```Makefile

|

||||

all: vmlinux

|

||||

include arch/$(SRCARCH)/Makefile

|

||||

```

|

||||

|

||||

不要操心我们略过的从 `export RCS_FIND_IGNORE.....` 到 `all: vmlinux.....` 这一部分 makefile 代码,他们只是负责根据各种配置文件(`make *.config`)生成不同目标内核的,因为之前我就说了这一部分我们只讨论构建内核的通用途径。

|

||||

|

||||

目标 `all:` 是在命令行如果不指定具体目标时默认使用的目标。你可以看到这里包含了架构相关的 makefile(在这里就指的是 [arch/x86/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile))。从这一时刻起,我们会从这个 makefile 继续进行下去。如我们所见,目标 `all` 依赖于根 makefile 后面声明的 `vmlinux`:

|

||||

|

||||

```Makefile

|

||||

vmlinux: scripts/link-vmlinux.sh $(vmlinux-deps) FORCE

|

||||

```

|

||||

|

||||

`vmlinux` 是 linux 内核的静态链接可执行文件格式。脚本 [scripts/link-vmlinux.sh](https://github.com/torvalds/linux/blob/master/scripts/link-vmlinux.sh) 把不同的编译好的子模块链接到一起形成了 vmlinux。

|

||||

|

||||

第二个目标是 `vmlinux-deps`,它的定义如下:

|

||||

|

||||

```Makefile

|

||||

vmlinux-deps := $(KBUILD_LDS) $(KBUILD_VMLINUX_INIT) $(KBUILD_VMLINUX_MAIN)

|

||||

```

|

||||

|

||||

它是由内核代码下的每个顶级目录的 `built-in.o` 组成的。之后我们还会检查内核所有的目录,`kbuild` 会编译各个目录下所有的对应 `$(obj-y)` 的源文件。接着调用 `$(LD) -r` 把这些文件合并到一个 `build-in.o` 文件里。此时我们还没有`vmlinux-deps`,所以目标 `vmlinux` 现在还不会被构建。对我而言 `vmlinux-deps` 包含下面的文件:

|

||||

|

||||

```

|

||||

arch/x86/kernel/vmlinux.lds arch/x86/kernel/head_64.o

|

||||

arch/x86/kernel/head64.o arch/x86/kernel/head.o

|

||||

init/built-in.o usr/built-in.o

|

||||

arch/x86/built-in.o kernel/built-in.o

|

||||

mm/built-in.o fs/built-in.o

|

||||

ipc/built-in.o security/built-in.o

|

||||

crypto/built-in.o block/built-in.o

|

||||

lib/lib.a arch/x86/lib/lib.a

|

||||

lib/built-in.o arch/x86/lib/built-in.o

|

||||

drivers/built-in.o sound/built-in.o

|

||||

firmware/built-in.o arch/x86/pci/built-in.o

|

||||

arch/x86/power/built-in.o arch/x86/video/built-in.o

|

||||

net/built-in.o

|

||||

```

|

||||

|

||||

下一个可以被执行的目标如下:

|

||||

|

||||

```Makefile

|

||||

$(sort $(vmlinux-deps)): $(vmlinux-dirs) ;

|

||||

$(vmlinux-dirs): prepare scripts

|

||||

$(Q)$(MAKE) $(build)=$@

|

||||

```

|

||||

|

||||

就像我们看到的,`vmlinux-dir` 依赖于两部分:`prepare` 和 `scripts`。第一个 `prepare` 定义在内核的根 `makefile` 中,准备工作分成三个阶段:

|

||||

|

||||

```Makefile

|

||||

prepare: prepare0

|

||||

prepare0: archprepare FORCE

|

||||

$(Q)$(MAKE) $(build)=.

|

||||

archprepare: archheaders archscripts prepare1 scripts_basic

|

||||

|

||||

prepare1: prepare2 $(version_h) include/generated/utsrelease.h \

|

||||

include/config/auto.conf

|

||||

$(cmd_crmodverdir)

|

||||

prepare2: prepare3 outputmakefile asm-generic

|

||||

```

|

||||

|

||||

第一个 `prepare0` 展开到 `archprepare` ,后者又展开到 `archheader` 和 `archscripts`,这两个变量定义在 `x86_64` 相关的 [Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile)。让我们看看这个文件。`x86_64` 特定的 makefile 从变量定义开始,这些变量都是和特定架构的配置文件 ([defconfig](https://github.com/torvalds/linux/tree/master/arch/x86/configs),等等)有关联。在定义了编译 [16-bit](https://en.wikipedia.org/wiki/Real_mode) 代码的编译选项之后,根据变量 `BITS` 的值,如果是 `32`, 汇编代码、链接器、以及其它很多东西(全部的定义都可以在[arch/x86/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile)找到)对应的参数就是 `i386`,而 `64` 就对应的是 `x86_84`。

|

||||

|

||||

第一个目标是 makefile 生成的系统调用列表(syscall table)中的 `archheaders` :

|

||||

|

||||

```Makefile

|

||||

archheaders:

|

||||

$(Q)$(MAKE) $(build)=arch/x86/entry/syscalls all

|

||||

```

|

||||

|

||||

第二个目标是 makefile 里的 `archscripts`:

|

||||

|

||||

```Makefile

|

||||

archscripts: scripts_basic

|

||||

$(Q)$(MAKE) $(build)=arch/x86/tools relocs

|

||||

```

|

||||

|

||||

我们可以看到 `archscripts` 是依赖于根 [Makefile](https://github.com/torvalds/linux/blob/master/Makefile)里的`scripts_basic` 。首先我们可以看出 `scripts_basic` 是按照 [scripts/basic](https://github.com/torvalds/linux/blob/master/scripts/basic/Makefile) 的 makefile 执行 make 的:

|

||||

|

||||

```Maklefile

|

||||

scripts_basic:

|

||||

$(Q)$(MAKE) $(build)=scripts/basic

|

||||

```

|

||||

|

||||

`scripts/basic/Makefile` 包含了编译两个主机程序 `fixdep` 和 `bin2` 的目标:

|

||||

|

||||

```Makefile

|

||||

hostprogs-y := fixdep

|

||||

hostprogs-$(CONFIG_BUILD_BIN2C) += bin2c

|

||||

always := $(hostprogs-y)

|

||||

|

||||

$(addprefix $(obj)/,$(filter-out fixdep,$(always))): $(obj)/fixdep

|

||||

```

|

||||

|

||||

第一个工具是 `fixdep`:用来优化 [gcc](https://gcc.gnu.org/) 生成的依赖列表,然后在重新编译源文件的时候告诉make。第二个工具是 `bin2c`,它依赖于内核配置选项 `CONFIG_BUILD_BIN2C`,并且它是一个用来将标准输入接口(LCTT 译注:即 stdin)收到的二进制流通过标准输出接口(即:stdout)转换成 C 头文件的非常小的 C 程序。你可能注意到这里有些奇怪的标志,如 `hostprogs-y` 等。这个标志用于所有的 `kbuild` 文件,更多的信息你可以从[documentation](https://github.com/torvalds/linux/blob/master/Documentation/kbuild/makefiles.txt) 获得。在我们这里, `hostprogs-y` 告诉 `kbuild` 这里有个名为 `fixed` 的程序,这个程序会通过和 `Makefile` 相同目录的 `fixdep.c` 编译而来。

|

||||

|

||||

执行 make 之后,终端的第一个输出就是 `kbuild` 的结果:

|

||||

|

||||

```

|

||||

$ make

|

||||

HOSTCC scripts/basic/fixdep

|

||||

```

|

||||

|

||||

当目标 `script_basic` 被执行,目标 `archscripts` 就会 make [arch/x86/tools](https://github.com/torvalds/linux/blob/master/arch/x86/tools/Makefile) 下的 makefile 和目标 `relocs`:

|

||||

|

||||

```Makefile

|

||||

$(Q)$(MAKE) $(build)=arch/x86/tools relocs

|

||||

```

|

||||

|

||||

包含了[重定位](https://en.wikipedia.org/wiki/Relocation_%28computing%29) 的信息的代码 `relocs_32.c` 和 `relocs_64.c` 将会被编译,这可以在`make` 的输出中看到:

|

||||

|

||||

```Makefile

|

||||

HOSTCC arch/x86/tools/relocs_32.o

|

||||

HOSTCC arch/x86/tools/relocs_64.o

|

||||

HOSTCC arch/x86/tools/relocs_common.o

|

||||

HOSTLD arch/x86/tools/relocs

|

||||

```

|

||||

|

||||

在编译完 `relocs.c` 之后会检查 `version.h`:

|

||||

|

||||

```Makefile

|

||||

$(version_h): $(srctree)/Makefile FORCE

|

||||

$(call filechk,version.h)

|

||||

$(Q)rm -f $(old_version_h)

|

||||

```

|

||||

|

||||

我们可以在输出看到它:

|

||||

|

||||

```

|

||||

CHK include/config/kernel.release

|

||||

```

|

||||

|

||||

以及在内核的根 Makefiel 使用 `arch/x86/include/generated/asm` 的目标 `asm-generic` 来构建 `generic` 汇编头文件。在目标 `asm-generic` 之后,`archprepare` 就完成了,所以目标 `prepare0` 会接着被执行,如我上面所写:

|

||||

|

||||

```Makefile

|

||||

prepare0: archprepare FORCE

|

||||

$(Q)$(MAKE) $(build)=.

|

||||

```

|

||||

|

||||

注意 `build`,它是定义在文件 [scripts/Kbuild.include](https://github.com/torvalds/linux/blob/master/scripts/Kbuild.include),内容是这样的:

|

||||

|

||||

```Makefile

|

||||

build := -f $(srctree)/scripts/Makefile.build obj

|

||||

```

|

||||

|

||||

或者在我们的例子中,它就是当前源码目录路径:`.`:

|

||||

|

||||

```Makefile

|

||||

$(Q)$(MAKE) -f $(srctree)/scripts/Makefile.build obj=.

|

||||

```

|

||||

|

||||

脚本 [scripts/Makefile.build](https://github.com/torvalds/linux/blob/master/scripts/Makefile.build) 通过参数 `obj` 给定的目录找到 `Kbuild` 文件,然后引入 `kbuild` 文件:

|

||||

|

||||

```Makefile

|

||||

include $(kbuild-file)

|

||||

```

|

||||

|

||||

并根据这个构建目标。我们这里 `.` 包含了生成 `kernel/bounds.s` 和 `arch/x86/kernel/asm-offsets.s` 的 [Kbuild](https://github.com/torvalds/linux/blob/master/Kbuild) 文件。在此之后,目标 `prepare` 就完成了它的工作。 `vmlinux-dirs` 也依赖于第二个目标 `scripts` ,它会编译接下来的几个程序:`filealias`,`mk_elfconfig`,`modpost` 等等。之后,`scripts/host-programs` 就可以开始编译我们的目标 `vmlinux-dirs` 了。

|

||||

|

||||

首先,我们先来理解一下 `vmlinux-dirs` 都包含了那些东西。在我们的例子中它包含了下列内核目录的路径:

|

||||

|

||||

```

|

||||

init usr arch/x86 kernel mm fs ipc security crypto block

|

||||

drivers sound firmware arch/x86/pci arch/x86/power

|

||||

arch/x86/video net lib arch/x86/lib

|

||||

```

|

||||

|

||||

我们可以在内核的根 [Makefile](https://github.com/torvalds/linux/blob/master/Makefile) 里找到 `vmlinux-dirs` 的定义:

|

||||

|

||||

```Makefile

|

||||

vmlinux-dirs := $(patsubst %/,%,$(filter %/, $(init-y) $(init-m) \

|

||||

$(core-y) $(core-m) $(drivers-y) $(drivers-m) \

|

||||

$(net-y) $(net-m) $(libs-y) $(libs-m)))

|

||||

|

||||

init-y := init/

|

||||

drivers-y := drivers/ sound/ firmware/

|

||||

net-y := net/

|

||||

libs-y := lib/

|

||||

...

|

||||

...

|

||||

...

|

||||

```

|

||||

|

||||

这里我们借助函数 `patsubst` 和 `filter`去掉了每个目录路径里的符号 `/`,并且把结果放到 `vmlinux-dirs` 里。所以我们就有了 `vmlinux-dirs` 里的目录列表,以及下面的代码:

|

||||

|

||||

```Makefile

|

||||

$(vmlinux-dirs): prepare scripts

|

||||

$(Q)$(MAKE) $(build)=$@

|

||||

```

|

||||

|

||||

符号 `$@` 在这里代表了 `vmlinux-dirs`,这就表明程序会递归遍历从 `vmlinux-dirs` 以及它内部的全部目录(依赖于配置),并且在对应的目录下执行 `make` 命令。我们可以在输出看到结果:

|

||||

|

||||

```

|

||||

CC init/main.o

|

||||

CHK include/generated/compile.h

|

||||

CC init/version.o

|

||||

CC init/do_mounts.o

|

||||

...

|

||||

CC arch/x86/crypto/glue_helper.o

|

||||

AS arch/x86/crypto/aes-x86_64-asm_64.o

|

||||

CC arch/x86/crypto/aes_glue.o

|

||||

...

|

||||

AS arch/x86/entry/entry_64.o

|

||||

AS arch/x86/entry/thunk_64.o

|

||||

CC arch/x86/entry/syscall_64.o

|

||||

```

|

||||

|

||||

每个目录下的源代码将会被编译并且链接到 `built-io.o` 里:

|

||||

|

||||

```

|

||||

$ find . -name built-in.o

|

||||

./arch/x86/crypto/built-in.o

|

||||

./arch/x86/crypto/sha-mb/built-in.o

|

||||

./arch/x86/net/built-in.o

|

||||

./init/built-in.o

|

||||

./usr/built-in.o

|

||||

...

|

||||

...

|

||||

```

|

||||

|

||||

好了,所有的 `built-in.o` 都构建完了,现在我们回到目标 `vmlinux` 上。你应该还记得,目标 `vmlinux` 是在内核的根makefile 里。在链接 `vmlinux` 之前,系统会构建 [samples](https://github.com/torvalds/linux/tree/master/samples), [Documentation](https://github.com/torvalds/linux/tree/master/Documentation) 等等,但是如上文所述,我不会在本文描述这些。

|

||||

|

||||

```Makefile

|

||||

vmlinux: scripts/link-vmlinux.sh $(vmlinux-deps) FORCE

|

||||

...

|

||||

...

|

||||

+$(call if_changed,link-vmlinux)

|

||||

```

|

||||

|

||||

你可以看到,调用脚本 [scripts/link-vmlinux.sh](https://github.com/torvalds/linux/blob/master/scripts/link-vmlinux.sh) 的主要目的是把所有的 `built-in.o` 链接成一个静态可执行文件,和生成 [System.map](https://en.wikipedia.org/wiki/System.map)。 最后我们来看看下面的输出:

|

||||

|

||||

```

|

||||

LINK vmlinux

|

||||

LD vmlinux.o

|

||||

MODPOST vmlinux.o

|

||||

GEN .version

|

||||

CHK include/generated/compile.h

|

||||

UPD include/generated/compile.h

|

||||

CC init/version.o

|

||||

LD init/built-in.o

|

||||

KSYM .tmp_kallsyms1.o

|

||||

KSYM .tmp_kallsyms2.o

|

||||

LD vmlinux

|

||||

SORTEX vmlinux

|

||||

SYSMAP System.map

|

||||

```

|

||||

|

||||

`vmlinux` 和`System.map` 生成在内核源码树根目录下。

|

||||

|

||||

```

|

||||

$ ls vmlinux System.map

|

||||

System.map vmlinux

|

||||

```

|

||||

|

||||

这就是全部了,`vmlinux` 构建好了,下一步就是创建 [bzImage](https://en.wikipedia.org/wiki/Vmlinux#bzImage).

|

||||

|

||||

###制作bzImage

|

||||

|

||||

`bzImage` 就是压缩了的 linux 内核镜像。我们可以在构建了 `vmlinux` 之后通过执行 `make bzImage` 获得`bzImage`。同时我们可以仅仅执行 `make` 而不带任何参数也可以生成 `bzImage` ,因为它是在 [arch/x86/kernel/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile) 里预定义的、默认生成的镜像:

|

||||

|

||||

```Makefile

|

||||

all: bzImage

|

||||

```

|

||||

|

||||

让我们看看这个目标,它能帮助我们理解这个镜像是怎么构建的。我已经说过了 `bzImage` 是被定义在 [arch/x86/kernel/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/Makefile),定义如下:

|

||||

|

||||

```Makefile

|

||||

bzImage: vmlinux

|

||||

$(Q)$(MAKE) $(build)=$(boot) $(KBUILD_IMAGE)

|

||||

$(Q)mkdir -p $(objtree)/arch/$(UTS_MACHINE)/boot

|

||||

$(Q)ln -fsn ../../x86/boot/bzImage $(objtree)/arch/$(UTS_MACHINE)/boot/$@

|

||||

```

|

||||

|

||||

在这里我们可以看到第一次为 boot 目录执行 `make`,在我们的例子里是这样的:

|

||||

|

||||

```Makefile

|

||||

boot := arch/x86/boot

|

||||

```

|

||||

|

||||

现在的主要目标是编译目录 `arch/x86/boot` 和 `arch/x86/boot/compressed` 的代码,构建 `setup.bin` 和 `vmlinux.bin`,最后用这两个文件生成 `bzImage`。第一个目标是定义在 [arch/x86/boot/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/boot/Makefile) 的 `$(obj)/setup.elf`:

|

||||

|

||||

```Makefile

|

||||

$(obj)/setup.elf: $(src)/setup.ld $(SETUP_OBJS) FORCE

|

||||

$(call if_changed,ld)

|

||||

```

|

||||

|

||||

我们已经在目录 `arch/x86/boot` 有了链接脚本 `setup.ld`,和扩展到 `boot` 目录下全部源代码的变量 `SETUP_OBJS` 。我们可以看看第一个输出:

|

||||

|

||||

```Makefile

|

||||

AS arch/x86/boot/bioscall.o

|

||||

CC arch/x86/boot/cmdline.o

|

||||

AS arch/x86/boot/copy.o

|

||||

HOSTCC arch/x86/boot/mkcpustr

|

||||

CPUSTR arch/x86/boot/cpustr.h

|

||||

CC arch/x86/boot/cpu.o

|

||||

CC arch/x86/boot/cpuflags.o

|

||||

CC arch/x86/boot/cpucheck.o

|

||||

CC arch/x86/boot/early_serial_console.o

|

||||

CC arch/x86/boot/edd.o

|

||||

```

|

||||

|

||||

下一个源码文件是 [arch/x86/boot/header.S](https://github.com/torvalds/linux/blob/master/arch/x86/boot/header.S),但是我们不能现在就编译它,因为这个目标依赖于下面两个头文件:

|

||||

|

||||

```Makefile

|

||||

$(obj)/header.o: $(obj)/voffset.h $(obj)/zoffset.h

|

||||

```

|

||||

|

||||

第一个头文件 `voffset.h` 是使用 `sed` 脚本生成的,包含用 `nm` 工具从 `vmlinux` 获取的两个地址:

|

||||

|

||||

```C

|

||||

#define VO__end 0xffffffff82ab0000

|

||||

#define VO__text 0xffffffff81000000

|

||||

```

|

||||

|

||||

这两个地址是内核的起始和结束地址。第二个头文件 `zoffset.h` 在 [arch/x86/boot/compressed/Makefile](https://github.com/torvalds/linux/blob/master/arch/x86/boot/compressed/Makefile) 可以看出是依赖于目标 `vmlinux`的:

|

||||

|

||||

```Makefile

|

||||

$(obj)/zoffset.h: $(obj)/compressed/vmlinux FORCE

|

||||

$(call if_changed,zoffset)

|

||||

```

|

||||

|

||||

目标 `$(obj)/compressed/vmlinux` 依赖于 `vmlinux-objs-y` —— 说明需要编译目录 [arch/x86/boot/compressed](https://github.com/torvalds/linux/tree/master/arch/x86/boot/compressed) 下的源代码,然后生成 `vmlinux.bin`、`vmlinux.bin.bz2`,和编译工具 `mkpiggy`。我们可以在下面的输出看出来:

|

||||

|

||||

```Makefile

|

||||

LDS arch/x86/boot/compressed/vmlinux.lds

|

||||

AS arch/x86/boot/compressed/head_64.o

|

||||

CC arch/x86/boot/compressed/misc.o

|

||||

CC arch/x86/boot/compressed/string.o

|

||||

CC arch/x86/boot/compressed/cmdline.o

|

||||

OBJCOPY arch/x86/boot/compressed/vmlinux.bin

|

||||

BZIP2 arch/x86/boot/compressed/vmlinux.bin.bz2

|

||||

HOSTCC arch/x86/boot/compressed/mkpiggy

|

||||

```

|

||||

|

||||

`vmlinux.bin` 是去掉了调试信息和注释的 `vmlinux` 二进制文件,加上了占用了 `u32` (LCTT 译注:即4-Byte)的长度信息的 `vmlinux.bin.all` 压缩后就是 `vmlinux.bin.bz2`。其中 `vmlinux.bin.all` 包含了 `vmlinux.bin` 和`vmlinux.relocs`(LCTT 译注:vmlinux 的重定位信息),其中 `vmlinux.relocs` 是 `vmlinux` 经过程序 `relocs` 处理之后的 `vmlinux` 镜像(见上文所述)。我们现在已经获取到了这些文件,汇编文件 `piggy.S` 将会被 `mkpiggy` 生成、然后编译:

|

||||

|

||||

```Makefile

|

||||

MKPIGGY arch/x86/boot/compressed/piggy.S

|

||||

AS arch/x86/boot/compressed/piggy.o

|

||||

```

|

||||

|

||||

这个汇编文件会包含经过计算得来的、压缩内核的偏移信息。处理完这个汇编文件,我们就可以看到 `zoffset` 生成了:

|

||||

|

||||

```Makefile

|

||||

ZOFFSET arch/x86/boot/zoffset.h

|

||||

```

|

||||

|

||||

现在 `zoffset.h` 和 `voffset.h` 已经生成了,[arch/x86/boot](https://github.com/torvalds/linux/tree/master/arch/x86/boot/) 里的源文件可以继续编译:

|

||||

|

||||

```Makefile

|

||||

AS arch/x86/boot/header.o

|

||||

CC arch/x86/boot/main.o

|

||||

CC arch/x86/boot/mca.o

|

||||

CC arch/x86/boot/memory.o

|

||||

CC arch/x86/boot/pm.o

|

||||

AS arch/x86/boot/pmjump.o

|

||||

CC arch/x86/boot/printf.o

|

||||

CC arch/x86/boot/regs.o

|

||||

CC arch/x86/boot/string.o

|

||||

CC arch/x86/boot/tty.o

|

||||

CC arch/x86/boot/video.o

|

||||

CC arch/x86/boot/video-mode.o

|

||||

CC arch/x86/boot/video-vga.o

|

||||

CC arch/x86/boot/video-vesa.o

|

||||

CC arch/x86/boot/video-bios.o

|

||||

```

|

||||

|

||||

所有的源代码会被编译,他们最终会被链接到 `setup.elf` :

|

||||

|

||||

```Makefile

|

||||

LD arch/x86/boot/setup.elf

|

||||

```

|

||||

|

||||

或者:

|

||||

|

||||

```

|

||||

ld -m elf_x86_64 -T arch/x86/boot/setup.ld arch/x86/boot/a20.o arch/x86/boot/bioscall.o arch/x86/boot/cmdline.o arch/x86/boot/copy.o arch/x86/boot/cpu.o arch/x86/boot/cpuflags.o arch/x86/boot/cpucheck.o arch/x86/boot/early_serial_console.o arch/x86/boot/edd.o arch/x86/boot/header.o arch/x86/boot/main.o arch/x86/boot/mca.o arch/x86/boot/memory.o arch/x86/boot/pm.o arch/x86/boot/pmjump.o arch/x86/boot/printf.o arch/x86/boot/regs.o arch/x86/boot/string.o arch/x86/boot/tty.o arch/x86/boot/video.o arch/x86/boot/video-mode.o arch/x86/boot/version.o arch/x86/boot/video-vga.o arch/x86/boot/video-vesa.o arch/x86/boot/video-bios.o -o arch/x86/boot/setup.elf

|

||||

```

|

||||

|

||||

最后的两件事是创建包含目录 `arch/x86/boot/*` 下的编译过的代码的 `setup.bin`:

|

||||

|

||||

```

|

||||

objcopy -O binary arch/x86/boot/setup.elf arch/x86/boot/setup.bin

|

||||

```

|

||||

|

||||

以及从 `vmlinux` 生成 `vmlinux.bin` :

|

||||

|

||||

```

|

||||

objcopy -O binary -R .note -R .comment -S arch/x86/boot/compressed/vmlinux arch/x86/boot/vmlinux.bin

|

||||

```

|

||||

|

||||

最最后,我们编译主机程序 [arch/x86/boot/tools/build.c](https://github.com/torvalds/linux/blob/master/arch/x86/boot/tools/build.c),它将会用来把 `setup.bin` 和 `vmlinux.bin` 打包成 `bzImage`:

|

||||

|

||||

```

|

||||

arch/x86/boot/tools/build arch/x86/boot/setup.bin arch/x86/boot/vmlinux.bin arch/x86/boot/zoffset.h arch/x86/boot/bzImage

|

||||

```

|

||||

|

||||

实际上 `bzImage` 就是把 `setup.bin` 和 `vmlinux.bin` 连接到一起。最终我们会看到输出结果,就和那些用源码编译过内核的同行的结果一样:

|

||||

|

||||

```

|

||||

Setup is 16268 bytes (padded to 16384 bytes).

|

||||

System is 4704 kB

|

||||

CRC 94a88f9a

|

||||

Kernel: arch/x86/boot/bzImage is ready (#5)

|

||||

```

|

||||

|

||||

|

||||

全部结束。

|

||||

|

||||

###结论

|

||||

|

||||

这就是本文的结尾部分。本文我们了解了编译内核的全部步骤:从执行 `make` 命令开始,到最后生成 `bzImage`。我知道,linux 内核的 makefile 和构建 linux 的过程第一眼看起来可能比较迷惑,但是这并不是很难。希望本文可以帮助你理解构建 linux 内核的整个流程。

|

||||

|

||||

|

||||

###链接

|

||||

|

||||

* [GNU make util](https://en.wikipedia.org/wiki/Make_%28software%29)

|

||||

* [Linux kernel top Makefile](https://github.com/torvalds/linux/blob/master/Makefile)

|

||||

* [cross-compilation](https://en.wikipedia.org/wiki/Cross_compiler)

|

||||

* [Ctags](https://en.wikipedia.org/wiki/Ctags)

|

||||

* [sparse](https://en.wikipedia.org/wiki/Sparse)

|

||||

* [bzImage](https://en.wikipedia.org/wiki/Vmlinux#bzImage)

|

||||

* [uname](https://en.wikipedia.org/wiki/Uname)

|

||||

* [shell](https://en.wikipedia.org/wiki/Shell_%28computing%29)

|

||||

* [Kbuild](https://github.com/torvalds/linux/blob/master/Documentation/kbuild/kbuild.txt)

|

||||

* [binutils](http://www.gnu.org/software/binutils/)

|

||||

* [gcc](https://gcc.gnu.org/)

|

||||

* [Documentation](https://github.com/torvalds/linux/blob/master/Documentation/kbuild/makefiles.txt)

|

||||

* [System.map](https://en.wikipedia.org/wiki/System.map)

|

||||

* [Relocation](https://en.wikipedia.org/wiki/Relocation_%28computing%29)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://github.com/0xAX/linux-insides/blob/master/Misc/how_kernel_compiled.md

|

||||

|

||||

译者:[oska874](https://github.com/oska874)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -1,16 +1,17 @@

|

||||

如何在CentOS上安装iTOP(IT操作门户)

|

||||

如何在 CentOS 7 上安装开源 ITIL 门户 iTOP

|

||||

================================================================================

|

||||

iTOP简单来说是一个简单的基于网络的开源IT服务管理工具。它有所有的ITIL功能包括服务台、配置管理、事件管理、问题管理、更改管理和服务管理。iTOP依赖于Apache/IIS、MySQL和PHP,因此它可以运行在任何支持这些软件的操作系统中。因为iTOP是一个网络程序,因此你不必在用户的PC端任何客户端程序。一个简单的浏览器就足够每天的IT环境操作了。

|

||||

|

||||

iTOP是一个简单的基于Web的开源IT服务管理工具。它有所有的ITIL功能,包括服务台、配置管理、事件管理、问题管理、变更管理和服务管理。iTOP依赖于Apache/IIS、MySQL和PHP,因此它可以运行在任何支持这些软件的操作系统中。因为iTOP是一个Web程序,因此你不必在用户的PC端任何客户端程序。一个简单的浏览器就足够每天的IT环境操作了。

|

||||

|

||||

我们要在一台有满足基本需求的LAMP环境的CentOS 7上安装和配置iTOP。

|

||||

|

||||

### 下载 iTOP ###

|

||||

|

||||

iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[链接][1]。

|

||||

iTOP的下载包现在在SourceForge上,我们可以从这获取它的官方[链接][1]。

|

||||

|

||||

|

||||

|

||||

我们从这里的连接用wget命令获取压缩文件

|

||||

我们从这里的连接用wget命令获取压缩文件。

|

||||

|

||||

[root@centos-007 ~]# wget http://downloads.sourceforge.net/project/itop/itop/2.1.0/iTop-2.1.0-2127.zip

|

||||

|

||||

@ -40,7 +41,7 @@ iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[

|

||||

installation.xml itop-change-mgmt-itil itop-incident-mgmt-itil itop-request-mgmt-itil itop-tickets

|

||||

itop-attachments itop-config itop-knownerror-mgmt itop-service-mgmt itop-virtualization-mgmt

|

||||

|

||||

在解压的目录下,通过不同的数据模型用复制命令迁移需要的扩展从datamodels复制到web扩展目录下。

|

||||

在解压的目录下,使用如下的 cp 命令将不同的数据模型从web 下的 datamodels 目录下复制到 extensions 目录,来迁移需要的扩展。

|

||||

|

||||

[root@centos-7 2.x]# pwd

|

||||

/var/www/html/itop/web/datamodels/2.x

|

||||

@ -50,19 +51,19 @@ iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[

|

||||

|

||||

大多数服务端设置和配置已经完成了。最后我们安装web界面来完成安装。

|

||||

|

||||

打开浏览器使用ip地址或者FQDN来访问WordPress web目录。

|

||||

打开浏览器使用ip地址或者完整域名来访问iTop 的 web目录。

|

||||

|

||||

http://servers_ip_address/itop/web/

|

||||

|

||||

你会被重定向到iTOP的web安装页面。让我们按照要求配置,就像在这篇教程中做的那样。

|

||||

|

||||

#### 先决要求验证 ####

|

||||

#### 验证先决要求 ####

|

||||

|

||||

这一步你就会看到验证完成的欢迎界面。如果你看到了一些警告信息,你需要先安装这些软件来解决这些问题。

|

||||

|

||||

|

||||

|

||||

这一步一个叫php mcrypt的可选包丢失了。下载下面的rpm包接着尝试安装php mcrypt包。

|

||||

这一步有一个叫php mcrypt的可选包丢失了。下载下面的rpm包接着尝试安装php mcrypt包。

|

||||

|

||||

[root@centos-7 ~]#yum localinstall php-mcrypt-5.3.3-1.el6.x86_64.rpm libmcrypt-2.5.8-9.el6.x86_64.rpm.

|

||||

|

||||

@ -76,7 +77,7 @@ iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[

|

||||

|

||||

#### iTop 许可协议 ####

|

||||

|

||||

勾选同意iTOP所有组件的许可协议并点击“NEXT”。

|

||||

勾选接受 iTOP所有组件的许可协议,并点击“NEXT”。

|

||||

|

||||

|

||||

|

||||

@ -94,7 +95,7 @@ iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[

|

||||

|

||||

#### 杂项参数 ####

|

||||

|

||||

让我们选择额外的参数来选择你是否需要安装一个演示内容或者使用全新的数据库,接着下一步。

|

||||

让我们选择额外的参数来选择你是否需要安装一个带有演示内容的数据库或者使用全新的数据库,接着下一步。

|

||||

|

||||

|

||||

|

||||

@ -118,7 +119,7 @@ iTOP的下载包现在在SOurceForge上,我们可以从这获取它的官方[

|

||||

|

||||

#### 改变管理选项 ####

|

||||

|

||||

选择不同的ticket类型以便管理可用选项中的IT设备更改。我们选择ITTL更改管理选项。

|

||||

选择不同的ticket类型以便管理可用选项中的IT设备变更。我们选择ITTL变更管理选项。

|

||||

|

||||

|

||||

|

||||

@ -166,7 +167,7 @@ via: http://linoxide.com/tools/setup-itop-centos-7/

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

如何在 Docker 容器中运行支持 OData 的 JBoss 数据虚拟化 GA

|

||||

Howto Run JBoss Data Virtualization GA with OData in Docker Container

|

||||

================================================================================

|

||||

大家好,我们今天来学习如何在一个 Docker 容器中运行支持 OData(译者注:Open Data Protocol,开放数据协议) 的 JBoss 数据虚拟化 6.0.0 GA(译者注:GA,General Availability,具体定义可以查看[WIKI][4])。JBoss 数据虚拟化是数据提供和集成解决方案平台,有多种分散的数据源时,转换为一种数据源统一对待,在正确的时间将所需数据传递给任意的应用或者用户。JBoss 数据虚拟化可以帮助我们将数据快速组合和转换为可重用的商业友好的数据模型,通过开放标准接口简单可用。它提供全面的数据抽取、联合、集成、转换,以及传输功能,将来自一个或多个源的数据组合为可重复使用和共享的灵活数据。要了解更多关于 JBoss 数据虚拟化的信息,可以查看它的[官方文档][1]。Docker 是一个提供开放平台用于打包,装载和以轻量级容器运行任何应用的开源平台。使用 Docker 容器我们可以轻松处理和启用支持 OData 的 JBoss 数据虚拟化。

|

||||

|

||||

大家好,我们今天来学习如何在一个 Docker 容器中运行支持 OData(译者注:Open Data Protocol,开放数据协议) 的 JBoss 数据虚拟化 6.0.0 GA(译者注:GA,General Availability,具体定义可以查看[WIKI][4])。JBoss 数据虚拟化是数据提供和集成解决方案平台,将多种分散的数据源转换为一种数据源统一对待,在正确的时间将所需数据传递给任意的应用或者用户。JBoss 数据虚拟化可以帮助我们将数据快速组合和转换为可重用的商业友好的数据模型,通过开放标准接口简单可用。它提供全面的数据抽取、联合、集成、转换,以及传输功能,将来自一个或多个源的数据组合为可重复使用和共享的灵活数据。要了解更多关于 JBoss 数据虚拟化的信息,可以查看它的[官方文档][1]。Docker 是一个提供开放平台用于打包,装载和以轻量级容器运行任何应用的开源平台。使用 Docker 容器我们可以轻松处理和启用支持 OData 的 JBoss 数据虚拟化。

|

||||

|

||||

下面是该指南中在 Docker 容器中运行支持 OData 的 JBoss 数据虚拟化的简单步骤。

|

||||

|

||||

@ -78,7 +78,6 @@ Howto Run JBoss Data Virtualization GA with OData in Docker Container

|

||||

"LinkLocalIPv6PrefixLen": 0,

|

||||

|

||||

### 6. Web 界面 ###

|

||||

### 6. Web Interface ###

|

||||

|

||||

现在,如果一切如期望的那样进行,当我们用浏览器打开 http://container-ip:8080/ 和 http://container-ip:9990 时会看到支持 oData 的 JBoss 数据虚拟化登录界面和 JBoss 管理界面。管理验证的用户名和密码分别是 admin 和 redhat1!数据虚拟化验证的用户名和密码都是 user。之后,我们可以通过 web 界面在内容间导航。

|

||||

|

||||

@ -94,7 +93,7 @@ via: http://linoxide.com/linux-how-to/run-jboss-data-virtualization-ga-odata-doc

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[ictlyh](http://www.mutouxiaogui.cn/blog)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,9 @@

|

||||

|

||||

在 Linux 中怎样将 MySQL 迁移到 MariaDB 上

|

||||

================================================================================

|

||||

|

||||

自从甲骨文收购 MySQL 后,很多 MySQL 的开发者和用户放弃了 MySQL 由于甲骨文对 MySQL 的开发和维护更多倾向于闭门的立场。在社区驱动下,促使更多人移到 MySQL 的另一个分支中,叫 MariaDB。在原有 MySQL 开发人员的带领下,MariaDB 的开发遵循开源的理念,并确保 [它的二进制格式与 MySQL 兼容][1]。Linux 发行版如 Red Hat 家族(Fedora,CentOS,RHEL),Ubuntu 和Mint,openSUSE 和 Debian 已经开始使用,并支持 MariaDB 作为 MySQL 的简易替换品。

|

||||

自从甲骨文收购 MySQL 后,由于甲骨文对 MySQL 的开发和维护更多倾向于闭门的立场,很多 MySQL 的开发者和用户放弃了 MySQL。在社区驱动下,促使更多人移到 MySQL 的另一个叫 MariaDB 的分支。在原有 MySQL 开发人员的带领下,MariaDB 的开发遵循开源的理念,并确保[它的二进制格式与 MySQL 兼容][1]。Linux 发行版如 Red Hat 家族(Fedora,CentOS,RHEL),Ubuntu 和 Mint,openSUSE 和 Debian 已经开始使用,并支持 MariaDB 作为 MySQL 的直接替换品。

|

||||

|

||||

如果想要将 MySQL 中的数据库迁移到 MariaDB 中,这篇文章就是你所期待的。幸运的是,由于他们的二进制兼容性,MySQL-to-MariaDB 迁移过程是非常简单的。如果你按照下面的步骤,将 MySQL 迁移到 MariaDB 会是无痛的。

|

||||

如果你想要将 MySQL 中的数据库迁移到 MariaDB 中,这篇文章就是你所期待的。幸运的是,由于他们的二进制兼容性,MySQL-to-MariaDB 迁移过程是非常简单的。如果你按照下面的步骤,将 MySQL 迁移到 MariaDB 会是无痛的。

|

||||

|

||||

### 准备 MySQL 数据库和表 ###

|

||||

|

||||

@ -69,7 +68,7 @@

|

||||

|

||||

### 安装 MariaDB ###

|

||||

|

||||

在 CentOS/RHEL 7和Ubuntu(14.04或更高版本)上,最新的 MariaDB 包含在其官方源。在 Fedora 上,自19版本后 MariaDB 已经替代了 MySQL。如果你使用的是旧版本或 LTS 类型如 Ubuntu 13.10 或更早的,你仍然可以通过添加其官方仓库来安装 MariaDB。

|

||||

在 CentOS/RHEL 7和Ubuntu(14.04或更高版本)上,最新的 MariaDB 已经包含在其官方源。在 Fedora 上,自19 版本后 MariaDB 已经替代了 MySQL。如果你使用的是旧版本或 LTS 类型如 Ubuntu 13.10 或更早的,你仍然可以通过添加其官方仓库来安装 MariaDB。

|

||||

|

||||

[MariaDB 网站][2] 提供了一个在线工具帮助你依据你的 Linux 发行版中来添加 MariaDB 的官方仓库。此工具为 openSUSE, Arch Linux, Mageia, Fedora, CentOS, RedHat, Mint, Ubuntu, 和 Debian 提供了 MariaDB 的官方仓库.

|

||||

|

||||

@ -103,7 +102,7 @@

|

||||

|

||||

$ sudo yum install MariaDB-server MariaDB-client

|

||||

|

||||

安装了所有必要的软件包后,你可能会被要求为 root 用户创建一个新密码。设置 root 的密码后,别忘了恢复备份的 my.cnf 文件。

|

||||

安装了所有必要的软件包后,你可能会被要求为 MariaDB 的 root 用户创建一个新密码。设置 root 的密码后,别忘了恢复备份的 my.cnf 文件。

|

||||

|

||||

$ sudo cp /opt/my.cnf /etc/mysql/

|

||||

|

||||

@ -111,7 +110,7 @@

|

||||

|

||||

$ sudo service mariadb start

|

||||

|

||||

或者:

|

||||

或:

|

||||

|

||||

$ sudo systemctl start mariadb

|

||||

|

||||

@ -141,13 +140,13 @@

|

||||

|

||||

### 结论 ###

|

||||

|

||||

如你在本教程中看到的,MySQL-to-MariaDB 的迁移并不难。MariaDB 相比 MySQL 有很多新的功能,你应该知道的。至于配置方面,在我的测试情况下,我只是将我旧的 MySQL 配置文件(my.cnf)作为 MariaDB 的配置文件,导入过程完全没有出现任何问题。对于配置文件,我建议你在迁移之前请仔细阅读MariaDB 配置选项的文件,特别是如果你正在使用 MySQL 的特殊配置。

|

||||

如你在本教程中看到的,MySQL-to-MariaDB 的迁移并不难。你应该知道,MariaDB 相比 MySQL 有很多新的功能。至于配置方面,在我的测试情况下,我只是将我旧的 MySQL 配置文件(my.cnf)作为 MariaDB 的配置文件,导入过程完全没有出现任何问题。对于配置文件,我建议你在迁移之前请仔细阅读 MariaDB 配置选项的文件,特别是如果你正在使用 MySQL 的特定配置。

|

||||

|

||||

如果你正在运行更复杂的配置有海量的数据库和表,包括群集或主从复制,看一看 Mozilla IT 和 Operations 团队的 [更详细的指南][3] ,或者 [官方的 MariaDB 文档][4]。

|

||||

如果你正在运行有海量的表、包括群集或主从复制的数据库的复杂配置,看一看 Mozilla IT 和 Operations 团队的 [更详细的指南][3] ,或者 [官方的 MariaDB 文档][4]。

|

||||

|

||||

### 故障排除 ###

|

||||

|

||||

1.在运行 mysqldump 命令备份数据库时出现以下错误。

|

||||

1、 在运行 mysqldump 命令备份数据库时出现以下错误。

|

||||

|

||||

$ mysqldump --all-databases --user=root --password --master-data > backupdb.sql

|

||||

|

||||

@ -155,7 +154,7 @@

|

||||

|

||||

mysqldump: Error: Binlogging on server not active

|

||||

|

||||

通过使用 "--master-data",你要在导出的输出中包含二进制日志信息,这对于数据库的复制和恢复是有用的。但是,二进制日志未在 MySQL 服务器启用。要解决这个错误,修改 my.cnf 文件,并在 [mysqld] 部分添加下面的选项。

|

||||

通过使用 "--master-data",你可以在导出的输出中包含二进制日志信息,这对于数据库的复制和恢复是有用的。但是,二进制日志未在 MySQL 服务器启用。要解决这个错误,修改 my.cnf 文件,并在 [mysqld] 部分添加下面的选项。

|

||||

|

||||

log-bin=mysql-bin

|

||||

|

||||

@ -176,8 +175,8 @@

|

||||

via: http://xmodulo.com/migrate-mysql-to-mariadb-linux.html

|

||||

|

||||

作者:[Kristophorus Hadiono][a]

|

||||

译者:[strugglingyouth](https://github.com/译者ID)

|

||||

校对:[strugglingyouth](https://github.com/校对者ID)

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||