mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into translating

This commit is contained in:

commit

f86d6a6100

@ -1,8 +1,9 @@

|

||||

如何在 Linux 中为每个屏幕设置不同的壁纸

|

||||

======

|

||||

**简介:如果你想在 Ubuntu 18.04 或任何其他 Linux 发行版上使用 GNOME、MATE 或 Budgie 桌面环境在多个显示器上显示不同的壁纸,这个小工具将帮助你实现这一点。**

|

||||

|

||||

多显示器设置通常会在 Linux 上出现多个问题,但我不打算在本文中讨论这些问题。我有一篇关于 Linux 上多显示器支持的文章。

|

||||

> 如果你想在 Ubuntu 18.04 或任何其他 Linux 发行版上使用 GNOME、MATE 或 Budgie 桌面环境在多个显示器上显示不同的壁纸,这个小工具将帮助你实现这一点。

|

||||

|

||||

多显示器设置通常会在 Linux 上出现多个问题,但我不打算在本文中讨论这些问题。我有另外一篇关于 Linux 上多显示器支持的文章。

|

||||

|

||||

如果你使用多台显示器,也许你想为每台显示器设置不同的壁纸。我不确定其他 Linux 发行版和桌面环境,但是 [GNOME 桌面][1] 的 Ubuntu 本身并不提供此功能。

|

||||

|

||||

@ -18,11 +19,11 @@

|

||||

|

||||

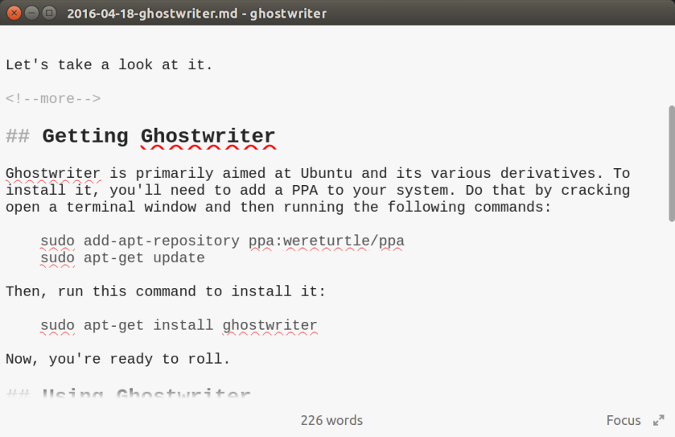

#### 使用 FlatPak 在 Linux 上安装 HydraPaper

|

||||

|

||||

使用 [FlatPak][9] 可以轻松安装 HydraPaper。Ubuntu 18.04已 经提供对 FlatPaks 的支持,所以你需要做的就是下载应用文件并双击在 GNOME 软件中心中打开它。

|

||||

使用 [FlatPak][9] 可以轻松安装 HydraPaper。Ubuntu 18.04 已 经提供对 FlatPaks 的支持,所以你需要做的就是下载应用文件并双击在 GNOME 软件中心中打开它。

|

||||

|

||||

你可以参考这篇文章来了解如何在你的发行版[启用 FlatPak 支持][10]。启用 FlatPak 支持后,只需从 [FlatHub][11] 下载并安装即可。

|

||||

|

||||

[Download HydraPaper][12]

|

||||

- [下载 HydraPaper][12]

|

||||

|

||||

#### 使用 HydraPaper 在不同的显示器上设置不同的背景

|

||||

|

||||

@ -65,13 +66,13 @@ via: https://itsfoss.com/wallpaper-multi-monitor/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]:https://www.gnome.org/

|

||||

[2]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/multi-monitor-wallpaper-setup-800x450.jpeg

|

||||

[2]:https://i2.wp.com/itsfoss.com/wp-content/uploads/2018/05/multi-monitor-wallpaper-setup.jpeg?w=800&ssl=1

|

||||

[3]:https://github.com/GabMus/HydraPaper

|

||||

[4]:https://www.gtk.org/

|

||||

[5]:https://itsfoss.com/gnome-tricks-ubuntu/

|

||||

@ -82,8 +83,8 @@ via: https://itsfoss.com/wallpaper-multi-monitor/

|

||||

[10]:https://flatpak.org/setup/

|

||||

[11]:https://flathub.org

|

||||

[12]:https://flathub.org/apps/details/org.gabmus.hydrapaper

|

||||

[13]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-2-800x631.jpeg

|

||||

[14]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-1.jpeg

|

||||

[15]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-3.jpeg

|

||||

[16]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/05/hydra-paper-dual-monitor-800x450.jpeg

|

||||

[17]:https://www.reddit.com/r/LinuxUsersGroup/

|

||||

[13]:https://i0.wp.com/itsfoss.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-2.jpeg?w=800&ssl=1

|

||||

[14]:https://i0.wp.com/itsfoss.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-1.jpeg?w=799&ssl=1

|

||||

[15]:https://i1.wp.com/itsfoss.com/wp-content/uploads/2018/05/different-wallpaper-each-monitor-hydrapaper-3.jpeg?w=799&ssl=1

|

||||

[16]:https://i0.wp.com/itsfoss.com/wp-content/uploads/2018/05/hydra-paper-dual-monitor.jpeg?w=800&ssl=1

|

||||

[17]:https://www.reddit.com/r/LinuxUsersGroup/

|

||||

116

published/20181004 Archiving web sites.md

Normal file

116

published/20181004 Archiving web sites.md

Normal file

@ -0,0 +1,116 @@

|

||||

对网站进行归档

|

||||

======

|

||||

|

||||

我最近深入研究了网站归档,因为有些朋友担心遇到糟糕的系统管理或恶意删除时失去对放在网上的内容的控制权。这使得网站归档成为系统管理员工具箱中的重要工具。事实证明,有些网站比其他网站更难归档。本文介绍了对传统网站进行归档的过程,并阐述在面对最新流行单页面应用程序(SPA)的现代网站时,它有哪些不足。

|

||||

|

||||

### 转换为简单网站

|

||||

|

||||

手动编码 HTML 网站的日子早已不复存在。现在的网站是动态的,并使用最新的 JavaScript、PHP 或 Python 框架即时构建。结果,这些网站更加脆弱:数据库崩溃、升级出错或者未修复的漏洞都可能使数据丢失。在我以前是一名 Web 开发人员时,我不得不接受客户这样的想法:希望网站基本上可以永久工作。这种期望与 web 开发“快速行动和破除陈规”的理念不相符。在这方面,使用 [Drupal][2] 内容管理系统(CMS)尤其具有挑战性,因为重大更新会破坏与第三方模块的兼容性,这意味着客户很少承担的起高昂的升级成本。解决方案是将这些网站归档:以实时动态的网站为基础,将其转换为任何 web 服务器可以永久服务的纯 HTML 文件。此过程对你自己的动态网站非常有用,也适用于你想保护但无法控制的第三方网站。

|

||||

|

||||

对于简单的静态网站,古老的 [Wget][3] 程序就可以胜任。然而镜像保存一个完整网站的命令却是错综复杂的:

|

||||

|

||||

```

|

||||

$ nice wget --mirror --execute robots=off --no-verbose --convert-links \

|

||||

--backup-converted --page-requisites --adjust-extension \

|

||||

--base=./ --directory-prefix=./ --span-hosts \

|

||||

--domains=www.example.com,example.com http://www.example.com/

|

||||

```

|

||||

|

||||

以上命令下载了网页的内容,也抓取了指定域名中的所有内容。在对你喜欢的网站执行此操作之前,请考虑此类抓取可能对网站产生的影响。上面的命令故意忽略了 `robots.txt` 规则,就像现在[归档者的习惯做法][4],并以尽可能快的速度归档网站。大多数抓取工具都可以选择在两次抓取间暂停并限制带宽使用,以避免使网站瘫痪。

|

||||

|

||||

上面的命令还将获取 “页面所需(LCTT 译注:单页面所需的所有元素)”,如样式表(CSS)、图像和脚本等。下载的页面内容将会被修改,以便链接也指向本地副本。任何 web 服务器均可托管生成的文件集,从而生成原始网站的静态副本。

|

||||

|

||||

以上所述是事情一切顺利的时候。任何使用过计算机的人都知道事情的进展很少如计划那样;各种各样的事情可以使程序以有趣的方式脱离正轨。比如,在网站上有一段时间很流行日历块。内容管理系统会动态生成这些内容,这会使爬虫程序陷入死循环以尝试检索所有页面。灵巧的归档者可以使用正则表达式(例如 Wget 有一个 `--reject-regex` 选项)来忽略有问题的资源。如果可以访问网站的管理界面,另一个方法是禁用日历、登录表单、评论表单和其他动态区域。一旦网站变成静态的,(那些动态区域)也肯定会停止工作,因此从原始网站中移除这些杂乱的东西也不是全无意义。

|

||||

|

||||

### JavaScript 噩梦

|

||||

|

||||

很不幸,有些网站不仅仅是纯 HTML 文件构建的。比如,在单页面网站中,web 浏览器通过执行一个小的 JavaScript 程序来构建内容。像 Wget 这样的简单用户代理将难以重建这些网站的有意义的静态副本,因为它根本不支持 JavaScript。理论上,网站应该使用[渐进增强][5]技术,在不使用 JavaScript 的情况下提供内容和实现功能,但这些指引很少被人遵循 —— 使用过 [NoScript][6] 或 [uMatrix][7] 等插件的人都知道。

|

||||

|

||||

传统的归档方法有时会以最愚蠢的方式失败。在尝试为一个本地报纸网站([pamplemousse.ca][8])创建备份时,我发现 WordPress 在包含 的 JavaScript 末尾添加了查询字符串(例如:`?ver=1.12.4`)。这会使提供归档服务的 web 服务器不能正确进行内容类型检测,因为其靠文件扩展名来发送正确的 `Content-Type` 头部信息。在 web 浏览器加载此类归档时,这些脚本会加载失败,导致动态网站受损。

|

||||

|

||||

随着 web 向使用浏览器作为执行任意代码的虚拟机转化,依赖于纯 HTML 文件解析的归档方法也需要随之适应。这个问题的解决方案是在抓取时记录(以及重现)服务器提供的 HTTP 头部信息,实际上专业的归档者就使用这种方法。

|

||||

|

||||

### 创建和显示 WARC 文件

|

||||

|

||||

在 <ruby>[互联网档案馆][9]<rt>Internet Archive</rt></ruby> 网站,Brewster Kahle 和 Mike Burner 在 1996 年设计了 [ARC][10] (即 “ARChive”)文件格式,以提供一种聚合其归档工作所产生的百万个小文件的方法。该格式最终标准化为 WARC(“Web ARChive”)[规范][11],并在 2009 年作为 ISO 标准发布,2017 年修订。标准化工作由<ruby>[国际互联网保护联盟][12]<rt>International Internet Preservation Consortium</rt></ruby>(IIPC)领导,据维基百科称,这是一个“*为了协调为未来而保护互联网内容的努力而成立的国际图书馆组织和其他组织*”;它的成员包括<ruby>美国国会图书馆<rt>US Library of Congress</rt></ruby>和互联网档案馆等。后者在其基于 Java 的 [Heritrix crawler][13](LCTT 译注:一种爬虫程序)内部使用了 WARC 格式。

|

||||

|

||||

WARC 在单个压缩文件中聚合了多种资源,像 HTTP 头部信息、文件内容,以及其他元数据。方便的是,Wget 实际上提供了 `--warc` 参数来支持 WARC 格式。不幸的是,web 浏览器不能直接显示 WARC 文件,所以为了访问归档文件,一个查看器或某些格式转换是很有必要的。我所发现的最简单的查看器是 [pywb][14],它以 Python 包的形式运行一个简单的 web 服务器提供一个像“<ruby>时光倒流机网站<rt>Wayback Machine</rt></ruby>”的界面,来浏览 WARC 文件的内容。执行以下命令将会在 `http://localhost:8080/` 地址显示 WARC 文件的内容:

|

||||

|

||||

```

|

||||

$ pip install pywb

|

||||

$ wb-manager init example

|

||||

$ wb-manager add example crawl.warc.gz

|

||||

$ wayback

|

||||

```

|

||||

|

||||

顺便说一句,这个工具是由 [Webrecorder][15] 服务提供者建立的,Webrecoder 服务可以使用 web 浏览器保存动态页面的内容。

|

||||

|

||||

很不幸,pywb 无法加载 Wget 生成的 WARC 文件,因为它[遵循][16]的 [1.0 规范不一致][17],[1.1 规范修复了此问题][17]。就算 Wget 或 pywb 修复了这些问题,Wget 生成的 WARC 文件对我的使用来说不够可靠,所以我找了其他的替代品。引起我注意的爬虫程序简称 [crawl][19]。以下是它的调用方式:

|

||||

|

||||

```

|

||||

$ crawl https://example.com/

|

||||

```

|

||||

|

||||

(它的 README 文件说“非常简单”。)该程序支持一些命令行参数选项,但大多数默认值都是最佳的:它会从其他域获取页面所需(除非使用 `-exclude-related` 参数),但肯定不会递归出域。默认情况下,它会与远程站点建立十个并发连接,这个值可以使用 `-c` 参数更改。但是,最重要的是,生成的 WARC 文件可以使用 pywb 完美加载。

|

||||

|

||||

### 未来的工作和替代方案

|

||||

|

||||

这里还有更多有关使用 WARC 文件的[资源][20]。特别要提的是,这里有一个专门用来归档网站的 Wget 的直接替代品,叫做 [Wpull][21]。它实验性地支持了 [PhantomJS][22] 和 [youtube-dl][23] 的集成,即允许分别下载更复杂的 JavaScript 页面以及流媒体。该程序是一个叫做 [ArchiveBot][24] 的复杂归档工具的基础,ArchiveBot 被那些在 [ArchiveTeam][25] 的“*零散离群的归档者、程序员、作家以及演说家*”使用,他们致力于“*在历史永远丢失之前保存它们*”。集成 PhantomJS 好像并没有如团队期望的那样良好工作,所以 ArchiveTeam 也用其它零散的工具来镜像保存更复杂的网站。例如,[snscrape][26] 将抓取一个社交媒体配置文件以生成要发送到 ArchiveBot 的页面列表。该团队使用的另一个工具是 [crocoite][27],它使用无头模式的 Chrome 浏览器来归档 JavaScript 较多的网站。

|

||||

|

||||

如果没有提到称做“网站复制者”的 [HTTrack][28] 项目,那么这篇文章算不上完整。它工作方式和 Wget 相似,HTTrack 可以对远程站点创建一个本地的副本,但是不幸的是它不支持输出 WRAC 文件。对于不熟悉命令行的小白用户来说,它在人机交互方面显得更有价值。

|

||||

|

||||

同样,在我的研究中,我发现了叫做 [Wget2][29] 的 Wget 的完全重制版本,它支持多线程操作,这可能使它比前身更快。和 Wget 相比,它[舍弃了一些功能][30],但是最值得注意的是拒绝模式、WARC 输出以及 FTP 支持,并增加了 RSS、DNS 缓存以及改进的 TLS 支持。

|

||||

|

||||

最后,我个人对这些工具的愿景是将它们与我现有的书签系统集成起来。目前我在 [Wallabag][31] 中保留了一些有趣的链接,这是一种自托管式的“稍后阅读”服务,意在成为 [Pocket][32](现在由 Mozilla 拥有)的免费替代品。但是 Wallabag 在设计上只保留了文章的“可读”副本,而不是一个完整的拷贝。在某些情况下,“可读版本”实际上[不可读][33],并且 Wallabag 有时[无法解析文章][34]。恰恰相反,像 [bookmark-archiver][35] 或 [reminiscence][36] 这样其他的工具会保存页面的屏幕截图以及完整的 HTML 文件,但遗憾的是,它没有 WRAC 文件所以没有办法更可信的重现网页内容。

|

||||

|

||||

我所经历的有关镜像保存和归档的悲剧就是死数据。幸运的是,业余的归档者可以利用工具将有趣的内容保存到网上。对于那些不想麻烦的人来说,“互联网档案馆”看起来仍然在那里,并且 ArchiveTeam 显然[正在为互联网档案馆本身做备份][37]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://anarc.at/blog/2018-10-04-archiving-web-sites/

|

||||

|

||||

作者:[Anarcat][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[fuowang](https://github.com/fuowang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://anarc.at

|

||||

[1]: https://anarc.at/blog

|

||||

[2]: https://drupal.org

|

||||

[3]: https://www.gnu.org/software/wget/

|

||||

[4]: https://blog.archive.org/2017/04/17/robots-txt-meant-for-search-engines-dont-work-well-for-web-archives/

|

||||

[5]: https://en.wikipedia.org/wiki/Progressive_enhancement

|

||||

[6]: https://noscript.net/

|

||||

[7]: https://github.com/gorhill/uMatrix

|

||||

[8]: https://pamplemousse.ca/

|

||||

[9]: https://archive.org

|

||||

[10]: http://www.archive.org/web/researcher/ArcFileFormat.php

|

||||

[11]: https://iipc.github.io/warc-specifications/

|

||||

[12]: https://en.wikipedia.org/wiki/International_Internet_Preservation_Consortium

|

||||

[13]: https://github.com/internetarchive/heritrix3/wiki

|

||||

[14]: https://github.com/webrecorder/pywb

|

||||

[15]: https://webrecorder.io/

|

||||

[16]: https://github.com/webrecorder/pywb/issues/294

|

||||

[17]: https://github.com/iipc/warc-specifications/issues/23

|

||||

[18]: https://github.com/iipc/warc-specifications/pull/24

|

||||

[19]: https://git.autistici.org/ale/crawl/

|

||||

[20]: https://archiveteam.org/index.php?title=The_WARC_Ecosystem

|

||||

[21]: https://github.com/chfoo/wpull

|

||||

[22]: http://phantomjs.org/

|

||||

[23]: http://rg3.github.io/youtube-dl/

|

||||

[24]: https://www.archiveteam.org/index.php?title=ArchiveBot

|

||||

[25]: https://archiveteam.org/

|

||||

[26]: https://github.com/JustAnotherArchivist/snscrape

|

||||

[27]: https://github.com/PromyLOPh/crocoite

|

||||

[28]: http://www.httrack.com/

|

||||

[29]: https://gitlab.com/gnuwget/wget2

|

||||

[30]: https://gitlab.com/gnuwget/wget2/wikis/home

|

||||

[31]: https://wallabag.org/

|

||||

[32]: https://getpocket.com/

|

||||

[33]: https://github.com/wallabag/wallabag/issues/2825

|

||||

[34]: https://github.com/wallabag/wallabag/issues/2914

|

||||

[35]: https://pirate.github.io/bookmark-archiver/

|

||||

[36]: https://github.com/kanishka-linux/reminiscence

|

||||

[37]: http://iabak.archiveteam.org

|

||||

@ -1,89 +1,86 @@

|

||||

实验 3:用户环境

|

||||

Caffeinated 6.828:实验 3:用户环境

|

||||

======

|

||||

### 实验 3:用户环境

|

||||

|

||||

#### 简介

|

||||

### 简介

|

||||

|

||||

在本实验中,你将要实现一个基本的内核功能,要求它能够保护运行的用户模式环境(即:进程)。你将去增强这个 JOS 内核,去配置数据结构以便于保持对用户环境的跟踪、创建一个单一用户环境、将程序镜像加载到用户环境中、并将它启动运行。你也要写出一些 JOS 内核的函数,用来处理任何用户环境生成的系统调用,以及处理由用户环境引进的各种异常。

|

||||

|

||||

**注意:** 在本实验中,术语**_“环境”_** 和**_“进程”_** 是可互换的 —— 它们都表示同一个抽象概念,那就是允许你去运行的程序。我在介绍中使用术语**“环境”**而不是使用传统术语**“进程”**的目的是为了强调一点,那就是 JOS 的环境和 UNIX 的进程提供了不同的接口,并且它们的语义也不相同。

|

||||

**注意:** 在本实验中,术语**“环境”** 和**“进程”** 是可互换的 —— 它们都表示同一个抽象概念,那就是允许你去运行的程序。我在介绍中使用术语**“环境”**而不是使用传统术语**“进程”**的目的是为了强调一点,那就是 JOS 的环境和 UNIX 的进程提供了不同的接口,并且它们的语义也不相同。

|

||||

|

||||

##### 预备知识

|

||||

#### 预备知识

|

||||

|

||||

使用 Git 去提交你自实验 2 以后的更改(如果有的话),获取课程仓库的最新版本,以及创建一个命名为 `lab3` 的本地分支,指向到我们的 lab3 分支上 `origin/lab3` :

|

||||

|

||||

```

|

||||

athena% cd ~/6.828/lab

|

||||

athena% add git

|

||||

athena% git commit -am 'changes to lab2 after handin'

|

||||

Created commit 734fab7: changes to lab2 after handin

|

||||

4 files changed, 42 insertions(+), 9 deletions(-)

|

||||

athena% git pull

|

||||

Already up-to-date.

|

||||

athena% git checkout -b lab3 origin/lab3

|

||||

Branch lab3 set up to track remote branch refs/remotes/origin/lab3.

|

||||

Switched to a new branch "lab3"

|

||||

athena% git merge lab2

|

||||

Merge made by recursive.

|

||||

kern/pmap.c | 42 +++++++++++++++++++

|

||||

1 files changed, 42 insertions(+), 0 deletions(-)

|

||||

athena%

|

||||

athena% cd ~/6.828/lab

|

||||

athena% add git

|

||||

athena% git commit -am 'changes to lab2 after handin'

|

||||

Created commit 734fab7: changes to lab2 after handin

|

||||

4 files changed, 42 insertions(+), 9 deletions(-)

|

||||

athena% git pull

|

||||

Already up-to-date.

|

||||

athena% git checkout -b lab3 origin/lab3

|

||||

Branch lab3 set up to track remote branch refs/remotes/origin/lab3.

|

||||

Switched to a new branch "lab3"

|

||||

athena% git merge lab2

|

||||

Merge made by recursive.

|

||||

kern/pmap.c | 42 +++++++++++++++++++

|

||||

1 files changed, 42 insertions(+), 0 deletions(-)

|

||||

athena%

|

||||

```

|

||||

|

||||

实验 3 包含一些你将探索的新源文件:

|

||||

|

||||

```c

|

||||

inc/ env.h Public definitions for user-mode environments

|

||||

trap.h Public definitions for trap handling

|

||||

syscall.h Public definitions for system calls from user environments to the kernel

|

||||

lib.h Public definitions for the user-mode support library

|

||||

kern/ env.h Kernel-private definitions for user-mode environments

|

||||

env.c Kernel code implementing user-mode environments

|

||||

trap.h Kernel-private trap handling definitions

|

||||

trap.c Trap handling code

|

||||

trapentry.S Assembly-language trap handler entry-points

|

||||

syscall.h Kernel-private definitions for system call handling

|

||||

syscall.c System call implementation code

|

||||

lib/ Makefrag Makefile fragment to build user-mode library, obj/lib/libjos.a

|

||||

entry.S Assembly-language entry-point for user environments

|

||||

libmain.c User-mode library setup code called from entry.S

|

||||

syscall.c User-mode system call stub functions

|

||||

console.c User-mode implementations of putchar and getchar, providing console I/O

|

||||

exit.c User-mode implementation of exit

|

||||

panic.c User-mode implementation of panic

|

||||

user/ * Various test programs to check kernel lab 3 code

|

||||

inc/ env.h Public definitions for user-mode environments

|

||||

trap.h Public definitions for trap handling

|

||||

syscall.h Public definitions for system calls from user environments to the kernel

|

||||

lib.h Public definitions for the user-mode support library

|

||||

kern/ env.h Kernel-private definitions for user-mode environments

|

||||

env.c Kernel code implementing user-mode environments

|

||||

trap.h Kernel-private trap handling definitions

|

||||

trap.c Trap handling code

|

||||

trapentry.S Assembly-language trap handler entry-points

|

||||

syscall.h Kernel-private definitions for system call handling

|

||||

syscall.c System call implementation code

|

||||

lib/ Makefrag Makefile fragment to build user-mode library, obj/lib/libjos.a

|

||||

entry.S Assembly-language entry-point for user environments

|

||||

libmain.c User-mode library setup code called from entry.S

|

||||

syscall.c User-mode system call stub functions

|

||||

console.c User-mode implementations of putchar and getchar, providing console I/O

|

||||

exit.c User-mode implementation of exit

|

||||

panic.c User-mode implementation of panic

|

||||

user/ * Various test programs to check kernel lab 3 code

|

||||

```

|

||||

|

||||

另外,一些在实验 2 中的源文件在实验 3 中将被修改。如果想去查看有什么更改,可以运行:

|

||||

|

||||

```

|

||||

$ git diff lab2

|

||||

|

||||

$ git diff lab2

|

||||

```

|

||||

|

||||

你也可以另外去看一下 [实验工具指南][1],它包含了与本实验有关的调试用户代码方面的信息。

|

||||

|

||||

##### 实验要求

|

||||

#### 实验要求

|

||||

|

||||

本实验分为两部分:Part A 和 Part B。Part A 在本实验完成后一周内提交;你将要提交你的更改和完成的动手实验,在提交之前要确保你的代码通过了 Part A 的所有检查(如果你的代码未通过 Part B 的检查也可以提交)。只需要在第二周提交 Part B 的期限之前代码检查通过即可。

|

||||

|

||||

由于在实验 2 中,你需要做实验中描述的所有正则表达式练习,并且至少通过一个挑战(是指整个实验,不是每个部分)。写出详细的问题答案并张贴在实验中,以及一到两个段落的关于你如何解决你选择的挑战问题的详细描述,并将它放在一个名为 `answers-lab3.txt` 的文件中,并将这个文件放在你的 `lab` 目标的根目录下。(如果你做了多个问题挑战,你仅需要提交其中一个即可)不要忘记使用 `git add answers-lab3.txt` 提交这个文件。

|

||||

|

||||

##### 行内汇编语言

|

||||

#### 行内汇编语言

|

||||

|

||||

在本实验中你可能找到使用了 GCC 的行内汇编语言特性,虽然不使用它也可以完成实验。但至少你需要去理解这些行内汇编语言片段,这些汇编语言("`asm`" 语句)片段已经存在于提供给你的源代码中。你可以在课程 [参考资料][2] 的页面上找到 GCC 行内汇编语言有关的信息。

|

||||

在本实验中你可能发现使用了 GCC 的行内汇编语言特性,虽然不使用它也可以完成实验。但至少你需要去理解这些行内汇编语言片段,这些汇编语言(`asm` 语句)片段已经存在于提供给你的源代码中。你可以在课程 [参考资料][2] 的页面上找到 GCC 行内汇编语言有关的信息。

|

||||

|

||||

#### Part A:用户环境和异常处理

|

||||

### Part A:用户环境和异常处理

|

||||

|

||||

新文件 `inc/env.h` 中包含了在 JOS 中关于用户环境的基本定义。现在就去阅读它。内核使用数据结构 `Env` 去保持对每个用户环境的跟踪。在本实验的开始,你将只创建一个环境,但你需要去设计 JOS 内核支持多环境;实验 4 将带来这个高级特性,允许用户环境去 `fork` 其它环境。

|

||||

|

||||

正如你在 `kern/env.c` 中所看到的,内核维护了与环境相关的三个全局变量:

|

||||

|

||||

```

|

||||

struct Env *envs = NULL; // All environments

|

||||

struct Env *curenv = NULL; // The current env

|

||||

static struct Env *env_free_list; // Free environment list

|

||||

|

||||

struct Env *envs = NULL; // All environments

|

||||

struct Env *curenv = NULL; // The current env

|

||||

static struct Env *env_free_list; // Free environment list

|

||||

```

|

||||

|

||||

一旦 JOS 启动并运行,`envs` 指针指向到一个数组,即数据结构 `Env`,它保存了系统中全部的环境。在我们的设计中,JOS 内核将同时支持最大值为 `NENV` 个的活动的环境,虽然在一般情况下,任何给定时刻运行的环境很少。(`NENV` 是在 `inc/env.h` 中用 `#define` 定义的一个常量)一旦它被分配,对于每个 `NENV` 可能的环境,`envs` 数组将包含一个数据结构 `Env` 的单个实例。

|

||||

@ -92,38 +89,38 @@ JOS 内核在 `env_free_list` 上用数据结构 `Env` 保存了所有不活动

|

||||

|

||||

内核使用符号 `curenv` 来保持对任意给定时刻的 _当前正在运行的环境_ 进行跟踪。在系统引导期间,在第一个环境运行之前,`curenv` 被初始化为 `NULL`。

|

||||

|

||||

##### 环境状态

|

||||

#### 环境状态

|

||||

|

||||

数据结构 `Env` 被定义在文件 `inc/env.h` 中,内容如下:(在后面的实验中将添加更多的字段):

|

||||

|

||||

```c

|

||||

struct Env {

|

||||

struct Trapframe env_tf; // Saved registers

|

||||

struct Env *env_link; // Next free Env

|

||||

envid_t env_id; // Unique environment identifier

|

||||

envid_t env_parent_id; // env_id of this env's parent

|

||||

enum EnvType env_type; // Indicates special system environments

|

||||

unsigned env_status; // Status of the environment

|

||||

uint32_t env_runs; // Number of times environment has run

|

||||

struct Env {

|

||||

struct Trapframe env_tf; // Saved registers

|

||||

struct Env *env_link; // Next free Env

|

||||

envid_t env_id; // Unique environment identifier

|

||||

envid_t env_parent_id; // env_id of this env's parent

|

||||

enum EnvType env_type; // Indicates special system environments

|

||||

unsigned env_status; // Status of the environment

|

||||

uint32_t env_runs; // Number of times environment has run

|

||||

|

||||

// Address space

|

||||

pde_t *env_pgdir; // Kernel virtual address of page dir

|

||||

};

|

||||

// Address space

|

||||

pde_t *env_pgdir; // Kernel virtual address of page dir

|

||||

};

|

||||

```

|

||||

|

||||

以下是数据结构 `Env` 中的字段简介:

|

||||

|

||||

* **env_tf**:

|

||||

* `env_tf`:

|

||||

这个结构定义在 `inc/trap.h` 中,它用于在那个环境不运行时保持它保存在寄存器中的值,即:当内核或一个不同的环境在运行时。当从用户模式切换到内核模式时,内核将保存这些东西,以便于那个环境能够在稍后重新运行时回到中断运行的地方。

|

||||

* **env_link**:

|

||||

* `env_link`:

|

||||

这是一个链接,它链接到在 `env_free_list` 上的下一个 `Env` 上。`env_free_list` 指向到列表上第一个空闲的环境。

|

||||

* **env_id**:

|

||||

* `env_id`:

|

||||

内核在数据结构 `Env` 中保存了一个唯一标识当前环境的值(即:使用数组 `envs` 中的特定槽位)。在一个用户环境终止之后,内核可能给另外的环境重新分配相同的数据结构 `Env` —— 但是新的环境将有一个与已终止的旧的环境不同的 `env_id`,即便是新的环境在数组 `envs` 中复用了同一个槽位。

|

||||

* **env_parent_id**:

|

||||

* `env_parent_id`:

|

||||

内核使用它来保存创建这个环境的父级环境的 `env_id`。通过这种方式,环境就可以形成一个“家族树”,这对于做出“哪个环境可以对谁做什么”这样的安全决策非常有用。

|

||||

* **env_type**:

|

||||

* `env_type`:

|

||||

它用于去区分特定的环境。对于大多数环境,它将是 `ENV_TYPE_USER` 的。在稍后的实验中,针对特定的系统服务环境,我们将引入更多的几种类型。

|

||||

* **env_status**:

|

||||

* `env_status`:

|

||||

这个变量持有以下几个值之一:

|

||||

* `ENV_FREE`:

|

||||

表示那个 `Env` 结构是非活动的,并且因此它还在 `env_free_list` 上。

|

||||

@ -135,59 +132,64 @@ JOS 内核在 `env_free_list` 上用数据结构 `Env` 保存了所有不活动

|

||||

表示那个 `Env` 结构所代表的是一个当前活动的环境,但不是当前准备去运行的:例如,因为它正在因为一个来自其它环境的进程间通讯(IPC)而处于等待状态。

|

||||

* `ENV_DYING`:

|

||||

表示那个 `Env` 结构所表示的是一个僵尸环境。一个僵尸环境将在下一次被内核捕获后被释放。我们在实验 4 之前不会去使用这个标志。

|

||||

* **env_pgdir**:

|

||||

* `env_pgdir`:

|

||||

这个变量持有这个环境的内核虚拟地址的页目录。

|

||||

|

||||

|

||||

|

||||

就像一个 Unix 进程一样,一个 JOS 环境耦合了“线程”和“地址空间”的概念。线程主要由保存的寄存器来定义(`env_tf` 字段),而地址空间由页目录和 `env_pgdir` 所指向的页表所定义。为运行一个环境,内核必须使用保存的寄存器值和相关的地址空间去设置 CPU。

|

||||

|

||||

我们的 `struct Env` 与 xv6 中的 `struct proc` 类似。它们都在一个 `Trapframe` 结构中持有环境(即进程)的用户模式寄存器状态。在 JOS 中,单个的环境并不能像 xv6 中的进程那样拥有它们自己的内核栈。在这里,内核中任意时间只能有一个 JOS 环境处于活动中,因此,JOS 仅需要一个单个的内核栈。

|

||||

|

||||

##### 为环境分配数组

|

||||

#### 为环境分配数组

|

||||

|

||||

在实验 2 的 `mem_init()` 中,你为数组 `pages[]` 分配了内存,它是内核用于对页面分配与否的状态进行跟踪的一个表。你现在将需要去修改 `mem_init()`,以便于后面使用它分配一个与结构 `Env` 类似的数组,这个数组被称为 `envs`。

|

||||

|

||||

```markdown

|

||||

练习 1、修改在 `kern/pmap.c` 中的 `mem_init()`,以用于去分配和映射 `envs` 数组。这个数组完全由 `Env` 结构分配的实例 `NENV` 组成,就像你分配的 `pages` 数组一样。与 `pages` 数组一样,由内存支持的数组 `envs` 也将在 `UENVS`(它的定义在 `inc/memlayout.h` 文件中)中映射用户只读的内存,以便于用户进程能够从这个数组中读取。

|

||||

```

|

||||

> **练习 1**、修改在 `kern/pmap.c` 中的 `mem_init()`,以用于去分配和映射 `envs` 数组。这个数组完全由 `Env` 结构分配的实例 `NENV` 组成,就像你分配的 `pages` 数组一样。与 `pages` 数组一样,由内存支持的数组 `envs` 也将在 `UENVS`(它的定义在 `inc/memlayout.h` 文件中)中映射用户只读的内存,以便于用户进程能够从这个数组中读取。

|

||||

|

||||

你应该去运行你的代码,并确保 `check_kern_pgdir()` 是没有问题的。

|

||||

|

||||

##### 创建和运行环境

|

||||

#### 创建和运行环境

|

||||

|

||||

现在,你将在 `kern/env.c` 中写一些必需的代码去运行一个用户环境。因为我们并没有做一个文件系统,因此,我们将设置内核去加载一个嵌入到内核中的静态的二进制镜像。JOS 内核以一个 ELF 可运行镜像的方式将这个二进制镜像嵌入到内核中。

|

||||

现在,你将在 `kern/env.c` 中写一些必需的代码去运行一个用户环境。因为我们并没有做一个文件系统,因此,我们将设置内核去加载一个嵌入到内核中的静态的二进制镜像。JOS 内核以一个 ELF 可运行镜像的方式将这个二进制镜像嵌入到内核中。

|

||||

|

||||

在实验 3 中,`GNUmakefile` 将在 `obj/user/` 目录中生成一些二进制镜像。如果你看到 `kern/Makefrag`,你将注意到一些奇怪的的东西,它们“链接”这些二进制直接进入到内核中运行,就像 `.o` 文件一样。在链接器命令行上的 `-b binary` 选项,将因此把它们链接为“原生的”不解析的二进制文件,而不是由编译器产生的普通的 `.o` 文件。(就链接器而言,这些文件压根就不是 ELF 镜像文件 —— 它们可以是任何东西,比如,一个文本文件或图片!)如果你在内核构建之后查看 `obj/kern/kernel.sym` ,你将会注意到链接器很奇怪的生成了一些有趣的、命名很费解的符号,比如像 `_binary_obj_user_hello_start`、`_binary_obj_user_hello_end`、以及 `_binary_obj_user_hello_size`。链接器通过改编二进制文件的命令来生成这些符号;这种符号为普通内核代码使用一种引入嵌入式二进制文件的方法。

|

||||

|

||||

在 `kern/init.c` 的 `i386_init()` 中,你将写一些代码在环境中运行这些二进制镜像中的一种。但是,设置用户环境的关键函数还没有实现;将需要你去完成它们。

|

||||

|

||||

```markdown

|

||||

练习 2、在文件 `env.c` 中,写完以下函数的代码:

|

||||

> **练习 2**、在文件 `env.c` 中,写完以下函数的代码:

|

||||

|

||||

* `env_init()`

|

||||

初始化 `envs` 数组中所有的 `Env` 结构,然后把它们添加到 `env_free_list` 中。也称为 `env_init_percpu`,它通过配置硬件,在硬件上为 level 0(内核)权限和 level 3(用户)权限使用单独的段。

|

||||

* `env_setup_vm()`

|

||||

为一个新环境分配一个页目录,并初始化新环境的地址空间的内核部分。

|

||||

* `region_alloc()`

|

||||

为一个新环境分配和映射物理内存

|

||||

* `load_icode()`

|

||||

你将需要去解析一个 ELF 二进制镜像,就像引导加载器那样,然后加载它的内容到一个新环境的用户地址空间中。

|

||||

* `env_create()`

|

||||

使用 `env_alloc` 去分配一个环境,并调用 `load_icode` 去加载一个 ELF 二进制

|

||||

* `env_run()`

|

||||

在用户模式中开始运行一个给定的环境

|

||||

> * `env_init()`

|

||||

|

||||

> 初始化 `envs` 数组中所有的 `Env` 结构,然后把它们添加到 `env_free_list` 中。也称为 `env_init_percpu`,它通过配置硬件,在硬件上为 level 0(内核)权限和 level 3(用户)权限使用单独的段。

|

||||

|

||||

> * `env_setup_vm()`

|

||||

|

||||

在你写这些函数时,你可能会发现新的 cprintf 动词 `%e` 非常有用 -- 它可以输出一个错误代码的相关描述。比如:

|

||||

> 为一个新环境分配一个页目录,并初始化新环境的地址空间的内核部分。

|

||||

|

||||

r = -E_NO_MEM;

|

||||

panic("env_alloc: %e", r);

|

||||

> * `region_alloc()`

|

||||

|

||||

中 panic 将输出消息 "env_alloc: out of memory"。

|

||||

> 为一个新环境分配和映射物理内存

|

||||

|

||||

> * `load_icode()`

|

||||

|

||||

> 你将需要去解析一个 ELF 二进制镜像,就像引导加载器那样,然后加载它的内容到一个新环境的用户地址空间中。

|

||||

|

||||

> * `env_create()`

|

||||

|

||||

> 使用 `env_alloc` 去分配一个环境,并调用 `load_icode` 去加载一个 ELF 二进制

|

||||

|

||||

> * `env_run()`

|

||||

|

||||

> 在用户模式中开始运行一个给定的环境

|

||||

|

||||

> 在你写这些函数时,你可能会发现新的 cprintf 动词 `%e` 非常有用 -- 它可以输出一个错误代码的相关描述。比如:

|

||||

|

||||

> ```

|

||||

r = -E_NO_MEM;

|

||||

panic("env_alloc: %e", r);

|

||||

```

|

||||

|

||||

> 中 panic 将输出消息 "env_alloc: out of memory"。

|

||||

|

||||

下面是用户代码相关的调用图。确保你理解了每一步的用途。

|

||||

|

||||

* `start` (`kern/entry.S`)

|

||||

@ -200,107 +202,94 @@ JOS 内核在 `env_free_list` 上用数据结构 `Env` 保存了所有不活动

|

||||

* `env_run`

|

||||

* `env_pop_tf`

|

||||

|

||||

|

||||

|

||||

在完成以上函数后,你应该去编译内核并在 QEMU 下运行它。如果一切正常,你的系统将进入到用户空间并运行二进制的 `hello` ,直到使用 `int` 指令生成一个系统调用为止。在那个时刻将存在一个问题,因为 JOS 尚未设置硬件去允许从用户空间到内核空间的各种转换。当 CPU 发现没有系统调用中断的服务程序时,它将生成一个一般保护异常,找到那个异常并去处理它,还将生成一个双重故障异常,同样也找到它并处理它,并且最后会出现所谓的“三重故障异常”。通常情况下,你将随后看到 CPU 复位以及系统重引导。虽然对于传统的应用程序(在 [这篇博客文章][3] 中解释了原因)这是重大的问题,但是对于内核开发来说,这是一个痛苦的过程,因此,在打了 6.828 补丁的 QEMU 上,你将可以看到转储的寄存器内容和一个“三重故障”的信息。

|

||||

|

||||

我们马上就会去处理这些问题,但是现在,我们可以使用调试器去检查我们是否进入了用户模式。使用 `make qemu-gdb` 并在 `env_pop_tf` 处设置一个 GDB 断点,它是你进入用户模式之前到达的最后一个函数。使用 `si` 单步进入这个函数;处理器将在 `iret` 指令之后进入用户模式。然后你将会看到在用户环境运行的第一个指令,它将是在 `lib/entry.S` 中的标签 `start` 的第一个指令 `cmpl`。现在,在 `hello` 中的 `sys_cputs()` 的 `int $0x30` 处使用 `b *0x...`(关于用户空间的地址,请查看 `obj/user/hello.asm` )设置断点。这个指令 `int` 是系统调用去显示一个字符到控制台。如果到 `int` 还没有运行,那么可能在你的地址空间设置或程序加载代码时发生了错误;返回去找到问题并解决后重新运行。

|

||||

|

||||

##### 处理中断和异常

|

||||

#### 处理中断和异常

|

||||

|

||||

到目前为止,在用户空间中的第一个系统调用指令 `int $0x30` 已正式寿终正寝了:一旦处理器进入用户模式,将无法返回。因此,现在,你需要去实现基本的异常和系统调用服务程序,因为那样才有可能让内核从用户模式代码中恢复对处理器的控制。你所做的第一件事情就是彻底地掌握 x86 的中断和异常机制的使用。

|

||||

|

||||

```

|

||||

练习 3、如果你对中断和异常机制不熟悉的话,阅读 80386 程序员手册的第 9 章(或 IA-32 开发者手册的第 5 章)。

|

||||

```

|

||||

> **练习 3**、如果你对中断和异常机制不熟悉的话,阅读 80386 程序员手册的第 9 章(或 IA-32 开发者手册的第 5 章)。

|

||||

|

||||

在这个实验中,对于中断、异常、以其它类似的东西,我们将遵循 Intel 的术语习惯。由于如<ruby>异常<rt>exception</rt></ruby>、<ruby>陷阱<rt>trap</rt></ruby>、<ruby>中断<rt>interrupt</rt></ruby>、<ruby>故障<rt>fault</rt></ruby>和<ruby>中止<rt>abort</rt></ruby>这些术语在不同的架构和操作系统上并没有一个统一的标准,我们经常在特定的架构下(如 x86)并不去考虑它们之间的细微差别。当你在本实验以外的地方看到这些术语时,它们的含义可能有细微的差别。

|

||||

|

||||

##### 受保护的控制转移基础

|

||||

#### 受保护的控制转移基础

|

||||

|

||||

异常和中断都是“受保护的控制转移”,它将导致处理器从用户模式切换到内核模式(CPL=0)而不会让用户模式的代码干扰到内核的其它函数或其它的环境。在 Intel 的术语中,一个中断就是一个“受保护的控制转移”,它是由于处理器以外的外部异步事件所引发的,比如外部设备 I/O 活动通知。而异常正好与之相反,它是由当前正在运行的代码所引发的同步的、受保护的控制转移,比如由于发生了一个除零错误或对无效内存的访问。

|

||||

异常和中断都是“受保护的控制转移”,它将导致处理器从用户模式切换到内核模式(`CPL=0`)而不会让用户模式的代码干扰到内核的其它函数或其它的环境。在 Intel 的术语中,一个中断就是一个“受保护的控制转移”,它是由于处理器以外的外部异步事件所引发的,比如外部设备 I/O 活动通知。而异常正好与之相反,它是由当前正在运行的代码所引发的同步的、受保护的控制转移,比如由于发生了一个除零错误或对无效内存的访问。

|

||||

|

||||

为了确保这些受保护的控制转移是真正地受到保护,处理器的中断/异常机制设计是:当中断/异常发生时,当前运行的代码不能随意选择进入内核的位置和方式。而是,处理器在确保内核能够严格控制的条件下才能进入内核。在 x86 上,有两种机制协同来提供这种保护:

|

||||

|

||||

1. **中断描述符表** 处理器确保中断和异常仅能够导致内核进入几个特定的、由内核本身定义好的、明确的入口点,而不是去运行中断或异常发生时的代码。

|

||||

1. **中断描述符表** 处理器确保中断和异常仅能够导致内核进入几个特定的、由内核本身定义好的、明确的入口点,而不是去运行中断或异常发生时的代码。

|

||||

|

||||

x86 允许最多有 256 个不同的中断或异常入口点去进入内核,每个入口点都使用一个不同的中断向量。一个向量是一个介于 0 和 255 之间的数字。一个中断向量是由中断源确定的:不同的设备、错误条件、以及应用程序去请求内核使用不同的向量生成中断。CPU 使用向量作为进入处理器的中断描述符表(IDT)的索引,它是内核设置的内核私有内存,GDT 也是。从这个表中的适当的条目中,处理器将加载:

|

||||

x86 允许最多有 256 个不同的中断或异常入口点去进入内核,每个入口点都使用一个不同的中断向量。一个向量是一个介于 0 和 255 之间的数字。一个中断向量是由中断源确定的:不同的设备、错误条件、以及应用程序去请求内核使用不同的向量生成中断。CPU 使用向量作为进入处理器的中断描述符表(IDT)的索引,它是内核设置的内核私有内存,GDT 也是。从这个表中的适当的条目中,处理器将加载:

|

||||

|

||||

* 将值加载到指令指针寄存器(EIP),指向内核代码设计好的,用于处理这种异常的服务程序。

|

||||

* 将值加载到代码段寄存器(CS),它包含运行权限为 0—1 级别的、要运行的异常服务程序。(在 JOS 中,所有的异常处理程序都运行在内核模式中,运行级别为 level 0。)

|

||||

2. **任务状态描述符表** 处理器在中断或异常发生时,需要一个地方去保存旧的处理器状态,比如,处理器在调用异常服务程序之前的 `EIP` 和 `CS` 的原始值,这样那个异常服务程序就能够稍后通过还原旧的状态来回到中断发生时的代码位置。但是对于已保存的处理器的旧状态必须被保护起来,不能被无权限的用户模式代码访问;否则代码中的 bug 或恶意用户代码将危及内核。

|

||||

* 将值加载到代码段寄存器(CS),它包含运行权限为 0—1 级别的、要运行的异常服务程序。(在 JOS 中,所有的异常处理程序都运行在内核模式中,运行级别为 0。)

|

||||

|

||||

基于这个原因,当一个 x86 处理器产生一个中断或陷阱时,将导致权限级别的变更,从用户模式转换到内核模式,它也将导致在内核的内存中发生栈切换。有一个被称为 TSS 的任务状态描述符表规定段描述符和这个栈所处的地址。处理器在这个新栈上推送 `SS`、`ESP`、`EFLAGS`、`CS`、`EIP`、以及一个可选的错误代码。然后它从中断描述符上加载 `CS` 和 `EIP` 的值,然后设置 `ESP` 和 `SS` 去指向新的栈。

|

||||

2. **任务状态描述符表** 处理器在中断或异常发生时,需要一个地方去保存旧的处理器状态,比如,处理器在调用异常服务程序之前的 `EIP` 和 `CS` 的原始值,这样那个异常服务程序就能够稍后通过还原旧的状态来回到中断发生时的代码位置。但是对于已保存的处理器的旧状态必须被保护起来,不能被无权限的用户模式代码访问;否则代码中的 bug 或恶意用户代码将危及内核。

|

||||

|

||||

虽然 TSS 很大并且默默地为各种用途服务,但是 JOS 仅用它去定义当从用户模式到内核模式的转移发生时,处理器即将切换过去的内核栈。因为在 JOS 中的“内核模式”仅运行在 x86 的 level 0 权限上,当进入内核模式时,处理器使用 TSS 上的 `ESP0` 和 `SS0` 字段去定义内核栈。JOS 并不去使用 TSS 的任何其它字段。

|

||||

基于这个原因,当一个 x86 处理器产生一个中断或陷阱时,将导致权限级别的变更,从用户模式转换到内核模式,它也将导致在内核的内存中发生栈切换。有一个被称为 TSS 的任务状态描述符表规定段描述符和这个栈所处的地址。处理器在这个新栈上推送 `SS`、`ESP`、`EFLAGS`、`CS`、`EIP`、以及一个可选的错误代码。然后它从中断描述符上加载 `CS` 和 `EIP` 的值,然后设置 `ESP` 和 `SS` 去指向新的栈。

|

||||

|

||||

虽然 TSS 很大并且默默地为各种用途服务,但是 JOS 仅用它去定义当从用户模式到内核模式的转移发生时,处理器即将切换过去的内核栈。因为在 JOS 中的“内核模式”仅运行在 x86 的运行级别 0 权限上,当进入内核模式时,处理器使用 TSS 上的 `ESP0` 和 `SS0` 字段去定义内核栈。JOS 并不去使用 TSS 的任何其它字段。

|

||||

|

||||

|

||||

|

||||

##### 异常和中断的类型

|

||||

#### 异常和中断的类型

|

||||

|

||||

所有的 x86 处理器上的同步异常都能够产生一个内部使用的、介于 0 到 31 之间的中断向量,因此它映射到 IDT 就是条目 0-31。例如,一个页故障总是通过向量 14 引发一个异常。大于 31 的中断向量仅用于软件中断,它由 `int` 指令生成,或异步硬件中断,当需要时,它们由外部设备产生。

|

||||

|

||||

在这一节中,我们将扩展 JOS 去处理向量为 0-31 之间的、内部产生的 x86 异常。在下一节中,我们将完成 JOS 的 48(0x30)号软件中断向量,JOS 将(随意选择的)使用它作为系统调用中断向量。在实验 4 中,我们将扩展 JOS 去处理外部生成的硬件中断,比如时钟中断。

|

||||

|

||||

##### 一个示例

|

||||

#### 一个示例

|

||||

|

||||

我们把这些片断综合到一起,通过一个示例来巩固一下。我们假设处理器在用户环境下运行代码,遇到一个除零问题。

|

||||

|

||||

1. 处理器去切换到由 TSS 中的 `SS0` 和 `ESP0` 定义的栈,在 JOS 中,它们各自保存着值 `GD_KD` 和 `KSTACKTOP`。

|

||||

|

||||

2. 处理器在内核栈上推入异常参数,起始地址为 `KSTACKTOP`:

|

||||

1. 处理器去切换到由 TSS 中的 `SS0` 和 `ESP0` 定义的栈,在 JOS 中,它们各自保存着值 `GD_KD` 和 `KSTACKTOP`。

|

||||

2. 处理器在内核栈上推入异常参数,起始地址为 `KSTACKTOP`:

|

||||

|

||||

```

|

||||

+--------------------+ KSTACKTOP

|

||||

| 0x00000 | old SS | " - 4

|

||||

| old ESP | " - 8

|

||||

| old EFLAGS | " - 12

|

||||

| 0x00000 | old CS | " - 16

|

||||

| old EIP | " - 20 <---- ESP

|

||||

+--------------------+

|

||||

```

|

||||

+--------------------+ KSTACKTOP

|

||||

| 0x00000 | old SS | " - 4

|

||||

| old ESP | " - 8

|

||||

| old EFLAGS | " - 12

|

||||

| 0x00000 | old CS | " - 16

|

||||

| old EIP | " - 20 <---- ESP

|

||||

+--------------------+

|

||||

|

||||

```

|

||||

|

||||

3. 由于我们要处理一个除零错误,它将在 x86 上产生一个中断向量 0,处理器读取 IDT 的条目 0,然后设置 `CS:EIP` 去指向由条目描述的处理函数。

|

||||

|

||||

4. 处理服务程序函数将接管控制权并处理异常,例如中止用户环境。

|

||||

|

||||

|

||||

|

||||

3. 由于我们要处理一个除零错误,它将在 x86 上产生一个中断向量 0,处理器读取 IDT 的条目 0,然后设置 `CS:EIP` 去指向由条目描述的处理函数。

|

||||

4. 处理服务程序函数将接管控制权并处理异常,例如中止用户环境。

|

||||

|

||||

对于某些类型的 x86 异常,除了以上的五个“标准的”寄存器外,处理器还推入另一个包含错误代码的寄存器值到栈中。页故障异常,向量号为 14,就是一个重要的示例。查看 80386 手册去确定哪些异常推入一个错误代码,以及错误代码在那个案例中的意义。当处理器推入一个错误代码后,当从用户模式中进入内核模式,异常处理服务程序开始时的栈看起来应该如下所示:

|

||||

|

||||

```

|

||||

+--------------------+ KSTACKTOP

|

||||

| 0x00000 | old SS | " - 4

|

||||

| old ESP | " - 8

|

||||

| old EFLAGS | " - 12

|

||||

| 0x00000 | old CS | " - 16

|

||||

| old EIP | " - 20

|

||||

| error code | " - 24 <---- ESP

|

||||

+--------------------+

|

||||

+--------------------+ KSTACKTOP

|

||||

| 0x00000 | old SS | " - 4

|

||||

| old ESP | " - 8

|

||||

| old EFLAGS | " - 12

|

||||

| 0x00000 | old CS | " - 16

|

||||

| old EIP | " - 20

|

||||

| error code | " - 24 <---- ESP

|

||||

+--------------------+

|

||||

```

|

||||

|

||||

##### 嵌套的异常和中断

|

||||

#### 嵌套的异常和中断

|

||||

|

||||

处理器能够处理来自用户和内核模式中的异常和中断。当收到来自用户模式的异常和中断时才会进入内核模式中,而且,在推送它的旧寄存器状态到栈中和通过 IDT 调用相关的异常服务程序之前,x86 处理器会自动切换栈。如果当异常或中断发生时,处理器已经处于内核模式中(`CS` 寄存器低位两个比特为 0),那么 CPU 只是推入一些值到相同的内核栈中。在这种方式中,内核可以优雅地处理嵌套的异常,嵌套的异常一般由内核本身的代码所引发。在实现保护时,这种功能是非常重要的工具,我们将在稍后的系统调用中看到它。

|

||||

|

||||

如果处理器已经处于内核模式中,并且发生了一个嵌套的异常,由于它并不需要切换栈,它也就不需要去保存旧的 `SS` 或 `ESP` 寄存器。对于不推入错误代码的异常类型,在进入到异常服务程序时,它的内核栈看起来应该如下图:

|

||||

|

||||

```

|

||||

+--------------------+ <---- old ESP

|

||||

| old EFLAGS | " - 4

|

||||

| 0x00000 | old CS | " - 8

|

||||

| old EIP | " - 12

|

||||

+--------------------+

|

||||

+--------------------+ <---- old ESP

|

||||

| old EFLAGS | " - 4

|

||||

| 0x00000 | old CS | " - 8

|

||||

| old EIP | " - 12

|

||||

+--------------------+

|

||||

```

|

||||

|

||||

对于需要推入一个错误代码的异常类型,处理器将在旧的 `EIP` 之后,立即推入一个错误代码,就和前面一样。

|

||||

|

||||

关于处理器的异常嵌套的功能,这里有一个重要的警告。如果处理器正处于内核模式时发生了一个异常,并且不论是什么原因,比如栈空间泄漏,都不会去推送它的旧的状态,那么这时处理器将不能做任何的恢复,它只是简单地重置。毫无疑问,内核应该被设计为禁止发生这种情况。

|

||||

|

||||

##### 设置 IDT

|

||||

#### 设置 IDT

|

||||

|

||||

到目前为止,你应该有了在 JOS 中为了设置 IDT 和处理异常所需的基本信息。现在,我们去设置 IDT 以处理中断向量 0-31(处理器异常)。我们将在本实验的稍后部分处理系统调用,然后在后面的实验中增加中断 32-47(设备 IRQ)。

|

||||

|

||||

@ -311,102 +300,94 @@ x86 允许最多有 256 个不同的中断或异常入口点去进入内核,

|

||||

你将要实现的完整的控制流如下图所描述:

|

||||

|

||||

```c

|

||||

IDT trapentry.S trap.c

|

||||

IDT trapentry.S trap.c

|

||||

|

||||

+----------------+

|

||||

| &handler1 |---------> handler1: trap (struct Trapframe *tf)

|

||||

| | // do stuff {

|

||||

| | call trap // handle the exception/interrupt

|

||||

| | // ... }

|

||||

| &handler1 |----> handler1: trap (struct Trapframe *tf)

|

||||

| | // do stuff {

|

||||

| | call trap // handle the exception/interrupt

|

||||

| | // ... }

|

||||

+----------------+

|

||||

| &handler2 |--------> handler2:

|

||||

| | // do stuff

|

||||

| | call trap

|

||||

| | // ...

|

||||

| &handler2 |----> handler2:

|

||||

| | // do stuff

|

||||

| | call trap

|

||||

| | // ...

|

||||

+----------------+

|

||||

.

|

||||

.

|

||||

.

|

||||

+----------------+

|

||||

| &handlerX |--------> handlerX:

|

||||

| | // do stuff

|

||||

| | call trap

|

||||

| | // ...

|

||||

| &handlerX |----> handlerX:

|

||||

| | // do stuff

|

||||

| | call trap

|

||||

| | // ...

|

||||

+----------------+

|

||||

```

|

||||

|

||||

每个异常或中断都应该在 `trapentry.S` 中有它自己的处理程序,并且 `trap_init()` 应该使用这些处理程序的地址去初始化 IDT。每个处理程序都应该在栈上构建一个 `struct Trapframe`(查看 `inc/trap.h`),然后使用一个指针调用 `trap()`(在 `trap.c` 中)到 `Trapframe`。`trap()` 接着处理异常/中断或派发给一个特定的处理函数。

|

||||

|

||||

```markdown

|

||||

练习 4、编辑 `trapentry.S` 和 `trap.c`,然后实现上面所描述的功能。在 `trapentry.S` 中的宏 `TRAPHANDLER` 和 `TRAPHANDLER_NOEC` 将会帮你,还有在 `inc/trap.h` 中的 T_* defines。你需要在 `trapentry.S` 中为每个定义在 `inc/trap.h` 中的陷阱添加一个入口点(使用这些宏),并且你将有 t、o 提供的 `_alltraps`,这是由宏 `TRAPHANDLER`指向到它。你也需要去修改 `trap_init()` 来初始化 `idt`,以使它指向到每个在 `trapentry.S` 中定义的入口点;宏 `SETGATE` 将有助你实现它。

|

||||

> 练习 4、编辑 `trapentry.S` 和 `trap.c`,然后实现上面所描述的功能。在 `trapentry.S` 中的宏 `TRAPHANDLER` 和 `TRAPHANDLER_NOEC` 将会帮你,还有在 `inc/trap.h` 中的 T_* defines。你需要在 `trapentry.S` 中为每个定义在 `inc/trap.h` 中的陷阱添加一个入口点(使用这些宏),并且你将有 t、o 提供的 `_alltraps`,这是由宏 `TRAPHANDLER`指向到它。你也需要去修改 `trap_init()` 来初始化 `idt`,以使它指向到每个在 `trapentry.S` 中定义的入口点;宏 `SETGATE` 将有助你实现它。

|

||||

|

||||

你的 `_alltraps` 应该:

|

||||

> 你的 `_alltraps` 应该:

|

||||

|

||||

1. 推送值以使栈看上去像一个结构 Trapframe

|

||||

2. 加载 `GD_KD` 到 `%ds` 和 `%es`

|

||||

3. `pushl %esp` 去传递一个指针到 Trapframe 以作为一个 trap() 的参数

|

||||

4. `call trap` (`trap` 能够返回吗?)

|

||||

> 1. 推送值以使栈看上去像一个结构 Trapframe

|

||||

> 2. 加载 `GD_KD` 到 `%ds` 和 `%es`

|

||||

> 3. `pushl %esp` 去传递一个指针到 Trapframe 以作为一个 trap() 的参数

|

||||

> 4. `call trap` (`trap` 能够返回吗?)

|

||||

|

||||

> 考虑使用 `pushal` 指令;它非常适合 `struct Trapframe` 的布局。

|

||||

|

||||

> 使用一些在 `user` 目录中的测试程序来测试你的陷阱处理代码,这些测试程序在生成任何系统调用之前能引发异常,比如 `user/divzero`。在这时,你应该能够成功完成 `divzero`、`softint`、以有 `badsegment` 测试。

|

||||

|

||||

考虑使用 `pushal` 指令;它非常适合 `struct Trapframe` 的布局。

|

||||

.

|

||||

|

||||

使用一些在 `user` 目录中的测试程序来测试你的陷阱处理代码,这些测试程序在生成任何系统调用之前能引发异常,比如 `user/divzero`。在这时,你应该能够成功完成 `divzero`、`softint`、以有 `badsegment` 测试。

|

||||

> **小挑战!**目前,在 `trapentry.S` 中列出的 `TRAPHANDLER` 和他们安装在 `trap.c` 中可能有许多代码非常相似。清除它们。修改 `trapentry.S` 中的宏去自动为 `trap.c` 生成一个表。注意,你可以直接使用 `.text` 和 `.data` 在汇编器中切换放置其中的代码和数据。

|

||||

|

||||

.

|

||||

|

||||

> **问题**

|

||||

|

||||

> 在你的 `answers-lab3.txt` 中回答下列问题:

|

||||

|

||||

> 1. 为每个异常/中断设置一个独立的服务程序函数的目的是什么?(即:如果所有的异常/中断都传递给同一个服务程序,在我们的当前实现中能否提供这样的特性?)

|

||||

> 2. 你需要做什么事情才能让 `user/softint` 程序正常运行?评级脚本预计将会产生一个一般保护故障(trap 13),但是 `softint` 的代码显示为 `int $14`。为什么它产生的中断向量是 13?如果内核允许 `softint` 的 `int $14` 指令去调用内核页故障的服务程序(它的中断向量是 14)会发生什么事情?

|

||||

```

|

||||

|

||||

```markdown

|

||||

小挑战!目前,在 `trapentry.S` 中列出的 `TRAPHANDLER` 和他们安装在 `trap.c` 中可能有许多代码非常相似。清除它们。修改 `trapentry.S` 中的宏去自动为 `trap.c` 生成一个表。注意,你可以直接使用 `.text` 和 `.data` 在汇编器中切换放置其中的代码和数据。

|

||||

```

|

||||

|

||||

```markdown

|

||||

问题

|

||||

|

||||

在你的 `answers-lab3.txt` 中回答下列问题:

|

||||

|

||||

1. 为每个异常/中断设置一个独立的服务程序函数的目的是什么?(即:如果所有的异常/中断都传递给同一个服务程序,在我们的当前实现中能否提供这样的特性?)

|

||||

2. 你需要做什么事情才能让 `user/softint` 程序正常运行?评级脚本预计将会产生一个一般保护故障(trap 13),但是 `softint` 的代码显示为 `int $14`。为什么它产生的中断向量是 13?如果内核允许 `softint` 的 `int $14` 指令去调用内核页故障的服务程序(它的中断向量是 14)会发生什么事情?

|

||||

```

|

||||

|

||||

|

||||

本实验的 Part A 部分结束了。不要忘了去添加 `answers-lab3.txt` 文件,提交你的变更,然后在 Part A 作业的提交截止日期之前运行 `make handin`。

|

||||

|

||||

#### Part B:页故障、断点异常、和系统调用

|

||||

### Part B:页故障、断点异常、和系统调用

|

||||

|

||||

现在,你的内核已经有了最基本的异常处理能力,你将要去继续改进它,来提供依赖异常服务程序的操作系统原语。

|

||||

|

||||

##### 处理页故障

|

||||

#### 处理页故障

|

||||

|

||||

页故障异常,中断向量为 14(`T_PGFLT`),它是一个非常重要的东西,我们将通过本实验和接下来的实验来大量练习它。当处理器产生一个页故障时,处理器将在它的一个特定的控制寄存器(`CR2`)中保存导致这个故障的线性地址(即:虚拟地址)。在 `trap.c` 中我们提供了一个专门处理它的函数的一个雏形,它就是 `page_fault_handler()`,我们将用它来处理页故障异常。

|

||||

|

||||

```markdown

|

||||

练习 5、修改 `trap_dispatch()` 将页故障异常派发到 `page_fault_handler()` 上。你现在应该能够成功测试 `faultread`、`faultreadkernel`、`faultwrite`、和 `faultwritekernel` 了。如果它们中的任何一个不能正常工作,找出问题并修复它。记住,你可以使用 make run- _x_ 或 make run- _x_ -nox 去重引导 JOS 进入到一个特定的用户程序。比如,你可以运行 make run-hello-nox 去运行 the _hello_ user 程序。

|

||||

```

|

||||

> **练习 5**、修改 `trap_dispatch()` 将页故障异常派发到 `page_fault_handler()` 上。你现在应该能够成功测试 `faultread`、`faultreadkernel`、`faultwrite` 和 `faultwritekernel` 了。如果它们中的任何一个不能正常工作,找出问题并修复它。记住,你可以使用 `make run-x` 或 `make run-x-nox` 去重引导 JOS 进入到一个特定的用户程序。比如,你可以运行 `make run-hello-nox` 去运行 `hello` 用户程序。

|

||||

|

||||

下面,你将进一步细化内核的页故障服务程序,因为你要实现系统调用了。

|

||||

|

||||

##### 断点异常

|

||||

#### 断点异常

|

||||

|

||||

断点异常,中断向量为 3(`T_BRKPT`),它一般用在调试上,它在一个程序代码中插入断点,从而使用特定的 1 字节的 `int3` 软件中断指令来临时替换相应的程序指令。在 JOS 中,我们将稍微“滥用”一下这个异常,通过将它打造成一个伪系统调用原语,使得任何用户环境都可以用它来调用 JOS 内核监视器。如果我们将 JOS 内核监视认为是原始调试器,那么这种用法是合适的。例如,在 `lib/panic.c` 中实现的用户模式下的 `panic()` ,它在显示它的 `panic` 消息后运行一个 `int3` 中断。

|

||||

|

||||

```markdown

|

||||

练习 6、修改 `trap_dispatch()`,让它在调用内核监视器时产生一个断点异常。你现在应该可以在 `breakpoint` 上成功完成测试。

|

||||

```

|

||||

> **练习 6**、修改 `trap_dispatch()`,让它在调用内核监视器时产生一个断点异常。你现在应该可以在 `breakpoint` 上成功完成测试。

|

||||

|

||||

```markdown

|

||||

小挑战!修改 JOS 内核监视器,以便于你能够从当前位置(即:在 `int3` 之后,断点异常调用了内核监视器) '继续' 异常,并且因此你就可以一次运行一个单步指令。为了实现单步运行,你需要去理解 `EFLAGS` 寄存器中的某些比特的意义。

|

||||

.

|

||||

|

||||

可选:如果你富有冒险精神,找一些 x86 反汇编的代码 —— 即通过从 QEMU 中、或从 GNU 二进制工具中分离、或你自己编写 —— 然后扩展 JOS 内核监视器,以使它能够反汇编,显示你的每步的指令。结合实验 1 中的符号表,这将是你写的一个真正的内核调试器。

|

||||

```

|

||||

> **小挑战!**修改 JOS 内核监视器,以便于你能够从当前位置(即:在 `int3` 之后,断点异常调用了内核监视器) '继续' 异常,并且因此你就可以一次运行一个单步指令。为了实现单步运行,你需要去理解 `EFLAGS` 寄存器中的某些比特的意义。

|

||||

|

||||

```markdown

|

||||

问题

|

||||

> 可选:如果你富有冒险精神,找一些 x86 反汇编的代码 —— 即通过从 QEMU 中、或从 GNU 二进制工具中分离、或你自己编写 —— 然后扩展 JOS 内核监视器,以使它能够反汇编,显示你的每步的指令。结合实验 1 中的符号表,这将是你写的一个真正的内核调试器。

|

||||

|

||||

3. 在断点测试案例中,根据你在 IDT 中如何初始化断点条目的不同情况(即:你的从 `trap_init` 到 `SETGATE` 的调用),既有可能产生一个断点异常,也有可能产生一个一般保护故障。为什么?为了能够像上面的案例那样工作,你需要如何去设置它,什么样的不正确设置才会触发一个一般保护故障?

|

||||

4. 你认为这些机制的意义是什么?尤其是要考虑 `user/softint` 测试程序的工作原理。

|

||||

```

|

||||

.

|

||||

|

||||

> **问题**

|

||||

|

||||

##### 系统调用

|

||||

> 3. 在断点测试案例中,根据你在 IDT 中如何初始化断点条目的不同情况(即:你的从 `trap_init` 到 `SETGATE` 的调用),既有可能产生一个断点异常,也有可能产生一个一般保护故障。为什么?为了能够像上面的案例那样工作,你需要如何去设置它,什么样的不正确设置才会触发一个一般保护故障?

|

||||

|

||||

> 4. 你认为这些机制的意义是什么?尤其是要考虑 `user/softint` 测试程序的工作原理。

|

||||

|

||||

#### 系统调用

|

||||

|

||||

用户进程请求内核为它做事情就是通过系统调用来实现的。当用户进程请求一个系统调用时,处理器首先进入内核模式,处理器和内核配合去保存用户进程的状态,内核为了完成系统调用会运行有关的代码,然后重新回到用户进程。用户进程如何获得内核的关注以及它如何指定它需要的系统调用的具体细节,这在不同的系统上是不同的。

|

||||

|

||||

@ -414,45 +395,43 @@ x86 允许最多有 256 个不同的中断或异常入口点去进入内核,

|

||||

|

||||

应用程序将在寄存器中传递系统调用号和系统调用参数。通过这种方式,内核就不需要去遍历用户环境的栈或指令流。系统调用号将放在 `%eax` 中,而参数(最多五个)将分别放在 `%edx`、`%ecx`、`%ebx`、`%edi`、和 `%esi` 中。内核将在 `%eax` 中传递返回值。在 `lib/syscall.c` 中的 `syscall()` 中已为你编写了使用一个系统调用的汇编代码。你可以通过阅读它来确保你已经理解了它们都做了什么。

|

||||

|

||||

```markdown

|

||||

练习 7、在内核中为中断向量 `T_SYSCALL` 添加一个服务程序。你将需要去编辑 `kern/trapentry.S` 和 `kern/trap.c` 的 `trap_init()`。还需要去修改 `trap_dispatch()`,以便于通过使用适当的参数来调用 `syscall()` (定义在 `kern/syscall.c`)以处理系统调用中断,然后将系统调用的返回值安排在 `%eax` 中传递给用户进程。最后,你需要去实现 `kern/syscall.c` 中的 `syscall()`。如果系统调用号是无效值,确保 `syscall()` 返回值一定是 `-E_INVAL`。为确保你理解了系统调用的接口,你应该去阅读和掌握 `lib/syscall.c` 文件(尤其是行内汇编的动作),对于在 `inc/syscall.h` 中列出的每个系统调用都需要通过调用相关的内核函数来处理A。

|

||||

> **练习 7**、在内核中为中断向量 `T_SYSCALL` 添加一个服务程序。你将需要去编辑 `kern/trapentry.S` 和 `kern/trap.c` 的 `trap_init()`。还需要去修改 `trap_dispatch()`,以便于通过使用适当的参数来调用 `syscall()` (定义在 `kern/syscall.c`)以处理系统调用中断,然后将系统调用的返回值安排在 `%eax` 中传递给用户进程。最后,你需要去实现 `kern/syscall.c` 中的 `syscall()`。如果系统调用号是无效值,确保 `syscall()` 返回值一定是 `-E_INVAL`。为确保你理解了系统调用的接口,你应该去阅读和掌握 `lib/syscall.c` 文件(尤其是行内汇编的动作),对于在 `inc/syscall.h` 中列出的每个系统调用都需要通过调用相关的内核函数来处理A。

|

||||

|

||||

在你的内核中运行 `user/hello` 程序(make run-hello)。它应该在控制台上输出 "`hello, world`",然后在用户模式中产生一个页故障。如果没有产生页故障,可能意味着你的系统调用服务程序不太正确。现在,你应该有能力成功通过 `testbss` 测试。

|

||||

> 在你的内核中运行 `user/hello` 程序(make run-hello)。它应该在控制台上输出 `hello, world`,然后在用户模式中产生一个页故障。如果没有产生页故障,可能意味着你的系统调用服务程序不太正确。现在,你应该有能力成功通过 `testbss` 测试。

|

||||

|

||||

.

|

||||

|

||||

> 小挑战!使用 `sysenter` 和 `sysexit` 指令而不是使用 `int 0x30` 和 `iret` 来实现系统调用。

|

||||

|

||||

> `sysenter/sysexit` 指令是由 Intel 设计的,它的运行速度要比 `int/iret` 指令快。它使用寄存器而不是栈来做到这一点,并且通过假定了分段寄存器是如何使用的。关于这些指令的详细内容可以在 Intel 参考手册 2B 卷中找到。

|

||||

|

||||

> 在 JOS 中添加对这些指令支持的最容易的方法是,在 `kern/trapentry.S` 中添加一个 `sysenter_handler`,在它里面保存足够多的关于用户环境返回、设置内核环境、推送参数到 `syscall()`、以及直接调用 `syscall()` 的信息。一旦 `syscall()` 返回,它将设置好运行 `sysexit` 指令所需的一切东西。你也将需要在 `kern/init.c` 中添加一些代码,以设置特殊模块寄存器(MSRs)。在 AMD 架构程序员手册第 2 卷的 6.1.2 节中和 Intel 参考手册的 2B 卷的 SYSENTER 上都有关于 MSRs 的很详细的描述。对于如何去写 MSRs,在[这里][4]你可以找到一个添加到 `inc/x86.h` 中的 `wrmsr` 的实现。

|

||||

|

||||

> 最后,`lib/syscall.c` 必须要修改,以便于支持用 `sysenter` 来生成一个系统调用。下面是 `sysenter` 指令的一种可能的寄存器布局:

|

||||

|

||||

> ```

|

||||

eax - syscall number

|

||||

edx, ecx, ebx, edi - arg1, arg2, arg3, arg4

|

||||

esi - return pc

|

||||

ebp - return esp

|

||||

esp - trashed by sysenter

|

||||

```

|

||||

|

||||

```markdown

|

||||

小挑战!使用 `sysenter` 和 `sysexit` 指令而不是使用 `int 0x30` 和 `iret` 来实现系统调用。

|

||||

> GCC 的内联汇编器将自动保存你告诉它的直接加载进寄存器的值。不要忘了同时去保存(`push`)和恢复(`pop`)你使用的其它寄存器,或告诉内联汇编器你正在使用它们。内联汇编器不支持保存 `%ebp`,因此你需要自己去增加一些代码来保存和恢复它们,返回地址可以使用一个像 `leal after_sysenter_label, %%esi` 的指令置入到 `%esi` 中。

|

||||

|

||||

`sysenter/sysexit` 指令是由 Intel 设计的,它的运行速度要比 `int/iret` 指令快。它使用寄存器而不是栈来做到这一点,并且通过假定了分段寄存器是如何使用的。关于这些指令的详细内容可以在 Intel 参考手册 2B 卷中找到。

|

||||

> 注意,它仅支持 4 个参数,因此你需要保留支持 5 个参数的系统调用的旧方法。而且,因为这个快速路径并不更新当前环境的 trap 帧,因此,在我们添加到后续实验中的一些系统调用上,它并不适合。

|

||||

|

||||

在 JOS 中添加对这些指令支持的最容易的方法是,在 `kern/trapentry.S` 中添加一个 `sysenter_handler`,在它里面保存足够多的关于用户环境返回、设置内核环境、推送参数到 `syscall()`、以及直接调用 `syscall()` 的信息。一旦 `syscall()` 返回,它将设置好运行 `sysexit` 指令所需的一切东西。你也将需要在 `kern/init.c` 中添加一些代码,以设置特殊模块寄存器(MSRs)。在 AMD 架构程序员手册第 2 卷的 6.1.2 节中和 Intel 参考手册的 2B 卷的 SYSENTER 上都有关于 MSRs 的很详细的描述。对于如何去写 MSRs,在[这里][4]你可以找到一个添加到 `inc/x86.h` 中的 `wrmsr` 的实现。

|

||||

> 在接下来的实验中我们启用了异步中断,你需要再次去评估一下你的代码。尤其是,当返回到用户进程时,你需要去启用中断,而 `sysexit` 指令并不会为你去做这一动作。

|

||||

|

||||

最后,`lib/syscall.c` 必须要修改,以便于支持用 `sysenter` 来生成一个系统调用。下面是 `sysenter` 指令的一种可能的寄存器布局:

|

||||

|

||||

eax - syscall number

|

||||

edx, ecx, ebx, edi - arg1, arg2, arg3, arg4

|

||||

esi - return pc

|

||||

ebp - return esp

|

||||

esp - trashed by sysenter

|

||||

|

||||

GCC 的内联汇编器将自动保存你告诉它的直接加载进寄存器的值。不要忘了同时去保存(push)和恢复(pop)你使用的其它寄存器,或告诉内联汇编器你正在使用它们。内联汇编器不支持保存 `%ebp`,因此你需要自己去增加一些代码来保存和恢复它们,返回地址可以使用一个像 `leal after_sysenter_label, %%esi` 的指令置入到 `%esi` 中。

|

||||

|

||||

注意,它仅支持 4 个参数,因此你需要保留支持 5 个参数的系统调用的旧方法。而且,因为这个快速路径并不更新当前环境的 trap 帧,因此,在我们添加到后续实验中的一些系统调用上,它并不适合。

|

||||

|

||||

在接下来的实验中我们启用了异步中断,你需要再次去评估一下你的代码。尤其是,当返回到用户进程时,你需要去启用中断,而 `sysexit` 指令并不会为你去做这一动作。

|

||||

```

|

||||

|

||||

##### 启动用户模式

|

||||

#### 启动用户模式

|

||||

|

||||

一个用户程序是从 `lib/entry.S` 的顶部开始运行的。在一些配置之后,代码调用 `lib/libmain.c` 中的 `libmain()`。你应该去修改 `libmain()` 以初始化全局指针 `thisenv`,使它指向到这个环境在数组 `envs[]` 中的 `struct Env`。(注意那个 `lib/entry.S` 中已经定义 `envs` 去指向到在 Part A 中映射的你的设置。)提示:查看 `inc/env.h` 和使用 `sys_getenvid`。

|

||||

|

||||

`libmain()` 接下来调用 `umain`,在 hello 程序的案例中,`umain` 是在 `user/hello.c` 中。注意,它在输出 "`hello, world`” 之后,它尝试去访问 `thisenv->env_id`。这就是为什么前面会发生故障的原因了。现在,你已经正确地初始化了 `thisenv`,它应该不会再发生故障了。如果仍然会发生故障,或许是因为你没有映射 `UENVS` 区域为用户可读取(回到前面 Part A 中 查看 `pmap.c`);这是我们第一次真实地使用 `UENVS` 区域)。

|

||||

|

||||

```markdown

|

||||

练习 8、添加要求的代码到用户库,然后引导你的内核。你应该能够看到 `user/hello` 程序会输出 "`hello, world`" 然后输出 "`i am environment 00001000`"。`user/hello` 接下来会通过调用 `sys_env_destroy()`(查看`lib/libmain.c` 和 `lib/exit.c`)尝试去"退出"。由于内核目前仅支持一个用户环境,它应该会报告它毁坏了唯一的环境,然后进入到内核监视器中。现在你应该能够成功通过 `hello` 的测试。

|

||||

```

|

||||

> **练习 8**、添加要求的代码到用户库,然后引导你的内核。你应该能够看到 `user/hello` 程序会输出 `hello, world` 然后输出 `i am environment 00001000`。`user/hello` 接下来会通过调用 `sys_env_destroy()`(查看`lib/libmain.c` 和 `lib/exit.c`)尝试去“退出”。由于内核目前仅支持一个用户环境,它应该会报告它毁坏了唯一的环境,然后进入到内核监视器中。现在你应该能够成功通过 `hello` 的测试。

|

||||

|

||||

##### 页故障和内存保护

|

||||

#### 页故障和内存保护

|

||||

|

||||

内存保护是一个操作系统中最重要的特性,通过它来保证一个程序中的 bug 不会破坏其它程序或操作系统本身。

|

||||

|

||||

@ -462,10 +441,8 @@ GCC 的内联汇编器将自动保存你告诉它的直接加载进寄存器的

|

||||

|

||||

对于内存保护,系统调用中有一个非常有趣的问题。许多系统调用接口让用户程序传递指针到内核中。这些指针指向用户要读取或写入的缓冲区。然后内核在执行系统调用时废弃这些指针。这样就有两个问题:

|

||||

|

||||

1. 内核中的页故障可能比用户程序中的页故障多的多。如果内核在维护它自己的数据结构时发生页故障,那就是一个内核 bug,而故障服务程序将使整个内核(和整个系统)崩溃。但是当内核废弃了由用户程序传递给它的指针后,它就需要一种方式去记住那些废弃指针所导致的页故障其实是代表用户程序的。

|

||||

2. 一般情况下内核拥有比用户程序更多的权限。用户程序可以传递一个指针到系统调用,而指针指向的区域有可能是内核可以读取或写入而用户程序不可访问的区域。内核必须要非常小心,不能被废弃的这种指针欺骗,因为这可能导致泄露私有信息或破坏内核的完整性。

|

||||

|

||||

|

||||

1. 内核中的页故障可能比用户程序中的页故障多的多。如果内核在维护它自己的数据结构时发生页故障,那就是一个内核 bug,而故障服务程序将使整个内核(和整个系统)崩溃。但是当内核废弃了由用户程序传递给它的指针后,它就需要一种方式去记住那些废弃指针所导致的页故障其实是代表用户程序的。

|

||||

2. 一般情况下内核拥有比用户程序更多的权限。用户程序可以传递一个指针到系统调用,而指针指向的区域有可能是内核可以读取或写入而用户程序不可访问的区域。内核必须要非常小心,不能被废弃的这种指针欺骗,因为这可能导致泄露私有信息或破坏内核的完整性。

|

||||

|

||||

由于以上的原因,内核在处理由用户程序提供的指针时必须格外小心。

|

||||

|

||||

@ -473,32 +450,33 @@ GCC 的内联汇编器将自动保存你告诉它的直接加载进寄存器的

|

||||

|

||||

这样,内核在废弃一个用户提供的指针时就绝不会发生页故障。如果内核出现这种页故障,它应该崩溃并终止。

|

||||

|

||||

```markdown

|

||||

练习 9、如果在内核模式中发生一个页故障,修改 `kern/trap.c` 去崩溃。

|

||||

> **练习 9**、如果在内核模式中发生一个页故障,修改 `kern/trap.c` 去崩溃。

|

||||

|

||||

提示:判断一个页故障是发生在用户模式还是内核模式,去检查 `tf_cs` 的低位比特即可。

|

||||

> 提示:判断一个页故障是发生在用户模式还是内核模式,去检查 `tf_cs` 的低位比特即可。

|

||||

|

||||

阅读 `kern/pmap.c` 中的 `user_mem_assert` 并在那个文件中实现 `user_mem_check`。

|

||||

> 阅读 `kern/pmap.c` 中的 `user_mem_assert` 并在那个文件中实现 `user_mem_check`。

|

||||

|

||||

修改 `kern/syscall.c` 去常态化检查传递给系统调用的参数。

|

||||

> 修改 `kern/syscall.c` 去常态化检查传递给系统调用的参数。

|

||||

|

||||

引导你的内核,运行 `user/buggyhello`。环境将被毁坏,而内核将不会崩溃。你将会看到:

|

||||

> 引导你的内核,运行 `user/buggyhello`。环境将被毁坏,而内核将不会崩溃。你将会看到:

|

||||

|

||||

[00001000] user_mem_check assertion failure for va 00000001

|

||||

[00001000] free env 00001000

|

||||

Destroyed the only environment - nothing more to do!

|

||||

最后,修改在 `kern/kdebug.c` 中的 `debuginfo_eip`,在 `usd`、`stabs`、和 `stabstr` 上调用 `user_mem_check`。如果你现在运行 `user/breakpoint`,你应该能够从内核监视器中运行回溯,然后在内核因页故障崩溃前看到回溯进入到 `lib/libmain.c`。是什么导致了这个页故障?你不需要去修复它,但是你应该明白它是如何发生的。

|

||||

> ```

|

||||

[00001000] user_mem_check assertion failure for va 00000001

|

||||

[00001000] free env 00001000

|

||||

Destroyed the only environment - nothing more to do!

|

||||

```

|

||||

|

||||

> 最后,修改在 `kern/kdebug.c` 中的 `debuginfo_eip`,在 `usd`、`stabs`、和 `stabstr` 上调用 `user_mem_check`。如果你现在运行 `user/breakpoint`,你应该能够从内核监视器中运行回溯,然后在内核因页故障崩溃前看到回溯进入到 `lib/libmain.c`。是什么导致了这个页故障?你不需要去修复它,但是你应该明白它是如何发生的。

|

||||

|

||||

注意,刚才实现的这些机制也同样适用于恶意用户程序(比如 `user/evilhello`)。

|

||||

|

||||

```

|

||||

练习 10、引导你的内核,运行 `user/evilhello`。环境应该被毁坏,并且内核不会崩溃。你应该能看到:

|

||||

> **练习 10**、引导你的内核,运行 `user/evilhello`。环境应该被毁坏,并且内核不会崩溃。你应该能看到:

|

||||

|

||||

[00000000] new env 00001000

|

||||

...

|

||||

[00001000] user_mem_check assertion failure for va f010000c

|

||||

[00001000] free env 00001000

|

||||

> ```

|

||||

[00000000] new env 00001000

|

||||

...

|

||||

[00001000] user_mem_check assertion failure for va f010000c

|

||||

[00001000] free env 00001000

|

||||

```

|

||||

|

||||

**本实验到此结束。**确保你通过了所有的等级测试,并且不要忘记去写下问题的答案,在 `answers-lab3.txt` 中详细描述你的挑战练习的解决方案。提交你的变更并在 `lab` 目录下输入 `make handin` 去提交你的工作。

|

||||

@ -512,13 +490,13 @@ via: https://pdos.csail.mit.edu/6.828/2018/labs/lab3/

|

||||

作者:[csail.mit][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://pdos.csail.mit.edu

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://pdos.csail.mit.edu/6.828/2018/labs/labguide.html

|

||||

[1]: https://linux.cn/article-10273-1.html

|

||||

[2]: https://pdos.csail.mit.edu/6.828/2018/labs/reference.html

|

||||

[3]: http://blogs.msdn.com/larryosterman/archive/2005/02/08/369243.aspx

|

||||

[4]: http://ftp.kh.edu.tw/Linux/SuSE/people/garloff/linux/k6mod.c

|

||||

@ -1,6 +1,8 @@

|

||||

提高 Linux 网络浏览器安全性的 5 个建议

|

||||

提高 Linux 的网络浏览器安全性的 5 个建议

|

||||

======

|

||||

|

||||

> 这些简单的步骤可以大大提高您的在线安全性。

|

||||

|

||||

|

||||

|

||||

如果你使用 Linux 桌面但从来不使用网络浏览器,那你算得上是百里挑一。网络浏览器是绝大多数人最常用的工具之一,无论是工作、娱乐、看新闻、社交、理财,对网络浏览器的依赖都比本地应用要多得多。因此,我们需要知道如何使用网络浏览器才是安全的。一直以来都有不法的犯罪分子以及他们建立的网页试图窃取私密的信息。正是由于我们需要通过网络浏览器收发大量的敏感信息,安全性就更是至关重要。

|

||||

|

||||

@ -8,17 +10,14 @@

|

||||

|

||||

### 正确选择浏览器

|

||||

|

||||

尽管我我提出的建议具有普适性,但是正确选择网络浏览器也是很必要的。网络浏览器的更新频率是它安全性的一个重要体现。网络浏览器会不断暴露出新的问题,因此版本越新的网络浏览器修复的问题就越多,也越安全。在主流的网络浏览器当中,2017 年版本更新的发布量排行榜如下:

|

||||

尽管我提出的建议具有普适性,但是正确选择网络浏览器也是很必要的。网络浏览器的更新频率是它安全性的一个重要体现。网络浏览器会不断暴露出新的问题,因此版本越新的网络浏览器修复的问题就越多,也越安全。在主流的网络浏览器当中,2017 年版本更新的发布量排行榜如下:

|

||||

|

||||

1. Chrome 发布了 8 个更新(Chromium 全年跟进发布了大量安全补丁)。

|

||||

2. Firefox 发布了 7 个更新。

|

||||

3. Edge 发布了 2 个更新。

|

||||

4. Safari 发布了 1 个更新(苹果也会每年发布 5 到 6 个安全补丁)。

|

||||

1. Chrome 发布了 8 个更新(Chromium 全年跟进发布了大量安全补丁)。

|

||||

2. Firefox 发布了 7 个更新。

|

||||

3. Edge 发布了 2 个更新。

|

||||

4. Safari 发布了 1 个更新(苹果也会每年发布 5 到 6 个安全补丁)。

|

||||

|

||||

|

||||

|

||||

|

||||

网络浏览器会经常发布更新,同时用户方面也要及时升级到最新的版本,否则毫无意义了。尽管大部分流行的 Linux 发行版都会自动更新网络浏览器到最新版本,但还是有一些 Linux 发行版不会自动进行更新,所以最好还是手动保持浏览器更新到最新版本。这就意味着你所使用的 Linux 发行版对应的标准软件库中存放的很可能就不是最新版本的网络浏览器,在这种情况下,你可以随时从网络浏览器开发者提供的最新版本下载页中进行下载安装。

|

||||

网络浏览器会经常发布更新,同时用户也要及时升级到最新的版本,否则毫无意义了。尽管大部分流行的 Linux 发行版都会自动更新网络浏览器到最新版本,但还是有一些 Linux 发行版不会自动进行更新,所以最好还是手动保持浏览器更新到最新版本。这就意味着你所使用的 Linux 发行版对应的标准软件库中存放的很可能就不是最新版本的网络浏览器,在这种情况下,你可以随时从网络浏览器开发者提供的最新版本下载页中进行下载安装。

|

||||

|

||||

如果你是一个勇于探索的人,你还可以尝试使用测试版或者<ruby>每日构建<rt>daily build</rt></ruby>版的网络浏览器,不过,这些版本将伴随着不能稳定运行的可能性。在基于 Ubuntu 的发行版中,你可以使用到每日构建版的 Firefox,只需要执行以下命令添加所需的存储库:

|

||||

|

||||

@ -43,60 +42,47 @@ sudo apt-get install firefox

|

||||

|

||||

### 保护好密码

|

||||

|

||||

有的人可能会认为,每次都需要重复输入密码,这样的操作太麻烦了。在 Firefox 中,如果你既想保护好自己的密码,又不想经常输入密码,就可以通过 Master Password 这一款内置的工具来实现你的需求。起用了这个工具之后,需要输入正确的主密码,才能后续使用保存在浏览器中的其它密码。你可以按照以下步骤进行操作:

|

||||

|

||||

1. 打开 Firefox。

|

||||

|

||||

2. 点击菜单按钮。

|

||||

|

||||

3. 点击“偏好设置”。

|

||||

|

||||

4. 在偏好设置页面,点击“隐私与安全”。

|

||||

|

||||

5. 在页面中勾选“使用主密码”选项(图 1)。

|

||||

|

||||

6. 确认以后,输入新的主密码(图 2)。

|

||||

|

||||

7. 重启 Firefox。

|

||||

|

||||

|

||||

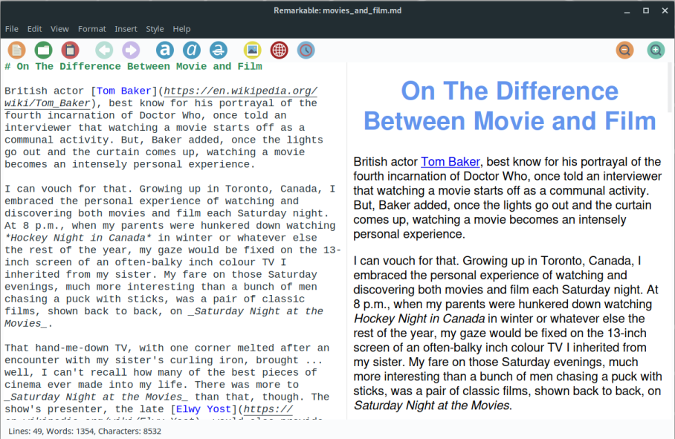

有的人可能会认为,每次都需要重复输入密码,这样的操作太麻烦了。在 Firefox 中,如果你既想保护好自己的密码,又不想经常输入密码,就可以通过<ruby>主密码<rt>Master Password</rt></ruby>这一款内置的工具来实现你的需求。起用了这个工具之后,需要输入正确的主密码,才能后续使用保存在浏览器中的其它密码。你可以按照以下步骤进行操作:

|

||||

|

||||

1. 打开 Firefox。

|

||||

2. 点击菜单按钮。

|

||||

3. 点击“偏好设置”。

|

||||

4. 在偏好设置页面,点击“隐私与安全”。

|

||||

5. 在页面中勾选“使用主密码”选项(图 1)。

|

||||

6. 确认以后,输入新的主密码(图 2)。

|

||||

7. 重启 Firefox。

|

||||

|

||||

![Master Password][3]

|

||||

|

||||

图 1: Firefox 偏好设置页中的主密码设置。

|

||||

*图 1: Firefox 偏好设置页中的主密码设置。*

|

||||

|

||||

![Setting password][6]

|

||||

|

||||

图 2:在 Firefox 中设置主密码。

|

||||

*图 2:在 Firefox 中设置主密码。*

|

||||

|

||||

### 了解你使用的扩展和插件

|

||||

|

||||

大多数网络浏览器在保护隐私方面都有很多扩展,你可以根据自己的需求选择不同的扩展。而我自己则选择了一下这些扩展:

|

||||

|

||||

* [Firefox Multi-Account Containers][7] \- 允许将某些站点配置为在容器化选项卡中打开。

|

||||

* [Facebook Container][8] \- 始终在容器化选项卡中打开 Facebook(这个扩展需要 Firefox Multi-Account Containers)。

|

||||

* [Avast Online Security][9] \- 识别并拦截已知的钓鱼网站,并显示网站的安全评级(由超过 4 亿用户的 Avast 社区支持)。

|

||||

* [Mining Blocker][10] \- 拦截所有使用 CPU 的挖矿工具。

|

||||

* [PassFF][11] \- 通过集成 `pass` (一个 UNIX 密码管理器)以安全存储密码。

|

||||

* [Privacy Badger][12] \- 自动拦截网站跟踪。

|

||||

* [uBlock Origin][13] \- 拦截已知的网站跟踪。

|

||||

|

||||

* [Firefox Multi-Account Containers][7] —— 允许将某些站点配置为在容器化选项卡中打开。

|

||||

* [Facebook Container][8] —— 始终在容器化选项卡中打开 Facebook(这个扩展需要 Firefox Multi-Account Containers)。

|

||||

* [Avast Online Security][9] —— 识别并拦截已知的钓鱼网站,并显示网站的安全评级(由超过 4 亿用户的 Avast 社区支持)。

|

||||

* [Mining Blocker][10] —— 拦截所有使用 CPU 的挖矿工具。

|

||||

* [PassFF][11] —— 通过集成 `pass` (一个 UNIX 密码管理器)以安全存储密码。

|

||||

* [Privacy Badger][12] —— 自动拦截网站跟踪。

|

||||

* [uBlock Origin][13] —— 拦截已知的网站跟踪。

|

||||

|

||||

除此以外,以下这些浏览器还有很多安全方面的扩展:

|

||||

|

||||

+ [Firefox][2]

|

||||

|

||||

+ [Chrome、Chromium,、Vivaldi][5]

|

||||

|

||||

+ [Opera][14]

|

||||

|

||||

|

||||

但并非每一个网络浏览器都会向用户提供扩展或插件。例如 Midoria 就只有少量可以开启或关闭的内置插件(图 3),同时这些轻量级浏览器的第三方插件也相当缺乏。

|

||||

|

||||

![Midori Browser][15]

|

||||

|

||||

图 3:Midori 浏览器的插件窗口。

|

||||

*图 3:Midori 浏览器的插件窗口。*

|

||||

|

||||

### 虚拟化

|

||||

|

||||

@ -115,7 +101,7 @@ via: https://www.linux.com/learn/intro-to-linux/2018/11/5-easy-tips-linux-web-br

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

108

sources/talk/20181129 9 top tech-recruiting mistakes to avoid.md

Normal file

108

sources/talk/20181129 9 top tech-recruiting mistakes to avoid.md

Normal file

@ -0,0 +1,108 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: subject: (9 top tech-recruiting mistakes to avoid)

|

||||

[#]: via: (https://opensource.com/article/18/11/top-tech-recruiting-mistakes-avoid)

|

||||

[#]: author: (Rikki Endsley https://opensource.com/users/rikki-endsley)

|

||||

[#]: url: ( )

|

||||

|

||||

9 top tech-recruiting mistakes to avoid

|

||||

======

|

||||

We round up common mistakes tech recruiters make and a few best practices to adopt instead.

|

||||

|

||||

|

||||

Some of my best friends and colleagues are tech recruiters, and a bunch of my favorite humans are on the job hunt. With these fine folks in mind, I decided to help them connect by finding out what kinds of recruiting efforts stand out to potential hires. I reached out to my colleagues and contacts and asked them what they like (and hate) when it comes to recruiting, then I rounded up a list of top tech-recruiting mistakes to avoid and best practices to use instead.

|

||||

|

||||

### 9 common tech recruiting mistakes to avoid

|

||||

|

||||

Don’t even think about reaching out to a potential candidate without doing due diligence on their background, experience, and expertise. Not knowing what skills a recruit has and what kind of work they’ve done in the past instantly turns off job seekers. Recruiters who make it clear that they’ve done their homework signal that they aren’t planning to waste a candidate’s time and stand a better chance of piquing their interest from the beginning. Common recruiting mistakes also include:

|

||||

|

||||

**1\. Sending form letters — or even worse, broken form letters.**

|

||||

|

||||

Unless someone is job-seeking and not getting many offers, chances are your form letter won’t stand out or get much interest. And if your form letter is broken and has [NAME] where a candidate’s name should appear, forget about getting any qualified candidate responses.

|

||||

|

||||

**2\. Blowing the salutation.**

|

||||

|

||||

Be sure to spell the candidate’s name correctly, and typing “Mr.” or “Miss” in the greeting could be a big mistake. For example, I can’t tell you how many “Mr. Endsley” messages I get every month, and “Miss Endsley” won’t sit well with me, either.

|

||||

|

||||

**3\. Not understanding what the recruit does.**

|

||||

|

||||

Potential candidates can tell whether you’ve done your homework, dug through their LinkedIn profiles, and have a grasp of their backgrounds, experiences, and areas of interest. Reaching out to a UX designer about an engineering role won’t get you good results.

|

||||

|

||||

**4\. Sending unsolicited contact requests.**

|

||||

|

||||

Don’t send LinkedIn connection requests to people you don’t know. Just don’t do it.

|

||||

|

||||

**5\. Being too general about the job position.**

|

||||

|

||||

Kill the coy. Share as many details you can about the role, including any must-have and nice-to-have skills. Draw potential recruits a picture of what the work looks like to make qualified candidates take notice.

|

||||

|

||||

**6\. Being too vague or mysterious about the team and company.**

|

||||

|

||||

Tech professionals with in-demand skills and experience are being more selective about what kind of team dynamics they walk into and [organizational ethics][1]. Letting potential hires know right away what the team looks like (e.g., small vs. large, remote vs. on-site) and which company you represent can save everyone a lot of time.

|

||||

|

||||

**7\. Having unrealistic or overly specific requirements.**

|

||||

|

||||

Does the right candidate really need to be an expert in every technology and programming language and hold multiple degrees? Be clear about what skills a candidate needs vs. what skills might come in handy or can be acquired on the job.

|

||||

|

||||

**8\. Getting too cutesy or culture-y in a description.**

|

||||

|

||||

Not all of us consider office puppies, free booze, and ping pong to be job perks. If this is the messaging you lead with, you’re going to attract a pretty specific kind of applicant and quickly narrow down your list of potential candidates.

|

||||

|

||||

Also [avoid terms][2] like “guys,” “rock star,” and “recent graduate,” which can translate to “women, minorities, and anyone over 25 need not apply.” Ouch.

|

||||

|

||||

**9\. Asking for referrals to other potential candidates.**

|

||||

|

||||

This is a no-win for recruiters. For example, I’m happy to recommend job seekers in my network for roles, but lots of other folks working in tech see this as lazy recruiting. The safest approach might be to ask a candidate later in the process, after you’ve developed a friendly working relationship and you've both agreed that this role doesn’t quite fit their interests or expertise. Then they might have other contacts in mind for it.

|

||||

|

||||

### 6 best practices for tech recruiters

|

||||

|

||||

**1\. Provide a sincere and personalized greeting.**

|

||||

|

||||

A personalized greeting goes a long way. Let potential candidates know you understand their previous work experience, you’re familiar with what they do, and that you have an idea of what they want to be doing.

|

||||

|

||||

|

||||

**2\. Offer a transparent description of the team and the role.**

|

||||

|

||||

Be as clear as you can when describing the team and the role. Using words like “rock star” or “fast-paced team” does nothing to help the potential candidate visualize what they’d be walking into. Is this a small team? Remote team?

|

||||

|

||||

Being mysterious about the role won’t build intrigue, so opt for transparency.

|

||||

|

||||

**3\. Give the company name.**

|

||||

|

||||

Telling job candidates that the organization is a startup or a Fortune 500 company also won’t work as well being transparent about the organization from the beginning. How can anyone know whether they’re interested in a role if they don’t know which organization they’d be joining?

|

||||

|

||||

**4\. Be persistent and specific.**

|

||||

|

||||

In addition to providing the job description and company name, specifying the salary range and why you think the potential candidate is a good fit for the role stands out. Also consider specifying the work authorization and whether the organization provides visa sponsorships, relocation, and additional benefits beyond an hourly or annual salary. If you have a good feeling about a candidate who might not be a 100% technical fit with the job posting, let them know and open those lines of communication.

|

||||

|

||||

**5\. Invite potential candidates to local recruiting events.**

|

||||

|

||||

The event should provide brief presentations about the company, refreshments, and a networking opportunity. Here’s your chance to show off the culture (i.e., people) part of your organization in person.

|

||||

|

||||

**6\. Maintain a relationship with potential recruits.**

|

||||

|

||||