mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

f51b24dae9

published

20170410 Writing a Time Series Database from Scratch.md20170414 5 projects for Raspberry Pi at home.md20180324 Memories of writing a parser for man pages.md20180416 How To Resize Active-Primary root Partition Using GParted Utility.md20180604 BootISO - A Simple Bash Script To Securely Create A Bootable USB Device From ISO File.md20180831 Get desktop notifications from Emacs shell commands .md20180914 A day in the life of a log message.md20190109 GoAccess - A Real-Time Web Server Log Analyzer And Interactive Viewer.md20190308 Blockchain 2.0 - Explaining Smart Contracts And Its Types -Part 5.md20190331 How to build a mobile particulate matter sensor with a Raspberry Pi.md20190404 Running LEDs in reverse could cool computers.md20190409 5 Linux rookie mistakes.md20190410 How we built a Linux desktop app with Electron.md20190423 Epic Games Store is Now Available on Linux Thanks to Lutris.md20190423 How to identify same-content files on Linux.md20190509 5 essential values for the DevOps mindset.md20190517 10 Places Where You Can Buy Linux Computers.md20190517 Using Testinfra with Ansible to verify server state.md20190520 Getting Started With Docker.md20190520 When IoT systems fail- The risk of having bad IoT data.md20190522 Securing telnet connections with stunnel.md20190525 4 Ways to Run Linux Commands in Windows.md20190527 5 GNOME keyboard shortcuts to be more productive.md20190527 A deeper dive into Linux permissions.md20190527 How To Check Available Security Updates On Red Hat (RHEL) And CentOS System.md20190527 How to write a good C main function.md20190529 NVMe on Linux.md20190531 Unity Editor is Now Officially Available for Linux.md20190531 Why translation platforms matter.md20190604 How To Verify NTP Setup (Sync) is Working or Not In Linux.md20190604 Two Methods To Check Or List Installed Security Updates on Redhat (RHEL) And CentOS System.md20190606 How Linux can help with your spelling.md20190607 5 reasons to use Kubernetes.md20190608 An open source bionic leg, Python data pipeline, data breach detection, and more news.md20190610 Expand And Unexpand Commands Tutorial With Examples.md20190610 Graviton- A Minimalist Open Source Code Editor.md20190610 Neofetch - Display Linux system Information In Terminal.md20190610 Screen Command Examples To Manage Multiple Terminal Sessions.md20190610 Search Linux Applications On AppImage, Flathub And Snapcraft Platforms.md20190610 Try a new game on Free RPG Day.md20190610 Welcoming Blockchain 3.0.md20190613 Ubuntu Kylin- The Official Chinese Version of Ubuntu.md

scripts/check

sources

news

20190606 Cisco to buy IoT security, management firm Sentryo.md20190606 Zorin OS Becomes Even More Awesome With Zorin 15 Release.md20190607 Free and Open Source Trello Alternative OpenProject 9 Released.md

talk

20170320 An Ubuntu User-s Review Of Dell XPS 13 Ubuntu Edition.md20171222 18 Cyber-Security Trends Organizations Need to Brace for in 2018.md20180209 A review of Virtual Labs virtualization solutions for MOOCs - WebLog Pro Olivier Berger.md20180705 New Training Options Address Demand for Blockchain Skills.md20180802 How blockchain will influence open source.md20180816 Debian Turns 25- Here are Some Interesting Facts About Debian Linux.md20181004 Interview With Peter Ganten, CEO of Univention GmbH.md20190228 Why CLAs aren-t good for open source.md20190311 Discuss everything Fedora.md20190322 How to save time with TiDB.md20190410 Google partners with Intel, HPE and Lenovo for hybrid cloud.md20190410 HPE and Nutanix partner for hyperconverged private cloud systems.md20190418 Cisco warns WLAN controller, 9000 series router and IOS-XE users to patch urgent security holes.md20190418 Fujitsu completes design of exascale supercomputer, promises to productize it.md20190419 Intel follows AMD-s lead (again) into single-socket Xeon servers.md20190424 IoT roundup- VMware, Nokia beef up their IoT.md20190425 Dell EMC and Cisco renew converged infrastructure alliance.md20190429 Venerable Cisco Catalyst 6000 switches ousted by new Catalyst 9600.md20190520 When IoT systems fail- The risk of having bad IoT data.md20190528 Managed WAN and the cloud-native SD-WAN.md20190528 Moving to the Cloud- SD-WAN Matters.md20190528 With Cray buy, HPE rules but does not own the supercomputing market.md20190529 Cisco security spotlights Microsoft Office 365 e-mail phishing increase.md20190529 Nvidia launches edge computing platform for AI processing.md20190529 Satellite-based internet possible by year-end, says SpaceX.md20190529 Survey finds SD-WANs are hot, but satisfaction with telcos is not.md20190601 HPE Synergy For Dummies.md20190601 True Hyperconvergence at Scale- HPE Simplivity With Composable Fabric.md20190602 IoT Roundup- New research on IoT security, Microsoft leans into IoT.md20190603 It-s time for the IoT to -optimize for trust.md20190604 5G will augment Wi-Fi, not replace it.md20190604 Data center workloads become more complex despite promises to the contrary.md20190604 Moving to the Cloud- SD-WAN Matters- Part 2.md20190605 Cisco will use AI-ML to boost intent-based networking.md20190606 Cloud adoption drives the evolution of application delivery controllers.md20190606 For enterprise storage, persistent memory is here to stay.md20190606 Juniper- Security could help drive interest in SDN.md20190606 Self-learning sensor chips won-t need networks.md20190606 What to do when yesterday-s technology won-t meet today-s support needs.md20190611 6 ways to make enterprise IoT cost effective.md20190611 Cisco launches a developer-community cert program.md20190611 The carbon footprints of IT shops that train AI models are huge.md20190612 Cisco offers cloud-based security for SD-WAN resources.md20190612 Dell and Cisco extend VxBlock integration with new features.md20190612 IoT security vs. privacy- Which is a bigger issue.md20190612 Software Defined Perimeter (SDP)- Creating a new network perimeter.md20190612 When to use 5G, when to use Wi-Fi 6.md20190613 Data centers should sell spare UPS capacity to the grid.md20190613 Oracle updates Exadata at long last with AI and machine learning abilities.md20190614 Report- Mirai tries to hook its tentacles into SD-WAN.md20190614 Western Digital launches open-source zettabyte storage initiative.md20190617 5 transferable higher-education skills.md20190617 Use ImageGlass to quickly view JPG images as a slideshow.md20190619 Cisco connects with IBM in to simplify hybrid cloud deployment.md

@ -0,0 +1,484 @@

|

||||

从零写一个时间序列数据库

|

||||

==================

|

||||

|

||||

编者按:Prometheus 是 CNCF 旗下的开源监控告警解决方案,它已经成为 Kubernetes 生态圈中的核心监控系统。本文作者 Fabian Reinartz 是 Prometheus 的核心开发者,这篇文章是其于 2017 年写的一篇关于 Prometheus 中的时间序列数据库的设计思考,虽然写作时间有点久了,但是其中的考虑和思路非常值得参考。长文预警,请坐下来慢慢品味。

|

||||

|

||||

---

|

||||

|

||||

|

||||

|

||||

我从事监控工作。特别是在 [Prometheus][2] 上,监控系统包含一个自定义的时间序列数据库,并且集成在 [Kubernetes][3] 上。

|

||||

|

||||

在许多方面上 Kubernetes 展现出了 Prometheus 所有的设计用途。它使得<ruby>持续部署<rt>continuous deployments</rt></ruby>,<ruby>弹性伸缩<rt>auto scaling</rt></ruby>和其他<ruby>高动态环境<rt>highly dynamic environments</rt></ruby>下的功能可以轻易地访问。查询语句和操作模型以及其它概念决策使得 Prometheus 特别适合这种环境。但是,如果监控的工作负载动态程度显著地增加,这就会给监控系统本身带来新的压力。考虑到这一点,我们就可以特别致力于在高动态或<ruby>瞬态服务<rt>transient services</rt></ruby>环境下提升它的表现,而不是回过头来解决 Prometheus 已经解决的很好的问题。

|

||||

|

||||

Prometheus 的存储层在历史以来都展现出卓越的性能,单一服务器就能够以每秒数百万个时间序列的速度摄入多达一百万个样本,同时只占用了很少的磁盘空间。尽管当前的存储做的很好,但我依旧提出一个新设计的存储子系统,它可以修正现存解决方案的缺点,并具备处理更大规模数据的能力。

|

||||

|

||||

> 备注:我没有数据库方面的背景。我说的东西可能是错的并让你误入歧途。你可以在 Freenode 的 #prometheus 频道上对我(fabxc)提出你的批评。

|

||||

|

||||

## 问题,难题,问题域

|

||||

|

||||

首先,快速地概览一下我们要完成的东西和它的关键难题。我们可以先看一下 Prometheus 当前的做法 ,它为什么做的这么好,以及我们打算用新设计解决哪些问题。

|

||||

|

||||

### 时间序列数据

|

||||

|

||||

我们有一个收集一段时间数据的系统。

|

||||

|

||||

```

|

||||

identifier -> (t0, v0), (t1, v1), (t2, v2), (t3, v3), ....

|

||||

```

|

||||

|

||||

每个数据点是一个时间戳和值的元组。在监控中,时间戳是一个整数,值可以是任意数字。64 位浮点数对于计数器和测量值来说是一个好的表示方法,因此我们将会使用它。一系列严格单调递增的时间戳数据点是一个序列,它由标识符所引用。我们的标识符是一个带有<ruby>标签维度<rt>label dimensions</rt></ruby>字典的度量名称。标签维度划分了单一指标的测量空间。每一个指标名称加上一个唯一标签集就成了它自己的时间序列,它有一个与之关联的<ruby>数据流<rt>value stream</rt></ruby>。

|

||||

|

||||

这是一个典型的<ruby>序列标识符<rt>series identifier</rt></ruby>集,它是统计请求指标的一部分:

|

||||

|

||||

```

|

||||

requests_total{path="/status", method="GET", instance=”10.0.0.1:80”}

|

||||

requests_total{path="/status", method="POST", instance=”10.0.0.3:80”}

|

||||

requests_total{path="/", method="GET", instance=”10.0.0.2:80”}

|

||||

```

|

||||

|

||||

让我们简化一下表示方法:度量名称可以当作另一个维度标签,在我们的例子中是 `__name__`。对于查询语句,可以对它进行特殊处理,但与我们存储的方式无关,我们后面也会见到。

|

||||

|

||||

```

|

||||

{__name__="requests_total", path="/status", method="GET", instance=”10.0.0.1:80”}

|

||||

{__name__="requests_total", path="/status", method="POST", instance=”10.0.0.3:80”}

|

||||

{__name__="requests_total", path="/", method="GET", instance=”10.0.0.2:80”}

|

||||

```

|

||||

|

||||

我们想通过标签来查询时间序列数据。在最简单的情况下,使用 `{__name__="requests_total"}` 选择所有属于 `requests_total` 指标的数据。对于所有选择的序列,我们在给定的时间窗口内获取数据点。

|

||||

|

||||

在更复杂的语句中,我们或许想一次性选择满足多个标签的序列,并且表示比相等条件更复杂的情况。例如,非语句(`method!="GET"`)或正则表达式匹配(`method=~"PUT|POST"`)。

|

||||

|

||||

这些在很大程度上定义了存储的数据和它的获取方式。

|

||||

|

||||

### 纵与横

|

||||

|

||||

在简化的视图中,所有的数据点可以分布在二维平面上。水平维度代表着时间,序列标识符域经纵轴展开。

|

||||

|

||||

```

|

||||

series

|

||||

^

|

||||

| . . . . . . . . . . . . . . . . . . . . . . {__name__="request_total", method="GET"}

|

||||

| . . . . . . . . . . . . . . . . . . . . . . {__name__="request_total", method="POST"}

|

||||

| . . . . . . .

|

||||

| . . . . . . . . . . . . . . . . . . . ...

|

||||

| . . . . . . . . . . . . . . . . . . . . .

|

||||

| . . . . . . . . . . . . . . . . . . . . . {__name__="errors_total", method="POST"}

|

||||

| . . . . . . . . . . . . . . . . . {__name__="errors_total", method="GET"}

|

||||

| . . . . . . . . . . . . . .

|

||||

| . . . . . . . . . . . . . . . . . . . ...

|

||||

| . . . . . . . . . . . . . . . . . . . .

|

||||

v

|

||||

<-------------------- time --------------------->

|

||||

```

|

||||

|

||||

Prometheus 通过定期地抓取一组时间序列的当前值来获取数据点。我们从中获取到的实体称为目标。因此,写入模式完全地垂直且高度并发,因为来自每个目标的样本是独立摄入的。

|

||||

|

||||

这里提供一些测量的规模:单一 Prometheus 实例从数万个目标中收集数据点,每个数据点都暴露在数百到数千个不同的时间序列中。

|

||||

|

||||

在每秒采集数百万数据点这种规模下,批量写入是一个不能妥协的性能要求。在磁盘上分散地写入单个数据点会相当地缓慢。因此,我们想要按顺序写入更大的数据块。

|

||||

|

||||

对于旋转式磁盘,它的磁头始终得在物理上向不同的扇区上移动,这是一个不足为奇的事实。而虽然我们都知道 SSD 具有快速随机写入的特点,但事实上它不能修改单个字节,只能写入一页或更多页的 4KiB 数据量。这就意味着写入 16 字节的样本相当于写入满满一个 4Kib 的页。这一行为就是所谓的[写入放大][4],这种特性会损耗你的 SSD。因此它不仅影响速度,而且还毫不夸张地在几天或几个周内破坏掉你的硬件。

|

||||

|

||||

关于此问题更深层次的资料,[“Coding for SSDs”系列][5]博客是极好的资源。让我们想想主要的用处:顺序写入和批量写入分别对于旋转式磁盘和 SSD 来说都是理想的写入模式。大道至简。

|

||||

|

||||

查询模式比起写入模式明显更不同。我们可以查询单一序列的一个数据点,也可以对 10000 个序列查询一个数据点,还可以查询一个序列几个周的数据点,甚至是 10000 个序列几个周的数据点。因此在我们的二维平面上,查询范围不是完全水平或垂直的,而是二者形成矩形似的组合。

|

||||

|

||||

[记录规则][6]可以减轻已知查询的问题,但对于<ruby>点对点<rt>ad-hoc</rt></ruby>查询来说并不是一个通用的解决方法。

|

||||

|

||||

我们知道我们想要批量地写入,但我们得到的仅仅是一系列垂直数据点的集合。当查询一段时间窗口内的数据点时,我们不仅很难弄清楚在哪才能找到这些单独的点,而且不得不从磁盘上大量随机的地方读取。也许一条查询语句会有数百万的样本,即使在最快的 SSD 上也会很慢。读入也会从磁盘上获取更多的数据而不仅仅是 16 字节的样本。SSD 会加载一整页,HDD 至少会读取整个扇区。不论哪一种,我们都在浪费宝贵的读取吞吐量。

|

||||

|

||||

因此在理想情况下,同一序列的样本将按顺序存储,这样我们就能通过尽可能少的读取来扫描它们。最重要的是,我们仅需要知道序列的起始位置就能访问所有的数据点。

|

||||

|

||||

显然,将收集到的数据写入磁盘的理想模式与能够显著提高查询效率的布局之间存在着明显的抵触。这是我们 TSDB 需要解决的一个基本问题。

|

||||

|

||||

#### 当前的解决方法

|

||||

|

||||

是时候看一下当前 Prometheus 是如何存储数据来解决这一问题的,让我们称它为“V2”。

|

||||

|

||||

我们创建一个时间序列的文件,它包含所有样本并按顺序存储。因为每几秒附加一个样本数据到所有文件中非常昂贵,我们在内存中打包 1Kib 样本序列的数据块,一旦打包完成就附加这些数据块到单独的文件中。这一方法解决了大部分问题。写入目前是批量的,样本也是按顺序存储的。基于给定的同一序列的样本相对之前的数据仅发生非常小的改变这一特性,它还支持非常高效的压缩格式。Facebook 在他们 Gorilla TSDB 上的论文中描述了一个相似的基于数据块的方法,并且[引入了一种压缩格式][7],它能够减少 16 字节的样本到平均 1.37 字节。V2 存储使用了包含 Gorilla 变体等在内的各种压缩格式。

|

||||

|

||||

```

|

||||

+----------+---------+---------+---------+---------+ series A

|

||||

+----------+---------+---------+---------+---------+

|

||||

+----------+---------+---------+---------+---------+ series B

|

||||

+----------+---------+---------+---------+---------+

|

||||

. . .

|

||||

+----------+---------+---------+---------+---------+---------+ series XYZ

|

||||

+----------+---------+---------+---------+---------+---------+

|

||||

chunk 1 chunk 2 chunk 3 ...

|

||||

```

|

||||

|

||||

尽管基于块存储的方法非常棒,但为每个序列保存一个独立的文件会给 V2 存储带来麻烦,因为:

|

||||

|

||||

* 实际上,我们需要的文件比当前收集数据的时间序列数量要多得多。多出的部分在<ruby>序列分流<rt>Series Churn</rt></ruby>上。有几百万个文件,迟早会使用光文件系统中的 [inode][1]。这种情况我们只能通过重新格式化来恢复磁盘,这种方式是最具有破坏性的。我们通常不想为了适应一个应用程序而格式化磁盘。

|

||||

* 即使是分块写入,每秒也会产生数千块的数据块并且准备持久化。这依然需要每秒数千次的磁盘写入。尽管通过为每个序列打包好多个块来缓解,但这反过来还是增加了等待持久化数据的总内存占用。

|

||||

* 要保持所有文件打开来进行读写是不可行的。特别是因为 99% 的数据在 24 小时之后不再会被查询到。如果查询它,我们就得打开数千个文件,找到并读取相关的数据点到内存中,然后再关掉。这样做就会引起很高的查询延迟,数据块缓存加剧会导致新的问题,这一点在“资源消耗”一节另作讲述。

|

||||

* 最终,旧的数据需要被删除,并且数据需要从数百万文件的头部删除。这就意味着删除实际上是写密集型操作。此外,循环遍历数百万文件并且进行分析通常会导致这一过程花费数小时。当它完成时,可能又得重新来过。喔天,继续删除旧文件又会进一步导致 SSD 产生写入放大。

|

||||

* 目前所积累的数据块仅维持在内存中。如果应用崩溃,数据就会丢失。为了避免这种情况,内存状态会定期的保存在磁盘上,这比我们能接受数据丢失窗口要长的多。恢复检查点也会花费数分钟,导致很长的重启周期。

|

||||

|

||||

我们能够从现有的设计中学到的关键部分是数据块的概念,我们当然希望保留这个概念。最新的数据块会保持在内存中一般也是好的主意。毕竟,最新的数据会大量的查询到。

|

||||

|

||||

一个时间序列对应一个文件,这个概念是我们想要替换掉的。

|

||||

|

||||

### 序列分流

|

||||

|

||||

在 Prometheus 的<ruby>上下文<rt>context</rt></ruby>中,我们使用术语<ruby>序列分流<rt>series churn</rt></ruby>来描述一个时间序列集合变得不活跃,即不再接收数据点,取而代之的是出现一组新的活跃序列。

|

||||

|

||||

例如,由给定微服务实例产生的所有序列都有一个相应的“instance”标签来标识其来源。如果我们为微服务执行了<ruby>滚动更新<rt>rolling update</rt></ruby>,并且为每个实例替换一个新的版本,序列分流便会发生。在更加动态的环境中,这些事情基本上每小时都会发生。像 Kubernetes 这样的<ruby>集群编排<rt>Cluster orchestration</rt></ruby>系统允许应用连续性的自动伸缩和频繁的滚动更新,这样也许会创建成千上万个新的应用程序实例,并且伴随着全新的时间序列集合,每天都是如此。

|

||||

|

||||

```

|

||||

series

|

||||

^

|

||||

| . . . . . .

|

||||

| . . . . . .

|

||||

| . . . . . .

|

||||

| . . . . . . .

|

||||

| . . . . . . .

|

||||

| . . . . . . .

|

||||

| . . . . . .

|

||||

| . . . . . .

|

||||

| . . . . .

|

||||

| . . . . .

|

||||

| . . . . .

|

||||

v

|

||||

<-------------------- time --------------------->

|

||||

```

|

||||

|

||||

所以即便整个基础设施的规模基本保持不变,过一段时间后数据库内的时间序列还是会成线性增长。尽管 Prometheus 很愿意采集 1000 万个时间序列数据,但要想在 10 亿个序列中找到数据,查询效果还是会受到严重的影响。

|

||||

|

||||

#### 当前解决方案

|

||||

|

||||

当前 Prometheus 的 V2 存储系统对所有当前保存的序列拥有基于 LevelDB 的索引。它允许查询语句含有给定的<ruby>标签对<rt>label pair</rt></ruby>,但是缺乏可伸缩的方法来从不同的标签选集中组合查询结果。

|

||||

|

||||

例如,从所有的序列中选择标签 `__name__="requests_total"` 非常高效,但是选择 `instance="A" AND __name__="requests_total"` 就有了可伸缩性的问题。我们稍后会重新考虑导致这一点的原因和能够提升查找延迟的调整方法。

|

||||

|

||||

事实上正是这个问题才催生出了对更好的存储系统的最初探索。Prometheus 需要为查找亿万个时间序列改进索引方法。

|

||||

|

||||

### 资源消耗

|

||||

|

||||

当试图扩展 Prometheus(或其他任何事情,真的)时,资源消耗是永恒不变的话题之一。但真正困扰用户的并不是对资源的绝对渴求。事实上,由于给定的需求,Prometheus 管理着令人难以置信的吞吐量。问题更在于面对变化时的相对未知性与不稳定性。通过其架构设计,V2 存储系统缓慢地构建了样本数据块,这一点导致内存占用随时间递增。当数据块完成之后,它们可以写到磁盘上并从内存中清除。最终,Prometheus 的内存使用到达稳定状态。直到监测环境发生了改变——每次我们扩展应用或者进行滚动更新,序列分流都会增加内存、CPU、磁盘 I/O 的使用。

|

||||

|

||||

如果变更正在进行,那么它最终还是会到达一个稳定的状态,但比起更加静态的环境,它的资源消耗会显著地提高。过渡时间通常为数个小时,而且难以确定最大资源使用量。

|

||||

|

||||

为每个时间序列保存一个文件这种方法也使得一个单个查询就很容易崩溃 Prometheus 进程。当查询的数据没有缓存在内存中,查询的序列文件就会被打开,然后将含有相关数据点的数据块读入内存。如果数据量超出内存可用量,Prometheus 就会因 OOM 被杀死而退出。

|

||||

|

||||

在查询语句完成之后,加载的数据便可以被再次释放掉,但通常会缓存更长的时间,以便更快地查询相同的数据。后者看起来是件不错的事情。

|

||||

|

||||

最后,我们看看之前提到的 SSD 的写入放大,以及 Prometheus 是如何通过批量写入来解决这个问题的。尽管如此,在许多地方还是存在因为批量太小以及数据未精确对齐页边界而导致的写入放大。对于更大规模的 Prometheus 服务器,现实当中会发现缩减硬件寿命的问题。这一点对于高写入吞吐量的数据库应用来说仍然相当普遍,但我们应该放眼看看是否可以解决它。

|

||||

|

||||

### 重新开始

|

||||

|

||||

到目前为止我们对于问题域、V2 存储系统是如何解决它的,以及设计上存在的问题有了一个清晰的认识。我们也看到了许多很棒的想法,这些或多或少都可以拿来直接使用。V2 存储系统相当数量的问题都可以通过改进和部分的重新设计来解决,但为了好玩(当然,在我仔细的验证想法之后),我决定试着写一个完整的时间序列数据库——从头开始,即向文件系统写入字节。

|

||||

|

||||

性能与资源使用这种最关键的部分直接影响了存储格式的选取。我们需要为数据找到正确的算法和磁盘布局来实现一个高性能的存储层。

|

||||

|

||||

这就是我解决问题的捷径——跳过令人头疼、失败的想法,数不尽的草图,泪水与绝望。

|

||||

|

||||

### V3—宏观设计

|

||||

|

||||

我们存储系统的宏观布局是什么?简而言之,是当我们在数据文件夹里运行 `tree` 命令时显示的一切。看看它能给我们带来怎样一副惊喜的画面。

|

||||

|

||||

```

|

||||

$ tree ./data

|

||||

./data

|

||||

+-- b-000001

|

||||

| +-- chunks

|

||||

| | +-- 000001

|

||||

| | +-- 000002

|

||||

| | +-- 000003

|

||||

| +-- index

|

||||

| +-- meta.json

|

||||

+-- b-000004

|

||||

| +-- chunks

|

||||

| | +-- 000001

|

||||

| +-- index

|

||||

| +-- meta.json

|

||||

+-- b-000005

|

||||

| +-- chunks

|

||||

| | +-- 000001

|

||||

| +-- index

|

||||

| +-- meta.json

|

||||

+-- b-000006

|

||||

+-- meta.json

|

||||

+-- wal

|

||||

+-- 000001

|

||||

+-- 000002

|

||||

+-- 000003

|

||||

```

|

||||

|

||||

在最顶层,我们有一系列以 `b-` 为前缀编号的<ruby>块<rt>block</rt></ruby>。每个块中显然保存了索引文件和含有更多编号文件的 `chunk` 文件夹。`chunks` 目录只包含不同序列<ruby>数据点的原始块<rt>raw chunks of data points</rt><ruby>。与 V2 存储系统一样,这使得通过时间窗口读取序列数据非常高效并且允许我们使用相同的有效压缩算法。这一点被证实行之有效,我们也打算沿用。显然,这里并不存在含有单个序列的文件,而是一堆保存着许多序列的数据块。

|

||||

|

||||

`index` 文件的存在应该不足为奇。让我们假设它拥有黑魔法,可以让我们找到标签、可能的值、整个时间序列和存放数据点的数据块。

|

||||

|

||||

但为什么这里有好几个文件夹都是索引和块文件的布局?并且为什么存在最后一个包含 `wal` 文件夹?理解这两个疑问便能解决九成的问题。

|

||||

|

||||

#### 许多小型数据库

|

||||

|

||||

我们分割横轴,即将时间域分割为不重叠的块。每一块扮演着完全独立的数据库,它包含该时间窗口所有的时间序列数据。因此,它拥有自己的索引和一系列块文件。

|

||||

|

||||

```

|

||||

|

||||

t0 t1 t2 t3 now

|

||||

+-----------+ +-----------+ +-----------+ +-----------+

|

||||

| | | | | | | | +------------+

|

||||

| | | | | | | mutable | <--- write ---- ┤ Prometheus |

|

||||

| | | | | | | | +------------+

|

||||

+-----------+ +-----------+ +-----------+ +-----------+ ^

|

||||

+--------------+-------+------+--------------+ |

|

||||

| query

|

||||

| |

|

||||

merge -------------------------------------------------+

|

||||

```

|

||||

|

||||

每一块的数据都是<ruby>不可变的<rt>immutable</rt></ruby>。当然,当我们采集新数据时,我们必须能向最近的块中添加新的序列和样本。对于该数据块,所有新的数据都将写入内存中的数据库中,它与我们的持久化的数据块一样提供了查找属性。内存中的数据结构可以高效地更新。为了防止数据丢失,所有传入的数据同样被写入临时的<ruby>预写日志<rt>write ahead log</rt></ruby>中,这就是 `wal` 文件夹中的一些列文件,我们可以在重新启动时通过它们重新填充内存数据库。

|

||||

|

||||

所有这些文件都带有序列化格式,有我们所期望的所有东西:许多标志、偏移量、变体和 CRC32 校验和。纸上得来终觉浅,绝知此事要躬行。

|

||||

|

||||

这种布局允许我们扩展查询范围到所有相关的块上。每个块上的部分结果最终合并成完整的结果。

|

||||

|

||||

这种横向分割增加了一些很棒的功能:

|

||||

|

||||

* 当查询一个时间范围,我们可以简单地忽略所有范围之外的数据块。通过减少需要检查的数据集,它可以初步解决序列分流的问题。

|

||||

* 当完成一个块,我们可以通过顺序的写入大文件从内存数据库中保存数据。这样可以避免任何的写入放大,并且 SSD 与 HDD 均适用。

|

||||

* 我们延续了 V2 存储系统的一个好的特性,最近使用而被多次查询的数据块,总是保留在内存中。

|

||||

* 很好,我们也不再受限于 1KiB 的数据块尺寸,以使数据在磁盘上更好地对齐。我们可以挑选对单个数据点和压缩格式最合理的尺寸。

|

||||

* 删除旧数据变得极为简单快捷。我们仅仅只需删除一个文件夹。记住,在旧的存储系统中我们不得不花数个小时分析并重写数亿个文件。

|

||||

|

||||

每个块还包含了 `meta.json` 文件。它简单地保存了关于块的存储状态和包含的数据,以便轻松了解存储状态及其包含的数据。

|

||||

|

||||

##### mmap

|

||||

|

||||

将数百万个小文件合并为少数几个大文件使得我们用很小的开销就能保持所有的文件都打开。这就解除了对 [mmap(2)][8] 的使用的阻碍,这是一个允许我们通过文件透明地回传虚拟内存的系统调用。简单起见,你可以将其视为<ruby>交换空间<rt>swap space</rt></ruby>,只是我们所有的数据已经保存在了磁盘上,并且当数据换出内存后不再会发生写入。

|

||||

|

||||

这意味着我们可以当作所有数据库的内容都视为在内存中却不占用任何物理内存。仅当我们访问数据库文件某些字节范围时,操作系统才会从磁盘上<ruby>惰性加载<rt>lazy load</rt></ruby>页数据。这使得我们将所有数据持久化相关的内存管理都交给了操作系统。通常,操作系统更有资格作出这样的决定,因为它可以全面了解整个机器和进程。查询的数据可以相当积极的缓存进内存,但内存压力会使得页被换出。如果机器拥有未使用的内存,Prometheus 目前将会高兴地缓存整个数据库,但是一旦其他进程需要,它就会立刻返回那些内存。

|

||||

|

||||

因此,查询不再轻易地使我们的进程 OOM,因为查询的是更多的持久化的数据而不是装入内存中的数据。内存缓存大小变得完全自适应,并且仅当查询真正需要时数据才会被加载。

|

||||

|

||||

就个人理解,这就是当今大多数数据库的工作方式,如果磁盘格式允许,这是一种理想的方式,——除非有人自信能在这个过程中超越操作系统。我们做了很少的工作但确实从外面获得了很多功能。

|

||||

|

||||

#### 压缩

|

||||

|

||||

存储系统需要定期“切”出新块并将之前完成的块写入到磁盘中。仅在块成功的持久化之后,才会被删除之前用来恢复内存块的日志文件(wal)。

|

||||

|

||||

我们希望将每个块的保存时间设置的相对短一些(通常配置为 2 小时),以避免内存中积累太多的数据。当查询多个块,我们必须将它们的结果合并为一个整体的结果。合并过程显然会消耗资源,一个星期的查询不应该由超过 80 个的部分结果所组成。

|

||||

|

||||

为了实现两者,我们引入<ruby>压缩<rt>compaction</rt></ruby>。压缩描述了一个过程:取一个或更多个数据块并将其写入一个可能更大的块中。它也可以在此过程中修改现有的数据。例如,清除已经删除的数据,或重建样本块以提升查询性能。

|

||||

|

||||

```

|

||||

|

||||

t0 t1 t2 t3 t4 now

|

||||

+------------+ +----------+ +-----------+ +-----------+ +-----------+

|

||||

| 1 | | 2 | | 3 | | 4 | | 5 mutable | before

|

||||

+------------+ +----------+ +-----------+ +-----------+ +-----------+

|

||||

+-----------------------------------------+ +-----------+ +-----------+

|

||||

| 1 compacted | | 4 | | 5 mutable | after (option A)

|

||||

+-----------------------------------------+ +-----------+ +-----------+

|

||||

+--------------------------+ +--------------------------+ +-----------+

|

||||

| 1 compacted | | 3 compacted | | 5 mutable | after (option B)

|

||||

+--------------------------+ +--------------------------+ +-----------+

|

||||

```

|

||||

|

||||

在这个例子中我们有顺序块 `[1,2,3,4]`。块 1、2、3 可以压缩在一起,新的布局将会是 `[1,4]`。或者,将它们成对压缩为 `[1,3]`。所有的时间序列数据仍然存在,但现在整体上保存在更少的块中。这极大程度地缩减了查询时间的消耗,因为需要合并的部分查询结果变得更少了。

|

||||

|

||||

#### 保留

|

||||

|

||||

我们看到了删除旧的数据在 V2 存储系统中是一个缓慢的过程,并且消耗 CPU、内存和磁盘。如何才能在我们基于块的设计上清除旧的数据?相当简单,只要删除我们配置的保留时间窗口里没有数据的块文件夹即可。在下面的例子中,块 1 可以被安全地删除,而块 2 则必须一直保留,直到它落在保留窗口边界之外。

|

||||

|

||||

```

|

||||

|

|

||||

+------------+ +----+-----+ +-----------+ +-----------+ +-----------+

|

||||

| 1 | | 2 | | | 3 | | 4 | | 5 | . . .

|

||||

+------------+ +----+-----+ +-----------+ +-----------+ +-----------+

|

||||

|

|

||||

|

|

||||

retention boundary

|

||||

```

|

||||

|

||||

随着我们不断压缩先前压缩的块,旧数据越大,块可能变得越大。因此必须为其设置一个上限,以防数据块扩展到整个数据库而损失我们设计的最初优势。

|

||||

|

||||

方便的是,这一点也限制了部分存在于保留窗口内部分存在于保留窗口外的块的磁盘消耗总量。例如上面例子中的块 2。当设置了最大块尺寸为总保留窗口的 10% 后,我们保留块 2 的总开销也有了 10% 的上限。

|

||||

|

||||

总结一下,保留与删除从非常昂贵到了几乎没有成本。

|

||||

|

||||

> 如果你读到这里并有一些数据库的背景知识,现在你也许会问:这些都是最新的技术吗?——并不是;而且可能还会做的更好。

|

||||

>

|

||||

> 在内存中批量处理数据,在预写日志中跟踪,并定期写入到磁盘的模式在现在相当普遍。

|

||||

>

|

||||

> 我们看到的好处无论在什么领域的数据里都是适用的。遵循这一方法最著名的开源案例是 LevelDB、Cassandra、InfluxDB 和 HBase。关键是避免重复发明劣质的轮子,采用经过验证的方法,并正确地运用它们。

|

||||

>

|

||||

> 脱离场景添加你自己的黑魔法是一种不太可能的情况。

|

||||

|

||||

### 索引

|

||||

|

||||

研究存储改进的最初想法是解决序列分流的问题。基于块的布局减少了查询所要考虑的序列总数。因此假设我们索引查找的复杂度是 `O(n^2)`,我们就要设法减少 n 个相当数量的复杂度,之后就相当于改进 `O(n^2)` 复杂度。——恩,等等……糟糕。

|

||||

|

||||

快速回顾一下“算法 101”课上提醒我们的,在理论上它并未带来任何好处。如果之前就很糟糕,那么现在也一样。理论是如此的残酷。

|

||||

|

||||

实际上,我们大多数的查询已经可以相当快响应。但是,跨越整个时间范围的查询仍然很慢,尽管只需要找到少部分数据。追溯到所有这些工作之前,最初我用来解决这个问题的想法是:我们需要一个更大容量的[倒排索引][9]。

|

||||

|

||||

倒排索引基于数据项内容的子集提供了一种快速的查找方式。简单地说,我可以通过标签 `app="nginx"` 查找所有的序列而无需遍历每个文件来看它是否包含该标签。

|

||||

|

||||

为此,每个序列被赋上一个唯一的 ID ,通过该 ID 可以恒定时间内检索它(`O(1)`)。在这个例子中 ID 就是我们的正向索引。

|

||||

|

||||

> 示例:如果 ID 为 10、29、9 的序列包含标签 `app="nginx"`,那么 “nginx”的倒排索引就是简单的列表 `[10, 29, 9]`,它就能用来快速地获取所有包含标签的序列。即使有 200 多亿个数据序列也不会影响查找速度。

|

||||

|

||||

简而言之,如果 `n` 是我们序列总数,`m` 是给定查询结果的大小,使用索引的查询复杂度现在就是 `O(m)`。查询语句依据它获取数据的数量 `m` 而不是被搜索的数据体 `n` 进行缩放是一个很好的特性,因为 `m` 一般相当小。

|

||||

|

||||

为了简单起见,我们假设可以在恒定时间内查找到倒排索引对应的列表。

|

||||

|

||||

实际上,这几乎就是 V2 存储系统具有的倒排索引,也是提供在数百万序列中查询性能的最低需求。敏锐的人会注意到,在最坏情况下,所有的序列都含有标签,因此 `m` 又成了 `O(n)`。这一点在预料之中,也相当合理。如果你查询所有的数据,它自然就会花费更多时间。一旦我们牵扯上了更复杂的查询语句就会有问题出现。

|

||||

|

||||

#### 标签组合

|

||||

|

||||

与数百万个序列相关的标签很常见。假设横向扩展着数百个实例的“foo”微服务,并且每个实例拥有数千个序列。每个序列都会带有标签 `app="foo"`。当然,用户通常不会查询所有的序列而是会通过进一步的标签来限制查询。例如,我想知道服务实例接收到了多少请求,那么查询语句便是 `__name__="requests_total" AND app="foo"`。

|

||||

|

||||

为了找到满足两个标签选择子的所有序列,我们得到每一个标签的倒排索引列表并取其交集。结果集通常会比任何一个输入列表小一个数量级。因为每个输入列表最坏情况下的大小为 `O(n)`,所以在嵌套地为每个列表进行<ruby>暴力求解<rt>brute force solution</rt><ruby>下,运行时间为 `O(n^2)`。相同的成本也适用于其他的集合操作,例如取并集(`app="foo" OR app="bar"`)。当在查询语句上添加更多标签选择子,耗费就会指数增长到 `O(n^3)`、`O(n^4)`、`O(n^5)`……`O(n^k)`。通过改变执行顺序,可以使用很多技巧以优化运行效率。越复杂,越是需要关于数据特征和标签之间相关性的知识。这引入了大量的复杂度,但是并没有减少算法的最坏运行时间。

|

||||

|

||||

这便是 V2 存储系统使用的基本方法,幸运的是,看似微小的改动就能获得显著的提升。如果我们假设倒排索引中的 ID 都是排序好的会怎么样?

|

||||

|

||||

假设这个例子的列表用于我们最初的查询:

|

||||

|

||||

```

|

||||

__name__="requests_total" -> [ 9999, 1000, 1001, 2000000, 2000001, 2000002, 2000003 ]

|

||||

app="foo" -> [ 1, 3, 10, 11, 12, 100, 311, 320, 1000, 1001, 10002 ]

|

||||

|

||||

intersection => [ 1000, 1001 ]

|

||||

```

|

||||

|

||||

它的交集相当小。我们可以为每个列表的起始位置设置游标,每次从最小的游标处移动来找到交集。当二者的数字相等,我们就添加它到结果中并移动二者的游标。总体上,我们以锯齿形扫描两个列表,因此总耗费是 `O(2n)=O(n)`,因为我们总是在一个列表上移动。

|

||||

|

||||

两个以上列表的不同集合操作也类似。因此 `k` 个集合操作仅仅改变了因子 `O(k*n)` 而不是最坏情况下查找运行时间的指数 `O(n^k)`。

|

||||

|

||||

我在这里所描述的是几乎所有[全文搜索引擎][10]使用的标准搜索索引的简化版本。每个序列描述符都视作一个简短的“文档”,每个标签(名称 + 固定值)作为其中的“单词”。我们可以忽略搜索引擎索引中通常遇到的很多附加数据,例如单词位置和和频率。

|

||||

|

||||

关于改进实际运行时间的方法似乎存在无穷无尽的研究,它们通常都是对输入数据做一些假设。不出意料的是,还有大量技术来压缩倒排索引,其中各有利弊。因为我们的“文档”比较小,而且“单词”在所有的序列里大量重复,压缩变得几乎无关紧要。例如,一个真实的数据集约有 440 万个序列与大约 12 个标签,每个标签拥有少于 5000 个单独的标签。对于最初的存储版本,我们坚持使用基本的方法而不压缩,仅做微小的调整来跳过大范围非交叉的 ID。

|

||||

|

||||

尽管维持排序好的 ID 听起来很简单,但实践过程中不是总能完成的。例如,V2 存储系统为新的序列赋上一个哈希值来当作 ID,我们就不能轻易地排序倒排索引。

|

||||

|

||||

另一个艰巨的任务是当磁盘上的数据被更新或删除掉后修改其索引。通常,最简单的方法是重新计算并写入,但是要保证数据库在此期间可查询且具有一致性。V3 存储系统通过每块上具有的独立不可变索引来解决这一问题,该索引仅通过压缩时的重写来进行修改。只有可变块上的索引需要被更新,它完全保存在内存中。

|

||||

|

||||

## 基准测试

|

||||

|

||||

我从存储的基准测试开始了初步的开发,它基于现实世界数据集中提取的大约 440 万个序列描述符,并生成合成数据点以输入到这些序列中。这个阶段的开发仅仅测试了单独的存储系统,对于快速找到性能瓶颈和高并发负载场景下的触发死锁至关重要。

|

||||

|

||||

在完成概念性的开发实施之后,该基准测试能够在我的 Macbook Pro 上维持每秒 2000 万的吞吐量 —— 并且这都是在打开着十几个 Chrome 的页面和 Slack 的时候。因此,尽管这听起来都很棒,它这也表明推动这项测试没有的进一步价值(或者是没有在高随机环境下运行)。毕竟,它是合成的数据,因此在除了良好的第一印象外没有多大价值。比起最初的设计目标高出 20 倍,是时候将它部署到真正的 Prometheus 服务器上了,为它添加更多现实环境中的开销和场景。

|

||||

|

||||

我们实际上没有可重现的 Prometheus 基准测试配置,特别是没有对于不同版本的 A/B 测试。亡羊补牢为时不晚,[不过现在就有一个了][11]!

|

||||

|

||||

我们的工具可以让我们声明性地定义基准测试场景,然后部署到 AWS 的 Kubernetes 集群上。尽管对于全面的基准测试来说不是最好环境,但它肯定比 64 核 128GB 内存的专用<ruby>裸机服务器<rt>bare metal servers</rt></ruby>更能反映出我们的用户群体。

|

||||

|

||||

我们部署了两个 Prometheus 1.5.2 服务器(V2 存储系统)和两个来自 2.0 开发分支的 Prometheus (V3 存储系统)。每个 Prometheus 运行在配备 SSD 的专用服务器上。我们将横向扩展的应用部署在了工作节点上,并且让其暴露典型的微服务度量。此外,Kubernetes 集群本身和节点也被监控着。整套系统由另一个 Meta-Prometheus 所监督,它监控每个 Prometheus 的健康状况和性能。

|

||||

|

||||

为了模拟序列分流,微服务定期的扩展和收缩来移除旧的 pod 并衍生新的 pod,生成新的序列。通过选择“典型”的查询来模拟查询负载,对每个 Prometheus 版本都执行一次。

|

||||

|

||||

总体上,伸缩与查询的负载以及采样频率极大的超出了 Prometheus 的生产部署。例如,我们每隔 15 分钟换出 60% 的微服务实例去产生序列分流。在现代的基础设施上,一天仅大约会发生 1-5 次。这就保证了我们的 V3 设计足以处理未来几年的工作负载。就结果而言,Prometheus 1.5.2 和 2.0 之间的性能差异在极端的环境下会变得更大。

|

||||

|

||||

总而言之,我们每秒从 850 个目标里收集大约 11 万份样本,每次暴露 50 万个序列。

|

||||

|

||||

在此系统运行一段时间之后,我们可以看一下数字。我们评估了两个版本在 12 个小时之后到达稳定时的几个指标。

|

||||

|

||||

> 请注意从 Prometheus 图形界面的截图中轻微截断的 Y 轴

|

||||

|

||||

|

||||

|

||||

*堆内存使用(GB)*

|

||||

|

||||

内存资源的使用对用户来说是最为困扰的问题,因为它相对的不可预测且可能导致进程崩溃。

|

||||

|

||||

显然,查询的服务器正在消耗内存,这很大程度上归咎于查询引擎的开销,这一点可以当作以后优化的主题。总的来说,Prometheus 2.0 的内存消耗减少了 3-4 倍。大约 6 小时之后,在 Prometheus 1.5 上有一个明显的峰值,与我们设置的 6 小时的保留边界相对应。因为删除操作成本非常高,所以资源消耗急剧提升。这一点在下面几张图中均有体现。

|

||||

|

||||

|

||||

|

||||

*CPU 使用(核心/秒)*

|

||||

|

||||

类似的模式也体现在 CPU 使用上,但是查询的服务器与非查询的服务器之间的差异尤为明显。每秒获取大约 11 万个数据需要 0.5 核心/秒的 CPU 资源,比起评估查询所花费的 CPU 时间,我们的新存储系统 CPU 消耗可忽略不计。总的来说,新存储需要的 CPU 资源减少了 3 到 10 倍。

|

||||

|

||||

|

||||

|

||||

*磁盘写入(MB/秒)*

|

||||

|

||||

迄今为止最引人注目和意想不到的改进表现在我们的磁盘写入利用率上。这就清楚的说明了为什么 Prometheus 1.5 很容易造成 SSD 损耗。我们看到最初的上升发生在第一个块被持久化到序列文件中的时期,然后一旦删除操作引发了重写就会带来第二个上升。令人惊讶的是,查询的服务器与非查询的服务器显示出了非常不同的利用率。

|

||||

|

||||

在另一方面,Prometheus 2.0 每秒仅向其预写日志写入大约一兆字节。当块被压缩到磁盘时,写入定期地出现峰值。这在总体上节省了:惊人的 97-99%。

|

||||

|

||||

|

||||

|

||||

*磁盘大小(GB)*

|

||||

|

||||

与磁盘写入密切相关的是总磁盘空间占用量。由于我们对样本(这是我们的大部分数据)几乎使用了相同的压缩算法,因此磁盘占用量应当相同。在更为稳定的系统中,这样做很大程度上是正确地,但是因为我们需要处理高的序列分流,所以还要考虑每个序列的开销。

|

||||

|

||||

如我们所见,Prometheus 1.5 在这两个版本达到稳定状态之前,使用的存储空间因其保留操作而急速上升。Prometheus 2.0 似乎在每个序列上的开销显著降低。我们可以清楚的看到预写日志线性地充满整个存储空间,然后当压缩完成后瞬间下降。事实上对于两个 Prometheus 2.0 服务器,它们的曲线并不是完全匹配的,这一点需要进一步的调查。

|

||||

|

||||

前景大好。剩下最重要的部分是查询延迟。新的索引应当优化了查找的复杂度。没有实质上发生改变的是处理数据的过程,例如 `rate()` 函数或聚合。这些就是查询引擎要做的东西了。

|

||||

|

||||

|

||||

|

||||

*第 99 个百分位查询延迟(秒)*

|

||||

|

||||

数据完全符合预期。在 Prometheus 1.5 上,查询延迟随着存储的序列而增加。只有在保留操作开始且旧的序列被删除后才会趋于稳定。作为对比,Prometheus 2.0 从一开始就保持在合适的位置。

|

||||

|

||||

我们需要花一些心思在数据是如何被采集上,对服务器发出的查询请求通过对以下方面的估计来选择:范围查询和即时查询的组合,进行更轻或更重的计算,访问更多或更少的文件。它并不需要代表真实世界里查询的分布。也不能代表冷数据的查询性能,我们可以假设所有的样本数据都是保存在内存中的热数据。

|

||||

|

||||

尽管如此,我们可以相当自信地说,整体查询效果对序列分流变得非常有弹性,并且在高压基准测试场景下提升了 4 倍的性能。在更为静态的环境下,我们可以假设查询时间大多数花费在了查询引擎上,改善程度明显较低。

|

||||

|

||||

|

||||

|

||||

*摄入的样本/秒*

|

||||

|

||||

最后,快速地看一下不同 Prometheus 服务器的摄入率。我们可以看到搭载 V3 存储系统的两个服务器具有相同的摄入速率。在几个小时之后变得不稳定,这是因为不同的基准测试集群节点由于高负载变得无响应,与 Prometheus 实例无关。(两个 2.0 的曲线完全匹配这一事实希望足够具有说服力)

|

||||

|

||||

尽管还有更多 CPU 和内存资源,两个 Prometheus 1.5.2 服务器的摄入率大大降低。序列分流的高压导致了无法采集更多的数据。

|

||||

|

||||

那么现在每秒可以摄入的<ruby>绝对最大<rt>absolute maximum</rt></ruby>样本数是多少?

|

||||

|

||||

但是现在你可以摄取的每秒绝对最大样本数是多少?

|

||||

|

||||

我不知道 —— 虽然这是一个相当容易的优化指标,但除了稳固的基线性能之外,它并不是特别有意义。

|

||||

|

||||

有很多因素都会影响 Prometheus 数据流量,而且没有一个单独的数字能够描述捕获质量。最大摄入率在历史上是一个导致基准出现偏差的度量,并且忽视了更多重要的层面,例如查询性能和对序列分流的弹性。关于资源使用线性增长的大致猜想通过一些基本的测试被证实。很容易推断出其中的原因。

|

||||

|

||||

我们的基准测试模拟了高动态环境下 Prometheus 的压力,它比起真实世界中的更大。结果表明,虽然运行在没有优化的云服务器上,但是已经超出了预期的效果。最终,成功将取决于用户反馈而不是基准数字。

|

||||

|

||||

> 注意:在撰写本文的同时,Prometheus 1.6 正在开发当中,它允许更可靠地配置最大内存使用量,并且可能会显著地减少整体的消耗,有利于稍微提高 CPU 使用率。我没有重复对此进行测试,因为整体结果变化不大,尤其是面对高序列分流的情况。

|

||||

|

||||

## 总结

|

||||

|

||||

Prometheus 开始应对高基数序列与单独样本的吞吐量。这仍然是一项富有挑战性的任务,但是新的存储系统似乎向我们展示了未来的一些好东西。

|

||||

|

||||

第一个配备 V3 存储系统的 [alpha 版本 Prometheus 2.0][12] 已经可以用来测试了。在早期阶段预计还会出现崩溃,死锁和其他 bug。

|

||||

|

||||

存储系统的代码可以在[这个单独的项目中找到][13]。Prometheus 对于寻找高效本地存储时间序列数据库的应用来说可能非常有用,这一点令人非常惊讶。

|

||||

|

||||

> 这里需要感谢很多人作出的贡献,以下排名不分先后:

|

||||

|

||||

> Bjoern Rabenstein 和 Julius Volz 在 V2 存储引擎上的打磨工作以及 V3 存储系统的反馈,这为新一代的设计奠定了基础。

|

||||

|

||||

> Wilhelm Bierbaum 对新设计不断的建议与见解作出了很大的贡献。Brian Brazil 不断的反馈确保了我们最终得到的是语义上合理的方法。与 Peter Bourgon 深刻的讨论验证了设计并形成了这篇文章。

|

||||

|

||||

> 别忘了我们整个 CoreOS 团队与公司对于这项工作的赞助与支持。感谢所有那些听我一遍遍唠叨 SSD、浮点数、序列化格式的同学。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fabxc.org/blog/2017-04-10-writing-a-tsdb/

|

||||

|

||||

作者:[Fabian Reinartz][a]

|

||||

译者:[LuuMing](https://github.com/LuuMing)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/fabxc

|

||||

[1]:https://en.wikipedia.org/wiki/Inode

|

||||

[2]:https://prometheus.io/

|

||||

[3]:https://kubernetes.io/

|

||||

[4]:https://en.wikipedia.org/wiki/Write_amplification

|

||||

[5]:http://codecapsule.com/2014/02/12/coding-for-ssds-part-1-introduction-and-table-of-contents/

|

||||

[6]:https://prometheus.io/docs/practices/rules/

|

||||

[7]:http://www.vldb.org/pvldb/vol8/p1816-teller.pdf

|

||||

[8]:https://en.wikipedia.org/wiki/Mmap

|

||||

[9]:https://en.wikipedia.org/wiki/Inverted_index

|

||||

[10]:https://en.wikipedia.org/wiki/Search_engine_indexing#Inverted_indices

|

||||

[11]:https://github.com/prometheus/prombench

|

||||

[12]:https://prometheus.io/blog/2017/04/10/promehteus-20-sneak-peak/

|

||||

[13]:https://github.com/prometheus/tsdb

|

||||

149

published/20170414 5 projects for Raspberry Pi at home.md

Normal file

149

published/20170414 5 projects for Raspberry Pi at home.md

Normal file

@ -0,0 +1,149 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (warmfrog)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10936-1.html)

|

||||

[#]: subject: (5 projects for Raspberry Pi at home)

|

||||

[#]: via: (https://opensource.com/article/17/4/5-projects-raspberry-pi-home)

|

||||

[#]: author: (Ben Nuttall https://opensource.com/users/bennuttall)

|

||||

|

||||

5 个可在家中使用的树莓派项目

|

||||

======================================

|

||||

|

||||

![5 projects for Raspberry Pi at home][1]

|

||||

|

||||

[树莓派][2] 电脑可被用来进行多种设置用于不同的目的。显然它在教育市场帮助学生在教室和创客空间中学习编程与创客技巧方面占有一席之地,它在工作场所和工厂中有大量行业应用。我打算介绍五个你可能想要在你的家中构建的项目。

|

||||

|

||||

### 媒体中心

|

||||

|

||||

在家中人们常用树莓派作为媒体中心来服务多媒体文件。它很容易搭建,树莓派提供了大量的 GPU(图形处理单元)运算能力来在大屏电视上渲染你的高清电视节目和电影。将 [Kodi][3](从前的 XBMC)运行在树莓派上是一个很棒的方式,它可以播放你的硬盘或网络存储上的任何媒体。你同样可以安装一个插件来播放 YouTube 视频。

|

||||

|

||||

还有几个略微不同的选择,最常见的是 [OSMC][4](开源媒体中心)和 [LibreELEC][5],都是基于 Kodi 的。它们在放映媒体内容方面表现的都非常好,但是 OSMC 有一个更酷炫的用户界面,而 LibreElec 更轻量级。你要做的只是选择一个发行版,下载镜像并安装到一个 SD 卡中(或者仅仅使用 [NOOBS][6]),启动,然后就准备好了。

|

||||

|

||||

![LibreElec ][7]

|

||||

|

||||

*LibreElec;树莓派基金会, CC BY-SA*

|

||||

|

||||

![OSMC][8]

|

||||

|

||||

*OSMC.tv, 版权所有, 授权使用*

|

||||

|

||||

在往下走之前,你需要决定[使用哪种树莓派][9]。这些发行版在任何树莓派(1、2、3 或 Zero)上都能运行,视频播放在这些树莓派中的任何一个上都能胜任。除了 Pi 3(和 Zero W)有内置 Wi-Fi,唯一可察觉的不同是用户界面的反应速度,在 Pi 3 上更快。Pi 2 也不会慢太多,所以如果你不需要 Wi-Fi 它也是可以的,但是当切换菜单时,你会注意到 Pi 3 比 Pi 1 和 Zero 表现的更好。

|

||||

|

||||

### SSH 网关

|

||||

|

||||

如果你想从外部网络访问你的家庭局域网的电脑和设备,你必须打开这些设备的端口来允许外部访问。在互联网中开放这些端口有安全风险,意味着你总是你总是处于被攻击、滥用或者其他各种未授权访问的风险中。然而,如果你在你的网络中安装一个树莓派,并且设置端口映射来仅允许通过 SSH 访问树莓派,你可以这么用来作为一个安全的网关来跳到网络中的其他树莓派和 PC。

|

||||

|

||||

大多数路由允许你配置端口映射规则。你需要给你的树莓派一个固定的内网 IP 地址来设置你的路由器端口 22 映射到你的树莓派端口 22。如果你的网络服务提供商给你提供了一个静态 IP 地址,你能够通过 SSH 和主机的 IP 地址访问(例如,`ssh pi@123.45.56.78`)。如果你有一个域名,你可以配置一个子域名指向这个 IP 地址,所以你没必要记住它(例如,`ssh pi@home.mydomain.com`)。

|

||||

|

||||

![][11]

|

||||

|

||||

然而,如果你不想将树莓派暴露在互联网上,你应该非常小心,不要让你的网络处于危险之中。如果你遵循一些简单的步骤来使它更安全:

|

||||

|

||||

1. 大多数人建议你更换你的登录密码(有道理,默认密码 “raspberry” 是众所周知的),但是这不能阻挡暴力攻击。你可以改变你的密码并添加一个双重验证(所以你需要你的密码*和*一个手机生成的与时间相关的密码),这么做更安全。但是,我相信最好的方法阻止入侵者访问你的树莓派是在你的 SSH 配置中[禁止密码认证][12],这样只能通过 SSH 密匙进入。这意味着任何试图猜测你的密码尝试登录的人都不会成功。只有你的私有密匙可以访问。简单来说,很多人建议将 SSH 端口从默认的 22 换成其他的,但是通过简单的 [Nmap][13] 扫描你的 IP 地址,你信任的 SSH 端口就会暴露。

|

||||

2. 最好,不要在这个树莓派上运行其他的软件,这样你不会意外暴露其他东西。如果你想要运行其他软件,你最好在网络中的其他树莓派上运行,它们没有暴露在互联网上。确保你经常升级来保证你的包是最新的,尤其是 `openssh-server` 包,这样你的安全缺陷就被打补丁了。

|

||||

3. 安装 [sshblack][14] 或 [fail2ban][15] 来将任何表露出恶意的用户加入黑名单,例如试图暴力破解你的 SSH 密码。

|

||||

|

||||

使树莓派安全后,让它在线,你将可以在世界的任何地方登录你的网络。一旦你登录到你的树莓派,你可以用 SSH 访问本地网络上的局域网地址(例如,192.168.1.31)访问其他设备。如果你在这些设备上有密码,用密码就好了。如果它们同样只允许 SSH 密匙,你需要确保你的密匙通过 SSH 转发,使用 `-A` 参数:`ssh -A pi@123.45.67.89`。

|

||||

|

||||

### CCTV / 宠物相机

|

||||

|

||||

另一个很棒的家庭项目是安装一个相机模块来拍照和录视频,录制并保存文件,在内网或者外网中进行流式传输。你想这么做有很多原因,但两个常见的情况是一个家庭安防相机或监控你的宠物。

|

||||

|

||||

[树莓派相机模块][16] 是一个优秀的配件。它提供全高清的相片和视频,包括很多高级配置,很[容易编程][17]。[红外线相机][18]用于这种目的是非常理想的,通过一个红外线 LED(树莓派可以控制的),你就能够在黑暗中看见东西。

|

||||

|

||||

如果你想通过一定频率拍摄静态图片来留意某件事,你可以仅仅写一个简短的 [Python][19] 脚本或者使用命令行工具 [raspistill][20], 在 [Cron][21] 中规划它多次运行。你可能想将它们保存到 [Dropbox][22] 或另一个网络服务,上传到一个网络服务器,你甚至可以创建一个[web 应用][23]来显示他们。

|

||||

|

||||

如果你想要在内网或外网中流式传输视频,那也相当简单。在 [picamera 文档][24]中(在 “web streaming” 章节)有一个简单的 MJPEG(Motion JPEG)例子。简单下载或者拷贝代码到文件中,运行并访问树莓派的 IP 地址的 8000 端口,你会看见你的相机的直播输出。

|

||||

|

||||

有一个更高级的流式传输项目 [pistreaming][25] 也可以,它通过在网络服务器中用 [JSMpeg][26] (一个 JavaScript 视频播放器)和一个用于相机流的单独运行的 websocket。这种方法性能更好,并且和之前的例子一样简单,但是如果要在互联网中流式传输,则需要包含更多代码,并且需要你开放两个端口。

|

||||

|

||||

一旦你的网络流建立起来,你可以将你的相机放在你想要的地方。我用一个来观察我的宠物龟:

|

||||

|

||||

![Tortoise ][27]

|

||||

|

||||

*Ben Nuttall, CC BY-SA*

|

||||

|

||||

如果你想控制相机位置,你可以用一个舵机。一个优雅的方案是用 Pimoroni 的 [Pan-Tilt HAT][28],它可以让你简单的在二维方向上移动相机。为了与 pistreaming 集成,可以看看该项目的 [pantilthat 分支][29].

|

||||

|

||||

![Pan-tilt][30]

|

||||

|

||||

*Pimoroni.com, Copyright, 授权使用*

|

||||

|

||||

如果你想将你的树莓派放到户外,你将需要一个防水的外围附件,并且需要一种给树莓派供电的方式。POE(通过以太网提供电力)电缆是一个不错的实现方式。

|

||||

|

||||

### 家庭自动化或物联网

|

||||

|

||||

现在是 2017 年(LCTT 译注:此文发表时间),到处都有很多物联网设备,尤其是家中。我们的电灯有 Wi-Fi,我们的面包烤箱比过去更智能,我们的茶壶处于俄国攻击的风险中,除非你确保你的设备安全,不然别将没有必要的设备连接到互联网,之后你可以在家中充分的利用物联网设备来完成自动化任务。

|

||||

|

||||

市场上有大量你可以购买或订阅的服务,像 Nest Thermostat 或 Philips Hue 电灯泡,允许你通过你的手机控制你的温度或者你的亮度,无论你是否在家。你可以用一个树莓派来催动这些设备的电源,通过一系列规则包括时间甚至是传感器来完成自动交互。用 Philips Hue,你做不到的当你进房间时打开灯光,但是有一个树莓派和一个运动传感器,你可以用 Python API 来打开灯光。类似地,当你在家的时候你可以通过配置你的 Nest 打开加热系统,但是如果你想在房间里至少有两个人时才打开呢?写一些 Python 代码来检查网络中有哪些手机,如果至少有两个,告诉 Nest 来打开加热器。

|

||||

|

||||

不用选择集成已存在的物联网设备,你可以用简单的组件来做的更多。一个自制的窃贼警报器,一个自动化的鸡笼门开关,一个夜灯,一个音乐盒,一个定时的加热灯,一个自动化的备份服务器,一个打印服务器,或者任何你能想到的。

|

||||

|

||||

### Tor 协议和屏蔽广告

|

||||

|

||||

Adafruit 的 [Onion Pi][31] 是一个 [Tor][32] 协议来使你的网络通讯匿名,允许你使用互联网而不用担心窥探者和各种形式的监视。跟随 Adafruit 的指南来设置 Onion Pi,你会找到一个舒服的匿名的浏览体验。

|

||||

|

||||

![Onion-Pi][33]

|

||||

|

||||

*Onion-pi from Adafruit, Copyright, 授权使用*

|

||||

|

||||

![Pi-hole][34]

|

||||

|

||||

可以在你的网络中安装一个树莓派来拦截所有的网络交通并过滤所有广告。简单下载 [Pi-hole][35] 软件到 Pi 中,你的网络中的所有设备都将没有广告(甚至屏蔽你的移动设备应用内的广告)。

|

||||

|

||||

树莓派在家中有很多用法。你在家里用树莓派来干什么?你想用它干什么?

|

||||

|

||||

在下方评论让我们知道。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/4/5-projects-raspberry-pi-home

|

||||

|

||||

作者:[Ben Nuttall][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[warmfrog](https://github.com/warmfrog)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bennuttall

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/raspberry_pi_home_automation.png?itok=2TnmJpD8 (5 projects for Raspberry Pi at home)

|

||||

[2]: https://www.raspberrypi.org/

|

||||

[3]: https://kodi.tv/

|

||||

[4]: https://osmc.tv/

|

||||

[5]: https://libreelec.tv/

|

||||

[6]: https://www.raspberrypi.org/downloads/noobs/

|

||||

[7]: https://opensource.com/sites/default/files/libreelec_0.png (LibreElec )

|

||||

[8]: https://opensource.com/sites/default/files/osmc.png (OSMC)

|

||||

[9]: https://opensource.com/life/16/10/which-raspberry-pi-should-you-choose-your-project

|

||||

[10]: mailto:pi@home.mydomain.com

|

||||

[11]: https://opensource.com/sites/default/files/resize/screenshot_from_2017-04-07_15-13-01-700x380.png

|

||||

[12]: http://stackoverflow.com/questions/20898384/ssh-disable-password-authentication

|

||||

[13]: https://nmap.org/

|

||||

[14]: http://www.pettingers.org/code/sshblack.html

|

||||

[15]: https://www.fail2ban.org/wiki/index.php/Main_Page

|

||||

[16]: https://www.raspberrypi.org/products/camera-module-v2/

|

||||

[17]: https://opensource.com/life/15/6/raspberry-pi-camera-projects

|

||||

[18]: https://www.raspberrypi.org/products/pi-noir-camera-v2/

|

||||

[19]: http://picamera.readthedocs.io/

|

||||

[20]: https://www.raspberrypi.org/documentation/usage/camera/raspicam/raspistill.md

|

||||

[21]: https://www.raspberrypi.org/documentation/linux/usage/cron.md

|

||||

[22]: https://github.com/RZRZR/plant-cam

|

||||

[23]: https://github.com/bennuttall/bett-bot

|

||||

[24]: http://picamera.readthedocs.io/en/release-1.13/recipes2.html#web-streaming

|

||||

[25]: https://github.com/waveform80/pistreaming

|

||||

[26]: http://jsmpeg.com/

|

||||

[27]: https://opensource.com/sites/default/files/tortoise.jpg (Tortoise)

|

||||

[28]: https://shop.pimoroni.com/products/pan-tilt-hat

|

||||

[29]: https://github.com/waveform80/pistreaming/tree/pantilthat

|

||||

[30]: https://opensource.com/sites/default/files/pan-tilt.gif (Pan-tilt)

|

||||

[31]: https://learn.adafruit.com/onion-pi/overview

|

||||

[32]: https://www.torproject.org/

|

||||

[33]: https://opensource.com/sites/default/files/onion-pi.jpg (Onion-Pi)

|

||||

[34]: https://opensource.com/sites/default/files/resize/pi-hole-250x250.png (Pi-hole)

|

||||

[35]: https://pi-hole.net/

|

||||

|

||||

|

||||

|

||||

103

published/20180324 Memories of writing a parser for man pages.md

Normal file

103

published/20180324 Memories of writing a parser for man pages.md

Normal file

@ -0,0 +1,103 @@

|

||||

为 man 手册页编写解析器的备忘录

|

||||

======

|

||||

|

||||

|

||||

我一般都很喜欢无所事事,但有时候太无聊了也不行 —— 2015 年的一个星期天下午就是这样,我决定开始写一个开源项目来让我不那么无聊。

|

||||

|

||||

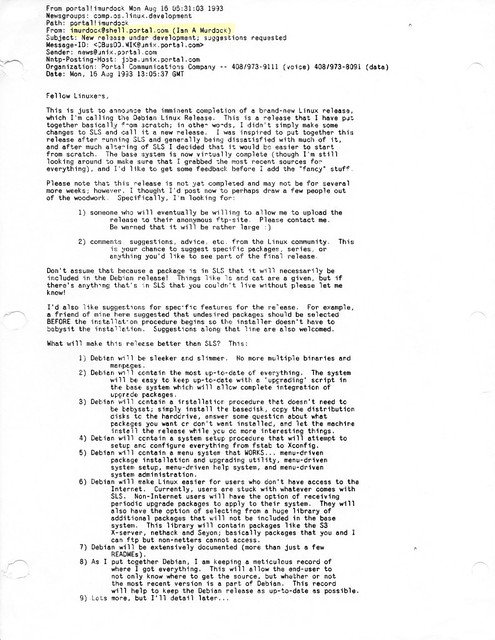

在我寻求创意时,我偶然发现了一个请求,要求构建一个由 [Mathias Bynens][2] 提出的“[按 Web 标准构建的 Man 手册页查看器][1]”。没有考虑太多,我开始使用 JavaScript 编写一个手册页解析器,经过大量的反复思考,最终做出了一个 [Jroff][3]。

|

||||

|

||||

那时候,我非常熟悉手册页这个概念,而且使用过很多次,但我知道的仅止于此,我不知道它们是如何生成的,或者是否有一个标准。在经过两年后,我有了一些关于此事的想法。

|

||||

|

||||

### man 手册页是如何写的

|

||||

|

||||

当时令我感到惊讶的第一件事是,手册页的核心只是存储在系统某处的纯文本文件(你可以使用 `manpath` 命令检查这些目录)。

|

||||

|

||||

此文件中不仅包含文档,还包含使用了 20 世纪 70 年代名为 `troff` 的排版系统的格式化信息。

|

||||

|

||||

> troff 及其 GNU 实现 groff 是处理文档的文本描述以生成适合打印的排版版本的程序。**它更像是“你所描述的即你得到的”,而不是你所见即所得的。**

|

||||

>

|

||||

> - 摘自 [troff.org][4]

|

||||

|

||||

如果你对排版格式毫不熟悉,可以将它们视为 steroids 期刊用的 Markdown,但其灵活性带来的就是更复杂的语法:

|

||||

|

||||

![groff-compressor][5]

|

||||

|

||||

`groff` 文件可以手工编写,也可以使用许多不同的工具从其他格式生成,如 Markdown、Latex、HTML 等。

|

||||

|

||||

为什么 `groff` 和 man 手册页绑在一起是有历史原因的,其格式[随时间有变化][6],它的血统由一系列类似命名的程序组成:RUNOFF > roff > nroff > troff > groff。

|

||||

|

||||

但这并不一定意味着 `groff` 与手册页有多紧密的关系,它是一种通用格式,已被用于[书籍][7],甚至用于[照相排版][8]。

|

||||

|

||||

此外,值得注意的是 `groff` 也可以调用后处理器将其中间输出结果转换为最终格式,这对于终端显示来说不一定是 ascii !一些支持的格式是:TeX DVI、HTML、Canon、HP LaserJet4 兼容格式、PostScript、utf8 等等。

|

||||

|

||||

### 宏

|

||||

|

||||

该格式的其他很酷的功能是它的可扩展性,你可以编写宏来增强其基本功能。

|

||||

|

||||

鉴于 *nix 系统的悠久历史,有几个可以根据你想要生成的输出而将特定功能组合在一起的宏包,例如 `man`、`mdoc`、`mom`、`ms`、`mm` 等等。

|

||||

|

||||

手册页通常使用 `man` 和 `mdoc` 宏包编写。

|

||||

|

||||

区分原生的 `groff` 命令和宏的方式是通过标准 `groff` 包大写其宏名称。对于 `man` 宏包,每个宏的名称都是大写的,如 `.PP`、`.TH`、`.SH` 等。对于 `mdoc` 宏包,只有第一个字母是大写的: `.Pp`、`.Dt`、`.Sh`。

|

||||

|

||||

![groff-example][9]

|

||||

|

||||

### 挑战

|

||||

|

||||

无论你是考虑编写自己的 `groff` 解析器,还是只是好奇,这些都是我发现的一些更具挑战性的问题。

|

||||

|

||||

#### 上下文敏感的语法

|

||||

|

||||

表面上,`groff` 的语法是上下文无关的,遗憾的是,因为宏描述的是主体不透明的令牌,所以包中的宏集合本身可能不会实现上下文无关的语法。

|

||||

|

||||

这导致我在那时做不出来一个解析器生成器(不管好坏)。

|

||||

|

||||

#### 嵌套的宏

|

||||

|

||||

`mdoc` 宏包中的大多数宏都是可调用的,这差不多意味着宏可以用作其他宏的参数,例如,你看看这个:

|

||||

|

||||

* 宏 `Fl`(Flag)会在其参数中添加破折号,因此 `Fl s` 会生成 `-s`

|

||||

* 宏 `Ar`(Argument)提供了定义参数的工具

|

||||

* 宏 `Op`(Optional)会将其参数括在括号中,因为这是将某些东西定义为可选的标准习惯用法

|

||||

* 以下组合 `.Op Fl s Ar file ` 将生成 `[-s file]`,因为 `Op` 宏可以嵌套。

|

||||

|

||||

#### 缺乏适合初学者的资源

|

||||

|

||||

让我感到困惑的是缺乏一个规范的、定义明确的、清晰的来源,网上有很多信息,这些信息对读者来说很重要,需要时间来掌握。

|

||||

|

||||

### 有趣的宏

|

||||

|

||||

总结一下,我会向你提供一个非常简短的宏列表,我在开发 jroff 时发现它很有趣:

|

||||

|

||||

`man` 宏包:

|

||||

|

||||

* `.TH`:用 `man` 宏包编写手册页时,你的第一个不是注释的行必须是这个宏,它接受五个参数:`title`、`section`、`date`、`source`、`manual`。

|

||||

* `.BI`:粗体加斜体(特别适用于函数格式)

|

||||

* `.BR`:粗体加正体(特别适用于参考其他手册页)

|

||||

|

||||

`mdoc` 宏包:

|

||||

|

||||

* `.Dd`、`.Dt`、`.Os`:类似于 `man` 宏包需要 `.TH`,`mdoc` 宏也需要这三个宏,需要按特定顺序使用。它们的缩写分别代表:文档日期、文档标题和操作系统。

|

||||

* `.Bl`、`.It`、`.El`:这三个宏用于创建列表,它们的名称不言自明:开始列表、项目和结束列表。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://monades.roperzh.com/memories-writing-parser-man-pages/

|

||||

|

||||

作者:[Roberto Dip][a]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://monades.roperzh.com

|

||||

[1]:https://github.com/h5bp/lazyweb-requests/issues/114

|

||||

[2]:https://mathiasbynens.be/

|

||||

[3]:jroff

|

||||

[4]:https://www.troff.org/

|

||||

[5]:https://user-images.githubusercontent.com/4419992/37868021-2e74027c-2f7f-11e8-894b-80829ce39435.gif

|

||||

[6]:https://manpages.bsd.lv/history.html

|

||||

[7]:https://rkrishnan.org/posts/2016-03-07-how-is-gopl-typeset.html

|

||||

[8]:https://en.wikipedia.org/wiki/Phototypesetting

|

||||

[9]:https://user-images.githubusercontent.com/4419992/37866838-e602ad78-2f6e-11e8-97a9-2a4494c766ae.jpg

|

||||

@ -0,0 +1,191 @@

|

||||

如何使用 GParted 实用工具缩放根分区

|

||||

======

|

||||

|

||||

今天,我们将讨论磁盘分区。这是 Linux 中的一个好话题。这允许用户来重新调整在 Linux 中的活动 root 分区。

|

||||

|

||||

在这篇文章中,我们将教你如何使用 GParted 缩放在 Linux 上的活动根分区。

|

||||

|

||||

比如说,当我们安装 Ubuntu 操作系统时,并没有恰当地配置,我们的系统仅有 30 GB 磁盘。我们需要安装另一个操作系统,因此我们想在其中制作第二个分区。

|

||||

|

||||

虽然不建议重新调整活动分区。然而,我们要执行这个操作,因为没有其它方法来释放系统分区。

|

||||

|

||||

> 注意:在执行这个动作前,确保你备份了重要的数据,因为如果一些东西出错(例如,电源故障或你的系统重启),你可以得以保留你的数据。

|

||||

|

||||

### Gparted 是什么

|

||||

|

||||

[GParted][1] 是一个自由的分区管理器,它使你能够缩放、复制和移动分区,而不丢失数据。通过使用 GParted 的 Live 可启动镜像,我们可以使用 GParted 应用程序的所有功能。GParted Live 可以使你能够在 GNU/Linux 以及其它的操作系统上使用 GParted,例如,Windows 或 Mac OS X 。

|

||||

|

||||

#### 1) 使用 df 命令检查磁盘空间利用率

|

||||

|

||||

我只是想使用 `df` 命令向你显示我的分区。`df` 命令输出清楚地表明我仅有一个分区。

|

||||

|

||||

```

|

||||

$ df -h

|

||||

Filesystem Size Used Avail Use% Mounted on

|

||||

/dev/sda1 30G 3.4G 26.2G 16% /

|

||||

none 4.0K 0 4.0K 0% /sys/fs/cgroup

|

||||

udev 487M 4.0K 487M 1% /dev

|

||||

tmpfs 100M 844K 99M 1% /run

|

||||

none 5.0M 0 5.0M 0% /run/lock

|

||||

none 498M 152K 497M 1% /run/shm

|

||||

none 100M 52K 100M 1% /run/user

|

||||

```

|

||||

|

||||

#### 2) 使用 fdisk 命令检查磁盘分区

|

||||

|

||||

我将使用 `fdisk` 命令验证这一点。

|

||||

|

||||

```

|

||||

$ sudo fdisk -l

|

||||

[sudo] password for daygeek:

|

||||

|

||||

Disk /dev/sda: 33.1 GB, 33129218048 bytes

|

||||

255 heads, 63 sectors/track, 4027 cylinders, total 64705504 sectors

|

||||

Units = sectors of 1 * 512 = 512 bytes

|

||||

Sector size (logical/physical): 512 bytes / 512 bytes

|

||||

I/O size (minimum/optimal): 512 bytes / 512 bytes

|

||||

Disk identifier: 0x000473a3

|

||||

|

||||

Device Boot Start End Blocks Id System

|

||||

/dev/sda1 * 2048 62609407 31303680 83 Linux

|

||||

/dev/sda2 62611454 64704511 1046529 5 Extended

|

||||

/dev/sda5 62611456 64704511 1046528 82 Linux swap / Solaris

|

||||

```

|

||||

|

||||

#### 3) 下载 GParted live ISO 镜像

|

||||

|

||||

使用下面的命令来执行下载 GParted live ISO。

|

||||

|

||||

```

|

||||

$ wget https://downloads.sourceforge.net/gparted/gparted-live-0.31.0-1-amd64.iso

|

||||

```

|

||||

|

||||

#### 4) 使用 GParted Live 安装介质启动你的系统

|

||||

|

||||

使用 GParted Live 安装介质(如烧录的 CD/DVD 或 USB 或 ISO 镜像)启动你的系统。你将获得类似于下面屏幕的输出。在这里选择 “GParted Live (Default settings)” ,并敲击回车按键。

|

||||

|

||||

![][3]

|

||||

|

||||

#### 5) 键盘选择

|

||||

|

||||

默认情况下,它选择第二个选项,按下回车即可。

|

||||

|

||||

![][4]

|

||||

|

||||

#### 6) 语言选择

|

||||

|

||||

默认情况下,它选择 “33” 美国英语,按下回车即可。

|

||||

|

||||

![][5]

|

||||

|

||||

#### 7) 模式选择(图形用户界面或命令行)

|

||||

|

||||

默认情况下,它选择 “0” 图形用户界面模式,按下回车即可。

|

||||

|

||||

![][6]

|

||||

|

||||

#### 8) 加载 GParted Live 屏幕

|

||||

|

||||

现在,GParted Live 屏幕已经加载,它显示我以前创建的分区列表。

|

||||

|

||||

![][7]

|

||||

|

||||

#### 9) 如何重新调整根分区大小

|

||||

|

||||

选择你想重新调整大小的根分区,在这里仅有一个分区,所以我将编辑这个分区以便于安装另一个操作系统。

|

||||

|

||||

![][8]

|

||||

|

||||

为做到这一点,按下 “Resize/Move” 按钮来重新调整分区大小。

|

||||

|

||||

![][9]

|

||||

|

||||

现在,在第一个框中输入你想从这个分区中取出的大小。我将索要 “10GB”,所以,我添加 “10240MB”,并让该对话框的其余部分为默认值,然后点击 “Resize/Move” 按钮。

|

||||

|

||||

![][10]

|

||||

|

||||

它将再次要求你确认重新调整分区的大小,因为你正在编辑活动的系统分区,然后点击 “Ok”。

|

||||

|

||||

![][11]

|

||||

|

||||

分区从 30GB 缩小到 20GB 已经成功。也显示 10GB 未分配的磁盘空间。

|

||||

|

||||

![][12]

|

||||

|

||||

最后点击 “Apply” 按钮来执行下面剩余的操作。

|

||||

|

||||

![][13]

|

||||

|

||||

`e2fsck` 是一个文件系统检查实用程序,自动修复文件系统中与 HDD 相关的坏扇道、I/O 错误。

|

||||

|

||||

![][14]

|

||||

|

||||

`resize2fs` 程序将重新调整 ext2、ext3 或 ext4 文件系统的大小。它可以被用于扩大或缩小一个位于设备上的未挂载的文件系统。

|

||||

|

||||

![][15]

|

||||

|

||||

`e2image` 程序将保存位于设备上的关键的 ext2、ext3 或 ext4 文件系统的元数据到一个指定文件中。

|

||||

|

||||

![][16]

|

||||

|

||||

所有的操作完成,关闭对话框。

|

||||

|

||||

![][17]

|

||||

|

||||

现在,我们可以看到未分配的 “10GB” 磁盘分区。

|

||||

|

||||

![][18]

|

||||

|

||||

重启系统来检查这一结果。

|

||||

|

||||

![][19]

|

||||

|

||||

#### 10) 检查剩余空间

|

||||

|

||||

重新登录系统,并使用 `fdisk` 命令来查看在分区中可用的空间。是的,我可以看到这个分区上未分配的 “10GB” 磁盘空间。

|

||||

|

||||

```

|

||||

$ sudo parted /dev/sda print free

|

||||

[sudo] password for daygeek:

|

||||

Model: ATA VBOX HARDDISK (scsi)

|

||||

Disk /dev/sda: 32.2GB

|

||||

Sector size (logical/physical): 512B/512B

|

||||

Partition Table: msdos

|

||||

Disk Flags:

|

||||

|

||||

Number Start End Size Type File system Flags

|

||||

32.3kB 10.7GB 10.7GB Free Space

|

||||

1 10.7GB 32.2GB 21.5GB primary ext4 boot

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/magesh/

|

||||

[1]:https://gparted.org/

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-1.png

|

||||

[4]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-2.png

|

||||

[5]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-3.png

|

||||

[6]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-4.png

|

||||

[7]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-5.png

|

||||

[8]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-6.png

|

||||

[9]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-7.png

|

||||

[10]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-8.png

|

||||

[11]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-9.png

|

||||

[12]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-10.png

|

||||

[13]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-11.png

|

||||

[14]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-12.png

|

||||

[15]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-13.png

|

||||

[16]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-14.png

|

||||

[17]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-15.png

|

||||

[18]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-16.png

|

||||

[19]:https://www.2daygeek.com/wp-content/uploads/2014/08/how-to-resize-active-primary-root-partition-in-linux-using-gparted-utility-17.png

|

||||

@ -0,0 +1,172 @@

|

||||

BootISO:从 ISO 文件中创建一个可启动的 USB 设备

|

||||

======

|

||||

|

||||

|

||||

|

||||

为了安装操作系统,我们中的大多数人(包括我)经常从 ISO 文件中创建一个可启动的 USB 设备。为达到这个目的,在 Linux 中有很多自由可用的应用程序。甚至在过去我们写了几篇介绍这种实用程序的文章。

|

||||

|

||||

每个人使用不同的应用程序,每个应用程序有它们自己的特色和功能。在这些应用程序中,一些应用程序属于 CLI 程序,一些应用程序则是 GUI 的。

|

||||

|

||||

今天,我们将讨论名为 BootISO 的实用程序类似工具。它是一个简单的 bash 脚本,允许用户来从 ISO 文件中创建一个可启动的 USB 设备。

|

||||

|

||||

很多 Linux 管理员使用 `dd` 命令开创建可启动的 ISO ,它是一个著名的原生方法,但是与此同时,它也是一个非常危险的命令。因此,小心,当你用 `dd` 命令执行一些动作时。

|

||||

|

||||

建议阅读:

|

||||

|

||||

- [Etcher:从一个 ISO 镜像中创建一个可启动的 USB 驱动器 & SD 卡的简单方法][1]

|

||||

- [在 Linux 上使用 dd 命令来从一个 ISO 镜像中创建一个可启动的 USB 驱动器][2]

|

||||

|

||||

### BootISO 是什么

|

||||

|

||||

[BootISO][3] 是一个简单的 bash 脚本,允许用户来安全的从一个 ISO 文件中创建一个可启动的 USB 设备,它是用 bash 编写的。

|

||||

|

||||

它不提供任何图形用户界面而是提供了大量的选项,可以让初学者顺利地在 Linux 上来创建一个可启动的 USB 设备。因为它是一个智能工具,能自动地选择连接到系统上的 USB 设备。

|

||||

|

||||

当系统有多个 USB 设备连接,它将打印出列表。当你手动选择了另一个硬盘而不是 USB 时,在这种情况下,它将安全地退出,而不会在硬盘上写入任何东西。

|

||||

|

||||

这个脚本也将检查依赖关系,并提示用户安装,它可以与所有的软件包管理器一起工作,例如 apt-get、yum、dnf、pacman 和 zypper。

|

||||

|

||||

### BootISO 的功能

|

||||

|

||||

* 它检查选择的 ISO 是否是正确的 mime 类型。如果不是,那么退出。

|

||||

* 如果你选择除 USB 设备以外的任何其它的磁盘(本地硬盘),BootISO 将自动地退出。

|

||||

* 当你有多个驱动器时,BootISO 允许用户选择想要使用的 USB 驱动器。

|

||||

* 在擦除和分区 USB 设备前,BootISO 会提示用户确认。

|

||||

* BootISO 将正确地处理来自一个命令的任何错误,并退出。

|

||||

* BootISO 在遇到问题退出时将调用一个清理例行程序。

|

||||

|

||||

### 如何在 Linux 中安装 BootISO

|

||||

|

||||

在 Linux 中安装 BootISO 有几个可用的方法,但是,我建议用户使用下面的方法安装。

|

||||

|

||||

```

|

||||

$ curl -L https://git.io/bootiso -O

|

||||

$ chmod +x bootiso

|

||||

$ sudo mv bootiso /usr/local/bin/

|

||||

```

|

||||

|

||||

一旦 BootISO 已经安装,运行下面的命令来列出可用的 USB 设备。

|

||||

|

||||

```

|

||||

$ bootiso -l

|

||||

|

||||

Listing USB drives available in your system:

|

||||

NAME HOTPLUG SIZE STATE TYPE

|

||||

sdd 1 32G running disk

|

||||

```

|

||||

|

||||

如果你仅有一个 USB 设备,那么简单地运行下面的命令来从一个 ISO 文件中创建一个可启动的 USB 设备。

|

||||

|

||||

```

|

||||

$ bootiso /path/to/iso file

|

||||

```

|

||||

|

||||

```

|

||||

$ bootiso /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

Granting root privileges for bootiso.

|

||||

Listing USB drives available in your system:

|

||||

NAME HOTPLUG SIZE STATE TYPE

|

||||

sdd 1 32G running disk

|

||||

Autoselecting `sdd' (only USB device candidate)

|

||||

The selected device `/dev/sdd' is connected through USB.

|

||||

Created ISO mount point at `/tmp/iso.vXo'

|

||||

`bootiso' is about to wipe out the content of device `/dev/sdd'.

|

||||

Are you sure you want to proceed? (y/n)>y

|

||||

Erasing contents of /dev/sdd...

|

||||

Creating FAT32 partition on `/dev/sdd1'...

|

||||

Created USB device mount point at `/tmp/usb.0j5'

|

||||

Copying files from ISO to USB device with `rsync'

|

||||

Synchronizing writes on device `/dev/sdd'

|

||||

`bootiso' took 250 seconds to write ISO to USB device with `rsync' method.

|

||||

ISO succesfully unmounted.

|

||||

USB device succesfully unmounted.

|

||||

USB device succesfully ejected.

|

||||

You can safely remove it !

|

||||

```

|

||||

|

||||

当你有多个 USB 设备时,可以使用 `--device` 选项指明你的设备名称。

|

||||

|

||||

```

|

||||

$ bootiso -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

```

|

||||

|

||||

默认情况下,BootISO 使用 `rsync` 命令来执行所有的动作,如果你想使用 `dd` 命令代替它,使用下面的格式。

|

||||

|

||||

```

|

||||

$ bootiso --dd -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

```

|

||||

|

||||

如果你想跳过 mime 类型检查,BootISO 实用程序带有下面的选项。

|

||||

|

||||

```

|

||||

$ bootiso --no-mime-check -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

```

|

||||

|

||||

为 BootISO 添加下面的选项来跳过在擦除和分区 USB 设备前的用户确认。

|

||||

|

||||

```

|

||||

$ bootiso -y -d /dev/sde /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

```

|

||||

|

||||

连同 `-y` 选项一起,启用自动选择 USB 设备。

|

||||

|

||||

```

|

||||

$ bootiso -y -a /opt/iso_images/archlinux-2018.05.01-x86_64.iso

|

||||

```

|

||||

|

||||

为知道更多的 BootISO 选项,运行下面的命令。

|

||||

|

||||

```

|

||||

$ bootiso -h

|

||||

Create a bootable USB from any ISO securely.

|

||||

Usage: bootiso [...]

|

||||

|

||||

Options

|

||||

|

||||

-h, --help, help Display this help message and exit.

|

||||

-v, --version Display version and exit.

|

||||

-d, --device Select block file as USB device.

|

||||

If is not connected through USB, `bootiso' will fail and exit.

|

||||

Device block files are usually situated in /dev/sXX or /dev/hXX.

|

||||

You will be prompted to select a device if you don't use this option.

|

||||

-b, --bootloader Install a bootloader with syslinux (safe mode) for non-hybrid ISOs. Does not work with `--dd' option.

|

||||

-y, --assume-yes `bootiso' won't prompt the user for confirmation before erasing and partitioning USB device.

|

||||

Use at your own risks.

|

||||

-a, --autoselect Enable autoselecting USB devices in conjunction with -y option.

|

||||

Autoselect will automatically select a USB drive device if there is exactly one connected to the system.

|

||||

Enabled by default when neither -d nor --no-usb-check options are given.

|

||||

-J, --no-eject Do not eject device after unmounting.

|

||||

-l, --list-usb-drives List available USB drives.

|

||||

-M, --no-mime-check `bootiso' won't assert that selected ISO file has the right mime-type.

|

||||

-s, --strict-mime-check Disallow loose application/octet-stream mime type in ISO file.

|

||||

-- POSIX end of options.

|

||||

--dd Use `dd' utility instead of mounting + `rsync'.

|

||||

Does not allow bootloader installation with syslinux.

|

||||

--no-usb-check `bootiso' won't assert that selected device is a USB (connected through USB bus).

|

||||

Use at your own risks.

|

||||

|

||||

Readme

|

||||

|

||||

Bootiso v2.5.2.

|

||||

Author: Jules Samuel Randolph

|

||||

Bugs and new features: https://github.com/jsamr/bootiso/issues

|

||||

If you like bootiso, please help the community by making it visible:

|

||||

* star the project at https://github.com/jsamr/bootiso

|

||||

* upvote those SE post: https://goo.gl/BNRmvm https://goo.gl/YDBvFe

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/bootiso-a-simple-bash-script-to-securely-create-a-bootable-usb-device-in-linux-from-iso-file/

|

||||

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/prakash/

|

||||

[1]:https://www.2daygeek.com/etcher-easy-way-to-create-a-bootable-usb-drive-sd-card-from-an-iso-image-on-linux/

|

||||

[2]:https://www.2daygeek.com/create-a-bootable-usb-drive-from-an-iso-image-using-dd-command-on-linux/

|

||||

[3]:https://github.com/jsamr/bootiso

|

||||

@ -1,21 +1,22 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10977-1.html)

|

||||

[#]: subject: (Get desktop notifications from Emacs shell commands ·)

|

||||

[#]: via: (https://blog.hoetzel.info/post/eshell-notifications/)

|

||||

[#]: author: (Jürgen Hötzel https://blog.hoetzel.info)

|

||||

|

||||

Get desktop notifications from Emacs shell commands ·

|

||||

让 Emacs shell 命令发送桌面通知

|

||||

======

|

||||

When interacting with the operating systems I always use [Eshell][1] because it integrates seamlessly with Emacs, supports (remote) [TRAMP][2] file names and also works nice on Windows.

|

||||

|

||||

After starting shell commands (like long running build jobs) I often lose track the task when switching buffers.

|

||||

我总是使用 [Eshell][1] 来与操作系统进行交互,因为它与 Emacs 无缝整合、支持处理 (远程) [TRAMP][2] 文件,而且在 Windows 上也能工作得很好。

|

||||

|

||||

Thanks to Emacs [hooks][3] mechanism you can customize Emacs to call a elisp function when an external command finishes.

|

||||

启动 shell 命令后 (比如耗时严重的构建任务) 我经常会由于切换缓冲区而忘了追踪任务的运行状态。

|

||||

|

||||

I use [John Wiegleys][4] excellent [alert][5] package to send desktop notifications:

|

||||

多亏了 Emacs 的 [钩子][3] 机制,你可以配置 Emacs 在某个外部命令完成后调用一个 elisp 函数。

|

||||

|

||||

我使用 [John Wiegleys][4] 所编写的超棒的 [alert][5] 包来发送桌面通知:

|

||||

|

||||

```

|

||||

(require 'alert)

|

||||

@ -32,7 +33,7 @@ I use [John Wiegleys][4] excellent [alert][5] package to send desktop notificati

|

||||

(add-hook 'eshell-kill-hook #'eshell-command-alert)

|

||||

```

|

||||

|

||||

[alert][5] rules can be setup programmatically. In my case I only want to get notified if the corresponding buffer is not visible:

|

||||

[alert][5] 的规则可以用程序来设置。就我这个情况来看,我只需要当对应的缓冲区不可见时得到通知:

|

||||

|

||||

```

|

||||

(alert-add-rule :status '(buried) ;only send alert when buffer not visible

|

||||

@ -40,7 +41,8 @@ I use [John Wiegleys][4] excellent [alert][5] package to send desktop notificati

|

||||

:style 'notifications)

|

||||

```

|

||||

|

||||

This even works on [TRAMP][2] buffers. Below is a screenshot showing a Gnome desktop notification of a failed `make` command.

|

||||

|

||||

这甚至对于 [TRAMP][2] 也一样生效。下面这个截屏展示了失败的 `make` 命令产生的 Gnome 桌面通知。

|

||||

|

||||

![../../img/eshell.png][6]

|

||||

|

||||

@ -51,7 +53,7 @@ via: https://blog.hoetzel.info/post/eshell-notifications/

|

||||

作者:[Jürgen Hötzel][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -62,4 +64,4 @@ via: https://blog.hoetzel.info/post/eshell-notifications/

|

||||

[3]: https://www.gnu.org/software/emacs/manual/html_node/emacs/Hooks.html (hooks)

|

||||

[4]: https://github.com/jwiegley (John Wiegleys)

|

||||

[5]: https://github.com/jwiegley/alert (alert)

|

||||

[6]: https://blog.hoetzel.info/img/eshell.png (../../img/eshell.png)

|

||||

[6]: https://blog.hoetzel.info/img/eshell.png

|

||||

56

published/20180914 A day in the life of a log message.md

Normal file

56

published/20180914 A day in the life of a log message.md

Normal file

@ -0,0 +1,56 @@

|

||||

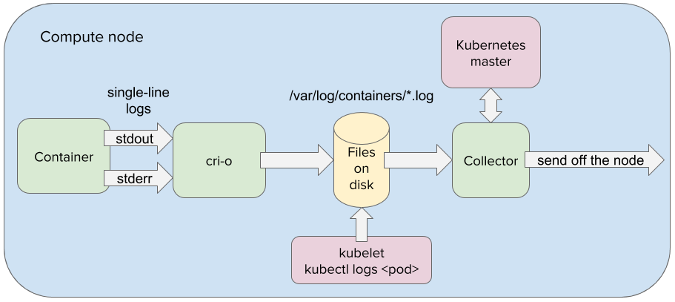

一条日志消息的现代生活

|

||||

======

|

||||

|

||||

> 从一条日志消息的角度来巡览现代分布式系统。

|

||||

|

||||