mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

f1da0e6828

@ -5,15 +5,16 @@ Linux 下如何通过两个或多个输出设备播放声音

|

||||

|

||||

在 Linux 上处理音频是一件很痛苦的事情。Pulseaudio 的出现则是利弊参半。虽然有些事情 Pluseaudio 能够做的更好,但有些事情则反而变得更复杂了。处理音频的输出就是这么一件事情。

|

||||

|

||||

如果你想要在 Linux PC 上启用多个音频输出,你只需要利用一个简单的工具就能在一个虚拟界面上启用另一个发音设备。这比看起来要简单的多。

|

||||

如果你想要在 Linux PC 上启用多个音频输出,你只需要利用一个简单的工具就能在一个虚拟j接口上启用另一个声音设备。这比看起来要简单的多。

|

||||

|

||||

你可能会好奇为什么要这么做,一个很常见的情况是用电脑在电视上播放视频,你可以同时使用电脑和电视上的扬声器。

|

||||

|

||||

### 安装 Paprefs

|

||||

|

||||

实现从多个来源启用音频播放的最简单的方法是是一款名为 "paprefs" 的简单图形化工具。它是 PulseAudio Preferences 的缩写。

|

||||

实现从多个来源启用音频播放的最简单的方法是是一款名为 “paprefs” 的简单图形化工具。它是 PulseAudio Preferences 的缩写。

|

||||

|

||||

该软件包含在 Ubuntu 仓库中,可以直接用 apt 来进行安装。

|

||||

|

||||

```

|

||||

sudo apt install paprefs

|

||||

```

|

||||

@ -24,17 +25,17 @@ sudo apt install paprefs

|

||||

|

||||

虽然这款工具是图形化的,但作为普通用户在命令行中输入 `paprefs` 来启动它恐怕还是要更容易一些。

|

||||

|

||||

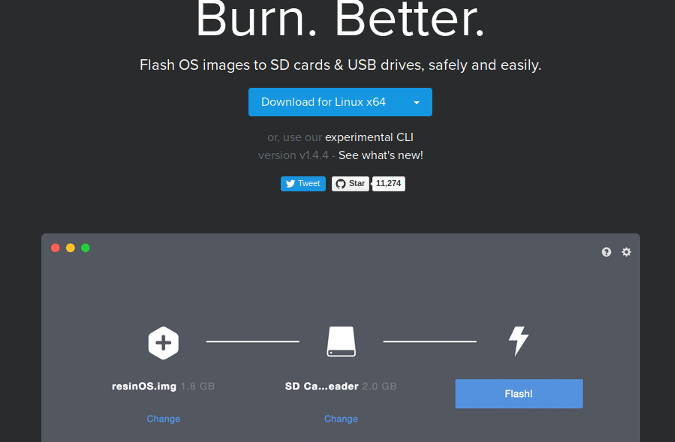

打开的窗口中有一些标签页,这些标签页内有一些可以调整的设置项。我们这里选择最后那个标签页,"Simultaneous Output。"

|

||||

打开的窗口中有一些标签页,这些标签页内有一些可以调整的设置项。我们这里选择最后那个标签页,“Simultaneous Output。”

|

||||

|

||||

![Paprefs on Ubuntu][1]

|

||||

|

||||

这个标签页中没有什么内容,只是一个复选框用来启用设置。

|

||||

这个标签页中没有什么内容,只是一个复选框用来启用该设置。

|

||||

|

||||

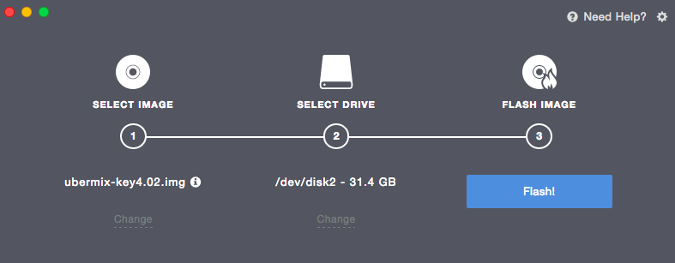

下一步,打开常规的声音首选项。这在不同的发行版中位于不同的位置。在 Ubuntu 上,它位于 GNOME 系统设置内。

|

||||

|

||||

![Enable Simultaneous Audio][2]

|

||||

|

||||

打开声音首选项后,选择 "output" 标签页。勾选 "Simultaneous output" 单选按钮。现在它就成了你的默认输出了。

|

||||

打开声音首选项后,选择 “output” 标签页。勾选 “Simultaneous output” 单选按钮。现在它就成了你的默认输出了。

|

||||

|

||||

### 测试一下

|

||||

|

||||

@ -42,7 +43,7 @@ sudo apt install paprefs

|

||||

|

||||

一切顺利的话,你就能从所有连接的设备中听到有声音传出了。

|

||||

|

||||

这就是所有要做的事了。此功能最适用于有多个设备(如 HDMI 端口和标准模拟输出)时。你当然也可以试一下其他配置。你还需要注意,只有一个音量控制器存在,因此你需要根据实际情况调整物理输出设备。

|

||||

这就是所有要做的事了。此功能最适用于有多个设备(如 HDMI 端口和标准模拟输出)时。你当然也可以试一下其他配置。你还需要注意,只有一个音量控制器,因此你需要根据实际情况调整物理输出设备。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -51,7 +52,7 @@ via: https://www.maketecheasier.com/play-sound-through-multiple-devices-linux/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,28 +1,25 @@

|

||||

使用 Kafka 和 MongoDB 进行 Go 异步处理

|

||||

============================================================

|

||||

|

||||

在我前面的博客文章 ["使用 MongoDB 和 Docker 多阶段构建我的第一个 Go 微服务][9] 中,我创建了一个 Go 微服务示例,它发布一个 REST 式的 http 端点,并将从 HTTP POST 中接收到的数据保存到 MongoDB 数据库。

|

||||

在我前面的博客文章 “[我的第一个 Go 微服务:使用 MongoDB 和 Docker 多阶段构建][9]” 中,我创建了一个 Go 微服务示例,它发布一个 REST 式的 http 端点,并将从 HTTP POST 中接收到的数据保存到 MongoDB 数据库。

|

||||

|

||||

在这个示例中,我将保存数据到 MongoDB 和创建另一个微服务去处理它解耦了。我还添加了 Kafka 为消息层服务,这样微服务就可以异步地处理它自己关心的东西了。

|

||||

在这个示例中,我将数据的保存和 MongoDB 分离,并创建另一个微服务去处理它。我还添加了 Kafka 为消息层服务,这样微服务就可以异步处理它自己关心的东西了。

|

||||

|

||||

> 如果你有时间去看,我将这个博客文章的整个过程录制到 [这个视频中了][1] :)

|

||||

|

||||

下面是这个使用了两个微服务的简单的异步处理示例的高级架构。

|

||||

下面是这个使用了两个微服务的简单的异步处理示例的上层架构图。

|

||||

|

||||

|

||||

|

||||

微服务 1 —— 是一个 REST 式微服务,它从一个 /POST http 调用中接收数据。接收到请求之后,它从 http 请求中检索数据,并将它保存到 Kafka。保存之后,它通过 /POST 发送相同的数据去响应调用者。

|

||||

|

||||

微服务 2 —— 是一个在 Kafka 中订阅一个主题的微服务,在这里就是微服务 1 保存的数据。一旦消息被微服务消费之后,它接着保存数据到 MongoDB 中。

|

||||

微服务 2 —— 是一个订阅了 Kafka 中的一个主题的微服务,微服务 1 的数据保存在该主题。一旦消息被微服务消费之后,它接着保存数据到 MongoDB 中。

|

||||

|

||||

在你继续之前,我们需要能够去运行这些微服务的几件东西:

|

||||

|

||||

1. [下载 Kafka][2] —— 我使用的版本是 kafka_2.11-1.1.0

|

||||

|

||||

2. 安装 [librdkafka][3] —— 不幸的是,这个库应该在目标系统中

|

||||

|

||||

3. 安装 [Kafka Go 客户端][4]

|

||||

|

||||

4. 运行 MongoDB。你可以去看我的 [以前的文章][5] 中关于这一块的内容,那篇文章中我使用了一个 MongoDB docker 镜像。

|

||||

|

||||

我们开始吧!

|

||||

@ -32,14 +29,12 @@

|

||||

```

|

||||

$ cd /<download path>/kafka_2.11-1.1.0

|

||||

$ bin/zookeeper-server-start.sh config/zookeeper.properties

|

||||

|

||||

```

|

||||

|

||||

接着运行 Kafka —— 我使用 9092 端口连接到 Kafka。如果你需要改变端口,只需要在 `config/server.properties` 中配置即可。如果你像我一样是个新手,我建议你现在还是使用默认端口。

|

||||

|

||||

```

|

||||

$ bin/kafka-server-start.sh config/server.properties

|

||||

|

||||

```

|

||||

|

||||

Kafka 跑起来之后,我们需要 MongoDB。它很简单,只需要使用这个 `docker-compose.yml` 即可。

|

||||

@ -61,17 +56,15 @@ volumes:

|

||||

|

||||

networks:

|

||||

network1:

|

||||

|

||||

```

|

||||

|

||||

使用 Docker Compose 去运行 MongoDB docker 容器。

|

||||

|

||||

```

|

||||

docker-compose up

|

||||

|

||||

```

|

||||

|

||||

这里是微服务 1 的相关代码。我只是修改了我前面的示例去保存到 Kafka 而不是 MongoDB。

|

||||

这里是微服务 1 的相关代码。我只是修改了我前面的示例去保存到 Kafka 而不是 MongoDB:

|

||||

|

||||

[rest-to-kafka/rest-kafka-sample.go][10]

|

||||

|

||||

@ -133,15 +126,13 @@ func saveJobToKafka(job Job) {

|

||||

}, nil)

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

这里是微服务 2 的代码。在这个代码中最重要的东西是从 Kafka 中消耗数据,保存部分我已经在前面的博客文章中讨论过了。这里代码的重点部分是从 Kafka 中消费数据。

|

||||

这里是微服务 2 的代码。在这个代码中最重要的东西是从 Kafka 中消费数据,保存部分我已经在前面的博客文章中讨论过了。这里代码的重点部分是从 Kafka 中消费数据:

|

||||

|

||||

[kafka-to-mongo/kafka-mongo-sample.go][11]

|

||||

|

||||

```

|

||||

|

||||

func main() {

|

||||

|

||||

//Create MongoDB session

|

||||

@ -206,14 +197,12 @@ func saveJobToMongo(jobString string) {

|

||||

fmt.Printf("Saved to MongoDB : %s", jobString)

|

||||

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

我们来演示一下,运行微服务 1。确保 Kafka 已经运行了。

|

||||

我们来演示一下,运行微服务 1。确保 Kafka 已经运行了。

|

||||

|

||||

```

|

||||

$ go run rest-kafka-sample.go

|

||||

|

||||

```

|

||||

|

||||

我使用 Postman 向微服务 1 发送数据。

|

||||

@ -228,7 +217,6 @@ $ go run rest-kafka-sample.go

|

||||

|

||||

```

|

||||

$ go run kafka-mongo-sample.go

|

||||

|

||||

```

|

||||

|

||||

现在,你将在微服务 2 上看到消费的数据,并将它保存到了 MongoDB。

|

||||

@ -239,27 +227,26 @@ $ go run kafka-mongo-sample.go

|

||||

|

||||

|

||||

|

||||

完整的源代码可以在这里找到

|

||||

完整的源代码可以在这里找到:

|

||||

|

||||

[https://github.com/donvito/learngo/tree/master/rest-kafka-mongo-microservice][12]

|

||||

|

||||

现在是广告时间:如果你喜欢这篇文章,请在 Twitter [@donvito][6] 上关注我。我的 Twitter 上有关于 Docker、Kubernetes、GoLang、Cloud、DevOps、Agile 和 Startups 的内容。欢迎你们在 [GitHub][7] 和 [LinkedIn][8] 关注我。

|

||||

|

||||

[视频](https://youtu.be/xa0Yia1jdu8)

|

||||

|

||||

开心地玩吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.melvinvivas.com/developing-microservices-using-kafka-and-mongodb/

|

||||

|

||||

作者:[Melvin Vivas ][a]

|

||||

作者:[Melvin Vivas][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.melvinvivas.com/author/melvin/

|

||||

[1]:https://www.melvinvivas.com/developing-microservices-using-kafka-and-mongodb/#video1

|

||||

[1]:https://youtu.be/xa0Yia1jdu8

|

||||

[2]:https://kafka.apache.org/downloads

|

||||

[3]:https://github.com/confluentinc/confluent-kafka-go

|

||||

[4]:https://github.com/confluentinc/confluent-kafka-go

|

||||

@ -0,0 +1,194 @@

|

||||

Yaourt 已死!在 Arch 上使用这些替代品

|

||||

======

|

||||

|

||||

**前略:Yaourt 曾是最流行的 AUR 助手,但现已停止开发。在这篇文章中,我们会为 Arch 衍生发行版们列出 Yaourt 最佳的替代品。**

|

||||

|

||||

[Arch User Repository][1] (常被称作 AUR),是一个为 Arch 用户而生的社区驱动软件仓库。Debian/Ubuntu 用户的对应类比是 PPA。

|

||||

|

||||

AUR 包含了不直接被 [Arch Linux][2] 官方所背书的软件。如果有人想在 Arch 上发布软件或者包,它可以通过这个社区仓库提供。这让最终用户们可以使用到比默认仓库里更多的软件。

|

||||

|

||||

所以你该如何使用 AUR 呢?简单来说,你需要另外的工具以从 AUR 中安装软件。Arch 的包管理器 [pacman][3] 不直接支持 AUR。那些支持 AUR 的“特殊工具”我们称之为 [AUR 助手][4]。

|

||||

|

||||

Yaourt (Yet AnOther User Repository Tool)(曾经)是 `pacman` 的一个封装,便于用户在 Arch Linux 上安装 AUR 软件。它基本上采用和 `pacman` 一样的语法。Yaourt 对于 AUR 的搜索、安装,乃至冲突解决和包依赖关系维护都有着良好的支持。

|

||||

|

||||

然而,Yaourt 的开发进度近来十分缓慢,甚至在 Arch Wiki 上已经被[列为][5]“停止或有问题”。[许多 Arch 用户认为它不安全][6] 进而开始寻找其它的 AUR 助手。

|

||||

|

||||

![Yaourt 以外的 AUR Helpers][7]

|

||||

|

||||

在这篇文章中,我们会介绍 Yaourt 最佳的替代品以便于你从 AUR 安装软件。

|

||||

|

||||

### 最好的 AUR 助手

|

||||

|

||||

我刻意忽略掉了例如 Trizen 和 Packer 这样的流行的选择,因为它们也被列为“停止或有问题”的了。

|

||||

|

||||

#### 1、 aurman

|

||||

|

||||

[aurman][8] 是最好的 AUR 助手之一,也能胜任 Yaourt 替代品的地位。它有非常类似于 `pacman` 的语法,可以支持所有的 `pacman` 操作。你可以搜索 AUR、解决包依赖,在构建 AUR 包前检查 PKGBUILD 的内容等等。

|

||||

|

||||

aurman 的特性:

|

||||

|

||||

* aurman 支持所有 `pacman` 操作,并且引入了可靠的包依赖解决方案、冲突判定和<ruby>分包<rt>split package</rt></ruby>支持

|

||||

* 线程化的 sudo 循环会在后台运行,所以你每次安装只需要输入一次管理员密码

|

||||

* 提供开发包支持,并且可以区分显性安装和隐性安装的包

|

||||

* 支持搜索 AUR 包和仓库

|

||||

* 在构建 AUR 包之前,你可以检视并编辑 PKGBUILD 的内容

|

||||

* 可以用作单独的 [包依赖解决工具][9]

|

||||

|

||||

安装 aurman:

|

||||

|

||||

```

|

||||

git clone https://aur.archlinux.org/aurman.git

|

||||

cd aurman

|

||||

makepkg -si

|

||||

```

|

||||

|

||||

使用 aurman:

|

||||

|

||||

用名字搜索:

|

||||

|

||||

```

|

||||

aurman -Ss <package-name>

|

||||

```

|

||||

|

||||

安装:

|

||||

|

||||

```

|

||||

aurman -S <package-name>

|

||||

```

|

||||

|

||||

#### 2、 yay

|

||||

|

||||

[yay][10] 是下一个最好的 AUR 助手。它使用 Go 语言写成,宗旨是提供最少化用户输入的 `pacman` 界面、yaourt 式的搜索,而几乎没有任何依赖软件。

|

||||

|

||||

yay 的特性:

|

||||

|

||||

* `yay` 提供 AUR 表格补全,并且从 ABS 或 AUR 下载 PKGBUILD

|

||||

* 支持收窄搜索,并且不需要引用 PKGBUILD 源

|

||||

* `yay` 的二进制文件除了 `pacman` 以外别无依赖

|

||||

* 提供先进的包依赖解决方案,以及在编译安装之后移除编译时的依赖

|

||||

* 当在 `/etc/pacman.conf` 文件配置中启用了色彩时支持色彩输出

|

||||

* `yay` 可被配置成只支持 AUR 或者 repo 里的软件包

|

||||

|

||||

安装 yay:

|

||||

|

||||

你可以从 `git` 克隆并编译安装。

|

||||

|

||||

```

|

||||

git clone https://aur.archlinux.org/yay.git

|

||||

cd yay

|

||||

makepkg -si

|

||||

```

|

||||

|

||||

使用 yay:

|

||||

|

||||

搜索:

|

||||

|

||||

```

|

||||

yay -Ss <package-name>

|

||||

```

|

||||

|

||||

安装:

|

||||

|

||||

```

|

||||

yay -S <package-name>

|

||||

```

|

||||

|

||||

#### 3、 pakku

|

||||

|

||||

[Pakku][11] 是另一个还处于开发早期的 pacman 封装,虽然它还处于开放早期,但这不说明它逊于其它任何 AUR 助手。Pakku 能很好地支持从 AUR 搜索和安装,并且也可以在安装后移除不必要的编译依赖。

|

||||

|

||||

pakku 的特性:

|

||||

|

||||

* 从 AUR 搜索和安装软件

|

||||

* 检视不同构建之间的文件和变化

|

||||

* 从官方仓库编译,并事后移除编译依赖

|

||||

* 获取 PKGBUILD 以及 pacman 整合

|

||||

* 类 pacman 的用户界面和选项支持

|

||||

* 支持pacman 配置文件以及无需 PKGBUILD 源

|

||||

|

||||

安装 pakku:

|

||||

|

||||

```

|

||||

git clone https://aur.archlinux.org/pakku.git

|

||||

cd pakku

|

||||

makepkg -si

|

||||

```

|

||||

|

||||

使用 pakku:

|

||||

|

||||

搜索:

|

||||

|

||||

```

|

||||

pakku -Ss spotify

|

||||

```

|

||||

|

||||

安装:

|

||||

|

||||

```

|

||||

pakku -S spotify

|

||||

```

|

||||

|

||||

#### 4、 aurutils

|

||||

|

||||

[aurutils][12] 本质上是一堆使用 AUR 的自动化脚本的集合。它可以搜索 AUR、检查更新,并且解决包依赖。

|

||||

|

||||

aurutils 的特性:

|

||||

|

||||

* aurutils 使用本地仓库以支持 pacman 文件,所有的包都支持 `–asdeps`

|

||||

* 不同的任务可以有多个仓库

|

||||

* `aursync -u` 一键同步本地代码库

|

||||

* `aursearch` 搜索提供 pkgbase、长格式和 raw 支持

|

||||

* 能忽略指定包

|

||||

|

||||

安装 aurutils:

|

||||

|

||||

```

|

||||

git clone https://aur.archlinux.org/aurutils.git

|

||||

cd aurutils

|

||||

makepkg -si

|

||||

```

|

||||

|

||||

使用 aurutils:

|

||||

|

||||

搜索:

|

||||

|

||||

```

|

||||

aurutils -Ss <package-name>

|

||||

```

|

||||

|

||||

安装:

|

||||

|

||||

```

|

||||

aurutils -S <package-name>

|

||||

```

|

||||

|

||||

所有这些包,在有 Yaourt 或者其它 AUR 助手的情况下都可以直接安装。

|

||||

|

||||

### 写在最后

|

||||

|

||||

Arch Linux 有着[很多 AUR 助手][4] 可以自动完成 AUR 各方面的日常任务。很多用户依然使用 Yaourt 来完成 AUR 相关任务,每个人都有自己不一样的偏好,欢迎留言告诉我们你在 Arch 里使用什么,又有什么心得?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/best-aur-helpers/

|

||||

|

||||

作者:[Ambarish Kumar][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[Moelf](https://github.com/Moelf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/ambarish/

|

||||

[1]:https://wiki.archlinux.org/index.php/Arch_User_Repository

|

||||

[2]:https://www.archlinux.org/

|

||||

[3]:https://wiki.archlinux.org/index.php/pacman

|

||||

[4]:https://wiki.archlinux.org/index.php/AUR_helpers

|

||||

[5]:https://wiki.archlinux.org/index.php/AUR_helpers#Comparison_table

|

||||

[6]:https://www.reddit.com/r/archlinux/comments/4azqyb/whats_so_bad_with_yaourt/

|

||||

[7]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/06/no-yaourt-arch-800x450.jpeg

|

||||

[8]:https://github.com/polygamma/aurman

|

||||

[9]:https://github.com/polygamma/aurman/wiki/Using-aurman-as-dependency-solver

|

||||

[10]:https://github.com/Jguer/yay

|

||||

[11]:https://github.com/kitsunyan/pakku

|

||||

[12]:https://github.com/AladW/aurutils

|

||||

209

published/20180806 What is CI-CD.md

Normal file

209

published/20180806 What is CI-CD.md

Normal file

@ -0,0 +1,209 @@

|

||||

什么是 CI/CD?

|

||||

======

|

||||

|

||||

在软件开发中经常会提到<ruby>持续集成<rt>Continuous Integration</rt></ruby>(CI)和<ruby>持续交付<rt>Continuous Delivery</rt></ruby>(CD)这几个术语。但它们真正的意思是什么呢?

|

||||

|

||||

|

||||

|

||||

在谈论软件开发时,经常会提到<ruby>持续集成<rt>Continuous Integration</rt></ruby>(CI)和<ruby>持续交付<rt>Continuous Delivery</rt></ruby>(CD)这几个术语。但它们真正的意思是什么呢?在本文中,我将解释这些和相关术语背后的含义和意义,例如<ruby>持续测试<rt>Continuous Testing</rt></ruby>和<ruby>持续部署<rt>Continuous Deployment</rt></ruby>。

|

||||

|

||||

### 概览

|

||||

|

||||

工厂里的装配线以快速、自动化、可重复的方式从原材料生产出消费品。同样,软件交付管道以快速、自动化和可重复的方式从源代码生成发布版本。如何完成这项工作的总体设计称为“持续交付”(CD)。启动装配线的过程称为“持续集成”(CI)。确保质量的过程称为“持续测试”,将最终产品提供给用户的过程称为“持续部署”。一些专家让这一切简单、顺畅、高效地运行,这些人被称为<ruby>运维开发<rt>DevOps</rt></ruby>践行者。

|

||||

|

||||

### “持续”是什么意思?

|

||||

|

||||

“持续”用于描述遵循我在此提到的许多不同流程实践。这并不意味着“一直在运行”,而是“随时可运行”。在软件开发领域,它还包括几个核心概念/最佳实践。这些是:

|

||||

|

||||

* **频繁发布**:持续实践背后的目标是能够频繁地交付高质量的软件。此处的交付频率是可变的,可由开发团队或公司定义。对于某些产品,一季度、一个月、一周或一天交付一次可能已经足够频繁了。对于另一些来说,一天可能需要多次交付也是可行的。所谓持续也有“偶尔、按需”的方面。最终目标是相同的:在可重复、可靠的过程中为最终用户提供高质量的软件更新。通常,这可以通过很少甚至无需用户的交互或掌握的知识来完成(想想设备更新)。

|

||||

* **自动化流程**:实现此频率的关键是用自动化流程来处理软件生产中的方方面面。这包括构建、测试、分析、版本控制,以及在某些情况下的部署。

|

||||

* **可重复**:如果我们使用的自动化流程在给定相同输入的情况下始终具有相同的行为,则这个过程应该是可重复的。也就是说,如果我们把某个历史版本的代码作为输入,我们应该得到对应相同的可交付产出。这也假设我们有相同版本的外部依赖项(即我们不创建该版本代码使用的其它交付物)。理想情况下,这也意味着可以对管道中的流程进行版本控制和重建(请参阅稍后的 DevOps 讨论)。

|

||||

* **快速迭代**:“快速”在这里是个相对术语,但无论软件更新/发布的频率如何,预期的持续过程都会以高效的方式将源代码转换为交付物。自动化负责大部分工作,但自动化处理的过程可能仍然很慢。例如,对于每天需要多次发布候选版更新的产品来说,一轮<ruby>集成测试<rt>integrated testing</rt></ruby>下来耗时就要大半天可能就太慢了。

|

||||

|

||||

### 什么是“持续交付管道”?

|

||||

|

||||

将源代码转换为可发布产品的多个不同的<ruby>任务<rt>task</rt></ruby>和<ruby>作业<rt>job</rt></ruby>通常串联成一个软件“管道”,一个自动流程成功完成后会启动管道中的下一个流程。这些管道有许多不同的叫法,例如持续交付管道、部署管道和软件开发管道。大体上讲,程序管理者在管道执行时管理管道各部分的定义、运行、监控和报告。

|

||||

|

||||

### 持续交付管道是如何工作的?

|

||||

|

||||

软件交付管道的实际实现可以有很大不同。有许多程序可用在管道中,用于源代码跟踪、构建、测试、指标采集,版本管理等各个方面。但整体工作流程通常是相同的。单个业务流程/工作流应用程序管理整个管道,每个流程作为独立的作业运行或由该应用程序进行阶段管理。通常,在业务流程中,这些独立作业是以应用程序可理解并可作为工作流程管理的语法和结构定义的。

|

||||

|

||||

这些作业被用于一个或多个功能(构建、测试、部署等)。每个作业可能使用不同的技术或多种技术。关键是作业是自动化的、高效的,并且可重复的。如果作业成功,则工作流管理器将触发管道中的下一个作业。如果作业失败,工作流管理器会向开发人员、测试人员和其他人发出警报,以便他们尽快纠正问题。这个过程是自动化的,所以比手动运行一组过程可更快地找到错误。这种快速排错称为<ruby>快速失败<rt>fail fast</rt></ruby>,并且在抵达管道端点方面同样有价值。

|

||||

|

||||

### “快速失败”是什么意思?

|

||||

|

||||

管道的工作之一就是快速处理变更。另一个是监视创建发布的不同任务/作业。由于编译失败或测试未通过的代码可以阻止管道继续运行,因此快速通知用户此类情况非常重要。快速失败指的是在管道流程中尽快发现问题并快速通知用户的方式,这样可以及时修正问题并重新提交代码以便使管道再次运行。通常在管道流程中可通过查看历史记录来确定是谁做了那次修改并通知此人及其团队。

|

||||

|

||||

### 所有持续交付管道都应该被自动化吗?

|

||||

|

||||

管道的几乎所有部分都是应该自动化的。对于某些部分,有一些人为干预/互动的地方可能是有意义的。一个例子可能是<ruby>用户验收测试<rt>user-acceptance testing</rt></ruby>(让最终用户试用软件并确保它能达到他们想要/期望的水平)。另一种情况可能是部署到生产环境时用户希望拥有更多的人为控制。当然,如果代码不正确或不能运行,则需要人工干预。

|

||||

|

||||

有了对“持续”含义理解的背景,让我们看看不同类型的持续流程以及它们在软件管道上下文中的含义。

|

||||

|

||||

### 什么是“持续集成”?

|

||||

|

||||

持续集成(CI)是在源代码变更后自动检测、拉取、构建和(在大多数情况下)进行单元测试的过程。持续集成是启动管道的环节(尽管某些预验证 —— 通常称为<ruby>上线前检查<rt>pre-flight checks</rt></ruby> —— 有时会被归在持续集成之前)。

|

||||

|

||||

持续集成的目标是快速确保开发人员新提交的变更是好的,并且适合在代码库中进一步使用。

|

||||

|

||||

### 持续集成是如何工作的?

|

||||

|

||||

持续集成的基本思想是让一个自动化过程监测一个或多个源代码仓库是否有变更。当变更被推送到仓库时,它会监测到更改、下载副本、构建并运行任何相关的单元测试。

|

||||

|

||||

### 持续集成如何监测变更?

|

||||

|

||||

目前,监测程序通常是像 [Jenkins][1] 这样的应用程序,它还协调管道中运行的所有(或大多数)进程,监视变更是其功能之一。监测程序可以以几种不同方式监测变更。这些包括:

|

||||

|

||||

* **轮询**:监测程序反复询问代码管理系统,“代码仓库里有什么我感兴趣的新东西吗?”当代码管理系统有新的变更时,监测程序会“唤醒”并完成其工作以获取新代码并构建/测试它。

|

||||

* **定期**:监测程序配置为定期启动构建,无论源码是否有变更。理想情况下,如果没有变更,则不会构建任何新内容,因此这不会增加额外的成本。

|

||||

* **推送**:这与用于代码管理系统检查的监测程序相反。在这种情况下,代码管理系统被配置为提交变更到仓库时将“推送”一个通知到监测程序。最常见的是,这可以以 webhook 的形式完成 —— 在新代码被推送时一个<ruby>挂勾<rt>hook</rt></ruby>的程序通过互联网向监测程序发送通知。为此,监测程序必须具有可以通过网络接收 webhook 信息的开放端口。

|

||||

|

||||

### 什么是“预检查”(又称“上线前检查”)?

|

||||

|

||||

在将代码引入仓库并触发持续集成之前,可以进行其它验证。这遵循了最佳实践,例如<ruby>测试构建<rt>test build</rt></ruby>和<ruby>代码审查<rt>code review</rt></ruby>。它们通常在代码引入管道之前构建到开发过程中。但是一些管道也可能将它们作为其监控流程或工作流的一部分。

|

||||

|

||||

例如,一个名为 [Gerrit][2] 的工具允许在开发人员推送代码之后但在允许进入([Git][3] 远程)仓库之前进行正式的代码审查、验证和测试构建。Gerrit 位于开发人员的工作区和 Git 远程仓库之间。它会“接收”来自开发人员的推送,并且可以执行通过/失败验证以确保它们在被允许进入仓库之前的检查是通过的。这可以包括检测新变更并启动构建测试(CI 的一种形式)。它还允许开发者在那时进行正式的代码审查。这种方式有一种额外的可信度评估机制,即当变更的代码被合并到代码库中时不会破坏任何内容。

|

||||

|

||||

### 什么是“单元测试”?

|

||||

|

||||

单元测试(也称为“提交测试”),是由开发人员编写的小型的专项测试,以确保新代码独立工作。“独立”这里意味着不依赖或调用其它不可直接访问的代码,也不依赖外部数据源或其它模块。如果运行代码需要这样的依赖关系,那么这些资源可以用<ruby>模拟<rt>mock</rt></ruby>来表示。模拟是指使用看起来像资源的<ruby>代码存根<rt>code stub</rt></ruby>,可以返回值,但不实现任何功能。

|

||||

|

||||

在大多数组织中,开发人员负责创建单元测试以证明其代码正确。事实上,一种称为<ruby>测试驱动开发<rt>test-driven develop</rt></ruby>(TDD)的模型要求将首先设计单元测试作为清楚地验证代码功能的基础。因为这样的代码可以更改速度快且改动量大,所以它们也必须执行很快。

|

||||

|

||||

由于这与持续集成工作流有关,因此开发人员在本地工作环境中编写或更新代码,并通单元测试来确保新开发的功能或方法正确。通常,这些测试采用断言形式,即函数或方法的给定输入集产生给定的输出集。它们通常进行测试以确保正确标记和处理出错条件。有很多单元测试框架都很有用,例如用于 Java 开发的 [JUnit][4]。

|

||||

|

||||

### 什么是“持续测试”?

|

||||

|

||||

持续测试是指在代码通过持续交付管道时运行扩展范围的自动化测试的实践。单元测试通常与构建过程集成,作为持续集成阶段的一部分,并专注于和其它与之交互的代码隔离的测试。

|

||||

|

||||

除此之外,可以有或者应该有各种形式的测试。这些可包括:

|

||||

|

||||

* **集成测试** 验证组件和服务组合在一起是否正常。

|

||||

* **功能测试** 验证产品中执行功能的结果是否符合预期。

|

||||

* **验收测试** 根据可接受的标准验证产品的某些特征。如性能、可伸缩性、抗压能力和容量。

|

||||

|

||||

所有这些可能不存在于自动化的管道中,并且一些不同类型的测试分类界限也不是很清晰。但是,在交付管道中持续测试的目标始终是相同的:通过持续的测试级别证明代码的质量可以在正在进行的发布中使用。在持续集成快速的原则基础上,第二个目标是快速发现问题并提醒开发团队。这通常被称为快速失败。

|

||||

|

||||

### 除了测试之外,还可以对管道中的代码进行哪些其它类型的验证?

|

||||

|

||||

除了测试是否通过之外,还有一些应用程序可以告诉我们测试用例执行(覆盖)的源代码行数。这是一个可以衡量代码量指标的例子。这个指标称为<ruby>代码覆盖率<rt>code-coverage</rt></ruby>,可以通过工具(例如用于 Java 的 [JaCoCo][5])进行统计。

|

||||

|

||||

还有很多其它类型的指标统计,例如代码行数、复杂度以及代码结构对比分析等。诸如 [SonarQube][6] 之类的工具可以检查源代码并计算这些指标。此外,用户还可以为他们可接受的“合格”范围的指标设置阈值。然后可以在管道中针对这些阈值设置一个检查,如果结果不在可接受范围内,则流程终端上。SonarQube 等应用程序具有很高的可配置性,可以设置仅检查团队感兴趣的内容。

|

||||

|

||||

### 什么是“持续交付”?

|

||||

|

||||

持续交付(CD)通常是指整个流程链(管道),它自动监测源代码变更并通过构建、测试、打包和相关操作运行它们以生成可部署的版本,基本上没有任何人为干预。

|

||||

|

||||

持续交付在软件开发过程中的目标是自动化、效率、可靠性、可重复性和质量保障(通过持续测试)。

|

||||

|

||||

持续交付包含持续集成(自动检测源代码变更、执行构建过程、运行单元测试以验证变更),持续测试(对代码运行各种测试以保障代码质量),和(可选)持续部署(通过管道发布版本自动提供给用户)。

|

||||

|

||||

### 如何在管道中识别/跟踪多个版本?

|

||||

|

||||

版本控制是持续交付和管道的关键概念。持续意味着能够经常集成新代码并提供更新版本。但这并不意味着每个人都想要“最新、最好的”。对于想要开发或测试已知的稳定版本的内部团队来说尤其如此。因此,管道创建并轻松存储和访问的这些版本化对象非常重要。

|

||||

|

||||

在管道中从源代码创建的对象通常可以称为<ruby>工件<rt>artifact</rt></ruby>。工件在构建时应该有应用于它们的版本。将版本号分配给工件的推荐策略称为<ruby>语义化版本控制<rt>semantic versioning</rt></ruby>。(这也适用于从外部源引入的依赖工件的版本。)

|

||||

|

||||

语义版本号有三个部分:<ruby>主要版本<rt>major</rt></ruby>、<ruby>次要版本<rt>minor</rt></ruby> 和 <ruby>补丁版本<rt>patch</rt></ruby>。(例如,1.4.3 反映了主要版本 1,次要版本 4 和补丁版本 3。)这个想法是,其中一个部分的更改表示工件中的更新级别。主要版本仅针对不兼容的 API 更改而递增。当以<ruby>向后兼容<rt>backward-compatible</rt></ruby>的方式添加功能时,次要版本会增加。当进行向后兼容的版本 bug 修复时,补丁版本会增加。这些是建议的指导方针,但只要团队在整个组织内以一致且易于理解的方式这样做,团队就可以自由地改变这种方法。例如,每次为发布完成构建时增加的数字可以放在补丁字段中。

|

||||

|

||||

### 如何“分销”工件?

|

||||

|

||||

团队可以为工件分配<ruby>分销<rt>promotion</rt></ruby>级别以指示适用于测试、生产等环境或用途。有很多方法。可以用 Jenkins 或 [Artifactory][7] 等应用程序进行分销。或者一个简单的方案可以在版本号字符串的末尾添加标签。例如,`-snapshot` 可以指示用于构建工件的代码的最新版本(快照)。可以使用各种分销策略或工具将工件“提升”到其它级别,例如 `-milestone` 或 `-production`,作为工件稳定性和完备性版本的标记。

|

||||

|

||||

### 如何存储和访问多个工件版本?

|

||||

|

||||

从源代码构建的版本化工件可以通过管理<ruby>工件仓库<rt>artifact repository</rt></ruby>的应用程序进行存储。工件仓库就像构建工件的版本控制工具一样。像 Artifactory 或 [Nexus][8] 这类应用可以接受版本化工件,存储和跟踪它们,并提供检索的方法。

|

||||

|

||||

管道用户可以指定他们想要使用的版本,并在这些版本中使用管道。

|

||||

|

||||

### 什么是“持续部署”?

|

||||

|

||||

持续部署(CD)是指能够自动提供持续交付管道中发布版本给最终用户使用的想法。根据用户的安装方式,可能是在云环境中自动部署、app 升级(如手机上的应用程序)、更新网站或只更新可用版本列表。

|

||||

|

||||

这里的一个重点是,仅仅因为可以进行持续部署并不意味着始终部署来自管道的每组可交付成果。它实际上指,通过管道每套可交付成果都被证明是“可部署的”。这在很大程度上是由持续测试的连续级别完成的(参见本文中的持续测试部分)。

|

||||

|

||||

管道构建的发布成果是否被部署可以通过人工决策,或利用在完全部署之前“试用”发布的各种方法来进行控制。

|

||||

|

||||

### 在完全部署到所有用户之前,有哪些方法可以测试部署?

|

||||

|

||||

由于必须回滚/撤消对所有用户的部署可能是一种代价高昂的情况(无论是技术上还是用户的感知),已经有许多技术允许“尝试”部署新功能并在发现问题时轻松“撤消”它们。这些包括:

|

||||

|

||||

#### 蓝/绿测试/部署

|

||||

|

||||

在这种部署软件的方法中,维护了两个相同的主机环境 —— 一个“蓝色” 和一个“绿色”。(颜色并不重要,仅作为标识。)对应来说,其中一个是“生产环境”,另一个是“预发布环境”。

|

||||

|

||||

在这些实例的前面是调度系统,它们充当产品或应用程序的客户“网关”。通过将调度系统指向蓝色或绿色实例,可以将客户流量引流到期望的部署环境。通过这种方式,切换指向哪个部署实例(蓝色或绿色)对用户来说是快速,简单和透明的。

|

||||

|

||||

当新版本准备好进行测试时,可以将其部署到非生产环境中。在经过测试和批准后,可以更改调度系统设置以将传入的线上流量指向它(因此它将成为新的生产站点)。现在,曾作为生产环境实例可供下一次候选发布使用。

|

||||

|

||||

同理,如果在最新部署中发现问题并且之前的生产实例仍然可用,则简单的更改可以将客户流量引流回到之前的生产实例 —— 有效地将问题实例“下线”并且回滚到以前的版本。然后有问题的新实例可以在其它区域中修复。

|

||||

|

||||

#### 金丝雀测试/部署

|

||||

|

||||

在某些情况下,通过蓝/绿发布切换整个部署可能不可行或不是期望的那样。另一种方法是为<ruby>金丝雀<rt>canary</rt></ruby>测试/部署。在这种模型中,一部分客户流量被重新引流到新的版本部署中。例如,新版本的搜索服务可以与当前服务的生产版本一起部署。然后,可以将 10% 的搜索查询引流到新版本,以在生产环境中对其进行测试。

|

||||

|

||||

如果服务那些流量的新版本没问题,那么可能会有更多的流量会被逐渐引流过去。如果仍然没有问题出现,那么随着时间的推移,可以对新版本增量部署,直到 100% 的流量都调度到新版本。这有效地“更替”了以前版本的服务,并让新版本对所有客户生效。

|

||||

|

||||

#### 功能开关

|

||||

|

||||

对于可能需要轻松关掉的新功能(如果发现问题),开发人员可以添加<ruby>功能开关<rt>feature toggles</rt></ruby>。这是代码中的 `if-then` 软件功能开关,仅在设置数据值时才激活新代码。此数据值可以是全局可访问的位置,部署的应用程序将检查该位置是否应执行新代码。如果设置了数据值,则执行代码;如果没有,则不执行。

|

||||

|

||||

这为开发人员提供了一个远程“终止开关”,以便在部署到生产环境后发现问题时关闭新功能。

|

||||

|

||||

#### 暗箱发布

|

||||

|

||||

在<ruby>暗箱发布<rt>dark launch</rt></ruby>中,代码被逐步测试/部署到生产环境中,但是用户不会看到更改(因此名称中有<ruby>暗箱<rt>dark</rt></ruby>一词)。例如,在生产版本中,网页查询的某些部分可能会重定向到查询新数据源的服务。开发人员可收集此信息进行分析,而不会将有关接口,事务或结果的任何信息暴露给用户。

|

||||

|

||||

这个想法是想获取候选版本在生产环境负载下如何执行的真实信息,而不会影响用户或改变他们的经验。随着时间的推移,可以调度更多负载,直到遇到问题或认为新功能已准备好供所有人使用。实际上功能开关标志可用于这种暗箱发布机制。

|

||||

|

||||

### 什么是“运维开发”?

|

||||

|

||||

<ruby>[运维开发][9]<rt>DevOps</rt></ruby> 是关于如何使开发和运维团队更容易合作开发和发布软件的一系列想法和推荐的实践。从历史上看,开发团队研发了产品,但没有像客户那样以常规、可重复的方式安装/部署它们。在整个周期中,这组安装/部署任务(以及其它支持任务)留给运维团队负责。这经常导致很多混乱和问题,因为运维团队在后期才开始介入,并且必须在短时间内完成他们的工作。同样,开发团队经常处于不利地位 —— 因为他们没有充分测试产品的安装/部署功能,他们可能会对该过程中出现的问题感到惊讶。

|

||||

|

||||

这往往导致开发和运维团队之间严重脱节和缺乏合作。DevOps 理念主张是贯穿整个开发周期的开发和运维综合协作的工作方式,就像持续交付那样。

|

||||

|

||||

### 持续交付如何与运维开发相交?

|

||||

|

||||

持续交付管道是几个 DevOps 理念的实现。产品开发的后期阶段(如打包和部署)始终可以在管道的每次运行中完成,而不是等待产品开发周期中的特定时间。同样,从开发到部署过程中,开发和运维都可以清楚地看到事情何时起作用,何时不起作用。要使持续交付管道循环成功,不仅要通过与开发相关的流程,还要通过与运维相关的流程。

|

||||

|

||||

说得更远一些,DevOps 建议实现管道的基础架构也会被视为代码。也就是说,它应该自动配置、可跟踪、易于修改,并在管道发生变化时触发新一轮运行。这可以通过将管道实现为代码来完成。

|

||||

|

||||

### 什么是“管道即代码”?

|

||||

|

||||

<ruby>管道即代码<rt>pipeline-as-code</rt></ruby>是通过编写代码创建管道作业/任务的通用术语,就像开发人员编写代码一样。它的目标是将管道实现表示为代码,以便它可以与代码一起存储、评审、跟踪,如果出现问题并且必须终止管道,则可以轻松地重建。有几个工具允许这样做,如 [Jenkins 2][1]。

|

||||

|

||||

### DevOps 如何影响生产软件的基础设施?

|

||||

|

||||

传统意义上,管道中使用的各个硬件系统都有配套的软件(操作系统、应用程序、开发工具等)。在极端情况下,每个系统都是手工设置来定制的。这意味着当系统出现问题或需要更新时,这通常也是一项自定义任务。这种方法违背了持续交付的基本理念,即具有易于重现和可跟踪的环境。

|

||||

|

||||

多年来,很多应用被开发用于标准化交付(安装和配置)系统。同样,<ruby>虚拟机<rt>virtual machine</rt></ruby>被开发为模拟在其它计算机之上运行的计算机程序。这些 VM 要有管理程序才能在底层主机系统上运行,并且它们需要自己的操作系统副本才能运行。

|

||||

|

||||

后来有了<ruby>容器<rt>container</rt></ruby>。容器虽然在概念上与 VM 类似,但工作方式不同。它们只需使用一些现有的操作系统结构来划分隔离空间,而不需要运行单独的程序和操作系统的副本。因此,它们的行为类似于 VM 以提供隔离但不需要过多的开销。

|

||||

|

||||

VM 和容器是根据配置定义创建的,因此可以轻易地销毁和重建,而不会影响运行它们的主机系统。这允许运行管道的系统也可重建。此外,对于容器,我们可以跟踪其构建定义文件的更改 —— 就像对源代码一样。

|

||||

|

||||

因此,如果遇到 VM 或容器中的问题,我们可以更容易、更快速地销毁和重建它们,而不是在当前环境尝试调试和修复。

|

||||

|

||||

这也意味着对管道代码的任何更改都可以触发管道新一轮运行(通过 CI),就像对代码的更改一样。这是 DevOps 关于基础架构的核心理念之一。

|

||||

|

||||

---

|

||||

|

||||

via: https://opensource.com/article/18/8/what-cicd

|

||||

|

||||

作者:[Brent Laster][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[pityonline](https://github.com/pityonline)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bclaster

|

||||

[1]: https://jenkins.io

|

||||

[2]: https://www.gerritcodereview.com

|

||||

[3]: https://opensource.com/resources/what-is-git

|

||||

[4]: https://junit.org/junit5/

|

||||

[5]: https://www.eclemma.org/jacoco/

|

||||

[6]: https://www.sonarqube.org/

|

||||

[7]: https://jfrog.com/artifactory/

|

||||

[8]: https://www.sonatype.com/nexus-repository-sonatype

|

||||

[9]: https://opensource.com/resources/devops

|

||||

@ -1,111 +0,0 @@

|

||||

My Lisp Experiences and the Development of GNU Emacs

|

||||

======

|

||||

|

||||

> (Transcript of Richard Stallman's Speech, 28 Oct 2002, at the International Lisp Conference).

|

||||

|

||||

Since none of my usual speeches have anything to do with Lisp, none of them were appropriate for today. So I'm going to have to wing it. Since I've done enough things in my career connected with Lisp I should be able to say something interesting.

|

||||

|

||||

My first experience with Lisp was when I read the Lisp 1.5 manual in high school. That's when I had my mind blown by the idea that there could be a computer language like that. The first time I had a chance to do anything with Lisp was when I was a freshman at Harvard and I wrote a Lisp interpreter for the PDP-11. It was a very small machine — it had something like 8k of memory — and I managed to write the interpreter in a thousand instructions. This gave me some room for a little bit of data. That was before I got to see what real software was like, that did real system jobs.

|

||||

|

||||

I began doing work on a real Lisp implementation with JonL White once I started working at MIT. I got hired at the Artificial Intelligence Lab not by JonL, but by Russ Noftsker, which was most ironic considering what was to come — he must have really regretted that day.

|

||||

|

||||

During the 1970s, before my life became politicized by horrible events, I was just going along making one extension after another for various programs, and most of them did not have anything to do with Lisp. But, along the way, I wrote a text editor, Emacs. The interesting idea about Emacs was that it had a programming language, and the user's editing commands would be written in that interpreted programming language, so that you could load new commands into your editor while you were editing. You could edit the programs you were using and then go on editing with them. So, we had a system that was useful for things other than programming, and yet you could program it while you were using it. I don't know if it was the first one of those, but it certainly was the first editor like that.

|

||||

|

||||

This spirit of building up gigantic, complicated programs to use in your own editing, and then exchanging them with other people, fueled the spirit of free-wheeling cooperation that we had at the AI Lab then. The idea was that you could give a copy of any program you had to someone who wanted a copy of it. We shared programs to whomever wanted to use them, they were human knowledge. So even though there was no organized political thought relating the way we shared software to the design of Emacs, I'm convinced that there was a connection between them, an unconscious connection perhaps. I think that it's the nature of the way we lived at the AI Lab that led to Emacs and made it what it was.

|

||||

|

||||

The original Emacs did not have Lisp in it. The lower level language, the non-interpreted language — was PDP-10 Assembler. The interpreter we wrote in that actually wasn't written for Emacs, it was written for TECO. It was our text editor, and was an extremely ugly programming language, as ugly as could possibly be. The reason was that it wasn't designed to be a programming language, it was designed to be an editor and command language. There were commands like ‘5l’, meaning ‘move five lines’, or ‘i’ and then a string and then an ESC to insert that string. You would type a string that was a series of commands, which was called a command string. You would end it with ESC ESC, and it would get executed.

|

||||

|

||||

Well, people wanted to extend this language with programming facilities, so they added some. For instance, one of the first was a looping construct, which was < >. You would put those around things and it would loop. There were other cryptic commands that could be used to conditionally exit the loop. To make Emacs, we (1) added facilities to have subroutines with names. Before that, it was sort of like Basic, and the subroutines could only have single letters as their names. That was hard to program big programs with, so we added code so they could have longer names. Actually, there were some rather sophisticated facilities; I think that Lisp got its unwind-protect facility from TECO.

|

||||

|

||||

We started putting in rather sophisticated facilities, all with the ugliest syntax you could ever think of, and it worked — people were able to write large programs in it anyway. The obvious lesson was that a language like TECO, which wasn't designed to be a programming language, was the wrong way to go. The language that you build your extensions on shouldn't be thought of as a programming language in afterthought; it should be designed as a programming language. In fact, we discovered that the best programming language for that purpose was Lisp.

|

||||

|

||||

It was Bernie Greenberg, who discovered that it was (2). He wrote a version of Emacs in Multics MacLisp, and he wrote his commands in MacLisp in a straightforward fashion. The editor itself was written entirely in Lisp. Multics Emacs proved to be a great success — programming new editing commands was so convenient that even the secretaries in his office started learning how to use it. They used a manual someone had written which showed how to extend Emacs, but didn't say it was a programming. So the secretaries, who believed they couldn't do programming, weren't scared off. They read the manual, discovered they could do useful things and they learned to program.

|

||||

|

||||

So Bernie saw that an application — a program that does something useful for you — which has Lisp inside it and which you could extend by rewriting the Lisp programs, is actually a very good way for people to learn programming. It gives them a chance to write small programs that are useful for them, which in most arenas you can't possibly do. They can get encouragement for their own practical use — at the stage where it's the hardest — where they don't believe they can program, until they get to the point where they are programmers.

|

||||

|

||||

At that point, people began to wonder how they could get something like this on a platform where they didn't have full service Lisp implementation. Multics MacLisp had a compiler as well as an interpreter — it was a full-fledged Lisp system — but people wanted to implement something like that on other systems where they had not already written a Lisp compiler. Well, if you didn't have the Lisp compiler you couldn't write the whole editor in Lisp — it would be too slow, especially redisplay, if it had to run interpreted Lisp. So we developed a hybrid technique. The idea was to write a Lisp interpreter and the lower level parts of the editor together, so that parts of the editor were built-in Lisp facilities. Those would be whatever parts we felt we had to optimize. This was a technique that we had already consciously practiced in the original Emacs, because there were certain fairly high level features which we re-implemented in machine language, making them into TECO primitives. For instance, there was a TECO primitive to fill a paragraph (actually, to do most of the work of filling a paragraph, because some of the less time-consuming parts of the job would be done at the higher level by a TECO program). You could do the whole job by writing a TECO program, but that was too slow, so we optimized it by putting part of it in machine language. We used the same idea here (in the hybrid technique), that most of the editor would be written in Lisp, but certain parts of it that had to run particularly fast would be written at a lower level.

|

||||

|

||||

Therefore, when I wrote my second implementation of Emacs, I followed the same kind of design. The low level language was not machine language anymore, it was C. C was a good, efficient language for portable programs to run in a Unix-like operating system. There was a Lisp interpreter, but I implemented facilities for special purpose editing jobs directly in C — manipulating editor buffers, inserting leading text, reading and writing files, redisplaying the buffer on the screen, managing editor windows.

|

||||

|

||||

Now, this was not the first Emacs that was written in C and ran on Unix. The first was written by James Gosling, and was referred to as GosMacs. A strange thing happened with him. In the beginning, he seemed to be influenced by the same spirit of sharing and cooperation of the original Emacs. I first released the original Emacs to people at MIT. Someone wanted to port it to run on Twenex — it originally only ran on the Incompatible Timesharing System we used at MIT. They ported it to Twenex, which meant that there were a few hundred installations around the world that could potentially use it. We started distributing it to them, with the rule that “you had to send back all of your improvements” so we could all benefit. No one ever tried to enforce that, but as far as I know people did cooperate.

|

||||

|

||||

Gosling did, at first, seem to participate in this spirit. He wrote in a manual that he called the program Emacs hoping that others in the community would improve it until it was worthy of that name. That's the right approach to take towards a community — to ask them to join in and make the program better. But after that he seemed to change the spirit, and sold it to a company.

|

||||

|

||||

At that time I was working on the GNU system (a free software Unix-like operating system that many people erroneously call “Linux”). There was no free software Emacs editor that ran on Unix. I did, however, have a friend who had participated in developing Gosling's Emacs. Gosling had given him, by email, permission to distribute his own version. He proposed to me that I use that version. Then I discovered that Gosling's Emacs did not have a real Lisp. It had a programming language that was known as ‘mocklisp’, which looks syntactically like Lisp, but didn't have the data structures of Lisp. So programs were not data, and vital elements of Lisp were missing. Its data structures were strings, numbers and a few other specialized things.

|

||||

|

||||

I concluded I couldn't use it and had to replace it all, the first step of which was to write an actual Lisp interpreter. I gradually adapted every part of the editor based on real Lisp data structures, rather than ad hoc data structures, making the data structures of the internals of the editor exposable and manipulable by the user's Lisp programs.

|

||||

|

||||

The one exception was redisplay. For a long time, redisplay was sort of an alternate world. The editor would enter the world of redisplay and things would go on with very special data structures that were not safe for garbage collection, not safe for interruption, and you couldn't run any Lisp programs during that. We've changed that since — it's now possible to run Lisp code during redisplay. It's quite a convenient thing.

|

||||

|

||||

This second Emacs program was ‘free software’ in the modern sense of the term — it was part of an explicit political campaign to make software free. The essence of this campaign was that everybody should be free to do the things we did in the old days at MIT, working together on software and working with whomever wanted to work with us. That is the basis for the free software movement — the experience I had, the life that I've lived at the MIT AI lab — to be working on human knowledge, and not be standing in the way of anybody's further using and further disseminating human knowledge.

|

||||

|

||||

At the time, you could make a computer that was about the same price range as other computers that weren't meant for Lisp, except that it would run Lisp much faster than they would, and with full type checking in every operation as well. Ordinary computers typically forced you to choose between execution speed and good typechecking. So yes, you could have a Lisp compiler and run your programs fast, but when they tried to take `car` of a number, it got nonsensical results and eventually crashed at some point.

|

||||

|

||||

The Lisp machine was able to execute instructions about as fast as those other machines, but each instruction — a car instruction would do data typechecking — so when you tried to get the car of a number in a compiled program, it would give you an immediate error. We built the machine and had a Lisp operating system for it. It was written almost entirely in Lisp, the only exceptions being parts written in the microcode. People became interested in manufacturing them, which meant they should start a company.

|

||||

|

||||

There were two different ideas about what this company should be like. Greenblatt wanted to start what he called a “hacker” company. This meant it would be a company run by hackers and would operate in a way conducive to hackers. Another goal was to maintain the AI Lab culture (3). Unfortunately, Greenblatt didn't have any business experience, so other people in the Lisp machine group said they doubted whether he could succeed. They thought that his plan to avoid outside investment wouldn't work.

|

||||

|

||||

Why did he want to avoid outside investment? Because when a company has outside investors, they take control and they don't let you have any scruples. And eventually, if you have any scruples, they also replace you as the manager.

|

||||

|

||||

So Greenblatt had the idea that he would find a customer who would pay in advance to buy the parts. They would build machines and deliver them; with profits from those parts, they would then be able to buy parts for a few more machines, sell those and then buy parts for a larger number of machines, and so on. The other people in the group thought that this couldn't possibly work.

|

||||

|

||||

Greenblatt then recruited Russell Noftsker, the man who had hired me, who had subsequently left the AI Lab and created a successful company. Russell was believed to have an aptitude for business. He demonstrated this aptitude for business by saying to the other people in the group, “Let's ditch Greenblatt, forget his ideas, and we'll make another company.” Stabbing in the back, clearly a real businessman. Those people decided they would form a company called Symbolics. They would get outside investment, not have scruples, and do everything possible to win.

|

||||

|

||||

But Greenblatt didn't give up. He and the few people loyal to him decided to start Lisp Machines Inc. anyway and go ahead with their plans. And what do you know, they succeeded! They got the first customer and were paid in advance. They built machines and sold them, and built more machines and more machines. They actually succeeded even though they didn't have the help of most of the people in the group. Symbolics also got off to a successful start, so you had two competing Lisp machine companies. When Symbolics saw that LMI was not going to fall flat on its face, they started looking for ways to destroy it.

|

||||

|

||||

Thus, the abandonment of our lab was followed by “war” in our lab. The abandonment happened when Symbolics hired away all the hackers, except me and the few who worked at LMI part-time. Then they invoked a rule and eliminated people who worked part-time for MIT, so they had to leave entirely, which left only me. The AI lab was now helpless. And MIT had made a very foolish arrangement with these two companies. It was a three-way contract where both companies licensed the use of Lisp machine system sources. These companies were required to let MIT use their changes. But it didn't say in the contract that MIT was entitled to put them into the MIT Lisp machine systems that both companies had licensed. Nobody had envisioned that the AI lab's hacker group would be wiped out, but it was.

|

||||

|

||||

So Symbolics came up with a plan (4). They said to the lab, “We will continue making our changes to the system available for you to use, but you can't put it into the MIT Lisp machine system. Instead, we'll give you access to Symbolics' Lisp machine system, and you can run it, but that's all you can do.”

|

||||

|

||||

This, in effect, meant that they demanded that we had to choose a side, and use either the MIT version of the system or the Symbolics version. Whichever choice we made determined which system our improvements went to. If we worked on and improved the Symbolics version, we would be supporting Symbolics alone. If we used and improved the MIT version of the system, we would be doing work available to both companies, but Symbolics saw that we would be supporting LMI because we would be helping them continue to exist. So we were not allowed to be neutral anymore.

|

||||

|

||||

Up until that point, I hadn't taken the side of either company, although it made me miserable to see what had happened to our community and the software. But now, Symbolics had forced the issue. So, in an effort to help keep Lisp Machines Inc. going (5) — I began duplicating all of the improvements Symbolics had made to the Lisp machine system. I wrote the equivalent improvements again myself (i.e., the code was my own).

|

||||

|

||||

After a while (6), I came to the conclusion that it would be best if I didn't even look at their code. When they made a beta announcement that gave the release notes, I would see what the features were and then implement them. By the time they had a real release, I did too.

|

||||

|

||||

In this way, for two years, I prevented them from wiping out Lisp Machines Incorporated, and the two companies went on. But, I didn't want to spend years and years punishing someone, just thwarting an evil deed. I figured they had been punished pretty thoroughly because they were stuck with competition that was not leaving or going to disappear (7). Meanwhile, it was time to start building a new community to replace the one that their actions and others had wiped out.

|

||||

|

||||

The Lisp community in the 70s was not limited to the MIT AI Lab, and the hackers were not all at MIT. The war that Symbolics started was what wiped out MIT, but there were other events going on then. There were people giving up on cooperation, and together this wiped out the community and there wasn't much left.

|

||||

|

||||

Once I stopped punishing Symbolics, I had to figure out what to do next. I had to make a free operating system, that was clear — the only way that people could work together and share was with a free operating system.

|

||||

|

||||

At first, I thought of making a Lisp-based system, but I realized that wouldn't be a good idea technically. To have something like the Lisp machine system, you needed special purpose microcode. That's what made it possible to run programs as fast as other computers would run their programs and still get the benefit of typechecking. Without that, you would be reduced to something like the Lisp compilers for other machines. The programs would be faster, but unstable. Now that's okay if you're running one program on a timesharing system — if one program crashes, that's not a disaster, that's something your program occasionally does. But that didn't make it good for writing the operating system in, so I rejected the idea of making a system like the Lisp machine.

|

||||

|

||||

I decided instead to make a Unix-like operating system that would have Lisp implementations to run as user programs. The kernel wouldn't be written in Lisp, but we'd have Lisp. So the development of that operating system, the GNU operating system, is what led me to write the GNU Emacs. In doing this, I aimed to make the absolute minimal possible Lisp implementation. The size of the programs was a tremendous concern.

|

||||

|

||||

There were people in those days, in 1985, who had one-megabyte machines without virtual memory. They wanted to be able to use GNU Emacs. This meant I had to keep the program as small as possible.

|

||||

|

||||

For instance, at the time the only looping construct was ‘while’, which was extremely simple. There was no way to break out of the ‘while’ statement, you just had to do a catch and a throw, or test a variable that ran the loop. That shows how far I was pushing to keep things small. We didn't have ‘caar’ and ‘cadr’ and so on; “squeeze out everything possible” was the spirit of GNU Emacs, the spirit of Emacs Lisp, from the beginning.

|

||||

|

||||

Obviously, machines are bigger now, and we don't do it that way any more. We put in ‘caar’ and ‘cadr’ and so on, and we might put in another looping construct one of these days. We're willing to extend it some now, but we don't want to extend it to the level of common Lisp. I implemented Common Lisp once on the Lisp machine, and I'm not all that happy with it. One thing I don't like terribly much is keyword arguments (8). They don't seem quite Lispy to me; I'll do it sometimes but I minimize the times when I do that.

|

||||

|

||||

That was not the end of the GNU projects involved with Lisp. Later on around 1995, we were looking into starting a graphical desktop project. It was clear that for the programs on the desktop, we wanted a programming language to write a lot of it in to make it easily extensible, like the editor. The question was what it should be.

|

||||

|

||||

At the time, TCL was being pushed heavily for this purpose. I had a very low opinion of TCL, basically because it wasn't Lisp. It looks a tiny bit like Lisp, but semantically it isn't, and it's not as clean. Then someone showed me an ad where Sun was trying to hire somebody to work on TCL to make it the “de-facto standard extension language” of the world. And I thought, “We've got to stop that from happening.” So we started to make Scheme the standard extensibility language for GNU. Not Common Lisp, because it was too large. The idea was that we would have a Scheme interpreter designed to be linked into applications in the same way TCL was linked into applications. We would then recommend that as the preferred extensibility package for all GNU programs.

|

||||

|

||||

There's an interesting benefit you can get from using such a powerful language as a version of Lisp as your primary extensibility language. You can implement other languages by translating them into your primary language. If your primary language is TCL, you can't very easily implement Lisp by translating it into TCL. But if your primary language is Lisp, it's not that hard to implement other things by translating them. Our idea was that if each extensible application supported Scheme, you could write an implementation of TCL or Python or Perl in Scheme that translates that program into Scheme. Then you could load that into any application and customize it in your favorite language and it would work with other customizations as well.

|

||||

|

||||

As long as the extensibility languages are weak, the users have to use only the language you provided them. Which means that people who love any given language have to compete for the choice of the developers of applications — saying “Please, application developer, put my language into your application, not his language.” Then the users get no choices at all — whichever application they're using comes with one language and they're stuck with [that language]. But when you have a powerful language that can implement others by translating into it, then you give the user a choice of language and we don't have to have a language war anymore. That's what we're hoping ‘Guile’, our scheme interpreter, will do. We had a person working last summer finishing up a translator from Python to Scheme. I don't know if it's entirely finished yet, but for anyone interested in this project, please get in touch. So that's the plan we have for the future.

|

||||

|

||||

I haven't been speaking about free software, but let me briefly tell you a little bit about what that means. Free software does not refer to price; it doesn't mean that you get it for free. (You may have paid for a copy, or gotten a copy gratis.) It means that you have freedom as a user. The crucial thing is that you are free to run the program, free to study what it does, free to change it to suit your needs, free to redistribute the copies of others and free to publish improved, extended versions. This is what free software means. If you are using a non-free program, you have lost crucial freedom, so don't ever do that.

|

||||

|

||||

The purpose of the GNU project is to make it easier for people to reject freedom-trampling, user-dominating, non-free software by providing free software to replace it. For those who don't have the moral courage to reject the non-free software, when that means some practical inconvenience, what we try to do is give a free alternative so that you can move to freedom with less of a mess and less of a sacrifice in practical terms. The less sacrifice the better. We want to make it easier for you to live in freedom, to cooperate.

|

||||

|

||||

This is a matter of the freedom to cooperate. We're used to thinking of freedom and cooperation with society as if they are opposites. But here they're on the same side. With free software you are free to cooperate with other people as well as free to help yourself. With non-free software, somebody is dominating you and keeping people divided. You're not allowed to share with them, you're not free to cooperate or help society, anymore than you're free to help yourself. Divided and helpless is the state of users using non-free software.

|

||||

|

||||

We've produced a tremendous range of free software. We've done what people said we could never do; we have two operating systems of free software. We have many applications and we obviously have a lot farther to go. So we need your help. I would like to ask you to volunteer for the GNU project; help us develop free software for more jobs. Take a look at [http://www.gnu.org/help][1] to find suggestions for how to help. If you want to order things, there's a link to that from the home page. If you want to read about philosophical issues, look in /philosophy. If you're looking for free software to use, look in /directory, which lists about 1900 packages now (which is a fraction of all the free software out there). Please write more and contribute to us. My book of essays, “Free Software and Free Society”, is on sale and can be purchased at [www.gnu.org][2]. Happy hacking!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.gnu.org/gnu/rms-lisp.html

|

||||

|

||||

作者:[Richard Stallman][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.gnu.org

|

||||

[1]:https://www.gnu.org/help/

|

||||

[2]:http://www.gnu.org/

|

||||

@ -0,0 +1,63 @@

|

||||

Tips for Success with Open Source Certification

|

||||

======

|

||||

|

||||

|

||||

|

||||

In today’s technology arena, open source is pervasive. The [2018 Open Source Jobs Report][1] found that hiring open source talent is a priority for 83 percent of hiring managers, and half are looking for candidates holding certifications. And yet, 87 percent of hiring managers also cite difficulty in finding the right open source skills and expertise. This article is the second in a weekly series on the growing importance of open source certification.

|

||||

|

||||

In the [first article][2], we focused on why certification matters now more than ever. Here, we’ll focus on the kinds of certifications that are making a difference, and what is involved in completing necessary training and passing the performance-based exams that lead to certification, with tips from Clyde Seepersad, General Manager of Training and Certification at The Linux Foundation.

|

||||

|

||||

### Performance-based exams

|

||||

|

||||

So, what are the details on getting certified and what are the differences between major types of certification? Most types of open source credentials and certification that you can obtain are performance-based. In many cases, trainees are required to demonstrate their skills directly from the command line.

|

||||

|

||||

“You're going to be asked to do something live on the system, and then at the end, we're going to evaluate that system to see if you were successful in accomplishing the task,” said Seepersad. This approach obviously differs from multiple choice exams and other tests where candidate answers are put in front of you. Often, certification programs involve online self-paced courses, so you can learn at your own speed, but the exams can be tough and require demonstration of expertise. That’s part of why the certifications that they lead to are valuable.

|

||||

|

||||

### Certification options

|

||||

|

||||

Many people are familiar with the certifications offered by The Linux Foundation, including the [Linux Foundation Certified System Administrator][3] (LFCS) and [Linux Foundation Certified Engineer][4] (LFCE) certifications. The Linux Foundation intentionally maintains separation between its training and certification programs and uses an independent proctoring solution to monitor candidates. It also requires that all certifications be renewed every two years, which gives potential employers confidence that skills are current and have been recently demonstrated.

|

||||

|

||||

“Note that there are no prerequisites,” Seepersad said. “What that means is that if you're an experienced Linux engineer, and you think the LFCE, the certified engineer credential, is the right one for you…, you're allowed to do what we call ‘challenge the exams.’ If you think you're ready for the LFCE, you can sign up for the LFCE without having to have gone through and taken and passed the LFCS.”

|

||||

|

||||

Seepersad noted that the LFCS credential is great for people starting their careers, and the LFCE credential is valuable for many people who have experience with Linux such as volunteer experience, and now want to demonstrate the breadth and depth of their skills for employers. He also said that the LFCS and LFCE coursework prepares trainees to work with various Linux distributions. Other certification options, such as the [Kubernetes Fundamentals][5] and [Essentials of OpenStack Administration][6]courses and exams, have also made a difference for many people, as cloud adoption has increased around the world.

|

||||

|

||||

Seepersad added that certification can make a difference if you are seeking a promotion. “Being able show that you're over the bar in terms of certification at the engineer level can be a great way to get yourself into the consideration set for that next promotion,” he said.

|

||||

|

||||

### Tips for Success

|

||||

|

||||

In terms of practical advice for taking an exam, Seepersad offered a number of tips:

|

||||

|

||||

* Set the date, and don’t procrastinate.

|

||||

|

||||

* Look through the online exam descriptions and get any training needed to be able to show fluency with the required skill sets.

|

||||

|

||||

* Practice on a live Linux system. This can involve downloading a free terminal emulator or other software and actually performing tasks that you will be tested on.

|

||||

|

||||

|

||||

|

||||

|

||||

Seepersad also noted some common mistakes that people make when taking their exams. These include spending too long on a small set of questions, wasting too much time looking through documentation and reference tools, and applying changes without testing them in the work environment.

|

||||

|

||||

With open source certification playing an increasingly important role in securing a rewarding career, stay tuned for more certification details in this article series, including how to prepare for certification.

|

||||

|

||||

[Learn more about Linux training and certification.][7]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/sysadmin-cert/2018/7/tips-success-open-source-certification

|

||||

|

||||

作者:[Sam Dean][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://www.linuxfoundation.org/publications/open-source-jobs-report-2018/

|

||||

[2]:https://www.linux.com/blog/sysadmin-cert/2018/7/5-reasons-open-source-certification-matters-more-ever

|

||||

[3]:https://training.linuxfoundation.org/certification/lfcs

|

||||

[4]:https://training.linuxfoundation.org/certification/lfce

|

||||

[5]:https://training.linuxfoundation.org/linux-courses/system-administration-training/kubernetes-fundamentals

|

||||

[6]:https://training.linuxfoundation.org/linux-courses/system-administration-training/openstack-administration-fundamentals

|

||||

[7]:https://training.linuxfoundation.org/certification

|

||||

@ -1,186 +0,0 @@

|

||||

FSSlc translating

|

||||

|

||||

View The Contents Of An Archive Or Compressed File Without Extracting It

|

||||

======

|

||||

|

||||

|

||||

In this tutorial, we are going to learn how to view the contents of an Archive and/or Compressed file without actually extracting it in Unix-like operating systems. Before going further, let be clear about Archive and compress files. There is significant difference between both. The Archiving is the process of combining multiple files or folders or both into a single file. In this case, the resulting file is not compressed. The compressing is a method of combining multiple files or folders or both into a single file and finally compress the resulting file. The archive is not a compressed file, but the compressed file can be an archive. Clear? Well, let us get to the topic.

|

||||

|

||||

### View The Contents Of An Archive Or Compressed File Without Extracting It

|

||||

|

||||

Thanks to Linux community, there are many command line applications are available to do it. Let us going to see some of them with examples.

|

||||

|

||||

**1\. Using Vim Editor**

|

||||

|

||||

Vim is not just an editor. Using Vim, we can do numerous things. The following command displays the contents of an compressed archive file without decompressing it.

|

||||

```

|

||||

$ vim ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

![][2]

|

||||

|

||||

You can even browse through the archive and open the text files (if there are any) in the archive as well. To open a text file, just put the mouse cursor in-front of the file using arrow keys and hit ENTER to open it.

|

||||

|

||||

|

||||

**2\. Using Tar command**

|

||||

|

||||

To list the contents of a tar archive file, run:

|

||||

```

|

||||

$ tar -tf ostechnix.tar

|

||||

ostechnix/

|

||||

ostechnix/image.jpg

|

||||

ostechnix/file.pdf

|

||||

ostechnix/song.mp3

|

||||

|

||||

```

|

||||

|

||||

Or, use **-v** flag to view the detailed properties of the archive file, such as permissions, file owner, group, creation date etc.

|

||||

```

|

||||

$ tar -tvf ostechnix.tar

|

||||

drwxr-xr-x sk/users 0 2018-07-02 19:30 ostechnix/

|

||||

-rw-r--r-- sk/users 53632 2018-06-29 15:57 ostechnix/image.jpg

|

||||

-rw-r--r-- sk/users 156831 2018-06-04 12:37 ostechnix/file.pdf

|

||||

-rw-r--r-- sk/users 9702219 2018-04-25 20:35 ostechnix/song.mp3

|

||||

|

||||

```

|

||||

|

||||

|

||||

**3\. Using Rar command**

|

||||

|

||||

To view the contents of a rar file, simply do:

|

||||

```

|

||||

$ rar v ostechnix.rar

|

||||

|

||||

RAR 5.60 Copyright (c) 1993-2018 Alexander Roshal 24 Jun 2018

|

||||

Trial version Type 'rar -?' for help

|

||||

|

||||

Archive: ostechnix.rar

|

||||

Details: RAR 5

|

||||

|

||||

Attributes Size Packed Ratio Date Time Checksum Name

|

||||

----------- --------- -------- ----- ---------- ----- -------- ----

|

||||

-rw-r--r-- 53632 52166 97% 2018-06-29 15:57 70260AC4 ostechnix/image.jpg

|

||||

-rw-r--r-- 156831 139094 88% 2018-06-04 12:37 C66C545E ostechnix/file.pdf

|

||||

-rw-r--r-- 9702219 9658527 99% 2018-04-25 20:35 DD875AC4 ostechnix/song.mp3

|

||||

----------- --------- -------- ----- ---------- ----- -------- ----

|

||||

9912682 9849787 99% 3

|

||||

|

||||

```

|

||||

|

||||

**4\. Using Unrar command**

|

||||

|

||||

You can also do the same using **Unrar** command with **l** flag as shown below.

|

||||

```

|

||||

$ unrar l ostechnix.rar

|

||||

|

||||

UNRAR 5.60 freeware Copyright (c) 1993-2018 Alexander Roshal

|

||||

|

||||

Archive: ostechnix.rar

|

||||

Details: RAR 5

|

||||

|

||||

Attributes Size Date Time Name

|

||||

----------- --------- ---------- ----- ----

|

||||

-rw-r--r-- 53632 2018-06-29 15:57 ostechnix/image.jpg

|

||||

-rw-r--r-- 156831 2018-06-04 12:37 ostechnix/file.pdf

|

||||

-rw-r--r-- 9702219 2018-04-25 20:35 ostechnix/song.mp3

|

||||

----------- --------- ---------- ----- ----

|

||||

9912682 3

|

||||

|

||||

```

|

||||

|

||||

**5\. Using Zip command**

|

||||

|

||||

To view the contents of a zip file without extracting it, use the following zip command:

|

||||

```

|

||||

$ zip -sf ostechnix.zip

|

||||

Archive contains:

|

||||

Life advices.jpg

|

||||

Total 1 entries (597219 bytes)

|

||||

|

||||

```

|

||||

|

||||

**6. Using Unzip command

|

||||

**

|

||||

|

||||

You can also use Unzip command with -l flag to display the contents of a zip file like below.

|

||||

```

|

||||

$ unzip -l ostechnix.zip

|

||||

Archive: ostechnix.zip

|

||||

Length Date Time Name

|

||||

--------- ---------- ----- ----

|

||||

597219 2018-04-09 12:48 Life advices.jpg

|

||||

--------- -------

|

||||

597219 1 file

|

||||

|

||||

```

|

||||

|

||||

|

||||

**7\. Using Zipinfo command**

|

||||

```

|

||||

$ zipinfo ostechnix.zip

|

||||

Archive: ostechnix.zip

|

||||

Zip file size: 584859 bytes, number of entries: 1

|

||||

-rw-r--r-- 6.3 unx 597219 bx defN 18-Apr-09 12:48 Life advices.jpg

|

||||

1 file, 597219 bytes uncompressed, 584693 bytes compressed: 2.1%

|

||||

|

||||

```

|

||||

|

||||

As you can see, the above command displays the contents of the zip file, its permissions, creating date, and percentage of compression etc.

|

||||

|

||||

**8. Using Zcat command

|

||||

**

|

||||

|

||||

To view the contents of a compressed archive file without extracting it using **zcat** command, we do:

|

||||

```

|

||||

$ zcat ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

The zcat is same as “gunzip -c” command. So, you can also use the following command to view the contents of the archive/compressed file:

|

||||

```

|

||||

$ gunzip -c ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

**9. Using Zless command

|

||||

**

|

||||

|

||||

To view the contents of an archive/compressed file using Zless command, simply do:

|

||||

```

|

||||

$ zless ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

This command is similar to “less” command where it displays the output page by page.

|

||||

|

||||

**10. Using Less command

|

||||

**

|

||||

|

||||

As you might already know, the **less** command can be used to open a file for interactive reading, allowing scrolling and search.

|

||||

|

||||

Run the following command to view the contents of an archive/compressed file using less command:

|

||||

```

|

||||

$ less ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

And, that’s all for now. You know now how to view the contents of an archive of compressed file using various commands in Linux. Hope you find this useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||