mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-10 22:21:11 +08:00

commit

f1a7a9c15a

@ -0,0 +1,214 @@

|

|||||||

|

free:一个在 Linux 中检查内存使用情况的标准命令

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

我们都知道, IT 基础设施方面的大多数服务器(包括世界顶级的超级计算机)都运行在 Linux 平台上,因为和其他操作系统相比, Linux 更加灵活。有的操作系统对于一些微乎其微的改动和补丁更新都需要重启,但是 Linux 不需要,只有对于一些关键补丁的更新, Linux 才会需要重启。

|

||||||

|

|

||||||

|

Linux 系统管理员面临的一大挑战是如何在没有任何停机时间的情况下维护系统的良好运行。管理内存使用是 Linux 管理员又一个具有挑战性的任务。`free` 是 Linux 中一个标准的并且被广泛使用的命令,它被用来分析内存统计(空闲和已用)。今天,我们将要讨论 `free` 命令以及它的一些有用选项。

|

||||||

|

|

||||||

|

推荐文章:

|

||||||

|

|

||||||

|

* [smem - Linux 内存报告/统计工具][1]

|

||||||

|

* [vmstat - 一个报告虚拟内存统计的标准而又漂亮的工具][2]

|

||||||

|

|

||||||

|

### Free 命令是什么

|

||||||

|

|

||||||

|

free 命令能够显示系统中物理上的空闲(free)和已用(used)内存,还有交换(swap)内存,同时,也能显示被内核使用的缓冲(buffers)和缓存(caches)。这些信息是通过解析文件 `/proc/meninfo` 而收集到的。

|

||||||

|

|

||||||

|

### 显示系统内存

|

||||||

|

|

||||||

|

不带任何选项运行 `free` 命令会显示系统内存,包括空闲(free)、已用(used)、交换(swap)、缓冲(buffers)、缓存(caches)和交换(swap)的内存总数。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32869744 25434276 7435468 0 412032 23361716

|

||||||

|

-/+ buffers/cache: 1660528 31209216

|

||||||

|

Swap: 4095992 0 4095992

|

||||||

|

```

|

||||||

|

|

||||||

|

输出有三行:

|

||||||

|

|

||||||

|

* 第一行:表明全部内存、已用内存、空闲内存、共用内存(主要被 tmpfs(`/proc/meninfo` 中的 `Shmem` 项)使用)、用于缓冲的内存以及缓存内容大小。

|

||||||

|

* 全部:全部已安装内存(`/proc/meminfo` 中的 `MemTotal` 项)

|

||||||

|

* 已用:已用内存(全部计算 - 空间+缓冲+缓存)

|

||||||

|

* 空闲:未使用内存(`/proc/meminfo` 中的 `MemFree` 项)

|

||||||

|

* 共用:主要被 tmpfs 使用的内存(`/proc/meminfo` 中的 `Shmem` 项)

|

||||||

|

* 缓冲:被内核缓冲使用的内存(`/proc/meminfo` 中的 `Buffers` 项)

|

||||||

|

* 缓存:被页面缓存和 slab 使用的内存(`/proc/meminfo` 中的 `Cached` 和 `SSReclaimable` 项)

|

||||||

|

* 第二行:表明已用和空闲的缓冲/缓存

|

||||||

|

* 第三行:表明总交换内存(`/proc/meminfo` 中的 `SwapTotal` 项)、空闲内存(`/proc/meminfo` 中的 `SwapFree` 项)和已用交换内存。

|

||||||

|

|

||||||

|

### 以 MB 为单位显示系统内存

|

||||||

|

|

||||||

|

默认情况下, `free` 命令以 `KB - Kilobytes` 为单位输出系统内存,这对于绝大多数管理员来说会有一点迷糊(当系统内存很大的时候,我们中的许多人需要把输出转化为以 MB 为单位,从而才能够理解内存大小)。为了避免这个迷惑,我们在 ‘free’ 命令后面加上 `-m` 选项,就可以立即得到以 ‘MB - Megabytes’ 为单位的输出。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -m

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32099 24838 7261 0 402 22814

|

||||||

|

-/+ buffers/cache: 1621 30477

|

||||||

|

Swap: 3999 0 3999

|

||||||

|

```

|

||||||

|

|

||||||

|

如何从上面的输出中检查剩余多少空闲内存?主要基于已用(used)和空闲(free)两列。你可能在想,你只有很低的空闲内存,因为它只有 `10%`, 为什么?

|

||||||

|

|

||||||

|

- 全部实际可用内存 = (全部内存 - 第 2 行的已用内存)

|

||||||

|

- 全部内存 = 32099

|

||||||

|

- 实际已用内存 = 1621 ( = 全部内存 - 缓冲 - 缓存)

|

||||||

|

- 全部实际可用内存 = 30477

|

||||||

|

|

||||||

|

如果你的 Linux 版本是最新的,那么有一个查看实际空闲内存的选项,叫做可用(`available`) ,对于旧的版本,请看显示 `-/+ buffers/cache` 那一行对应的空闲(`free`)一列。

|

||||||

|

|

||||||

|

如何从上面的输出中检查有多少实际已用内存?基于已用(used)和空闲(free)一列。你可能想,你已经使用了超过 `95%` 的内存。

|

||||||

|

|

||||||

|

- 全部实际已用内存 = 第一列已用 - (第一列缓冲 + 第一列缓存)

|

||||||

|

- 已用内存 = 24838

|

||||||

|

- 已用缓冲 = 402

|

||||||

|

- 已用缓存 = 22814

|

||||||

|

- 全部实际已用内存 = 1621

|

||||||

|

|

||||||

|

### 以 GB 为单位显示内存

|

||||||

|

|

||||||

|

默认情况下, `free` 命令会以 `KB - kilobytes` 为单位显示输出,这对于大多数管理员来说会有一些迷惑,所以我们使用上面的选项来获得以 `MB - Megabytes` 为单位的输出。但是,当服务器的内存很大(超过 100 GB 或 200 GB)时,上面的选项也会让人很迷惑。所以,在这个时候,我们可以在 `free` 命令后面加上 `-g` 选项,从而立即得到以 `GB - Gigabytes` 为单位的输出。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -g

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 31 24 7 0 0 22

|

||||||

|

-/+ buffers/cache: 1 29

|

||||||

|

Swap: 3 0 3

|

||||||

|

```

|

||||||

|

|

||||||

|

### 显示全部内存行

|

||||||

|

|

||||||

|

默认情况下, `free` 命令的输出只有三行(内存、缓冲/缓存以及交换)。为了统一以单独一行显示(全部(内存+交换)、已用(内存+(已用-缓冲/缓存)+交换)以及空闲(内存+(已用-缓冲/缓存)+交换),在 ‘free’ 命令后面加上 `-t` 选项。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -t

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32869744 25434276 7435468 0 412032 23361716

|

||||||

|

-/+ buffers/cache: 1660528 31209216

|

||||||

|

Swap: 4095992 0 4095992

|

||||||

|

Total: 36965736 27094804 42740676

|

||||||

|

```

|

||||||

|

|

||||||

|

### 按延迟运行 free 命令以便更好的统计

|

||||||

|

|

||||||

|

默认情况下, free 命令只会显示一次统计输出,这是不足够进一步排除故障的,所以,可以通过添加延迟(延迟是指在几秒后再次更新)来定期统计内存活动。如果你想以两秒的延迟运行 free 命令,可以使用下面的命令(如果你想要更多的延迟,你可以按照你的意愿更改数值)。

|

||||||

|

|

||||||

|

下面的命令将会每 2 秒运行一次直到你退出:

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -s 2

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25935844 6913548 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1120624 31728768

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25935288 6914104 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1120068 31729324

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25934968 6914424 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1119748 31729644

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

```

|

||||||

|

|

||||||

|

### 按延迟和具体次数运行 free 命令

|

||||||

|

|

||||||

|

另外,你可以按延迟和具体次数运行 free 命令,一旦达到某个次数,便自动退出。

|

||||||

|

|

||||||

|

下面的命令将会每 2 秒运行一次 free 命令,计数 5 次以后自动退出。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -s 2 -c 5

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931052 6918340 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1115832 31733560

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931192 6918200 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1115972 31733420

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931348 6918044 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1116128 31733264

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931316 6918076 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1116096 31733296

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931308 6918084 188 182424 24632796

|

||||||

|

-/+ buffers/cache: 1116088 31733304

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

```

|

||||||

|

|

||||||

|

### 人类可读格式

|

||||||

|

|

||||||

|

为了以人类可读的格式输出,在 `free` 命令的后面加上 `-h` 选项,和其他选项比如 `-m` 和 `-g` 相比,这将会更人性化输出(自动使用 GB 和 MB 单位)。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -h

|

||||||

|

total used free shared buff/cache available

|

||||||

|

Mem: 2.0G 1.6G 138M 20M 188M 161M

|

||||||

|

Swap: 2.0G 1.8G 249M

|

||||||

|

```

|

||||||

|

|

||||||

|

### 取消缓冲区和缓存内存输出

|

||||||

|

|

||||||

|

默认情况下, `缓冲/缓存` 内存输出是同时输出的。为了取消缓冲和缓存内存的输出,可以在 `free` 命令后面加上 `-w` 选项。(该选项在版本 3.3.12 上可用)

|

||||||

|

|

||||||

|

注意比较上面有`缓冲/缓存`的输出。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -wh

|

||||||

|

total used free shared buffers cache available

|

||||||

|

Mem: 2.0G 1.6G 137M 20M 8.1M 183M 163M

|

||||||

|

Swap: 2.0G 1.8G 249M

|

||||||

|

```

|

||||||

|

|

||||||

|

### 显示最低和最高的内存统计

|

||||||

|

|

||||||

|

默认情况下, `free` 命令不会显示最低和最高的内存统计。为了显示最低和最高的内存统计,在 free 命令后面加上 `-l` 选项。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free -l

|

||||||

|

total used free shared buffers cached

|

||||||

|

Mem: 32849392 25931336 6918056 188 182424 24632808

|

||||||

|

Low: 32849392 25931336 6918056

|

||||||

|

High: 0 0 0

|

||||||

|

-/+ buffers/cache: 1116104 31733288

|

||||||

|

Swap: 20970492 0 20970492

|

||||||

|

```

|

||||||

|

|

||||||

|

### 阅读关于 free 命令的更过信息

|

||||||

|

|

||||||

|

如果你想了解 free 命令的更多可用选项,只需查看其 man 手册。

|

||||||

|

|

||||||

|

```

|

||||||

|

# free --help

|

||||||

|

or

|

||||||

|

# man free

|

||||||

|

```

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://www.2daygeek.com/free-command-to-check-memory-usage-statistics-in-linux/

|

||||||

|

|

||||||

|

作者:[MAGESH MARUTHAMUTHU][a]

|

||||||

|

译者:[ucasFL](https://github.com/ucasFL)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://www.2daygeek.com/author/magesh/

|

||||||

|

[1]:http://www.2daygeek.com/smem-linux-memory-usage-statistics-reporting-tool/

|

||||||

|

[2]:http://www.2daygeek.com/linux-vmstat-command-examples-tool-report-virtual-memory-statistics/

|

||||||

|

[3]:http://www.2daygeek.com/author/magesh/

|

||||||

139

published/20170227 How to setup a Linux server on Amazon AWS.md

Normal file

139

published/20170227 How to setup a Linux server on Amazon AWS.md

Normal file

@ -0,0 +1,139 @@

|

|||||||

|

如何在 Amazon AWS 上设置一台 Linux 服务器

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

AWS(Amazon Web Services)是全球领先的云服务器提供商之一。你可以使用 AWS 平台在一分钟内设置完服务器。在 AWS 上,你可以微调服务器的许多技术细节,如 CPU 数量,内存和磁盘空间,磁盘类型(更快的 SSD 或者经典的 IDE)等。关于 AWS 最好的一点是,你只需要为你使用到的服务付费。在开始之前,AWS 提供了一个名为 “Free Tier” 的特殊帐户,你可以免费使用一年的 AWS 技术服务,但会有一些小限制,例如,你每个月使用服务器时长不能超过 750 小时,超过这个他们就会向你收费。你可以在 [aws 官网][3]上查看所有相关的规则。

|

||||||

|

|

||||||

|

因为我的这篇文章是关于在 AWS 上创建 Linux 服务器,因此拥有 “Free Tier” 帐户是先决条件。要注册帐户,你可以使用此[链接][4]。请注意,你需要在创建帐户时输入信用卡详细信息。

|

||||||

|

|

||||||

|

让我们假设你已经创建了 “Free Tier” 帐户。

|

||||||

|

|

||||||

|

在继续之前,你必须了解 AWS 中的一些术语以了解设置:

|

||||||

|

|

||||||

|

1. EC2(弹性计算云):此术语用于虚拟机。

|

||||||

|

2. AMI(Amazon 机器镜像):表示操作系统实例。

|

||||||

|

3. EBS(弹性块存储):AWS 中的一种存储环境类型。

|

||||||

|

|

||||||

|

通过以下链接登录 AWS 控制台:[https://console.aws.amazon.com/][5] 。

|

||||||

|

|

||||||

|

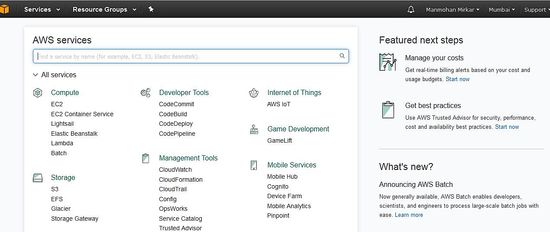

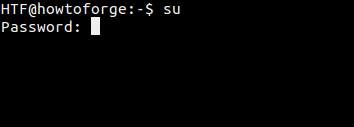

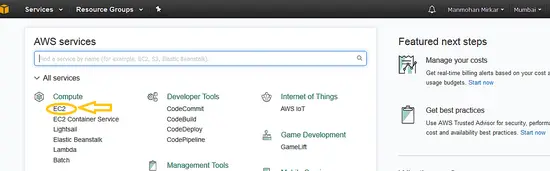

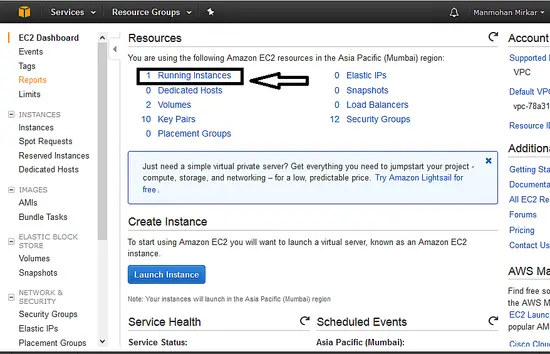

AWS 控制台将如下所示:

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][6]

|

||||||

|

|

||||||

|

### 在 AWS 中设置 Linux VM

|

||||||

|

|

||||||

|

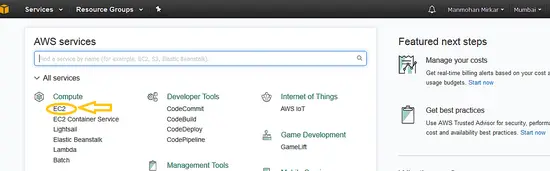

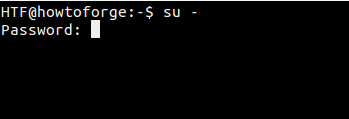

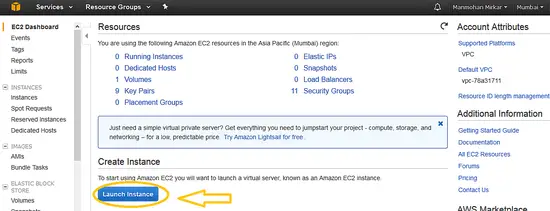

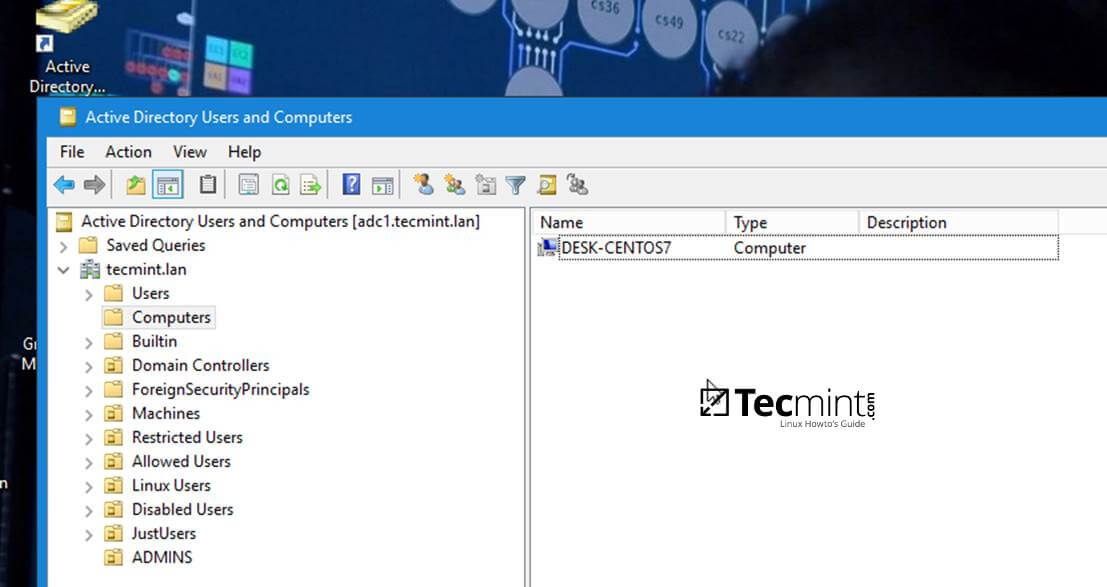

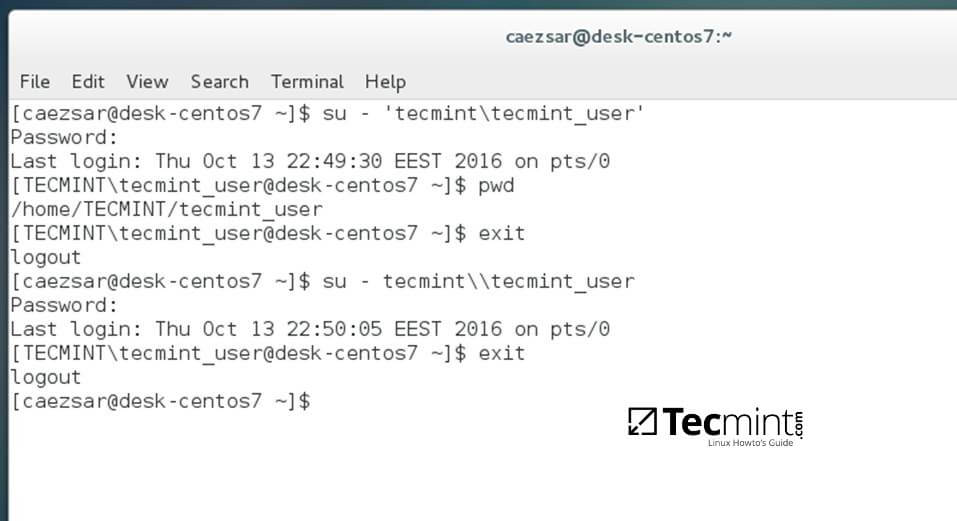

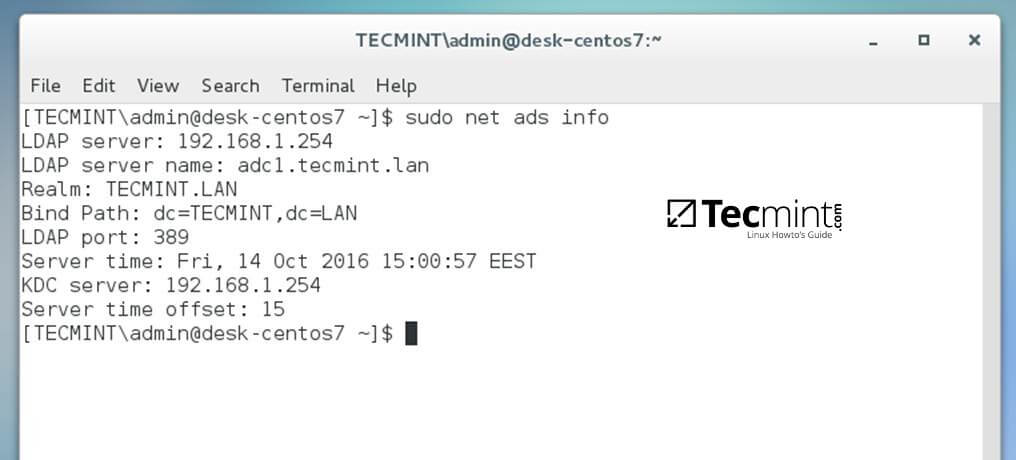

1、 创建一个 EC2(虚拟机)实例:在开始安装系统之前,你必须在 AWS 中创建一台虚拟机。要创建虚拟机,在“<ruby>计算<rt>compute</rt></ruby>”菜单下点击 EC2:

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][7]

|

||||||

|

|

||||||

|

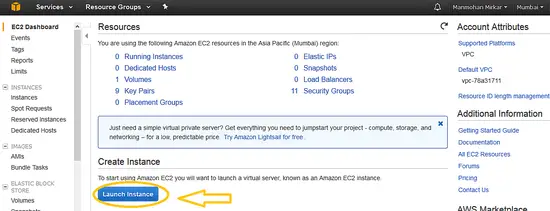

2、 现在在<ruby>创建实例<rt>Create instance</rt></ruby>下点击<ruby>“启动实例”<rt>Launch Instance</rt></ruby>按钮。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][8]

|

||||||

|

|

||||||

|

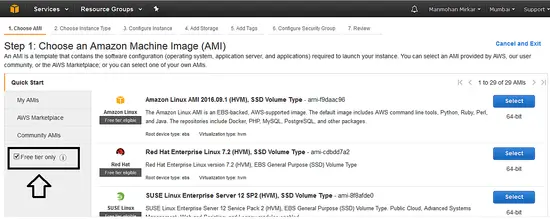

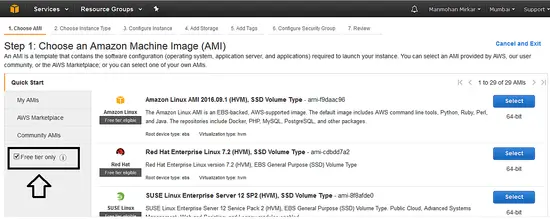

3、 现在,当你使用的是一个 “Free Tier” 帐号,接着最好选择 “Free Tier” 单选按钮以便 AWS 可以过滤出可以免费使用的实例。这可以让你不用为使用 AWS 的资源而付费。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][9]

|

||||||

|

|

||||||

|

4、 要继续操作,请选择以下选项:

|

||||||

|

|

||||||

|

a、 在经典实例向导中选择一个 AMI(Amazon Machine Image),然后选择使用 **Red Hat Enterprise Linux 7.2(HVM),SSD 存储**

|

||||||

|

|

||||||

|

b、 选择 “**t2.micro**” 作为实例详细信息。

|

||||||

|

|

||||||

|

c、 **配置实例详细信息**:不要更改任何内容,只需单击下一步。

|

||||||

|

|

||||||

|

d、 **添加存储**:不要更改任何内容,只需点击下一步,因为此时我们将使用默认的 10(GiB)硬盘。

|

||||||

|

|

||||||

|

e、 **添加标签**:不要更改任何内容只需点击下一步。

|

||||||

|

|

||||||

|

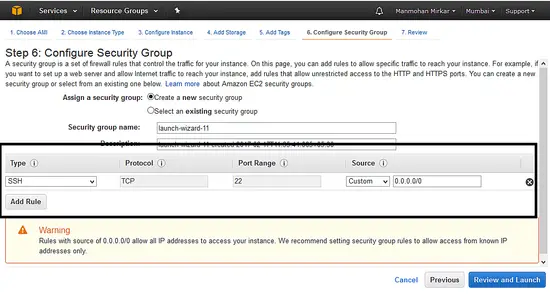

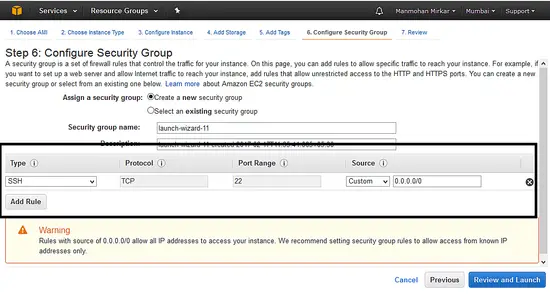

f、 **配置安全组**:现在选择用于 ssh 的 22 端口,以便你可以在任何地方访问此服务器。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][10]

|

||||||

|

|

||||||

|

g、 选择“<ruby>查看并启动<rt>Review and Launch</rt></ruby>”按钮。

|

||||||

|

|

||||||

|

h、 如果所有的详情都无误,点击 “<ruby>启动<rt>Launch</rt></ruby>”按钮。

|

||||||

|

|

||||||

|

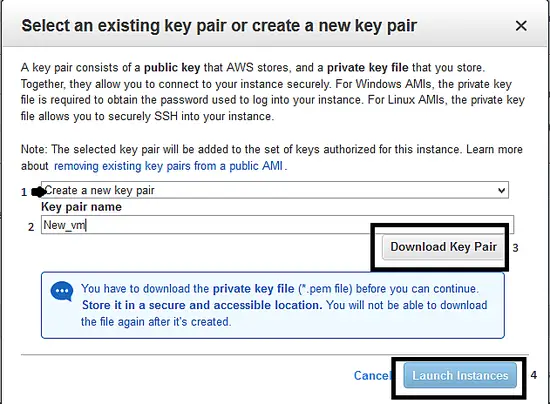

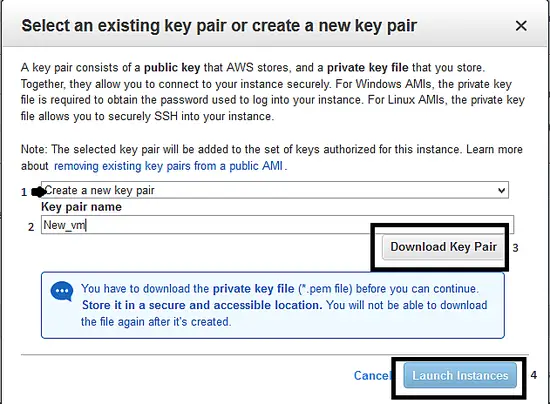

i、 单击“<ruby>启动<rt>Launch</rt></ruby>”按钮后,系统会像下面那样弹出一个窗口以创建“密钥对”:选择选项“<ruby>创建密钥对<rt>create a new key pair</rt></ruby>”,并给密钥对起个名字,然后下载下来。在使用 ssh 连接到服务器时,需要此密钥对。最后,单击“<ruby>启动实例<rt>Launch Instance</rt></ruby>”按钮。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][11]

|

||||||

|

|

||||||

|

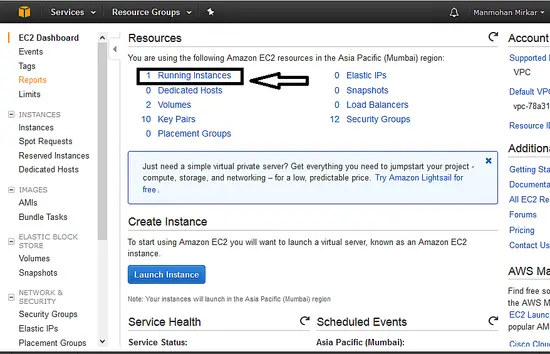

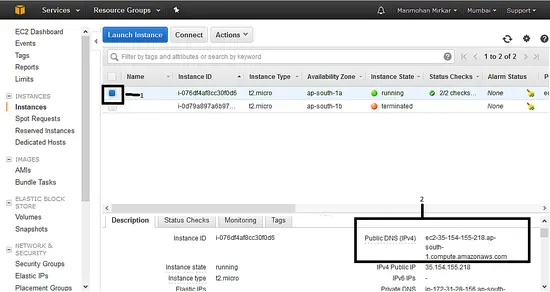

j、 点击“<ruby>启动实例<rt>Launch Instance</rt></ruby>”按钮后,转到左上角的服务。选择“<ruby>计算<rt>compute</rt></ruby>”--> “EC2”。现在点击“<ruby>运行实例<rt>Running Instances</rt></ruby>”:

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][12]

|

||||||

|

|

||||||

|

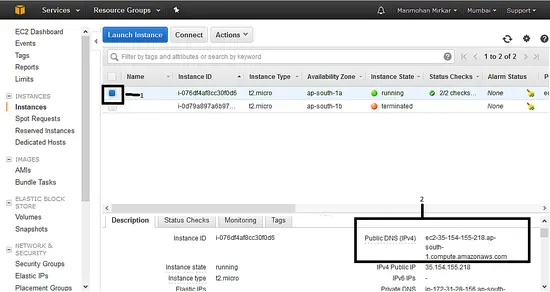

k、 现在你可以看到,你的新 VM 的状态是 “<ruby>运行中<rt>running</rt></ruby>”。选择实例,请记下登录到服务器所需的 “<ruby>公开 DNS 名称<rt>Public DNS</rt></ruby>”。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][13]

|

||||||

|

|

||||||

|

现在你已完成创建一台运行 Linux 的 VM。要连接到服务器,请按照以下步骤操作。

|

||||||

|

|

||||||

|

### 从 Windows 中连接到 EC2 实例

|

||||||

|

|

||||||

|

1、 首先,你需要有 putty gen 和 Putty exe 用于从 Windows 连接到服务器(或 Linux 上的 SSH 命令)。你可以通过下面的[链接][14]下载 putty。

|

||||||

|

|

||||||

|

2、 现在打开 putty gen :`puttygen.exe`。

|

||||||

|

|

||||||

|

3、 你需要单击 “Load” 按钮,浏览并选择你从亚马逊上面下载的密钥对文件(pem 文件)。

|

||||||

|

|

||||||

|

4、 你需要选择 “ssh2-RSA” 选项,然后单击保存私钥按钮。请在下一个弹出窗口中选择 “yes”。

|

||||||

|

|

||||||

|

5、 将文件以扩展名 `.ppk` 保存。

|

||||||

|

|

||||||

|

6、 现在你需要打开 `putty.exe`。在左侧菜单中点击 “connect”,然后选择 “SSH”,然后选择 “Auth”。你需要单击浏览按钮来选择我们在步骤 4 中创建的 .ppk 文件。

|

||||||

|

|

||||||

|

7、 现在点击 “session” 菜单,并在“host name” 中粘贴在本教程中 “k” 步骤中的 DNS 值,然后点击 “open” 按钮。

|

||||||

|

|

||||||

|

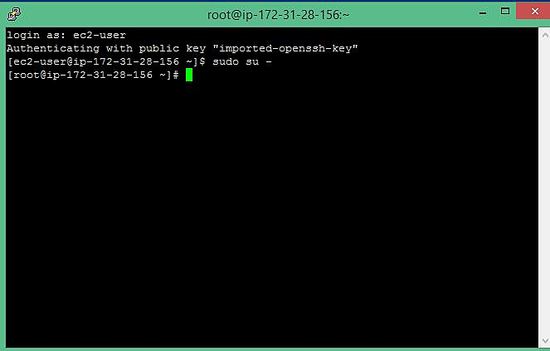

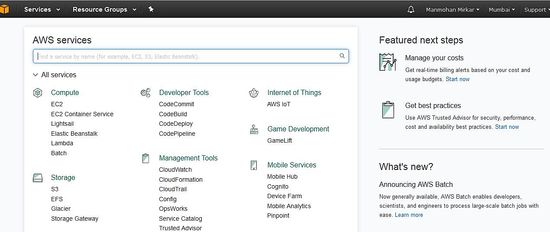

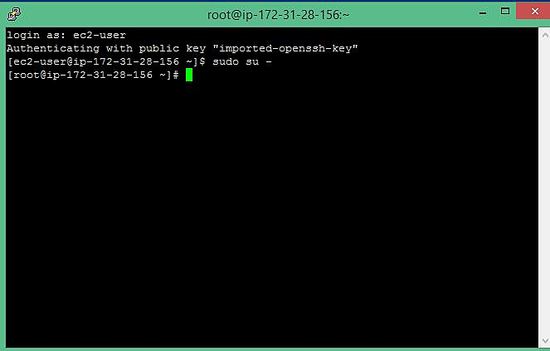

8、 在要求用户名和密码时,输入 `ec2-user` 和空白密码,然后输入下面的命令。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ sudo su -

|

||||||

|

```

|

||||||

|

|

||||||

|

哈哈,你现在是在 AWS 云上托管的 Linux 服务器上的主人啦。

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][15]

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/

|

||||||

|

|

||||||

|

作者:[MANMOHAN MIRKAR][a]

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/

|

||||||

|

[1]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/#setup-a-linux-vm-in-aws

|

||||||

|

[2]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/#connect-to-an-ec-instance-from-windows

|

||||||

|

[3]:http://aws.amazon.com/free/

|

||||||

|

[4]:http://aws.amazon.com/ec2/

|

||||||

|

[5]:https://console.aws.amazon.com/

|

||||||

|

[6]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_console.JPG

|

||||||

|

[7]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_console_ec21.png

|

||||||

|

[8]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_launch_ec2.png

|

||||||

|

[9]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_free_tier_radio1.png

|

||||||

|

[10]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_ssh_port1.png

|

||||||

|

[11]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_key_pair.png

|

||||||

|

[12]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_running_instance.png

|

||||||

|

[13]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_dns_value.png

|

||||||

|

[14]:http://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

|

||||||

|

[15]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_putty1.JPG

|

||||||

@ -0,0 +1,111 @@

|

|||||||

|

如何在 Linux 中安装最新的 Python 3.6 版本

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

在这篇文章中,我将展示如何在 CentOS/RHEL 7、Debian 以及它的衍生版本比如 Ubuntu(最新的 Ubuntu 16.04 LTS 版本已经安装了最新的 Python 版本)或 Linux Mint 上安装和使用 Python 3.x 。我们的重点是安装可用于命令行的核心语言工具。

|

||||||

|

|

||||||

|

然后,我们也会阐述如何安装 Python IDLE - 一个基于 GUI 的工具,它允许我们运行 Python 代码和创建独立函数。

|

||||||

|

|

||||||

|

### 在 Linux 中安装 Python 3.6

|

||||||

|

|

||||||

|

在我写这篇文章的时候(2017 年三月中旬),在 CentOS 和 Debian 8 中可用的最新 Python 版本分别是 Python 3.4 和 Python 3.5 。

|

||||||

|

|

||||||

|

虽然我们可以使用 [yum][1] 和 [aptitude][2](或 [apt-get][3])安装核心安装包以及它们的依赖,但在这儿,我将阐述如何使用源代码进行安装。

|

||||||

|

|

||||||

|

为什么?理由很简单:这样我们能够获取语言的最新的稳定发行版(3.6),并且提供了一种和 Linux 版本无关的安装方法。

|

||||||

|

|

||||||

|

在 CentOS 7 中安装 Python 之前,请确保系统中已经有了所有必要的开发依赖:

|

||||||

|

|

||||||

|

```

|

||||||

|

# yum -y groupinstall development

|

||||||

|

# yum -y install zlib-devel

|

||||||

|

```

|

||||||

|

|

||||||

|

在 Debian 中,我们需要安装 gcc、make 和 zlib 压缩/解压缩库:

|

||||||

|

|

||||||

|

```

|

||||||

|

# aptitude -y install gcc make zlib1g-dev

|

||||||

|

```

|

||||||

|

|

||||||

|

运行下面的命令来安装 Python 3.6:

|

||||||

|

|

||||||

|

```

|

||||||

|

# wget https://www.python.org/ftp/python/3.6.0/Python-3.6.0.tar.xz

|

||||||

|

# tar xJf Python-3.6.0.tar.xz

|

||||||

|

# cd Python-3.6.0

|

||||||

|

# ./configure

|

||||||

|

# make && make install

|

||||||

|

```

|

||||||

|

|

||||||

|

现在,放松一下,或者饿的话去吃个三明治,因为这可能需要花费一些时间。安装完成以后,使用 `which` 命令来查看主要二进制代码的位置:

|

||||||

|

|

||||||

|

```

|

||||||

|

# which python3

|

||||||

|

# python3 -V

|

||||||

|

```

|

||||||

|

|

||||||

|

上面的命令的输出应该和这相似:

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][4]

|

||||||

|

|

||||||

|

*查看 Linux 系统中的 Python 版本*

|

||||||

|

|

||||||

|

要退出 Python 提示符,只需输入:

|

||||||

|

|

||||||

|

```

|

||||||

|

quit()

|

||||||

|

或

|

||||||

|

exit()

|

||||||

|

```

|

||||||

|

|

||||||

|

然后按回车键。

|

||||||

|

|

||||||

|

恭喜!Python 3.6 已经安装在你的系统上了。

|

||||||

|

|

||||||

|

### 在 Linux 中安装 Python IDLE

|

||||||

|

|

||||||

|

Python IDLE 是一个基于 GUI 的 Python 工具。如果你想安装 Python IDLE,请安装叫做 idle(Debian)或 python-tools(CentOS)的包:

|

||||||

|

|

||||||

|

```

|

||||||

|

# apt-get install idle [On Debian]

|

||||||

|

# yum install python-tools [On CentOS]

|

||||||

|

```

|

||||||

|

|

||||||

|

输入下面的命令启动 Python IDLE:

|

||||||

|

|

||||||

|

```

|

||||||

|

# idle

|

||||||

|

```

|

||||||

|

|

||||||

|

### 总结

|

||||||

|

|

||||||

|

在这篇文章中,我们阐述了如何从源代码安装最新的 Python 稳定版本。

|

||||||

|

|

||||||

|

最后但不是不重要,如果你之前使用 Python 2,那么你可能需要看一下 [从 Python 2 迁移到 Python 3 的官方文档][5]。这是一个可以读入 Python 2 代码,然后转化为有效的 Python 3 代码的程序。

|

||||||

|

|

||||||

|

你有任何关于这篇文章的问题或想法吗?请使用下面的评论栏与我们联系

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

Gabriel Cánepa - 一位来自阿根廷圣路易斯梅塞德斯镇 (Villa Mercedes, San Luis, Argentina) 的 GNU/Linux 系统管理员,Web 开发者。就职于一家世界领先级的消费品公司,乐于在每天的工作中能使用 FOSS 工具来提高生产力。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://www.tecmint.com/install-python-in-linux/

|

||||||

|

|

||||||

|

作者:[Gabriel Cánepa][a]

|

||||||

|

译者:[ucasFL](https://github.com/ucasFL)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://www.tecmint.com/author/gacanepa/

|

||||||

|

|

||||||

|

[1]:http://www.tecmint.com/20-linux-yum-yellowdog-updater-modified-commands-for-package-mangement/

|

||||||

|

[2]:http://www.tecmint.com/linux-package-management/

|

||||||

|

[3]:http://www.tecmint.com/useful-basic-commands-of-apt-get-and-apt-cache-for-package-management/

|

||||||

|

[4]:http://www.tecmint.com/wp-content/uploads/2017/03/Check-Python-Version-in-Linux.png

|

||||||

|

[5]:https://docs.python.org/3.6/library/2to3.html

|

||||||

@ -1,14 +0,0 @@

|

|||||||

Programmer Levels

|

|

||||||

=======

|

|

||||||

|

|

||||||

|

|

||||||

via: http://turnoff.us/geek/programmer-leves/

|

|

||||||

|

|

||||||

|

|

||||||

作者:[Daniel Stori][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]: http://turnoff.us/about/

|

|

||||||

@ -1,277 +0,0 @@

|

|||||||

Index of turnoff.us

|

|

||||||

====================

|

|

||||||

|

|

||||||

* ### [Ode To My Family][1]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 11][2]

|

|

||||||

|

|

||||||

* ### [The Jealous Process][3]

|

|

||||||

|

|

||||||

* ### [#!S][4]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 10][5]

|

|

||||||

|

|

||||||

* ### [User Space Election][6]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 7][7]

|

|

||||||

|

|

||||||

* ### [The War for Port 80][8]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 6][9]

|

|

||||||

|

|

||||||

* ### [Adopt a good cause, DON'T SIGKILL][10]

|

|

||||||

|

|

||||||

* ### [Happy 0b11111100001][11]

|

|

||||||

|

|

||||||

* ### [Bash History][12]

|

|

||||||

|

|

||||||

* ### [My (Dev) Morning Routine][13]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 5][14]

|

|

||||||

|

|

||||||

* ### [The Real Reason Not To Share a Mutable State][15]

|

|

||||||

|

|

||||||

* ### [Any Given Day][16]

|

|

||||||

|

|

||||||

* ### [One Last Question][17]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 3][18]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 4][19]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer 2][20]

|

|

||||||

|

|

||||||

* ### [The Depressed Developer][21]

|

|

||||||

|

|

||||||

* ### [Protocols][22]

|

|

||||||

|

|

||||||

* ### [The Lord of The Matrix][23]

|

|

||||||

|

|

||||||

* ### [Inheritance versus Composition][24]

|

|

||||||

|

|

||||||

* ### [Coding From Anthill][25]

|

|

||||||

|

|

||||||

* ### [Life of Embedded Processes][26]

|

|

||||||

|

|

||||||

* ### [Deadline][27]

|

|

||||||

|

|

||||||

* ### [Ubuntu Core][28]

|

|

||||||

|

|

||||||

* ### [The Truth About Google][29]

|

|

||||||

|

|

||||||

* ### [Inside the Linux Kernel][30]

|

|

||||||

|

|

||||||

* ### [Programmer Levels][31]

|

|

||||||

|

|

||||||

* ### [Microservices][32]

|

|

||||||

|

|

||||||

* ### [Binary Tree][33]

|

|

||||||

|

|

||||||

* ### [Annoying Software 4 - Checkbox vs Radio Button][34]

|

|

||||||

|

|

||||||

* ### [Zombie Processes][35]

|

|

||||||

|

|

||||||

* ### [Poprocks and Coke][36]

|

|

||||||

|

|

||||||

* ### [jhamlet][37]

|

|

||||||

|

|

||||||

* ### [Java Thread Life][38]

|

|

||||||

|

|

||||||

* ### [Stranger Things - In The SysAdmin's World][39]

|

|

||||||

|

|

||||||

* ### [Who Killed MySQL? - Epilogue][40]

|

|

||||||

|

|

||||||

* ### [Sometimes They Are][41]

|

|

||||||

|

|

||||||

* ### [Dotnet on Linux][42]

|

|

||||||

|

|

||||||

* ### [Who Killed MySQL?][43]

|

|

||||||

|

|

||||||

* ### [To VI Or Not To VI][44]

|

|

||||||

|

|

||||||

* ### [Brothers Conflict (at linux kernel)][45]

|

|

||||||

|

|

||||||

* ### [Big Numbers][46]

|

|

||||||

|

|

||||||

* ### [The Codeless Developer][47]

|

|

||||||

|

|

||||||

* ### [Introducing the OOM Killer][48]

|

|

||||||

|

|

||||||

* ### [Reactive and Boring][49]

|

|

||||||

|

|

||||||

* ### [Hype Detected][50]

|

|

||||||

|

|

||||||

* ### [3rd World Daily News][51]

|

|

||||||

|

|

||||||

* ### [Java Evolution Parade][52]

|

|

||||||

|

|

||||||

* ### [The Opposite of RIP][53]

|

|

||||||

|

|

||||||

* ### [How I Met Your Mother][54]

|

|

||||||

|

|

||||||

* ### [Schrödinger's Cat Last Declarations][55]

|

|

||||||

|

|

||||||

* ### [Bash on Windows][56]

|

|

||||||

|

|

||||||

* ### [Ubuntu Updates][57]

|

|

||||||

|

|

||||||

* ### [SQL Server on Linux Part 2][58]

|

|

||||||

|

|

||||||

* ### [About JavaScript Developers][59]

|

|

||||||

|

|

||||||

* ### [The Real Reason to Not Use SIGKILL][60]

|

|

||||||

|

|

||||||

* ### [Java 20 - Predictions][61]

|

|

||||||

|

|

||||||

* ### [Do the Evolution, Baby!][62]

|

|

||||||

|

|

||||||

* ### [SQL Server on Linux][63]

|

|

||||||

|

|

||||||

* ### [When Just-In-Time Is Justin Time][64]

|

|

||||||

|

|

||||||

* ### [The Agile Restaurant][65]

|

|

||||||

|

|

||||||

* ### [I Love Windows PowerShell][66]

|

|

||||||

|

|

||||||

* ### [Linux Master Hero][67]

|

|

||||||

|

|

||||||

* ### [Doing a Great Job Together][68]

|

|

||||||

|

|

||||||

* ### [Geek Rivalries][69]

|

|

||||||

|

|

||||||

* ### [A Java Nightmare][70]

|

|

||||||

|

|

||||||

* ### [Java Family Crisis][71]

|

|

||||||

|

|

||||||

* ### [Annoying Software 3 - The Date Situation][72]

|

|

||||||

|

|

||||||

* ### [The (Sometimes Hard) Cloud Journey][73]

|

|

||||||

|

|

||||||

* ### [Thread.Sleep Room][74]

|

|

||||||

|

|

||||||

* ### [Web Server Upgrade Training][75]

|

|

||||||

|

|

||||||

* ### [Life in a Web Server][76]

|

|

||||||

|

|

||||||

* ### [Mastering RegExp][77]

|

|

||||||

|

|

||||||

* ### [Java Collections in Duck Life][78]

|

|

||||||

|

|

||||||

* ### [Java Garbage Collection Explained][79]

|

|

||||||

|

|

||||||

* ### [Waze vs Battery][80]

|

|

||||||

|

|

||||||

* ### [Masks][81]

|

|

||||||

|

|

||||||

* ### [Big Data Marriage][82]

|

|

||||||

|

|

||||||

* ### [Annoying Software 2][83]

|

|

||||||

|

|

||||||

* ### [Annoying Software][84]

|

|

||||||

|

|

||||||

* ### [Advanced Species][85]

|

|

||||||

|

|

||||||

* ### [The Modern Evil][86]

|

|

||||||

|

|

||||||

* ### [Software Testing][87]

|

|

||||||

|

|

||||||

* ### [Arduino Project][88]

|

|

||||||

|

|

||||||

* ### [Tales of DOS][89]

|

|

||||||

|

|

||||||

* ### [Developers][90]

|

|

||||||

|

|

||||||

* ### [TCP Buddies][91]

|

|

||||||

|

|

||||||

|

|

||||||

[1]:https://turnoff.us/geek/ode-to-my-family

|

|

||||||

[2]:https://turnoff.us/geek/the-depressed-developer-11

|

|

||||||

[3]:https://turnoff.us/geek/the-jealous-process

|

|

||||||

[4]:https://turnoff.us/geek/shebang

|

|

||||||

[5]:https://turnoff.us/geek/the-depressed-developer-10

|

|

||||||

[6]:https://turnoff.us/geek/user-space-election

|

|

||||||

[7]:https://turnoff.us/geek/the-depressed-developer-7

|

|

||||||

[8]:https://turnoff.us/geek/apache-vs-nginx

|

|

||||||

[9]:https://turnoff.us/geek/the-depressed-developer-6

|

|

||||||

[10]:https://turnoff.us/geek/dont-sigkill-2

|

|

||||||

[11]:https://turnoff.us/geek/2016-2017

|

|

||||||

[12]:https://turnoff.us/geek/bash-history

|

|

||||||

[13]:https://turnoff.us/geek/my-morning-routine

|

|

||||||

[14]:https://turnoff.us/geek/the-depressed-developer-5

|

|

||||||

[15]:https://turnoff.us/geek/dont-share-mutable-state

|

|

||||||

[16]:https://turnoff.us/geek/sql-injection

|

|

||||||

[17]:https://turnoff.us/geek/one-last-question

|

|

||||||

[18]:https://turnoff.us/geek/the-depressed-developer-3

|

|

||||||

[19]:https://turnoff.us/geek/the-depressed-developer-4

|

|

||||||

[20]:https://turnoff.us/geek/the-depressed-developer-2

|

|

||||||

[21]:https://turnoff.us/geek/the-depressed-developer

|

|

||||||

[22]:https://turnoff.us/geek/protocols

|

|

||||||

[23]:https://turnoff.us/geek/the-lord-of-the-matrix

|

|

||||||

[24]:https://turnoff.us/geek/inheritance-versus-composition

|

|

||||||

[25]:https://turnoff.us/geek/ant

|

|

||||||

[26]:https://turnoff.us/geek/ubuntu-core-2

|

|

||||||

[27]:https://turnoff.us/geek/deadline

|

|

||||||

[28]:https://turnoff.us/geek/ubuntu-core

|

|

||||||

[29]:https://turnoff.us/geek/the-truth-about-google

|

|

||||||

[30]:https://turnoff.us/geek/inside-the-linux-kernel

|

|

||||||

[31]:https://turnoff.us/geek/programmer-leves

|

|

||||||

[32]:https://turnoff.us/geek/microservices

|

|

||||||

[33]:https://turnoff.us/geek/binary-tree

|

|

||||||

[34]:https://turnoff.us/geek/annoying-software-4

|

|

||||||

[35]:https://turnoff.us/geek/zombie-processes

|

|

||||||

[36]:https://turnoff.us/geek/poprocks-and-coke

|

|

||||||

[37]:https://turnoff.us/geek/jhamlet

|

|

||||||

[38]:https://turnoff.us/geek/java-thread-life

|

|

||||||

[39]:https://turnoff.us/geek/stranger-things-sysadmin-world

|

|

||||||

[40]:https://turnoff.us/geek/who-killed-mysql-epilogue

|

|

||||||

[41]:https://turnoff.us/geek/sad-robot

|

|

||||||

[42]:https://turnoff.us/geek/dotnet-on-linux

|

|

||||||

[43]:https://turnoff.us/geek/who-killed-mysql

|

|

||||||

[44]:https://turnoff.us/geek/to-vi-or-not-to-vi

|

|

||||||

[45]:https://turnoff.us/geek/brothers-conflict

|

|

||||||

[46]:https://turnoff.us/geek/big-numbers

|

|

||||||

[47]:https://turnoff.us/geek/codeless

|

|

||||||

[48]:https://turnoff.us/geek/oom-killer

|

|

||||||

[49]:https://turnoff.us/geek/reactive-and-boring

|

|

||||||

[50]:https://turnoff.us/geek/tech-adoption

|

|

||||||

[51]:https://turnoff.us/geek/3rd-world-news

|

|

||||||

[52]:https://turnoff.us/geek/java-evolution-parade

|

|

||||||

[53]:https://turnoff.us/geek/opposite-of-rip

|

|

||||||

[54]:https://turnoff.us/geek/how-i-met-your-mother

|

|

||||||

[55]:https://turnoff.us/geek/schrodinger-cat

|

|

||||||

[56]:https://turnoff.us/geek/bash-on-windows

|

|

||||||

[57]:https://turnoff.us/geek/ubuntu-updates

|

|

||||||

[58]:https://turnoff.us/geek/sql-server-on-linux-2

|

|

||||||

[59]:https://turnoff.us/geek/love-ecma6

|

|

||||||

[60]:https://turnoff.us/geek/dont-sigkill

|

|

||||||

[61]:https://turnoff.us/geek/java20-predictions

|

|

||||||

[62]:https://turnoff.us/geek/its-evolution-baby

|

|

||||||

[63]:https://turnoff.us/geek/sql-server-on-linux

|

|

||||||

[64]:https://turnoff.us/geek/lazy-justin-is-late-again

|

|

||||||

[65]:https://turnoff.us/geek/agile-restaurant

|

|

||||||

[66]:https://turnoff.us/geek/love-powershell

|

|

||||||

[67]:https://turnoff.us/geek/linux-master-hero

|

|

||||||

[68]:https://turnoff.us/geek/duke-tux

|

|

||||||

[69]:https://turnoff.us/geek/geek-rivalries

|

|

||||||

[70]:https://turnoff.us/geek/a-java-nightmare

|

|

||||||

[71]:https://turnoff.us/geek/java-family-crisis

|

|

||||||

[72]:https://turnoff.us/geek/annoying-software-3

|

|

||||||

[73]:https://turnoff.us/geek/cloud-sometimes-hard-journey

|

|

||||||

[74]:https://turnoff.us/geek/thread-sleep-room

|

|

||||||

[75]:https://turnoff.us/geek/webserver-upgrade-training

|

|

||||||

[76]:https://turnoff.us/geek/life-in-a-web-server

|

|

||||||

[77]:https://turnoff.us/geek/mastering-regexp

|

|

||||||

[78]:https://turnoff.us/geek/java-collections

|

|

||||||

[79]:https://turnoff.us/geek/java-gc-explained

|

|

||||||

[80]:https://turnoff.us/geek/waze-vs-battery

|

|

||||||

[81]:https://turnoff.us/geek/masks

|

|

||||||

[82]:https://turnoff.us/geek/bigdata-marriage

|

|

||||||

[83]:https://turnoff.us/geek/annoying-software-2

|

|

||||||

[84]:https://turnoff.us/geek/annoying-software

|

|

||||||

[85]:https://turnoff.us/geek/advanced-species

|

|

||||||

[86]:https://turnoff.us/geek/modern-evil

|

|

||||||

[87]:https://turnoff.us/geek/software-test

|

|

||||||

[88]:https://turnoff.us/geek/arduino-project

|

|

||||||

[89]:https://turnoff.us/geek/tales-of-dos

|

|

||||||

[90]:https://turnoff.us/geek/developers

|

|

||||||

[91]:https://turnoff.us/geek/tcp-buddies

|

|

||||||

@ -0,0 +1,97 @@

|

|||||||

|

Why do you use Linux and open source software?

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

>LinuxQuestions.org readers share reasons they use Linux and open source technologies. How will Opensource.com readers respond?

|

||||||

|

|

||||||

|

|

||||||

|

>Image by : opensource.com

|

||||||

|

|

||||||

|

As I mentioned when [The Queue][4] launched, although typically I will answer questions from readers, sometimes I'll switch that around and ask readers a question. I haven't done so since that initial column, so it's overdue. I recently asked two related questions at LinuxQuestions.org and the response was overwhelming. Let's see how the Opensource.com community answers both questions, and how those responses compare and contrast to those on LQ.

|

||||||

|

|

||||||

|

### Why do you use Linux?

|

||||||

|

|

||||||

|

The first question I asked the LinuxQuestions.org community is: **[What are the reasons you use Linux?][1]**

|

||||||

|

|

||||||

|

### Answer highlights

|

||||||

|

|

||||||

|

_oldwierdal_ : I use Linux because it is fast, safe, and reliable. With contributors from all over the world, it has become, perhaps, the most advanced and innovative software available. And, here is the icing on the red-velvet cake; It is free!

|

||||||

|

|

||||||

|

_Timothy Miller_ : I started using it because it was free as in beer and I was poor so couldn't afford to keep buying new Windows licenses.

|

||||||

|

|

||||||

|

_ondoho_ : Because it's a global community effort, self-governed grassroot operating system. Because it's free in every sense. Because there's good reason to trust in it.

|

||||||

|

|

||||||

|

_joham34_ : Stable, free, safe, runs in low specs PCs, nice support community, little to no danger for viruses.

|

||||||

|

|

||||||

|

_Ook_ : I use Linux because it just works, something Windows never did well for me. I don't have to waste time and money getting it going and keeping it going.

|

||||||

|

|

||||||

|

_rhamel_ : I am very concerned about the loss of privacy as a whole on the internet. I recognize that compromises have to be made between privacy and convenience. I may be fooling myself but I think Linux gives me at least the possibility of some measure of privacy.

|

||||||

|

|

||||||

|

_educateme_ : I use Linux because of the open-minded, learning-hungry, passionately helpful community. And, it's free.

|

||||||

|

|

||||||

|

_colinetsegers_ : Why I use Linux? There's not only one reason. In short I would say:

|

||||||

|

|

||||||

|

1. The philosophy of free shared knowledge.

|

||||||

|

2. Feeling safe while surfing the web.

|

||||||

|

3. Lots of free and useful software.

|

||||||

|

|

||||||

|

_bamunds_ : Because I love freedom.

|

||||||

|

|

||||||

|

_cecilskinner1989_ : I use linux for two reasons: stability and privacy.

|

||||||

|

|

||||||

|

### Why do you use open source software?

|

||||||

|

|

||||||

|

The second questions is, more broadly: **[What are the reasons you use open source software?][2]** You'll notice that, although there is a fair amount of overlap here, the general tone is different, with some sentiments receiving more emphasis, and others less.

|

||||||

|

|

||||||

|

### Answer highlights

|

||||||

|

|

||||||

|

_robert leleu_ : Warm and cooperative atmosphere is the main reason of my addiction to open source.

|

||||||

|

|

||||||

|

_cjturner_ : Open Source is an answer to the Pareto Principle as applied to Applications; OOTB, a software package ends up meeting 80% of your requirements, and you have to get the other 20% done. Open Source gives you a mechanism and a community to share this burden, putting your own effort (if you have the skills) or money into your high-priority requirements.

|

||||||

|

|

||||||

|

_Timothy Miller_ : I like the knowledge that I _can_ examine the source code to verify that the software is secure if I so choose.

|

||||||

|

|

||||||

|

_teckk_ : There are no burdensome licensing requirements or DRM and it's available to everyone.

|

||||||

|

|

||||||

|

_rokytnji_ : Beer money. Motorcycle parts. Grandkids birthday presents.

|

||||||

|

|

||||||

|

_timl_ : Privacy is impossible without free software

|

||||||

|

|

||||||

|

_hazel_ : I like the philosophy of free software, but I wouldn't use it just for philosophical reasons if Linux was a bad OS. I use Linux because I love Linux, and because you can get it for free as in free beer. The fact that it's also free as in free speech is a bonus, because it makes me feel good about using it. But if I find that a piece of hardware on my machine needs proprietary firmware, I'll use proprietary firmware.

|

||||||

|

|

||||||

|

_lm8_ : I use open source software because I don't have to worry about it going obsolete when a company goes out of business or decides to stop supporting it. I can continue to update and maintain the software myself. I can also customize it if the software does almost everything I want, but it would be nice to have a few more features. I also like open source because I can share my favorite programs with friend and coworkers.

|

||||||

|

|

||||||

|

_donguitar_ : Because it empowers me and enables me to empower others.

|

||||||

|

|

||||||

|

### Your turn

|

||||||

|

|

||||||

|

So, what are the reasons _**you**_ use Linux? What are the reasons _**you**_ use open source software? Let us know in the comments.

|

||||||

|

|

||||||

|

### Fill The Queue

|

||||||

|

|

||||||

|

Lastly, what questions would you like to see answered in a future article? From questions on building and maintaining communities, to what you'd like to know about contributing to an open source project, to questions more technical in nature—[submit your Linux and open source questions][5].

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

Jeremy Garcia - Jeremy Garcia is the founder of LinuxQuestions.org and an ardent but realistic open source advocate. Follow Jeremy on Twitter: @linuxquestions

|

||||||

|

|

||||||

|

------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/17/3/why-do-you-use-linux-and-open-source-software

|

||||||

|

|

||||||

|

作者:[Jeremy Garcia ][a]

|

||||||

|

译者:[译者ID](https://github.com/译者ID)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/jeremy-garcia

|

||||||

|

[1]:http://www.linuxquestions.org/questions/linux-general-1/what-are-the-reasons-you-use-linux-4175600842/

|

||||||

|

[2]:http://www.linuxquestions.org/questions/linux-general-1/what-are-the-reasons-you-use-open-source-software-4175600843/

|

||||||

|

[3]:https://opensource.com/article/17/3/why-do-you-use-linux-and-open-source-software?rate=lVazcbF6Oern5CpV86PgNrRNZltZ8aJZwrUp7SrZIAw

|

||||||

|

[4]:https://opensource.com/tags/queue-column

|

||||||

|

[5]:https://opensource.com/thequeue-submit-question

|

||||||

|

[6]:https://opensource.com/user/86816/feed

|

||||||

|

[7]:https://opensource.com/article/17/3/why-do-you-use-linux-and-open-source-software#comments

|

||||||

|

[8]:https://opensource.com/users/jeremy-garcia

|

||||||

@ -1,176 +0,0 @@

|

|||||||

translating by xiaow6

|

|

||||||

Your visual how-to guide for SELinux policy enforcement

|

|

||||||

============================================================

|

|

||||||

|

|

||||||

|

|

||||||

>Image by : opensource.com

|

|

||||||

|

|

||||||

We are celebrating the SELinux 10th year anversary this year. Hard to believe it. SELinux was first introduced in Fedora Core 3 and later in Red Hat Enterprise Linux 4. For those who have never used SELinux, or would like an explanation...

|

|

||||||

|

|

||||||

More Linux resources

|

|

||||||

|

|

||||||

* [What is Linux?][1]

|

|

||||||

* [What are Linux containers?][2]

|

|

||||||

* [Managing devices in Linux][3]

|

|

||||||

* [Download Now: Linux commands cheat sheet][4]

|

|

||||||

* [Our latest Linux articles][5]

|

|

||||||

|

|

||||||

SElinux is a labeling system. Every process has a label. Every file/directory object in the operating system has a label. Even network ports, devices, and potentially hostnames have labels assigned to them. We write rules to control the access of a process label to an a object label like a file. We call this _policy_ . The kernel enforces the rules. Sometimes this enforcement is called Mandatory Access Control (MAC).

|

|

||||||

|

|

||||||

The owner of an object does not have discretion over the security attributes of a object. Standard Linux access control, owner/group + permission flags like rwx, is often called Discretionary Access Control (DAC). SELinux has no concept of UID or ownership of files. Everything is controlled by the labels. Meaning an SELinux system can be setup without an all powerful root process.

|

|

||||||

|

|

||||||

**Note:** _SELinux does not let you side step DAC Controls. SELinux is a parallel enforcement model. An application has to be allowed by BOTH SELinux and DAC to do certain activities. This can lead to confusion for administrators because the process gets Permission Denied. Administrators see Permission Denied means something is wrong with DAC, not SELinux labels._

|

|

||||||

|

|

||||||

### Type enforcement

|

|

||||||

|

|

||||||

Lets look a little further into the labels. The SELinux primary model or enforcement is called _type enforcement_ . Basically this means we define the label on a process based on its type, and the label on a file system object based on its type.

|

|

||||||

|

|

||||||

_Analogy_

|

|

||||||

|

|

||||||

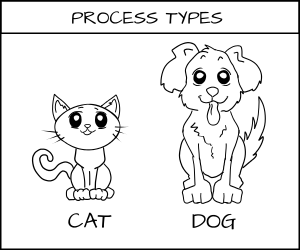

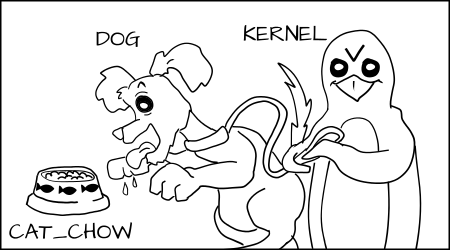

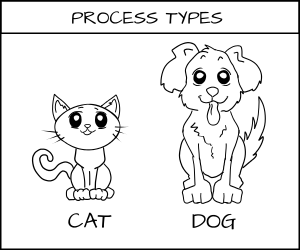

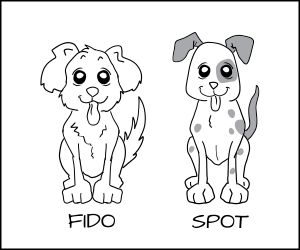

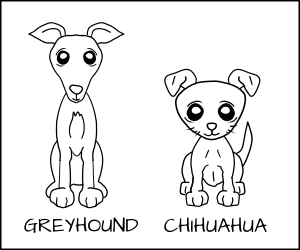

Imagine a system where we define types on objects like cats and dogs. A cat and dog are process types.

|

|

||||||

|

|

||||||

_*all cartoons by [Máirín Duffy][6]_

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

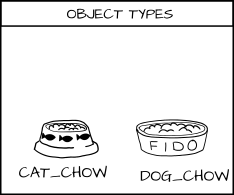

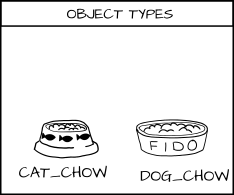

We have a class of objects that they want to interact with which we call food. And I want to add types to the food, _cat_food_ and _dog_food_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

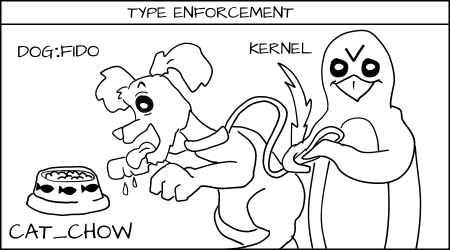

As a policy writer, I would say that a dog has permission to eat _dog_chow_ food and a cat has permission to eat _cat_chow_ food. In SELinux we would write this rule in policy.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

allow cat cat_chow:food eat;

|

|

||||||

|

|

||||||

allow dog dog_chow:food eat;

|

|

||||||

|

|

||||||

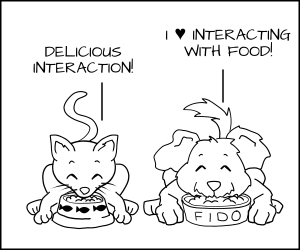

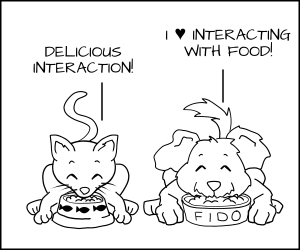

With these rules the kernel would allow the cat process to eat food labeled _cat_chow _ and the dog to eat food labeled _dog_chow_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

But in an SELinux system everything is denied by default. This means that if the dog process tried to eat the _cat_chow_ , the kernel would prevent it.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Likewise cats would not be allowed to touch dog food.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

_Real world_

|

|

||||||

|

|

||||||

We label Apache processes as _httpd_t_ and we label Apache content as _httpd_sys_content_t _ and _httpd_sys_content_rw_t_ . Imagine we have credit card data stored in a mySQL database which is labeled _msyqld_data_t_ . If an Apache process is hacked, the hacker could get control of the _httpd_t process_ and would be allowed to read _httpd_sys_content_t_ files and write to _httpd_sys_content_rw_t_ . But the hacker would not be allowed to read the credit card data ( _mysqld_data_t_ ) even if the process was running as root. In this case SELinux has mitigated the break in.

|

|

||||||

|

|

||||||

### MCS enforcement

|

|

||||||

|

|

||||||

_Analogy _

|

|

||||||

|

|

||||||

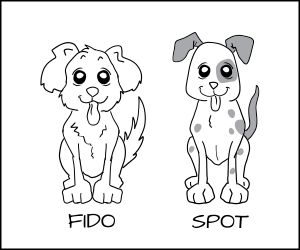

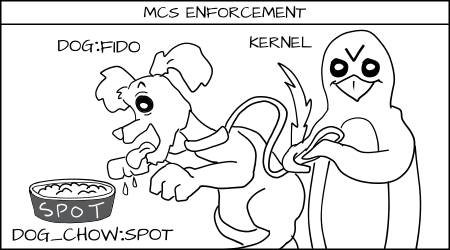

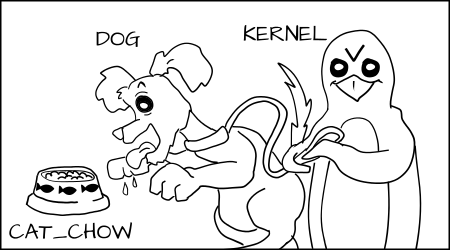

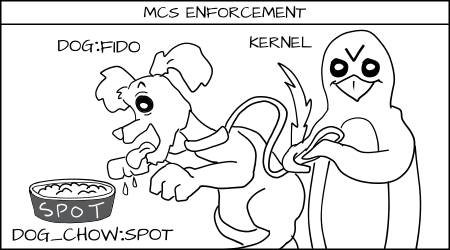

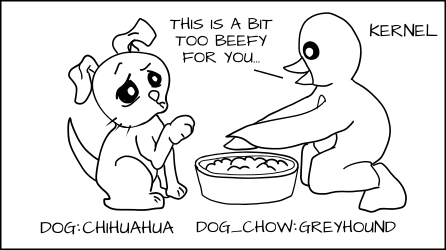

Above, we typed the dog process and cat process, but what happens if you have multiple dogs processes: Fido and Spot. You want to stop Fido from eating Spot's _dog_chow_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

One solution would be to create lots of new types, like _Fido_dog_ and _Fido_dog_chow_ . But, this will quickly become unruly because all dogs have pretty much the same permissions.

|

|

||||||

|

|

||||||

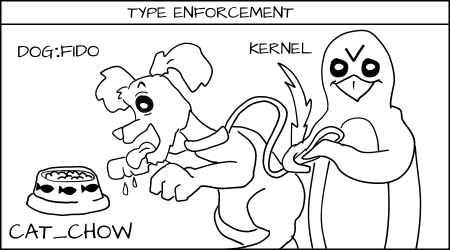

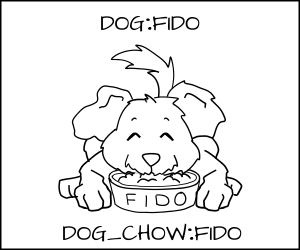

To handle this we developed a new form of enforcement, which we call Multi Category Security (MCS). In MCS, we add another section of the label which we can apply to the dog process and to the dog_chow food. Now we label the dog process as _dog:random1 _ (Fido) and _dog:random2_ (Spot).

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

We label the dog chow as _dog_chow:random1 (Fido)_ and _dog_chow:random2_ (Spot).

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

MCS rules say that if the type enforcement rules are OK and the random MCS labels match exactly, then the access is allowed, if not it is denied.

|

|

||||||

|

|

||||||

Fido (dog:random1) trying to eat _cat_chow:food_ is denied by type enforcement.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Fido (dog:random1) is allowed to eat _dog_chow:random1._

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Fido (dog:random1) denied to eat spot's ( _dog_chow:random2_ ) food.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

_Real world_

|

|

||||||

|

|

||||||

In computer systems we often have lots of processes all with the same access, but we want them separated from each other. We sometimes call this a _multi-tenant environment_ . The best example of this is virtual machines. If I have a server running lots of virtual machines, and one of them gets hacked, I want to prevent it from attacking the other virtual machines and virtual machine images. But in a type enforcement system the KVM virtual machine is labeled _svirt_t_ and the image is labeled _svirt_image_t_ . We have rules that say _svirt_t_ can read/write/delete content labeled _svirt_image_t_ . With libvirt we implemented not only type enforcement separation, but also MCS separation. When libvirt is about to launch a virtual machine it picks out a random MCS label like _s0:c1,c2_ , it then assigns the _svirt_image_t:s0:c1,c2_ label to all of the content that the virtual machine is going to need to manage. Finally, it launches the virtual machine as _svirt_t:s0:c1,c2_ . Then, the SELinux kernel controls that _svirt_t:s0:c1,c2_ can not write to _svirt_image_t:s0:c3,c4_ , even if the virtual machine is controled by a hacker and takes it over. Even if it is running as root.

|

|

||||||

|

|

||||||

We use [similar separation][8] in OpenShift. Each gear (user/app process)runs with the same SELinux type (openshift_t). Policy defines the rules controlling the access of the gear type and a unique MCS label to make sure one gear can not interact with other gears.

|

|

||||||

|

|

||||||

Watch [this short video][9] on what would happen if an Openshift gear became root.

|

|

||||||

|

|

||||||

### MLS enforcement

|

|

||||||

|

|

||||||

Another form of SELinux enforcement, used much less frequently, is called Multi Level Security (MLS); it was developed back in the 60s and is used mainly in trusted operating systems like Trusted Solaris.

|

|

||||||

|

|

||||||

The main idea is to control processes based on the level of the data they will be using. A _secret _ process can not read _top secret_ data.

|

|

||||||

|

|

||||||

MLS is very similar to MCS, except it adds a concept of dominance to enforcement. Where MCS labels have to match exactly, one MLS label can dominate another MLS label and get access.

|

|

||||||

|

|

||||||

_Analogy_

|

|

||||||

|

|

||||||

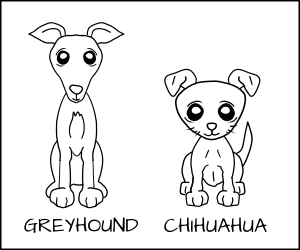

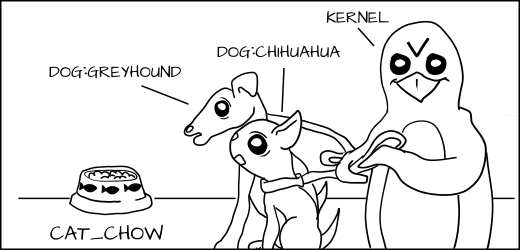

Instead of talking about different dogs, we now look at different breeds. We might have a Greyhound and a Chihuahua.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

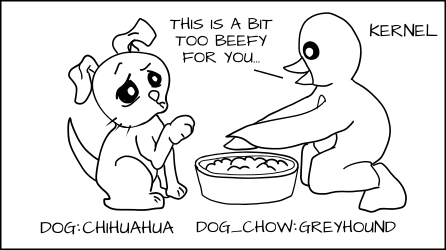

We might want to allow the Greyhound to eat any dog food, but a Chihuahua could choke if it tried to eat Greyhound dog food.

|

|

||||||

|

|

||||||

We want to label the Greyhound as _dog:Greyhound_ and his dog food as _dog_chow:Greyhound, _ and label the Chihuahua as _dog:Chihuahua_ and his food as _dog_chow:Chihuahua_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

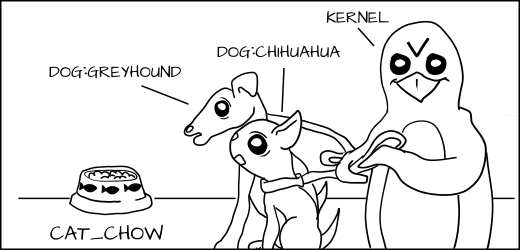

With the MLS policy, we would have the MLS Greyhound label dominate the Chihuahua label. This means _dog:Greyhound_ is allowed to eat _dog_chow:Greyhound _ and _dog_chow:Chihuahua_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

But _dog:Chihuahua_ is not allowed to eat _dog_chow:Greyhound_ .

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Of course, _dog:Greyhound_ and _dog:Chihuahua_ are still prevented from eating _cat_chow:Siamese_ by type enforcement, even if the MLS type Greyhound dominates Siamese.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

_Real world_

|

|

||||||

|

|

||||||

I could have two Apache servers: one running as _httpd_t:TopSecret_ and another running as _httpd_t:Secret_ . If the Apache process _httpd_t:Secret_ were hacked, the hacker could read _httpd_sys_content_t:Secret_ but would be prevented from reading _httpd_sys_content_t:TopSecret_ .

|

|

||||||

|

|

||||||

However, if the Apache server running _httpd_t:TopSecret_ was hacked, it could read _httpd_sys_content_t:Secret data_ as well as _httpd_sys_content_t:TopSecret_ .

|

|

||||||

|

|

||||||

We use the MLS in military environments where a user might only be allowed to see _secret _ data, but another user on the same system could read _top secret_ data.

|

|

||||||

|

|

||||||

### Conclusion

|

|

||||||

|

|

||||||

SELinux is a powerful labeling system, controlling access granted to individual processes by the kernel. The primary feature of this is type enforcement where rules define the access allowed to a process is allowed based on the labeled type of the process and the labeled type of the object. Two additional controls have been added to separate processes with the same type from each other called MCS, total separtion from each other, and MLS, allowing for process domination.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

作者简介:

|

|

||||||

|

|

||||||

Daniel J Walsh - Daniel Walsh has worked in the computer security field for almost 30 years. Dan joined Red Hat in August 2001.

|

|

||||||

|

|

||||||

-------------------------

|

|

||||||

|

|

||||||

via: https://opensource.com/business/13/11/selinux-policy-guide

|

|

||||||

|

|

||||||

作者:[Daniel J Walsh ][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:https://opensource.com/users/rhatdan

|

|

||||||

[1]:https://opensource.com/resources/what-is-linux?src=linux_resource_menu

|

|

||||||

[2]:https://opensource.com/resources/what-are-linux-containers?src=linux_resource_menu

|

|

||||||

[3]:https://opensource.com/article/16/11/managing-devices-linux?src=linux_resource_menu

|

|

||||||

[4]:https://developers.redhat.com/promotions/linux-cheatsheet/?intcmp=7016000000127cYAAQ

|

|

||||||

[5]:https://opensource.com/tags/linux?src=linux_resource_menu

|

|

||||||

[6]:https://opensource.com/users/mairin

|

|

||||||

[7]:https://opensource.com/business/13/11/selinux-policy-guide?rate=XNCbBUJpG2rjpCoRumnDzQw-VsLWBEh-9G2hdHyB31I

|

|

||||||

[8]:http://people.fedoraproject.org/~dwalsh/SELinux/Presentations/openshift_selinux.ogv

|

|

||||||

[9]:http://people.fedoraproject.org/~dwalsh/SELinux/Presentations/openshift_selinux.ogv

|

|

||||||

[10]:https://opensource.com/user/16673/feed

|

|

||||||

[11]:https://opensource.com/business/13/11/selinux-policy-guide#comments

|

|

||||||

[12]:https://opensource.com/users/rhatdan

|

|

||||||

@ -1,4 +1,4 @@

|

|||||||

|

[kenxx](https://github.com/kenxx)

|

||||||

|

|

||||||

[Why (most) High Level Languages are Slow][7]

|

[Why (most) High Level Languages are Slow][7]

|

||||||

============================================================

|

============================================================

|

||||||

@ -98,7 +98,7 @@ I typically blog graphics, languages, performance, and such. Feel free to hit me

|

|||||||

via: https://www.sebastiansylvan.com/post/why-most-high-level-languages-are-slow

|

via: https://www.sebastiansylvan.com/post/why-most-high-level-languages-are-slow

|

||||||

|

|

||||||

作者:[Sebastian Sylvan ][a]

|

作者:[Sebastian Sylvan ][a]

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

译者:[kenxx](https://github.com/kenxx)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|||||||

@ -0,0 +1,255 @@

|

|||||||

|

5 ways to change GRUB background in Kali Linux

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

|

||||||

|

This is a simple guide on how to change GRUB background in Kali Linux (i.e. it’s actually Kali Linux GRUB splash image). Kali dev team did few things that seems almost too much work, so in this article I will explain one of two things about GRUB and somewhat make this post little unnecessarily long and boring cause I like to write! So here goes …

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][10]

|

||||||

|

|

||||||

|

### Finding GRUB settings

|

||||||

|

|

||||||

|

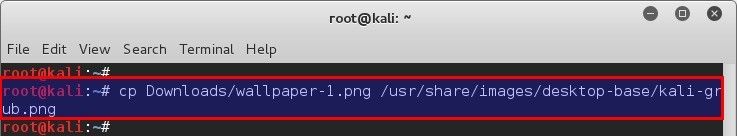

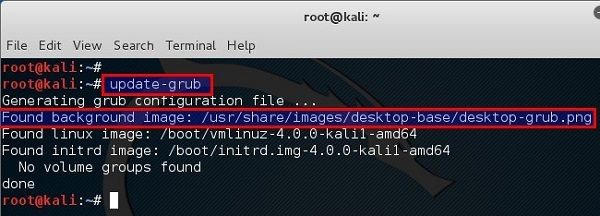

This is usually the first issue everyone faces, where do I look? There’s a many ways to find GRUB settings. Users might have their own opinion but I always found that `update-grub` is the easiest way. If you run `update-grub` in a VMWare/VirtualBox, you will see something like this:

|

||||||

|

|

||||||

|

```

|

||||||

|

root@kali:~# update-grub

|

||||||

|

Generating grub configuration file ...

|

||||||

|

Found background image: /usr/share/images/desktop-base/desktop-grub.png

|

||||||

|

Found linux image: /boot/vmlinuz-4.0.0-kali1-amd64

|

||||||

|

Found initrd image: /boot/initrd.img-4.0.0-kali1-amd64

|

||||||

|

No volume groups found

|

||||||

|

done

|

||||||

|

root@kali:~#

|

||||||

|

```

|

||||||

|

|

||||||

|

If you’re using a Dual Boot, Triple Boot then you will see GRUB goes in and finds other OS’es as well. However, the part we’re interested is the background image part, in my case this is what I see (you will see exactly the same thing):

|

||||||

|

|

||||||

|

```

|

||||||

|

Found background image: /usr/share/images/desktop-base/desktop-grub.png

|

||||||

|

```

|

||||||

|

|

||||||

|

### GRUB splash image search order

|

||||||

|

|

||||||

|

In grub-2.02, it will search for the splash image in the following order for a Debian based system:

|

||||||

|

|

||||||

|

1. GRUB_BACKGROUND line in `/etc/default/grub`

|

||||||

|

2. First image found in `/boot/grub/` ( more images found, it will be taken alphanumerically )

|

||||||

|

3. The image specified in `/usr/share/desktop-base/grub_background.sh`

|

||||||

|

4. The file listed in the WALLPAPER line in `/etc/grub.d/05_debian_theme`

|

||||||

|

|

||||||

|

Now hang onto this info and we will soon revisit it.

|

||||||

|

|

||||||

|

### Kali Linux GRUB splash image

|

||||||

|

|

||||||

|

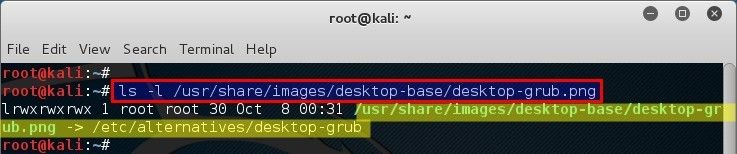

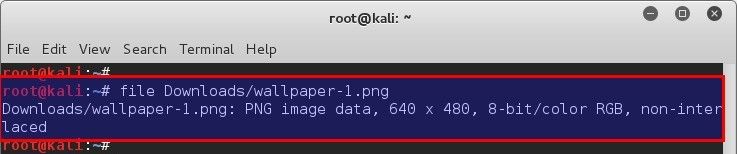

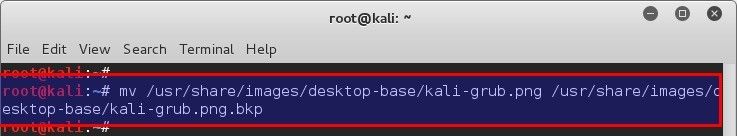

As I use Kali Linux (cause I like do stuff), we found that Kali is using a background image from here: `/usr/share/images/desktop-base/desktop-grub.png`

|

||||||

|

|

||||||

|

Just to be sure, let’s check that `.png` file and it’s properties.

|

||||||

|

|

||||||

|

```

|

||||||

|

root@kali:~#

|

||||||

|

root@kali:~# ls -l /usr/share/images/desktop-base/desktop-grub.png

|

||||||

|

lrwxrwxrwx 1 root root 30 Oct 8 00:31 /usr/share/images/desktop-base/desktop-grub.png -> /etc/alternatives/desktop-grub

|

||||||

|

root@kali:~#

|

||||||

|

```

|

||||||

|

|

||||||

|

[

|

||||||

|

|

||||||

|

][11]

|

||||||

|

|

||||||

|

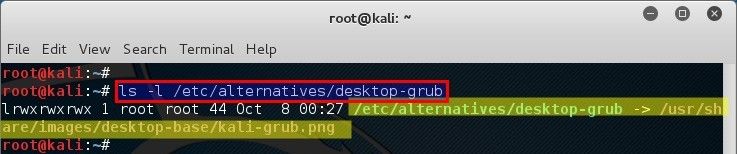

What? It’s just a symbolic link to `/etc/alternatives/desktop-grub` file? But `/etc/alternatives/desktop-grub` is not an image file. Looks like I need to check that file and it’s properties as well.

|

||||||

|

|

||||||

|

```

|

||||||

|

root@kali:~#

|

||||||

|

root@kali:~# ls -l /etc/alternatives/desktop-grub

|

||||||