mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

f065a1b9d4

@ -1,37 +1,39 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (robsean)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10850-1.html)

|

||||

[#]: subject: (Build a game framework with Python using the module Pygame)

|

||||

[#]: via: (https://opensource.com/article/17/12/game-framework-python)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

使用 Python 和 Pygame 模块构建一个游戏框架

|

||||

======

|

||||

这系列的第一篇通过创建一个简单的骰子游戏来探究 Python。现在是来从零制作你自己的游戏的时间。

|

||||

|

||||

> 这系列的第一篇通过创建一个简单的骰子游戏来探究 Python。现在是来从零制作你自己的游戏的时间。

|

||||

|

||||

|

||||

|

||||

在我的 [这系列的第一篇文章][1] 中, 我已经讲解如何使用 Python 创建一个简单的,基于文本的骰子游戏。这次,我将展示如何使用 Python 和 Pygame 模块来创建一个图形化游戏。它将占用一些文章来得到一个确实完成一些东西的游戏,但是在这系列的结尾,你将有一个更好的理解,如何查找和学习新的 Python 模块和如何从其基础上构建一个应用程序。

|

||||

在我的[这系列的第一篇文章][1] 中, 我已经讲解如何使用 Python 创建一个简单的、基于文本的骰子游戏。这次,我将展示如何使用 Python 模块 Pygame 来创建一个图形化游戏。它将需要几篇文章才能来得到一个确实做成一些东西的游戏,但是到这系列的结尾,你将更好地理解如何查找和学习新的 Python 模块和如何从其基础上构建一个应用程序。

|

||||

|

||||

在开始前,你必须安装 [Pygame][2]。

|

||||

|

||||

### 安装新的 Python 模块

|

||||

|

||||

这里有一些方法来安装 Python 模块,但是最通用的两个是:

|

||||

有几种方法来安装 Python 模块,但是最通用的两个是:

|

||||

|

||||

* 从你的发行版的软件存储库

|

||||

* 使用 Python 的软件包管理器,pip

|

||||

* 使用 Python 的软件包管理器 `pip`

|

||||

|

||||

两个方法都工作很好,并且每一个都有它自己的一套优势。如果你是在 Linux 或 BSD 上开发,促使你的发行版的软件存储库确保自动及时更新。

|

||||

两个方法都工作的很好,并且每一个都有它自己的一套优势。如果你是在 Linux 或 BSD 上开发,可以利用你的发行版的软件存储库来自动和及时地更新。

|

||||

|

||||

然而,使用 Python 的内置软件包管理器给予你控制更新模块时间的能力。而且,它不是明确指定操作系统的,意味着,即使当你不是在你常用的开发机器上时,你也可以使用它。pip 的其它的优势是允许模块局部安装,如果你没有一台正在使用的计算机的权限,它是有用的。

|

||||

然而,使用 Python 的内置软件包管理器可以给予你控制更新模块时间的能力。而且,它不是特定于操作系统的,这意味着,即使当你不是在你常用的开发机器上时,你也可以使用它。`pip` 的其它的优势是允许本地安装模块,如果你没有正在使用的计算机的管理权限,这是有用的。

|

||||

|

||||

### 使用 pip

|

||||

|

||||

如果 Python 和 Python3 都安装在你的系统上,你想使用的命令很可能是 `pip3`,它区分来自Python 2.x 的 `pip` 的命令。如果你不确定,先尝试 `pip3`。

|

||||

如果 Python 和 Python3 都安装在你的系统上,你想使用的命令很可能是 `pip3`,它用来区分 Python 2.x 的 `pip` 的命令。如果你不确定,先尝试 `pip3`。

|

||||

|

||||

`pip` 命令有些像大多数 Linux 软件包管理器的工作。你可以使用 `search` 搜索 Pythin 模块,然后使用 `install` 安装它们。如果你没有你正在使用的计算机的权限来安装软件,你可以使用 `--user` 选项来仅仅安装模块到你的 home 目录。

|

||||

`pip` 命令有些像大多数 Linux 软件包管理器一样工作。你可以使用 `search` 搜索 Python 模块,然后使用 `install` 安装它们。如果你没有你正在使用的计算机的管理权限来安装软件,你可以使用 `--user` 选项来仅仅安装模块到你的家目录。

|

||||

|

||||

```

|

||||

$ pip3 search pygame

|

||||

@ -44,11 +46,11 @@ pygame_cffi (0.2.1) - A cffi-based SDL wrapper that copies the

|

||||

$ pip3 install Pygame --user

|

||||

```

|

||||

|

||||

Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用在你的 Python 程序中库。换句话说,它不是一个你启动的程序,像 [IDLE][3] 或 [Ninja-IDE][4] 一样。

|

||||

Pygame 是一个 Python 模块,这意味着它仅仅是一套可以使用在你的 Python 程序中的库。换句话说,它不是一个像 [IDLE][3] 或 [Ninja-IDE][4] 一样可以让你启动的程序。

|

||||

|

||||

### Pygame 新手入门

|

||||

|

||||

一个电子游戏需要一个故事背景;一个发生的地点。在 Python 中,有两种不同的方法来创建你的故事背景:

|

||||

一个电子游戏需要一个背景设定:故事发生的地点。在 Python 中,有两种不同的方法来创建你的故事背景:

|

||||

|

||||

* 设置一种背景颜色

|

||||

* 设置一张背景图片

|

||||

@ -57,15 +59,15 @@ Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用

|

||||

|

||||

### 设置你的 Pygame 脚本

|

||||

|

||||

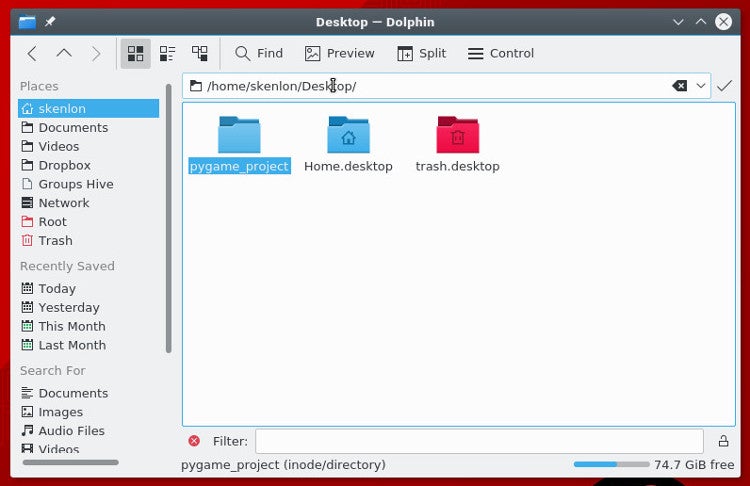

为了开始一个新的 Pygame 脚本,在计算机上创建一个文件夹。游戏的全部文件被放在这个目录中。在工程文件夹内部保持所需要的所有的文件来运行游戏是极其重要的。

|

||||

要开始一个新的 Pygame 工程,先在计算机上创建一个文件夹。游戏的全部文件被放在这个目录中。在你的工程文件夹内部保持所需要的所有的文件来运行游戏是极其重要的。

|

||||

|

||||

|

||||

|

||||

一个 Python 脚本以文件类型,你的姓名,和你想使用的协议开始。使用一个开放源码协议,以便你的朋友可以改善你的游戏并与你一起分享他们的更改:

|

||||

一个 Python 脚本以文件类型、你的姓名,和你想使用的许可证开始。使用一个开放源码许可证,以便你的朋友可以改善你的游戏并与你一起分享他们的更改:

|

||||

|

||||

```

|

||||

#!/usr/bin/env python3

|

||||

# Seth Kenlon 编写

|

||||

# by Seth Kenlon

|

||||

|

||||

## GPLv3

|

||||

# This program is free software: you can redistribute it and/or

|

||||

@ -75,14 +77,14 @@ Pygame 是一个 Python 模块,这意味着它仅仅是一套可以被使用

|

||||

#

|

||||

# This program is distributed in the hope that it will be useful, but

|

||||

# WITHOUT ANY WARRANTY; without even the implied warranty of

|

||||

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

|

||||

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU

|

||||

# General Public License for more details.

|

||||

#

|

||||

# You should have received a copy of the GNU General Public License

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

# along with this program. If not, see <http://www.gnu.org/licenses/>.

|

||||

```

|

||||

|

||||

然后,你告诉 Python 你想使用的模块。一些模块是常见的 Python 库,当然,你想包括一个你刚刚安装的,Pygame 。

|

||||

然后,你告诉 Python 你想使用的模块。一些模块是常见的 Python 库,当然,你想包括一个你刚刚安装的 Pygame 模块。

|

||||

|

||||

```

|

||||

import pygame # 加载 pygame 关键字

|

||||

@ -90,7 +92,7 @@ import sys # 让 python 使用你的文件系统

|

||||

import os # 帮助 python 识别你的操作系统

|

||||

```

|

||||

|

||||

由于你将用这个脚本文件工作很多,在文件中制作成段落是有帮助的,以便你知道在哪里放原料。使用语句块注释来做这些,这些注释仅在看你的源文件代码时是可见的。在你的代码中创建三个语句块。

|

||||

由于你将用这个脚本文件做很多工作,在文件中分成段落是有帮助的,以便你知道在哪里放代码。你可以使用块注释来做这些,这些注释仅在看你的源文件代码时是可见的。在你的代码中创建三个块。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -114,7 +116,7 @@ Main Loop

|

||||

|

||||

接下来,为你的游戏设置窗口大小。注意,不是每一个人都有大计算机屏幕,所以,最好使用一个适合大多数人的计算机的屏幕大小。

|

||||

|

||||

这里有一个方法来切换全屏模式,很多现代电子游戏做的方法,但是,由于你刚刚开始,保存它简单和仅设置一个大小。

|

||||

这里有一个方法来切换全屏模式,很多现代电子游戏都会这样做,但是,由于你刚刚开始,简单起见仅设置一个大小即可。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -124,7 +126,7 @@ worldx = 960

|

||||

worldy = 720

|

||||

```

|

||||

|

||||

在一个脚本中使用 Pygame 引擎前,你需要一些基本的设置。你必需设置帧频,启动它的内部时钟,然后开始 (`init`) Pygame 。

|

||||

在脚本中使用 Pygame 引擎前,你需要一些基本的设置。你必须设置帧频,启动它的内部时钟,然后开始 (`init`)Pygame 。

|

||||

|

||||

```

|

||||

fps = 40 # 帧频

|

||||

@ -137,17 +139,15 @@ pygame.init()

|

||||

|

||||

### 设置背景

|

||||

|

||||

在你继续前,打开一个图形应用程序,并为你的游戏世界创建一个背景。在你的工程目录中的 `images` 文件夹内部保存它为 `stage.png` 。

|

||||

在你继续前,打开一个图形应用程序,为你的游戏世界创建一个背景。在你的工程目录中的 `images` 文件夹内部保存它为 `stage.png` 。

|

||||

|

||||

这里有一些你可以使用的自由图形应用程序。

|

||||

|

||||

* [Krita][5] 是一个专业级绘图原料模拟器,它可以被用于创建漂亮的图片。如果你对电子游戏创建艺术作品非常感兴趣,你甚至可以购买一系列的[游戏艺术作品教程][6].

|

||||

* [Pinta][7] 是一个基本的,易于学习的绘图应用程序。

|

||||

* [Inkscape][8] 是一个矢量图形应用程序。使用它来绘制形状,线,样条曲线,和 Bézier 曲线。

|

||||

* [Krita][5] 是一个专业级绘图素材模拟器,它可以被用于创建漂亮的图片。如果你对创建电子游戏艺术作品非常感兴趣,你甚至可以购买一系列的[游戏艺术作品教程][6]。

|

||||

* [Pinta][7] 是一个基本的,易于学习的绘图应用程序。

|

||||

* [Inkscape][8] 是一个矢量图形应用程序。使用它来绘制形状、线、样条曲线和贝塞尔曲线。

|

||||

|

||||

|

||||

|

||||

你的图像不必很复杂,你可以以后回去更改它。一旦你有它,在你文件的 setup 部分添加这些代码:

|

||||

你的图像不必很复杂,你可以以后回去更改它。一旦有了它,在你文件的 Setup 部分添加这些代码:

|

||||

|

||||

```

|

||||

world = pygame.display.set_mode([worldx,worldy])

|

||||

@ -155,13 +155,13 @@ backdrop = pygame.image.load(os.path.join('images','stage.png').convert())

|

||||

backdropbox = world.get_rect()

|

||||

```

|

||||

|

||||

如果你仅仅用一种颜色来填充你的游戏的背景,你需要做的全部是:

|

||||

如果你仅仅用一种颜色来填充你的游戏的背景,你需要做的就是:

|

||||

|

||||

```

|

||||

world = pygame.display.set_mode([worldx,worldy])

|

||||

```

|

||||

|

||||

你也必需定义一个来使用的颜色。在你的 setup 部分,使用红,绿,蓝 (RGB) 的值来创建一些颜色的定义。

|

||||

你也必须定义颜色以使用。在你的 Setup 部分,使用红、绿、蓝 (RGB) 的值来创建一些颜色的定义。

|

||||

|

||||

```

|

||||

'''

|

||||

@ -173,13 +173,13 @@ BLACK = (23,23,23 )

|

||||

WHITE = (254,254,254)

|

||||

```

|

||||

|

||||

在这点上,你能理论上启动你的游戏。问题是,它可能仅持续一毫秒。

|

||||

至此,你理论上可以启动你的游戏了。问题是,它可能仅持续了一毫秒。

|

||||

|

||||

为证明这一点,保存你的文件为 `your-name_game.py` (用你真实的名称替换 `your-name` )。然后启动你的游戏。

|

||||

为证明这一点,保存你的文件为 `your-name_game.py`(用你真实的名称替换 `your-name`)。然后启动你的游戏。

|

||||

|

||||

如果你正在使用 IDLE ,通过选择来自 Run 菜单的 `Run Module` 来运行你的游戏。

|

||||

如果你正在使用 IDLE,通过选择来自 “Run” 菜单的 “Run Module” 来运行你的游戏。

|

||||

|

||||

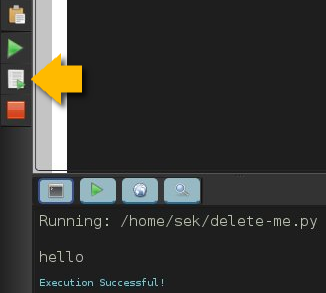

如果你正在使用 Ninja ,在左侧按钮条中单击 `Run file` 按钮。

|

||||

如果你正在使用 Ninja,在左侧按钮条中单击 “Run file” 按钮。

|

||||

|

||||

|

||||

|

||||

@ -189,27 +189,27 @@ WHITE = (254,254,254)

|

||||

$ python3 ./your-name_game.py

|

||||

```

|

||||

|

||||

如果你正在使用 Windows ,使用这命令:

|

||||

如果你正在使用 Windows,使用这命令:

|

||||

|

||||

```

|

||||

py.exe your-name_game.py

|

||||

```

|

||||

|

||||

你启动它,不过不要期望很多,因为你的游戏现在仅仅持续几毫秒。你可以在下一部分中修复它。

|

||||

启动它,不过不要期望很多,因为你的游戏现在仅仅持续几毫秒。你可以在下一部分中修复它。

|

||||

|

||||

### 循环

|

||||

|

||||

除非另有说明,一个 Python 脚本运行一次并仅一次。近来计算机的运行速度是非常快的,所以你的 Python 脚本运行时间少于1秒钟。

|

||||

除非另有说明,一个 Python 脚本运行一次并仅一次。近来计算机的运行速度是非常快的,所以你的 Python 脚本运行时间会少于 1 秒钟。

|

||||

|

||||

为强制你的游戏来处于足够长的打开和活跃状态来让人看到它(更不要说玩它),使用一个 `while` 循环。为使你的游戏保存打开,你可以设置一个变量为一些值,然后告诉一个 `while` 循环只要变量保持未更改则一直保存循环。

|

||||

|

||||

这经常被称为一个"主循环",你可以使用术语 `main` 作为你的变量。在你的 setup 部分的任意位置添加这些代码:

|

||||

这经常被称为一个“主循环”,你可以使用术语 `main` 作为你的变量。在你的 Setup 部分的任意位置添加代码:

|

||||

|

||||

```

|

||||

main = True

|

||||

```

|

||||

|

||||

在主循环期间,使用 Pygame 关键字来检查是否在键盘上的按键已经被按下或释放。添加这些代码到你的主循环部分:

|

||||

在主循环期间,使用 Pygame 关键字来检查键盘上的按键是否已经被按下或释放。添加这些代码到你的主循环部分:

|

||||

|

||||

```

|

||||

'''

|

||||

@ -228,7 +228,7 @@ while main == True:

|

||||

main = False

|

||||

```

|

||||

|

||||

也在你的循环中,刷新你世界的背景。

|

||||

也是在你的循环中,刷新你世界的背景。

|

||||

|

||||

如果你使用一个图片作为背景:

|

||||

|

||||

@ -242,33 +242,33 @@ world.blit(backdrop, backdropbox)

|

||||

world.fill(BLUE)

|

||||

```

|

||||

|

||||

最后,告诉 Pygame 来刷新在屏幕上的所有内容并推进游戏的内部时钟。

|

||||

最后,告诉 Pygame 来重新刷新屏幕上的所有内容,并推进游戏的内部时钟。

|

||||

|

||||

```

|

||||

pygame.display.flip()

|

||||

clock.tick(fps)

|

||||

```

|

||||

|

||||

保存你的文件,再次运行它来查看曾经创建的最无趣的游戏。

|

||||

保存你的文件,再次运行它来查看你曾经创建的最无趣的游戏。

|

||||

|

||||

退出游戏,在你的键盘上按 `q` 键。

|

||||

|

||||

在这系列的 [下一篇文章][9] 中,我将向你演示,如何加强你当前空的游戏世界,所以,继续学习并创建一些将要使用的图形!

|

||||

在这系列的 [下一篇文章][9] 中,我将向你演示,如何加强你当前空空如也的游戏世界,所以,继续学习并创建一些将要使用的图形!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

通过: https://opensource.com/article/17/12/game-framework-python

|

||||

via: https://opensource.com/article/17/12/game-framework-python

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/article/17/10/python-101

|

||||

[1]: https://linux.cn/article-9071-1.html

|

||||

[2]: http://www.pygame.org/wiki/about

|

||||

[3]: https://en.wikipedia.org/wiki/IDLE

|

||||

[4]: http://ninja-ide.org/

|

||||

163

published/20171215 How to add a player to your Python game.md

Normal file

163

published/20171215 How to add a player to your Python game.md

Normal file

@ -0,0 +1,163 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (cycoe)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10858-1.html)

|

||||

[#]: subject: (How to add a player to your Python game)

|

||||

[#]: via: (https://opensource.com/article/17/12/game-python-add-a-player)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

如何在你的 Python 游戏中添加一个玩家

|

||||

======

|

||||

> 这是用 Python 从头开始构建游戏的系列文章的第三部分。

|

||||

|

||||

|

||||

|

||||

在 [这个系列的第一篇文章][1] 中,我解释了如何使用 Python 创建一个简单的基于文本的骰子游戏。在第二部分中,我向你们展示了如何从头开始构建游戏,即从 [创建游戏的环境][2] 开始。但是每个游戏都需要一名玩家,并且每个玩家都需要一个可操控的角色,这也就是我们接下来要在这个系列的第三部分中需要做的。

|

||||

|

||||

在 Pygame 中,玩家操控的图标或者化身被称作<ruby>妖精<rt>sprite</rt></ruby>。如果你现在还没有任何可用于玩家妖精的图像,你可以使用 [Krita][3] 或 [Inkscape][4] 来自己创建一些图像。如果你对自己的艺术细胞缺乏自信,你也可以在 [OpenClipArt.org][5] 或 [OpenGameArt.org][6] 搜索一些现成的图像。如果你还未按照上一篇文章所说的单独创建一个 `images` 文件夹,那么你需要在你的 Python 项目目录中创建它。将你想要在游戏中使用的图片都放 `images` 文件夹中。

|

||||

|

||||

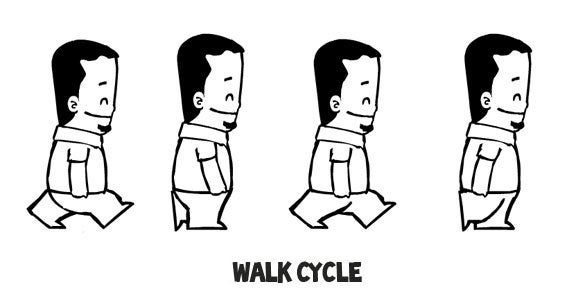

为了使你的游戏真正的刺激,你应该为你的英雄使用一张动态的妖精图片。这意味着你需要绘制更多的素材,并且它们要大不相同。最常见的动画就是走路循环,通过一系列的图像让你的妖精看起来像是在走路。走路循环最快捷粗糙的版本需要四张图像。

|

||||

|

||||

|

||||

|

||||

注意:这篇文章中的代码示例同时兼容静止的和动态的玩家妖精。

|

||||

|

||||

将你的玩家妖精命名为 `hero.png`。如果你正在创建一个动态的妖精,则需要在名字后面加上一个数字,从 `hero1.png` 开始。

|

||||

|

||||

### 创建一个 Python 类

|

||||

|

||||

在 Python 中,当你在创建一个你想要显示在屏幕上的对象时,你需要创建一个类。

|

||||

|

||||

在你的 Python 脚本靠近顶端的位置,加入如下代码来创建一个玩家。在以下的代码示例中,前三行已经在你正在处理的 Python 脚本中:

|

||||

|

||||

```

|

||||

import pygame

|

||||

import sys

|

||||

import os # 以下是新代码

|

||||

|

||||

class Player(pygame.sprite.Sprite):

|

||||

'''

|

||||

生成一个玩家

|

||||

'''

|

||||

def __init__(self):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.images = []

|

||||

img = pygame.image.load(os.path.join('images','hero.png')).convert()

|

||||

self.images.append(img)

|

||||

self.image = self.images[0]

|

||||

self.rect = self.image.get_rect()

|

||||

```

|

||||

|

||||

如果你的可操控角色拥有一个走路循环,在 `images` 文件夹中将对应图片保存为 `hero1.png` 到 `hero4.png` 的独立文件。

|

||||

|

||||

使用一个循环来告诉 Python 遍历每个文件。

|

||||

|

||||

```

|

||||

'''

|

||||

对象

|

||||

'''

|

||||

|

||||

class Player(pygame.sprite.Sprite):

|

||||

'''

|

||||

生成一个玩家

|

||||

'''

|

||||

def __init__(self):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.images = []

|

||||

for i in range(1,5):

|

||||

img = pygame.image.load(os.path.join('images','hero' + str(i) + '.png')).convert()

|

||||

self.images.append(img)

|

||||

self.image = self.images[0]

|

||||

self.rect = self.image.get_rect()

|

||||

```

|

||||

|

||||

### 将玩家带入游戏世界

|

||||

|

||||

现在已经创建好了一个 Player 类,你需要使用它在你的游戏世界中生成一个玩家妖精。如果你不调用 Player 类,那它永远不会起作用,(游戏世界中)也就不会有玩家。你可以通过立马运行你的游戏来验证一下。游戏会像上一篇文章末尾看到的那样运行,并得到明确的结果:一个空荡荡的游戏世界。

|

||||

|

||||

为了将一个玩家妖精带到你的游戏世界,你必须通过调用 Player 类来生成一个妖精,并将它加入到 Pygame 的妖精组中。在如下的代码示例中,前三行是已经存在的代码,你需要在其后添加代码:

|

||||

|

||||

```

|

||||

world = pygame.display.set_mode([worldx,worldy])

|

||||

backdrop = pygame.image.load(os.path.join('images','stage.png')).convert()

|

||||

backdropbox = screen.get_rect()

|

||||

|

||||

# 以下是新代码

|

||||

|

||||

player = Player() # 生成玩家

|

||||

player.rect.x = 0 # 移动 x 坐标

|

||||

player.rect.y = 0 # 移动 y 坐标

|

||||

player_list = pygame.sprite.Group()

|

||||

player_list.add(player)

|

||||

```

|

||||

|

||||

尝试启动你的游戏来看看发生了什么。高能预警:它不会像你预期的那样工作,当你启动你的项目,玩家妖精没有出现。事实上它生成了,只不过只出现了一毫秒。你要如何修复一个只出现了一毫秒的东西呢?你可能回想起上一篇文章中,你需要在主循环中添加一些东西。为了使玩家的存在时间超过一毫秒,你需要告诉 Python 在每次循环中都绘制一次。

|

||||

|

||||

将你的循环底部的语句更改如下:

|

||||

|

||||

```

|

||||

world.blit(backdrop, backdropbox)

|

||||

player_list.draw(screen) # 绘制玩家

|

||||

pygame.display.flip()

|

||||

clock.tick(fps)

|

||||

```

|

||||

|

||||

现在启动你的游戏,你的玩家出现了!

|

||||

|

||||

### 设置 alpha 通道

|

||||

|

||||

根据你如何创建你的玩家妖精,在它周围可能会有一个色块。你所看到的是 alpha 通道应该占据的空间。它本来是不可见的“颜色”,但 Python 现在还不知道要使它不可见。那么你所看到的,是围绕在妖精周围的边界区(或现代游戏术语中的“<ruby>命中区<rt>hit box</rt></ruby>”)内的空间。

|

||||

|

||||

|

||||

|

||||

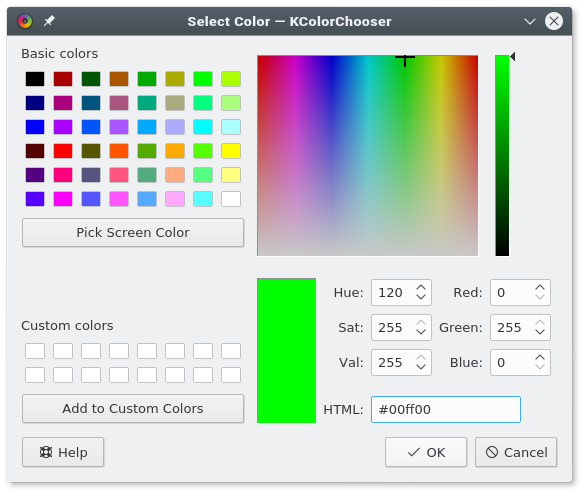

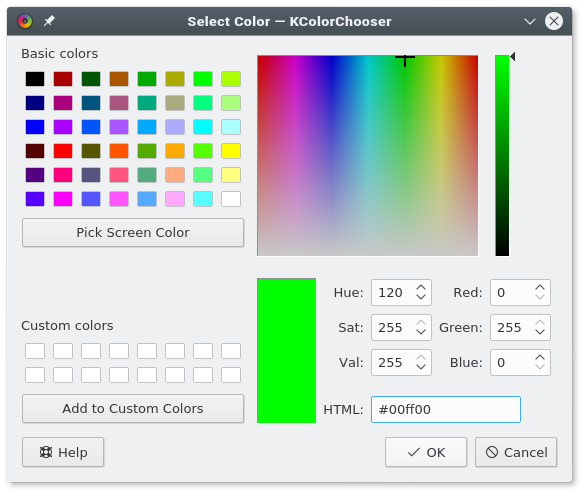

你可以通过设置一个 alpha 通道和 RGB 值来告诉 Python 使哪种颜色不可见。如果你不知道你使用 alpha 通道的图像的 RGB 值,你可以使用 Krita 或 Inkscape 打开它,并使用一种独特的颜色,比如 `#00ff00`(差不多是“绿屏绿”)来填充图像周围的空白区域。记下颜色对应的十六进制值(此处为 `#00ff00`,绿屏绿)并将其作为 alpha 通道用于你的 Python 脚本。

|

||||

|

||||

使用 alpha 通道需要在你的妖精生成相关代码中添加如下两行。类似第一行的代码已经存在于你的脚本中,你只需要添加另外两行:

|

||||

|

||||

```

|

||||

img = pygame.image.load(os.path.join('images','hero' + str(i) + '.png')).convert()

|

||||

img.convert_alpha() # 优化 alpha

|

||||

img.set_colorkey(ALPHA) # 设置 alpha

|

||||

```

|

||||

|

||||

除非你告诉它,否则 Python 不知道将哪种颜色作为 alpha 通道。在你代码的设置相关区域,添加一些颜色定义。将如下的变量定义添加于你的设置相关区域的任意位置:

|

||||

|

||||

```

|

||||

ALPHA = (0, 255, 0)

|

||||

```

|

||||

|

||||

在以上示例代码中,`0,255,0` 被我们使用,它在 RGB 中所代表的值与 `#00ff00` 在十六进制中所代表的值相同。你可以通过一个优秀的图像应用程序,如 [GIMP][7]、Krita 或 Inkscape,来获取所有这些颜色值。或者,你可以使用一个优秀的系统级颜色选择器,如 [KColorChooser][8],来检测颜色。

|

||||

|

||||

|

||||

|

||||

如果你的图像应用程序将你的妖精背景渲染成了其他的值,你可以按需调整 `ALPHA` 变量的值。不论你将 alpha 设为多少,最后它都将“不可见”。RGB 颜色值是非常严格的,因此如果你需要将 alpha 设为 000,但你又想将 000 用于你图像中的黑线,你只需要将图像中线的颜色设为 111。这样一来,(图像中的黑线)就足够接近黑色,但除了电脑以外没有人能看出区别。

|

||||

|

||||

运行你的游戏查看结果。

|

||||

|

||||

|

||||

|

||||

在 [这个系列的第四篇文章][9] 中,我会向你们展示如何使你的妖精动起来。多么的激动人心啊!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/12/game-python-add-a-player

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[cycoe](https://github.com/cycoe)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://linux.cn/article-9071-1.html

|

||||

[2]: https://linux.cn/article-10850-1.html

|

||||

[3]: http://krita.org

|

||||

[4]: http://inkscape.org

|

||||

[5]: http://openclipart.org

|

||||

[6]: https://opengameart.org/

|

||||

[7]: http://gimp.org

|

||||

[8]: https://github.com/KDE/kcolorchooser

|

||||

[9]: https://opensource.com/article/17/12/program-game-python-part-4-moving-your-sprite

|

||||

@ -1,21 +1,22 @@

|

||||

没有恶棍,英雄又将如何?如何向你的 Python 游戏中添加一个敌人

|

||||

如何向你的 Python 游戏中添加一个敌人

|

||||

======

|

||||

|

||||

> 在本系列的第五部分,学习如何增加一个坏蛋与你的好人战斗。

|

||||

|

||||

|

||||

|

||||

在本系列的前几篇文章中(参见 [第一部分][1]、[第二部分][2]、[第三部分][3] 以及 [第四部分][4]),你已经学习了如何使用 Pygame 和 Python 在一个空白的视频游戏世界中生成一个可玩的角色。但没有恶棍,英雄又将如何?

|

||||

|

||||

如果你没有敌人,那将会是一个非常无聊的游戏。所以在此篇文章中,你将为你的游戏添加一个敌人并构建一个用于创建关卡的框架。

|

||||

|

||||

在对玩家妖精实现全部功能仍有许多事情可做之前,跳向敌人似乎就很奇怪。但你已经学到了很多东西,创造恶棍与与创造玩家妖精非常相似。所以放轻松,使用你已经掌握的知识,看看能挑起怎样一些麻烦。

|

||||

在对玩家妖精实现全部功能之前,就来实现一个敌人似乎就很奇怪。但你已经学到了很多东西,创造恶棍与与创造玩家妖精非常相似。所以放轻松,使用你已经掌握的知识,看看能挑起怎样一些麻烦。

|

||||

|

||||

针对本次训练,你能够从 [Open Game Art][5] 下载一些预创建的素材。此处是我使用的一些素材:

|

||||

|

||||

|

||||

+ 印加花砖(译注:游戏中使用的花砖贴图)

|

||||

+ 印加花砖(LCTT 译注:游戏中使用的花砖贴图)

|

||||

+ 一些侵略者

|

||||

+ 妖精、角色、物体以及特效

|

||||

|

||||

|

||||

### 创造敌方妖精

|

||||

|

||||

是的,不管你意识到与否,你其实已经知道如何去实现敌人。这个过程与创造一个玩家妖精非常相似:

|

||||

@ -24,40 +25,27 @@

|

||||

2. 创建 `update` 方法使得敌人能够检测碰撞

|

||||

3. 创建 `move` 方法使得敌人能够四处游荡

|

||||

|

||||

|

||||

|

||||

从类入手。从概念上看,它与你的 Player 类大体相同。你设置一张或者一组图片,然后设置妖精的初始位置。

|

||||

从类入手。从概念上看,它与你的 `Player` 类大体相同。你设置一张或者一组图片,然后设置妖精的初始位置。

|

||||

|

||||

在继续下一步之前,确保你有一张你的敌人的图像,即使只是一张临时图像。将图像放在你的游戏项目的 `images` 目录(你放置你的玩家图像的相同目录)。

|

||||

|

||||

如果所有的活物都拥有动画,那么游戏看起来会好得多。为敌方妖精设置动画与为玩家妖精设置动画具有相同的方式。但现在,为了保持简单,我们使用一个没有动画的妖精。

|

||||

|

||||

在你代码 `objects` 节的顶部,使用以下代码创建一个叫做 `Enemy` 的类:

|

||||

|

||||

```

|

||||

class Enemy(pygame.sprite.Sprite):

|

||||

|

||||

'''

|

||||

|

||||

生成一个敌人

|

||||

|

||||

'''

|

||||

|

||||

def __init__(self,x,y,img):

|

||||

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

|

||||

self.image = pygame.image.load(os.path.join('images',img))

|

||||

|

||||

self.image.convert_alpha()

|

||||

|

||||

self.image.set_colorkey(ALPHA)

|

||||

|

||||

self.rect = self.image.get_rect()

|

||||

|

||||

self.rect.x = x

|

||||

|

||||

self.rect.y = y

|

||||

|

||||

```

|

||||

|

||||

如果你想让你的敌人动起来,使用让你的玩家拥有动画的 [相同方式][4]。

|

||||

@ -67,25 +55,21 @@ class Enemy(pygame.sprite.Sprite):

|

||||

你能够通过告诉类,妖精应使用哪张图像,应出现在世界上的什么地方,来生成不只一个敌人。这意味着,你能够使用相同的敌人类,在游戏世界的任意地方生成任意数量的敌方妖精。你需要做的仅仅是调用这个类,并告诉它应使用哪张图像,以及你期望生成点的 X 和 Y 坐标。

|

||||

|

||||

再次,这从原则上与生成一个玩家精灵相似。在你脚本的 `setup` 节添加如下代码:

|

||||

|

||||

```

|

||||

enemy = Enemy(20,200,'yeti.png') # 生成敌人

|

||||

|

||||

enemy_list = pygame.sprite.Group() # 创建敌人组

|

||||

|

||||

enemy_list.add(enemy) # 将敌人加入敌人组

|

||||

|

||||

```

|

||||

|

||||

在示例代码中,X 坐标为 20,Y 坐标为 200。你可能需要根据你的敌方妖精的大小,来调整这些数字,但尽量生成在一个地方,使得你的玩家妖精能够到它。`Yeti.png` 是用于敌人的图像。

|

||||

在示例代码中,X 坐标为 20,Y 坐标为 200。你可能需要根据你的敌方妖精的大小,来调整这些数字,但尽量生成在一个范围内,使得你的玩家妖精能够碰到它。`Yeti.png` 是用于敌人的图像。

|

||||

|

||||

接下来,将敌人组的所有敌人绘制在屏幕上。现在,你只有一个敌人,如果你想要更多你可以稍后添加。一但你将一个敌人加入敌人组,它就会在主循环中被绘制在屏幕上。中间这一行是你需要添加的新行:

|

||||

|

||||

```

|

||||

player_list.draw(world)

|

||||

|

||||

enemy_list.draw(world) # 刷新敌人

|

||||

|

||||

pygame.display.flip()

|

||||

|

||||

```

|

||||

|

||||

启动你的游戏,你的敌人会出现在游戏世界中你选择的 X 和 Y 坐标处。

|

||||

@ -96,42 +80,31 @@ enemy_list.add(enemy) # 将敌人加入敌人组

|

||||

|

||||

思考一下“关卡”是什么。你如何知道你是在游戏中的一个特定关卡中呢?

|

||||

|

||||

你可以把关卡想成一系列项目的集合。就像你刚刚创建的这个平台中,一个关卡,包含了平台、敌人放置、赃物等的一个特定排列。你可以创建一个类,用来在你的玩家附近创建关卡。最终,当你创建了超过一个关卡,你就可以在你的玩家达到特定目标时,使用这个类生成下一个关卡。

|

||||

你可以把关卡想成一系列项目的集合。就像你刚刚创建的这个平台中,一个关卡,包含了平台、敌人放置、战利品等的一个特定排列。你可以创建一个类,用来在你的玩家附近创建关卡。最终,当你创建了一个以上的关卡,你就可以在你的玩家达到特定目标时,使用这个类生成下一个关卡。

|

||||

|

||||

将你写的用于生成敌人及其群组的代码,移动到一个每次生成新关卡时都会被调用的新函数中。你需要做一些修改,使得每次你创建新关卡时,你都能够创建一些敌人。

|

||||

|

||||

```

|

||||

class Level():

|

||||

|

||||

def bad(lvl,eloc):

|

||||

|

||||

if lvl == 1:

|

||||

|

||||

enemy = Enemy(eloc[0],eloc[1],'yeti.png') # 生成敌人

|

||||

|

||||

enemy_list = pygame.sprite.Group() # 生成敌人组

|

||||

|

||||

enemy_list.add(enemy) # 将敌人加入敌人组

|

||||

|

||||

if lvl == 2:

|

||||

|

||||

print("Level " + str(lvl) )

|

||||

|

||||

|

||||

|

||||

return enemy_list

|

||||

|

||||

```

|

||||

|

||||

`return` 语句确保了当你调用 `Level.bad` 方法时,你将会得到一个 `enemy_list` 变量包含了所有你定义的敌人。

|

||||

|

||||

因为你现在将创造敌人作为每个关卡的一部分,你的 `setup` 部分也需要做些更改。不同于创造一个敌人,取而代之的是你必须去定义敌人在那里生成,以及敌人属于哪个关卡。

|

||||

|

||||

```

|

||||

eloc = []

|

||||

|

||||

eloc = [200,20]

|

||||

|

||||

enemy_list = Level.bad( 1, eloc )

|

||||

|

||||

```

|

||||

|

||||

再次运行游戏来确认你的关卡生成正确。与往常一样,你应该会看到你的玩家,并且能看到你在本章节中添加的敌人。

|

||||

@ -140,31 +113,27 @@ enemy_list = Level.bad( 1, eloc )

|

||||

|

||||

一个敌人如果对玩家没有效果,那么它不太算得上是一个敌人。当玩家与敌人发生碰撞时,他们通常会对玩家造成伤害。

|

||||

|

||||

因为你可能想要去跟踪玩家的生命值,因此碰撞检测发生在 Player 类,而不是 Enemy 类中。当然如果你想,你也可以跟踪敌人的生命值。它们之间的逻辑与代码大体相似,现在,我们只需要跟踪玩家的生命值。

|

||||

因为你可能想要去跟踪玩家的生命值,因此碰撞检测发生在 `Player` 类,而不是 `Enemy` 类中。当然如果你想,你也可以跟踪敌人的生命值。它们之间的逻辑与代码大体相似,现在,我们只需要跟踪玩家的生命值。

|

||||

|

||||

为了跟踪玩家的生命值,你必须为它确定一个变量。代码示例中的第一行是上下文提示,那么将第二行代码添加到你的 Player 类中:

|

||||

|

||||

```

|

||||

self.frame = 0

|

||||

|

||||

self.health = 10

|

||||

|

||||

```

|

||||

|

||||

在你 Player 类的 `update` 方法中,添加如下代码块:

|

||||

在你 `Player` 类的 `update` 方法中,添加如下代码块:

|

||||

|

||||

```

|

||||

hit_list = pygame.sprite.spritecollide(self, enemy_list, False)

|

||||

|

||||

for enemy in hit_list:

|

||||

|

||||

self.health -= 1

|

||||

|

||||

print(self.health)

|

||||

|

||||

```

|

||||

|

||||

这段代码使用 Pygame 的 `sprite.spritecollide` 方法,建立了一个碰撞检测器,称作 `enemy_hit`。每当它的父类妖精(生成检测器的玩家妖精)的碰撞区触碰到 `enemy_list` 中的任一妖精的碰撞区时,碰撞检测器都会发出一个信号。当这个信号被接收,`for` 循环就会被触发,同时扣除一点玩家生命值。

|

||||

|

||||

一旦这段代码出现在你 Player 类的 `update` 方法,并且 `update` 方法在你的主循环中被调用,Pygame 会在每个时钟 tick 检测一次碰撞。

|

||||

一旦这段代码出现在你 `Player` 类的 `update` 方法,并且 `update` 方法在你的主循环中被调用,Pygame 会在每个时钟滴答中检测一次碰撞。

|

||||

|

||||

### 移动敌人

|

||||

|

||||

@ -176,60 +145,41 @@ enemy_list = Level.bad( 1, eloc )

|

||||

|

||||

举个例子,你告诉你的敌方妖精向右移动 10 步,向左移动 10 步。但敌方妖精不会计数,因此你需要创建一个变量来跟踪你的敌人已经移动了多少步,并根据计数变量的值来向左或向右移动你的敌人。

|

||||

|

||||

首先,在你的 Enemy 类中创建计数变量。添加以下代码示例中的最后一行代码:

|

||||

首先,在你的 `Enemy` 类中创建计数变量。添加以下代码示例中的最后一行代码:

|

||||

|

||||

```

|

||||

self.rect = self.image.get_rect()

|

||||

|

||||

self.rect.x = x

|

||||

|

||||

self.rect.y = y

|

||||

|

||||

self.counter = 0 # 计数变量

|

||||

|

||||

```

|

||||

|

||||

然后,在你的 Enemy 类中创建一个 `move` 方法。使用 if-else 循环来创建一个所谓的死循环:

|

||||

然后,在你的 `Enemy` 类中创建一个 `move` 方法。使用 if-else 循环来创建一个所谓的死循环:

|

||||

|

||||

* 如果计数在 0 到 100 之间,向右移动;

|

||||

* 如果计数在 100 到 200 之间,向左移动;

|

||||

* 如果计数大于 200,则将计数重置为 0。

|

||||

|

||||

|

||||

|

||||

死循环没有终点,因为循环判断条件永远为真,所以它将永远循环下去。在此情况下,计数器总是介于 0 到 100 或 100 到 200 之间,因此敌人会永远地从左向右再从右向左移动。

|

||||

|

||||

你用于敌人在每个方向上移动距离的具体值,取决于你的屏幕尺寸,更确切地说,取决于你的敌人移动的平台大小。从较小的值开始,依据习惯逐步提高数值。首先进行如下尝试:

|

||||

|

||||

```

|

||||

def move(self):

|

||||

|

||||

'''

|

||||

|

||||

敌人移动

|

||||

|

||||

'''

|

||||

|

||||

distance = 80

|

||||

|

||||

speed = 8

|

||||

|

||||

|

||||

|

||||

if self.counter >= 0 and self.counter <= distance:

|

||||

|

||||

self.rect.x += speed

|

||||

|

||||

elif self.counter >= distance and self.counter <= distance*2:

|

||||

|

||||

self.rect.x -= speed

|

||||

|

||||

else:

|

||||

|

||||

self.counter = 0

|

||||

|

||||

|

||||

|

||||

self.counter += 1

|

||||

|

||||

```

|

||||

|

||||

你可以根据需要调整距离和速度。

|

||||

@ -237,13 +187,11 @@ enemy_list = Level.bad( 1, eloc )

|

||||

当你现在启动游戏,这段代码有效果吗?

|

||||

|

||||

当然不,你应该也知道原因。你必须在主循环中调用 `move` 方法。如下示例代码中的第一行是上下文提示,那么添加最后两行代码:

|

||||

|

||||

```

|

||||

enemy_list.draw(world) #refresh enemy

|

||||

|

||||

for e in enemy_list:

|

||||

|

||||

e.move()

|

||||

|

||||

```

|

||||

|

||||

启动你的游戏看看当你打击敌人时发生了什么。你可能需要调整妖精的生成地点,使得你的玩家和敌人能够碰撞。当他们发生碰撞时,查看 [IDLE][6] 或 [Ninja-IDE][7] 的控制台,你可以看到生命值正在被扣除。

|

||||

@ -261,15 +209,15 @@ via: https://opensource.com/article/18/5/pygame-enemy

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[cycoe](https://github.com/cycoe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[1]:https://opensource.com/article/17/10/python-101

|

||||

[2]:https://opensource.com/article/17/12/game-framework-python

|

||||

[3]:https://opensource.com/article/17/12/game-python-add-a-player

|

||||

[4]:https://opensource.com/article/17/12/game-python-moving-player

|

||||

[1]:https://linux.cn/article-9071-1.html

|

||||

[2]:https://linux.cn/article-10850-1.html

|

||||

[3]:https://linux.cn/article-10858-1.html

|

||||

[4]:https://linux.cn/article-10874-1.html

|

||||

[5]:https://opengameart.org

|

||||

[6]:https://docs.python.org/3/library/idle.html

|

||||

[7]:http://ninja-ide.org/

|

||||

@ -0,0 +1,596 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10848-1.html)

|

||||

[#]: subject: (TLP – An Advanced Power Management Tool That Improve Battery Life On Linux Laptop)

|

||||

[#]: via: (https://www.2daygeek.com/tlp-increase-optimize-linux-laptop-battery-life/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

TLP:一个可以延长 Linux 笔记本电池寿命的高级电源管理工具

|

||||

======

|

||||

|

||||

|

||||

|

||||

笔记本电池是针对 Windows 操作系统进行了高度优化的,当我在笔记本电脑中使用 Windows 操作系统时,我已经意识到这一点,但对于 Linux 来说却不一样。

|

||||

|

||||

多年来,Linux 在电池优化方面取得了很大进步,但我们仍然需要做一些必要的事情来改善 Linux 中笔记本电脑的电池寿命。

|

||||

|

||||

当我考虑延长电池寿命时,我没有多少选择,但我觉得 TLP 对我来说是一个更好的解决方案,所以我会继续使用它。

|

||||

|

||||

在本教程中,我们将详细讨论 TLP 以延长电池寿命。

|

||||

|

||||

我们之前在我们的网站上写过三篇关于 Linux [笔记本电池节电工具][1] 的文章:[PowerTOP][2] 和 [电池充电状态][3]。

|

||||

|

||||

### TLP

|

||||

|

||||

[TLP][4] 是一款自由开源的高级电源管理工具,可在不进行任何配置更改的情况下延长电池寿命。

|

||||

|

||||

由于它的默认配置已针对电池寿命进行了优化,因此你可能只需要安装,然后就忘记它吧。

|

||||

|

||||

此外,它可以高度定制化,以满足你的特定要求。TLP 是一个具有自动后台任务的纯命令行工具。它不包含GUI。

|

||||

|

||||

TLP 适用于各种品牌的笔记本电脑。设置电池充电阈值仅适用于 IBM/Lenovo ThinkPad。

|

||||

|

||||

所有 TLP 设置都存储在 `/etc/default/tlp` 中。其默认配置提供了开箱即用的优化的节能设置。

|

||||

|

||||

以下 TLP 设置可用于自定义,如果需要,你可以相应地进行必要的更改。

|

||||

|

||||

### TLP 功能

|

||||

|

||||

* 内核笔记本电脑模式和脏缓冲区超时

|

||||

* 处理器频率调整,包括 “turbo boost”/“turbo core”

|

||||

* 限制最大/最小的 P 状态以控制 CPU 的功耗

|

||||

* HWP 能源性能提示

|

||||

* 用于多核/超线程的功率感知进程调度程序

|

||||

* 处理器性能与节能策略(`x86_energy_perf_policy`)

|

||||

* 硬盘高级电源管理级别(APM)和降速超时(按磁盘)

|

||||

* AHCI 链路电源管理(ALPM)与设备黑名单

|

||||

* PCIe 活动状态电源管理(PCIe ASPM)

|

||||

* PCI(e) 总线设备的运行时电源管理

|

||||

* Radeon 图形电源管理(KMS 和 DPM)

|

||||

* Wifi 省电模式

|

||||

* 关闭驱动器托架中的光盘驱动器

|

||||

* 音频省电模式

|

||||

* I/O 调度程序(按磁盘)

|

||||

* USB 自动暂停,支持设备黑名单/白名单(输入设备自动排除)

|

||||

* 在系统启动和关闭时启用或禁用集成的 wifi、蓝牙或 wwan 设备

|

||||

* 在系统启动时恢复无线电设备状态(从之前的关机时的状态)

|

||||

* 无线电设备向导:在网络连接/断开和停靠/取消停靠时切换无线电

|

||||

* 禁用 LAN 唤醒

|

||||

* 挂起/休眠后恢复集成的 WWAN 和蓝牙状态

|

||||

* 英特尔处理器的动态电源降低 —— 需要内核和 PHC-Patch 支持

|

||||

* 电池充电阈值 —— 仅限 ThinkPad

|

||||

* 重新校准电池 —— 仅限 ThinkPad

|

||||

|

||||

### 如何在 Linux 上安装 TLP

|

||||

|

||||

TLP 包在大多数发行版官方存储库中都可用,因此,使用发行版的 [包管理器][5] 来安装它。

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo dnf install tlp tlp-rdw

|

||||

```

|

||||

|

||||

ThinkPad 需要一些附加软件包。

|

||||

|

||||

```

|

||||

$ sudo dnf install https://download1.rpmfusion.org/free/fedora/rpmfusion-free-release-$(rpm -E %fedora).noarch.rpm

|

||||

$ sudo dnf install http://repo.linrunner.de/fedora/tlp/repos/releases/tlp-release.fc$(rpm -E %fedora).noarch.rpm

|

||||

$ sudo dnf install akmod-tp_smapi akmod-acpi_call kernel-devel

|

||||

```

|

||||

|

||||

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

|

||||

|

||||

```

|

||||

$ sudo dnf install smartmontools

|

||||

```

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][7] 或 [APT 命令][8] 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo apt install tlp tlp-rdw

|

||||

```

|

||||

|

||||

ThinkPad 需要一些附加软件包。

|

||||

|

||||

```

|

||||

$ sudo apt-get install tp-smapi-dkms acpi-call-dkms

|

||||

```

|

||||

|

||||

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

|

||||

|

||||

```

|

||||

$ sudo apt-get install smartmontools

|

||||

```

|

||||

|

||||

当基于 Ubuntu 的系统的官方软件包过时时,请使用以下 PPA 存储库,该存储库提供最新版本。运行以下命令以使用 PPA 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo add-apt-repository ppa:linrunner/tlp

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install tlp

|

||||

```

|

||||

|

||||

对于基于 Arch Linux 的系统,使用 [Pacman 命令][9] 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo pacman -S tlp tlp-rdw

|

||||

```

|

||||

|

||||

ThinkPad 需要一些附加软件包。

|

||||

|

||||

```

|

||||

$ pacman -S tp_smapi acpi_call

|

||||

```

|

||||

|

||||

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

|

||||

|

||||

```

|

||||

$ sudo pacman -S smartmontools

|

||||

```

|

||||

|

||||

对于基于 Arch Linux 的系统,在启动时启用 TLP 和 TLP-Sleep 服务。

|

||||

|

||||

```

|

||||

$ sudo systemctl enable tlp.service

|

||||

$ sudo systemctl enable tlp-sleep.service

|

||||

```

|

||||

|

||||

对于基于 Arch Linux 的系统,你还应该屏蔽以下服务以避免冲突,并确保 TLP 的无线电设备切换选项的正确操作。

|

||||

|

||||

```

|

||||

$ sudo systemctl mask systemd-rfkill.service

|

||||

$ sudo systemctl mask systemd-rfkill.socket

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][10] 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo yum install tlp tlp-rdw

|

||||

```

|

||||

|

||||

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

|

||||

|

||||

```

|

||||

$ sudo yum install smartmontools

|

||||

```

|

||||

|

||||

对于 openSUSE Leap 系统,使用 [Zypper 命令][11] 安装 TLP。

|

||||

|

||||

```

|

||||

$ sudo zypper install TLP

|

||||

```

|

||||

|

||||

安装 smartmontool 以显示 tlp-stat 中 S.M.A.R.T. 数据。

|

||||

|

||||

```

|

||||

$ sudo zypper install smartmontools

|

||||

```

|

||||

|

||||

成功安装 TLP 后,使用以下命令启动服务。

|

||||

|

||||

```

|

||||

$ systemctl start tlp.service

|

||||

```

|

||||

|

||||

### 使用方法

|

||||

|

||||

#### 显示电池信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -b

|

||||

或

|

||||

$ sudo tlp-stat --battery

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Battery Status

|

||||

/sys/class/power_supply/BAT0/manufacturer = SMP

|

||||

/sys/class/power_supply/BAT0/model_name = L14M4P23

|

||||

/sys/class/power_supply/BAT0/cycle_count = (not supported)

|

||||

/sys/class/power_supply/BAT0/energy_full_design = 60000 [mWh]

|

||||

/sys/class/power_supply/BAT0/energy_full = 48850 [mWh]

|

||||

/sys/class/power_supply/BAT0/energy_now = 48850 [mWh]

|

||||

/sys/class/power_supply/BAT0/power_now = 0 [mW]

|

||||

/sys/class/power_supply/BAT0/status = Full

|

||||

|

||||

Charge = 100.0 [%]

|

||||

Capacity = 81.4 [%]

|

||||

```

|

||||

|

||||

#### 显示磁盘信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -d

|

||||

或

|

||||

$ sudo tlp-stat --disk

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Storage Devices

|

||||

/dev/sda:

|

||||

Model = WDC WD10SPCX-24HWST1

|

||||

Firmware = 02.01A02

|

||||

APM Level = 128

|

||||

Status = active/idle

|

||||

Scheduler = mq-deadline

|

||||

|

||||

Runtime PM: control = on, autosuspend_delay = (not available)

|

||||

|

||||

SMART info:

|

||||

4 Start_Stop_Count = 18787

|

||||

5 Reallocated_Sector_Ct = 0

|

||||

9 Power_On_Hours = 606 [h]

|

||||

12 Power_Cycle_Count = 1792

|

||||

193 Load_Cycle_Count = 25775

|

||||

194 Temperature_Celsius = 31 [°C]

|

||||

|

||||

|

||||

+++ AHCI Link Power Management (ALPM)

|

||||

/sys/class/scsi_host/host0/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host1/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host2/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host3/link_power_management_policy = med_power_with_dipm

|

||||

|

||||

+++ AHCI Host Controller Runtime Power Management

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata1/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata2/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata3/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata4/power/control = on

|

||||

```

|

||||

|

||||

#### 显示 PCI 设备信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -e

|

||||

或

|

||||

$ sudo tlp-stat --pcie

|

||||

```

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -e

|

||||

or

|

||||

$ sudo tlp-stat --pcie

|

||||

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Runtime Power Management

|

||||

Device blacklist = (not configured)

|

||||

Driver blacklist = amdgpu nouveau nvidia radeon pcieport

|

||||

|

||||

/sys/bus/pci/devices/0000:00:00.0/power/control = auto (0x060000, Host bridge, skl_uncore)

|

||||

/sys/bus/pci/devices/0000:00:01.0/power/control = auto (0x060400, PCI bridge, pcieport)

|

||||

/sys/bus/pci/devices/0000:00:02.0/power/control = auto (0x030000, VGA compatible controller, i915)

|

||||

/sys/bus/pci/devices/0000:00:14.0/power/control = auto (0x0c0330, USB controller, xhci_hcd)

|

||||

|

||||

......

|

||||

```

|

||||

|

||||

#### 显示图形卡信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -g

|

||||

或

|

||||

$ sudo tlp-stat --graphics

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Intel Graphics

|

||||

/sys/module/i915/parameters/enable_dc = -1 (use per-chip default)

|

||||

/sys/module/i915/parameters/enable_fbc = 1 (enabled)

|

||||

/sys/module/i915/parameters/enable_psr = 0 (disabled)

|

||||

/sys/module/i915/parameters/modeset = -1 (use per-chip default)

|

||||

```

|

||||

|

||||

#### 显示处理器信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -p

|

||||

或

|

||||

$ sudo tlp-stat --processor

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Processor

|

||||

CPU model = Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

|

||||

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_driver = intel_pstate

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor = powersave

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors = performance powersave

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_min_freq = 800000 [kHz]

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_max_freq = 3500000 [kHz]

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_preference = balance_power

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/energy_performance_available_preferences = default performance balance_performance balance_power power

|

||||

|

||||

......

|

||||

|

||||

/sys/devices/system/cpu/intel_pstate/min_perf_pct = 22 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/max_perf_pct = 100 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/no_turbo = 0

|

||||

/sys/devices/system/cpu/intel_pstate/turbo_pct = 33 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/num_pstates = 28

|

||||

|

||||

x86_energy_perf_policy: program not installed.

|

||||

|

||||

/sys/module/workqueue/parameters/power_efficient = Y

|

||||

/proc/sys/kernel/nmi_watchdog = 0

|

||||

|

||||

+++ Undervolting

|

||||

PHC kernel not available.

|

||||

```

|

||||

|

||||

#### 显示系统数据信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -s

|

||||

或

|

||||

$ sudo tlp-stat --system

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ System Info

|

||||

System = LENOVO Lenovo ideapad Y700-15ISK 80NV

|

||||

BIOS = CDCN35WW

|

||||

Release = "Manjaro Linux"

|

||||

Kernel = 4.19.6-1-MANJARO #1 SMP PREEMPT Sat Dec 1 12:21:26 UTC 2018 x86_64

|

||||

/proc/cmdline = BOOT_IMAGE=/boot/vmlinuz-4.19-x86_64 root=UUID=69d9dd18-36be-4631-9ebb-78f05fe3217f rw quiet resume=UUID=a2092b92-af29-4760-8e68-7a201922573b

|

||||

Init system = systemd

|

||||

Boot mode = BIOS (CSM, Legacy)

|

||||

|

||||

+++ TLP Status

|

||||

State = enabled

|

||||

Last run = 11:04:00 IST, 596 sec(s) ago

|

||||

Mode = battery

|

||||

Power source = battery

|

||||

```

|

||||

|

||||

#### 显示温度和风扇速度信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -t

|

||||

或

|

||||

$ sudo tlp-stat --temp

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Temperatures

|

||||

CPU temp = 36 [°C]

|

||||

Fan speed = (not available)

|

||||

```

|

||||

|

||||

#### 显示 USB 设备数据信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -u

|

||||

或

|

||||

$ sudo tlp-stat --usb

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ USB

|

||||

Autosuspend = disabled

|

||||

Device whitelist = (not configured)

|

||||

Device blacklist = (not configured)

|

||||

Bluetooth blacklist = disabled

|

||||

Phone blacklist = disabled

|

||||

WWAN blacklist = enabled

|

||||

|

||||

Bus 002 Device 001 ID 1d6b:0003 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 3.0 root hub (hub)

|

||||

Bus 001 Device 003 ID 174f:14e8 control = auto, autosuspend_delay_ms = 2000 -- Syntek (uvcvideo)

|

||||

|

||||

......

|

||||

```

|

||||

|

||||

#### 显示警告信息

|

||||

|

||||

```

|

||||

$ sudo tlp-stat -w

|

||||

或

|

||||

$ sudo tlp-stat --warn

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

No warnings detected.

|

||||

```

|

||||

|

||||

#### 状态报告及配置和所有活动的设置

|

||||

|

||||

```

|

||||

$ sudo tlp-stat

|

||||

```

|

||||

|

||||

```

|

||||

--- TLP 1.1 --------------------------------------------

|

||||

|

||||

+++ Configured Settings: /etc/default/tlp

|

||||

TLP_ENABLE=1

|

||||

TLP_DEFAULT_MODE=AC

|

||||

TLP_PERSISTENT_DEFAULT=0

|

||||

DISK_IDLE_SECS_ON_AC=0

|

||||

DISK_IDLE_SECS_ON_BAT=2

|

||||

MAX_LOST_WORK_SECS_ON_AC=15

|

||||

MAX_LOST_WORK_SECS_ON_BAT=60

|

||||

|

||||

......

|

||||

|

||||

+++ System Info

|

||||

System = LENOVO Lenovo ideapad Y700-15ISK 80NV

|

||||

BIOS = CDCN35WW

|

||||

Release = "Manjaro Linux"

|

||||

Kernel = 4.19.6-1-MANJARO #1 SMP PREEMPT Sat Dec 1 12:21:26 UTC 2018 x86_64

|

||||

/proc/cmdline = BOOT_IMAGE=/boot/vmlinuz-4.19-x86_64 root=UUID=69d9dd18-36be-4631-9ebb-78f05fe3217f rw quiet resume=UUID=a2092b92-af29-4760-8e68-7a201922573b

|

||||

Init system = systemd

|

||||

Boot mode = BIOS (CSM, Legacy)

|

||||

|

||||

+++ TLP Status

|

||||

State = enabled

|

||||

Last run = 11:04:00 IST, 684 sec(s) ago

|

||||

Mode = battery

|

||||

Power source = battery

|

||||

|

||||

+++ Processor

|

||||

CPU model = Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

|

||||

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_driver = intel_pstate

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_governor = powersave

|

||||

/sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors = performance powersave

|

||||

|

||||

......

|

||||

|

||||

/sys/devices/system/cpu/intel_pstate/min_perf_pct = 22 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/max_perf_pct = 100 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/no_turbo = 0

|

||||

/sys/devices/system/cpu/intel_pstate/turbo_pct = 33 [%]

|

||||

/sys/devices/system/cpu/intel_pstate/num_pstates = 28

|

||||

|

||||

x86_energy_perf_policy: program not installed.

|

||||

|

||||

/sys/module/workqueue/parameters/power_efficient = Y

|

||||

/proc/sys/kernel/nmi_watchdog = 0

|

||||

|

||||

+++ Undervolting

|

||||

PHC kernel not available.

|

||||

|

||||

+++ Temperatures

|

||||

CPU temp = 42 [°C]

|

||||

Fan speed = (not available)

|

||||

|

||||

+++ File System

|

||||

/proc/sys/vm/laptop_mode = 2

|

||||

/proc/sys/vm/dirty_writeback_centisecs = 6000

|

||||

/proc/sys/vm/dirty_expire_centisecs = 6000

|

||||

/proc/sys/vm/dirty_ratio = 20

|

||||

/proc/sys/vm/dirty_background_ratio = 10

|

||||

|

||||

+++ Storage Devices

|

||||

/dev/sda:

|

||||

Model = WDC WD10SPCX-24HWST1

|

||||

Firmware = 02.01A02

|

||||

APM Level = 128

|

||||

Status = active/idle

|

||||

Scheduler = mq-deadline

|

||||

|

||||

Runtime PM: control = on, autosuspend_delay = (not available)

|

||||

|

||||

SMART info:

|

||||

4 Start_Stop_Count = 18787

|

||||

5 Reallocated_Sector_Ct = 0

|

||||

9 Power_On_Hours = 606 [h]

|

||||

12 Power_Cycle_Count = 1792

|

||||

193 Load_Cycle_Count = 25777

|

||||

194 Temperature_Celsius = 31 [°C]

|

||||

|

||||

|

||||

+++ AHCI Link Power Management (ALPM)

|

||||

/sys/class/scsi_host/host0/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host1/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host2/link_power_management_policy = med_power_with_dipm

|

||||

/sys/class/scsi_host/host3/link_power_management_policy = med_power_with_dipm

|

||||

|

||||

+++ AHCI Host Controller Runtime Power Management

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata1/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata2/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata3/power/control = on

|

||||

/sys/bus/pci/devices/0000:00:17.0/ata4/power/control = on

|

||||

|

||||

+++ PCIe Active State Power Management

|

||||

/sys/module/pcie_aspm/parameters/policy = powersave

|

||||

|

||||

+++ Intel Graphics

|

||||

/sys/module/i915/parameters/enable_dc = -1 (use per-chip default)

|

||||

/sys/module/i915/parameters/enable_fbc = 1 (enabled)

|

||||

/sys/module/i915/parameters/enable_psr = 0 (disabled)

|

||||

/sys/module/i915/parameters/modeset = -1 (use per-chip default)

|

||||

|

||||

+++ Wireless

|

||||

bluetooth = on

|

||||

wifi = on

|

||||

wwan = none (no device)

|

||||

|

||||

hci0(btusb) : bluetooth, not connected

|

||||

wlp8s0(iwlwifi) : wifi, connected, power management = on

|

||||

|

||||

+++ Audio

|

||||

/sys/module/snd_hda_intel/parameters/power_save = 1

|

||||

/sys/module/snd_hda_intel/parameters/power_save_controller = Y

|

||||

|

||||

+++ Runtime Power Management

|

||||

Device blacklist = (not configured)

|

||||

Driver blacklist = amdgpu nouveau nvidia radeon pcieport

|

||||

|

||||

/sys/bus/pci/devices/0000:00:00.0/power/control = auto (0x060000, Host bridge, skl_uncore)

|

||||

/sys/bus/pci/devices/0000:00:01.0/power/control = auto (0x060400, PCI bridge, pcieport)

|

||||

/sys/bus/pci/devices/0000:00:02.0/power/control = auto (0x030000, VGA compatible controller, i915)

|

||||

|

||||

......

|

||||

|

||||

+++ USB

|

||||

Autosuspend = disabled

|

||||

Device whitelist = (not configured)

|

||||

Device blacklist = (not configured)

|

||||

Bluetooth blacklist = disabled

|

||||

Phone blacklist = disabled

|

||||

WWAN blacklist = enabled

|

||||

|

||||

Bus 002 Device 001 ID 1d6b:0003 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 3.0 root hub (hub)

|

||||

Bus 001 Device 003 ID 174f:14e8 control = auto, autosuspend_delay_ms = 2000 -- Syntek (uvcvideo)

|

||||

Bus 001 Device 002 ID 17ef:6053 control = on, autosuspend_delay_ms = 2000 -- Lenovo (usbhid)

|

||||

Bus 001 Device 004 ID 8087:0a2b control = auto, autosuspend_delay_ms = 2000 -- Intel Corp. (btusb)

|

||||

Bus 001 Device 001 ID 1d6b:0002 control = auto, autosuspend_delay_ms = 0 -- Linux Foundation 2.0 root hub (hub)

|

||||

|

||||

+++ Battery Status

|

||||

/sys/class/power_supply/BAT0/manufacturer = SMP

|

||||

/sys/class/power_supply/BAT0/model_name = L14M4P23

|

||||

/sys/class/power_supply/BAT0/cycle_count = (not supported)

|

||||

/sys/class/power_supply/BAT0/energy_full_design = 60000 [mWh]

|

||||

/sys/class/power_supply/BAT0/energy_full = 51690 [mWh]

|

||||

/sys/class/power_supply/BAT0/energy_now = 50140 [mWh]

|

||||

/sys/class/power_supply/BAT0/power_now = 12185 [mW]

|

||||

/sys/class/power_supply/BAT0/status = Discharging

|

||||

|

||||

Charge = 97.0 [%]

|

||||

Capacity = 86.2 [%]

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/tlp-increase-optimize-linux-laptop-battery-life/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/check-laptop-battery-status-and-charging-state-in-linux-terminal/

|

||||

[2]: https://www.2daygeek.com/powertop-monitors-laptop-battery-usage-linux/

|

||||

[3]: https://www.2daygeek.com/monitor-laptop-battery-charging-state-linux/

|

||||

[4]: https://linrunner.de/en/tlp/docs/tlp-linux-advanced-power-management.html

|

||||

[5]: https://www.2daygeek.com/category/package-management/

|

||||

[6]: https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/

|

||||

[7]: https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[8]: https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[9]: https://www.2daygeek.com/pacman-command-examples-manage-packages-arch-linux-system/

|

||||

[10]: https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/

|

||||

[11]: https://www.2daygeek.com/zypper-command-examples-manage-packages-opensuse-system/

|

||||

@ -0,0 +1,354 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (cycoe)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10874-1.html)

|

||||

[#]: subject: (Using Pygame to move your game character around)

|

||||

[#]: via: (https://opensource.com/article/17/12/game-python-moving-player)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

用 Pygame 使你的游戏角色移动起来

|

||||

======

|

||||

> 在本系列的第四部分,学习如何编写移动游戏角色的控制代码。

|

||||

|

||||

|

||||

|

||||

在这个系列的第一篇文章中,我解释了如何使用 Python 创建一个简单的[基于文本的骰子游戏][1]。在第二部分中,我向你们展示了如何从头开始构建游戏,即从 [创建游戏的环境][2] 开始。然后在第三部分,我们[创建了一个玩家妖精][3],并且使它在你的(而不是空的)游戏世界内生成。你可能已经注意到,如果你不能移动你的角色,那么游戏不是那么有趣。在本篇文章中,我们将使用 Pygame 来添加键盘控制,如此一来你就可以控制你的角色的移动。

|

||||

|

||||

在 Pygame 中有许多函数可以用来添加(除键盘外的)其他控制,但如果你正在敲击 Python 代码,那么你一定是有一个键盘的,这将成为我们接下来会使用的控制方式。一旦你理解了键盘控制,你可以自己去探索其他选项。

|

||||

|

||||

在本系列的第二篇文章中,你已经为退出游戏创建了一个按键,移动角色的(按键)原则也是相同的。但是,使你的角色移动起来要稍微复杂一点。

|

||||

|

||||

让我们从简单的部分入手:设置控制器按键。

|

||||

|

||||

### 为控制你的玩家妖精设置按键

|

||||

|

||||

在 IDLE、Ninja-IDE 或文本编辑器中打开你的 Python 游戏脚本。

|

||||

|

||||

因为游戏需要时刻“监听”键盘事件,所以你写的代码需要连续运行。你知道应该把需要在游戏周期中持续运行的代码放在哪里吗?

|

||||

|

||||

如果你回答“放在主循环中”,那么你是正确的!记住除非代码在循环中,否则(大多数情况下)它只会运行仅一次。如果它被写在一个从未被使用的类或函数中,它可能根本不会运行。

|

||||

|

||||

要使 Python 监听传入的按键,将如下代码添加到主循环。目前的代码还不能产生任何的效果,所以使用 `print` 语句来表示成功的信号。这是一种常见的调试技术。

|

||||

|

||||

```

|

||||

while main == True:

|

||||

for event in pygame.event.get():

|

||||

if event.type == pygame.QUIT:

|

||||

pygame.quit(); sys.exit()

|

||||

main = False

|

||||

|

||||

if event.type == pygame.KEYDOWN:

|

||||

if event.key == pygame.K_LEFT or event.key == ord('a'):

|

||||

print('left')

|

||||

if event.key == pygame.K_RIGHT or event.key == ord('d'):

|

||||

print('right')

|

||||

if event.key == pygame.K_UP or event.key == ord('w'):

|

||||

print('jump')

|

||||

|

||||

if event.type == pygame.KEYUP:

|

||||

if event.key == pygame.K_LEFT or event.key == ord('a'):

|

||||

print('left stop')

|

||||

if event.key == pygame.K_RIGHT or event.key == ord('d'):

|

||||

print('right stop')

|

||||

if event.key == ord('q'):

|

||||

pygame.quit()

|

||||

sys.exit()

|

||||

main = False

|

||||

```

|

||||

|

||||

一些人偏好使用键盘字母 `W`、`A`、`S` 和 `D` 来控制玩家角色,而另一些偏好使用方向键。因此确保你包含了两种选项。

|

||||

|

||||

注意:当你在编程时,同时考虑所有用户是非常重要的。如果你写代码只是为了自己运行,那么很可能你会成为你写的程序的唯一用户。更重要的是,如果你想找一个通过写代码赚钱的工作,你写的代码就应该让所有人都能运行。给你的用户选择权,比如提供使用方向键或 WASD 的选项,是一个优秀程序员的标志。

|

||||

|

||||

使用 Python 启动你的游戏,并在你按下“上下左右”方向键或 `A`、`D` 和 `W` 键的时候查看控制台窗口的输出。

|

||||

|

||||

```

|

||||

$ python ./your-name_game.py

|

||||

left

|

||||

left stop

|

||||

right

|

||||

right stop

|

||||

jump

|

||||

```

|

||||

|

||||

这验证了 Pygame 可以正确地检测按键。现在是时候来完成使妖精移动的艰巨任务了。

|

||||

|

||||

### 编写玩家移动函数

|

||||

|

||||

为了使你的妖精移动起来,你必须为你的妖精创建一个属性代表移动。当你的妖精没有在移动时,这个变量被设为 `0`。

|

||||

|

||||

如果你正在为你的妖精设置动画,或者你决定在将来为它设置动画,你还必须跟踪帧来使走路循环保持在轨迹上。

|

||||

|

||||

在 `Player` 类中创建如下变量。开头两行作为上下文对照(如果你一直跟着做,你的代码中就已经有这两行),因此只需要添加最后三行:

|

||||

|

||||

```

|

||||

def __init__(self):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.movex = 0 # 沿 X 方向移动

|

||||

self.movey = 0 # 沿 Y 方向移动

|

||||

self.frame = 0 # 帧计数

|

||||

```

|

||||

|

||||

设置好了这些变量,是时候去为妖精移动编写代码了。

|

||||

|

||||

玩家妖精不需要时刻响应控制,有时它并没有在移动。控制妖精的代码,仅仅只是玩家妖精所有能做的事情中的一小部分。在 Python 中,当你想要使一个对象做某件事并独立于剩余其他代码时,你可以将你的新代码放入一个函数。Python 的函数以关键词 `def` 开头,(该关键词)代表了定义函数。

|

||||

|

||||

在你的 `Player` 类中创建如下函数,来为你的妖精在屏幕上的位置增加几个像素。现在先不要担心你增加几个像素,这将在后续的代码中确定。

|

||||

|

||||

```

|

||||

def control(self,x,y):

|

||||

'''

|

||||

控制玩家移动

|

||||

'''

|

||||

self.movex += x

|

||||

self.movey += y

|

||||

```

|

||||

|

||||

为了在 Pygame 中移动妖精,你需要告诉 Python 在新的位置重绘妖精,以及这个新位置在哪里。

|

||||

|

||||

因为玩家妖精并不总是在移动,所以更新只需要是 Player 类中的一个函数。将此函数添加前面创建的 `control` 函数之后。

|

||||

|

||||

要使妖精看起来像是在行走(或者飞行,或是你的妖精应该做的任何事),你需要在按下适当的键时改变它在屏幕上的位置。要让它在屏幕上移动,你需要将它的位置(由 `self.rect.x` 和 `self.rect.y` 属性指定)重新定义为当前位置加上已应用的任意 `movex` 或 `movey`。(移动的像素数量将在后续进行设置。)

|

||||

|

||||

```

|

||||

def update(self):

|

||||

'''

|

||||

更新妖精位置

|

||||

'''

|

||||

self.rect.x = self.rect.x + self.movex

|

||||

```

|

||||

|

||||

对 Y 方向做同样的处理:

|

||||

|

||||

```

|

||||

self.rect.y = self.rect.y + self.movey

|

||||

```

|

||||

|

||||

对于动画,在妖精移动时推进动画帧,并使用相应的动画帧作为玩家的图像:

|

||||

|

||||

```

|

||||

# 向左移动

|

||||

if self.movex < 0:

|

||||

self.frame += 1

|

||||

if self.frame > 3*ani:

|

||||

self.frame = 0

|

||||

self.image = self.images[self.frame//ani]

|

||||

|

||||

# 向右移动

|

||||

if self.movex > 0:

|

||||

self.frame += 1

|

||||

if self.frame > 3*ani:

|

||||

self.frame = 0

|

||||

self.image = self.images[(self.frame//ani)+4]

|

||||

```

|

||||

|

||||

通过设置一个变量来告诉代码为你的妖精位置增加多少像素,然后在触发你的玩家妖精的函数时使用这个变量。

|

||||

|

||||

首先,在你的设置部分创建这个变量。在如下代码中,开头两行是上下文对照,因此只需要在你的脚本中增加第三行代码:

|

||||

|

||||

```

|

||||

player_list = pygame.sprite.Group()

|

||||

player_list.add(player)

|

||||

steps = 10 # 移动多少个像素

|

||||

```

|

||||

|

||||

现在你已经有了适当的函数和变量,使用你的按键来触发函数并将变量传递给你的妖精。

|

||||

|

||||

为此,将主循环中的 `print` 语句替换为玩家妖精的名字(`player`)、函数(`.control`)以及你希望玩家妖精在每个循环中沿 X 轴和 Y 轴移动的步数。

|

||||

|

||||

```

|

||||

if event.type == pygame.KEYDOWN:

|

||||

if event.key == pygame.K_LEFT or event.key == ord('a'):

|

||||

player.control(-steps,0)

|

||||

if event.key == pygame.K_RIGHT or event.key == ord('d'):

|

||||

player.control(steps,0)

|

||||

if event.key == pygame.K_UP or event.key == ord('w'):

|

||||

print('jump')

|

||||

|

||||

if event.type == pygame.KEYUP:

|

||||

if event.key == pygame.K_LEFT or event.key == ord('a'):

|

||||

player.control(steps,0)

|

||||

if event.key == pygame.K_RIGHT or event.key == ord('d'):

|

||||

player.control(-steps,0)

|

||||

if event.key == ord('q'):

|

||||

pygame.quit()

|

||||

sys.exit()

|

||||

main = False

|

||||

```

|

||||

|

||||

记住,`steps` 变量代表了当一个按键被按下时,你的妖精会移动多少个像素。如果当你按下 `D` 或右方向键时,你的妖精的位置增加了 10 个像素。那么当你停止按下这个键时,你必须(将 `step`)减 10(`-steps`)来使你的妖精的动量回到 0。

|

||||

|

||||

现在尝试你的游戏。注意:它不会像你预想的那样运行。

|

||||

|

||||

为什么你的妖精仍无法移动?因为主循环还没有调用 `update` 函数。

|

||||

|

||||

将如下代码加入到你的主循环中来告诉 Python 更新你的玩家妖精的位置。增加带注释的那行:

|

||||

|

||||

```

|

||||

player.update() # 更新玩家位置

|

||||

player_list.draw(world)

|

||||

pygame.display.flip()

|

||||

clock.tick(fps)

|

||||

```

|

||||

|

||||

再次启动你的游戏来见证你的玩家妖精在你的命令下在屏幕上来回移动。现在还没有垂直方向的移动,因为这部分函数会被重力控制,不过这是另一篇文章中的课程了。

|

||||

|

||||

与此同时,如果你拥有一个摇杆,你可以尝试阅读 Pygame 中 [joystick][4] 模块相关的文档,看看你是否能通过这种方式让你的妖精移动起来。或者,看看你是否能通过[鼠标][5]与你的妖精互动。

|

||||

|

||||

最重要的是,玩的开心!

|

||||

|

||||

### 本教程中用到的所有代码

|

||||

|

||||

为了方便查阅,以下是目前本系列文章用到的所有代码。

|

||||

|

||||

```

|

||||

#!/usr/bin/env python3

|

||||

# 绘制世界

|

||||

# 添加玩家和玩家控制

|

||||

# 添加玩家移动控制

|

||||

|

||||

# GNU All-Permissive License

|

||||

# Copying and distribution of this file, with or without modification,

|

||||

# are permitted in any medium without royalty provided the copyright

|

||||

# notice and this notice are preserved. This file is offered as-is,

|

||||

# without any warranty.

|

||||

|

||||

import pygame

|

||||

import sys

|

||||

import os

|

||||

|

||||

'''

|

||||

Objects

|

||||

'''

|

||||

|

||||

class Player(pygame.sprite.Sprite):

|

||||

'''

|

||||

生成玩家

|

||||

'''

|

||||

def __init__(self):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.movex = 0

|

||||

self.movey = 0

|

||||

self.frame = 0

|

||||

self.images = []

|

||||

for i in range(1,5):

|

||||

img = pygame.image.load(os.path.join('images','hero' + str(i) + '.png')).convert()

|

||||

img.convert_alpha()

|

||||

img.set_colorkey(ALPHA)

|

||||

self.images.append(img)

|

||||

self.image = self.images[0]

|

||||

self.rect = self.image.get_rect()

|

||||

|

||||

def control(self,x,y):

|

||||

'''

|

||||

控制玩家移动

|

||||

'''

|

||||

self.movex += x

|