mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

ef44d4ba46

@ -1,11 +1,12 @@

|

||||

Free DOS 的简单介绍

|

||||

FreeDOS 的简单介绍

|

||||

======

|

||||

> 学习如何穿行于 C:\ 提示符下,就像上世纪 90 年代的 DOS 高手一样。

|

||||

|

||||

|

||||

|

||||

FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌生的。在 1994 年,我和几个开发者一起 [开发 FreeDOS][1]--一个完整、自由、DOS 兼容的操作系统,你可以用它来玩经典的 DOS 游戏、运行遗留的商业软件或者开发嵌入式系统。任何在 MS-DOS 下工作的程序在 FreeDOS 下也可以运行。

|

||||

FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌生的。在 1994 年,我和几个开发者一起 [开发了 FreeDOS][1] —— 这是一个完整、自由、兼容 DOS 的操作系统,你可以用它来玩经典的 DOS 游戏、运行过时的商业软件或者开发嵌入式系统。任何在 MS-DOS 下工作的程序在 FreeDOS 下也可以运行。

|

||||

|

||||

在 1994 年,任何一个曾经使用过微软专利的 MS-DOS 的人都会迅速地熟悉 FreeDOS。这是设计而为之的;FreeDOS 尽可能地去模仿 MS-DOS。结果,1990 年代的 DOS 用户能够直接转换到 FreeDOS。但是,时代变了。今天,开源的开发者们对于 Linux 命令行更熟悉或者他们可能倾向于像 [GNOME][2] 一样的图形桌面环境,这导致 FreeDOS 命令行界面最初看起来像个异类。

|

||||

在 1994 年,任何一个曾经使用过微软的商业版 MS-DOS 的人都会迅速地熟悉 FreeDOS。这是设计而为之的;FreeDOS 尽可能地去模仿 MS-DOS。结果,1990 年代的 DOS 用户能够直接转换到 FreeDOS。但是,时代变了。今天,开源的开发者们对于 Linux 命令行更熟悉,或者他们可能倾向于像 [GNOME][2] 一样的图形桌面环境,这导致 FreeDOS 命令行界面最初看起来像个异类。

|

||||

|

||||

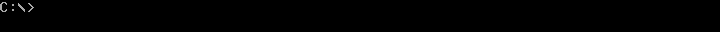

新的用户通常会问,“我已经安装了 [FreeDOS][3],但是如何使用呢?”。如果你之前并没有使用过 DOS,那么闪烁的 `C:\>` DOS 提示符看起来会有点不太友好,而且可能有点吓人。这份 FreeDOS 的简单介绍将带你起步。它只提供了基础:如何浏览以及如何查看文件。如果你想了解比这里提及的更多的知识,访问 [FreeDOS 维基][4]。

|

||||

|

||||

@ -15,13 +16,13 @@ FreeDOS 是一个古老的操作系统,但是对于多数人而言它又是陌

|

||||

|

||||

|

||||

|

||||

DOS 是在个人电脑从软盘运行时期创建的一个“磁盘操作系统”。甚至当电脑支持硬盘了,在 1980 年代和 1990 年代,频繁地在不同的驱动器之间切换也是很普遍的。举例来说,你可能想将最重要的文件都备份一份拷贝到软盘中。

|

||||

DOS 是在个人电脑从软盘运行时期创建的一个“<ruby>磁盘操作系统<rt>disk operating system</rt></ruby>”。甚至当电脑支持硬盘了,在 1980 年代和 1990 年代,频繁地在不同的驱动器之间切换也是很普遍的。举例来说,你可能想将最重要的文件都备份一份拷贝到软盘中。

|

||||

|

||||

DOS 使用一个字母来指代每个驱动器。早期的电脑仅拥有两个软盘驱动器,他们被分配了 `A:` 和 `B:` 盘符。硬盘上的第一个分区盘符是 `C:` ,然后其它的盘符依次这样分配下去。提示符中的 `C:` 表示你正在使用第一个硬盘的第一个分区。

|

||||

|

||||

从 1983 年的 PC-DOS 2.0 开始,DOS 也支持目录和子目录,非常类似 Linux 文件系统中的目录和子目录。但是跟 Linux 不一样的是,DOS 目录名由 `\` 分隔而不是 `/`。将这个与驱动器字母合起来看,提示符中的 `C:\` 表示你正在 `C:` 盘的顶端或者“根”目录。

|

||||

|

||||

`>` 修饰符提示你输入 DOS 命令的地方,就像众多 Linux shell 的 `$`。`>` 前面的部分告诉你当前的工作目录,然后你在 `>` 提示符这输入命令。

|

||||

`>` 符号是提示你输入 DOS 命令的地方,就像众多 Linux shell 的 `$`。`>` 前面的部分告诉你当前的工作目录,然后你在 `>` 提示符这输入命令。

|

||||

|

||||

### 在 DOS 中找到你的使用方式

|

||||

|

||||

@ -33,7 +34,7 @@ DOS 使用一个字母来指代每个驱动器。早期的电脑仅拥有两个

|

||||

|

||||

|

||||

|

||||

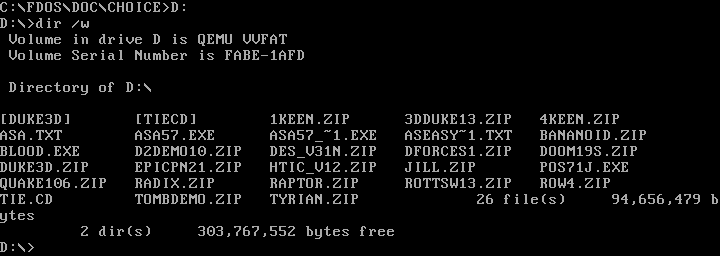

如果你不想显示单个文件大小的额外细节,你可以在 `DIR` 命令中使用 `/w` 选项来显示一个“宽泛”文件夹。注意,Linux 用户使用连字号(`-`)或者双连字号(`--`)来开启命令行选项,而 DOS 使用斜线字符(`/`)。

|

||||

如果你不想显示单个文件大小的额外细节,你可以在 `DIR` 命令中使用 `/w` 选项来显示一个“宽”的目录列表。注意,Linux 用户使用连字号(`-`)或者双连字号(`--`)来开始命令行选项,而 DOS 使用斜线字符(`/`)。

|

||||

|

||||

|

||||

|

||||

@ -64,7 +65,7 @@ FreeDOS 也从 Linux 那借鉴了一些特性:你可以使用 `CD -` 跳转回

|

||||

|

||||

|

||||

|

||||

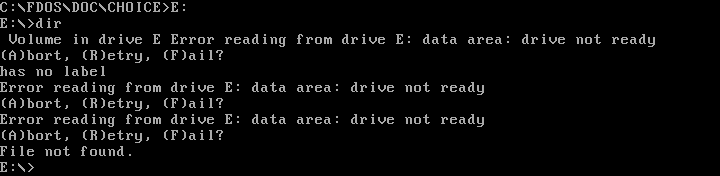

小心不要尝试切换到一个不存在的磁盘。DOS 可能会将它设置为工作磁盘,但是如果你尝试在那做任何事,你将会遇到略微臭名昭著的“退出、重试、失败” DOS 错误信息。

|

||||

小心不要尝试切换到一个不存在的磁盘。DOS 可能会将它设置为工作磁盘,但是如果你尝试在那做任何事,你将会遇到略微臭名昭著的“<ruby>退出、重试、失败<rt>Abort, Retry, Fail</rt></ruby>” DOS 错误信息。

|

||||

|

||||

|

||||

|

||||

@ -86,7 +87,7 @@ FreeDOS 也从 Linux 那借鉴了一些特性:你可以使用 `CD -` 跳转回

|

||||

|

||||

在 FreeDOS 下,针对每个命令你都能够使用 `/?` 参数来获取简要的说明。举例来说,`EDIT /?` 会告诉你编辑器的用法和选项。或者你可以输入 `HELP` 来使用交互式帮助系统。

|

||||

|

||||

像任何一个 DOS 一样,FreeDOS 被认为是一个简单的操作系统。仅使用一些基本命令就可以轻松浏览 DOS 文件系统。那么启动一个 QEMU 会话,安装 FreeDOS,然后尝试一下 DOS 命令行界面。也许它现在看起来就没那么吓人了。

|

||||

像任何一个 DOS 一样,FreeDOS 被认为是一个简单的操作系统。仅使用一些基本命令就可以轻松浏览 DOS 文件系统。那么,启动一个 QEMU 会话,安装 FreeDOS,然后尝试一下 DOS 命令行界面吧。也许它现在看起来就没那么吓人了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -96,7 +97,7 @@ via: https://opensource.com/article/18/4/gentle-introduction-freedos

|

||||

作者:[Jim Hall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[icecoobe](https://github.com/icecoobe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,50 +1,59 @@

|

||||

显卡工作原理简介

|

||||

极致技术探索:显卡工作原理

|

||||

======

|

||||

|

||||

![AMD-Polaris][1]

|

||||

|

||||

自从 sdfx 推出最初的 Voodoo 加速器以来,不起眼的显卡对你的 PC 是否可以玩游戏起到决定性作用,PC 上任何其它设备都无法与其相比。其它组件当然也很重要,但对于一个拥有 32GB 内存、价值 500 美金的 CPU 和 基于 PCIe 的存储设备的高端 PC,如果使用 10 年前的显卡,都无法以最高分辨率和细节质量运行当前<ruby>最高品质的游戏<rt>AAA titles</rt></ruby>,会发生卡顿甚至无响应。显卡(也常被称为 GPU, 或<ruby>图形处理单元<rt>Graphic Processing Unit</rt></ruby>)对游戏性能影响极大,我们反复强调这一点;但我们通常并不会深入了解显卡的工作原理。

|

||||

自从 3dfx 推出最初的 Voodoo 加速器以来,不起眼的显卡对你的 PC 是否可以玩游戏起到决定性作用,PC 上任何其它设备都无法与其相比。其它组件当然也很重要,但对于一个拥有 32GB 内存、价值 500 美金的 CPU 和 基于 PCIe 的存储设备的高端 PC,如果使用 10 年前的显卡,都无法以最高分辨率和细节质量运行当前<ruby>最高品质的游戏<rt>AAA titles</rt></ruby>,会发生卡顿甚至无响应。显卡(也常被称为 GPU,即<ruby>图形处理单元<rt>Graphic Processing Unit</rt></ruby>),对游戏性能影响极大,我们反复强调这一点;但我们通常并不会深入了解显卡的工作原理。

|

||||

|

||||

出于实际考虑,本文将概述 GPU 的上层功能特性,内容包括 AMD 显卡、Nvidia 显卡、Intel 集成显卡以及 Intel 后续可能发布的独立显卡之间共同的部分。也应该适用于 Apple, Imagination Technologies, Qualcomm, ARM 和 其它显卡生产商发布的移动平台 GPU。

|

||||

出于实际考虑,本文将概述 GPU 的上层功能特性,内容包括 AMD 显卡、Nvidia 显卡、Intel 集成显卡以及 Intel 后续可能发布的独立显卡之间共同的部分。也应该适用于 Apple、Imagination Technologies、Qualcomm、ARM 和其它显卡生产商发布的移动平台 GPU。

|

||||

|

||||

### 我们为何不使用 CPU 进行渲染?

|

||||

|

||||

我要说明的第一点是我们为何不直接使用 CPU 完成游戏中的渲染工作。坦率的说,在理论上你确实可以直接使用 CPU 完成<ruby>渲染<rt>rendering</rt></ruby>工作。在显卡没有广泛普及之前,早期的 3D 游戏就是完全基于 CPU 运行的,例如 Ultima Underworld(LCTT 译注:中文名为 _地下创世纪_ ,下文中简称 UU)。UU 是一个很特别的例子,原因如下:与 Doom (LCTT 译注:中文名 _毁灭战士_)相比,UU 具有一个更高级的渲染引擎,全面支持<ruby>向上或向下查找<rt>looking up and down</rt></ruby>以及一些在当时比较高级的特性,例如<ruby>纹理映射<rt>texture mapping</rt></ruby>。但为支持这些高级特性,需要付出高昂的代价,很少有人可以拥有真正能运行起 UU 的 PC。

|

||||

我要说明的第一点是我们为何不直接使用 CPU 完成游戏中的渲染工作。坦率的说,在理论上你确实可以直接使用 CPU 完成<ruby>渲染<rt>rendering</rt></ruby>工作。在显卡没有广泛普及之前,早期的 3D 游戏就是完全基于 CPU 运行的,例如 《<ruby>地下创世纪<rt>Ultima Underworld</rt></ruby>(下文中简称 UU)。UU 是一个很特别的例子,原因如下:与《<ruby>毁灭战士<rt>Doom</rt></ruby>相比,UU 具有一个更高级的渲染引擎,全面支持“向上或向下看”以及一些在当时比较高级的特性,例如<ruby>纹理映射<rt>texture mapping</rt></ruby>。但为支持这些高级特性,需要付出高昂的代价,很少有人可以拥有真正能运行起 UU 的 PC。

|

||||

|

||||

|

||||

|

||||

对于早期的 3D 游戏,包括 Half Life 和 Quake II 在内的很多游戏,内部包含一个软件渲染器,让没有 3D 加速器的玩家也可以玩游戏。但现代游戏都弃用了这种方式,原因很简单:CPU 是设计用于通用任务的微处理器,意味着缺少 GPU 提供的<ruby>专用硬件<rt>specialized hardware</rt></ruby>和<ruby>功能<rt>capabilities</rt></ruby>。对于 18 年前使用软件渲染的那些游戏,当代 CPU 可以轻松胜任;但对于当代最高品质的游戏,除非明显降低<ruby>景象质量<rt>scene</rt></ruby>、分辨率和各种虚拟特效,否则现有的 CPU 都无法胜任。

|

||||

*地下创世纪,图片来自 [GOG](https://www.gog.com/game/ultima_underworld_1_2)*

|

||||

|

||||

对于早期的 3D 游戏,包括《<ruby>半条命<rt>Half Life</rt></ruby>》和《<ruby>雷神之锤 2<rt>Quake II</rt></ruby>》在内的很多游戏,内部包含一个软件渲染器,让没有 3D 加速器的玩家也可以玩游戏。但现代游戏都弃用了这种方式,原因很简单:CPU 是设计用于通用任务的微处理器,意味着缺少 GPU 提供的<ruby>专用硬件<rt>specialized hardware</rt></ruby>和<ruby>功能<rt>capabilities</rt></ruby>。对于 18 年前使用软件渲染的那些游戏,当代 CPU 可以轻松胜任;但对于当代最高品质的游戏,除非明显降低<ruby>景象质量<rt>scene</rt></ruby>、分辨率和各种虚拟特效,否则现有的 CPU 都无法胜任。

|

||||

|

||||

### 什么是 GPU ?

|

||||

|

||||

GPU 是一种包含一系列专用硬件特性的设备,其中这些特性可以让各种 3D 引擎更好地执行代码,包括<ruby>形状构建<rt>geometry setup</rt></ruby>,纹理映射,<ruby>访存<rt>memory access</rt></ruby>和<ruby>着色器<rt>shaders</rt></ruby>等。3D 引擎的功能特性影响着设计者如何设计 GPU。可能有人还记得,AMD HD5000 系列使用 VLIW5 <ruby>架构<rt>archtecture</rt></ruby>;但在更高端的 HD 6000 系列中使用了 VLIW4 架构。通过 GCN (LCTT 译注:GCN 是 Graphics Core Next 的缩写,字面意思是下一代图形核心,既是若干代微体系结构的代号,也是指令集的名称),AMD 改变了并行化的实现方法,提高了每个时钟周期的有效性能。

|

||||

GPU 是一种包含一系列专用硬件特性的设备,其中这些特性可以让各种 3D 引擎更好地执行代码,包括<ruby>形状构建<rt>geometry setup</rt></ruby>,纹理映射,<ruby>访存<rt>memory access</rt></ruby>和<ruby>着色器<rt>shaders</rt></ruby>等。3D 引擎的功能特性影响着设计者如何设计 GPU。可能有人还记得,AMD HD5000 系列使用 VLIW5 <ruby>架构<rt>archtecture</rt></ruby>;但在更高端的 HD 6000 系列中使用了 VLIW4 架构。通过 GCN (LCTT 译注:GCN 是 Graphics Core Next 的缩写,字面意思是“下一代图形核心”,既是若干代微体系结构的代号,也是指令集的名称),AMD 改变了并行化的实现方法,提高了每个时钟周期的有效性能。

|

||||

|

||||

|

||||

|

||||

*“GPU 革命”的前两块奠基石属于 AMD 和 NV;而“第三个时代”则独属于 AMD。*

|

||||

|

||||

Nvidia 在发布首款 GeForce 256 时(大致对应 Microsoft 推出 DirectX7 的时间点)提出了 GPU 这个术语,这款 GPU 支持在硬件上执行转换和<ruby>光照计算<rt>lighting calculation</rt></ruby>。将专用功能直接集成到硬件中是早期 GPU 的显著技术特点。很多专用功能还在(以一种极为不同的方式)使用,毕竟对于特定类型的工作任务,使用<ruby>片上<rt>on-chip</rt></ruby>专用计算资源明显比使用一组<ruby>可编程单元<rt>programmable cores</rt></ruby>要更加高效和快速。

|

||||

|

||||

GPU 和 CPU 的核心有很多差异,但我们可以按如下方式比较其上层特性。CPU 一般被设计成尽可能快速和高效的执行单线程代码。虽然 <ruby>同时多线程<rt>SMT, Simultaneous multithreading</rt></ruby> 或 <ruby>超线程<rt>Hyper-Threading</rt></ruby>在这方面有所改进,但我们实际上通过堆叠众多高效率的单线程核心来扩展多线程性能。AMD 的 32 核心/64 线程 Epyc CPU 已经是我们能买到的核心数最多的 CPU;相比而言,Nvidia 最低端的 Pascal GPU 都拥有 384 个核心。但相比 CPU 的核心,GPU 所谓的核心是处理能力低得多的的处理单元。

|

||||

GPU 和 CPU 的核心有很多差异,但我们可以按如下方式比较其上层特性。CPU 一般被设计成尽可能快速和高效的执行单线程代码。虽然 <ruby>同时多线程<rt> Simultaneous multithreading</rt></ruby>(SMT)或 <ruby>超线程<rt>Hyper-Threading</rt></ruby>(HT)在这方面有所改进,但我们实际上通过堆叠众多高效率的单线程核心来扩展多线程性能。AMD 的 32 核心/64 线程 Epyc CPU 已经是我们能买到的核心数最多的 CPU;相比而言,Nvidia 最低端的 Pascal GPU 都拥有 384 个核心。但相比 CPU 的核心,GPU 所谓的核心是处理能力低得多的的处理单元。

|

||||

|

||||

**注意:** 简单比较 GPU 核心数,无法比较或评估 AMD 与 Nvidia 的相对游戏性能。在同样 GPU 系列(例如 Nvidia 的 GeForce GTX 10 系列,或 AMD 的 RX 4xx 或 5xx 系列)的情况下,更高的 GPU 核心数往往意味着更高的性能。

|

||||

|

||||

你无法只根据核心数比较不同供应商或核心系列的 GPU 之间的性能,这是因为不同的架构对应的效率各不相同。与 CPU 不同,GPU 被设计用于并行计算。AMD 和 Nvidia 在结构上都划分为计算资源<ruby>块<rt>block</rt></ruby>。Nvidia 将这些块称之为<ruby>流处理器<rt>SM, Streaming Multiprocessor</rt></ruby>,而 AMD 则称之为<ruby>计算单元<rt>Compute Unit</rt></ruby>。

|

||||

你无法只根据核心数比较不同供应商或核心系列的 GPU 之间的性能,这是因为不同的架构对应的效率各不相同。与 CPU 不同,GPU 被设计用于并行计算。AMD 和 Nvidia 在结构上都划分为计算资源<ruby>块<rt>block</rt></ruby>。Nvidia 将这些块称之为<ruby>流处理器<rt>Streaming Multiprocessor</rt></ruby>(SM),而 AMD 则称之为<ruby>计算单元<rt>Compute Unit</rt></ruby>(CU)。

|

||||

|

||||

|

||||

|

||||

每个块都包含如下组件:一组核心,一个<ruby>调度器<rt>scheduler</rt></ruby>,一个<ruby>寄存器文件<rt>register file</rt></ruby>,指令缓存,纹理和 L1 缓存以及纹理<ruby>映射单元<rt>mapping units</rt></ruby>。SM/CU 可以被认为是 GPU 中最小的可工作块。SM/CU 没有涵盖全部的功能单元,例如视频解码引擎,实际在屏幕绘图所需的渲染输出,以及与<ruby>板载<rt>onboard</rt></ruby><ruby>显存<rt>VRAM, Video Memory</rt></ruby>通信相关的<ruby>内存接口<rt>memory interfaces</rt></ruby>都不在 SM/CU 的范围内;但当 AMD 提到一个 APU 拥有 8 或 11 个 Vega 计算单元时,所指的是(等价的)<ruby>硅晶块<rt>block of silicon</rt></ruby>数目。如果你查看任意一款 GPU 的模块设计图,你会发现图中 SM/CU 是反复出现很多次的部分。

|

||||

*一个 Pascal 流处理器(SM)。*

|

||||

|

||||

每个块都包含如下组件:一组核心、一个<ruby>调度器<rt>scheduler</rt></ruby>、一个<ruby>寄存器文件<rt>register file</rt></ruby>、指令缓存、纹理和 L1 缓存以及纹理<ruby>映射单元<rt>mapping unit</rt></ruby>。SM/CU 可以被认为是 GPU 中最小的可工作块。SM/CU 没有涵盖全部的功能单元,例如视频解码引擎,实际在屏幕绘图所需的渲染输出,以及与<ruby>板载<rt>onboard</rt></ruby><ruby>显存<rt>Video Memory</rt></ruby>(VRAM)通信相关的<ruby>内存接口<rt>memory interfaces</rt></ruby>都不在 SM/CU 的范围内;但当 AMD 提到一个 APU 拥有 8 或 11 个 Vega 计算单元时,所指的是(等价的)<ruby>硅晶块<rt>block of silicon</rt></ruby>数目。如果你查看任意一款 GPU 的模块设计图,你会发现图中 SM/CU 是反复出现很多次的部分。

|

||||

|

||||

|

||||

|

||||

*这是 Pascal 的全平面图*

|

||||

|

||||

GPU 中的 SM/CU 数目越多,每个时钟周期内可以并行完成的工作也越多。渲染是一种通常被认为是“高度并行”的计算问题,意味着随着核心数增加带来的可扩展性很高。

|

||||

|

||||

当我们讨论 GPU 设计时,我们通常会使用一种形如 4096:160:64 的格式,其中第一个数字代表核心数。在核心系列(如 GTX970/GTX 980/GTX 980 Ti, 如 RX 560/RX 580 等等)一致的情况下,核心数越高,GPU 也就相对更快。

|

||||

当我们讨论 GPU 设计时,我们通常会使用一种形如 4096:160:64 的格式,其中第一个数字代表核心数。在核心系列(如 GTX970/GTX 980/GTX 980 Ti,如 RX 560/RX 580 等等)一致的情况下,核心数越高,GPU 也就相对更快。

|

||||

|

||||

### 纹理映射和渲染输出

|

||||

|

||||

GPU 的另外两个主要组件是纹理映射单元和渲染输出。设计中的纹理映射单元数目决定了最大的<ruby>纹素<rt>texel</rt></ruby>输出以及可以多快的处理并将纹理映射到对象上。早期的 3D 游戏很少用到纹理,这是因为绘制 3D 多边形形状的工作有较大的难度。纹理其实并不是 3D 游戏必须的,但不使用纹理的现代游戏屈指可数。

|

||||

|

||||

GPU 中的纹理映射单元数目用 4096:160:64 指标中的第二个数字表示。AMD,Nvidia 和 Intel 一般都等比例变更指标中的数字。换句话说,如果你找到一个指标为 4096:160:64 的 GPU,同系列中不会出现指标为 4096:320:64 的 GPU。纹理映射绝对有可能成为游戏的瓶颈,但产品系列中次高级别的 GPU 往往提供更多的核心和纹理映射单元(是否拥有更高的渲染输出单元取决于 GPU 系列和显卡的指标)。

|

||||

GPU 中的纹理映射单元数目用 4096:160:64 指标中的第二个数字表示。AMD、Nvidia 和 Intel 一般都等比例变更指标中的数字。换句话说,如果你找到一个指标为 4096:160:64 的 GPU,同系列中不会出现指标为 4096:320:64 的 GPU。纹理映射绝对有可能成为游戏的瓶颈,但产品系列中次高级别的 GPU 往往提供更多的核心和纹理映射单元(是否拥有更高的渲染输出单元取决于 GPU 系列和显卡的指标)。

|

||||

|

||||

<ruby>渲染输出单元<rt>Render outputs, ROPs</rt></ruby>(有时也叫做<ruby>光栅操作管道<rt>raster operations pipelines</rt></ruby>是 GPU 输出汇集成图像的场所,图像最终会在显示器或电视上呈现。渲染输出单元的数目乘以 GPU 的时钟频率决定了<ruby>像素填充速率<rt>pixel fill rate</rt></ruby>。渲染输出单元数目越多意味着可以同时输出的像素越多。渲染输出单元还处理<ruby>抗锯齿<rt>antialiasing</rt></ruby>,启用抗锯齿(尤其是<ruby>超级采样<rt>supersampled</rt></ruby>抗锯齿)会导致游戏填充速率受限。

|

||||

<ruby>渲染输出单元<rt>Render outputs</rt></ruby>(ROP),有时也叫做<ruby>光栅操作管道<rt>raster operations pipelines</rt></ruby>是 GPU 输出汇集成图像的场所,图像最终会在显示器或电视上呈现。渲染输出单元的数目乘以 GPU 的时钟频率决定了<ruby>像素填充速率<rt>pixel fill rate</rt></ruby>。渲染输出单元数目越多意味着可以同时输出的像素越多。渲染输出单元还处理<ruby>抗锯齿<rt>antialiasing</rt></ruby>,启用抗锯齿(尤其是<ruby>超级采样<rt>supersampled</rt></ruby>抗锯齿)会导致游戏填充速率受限。

|

||||

|

||||

### 显存带宽与显存容量

|

||||

|

||||

@ -52,11 +61,11 @@ GPU 中的纹理映射单元数目用 4096:160:64 指标中的第二个数字表

|

||||

|

||||

在某些情况下,显存带宽不足会成为 GPU 的显著瓶颈。以 Ryzen 5 2400G 为例的 AMD APU 就是严重带宽受限的,以至于提高 DDR4 的时钟频率可以显著提高整体性能。导致瓶颈的显存带宽阈值,也与游戏引擎和游戏使用的分辨率相关。

|

||||

|

||||

板载内存大小也是 GPU 的重要指标。如果按指定细节级别或分辨率运行所需的显存量超过了可用的资源量,游戏通常仍可以运行,但会使用 CPU 的主存存储额外的纹理数据;而从 DRAM 中提取数据比从板载显存中提取数据要慢得多。这会导致游戏在板载的快速访问内存池和系统内存中共同提取数据时出现明显的卡顿。

|

||||

板载内存大小也是 GPU 的重要指标。如果按指定细节级别或分辨率运行所需的显存量超过了可用的资源量,游戏通常仍可以运行,但会使用 CPU 的主存来存储额外的纹理数据;而从 DRAM 中提取数据比从板载显存中提取数据要慢得多。这会导致游戏在板载的快速访问内存池和系统内存中共同提取数据时出现明显的卡顿。

|

||||

|

||||

有一点我们需要留意,GPU 生产厂家通常为一款低端或中端 GPU 配置比通常更大的显存,这是他们为产品提价的一种常用手段。很难说大显存是否更具有吸引力,毕竟需要具体问题具体分析。大多数情况下,用更高的价格购买一款仅显存更高的显卡是不划算的。经验规律告诉我们,低端显卡遇到显存瓶颈之前就会碰到其它瓶颈。如果存在疑问,可以查看相关评论,例如 4G 版本或其它数目的版本是否性能超过 2G 版本。更多情况下,如果其它指标都相同,购买大显存版本并不值得。

|

||||

有一点我们需要留意,GPU 生产厂家通常为一款低端或中端 GPU 配置比通常更大的显存,这是他们为产品提价的一种常用手段。很难说大显存是否更具有吸引力,毕竟需要具体问题具体分析。大多数情况下,用更高的价格购买一款仅是显存更高的显卡是不划算的。经验规律告诉我们,低端显卡遇到显存瓶颈之前就会碰到其它瓶颈。如果存在疑问,可以查看相关评论,例如 4G 版本或其它数目的版本是否性能超过 2G 版本。更多情况下,如果其它指标都相同,购买大显存版本并不值得。

|

||||

|

||||

查看我们的[极致技术讲解][2]系列,深入了解更多当前最热的技术话题。

|

||||

查看我们的[极致技术探索][2]系列,深入了解更多当前最热的技术话题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -65,7 +74,7 @@ via: https://www.extremetech.com/gaming/269335-how-graphics-cards-work

|

||||

作者:[Joel Hruska][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,6 @@

|

||||

查看一个归档或压缩文件的内容而无需解压它

|

||||

======

|

||||

|

||||

|

||||

|

||||

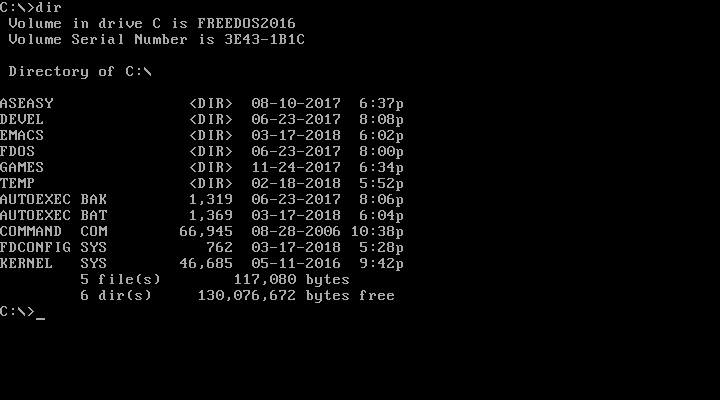

在本教程中,我们将学习如何在类 Unix 系统中查看一个归档或者压缩文件的内容而无需实际解压它。在深入之前,让我们先厘清归档和压缩文件的概念,它们之间有显著不同。归档是将多个文件或者目录归并到一个文件的过程,因此这个生成的文件是没有被压缩过的。而压缩则是结合多个文件或者目录到一个文件并最终压缩这个文件的方法。归档文件不是一个压缩文件,但压缩文件可以是一个归档文件,清楚了吗?好,那就让我们进入今天的主题。

|

||||

@ -8,44 +9,44 @@

|

||||

|

||||

得益于 Linux 社区,有很多命令行工具可以来达成上面的目标。下面就让我们来看看使用它们的一些示例。

|

||||

|

||||

**1 使用 Vim 编辑器**

|

||||

#### 1、使用 vim 编辑器

|

||||

|

||||

Vim 不只是一个编辑器,使用它我们可以干很多事情。下面的命令展示的是在没有解压的情况下使用 Vim 查看一个压缩的归档文件的内容:

|

||||

vim 不只是一个编辑器,使用它我们可以干很多事情。下面的命令展示的是在没有解压的情况下使用 vim 查看一个压缩的归档文件的内容:

|

||||

|

||||

```

|

||||

$ vim ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

![][2]

|

||||

|

||||

你甚至还可以浏览归档文件的内容,打开其中的文本文件(假如有的话)。要打开一个文本文件,只需要用方向键将鼠标的游标放置到文件的前面,然后敲 ENTER 键来打开它。

|

||||

|

||||

**2 使用 Tar 命令**

|

||||

#### 2、使用 tar 命令

|

||||

|

||||

为了列出一个 tar 归档文件的内容,可以运行:

|

||||

|

||||

```

|

||||

$ tar -tf ostechnix.tar

|

||||

ostechnix/

|

||||

ostechnix/image.jpg

|

||||

ostechnix/file.pdf

|

||||

ostechnix/song.mp3

|

||||

|

||||

```

|

||||

|

||||

或者使用 **-v** 选项来查看归档文件的具体属性,例如它的文件所有者、属组、创建日期等等。

|

||||

或者使用 `-v` 选项来查看归档文件的具体属性,例如它的文件所有者、属组、创建日期等等。

|

||||

|

||||

```

|

||||

$ tar -tvf ostechnix.tar

|

||||

drwxr-xr-x sk/users 0 2018-07-02 19:30 ostechnix/

|

||||

-rw-r--r-- sk/users 53632 2018-06-29 15:57 ostechnix/image.jpg

|

||||

-rw-r--r-- sk/users 156831 2018-06-04 12:37 ostechnix/file.pdf

|

||||

-rw-r--r-- sk/users 9702219 2018-04-25 20:35 ostechnix/song.mp3

|

||||

|

||||

```

|

||||

|

||||

**3 使用 Rar 命令**

|

||||

#### 3、使用 rar 命令

|

||||

|

||||

要查看一个 rar 文件的内容,只需要执行:

|

||||

|

||||

```

|

||||

$ rar v ostechnix.rar

|

||||

|

||||

@ -62,12 +63,12 @@ Attributes Size Packed Ratio Date Time Checksum Name

|

||||

-rw-r--r-- 9702219 9658527 99% 2018-04-25 20:35 DD875AC4 ostechnix/song.mp3

|

||||

----------- --------- -------- ----- ---------- ----- -------- ----

|

||||

9912682 9849787 99% 3

|

||||

|

||||

```

|

||||

|

||||

**4 使用 Unrar 命令**

|

||||

#### 4、使用 unrar 命令

|

||||

|

||||

你也可以使用带有 `l` 选项的 `unrar` 来做到与上面相同的事情,展示如下:

|

||||

|

||||

你也可以使用带有 **l** 选项的 **Unrar** 来做到与上面相同的事情,展示如下:

|

||||

```

|

||||

$ unrar l ostechnix.rar

|

||||

|

||||

@ -83,23 +84,23 @@ Attributes Size Date Time Name

|

||||

-rw-r--r-- 9702219 2018-04-25 20:35 ostechnix/song.mp3

|

||||

----------- --------- ---------- ----- ----

|

||||

9912682 3

|

||||

|

||||

```

|

||||

|

||||

**5 使用 Zip 命令**

|

||||

#### 5、使用 zip 命令

|

||||

|

||||

为了查看一个 zip 文件的内容而无需解压它,可以使用下面的 `zip` 命令:

|

||||

|

||||

为了查看一个 zip 文件的内容而无需解压它,可以使用下面的 **zip** 命令:

|

||||

```

|

||||

$ zip -sf ostechnix.zip

|

||||

Archive contains:

|

||||

Life advices.jpg

|

||||

Total 1 entries (597219 bytes)

|

||||

|

||||

```

|

||||

|

||||

**6. 使用 Unzip 命令**

|

||||

#### 6、使用 unzip 命令

|

||||

|

||||

你也可以像下面这样使用 `-l` 选项的 `unzip` 命令来呈现一个 zip 文件的内容:

|

||||

|

||||

你也可以像下面这样使用 **-l** 选项的 **Unzip** 命令来呈现一个 zip 文件的内容:

|

||||

```

|

||||

$ unzip -l ostechnix.zip

|

||||

Archive: ostechnix.zip

|

||||

@ -108,10 +109,9 @@ Length Date Time Name

|

||||

597219 2018-04-09 12:48 Life advices.jpg

|

||||

--------- -------

|

||||

597219 1 file

|

||||

|

||||

```

|

||||

|

||||

**7 使用 Zipinfo 命令**

|

||||

#### 7、使用 zipinfo 命令

|

||||

|

||||

```

|

||||

$ zipinfo ostechnix.zip

|

||||

@ -119,43 +119,42 @@ Archive: ostechnix.zip

|

||||

Zip file size: 584859 bytes, number of entries: 1

|

||||

-rw-r--r-- 6.3 unx 597219 bx defN 18-Apr-09 12:48 Life advices.jpg

|

||||

1 file, 597219 bytes uncompressed, 584693 bytes compressed: 2.1%

|

||||

|

||||

```

|

||||

|

||||

如你所见,上面的命令展示了一个 zip 文件的内容、它的权限、创建日期和压缩百分比等等信息。

|

||||

|

||||

**8. 使用 Zcat 命令**

|

||||

#### 8、使用 zcat 命令

|

||||

|

||||

要一个压缩的归档文件的内容而不解压它,使用 `zcat` 命令,我们可以得到:

|

||||

|

||||

要一个压缩的归档文件的内容而不解压它,使用 **zcat** 命令,我们可以得到:

|

||||

```

|

||||

$ zcat ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

zcat 和 `gunzip -c` 命令相同。所以你可以使用下面的命令来查看归档或者压缩文件的内容:

|

||||

`zcat` 和 `gunzip -c` 命令相同。所以你可以使用下面的命令来查看归档或者压缩文件的内容:

|

||||

|

||||

```

|

||||

$ gunzip -c ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

**9. 使用 Zless 命令**

|

||||

#### 9、使用 zless 命令

|

||||

|

||||

要使用 zless 命令来查看一个归档或者压缩文件的内容,只需:

|

||||

|

||||

要使用 Zless 命令来查看一个归档或者压缩文件的内容,只需:

|

||||

```

|

||||

$ zless ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

这个命令类似于 `less` 命令,它将一页一页地展示其输出。

|

||||

|

||||

**10. 使用 Less 命令**

|

||||

#### 10、使用 less 命令

|

||||

|

||||

可能你已经知道 **less** 命令可以打开文件来交互式地阅读它,并且它支持滚动和搜索。

|

||||

可能你已经知道 `less` 命令可以打开文件来交互式地阅读它,并且它支持滚动和搜索。

|

||||

|

||||

运行下面的命令来使用 `less` 命令查看一个归档或者压缩文件的内容:

|

||||

|

||||

运行下面的命令来使用 less 命令查看一个归档或者压缩文件的内容:

|

||||

```

|

||||

$ less ostechnix.tar.gz

|

||||

|

||||

```

|

||||

|

||||

上面便是全部的内容了。现在你知道了如何在 Linux 中使用各种命令查看一个归档或者压缩文件的内容了。希望本文对你有用。更多好的内容将呈现给大家,希望继续关注我们!

|

||||

@ -169,7 +168,7 @@ via: https://www.ostechnix.com/how-to-view-the-contents-of-an-archive-or-compres

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,17 +1,19 @@

|

||||

MPV 播放器:Linux 下的极简视频播放器

|

||||

======

|

||||

MPV 是一个开源的,跨平台视频播放器,带有极简的 GUI 界面以及丰富的命令行控制。

|

||||

|

||||

> MPV 是一个开源的,跨平台视频播放器,带有极简的 GUI 界面以及丰富的命令行控制。

|

||||

|

||||

VLC 可能是 Linux 或者其他平台下最好的视频播放器。我已经使用 VLC 很多年了,它现在仍是我最喜欢的播放器。

|

||||

|

||||

不过最近,我倾向于使用简洁界面的极简应用。这也是我偶然发现 MPV 的原因。我太喜欢这个软件,并把它加入了 [Ubuntu 最佳应用][1]列表里。

|

||||

|

||||

[MPV][2] 是一个开源的视频播放器,有 Linux,Windows,MacOS,BSD 以及 Android 等平台下的版本。它实际上是从 [MPlayer][3] 分支出来的。

|

||||

[MPV][2] 是一个开源的视频播放器,有 Linux、Windows、MacOS、BSD 以及 Android 等平台下的版本。它实际上是从 [MPlayer][3] 分支出来的。

|

||||

|

||||

它的图形界面只有必须的元素而且非常整洁。

|

||||

|

||||

![MPV 播放器在 Linux 下的界面][4]

|

||||

MPV 播放器

|

||||

|

||||

*MPV 播放器*

|

||||

|

||||

### MPV 的功能

|

||||

|

||||

@ -24,20 +26,18 @@ MPV 有标准播放器该有的所有功能。你可以播放各种视频,以

|

||||

* 可以通过命令行播放 YouTube 等流媒体视频。

|

||||

* 命令行模式的 MPV 可以嵌入到网页或其他应用中。

|

||||

|

||||

|

||||

|

||||

尽管 MPV 播放器只有极简的界面以及有限的选项,但请不要怀疑它的功能。它主要的能力都来自命令行版本。

|

||||

|

||||

只需要输入命令 mpv --list-options,然后你会看到它所提供的 447 个不同的选项。但是本文不会介绍 MPV 的高级应用。让我们看看作为一个普通的桌面视频播放器,它能有多么优秀。

|

||||

只需要输入命令 `mpv --list-options`,然后你会看到它所提供的 447 个不同的选项。但是本文不会介绍 MPV 的高级应用。让我们看看作为一个普通的桌面视频播放器,它能有多么优秀。

|

||||

|

||||

### 在 Linux 上安装 MPV

|

||||

|

||||

MPV 是一个常用应用,加入了大多数 Linux 发行版默认仓库里。在软件中心里搜索一下就可以了。

|

||||

|

||||

我可以确认在 Ubuntu 的软件中心里能找到。你可以在里面选择安装,或者通过下面的命令安装:

|

||||

|

||||

```

|

||||

sudo apt install mpv

|

||||

|

||||

```

|

||||

|

||||

你可以在 [MPV 网站][5]上查看其他平台的安装指引。

|

||||

@ -47,13 +47,14 @@ sudo apt install mpv

|

||||

在安装完成以后,你可以通过鼠标右键点击视频文件,然后在列表里选择 MPV 来播放。

|

||||

|

||||

![MPV 播放器界面][6]

|

||||

MPV 播放器界面

|

||||

|

||||

*MPV 播放器界面*

|

||||

|

||||

整个界面只有一个控制面板,只有在鼠标移动到播放窗口上才会显示出来。控制面板上有播放/暂停,选择视频轨道,切换音轨,字幕以及全屏等选项。

|

||||

|

||||

MPV 的默认大小取决于你所播放视频的画质。比如一个 240p 的视频,播放窗口会比较小,而在全高清显示器上播放 1080p 视频时,会几乎占满整个屏幕。不管视频大小,你总是可以在播放窗口上双击鼠标切换成全屏。

|

||||

|

||||

#### The subtitle struggle

|

||||

#### 字幕

|

||||

|

||||

如果你的视频带有字幕,MPV 会[自动加载字幕][7],你也可以选择关闭。不过,如果你想使用其他外挂字幕文件,不能直接在播放器界面上操作。

|

||||

|

||||

@ -66,17 +67,18 @@ MPV 的默认大小取决于你所播放视频的画质。比如一个 240p 的

|

||||

要播放在线视频,你只能使用命令行模式的 MPV。

|

||||

|

||||

打开终端窗口,然后用类似下面的方式来播放:

|

||||

|

||||

```

|

||||

mpv <URL_of_Video>

|

||||

|

||||

```

|

||||

|

||||

![在 Linux 桌面上使用 MPV 播放 YouTube 视频][8]

|

||||

在 Linux 桌面上使用 MPV 播放 YouTube 视频

|

||||

|

||||

*在 Linux 桌面上使用 MPV 播放 YouTube 视频*

|

||||

|

||||

用 MPV 播放 YouTube 视频的体验不怎么好。它总是在缓冲缓冲,有点烦。

|

||||

|

||||

#### 是否需要安装 MPV 播放器?

|

||||

### 是否安装 MPV 播放器?

|

||||

|

||||

这个看你自己。如果你想体验各种应用,大可以试试 MPV。否则,默认的视频播放器或者 VLC 就足够了。

|

||||

|

||||

@ -95,7 +97,7 @@ via: https://itsfoss.com/mpv-video-player/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,95 @@

|

||||

How blockchain can complement open source

|

||||

======

|

||||

|

||||

|

||||

|

||||

[The Cathedral and The Bazaar][1] is a classic open source story, written 20 years ago by Eric Steven Raymond. In the story, Eric describes a new revolutionary software development model where complex software projects are built without (or with a very little) central management. This new model is open source.

|

||||

|

||||

Eric's story compares two models:

|

||||

|

||||

* The classic model (represented by the cathedral), in which software is crafted by a small group of individuals in a closed and controlled environment through slow and stable releases.

|

||||

* And the new model (represented by the bazaar), in which software is crafted in an open environment where individuals can participate freely but still produce a stable and coherent system.

|

||||

|

||||

|

||||

|

||||

Some of the reasons open source is so successful can be traced back to the founding principles Eric describes. Releasing early, releasing often, and accepting the fact that many heads are inevitably better than one allows open source projects to tap into the world’s pool of talent (and few companies can match that using the closed source model).

|

||||

|

||||

Two decades after Eric's reflective analysis of the hacker community, we see open source becoming dominant. It is no longer a model only for scratching a developer’s personal itch, but instead, the place where innovation happens. Even the world's [largest][2] software companies are transitioning to this model in order to continue dominating.

|

||||

|

||||

### A barter system

|

||||

|

||||

If we look closely at how the open source model works in practice, we realize that it is a closed system, exclusive only to open source developers and techies. The only way to influence the direction of a project is by joining the open source community, understanding the written and the unwritten rules, learning how to contribute, the coding standards, etc., and doing it yourself.

|

||||

|

||||

This is how the bazaar works, and it is where the barter system analogy comes from. A barter system is a method of exchanging services and goods in return for other services and goods. In the bazaar—where the software is built—that means in order to take something, you must also be a producer yourself and give something back in return. And that is by exchanging your time and knowledge for getting something done. A bazaar is a place where open source developers interact with other open source developers and produce open source software the open source way.

|

||||

|

||||

The barter system is a great step forward and an evolution from the state of self-sufficiency where everybody must be a jack of all trades. The bazaar (open source model) using the barter system allows people with common interests and different skills to gather, collaborate, and create something that no individual can create on their own. The barter system is simple and lacks complex problems of the modern monetary systems, but it also has some limitations, such as:

|

||||

|

||||

* Lack of divisibility: In the absence of a common medium of exchange, a large indivisible commodity/value cannot be exchanged for a smaller commodity/value. For example, if you want to do even a small change in an open source project, you may sometimes still need to go through a high entry barrier.

|

||||

* Storing value: If a project is important to your company, you may want to have a large investment/commitment in it. But since it is a barter system among open source developers, the only way to have a strong say is by employing many open source committers, and that is not always possible.

|

||||

* Transferring value: If you have invested in a project (trained employees, hired open source developers) and want to move focus to another project, it is not possible to transfer expertise, reputation, and influence quickly.

|

||||

* Temporal decoupling: The barter system does not provide a good mechanism for deferred or advance commitments. In the open source world, that means a user cannot express commitment or interest in a project in a measurable way in advance, or continuously for future periods.

|

||||

|

||||

|

||||

|

||||

Below, we will explore how to address these limitations using the back door to the bazaar.

|

||||

|

||||

### A currency system

|

||||

|

||||

People are hanging at the bazaar for different reasons: Some are there to learn, some are there to scratch a personal developer's itch, and some work for large software farms. Because the only way to have a say in the bazaar is to become part of the open source community and join the barter system, in order to gain credibility in the open source world, many large software companies employ these developers and pay them in monetary value. This represents the use of a currency system to influence the bazaar. Open source is no longer only for scratching the personal developer itch. It also accounts for a significant part of the overall software production worldwide, and there are many who want to have an influence.

|

||||

|

||||

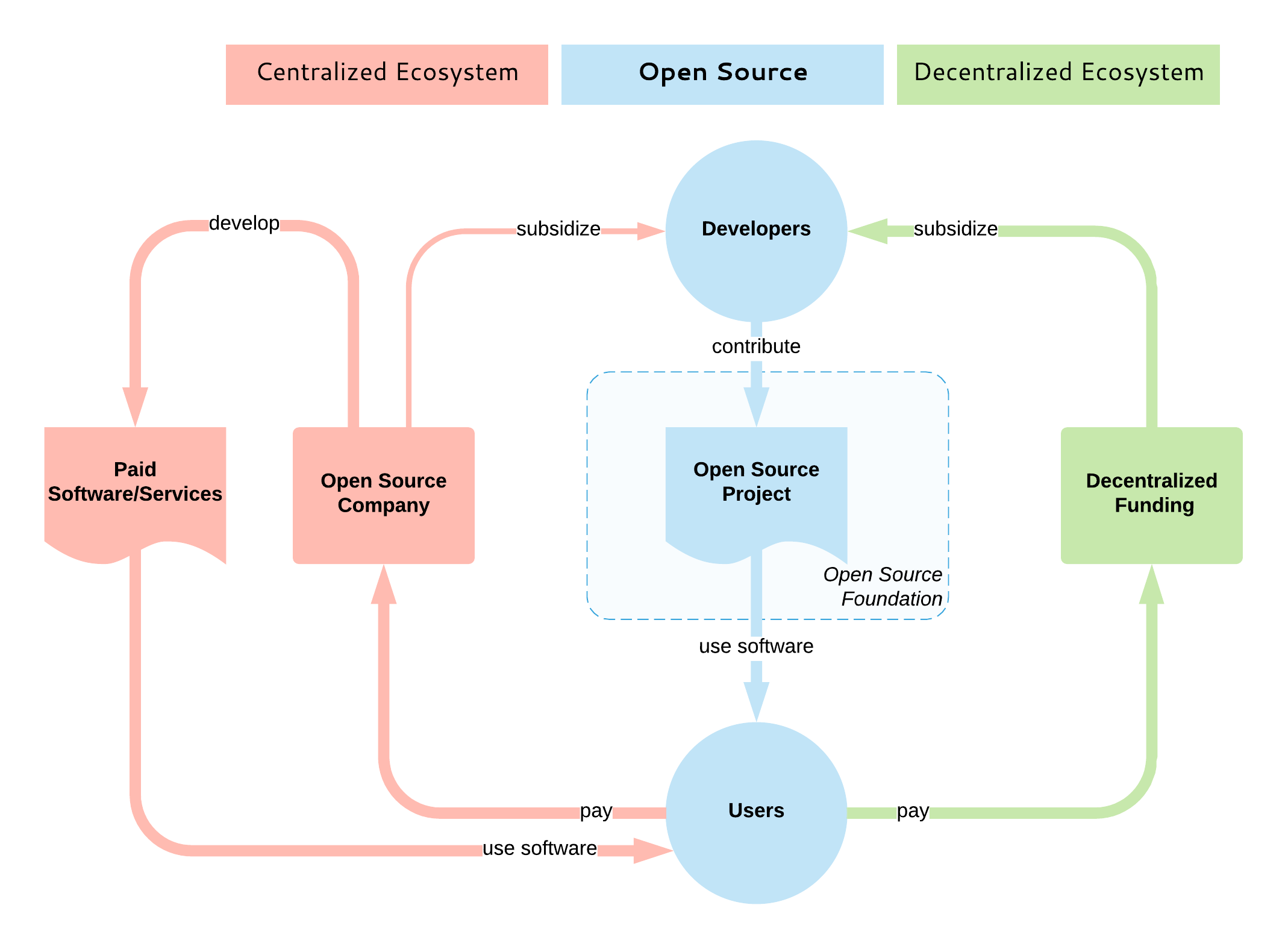

Open source sets the guiding principles through which developers interact and build a coherent system in a distributed way. It dictates how a project is governed, how software is built, and how the output distributed to users. It is an open consensus model for decentralized entities for building quality software together. But the open source model does not cover how open source is subsidized. Whether it is sponsored, directly or indirectly, through intrinsic or extrinsic motivators is irrelevant to the bazaar.

|

||||

|

||||

|

||||

|

||||

Currently, there is no equivalent of the decentralized open source development model for subsidization purposes. The majority of open source subsidization is centralized, where typically one company dominates a project by employing the majority of the open source developers of that project. And to be honest, this is currently the best-case scenario, as it guarantees that the developers will be paid for a long period and the project will continue to flourish.

|

||||

|

||||

There are also exceptions for the project monopoly scenario: For example, some Cloud Native Computing Foundation projects are developed by a large number of competing companies. Also, the Apache Software Foundation aims for their projects not to be dominated by a single vendor by encouraging diverse contributors, but most of the popular projects, in reality, are still single-vendor projects.

|

||||

|

||||

What we are missing is an open and decentralized model that works like the bazaar without a central coordination and ownership, where consumers (open source users) and producers (open source developers) interact with each other, driven by market forces and open source value. In order to complement open source, such a model must also be open and decentralized, and this is why I think the blockchain technology would [fit best here][3].

|

||||

|

||||

Most of the existing blockchain (and non-blockchain) platforms that aim to subsidize open source development are targeting primarily bug bounties, small and piecemeal tasks. A few also focus on funding new open source projects. But not many aim to provide mechanisms for sustaining continued development of open source projects—basically, a system that would emulate the behavior of an open source service provider company, or open core, open source-based SaaS product company: ensuring developers get continued and predictable incentives and guiding the project development based on the priorities of the incentivizers; i.e., the users. Such a model would address the limitations of the barter system listed above:

|

||||

|

||||

* Allow divisibility: If you want something small fixed, you can pay a small amount rather than the full premium of becoming an open source developer for a project.

|

||||

* Storing value: You can invest a large amount into a project and ensure both its continued development and that your voice is heard.

|

||||

* Transferring value: At any point, you can stop investing in the project and move funds into other projects.

|

||||

* Temporal decoupling: Allow regular recurring payments and subscriptions.

|

||||

|

||||

|

||||

|

||||

There would be also other benefits, purely from the fact that such a blockchain-based system is transparent and decentralized: to quantify a project’s value/usefulness based on its users’ commitment, open roadmap commitment, decentralized decision making, etc.

|

||||

|

||||

### Conclusion

|

||||

|

||||

On the one hand, we see large companies hiring open source developers and acquiring open source startups and even foundational platforms (such as Microsoft buying GitHub). Many, if not most, long-running successful open source projects are centralized around a single vendor. The significance of open source and its centralization is a fact.

|

||||

|

||||

On the other hand, the challenges around [sustaining open source][4] software are becoming more apparent, and many are investigating this space and its foundational issues more deeply. There are a few projects with high visibility and a large number of contributors, but there are also many other still-important projects that lack enough contributors and maintainers.

|

||||

|

||||

There are [many efforts][3] trying to address the challenges of open source through blockchain. These projects should improve the transparency, decentralization, and subsidization and establish a direct link between open source users and developers. This space is still very young, but it is progressing quickly, and with time, the bazaar is going to have a cryptocurrency system.

|

||||

|

||||

Given enough time and adequate technology, decentralization is happening at many levels:

|

||||

|

||||

* The internet is a decentralized medium that has unlocked the world’s potential for sharing and acquiring knowledge.

|

||||

* Open source is a decentralized collaboration model that has unlocked the world’s potential for innovation.

|

||||

* Similarly, blockchain can complement open source and become the decentralized open source subsidization model.

|

||||

|

||||

|

||||

|

||||

Follow me on [Twitter][5] for other posts in this space.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/barter-currency-system

|

||||

|

||||

作者:[Bilgin lbryam][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bibryam

|

||||

[1]: http://catb.org/

|

||||

[2]: http://oss.cash/

|

||||

[3]: https://opensource.com/article/18/8/open-source-tokenomics

|

||||

[4]: https://www.youtube.com/watch?v=VS6IpvTWwkQ

|

||||

[5]: http://twitter.com/bibryam

|

||||

48

sources/talk/20180904 Why schools of the future are open.md

Normal file

48

sources/talk/20180904 Why schools of the future are open.md

Normal file

@ -0,0 +1,48 @@

|

||||

Why schools of the future are open

|

||||

======

|

||||

|

||||

|

||||

|

||||

Someone recently asked me what education will look like in the modern era. My response: Much like it has for the last 100 years. How's that for a pessimistic view of our education system?

|

||||

|

||||

It's not a pessimistic view as much as it is a pragmatic one. Anyone who spends time in schools could walk away feeling similarly, given that the ways we teach young people are stubbornly resistant to change. As schools in the United States begin a new year, most students are returning to classrooms where desks are lined-up in rows, the instructional environment is primarily teacher-centred, progress is measured by Carnegie units and A-F grading, and collaboration is often considered cheating.

|

||||

|

||||

Were we able to point to evidence that this industrialized model was producing the kind of results that are required, where every child is given the personal attention needed to grow a love of learning and develop the skills needed to thrive in today's innovation economy, then we could very well be satisfied with the status quo. But any honest and objective look at current metrics speaks to the need for fundamental change.

|

||||

|

||||

But my view isn't a pessimistic one. In fact, it's quite optimistic.

|

||||

|

||||

For as easy as it is to dwell on what's wrong with our current education model, I also know of example after example of where education stakeholders are willing to step out of what's comfortable and challenge this system that is so immune to change. Teachers are demanding more collaboration with peers and more ways to be open and transparent about prototyping ideas that lead to true innovation for students—not just repackaging of traditional methods with technology. Administrators are enabling deeper, more connected learning to real-world applications through community-focused, project-based learning—not just jumping through hoops of "doing projects" in isolated classrooms. And parents are demanding that the joy and wonder of learning return to the culture of their schools that have been corrupted by an emphasis on test prep.

|

||||

|

||||

These and other types of cultural changes are never easy, especially in an environment so reluctant to take risks in the face of political backlash from any dip in test scores (regardless of statistical significance). So why am I optimistic that we are approaching a tipping point where the type of changes we desperately need can indeed overcome the inertia that has thwarted them for too long?

|

||||

|

||||

Because there is something else in water at this point in our modern era that was not present before: an ethos of openness, catalyzed by digital technology.

|

||||

|

||||

Think for a moment: If you need to learn how to speak basic French for an upcoming trip to France, where do you turn? You could sign up for a course at a local community college or check out a book from the library, but in all likelihood, you'll access a free online video and learn the basics you will need for your trip. Never before in human history has free, on-demand learning been so accessible. In fact, one can sign up right now for a free, online course from MIT on "[Special Topics in Mathematics with Applications: Linear Algebra and the Calculus of Variations][1]." Sign me up!

|

||||

|

||||

Why do schools such as MIT, Stanford, and Harvard offer free access to their courses? Why are people and corporations willing to openly share what was once tightly controlled intellectual property? Why are people all over the planet willing to invest their time—for no pay—to help with citizen science projects?

|

||||

|

||||

There is something else in water at this point in our modern era that was not present before: an ethos of openness, catalyzed by digital technology.

|

||||

|

||||

In his wonderful book [Open: How We'll Work Live and Learn in the Future][2], author David Price clearly describes how informal, social learning is becoming the new norm of learning, especially among young people accustomed to being able to get the "just in time" knowledge they need. Through a series of case studies, Price paints a clear picture of what happens when traditional institutions don't adapt to this new reality and thus become less and less relevant. That's the missing ingredient that has the crowdsourced power of creating positive disruption.

|

||||

|

||||

What Price points out (and what people are now demanding at a grassroots level) is nothing short of an open movement, one recognizing that open collaboration and free exchange of ideas have already disrupted ecosystems from music to software to publishing. And more than any top-down driven "reform," this expectation for openness has the potential to fundamentally alter an educational system that has resisted change for too long. In fact, one of the hallmarks of the open ethos is that it expects the transparent and fair democratization of knowledge for the benefit of all. So what better ecosystem for such an ethos to thrive than within the one that seeks to prepare young people to inherit the world and make it better?

|

||||

|

||||

Sure, the pessimist in me says that my earlier prediction about the future of education may indeed be the state of education in the short term future. But I am also very optimistic that this prediction will be proven to be dead wrong. I know that I and many other kindred-spirit educators are working every day to ensure that it's wrong. Won't you join me as we start a movement to help our schools [transform into open organizations][3]—to transition from from an outdated, legacy model to one that is more open, nimble, and responsive to the needs of every student and the communities in which they serve?

|

||||

|

||||

That's a true education model appropriate for the modern era.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/18/9/modern-education-open-education

|

||||

|

||||

作者:[Ben Owens][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/engineerteacher

|

||||

[1]: https://ocw.mit.edu/courses/mechanical-engineering/2-035-special-topics-in-mathematics-with-applications-linear-algebra-and-the-calculus-of-variations-spring-2007/

|

||||

[2]: https://www.goodreads.com/book/show/18730272-open

|

||||

[3]: https://opensource.com/open-organization/resources/open-org-definition

|

||||

@ -0,0 +1,234 @@

|

||||

Translating by qhwdw

|

||||

|

||||

# Caffeinated 6.828:Lab 2: Memory Management

|

||||

|

||||

### Introduction

|

||||

|

||||

In this lab, you will write the memory management code for your operating system. Memory management has two components.

|

||||

|

||||

The first component is a physical memory allocator for the kernel, so that the kernel can allocate memory and later free it. Your allocator will operate in units of 4096 bytes, called pages. Your task will be to maintain data structures that record which physical pages are free and which are allocated, and how many processes are sharing each allocated page. You will also write the routines to allocate and free pages of memory.

|

||||

|

||||

The second component of memory management is virtual memory, which maps the virtual addresses used by kernel and user software to addresses in physical memory. The x86 hardware’s memory management unit (MMU) performs the mapping when instructions use memory, consulting a set of page tables. You will modify JOS to set up the MMU’s page tables according to a specification we provide.

|

||||

|

||||

### Getting started

|

||||

|

||||

In this and future labs you will progressively build up your kernel. We will also provide you with some additional source. To fetch that source, use Git to commit changes you’ve made since handing in lab 1 (if any), fetch the latest version of the course repository, and then create a local branch called lab2 based on our lab2 branch, origin/lab2:

|

||||

|

||||

```

|

||||

athena% cd ~/6.828/lab

|

||||

athena% add git

|

||||

athena% git pull

|

||||

Already up-to-date.

|

||||

athena% git checkout -b lab2 origin/lab2

|

||||

Branch lab2 set up to track remote branch refs/remotes/origin/lab2.

|

||||

Switched to a new branch "lab2"

|

||||

athena%

|

||||

```

|

||||

|

||||

You will now need to merge the changes you made in your lab1 branch into the lab2 branch, as follows:

|

||||

|

||||

```

|

||||

athena% git merge lab1

|

||||

Merge made by recursive.

|

||||

kern/kdebug.c | 11 +++++++++--

|

||||

kern/monitor.c | 19 +++++++++++++++++++

|

||||

lib/printfmt.c | 7 +++----

|

||||

3 files changed, 31 insertions(+), 6 deletions(-)

|

||||

athena%

|

||||

```

|

||||

|

||||

Lab 2 contains the following new source files, which you should browse through:

|

||||

|

||||

- inc/memlayout.h

|

||||

- kern/pmap.c

|

||||

- kern/pmap.h

|

||||

- kern/kclock.h

|

||||

- kern/kclock.c

|

||||

|

||||

memlayout.h describes the layout of the virtual address space that you must implement by modifying pmap.c. memlayout.h and pmap.h define the PageInfo structure that you’ll use to keep track of which pages of physical memory are free. kclock.c and kclock.h manipulate the PC’s battery-backed clock and CMOS RAM hardware, in which the BIOS records the amount of physical memory the PC contains, among other things. The code in pmap.c needs to read this device hardware in order to figure out how much physical memory there is, but that part of the code is done for you: you do not need to know the details of how the CMOS hardware works.

|

||||

|

||||

Pay particular attention to memlayout.h and pmap.h, since this lab requires you to use and understand many of the definitions they contain. You may want to review inc/mmu.h, too, as it also contains a number of definitions that will be useful for this lab.

|

||||

|

||||

Before beginning the lab, don’t forget to add exokernel to get the 6.828 version of QEMU.

|

||||

|

||||

### Hand-In Procedure

|

||||

|

||||

When you are ready to hand in your lab code and write-up, add your answers-lab2.txt to the Git repository, commit your changes, and then run make handin.

|

||||

|

||||

```

|

||||

athena% git add answers-lab2.txt

|

||||

athena% git commit -am "my answer to lab2"

|

||||

[lab2 a823de9] my answer to lab2 4 files changed, 87 insertions(+), 10 deletions(-)

|

||||

athena% make handin

|

||||

```

|

||||

|

||||

### Part 1: Physical Page Management

|

||||

|

||||

The operating system must keep track of which parts of physical RAM are free and which are currently in use. JOS manages the PC’s physical memory with page granularity so that it can use the MMU to map and protect each piece of allocated memory.

|

||||

|

||||

You’ll now write the physical page allocator. It keeps track of which pages are free with a linked list of struct PageInfo objects, each corresponding to a physical page. You need to write the physical page allocator before you can write the rest of the virtual memory implementation, because your page table management code will need to allocate physical memory in which to store page tables.

|

||||

|

||||

> Exercise 1

|

||||

>

|

||||

> In the file kern/pmap.c, you must implement code for the following functions (probably in the order given).

|

||||

>

|

||||

> boot_alloc()

|

||||

>

|

||||

> mem_init() (only up to the call to check_page_free_list())

|

||||

>

|

||||

> page_init()

|

||||

>

|

||||

> page_alloc()

|

||||

>

|

||||

> page_free()

|

||||

>

|

||||

> check_page_free_list() and check_page_alloc() test your physical page allocator. You should boot JOS and see whether check_page_alloc() reports success. Fix your code so that it passes. You may find it helpful to add your own assert()s to verify that your assumptions are correct.

|

||||

|

||||

This lab, and all the 6.828 labs, will require you to do a bit of detective work to figure out exactly what you need to do. This assignment does not describe all the details of the code you’ll have to add to JOS. Look for comments in the parts of the JOS source that you have to modify; those comments often contain specifications and hints. You will also need to look at related parts of JOS, at the Intel manuals, and perhaps at your 6.004 or 6.033 notes.

|

||||

|

||||

### Part 2: Virtual Memory

|

||||

|

||||

Before doing anything else, familiarize yourself with the x86’s protected-mode memory management architecture: namely segmentationand page translation.

|

||||

|

||||

> Exercise 2

|

||||

>

|

||||

> Look at chapters 5 and 6 of the Intel 80386 Reference Manual, if you haven’t done so already. Read the sections about page translation and page-based protection closely (5.2 and 6.4). We recommend that you also skim the sections about segmentation; while JOS uses paging for virtual memory and protection, segment translation and segment-based protection cannot be disabled on the x86, so you will need a basic understanding of it.

|

||||

|

||||

### Virtual, Linear, and Physical Addresses

|

||||

|

||||

In x86 terminology, a virtual address consists of a segment selector and an offset within the segment. A linear address is what you get after segment translation but before page translation. A physical address is what you finally get after both segment and page translation and what ultimately goes out on the hardware bus to your RAM.

|

||||

|

||||

|

||||

|

||||

Recall that in part 3 of lab 1, we installed a simple page table so that the kernel could run at its link address of 0xf0100000, even though it is actually loaded in physical memory just above the ROM BIOS at 0x00100000. This page table mapped only 4MB of memory. In the virtual memory layout you are going to set up for JOS in this lab, we’ll expand this to map the first 256MB of physical memory starting at virtual address 0xf0000000 and to map a number of other regions of virtual memory.

|

||||

|

||||

> Exercise 3

|

||||

>

|

||||

> While GDB can only access QEMU’s memory by virtual address, it’s often useful to be able to inspect physical memory while setting up virtual memory. Review the QEMU monitor commands from the lab tools guide, especially the xp command, which lets you inspect physical memory. To access the QEMU monitor, press Ctrl-a c in the terminal (the same binding returns to the serial console).

|

||||

>

|

||||

> Use the xp command in the QEMU monitor and the x command in GDB to inspect memory at corresponding physical and virtual addresses and make sure you see the same data.

|

||||

>

|

||||

> Our patched version of QEMU provides an info pg command that may also prove useful: it shows a compact but detailed representation of the current page tables, including all mapped memory ranges, permissions, and flags. Stock QEMU also provides an info mem command that shows an overview of which ranges of virtual memory are mapped and with what permissions.

|

||||

|

||||

From code executing on the CPU, once we’re in protected mode (which we entered first thing in boot/boot.S), there’s no way to directly use a linear or physical address. All memory references are interpreted as virtual addresses and translated by the MMU, which means all pointers in C are virtual addresses.

|

||||

|

||||

The JOS kernel often needs to manipulate addresses as opaque values or as integers, without dereferencing them, for example in the physical memory allocator. Sometimes these are virtual addresses, and sometimes they are physical addresses. To help document the code, the JOS source distinguishes the two cases: the type uintptr_t represents opaque virtual addresses, and physaddr_trepresents physical addresses. Both these types are really just synonyms for 32-bit integers (uint32_t), so the compiler won’t stop you from assigning one type to another! Since they are integer types (not pointers), the compiler will complain if you try to dereference them.

|

||||

|

||||

The JOS kernel can dereference a uintptr_t by first casting it to a pointer type. In contrast, the kernel can’t sensibly dereference a physical address, since the MMU translates all memory references. If you cast a physaddr_t to a pointer and dereference it, you may be able to load and store to the resulting address (the hardware will interpret it as a virtual address), but you probably won’t get the memory location you intended.

|

||||

|

||||

To summarize:

|

||||

|

||||

| C type | Address type |

|

||||

| ------------ | ------------ |

|

||||

| `T*` | Virtual |

|

||||

| `uintptr_t` | Virtual |

|

||||

| `physaddr_t` | Physical |

|

||||

|

||||

>Question

|

||||

>

|

||||

>Assuming that the following JOS kernel code is correct, what type should variable x have, >uintptr_t or physaddr_t?

|

||||

>

|

||||

>

|

||||

>

|

||||

|

||||

The JOS kernel sometimes needs to read or modify memory for which it knows only the physical address. For example, adding a mapping to a page table may require allocating physical memory to store a page directory and then initializing that memory. However, the kernel, like any other software, cannot bypass virtual memory translation and thus cannot directly load and store to physical addresses. One reason JOS remaps of all of physical memory starting from physical address 0 at virtual address 0xf0000000 is to help the kernel read and write memory for which it knows just the physical address. In order to translate a physical address into a virtual address that the kernel can actually read and write, the kernel must add 0xf0000000 to the physical address to find its corresponding virtual address in the remapped region. You should use KADDR(pa) to do that addition.

|

||||

|

||||

The JOS kernel also sometimes needs to be able to find a physical address given the virtual address of the memory in which a kernel data structure is stored. Kernel global variables and memory allocated by boot_alloc() are in the region where the kernel was loaded, starting at 0xf0000000, the very region where we mapped all of physical memory. Thus, to turn a virtual address in this region into a physical address, the kernel can simply subtract 0xf0000000. You should use PADDR(va) to do that subtraction.

|

||||

|

||||

### Reference counting

|

||||

|

||||

In future labs you will often have the same physical page mapped at multiple virtual addresses simultaneously (or in the address spaces of multiple environments). You will keep a count of the number of references to each physical page in the pp_ref field of thestruct PageInfo corresponding to the physical page. When this count goes to zero for a physical page, that page can be freed because it is no longer used. In general, this count should equal to the number of times the physical page appears below UTOP in all page tables (the mappings above UTOP are mostly set up at boot time by the kernel and should never be freed, so there’s no need to reference count them). We’ll also use it to keep track of the number of pointers we keep to the page directory pages and, in turn, of the number of references the page directories have to page table pages.

|

||||

|

||||

Be careful when using page_alloc. The page it returns will always have a reference count of 0, so pp_ref should be incremented as soon as you’ve done something with the returned page (like inserting it into a page table). Sometimes this is handled by other functions (for example, page_insert) and sometimes the function calling page_alloc must do it directly.

|

||||

|

||||

### Page Table Management

|

||||

|

||||

Now you’ll write a set of routines to manage page tables: to insert and remove linear-to-physical mappings, and to create page table pages when needed.

|

||||

|

||||

> Exercise 4

|

||||

>

|

||||

> In the file kern/pmap.c, you must implement code for the following functions.

|

||||

>

|

||||

> pgdir_walk()

|

||||

>

|

||||

> boot_map_region()

|

||||

>

|

||||

> page_lookup()

|

||||

>

|

||||

> page_remove()

|

||||

>

|

||||

> page_insert()

|

||||

>

|

||||

> check_page(), called from mem_init(), tests your page table management routines. You should make sure it reports success before proceeding.

|

||||

|

||||

### Part 3: Kernel Address Space

|

||||

|

||||

JOS divides the processor’s 32-bit linear address space into two parts. User environments (processes), which we will begin loading and running in lab 3, will have control over the layout and contents of the lower part, while the kernel always maintains complete control over the upper part. The dividing line is defined somewhat arbitrarily by the symbol ULIM in inc/memlayout.h, reserving approximately 256MB of virtual address space for the kernel. This explains why we needed to give the kernel such a high link address in lab 1: otherwise there would not be enough room in the kernel’s virtual address space to map in a user environment below it at the same time.

|

||||

|

||||

You’ll find it helpful to refer to the JOS memory layout diagram in inc/memlayout.h both for this part and for later labs.

|

||||

|

||||

### Permissions and Fault Isolation

|

||||

|

||||

Since kernel and user memory are both present in each environment’s address space, we will have to use permission bits in our x86 page tables to allow user code access only to the user part of the address space. Otherwise bugs in user code might overwrite kernel data, causing a crash or more subtle malfunction; user code might also be able to steal other environments’ private data.

|

||||

|

||||

The user environment will have no permission to any of the memory above ULIM, while the kernel will be able to read and write this memory. For the address range [UTOP,ULIM), both the kernel and the user environment have the same permission: they can read but not write this address range. This range of address is used to expose certain kernel data structures read-only to the user environment. Lastly, the address space below UTOP is for the user environment to use; the user environment will set permissions for accessing this memory.

|

||||

|

||||

### Initializing the Kernel Address Space

|

||||

|

||||

Now you’ll set up the address space above UTOP: the kernel part of the address space. inc/memlayout.h shows the layout you should use. You’ll use the functions you just wrote to set up the appropriate linear to physical mappings.

|

||||

|

||||

> Exercise 5

|

||||

>

|

||||

> Fill in the missing code in mem_init() after the call to check_page().

|

||||

|

||||

Your code should now pass the check_kern_pgdir() and check_page_installed_pgdir() checks.

|

||||

|

||||

> Question

|

||||

>

|

||||

> 1、What entries (rows) in the page directory have been filled in at this point? What addresses do they map and where do they point? In other words, fill out this table as much as possible:

|

||||

>

|

||||

> EntryBase Virtual AddressPoints to (logically):

|

||||

>

|

||||

> 1023 ? Page table for top 4MB of phys memory

|

||||

>

|

||||

> 1022 ? ?

|

||||

>

|

||||

> . ? ?

|

||||

>

|

||||

> . ? ?

|

||||

>

|

||||

> . ? ?

|

||||

>

|

||||

> 2 0x00800000 ?

|

||||

>

|

||||

> 1 0x00400000 ?

|

||||

>

|

||||

> 0 0x00000000 [see next question]

|

||||

>

|

||||

> 2、(From 20 Lecture3) We have placed the kernel and user environment in the same address space. Why will user programs not be able to read or write the kernel’s memory? What specific mechanisms protect the kernel memory?

|

||||

>

|

||||

> 3、What is the maximum amount of physical memory that this operating system can support? Why?

|

||||

>

|

||||

> 4、How much space overhead is there for managing memory, if we actually had the maximum amount of physical memory? How is this overhead broken down?

|

||||

>

|

||||

> 5、Revisit the page table setup in kern/entry.S and kern/entrypgdir.c. Immediately after we turn on paging, EIP is still a low number (a little over 1MB). At what point do we transition to running at an EIP above KERNBASE? What makes it possible for us to continue executing at a low EIP between when we enable paging and when we begin running at an EIP above KERNBASE? Why is this transition necessary?

|

||||

|

||||

### Address Space Layout Alternatives

|

||||

|

||||

The address space layout we use in JOS is not the only one possible. An operating system might map the kernel at low linear addresses while leaving the upper part of the linear address space for user processes. x86 kernels generally do not take this approach, however, because one of the x86’s backward-compatibility modes, known as virtual 8086 mode, is “hard-wired” in the processor to use the bottom part of the linear address space, and thus cannot be used at all if the kernel is mapped there.

|

||||

|

||||

It is even possible, though much more difficult, to design the kernel so as not to have to reserve any fixed portion of the processor’s linear or virtual address space for itself, but instead effectively to allow allow user-level processes unrestricted use of the entire 4GB of virtual address space - while still fully protecting the kernel from these processes and protecting different processes from each other!

|

||||

|

||||

Generalize the kernel’s memory allocation system to support pages of a variety of power-of-two allocation unit sizes from 4KB up to some reasonable maximum of your choice. Be sure you have some way to divide larger allocation units into smaller ones on demand, and to coalesce multiple small allocation units back into larger units when possible. Think about the issues that might arise in such a system.

|

||||

|

||||

This completes the lab. Make sure you pass all of the make grade tests and don’t forget to write up your answers to the questions inanswers-lab2.txt. Commit your changes (including adding answers-lab2.txt) and type make handin in the lab directory to hand in your lab.

|

||||

|

||||

------

|

||||

|

||||

via: <https://sipb.mit.edu/iap/6.828/lab/lab2/>

|

||||

|

||||

作者:[Mit][<https://sipb.mit.edu/iap/6.828/lab/lab2/>]

|

||||

译者:[译者ID](https://github.com/%E8%AF%91%E8%80%85ID)

|

||||

校对:[校对者ID](https://github.com/%E6%A0%A1%E5%AF%B9%E8%80%85ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -1,193 +0,0 @@

|

||||

translating by distant1219

|

||||

|

||||

An Introduction to Using Git

|

||||

======

|

||||

|

||||

If you’re a developer, then you know your way around development tools. You’ve spent years studying one or more programming languages and have perfected your skills. You can develop with GUI tools or from the command line. On your own, nothing can stop you. You code as if your mind and your fingers are one to create elegant, perfectly commented, source for an app you know will take the world by storm.

|

||||

|

||||

But what happens when you’re tasked with collaborating on a project? Or what about when that app you’ve developed becomes bigger than just you? What’s the next step? If you want to successfully collaborate with other developers, you’ll want to make use of a distributed version control system. With such a system, collaborating on a project becomes incredibly efficient and reliable. One such system is [Git][1]. Along with Git comes a handy repository called [GitHub][2], where you can house your projects, such that a team can check out and check in code.

|

||||

|

||||

I will walk you through the very basics of getting Git up and running and using it with GitHub, so the development on your game-changing app can be taken to the next level. I’ll be demonstrating on Ubuntu 18.04, so if your distribution of choice is different, you’ll only need to modify the Git install commands to suit your distribution’s package manager.

|

||||

|

||||

### Git and GitHub

|

||||

|

||||

The first thing to do is create a free GitHub account. Head over to the [GitHub signup page][3] and fill out the necessary information. Once you’ve done that, you’re ready to move on to installing Git (you can actually do these two steps in any order).

|

||||

|

||||

Installing Git is simple. Open up a terminal window and issue the command:

|

||||

```

|

||||

sudo apt install git-all

|

||||

|

||||

```

|

||||

|

||||

This will include a rather large number of dependencies, but you’ll wind up with everything you need to work with Git and GitHub.

|

||||

|

||||

On a side note: I use Git quite a bit to download source for application installation. There are times when a piece of software isn’t available via the built-in package manager. Instead of downloading the source files from a third-party location, I’ll often go the project’s Git page and clone the package like so:

|

||||

```

|

||||

git clone ADDRESS

|

||||

|

||||

```

|

||||

|

||||

Where ADDRESS is the URL given on the software’s Git page.

|

||||

Doing this most always ensures I am installing the latest release of a package.

|

||||

|

||||

Create a local repository and add a file

|

||||

|

||||

The next step is to create a local repository on your system (we’ll call it newproject and house it in ~/). Open up a terminal window and issue the commands:

|

||||

```

|

||||

cd ~/

|

||||

|

||||

mkdir newproject

|

||||

|

||||

cd newproject

|

||||

|

||||

```

|

||||

|

||||

Now we must initialize the repository. In the ~/newproject folder, issue the command git init. When the command completes, you should see that the empty Git repository has been created (Figure 1).

|

||||

|

||||

![new repository][5]

|

||||

|

||||

Figure 1: Our new repository has been initialized.

|

||||

|

||||

[Used with permission][6]

|

||||

|

||||

Next we need to add a file to the project. From within the root folder (~/newproject) issue the command:

|

||||

```

|

||||

touch readme.txt

|

||||

|

||||

```

|

||||

|

||||

You will now have an empty file in your repository. Issue the command git status to verify that Git is aware of the new file (Figure 2).

|

||||

|

||||

![readme][8]

|

||||

|

||||

Figure 2: Git knows about our readme.txt file.

|

||||

|

||||

[Used with permission][6]

|

||||

|

||||

Even though Git is aware of the file, it hasn’t actually been added to the project. To do that, issue the command:

|

||||

```

|

||||

git add readme.txt

|

||||

|

||||

```

|

||||

|

||||

Once you’ve done that, issue the git status command again to see that readme.txt is now considered a new file in the project (Figure 3).

|

||||

|

||||

![file added][10]

|

||||

|

||||

Figure 3: Our file now has now been added to the staging environment.

|

||||

|

||||

[Used with permission][6]

|

||||

|

||||

### Your first commit

|

||||

|

||||

With the new file in the staging environment, you are now ready to create your first commit. What is a commit? Easy: A commit is a record of the files you’ve changed within the project. Creating the commit is actually quite simple. It is important, however, that you include a descriptive message for the commit. By doing this, you are adding notes about what the commit contains (such as what changes you’ve made to the file). Before we do this, however, we have to inform Git who we are. To do this, issue the command:

|

||||

```

|

||||

git config --global user.email EMAIL

|

||||

|

||||

git config --global user.name “FULL NAME”

|

||||

|

||||

```

|

||||

|

||||

Where EMAIL is your email address and FULL NAME is your name.

|

||||

|

||||

Now we can create the commit by issuing the command:

|

||||

```

|

||||