mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

ef37bcadc7

@ -1,6 +1,8 @@

|

||||

在 Linux 中使用 Stratis 配置本地存储

|

||||

======

|

||||

|

||||

> 关注于易用性,Stratis 为桌面用户提供了一套强力的高级存储功能。

|

||||

|

||||

|

||||

|

||||

对桌面 Linux 用户而言,极少或仅在安装系统时配置本地存储。Linux 存储技术进展比较慢,以至于 20 年前的很多存储工具仍在今天广泛使用。但从那之后,存储技术已经提升了不少,我们为何不享受新特性带来的好处呢?

|

||||

@ -9,29 +11,29 @@

|

||||

|

||||

### 简单可靠地使用高级存储特性

|

||||

|

||||

Stratis 希望让如下三件事变得更加容易:存储初始化配置;做后续变更;使用高级存储特性,包括<ruby>快照<rt>snapshots</rt></ruby>、<ruby>精简配置<rt>thin provisioning</rt></ruby>,甚至<ruby>分层<rt>tiering</rt></ruby>。

|

||||

Stratis 希望让如下三件事变得更加容易:存储初始化配置;后续变更;使用高级存储特性,包括<ruby>快照<rt>snapshots</rt></ruby>、<ruby>精简配置<rt>thin provisioning</rt></ruby>,甚至<ruby>分层<rt>tiering</rt></ruby>。

|

||||

|

||||

### Stratis:一个卷管理文件系统

|

||||

|

||||

Stratis 是一个<ruby>卷管理文件系统<rt>volume-managing filesystem, VMF</rt></ruby>,类似于 [ZFS][1] 和 [Btrfs][2]。它使用了存储“池”的核心思想,该思想被各种 VMFs 和 形如 [LVM][3] 的独立卷管理器采用。使用一个或多个硬盘(或分区)创建存储池,然后在存储池中创建<ruby>卷<rt>volumes</rt></ruby>。与使用 [fdisk][4] 或 [GParted][5] 执行的传统硬盘分区不同,存储池中的卷分布无需用户指定。

|

||||

Stratis 是一个<ruby>卷管理文件系统<rt>volume-managing filesystem</rt></ruby>(VMF),类似于 [ZFS][1] 和 [Btrfs][2]。它使用了存储“池”的核心思想,该思想被各种 VMF 和 形如 [LVM][3] 的独立卷管理器采用。使用一个或多个硬盘(或分区)创建存储池,然后在存储池中创建<ruby>卷<rt>volume</rt></ruby>。与使用 [fdisk][4] 或 [GParted][5] 执行的传统硬盘分区不同,存储池中的卷分布无需用户指定。

|

||||

|

||||

VMF 更进一步与文件系统层结合起来。用户无需在卷上部署选取的文件系统,因为文件系统和卷已经被合并在一起,成为一个概念上的文件树(ZFS 称之为<ruby>数据集<rt>dataset</rt></ruby>,Brtfs 称之为<ruby>子卷<rt>subvolume</rt></ruby>,Stratis 称之为文件系统),文件数据位于存储池中,但文件大小仅受存储池整体容量限制。

|

||||

|

||||

换一个角度来看:正如文件系统对其中单个文件的真实存储块的实际位置做了一层<ruby>抽象<rt>abstract</rt></ruby>,VMF 对存储池中单个文件系统的真实存储块的实际位置做了一层抽象。

|

||||

换一个角度来看:正如文件系统对其中单个文件的真实存储块的实际位置做了一层<ruby>抽象<rt>abstract</rt></ruby>,而 VMF 对存储池中单个文件系统的真实存储块的实际位置做了一层抽象。

|

||||

|

||||

基于存储池,我们可以启用其它有用的特性。特性中的一部分理所当然地来自典型的 VMF <ruby>实现<rt>implementation</rt></ruby>,例如文件系统快照,毕竟存储池中的多个文件系统可以共享<ruby>物理数据块<rt>physical data blocks</rt></ruby>;<ruby>冗余<rt>redundancy</rt></ruby>,分层,<ruby>完整性<rt>integrity</rt></ruby>等其它特性也很符合逻辑,因为存储池是操作系统中管理所有文件系统上述特性的重要场所。

|

||||

基于存储池,我们可以启用其它有用的特性。特性中的一部分理所当然地来自典型的 VMF <ruby>实现<rt>implementation</rt></ruby>,例如文件系统快照,毕竟存储池中的多个文件系统可以共享<ruby>物理数据块<rt>physical data block</rt></ruby>;<ruby>冗余<rt>redundancy</rt></ruby>,分层,<ruby>完整性<rt>integrity</rt></ruby>等其它特性也很符合逻辑,因为存储池是操作系统中管理所有文件系统上述特性的重要场所。

|

||||

|

||||

上述结果表明,相比独立的卷管理器和文件系统层,VMF 的搭建和管理更简单,启用高级存储特性也更容易。

|

||||

|

||||

### Stratis 与 ZFS 和 Btrfs 有哪些不同?

|

||||

|

||||

作为新项目,Stratis 可以从已有项目中吸取经验,我们将在[第二部分][6]深入介绍 Stratis 采用了 ZFS,Brtfs 和 LVM 的哪些设计。总结一下,Stratis 与其不同之处来自于对功能特性支持的观察,来自于个人使用及计算机自动化运行方式的改变,以及来自于底层硬件的改变。

|

||||

作为新项目,Stratis 可以从已有项目中吸取经验,我们将在[第二部分][6]深入介绍 Stratis 采用了 ZFS、Brtfs 和 LVM 的哪些设计。总结一下,Stratis 与其不同之处来自于对功能特性支持的观察,来自于个人使用及计算机自动化运行方式的改变,以及来自于底层硬件的改变。

|

||||

|

||||

首先,Stratis 强调易用性和安全性。对个人用户而言,这很重要,毕竟他们与 Stratis 交互的时间间隔可能很长。如果交互不那么友好,尤其是有丢数据的可能性,大部分人宁愿放弃使用新特性,继续使用功能比较基础的文件系统。

|

||||

|

||||

第二,当前 API 和 <ruby>DevOps 式<rt>Devops-style</rt></ruby>自动化的重要性远高于早些年。通过提供<ruby>极好的<rt>first-class</rt></ruby> API,Stratis 支持自动化,这样人们可以直接通过自动化工具使用 Stratis。

|

||||

第二,当前 API 和 <ruby>DevOps 式<rt>Devops-style</rt></ruby>自动化的重要性远高于早些年。Stratis 提供了支持自动化的一流 API,这样人们可以直接通过自动化工具使用 Stratis。

|

||||

|

||||

第三,SSD 的容量和市场份额都已经显著提升。早期的文件系统中很多代码用于优化机械<ruby>介质<rt>media</rt></ruby>访问速度慢的问题,但对于基于闪存的介质,这些优化变得不那么重要。即使当存储池过大不适合使用 SSD 的情况,仍可以考虑使用 SSD 充当<ruby>缓存层<rt>caching tier</rt></ruby>,可以提供不错的性能提升。考虑到 SSD 的优良性能,Stratis 主要聚焦存储池设计方面的<ruby>灵活性<rt>flexibility</rt></ruby>和<ruby>可靠性<rt>reliability</rt></ruby>。

|

||||

第三,SSD 的容量和市场份额都已经显著提升。早期的文件系统中很多代码用于优化机械介质访问速度慢的问题,但对于基于闪存的介质,这些优化变得不那么重要。即使当存储池过大而不适合使用 SSD 的情况,仍可以考虑使用 SSD 充当<ruby>缓存层<rt>caching tier</rt></ruby>,可以提供不错的性能提升。考虑到 SSD 的优良性能,Stratis 主要聚焦存储池设计方面的<ruby>灵活性<rt>flexibility</rt></ruby>和<ruby>可靠性<rt>reliability</rt></ruby>。

|

||||

|

||||

最后,与 ZFS 和 Btrfs 相比,Stratis 具有明显不一样的<ruby>实现模型<rt>implementation model</rt></ruby>(我会在[第二部分][6]进一步分析)。这意味着对 Stratis 而言,虽然一些功能较难实现,但一些功能较容易实现。这也加快了 Stratis 的开发进度。

|

||||

|

||||

@ -56,7 +58,7 @@ via: https://opensource.com/article/18/4/stratis-easy-use-local-storage-manageme

|

||||

作者:[Andy Grover][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

源代码行计数器和分析器

|

||||

Ohcount:源代码行计数器和分析器

|

||||

======

|

||||

|

||||

|

||||

@ -9,26 +9,25 @@

|

||||

|

||||

### Ohcount – 代码行计数器

|

||||

|

||||

**安装**

|

||||

#### 安装

|

||||

|

||||

Ohcount 存在于 Debian 和 Ubuntu 及其派生版的默认仓库中,因此你可以使用 APT 软件包管理器来安装它,如下所示。

|

||||

|

||||

```

|

||||

$ sudo apt-get install ohcount

|

||||

|

||||

```

|

||||

|

||||

**用法**

|

||||

#### 用法

|

||||

|

||||

Ohcount 的使用非常简单。

|

||||

|

||||

你所要做的就是进入你想要分析代码的目录并执行程序。

|

||||

|

||||

举例来说,我将分析 [**coursera-dl**][2] 程序的源代码。

|

||||

举例来说,我将分析 [coursera-dl][2] 程序的源代码。

|

||||

|

||||

```

|

||||

$ cd coursera-dl-master/

|

||||

|

||||

$ ohcount

|

||||

|

||||

```

|

||||

|

||||

以下是 Coursera-dl 的行数摘要:

|

||||

@ -38,6 +37,7 @@ $ ohcount

|

||||

如你所见,Coursera-dl 的源代码总共包含 141 个文件。第一列说明源码含有的编程语言的名称。第二列显示每种编程语言的文件数量。第三列显示每种编程语言的总行数。第四行和第五行显示代码中由多少行注释及其百分比。第六列显示空行的数量。最后一列和第七列显示每种语言的全部代码行数以及 coursera-dl 的总行数。

|

||||

|

||||

或者,直接使用下面的完整路径。

|

||||

|

||||

```

|

||||

$ ohcount coursera-dl-master/

|

||||

|

||||

@ -47,52 +47,52 @@ $ ohcount coursera-dl-master/

|

||||

|

||||

如果你不想每次都输入完整目录路径,只需 cd 进入它,然后使用 ohcount 来分析该目录中的代码。

|

||||

|

||||

要计算每个文件的代码行数,请使用 **-i** 标志。

|

||||

要计算每个文件的代码行数,请使用 `-i` 标志。

|

||||

|

||||

```

|

||||

$ ohcount -i

|

||||

|

||||

```

|

||||

|

||||

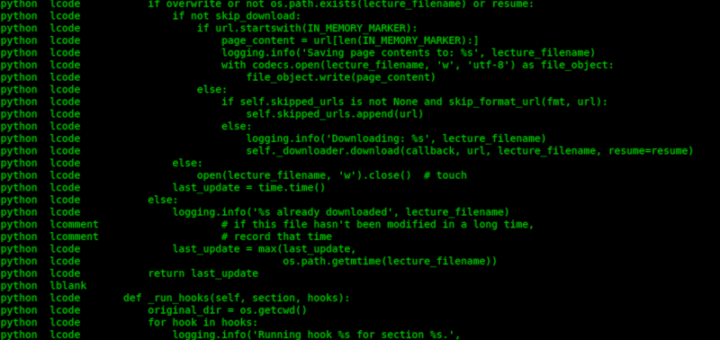

**示例输出:**

|

||||

示例输出:

|

||||

|

||||

![][5]

|

||||

|

||||

当您使用 **-a** 标志时,ohcount 还可以显示带标注的源码。

|

||||

当您使用 `-a` 标志时,ohcount 还可以显示带标注的源码。

|

||||

|

||||

```

|

||||

$ ohcount -a

|

||||

|

||||

```

|

||||

|

||||

![][6]

|

||||

|

||||

如你所见,显示了目录中所有源代码的内容。每行都以制表符分隔的语言名称和语义分类(代码、注释或空白)为前缀。

|

||||

|

||||

有时候,你只是想知道源码中使用的许可证。为此,请使用 **-l** 标志。

|

||||

有时候,你只是想知道源码中使用的许可证。为此,请使用 `-l` 标志。

|

||||

|

||||

```

|

||||

$ ohcount -l

|

||||

lgpl3, coursera_dl.py

|

||||

gpl coursera_dl.py

|

||||

|

||||

```

|

||||

|

||||

另一个可用选项是 **-re**,用于将原始实体信息打印到屏幕(主要用于调试)。

|

||||

另一个可用选项是 `-re`,用于将原始实体信息打印到屏幕(主要用于调试)。

|

||||

|

||||

```

|

||||

$ ohcount -re

|

||||

|

||||

```

|

||||

|

||||

要递归地查找给定路径内的所有源码文件,请使用 **-d** 标志。

|

||||

要递归地查找给定路径内的所有源码文件,请使用 `-d` 标志。

|

||||

|

||||

```

|

||||

$ ohcount -d

|

||||

|

||||

```

|

||||

|

||||

上述命令将显示当前工作目录中的所有源码文件,每个文件名将以制表符分隔的语言名称为前缀。

|

||||

|

||||

要了解更多详细信息和支持的选项,请运行:

|

||||

|

||||

```

|

||||

$ ohcount --help

|

||||

|

||||

```

|

||||

|

||||

对于想要分析自己或其他开发人员开发的代码,并检查代码的行数,用于编写这些代码的语言以及代码的许可证详细信息等,ohcount 非常有用。

|

||||

@ -101,8 +101,6 @@ $ ohcount --help

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/ohcount-the-source-code-line-counter-and-analyzer/

|

||||

@ -110,7 +108,7 @@ via: https://www.ostechnix.com/ohcount-the-source-code-line-counter-and-analyzer

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,111 @@

|

||||

My Lisp Experiences and the Development of GNU Emacs

|

||||

======

|

||||

|

||||

> (Transcript of Richard Stallman's Speech, 28 Oct 2002, at the International Lisp Conference).

|

||||

|

||||

Since none of my usual speeches have anything to do with Lisp, none of them were appropriate for today. So I'm going to have to wing it. Since I've done enough things in my career connected with Lisp I should be able to say something interesting.

|

||||

|

||||

My first experience with Lisp was when I read the Lisp 1.5 manual in high school. That's when I had my mind blown by the idea that there could be a computer language like that. The first time I had a chance to do anything with Lisp was when I was a freshman at Harvard and I wrote a Lisp interpreter for the PDP-11. It was a very small machine — it had something like 8k of memory — and I managed to write the interpreter in a thousand instructions. This gave me some room for a little bit of data. That was before I got to see what real software was like, that did real system jobs.

|

||||

|

||||

I began doing work on a real Lisp implementation with JonL White once I started working at MIT. I got hired at the Artificial Intelligence Lab not by JonL, but by Russ Noftsker, which was most ironic considering what was to come — he must have really regretted that day.

|

||||

|

||||

During the 1970s, before my life became politicized by horrible events, I was just going along making one extension after another for various programs, and most of them did not have anything to do with Lisp. But, along the way, I wrote a text editor, Emacs. The interesting idea about Emacs was that it had a programming language, and the user's editing commands would be written in that interpreted programming language, so that you could load new commands into your editor while you were editing. You could edit the programs you were using and then go on editing with them. So, we had a system that was useful for things other than programming, and yet you could program it while you were using it. I don't know if it was the first one of those, but it certainly was the first editor like that.

|

||||

|

||||

This spirit of building up gigantic, complicated programs to use in your own editing, and then exchanging them with other people, fueled the spirit of free-wheeling cooperation that we had at the AI Lab then. The idea was that you could give a copy of any program you had to someone who wanted a copy of it. We shared programs to whomever wanted to use them, they were human knowledge. So even though there was no organized political thought relating the way we shared software to the design of Emacs, I'm convinced that there was a connection between them, an unconscious connection perhaps. I think that it's the nature of the way we lived at the AI Lab that led to Emacs and made it what it was.

|

||||

|

||||

The original Emacs did not have Lisp in it. The lower level language, the non-interpreted language — was PDP-10 Assembler. The interpreter we wrote in that actually wasn't written for Emacs, it was written for TECO. It was our text editor, and was an extremely ugly programming language, as ugly as could possibly be. The reason was that it wasn't designed to be a programming language, it was designed to be an editor and command language. There were commands like ‘5l’, meaning ‘move five lines’, or ‘i’ and then a string and then an ESC to insert that string. You would type a string that was a series of commands, which was called a command string. You would end it with ESC ESC, and it would get executed.

|

||||

|

||||

Well, people wanted to extend this language with programming facilities, so they added some. For instance, one of the first was a looping construct, which was < >. You would put those around things and it would loop. There were other cryptic commands that could be used to conditionally exit the loop. To make Emacs, we (1) added facilities to have subroutines with names. Before that, it was sort of like Basic, and the subroutines could only have single letters as their names. That was hard to program big programs with, so we added code so they could have longer names. Actually, there were some rather sophisticated facilities; I think that Lisp got its unwind-protect facility from TECO.

|

||||

|

||||

We started putting in rather sophisticated facilities, all with the ugliest syntax you could ever think of, and it worked — people were able to write large programs in it anyway. The obvious lesson was that a language like TECO, which wasn't designed to be a programming language, was the wrong way to go. The language that you build your extensions on shouldn't be thought of as a programming language in afterthought; it should be designed as a programming language. In fact, we discovered that the best programming language for that purpose was Lisp.

|

||||

|

||||

It was Bernie Greenberg, who discovered that it was (2). He wrote a version of Emacs in Multics MacLisp, and he wrote his commands in MacLisp in a straightforward fashion. The editor itself was written entirely in Lisp. Multics Emacs proved to be a great success — programming new editing commands was so convenient that even the secretaries in his office started learning how to use it. They used a manual someone had written which showed how to extend Emacs, but didn't say it was a programming. So the secretaries, who believed they couldn't do programming, weren't scared off. They read the manual, discovered they could do useful things and they learned to program.

|

||||

|

||||

So Bernie saw that an application — a program that does something useful for you — which has Lisp inside it and which you could extend by rewriting the Lisp programs, is actually a very good way for people to learn programming. It gives them a chance to write small programs that are useful for them, which in most arenas you can't possibly do. They can get encouragement for their own practical use — at the stage where it's the hardest — where they don't believe they can program, until they get to the point where they are programmers.

|

||||

|

||||

At that point, people began to wonder how they could get something like this on a platform where they didn't have full service Lisp implementation. Multics MacLisp had a compiler as well as an interpreter — it was a full-fledged Lisp system — but people wanted to implement something like that on other systems where they had not already written a Lisp compiler. Well, if you didn't have the Lisp compiler you couldn't write the whole editor in Lisp — it would be too slow, especially redisplay, if it had to run interpreted Lisp. So we developed a hybrid technique. The idea was to write a Lisp interpreter and the lower level parts of the editor together, so that parts of the editor were built-in Lisp facilities. Those would be whatever parts we felt we had to optimize. This was a technique that we had already consciously practiced in the original Emacs, because there were certain fairly high level features which we re-implemented in machine language, making them into TECO primitives. For instance, there was a TECO primitive to fill a paragraph (actually, to do most of the work of filling a paragraph, because some of the less time-consuming parts of the job would be done at the higher level by a TECO program). You could do the whole job by writing a TECO program, but that was too slow, so we optimized it by putting part of it in machine language. We used the same idea here (in the hybrid technique), that most of the editor would be written in Lisp, but certain parts of it that had to run particularly fast would be written at a lower level.

|

||||

|

||||

Therefore, when I wrote my second implementation of Emacs, I followed the same kind of design. The low level language was not machine language anymore, it was C. C was a good, efficient language for portable programs to run in a Unix-like operating system. There was a Lisp interpreter, but I implemented facilities for special purpose editing jobs directly in C — manipulating editor buffers, inserting leading text, reading and writing files, redisplaying the buffer on the screen, managing editor windows.

|

||||

|

||||

Now, this was not the first Emacs that was written in C and ran on Unix. The first was written by James Gosling, and was referred to as GosMacs. A strange thing happened with him. In the beginning, he seemed to be influenced by the same spirit of sharing and cooperation of the original Emacs. I first released the original Emacs to people at MIT. Someone wanted to port it to run on Twenex — it originally only ran on the Incompatible Timesharing System we used at MIT. They ported it to Twenex, which meant that there were a few hundred installations around the world that could potentially use it. We started distributing it to them, with the rule that “you had to send back all of your improvements” so we could all benefit. No one ever tried to enforce that, but as far as I know people did cooperate.

|

||||

|

||||

Gosling did, at first, seem to participate in this spirit. He wrote in a manual that he called the program Emacs hoping that others in the community would improve it until it was worthy of that name. That's the right approach to take towards a community — to ask them to join in and make the program better. But after that he seemed to change the spirit, and sold it to a company.

|

||||

|

||||

At that time I was working on the GNU system (a free software Unix-like operating system that many people erroneously call “Linux”). There was no free software Emacs editor that ran on Unix. I did, however, have a friend who had participated in developing Gosling's Emacs. Gosling had given him, by email, permission to distribute his own version. He proposed to me that I use that version. Then I discovered that Gosling's Emacs did not have a real Lisp. It had a programming language that was known as ‘mocklisp’, which looks syntactically like Lisp, but didn't have the data structures of Lisp. So programs were not data, and vital elements of Lisp were missing. Its data structures were strings, numbers and a few other specialized things.

|

||||

|

||||

I concluded I couldn't use it and had to replace it all, the first step of which was to write an actual Lisp interpreter. I gradually adapted every part of the editor based on real Lisp data structures, rather than ad hoc data structures, making the data structures of the internals of the editor exposable and manipulable by the user's Lisp programs.

|

||||

|

||||

The one exception was redisplay. For a long time, redisplay was sort of an alternate world. The editor would enter the world of redisplay and things would go on with very special data structures that were not safe for garbage collection, not safe for interruption, and you couldn't run any Lisp programs during that. We've changed that since — it's now possible to run Lisp code during redisplay. It's quite a convenient thing.

|

||||

|

||||

This second Emacs program was ‘free software’ in the modern sense of the term — it was part of an explicit political campaign to make software free. The essence of this campaign was that everybody should be free to do the things we did in the old days at MIT, working together on software and working with whomever wanted to work with us. That is the basis for the free software movement — the experience I had, the life that I've lived at the MIT AI lab — to be working on human knowledge, and not be standing in the way of anybody's further using and further disseminating human knowledge.

|

||||

|

||||

At the time, you could make a computer that was about the same price range as other computers that weren't meant for Lisp, except that it would run Lisp much faster than they would, and with full type checking in every operation as well. Ordinary computers typically forced you to choose between execution speed and good typechecking. So yes, you could have a Lisp compiler and run your programs fast, but when they tried to take `car` of a number, it got nonsensical results and eventually crashed at some point.

|

||||

|

||||

The Lisp machine was able to execute instructions about as fast as those other machines, but each instruction — a car instruction would do data typechecking — so when you tried to get the car of a number in a compiled program, it would give you an immediate error. We built the machine and had a Lisp operating system for it. It was written almost entirely in Lisp, the only exceptions being parts written in the microcode. People became interested in manufacturing them, which meant they should start a company.

|

||||

|

||||

There were two different ideas about what this company should be like. Greenblatt wanted to start what he called a “hacker” company. This meant it would be a company run by hackers and would operate in a way conducive to hackers. Another goal was to maintain the AI Lab culture (3). Unfortunately, Greenblatt didn't have any business experience, so other people in the Lisp machine group said they doubted whether he could succeed. They thought that his plan to avoid outside investment wouldn't work.

|

||||

|

||||

Why did he want to avoid outside investment? Because when a company has outside investors, they take control and they don't let you have any scruples. And eventually, if you have any scruples, they also replace you as the manager.

|

||||

|

||||

So Greenblatt had the idea that he would find a customer who would pay in advance to buy the parts. They would build machines and deliver them; with profits from those parts, they would then be able to buy parts for a few more machines, sell those and then buy parts for a larger number of machines, and so on. The other people in the group thought that this couldn't possibly work.

|

||||

|

||||

Greenblatt then recruited Russell Noftsker, the man who had hired me, who had subsequently left the AI Lab and created a successful company. Russell was believed to have an aptitude for business. He demonstrated this aptitude for business by saying to the other people in the group, “Let's ditch Greenblatt, forget his ideas, and we'll make another company.” Stabbing in the back, clearly a real businessman. Those people decided they would form a company called Symbolics. They would get outside investment, not have scruples, and do everything possible to win.

|

||||

|

||||

But Greenblatt didn't give up. He and the few people loyal to him decided to start Lisp Machines Inc. anyway and go ahead with their plans. And what do you know, they succeeded! They got the first customer and were paid in advance. They built machines and sold them, and built more machines and more machines. They actually succeeded even though they didn't have the help of most of the people in the group. Symbolics also got off to a successful start, so you had two competing Lisp machine companies. When Symbolics saw that LMI was not going to fall flat on its face, they started looking for ways to destroy it.

|

||||

|

||||

Thus, the abandonment of our lab was followed by “war” in our lab. The abandonment happened when Symbolics hired away all the hackers, except me and the few who worked at LMI part-time. Then they invoked a rule and eliminated people who worked part-time for MIT, so they had to leave entirely, which left only me. The AI lab was now helpless. And MIT had made a very foolish arrangement with these two companies. It was a three-way contract where both companies licensed the use of Lisp machine system sources. These companies were required to let MIT use their changes. But it didn't say in the contract that MIT was entitled to put them into the MIT Lisp machine systems that both companies had licensed. Nobody had envisioned that the AI lab's hacker group would be wiped out, but it was.

|

||||

|

||||

So Symbolics came up with a plan (4). They said to the lab, “We will continue making our changes to the system available for you to use, but you can't put it into the MIT Lisp machine system. Instead, we'll give you access to Symbolics' Lisp machine system, and you can run it, but that's all you can do.”

|

||||

|

||||

This, in effect, meant that they demanded that we had to choose a side, and use either the MIT version of the system or the Symbolics version. Whichever choice we made determined which system our improvements went to. If we worked on and improved the Symbolics version, we would be supporting Symbolics alone. If we used and improved the MIT version of the system, we would be doing work available to both companies, but Symbolics saw that we would be supporting LMI because we would be helping them continue to exist. So we were not allowed to be neutral anymore.

|

||||

|

||||

Up until that point, I hadn't taken the side of either company, although it made me miserable to see what had happened to our community and the software. But now, Symbolics had forced the issue. So, in an effort to help keep Lisp Machines Inc. going (5) — I began duplicating all of the improvements Symbolics had made to the Lisp machine system. I wrote the equivalent improvements again myself (i.e., the code was my own).

|

||||

|

||||

After a while (6), I came to the conclusion that it would be best if I didn't even look at their code. When they made a beta announcement that gave the release notes, I would see what the features were and then implement them. By the time they had a real release, I did too.

|

||||

|

||||

In this way, for two years, I prevented them from wiping out Lisp Machines Incorporated, and the two companies went on. But, I didn't want to spend years and years punishing someone, just thwarting an evil deed. I figured they had been punished pretty thoroughly because they were stuck with competition that was not leaving or going to disappear (7). Meanwhile, it was time to start building a new community to replace the one that their actions and others had wiped out.

|

||||

|

||||

The Lisp community in the 70s was not limited to the MIT AI Lab, and the hackers were not all at MIT. The war that Symbolics started was what wiped out MIT, but there were other events going on then. There were people giving up on cooperation, and together this wiped out the community and there wasn't much left.

|

||||

|

||||

Once I stopped punishing Symbolics, I had to figure out what to do next. I had to make a free operating system, that was clear — the only way that people could work together and share was with a free operating system.

|

||||

|

||||

At first, I thought of making a Lisp-based system, but I realized that wouldn't be a good idea technically. To have something like the Lisp machine system, you needed special purpose microcode. That's what made it possible to run programs as fast as other computers would run their programs and still get the benefit of typechecking. Without that, you would be reduced to something like the Lisp compilers for other machines. The programs would be faster, but unstable. Now that's okay if you're running one program on a timesharing system — if one program crashes, that's not a disaster, that's something your program occasionally does. But that didn't make it good for writing the operating system in, so I rejected the idea of making a system like the Lisp machine.

|

||||

|

||||

I decided instead to make a Unix-like operating system that would have Lisp implementations to run as user programs. The kernel wouldn't be written in Lisp, but we'd have Lisp. So the development of that operating system, the GNU operating system, is what led me to write the GNU Emacs. In doing this, I aimed to make the absolute minimal possible Lisp implementation. The size of the programs was a tremendous concern.

|

||||

|

||||

There were people in those days, in 1985, who had one-megabyte machines without virtual memory. They wanted to be able to use GNU Emacs. This meant I had to keep the program as small as possible.

|

||||

|

||||

For instance, at the time the only looping construct was ‘while’, which was extremely simple. There was no way to break out of the ‘while’ statement, you just had to do a catch and a throw, or test a variable that ran the loop. That shows how far I was pushing to keep things small. We didn't have ‘caar’ and ‘cadr’ and so on; “squeeze out everything possible” was the spirit of GNU Emacs, the spirit of Emacs Lisp, from the beginning.

|

||||

|

||||

Obviously, machines are bigger now, and we don't do it that way any more. We put in ‘caar’ and ‘cadr’ and so on, and we might put in another looping construct one of these days. We're willing to extend it some now, but we don't want to extend it to the level of common Lisp. I implemented Common Lisp once on the Lisp machine, and I'm not all that happy with it. One thing I don't like terribly much is keyword arguments (8). They don't seem quite Lispy to me; I'll do it sometimes but I minimize the times when I do that.

|

||||

|

||||

That was not the end of the GNU projects involved with Lisp. Later on around 1995, we were looking into starting a graphical desktop project. It was clear that for the programs on the desktop, we wanted a programming language to write a lot of it in to make it easily extensible, like the editor. The question was what it should be.

|

||||

|

||||

At the time, TCL was being pushed heavily for this purpose. I had a very low opinion of TCL, basically because it wasn't Lisp. It looks a tiny bit like Lisp, but semantically it isn't, and it's not as clean. Then someone showed me an ad where Sun was trying to hire somebody to work on TCL to make it the “de-facto standard extension language” of the world. And I thought, “We've got to stop that from happening.” So we started to make Scheme the standard extensibility language for GNU. Not Common Lisp, because it was too large. The idea was that we would have a Scheme interpreter designed to be linked into applications in the same way TCL was linked into applications. We would then recommend that as the preferred extensibility package for all GNU programs.

|

||||

|

||||

There's an interesting benefit you can get from using such a powerful language as a version of Lisp as your primary extensibility language. You can implement other languages by translating them into your primary language. If your primary language is TCL, you can't very easily implement Lisp by translating it into TCL. But if your primary language is Lisp, it's not that hard to implement other things by translating them. Our idea was that if each extensible application supported Scheme, you could write an implementation of TCL or Python or Perl in Scheme that translates that program into Scheme. Then you could load that into any application and customize it in your favorite language and it would work with other customizations as well.

|

||||

|

||||

As long as the extensibility languages are weak, the users have to use only the language you provided them. Which means that people who love any given language have to compete for the choice of the developers of applications — saying “Please, application developer, put my language into your application, not his language.” Then the users get no choices at all — whichever application they're using comes with one language and they're stuck with [that language]. But when you have a powerful language that can implement others by translating into it, then you give the user a choice of language and we don't have to have a language war anymore. That's what we're hoping ‘Guile’, our scheme interpreter, will do. We had a person working last summer finishing up a translator from Python to Scheme. I don't know if it's entirely finished yet, but for anyone interested in this project, please get in touch. So that's the plan we have for the future.

|

||||

|

||||

I haven't been speaking about free software, but let me briefly tell you a little bit about what that means. Free software does not refer to price; it doesn't mean that you get it for free. (You may have paid for a copy, or gotten a copy gratis.) It means that you have freedom as a user. The crucial thing is that you are free to run the program, free to study what it does, free to change it to suit your needs, free to redistribute the copies of others and free to publish improved, extended versions. This is what free software means. If you are using a non-free program, you have lost crucial freedom, so don't ever do that.

|

||||

|

||||

The purpose of the GNU project is to make it easier for people to reject freedom-trampling, user-dominating, non-free software by providing free software to replace it. For those who don't have the moral courage to reject the non-free software, when that means some practical inconvenience, what we try to do is give a free alternative so that you can move to freedom with less of a mess and less of a sacrifice in practical terms. The less sacrifice the better. We want to make it easier for you to live in freedom, to cooperate.

|

||||

|

||||

This is a matter of the freedom to cooperate. We're used to thinking of freedom and cooperation with society as if they are opposites. But here they're on the same side. With free software you are free to cooperate with other people as well as free to help yourself. With non-free software, somebody is dominating you and keeping people divided. You're not allowed to share with them, you're not free to cooperate or help society, anymore than you're free to help yourself. Divided and helpless is the state of users using non-free software.

|

||||

|

||||

We've produced a tremendous range of free software. We've done what people said we could never do; we have two operating systems of free software. We have many applications and we obviously have a lot farther to go. So we need your help. I would like to ask you to volunteer for the GNU project; help us develop free software for more jobs. Take a look at [http://www.gnu.org/help][1] to find suggestions for how to help. If you want to order things, there's a link to that from the home page. If you want to read about philosophical issues, look in /philosophy. If you're looking for free software to use, look in /directory, which lists about 1900 packages now (which is a fraction of all the free software out there). Please write more and contribute to us. My book of essays, “Free Software and Free Society”, is on sale and can be purchased at [www.gnu.org][2]. Happy hacking!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.gnu.org/gnu/rms-lisp.html

|

||||

|

||||

作者:[Richard Stallman][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.gnu.org

|

||||

[1]:https://www.gnu.org/help/

|

||||

[2]:http://www.gnu.org/

|

||||

@ -1,5 +1,6 @@

|

||||

Intel and AMD Reveal New Processor Designs

|

||||

======

|

||||

[translating by softpaopao](#)

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,135 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

A CLI Game To Learn Vim Commands

|

||||

======

|

||||

|

||||

|

||||

|

||||

Howdy, Vim users! Today, I stumbled upon a cool utility to sharpen your Vim usage skills. Vim is a great editor to write and edit code. However, some of you (including me) are still struggling with the steep learning curve. Not anymore! Meet **PacVim** , a CLI game that helps you to learn Vim commands. PacVim is inspired by the classic game [**PacMan**][1] and it gives you plenty of practice with Vim commands in a fun and interesting way. Simply put, PacVim is a fun, free way to learn about the vim commands in-depth. Please do not confuse PacMan with [**pacman**][2] (the arch Linux package manager). PacMan is a classic, popular arcade game released in the 1980s.

|

||||

|

||||

In this brief guide, we will see how to install and use PacVim in Linux.

|

||||

|

||||

### Install PacVim

|

||||

|

||||

First, install **Ncurses** library and **development tools** as described in the following links.

|

||||

|

||||

Please note that this game may not compile and install properly without gcc version 4.8.X or higher. I tested PacVim on Ubuntu 18.04 LTS and it worked perfectly.

|

||||

|

||||

Once Ncurses and gcc are installed, run the following commands to install PacVim.

|

||||

```

|

||||

$ git clone https://github.com/jmoon018/PacVim.git

|

||||

$ cd PacVim

|

||||

$ sudo make install

|

||||

|

||||

```

|

||||

|

||||

## Learn Vim Commands Using PacVim

|

||||

|

||||

### Start PacVim game

|

||||

|

||||

To play this game, just run:

|

||||

```

|

||||

$ pacvim [LEVEL_NUMER] [MODE]

|

||||

|

||||

```

|

||||

|

||||

For example, the following command starts the game in 5th level with normal mode.

|

||||

```

|

||||

$ pacvim 5 n

|

||||

|

||||

```

|

||||

|

||||

Here, **“5”** represents the level and **“n”** represents the mode. There are two modes

|

||||

|

||||

* **n** – normal mode.

|

||||

* **h** – hard mode.

|

||||

|

||||

|

||||

|

||||

The default mode is h, which is hard:

|

||||

|

||||

To start from the beginning (0 level), just run:

|

||||

```

|

||||

$ pacvim

|

||||

|

||||

```

|

||||

|

||||

Here is the sample output from my Ubuntu 18.04 LTS system.

|

||||

|

||||

![][4]

|

||||

|

||||

To begin the game, just press **ENTER**.

|

||||

|

||||

![][5]

|

||||

|

||||

Now start playing the game. Read the next chapter to know how to play.

|

||||

|

||||

To quit, press **ESC** or **q**.

|

||||

|

||||

The following command starts the game in 5th level with hard mode.

|

||||

```

|

||||

$ pacvim 5 h

|

||||

|

||||

```

|

||||

|

||||

Or,

|

||||

```

|

||||

$ pacvim 5

|

||||

|

||||

```

|

||||

|

||||

### How to play PacVim?

|

||||

|

||||

The usage of PacVim is very similar to PacMan.

|

||||

|

||||

You must run over all the characters on the screen while avoiding the ghosts (the red color characters).

|

||||

|

||||

PacVim has two special obstacles:

|

||||

|

||||

1. You cannot move into the walls (yellow color). You must use vim motions to jump over them.

|

||||

2. If you step on a tilde character (cyan `~`), you lose!

|

||||

|

||||

|

||||

|

||||

You are given three lives. You gain a life each time you beat level 0, 3, 6, 9, etc. There are 10 levels in total, starting from 0 to 9. After beating the 9th level, the game is reset to the 0th level, but the ghosts move faster.

|

||||

|

||||

**Winning conditions**

|

||||

|

||||

Use vim commands to move the cursor over the letters and highlight them. After all letters are highlighted, you win and proceed to the next level.

|

||||

|

||||

**Losing conditions**

|

||||

|

||||

If you touch a ghost (indicated by a **red G** ) or a **tilde** character, you lose a life. If you have less than 0 lives, you will lose the entire game.

|

||||

|

||||

Here is the list of Implemented Commands:-

|

||||

|

||||

key what it does q quit the game h move left j move down k move up l move right w move forward to next word beginning W move forward to next WORD beginning e move forward to next word ending E move forward to next WORD ending b move backward to next word beginning B move backward to next WORD beginning $ move to the end of the line 0 move to the beginning of the line gg/1G move to the beginning of the first line numberG move to the beginning of the line given by number G move to the beginning of the last line ^ move to the first word at the current line & 1337 cheatz (beat current level)

|

||||

|

||||

After playing couple levels, you may notice there is a slight improvement in Vim usage. Keep playing this game once in a while until you mastering the Vim usage.

|

||||

|

||||

**Suggested read:**

|

||||

|

||||

And, that’s all for now. Hope this was useful. Playing PacVim is fun, interesting and keep you occupied. At the same time, you should be able to thoroughly learn the enough Vim commands. Give it a try, you won’t be disappointed.

|

||||

|

||||

More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/pacvim-a-cli-game-to-learn-vim-commands/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://en.wikipedia.org/wiki/Pac-Man

|

||||

[2]:https://www.ostechnix.com/getting-started-pacman/

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/05/pacvim-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/05/pacvim-2.png

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

How To Disable Built-in Webcam In Linux

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,152 @@

|

||||

Find If A Package Is Available For Your Linux Distribution

|

||||

======

|

||||

|

||||

|

||||

|

||||

Some times, you might wonder how to find if a package is available for your Linux distribution. Or, you simply wanted to know what version of package is available for your distribution. If so, well, it’s your lucky day. I know a tool that can get you such information. Meet **“Whohas”** – a command line tool that allows querying several package lists at once. Currently, it supports Arch, Debian, Fedora, Gentoo, Mandriva, openSUSE, Slackware, Source Mage, Ubuntu, FreeBSD, NetBSD, OpenBSD, Fink, MacPorts and Cygwin. Using this little tool, the package maintainers can easily find ebuilds, pkgbuilds and similar package definitions from other distributions. Whohas is free, open source and written in Perl programming language.

|

||||

|

||||

### Find If A Package Is Available For Your Linux Distribution

|

||||

|

||||

**Installing Whohas**

|

||||

|

||||

Whohas is available in the default repositories of Debian, Ubuntu, Linux Mint. If you’re using any one of the DEB-based system, you can install it using command:

|

||||

```

|

||||

$ sudo apt-get install whohas

|

||||

|

||||

```

|

||||

|

||||

For Arch-based systems, it is available in [**AUR**][1]. You can use any AUR helper programs to install it.

|

||||

|

||||

Using [**Packer**][2]:

|

||||

```

|

||||

$ packer -S whohas

|

||||

|

||||

```

|

||||

|

||||

Using [**Trizen**][3]:

|

||||

```

|

||||

$ trizen -S whohas

|

||||

|

||||

```

|

||||

|

||||

Using [**Yay**][4]:

|

||||

```

|

||||

$ yay -S whohas

|

||||

|

||||

```

|

||||

|

||||

Using [**Yaourt**][5]:

|

||||

```

|

||||

$ yaourt -S whohas

|

||||

|

||||

```

|

||||

|

||||

In other Linux distributions, download Whohas utility source from [**here**][6] and manually compile and install it.

|

||||

|

||||

**Usage**

|

||||

|

||||

The main objective of Whohas tool is to let you know:

|

||||

|

||||

* Which distribution provides packages on which the user depends.

|

||||

* What version of a given package is in use in each distribution, or in each release of a distribution.

|

||||

|

||||

|

||||

|

||||

Let us find which distributions contains a specific package, for example **vim**. To do so, run:

|

||||

```

|

||||

$ whohas vim

|

||||

|

||||

```

|

||||

|

||||

This command will show all distributions that contains the vim package with the available version of the given package, its size, repository and the download URL.

|

||||

|

||||

![][8]

|

||||

|

||||

You can even sort the results in alphabetical order by distribution using by piping the output to “sort” command like below.

|

||||

```

|

||||

$ whohas vim | sort

|

||||

|

||||

```

|

||||

|

||||

Please note that the above commands will display all packages that starts with name **vim** , for example vim-spell, vimcommander, vimpager etc. You can narrow down the search to the exact package by using grep command and space before or after or on both sides of your package like below.

|

||||

```

|

||||

$ whohas vim | sort | grep " vim"

|

||||

|

||||

$ whohas vim | sort | grep "vim "

|

||||

|

||||

$ whohas vim | sort | grep " vim "

|

||||

|

||||

```

|

||||

|

||||

The space before the package name will display all packages that ends with search term. The space after the package name will display all packages whose names begin with your search term. The space on both sides of the search will display the exact match.

|

||||

|

||||

Alternatively, you could simply use “–strict” option like below.

|

||||

```

|

||||

$ whohas --strict vim

|

||||

|

||||

```

|

||||

|

||||

Sometimes, you want to know if a package is available for a specific distribution only. For example, to find if vim package is available in Arch Linux, run:

|

||||

```

|

||||

$ whohas vim | grep "^Arch"

|

||||

|

||||

```

|

||||

|

||||

The distribution names are abbreviated as “archlinux”, “cygwin”, “debian”, “fedora”, “fink”, “freebsd”, “gentoo”, “mandriva”, “macports”, “netbsd”, “openbsd”, “opensuse”, “slackware”, “sourcemage”, and “ubuntu”.

|

||||

|

||||

You can also get the same results by using **-d** option like below.

|

||||

```

|

||||

$ whohas -d archlinux vim

|

||||

|

||||

```

|

||||

|

||||

This command will search vim packages for Arch Linux distribution only.

|

||||

|

||||

To search for multiple distributions, for example arch linux, ubuntu, use the following command instead.

|

||||

```

|

||||

$ whohas -d archlinux,ubuntu vim

|

||||

|

||||

```

|

||||

|

||||

You can even find which distributions have “whohas” package.

|

||||

```

|

||||

$ whohas whohas

|

||||

|

||||

```

|

||||

|

||||

For more details, refer the man pages.

|

||||

```

|

||||

$ man whohas

|

||||

|

||||

```

|

||||

|

||||

**Also read:**

|

||||

|

||||

All package managers can easily find the available package versions in the repositories.. However, Whohas can help you to get the comparison of available versions of packages across different distributions and which even has it available now. Give it a try, you won’t be disappointed.

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/find-if-a-package-is-available-for-your-linux-distribution/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://aur.archlinux.org/packages/whohas/

|

||||

[2]:https://www.ostechnix.com/install-packer-arch-linux-2/

|

||||

[3]:https://www.ostechnix.com/trizen-lightweight-aur-package-manager-arch-based-systems/

|

||||

[4]:https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[5]:https://www.ostechnix.com/install-yaourt-arch-linux/

|

||||

[6]:http://www.philippwesche.org/200811/whohas/intro.html

|

||||

[7]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2018/06/whohas-1.png

|

||||

@ -0,0 +1,77 @@

|

||||

GitLab’s Ultimate & Gold Plans Are Now Free For Open-Source Projects

|

||||

======

|

||||

A lot has happened in the open-source community recently. First, [Microsoft acquired GitHub][1] and then people started to look for [GitHub alternatives][2] without even taking a second to think about it while Linus Torvalds released the [Linux Kernel 4.17][3]. Well, if you’ve been following us, I assume that you know all that.

|

||||

|

||||

But, today, GitLab made a smart move by making some of its high-tier plans free for educational institutes and open-source projects. There couldn’t be a better time to offer something like this when a lot of developers are interested in migrating their open-source projects to GitLab.

|

||||

|

||||

### GitLab’s premium plans are now free for open source projects and educational institutes

|

||||

|

||||

![GitLab Logo][4]

|

||||

|

||||

In a [blog post][5] today, GitLab announced that the **Ultimate** and Gold plans are now free for educational institutes and open-source projects. While we already know why GitLab made this move (a darn perfect timing!), they did explain their motive to make it free:

|

||||

|

||||

> We make GitLab free for education because we want students to use our most advanced features. Many universities already run GitLab. If the students use the advanced features of GitLab Ultimate and Gold they will take their experiences with these advanced features to their workplaces.

|

||||

>

|

||||

> We would love to have more open source projects use GitLab. Public projects on GitLab.com already have all the features of GitLab Ultimate. And projects like [Gnome][6] and [Debian][7] already run their own server with the open source version of GitLab. With today’s announcement, open source projects that are comfortable running on proprietary software can use all the features GitLab has to offer while allowing us to have a sustainable business model by charging non-open-source organizations.

|

||||

|

||||

### What are these ‘free’ plans offered by GitLab?

|

||||

|

||||

![GitLab Pricing][8]

|

||||

|

||||

GitLab has two categories of offerings. One is the software that you could host on your own cloud hosting service like [Digital Ocean][9]. The other is providing GitLab software as a service where the hosting is managed by GitLab itself and you get an account on GitLab.com.

|

||||

|

||||

![GitLab Pricing for hosted service][10]

|

||||

|

||||

Gold is the highest offering in the hosted category while Ultimate is the highest offering in the self-hosted category.

|

||||

|

||||

You can get more details about their features on GitLab pricing page. Do note that the support is not included in this offer. You have to purchase it separately.

|

||||

|

||||

### You have to match certain criteria to avail this offer

|

||||

|

||||

GitLab also mentioned – to whom the offer will be valid for. Here’s what they wrote in their blog post:

|

||||

|

||||

> 1. **Educational institutions:** any institution whose purposes directly relate to learning, teaching, and/or training by a qualified educational institution, faculty, or student. Educational purposes do not include commercial, professional, or any other for-profit purposes.

|

||||

>

|

||||

> 2. **Open source projects:** any project that uses a [standard open source license][11] and is non-commercial. It should not have paid support or paid contributors.

|

||||

>

|

||||

>

|

||||

|

||||

|

||||

Although the free plan does not include support, you can still pay an additional fee of 4.95 USD per user per month – which is a very fair price, when you are in the dire need of an expert to help resolve an issue.

|

||||

|

||||

GitLab also added a note for the students:

|

||||

|

||||

> To reduce the administrative burden for GitLab, only educational institutions can apply on behalf of their students. If you’re a student and your educational institution does not apply, you can use public projects on GitLab.com with all functionality, use private projects with the free functionality, or pay yourself.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

Now that GitLab is stepping up its game, what do you think about it?

|

||||

|

||||

Do you have a project hosted on [GitHub][12]? Will you be switching over? Or, luckily, you already happen to use GitLab from the start?

|

||||

|

||||

Let us know your thoughts in the comments section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/gitlab-free-open-source/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/ankush/

|

||||

[1]:https://itsfoss.com/microsoft-github/

|

||||

[2]:https://itsfoss.com/github-alternatives/

|

||||

[3]:https://itsfoss.com/linux-kernel-4-17/

|

||||

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/06/GitLab-logo-800x450.png

|

||||

[5]:https://about.gitlab.com/2018/06/05/gitlab-ultimate-and-gold-free-for-education-and-open-source/

|

||||

[6]:https://www.gnome.org/news/2018/05/gnome-moves-to-gitlab-2/

|

||||

[7]:https://salsa.debian.org/public

|

||||

[8]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/06/gitlab-pricing.jpeg

|

||||

[9]:https://m.do.co/c/d58840562553

|

||||

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/06/gitlab-hosted-service-800x273.jpeg

|

||||

[11]:https://itsfoss.com/open-source-licenses-explained/

|

||||

[12]:https://github.com/

|

||||

@ -0,0 +1,309 @@

|

||||

Using MQTT to send and receive data for your next project

|

||||

======

|

||||

|

||||

|

||||

|

||||

Last November we bought an electric car, and it raised an interesting question: When should we charge it? I was concerned about having the lowest emissions for the electricity used to charge the car, so this is a specific question: What is the rate of CO2 emissions per kWh at any given time, and when during the day is it at its lowest?

|

||||

|

||||

### Finding the data

|

||||

|

||||

I live in New York State. About 80% of our electricity comes from in-state generation, mostly through natural gas, hydro dams (much of it from Niagara Falls), nuclear, and a bit of wind, solar, and other fossil fuels. The entire system is managed by the [New York Independent System Operator][1] (NYISO), a not-for-profit entity that was set up to balance the needs of power generators, consumers, and regulatory bodies to keep the lights on in New York.

|

||||

|

||||

Although there is no official public API, as part of its mission, NYISO makes [a lot of open data][2] available for public consumption. This includes reporting on what fuels are being consumed to generate power, at five-minute intervals, throughout the state. These are published as CSV files on a public archive and updated throughout the day. If you know the number of megawatts coming from different kinds of fuels, you can make a reasonable approximation of how much CO2 is being emitted at any given time.

|

||||

|

||||

We should always be kind when building tools to collect and process open data to avoid overloading those systems. Instead of sending everyone to their archive service to download the files all the time, we can do better. We can create a low-overhead event stream that people can subscribe to and get updates as they happen. We can do that with [MQTT][3]. The target for my project ([ny-power.org][4]) was inclusion in the [Home Assistant][5] project, an open source home automation platform that has hundreds of thousands of users. If all of these users were hitting this CSV server all the time, NYISO might need to restrict access to it.

|

||||

|

||||

### What is MQTT?

|

||||

|

||||

MQTT is a publish/subscribe (pubsub) wire protocol designed with small devices in mind. Pubsub systems work like a message bus. You send a message to a topic, and any software with a subscription for that topic gets a copy of your message. As a sender, you never really know who is listening; you just provide your information to a set of topics and listen for any other topics you might care about. It's like walking into a party and listening for interesting conversations to join.

|

||||

|

||||

This can make for extremely efficient applications. Clients subscribe to a narrow selection of topics and only receive the information they are looking for. This saves both processing time and network bandwidth.

|

||||

|

||||

As an open standard, MQTT has many open source implementations of both clients and servers. There are client libraries for every language you could imagine, even a library you can embed in Arduino for making sensor networks. There are many servers to choose from. My go-to is the [Mosquitto][6] server from Eclipse, as it's small, written in C, and can handle tens of thousands of subscribers without breaking a sweat.

|

||||

|

||||

### Why I like MQTT

|

||||

|

||||

Over the past two decades, we've come up with tried and true models for software applications to ask questions of services. Do I have more email? What is the current weather? Should I buy this thing now? This pattern of "ask/receive" works well much of the time; however, in a world awash with data, there are other patterns we need. The MQTT pubsub model is powerful where lots of data is published inbound to the system. Clients can subscribe to narrow slices of data and receive updates instantly when that data comes in.

|

||||

|

||||

MQTT also has additional interesting features, such as "last-will-and-testament" messages, which make it possible to distinguish between silence because there is no relevant data and silence because your data collectors have crashed. MQTT also has retained messages, which provide the last message on a topic to clients when they first connect. This is extremely useful for topics that update slowly.

|

||||

|

||||

In my work with the Home Assistant project, I've found this message bus model works extremely well for heterogeneous systems. If you dive into the Internet of Things space, you'll quickly run into MQTT everywhere.

|

||||

|

||||

### Our first MQTT stream

|

||||

|

||||

One of NYSO's CSV files is the real-time fuel mix. Every five minutes, it's updated with the fuel sources and power generated (in megawatts) during that time period.

|

||||

|

||||

The CSV file looks something like this:

|

||||

|

||||

| Time Stamp | Time Zone | Fuel Category | Gen MW |

|

||||

| 05/09/2018 00:05:00 | EDT | Dual Fuel | 1400 |

|

||||

| 05/09/2018 00:05:00 | EDT | Natural Gas | 2144 |

|

||||

| 05/09/2018 00:05:00 | EDT | Nuclear | 4114 |

|

||||

| 05/09/2018 00:05:00 | EDT | Other Fossil Fuels | 4 |

|

||||

| 05/09/2018 00:05:00 | EDT | Other Renewables | 226 |

|

||||

| 05/09/2018 00:05:00 | EDT | Wind | 1 |

|

||||

| 05/09/2018 00:05:00 | EDT | Hydro | 3229 |

|

||||

| 05/09/2018 00:10:00 | EDT | Dual Fuel | 1307 |

|

||||

| 05/09/2018 00:10:00 | EDT | Natural Gas | 2092 |

|

||||

| 05/09/2018 00:10:00 | EDT | Nuclear | 4115 |

|

||||

| 05/09/2018 00:10:00 | EDT | Other Fossil Fuels | 4 |

|

||||

| 05/09/2018 00:10:00 | EDT | Other Renewables | 224 |

|

||||

| 05/09/2018 00:10:00 | EDT | Wind | 40 |

|

||||

| 05/09/2018 00:10:00 | EDT | Hydro | 3166 |

|

||||

|

||||

The only odd thing in the table is the dual-fuel category. Most natural gas plants in New York can also burn other fossil fuel to generate power. During cold snaps in the winter, the natural gas supply gets constrained, and its use for home heating is prioritized over power generation. This happens at a low enough frequency that we can consider dual fuel to be natural gas (for our calculations).

|

||||

|

||||

The file is updated throughout the day. I created a simple data pump that polls for the file every minute and looks for updates. It publishes any new entries out to the MQTT server into a set of topics that largely mirror this CSV file. The payload is turned into a JSON object that is easy to parse from nearly any programming language.

|

||||

```

|

||||

ny-power/upstream/fuel-mix/Hydro {"units": "MW", "value": 3229, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Dual Fuel {"units": "MW", "value": 1400, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Natural Gas {"units": "MW", "value": 2144, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Other Fossil Fuels {"units": "MW", "value": 4, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Wind {"units": "MW", "value": 41, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Other Renewables {"units": "MW", "value": 226, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

ny-power/upstream/fuel-mix/Nuclear {"units": "MW", "value": 4114, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

```

|

||||

|

||||

This direct reflection is a good first step in turning open data into open events. We'll be converting this into a CO2 intensity, but other applications might want these raw feeds to do other calculations with them.

|

||||

|

||||

### MQTT topics

|

||||

|

||||

Topics and topic structures are one of MQTT's major design points. Unlike more "enterprisey" message buses, in MQTT topics are not preregistered. A sender can create topics on the fly, the only limit being that they are less than 220 characters. The `/` character is special; it's used to create topic hierarchies. As we'll soon see, you can subscribe to slices of data in these hierarchies.

|

||||

|

||||

Out of the box with Mosquitto, every client can publish to any topic. While it's great for prototyping, before going to production you'll want to add an access control list (ACL) to restrict writing to authorized applications. For example, my app's tree is accessible to everyone in read-only format, but only clients with specific credentials can publish to it.

|

||||

|

||||

There is no automatic schema around topics nor a way to discover all the possible topics that clients will publish to. You'll have to encode that understanding directly into any application that consumes the MQTT bus.

|

||||

|

||||

So how should you design your topics? The best practice is to start with an application-specific root name, in our case, `ny-power`. After that, build a hierarchy as deep as you need for efficient subscription. The `upstream` tree will contain data that comes directly from an upstream source without any processing. Our `fuel-mix` category is a specific type of data. We may add others later.

|

||||

|

||||

### Subscribing to topics

|

||||

|

||||

Subscriptions in MQTT are simple string matches. For processing efficiency, only two wildcards are allowed:

|

||||

|

||||

* `#` matches everything recursively to the end

|

||||

* `+` matches only until the next `/` character

|

||||

|

||||

|

||||

|

||||

It's easiest to explain this with some examples:

|

||||

```

|

||||

ny-power/# - match everything published by the ny-power app

|

||||

|

||||

ny-power/upstream/# - match all raw data

|

||||

|

||||

ny-power/upstream/fuel-mix/+ - match all fuel types

|

||||

|

||||

ny-power/+/+/Hydro - match everything about Hydro power that's

|

||||

|

||||

nested 2 deep (even if it's not in the upstream tree)

|

||||

|

||||

```

|

||||

|

||||

A wide subscription like `ny-power/#` is common for low-volume applications. Just get everything over the network and handle it in your own application. This works poorly for high-volume applications, as most of the network bandwidth will be wasted as you drop most of the messages on the floor.

|

||||

|

||||

To stay performant at higher volumes, applications will do some clever topic slides like `ny-power/+/+/Hydro` to get exactly the cross-section of data they need.

|

||||

|

||||

### Adding our next layer of data

|

||||

|

||||

From this point forward, everything in the application will work off existing MQTT streams. The first additional layer of data is computing the power's CO2 intensity.

|

||||

|

||||

Using the 2016 [U.S. Energy Information Administration][7] numbers for total emissions and total power by fuel type in New York, we can come up with an [average emissions rate][8] per megawatt hour of power.

|

||||

|

||||

This is encapsulated in a dedicated microservice. This has a subscription on `ny-power/upstream/fuel-mix/+`, which matches all upstream fuel-mix entries from the data pump. It then performs the calculation and publishes out to a new topic tree:

|

||||

```

|

||||

ny-power/computed/co2 {"units": "g / kWh", "value": 152.9486, "ts": "05/09/2018 00:05:00"}

|

||||

|

||||

```

|

||||

|

||||

In turn, there is another process that subscribes to this topic tree and archives that data into an [InfluxDB][9] instance. It then publishes a 24-hour time series to `ny-power/archive/co2/24h`, which makes it easy to graph the recent changes.

|

||||

|

||||

This layer model works well, as the logic for each of these programs can be distinct from each other. In a more complicated system, they may not even be in the same programming language. We don't care, because the interchange format is MQTT messages, with well-known topics and JSON payloads.

|

||||

|

||||

### Consuming from the command line

|

||||

|

||||

To get a feel for MQTT in action, it's useful to just attach it to a bus and see the messages flow. The `mosquitto_sub` program included in the `mosquitto-clients` package is a simple way to do that.

|

||||

|

||||

After you've installed it, you need to provide a server hostname and the topic you'd like to listen to. The `-v` flag is important if you want to see the topics being posted to. Without that, you'll see only the payloads.

|

||||

```

|

||||

mosquitto_sub -h mqtt.ny-power.org -t ny-power/# -v

|

||||

|

||||

```

|

||||

|

||||

Whenever I'm writing or debugging an MQTT application, I always have a terminal with `mosquitto_sub` running.

|

||||

|

||||

### Accessing MQTT directly from the web

|

||||

|

||||

We now have an application providing an open event stream. We can connect to it with our microservices and, with some command-line tooling, it's on the internet for all to see. But the web is still king, so it's important to get it directly into a user's browser.

|

||||

|

||||

The MQTT folks thought about this one. The protocol specification is designed to work over three transport protocols: [TCP][10], [UDP][11], and [WebSockets][12]. WebSockets are supported by all major browsers as a way to retain persistent connections for real-time applications.

|

||||

|

||||

The Eclipse project has a JavaScript implementation of MQTT called [Paho][13], which can be included in your application. The pattern is to connect to the host, set up some subscriptions, and then react to messages as they are received.

|

||||

```

|

||||

// ny-power web console application

|

||||

|

||||

var client = new Paho.MQTT.Client(mqttHost, Number("80"), "client-" + Math.random());

|

||||

|

||||

|

||||

|

||||

// set callback handlers

|

||||

|

||||

client.onMessageArrived = onMessageArrived;

|

||||

|

||||

|

||||

|

||||

// connect the client

|

||||

|

||||

client.reconnect = true;

|

||||

|