mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-22 23:00:57 +08:00

[Translated] How to analyze Squid logs with SARG log analyzer on CentOS

This commit is contained in:

parent

5bb5a79c70

commit

edb91d2e30

@ -1,9 +1,6 @@

|

||||

Translating-----geekpi

|

||||

|

||||

|

||||

How to analyze Squid logs with SARG log analyzer on CentOS

|

||||

如何用CentOS上的SARG log分析其来分析Squid的log

|

||||

================================================================================

|

||||

In a [previous tutorial][1], we show how to configure a transparent proxy with Squid on CentOS. Squid provides many useful features, but analyzing a raw Squid log file is not straightfoward. For example, how could you analyze the time stamps and the number of hits in the following Squid log?

|

||||

[上一节教程][1]中,我们战士了如何在CentOS上使用Squid配置透明代理。Squid提供了很多有用的特性,但是分析一个原始Squid日志文件并不直接。比如,你如何分析下面Squid log中的时间戳和数字?

|

||||

|

||||

1404788984.429 1162 172.17.1.23 TCP_MISS/302 436 GET http://facebook.com/ - DIRECT/173.252.110.27 text/html

|

||||

1404788985.046 12416 172.17.1.23 TCP_MISS/200 4169 CONNECT stats.pusher.com:443 - DIRECT/173.255.223.127 -

|

||||

@ -15,24 +12,24 @@ In a [previous tutorial][1], we show how to configure a transparent proxy with S

|

||||

1404788990.849 2151 172.17.1.23 TCP_MISS/200 76809 CONNECT fbstatic-a.akamaihd.net:443 - DIRECT/184.26.162.35 -

|

||||

1404788991.140 611 172.17.1.23 TCP_MISS/200 110073 CONNECT fbstatic-a.akamaihd.net:443 - DIRECT/184.26.162.35 –

|

||||

|

||||

SARG (or Squid Analysis Report Generator) is a web based tool that creates reports from Squid logs. SARG provides an easy-to-understand view of network traffic handled by Squid, and it is very easy to set up and maintain. In the following tutorial, we show **how to set up SARG on a CentOS platform**.

|

||||

SARG(或者说是Squid分析报告生成器)是一款基于web的工具,用于从Squid日志中生成报告。SARG提供了一个易于理解的由Squid处理的网络流量视图,并且它很容易设置与维护。在下面的教程中,我们会展示**如何在CentOS平台上设置SARG**。

|

||||

|

||||

We start the process by installing necessary dependencies using yum.

|

||||

我们使用yum来安装安装必要的依赖。

|

||||

|

||||

# yum install gcc make wget httpd crond

|

||||

|

||||

Necessary services are started and loaded at startup.

|

||||

在启动时加载必要的服务

|

||||

|

||||

# service httpd start; service crond start

|

||||

# chkconfig httpd on; chkconfig crond on

|

||||

|

||||

Now we download and extract SARG.

|

||||

现在我们下载并解压SARG

|

||||

|

||||

# wget http://downloads.sourceforge.net/project/sarg/sarg/sarg-2.3.8/sarg-2.3.8.tar.gz?

|

||||

# tar zxvf sarg-2.3.8.tar.gz

|

||||

# cd sarg-2.3.8

|

||||

|

||||

**NOTE**: For 64-bit Linux, the source code in log.c needs to be patched as follows.

|

||||

**注意**: 对于64位的Linux,log.c的源代码需要用下面的文件打补丁。

|

||||

|

||||

1506c1506

|

||||

< if (fprintf(ufile->file, "%s\t%s\t%s\t%s\t%"PRIi64"\t%s\t%ld\t%s\n",dia,hora,ip,url,nbytes,code,elap_time,smartfilter)<=0) {

|

||||

@ -47,13 +44,13 @@ Now we download and extract SARG.

|

||||

---

|

||||

> printf("LEN=\t%"PRIi64"\n",(int64_t)nbytes);

|

||||

|

||||

Go ahead and build/install SARG as follows.

|

||||

如下继续并编译/安装SARG

|

||||

|

||||

# ./configure

|

||||

# make

|

||||

# make install

|

||||

|

||||

After SARG is installed, the configuration file can be modified to match your requirements. The following is one example of SARG configuration.

|

||||

SARG安装之后,配置文件可以按你的要求修改。下面是一个SARG配置的例子。

|

||||

|

||||

# vim /usr/local/etc/sarg.conf

|

||||

|

||||

@ -66,19 +63,19 @@ After SARG is installed, the configuration file can be modified to match your re

|

||||

## we don’t want multiple reports for single day/week/month ##

|

||||

overwrite_report yes

|

||||

|

||||

Now it's time for a test run. We run sarg command in debug mode to find whether there is any error.

|

||||

现在是时候测试运行了,我们用调试模式运行sarg来找出是否存在错误。

|

||||

|

||||

# sarg -x

|

||||

|

||||

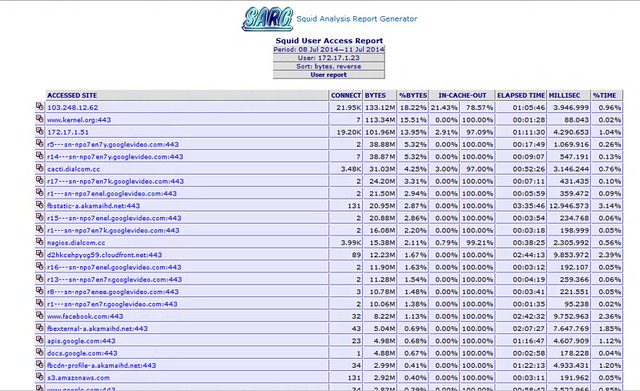

If all goes well, sarg should analyze Squid logs, and create reports in /var/www/html/squid-reports. The reports should be visible in a web browser using the address http://<server-IP>/squid-reports/

|

||||

如果i一切正常,sarg会根系Squid日志,并在/var/www/html/squid-reports下创建报告。报告也可以在浏览器中通过地址http://<服务器IP>/squid-reports/访问

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

SARG can be used to create daily, weekly and monthly reports. Time range can be specified using the "-d" parameter with possible values in the form of day-n, week-n or month-n, where n is the number of days/weeks/months to jump backward. For example, with week-1, SARG will generate a report for the previous week. With day-2, SARG will prepare reports for the previous two days.

|

||||

、SARG可以用于创建每日、每周、每月的报告。时间范围用“-d”参数来指定,可能的值的形式可能为day-n、 week-n 或者 month-n,n的值向后天/周/月的数量。比如,使用week-1,SARG会生成前面一星期的报告。使用day-2,SARG会准备前面两天的报告。

|

||||

|

||||

As a demonstration, we will prepare a cron job to run SARG daily.

|

||||

作为演示,我们会准备一个计划任务来每天运行SARG。

|

||||

|

||||

# vim /etc/cron.daily/sarg

|

||||

|

||||

@ -87,30 +84,31 @@ As a demonstration, we will prepare a cron job to run SARG daily.

|

||||

#!/bin/sh

|

||||

/usr/local/bin/sarg -d day-1

|

||||

|

||||

The file needs a execution permission.

|

||||

文件需要可执行权限。

|

||||

|

||||

# chmod 755 /usr/local/bin/sarg

|

||||

|

||||

Now SARG should prepare daily reports about Squid-managed traffic. These reports can easily be accessed from the SARG web interface.

|

||||

现在SARG应该会每天准备关于Squid管理的流量报告。这些报告可以很容易地通过SARG网络接口访问。

|

||||

|

||||

To sum up, SARG is a web based tool that analyzes Squid logs and presents the analysis in an informative way. System admins can leverage SARG to monitor what sites are being accessed, and to keep track of top visited sites and top users. This tutorial covers a working configuration for SARG. You can customize the configuration even further to match your requirements.

|

||||

总结一下,SARG一款基于网络的工具,它可以分析Squid日志,并以更详细的方式展示分析。系统管理员可以利用SARG来监视哪些网站被访问了,并跟踪访问量最大的网站和用户。本教程涵盖了SARG配置工作。你甚至可以进一步自定义配置来满足您的要求。

|

||||

|

||||

Hope this helps.

|

||||

希望这篇教程有用

|

||||

|

||||

----------

|

||||

|

||||

[Sarmed Rahman][w]

|

||||

|

||||

- [Twitter profile][t]

|

||||

- [LinkedIn profile][l]

|

||||

- [Twitter 地址][t]

|

||||

- [LinkedIn 地址][l]

|

||||

|

||||

Sarmed Rahman is an IT professional in the Internet Industry in Bangladesh. He writes tutorial articles on technology every now and then from a belief that knowledge grows through sharing. During his free time, he loves gaming and spending time with his friends.

|

||||

Sarmed Rahman是一名在孟加拉国的IT专业人士。他坚持写作科学文章,并坚信技术可以通过分享提高。在他的空闲时间里,他爱好游戏与他的朋友在一起

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/2014/07/analyze-squid-logs-sarg-log-analyzer-centos.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[geekpi](https://github.com/geekpi) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

Loading…

Reference in New Issue

Block a user