mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge remote-tracking branch 'upstream/master' into Scout-out-code-problems-with-SonarQube

This commit is contained in:

commit

ecdff70c2e

@ -4,9 +4,9 @@ script:

|

||||

- 'if [ "$TRAVIS_PULL_REQUEST" = "false" ]; then sh ./scripts/badge.sh; fi'

|

||||

branches:

|

||||

only:

|

||||

- master

|

||||

- master

|

||||

except:

|

||||

- gh-pages

|

||||

- gh-pages

|

||||

git:

|

||||

submodules: false

|

||||

deploy:

|

||||

|

||||

@ -1,40 +1,41 @@

|

||||

SDKMAN – 轻松管理多个软件开发套件 (SDK) 的命令行工具

|

||||

SDKMAN:轻松管理多个软件开发套件 (SDK) 的命令行工具

|

||||

======

|

||||

|

||||

|

||||

|

||||

你是否是一个经常在不同的 SDK 下安装和测试应用的开发者?我有一个好消息要告诉你!**SDKMAN**,一个可以帮你轻松管理多个 SDK 的命令行工具。它为安装、切换、列出和移除 SDK 提供了一个简便的方式。有了 SDKMAN,你可以在任何类 Unix 的操作系统上轻松地并行管理多个 SDK 的多个版本。它允许开发者为 JVM 安装不同的 SDK,例如 Java、Groovy、Scala、Kotlin 和 Ceylon、Ant、Gradle、Grails、Maven、SBT、Spark、Spring Boot、Vert.x,以及许多其他支持的 SDK。SDKMAN 是免费、轻量、开源、使用 **Bash** 编写的程序。

|

||||

你是否是一个经常在不同的 SDK 下安装和测试应用的开发者?我有一个好消息要告诉你!给你介绍一下 **SDKMAN**,一个可以帮你轻松管理多个 SDK 的命令行工具。它为安装、切换、列出和移除 SDK 提供了一个简便的方式。有了 SDKMAN,你可以在任何类 Unix 的操作系统上轻松地并行管理多个 SDK 的多个版本。它允许开发者为 JVM 安装不同的 SDK,例如 Java、Groovy、Scala、Kotlin 和 Ceylon、Ant、Gradle、Grails、Maven、SBT、Spark、Spring Boot、Vert.x,以及许多其他支持的 SDK。SDKMAN 是免费、轻量、开源、使用 **Bash** 编写的程序。

|

||||

|

||||

### 安装 SDKMAN

|

||||

|

||||

安装 SDKMAN 很简单。首先,确保你已经安装了 **zip** 和 **unzip** 这两个应用。它们在大多数的 Linux 发行版的默认仓库中。

|

||||

安装 SDKMAN 很简单。首先,确保你已经安装了 `zip` 和 `unzip` 这两个应用。它们在大多数的 Linux 发行版的默认仓库中。

|

||||

例如,在基于 Debian 的系统上安装 unzip,只需要运行:

|

||||

|

||||

```

|

||||

$ sudo apt-get install zip unzip

|

||||

|

||||

```

|

||||

|

||||

然后使用下面的命令安装 SDKMAN:

|

||||

|

||||

```

|

||||

$ curl -s "https://get.sdkman.io" | bash

|

||||

|

||||

```

|

||||

|

||||

在安装完成之后,运行以下命令:

|

||||

|

||||

```

|

||||

$ source "$HOME/.sdkman/bin/sdkman-init.sh"

|

||||

|

||||

```

|

||||

|

||||

如果你希望自定义安装到其他位置,例如 **/usr/local/**,你可以这样做:

|

||||

如果你希望自定义安装到其他位置,例如 `/usr/local/`,你可以这样做:

|

||||

|

||||

```

|

||||

$ export SDKMAN_DIR="/usr/local/sdkman" && curl -s "https://get.sdkman.io" | bash

|

||||

|

||||

```

|

||||

|

||||

确保你的用户有足够的权限访问这个目录。

|

||||

|

||||

最后,在安装完成后使用下面的命令检查一下:

|

||||

|

||||

```

|

||||

$ sdk version

|

||||

==== BROADCAST =================================================================

|

||||

@ -44,7 +45,6 @@ $ sdk version

|

||||

================================================================================

|

||||

|

||||

SDKMAN 5.7.2+323

|

||||

|

||||

```

|

||||

|

||||

恭喜你!SDKMAN 已经安装完成了。让我们接下来看如何安装和管理 SDKs 吧。

|

||||

@ -52,12 +52,13 @@ SDKMAN 5.7.2+323

|

||||

### 管理多个 SDK

|

||||

|

||||

查看可用的 SDK 清单,运行:

|

||||

|

||||

```

|

||||

$ sdk list

|

||||

|

||||

```

|

||||

|

||||

将会输出:

|

||||

|

||||

```

|

||||

================================================================================

|

||||

Available Candidates

|

||||

@ -79,18 +80,18 @@ used to pilot any type of process which can be described in terms of targets and

|

||||

tasks.

|

||||

|

||||

: $ sdk install ant

|

||||

|

||||

```

|

||||

|

||||

就像你看到的,SDK 每次列出众多 SDK 中的一个,以及该 SDK 的描述信息、官方网址和安装命令。按回车键继续下一个。

|

||||

|

||||

安装一个新的 SDK,例如 Java JDK,运行:

|

||||

|

||||

```

|

||||

$ sdk install java

|

||||

|

||||

```

|

||||

|

||||

将会输出:

|

||||

|

||||

```

|

||||

Downloading: java 8.0.172-zulu

|

||||

|

||||

@ -106,30 +107,30 @@ Installing: java 8.0.172-zulu

|

||||

Done installing!

|

||||

|

||||

Setting java 8.0.172-zulu as default.

|

||||

|

||||

```

|

||||

|

||||

如果你安装了多个 SDK,它将会提示你是否想要将当前安装的版本设置为 **默认版本**。回答 **Yes** 将会把当前版本设置为默认版本。

|

||||

如果你安装了多个 SDK,它将会提示你是否想要将当前安装的版本设置为 **默认版本**。回答 `Yes` 将会把当前版本设置为默认版本。

|

||||

|

||||

使用以下命令安装一个 SDK 的其他版本:

|

||||

|

||||

使用以下命令安装一个 SDK 的其他版本:

|

||||

```

|

||||

$ sdk install ant 1.10.1

|

||||

|

||||

```

|

||||

|

||||

如果你之前已经在本地安装了一个 SDK,你可以像下面这样设置它为本地版本。

|

||||

|

||||

```

|

||||

$ sdk install groovy 3.0.0-SNAPSHOT /path/to/groovy-3.0.0-SNAPSHOT

|

||||

|

||||

```

|

||||

|

||||

列出一个 SDK 的多个版本:

|

||||

|

||||

```

|

||||

$ sdk list ant

|

||||

|

||||

```

|

||||

|

||||

将会输出

|

||||

将会输出:

|

||||

|

||||

```

|

||||

================================================================================

|

||||

Available Ant Versions

|

||||

@ -145,32 +146,31 @@ Available Ant Versions

|

||||

* - installed

|

||||

> - currently in use

|

||||

================================================================================

|

||||

|

||||

```

|

||||

|

||||

像我之前说的,如果你安装了多个版本,SDKMAN 会提示你是否想要设置当前安装的版本为 **默认版本**。你可以回答 Yes 设置它为默认版本。当然,你也可以在稍后使用下面的命令设置:

|

||||

|

||||

```

|

||||

$ sdk default ant 1.9.9

|

||||

|

||||

```

|

||||

|

||||

上面的命令将会设置 Apache Ant 1.9.9 为默认版本。

|

||||

|

||||

你可以根据自己的需要选择使用任何已安装的 SDK 版本,仅需运行以下命令:

|

||||

你可以根据自己的需要选择使用任何已安装的 SDK 版本,仅需运行以下命令:

|

||||

|

||||

```

|

||||

$ sdk use ant 1.9.9

|

||||

|

||||

```

|

||||

|

||||

检查某个具体 SDK 当前的版本号,例如 Java,运行:

|

||||

|

||||

```

|

||||

$ sdk current java

|

||||

|

||||

Using java version 8.0.172-zulu

|

||||

|

||||

```

|

||||

|

||||

检查所有当下在使用的 SDK 版本号,运行:

|

||||

|

||||

```

|

||||

$ sdk current

|

||||

|

||||

@ -178,36 +178,35 @@ Using:

|

||||

|

||||

ant: 1.10.1

|

||||

java: 8.0.172-zulu

|

||||

|

||||

```

|

||||

|

||||

升级过时的 SDK,运行:

|

||||

|

||||

```

|

||||

$ sdk upgrade scala

|

||||

|

||||

```

|

||||

|

||||

你也可以检查所有的 SDKs 中还有哪些是过时的。

|

||||

你也可以检查所有的 SDK 中还有哪些是过时的。

|

||||

|

||||

```

|

||||

$ sdk upgrade

|

||||

|

||||

```

|

||||

|

||||

SDKMAN 有离线模式,可以让 SDKMAN 在离线时也正常运作。你可以使用下面的命令在任何时间开启或者关闭离线模式:

|

||||

|

||||

```

|

||||

$ sdk offline enable

|

||||

|

||||

$ sdk offline disable

|

||||

|

||||

```

|

||||

|

||||

要移除已安装的 SDK,运行:

|

||||

|

||||

```

|

||||

$ sdk uninstall ant 1.9.9

|

||||

|

||||

```

|

||||

|

||||

要了解更多的细节,参阅帮助章节。

|

||||

|

||||

```

|

||||

$ sdk help

|

||||

|

||||

@ -231,72 +230,68 @@ update

|

||||

flush <broadcast|archives|temp>

|

||||

|

||||

candidate : the SDK to install: groovy, scala, grails, gradle, kotlin, etc.

|

||||

use list command for comprehensive list of candidates

|

||||

eg: $ sdk list

|

||||

use list command for comprehensive list of candidates

|

||||

eg: $ sdk list

|

||||

|

||||

version : where optional, defaults to latest stable if not provided

|

||||

eg: $ sdk install groovy

|

||||

|

||||

eg: $ sdk install groovy

|

||||

```

|

||||

|

||||

### 更新 SDKMAN

|

||||

|

||||

如果有可用的新版本,可以使用下面的命令安装:

|

||||

|

||||

```

|

||||

$ sdk selfupdate

|

||||

|

||||

```

|

||||

|

||||

SDKMAN 会定期检查更新,以及让你了解如何更新的指令。

|

||||

SDKMAN 会定期检查更新,并给出让你了解如何更新的指令。

|

||||

|

||||

```

|

||||

WARNING: SDKMAN is out-of-date and requires an update.

|

||||

|

||||

$ sdk update

|

||||

Adding new candidates(s): scala

|

||||

|

||||

```

|

||||

|

||||

### 清除缓存

|

||||

|

||||

建议时不时的清理缓存(包括那些下载的 SDK 的二进制文件)。仅需运行下面的命令就可以了:

|

||||

建议时不时的清理缓存(包括那些下载的 SDK 的二进制文件)。仅需运行下面的命令就可以了:

|

||||

|

||||

```

|

||||

$ sdk flush archives

|

||||

|

||||

```

|

||||

|

||||

它也可以用于清理空的文件夹,节省一点空间:

|

||||

|

||||

```

|

||||

$ sdk flush temp

|

||||

|

||||

```

|

||||

|

||||

### 卸载 SDKMAN

|

||||

|

||||

如果你觉得不需要或者不喜欢 SDKMAN,可以使用下面的命令删除。

|

||||

|

||||

```

|

||||

$ tar zcvf ~/sdkman-backup_$(date +%F-%kh%M).tar.gz -C ~/ .sdkman

|

||||

$ rm -rf ~/.sdkman

|

||||

|

||||

```

|

||||

最后打开你的 **.bashrc**,**.bash_profile** 和/或者 **.profile**,找到并删除下面这几行。

|

||||

最后打开你的 `.bashrc`、`.bash_profile` 和/或者 `.profile`,找到并删除下面这几行。

|

||||

|

||||

```

|

||||

#THIS MUST BE AT THE END OF THE FILE FOR SDKMAN TO WORK!!!

|

||||

export SDKMAN_DIR="/home/sk/.sdkman"

|

||||

[[ -s "/home/sk/.sdkman/bin/sdkman-init.sh" ]] && source "/home/sk/.sdkman/bin/sdkman-init.sh"

|

||||

|

||||

```

|

||||

|

||||

如果你使用的是 ZSH,就从 **.zshrc** 中删除上面这一行。

|

||||

如果你使用的是 ZSH,就从 `.zshrc` 中删除上面这一行。

|

||||

|

||||

这就是所有的内容了。我希望 SDKMAN 可以帮到你。还有更多的干货即将到来。敬请期待!

|

||||

|

||||

祝近祺!

|

||||

|

||||

|

||||

:)

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/sdkman-a-cli-tool-to-easily-manage-multiple-software-development-kits/

|

||||

@ -304,7 +299,7 @@ via: https://www.ostechnix.com/sdkman-a-cli-tool-to-easily-manage-multiple-softw

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[dianbanjiu](https://github.com/dianbanjiu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,9 @@

|

||||

如何在 Linux 中快速地通过 HTTP 访问文件和文件夹

|

||||

如何在 Linux 中快速地通过 HTTP 提供文件访问服务

|

||||

======

|

||||

|

||||

|

||||

|

||||

今天,我有很多方法来通过网络浏览器为局域网中的其他系统提供单个文件或整个目录访问。我在我的 Ubuntu 测试机上测试了这些方法,它们和下面描述的那样运行正常。如果你想知道如何在类 Unix 操作系统中通过 HTTP 轻松快速地访问文件和文件夹,以下方法之一肯定会有所帮助。

|

||||

如今,我有很多方法来通过 Web 浏览器为局域网中的其他系统提供单个文件或整个目录的访问。我在我的 Ubuntu 测试机上测试了这些方法,它们如下面描述的那样运行正常。如果你想知道如何在类 Unix 操作系统中通过 HTTP 轻松快速地提供文件和文件夹的访问服务,以下方法之一肯定会有所帮助。

|

||||

|

||||

### 在 Linux 中通过 HTTP 访问文件和文件夹

|

||||

|

||||

@ -13,50 +13,59 @@

|

||||

|

||||

我们写了一篇简要的指南来设置一个简单的 http 服务器,以便在以下链接中即时共享文件和目录。如果你有一个安装了 Python 的系统,这个方法非常方便。

|

||||

|

||||

- [如何使用 simpleHTTPserver 设置一个简单的文件服务器](https://www.ostechnix.com/how-to-setup-a-file-server-in-minutes-using-python/)

|

||||

|

||||

#### 方法 2 - 使用 Quickserve(Python)

|

||||

|

||||

此方法针对 Arch Linux 及其衍生版。有关详细信息,请查看下面的链接。

|

||||

|

||||

- [如何在 Arch Linux 中即时共享文件和文件夹](https://www.ostechnix.com/instantly-share-files-folders-arch-linux/)

|

||||

|

||||

#### 方法 3 - 使用 Ruby

|

||||

|

||||

在此方法中,我们使用 Ruby 在类 Unix 系统中通过 HTTP 提供文件和文件夹访问。按照以下链接中的说明安装 Ruby 和 Rails。

|

||||

|

||||

- [在 CentOS 和 Ubuntu 中安装 Ruby on Rails](https://www.ostechnix.com/install-ruby-rails-ubuntu-16-04/)

|

||||

|

||||

安装 Ruby 后,进入要通过网络共享的目录,例如 ostechnix:

|

||||

|

||||

```

|

||||

$ cd ostechnix

|

||||

|

||||

```

|

||||

|

||||

并运行以下命令:

|

||||

|

||||

```

|

||||

$ ruby -run -ehttpd . -p8000

|

||||

[2018-08-10 16:02:55] INFO WEBrick 1.4.2

|

||||

[2018-08-10 16:02:55] INFO ruby 2.5.1 (2018-03-29) [x86_64-linux]

|

||||

[2018-08-10 16:02:55] INFO WEBrick::HTTPServer#start: pid=5859 port=8000

|

||||

|

||||

```

|

||||

|

||||

确保在路由器或防火墙中打开端口 8000。如果该端口已被其他一些服务使用,那么请使用不同的端口。

|

||||

|

||||

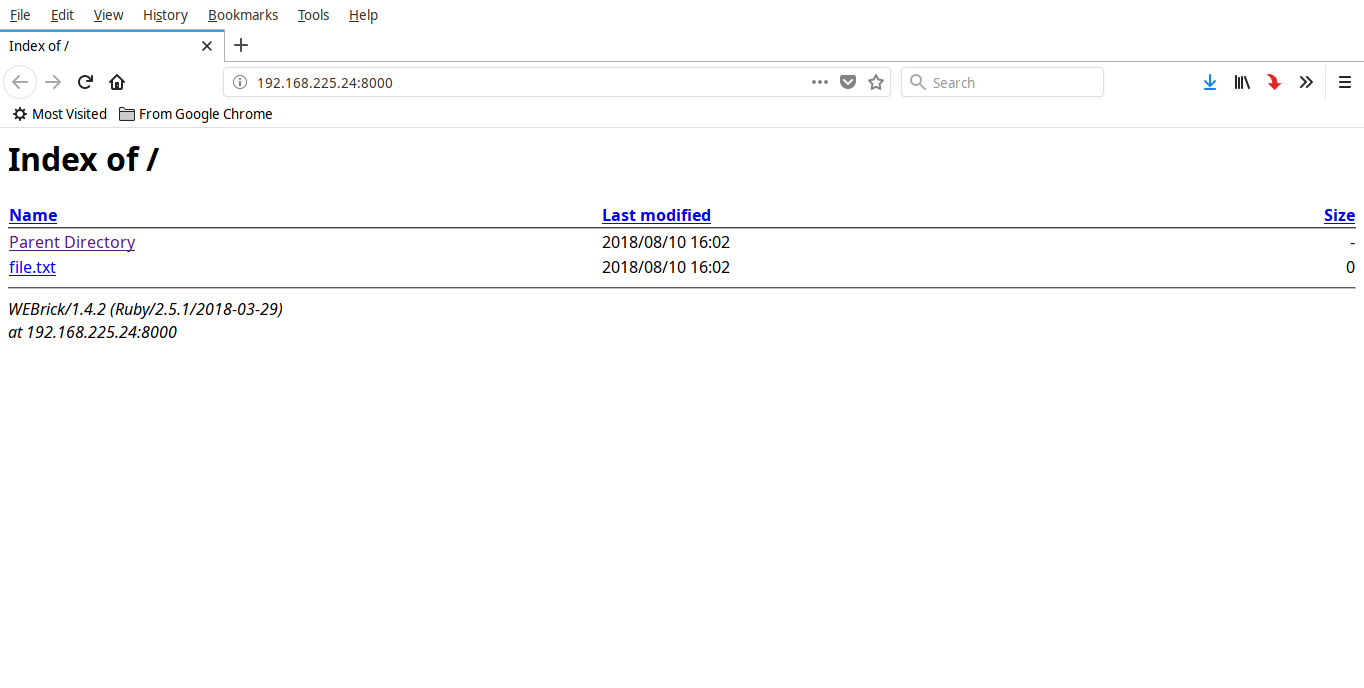

现在你可以使用 URL 从任何远程系统访问此文件夹的内容 - **http:// <ip-address>:8000**。

|

||||

现在你可以使用 URL 从任何远程系统访问此文件夹的内容 - `http:// <ip-address>:8000`。

|

||||

|

||||

|

||||

|

||||

要停止共享,请按 **CTRL+C**。

|

||||

要停止共享,请按 `CTRL+C`。

|

||||

|

||||

#### 方法 4 - 使用 Http-server(NodeJS)

|

||||

|

||||

[**Http-server**][1] 是一个用 NodeJS 编写的简单的可用于生产的命令行 http-server。它不需要要配置,可用于通过 Web 浏览器即时共享文件和目录。

|

||||

[Http-server][1] 是一个用 NodeJS 编写的简单的可用于生产环境的命令行 http 服务器。它不需要配置,可用于通过 Web 浏览器即时共享文件和目录。

|

||||

|

||||

按如下所述安装 NodeJS。

|

||||

|

||||

- [如何在 Linux 上安装 NodeJS](https://www.ostechnix.com/install-node-js-linux/)

|

||||

|

||||

安装 NodeJS 后,运行以下命令安装 http-server。

|

||||

|

||||

```

|

||||

$ npm install -g http-server

|

||||

|

||||

```

|

||||

|

||||

现在进入任何目录并通过 HTTP 共享其内容,如下所示。

|

||||

|

||||

```

|

||||

$ cd ostechnix

|

||||

|

||||

@ -67,80 +76,81 @@ Available on:

|

||||

http://192.168.225.24:8000

|

||||

http://192.168.225.20:8000

|

||||

Hit CTRL-C to stop the server

|

||||

|

||||

```

|

||||

|

||||

现在你可以使用 URL 从任何远程系统访问此文件夹的内容 - **http:// <ip-address>:8000**。

|

||||

现在你可以使用 URL 从任何远程系统访问此文件夹的内容 - `http:// <ip-address>:8000`。

|

||||

|

||||

|

||||

|

||||

要停止共享,请按 **CTRL+C**。

|

||||

要停止共享,请按 `CTRL+C`。

|

||||

|

||||

#### 方法 5 - 使用 Miniserve(Rust)

|

||||

|

||||

[**Miniserve**][2] 是另一个命令行程序,它允许你通过 HTTP 快速访问文件。它是一个非常快速,易于使用的跨平台程序,它用 **Rust** 编程语言编写。与上面的程序/方法不同,它提供身份验证支持,因此你可以为共享设置用户名和密码。

|

||||

[**Miniserve**][2] 是另一个命令行程序,它允许你通过 HTTP 快速访问文件。它是一个非常快速、易于使用的跨平台程序,它用 Rust 编程语言编写。与上面的程序/方法不同,它提供身份验证支持,因此你可以为共享设置用户名和密码。

|

||||

|

||||

按下面的链接在 Linux 系统中安装 Rust。

|

||||

|

||||

- [在 Linux 上安装 Rust 编程语言](https://www.ostechnix.com/install-rust-programming-language-in-linux/)

|

||||

|

||||

安装 Rust 后,运行以下命令安装 miniserve:

|

||||

|

||||

```

|

||||

$ cargo install miniserve

|

||||

|

||||

```

|

||||

|

||||

或者,你可以在[**发布页**][3]下载二进制文件并使其可执行。

|

||||

或者,你可以在其[发布页][3]下载二进制文件并使其可执行。

|

||||

|

||||

```

|

||||

$ chmod +x miniserve-linux

|

||||

|

||||

```

|

||||

|

||||

然后,你可以使用命令运行它(假设 miniserve 二进制文件下载到当前的工作目录中):

|

||||

|

||||

```

|

||||

$ ./miniserve-linux <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

**用法**

|

||||

|

||||

要提供目录访问:

|

||||

|

||||

```

|

||||

$ miniserve <path-to-directory>

|

||||

|

||||

```

|

||||

|

||||

**示例:**

|

||||

|

||||

```

|

||||

$ miniserve /home/sk/ostechnix/

|

||||

miniserve v0.2.0

|

||||

Serving path /home/sk/ostechnix at http://[::]:8080, http://localhost:8080

|

||||

Quit by pressing CTRL-C

|

||||

|

||||

```

|

||||

|

||||

现在,你可以在本地系统使用 URL – **<http://localhost:8080>** 访问共享,或者在远程系统使用 URL – **http:// <ip-address>:8080** 访问。

|

||||

现在,你可以在本地系统使用 URL – `http://localhost:8080` 访问共享,或者在远程系统使用 URL – `http://<ip-address>:8080` 访问。

|

||||

|

||||

要提供单个文件访问:

|

||||

|

||||

```

|

||||

$ miniserve <path-to-file>

|

||||

|

||||

```

|

||||

|

||||

**示例:**

|

||||

|

||||

```

|

||||

$ miniserve ostechnix/file.txt

|

||||

|

||||

```

|

||||

|

||||

带用户名和密码提供文件/文件夹访问:

|

||||

|

||||

```

|

||||

$ miniserve --auth joe:123 <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

绑定到多个接口:

|

||||

|

||||

```

|

||||

$ miniserve -i 192.168.225.1 -i 10.10.0.1 -i ::1 -- <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

如你所见,我只给出了 5 种方法。但是,本指南末尾附带的链接中还提供了几种方法。也去测试一下它们。此外,收藏并时不时重新访问它来检查将来是否有新的方法。

|

||||

@ -149,7 +159,9 @@ $ miniserve -i 192.168.225.1 -i 10.10.0.1 -i ::1 -- <path-to-share>

|

||||

|

||||

干杯!

|

||||

|

||||

### 资源

|

||||

|

||||

- [单行静态 http 服务器大全](https://gist.github.com/willurd/5720255)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -158,7 +170,7 @@ via: https://www.ostechnix.com/how-to-quickly-serve-files-and-folders-over-http-

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,22 +1,23 @@

|

||||

适用于小型企业的 4 个开源发票工具

|

||||

======

|

||||

用基于 web 的发票软件管理你的账单,完成收款,十分简单。

|

||||

|

||||

> 用基于 web 的发票软件管理你的账单,轻松完成收款,十分简单。

|

||||

|

||||

|

||||

|

||||

无论您开办小型企业的原因是什么,保持业务发展的关键是可以盈利。收款也就意味着向客户提供发票。

|

||||

|

||||

使用 LibreOffice Writer 或 LibreOffice Calc 提供发票很容易,但有时候你需要的不止这些。从更专业的角度看。一种跟进发票的方法。提醒你何时跟进你发出的发票。

|

||||

使用 LibreOffice Writer 或 LibreOffice Calc 提供发票很容易,但有时候你需要的不止这些。从更专业的角度看,一种跟进发票的方法,可以提醒你何时跟进你发出的发票。

|

||||

|

||||

在这里有各种各样的商业闭源发票管理工具。但是开源界的产品和相对应的闭源商业工具比起来,并不差,没准还更灵活。

|

||||

在这里有各种各样的商业闭源的发票管理工具。但是开源的产品和相对应的闭源商业工具比起来,并不差,没准还更灵活。

|

||||

|

||||

让我们一起了解这 4 款基于 web 的开源发票工具,它们很适用于预算紧张的自由职业者和小型企业。2014 年,我在本文的[早期版本][1]中提到了其中两个工具。这 4 个工具用起来都很简单,并且你可以在任何设备上使用它们。

|

||||

|

||||

### Invoice Ninja

|

||||

|

||||

我不是很喜欢 ninja 这个词。尽管如此,我喜欢 [Invoice Ninja][2]。非常喜欢。它将功能融合在一个简单的界面,其中包含一组功能,可让创建,管理和向客户、消费者发送发票。

|

||||

我不是很喜欢 ninja (忍者)这个词。尽管如此,我喜欢 [Invoice Ninja][2]。非常喜欢。它将功能融合在一个简单的界面,其中包含一组可让你创建、管理和向客户、消费者发送发票的功能。

|

||||

|

||||

您可以轻松配置多个客户端,跟进付款和未结清的发票,生成报价并用电子邮件发送发票。Invoice Ninja 与其竞争对手不同,它[集成][3]了超过 40 个流行支付方式,包括 PayPal,Stripe,WePay 以及 Apple Pay。

|

||||

您可以轻松配置多个客户端,跟进付款和未结清的发票,生成报价并用电子邮件发送发票。Invoice Ninja 与其竞争对手不同,它[集成][3]了超过 40 个流行支付方式,包括 PayPal、Stripe、WePay 以及 Apple Pay。

|

||||

|

||||

[下载][4]一个可以安装到自己服务器上的版本,或者获取一个[托管版][5]的账户,都可以使用 Invoice Ninja。它有免费版,也有每月 8 美元的收费版。

|

||||

|

||||

@ -34,7 +35,7 @@ InvoicePlane 不仅可以生成或跟进发票。你还可以为任务或商品

|

||||

|

||||

[OpenSourceBilling][9] 被它的开发者称赞为“非常简单的计费软件”,当之无愧。它拥有最简洁的交互界面,配置使用起来轻而易举。

|

||||

|

||||

OpenSourceBilling 因它的商业智能仪表盘脱颖而出,它可以跟进跟进你当前和以前的发票,以及任何没有支付的款项。它以图表的形式整理信息,使之很容易阅读。

|

||||

OpenSourceBilling 因它的商业智能仪表盘脱颖而出,它可以跟进你当前和以前的发票,以及任何没有支付的款项。它以图表的形式整理信息,使之很容易阅读。

|

||||

|

||||

你可以在发票上配置很多信息。只需点几下鼠标按几下键盘,即可添加项目、税率、客户名称以及付款条件。OpenSourceBilling 将这些信息保存在你所有的发票当中,不管新发票还是旧发票。

|

||||

|

||||

@ -57,7 +58,7 @@ via: https://opensource.com/article/18/10/open-source-invoicing-tools

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[fuowang](https://github.com/fuowang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,5 @@

|

||||

# KeeWeb – 一个开源且跨平台的密码管理工具

|

||||

KeeWeb:一个开源且跨平台的密码管理工具

|

||||

======

|

||||

|

||||

|

||||

|

||||

@ -6,64 +7,60 @@

|

||||

|

||||

**KeePass** 就是一个这样的开源密码管理工具,它有一个官方客户端,但功能非常简单。也有许多 PC 端和手机端的其他密码管理工具,并且与 KeePass 存储加密密码的文件格式兼容。其中一个就是 **KeeWeb**。

|

||||

|

||||

KeeWeb 是一个开源、跨平台的密码管理工具,具有云同步,键盘快捷键和插件等功能。KeeWeb使用 Electron 框架,这意味着它可以在 Windows,Linux 和 Mac OS 上运行。

|

||||

KeeWeb 是一个开源、跨平台的密码管理工具,具有云同步,键盘快捷键和插件等功能。KeeWeb使用 Electron 框架,这意味着它可以在 Windows、Linux 和 Mac OS 上运行。

|

||||

|

||||

### KeeWeb 的使用

|

||||

|

||||

有两种方式可以使用 KeeWeb。第一种无需安装,直接在网页上使用,第二中就是在本地系统中安装 KeeWeb 客户端。

|

||||

|

||||

**在网页上使用 KeeWeb**

|

||||

#### 在网页上使用 KeeWeb

|

||||

|

||||

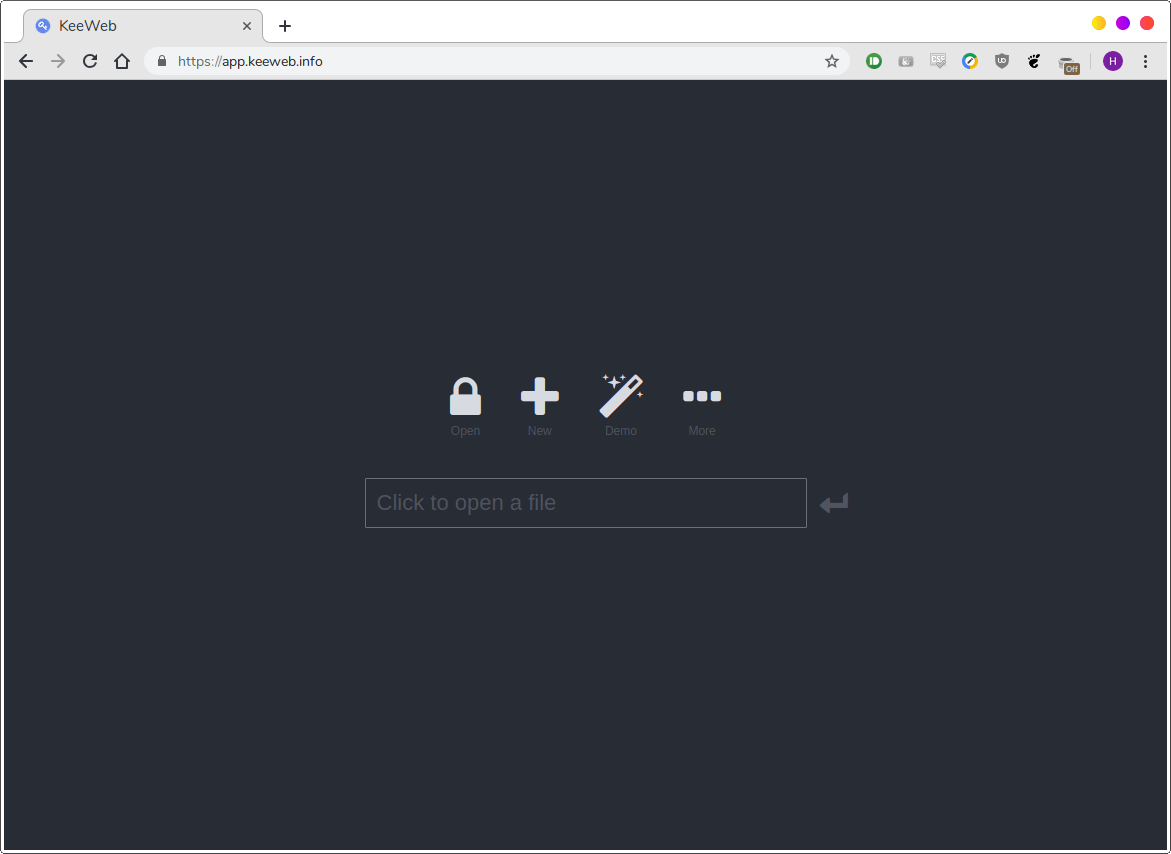

如果不想在系统中安装应用,可以去 [**https://app.keeweb.info/**][1] 使用KeeWeb。

|

||||

如果不想在系统中安装应用,可以去 [https://app.keeweb.info/][1] 使用KeeWeb。

|

||||

|

||||

|

||||

|

||||

网页端具有桌面客户端的所有功能,当然也需要联网才能进行使用。

|

||||

|

||||

**在计算机中安装 KeeWeb**

|

||||

#### 在计算机中安装 KeeWeb

|

||||

|

||||

如果喜欢客户端的舒适性和离线可用性,也可以将其安装在系统中。

|

||||

|

||||

如果使用Ubuntu/Debian,你可以去 [**releases pages**][2] 下载 KeeWeb 最新的 **.deb ** 文件,然后通过下面的命令进行安装:

|

||||

如果使用 Ubuntu/Debian,你可以去 [发布页][2] 下载 KeeWeb 最新的 .deb 文件,然后通过下面的命令进行安装:

|

||||

|

||||

```

|

||||

$ sudo dpkg -i KeeWeb-1.6.3.linux.x64.deb

|

||||

|

||||

```

|

||||

|

||||

如果用的是 Arch,在 [**AUR**][3] 上也有 KeeWeb,可以使用任何 AUR 助手进行安装,例如 [**Yay**][4]:

|

||||

如果用的是 Arch,在 [AUR][3] 上也有 KeeWeb,可以使用任何 AUR 助手进行安装,例如 [Yay][4]:

|

||||

|

||||

```

|

||||

$ yay -S keeweb

|

||||

|

||||

```

|

||||

|

||||

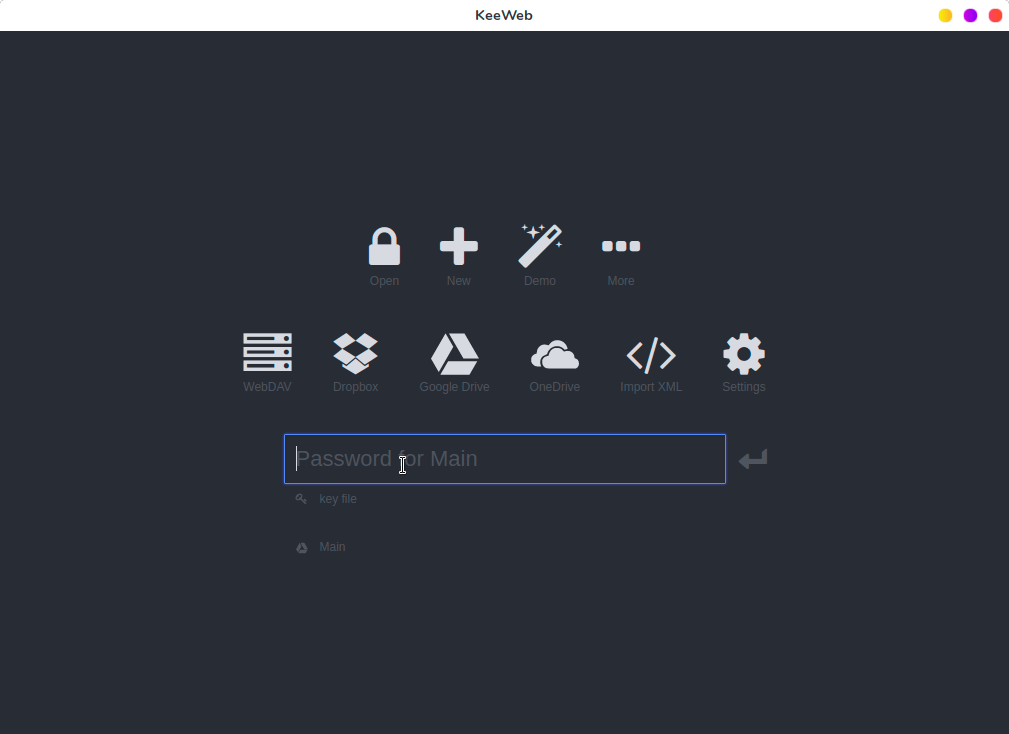

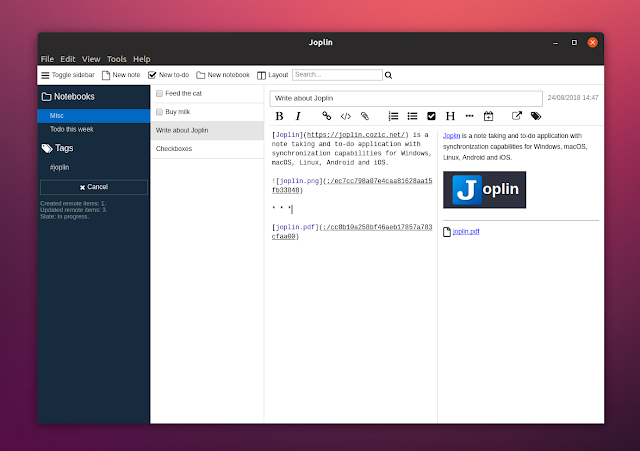

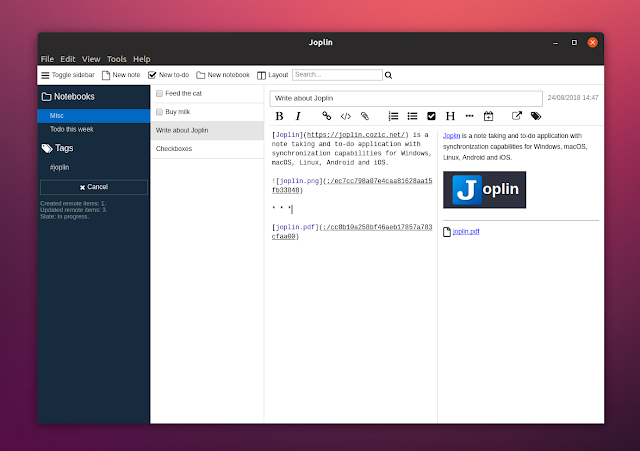

安装后,从菜单中或应用程序启动器启动 KeeWeb。默认界面长这样:

|

||||

安装后,从菜单中或应用程序启动器启动 KeeWeb。默认界面如下:

|

||||

|

||||

|

||||

|

||||

### 总体布局

|

||||

|

||||

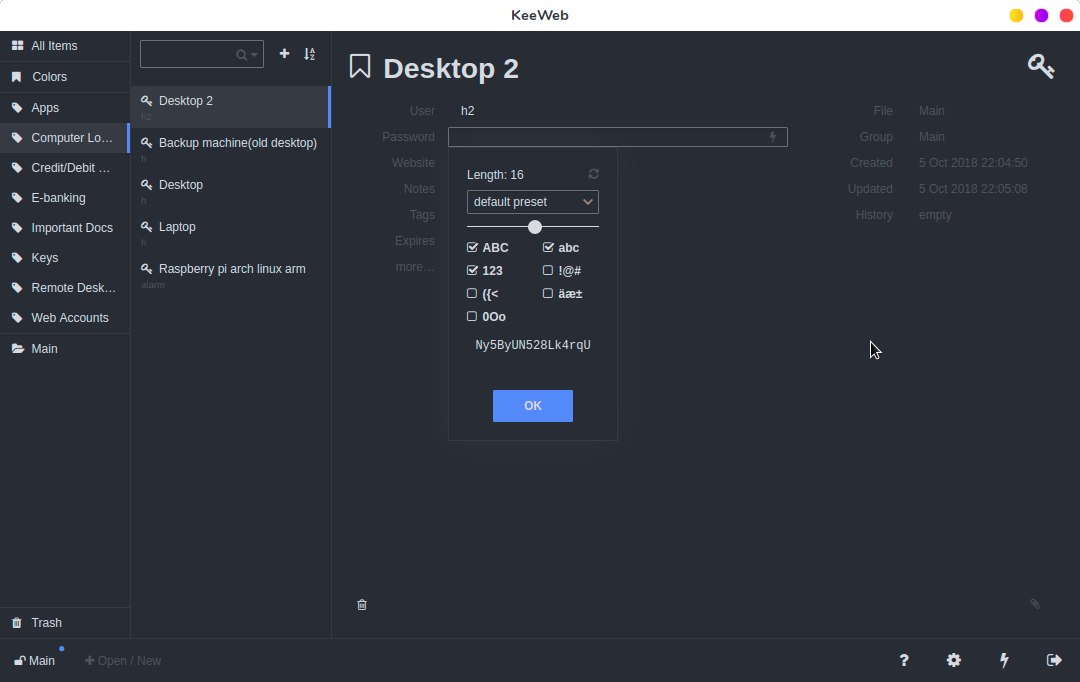

KeeWeb 界面主要显示所有密码的列表,在左侧展示所有标签。单击标签将对密码进行过滤,只显示带有那个标签的密码。在右侧,显示所选帐户的所有字段。你可以设置用户名,密码,网址,或者添加自定义的备注。你甚至可以创建自己的字段并将其标记为安全字段,这在存储信用卡信息等内容时非常有用。你只需单击即可复制密码。 KeeWeb 还显示账户的创建和修改日期。已删除的密码会保留在回收站中,可以在其中还原或永久删除。

|

||||

KeeWeb 界面主要显示所有密码的列表,在左侧展示所有标签。单击标签将对密码进行筛选,只显示带有那个标签的密码。在右侧,显示所选帐户的所有字段。你可以设置用户名、密码、网址,或者添加自定义的备注。你甚至可以创建自己的字段并将其标记为安全字段,这在存储信用卡信息等内容时非常有用。你只需单击即可复制密码。 KeeWeb 还显示账户的创建和修改日期。已删除的密码会保留在回收站中,可以在其中还原或永久删除。

|

||||

|

||||

|

||||

|

||||

### KeeWeb 功能

|

||||

|

||||

**云同步**

|

||||

#### 云同步

|

||||

|

||||

KeeWeb 的主要功能之一是支持各种远程位置和云服务。除了加载本地文件,你可以从以下位置打开文件:

|

||||

|

||||

```

|

||||

1. WebDAV Servers

|

||||

2. Google Drive

|

||||

3. Dropbox

|

||||

4. OneDrive

|

||||

```

|

||||

|

||||

这意味着如果你使用多台计算机,就可以在它们之间同步密码文件,因此不必担心某台设备无法访问所有密码。

|

||||

|

||||

**密码生成器**

|

||||

#### 密码生成器

|

||||

|

||||

|

||||

|

||||

@ -71,13 +68,13 @@ KeeWeb 的主要功能之一是支持各种远程位置和云服务。除了加

|

||||

|

||||

为此,KeeWeb 有一个内置密码生成器,可以生成特定长度、包含指定字符的自定义密码。

|

||||

|

||||

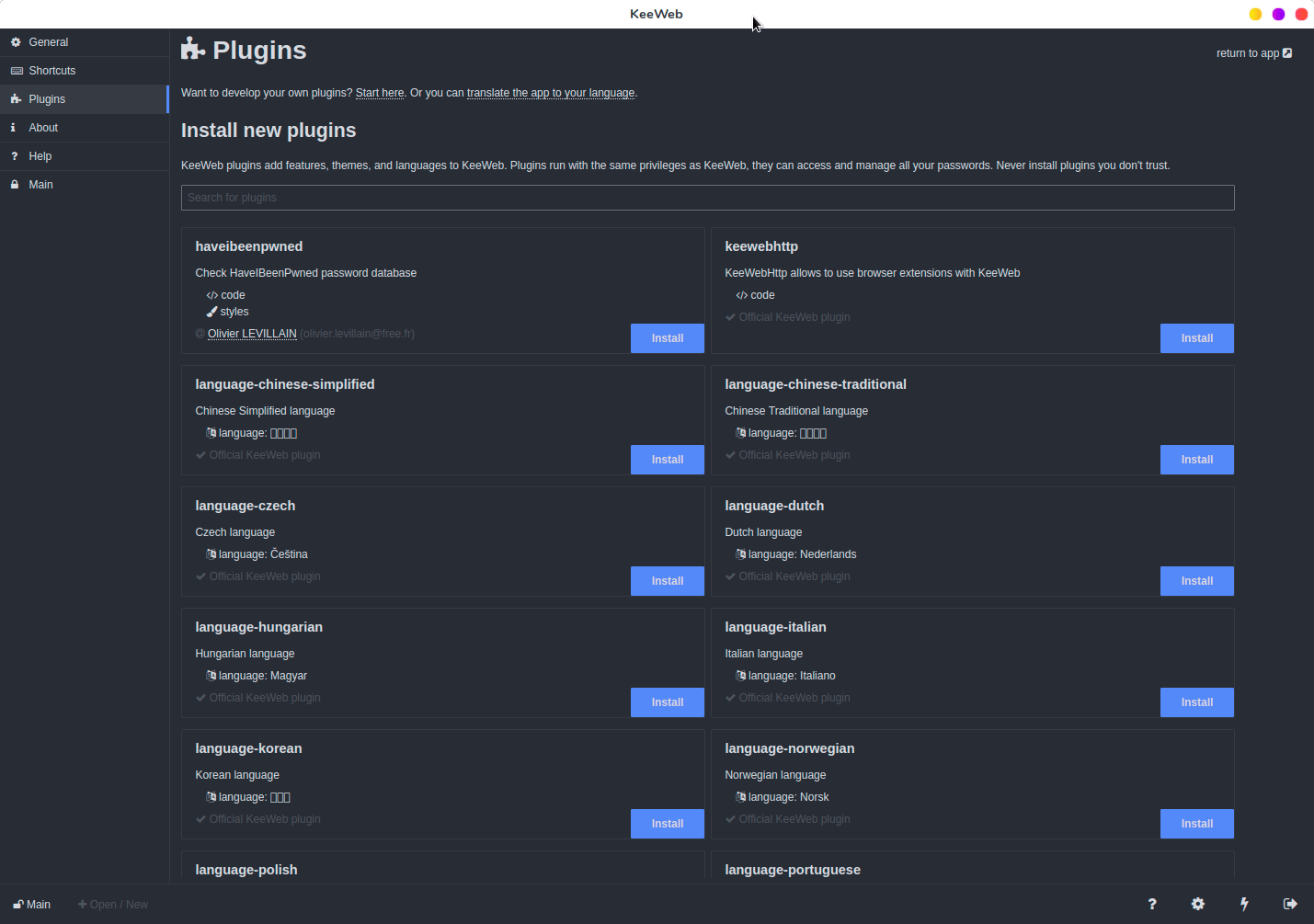

**插件**

|

||||

#### 插件

|

||||

|

||||

|

||||

|

||||

你可以使用插件扩展 KeeWeb 的功能。 其中一些插件用于更改界面语言,而其他插件则添加新功能,例如访问 **<https://haveibeenpwned.com>** 以查看密码是否暴露。

|

||||

你可以使用插件扩展 KeeWeb 的功能。其中一些插件用于更改界面语言,而其他插件则添加新功能,例如访问 https://haveibeenpwned.com 以查看密码是否暴露。

|

||||

|

||||

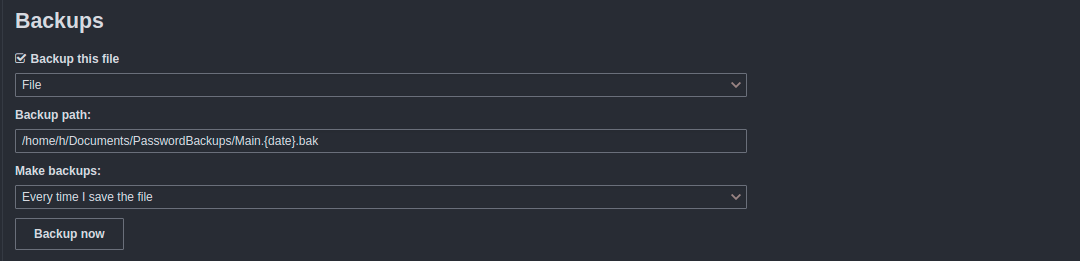

**本地备份**

|

||||

#### 本地备份

|

||||

|

||||

|

||||

|

||||

@ -94,7 +91,7 @@ via: https://www.ostechnix.com/keeweb-an-open-source-cross-platform-password-man

|

||||

作者:[EDITOR][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[jlztan](https://github.com/jlztan)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

设计更快的网页(二):图片替换

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

欢迎回到我们为了构建更快网页所写的系列文章。上一篇[文章][1]讨论了只通过图片压缩实现这个目标的方法。这个例子从一开始有 1.2MB 的“浏览器脂肪”,然后它减轻到了 488.9KB 的大小。但这还不够快!那么本文继续来给浏览器“减肥”。你可能在这个过程中会认为我们所做的事情有点疯狂,但一旦完成,你就会明白为什么要这么做了。

|

||||

|

||||

@ -21,17 +21,15 @@ $ sudo dnf install inkscape

|

||||

|

||||

![Getfedora 的页面,对其中的图片做了标记][5]

|

||||

|

||||

这次分析更好地以图形方式完成,这也就是它从屏幕截图开始的原因。上面的截图标记了页面中的所有图形元素。Fedora 网站团队已经针对两种情况措施(也有可能是四种,这样更好)来替换图像了。社交媒体的图标变成了字体的字形,而语言选择器变成了 SVG.

|

||||

这次分析以图形方式完成更好,这也就是它从屏幕截图开始的原因。上面的截图标记了页面中的所有图形元素。Fedora 网站团队已经针对两种情况措施(也有可能是四种,这样更好)来替换图像了。社交媒体的图标变成了字体的字形,而语言选择器变成了 SVG.

|

||||

|

||||

我们有几个可以替换的选择:

|

||||

|

||||

|

||||

+ CSS3

|

||||

+ 字体

|

||||

+ SVG

|

||||

+ HTML5 Canvas

|

||||

|

||||

|

||||

#### HTML5 Canvas

|

||||

|

||||

简单来说,HTML5 Canvas 是一种 HTML 元素,它允许你借助脚本语言(通常是 JavaScript)在上面绘图,不过它现在还没有被广泛使用。因为它可以使用脚本语言来绘制,所以这个元素也可以用来做动画。这里有一些使用 HTML Canvas 实现的实例,比如[三角形模式][6]、[动态波浪][7]和[字体动画][8]。不过,在这种情况下,似乎这也不是最好的选择。

|

||||

@ -42,7 +40,7 @@ $ sudo dnf install inkscape

|

||||

|

||||

#### 字体

|

||||

|

||||

另外一种方式是使用字体来装饰网页,[Fontawesome][9] 在这方面很流行。比如,在这个例子中你可以使用字体来替换“风味”和“旋转”的图标。这种方法有一个负面影响,但解决起来很容易,我们会在本系列的下一部分中来介绍。

|

||||

另外一种方式是使用字体来装饰网页,[Fontawesome][9] 在这方面很流行。比如,在这个例子中你可以使用字体来替换“Flavor”和“Spin”的图标。这种方法有一个负面影响,但解决起来很容易,我们会在本系列的下一部分中来介绍。

|

||||

|

||||

#### SVG

|

||||

|

||||

@ -94,13 +92,13 @@ inkscape:connector-curvature="0" />

|

||||

|

||||

![Inkscape - 激活节点工具][10]

|

||||

|

||||

这个例子中有五个不必要的节点——就是直线中间的那些。要删除它们,你可以使用已激活的节点工具依次选中它们,并按下 **Del** 键。然后,选中这条线的定义节点,并使用工具栏的工具把它们重新做成角。

|

||||

这个例子中有五个不必要的节点——就是直线中间的那些。要删除它们,你可以使用已激活的节点工具依次选中它们,并按下 `Del` 键。然后,选中这条线的定义节点,并使用工具栏的工具把它们重新做成角。

|

||||

|

||||

![Inkscape - 将节点变成角的工具][11]

|

||||

|

||||

如果不修复这些角,我们还有方法可以定义这条曲线,这条曲线会被保存,也就会增加文件体积。你可以手动清理这些节点,因为它无法有效的自动完成。现在,你已经为下一阶段做好了准备。

|

||||

|

||||

使用_另存为_功能,并选择_优化的 SVG_。这会弹出一个窗口,你可以在里面选择移除或保留哪些成分。

|

||||

使用“另存为”功能,并选择“优化的 SVG”。这会弹出一个窗口,你可以在里面选择移除或保留哪些成分。

|

||||

|

||||

![Inkscape - “另存为”“优化的 SVG”][12]

|

||||

|

||||

@ -121,7 +119,7 @@ insgesamt 928K

|

||||

-rw-rw-r--. 1 user user 112K 19. Feb 19:05 greyscale-pattern-opti.svg.gz

|

||||

```

|

||||

|

||||

这是我为可视化这个主题所做的一个小测试的输出。你可能应该看到光栅图形——PNG——已经被压缩,不能再被压缩了。而 SVG,一个 XML 文件正相反。它是文本文件,所以可被压缩至原来的四分之一不到。因此,现在它的体积要比 PNG 小 50 KB 左右。

|

||||

这是我为可视化这个主题所做的一个小测试的输出。你可能应该看到光栅图形——PNG——已经被压缩,不能再被压缩了。而 SVG,它是一个 XML 文件正相反。它是文本文件,所以可被压缩至原来的四分之一不到。因此,现在它的体积要比 PNG 小 50 KB 左右。

|

||||

|

||||

现代浏览器可以以原生方式处理压缩文件。所以,许多 Web 服务器都打开了 mod_deflate (Apache) 和 gzip (Nginx) 模式。这样我们就可以在传输过程中节省空间。你可以在[这儿][13]看看你的服务器是不是启用了它。

|

||||

|

||||

@ -129,18 +127,16 @@ insgesamt 928K

|

||||

|

||||

首先,没有人希望每次都要用 Inkscape 来优化 SVG. 你可以在命令行中脱离 GUI 来运行 Inkscape,但你找不到选项来将 Inkscape SVG 转换成优化的 SVG. 用这种方式只能导出光栅图像。但是我们替代品:

|

||||

|

||||

* SVGO (看起来开发过程已经不活跃了)

|

||||

* Scour

|

||||

* SVGO (看起来开发过程已经不活跃了)

|

||||

* Scour

|

||||

|

||||

|

||||

|

||||

本例中我们使用 scour 来进行优化。先来安装它:

|

||||

本例中我们使用 `scour` 来进行优化。先来安装它:

|

||||

|

||||

```

|

||||

$ sudo dnf install scour

|

||||

```

|

||||

|

||||

要想自动优化 SVG 文件,请运行 scour,就像这样:

|

||||

要想自动优化 SVG 文件,请运行 `scour`,就像这样:

|

||||

|

||||

```

|

||||

[user@localhost ]$ scour INPUT.svg OUTPUT.svg -p 3 --create-groups --renderer-workaround --strip-xml-prolog --remove-descriptive-elements --enable-comment-stripping --disable-embed-rasters --no-line-breaks --enable-id-stripping --shorten-ids

|

||||

@ -156,13 +152,13 @@ via: https://fedoramagazine.org/design-faster-web-pages-part-2-image-replacement

|

||||

作者:[Sirko Kemter][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[StdioA](https://github.com/StdioA)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/gnokii/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://wp.me/p3XX0v-5fJ

|

||||

[1]: https://linux.cn/article-10166-1.html

|

||||

[2]: https://fedoramagazine.org/howto-use-sudo/

|

||||

[3]: https://fedoramagazine.org/?s=Inkscape

|

||||

[4]: https://getfedora.org

|

||||

@ -1,10 +1,11 @@

|

||||

在 Fedora 上使用 Pitivi 编辑你的视频

|

||||

在 Fedora 上使用 Pitivi 编辑视频

|

||||

======

|

||||

|

||||

|

||||

想制作一部你本周末冒险的视频吗?视频编辑有很多选择。但是,如果你在寻找一个容易上手的视频编辑器,并且也可以在官方 Fedora 仓库中找到,请尝试一下[Pitivi][1]。

|

||||

|

||||

Pitivi 是一个使用 GStreamer 框架的开源非线性视频编辑器。在 Fedora 下开箱即用,Pitivi 支持 OGG、WebM 和一系列其他格式。此外,通过 gstreamer 插件可以获得更多视频格式支持。Pitivi 也与 GNOME 桌面紧密集成,因此相比其他新的程序,它的 UI 在 Fedora Workstation 上会感觉很熟悉。

|

||||

想制作一部你本周末冒险的视频吗?视频编辑有很多选择。但是,如果你在寻找一个容易上手的视频编辑器,并且也可以在官方 Fedora 仓库中找到,请尝试一下 [Pitivi][1]。

|

||||

|

||||

Pitivi 是一个使用 GStreamer 框架的开源非线性视频编辑器。在 Fedora 下开箱即用,Pitivi 支持 OGG、WebM 和一系列其他格式。此外,通过 GStreamer 插件可以获得更多视频格式支持。Pitivi 也与 GNOME 桌面紧密集成,因此相比其他新的程序,它的 UI 在 Fedora Workstation 上会感觉很熟悉。

|

||||

|

||||

### 在 Fedora 上安装 Pitivi

|

||||

|

||||

@ -20,7 +21,7 @@ sudo dnf install pitivi

|

||||

|

||||

### 基本编辑

|

||||

|

||||

Pitivi 内置了多种工具,可以快速有效地编辑剪辑。只需将视频、音频和图像导入 Pitivi 媒体库,然后将它们拖到时间线上即可。此外,除了时间线上的简单淡入淡出过渡之外,pitivi 还允许你轻松地将剪辑的各个部分分割、修剪和分组。

|

||||

Pitivi 内置了多种工具,可以快速有效地编辑剪辑。只需将视频、音频和图像导入 Pitivi 媒体库,然后将它们拖到时间线上即可。此外,除了时间线上的简单淡入淡出过渡之外,Pitivi 还允许你轻松地将剪辑的各个部分分割、修剪和分组。

|

||||

|

||||

![][3]

|

||||

|

||||

@ -40,7 +41,7 @@ via: https://fedoramagazine.org/edit-your-videos-with-pitivi-on-fedora/

|

||||

作者:[Ryan Lerch][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,38 +1,39 @@

|

||||

如何在 Rasspberry Pi 上搭建 WordPress

|

||||

如何在树莓派上搭建 WordPress

|

||||

======

|

||||

|

||||

这篇简单的教程可以让你在 Rasspberry Pi 上运行你的 WordPress 网站。

|

||||

> 这篇简单的教程可以让你在树莓派上运行你的 WordPress 网站。

|

||||

|

||||

|

||||

|

||||

WordPress 是一个非常受欢迎的开源博客平台和内容管理平台(CMS)。它很容易搭建,而且还有一个活跃的开发者社区构建网站、创建主题和插件供其他人使用。

|

||||

|

||||

虽然通过一键式 WordPress 设置获得托管包很容易,但通过命令行就可以在 Linux 服务器上设置自己的托管包,而且 Raspberry Pi 是一种用来尝试它并顺便学习一些东西的相当好的途径。

|

||||

虽然通过一键式 WordPress 设置获得托管包很容易,但也可以简单地通过命令行在 Linux 服务器上设置自己的托管包,而且树莓派是一种用来尝试它并顺便学习一些东西的相当好的途径。

|

||||

|

||||

使用一个 web 堆栈的四个部分是 Linux、Apache、MySQL 和 PHP。这里是你对它们每一个需要了解的。

|

||||

一个经常使用的 Web 套件的四个部分是 Linux、Apache、MySQL 和 PHP。这里是你对它们每一个需要了解的。

|

||||

|

||||

### Linux

|

||||

|

||||

Raspberry Pi 上运行的系统是 Raspbian,这是一个基于 Debian,优化地可以很好的运行在 Raspberry Pi 硬件上的 Linux 发行版。你有两个选择:桌面版或是精简版。桌面版有一个熟悉的桌面还有很多教育软件和编程工具,像是 LibreOffice 套件、Mincraft,还有一个 web 浏览器。精简版本没有桌面环境,因此它只有命令行以及一些必要的软件。

|

||||

树莓派上运行的系统是 Raspbian,这是一个基于 Debian,为运行在树莓派硬件上而优化的很好的 Linux 发行版。你有两个选择:桌面版或是精简版。桌面版有一个熟悉的桌面还有很多教育软件和编程工具,像是 LibreOffice 套件、Mincraft,还有一个 web 浏览器。精简版本没有桌面环境,因此它只有命令行以及一些必要的软件。

|

||||

|

||||

这篇教程在两个版本上都可以使用,但是如果你使用的是精简版,你必须要有另外一台电脑去访问你的站点。

|

||||

|

||||

### Apache

|

||||

|

||||

Apache 是一个受欢迎的 web 服务器应用,你可以安装在你的 Raspberry Pi 上伺服你的 web 页面。就其自身而言,Apache 可以通过 HTTP 提供静态 HTML 文件。使用额外的模块,它也可以使用像是 PHP 的脚本语言提供动态网页。

|

||||

Apache 是一个受欢迎的 web 服务器应用,你可以安装在你的树莓派上伺服你的 web 页面。就其自身而言,Apache 可以通过 HTTP 提供静态 HTML 文件。使用额外的模块,它也可以使用像是 PHP 的脚本语言提供动态网页。

|

||||

|

||||

安装 Apache 非常简单。打开一个终端窗口,然后输入下面的命令:

|

||||

|

||||

```

|

||||

sudo apt install apache2 -y

|

||||

```

|

||||

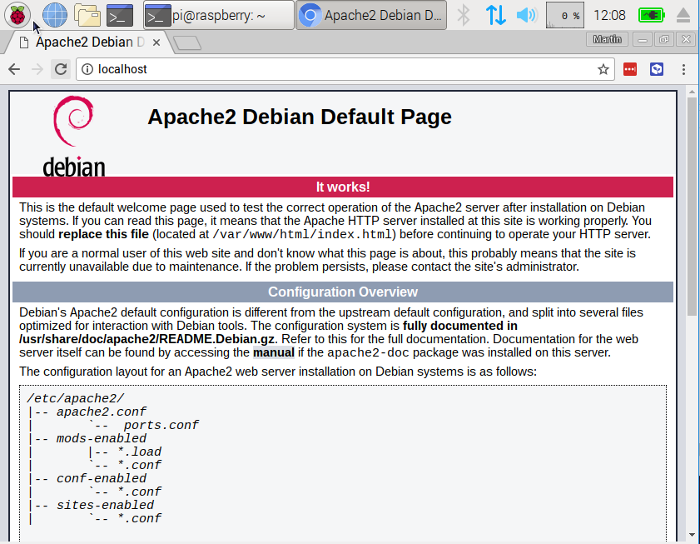

Apache 默认放了一个测试文件在一个 web 目录中,你可以从你的电脑或是你网络中的其他计算机进行访问。只需要打开 web 浏览器,然后输入地址 **<http://localhost>**。或者(特别是你使用的是 Raspbian Lite 的话)输入你的 Pi 的 IP 地址代替 **localhost**。你应该会在你的浏览器窗口中看到这样的内容:

|

||||

|

||||

Apache 默认放了一个测试文件在一个 web 目录中,你可以从你的电脑或是你网络中的其他计算机进行访问。只需要打开 web 浏览器,然后输入地址 `<http://localhost>`。或者(特别是你使用的是 Raspbian Lite 的话)输入你的树莓派的 IP 地址代替 `localhost`。你应该会在你的浏览器窗口中看到这样的内容:

|

||||

|

||||

|

||||

|

||||

这意味着你的 Apache 已经开始工作了!

|

||||

|

||||

这个默认的网页仅仅是你文件系统里的一个文件。它在你本地的 **/var/www/html/index/html**。你可以使用 [Leafpad][2] 文本编辑器写一些 HTML 去替换这个文件的内容。

|

||||

这个默认的网页仅仅是你文件系统里的一个文件。它在你本地的 `/var/www/html/index/html`。你可以使用 [Leafpad][2] 文本编辑器写一些 HTML 去替换这个文件的内容。

|

||||

|

||||

```

|

||||

cd /var/www/html/

|

||||

@ -43,27 +44,27 @@ sudo leafpad index.html

|

||||

|

||||

### MySQL

|

||||

|

||||

MySQL (显然是 "my S-Q-L" 或者 "my sequel") 是一个很受欢迎的数据库引擎。就像 PHP,它被非常广泛的应用于网页服务,这也是为什么像 WordPress 一样的项目选择了它,以及这些项目是为何如此受欢迎。

|

||||

MySQL(读作 “my S-Q-L” 或者 “my sequel”)是一个很受欢迎的数据库引擎。就像 PHP,它被非常广泛的应用于网页服务,这也是为什么像 WordPress 一样的项目选择了它,以及这些项目是为何如此受欢迎。

|

||||

|

||||

在一个终端窗口中输入以下命令安装 MySQL 服务:

|

||||

在一个终端窗口中输入以下命令安装 MySQL 服务(LCTT 译注:实际上安装的是 MySQL 分支 MariaDB):

|

||||

|

||||

```

|

||||

sudo apt-get install mysql-server -y

|

||||

```

|

||||

|

||||

WordPress 使用 MySQL 存储文章、页面、用户数据、还有许多其他的内容。

|

||||

WordPress 使用 MySQL 存储文章、页面、用户数据、还有许多其他的内容。

|

||||

|

||||

### PHP

|

||||

|

||||

PHP 是一个预处理器:它是在服务器通过网络浏览器接受网页请求是运行的代码。它解决那些需要展示在网页上的内容,然后发送这些网页到浏览器上。,不像静态的 HTML,PHP 能在不同的情况下展示不同的内容。PHP 是一个在 web 上非常受欢迎的语言;很多像 Facebook 和 Wikipedia 的项目都使用 PHP 编写。

|

||||

PHP 是一个预处理器:它是在服务器通过网络浏览器接受网页请求是运行的代码。它解决那些需要展示在网页上的内容,然后发送这些网页到浏览器上。不像静态的 HTML,PHP 能在不同的情况下展示不同的内容。PHP 是一个在 web 上非常受欢迎的语言;很多像 Facebook 和 Wikipedia 的项目都使用 PHP 编写。

|

||||

|

||||

安装 PHP 和 MySQL 的插件:

|

||||

安装 PHP 和 MySQL 的插件:

|

||||

|

||||

```

|

||||

sudo apt-get install php php-mysql -y

|

||||

```

|

||||

|

||||

删除 **index.html**,然后创建 **index.php**:

|

||||

删除 `index.html`,然后创建 `index.php`:

|

||||

|

||||

```

|

||||

sudo rm index.html

|

||||

@ -82,16 +83,16 @@ sudo leafpad index.php

|

||||

|

||||

### WordPress

|

||||

|

||||

你可以使用 **wget** 命令从 [wordpress.org][3] 下载 WordPress。最新的 WordPress 总是使用 [wordpress.org/latest.tar.gz][4] 这个网址,所以你可以直接抓取这些文件,而无需到网页里面查看,现在的版本是 4.9.8。

|

||||

你可以使用 `wget` 命令从 [wordpress.org][3] 下载 WordPress。最新的 WordPress 总是使用 [wordpress.org/latest.tar.gz][4] 这个网址,所以你可以直接抓取这些文件,而无需到网页里面查看,现在的版本是 4.9.8。

|

||||

|

||||

确保你在 **/var/www/html** 目录中,然后删除里面的所有内容:

|

||||

确保你在 `/var/www/html` 目录中,然后删除里面的所有内容:

|

||||

|

||||

```

|

||||

cd /var/www/html/

|

||||

sudo rm *

|

||||

```

|

||||

|

||||

使用 **wget** 下载 WordPress,然后提取里面的内容,并移动提取的 WordPress 目录中的内容移动到 **html** 目录下:

|

||||

使用 `wget` 下载 WordPress,然后提取里面的内容,并移动提取的 WordPress 目录中的内容移动到 `html` 目录下:

|

||||

|

||||

```

|

||||

sudo wget http://wordpress.org/latest.tar.gz

|

||||

@ -99,13 +100,13 @@ sudo tar xzf latest.tar.gz

|

||||

sudo mv wordpress/* .

|

||||

```

|

||||

|

||||

现在可以删除压缩包和空的 **wordpress** 目录:

|

||||

现在可以删除压缩包和空的 `wordpress` 目录了:

|

||||

|

||||

```

|

||||

sudo rm -rf wordpress latest.tar.gz

|

||||

```

|

||||

|

||||

运行 **ls** 或者 **tree -L 1** 命令显示 WordPress 项目下包含的内容:

|

||||

运行 `ls` 或者 `tree -L 1` 命令显示 WordPress 项目下包含的内容:

|

||||

|

||||

```

|

||||

.

|

||||

@ -132,9 +133,9 @@ sudo rm -rf wordpress latest.tar.gz

|

||||

3 directories, 16 files

|

||||

```

|

||||

|

||||

这是 WordPress 的默认安装源。在 **wp-content** 目录中,你可以编辑你的自定义安装。

|

||||

这是 WordPress 的默认安装源。在 `wp-content` 目录中,你可以编辑你的自定义安装。

|

||||

|

||||

你现在应该把所有文件的所有权改为 Apache 用户:

|

||||

你现在应该把所有文件的所有权改为 Apache 的运行用户 `www-data`:

|

||||

|

||||

```

|

||||

sudo chown -R www-data: .

|

||||

@ -152,24 +153,27 @@ sudo mysql_secure_installation

|

||||

|

||||

你将会被问到一系列的问题。这里原来没有设置密码,但是在下一步你应该设置一个。确保你记住了你输入的密码,后面你需要使用它去连接你的 WordPress。按回车确认下面的所有问题。

|

||||

|

||||

当它完成之后,你将会看到 "All done!" 和 "Thanks for using MariaDB!" 的信息。

|

||||

当它完成之后,你将会看到 “All done!” 和 “Thanks for using MariaDB!” 的信息。

|

||||

|

||||

在终端窗口运行 **mysql** 命令:

|

||||

在终端窗口运行 `mysql` 命令:

|

||||

|

||||

```

|

||||

sudo mysql -uroot -p

|

||||

```

|

||||

输入你创建的 root 密码。你将看到 “Welcome to the MariaDB monitor.” 的欢迎信息。在 **MariaDB [(none)] >** 提示处使用以下命令,为你 WordPress 的安装创建一个数据库:

|

||||

|

||||

输入你创建的 root 密码(LCTT 译注:不是 Linux 系统的 root 密码,是 MySQL 的 root 密码)。你将看到 “Welcome to the MariaDB monitor.” 的欢迎信息。在 “MariaDB [(none)] >” 提示处使用以下命令,为你 WordPress 的安装创建一个数据库:

|

||||

|

||||

```

|

||||

create database wordpress;

|

||||

```

|

||||

|

||||

注意声明最后的分号,如果命令执行成功,你将看到下面的提示:

|

||||

|

||||

```

|

||||

Query OK, 1 row affected (0.00 sec)

|

||||

```

|

||||

把 数据库权限交给 root 用户在声明的底部输入密码:

|

||||

|

||||

把数据库权限交给 root 用户在声明的底部输入密码:

|

||||

|

||||

```

|

||||

GRANT ALL PRIVILEGES ON wordpress.* TO 'root'@'localhost' IDENTIFIED BY 'YOURPASSWORD';

|

||||

@ -181,13 +185,13 @@ GRANT ALL PRIVILEGES ON wordpress.* TO 'root'@'localhost' IDENTIFIED BY 'YOURPAS

|

||||

FLUSH PRIVILEGES;

|

||||

```

|

||||

|

||||

按 **Ctrl+D** 退出 MariaDB 提示,返回到 Bash shell。

|

||||

按 `Ctrl+D` 退出 MariaDB 提示符,返回到 Bash shell。

|

||||

|

||||

### WordPress 配置

|

||||

|

||||

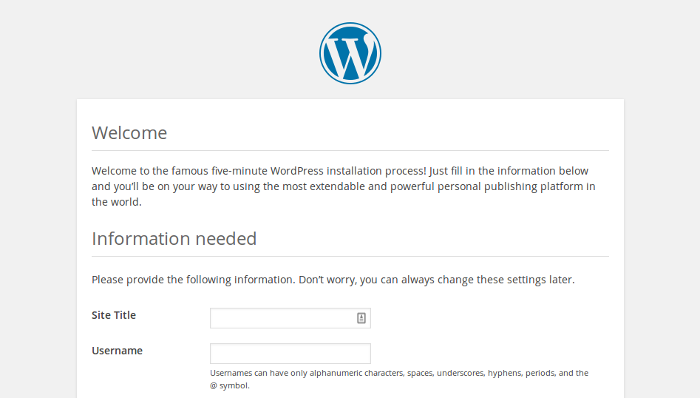

在你的 Raspberry Pi 打开网页浏览器,地址栏输入 **<http://localhost>**。选择一个你想要在 WordPress 使用的语言,然后点击 **继续**。你将会看到 WordPress 的欢迎界面。点击 **让我们开始吧** 按钮。

|

||||

在你的 树莓派 打开网页浏览器,地址栏输入 `http://localhost`。选择一个你想要在 WordPress 使用的语言,然后点击“Continue”。你将会看到 WordPress 的欢迎界面。点击 “Let's go!” 按钮。

|

||||

|

||||

按照下面这样填写基本的站点信息:

|

||||

按照下面这样填写基本的站点信息:

|

||||

|

||||

```

|

||||

Database Name: wordpress

|

||||

@ -197,22 +201,23 @@ Database Host: localhost

|

||||

Table Prefix: wp_

|

||||

```

|

||||

|

||||

点击 **提交** 继续,然后点击 **运行安装**。

|

||||

点击 “Submit” 继续,然后点击 “Run the install”。

|

||||

|

||||

|

||||

|

||||

按下面的格式填写:为你的站点设置一个标题、创建一个用户名和密码、输入你的 email 地址。点击 **安装 WordPress** 按钮,然后使用你刚刚创建的账号登录,你现在已经登录,而且你的站点已经设置好了,你可以在浏览器地址栏输入 **<http://localhost/wp-admin>** 查看你的网站。

|

||||

按下面的格式填写:为你的站点设置一个标题、创建一个用户名和密码、输入你的 email 地址。点击 “Install WordPress” 按钮,然后使用你刚刚创建的账号登录,你现在已经登录,而且你的站点已经设置好了,你可以在浏览器地址栏输入 `http://localhost/wp-admin` 查看你的网站。

|

||||

|

||||

### 永久链接

|

||||

|

||||

更改你的永久链接,使得你的 URLs 更加友好是一个很好的想法。

|

||||

更改你的永久链接设置,使得你的 URL 更加友好是一个很好的想法。

|

||||

|

||||

要这样做,首先登录你的 WordPress ,进入仪表盘。进入 **设置**,**永久链接**。选择 **文章名** 选项,然后点击 **保存更改**。接着你需要开启 Apache 的 **改写** 模块。

|

||||

要这样做,首先登录你的 WordPress ,进入仪表盘。进入 “Settings”,“Permalinks”。选择 “Post name” 选项,然后点击 “Save Changes”。接着你需要开启 Apache 的 `rewrite` 模块。

|

||||

|

||||

```

|

||||

sudo a2enmod rewrite

|

||||

```

|

||||

你还需要告诉虚拟托管服务,站点允许改写请求。为你的虚拟主机编辑 Apache 配置文件

|

||||

|

||||

你还需要告诉虚拟托管服务,站点允许改写请求。为你的虚拟主机编辑 Apache 配置文件:

|

||||

|

||||

```

|

||||

sudo leafpad /etc/apache2/sites-available/000-default.conf

|

||||

@ -226,7 +231,7 @@ sudo leafpad /etc/apache2/sites-available/000-default.conf

|

||||

</Directory>

|

||||

```

|

||||

|

||||

确保其中有像这样的内容 **< VirtualHost \*:80>**

|

||||

确保其中有像这样的内容 `<VirtualHost *:80>`:

|

||||

|

||||

```

|

||||

<VirtualHost *:80>

|

||||

@ -244,17 +249,16 @@ sudo systemctl restart apache2

|

||||

|

||||

### 下一步?

|

||||

|

||||

WordPress 是可以高度自定义的。在网站顶部横幅处点击你的站点名,你就会进入仪表盘,。在这里你可以修改主题、添加页面和文章、编辑菜单、添加插件、以及许多其他的事情。

|

||||

WordPress 是可以高度自定义的。在网站顶部横幅处点击你的站点名,你就会进入仪表盘。在这里你可以修改主题、添加页面和文章、编辑菜单、添加插件、以及许多其他的事情。

|

||||

|

||||

这里有一些你可以在 Raspberry Pi 的网页服务上尝试的有趣的事情:

|

||||

这里有一些你可以在树莓派的网页服务上尝试的有趣的事情:

|

||||

|

||||

* 添加页面和文章到你的网站

|

||||

* 从外观菜单安装不同的主题

|

||||

* 自定义你的网站主题或是创建你自己的

|

||||

* 使用你的网站服务向你的网络上的其他人显示有用的信息

|

||||

|

||||

|

||||

不要忘记,Raspberry Pi 是一台 Linux 电脑。你也可以使用相同的结构在运行着 Debian 或者 Ubuntu 的服务器上安装 WordPress。

|

||||

不要忘记,树莓派是一台 Linux 电脑。你也可以使用相同的结构在运行着 Debian 或者 Ubuntu 的服务器上安装 WordPress。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -263,7 +267,7 @@ via: https://opensource.com/article/18/10/setting-wordpress-raspberry-pi

|

||||

作者:[Ben Nuttall][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[dianbanjiu](https://github.com/dianbanjiu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,13 +1,13 @@

|

||||

理解 Linux 链接 (二)

|

||||

理解 Linux 链接(二)

|

||||

======

|

||||

> 我们继续这个系列,来看一些你所不知道的微妙之处。

|

||||

|

||||

|

||||

|

||||

在[本系列的第一篇文章中][1],我们认识了硬链接,软链接,知道在很多时候链接是非常有用的。链接看起来比较简单,但是也有一些不易察觉的奇怪的地方需要注意。这就是我们这篇文章中要讲的。例如,像一下我们在前一篇文章中创建的指向 `libblah` 的链接。请注意,我们是如何从目标文件夹中创建链接的。

|

||||

在[本系列的第一篇文章中][1],我们认识了硬链接、软链接,知道在很多时候链接是非常有用的。链接看起来比较简单,但是也有一些不易察觉的奇怪的地方需要注意。这就是我们这篇文章中要讲的。例如,像一下我们在前一篇文章中创建的指向 `libblah` 的链接。请注意,我们是如何从目标文件夹中创建链接的。

|

||||

|

||||

```

|

||||

cd /usr/local/lib

|

||||

|

||||

ln -s /usr/lib/libblah

|

||||

```

|

||||

|

||||

@ -15,35 +15,32 @@ ln -s /usr/lib/libblah

|

||||

|

||||

```

|

||||

cd /usr/lib

|

||||

|

||||

ln -s libblah /usr/local/lib

|

||||

```

|

||||

|

||||

也就是说,从原始文件夹内到目标文件夹之间的链接将不起作用。

|

||||

|

||||

出现这种情况的原因是 `ln` 会把它当作是你在 `/usr/local/lib` 中创建一个到 `/usr/local/lib` 的链接,并在 `/usr/local/lib` 中创建了从 `libblah` 到 `libblah` 的一个链接。这是因为所有链接文件获取的是文件的名称(`libblah`),而不是文件的路径,最终的结果将会产生一个坏的链接。

|

||||

出现这种情况的原因是 `ln` 会把它当作是你在 `/usr/local/lib` 中创建一个到 `/usr/local/lib` 的链接,并在 `/usr/local/lib` 中创建了从 `libblah` 到 `libblah` 的一个链接。这是因为所有链接文件获取的是文件的名称(`libblah),而不是文件的路径,最终的结果将会产生一个坏的链接。

|

||||

|

||||

然而,请看下面的这种情况。

|

||||

|

||||

```

|

||||

cd /usr/lib

|

||||

|

||||

ln -s /usr/lib/libblah /usr/local/lib

|

||||

```

|

||||

|

||||

是可以工作的。奇怪的事情又来了,不管你在文件系统的任何位置执行指令,它都可以好好的工作。使用绝对路径,也就是说,指定整个完整的路径,从根目录(`/`)开始到需要的文件或者是文件夹,是最好的实现方式。

|

||||

是可以工作的。奇怪的事情又来了,不管你在文件系统的任何位置执行这个指令,它都可以好好的工作。使用绝对路径,也就是说,指定整个完整的路径,从根目录(`/`)开始到需要的文件或者是文件夹,是最好的实现方式。

|

||||

|

||||

其它需要注意的事情是,只要 `/usr/lib` 和 `/usr/local/lib` 在一个分区上,做一个如下的硬链接:

|

||||

|

||||

```

|

||||

cd /usr/lib

|

||||

|

||||

ln libblah /usr/local/lib

|

||||

```

|

||||

|

||||

也是可以工作的,因为硬链接不依赖于指向文件系统内的文件来工作。

|

||||

|

||||

如果硬链接不起作用,那么可能是你想跨分区之间建立一个硬链接。就比如说,你有分区A上有文件 `fileA` ,并且把这个分区挂载到 `/path/to/partitionA/directory` 目录,而你又想从 `fileA` 链接到分区B上 `/path/to/partitionB/directory` 目录,这样是行不通的。

|

||||

如果硬链接不起作用,那么可能是你想跨分区之间建立一个硬链接。就比如说,你有分区 A 上有文件 `fileA` ,并且把这个分区挂载到 `/path/to/partitionA/directory` 目录,而你又想从 `fileA` 链接到分区 B 上 `/path/to/partitionB/directory` 目录,这样是行不通的。

|

||||

|

||||

```

|

||||

ln /path/to/partitionA/directory/file /path/to/partitionB/directory

|

||||

@ -63,15 +60,15 @@ ln -s /path/to/some/directory /path/to/some/other/directory

|

||||

|

||||

这将在 `/path/to/some/other/directory` 中创建 `/path/to/some/directory` 的链接,没有任何问题。

|

||||

|

||||

当你使用硬链接做同样的事情的时候,会提示你一个错误,说不允许那么做。而不允许这么做的原因量会导致无休止的递归:如果你在目录A中有一个目录B,然后你在目录B中链接A,就会出现同样的情况,在目录A中,目录A包含了目录B,而在目录B中又包含了A,然后又包含了B,等等无穷无尽。

|

||||

当你使用硬链接做同样的事情的时候,会提示你一个错误,说不允许那么做。而不允许这么做的原因量会导致无休止的递归:如果你在目录 A 中有一个目录 B,然后你在目录 B 中链接 A,就会出现同样的情况,在目录 A 中,目录 A 包含了目录 B,而在目录 B 中又包含了 A,然后又包含了 B,等等无穷无尽。

|

||||

|

||||

当然你可以在递归中使用软链接,但你为什么要那样做呢?

|

||||

|

||||

### 我应该使用硬链接还是软链接呢?

|

||||

|

||||

通常,你可以在任何地方使用软链接做任何事情。实际上,在有些情况下你只能使用软软链接。话说回来,硬链接的效率要稍高一些:它们占用的磁盘空间更少,访问速度更快。在大多数的机器上, 发你可以忽略这一点点的差异,因为:在磁盘空间越来越大,访问速度越来越快的今天,空间和速度的差异可以忽略不计。不过,如果你是在一个有小存储和低功耗的处理器上使用嵌入式系统上使用 linux, 则可能需要考虑使用硬链接。

|

||||

通常,你可以在任何地方使用软链接做任何事情。实际上,在有些情况下你只能使用软链接。话说回来,硬链接的效率要稍高一些:它们占用的磁盘空间更少,访问速度更快。在大多数的机器上,你可以忽略这一点点的差异,因为:在磁盘空间越来越大,访问速度越来越快的今天,空间和速度的差异可以忽略不计。不过,如果你是在一个有小存储和低功耗的处理器上使用嵌入式系统上使用 Linux, 则可能需要考虑使用硬链接。

|

||||

|

||||

另一个使用硬链接的原因是硬链接不容易破碎。假设你有一个软链接,而你意外的移动或者删除了它指向的文件,那么你的软链接将会破碎,并指向了一个不存在的东西。这种情况是不会发生在硬链接中的,因为硬链接直接指向的是磁盘上的数据。实际上,磁盘上的空间不不会被标记为空闲,除非最后一个指向它的硬链接把它从文件系统中擦除掉。

|

||||

另一个使用硬链接的原因是硬链接不容易损坏。假设你有一个软链接,而你意外的移动或者删除了它指向的文件,那么你的软链接将会损坏,并指向了一个不存在的东西。这种情况是不会发生在硬链接中的,因为硬链接直接指向的是磁盘上的数据。实际上,磁盘上的空间不会被标记为空闲,除非最后一个指向它的硬链接把它从文件系统中擦除掉。

|

||||

|

||||

软链接,在另一方面比硬链接可以做更多的事情,而且可以指向任何东西,可以是文件或目录。它也可以指向不在同一个分区上的文件和目录。仅这两个不同,我们就可以做出唯一的选择了。

|

||||

|

||||

@ -79,7 +76,7 @@ ln -s /path/to/some/directory /path/to/some/other/directory

|

||||

|

||||

现在我们已经介绍了文件和目录以及操作它们的工具,你是否已经准备好转到这些工具,可以浏览目录层次结构,可以查找文件中的数据,也可以检查目录。这就是我们下一期中要做的事情。下期见。

|

||||

|

||||

你可以通过Linux 基金会和edX [Linux 简介][2]了解更多关于Linux的免费课程。

|

||||

你可以通过 Linux 基金会和 edX “[Linux 简介][2]”了解更多关于 Linux 的免费课程。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -87,12 +84,12 @@ via: https://www.linux.com/blog/2018/10/understanding-linux-links-part-2

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[Jamkr](https://github.com/Jamkr)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linux.com/blog/intro-to-linux/2018/10/linux-links-part-1

|

||||

[1]: https://linux.cn/article-10173-1.html

|

||||

[2]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -3,33 +3,34 @@

|

||||

|

||||

|

||||

|

||||

管道命令的作用是将一个命令/程序/进程的输出发送给另一个命令/程序/进程,以便将输出结果进行进一步的处理。我们可以通过使用管道命令把多个命令组合起来,使一个命令的标准输入或输出重定向到另一个命令。两个或多个 Linux 命令之间的竖线字符(|)表示在命令之间使用管道命令。管道命令的一般语法如下所示:

|

||||

管道命令的作用是将一个命令/程序/进程的输出发送给另一个命令/程序/进程,以便将输出结果进行进一步的处理。我们可以通过使用管道命令把多个命令组合起来,使一个命令的标准输入或输出重定向到另一个命令。两个或多个 Linux 命令之间的竖线字符(`|`)表示在命令之间使用管道命令。管道命令的一般语法如下所示:

|

||||

|

||||

```

|

||||

Command-1 | Command-2 | Command-3 | …| Command-N

|

||||

```

|

||||

|

||||

`Ultimate Plumber`(简称 `UP`)是一个命令行工具,它可以用于即时预览管道命令结果。如果你在使用 Linux 时经常会用到管道命令,就可以通过它更好地运用管道命令了。它可以预先显示执行管道命令后的结果,而且是即时滚动地显示,让你可以轻松构建复杂的管道。

|

||||

Ultimate Plumber(简称 UP)是一个命令行工具,它可以用于即时预览管道命令结果。如果你在使用 Linux 时经常会用到管道命令,就可以通过它更好地运用管道命令了。它可以预先显示执行管道命令后的结果,而且是即时滚动地显示,让你可以轻松构建复杂的管道。

|

||||

|

||||

下文将会介绍如何安装 `UP` 并用它将复杂管道命令的编写变得简单。

|

||||

下文将会介绍如何安装 UP 并用它将复杂管道命令的编写变得简单。

|

||||

|

||||

|

||||

**重要警告:**

|

||||

|

||||

在生产环境中请谨慎使用 `UP`!在使用它的过程中,有可能会在无意中删除重要数据,尤其是搭配 `rm` 或 `dd` 命令时需要更加小心。勿谓言之不预。

|

||||

在生产环境中请谨慎使用 UP!在使用它的过程中,有可能会在无意中删除重要数据,尤其是搭配 `rm` 或 `dd` 命令时需要更加小心。勿谓言之不预。

|

||||

|

||||

### 使用 Ultimate Plumber 即时预览管道命令

|

||||

|

||||

下面给出一个简单的例子介绍 `UP` 的使用方法。如果需要将 `lshw` 命令的输出传递给 `UP`,只需要在终端中输入以下命令,然后回车:

|

||||

下面给出一个简单的例子介绍 `up` 的使用方法。如果需要将 `lshw` 命令的输出传递给 `up`,只需要在终端中输入以下命令,然后回车:

|

||||

|

||||

```

|

||||

$ lshw |& up

|

||||

```

|

||||

|

||||

你会在屏幕顶部看到一个输入框,如下图所示。

|

||||

|

||||

|

||||

|

||||

在输入命令的过程中,输入管道符号并回车,就可以立即执行已经输入了的命令。`Ultimate Plumber` 会在下方的可滚动窗口中即时显示管道命令的输出。在这种状态下,你可以通过 `PgUp`/`PgDn` 键或 `ctrl + ←`/`ctrl + →` 组合键来查看结果。

|

||||

在输入命令的过程中,输入管道符号并回车,就可以立即执行已经输入了的命令。Ultimate Plumber 会在下方的可滚动窗口中即时显示管道命令的输出。在这种状态下,你可以通过 `PgUp`/`PgDn` 键或 `ctrl + ←`/`ctrl + →` 组合键来查看结果。

|

||||

|

||||

当你满意执行结果之后,可以使用 `ctrl + x` 组合键退出 `UP`。而退出前编写的管道命令则会保存在当前工作目录的文件中,并命名为 `up1.sh`。如果这个文件名已经被占用,就会命名为 `up2.sh`、`up3.sh` 等等以此类推,直到第 1000 个文件。如果你不需要将管道命令保存输出,只需要使用 `ctrl + c` 组合键退出即可。

|

||||

|

||||

@ -41,29 +42,29 @@ $ cat up2.sh

|

||||

grep network -A5 | grep : | cut -d: -f2- | paste - -

|

||||

```

|

||||

|

||||

如果通过管道发送到 `UP` 的命令运行时间太长,终端窗口的左上角会显示一个波浪号(~)字符,这就表示 `UP` 在等待前一个命令的输出结果作为输入。在这种情况下,你可能需要使用 `ctrl + s` 组合键暂时冻结 `UP` 的输入缓冲区大小。在需要解冻的时候,使用 `ctrl + q` 组合键即可。`Ultimate Plumber` 的输入缓冲区大小一般为 40 MB,到达这个限制之后,屏幕的左上角会显示一个加号。

|

||||

如果通过管道发送到 `up` 的命令运行时间太长,终端窗口的左上角会显示一个波浪号(~)字符,这就表示 `up` 在等待前一个命令的输出结果作为输入。在这种情况下,你可能需要使用 `ctrl + s` 组合键暂时冻结 `up` 的输入缓冲区大小。在需要解冻的时候,使用 `ctrl + q` 组合键即可。Ultimate Plumber 的输入缓冲区大小一般为 40 MB,到达这个限制之后,屏幕的左上角会显示一个加号。

|

||||

|

||||

以下是 `up` 命令的一个简单演示:

|

||||

|

||||

以下是 `UP` 命令的一个简单演示:

|

||||

|

||||

|

||||

### 安装 Ultimate Plumber

|

||||

|

||||

喜欢这个工具的话,你可以在你的 Linux 系统上安装使用。安装过程也相当简单,只需要在终端里执行以下两个命令就可以安装 `UP` 了。

|

||||

喜欢这个工具的话,你可以在你的 Linux 系统上安装使用。安装过程也相当简单,只需要在终端里执行以下两个命令就可以安装 `up` 了。

|

||||

|

||||

首先从 Ultimate Plumber 的[发布页面][1]下载最新的二进制文件,并将放在你系统的某个路径下,例如`/usr/local/bin/`。

|

||||

首先从 Ultimate Plumber 的[发布页面][1]下载最新的二进制文件,并将放在你系统的某个路径下,例如 `/usr/local/bin/`。

|

||||

|

||||

```

|

||||

$ sudo wget -O /usr/local/bin/up wget https://github.com/akavel/up/releases/download/v0.2.1/up

|

||||

```

|

||||

|

||||

然后向 `UP` 二进制文件赋予可执行权限:

|

||||

然后向 `up` 二进制文件赋予可执行权限:

|

||||

|

||||

```

|

||||

$ sudo chmod a+x /usr/local/bin/up

|

||||

```

|

||||

|

||||

至此,你已经完成了 `UP` 的安装,可以开始编写你的管道命令了。

|

||||

|

||||

至此,你已经完成了 `up` 的安装,可以开始编写你的管道命令了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -73,7 +74,7 @@ via: https://www.ostechnix.com/ultimate-plumber-writing-linux-pipes-with-instant

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,32 +1,28 @@

|

||||

Python 机器学习的必备技巧

|

||||

======

|

||||

|

||||

> 尝试使用 Python 掌握机器学习、人工智能和深度学习。

|

||||

|

||||

|

||||

|

||||

想要入门机器学习并不难。除了<ruby>大规模网络公开课<rt>Massive Open Online Courses</rt></ruby>(MOOCs)之外,还有很多其它优秀的免费资源。下面我分享一些我觉得比较有用的方法。

|

||||

想要入门机器学习并不难。除了<ruby>大规模网络公开课<rt>Massive Open Online Courses</rt></ruby>(MOOC)之外,还有很多其它优秀的免费资源。下面我分享一些我觉得比较有用的方法。

|

||||

|

||||

1. 阅览一些关于这方面的视频、文章或者书籍,例如 [The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World][29],你肯定会喜欢这些[关于机器学习的互动页面][30]。

|

||||

|

||||

2. 对于“机器学习”、“人工智能”、“深度学习”、“数据科学”、“计算机视觉”和“机器人技术”这一堆新名词,你需要知道它们之前的区别。你可以阅览这些领域的专家们的演讲,例如[数据科学家 Brandon Rohrer 的这个视频][1]。

|

||||

|

||||

3. 明确你自己的学习目标,并选择合适的 [Coursera 课程][3],或者参加高校的网络公开课。例如[华盛顿大学的课程][4]就很不错。

|

||||

|

||||

4. 关注优秀的博客:例如 [KDnuggets][32] 的博客、[Mark Meloon][33] 的博客、[Brandon Rohrer][34] 的博客、[Open AI][35] 的博客,这些都值得推荐。

|

||||

|

||||

5. 如果你对在线课程有很大兴趣,后文中会有如何[正确选择 MOOC 课程][31]的指导。

|

||||

|

||||

6. 最重要的是,培养自己对这些技术的兴趣。加入一些优秀的社交论坛,专注于阅读和了解,将这些技术的背景知识和发展方向理解透彻,并积极思考在日常生活和工作中如何应用机器学习或数据科学的原理。例如建立一个简单的回归模型来预测下一次午餐的成本,又或者是从电力公司的网站上下载历史电费数据,在 Excel 中进行简单的时序分析以发现某种规律。在你对这些技术产生了浓厚兴趣之后,可以观看以下这个视频。

|

||||

1. 从一些 YouTube 上的好视频开始,阅览一些关于这方面的文章或者书籍,例如 《[主算法:终极学习机器的探索将如何重塑我们的世界][29]》,而且我觉得你肯定会喜欢这些[关于机器学习的很酷的互动页面][30]。

|

||||

2. 对于“<ruby>机器学习<rt>machine learning</rt></ruby>”、“<ruby>人工智能<rt>artificial intelligence</rt></ruby>”、“<ruby>深度学习<rt>deep learning</rt></ruby>”、“<ruby>数据科学<rt>data science</rt></ruby>”、“<ruby>计算机视觉<rt>computer vision</rt></ruby>”和“<ruby>机器人技术<rt>robotics</rt></ruby>”这一堆新名词,你需要知道它们之间的区别。你可以阅览或聆听这些领域的专家们的演讲,例如这位有影响力的[数据科学家 Brandon Rohrer 的精彩视频][1]。或者这个讲述了数据科学相关的[各种角色之间的区别][2]的视频。

|

||||

3. 明确你自己的学习目标,并选择合适的 [Coursera 课程][3],或者参加高校的网络公开课,例如[华盛顿大学的课程][4]就很不错。

|

||||

4. 关注优秀的博客:例如 [KDnuggets][32] 的博客、[Mark Meloon][33] 的博客、[Brandon Rohrer][34] 的博客、[Open AI][35] 的研究博客,这些都值得推荐。

|

||||

5. 如果你热衷于在线课程,后文中会有如何[正确选择 MOOC 课程][31]的指导。

|

||||

6. 最重要的是,培养自己对这些技术的兴趣。加入一些优秀的社交论坛,不要被那些耸人听闻的头条和新闻所吸引,专注于阅读和了解,将这些技术的背景知识和发展方向理解透彻,并积极思考在日常生活和工作中如何应用机器学习或数据科学的原理。例如建立一个简单的回归模型来预测下一次午餐的成本,又或者是从电力公司的网站上下载历史电费数据,在 Excel 中进行简单的时序分析以发现某种规律。在你对这些技术产生了浓厚兴趣之后,可以观看以下这个视频。

|

||||

|

||||

<https://www.youtube.com/embed/IpGxLWOIZy4>

|

||||

|

||||

### Python 是机器学习和人工智能方面的最佳语言吗?

|

||||

|

||||

除非你是一名专业的研究一些复杂算法纯理论证明的研究人员,否则,对于一个机器学习的入门者来说,需要熟悉至少一种高级编程语言一家相关的专业知识。因为大多数情况下都是需要考虑如何将机器学习算法应用于解决实际问题,而这需要有一定的编程能力作为基础。

|

||||

除非你是一名专业的研究一些复杂算法纯理论证明的研究人员,否则,对于一个机器学习的入门者来说,需要熟悉至少一种高级编程语言。因为大多数情况下都是需要考虑如何将现有的机器学习算法应用于解决实际问题,而这需要有一定的编程能力作为基础。

|

||||

|

||||

哪一种语言是数据科学的最佳语言?这个讨论一直没有停息过。对于这方面,你可以提起精神来看一下 FreeCodeCamp 上这一篇关于[数据科学语言][6]的文章,又或者是 KDnuggets 关于 [Python 和 R][7] 之间的深入探讨。

|

||||

哪一种语言是数据科学的最佳语言?这个讨论一直没有停息过。对于这方面,你可以提起精神来看一下 FreeCodeCamp 上这一篇关于[数据科学语言][6]的文章,又或者是 KDnuggets 关于 [Python 和 R 之争][7]的深入探讨。

|

||||

|

||||

目前人们普遍认为 Python 在开发、部署、维护各方面的效率都是比较高的。与 Java、C 和 C++ 这些较为传统的语言相比,Python 的语法更为简单和高级。而且 Python 拥有活跃的社区群体、广泛的开源文化、数百个专用于机器学习的优质代码库,以及来自业界巨头(包括Google、Dropbox、Airbnb 等)的强大技术支持。

|

||||

目前人们普遍认为 Python 在开发、部署、维护各方面的效率都是比较高的。与 Java、C 和 C++ 这些较为传统的语言相比,Python 的语法更为简单和高级。而且 Python 拥有活跃的社区群体、广泛的开源文化、数百个专用于机器学习的优质代码库,以及来自业界巨头(包括 Google、Dropbox、Airbnb 等)的强大技术支持。

|

||||

|

||||

### 基础 Python 库

|

||||

|

||||

@ -46,7 +42,7 @@ Pandas 是 Python 生态中用于进行通用数据分析的最受欢迎的库

|

||||

* 选择数据子集

|

||||

* 跨行列计算

|

||||

* 查找并补充缺失的数据

|

||||

* 将操作应用于数据中的独立组

|

||||

* 将操作应用于数据中的独立分组

|

||||

* 按照多种格式转换数据

|

||||

* 组合多个数据集

|

||||

* 高级时间序列功能

|

||||

@ -68,7 +64,7 @@ Pandas 是 Python 生态中用于进行通用数据分析的最受欢迎的库

|

||||

|

||||

#### Scikit-learn

|

||||

|

||||

Scikit-learn 是机器学习方面通用的重要 Python 包。它实现了多种[分类][16]、[回归][17]和[聚类][18]算法,包括[支持向量机][19]、[随机森林][20]、[梯度增强][21]、[k-means 算法][22]和 [DBSCAN 算法][23],可以与 Python 的数值库 NumPy 和科学计算库 [SciPy][24] 结合使用。它通过兼容的接口提供了有监督和无监督的学习算法。Scikit-learn 的强壮性让它可以稳定运行在生产环境中,同时它在易用性、代码质量、团队协作、文档和性能等各个方面都有良好的表现。可以参考这篇基于 Scikit-learn 的[机器学习入门][25],或者这篇基于 Scikit-learn 的[简单机器学习用例演示][26]。

|

||||

Scikit-learn 是机器学习方面通用的重要 Python 包。它实现了多种[分类][16]、[回归][17]和[聚类][18]算法,包括[支持向量机][19]、[随机森林][20]、[梯度增强][21]、[k-means 算法][22]和 [DBSCAN 算法][23],可以与 Python 的数值库 NumPy 和科学计算库 [SciPy][24] 结合使用。它通过兼容的接口提供了有监督和无监督的学习算法。Scikit-learn 的强壮性让它可以稳定运行在生产环境中,同时它在易用性、代码质量、团队协作、文档和性能等各个方面都有良好的表现。可以参考[这篇基于 Scikit-learn 的机器学习入门][25],或者[这篇基于 Scikit-learn 的简单机器学习用例演示][26]。

|

||||

|

||||

本文使用 [CC BY-SA 4.0][28] 许可,在 [Heartbeat][27] 上首发。

|

||||

|

||||

@ -79,7 +75,7 @@ via: https://opensource.com/article/18/10/machine-learning-python-essential-hack

|

||||

作者:[Tirthajyoti Sarkar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,58 @@

|

||||

8 个出没于终端中的吓人命令

|

||||

======

|

||||

|

||||

> 欢迎来到 Linux 令人毛骨悚然的一面。

|

||||

|

||||

|

||||

|

||||

又是一年中的这个时候:天气变冷了、树叶变色了,各处的孩子都化妆成了小鬼、妖精和僵尸。(LCTT 译注:本文原发表于万圣节)但你知道吗, Unix (和 Linux) 和它们的各个分支也充满了令人毛骨悚然的东西?让我们来看一下我们所熟悉和喜爱的操作系统的一些令人毛骨悚然的一面。

|

||||

|

||||

### 半神(守护进程)

|

||||

|

||||

如果没有潜伏于系统中的各种<ruby>守护进程<rt>daemon</rt></ruby>,那么 Unix 就没什么不同。守护进程是运行在后台的进程,并为用户和操作系统本身提供有用的服务,比如 SSH、FTP、HTTP 等等。

|

||||

|

||||

### 僵尸(僵尸进程)

|

||||

|

||||

不时出现的僵尸进程是一种被杀死但是拒绝离开的进程。当它出现时,无疑你只能选择你有的工具来赶走它。僵尸进程通常表明产生它的进程出现了问题。

|

||||

|

||||

### 杀死(kill)

|

||||

|

||||

你不仅可以使用 `kill` 来干掉一个僵尸进程,你还可以用它杀死任何对你系统产生负面影响的进程。有一个使用太多 RAM 或 CPU 周期的进程?使用 `kill` 命令杀死它。

|

||||

|

||||

### 猫(cat)

|

||||

|

||||

`cat` 和猫科动物无关,但是与文件操作有关:`cat` 是 “concatenate” 的缩写。你甚至可以使用这个方便的命令来查看文件的内容。

|

||||

|

||||

### 尾巴(tail)

|

||||

|

||||

当你想要查看文件中最后 n 行时,`tail` 命令很有用。当你想要监控一个文件时,它也很棒。

|

||||

|

||||

### 巫师(which)

|

||||

|

||||

哦,不,它不是巫师(witch)的一种。而是打印传递给它的命令所在的文件位置的命令。例如,`which python` 将在你系统上打印每个版本的 Python 的位置。

|

||||

|

||||

### 地下室(crypt)

|

||||

|

||||

`crypt` 命令,以前称为 `mcrypt`,当你想要加密(encrypt)文件的内容时,它是很方便的,这样除了你之外没有人可以读取它。像大多数 Unix 命令一样,你可以单独使用 `crypt` 或在系统脚本中调用它。

|

||||

|

||||

### 切碎(shred)

|

||||

|

||||

当你不仅要删除文件还想要确保没有人能够恢复它时,`shred` 命令很方便。使用 `rm` 命令删除文件是不够的。你还需要覆盖该文件以前占用的空间。这就是 `shred` 的用武之地。

|

||||

|

||||

这些只是你会在 Unix 中发现的一部分令人毛骨悚然的东西。你还知道其他诡异的命令么?请随时告诉我。

|

||||

|

||||

万圣节快乐!(LCTT:可惜我们翻译完了,只能将恐怖的感觉延迟了 :D)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/spookier-side-unix-linux

|

||||

|

||||

作者:[Patrick H.Mullins][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/pmullins

|

||||

[b]: https://github.com/lujun9972

|

||||

@ -1,4 +1,4 @@

|

||||

#!/bin/bash

|

||||

#!/bin/sh

|

||||

# PR 检查脚本

|

||||

set -e

|

||||

|

||||

|

||||

@ -22,7 +22,12 @@ do_analyze() {

|

||||

# 统计每个类别的每个操作

|

||||

REGEX="$(get_operation_regex "$STAT" "$TYPE")"

|

||||

OTHER_REGEX="${OTHER_REGEX}|${REGEX}"

|

||||

eval "${TYPE}_${STAT}=\"\$(grep -Ec '$REGEX' /tmp/changes)\"" || true

|

||||

CHANGES_FILE="/tmp/changes_${TYPE}_${STAT}"

|

||||

eval "grep -E '$REGEX' /tmp/changes" \

|

||||

| sed 's/^[^\/]*\///g' \

|

||||

| sort > "$CHANGES_FILE" || true

|

||||

sed 's/^.*\///g' "$CHANGES_FILE" > "${CHANGES_FILE}_basename"

|

||||

eval "${TYPE}_${STAT}=$(wc -l < "$CHANGES_FILE")"

|

||||

eval echo "${TYPE}_${STAT}=\$${TYPE}_${STAT}"

|

||||

done

|

||||

done

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

#!/bin/bash

|

||||

#!/bin/sh

|

||||

# 检查脚本状态

|

||||

set -e

|

||||

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

#!/bin/bash

|

||||

#!/bin/sh

|

||||

# PR 文件变更收集

|

||||

set -e

|

||||

|

||||

@ -31,7 +31,16 @@ git --no-pager show --summary "${MERGE_BASE}..HEAD"

|

||||

|

||||

echo "[收集] 写出文件变更列表……"

|

||||

|

||||

git diff "$MERGE_BASE" HEAD --no-renames --name-status > /tmp/changes

|

||||

RAW_CHANGES="$(git diff "$MERGE_BASE" HEAD --no-renames --name-status -z \

|

||||

| tr '\0' '\n')"

|

||||

[ -z "$RAW_CHANGES" ] && {

|

||||

echo "[收集] 无变更,退出……"

|

||||

exit 1

|

||||

}

|

||||

echo "$RAW_CHANGES" | while read -r STAT; do

|

||||

read -r NAME

|

||||

echo "${STAT} ${NAME}"

|

||||

done > /tmp/changes

|

||||

echo "[收集] 已写出文件变更列表:"

|

||||

cat /tmp/changes

|

||||

{ [ -z "$(cat /tmp/changes)" ] && echo "(无变更)"; } || true

|

||||

|

||||

@ -10,9 +10,10 @@ export TSL_DIR='translated' # 已翻译

|

||||

export PUB_DIR='published' # 已发布

|

||||

|

||||

# 定义匹配规则

|

||||

export CATE_PATTERN='(news|talk|tech)' # 类别

|

||||

export CATE_PATTERN='(talk|tech)' # 类别

|

||||

export FILE_PATTERN='[0-9]{8} [a-zA-Z0-9_.,() -]*\.md' # 文件名

|

||||

|

||||

# 获取用于匹配操作的正则表达式

|

||||

# 用法:get_operation_regex 状态 类型

|

||||

#

|

||||

# 状态为:

|

||||

@ -26,5 +27,50 @@ export FILE_PATTERN='[0-9]{8} [a-zA-Z0-9_.,() -]*\.md' # 文件名

|

||||

get_operation_regex() {

|

||||

STAT="$1"

|

||||

TYPE="$2"

|

||||

|

||||

echo "^${STAT}\\s+\"?$(eval echo "\$${TYPE}_DIR")/"

|

||||

}

|

||||

|

||||

# 确保两个变更文件一致

|

||||

# 用法:ensure_identical X类型 X状态 Y类型 Y状态 是否仅比较文件名

|

||||

#

|

||||

# 状态为:

|

||||

# - A:添加

|

||||

# - M:修改

|

||||

# - D:删除

|

||||

# 类型为:

|

||||

# - SRC:未翻译

|

||||

# - TSL:已翻译

|

||||

# - PUB:已发布

|

||||

ensure_identical() {

|

||||

TYPE_X="$1"

|

||||

STAT_X="$2"

|

||||

TYPE_Y="$3"

|

||||

STAT_Y="$4"

|

||||

NAME_ONLY="$5"

|

||||

SUFFIX=

|

||||

[ -n "$NAME_ONLY" ] && SUFFIX="_basename"

|

||||

|

||||

X_FILE="/tmp/changes_${TYPE_X}_${STAT_X}${SUFFIX}"

|

||||

Y_FILE="/tmp/changes_${TYPE_Y}_${STAT_Y}${SUFFIX}"

|

||||

|

||||

cmp "$X_FILE" "$Y_FILE" 2> /dev/null

|

||||

}

|

||||

|

||||

# 检查文章分类

|

||||

# 用法:check_category 类型 状态

|

||||

#

|

||||

# 状态为:

|

||||

# - A:添加

|

||||

# - M:修改

|

||||

# - D:删除

|

||||

# 类型为:

|

||||

# - SRC:未翻译

|

||||

# - TSL:已翻译

|

||||

check_category() {

|

||||

TYPE="$1"

|

||||

STAT="$2"

|

||||

|

||||

CHANGES="/tmp/changes_${TYPE}_${STAT}"

|

||||

! grep -Eqv "^${CATE_PATTERN}/" "$CHANGES"

|

||||

}

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

#!/bin/bash

|

||||

#!/bin/sh

|

||||

# 匹配 PR 规则

|

||||

set -e

|

||||

|

||||

@ -27,31 +27,39 @@ rule_bypass_check() {

|

||||

# 添加原文:添加至少一篇原文

|

||||

rule_source_added() {

|

||||

[ "$SRC_A" -ge 1 ] \

|

||||

&& check_category SRC A \

|

||||

&& [ "$TOTAL" -eq "$SRC_A" ] && echo "匹配规则:添加原文 ${SRC_A} 篇"

|

||||

}

|

||||

|

||||

# 申领翻译:只能申领一篇原文

|

||||

rule_translation_requested() {

|

||||

[ "$SRC_M" -eq 1 ] \

|

||||

&& check_category SRC M \

|

||||

&& [ "$TOTAL" -eq 1 ] && echo "匹配规则:申领翻译"

|

||||

}

|

||||

|

||||

# 提交译文:只能提交一篇译文

|

||||

rule_translation_completed() {

|

||||

[ "$SRC_D" -eq 1 ] && [ "$TSL_A" -eq 1 ] \

|

||||

&& ensure_identical SRC D TSL A \

|

||||

&& check_category SRC D \

|

||||

&& check_category TSL A \

|

||||