mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

ec632df55e

@ -8,14 +8,13 @@

|

||||

3. CPU 和内存瓶颈

|

||||

4. 网络瓶颈

|

||||

|

||||

|

||||

### 1. top - 进程活动监控命令

|

||||

|

||||

top 命令显示 Linux 的进程。它提供了一个系统的实时动态视图,即实际的进程活动。默认情况下,它显示在服务器上运行的 CPU 占用率最高的任务,并且每五秒更新一次。

|

||||

`top` 命令会显示 Linux 的进程。它提供了一个运行中系统的实时动态视图,即实际的进程活动。默认情况下,它显示在服务器上运行的 CPU 占用率最高的任务,并且每五秒更新一次。

|

||||

|

||||

|

||||

|

||||

图 01:Linux top 命令

|

||||

*图 01:Linux top 命令*

|

||||

|

||||

#### top 的常用快捷键

|

||||

|

||||

@ -23,22 +22,24 @@ top 命令显示 Linux 的进程。它提供了一个系统的实时动态视图

|

||||

|

||||

| 快捷键 | 用法 |

|

||||

| ---- | -------------------------------------- |

|

||||

| t | 是否显示总结信息 |

|

||||

| m | 是否显示内存信息 |

|

||||

| A | 根据各种系统资源的利用率对进程进行排序,有助于快速识别系统中性能不佳的任务。 |

|

||||

| f | 进入 top 的交互式配置屏幕,用于根据特定的需求而设置 top 的显示。 |

|

||||

| o | 交互式地调整 top 每一列的顺序。 |

|

||||

| r | 调整优先级(renice) |

|

||||

| k | 杀掉进程(kill) |

|

||||

| z | 开启或关闭彩色或黑白模式 |

|

||||

| `t` | 是否显示汇总信息 |

|

||||

| `m` | 是否显示内存信息 |

|

||||

| `A` | 根据各种系统资源的利用率对进程进行排序,有助于快速识别系统中性能不佳的任务。 |

|

||||

| `f` | 进入 `top` 的交互式配置屏幕,用于根据特定的需求而设置 `top` 的显示。 |

|

||||

| `o` | 交互式地调整 `top` 每一列的顺序。 |

|

||||

| `r` | 调整优先级(`renice`) |

|

||||

| `k` | 杀掉进程(`kill`) |

|

||||

| `z` | 切换彩色或黑白模式 |

|

||||

|

||||

相关链接:[Linux 如何查看 CPU 利用率?][1]

|

||||

|

||||

### 2. vmstat - 虚拟内存统计

|

||||

|

||||

vmstat 命令报告有关进程、内存、分页、块 IO、陷阱和 cpu 活动等信息。

|

||||

`vmstat` 命令报告有关进程、内存、分页、块 IO、中断和 CPU 活动等信息。

|

||||

|

||||

`# vmstat 3`

|

||||

```

|

||||

# vmstat 3

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -56,11 +57,15 @@ procs -----------memory---------- ---swap-- -----io---- --system-- -----cpu-----

|

||||

|

||||

#### 显示 Slab 缓存的利用率

|

||||

|

||||

`# vmstat -m`

|

||||

```

|

||||

# vmstat -m

|

||||

```

|

||||

|

||||

#### 获取有关活动和非活动内存页面的信息

|

||||

|

||||

`# vmstat -a`

|

||||

```

|

||||

# vmstat -a

|

||||

```

|

||||

|

||||

相关链接:[如何查看 Linux 的资源利用率从而找到系统瓶颈?][2]

|

||||

|

||||

@ -84,9 +89,11 @@ root pts/1 10.1.3.145 17:43 0.00s 0.03s 0.00s w

|

||||

|

||||

### 4. uptime - Linux 系统运行了多久

|

||||

|

||||

uptime 命令可以用来查看服务器运行了多长时间:当前时间、已运行的时间、当前登录的用户连接数,以及过去 1 分钟、5 分钟和 15 分钟的系统负载平均值。

|

||||

`uptime` 命令可以用来查看服务器运行了多长时间:当前时间、已运行的时间、当前登录的用户连接数,以及过去 1 分钟、5 分钟和 15 分钟的系统负载平均值。

|

||||

|

||||

`# uptime`

|

||||

```

|

||||

# uptime

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -94,13 +101,15 @@ uptime 命令可以用来查看服务器运行了多长时间:当前时间、

|

||||

18:02:41 up 41 days, 23:42, 1 user, load average: 0.00, 0.00, 0.00

|

||||

```

|

||||

|

||||

1 可以被认为是最佳负载值。不同的系统会有不同的负载:对于单核 CPU 系统来说,1 到 3 的负载值是可以接受的;而对于 SMP(对称多处理)系统来说,负载可以是 6 到 10。

|

||||

`1` 可以被认为是最佳负载值。不同的系统会有不同的负载:对于单核 CPU 系统来说,`1` 到 `3` 的负载值是可以接受的;而对于 SMP(对称多处理)系统来说,负载可以是 `6` 到 `10`。

|

||||

|

||||

### 5. ps - 显示系统进程

|

||||

|

||||

ps 命令显示当前运行的进程。要显示所有的进程,请使用 -A 或 -e 选项:

|

||||

`ps` 命令显示当前运行的进程。要显示所有的进程,请使用 `-A` 或 `-e` 选项:

|

||||

|

||||

`# ps -A`

|

||||

```

|

||||

# ps -A

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -132,23 +141,31 @@ ps 命令显示当前运行的进程。要显示所有的进程,请使用 -A

|

||||

55704 pts/1 00:00:00 ps

|

||||

```

|

||||

|

||||

ps 与 top 类似,但它提供了更多的信息。

|

||||

`ps` 与 `top` 类似,但它提供了更多的信息。

|

||||

|

||||

#### 显示长输出格式

|

||||

|

||||

`# ps -Al`

|

||||

```

|

||||

# ps -Al

|

||||

```

|

||||

|

||||

显示完整输出格式(它将显示传递给进程的命令行参数):

|

||||

|

||||

`# ps -AlF`

|

||||

```

|

||||

# ps -AlF

|

||||

```

|

||||

|

||||

#### 显示线程(轻量级进程(LWP)和线程的数量(NLWP))

|

||||

|

||||

`# ps -AlFH`

|

||||

```

|

||||

# ps -AlFH

|

||||

```

|

||||

|

||||

#### 在进程后显示线程

|

||||

|

||||

`# ps -AlLm`

|

||||

```

|

||||

# ps -AlLm

|

||||

```

|

||||

|

||||

#### 显示系统上所有的进程

|

||||

|

||||

@ -162,7 +179,7 @@ ps 与 top 类似,但它提供了更多的信息。

|

||||

```

|

||||

# ps -ejH

|

||||

# ps axjf

|

||||

# [pstree][4]

|

||||

# pstree

|

||||

```

|

||||

|

||||

#### 显示进程的安全信息

|

||||

@ -192,11 +209,15 @@ ps 与 top 类似,但它提供了更多的信息。

|

||||

```

|

||||

# ps -C lighttpd -o pid=

|

||||

```

|

||||

|

||||

或

|

||||

|

||||

```

|

||||

# pgrep lighttpd

|

||||

```

|

||||

|

||||

或

|

||||

|

||||

```

|

||||

# pgrep -u vivek php-cgi

|

||||

```

|

||||

@ -215,15 +236,19 @@ ps 与 top 类似,但它提供了更多的信息。

|

||||

|

||||

#### 找出占用 CPU 资源最多的前 10 个进程

|

||||

|

||||

`# ps -auxf | sort -nr -k 3 | head -10`

|

||||

```

|

||||

# ps -auxf | sort -nr -k 3 | head -10

|

||||

```

|

||||

|

||||

相关链接:[显示 Linux 上所有运行的进程][5]

|

||||

|

||||

### 6. free - 内存使用情况

|

||||

|

||||

free 命令显示了系统的可用和已用的物理内存及交换内存的总量,以及内核用到的缓存空间。

|

||||

`free` 命令显示了系统的可用和已用的物理内存及交换内存的总量,以及内核用到的缓存空间。

|

||||

|

||||

`# free `

|

||||

```

|

||||

# free

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -242,9 +267,11 @@ Swap: 1052248 0 1052248

|

||||

|

||||

### 7. iostat - CPU 平均负载和磁盘活动

|

||||

|

||||

iostat 命令用于汇报 CPU 的使用情况,以及设备、分区和网络文件系统(NFS)的 IO 统计信息。

|

||||

`iostat` 命令用于汇报 CPU 的使用情况,以及设备、分区和网络文件系统(NFS)的 IO 统计信息。

|

||||

|

||||

`# iostat `

|

||||

```

|

||||

# iostat

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -265,17 +292,21 @@ sda3 0.00 0.00 0.00 1615 0

|

||||

|

||||

### 8. sar - 监控、收集和汇报系统活动

|

||||

|

||||

sar 命令用于收集、汇报和保存系统活动信息。要查看网络统计,请输入:

|

||||

`sar` 命令用于收集、汇报和保存系统活动信息。要查看网络统计,请输入:

|

||||

|

||||

`# sar -n DEV | more`

|

||||

```

|

||||

# sar -n DEV | more

|

||||

```

|

||||

|

||||

显示 24 日的网络统计:

|

||||

|

||||

`# sar -n DEV -f /var/log/sa/sa24 | more`

|

||||

|

||||

您还可以使用 sar 显示实时使用情况:

|

||||

您还可以使用 `sar` 显示实时使用情况:

|

||||

|

||||

`# sar 4 5`

|

||||

```

|

||||

# sar 4 5

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -295,12 +326,13 @@ Average: all 2.02 0.00 0.27 0.01 0.00 97.70

|

||||

+ [如何将 Linux 系统资源利用率的数据写入文件中][53]

|

||||

+ [如何使用 kSar 创建 sar 性能图以找出系统瓶颈][54]

|

||||

|

||||

|

||||

### 9. mpstat - 监控多处理器的使用情况

|

||||

|

||||

mpstat 命令显示每个可用处理器的使用情况,编号从 0 开始。命令 mpstat -P ALL 显示了每个处理器的平均使用率:

|

||||

`mpstat` 命令显示每个可用处理器的使用情况,编号从 0 开始。命令 `mpstat -P ALL` 显示了每个处理器的平均使用率:

|

||||

|

||||

`# mpstat -P ALL`

|

||||

```

|

||||

# mpstat -P ALL

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -323,13 +355,17 @@ Linux 2.6.18-128.1.14.el5 (www03.nixcraft.in) 06/26/2009

|

||||

|

||||

### 10. pmap - 监控进程的内存使用情况

|

||||

|

||||

pmap 命令用以显示进程的内存映射,使用此命令可以查找内存瓶颈。

|

||||

`pmap` 命令用以显示进程的内存映射,使用此命令可以查找内存瓶颈。

|

||||

|

||||

`# pmap -d PID`

|

||||

```

|

||||

# pmap -d PID

|

||||

```

|

||||

|

||||

显示 PID 为 47394 的进程的内存信息,请输入:

|

||||

|

||||

`# pmap -d 47394`

|

||||

```

|

||||

# pmap -d 47394

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -362,16 +398,15 @@ mapped: 933712K writeable/private: 4304K shared: 768000K

|

||||

|

||||

最后一行非常重要:

|

||||

|

||||

* **mapped: 933712K** 映射到文件的内存量

|

||||

* **writeable/private: 4304K** 私有地址空间

|

||||

* **shared: 768000K** 此进程与其他进程共享的地址空间

|

||||

|

||||

* `mapped: 933712K` 映射到文件的内存量

|

||||

* `writeable/private: 4304K` 私有地址空间

|

||||

* `shared: 768000K` 此进程与其他进程共享的地址空间

|

||||

|

||||

相关链接:[使用 pmap 命令查看 Linux 上单个程序或进程使用的内存][8]

|

||||

|

||||

### 11. netstat - Linux 网络统计监控工具

|

||||

|

||||

netstat 命令显示网络连接、路由表、接口统计、伪装连接和多播连接等信息。

|

||||

`netstat` 命令显示网络连接、路由表、接口统计、伪装连接和多播连接等信息。

|

||||

|

||||

```

|

||||

# netstat -tulpn

|

||||

@ -380,27 +415,32 @@ netstat 命令显示网络连接、路由表、接口统计、伪装连接和多

|

||||

|

||||

### 12. ss - 网络统计

|

||||

|

||||

ss 命令用于获取套接字统计信息。它可以显示类似于 netstat 的信息。不过 netstat 几乎要过时了,ss 命令更具优势。要显示所有 TCP 或 UDP 套接字:

|

||||

`ss` 命令用于获取套接字统计信息。它可以显示类似于 `netstat` 的信息。不过 `netstat` 几乎要过时了,`ss` 命令更具优势。要显示所有 TCP 或 UDP 套接字:

|

||||

|

||||

`# ss -t -a`

|

||||

```

|

||||

# ss -t -a

|

||||

```

|

||||

|

||||

或

|

||||

|

||||

`# ss -u -a `

|

||||

```

|

||||

# ss -u -a

|

||||

```

|

||||

|

||||

显示所有带有 SELinux 安全上下文(Security Context)的 TCP 套接字:

|

||||

显示所有带有 SELinux <ruby>安全上下文<rt>Security Context</rt></ruby>的 TCP 套接字:

|

||||

|

||||

`# ss -t -a -Z `

|

||||

```

|

||||

# ss -t -a -Z

|

||||

```

|

||||

|

||||

请参阅以下关于 ss 和 netstat 命令的资料:

|

||||

请参阅以下关于 `ss` 和 `netstat` 命令的资料:

|

||||

|

||||

+ [ss:显示 Linux TCP / UDP 网络套接字信息][56]

|

||||

+ [使用 netstat 命令获取有关特定 IP 地址连接的详细信息][57]

|

||||

|

||||

|

||||

### 13. iptraf - 获取实时网络统计信息

|

||||

|

||||

iptraf 命令是一个基于 ncurses 的交互式 IP 网络监控工具。它可以生成多种网络统计信息,包括 TCP 信息、UDP 计数、ICMP 和 OSPF 信息、以太网负载信息、节点统计信息、IP 校验错误等。它以简单的格式提供了以下信息:

|

||||

`iptraf` 命令是一个基于 ncurses 的交互式 IP 网络监控工具。它可以生成多种网络统计信息,包括 TCP 信息、UDP 计数、ICMP 和 OSPF 信息、以太网负载信息、节点统计信息、IP 校验错误等。它以简单的格式提供了以下信息:

|

||||

|

||||

* 基于 TCP 连接的网络流量统计

|

||||

* 基于网络接口的 IP 流量统计

|

||||

@ -410,41 +450,53 @@ iptraf 命令是一个基于 ncurses 的交互式 IP 网络监控工具。它可

|

||||

|

||||

![Fig.02: General interface statistics: IP traffic statistics by network interface ][9]

|

||||

|

||||

图 02:常规接口统计:基于网络接口的 IP 流量统计

|

||||

*图 02:常规接口统计:基于网络接口的 IP 流量统计*

|

||||

|

||||

![Fig.03 Network traffic statistics by TCP connection][10]

|

||||

|

||||

图 03:基于 TCP 连接的网络流量统计

|

||||

*图 03:基于 TCP 连接的网络流量统计*

|

||||

|

||||

相关链接:[在 Centos / RHEL / Fedora Linux 上安装 IPTraf 以获取网络统计信息][11]

|

||||

|

||||

### 14. tcpdump - 详细的网络流量分析

|

||||

|

||||

tcpdump 命令是简单的分析网络通信的命令。您需要充分了解 TCP/IP 协议才便于使用此工具。例如,要显示有关 DNS 的流量信息,请输入:

|

||||

`tcpdump` 命令是简单的分析网络通信的命令。您需要充分了解 TCP/IP 协议才便于使用此工具。例如,要显示有关 DNS 的流量信息,请输入:

|

||||

|

||||

`# tcpdump -i eth1 'udp port 53'`

|

||||

```

|

||||

# tcpdump -i eth1 'udp port 53'

|

||||

```

|

||||

|

||||

查看所有去往和来自端口 80 的 IPv4 HTTP 数据包,仅打印真正包含数据的包,而不是像 SYN、FIN 和仅含 ACK 这类的数据包,请输入:

|

||||

|

||||

`# tcpdump 'tcp port 80 and (((ip[2:2] - ((ip[0]&0xf)<<2)) - ((tcp[12]&0xf0)>>2)) != 0)'`

|

||||

```

|

||||

# tcpdump 'tcp port 80 and (((ip[2:2] - ((ip[0]&0xf)<<2)) - ((tcp[12]&0xf0)>>2)) != 0)'

|

||||

```

|

||||

|

||||

显示所有目标地址为 202.54.1.5 的 FTP 会话,请输入:

|

||||

|

||||

`# tcpdump -i eth1 'dst 202.54.1.5 and (port 21 or 20'`

|

||||

```

|

||||

# tcpdump -i eth1 'dst 202.54.1.5 and (port 21 or 20'

|

||||

```

|

||||

|

||||

打印所有目标地址为 192.168.1.5 的 HTTP 会话:

|

||||

|

||||

`# tcpdump -ni eth0 'dst 192.168.1.5 and tcp and port http'`

|

||||

```

|

||||

# tcpdump -ni eth0 'dst 192.168.1.5 and tcp and port http'

|

||||

```

|

||||

|

||||

使用 [wireshark][12] 查看文件的详细内容,请输入:

|

||||

|

||||

`# tcpdump -n -i eth1 -s 0 -w output.txt src or dst port 80`

|

||||

```

|

||||

# tcpdump -n -i eth1 -s 0 -w output.txt src or dst port 80

|

||||

```

|

||||

|

||||

### 15. iotop - I/O 监控

|

||||

|

||||

iotop 命令利用 Linux 内核监控 I/O 使用情况,它按进程或线程的顺序显示 I/O 使用情况。

|

||||

`iotop` 命令利用 Linux 内核监控 I/O 使用情况,它按进程或线程的顺序显示 I/O 使用情况。

|

||||

|

||||

`$ sudo iotop`

|

||||

```

|

||||

$ sudo iotop

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -454,9 +506,11 @@ iotop 命令利用 Linux 内核监控 I/O 使用情况,它按进程或线程

|

||||

|

||||

### 16. htop - 交互式的进程查看器

|

||||

|

||||

htop 是一款免费并开源的基于 ncurses 的 Linux 进程查看器。它比 top 命令更简单易用。您无需使用 PID、无需离开 htop 界面,便可以杀掉进程或调整其调度优先级。

|

||||

`htop` 是一款免费并开源的基于 ncurses 的 Linux 进程查看器。它比 `top` 命令更简单易用。您无需使用 PID、无需离开 `htop` 界面,便可以杀掉进程或调整其调度优先级。

|

||||

|

||||

`$ htop`

|

||||

```

|

||||

$ htop

|

||||

```

|

||||

|

||||

输出示例:

|

||||

|

||||

@ -464,40 +518,40 @@ htop 是一款免费并开源的基于 ncurses 的 Linux 进程查看器。它

|

||||

|

||||

相关链接:[CentOS / RHEL:安装 htop——交互式文本模式进程查看器][58]

|

||||

|

||||

|

||||

### 17. atop - 高级版系统与进程监控工具

|

||||

|

||||

atop 是一个非常强大的交互式 Linux 系统负载监控器,它从性能的角度显示最关键的硬件资源信息。您可以快速查看 CPU、内存、磁盘和网络性能。它还可以从进程的级别显示哪些进程造成了相关 CPU 和内存的负载。

|

||||

`atop` 是一个非常强大的交互式 Linux 系统负载监控器,它从性能的角度显示最关键的硬件资源信息。您可以快速查看 CPU、内存、磁盘和网络性能。它还可以从进程的级别显示哪些进程造成了相关 CPU 和内存的负载。

|

||||

|

||||

`$ atop`

|

||||

```

|

||||

$ atop

|

||||

```

|

||||

|

||||

![atop Command Line Tools to Monitor Linux Performance][16]

|

||||

|

||||

相关链接:[CentOS / RHEL:安装 atop 工具——高级系统和进程监控器][59]

|

||||

|

||||

|

||||

### 18. ac 和 lastcomm

|

||||

|

||||

您一定需要监控 Linux 服务器上的进程和登录活动吧。psacct 或 acct 软件包中包含了多个用于监控进程活动的工具,包括:

|

||||

您一定需要监控 Linux 服务器上的进程和登录活动吧。`psacct` 或 `acct` 软件包中包含了多个用于监控进程活动的工具,包括:

|

||||

|

||||

|

||||

1. ac 命令:显示有关用户连接时间的统计信息

|

||||

1. `ac` 命令:显示有关用户连接时间的统计信息

|

||||

2. [lastcomm 命令][17]:显示已执行过的命令

|

||||

3. accton 命令:打开或关闭进程账号记录功能

|

||||

4. sa 命令:进程账号记录信息的摘要

|

||||

3. `accton` 命令:打开或关闭进程账号记录功能

|

||||

4. `sa` 命令:进程账号记录信息的摘要

|

||||

|

||||

相关链接:[如何对 Linux 系统的活动做详细的跟踪记录][18]

|

||||

|

||||

### 19. monit - 进程监控器

|

||||

|

||||

Monit 是一个免费且开源的进程监控软件,它可以自动重启停掉的服务。您也可以使用 Systemd、daemontools 或其他类似工具来达到同样的目的。[本教程演示如何在 Debian 或 Ubuntu Linux 上安装和配置 monit 作为进程监控器][19]。

|

||||

`monit` 是一个免费且开源的进程监控软件,它可以自动重启停掉的服务。您也可以使用 Systemd、daemontools 或其他类似工具来达到同样的目的。[本教程演示如何在 Debian 或 Ubuntu Linux 上安装和配置 monit 作为进程监控器][19]。

|

||||

|

||||

|

||||

### 20. nethogs - 找出占用带宽的进程

|

||||

### 20. NetHogs - 找出占用带宽的进程

|

||||

|

||||

NetHogs 是一个轻便的网络监控工具,它按照进程名称(如 Firefox、wget 等)对带宽进行分组。如果网络流量突然爆发,启动 NetHogs,您将看到哪个进程(PID)导致了带宽激增。

|

||||

|

||||

`$ sudo nethogs`

|

||||

```

|

||||

$ sudo nethogs

|

||||

```

|

||||

|

||||

![nethogs linux monitoring tools open source][20]

|

||||

|

||||

@ -505,31 +559,37 @@ NetHogs 是一个轻便的网络监控工具,它按照进程名称(如 Firef

|

||||

|

||||

### 21. iftop - 显示主机上网络接口的带宽使用情况

|

||||

|

||||

iftop 命令监听指定接口(如 eth0)上的网络通信情况。[它显示了一对主机的带宽使用情况][22]。

|

||||

`iftop` 命令监听指定接口(如 eth0)上的网络通信情况。[它显示了一对主机的带宽使用情况][22]。

|

||||

|

||||

`$ sudo iftop`

|

||||

```

|

||||

$ sudo iftop

|

||||

```

|

||||

|

||||

![iftop in action][23]

|

||||

|

||||

### 22. vnstat - 基于控制台的网络流量监控工具

|

||||

|

||||

vnstat 是一个简单易用的基于控制台的网络流量监视器,它为指定网络接口保留每小时、每天和每月网络流量日志。

|

||||

`vnstat` 是一个简单易用的基于控制台的网络流量监视器,它为指定网络接口保留每小时、每天和每月网络流量日志。

|

||||

|

||||

`$ vnstat `

|

||||

```

|

||||

$ vnstat

|

||||

```

|

||||

|

||||

![vnstat linux network traffic monitor][25]

|

||||

|

||||

相关链接:

|

||||

|

||||

+ [为 ADSL 或专用远程 Linux 服务器保留日常网络流量日志][60]

|

||||

+ [CentOS / RHEL:安装 vnStat 网络流量监控器以保留日常网络流量日志][61]

|

||||

+ [CentOS / RHEL:使用 PHP 网页前端接口查看 Vnstat 图表][62]

|

||||

|

||||

|

||||

### 23. nmon - Linux 系统管理员的调优和基准测量工具

|

||||

|

||||

nmon 是 Linux 系统管理员用于性能调优的利器,它在命令行显示 CPU、内存、网络、磁盘、文件系统、NFS、消耗资源最多的进程和分区信息。

|

||||

`nmon` 是 Linux 系统管理员用于性能调优的利器,它在命令行显示 CPU、内存、网络、磁盘、文件系统、NFS、消耗资源最多的进程和分区信息。

|

||||

|

||||

`$ nmon`

|

||||

```

|

||||

$ nmon

|

||||

```

|

||||

|

||||

![nmon command][26]

|

||||

|

||||

@ -537,9 +597,11 @@ nmon 是 Linux 系统管理员用于性能调优的利器,它在命令行显

|

||||

|

||||

### 24. glances - 密切关注 Linux 系统

|

||||

|

||||

glances 是一款开源的跨平台监控工具。它在小小的屏幕上提供了大量的信息,还可以用作客户端-服务器架构。

|

||||

`glances` 是一款开源的跨平台监控工具。它在小小的屏幕上提供了大量的信息,还可以工作于客户端-服务器模式下。

|

||||

|

||||

`$ glances`

|

||||

```

|

||||

$ glances

|

||||

```

|

||||

|

||||

![Glances][28]

|

||||

|

||||

@ -547,11 +609,11 @@ glances 是一款开源的跨平台监控工具。它在小小的屏幕上提供

|

||||

|

||||

### 25. strace - 查看系统调用

|

||||

|

||||

想要跟踪 Linux 系统的调用和信号吗?试试 strace 命令吧。它对于调试网页服务器和其他服务器问题很有用。了解如何利用其 [追踪进程][30] 并查看它在做什么。

|

||||

想要跟踪 Linux 系统的调用和信号吗?试试 `strace` 命令吧。它对于调试网页服务器和其他服务器问题很有用。了解如何利用其 [追踪进程][30] 并查看它在做什么。

|

||||

|

||||

### 26. /proc/ 文件系统 - 各种内核信息

|

||||

### 26. /proc 文件系统 - 各种内核信息

|

||||

|

||||

/proc 文件系统提供了不同硬件设备和 Linux 内核的详细信息。更多详细信息,请参阅 [Linux 内核 /proc][31] 文档。常见的 /proc 例子:

|

||||

`/proc` 文件系统提供了不同硬件设备和 Linux 内核的详细信息。更多详细信息,请参阅 [Linux 内核 /proc][31] 文档。常见的 `/proc` 例子:

|

||||

|

||||

```

|

||||

# cat /proc/cpuinfo

|

||||

@ -562,23 +624,23 @@ glances 是一款开源的跨平台监控工具。它在小小的屏幕上提供

|

||||

|

||||

### 27. Nagios - Linux 服务器和网络监控

|

||||

|

||||

[Nagios][32] 是一款普遍使用的开源系统和网络监控软件。您可以轻松地监控所有主机、网络设备和服务,当状态异常和恢复正常时它都会发出警报通知。[FAN][33] 是“全自动 Nagios”的缩写。FAN 的目标是提供包含由 Nagios 社区提供的大多数工具包的 Nagios 安装。FAN 提供了标准 ISO 格式的 CDRom 镜像,使安装变得更加容易。除此之外,为了改善 Nagios 的用户体验,发行版还包含了大量的工具。

|

||||

[Nagios][32] 是一款普遍使用的开源系统和网络监控软件。您可以轻松地监控所有主机、网络设备和服务,当状态异常和恢复正常时它都会发出警报通知。[FAN][33] 是“全自动 Nagios”的缩写。FAN 的目标是提供包含由 Nagios 社区提供的大多数工具包的 Nagios 安装。FAN 提供了标准 ISO 格式的 CD-Rom 镜像,使安装变得更加容易。除此之外,为了改善 Nagios 的用户体验,发行版还包含了大量的工具。

|

||||

|

||||

### 28. Cacti - 基于 Web 的 Linux 监控工具

|

||||

|

||||

Cacti 是一个完整的网络图形化解决方案,旨在充分利用 RRDTool 的数据存储和图形功能。Cacti 提供了快速轮询器、高级图形模板、多种数据采集方法和用户管理功能。这些功能被包装在一个直观易用的界面中,确保可以实现从局域网到拥有数百台设备的复杂网络上的安装。它可以提供有关网络、CPU、内存、登录用户、Apache、DNS 服务器等的数据。了解如何在 CentOS / RHEL 下 [安装和配置 Cacti 网络图形化工具][34]。

|

||||

|

||||

### 29. KDE System Guard - 实时系统报告和图形化显示

|

||||

### 29. KDE 系统监控器 - 实时系统报告和图形化显示

|

||||

|

||||

KSysguard 是 KDE 桌面的网络化系统监控程序。这个工具可以通过 ssh 会话运行。它提供了许多功能,比如监控本地和远程主机的客户端-服务器架构。前端图形界面使用传感器来检索信息。传感器可以返回简单的值或更复杂的信息,如表格。每种类型的信息都有一个或多个显示界面,并被组织成工作表的形式,这些工作表可以分别保存和加载。所以,KSysguard 不仅是一个简单的任务管理器,还是一个控制大型服务器平台的强大工具。

|

||||

KSysguard 是 KDE 桌面的网络化系统监控程序。这个工具可以通过 ssh 会话运行。它提供了许多功能,比如可以监控本地和远程主机的客户端-服务器模式。前端图形界面使用传感器来检索信息。传感器可以返回简单的值或更复杂的信息,如表格。每种类型的信息都有一个或多个显示界面,并被组织成工作表的形式,这些工作表可以分别保存和加载。所以,KSysguard 不仅是一个简单的任务管理器,还是一个控制大型服务器平台的强大工具。

|

||||

|

||||

![Fig.05 KDE System Guard][35]

|

||||

|

||||

图 05:KDE System Guard {图片来源:维基百科}

|

||||

*图 05:KDE System Guard {图片来源:维基百科}*

|

||||

|

||||

详细用法,请参阅 [KSysguard 手册][36]。

|

||||

|

||||

### 30. Gnome 系统监控器

|

||||

### 30. GNOME 系统监控器

|

||||

|

||||

系统监控程序能够显示系统基本信息,并监控系统进程、系统资源使用情况和文件系统。您还可以用其修改系统行为。虽然不如 KDE System Guard 强大,但它提供的基本信息对新用户还是有用的:

|

||||

|

||||

@ -598,7 +660,7 @@ KSysguard 是 KDE 桌面的网络化系统监控程序。这个工具可以通

|

||||

|

||||

![Fig.06 The Gnome System Monitor application][37]

|

||||

|

||||

图 06:Gnome 系统监控程序

|

||||

*图 06:Gnome 系统监控程序*

|

||||

|

||||

### 福利:其他工具

|

||||

|

||||

@ -606,16 +668,15 @@ KSysguard 是 KDE 桌面的网络化系统监控程序。这个工具可以通

|

||||

|

||||

* [nmap][38] - 扫描服务器的开放端口

|

||||

* [lsof][39] - 列出打开的文件和网络连接等

|

||||

* [ntop][40] 网页工具 - ntop 是查看网络使用情况的最佳工具,与 top 命令之于进程的方式类似,即网络流量监控工具。您可以查看网络状态和 UDP、TCP、DNS、HTTP 等协议的流量分发。

|

||||

* [Conky][41] - X Window 系统的另一个很好的监控工具。它具有很高的可配置性,能够监视许多系统变量,包括 CPU 状态、内存、交换空间、磁盘存储、温度、进程、网络接口、电池、系统消息和电子邮件等。

|

||||

* [ntop][40] 基于网页的工具 - `ntop` 是查看网络使用情况的最佳工具,与 `top` 命令之于进程的方式类似,即网络流量监控工具。您可以查看网络状态和 UDP、TCP、DNS、HTTP 等协议的流量分发。

|

||||

* [Conky][41] - X Window 系统下的另一个很好的监控工具。它具有很高的可配置性,能够监视许多系统变量,包括 CPU 状态、内存、交换空间、磁盘存储、温度、进程、网络接口、电池、系统消息和电子邮件等。

|

||||

* [GKrellM][42] - 它可以用来监控 CPU 状态、主内存、硬盘、网络接口、本地和远程邮箱及其他信息。

|

||||

* [mtr][43] - mtr 将 traceroute 和 ping 程序的功能结合在一个网络诊断工具中。

|

||||

* [mtr][43] - `mtr` 将 `traceroute` 和 `ping` 程序的功能结合在一个网络诊断工具中。

|

||||

* [vtop][44] - 图形化活动监控终端

|

||||

|

||||

|

||||

如果您有其他推荐的系统监控工具,欢迎在评论区分享。

|

||||

|

||||

#### 关于作者

|

||||

### 关于作者

|

||||

|

||||

作者 Vivek Gite 是 nixCraft 的创建者,也是经验丰富的系统管理员,以及 Linux 操作系统和 Unix shell 脚本的培训师。他的客户遍布全球,行业涉及 IT、教育、国防航天研究以及非营利部门等。您可以在 [Twitter][45]、[Facebook][46] 和 [Google+][47] 上关注他。

|

||||

|

||||

@ -625,7 +686,7 @@ via: https://www.cyberciti.biz/tips/top-linux-monitoring-tools.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[jessie-pang](https://github.com/jessie-pang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,23 +1,22 @@

|

||||

Torrent 提速 - 为什么总是无济于事

|

||||

Torrent 提速为什么总是无济于事

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

是不是总是想要 **更快的 torrent 速度**?不管现在的速度有多块,但总是无法对此满足。我们对 torrent 速度的痴迷使我们经常从包括 YouTube 视频在内的许多网站上寻找并应用各种所谓的技巧。但是相信我,从小到大我就没发现哪个技巧有用过。因此本文我们就就来看看,为什么尝试提高 torrent 速度是行不通的。

|

||||

|

||||

## 影响速度的因素

|

||||

### 影响速度的因素

|

||||

|

||||

### 本地因素

|

||||

#### 本地因素

|

||||

|

||||

从下图中可以看到 3 台电脑分别对应的 A,B,C 三个用户。A 和 B 本地相连,而 C 的位置则比较远,它与本地之间有 1,2,3 三个连接点。

|

||||

从下图中可以看到 3 台电脑分别对应的 A、B、C 三个用户。A 和 B 本地相连,而 C 的位置则比较远,它与本地之间有 1、2、3 三个连接点。

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

若用户 A 和用户 B 之间要分享文件,他们之间直接分享就能达到最大速度了而无需使用 torrent。这个速度跟互联网什么的都没有关系。

|

||||

|

||||

+ 网线的性能

|

||||

|

||||

+ 网卡的性能

|

||||

|

||||

+ 路由器的性能

|

||||

|

||||

当谈到 torrent 的时候,人们都是在说一些很复杂的东西,但是却总是不得要点。

|

||||

@ -30,7 +29,7 @@ Torrent 提速 - 为什么总是无济于事

|

||||

|

||||

即使你把目标降到 30 Megabytes,然而你连接到路由器的电缆/网线的性能最多只有 100 megabits 也就是 10 MegaBytes。这是一个纯粹的瓶颈问题,由一个薄弱的环节影响到了其他强健部分,也就是说这个传输速率只能达到 10 Megabytes,即电缆的极限速度。现在想象有一个 torrent 即使能够用最大速度进行下载,那也会由于你的硬件不够强大而导致瓶颈。

|

||||

|

||||

### 外部因素

|

||||

#### 外部因素

|

||||

|

||||

现在再来看一下这幅图。用户 C 在很遥远的某个地方。甚至可能在另一个国家。

|

||||

|

||||

@ -40,24 +39,23 @@ Torrent 提速 - 为什么总是无济于事

|

||||

|

||||

第二,由于 C 与本地之间多个有连接点,其中一个点就有可能成为瓶颈所在,可能由于繁重的流量和相对薄弱的硬件导致了缓慢的速度。

|

||||

|

||||

### Seeders( 译者注:做种者) 与 Leechers( 译者注:只下载不做种的人)

|

||||

#### 做种者与吸血者

|

||||

|

||||

关于此已经有了太多的讨论,总的想法就是搜索更多的种子,但要注意上面的那些因素,一个很好的种子提供者但是跟我之间的连接不好的话那也是无济于事的。通常,这不可能发生,因为我们也不是唯一下载这个资源的人,一般都会有一些在本地的人已经下载好了这个文件并已经在做种了。

|

||||

关于此已经有了太多的讨论,总的想法就是搜索更多的种子,但要注意上面的那些因素,有一个很好的种子提供者,但是跟我之间的连接不好的话那也是无济于事的。通常,这不可能发生,因为我们也不是唯一下载这个资源的人,一般都会有一些在本地的人已经下载好了这个文件并已经在做种了。

|

||||

|

||||

## 结论

|

||||

### 结论

|

||||

|

||||

我们尝试搞清楚哪些因素影响了 torrent 速度的好坏。不管我们如何用软件进行优化,大多数时候是这是由于物理瓶颈导致的。我从来不关心那些软件,使用默认配置对我来说就够了。

|

||||

|

||||

希望你会喜欢这篇文章,有什么想法敬请留言。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.theitstuff.com/increase-torrent-speed-will-never-work

|

||||

|

||||

作者:[Rishabh Kandari][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,73 @@

|

||||

会话与 Cookie:用户登录的原理是什么?

|

||||

======

|

||||

|

||||

Facebook、 Gmail、 Twitter 是我们每天都会用的网站(LCTT 译注:才不是呢)。它们的共同点在于都需要你登录进去后才能做进一步的操作。只有你通过认证并登录后才能在 twitter 发推,在 Facebook 上评论,以及在 Gmail上处理电子邮件。

|

||||

|

||||

[][1]

|

||||

|

||||

那么登录的原理是什么?网站是如何认证的?它怎么知道是哪个用户从哪儿登录进来的?下面我们来对这些问题进行一一解答。

|

||||

|

||||

### 用户登录的原理是什么?

|

||||

|

||||

每次你在网站的登录页面中输入用户名和密码时,这些信息都会发送到服务器。服务器随后会将你的密码与服务器中的密码进行验证。如果两者不匹配,则你会得到一个错误密码的提示。如果两者匹配,则成功登录。

|

||||

|

||||

### 登录时发生了什么?

|

||||

|

||||

登录后,web 服务器会初始化一个<ruby>会话<rt>session</rt></ruby>并在你的浏览器中设置一个 cookie 变量。该 cookie 变量用于作为新建会话的一个引用。搞晕了?让我们说的再简单一点。

|

||||

|

||||

### 会话的原理是什么?

|

||||

|

||||

服务器在用户名和密码都正确的情况下会初始化一个会话。会话的定义很复杂,你可以把它理解为“关系的开始”。

|

||||

|

||||

[][2]

|

||||

|

||||

认证通过后,服务器就开始跟你展开一段关系了。由于服务器不能象我们人类一样看东西,它会在我们的浏览器中设置一个 cookie 来将我们的关系从其他人与服务器的关系标识出来。

|

||||

|

||||

### 什么是 Cookie?

|

||||

|

||||

cookie 是网站在你的浏览器中存储的一小段数据。你应该已经见过他们了。

|

||||

|

||||

[][3]

|

||||

|

||||

当你登录后,服务器为你创建一段关系或者说一个会话,然后将唯一标识这个会话的会话 id 以 cookie 的形式存储在你的浏览器中。

|

||||

|

||||

### 什么意思?

|

||||

|

||||

所有这些东西存在的原因在于识别出你来,这样当你写评论或者发推时,服务器能知道是谁在发评论,是谁在发推。

|

||||

|

||||

当你登录后,会产生一个包含会话 id 的 cookie。这样,这个会话 id 就被赋予了那个输入正确用户名和密码的人了。

|

||||

|

||||

[][4]

|

||||

|

||||

也就是说,会话 id 被赋予给了拥有这个账户的人了。之后,所有在网站上产生的行为,服务器都能通过他们的会话 id 来判断是由谁发起的。

|

||||

|

||||

### 如何让我保持登录状态?

|

||||

|

||||

会话有一定的时间限制。这一点与现实生活中不一样,现实生活中的关系可以在不见面的情况下持续很长一段时间,而会话具有时间限制。你必须要不断地通过一些动作来告诉服务器你还在线。否则的话,服务器会关掉这个会话,而你会被登出。

|

||||

|

||||

[][5]

|

||||

|

||||

不过在某些网站上可以启用“保持登录”功能,这样服务器会将另一个唯一变量以 cookie 的形式保存到我们的浏览器中。这个唯一变量会通过与服务器上的变量进行对比来实现自动登录。若有人盗取了这个唯一标识(我们称之为 cookie stealing),他们就能访问你的账户了。

|

||||

|

||||

### 结论

|

||||

|

||||

我们讨论了登录系统的工作原理以及网站是如何进行认证的。我们还学到了什么是会话和 cookies,以及它们在登录机制中的作用。

|

||||

|

||||

我们希望你们以及理解了用户登录的工作原理,如有疑问,欢迎提问。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.theitstuff.com/sessions-cookies-user-login-work

|

||||

|

||||

作者:[Rishabh Kandari][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.theitstuff.com/author/reevkandari

|

||||

[1]:http://www.theitstuff.com/wp-content/uploads/2017/10/Untitled-design-1.jpg

|

||||

[2]:http://www.theitstuff.com/wp-content/uploads/2017/10/pasted-image-0-9.png

|

||||

[3]:http://www.theitstuff.com/wp-content/uploads/2017/10/pasted-image-0-1-4.png

|

||||

[4]:http://www.theitstuff.com/wp-content/uploads/2017/10/pasted-image-0-2-3-e1508926255472.png

|

||||

[5]:http://www.theitstuff.com/wp-content/uploads/2017/10/pasted-image-0-3-3-e1508926314117.png

|

||||

50

published/20171211 A tour of containerd 1.0.md

Normal file

50

published/20171211 A tour of containerd 1.0.md

Normal file

@ -0,0 +1,50 @@

|

||||

containerd 1.0 探索之旅

|

||||

======

|

||||

|

||||

我们在过去的文章中讨论了一些 containerd 的不同特性,它是如何设计的,以及随着时间推移已经修复的一些问题。containerd 被用于 Docker、Kubernetes CRI、以及一些其它的项目,在这些平台中事实上都使用了 containerd,而许多人并不知道 containerd 存在于这些平台之中,这篇文章就是为这些人所写的。我将来会写更多的关于 containerd 的设计以及特性集方面的文章,但是现在,让我们从它的基础知识开始。

|

||||

|

||||

![containerd][1]

|

||||

|

||||

我认为容器生态系统有时候可能很复杂。尤其是我们所使用的术语。它是什么?一个运行时,还是别的?一个运行时 … containerd(它的发音是 “container-dee”)正如它的名字,它是一个容器守护进程,而不是一些人忽悠我的“<ruby>收集<rt>contain</rt></ruby><ruby>迷<rt>nerd</rt></ruby>”。它最初是作为 OCI 运行时(就像 runc 一样)的集成点而构建的,在过去的六个月中它增加了许多特性,使其达到了像 Docker 这样的现代容器平台以及像 Kubernetes 这样的编排平台的需求。

|

||||

|

||||

那么,你使用 containerd 能去做些什么呢?你可以拥有推送或拉取功能以及镜像管理。可以拥有容器生命周期 API 去创建、运行、以及管理容器和它们的任务。一个完整的专门用于快照管理的 API,以及一个其所依赖的开放治理的项目。如果你需要去构建一个容器平台,基本上你不需要去处理任何底层操作系统细节方面的事情。我认为关于 containerd 中最重要的部分是,它有一个版本化的并且有 bug 修复和安全补丁的稳定 API。

|

||||

|

||||

![containerd][2]

|

||||

|

||||

由于在内核中没有一个 Linux 容器这样的东西,因此容器是多种内核特性捆绑在一起而成的,当你构建一个大型平台或者分布式系统时,你需要在你的管理代码和系统调用之间构建一个抽象层,然后将这些特性捆绑粘接在一起去运行一个容器。而这个抽象层就是 containerd 的所在之处。它为稳定类型的平台层提供了一个客户端,这样平台可以构建在顶部而无需进入到内核级。因此,可以让使用容器、任务、和快照类型的工作相比通过管理调用去 clone() 或者 mount() 要友好的多。与灵活性相平衡,直接与运行时或者宿主机交互,这些对象避免了常规的高级抽象所带来的性能牺牲。结果是简单的任务很容易完成,而困难的任务也变得更有可能完成。

|

||||

|

||||

![containerd][3]

|

||||

|

||||

containerd 被设计用于 Docker 和 Kubernetes、以及想去抽象出系统调用或者在 Linux、Windows、Solaris 以及其它的操作系统上特定的功能去运行容器的其它容器系统。考虑到这些用户的想法,我们希望确保 containerd 只拥有它们所需要的东西,而没有它们不希望的东西。事实上这是不太可能的,但是至少我们想去尝试一下。虽然网络不在 containerd 的范围之内,它并不能做成让高级系统可以完全控制的东西。原因是,当你构建一个分布式系统时,网络是非常中心的地方。现在,对于 SDN 和服务发现,相比于在 Linux 上抽象出 netlink 调用,网络是更特殊的平台。大多数新的网络都是基于路由的,并且每次一个新的容器被创建或者删除时,都会请求更新路由表。服务发现、DNS 等等都需要及时被通知到这些改变。如果在 containerd 中添加对网络的管理,为了能够支持不同的网络接口、钩子、以及集成点,将会在 containerd 中增加很大的一块代码。而我们的选择是,在 containerd 中做一个健壮的事件系统,以便于多个消费者可以去订阅它们所关心的事件。我们也公开发布了一个 [任务 API][4],它可以让用户去创建一个运行任务,也可以在一个容器的网络命名空间中添加一个接口,以及在一个容器的生命周期中的任何时候,无需复杂的钩子来启用容器的进程。

|

||||

|

||||

在过去的几个月中另一个添加到 containerd 中的领域是完整的存储,以及支持 OCI 和 Docker 镜像格式的分布式系统。有了一个跨 containerd API 的完整的目录地址存储系统,它不仅适用于镜像,也适用于元数据、检查点、以及附加到容器的任何数据。

|

||||

|

||||

我们也花时间去 [重新考虑如何使用 “图驱动” 工作][5]。这些是叠加的或者允许镜像分层的块级文件系统,可以使你执行的构建更加高效。当我们添加对 devicemapper 的支持时,<ruby>图驱动<rt>graphdrivers</rt></ruby>最初是由 Solomon 和我写的。Docker 在那个时候仅支持 AUFS,因此我们在叠加文件系统之后,对图驱动进行了建模。但是,做一个像 devicemapper/lvm 这样的块级文件系统,就如同一个堆叠文件系统一样,从长远来看是非常困难的。这些接口必须基于时间的推移进行扩展,以支持我们最初认为并不需要的那些不同的特性。对于 containerd,我们使用了一个不同的方法,像快照一样做一个堆叠文件系统而不是相反。这样做起来更容易,因为堆叠文件系统比起像 BTRFS、ZFS 以及 devicemapper 这样的快照文件系统提供了更好的灵活性。因为这些文件系统没有严格的父/子关系。这有助于我们去构建出 [快照的一个小型接口][6],同时还能满足 [构建者][7] 的要求,还能减少了需要的代码数量,从长远来看这样更易于维护。

|

||||

|

||||

![][8]

|

||||

|

||||

你可以在 [Stephen Day 2017/12/7 在 KubeCon SIG Node 上的演讲][9]找到更多关于 containerd 的架构方面的详细资料。

|

||||

|

||||

除了在 1.0 代码库中的技术和设计上的更改之外,我们也将 [containerd 管理模式从长期 BDFL 模式转换为技术委员会][10],为社区提供一个独立的可信任的第三方资源。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.docker.com/2017/12/containerd-ga-features-2/

|

||||

|

||||

作者:[Michael Crosby][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blog.docker.com/author/michael/

|

||||

[1]:https://i0.wp.com/blog.docker.com/wp-content/uploads/950cf948-7c08-4df6-afd9-cc9bc417cabe-6.jpg?resize=400%2C120&amp;ssl=1

|

||||

[2]:https://i1.wp.com/blog.docker.com/wp-content/uploads/4a7666e4-ebdb-4a40-b61a-26ac7c3f663e-4.jpg?resize=906%2C470&amp;ssl=1 "containerd"

|

||||

[3]:https://i1.wp.com/blog.docker.com/wp-content/uploads/2a73a4d8-cd40-4187-851f-6104ae3c12ba-1.jpg?resize=1140%2C680&amp;ssl=1

|

||||

[4]:https://github.com/containerd/containerd/blob/master/api/services/tasks/v1/tasks.proto

|

||||

[5]:https://blog.mobyproject.org/where-are-containerds-graph-drivers-145fc9b7255

|

||||

[6]:https://github.com/containerd/containerd/blob/master/api/services/snapshots/v1/snapshots.proto

|

||||

[7]:https://blog.mobyproject.org/introducing-buildkit-17e056cc5317

|

||||

[8]:https://i1.wp.com/blog.docker.com/wp-content/uploads/d0fb5eb9-c561-415d-8d57-e74442a879a2-1.jpg?resize=1140%2C556&amp;ssl=1

|

||||

[9]:https://speakerdeck.com/stevvooe/whats-happening-with-containerd-and-the-cri

|

||||

[10]:https://github.com/containerd/containerd/pull/1748

|

||||

@ -0,0 +1,125 @@

|

||||

如何在 Ubuntu 16.04 上安装和使用 Encryptpad

|

||||

==============

|

||||

|

||||

EncryptPad 是一个自由开源软件,它通过简单方便的图形界面和命令行接口来查看和修改加密的文本,它使用 OpenPGP RFC 4880 文件格式。通过 EncryptPad,你可以很容易的加密或者解密文件。你能够像保存密码、信用卡信息等私人信息,并使用密码或者密钥文件来访问。

|

||||

|

||||

### 特性

|

||||

|

||||

- 支持 windows、Linux 和 Max OS。

|

||||

- 可定制的密码生成器,可生成健壮的密码。

|

||||

- 随机的密钥文件和密码生成器。

|

||||

- 支持 GPG 和 EPD 文件格式。

|

||||

- 能够通过 CURL 自动从远程远程仓库下载密钥。

|

||||

- 密钥文件的路径能够存储在加密的文件中。如果这样做的话,你不需要每次打开文件都指定密钥文件。

|

||||

- 提供只读模式来防止文件被修改。

|

||||

- 可加密二进制文件,例如图片、视频、归档等。

|

||||

|

||||

|

||||

在这份教程中,我们将学习如何在 Ubuntu 16.04 中安装和使用 EncryptPad。

|

||||

|

||||

### 环境要求

|

||||

|

||||

- 在系统上安装了 Ubuntu 16.04 桌面版本。

|

||||

- 在系统上有 `sudo` 的权限的普通用户。

|

||||

|

||||

### 安装 EncryptPad

|

||||

|

||||

在默认情况下,EncryPad 在 Ubuntu 16.04 的默认仓库是不存在的。你需要安装一个额外的仓库。你能够通过下面的命令来添加它 :

|

||||

|

||||

```

|

||||

sudo apt-add-repository ppa:nilaimogard/webupd8

|

||||

```

|

||||

|

||||

下一步,用下面的命令来更新仓库:

|

||||

|

||||

```

|

||||

sudo apt-get update -y

|

||||

```

|

||||

|

||||

最后一步,通过下面命令安装 EncryptPad:

|

||||

|

||||

```

|

||||

sudo apt-get install encryptpad encryptcli -y

|

||||

```

|

||||

|

||||

当 EncryptPad 安装完成后,你可以在 Ubuntu 的 Dash 上找到它。

|

||||

|

||||

### 使用 EncryptPad 生成密钥和密码

|

||||

|

||||

现在,在 Ubunntu Dash 上输入 `encryptpad`,你能够在你的屏幕上看到下面的图片 :

|

||||

|

||||

[![Ubuntu DeskTop][1]][2]

|

||||

|

||||

下一步,点击 EncryptPad 的图标。你能够看到 EncryptPad 的界面,它是一个简单的文本编辑器,带有顶部菜单栏。

|

||||

|

||||

[![EncryptPad screen][3]][4]

|

||||

|

||||

首先,你需要生成一个密钥文件和密码用于加密/解密任务。点击顶部菜单栏中的 “Encryption->Generate Key”,你会看见下面的界面:

|

||||

|

||||

[![Generate key][5]][6]

|

||||

|

||||

选择文件保存的路径,点击 “OK” 按钮,你将看到下面的界面:

|

||||

|

||||

[![select path][7]][8]

|

||||

|

||||

输入密钥文件的密码,点击 “OK” 按钮 ,你将看到下面的界面:

|

||||

|

||||

[![last step][9]][10]

|

||||

|

||||

点击 “yes” 按钮来完成该过程。

|

||||

|

||||

### 加密和解密文件

|

||||

|

||||

现在,密钥文件和密码都已经生成了。可以执行加密和解密操作了。在这个文件编辑器中打开一个文件文件,点击 “encryption” 图标 ,你会看见下面的界面:

|

||||

|

||||

[![Encry operation][11]][12]

|

||||

|

||||

提供需要加密的文件和指定输出的文件,提供密码和前面产生的密钥文件。点击 “Start” 按钮来开始加密的进程。当文件被成功的加密,会出现下面的界面:

|

||||

|

||||

[![Success Encrypt][13]][14]

|

||||

|

||||

文件已经被该密码和密钥文件加密了。

|

||||

|

||||

如果你想解密被加密后的文件,打开 EncryptPad ,点击 “File Encryption” ,选择 “Decryption” 操作,提供加密文件的位置和你要保存输出的解密文件的位置,然后提供密钥文件地址,点击 “Start” 按钮,它将要求你输入密码,输入你先前加密使用的密码,点击 “OK” 按钮开始解密过程。当该过程成功完成,你会看到 “File has been decrypted successfully” 的消息 。

|

||||

|

||||

|

||||

[![decrypt ][16]][17]

|

||||

[![][18]][18]

|

||||

[![][13]]

|

||||

|

||||

|

||||

**注意:**

|

||||

|

||||

如果你遗忘了你的密码或者丢失了密钥文件,就没有其他的方法可以打开你的加密信息了。对于 EncrypePad 所支持的格式是没有后门的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

via: https://www.howtoforge.com/tutorial/how-to-install-and-use-encryptpad-on-ubuntu-1604/

|

||||

|

||||

作者:[Hitesh Jethva][a]

|

||||

译者:[singledo](https://github.com/singledo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[a]:https://www.howtoforge.com

|

||||

[1]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-dash.png

|

||||

[2]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-dash.png

|

||||

[3]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-dashboard.png

|

||||

[4]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-dashboard.png

|

||||

[5]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-generate-key.png

|

||||

[6]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-generate-key.png

|

||||

[7]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-generate-passphrase.png

|

||||

[8]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-generate-passphrase.png

|

||||

[9]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-use-key-file.png

|

||||

[10]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-use-key-file.png

|

||||

[11]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-start-encryption.png

|

||||

[12]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-start-encryption.png

|

||||

[13]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-file-encrypted-successfully.png

|

||||

[14]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-file-encrypted-successfully.png

|

||||

[15]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-decryption-page.png

|

||||

[16]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-decryption-page.png

|

||||

[17]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-decryption-passphrase.png

|

||||

[18]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-decryption-passphrase.png

|

||||

[19]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/Screenshot-of-encryptpad-decryption-successfully.png

|

||||

[20]:https://www.howtoforge.com/images/how_to_install_and_use_encryptpad_on_ubuntu_1604/big/Screenshot-of-encryptpad-decryption-successfully.png

|

||||

@ -1,11 +1,11 @@

|

||||

Docker 化编译的软件 ┈ Tianon's Ramblings ✿

|

||||

如何 Docker 化编译的软件

|

||||

======

|

||||

我最近在 [docker-library/php][1] 仓库中关闭了大量问题,最老的(并且是最长的)讨论之一是关于安装编译扩展的依赖关系,我写了一个[中篇评论][2]解释了我如何用通常的方式为我想要的软件 Docker 化的。

|

||||

|

||||

I'm going to copy most of that comment here and perhaps expand a little bit more in order to have a better/cleaner place to link to!

|

||||

我要在这复制大部分的评论,或许扩展一点点,以便有一个更好的/更干净的链接!

|

||||

我最近在 [docker-library/php][1] 仓库中关闭了大量问题,最老的(并且是最长的)讨论之一是关于安装编译扩展的依赖关系,我写了一个[中等篇幅的评论][2]解释了我如何用常规的方式为我想要的软件进行 Docker 化的。

|

||||

|

||||

我第一步是编写 `Dockerfile` 的原始版本:下载源码,运行 `./configure && make` 等,清理。然后我尝试构建我的原始版本,并希望在这过程中看到错误消息。(对真的!)

|

||||

我要在这里复制大部分的评论内容,或许扩展一点点,以便有一个更好的/更干净的链接!

|

||||

|

||||

我第一步是编写 `Dockerfile` 的原始版本:下载源码,运行 `./configure && make` 等,清理。然后我尝试构建我的原始版本,并希望在这过程中看到错误消息。(对,真的!)

|

||||

|

||||

错误信息通常以 `error: could not find "xyz.h"` 或 `error: libxyz development headers not found` 的形式出现。

|

||||

|

||||

@ -13,9 +13,9 @@ I'm going to copy most of that comment here and perhaps expand a little bit more

|

||||

|

||||

如果我在 Alpine 中构建,我将使用 <https://pkgs.alpinelinux.org/contents> 进行类似的搜索。

|

||||

|

||||

“libxyz development headers” 在某种程度上也是一样的,但是根据我的经验,对于这些 Google 对开发者来说效果更好,因为不同的发行版和项目会以不同的名字来调用这些开发包,所以有时候更难确切的知道哪一个是“正确”的。

|

||||

“libxyz development headers” 在某种程度上也是一样的,但是根据我的经验,对于这些用 Google 对开发者来说效果更好,因为不同的发行版和项目会以不同的名字来调用这些开发包,所以有时候更难确切的知道哪一个是“正确”的。

|

||||

|

||||

当我得到包名后,我将这个包名称添加到我的 `Dockerfile` 中,清理之后,然后重复操作。最终通常会构建成功。偶尔我发现某些库不在 Debian 或 Alpine 中,或者是不够新的,由此我必须从源码构建它,但这些情况在我的经验中很少见 - 因人而异。

|

||||

当我得到包名后,我将这个包名称添加到我的 `Dockerfile` 中,清理之后,然后重复操作。最终通常会构建成功。偶尔我发现某些库不在 Debian 或 Alpine 中,或者是不够新的,由此我必须从源码构建它,但这些情况在我的经验中很少见 —— 因人而异。

|

||||

|

||||

我还会经常查看 Debian(通过 <https://sources.debian.org>)或 Alpine(通过 <https://git.alpinelinux.org/cgit/aports/tree>)我要编译的软件包源码,特别关注 `Build-Depends`(如 [`php7.0=7.0.26-1` 的 `debian/control` 文件][3])以及/或者 `makedepends` (如 [`php7` 的 `APKBUILD` 文件][4])用于包名线索。

|

||||

|

||||

@ -31,7 +31,7 @@ via: https://tianon.github.io/post/2017/12/26/dockerize-compiled-software.html

|

||||

|

||||

作者:[Tianon Gravi][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,108 @@

|

||||

用一些超酷的功能使 Vim 变得更强大

|

||||

======

|

||||

|

||||

|

||||

|

||||

Vim 是每个 Linux 发行版]中不可或缺的一部分,也是 Linux 用户最常用的工具(当然是基于终端的)。至少,这个说法对我来说是成立的。人们可能会在利用什么工具进行程序设计更好方面产生争议,的确 Vim 可能不是一个好的选择,因为有很多不同的 IDE 或其它类似于 Sublime Text 3,Atom 等使程序设计变得更加容易的成熟的文本编辑器。

|

||||

|

||||

### 我的感想

|

||||

|

||||

但我认为,Vim 应该从一开始就以我们想要的方式运作,而其它编辑器让我们按照已经设计好的方式工作,实际上不是我们想要的工作方式。我不会过多地谈论其它编辑器,因为我没有过多地使用过它们(我对 Vim 情有独钟)。

|

||||

|

||||

不管怎样,让我们用 Vim 来做一些事情吧,它完全可以胜任。

|

||||

|

||||

### 利用 Vim 进行程序设计

|

||||

|

||||

#### 执行代码

|

||||

|

||||

|

||||

考虑一个场景,当我们使用 Vim 设计 C++ 代码并需要编译和运行它时,该怎么做呢。

|

||||

|

||||

(a). 我们通过 `Ctrl + Z` 返回到终端,或者利用 `:wq` 保存并退出。

|

||||

|

||||

(b). 但是任务还没有结束,接下来需要在终端上输入类似于 `g++ fileName.cxx` 的命令进行编译。

|

||||

|

||||

(c). 接下来需要键入 `./a.out` 执行它。

|

||||

|

||||

为了让我们的 C++ 代码在 shell 中运行,需要做很多事情。但这似乎并不是利用 Vim 操作的方法( Vim 总是倾向于把几乎所有操作方法利用一两个按键实现)。那么,做这些事情的 Vim 的方式究竟是什么?

|

||||

|

||||

#### Vim 方式

|

||||

|

||||

Vim 不仅仅是一个文本编辑器,它是一种编辑文本的编程语言。这种帮助我们扩展 Vim 功能的编程语言是 “VimScript”(LCTT 译注: Vim 脚本)。

|

||||

|

||||

因此,在 VimScript 的帮助下,我们可以只需一个按键轻松地将编译和运行代码的任务自动化。

|

||||

|

||||

[][2]

|

||||

|

||||

以上是在我的 `.vimrc` 配置文件里创建的一个名为 `CPP()` 函数的片段。

|

||||

|

||||

#### 利用 VimScript 创建函数

|

||||

|

||||

在 VimScript 中创建函数的语法非常简单。它以关键字 `func` 开头,然后是函数名(在 VimScript 中函数名必须以大写字母开头,否则 Vim 将提示错误)。在函数的结尾用关键词 `endfunc`。

|

||||

|

||||

在函数的主体中,可以看到 `exec` 语句,无论您在 `exec` 关键字之后写什么,都会在 Vim 的命令模式上执行(记住,就是在 Vim 窗口的底部以 `:` 开始的命令)。现在,传递给 `exec` 的字符串是(LCTT 译注:`:!clear && g++ % && ./a.out`) -

|

||||

|

||||

[][3]

|

||||

|

||||

|

||||

当这个函数被调用时,它首先清除终端屏幕,因此只能看到输出,接着利用 `g++` 执行正在处理的文件,然后运行由前一步编译而形成的 `a.out` 文件。

|

||||

|

||||

#### 将 `Ctrl+r` 映射为运行 C++ 代码。

|

||||

|

||||

我将语句 `call CPP()` 映射到键组合 `Ctrl+r`,以便我现在可以按 `Ctrl+r` 来执行我的 C++ 代码,无需手动输入`:call CPP()` ,然后按回车键。

|

||||

|

||||

#### 最终结果

|

||||

|

||||

我们终于找到了 Vim 方式的操作方法。现在,你只需按一个(组合)键,你编写的 C++ 代码就输出在你的屏幕上,你不需要键入所有冗长的命令了。这也节省了你的时间。

|

||||

|

||||

我们也可以为其他语言实现这类功能。

|

||||

|

||||

[][4]

|

||||

|

||||

对于Python:您可以按下 `Ctrl+e` 解释执行您的代码。

|

||||

|

||||

[][5]

|

||||

|

||||

|

||||

对于Java:您现在可以按下 `Ctrl+j`,它将首先编译您的 Java 代码,然后执行您的 Java 类文件并显示输出。

|

||||

|

||||

### 进一步提高

|

||||

|

||||

所以,这就是如何在 Vim 中操作的方法。现在,我们来看看如何在 Vim 中实现所有这些。我们可以直接在 Vim 中使用这些代码片段,而另一种方法是使用 Vim 中的自动命令 `autocmd`。`autocmd` 的优点是这些命令无需用户调用,它们在用户所提供的任何特定条件下自动执行。

|

||||

|

||||

我想用 `autocmd` 实现这个,而不是对每种语言使用不同的映射,执行不同程序设计语言编译出的代码。

|

||||

|

||||

[][6]

|

||||

|

||||

在这里做的是,为所有的定义了执行相应文件类型代码的函数编写了自动命令。

|

||||

|

||||

会发生什么?当我打开任何上述提到的文件类型的缓冲区, Vim 会自动将 `Ctrl + r` 映射到函数调用,而 `<CR>` 表示回车键,这样就不需要每完成一个独立的任务就按一次回车键了。

|

||||

|

||||

为了实现这个功能,您只需将函数片段添加到 `.vimrc` 文件中,然后将所有这些 `autocmd` 也一并添加进去。这样,当您下一次打开 Vim 时,Vim 将拥有所有相应的功能来执行所有具有相同绑定键的代码。

|

||||

|

||||

### 总结

|

||||

|

||||

就这些了。希望这些能让你更爱 Vim 。我目前正在探究 Vim 中的一些内容,正阅读文档,补充 `.vimrc` 文件,当我研究出一些成果后我会再次与你分享。

|

||||

|

||||

如果你想看一下我现在的 `.vimrc` 文件,这是我的 Github 账户的链接: [MyVimrc][7]。

|

||||

|

||||

期待你的好评。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxandubuntu.com/home/making-vim-even-more-awesome-with-these-cool-features

|

||||

|

||||

作者:[LINUXANDUBUNTU][a]

|

||||

译者:[stevenzdg988](https://github.com/stevenzdg988)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxandubuntu.com

|

||||

[1]:http://www.linuxandubuntu.com/home/category/distros

|

||||

[2]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/vim_orig.png

|

||||

[3]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/vim_1_orig.png

|

||||

[4]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/vim_2_orig.png

|

||||

[5]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/vim_3_orig.png

|

||||

[6]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/vim_4_orig.png

|

||||

[7]:https://github.com/phenomenal-ab/VIm-Configurations/blob/master/.vimrc

|

||||

@ -1,40 +1,41 @@

|

||||

Telnet,爱一直在

|

||||

======

|

||||

Telnet, 是系统管理员登录远程服务器的协议和工具。然而,由于所有的通信都没有加密,包括密码,都是明文发送的。Telnet 在 SSH 被开发出来之后就基本弃用了。

|

||||

|

||||

Telnet,是系统管理员登录远程服务器的一种协议和工具。然而,由于所有的通信都没有加密,包括密码,都是明文发送的。Telnet 在 SSH 被开发出来之后就基本弃用了。

|

||||

|

||||

登录远程服务器,你可能不会也从未考虑过它。但这并不意味着 `telnet` 命令在调试远程连接问题时不是一个实用的工具。

|

||||

|

||||

本教程中,我们将探索使用 `telnet` 解决所有常见问题,“我怎么又连不上啦?”

|

||||

本教程中,我们将探索使用 `telnet` 解决所有常见问题:“我怎么又连不上啦?”

|

||||

|

||||

这种讨厌的问题通常会在安装了像web服务器、邮件服务器、ssh服务器、Samba服务器等诸如此类的事之后遇到,用户无法连接服务器。

|

||||

这种讨厌的问题通常会在安装了像 Web服务器、邮件服务器、ssh 服务器、Samba 服务器等诸如此类的事之后遇到,用户无法连接服务器。

|

||||

|

||||

`telnet` 不会解决问题但可以很快缩小问题的范围。

|

||||

|

||||

`telnet` 用来调试网络问题的简单命令和语法:

|

||||

|

||||

```

|

||||

telnet <hostname or IP> <port>

|

||||

|

||||

```

|

||||

|

||||

因为 `telnet` 最初通过端口建立连接不会发送任何数据,适用于任何协议包括加密协议。

|

||||

因为 `telnet` 最初通过端口建立连接不会发送任何数据,适用于任何协议,包括加密协议。

|

||||

|

||||

连接问题服务器有四个可能会遇到的主要问题。我们会研究这四个问题,研究他们意味着什么以及如何解决。

|

||||

连接问题服务器有四个可能会遇到的主要问题。我们会研究这四个问题,研究它们意味着什么以及如何解决。

|

||||

|

||||

本教程默认已经在 `samba.example.com` 安装了 [Samba][1] 服务器而且本地客户无法连上服务器。

|

||||

|

||||

### Error 1 - 连接挂起

|

||||

|

||||

首先,我们需要试着用 `telnet` 连接 Samba 服务器。使用下列命令 (Samba 监听端口445):

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

|

||||

```

|

||||

|

||||

有时连接会莫名停止:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Trying 172.31.25.31...

|

||||

|

||||

```

|

||||

|

||||

这意味着 `telnet` 没有收到任何回应来建立连接。有两个可能的原因:

|

||||

@ -43,10 +44,10 @@ Trying 172.31.25.31...

|

||||

2. 防火墙拦截了你的请求。

|

||||

|

||||

|

||||

为了排除第 1 点,对服务器上进行一个快速 [`mtr samba.example.com`][2] 。如果服务器是可达的,那么便是防火墙(注意:防火墙总是存在的)。

|

||||

|

||||

为了排除 **1.** 在服务器上运行一个快速 [`mtr samba.example.com`][2] 。如果服务器是可达的那么便是防火墙(注意:防火墙总是存在的)。

|

||||

首先用 `iptables -L -v -n` 命令检查服务器本身有没有防火墙,没有的话你能看到以下内容:

|

||||

|

||||

首先用 `iptables -L -v -n` 命令检查服务器本身有没有防火墙, 没有的话你能看到以下内容:

|

||||

```

|

||||

iptables -L -v -n

|

||||

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

|

||||

@ -57,41 +58,38 @@ Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

|

||||

|

||||

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

|

||||

pkts bytes target prot opt in out source destination

|

||||

|

||||

```

|

||||

|

||||

如果你看到其他东西那可能就是问题所在了。为了检验,停止 `iptables` 一下并再次运行 `telnet samba.example.com 445` 看看你是否能连接。如果你还是不能连接看看你的提供商或企业有没有防火墙拦截你。

|

||||

|

||||

### Error 2 - DNS 问题

|

||||

|

||||

DNS问题通常发生在你正使用的主机名没有解析到 IP 地址。错误如下:

|

||||

DNS 问题通常发生在你正使用的主机名没有解析到 IP 地址。错误如下:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Server lookup failure: samba.example.com:445, Name or service not known

|

||||

|

||||

```

|

||||

|

||||

第一步是把主机名替换成服务器的IP地址。如果你可以连上那么就是主机名的问题。

|

||||

第一步是把主机名替换成服务器的 IP 地址。如果你可以连上那么就是主机名的问题。

|

||||

|

||||

有很多发生的原因(以下是我见过的):

|

||||

|

||||

1. 域注册了吗?用 `whois` 来检验。

|

||||

2. 域过期了吗?用 `whois` 来检验。

|

||||

1. 域名注册了吗?用 `whois` 来检验。

|

||||

2. 域名过期了吗?用 `whois` 来检验。

|

||||

3. 是否使用正确的主机名?用 `dig` 或 `host` 来确保你使用的主机名解析到正确的 IP。

|

||||

4. 你的 **A** 记录正确吗?确保你没有偶然创建类似 `smaba.example.com` 的 **A** 记录。

|

||||

|

||||

|

||||

|

||||

一定要多检查几次拼写和主机名是否正确(是 `samba.example.com` 还是 `samba1.example.com`)这些经常会困扰你特别是长、难或外来主机名。

|

||||

一定要多检查几次拼写和主机名是否正确(是 `samba.example.com` 还是 `samba1.example.com`)?这些经常会困扰你,特别是比较长、难记或其它国家的主机名。

|

||||

|

||||

### Error 3 - 服务器没有侦听端口

|

||||

|

||||

这种错误发生在 `telnet` 可达服务器但是指定端口没有监听。就像这样:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Trying 172.31.25.31...

|

||||

telnet: Unable to connect to remote host: Connection refused

|

||||

|

||||

```

|

||||

|

||||

有这些原因:

|

||||

@ -100,18 +98,16 @@ telnet: Unable to connect to remote host: Connection refused

|

||||

2. 你的应用服务器没有侦听预期的端口。在服务器上运行 `netstat -plunt` 来查看它究竟在干什么并看哪个端口才是对的,实际正在监听中的。

|

||||

3. 应用服务器没有运行。这可能突然而又悄悄地发生在你启动应用服务器之后。启动服务器运行 `ps auxf` 或 `systemctl status application.service` 查看运行。

|

||||

|

||||

|

||||

|

||||

### Error 4 - 连接被服务器关闭

|

||||

|

||||

这种错误发生在连接成功建立但是应用服务器建立的安全措施一连上就将其结束。错误如下:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Trying 172.31.25.31...

|

||||

Connected to samba.example.com.

|

||||

Escape character is '^]'.

|

||||

<EFBFBD><EFBFBD>Connection closed by foreign host.

|

||||

|

||||

Connection closed by foreign host.

|

||||

```

|

||||

|

||||

最后一行 `Connection closed by foreign host.` 意味着连接被服务器主动终止。为了修复这个问题,需要看看应用服务器的安全设置确保你的 IP 或用户允许连接。

|

||||

@ -119,17 +115,18 @@ Escape character is '^]'.

|

||||

### 成功连接

|

||||

|

||||

成功的 `telnet` 连接如下:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Trying 172.31.25.31...

|

||||

Connected to samba.example.com.

|

||||

Escape character is '^]'.

|

||||

|

||||

```

|

||||

|

||||

连接会保持一段时间只要你连接的应用服务器时限没到。

|

||||

|

||||

输入 `CTRL+]` 中止连接然后当你看到 `telnet>` 提示,输入 "quit" 并点击 ENTER 例:

|

||||

输入 `CTRL+]` 中止连接,然后当你看到 `telnet>` 提示,输入 `quit` 并按回车:

|

||||

|

||||

```

|

||||

telnet samba.example.com 445

|

||||

Trying 172.31.25.31...

|

||||

@ -138,12 +135,11 @@ Escape character is '^]'.

|

||||

^]

|

||||

telnet> quit

|

||||

Connection closed.

|

||||

|

||||

```

|

||||

|

||||

### 总结

|

||||

|

||||

客户程序连不上服务器的原因有很多。确切原理很难确定特别是当客户是图形用户界面提供很少或没有错误信息。用 `telnet` 并观察输出可以让你很快确定问题所在节约很多时间。

|

||||

客户程序连不上服务器的原因有很多。确切原因很难确定,特别是当客户是图形用户界面提供很少或没有错误信息。用 `telnet` 并观察输出可以让你很快确定问题所在节约很多时间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -151,7 +147,7 @@ via: https://bash-prompt.net/guides/telnet/

|

||||

|

||||

作者:[Elliot Cooper][a]

|

||||

译者:[XYenChi](https://github.com/XYenChi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -3,68 +3,70 @@

|

||||

|

||||

|

||||

|

||||

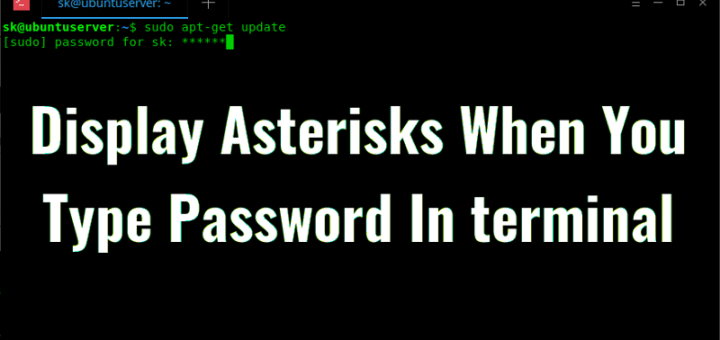

当你在 Web 浏览器或任何 GUI 登录中输入密码时,密码会被标记成星号 ******** 或圆形符号 ••••••••••••• 。这是内置的安全机制,以防止你附近的用户看到你的密码。但是当你在终端输入密码来执行任何 **sudo** 或 **su** 的管理任务时,你不会在输入密码的时候看见星号或者圆形符号。它不会有任何输入密码的视觉指示,也不会有任何光标移动,什么也没有。你不知道你是否输入了所有的字符。你只会看到一个空白的屏幕!

|

||||

当你在 Web 浏览器或任何 GUI 登录中输入密码时,密码会被标记成星号 `********` 或圆点符号 `•••••••••••••` 。这是内置的安全机制,以防止你附近的用户看到你的密码。但是当你在终端输入密码来执行任何 `sudo` 或 `su` 的管理任务时,你不会在输入密码的时候看见星号或者圆点符号。它不会有任何输入密码的视觉指示,也不会有任何光标移动,什么也没有。你不知道你是否输入了所有的字符。你只会看到一个空白的屏幕!

|

||||

|

||||

看看下面的截图。

|

||||

|

||||

![][2]

|

||||

|

||||

正如你在上面的图片中看到的,我已经输入了密码,但没有任何指示(星号或圆形符号)。现在,我不确定我是否输入了所有密码。这个安全机制也可以防止你附近的人猜测密码长度。当然,这种行为可以改变。这是本指南要说的。这并不困难。请继续阅读。

|

||||

正如你在上面的图片中看到的,我已经输入了密码,但没有任何指示(星号或圆点符号)。现在,我不确定我是否输入了所有密码。这个安全机制也可以防止你附近的人猜测密码长度。当然,这种行为可以改变。这是本指南要说的。这并不困难。请继续阅读。

|

||||

|

||||

#### 当你在终端输入密码时显示星号

|

||||

|

||||

要在终端输入密码时显示星号,我们需要在 **“/etc/sudoers”** 中做一些小修改。在做任何更改之前,最好备份这个文件。为此,只需运行:

|

||||

要在终端输入密码时显示星号,我们需要在 `/etc/sudoers` 中做一些小修改。在做任何更改之前,最好备份这个文件。为此,只需运行:

|

||||

|

||||

```

|

||||

sudo cp /etc/sudoers{,.bak}

|

||||

```

|

||||

|

||||

上述命令将 /etc/sudoers 备份成名为 /etc/sudoers.bak。你可以恢复它,以防万一在编辑文件后出错。

|

||||

上述命令将 `/etc/sudoers` 备份成名为 `/etc/sudoers.bak`。你可以恢复它,以防万一在编辑文件后出错。

|

||||

|

||||

接下来,使用下面的命令编辑 `/etc/sudoers`:

|

||||

|

||||

接下来,使用下面的命令编辑 **“/etc/sudoers”**:

|

||||

```

|

||||

sudo visudo

|

||||

```

|

||||

|

||||

找到下面这行:

|

||||

|

||||

```

|

||||

Defaults env_reset

|

||||

```

|

||||

|

||||

![][3]

|

||||

|

||||

在该行的末尾添加一个额外的单词 **“,pwfeedback”**,如下所示。

|

||||

在该行的末尾添加一个额外的单词 `,pwfeedback`,如下所示。

|

||||

|

||||

```

|

||||

Defaults env_reset,pwfeedback

|

||||

```

|

||||

|

||||

![][4]

|

||||

|

||||

然后,按下 **“CTRL + x”** 和 **“y”** 保存并关闭文件。重新启动终端以使更改生效。

|

||||

然后,按下 `CTRL + x` 和 `y` 保存并关闭文件。重新启动终端以使更改生效。

|

||||

|

||||

现在,当你在终端输入密码时,你会看到星号。

|

||||

|

||||

![][5]

|

||||

|

||||

如果你对在终端输入密码时看不到密码感到不舒服,那么这个小技巧会有帮助。请注意,当你输入输入密码时其他用户就可以预测你的密码长度。如果你不介意,请按照上述方法进行更改,以使你的密码可见(当然,标记为星号!)。

|

||||

如果你对在终端输入密码时看不到密码感到不舒服,那么这个小技巧会有帮助。请注意,当你输入输入密码时其他用户就可以预测你的密码长度。如果你不介意,请按照上述方法进行更改,以使你的密码可见(当然,显示为星号!)。

|

||||

|

||||

现在就是这样了。还有更好的东西。敬请关注!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/display-asterisks-type-password-terminal/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2018/01/password-1.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-1.png ()

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-1-1.png ()

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-2.png ()

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2018/01/password-1.png

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-1.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-1-1.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/01/visudo-2.png

|

||||

@ -3,19 +3,19 @@ Kali Linux 是什么,你需要它吗?

|

||||

|

||||

|

||||

|

||||

如果你听到一个 13 岁的黑客吹嘘它是多么的牛逼,是有可能的,因为有 Kali Linux 的存在。尽管有可能会被称为“脚本小子”,但是事实上,Kali 仍旧是安全专家手头的重要工具(或工具集)。

|

||||

如果你听到一个 13 岁的黑客吹嘘他是多么的牛逼,是有可能的,因为有 Kali Linux 的存在。尽管有可能会被称为“脚本小子”,但是事实上,Kali 仍旧是安全专家手头的重要工具(或工具集)。

|

||||

|

||||

Kali 是一个基于 Debian 的 Linux 发行版。它的目标就是为了简单;在一个实用的工具包里尽可能多的包含渗透和审计工具。Kali 实现了这个目标。大多数做安全测试的开源工具都被囊括在内。

|

||||

Kali 是一个基于 Debian 的 Linux 发行版。它的目标就是为了简单:在一个实用的工具包里尽可能多的包含渗透和审计工具。Kali 实现了这个目标。大多数做安全测试的开源工具都被囊括在内。

|

||||

|

||||

**相关** : [4 个极好的为隐私和案例设计的 Linux 发行版][1]

|

||||

**相关** : [4 个极好的为隐私和安全设计的 Linux 发行版][1]

|

||||

|

||||

### 为什么是 Kali?

|

||||

|

||||

![Kali Linux Desktop][2]

|

||||

|

||||

[Kali][3] 是由 Offensive Security (https://www.offensive-security.com/)公司开发和维护的。它在安全领域是一家知名的、值得信赖的公司,它甚至还有一些受人尊敬的认证,来对安全从业人员做资格认证。

|

||||

[Kali][3] 是由 [Offensive Security](https://www.offensive-security.com/) 公司开发和维护的。它在安全领域是一家知名的、值得信赖的公司,它甚至还有一些受人尊敬的认证,来对安全从业人员做资格认证。

|

||||

|

||||

Kali 也是一个简便的安全解决方案。Kali 并不要求你自己去维护一个 Linux,或者收集你自己的软件和依赖。它是一个“交钥匙工程”。所有这些繁杂的工作都不需要你去考虑,因此,你只需要专注于要审计的真实工作上,而不需要去考虑准备测试系统。

|

||||

Kali 也是一个简便的安全解决方案。Kali 并不要求你自己去维护一个 Linux 系统,或者你自己去收集软件和依赖项。它是一个“交钥匙工程”。所有这些繁杂的工作都不需要你去考虑,因此,你只需要专注于要审计的真实工作上,而不需要去考虑准备测试系统。

|

||||

|

||||

### 如何使用它?

|

||||

|

||||

@ -61,7 +61,7 @@ via: https://www.maketecheasier.com/what-is-kali-linux-and-do-you-need-it/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,33 +1,43 @@

|

||||

如何使用 Ansible 创建 AWS ec2 密钥

|

||||

======

|

||||

我想使用 Ansible 工具创建 Amazon EC2 密钥对。不想使用 AWS CLI 来创建。可以使用 Ansible 来创建 AWS ec2 密钥吗?

|

||||

|

||||

你需要使用 Ansible 的 ec2_key 模块。这个模块依赖于 python-boto 2.5 版本或者更高版本。 boto 只不过是亚马逊 Web 服务的一个 Python API。你可以将 boto 用于 Amazon S3,Amazon EC2 等其他服务。简而言之,你需要安装 ansible 和 boto 模块。我们一起来看下如何安装 boto 并结合 Ansible 使用。

|

||||

**我想使用 Ansible 工具创建 Amazon EC2 密钥对。不想使用 AWS CLI 来创建。可以使用 Ansible 来创建 AWS ec2 密钥吗?**

|

||||

|

||||

你需要使用 Ansible 的 ec2_key 模块。这个模块依赖于 python-boto 2.5 版本或者更高版本。 boto 是亚马逊 Web 服务的一个 Python API。你可以将 boto 用于 Amazon S3、Amazon EC2 等其他服务。简而言之,你需要安装 Ansible 和 boto 模块。我们一起来看下如何安装 boto 并结合 Ansible 使用。

|

||||

|

||||

### 第一步 - 在 Ubuntu 上安装最新版本的 Ansible

|

||||

|

||||

你必须[给你的系统配置 PPA 来安装最新版的 Ansible][2]。为了管理你从各种 PPA(Personal Package Archives)安装软件的仓库,你可以上传 Ubuntu 源码包并编译,然后通过 Launchpad 以 apt 仓库的形式发布。键入如下命令 [apt-get 命令][3]或者 [apt 命令][4]:

|

||||

|

||||

### 第一步 - [在 Ubuntu 上安装最新版本的 Ansible][1]

|

||||

你必须[给你的系统配置 PPA 来安装最新版的 ansible][2]。为了管理你从各种 PPA(Personal Package Archives) 安装软件的仓库,你可以上传 Ubuntu 源码包并编译,然后通过 Launchpad 以 apt 仓库的形式发布。键入如下命令 [apt-get 命令][3]或者 [apt 命令][4]:

|

||||

```

|

||||

$ sudo apt update

|

||||

$ sudo apt upgrade

|

||||

$ sudo apt install software-properties-common

|

||||

```

|

||||

接下来给你的系统的软件源中添加 ppa:ansible/ansible

|

||||

|

||||

接下来给你的系统的软件源中添加 `ppa:ansible/ansible`。

|

||||

|

||||

```

|

||||

$ sudo apt-add-repository ppa:ansible/ansible

|

||||

```

|

||||

更新你的仓库并安装ansible:

|

||||

|

||||

更新你的仓库并安装 Ansible:

|

||||

|

||||

```

|

||||

$ sudo apt update

|

||||

$ sudo apt install ansible

|

||||

```

|

||||

|

||||

安装 boto:

|

||||

|

||||

```

|

||||

$ pip3 install boto3

|

||||

```

|

||||

|

||||

#### 关于在CentOS/RHEL 7.x上安装Ansible的注意事项

|

||||

#### 关于在CentOS/RHEL 7.x上安装 Ansible 的注意事项

|

||||

|

||||

你[需要在 CentOS 和 RHEL 7.x 上配置 EPEL 源][5]和 [yum命令][6]

|

||||

|

||||

```

|

||||

$ cd /tmp

|

||||

$ wget https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

|

||||

@ -35,14 +45,17 @@ $ ls *.rpm

|

||||

$ sudo yum install epel-release-latest-7.noarch.rpm

|

||||

$ sudo yum install ansible

|

||||

```

|

||||

|

||||

安装 boto:

|

||||

|

||||

```

|

||||

$ pip install boto3

|

||||

```

|

||||

|

||||

### 第二步 2 – 配置 boto

|

||||

|

||||

你需要配置 AWS credentials/API 密钥。参考 “[AWS Security Credentials][7]” 文档如何创建 API key。用 mkdir 命令创建一个名为 ~/.aws 的目录,然后配置 API key:

|

||||

你需要配置 AWS credentials/API 密钥。参考 “[AWS Security Credentials][7]” 文档如何创建 API key。用 `mkdir` 命令创建一个名为 `~/.aws` 的目录,然后配置 API key:

|

||||

|

||||

```

|

||||

$ mkdir -pv ~/.aws/

|

||||

$ vi ~/.aws/credentials

|

||||

@ -54,14 +67,20 @@ aws_secret_access_key = YOUR-SECRET-ACCESS-KEY-HERE

|

||||

```

|

||||

|

||||

还需要配置默认 [AWS 区域][8]:

|

||||

`$ vi ~/.aws/config`

|

||||

|

||||

```

|

||||

$ vi ~/.aws/config

|

||||

```

|

||||

|

||||

输出样例如下:

|

||||

|

||||

```

|

||||

[default]

|

||||

region = us-west-1

|

||||

```

|

||||

|

||||

通过创建一个简单的名为 test-boto.py 的 python 程序来测试你的 boto 配置是否正确:

|

||||

通过创建一个简单的名为 `test-boto.py` 的 Python 程序来测试你的 boto 配置是否正确:

|

||||

|

||||

```

|

||||

#!/usr/bin/python3

|

||||

# A simple program to test boto and print s3 bucket names

|

||||

@ -72,20 +91,25 @@ for b in t.buckets.all():

|

||||

```

|

||||

|

||||

按下面方式来运行该程序:

|

||||

`$ python3 test-boto.py`

|

||||

|

||||

```

|

||||

$ python3 test-boto.py

|

||||

```

|

||||

|

||||

输出样例:

|

||||

|

||||

```

|

||||

nixcraft-images

|

||||

nixcraft-backups-cbz

|

||||

nixcraft-backups-forum

|

||||

|

||||

```

|

||||

|

||||

上面输出可以确定 Python-boto 可以使用 AWS API 正常工作。

|

||||

|

||||

### 步骤 3 - 使用 Ansible 创建 AWS ec2 密钥

|

||||

|

||||

创建一个名为 ec2.key.yml 的 playbook,如下所示:

|

||||

创建一个名为 `ec2.key.yml` 的剧本,如下所示:

|

||||

|

||||

```

|

||||

---

|

||||

- hosts: local

|

||||

@ -106,44 +130,54 @@ nixcraft-backups-forum

|

||||

|

||||

其中,

|

||||

|

||||

* ec2_key: – ec2 密钥对。

|

||||

* name: nixcraft_key – 密钥对的名称。

|

||||

* region: us-west-1 – 使用的 AWS 区域。

|

||||