mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

eb4273b8a4

@ -1,8 +1,11 @@

|

||||

在 Linux 上用 DNS 实现简单的负载均衡

|

||||

======

|

||||

|

||||

> DNS 轮询将多个服务器映射到同一个主机名,并没有为这里展示的魔法做更多的工作。

|

||||

|

||||

|

||||

如果你的后端服务器是由多台服务器构成的,比如集群化或者镜像的 Web 或者文件服务器,通过一个负载均衡器提供了单一的入口点。业务繁忙的大型电商花费大量的资金在高端负载均衡器上,用它来执行各种各样的任务:代理、缓存、状况检查、SSL 处理、可配置的优先级、流量整形等很多任务。

|

||||

|

||||

如果你的后端服务器是由多台服务器构成的,比如集群化或者镜像的 Web 或者文件服务器,通过负载均衡器提供了单一的入口点。业务繁忙的大型电商在高端负载均衡器上花费了大量的资金,用它来执行各种各样的任务:代理、缓存、状况检查、SSL 处理、可配置的优先级、流量整形等很多任务。

|

||||

|

||||

但是你并不需要做那么多工作的负载均衡器。你需要的是一个跨服务器分发负载的简单方法,它能够提供故障切换,并且不太在意它是否高效和完美。DNS 轮询和使用轮询的子域委派是实现这个目标的两种简单方法。

|

||||

|

||||

@ -12,11 +15,12 @@ DNS 轮询是将多台服务器映射到同一个主机名上,当用户访问

|

||||

|

||||

### DNS 轮询

|

||||

|

||||

轮询和旅鸫鸟(robins)没有任何关系,据我喜欢的图书管理员说,它最初是一个法语短语,_`ruban rond`_、或者 `round ribbon`。很久以前,法国政府官员以不分级的圆形、波浪形、或者辐条形状去签署请愿书以掩盖请愿书的发起人。

|

||||

轮询和<ruby>旅鸫鸟<rt>robins</rt></ruby>没有任何关系,据我相熟的图书管理员说,它最初是一个法语短语,_ruban rond_、或者 _round ribbon_。很久以前,法国政府官员以不分级的圆形、波浪线、或者直线形状来在请愿书上签字,以盖住原来的发起人。

|

||||

|

||||

DNS 轮询也是不分级的,简单配置一个服务器列表,然后将请求转到每个服务器上。它并不做真正的负载均衡,因为它根本就不测量负载,也没有状况检查,因此如果一个服务器宕机,请求仍然会发送到那个宕机的服务器上。它的优点就是简单。如果你有一个小的文件或者 Web 服务器集群,想通过一个简单的方法在它们之间分散负载,那么 DNS 轮询很适合你。

|

||||

|

||||

你所做的全部配置就是创建多条 A 或者 AAAA 记录,映射多台服务器到单个的主机名。这个 BIND 示例同时使用了 IPv4 和 IPv6 私有地址类:

|

||||

|

||||

```

|

||||

fileserv.example.com. IN A 172.16.10.10

|

||||

fileserv.example.com. IN A 172.16.10.11

|

||||

@ -25,10 +29,10 @@ fileserv.example.com. IN A 172.16.10.12

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::10

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::11

|

||||

fileserv.example.com. IN AAAA fd02:faea:f561:8fa0:1::12

|

||||

|

||||

```

|

||||

|

||||

Dnsmasq 在 _/etc/hosts_ 文件中保存 A 和 AAAA 记录:

|

||||

Dnsmasq 在 `/etc/hosts` 文件中保存 A 和 AAAA 记录:

|

||||

|

||||

```

|

||||

172.16.1.10 fileserv fileserv.example.com

|

||||

172.16.1.11 fileserv fileserv.example.com

|

||||

@ -36,15 +40,14 @@ Dnsmasq 在 _/etc/hosts_ 文件中保存 A 和 AAAA 记录:

|

||||

fd02:faea:f561:8fa0:1::10 fileserv fileserv.example.com

|

||||

fd02:faea:f561:8fa0:1::11 fileserv fileserv.example.com

|

||||

fd02:faea:f561:8fa0:1::12 fileserv fileserv.example.com

|

||||

|

||||

```

|

||||

|

||||

请注意这些示例都是很简化的,解析完全合格域名有多种方法,因此,关于如何配置 DNS 请自行学习。

|

||||

|

||||

使用 `dig` 命令去检查你的配置能否按预期工作。将 `ns.example.com` 替换为你的域名服务器:

|

||||

|

||||

```

|

||||

$ dig @ns.example.com fileserv A fileserv AAA

|

||||

|

||||

```

|

||||

|

||||

它将同时显示出 IPv4 和 IPv6 的轮询记录。

|

||||

@ -56,6 +59,7 @@ $ dig @ns.example.com fileserv A fileserv AAA

|

||||

这种方法需要多台域名服务器。在最简化的场景中,你需要一台主域名服务器和两个子域,每个子域都有它们自己的域名服务器。在子域服务器上配置你的轮询记录,然后在你的主域名服务器上配置委派。

|

||||

|

||||

在主域名服务器上的 BIND 中,你至少需要两个额外的配置,一个区声明以及在区数据文件中的 A/AAAA 记录。主域名服务器中的委派应该像如下的内容:

|

||||

|

||||

```

|

||||

ns1.sub.example.com. IN A 172.16.1.20

|

||||

ns1.sub.example.com. IN AAAA fd02:faea:f561:8fa0:1::20

|

||||

@ -64,64 +68,65 @@ ns2.sub.example.com. IN AAA fd02:faea:f561:8fa0:1::21

|

||||

|

||||

sub.example.com. IN NS ns1.sub.example.com.

|

||||

sub.example.com. IN NS ns2.sub.example.com.

|

||||

|

||||

```

|

||||

|

||||

接下来的每台子域服务器上有它们自己的区文件。在这里它的关键点是每个服务器去返回它**自己的** IP 地址。在 `named.conf` 中的区声明,所有的服务上都是一样的:

|

||||

|

||||

```

|

||||

zone "sub.example.com" {

|

||||

type master;

|

||||

file "db.sub.example.com";

|

||||

type master;

|

||||

file "db.sub.example.com";

|

||||

};

|

||||

|

||||

```

|

||||

|

||||

然后数据文件也是相同的,除了那个 A/AAAA 记录使用的是各个服务器自己的 IP 地址。SOA 记录都指向到主域名服务器:

|

||||

|

||||

```

|

||||

; first subdomain name server

|

||||

$ORIGIN sub.example.com.

|

||||

$TTL 60

|

||||

sub.example.com IN SOA ns1.example.com. admin.example.com. (

|

||||

2018123456 ; serial

|

||||

3H ; refresh

|

||||

15 ; retry

|

||||

3600000 ; expire

|

||||

sub.example.com IN SOA ns1.example.com. admin.example.com. (

|

||||

2018123456 ; serial

|

||||

3H ; refresh

|

||||

15 ; retry

|

||||

3600000 ; expire

|

||||

)

|

||||

|

||||

sub.example.com. IN NS ns1.sub.example.com.

|

||||

sub.example.com. IN A 172.16.1.20

|

||||

ns1.sub.example.com. IN AAAA fd02:faea:f561:8fa0:1::20

|

||||

|

||||

sub.example.com. IN A 172.16.1.20

|

||||

ns1.sub.example.com. IN AAAA fd02:faea:f561:8fa0:1::20

|

||||

; second subdomain name server

|

||||

$ORIGIN sub.example.com.

|

||||

$TTL 60

|

||||

sub.example.com IN SOA ns1.example.com. admin.example.com. (

|

||||

2018234567 ; serial

|

||||

3H ; refresh

|

||||

15 ; retry

|

||||

3600000 ; expire

|

||||

sub.example.com IN SOA ns1.example.com. admin.example.com. (

|

||||

2018234567 ; serial

|

||||

3H ; refresh

|

||||

15 ; retry

|

||||

3600000 ; expire

|

||||

)

|

||||

|

||||

sub.example.com. IN NS ns1.sub.example.com.

|

||||

sub.example.com. IN A 172.16.1.21

|

||||

ns2.sub.example.com. IN AAAA fd02:faea:f561:8fa0:1::21

|

||||

|

||||

sub.example.com. IN A 172.16.1.21

|

||||

ns2.sub.example.com. IN AAAA fd02:faea:f561:8fa0:1::21

|

||||

```

|

||||

|

||||

接下来生成子域服务器上的轮询记录,方法和前面一样。现在你已经有了多个域名服务器来处理到你的子域的请求。再说一次,BIND 是很复杂的,做同一件事情它有多种方法,因此,给你留的家庭作业是找出适合你使用的最佳配置方法。

|

||||

|

||||

在 Dnsmasq 中做子域委派很容易。在你的主域名服务器上的 `dnsmasq.conf` 文件中添加如下的行,去指向到子域的域名服务器:

|

||||

|

||||

```

|

||||

server=/sub.example.com/172.16.1.20

|

||||

server=/sub.example.com/172.16.1.21

|

||||

server=/sub.example.com/fd02:faea:f561:8fa0:1::20

|

||||

server=/sub.example.com/fd02:faea:f561:8fa0:1::21

|

||||

|

||||

```

|

||||

|

||||

然后在子域的域名服务器上的 `/etc/hosts` 中配置轮询。

|

||||

|

||||

获取配置方法的详细内容和帮助,请参考这些资源:~~(致校对:这里的资源链接全部丢失了!!)~~

|

||||

获取配置方法的详细内容和帮助,请参考这些资源:

|

||||

|

||||

- [Dnsmasq][2]

|

||||

- [DNS and BIND, 5th Edition][3]

|

||||

|

||||

通过来自 Linux 基金会和 edX 的免费课程 ["Linux 入门" ][1] 学习更多 Linux 的知识。

|

||||

|

||||

@ -131,9 +136,11 @@ via: https://www.linux.com/learn/intro-to-linux/2018/3/simple-load-balancing-dns

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

[2]:http://www.thekelleys.org.uk/dnsmasq/doc.html

|

||||

[3]:http://shop.oreilly.com/product/9780596100575.do

|

||||

@ -1,61 +1,56 @@

|

||||

# 在命令行中整理数据

|

||||

如何在命令行中整理数据

|

||||

=========

|

||||

|

||||

> 命令行审计不会影响数据库,因为它使用从数据库中释放的数据。

|

||||

|

||||

|

||||

|

||||

我兼职做数据审计。把我想象成一个校对者,处理数据表格而不是一页一页的文章。这些表是从关系数据库导出的,并且规模相当小:100,000 到 1,000,000条记录,50 到 200个字段。

|

||||

我兼职做数据审计。把我想象成一个校对者,校对的是数据表格而不是一页一页的文章。这些表是从关系数据库导出的,并且规模相当小:100,000 到 1,000,000条记录,50 到 200 个字段。

|

||||

|

||||

我从来没有见过没有错误的数据表。您可能认为,这种混乱并不局限于重复记录、拼写和格式错误以及放置在错误字段中的数据项。我还发现:

|

||||

我从来没有见过没有错误的数据表。如你所能想到的,这种混乱并不局限于重复记录、拼写和格式错误以及放置在错误字段中的数据项。我还发现:

|

||||

|

||||

* 损坏的记录分布在几行上,因为数据项具有内嵌的换行符

|

||||

* 损坏的记录分布在几行上,因为数据项具有内嵌的换行符

|

||||

* 在同一记录中一个字段中的数据项与另一个字段中的数据项不一致

|

||||

* 使用截断数据项的记录,通常是因为非常长的字符串被硬塞到具有50或100字符限制的字段中

|

||||

* 字符编码失败产生称为[乱码][1]

|

||||

* 使用截断数据项的记录,通常是因为非常长的字符串被硬塞到具有 50 或 100 字符限制的字段中

|

||||

* 字符编码失败产生称为[乱码][1]的垃圾

|

||||

* 不可见的[控制字符][2],其中一些会导致数据处理错误

|

||||

* 由上一个程序插入的[替换字符][3]和神秘的问号,这导致了不知道数据的编码是什么

|

||||

* 由上一个程序插入的[替换字符][3]和神秘的问号,这是由于不知道数据的编码是什么

|

||||

|

||||

解决这些问题并不困难,但找到它们存在非技术障碍。首先,每个人都不愿处理数据错误。在我看到表格之前,数据所有者或管理人员可能已经经历了数据悲伤的所有五个阶段:

|

||||

解决这些问题并不困难,但找到它们存在非技术障碍。首先,每个人都不愿处理数据错误。在我看到表格之前,数据所有者或管理人员可能已经经历了<ruby>数据悲伤<rt>Data Grief</rt></ruby>的所有五个阶段:

|

||||

|

||||

1. 我们的数据没有错误。

|

||||

2. 好吧,也许有一些错误,但它们并不重要。

|

||||

3. 好的,有很多错误;我们会让我们的内部人员处理它们。

|

||||

4. 我们已经开始修复一些错误,但这很耗时间;我们将在迁移到新的数据库软件时执行此操作。

|

||||

5. 移至新数据库时,我们没有时间整理数据; 我们需要一些帮助。

|

||||

|

||||

1. 好吧,也许有一些错误,但它们并不重要。

|

||||

2. 好的,有很多错误; 我们会让我们的内部人员处理它们。

|

||||

3. 我们已经开始修复一些错误,但这很耗时间; 我们将在迁移到新的数据库软件时执行此操作。

|

||||

4. 1.移至新数据库时,我们没有时间整理数据; 我们可以使用一些帮助。

|

||||

|

||||

第二个阻碍进展的是相信数据整理需要专用的应用程序——要么是昂贵的专有程序,要么是优秀的开源程序 [OpenRefine][4] 。为了解决专用应用程序无法解决的问题,数据管理人员可能会向程序员寻求帮助,比如擅长 [Python][5] 或 [R][6] 的人。

|

||||

第二个阻碍进展的是相信数据整理需要专用的应用程序——要么是昂贵的专有程序,要么是优秀的开源程序 [OpenRefine][4] 。为了解决专用应用程序无法解决的问题,数据管理人员可能会向程序员寻求帮助,比如擅长 [Python][5] 或 [R][6] 的人。

|

||||

|

||||

但是数据审计和整理通常不需要专用的应用程序。纯文本数据表已经存在了几十年,文本处理工具也是如此。打开 Bash shell,您将拥有一个工具箱,其中装载了强大的文本处理器,如 `grep`、`cut`、`paste`、`sort`、`uniq`、`tr` 和 `awk`。它们快速、可靠、易于使用。

|

||||

|

||||

我在命令行上执行所有的数据审计工作,并且在 “[cookbook][7]” 网站上发布了许多数据审计技巧。我经常将操作存储为函数和 shell 脚本(参见下面的示例)。

|

||||

|

||||

是的,命令行方法要求将要审计的数据从数据库中导出。 是的,审计结果需要稍后在数据库中进行编辑,或者(数据库允许)整理的数据项作为替换杂乱的数据项导入其中。

|

||||

是的,命令行方法要求将要审计的数据从数据库中导出。而且,审计结果需要稍后在数据库中进行编辑,或者(数据库允许)将整理的数据项导入其中,以替换杂乱的数据项。

|

||||

|

||||

但其优势是显著的。awk 将在普通的台式机或笔记本电脑上以几秒钟的时间处理数百万条记录。不复杂的正则表达式将找到您可以想象的所有数据错误。所有这些都将安全地发生在数据库结构之外:命令行审计不会影响数据库,因为它使用从数据库中释放的数据。

|

||||

|

||||

受过 Unix 培训的读者此时会沾沾自喜。他们还记得许多年前用这些方法操纵命令行上的数据。从那时起,计算机的处理能力和 RAM 得到了显著提高,标准命令行工具的效率大大提高。数据审计从来都不是更快或更容易的。现在微软的 Windows 10 可以运行 Bash 和 GNU/Linux 程序了,Windows 用户也可以用 Unix 和 Linux 的座右铭来处理混乱的数据:保持冷静,打开一个终端。

|

||||

受过 Unix 培训的读者此时会沾沾自喜。他们还记得许多年前用这些方法操纵命令行上的数据。从那时起,计算机的处理能力和 RAM 得到了显著提高,标准命令行工具的效率大大提高。数据审计从来没有这么快、这么容易过。现在微软的 Windows 10 可以运行 Bash 和 GNU/Linux 程序了,Windows 用户也可以用 Unix 和 Linux 的座右铭来处理混乱的数据:保持冷静,打开一个终端。

|

||||

|

||||

|

||||

![Tshirt, Keep Calm and Open A Terminal][9]

|

||||

|

||||

图片:Robert Mesibov,CC BY

|

||||

### 例子

|

||||

|

||||

### 例子:

|

||||

假设我想在一个大的表中的特定字段中找到最长的数据项。 这不是一个真正的数据审计任务,但它会显示 shell 工具的工作方式。 为了演示目的,我将使用制表符分隔的表 `full0` ,它有 1,122,023 条记录(加上一个标题行)和 49 个字段,我会查看 36 号字段。(我得到字段编号的函数在我的[网站][10]上有解释)

|

||||

|

||||

假设我想在一个大的表中的特定字段中找到最长的数据项。 这不是一个真正的数据审计任务,但它会显示 shell 工具的工作方式。 为了演示目的,我将使用制表符分隔的表 `full0` ,它有 1,122,023 条记录(加上一个标题行)和 49 个字段,我会查看 36 号字段.(我得到字段编号的函数在我的[网站][10]上有解释)

|

||||

|

||||

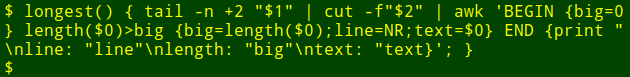

首先,使用 `tail` 命令从表 `full0` 移除标题行,结果管道至 `cut` 命令,截取第 36 个字段,接下来,管道至 `awk` ,这里有一个初始化为 0 的变量 `big` ,然后 `awk` 开始检测第一行数据项的长度,如果长度大于 0 , `awk` 将会设置 `big` 变量为新的长度,同时存储行数到变量 `line` 中。整个数据项存储在变量 `text` 中。然后 `awk` 开始轮流处理剩余的 1,122,022 记录项。同时,如果发现更长的数据项时,更新 3 个变量。最后,它打印出行号,数据项的长度,以及最长数据项的内容。(在下面的代码中,为了清晰起见,将代码分为几行)

|

||||

首先,使用 `tail` 命令从表 `full0` 移除标题行,结果管道至 `cut` 命令,截取第 36 个字段,接下来,管道至 `awk` ,这里有一个初始化为 0 的变量 `big` ,然后 `awk` 开始检测第一行数据项的长度,如果长度大于 0 ,`awk` 将会设置 `big` 变量为新的长度,同时存储行数到变量 `line` 中。整个数据项存储在变量 `text` 中。然后 `awk` 开始轮流处理剩余的 1,122,022 记录项。同时,如果发现更长的数据项时,更新 3 个变量。最后,它打印出行号、数据项的长度,以及最长数据项的内容。(在下面的代码中,为了清晰起见,将代码分为几行)

|

||||

|

||||

```

|

||||

<code>tail -n +2 full0 \

|

||||

|

||||

tail -n +2 full0 \

|

||||

> | cut -f36 \

|

||||

|

||||

> | awk 'BEGIN {big=0} length($0)>big \

|

||||

|

||||

> {big=length($0);line=NR;text=$0} \

|

||||

|

||||

> END {print "\nline: "line"\nlength: "big"\ntext: "text}' </code>

|

||||

|

||||

> END {print "\nline: "line"\nlength: "big"\ntext: "text}'

|

||||

```

|

||||

|

||||

大约花了多长时间?我的电脑大约用了 4 秒钟(core i5,8GB RAM);

|

||||

@ -66,25 +61,19 @@

|

||||

|

||||

|

||||

|

||||

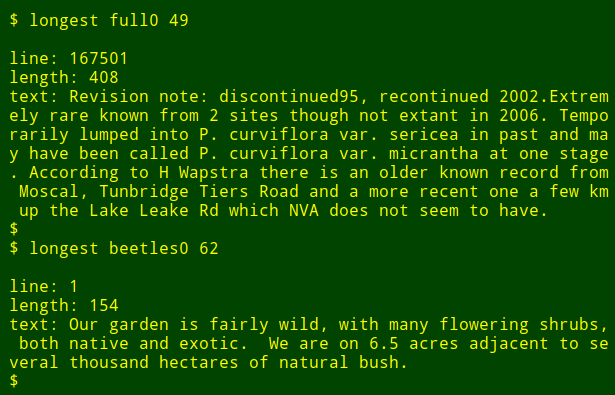

现在,我重新运行这个命令,在另一个文件中找另一个字段中最长的数据项而不需要去记忆命令是如何写的:

|

||||

现在,我可以以函数的方式重新运行这个命令,在另一个文件中的另一个字段中找最长的数据项,而不需要去记忆这个命令是如何写的:

|

||||

|

||||

|

||||

|

||||

最后调整一下,我还可以输出我要查询字段的名称,我只需要使用 `head` 命令抽取表格第一行的标题行,然后将结果管道至 `tr` 命令,将制表位转换为换行,然后将结果管道至 `tail` 和 `head` 命令,打印出第二个参数在列表中名称,第二个参数就是字段号。字段的名字就存储到变量 `field` 中,然后将他传向 `awk` ,通过变量 `fld` 打印出来。(译者注:按照下面的代码,编号的方式应该是从右向左)

|

||||

最后调整一下,我还可以输出我要查询字段的名称,我只需要使用 `head` 命令抽取表格第一行的标题行,然后将结果管道至 `tr` 命令,将制表位转换为换行,然后将结果管道至 `tail` 和 `head` 命令,打印出第二个参数在列表中名称,第二个参数就是字段号。字段的名字就存储到变量 `field` 中,然后将它传向 `awk` ,通过变量 `fld` 打印出来。(LCTT 译注:按照下面的代码,编号的方式应该是从右向左)

|

||||

|

||||

```

|

||||

<code>longest() { field=$(head -n 1 "$1" | tr '\t' '\n' | tail -n +"$2" | head -n 1); \

|

||||

|

||||

longest() { field=$(head -n 1 "$1" | tr '\t' '\n' | tail -n +"$2" | head -n 1); \

|

||||

tail -n +2 "$1" \

|

||||

|

||||

| cut -f"$2" | \

|

||||

|

||||

awk -v fld="$field" 'BEGIN {big=0} length($0)>big \

|

||||

|

||||

{big=length($0);line=NR;text=$0}

|

||||

|

||||

END {print "\nfield: "fld"\nline: "line"\nlength: "big"\ntext: "text}'; }</code>

|

||||

|

||||

END {print "\nfield: "fld"\nline: "line"\nlength: "big"\ntext: "text}'; }

|

||||

```

|

||||

|

||||

|

||||

@ -98,7 +87,7 @@ via: https://opensource.com/article/18/5/command-line-data-auditing

|

||||

作者:[Bob Mesibov][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[amwps290](https://github.com/amwps290)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Let's migrate away from GitHub

|

||||

======

|

||||

As many of you heard today, [Microsoft is acquiring GitHub][1]. What this means for the future of GitHub is not yet clear, but [the folks at Gitlab][2] think Microsoft's end goal is to integrate GitHub in their Azure empire. To me, this makes a lot of sense.

|

||||

|

||||

@ -1,167 +0,0 @@

|

||||

Translating by qhwdw

|

||||

Token ERC Comparison for Fungible Tokens – Blockchainers

|

||||

======

|

||||

“The good thing about standards is that there are so many to choose from.” [_Andrew S. Tanenbaum_][1]

|

||||

|

||||

### Current State of Token Standards

|

||||

|

||||

The current state of Token standards on the Ethereum platform is surprisingly simple: ERC-20 Token Standard is the only accepted and adopted (as [EIP-20][2]) standard for a Token interface.

|

||||

|

||||

Proposed in 2015, it has finally been accepted at the end of 2017.

|

||||

|

||||

In the meantime, many Ethereum Requests for Comments (ERC) have been proposed which address shortcomings of the ERC-20, which partly were caused by changes in the Ethereum platform itself, eg. the fix for the re-entrancy bug with [EIP-150][3]. Other ERC propose enhancements to the ERC-20 Token model. These enhancements were identified by experiences gathered due to the broad adoption of the Ethereum blockchain and the ERC-20 Token standard. The actual usage of the ERC-20 Token interface resulted in new demands and requirements to address non-functional requirements like permissioning and operations.

|

||||

|

||||

This blogpost should give a superficial, but complete, overview of all proposals for Token(-like) standards on the Ethereum platform. This comparison tries to be objective but most certainly will fail in doing so.

|

||||

|

||||

### The Mother of all Token Standards: ERC-20

|

||||

|

||||

There are dozens of [very good][4] and detailed description of the ERC-20, which will not be repeated here. Just the core concepts relevant for comparing the proposals are mentioned in this post.

|

||||

|

||||

#### The Withdraw Pattern

|

||||

|

||||

Users trying to understand the ERC-20 interface and especially the usage pattern for _transfer_ ing Tokens _from_ one externally owned account (EOA), ie. an end-user (“Alice”), to a smart contract, have a hard time getting the approve/transferFrom pattern right.

|

||||

|

||||

![][5]

|

||||

|

||||

From a software engineering perspective, this withdraw pattern is very similar to the [Hollywood principle][6] (“Don’t call us, we’ll call you!”). The idea is that the call chain is reversed: during the ERC-20 Token transfer, the Token doesn’t call the contract, but the contract does the call transferFrom on the Token.

|

||||

|

||||

While the Hollywood Principle is often used to implement Separation-of-Concerns (SoC), in Ethereum it is a security pattern to avoid having the Token contract to call an unknown function on an external contract. This behaviour was necessary due to the [Call Depth Attack][7] until [EIP-150][3] was activated. After this hard fork, the re-entrancy bug was not possible anymore and the withdraw pattern did not provide any more security than calling the Token directly.

|

||||

|

||||

But why should it be a problem now, the usage might be somehow clumsy, but we can fix this in the DApp frontend, right?

|

||||

|

||||

So, let’s see what happens if a user used transfer to send Tokens to a smart contract. Alice calls transfer on the Token contract with the contract address

|

||||

|

||||

**….aaaaand it’s gone!**

|

||||

|

||||

That’s right, the Tokens are gone. Most likely, nobody will ever get the Tokens back. But Alice is not alone, as Dexaran, inventor of ERC-223, found out, about $400.000 in tokens (let’s just say _a lot_ due to the high volatility of ETH) are irretrievably lost for all of us due to users accidentally sending Tokens to smart contracts.

|

||||

|

||||

Even if the contract developer was extremely user friendly and altruistic, he couldn’t create the contract so that it could react to getting Tokens transferred to it and eg. return them, as the contract will never be notified of this transfer and the event is only emitted on the Token contract.

|

||||

|

||||

From a software engineering perspective that’s a severe shortcoming of ERC-20. If an event occurs (and for the sake of simplicity, we are now assuming Ethereum transactions are actually events), there should be a notification to the parties involved. However, there is an event, but it’s triggered in the Token smart contract which the receiving contract cannot know.

|

||||

|

||||

Currently, it’s not possible to prevent users sending Tokens to smart contracts and losing them forever using the unintuitive transfer on the ERC-20 Token contract.

|

||||

|

||||

### The Empire Strikes Back: ERC-223

|

||||

|

||||

The first attempt at fixing the problems of ERC-20 was proposed by [Dexaran][8]. The main issue solved by this proposal is the different handling of EOA and smart contract accounts.

|

||||

|

||||

The compelling strategy is to reverse the calling chain (and with [EIP-150][3] solved this is now possible) and use a pre-defined callback (tokenFallback) on the receiving smart contract. If this callback is not implemented, the transfer will fail (costing all gas for the sender, a common criticism for ERC-223).

|

||||

|

||||

![][9]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Establishes a new interface, intentionally being not compliant to ERC-20 with respect to the deprecated functions

|

||||

|

||||

* Allows contract developers to handle incoming tokens (eg. accept/reject) since event pattern is followed

|

||||

|

||||

* Uses one transaction instead of two (transfer vs. approve/transferFrom) and thus saves gas and Blockchain storage

|

||||

|

||||

|

||||

|

||||

|

||||

#### Cons:

|

||||

|

||||

* If tokenFallback doesn’t exist then the contract fallback function is executed, this might have unintended side-effects

|

||||

|

||||

* If contracts assume that transfer works with Tokens, eg. for sending Tokens to specific contracts like multi-sig wallets, this would fail with ERC-223 Tokens, making it impossible to move them (ie. they are lost)

|

||||

|

||||

|

||||

### The Pragmatic Programmer: ERC-677

|

||||

|

||||

The [ERC-667 transferAndCall Token Standard][10] tries to marriage the ERC-20 and ERC-223. The idea is to introduce a transferAndCall function to the ERC-20, but keep the standard as is. ERC-223 intentionally is not completely backwards compatible, since the approve/allowance pattern is not needed anymore and was therefore removed.

|

||||

|

||||

The main goal of ERC-667 is backward compatibility, providing a safe way for new contracts to transfer tokens to external contracts.

|

||||

|

||||

![][11]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Easy to adapt for new Tokens

|

||||

|

||||

* Compatible to ERC-20

|

||||

|

||||

* Adapter for ERC-20 to use ERC-20 safely

|

||||

|

||||

#### Cons:

|

||||

|

||||

* No real innovations. A compromise of ERC-20 and ERC-223

|

||||

|

||||

* Current implementation [is not finished][12]

|

||||

|

||||

|

||||

### The Reunion: ERC-777

|

||||

|

||||

[ERC-777 A New Advanced Token Standard][13] was introduced to establish an evolved Token standard which learned from misconceptions like approve() with a value and the aforementioned send-tokens-to-contract-issue.

|

||||

|

||||

Additionally, the ERC-777 uses the new standard [ERC-820: Pseudo-introspection using a registry contract][14] which allows for registering meta-data for contracts to provide a simple type of introspection. This allows for backwards compatibility and other functionality extensions, depending on the ITokenRecipient returned by a EIP-820 lookup on the to address, and the functions implemented by the target contract.

|

||||

|

||||

ERC-777 adds a lot of learnings from using ERC-20 Tokens, eg. white-listed operators, providing Ether-compliant interfaces with send(…), using the ERC-820 to override and adapt functionality for backwards compatibility.

|

||||

|

||||

![][15]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Well thought and evolved interface for tokens, learnings from ERC-20 usage

|

||||

|

||||

* Uses the new standard request ERC-820 for introspection, allowing for added functionality

|

||||

|

||||

* White-listed operators are very useful and are more necessary than approve/allowance , which was often left infinite

|

||||

|

||||

|

||||

#### Cons:

|

||||

|

||||

* Is just starting, complex construction with dependent contract calls

|

||||

|

||||

* Dependencies raise the probability of security issues: first security issues have been [identified (and solved)][16] not in the ERC-777, but in the even newer ERC-820

|

||||

|

||||

|

||||

|

||||

|

||||

### (Pure Subjective) Conclusion

|

||||

|

||||

For now, if you want to go with the “industry standard” you have to choose ERC-20. It is widely supported and well understood. However, it has its flaws, the biggest one being the risk of non-professional users actually losing money due to design and specification issues. ERC-223 is a very good and theoretically founded answer for the issues in ERC-20 and should be considered a good alternative standard. Implementing both interfaces in a new token is not complicated and allows for reduced gas usage.

|

||||

|

||||

A pragmatic solution to the event and money loss problem is ERC-677, however it doesn’t offer enough innovation to establish itself as a standard. It could however be a good candidate for an ERC-20 2.0.

|

||||

|

||||

ERC-777 is an advanced token standard which should be the legitimate successor to ERC-20, it offers great concepts which are needed on the matured Ethereum platform, like white-listed operators, and allows for extension in an elegant way. Due to its complexity and dependency on other new standards, it will take time till the first ERC-777 tokens will be on the Mainnet.

|

||||

|

||||

### Links

|

||||

|

||||

[1] Security Issues with approve/transferFrom-Pattern in ERC-20: <https://drive.google.com/file/d/0ByMtMw2hul0EN3NCaVFHSFdxRzA/view>

|

||||

|

||||

[2] No Event Handling in ERC-20: <https://docs.google.com/document/d/1Feh5sP6oQL1-1NHi-X1dbgT3ch2WdhbXRevDN681Jv4>

|

||||

|

||||

[3] Statement for ERC-20 failures and history: <https://github.com/ethereum/EIPs/issues/223#issuecomment-317979258>

|

||||

|

||||

[4] List of differences ERC-20/223: <https://ethereum.stackexchange.com/questions/17054/erc20-vs-erc223-list-of-differences>

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://blockchainers.org/index.php/2018/02/08/token-erc-comparison-for-fungible-tokens/

|

||||

|

||||

作者:[Alexander Culum][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://blockchainers.org/index.php/author/alex/

|

||||

[1]:https://www.goodreads.com/quotes/589703-the-good-thing-about-standards-is-that-there-are-so

|

||||

[2]:https://github.com/ethereum/EIPs/blob/master/EIPS/eip-20.md

|

||||

[3]:https://github.com/ethereum/EIPs/blob/master/EIPS/eip-150.md

|

||||

[4]:https://medium.com/@jgm.orinoco/understanding-erc-20-token-contracts-a809a7310aa5

|

||||

[5]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-20-Token-Transfer-2.png

|

||||

[6]:http://matthewtmead.com/blog/hollywood-principle-dont-call-us-well-call-you-4/

|

||||

[7]:https://consensys.github.io/smart-contract-best-practices/known_attacks/

|

||||

[8]:https://github.com/Dexaran

|

||||

[9]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-223-Token-Transfer-1.png

|

||||

[10]:https://github.com/ethereum/EIPs/issues/677

|

||||

[11]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-677-Token-Transfer.png

|

||||

[12]:https://github.com/ethereum/EIPs/issues/677#issuecomment-353871138

|

||||

[13]:https://github.com/ethereum/EIPs/issues/777

|

||||

[14]:https://github.com/ethereum/EIPs/issues/820

|

||||

[15]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-777-Token-Transfer.png

|

||||

[16]:https://github.com/ethereum/EIPs/issues/820#issuecomment-362049573

|

||||

@ -1,72 +0,0 @@

|

||||

translating----geekpi

|

||||

|

||||

Python Debugging Tips

|

||||

======

|

||||

When it comes to debugging, there’s a lot of choices that you can make. It is hard to give generic advice that always works (other than “Have you tried turning it off and back on?”).

|

||||

|

||||

Here are a few of my favorite Python Debugging tips.

|

||||

|

||||

### Make a branch

|

||||

|

||||

Trust me on this. Even if you never intend to commit the changes back upstream, you will be glad your experiments are contained within their own branch.

|

||||

|

||||

If nothing else, it makes cleanup a lot easier!

|

||||

|

||||

### Install pdb++

|

||||

|

||||

Seriously. It makes you life easier if you are on the command line.

|

||||

|

||||

All that pdb++ does is replace the standard pdb module with 100% PURE AWESOMENESS. Here’s what you get when you `pip install pdbpp`:

|

||||

|

||||

* A Colorized prompt!

|

||||

* tab completion! (perfect for poking around!)

|

||||

* It slices! It dices!

|

||||

|

||||

|

||||

|

||||

Ok, maybe the last one is a little bit much… But in all seriousness, installing pdb++ is well worth your time.

|

||||

|

||||

### Poke around

|

||||

|

||||

Sometimes the best approach is to just mess around and see what happens. Put a break point in an “obvious” spot and make sure it gets hit. Pepper the code with `print()` and/or `logging.debug()` statements and see where the code execution goes.

|

||||

|

||||

Examine the arguments being passed into your functions. Check the versions of the libraries (if things are getting really desperate).

|

||||

|

||||

### Only change one thing at a time

|

||||

|

||||

Once you are poking around a bit you are going to get ideas on things you could do. But before you start slinging code, take a step back and think about what you could change, and then only change 1 thing.

|

||||

|

||||

Once you’ve made the change, then test and see if you are closer to resolving the issue. If not, change the thing back, and try something else.

|

||||

|

||||

Changing only one thing allows you to know what does and doesn’t work. Plus once you do get it working, your new commit is going to be much smaller (because there will be less changes).

|

||||

|

||||

This is pretty much what one does in the Scientific Process: only change one variable at a time. By allowing yourself to see and measure the results of one change you will save your sanity and arrive at a working solution faster.

|

||||

|

||||

### Assume nothing, ask questions

|

||||

|

||||

Occasionally a developer (not you of course!) will be in a hurry and whip out some questionable code. When you go through to debug this code you need to stop and make sure you understand what it is trying to accomplish.

|

||||

|

||||

Make no assumptions. Just because the code is in the `model.py` file doesn’t mean it won’t try to render some HTML.

|

||||

|

||||

Likewise, double check all of your external connections before you do anything destructive! Going to delete some configuration data? MAKE SURE YOU ARE NOT CONNECTED TO YOUR PRODUCTION SYSTEM.

|

||||

|

||||

### Be clever, but not too clever

|

||||

|

||||

Sometimes we write code that is so amazingly awesome it is not obvious how it does what it does.

|

||||

|

||||

While we might feel smart when we publish that code, more often than not we will wind up feeling dumb later on when the code breaks and we have to remember how it works to figure out why it isn’t working.

|

||||

|

||||

Keep an eye out for any sections of code that look either overly complicated and long, or extremely short. These could be places where complexity is hiding and causing your bugs.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://pythondebugging.com/articles/python-debugging-tips

|

||||

|

||||

作者:[PythonDebugging.com][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://pythondebugging.com

|

||||

@ -1,95 +0,0 @@

|

||||

Use this vi setup to keep and organize your notes

|

||||

======

|

||||

|

||||

|

||||

|

||||

The idea of using vi to manage a wiki for your notes may seem unconventional, but when you're using vi in your daily work, it makes a lot of sense.

|

||||

|

||||

As a software developer, it’s just easier to write my notes in the same tool I use to code. I want my notes to be only an editor command away, available wherever I am, and managed the same way I handle my code. That's why I created a vi-based setup for my personal knowledge base. In a nutshell: I use the vi plugin [Vimwiki][1] to manage my wiki locally on my laptop, I use Git to version it (and keep a central, updated version), and I use GitLab for online editing (for example, on my mobile device).

|

||||

|

||||

### Why it makes sense to use a wiki for note-keeping

|

||||

|

||||

I've tried many different tools to keep track of notes, write down fleeting thoughts, and structure tasks I shouldn’t forget. These include offline notebooks (yes, that involves paper), special note-keeping software, and mind-mapping software.

|

||||

|

||||

All these solutions have positives, but none fit all of my needs. For example, [mind maps][2] are a great way to visualize what’s in your mind (hence the name), but the tools I tried provided poor searching functionality. (The same thing is true for paper notes.) Also, it’s often hard to read mind maps after time passes, so they don’t work very well for long-term note keeping.

|

||||

|

||||

One day while setting up a [DokuWiki][3] for a collaboration project, I found that the wiki structure fits most of my requirements. With a wiki, you can create notes (like you would in any text editor) and create links between your notes. If a link points to a non-existent page (maybe because you wanted a piece of information to be on its own page but haven’t set it up yet), the wiki will create that page for you. These features make a wiki a good fit for quickly writing things as they come to your mind, while still keeping your notes in a page structure that is easy to browse and search for keywords.

|

||||

|

||||

While this sounds promising, and setting up DokuWiki is not difficult, I found it a bit too much work to set up a whole wiki just for keeping track of my notes. After some research, I found Vimwiki, a Vi plugin that does what I want. Since I use Vi every day, keeping notes is very similar to editing code. Also, it’s even easier to create a page in Vimwiki than DokuWiki—all you have to do is press Enter while your cursor hovers over a word. If there isn’t already a page with that name, Vimwiki will create it for you.

|

||||

|

||||

To take my plan to use my everyday tools for note-keeping a step further, I’m not only using my favorite IDE to write notes but also my favorite code management tools—Git and GitLab—to distribute notes across my various machines and be able to access them online. I’m also using Markdown syntax in GitLab's online Markdown editor to write this article.

|

||||

|

||||

### Setting up Vimwiki

|

||||

|

||||

Installing Vimwiki is easy using your existing plugin manager: Just add `vimwiki/vimwiki` to your plugins. In my preferred plugin manager, Vundle, you just add the line `Plugin 'vimwiki/vimwiki'` in your `~/.vimrc` followed by a `:source ~/.vimrc|PluginInstall`.

|

||||

|

||||

Following is a piece of my `~.vimrc` showing a bit of Vimwiki configuration. You can learn more about installing and using this tool on the [Vimwiki page][1].

|

||||

```

|

||||

let wiki_1 = {}

|

||||

let wiki_1.path = '~/vimwiki_work_md/'

|

||||

let wiki_1.syntax = 'markdown'

|

||||

let wiki_1.ext = '.md'

|

||||

|

||||

let wiki_2 = {}

|

||||

let wiki_2.path = '~/vimwiki_personal_md/'

|

||||

let wiki_2.syntax = 'markdown'

|

||||

let wiki_2.ext = '.md'

|

||||

|

||||

let g:vimwiki_list = [wiki_1, wiki_2]

|

||||

let g:vimwiki_ext2syntax = {'.md': 'markdown', '.markdown': 'markdown', '.mdown': 'markdown'}

|

||||

```

|

||||

|

||||

Another advantage of my approach, which you can see in the configuration, is that I can easily divide my personal and work-related notes without switching the note-keeping software. I want my personal notes accessible everywhere, but I don’t want to sync my work-related notes to my private GitLab and computer. This was easier to set up in Vimwiki compared to the other software I tried.

|

||||

|

||||

The configuration tells Vimwiki there are two different wikis and I want to use Markdown syntax in both (again, because I’m used to Markdown from my daily work). It also tells Vimwiki the folders where to store the wiki pages.

|

||||

|

||||

If you navigate to the folders where the wiki pages are stored, you will find your wiki’s flat Markdown pages without any special Vimwiki context. That makes it easy to initialize a Git repository and sync your wiki to a central repository.

|

||||

|

||||

### Synchronizing your wiki to GitLab

|

||||

|

||||

The steps to check out a GitLab project to your local Vimwiki folder are nearly the same as you’d use for any GitHub repository. I just prefer to keep my notes in a private GitLab repository, so I keep a GitLab instance running for my personal projects.

|

||||

|

||||

GitLab has a wiki functionality that allows you to create wiki pages for your projects. Those wikis are Git repositories themselves. And they use Markdown syntax. You get where this is leading.

|

||||

|

||||

Just initialize the wiki you want to synchronize with the wiki of a project you created for your notes:

|

||||

```

|

||||

cd ~/vimwiki_personal_md/

|

||||

git init

|

||||

git remote add origin git@your.gitlab.com:your_user/vimwiki_personal_md.wiki

|

||||

git add .

|

||||

git commit -m "Initial commit"

|

||||

git push -u origin master

|

||||

```

|

||||

|

||||

These steps can be copied from the page where you land after creating a new project on GitLab. The only thing to change is the `.wiki` at the end of the repository URL (instead of `.git`), which tells it to clone the wiki repository instead of the project itself.

|

||||

|

||||

That’s it! Now you can manage your notes with Git and edit them in GitLab’s wiki user interface.

|

||||

|

||||

But maybe (like me) you don’t want to manually create commits for every note you add to your notebook. To solve this problem, I use the Vim plugin [chazy/dirsettings][4]. I added a `.vimdir` file with the following content to `~/vimwiki_personal_md`:

|

||||

```

|

||||

:cd %:p:h

|

||||

silent! !git pull > /dev/null

|

||||

:e!

|

||||

autocmd! BufWritePost * silent! !git add .;git commit -m "vim autocommit" > /dev/null; git push > /dev/null&

|

||||

```

|

||||

|

||||

This pulls the latest version of my wiki every time I open a wiki file and publishes my changes after every `:w` command. Doing this should keep your local copy in sync with the central repo. If you have merge conflicts, you may need to resolve them (as usual).

|

||||

|

||||

For now, this is the way I interact with my knowledge base, and I’m quite happy with it. Please let me know what you think about this approach. And please share in the comments your favorite way to keep track of your notes.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/6/vimwiki-gitlab-notes

|

||||

|

||||

作者:[Manuel Dewald][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ntlx

|

||||

[1]:http://vimwiki.github.io/

|

||||

[2]:https://opensource.com/article/17/8/mind-maps-creative-dashboard

|

||||

[3]:https://www.dokuwiki.org/dokuwiki

|

||||

[4]:https://github.com/chazy/dirsettings

|

||||

@ -1,41 +1,40 @@

|

||||

Translating by qhwdw

|

||||

Learn Blockchains by Building One

|

||||

通过构建一个区块链来学习区块链技术

|

||||

======

|

||||

|

||||

|

||||

You’re here because, like me, you’re psyched about the rise of Cryptocurrencies. And you want to know how Blockchains work—the fundamental technology behind them.

|

||||

你看到这篇文章是因为和我一样,对加密货币的大热而感到兴奋。并且想知道区块链是如何工作的 —— 它们背后的技术是什么。

|

||||

|

||||

But understanding Blockchains isn’t easy—or at least wasn’t for me. I trudged through dense videos, followed porous tutorials, and dealt with the amplified frustration of too few examples.

|

||||

但是理解区块链并不容易 —— 至少对我来说是这样。我徜徉在各种难懂的视频中,并且因为示例太少而陷入深深的挫败感中。

|

||||

|

||||

I like learning by doing. It forces me to deal with the subject matter at a code level, which gets it sticking. If you do the same, at the end of this guide you’ll have a functioning Blockchain with a solid grasp of how they work.

|

||||

我喜欢在实践中学习。这迫使我去处理被卡在代码级别上的难题。如果你也是这么做的,在本指南结束的时候,你将拥有一个功能正常的区块链,并且实实在在地理解了它的工作原理。

|

||||

|

||||

### Before you get started…

|

||||

### 开始之前 …

|

||||

|

||||

Remember that a blockchain is an _immutable, sequential_ chain of records called Blocks. They can contain transactions, files or any data you like, really. But the important thing is that they’re _chained_ together using _hashes_ .

|

||||

记住,区块链是一个 _不可更改的、有序的_ 被称为区块的记录链。它们可以包括事务~~(交易???校对确认一下,下同)~~、文件或者任何你希望的真实数据。最重要的是它们是通过使用_哈希_链接到一起的。

|

||||

|

||||

If you aren’t sure what a hash is, [here’s an explanation][1].

|

||||

如果你不知道哈希是什么,[这里有解释][1]。

|

||||

|

||||

**_Who is this guide aimed at?_** You should be comfy reading and writing some basic Python, as well as have some understanding of how HTTP requests work, since we’ll be talking to our Blockchain over HTTP.

|

||||

**_本指南的目标读者是谁?_** 你应该能很容易地读和写一些基本的 Python 代码,并能够理解 HTTP 请求是如何工作的,因为我们讨论的区块链将基于 HTTP。

|

||||

|

||||

**_What do I need?_** Make sure that [Python 3.6][2]+ (along with `pip`) is installed. You’ll also need to install Flask and the wonderful Requests library:

|

||||

**_我需要做什么?_** 确保安装了 [Python 3.6][2]+(以及 `pip`),还需要去安装 Flask 和非常好用的 Requests 库:

|

||||

|

||||

```

|

||||

pip install Flask==0.12.2 requests==2.18.4

|

||||

```

|

||||

|

||||

Oh, you’ll also need an HTTP Client, like [Postman][3] or cURL. But anything will do.

|

||||

当然,你也需要一个 HTTP 客户端,像 [Postman][3] 或者 cURL。哪个都行。

|

||||

|

||||

**_Where’s the final code?_** The source code is [available here][4].

|

||||

**_最终的代码在哪里可以找到?_** 源代码在 [这里][4]。

|

||||

|

||||

* * *

|

||||

|

||||

### Step 1: Building a Blockchain

|

||||

### 第 1 步:构建一个区块链

|

||||

|

||||

Open up your favourite text editor or IDE, personally I ❤️ [PyCharm][5]. Create a new file, called `blockchain.py`. We’ll only use a single file, but if you get lost, you can always refer to the [source code][6].

|

||||

打开你喜欢的文本编辑器或者 IDE,我个人 ❤️ [PyCharm][5]。创建一个名为 `blockchain.py` 的新文件。我将使用一个单个的文件,如果你看晕了,可以去参考 [源代码][6]。

|

||||

|

||||

#### Representing a Blockchain

|

||||

#### 描述一个区块链

|

||||

|

||||

We’ll create a `Blockchain` class whose constructor creates an initial empty list (to store our blockchain), and another to store transactions. Here’s the blueprint for our class:

|

||||

我们将创建一个 `Blockchain` 类,它的构造函数将去初始化一个空列表(去存储我们的区块链),以及另一个列表去保存事务。下面是我们的类规划:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

@ -63,13 +62,13 @@ pass

|

||||

```

|

||||

|

||||

|

||||

Our Blockchain class is responsible for managing the chain. It will store transactions and have some helper methods for adding new blocks to the chain. Let’s start fleshing out some methods.

|

||||

我们的区块链类负责管理链。它将存储事务并且有一些为链中增加新区块的助理性质的方法。现在我们开始去充实一些类的方法。

|

||||

|

||||

#### What does a Block look like?

|

||||

#### 一个区块是什么样子的?

|

||||

|

||||

Each Block has an index, a timestamp (in Unix time), a list of transactions, a proof (more on that later), and the hash of the previous Block.

|

||||

每个区块有一个索引、一个时间戳(Unix 时间)、一个事务的列表、一个证明(后面会详细解释)、以及前一个区块的哈希。

|

||||

|

||||

Here’s an example of what a single Block looks like:

|

||||

单个区块的示例应该是下面的样子:

|

||||

|

||||

```

|

||||

block = {

|

||||

@ -87,13 +86,13 @@ block = {

|

||||

}

|

||||

```

|

||||

|

||||

At this point, the idea of a chain should be apparent—each new block contains within itself, the hash of the previous Block. This is crucial because it’s what gives blockchains immutability: If an attacker corrupted an earlier Block in the chain then all subsequent blocks will contain incorrect hashes.

|

||||

此刻,链的概念应该非常明显 —— 每个新区块包含它自身的信息和前一个区域的哈希。这一点非常重要,因为这就是区块链不可更改的原因:如果攻击者修改了一个早期的区块,那么所有的后续区块将包含错误的哈希。

|

||||

|

||||

Does this make sense? If it doesn’t, take some time to let it sink in—it’s the core idea behind blockchains.

|

||||

这样做有意义吗?如果没有,就让时间来埋葬它吧 —— 这就是区块链背后的核心思想。

|

||||

|

||||

#### Adding Transactions to a Block

|

||||

#### 添加事务到一个区块

|

||||

|

||||

We’ll need a way of adding transactions to a Block. Our new_transaction() method is responsible for this, and it’s pretty straight-forward:

|

||||

我们将需要一种区块中添加事务的方式。我们的 `new_transaction()` 就是做这个的,它非常简单明了:

|

||||

|

||||

```

|

||||

class Blockchain(object):

|

||||

@ -117,13 +116,13 @@ class Blockchain(object):

|

||||

return self.last_block['index'] + 1

|

||||

```

|

||||

|

||||

After new_transaction() adds a transaction to the list, it returns the index of the block which the transaction will be added to—the next one to be mined. This will be useful later on, to the user submitting the transaction.

|

||||

在 `new_transaction()` 运行后将在列表中添加一个事务,它返回添加事务后的那个区块的索引 —— 那个区块接下来将被挖矿。提交事务的用户后面会用到这些。

|

||||

|

||||

#### Creating new Blocks

|

||||

#### 创建新区块

|

||||

|

||||

When our Blockchain is instantiated we’ll need to seed it with a genesis block—a block with no predecessors. We’ll also need to add a “proof” to our genesis block which is the result of mining (or proof of work). We’ll talk more about mining later.

|

||||

当我们的区块链被实例化后,我们需要一个创世区块(一个没有祖先的区块)来播种它。我们也需要去添加一些 “证明” 到创世区块,它是挖矿(工作量证明 PoW)的成果。我们在后面将讨论更多挖矿的内容。

|

||||

|

||||

In addition to creating the genesis block in our constructor, we’ll also flesh out the methods for new_block(), new_transaction() and hash():

|

||||

除了在我们的构造函数中创建创世区块之外,我们还需要写一些方法,如 `new_block()`、`new_transaction()` 以及 `hash()`:

|

||||

|

||||

```

|

||||

import hashlib

|

||||

@ -194,15 +193,15 @@ class Blockchain(object):

|

||||

return hashlib.sha256(block_string).hexdigest()

|

||||

```

|

||||

|

||||

The above should be straight-forward—I’ve added some comments and docstrings to help keep it clear. We’re almost done with representing our blockchain. But at this point, you must be wondering how new blocks are created, forged or mined.

|

||||

上面的内容简单明了 —— 我添加了一些注释和文档字符串,以使代码清晰可读。到此为止,表示我们的区块链基本上要完成了。但是,你肯定想知道新区块是如何被创建、打造或者挖矿的。

|

||||

|

||||

#### Understanding Proof of Work

|

||||

#### 理解工作量证明

|

||||

|

||||

A Proof of Work algorithm (PoW) is how new Blocks are created or mined on the blockchain. The goal of PoW is to discover a number which solves a problem. The number must be difficult to find but easy to verify—computationally speaking—by anyone on the network. This is the core idea behind Proof of Work.

|

||||

一个工作量证明(PoW)算法是在区块链上创建或者挖出新区块的方法。PoW 的目标是去撞出一个能够解决问题的数字。这个数字必须满足“找到它很困难但是验证它很容易”的条件 —— 网络上的任何人都可以计算它。这就是 PoW 背后的核心思想。

|

||||

|

||||

We’ll look at a very simple example to help this sink in.

|

||||

我们来看一个非常简单的示例来帮助你了解它。

|

||||

|

||||

Let’s decide that the hash of some integer x multiplied by another y must end in 0\. So, hash(x * y) = ac23dc...0\. And for this simplified example, let’s fix x = 5\. Implementing this in Python:

|

||||

我们来解决一个问题,一些整数 x 乘以另外一个整数 y 的结果的哈希值必须以 0 结束。因此,hash(x * y) = ac23dc…0。为简单起见,我们先把 x = 5 固定下来。在 Python 中的实现如下:

|

||||

|

||||

```

|

||||

from hashlib import sha256

|

||||

@ -216,19 +215,19 @@ while sha256(f'{x*y}'.encode()).hexdigest()[-1] != "0":

|

||||

print(f'The solution is y = {y}')

|

||||

```

|

||||

|

||||

The solution here is y = 21\. Since, the produced hash ends in 0:

|

||||

在这里的答案是 y = 21。因为它产生的哈希值是以 0 结尾的:

|

||||

|

||||

```

|

||||

hash(5 * 21) = 1253e9373e...5e3600155e860

|

||||

```

|

||||

|

||||

The network is able to easily verify their solution.

|

||||

网络上的任何人都可以很容易地去核验它的答案。

|

||||

|

||||

#### Implementing basic Proof of Work

|

||||

#### 实现基本的 PoW

|

||||

|

||||

Let’s implement a similar algorithm for our blockchain. Our rule will be similar to the example above:

|

||||

为我们的区块链来实现一个简单的算法。我们的规则与上面的示例类似:

|

||||

|

||||

> Find a number p that when hashed with the previous block’s solution a hash with 4 leading 0s is produced.

|

||||

> 找出一个数字 p,它与前一个区块的答案进行哈希运算得到一个哈希值,这个哈希值的前四位必须是由 0 组成。

|

||||

|

||||

```

|

||||

import hashlib

|

||||

@ -270,27 +269,27 @@ class Blockchain(object):

|

||||

return guess_hash[:4] == "0000"

|

||||

```

|

||||

|

||||

To adjust the difficulty of the algorithm, we could modify the number of leading zeroes. But 4 is sufficient. You’ll find out that the addition of a single leading zero makes a mammoth difference to the time required to find a solution.

|

||||

为了调整算法的难度,我们可以修改前导 0 的数量。但是 4 个零已经足够难了。你会发现,将前导 0 的数量每增加一,那么找到正确答案所需要的时间难度将大幅增加。

|

||||

|

||||

Our class is almost complete and we’re ready to begin interacting with it using HTTP requests.

|

||||

我们的类基本完成了,现在我们开始去使用 HTTP 请求与它交互。

|

||||

|

||||

* * *

|

||||

|

||||

### Step 2: Our Blockchain as an API

|

||||

### 第 2 步:以 API 方式去访问我们的区块链

|

||||

|

||||

We’re going to use the Python Flask Framework. It’s a micro-framework and it makes it easy to map endpoints to Python functions. This allows us talk to our blockchain over the web using HTTP requests.

|

||||

我们将去使用 Python Flask 框架。它是个微框架,使用它去做端点到 Python 函数的映射很容易。这样我们可以使用 HTTP 请求基于 web 来与我们的区块链对话。

|

||||

|

||||

We’ll create three methods:

|

||||

我们将创建三个方法:

|

||||

|

||||

* `/transactions/new` to create a new transaction to a block

|

||||

* `/transactions/new` 在一个区块上创建一个新事务

|

||||

|

||||

* `/mine` to tell our server to mine a new block.

|

||||

* `/mine` 告诉我们的服务器去挖矿一个新区块

|

||||

|

||||

* `/chain` to return the full Blockchain.

|

||||

* `/chain` 返回完整的区块链

|

||||

|

||||

#### Setting up Flask

|

||||

#### 配置 Flask

|

||||

|

||||

Our “server” will form a single node in our blockchain network. Let’s create some boilerplate code:

|

||||

我们的 “服务器” 将在我们的区块链网络中产生一个单个的节点。我们来创建一些样板代码:

|

||||

|

||||

```

|

||||

import hashlib

|

||||

@ -336,25 +335,25 @@ if __name__ == '__main__':

|

||||

app.run(host='0.0.0.0', port=5000)

|

||||

```

|

||||

|

||||

A brief explanation of what we’ve added above:

|

||||

对上面的代码,我们做添加一些详细的解释:

|

||||

|

||||

* Line 15: Instantiates our Node. Read more about Flask [here][7].

|

||||

* Line 15:实例化我们的节点。更多关于 Flask 的知识读 [这里][7]。

|

||||

|

||||

* Line 18: Create a random name for our node.

|

||||

* Line 18:为我们的节点创建一个随机的名字。

|

||||

|

||||

* Line 21: Instantiate our Blockchain class.

|

||||

* Line 21:实例化我们的区块链类。

|

||||

|

||||

* Line 24–26: Create the /mine endpoint, which is a GET request.

|

||||

* Line 24–26:创建 /mine 端点,这是一个 GET 请求。

|

||||

|

||||

* Line 28–30: Create the /transactions/new endpoint, which is a POST request, since we’ll be sending data to it.

|

||||

* Line 28–30:创建 /transactions/new 端点,这是一个 POST 请求,因为我们要发送数据给它。

|

||||

|

||||

* Line 32–38: Create the /chain endpoint, which returns the full Blockchain.

|

||||

* Line 32–38:创建 /chain 端点,它返回全部区块链。

|

||||

|

||||

* Line 40–41: Runs the server on port 5000.

|

||||

* Line 40–41:在 5000 端口上运行服务器。

|

||||

|

||||

#### The Transactions Endpoint

|

||||

#### 事务端点

|

||||

|

||||

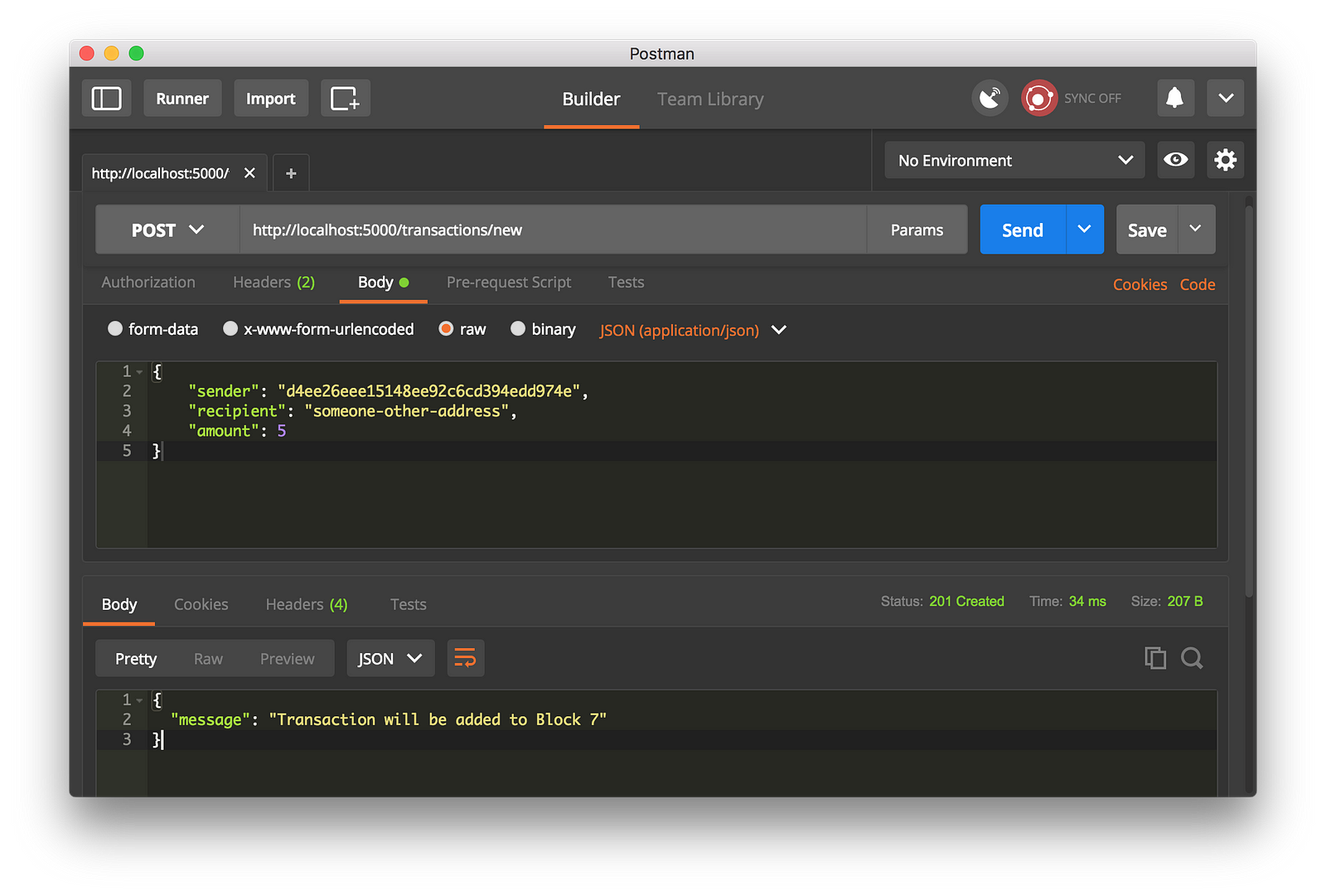

This is what the request for a transaction will look like. It’s what the user sends to the server:

|

||||

这就是对一个事务的请求,它是用户发送给服务器的:

|

||||

|

||||

```

|

||||

{ "sender": "my address", "recipient": "someone else's address", "amount": 5}

|

||||

@ -386,17 +385,17 @@ def new_transaction():

|

||||

response = {'message': f'Transaction will be added to Block {index}'}

|

||||

return jsonify(response), 201

|

||||

```

|

||||

A method for creating Transactions

|

||||

创建事务的方法

|

||||

|

||||

#### The Mining Endpoint

|

||||

#### 挖矿端点

|

||||

|

||||

Our mining endpoint is where the magic happens, and it’s easy. It has to do three things:

|

||||

我们的挖矿端点是见证奇迹的地方,它实现起来很容易。它要做三件事情:

|

||||

|

||||

1. Calculate the Proof of Work

|

||||

1. 计算工作量证明

|

||||

|

||||

2. Reward the miner (us) by adding a transaction granting us 1 coin

|

||||

2. 因为矿工(我们)添加一个事务而获得报酬,奖励矿工(我们) 1 个硬币

|

||||

|

||||

3. Forge the new Block by adding it to the chain

|

||||

3. 通过将它添加到链上而打造一个新区块

|

||||

|

||||

```

|

||||

import hashlib

|

||||

@ -438,34 +437,34 @@ def mine():

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

Note that the recipient of the mined block is the address of our node. And most of what we’ve done here is just interact with the methods on our Blockchain class. At this point, we’re done, and can start interacting with our blockchain.

|

||||

注意,挖掘出的区块的接收方是我们的节点地址。现在,我们所做的大部分工作都只是与我们的区块链类的方法进行交互的。到目前为止,我们已经做到了,现在开始与我们的区块链去交互。

|

||||

|

||||

### Step 3: Interacting with our Blockchain

|

||||

### 第 3 步:与我们的区块链去交互

|

||||

|

||||

You can use plain old cURL or Postman to interact with our API over a network.

|

||||

你可以使用简单的 cURL 或者 Postman 通过网络与我们的 API 去交互。

|

||||

|

||||

Fire up the server:

|

||||

启动服务器:

|

||||

|

||||

```

|

||||

$ python blockchain.py

|

||||

```

|

||||

|

||||

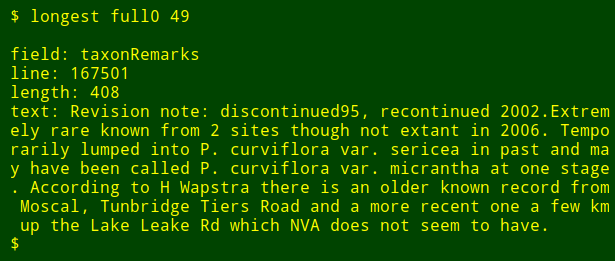

Let’s try mining a block by making a GET request to http://localhost:5000/mine:

|

||||

我们通过生成一个 GET 请求到 http://localhost:5000/mine 去尝试挖一个区块:

|

||||

|

||||

|

||||

Using Postman to make a GET request

|

||||

使用 Postman 去生成一个 GET 请求

|

||||

|

||||

Let’s create a new transaction by making a POST request tohttp://localhost:5000/transactions/new with a body containing our transaction structure:

|

||||

我们通过生成一个 POST 请求到 http://localhost:5000/transactions/new 去创建一个区块,它带有一个包含我们的事务结构的 `Body`:

|

||||

|

||||

|

||||

Using Postman to make a POST request

|

||||

使用 Postman 去生成一个 POST 请求

|

||||

|

||||

If you aren’t using Postman, then you can make the equivalent request using cURL:

|

||||

如果你不使用 Postman,也可以使用 cURL 去生成一个等价的请求:

|

||||

|

||||

```

|

||||

$ curl -X POST -H "Content-Type: application/json" -d '{ "sender": "d4ee26eee15148ee92c6cd394edd974e", "recipient": "someone-other-address", "amount": 5}' "http://localhost:5000/transactions/new"

|

||||

```

|

||||

I restarted my server, and mined two blocks, to give 3 in total. Let’s inspect the full chain by requesting http://localhost:5000/chain:

|

||||

我重启动我的服务器,然后我挖到了两个区块,这样总共有了3 个区块。我们通过请求 http://localhost:5000/chain 来检查整个区块链:

|

||||

```

|

||||

{

|

||||

"chain": [

|

||||

@ -505,19 +504,19 @@ I restarted my server, and mined two blocks, to give 3 in total. Let’s inspect

|

||||

],

|

||||

"length": 3

|

||||

```

|

||||

### Step 4: Consensus

|

||||

### 第 4 步:共识

|

||||

|

||||

This is very cool. We’ve got a basic Blockchain that accepts transactions and allows us to mine new Blocks. But the whole point of Blockchains is that they should be decentralized. And if they’re decentralized, how on earth do we ensure that they all reflect the same chain? This is called the problem of Consensus, and we’ll have to implement a Consensus Algorithm if we want more than one node in our network.

|

||||

这是很酷的一个地方。我们已经有了一个基本的区块链,它可以接收事务并允许我们去挖掘出新区块。但是区块链的整个重点在于它是去中心化的。而如果它们是去中心化的,那我们如何才能确保它们表示在同一个区块链上?这就是共识问题,如果我们希望在我们的网络上有多于一个的节点运行,那么我们将必须去实现一个共识算法。

|

||||

|

||||

#### Registering new Nodes

|

||||

#### 注册新节点

|

||||

|

||||

Before we can implement a Consensus Algorithm, we need a way to let a node know about neighbouring nodes on the network. Each node on our network should keep a registry of other nodes on the network. Thus, we’ll need some more endpoints:

|

||||

在我们能实现一个共识算法之前,我们需要一个办法去让一个节点知道网络上的邻居节点。我们网络上的每个节点都保留有一个该网络上其它节点的注册信息。因此,我们需要更多的端点:

|

||||

|

||||

1. /nodes/register to accept a list of new nodes in the form of URLs.

|

||||

1. /nodes/register 以 URLs 的形式去接受一个新节点列表

|

||||

|

||||

2. /nodes/resolve to implement our Consensus Algorithm, which resolves any conflicts—to ensure a node has the correct chain.

|

||||

2. /nodes/resolve 去实现我们的共识算法,由它来解决任何的冲突 —— 确保节点有一个正确的链。

|

||||

|

||||

We’ll need to modify our Blockchain’s constructor and provide a method for registering nodes:

|

||||

我们需要去修改我们的区块链的构造函数,来提供一个注册节点的方法:

|

||||

|

||||

```

|

||||

...

|

||||

@ -541,13 +540,13 @@ class Blockchain(object):

|

||||

parsed_url = urlparse(address)

|

||||

self.nodes.add(parsed_url.netloc)

|

||||

```

|

||||

A method for adding neighbouring nodes to our Network

|

||||

一个添加邻居节点到我们的网络的方法

|

||||

|

||||

Note that we’ve used a set() to hold the list of nodes. This is a cheap way of ensuring that the addition of new nodes is idempotent—meaning that no matter how many times we add a specific node, it appears exactly once.

|

||||

注意,我们将使用一个 `set()` 去保存节点列表。这是一个非常合算的方式,它将确保添加的内容是幂等的 —— 这意味着不论你将特定的节点添加多少次,它都是精确地只出现一次。

|

||||

|

||||

#### Implementing the Consensus Algorithm

|

||||

#### 实现共识算法

|

||||

|

||||

As mentioned, a conflict is when one node has a different chain to another node. To resolve this, we’ll make the rule that the longest valid chain is authoritative. In other words, the longest chain on the network is the de-facto one. Using this algorithm, we reach Consensus amongst the nodes in our network.

|

||||

正如前面提到的,当一个节点与另一个节点有不同的链时就会产生冲突。为解决冲突,我们制定一个规则,即最长的有效的链才是权威的链。换句话说就是,网络上最长的链就是事实上的区块链。使用这个算法,可以在我们的网络上节点之间达到共识。

|

||||

|

||||

```

|

||||

...

|

||||

@ -619,11 +618,11 @@ class Blockchain(object)

|

||||

return False

|

||||

```

|

||||

|

||||

The first method valid_chain() is responsible for checking if a chain is valid by looping through each block and verifying both the hash and the proof.

|

||||

第一个方法 `valid_chain()` 是负责来检查链是否有效,它通过遍历区块链上的每个区块并验证它们的哈希和工作量证明来检查这个区块链是否有效。

|

||||

|

||||

resolve_conflicts() is a method which loops through all our neighbouring nodes, downloads their chains and verifies them using the above method. If a valid chain is found, whose length is greater than ours, we replace ours.

|

||||

`resolve_conflicts()` 方法用于遍历所有的邻居节点,下载它们的链并使用上面的方法去验证它们是否有效。如果找到有效的链,确定谁是最长的链,然后我们就用最长的链来替换我们的当前的链。

|

||||

|

||||

Let’s register the two endpoints to our API, one for adding neighbouring nodes and the another for resolving conflicts:

|

||||

在我们的 API 上来注册两个端点,一个用于添加邻居节点,另一个用于解决冲突:

|

||||

|

||||

```

|

||||

@app.route('/nodes/register', methods=['POST'])

|

||||

@ -662,31 +661,30 @@ def consensus():

|

||||

return jsonify(response), 200

|

||||

```

|

||||

|

||||

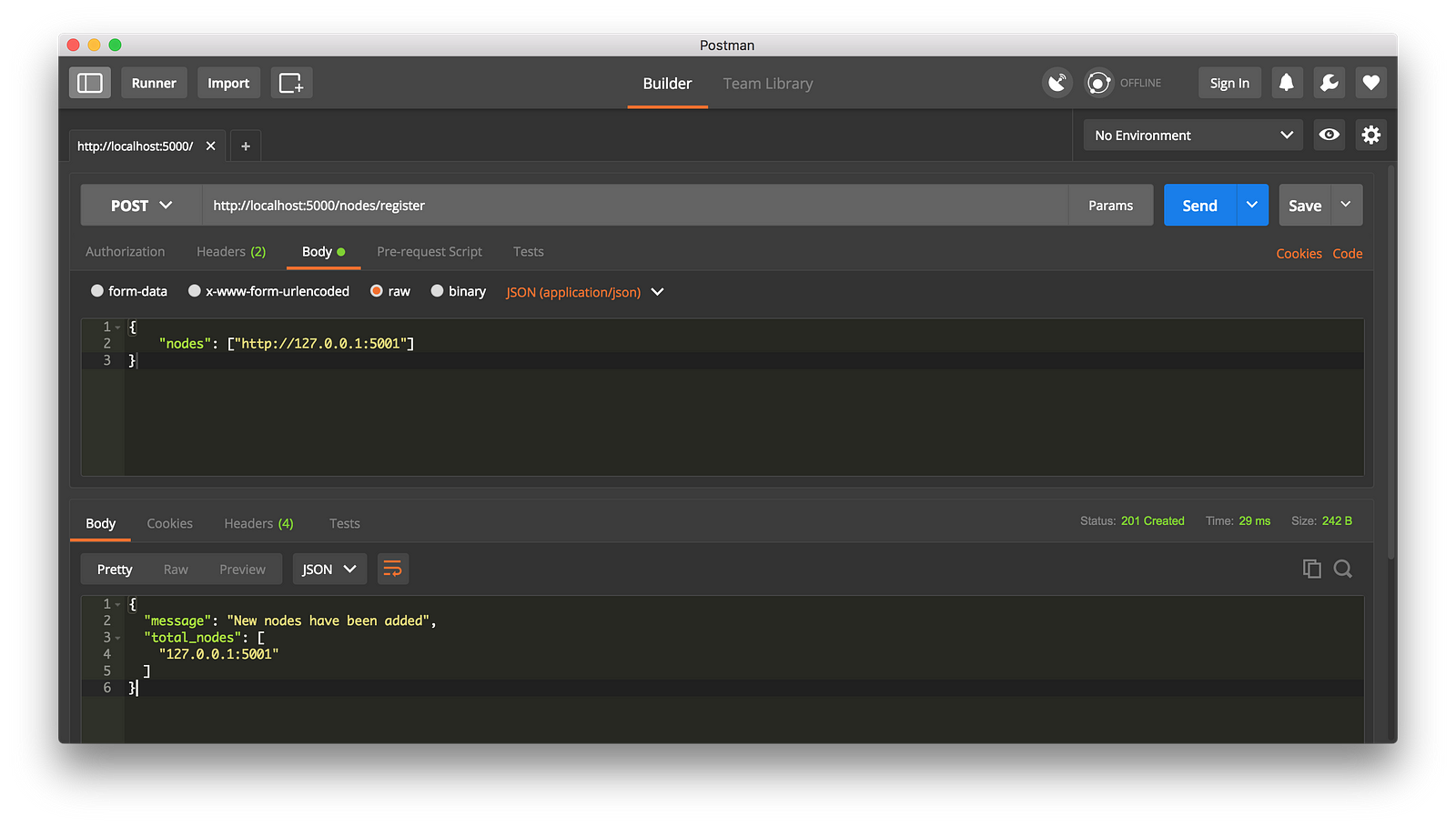

At this point you can grab a different machine if you like, and spin up different nodes on your network. Or spin up processes using different ports on the same machine. I spun up another node on my machine, on a different port, and registered it with my current node. Thus, I have two nodes: [http://localhost:5000][9] and http://localhost:5001.

|

||||

这种情况下,如果你愿意可以使用不同的机器来做,然后在你的网络上启动不同的节点。或者是在同一台机器上使用不同的端口启动另一个进程。我是在我的机器上使用了不同的端口启动了另一个节点,并将它注册到了当前的节点上。因此,我现在有了两个节点:[http://localhost:5000][9] 和 http://localhost:5001。

|

||||

|

||||

|

||||

Registering a new Node

|

||||

注册一个新节点

|

||||

|

||||

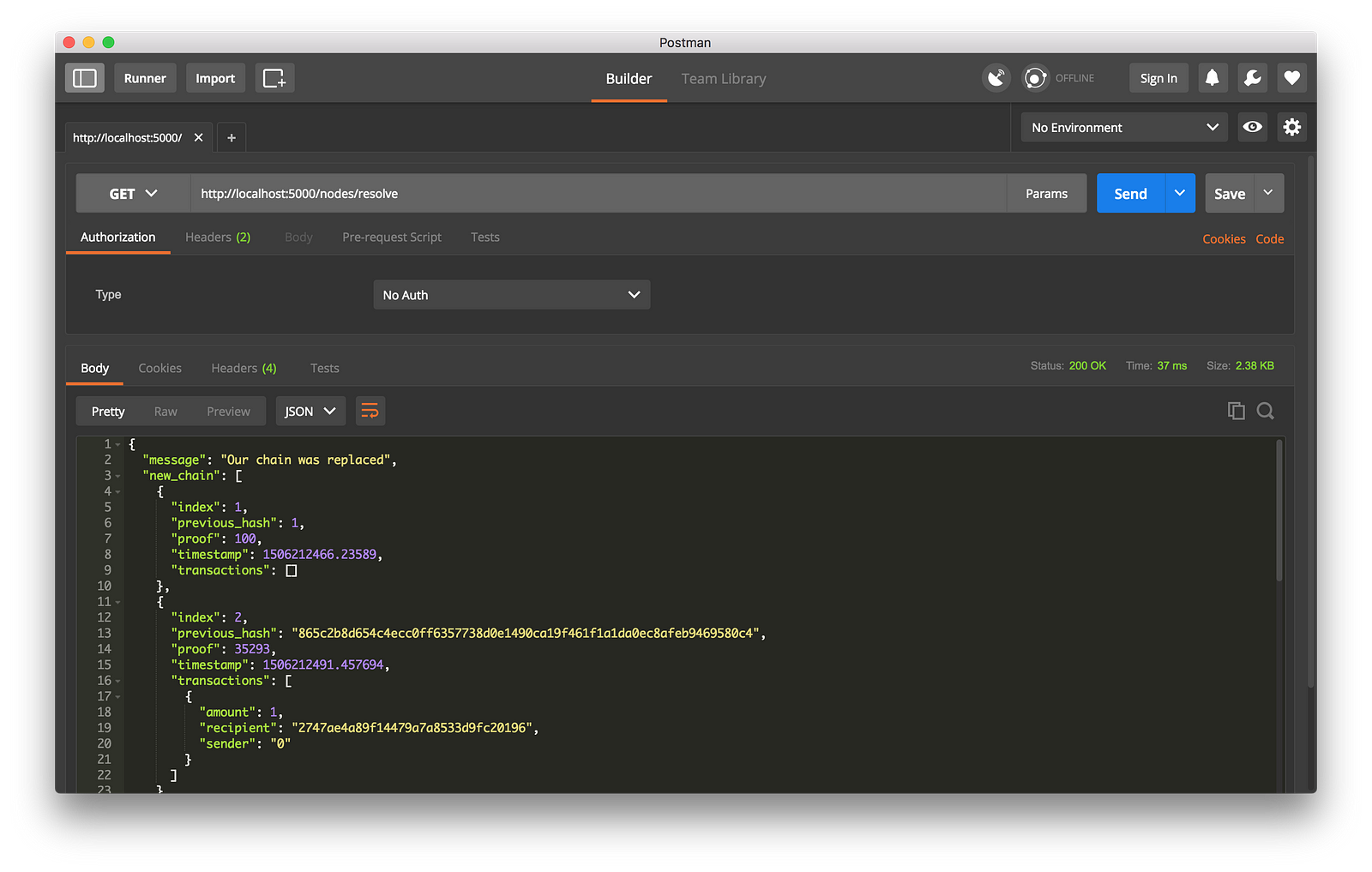

I then mined some new Blocks on node 2, to ensure the chain was longer. Afterward, I called GET /nodes/resolve on node 1, where the chain was replaced by the Consensus Algorithm:

|

||||

我接着在节点 2 上挖出一些新区块,以确保这个链是最长的。之后我在节点 1 上以 `GET` 方式调用了 `/nodes/resolve`,这时,节点 1 上的链被共识算法替换成节点 2 上的链了:

|

||||

|

||||

|

||||

Consensus Algorithm at Work

|

||||

工作中的共识算法

|

||||

|

||||

And that’s a wrap... Go get some friends together to help test out your Blockchain.

|

||||

然后将它们封装起来 … 找一些朋友来帮你一起测试你的区块链。

|

||||

|

||||

* * *

|

||||

|

||||

I hope that this has inspired you to create something new. I’m ecstatic about Cryptocurrencies because I believe that Blockchains will rapidly change the way we think about economies, governments and record-keeping.

|

||||

|

||||

**Update:** I’m planning on following up with a Part 2, where we’ll extend our Blockchain to have a Transaction Validation Mechanism as well as discuss some ways in which you can productionize your Blockchain.

|

||||

我希望以上内容能够鼓舞你去创建一些新的东西。我是加密货币的狂热拥护者,因此我相信区块链将迅速改变我们对经济、政府和记录保存的看法。

|

||||

|

||||

**更新:** 我正计划继续它的第二部分,其中我将扩展我们的区块链,使它具备事务验证机制,同时讨论一些你可以在其上产生你自己的区块链的方式。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/learn-blockchains-by-building-one-117428612f46

|

||||

|

||||

作者:[Daniel van Flymen][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -0,0 +1,165 @@

|

||||

对可互换通证的通证 ERC 的比较 – Blockchainers

|

||||

======

|

||||

“对于标准来说,最好的事情莫过于大量的人都去选择使用它。“ [_Andrew S. Tanenbaum_][1]

|

||||

|

||||

### 通证标准的现状

|

||||

|

||||

在以太坊平台上,通证标准的现状出奇的简单:ERC-20 通证标准是通证接口中唯一被采用( [EIP-20][2])和接受的通证标准。

|

||||

|

||||

它在 2015 年被提出,最终接受是在 2017 年末。

|

||||

|

||||

在此期间,提出了许多解决 ERC-20 缺点的以太坊意见征集(ERC),其中的一部分是因为以太坊平台自身变更所导致的,比如,由 [EIP-150][3] 修复的重入(re-entrancy) bug。其它 ERC 提出的对 ERC-20 通证模型的强化。这些强化是通过采集大量的以太坊区块链和 ERC-20 通证标准的使用经验所确定的。ERC-20 通证接口的实际应用产生了新的要求和需要,比如像权限和操作方面的非功能性需求。

|

||||

|

||||

这篇文章将浅显但完整地对以太坊平台上提出的所有通证(类)标准进行简单概述。我将尽可能客观地去做比较,但不可避免地仍有一些不客观的地方。

|

||||

|

||||

### 通证标准之母:ERC-20

|

||||

|

||||

有成打的 [非常好的][4] 关于 ERC-20 的详细描述,在这里就不一一列出了。只对在文章中提到的相关核心概念做个比较。

|

||||

|

||||

#### 提取模式

|

||||

|

||||

用户们尽可能地去理解 ERC-20 接口,尤其是从一个外部所有者帐户(EOA)_转账_ 通证的模式,即一个终端用户(“Alice”)到一个智能合约,很难去获得 approve/transferFrom 模式权利。

|

||||

|

||||

![][5]

|

||||

|

||||

从软件工程师的角度看,这个提取模式非常类似于 [好莱坞原则][6] (“不要给我们打电话,我们会给你打电话的!”)。那个调用链的创意正好相反:在 ERC-20 通证转账中,通证不能调用合约,但是合约可以调用通证上的 `transferFrom`。

|

||||

|

||||

虽然好莱坞原则经常用于去实现关注点分离(SoC),但在以太坊中它是一个安全模式,目的是为了防止通证合约去调用外部合约上的未知的函数。这种行为是非常有必要的,因为 [Call Depth Attack][7] 直到 [EIP-150][3] 才被启用。在硬分叉之后,这个重入bug 将不再可能出现了,并且提取模式不提供任何比直接通证调用更好的安全性了。

|

||||

|

||||

但是,为什么现在它成了一个问题呢?可能是由于某些原因,它的用法设计有些欠佳,但是我们可以通过前端的 DApp 来修复这个问题,对吗?

|

||||

|

||||

因此,我们来看一看,如果一个用户使用 `transfer` 去转账一些通证到智能合约会发生什么事情。Alice 在带合约地址的通证合约上调用 `transfer`

|

||||

|

||||

**….aaaaand 它不见了!**

|

||||

|

||||

是的,通证没有了。很有可能,没有任何人再能拿回通证了。但是像 Alice 的这种做法并不鲜见,正如 ERC-223 的发明者 Dexaran 所发现的,大约有 $400.000 的通证(由于 ETH 波动很大,我们只能说很多)是由于用户意外发送到智能合约中,并因此而丢失。

|

||||

|

||||

即便合约开发者是一个非常友好和无私的用户,他也不能创建一个合约以便将它收到的通证返还给你。因为合约并不会提示这类转账,并且事件仅在通证合约上发出。

|

||||

|

||||

从软件工程师的角度来看,那就是 ERC-20 的重大缺点。如果发生一个事件(为简单起见,我们现在假设以太坊交易是真实事件),对参与的当事人将有一个提示。但是,这个事件是在通证智能合约中触发的,合约接收方是无法知道它的。

|

||||

|

||||

目前,还不能做到防止用户向智能合约发送通证,并且在 ERC-20 通证合约上使用不直观转账将导致这些发送的通证永远丢失。

|

||||

|

||||

### 帝国反击战:ERC-223

|

||||

|

||||

[Dexaran][8] 第一个提出尝试去修复 ERC-20 的问题。这个提议通过将 EOA 和智能合约账户做不同的处理的方式来解决这个问题。

|

||||

|

||||

强制的策略是去反转调用链(并且使用 [EIP-150][3] 解决它现在能做到了),并且在正接收的智能合约上使用一个预定义的回调(tokenFallback)。如果回调没有实现,转账将失败(将消耗掉发送方的汽油,这是 ERC-223 被批的最常见的一个地方)。

|

||||

|

||||

![][9]

|

||||

|

||||

#### 好处:

|

||||

|

||||

* 创建一个新接口,有意不遵守 ERC-20 关于弃权的功能

|

||||

|

||||

* 允许合约开发者去处理收到的通证(即:接受/拒绝)并因此遵守事件模式

|

||||

|

||||

* 用一个交易来代替两个交易(transfer vs. approve/transferFrom)并且节省了汽油和区域链的存储空间

|

||||

|

||||

|

||||

|

||||

|

||||

#### 坏处:

|

||||

|

||||

* 如果 `tokenFallback` 不存在,那么合约的 `fallback` 功能将运行,这可能会产生意料之外的副作用

|

||||

|

||||

* 如果合约假设使用通证转账,比如,发送通证到一个特定的像多签名钱包一样的账户,这将使 ERC-223 通证失败,它将不能转移(即它们会丢失)。

|

||||

|

||||

|

||||

### 程序员修练之道:ERC-677

|

||||

|

||||

[ERC-667 transferAndCall 通证标准][10] 尝试将 ERC-20 和 ERC-223 结合起来。这个创意是在 ERC-20 中引入一个 `transferAndCall` 函数,并保持标准不变。ERC-223 有意不完全向后兼容,由于不再需要 approve/allowance 模式,并因此将它删除。

|

||||

|

||||

ERC-667 的主要目标是向后兼容,为新合约向外部合约转账提供一个安全的方法。

|

||||

|

||||

![][11]

|

||||

|

||||

#### 好处:

|

||||

|

||||

* 容易适用新的通证

|

||||

|

||||

* 兼容 ERC-20

|

||||

|

||||

* 为 ERC-20 设计的适配器用于安全使用 ERC-20

|

||||

|

||||

#### 坏处:

|

||||

|

||||

* 不是真正的新方法。只是一个 ERC-20 和 ERC-223 的折衷

|

||||

|

||||

* 目前实现 [尚未完成][12]

|

||||

|

||||

|

||||

### 重逢:ERC-777

|

||||

|

||||

[ERC-777 一个新的先进的通证标准][13],引入它是为了建立一个演进的通证标准,它是吸取了像带值的 `approve()` 以及上面提到的将通证发送到合约这样的错误观念的教训之后得来的演进后标准。

|

||||

|

||||

另外,ERC-777 使用了新标准 [ERC-820:使用一个注册合约的伪内省][14],它允许为合约注册元数据以提供一个简单的内省类型。并考虑到了向后兼容和其它的功能扩展,这些取决于由一个 EIP-820 查找到的地址返回的 `ITokenRecipient`,和由目标合约实现的函数。

|

||||

|