mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

Merge remote-tracking branch 'lctt/master'

This commit is contained in:

commit

eaa0194ceb

@ -0,0 +1,230 @@

|

||||

Linux 包管理基础:apt、yum、dnf 和 pkg

|

||||

========================

|

||||

|

||||

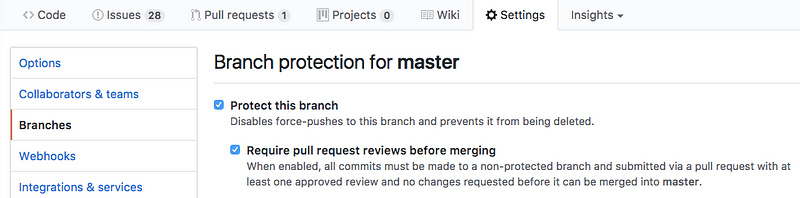

![Package_Management_tw_mostov.png-307.8kB][1]

|

||||

|

||||

### 介绍

|

||||

|

||||

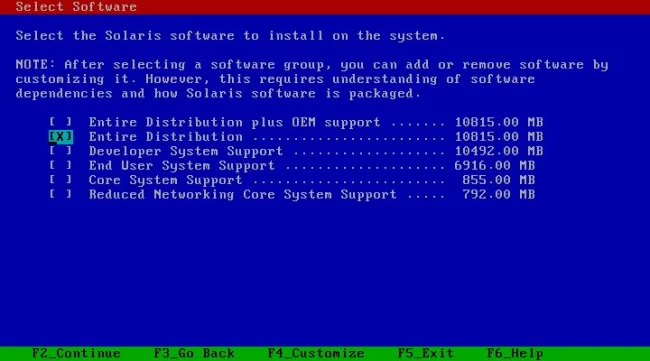

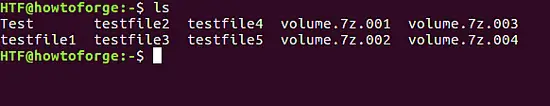

大多数现代的类 Unix 操作系统都提供了一种中心化的机制用来搜索和安装软件。软件通常都是存放在存储库中,并通过包的形式进行分发。处理包的工作被称为包管理。包提供了操作系统的基本组件,以及共享的库、应用程序、服务和文档。

|

||||

|

||||

包管理系统除了安装软件外,它还提供了工具来更新已经安装的包。包存储库有助于确保你的系统中使用的代码是经过审查的,并且软件的安装版本已经得到了开发人员和包维护人员的认可。

|

||||

|

||||

在配置服务器或开发环境时,我们最好了解下包在官方存储库之外的情况。某个发行版的稳定版本中的包有可能已经过时了,尤其是那些新的或者快速迭代的软件。然而,包管理无论对于系统管理员还是开发人员来说都是至关重要的技能,而已打包的软件对于主流 Linux 发行版来说也是一笔巨大的财富。

|

||||

|

||||

本指南旨在快速地介绍下在多种 Linux 发行版中查找、安装和升级软件包的基础知识,并帮助您将这些内容在多个系统之间进行交叉对比。

|

||||

|

||||

### 包管理系统:简要概述

|

||||

|

||||

大多数包系统都是围绕包文件的集合构建的。包文件通常是一个存档文件,它包含已编译的二进制文件和软件的其他资源,以及安装脚本。包文件同时也包含有价值的元数据,包括它们的依赖项,以及安装和运行它们所需的其他包的列表。

|

||||

|

||||

虽然这些包管理系统的功能和优点大致相同,但打包格式和工具却因平台而异:

|

||||

|

||||

| 操作系统 | 格式 | 工具 |

|

||||

| --- | --- | --- |

|

||||

| Debian | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

|

||||

| Ubuntu | `.deb` | `apt`, `apt-cache`, `apt-get`, `dpkg` |

|

||||

| CentOS | `.rpm` | `yum` |

|

||||

| Fedora | `.rpm` | `dnf` |

|

||||

| FreeBSD | Ports, `.txz` | `make`, `pkg` |

|

||||

|

||||

Debian 及其衍生版,如 Ubuntu、Linux Mint 和 Raspbian,它们的包格式是 `.deb`。APT 这款先进的包管理工具提供了大多数常见的操作命令:搜索存储库、安装软件包及其依赖项,并管理升级。在本地系统中,我们还可以使用 `dpkg` 程序来安装单个的 `deb` 文件,APT 命令作为底层 `dpkg` 的前端,有时也会直接调用它。

|

||||

|

||||

最近发布的 debian 衍生版大多数都包含了 `apt` 命令,它提供了一个简洁统一的接口,可用于通常由 `apt-get` 和 `apt-cache` 命令处理的常见操作。这个命令是可选的,但使用它可以简化一些任务。

|

||||

|

||||

CentOS、Fedora 和其它 Red Hat 家族成员使用 RPM 文件。在 CentOS 中,通过 `yum` 来与单独的包文件和存储库进行交互。

|

||||

|

||||

在最近的 Fedora 版本中,`yum` 已经被 `dnf` 取代,`dnf` 是它的一个现代化的分支,它保留了大部分 `yum` 的接口。

|

||||

|

||||

FreeBSD 的二进制包系统由 `pkg` 命令管理。FreeBSD 还提供了 `Ports` 集合,这是一个存在于本地的目录结构和工具,它允许用户获取源码后使用 Makefile 直接从源码编译和安装包。

|

||||

|

||||

### 更新包列表

|

||||

|

||||

大多数系统在本地都会有一个和远程存储库对应的包数据库,在安装或升级包之前最好更新一下这个数据库。另外,`yum` 和 `dnf` 在执行一些操作之前也会自动检查更新。当然你可以在任何时候对系统进行更新。

|

||||

|

||||

| 系统 | 命令 |

|

||||

| --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get update` |

|

||||

| | `sudo apt update` |

|

||||

| CentOS | `yum check-update` |

|

||||

| Fedora | `dnf check-update` |

|

||||

| FreeBSD Packages | `sudo pkg update` |

|

||||

| FreeBSD Ports | `sudo portsnap fetch update` |

|

||||

|

||||

### 更新已安装的包

|

||||

|

||||

在没有包系统的情况下,想确保机器上所有已安装的软件都保持在最新的状态是一个很艰巨的任务。你将不得不跟踪数百个不同包的上游更改和安全警报。虽然包管理器并不能解决升级软件时遇到的所有问题,但它确实使你能够使用一些命令来维护大多数系统组件。

|

||||

|

||||

在 FreeBSD 上,升级已安装的 ports 可能会引入破坏性的改变,有些步骤还需要进行手动配置,所以在通过 `portmaster` 更新之前最好阅读下 `/usr/ports/UPDATING` 的内容。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get upgrade` | 只更新已安装的包 |

|

||||

| | `sudo apt-get dist-upgrade` | 可能会增加或删除包以满足新的依赖项 |

|

||||

| | `sudo apt upgrade` | 和 `apt-get upgrade` 类似 |

|

||||

| | `sudo apt full-upgrade` | 和 `apt-get dist-upgrade` 类似 |

|

||||

| CentOS | `sudo yum update` | |

|

||||

| Fedora | `sudo dnf upgrade` | |

|

||||

| FreeBSD Packages | `sudo pkg upgrade` | |

|

||||

| FreeBSD Ports | `less /usr/ports/UPDATING` | 使用 `less` 来查看 ports 的更新提示(使用上下光标键滚动,按 q 退出)。 |

|

||||

| | `cd /usr/ports/ports-mgmt/portmaster && sudo make install && sudo portmaster -a` | 安装 `portmaster` 然后使用它更新已安装的 ports |

|

||||

|

||||

### 搜索某个包

|

||||

|

||||

大多数发行版都提供针对包集合的图形化或菜单驱动的工具,我们可以分类浏览软件,这也是一个发现新软件的好方法。然而,查找包最快和最有效的方法是使用命令行工具进行搜索。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `apt-cache search search_string` | |

|

||||

| | `apt search search_string` | |

|

||||

| CentOS | `yum search search_string` | |

|

||||

| | `yum search all search_string` | 搜索所有的字段,包括描述 |

|

||||

| Fedora | `dnf search search_string` | |

|

||||

| | `dnf search all search_string` | 搜索所有的字段,包括描述 |

|

||||

| FreeBSD Packages | `pkg search search_string` | 通过名字进行搜索 |

|

||||

| | `pkg search -f search_string` | 通过名字进行搜索并返回完整的描述 |

|

||||

| | `pkg search -D search_string` | 搜索描述 |

|

||||

| FreeBSD Ports | `cd /usr/ports && make search name=package` | 通过名字进行搜索 |

|

||||

| | `cd /usr/ports && make search key=search_string` | 搜索评论、描述和依赖 |

|

||||

|

||||

### 查看某个软件包的信息

|

||||

|

||||

在安装软件包之前,我们可以通过仔细阅读包的描述来获得很多有用的信息。除了人类可读的文本之外,这些内容通常包括像版本号这样的元数据和包的依赖项列表。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `apt-cache show package` | 显示有关包的本地缓存信息 |

|

||||

| | `apt show package` | |

|

||||

| | `dpkg -s package` | 显示包的当前安装状态 |

|

||||

| CentOS | `yum info package` | |

|

||||

| | `yum deplist package` | 列出包的依赖 |

|

||||

| Fedora | `dnf info package` | |

|

||||

| | `dnf repoquery --requires package` | 列出包的依赖 |

|

||||

| FreeBSD Packages | `pkg info package` | 显示已安装的包的信息 |

|

||||

| FreeBSD Ports | `cd /usr/ports/category/port && cat pkg-descr` | |

|

||||

|

||||

### 从存储库安装包

|

||||

|

||||

知道包名后,通常可以用一个命令来安装它及其依赖。你也可以一次性安装多个包,只需将它们全部列出来即可。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get install package` | |

|

||||

| | `sudo apt-get install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo apt-get install -y package` | 在 `apt` 提示是否继续的地方直接默认 `yes` |

|

||||

| | `sudo apt install package` | 显示一个彩色的进度条 |

|

||||

| CentOS | `sudo yum install package` | |

|

||||

| | `sudo yum install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo yum install -y package` | 在 `yum` 提示是否继续的地方直接默认 `yes` |

|

||||

| Fedora | `sudo dnf install package` | |

|

||||

| | `sudo dnf install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| | `sudo dnf install -y package` | 在 `dnf` 提示是否继续的地方直接默认 `yes` |

|

||||

| FreeBSD Packages | `sudo pkg install package` | |

|

||||

| | `sudo pkg install package1 package2 ...` | 安装所有列出来的包 |

|

||||

| FreeBSD Ports | `cd /usr/ports/category/port && sudo make install` | 从源码构建安装一个 port |

|

||||

|

||||

### 从本地文件系统安装一个包

|

||||

|

||||

对于一个给定的操作系统,有时有些软件官方并没有提供相应的包,那么开发人员或供应商将需要提供包文件的下载。你通常可以通过 web 浏览器检索这些包,或者通过命令行 `curl` 来检索这些信息。将包下载到目标系统后,我们通常可以通过单个命令来安装它。

|

||||

|

||||

在 Debian 派生的系统上,`dpkg` 用来处理单个的包文件。如果一个包有未满足的依赖项,那么我们可以使用 `gdebi` 从官方存储库中检索它们。

|

||||

|

||||

在 CentOS 和 Fedora 系统上,`yum` 和 `dnf` 用于安装单个的文件,并且会处理需要的依赖。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo dpkg -i package.deb` | |

|

||||

| | `sudo apt-get install -y gdebi && sudo gdebi package.deb` | 安装 `gdebi`,然后使用 `gdebi` 安装 `package.deb` 并处理缺失的依赖|

|

||||

| CentOS | `sudo yum install package.rpm` | |

|

||||

| Fedora | `sudo dnf install package.rpm` | |

|

||||

| FreeBSD Packages | `sudo pkg add package.txz` | |

|

||||

| | `sudo pkg add -f package.txz` | 即使已经安装的包也会重新安装 |

|

||||

|

||||

### 删除一个或多个已安装的包

|

||||

|

||||

由于包管理器知道给定的软件包提供了哪些文件,因此如果某个软件不再需要了,它通常可以干净利落地从系统中清除这些文件。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `sudo apt-get remove package` | |

|

||||

| | `sudo apt remove package` | |

|

||||

| | `sudo apt-get autoremove` | 删除不需要的包 |

|

||||

| CentOS | `sudo yum remove package` | |

|

||||

| Fedora | `sudo dnf erase package` | |

|

||||

| FreeBSD Packages | `sudo pkg delete package` | |

|

||||

| | `sudo pkg autoremove` | 删除不需要的包 |

|

||||

| FreeBSD Ports | `sudo pkg delete package` | |

|

||||

| | `cd /usr/ports/path_to_port && make deinstall` | 卸载 port |

|

||||

|

||||

### `apt` 命令

|

||||

|

||||

Debian 家族发行版的管理员通常熟悉 `apt-get` 和 `apt-cache`。较少为人所知的是简化的 `apt` 接口,它是专为交互式使用而设计的。

|

||||

|

||||

| 传统命令 | 等价的 `apt` 命令 |

|

||||

| --- | --- |

|

||||

| `apt-get update` | `apt update` |

|

||||

| `apt-get dist-upgrade` | `apt full-upgrade` |

|

||||

| `apt-cache search string` | `apt search string` |

|

||||

| `apt-get install package` | `apt install package` |

|

||||

| `apt-get remove package` | `apt remove package` |

|

||||

| `apt-get purge package` | `apt purge package` |

|

||||

|

||||

虽然 `apt` 通常是一个特定操作的快捷方式,但它并不能完全替代传统的工具,它的接口可能会随着版本的不同而发生变化,以提高可用性。如果你在脚本或 shell 管道中使用包管理命令,那么最好还是坚持使用 `apt-get` 和 `apt-cache`。

|

||||

|

||||

### 获取帮助

|

||||

|

||||

除了基于 web 的文档,请记住我们可以通过 shell 从 Unix 手册页(通常称为 man 页面)中获得大多数的命令。比如要阅读某页,可以使用 `man`:

|

||||

|

||||

```

|

||||

man page

|

||||

|

||||

```

|

||||

|

||||

在 `man` 中,你可以用箭头键导航。按 `/` 搜索页面内的文本,使用 `q` 退出。

|

||||

|

||||

| 系统 | 命令 | 说明 |

|

||||

| --- | --- | --- |

|

||||

| Debian / Ubuntu | `man apt-get` | 更新本地包数据库以及与包一起工作 |

|

||||

| | `man apt-cache` | 在本地的包数据库中搜索 |

|

||||

| | `man dpkg` | 和单独的包文件一起工作以及能查询已安装的包 |

|

||||

| | `man apt` | 通过更简洁,用户友好的接口进行最基本的操作 |

|

||||

| CentOS | `man yum` | |

|

||||

| Fedora | `man dnf` | |

|

||||

| FreeBSD Packages | `man pkg` | 和预先编译的二进制包一起工作 |

|

||||

| FreeBSD Ports | `man ports` | 和 Ports 集合一起工作 |

|

||||

|

||||

### 结论和进一步的阅读

|

||||

|

||||

本指南通过对多个系统间进行交叉对比概述了一下包管理系统的基本操作,但只涉及了这个复杂主题的表面。对于特定系统更详细的信息,可以参考以下资源:

|

||||

|

||||

* [这份指南][2] 详细介绍了 Ubuntu 和 Debian 的软件包管理。

|

||||

* 这里有一份 CentOS 官方的指南 [使用 yum 管理软件][3]

|

||||

* 这里有一个有关 Fedora 的 `dnf` 的 [wifi 页面][4] 以及一份有关 `dnf` [官方的手册][5]

|

||||

* [这份指南][6] 讲述了如何使用 `pkg` 在 FreeBSD 上进行包管理

|

||||

* 这本 [FreeBSD Handbook][7] 有一节讲述了[如何使用 Ports 集合][8]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.digitalocean.com/community/tutorials/package-management-basics-apt-yum-dnf-pkg

|

||||

|

||||

译者后记:

|

||||

|

||||

从经典的 `configure` && `make` && `make install` 三部曲到 `dpkg`,从需要手处理依赖关系的 `dpkg` 到全自动化的 `apt-get`,恩~,你有没有想过接下来会是什么?译者只能说可能会是 `Snaps`,如果你还没有听过这个东东,你也许需要关注下这个公众号了:**Snapcraft**

|

||||

|

||||

作者:[Brennen Bearnes][a]

|

||||

译者:[Snapcrafter](https://github.com/Snapcrafter)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.digitalocean.com/community/users/bpb

|

||||

|

||||

|

||||

[1]: http://static.zybuluo.com/apollomoon/g9kiere2xuo1511ls1hi9w9w/Package_Management_tw_mostov.png

|

||||

[2]:https://www.digitalocean.com/community/tutorials/ubuntu-and-debian-package-management-essentials

|

||||

[3]: https://www.centos.org/docs/5/html/yum/

|

||||

[4]: https://fedoraproject.org/wiki/Dnf

|

||||

[5]: https://dnf.readthedocs.org/en/latest/index.html

|

||||

[6]: https://www.digitalocean.com/community/tutorials/how-to-manage-packages-on-freebsd-10-1-with-pkg

|

||||

[7]:https://www.freebsd.org/doc/handbook/

|

||||

[8]: https://www.freebsd.org/doc/handbook/ports-using.html

|

||||

[9]:https://www.freebsd.org/doc/handbook/ports-using.html

|

||||

@ -0,0 +1,173 @@

|

||||

使用 snapcraft 将 snap 包发布到商店

|

||||

==================

|

||||

|

||||

|

||||

|

||||

Ubuntu Core 已经正式发布(LCTT 译注:指 2016 年 11 月发布的 Ubuntu Snappy Core 16 ),也许是时候让你的 snap 包进入商店了!

|

||||

|

||||

### 交付和商店的概念

|

||||

|

||||

首先回顾一下我们是怎么通过商店管理 snap 包的吧。

|

||||

|

||||

每次你上传 snap 包,商店都会为其分配一个修订版本号,并且商店中针对特定 snap 包 的版本号都是唯一的。

|

||||

|

||||

但是第一次上传 snap 包的时候,我们首先要为其注册一个还没有被使用的名字,这很容易。

|

||||

|

||||

商店中所有的修订版本都可以释放到多个通道中,这些通道只是概念上定义的,以便给用户一个稳定或风险等级的参照,这些通道有:

|

||||

|

||||

* 稳定(stable)

|

||||

* 候选(candidate)

|

||||

* 测试(beta)

|

||||

* 边缘(edge)

|

||||

|

||||

理想情况下,如果我们设置了 CI/CD 过程,那么每天或在每次更新源码时都会将其推送到边缘通道。在此过程中有两件事需要考虑。

|

||||

|

||||

首先在开始的时候,你最好制作一个不受限制的 snap 包,因为在这种新范例下,snap 包的大部分功能都能不受限制地工作。考虑到这一点,你的项目开始时 `confinement` 将被设置为 `devmode`(LCTT 译注:这是 `snapcraft.yaml` 中的一个键及其可选值)。这使得你在开发的早期阶段,仍然可以让你的 snap 包进入商店。一旦所有的东西都得到了 snap 包运行的安全模型的充分支持,那么就可以将 `confinement` 修改为 `strict`。

|

||||

|

||||

好了,假设你在限制方面已经做好了,并且也开始了一个对应边缘通道的 CI/CD 过程,但是如果你也想确保在某些情况下,早期版本 master 分支新的迭代永远也不会进入稳定或候选通道,那么我们可以使用 `gadge` 设置。如果 snap 包的 `gadge` 设置为 `devel` (LCTT注:这是 `snapcraft.yaml` 中的一个键及其可选值),商店将会永远禁止你将 snap 包释放到稳定和候选通道。

|

||||

|

||||

在这个过程中,我们有时可能想要发布一个修订版本到测试通道,以便让有些用户更愿意去跟踪它(一个好的发布管理流程应该比一个随机的日常构建更有用)。这个阶段结束后,如果希望人们仍然能保持更新,我们可以选择关闭测试通道,从一个特定的时间点开始我们只计划发布到候选和稳定通道,通过关闭测试通道我们将使该通道跟随稳定列表中的下一个开放通道,在这里是候选通道。而如果候选通道跟随的是稳定通道后,那么最终得到是稳定通道了。

|

||||

|

||||

### 进入 Snapcraft

|

||||

|

||||

那么所有这些给定的概念是如何在 snapcraft 中配合使用的?首先我们需要登录:

|

||||

|

||||

```

|

||||

$ snapcraft login

|

||||

Enter your Ubuntu One SSO credentials.

|

||||

Email: sxxxxx.sxxxxxx@canonical.com

|

||||

Password: **************

|

||||

Second-factor auth: 123456

|

||||

```

|

||||

|

||||

在登录之后,我们就可以开始注册 snap 了。例如,我们想要注册一个虚构的 snap 包 awesome-database:

|

||||

|

||||

```

|

||||

$ snapcraft register awesome-database

|

||||

We always want to ensure that users get the software they expect

|

||||

for a particular name.

|

||||

|

||||

If needed, we will rename snaps to ensure that a particular name

|

||||

reflects the software most widely expected by our community.

|

||||

|

||||

For example, most people would expect ‘thunderbird’ to be published by

|

||||

Mozilla. They would also expect to be able to get other snaps of

|

||||

Thunderbird as 'thunderbird-sergiusens'.

|

||||

|

||||

Would you say that MOST users will expect 'a' to come from

|

||||

you, and be the software you intend to publish there? [y/N]: y

|

||||

|

||||

You are now the publisher for 'awesome-database'

|

||||

```

|

||||

|

||||

假设我们已经构建了 snap 包,接下来我们要做的就是把它上传到商店。我们可以在同一个命令中使用快捷方式和 `--release` 选项:

|

||||

|

||||

```

|

||||

$ snapcraft push awesome-databse_0.1_amd64.snap --release edge

|

||||

Uploading awesome-database_0.1_amd64.snap [=================] 100%

|

||||

Processing....

|

||||

Revision 1 of 'awesome-database' created.

|

||||

|

||||

Channel Version Revision

|

||||

stable - -

|

||||

candidate - -

|

||||

beta - -

|

||||

edge 0.1 1

|

||||

|

||||

The edge channel is now open.

|

||||

```

|

||||

|

||||

如果我们试图将其发布到稳定通道,商店将会阻止我们:

|

||||

|

||||

```

|

||||

$ snapcraft release awesome-database 1 stable

|

||||

Revision 1 (devmode) cannot target a stable channel (stable, grade: devel)

|

||||

```

|

||||

|

||||

这样我们不会搞砸,也不会让我们的忠实用户使用它。现在,我们将最终推出一个值得发布到稳定通道的修订版本:

|

||||

|

||||

```

|

||||

$ snapcraft push awesome-databse_0.1_amd64.snap

|

||||

Uploading awesome-database_0.1_amd64.snap [=================] 100%

|

||||

Processing....

|

||||

Revision 10 of 'awesome-database' created.

|

||||

```

|

||||

|

||||

注意,<ruby>版本号<rt>version</rt></ruby>(LCTT 译注:这里指的是 snap 包名中 `0.1` 这个版本号)只是一个友好的标识符,真正重要的是商店为我们生成的<ruby>修订版本号<rt>Revision</rt></ruby>(LCTT 译注:这里生成的修订版本号为 `10`)。现在让我们把它释放到稳定通道:

|

||||

|

||||

```

|

||||

$ snapcraft release awesome-database 10 stable

|

||||

Channel Version Revision

|

||||

stable 0.1 10

|

||||

candidate ^ ^

|

||||

beta ^ ^

|

||||

edge 0.1 10

|

||||

|

||||

The 'stable' channel is now open.

|

||||

```

|

||||

|

||||

在这个针对我们正在使用架构最终的通道映射视图中,可以看到边缘通道将会被固定在修订版本 10 上,并且测试和候选通道将会跟随现在修订版本为 10 的稳定通道。由于某些原因,我们决定将专注于稳定性并让我们的 CI/CD 推送到测试通道。这意味着我们的边缘通道将会略微过时,为了避免这种情况,我们可以关闭这个通道:

|

||||

|

||||

```

|

||||

$ snapcraft close awesome-database edge

|

||||

Arch Channel Version Revision

|

||||

amd64 stable 0.1 10

|

||||

candidate ^ ^

|

||||

beta ^ ^

|

||||

edge ^ ^

|

||||

|

||||

The edge channel is now closed.

|

||||

```

|

||||

|

||||

在当前状态下,所有通道都跟随着稳定通道,因此订阅了候选、测试和边缘通道的人也将跟踪稳定通道的改动。比如就算修订版本 11 只发布到稳定通道,其他通道的人们也能看到它。

|

||||

|

||||

这个清单还提供了完整的体系结构视图,在本例中,我们只使用了 amd64。

|

||||

|

||||

### 获得更多的信息

|

||||

|

||||

有时过了一段时间,我们想知道商店中的某个 snap 包的历史记录和现在的状态是什么样的,这里有两个命令,一个是直截了当输出当前的状态,它会给我们一个熟悉的结果:

|

||||

|

||||

```

|

||||

$ snapcraft status awesome-database

|

||||

Arch Channel Version Revision

|

||||

amd64 stable 0.1 10

|

||||

candidate ^ ^

|

||||

beta ^ ^

|

||||

edge ^ ^

|

||||

```

|

||||

|

||||

我们也可以通过下面的命令获得完整的历史记录:

|

||||

|

||||

```

|

||||

$ snapcraft history awesome-database

|

||||

Rev. Uploaded Arch Version Channels

|

||||

3 2016-09-30T12:46:21Z amd64 0.1 stable*

|

||||

...

|

||||

...

|

||||

...

|

||||

2 2016-09-30T12:38:20Z amd64 0.1 -

|

||||

1 2016-09-30T12:33:55Z amd64 0.1 -

|

||||

```

|

||||

|

||||

### 结束语

|

||||

|

||||

希望这篇文章能让你对商店能做的事情有一个大概的了解,并让更多的人开始使用它!

|

||||

|

||||

--------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2016/11/15/making-your-snaps-available-to-the-store-using-snapcraft/

|

||||

|

||||

*译者简介:*

|

||||

|

||||

> snapcraft.io 的钉子户,对 Ubuntu Core、Snaps 和 Snapcraft 有着浓厚的兴趣,并致力于将这些还在快速发展的新技术通过翻译或原创的方式介绍到中文世界。有兴趣的小伙伴也可以关注译者个人的公众号: `Snapcraft`,近期会在上面连载几篇有关 Core snap 发布策略、交付流程和验证流程的文章,欢迎围观 :)

|

||||

|

||||

|

||||

作者:[Sergio Schvezov][a]

|

||||

译者:[Snapcrafter](https://github.com/Snapcrafter)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://insights.ubuntu.com/author/sergio-schvezov/

|

||||

[1]:https://insights.ubuntu.com/author/sergio-schvezov/

|

||||

[2]:http://snapcraft.io/docs/build-snaps/publish

|

||||

155

published/20170101 What is Kubernetes.md

Normal file

155

published/20170101 What is Kubernetes.md

Normal file

@ -0,0 +1,155 @@

|

||||

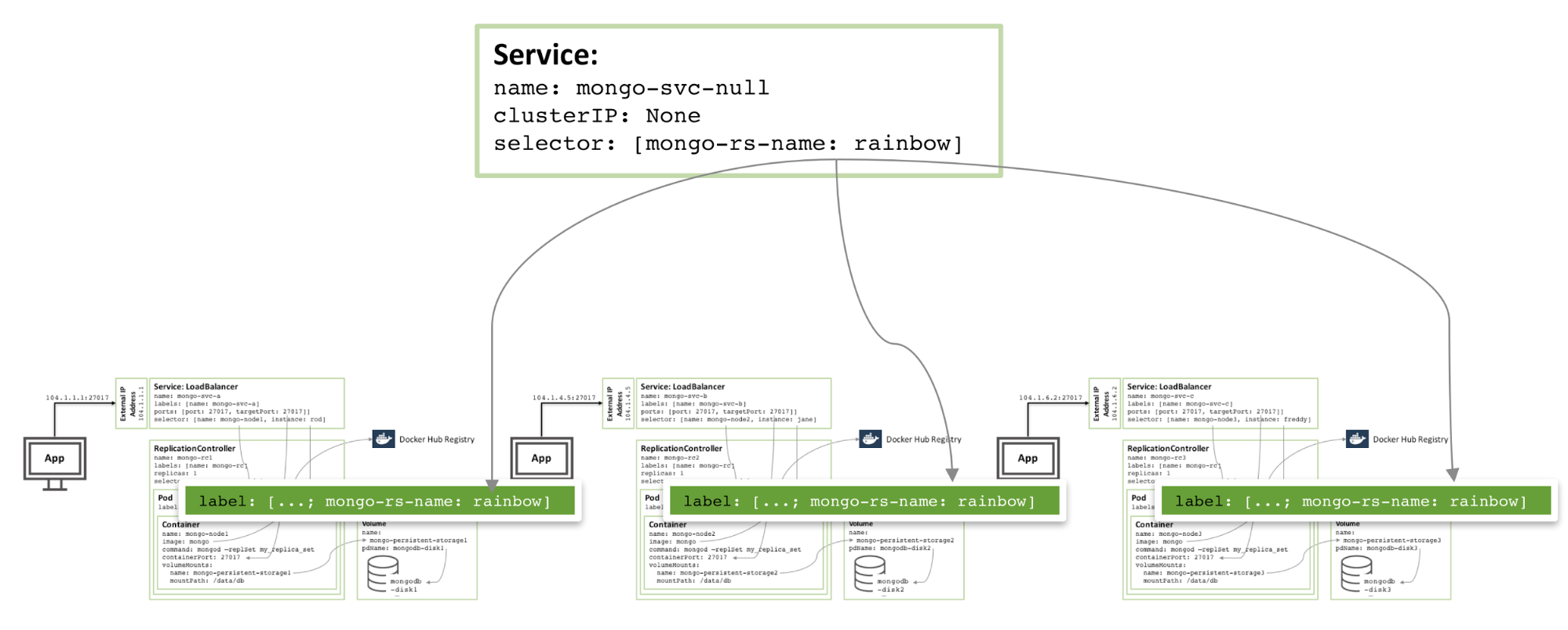

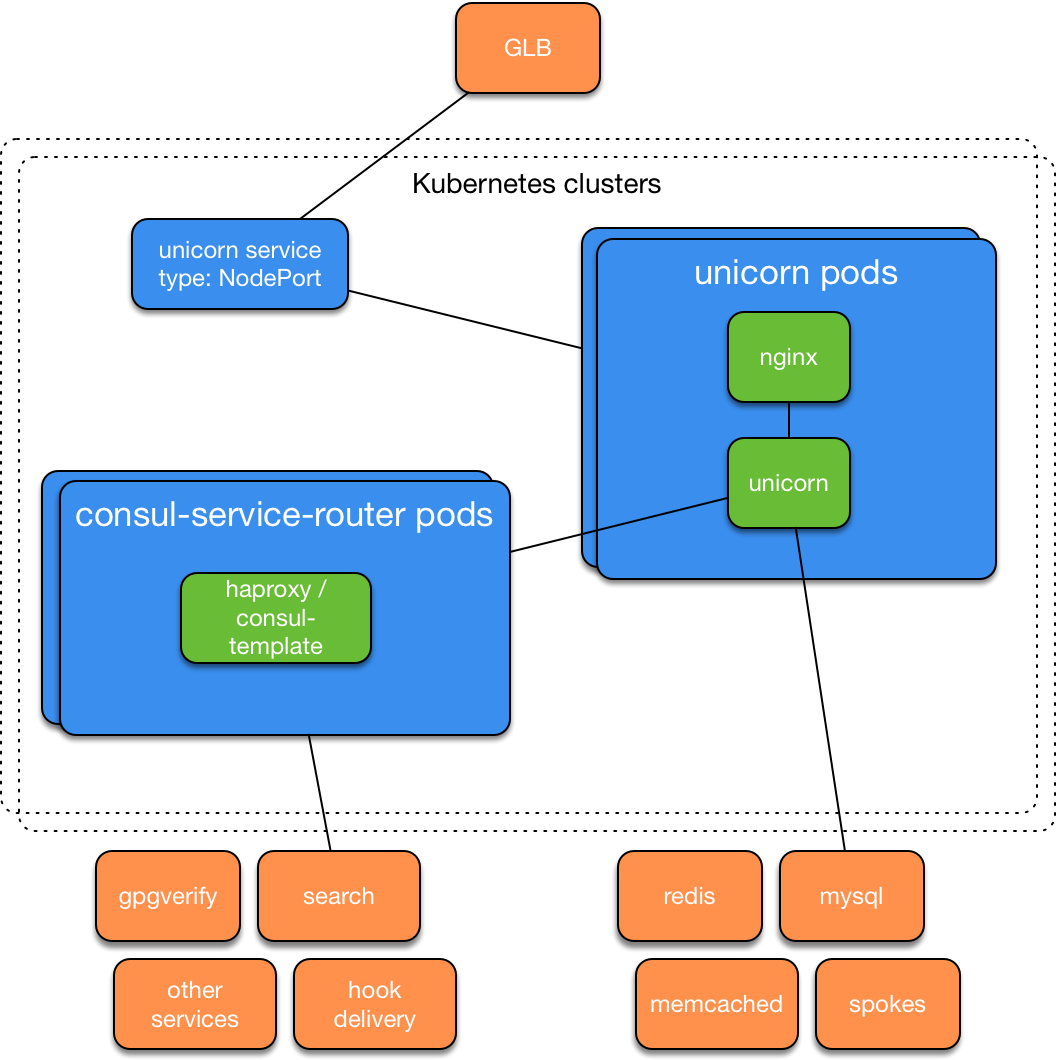

一文了解 Kubernetes 是什么?

|

||||

============================================================

|

||||

|

||||

这是一篇 Kubernetes 的概览。

|

||||

|

||||

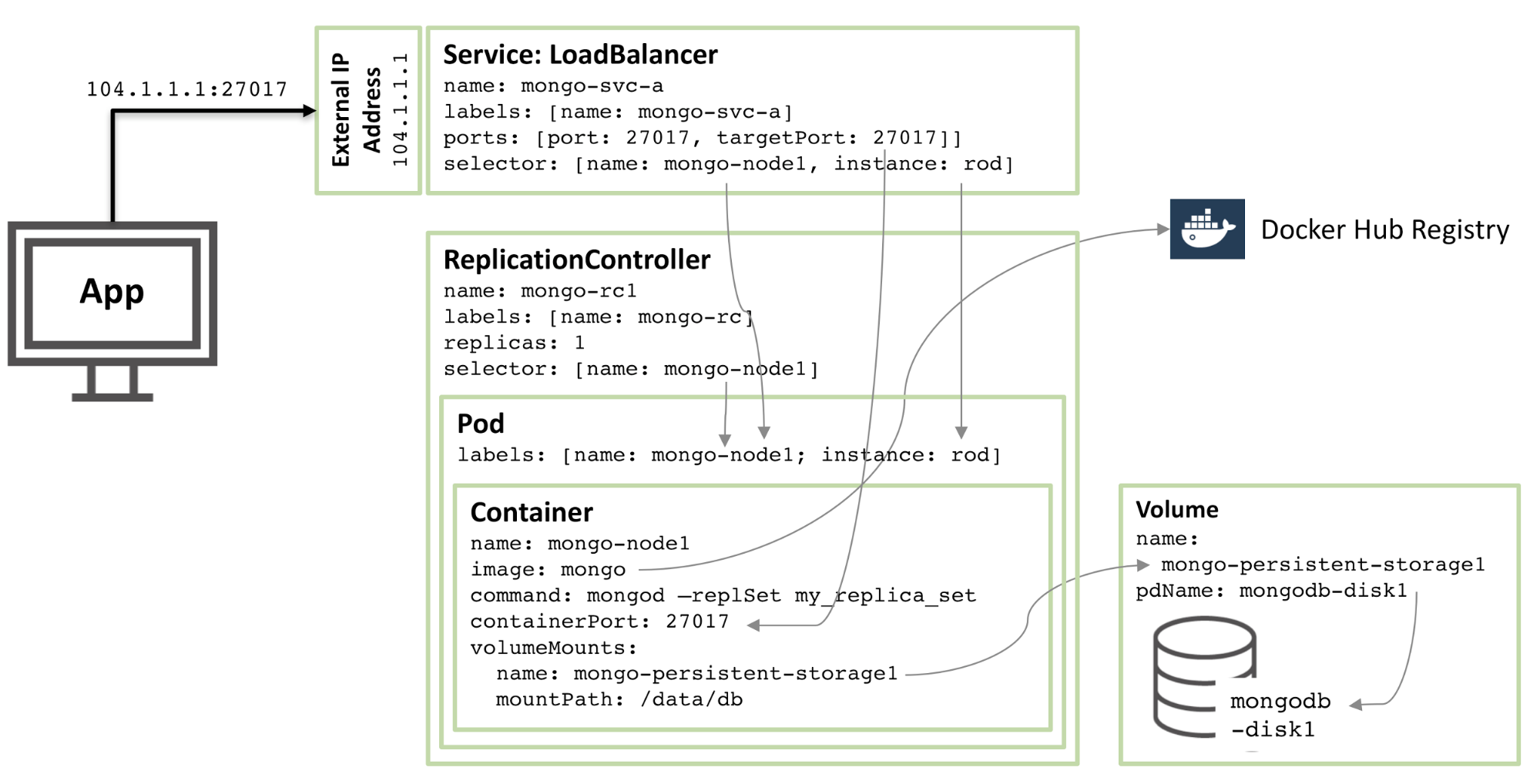

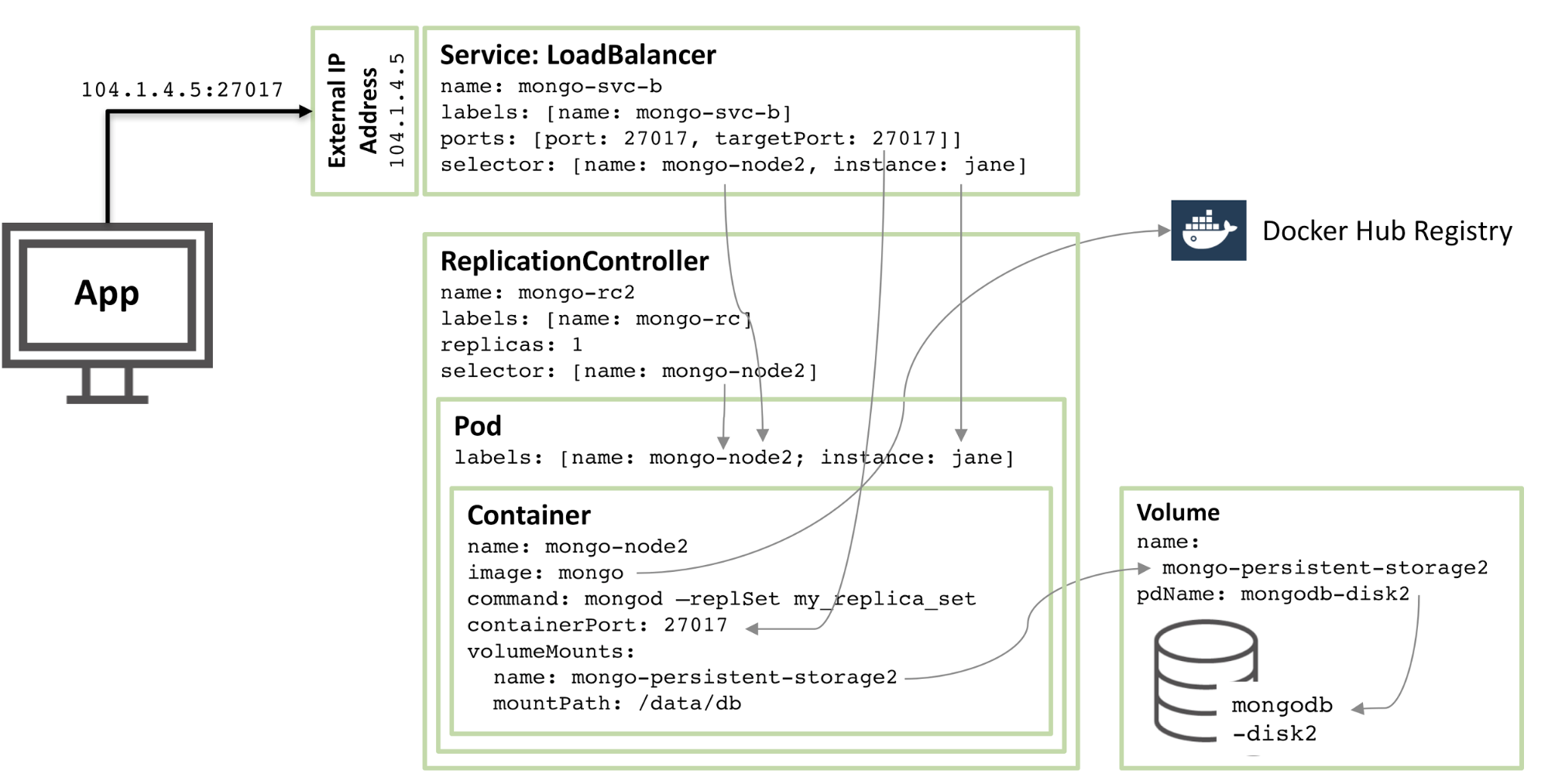

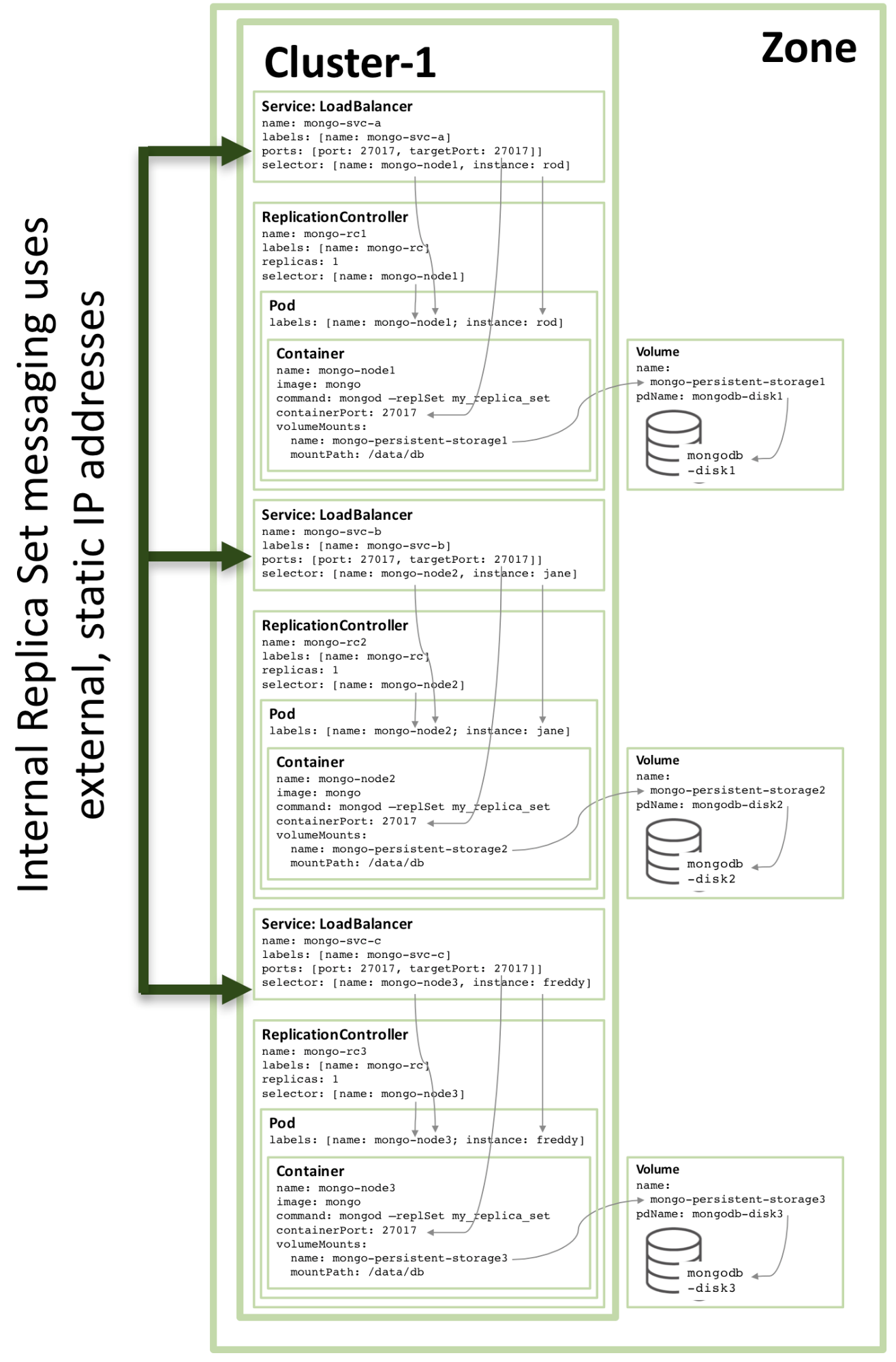

Kubernetes 是一个[自动化部署、伸缩和操作应用程序容器的开源平台][25]。

|

||||

|

||||

使用 Kubernetes,你可以快速、高效地满足用户以下的需求:

|

||||

|

||||

* 快速精准地部署应用程序

|

||||

* 即时伸缩你的应用程序

|

||||

* 无缝展现新特征

|

||||

* 限制硬件用量仅为所需资源

|

||||

|

||||

我们的目标是培育一个工具和组件的生态系统,以减缓在公有云或私有云中运行的程序的压力。

|

||||

|

||||

#### Kubernetes 的优势

|

||||

|

||||

* **可移动**: 公有云、私有云、混合云、多态云

|

||||

* **可扩展**: 模块化、插件化、可挂载、可组合

|

||||

* **自修复**: 自动部署、自动重启、自动复制、自动伸缩

|

||||

|

||||

Google 公司于 2014 年启动了 Kubernetes 项目。Kubernetes 是在 [Google 的长达 15 年的成规模的产品级任务的经验下][26]构建的,结合了来自社区的最佳创意和实践经验。

|

||||

|

||||

### 为什么选择容器?

|

||||

|

||||

想要知道你为什么要选择使用 [容器][27]?

|

||||

|

||||

|

||||

|

||||

程序部署的_传统方法_是指通过操作系统包管理器在主机上安装程序。这样做的缺点是,容易混淆程序之间以及程序和主机系统之间的可执行文件、配置文件、库、生命周期。为了达到精准展现和精准回撤,你可以搭建一台不可变的虚拟机镜像。但是虚拟机体量往往过于庞大而且不可转移。

|

||||

|

||||

容器部署的_新的方式_是基于操作系统级别的虚拟化,而非硬件虚拟化。容器彼此是隔离的,与宿主机也是隔离的:它们有自己的文件系统,彼此之间不能看到对方的进程,分配到的计算资源都是有限制的。它们比虚拟机更容易搭建。并且由于和基础架构、宿主机文件系统是解耦的,它们可以在不同类型的云上或操作系统上转移。

|

||||

|

||||

正因为容器又小又快,每一个容器镜像都可以打包装载一个程序。这种一对一的“程序 - 镜像”联系带给了容器诸多便捷。有了容器,静态容器镜像可以在编译/发布时期创建,而非部署时期。因此,每个应用不必再等待和整个应用栈其它部分进行整合,也不必和产品基础架构环境之间进行妥协。在编译/发布时期生成容器镜像建立了一个持续地把开发转化为产品的环境。相似地,容器远比虚拟机更加透明,尤其在设备监控和管理上。这一点,在容器的进程生命周期被基础架构管理而非被容器内的进程监督器隐藏掉时,尤为显著。最终,随着每个容器内都装载了单一的程序,管理容器就等于管理或部署整个应用。

|

||||

|

||||

容器优势总结:

|

||||

|

||||

* **敏捷的应用创建与部署**:相比虚拟机镜像,容器镜像的创建更简便、更高效。

|

||||

* **持续的开发、集成,以及部署**:在快速回滚下提供可靠、高频的容器镜像编译和部署(基于镜像的不可变性)。

|

||||

* **开发与运营的关注点分离**:由于容器镜像是在编译/发布期创建的,因此整个过程与基础架构解耦。

|

||||

* **跨开发、测试、产品阶段的环境稳定性**:在笔记本电脑上的运行结果和在云上完全一致。

|

||||

* **在云平台与 OS 上分发的可转移性**:可以在 Ubuntu、RHEL、CoreOS、预置系统、Google 容器引擎,乃至其它各类平台上运行。

|

||||

* **以应用为核心的管理**: 从在虚拟硬件上运行系统,到在利用逻辑资源的系统上运行程序,从而提升了系统的抽象层级。

|

||||

* **松散耦联、分布式、弹性、无拘束的[微服务][5]**:整个应用被分散为更小、更独立的模块,并且这些模块可以被动态地部署和管理,而不再是存储在大型的单用途机器上的臃肿的单一应用栈。

|

||||

* **资源隔离**:增加程序表现的可预见性。

|

||||

* **资源利用率**:高效且密集。

|

||||

|

||||

#### 为什么我需要 Kubernetes,它能做什么?

|

||||

|

||||

至少,Kubernetes 能在实体机或虚拟机集群上调度和运行程序容器。而且,Kubernetes 也能让开发者斩断联系着实体机或虚拟机的“锁链”,从**以主机为中心**的架构跃至**以容器为中心**的架构。该架构最终提供给开发者诸多内在的优势和便利。Kubernetes 提供给基础架构以真正的**以容器为中心**的开发环境。

|

||||

|

||||

Kubernetes 满足了一系列产品内运行程序的普通需求,诸如:

|

||||

|

||||

* [协调辅助进程][9],协助应用程序整合,维护一对一“程序 - 镜像”模型。

|

||||

* [挂载存储系统][10]

|

||||

* [分布式机密信息][11]

|

||||

* [检查程序状态][12]

|

||||

* [复制应用实例][13]

|

||||

* [使用横向荚式自动缩放][14]

|

||||

* [命名与发现][15]

|

||||

* [负载均衡][16]

|

||||

* [滚动更新][17]

|

||||

* [资源监控][18]

|

||||

* [访问并读取日志][19]

|

||||

* [程序调试][20]

|

||||

* [提供验证与授权][21]

|

||||

|

||||

以上兼具平台即服务(PaaS)的简化和基础架构即服务(IaaS)的灵活,并促进了在平台服务提供商之间的迁移。

|

||||

|

||||

#### Kubernetes 是一个什么样的平台?

|

||||

|

||||

虽然 Kubernetes 提供了非常多的功能,总会有更多受益于新特性的新场景出现。针对特定应用的工作流程,能被流水线化以加速开发速度。特别的编排起初是可接受的,这往往需要拥有健壮的大规模自动化机制。这也是为什么 Kubernetes 也被设计为一个构建组件和工具的生态系统的平台,使其更容易地部署、缩放、管理应用程序。

|

||||

|

||||

[<ruby>标签<rt>label</rt></ruby>][28]可以让用户按照自己的喜好组织资源。 [<ruby>注释<rt>annotation</rt></ruby>][29]让用户在资源里添加客户信息,以优化工作流程,为管理工具提供一个标示调试状态的简单方法。

|

||||

|

||||

此外,[Kubernetes 控制面板][30]是由开发者和用户均可使用的同样的 [API][31] 构建的。用户可以编写自己的控制器,比如 [<ruby>调度器<rt>scheduler</rt></ruby>][32],使用可以被通用的[命令行工具][34]识别的[他们自己的 API][33]。

|

||||

|

||||

这种[设计][35]让大量的其它系统也能构建于 Kubernetes 之上。

|

||||

|

||||

#### Kubernetes 不是什么?

|

||||

|

||||

Kubernetes 不是传统的、全包容的平台即服务(Paas)系统。它尊重用户的选择,这很重要。

|

||||

|

||||

Kubernetes:

|

||||

|

||||

* 并不限制支持的程序类型。它并不检测程序的框架 (例如,[Wildfly][22]),也不限制运行时支持的语言集合 (比如, Java、Python、Ruby),也不仅仅迎合 [12 因子应用程序][23],也不区分 _应用_ 与 _服务_ 。Kubernetes 旨在支持尽可能多种类的工作负载,包括无状态的、有状态的和处理数据的工作负载。如果某程序在容器内运行良好,它在 Kubernetes 上只可能运行地更好。

|

||||

* 不提供中间件(例如消息总线)、数据处理框架(例如 Spark)、数据库(例如 mysql),也不把集群存储系统(例如 Ceph)作为内置服务。但是以上程序都可以在 Kubernetes 上运行。

|

||||

* 没有“点击即部署”这类的服务市场存在。

|

||||

* 不部署源代码,也不编译程序。持续集成 (CI) 工作流程是不同的用户和项目拥有其各自不同的需求和表现的地方。所以,Kubernetes 支持分层 CI 工作流程,却并不监听每层的工作状态。

|

||||

* 允许用户自行选择日志、监控、预警系统。( Kubernetes 提供一些集成工具以保证这一概念得到执行)

|

||||

* 不提供也不管理一套完整的应用程序配置语言/系统(例如 [jsonnet][24])。

|

||||

* 不提供也不配合任何完整的机器配置、维护、管理、自我修复系统。

|

||||

|

||||

另一方面,大量的 PaaS 系统运行_在_ Kubernetes 上,诸如 [Openshift][36]、[Deis][37],以及 [Eldarion][38]。你也可以开发你的自定义 PaaS,整合上你自选的 CI 系统,或者只在 Kubernetes 上部署容器镜像。

|

||||

|

||||

因为 Kubernetes 运营在应用程序层面而不是在硬件层面,它提供了一些 PaaS 所通常提供的常见的适用功能,比如部署、伸缩、负载平衡、日志和监控。然而,Kubernetes 并非铁板一块,这些默认的解决方案是可供选择,可自行增加或删除的。

|

||||

|

||||

|

||||

而且, Kubernetes 不只是一个_编排系统_ 。事实上,它满足了编排的需求。 _编排_ 的技术定义是,一个定义好的工作流程的执行:先做 A,再做 B,最后做 C。相反地, Kubernetes 囊括了一系列独立、可组合的控制流程,它们持续驱动当前状态向需求的状态发展。从 A 到 C 的具体过程并不唯一。集中化控制也并不是必须的;这种方式更像是_编舞_。这将使系统更易用、更高效、更健壮、复用性、扩展性更强。

|

||||

|

||||

#### Kubernetes 这个单词的含义?k8s?

|

||||

|

||||

**Kubernetes** 这个单词来自于希腊语,含义是 _舵手_ 或 _领航员_ 。其词根是 _governor_ 和 [cybernetic][39]。 _K8s_ 是它的缩写,用 8 字替代了“ubernete”。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/

|

||||

|

||||

作者:[kubernetes.io][a]

|

||||

译者:[songshuang00](https://github.com/songsuhang00)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://kubernetes.io/

|

||||

[1]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#why-do-i-need-kubernetes-and-what-can-it-do

|

||||

[2]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#how-is-kubernetes-a-platform

|

||||

[3]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#what-kubernetes-is-not

|

||||

[4]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#what-does-kubernetes-mean-k8s

|

||||

[5]:https://martinfowler.com/articles/microservices.html

|

||||

[6]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#kubernetes-is

|

||||

[7]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#why-containers

|

||||

[8]:https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/#whats-next

|

||||

[9]:https://kubernetes.io/docs/concepts/workloads/pods/pod/

|

||||

[10]:https://kubernetes.io/docs/concepts/storage/volumes/

|

||||

[11]:https://kubernetes.io/docs/concepts/configuration/secret/

|

||||

[12]:https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/

|

||||

[13]:https://kubernetes.io/docs/concepts/workloads/controllers/replicationcontroller/

|

||||

[14]:https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

|

||||

[15]:https://kubernetes.io/docs/concepts/services-networking/connect-applications-service/

|

||||

[16]:https://kubernetes.io/docs/concepts/services-networking/service/

|

||||

[17]:https://kubernetes.io/docs/tasks/run-application/rolling-update-replication-controller/

|

||||

[18]:https://kubernetes.io/docs/tasks/debug-application-cluster/resource-usage-monitoring/

|

||||

[19]:https://kubernetes.io/docs/concepts/cluster-administration/logging/

|

||||

[20]:https://kubernetes.io/docs/tasks/debug-application-cluster/debug-application-introspection/

|

||||

[21]:https://kubernetes.io/docs/admin/authorization/

|

||||

[22]:http://wildfly.org/

|

||||

[23]:https://12factor.net/

|

||||

[24]:https://github.com/google/jsonnet

|

||||

[25]:http://www.slideshare.net/BrianGrant11/wso2con-us-2015-kubernetes-a-platform-for-automating-deployment-scaling-and-operations

|

||||

[26]:https://research.google.com/pubs/pub43438.html

|

||||

[27]:https://aucouranton.com/2014/06/13/linux-containers-parallels-lxc-openvz-docker-and-more/

|

||||

[28]:https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/

|

||||

[29]:https://kubernetes.io/docs/concepts/overview/working-with-objects/annotations/

|

||||

[30]:https://kubernetes.io/docs/concepts/overview/components/

|

||||

[31]:https://kubernetes.io/docs/reference/api-overview/

|

||||

[32]:https://git.k8s.io/community/contributors/devel/scheduler.md

|

||||

[33]:https://git.k8s.io/community/contributors/design-proposals/extending-api.md

|

||||

[34]:https://kubernetes.io/docs/user-guide/kubectl-overview/

|

||||

[35]:https://github.com/kubernetes/community/blob/master/contributors/design-proposals/principles.md

|

||||

[36]:https://www.openshift.org/

|

||||

[37]:http://deis.io/

|

||||

[38]:http://eldarion.cloud/

|

||||

[39]:http://www.etymonline.com/index.php?term=cybernetics

|

||||

@ -0,0 +1,234 @@

|

||||

一个时代的结束:Solaris 系统的那些年,那些事

|

||||

=================================

|

||||

|

||||

|

||||

|

||||

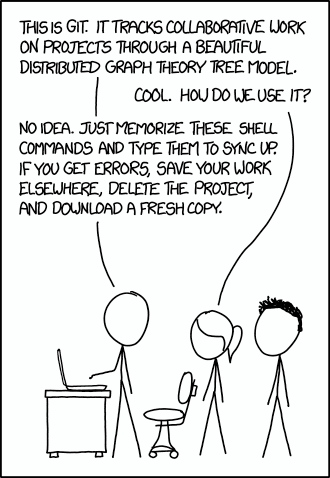

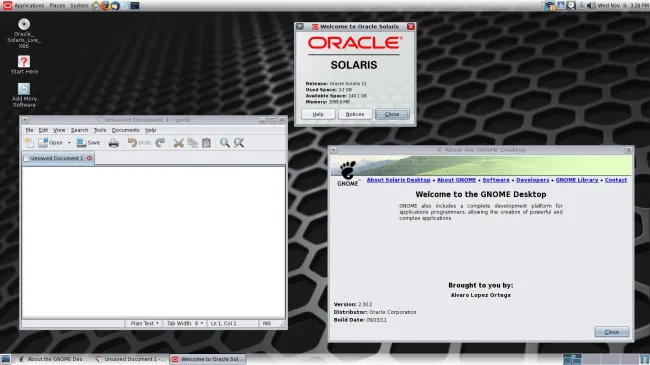

现在看来,Oracle 公司正在通过取消 Solaris 12 而[终止 Solaris 的功能开发][42],这里我们要回顾下多年来在 Phoronix 上最受欢迎的 Solaris 重大事件和新闻。

|

||||

|

||||

这里有许多关于 Solaris 的有趣/重要的回忆。

|

||||

|

||||

[

|

||||

|

||||

][1]

|

||||

|

||||

在 Sun Microsystems 时期,我真的对 Solaris 很感兴趣。在 Phoronix 上我们一直重点关注 Linux 的同时,经常也有 Solaris 的文章出现。 Solaris 玩起来很有趣,OpenSolaris/SXCE 是伟大的产物,我将 Phoronix 测试套件移植到 Solaris 上,我们与 Sun Microsystems 人员有密切的联系,也出现在 Sun 的许多活动中。

|

||||

|

||||

[

|

||||

|

||||

][2]

|

||||

|

||||

_在那些日子里 Sun 有一些相当独特的活动..._

|

||||

|

||||

不幸的是,自从 Oracle 公司收购了 Sun 公司, Solaris 就如坠入深渊一样。最大的打击大概是 Oracle 结束了 OpenSolaris ,并将所有 Solaris 的工作转移到专有模式...

|

||||

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

在 Sun 时代的 Solaris 有很多美好的回忆,所以 Oracle 在其计划中抹去了 Solaris 12 之后,我经常在 Phoronix 上翻回去看一些之前 Solaris 的经典文章,期待着能从 Oracle 听到 “Solaris 11” 下一代的消息,重启 Solaris 项目的开发。

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

虽然在后 Solaris 的世界中,看到 Oracle 对 ZFS 所做的事情以及他们在基于 RHEL 的 Oracle Enterprise Linux 上下的重注将会很有趣,但时间将会告诉我们一切。

|

||||

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

无论如何,这是回顾自 2004 年以来我们最受欢迎的 Solaris 文章:

|

||||

|

||||

### 2016/12/1 [Oracle 或许会罐藏 Solaris][20]

|

||||

|

||||

Oracle 可能正在拔掉 Solaris 的电源插头,据一些新的传闻说。

|

||||

|

||||

### 2013/6/9 [OpenSXCE 2013.05 拯救 Solaris 社区][17]

|

||||

|

||||

作为 Solaris 社区版的社区复兴,OpenSXCE 2013.05 出现在网上。

|

||||

|

||||

### 2013/2/2 [Solaris 12 可能最终带来 Radeon KMS 驱动程序][16]

|

||||

|

||||

看起来,Oracle 可能正在准备发布自己的 AMD Radeon 内核模式设置(KMS)驱动程序,并引入到 Oracle Solaris 12 中。

|

||||

|

||||

### 2012/10/4 [Oracle Solaris 11.1 提供 300 个以上增强功能][25]

|

||||

|

||||

Oracle昨天在旧金山的 Oracle OpenWorld 会议上发布了 Solaris 11.1 。

|

||||

|

||||

[

|

||||

|

||||

][26]

|

||||

|

||||

### 2012/1/9 [Oracle 尚未澄清 Solaris 11 内核来源][19]

|

||||

|

||||

一个月前,Phoronix 是第一个注意到 Solaris 11 内核源代码通过 Torrent 站点泄漏到网上的信息。一个月后,甲骨文还没有正式评论这个情况。

|

||||

|

||||

### 2011/12/19 [Oracle Solaris 11 内核源代码泄漏][15]

|

||||

|

||||

似乎 Solaris 11的内核源代码在过去的一个周末被泄露到了网上。

|

||||

|

||||

### 2011/8/25 [对于 BSD,Solaris 的 GPU 驱动程序的悲惨状态][24]

|

||||

|

||||

昨天在邮件列表上出现了关于干掉所有旧式 Mesa 驱动程序的讨论。这些旧驱动程序没有被积极维护,支持复古的图形处理器,并且没有更新支持新的 Mesa 功能。英特尔和其他开发人员正在努力清理 Mesa 核心,以将来增强这一开源图形库。这种清理 Mesa,对 BSD 和 Solaris 用户也有一些影响。

|

||||

|

||||

### 2010/8/13 [告别 OpenSolaris,Oracle 刚刚把它干掉][8]

|

||||

|

||||

Oracle 终于宣布了他们对 Solaris 操作系统和 OpenSolaris 平台的计划,而且不是好消息。OpenSolaris 将实际死亡,未来将不会有更多的 Solaris 版本出现 - 包括长期延期的 2010 年版本。Solaris 仍然会继续存在,现在 Oracle 正在忙于明年发布的 Solaris 11,但仅在 Oracle 的企业版之后才会发布 “Solaris 11 Express” 作为 OpenSolaris 的类似产品。

|

||||

|

||||

### 2010/2/22 [Oracle 仍然要对 OpenSolaris 进行更改][12]

|

||||

|

||||

自从 Oracle 完成对 Sun Microsystems 的收购以来,已经有了许多变化,这个 Sun 最初支持的开源项目现在已经不再被 Oracle 支持,并且对其余的开源产品进行了重大改变。 Oracle 表现出并不太开放的意图的开源项目之一是 OpenSolaris 。 Solaris Express 社区版(SXCE)上个月已经关闭,并且也没有预计 3 月份发布的下一个 OpenSolaris 版本(OpenSolaris 2010.03)的信息流出。

|

||||

|

||||

### 2007/9/10 [Solaris Express 社区版 Build 72][9]

|

||||

|

||||

对于那些想要在 “印第安纳项目” 发布之前尝试 OpenSolaris 软件中最新最好的软件的人来说,现在可以使用 Solaris Express 社区版 Build 72。Solaris Express 社区版(SXCE)Build 72 可以从 OpenSolaris.org 下载。同时,预计将在下个月推出 Sun 的 “印第安纳项目” 项目的预览版。

|

||||

|

||||

### 2007/9/6 [ATI R500/600 驱动要支持 Solaris 了?][6]

|

||||

|

||||

虽然没有可用于 Solaris/OpenSolaris 或 * BSD 的 ATI fglrx 驱动程序,现在 AMD 将向 X.Org 开发人员和开源驱动程序交付规范,但对于任何使用 ATI 的 Radeon X1000 “R500” 或者 HD 2000“R600” 系列的 Solaris 用户来说,这肯定是有希望的。将于下周发布的开源 X.Org 驱动程序距离成熟尚远,但应该能够相对容易地移植到使用 X.Org 的 Solaris 和其他操作系统上。 AMD 今天宣布的针对的是 Linux 社区,但它也可以帮助使用 ATI 硬件的 Solaris/OpenSolaris 用户。特别是随着印第安纳项目的即将推出,开源 R500/600 驱动程序移植就只是时间问题了。

|

||||

|

||||

### 2007/9/5 [Solaris Express 社区版 Build 71][7]

|

||||

|

||||

Solaris Express 社区版(SXCE)现已推出 Build 71。您可以在 OpenSolaris.org 中找到有关 Solaris Express 社区版 Build 71 的更多信息。另外,在 Linux 内核峰会上,AMD 将提供 GPU 规格的消息,由此产生的 X.Org 驱动程序将来可能会导致 ATI 硬件上 Solaris/OpenSolaris 有所改善。

|

||||

|

||||

### 2007/8/27 [Linux 的 Solaris 容器][11]

|

||||

|

||||

Sun Microsystems 已经宣布,他们将很快支持适用于 Linux 应用程序的 Solaris 容器。这样可以在 Solaris 下运行 Linux 应用程序,而无需对二进制包进行任何修改。适用于 Linux 的 Solaris 容器将允许从 Linux 到 Solaris 的平滑迁移,协助跨平台开发以及其他优势。当该支持到来时,这个时代就“快到了”。

|

||||

|

||||

### 2007/8/23 [OpenSolaris 开发者峰会][10]

|

||||

|

||||

今天早些时候在 OpenSolaris 论坛上发布了第一次 OpenSolaris 开发人员峰会的消息。这次峰会将在十月份在加州大学圣克鲁斯分校举行。 Sara Dornsife 将这次峰会描述为“不是与演示文稿或参展商举行会议,而是一个亲自参与的协作工作会议,以计划下一期的印第安纳项目。” 伊恩·默多克(Ian Murdock) 将在这个“印第安纳项目”中进行主题演讲,但除此之外,该计划仍在计划之中。 Phoronix 可能会继续跟踪此事件,您可以在 Solaris 论坛上讨论此次峰会。

|

||||

|

||||

### 2007/8/18 [Solaris Express 社区版 Build 70][21]

|

||||

|

||||

名叫 "Nevada" 的 Solaris Express 社区版 Build 70 (SXCE snv_70) 现在已经发布。有关下载链接的通知可以在 OpenSolaris 论坛中找到。还有公布了其网络存储的 Build 71 版本,包括来自 Qlogic 的光纤通道 HBA 驱动程序的源代码。

|

||||

|

||||

### 2007/8/16 [IBM 使用 Sun Solaris 的系统][14]

|

||||

|

||||

Sun Microsystems 和 IBM正在举行电话会议,他们刚刚宣布,IBM 将开始在服务器上使用 Sun 的 Solaris 操作系统。这些 IBM 服务器包括基于 x86 的服务器系统以及 Blade Center 服务器。官方新闻稿刚刚发布,可以在 sun 新闻室阅读。

|

||||

|

||||

### 2007/8/9 [OpenSolaris 不会与 Linux 合并][18]

|

||||

|

||||

在旧金山的 LinuxWorld 2007 上,Andrew Morton 在主题演讲中表示, OpenSolaris 的关键组件不会出现在 Linux 内核中。事实上,莫顿甚至表示 “非常遗憾 OpenSolaris 活着”。OpenSolaris 的一些关键组件包括 Zones、ZFS 和 DTrace 。虽然印第安纳州项目有可能将这些项目转变为 GPLv3 项目... 更多信息参见 ZDNET。

|

||||

|

||||

### 2007/7/27 [Solaris Xen 已经更新][13]

|

||||

|

||||

已经有一段时间了,Solaris Xen 终于更新了。约翰·莱文(John Levon)表示,这一最新版本基于 Xen 3.0.4 和 Solaris “Nevada” Build 66。这一最新版本的改进包括 PAE 支持、HVM 支持、新的 virt-manager 工具、改进的调试支持以及管理域支持。可以在 Sun 的网站上找到 2007 年 7 月 Solaris Xen 更新的下载。

|

||||

|

||||

### 2007/7/25 [Solaris 10 7/07 HW 版本][22]

|

||||

|

||||

Solaris 10 7/07 HW 版本的文档已经上线。如 Solaris 发行注记中所述,Solaris 10 7/07 仅适用于 SPARC Enterprise M4000-M9000 服务器,并且没有 x86/x64 版本可用。所有平台的最新 Solaris 更新是 Solaris 10 11/06 。您可以在 Phoronix 论坛中讨论 Solaris 7/07。

|

||||

|

||||

### 2007/7/16 [来自英特尔的 Solaris 电信服务器][23]

|

||||

|

||||

今天宣布推出符合 NEBS、ETSI 和 ATCA 合规性的英特尔体系的 Sun Solaris 电信机架服务器和刀片服务器。在这些新的运营商级平台中,英特尔运营商级机架式服务器 TIGW1U 支持 Linux 和 Solaris 10,而 Intel NetStructure MPCBL0050 SBC 也将支持这两种操作系统。今天的新闻稿可以在这里阅读。

|

||||

|

||||

然后是 Solaris 分类中最受欢迎的特色文章:

|

||||

|

||||

### [Ubuntu vs. OpenSolaris vs. FreeBSD 基准测试][27]

|

||||

|

||||

在过去的几个星期里,我们提供了几篇关于 Ubuntu Linux 性能的深入文章。我们已经开始提供 Ubuntu 7.04 到 8.10 的基准测试,并且发现这款受欢迎的 Linux 发行版的性能随着时间的推移而变慢,随之而来的是 Mac OS X 10.5 对比 Ubuntu 8.10 的基准测试和其他文章。在本文中,我们正在比较 Ubuntu 8.10 的 64 位性能与 OpenSolaris 2008.11 和 FreeBSD 7.1 的最新测试版本。

|

||||

|

||||

### [NVIDIA 的性能:Windows vs. Linux vs. Solaris][28]

|

||||

|

||||

本周早些时候,我们预览了 Quadro FX1700,它是 NVIDIA 的中端工作站显卡之一,基于 G84GL 内核,而 G84GL 内核又源于消费级 GeForce 8600 系列。该 PCI Express 显卡提供 512MB 的视频内存,具有两个双链路 DVI 连接,并支持 OpenGL 2.1 ,同时保持最大功耗仅为 42 瓦。正如我们在预览文章中提到的,我们将不仅在 Linux 下查看此显卡的性能,还要在 Microsoft Windows 和 Sun 的 Solaris 中测试此工作站解决方案。在今天的这篇文章中,我们正在这样做,因为我们测试了 NVIDIA Quadro FX1700 512MB 与这些操作系统及其各自的二进制显示驱动程序。

|

||||

|

||||

### [FreeBSD 8.0 对比 Linux、OpenSolaris][29]

|

||||

|

||||

在 FreeBSD 8.0 的稳定版本发布的上周,我们终于可以把它放在测试台上,并用 Phoronix 测试套件进行了全面的了解。我们将 FreeBSD 8.0 的性能与早期的 FreeBSD 7.2 版本以及 Fedora 12 和 Ubuntu 9.10 还有 Sun OS 端的 OpenSolaris 2010.02 b127 快照进行了比较。

|

||||

|

||||

### [Fedora、Debian、FreeBSD、OpenBSD、OpenSolaris 基准测试][30]

|

||||

|

||||

上周我们发布了第一个 Debian GNU/kFreeBSD 基准测试,将 FreeBSD 内核捆绑在 Debian GNU 用户的 Debian GNU/Linux 上,比较了这款 Debian 系统的 32 位和 64 位性能。 我们现在扩展了这个比较,使许多其他操作系统与 Debian GNU/Linux 和 Debian GNU/kFreeBSD 的 6.0 Squeeze 快照直接进行比较,如 Fedora 12,FreeBSD 7.2,FreeBSD 8.0,OpenBSD 4.6 和 OpenSolaris 2009.06 。

|

||||

|

||||

### [AMD 上海皓龙:Linux vs. OpenSolaris 基准测试][31]

|

||||

|

||||

1月份,当我们研究了四款皓龙 2384 型号时,我们在 Linux 上发布了关于 AMD 上海皓龙 CPU 的综述。与早期的 AMD 巴塞罗那处理器 Ubuntu Linux 相比,这些 45nm 四核工作站服务器处理器的性能非常好,但是在运行 Sun OpenSolaris 操作系统时,性能如何?今天浏览的是 AMD 双核的基准测试,运行 OpenSolaris 2008.11、Ubuntu 8.10 和即将推出的 Ubuntu 9.04 版本。

|

||||

|

||||

### [OpenSolaris vs. Linux 内核基准][32]

|

||||

|

||||

本周早些时候,我们提供了 Ubuntu 9.04 与 Mac OS X 10.5.6 的基准测试,发现 Leopard 操作系统(Mac)在大多数测试中的表现要优于 Jaunty Jackalope (Ubuntu),至少在 Ubuntu 32 位是这样的。我们今天又回过来进行更多的操作系统基准测试,但这次我们正在比较 Linux 和 Sun OpenSolaris 内核的性能。我们使用的 Nexenta Core 2 操作系统将 OpenSolaris 内核与 GNU/Ubuntu 用户界面组合在同一个 Ubuntu 软件包中,但使用了 Linux 内核的 32 位和 64 位 Ubuntu 服务器安装进行测试。

|

||||

|

||||

### [Netbook 性能:Ubuntu vs. OpenSolaris][33]

|

||||

|

||||

过去,我们已经发布了 OpenSolaris vs. Linux Kernel 基准测试以及类似的文章,关注 Sun 的 OpenSolaris 与流行的 Linux 发行版的性能。我们已经看过高端 AMD 工作站的性能,但是我们从来没有比较上网本上的 OpenSolaris 和 Linux 性能。直到今天,在本文中,我们将比较戴尔 Inspiron Mini 9 上网本上的 OpenSolaris 2009.06 和 Ubuntu 9.04 的结果。

|

||||

|

||||

### [NVIDIA 图形:Linux vs. Solaris][34]

|

||||

|

||||

在 Phoronix,我们不断探索 Linux 下的不同显示驱动程序,在我们评估了 Sun 的检查工具并测试了 Solaris 主板以及覆盖其他几个领域之后,我们还没有执行图形驱动程序 Linux 和 Solaris 之间的比较。直到今天。由于印第安纳州项目,我们对 Solaris 更感兴趣,我们决定终于通过 NVIDIA 专有驱动程序提供我们在 Linux 和 Solaris 之间的第一次定量图形比较。

|

||||

|

||||

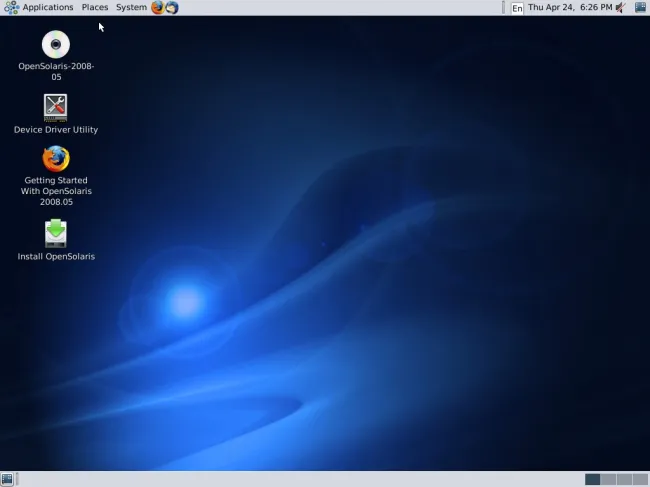

### [OpenSolaris 2008.05 向 Solaris 提供了一个新面孔][35]

|

||||

|

||||

2月初,Sun Microsystems 发布了印第安纳项目的第二个预览版本。对于那些人来说,印第安纳州项目是 Sun 的 Ian Murdock 领导的项目的代号,旨在通过解决 Solaris 的长期可用性问题,将 OpenSolaris 推向更多的台式机和笔记本电脑。我们没有对预览 2 留下什么深刻印象,因为它没有比普通用户感兴趣的 GNU/Linux 桌面更有优势。然而,随着 5 月份推出的 OpenSolaris 2008.05 印第安纳项目发布,Sun Microsystems 今天发布了该操作系统的最终测试副本。当最后看到项目印第安纳时, 我们对这个新的 OpenSolaris 版本的最初体验是远远优于我们不到三月前的体验的。

|

||||

|

||||

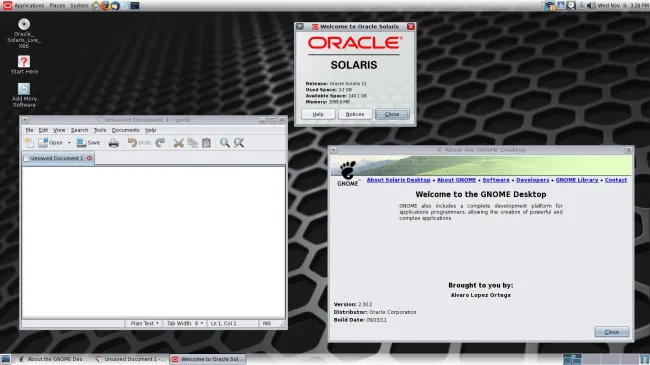

### [快速概览 Oracle Solaris 11][36]

|

||||

|

||||

Solaris 11 在周三发布,是七年来这个前 Sun 操作系统的第一个主要更新。在过去七年中,Solaris 家族发生了很大变化,OpenSolaris 在那个时候已经到来,但在本文中,简要介绍了全新的 Oracle Solaris 11 版本。

|

||||

|

||||

### [OpenSolaris、BSD & Linux 的新基准测试][37]

|

||||

|

||||

今天早些时候,我们对以原生的内核模块支持的 Linux 上的 ZFS 进行了基准测试,该原生模块将被公开提供,以将这个 Sun/Oracle 文件系统覆盖到更多的 Linux 用户。现在,尽管作为一个附加奖励,我们碰巧有了基于 OpenSolaris 的最新发行版的新基准,包括 OpenSolaris、OpenIndiana 和 Augustiner-Schweinshaxe,与 PC-BSD、Fedora 和 Ubuntu相比。

|

||||

|

||||

### [FreeBSD/PC-BSD 9.1 针对 Linux、Solaris、BSD 的基准][38]

|

||||

|

||||

虽然 FreeBSD 9.1 尚未正式发布,但是基于 FreeBSD 的 PC-BSD 9.1 “Isotope”版本本月已经可用。本文中的性能指标是 64 位版本的 PC-BSD 9.1 与 DragonFlyBSD 3.0.3、Oracle Solaris Express 11.1、CentOS 6.3、Ubuntu 12.10 以及 Ubuntu 13.04 开发快照的比较。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Michael Larabel 是 Phoronix.com 的作者,并于 2004 年创立了该网站,该网站重点是丰富多样的 Linux 硬件体验。 Michael 撰写了超过10,000 篇文章,涵盖了 Linux 硬件支持,Linux 性能,图形驱动程序等主题。 Michael 也是 Phoronix 测试套件、 Phoromatic 和 OpenBenchmarking.org 自动化基准测试软件的主要开发人员。可以通过 Twitter 关注他或通过 MichaelLarabel.com 联系他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.phoronix.com/scan.php?page=news_item&px=Solaris-2017-Look-Back

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[MonkeyDEcho](https://github.com/MonkeyDEcho)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.michaellarabel.com/

|

||||

[1]:http://www.phoronix.com/image-viewer.php?id=982&image=sun_sxce81_03_lrg

|

||||

[2]:http://www.phoronix.com/image-viewer.php?id=711&image=java7_bash_13_lrg

|

||||

[3]:http://www.phoronix.com/image-viewer.php?id=sun_sxce_farewell&image=sun_sxce_07_lrg

|

||||

[4]:http://www.phoronix.com/image-viewer.php?id=solaris_200805&image=opensolaris_indiana_03b_lrg

|

||||

[5]:http://www.phoronix.com/image-viewer.php?id=oracle_solaris_11&image=oracle_solaris11_02_lrg

|

||||

[6]:http://www.phoronix.com/scan.php?page=news_item&px=NjA0Mg

|

||||

[7]:http://www.phoronix.com/scan.php?page=news_item&px=NjAzNQ

|

||||

[8]:http://www.phoronix.com/scan.php?page=news_item&px=ODUwNQ

|

||||

[9]:http://www.phoronix.com/scan.php?page=news_item&px=NjA0Nw

|

||||

[10]:http://www.phoronix.com/scan.php?page=news_item&px=NjAwNA

|

||||

[11]:http://www.phoronix.com/scan.php?page=news_item&px=NjAxMQ

|

||||

[12]:http://www.phoronix.com/scan.php?page=news_item&px=ODAwNg

|

||||

[13]:http://www.phoronix.com/scan.php?page=news_item&px=NTkzMQ

|

||||

[14]:http://www.phoronix.com/scan.php?page=news_item&px=NTk4NA

|

||||

[15]:http://www.phoronix.com/scan.php?page=news_item&px=MTAzMDE

|

||||

[16]:http://www.phoronix.com/scan.php?page=news_item&px=MTI5MTU

|

||||

[17]:http://www.phoronix.com/scan.php?page=news_item&px=MTM4Njc

|

||||

[18]:http://www.phoronix.com/scan.php?page=news_item&px=NTk2Ng

|

||||

[19]:http://www.phoronix.com/scan.php?page=news_item&px=MTAzOTc

|

||||

[20]:http://www.phoronix.com/scan.php?page=news_item&px=Oracle-Solaris-Demise-Rumors

|

||||

[21]:http://www.phoronix.com/scan.php?page=news_item&px=NTk4Nw

|

||||

[22]:http://www.phoronix.com/scan.php?page=news_item&px=NTkyMA

|

||||

[23]:http://www.phoronix.com/scan.php?page=news_item&px=NTg5Nw

|

||||

[24]:http://www.phoronix.com/scan.php?page=news_item&px=OTgzNA

|

||||

[25]:http://www.phoronix.com/scan.php?page=news_item&px=MTE5OTQ

|

||||

[26]:http://www.phoronix.com/image-viewer.php?id=opensolaris_200906&image=opensolaris_200906_06_lrg

|

||||

[27]:http://www.phoronix.com/vr.php?view=13149

|

||||

[28]:http://www.phoronix.com/vr.php?view=11968

|

||||

[29]:http://www.phoronix.com/vr.php?view=14407

|

||||

[30]:http://www.phoronix.com/vr.php?view=14533

|

||||

[31]:http://www.phoronix.com/vr.php?view=13475

|

||||

[32]:http://www.phoronix.com/vr.php?view=13826

|

||||

[33]:http://www.phoronix.com/vr.php?view=14039

|

||||

[34]:http://www.phoronix.com/vr.php?view=10301

|

||||

[35]:http://www.phoronix.com/vr.php?view=12269

|

||||

[36]:http://www.phoronix.com/vr.php?view=16681

|

||||

[37]:http://www.phoronix.com/vr.php?view=15476

|

||||

[38]:http://www.phoronix.com/vr.php?view=18291

|

||||

[39]:http://www.michaellarabel.com/

|

||||

[40]:https://www.phoronix.com/scan.php?page=news_topic&q=Oracle

|

||||

[41]:https://www.phoronix.com/forums/node/925794

|

||||

[42]:http://www.phoronix.com/scan.php?page=news_item&px=No-Solaris-12

|

||||

@ -0,0 +1,124 @@

|

||||

给中级 Meld 用户的有用技巧

|

||||

============================================================

|

||||

|

||||

Meld 是 Linux 上功能丰富的可视化比较和合并工具。如果你是第一次接触,你可以进入我们的[初学者指南][5],了解该程序的工作原理,如果你已经阅读过或正在使用 Meld 进行基本的比较/合并任务,你将很高兴了解本教程的东西,在本教程中,我们将讨论一些非常有用的技巧,这将让你使用工具的体验更好。

|

||||

|

||||

_但在我们跳到安装和解释部分之前,值得一提的是,本教程中介绍的所有说明和示例已在 Ubuntu 14.04 上进行了测试,而我们使用的 Meld 版本为 3.14.2_。

|

||||

|

||||

### 1、 跳转

|

||||

|

||||

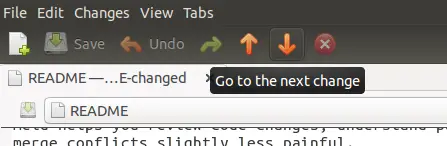

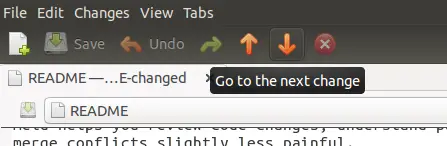

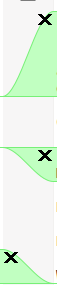

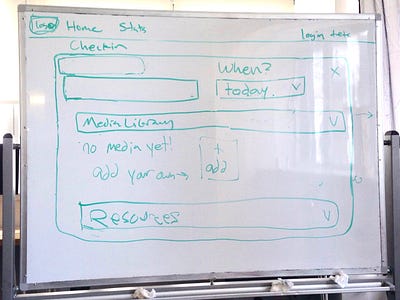

你可能已经知道(我们也在初学者指南中也提到过这一点),标准滚动不是在使用 Meld 时在更改之间跳转的唯一方法 - 你可以使用向上和向下箭头键轻松地从一个更改跳转到另一个更改位于编辑区域上方的窗格中:

|

||||

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

但是,这需要你将鼠标指针移动到这些箭头,然后再次单击其中一个(取决于你要去哪里 - 向上或向下)。你会很高兴知道,存在另一种更简单的方式来跳转:只需使用鼠标的滚轮即可在鼠标指针位于中央更改栏上时进行滚动。

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

这样,你就可以在视线不离开或者分心的情况下进行跳转,

|

||||

|

||||

### 2、 可以对更改进行的操作

|

||||

|

||||

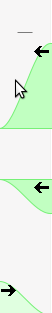

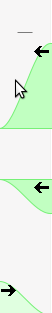

看下上一节的最后一个屏幕截图。你知道那些黑箭头做什么吧?默认情况下,它们允许你执行合并/更改操作 - 当没有冲突时进行合并,并在同一行发生冲突时进行更改。

|

||||

|

||||

但是你知道你可以根据需要删除个别的更改么?是的,这是可能的。为此,你需要做的是在处理更改时按下 Shift 键。你会观察到箭头被变成了十字架。

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

只需点击其中任何一个,相应的更改将被删除。

|

||||

|

||||

不仅是删除,你还可以确保冲突的更改不会在合并时更改行。例如,以下是一个冲突变化的例子:

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

现在,如果你点击任意两个黑色箭头,箭头指向的行将被改变,并且将变得与其他文件的相应行相似。只要你想这样做,这是没问题的。但是,如果你不想要更改任何行呢?相反,目的是将更改的行在相应行的上方或下方插入到其他文件中。

|

||||

|

||||

我想说的是,例如,在上面的截图中,需要在 “test23” 之上或之下添加 “test 2”,而不是将 “test23” 更改为 “test2”。你会很高兴知道在 Meld 中这是可能的。就像你按下 Shift 键删除注释一样,在这种情况下,你必须按下 Ctrl 键。

|

||||

|

||||

你会观察到当前操作将被更改为插入 - 双箭头图标将确认这一点 。

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

从箭头的方向看,此操作可帮助用户将当前更改插入到其他文件中的相应更改 (如所选择的)。

|

||||

|

||||

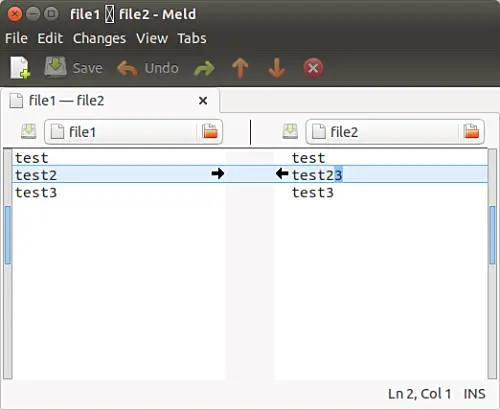

### 3、 自定义文件在 Meld 的编辑器区域中显示的方式

|

||||

|

||||

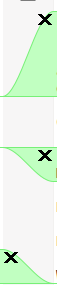

有时候,你希望 Meld 的编辑区域中的文字大小变大(为了更好或更舒适的浏览),或者你希望文本行被包含而不是脱离视觉区域(意味着你不要想使用底部的水平滚动条)。

|

||||

|

||||

Meld 在 _Editor_ 选项卡(_Edit->Preferences->Editor_)的 _Preferences_ 菜单中提供了一些显示和字体相关的自定义选项,你可以进行这些调整:

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

在这里你可以看到,默认情况下,Meld 使用系统定义的字体宽度。只需取消选中 _Font_ 类别下的框,你将有大量的字体类型和大小选项可供选择。

|

||||

|

||||

然后在 _Display_ 部分,你将看到我们正在讨论的所有自定义选项:你可以设置 Tab 宽度、告诉工具是否插入空格而不是 tab、启用/禁用文本换行、使Meld显示行号和空白(在某些情况下非常有用)以及使用语法突出显示。

|

||||

|

||||

### 4、 过滤文本

|

||||

|

||||

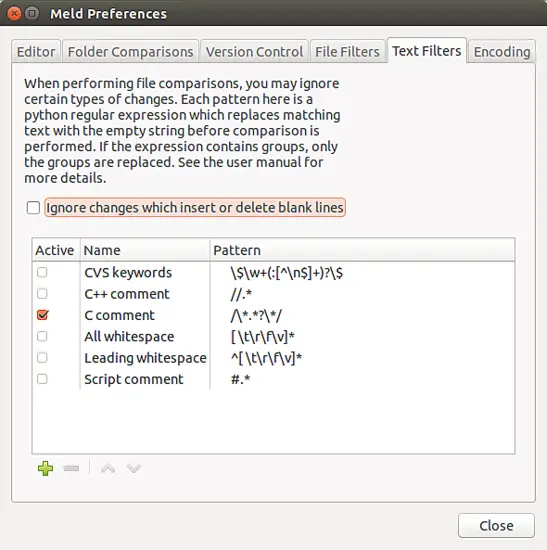

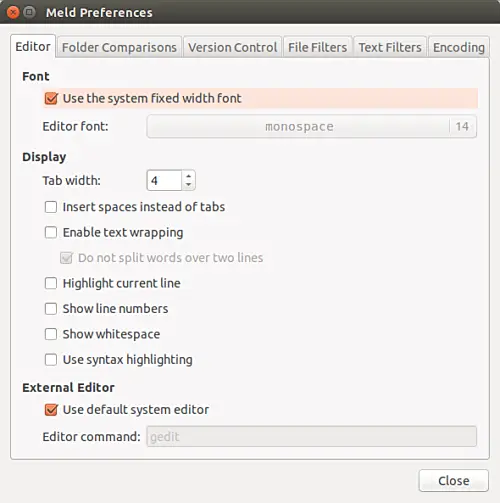

有时候,并不是所有的修改都是对你很重要的。例如,在比较两个 C 编程文件时,你可能不希望 Meld 显示注释中的更改,因为你只想专注于与代码相关的更改。因此,在这种情况下,你可以告诉 Meld 过滤(或忽略)与注释相关的更改。

|

||||

|

||||

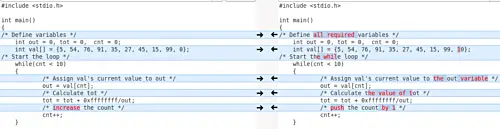

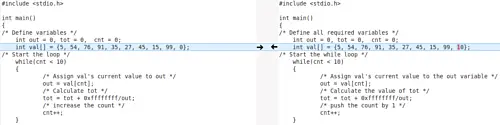

例如,这里是 Meld 中的一个比较,其中由工具高亮了注释相关更改:

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

而在这种情况下,Meld 忽略了相同的变化,仅关注与代码相关的变更:

|

||||

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

很酷,不是吗?那么这是怎么回事?为此,我是在 “_Edit->Preferences->Text Filters_” 标签中启用了 “C comments” 文本过滤器:

|

||||

|

||||

[

|

||||

|

||||

][14]

|

||||

|

||||

如你所见,除了 “C comments” 之外,你还可以过滤掉 C++ 注释、脚本注释、引导或所有的空格等。此外,你还可以为你处理的任何特定情况定义自定义文本过滤器。例如,如果你正在处理日志文件,并且不希望 Meld 高亮显示特定模式开头的行中的更改,则可以为该情况定义自定义文本过滤器。

|

||||

|

||||

但是,请记住,要定义一个新的文本过滤器,你需要了解 Python 语言以及如何使用该语言创建正则表达式。

|

||||

|

||||

### 总结

|

||||

|

||||

这里讨论的所有四个技巧都不是很难理解和使用(当然,除了你想立即创建自定义文本过滤器),一旦你开始使用它们,你会认为他们是真的有好处。这里的关键是要继续练习,否则你学到的任何技巧不久后都会忘记。

|

||||

|

||||

你还知道或者使用其他任何中级 Meld 的贴士和技巧么?如果有的话,欢迎你在下面的评论中分享。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/

|

||||

|

||||

作者:[Ansh][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/

|

||||

[1]:https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/#-navigation

|

||||

[2]:https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/#-things-you-can-do-with-changes

|

||||

[3]:https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/#-filtering-text

|

||||

[4]:https://www.howtoforge.com/tutorial/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/#conclusion

|

||||

[5]:https://linux.cn/article-8402-1.html

|

||||

[6]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-go-next-prev-9.png

|

||||

[7]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-center-area-scrolling.png

|

||||

[8]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-delete-changes.png

|

||||

[9]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-conflicting-change.png

|

||||

[10]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-ctrl-insert.png

|

||||

[11]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-editor-tab.png

|

||||

[12]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-changes-with-comments.png

|

||||

[13]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-changes-without-comments.png

|

||||

[14]:https://www.howtoforge.com/images/beginners-guide-to-visual-merge-tool-meld-on-linux-part-2/big/meld-text-filters.png

|

||||

@ -0,0 +1,68 @@

|

||||

Linux 容器轻松应对性能工程

|

||||

============================================================

|

||||

|

||||

|

||||

图片来源: CC0 Public Domain

|

||||

|

||||

应用程序的性能决定了软件能多快完成预期任务。这回答有关应用程序的几个问题,例如:

|

||||

|

||||

* 峰值负载下的响应时间

|

||||

* 与替代方案相比,它易于使用,受支持的功能和用例

|

||||

* 运营成本(CPU 使用率、内存需求、数据吞吐量、带宽等)

|

||||

|

||||

该性能分析的价值超出了服务负载所需的计算资源或满足峰值需求所需的应用实例数量的估计。性能显然与成功企业的基本要素挂钩。它揭示了用户的总体体验,包括确定什么会拖慢客户预期的响应时间,通过设计满足带宽要求的内容交付来提高客户粘性,选择最佳设备,最终帮助企业发展业务。

|

||||

|

||||

### 问题

|

||||

|

||||

当然,这是对业务服务的性能工程价值的过度简化。为了理解在完成我刚刚所描述事情背后的挑战,让我们把它放到一个真实的稍微有点复杂的场景中。

|

||||

|

||||

|

||||

|

||||

现实世界的应用程序可能托管在云端。应用程序可以利用非常大(或概念上是无穷大)的计算资源。在硬件和软件方面的需求将通过云来满足。从事开发工作的开发人员将使用云交付功能来实现更快的编码和部署。云托管不是免费的,但成本开销与应用程序的资源需求成正比。

|

||||

|

||||

除了<ruby>搜索即服务<rt>Search as a Service</rt></ruby>(SaaS)、<ruby>平台即服务<rt>Platform as a Service</rt></ruby>(PaaS)、<ruby>基础设施即服务<rt>Infrastructure as a Service</rt></ruby>(IaaS)以及<ruby>负载平衡即服务<rt>Load Balancing as a Service</rt></ruby>(LBaaS)之外,当云端管理托管程序的流量时,开发人员可能还会使用这些快速增长的云服务中的一个或多个:

|

||||

|

||||

* <ruby>安全即服务<rt>Security as a Service</rt></ruby> (SECaaS),可满足软件和用户的安全需求

|

||||

* <ruby>数据即服务<rt>Data as a Service</rt></ruby> (DaaS),为应用提供了用户需求的数据

|

||||

* <ruby>登录即服务<rt>Logging as a Service</rt></ruby> (LaaS),DaaS 的近亲,提供了日志传递和使用的分析指标

|

||||

* <ruby>搜索即服务<rt>Search as a Service</rt></ruby> (SaaS),用于应用程序的分析和大数据需求

|

||||

* <ruby>网络即服务<rt>Network as a Service</rt></ruby> (NaaS),用于通过公共网络发送和接收数据

|

||||

|

||||

云服务也呈指数级增长,因为它们使得开发人员更容易编写复杂的应用程序。除了软件复杂性之外,所有这些分布式组件的相互作用变得越来越多。用户群变得更加多元化。该软件的需求列表变得更长。对其他服务的依赖性变大。由于这些因素,这个生态系统的缺陷会引发性能问题的多米诺效应。

|

||||

|

||||

例如,假设你有一个精心编写的应用程序,它遵循安全编码实践,旨在满足不同的负载要求,并经过彻底测试。另外假设你已经将基础架构和分析工作结合起来,以支持基本的性能要求。在系统的实现、设计和架构中建立性能标准需要做些什么?软件如何跟上不断变化的市场需求和新兴技术?如何测量关键参数以调整系统以获得最佳性能?如何使系统具有弹性和自我恢复能力?你如何更快地识别任何潜在的性能问题,并尽早解决?

|

||||

|

||||

### 进入容器

|

||||

|

||||

软件[容器][2]以[微服务][3]设计或面向服务的架构(SoA)的优点为基础,提高了性能,因为包含更小的、自足的代码块的系统更容易编码,对其它系统组件有更清晰、定义良好的依赖。测试更容易,包括围绕资源利用和内存过度消耗的问题比在宏架构中更容易确定。

|

||||

|

||||

当扩容系统以增加负载能力时,容器应用程序的复制快速而简单。安全漏洞能更好地隔离。补丁可以独立版本化并快速部署。性能监控更有针对性,测量更可靠。你还可以重写和“改版”资源密集型代码,以满足不断变化的性能要求。

|

||||

|

||||

容器启动快速,停止也快速。它比虚拟机(VM)有更高效资源利用和更好的进程隔离。容器没有空闲内存和 CPU 闲置。它们允许多个应用程序共享机器,而不会丢失数据或性能。容器使应用程序可移植,因此开发人员可以构建并将应用程序发送到任何支持容器技术的 Linux 服务器上,而不必担心性能损失。容器生存在其内,并遵守其集群管理器(如 Cloud Foundry 的 Diego、[Kubernetes][4]、Apache Mesos 和 Docker Swarm)所规定的配额(比如包括存储、计算和对象计数配额)。

|

||||

|

||||

容器在性能方面表现出色,而即将到来的 “serverless” 计算(也称为<ruby>功能即服务<rt>Function as a Service</rt></ruby>(FaaS))的浪潮将扩大容器的优势。在 FaaS 时代,这些临时性或短期的容器将带来超越应用程序性能的优势,直接转化为在云中托管的间接成本的节省。如果容器的工作更快,那么它的寿命就会更短,而且计算量负载纯粹是按需的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Garima 是 Red Hat 的工程经理,专注于 OpenShift 容器平台。在加入 Red Hat 之前,Garima 帮助 Akamai Technologies&MathWorks Inc. 开创了创新。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/2/performance-container-world

|

||||

|

||||

作者:[Garima][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/garimavsharma

|

||||

[1]:https://opensource.com/article/17/2/performance-container-world?rate=RozKaIY39AZNxbayqFkUmtkkhoGdctOVuGOAJqVJII8

|

||||

[2]:https://opensource.com/resources/what-are-linux-containers

|

||||

[3]:https://opensource.com/resources/what-are-microservices

|

||||

[4]:https://opensource.com/resources/what-is-kubernetes

|

||||

[5]:https://opensource.com/user/109286/feed

|

||||

[6]:https://opensource.com/article/17/2/performance-container-world#comments

|

||||

[7]:https://opensource.com/users/garimavsharma

|

||||

@ -0,0 +1,61 @@

|

||||

在 Kali Linux 的 Wireshark 中过滤数据包

|

||||

==================

|

||||

|

||||

### 介绍

|

||||

|

||||

数据包过滤可让你专注于你感兴趣的确定数据集。如你所见,Wireshark 默认会抓取_所有_数据包。这可能会妨碍你寻找具体的数据。 Wireshark 提供了两个功能强大的过滤工具,让你简单而无痛地获得精确的数据。

|

||||

|

||||

Wireshark 可以通过两种方式过滤数据包。它可以通过只收集某些数据包来过滤,或者在抓取数据包后进行过滤。当然,这些可以彼此结合使用,并且它们各自的用处取决于收集的数据和信息的多少。

|

||||

|

||||

### 布尔表达式和比较运算符

|

||||

|

||||

Wireshark 有很多很棒的内置过滤器。当开始输入任何一个过滤器字段时,你将看到它们会自动补完。这些过滤器大多数对应于用户对数据包的常见分组方式,比如仅过滤 HTTP 请求就是一个很好的例子。

|

||||

|

||||

对于其他的,Wireshark 使用布尔表达式和/或比较运算符。如果你曾经做过任何编程,你应该熟悉布尔表达式。他们是使用 `and`、`or`、`not` 来验证声明或表达式的真假。比较运算符要简单得多,它们只是确定两件或更多件事情是否彼此相等、大于或小于。

|

||||

|

||||

### 过滤抓包

|

||||

|

||||

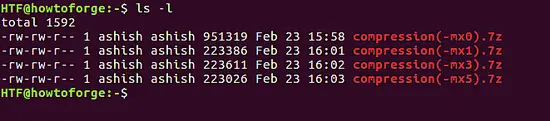

在深入自定义抓包过滤器之前,请先查看 Wireshark 已经内置的内容。单击顶部菜单上的 “Capture” 选项卡,然后点击 “Options”。可用接口下面是可以编写抓包过滤器的行。直接移到左边一个标有 “Capture Filter” 的按钮上。点击它,你将看到一个新的对话框,其中包含内置的抓包过滤器列表。看看里面有些什么。

|

||||

|

||||

|

||||

|

||||

在对话框的底部,有一个用于创建并保存抓包过滤器的表单。按左边的 “New” 按钮。它将创建一个填充有默认数据的新的抓包过滤器。要保存新的过滤器,只需将实际需要的名称和表达式替换原来的默认值,然后单击“Ok”。过滤器将被保存并应用。使用此工具,你可以编写并保存多个不同的过滤器,以便它们将来可以再次使用。

|

||||

|

||||

抓包有自己的过滤语法。对于比较,它不使用等于号,并使用 `>` 和 `<` 来用于大于或小于。对于布尔值来说,它使用 `and`、`or` 和 `not`。

|

||||

|

||||

例如,如果你只想监听 80 端口的流量,你可以使用这样的表达式:`port 80`。如果你只想从特定的 IP 监听端口 80,你可以使用 `port 80 and host 192.168.1.20`。如你所见,抓包过滤器有特定的关键字。这些关键字用于告诉 Wireshark 如何监控数据包以及哪一个数据是要找的。例如,`host` 用于查看来自 IP 的所有流量。`src` 用于查看源自该 IP 的流量。与之相反,`dst` 只监听目标到这个 IP 的流量。要查看一组 IP 或网络上的流量,请使用 `net`。

|

||||

|

||||

### 过滤结果

|

||||

|

||||

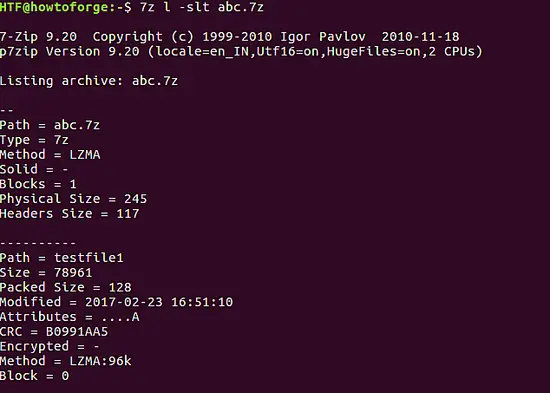

界面的底部菜单栏是专门用于过滤结果的菜单栏。此过滤器不会更改 Wireshark 收集的数据,它只允许你更轻松地对其进行排序。有一个文本字段用于输入新的过滤器表达式,并带有一个下拉箭头以查看以前输入的过滤器。旁边是一个标为 “Expression” 的按钮,另外还有一些用于清除和保存当前表达式的按钮。

|

||||

|

||||

点击 “Expression” 按钮。你将看到一个小窗口,其中包含多个选项。左边一栏有大量的条目,每个都有附加的折叠子列表。你可以用这些来过滤所有不同的协议、字段和信息。你不可能看完所有,所以最好是大概看下。你应该注意到了一些熟悉的选项,如 HTTP、SSL 和 TCP。

|

||||

|

||||

|

||||

|

||||

子列表包含可以过滤的不同部分和请求方法。你可以看到通过 GET 和 POST 请求过滤 HTTP 请求。

|

||||

|

||||

你还可以在中间看到运算符列表。通过从每列中选择条目,你可以使用此窗口创建过滤器,而不用记住 Wireshark 可以过滤的每个条目。对于过滤结果,比较运算符使用一组特定的符号。 `==` 用于确定是否相等。`>` 用于确定一件东西是否大于另一个东西,`<` 找出是否小一些。 `>=` 和 `<=` 分别用于大于等于和小于等于。它们可用于确定数据包是否包含正确的值或按大小过滤。使用 `==` 仅过滤 HTTP GET 请求的示例如下:`http.request.method == "GET"`。

|

||||

|

||||

布尔运算符基于多个条件将小的表达式串到一起。不像是抓包所使用的单词,它使用三个基本的符号来做到这一点。`&&` 代表 “与”。当使用时,`&&` 两边的两个语句都必须为真值才行,以便 Wireshark 来过滤这些包。`||` 表示 “或”。只要两个表达式任何一个为真值,它就会被过滤。如果你正在查找所有的 GET 和 POST 请求,你可以这样使用 `||`:`(http.request.method == "GET") || (http.request.method == "POST")`。`!` 是 “非” 运算符。它会寻找除了指定的东西之外的所有东西。例如,`!http` 将展示除了 HTTP 请求之外的所有东西。

|

||||

|

||||

### 总结思考

|

||||

|

||||

过滤 Wireshark 可以让你有效监控网络流量。熟悉可以使用的选项并习惯你可以创建过滤器的强大表达式需要一些时间。然而一旦你学会了,你将能够快速收集和查找你要的网络数据,而无需梳理长长的数据包或进行大量的工作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux

|

||||

[1]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux#h1-introduction

|

||||

[2]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux#h2-boolean-expressions-and-comparison-operators

|

||||

[3]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux#h3-filtering-capture

|

||||

[4]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux#h4-filtering-results

|

||||

[5]:https://linuxconfig.org/filtering-packets-in-wireshark-on-kali-linux#h5-closing-thoughts

|

||||

@ -0,0 +1,68 @@

|

||||

开源优先:私营公司宣言

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

这是一个宣言,任何私人组织都可以用来构建其协作转型。请阅读并让我知道你的看法。

|

||||

|

||||

我[在 Linux TODO 小组中作了一个演讲][3]使用了这篇文章作为我的材料。对于那些不熟悉 TODO 小组的人,他们是在商业公司支持开源领导力的组织。相互依赖是很重要的,因为法律、安全和其他共享的知识对于开源社区向前推进是非常重要的。尤其是因为我们需要同时代表商业和公共社区的最佳利益。

|

||||

|

||||

“开源优先”意味着我们在考虑供应商出品的产品以满足我们的需求之前,首先考虑开源。要正确使用开源技术,你需要做的不仅仅是消费,还需要你的参与,以确保开源技术长期存在。要参与开源工作,你需要将工程师的工作时间分别分配给你的公司和开源项目。我们期望将开源贡献意图以及内部协作带到私营公司。我们需要定义、建立和维护一种贡献、协作和择优工作的文化。

|

||||

|

||||

### 开放花园开发

|

||||

|

||||

我们的私营公司致力于通过对技术界的贡献,成为技术的领导者。这不仅仅是使用开源代码,成为领导者需要参与。成为领导者还需要与公司以外的团体(社区)进行各种类型的参与。这些社区围绕一个特定的研发项目进行组织。每个社区的参与就像为公司工作一样。重大成果需要大量的参与。

|

||||

|

||||

### 编码更多,生活更好

|

||||

|

||||

我们必须对计算资源慷慨,对空间吝啬,并鼓励由此产生的凌乱而有创造力的结果。允许人们使用他们的业务的这些工具将改变他们。我们必须有自发的互动。我们必须通过协作来构建鼓励创造性的线上以及线下空间。无法实时联系对方,协作就不能进行。

|

||||

|

||||

### 通过精英体制创新

|

||||

|

||||

我们必须创建一个精英阶层。思想素质要超过群体结构和在其中的职位任期。按业绩晋升鼓励每个人都成为更好的人和雇员。当我们成为最好的坏人时, 充满激情的人之间的争论将会发生。我们的文化应该有鼓励异议的义务。强烈的意见和想法将会变成热情的职业道德。这些想法和意见可以来自而且应该来自所有人。它不应该改变你是谁,而是应该关心你做什么。随着精英体制的进行,我们会投资未经许可就能正确行事的团队。

|

||||

|

||||

### 项目到产品

|

||||

|

||||

由于我们的私营公司拥抱开源贡献,我们还必须在研发项目中的上游工作和实现最终产品之间实现明确的分离。项目是研发工作,快速失败以及开发功能是常态。产品是你投入生产,拥有 SLA,并使用研发项目的成果。分离至少需要分离项目和产品的仓库。正常的分离包含在项目和产品上工作的不同社区。每个社区都需要大量的贡献和参与。为了使这些活动保持独立,需要有一个客户功能以及项目到产品的 bug 修复请求的工作流程。

|

||||

|

||||

接下来,我们会强调在私营公司创建、支持和扩展开源中的主要步骤。

|

||||

|

||||

### 技术上有天赋的人的学校

|

||||

|

||||

高手必须指导没有经验的人。当你学习新技能时,你将它们传给下一个人。当你训练下一个人时,你会面临新的挑战。永远不要期待在一个位置很长时间。获得技能,变得强大,通过学习,然后继续前进。

|

||||

|

||||

### 找到最适合你的人

|

||||

|

||||

我们热爱我们的工作。我们非常喜欢它,我们想和我们的朋友一起工作。我们是一个比我们公司大的社区的一部分。我们应该永远记住招募最好的人与我们一起工作。即使不是为我们公司工作,我们将会为我们周围的人找到很棒的工作。这样的想法使雇用很棒的人成为一种生活方式。随着招聘变得普遍,那么审查和帮助新员工就会变得容易了。

|

||||

|

||||

### 即将写的

|

||||

|

||||

我将在我的博客上发布关于每个宗旨的[更多细节][4],敬请关注。

|

||||

|

||||

_这篇文章最初发表在[ Sean Robert 的博客][1]上。CC BY 许可。_

|

||||

|

||||

(题图: opensource.com)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Sean A Roberts - -以同理心为主导,同时专注于结果。我实践精英体制。在这里发现的智慧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/2/open-source-first

|

||||

|

||||

作者:[Sean A Roberts][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/sarob

|

||||

[1]:https://sarob.com/2017/01/open-source-first/

|

||||

[2]:https://opensource.com/article/17/2/open-source-first?rate=CKF77ZVh5e_DpnmSlOKTH-MuFBumAp-tIw-Rza94iEI

|

||||

[3]:https://sarob.com/2017/01/todo-open-source-presentation-17-january-2017/

|

||||

[4]:https://sarob.com/2017/02/open-source-first-project-product/

|

||||

[5]:https://opensource.com/user/117441/feed

|

||||

[6]:https://opensource.com/users/sarob

|

||||

@ -0,0 +1,222 @@

|

||||

Linux 开机引导和启动过程详解

|

||||

===========

|

||||

|

||||

|

||||

> 你是否曾经对操作系统为何能够执行应用程序而感到疑惑?那么本文将为你揭开操作系统引导与启动的面纱。

|

||||

|

||||

理解操作系统开机引导和启动过程对于配置操作系统和解决相关启动问题是至关重要的。该文章陈述了 [GRUB2 引导装载程序][1]开机引导装载内核的过程和 [systemd 初始化系统][2]执行开机启动操作系统的过程。

|

||||

|

||||

事实上,操作系统的启动分为两个阶段:<ruby>引导<rt>boot</rt></ruby>和<ruby>启动<rt>startup</rt></ruby>。引导阶段开始于打开电源开关,结束于内核初始化完成和 systemd 进程成功运行。启动阶段接管了剩余工作,直到操作系统进入可操作状态。

|

||||

|

||||

总体来说,Linux 的开机引导和启动过程是相当容易理解,下文将分节对于不同步骤进行详细说明。

|

||||

|

||||

- BIOS 上电自检(POST)

|

||||

- 引导装载程序 (GRUB2)

|

||||

- 内核初始化

|

||||

- 启动 systemd,其是所有进程之父。

|

||||

|

||||

注意,本文以 GRUB2 和 systemd 为载体讲述操作系统的开机引导和启动过程,是因为这二者是目前主流的 linux 发行版本所使用的引导装载程序和初始化软件。当然另外一些过去使用的相关软件仍然在一些 Linux 发行版本中使用。

|

||||

|

||||

### 引导过程

|

||||

|

||||

引导过程能以两种方式之一初始化。其一,如果系统处于关机状态,那么打开电源按钮将开启系统引导过程。其二,如果操作系统已经运行在一个本地用户(该用户可以是 root 或其他非特权用户),那么用户可以借助图形界面或命令行界面通过编程方式发起一个重启操作,从而触发系统引导过程。重启包括了一个关机和重新开始的操作。

|

||||

|

||||

#### BIOS 上电自检(POST)

|

||||

|

||||

上电自检过程中其实 Linux 没有什么也没做,上电自检主要由硬件的部分来完成,这对于所有操作系统都一样。当电脑接通电源,电脑开始执行 BIOS(<ruby>基本输入输出系统<rt>Basic I/O System</rt></ruby>)的 POST(<ruby>上电自检<rt>Power On Self Test</rt></ruby>)过程。

|

||||

|

||||

在 1981 年,IBM 设计的第一台个人电脑中,BIOS 被设计为用来初始化硬件组件。POST 作为 BIOS 的组成部分,用于检验电脑硬件基本功能是否正常。如果 POST 失败,那么这个电脑就不能使用,引导过程也将就此中断。

|

||||

|

||||

BIOS 上电自检确认硬件的基本功能正常,然后产生一个 BIOS [中断][3] INT 13H,该中断指向某个接入的可引导设备的引导扇区。它所找到的包含有效的引导记录的第一个引导扇区将被装载到内存中,并且控制权也将从引导扇区转移到此段代码。

|

||||

|

||||

引导扇区是引导加载器真正的第一阶段。大多数 Linux 发行版本使用的引导加载器有三种:GRUB、GRUB2 和 LILO。GRUB2 是最新的,也是相对于其他老的同类程序使用最广泛的。

|

||||

|

||||

#### GRUB2

|

||||

|

||||

GRUB2 全称是 GRand Unified BootLoader,Version 2(第二版大一统引导装载程序)。它是目前流行的大部分 Linux 发行版本的主要引导加载程序。GRUB2 是一个用于计算机寻找操作系统内核并加载其到内存的智能程序。由于 GRUB 这个单词比 GRUB2 更易于书写和阅读,在下文中,除特殊指明以外,GRUB 将代指 GRUB2。

|

||||

|

||||

GRUB 被设计为兼容操作系统[多重引导规范][4],它能够用来引导不同版本的 Linux 和其他的开源操作系统;它还能链式加载专有操作系统的引导记录。

|

||||

|

||||

GRUB 允许用户从任何给定的 Linux 发行版本的几个不同内核中选择一个进行引导。这个特性使得操作系统,在因为关键软件不兼容或其它某些原因升级失败时,具备引导到先前版本的内核的能力。GRUB 能够通过文件 `/boot/grub/grub.conf` 进行配置。(LCTT 译注:此处指 GRUB1)

|

||||

|

||||

GRUB1 现在已经逐步被弃用,在大多数现代发行版上它已经被 GRUB2 所替换,GRUB2 是在 GRUB1 的基础上重写完成。基于 Red Hat 的发行版大约是在 Fedora 15 和 CentOS/RHEL 7 时升级到 GRUB2 的。GRUB2 提供了与 GRUB1 同样的引导功能,但是 GRUB2 也是一个类似主框架(mainframe)系统上的基于命令行的前置操作系统(Pre-OS)环境,使得在预引导阶段配置更为方便和易操作。GRUB2 通过 `/boot/grub2/grub.cfg` 进行配置。

|

||||

|

||||

两个 GRUB 的最主要作用都是将内核加载到内存并运行。两个版本的 GRUB 的基本工作方式一致,其主要阶段也保持相同,都可分为 3 个阶段。在本文将以 GRUB2 为例进行讨论其工作过程。GRUB 或 GRUB2 的配置,以及 GRUB2 的命令使用均超过本文范围,不会在文中进行介绍。

|

||||

|

||||

虽然 GRUB2 并未在其三个引导阶段中正式使用这些<ruby>阶段<rt>stage</rt></ruby>名词,但是为了讨论方便,我们在本文中使用它们。

|

||||

|

||||

##### 阶段 1

|

||||

|

||||

如上文 POST(上电自检)阶段提到的,在 POST 阶段结束时,BIOS 将查找在接入的磁盘中查找引导记录,其通常位于 MBR(<ruby>主引导记录<rt>Master Boot Record</rt></ruby>),它加载它找到的第一个引导记录中到内存中,并开始执行此代码。引导代码(及阶段 1 代码)必须非常小,因为它必须连同分区表放到硬盘的第一个 512 字节的扇区中。 在[传统的常规 MBR][5] 中,引导代码实际所占用的空间大小为 446 字节。这个阶段 1 的 446 字节的文件通常被叫做引导镜像(boot.img),其中不包含设备的分区信息,分区是一般单独添加到引导记录中。

|

||||

|

||||

由于引导记录必须非常的小,它不可能非常智能,且不能理解文件系统结构。因此阶段 1 的唯一功能就是定位并加载阶段 1.5 的代码。为了完成此任务,阶段 1.5 的代码必须位于引导记录与设备第一个分区之间的位置。在加载阶段 1.5 代码进入内存后,控制权将由阶段 1 转移到阶段 1.5。

|

||||

|

||||

##### 阶段 1.5

|

||||

|

||||

如上所述,阶段 1.5 的代码必须位于引导记录与设备第一个分区之间的位置。该空间由于历史上的技术原因而空闲。第一个分区的开始位置在扇区 63 和 MBR(扇区 0)之间遗留下 62 个 512 字节的扇区(共 31744 字节),该区域用于存储阶段 1.5 的代码镜像 core.img 文件。该文件大小为 25389 字节,故此区域有足够大小的空间用来存储 core.img。

|

||||

|

||||

因为有更大的存储空间用于阶段 1.5,且该空间足够容纳一些通用的文件系统驱动程序,如标准的 EXT 和其它的 Linux 文件系统,如 FAT 和 NTFS 等。GRUB2 的 core.img 远比更老的 GRUB1 阶段 1.5 更复杂且更强大。这意味着 GRUB2 的阶段 2 能够放在标准的 EXT 文件系统内,但是不能放在逻辑卷内。故阶段 2 的文件可以存放于 `/boot` 文件系统中,一般在 `/boot/grub2` 目录下。

|

||||

|

||||

注意 `/boot` 目录必须放在一个 GRUB 所支持的文件系统(并不是所有的文件系统均可)。阶段 1.5 的功能是开始执行存放阶段 2 文件的 `/boot` 文件系统的驱动程序,并加载相关的驱动程序。

|

||||

|

||||

##### 阶段 2

|

||||

|

||||

GRUB 阶段 2 所有的文件都已存放于 `/boot/grub2` 目录及其几个子目录之下。该阶段没有一个类似于阶段 1 与阶段 1.5 的镜像文件。相应地,该阶段主要需要从 `/boot/grub2/i386-pc` 目录下加载一些内核运行时模块。

|

||||

|

||||

GRUB 阶段 2 的主要功能是定位和加载 Linux 内核到内存中,并转移控制权到内核。内核的相关文件位于 `/boot` 目录下,这些内核文件可以通过其文件名进行识别,其文件名均带有前缀 vmlinuz。你可以列出 `/boot` 目录中的内容来查看操作系统中当前已经安装的内核。

|

||||

|

||||

GRUB2 跟 GRUB1 类似,支持从 Linux 内核选择之一引导启动。Red Hat 包管理器(DNF)支持保留多个内核版本,以防最新版本内核发生问题而无法启动时,可以恢复老版本的内核。默认情况下,GRUB 提供了一个已安装内核的预引导菜单,其中包括问题诊断菜单(recuse)以及恢复菜单(如果配置已经设置恢复镜像)。

|

||||

|

||||

阶段 2 加载选定的内核到内存中,并转移控制权到内核代码。

|

||||

|

||||

#### 内核

|

||||

|

||||

内核文件都是以一种自解压的压缩格式存储以节省空间,它与一个初始化的内存映像和存储设备映射表都存储于 `/boot` 目录之下。

|

||||

|

||||

在选定的内核加载到内存中并开始执行后,在其进行任何工作之前,内核文件首先必须从压缩格式解压自身。一旦内核自解压完成,则加载 [systemd][6] 进程(其是老式 System V 系统的 [init][7] 程序的替代品),并转移控制权到 systemd。

|

||||

|

||||

这就是引导过程的结束。此刻,Linux 内核和 systemd 处于运行状态,但是由于没有其他任何程序在执行,故其不能执行任何有关用户的功能性任务。

|

||||

|

||||

### 启动过程

|

||||

|

||||

启动过程紧随引导过程之后,启动过程使 Linux 系统进入可操作状态,并能够执行用户功能性任务。

|

||||

|

||||

#### systemd

|

||||

|

||||

systemd 是所有进程的父进程。它负责将 Linux 主机带到一个用户可操作状态(可以执行功能任务)。systemd 的一些功能远较旧式 init 程序更丰富,可以管理运行中的 Linux 主机的许多方面,包括挂载文件系统,以及开启和管理 Linux 主机的系统服务等。但是 systemd 的任何与系统启动过程无关的功能均不在此文的讨论范围。

|

||||

|

||||

首先,systemd 挂载在 `/etc/fstab` 中配置的文件系统,包括内存交换文件或分区。据此,systemd 必须能够访问位于 `/etc` 目录下的配置文件,包括它自己的。systemd 借助其配置文件 `/etc/systemd/system/default.target` 决定 Linux 系统应该启动达到哪个状态(或<ruby>目标态<rt>target</rt></ruby>)。`default.target` 是一个真实的 target 文件的符号链接。对于桌面系统,其链接到 `graphical.target`,该文件相当于旧式 systemV init 方式的 **runlevel 5**。对于一个服务器操作系统来说,`default.target` 更多是默认链接到 `multi-user.target`, 相当于 systemV 系统的 **runlevel 3**。 `emergency.target` 相当于单用户模式。

|

||||

|

||||