mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-06 01:20:12 +08:00

commit

e96e20dcbe

@ -1,44 +1,43 @@

|

||||

2017 年 Linux 上最好的 9 个免费视频编辑软件

|

||||

Linux 上最好的 9 个免费视频编辑软件(2018)

|

||||

======

|

||||

**概要:这里介绍 Linux 上几个最好的视频编辑器,介绍他们的特性、利与弊,以及如何在你的 Linux 发行版上安装它们。**

|

||||

|

||||

![Linux 上最好的视频编辑器][1]

|

||||

|

||||

> 概要:这里介绍 Linux 上几个最好的视频编辑器,介绍它们的特性、利与弊,以及如何在你的 Linux 发行版上安装它们。

|

||||

|

||||

![Linux 上最好的视频编辑器][2]

|

||||

|

||||

我们曾经在一篇短文中讨论过[ Linux 上最好的照片管理应用][3],[Linux 上最好的代码编辑器][4]。今天我们将讨论 **Linux 上最好的视频编辑软件**。

|

||||

我们曾经在一篇短文中讨论过 [Linux 上最好的照片管理应用][3],[Linux 上最好的代码编辑器][4]。今天我们将讨论 **Linux 上最好的视频编辑软件**。

|

||||

|

||||

当谈到免费视频编辑软件,Windows Movie Maker 和 iMovie 是大部分人经常推荐的。

|

||||

|

||||

很不幸,上述两者在 GNU/Linux 上都不可用。但是不必担心,我们为你汇集了一个**最好的视频编辑器**清单。

|

||||

|

||||

## Linux 上最好的视频编辑器

|

||||

### Linux 上最好的视频编辑器

|

||||

|

||||

接下来让我们一起看看这些最好的视频编辑软件。如果你觉得文章读起来太长,这里有一个快速摘要。你可以点击链接跳转到文章的相关章节:

|

||||

接下来让我们一起看看这些最好的视频编辑软件。如果你觉得文章读起来太长,这里有一个快速摘要。

|

||||

|

||||

视频编辑器 主要用途 类型

|

||||

Kdenlive 通用视频编辑 免费开源

|

||||

OpenShot 通用视频编辑 免费开源

|

||||

Shotcut 通用视频编辑 免费开源

|

||||

Flowblade 通用视频编辑 免费开源

|

||||

Lightworks 专业级视频编辑 免费增值

|

||||

Blender 专业级三维编辑 免费开源

|

||||

Cinelerra 通用视频编辑 免费开源

|

||||

DaVinci 专业级视频处理编辑 免费增值

|

||||

VidCutter 简单视频拆分合并 免费开源

|

||||

| 视频编辑器 | 主要用途 | 类型 |

|

||||

|----------|---------|-----|

|

||||

| Kdenlive | 通用视频编辑 | 自由开源 |

|

||||

| OpenShot | 通用视频编辑 | 自由开源 |

|

||||

| Shotcut | 通用视频编辑 | 自由开源 |

|

||||

| Flowblade | 通用视频编辑 | 自由开源 |

|

||||

| Lightworks | 专业级视频编辑 | 免费增值 |

|

||||

| Blender | 专业级三维编辑 | 自由开源 |

|

||||

| Cinelerra | 通用视频编辑 | 自由开源 |

|

||||

| DaVinci | 专业级视频处理编辑 | 免费增值 |

|

||||

| VidCutter | 简单视频拆分合并 | 自由开源 |

|

||||

|

||||

### 1\. Kdenlive

|

||||

|

||||

![Kdenlive - Ubuntu 上的免费视频编辑器][1]

|

||||

### 1、 Kdenlive

|

||||

|

||||

![Kdenlive - Ubuntu 上的免费视频编辑器][5]

|

||||

[Kdenlive][6] 是 [KDE][8] 上的一个免费且[开源][7]的视频编辑软件,支持双视频监控,多轨时间线,剪辑列表,支持自定义布局,基本效果,以及基本过渡。

|

||||

|

||||

[Kdenlive][6] 是 [KDE][8] 上的一个自由且[开源][7]的视频编辑软件,支持双视频监控、多轨时间线、剪辑列表、自定义布局、基本效果,以及基本过渡效果。

|

||||

|

||||

它支持多种文件格式和多种摄像机、相机,包括低分辨率摄像机(Raw 和 AVI DV 编辑),Mpeg2,mpeg4 和 h264 AVCHD(小型相机和便携式摄像机),高分辨率摄像机文件,包括 HDV 和 AVCHD 摄像机,专业摄像机,包括 XDCAM-HD™ 流, IMX™ (D10) 流,DVCAM (D10),DVCAM,DVCPRO™,DVCPRO50™ 流以及 DNxHD™ 流。

|

||||

它支持多种文件格式和多种摄像机、相机,包括低分辨率摄像机(Raw 和 AVI DV 编辑)、mpeg2、mpeg4 和 h264 AVCHD(小型相机和便携式摄像机)、高分辨率摄像机文件(包括 HDV 和 AVCHD 摄像机)、专业摄像机(包括 XDCAM-HD™ 流、IMX™ (D10) 流、DVCAM (D10)、DVCAM、DVCPRO™、DVCPRO50™ 流以及 DNxHD™ 流)。

|

||||

|

||||

如果你正寻找 Linux 上 iMovie 的替代品,Kdenlive 会是你最好的选择。

|

||||

|

||||

#### Kdenlive 特性

|

||||

Kdenlive 特性:

|

||||

|

||||

* 多轨视频编辑

|

||||

* 多种音视频格式支持

|

||||

@ -51,50 +50,44 @@ VidCutter 简单视频拆分合并 免费开源

|

||||

* 广泛的硬件支持

|

||||

* 关键帧效果

|

||||

|

||||

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 通用视频编辑器

|

||||

* 对于那些熟悉视频编辑的人来说并不太复杂

|

||||

|

||||

缺点:

|

||||

|

||||

|

||||

#### 缺点

|

||||

* 如果你想找的是极致简单的编辑软件,它可能还是令你有些困惑

|

||||

* KDE 应用程序以臃肿而臭名昭著

|

||||

|

||||

|

||||

|

||||

#### 安装 Kdenlive

|

||||

|

||||

Kdenlive 适用于所有主要的 Linux 发行版。你只需在软件中心搜索即可。[Kdenlive 网站的下载部分][9]提供了各种软件包。

|

||||

|

||||

命令行爱好者可以通过在 Debian 和基于 Ubuntu 的 Linux 发行版中运行以下命令从终端安装它:

|

||||

命令行爱好者可以通过在 Debian 和基于 Ubuntu 的 Linux 发行版中运行以下命令从终端安装它:

|

||||

|

||||

```

|

||||

sudo apt install kdenlive

|

||||

```

|

||||

|

||||

### 2\. OpenShot

|

||||

|

||||

![Openshot - ubuntu 上的免费视频编辑器][1]

|

||||

### 2、 OpenShot

|

||||

|

||||

![Openshot - ubuntu 上的免费视频编辑器][10]

|

||||

|

||||

[OpenShot][11] 是 Linux 上的另一个多用途视频编辑器。OpenShot 可以帮助你创建具有过渡和效果的视频。你还可以调整声音大小。当然,它支持大多数格式和编解码器。

|

||||

|

||||

你还可以将视频导出至 DVD,上传至 YouTube,Vimeo,Xbox 360 以及许多常见的视频格式。OpenShot 比 Kdenlive 要简单一些。因此,如果你需要界面简单的视频编辑器,OpenShot 是一个不错的选择。

|

||||

你还可以将视频导出至 DVD,上传至 YouTube、Vimeo、Xbox 360 以及许多常见的视频格式。OpenShot 比 Kdenlive 要简单一些。因此,如果你需要界面简单的视频编辑器,OpenShot 是一个不错的选择。

|

||||

|

||||

它还有个简洁的[开始使用 Openshot][12] 文档。

|

||||

|

||||

#### OpenShot 特性

|

||||

OpenShot 特性:

|

||||

|

||||

* 跨平台,可在Linux,macOS 和 Windows 上使用

|

||||

* 跨平台,可在 Linux、macOS 和 Windows 上使用

|

||||

* 支持多种视频,音频和图像格式

|

||||

* 强大的基于曲线的关键帧动画

|

||||

* 桌面集成与拖放支持

|

||||

* 不受限制的音视频轨道或图层

|

||||

* 可剪辑调整大小,缩放,修剪,捕捉,旋转和剪切

|

||||

* 可剪辑调整大小、缩放、修剪、捕捉、旋转和剪切

|

||||

* 视频转换可实时预览

|

||||

* 合成,图像层叠和水印

|

||||

* 标题模板,标题创建,子标题

|

||||

@ -107,96 +100,81 @@ sudo apt install kdenlive

|

||||

* 音频混合和编辑

|

||||

* 数字视频效果,包括亮度,伽玛,色调,灰度,色度键等

|

||||

|

||||

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 用于一般视频编辑需求的通用视频编辑器

|

||||

* 可在 Windows 和 macOS 以及 Linux 上使用

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 软件用起来可能很简单,但如果你对视频编辑非常陌生,那么肯定需要一个曲折学习的过程

|

||||

* 你可能仍然没有达到专业级电影制作编辑软件的水准

|

||||

|

||||

|

||||

|

||||

#### 安装 OpenShot

|

||||

|

||||

OpenShot 也可以在所有主流 Linux 发行版的软件仓库中使用。你只需在软件中心搜索即可。你也可以从[官方页面][13]中获取它。

|

||||

|

||||

在 Debian 和基于 Ubuntu 的 Linux 发行版中,我最喜欢运行以下命令来安装它:

|

||||

在 Debian 和基于 Ubuntu 的 Linux 发行版中,我最喜欢运行以下命令来安装它:

|

||||

|

||||

```

|

||||

sudo apt install openshot

|

||||

```

|

||||

|

||||

### 3\. Shotcut

|

||||

|

||||

![Shotcut Linux 视频编辑器][1]

|

||||

### 3、 Shotcut

|

||||

|

||||

![Shotcut Linux 视频编辑器][14]

|

||||

|

||||

[Shotcut][15] 是 Linux 上的另一个编辑器,可以和 Kdenlive 与 OpenShot 归为同一联盟。虽然它确实与上面讨论的其他两个软件有类似的功能,但 Shotcut 更先进的地方是支持4K视频。

|

||||

[Shotcut][15] 是 Linux 上的另一个编辑器,可以和 Kdenlive 与 OpenShot 归为同一联盟。虽然它确实与上面讨论的其他两个软件有类似的功能,但 Shotcut 更先进的地方是支持 4K 视频。

|

||||

|

||||

支持许多音频,视频格式,过渡和效果是 Shotcut 的众多功能中的一部分。它也支持外部监视器。

|

||||

支持许多音频、视频格式,过渡和效果是 Shotcut 的众多功能中的一部分。它也支持外部监视器。

|

||||

|

||||

这里有一系列视频教程让你[轻松上手 Shotcut][16]。它也可在 Windows 和 macOS 上使用,因此你也可以在其他操作系统上学习。

|

||||

|

||||

#### Shotcut 特性

|

||||

Shotcut 特性:

|

||||

|

||||

* 跨平台,可在 Linux,macOS 和 Windows 上使用

|

||||

* 跨平台,可在 Linux、macOS 和 Windows 上使用

|

||||

* 支持各种视频,音频和图像格式

|

||||

* 原生时间线编辑

|

||||

* 混合并匹配项目中的分辨率和帧速率

|

||||

* 音频滤波,混音和效果

|

||||

* 音频滤波、混音和效果

|

||||

* 视频转换和过滤

|

||||

* 具有缩略图和波形的多轨时间轴

|

||||

* 无限制撤消和重做播放列表编辑,包括历史记录视图

|

||||

* 剪辑调整大小,缩放,修剪,捕捉,旋转和剪切

|

||||

* 剪辑调整大小、缩放、修剪、捕捉、旋转和剪切

|

||||

* 使用纹波选项修剪源剪辑播放器或时间轴

|

||||

* 在额外系统显示/监视器上的外部监察

|

||||

* 硬件支持

|

||||

|

||||

|

||||

|

||||

你可以在[这里][17]阅它的更多特性。

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 用于常见视频编辑需求的通用视频编辑器

|

||||

* 支持 4K 视频

|

||||

* 可在 Windows,macOS 以及 Linux 上使用

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 功能太多降低了软件的易用性

|

||||

|

||||

|

||||

|

||||

#### 安装 Shotcut

|

||||

|

||||

Shotcut 以 [Snap][18] 格式提供。你可以在 Ubuntu 软件中心找到它。对于其他发行版,你可以从此[下载页面][19]获取可执行文件来安装。

|

||||

|

||||

### 4\. Flowblade

|

||||

|

||||

![Flowblade ubuntu 上的视频编辑器][1]

|

||||

### 4、 Flowblade

|

||||

|

||||

![Flowblade ubuntu 上的视频编辑器][20]

|

||||

|

||||

[Flowblade][21] 是 Linux 上的一个多轨非线性视频编辑器。与上面讨论的一样,这也是一个免费开源的软件。它具有时尚和现代化的用户界面。

|

||||

[Flowblade][21] 是 Linux 上的一个多轨非线性视频编辑器。与上面讨论的一样,这也是一个自由开源的软件。它具有时尚和现代化的用户界面。

|

||||

|

||||

用 Python 编写,它的设计初衷是快速且准确。Flowblade 专注于在 Linux 和其他免费平台上提供最佳体验。所以它没有在 Windows 和 OS X 上运行的版本。Linux 用户专享其实感觉也不错的。

|

||||

用 Python 编写,它的设计初衷是快速且准确。Flowblade 专注于在 Linux 和其他自由平台上提供最佳体验。所以它没有在 Windows 和 OS X 上运行的版本。Linux 用户专享其实感觉也不错的。

|

||||

|

||||

你也可以查看这个不错的[文档][22]来帮助你使用它的所有功能。

|

||||

|

||||

#### Flowblade 特性

|

||||

Flowblade 特性:

|

||||

|

||||

* 轻量级应用

|

||||

* 为简单的任务提供简单的界面,如拆分,合并,覆盖等

|

||||

* 为简单的任务提供简单的界面,如拆分、合并、覆盖等

|

||||

* 大量的音视频效果和过滤器

|

||||

* 支持[代理编辑][23]

|

||||

* 支持拖拽

|

||||

@ -206,39 +184,32 @@ Shotcut 以 [Snap][18] 格式提供。你可以在 Ubuntu 软件中心找到它

|

||||

* 视频转换和过滤器

|

||||

* 具有缩略图和波形的多轨时间轴

|

||||

|

||||

|

||||

|

||||

你可以在 [Flowblade 特性][24]里阅读关于它的更多信息。

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 轻量

|

||||

* 适用于通用视频编辑

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 不支持其他平台

|

||||

|

||||

|

||||

|

||||

#### 安装 Flowblade

|

||||

|

||||

Flowblade 应当在所有主流 Linux 发行版的软件仓库中都可以找到。你可以从软件中心安装它。也可以在[下载页面][25]查看更多信息。

|

||||

|

||||

另外,你可以在 Ubuntu 和基于 Ubuntu 的系统中使用一下命令安装 Flowblade:

|

||||

|

||||

```

|

||||

sudo apt install flowblade

|

||||

```

|

||||

|

||||

### 5\. Lightworks

|

||||

|

||||

![Lightworks 运行在 ubuntu 16.04][1]

|

||||

### 5、 Lightworks

|

||||

|

||||

![Lightworks 运行在 ubuntu 16.04][26]

|

||||

|

||||

如果你在寻找一个具有更多特性的视频编辑器,这就是你想要的。[Lightworks][27] 是一个跨平台的专业视频编辑器,可以在 Linux,Mac OS X 以及 Windows上使用。

|

||||

如果你在寻找一个具有更多特性的视频编辑器,这就是你想要的。[Lightworks][27] 是一个跨平台的专业视频编辑器,可以在 Linux、Mac OS X 以及 Windows上使用。

|

||||

|

||||

它是一款屡获殊荣的专业[非线性编辑][28](NLE)软件,支持高达 4K 的分辨率以及 SD 和 HD 格式的视频。

|

||||

|

||||

@ -249,13 +220,11 @@ Lightwokrs 有两个版本:

|

||||

* Lightworks 免费版

|

||||

* Lightworks 专业版

|

||||

|

||||

|

||||

|

||||

专业版有更多功能,比如支持更高的分辨率,支持 4K 和 蓝光视频等。

|

||||

专业版有更多功能,比如支持更高的分辨率,支持 4K 和蓝光视频等。

|

||||

|

||||

这个[页面][29]有广泛的可用文档。你也可以参考 [Lightworks 视频向导页][30]的视频。

|

||||

|

||||

#### Lightworks 特性

|

||||

Lightworks 特性:

|

||||

|

||||

* 跨平台

|

||||

* 简单直观的用户界面

|

||||

@ -267,27 +236,19 @@ Lightwokrs 有两个版本:

|

||||

* 支持拖拽

|

||||

* 各种音频和视频效果和滤镜

|

||||

|

||||

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 专业,功能丰富的视频编辑器

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 免费版有使用限制

|

||||

|

||||

|

||||

|

||||

#### 安装 Lightworks

|

||||

|

||||

Lightworks 为 Debian 和基于 Ubuntu 的 Linux 提供了 DEB 安装包,为基于 Fedora 的 Linux 发行版提供了RPM 安装包。你可以在[下载页面][31]找到安装包。

|

||||

|

||||

### 6\. Blender

|

||||

|

||||

![Blender 运行在 Ubuntu 16.04][1]

|

||||

### 6、 Blender

|

||||

|

||||

![Blender 运行在 Ubuntu 16.04][32]

|

||||

|

||||

@ -295,48 +256,40 @@ Lightworks 为 Debian 和基于 Ubuntu 的 Linux 提供了 DEB 安装包,为

|

||||

|

||||

虽然最初设计用于制作 3D 模型,但它也具有多种格式视频的编辑功能。

|

||||

|

||||

#### Blender 特性

|

||||

|

||||

* 实时预览,亮度波形,色度矢量显示和直方图显示

|

||||

* 音频混合,同步,擦洗和波形可视化

|

||||

* 最多32个轨道,用于添加视频,图像,音频,场景,面具和效果

|

||||

* 速度控制,调整图层,过渡,关键帧,过滤器等

|

||||

|

||||

Blender 特性:

|

||||

|

||||

* 实时预览、亮度波形、色度矢量显示和直方图显示

|

||||

* 音频混合、同步、擦洗和波形可视化

|

||||

* 最多32个轨道,用于添加视频、图像、音频、场景、面具和效果

|

||||

* 速度控制、调整图层、过渡、关键帧、过滤器等

|

||||

|

||||

你可以在[这里][34]阅读更多相关特性。

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 跨平台

|

||||

* 专业级视频编辑

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 复杂

|

||||

* 主要用于制作 3D 动画,不专门用于常规视频编辑

|

||||

|

||||

|

||||

|

||||

#### 安装 Blender

|

||||

|

||||

Blender 的最新版本可以从[下载页面][35]下载。

|

||||

|

||||

### 7\. Cinelerra

|

||||

|

||||

![Cinelerra Linux 上的视频编辑器][1]

|

||||

### 7、 Cinelerra

|

||||

|

||||

![Cinelerra Linux 上的视频编辑器][36]

|

||||

|

||||

[Cinelerra][37] 从 1998 年发布以来,已被下载超过500万次。它是 2003 年第一个在 64 位系统上提供非线性编辑的视频编辑器。当时它是Linux用户的首选视频编辑器,但随后一些开发人员丢弃了此项目,它也随之失去了光彩。

|

||||

[Cinelerra][37] 从 1998 年发布以来,已被下载超过 500 万次。它是 2003 年第一个在 64 位系统上提供非线性编辑的视频编辑器。当时它是 Linux 用户的首选视频编辑器,但随后一些开发人员丢弃了此项目,它也随之失去了光彩。

|

||||

|

||||

好消息是它正回到正轨并且良好地再次发展。

|

||||

|

||||

如果你想了解关于 Cinelerra 项目是如何开始的,这里有些[有趣的背景故事][38]。

|

||||

|

||||

#### Cinelerra 特性

|

||||

Cinelerra 特性:

|

||||

|

||||

* 非线性编辑

|

||||

* 支持 HD 视频

|

||||

@ -345,27 +298,20 @@ Blender 的最新版本可以从[下载页面][35]下载。

|

||||

* 不受限制的图层数量

|

||||

* 拆分窗格编辑

|

||||

|

||||

|

||||

|

||||

#### 优点

|

||||

优点:

|

||||

|

||||

* 通用视频编辑器

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 不适用于新手

|

||||

* 没有可用的安装包

|

||||

|

||||

|

||||

|

||||

#### 安装 Cinelerra

|

||||

|

||||

你可以从 [SourceForge][39] 下载源码。更多相关信息请看[下载页面][40]。

|

||||

|

||||

### 8\. DaVinci Resolve

|

||||

|

||||

![DaVinci Resolve 视频编辑器][1]

|

||||

### 8、 DaVinci Resolve

|

||||

|

||||

![DaVinci Resolve 视频编辑器][41]

|

||||

|

||||

@ -375,10 +321,10 @@ DaVinci Resolve 不是常规的视频编辑器。它是一个成熟的编辑工

|

||||

|

||||

DaVinci Resolve 不开源。类似于 LightWorks,它也为 Linux 提供一个免费版本。专业版售价是 $300。

|

||||

|

||||

#### DaVinci Resolve 特性

|

||||

DaVinci Resolve 特性:

|

||||

|

||||

* 高性能播放引擎

|

||||

* 支持所有类型的编辑类型,如覆盖,插入,波纹覆盖,替换,适合填充,末尾追加

|

||||

* 支持所有类型的编辑类型,如覆盖、插入、波纹覆盖、替换、适合填充、末尾追加

|

||||

* 高级修剪

|

||||

* 音频叠加

|

||||

* Multicam Editing 可实现实时编辑来自多个摄像机的镜头

|

||||

@ -387,60 +333,48 @@ DaVinci Resolve 不开源。类似于 LightWorks,它也为 Linux 提供一个

|

||||

* 时间轴曲线编辑器

|

||||

* VFX 的非线性编辑

|

||||

|

||||

优点:

|

||||

|

||||

|

||||

#### 优点

|

||||

* 跨平台

|

||||

* 专业级视频编辑器

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 不适用于通用视频编辑

|

||||

* 不开源

|

||||

* 免费版本中有些功能无法使用

|

||||

|

||||

|

||||

|

||||

#### 安装 DaVinci Resolve

|

||||

|

||||

你可以从[这个页面][42]下载 DaVinci Resolve。你需要注册,哪怕仅仅下载免费版。

|

||||

|

||||

### 9\. VidCutter

|

||||

|

||||

![VidCutter Linux 上的视频编辑器][1]

|

||||

### 9、 VidCutter

|

||||

|

||||

![VidCutter Linux 上的视频编辑器][43]

|

||||

|

||||

不像这篇文章讨论的其他视频编辑器,[VidCutter][44] 非常简单。除了分割和合并视频之外,它没有其他太多功能。但有时你正好需要 VidCutter 提供的这些功能。

|

||||

|

||||

#### VidCutter 特性

|

||||

VidCutter 特性:

|

||||

|

||||

* 适用于Linux,Windows 和 MacOS 的跨平台应用程序

|

||||

* 支持绝大多数常见视频格式,例如:AVI,MP4,MPEG 1/2,WMV,MP3,MOV,3GP,FLV 等等

|

||||

* 适用于Linux、Windows 和 MacOS 的跨平台应用程序

|

||||

* 支持绝大多数常见视频格式,例如:AVI、MP4、MPEG 1/2、WMV、MP3、MOV、3GP、FLV 等等

|

||||

* 界面简单

|

||||

* 修剪和合并视频,仅此而已

|

||||

|

||||

|

||||

|

||||

#### 优点

|

||||

|

||||

优点:

|

||||

|

||||

* 跨平台

|

||||

* 很适合做简单的视频分割和合并

|

||||

|

||||

|

||||

|

||||

#### 缺点

|

||||

缺点:

|

||||

|

||||

* 不适合用于通用视频编辑

|

||||

* 经常崩溃

|

||||

|

||||

|

||||

|

||||

#### 安装 VidCutter

|

||||

|

||||

如果你使用的是基于 Ubuntu 的 Linux 发行版,你可以使用这个官方 PPA(译者注:PPA,个人软件包档案,PersonalPackageArchives):

|

||||

如果你使用的是基于 Ubuntu 的 Linux 发行版,你可以使用这个官方 PPA(LCTT 译注:PPA,个人软件包档案,PersonalPackageArchives):

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:ozmartian/apps

|

||||

sudo apt-get update

|

||||

@ -457,17 +391,17 @@ Arch Linux 用户可以轻松的使用 AUR 安装它。对于其他 Linux 发行

|

||||

|

||||

如果你需要的不止这些,**OpenShot** 或者 **Kdenlive** 是不错的选择。他们有规格标准的系统,适用于初学者。

|

||||

|

||||

如果你拥有一台高端计算机并且需要高级功能,可以使用 **Lightworks** 或者 **DaVinci Resolve**。如果你在寻找更高级的工具用于制作 3D 作品,If you are looking for more advanced features for 3D works,**Blender** 就得到了你的支持。

|

||||

如果你拥有一台高端计算机并且需要高级功能,可以使用 **Lightworks** 或者 **DaVinci Resolve**。如果你在寻找更高级的工具用于制作 3D 作品,你肯定会支持选择 **Blender**。

|

||||

|

||||

这就是关于 **Linux 上最好的视频编辑软件**我所能表达的全部内容,像Ubuntu,Linux Mint,Elementary,以及其他 Linux 发行版。向我们分享你最喜欢的视频编辑器。

|

||||

这就是在 Ubuntu、Linux Mint、Elementary,以及其它发行版等 **Linux 上最好的视频编辑软件**的全部内容。向我们分享你最喜欢的视频编辑器。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/best-video-editing-software-linux/

|

||||

|

||||

作者:[It'S Foss Team][a]

|

||||

作者:[itsfoss][a]

|

||||

译者:[fuowang](https://github.com/fuowang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,19 +1,20 @@

|

||||

在 Linux 上使用 systemd 设置定时器

|

||||

======

|

||||

> 学习使用 systemd 创建启动你的游戏服务器的定时器。

|

||||

|

||||

|

||||

|

||||

之前,我们看到了如何[手动的][1]、[在开机与关机时][2]、[在启用某个设备时][3]、[在文件系统发生改变时][4]启用与禁用 systemd 服务。

|

||||

之前,我们看到了如何[手动的][1]、[在开机与关机时][2]、[在启用某个设备时][3]、[在文件系统发生改变时][4] 启用与禁用 systemd 服务。

|

||||

|

||||

定时器增加了另一种启动服务的方式,基于...时间。尽管与定时任务很相似,但 systemd 定时器稍微地灵活一些。让我们看看它是怎么工作的。

|

||||

定时器增加了另一种启动服务的方式,基于……时间。尽管与定时任务很相似,但 systemd 定时器稍微地灵活一些。让我们看看它是怎么工作的。

|

||||

|

||||

### “定时运行”

|

||||

|

||||

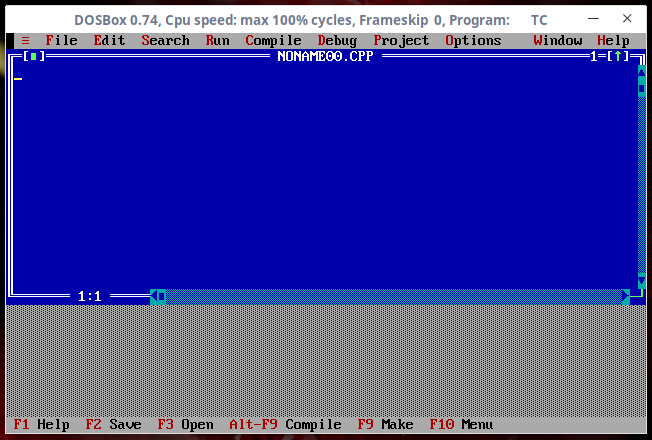

让我们展开[本系列前两篇文章][2]中[你所设置的 ][1] [Minetest][5] 服务器作为如何使用定时器单元的第一个例子。如果你还没有读过那几篇文章,可以现在去看看。

|

||||

让我们展开[本系列前两篇文章][2]中[你所设置的][1] [Minetest][5] 服务器作为如何使用定时器单元的第一个例子。如果你还没有读过那几篇文章,可以现在去看看。

|

||||

|

||||

你将通过创建一个定时器来改进 Minetest 服务器,使得在定时器启动 1 分钟后运行游戏服务器而不是立即运行。这样做的原因可能是,在启动之前可能会用到其他的服务,例如发邮件给其他玩家告诉他们游戏已经准备就绪,你要确保其他的服务(例如网络)在开始前完全启动并运行。

|

||||

你将通过创建一个定时器来“改进” Minetest 服务器,使得在服务器启动 1 分钟后运行游戏服务器而不是立即运行。这样做的原因可能是,在启动之前可能会用到其他的服务,例如发邮件给其他玩家告诉他们游戏已经准备就绪,你要确保其他的服务(例如网络)在开始前完全启动并运行。

|

||||

|

||||

跳到最底下,你的 `_minetest.timer_` 单元看起来就像这样:

|

||||

最终,你的 `minetest.timer` 单元看起来就像这样:

|

||||

|

||||

```

|

||||

# minetest.timer

|

||||

@ -26,23 +27,22 @@ Unit=minetest.service

|

||||

|

||||

[Install]

|

||||

WantedBy=basic.target

|

||||

|

||||

```

|

||||

|

||||

一点也不难吧。

|

||||

|

||||

通常,开头是 `[Unit]` 和一段描述单元作用的信息,这儿没什么新东西。`[Timer]` 这一节是新出现的,但它的作用不言自明:它包含了何时启动服务,启动哪个服务的信息。在这个例子当中,`OnBootSec` 是告诉 systemd 在系统启动后运行服务的指令。

|

||||

如以往一般,开头是 `[Unit]` 和一段描述单元作用的信息,这儿没什么新东西。`[Timer]` 这一节是新出现的,但它的作用不言自明:它包含了何时启动服务,启动哪个服务的信息。在这个例子当中,`OnBootSec` 是告诉 systemd 在系统启动后运行服务的指令。

|

||||

|

||||

其他的指令有:

|

||||

|

||||

* `OnActiveSec=`,告诉 systemd 在定时器启动后多长时间运行服务。

|

||||

* `OnStartupSec=`,同样的,它告诉 systemd 在 systemd 进程启动后多长时间运行服务。

|

||||

* `OnUnitActiveSec=`,告诉 systemd 在上次由定时器激活的服务启动后多长时间运行服务。

|

||||

* `OnUnitInactiveSec=`,告诉 systemd 在上次由定时器激活的服务停用后多长时间运行服务。

|

||||

* `OnActiveSec=`,告诉 systemd 在定时器启动后多长时间运行服务。

|

||||

* `OnStartupSec=`,同样的,它告诉 systemd 在 systemd 进程启动后多长时间运行服务。

|

||||

* `OnUnitActiveSec=`,告诉 systemd 在上次由定时器激活的服务启动后多长时间运行服务。

|

||||

* `OnUnitInactiveSec=`,告诉 systemd 在上次由定时器激活的服务停用后多长时间运行服务。

|

||||

|

||||

继续 `_minetest.timer_` 单元,`basic.target` 通常用作<ruby>后期引导服务<rt>late boot services</rt></ruby>的<ruby>同步点<rt>synchronization point</rt></ruby>。这就意味着它可以让 `_minetest.timer_` 单元运行在安装完<ruby>本地挂载点<rt>local mount points</rt></ruby>或交换设备,套接字、定时器、路径单元和其他基本的初始化进程之后。就像在[第二篇文章中 systemd 单元][2]里解释的那样,`_targets_` 就像<ruby>旧的运行等级<rt>old run levels</rt></ruby>,可以将你的计算机置于某个状态,或像这样告诉你的服务在达到某个状态后开始运行。

|

||||

继续 `minetest.timer` 单元,`basic.target` 通常用作<ruby>后期引导服务<rt>late boot services</rt></ruby>的<ruby>同步点<rt>synchronization point</rt></ruby>。这就意味着它可以让 `minetest.timer` 单元运行在安装完<ruby>本地挂载点<rt>local mount points</rt></ruby>或交换设备,套接字、定时器、路径单元和其他基本的初始化进程之后。就像在[第二篇文章中 systemd 单元][2]里解释的那样,`targets` 就像<ruby>旧的运行等级<rt>old run levels</rt></ruby>一样,可以将你的计算机置于某个状态,或像这样告诉你的服务在达到某个状态后开始运行。

|

||||

|

||||

在前两篇文章中你配置的`_minetest.service_`文件[最终][2]看起来就像这样:

|

||||

在前两篇文章中你配置的 `minetest.service` 文件[最终][2]看起来就像这样:

|

||||

|

||||

```

|

||||

# minetest.service

|

||||

@ -64,10 +64,9 @@ ExecStop= /bin/kill -2 $MAINPID

|

||||

|

||||

[Install]

|

||||

WantedBy= multi-user.target

|

||||

|

||||

```

|

||||

|

||||

这儿没什么需要修改的。但是你需要将 `_mtsendmail.sh_`(发送你的 email 的脚本)从:

|

||||

这儿没什么需要修改的。但是你需要将 `mtsendmail.sh`(发送你的 email 的脚本)从:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

@ -75,7 +74,6 @@ WantedBy= multi-user.target

|

||||

sleep 20

|

||||

echo $1 | mutt -F /home/<username>/.muttrc -s "$2" my_minetest@mailing_list.com

|

||||

sleep 10

|

||||

|

||||

```

|

||||

|

||||

改成:

|

||||

@ -84,40 +82,37 @@ sleep 10

|

||||

#!/bin/bash

|

||||

# mtsendmail.sh

|

||||

echo $1 | mutt -F /home/paul/.muttrc -s "$2" pbrown@mykolab.com

|

||||

|

||||

```

|

||||

|

||||

你做的事是去除掉 Bash 脚本中那些蹩脚的停顿。Systemd 现在正在等待。

|

||||

你做的事是去除掉 Bash 脚本中那些蹩脚的停顿。Systemd 现在来做等待。

|

||||

|

||||

### 让它运行起来

|

||||

|

||||

确保一切运作正常,禁用 `_minetest.service_`:

|

||||

确保一切运作正常,禁用 `minetest.service`:

|

||||

|

||||

```

|

||||

sudo systemctl disable minetest

|

||||

|

||||

```

|

||||

|

||||

这使得系统启动时它不会一同启动;然后,相反地,启用 `_minetest.timer_`:

|

||||

这使得系统启动时它不会一同启动;然后,相反地,启用 `minetest.timer`:

|

||||

|

||||

```

|

||||

sudo systemctl enable minetest.timer

|

||||

|

||||

```

|

||||

|

||||

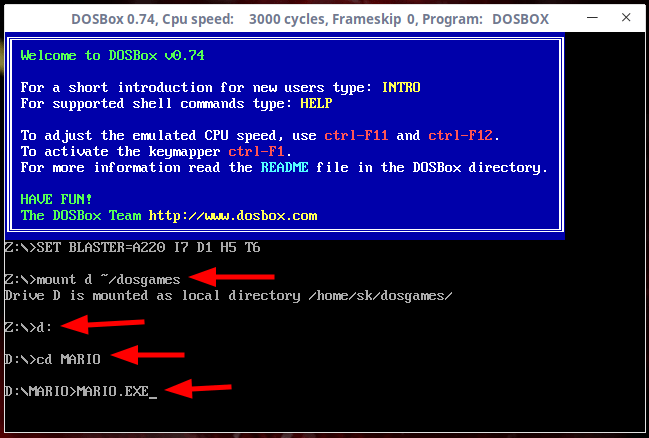

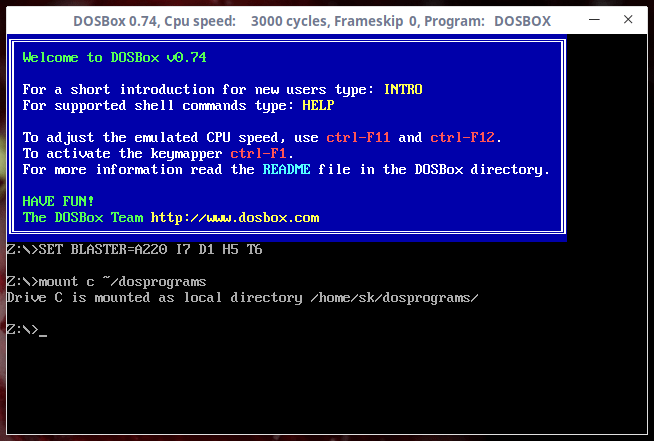

现在你就可以重启服务器了,当运行`sudo journalctl -u minetest.*`后,你就会看到 `_minetest.timer_` 单元执行后大约一分钟,`_minetest.service_` 单元开始运行。

|

||||

现在你就可以重启服务器了,当运行 `sudo journalctl -u minetest.*` 后,你就会看到 `minetest.timer` 单元执行后大约一分钟,`minetest.service` 单元开始运行。

|

||||

|

||||

![minetest timer][7]

|

||||

|

||||

图 1:minetest.timer 运行大约 1 分钟后 minetest.service 开始运行

|

||||

|

||||

[经许可使用][8]

|

||||

*图 1:minetest.timer 运行大约 1 分钟后 minetest.service 开始运行*

|

||||

|

||||

### 时间的问题

|

||||

|

||||

`_minetest.timer_` 在 systemd 的日志里显示的启动时间为 09:08:33 而 `_minetest.service` 启动时间是 09:09:18,它们之间少于 1 分钟,关于这件事有几点需要说明一下:首先,请记住我们说过 `OnBootSec=` 指令是从引导完成后开始计算服务启动的时间。当 `_minetest.timer_` 的时间到来时,引导已经在几秒之前完成了。

|

||||

`minetest.timer` 在 systemd 的日志里显示的启动时间为 09:08:33 而 `minetest.service` 启动时间是 09:09:18,它们之间少于 1 分钟,关于这件事有几点需要说明一下:首先,请记住我们说过 `OnBootSec=` 指令是从引导完成后开始计算服务启动的时间。当 `minetest.timer` 的时间到来时,引导已经在几秒之前完成了。

|

||||

|

||||

另一件事情是 systemd 给自己设置了一个<ruby>误差幅度<rt>margin of error</rt></ruby>(默认是 1 分钟)来运行东西。这有助于在多个<ruby>资源密集型进程<rt>resource-intensive processes</rt></ruby>同时运行时分配负载:通过分配 1 分钟的时间,systemd 可以等待某些进程关闭。这也意味着 `_minetest.service_`会在引导完成后的 1~2 分钟之间启动。但精确的时间谁也不知道。

|

||||

另一件事情是 systemd 给自己设置了一个<ruby>误差幅度<rt>margin of error</rt></ruby>(默认是 1 分钟)来运行东西。这有助于在多个<ruby>资源密集型进程<rt>resource-intensive processes</rt></ruby>同时运行时分配负载:通过分配 1 分钟的时间,systemd 可以等待某些进程关闭。这也意味着 `minetest.service` 会在引导完成后的 1~2 分钟之间启动。但精确的时间谁也不知道。

|

||||

|

||||

作为记录,你可以用 `AccuracySec=` 指令[修改误差幅度][9]。

|

||||

顺便一提,你可以用 `AccuracySec=` 指令[修改误差幅度][9]。

|

||||

|

||||

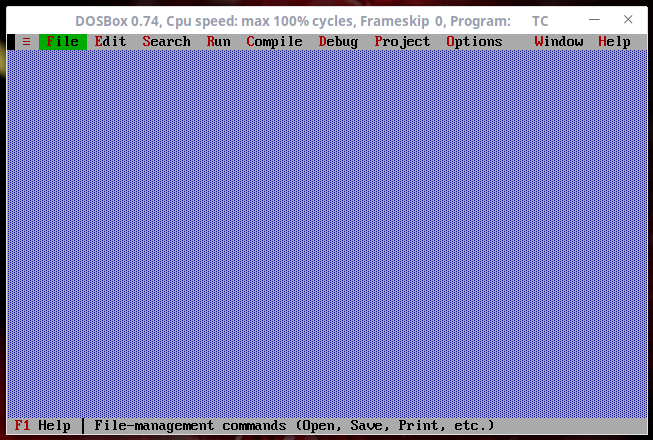

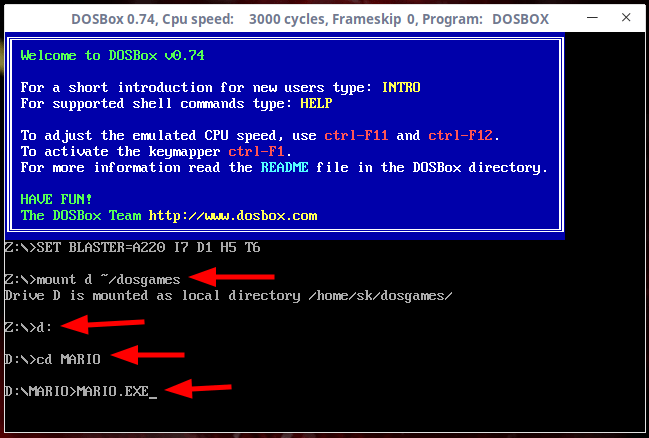

你也可以检查系统上所有的定时器何时运行或是上次运行的时间:

|

||||

|

||||

@ -127,9 +122,7 @@ systemctl list-timers --all

|

||||

|

||||

![check timer][11]

|

||||

|

||||

图 2:检查定时器何时运行或上次运行的时间

|

||||

|

||||

[经许可使用][8]

|

||||

*图 2:检查定时器何时运行或上次运行的时间*

|

||||

|

||||

最后一件值得思考的事就是你应该用怎样的格式去表示一段时间。Systemd 在这方面非常灵活:`2 h`,`2 hours` 或 `2hr` 都可以用来表示 2 个小时。对于“秒”,你可以用 `seconds`,`second`,`sec` 和 `s`。“分”也是同样的方式:`minutes`,`minute`,`min` 和 `m`。你可以检查 `man systemd.time` 来查看 systemd 能够理解的所有时间单元。

|

||||

|

||||

@ -148,13 +141,13 @@ via: https://www.linux.com/blog/learn/intro-to-linux/2018/7/setting-timer-system

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[LuuMing](https://github.com/LuuMing)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/bro66

|

||||

[1]:https://www.linux.com/blog/learn/intro-to-linux/2018/5/writing-systemd-services-fun-and-profit

|

||||

[2]:https://www.linux.com/blog/learn/2018/5/systemd-services-beyond-starting-and-stopping

|

||||

[1]:https://linux.cn/article-9700-1.html

|

||||

[2]:https://linux.cn/article-9703-1.html

|

||||

[3]:https://www.linux.com/blog/intro-to-linux/2018/6/systemd-services-reacting-change

|

||||

[4]:https://www.linux.com/blog/learn/intro-to-linux/2018/6/systemd-services-monitoring-files-and-directories

|

||||

[5]:https://www.minetest.net/

|

||||

103

published/20180827 Top 10 Raspberry Pi blogs to follow.md

Normal file

103

published/20180827 Top 10 Raspberry Pi blogs to follow.md

Normal file

@ -0,0 +1,103 @@

|

||||

10 个最值得关注的树莓派博客

|

||||

======

|

||||

|

||||

> 如果你正在计划你的下一个树莓派项目,那么这些博客或许有帮助。

|

||||

|

||||

|

||||

|

||||

网上有很多很棒的树莓派爱好者网站、教程、代码仓库、YouTube 频道和其他资源。以下是我最喜欢的十大树莓派博客,排名不分先后。

|

||||

|

||||

### 1、Raspberry Pi Spy

|

||||

|

||||

树莓派粉丝 Matt Hawkins 从很早开始就在他的网站 [Raspberry Pi Spy][4] 上撰写了大量全面且信息丰富的教程。我从这个网站上直接学到了很多东西,而且 Matt 似乎也总是涵盖到众多主题的第一个人。在我学习使用树莓派的前三年里,多次在这个网站得到帮助。

|

||||

|

||||

值得庆幸的是,这个不断采用新技术的网站仍然很强大。我希望看到它继续存在下去,让新社区成员在需要时得到帮助。

|

||||

|

||||

### 2、Adafruit

|

||||

|

||||

[Adafruit][1] 是硬件黑客中知名品牌之一。该公司制作和销售漂亮的硬件,并提供由员工、社区成员,甚至 Lady Ada 女士自己编写的优秀教程。

|

||||

|

||||

除了网上商店,Adafruit 还经营一个博客,这个博客充满了来自世界各地的精彩内容。在博客上可以查看树莓派的类别,特别是在工作日的最后一天,会在 Adafruit Towers 举办名为 [Friday is Pi Day][1] 的活动。

|

||||

|

||||

### 3、Recantha 的 Raspberry Pi Pod

|

||||

|

||||

Mike Horne(Recantha)是英国一位重要的树莓派社区成员,负责 [CamJam 和 Potton Pi&Pint][2](剑桥的两个树莓派社团)以及 [Pi Wars][3] (一年一度的树莓派机器人竞赛)。他为其他人建立树莓派社团提供建议,并且总是有时间帮助初学者。Horne 和他的共同组织者 Tim Richardson 一起开发了 CamJam Edu Kit (一系列小巧且价格合理的套件,适合初学者使用 Python 学习物理计算)。

|

||||

|

||||

除此之外,他还运营着 [Pi Pod][18],这是一个包含了世界各地树莓派相关内容的博客。它可能是这个列表中更新最频繁的树莓派博客,所以这是一个把握树莓派社区动向的极好方式。

|

||||

|

||||

### 4. Raspberry Pi 官方博客

|

||||

|

||||

必须提一下[树莓派基金会][19]的官方博客,这个博客涵盖了基金会的硬件、软件、教育、社区、慈善和青年编码俱乐部的一系列内容。博客上的大型主题是家庭数字化、教育授权,以及硬件版本和软件更新的官方新闻。

|

||||

|

||||

该博客自 [2011 年][5] 运行至今,并提供了自那时以来所有 1800 多个帖子的 [存档][6] 。你也可以在 Twitter 上关注[@raspberrypi_otd][7],这是我用 [Python][8] 创建的机器人(教程在这里:[Opensource.com][9])。Twitter 机器人推送来自博客存档的过去几年同一天的树莓派帖子。

|

||||

|

||||

### 5、RasPi.tv

|

||||

|

||||

另一位开创性的树莓派社区成员是 Alex Eames,通过他的博客和 YouTube 频道 [RasPi.tv][20],他很早就加入了树莓派社区。他的网站为很多创客项目提供高质量、精心制作的视频教程和书面指南。

|

||||

|

||||

Alex 的网站 [RasP.iO][10] 制作了一系列树莓派附加板和配件,包括方便的 GPIO 端口引脚、电路板测量尺等等。他的博客也拓展到了 [Arduino][11]、[WEMO][12] 以及其他小网站。

|

||||

|

||||

### 6、pyimagesearch

|

||||

|

||||

虽然不是严格的树莓派博客(名称中的“py”是“Python”,而不是“树莓派”),但该网站的 [树莓派栏目][13] 有着大量内容。 Adrian Rosebrock 获得了计算机视觉和机器学习领域的博士学位,他的博客旨在分享他在学习和制作自己的计算机视觉项目时所学到的机器学习技巧。

|

||||

|

||||

如果你想使用树莓派的相机模块学习面部或物体识别,来这个网站就对了。Adrian 在图像识别领域的深度学习和人工智能知识和实际应用是首屈一指的,而且他编写了自己的项目,这样任何人都可以进行尝试。

|

||||

|

||||

### 7、Raspberry Pi Roundup

|

||||

|

||||

这个[博客][21]由英国官方树莓派经销商之一 The Pi Hut 进行维护,会有每周的树莓派新闻。这是另一个很好的资源,可以紧跟树莓派社区的最新资讯,而且之前的文章也值得回顾。

|

||||

|

||||

### 8、Dave Akerman

|

||||

|

||||

[Dave Akerman][22] 是研究高空热气球的一流专家,他分享使用树莓派以最低的成本进行热气球发射方面的知识和经验。他会在一张由热气球拍摄的平流层照片下面对本次发射进行评论,也会对个人发射树莓派热气球给出自己的建议。

|

||||

|

||||

查看 Dave 的[博客][22],了解精彩的临近空间摄影作品。

|

||||

|

||||

### 9、Pimoroni

|

||||

|

||||

[Pimoroni][23] 是一家世界知名的树莓派经销商,其总部位于英国谢菲尔德。这家经销商制作了著名的 [树莓派彩虹保护壳][14],并推出了许多极好的定制附加板和配件。

|

||||

|

||||

Pimoroni 的博客布局与其硬件设计和品牌推广一样精美,博文内容非常适合创客和业余爱好者在家进行创作,并且可以在有趣的 YouTube 频道 [Bilge Tank][15] 上找到。

|

||||

|

||||

### 10、Stuff About Code

|

||||

|

||||

Martin O'Hanlon 以树莓派社区成员的身份转为了树莓派基金会的员工,他起初出于乐趣在树莓派上开发“我的世界”作弊器,最近作为内容编辑加入了树莓派基金会。幸运的是,马丁的新工作并没有阻止他更新[博客][24]并与世界分享有益的趣闻。

|

||||

|

||||

除了“我的世界”的很多内容,你还可以在 Python 库、[Blue Dot][16] 和 [guizero][17] 上找到 Martin O'Hanlon 的贡献,以及一些总结性的树莓派技巧。

|

||||

|

||||

------

|

||||

|

||||

via: https://opensource.com/article/18/8/top-10-raspberry-pi-blogs-follow

|

||||

|

||||

作者:[Ben Nuttall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[jlztan](https://github.com/jlztan)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bennuttall

|

||||

[1]: https://blog.adafruit.com/category/raspberry-pi/

|

||||

[2]: https://camjam.me/?page_id=753

|

||||

[3]: https://piwars.org/

|

||||

[4]: https://www.raspberrypi-spy.co.uk/

|

||||

[5]: https://www.raspberrypi.org/blog/first-post/

|

||||

[6]: https://www.raspberrypi.org/blog/archive/

|

||||

[7]: https://twitter.com/raspberrypi_otd

|

||||

[8]: https://github.com/bennuttall/rpi-otd-bot/blob/master/src/bot.py

|

||||

[9]: https://opensource.com/article/17/8/raspberry-pi-twitter-bot

|

||||

[10]: https://rasp.io/

|

||||

[11]: https://www.arduino.cc/

|

||||

[12]: http://community.wemo.com/

|

||||

[13]: https://www.pyimagesearch.com/category/raspberry-pi/

|

||||

[14]: https://shop.pimoroni.com/products/pibow-for-raspberry-pi-3-b-plus

|

||||

[15]: https://www.youtube.com/channel/UCuiDNTaTdPTGZZzHm0iriGQ

|

||||

[16]: https://bluedot.readthedocs.io/en/latest/#

|

||||

[17]: https://lawsie.github.io/guizero/

|

||||

[18]: https://www.recantha.co.uk/blog/

|

||||

[19]: https://www.raspberrypi.org/blog/

|

||||

[20]: https://rasp.tv/

|

||||

[21]: https://thepihut.com/blogs/raspberry-pi-roundup

|

||||

[22]: http://www.daveakerman.com/

|

||||

[23]: https://blog.pimoroni.com/

|

||||

[24]: https://www.stuffaboutcode.com/

|

||||

@ -1,13 +1,13 @@

|

||||

Gifski – 一个跨平台的高质量 GIF 编码器

|

||||

Gifski:一个跨平台的高质量 GIF 编码器

|

||||

======

|

||||

|

||||

|

||||

|

||||

作为一名文字工作者,我需要在我的文章中添加图片。有时为了更容易讲清楚某个概念,我还会添加视频或者 gif 动图,相比于文字,通过视频或者 gif 格式的输出,读者可以更容易地理解我的指导。前些天,我已经写了篇文章来介绍针对 Linux 的功能丰富的强大截屏工具 [**Flameshot**][1]。今天,我将向你展示如何从一段视频或者一些图片来制作高质量的 gif 动图。这个工具就是 **Gifski**,一个跨平台、开源、基于 **Pngquant** 的高质量命令行 GIF 编码器。

|

||||

作为一名文字工作者,我需要在我的文章中添加图片。有时为了更容易讲清楚某个概念,我还会添加视频或者 gif 动图,相比于文字,通过视频或者 gif 格式的输出,读者可以更容易地理解我的指导。前些天,我已经写了篇文章来介绍针对 Linux 的功能丰富的强大截屏工具 [Flameshot][1]。今天,我将向你展示如何从一段视频或者一些图片来制作高质量的 gif 动图。这个工具就是 **Gifski**,一个跨平台、开源、基于 **Pngquant** 的高质量命令行 GIF 编码器。

|

||||

|

||||

对于那些好奇 pngquant 是什么的读者,简单来说 pngquant 是一个针对 PNG 图片的无损压缩命令行工具。相信我,pngquant 是我使用过的最好的 PNG 无损压缩工具。它可以将 PNG 图片最高压缩 **70%** 而不会损失图片的原有质量并保存了所有的阿尔法透明度。经过压缩的图片可以在所有的网络浏览器和系统中使用。而 Gifski 是基于 Pngquant 的,它使用 pngquant 的功能来创建高质量的 GIF 动图。Gifski 能够创建每帧包含上千种颜色的 GIF 动图。Gifski 也需要 **ffmpeg** 来将视频转换为 PNG 图片。

|

||||

|

||||

### **安装 Gifski**

|

||||

### 安装 Gifski

|

||||

|

||||

首先需要确保你安装了 FFMpeg 和 Pngquant。

|

||||

|

||||

@ -15,31 +15,34 @@ FFmpeg 在大多数的 Linux 发行版的默认软件仓库中都可以获取到

|

||||

|

||||

- [在 Linux 中如何安装 FFmpeg](https://www.ostechnix.com/install-ffmpeg-linux/)

|

||||

|

||||

Pngquant 可以从 [**AUR**][2] 中获取到。要在基于 Arch 的系统安装它,使用任意一个 AUR 帮助程序即可,例如下面示例中的 [**Yay**][3]:

|

||||

Pngquant 可以从 [AUR][2] 中获取到。要在基于 Arch 的系统安装它,使用任意一个 AUR 帮助程序即可,例如下面示例中的 [Yay][3]:

|

||||

|

||||

```

|

||||

$ yay -S pngquant

|

||||

```

|

||||

|

||||

在基于 Debian 的系统中,运行:

|

||||

|

||||

```

|

||||

$ sudo apt install pngquant

|

||||

```

|

||||

|

||||

假如在你使用的发行版中没有 pngquant,你可以从源码编译并安装它。为此你还需要安装 **`libpng-dev`** 包。

|

||||

假如在你使用的发行版中没有 pngquant,你可以从源码编译并安装它。为此你还需要安装 `libpng-dev` 包。

|

||||

|

||||

```

|

||||

$ git clone --recursive https://github.com/kornelski/pngquant.git

|

||||

|

||||

$ make

|

||||

|

||||

$ sudo make install

|

||||

```

|

||||

|

||||

安装完上述依赖后,再安装 Gifski。假如你已经安装了 [**Rust**][4] 编程语言,你可以使用 **cargo** 来安装它:

|

||||

安装完上述依赖后,再安装 Gifski。假如你已经安装了 [Rust][4] 编程语言,你可以使用 **cargo** 来安装它:

|

||||

|

||||

```

|

||||

$ cargo install gifski

|

||||

```

|

||||

|

||||

另外,你还可以使用 [**Linuxbrew**][5] 包管理器来安装它:

|

||||

另外,你还可以使用 [Linuxbrew][5] 包管理器来安装它:

|

||||

|

||||

```

|

||||

$ brew install gifski

|

||||

```

|

||||

@ -49,6 +52,7 @@ $ brew install gifski

|

||||

### 使用 Gifski 来创建高质量的 GIF 动图

|

||||

|

||||

进入你保存 PNG 图片的目录,然后运行下面的命令来从这些图片创建 GIF 动图:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif *.png

|

||||

```

|

||||

@ -64,12 +68,13 @@ Gifski 还有其他的特性,例如:

|

||||

* 以给定顺序来编码图片,而不是以排序的结果来编码

|

||||

|

||||

为了创建特定大小的 GIF 动图,例如宽为 800,高为 400,可以使用下面的命令:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif -W 800 -H 400 *.png

|

||||

|

||||

```

|

||||

|

||||

你可以设定 GIF 动图在每秒钟展示多少帧,默认值是 **20**。为此,可以运行下面的命令:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif --fps 1 *.png

|

||||

```

|

||||

@ -77,42 +82,49 @@ $ gifski -o file.gif --fps 1 *.png

|

||||

在上面的例子中,我指定每秒钟展示 1 帧。

|

||||

|

||||

我们还能够以特定质量(1-100 范围内)来编码。显然,更低的质量将生成更小的文件,更高的质量将生成更大的 GIF 动图文件。

|

||||

|

||||

```

|

||||

$ gifski -o file.gif --quality 50 *.png

|

||||

```

|

||||

|

||||

当需要编码大量图片时,Gifski 将会花费更多时间。如果想要编码过程加快到通常速度的 3 倍左右,可以运行:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif --fast *.png

|

||||

```

|

||||

|

||||

请注意上面的命令产生的 GIF 动图文件将减少 10% 的质量并且文件大小也会更大。

|

||||

请注意上面的命令产生的 GIF 动图文件将减少 10% 的质量,并且文件大小也会更大。

|

||||

|

||||

如果想让图片以某个给定的顺序(而不是通过排序)精确地被编码,可以使用 `--nosort` 选项。

|

||||

|

||||

如果想让图片以某个给定的顺序(而不是通过排序)精确地被编码,可以使用 **`--nosort`** 选项。

|

||||

```

|

||||

$ gifski -o file.gif --nosort *.png

|

||||

```

|

||||

|

||||

假如你不想让 GIF 循环播放,只需要使用 **`--once`** 选项即可:

|

||||

假如你不想让 GIF 循环播放,只需要使用 `--once` 选项即可:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif --once *.png

|

||||

```

|

||||

|

||||

**从视频创建 GIF 动图**

|

||||

### 从视频创建 GIF 动图

|

||||

|

||||

有时或许你想从一个视频创建 GIF 动图。这也是可以做到的,这时候 FFmpeg 便能提供帮助。首先像下面这样,将视频转换成一系列的 PNG 图片:

|

||||

|

||||

```

|

||||

$ ffmpeg -i video.mp4 frame%04d.png

|

||||

```

|

||||

|

||||

上面的命令将会从 `video.mp4` 这个视频文件创建名为“frame0001.png”、“frame0002.png”、“frame0003.png”等等形式的图片(其中的 `%04d` 代表帧数),然后将这些图片保存在当前的工作目录。

|

||||

上面的命令将会从 `video.mp4` 这个视频文件创建名为 “frame0001.png”、“frame0002.png”、“frame0003.png” 等等形式的图片(其中的 `%04d` 代表帧数),然后将这些图片保存在当前的工作目录。

|

||||

|

||||

转换好图片后,只需要运行下面的命令便可以制作 GIF 动图了:

|

||||

|

||||

```

|

||||

$ gifski -o file.gif *.png

|

||||

```

|

||||

|

||||

想知晓更多的细节,请参考它的帮助部分:

|

||||

|

||||

```

|

||||

$ gifski -h

|

||||

```

|

||||

@ -129,17 +141,17 @@ $ gifski -h

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/gifski-a-cross-platform-high-quality-gif-encoder/

|

||||

via: https://www.ostechnix.com/gifski-a-cross-platform-high-quality-gif-encoder/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[1]: https://www.ostechnix.com/flameshot-a-simple-yet-powerful-feature-rich-screenshot-tool/

|

||||

[1]: https://linux.cn/article-10180-1.html

|

||||

[2]: https://aur.archlinux.org/packages/pngquant/

|

||||

[3]: https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||

[4]: https://www.ostechnix.com/install-rust-programming-language-in-linux/

|

||||

68

published/20180907 6 open source tools for writing a book.md

Normal file

68

published/20180907 6 open source tools for writing a book.md

Normal file

@ -0,0 +1,68 @@

|

||||

6 个用于写书的开源工具

|

||||

======

|

||||

> 这些多能、免费的工具可以满足你撰写、编辑和生成你自己的书籍的全部需求。

|

||||

|

||||

|

||||

|

||||

我在 1993 年首次使用并贡献了免费和开源软件,从那时起我一直是一名开源软件的开发人员和布道者。尽管我被记住的一个项目是 [FreeDOS 项目][1],这是一个 DOS 操作系统的开源实现,但我已经编写或者贡献了数十个开源软件项目。

|

||||

|

||||

我最近写了一本关于 FreeDOS 的书。《[使用 FreeDOS][2]》是我庆祝 FreeDOS 出现 24 周年而撰写的。它是关于安装和使用 FreeDOS、关于我最喜欢的 DOS 程序,以及 DOS 命令行和 DOS 批处理编程的快速参考指南的集合。在一位出色的专业编辑的帮助下,我在过去的几个月里一直在编写这本书。

|

||||

|

||||

《使用 FreeDOS》 可在知识共享署名(cc-by)国际公共许可证下获得。你可以从 [FreeDOS 电子书][2]网站免费下载 EPUB 和 PDF 版本。(我也计划为那些喜欢纸质的人提供印刷版本。)

|

||||

|

||||

这本书几乎完全是用开源软件制作的。我想分享一下对用来创建、编辑和生成《使用 FreeDOS》的工具的看法。

|

||||

|

||||

### Google 文档

|

||||

|

||||

[Google 文档][3]是我使用的唯一不是开源软件的工具。我将我的第一份草稿上传到 Google 文档,这样我就能与编辑器进行协作。我确信有开源协作工具,但 Google 文档能够让两个人同时编辑同一个文档、发表评论、编辑建议和更改跟踪 —— 更不用说它使用段落样式和能够下载完成的文档 —— 这使其成为编辑过程中有价值的一部分。

|

||||

|

||||

### LibreOffice

|

||||

|

||||

我开始使用的是 [LibreOffice][4] 6.0,但我最终使用 LibreOffice 6.1 完成了这本书。我喜欢 LibreOffice 对样式的丰富支持。段落样式可以轻松地为标题、页眉、正文、示例代码和其他文本应用样式。字符样式允许我修改段落中文本的外观,例如内联示例代码或用不同的样式代表文件名。图形样式让我可以将某些样式应用于截图和其他图像。页面样式允许我轻松修改页面的布局和外观。

|

||||

|

||||

### GIMP

|

||||

|

||||

我的书包括很多 DOS 程序截图、网站截图和 FreeDOS 的 logo。我用 [GIMP][5] 修改这本书的图像。通常,只是裁剪或调整图像大小,但在我准备本书的印刷版时,我使用 GIMP 创建了一些更适于打印布局的图像。

|

||||

|

||||

### Inkscape

|

||||

|

||||

大多数 FreeDOS 的 logo 和小鱼吉祥物都是 SVG 格式,我使用 [Inkscape][6] 来调整它们。在准备电子书的 PDF 版本时,我想在页面顶部放置一个简单的蓝色横幅,角落里有 FreeDOS 的 logo。实验后,我发现在 Inkscape 中创建一个我想要的横幅 SVG 图案更容易,然后我将其粘贴到页眉中。

|

||||

|

||||

### ImageMagick

|

||||

|

||||

虽然使用 GIMP 来完成这项工作也很好,但有时在一组图像上运行 [ImageMagick][7] 命令会更快,例如转换为 PNG 格式或调整图像大小。

|

||||

|

||||

### Sigil

|

||||

|

||||

LibreOffice 可以直接导出到 EPUB 格式,但它不是个好的转换器。我没有尝试使用 LibreOffice 6.1 创建 EPUB,但在 LibreOffice 6.0 中没有包含我的图像。它还以奇怪的方式添加了样式。我使用 [Sigil][8] 来调整 EPUB 并使一切看起来正常。Sigil 甚至还有预览功能,因此你可以看到 EPUB 的样子。

|

||||

|

||||

### QEMU

|

||||

|

||||

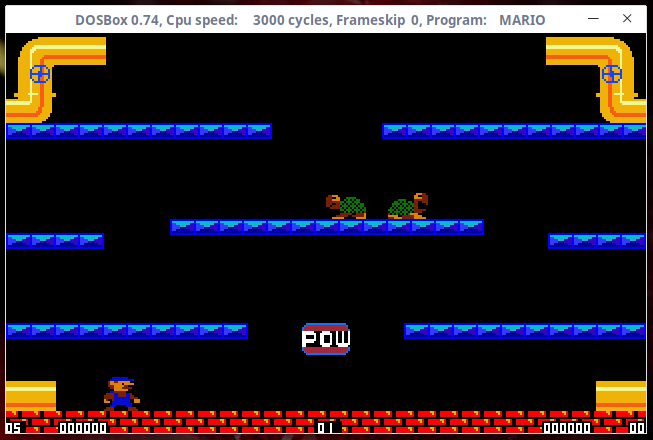

因为本书是关于安装和运行 FreeDOS 的,所以我需要实际运行 FreeDOS。你可以在任何 PC 模拟器中启动 FreeDOS,包括 VirtualBox、QEMU、GNOME Boxes、PCem 和 Bochs。但我喜欢 [QEMU][9] 的简单性。QEMU 控制台允许你以 PPM 格式转储屏幕,这非常适合抓取截图来包含在书中。

|

||||

|

||||

当然,我不得不提到在 [Linux][11] 上运行 [GNOME][10]。我使用 Linux 的 [Fedora][12] 发行版。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/writing-book-open-source-tools

|

||||

|

||||

作者:[Jim Hall][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jim-hall

|

||||

[1]: http://www.freedos.org/

|

||||

[2]: http://www.freedos.org/ebook/

|

||||

[3]: https://www.google.com/docs/about/

|

||||

[4]: https://www.libreoffice.org/

|

||||

[5]: https://www.gimp.org/

|

||||

[6]: https://inkscape.org/

|

||||

[7]: https://www.imagemagick.org/

|

||||

[8]: https://sigil-ebook.com/

|

||||

[9]: https://www.qemu.org/

|

||||

[10]: https://www.gnome.org/

|

||||

[11]: https://www.kernel.org/

|

||||

[12]: https://getfedora.org/

|

||||

@ -1,10 +1,11 @@

|

||||

正确选择开源数据库的 5 个技巧

|

||||

======

|

||||

|

||||

> 对关键应用的选择不容许丝毫错误。

|

||||

|

||||

|

||||

|

||||

你或许会遇到需要选择适合的开源数据库的情况。但这无论对于开源方面的老手或是新手,都是一项艰巨的任务。

|

||||

你或许会遇到需要选择合适的开源数据库的情况。但这无论对于开源方面的老手或是新手,都是一项艰巨的任务。

|

||||

|

||||

在过去的几年中,采用开源技术的企业越来越多。面对这样的趋势,众多开源应用公司都纷纷承诺自己提供的解决方案能够各种问题、适应各种负载。但这些承诺不能轻信,在开源应用上的选择是重要而艰难的,尤其是数据库这种关键的应用。

|

||||

|

||||

@ -20,7 +21,7 @@

|

||||

|

||||

### 了解你的工作负载

|

||||

|

||||

尽管开源数据库技术的功能越来越丰富,但这些新加入的功能都不太具有普适性。譬如 MongoDB 新增了事务的支持、MySQL 新增了 JSON 存储的功能等等。目前开源数据库的普遍趋势是不断加入新的功能,但很多人的误区却在于没有选择最适合的工具来完成自己的工作——这样的人或许是一个自大的开发者,又或许是一个视野狭窄的主管——最终导致公司业务上的损失。最致命的是,在业务初期,使用了不适合的工具往往也可以顺利地完成任务,但随着业务的增长,很快就会到达瓶颈,尽管这个时候还可以替换更合适的工具,但成本就比较高了。

|

||||

尽管开源数据库技术的功能越来越丰富,但这些新加入的功能都不太具有普适性。譬如 MongoDB 新增了事务的支持、MySQL 新增了 JSON 存储的功能等等。目前开源数据库的普遍趋势是不断加入新的功能,但很多人的误区却在于没有选择最适合的工具来完成自己的工作 —— 这样的人或许是一个自大的开发者,又或许是一个视野狭窄的主管 —— 最终导致公司业务上的损失。最致命的是,在业务初期,使用了不适合的工具往往也可以顺利地完成任务,但随着业务的增长,很快就会到达瓶颈,尽管这个时候还可以替换更合适的工具,但成本就比较高了。

|

||||

|

||||

例如,如果你需要的是数据分析仓库,关系数据库可能不是一个适合的选择;如果你处理事务的应用要求严格的数据完整性和一致性,就不要考虑 NoSQL 了。

|

||||

|

||||

@ -30,7 +31,7 @@

|

||||

|

||||

Battery Ventures 是一家专注于技术的投资公司,最近推出了一个用于跟踪最受欢迎开源项目的 [BOSS 指数][2] 。它提供了对一些被广泛采用的开源项目和活跃的开源项目的详细情况。其中,数据库技术毫无悬念地占据了榜单的主导地位,在前十位之中占了一半。这个 BOSS 指数对于刚接触开源数据库领域的人来说,这是一个很好的切入点。当然,开源技术的提供者也会针对很多常见的典型问题给出对应的解决方案。

|

||||

|

||||

我认为,你想要做的事情很可能已经有人解决过了。即使这些先行者的解决方案不一定完全契合你的需求,但也可以从他们成功或失败案例中根据你自己的需求修改得出合适的解决方案。

|

||||

我认为,你想要做的事情很可能已经有人解决过了。即使这些先行者的解决方案不一定完全契合你的需求,但也可以从他们成功或失败的案例中根据你自己的需求修改得出合适的解决方案。

|

||||

|

||||

如果你采用了一个最前沿的技术,这就是你探索的好机会了。如果你的工作负载刚好适合新的开源数据库技术,放胆去尝试吧。第一个吃螃蟹的人总是会得到意外的挑战和收获。

|

||||

|

||||

@ -46,7 +47,7 @@ Battery Ventures 是一家专注于技术的投资公司,最近推出了一个

|

||||

|

||||

### 有疑问,找专家

|

||||

|

||||

如果你仍然不确定数据库选择得是否合适,可以在论坛、网站或者与软件的提供者处商讨。研究各种开源数据库是否满足自己的需求是一件很有意义的事,因为总会发现你从不知道的技术。而开源社区就是分享这些信息的地方。

|

||||

如果你仍然不确定数据库选择的是否合适,可以在论坛、网站或者与软件的提供者处商讨。研究各种开源数据库是否满足自己的需求是一件很有意义的事,因为总会发现你从不知道的技术。而开源社区就是分享这些信息的地方。

|

||||

|

||||

当你接触到开源软件和软件提供者时,有一件重要的事情需要注意。很多公司都有开放的核心业务模式,鼓励采用他们的数据库软件。你可以只接受他们的部分建议和指导,然后用你自己的能力去研究和探索替代方案。

|

||||

|

||||

@ -62,7 +63,7 @@ via: https://opensource.com/article/18/10/tips-choosing-right-open-source-databa

|

||||

作者:[Barrett Chambers][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,37 +1,39 @@

|

||||

四个开源的Android邮件客户端

|

||||

四个开源的 Android 邮件客户端

|

||||

======

|

||||

Email 现在还没有绝迹,而且现在大部分邮件都来自于移动设备。

|

||||

|

||||

> Email 现在还没有绝迹,而且现在大部分邮件都来自于移动设备。

|

||||

|

||||

|

||||

|

||||

现在一些年轻人正将邮件称之为“老年人的交流方式”,然而事实却是邮件绝对还没有消亡。虽然[协作工具][1],社交媒体,和短信很常用,但是它们还没做好取代邮件这种必要的商业(和社交)通信工具。

|

||||

现在一些年轻人正将邮件称之为“老年人的交流方式”,然而事实却是邮件绝对还没有消亡。虽然[协作工具][1]、社交媒体,和短信很常用,但是它们还没做好取代邮件这种必要的商业(和社交)通信工具的准备。

|

||||

|

||||

考虑到邮件还没有消失,并且(很多研究表明)人们都是在移动设备上阅读邮件,拥有一个好的移动邮件客户端就变得很关键。如果你是一个想使用开源的邮件客户端的 Android 用户,事情就变得有点棘手了。

|

||||

|

||||

我们提供了四个开源的 Andorid 邮件客户端供选择。其中两个可以通过 Andorid 官方应用商店 [Google Play][2] 下载。你也可以在 [Fossdroid][3] 或者 [F-Droid][4] 这些开源 Android 应用库中找到他们。(下方有每个应用的具体下载方式。)

|

||||

|

||||

### K-9 Mail

|

||||

|

||||

[K-9 Mail][5] 拥有几乎和 Android 一样长的历史——它起源于 Android 1.0 邮件客户端的一个补丁。它支持 IMAP 和 WebDAV、多用户、附件、emojis 和其他经典的邮件客户端功能。它的[用户文档][6]提供了关于安装、启动、安全、阅读和发送邮件等等的帮助。

|

||||

[K-9 Mail][5] 拥有几乎和 Android 一样长的历史——它起源于 Android 1.0 邮件客户端的一个补丁。它支持 IMAP 和 WebDAV、多用户、附件、emoji 和其它经典的邮件客户端功能。它的[用户文档][6]提供了关于安装、启动、安全、阅读和发送邮件等等的帮助。

|

||||

|

||||

K-9 基于 [Apache 2.0][7] 协议开源,[源码][8]可以从 GitHub 上获得. 应用可以从 [Google Play][9]、[Amazon][10] 和 [F-Droid][11] 上下载。

|

||||

|

||||

### p≡p

|

||||

|

||||

正如它的全称,”Pretty Easy Privacy”说的那样,[p≡p][12] 主要关注于隐私和安全通信。它提供自动的、端到端的邮件和附件加密(但要求你的收件人也要能够加密邮件——否则,p≡p会警告你的邮件将不加密发出)。

|

||||

正如它的全称,”Pretty Easy Privacy”说的那样,[p≡p][12] 主要关注于隐私和安全通信。它提供自动的、端到端的邮件和附件加密(但要求你的收件人也要能够加密邮件——否则,p≡p 会警告你的邮件将不加密发出)。

|

||||

|

||||

你可以从 GitLab 获得[源码][13](基于 [GPLv3][14] 协议),并且可以从应用的官网上找到相应的[文档][15]。应用可以在 [Fossdroid][16] 上免费下载或者在 [Google Play][17] 上支付一点儿象征性的费用下载。

|

||||

|

||||

### InboxPager

|

||||

|

||||

[InboxPager][18] 允许你通过 SSL/TLS 协议收发邮件信息,这也表明如果你的邮件提供商(比如 Gmail )没有默认开启这个功能的话,你可能要做一些设置。(幸运的是, InboxPager 提供了 Gmail的[设置教程][19]。)它同时也支持通过 OpenKeychain 应用进行 OpenPGP 机密。

|

||||

[InboxPager][18] 允许你通过 SSL/TLS 协议收发邮件信息,这也表明如果你的邮件提供商(比如 Gmail )没有默认开启这个功能的话,你可能要做一些设置。(幸运的是, InboxPager 提供了 Gmail 的[设置教程][19]。)它同时也支持通过 OpenKeychain 应用进行 OpenPGP 加密。

|

||||

|

||||

InboxPager 基于 [GPLv3][20] 协议,其源码可从 GitHub 获得,并且应用可以从 [F-Droid][21] 下载。

|

||||

|

||||

### FairEmail

|

||||

|

||||

[FairEmail][22] 是一个极简的邮件客户端,它的功能集中于读写信息,没有任何多余的可能拖慢客户端的功能。它支持多个帐号和用户,消息线程,加密等等。

|

||||

[FairEmail][22] 是一个极简的邮件客户端,它的功能集中于读写信息,没有任何多余的可能拖慢客户端的功能。它支持多个帐号和用户、消息线索、加密等等。

|

||||

|

||||

它基于 [GPLv3][23] 协议开源,[源码][24]可以从GitHub上获得。你可以在 [Fossdroid][25] 上下载 FairEamil; 对 Google Play 版本感兴趣的人可以从 [testing the software][26] 获得应用。

|

||||

它基于 [GPLv3][23] 协议开源,[源码][24]可以从 GitHub 上获得。你可以在 [Fossdroid][25] 上下载 FairEamil;对 Google Play 版本感兴趣的人可以从 [testing the software][26] 获得应用。

|

||||

|

||||

肯定还有更多的开源 Android 客户端(或者上述软件的加强版本)——活跃的开发者们可以关注一下。如果你知道还有哪些优秀的应用,可以在评论里和我们分享。

|

||||

|

||||

@ -42,7 +44,7 @@ via: https://opensource.com/article/18/10/open-source-android-email-clients

|

||||

作者:[Opensource.com][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zianglei][c]

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

thecyanbird translating

|

||||

|

||||

The Rise and Rise of JSON

|

||||

======

|

||||

JSON has taken over the world. Today, when any two applications communicate with each other across the internet, odds are they do so using JSON. It has been adopted by all the big players: Of the ten most popular web APIs, a list consisting mostly of APIs offered by major companies like Google, Facebook, and Twitter, only one API exposes data in XML rather than JSON. Twitter, to take an illustrative example from that list, supported XML until 2013, when it released a new version of its API that dropped XML in favor of using JSON exclusively. JSON has also been widely adopted by the programming rank and file: According to Stack Overflow, a question and answer site for programmers, more questions are now asked about JSON than about any other data interchange format.

|

||||

|

||||

@ -1,118 +0,0 @@

|

||||

thecyanbird translating

|

||||

|

||||

Where Vim Came From

|

||||

======

|

||||

I recently stumbled across a file format known as Intel HEX. As far as I can gather, Intel HEX files (which use the `.hex` extension) are meant to make binary images less opaque by encoding them as lines of hexadecimal digits. Apparently they are used by people who program microcontrollers or need to burn data into ROM. In any case, when I opened up a HEX file in Vim for the first time, I discovered something shocking. Here was this file format that, at least to me, was deeply esoteric, but Vim already knew all about it. Each line of a HEX file is a record divided into different fields—Vim had gone ahead and colored each of the fields a different color. `set ft?` I asked, in awe. `filetype=hex`, Vim answered, triumphant.

|

||||

|

||||

Vim is everywhere. It is used by so many people that something like HEX file support shouldn’t be a surprise. Vim comes pre-installed on Mac OS and has a large constituency in the Linux world. It is familiar even to people that hate it, because enough popular command line tools will throw users into Vim by default that the uninitiated getting trapped in Vim has become [a meme][1]. There are major websites, including Facebook, that will scroll down when you press the `j` key and up when you press the `k` key—the unlikely high-water mark of Vim’s spread through digital culture.

|

||||

|

||||

And yet Vim is also a mystery. Unlike React, for example, which everyone knows is developed and maintained by Facebook, Vim has no obvious sponsor. Despite its ubiquity and importance, there doesn’t seem to be any kind of committee or organization that makes decisions about Vim. You could spend several minutes poking around the [Vim website][2] without getting a better idea of who created Vim or why. If you launch Vim without giving it a file argument, then you will see Vim’s startup message, which says that Vim is developed by “Bram Moolenaar et al.” But that doesn’t tell you much. Who is Bram Moolenaar and who are his shadowy confederates?

|

||||

|

||||

Perhaps more importantly, while we’re asking questions, why does exiting Vim involve typing `:wq`? Sure, it’s a “write” operation followed by a “quit” operation, but that is not a particularly intuitive convention. Who decided that copying text should instead be called “yanking”? Why is `:%s/foo/bar/gc` short for “find and replace”? Vim’s idiosyncrasies seem too arbitrary to have been made up, but then where did they come from?

|

||||

|

||||

The answer, as is so often the case, begins with that ancient crucible of computing, Bell Labs. In some sense, Vim is only the latest iteration of a piece of software—call it the “wq text editor”—that has been continuously developed and improved since the dawn of the Unix epoch.

|

||||

|

||||

### Ken Thompson Writes a Line Editor

|

||||

|

||||

In 1966, Bell Labs hired Ken Thompson. Thompson had just completed a Master’s degree in Electrical Engineering and Computer Science at the University of California, Berkeley. While there, he had used a text editor called QED, written for the Berkeley Timesharing System between 1965 and 1966. One of the first things Thompson did after arriving at Bell Labs was rewrite QED for the MIT Compatible Time-Sharing System. He would later write another version of QED for the Multics project. Along the way, he expanded the program so that users could search for lines in a file and make substitutions using regular expressions.

|

||||

|

||||

The Multics project, which like the Berkeley Timesharing System sought to create a commercially viable time-sharing operating system, was a partnership between MIT, General Electric, and Bell Labs. AT&T eventually decided the project was going nowhere and pulled out. Thompson and fellow Bell Labs researcher Dennis Ritchie, now without access to a time-sharing system and missing the “feel of interactive computing” that such systems offered, set about creating their own version, which would eventually be known as Unix. In August 1969, while his wife and young son were away on vacation in California, Thompson put together the basic components of the new system, allocating “a week each to the operating system, the shell, the editor, and the assembler.”

|

||||

|

||||

The editor would be called `ed`. It was based on QED but was not an exact re-implementation. Thompson decided to ditch certain QED features. Regular expression support was pared back so that only relatively simple regular expressions would be understood. QED allowed users to edit several files at once by opening multiple buffers, but `ed` would only work with one buffer at a time. And whereas QED could execute a buffer containing commands, `ed` would do no such thing. These simplifications may have been called for. Dennis Ritchie has said that going without QED’s advanced regular expressions was “not much of a loss.”

|

||||

|

||||

`ed` is now a part of the POSIX specification, so if you have a POSIX-compliant system, you will have it installed on your computer. It’s worth playing around with, because many of the `ed` commands are today a part of Vim. In order to write a buffer to disk, for example, you have to use the `w` command. In order to quit the editor, you have to use the `q` command. These two commands can be specified on the same line at once—hence, `wq`. Like Vim, `ed` is a modal editor; to enter input mode from command mode you would use the insert command (`i`), the append command (`a`), or the change command (`c`), depending on how you are trying to transform your text. `ed` also introduced the `s/foo/bar/g` syntax for finding and replacing, or “substituting,” text.

|

||||

|

||||

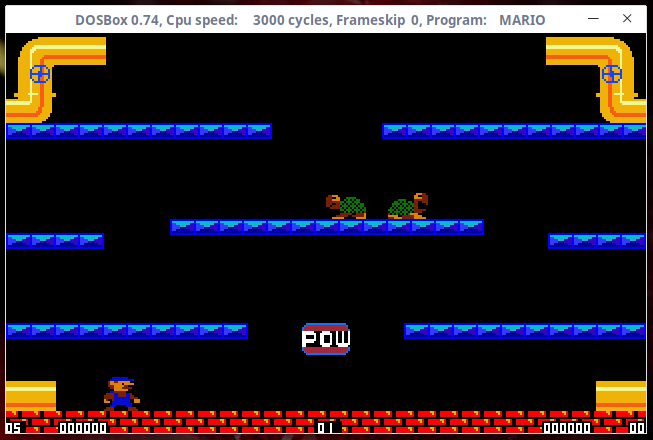

Given all these similarities, you might expect the average Vim user to have no trouble using `ed`. But `ed` is not at all like Vim in another important respect. `ed` is a true line editor. It was written and widely used in the days of the teletype printer. When Ken Thompson and Dennis Ritchie were hacking away at Unix, they looked like this:

|

||||

|

||||

![Ken Thompson interacting with a PDP-11 via teletype.][3]

|

||||

|

||||

`ed` doesn’t allow you to edit lines in place among the other lines of the open buffer, or move a cursor around, because `ed` would have to reprint the entire file every time you made a change to it. There was no mechanism in 1969 for `ed` to “clear” the contents of the screen, because the screen was just a sheet of paper and everything that had already been output had been output in ink. When necessary, you can ask `ed` to print out a range of lines for you using the list command (`l`), but most of the time you are operating on text that you can’t see. Using `ed` is thus a little trying to find your way around a dark house with an underpowered flashlight. You can only see so much at once, so you have to try your best to remember where everything is.

|

||||

|

||||

Here’s an example of an `ed` session. I’ve added comments (after the `#` character) explaining the purpose of each line, though if these were actually entered `ed` wouldn’t recognize them as comments and would complain:

|

||||

|

||||

```

|

||||

[sinclairtarget 09:49 ~]$ ed

|

||||

i # Enter input mode

|

||||

Hello world!

|

||||

|

||||

Isn't it a nice day?

|

||||

. # Finish input

|

||||

1,2l # List lines 1 to 2

|

||||

Hello world!$

|

||||

$

|

||||

2d # Delete line 2

|

||||

,l # List entire buffer

|

||||

Hello world!$

|

||||

Isn't it a nice day?$

|

||||

s/nice/terrible/g # Substitute globally

|

||||

,l

|

||||

Hello world!$

|

||||

Isn't it a terrible day?$

|

||||

w foo.txt # Write to foo.txt

|

||||

38 # (bytes written)

|

||||

q # Quit

|

||||

[sinclairtarget 10:50 ~]$ cat foo.txt

|

||||

Hello world!

|

||||

Isn't it a terrible day?

|

||||

```

|

||||

|

||||

As you can see, `ed` is not an especially talkative program.

|

||||

|

||||

### Bill Joy Writes a Text Editor

|

||||

|

||||

`ed` worked well enough for Thompson and Ritchie. Others found it difficult to use and it acquired a reputation for being a particularly egregious example of Unix’s hostility toward the novice. In 1975, a man named George Coulouris developed an improved version of `ed` on the Unix system installed at Queen Mary’s College, London. Coulouris wrote his editor to take advantage of the video displays that he had available at Queen Mary’s. Unlike `ed`, Coulouris’ program allowed users to edit a single line in place on screen, navigating through the line keystroke by keystroke (imagine using Vim on one line at a time). Coulouris called his program `em`, or “editor for mortals,” which he had supposedly been inspired to do after Thompson paid a visit to Queen Mary’s, saw the program Coulouris had built, and dismissed it, saying that he had no need to see the state of a file while editing it.

|

||||

|

||||

In 1976, Coulouris brought `em` with him to UC Berkeley, where he spent the summer as a visitor to the CS department. This was exactly ten years after Ken Thompson had left Berkeley to work at Bell Labs. At Berkeley, Coulouris met Bill Joy, a graduate student working on the Berkeley Software Distribution (BSD). Coulouris showed `em` to Joy, who, starting with Coulouris’ source code, built out an improved version of `ed` called `ex`, for “extended `ed`.” Version 1.1 of `ex` was bundled with the first release of BSD Unix in 1978. `ex` was largely compatible with `ed`, but it added two more modes: an “open” mode, which enabled single-line editing like had been possible with `em`, and a “visual” mode, which took over the whole screen and enabled live editing of an entire file like we are used to today.

|

||||

|

||||

For the second release of BSD in 1979, an executable named `vi` was introduced that did little more than open `ex` in visual mode.

|

||||

|

||||

`ex`/`vi` (henceforth `vi`) established most of the conventions we now associate with Vim that weren’t already a part of `ed`. The video terminal that Joy was using was a Lear Siegler ADM-3A, which had a keyboard with no cursor keys. Instead, arrows were painted on the `h`, `j`, `k`, and `l` keys, which is why Joy used those keys for cursor movement in `vi`. The escape key on the ADM-3A keyboard was also where today we would find the tab key, which explains how such a hard-to-reach key was ever assigned an operation as common as exiting a mode. The `:` character that prefixes commands also comes from `vi`, which in regular mode (i.e. the mode entered by running `ex`) used `:` as a prompt. This addressed a long-standing complaint about `ed`, which, once launched, greets users with utter silence. In visual mode, saving and quitting now involved typing the classic `:wq`. “Yanking” and “putting,” marks, and the `set` command for setting options were all part of the original `vi`. The features we use in the course of basic text editing today in Vim are largely `vi` features.

|

||||

|

||||

![A Lear Siegler ADM-3A keyboard.][4]

|

||||

|

||||

`vi` was the only text editor bundled with BSD Unix other than `ed`. At the time, Emacs could cost hundreds of dollars (this was before GNU Emacs), so `vi` became enormously popular. But `vi` was a direct descendant of `ed`, which meant that the source code could not be modified without an AT&T source license. This motivated several people to create open-source versions of `vi`. STEVIE (ST Editor for VI Enthusiasts) appeared in 1987, Elvis appeared in 1990, and `nvi` appeared in 1994. Some of these clones added extra features like syntax highlighting and split windows. Elvis in particular saw many of its features incorporated into Vim, since so many Elvis users pushed for their inclusion.

|

||||

|

||||

### Bram Moolenaar Writes Vim

|

||||

|

||||

“Vim”, which now abbreviates “Vi Improved”, originally stood for “Vi Imitation.” Like many of the other `vi` clones, Vim began as an attempt to replicate `vi` on a platform where it was not available. Bram Moolenaar, a Dutch software engineer working for a photocopier company in Venlo, the Netherlands, wanted something like `vi` for his brand-new Amiga 2000. Moolenaar had grown used to using `vi` on the Unix systems at his university and it was now “in his fingers.” So in 1988, using the existing STEVIE `vi` clone as a starting point, Moolenaar began work on Vim.

|

||||

|

||||

Moolenaar had access to STEVIE because STEVIE had previously appeared on something called a Fred Fish disk. Fred Fish was an American programmer that mailed out a floppy disk every month with a curated selection of the best open-source software available for the Amiga platform. Anyone could request a disk for nothing more than the price of postage. Several versions of STEVIE were released on Fred Fish disks. The version that Moolenaar used had been released on Fred Fish disk 256. (Disappointingly, Fred Fish disks seem to have nothing to do with [Freddi Fish][5].)

|

||||

|

||||

Moolenaar liked STEVIE but quickly noticed that there were many `vi` commands missing. So, for the first release of Vim, Moolenaar made `vi` compatibility his priority. Someone else had written a series of `vi` macros that, when run through a properly `vi`-compatible editor, could solve a [randomly generated maze][6]. Moolenaar was able to get these macros working in Vim. In 1991, Vim was released for the first time on Fred Fish disk 591 as “Vi Imitation.” Moolenaar had added some features (including multi-level undo and a “quickfix” mode for compiler errors) that meant that Vim had surpassed `vi`. But Vim would remain “Vi Imitation” until Vim 2.0, released in 1993 via FTP.

|

||||

|

||||

Moolenaar, with the occasional help of various internet collaborators, added features to Vim at a steady clip. Vim 2.0 introduced support for the `wrap` option and for horizontal scrolling through long lines of text. Vim 3.0 added support for split windows and buffers, a feature inspired by the `vi` clone `nvi`. Vim also now saved each buffer to a swap file, so that edited text could survive a crash. Vimscript made its first appearance in Vim 5.0, along with support for syntax highlighting. All the while, Vim’s popularity was growing. It was ported to MS-DOS, to Windows, to Mac, and even to Unix, where it competed with the original `vi`.

|

||||

|

||||

In 2006, Vim was voted the most popular editor among Linux Journal readers. Today, according to Stack Overflow’s 2018 Developer Survey, Vim is the most popular text-mode (i.e. terminal emulator) editor, used by 25.8% of all software developers (and 40% of Sysadmin/DevOps people). For a while, during the late 1980s and throughout the 1990s, programmers waged the “Editor Wars,” which pitted Emacs users against `vi` (and eventually Vim) users. While Emacs certainly still has a following, some people think that the Editor Wars are over and that Vim won. The 2018 Stack Overflow Developer Survey suggests that this is true; only 4.1% of respondents used Emacs.

|

||||

|

||||

How did Vim become so successful? Obviously people like the features that Vim has to offer. But I would argue that the long history behind Vim illustrates that it had more advantages than just its feature set. Vim’s codebase dates back only to 1988, when Moolenaar began working on it. The “wq text editor,” on the other hand—the broader vision of how a Unix-y text editor should work—goes back a half-century. The “wq text editor” had a few different concrete expressions, but thanks in part to the unusual attention paid to backward compatibility by both Bill Joy and Bram Moolenaar, good ideas accumulated gradually over time. The “wq text editor,” in that sense, is one of the longest-running and most successful open-source projects, having enjoyed contributions from some of the greatest minds in the computing world. I don’t think the “startup-company-throws-away all-precedents-and-creates-disruptive-new-software” approach to development is necessarily bad, but Vim is a reminder that the collaborative and incremental approach can also yield wonders.

|

||||

|

||||

If you enjoyed this post, more like it come out every two weeks! Follow [@TwoBitHistory][7] on Twitter or subscribe to the [RSS feed][8] to make sure you know when a new post is out.

|

||||

|

||||

Previously on TwoBitHistory…

|

||||

|

||||

> New post! This time we're taking a look at the Altair 8800, the very first home computer, and how to simulate it on your modern PC.<https://t.co/s2sB5njrkd>

|

||||

>

|

||||

> — TwoBitHistory (@TwoBitHistory) [July 22, 2018][9]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://twobithistory.org/2018/08/05/where-vim-came-from.html

|

||||

|

||||

作者:[Two-Bit History][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://twobithistory.org

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://stackoverflow.blog/wp-content/uploads/2017/05/meme.jpeg

|

||||

[2]: https://www.vim.org/

|

||||

[3]: https://upload.wikimedia.org/wikipedia/commons/8/8f/Ken_Thompson_%28sitting%29_and_Dennis_Ritchie_at_PDP-11_%282876612463%29.jpg

|

||||

[4]: https://vintagecomputer.ca/wp-content/uploads/2015/01/LSI-ADM3A-full-keyboard.jpg

|

||||

[5]: https://en.wikipedia.org/wiki/Freddi_Fish

|

||||