mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

e80bbbd082

@ -1,62 +1,63 @@

|

||||

在终端显示世界地图

|

||||

MapSCII:在终端显示世界地图

|

||||

======

|

||||

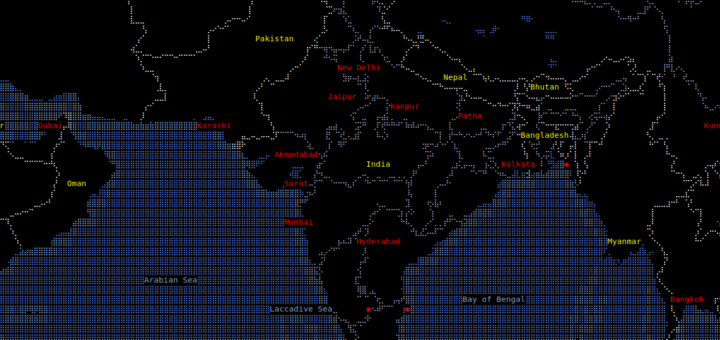

我偶然发现了一个有趣的工具。在终端的世界地图!是的,这太酷了。向 **MapSCII** 问好,这是可在 xterm 兼容终端渲染的盲文和 ASCII 世界地图。它支持 GNU/Linux、Mac OS 和 Windows。我以为这是另一个在 GitHub 上托管的项目。但是我错了!他们做了令人印象深刻的事。我们可以使用我们的鼠标指针在世界地图的任何地方拖拽放大和缩小。其他显著的特性是:

|

||||

|

||||

|

||||

|

||||

我偶然发现了一个有趣的工具。在终端里的世界地图!是的,这太酷了。给 `MapSCII` 打 call,这是可在 xterm 兼容终端上渲染的布莱叶盲文和 ASCII 世界地图。它支持 GNU/Linux、Mac OS 和 Windows。我原以为它只不过是一个在 GitHub 上托管的项目而已,但是我错了!他们做的事令人印象深刻。我们可以使用我们的鼠标指针在世界地图的任何地方拖拽放大和缩小。其他显著的特性是:

|

||||

|

||||

* 发现任何特定地点周围的兴趣点

|

||||

* 高度可定制的图层样式,带有[ Mapbox 样式][1]支持

|

||||

* 连接到任何公共或私有矢量贴片服务器

|

||||

* 高度可定制的图层样式,支持 [Mapbox 样式][1]

|

||||

* 可连接到任何公共或私有的矢量贴片服务器

|

||||

* 或者使用已经提供并已优化的基于 [OSM2VectorTiles][2] 服务器

|

||||

* 离线工作,发现本地 [VectorTile][3]/[MBTiles][4]

|

||||

* 可以离线工作并发现本地的 [VectorTile][3]/[MBTiles][4]

|

||||

* 兼容大多数 Linux 和 OSX 终端

|

||||

* 高度优化算法的流畅体验

|

||||

|

||||

|

||||

|

||||

### 使用 MapSCII 在终端中显示世界地图

|

||||

|

||||

要打开地图,只需从终端运行以下命令:

|

||||

|

||||

```

|

||||

telnet mapscii.me

|

||||

```

|

||||

|

||||

这是我终端上的世界地图。

|

||||

|

||||

[![][5]][6]

|

||||

![][6]

|

||||

|

||||

很酷,是吗?

|

||||

|

||||

要切换到盲文视图,请按 **c**。

|

||||

要切换到布莱叶盲文视图,请按 `c`。

|

||||

|

||||

[![][5]][7]

|

||||

![][7]

|

||||

|

||||

Type **c** again to switch back to the previous format **.**

|

||||

再次输入 **c** 切回以前的格式。

|

||||

再次输入 `c` 切回以前的格式。

|

||||

|

||||

要滚动地图,请使用**向上**、向下**、**向左**、**向右**箭头键。要放大/缩小位置,请使用 **a** 和 **a** 键。另外,你可以使用鼠标的滚轮进行放大或缩小。要退出地图,请按 **q**。

|

||||

要滚动地图,请使用“向上”、“向下”、“向左”、“向右”箭头键。要放大/缩小位置,请使用 `a` 和 `z` 键。另外,你可以使用鼠标的滚轮进行放大或缩小。要退出地图,请按 `q`。

|

||||

|

||||

就像我已经说过的,不要认为这是一个简单的项目。点击地图上的任何位置,然后按 **“a”** 放大。

|

||||

就像我已经说过的,不要认为这是一个简单的项目。点击地图上的任何位置,然后按 `a` 放大。

|

||||

|

||||

放大后,下面是一些示例截图。

|

||||

|

||||

[![][5]][8]

|

||||

![][8]

|

||||

|

||||

我可以放大查看我的国家(印度)的州。

|

||||

|

||||

[![][5]][9]

|

||||

![][9]

|

||||

|

||||

和州内的地区(Tamilnadu):

|

||||

|

||||

[![][5]][10]

|

||||

![][10]

|

||||

|

||||

甚至是地区内的镇 [Taluks][11]:

|

||||

|

||||

[![][5]][12]

|

||||

![][12]

|

||||

|

||||

还有,我完成学业的地方:

|

||||

|

||||

[![][5]][13]

|

||||

![][13]

|

||||

|

||||

即使它只是一个最小的城镇,MapSCII 也能准确地显示出来。 MapSCII 使用 [**OpenStreetMap**][14] 来收集数据。

|

||||

即使它只是一个最小的城镇,MapSCII 也能准确地显示出来。 MapSCII 使用 [OpenStreetMap][14] 来收集数据。

|

||||

|

||||

### 在本地安装 MapSCII

|

||||

|

||||

@ -64,15 +65,16 @@ Type **c** again to switch back to the previous format **.**

|

||||

|

||||

确保你的系统上已经安装了 Node.js。如果还没有,请参阅以下链接。

|

||||

|

||||

[Install NodeJS on Linux][15]

|

||||

- [在 Linux 上安装 NodeJS][15]

|

||||

|

||||

然后,运行以下命令来安装它。

|

||||

|

||||

```

|

||||

sudo npm install -g mapscii

|

||||

|

||||

```

|

||||

|

||||

要启动 MapSCII,请运行:

|

||||

|

||||

```

|

||||

mapscii

|

||||

```

|

||||

@ -81,15 +83,13 @@ mapscii

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/mapscii-world-map-terminal/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -99,13 +99,13 @@ via: https://www.ostechnix.com/mapscii-world-map-terminal/

|

||||

[3]:https://github.com/mapbox/vector-tile-spec

|

||||

[4]:https://github.com/mapbox/mbtiles-spec

|

||||

[5]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-1-2.png ()

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-2.png ()

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-3.png ()

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-4.png ()

|

||||

[10]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-5.png ()

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-1-2.png

|

||||

[7]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-2.png

|

||||

[8]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-3.png

|

||||

[9]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-4.png

|

||||

[10]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-5.png

|

||||

[11]:https://en.wikipedia.org/wiki/Tehsils_of_India

|

||||

[12]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-6.png ()

|

||||

[13]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-7.png ()

|

||||

[12]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-6.png

|

||||

[13]:http://www.ostechnix.com/wp-content/uploads/2018/01/MapSCII-7.png

|

||||

[14]:https://www.openstreetmap.org/

|

||||

[15]:https://www.ostechnix.com/install-node-js-linux/

|

||||

@ -0,0 +1,170 @@

|

||||

8 个你不一定全都了解的 rm 命令示例

|

||||

======

|

||||

|

||||

删除文件和复制/移动文件一样,都是很基础的操作。在 Linux 中,有一个专门的命令 `rm`,可用于完成所有删除相关的操作。在本文中,我们将用些容易理解的例子来讨论这个命令的基本使用。

|

||||

|

||||

但在我们开始前,值得指出的是本文所有示例都在 Ubuntu 16.04 LTS 中测试过。

|

||||

|

||||

### Linux rm 命令概述

|

||||

|

||||

通俗的讲,我们可以认为 `rm` 命令是用于删除文件和目录的。下面是此命令的语法:

|

||||

|

||||

```

|

||||

rm [选项]... [要删除的文件/目录]...

|

||||

```

|

||||

|

||||

下面是命令使用说明:

|

||||

|

||||

> GUN 版本 `rm` 命令的手册文档。`rm` 删除每个指定的文件,默认情况下不删除目录。

|

||||

|

||||

> 当删除的文件超过三个或者提供了选项 `-r`、`-R` 或 `--recursive`(LCTT 译注:表示递归删除目录中的文件)时,如果给出 `-I`(LCTT 译注:大写的 I)或 `--interactive=once` 选项(LCTT 译注:表示开启交互一次),则 `rm` 命令会提示用户是否继续整个删除操作,如果用户回应不是确认(LCTT 译注:即没有回复 `y`),则整个命令立刻终止。

|

||||

|

||||

> 另外,如果被删除文件是不可写的,标准输入是终端,这时如果没有提供 `-f` 或 `--force` 选项,或者提供了 `-i`(LCTT 译注:小写的 i) 或 `--interactive=always` 选项,`rm` 会提示用户是否要删除此文件,如果用户回应不是确认(LCTT 译注:即没有回复 `y`),则跳过此文件。

|

||||

|

||||

|

||||

下面这些问答式例子会让你更好的理解这个命令的使用。

|

||||

|

||||

### Q1. 如何用 rm 命令删除文件?

|

||||

|

||||

这是非常简单和直观的。你只需要把文件名(如果文件不是在当前目录中,则还需要添加文件路径)传入给 `rm` 命令即可。

|

||||

|

||||

(LCTT 译注:可以用空格隔开传入多个文件名称。)

|

||||

|

||||

```

|

||||

rm 文件1 文件2 ...

|

||||

```

|

||||

如:

|

||||

|

||||

```

|

||||

rm testfile.txt

|

||||

```

|

||||

|

||||

[![How to remove files using rm command][1]][2]

|

||||

|

||||

### Q2. 如何用 `rm` 命令删除目录?

|

||||

|

||||

如果你试图删除一个目录,你需要提供 `-r` 选项。否则 `rm` 会抛出一个错误告诉你正试图删除一个目录。

|

||||

|

||||

(LCTT 译注:`-r` 表示递归地删除目录下的所有文件和目录。)

|

||||

|

||||

```

|

||||

rm -r [目录名称]

|

||||

```

|

||||

|

||||

如:

|

||||

|

||||

```

|

||||

rm -r testdir

|

||||

```

|

||||

|

||||

[![How to remove directories using rm command][3]][4]

|

||||

|

||||

### Q3. 如何让删除操作前有确认提示?

|

||||

|

||||

如果你希望在每个删除操作完成前都有确认提示,可以使用 `-i` 选项。

|

||||

|

||||

```

|

||||

rm -i [文件/目录]

|

||||

```

|

||||

|

||||

比如,你想要删除一个目录“testdir”,但需要每个删除操作都有确认提示,你可以这么做:

|

||||

|

||||

```

|

||||

rm -r -i testdir

|

||||

```

|

||||

|

||||

[![How to make rm prompt before every removal][5]][6]

|

||||

|

||||

### Q4. 如何让 rm 忽略不存在的文件或目录?

|

||||

|

||||

如果你删除一个不存在的文件或目录时,`rm` 命令会抛出一个错误,如:

|

||||

|

||||

[![Linux rm command example][7]][8]

|

||||

|

||||

然而,如果你愿意,你可以使用 `-f` 选项(LCTT 译注:即 “force”)让此次操作强制执行,忽略错误提示。

|

||||

|

||||

```

|

||||

rm -f [文件...]

|

||||

```

|

||||

|

||||

[![How to force rm to ignore nonexistent files][9]][10]

|

||||

|

||||

### Q5. 如何让 rm 仅在某些场景下确认删除?

|

||||

|

||||

选项 `-I`,可保证在删除超过 3 个文件时或递归删除时(LCTT 译注: 如删除目录)仅提示一次确认。

|

||||

|

||||

比如,下面的截图展示了 `-I` 选项的作用——当两个文件被删除时没有提示,当超过 3 个文件时会有提示。

|

||||

|

||||

[![How to make rm prompt only in some scenarios][11]][12]

|

||||

|

||||

### Q6. 当删除根目录是 rm 是如何工作的?

|

||||

|

||||

当然,删除根目录(`/`)是 Linux 用户最不想要的操作。这也就是为什么默认 `rm` 命令不支持在根目录上执行递归删除操作。(LCTT 译注:早期的 `rm` 命令并无此预防行为。)

|

||||

|

||||

[![How rm works when dealing with root directory][13]][14]

|

||||

|

||||

然而,如果你非得完成这个操作,你需要使用 `--no-preserve-root` 选项。当提供此选项,`rm` 就不会特殊处理根目录(`/`)了。

|

||||

|

||||

假如你想知道在哪些场景下 Linux 用户会删除他们的根目录,点击[这里][15]。

|

||||

|

||||

### Q7. 如何让 rm 仅删除空目录?

|

||||

|

||||

假如你需要 `rm` 在删除目录时仅删除空目录,你可以使用 `-d` 选项。

|

||||

|

||||

```

|

||||

rm -d [目录]

|

||||

```

|

||||

|

||||

下面的截图展示 `-d` 选项的用途——仅空目录被删除了。

|

||||

|

||||

[![How to make rm only remove empty directories][16]][17]

|

||||

|

||||

### Q8. 如何让 rm 显示当前删除操作的详情?

|

||||

|

||||

如果你想 rm 显示当前操作完成时的详细情况,使用 `-v` 选项可以做到。

|

||||

|

||||

```

|

||||

rm -v [文件/目录]

|

||||

```

|

||||

|

||||

如:

|

||||

|

||||

[![How to force rm to emit details of operation it is performing][18]][19]

|

||||

|

||||

### 结论

|

||||

|

||||

考虑到 `rm` 命令提供的功能,可以说其是 Linux 中使用频率最高的命令之一了(就像 [cp][20] 和 `mv` 一样)。在本文中,我们涉及到了其提供的几乎所有主要选项。`rm` 命令有些学习曲线,因此在你日常工作中开始使用此命令之前

|

||||

你将需要花费些时间去练习它的选项。更多的信息,请点击此命令的 [man 手册页][21]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/linux-rm-command/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[yizhuoyan](https://github.com/yizhuoyan)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com

|

||||

[1]:https://www.howtoforge.com/images/command-tutorial/rm-basic-usage.png

|

||||

[2]:https://www.howtoforge.com/images/command-tutorial/big/rm-basic-usage.png

|

||||

[3]:https://www.howtoforge.com/images/command-tutorial/rm-r.png

|

||||

[4]:https://www.howtoforge.com/images/command-tutorial/big/rm-r.png

|

||||

[5]:https://www.howtoforge.com/images/command-tutorial/rm-i-option.png

|

||||

[6]:https://www.howtoforge.com/images/command-tutorial/big/rm-i-option.png

|

||||

[7]:https://www.howtoforge.com/images/command-tutorial/rm-non-ext-error.png

|

||||

[8]:https://www.howtoforge.com/images/command-tutorial/big/rm-non-ext-error.png

|

||||

[9]:https://www.howtoforge.com/images/command-tutorial/rm-f-option.png

|

||||

[10]:https://www.howtoforge.com/images/command-tutorial/big/rm-f-option.png

|

||||

[11]:https://www.howtoforge.com/images/command-tutorial/rm-I-option.png

|

||||

[12]:https://www.howtoforge.com/images/command-tutorial/big/rm-I-option.png

|

||||

[13]:https://www.howtoforge.com/images/command-tutorial/rm-root-default.png

|

||||

[14]:https://www.howtoforge.com/images/command-tutorial/big/rm-root-default.png

|

||||

[15]:https://superuser.com/questions/742334/is-there-a-scenario-where-rm-rf-no-preserve-root-is-needed

|

||||

[16]:https://www.howtoforge.com/images/command-tutorial/rm-d-option.png

|

||||

[17]:https://www.howtoforge.com/images/command-tutorial/big/rm-d-option.png

|

||||

[18]:https://www.howtoforge.com/images/command-tutorial/rm-v-option.png

|

||||

[19]:https://www.howtoforge.com/images/command-tutorial/big/rm-v-option.png

|

||||

[20]:https://www.howtoforge.com/linux-cp-command/

|

||||

[21]:https://linux.die.net/man/1/rm

|

||||

@ -0,0 +1,75 @@

|

||||

Building Slack for the Linux community and adopting snaps

|

||||

======

|

||||

![][1]

|

||||

|

||||

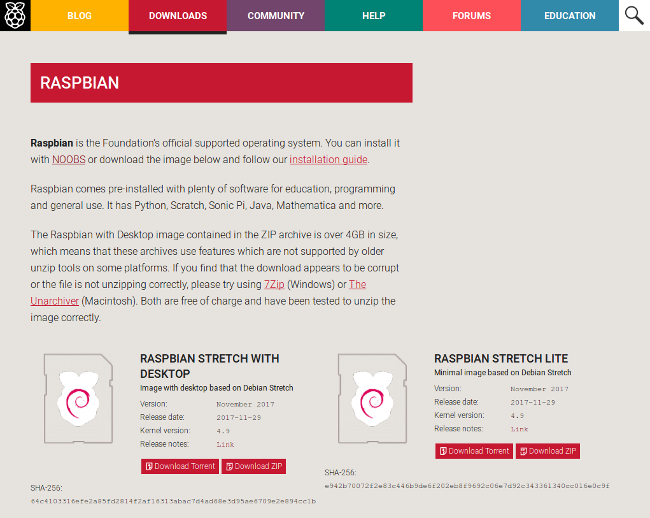

Used by millions around the world, [Slack][2] is an enterprise software platform that allows teams and businesses of all sizes to communicate effectively. Slack works seamlessly with other software tools within a single integrated environment, providing an accessible archive of an organisation’s communications, information and projects. Although Slack has grown at a rapid rate in the 4 years since their inception, their desktop engineering team who work across Windows, MacOS and Linux consists of just 4 people currently. We spoke to Felix Rieseberg, Staff Software Engineer, who works on this team following the release of Slack’s first [snap last month][3] to discover more about the company’s attitude to the Linux community and why they decided to build a snap.

|

||||

|

||||

[Install Slack snap][4]

|

||||

|

||||

### Can you tell us about the Slack snap which has been published?

|

||||

|

||||

We launched our first snap last month as a new way to distribute to our Linux community. In the enterprise space, we find that people tend to adopt new technology at a slower pace than consumers, so we will continue to offer a .deb package.

|

||||

|

||||

### What level of interest do you see for Slack from the Linux community?

|

||||

|

||||

I’m excited that interest for Slack is growing across all platforms, so it is hard for us to say whether the interest coming out of the Linux community is different from the one we’re generally seeing. However, it is important for us to meet users wherever they do their work. We have a dedicated QA engineer focusing entirely on Linux and we really do try hard to deliver the best possible experience.

|

||||

|

||||

We generally find it is a little harder to build for Linux, than say Windows, as there is a less predictable base to work from – and this is an area where the Linux community truly shines. We have a fairly large number of users that are quite helpful when it comes to reporting bugs and hunting root causes down.

|

||||

|

||||

### How did you find out about snaps?

|

||||

|

||||

Martin Wimpress at Canonical reached out to me and explained the concept of snaps. Honestly, initially I was hesitant – even though I use Ubuntu – because it seemed like another standard to build and maintain. However, once understanding the benefits I was convinced it was a worthwhile investment.

|

||||

|

||||

### What was the appeal of snaps that made you decide to invest in them?

|

||||

|

||||

Without doubt, the biggest reason we decided to build the snap is the updating feature. We at Slack make heavy use of web technologies, which in turn allows us to offer a wide variety of features – like the integration of YouTube videos or Spotify playlists. Much like a browser, that means that we frequently need to update the application.

|

||||

|

||||

On macOS and Windows, we already had a dedicated auto-updater that doesn’t require the user to even think about updates. We have found that any sort of interruption, even for an update, is an annoyance that we’d like to avoid. Therefore, the automatic updates via snaps seemed far more seamless and easy.

|

||||

|

||||

### How does building snaps compare to other forms of packaging you produce? How easy was it to integrate with your existing infrastructure and process?

|

||||

|

||||

As far as Linux is concerned, we have not tried other “new” packaging formats, but we’ll never say never. Snaps were an easy choice given that the majority of our Linux customers do use Ubuntu. The fact that snaps also run on other distributions was a decent bonus. I think it is really neat how Canonical is making snaps cross-distro rather than focusing on just Ubuntu.

|

||||

|

||||

Building it was surprisingly easy: We have one unified build process that creates installers and packages – and our snap creation simply takes the .deb package and churns out a snap. For other technologies, we sometimes had to build in-house tools to support our buildchain, but the `snapcraft` tool turned out to be just the right thing. The team at Canonical were incredibly helpful to push it through as we did experience a few problems along the way.

|

||||

|

||||

### How do you see the store changing the way users find and install your software?

|

||||

|

||||

What is really unique about Slack is that people don’t just stumble upon it – they know about it from elsewhere and actively try to find it. Therefore, our levels of awareness are already high but having the snap available in the store, I hope, will make installation a lot easier for our users.

|

||||

|

||||

We always try to do the best for our users. The more convinced we become that it is better than other installation options, the more we will recommend the snap to our users.

|

||||

|

||||

### What are your expectations or already seen savings by using snaps instead of having to package for other distros?

|

||||

|

||||

We expect the snap to offer more convenience for our users and ensure they enjoy using Slack more. From our side, the snap will save time on customer support as users won’t be stuck on previous versions which will naturally resolve a lot of issues. Having the snap is an additional bonus for us and something to build on, rather than displacing anything we already have.

|

||||

|

||||

### What release channels (edge/beta/candidate/stable) in the store are you using or plan to use, if any?

|

||||

|

||||

We used the edge channel exclusively in the development to share with the team at Canonical. Slack for Linux as a whole is still in beta, but long-term, having the options for channels is interesting and being able to release versions to interested customers a little earlier will certainly be beneficial.

|

||||

|

||||

### How do you think packaging your software as a snap helps your users? Did you get any feedback from them?

|

||||

|

||||

Installation and updating generally being easier will be the big benefit to our users. Long-term, the question is “Will users that installed the snap experience less problems than other customers?” I have a decent amount of hope that the built-in dependencies in snaps make it likely.

|

||||

|

||||

### What advice or knowledge would you share with developers who are new to snaps?

|

||||

|

||||

I would recommend starting with the Debian package to build your snap – that was shockingly easy. It also starts the scope smaller to avoid being overwhelmed. It is a fairly small time investment and probably worth it. Also if you can, try to find someone at Canonical to work with – they have amazing engineers.

|

||||

|

||||

### Where do you see the biggest opportunity for development?

|

||||

|

||||

We are taking it step by step currently – first get people on the snap, and build from there. People using it will already be more secure as they will benefit from the latest updates.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2018/02/06/building-slack-for-the-linux-community-and-adopting-snaps/

|

||||

|

||||

作者:[Sarah][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://insights.ubuntu.com/author/sarahfd/

|

||||

[1]:https://insights.ubuntu.com/wp-content/uploads/a115/Slack_linux_screenshot@2x-2.png

|

||||

[2]:https://slack.com/

|

||||

[3]:https://insights.ubuntu.com/2018/01/18/canonical-brings-slack-to-the-snap-ecosystem/

|

||||

[4]:https://snapcraft.io/slack/

|

||||

@ -0,0 +1,49 @@

|

||||

How to start an open source program in your company

|

||||

======

|

||||

|

||||

|

||||

|

||||

Many internet-scale companies, including Google, Facebook, and Twitter, have established formal open source programs (sometimes referred to as open source program offices, or OSPOs for short), a designated place where open source consumption and production is supported inside a company. With such an office in place, any business can execute its open source strategies in clear terms, giving the company tools needed to make open source a success. An open source program office's responsibilities may include establishing policies for code use, distribution, selection, and auditing; engaging with open source communities; training developers; and ensuring legal compliance.

|

||||

|

||||

Internet-scale companies aren't the only ones establishing open source programs; studies show that [65% of companies][1] across industries are using and contributing to open source. In the last couple of years we’ve seen [VMware][2], [Amazon][3], [Microsoft][4], and even the [UK government][5] hire open source leaders and/or create open source programs. Having an open source strategy has become critical for businesses and even governments, and all organizations should be following in their footsteps.

|

||||

|

||||

### How to start an open source program

|

||||

|

||||

Although each open source office will be customized to a specific organization’s needs, there are standard steps that every company goes through. These include:

|

||||

|

||||

* **Finding a leader:** Identifying the right person to lead the open source program is the first step. The [TODO Group][6] maintains a list of [sample job descriptions][7] that may be helpful in finding candidates.

|

||||

* **Deciding on the program structure:** There are a variety of ways to fit an open source program office into an organization's existing structure, depending on its focus. Companies with large intellectual property portfolios may be most comfortable placing the office within the legal department. Engineering-driven organizations may choose to place the office in an engineering department, especially if the focus of the office is to improve developer productivity. Others may want the office to be within the marketing department to support sales of open source products. For inspiration, the TODO Group offers [open source program case studies][8] that can be useful.

|

||||

* **Setting policies and processes:** There needs to be a standardized method for implementing the organization’s open source strategy. The policies, which should require as little oversight as possible, lay out the requirements and rules for working with open source across the organization. They should be clearly defined, easily accessible, and even automated with tooling. Ideally, employees should be able to question policies and provide recommendations for improving or revising them. Numerous organizations active in open source, such as Google, [publish their policies publicly][9], which can be a good place to start. The TODO Group offers examples of other [open source policies][10] organizations can use as resources.

|

||||

|

||||

|

||||

|

||||

### A worthy step

|

||||

|

||||

Opening an open source program office is a big step for most organizations, especially if they are (or are transitioning into) a software company. The benefits to the organization are tremendous and will more than make up for the investment in the long run—not only in employee satisfaction but also in developer efficiency. There are many resources to help on the journey. The TODO Group guides [How to Create an Open Source Program][11], [Measuring Your Open Source Program's Success][12], and [Tools for Managing Open Source Programs][13] are great starting points.

|

||||

|

||||

Open source will truly be sustainable as more companies formalize programs to contribute back to these projects. I hope these resources are useful to you, and I wish you luck on your open source program journey.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/1/how-start-open-source-program-your-company

|

||||

|

||||

作者:[Chris Aniszczyk][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/caniszczyk

|

||||

[1]:https://www.blackducksoftware.com/2016-future-of-open-source

|

||||

[2]:http://www.cio.com/article/3095843/open-source-tools/vmware-today-has-a-strong-investment-in-open-source-dirk-hohndel.html

|

||||

[3]:http://fortune.com/2016/12/01/amazon-open-source-guru/

|

||||

[4]:https://opensource.microsoft.com/

|

||||

[5]:https://www.linkedin.com/jobs/view/169669924

|

||||

[6]:http://todogroup.org

|

||||

[7]:https://github.com/todogroup/job-descriptions

|

||||

[8]:https://github.com/todogroup/guides/tree/master/casestudies

|

||||

[9]:https://opensource.google.com/docs/why/

|

||||

[10]:https://github.com/todogroup/policies

|

||||

[11]:https://github.com/todogroup/guides/blob/master/creating-an-open-source-program.md

|

||||

[12]:https://github.com/todogroup/guides/blob/master/measuring-your-open-source-program.md

|

||||

[13]:https://github.com/todogroup/guides/blob/master/tools-for-managing-open-source-programs.md

|

||||

@ -0,0 +1,99 @@

|

||||

UQDS: A software-development process that puts quality first

|

||||

======

|

||||

|

||||

|

||||

|

||||

The Ultimate Quality Development System (UQDS) is a software development process that provides clear guidelines for how to use branches, tickets, and code reviews. It was invented more than a decade ago by Divmod and adopted by [Twisted][1], an event-driven framework for Python that underlies popular commercial platforms like HipChat as well as open source projects like Scrapy (a web scraper).

|

||||

|

||||

Divmod, sadly, is no longer around—it has gone the way of many startups. Luckily, since many of its products were open source, its legacy lives on.

|

||||

|

||||

When Twisted was a young project, there was no clear process for when code was "good enough" to go in. As a result, while some parts were highly polished and reliable, others were alpha quality software—with no way to tell which was which. UQDS was designed as a process to help an existing project with definite quality challenges ramp up its quality while continuing to add features and become more useful.

|

||||

|

||||

UQDS has helped the Twisted project evolve from having frequent regressions and needing multiple release candidates to get a working version, to achieving its current reputation of stability and reliability.

|

||||

|

||||

### UQDS's building blocks

|

||||

|

||||

UQDS was invented by Divmod back in 2006. At that time, Continuous Integration (CI) was in its infancy and modern version control systems, which allow easy branch merging, were barely proofs of concept. Although Divmod did not have today's modern tooling, it put together CI, some ad-hoc tooling to make [Subversion branches][2] work, and a lot of thought into a working process. Thus the UQDS methodology was born.

|

||||

|

||||

UQDS is based upon fundamental building blocks, each with their own carefully considered best practices:

|

||||

|

||||

1. Tickets

|

||||

2. Branches

|

||||

3. Tests

|

||||

4. Reviews

|

||||

5. No exceptions

|

||||

|

||||

|

||||

|

||||

Let's go into each of those in a little more detail.

|

||||

|

||||

#### Tickets

|

||||

|

||||

In a project using the UQDS methodology, no change is allowed to happen if it's not accompanied by a ticket. This creates a written record of what change is needed and—more importantly—why.

|

||||

|

||||

* Tickets should define clear, measurable goals.

|

||||

* Work on a ticket does not begin until the ticket contains goals that are clearly defined.

|

||||

|

||||

|

||||

|

||||

#### Branches

|

||||

|

||||

Branches in UQDS are tightly coupled with tickets. Each branch must solve one complete ticket, no more and no less. If a branch addresses either more or less than a single ticket, it means there was a problem with the ticket definition—or with the branch. Tickets might be split or merged, or a branch split and merged, until congruence is achieved.

|

||||

|

||||

Enforcing that each branch addresses no more nor less than a single ticket—which corresponds to one logical, measurable change—allows a project using UQDS to have fine-grained control over the commits: A single change can be reverted or changes may even be applied in a different order than they were committed. This helps the project maintain a stable and clean codebase.

|

||||

|

||||

#### Tests

|

||||

|

||||

UQDS relies upon automated testing of all sorts, including unit, integration, regression, and static tests. In order for this to work, all relevant tests must pass at all times. Tests that don't pass must either be fixed or, if no longer relevant, be removed entirely.

|

||||

|

||||

Tests are also coupled with tickets. All new work must include tests that demonstrate that the ticket goals are fully met. Without this, the work won't be merged no matter how good it may seem to be.

|

||||

|

||||

A side effect of the focus on tests is that the only platforms that a UQDS-using project can say it supports are those on which the tests run with a CI framework—and where passing the test on the platform is a condition for merging a branch. Without this restriction on supported platforms, the quality of the project is not Ultimate.

|

||||

|

||||

#### Reviews

|

||||

|

||||

While automated tests are important to the quality ensured by UQDS, the methodology never loses sight of the human factor. Every branch commit requires code review, and each review must follow very strict rules:

|

||||

|

||||

1. Each commit must be reviewed by a different person than the author.

|

||||

2. Start with a comment thanking the contributor for their work.

|

||||

3. Make a note of something that the contributor did especially well (e.g., "that's the perfect name for that variable!").

|

||||

4. Make a note of something that could be done better (e.g., "this line could use a comment explaining the choices.").

|

||||

5. Finish with directions for an explicit next step, typically either merge as-is, fix and merge, or fix and submit for re-review.

|

||||

|

||||

|

||||

|

||||

These rules respect the time and effort of the contributor while also increasing the sharing of knowledge and ideas. The explicit next step allows the contributor to have a clear idea on how to make progress.

|

||||

|

||||

#### No exceptions

|

||||

|

||||

In any process, it's easy to come up with reasons why you might need to flex the rules just a little bit to let this thing or that thing slide through the system. The most important fundamental building block of UQDS is that there are no exceptions. The entire community works together to make sure that the rules do not flex, not for any reason whatsoever.

|

||||

|

||||

Knowing that all code has been approved by a different person than the author, that the code has complete test coverage, that each branch corresponds to a single ticket, and that this ticket is well considered and complete brings a piece of mind that is too valuable to risk losing, even for a single small exception. The goal is quality, and quality does not come from compromise.

|

||||

|

||||

### A downside to UQDS

|

||||

|

||||

While UQDS has helped Twisted become a highly stable and reliable project, this reliability hasn't come without cost. We quickly found that the review requirements caused a slowdown and backlog of commits to review, leading to slower development. The answer to this wasn't to compromise on quality by getting rid of UQDS; it was to refocus the community priorities such that reviewing commits became one of the most important ways to contribute to the project.

|

||||

|

||||

To help with this, the community developed a bot in the [Twisted IRC channel][3] that will reply to the command `review tickets` with a list of tickets that still need review. The [Twisted review queue][4] website returns a prioritized list of tickets for review. Finally, the entire community keeps close tabs on the number of tickets that need review. It's become an important metric the community uses to gauge the health of the project.

|

||||

|

||||

### Learn more

|

||||

|

||||

The best way to learn about UQDS is to [join the Twisted Community][5] and see it in action. If you'd like more information about the methodology and how it might help your project reach a high level of reliability and stability, have a look at the [UQDS documentation][6] in the Twisted wiki.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/uqds

|

||||

|

||||

作者:[Moshe Zadka][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/moshez

|

||||

[1]:https://twistedmatrix.com/trac/

|

||||

[2]:http://structure.usc.edu/svn/svn.branchmerge.html

|

||||

[3]:http://webchat.freenode.net/?channels=%23twisted

|

||||

[4]:https://twisted.reviews

|

||||

[5]:https://twistedmatrix.com/trac/wiki/TwistedCommunity

|

||||

[6]:https://twistedmatrix.com/trac/wiki/UltimateQualityDevelopmentSystem

|

||||

@ -0,0 +1,102 @@

|

||||

Why Linux is better than Windows or macOS for security

|

||||

======

|

||||

|

||||

|

||||

|

||||

Enterprises invest a lot of time, effort and money in keeping their systems secure. The most security-conscious might have a security operations center. They of course use firewalls and antivirus tools. They probably spend a lot of time monitoring their networks, looking for telltale anomalies that could indicate a breach. What with IDS, SIEM and NGFWs, they deploy a veritable alphabet of defenses.

|

||||

|

||||

But how many have given much thought to one of the cornerstones of their digital operations: the operating systems deployed on the workforce’s PCs? Was security even a factor when the desktop OS was selected?

|

||||

|

||||

This raises a question that every IT person should be able to answer: Which operating system is the most secure for general deployment?

|

||||

|

||||

We asked some experts what they think of the security of these three choices: Windows, the ever-more-complex platform that’s easily the most popular desktop system; macOS X, the FreeBSD Unix-based operating system that powers Apple Macintosh systems; and Linux, by which we mean all the various Linux distributions and related Unix-based systems.

|

||||

|

||||

### How we got here

|

||||

|

||||

One reason enterprises might not have evaluated the security of the OS they deployed to the workforce is that they made the choice years ago. Go back far enough and all operating systems were reasonably safe, because the business of hacking into them and stealing data or installing malware was in its infancy. And once an OS choice is made, it’s hard to consider a change. Few IT organizations would want the headache of moving a globally dispersed workforce to an entirely new OS. Heck, they get enough pushback when they move users to a new version of their OS of choice.

|

||||

|

||||

Still, would it be wise to reconsider? Are the three leading desktop OSes different enough in their approach to security to make a change worthwhile?

|

||||

|

||||

Certainly the threats confronting enterprise systems have changed in the last few years. Attacks have become far more sophisticated. The lone teen hacker that once dominated the public imagination has been supplanted by well-organized networks of criminals and shadowy, government-funded organizations with vast computing resources.

|

||||

|

||||

Like many of you, I have firsthand experience of the threats that are out there: I have been infected by malware and viruses on numerous Windows computers, and I even had macro viruses that infected files on my Mac. More recently, a widespread automated hack circumvented the security on my website and infected it with malware. The effects of such malware were always initially subtle, something you wouldn’t even notice, until the malware ended up so deeply embedded in the system that performance started to suffer noticeably. One striking thing about the infestations was that I was never specifically targeted by the miscreants; nowadays, it’s as easy to attack 100,000 computers with a botnet as it is to attack a dozen.

|

||||

|

||||

### Does the OS really matter?

|

||||

|

||||

The OS you deploy to your users does make a difference for your security stance, but it isn’t a sure safeguard. For one thing, a breach these days is more likely to come about because an attacker probed your users, not your systems. A [survey][1] of hackers who attended a recent DEFCON conference revealed that “84 percent use social engineering as part of their attack strategy.” Deploying a secure operating system is an important starting point, but without user education, strong firewalls and constant vigilance, even the most secure networks can be invaded. And of course there’s always the risk of user-downloaded software, extensions, utilities, plug-ins and other software that appears benign but becomes a path for malware to appear on the system.

|

||||

|

||||

And no matter which platform you choose, one of the best ways to keep your system secure is to ensure that you apply software updates promptly. Once a patch is in the wild, after all, the hackers can reverse engineer it and find a new exploit they can use in their next wave of attacks.

|

||||

|

||||

And don’t forget the basics. Don’t use root, and don’t grant guest access to even older servers on the network. Teach your users how to pick really good passwords and arm them with tools such as [1Password][2] that make it easier for them to have different passwords on every account and website they use.

|

||||

|

||||

Because the bottom line is that every decision you make regarding your systems will affect your security, even the operating system your users do their work on.

|

||||

|

||||

**[ To comment on this story, visit[Computerworld's Facebook page][3]. ]**

|

||||

|

||||

### Windows, the popular choice

|

||||

|

||||

If you’re a security manager, it is extremely likely that the questions raised by this article could be rephrased like so: Would we be more secure if we moved away from Microsoft Windows? To say that Windows dominates the enterprise market is to understate the case. [NetMarketShare][4] estimates that a staggering 88% of all computers on the internet are running a version of Windows.

|

||||

|

||||

If your systems fall within that 88%, you’re probably aware that Microsoft has continued to beef up security in the Windows system. Among its improvements have been rewriting and re-rewriting its operating system codebase, adding its own antivirus software system, improving firewalls and implementing a sandbox architecture, where programs can’t access the memory space of the OS or other applications.

|

||||

|

||||

But the popularity of Windows is a problem in itself. The security of an operating system can depend to a large degree on the size of its installed base. For malware authors, Windows provides a massive playing field. Concentrating on it gives them the most bang for their efforts.

|

||||

As Troy Wilkinson, CEO of Axiom Cyber Solutions, explains, “Windows always comes in last in the security world for a number of reasons, mainly because of the adoption rate of consumers. With a large number of Windows-based personal computers on the market, hackers historically have targeted these systems the most.”

|

||||

|

||||

It’s certainly true that, from Melissa to WannaCry and beyond, much of the malware the world has seen has been aimed at Windows systems.

|

||||

|

||||

### macOS X and security through obscurity

|

||||

|

||||

If the most popular OS is always going to be the biggest target, then can using a less popular option ensure security? That idea is a new take on the old — and entirely discredited — concept of “security through obscurity,” which held that keeping the inner workings of software proprietary and therefore secret was the best way to defend against attacks.

|

||||

|

||||

Wilkinson flatly states that macOS X “is more secure than Windows,” but he hastens to add that “macOS used to be considered a fully secure operating system with little chance of security flaws, but in recent years we have seen hackers crafting additional exploits against macOS.”

|

||||

|

||||

In other words, the attackers are branching out and not ignoring the Mac universe.

|

||||

|

||||

Security researcher Lee Muson of Comparitech says that “macOS is likely to be the pick of the bunch” when it comes to choosing a more secure OS, but he cautions that it is not impenetrable, as once thought. Its advantage is that “it still benefits from a touch of security through obscurity versus the still much larger target presented by Microsoft’s offering.”

|

||||

|

||||

Joe Moore of Wolf Solutions gives Apple a bit more credit, saying that “off the shelf, macOS X has a great track record when it comes to security, in part because it isn’t as widely targeted as Windows and in part because Apple does a pretty good job of staying on top of security issues.”

|

||||

|

||||

### And the winner is …

|

||||

|

||||

You probably knew this from the beginning: The clear consensus among experts is that Linux is the most secure operating system. But while it’s the OS of choice for servers, enterprises deploying it on the desktop are few and far between.

|

||||

|

||||

And if you did decide that Linux was the way to go, you would still have to decide which distribution of the Linux system to choose, and things get a bit more complicated there. Users are going to want a UI that seems familiar, and you are going to want the most secure OS.

|

||||

|

||||

As Moore explains, “Linux has the potential to be the most secure, but requires the user be something of a power user.” So, not for everyone.

|

||||

|

||||

Linux distros that target security as a primary feature include [Parrot Linux][5], a Debian-based distro that Moore says provides numerous security-related tools right out of the box.

|

||||

|

||||

Of course, an important differentiator is that Linux is open source. The fact that coders can read and comment upon each other’s work might seem like a security nightmare, but it actually turns out to be an important reason why Linux is so secure, says Igor Bidenko, CISO of Simplex Solutions. “Linux is the most secure OS, as its source is open. Anyone can review it and make sure there are no bugs or back doors.”

|

||||

|

||||

Wilkinson elaborates that “Linux and Unix-based operating systems have less exploitable security flaws known to the information security world. Linux code is reviewed by the tech community, which lends itself to security: By having that much oversight, there are fewer vulnerabilities, bugs and threats.”

|

||||

|

||||

That’s a subtle and perhaps counterintuitive explanation, but by having dozens — or sometimes hundreds — of people read through every line of code in the operating system, the code is actually more robust and the chance of flaws slipping into the wild is diminished. That had a lot to do with why PC World came right out and said Linux is more secure. As Katherine Noyes [explains][6], “Microsoft may tout its large team of paid developers, but it’s unlikely that team can compare with a global base of Linux user-developers around the globe. Security can only benefit through all those extra eyeballs.”

|

||||

|

||||

Another factor cited by PC World is Linux’s better user privileges model: Windows users “are generally given administrator access by default, which means they pretty much have access to everything on the system,” according to Noyes’ article. Linux, in contrast, greatly restricts “root.”

|

||||

|

||||

Noyes also noted that the diversity possible within Linux environments is a better hedge against attacks than the typical Windows monoculture: There are simply a lot of different distributions of Linux available. And some of them are differentiated in ways that specifically address security concerns. Security Researcher Lee Muson of Comparitech offers this suggestion for a Linux distro: “The[Qubes OS][7] is as good a starting point with Linux as you can find right now, with an [endorsement from Edward Snowden][8] massively overshadowing its own extremely humble claims.” Other security experts point to specialized secure Linux distributions such as [Tails Linux][9], designed to run securely and anonymously directly from a USB flash drive or similar external device.

|

||||

|

||||

### Building security momentum

|

||||

|

||||

Inertia is a powerful force. Although there is clear consensus that Linux is the safest choice for the desktop, there has been no stampede to dump Windows and Mac machines in favor of it. Nonetheless, a small but significant increase in Linux adoption would probably result in safer computing for everyone, because in market share loss is one sure way to get Microsoft’s and Apple’s attention. In other words, if enough users switch to Linux on the desktop, Windows and Mac PCs are very likely to become more secure platforms.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.computerworld.com/article/3252823/linux/why-linux-is-better-than-windows-or-macos-for-security.html

|

||||

|

||||

作者:[Dave Taylor][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.computerworld.com/author/Dave-Taylor/

|

||||

[1]:https://www.esecurityplanet.com/hackers/fully-84-percent-of-hackers-leverage-social-engineering-in-attacks.html

|

||||

[2]:http://www.1password.com

|

||||

[3]:https://www.facebook.com/Computerworld/posts/10156160917029680

|

||||

[4]:https://www.netmarketshare.com/operating-system-market-share.aspx?options=%7B%22filter%22%3A%7B%22%24and%22%3A%5B%7B%22deviceType%22%3A%7B%22%24in%22%3A%5B%22Desktop%2Flaptop%22%5D%7D%7D%5D%7D%2C%22dateLabel%22%3A%22Trend%22%2C%22attributes%22%3A%22share%22%2C%22group%22%3A%22platform%22%2C%22sort%22%3A%7B%22share%22%3A-1%7D%2C%22id%22%3A%22platformsDesktop%22%2C%22dateInterval%22%3A%22Monthly%22%2C%22dateStart%22%3A%222017-02%22%2C%22dateEnd%22%3A%222018-01%22%2C%22segments%22%3A%22-1000%22%7D

|

||||

[5]:https://www.parrotsec.org/

|

||||

[6]:https://www.pcworld.com/article/202452/why_linux_is_more_secure_than_windows.html

|

||||

[7]:https://www.qubes-os.org/

|

||||

[8]:https://twitter.com/snowden/status/781493632293605376?lang=en

|

||||

[9]:https://tails.boum.org/about/index.en.html

|

||||

@ -0,0 +1,295 @@

|

||||

Prevent Files And Folders From Accidental Deletion Or Modification In Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

Some times, I accidentally “SHIFT+DELETE” my data. Yes, I am an idiot who don’t double check what I am exactly going to delete. And, I am too dumb or lazy to backup the data. Result? Data loss! They are gone in a fraction of second. I do it every now and then. If you’re anything like me, I’ve got a good news. There is a simple, yet useful commandline utility called **“chattr”** (abbreviation of **Ch** ange **Attr** ibute) which can be used to prevent files and folders from accidental deletion or modification in Unix-like distributions. It applies/removes certain attributes to a file or folder in your Linux system. So the users can’t delete or modify the files and folders either accidentally or intentionally, even as root user. Sounds useful, isn’t it?

|

||||

|

||||

In this brief tutorial, we are going to see how to use chattr in real time in-order to prevent files and folders from accidental deletion in Linux.

|

||||

|

||||

### Prevent Files And Folders From Accidental Deletion Or Modification In Linux

|

||||

|

||||

By default, Chattr is available in most modern Linux operating systems. Let us see some examples.

|

||||

|

||||

The default syntax of chattr command is:

|

||||

```

|

||||

chattr [operator] [switch] [filename]

|

||||

|

||||

```

|

||||

|

||||

chattr has the following operators.

|

||||

|

||||

* The operator **‘+’** causes the selected attributes to be added to the existing attributes of the files;

|

||||

* The operator **‘-‘** causes them to be removed;

|

||||

* The operator **‘=’** causes them to be the only attributes that the files have.

|

||||

|

||||

|

||||

|

||||

Chattr has different attributes namely – **aAcCdDeijsStTu**. Each letter applies a particular attributes to a file.

|

||||

|

||||

* **a** – append only,

|

||||

* **A** – no atime updates,

|

||||

* **c** – compressed,

|

||||

* **C** – no copy on write,

|

||||

* **d** – no dump,

|

||||

* **D** – synchronous directory updates,

|

||||

* **e** – extent format,

|

||||

* **i** – immutable,

|

||||

* **j** – data journalling,

|

||||

* **P** – project hierarchy,

|

||||

* **s** – secure deletion,

|

||||

* **S** – synchronous updates,

|

||||

* **t** – no tail-merging,

|

||||

* **T** – top of directory hierarchy,

|

||||

* **u** – undeletable.

|

||||

|

||||

|

||||

|

||||

In this tutorial, we are going to discuss the usage of two attributes, namely **a** , **i** which are used to prevent the deletion of files and folders. That’s what our topic today, isn’t? Indeed!

|

||||

|

||||

### Prevent files from accidental deletion

|

||||

|

||||

Let me create a file called **file.txt** in my current directory.

|

||||

```

|

||||

$ touch file.txt

|

||||

|

||||

```

|

||||

|

||||

Now, I am going to apply **“i”** attribute which makes the file immutable. It means you can’t delete, modify the file, even if you’re the file owner and the root user.

|

||||

```

|

||||

$ sudo chattr +i file.txt

|

||||

|

||||

```

|

||||

|

||||

You can check the file attributes using command:

|

||||

```

|

||||

$ lsattr file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

----i---------e---- file.txt

|

||||

|

||||

```

|

||||

|

||||

Now, try to remove the file either as a normal user or with sudo privileges.

|

||||

```

|

||||

$ rm file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

rm: cannot remove 'file.txt': Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

Let me try with sudo command:

|

||||

```

|

||||

$ sudo rm file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

rm: cannot remove 'file.txt': Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

Let us try to append some contents in the text file.

|

||||

```

|

||||

$ echo 'Hello World!' >> file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

bash: file.txt: Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

Try with **sudo** privilege:

|

||||

```

|

||||

$ sudo echo 'Hello World!' >> file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

bash: file.txt: Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

As you noticed in the above outputs, We can’t delete or modify the file even as root user or the file owner.

|

||||

|

||||

To revoke attributes, just use **“-i”** switch as shown below.

|

||||

```

|

||||

$ sudo chattr -i file.txt

|

||||

|

||||

```

|

||||

|

||||

Now, the immutable attribute has been removed. You can now delete or modify the file.

|

||||

```

|

||||

$ rm file.txt

|

||||

|

||||

```

|

||||

|

||||

Similarly, you can restrict the directories from accidental deletion or modification as described in the next section.

|

||||

|

||||

### Prevent folders from accidental deletion and modification

|

||||

|

||||

Create a directory called dir1 and a file called file.txt inside this directory.

|

||||

```

|

||||

$ mkdir dir1 && touch dir1/file.txt

|

||||

|

||||

```

|

||||

|

||||

Now, make this directory and its contents (file.txt) immutable using command:

|

||||

```

|

||||

$ sudo chattr -R +i dir1

|

||||

|

||||

```

|

||||

|

||||

Where,

|

||||

|

||||

* **-R** – will make the dir1 and its contents immutable recursively.

|

||||

* **+i** – makes the directory immutable.

|

||||

|

||||

|

||||

|

||||

Now, try to delete the directory either as normal user or using sudo user.

|

||||

```

|

||||

$ rm -fr dir1

|

||||

|

||||

$ sudo rm -fr dir1

|

||||

|

||||

```

|

||||

|

||||

You will get the following output:

|

||||

```

|

||||

rm: cannot remove 'dir1/file.txt': Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

Try to append some contents in the file using “echo” command. Did you make it? Of course, you couldn’t!

|

||||

|

||||

To revoke the attributes back, run:

|

||||

```

|

||||

$ sudo chattr -R -i dir1

|

||||

|

||||

```

|

||||

|

||||

Now, you can delete or modify the contents of this directory as usual.

|

||||

|

||||

### Prevent files and folders from accidental deletion, but allow append operation

|

||||

|

||||

We know now how to prevent files and folders from accidental deletion and modification. Next, we are going to prevent files and folders from deletion, but allow the file for writing in append mode only. That means you can’t edit, modify the existing data in the file, rename the file, and delete the file. You can only open the file for writing in append mode.

|

||||

|

||||

To set append mode attribution to a file/directory, we do the following.

|

||||

|

||||

**For files:**

|

||||

```

|

||||

$ sudo chattr +a file.txt

|

||||

|

||||

```

|

||||

|

||||

**For directories: **

|

||||

```

|

||||

$ sudo chattr -R +a dir1

|

||||

|

||||

```

|

||||

|

||||

A file/folder with the ‘a’ attribute set can only be open in append mode for writing.

|

||||

|

||||

Add some contents to the file(s) to check whether it works or not.

|

||||

```

|

||||

$ echo 'Hello World!' >> file.txt

|

||||

|

||||

$ echo 'Hello World!' >> dir1/file.txt

|

||||

|

||||

```

|

||||

|

||||

Check the file contents using cat command:

|

||||

```

|

||||

$ cat file.txt

|

||||

|

||||

$ cat dir1/file.txt

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

Hello World!

|

||||

|

||||

```

|

||||

|

||||

You will see that you can now be able to append the contents. It means we can modify the files and folders.

|

||||

|

||||

Let us try to delete the file or folder now.

|

||||

```

|

||||

$ rm file.txt

|

||||

|

||||

```

|

||||

|

||||

**Output:**

|

||||

```

|

||||

rm: cannot remove 'file.txt': Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

Let us try to delete the folder:

|

||||

```

|

||||

$ rm -fr dir1/

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

```

|

||||

rm: cannot remove 'dir1/file.txt': Operation not permitted

|

||||

|

||||

```

|

||||

|

||||

To remove the attributes, run the following commands:

|

||||

|

||||

**For files:**

|

||||

```

|

||||

$ sudo chattr -R -a file.txt

|

||||

|

||||

```

|

||||

|

||||

**For directories: **

|

||||

```

|

||||

$ sudo chattr -R -a dir1/

|

||||

|

||||

```

|

||||

|

||||

Now, you can delete or modify the files and folders as usual.

|

||||

|

||||

For more details, refer the man pages.

|

||||

```

|

||||

man chattr

|

||||

|

||||

```

|

||||

|

||||

### Wrapping up

|

||||

|

||||

Data protection is one of the main job of a System administrator. There are numerous free and commercial data protection software are available on the market. Luckily, we’ve got this built-in tool that helps us to protect the data from accidental deletion or modification. Chattr can be used as additional tool to protect the important system files and data in your Linux system.

|

||||

|

||||

And, that’s all for today. Hope this helps. I will be soon here with another useful article. Until then, stay tuned with OSTechNix!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/prevent-files-folders-accidental-deletion-modification-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

@ -1,97 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

Monitoring network bandwidth with iftop command

|

||||

======

|

||||

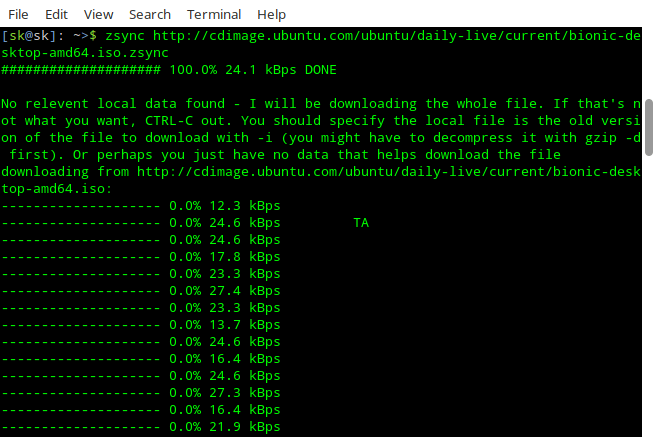

System Admins are required to monitor IT infrastructure to make sure that everything is up & running. We have to monitor performance of hardware i.e memory, hdds & CPUs etc & so does we have to monitor our network. We need to make sure that our network is not being over utilised or our applications, websites might not work. In this tutorial, we are going to learn to use IFTOP utility.

|

||||

|

||||

( **Recommended read** :[ **Resource monitoring using Nagios**][1], [**Tools for checking system info**,][2] [**Important logs to monitor**][3])

|

||||

|

||||

Iftop is network monitoring utility that provides real time real time bandwidth monitoring. Iftop measures total data moving in & out of the individual socket connections i.e. it captures packets moving in and out via network adapter & than sums those up to find the bandwidth being utilized.

|

||||

|

||||

## Installation on Debian/Ubuntu

|

||||

|

||||

Iftop is available with default repositories of Debian/Ubuntu & can be simply installed using the command below,

|

||||

|

||||

```

|

||||

$ sudo apt-get install iftop

|

||||

```

|

||||

|

||||

## Installation on RHEL/Centos using yum

|

||||

|

||||

For installing iftop on CentOS or RHEL, we need to enable EPEL repository. To enable repository, run the following on your terminal,

|

||||

|

||||

### RHEL/CentOS 7

|

||||

|

||||

```

|

||||

$ rpm -Uvh https://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-10.noarch.rpm

|

||||

```

|

||||

|

||||

### RHEL/CentOS 6 (64 Bit)

|

||||

|

||||

```

|

||||

$ rpm -Uvh http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

|

||||

```

|

||||

|

||||

### RHEL/CentOS 6 (32 Bit)

|

||||

|

||||

```

|

||||

$ rpm -Uvh http://dl.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpm

|

||||

```

|

||||

|

||||

After epel repository has been installed, we can now install iftop by running,

|

||||

|

||||

```

|

||||

$ yum install iftop

|

||||

```

|

||||

|

||||

This will install iftop utility on your system. We will now use it to monitor our network,

|

||||

|

||||

## Using IFTOP

|

||||

|

||||

You can start using iftop by opening your terminal windown & type,

|

||||

|

||||

```

|

||||

$ iftop

|

||||

```

|

||||

|

||||

![network monitoring][5]

|

||||

|

||||

You will now be presented with network activity happening on your machine. You can also use

|

||||

|

||||

```

|

||||

$ iftop -n

|

||||

```

|

||||

|

||||

Which will present the network information on your screen but with '-n' , you will not be presented with the names related to IP addresses but only ip addresses. This option allows for some bandwidth to be saved, which goes into resolving IP addresses to names.

|

||||

|

||||

Now we can also see all the commands that can be used with iftop. Once you have ran iftop, press 'h' button on the keyboard to see all the commands that can be used with iftop.

|

||||

|

||||

![network monitoring][7]

|

||||

|

||||

To monitor a particular network interface, we can mention interface with iftop,

|

||||

|

||||

```

|

||||

$ iftop -I enp0s3

|

||||

```

|

||||

|

||||

You can check further options that are used with iftop using help, as mentioned above. But these mentioned examples are only what you might to monitor network.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/monitoring-network-bandwidth-iftop-command/

|

||||

|

||||

作者:[SHUSAIN][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:http://linuxtechlab.com/installing-configuring-nagios-server/

|

||||

[2]:http://linuxtechlab.com/commands-system-hardware-info/

|

||||

[3]:http://linuxtechlab.com/important-logs-monitor-identify-issues/

|

||||

[4]:https://i1.wp.com/linuxtechlab.com/wp-content/plugins/a3-lazy-load/assets/images/lazy_placeholder.gif?resize=661%2C424

|

||||

[5]:https://i0.wp.com/linuxtechlab.com/wp-content/uploads/2017/04/iftop-1.jpg?resize=661%2C424

|

||||

[6]:https://i1.wp.com/linuxtechlab.com/wp-content/plugins/a3-lazy-load/assets/images/lazy_placeholder.gif?resize=663%2C416

|

||||

[7]:https://i0.wp.com/linuxtechlab.com/wp-content/uploads/2017/04/iftop-help.jpg?resize=663%2C416

|

||||

193

sources/tech/20180123 Migrating to Linux- The Command Line.md

Normal file

193

sources/tech/20180123 Migrating to Linux- The Command Line.md

Normal file

@ -0,0 +1,193 @@

|

||||

Migrating to Linux: The Command Line

|

||||

======

|

||||

|

||||

|

||||

|

||||

This is the fourth article in our series on migrating to Linux. If you missed the previous installments, we've covered [Linux for new users][1], [files and filesystems][2], and [graphical environments][3]. Linux is everywhere. It's used to run most Internet services like web servers, email servers, and others. It's also used in your cell phone, your car console, and a whole lot more. So, you might be curious to try out Linux and learn more about how it works.

|

||||

|

||||

Under Linux, the command line is very useful. On desktop Linux systems, although the command line is optional, you will often see people have a command line window open alongside other application windows. On Internet servers, and when Linux is running in a device, the command line is often the only way to interact directly with the system. So, it's good to know at least some command line basics.

|

||||

|

||||

In the command line (often called a shell in Linux), everything is done by entering commands. You can list files, move files, display the contents of files, edit files, and more, even display web pages, all from the command line.

|

||||

|

||||

If you are already familiar with using the command line in Windows (either CMD.EXE or PowerShell), you may want to jump down to the section titled Familiar with Windows Command Line? and read that first.

|

||||

|

||||

### Navigating

|

||||

|

||||

In the command line, there is the concept of the current working directory (Note: A folder and a directory are synonymous, and in Linux they're usually called directories). Many commands will look in this directory by default if no other directory path is specified. For example, typing ls to list files, will list files in this working directory. For example:

|

||||

```

|

||||

$ ls

|

||||

Desktop Documents Downloads Music Pictures README.txt Videos

|

||||

```

|

||||

|

||||

The command, ls Documents, will instead list files in the Documents directory:

|

||||

```

|

||||

$ ls Documents

|

||||

report.txt todo.txt EmailHowTo.pdf

|

||||

```

|

||||

|

||||

You can display the current working directory by typing pwd. For example:

|