mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

e7da8a74ec

@ -1,42 +1,42 @@

|

||||

Ubuntu每日贴士——保护你的Home文件夹

|

||||

================================================================================

|

||||

几天之前,我们向大家展示了如何在Ubuntu中改变您的home文件夹,以便只有授权用户才能够看到您文件夹中的内容。我们说过,“adduser”命令创建的用户目录,目录里面内容是所有人可读的。这意味着:默认情况下,您的机器上所有有帐号的用户,都能够浏览您home文件夹里面的内容。

|

||||

Ubuntu每日小技巧——保护你的Home文件夹

|

||||

=================================

|

||||

|

||||

几天之前,我们向大家展示了如何在Ubuntu中改变您的home文件夹,以便只有授权用户才能够看到您文件夹中的内容。我们说过,“adduser”命令创建的用户目录,目录里面内容是所有人可读的。这意味着:默认情况下,您的机器上所有有帐号的用户,都能够浏览您home文件夹里面的内容。

|

||||

|

||||

要想阅读之前的文章,[请点击这里][2].在那篇文章中,我们还介绍了如何设置权限,可以让您的home文件夹不被任何人浏览。

|

||||

这篇博客中提到,还可以通过加密文件目录的方式来获得同样的效果。当home文件夹被加密后,未经授权的用户将既不能看到也不能访问该目录。

|

||||

|

||||

在这篇博客里,还可以看到如何通过加密文件目录的方式来获得同样的效果。当home文件夹被加密后,未经授权的用户将既不能看到也不能访问该目录。

|

||||

|

||||

加密home文件夹并不是在每个环境中对每个人都适用,所以在实际使用该功能之前,请确信自己真的需要它。

|

||||

要加密home目录,请登录到Ubuntu并运行以下命令。

|

||||

|

||||

要使用加密home目录的功能,请登录到Ubuntu并运行以下命令。

|

||||

|

||||

sudo apt-get install ecryptfs-utils

|

||||

|

||||

当加密当前home文件夹时,你是无法进行系统登录的,必须创建一个临时账户并登录进去。之后再运行下面这些命令,来加密你的home文件夹。

|

||||

使用你当前的账户名代替USERNAME。

|

||||

你是无法在登录后加密当前home文件夹的,必须创建一个临时账户并登录进去。之后再运行下面这些命令,来加密你的home文件夹。

|

||||

|

||||

使用你当前的账户名代替下面的USERNAME。

|

||||

|

||||

sudo ecryptfs-migrate-home -u USERNAME

|

||||

|

||||

当以临时用户的身份登录时,由于你的帐号拥有root或admin权限,就需要运行**su**+用户名,以自己的身份运行命令。系统会提示你输入密码。

|

||||

当以临时用户的身份登录后,为使你的帐号拥有root或admin权限,就需要以自己的身份运行 **su**+用户名的命令。系统会提示你输入密码。

|

||||

|

||||

su USERNAME

|

||||

|

||||

使用具有root或admin权限的帐号代替USERNAME。

|

||||

使用具有使用root或admin权限的帐号(译注:即拥有su权限的账号)代替USERNAME。

|

||||

|

||||

在这之后,运行**ecryptfs-migrate-home –u USERNAME**命令加密home文件夹。

|

||||

在这之后,运行 **ecryptfs-migrate-home –u USERNAME** 命令加密home文件夹。

|

||||

|

||||

要想在Ubuntu创建一个用户,运行下面的命令。

|

||||

|

||||

sudo adduser USERNAME

|

||||

|

||||

要想在Ubuntu中删除的用户,运行下面的命令。

|

||||

|

||||

sudo deluser USERNAME

|

||||

|

||||

登录后,你将会看到如下截图的界面,包含更多关于加密home文件夹的信息。

|

||||

使用被加密的账号第一次登录后,你将会看到如下截图的界面,包含更多关于加密home文件夹的信息。

|

||||

|

||||

|

||||

|

||||

要创建带有加密home目录的用户,运行下面的命令:

|

||||

要创建带有加密home目录的用户,运行下面的命令:

|

||||

|

||||

adduser –encrypt-home USERNAME

|

||||

|

||||

试试看吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.liberiangeek.net/2013/09/daily-ubuntu-tips-protect-home-folders/

|

||||

@ -8,13 +8,13 @@ Gnome Control Center允许用户使用大量的工具应用程序来对他们的

|

||||

|

||||

**GNOME Control Center 3.10.1的功能亮点:**

|

||||

|

||||

- 修正了一些内存泄露;

|

||||

- 修正了一些内存泄露问题;

|

||||

- 创建目录时使用一致的权限;

|

||||

- 鼠标移动速度设置不会再复位;

|

||||

- 没有启用远程控制功能时屏幕共享可正常使用;

|

||||

- 相同名字的文件夹不会再被选定为媒体共享文件夹;

|

||||

- 当要启用DLNA,必须使MediaExport插件启用;

|

||||

- 在“标题栏”的按钮图标已经对齐。

|

||||

- 顶栏的按钮图标已经对齐。

|

||||

|

||||

有关变更、更新及Bug修复等情况的完整列表信息可以在官网[变更日志][1]中查看。

|

||||

|

||||

@ -0,0 +1,33 @@

|

||||

Mark Shuttleworth将出席下月5~8号香港OpenStack峰会并演讲

|

||||

================================================================================

|

||||

|

||||

通过分析[Canonical][1],调查者不难发现几个改变,其中包括愿景、奋斗目标和行动准则等,都已经逐渐地将Canonical定位到业界之巅,通过在所有相关业绩和计算环境方面的成绩,它成为了领导创新发展的非常重要的部分。

|

||||

|

||||

Ubuntu桌面环境为那些追求稳定、快速、安全而优美的个人用户、公司企业和国家部门支撑了3000万台计算机的运行,这是一个巨大的成功,然而,这个桌面系统只是Canonical跨过层层困难到达IT世界之巅的蓬勃运动的一部分。

|

||||

|

||||

在云计算方面,Canonical已经深入而积极参与到OpenStack的创建上。作为最流行、可靠的、快速的开源云平台,Ubuntu正是OpenStack所基于的操作系统,这个云平台是汇集了NASA、HP和世界上的专家们,通力合作的所开发的开放云平台。

|

||||

|

||||

Ubuntu是公司和开发者所渴望使用的强大的OpenStack[原生的][2]操作系统,Ubuntu提供了众多优势和优点:Ubuntu和OpenStack发布时间是同步的,同步的发行使得OpenStack能在最新的Utuntu下运行,Canonical提供支持,包括产品、服务等,来支持最佳的OpenStack的管理和操作等。

|

||||

|

||||

**OpenStack峰会**是一个专家们的重要集会,在这里讨论、提出和分析OpenStack各个方面的内容,也包括丰富的展览、案例研究,以来自创新开发者的基调为特色,也有开发者集会和工作分享,最主要的是专家们会讨论关于目前和将来的OpenStack和云计算的格局。

|

||||

|

||||

|

||||

|

||||

这次OpenStack峰会将会于11月5号到8号在香港举行,可以[在此注册参会][3]。

|

||||

|

||||

Canonical的**Mark Shuttleworth**已经确定会出席在香港举行的OpenStack峰会,他将做一个讲演,围绕交互性,进一步加强Ubuntu和OpenStack的结合等,并揭示关于未来的创新目标的新细节、计划和Canonical为Ubuntu所做的成就。

|

||||

|

||||

更多细节请点击 [https://www.openstack.org/summit/hk][4]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://iloveubuntu.net/mark-shuttleworth-attend-and-conduct-keynote-openstack-summit-hong-kong-november-5th-8th-2013

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

译者:[Vic___](http://blog.csdn.net/Vic___) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

[1]:http://www.canonical.com/

|

||||

[2]:http://www.ubuntu.com/cloud/tools/openstack

|

||||

[3]:https://www.eventbrite.com/event/6786581849/o21

|

||||

[4]:https://www.openstack.org/summit/hk

|

||||

@ -1,14 +1,13 @@

|

||||

现在可以预订System 76 Ubuntu触摸笔记本了!!!

|

||||

|

||||

================================================================================

|

||||

现在可以预订System 76的Ubuntu触摸笔记本了!!!

|

||||

===================================

|

||||

|

||||

|

||||

|

||||

**Ubuntu PC 制造商 System 76 已经公布了一款搭载Ubuntu 13.10的触摸笔记本.**

|

||||

|

||||

Darter Ultra Thin 14.1寸高清**搭载Ubuntu多点触摸显示**,0.9''的厚度,地盘重4.60英镑(约2kg),电池大约能支持5个小时 - 受Linux电池管理的影响,这是令人震撼的.

|

||||

Darter Ultra Thin 14.1寸高清笔记本 **搭载了Ubuntu多点触摸显示**,0.9英寸的厚度,约重4.60磅(大约2公斤)。令人吃惊的是,虽然受到了Linux电池管理缺陷的影响,电池居然能支持5个小时。

|

||||

|

||||

在笔记本的触摸屏附近也提供很多传统的输入设备,也就是多点的触摸板和巧克力键盘.

|

||||

除了触摸屏外,也提供了传统的输入设备,如多点触摸板和巧克力式的键盘。

|

||||

|

||||

|

||||

|

||||

@ -16,30 +15,30 @@ Darter Ultra Thin 14.1寸高清**搭载Ubuntu多点触摸显示**,0.9''的厚度

|

||||

|

||||

|

||||

|

||||

普通版**定价在899美元左右**,它带有:

|

||||

普通版 **定价在899美元左右**,它带有:

|

||||

|

||||

- Inel i5-4200U @ 1.5Ghz (双核)

|

||||

- 4GB DDR3 RAM

|

||||

- Intel HD 4400 显卡

|

||||

- 500 GB 5400 RPM HDD

|

||||

- 集成WIFI和蓝牙

|

||||

- 1MP 网络摄像头

|

||||

- 一百万像素的网络摄像头

|

||||

|

||||

对于所有System 76电脑来说,你可以通过提高规格和添加可选的额外设备来定制你的神机。Dater提供的可选项包括有:

|

||||

|

||||

对于所有System 76电脑来说,你可以通过提高规格和添加可选的额外设备来精饰你的梦幻机.Dater提供的可选项包括有:

|

||||

-

|

||||

- Inter 酷睿i5和i7 CPU

|

||||

- 能扩展到16GB的DDR3 RAM

|

||||

- 双储存,包括SSD + HDD的联合体.

|

||||

|

||||

提供所有必要的端口:

|

||||

|

||||

提供所有必要的端口:

|

||||

- HDMI 输出

|

||||

- 以太网

|

||||

- 2个USB3.0插口

|

||||

- 分开的耳机和麦克风插孔

|

||||

- SD 读卡器

|

||||

|

||||

更多关于Dater Thin的信息请转向System 76站点,到10月28号后,你可以预订Dater Thin,只需5美元在美国的快递费.

|

||||

更多关于Dater Thin的信息请访问System 76站点,到10月28号前,你都可以预订Dater Thin。

|

||||

|

||||

- [System76 Darter UltraThin 笔记本][1]

|

||||

|

||||

@ -49,6 +48,6 @@ via: http://www.omgubuntu.co.uk/2013/10/system76-touchscreen-ubuntu-laptop-avail

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

译者:[Luoxcat](https://github.com/Luoxcat) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[Luoxcat](https://github.com/Luoxcat) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

[1]:https://www.system76.com/laptops/model/daru4

|

||||

@ -1,8 +1,9 @@

|

||||

Ubuntu 13.10 发布 - 升级是否是必须的 ?

|

||||

================================================================================

|

||||

Ubuntu 13.10 发布 - 值得升级吗 ?

|

||||

===================================

|

||||

|

||||

|

||||

|

||||

**今天是Ubuntu 13.10 发布的日子。经过6个月紧锣密鼓地开发,‘Saucy Salamander’ 终于可供下载了。**

|

||||

**经过6个月紧锣密鼓地开发,‘Saucy Salamander’ 终于发布了。**

|

||||

|

||||

拥有超过两千万的用户基础,Ubuntu的每次更新,无论最终变动的结果多么得琐碎、细小,都能引起关注。这次发布也不例外。

|

||||

|

||||

@ -12,60 +13,61 @@ Ubuntu 13.10 发布 - 升级是否是必须的 ?

|

||||

|

||||

**下载 Ubuntu 13.10**:[http://releases.ubuntu.com/13.10/][1]

|

||||

|

||||

## “Ubuntu 13.10 令人厌烦的” - 网络 ##

|

||||

## “Ubuntu 13.10 令人厌烦的” - 来自互联网

|

||||

|

||||

我见过许多人-科技记者,博主,还有评论家,用“令人讨厌的”字眼来形容Ubuntu 13.10。

|

||||

我见过许多人,有科技记者、博主,还有评论家,用“令人讨厌的”字眼来形容Ubuntu 13.10。

|

||||

|

||||

诚然,Saucy Salamander比之先前的版本并没有为桌面版添加多少新的特色,但是新的版本确实变动了,也改善了,只不过大多数变动相较而言很小。

|

||||

诚然,Saucy Salamander比之先前的版本并没有为桌面用户添加多少新的特色,但是新的版本确实变化了,也改善了,只不过 **大多数** 变动相较而言很小。

|

||||

|

||||

强调一下这里的‘most’。

|

||||

强调一下这里的‘大多数’。

|

||||

|

||||

**工具箱花样繁多**

|

||||

|

||||

|

||||

|

||||

*这些东西究竟有多大用处呢?*

|

||||

|

||||

Unity的新的 搜索建议 功能是新版本中大的亮点。你的每一次搜索,都会把大范围在线资源的相关信息整合到一起,然后利用语义智能把筛选出来的东西放到工具箱。

|

||||

Unity的新的_搜索建议_功能是新版本中大的亮点。你的每一次搜索,都会把大范围的在线资源的相关信息整合到一起,然后利用语义智能把筛选出来的东西放到Dash中。

|

||||

|

||||

*Amazon, eBay, Etsy, Wikipedia, Weather Channel, SoundCloud* - 信息来源超过50个网站

|

||||

|

||||

> ‘…莫名其妙,乱七八糟。’

|

||||

|

||||

字面上貌似这个功能挺有用的:按下tab键,,你就可以绕过浏览器找到任何你想搜寻的东西,随便一些东西,然后从桌面上就可以浏览结果。

|

||||

|

||||

事实上,帮助挺少,阻碍倒是不小。那么多的web服务都给一个搜索的关键字条目提供结果 - 无论看起来有多无碍 -

|

||||

都导致工具箱充斥着莫名其妙、乱七八糟、不相关的东西。

|

||||

事实上,帮助挺少,阻碍倒是不小。那么多的web服务都给一个搜索的关键字条目提供结果 - 无论看起来有多无碍 - 都导致工具箱充斥着莫名其妙、乱七八糟、不相关的东西。

|

||||

|

||||

> ‘以当前这种形式,该功能还超越不了浏览器体验’

|

||||

|

||||

也不是没有尝试过结束这种混乱,代之以秩序井然。给查找结果分门别类,比如,购物,音乐,视频。由结果过滤器来控制泛滥的信息。

|

||||

|

||||

但是,坦白说,以当前这种形式,该功能还超越不了浏览器体验。谷歌就比较聪明,知道自己要找什么,把结果以一种易于浏览,易于过滤的形式呈现出来。

|

||||

|

||||

Ubuntu开发者称**搜索结果会变得越来越相关的**,因为该服务可以从用户那里自主学习。让我们拭目以待。

|

||||

Ubuntu开发者称 **搜索结果会变得越来越相关的**,因为该服务可以从用户那里自主学习。让我们拭目以待。

|

||||

|

||||

**关闭搜索建议功能**

|

||||

|

||||

关闭范围建议功能

|

||||

|

||||

|

||||

*每一个范围都可以单独关闭*

|

||||

*每一个搜索范围都可以单独关闭*

|

||||

|

||||

关闭“搜索建议”功能很简单,听从我的建议,就可以单独关闭给你带来混乱结果的范围。这样你就能够继续使用该功能,同时又过滤掉不相干的东西。

|

||||

|

||||

## Ubuntu 13.10 桌面 ##

|

||||

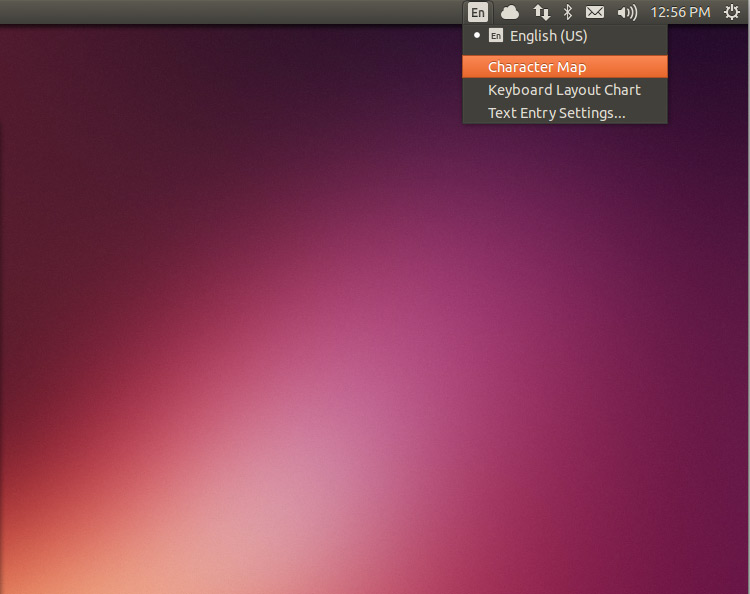

**Indicator Keyboard**

|

||||

**指示灯键盘**

|

||||

|

||||

**键盘指示器**

|

||||

|

||||

|

||||

无论你需要与否,新的‘指示灯键盘’已经添加到Ubuntu中,该功能使得在多种语言之间的切换更容易。

|

||||

无论你需要与否,新的‘键盘指示器’已经添加到Ubuntu中,该功能使得在多种语言之间的切换更容易。

|

||||

|

||||

可以在Text Entry Settings上面找到该功能入口,关闭它,然后取消紧挨着‘Show Current Input Source in Menu Bar’勾选框。

|

||||

|

||||

**Ubuntu 登陆**

|

||||

**Ubuntu 登录**

|

||||

|

||||

|

||||

登陆、注册页面也加到了Ubuntu安装程序里头,安装后就不需要再配置账号了。

|

||||

登录、注册页面也加到了Ubuntu安装程序里头,安装后就不需要再配置账号了。

|

||||

|

||||

**性能**

|

||||

|

||||

Unity 7正常运行远远超出原先预定的时间(14.04 LTS默认情况下,在4月到期),其中一些急需维修了。

|

||||

Unity 7的使用已经远超原先预定的时间(14.04 LTS默认情况下,在4月到期),其中一些急需改进了。

|

||||

|

||||

我自己并没有切身体验一下,但是那些体验过的人们都注意到该发行版性能有显著提升。

|

||||

|

||||

@ -78,10 +80,10 @@ Shotwell都已经预安装好了。

|

||||

|

||||

Ubuntu仓库也包含一些其他的流行的应用,比如[Geary mail client][2] 和比较受欢迎的图片编辑器 GIMP。

|

||||

|

||||

Finally, [GTK3 apps now look better under Ubuntu’s default theme][3].

|

||||

最后, [使用Ubuntu默认主题,GTK3 应用看起来好多了][3].

|

||||

|

||||

## 总结 ##

|

||||

|

||||

> 一个健壮、可靠的发行版,与其说是崭新的开始,不如说是一个脚注。

|

||||

|

||||

Ubuntu 13.10是一个健壮、可靠的发行版, 巩固了其作为“走出去”的Linux发行版的地位,对新用户和丰富的专业人员均适用。

|

||||

@ -94,8 +96,8 @@ via: http://www.omgubuntu.co.uk/2013/10/ubuntu-13-10-review-available-for-downlo

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

译者:[译者ID](https://github.com/l3b2w1) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[l3b2w1](https://github.com/l3b2w1) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

[1]:http://releases.ubuntu.com/13.10/

|

||||

[2]:http://www.omgubuntu.co.uk/2013/10/geary-0-4-released-with-new-look-new-features

|

||||

[3]:http://www.omgubuntu.co.uk/2013/08/ub untu-themes-fix-coming-to-saucy

|

||||

[3]:http://www.omgubuntu.co.uk/2013/08/ubuntu-themes-fix-coming-to-saucy

|

||||

@ -1,37 +0,0 @@

|

||||

crowner翻译

|

||||

Daily Ubuntu Tips – Understanding The App Menus And Buttons

|

||||

================================================================================

|

||||

Ubuntu is a decent operating system. It can do almost anything a modern OS can do and sometimes, even better. If you’re new to Ubuntu, there are some things you won’t know right away. Things that are common to power users may not be so common to you and this series called ‘Daily Ubuntu Tips’ is here to help you, the new users learn how to configure and manage Ubuntu easily.

|

||||

|

||||

Ubuntu comes with a menu bar. The main menu bar is the dark strip at the top of your screen which contains the status menu or indicator with (Date/Time, volume button), the App menus and Windows management buttons.

|

||||

|

||||

Windows management buttons are at the top left corner of the main menu (dark strip). When you open an application, the buttons on the main menu at the top left corner with close, minimize, maximize and restore is called Windows management buttons.

|

||||

|

||||

The App menus is located at the right of the Windows management button. It shows application menus when they are opened.

|

||||

|

||||

By default, Ubuntu hides the app menus and windows management buttons unless you move your mouse to the left corner, you wouldn’t be able to see them. If you open an application and can’t find the menu, just move your mouse to the left corner of your screen to show it.

|

||||

|

||||

If this is confusing and you want to disable the app menus so that each application can have its own menu, then continue below.

|

||||

|

||||

To uninstall or remove the app menus, run the commands below.

|

||||

|

||||

sudo apt-get autoremove indicator-appmenu

|

||||

|

||||

Running the command above will remove the app menu also known as global-menu. Now for the change to take effect, log out and log back in.

|

||||

|

||||

Now when you open applications in Ubuntu, each application will show its own menus instead of hiding it on the global menu or main menu.

|

||||

|

||||

|

||||

|

||||

That’s it! To go back to what it was, run the commands below

|

||||

|

||||

sudo apt-get install indicator-appmenu

|

||||

|

||||

Enjoy!

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.liberiangeek.net/2013/09/daily-ubuntu-tips-understanding-app-menus-buttons/

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

@ -1,3 +1,4 @@

|

||||

翻译 hello world

|

||||

Install Apache With SSL in Ubuntu 13.10

|

||||

================================================================================

|

||||

In this short tutorial let me show you how to install Apache with SSL support. My testbox details are given below:

|

||||

@ -93,4 +94,4 @@ via: http://www.unixmen.com/install-apache-ssl-ubuntu-13-10/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,292 +0,0 @@

|

||||

coolpigs is translating this article

|

||||

|

||||

Installing a Desktop Algorithmic Trading Research Environment using Ubuntu Linux and Python

|

||||

================================================================================

|

||||

In this article I want to discuss how to set up a robust, efficient and interactive development environment for algorithmic trading strategy research making use of Ubuntu Desktop Linux and the Python programming language. We will utilise this environment for nearly all subsequent algorithmic trading articles.

|

||||

|

||||

To create the research environment we will install the following software tools, all of which are open-source and free to download:

|

||||

|

||||

- [Oracle VirtualBox][1] - For virtualisation of the operating system

|

||||

- [Ubuntu Desktop Linux][2] - As our virtual operating system

|

||||

- [Python][3] - The core programming environment

|

||||

- [NumPy][4]/[SciPy][5] - For fast, efficient array/matrix calculation

|

||||

- [IPython][6] - For visual interactive development with Python

|

||||

- [matplotlib][7] - For graphical visualisation of data

|

||||

- [pandas][8] - For data "wrangling" and time series analysis

|

||||

- [scikit-learn][9] - For machine learning and artificial intelligence algorithms

|

||||

|

||||

These tools (coupled with a suitable [securities master database][10]) will allow us to create a rapid interactive strategy research environment. Pandas is designed for "data wrangling" and can import and cleanse time series data very efficiently. NumPy/SciPy running underneath keeps the system extremely well optimised. IPython/matplotlib (and the qtconsole described below) allow interactive visualisation of results and rapid iteration. scikit-learn allows us to apply machine learning techniques to our strategies to further enhance performance.

|

||||

|

||||

Note that I've written the tutorial so that Windows or Mac OSX users who are unwilling or unable to install Ubuntu Linux directly can still follow along by using VirtualBox. VirtualBox allows us to create a "Virtual Machine" inside the host system that can emulate a guest operating system without affecting the host in any way. This allows experimentation with Ubuntu and the Python tools before committing to a full installation. For those who already have Ubuntu Desktop installed, you can skip to the section on "Installing the Python Research Environment Packages on Ubuntu".

|

||||

|

||||

## Installing VirtualBox and Ubuntu Linux ##

|

||||

|

||||

This section of the tutorial regarding VirtualBox installation has been written for a Mac OSX system, but will easily translate to a Windows host environment. Once VirtualBox has been installed the procedure will be the same for any underlying host operating system.

|

||||

|

||||

Before we begin installing the software we need to go ahead and download both Ubuntu and VirtualBox.

|

||||

|

||||

**Downloading the Ubuntu Desktop disk image**

|

||||

|

||||

Open up your favourite web browser and navigate to the [Ubuntu Desktop][11] homepage then select Ubuntu 13.04:

|

||||

|

||||

|

||||

|

||||

*Download Ubuntu 13.04 (64-bit if appropriate)*

|

||||

|

||||

You will be asked to contribute a donation although this is optional. Once you have reached the download page make sure to select Ubuntu 13.04. You'll need to choose whether you want the 32-bit or 64-bit version. It is likely you'll have a 64-bit system, but if you're in doubt, then choose 32-bit. On a Mac OSX system the Ubuntu Desktop ISO disk image will be stored in your Downloads directory. We will make use of it later once we have installed VirtualBox.

|

||||

|

||||

**Downloading and Installing VirtualBox**

|

||||

|

||||

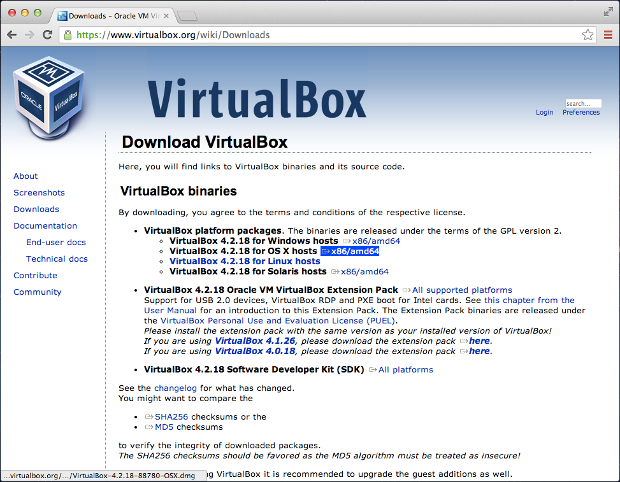

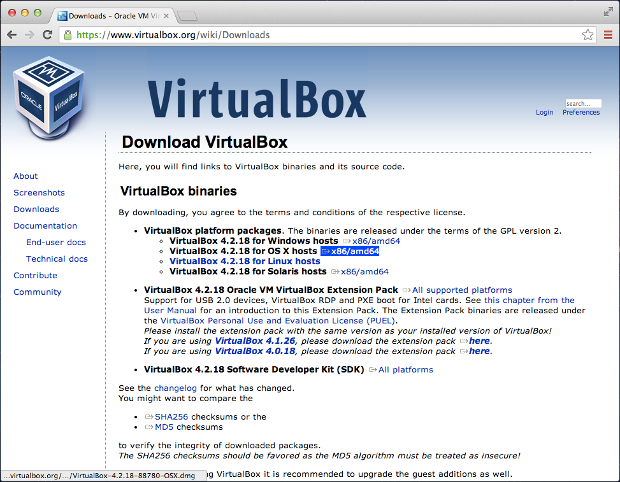

Now that we've downloaded Ubuntu we need to go and obtain the latest version of Oracle's VirtualBox software. Click [here][12] to visit the website and select the version for your particular host (for the purposes of this tutorial we need Mac OSX):

|

||||

|

||||

|

||||

|

||||

*Oracle VirtualBox download page*

|

||||

|

||||

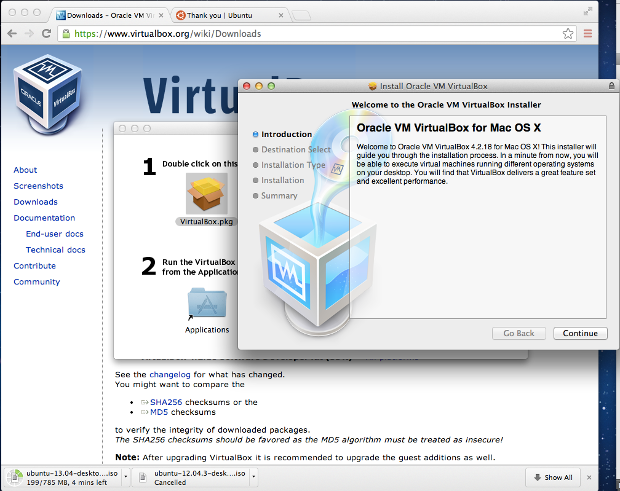

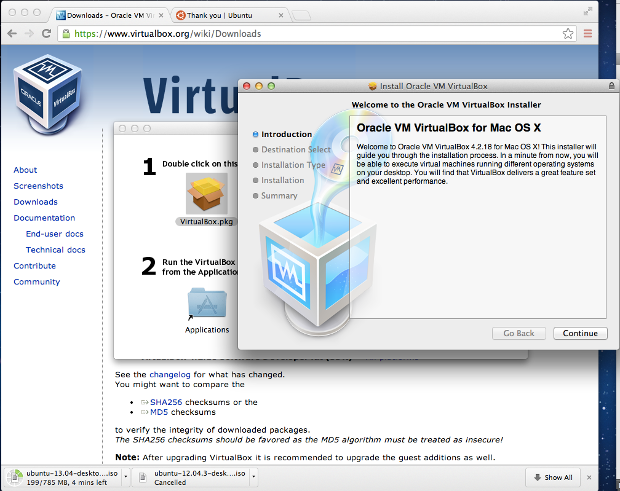

Once the file has been downloaded we need to run it and click on the package icon (this will vary somewhat in Windows but will be a similar process):

|

||||

|

||||

|

||||

|

||||

*Double-click the package icon to install VirtualBox*

|

||||

|

||||

Once the package has opened, we follow the installation instructions, keeping the defaults as they are (unless you feel the need to change them!). Now that VirtualBox has been installed we can open it from the Applications folder (which can be found with Finder). It puts VirtualBox on the icon dock while running, so you may wish to keep it there permanently if you want to examine Ubuntu Linux more closely in the future before committing to a full install:

|

||||

|

||||

|

||||

|

||||

*VirtualBox with no disk images yet*

|

||||

|

||||

We are now going to create a new 'virtual box' (i.e. virtualised operating system) by clicking on the New icon, which looks like a cog. I've called mine "Ubuntu Desktop 13.04 Algorithmic Trading" (so you may wish to use something similarly descriptive!):

|

||||

|

||||

|

||||

|

||||

*Naming our new virtual environment*

|

||||

|

||||

Choose the amount of RAM you wish to allocate to the virtual system. I've kept it at 512Mb since this is only a "test" system. A 'real' backtesting engine would likely use a native installation (and thus allocate significantly more memory) for efficiency reasons:

|

||||

|

||||

|

||||

|

||||

*Choose the amount of RAM for the virtual disk*

|

||||

|

||||

Create a virtual hard drive and use the recommended 8Gb, with a VirtualBox Disk Image, dynamically allocated, with the same name as the VirtualBox Image above:

|

||||

|

||||

|

||||

|

||||

*Choosing the type of hard disk used by the image*

|

||||

|

||||

You will now see a complete system with listed details:

|

||||

|

||||

|

||||

|

||||

*The virtual image has been created*

|

||||

|

||||

We now need to tell VirtualBox to include a virtual 'CD drive' for the new disk image so that we can pretend to boot our new Ubuntu disk image from this CD drive. Head to the Settings section, click on the "Storage" tab and add a Disk. You will need to navigate to the Ubuntu Disk image ISO file stored in your Downloads directly (or wherever you downloaded Ubuntu to). Select it and then save the settings:

|

||||

|

||||

|

||||

|

||||

*Choosing the Ubuntu Desktop ISO on first boot*

|

||||

|

||||

Now we are ready to boot up our Ubuntu image and get it installed. Click on "Start" and then on "OK" when you see the message about Host Capture of the Mouse/Keyboard. Note that on my Mac OSX, the host capture key is the Left Cmd key (i.e. Left Apple key). You will now be presented with the Ubuntu Desktop installation screen. Click on "Install Ubuntu":

|

||||

|

||||

|

||||

|

||||

*Click on Install Ubuntu to get started*

|

||||

|

||||

Make sure to tick both boxes to install the proprietary MP3 and Wi-Fi drivers:

|

||||

|

||||

|

||||

|

||||

*Install the proprietary drivers for MP3 and Wi-Fi*

|

||||

|

||||

You will now see a screen asking how you would like to store the data created for the operating system. Don't panic about the "Erase Disk and Install Ubuntu" option. It does NOT mean that it will erase your normal hard disk! It actually refers to the virtual disk it is using to run Ubuntu in, which is safe to erase (there isn't anything on there anyway, as we've just created it). Carry on with the install and you will be presented with a screen asking for your location and subsequently, your keyboard layout:

|

||||

|

||||

|

||||

|

||||

*Select your geographical location*

|

||||

|

||||

Enter in your user credentials, making sure to remember your password as you'll need it later on for installing packages:

|

||||

|

||||

|

||||

|

||||

*Enter your username and password (this password is the administrator password)*

|

||||

|

||||

Ubuntu will now install the files. It should be relatively quick as it is just copying from the hard disk to the hard disk! Eventually it will complete and the VirtualBox will restart. If it doesn't restart on its own, you can go to the menu and force a Shutdown. You will be brought back to the Ubuntu Login Screen:

|

||||

|

||||

|

||||

|

||||

*The Ubuntu Desktop login screen*

|

||||

|

||||

Login with your username and password from above and you will see your shiny new Ubuntu desktop:

|

||||

|

||||

|

||||

|

||||

*The Unity interface to the Ubuntu Desktop after logging in*

|

||||

|

||||

The last thing to do is click on the Firefox icon to test that the internet/networking functionality is correct by visiting a website (I picked QuantStart.com, funnily enough!):

|

||||

|

||||

|

||||

|

||||

The Ubuntu Desktop login screen

|

||||

|

||||

Now that the Ubuntu Desktop is installed we can begin installing the algorithmic trading research environment packages.

|

||||

|

||||

## Installing the Python Research Environment Packages on Ubuntu ##

|

||||

|

||||

Click on the search button at the top-left of the screen and type "Terminal" into the box to bring up the command-line interface. Double-click the terminal icon to launch the Terminal:

|

||||

|

||||

|

||||

|

||||

*The Ubuntu Desktop login screen*

|

||||

|

||||

All subsequent commands will need to be typed into this terminal.

|

||||

|

||||

The first thing to do on any brand new Ubuntu Linux system is to update and upgrade the packages. The former tells Ubuntu about new packages that are available, while the latter actually performs the process of replacing older packages with newer versions. Run the following commands (you will be prompted for your passwords):

|

||||

|

||||

sudo apt-get -y update

|

||||

sudo apt-get -y upgrade

|

||||

|

||||

*Note that the -y prefix tells Ubuntu that you want to accept 'yes' to all yes/no questions. "sudo" is a Ubuntu/Debian Linux command that allows other commands to be executed with administrator privileges. Since we are installing our packages sitewide, we need 'root access' to the machine and thus must make use of 'sudo'.*

|

||||

|

||||

You may get an error message here:

|

||||

|

||||

E: Could not get lock /var/lib/dpkg/lock - open (11: Resource temporarily unavailable)

|

||||

|

||||

To remedy it just run "sudo apt-get -y update" again or take a look at this site for additional commands to run in case the first does not work ([http://penreturns.rc.my/2012/02/could-not-get-lock-varlibaptlistslock.html][13]).

|

||||

|

||||

Once both of those updating commands have been successfully executed we now need to install Python, NumPy/SciPy, matplotlib, pandas, scikit-learn and IPython. We will start by installing the Python development packages and compilers needed to compile all of the software:

|

||||

|

||||

sudo apt-get install python-pip python-dev python2.7-dev build-essential liblapack-dev libblas-dev

|

||||

|

||||

Once the necessary packages are installed we can go ahead and install NumPy via pip, the Python package manager. Pip will download a zip file of the package and then compile it from the source code for us. Bear in mind that it will take some time to compile, probably 10-20 minutes!

|

||||

|

||||

sudo pip install numpy

|

||||

|

||||

Once NumPy has been installed we need to check that it works before proceeding. If you look in the terminal you'll see your username followed by your computer name. In my case it is `mhallsmoore@algobox`, which is followed by the prompt. At the prompt type `python` and then try importing NumPy. We will test that it works by calculating the mean average of a list:

|

||||

|

||||

mhallsmoore@algobox:~$ python

|

||||

Python 2.7.4 (default, Sep 26 2013, 03:20:26)

|

||||

[GCC 4.7.3] on linux2

|

||||

Type "help", "copyright", "credits" or "license" for more information.

|

||||

>>> import numpy

|

||||

>>> from numpy import mean

|

||||

>>> mean([1,2,3])

|

||||

2.0

|

||||

>>> exit()

|

||||

|

||||

Now that NumPy has been successfully installed we want to install the Python Scientific library known as SciPy. However it has a few package dependencies of its own including the ATLAS library and the GNU Fortran compiler:

|

||||

|

||||

sudo apt-get install libatlas-base-dev gfortran

|

||||

|

||||

We are ready to install SciPy now, with pip. This will take quite a long time (approx 20 minutes, depending upon your computer) so it might be worth going and grabbing a coffee:

|

||||

|

||||

sudo pip install scipy

|

||||

|

||||

Phew! SciPy has now been installed. Let's test it out by calculating the standard deviation of a list of integers:

|

||||

|

||||

mhallsmoore@algobox:~$ python

|

||||

Python 2.7.4 (default, Sep 26 2013, 03:20:26)

|

||||

[GCC 4.7.3] on linux2

|

||||

Type "help", "copyright", "credits" or "license" for more information.

|

||||

>>> import scipy

|

||||

>>> from scipy import std

|

||||

>>> std([1,2,3])

|

||||

0.81649658092772603

|

||||

>>> exit()

|

||||

|

||||

Next we need to install the dependency packages for matplotlib, the Python graphing library. Since matplotlib is a Python package, we cannot use pip to install the underlying libraries for working with PNGs, JPEGs and freetype fonts, so we need Ubuntu to install them for us:

|

||||

|

||||

sudo apt-get install libpng-dev libjpeg8-dev libfreetype6-dev

|

||||

|

||||

Now we can install matplotlib:

|

||||

|

||||

sudo pip install matplotlib

|

||||

|

||||

We're now going to install the data analysis and machine learning libraries pandas and scikit-learn. We don't need any additional dependencies at this stage as they're covered by NumPy and SciPy:

|

||||

|

||||

sudo pip install -U scikit-learn

|

||||

sudo pip install pandas

|

||||

|

||||

We should test scikit-learn:

|

||||

|

||||

mhallsmoore@algobox:~$ python

|

||||

Python 2.7.4 (default, Sep 26 2013, 03:20:26)

|

||||

[GCC 4.7.3] on linux2

|

||||

Type "help", "copyright", "credits" or "license" for more information.

|

||||

>>> from sklearn load datasets

|

||||

>>> iris = datasets.load_iris()

|

||||

>>> iris

|

||||

..

|

||||

..

|

||||

'petal width (cm)']}

|

||||

>>>

|

||||

|

||||

In addition, we should also test pandas:

|

||||

|

||||

>>> from pandas import DataFrame

|

||||

>>> pd = DataFrame()

|

||||

>>> pd

|

||||

Empty DataFrame

|

||||

Columns: []

|

||||

Index: []

|

||||

>>> exit()

|

||||

|

||||

Finally, we want to instal IPython. This is an interactive Python interpreter that provides a significantly more streamlined workflow compared to using the standard Python console. In later tutorials I will outline the full usefulness of IPython for algorithmic trading development:

|

||||

|

||||

sudo pip install ipython

|

||||

|

||||

While IPython is sufficiently useful on its own, it can be made even more powerful by including the qtconsole, which provides the ability to inline matplotlib visualisations. However, it takes a little bit more work to get this up and running.

|

||||

|

||||

First, we need to install the the [Qt library][14]. For this you may need to update your packages again (I did!):

|

||||

|

||||

sudo apt-get update

|

||||

|

||||

Now we can install Qt:

|

||||

|

||||

sudo apt-get install libqt4-core libqt4-gui libqt4-dev

|

||||

|

||||

The qtconsole has a few additional packages, namely the ZMQ and Pygments libraries:

|

||||

|

||||

sudo apt-get install libzmq-dev

|

||||

sudo pip install pyzmq

|

||||

sudo pip install pygments

|

||||

|

||||

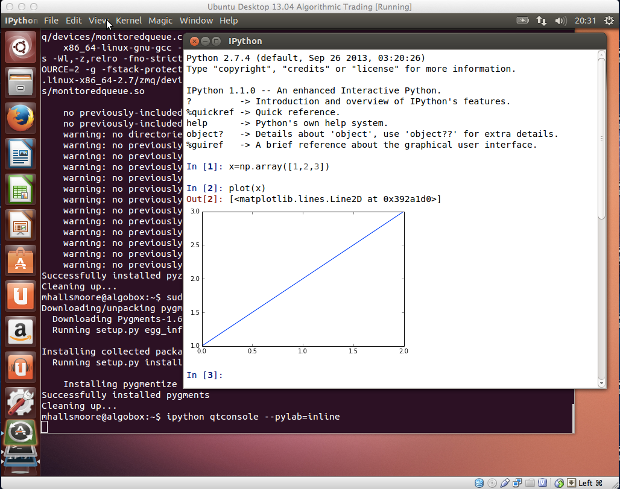

Finally we are ready to launch IPython with the qtconsole:

|

||||

|

||||

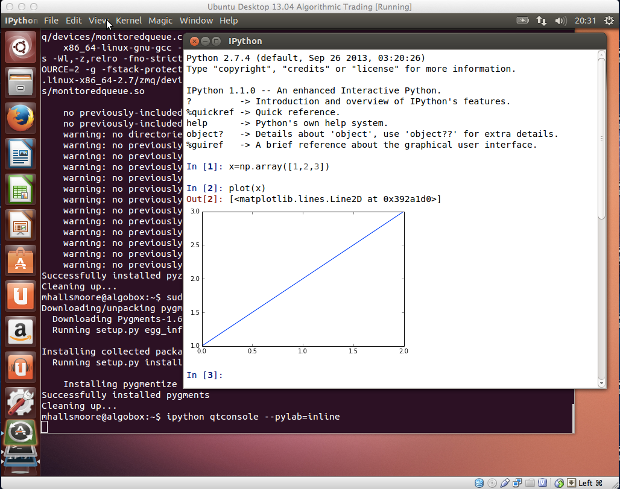

ipython qtconsole --pylab=inline

|

||||

|

||||

Then we can make a (rather simple!) plot by typing the following commands (I've included the IPython numbered input/outut which you do not need to type):

|

||||

|

||||

In [1]: x=np.array([1,2,3])

|

||||

|

||||

In [2]: plot(x)

|

||||

Out[2]: [<matplotlib.lines.Line2D at 0x392a1d0>]

|

||||

|

||||

This produces the following inline chart:

|

||||

|

||||

|

||||

|

||||

*IPython with qtconsole displaying an inline chart*

|

||||

|

||||

That's it for the installation procedure. We now have an extremely robust, efficient and interactive algorithmic trading research environment at our fingertips. In subsequent articles I will be detailing how IPython, matplotlib, pandas and scikit-learn can be combined to successfully research and backtest quantitative trading strategies in a straightforward manner.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://quantstart.com/articles/Installing-a-Desktop-Algorithmic-Trading-Research-Environment-using-Ubuntu-Linux-and-Python

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

[1]:https://www.virtualbox.org/

|

||||

[2]:http://www.ubuntu.com/desktop

|

||||

[3]:http://python.org/

|

||||

[4]:http://www.numpy.org/

|

||||

[5]:http://www.scipy.org/

|

||||

[6]:http://ipython.org/

|

||||

[7]:http://matplotlib.org/

|

||||

[8]:http://pandas.pydata.org/

|

||||

[9]:http://scikit-learn.org/

|

||||

[10]:http://quantstart.com/articles/Securities-Master-Database-with-MySQL-and-Python

|

||||

[11]:http://www.ubuntu.com/desktop

|

||||

[12]:https://www.virtualbox.org/

|

||||

[13]:http://penreturns.rc.my/2012/02/could-not-get-lock-varlibaptlistslock.html

|

||||

[14]:http://qt-project.org/downloads

|

||||

@ -1,403 +0,0 @@

|

||||

[这篇我领了]

|

||||

NoSQL comparison

|

||||

================

|

||||

|

||||

While SQL databases are insanely useful tools, their monopoly in the last decades is coming to an end. And it's just time: I can't even count the things that were forced into relational databases, but never really fitted them. (That being said, relational databases will always be the best for the stuff that has relations.)

|

||||

|

||||

But, the differences between NoSQL databases are much bigger than ever was between one SQL database and another. This means that it is a bigger responsibility on software architects to choose the appropriate one for a project right at the beginning.

|

||||

|

||||

In this light, here is a comparison of [Cassandra][], [Mongodb][], [CouchDB][], [Redis][], [Riak][], [Couchbase (ex-Membase)][], [Hypertable][], [ElasticSearch][], [Accumulo][], [VoltDB][], [Kyoto Tycoon][], [Scalaris][], [Neo4j][] and [HBase][]:

|

||||

|

||||

##The most popular ones

|

||||

|

||||

###MongoDB (2.2)

|

||||

|

||||

**Written in:** C++

|

||||

|

||||

**Main point:** Retains some friendly properties of SQL. (Query, index)

|

||||

|

||||

**License:** AGPL (Drivers: Apache)

|

||||

|

||||

**Protocol:** Custom, binary (BSON)

|

||||

|

||||

- Master/slave replication (auto failover with replica sets)

|

||||

- Sharding built-in

|

||||

- Queries are javascript expressions

|

||||

- Run arbitrary javascript functions server-side

|

||||

- Better update-in-place than CouchDB

|

||||

- Uses memory mapped files for data storage

|

||||

- Performance over features

|

||||

- Journaling (with --journal) is best turned on

|

||||

- On 32bit systems, limited to ~2.5Gb

|

||||

- An empty database takes up 192Mb

|

||||

- GridFS to store big data + metadata (not actually an FS)

|

||||

- Has geospatial indexing

|

||||

- Data center aware

|

||||

|

||||

**Best used:** If you need dynamic queries. If you prefer to define indexes, not map/reduce functions. If you need good performance on a big DB. If you wanted CouchDB, but your data changes too much, filling up disks.

|

||||

|

||||

**For example:** For most things that you would do with MySQL or PostgreSQL, but having predefined columns really holds you back.

|

||||

|

||||

|

||||

###Riak (V1.2)

|

||||

|

||||

**Written in:** Erlang & C, some JavaScript

|

||||

|

||||

**Main point:** Fault tolerance

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** HTTP/REST or custom binary

|

||||

|

||||

- Stores blobs

|

||||

- Tunable trade-offs for distribution and replication

|

||||

- Pre- and post-commit hooks in JavaScript or Erlang, for validation and security.

|

||||

- Map/reduce in JavaScript or Erlang

|

||||

- Links & link walking: use it as a graph database

|

||||

- Secondary indices: but only one at once

|

||||

- Large object support (Luwak)

|

||||

- Comes in "open source" and "enterprise" editions

|

||||

- Full-text search, indexing, querying with Riak Search

|

||||

- In the process of migrating the storing backend from "Bitcask" to Google's "LevelDB"

|

||||

- Masterless multi-site replication replication and SNMP monitoring are commercially licensed

|

||||

|

||||

**Best used:** If you want something Dynamo-like data storage, but no way you're gonna deal with the bloat and complexity. If you need very good single-site scalability, availability and fault-tolerance, but you're ready to pay for multi-site replication.

|

||||

|

||||

**For example:** Point-of-sales data collection. Factory control systems. Places where even seconds of downtime hurt. Could be used as a well-update-able web server.

|

||||

|

||||

###CouchDB (V1.2)

|

||||

|

||||

**Written in:** Erlang

|

||||

|

||||

**Main point:** DB consistency, ease of use

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** HTTP/REST

|

||||

|

||||

- Bi-directional (!) replication,

|

||||

- continuous or ad-hoc,

|

||||

- with conflict detection,

|

||||

- thus, master-master replication. (!)

|

||||

- MVCC - write operations do not block reads

|

||||

- Previous versions of documents are available

|

||||

- Crash-only (reliable) design

|

||||

- Needs compacting from time to time

|

||||

- Views: embedded map/reduce

|

||||

- Formatting views: lists & shows

|

||||

- Server-side document validation possible

|

||||

- Authentication possible

|

||||

- Real-time updates via '_changes' (!)

|

||||

- Attachment handling

|

||||

- thus, CouchApps (standalone js apps)

|

||||

|

||||

**Best used:** For accumulating, occasionally changing data, on which pre-defined queries are to be run. Places where versioning is important.

|

||||

|

||||

**For example:** CRM, CMS systems. Master-master replication is an especially interesting feature, allowing easy multi-site deployments.

|

||||

|

||||

###Redis (V2.4)

|

||||

|

||||

**Written in:** C/C++

|

||||

|

||||

**Main point:** Blazing fast

|

||||

|

||||

**License:** BSD

|

||||

|

||||

**Protocol:** Telnet-like

|

||||

|

||||

- Disk-backed in-memory database,

|

||||

- Currently without disk-swap (VM and Diskstore were abandoned)

|

||||

- Master-slave replication

|

||||

- Simple values or hash tables by keys,

|

||||

- but complex operations like ZREVRANGEBYSCORE.

|

||||

- INCR & co (good for rate limiting or statistics)

|

||||

- Has sets (also union/diff/inter)

|

||||

- Has lists (also a queue; blocking pop)

|

||||

- Has hashes (objects of multiple fields)

|

||||

- Sorted sets (high score table, good for range queries)

|

||||

- Redis has transactions (!)

|

||||

- Values can be set to expire (as in a cache)

|

||||

- Pub/Sub lets one implement messaging (!)

|

||||

|

||||

**Best used:** For rapidly changing data with a foreseeable database size (should fit mostly in memory).

|

||||

|

||||

**For example:** Stock prices. Analytics. Real-time data collection. Real-time communication. And wherever you used memcached before.

|

||||

|

||||

##Clones of Google's Bigtable

|

||||

|

||||

###HBase (V0.92.0)

|

||||

|

||||

**Written in:** Java

|

||||

|

||||

**Main point:** Billions of rows X millions of columns

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** HTTP/REST (also Thrift)

|

||||

|

||||

- Modeled after Google's BigTable

|

||||

- Uses Hadoop's HDFS as storage

|

||||

- Map/reduce with Hadoop

|

||||

- Query predicate push down via server side scan and get filters

|

||||

- Optimizations for real time queries

|

||||

- A high performance Thrift gateway

|

||||

- HTTP supports XML, Protobuf, and binary

|

||||

- Jruby-based (JIRB) shell

|

||||

- Rolling restart for configuration changes and minor upgrades

|

||||

- Random access performance is like MySQL

|

||||

- A cluster consists of several different types of nodes

|

||||

|

||||

**Best used:** Hadoop is probably still the best way to run Map/Reduce jobs on huge datasets. Best if you use the Hadoop/HDFS stack already.

|

||||

|

||||

**For example:** Search engines. Analysing log data. Any place where scanning huge, two-dimensional join-less tables are a requirement.

|

||||

|

||||

###Cassandra (1.2)

|

||||

|

||||

**Written in:** Java

|

||||

|

||||

**Main point:** Best of BigTable and Dynamo

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** Thrift & custom binary CQL3

|

||||

|

||||

- Tunable trade-offs for distribution and replication (N, R, W)

|

||||

- Querying by column, range of keys (Requires indices on anything that you want to search on)

|

||||

- BigTable-like features: columns, column families

|

||||

- Can be used as a distributed hash-table, with an "SQL-like" language, CQL (but no JOIN!)

|

||||

- Data can have expiration (set on INSERT)

|

||||

- Writes can be much faster than reads (when reads are disk-bound)

|

||||

- Map/reduce possible with Apache Hadoop

|

||||

- All nodes are similar, as opposed to Hadoop/HBase

|

||||

- Very good and reliable cross-datacenter replication

|

||||

|

||||

**Best used:** When you write more than you read (logging). If every component of the system must be in Java. ("No one gets fired for choosing Apache's stuff.")

|

||||

|

||||

**For example:** Banking, financial industry (though not necessarily for financial transactions, but these industries are much bigger than that.) Writes are faster than reads, so one natural niche is data analysis.

|

||||

|

||||

###Hypertable (0.9.6.5)

|

||||

|

||||

**Written in:** C++

|

||||

|

||||

**Main point:** A faster, smaller HBase

|

||||

|

||||

**License:** GPL 2.0

|

||||

|

||||

**Protocol:** Thrift, C++ library, or HQL shell

|

||||

|

||||

- Implements Google's BigTable design

|

||||

- Run on Hadoop's HDFS

|

||||

- Uses its own, "SQL-like" language, HQL

|

||||

- Can search by key, by cell, or for values in column families.

|

||||

- Search can be limited to key/column ranges.

|

||||

- Sponsored by Baidu

|

||||

- Retains the last N historical values

|

||||

- Tables are in namespaces

|

||||

- Map/reduce with Hadoop

|

||||

|

||||

**Best used:** If you need a better HBase.

|

||||

|

||||

**For example:** Same as HBase, since it's basically a replacement: Search engines. Analysing log data. Any place where scanning huge, two-dimensional join-less tables are a requirement.

|

||||

|

||||

###Accumulo (1.4)

|

||||

|

||||

**Written in:** Java and C++

|

||||

|

||||

**Main point:** A BigTable with Cell-level security

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** Thrift

|

||||

|

||||

- Another BigTable clone, also runs of top of Hadoop

|

||||

- Cell-level security

|

||||

- Bigger rows than memory are allowed

|

||||

- Keeps a memory map outside Java, in C++ STL

|

||||

- Map/reduce using Hadoop's facitlities (ZooKeeper & co)

|

||||

- Some server-side programming

|

||||

|

||||

**Best used:** If you need a different HBase.

|

||||

|

||||

**For example:** Same as HBase, since it's basically a replacement: Search engines. Analysing log data. Any place where scanning huge, two-dimensional join-less tables are a requirement.

|

||||

|

||||

##Special-purpose

|

||||

|

||||

###Neo4j (V1.5M02)

|

||||

|

||||

**Written in:** Java

|

||||

|

||||

**Main point:** Graph database - connected data

|

||||

|

||||

**License:** GPL, some features AGPL/commercial

|

||||

|

||||

**Protocol:** HTTP/REST (or embedding in Java)

|

||||

|

||||

- Standalone, or embeddable into Java applications

|

||||

- Full ACID conformity (including durable data)

|

||||

- Both nodes and relationships can have metadata

|

||||

- Integrated pattern-matching-based query language ("Cypher")

|

||||

- Also the "Gremlin" graph traversal language can be used

|

||||

- Indexing of nodes and relationships

|

||||

- Nice self-contained web admin

|

||||

- Advanced path-finding with multiple algorithms

|

||||

- Indexing of keys and relationships

|

||||

- Optimized for reads

|

||||

- Has transactions (in the Java API)

|

||||

- Scriptable in Groovy

|

||||

- Online backup, advanced monitoring and High Availability is AGPL/commercial licensed

|

||||

|

||||

**Best used:** For graph-style, rich or complex, interconnected data. Neo4j is quite different from the others in this sense.

|

||||

|

||||

**For example:** For searching routes in social relations, public transport links, road maps, or network topologies.

|

||||

|

||||

###ElasticSearch (0.20.1)

|

||||

|

||||

**Written in:** Java

|

||||

|

||||

**Main point:** Advanced Search

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** JSON over HTTP (Plugins: Thrift, memcached)

|

||||

|

||||

- Stores JSON documents

|

||||

- Has versioning

|

||||

- Parent and children documents

|

||||

- Documents can time out

|

||||

- Very versatile and sophisticated querying, scriptable

|

||||

- Write consistency: one, quorum or all

|

||||

- Sorting by score (!)

|

||||

- Geo distance sorting

|

||||

- Fuzzy searches (approximate date, etc) (!)

|

||||

- Asynchronous replication

|

||||

- Atomic, scripted updates (good for counters, etc)

|

||||

- Can maintain automatic "stats groups" (good for debugging)

|

||||

- Still depends very much on only one developer (kimchy).

|

||||

|

||||

**Best used:** When you have objects with (flexible) fields, and you need "advanced search" functionality.

|

||||

|

||||

**For example:** A dating service that handles age difference, geographic location, tastes and dislikes, etc. Or a leaderboard system that depends on many variables.

|

||||

|

||||

##The "long tail"

|

||||

|

||||

(Not widely known, but definitely worthy ones)

|

||||

|

||||

###Couchbase (ex-Membase) (2.0)

|

||||

|

||||

**Written in:** Erlang & C

|

||||

|

||||

**Main point:** Memcache compatible, but with persistence and clustering

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** memcached + extensions

|

||||

|

||||

- Very fast (200k+/sec) access of data by key

|

||||

- Persistence to disk

|

||||

- All nodes are identical (master-master replication)

|

||||

- Provides memcached-style in-memory caching buckets, too

|

||||

- Write de-duplication to reduce IO

|

||||

- Friendly cluster-management web GUI

|

||||

- Connection proxy for connection pooling and multiplexing (Moxi)

|

||||

- Incremental map/reduce

|

||||

- Cross-datacenter replication

|

||||

|

||||

**Best used:** Any application where low-latency data access, high concurrency support and high availability is a requirement.

|

||||

|

||||

**For example:** Low-latency use-cases like ad targeting or highly-concurrent web apps like online gaming (e.g. Zynga).

|

||||

|

||||

###VoltDB (2.8.4.1)

|

||||

|

||||

**Written in:** Java

|

||||

|

||||

**Main point:** Fast transactions and rapidly changing data

|

||||

|

||||

**License:** GPL 3

|

||||

|

||||

**Protocol:** Proprietary

|

||||

|

||||

- In-memory relational database.

|

||||

- Can export data into Hadoop

|

||||

- Supports ANSI SQL

|

||||

- Stored procedures in Java

|

||||

- Cross-datacenter replication

|

||||

|

||||

**Best used:** Where you need to act fast on massive amounts of incoming data.

|

||||

|

||||

**For example:** Point-of-sales data analysis. Factory control systems.

|

||||

|

||||

###Scalaris (0.5)

|

||||

|

||||

**Written in:** Erlang

|

||||

|

||||

**Main point:** Distributed P2P key-value store

|

||||

|

||||

**License:** Apache

|

||||

|

||||

**Protocol:** Proprietary & JSON-RPC

|

||||

|

||||

- In-memory (disk when using Tokyo Cabinet as a backend)

|

||||

- Uses YAWS as a web server

|

||||

- Has transactions (an adapted Paxos commit)

|

||||

- Consistent, distributed write operations

|

||||

- From CAP, values Consistency over Availability (in case of network partitioning, only the bigger partition - works)

|

||||

|

||||

**Best used:** If you like Erlang and wanted to use Mnesia or DETS or ETS, but you need something that is accessible from more languages (and scales much better than ETS or DETS).

|

||||

|

||||

**For example:** In an Erlang-based system when you want to give access to the DB to Python, Ruby or Java programmers.

|

||||

|

||||

###Kyoto Tycoon (0.9.56)

|

||||

|

||||

**Written in:** C++

|

||||

|

||||

**Main point:** A lightweight network DBM

|

||||

|

||||

**License:** GPL

|

||||

|

||||

**Protocol:** HTTP (TSV-RPC or REST)

|

||||

|

||||

- Based on Kyoto Cabinet, Tokyo Cabinet's successor

|

||||

- Multitudes of storage backends: Hash, Tree, Dir, etc (everything from Kyoto Cabinet)

|

||||

- Kyoto Cabinet can do 1M+ insert/select operations per sec (but Tycoon does less because of overhead)

|

||||

- Lua on the server side

|

||||

- Language bindings for C, Java, Python, Ruby, Perl, Lua, etc

|

||||

- Uses the "visitor" pattern

|

||||

- Hot backup, asynchronous replication

|

||||

- background snapshot of in-memory databases

|

||||

- Auto expiration (can be used as a cache server)

|

||||

|

||||

**Best used:** When you want to choose the backend storage algorithm engine very precisely. When speed is of the essence.

|

||||

|

||||

**For example:** Caching server. Stock prices. Analytics. Real-time data collection. Real-time communication. And wherever you used memcached before.

|

||||

|

||||

Of course, all these systems have much more features than what's listed here. I only wanted to list the key points that I base my decisions on. Also, development of all are very fast, so things are bound to change.

|

||||

|

||||

P.s.: And no, there's no date on this review. There are version numbers, since I update the databases one by one, not at the same time. And believe me, the basic properties of databases don't change that much.

|

||||

|

||||

---

|

||||

|

||||

via: http://kkovacs.eu/cassandra-vs-mongodb-vs-couchdb-vs-redis

|

||||

|

||||

本文由 [LCTT][] 原创翻译,[Linux中国][] 荣誉推出

|

||||

|

||||

译者:[译者ID][] 校对:[校对者ID][]

|

||||

|

||||

[LCTT]:https://github.com/LCTT/TranslateProject

|

||||

[Linux中国]:http://linux.cn/portal.php

|

||||

[译者ID]:http://linux.cn/space/译者ID

|

||||

[校对者ID]:http://linux.cn/space/校对者ID

|

||||

|

||||

[Cassandra]:http://cassandra.apache.org/

|

||||

[Mongodb]:http://www.mongodb.org/

|

||||

[CouchDB]:http://couchdb.apache.org/

|

||||

[Redis]:http://redis.io/

|

||||

[Riak]:http://basho.com/riak/

|

||||

[Couchbase (ex-Membase)]:http://www.couchbase.org/membase

|

||||

[Hypertable]:http://hypertable.org/

|

||||

[ElasticSearch]:http://www.elasticsearch.org/

|

||||

[Accumulo]:http://accumulo.apache.org/

|

||||

[VoltDB]:http://voltdb.com/

|

||||

[Kyoto Tycoon]:http://fallabs.com/kyototycoon/

|

||||

[Scalaris]:https://code.google.com/p/scalaris/

|

||||

[Neo4j]:http://neo4j.org/

|

||||

[HBase]:http://hbase.apache.org/

|

||||

@ -1,68 +0,0 @@

|

||||

00 About the author

|

||||

================================================================================

|

||||

[][1]

|

||||

|

||||

Feel free to post or email me (DevynCJohnson@Gmail.com) suggestions for this series, both on topics for future articles in the series and on how the series can be made better and more interesting.

|

||||

|

||||

I write two articles a week for Linux.org. One is the Linux kernel series and the other is any random Linux topic. Feel free to email suggestions on what you would like to read about in the "random article". I would like to write something that will draw numerous readers to the site. My goal is to write an article that has 10,000+ readers in one week.

|

||||

|

||||

Soon, I will also write tutorials on how to install some of the popular Linux distros, so if there is a particular one you want to read about, email me.

|

||||

|

||||

Check out my wallpapers on [http://gnome-look.org/usermanager/search.php?username=DevynCJohnson&action=contents&PHPSESSID=32424677ef4d9dffed020d06ef2522ac][2]

|

||||

|

||||

My AI project:

|

||||

|

||||

- [https://launchpad.net/neobot][3]

|

||||

|

||||

Ubuntu 13.10 (AMD64)

|

||||

|

||||

- [https://launchpad.net/~devyncjohnson-d][4]

|

||||

- [DevynCJohnson@Gmail.com][5]

|

||||

|

||||

|

||||

|

||||

**Gender**:Male

|

||||

|

||||

**Birthday**:Aug 31, 1994 (Age: 19)

|

||||

|

||||

**Home page**:https://launchpad.net/~devyncjohnson-d

|

||||

|

||||

**Location**:United States

|

||||

|

||||

Devyn Collier Johnson was home-schooled by his two wonderful parents and has graduated one university and is now attending another. His father, Jarret Wayne Buse, has many computer certifications, and Jarret has written and published many books on computers. He also does some programming, and he has given Devyn help and ideas for his artificial intelligence program. His mother, Cassandra Ann Johnson, is a stay-at-home mother, home-schooling his many siblings. Devyn Collier Johnson lives in Indiana with his parents and focuses his time on college and personal computer programming.

|

||||

|

||||

Devyn Collier Johnson graduated high-school at age sixteen. He attends college as a commuting student maintaining the Dean's list. He majors in electrical technology engineering. Devyn Collier Johnson has learned many computer languages. Some he taught himself while others his father taught him and helped him understand. Some of the languages he knows include Xaiml, AIML, Unix Shell, Python3, VPython, PyQT, PyGTK, Coffeescript, GEL, SED, HTML4/5, CSS3, SVG, and XML. Devyn knows bits and pieces of some other languages. He earned four computer certifications in April 2012 and those four being NCLA, Linux+, LPIC-1, and DCTS. His Linux Professional ID is LPI000254694.

|

||||

|

||||

In July 2012, Devyn Collier Johnson decided to make his chatterbot from scratch. He designed his own markup language (Xaiml) and AI engine (ProgramPY-SH or Pysh). On March 3, 2013, Devyn published his new chatterbot on Launchpad.net. The bot is named Neo which is from the Proto-Indo European word for "new".

|

||||

|

||||

Devyn maintains a few other projects. He makes Opera and Firefox themes ([https://addons.mozilla.org/en-US/firefox/user/DevynCJohnson/][6]) ([https://my.opera.com/devyncjohnson/account/][7]); he also has many other graphic design projects. Most of his programming projects are hosted on [https://launchpad.net/~devyncjohnson-d][4], and some are mirrored on Sourceforge.net. Some other miscellaneous projects can be found in the links below.

|

||||

|

||||

- [http://askubuntu.com/users/158340/devyn-collier-johnson][8]

|

||||

- [http://unix.stackexchange.com/users/40770/devyn-collier-johnson][9]

|

||||

- [http://stackoverflow.com/users/2354783/devyn-collier-johnson][10]

|

||||

- [http://www.linux.org/members/devyncjohnson.4843/][1]

|

||||

- [http://gnome-look.org/usermanager/search.php?username=DevynCJohnson][11]

|

||||

- [http://www.creatity.com/?user=1449&action=detailUser][12]

|

||||

- [http://openclipart.org/user-detail/DevynCJohnson][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.org/members/devyncjohnson.4843/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.linux.org/members/devyncjohnson.4843/

|

||||

[2]:http://gnome-look.org/usermanager/search.php?username=DevynCJohnson&action=contents&PHPSESSID=32424677ef4d9dffed020d06ef2522ac

|

||||

[3]:https://launchpad.net/neobot

|

||||

[4]:https://launchpad.net/~devyncjohnson-d

|

||||

[5]:DevynCJohnson@Gmail.com

|

||||

[6]:https://addons.mozilla.org/en-US/firefox/user/DevynCJohnson/

|

||||

[7]:https://my.opera.com/devyncjohnson/account/

|

||||

[8]:http://askubuntu.com/users/158340/devyn-collier-johnson

|

||||

[9]:http://unix.stackexchange.com/users/40770/devyn-collier-johnson

|

||||

[10]:http://stackoverflow.com/users/2354783/devyn-collier-johnson

|

||||

[11]:http://gnome-look.org/usermanager/search.php?username=DevynCJohnson

|

||||

[12]:http://www.creatity.com/?user=1449&action=detailUser

|

||||

[13]:http://openclipart.org/user-detail/DevynCJohnson

|

||||

@ -1,37 +0,0 @@

|

||||

Translating----------geekpi

|

||||

|

||||

01 The Linux Kernel: Introduction

|

||||

================================================================================

|

||||

In 1991, a Finnish student named Linus Benedict Torvalds made the kernel of a now popular operating system. He released Linux version 0.01 on September 1991, and on February 1992, he licensed the kernel under the GPL license. The GNU General Public License (GPL) allows people to use, own, modify, and distribute the source code legally and free of charge. This permits the kernel to become very popular because anyone may download it for free. Now that anyone can make their own kernel, it may be helpful to know how to obtain, edit, configure, compile, and install the Linux kernel.

|

||||

|

||||

A kernel is the core of an operating system. The operating system is all of the programs that manages the hardware and allows users to run applications on a computer. The kernel controls the hardware and applications. Applications do not communicate with the hardware directly, instead they go to the kernel. In summary, software runs on the kernel and the kernel operates the hardware. Without a kernel, a computer is a useless object.

|

||||

|

||||

There are many reasons for a user to want to make their own kernel. Many users may want to make a kernel that only contains the code needed to run on their system. For instance, my kernel contains drivers for FireWire devices, but my computer lacks these ports. When the system boots up, time and RAM space is wasted on drivers for devices that my system does not have installed. If I wanted to streamline my kernel, I could make my own kernel that does not have FireWire drivers. As for another reason, a user may own a device with a special piece of hardware, but the kernel that came with their latest version of Ubuntu lacks the needed driver. This user could download the latest kernel (which is a few versions ahead of Ubuntu's Linux kernels) and make their own kernel that has the needed driver. However, these are two of the most common reasons for users wanting to make their own Linux kernels.

|

||||

|

||||

Before we download a kernel, we should discuss some important definitions and facts. The Linux kernel is a monolithic kernel. This means that the whole operating system is on the RAM reserved as kernel space. To clarify, the kernel is put on the RAM. The space used by the kernel is reserved for the kernel. Only the kernel may use the reserved kernel space. The kernel owns that space on the RAM until the system is shutdown. In contrast to kernel space, there is user space. User space is the space on the RAM that the user's programs own. Applications like web browsers, video games, word processors, media players, the wallpaper, themes, etc. are all on the user space of the RAM. When an application is closed, any program may use the newly freed space. With kernel space, once the RAM space is taken, nothing else can have that space.

|

||||

|

||||

The Linux kernel is also a preemptive multitasking kernel. This means that the kernel will pause some tasks to ensure that every application gets a chance to use the CPU. For instance, if an application is running but is waiting for some data, the kernel will put that application on hold and allow another program to use the newly freed CPU resources until the data arrives. Otherwise, the system would be wasting resources for tasks that are waiting for data or another program to execute. The kernel will force programs to wait for the CPU or stop using the CPU. Applications cannot unpause or use the CPU without the kernel allowing them to do so.

|

||||

|

||||

The Linux kernel makes devices appear as files in the folder /dev. USB ports, for instance, are located in /dev/bus/usb. The hard-drive partitions are seen in /dev/disk/by-label. It is because of this feature that many people say "On Linux, everything is a file.". If a user wanted to access data on their memory card, for example, they cannot access the data through these device files.

|

||||

|

||||

The Linux kernel is portable. Portability is one of the best features that makes Linux popular. Portability is the ability for the kernel to work on a wide variety of processors and systems. Some of the processor types that the kernel supports include Alpha, AMD, ARM, C6X, Intel, x86, Microblaze, MIPS, PowerPC, SPARC, UltraSPARC, etc. This is not a complete list.

|

||||

|

||||

In the boot folder (/boot), users will see a "vmlinux" or a "vmlinuz" file. Both are compiled Linux kernels. The one that ends in a "z" is compressed. The "vm" stands for virtual memory. On systems with SPARC processors, users will see a zImage file instead. A small number of users may find a bzImage file; this is also a compressed Linux kernel. No matter which one a user owns, they are all bootable files that should not be changed unless the user knows what they are doing. Otherwise, their system can be made unbootable - the system will not turn on.

|

||||

|

||||

Source code is the coding of the program. With source code, programmers can make changes to the kernel and see how the kernel works.

|

||||

|

||||

### Downloading the Kernel: ###

|

||||

|

||||

Now, that we understand more about the kernel, it is time to download the source code. Go to [kernel.org][1] and click the large download button. Once the download is finished, uncompress the downloaded file.

|

||||

|

||||

For this article, I am using the source code for Linux kernel 3.9.4. All of the instructions in this article series are the same (or nearly the same) for all versions of the kernel.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.org/threads/%EF%BB%BFthe-linux-kernel-introduction.4203/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.kernel.org/

|

||||

@ -1,134 +0,0 @@

|

||||

02 The Linux Kernel: The Source Code

|

||||

================================================================================

|

||||