mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

Merge pull request #2 from LCTT/master

Update 20160913 Ryver - Why You Should Be Using It instead of Slack.md

This commit is contained in:

commit

e5c4fd5b6b

317

published/20160604 How to Build Your First Slack Bot with Python.md

Executable file

317

published/20160604 How to Build Your First Slack Bot with Python.md

Executable file

@ -0,0 +1,317 @@

|

||||

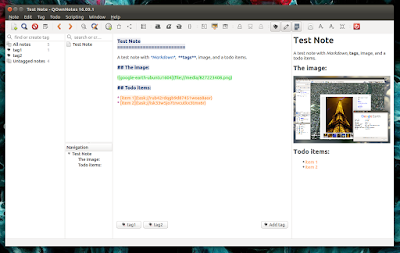

如何运用 Python 建立你的第一个 Slack 聊天机器人?

|

||||

============

|

||||

|

||||

[聊天机器人(Bot)](https://www.fullstackpython.com/bots.html) 是一种像 [Slack](https://slack.com/) 一样的实用的互动聊天服务方式。如果你之前从来没有建立过聊天机器人,那么这篇文章提供了一个简单的入门指南,告诉你如何用 Python 结合 [Slack API](https://api.slack.com/) 建立你第一个聊天机器人。

|

||||

|

||||

我们通过搭建你的开发环境, 获得一个 Slack API 的聊天机器人令牌,并用 Pyhon 开发一个简单聊天机器人。

|

||||

|

||||

### 我们所需的工具

|

||||

|

||||

我们的聊天机器人我们将它称作为“StarterBot”,它需要 Python 和 Slack API。要运行我们的 Python 代码,我们需要:

|

||||

|

||||

* [Python 2 或者 Python 3](https://www.fullstackpython.com/python-2-or-3.html)

|

||||

* [pip](https://pip.pypa.io/en/stable/) 和 [virtualenv](https://virtualenv.pypa.io/en/stable/) 来处理 Python [应用程序依赖关系](https://www.fullstackpython.com/application-dependencies.html)

|

||||

* 一个可以访问 API 的[免费 Slack 账号](https://slack.com/),或者你可以注册一个 [Slack Developer Hangout team](http://dev4slack.xoxco.com/)。

|

||||

* 通过 Slack 团队建立的官方 Python [Slack 客户端](https://github.com/slackhq/python-slackclient)代码库

|

||||

* [Slack API 测试令牌](https://api.slack.com/tokens)

|

||||

|

||||

当你在本教程中进行构建时,[Slack API 文档](https://api.slack.com/) 是很有用的。

|

||||

|

||||

本教程中所有的代码都放在 [slack-starterbot](https://github.com/mattmakai/slack-starterbot) 公共库里,并以 MIT 许可证开源。

|

||||

|

||||

### 搭建我们的环境

|

||||

|

||||

我们现在已经知道我们的项目需要什么样的工具,因此让我们来搭建我们所的开发环境吧。首先到终端上(或者 Windows 上的命令提示符)并且切换到你想要存储这个项目的目录。在那个目录里,创建一个新的 virtualenv 以便和其他的 Python 项目相隔离我们的应用程序依赖关系。

|

||||

|

||||

```

|

||||

virtualenv starterbot

|

||||

|

||||

```

|

||||

|

||||

激活 virtualenv:

|

||||

|

||||

```

|

||||

source starterbot/bin/activate

|

||||

|

||||

```

|

||||

|

||||

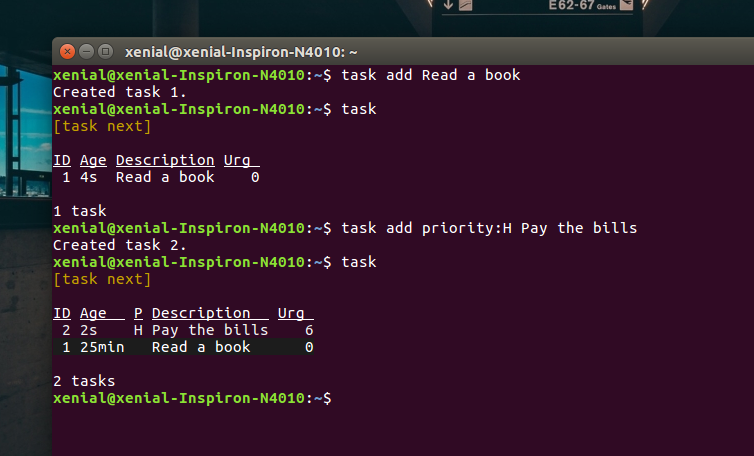

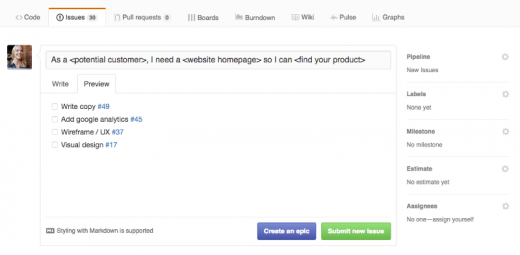

你的提示符现在应该看起来如截图:

|

||||

|

||||

|

||||

|

||||

这个官方的 slack 客户端 API 帮助库是由 Slack 建立的,它可以通过 Slack 通道发送和接收消息。通过这个 `pip` 命令安装 slackclient 库:

|

||||

|

||||

```

|

||||

pip install slackclient

|

||||

|

||||

```

|

||||

|

||||

当 `pip` 命令完成时,你应该看到类似这样的输出,并返回提示符。

|

||||

|

||||

|

||||

|

||||

我们也需要为我们的 Slack 项目获得一个访问令牌,以便我们的聊天机器人可以用它来连接到 Slack API。

|

||||

|

||||

### Slack 实时消息传递(RTM)API

|

||||

|

||||

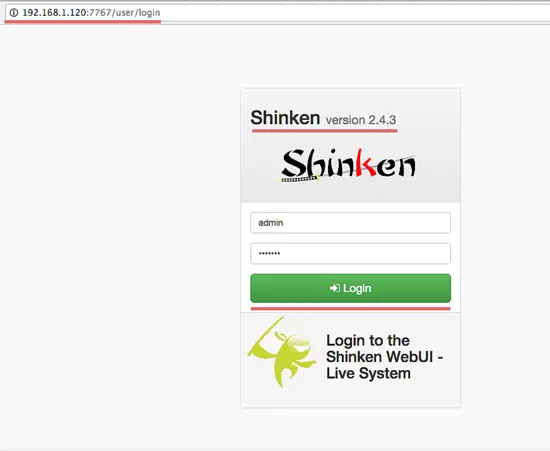

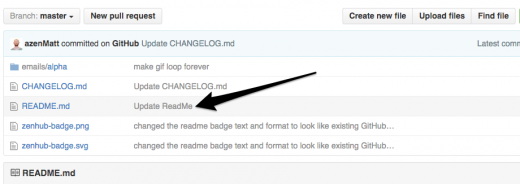

Slack 允许程序通过一个 [Web API](https://www.fullstackpython.com/application-programming-interfaces.html) 来访问他们的消息传递通道。去这个 [Slack Web API 页面](https://api.slack.com/) 注册建立你自己的 Slack 项目。你也可以登录一个你拥有管理权限的已有账号。

|

||||

|

||||

|

||||

|

||||

登录后你会到达 [聊天机器人用户页面](https://api.slack.com/bot-users)。

|

||||

|

||||

|

||||

|

||||

给你的聊天机器人起名为“starterbot”然后点击 “Add bot integration” 按钮。

|

||||

|

||||

|

||||

|

||||

这个页面将重新加载,你将看到一个新生成的访问令牌。你还可以将标志改成你自己设计的。例如我给的这个“Full Stack Python”标志。

|

||||

|

||||

|

||||

|

||||

在页面底部点击“Save Integration”按钮。你的聊天机器人现在已经准备好连接 Slack API。

|

||||

|

||||

Python 开发人员的一个常见的做法是以环境变量输出秘密令牌。输出的 Slack 令牌名字为`SLACK_BOT_TOKEN`:

|

||||

|

||||

```

|

||||

export SLACK_BOT_TOKEN='你的 slack 令牌粘帖在这里'

|

||||

|

||||

```

|

||||

|

||||

好了,我们现在得到了将这个 Slack API 用作聊天机器人的授权。

|

||||

|

||||

我们建立聊天机器人还需要更多信息:我们的聊天机器人的 ID。接下来我们将会写一个简短的脚本,从 Slack API 获得该 ID。

|

||||

|

||||

### 获得我们聊天机器人的 ID

|

||||

|

||||

这是最后写一些 Python 代码的时候了! 我们编写一个简短的 Python 脚本获得 StarterBot 的 ID 来热身一下。这个 ID 基于 Slack 项目而不同。

|

||||

|

||||

我们需要该 ID,当解析从 Slack RTM 上发给 StarterBot 的消息时,它用于对我们的应用验明正身。我们的脚本也会测试我们 `SLACK_BOT_TOKEN` 环境变量是否设置正确。

|

||||

|

||||

建立一个命名为 print_bot_id.py 的新文件,并且填入下面的代码:

|

||||

|

||||

```

|

||||

import os

|

||||

from slackclient import SlackClient

|

||||

|

||||

|

||||

BOT_NAME = 'starterbot'

|

||||

|

||||

slack_client = SlackClient(os.environ.get('SLACK_BOT_TOKEN'))

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

api_call = slack_client.api_call("users.list")

|

||||

if api_call.get('ok'):

|

||||

# retrieve all users so we can find our bot

|

||||

users = api_call.get('members')

|

||||

for user in users:

|

||||

if 'name' in user and user.get('name') == BOT_NAME:

|

||||

print("Bot ID for '" + user['name'] + "' is " + user.get('id'))

|

||||

else:

|

||||

print("could not find bot user with the name " + BOT_NAME)

|

||||

|

||||

```

|

||||

|

||||

我们的代码导入 SlackClient,并用我们设置的环境变量 `SLACK_BOT_TOKEN` 实例化它。 当该脚本通过 python 命令执行时,我们通过会访问 Slack API 列出所有的 Slack 用户并且获得匹配一个名字为“satrterbot”的 ID。

|

||||

|

||||

这个获得聊天机器人的 ID 的脚本我们仅需要运行一次。

|

||||

|

||||

```

|

||||

python print_bot_id.py

|

||||

|

||||

```

|

||||

|

||||

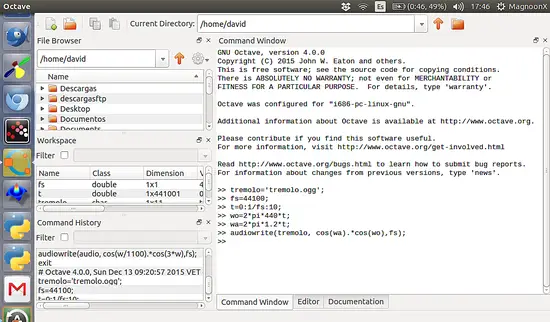

当它运行为我们提供了聊天机器人的 ID 时,脚本会打印出简单的一行输出。

|

||||

|

||||

|

||||

|

||||

复制这个脚本打印出的唯一 ID。并将该 ID 作为一个环境变量 `BOT_ID` 输出。

|

||||

|

||||

```

|

||||

(starterbot)$ export BOT_ID='bot id returned by script'

|

||||

|

||||

```

|

||||

|

||||

这个脚本仅仅需要运行一次来获得聊天机器人的 ID。 我们现在可以在我们的运行 StarterBot 的 Python应用程序中使用这个 ID 。

|

||||

|

||||

### 编码我们的 StarterBot

|

||||

|

||||

现在我们拥有了写我们的 StarterBot 代码所需的一切。 创建一个新文件命名为 starterbot.py ,它包括以下代码。

|

||||

|

||||

```

|

||||

import os

|

||||

import time

|

||||

from slackclient import SlackClient

|

||||

|

||||

```

|

||||

|

||||

对 `os` 和 `SlackClient` 的导入我们看起来很熟悉,因为我们已经在 theprint_bot_id.py 中用过它们了。

|

||||

|

||||

通过我们导入的依赖包,我们可以使用它们获得环境变量值,并实例化 Slack 客户端。

|

||||

|

||||

```

|

||||

# starterbot 的 ID 作为一个环境变量

|

||||

BOT_ID = os.environ.get("BOT_ID")

|

||||

|

||||

# 常量

|

||||

AT_BOT = "<@" + BOT_ID + ">:"

|

||||

EXAMPLE_COMMAND = "do"

|

||||

|

||||

# 实例化 Slack 和 Twilio 客户端

|

||||

slack_client = SlackClient(os.environ.get('SLACK_BOT_TOKEN'))

|

||||

|

||||

```

|

||||

|

||||

该代码通过我们以输出的环境变量 `SLACK_BOT_TOKEN 实例化 `SlackClient` 客户端。

|

||||

|

||||

```

|

||||

if __name__ == "__main__":

|

||||

READ_WEBSOCKET_DELAY = 1 # 1 从 firehose 读取延迟 1 秒

|

||||

if slack_client.rtm_connect():

|

||||

print("StarterBot connected and running!")

|

||||

while True:

|

||||

command, channel = parse_slack_output(slack_client.rtm_read())

|

||||

if command and channel:

|

||||

handle_command(command, channel)

|

||||

time.sleep(READ_WEBSOCKET_DELAY)

|

||||

else:

|

||||

print("Connection failed. Invalid Slack token or bot ID?")

|

||||

|

||||

```

|

||||

|

||||

Slack 客户端会连接到 Slack RTM API WebSocket,然后当解析来自 firehose 的消息时会不断循环。如果有任何发给 StarterBot 的消息,那么一个被称作 `handle_command` 的函数会决定做什么。

|

||||

|

||||

接下来添加两个函数来解析 Slack 的输出并处理命令。

|

||||

|

||||

```

|

||||

def handle_command(command, channel):

|

||||

"""

|

||||

Receives commands directed at the bot and determines if they

|

||||

are valid commands. If so, then acts on the commands. If not,

|

||||

returns back what it needs for clarification.

|

||||

"""

|

||||

response = "Not sure what you mean. Use the *" + EXAMPLE_COMMAND + \

|

||||

"* command with numbers, delimited by spaces."

|

||||

if command.startswith(EXAMPLE_COMMAND):

|

||||

response = "Sure...write some more code then I can do that!"

|

||||

slack_client.api_call("chat.postMessage", channel=channel,

|

||||

text=response, as_user=True)

|

||||

|

||||

def parse_slack_output(slack_rtm_output):

|

||||

"""

|

||||

The Slack Real Time Messaging API is an events firehose.

|

||||

this parsing function returns None unless a message is

|

||||

directed at the Bot, based on its ID.

|

||||

"""

|

||||

output_list = slack_rtm_output

|

||||

if output_list and len(output_list) > 0:

|

||||

for output in output_list:

|

||||

if output and 'text' in output and AT_BOT in output['text']:

|

||||

# 返回 @ 之后的文本,删除空格

|

||||

return output['text'].split(AT_BOT)[1].strip().lower(), \

|

||||

output['channel']

|

||||

return None, None

|

||||

|

||||

```

|

||||

|

||||

`parse_slack_output` 函数从 Slack 接受信息,并且如果它们是发给我们的 StarterBot 时会作出判断。消息以一个给我们的聊天机器人 ID 的直接命令开始,然后交由我们的代码处理。目前只是通过 Slack 管道发布一个消息回去告诉用户去多写一些 Python 代码!

|

||||

|

||||

这是整个程序组合在一起的样子 (你也可以 [在 GitHub 中查看该文件](https://github.com/mattmakai/slack-starterbot/blob/master/starterbot.py)):

|

||||

|

||||

```

|

||||

import os

|

||||

import time

|

||||

from slackclient import SlackClient

|

||||

|

||||

# starterbot 的 ID 作为一个环境变量

|

||||

BOT_ID = os.environ.get("BOT_ID")

|

||||

|

||||

# 常量

|

||||

AT_BOT = "<@" + BOT_ID + ">:"

|

||||

EXAMPLE_COMMAND = "do"

|

||||

|

||||

# 实例化 Slack 和 Twilio 客户端

|

||||

slack_client = SlackClient(os.environ.get('SLACK_BOT_TOKEN'))

|

||||

|

||||

def handle_command(command, channel):

|

||||

"""

|

||||

Receives commands directed at the bot and determines if they

|

||||

are valid commands. If so, then acts on the commands. If not,

|

||||

returns back what it needs for clarification.

|

||||

"""

|

||||

response = "Not sure what you mean. Use the *" + EXAMPLE_COMMAND + \

|

||||

"* command with numbers, delimited by spaces."

|

||||

if command.startswith(EXAMPLE_COMMAND):

|

||||

response = "Sure...write some more code then I can do that!"

|

||||

slack_client.api_call("chat.postMessage", channel=channel,

|

||||

text=response, as_user=True)

|

||||

|

||||

def parse_slack_output(slack_rtm_output):

|

||||

"""

|

||||

The Slack Real Time Messaging API is an events firehose.

|

||||

this parsing function returns None unless a message is

|

||||

directed at the Bot, based on its ID.

|

||||

"""

|

||||

output_list = slack_rtm_output

|

||||

if output_list and len(output_list) > 0:

|

||||

for output in output_list:

|

||||

if output and 'text' in output and AT_BOT in output['text']:

|

||||

# 返回 @ 之后的文本,删除空格

|

||||

return output['text'].split(AT_BOT)[1].strip().lower(), \

|

||||

output['channel']

|

||||

return None, None

|

||||

|

||||

if __name__ == "__main__":

|

||||

READ_WEBSOCKET_DELAY = 1 # 1 second delay between reading from firehose

|

||||

if slack_client.rtm_connect():

|

||||

print("StarterBot connected and running!")

|

||||

while True:

|

||||

command, channel = parse_slack_output(slack_client.rtm_read())

|

||||

if command and channel:

|

||||

handle_command(command, channel)

|

||||

time.sleep(READ_WEBSOCKET_DELAY)

|

||||

else:

|

||||

print("Connection failed. Invalid Slack token or bot ID?")

|

||||

|

||||

```

|

||||

|

||||

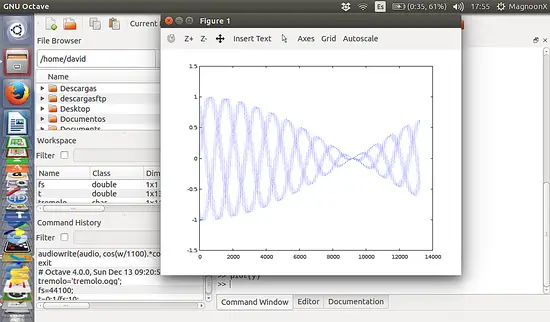

现在我们的代码已经有了,我们可以通过 `python starterbot.py` 来运行我们 StarterBot 的代码了。

|

||||

|

||||

|

||||

|

||||

在 Slack 中创建新通道,并且把 StarterBot 邀请进来,或者把 StarterBot 邀请进一个已经存在的通道中。

|

||||

|

||||

|

||||

|

||||

现在在你的通道中给 StarterBot 发命令。

|

||||

|

||||

|

||||

|

||||

如果你从聊天机器人得到的响应中遇见问题,你可能需要做一个修改。正如上面所写的这个教程,其中一行 `AT_BOT = "<@" + BOT_ID + ">:"`,在“@starter”(你给你自己的聊天机器人起的名字)后需要一个冒号。从`AT_BOT` 字符串后面移除`:`。Slack 似乎需要在 `@` 一个人名后加一个冒号,但这好像是有些不协调的。

|

||||

|

||||

### 结束

|

||||

|

||||

好吧,你现在已经获得一个简易的聊天机器人,你可以在代码中很多地方加入你想要创建的任何特性。

|

||||

|

||||

我们能够使用 Slack RTM API 和 Python 完成很多功能。看看通过这些文章你还可以学习到什么:

|

||||

|

||||

* 附加一个持久的[关系数据库](https://www.fullstackpython.com/databases.html) 或者 [NoSQL 后端](https://www.fullstackpython.com/no-sql-datastore.html) 比如 [PostgreSQL](https://www.fullstackpython.com/postgresql.html)、[MySQL](https://www.fullstackpython.com/mysql.html) 或者 [SQLite](https://www.fullstackpython.com/sqlite.html) ,来保存和检索用户数据

|

||||

* 添加另外一个与聊天机器人互动的通道,比如 [短信](https://www.twilio.com/blog/2016/05/build-sms-slack-bot-python.html) 或者[电话呼叫](https://www.twilio.com/blog/2016/05/add-phone-calling-slack-python.html)

|

||||

* [集成其它的 web API](https://www.fullstackpython.com/api-integration.html),比如 [GitHub](https://developer.github.com/v3/)、[Twilio](https://www.twilio.com/docs) 或者 [api.ai](https://docs.api.ai/)

|

||||

|

||||

有问题? 通过 Twitter 联系我 [@fullstackpython](https://twitter.com/fullstackpython) 或 [@mattmakai](https://twitter.com/mattmakai)。 我在 GitHub 上的用户名是 [mattmakai](https://github.com/mattmakai)。

|

||||

|

||||

这篇文章感兴趣? Fork 这个 [GitHub 上的页面](https://github.com/mattmakai/fullstackpython.com/blob/gh-pages/source/content/posts/160604-build-first-slack-bot-python.markdown)吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

via: https://www.fullstackpython.com/blog/build-first-slack-bot-python.html

|

||||

|

||||

作者:[Matt Makai][a]

|

||||

译者:[jiajia9llinuxer](https://github.com/jiajia9linuxer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出aa

|

||||

|

||||

[a]: https://www.fullstackpython.com/about-author.html

|

||||

59

published/20160812 What is copyleft.md

Executable file

59

published/20160812 What is copyleft.md

Executable file

@ -0,0 +1,59 @@

|

||||

什么是 Copyleft ?

|

||||

=============

|

||||

|

||||

如果你在开源项目中花费了很多时间的话,你可能会看到使用的术语 “copyleft”(GNU 官方网站上的释义:[中文][1],[英文][2])。虽然这个术语使用比较普遍,但是很多人却不理解它。软件许可是一个至少不亚于文件编辑器和打包格式的激烈辩论的主题。专家们对 copyleft 的理解可能会充斥在好多书中,但是这篇文章可以作为你理解 copyleft 启蒙之路的起点。

|

||||

|

||||

### 什么是 copyright?

|

||||

|

||||

在我们可以理解 copyleft 之前,我们必须先介绍一下 copyright 的概念。copyleft 并不是一个脱离于 copyright 的法律框架,copyleft 存在于 copyright 规则中。那么,什么是 copyright?

|

||||

|

||||

它的准确定义随着司法权的不同而不同,但是其本质就是:作品的作者对于作品的复制(copying)(因此这个术语称之为 “copyright”:copy right)、表现等有一定的垄断性。在美国,其宪法明确地阐述了美国国会的任务就是制定版权法律来“促进科学和实用艺术的进步”。

|

||||

|

||||

不同于以往,版权会立刻附加到作品上——而且不需要注册。默认情况下,所有的权力都是保留的。也就是说,没有经过作者的允许,没有人可以重新出版、表现或者修改作品。这种“允许”就是一种许可,可能还会附加有一定的条件。

|

||||

|

||||

如果希望得到对于 copyright 更彻底的介绍,Coursera 上的[教育工作者和图书管理员的著作权](https://www.coursera.org/learn/copyright-for-education)是一个非常优秀的课程。

|

||||

|

||||

### 什么是 copyleft?

|

||||

|

||||

先不要着急,在我们讨论 copyleft 是什么之前,还有一步。首先,让我们解释一下开源(open source)意味着什么。所有的开源许可协议,按照[开源倡议的定义(Open Source Inititative's definition)](https://opensource.org/osd)(规定),除其他形式外,必须以源码的形式发放。获得开源软件的任何人都有权利查看并修改源码。

|

||||

|

||||

copyleft 许可和所谓的 “自由(permissive)” 许可不同的地方在于,其衍生的作品中,也需要相同的 copyleft 许可。我倾向于通过这种方式来区分两者不同: 自由(permissive)许可向直接下游的开发者提供了最大的自由(包括能够在闭源项目中使用开源代码的权力),而 copyleft 许可则向最终用户提供最大的自由。

|

||||

|

||||

GNU 项目为 copyleft 提供了这个简单的定义([中文][3],[英文][4]):“规则就是当重新分发该程序时,你不可以添加限制来否认其他人对于[自由软件]的自由。(the rule that when redistributing the program, you cannot add restrictions to deny other people the central freedoms [of free software].)”这可以被认为权威的定义,因为 [GNU 通用许可证(GNU General Public License,GPL)](https://www.gnu.org/licenses/gpl.html)的各种版本的依然是最广泛使用的 copyleft 许可。

|

||||

|

||||

### 软件中的 copyleft

|

||||

|

||||

GPL 家族是最出名的 copyleft 许可,但是它们并不是唯一的。[Mozilla 公共许可协议(Mozilla Public License,MPL)](https://www.mozilla.org/en-US/MPL/)和[Eclipse 公共许可协议( Eclipse Public License,EPL)](https://www.eclipse.org/legal/epl-v10.html)也很出名。很多[其它的 copyleft 许可](https://tldrlegal.com/licenses/tags/Copyleft) 也有较少的采用。

|

||||

|

||||

就像之前章节介绍的那样,一个 copyleft 许可意味着下游的项目不可以在软件的使用上添加额外的限制。这最好用一个例子来说明。如果我写了一个名为 MyCoolProgram 的程序,并且使用 copyleft 许可来发布,你将有使用和修改它的自由。你可以发布你修改后的版本,但是你必须让你的用户拥有我给你的同样的自由。(但)如果我使用 “自由(permissive)” 许可,你将可以将它自由地合并到一个不提供源码的闭源软件中。

|

||||

|

||||

对于我的 MyCoolProgram 程序,和你必须能做什么同样重要的是你必须不能做什么。你不必用和我完全一样的许可协议,只要它们相互兼容就行(但一般的为了简单起见,下游的项目也使用相同的许可)。你不必向我贡献出你的修改,但是你这么做的话,通常被认为一个很好的形式,尤其是这些修改是 bug 修复的话。

|

||||

|

||||

### 非软件中的 copyleft

|

||||

|

||||

虽然,copyleft 的概念起始于软件世界,但是它也存在于之外的世界。“做你想做的,只要你保留其他人也有做同样的事的权力”的概念是应用于文字创作、视觉艺术等方面的知识共享署名许可([中文][5],[英文][6])的一个显著的特点(CC BY-SA 4.0 是贡献于 Opensource.com 默认的许可,也是很多开源网站,包括 [Linux.cn][7] 在内所采用的内容许可协议)。[GNU 自由文档许可证](https://www.gnu.org/licenses/fdl.html)是另一个非软件协议中 copyleft 的例子。在非软件中使用软件协议通常不被建议。

|

||||

|

||||

### 我是否需要选择一种 copyleft 许可?

|

||||

|

||||

关于项目应该使用哪一种许可,可以用(已经有了)成篇累牍的文章在阐述。我的建议是首先将许可列表缩小,以满足你的哲学信条和项目目标。GitHub 的 [choosealicense.com](http://choosealicense.com/) 是一种查找满足你的需求的许可协议的好方法。[tl;drLegal](https://tldrlegal.com/)使用平实的语言来解释了许多常见和不常见的软件许可。而且也要考虑你的项目所在的生态系统,围绕一种特定语言和技术的项目经常使用相同或者相似的许可。如果你希望你的项目可以运行的更出色,你可能需要确保你选择的许可是兼容的。

|

||||

|

||||

关于更多 copyleft 的信息,请查看 [copyleft 指南](https://copyleft.org/)。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/resources/what-is-copyleft

|

||||

|

||||

作者:[Ben Cotton][a]

|

||||

译者:[yangmingming](https://github.com/yangmingming)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bcotton

|

||||

[1]: https://www.gnu.org/licenses/copyleft.zh-cn.html

|

||||

[2]: https://www.gnu.org/licenses/copyleft.en.html

|

||||

[3]: https://www.gnu.org/philosophy/free-sw.zh-cn.html

|

||||

[4]: https://www.gnu.org/philosophy/free-sw.en.html

|

||||

[5]: https://creativecommons.org/licenses/by-sa/4.0/deed.zh

|

||||

[6]: https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[7]: https://linux.cn/

|

||||

@ -0,0 +1,66 @@

|

||||

零配置部署 React

|

||||

========================

|

||||

|

||||

你想使用 [React][1] 来构建应用吗?“[入门][2]”是很容易的,可是接下来呢?

|

||||

|

||||

React 是一个构建用户界面的库,而它只是组成一个应用的一部分。应用还有其他的部分——风格、路由器、npm 模块、ES6 代码、捆绑和更多——这就是为什么使用它们的开发者不断流失的原因。这被称为 [JavaScript 疲劳][3]。尽管存在这种复杂性,但是使用 React 的用户依旧继续增长。

|

||||

|

||||

社区应对这一挑战的方法是共享[模版文件][4]。这些模版文件展示出开发者们架构选择的多样性。官方的“开始入门”似乎离一个实际可用的应用程序相去甚远。

|

||||

|

||||

### 新的,零配置体验

|

||||

|

||||

受开发者来自 [Ember.js][5] 和 [Elm][6] 的经验启发,Facebook 的人们想要提供一个简单、直接的方式。他们发明了一个[新的开发 React 应用的方法][10] :`create-react-app`。在初始的公开版发布的三个星期以来,它已经受到了极大的社区关注(超过 8000 个 GitHub 粉丝)和支持(许多的拉取请求)。

|

||||

|

||||

`create-react-app` 是不同于许多过去使用模板和开发启动工具包的尝试。它的目标是零配置的[惯例-优于-配置][7],使开发者关注于他们的应用的不同之处。

|

||||

|

||||

零配置一个强大的附带影响是这个工具可以在后台逐步成型。零配置奠定了工具生态系统的基础,创造的自动化和喜悦的开发远远超越 React 本身。

|

||||

|

||||

### 将零配置部署到 Heroku 上

|

||||

|

||||

多亏了 create-react-app 中打下的零配置基础,零配置的目标看起来快要达到了。因为这些新的应用都使用一个公共的、默认的架构,构建的过程可以被自动化,同时可以使用智能的默认项来配置。因此,[我们创造这个社区构建包来体验在 Heroku 零配置的过程][8]。

|

||||

|

||||

#### 在两分钟内创造和发布 React 应用

|

||||

|

||||

你可以免费在 Heroku 上开始构建 React 应用。

|

||||

```

|

||||

npm install -g create-react-app

|

||||

create-react-app my-app

|

||||

cd my-app

|

||||

git init

|

||||

heroku create -b https://github.com/mars/create-react-app-buildpack.git

|

||||

git add .

|

||||

git commit -m "react-create-app on Heroku"

|

||||

git push heroku master

|

||||

heroku open

|

||||

```

|

||||

[使用构建包文档][9]亲自试试吧。

|

||||

|

||||

### 从零配置出发

|

||||

|

||||

create-react-app 非常的新(目前版本是 0.2),同时因为它的目标是简洁的开发者体验,更多高级的使用情景并不支持(或者肯定不会支持)。例如,它不支持服务端渲染或者自定义捆绑。

|

||||

|

||||

为了支持更好的控制,create-react-app 包括了 npm run eject 命令。Eject 将所有的工具(配置文件和 package.json 依赖库)解压到应用所在的路径,因此你可以按照你心中的想法定做。一旦被弹出,你做的改变或许有必要选择一个特定的用 Node.js 或静态的构建包来布署。总是通过一个分支/拉取请求来使类似的工程改变生效,因此这些改变可以轻易撤销。Heroku 的预览应用对测试发布的改变是完美的。

|

||||

|

||||

我们将会追踪 create-react-app 的进度,当它们可用时,同时适配构建包来支持更多的高级使用情况。发布万岁!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.heroku.com/deploying-react-with-zero-configuration

|

||||

|

||||

作者:[Mars Hall][a]

|

||||

译者:[zky001](https://github.com/zky001)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://blog.heroku.com/deploying-react-with-zero-configuration

|

||||

[1]: https://facebook.github.io/react/

|

||||

[2]: https://facebook.github.io/react/docs/getting-started.html

|

||||

[3]: https://medium.com/@ericclemmons/javascript-fatigue-48d4011b6fc4

|

||||

[4]: https://github.com/search?q=react+boilerplate

|

||||

[5]: http://emberjs.com/

|

||||

[6]: http://elm-lang.org/

|

||||

[7]: http://rubyonrails.org/doctrine/#convention-over-configuration

|

||||

[8]: https://github.com/mars/create-react-app-buildpack

|

||||

[9]: https://github.com/mars/create-react-app-buildpack#usage

|

||||

[10]: https://github.com/facebookincubator/create-react-app

|

||||

85

published/201609/20160506 Setup honeypot in Kali Linux.md

Normal file

85

published/201609/20160506 Setup honeypot in Kali Linux.md

Normal file

@ -0,0 +1,85 @@

|

||||

在 Kali Linux 环境下设置蜜罐

|

||||

=========================

|

||||

|

||||

Pentbox 是一个包含了许多可以使渗透测试工作变得简单流程化的工具的安全套件。它是用 Ruby 编写并且面向 GNU / Linux,同时也支持 Windows、MacOS 和其它任何安装有 Ruby 的系统。在这篇短文中我们将讲解如何在 Kali Linux 环境下设置蜜罐。如果你还不知道什么是蜜罐(honeypot),“蜜罐是一种计算机安全机制,其设置用来发现、转移、或者以某种方式,抵消对信息系统的非授权尝试。"

|

||||

|

||||

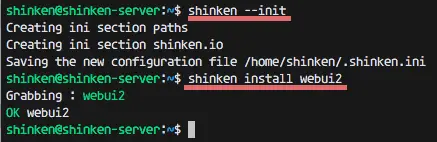

### 下载 Pentbox:

|

||||

|

||||

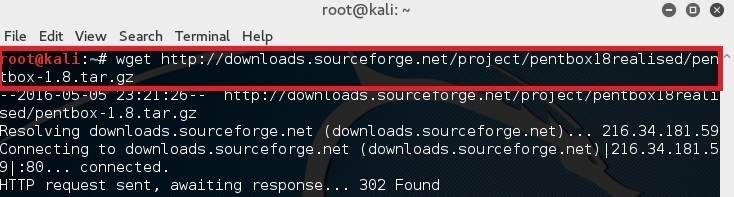

在你的终端中简单的键入下面的命令来下载 pentbox-1.8。

|

||||

|

||||

```

|

||||

root@kali:~# wget http://downloads.sourceforge.net/project/pentbox18realised/pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 解压 pentbox 文件

|

||||

|

||||

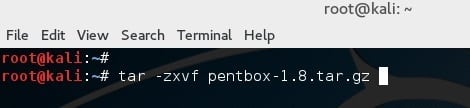

使用如下命令解压文件:

|

||||

|

||||

```

|

||||

root@kali:~# tar -zxvf pentbox-1.8.tar.gz

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 运行 pentbox 的 ruby 脚本

|

||||

|

||||

改变目录到 pentbox 文件夹:

|

||||

|

||||

```

|

||||

root@kali:~# cd pentbox-1.8/

|

||||

```

|

||||

|

||||

|

||||

|

||||

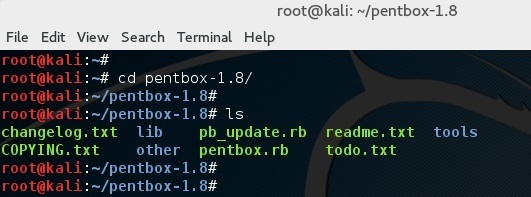

使用下面的命令来运行 pentbox:

|

||||

|

||||

```

|

||||

root@kali:~# ./pentbox.rb

|

||||

```

|

||||

|

||||

|

||||

|

||||

### 设置一个蜜罐

|

||||

|

||||

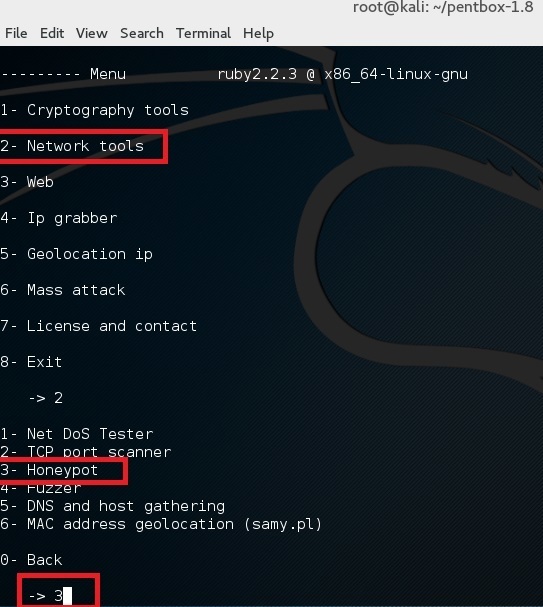

使用选项 2 (Network Tools) 然后是其中的选项 3 (Honeypot)。

|

||||

|

||||

|

||||

|

||||

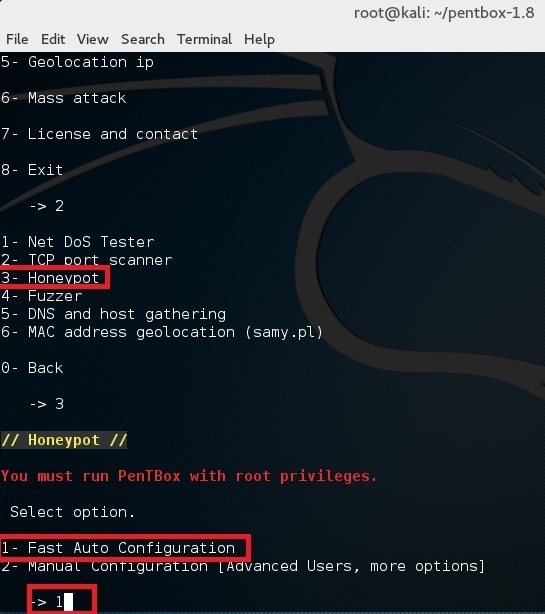

完成让我们执行首次测试,选择其中的选项 1 (Fast Auto Configuration)

|

||||

|

||||

|

||||

|

||||

这样就在 80 端口上开启了一个蜜罐。打开浏览器并且打开链接 http://192.168.160.128 (这里的 192.168.160.128 是你自己的 IP 地址。)你应该会看到一个 Access denied 的报错。

|

||||

|

||||

|

||||

|

||||

|

||||

并且在你的终端应该会看到 “HONEYPOT ACTIVATED ON PORT 80” 和跟着的 “INTRUSION ATTEMPT DETECTED”。

|

||||

|

||||

|

||||

|

||||

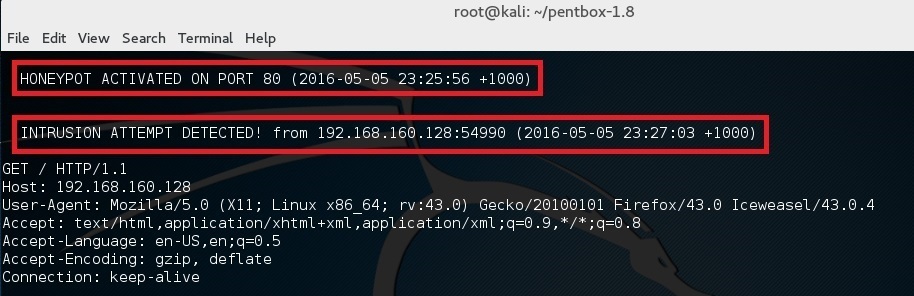

现在,如果你在同一步选择了选项 2 (Manual Configuration), 你应该看见更多的其它选项:

|

||||

|

||||

|

||||

|

||||

执行相同的步骤但是这次选择 22 端口 (SSH 端口)。接着在你家里的路由器上做一个端口转发,将外部的 22 端口转发到这台机器的 22 端口上。或者,把这个蜜罐设置在你的云端服务器的一个 VPS 上。

|

||||

|

||||

你将会被有如此多的机器在持续不断地扫描着 SSH 端口而震惊。 你知道你接着应该干什么么? 你应该黑回它们去!桀桀桀!

|

||||

|

||||

如果视频是你的菜的话,这里有一个设置蜜罐的视频:

|

||||

|

||||

<https://youtu.be/NufOMiktplA>

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.blackmoreops.com/2016/05/06/setup-honeypot-in-kali-linux/

|

||||

|

||||

作者:[blackmoreops.com][a]

|

||||

译者:[wcnnbdk1](https://github.com/wcnnbdk1)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: blackmoreops.com

|

||||

@ -0,0 +1,74 @@

|

||||

使用 Python 和 Asyncio 编写在线多人游戏(一)

|

||||

===================================================================

|

||||

|

||||

你在 Python 中用过异步编程吗?本文中我会告诉你怎样做,而且用一个[能工作的例子][1]来展示它:这是一个流行的贪吃蛇游戏,而且是为多人游戏而设计的。

|

||||

|

||||

- [游戏入口在此,点此体验][2]。

|

||||

|

||||

###1、简介

|

||||

|

||||

在技术和文化领域,大规模多人在线游戏(MMO)毋庸置疑是我们当今世界的潮流之一。很长时间以来,为一个 MMO 游戏写一个服务器这件事总是会涉及到大量的预算与复杂的底层编程技术,不过在最近这几年,事情迅速发生了变化。基于动态语言的现代框架允许在中档的硬件上面处理大量并发的用户连接。同时,HTML5 和 WebSockets 标准使得创建基于实时图形的游戏的直接运行至浏览器上的客户端成为可能,而不需要任何的扩展。

|

||||

|

||||

对于创建可扩展的非堵塞性的服务器来说,Python 可能不是最受欢迎的工具,尤其是和在这个领域里最受欢迎的 Node.js 相比而言。但是最近版本的 Python 正在改变这种现状。[asyncio][3] 的引入和一个特别的 [async/await][4] 语法使得异步代码看起来像常规的阻塞代码一样,这使得 Python 成为了一个值得信赖的异步编程语言,所以我将尝试利用这些新特点来创建一个多人在线游戏。

|

||||

|

||||

###2、异步

|

||||

|

||||

一个游戏服务器应该可以接受尽可能多的用户并发连接,并实时处理这些连接。一个典型的解决方案是创建线程,然而在这种情况下并不能解决这个问题。运行上千的线程需要 CPU 在它们之间不停的切换(这叫做上下文切换),这将导致开销非常大,效率很低下。更糟糕的是使用进程来实现,因为,不但如此,它们还会占用大量的内存。在 Python 中,甚至还有一个问题,Python 的解释器(CPython)并不是针对多线程设计的,相反它主要针对于单线程应用实现最大的性能。这就是为什么它使用 GIL(global interpreter lock),这是一个不允许同时运行多线程 Python 代码的架构,以防止同一个共享对象出现使用不可控。正常情况下,在当前线程正在等待的时候,解释器会转换到另一个线程,通常是等待一个 I/O 的响应(举例说,比如等待 Web 服务器的响应)。这就允许在你的应用中实现非阻塞 I/O 操作,因为每一个操作仅仅阻塞一个线程而不是阻塞整个服务器。然而,这也使得通常的多线程方案变得几近无用,因为它不允许你并发执行 Python 代码,即使是在多核心的 CPU 上也是这样。而与此同时,在一个单一线程中拥有非阻塞 I/O 是完全有可能的,因而消除了经常切换上下文的需要。

|

||||

|

||||

实际上,你可以用纯 Python 代码来实现一个单线程的非阻塞 I/O。你所需要的只是标准的 [select][5] 模块,这个模块可以让你写一个事件循环来等待未阻塞的 socket 的 I/O。然而,这个方法需要你在一个地方定义所有 app 的逻辑,用不了多久,你的 app 就会变成非常复杂的状态机。有一些框架可以简化这个任务,比较流行的是 [tornade][6] 和 [twisted][7]。它们被用来使用回调方法实现复杂的协议(这和 Node.js 比较相似)。这种框架运行在它自己的事件循环中,按照定义的事件调用你的回调函数。并且,这或许是一些情况的解决方案,但是它仍然需要使用回调的方式编程,这使你的代码变得碎片化。与写同步代码并且并发地执行多个副本相比,这就像我们在普通的线程上做的一样。在单个线程上这为什么是不可能的呢?

|

||||

|

||||

这就是为什么出现微线程(microthread)概念的原因。这个想法是为了在一个线程上并发执行任务。当你在一个任务中调用阻塞的方法时,有一个叫做“manager” (或者“scheduler”)的东西在执行事件循环。当有一些事件准备处理的时候,一个 manager 会转移执行权给一个任务,并等着它执行完毕。任务将一直执行,直到它遇到一个阻塞调用,然后它就会将执行权返还给 manager。

|

||||

|

||||

> 微线程也称为轻量级线程(lightweight threads)或绿色线程(green threads)(来自于 Java 中的一个术语)。在伪线程中并发执行的任务叫做 tasklets、greenlets 或者协程(coroutines)。

|

||||

|

||||

Python 中的微线程最早的实现之一是 [Stackless Python][8]。它之所以这么知名是因为它被用在了一个叫 [EVE online][9] 的非常有名的在线游戏中。这个 MMO 游戏自称说在一个持久的“宇宙”中,有上千个玩家在做不同的活动,这些都是实时发生的。Stackless 是一个独立的 Python 解释器,它代替了标准的函数栈调用,并且直接控制程序运行流程来减少上下文切换的开销。尽管这非常有效,这个解决方案不如在标准解释器中使用“软”库更流行,像 [eventlet][10] 和 [gevent][11] 的软件包配备了修补过的标准 I/O 库,I/O 函数会将执行权传递到内部事件循环。这使得将正常的阻塞代码转变成非阻塞的代码变得简单。这种方法的一个缺点是从代码上看这并不分明,它的调用是非阻塞的。新版本的 Python 引入了本地协程作为生成器的高级形式。在 Python 的 3.4 版本之后,引入了 asyncio 库,这个库依赖于本地协程来提供单线程并发。但是仅仅到了 Python 3.5 ,协程就变成了 Python 语言的一部分,使用新的关键字 async 和 await 来描述。这是一个简单的例子,演示了使用 asyncio 来运行并发任务。

|

||||

|

||||

```

|

||||

import asyncio

|

||||

|

||||

async def my_task(seconds):

|

||||

print("start sleeping for {} seconds".format(seconds))

|

||||

await asyncio.sleep(seconds)

|

||||

print("end sleeping for {} seconds".format(seconds))

|

||||

|

||||

all_tasks = asyncio.gather(my_task(1), my_task(2))

|

||||

loop = asyncio.get_event_loop()

|

||||

loop.run_until_complete(all_tasks)

|

||||

loop.close()

|

||||

```

|

||||

|

||||

我们启动了两个任务,一个睡眠 1 秒钟,另一个睡眠 2 秒钟,输出如下:

|

||||

|

||||

```

|

||||

start sleeping for 1 seconds

|

||||

start sleeping for 2 seconds

|

||||

end sleeping for 1 seconds

|

||||

end sleeping for 2 seconds

|

||||

```

|

||||

|

||||

正如你所看到的,协程不会阻塞彼此——第二个任务在第一个结束之前启动。这发生的原因是 asyncio.sleep 是协程,它会返回执行权给调度器,直到时间到了。

|

||||

|

||||

在下一节中,我们将会使用基于协程的任务来创建一个游戏循环。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[xinglianfly](https://github.com/xinglianfly)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-asyncio-getting-asynchronous/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: http://snakepit-game.com/

|

||||

[3]: https://docs.python.org/3/library/asyncio.html

|

||||

[4]: https://docs.python.org/3/whatsnew/3.5.html#whatsnew-pep-492

|

||||

[5]: https://docs.python.org/2/library/select.html

|

||||

[6]: http://www.tornadoweb.org/

|

||||

[7]: http://twistedmatrix.com/

|

||||

[8]: http://www.stackless.com/

|

||||

[9]: http://www.eveonline.com/

|

||||

[10]: http://eventlet.net/

|

||||

[11]: http://www.gevent.org/

|

||||

@ -0,0 +1,59 @@

|

||||

Linux 发行版们应该禁用 IPv4 映射的 IPv6 地址吗?

|

||||

=============================================

|

||||

|

||||

从各方面来看,互联网向 IPv6 的过渡是件很缓慢的事情。不过在最近几年,可能是由于 IPv4 地址资源的枯竭,IPv6 的使用处于[上升态势][1]。相应的,开发者也有兴趣确保软件能在 IPv4 和 IPv6 下工作。但是,正如近期 OpenBSD 邮件列表的讨论所关注的,一个使得向 IPv6 转换更加轻松的机制设计同时也可能导致网络更不安全——并且 Linux 发行版们的默认配置可能并不安全。

|

||||

|

||||

### 地址映射

|

||||

|

||||

IPv6 在很多方面看起来可能很像 IPv4,但它是一个不同地址空间的不同的协议。服务器程序想要接受使用二者之中任意一个协议的连接,必须给两个不同的地址族分别打开一个套接字——IPv4 的 `AF_INET` 和 IPv6 的 `AF_INET6`。特别是一个程序希望在主机的使用两种地址协议的任意接口都接受连接的话,需要创建一个绑定到全零通配符地址(`0.0.0.0`)的 `AF_INET` 套接字和一个绑定到 IPv6 等效地址(写作 `::`)的 `AF_INET6` 套接字。它必须在两个套接字上都监听连接——或者有人会这么认为。

|

||||

|

||||

多年前,在 [RFC 3493][2],IETF 指定了一个机制,程序可以使用一个单独的 IPv6 套接字工作在两个协议之上。有了一个启用这个行为的套接字,程序只需要绑定到 `::` 来在所有接口上接受使用这两个协议连接。当创建了一个 IPv4 连接到该绑定端口,源地址会像 [RFC 2373][3] 中描述的那样映射到 IPv6。所以,举个例子,一个使用了这个模式的程序会将一个 `192.168.1.1` 的传入连接看作来自 `::ffff:192.168.1.1`(这个混合的写法就是这种地址通常的写法)。程序也能通过相同的映射方法打开一个到 IPv4 地址的连接。

|

||||

|

||||

RFC 要求这个行为要默认实现,所以大多数系统这么做了。不过也有些例外,OpenBSD 就是其中之一;在那里,希望在两种协议下工作的程序能做的只能是创建两个独立的套接字。但一个在 Linux 中打开两个套接字的程序会遇到麻烦:IPv4 和 IPv6 套接字都会尝试绑定到 IPv4 地址,所以不论是哪个后者都会失败。换句话说,一个绑定到 `::` 指定端口的套接字的程序会同时绑定到 IPv6 `::` 和 IPv4 `0.0.0.0` 地址的那个端口上。如果程序之后尝试绑定一个 IPv4 套接字到 `0.0.0.0` 的相同端口上时,这个操作会失败,因为这个端口已经被绑定了。

|

||||

|

||||

当然有个办法可以解决这个问题;程序可以调用 `setsockopt()` 来打开 `IPV6_V6ONLY` 选项。一个打开两个套接字并且设置了 `IPV6_V6ONLY` 的程序应该可以在所有的系统间移植。

|

||||

|

||||

读者们可能对不是每个程序都能正确处理这一问题没那么震惊。事实证明,这些程序的其中之一是网络时间协议(Network Time Protocol)的 [OpenNTPD][4] 实现。Brent Cook 最近给上游 OpenNTPD 源码[提交了一个小补丁][5],添加了必要的 `setsockopt()` 调用,它也被提交到了 OpenBSD 中了。不过那个补丁看起来不大可能被接受,最可能是因为 OpenBSD 式的理由(LCTT 译注:如前文提到的,OpenBSD 并不受这个问题的影响)。

|

||||

|

||||

### 安全担忧

|

||||

|

||||

正如上文所提到,OpenBSD 根本不支持 IPv4 映射的 IPv6 套接字。即使一个程序试着通过将 `IPV6_V6ONLY` 选项设置为 0 显式地启用地址映射,它的作者会感到沮丧,因为这个设置在 OpenBSD 系统中无效。这个决定背后的原因是这个映射带来了一些安全担忧。攻击打开的接口的攻击类型有很多种,但它们最后都会回到规定的两个途径到达相同的端口,每个端口都有它自己的控制规则。

|

||||

|

||||

任何给定的服务器系统可能都设置了防火墙规则,描述端口的允许访问权限。也许还会有适当的机制,比如 TCP wrappers 或一个基于 BPF 的过滤器,或一个网络上的路由器可以做连接状态协议过滤。结果可能是导致防火墙保护和潜在的所有类型的混乱连接之间的缺口导致同一 IPv4 地址可以通过两个不同的协议到达。如果地址映射是在网络边界完成的,情况甚至会变得更加复杂;参看[这个 2003 年的 RFC 草案][6],它描述了如果映射地址在主机之间传播,一些随之而来的其它攻击场景。

|

||||

|

||||

改变系统和软件正确地处理 IPv4 映射的 IPv6 地址当然可以实现。但那增加了系统的整体复杂度,并且可以确定这个改动没有实际地完整实现到它应该实现的范围内。如同 Theo de Raadt [说的][7]:

|

||||

|

||||

> **有时候人们将一个糟糕的想法放进了 RFC。之后他们发现这个想法是不可能的就将它丢回垃圾箱了。结果就是概念变得如此复杂,每个人都得在管理和编码方面是个全职专家。**

|

||||

|

||||

我们也根本不清楚这些全职专家有多少在实际配置使用 IPv4 映射的 IPv6 地址的系统和网络。

|

||||

|

||||

有人可能会说,尽管 IPv4 映射的 IPv6 地址造成了安全危险,更改一下程序让它在实现了地址映射的系统上关闭地址映射应该没什么危害。但 Theo [认为][8]不应该这么做,有两个理由。第一个是有许多破旧的程序,它们永远不会被修复。而实际的原因是给发行版们施加压力去默认关闭地址映射。正如他说的:“**最终有人会理解这个危害是系统性的,并更改系统默认行为使之‘secure by default’**。”

|

||||

|

||||

### Linux 上的地址映射

|

||||

|

||||

在 Linux 系统,地址映射由一个叫做 `net.ipv6.bindv6only` 的 sysctl 开关控制;它默认设置为 0(启用地址映射)。管理员(或发行版们)可以通过将它设置为 1 来关闭地址映射,但在部署这样一个系统到生产环境之前最好确认软件都能正常工作。一个快速调查显示没有哪个主要发行版改变这个默认值;Debian 在 2009 年的 “squeeze” 中[改变了这个默认值][9],但这个改动破坏了足够多的软件包(比如[任何包含 Java 的程序][10]),[在经过了几次的 Debian 式的讨论之后][11],它恢复到了原来的设置。看上去不少程序依赖于默认启用地址映射。

|

||||

|

||||

OpenBSD 有以“secure by default”的名义打破其核心系统之外的东西的传统;而 Linux 发行版们则更倾向于难以作出这样的改变。所以那些一般不愿意收到他们用户的不满的发行版们,不太可能很快对 bindv6only 的默认设置作出改变。好消息是这个功能作为默认已经很多年了,但很难找到被利用的例子。但是,正如我们都知道的,谁都无法保证这样的利用不可能发生。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://lwn.net/Articles/688462/

|

||||

|

||||

作者:[Jonathan Corbet][a]

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://lwn.net/

|

||||

[1]: https://www.google.com/intl/en/ipv6/statistics.html

|

||||

[2]: https://tools.ietf.org/html/rfc3493#section-3.7

|

||||

[3]: https://tools.ietf.org/html/rfc2373#page-10

|

||||

[4]: https://github.com/openntpd-portable/

|

||||

[5]: https://lwn.net/Articles/688464/

|

||||

[6]: https://tools.ietf.org/html/draft-itojun-v6ops-v4mapped-harmful-02

|

||||

[7]: https://lwn.net/Articles/688465/

|

||||

[8]: https://lwn.net/Articles/688466/

|

||||

[9]: https://lists.debian.org/debian-devel/2009/10/msg00541.html

|

||||

[10]: https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=560056

|

||||

[11]: https://lists.debian.org/debian-devel/2010/04/msg00099.html

|

||||

@ -0,0 +1,236 @@

|

||||

使用 Python 和 Asyncio 编写在线多用人游戏(二)

|

||||

==================================================================

|

||||

|

||||

|

||||

|

||||

> 你在 Python 中用过异步编程吗?本文中我会告诉你怎样做,而且用一个[能工作的例子][1]来展示它:这是一个流行的贪吃蛇游戏,而且是为多人游戏而设计的。

|

||||

|

||||

介绍和理论部分参见“[第一部分 异步化][2]”。

|

||||

|

||||

- [游戏入口在此,点此体验][1]。

|

||||

|

||||

### 3、编写游戏循环主体

|

||||

|

||||

游戏循环是每一个游戏的核心。它持续地运行以读取玩家的输入、更新游戏的状态,并且在屏幕上渲染游戏结果。在在线游戏中,游戏循环分为客户端和服务端两部分,所以一般有两个循环通过网络通信。通常客户端的角色是获取玩家输入,比如按键或者鼠标移动,将数据传输给服务端,然后接收需要渲染的数据。服务端处理来自玩家的所有数据,更新游戏的状态,执行渲染下一帧的必要计算,然后将结果传回客户端,例如游戏中对象的新位置。如果没有可靠的理由,不混淆客户端和服务端的角色是一件很重要的事。如果你在客户端执行游戏逻辑的计算,很容易就会和其它客户端失去同步,其实你的游戏也可以通过简单地传递客户端的数据来创建。

|

||||

|

||||

> 游戏循环的一次迭代称为一个嘀嗒(tick)。嘀嗒是一个事件,表示当前游戏循环的迭代已经结束,下一帧(或者多帧)的数据已经就绪。

|

||||

|

||||

在后面的例子中,我们使用相同的客户端,它使用 WebSocket 从一个网页上连接到服务端。它执行一个简单的循环,将按键码发送给服务端,并显示来自服务端的所有信息。[客户端代码戳这里][4]。

|

||||

|

||||

### 例子 3.1:基本游戏循环

|

||||

|

||||

> [例子 3.1 源码][5]。

|

||||

|

||||

我们使用 [aiohttp][6] 库来创建游戏服务器。它可以通过 asyncio 创建网页服务器和客户端。这个库的一个优势是它同时支持普通 http 请求和 websocket。所以我们不用其他网页服务器来渲染游戏的 html 页面。

|

||||

|

||||

下面是启动服务器的方法:

|

||||

|

||||

```

|

||||

app = web.Application()

|

||||

app["sockets"] = []

|

||||

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

|

||||

app.router.add_route('GET', '/connect', wshandler)

|

||||

app.router.add_route('GET', '/', handle)

|

||||

|

||||

web.run_app(app)

|

||||

```

|

||||

|

||||

`web.run_app` 是创建服务主任务的快捷方法,通过它的 `run_forever()` 方法来执行 `asyncio` 事件循环。建议你查看这个方法的源码,弄清楚服务器到底是如何创建和结束的。

|

||||

|

||||

`app` 变量就是一个类似于字典的对象,它用于在所连接的客户端之间共享数据。我们使用它来存储连接的套接字的列表。随后会用这个列表来给所有连接的客户端发送消息。`asyncio.ensure_future()` 调用会启动主游戏循环的任务,每隔2 秒向客户端发送嘀嗒消息。这个任务会在同样的 asyncio 事件循环中和网页服务器并行执行。

|

||||

|

||||

有两个网页请求处理器:`handle` 是提供 html 页面的处理器;`wshandler` 是主要的 websocket 服务器任务,处理和客户端之间的交互。在事件循环中,每一个连接的客户端都会创建一个新的 `wshandler` 任务。这个任务会添加客户端的套接字到列表中,以便 `game_loop` 任务可以给所有的客户端发送消息。然后它将随同消息回显客户端的每个击键。

|

||||

|

||||

在启动的任务中,我们在 `asyncio` 的主事件循环中启动 worker 循环。任务之间的切换发生在它们之间任何一个使用 `await`语句来等待某个协程结束时。例如 `asyncio.sleep` 仅仅是将程序执行权交给调度器一段指定的时间;`ws.receive` 等待 websocket 的消息,此时调度器可能切换到其它任务。

|

||||

|

||||

在浏览器中打开主页,连接上服务器后,试试随便按下键。它们的键值会从服务端返回,每隔 2 秒这个数字会被游戏循环中发给所有客户端的嘀嗒消息所覆盖。

|

||||

|

||||

我们刚刚创建了一个处理客户端按键的服务器,主游戏循环在后台做一些处理,周期性地同时更新所有的客户端。

|

||||

|

||||

### 例子 3.2: 根据请求启动游戏

|

||||

|

||||

> [例子 3.2 的源码][7]

|

||||

|

||||

在前一个例子中,在服务器的生命周期内,游戏循环一直运行着。但是现实中,如果没有一个人连接服务器,空运行游戏循环通常是不合理的。而且,同一个服务器上可能有不同的“游戏房间”。在这种假设下,每一个玩家“创建”一个游戏会话(比如说,多人游戏中的一个比赛或者大型多人游戏中的副本),这样其他用户可以加入其中。当游戏会话开始时,游戏循环才开始执行。

|

||||

|

||||

在这个例子中,我们使用一个全局标记来检测游戏循环是否在执行。当第一个用户发起连接时,启动它。最开始,游戏循环没有执行,标记设置为 `False`。游戏循环是通过客户端的处理方法启动的。

|

||||

|

||||

```

|

||||

if app["game_is_running"] == False:

|

||||

asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

当 `game_loop()` 运行时,这个标记设置为 `True`;当所有客户端都断开连接时,其又被设置为 `False`。

|

||||

|

||||

### 例子 3.3:管理任务

|

||||

|

||||

> [例子3.3源码][8]

|

||||

|

||||

这个例子用来解释如何和任务对象协同工作。我们把游戏循环的任务直接存储在游戏循环的全局字典中,代替标记的使用。在像这样的一个简单例子中并不一定是最优的,但是有时候你可能需要控制所有已经启动的任务。

|

||||

|

||||

```

|

||||

if app["game_loop"] is None or \

|

||||

app["game_loop"].cancelled():

|

||||

app["game_loop"] = asyncio.ensure_future(game_loop(app))

|

||||

```

|

||||

|

||||

这里 `ensure_future()` 返回我们存放在全局字典中的任务对象,当所有用户都断开连接时,我们使用下面方式取消任务:

|

||||

|

||||

```

|

||||

app["game_loop"].cancel()

|

||||

```

|

||||

|

||||

这个 `cancel()` 调用将通知调度器不要向这个协程传递执行权,而且将它的状态设置为已取消:`cancelled`,之后可以通过 `cancelled()` 方法来检查是否已取消。这里有一个值得一提的小注意点:当你持有一个任务对象的外部引用时,而这个任务执行中发生了异常,这个异常不会抛出。取而代之的是为这个任务设置一个异常状态,可以通过 `exception()` 方法来检查是否出现了异常。这种悄无声息地失败在调试时不是很有用。所以,你可能想用抛出所有异常来取代这种做法。你可以对所有未完成的任务显式地调用 `result()` 来实现。可以通过如下的回调来实现:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result())

|

||||

```

|

||||

|

||||

如果我们打算在我们代码中取消这个任务,但是又不想产生 `CancelError` 异常,有一个检查 `cancelled` 状态的点:

|

||||

|

||||

```

|

||||

app["game_loop"].add_done_callback(lambda t: t.result()

|

||||

if not t.cancelled() else None)

|

||||

```

|

||||

|

||||

注意仅当你持有任务对象的引用时才需要这么做。在前一个例子,所有的异常都是没有额外的回调,直接抛出所有异常。

|

||||

|

||||

### 例子 3.4:等待多个事件

|

||||

|

||||

> [例子 3.4 源码][9]

|

||||

|

||||

在许多场景下,在客户端的处理方法中你需要等待多个事件的发生。除了来自客户端的消息,你可能需要等待不同类型事件的发生。比如,如果你的游戏时间有限制,那么你可能需要等一个来自定时器的信号。或者你需要使用管道来等待来自其它进程的消息。亦或者是使用分布式消息系统的网络中其它服务器的信息。

|

||||

|

||||

为了简单起见,这个例子是基于例子 3.1。但是这个例子中我们使用 `Condition` 对象来与已连接的客户端保持游戏循环的同步。我们不保存套接字的全局列表,因为只在该处理方法中使用套接字。当游戏循环停止迭代时,我们使用 `Condition.notify_all()` 方法来通知所有的客户端。这个方法允许在 `asyncio` 的事件循环中使用发布/订阅的模式。

|

||||

|

||||

为了等待这两个事件,首先我们使用 `ensure_future()` 来封装任务中这个可等待对象。

|

||||

|

||||

```

|

||||

if not recv_task:

|

||||

recv_task = asyncio.ensure_future(ws.receive())

|

||||

if not tick_task:

|

||||

await tick.acquire()

|

||||

tick_task = asyncio.ensure_future(tick.wait())

|

||||

```

|

||||

|

||||

在我们调用 `Condition.wait()` 之前,我们需要在它后面获取一把锁。这就是我们为什么先调用 `tick.acquire()` 的原因。在调用 `tick.wait()` 之后,锁会被释放,这样其他的协程也可以使用它。但是当我们收到通知时,会重新获取锁,所以在收到通知后需要调用 `tick.release()` 来释放它。

|

||||

|

||||

我们使用 `asyncio.wait()` 协程来等待两个任务。

|

||||

|

||||

```

|

||||

done, pending = await asyncio.wait(

|

||||

[recv_task,

|

||||

tick_task],

|

||||

return_when=asyncio.FIRST_COMPLETED)

|

||||

```

|

||||

|

||||

程序会阻塞,直到列表中的任意一个任务完成。然后它返回两个列表:执行完成的任务列表和仍然在执行的任务列表。如果任务执行完成了,其对应变量赋值为 `None`,所以在下一个迭代时,它可能会被再次创建。

|

||||

|

||||

### 例子 3.5: 结合多个线程

|

||||

|

||||

> [例子 3.5 源码][10]

|

||||

|

||||

在这个例子中,我们结合 `asyncio` 循环和线程,在一个单独的线程中执行主游戏循环。我之前提到过,由于 `GIL` 的存在,Python 代码的真正并行执行是不可能的。所以使用其它线程来执行复杂计算并不是一个好主意。然而,在使用 `asyncio` 时结合线程有原因的:当我们使用的其它库不支持 `asyncio` 时就需要。在主线程中调用这些库会阻塞循环的执行,所以异步使用他们的唯一方法是在不同的线程中使用他们。

|

||||

|

||||

我们使用 `asyncio` 循环的`run_in_executor()` 方法和 `ThreadPoolExecutor` 来执行游戏循环。注意 `game_loop()` 已经不再是一个协程了。它是一个由其它线程执行的函数。然而我们需要和主线程交互,在游戏事件到来时通知客户端。`asyncio` 本身不是线程安全的,它提供了可以在其它线程中执行你的代码的方法。普通函数有 `call_soon_threadsafe()`,协程有 `run_coroutine_threadsafe()`。我们在 `notify()` 协程中增加了通知客户端游戏的嘀嗒的代码,然后通过另外一个线程执行主事件循环。

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

print("Game loop thread id {}".format(threading.get_ident()))

|

||||

async def notify():

|

||||

print("Notify thread id {}".format(threading.get_ident()))

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

while 1:

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

# blocking the thread

|

||||

sleep(1)

|

||||

# make sure the task has finished

|

||||

task.result()

|

||||

```

|

||||

|

||||

当你执行这个例子时,你会看到 “Notify thread id” 和 “Main thread id” 相等,因为 `notify()` 协程在主线程中执行。与此同时 `sleep(1)` 在另外一个线程中执行,因此它不会阻塞主事件循环。

|

||||

|

||||

### 例子 3.6:多进程和扩展

|

||||

|

||||

> [例子 3.6 源码][11]

|

||||

|

||||

单线程的服务器可能运行得很好,但是它只能使用一个 CPU 核。为了将服务扩展到多核,我们需要执行多个进程,每个进程执行各自的事件循环。这样我们需要在进程间交互信息或者共享游戏的数据。而且在一个游戏中经常需要进行复杂的计算,例如路径查找之类。这些任务有时候在一个游戏嘀嗒中没法快速完成。在协程中不推荐进行费时的计算,因为它会阻塞事件的处理。在这种情况下,将这个复杂任务交给其它并行执行的进程可能更合理。

|

||||

|

||||

最简单的使用多个核的方法是启动多个使用单核的服务器,就像之前的例子中一样,每个服务器占用不同的端口。你可以使用 `supervisord` 或者其它进程控制的系统。这个时候你需要一个像 `HAProxy` 这样的负载均衡器,使得连接的客户端分布在多个进程间。已经有一些可以连接 asyncio 和一些流行的消息及存储系统的适配系统。例如:

|

||||

|

||||

- [aiomcache][12] 用于 memcached 客户端

|

||||

- [aiozmq][13] 用于 zeroMQ

|

||||

- [aioredis][14] 用于 Redis 存储,支持发布/订阅

|

||||

|

||||

你可以在 github 或者 pypi 上找到其它的软件包,大部分以 `aio` 开头。

|

||||

|

||||

使用网络服务在存储持久状态和交换某些信息时可能比较有效。但是如果你需要进行进程间通信的实时处理,它的性能可能不足。此时,使用标准的 unix 管道可能更合适。`asyncio` 支持管道,在`aiohttp`仓库有个 [使用管道的服务器的非常底层的例子][15]。

|

||||

|

||||

在当前的例子中,我们使用 Python 的高层类库 [multiprocessing][16] 来在不同的核上启动复杂的计算,使用 `multiprocessing.Queue` 来进行进程间的消息交互。不幸的是,当前的 `multiprocessing` 实现与 `asyncio` 不兼容。所以每一个阻塞方法的调用都会阻塞事件循环。但是此时线程正好可以起到帮助作用,因为如果在不同线程里面执行 `multiprocessing` 的代码,它就不会阻塞主线程。所有我们需要做的就是把所有进程间的通信放到另外一个线程中去。这个例子会解释如何使用这个方法。和上面的多线程例子非常类似,但是我们从线程中创建的是一个新的进程。

|

||||

|

||||

```

|

||||

def game_loop(asyncio_loop):

|

||||

# coroutine to run in main thread

|

||||

async def notify():

|

||||

await tick.acquire()

|

||||

tick.notify_all()

|

||||

tick.release()

|

||||

|

||||

queue = Queue()

|

||||

|

||||

# function to run in a different process

|

||||

def worker():

|

||||

while 1:

|

||||

print("doing heavy calculation in process {}".format(os.getpid()))

|

||||

sleep(1)

|

||||

queue.put("calculation result")

|

||||

|

||||

Process(target=worker).start()

|

||||

|

||||

while 1:

|

||||

# blocks this thread but not main thread with event loop

|

||||

result = queue.get()

|

||||

print("getting {} in process {}".format(result, os.getpid()))

|

||||

task = asyncio.run_coroutine_threadsafe(notify(), asyncio_loop)

|

||||

task.result()

|

||||

```

|

||||

|

||||

这里我们在另外一个进程中运行 `worker()` 函数。它包括一个执行复杂计算并把计算结果放到 `queue` 中的循环,这个 `queue` 是 `multiprocessing.Queue` 的实例。然后我们就可以在另外一个线程的主事件循环中获取结果并通知客户端,就和例子 3.5 一样。这个例子已经非常简化了,它没有合理的结束进程。而且在真实的游戏中,我们可能需要另外一个队列来将数据传递给 `worker`。

|

||||

|

||||

有一个项目叫 [aioprocessing][17],它封装了 `multiprocessing`,使得它可以和 `asyncio` 兼容。但是实际上它只是和上面例子使用了完全一样的方法:从线程中创建进程。它并没有给你带来任何方便,除了它使用了简单的接口隐藏了后面的这些技巧。希望在 Python 的下一个版本中,我们能有一个基于协程且支持 `asyncio` 的 `multiprocessing` 库。

|

||||

|

||||

> 注意!如果你从主线程或者主进程中创建了一个不同的线程或者子进程来运行另外一个 `asyncio` 事件循环,你需要显式地使用 `asyncio.new_event_loop()` 来创建循环,不然的话可能程序不会正常工作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[chunyang-wen](https://github.com/chunyang-wen)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-writing-game-loop/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: https://linux.cn/article-7767-1.html

|

||||

[3]: http://snakepit-game.com/

|

||||

[4]: https://github.com/7WebPages/snakepit-game/blob/master/simple/index.html

|

||||

[5]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_basic.py

|

||||

[6]: http://aiohttp.readthedocs.org/

|

||||

[7]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_handler.py

|

||||

[8]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_global.py

|

||||

[9]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_wait.py

|

||||

[10]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_thread.py

|

||||

[11]: https://github.com/7WebPages/snakepit-game/blob/master/simple/game_loop_process.py

|

||||

[12]: https://github.com/aio-libs/aiomcache

|

||||

[13]: https://github.com/aio-libs/aiozmq

|

||||

[14]: https://github.com/aio-libs/aioredis

|

||||

[15]: https://github.com/KeepSafe/aiohttp/blob/master/examples/mpsrv.py

|

||||

[16]: https://docs.python.org/3.5/library/multiprocessing.html

|

||||

[17]: https://github.com/dano/aioprocessing

|

||||

@ -0,0 +1,76 @@

|

||||

Instagram 基于 Python 语言的 Web Service 效率提升之道

|

||||

===============================================

|

||||

|

||||

Instagram 目前部署了世界上最大规模的 Django Web 框架(该框架完全使用 Python 编写)。我们最初选用 Python 是因为它久负盛名的简洁性与实用性,这非常符合我们的哲学思想——“先做简单的事情”。但简洁性也会带来效率方面的折衷。Instagram 的规模在过去两年中已经翻番,并且最近已突破 5 亿用户,所以急需最大程度地提升 web 服务效率以便我们的平台能够继续顺利地扩大。在过去的一年,我们已经将效率计划(efficiency program)提上日程,并在过去的六个月,我们已经能够做到无需向我们的 Django 层(Django tiers)添加新的容量来维持我们的用户增长。我们将在本文分享一些由我们构建的工具以及如何使用它们来优化我们的日常部署流程。

|

||||

|

||||

### 为何需要提升效率?

|

||||

|

||||

Instagram,正如所有的软件,受限于像服务器和数据中心能源这样的物理限制。鉴于这些限制,在我们的效率计划中有两个我们希望实现的主要目标:

|

||||

|

||||

1. Instagram 应当能够利用持续代码发布正常地提供通信服务,防止因为自然灾害、区域性网络问题等造成某一个数据中心区丢失。

|

||||

2. Instagram 应当能够自由地滚动发布新产品和新功能,不必因容量而受阻。

|

||||

|

||||

想要实现这些目标,我们意识到我们需要持续不断地监控我们的系统并与回归(regressions)进行战斗。

|

||||

|

||||

### 定义效率

|

||||

|

||||

Web services 的瓶颈通常在于每台服务器上可用的 CPU 时间。在这种环境下,效率就意味着利用相同的 CPU 资源完成更多的任务,也就是说,每秒处理更多的用户请求(requests per second, RPS)。当我们寻找优化方法时,我们面临的第一个最大的挑战就是尝试量化我们当前的效率。到目前为止,我们一直在使用“每次请求的平均 CPU 时间”来评估效率,但使用这种指标也有其固有限制:

|

||||

|

||||

1. **设备多样性**。使用 CPU 时间来测量 CPU 资源并非理想方案,因为它同时受到 CPU 型号与 CPU 负载的影响。

|

||||

2. **请求影响数据**。测量每次请求的 CPU 资源并非理想方案,因为在使用每次请求测量(per-request measurement)方案时,添加或移除轻量级或重量级的请求也会影响到效率指标。

|

||||

|

||||

相对于 CPU 时间来说,CPU 指令是一种更好的指标,因为对于相同的请求,它会报告相同的数字,不管 CPU 型号和 CPU 负载情况如何。我们选择使用了一种叫做“每个活动用户(per active user)”的指标,而不是将我们所有的数据关联到每个用户请求上。我们最终采用“每个活动用户在高峰期间的 CPU 指令(CPU instruction per active user during peak minute)”来测量效率。我们建立好新的度量标准后,下一步就是通过对 Django 的分析来更多的了解一下我们的回归。

|

||||

|

||||

### Django web services 分析

|

||||

|

||||

通过分析我们的 Django web services,我们希望回答两个主要问题:

|

||||

|

||||

1. CPU 回归会发生吗?

|

||||

2. 是什么导致了 CPU 回归发生以及我们该怎样修复它?

|

||||

|

||||

想要回答第一个问题,我们需要追踪”每个活动用户的 CPU 指令(CPU-instruction-per-active-user)“指标。如果该指标增加,我们就知道已经发生了一次 CPU 回归。

|

||||

|

||||

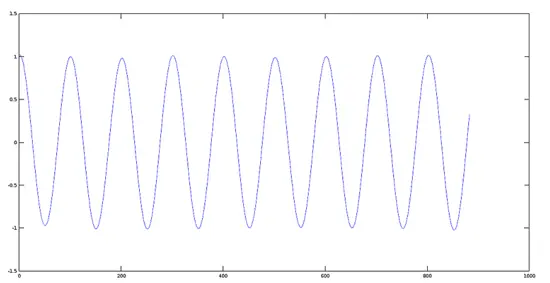

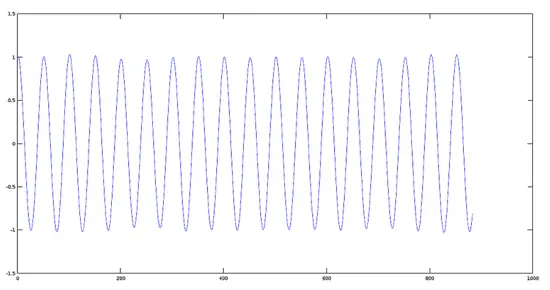

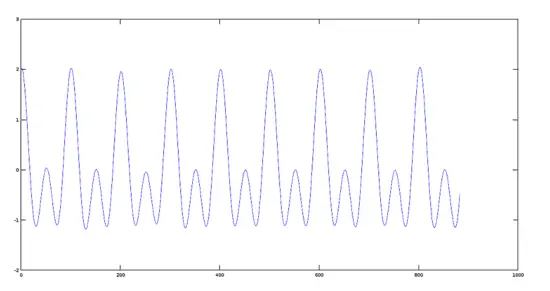

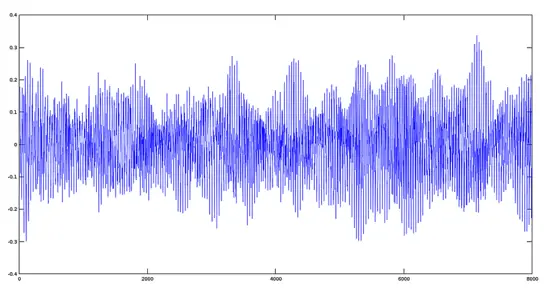

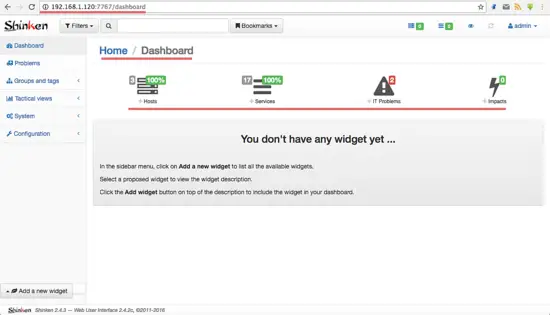

我们为此构建的工具叫做 Dynostats。Dynostats 利用 Django 中间件以一定的速率采样用户请求,记录关键的效率以及性能指标,例如 CPU 总指令数、端到端请求时延、花费在访问内存缓存(memcache)和数据库服务的时间等。另一方面,每个请求都有很多可用于聚合的元数据(metadata),例如端点名称、HTTP 请求返回码、服务该请求的服务器名称以及请求中最新提交的哈希值(hash)。对于单个请求记录来说,有两个方面非常强大,因为我们可以在不同的维度上进行切割,那将帮助我们减少任何导致 CPU 回归的原因。例如,我们可以根据它们的端点名称聚合所有请求,正如下面的时间序列图所示,从图中可以清晰地看出在特定端点上是否发生了回归。

|

||||

|

||||

|

||||

|

||||

CPU 指令对测量效率很重要——当然,它们也很难获得。Python 并没有支持直接访问 CPU 硬件计数器(CPU 硬件计数器是指可编程 CPU 寄存器,用于测量性能指标,例如 CPU 指令)的公共库。另一方面,Linux 内核提供了 `perf_event_open` 系统调用。通过 Python `ctypes` 桥接技术能够让我们调用标准 C 库的系统调用函数 `syscall`,它也为我们提供了兼容 C 的数据类型,从而可以编程硬件计数器并从它们读取数据。

|

||||

|

||||

使用 Dynostats,我们已经可以找出 CPU 回归,并探究 CPU 回归发生的原因,例如哪个端点受到的影响最多,谁提交了真正会导致 CPU 回归的变更等。然而,当开发者收到他们的变更已经导致一次 CPU 回归发生的通知时,他们通常难以找出问题所在。如果问题很明显,那么回归可能就不会一开始就被提交!

|

||||

|

||||

这就是为何我们需要一个 Python 分析器,(一旦 Dynostats 发现了它)从而使开发者能够使用它找出回归发生的根本原因。不同于白手起家,我们决定对一个现成的 Python 分析器 cProfile 做适当的修改。cProfile 模块通常会提供一个统计集合来描述程序不同的部分执行时间和执行频率。我们将 cProfile 的定时器(timer)替换成了一个从硬件计数器读取的 CPU 指令计数器,以此取代对时间的测量。我们在采样请求后产生数据并把数据发送到数据流水线。我们也会发送一些我们在 Dynostats 所拥有的类似元数据,例如服务器名称、集群、区域、端点名称等。

|

||||

|

||||

在数据流水线的另一边,我们创建了一个消费数据的尾随者(tailer)。尾随者的主要功能是解析 cProfile 的统计数据并创建能够表示 Python 函数级别的 CPU 指令的实体。如此,我们能够通过 Python 函数来聚合 CPU 指令,从而更加方便地找出是什么函数导致了 CPU 回归。

|

||||

|

||||

### 监控与警报机制

|

||||

|

||||

在 Instagram,我们[每天部署 30-50 次后端服务][1]。这些部署中的任何一个都能发生 CPU 回归的问题。因为每次发生通常都包含至少一个差异(diff),所以找出任何回归是很容易的。我们的效率监控机制包括在每次发布前后都会在 Dynostats 中扫描 CPU 指令,并且当变更超出某个阈值时发出警告。对于长期会发生 CPU 回归的情况,我们也有一个探测器为负载最繁重的端点提供日常和每周的变更扫描。

|

||||

|

||||

部署新的变更并非触发一次 CPU 回归的唯一情况。在许多情况下,新的功能和新的代码路径都由全局环境变量(global environment variables,GEV)控制。 在一个计划好的时间表上,给一部分用户发布新功能是很常见事情。我们在 Dynostats 和 cProfile 统计数据中为每个请求添加了这个信息作为额外的元数据字段。按这些字段将请求分组可以找出由全局环境变量(GEV)改变导致的可能的 CPU 回归问题。这让我们能够在它们对性能造成影响前就捕获到 CPU 回归。

|

||||

|

||||

### 接下来是什么?

|

||||

|

||||

Dynostats 和我们定制的 cProfile,以及我们建立的支持它们的监控和警报机制能够有效地找出大多数导致 CPU 回归的元凶。这些进展已经帮助我们恢复了超过 50% 的不必要的 CPU 回归,否则我们就根本不会知道。

|

||||

|

||||

我们仍然还有一些可以提升的方面,并很容易将它们地加入到 Instagram 的日常部署流程中:

|

||||

|

||||

1. CPU 指令指标应该要比其它指标如 CPU 时间更加稳定,但我们仍然观察了让我们头疼的差异。保持“信噪比(signal:noise ratio)”合理地低是非常重要的,这样开发者们就可以集中于真实的回归上。这可以通过引入置信区间(confidence intervals)的概念来提升,并在信噪比过高时发出警报。针对不同的端点,变化的阈值也可以设置为不同值。

|

||||

2. 通过更改 GEV 来探测 CPU 回归的一个限制就是我们要在 Dynostats 中手动启用这些比较的日志输出。当 GEV 的数量逐渐增加,开发了越来越多的功能,这就不便于扩展了。相反,我们能够利用一个自动化框架来调度这些比较的日志输出,并对所有的 GEV 进行遍历,然后当检查到回归时就发出警告。

|

||||

3. cProfile 需要一些增强以便更好地处理封装函数以及它们的子函数。

|

||||

|

||||

鉴于我们在为 Instagram 的 web service 构建效率框架中所投入的工作,所以我们对于将来使用 Python 继续扩展我们的服务很有信心。我们也开始向 Python 语言本身投入更多,并且开始探索从 Python 2 转移 Python 3 之道。我们将会继续探索并做更多的实验以继续提升基础设施与开发者效率,我们期待着很快能够分享更多的经验。

|

||||

|

||||

本文作者 Min Ni 是 Instagram 的软件工程师。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://engineering.instagram.com/web-service-efficiency-at-instagram-with-python-4976d078e366#.tiakuoi4p

|

||||

|

||||

作者:[Min Ni][a]

|

||||

译者:[ChrisLeeGit](https://github.com/chrisleegit)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://engineering.instagram.com/@InstagramEng?source=post_header_lockup

|

||||

[1]: https://engineering.instagram.com/continuous-deployment-at-instagram-1e18548f01d1#.p5adp7kcz

|

||||

@ -0,0 +1,134 @@

|

||||

使用 Python 和 Asyncio 来编写在线多人游戏(三)

|

||||

=================================================================

|

||||

|

||||

|

||||

|

||||

> 在这个系列中,我们基于多人游戏 [贪吃蛇][1] 来制作一个异步的 Python 程序。上一篇文章聚焦于[编写游戏循环][2]上,而本系列第 1 部分则涵盖了如何[异步化][3]。

|

||||

|

||||

- 代码戳[这里][4]

|

||||

|

||||

### 4、制作一个完整的游戏

|

||||

|

||||

|

||||

|

||||

#### 4.1 工程概览

|

||||

|

||||

在此部分,我们将回顾一个完整在线游戏的设计。这是一个经典的贪吃蛇游戏,增加了多玩家支持。你可以自己在 (<http://snakepit-game.com>) 亲自试玩。源码在 GitHub 的这个[仓库][5]。游戏包括下列文件:

|

||||

|

||||

- [server.py][6] - 处理主游戏循环和连接。

|

||||

- [game.py][7] - 主要的 `Game` 类。实现游戏的逻辑和游戏的大部分通信协议。

|

||||

- [player.py][8] - `Player` 类,包括每一个独立玩家的数据和蛇的展现。这个类负责获取玩家的输入并相应地移动蛇。

|

||||

- [datatypes.py][9] - 基本数据结构。

|

||||

- [settings.py][10] - 游戏设置,在注释中有相关的说明。

|

||||

- [index.html][11] - 客户端所有的 html 和 javascript代码都放在一个文件中。

|

||||

|

||||

#### 4.2 游戏循环内窥

|

||||

|

||||

多人的贪吃蛇游戏是个用于学习十分好的例子,因为它简单。所有的蛇在每个帧中移动到一个位置,而且帧以非常低的频率进行变化,这样就可以让你就观察到游戏引擎到底是如何工作的。因为速度慢,对于玩家的按键不会立马响应。按键先是记录下来,然后在一个游戏循环迭代的最后计算下一帧时使用。

|

||||

|

||||

> 现代的动作游戏帧频率更高,而且通常服务端和客户端的帧频率是不相等的。客户端的帧频率通常依赖于客户端的硬件性能,而服务端的帧频率则是固定的。一个客户端可能根据一个游戏“嘀嗒”的数据渲染多个帧。这样就可以创建平滑的动画,这个受限于客户端的性能。在这个例子中,服务端不仅传输物体的当前位置,也要传输它们的移动方向、速度和加速度。客户端的帧频率称之为 FPS(每秒帧数:frames per second),服务端的帧频率称之为 TPS(每秒滴答数:ticks per second)。在这个贪吃蛇游戏的例子中,二者的值是相等的,在客户端显示的一帧是在服务端的一个“嘀嗒”内计算出来的。

|

||||

|

||||

我们使用类似文本模式的游戏区域,事实上是 html 表格中的一个字符宽的小格。游戏中的所有对象都是通过表格中的不同颜色字符来表示。大部分时候,客户端将按键的码发送至服务端,然后每个“滴答”更新游戏区域。服务端一次更新包括需要更新字符的坐标和颜色。所以我们将所有游戏逻辑放置在服务端,只将需要渲染的数据发送给客户端。此外,我们通过替换通过网络发送的数据来减少游戏被破解的概率。

|

||||

|

||||

#### 4.3 它是如何运行的?

|

||||

|

||||

这个游戏中的服务端出于简化的目的,它和例子 3.2 类似。但是我们用一个所有服务端都可访问的 `Game` 对象来代替之前保存了所有已连接 websocket 的全局列表。一个 `Game` 实例包括一个表示连接到此游戏的玩家的 `Player` 对象的列表(在 `self._players` 属性里面),以及他们的个人数据和 websocket 对象。将所有游戏相关的数据存储在一个 `Game` 对象中,会方便我们增加多个游戏房间这个功能——如果我们要增加这个功能的话。这样,我们维护多个 `Game` 对象,每个游戏开始时创建一个。

|

||||

|

||||

客户端和服务端的所有交互都是通过编码成 json 的消息来完成。来自客户端的消息仅包含玩家所按下键码对应的编号。其它来自客户端消息使用如下格式:

|

||||

|

||||

```

|

||||

[command, arg1, arg2, ... argN ]

|

||||

```

|

||||

|

||||

来自服务端的消息以列表的形式发送,因为通常一次要发送多个消息 (大多数情况下是渲染的数据):

|

||||

|

||||

```

|

||||

[[command, arg1, arg2, ... argN ], ... ]

|

||||

```

|

||||

|

||||

在每次游戏循环迭代的最后会计算下一帧,并且将数据发送给所有的客户端。当然,每次不是发送完整的帧,而是发送两帧之间的变化列表。

|

||||

|

||||

注意玩家连接上服务端后不是立马加入游戏。连接开始时是观望者(spectator)模式,玩家可以观察其它玩家如何玩游戏。如果游戏已经开始或者上一个游戏会话已经在屏幕上显示 “game over” (游戏结束),用户此时可以按下 “Join”(参与),来加入一个已经存在的游戏,或者如果游戏没有运行(没有其它玩家)则创建一个新的游戏。后一种情况下,游戏区域在开始前会被先清空。

|

||||

|

||||

游戏区域存储在 `Game._field` 这个属性中,它是由嵌套列表组成的二维数组,用于内部存储游戏区域的状态。数组中的每一个元素表示区域中的一个小格,最终小格会被渲染成 html 表格的格子。它有一个 `Char` 的类型,是一个 `namedtuple` ,包括一个字符和颜色。在所有连接的客户端之间保证游戏区域的同步很重要,所以所有游戏区域的更新都必须依据发送到客户端的相应的信息。这是通过 `Game.apply_render()` 来实现的。它接受一个 `Draw` 对象的列表,其用于内部更新游戏区域和发送渲染消息给客户端。

|

||||

|

||||

> 我们使用 `namedtuple` 不仅因为它表示简单数据结构很方便,也因为用它生成 json 格式的消息时相对于 `dict` 更省空间。如果你在一个真实的游戏循环中需要发送复杂的数据结构,建议先将它们序列化成一个简单的、更短的格式,甚至打包成二进制格式(例如 bson,而不是 json),以减少网络传输。

|

||||

|

||||

`Player` 对象包括用 `deque` 对象表示的蛇。这种数据类型和 `list` 相似,但是在两端增加和删除元素时效率更高,用它来表示蛇很理想。它的主要方法是 `Player.render_move()`,它返回移动玩家的蛇至下一个位置的渲染数据。一般来说它在新的位置渲染蛇的头部,移除上一帧中表示蛇的尾巴的元素。如果蛇吃了一个数字变长了,在相应的多个帧中尾巴是不需要移动的。蛇的渲染数据在主类的 `Game.next_frame()` 中使用,该方法中实现所有的游戏逻辑。这个方法渲染所有蛇的移动,检查每一个蛇前面的障碍物,而且生成数字和“石头”。每一个“嘀嗒”,`game_loop()` 都会直接调用它来生成下一帧。

|

||||

|

||||

如果蛇头前面有障碍物,在 `Game.next_frame()` 中会调用 `Game.game_over()`。它后通知所有的客户端那个蛇死掉了 (会调用 `player.render_game_over()` 方法将其变成石头),然后更新表中的分数排行榜。`Player` 对象的 `alive` 标记被置为 `False`,当渲染下一帧时,这个玩家会被跳过,除非他重新加入游戏。当没有蛇存活时,游戏区域会显示 “game over” (游戏结束)。而且,主游戏循环会停止,设置 `game.running` 标记为 `False`。当某个玩家下次按下 “Join” (加入)时,游戏区域会被清空。

|

||||

|

||||

在渲染游戏的每个下一帧时也会产生数字和石头,它们是由随机值决定的。产生数字或者石头的概率可以在 `settings.py` 中修改成其它值。注意数字的产生是针对游戏区域每一个活的蛇的,所以蛇越多,产生的数字就越多,这样它们都有足够的食物来吃掉。

|

||||

|

||||

#### 4.4 网络协议

|

||||

|

||||

从客户端发送消息的列表:

|

||||

|

||||

命令 | 参数 |描述

|

||||

:-- |:-- |:--

|

||||

new_player | [name] |设置玩家的昵称

|

||||

join | |玩家加入游戏

|

||||

|

||||

|

||||

从服务端发送消息的列表:

|

||||

|

||||

命令 | 参数 |描述

|

||||

:-- |:-- |:--

|

||||

handshake |[id] |给一个玩家指定 ID

|

||||

world |[[(char, color), ...], ...] |初始化游戏区域(世界地图)

|

||||

reset_world | |清除实际地图,替换所有字符为空格

|

||||

render |[x, y, char, color] |在某个位置显示字符

|

||||

p_joined |[id, name, color, score] |新玩家加入游戏

|

||||

p_gameover |[id] |某个玩家游戏结束

|

||||

p_score |[id, score] |给某个玩家计分

|

||||

top_scores |[[name, score, color], ...] |更新排行榜

|

||||

|

||||

典型的消息交换顺序:

|

||||

|

||||

客户端 -> 服务端 |服务端 -> 客户端 |服务端 -> 所有客户端 |备注

|

||||

:-- |:-- |:-- |:--

|

||||

new_player | | |名字传递给服务端

|

||||

|handshake | |指定 ID

|

||||

|world | |初始化传递的世界地图

|

||||

|top_scores | |收到传递的排行榜

|

||||

join | | |玩家按下“Join”,游戏循环开始

|

||||

| |reset_world |命令客户端清除游戏区域

|

||||

| |render, render, ... |第一个游戏“滴答”,渲染第一帧

|

||||

(key code) | | |玩家按下一个键

|

||||

| |render, render, ... |渲染第二帧

|

||||

| |p_score |蛇吃掉了一个数字

|

||||

| |render, render, ... |渲染第三帧

|

||||

| | |... 重复若干帧 ...

|

||||

| |p_gameover |试着吃掉障碍物时蛇死掉了

|

||||

| |top_scores |更新排行榜(如果需要更新的话)

|

||||

|

||||

### 5. 总结

|

||||

|

||||

说实话,我十分享受 Python 最新的异步特性。新的语法做了改善,所以异步代码很容易阅读。可以明显看出哪些调用是非阻塞的,什么时候发生 greenthread 的切换。所以现在我可以宣称 Python 是异步编程的好工具。

|

||||

|

||||

SnakePit 在 7WebPages 团队中非常受欢迎。如果你在公司想休息一下,不要忘记给我们在 [Twitter][12] 或者 [Facebook][13] 留下反馈。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-part-3/

|

||||

|

||||

作者:[Kyrylo Subbotin][a]

|

||||

译者:[chunyang-wen](https://github.com/chunyang-wen)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://7webpages.com/blog/writing-online-multiplayer-game-with-python-and-asyncio-part-3/

|

||||

[1]: http://snakepit-game.com/

|

||||

[2]: https://linux.cn/article-7784-1.html

|

||||

[3]: https://linux.cn/article-7767-1.html

|

||||

[4]: https://github.com/7WebPages/snakepit-game

|

||||

[5]: https://github.com/7WebPages/snakepit-game

|

||||

[6]: https://github.com/7WebPages/snakepit-game/blob/master/server.py

|

||||

[7]: https://github.com/7WebPages/snakepit-game/blob/master/game.py

|

||||

[8]: https://github.com/7WebPages/snakepit-game/blob/master/player.py

|

||||

[9]: https://github.com/7WebPages/snakepit-game/blob/master/datatypes.py

|

||||

[10]: https://github.com/7WebPages/snakepit-game/blob/master/settings.py

|

||||

[11]: https://github.com/7WebPages/snakepit-game/blob/master/index.html

|

||||

[12]: https://twitter.com/7WebPages

|

||||

[13]: https://www.facebook.com/7WebPages/

|

||||

@ -0,0 +1,464 @@

|

||||

从零构建一个简单的 Python 框架

|

||||

===================================

|

||||

|

||||

为什么你想要自己构建一个 web 框架呢?我想,原因有以下几点:

|

||||

|

||||

- 你有一个新奇的想法,觉得将会取代其他的框架

|

||||

- 你想要获得一些名气

|

||||

- 你遇到的问题很独特,以至于现有的框架不太合适

|

||||

- 你对 web 框架是如何工作的很感兴趣,因为你想要成为一位更好的 web 开发者。

|

||||

|

||||

接下来的笔墨将着重于最后一点。这篇文章旨在通过对设计和实现过程一步一步的阐述告诉读者,我在完成一个小型的服务器和框架之后学到了什么。你可以在这个[代码仓库][1]中找到这个项目的完整代码。

|

||||

|

||||

我希望这篇文章可以鼓励更多的人来尝试,因为这确实很有趣。它让我知道了 web 应用是如何工作的,而且这比我想的要容易的多!

|

||||

|

||||

### 范围

|

||||

|

||||

框架可以处理请求-响应周期、身份认证、数据库访问、模板生成等部分工作。Web 开发者使用框架是因为,大多数的 web 应用拥有大量相同的功能,而对每个项目都重新实现同样的功能意义不大。

|

||||

|

||||

比较大的的框架如 Rails 和 Django 实现了高层次的抽象,或者说“自备电池”(“batteries-included”,这是 Python 的口号之一,意即所有功能都自足。)。而实现所有的这些功能可能要花费数千小时,因此在这个项目上,我们重点完成其中的一小部分。在开始写代码前,我先列举一下所需的功能以及限制。

|

||||

|

||||

功能:

|

||||

|

||||

- 处理 HTTP 的 GET 和 POST 请求。你可以在[这篇 wiki][2] 中对 HTTP 有个大致的了解。

|

||||

- 实现异步操作(我*喜欢* Python 3 的 asyncio 模块)。

|

||||

- 简单的路由逻辑以及参数撷取。

|

||||

- 像其他微型框架一样,提供一个简单的用户级 API 。

|

||||

- 支持身份认证,因为学会这个很酷啊(微笑)。

|

||||

|

||||

限制:

|

||||

|

||||

- 将只支持 HTTP 1.1 的一个小子集,不支持传输编码(transfer-encoding)、HTTP 认证(http-auth)、内容编码(content-encoding,如 gzip)以及[持久化连接][3]等功能。

|

||||

- 不支持对响应内容的 MIME 判断 - 用户需要手动指定。

|

||||

- 不支持 WSGI - 仅能处理简单的 TCP 连接。

|

||||

- 不支持数据库。

|

||||

|

||||

我觉得一个小的用例可以让上述内容更加具体,也可以用来演示这个框架的 API:

|

||||

|

||||

```

|

||||

from diy_framework import App, Router

|

||||

from diy_framework.http_utils import Response

|

||||

|

||||

|

||||

# GET simple route

|

||||

async def home(r):

|

||||

rsp = Response()

|

||||

rsp.set_header('Content-Type', 'text/html')

|

||||

rsp.body = '<html><body><b>test</b></body></html>'

|

||||

return rsp

|

||||

|

||||

|

||||

# GET route + params

|

||||

async def welcome(r, name):

|

||||

return "Welcome {}".format(name)

|

||||

|

||||

# POST route + body param

|

||||

async def parse_form(r):

|

||||

if r.method == 'GET':

|

||||

return 'form'

|

||||

else:

|

||||

name = r.body.get('name', '')[0]

|

||||

password = r.body.get('password', '')[0]

|

||||

|

||||

return "{0}:{1}".format(name, password)

|

||||

|

||||

# application = router + http server

|

||||

router = Router()

|

||||

router.add_routes({

|

||||

r'/welcome/{name}': welcome,

|

||||

r'/': home,

|

||||

r'/login': parse_form,})

|

||||

|

||||

app = App(router)

|

||||

app.start_server()

|

||||

```

|

||||

'

|

||||

用户需要定义一些能够返回字符串或 `Response` 对象的异步函数,然后将这些函数与表示路由的字符串配对,最后通过一个函数调用(`start_server`)开始处理请求。

|

||||

|

||||

完成设计之后,我将它抽象为几个我需要编码的部分:

|

||||

|

||||

- 接受 TCP 连接以及调度一个异步函数来处理这些连接的部分

|

||||

- 将原始文本解析成某种抽象容器的部分

|

||||

- 对于每个请求,用来决定调用哪个函数的部分

|

||||

- 将上述部分集中到一起,并为开发者提供一个简单接口的部分

|

||||

|

||||

我先编写一些测试,这些测试被用来描述每个部分的功能。几次重构后,整个设计被分成若干部分,每个部分之间是相对解耦的。这样就非常好,因为每个部分可以被独立地研究学习。以下是我上文列出的抽象的具体体现:

|

||||

|

||||

- 一个 HTTPServer 对象,需要一个 Router 对象和一个 http_parser 模块,并使用它们来初始化。

|

||||

- HTTPConnection 对象,每一个对象表示一个单独的客户端 HTTP 连接,并且处理其请求-响应周期:使用 http_parser 模块将收到的字节流解析为一个 Request 对象;使用一个 Router 实例寻找并调用正确的函数来生成一个响应;最后将这个响应发送回客户端。

|

||||

- 一对 Request 和 Response 对象为用户提供了一种友好的方式,来处理实质上是字节流的字符串。用户不需要知道正确的消息格式和分隔符是怎样的。

|

||||

- 一个包含“路由:函数”对应关系的 Router 对象。它提供一个添加配对的方法,可以根据 URL 路径查找到相应的函数。

|

||||

- 最后,一个 App 对象。它包含配置信息,并使用它们实例化一个 HTTPServer 实例。

|

||||

|

||||

让我们从 `HTTPConnection` 开始来讲解各个部分。

|

||||

|

||||

### 模拟异步连接

|

||||

|

||||

为了满足上述约束条件,每一个 HTTP 请求都是一个单独的 TCP 连接。这使得处理请求的速度变慢了,因为建立多个 TCP 连接需要相对高的花销(DNS 查询,TCP 三次握手,[慢启动][4]等等的花销),不过这样更加容易模拟。对于这一任务,我选择相对高级的 [asyncio-stream][5] 模块,它建立在 [asyncio 的传输和协议][6]的基础之上。我强烈推荐你读一读标准库中的相应代码,很有意思!

|

||||

|

||||

一个 `HTTPConnection` 的实例能够处理多个任务。首先,它使用 `asyncio.StreamReader` 对象以增量的方式从 TCP 连接中读取数据,并存储在缓存中。每一个读取操作完成后,它会尝试解析缓存中的数据,并生成一个 `Request` 对象。一旦收到了这个完整的请求,它就生成一个回复,并通过 `asyncio.StreamWriter` 对象发送回客户端。当然,它还有两个任务:超时连接以及错误处理。

|

||||

|

||||

你可以在[这里][7]浏览这个类的完整代码。我将分别介绍代码的每一部分。为了简单起见,我移除了代码文档。

|

||||

|

||||

```

|

||||

class HTTPConnection(object):

|

||||

def init(self, http_server, reader, writer):

|

||||

self.router = http_server.router

|

||||

self.http_parser = http_server.http_parser

|

||||

self.loop = http_server.loop

|

||||

|

||||

self._reader = reader

|

||||

self._writer = writer

|

||||

self._buffer = bytearray()

|

||||

self._conn_timeout = None

|

||||

self.request = Request()

|

||||

```

|

||||

|

||||

这个 `init` 方法没啥意思,它仅仅是收集了一些对象以供后面使用。它存储了一个 `router` 对象、一个 `http_parser` 对象以及 `loop` 对象,分别用来生成响应、解析请求以及在事件循环中调度任务。

|

||||

|

||||

然后,它存储了代表一个 TCP 连接的读写对,和一个充当原始字节缓冲区的空[字节数组][8]。`_conn_timeout` 存储了一个 [asyncio.Handle][9] 的实例,用来管理超时逻辑。最后,它还存储了 `Request` 对象的一个单一实例。

|

||||

|

||||

下面的代码是用来接受和发送数据的核心功能:

|

||||

|

||||

```

|

||||

async def handle_request(self):

|

||||

try:

|

||||

while not self.request.finished and not self._reader.at_eof():

|

||||

data = await self._reader.read(1024)

|

||||

if data:

|

||||

self._reset_conn_timeout()

|

||||

await self.process_data(data)

|

||||

if self.request.finished:

|

||||

await self.reply()

|

||||

elif self._reader.at_eof():

|

||||

raise BadRequestException()

|

||||

except (NotFoundException,

|

||||

BadRequestException) as e:

|

||||

self.error_reply(e.code, body=Response.reason_phrases[e.code])

|

||||

except Exception as e:

|

||||

self.error_reply(500, body=Response.reason_phrases[500])

|

||||

|

||||

self.close_connection()

|

||||

```

|

||||

|

||||

所有内容被包含在 `try-except` 代码块中,这样在解析请求或响应期间抛出的异常可以被捕获到,然后一个错误响应会发送回客户端。

|

||||

|

||||

在 `while` 循环中不断读取请求,直到解析器将 `self.request.finished` 设置为 True ,或者客户端关闭连接所触发的信号使得 `self._reader_at_eof()` 函数返回值为 True 为止。这段代码尝试在每次循环迭代中从 `StreamReader` 中读取数据,并通过调用 `self.process_data(data)` 函数以增量方式生成 `self.request`。每次循环读取数据时,连接超时计数器被重置。

|

||||

|

||||