mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

e5b39dc6a6

@ -0,0 +1,69 @@

|

||||

如何在家中使用 SSH 和 SFTP 协议

|

||||

======

|

||||

|

||||

> 通过 SSH 和 SFTP 协议,我们能够访问其他设备,有效而且安全的传输文件等等。

|

||||

|

||||

|

||||

|

||||

几年前,我决定配置另外一台电脑,以便我能在工作时访问它来传输我所需要的文件。要做到这一点,最基本的一步是要求你的网络提供商(ISP)提供一个固定的地址。

|

||||

|

||||

有一个不必要但很重要的步骤,就是保证你的这个可以访问的系统是安全的。在我的这种情况下,我计划只在工作场所访问它,所以我能够限定访问的 IP 地址。即使如此,你依然要尽多的采用安全措施。一旦你建立起来这个系统,全世界的人们马上就能尝试访问你的系统。这是非常令人惊奇及恐慌的。你能通过日志文件来发现这一点。我推测有探测机器人在尽其所能的搜索那些没有安全措施的系统。

|

||||

|

||||

在我设置好系统不久后,我觉得这种访问没什么大用,为此,我将它关闭了以便不再为它操心。尽管如此,只要架设了它,在家庭网络中使用 SSH 和 SFTP 还是有点用的。

|

||||

|

||||

当然,有一个必备条件,这个另外的电脑必须已经开机了,至于电脑是否登录与否无所谓的。你也需要知道其 IP 地址。有两个方法能够知道,一个是通过浏览器访问你的路由器,一般情况下你的地址格式类似于 192.168.1.254 这样。通过一些搜索,很容易找出当前是开机的并且接在 eth0 或者 wifi 上的系统。如何识别你所要找到的电脑可能是个挑战。

|

||||

|

||||

更容易找到这个电脑的方式是,打开 shell,输入 :

|

||||

|

||||

```

|

||||

ifconfig

|

||||

```

|

||||

|

||||

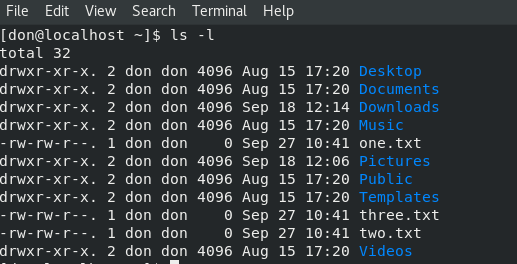

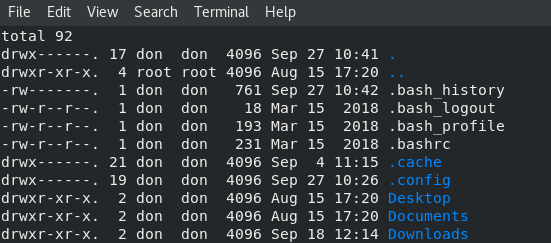

命令会输出一些信息,你所需要的信息在 `inet` 后面,看起来和 192.168.1.234 类似。当你发现这个后,回到你要访问这台主机的客户端电脑,在命令行中输入 :

|

||||

|

||||

```

|

||||

ssh gregp@192.168.1.234

|

||||

```

|

||||

|

||||

如果要让上面的命令能够正常执行,`gregp` 必须是该主机系统中正确的用户名。你会被询问其密码。如果你键入的密码和用户名都是正确的,你将通过 shell 环境连接上了这台电脑。我坦诚,对于 SSH 我并不是经常使用的。我偶尔使用它,我能够运行 `dnf` 来更新我所常使用电脑之外的其它电脑。通常,我用 SFTP :

|

||||

|

||||

```

|

||||

sftp grego@192.168.1.234

|

||||

```

|

||||

|

||||

我更需要用简单的方法来把一个文件传输到另一个电脑。相对于闪存棒和额外的设备,它更加方便,耗时更少。

|

||||

|

||||

一旦连接建立成功,SFTP 有两个基本的命令,`get`,从主机接收文件 ;`put`,向主机发送文件。在连接之前,我经常在客户端移动到我想接收或者传输的文件夹下。在连接之后,你将处于一个顶层目录里,比如 `home/gregp`。一旦连接成功,你可以像在客户端一样的使用 `cd`,改变你在主机上的工作路径。你也许需要用 `ls` 来确认你的位置。

|

||||

|

||||

如果你想改变你的客户端的工作目录。用 `lcd` 命令( 即 local change directory 的意思)。同样的,用 `lls` 来显示客户端工作目录的内容。

|

||||

|

||||

如果主机上没有你想要的目录名,你该怎么办?用 `mkdir` 在主机上创建一个新的目录。或者你可以将整个目录的文件全拷贝到主机 :

|

||||

|

||||

```

|

||||

put -r thisDir/

|

||||

```

|

||||

|

||||

这将在主机上创建该目录并复制它的全部文件和子目录到主机上。这种传输是非常快速的,能达到硬件的上限。不像在互联网传输一样遇到网络瓶颈。要查看你能在 SFTP 会话中能够使用的命令列表:

|

||||

|

||||

```

|

||||

man sftp

|

||||

```

|

||||

|

||||

我也能够在我的电脑上的 Windows 虚拟机内用 SFTP,这是配置一个虚拟机而不是一个双系统的另外一个优势。这让我能够在系统的 Linux 部分移入或者移出文件。而我只需要在 Windows 中使用一个客户端就行。

|

||||

|

||||

你能够使用 SSH 或 SFTP 访问通过网线或者 WIFI 连接到你路由器的任何设备。这里,我使用了一个叫做 [SSHDroid][1] 的应用,能够在被动模式下运行 SSH。换句话来说,你能够用你的电脑访问作为主机的 Android 设备。近来我还发现了另外一个应用,[Admin Hands][2],不管你的客户端是平板还是手机,都能使用 SSH 或者 SFTP 操作。这个应用对于备份和手机分享照片是极好的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/ssh-sftp-home-network

|

||||

|

||||

作者:[Geg Pittman][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[singledo](https://github.com/singledo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/greg-p

|

||||

[1]: https://play.google.com/store/apps/details?id=berserker.android.apps.sshdroid

|

||||

[2]: https://play.google.com/store/apps/details?id=com.arpaplus.adminhands&hl=en_US

|

||||

@ -1,6 +1,7 @@

|

||||

使用 Python 为你的油箱加油

|

||||

======

|

||||

我来介绍一下我是如何使用 Python 来节省成本的。

|

||||

|

||||

> 我来介绍一下我是如何使用 Python 来节省成本的。

|

||||

|

||||

|

||||

|

||||

@ -82,7 +83,7 @@ while i < 21: # 20 次迭代 (加油次数)

|

||||

|

||||

如你所见,这个调整会令混合汽油号数始终略高于 91。当然,我的油量表并没有 1/12 的刻度,但是 7/12 略小于 5/8,我可以近似地计算。

|

||||

|

||||

一个更简单地方案是每次都首先加满 93 号汽油,然后在油箱半满时加入 89 号汽油直到耗尽,这可能会是我的常规方案。但就我个人而言,这种方法并不太好,有时甚至会产生一些麻烦。但对于长途旅行来说,这种方案会相对简便一些。有时我也会因为油价突然下跌而购买一些汽油,所以,这个方案是我可以考虑的一系列选项之一。

|

||||

一个更简单地方案是每次都首先加满 93 号汽油,然后在油箱半满时加入 89 号汽油直到耗尽,这可能会是我的常规方案。就我个人而言,这种方法并不太好,有时甚至会产生一些麻烦。但对于长途旅行来说,这种方案会相对简便一些。有时我也会因为油价突然下跌而购买一些汽油,所以,这个方案是我可以考虑的一系列选项之一。

|

||||

|

||||

当然最重要的是:开车不写码,写码不开车!

|

||||

|

||||

@ -93,7 +94,7 @@ via: https://opensource.com/article/18/10/python-gas-pump

|

||||

作者:[Greg Pittman][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,24 +1,25 @@

|

||||

如何创建和维护你的Man手册

|

||||

如何创建和维护你自己的 man 手册

|

||||

======

|

||||

|

||||

|

||||

|

||||

我们已经讨论了一些[Man手册的替代方案] [1]。 这些替代方案主要用于学习简洁的Linux命令示例,而无需通过全面过于详细的手册页。 如果你正在寻找一种快速而简单的方法来轻松快速地学习Linux命令,那么这些替代方案值得尝试。 现在,你可能正在考虑 - 如何为Linux命令创建自己的man-like帮助页面? 这时**“Um”**就派上用场了。 Um是一个命令行实用程序,用于轻松创建和维护包含你到目前为止所了解的所有命令的Man页面。

|

||||

我们已经讨论了一些 [man 手册的替代方案][1]。 这些替代方案主要用于学习简洁的 Linux 命令示例,而无需通过全面而过于详细的手册页。 如果你正在寻找一种快速而简单的方法来轻松快速地学习 Linux 命令,那么这些替代方案值得尝试。 现在,你可能正在考虑 —— 如何为 Linux 命令创建自己的 man 式的帮助页面? 这时 “Um” 就派上用场了。 Um 是一个命令行实用程序,可以用于轻松创建和维护包含你到目前为止所了解的所有命令的 man 页面。

|

||||

|

||||

通过创建自己的手册页,你可以在手册页中避免大量不必要的细节,并且只包含你需要记住的内容。 如果你想创建自己的一套man-like页面,“Um”也能为你提供帮助。 在这个简短的教程中,我们将学习如何安装“Um”命令以及如何创建自己的man手册页。

|

||||

通过创建自己的手册页,你可以在手册页中避免大量不必要的细节,并且只包含你需要记住的内容。 如果你想创建自己的一套 man 式的页面,“Um” 也能为你提供帮助。 在这个简短的教程中,我们将学习如何安装 “Um” 命令以及如何创建自己的 man 手册页。

|

||||

|

||||

### 安装 Um

|

||||

|

||||

Um适用于Linux和Mac OS。 目前,它只能在Linux系统中使用** Linuxbrew **软件包管理器来进行安装。 如果你尚未安装Linuxbrew,请参考以下链接。

|

||||

Um 适用于 Linux 和Mac OS。 目前,它只能在 Linux 系统中使用 Linuxbrew 软件包管理器来进行安装。 如果你尚未安装 Linuxbrew,请参考以下链接:

|

||||

|

||||

安装Linuxbrew后,运行以下命令安装Um实用程序。

|

||||

- [Linuxbrew:一个用于 Linux 和 MacOS 的通用包管理器][3]

|

||||

|

||||

安装 Linuxbrew 后,运行以下命令安装 Um 实用程序。

|

||||

|

||||

```

|

||||

$ brew install sinclairtarget/wst/um

|

||||

|

||||

```

|

||||

|

||||

如果你会看到类似下面的输出,恭喜你! Um已经安装好并且可以使用了。

|

||||

如果你会看到类似下面的输出,恭喜你! Um 已经安装好并且可以使用了。

|

||||

|

||||

```

|

||||

[...]

|

||||

@ -49,88 +50,78 @@ Emacs Lisp files have been installed to:

|

||||

==> um

|

||||

Bash completion has been installed to:

|

||||

/home/linuxbrew/.linuxbrew/etc/bash_completion.d

|

||||

|

||||

```

|

||||

|

||||

在制作你的man手册页之前,你需要为Um启用bash补全。

|

||||

在制作你的 man 手册页之前,你需要为 Um 启用 bash 补全。

|

||||

|

||||

要开启bash'补全,首先你需要打开 **~/.bash_profile** 文件:

|

||||

要开启 bash 补全,首先你需要打开 `~/.bash_profile` 文件:

|

||||

|

||||

```

|

||||

$ nano ~/.bash_profile

|

||||

|

||||

```

|

||||

|

||||

并在其中添加以下内容:

|

||||

并在其中添加以下内容:

|

||||

|

||||

```

|

||||

if [ -f $(brew --prefix)/etc/bash_completion.d/um-completion.sh ]; then

|

||||

. $(brew --prefix)/etc/bash_completion.d/um-completion.sh

|

||||

fi

|

||||

|

||||

```

|

||||

|

||||

保存并关闭文件。运行以下命令以更新更改。

|

||||

|

||||

```

|

||||

$ source ~/.bash_profile

|

||||

|

||||

```

|

||||

|

||||

准备工作全部完成。让我们继续创建我们的第一个man手册页。

|

||||

准备工作全部完成。让我们继续创建我们的第一个 man 手册页。

|

||||

|

||||

### 创建并维护自己的man手册

|

||||

|

||||

### 创建并维护自己的Man手册

|

||||

|

||||

如果你想为“dpkg”命令创建自己的Man手册。请运行:

|

||||

如果你想为 `dpkg` 命令创建自己的 man 手册。请运行:

|

||||

|

||||

```

|

||||

$ um edit dpkg

|

||||

|

||||

```

|

||||

|

||||

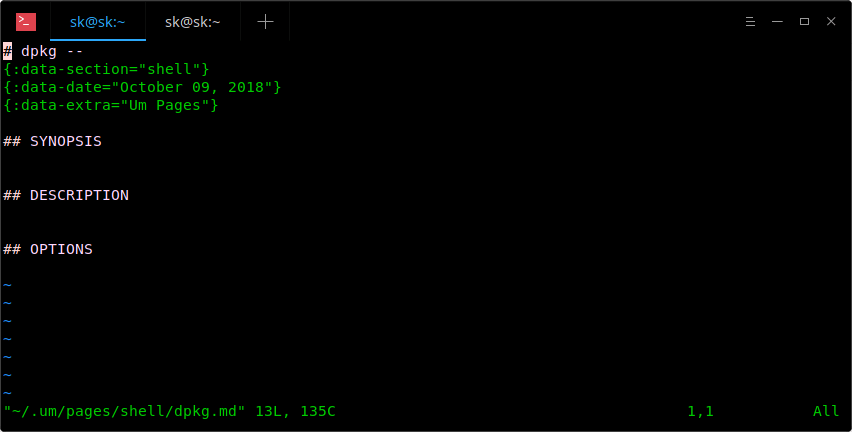

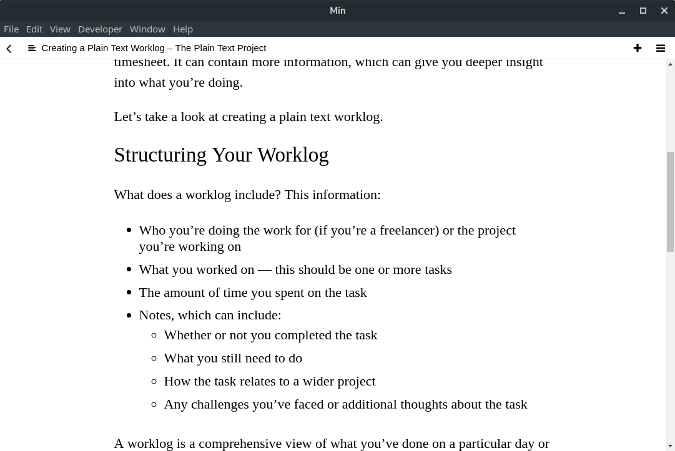

上面的命令将在默认编辑器中打开markdown模板:

|

||||

上面的命令将在默认编辑器中打开 markdown 模板:

|

||||

|

||||

|

||||

|

||||

我的默认编辑器是Vi,因此上面的命令会在Vi编辑器中打开它。现在,开始在此模板中添加有关“dpkg”命令的所有内容。

|

||||

我的默认编辑器是 Vi,因此上面的命令会在 Vi 编辑器中打开它。现在,开始在此模板中添加有关 `dpkg` 命令的所有内容。

|

||||

|

||||

下面是一个示例:

|

||||

下面是一个示例:

|

||||

|

||||

|

||||

|

||||

正如你在上图的输出中看到的,我为dpkg命令添加了概要,描述和两个参数选项。 你可以在Man手册中添加你所需要的所有部分。不过你也要确保为每个部分提供了适当且易于理解的标题。 完成后,保存并退出文件(如果使用Vi编辑器,请按ESC键并键入:wq)。

|

||||

正如你在上图的输出中看到的,我为 `dpkg` 命令添加了概要,描述和两个参数选项。 你可以在 man 手册中添加你所需要的所有部分。不过你也要确保为每个部分提供了适当且易于理解的标题。 完成后,保存并退出文件(如果使用 Vi 编辑器,请按 `ESC` 键并键入`:wq`)。

|

||||

|

||||

最后,使用以下命令查看新创建的Man手册页:

|

||||

最后,使用以下命令查看新创建的 man 手册页:

|

||||

|

||||

```

|

||||

$ um dpkg

|

||||

|

||||

```

|

||||

|

||||

|

||||

|

||||

如你所见,dpkg的Man手册页看起来与官方手册页完全相同。 如果要在手册页中编辑和/或添加更多详细信息,请再次运行相同的命令并添加更多详细信息。

|

||||

如你所见,`dpkg` 的 man 手册页看起来与官方手册页完全相同。 如果要在手册页中编辑和/或添加更多详细信息,请再次运行相同的命令并添加更多详细信息。

|

||||

|

||||

```

|

||||

$ um edit dpkg

|

||||

|

||||

```

|

||||

|

||||

要使用Um查看新创建的Man手册页列表,请运行:

|

||||

要使用 Um 查看新创建的 man 手册页列表,请运行:

|

||||

|

||||

```

|

||||

$ um list

|

||||

|

||||

```

|

||||

|

||||

所有手册页将保存在主目录中名为**`.um` **的目录下

|

||||

所有手册页将保存在主目录中名为 `.um` 的目录下

|

||||

|

||||

以防万一,如果你不想要某个特定页面,只需删除它,如下所示。

|

||||

|

||||

```

|

||||

$ um rm dpkg

|

||||

|

||||

```

|

||||

|

||||

要查看帮助部分和所有可用的常规选项,请运行:

|

||||

@ -151,7 +142,6 @@ Subcommands:

|

||||

um topics List all topics.

|

||||

um (c)onfig [config key] Display configuration environment.

|

||||

um (h)elp [sub-command] Display this help message, or the help message for a sub-command.

|

||||

|

||||

```

|

||||

|

||||

### 配置 Um

|

||||

@ -166,22 +156,18 @@ pager = less

|

||||

pages_directory = /home/sk/.um/pages

|

||||

default_topic = shell

|

||||

pages_ext = .md

|

||||

|

||||

```

|

||||

|

||||

在此文件中,你可以根据需要编辑和更改** pager **,** editor **,** default_topic **,** pages_directory **和** pages_ext **选项的值。 比如说,如果你想在** [Dropbox] [2] **文件夹中保存新创建的Um页面,只需更改/.um/umconfig**文件中** pages_directory **的值并将其更改为Dropbox文件夹即可。

|

||||

在此文件中,你可以根据需要编辑和更改 `pager`、`editor`、`default_topic`、`pages_directory` 和 `pages_ext` 选项的值。 比如说,如果你想在 [Dropbox][2] 文件夹中保存新创建的 Um 页面,只需更改 `~/.um/umconfig` 文件中 `pages_directory` 的值并将其更改为 Dropbox 文件夹即可。

|

||||

|

||||

```

|

||||

pages_directory = /Users/myusername/Dropbox/um

|

||||

|

||||

```

|

||||

|

||||

这就是全部内容,希望这些能对你有用,更多好的内容敬请关注!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-create-and-maintain-your-own-man-pages/

|

||||

@ -189,7 +175,7 @@ via: https://www.ostechnix.com/how-to-create-and-maintain-your-own-man-pages/

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[way-ww](https://github.com/way-ww)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -197,3 +183,4 @@ via: https://www.ostechnix.com/how-to-create-and-maintain-your-own-man-pages/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.ostechnix.com/3-good-alternatives-man-pages-every-linux-user-know/

|

||||

[2]: https://www.ostechnix.com/install-dropbox-in-ubuntu-18-04-lts-desktop/

|

||||

[3]: https://www.ostechnix.com/linuxbrew-common-package-manager-linux-mac-os-x/

|

||||

File diff suppressed because it is too large

Load Diff

@ -1,3 +1,4 @@

|

||||

LuuMing translating

|

||||

Setting Up a Timer with systemd in Linux

|

||||

======

|

||||

|

||||

|

||||

@ -1,299 +0,0 @@

|

||||

Translating by DavidChenLiang

|

||||

|

||||

CLI: improved

|

||||

======

|

||||

I'm not sure many web developers can get away without visiting the command line. As for me, I've been using the command line since 1997, first at university when I felt both super cool l33t-hacker and simultaneously utterly out of my depth.

|

||||

|

||||

Over the years my command line habits have improved and I often search for smarter tools for the jobs I commonly do. With that said, here's my current list of improved CLI tools.

|

||||

|

||||

|

||||

### Ignoring my improvements

|

||||

|

||||

In a number of cases I've aliased the new and improved command line tool over the original (as with `cat` and `ping`).

|

||||

|

||||

If I want to run the original command, which is sometimes I do need to do, then there's two ways I can do this (I'm on a Mac so your mileage may vary):

|

||||

```

|

||||

$ \cat # ignore aliases named "cat" - explanation: https://stackoverflow.com/a/16506263/22617

|

||||

$ command cat # ignore functions and aliases

|

||||

|

||||

```

|

||||

|

||||

### bat > cat

|

||||

|

||||

`cat` is used to print the contents of a file, but given more time spent in the command line, features like syntax highlighting come in very handy. I found [ccat][3] which offers highlighting then I found [bat][4] which has highlighting, paging, line numbers and git integration.

|

||||

|

||||

The `bat` command also allows me to search during output (only if the output is longer than the screen height) using the `/` key binding (similarly to `less` searching).

|

||||

|

||||

![Simple bat output][5]

|

||||

|

||||

I've also aliased `bat` to the `cat` command:

|

||||

```

|

||||

alias cat='bat'

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][4]

|

||||

|

||||

### prettyping > ping

|

||||

|

||||

`ping` is incredibly useful, and probably my goto tool for the "oh crap is X down/does my internet work!!!". But `prettyping` ("pretty ping" not "pre typing"!) gives ping a really nice output and just makes me feel like the command line is a bit more welcoming.

|

||||

|

||||

![/images/cli-improved/ping.gif][6]

|

||||

|

||||

I've also aliased `ping` to the `prettyping` command:

|

||||

```

|

||||

alias ping='prettyping --nolegend'

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][7]

|

||||

|

||||

### fzf > ctrl+r

|

||||

|

||||

In the terminal, using `ctrl+r` will allow you to [search backwards][8] through your history. It's a nice trick, albeit a bit fiddly.

|

||||

|

||||

The `fzf` tool is a **huge** enhancement on `ctrl+r`. It's a fuzzy search against the terminal history, with a fully interactive preview of the possible matches.

|

||||

|

||||

In addition to searching through the history, `fzf` can also preview and open files, which is what I've done in the video below:

|

||||

|

||||

For this preview effect, I created an alias called `preview` which combines `fzf` with `bat` for the preview and a custom key binding to open VS Code:

|

||||

```

|

||||

alias preview="fzf --preview 'bat --color \"always\" {}'"

|

||||

# add support for ctrl+o to open selected file in VS Code

|

||||

export FZF_DEFAULT_OPTS="--bind='ctrl-o:execute(code {})+abort'"

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][9]

|

||||

|

||||

### htop > top

|

||||

|

||||

`top` is my goto tool for quickly diagnosing why the CPU on the machine is running hard or my fan is whirring. I also use these tools in production. Annoyingly (to me!) `top` on the Mac is vastly different (and inferior IMHO) to `top` on linux.

|

||||

|

||||

However, `htop` is an improvement on both regular `top` and crappy-mac `top`. Lots of colour coding, keyboard bindings and different views which have helped me in the past to understand which processes belong to which.

|

||||

|

||||

Handy key bindings include:

|

||||

|

||||

* P - sort by CPU

|

||||

* M - sort by memory usage

|

||||

* F4 - filter processes by string (to narrow to just "node" for instance)

|

||||

* space - mark a single process so I can watch if the process is spiking

|

||||

|

||||

|

||||

|

||||

![htop output][10]

|

||||

|

||||

There is a weird bug in Mac Sierra that can be overcome by running `htop` as root (I can't remember exactly what the bug is, but this alias fixes it - though annoying that I have to enter my password every now and again):

|

||||

```

|

||||

alias top="sudo htop" # alias top and fix high sierra bug

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][11]

|

||||

|

||||

### diff-so-fancy > diff

|

||||

|

||||

I'm pretty sure I picked this one up from Paul Irish some years ago. Although I rarely fire up `diff` manually, my git commands use diff all the time. `diff-so-fancy` gives me both colour coding but also character highlight of changes.

|

||||

|

||||

![diff so fancy][12]

|

||||

|

||||

Then in my `~/.gitconfig` I have included the following entry to enable `diff-so-fancy` on `git diff` and `git show`:

|

||||

```

|

||||

[pager]

|

||||

diff = diff-so-fancy | less --tabs=1,5 -RFX

|

||||

show = diff-so-fancy | less --tabs=1,5 -RFX

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][13]

|

||||

|

||||

### fd > find

|

||||

|

||||

Although I use a Mac, I've never been a fan of Spotlight (I found it sluggish, hard to remember the keywords, the database update would hammer my CPU and generally useless!). I use [Alfred][14] a lot, but even the finder feature doesn't serve me well.

|

||||

|

||||

I tend to turn the command line to find files, but `find` is always a bit of a pain to remember the right expression to find what I want (and indeed the Mac flavour is slightly different non-mac find which adds to frustration).

|

||||

|

||||

`fd` is a great replacement (by the same individual who wrote `bat`). It is very fast and the common use cases I need to search with are simple to remember.

|

||||

|

||||

A few handy commands:

|

||||

```

|

||||

$ fd cli # all filenames containing "cli"

|

||||

$ fd -e md # all with .md extension

|

||||

$ fd cli -x wc -w # find "cli" and run `wc -w` on each file

|

||||

|

||||

```

|

||||

|

||||

![fd output][15]

|

||||

|

||||

💾 [Installation directions][16]

|

||||

|

||||

### ncdu > du

|

||||

|

||||

Knowing where disk space is being taking up is a fairly important task for me. I've used the Mac app [Disk Daisy][17] but I find that it can be a little slow to actually yield results.

|

||||

|

||||

The `du -sh` command is what I'll use in the terminal (`-sh` means summary and human readable), but often I'll want to dig into the directories taking up the space.

|

||||

|

||||

`ncdu` is a nice alternative. It offers an interactive interface and allows for quickly scanning which folders or files are responsible for taking up space and it's very quick to navigate. (Though any time I want to scan my entire home directory, it's going to take a long time, regardless of the tool - my directory is about 550gb).

|

||||

|

||||

Once I've found a directory I want to manage (to delete, move or compress files), I'll use the cmd + click the pathname at the top of the screen in [iTerm2][18] to launch finder to that directory.

|

||||

|

||||

![ncdu output][19]

|

||||

|

||||

There's another [alternative called nnn][20] which offers a slightly nicer interface and although it does file sizes and usage by default, it's actually a fully fledged file manager.

|

||||

|

||||

My `ncdu` is aliased to the following:

|

||||

```

|

||||

alias du="ncdu --color dark -rr -x --exclude .git --exclude node_modules"

|

||||

|

||||

```

|

||||

|

||||

The options are:

|

||||

|

||||

* `--color dark` \- use a colour scheme

|

||||

* `-rr` \- read-only mode (prevents delete and spawn shell)

|

||||

* `--exclude` ignore directories I won't do anything about

|

||||

|

||||

|

||||

|

||||

💾 [Installation directions][21]

|

||||

|

||||

### tldr > man

|

||||

|

||||

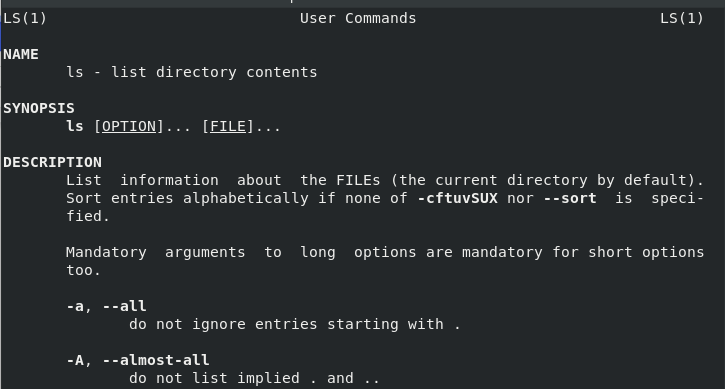

It's amazing that nearly every single command line tool comes with a manual via `man <command>`, but navigating the `man` output can be sometimes a little confusing, plus it can be daunting given all the technical information that's included in the manual output.

|

||||

|

||||

This is where the TL;DR project comes in. It's a community driven documentation system that's available from the command line. So far in my own usage, I've not come across a command that's not been documented, but you can [also contribute too][22].

|

||||

|

||||

![TLDR output for 'fd'][23]

|

||||

|

||||

As a nicety, I've also aliased `tldr` to `help` (since it's quicker to type!):

|

||||

```

|

||||

alias help='tldr'

|

||||

|

||||

```

|

||||

|

||||

💾 [Installation directions][24]

|

||||

|

||||

### ack || ag > grep

|

||||

|

||||

`grep` is no doubt a powerful tool on the command line, but over the years it's been superseded by a number of tools. Two of which are `ack` and `ag`.

|

||||

|

||||

I personally flitter between `ack` and `ag` without really remembering which I prefer (that's to say they're both very good and very similar!). I tend to default to `ack` only because it rolls of my fingers a little easier. Plus, `ack` comes with the mega `ack --bar` argument (I'll let you experiment)!

|

||||

|

||||

Both `ack` and `ag` will (by default) use a regular expression to search, and extremely pertinent to my work, I can specify the file types to search within using flags like `--js` or `--html` (though here `ag` includes more files in the js filter than `ack`).

|

||||

|

||||

Both tools also support the usual `grep` options, like `-B` and `-A` for before and after context in the grep.

|

||||

|

||||

![ack in action][25]

|

||||

|

||||

Since `ack` doesn't come with markdown support (and I write a lot in markdown), I've got this customisation in my `~/.ackrc` file:

|

||||

```

|

||||

--type-set=md=.md,.mkd,.markdown

|

||||

--pager=less -FRX

|

||||

|

||||

```

|

||||

|

||||

💾 Installation directions: [ack][26], [ag][27]

|

||||

|

||||

[Futher reading on ack & ag][28]

|

||||

|

||||

### jq > grep et al

|

||||

|

||||

I'm a massive fanboy of [jq][29]. At first I struggled with the syntax, but I've since come around to the query language and use `jq` on a near daily basis (whereas before I'd either drop into node, use grep or use a tool called [json][30] which is very basic in comparison).

|

||||

|

||||

I've even started the process of writing a jq tutorial series (2,500 words and counting) and have published a [web tool][31] and a native mac app (yet to be released).

|

||||

|

||||

`jq` allows me to pass in JSON and transform the source very easily so that the JSON result fits my requirements. One such example allows me to update all my node dependencies in one command (broken into multiple lines for readability):

|

||||

```

|

||||

$ npm i $(echo $(\

|

||||

npm outdated --json | \

|

||||

jq -r 'to_entries | .[] | "\(.key)@\(.value.latest)"' \

|

||||

))

|

||||

|

||||

```

|

||||

|

||||

The above command will list all the node dependencies that are out of date, and use npm's JSON output format, then transform the source JSON from this:

|

||||

```

|

||||

{

|

||||

"node-jq": {

|

||||

"current": "0.7.0",

|

||||

"wanted": "0.7.0",

|

||||

"latest": "1.2.0",

|

||||

"location": "node_modules/node-jq"

|

||||

},

|

||||

"uuid": {

|

||||

"current": "3.1.0",

|

||||

"wanted": "3.2.1",

|

||||

"latest": "3.2.1",

|

||||

"location": "node_modules/uuid"

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

…to this:

|

||||

|

||||

That result is then fed into the `npm install` command and voilà, I'm all upgraded (using the sledgehammer approach).

|

||||

|

||||

### Honourable mentions

|

||||

|

||||

Some of the other tools that I've started poking around with, but haven't used too often (with the exception of ponysay, which appears when I start a new terminal session!):

|

||||

|

||||

* [ponysay][32] > cowsay

|

||||

* [csvkit][33] > awk et al

|

||||

* [noti][34] > `display notification`

|

||||

* [entr][35] > watch

|

||||

|

||||

|

||||

|

||||

### What about you?

|

||||

|

||||

So that's my list. How about you? What daily command line tools have you improved? I'd love to know.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://remysharp.com/2018/08/23/cli-improved

|

||||

|

||||

作者:[Remy Sharp][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://remysharp.com

|

||||

[1]: https://remysharp.com/images/terminal-600.jpg

|

||||

[2]: https://training.leftlogic.com/buy/terminal/cli2?coupon=READERS-DISCOUNT&utm_source=blog&utm_medium=banner&utm_campaign=remysharp-discount

|

||||

[3]: https://github.com/jingweno/ccat

|

||||

[4]: https://github.com/sharkdp/bat

|

||||

[5]: https://remysharp.com/images/cli-improved/bat.gif (Sample bat output)

|

||||

[6]: https://remysharp.com/images/cli-improved/ping.gif (Sample ping output)

|

||||

[7]: http://denilson.sa.nom.br/prettyping/

|

||||

[8]: https://lifehacker.com/278888/ctrl%252Br-to-search-and-other-terminal-history-tricks

|

||||

[9]: https://github.com/junegunn/fzf

|

||||

[10]: https://remysharp.com/images/cli-improved/htop.jpg (Sample htop output)

|

||||

[11]: http://hisham.hm/htop/

|

||||

[12]: https://remysharp.com/images/cli-improved/diff-so-fancy.jpg (Sample diff output)

|

||||

[13]: https://github.com/so-fancy/diff-so-fancy

|

||||

[14]: https://www.alfredapp.com/

|

||||

[15]: https://remysharp.com/images/cli-improved/fd.png (Sample fd output)

|

||||

[16]: https://github.com/sharkdp/fd/

|

||||

[17]: https://daisydiskapp.com/

|

||||

[18]: https://www.iterm2.com/

|

||||

[19]: https://remysharp.com/images/cli-improved/ncdu.png (Sample ncdu output)

|

||||

[20]: https://github.com/jarun/nnn

|

||||

[21]: https://dev.yorhel.nl/ncdu

|

||||

[22]: https://github.com/tldr-pages/tldr#contributing

|

||||

[23]: https://remysharp.com/images/cli-improved/tldr.png (Sample tldr output for 'fd')

|

||||

[24]: http://tldr-pages.github.io/

|

||||

[25]: https://remysharp.com/images/cli-improved/ack.png (Sample ack output with grep args)

|

||||

[26]: https://beyondgrep.com

|

||||

[27]: https://github.com/ggreer/the_silver_searcher

|

||||

[28]: http://conqueringthecommandline.com/book/ack_ag

|

||||

[29]: https://stedolan.github.io/jq

|

||||

[30]: http://trentm.com/json/

|

||||

[31]: https://jqterm.com

|

||||

[32]: https://github.com/erkin/ponysay

|

||||

[33]: https://csvkit.readthedocs.io/en/1.0.3/

|

||||

[34]: https://github.com/variadico/noti

|

||||

[35]: http://www.entrproject.org/

|

||||

@ -1,397 +0,0 @@

|

||||

translating by Flowsnow

|

||||

|

||||

How to build rpm packages

|

||||

======

|

||||

|

||||

Save time and effort installing files and scripts across multiple hosts.

|

||||

|

||||

|

||||

|

||||

I have used rpm-based package managers to install software on Red Hat and Fedora Linux since I started using Linux more than 20 years ago. I have used the **rpm** program itself, **yum** , and **DNF** , which is a close descendant of yum, to install and update packages on my Linux hosts. The yum and DNF tools are wrappers around the rpm utility that provide additional functionality, such as the ability to find and install package dependencies.

|

||||

|

||||

Over the years I have created a number of Bash scripts, some of which have separate configuration files, that I like to install on most of my new computers and virtual machines. It reached the point that it took a great deal of time to install all of these packages, so I decided to automate that process by creating an rpm package that I could copy to the target hosts and install all of these files in their proper locations. Although the **rpm** tool was formerly used to build rpm packages, that function was removed and a new tool,was created to build new rpms.

|

||||

|

||||

When I started this project, I found very little information about creating rpm packages, but I managed to find a book, Maximum RPM, that helped me figure it out. That book is now somewhat out of date, as is the vast majority of information I have found. It is also out of print, and used copies go for hundreds of dollars. The online version of [Maximum RPM][1] is available at no charge and is kept up to date. The [RPM website][2] also has links to other websites that have a lot of documentation about rpm. What other information there is tends to be brief and apparently assumes that you already have a good deal of knowledge about the process.

|

||||

|

||||

In addition, every one of the documents I found assumes that the code needs to be compiled from sources as in a development environment. I am not a developer. I am a sysadmin, and we sysadmins have different needs because we don’t—or we shouldn’t—compile code to use for administrative tasks; we should use shell scripts. So we have no source code in the sense that it is something that needs to be compiled into binary executables. What we have is a source that is also the executable.

|

||||

|

||||

For the most part, this project should be performed as the non-root user student. Rpms should never be built by root, but only by non-privileged users. I will indicate which parts should be performed as root and which by a non-root, unprivileged user.

|

||||

|

||||

### Preparation

|

||||

|

||||

First, open one terminal session and `su` to root. Be sure to use the `-` option to ensure that the complete root environment is enabled. I do not believe that sysadmins should use `sudo` for any administrative tasks. Find out why in my personal blog post: [Real SysAdmins don’t sudo][3].

|

||||

|

||||

```

|

||||

[student@testvm1 ~]$ su -

|

||||

Password:

|

||||

[root@testvm1 ~]#

|

||||

```

|

||||

|

||||

Create a student user that can be used for this project and set a password for that user.

|

||||

|

||||

```

|

||||

[root@testvm1 ~]# useradd -c "Student User" student

|

||||

[root@testvm1 ~]# passwd student

|

||||

Changing password for user student.

|

||||

New password: <Enter the password>

|

||||

Retype new password: <Enter the password>

|

||||

passwd: all authentication tokens updated successfully.

|

||||

[root@testvm1 ~]#

|

||||

```

|

||||

|

||||

Building rpm packages requires the `rpm-build` package, which is likely not already installed. Install it now as root. Note that this command will also install several dependencies. The number may vary, depending upon the packages already installed on your host; it installed a total of 17 packages on my test VM, which is pretty minimal.

|

||||

|

||||

```

|

||||

dnf install -y rpm-build

|

||||

```

|

||||

|

||||

The rest of this project should be performed as the user student unless otherwise explicitly directed. Open another terminal session and use `su` to switch to that user to perform the rest of these steps. Download a tarball that I have prepared of a development directory structure, utils.tar, from GitHub using the following command:

|

||||

|

||||

```

|

||||

wget https://github.com/opensourceway/how-to-rpm/raw/master/utils.tar

|

||||

```

|

||||

|

||||

This tarball includes all of the files and Bash scripts that will be installed by the final rpm. There is also a complete spec file, which you can use to build the rpm. We will go into detail about each section of the spec file.

|

||||

|

||||

As user student, using your home directory as your present working directory (pwd), untar the tarball.

|

||||

|

||||

```

|

||||

[student@testvm1 ~]$ cd ; tar -xvf utils.tar

|

||||

```

|

||||

|

||||

Use the `tree` command to verify that the directory structure of ~/development and the contained files looks like the following output:

|

||||

|

||||

```

|

||||

[student@testvm1 ~]$ tree development/

|

||||

development/

|

||||

├── license

|

||||

│ ├── Copyright.and.GPL.Notice.txt

|

||||

│ └── GPL_LICENSE.txt

|

||||

├── scripts

|

||||

│ ├── create_motd

|

||||

│ ├── die

|

||||

│ ├── mymotd

|

||||

│ └── sysdata

|

||||

└── spec

|

||||

└── utils.spec

|

||||

|

||||

3 directories, 7 files

|

||||

[student@testvm1 ~]$

|

||||

```

|

||||

|

||||

The `mymotd` script creates a “Message Of The Day” data stream that is sent to stdout. The `create_motd` script runs the `mymotd` scripts and redirects the output to the /etc/motd file. This file is used to display a daily message to users who log in remotely using SSH.

|

||||

|

||||

The `die` script is my own script that wraps the `kill` command in a bit of code that can find running programs that match a specified string and kill them. It uses `kill -9` to ensure that they cannot ignore the kill message.

|

||||

|

||||

The `sysdata` script can spew tens of thousands of lines of data about your computer hardware, the installed version of Linux, all installed packages, and the metadata of your hard drives. I use it to document the state of a host at a point in time. I can later use it for reference. I used to do this to maintain a record of hosts that I installed for customers.

|

||||

|

||||

You may need to change ownership of these files and directories to student.student. Do this, if necessary, using the following command:

|

||||

|

||||

```

|

||||

chown -R student.student development

|

||||

```

|

||||

|

||||

Most of the files and directories in this tree will be installed on Fedora systems by the rpm you create during this project.

|

||||

|

||||

### Creating the build directory structure

|

||||

|

||||

The `rpmbuild` command requires a very specific directory structure. You must create this directory structure yourself because no automated way is provided. Create the following directory structure in your home directory:

|

||||

|

||||

```

|

||||

~ ─ rpmbuild

|

||||

├── RPMS

|

||||

│ └── noarch

|

||||

├── SOURCES

|

||||

├── SPECS

|

||||

└── SRPMS

|

||||

```

|

||||

|

||||

We will not create the rpmbuild/RPMS/X86_64 directory because that would be architecture-specific for 64-bit compiled binaries. We have shell scripts that are not architecture-specific. In reality, we won’t be using the SRPMS directory either, which would contain source files for the compiler.

|

||||

|

||||

### Examining the spec file

|

||||

|

||||

Each spec file has a number of sections, some of which may be ignored or omitted, depending upon the specific circumstances of the rpm build. This particular spec file is not an example of a minimal file required to work, but it is a good example of a moderately complex spec file that packages files that do not need to be compiled. If a compile were required, it would be performed in the `%build` section, which is omitted from this spec file because it is not required.

|

||||

|

||||

#### Preamble

|

||||

|

||||

This is the only section of the spec file that does not have a label. It consists of much of the information you see when the command `rpm -qi [Package Name]` is run. Each datum is a single line which consists of a tag, which identifies it and text data for the value of the tag.

|

||||

|

||||

```

|

||||

###############################################################################

|

||||

# Spec file for utils

|

||||

################################################################################

|

||||

# Configured to be built by user student or other non-root user

|

||||

################################################################################

|

||||

#

|

||||

Summary: Utility scripts for testing RPM creation

|

||||

Name: utils

|

||||

Version: 1.0.0

|

||||

Release: 1

|

||||

License: GPL

|

||||

URL: http://www.both.org

|

||||

Group: System

|

||||

Packager: David Both

|

||||

Requires: bash

|

||||

Requires: screen

|

||||

Requires: mc

|

||||

Requires: dmidecode

|

||||

BuildRoot: ~/rpmbuild/

|

||||

|

||||

# Build with the following syntax:

|

||||

# rpmbuild --target noarch -bb utils.spec

|

||||

```

|

||||

|

||||

Comment lines are ignored by the `rpmbuild` program. I always like to add a comment to this section that contains the exact syntax of the `rpmbuild` command required to create the package. The Summary tag is a short description of the package. The Name, Version, and Release tags are used to create the name of the rpm file, as in utils-1.00-1.rpm. Incrementing the release and version numbers lets you create rpms that can be used to update older ones.

|

||||

|

||||

The License tag defines the license under which the package is released. I always use a variation of the GPL. Specifying the license is important to clarify the fact that the software contained in the package is open source. This is also why I included the license and GPL statement in the files that will be installed.

|

||||

|

||||

The URL is usually the web page of the project or project owner. In this case, it is my personal web page.

|

||||

|

||||

The Group tag is interesting and is usually used for GUI applications. The value of the Group tag determines which group of icons in the applications menu will contain the icon for the executable in this package. Used in conjunction with the Icon tag (which we are not using here), the Group tag allows adding the icon and the required information to launch a program into the applications menu structure.

|

||||

|

||||

The Packager tag is used to specify the person or organization responsible for maintaining and creating the package.

|

||||

|

||||

The Requires statements define the dependencies for this rpm. Each is a package name. If one of the specified packages is not present, the DNF installation utility will try to locate it in one of the defined repositories defined in /etc/yum.repos.d and install it if it exists. If DNF cannot find one or more of the required packages, it will throw an error indicating which packages are missing and terminate.

|

||||

|

||||

The BuildRoot line specifies the top-level directory in which the `rpmbuild` tool will find the spec file and in which it will create temporary directories while it builds the package. The finished package will be stored in the noarch subdirectory that we specified earlier. The comment showing the command syntax used to build this package includes the option `–target noarch`, which defines the target architecture. Because these are Bash scripts, they are not associated with a specific CPU architecture. If this option were omitted, the build would be targeted to the architecture of the CPU on which the build is being performed.

|

||||

|

||||

The `rpmbuild` program can target many different architectures, and using the `--target` option allows us to build architecture-specific packages on a host with a different architecture from the one on which the build is performed. So I could build a package intended for use on an i686 architecture on an x86_64 host, and vice versa.

|

||||

|

||||

Change the packager name to yours and the URL to your own website if you have one.

|

||||

|

||||

#### %description

|

||||

|

||||

The `%description` section of the spec file contains a description of the rpm package. It can be very short or can contain many lines of information. Our `%description` section is rather terse.

|

||||

|

||||

```

|

||||

%description

|

||||

A collection of utility scripts for testing RPM creation.

|

||||

```

|

||||

|

||||

#### %prep

|

||||

|

||||

The `%prep` section is the first script that is executed during the build process. This script is not executed during the installation of the package.

|

||||

|

||||

This script is just a Bash shell script. It prepares the build directory, creating directories used for the build as required and copying the appropriate files into their respective directories. This would include the sources required for a complete compile as part of the build.

|

||||

|

||||

The $RPM_BUILD_ROOT directory represents the root directory of an installed system. The directories created in the $RPM_BUILD_ROOT directory are fully qualified paths, such as /user/local/share/utils, /usr/local/bin, and so on, in a live filesystem.

|

||||

|

||||

In the case of our package, we have no pre-compile sources as all of our programs are Bash scripts. So we simply copy those scripts and other files into the directories where they belong in the installed system.

|

||||

|

||||

```

|

||||

%prep

|

||||

################################################################################

|

||||

# Create the build tree and copy the files from the development directories #

|

||||

# into the build tree. #

|

||||

################################################################################

|

||||

echo "BUILDROOT = $RPM_BUILD_ROOT"

|

||||

mkdir -p $RPM_BUILD_ROOT/usr/local/bin/

|

||||

mkdir -p $RPM_BUILD_ROOT/usr/local/share/utils

|

||||

|

||||

cp /home/student/development/utils/scripts/* $RPM_BUILD_ROOT/usr/local/bin

|

||||

cp /home/student/development/utils/license/* $RPM_BUILD_ROOT/usr/local/share/utils

|

||||

cp /home/student/development/utils/spec/* $RPM_BUILD_ROOT/usr/local/share/utils

|

||||

|

||||

exit

|

||||

```

|

||||

|

||||

Note that the exit statement at the end of this section is required.

|

||||

|

||||

#### %files

|

||||

|

||||

This section of the spec file defines the files to be installed and their locations in the directory tree. It also specifies the file attributes and the owner and group owner for each file to be installed. The file permissions and ownerships are optional, but I recommend that they be explicitly set to eliminate any chance for those attributes to be incorrect or ambiguous when installed. Directories are created as required during the installation if they do not already exist.

|

||||

|

||||

```

|

||||

%files

|

||||

%attr(0744, root, root) /usr/local/bin/*

|

||||

%attr(0644, root, root) /usr/local/share/utils/*

|

||||

```

|

||||

|

||||

#### %pre

|

||||

|

||||

This section is empty in our lab project’s spec file. This would be the place to put any scripts that are required to run during installation of the rpm but prior to the installation of the files.

|

||||

|

||||

#### %post

|

||||

|

||||

This section of the spec file is another Bash script. This one runs after the installation of files. This section can be pretty much anything you need or want it to be, including creating files, running system commands, and restarting services to reinitialize them after making configuration changes. The `%post` script for our rpm package performs some of those tasks.

|

||||

|

||||

```

|

||||

%post

|

||||

################################################################################

|

||||

# Set up MOTD scripts #

|

||||

################################################################################

|

||||

cd /etc

|

||||

# Save the old MOTD if it exists

|

||||

if [ -e motd ]

|

||||

then

|

||||

cp motd motd.orig

|

||||

fi

|

||||

# If not there already, Add link to create_motd to cron.daily

|

||||

cd /etc/cron.daily

|

||||

if [ ! -e create_motd ]

|

||||

then

|

||||

ln -s /usr/local/bin/create_motd

|

||||

fi

|

||||

# create the MOTD for the first time

|

||||

/usr/local/bin/mymotd > /etc/motd

|

||||

```

|

||||

|

||||

The comments included in this script should make its purpose clear.

|

||||

|

||||

#### %postun

|

||||

|

||||

This section contains a script that would be run after the rpm package is uninstalled. Using rpm or DNF to remove a package removes all of the files listed in the `%files` section, but it does not remove files or links created by the `%post` section, so we need to handle that in this section.

|

||||

|

||||

This script usually consists of cleanup tasks that simply erasing the files previously installed by the rpm cannot accomplish. In the case of our package, it includes removing the link created by the `%post` script and restoring the saved original of the motd file.

|

||||

|

||||

```

|

||||

%postun

|

||||

# remove installed files and links

|

||||

rm /etc/cron.daily/create_motd

|

||||

|

||||

# Restore the original MOTD if it was backed up

|

||||

if [ -e /etc/motd.orig ]

|

||||

then

|

||||

mv -f /etc/motd.orig /etc/motd

|

||||

fi

|

||||

```

|

||||

|

||||

#### %clean

|

||||

|

||||

This Bash script performs cleanup after the rpm build process. The two lines in the `%clean` section below remove the build directories created by the `rpm-build` command. In many cases, additional cleanup may also be required.

|

||||

|

||||

```

|

||||

%clean

|

||||

rm -rf $RPM_BUILD_ROOT/usr/local/bin

|

||||

rm -rf $RPM_BUILD_ROOT/usr/local/share/utils

|

||||

```

|

||||

|

||||

#### %changelog

|

||||

|

||||

This optional text section contains a list of changes to the rpm and files it contains. The newest changes are recorded at the top of this section.

|

||||

|

||||

```

|

||||

%changelog

|

||||

* Wed Aug 29 2018 Your Name <Youremail@yourdomain.com>

|

||||

- The original package includes several useful scripts. it is

|

||||

primarily intended to be used to illustrate the process of

|

||||

building an RPM.

|

||||

```

|

||||

|

||||

Replace the data in the header line with your own name and email address.

|

||||

|

||||

### Building the rpm

|

||||

|

||||

The spec file must be in the SPECS directory of the rpmbuild tree. I find it easiest to create a link to the actual spec file in that directory so that it can be edited in the development directory and there is no need to copy it to the SPECS directory. Make the SPECS directory your pwd, then create the link.

|

||||

|

||||

```

|

||||

cd ~/rpmbuild/SPECS/

|

||||

ln -s ~/development/spec/utils.spec

|

||||

```

|

||||

|

||||

Run the following command to build the rpm. It should only take a moment to create the rpm if no errors occur.

|

||||

|

||||

```

|

||||

rpmbuild --target noarch -bb utils.spec

|

||||

```

|

||||

|

||||

Check in the ~/rpmbuild/RPMS/noarch directory to verify that the new rpm exists there.

|

||||

|

||||

```

|

||||

[student@testvm1 ~]$ cd rpmbuild/RPMS/noarch/

|

||||

[student@testvm1 noarch]$ ll

|

||||

total 24

|

||||

-rw-rw-r--. 1 student student 24364 Aug 30 10:00 utils-1.0.0-1.noarch.rpm

|

||||

[student@testvm1 noarch]$

|

||||

```

|

||||

|

||||

### Testing the rpm

|

||||

|

||||

As root, install the rpm to verify that it installs correctly and that the files are installed in the correct directories. The exact name of the rpm will depend upon the values you used for the tags in the Preamble section, but if you used the ones in the sample, the rpm name will be as shown in the sample command below:

|

||||

|

||||

```

|

||||

[root@testvm1 ~]# cd /home/student/rpmbuild/RPMS/noarch/

|

||||

[root@testvm1 noarch]# ll

|

||||

total 24

|

||||

-rw-rw-r--. 1 student student 24364 Aug 30 10:00 utils-1.0.0-1.noarch.rpm

|

||||

[root@testvm1 noarch]# rpm -ivh utils-1.0.0-1.noarch.rpm

|

||||

Preparing... ################################# [100%]

|

||||

Updating / installing...

|

||||

1:utils-1.0.0-1 ################################# [100%]

|

||||

```

|

||||

|

||||

Check /usr/local/bin to ensure that the new files are there. You should also verify that the create_motd link in /etc/cron.daily has been created.

|

||||

|

||||

Use the `rpm -q --changelog utils` command to view the changelog. View the files installed by the package using the `rpm -ql utils` command (that is a lowercase L in `ql`.)

|

||||

|

||||

```

|

||||

[root@testvm1 noarch]# rpm -q --changelog utils

|

||||

* Wed Aug 29 2018 Your Name <Youremail@yourdomain.com>

|

||||

- The original package includes several useful scripts. it is

|

||||

primarily intended to be used to illustrate the process of

|

||||

building an RPM.

|

||||

|

||||

[root@testvm1 noarch]# rpm -ql utils

|

||||

/usr/local/bin/create_motd

|

||||

/usr/local/bin/die

|

||||

/usr/local/bin/mymotd

|

||||

/usr/local/bin/sysdata

|

||||

/usr/local/share/utils/Copyright.and.GPL.Notice.txt

|

||||

/usr/local/share/utils/GPL_LICENSE.txt

|

||||

/usr/local/share/utils/utils.spec

|

||||

[root@testvm1 noarch]#

|

||||

```

|

||||

|

||||

Remove the package.

|

||||

|

||||

```

|

||||

rpm -e utils

|

||||

```

|

||||

|

||||

### Experimenting

|

||||

|

||||

Now you will change the spec file to require a package that does not exist. This will simulate a dependency that cannot be met. Add the following line immediately under the existing Requires line:

|

||||

|

||||

```

|

||||

Requires: badrequire

|

||||

```

|

||||

|

||||

Build the package and attempt to install it. What message is displayed?

|

||||

|

||||

We used the `rpm` command to install and delete the `utils` package. Try installing the package with yum or DNF. You must be in the same directory as the package or specify the full path to the package for this to work.

|

||||

|

||||

### Conclusion

|

||||

|

||||

There are many tags and a couple sections that we did not cover in this look at the basics of creating an rpm package. The resources listed below can provide more information. Building rpm packages is not difficult; you just need the right information. I hope this helps you—it took me months to figure things out on my own.

|

||||

|

||||

We did not cover building from source code, but if you are a developer, that should be a simple step from this point.

|

||||

|

||||

Creating rpm packages is another good way to be a lazy sysadmin and save time and effort. It provides an easy method for distributing and installing the scripts and other files that we as sysadmins need to install on many hosts.

|

||||

|

||||

### Resources

|

||||

|

||||

* Edward C. Baily, Maximum RPM, Sams Publishing, 2000, ISBN 0-672-31105-4

|

||||

|

||||

* Edward C. Baily, [Maximum RPM][1], updated online version

|

||||

|

||||

* [RPM Documentation][4]: This web page lists most of the available online documentation for rpm. It includes many links to other websites and information about rpm.

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/how-build-rpm-packages

|

||||

|

||||

作者:[David Both][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/dboth

|

||||

[1]: http://ftp.rpm.org/max-rpm/

|

||||

[2]: http://rpm.org/index.html

|

||||

[3]: http://www.both.org/?p=960

|

||||

[4]: http://rpm.org/documentation.html

|

||||

@ -1,110 +0,0 @@

|

||||

translating by ypingcn

|

||||

|

||||

Control your data with Syncthing: An open source synchronization tool

|

||||

======

|

||||

Decide how to store and share your personal information.

|

||||

|

||||

|

||||

|

||||

These days, some of our most important possessions—from pictures and videos of family and friends to financial and medical documents—are data. And even as cloud storage services are booming, so there are concerns about privacy and lack of control over our personal data. From the PRISM surveillance program to Google [letting app developers scan your personal emails][1], the news is full of reports that should give us all pause regarding the security of our personal information.

|

||||

|

||||

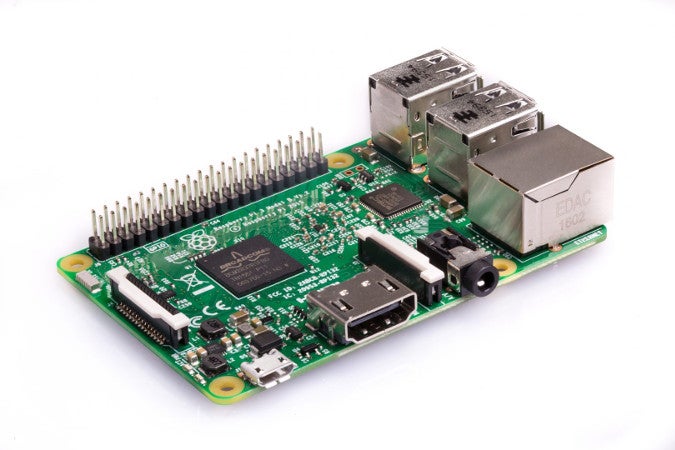

[Syncthing][2] can help put your mind at ease. An open source peer-to-peer file synchronization tool that runs on Linux, Windows, Mac, Android, and others (sorry, no iOS), Syncthing uses its own protocol, called [Block Exchange Protocol][3]. In brief, Syncthing lets you synchronize your data across many devices without owning a server.

|

||||

|

||||

### Linux

|

||||

|

||||

In this post, I will explain how to install and synchronize files between a Linux computer and an Android phone.

|

||||

|

||||

Syncthing is readily available for most popular distributions. Fedora 28 includes the latest version.

|

||||

|

||||

To install Syncthing in Fedora, you can either search for it in Software Center or execute the following command:

|

||||

|

||||

```

|

||||

sudo dnf install syncthing syncthing-gtk

|

||||

|

||||

```

|

||||

|

||||

Once it’s installed, open it. You’ll be welcomed by an assistant to help configure Syncthing. Click **Next** until it asks to configure the WebUI. The safest option is to keep the option **Listen on localhost**. That will disable the web interface and keep unauthorized users away.

|

||||

|

||||

![Syncthing in Setup WebUI dialog box][5]

|

||||

|

||||

Syncthing in Setup WebUI dialog box

|

||||

|

||||

Close the dialog. Now that Syncthing is installed, it’s time to share a folder, connect a device, and start syncing. But first, let’s continue with your other client.

|

||||

|

||||

### Android

|

||||

|

||||

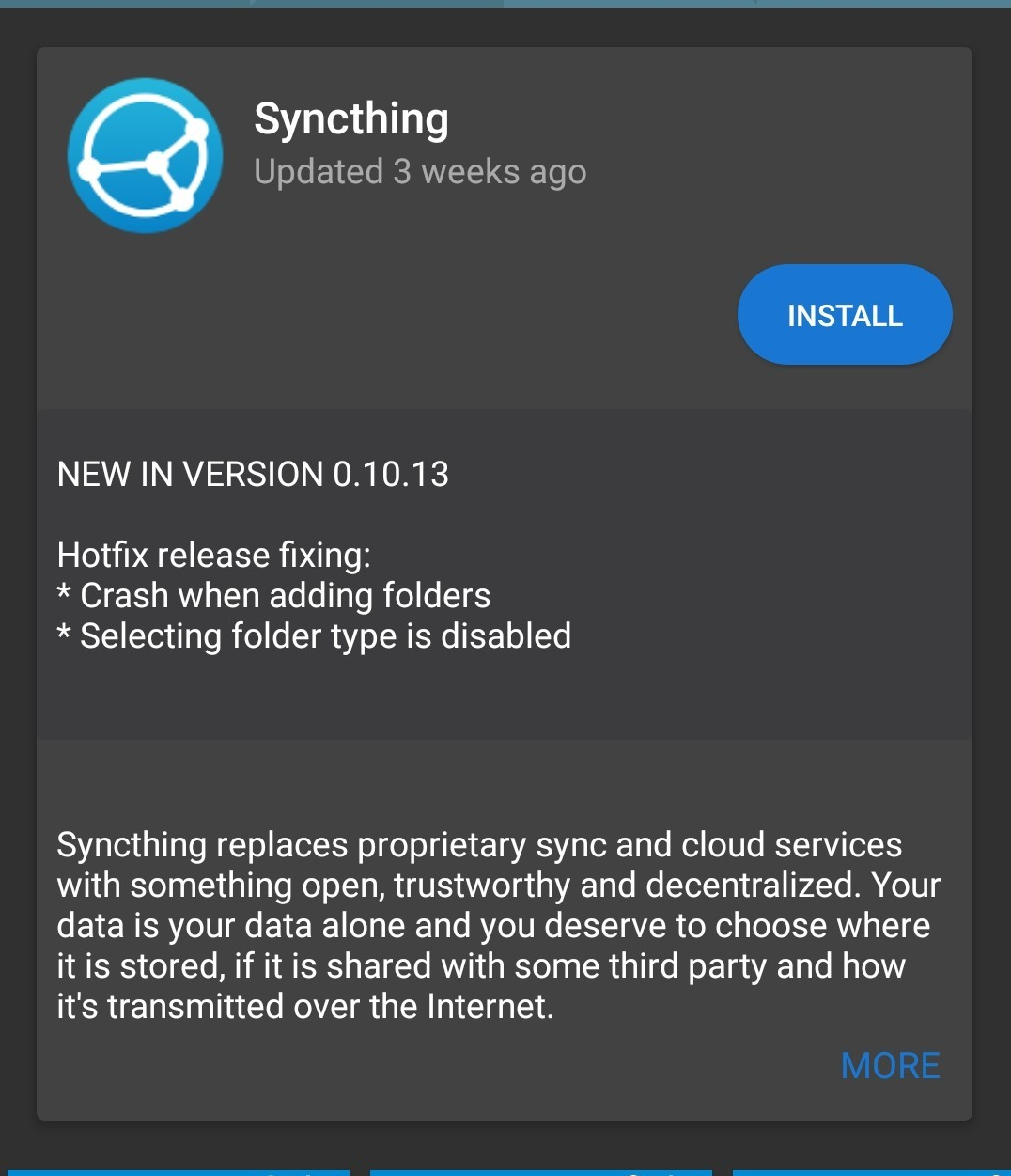

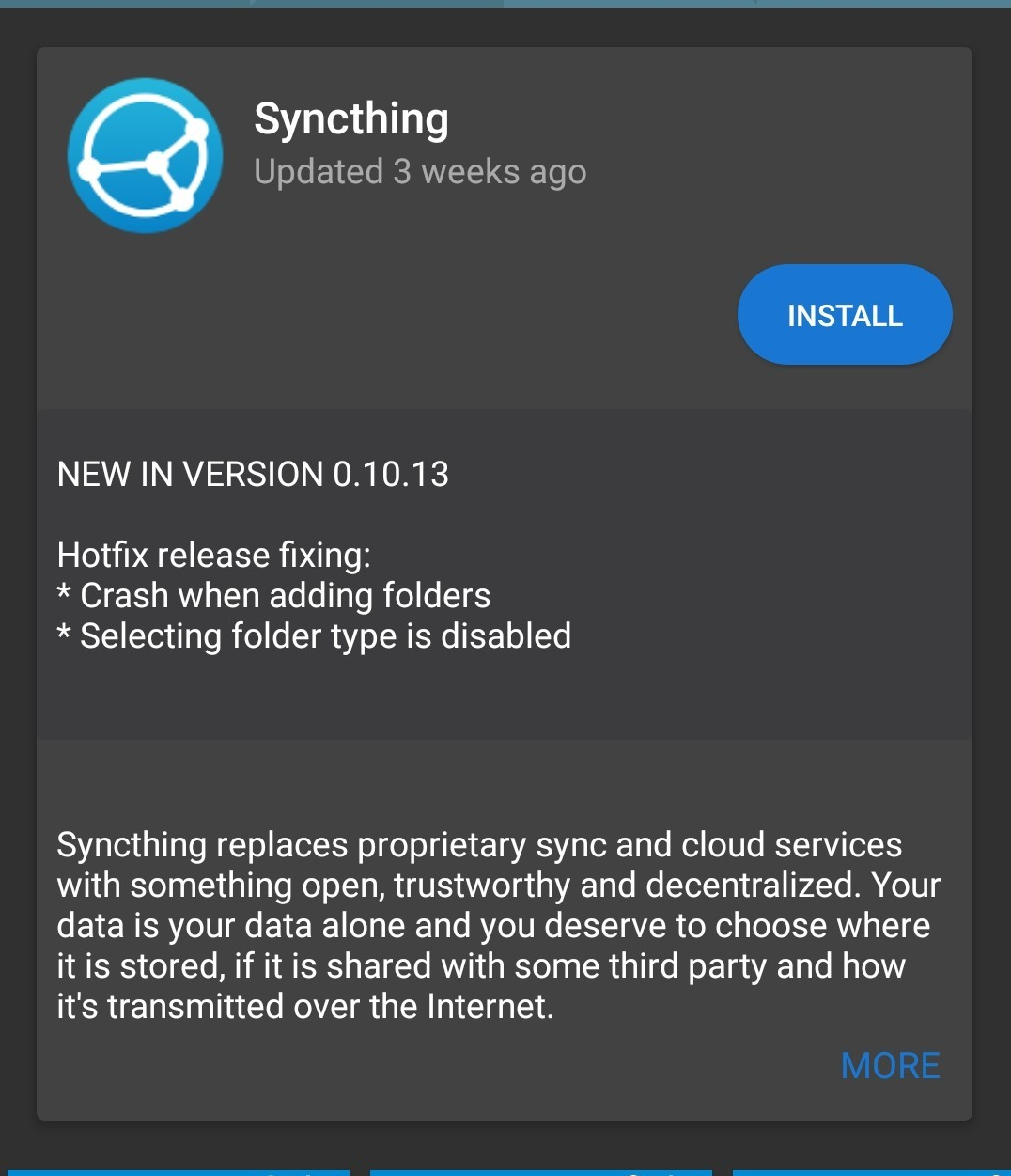

Syncthing is available in Google Play and in F-Droid app stores.

|

||||

|

||||

|

||||

|

||||

Once the application is installed, you’ll be welcomed by a wizard. Grant Syncthing permissions to your storage. You might be asked to disable battery optimization for this application. It is safe to do so as we will optimize the app to synchronize only when plugged in and connected to a wireless network.

|

||||

|

||||

Click on the main menu icon and go to **Settings** , then **Run Conditions**. Tick **Always run in** **the background** , **Run only when charging** , and **Run only on wifi**. Now your Android client is ready to exchange files with your devices.

|

||||

|

||||

There are two important concepts to remember in Syncthing: folders and devices. Folders are what you want to share, but you must have a device to share with. Syncthing allows you to share individual folders with different devices. Devices are added by exchanging device IDs. A device ID is a unique, cryptographically secure identifier that is created when Syncthing starts for the first time.

|

||||

|

||||

### Connecting devices

|

||||

|

||||

Now let’s connect your Linux machine and your Android client.

|

||||

|

||||

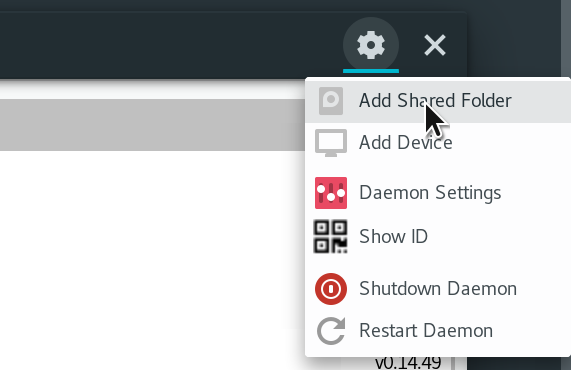

In your Linux computer, open Syncthing, click on the **Settings** icon and click **Show ID**. A QR code will show up.

|

||||

|

||||

In your Android mobile, open Syncthing. In the main screen, click the **Devices** tab and press the **+** symbol. In the first field, press the QR code symbol to open the QR scanner.

|

||||

|

||||

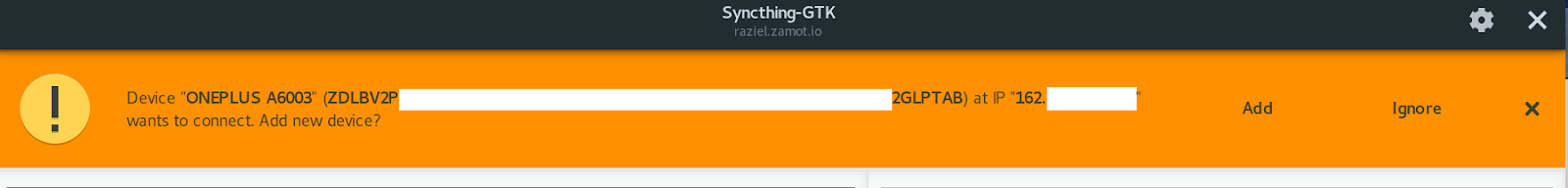

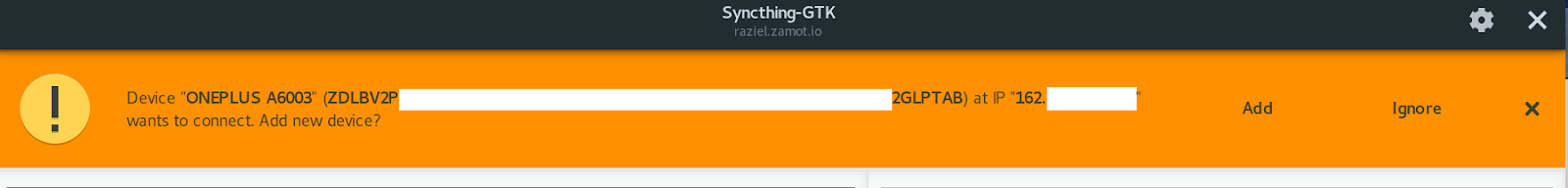

Point your mobile camera to the computer QR code. The Device ID** **field will be populated with your desktop client Device ID. Give it a friendly name and save. Because adding a device goes two ways, you now need to confirm on the computer client that you want to add the Android mobile. It might take a couple of minutes for your computer client to ask for confirmation. When it does, click **Add**.

|

||||

|

||||

|

||||

|

||||

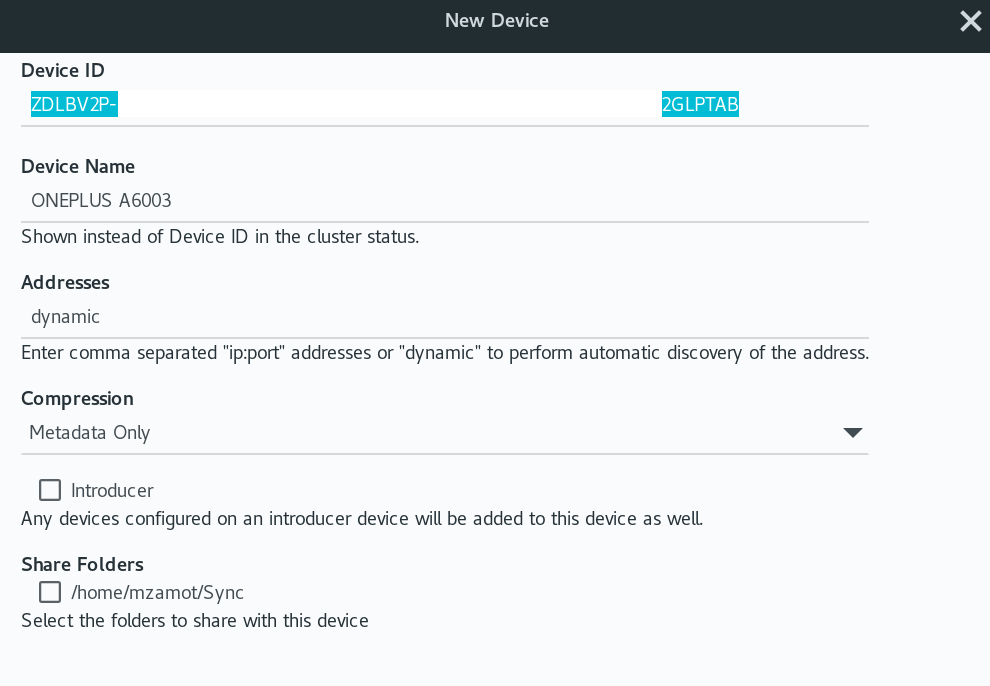

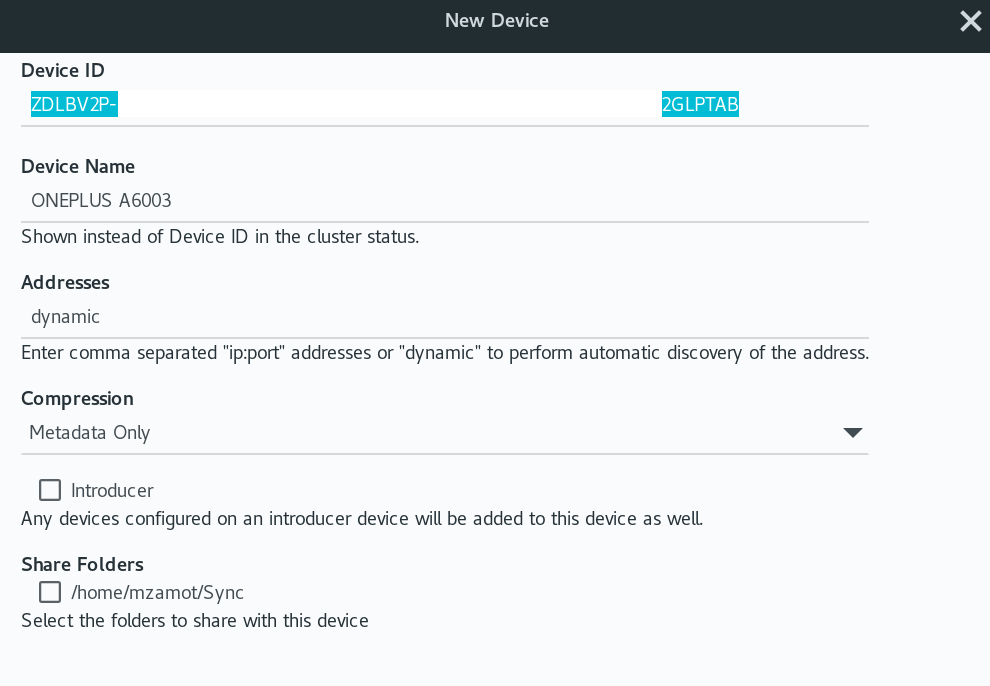

In the **New Device** window, you can verify and configure some options about your new device, like the **Device Name** and **Addresses**. If you keep dynamic, it will try to auto-discover the device IP, but if you want to force one, you can add it in this field. If you already created a folder (more on this later), you can also share it with this new device.

|

||||

|

||||

|

||||

|

||||

Your computer and Android are now paired and ready to exchange files. (If you have more than one computer or mobile phone, simply repeat these steps.)

|

||||

|

||||

### Sharing folders

|

||||

|

||||

Now that the devices you want to sync are already connected, it’s time to share a folder. You can share folders on your computer and the devices you add to that folder will get a copy.

|

||||

|

||||

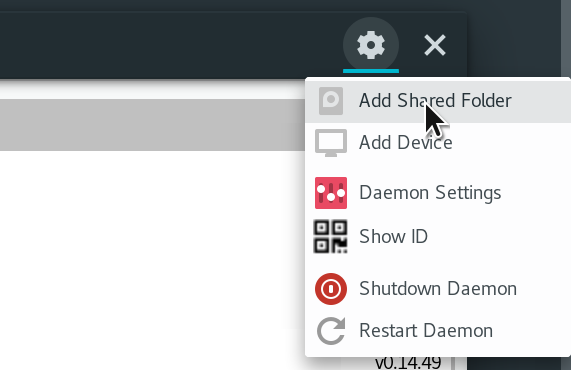

To share a folder, go to **Settings** and click **Add Shared Folder** :

|

||||

|

||||

|

||||

|

||||

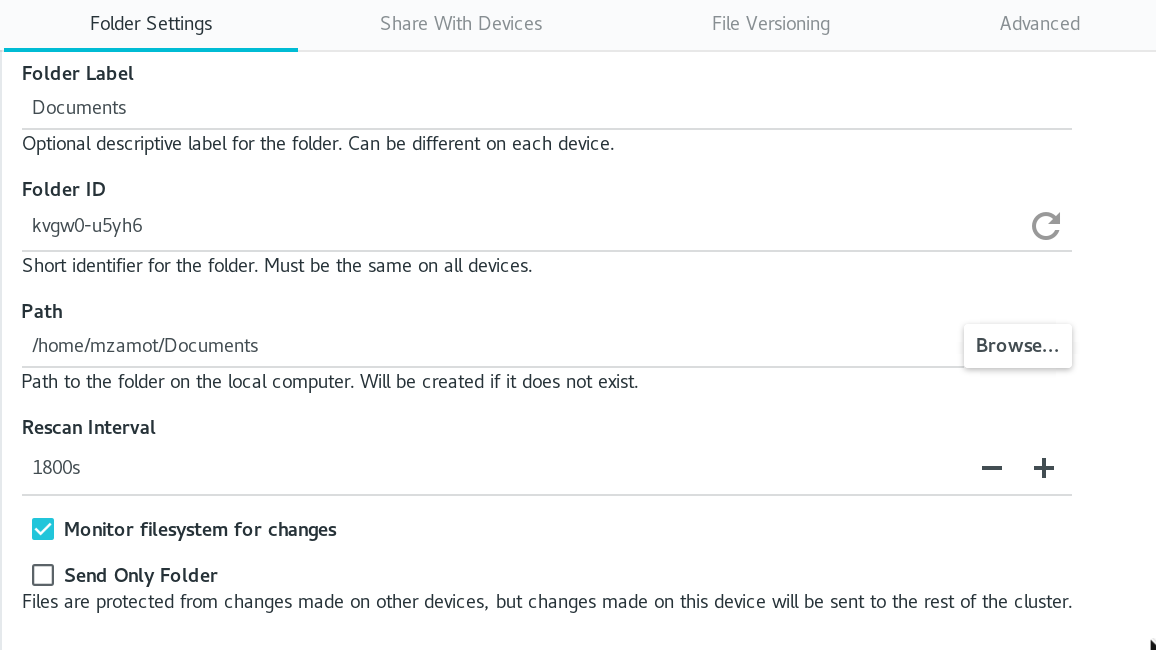

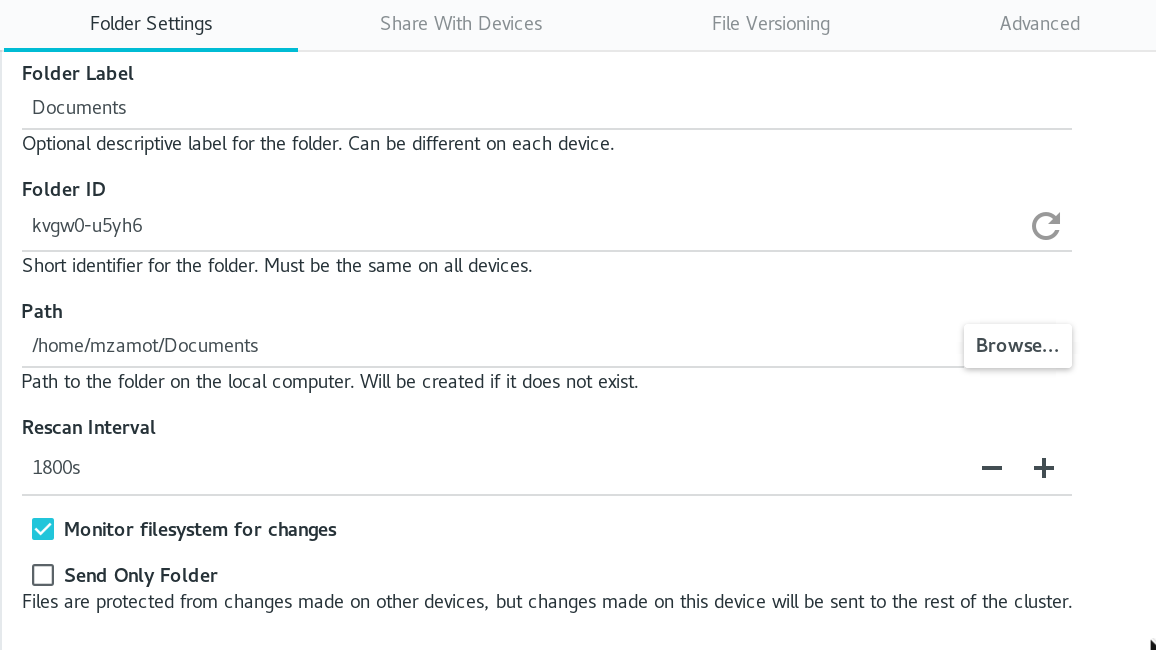

In the next window, enter the information of the folder you want to share:

|

||||

|

||||

|

||||

|

||||

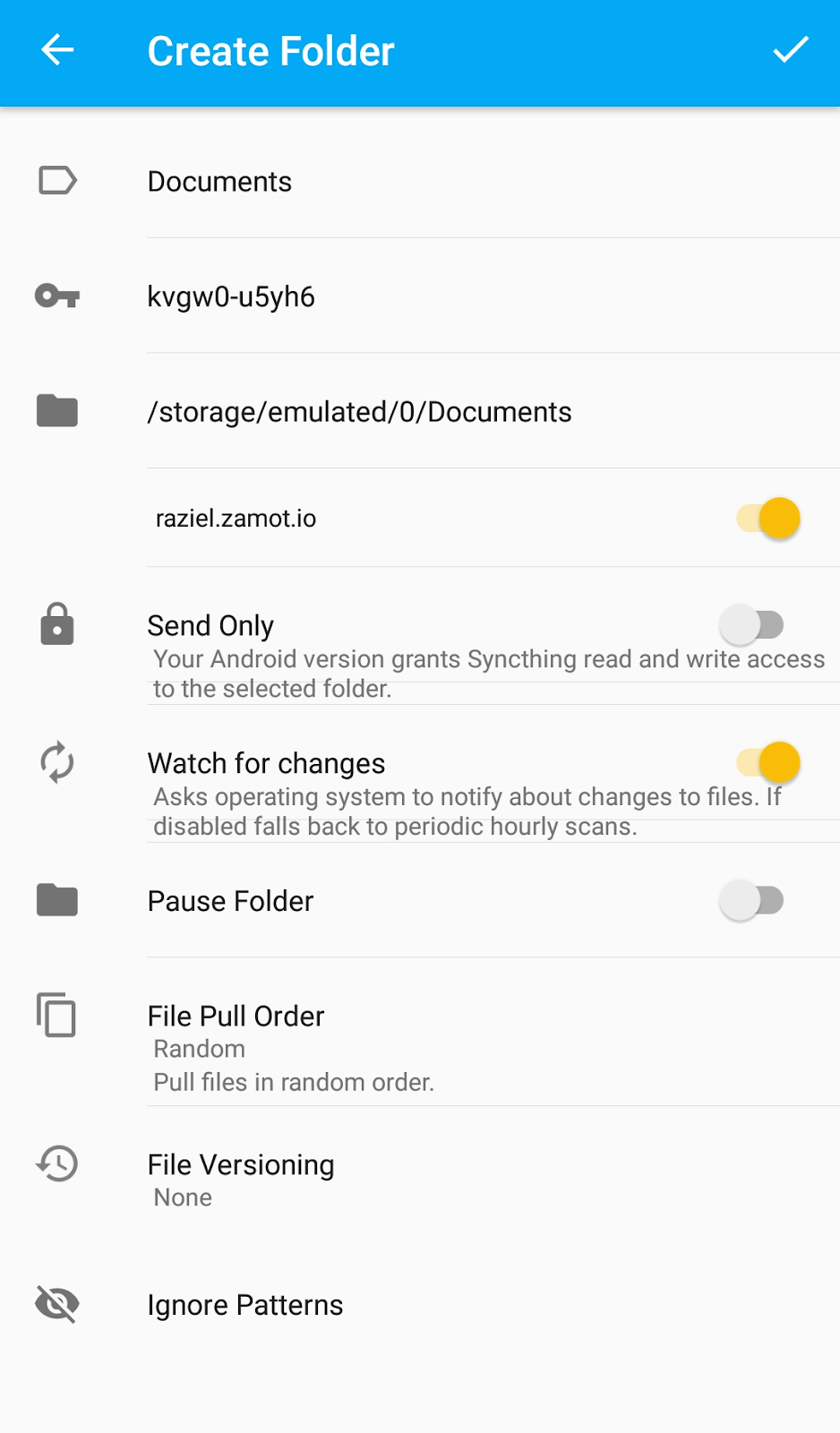

You can use any label you want. **Folder ID** will be generated randomly and will be used to identify the folder between the clients. In **Path** , click **Browse** and locate the folder you want to share. If you want Syncthing to monitor the folder for changes (such as deletes, new files, etc.), click **Monitor filesystem for changes**.

|

||||

|

||||

Remember, when you share a folder, any change that happens on the other clients will be reflected on every single device. That means that if you share a folder containing pictures with other computers or mobile devices, changes in these other clients will be reflected everywhere. If this is not what you want, you can make your folder “Send Only” so it will send files to the clients, but the other clients’ changes won’t be synced.

|

||||

|

||||

When this is done, go to **Share with Devices** and select the hosts you want to sync with your folder:

|

||||

|

||||

All the devices you select will need to accept the share request; you will get a notification from the devices:

|

||||

|

||||

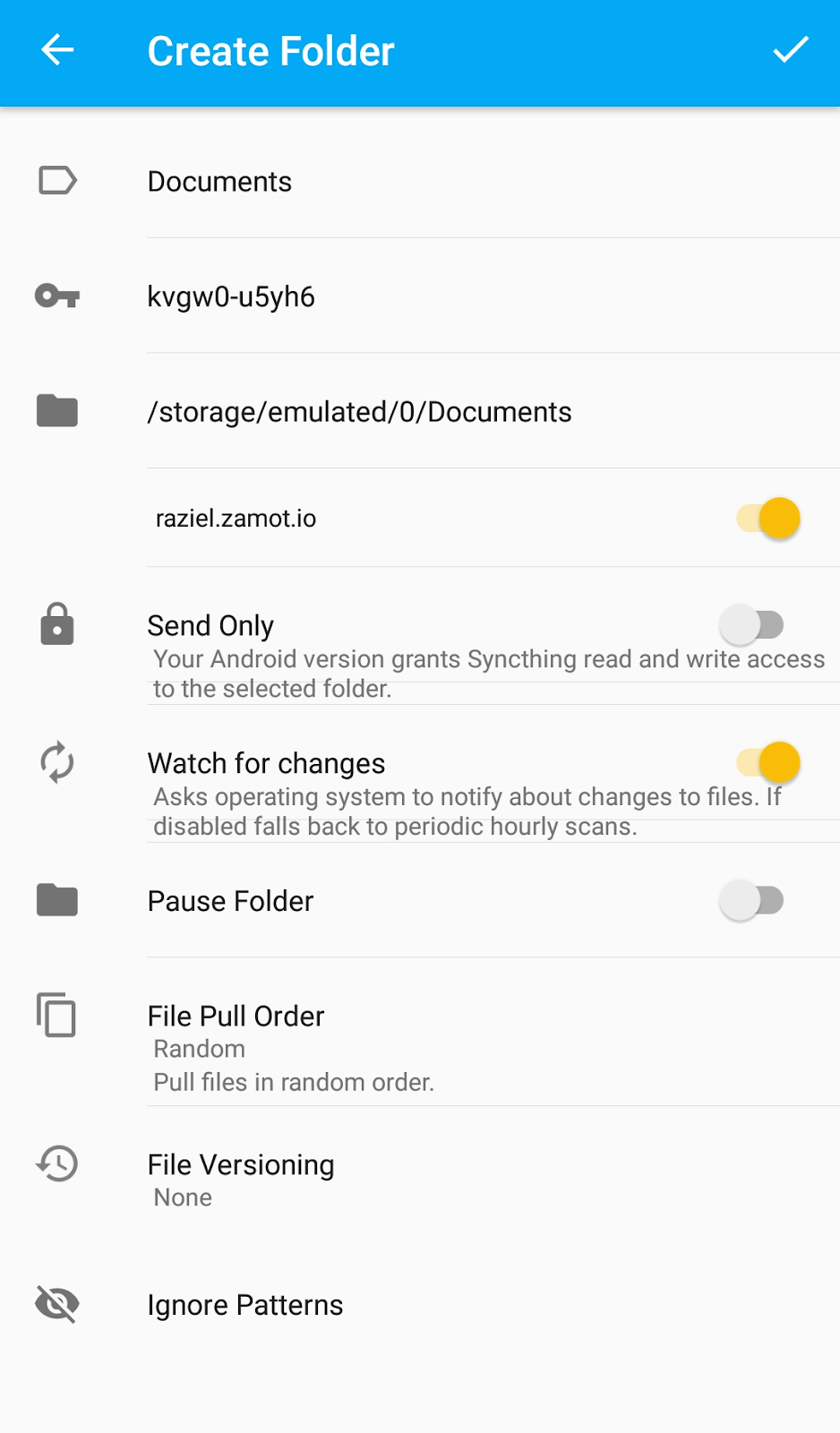

Just as when you shared the folder, you must configure the new shared folder:

|

||||

|

||||

|

||||

|

||||

Again, here you can define any label, but the ID must match each client. In the folder option, select the destination for the folder and its files. Remember that any change done in this folder will be reflected with every device allowed in the folder.

|

||||

|

||||

These are the steps to connect devices and share folders with Syncthing. It might take a few minutes to start copying, depending on your network settings or if you are not on the same network.

|

||||

|

||||

Syncthing offers many more great features and options. Try it—and take control of your data.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/take-control-your-data-syncthing

|

||||

|

||||

作者:[Michael Zamot][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mzamot

|

||||

[1]: https://gizmodo.com/google-says-it-doesnt-go-through-your-inbox-anymore-bu-1827299695

|

||||

[2]: https://syncthing.net/

|

||||

[3]: https://docs.syncthing.net/specs/bep-v1.html

|

||||

[4]: /file/410191

|

||||

[5]: https://opensource.com/sites/default/files/uploads/syncthing1.png (Syncthing in Setup WebUI dialog box)

|

||||

@ -1,61 +1,59 @@

|

||||

translating by hopefully2333

|

||||

|

||||

Play Windows games on Fedora with Steam Play and Proton

|

||||

在 Fedora 上使用 Steam play 和 Proton 来玩 Windows 游戏

|

||||

======

|

||||

|

||||

|

||||

|

||||

Some weeks ago, Steam [announced][1] a new addition to Steam Play with Linux support for Windows games using Proton, a fork from WINE. This capability is still in beta, and not all games work. Here are some more details about Steam and Proton.

|

||||

几周前,Steam 宣布要给 Steam Play 增加一个新组件,用于支持在 Linux 平台上使用 Proton 来玩 Windows 的游戏,这个组件是 WINE 的一个分支。这个功能仍然处于测试阶段,且并非对所有游戏都有效。这里有一些关于 Steam 和 Proton 的细节。

|

||||

|

||||

According to the Steam website, there are new features in the beta release:

|

||||

据 Steam 网站称,测试版本中有以下这些新功能:

|

||||

|

||||

* Windows games with no Linux version currently available can now be installed and run directly from the Linux Steam client, complete with native Steamworks and OpenVR support.

|

||||

* DirectX 11 and 12 implementations are now based on Vulkan, which improves game compatibility and reduces performance impact.

|

||||

* Fullscreen support has been improved. Fullscreen games seamlessly stretch to the desired display without interfering with the native monitor resolution or requiring the use of a virtual desktop.

|

||||

* Improved game controller support. Games automatically recognize all controllers supported by Steam. Expect more out-of-the-box controller compatibility than even the original version of the game.

|

||||

* Performance for multi-threaded games has been greatly improved compared to vanilla WINE.

|

||||

* 现在没有 Linux 版本的 Windows 游戏可以直接从 Linux 上的 Steam 客户端进行安装和运行,并且有完整、原生的 Steamworks 和 OpenVR 的支持。

|

||||

* 现在 DirectX 11 和 12 的实现都基于 Vulkan,它可以提高游戏的兼容性并减小游戏性能收到的影响。

|

||||

* 全屏支持已经得到了改进,全屏游戏时可以无缝扩展到所需的显示程度,而不会干扰到显示屏本身的分辨率或者说需要使用虚拟桌面。

|

||||

* 改进了对游戏控制器的支持,游戏自动识别所有 Steam 支持的控制器,比起游戏的原始版本,能够获得更多开箱即用的控制器兼容性。

|

||||

* 和 vanilla WINE 比起来,游戏的多线程性能得到了极大的提高。

|

||||

|

||||

|

||||

|

||||

### Installation

|

||||

### 安装

|

||||

|

||||

If you’re interested in trying Steam with Proton out, just follow these easy steps. (Note that you can ignore the first steps to enable the Steam Beta if you have the [latest updated version of Steam installed][2]. In that case you no longer need Steam Beta to use Proton.)

|

||||

如果你有兴趣,想尝试一下 Steam 和 Proton。请按照下面这些简单的步骤进行操作。(请注意,如果你已经安装了最新版本的 Steam,可以忽略启用 Steam 测试版这个第一步。在这种情况下,你不再需要通过 Steam 测试版来使用 Proton。)

|

||||

|

||||

Open up Steam and log in to your account. This example screenshot shows support for only 22 games before enabling Proton.

|

||||

打开 Steam 并登陆到你的帐户,这个截屏示例显示的是在使用 Proton 之前仅支持22个游戏。

|

||||

|

||||

![][3]

|

||||

|

||||

Now click on Steam option on top of the client. This displays a drop down menu. Then select Settings.

|

||||

现在点击客户端顶部的 Steam 选项,这会显示一个下拉菜单。然后选择设置。

|

||||

|

||||

![][4]

|

||||

|

||||

Now the settings window pops up. Select the Account option and next to Beta participation, click on change.

|

||||

现在弹出了设置窗口,选择账户选项,并在 Beta participation 旁边,点击更改。

|

||||

|

||||

![][5]

|

||||

|

||||

Now change None to Steam Beta Update.

|

||||

现在将 None 更改为 Steam Beta Update。

|

||||

|

||||

![][6]

|

||||

|

||||

Click on OK and a prompt asks you to restart.

|

||||

点击确定,然后系统会提示你重新启动。

|

||||

|

||||

![][7]

|

||||

|

||||

Let Steam download the update. This can take a while depending on your internet speed and computer resources.

|

||||

让 Steam 下载更新,这会需要一段时间,具体需要多久这要取决于你的网络速度和电脑配置。

|

||||

|

||||

![][8]

|

||||

|

||||