mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

merge from LCTT

This commit is contained in:

commit

e572bbb3d4

@ -0,0 +1,70 @@

|

||||

Ansible 入门秘诀

|

||||

======

|

||||

|

||||

> 用 Ansible 自动化你的数据中心的关键点。

|

||||

|

||||

|

||||

|

||||

Ansible 是一个开源自动化工具,可以从中央控制节点统一配置服务器、安装软件或执行各种 IT 任务。它采用一对多、<ruby>无客户端<rt>agentless</rt></ruby>的机制,从控制节点上通过 SSH 发送指令给远端的客户机来完成任务(当然除了 SSH 外也可以用别的协议)。

|

||||

|

||||

Ansible 的主要使用群体是系统管理员,他们经常会周期性地执行一些安装、配置应用的工作。尽管如此,一些非特权用户也可以使用 Ansible,例如数据库管理员就可以通过 Ansible 用 `mysql` 这个用户来创建数据库、添加数据库用户、定义访问权限等。

|

||||

|

||||

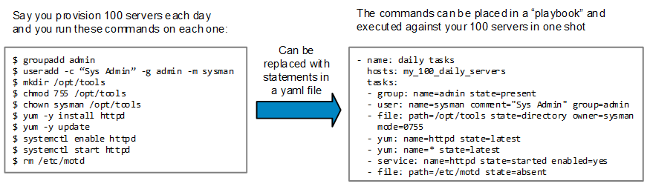

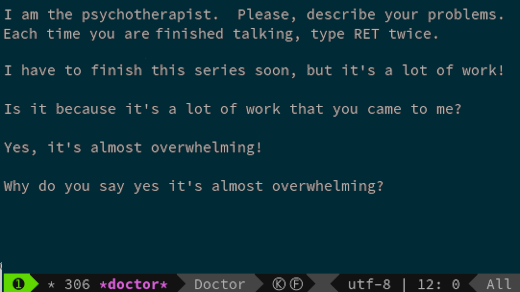

让我们来看一个简单的使用场景,一位系统管理员每天要配置 100 台服务器,并且必须在每台机器上执行一系列 Bash 命令,然后交付给用户。

|

||||

|

||||

|

||||

|

||||

这是个简单的例子,但应该能够证明:在 yaml 文件里写好命令然后在远程服务器上运行,是一件非常轻松的事。而且如果运行环境不同,就可以加入判断条件,指明某些命令只能在特定的服务器上运行(如:只在那些不是 Ubuntu 或 Debian 的系统上运行 `yum` 命令)。

|

||||

|

||||

Ansible 的一个重要特性是用<ruby>剧本<rt>playbook</rt></ruby>来描述一个计算机系统的最终状态,所以一个剧本可以在服务器上反复执行而不影响其最终状态(LCTT 译注:即是幂等的)。如果某个任务已经被实施过了(如,“用户 `sysman` 已经存在”),那么 Ansible 就会忽略它继续执行后续的任务。

|

||||

|

||||

### 定义

|

||||

|

||||

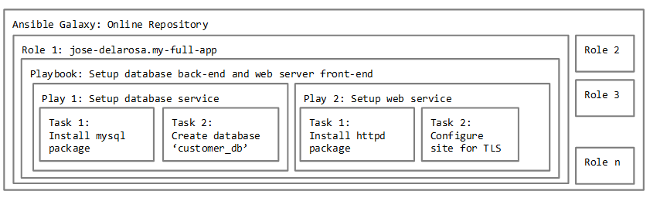

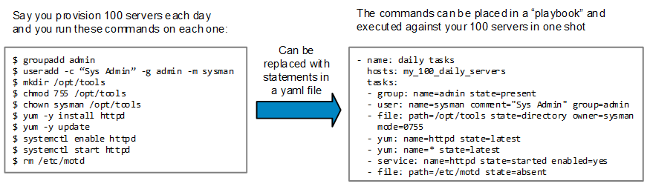

* <ruby>任务<rt>task</rt></ruby>:是工作的最小单位,它可以是个动作,比如“安装一个数据库服务”、“安装一个 web 服务器”、“创建一条防火墙规则”或者“把这个配置文件拷贝到那个服务器上去”。

|

||||

* <ruby>动作<rt>play</rt></ruby>: 由任务组成,例如,一个动作的内容是要“设置一个数据库,给 web 服务用”,这就包含了如下任务:1)安装数据库包;2)设置数据库管理员密码;3)创建数据库实例;4)为该实例分配权限。

|

||||

* <ruby>剧本<rt>playbook</rt></ruby>:(LCTT 译注:playbook 原指美式橄榄球队的[战术手册][5],也常指“剧本”,此处惯例采用“剧本”译名)由动作组成,一个剧本可能像这样:“设置我的网站,包含后端数据库”,其中的动作包括:1)设置数据库服务器;2)设置 web 服务器。

|

||||

* <ruby>角色<rt>role</rt></ruby>:用来保存和组织剧本,以便分享和再次使用它们。还拿上个例子来说,如果你需要一个全新的 web 服务器,就可以用别人已经写好并分享出来的角色来设置。因为角色是高度可配置的(如果编写正确的话),可以根据部署需求轻松地复用它们。

|

||||

* <ruby>[Ansible 星系][1]<rt>Ansible Galaxy</rt></ruby>:是一个在线仓库,里面保存的是由社区成员上传的角色,方便彼此分享。它与 GitHub 紧密集成,因此这些角色可以先在 Git 仓库里组织好,然后通过 Ansible 星系分享出来。

|

||||

|

||||

这些定义以及它们之间的关系可以用下图来描述:

|

||||

|

||||

|

||||

|

||||

请注意上面的例子只是组织任务的方式之一,我们当然也可以把安装数据库和安装 web 服务器的剧本拆开,放到不同的角色里。Ansible 星系上最常见的角色是独立安装、配置每个应用服务,你可以参考这些安装 [mysql][2] 和 [httpd][3] 的例子。

|

||||

|

||||

### 编写剧本的小技巧

|

||||

|

||||

学习 Ansible 最好的资源是其[官方文档][4]。另外,像学习其他东西一样,搜索引擎是你的好朋友。我推荐你从一些简单的任务开始,比如安装应用或创建用户。下面是一些有用的指南:

|

||||

|

||||

* 在测试的时候少选几台服务器,这样你的动作可以执行的更快一些。如果它们在一台机器上执行成功,在其他机器上也没问题。

|

||||

* 总是在真正运行前做一次<ruby>测试<rt>dry run</rt></ruby>,以确保所有的命令都能正确执行(要运行测试,加上 `--check-mode` 参数 )。

|

||||

* 尽可能多做测试,别担心搞砸。任务里描述的是所需的状态,如果系统已经达到预期状态,任务会被简单地忽略掉。

|

||||

* 确保在 `/etc/ansible/hosts` 里定义的主机名都可以被正确解析。

|

||||

* 因为是用 SSH 与远程主机通信,主控节点必须要能接受密钥,所以你面临如下选择:1)要么在正式使用之前就做好与远程主机的密钥交换工作;2)要么在开始管理某台新的远程主机时做好准备输入 “Yes”,因为你要接受对方的 SSH 密钥交换请求(LCTT 译注:还有另一个不那么安全的选择,修改主控节点的 ssh 配置文件,将 `StrictHostKeyChecking` 设置成 “no”)。

|

||||

* 尽管你可以在同一个剧本内把不同 Linux 发行版的任务整合到一起,但为每个发行版单独编写剧本会更明晰一些。

|

||||

|

||||

### 总结一下

|

||||

|

||||

Ansible 是你在数据中心里实施运维自动化的好选择,因为它:

|

||||

|

||||

* 无需客户端,所以比其他自动化工具更易安装。

|

||||

* 将指令保存在 YAML 文件中(虽然也支持 JSON),比写 shell 脚本更简单。

|

||||

* 开源,因此你也可以做出自己的贡献,让它更加强大!

|

||||

|

||||

你是怎样使用 Ansible 让数据中心更加自动化的呢?请在评论中分享您的经验。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/tips-success-when-getting-started-ansible

|

||||

|

||||

作者:[Jose Delarosa][a]

|

||||

译者:[jdh8383](https://github.com/jdh8383)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/jdelaros1

|

||||

[1]:https://galaxy.ansible.com/

|

||||

[2]:https://galaxy.ansible.com/bennojoy/mysql/

|

||||

[3]:https://galaxy.ansible.com/xcezx/httpd/

|

||||

[4]:http://docs.ansible.com/

|

||||

[5]:https://usafootball.com/football-playbook/

|

||||

@ -0,0 +1,80 @@

|

||||

3 款用于学术出版的开源工具

|

||||

======

|

||||

> 学术出版业每年的价值超过 260 亿美元。

|

||||

|

||||

|

||||

|

||||

有一个行业在采用数字化或开源工具方面已落后其它行业,那就是竞争与利润并存的学术出版业。根据 Stephen Buranyi 去年在 [卫报][1] 上发表的一份图表,这个估值超过 190 亿英镑(260 亿美元)的行业,即使是最重要的科学研究方面,至今其系统在选题、出版甚至分享方面仍受限于印刷媒介的诸多限制。全新的数字时代科技展现了一个巨大机遇,可以加速探索、推动科学协作而非竞争,以及将投入从基础建设导向有益于社会的研究。

|

||||

|

||||

非盈利性的 [eLife 倡议][2] 是由研究资金赞助方建立,旨在通过使用数字或者开源技术来走出上述僵局。除了为生命科学和生物医疗方面的重大成就出版开放式获取的期刊,eLife 已将自己变成了一个在研究交流方面的实验和展示创新的平台 —— 而大部分的实验都是基于开源精神的。

|

||||

|

||||

致力于开放出版基础设施项目给予我们加速接触、采用科学技术、提升用户体验的机会。我们认为这种机会对于推动学术出版行业是重要的。大而化之地说,开源产品的用户体验经常是有待开发的,而有时候这种情况会阻止其他人去使用它。作为我们在 OSS(开源软件)开发中投入的一部分,为了鼓励更多用户使用这些产品,我们十分注重用户体验。

|

||||

|

||||

我们所有的代码都是开源的,并且我们也积极鼓励社区参与进我们的项目中。这对我们来说意味着更快的迭代、更多的实验、更大的透明度,同时也拓宽了我们工作的外延。

|

||||

|

||||

我们现在参与的项目,例如 Libero (之前称作 [eLife Continuum][3])和 <ruby>[可重现文档栈][4]<rt>Reproducible Document Stack</rt></ruby> 的开发,以及我们最近和 [Hypothesis][5] 的合作,展示了 OSS 是如何在评估、出版以及新发现的沟通方面带来正面影响的。

|

||||

|

||||

### Libero

|

||||

|

||||

Libero 是面向出版商的服务及应用套餐,它包括一个后期制作出版系统、整套前端用户界面样式套件、Libero 的镜头阅读器、一个 Open API 以及一个搜索及推荐引擎。

|

||||

|

||||

去年我们采取了用户驱动的方式重新设计了 Libero 的前端,可以使用户较少地分心于网站的“陈设”,而是更多地集中关注于研究文章上。我们和 eLife 社区成员测试并迭代了该站点所有的核心功能,以确保给所有人最好的阅读体验。该网站的新 API 也为机器阅读能力提供了更简单的访问途径,其中包括文本挖掘、机器学习以及在线应用开发。

|

||||

|

||||

我们网站上的内容以及引领新设计的样式都是开源的,以鼓励 eLife 和其它想要使用它的出版商后续的产品开发。

|

||||

|

||||

### 可重现文档栈

|

||||

|

||||

在与 [Substance][6] 和 [Stencila][7] 的合作下,eLife 也参与了一个项目来创建可重现文档栈(RDS)—— 一个开放式的创作、编纂以及在线出版可重现的计算型手稿的工具栈。

|

||||

|

||||

今天越来越多的研究人员能够通过 [R Markdown][8] 和 [Python][9] 等语言记录他们的计算实验。这些可以作为实验记录的重要部分,但是尽管它们可以独立于最终的研究文章或与之一同分享,但传统出版流程经常将它们视为次级内容。为了发表论文,使用这些语言的研究人员除了将他们的计算结果用图片的形式“扁平化”提交外别无他法。但是这导致了许多实验价值和代码和计算数据可重复利用性的流失。诸如 [Jupyter][10] 这样的电子笔记本解决方案确实可以使研究员以一种可重复利用、可执行的简单形式发布,但是这种方案仍然是出版的手稿的补充,而不是不可或缺的一部分。

|

||||

|

||||

[可重现文档栈][11] 项目旨在通过开发、发布一个可重现原稿的产品原型来解决这些挑战,该原型将代码和数据视为文档的组成部分,并展示了从创作到出版的完整端对端技术栈。它将最终允许用户以一种包含嵌入代码块和计算结果(统计结果、图表或图形)的形式提交他们的手稿,并在出版过程中保留这些可视、可执行的部分。那时出版商就可以将这些做为出版的在线文章的组成部分而保存。

|

||||

|

||||

### 用 Hypothesis 进行开放式注解

|

||||

|

||||

最近,我们与 [Hypothesis][12] 合作引进了开放式注解,使得我们网站的用户们可以写评语、高亮文章重要部分以及与在线阅读的群体互动。

|

||||

|

||||

通过这样的合作,开源的 Hypothesis 软件被定制得更具有现代化的特性,如单次登录验证、用户界面定制,给予了出版商在他们自己网站上实现更多的控制。这些提升正引导着关于出版学术内容的高质量讨论。

|

||||

|

||||

这个工具可以无缝集成到出版商的网站,学术出版平台 [PubFactory][13] 和内容解决方案供应商 [Ingenta][14] 已经利用了它优化后的特性集。[HighWire][15] 和 [Silverchair][16] 也为他们的出版商提供了实施这套方案的机会。

|

||||

|

||||

### 其它产业和开源软件

|

||||

|

||||

随着时间的推移,我们希望看到更多的出版商采用 Hypothesis、Libero 以及其它开源项目去帮助他们促进重要科学研究的发现以及循环利用。但是 eLife 的创新机遇也能被其它行业所利用,因为这些软件和其它 OSS 技术在其他行业也很普遍。

|

||||

|

||||

数据科学的世界离不开高质量、良好支持的开源软件和围绕它们形成的社区;[TensorFlow][17] 就是这样一个好例子。感谢 OSS 以及其社区,AI 和机器学习的所有领域相比于计算机的其它领域的提升和发展更加迅猛。与之类似的是以 Linux 作为云端 Web 主机的爆炸性增长、接着是 Docker 容器、以及现在 GitHub 上最流行的开源项目之一的 Kubernetes 的增长。

|

||||

|

||||

所有的这些技术使得机构们能够用更少的资源做更多的事情,并专注于创新而不是重新发明轮子上。最后,这就是 OSS 真正的好处:它使得我们从互相的失败中学习,在互相的成功中成长。

|

||||

|

||||

我们总是在寻找与研究和科技界面方面最好的人才和想法交流的机会。你可以在 [eLife Labs][18] 上或者联系 [innovation@elifesciences.org][19] 找到更多这种交流的信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/3/scientific-publishing-software

|

||||

|

||||

作者:[Paul Shanno][a]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/pshannon

|

||||

[1]:https://www.theguardian.com/science/2017/jun/27/profitable-business-scientific-publishing-bad-for-science

|

||||

[2]:https://elifesciences.org/about

|

||||

[3]:https://elifesciences.org/inside-elife/33e4127f/elife-introduces-continuum-a-new-open-source-tool-for-publishing

|

||||

[4]:https://elifesciences.org/for-the-press/e6038800/elife-supports-development-of-open-technology-stack-for-publishing-reproducible-manuscripts-online

|

||||

[5]:https://elifesciences.org/for-the-press/81d42f7d/elife-enhances-open-annotation-with-hypothesis-to-promote-scientific-discussion-online

|

||||

[6]:https://github.com/substance

|

||||

[7]:https://github.com/stencila/stencila

|

||||

[8]:https://rmarkdown.rstudio.com/

|

||||

[9]:https://www.python.org/

|

||||

[10]:http://jupyter.org/

|

||||

[11]:https://elifesciences.org/labs/7dbeb390/reproducible-document-stack-supporting-the-next-generation-research-article

|

||||

[12]:https://github.com/hypothesis

|

||||

[13]:http://www.pubfactory.com/

|

||||

[14]:http://www.ingenta.com/

|

||||

[15]:https://github.com/highwire

|

||||

[16]:https://www.silverchair.com/community/silverchair-universe/hypothesis/

|

||||

[17]:https://www.tensorflow.org/

|

||||

[18]:https://elifesciences.org/labs

|

||||

[19]:mailto:innovation@elifesciences.org

|

||||

@ -1,16 +1,18 @@

|

||||

[#]:collector:(lujun9972)

|

||||

[#]:translator:(lujun9972)

|

||||

[#]:reviewer:( )

|

||||

[#]:publisher:( )

|

||||

[#]:url:( )

|

||||

[#]:subject:(Schedule a visit with the Emacs psychiatrist)

|

||||

[#]:via:(https://opensource.com/article/18/12/linux-toy-eliza)

|

||||

[#]:author:(Jason Baker https://opensource.com/users/jason-baker)

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lujun9972)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10601-1.html)

|

||||

[#]: subject: (Schedule a visit with the Emacs psychiatrist)

|

||||

[#]: via: (https://opensource.com/article/18/12/linux-toy-eliza)

|

||||

[#]: author: (Jason Baker https://opensource.com/users/jason-baker)

|

||||

|

||||

预约 Emacs 心理医生

|

||||

======

|

||||

Eliza 是一个隐藏于某个 Linux 最流行文本编辑器中的自然语言处理聊天机器人。

|

||||

|

||||

|

||||

> Eliza 是一个隐藏于某个 Linux 最流行文本编辑器中的自然语言处理聊天机器人。

|

||||

|

||||

|

||||

|

||||

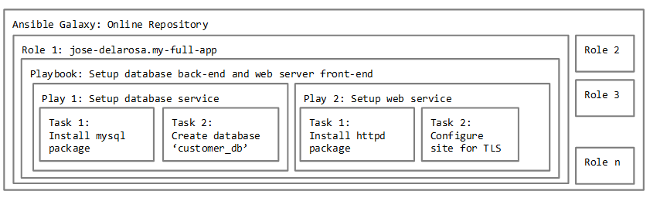

欢迎你,今天时期 24 天的 Linux 命令行玩具的又一天。如果你是第一次访问本系列,你可能会问什么是命令行玩具呢。我们将会逐步确定这个概念,但一般来说,它可能是一个游戏,或任何能让你在终端玩的开心的其他东西。

|

||||

|

||||

@ -22,24 +24,23 @@ Eliza 是一个隐藏于某个 Linux 最流行文本编辑器中的自然语言

|

||||

|

||||

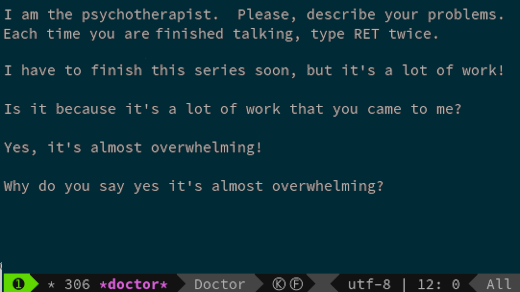

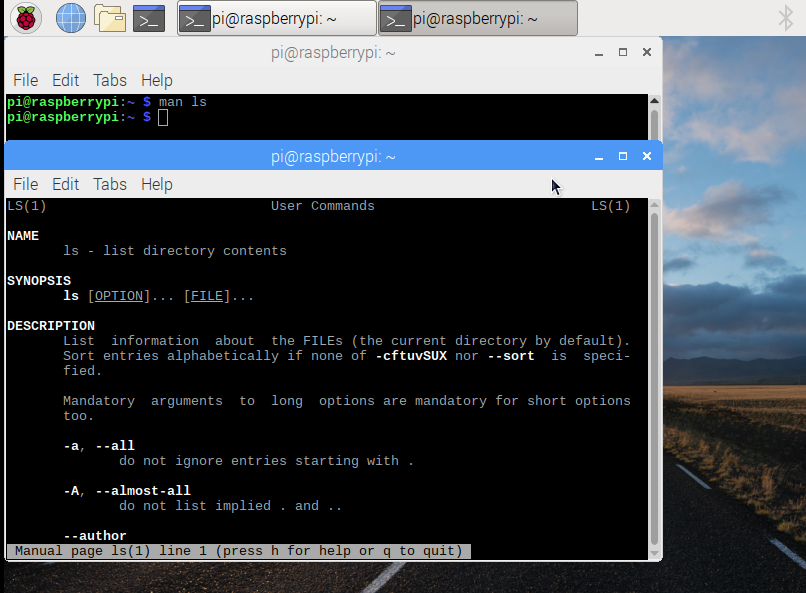

要启动 [Eliza][1],首先,你需要启动 Emacs。很有可能 Emacs 已经安装在你的系统中了,但若没有,它基本上也肯定在你默认的软件仓库中。

|

||||

|

||||

由于我要求本系列的工具一定要时运行在终端内,因此使用 **-nw** 标志来启动 Emacs 让它在你的终端模拟器中运行。

|

||||

由于我要求本系列的工具一定要时运行在终端内,因此使用 `-nw` 标志来启动 Emacs 让它在你的终端模拟器中运行。

|

||||

|

||||

```

|

||||

$ emacs -nw

|

||||

```

|

||||

|

||||

在 Emacs 中,输入 M-x doctor 来启动 Eliza。对于像我这样有 Vim 背景的人可能不知道这是什么意思,只需要按下 escape,输入 x 然后输入 doctor。然后,向它倾述所有假日的烦恼吧。

|

||||

在 Emacs 中,输入 `M-x doctor` 来启动 Eliza。对于像我这样有 Vim 背景的人可能不知道这是什么意思,只需要按下 `escape`,输入 `x` 然后输入 `doctor`。然后,向它倾述所有假日的烦恼吧。

|

||||

|

||||

Eliza 历史悠久,最早可以追溯到 1960 年代中期的 MIT 人工智能实验室。[维基百科 ][2] 上有它历史的详细说明。

|

||||

|

||||

Eliza 并不是 Emacs 中唯一的娱乐工具。查看 [手册 ][3] 可以看到一整列好玩的玩具。

|

||||

Eliza 历史悠久,最早可以追溯到 1960 年代中期的 MIT 人工智能实验室。[维基百科][2] 上有它历史的详细说明。

|

||||

|

||||

Eliza 并不是 Emacs 中唯一的娱乐工具。查看 [手册][3] 可以看到一整列好玩的玩具。

|

||||

|

||||

![Linux toy:eliza animated][5]

|

||||

|

||||

你有什么喜欢的命令行玩具值得推荐吗?我们时间不多了,但我还是想听听你的建议。请在下面评论中告诉我,我会查看的。另外也欢迎告诉我你们对本次玩具的想法。

|

||||

|

||||

请一定要看看昨天的玩具,[带着这个复刻版吃豆人来到 Linux 终端游乐中心 ][6],然后明天再来看另一个玩具!

|

||||

请一定要看看昨天的玩具,[带着这个复刻版吃豆人来到 Linux 终端游乐中心][6],然后明天再来看另一个玩具!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -48,7 +49,7 @@ via: https://opensource.com/article/18/12/linux-toy-eliza

|

||||

作者:[Jason Baker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10602-1.html)

|

||||

[#]: subject: (Midori: A Lightweight Open Source Web Browser)

|

||||

[#]: via: (https://itsfoss.com/midori-browser)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

@ -10,13 +10,13 @@

|

||||

Midori:轻量级开源 Web 浏览器

|

||||

======

|

||||

|

||||

**这是一个对再次回归的轻量级、快速、开源的 Web 浏览器 Midori 的快速回顾**

|

||||

> 这是一个对再次回归的轻量级、快速、开源的 Web 浏览器 Midori 的快速回顾。

|

||||

|

||||

如果你正在寻找一款轻量级[网络浏览器替代品][1],请试试 Midori。

|

||||

|

||||

[Midori][2]是一款开源的网络浏览器,它更注重轻量级而不是提供大量功能。

|

||||

|

||||

如果你从未听说过 Midori,你可能会认为它是一个新的应用程序,但实际上 Midori 于 2007 年首次发布。

|

||||

如果你从未听说过 Midori,你可能会认为它是一个新的应用程序,但实际上 Midori 首次发布于 2007 年。

|

||||

|

||||

因为它专注于速度,所以 Midori 很快就聚集了一群爱好者,并成为了 Bodhi Linux、SilTaz 等轻量级 Linux 发行版的默认浏览器。

|

||||

|

||||

@ -28,25 +28,23 @@ Midori:轻量级开源 Web 浏览器

|

||||

|

||||

![Midori web browser][4]

|

||||

|

||||

以下是Midori浏览器的一些主要功能

|

||||

以下是 Midori 浏览器的一些主要功能

|

||||

|

||||

* 使用 Vala 编写,带有 GTK+3 和 WebKit 渲染引擎。

|

||||

* 标签、窗口和会话管理

|

||||

* 快速拨号

|

||||

* 默认保存下一个会话的选项卡

|

||||

* 使用 Vala 编写,使用 GTK+3 和 WebKit 渲染引擎。

|

||||

* 标签、窗口和会话管理。

|

||||

* 快速拨号。

|

||||

* 默认保存下一个会话的选项卡。

|

||||

* 使用 DuckDuckGo 作为默认搜索引擎。可以更改为 Google 或 Yahoo。

|

||||

* 书签管理

|

||||

* 可定制和可扩展的界面

|

||||

* 扩展模块可以用 C 和 Vala 编写

|

||||

* 支持 HTML5

|

||||

* 书签管理。

|

||||

* 可定制和可扩展的界面。

|

||||

* 扩展模块可以用 C 和 Vala 编写。

|

||||

* 支持 HTML5。

|

||||

* 少量的扩展程序包括广告拦截器、彩色标签等。没有第三方扩展程序。

|

||||

* 表格历史

|

||||

* 隐私浏览

|

||||

* 可用于 Linux 和 Windows

|

||||

* 表单历史。

|

||||

* 隐私浏览。

|

||||

* 可用于 Linux 和 Windows。

|

||||

|

||||

|

||||

|

||||

小知识:Midori 是日语单词,意思是绿色。如果你因此在猜想一些东西,Midori 的开发者实际不是日本人。

|

||||

小知识:Midori 是日语单词,意思是绿色。如果你因此而猜想的话,但 Midori 的开发者实际不是日本人。

|

||||

|

||||

### 体验 Midori

|

||||

|

||||

@ -54,7 +52,7 @@ Midori:轻量级开源 Web 浏览器

|

||||

|

||||

这几天我一直在使用 Midori。体验基本很好。它支持 HTML5 并能快速渲染网站。广告拦截器也没问题。正如你对任何标准 Web 浏览器所期望的那样,浏览体验挺顺滑。

|

||||

|

||||

缺少扩展一直是 Midori 的弱点所以我不打算谈论这个。

|

||||

缺少扩展一直是 Midori 的弱点,所以我不打算谈论这个。

|

||||

|

||||

我注意到的是它不支持国际语言。我找不到添加新语言支持的方法。它根本无法渲染印地语字体,我猜对其他非[罗曼语言][6]也是一样。

|

||||

|

||||

@ -70,7 +68,7 @@ Midori 没有像 Chrome 那样吃我的内存,所以这是一个很大的优

|

||||

|

||||

如果你使用的是 Ubuntu,你可以在软件中心找到 Midori(Snap 版)并从那里安装。

|

||||

|

||||

![Midori browser is available in Ubuntu Software Center][8]Midori browser is available in Ubuntu Software Center

|

||||

![Midori browser is available in Ubuntu Software Center][8]

|

||||

|

||||

对于其他 Linux 发行版,请确保你[已启用 Snap 支持][9],然后你可以使用以下命令安装 Midori:

|

||||

|

||||

@ -80,6 +78,8 @@ sudo snap install midori

|

||||

|

||||

你可以选择从源代码编译。你可以从 Midori 的网站下载它的代码。

|

||||

|

||||

- [下载 Midori](https://www.midori-browser.org/download/)

|

||||

|

||||

如果你喜欢 Midori 并希望帮助这个开源项目,请向他们捐赠或[从他们的商店购买 Midori 商品][10]。

|

||||

|

||||

你在使用 Midori 还是曾经用过么?你的体验如何?你更喜欢使用哪种其他网络浏览器?请在下面的评论栏分享你的观点。

|

||||

@ -91,7 +91,7 @@ via: https://itsfoss.com/midori-browser

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,127 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (leommxj)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10592-1.html)

|

||||

[#]: subject: (How ASLR protects Linux systems from buffer overflow attacks)

|

||||

[#]: via: (https://www.networkworld.com/article/3331199/linux/what-does-aslr-do-for-linux.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

ASLR 是如何保护 Linux 系统免受缓冲区溢出攻击的

|

||||

======

|

||||

|

||||

> 地址空间随机化(ASLR)是一种内存攻击缓解技术,可以用于 Linux 和 Windows 系统。了解一下如何运行它、启用/禁用它,以及它是如何工作的。

|

||||

|

||||

|

||||

|

||||

<ruby>地址空间随机化<rt>Address Space Layout Randomization</rt></ruby>(ASLR)是一种操作系统用来抵御缓冲区溢出攻击的内存保护机制。这种技术使得系统上运行的进程的内存地址无法被预测,使得与这些进程有关的漏洞变得更加难以利用。

|

||||

|

||||

ASLR 目前在 Linux、Windows 以及 MacOS 系统上都有使用。其最早出现在 2005 的 Linux 系统上。2007 年,这项技术被 Windows 和 MacOS 部署使用。尽管 ASLR 在各个系统上都提供相同的功能,却有着不同的实现。

|

||||

|

||||

ASLR 的有效性依赖于整个地址空间布局是否对于攻击者保持未知。此外,只有编译时作为<ruby>位置无关可执行文件<rt>Position Independent Executable</rt></ruby>(PIE)的可执行程序才能得到 ASLR 技术的最大保护,因为只有这样,可执行文件的所有代码节区才会被加载在随机地址。PIE 机器码不管绝对地址是多少都可以正确执行。

|

||||

|

||||

### ASLR 的局限性

|

||||

|

||||

尽管 ASLR 使得对系统漏洞的利用更加困难了,但其保护系统的能力是有限的。理解关于 ASLR 的以下几点是很重要的:

|

||||

|

||||

* 它不能*解决*漏洞,而是增加利用漏洞的难度

|

||||

* 并不追踪或报告漏洞

|

||||

* 不能对编译时没有开启 ASLR 支持的二进制文件提供保护

|

||||

* 不能避免被绕过

|

||||

|

||||

### ASLR 是如何工作的

|

||||

|

||||

通过对攻击者在进行缓冲区溢出攻击时所要用到的内存布局中的偏移做了随机化,ASLR 加大了攻击成功的难度,从而增强了系统的控制流完整性。

|

||||

|

||||

通常认为 ASLR 在 64 位系统上效果更好,因为 64 位系统提供了更大的熵(可随机的地址范围)。

|

||||

|

||||

### ASLR 是否正在你的 Linux 系统上运行?

|

||||

|

||||

下面展示的两条命令都可以告诉你的系统是否启用了 ASLR 功能:

|

||||

|

||||

```

|

||||

$ cat /proc/sys/kernel/randomize_va_space

|

||||

2

|

||||

$ sysctl -a --pattern randomize

|

||||

kernel.randomize_va_space = 2

|

||||

```

|

||||

|

||||

上方指令结果中的数值(`2`)表示 ASLR 工作在全随机化模式。其可能为下面的几个数值之一:

|

||||

|

||||

```

|

||||

0 = Disabled

|

||||

1 = Conservative Randomization

|

||||

2 = Full Randomization

|

||||

```

|

||||

|

||||

如果你关闭了 ASLR 并且执行下面的指令,你将会注意到前后两条 `ldd` 的输出是完全一样的。`ldd` 命令会加载共享对象并显示它们在内存中的地址。

|

||||

|

||||

```

|

||||

$ sudo sysctl -w kernel.randomize_va_space=0 <== disable

|

||||

[sudo] password for shs:

|

||||

kernel.randomize_va_space = 0

|

||||

$ ldd /bin/bash

|

||||

linux-vdso.so.1 (0x00007ffff7fd1000) <== same addresses

|

||||

libtinfo.so.6 => /lib/x86_64-linux-gnu/libtinfo.so.6 (0x00007ffff7c69000)

|

||||

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007ffff7c63000)

|

||||

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007ffff7a79000)

|

||||

/lib64/ld-linux-x86-64.so.2 (0x00007ffff7fd3000)

|

||||

$ ldd /bin/bash

|

||||

linux-vdso.so.1 (0x00007ffff7fd1000) <== same addresses

|

||||

libtinfo.so.6 => /lib/x86_64-linux-gnu/libtinfo.so.6 (0x00007ffff7c69000)

|

||||

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007ffff7c63000)

|

||||

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007ffff7a79000)

|

||||

/lib64/ld-linux-x86-64.so.2 (0x00007ffff7fd3000)

|

||||

```

|

||||

|

||||

如果将其重新设置为 `2` 来启用 ASLR,你将会看到每次运行 `ldd`,得到的内存地址都不相同。

|

||||

|

||||

```

|

||||

$ sudo sysctl -w kernel.randomize_va_space=2 <== enable

|

||||

[sudo] password for shs:

|

||||

kernel.randomize_va_space = 2

|

||||

$ ldd /bin/bash

|

||||

linux-vdso.so.1 (0x00007fff47d0e000) <== first set of addresses

|

||||

libtinfo.so.6 => /lib/x86_64-linux-gnu/libtinfo.so.6 (0x00007f1cb7ce0000)

|

||||

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f1cb7cda000)

|

||||

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f1cb7af0000)

|

||||

/lib64/ld-linux-x86-64.so.2 (0x00007f1cb8045000)

|

||||

$ ldd /bin/bash

|

||||

linux-vdso.so.1 (0x00007ffe1cbd7000) <== second set of addresses

|

||||

libtinfo.so.6 => /lib/x86_64-linux-gnu/libtinfo.so.6 (0x00007fed59742000)

|

||||

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007fed5973c000)

|

||||

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007fed59552000)

|

||||

/lib64/ld-linux-x86-64.so.2 (0x00007fed59aa7000)

|

||||

```

|

||||

|

||||

### 尝试绕过 ASLR

|

||||

|

||||

尽管这项技术有很多优点,但绕过 ASLR 的攻击并不罕见,主要有以下几类:

|

||||

|

||||

* 利用地址泄露

|

||||

* 访问与特定地址关联的数据

|

||||

* 针对 ASLR 实现的缺陷来猜测地址,常见于系统熵过低或 ASLR 实现不完善。

|

||||

* 利用侧信道攻击

|

||||

|

||||

### 总结

|

||||

|

||||

ASLR 有很大的价值,尤其是在 64 位系统上运行并被正确实现时。虽然不能避免被绕过,但这项技术的确使得利用系统漏洞变得更加困难了。这份参考资料可以提供 [在 64 位 Linux 系统上的完全 ASLR 的有效性][2] 的更多有关细节,这篇论文介绍了一种利用分支预测 [绕过 ASLR][3] 的技术。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3331199/linux/what-does-aslr-do-for-linux.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[leommxj](https://github.com/leommxj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3242170/linux/invaluable-tips-and-tricks-for-troubleshooting-linux.html

|

||||

[2]: https://cybersecurity.upv.es/attacks/offset2lib/offset2lib-paper.pdf

|

||||

[3]: http://www.cs.ucr.edu/~nael/pubs/micro16.pdf

|

||||

[4]: https://www.facebook.com/NetworkWorld/

|

||||

[5]: https://www.linkedin.com/company/network-world

|

||||

164

published/20190212 Ampersands and File Descriptors in Bash.md

Normal file

164

published/20190212 Ampersands and File Descriptors in Bash.md

Normal file

@ -0,0 +1,164 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (zero-mk)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10591-1.html)

|

||||

[#]: subject: (Ampersands and File Descriptors in Bash)

|

||||

[#]: via: (https://www.linux.com/blog/learn/2019/2/ampersands-and-file-descriptors-bash)

|

||||

[#]: author: (Paul Brown https://www.linux.com/users/bro66)

|

||||

|

||||

Bash 中的 & 符号和文件描述符

|

||||

======

|

||||

|

||||

> 了解如何将 “&” 与尖括号结合使用,并从命令行中获得更多信息。

|

||||

|

||||

|

||||

|

||||

在我们探究大多数链式 Bash 命令中出现的所有的杂项符号(`&`、`|`、`;`、`>`、`<`、`{`、`[`、`(`、`)`、`]`、`}` 等等)的任务中,[我们一直在仔细研究 & 符号][1]。

|

||||

|

||||

[上次,我们看到了如何使用 & 把可能需要很长时间运行的进程放到后台运行][1]。但是,`&` 与尖括号 `<` 结合使用,也可用于将输出或输出通过管道导向其他地方。

|

||||

|

||||

在 [前面的][2] [尖括号教程中][3],你看到了如何使用 `>`,如下:

|

||||

|

||||

```

|

||||

ls > list.txt

|

||||

```

|

||||

|

||||

将 `ls` 输出传递给 `list.txt` 文件。

|

||||

|

||||

现在我们看到的是简写:

|

||||

|

||||

```

|

||||

ls 1> list.txt

|

||||

```

|

||||

|

||||

在这种情况下,`1` 是一个文件描述符,指向标准输出(`stdout`)。

|

||||

|

||||

以类似的方式,`2` 指向标准错误输出(`stderr`):

|

||||

|

||||

```

|

||||

ls 2> error.log

|

||||

```

|

||||

|

||||

所有错误消息都通过管道传递给 `error.log` 文件。

|

||||

|

||||

回顾一下:`1>` 是标准输出(`stdout`),`2>` 是标准错误输出(`stderr`)。

|

||||

|

||||

第三个标准文件描述符,`0<` 是标准输入(`stdin`)。你可以看到它是一个输入,因为箭头(`<`)指向`0`,而对于 `1` 和 `2`,箭头(`>`)是指向外部的。

|

||||

|

||||

### 标准文件描述符有什么用?

|

||||

|

||||

如果你在阅读本系列以后,你已经多次使用标准输出(`1>`)的简写形式:`>`。

|

||||

|

||||

例如,当(假如)你知道你的命令会抛出一个错误时,像 `stderr`(`2`)这样的东西也很方便,但是 Bash 告诉你的东西是没有用的,你不需要看到它。如果要在 `home/` 目录中创建目录,例如:

|

||||

|

||||

```

|

||||

mkdir newdir

|

||||

```

|

||||

|

||||

如果 `newdir/` 已经存在,`mkdir` 将显示错误。但你为什么要关心这些呢?(好吧,在某些情况下你可能会关心,但并非总是如此。)在一天结束时,`newdir` 会以某种方式让你填入一些东西。你可以通过将错误消息推入虚空(即 ``/dev/null`)来抑制错误消息:

|

||||

|

||||

```

|

||||

mkdir newdir 2> /dev/null

|

||||

```

|

||||

|

||||

这不仅仅是 “让我们不要看到丑陋和无关的错误消息,因为它们很烦人”,因为在某些情况下,错误消息可能会在其他地方引起一连串错误。比如说,你想找到 `/etc` 下所有的 `.service` 文件。你可以这样做:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service"

|

||||

```

|

||||

|

||||

但事实证明,在大多数系统中,`find` 显示的错误会有许多行,因为普通用户对 `/etc` 下的某些文件夹没有读取访问权限。它使读取正确的输出变得很麻烦,如果 `find` 是更大的脚本的一部分,它可能会导致行中的下一个命令排队。

|

||||

|

||||

相反,你可以这样做:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 2> /dev/null

|

||||

```

|

||||

|

||||

而且你只得到你想要的结果。

|

||||

|

||||

### 文件描述符入门

|

||||

|

||||

单独的文件描述符 `stdout` 和 `stderr` 还有一些注意事项。如果要将输出存储在文件中,请执行以下操作:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 1> services.txt

|

||||

```

|

||||

|

||||

工作正常,因为 `1>` 意味着 “发送标准输出且自身标准输出(非标准错误)到某个地方”。

|

||||

|

||||

但这里存在一个问题:如果你想把命令抛出的错误信息记录到文件,而结果中没有错误信息你该怎么**做**?上面的命令并不会这样做,因为它只写入 `find` 正确的结果,而:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 2> services.txt

|

||||

```

|

||||

|

||||

只会写入命令抛出的错误信息。

|

||||

|

||||

我们如何得到两者?请尝试以下命令:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" &> services.txt

|

||||

```

|

||||

|

||||

…… 再次和 `&` 打个招呼!

|

||||

|

||||

我们一直在说 `stdin`(`0`)、`stdout`(`1`)和 `stderr`(`2`)是“文件描述符”。文件描述符是一种特殊构造,是指向文件的通道,用于读取或写入,或两者兼而有之。这来自于将所有内容都视为文件的旧 UNIX 理念。想写一个设备?将其视为文件。想写入套接字并通过网络发送数据?将其视为文件。想要读取和写入文件?嗯,显然,将其视为文件。

|

||||

|

||||

因此,在管理命令的输出和错误的位置时,将目标视为文件。因此,当你打开它们来读取和写入它们时,它们都会获得文件描述符。

|

||||

|

||||

这是一个有趣的效果。例如,你可以将内容从一个文件描述符传递到另一个文件描述符:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 1> services.txt 2>&1

|

||||

```

|

||||

|

||||

这会将 `stderr` 导向到 `stdout`,而 `stdout` 通过管道被导向到一个文件中 `services.txt` 中。

|

||||

|

||||

它再次出现:`&` 发信号通知 Bash `1` 是目标文件描述符。

|

||||

|

||||

标准文件描述符的另一个问题是,当你从一个管道传输到另一个时,你执行此操作的顺序有点违反直觉。例如,按照上面的命令。它看起来像是错误的方式。你应该像这样阅读它:“将输出导向到文件,然后将错误导向到标准输出。” 看起来错误输出会在后面,并且在输出到标准输出(`1`)已经完成时才发送。

|

||||

|

||||

但这不是文件描述符的工作方式。文件描述符不是文件的占位符,而是文件的输入和(或)输出通道。在这种情况下,当你做 `1> services.txt` 时,你的意思是 “打开一个写管道到 `services.txt` 并保持打开状态”。`1` 是你要使用的管道的名称,它将保持打开状态直到该行的结尾。

|

||||

|

||||

如果你仍然认为这是错误的方法,试试这个:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 2>&1 1>services.txt

|

||||

```

|

||||

|

||||

并注意它是如何不工作的;注意错误是如何被导向到终端的,而只有非错误的输出(即 `stdout`)被推送到 `services.txt`。

|

||||

|

||||

这是因为 Bash 从左到右处理 `find` 的每个结果。这样想:当 Bash 到达 `2>&1` 时,`stdout` (`1`)仍然是指向终端的通道。如果 `find` 给 Bash 的结果包含一个错误,它将被弹出到 `2`,转移到 `1`,然后留在终端!

|

||||

|

||||

然后在命令结束时,Bash 看到你要打开 `stdout`(`1`) 作为到 `services.txt` 文件的通道。如果没有发生错误,结果将通过通道 `1` 进入文件。

|

||||

|

||||

相比之下,在:

|

||||

|

||||

```

|

||||

find /etc -iname "*.service" 1>services.txt 2>&1

|

||||

```

|

||||

|

||||

`1` 从一开始就指向 `services.txt`,因此任何弹出到 `2` 的内容都会导向到 `1` ,而 `1` 已经指向最终去的位置 `services.txt`,这就是它工作的原因。

|

||||

|

||||

在任何情况下,如上所述 `&>` 都是“标准输出和标准错误”的缩写,即 `2>&1`。

|

||||

|

||||

这可能有点多,但不用担心。重新导向文件描述符在 Bash 命令行和脚本中是司空见惯的事。随着本系列的深入,你将了解更多关于文件描述符的知识。下周见!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/2019/2/ampersands-and-file-descriptors-bash

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zero-mk](https://github.com/zero-mk)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://linux.cn/article-10587-1.html

|

||||

[2]: https://linux.cn/article-10502-1.html

|

||||

[3]: https://linux.cn/article-10529-1.html

|

||||

@ -0,0 +1,218 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (An-DJ)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10595-1.html)

|

||||

[#]: subject: (How To Check CPU, Memory And Swap Utilization Percentage In Linux?)

|

||||

[#]: via: (https://www.2daygeek.com/linux-check-cpu-memory-swap-utilization-percentage/)

|

||||

[#]: author: (Vinoth Kumar https://www.2daygeek.com/author/vinoth/)

|

||||

|

||||

如何查看 Linux 下 CPU、内存和交换分区的占用率?

|

||||

======

|

||||

|

||||

在 Linux 下有很多可以用来查看内存占用情况的命令和选项,但是我并没有看见关于内存占用率的更多的信息。

|

||||

|

||||

在大多数情况下我们只想查看内存使用情况,并没有考虑占用的百分比究竟是多少。如果你想要了解这些信息,那你看这篇文章就对了。我们将会详细地在这里帮助你解决这个问题。

|

||||

|

||||

这篇教程将会帮助你在面对 Linux 服务器下频繁的内存高占用情况时,确定内存使用情况。

|

||||

|

||||

而在同时,如果你使用的是 `free -m` 或者 `free -g`,占用情况描述地也并不是十分清楚。

|

||||

|

||||

这些格式化命令属于 Linux 高级命令。它将会对 Linux 专家和中等水平 Linux 使用者非常有用。

|

||||

|

||||

### 方法-1:如何查看 Linux 下内存占用率?

|

||||

|

||||

我们可以使用下面命令的组合来达到此目的。在该方法中,我们使用的是 `free` 和 `awk` 命令的组合来获取内存占用率。

|

||||

|

||||

如果你正在寻找其他有关于内存的文章,你可以导航到如下链接。这些文章有 [free 命令][1]、[smem 命令][2]、[ps_mem 命令][3]、[vmstat 命令][4] 及 [查看物理内存大小的多种方式][5]。

|

||||

|

||||

要获取不包含百分比符号的内存占用率:

|

||||

|

||||

```

|

||||

$ free -t | awk 'NR == 2 {print "Current Memory Utilization is : " $3/$2*100}'

|

||||

或

|

||||

$ free -t | awk 'FNR == 2 {print "Current Memory Utilization is : " $3/$2*100}'

|

||||

|

||||

Current Memory Utilization is : 20.4194

|

||||

```

|

||||

|

||||

要获取不包含百分比符号的交换分区占用率:

|

||||

|

||||

```

|

||||

$ free -t | awk 'NR == 3 {print "Current Swap Utilization is : " $3/$2*100}'

|

||||

或

|

||||

$ free -t | awk 'FNR == 3 {print "Current Swap Utilization is : " $3/$2*100}'

|

||||

|

||||

Current Swap Utilization is : 0

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的内存占用率:

|

||||

|

||||

```

|

||||

$ free -t | awk 'NR == 2 {printf("Current Memory Utilization is : %.2f%"), $3/$2*100}'

|

||||

或

|

||||

$ free -t | awk 'FNR == 2 {printf("Current Memory Utilization is : %.2f%"), $3/$2*100}'

|

||||

|

||||

Current Memory Utilization is : 20.42%

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的交换分区占用率:

|

||||

|

||||

```

|

||||

$ free -t | awk 'NR == 3 {printf("Current Swap Utilization is : %.2f%"), $3/$2*100}'

|

||||

或

|

||||

$ free -t | awk 'FNR == 3 {printf("Current Swap Utilization is : %.2f%"), $3/$2*100}'

|

||||

|

||||

Current Swap Utilization is : 0.00%

|

||||

```

|

||||

|

||||

如果你正在寻找有关于交换分区的其他文章,你可以导航至如下链接。这些链接有 [使用 LVM(逻辑盘卷管理)创建和扩展交换分区][6],[创建或扩展交换分区的多种方式][7] 和 [创建/删除和挂载交换分区文件的多种方式][8]。

|

||||

|

||||

键入 `free` 命令会更好地作出阐释:

|

||||

|

||||

```

|

||||

$ free

|

||||

total used free shared buff/cache available

|

||||

Mem: 15867 3730 9868 1189 2269 10640

|

||||

Swap: 17454 0 17454

|

||||

Total: 33322 3730 27322

|

||||

```

|

||||

|

||||

细节如下:

|

||||

|

||||

* `free`:是一个标准命令,用于在 Linux 下查看内存使用情况。

|

||||

* `awk`:是一个专门用来做文本数据处理的强大命令。

|

||||

* `FNR == 2`:该命令给出了每一个输入文件的行数。其基本上用于挑选出给定的行(针对于这里,它选择的是行号为 2 的行)

|

||||

* `NR == 2`:该命令给出了处理的行总数。其基本上用于过滤给出的行(针对于这里,它选择的是行号为 2 的行)

|

||||

* `$3/$2*100`:该命令将列 3 除以列 2 并将结果乘以 100。

|

||||

* `printf`:该命令用于格式化和打印数据。

|

||||

* `%.2f%`:默认情况下,其打印小数点后保留 6 位的浮点数。使用后跟的格式来约束小数位。

|

||||

|

||||

### 方法-2:如何查看 Linux 下内存占用率?

|

||||

|

||||

我们可以使用下面命令的组合来达到此目的。在这种方法中,我们使用 `free`、`grep` 和 `awk` 命令的组合来获取内存占用率。

|

||||

|

||||

要获取不包含百分比符号的内存占用率:

|

||||

|

||||

```

|

||||

$ free -t | grep Mem | awk '{print "Current Memory Utilization is : " $3/$2*100}'

|

||||

Current Memory Utilization is : 20.4228

|

||||

```

|

||||

|

||||

要获取不包含百分比符号的交换分区占用率:

|

||||

|

||||

```

|

||||

$ free -t | grep Swap | awk '{print "Current Swap Utilization is : " $3/$2*100}'

|

||||

Current Swap Utilization is : 0

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的内存占用率:

|

||||

|

||||

```

|

||||

$ free -t | grep Mem | awk '{printf("Current Memory Utilization is : %.2f%"), $3/$2*100}'

|

||||

Current Memory Utilization is : 20.43%

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的交换空间占用率:

|

||||

|

||||

```

|

||||

$ free -t | grep Swap | awk '{printf("Current Swap Utilization is : %.2f%"), $3/$2*100}'

|

||||

Current Swap Utilization is : 0.00%

|

||||

```

|

||||

|

||||

### 方法-1:如何查看 Linux 下 CPU 的占用率?

|

||||

|

||||

我们可以使用如下命令的组合来达到此目的。在这种方法中,我们使用 `top`、`print` 和 `awk` 命令的组合来获取 CPU 的占用率。

|

||||

|

||||

如果你正在寻找其他有关于 CPU(LCTT 译注:原文误为 memory)的文章,你可以导航至如下链接。这些文章有 [top 命令][9]、[htop 命令][10]、[atop 命令][11] 及 [Glances 命令][12]。

|

||||

|

||||

如果在输出中展示的是多个 CPU 的情况,那么你需要使用下面的方法。

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu

|

||||

%Cpu0 : 5.3 us, 0.0 sy, 0.0 ni, 94.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu1 : 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu2 : 0.0 us, 0.0 sy, 0.0 ni, 94.7 id, 0.0 wa, 0.0 hi, 5.3 si, 0.0 st

|

||||

%Cpu3 : 5.3 us, 0.0 sy, 0.0 ni, 94.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu4 : 10.5 us, 15.8 sy, 0.0 ni, 73.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu5 : 0.0 us, 5.0 sy, 0.0 ni, 95.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu6 : 5.3 us, 0.0 sy, 0.0 ni, 94.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

%Cpu7 : 5.3 us, 0.0 sy, 0.0 ni, 94.7 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

|

||||

```

|

||||

|

||||

要获取不包含百分比符号的 CPU 占用率:

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu | awk '{cpu+=$9}END{print "Current CPU Utilization is : " 100-cpu/NR}'

|

||||

Current CPU Utilization is : 21.05

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的 CPU 占用率:

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu | awk '{cpu+=$9}END{printf("Current CPU Utilization is : %.2f%"), 100-cpu/NR}'

|

||||

Current CPU Utilization is : 14.81%

|

||||

```

|

||||

|

||||

### 方法-2:如何查看 Linux 下 CPU 的占用率?

|

||||

|

||||

我们可以使用如下命令的组合来达到此目的。在这种方法中,我们使用的是 `top`、`print`/`printf` 和 `awk` 命令的组合来获取 CPU 的占用率。

|

||||

|

||||

如果在单个输出中一起展示了所有的 CPU 的情况,那么你需要使用下面的方法。

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu

|

||||

%Cpu(s): 15.3 us, 7.2 sy, 0.8 ni, 69.0 id, 6.7 wa, 0.0 hi, 1.0 si, 0.0 st

|

||||

```

|

||||

|

||||

要获取不包含百分比符号的 CPU 占用率:

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu | awk '{print "Current CPU Utilization is : " 100-$8}'

|

||||

Current CPU Utilization is : 5.6

|

||||

```

|

||||

|

||||

要获取包含百分比符号及保留两位小数的 CPU 占用率:

|

||||

|

||||

```

|

||||

$ top -b -n1 | grep ^%Cpu | awk '{printf("Current CPU Utilization is : %.2f%"), 100-$8}'

|

||||

Current CPU Utilization is : 5.40%

|

||||

```

|

||||

|

||||

如下是一些细节:

|

||||

|

||||

* `top`:是一种用于查看当前 Linux 系统下正在运行的进程的非常好的命令。

|

||||

* `-b`:选项允许 `top` 命令切换至批处理的模式。当你从本地系统运行 `top` 命令至远程系统时,它将会非常有用。

|

||||

* `-n1`:迭代次数。

|

||||

* `^%Cpu`:过滤以 `%CPU` 开头的行。

|

||||

* `awk`:是一种专门用来做文本数据处理的强大命令。

|

||||

* `cpu+=$9`:对于每一行,将第 9 列添加至变量 `cpu`。

|

||||

* `printf`:该命令用于格式化和打印数据。

|

||||

* `%.2f%`:默认情况下,它打印小数点后保留 6 位的浮点数。使用后跟的格式来限制小数位数。

|

||||

* `100-cpu/NR`:最终打印出 CPU 平均占用率,即用 100 减去其并除以行数。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/linux-check-cpu-memory-swap-utilization-percentage/

|

||||

|

||||

作者:[Vinoth Kumar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[An-DJ](https://github.com/An-DJ)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/vinoth/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/free-command-to-check-memory-usage-statistics-in-linux/

|

||||

[2]: https://www.2daygeek.com/smem-linux-memory-usage-statistics-reporting-tool/

|

||||

[3]: https://www.2daygeek.com/ps_mem-report-core-memory-usage-accurately-in-linux/

|

||||

[4]: https://www.2daygeek.com/linux-vmstat-command-examples-tool-report-virtual-memory-statistics/

|

||||

[5]: https://www.2daygeek.com/easy-ways-to-check-size-of-physical-memory-ram-in-linux/

|

||||

[6]: https://www.2daygeek.com/how-to-create-extend-swap-partition-in-linux-using-lvm/

|

||||

[7]: https://www.2daygeek.com/add-extend-increase-swap-space-memory-file-partition-linux/

|

||||

[8]: https://www.2daygeek.com/shell-script-create-add-extend-swap-space-linux/

|

||||

[9]: https://www.2daygeek.com/linux-top-command-linux-system-performance-monitoring-tool/

|

||||

[10]: https://www.2daygeek.com/linux-htop-command-linux-system-performance-resource-monitoring-tool/

|

||||

[11]: https://www.2daygeek.com/atop-system-process-performance-monitoring-tool/

|

||||

[12]: https://www.2daygeek.com/install-glances-advanced-real-time-linux-system-performance-monitoring-tool-on-centos-fedora-ubuntu-debian-opensuse-arch-linux/

|

||||

194

published/20190219 Logical - in Bash.md

Normal file

194

published/20190219 Logical - in Bash.md

Normal file

@ -0,0 +1,194 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "zero-mk"

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-10596-1.html"

|

||||

[#]: subject: "Logical & in Bash"

|

||||

[#]: via: "https://www.linux.com/blog/learn/2019/2/logical-ampersand-bash"

|

||||

[#]: author: "Paul Brown https://www.linux.com/users/bro66"

|

||||

|

||||

Bash 中的逻辑和(&)

|

||||

======

|

||||

> 在 Bash 中,你可以使用 & 作为 AND(逻辑和)操作符。

|

||||

|

||||

|

||||

|

||||

有人可能会认为两篇文章中的 `&` 意思差不多,但实际上并不是。虽然 [第一篇文章讨论了如何在命令末尾使用 & 来将命令转到后台运行][1],在之后剖析了流程管理,第二篇文章将 [ & 看作引用文件描述符的方法][2],这些文章让我们知道了,与 `<` 和 `>` 结合使用后,你可以将输入或输出引导到别的地方。

|

||||

|

||||

但我们还没接触过作为 AND 操作符使用的 `&`。所以,让我们来看看。

|

||||

|

||||

### & 是一个按位运算符

|

||||

|

||||

如果你十分熟悉二进制数操作,你肯定听说过 AND 和 OR 。这些是按位操作,对二进制数的各个位进行操作。在 Bash 中,使用 `&` 作为 AND 运算符,使用 `|` 作为 OR 运算符:

|

||||

|

||||

**AND**:

|

||||

|

||||

```

|

||||

0 & 0 = 0

|

||||

0 & 1 = 0

|

||||

1 & 0 = 0

|

||||

1 & 1 = 1

|

||||

```

|

||||

|

||||

**OR**:

|

||||

|

||||

```

|

||||

0 | 0 = 0

|

||||

0 | 1 = 1

|

||||

1 | 0 = 1

|

||||

1 | 1 = 1

|

||||

```

|

||||

|

||||

你可以通过对任何两个数字进行 AND 运算并使用 `echo` 输出结果:

|

||||

|

||||

```

|

||||

$ echo $(( 2 & 3 )) # 00000010 AND 00000011 = 00000010

|

||||

2

|

||||

$ echo $(( 120 & 97 )) # 01111000 AND 01100001 = 01100000

|

||||

96

|

||||

```

|

||||

|

||||

OR(`|`)也是如此:

|

||||

|

||||

```

|

||||

$ echo $(( 2 | 3 )) # 00000010 OR 00000011 = 00000011

|

||||

3

|

||||

$ echo $(( 120 | 97 )) # 01111000 OR 01100001 = 01111001

|

||||

121

|

||||

```

|

||||

|

||||

说明:

|

||||

|

||||

1. 使用 `(( ... ))` 告诉 Bash 双括号之间的内容是某种算术或逻辑运算。`(( 2 + 2 ))`、 `(( 5 % 2 ))` (`%` 是[求模][3]运算符)和 `((( 5 % 2 ) + 1))`(等于 3)都可以工作。

|

||||

2. [像变量一样][4],使用 `$` 提取值,以便你可以使用它。

|

||||

3. 空格并没有影响:`((2+3))` 等价于 `(( 2+3 ))` 和 `(( 2 + 3 ))`。

|

||||

4. Bash 只能对整数进行操作。试试这样做: `(( 5 / 2 ))` ,你会得到 `2`;或者这样 `(( 2.5 & 7 ))` ,但会得到一个错误。然后,在按位操作中使用除了整数之外的任何东西(这就是我们现在所讨论的)通常是你不应该做的事情。

|

||||

|

||||

**提示:** 如果你想看看十进制数字在二进制下会是什么样子,你可以使用 `bc` ,这是一个大多数 Linux 发行版都预装了的命令行计算器。比如:

|

||||

|

||||

```

|

||||

bc <<< "obase=2; 97"

|

||||

```

|

||||

|

||||

这个操作将会把 `97` 转换成十二进制(`obase` 中的 `o` 代表 “output” ,也即,“输出”)。

|

||||

|

||||

```

|

||||

bc <<< "ibase=2; 11001011"

|

||||

```

|

||||

|

||||

这个操作将会把 `11001011` 转换成十进制(`ibase` 中的 `i` 代表 “input”,也即,“输入”)。

|

||||

|

||||

### && 是一个逻辑运算符

|

||||

|

||||

虽然它使用与其按位表达相同的逻辑原理,但 Bash 的 `&&` 运算符只能呈现两个结果:`1`(“真值”)和`0`(“假值”)。对于 Bash 来说,任何不是 `0` 的数字都是 “真值”,任何等于 `0` 的数字都是 “假值”。什么也是 “假值”同时也不是数字呢:

|

||||

|

||||

```

|

||||

$ echo $(( 4 && 5 )) # 两个非零数字,两个为 true = true

|

||||

1

|

||||

$ echo $(( 0 && 5 )) # 有一个为零,一个为 false = false

|

||||

0

|

||||

$ echo $(( b && 5 )) # 其中一个不是数字,一个为 false = false

|

||||

0

|

||||

```

|

||||

|

||||

与 `&&` 类似, OR 对应着 `||` ,用法正如你想的那样。

|

||||

|

||||

以上这些都很简单……直到它用在命令的退出状态时。

|

||||

|

||||

### && 是命令退出状态的逻辑运算符

|

||||

|

||||

[正如我们在之前的文章中看到的][2],当命令运行时,它会输出错误消息。更重要的是,对于今天的讨论,它在结束时也会输出一个数字。此数字称为“返回码”,如果为 0,则表示该命令在执行期间未遇到任何问题。如果是任何其他数字,即使命令完成,也意味着某些地方出错了。

|

||||

|

||||

所以 0 意味着是好的,任何其他数字都说明有问题发生,并且,在返回码的上下文中,0 意味着“真”,其他任何数字都意味着“假”。对!这 **与你所熟知的逻辑操作完全相反** ,但是你能用这个做什么? 不同的背景,不同的规则。这种用处很快就会显现出来。

|

||||

|

||||

让我们继续!

|

||||

|

||||

返回码 *临时* 储存在 [特殊变量][5] `?` 中 —— 是的,我知道:这又是一个令人迷惑的选择。但不管怎样,[别忘了我们在讨论变量的文章中说过][4],那时我们说你要用 `$` 符号来读取变量中的值,在这里也一样。所以,如果你想知道一个命令是否顺利运行,你需要在命令结束后,在运行别的命令之前马上用 `$?` 来读取 `?` 变量的值。

|

||||

|

||||

试试下面的命令:

|

||||

|

||||

```

|

||||

$ find /etc -iname "*.service"

|

||||

find: '/etc/audisp/plugins.d': Permission denied

|

||||

/etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service

|

||||

/etc/systemd/system/dbus-org.freedesktop.ModemManager1.service

|

||||

[......]

|

||||

```

|

||||

|

||||

[正如你在上一篇文章中看到的一样][2],普通用户权限在 `/etc` 下运行 `find` 通常将抛出错误,因为它试图读取你没有权限访问的子目录。

|

||||

|

||||

所以,如果你在执行 `find` 后立马执行……

|

||||

|

||||

```

|

||||

echo $?

|

||||

```

|

||||

|

||||

……,它将打印 `1`,表明存在错误。

|

||||

|

||||

(注意:当你在一行中运行两遍 `echo $?` ,你将得到一个 `0` 。这是因为 `$?` 将包含第一个 `echo $?` 的返回码,而这条命令按理说一定会执行成功。所以学习如何使用 `$?` 的第一课就是: **单独执行 `$?`** 或者将它保存在别的安全的地方 —— 比如保存在一个变量里,不然你会很快丢失它。)

|

||||

|

||||

一个直接使用 `?` 变量的用法是将它并入一串链式命令列表,这样 Bash 运行这串命令时若有任何操作失败,后面命令将终止。例如,你可能熟悉构建和编译应用程序源代码的过程。你可以像这样手动一个接一个地运行它们:

|

||||

|

||||

```

|

||||

$ configure

|

||||

.

|

||||

.

|

||||

.

|

||||

$ make

|

||||

.

|

||||

.

|

||||

.

|

||||

$ make install

|

||||

.

|

||||

.

|

||||

.

|

||||

```

|

||||

|

||||

你也可以把这三行合并成一行……

|

||||

|

||||

```

|

||||

$ configure; make; make install

|

||||

```

|

||||

|

||||

…… 但你要希望上天保佑。

|

||||

|

||||

为什么这样说呢?因为你这样做是有缺点的,比方说 `configure` 执行失败了, Bash 将仍会尝试执行 `make` 和 `sudo make install`——就算没东西可 `make` ,实际上,是没东西会安装。

|

||||

|

||||

聪明一点的做法是:

|

||||

|

||||

```

|

||||

$ configure && make && make install

|

||||

```

|

||||

|

||||

这将从每个命令中获取退出码,并将其用作链式 `&&` 操作的操作数。

|

||||

|

||||

但是,没什么好抱怨的,Bash 知道如果 `configure` 返回非零结果,整个过程都会失败。如果发生这种情况,不必运行 `make` 来检查它的退出代码,因为无论如何都会失败的。因此,它放弃运行 `make`,只是将非零结果传递给下一步操作。并且,由于 `configure && make` 传递了错误,Bash 也不必运行`make install`。这意味着,在一长串命令中,你可以使用 `&&` 连接它们,并且一旦失败,你可以节省时间,因为其他命令会立即被取消运行。

|

||||

|

||||

你可以类似地使用 `||`,OR 逻辑操作符,这样就算只有一部分命令成功执行,Bash 也能运行接下来链接在一起的命令。

|

||||

|

||||

鉴于所有这些(以及我们之前介绍过的内容),你现在应该更清楚地了解我们在 [这篇文章开头][1] 出现的命令行:

|

||||

|

||||

```

|

||||

mkdir test_dir 2>/dev/null || touch backup/dir/images.txt && find . -iname "*jpg" > backup/dir/images.txt &

|

||||

```

|

||||

|

||||

因此,假设你从具有读写权限的目录运行上述内容,它做了什么以及如何做到这一点?它如何避免不合时宜且可能导致执行中断的错误?下周,除了给你这些答案的结果,我们将讨论圆括号,不要错过了哟!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/2019/2/logical-ampersand-bash

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zero-MK](https://github.com/zero-mk)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://linux.cn/article-10587-1.html

|

||||

[2]: https://linux.cn/article-10591-1.html

|

||||

[3]: https://en.wikipedia.org/wiki/Modulo_operation

|

||||

[4]: https://www.linux.com/blog/learn/2018/12/bash-variables-environmental-and-otherwise

|

||||

[5]: https://www.gnu.org/software/bash/manual/html_node/Special-Parameters.html

|

||||

@ -0,0 +1,135 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10600-1.html)

|

||||

[#]: subject: (An Automated Way To Install Essential Applications On Ubuntu)

|

||||

[#]: via: (https://www.ostechnix.com/an-automated-way-to-install-essential-applications-on-ubuntu/)

|

||||

[#]: author: (SK https://www.ostechnix.com/author/sk/)

|

||||

|

||||

在 Ubuntu 上自动化安装基本应用的方法

|

||||

======

|

||||

|

||||

|

||||

|

||||

默认安装的 Ubuntu 并未预先安装所有必需的应用。你可能需要在网上花几个小时或者向其他 Linux 用户寻求帮助才能找到并安装 Ubuntu 所需的应用。如果你是新手,那么你肯定需要花更多的时间来学习如何从命令行(使用 `apt-get` 或 `dpkg`)或从 Ubuntu 软件中心搜索和安装应用。一些用户,特别是新手,可能希望轻松快速地安装他们喜欢的每个应用。如果你是其中之一,不用担心。在本指南中,我们将了解如何使用名为 “Alfred” 的简单命令行程序在 Ubuntu 上安装基本应用。

|

||||

|

||||

Alfred 是用 Python 语言编写的自由、开源脚本。它使用 Zenity 创建了一个简单的图形界面,用户只需点击几下鼠标即可轻松选择和安装他们选择的应用。你不必花费数小时来搜索所有必要的应用程序、PPA、deb、AppImage、snap 或 flatpak。Alfred 将所有常见的应用、工具和小程序集中在一起,并自动安装所选的应用。如果你是最近从 Windows 迁移到 Ubuntu Linux 的新手,Alfred 会帮助你在新安装的 Ubuntu 系统上进行无人值守的软件安装,而无需太多用户干预。请注意,还有一个名称相似的 Mac OS 应用,但两者有不同的用途。

|

||||

|

||||

### 在 Ubuntu 上安装 Alfred

|

||||

|

||||

Alfred 安装很简单!只需下载脚本并启动它。就这么简单。

|

||||

|

||||

```

|

||||

$ wget https://raw.githubusercontent.com/derkomai/alfred/master/alfred.py

|

||||

$ python3 alfred.py

|

||||

```

|

||||

|

||||

或者,使用 `wget` 下载脚本,如上所示,只需将 `alfred.py` 移动到 `$PATH` 中:

|

||||

|

||||

```

|

||||

$ sudo cp alfred.py /usr/local/bin/alfred

|

||||

```

|

||||

|

||||

使其可执行:

|

||||

|

||||

```

|

||||

$ sudo chmod +x /usr/local/bin/alfred

|

||||

```

|

||||

|

||||

并使用命令启动它:

|

||||

|

||||

```

|

||||

$ alfred

|

||||

```

|

||||

|

||||

### 使用 Alfred 脚本轻松快速地在 Ubuntu 上安装基本应用程序

|

||||

|

||||

按照上面所说启动 Alfred 脚本。这就是 Alfred 默认界面的样子。

|

||||

|

||||

![][2]

|

||||

|

||||

如你所见,Alfred 列出了许多最常用的应用类型,例如:

|

||||

|

||||

* 网络浏览器,

|

||||

* 邮件客户端,

|

||||

* 消息,

|

||||

* 云存储客户端,

|

||||

* 硬件驱动程序,

|

||||

* 编解码器,

|

||||

* 开发者工具,

|

||||

* Android,

|

||||

* 文本编辑器,

|

||||

* Git,

|

||||

* 内核更新工具,

|

||||

* 音频/视频播放器,

|

||||

* 截图工具,

|

||||

* 录屏工具,

|

||||

* 视频编码器,

|

||||

* 流媒体应用,

|

||||

* 3D 建模和动画工具,

|

||||

* 图像查看器和编辑器,

|

||||

* CAD 软件,

|

||||

* PDF 工具,

|

||||

* 游戏模拟器,

|

||||

* 磁盘管理工具,

|

||||

* 加密工具,

|

||||

* 密码管理器,

|

||||

* 存档工具,

|

||||

* FTP 软件,

|

||||

* 系统资源监视器,

|

||||

* 应用启动器等。

|

||||

|

||||

你可以选择任何一个或多个应用并立即安装它们。在这里,我将安装 “Developer bundle”,因此我选择它并单击 OK 按钮。

|

||||

|

||||

![][3]

|

||||

|

||||

现在,Alfred 脚本将自动你的 Ubuntu 系统上添加必要仓库、PPA 并开始安装所选的应用。

|

||||

|

||||

![][4]

|

||||

|

||||

安装完成后,你将看到以下消息。

|

||||

|

||||

![][5]

|

||||

|

||||

恭喜你!已安装选定的软件包。

|

||||

|

||||

你可以使用以下命令[在 Ubuntu 上查看最近安装的应用][6]:

|

||||

|

||||

```

|

||||

$ grep " install " /var/log/dpkg.log

|

||||

```

|

||||

|

||||

你可能需要重启系统才能使用某些已安装的应用。类似地,你可以方便地安装列表中的任何程序。

|

||||

|

||||

提示一下,还有一个由不同的开发人员编写的类似脚本,名为 `post_install.sh`。它与 Alfred 完全相同,但提供了一些不同的应用。请查看以下链接获取更多详细信息。

|

||||

|

||||

- [Ubuntu Post Installation Script](https://www.ostechnix.com/ubuntu-post-installation-script/)

|

||||

|

||||

这两个脚本能让懒惰的用户,特别是新手,只需点击几下鼠标就能够轻松快速地安装他们想要在 Ubuntu Linux 中使用的大多数常见应用、工具、更新、小程序,而无需依赖官方或者非官方文档的帮助。

|

||||

|

||||

就是这些了。希望这篇文章有用。还有更多好东西。敬请期待!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/an-automated-way-to-install-essential-applications-on-ubuntu/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: http://www.ostechnix.com/wp-content/uploads/2019/02/alfred-1.png

|

||||

[3]: http://www.ostechnix.com/wp-content/uploads/2019/02/alfred-2.png

|

||||

[4]: http://www.ostechnix.com/wp-content/uploads/2019/02/alfred-4.png

|

||||

[5]: http://www.ostechnix.com/wp-content/uploads/2019/02/alfred-5-1.png

|

||||

[6]: https://www.ostechnix.com/list-installed-packages-sorted-installation-date-linux/

|

||||

@ -1,60 +1,56 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "zero-mk"

|

||||

[#]: reviewer: " "

|

||||

[#]: publisher: " "

|

||||

[#]: url: " "

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-10593-1.html"

|

||||

[#]: subject: "Bash-Insulter : A Script That Insults An User When Typing A Wrong Command"

|

||||

[#]: via: "https://www.2daygeek.com/bash-insulter-insults-the-user-when-typing-wrong-command/"

|

||||

[#]: author: "Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/"

|

||||

|

||||

Bash-Insulter : 一个在输入错误命令时侮辱用户的脚本

|

||||

Bash-Insulter:一个在输入错误命令时嘲讽用户的脚本

|

||||

======

|

||||

|

||||

这是一个非常有趣的脚本,每当用户在终端输入错误的命令时,它都会侮辱用户。

|

||||

这是一个非常有趣的脚本,每当用户在终端输入错误的命令时,它都会嘲讽用户。

|

||||

|

||||

它让你在处理一些问题时感到快乐。

|

||||

它让你在解决一些问题时会感到快乐。有的人在受到终端嘲讽的时候感到不愉快。但是,当我受到终端的批评时,我真的很开心。

|

||||

|

||||

有的人在受到终端侮辱的时候感到不愉快。但是,当我受到终端的侮辱时,我真的很开心。

|

||||

|

||||

这是一个有趣的CLI(译者注:command-line interface) 工具,在你弄错的时候,会用随机短语侮辱你。

|

||||

|

||||

此外,它允许您添加自己的短语。

|

||||

这是一个有趣的 CLI 工具,在你弄错的时候,会用随机短语嘲讽你。此外,它允许你添加自己的短语。

|

||||

|

||||

### 如何在 Linux 上安装 Bash-Insulter?

|

||||

|

||||

在安装 Bash-Insulter 之前,请确保您的系统上安装了 git。如果没有,请使用以下命令安装它。

|

||||

在安装 Bash-Insulter 之前,请确保你的系统上安装了 git。如果没有,请使用以下命令安装它。

|

||||

|

||||

对于 **`Fedora`** 系统, 请使用 **[DNF 命令][1]** 安装 git

|

||||

对于 Fedora 系统, 请使用 [DNF 命令][1] 安装 git。

|

||||

|

||||

```

|

||||

$ sudo dnf install git

|

||||

```

|

||||

|

||||

对于 **`Debian/Ubuntu`** 系统,,请使用 **[APT-GET 命令][2]** 或者 **[APT 命令][3]** 安装 git。

|

||||

对于 Debian/Ubuntu 系统,请使用 [APT-GET 命令][2] 或者 [APT 命令][3] 安装 git。

|

||||

|

||||

```

|

||||

$ sudo apt install git

|

||||

```

|

||||

|

||||

对于基于 **`Arch Linux`** 的系统, 请使用 **[Pacman 命令][4]** 安装 git。

|

||||

对于基于 Arch Linux 的系统,请使用 [Pacman 命令][4] 安装 git。

|

||||

|

||||

```

|

||||

$ sudo pacman -S git

|

||||

```

|

||||

|

||||

对于 **`RHEL/CentOS`** systems, 请使用 **[YUM 命令][5]** 安装 git。

|

||||

对于 RHEL/CentOS 系统,请使用 [YUM 命令][5] 安装 git。

|

||||

|

||||

```

|

||||

$ sudo yum install git

|

||||

```

|

||||

|

||||

对于 **`openSUSE Leap`** system, 请使用 **[Zypper 命令][6]** 安装 git。

|

||||

对于 openSUSE Leap 系统,请使用 [Zypper 命令][6] 安装 git。

|

||||

|

||||

```

|

||||

$ sudo zypper install git

|

||||

```

|

||||

|

||||

我们可以通过克隆(clone)开发人员的github存储库轻松地安装它。

|

||||

我们可以通过<ruby>克隆<rt>clone</rt></ruby>开发人员的 GitHub 存储库轻松地安装它。

|

||||

|

||||

首先克隆 Bash-insulter 存储库。

|

||||

|

||||

@ -85,7 +81,7 @@ fi

|

||||

$ sudo source /etc/bash.bashrc

|

||||

```

|

||||

|

||||

你想测试一下安装是否生效吗?你可以试试在终端上输入一些错误的命令,看看它如何侮辱你。

|

||||

你想测试一下安装是否生效吗?你可以试试在终端上输入一些错误的命令,看看它如何嘲讽你。

|

||||

|

||||

```

|

||||

$ unam -a

|

||||

@ -95,9 +91,7 @@ $ pin 2daygeek.com

|

||||

|

||||

![][8]

|

||||

|

||||

如果您想附加您自己的短语,则导航到以下文件并更新它

|

||||

|

||||

您可以在 `messages` 部分中添加短语。

|

||||

如果你想附加你自己的短语,则导航到以下文件并更新它。你可以在 `messages` 部分中添加短语。

|

||||

|

||||

```

|

||||

# vi /etc/bash.command-not-found

|

||||

@ -178,7 +172,7 @@ via: https://www.2daygeek.com/bash-insulter-insults-the-user-when-typing-wrong-c

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zero-mk](https://github.com/zero-mk)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,13 +1,13 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10594-1.html)

|

||||

[#]: subject: (Regex groups and numerals)

|

||||

[#]: via: (https://leancrew.com/all-this/2019/02/regex-groups-and-numerals/)

|

||||

[#]: author: (Dr.Drang https://leancrew.com)

|

||||

|

||||

正则组和数字

|

||||

正则表达式的分组和数字

|

||||

======

|

||||

|

||||

大约一周前,我在编辑一个程序时想要更改一些变量名。我之前认为这将是一个简单的正则表达式查找/替换。只是这没有我想象的那么简单。

|

||||

@ -16,9 +16,9 @@

|

||||

|

||||

![Mistaken BBEdit replacement pattern][2]

|

||||

|

||||

我不能简单地 `30` 替换为 `10`,因为代码中有一些与变量无关的数字 `10`。我认为我很聪明,所以我不想写三个非正则表达式替换,`a10`、`v10` 和 `x10` 每个一个。但是我却没有注意到替换模式中的蓝色。如果我这样做了,我会看到 BBEdit 将我的替换模式解释为“匹配组 13,后面跟着 `0`,而不是”匹配组 1,后面跟着 `30`,后者是我想要的。由于匹配组 13 是空白的,因此所有变量名都会被替换为 `0`。

|

||||

我不能简单地 `30` 替换为 `10`,因为代码中有一些与变量无关的数字 `10`。我认为我很聪明,所以我不想写三个非正则表达式替换,`a10`、`v10` 和 `x10`,每个一个。但是我却没有注意到替换模式中的蓝色。如果我这样做了,我会看到 BBEdit 将我的替换模式解释为“匹配组 13,后面跟着 `0`,而不是”匹配组 1,后面跟着 `30`,后者是我想要的。由于匹配组 13 是空白的,因此所有变量名都会被替换为 `0`。

|

||||

|

||||

你看,BBEdit 可以在搜索模式中匹配多达 99 个组,严格来说,我们应该在替换模式中引用它们时使用两位数字。但在大多数情况下,我们可以使用 `\1` 到 `\9` 而不是 `\01` 到 `\09`,因为这没有歧义。换句话说,如果我尝试将 `a10`、`v10` 和 `x10` 更改为 `az`、`vz` 和 `xz`,那么使用 `\1z`的替换模式就可以了。因为后面的 `z` 意味着不会误解释该模式中 `\1`。

|

||||

你看,BBEdit 可以在搜索模式中匹配多达 99 个分组,严格来说,我们应该在替换模式中引用它们时使用两位数字。但在大多数情况下,我们可以使用 `\1` 到 `\9` 而不是 `\01` 到 `\09`,因为这没有歧义。换句话说,如果我尝试将 `a10`、`v10` 和 `x10` 更改为 `az`、`vz` 和 `xz`,那么使用 `\1z`的替换模式就可以了。因为后面的 `z` 意味着不会误解释该模式中 `\1`。

|

||||

|

||||

因此,在撤消替换后,我将模式更改为这样:

|

||||

|

||||

@ -30,10 +30,9 @@

|

||||

|

||||

![Named BBEdit replacement pattern][4]

|

||||

|

||||

在任何情况下,我从来都没有使用过命名组,无论正则表达式是在文本编辑器还是在脚本中。我的总体感觉是,如果模式复杂到我必须使用变量来跟踪所有组,那么我应该停下来并将问题分解为更小的部分。

|

||||

我从来都没有使用过命名组,无论正则表达式是在文本编辑器还是在脚本中。我的总体感觉是,如果模式复杂到我必须使用变量来跟踪所有组,那么我应该停下来并将问题分解为更小的部分。

|

||||

|

||||

By the way, you may have heard that BBEdit is celebrating its [25th anniversary][5] of not sucking. When a well-documented app has such a long history, the manual starts to accumulate delightful callbacks to the olden days. As I was looking up the notation for named groups in the BBEdit manual, I ran across this note:

|

||||

顺便说一下,你可能已经听说 BBEdit 正在庆祝它诞生[25周年][5]。当一个有良好文档的应用有如此历史时,手册的积累能让人愉快地回到过去的日子。当我在 BBEdit 手册中查找命名组的表示法时,我遇到了这个说明:

|

||||

顺便说一下,你可能已经听说 BBEdit 正在庆祝它诞生[25周年][5]。当一个有良好文档的应用有如此长的历史时,手册的积累能让人愉快地回到过去的日子。当我在 BBEdit 手册中查找命名组的表示法时,我遇到了这个说明:

|

||||

|

||||

![BBEdit regex manual excerpt][6]

|

||||

|

||||

@ -45,8 +44,8 @@ via: https://leancrew.com/all-this/2019/02/regex-groups-and-numerals/

|

||||

|

||||

作者:[Dr.Drang][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[s](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (mokshal)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: subject: (5 reasons to give Linux for the holidays)

|

||||

|

||||

@ -1,54 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (alim0x)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Booting Linux faster)

|

||||

[#]: via: (https://opensource.com/article/19/1/booting-linux-faster)

|

||||

[#]: author: (Stewart Smith https://opensource.com/users/stewart-ibm)

|

||||

|

||||

Booting Linux faster

|

||||

======

|

||||

Doing Linux kernel and firmware development leads to lots of reboots and lots of wasted time.

|

||||

|

||||

Of all the computers I've ever owned or used, the one that booted the quickest was from the 1980s; by the time your hand moved from the power switch to the keyboard, the BASIC interpreter was ready for your commands. Modern computers take anywhere from 15 seconds for a laptop to minutes for a small home server to boot. Why is there such a difference in boot times?

|

||||

|

||||

A microcomputer from the 1980s that booted straight to a BASIC prompt had a very simple CPU that started fetching and executing instructions from a memory address immediately upon getting power. Since these systems had BASIC in ROM, there was no loading time—you got to the BASIC prompt really quickly. More complex systems of that same era, such as the IBM PC or Macintosh, took a significant time to boot (~30 seconds), although this was mostly due to having to read the operating system (OS) off a floppy disk. Only a handful of seconds were spent in firmware before being able to load an OS.

|

||||

|

||||

Modern servers typically spend minutes, rather than seconds, in firmware before getting to the point of booting an OS from disk. This is largely due to modern systems' increased complexity. No longer can a CPU just come up and start executing instructions at full speed; we've become accustomed to CPU frequency scaling, idle states that save a lot of power, and multiple CPU cores. In fact, inside modern CPUs are a surprising number of simpler CPUs that help start the main CPU cores and provide runtime services such as throttling the frequency when it gets too hot. On most CPU architectures, the code running on these cores inside your CPU is provided as opaque binary blobs.

|

||||

|

||||

On OpenPOWER systems, every instruction executed on every core inside the CPU is open source software. On machines with [OpenBMC][1] (such as IBM's AC922 system and Raptor's TALOS II and Blackbird systems), this extends to the code running on the Baseboard Management Controller as well. This means we can get a tremendous amount of insight into what takes so long from the time you plug in a power cable to the time a familiar login prompt is displayed.

|

||||

|

||||

If you're part of a team that works on the Linux kernel, you probably boot a lot of kernels. If you're part of a team that works on firmware, you're probably going to boot a lot of different firmware images, followed by an OS to ensure your firmware still works. If we can reduce the hardware's boot time, these teams can become more productive, and end users may be grateful when they're setting up systems or rebooting to install firmware or OS updates.

|

||||

|

||||

Over the years, many improvements have been made to Linux distributions' boot time. Modern init systems deal well with doing things concurrently and on-demand. On a modern system, once the kernel starts executing, it can take very few seconds to get to a login prompt. This handful of seconds are not the place to optimize boot time; we have to go earlier: before we get to the OS.

|

||||

|

||||

On OpenPOWER systems, the firmware loads an OS by booting a Linux kernel stored in the firmware flash chip that runs a userspace program called [Petitboot][2] to find the disk that holds the OS the user wants to boot and [kexec][3][()][3] to it. This code reuse leverages the efforts that have gone into making Linux boot quicker. Even so, we found places in our kernel config and userspace where we could improve and easily shave seconds off boot time. With these optimizations, booting the Petitboot environment is a single-digit percentage of boot time, so we had to find more improvements elsewhere.

|

||||

|

||||

Before the Petitboot environment starts, there's a prior bit of firmware called [Skiboot][4], and before that there's [Hostboot][5]. Prior to Hostboot is the [Self-Boot Engine][6], a separate core on the die that gets a single CPU core up and executing instructions out of Level 3 cache. These components are where we can make the most headway in reducing boot time, as they take up the overwhelming majority of it. Perhaps some of these components aren't optimized enough or doing as much in parallel as they could be?

|

||||

|

||||

Another avenue of attack is reboot time rather than boot time. On a reboot, do we really need to reinitialize all the hardware?

|

||||

|

||||