mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

commit

e3991b36a9

38

README.md

38

README.md

@ -30,6 +30,26 @@ LCTT的组成

|

||||

|

||||

请阅读[WIKI](https://github.com/LCTT/TranslateProject/wiki)。

|

||||

|

||||

历史

|

||||

-------------------------------

|

||||

|

||||

* 2013/09/10 倡议并得到了大家的积极响应,成立翻译组。

|

||||

* 2013/09/11 采用github进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/16 公开发布了翻译组成立消息后,又有新的成员申请加入了。并从此建立见习成员制度。

|

||||

* 2013/09/24 鉴于大家使用Github的水平不一,容易导致主仓库的一些错误,因此换成了常规的fork+PR的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对LCTT的贡献,划分了Core Translators组,最先的加入成员是vito-L和tinyeyeser。

|

||||

* 2013/10/12 取消对LINUX.CN注册用户的依赖,在QQ群内、文章内都采用github的注册ID。

|

||||

* 2013/10/18 正式启动man翻译计划。

|

||||

* 2013/11/10 举行第一次北京线下聚会。

|

||||

* 2014/01/02 增加了Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的QQ群:198889102

|

||||

* 2014/05/16 增加了Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于GOLinux令人惊叹的翻译速度和不错的翻译质量,升级为Core Translators成员。

|

||||

* 2014/09/09 LCTT 一周年,做一年[总结](http://linux.cn/article-3784-1.html)。并将曾任 CORE 的成员分组为 Senior,以表彰他们的贡献。

|

||||

* 2014/10/08 提升bazz2为Core Translators成员。

|

||||

* 2014/11/04 提升zpl1025为Core Translators成员。

|

||||

* 2014/12/25 提升runningwater为Core Translators成员。

|

||||

|

||||

活跃成员

|

||||

-------------------------------

|

||||

|

||||

@ -119,21 +139,3 @@ LCTT的组成

|

||||

|

||||

谢谢大家的支持!

|

||||

|

||||

历史

|

||||

-------------------------------

|

||||

|

||||

* 2013/09/10 倡议并得到了大家的积极响应,成立翻译组。

|

||||

* 2013/09/11 采用github进行翻译协作,并开始进行选题翻译。

|

||||

* 2013/09/16 公开发布了翻译组成立消息后,又有新的成员申请加入了。并从此建立见习成员制度。

|

||||

* 2013/09/24 鉴于大家使用Github的水平不一,容易导致主仓库的一些错误,因此换成了常规的fork+PR的模式来进行翻译流程。

|

||||

* 2013/10/11 根据对LCTT的贡献,划分了Core Translators组,最先的加入成员是vito-L和tinyeyeser。

|

||||

* 2013/10/12 取消对LINUX.CN注册用户的依赖,在QQ群内、文章内都采用github的注册ID。

|

||||

* 2013/10/18 正式启动man翻译计划。

|

||||

* 2013/11/10 举行第一次北京线下聚会。

|

||||

* 2014/01/02 增加了Core Translators 成员: geekpi。

|

||||

* 2014/05/04 更换了新的QQ群:198889102

|

||||

* 2014/05/16 增加了Core Translators 成员: will.qian、vizv。

|

||||

* 2014/06/18 由于GOLinux令人惊叹的翻译速度和不错的翻译质量,升级为Core Translators成员。

|

||||

* 2014/09/09 LCTT 一周年,做一年[总结](http://linux.cn/article-3784-1.html)。并将曾任 CORE 的成员分组为 Senior,以表彰他们的贡献。

|

||||

* 2014/10/08 提升bazz2为Core Translators成员。

|

||||

* 2014/11/04 提升zpl1025为Core Translators成员。

|

||||

@ -8,23 +8,23 @@ Linux能够提供消费者想要的东西吗?

|

||||

|

||||

Linux需要深深凝视自己的水晶球,仔细体会那场浏览器大战留下的尘埃,然后留意一下这点建议:

|

||||

|

||||

如果你不能提供他们想要的,他们就会离开。

|

||||

> 如果你不能提供他们想要的,他们就会离开。

|

||||

|

||||

而这种事与愿违的另一个例子是Windows 8。消费者不喜欢那套界面。而微软却坚持使用,因为这是把所有东西搬到Surface平板上所必须的。相同的情况也可能发生在Canonical和Ubuntu Unity身上 -- 尽管它们的目标并不是单一独特地针对平板电脑来设计(所以,整套界面在桌面系统上仍然很实用而且直观)。

|

||||

|

||||

一直以来,Linux开发者和设计者们看上去都按照他们自己的想法来做事情。他们过分在意“吃你自家的狗粮”这句话了。以至于他们忘记了一件非常重要的事情:

|

||||

|

||||

没有新用户,他们的“根基”也仅仅只属于他们自己。

|

||||

> 没有新用户,他们的“根基”也仅仅只属于他们自己。

|

||||

|

||||

换句话说,唱诗班不仅仅是被传道,他们也同时在宣传。让我给你看三个案例来完全掌握这一点。

|

||||

|

||||

- 多年以来,有在Linux系统中替代活动目录(Active Directory)的需求。我很想把这个名称换成LDAP,但是你真的用过LDAP吗?那就是个噩梦。开发者们也努力了想让LDAP能易用一点,但是没一个做到了。而让我很震惊的是这样一个从多用户环境下发展起来的平台居然没有一个能和AD正面较量的功能。这需要一组开发人员,从头开始建立一个AD的开源替代。这对那些寻求从微软产品迁移的中型企业来说是非常大的福利。但是在这个产品做好之前,他们还不能开始迁移。

|

||||

- 多年以来,一直有在Linux系统中替代活动目录(Active Directory)的需求。我很想把这个名称换成LDAP,但是你真的用过LDAP吗?那就是个噩梦。开发者们也努力了想让LDAP能易用一点,但是没一个做到了。而让我很震惊的是这样一个从多用户环境下发展起来的平台居然没有一个能和AD正面较量的功能。这需要一组开发人员,从头开始建立一个AD的开源替代。这对那些寻求从微软产品迁移的中型企业来说是非常大的福利。但是在这个产品做好之前,他们还不能开始迁移。

|

||||

- 另一个从微软激发的需求是Exchange/Outlook。是,我也知道许多人都开始用云。但是,事实上中等和大型规模生意仍然依赖于Exchange/Outlook组合,直到能有更好的产品出现。而这将非常有希望发生在开源社区。整个拼图的一小块已经摆好了(虽然还需要一些工作)- 群件客户端,Evolution。如果有人能够从Zimbra拉出一个分支,然后重新设计成可以配合Evolution(甚至Thunderbird)来提供服务实现Exchange的简单替代,那这个游戏就不是这么玩了,而消费者获得的利益将是巨大的。

|

||||

- 便宜,便宜,还是便宜。这是大多数人都得咽下去的苦药片 - 但是消费者(和生意)就是希望便宜。看看去年一年Chromebook的销量吧。现在,搜索一下Linux笔记本看能不能找到700美元以下的。而只用三分之一的价格,就可以买到一个让你够用的Chromebook(一个使用了Linux内核的平台)。但是因为Linux仍然是一个细分市场,很难降低成本。像红帽那种公司也许可以改变现状。他们也已经推出了服务器硬件。为什么不推出一些和Chromebook有类似定位但是却运行完整Linux环境的低价中档笔记本呢?(请看“[Cloudbook是Linux的未来吗?][1]”)其中的关键是这种设备要低成本并且符合普通消费者的要求。不要站在游戏玩家/开发者的角度去思考了,记住普通消费者真正的需求 - 一个网页浏览器,不会有更多了。这是Chromebook为什么可以这么轻松地成功。Google精确地知道消费者想要什么,然后推出相应的产品。而面对Linux,一些公司仍然认为他们吸引买家的唯一途径是高端昂贵的硬件。而有一点讽刺的是,口水战中最经常听到的却是Linux只能在更慢更旧的硬件上运行。

|

||||

|

||||

最后,Linux需要看一看乔布斯传(Book Of Jobs),搞清楚如何说服消费者们他们真正要的就是Linux。在生意上和在家里 -- 每个人都可以享受到Linux带来的好处。说真的,开源社区怎么可能做不到这点呢?Linux本身就已经带有很多漂亮的时髦术语标签:稳定性,可靠性,安全性,云,免费 -- 再加上Linux实际已经进入到绝大多数人手中了(只是他们自己还不清楚罢了)。现在是时候让他们知道这一点了。如果你是用Android或者Chromebooks,那么你就在用(某种形式上的)Linux。

|

||||

最后,Linux需要看一看乔布斯传(Book Of Jobs),搞清楚如何说服消费者们他们真正要的就是Linux。在公司里和在家里 -- 每个人都可以享受到Linux带来的好处。说真的,开源社区怎么可能做不到这点呢?Linux本身就已经带有很多漂亮的时髦术语标签:稳定性、可靠性、安全性、云、免费 -- 再加上Linux实际已经进入到绝大多数人手中了(只是他们自己还不清楚罢了)。现在是时候让他们知道这一点了。如果你是用Android或者Chromebooks,那么你就在用(某种形式上的)Linux。

|

||||

|

||||

搞清楚消费者需求一直以来都是Linux社区的绊脚石。而且我知道 -- 太多的Linux开发都基于某个开发者有个特殊的想法。这意味着这些开发都针对的“微型市场”。是时候,无论如何,让Linux开发社区能够进行全球性思考了。“一般用户有什么需求,我们怎么满足他们?”让我提几个最基本的点。

|

||||

搞清楚消费者需求一直以来都是Linux社区的绊脚石。而且我知道 -- 太多的Linux开发都基于某个开发者有个特殊的想法。这意味着这些开发都针对的“微型市场”。是时候了,无论如何,让Linux开发社区能够进行全球性思考了。“一般用户有什么需求,我们怎么满足他们?”让我提几个最基本的点。

|

||||

|

||||

一般用户想要:

|

||||

|

||||

@ -43,7 +43,7 @@ via: http://www.techrepublic.com/article/will-linux-ever-be-able-to-give-consume

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,26 @@

|

||||

让下载更方便

|

||||

================================================================================

|

||||

下载管理器是一个电脑程序,专门处理下载文件,优化带宽占用,以及让下载更有条理等任务。有些网页浏览器,例如Firefox,也集成了一个下载管理器作为功能,但是它们的方式还是没有专门的下载管理器(或者浏览器插件)那么专业,没有最佳地使用带宽,也没有好用的文件管理功能。

|

||||

下载管理器是一个电脑程序,专门处理下载文件,优化带宽占用,以及让下载更有条理等任务。有些网页浏览器,例如Firefox,也集成了一个下载管理器作为功能,但是它们的使用方式还是没有专门的下载管理器(或者浏览器插件)那么专业,没有最佳地使用带宽,也没有好用的文件管理功能。

|

||||

|

||||

对于那些经常下载的人,使用一个好的下载管理器会更有帮助。它能够最大化下载速度(加速下载),断点续传以及制定下载计划,让下载更安全也更有价值。下载管理器已经没有之前流行了,但是最好的下载管理器还是很实用,包括和浏览器的紧密结合,支持类似YouTube的主流网站,以及更多。

|

||||

|

||||

有好几个能在Linux下工作都非常优秀的开源下载管理器,以至于让人无从选择。我整理了一个摘要,是我喜欢的下载管理器,以及Firefox里的一个非常好用的下载插件。这里列出的每一个程序都是开源许可发布的。

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###uGet

|

||||

|

||||

|

||||

|

||||

uGet是一个轻量级,容易使用,功能完备的开源下载管理器。uGet允许用户从不同的源并行下载来加快速度,添加文件到下载序列,暂停或继续下载,提供高级分类管理,和浏览器集成,监控剪贴板,批量下载,支持26种语言,以及其他许多功能。

|

||||

|

||||

uGet是一个成熟的软件;保持开发超过11年。在这个时间里,它发展成一个非常多功能的下载管理器,拥有一套很高价值的功能集,还保持了易用性。

|

||||

uGet是一个成熟的软件;持续开发超过了11年。在这段时间里,它发展成一个非常多功能的下载管理器,拥有一套很高价值的功能集,还保持了易用性。

|

||||

|

||||

uGet是用C语言开发的,使用了cURL作为底层支持,以及应用库libcurl。uGet有非常好的平台兼容性。它一开始是Linux系统下的项目,但是被移植到在Mac OS X,FreeBSD,Android和Windows平台运行。

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

- 容易使用

|

||||

- 下载队列可以让下载任务按任意多或少或你希望的数量同时进行。

|

||||

- 下载队列可以让下载任务按任意数量或你希望的数量同时进行。

|

||||

- 断点续传

|

||||

- 默认分类

|

||||

- 完美实现的剪贴板监控功能

|

||||

@ -43,19 +42,19 @@ uGet是用C语言开发的,使用了cURL作为底层支持,以及应用库li

|

||||

- 支持GnuTLS

|

||||

- 支持26种语言,包括:阿拉伯语,白俄罗斯语,简体中文,繁体中文,捷克语,丹麦语,英语(默认),法语,格鲁吉亚语,德语,匈牙利语,印尼语,意大利语,波兰语,葡萄牙语(巴西),俄语,西班牙语,土耳其语,乌克兰语,以及越南语。

|

||||

|

||||

---

|

||||

|

||||

- 网站:[ugetdm.com][1]

|

||||

- 开发人员:C.H. Huang and contributors

|

||||

- 许可:GNU LGPL 2.1

|

||||

- 版本:1.10.5

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###DownThemAll!

|

||||

|

||||

|

||||

|

||||

DownThemAll!是一个小巧的,可靠的以及易用的,开源下载管理器/加速器,是Firefox的一个组件。它可以让用户下载一个页面上所有链接和图片以及更多功能。它可以让用户完全控制下载任务,随时分配下载速度以及同时下载的任务数量。通过使用Metalinks或者手动添加镜像的方式,可以同时从不同的服务器下载同一个文件。

|

||||

DownThemAll!是一个小巧可靠的、易用的开源下载管理器/加速器,是Firefox的一个组件。它可以让用户下载一个页面上所有链接和图片,还有更多功能。它可以让用户完全控制下载任务,随时分配下载速度以及同时下载的任务数量。通过使用Metalinks或者手动添加镜像的方式,可以同时从不同的服务器下载同一个文件。

|

||||

|

||||

DownThemAll会根据你要下载的文件大小,切割成不同的部分,然后并行下载。

|

||||

|

||||

@ -69,6 +68,7 @@ DownThemAll会根据你要下载的文件大小,切割成不同的部分,然

|

||||

- 高级重命名选项

|

||||

- 暂停和继续下载任务

|

||||

|

||||

---

|

||||

|

||||

- 网站:[addons.mozilla.org/en-US/firefox/addon/downthemall][2]

|

||||

- 开发人员:Federico Parodi, Stefano Verna, Nils Maier

|

||||

@ -77,13 +77,13 @@ DownThemAll会根据你要下载的文件大小,切割成不同的部分,然

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###JDownloader

|

||||

|

||||

|

||||

|

||||

JDownloader是一个免费,开源的下载管理工具,拥有一个大型社区的开发者支持,让下载更简单和快捷。用户可以开始,停止或暂停下载,设置带宽限制,自动解压缩包,以及更多功能。它提供了一个容易扩展的框架。

|

||||

|

||||

JDownloader简化了从一键下载网站下载文件。它还支持从不同并行资源下载,手势识别,自动文件解压缩以及更多功能。另外,还支持许多“加密链接”网站-所以你只需要复制粘贴“加密的”链接,然后JDownloader会处理剩下的事情。JDownloader还能导入CCF,RSDF和DLC文件。

|

||||

JDownloader简化了从一键下载网站下载文件。它还支持从不同并行资源下载、手势识别、自动文件解压缩以及更多功能。另外,还支持许多“加密链接”网站-所以你只需要复制粘贴“加密的”链接,然后JDownloader会处理剩下的事情。JDownloader还能导入CCF,RSDF和DLC文件。

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

@ -98,6 +98,7 @@ JDownloader简化了从一键下载网站下载文件。它还支持从不同并

|

||||

- 网页更新

|

||||

- 集成包管理器支持额外模块(例如,Webinterface,Shutdown)

|

||||

|

||||

---

|

||||

|

||||

- 网站:[jdownloader.org][3]

|

||||

- 开发人员:AppWork UG

|

||||

@ -106,11 +107,11 @@ JDownloader简化了从一键下载网站下载文件。它还支持从不同并

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###FreeRapid Downloader

|

||||

|

||||

|

||||

|

||||

FreeRapid Downloader是一个易用的开源下载程序,支持从Rapidshare,Youtube,Facebook,Picasa和其他文件分享网站下载。他的下载引擎基于一些插件,所以可以从特殊站点下载。

|

||||

FreeRapid Downloader是一个易用的开源下载程序,支持从Rapidshare,Youtube,Facebook,Picasa和其他文件分享网站下载。他的下载引擎基于一些插件,所以可以从那些特别的站点下载。

|

||||

|

||||

对于需要针对特定文件分享网站的下载管理器用户来说,FreeRapid Downloader是理想的选择。

|

||||

|

||||

@ -133,6 +134,7 @@ FreeRapid Downloader使用Java语言编写。需要至少Sun Java 7.0版本才

|

||||

- 支持多国语言:英语,保加利亚语,捷克语,芬兰语,葡萄牙语,斯洛伐克语,匈牙利语,简体中文,以及其他

|

||||

- 支持超过700个站点

|

||||

|

||||

---

|

||||

|

||||

- 网站:[wordrider.net/freerapid/][4]

|

||||

- 开发人员:Vity and contributors

|

||||

@ -141,7 +143,7 @@ FreeRapid Downloader使用Java语言编写。需要至少Sun Java 7.0版本才

|

||||

|

||||

----------

|

||||

|

||||

|

||||

###FlashGot

|

||||

|

||||

|

||||

|

||||

@ -151,7 +153,7 @@ FlashGot把所支持的所有下载管理器统一成Firefox中的一个下载

|

||||

|

||||

#### 功能点: ####

|

||||

|

||||

- Linux下支持:Aria, Axel Download Accelerator, cURL, Downloader 4 X, FatRat, GNOME Gwget, FatRat, JDownloader, KDE KGet, pyLoad, SteadyFlow, uGet, wxDFast, 和wxDownload Fast

|

||||

- Linux下支持:Aria, Axel Download Accelerator, cURL, Downloader 4 X, FatRat, GNOME Gwget, FatRat, JDownloader, KDE KGet, pyLoad, SteadyFlow, uGet, wxDFast 和 wxDownload Fast

|

||||

- 支持图库功能,可以帮助把原来分散在不同页面的系列资源,整合到一个所有媒体库页面中,然后可以轻松迅速地“下载所有”

|

||||

- FlashGot Link会使用默认下载管理器下载当前鼠标选中的链接

|

||||

- FlashGot Selection

|

||||

@ -160,12 +162,13 @@ FlashGot把所支持的所有下载管理器统一成Firefox中的一个下载

|

||||

- FlashGot Media

|

||||

- 抓取页面里所有链接

|

||||

- 抓取所有标签栏的所有链接

|

||||

- 链接过滤(例如,只下载指定类型文件)

|

||||

- 链接过滤(例如只下载指定类型文件)

|

||||

- 在网页上抓取点击所产生的所有链接

|

||||

- 支持从大多数链接保护和文件托管服务器直接和批量下载

|

||||

- 隐私选项

|

||||

- 支持国际化

|

||||

|

||||

---

|

||||

|

||||

- 网站:[flashgot.net][5]

|

||||

- 开发人员:Giorgio Maone

|

||||

@ -178,7 +181,7 @@ via: http://www.linuxlinks.com/article/20140913062041384/DownloadManagers.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,79 @@

|

||||

如何在Ubuntu桌面上使用Steam Music音乐播放器

|

||||

================================================================================

|

||||

|

||||

|

||||

**‘音乐让人们走到一起’ 麦当娜曾这样唱道。但是Steam的新音乐播放器特性能否很好的混搭小资与叛逆?**

|

||||

|

||||

如果你曾与世隔绝,充耳不闻,你就会错过与Steam Music的相识。它的特性并不是全新的。从今年的早些时候开始,它就已经以这样或那样的形式进行了测试。

|

||||

|

||||

但Steam客户端最近一次在Windows、Mac和Linux上的定期更新中,所有的客户端都能使用它了。你会问为什么一个游戏客户端会添加一个音乐播放器呢?当然是为了让你能一边玩游戏一边一边听你最喜欢的音乐了。

|

||||

|

||||

别担心:在游戏的音乐声中再加上你自己的音乐,听起来并不会像你想象的那么糟(哈哈)。Steam会帮你减少或消除游戏的背景音乐,但在混音器中保持效果音的高音量,以便于你能和平时一样听到那些叮,嘭和各种爆炸声。

|

||||

|

||||

### 使用Steam Music音乐播放器 ###

|

||||

|

||||

|

||||

|

||||

*大图模式*

|

||||

|

||||

任何使用最新版客户端的人都能使用Steam Music音乐播放器。它是个相当简单的附加程序:它让你能从你的电脑中添加、浏览并播放音乐。

|

||||

|

||||

播放器可以以两种方式进入:桌面和(超棒的)Steam大图模式。在两种方式下,控制播放都超级简单。

|

||||

|

||||

作为一个Rhythmbox的对手或是Spotify的继承者,把**为玩游戏时放音乐而设计**作为特点一点也不吸引人。事实上,他没有任何可购买音乐的商店,也没有整合Rdio,Grooveshark这类在线服务或是桌面服务。没错,你的多媒体键在Linux的播放器上完全不能用。

|

||||

|

||||

Valve说他们“*……计划增加更多的功能以便用户能以新的方式体验Steam Music。我们才刚刚开始。*”

|

||||

|

||||

#### Steam Music的重要特性:####

|

||||

|

||||

- 只能播放MP3文件

|

||||

- 与游戏中的音乐相融

|

||||

- 在游戏中可以控制音乐

|

||||

- 播放器可以在桌面上或在大图模式下运行

|

||||

- 基于播放列表的播放方式

|

||||

|

||||

**它没有整合到Ubuntu的声音菜单里,而且目前也不支持键盘上的多媒体键。**

|

||||

|

||||

### 在Ubuntu上使用Steam Music播放器 ###

|

||||

|

||||

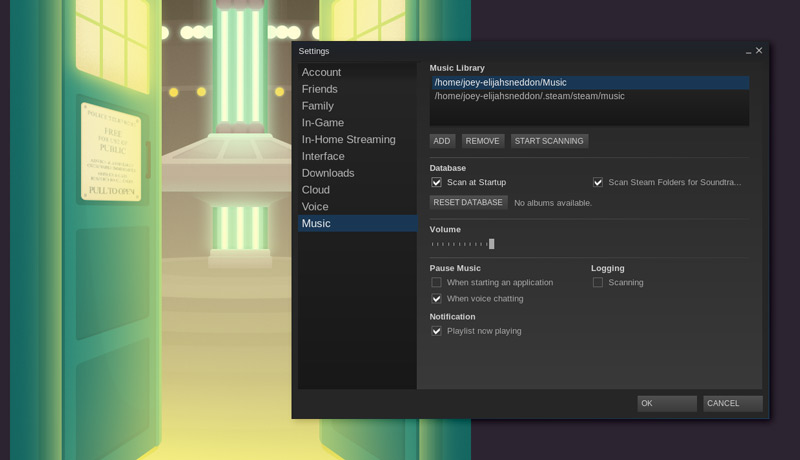

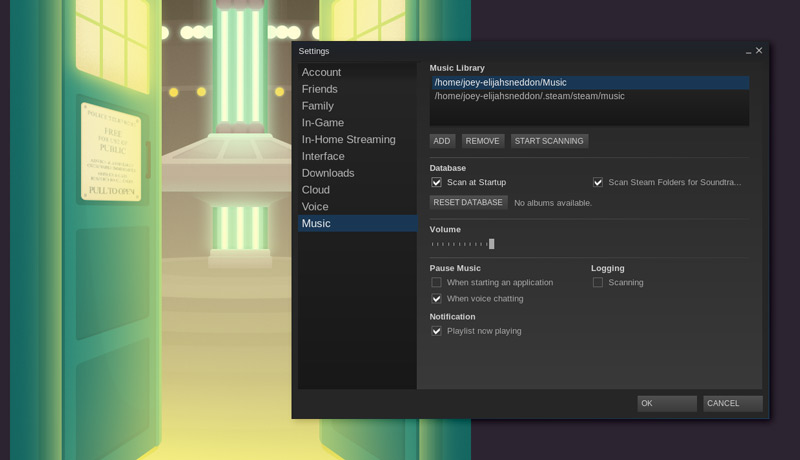

显然,添加音乐是你播放音乐前的第一件事。在Ubuntu上,默认设置下,Steam会自动添加两个文件夹:Home下的标准Music目录和它自带的Steam Music文件夹。任何可下载的音轨都保存在其中。

|

||||

|

||||

注意:目前**Steam Music只能播放MP3文件**。如果你的大部分音乐都是其他文件格式(比如.acc、.m4a等等),这些文件不会被添加也不能被播放。

|

||||

|

||||

若想添加其他的文件夹或重新扫描:

|

||||

|

||||

- 到**View > Settings > Music**。

|

||||

- 点击‘**Add**‘将其他位置的文件夹添加到已列出两个文件夹的列表下。

|

||||

- 点击‘**Start Scanning**’

|

||||

|

||||

|

||||

|

||||

你还可以在这个对话框中调整其他设置,包括‘scan at start’。如果你经常添加新音乐而且很容易忘记手动启动扫描,请标记此项。你还可以选择当路径变化时是否显示提示,设置默认的音量,还能调整当你打开一个应用软件或语音聊天时的播放状态的改变。

|

||||

|

||||

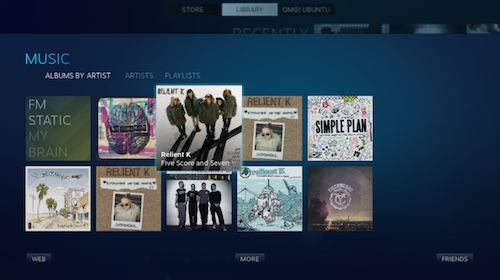

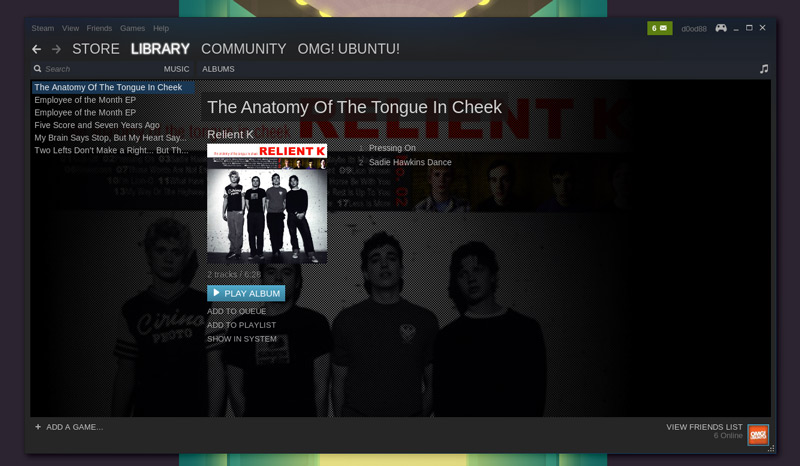

一旦你的音乐源成功的被添加并扫描后,你就可以通过主客户端的**Library > Music**区域浏览你的音乐了。

|

||||

|

||||

|

||||

|

||||

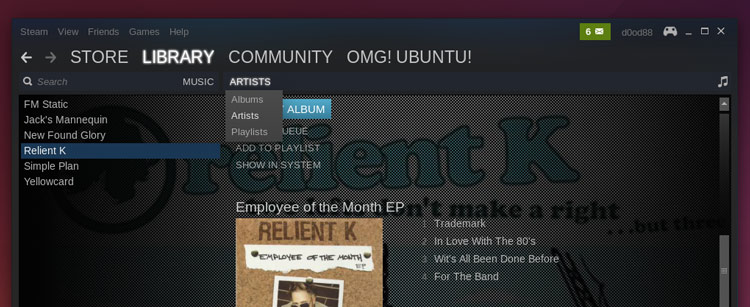

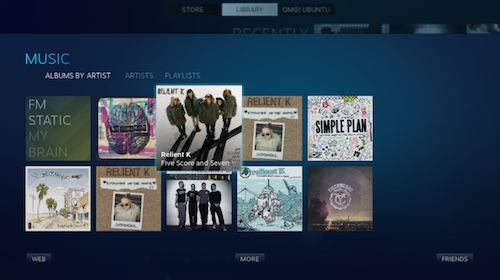

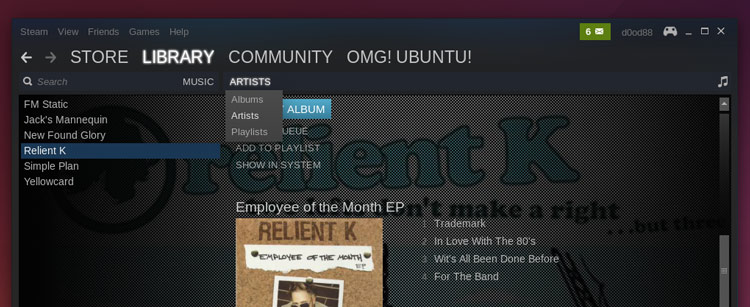

Steam Music会默认的将音乐按照专辑进行分组。若想按照乐队名进行浏览,你需要点击‘Albums’然后从下拉菜单中选择‘Artists’。

|

||||

|

||||

|

||||

|

||||

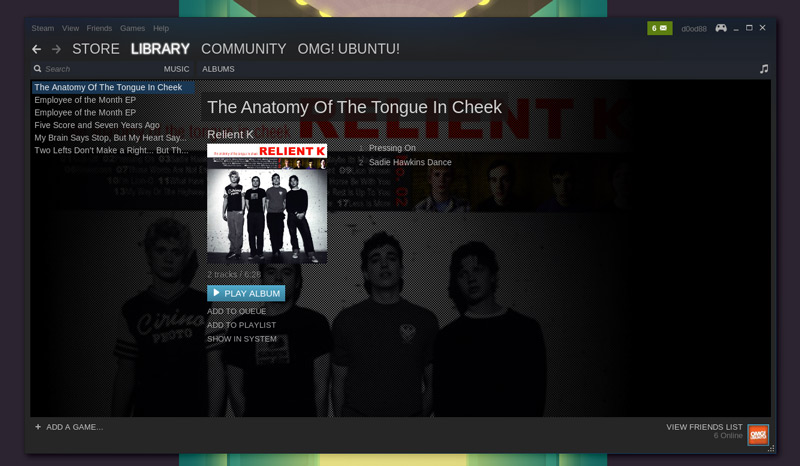

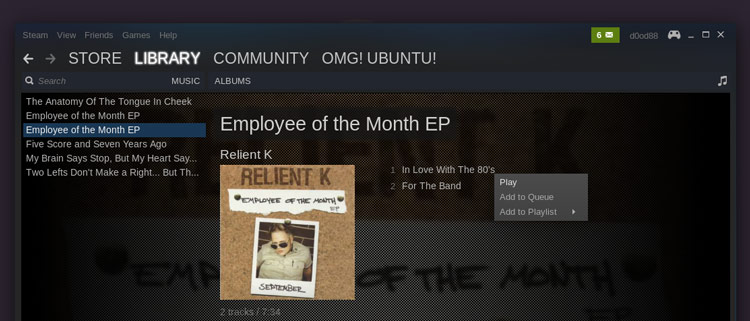

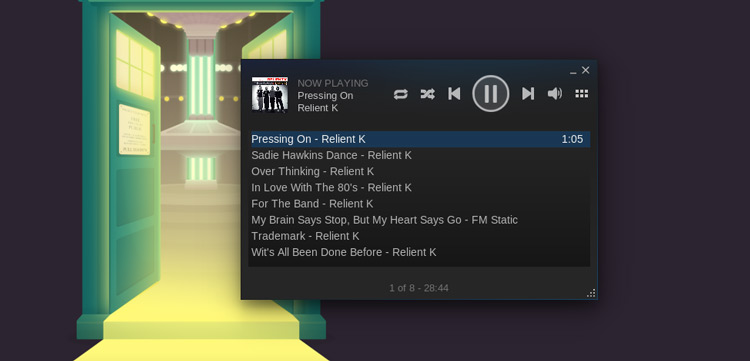

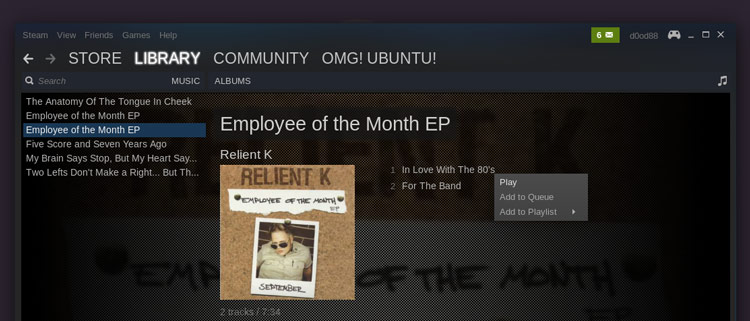

Steam Music是一个以‘队列’方式工作的系统。你可以通过双击浏览器里的音乐或右键单击并选择‘Add to Queue’来把音乐添加到播放队列里。

|

||||

|

||||

|

||||

|

||||

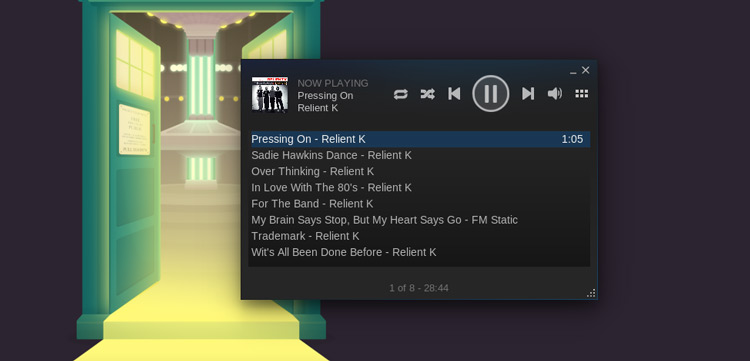

若想**启动桌面播放器**请点击右上角的音符图标或通过**View > Music Player**菜单。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/10/use-steam-music-player-linux

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[H-mudcup](https://github.com/H-mudcup)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

@ -1,4 +1,4 @@

|

||||

Linux问答时间--如何在CentOS上安装phpMyAdmin

|

||||

Linux有问必答:如何在CentOS上安装phpMyAdmin

|

||||

================================================================================

|

||||

> **问题**:我正在CentOS上运行一个MySQL/MariaDB服务,并且我想要通过网络接口来用phpMyAdmin来管理数据库。在CentOS上安装phpMyAdmin的最佳方法是什么?

|

||||

|

||||

@ -108,7 +108,7 @@ phpMyAdmin是一款以PHP为基础,基于Web的MySQL/MariaDB数据库管理工

|

||||

|

||||

### 测试phpMyAdmin ###

|

||||

|

||||

测试phpMyAdmin是否设置成功,访问这个页面:http://<web-server-ip-addresss>/phpmyadmin

|

||||

测试phpMyAdmin是否设置成功,访问这个页面:http://\<web-server-ip-addresss>/phpmyadmin

|

||||

|

||||

|

||||

|

||||

@ -153,14 +153,14 @@ phpMyAdmin是一款以PHP为基础,基于Web的MySQL/MariaDB数据库管理工

|

||||

via: http://ask.xmodulo.com/install-phpmyadmin-centos.html

|

||||

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://xmodulo.com/set-web-based-database-management-system-adminer.html

|

||||

[2]:http://xmodulo.com/install-lamp-stack-centos.html

|

||||

[3]:http://xmodulo.com/install-lemp-stack-centos.html

|

||||

[4]:http://xmodulo.com/how-to-set-up-epel-repository-on-centos.html

|

||||

[2]:http://linux.cn/article-1567-1.html

|

||||

[3]:http://linux.cn/article-4314-1.html

|

||||

[4]:http://linux.cn/article-2324-1.html

|

||||

[5]:

|

||||

[6]:

|

||||

[7]:

|

||||

@ -1,6 +1,6 @@

|

||||

Linux的十条SCP传输命令

|

||||

十个 SCP 传输命令例子

|

||||

================================================================================

|

||||

Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是不安装**GUI**的。**SSH**可能是Linux系统管理员通过远程方式安全管理服务器的最流行协议。在**SSH**命令中内置了一种叫**SCP**的命令,用来在服务器之间安全传输文件。

|

||||

Linux系统管理员应该很熟悉**CLI**环境,因为通常在Linux服务器中是不安装**GUI**的。**SSH**可能是Linux系统管理员通过远程方式安全管理服务器的最流行协议。在**SSH**命令中内置了一种叫**SCP**的命令,用来在服务器之间安全传输文件。

|

||||

|

||||

|

||||

|

||||

@ -10,7 +10,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

scp source_file_name username@destination_host:destination_folder

|

||||

|

||||

**SCP**命令有很多参数供你使用,这里指的是每次都会用到的参数。

|

||||

**SCP**命令有很多可以使用的参数,这里指的是每次都会用到的参数。

|

||||

|

||||

### 用-v参数来提供SCP进程的详细信息 ###

|

||||

|

||||

@ -53,7 +53,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 用-C参数来让文件传输更快 ###

|

||||

|

||||

有一个参数能让传输文件更快,就是“**-C**”参数,它的作用是不停压缩所传输的文件。它特别之处在于压缩是在网络中进行,当文件传到目标服务器时,它会变回压缩之前的原始大小。

|

||||

有一个参数能让传输文件更快,就是“**-C**”参数,它的作用是不停压缩所传输的文件。它特别之处在于压缩是在网络传输中进行,当文件传到目标服务器时,它会变回压缩之前的原始大小。

|

||||

|

||||

来看看这些命令,我们使用一个**93 Mb**的单一文件来做例子。

|

||||

|

||||

@ -121,18 +121,18 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

看到了吧,压缩了文件之后,传输过程在**162.5**秒内就完成了,速度是不用“**-C**”参数的10倍。如果你要通过网络拷贝很多份文件,那么“**-C**”参数能帮你节省掉很多时间。

|

||||

|

||||

有一点我们需要注意,这个压缩的方法不是适用于所有文件。当源文件已经被压缩过了,那就没办法再压缩了。诸如那些像**.zip**,**.rar**,**pictures**和**.iso**的文件,用“**-C**”参数就无效。

|

||||

有一点我们需要注意,这个压缩的方法不是适用于所有文件。当源文件已经被压缩过了,那就没办法再压缩很多了。诸如那些像**.zip**,**.rar**,**pictures**和**.iso**的文件,用“**-C**”参数就没什么意义。

|

||||

|

||||

### 选择其它加密算法来加密文件 ###

|

||||

|

||||

**SCP**默认是用“**AES-128**”加密算法来加密文件的。如果你想要改用其它加密算法来加密文件,你可以用“**-c**”参数。我们来瞧瞧。

|

||||

**SCP**默认是用“**AES-128**”加密算法来加密传输的。如果你想要改用其它加密算法来加密传输,你可以用“**-c**”参数。我们来瞧瞧。

|

||||

|

||||

pungki@mint ~/Documents $ scp -c 3des Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 282.5KB/s 00:13

|

||||

|

||||

上述命令是告诉**SCP**用**3des algorithm**来加密文件。要注意这个参数是“**-c**”而不是“**-C**“。

|

||||

上述命令是告诉**SCP**用**3des algorithm**来加密文件。要注意这个参数是“**-c**”(小写)而不是“**-C**“(大写)。

|

||||

|

||||

### 限制带宽使用 ###

|

||||

|

||||

@ -143,24 +143,24 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 50.3KB/s 01:13

|

||||

|

||||

在“**-l**”参数后面的这个**400**值意思是我们给**SCP**进程限制了带宽为**50 KB/秒**。有一点要记住,带宽是以**千比特/秒** (**kbps**)表示的,**8 比特**等于**1 字节**。

|

||||

在“**-l**”参数后面的这个**400**值意思是我们给**SCP**进程限制了带宽为**50 KB/秒**。有一点要记住,带宽是以**千比特/秒** (**kbps**)表示的,而**8 比特**等于**1 字节**。

|

||||

|

||||

因为**SCP**是用**千字节/秒** (**KB/s**)计算的,所以如果你想要限制**SCP**的最大带宽只有**50 KB/s**,你就需要设置成**50 x 8 = 400**。

|

||||

|

||||

### 指定端口 ###

|

||||

|

||||

通常**SCP**是把**22**作为默认端口。但是为了安全起见,你可以改成其它端口。比如说,我们想用**2249**端口,命令如下所示。

|

||||

通常**SCP**是把**22**作为默认端口。但是为了安全起见SSH 监听端口改成其它端口。比如说,我们想用**2249**端口,这种情况下就要指定端口。命令如下所示。

|

||||

|

||||

pungki@mint ~/Documents $ scp -P 2249 Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

mrarianto@202.x.x.x's password:

|

||||

Label.pdf 100% 3672KB 262.3KB/s 00:14

|

||||

|

||||

确认一下写的是大写字母“**P**”而不是“**p**“,因为“**p**”已经被用来保留源文件的修改时间和模式。

|

||||

确认一下写的是大写字母“**P**”而不是“**p**“,因为“**p**”已经被用来保留源文件的修改时间和模式(LCTT 译注:和 ssh 命令不同了)。

|

||||

|

||||

### 递归拷贝文件和文件夹 ###

|

||||

|

||||

有时我们需要拷贝文件夹及其内部的所有**文件** / **文件夹**,我们如果能用一条命令解决问题那就更好了。**SCP**用“**-r**”参数就能做到。

|

||||

有时我们需要拷贝文件夹及其内部的所有**文件**/**子文件夹**,我们如果能用一条命令解决问题那就更好了。**SCP**用“**-r**”参数就能做到。

|

||||

|

||||

pungki@mint ~/Documents $ scp -r documents mrarianto@202.x.x.x:.

|

||||

|

||||

@ -172,7 +172,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 禁用进度条和警告/诊断信息 ###

|

||||

|

||||

如果你不想从SCP中看到进度条和警告/诊断信息,你可以用“**-q**”参数来禁用它们,举例如下。

|

||||

如果你不想从SCP中看到进度条和警告/诊断信息,你可以用“**-q**”参数来静默它们,举例如下。

|

||||

|

||||

pungki@mint ~/Documents $ scp -q Label.pdf mrarianto@202.x.x.x:.

|

||||

|

||||

@ -207,7 +207,7 @@ Linux系统管理员应该很熟悉**CLI**环境,因为在Linux服务器中是

|

||||

|

||||

### 选择不同的ssh_config文件 ###

|

||||

|

||||

对于经常在公司网络和公共网络之间切换的移动用户来说,一直改变SCP的设置显然是很痛苦的。如果我们能放一个不同的**ssh_config**文件来匹配我们的需求那就很好了。

|

||||

对于经常在公司网络和公共网络之间切换的移动用户来说,一直改变SCP的设置显然是很痛苦的。如果我们能放一个保存不同配置的**ssh_config**文件来匹配我们的需求那就很好了。

|

||||

|

||||

#### 以下是一个简单的场景 ####

|

||||

|

||||

@ -231,7 +231,7 @@ via: http://www.tecmint.com/scp-commands-examples/

|

||||

|

||||

作者:[Pungki Arianto][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,44 +1,46 @@

|

||||

2014年会是 "Linux桌面年"吗?

|

||||

================================================================================

|

||||

> 现在谈起Linux桌面终于能头头是道了

|

||||

> Linux桌面现在终于发出最强音!

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

**看来Linux在2014年有很多改变,许多用户都表示今年Linux的确有进步,但是仅凭这个就能断定2014年就是"Linux桌面年"吗?**

|

||||

|

||||

"Linux桌面年"这句话,在过去几年就被传诵得像句颂歌一样,可以说是在试图用一种比较有意义的方式来标记它的发展进程。此类事情目前还没有发生过,在我们的见证下也从无先例,所以这就不难理解为什么Linux用户会用这个角度去看待这句话。

|

||||

|

||||

大多数软件和硬件领域不太会有这种快速的进步,都以较慢的速度发展,但是对于那些在工业领域有更好眼光的人来说,事情就会变得疯狂。即使有可能,针对某一时刻或某一事件还是比较困难的,但是Linux在几年的过程中还是以指数方式迅速发展成长。

|

||||

|

||||

|

||||

|

||||

|

||||

### Linux桌面年这句话不可轻言 ###

|

||||

|

||||

没有一个比较权威的人和机构能判定Linux桌面年已经到来或者已经过去,所以我们只能尝试根据迄今为止我们所看到的和用户所反映的去推断。有一些人比较保守,改变对他们影响不大,还有一些人则比较激进,永远不知满足。这真的要取决于你的见解了。

|

||||

|

||||

点燃这一切的火花似乎就是Linux上的Steam平台,尽管在这变成现实之前我们已经看到了一些Linux游戏已经开始有重要的动作了。在任何情况下,Valve都可能是我们今天所看到的一系列复苏事件的催化剂。

|

||||

|

||||

在过去的十年里,Linux桌面以一种缓慢的速度在发展,并没有什么真正的改变。创新肯定是有的,但是市场份额几乎还是保持不变。无论桌面变得多么酷或Linux相比之前的任何一版多出了多少特点,很大程度上还是在原地踏步,包括那些制作专有软件的公司,他们的参与度一直很小,基本上就忽略掉了Linux。

|

||||

|

||||

|

||||

在过去的十年里,Linux桌面以一种缓慢的速度在发展,并没有什么真正的改变。创新肯定是有的,但是市场份额几乎还是保持不变。无论桌面变得多么酷或Linux相比之前的任何一版多出了多少特点,很大程度上还是在原地踏步,包括那些开发商业软件的公司,他们的参与度一直很小,基本上就忽略掉了Linux。

|

||||

|

||||

|

||||

|

||||

|

||||

现在,相比过去的十年里,更多的公司表现出了对Linux平台的浓厚兴趣。或许这是一种自然地演变,Valve并没有做什么,但是Linux最终还是达到了一个能被普通用户接受并理解的水平,并不只是因为令人着迷的开源技术。

|

||||

|

||||

驱动程序能力强了,游戏工作室就会定期移植游戏,在Linux中我们前所未见的应用和中间件就会开始出现。Linux内核发展达到了难以置信的速度,大多数发行版的安装过程通常都不怎么难,所有这一切都只是冰山一角。

|

||||

|

||||

|

||||

|

||||

所以,当有人问你2014年是不是Linux桌面年时,你可以说“是的!”,因为Linux桌面完全统治了2014年。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Was-2014-The-Year-of-Linux-Desktop-467036.shtml

|

||||

|

||||

作者:[Silviu Stahie ][a]

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

Attic——重复数据删除备份程序

|

||||

Attic——删除重复数据的备份程序

|

||||

================================================================================

|

||||

Attic是一个Python写的重复数据删除备份程序,其主要目标是提供一种高效安全的数据备份方式。重复数据消除技术的使用使得Attic适用于日常备份,因为它可以只存储那些修改过的数据。

|

||||

Attic是一个Python写的删除重复数据的备份程序,其主要目标是提供一种高效安全的数据备份方式。重复数据消除技术的使用使得Attic适用于日常备份,因为它可以只存储那些修改过的数据。

|

||||

|

||||

### Attic特性 ###

|

||||

|

||||

#### 空间高效存储 ####

|

||||

|

||||

可变块大小重复数据消除技术用于减少检测到的冗余数据存储字节数量。每个文件被分割成若干可变长度组块,只有那些从没见过的组块会被压缩并添加到仓库中。

|

||||

可变块大小重复数据消除技术用于减少检测到的冗余数据存储字节数量。每个文件被分割成若干可变长度组块,只有那些从没见过的组合块会被压缩并添加到仓库中。

|

||||

|

||||

#### 可选数据加密 ####

|

||||

|

||||

@ -44,29 +44,29 @@ Attic可以通过SSH将数据存储到安装有Attic的远程主机上。

|

||||

|

||||

该备份将更快些,也更小些,因为只有之前从没见过的新数据会被存储。--stats选项会让Attic输出关于新创建的归档的统计数据,比如唯一数据(不和其它归档共享)的数量:

|

||||

|

||||

归档名:Tuesday

|

||||

归档指纹:387a5e3f9b0e792e91ce87134b0f4bfe17677d9248cb5337f3fbf3a8e157942a

|

||||

开始时间: Tue Mar 25 12:00:10 2014

|

||||

结束时间: Tue Mar 25 12:00:10 2014

|

||||

持续时间: 0.08 seconds

|

||||

文件数量: 358

|

||||

最初大小 压缩后大小 重复数据删除后大小

|

||||

本归档: 57.16 MB 46.78 MB 151.67 kB

|

||||

所有归档:114.02 MB 93.46 MB 44.81 MB

|

||||

归档名:Tuesday

|

||||

归档指纹:387a5e3f9b0e792e91ce87134b0f4bfe17677d9248cb5337f3fbf3a8e157942a

|

||||

开始时间: Tue Mar 25 12:00:10 2014

|

||||

结束时间: Tue Mar 25 12:00:10 2014

|

||||

持续时间: 0.08 seconds

|

||||

文件数量: 358

|

||||

最初大小 压缩后大小 重复数据删除后大小

|

||||

本归档: 57.16 MB 46.78 MB 151.67 kB

|

||||

所有归档:114.02 MB 93.46 MB 44.81 MB

|

||||

|

||||

列出仓库中所有归档:

|

||||

|

||||

$ attic list /somewhere/my-repository.attic

|

||||

|

||||

Monday Mon Mar 24 11:59:35 2014

|

||||

Tuesday Tue Mar 25 12:00:10 2014

|

||||

Monday Mon Mar 24 11:59:35 2014

|

||||

Tuesday Tue Mar 25 12:00:10 2014

|

||||

|

||||

列出Monday归档的内容:

|

||||

|

||||

$ attic list /somewhere/my-repository.attic::Monday

|

||||

|

||||

drwxr-xr-x user group 0 Jan 06 15:22 home/user/Documents

|

||||

-rw-r--r-- user group 7961 Nov 17 2012 home/user/Documents/Important.doc

|

||||

drwxr-xr-x user group 0 Jan 06 15:22 home/user/Documents

|

||||

-rw-r--r-- user group 7961 Nov 17 2012 home/user/Documents/Important.doc

|

||||

|

||||

恢复Monday归档:

|

||||

|

||||

@ -76,7 +76,7 @@ drwxr-xr-x user group 0 Jan 06 15:22 home/user/Documents

|

||||

|

||||

$ attic delete /somwhere/my-backup.attic::Monday

|

||||

|

||||

详情请查阅[Attic文档][1]

|

||||

详情请查阅[Attic文档][1]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -84,7 +84,7 @@ via: http://www.ubuntugeek.com/attic-deduplicating-backup-program.html

|

||||

|

||||

作者:[ruchi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

红帽反驳:“grinch”算不上Linux漏洞

|

||||

红帽反驳:“grinch(鬼精灵)”算不上Linux漏洞

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -14,7 +14,7 @@

|

||||

|

||||

Alert Logic 称攻击者可以使用第三方Linux 软件框架Policy Kit (Polkit)达到利用“鬼精灵”漏洞的目的。Polkit旨在帮助用户安装与运行软件包,此开源程序由红帽维护。Alert Logic 声称,允许用户安装软件程序的过程中往往需要超级用户权限,如此一来,Polkit也在不经意间或通过其它形式为恶意程序的运行洞开方便之门。

|

||||

|

||||

红帽对此不以为意,表示系统就是这么设计的,换句话说,“鬼精灵”不是臭虫而是一项特性。

|

||||

红帽对此不以为意,表示系统就是这么设计的,换句话说,**“鬼精灵”不是臭虫而是一项特性。**

|

||||

|

||||

安全监控公司Threat Stack联合创造人 Jen Andre [就此在一篇博客][4]中写道:“如果你任由用户通过使用那些利用了Policykit的软件,无需密码就可以在系统上安装任何软件,实际上也就绕过了Linux内在授权与访问控制。”

|

||||

|

||||

@ -52,7 +52,7 @@ via:http://www.computerworld.com/article/2861392/security0/the-grinch-isnt-a-lin

|

||||

|

||||

作者:[Joab Jackson][a]

|

||||

译者:[yupmoon](https://github.com/yupmoon)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,47 @@

|

||||

Linux有问必答:如何在Debian下安装闭源软件包

|

||||

================================================================================

|

||||

> **提问**: 我需要在Debian下安装特定的闭源设备驱动。然而, 我无法在Debian中找到并安装软件包。如何在Debian下安装闭源软件包?

|

||||

|

||||

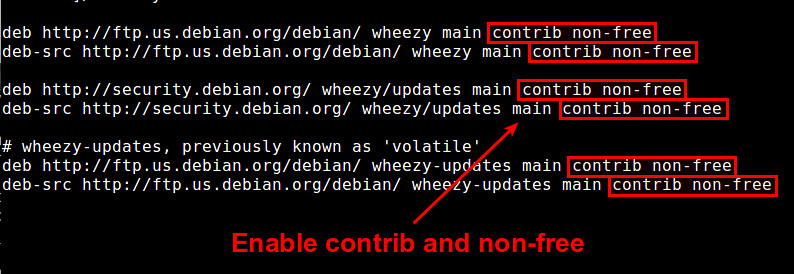

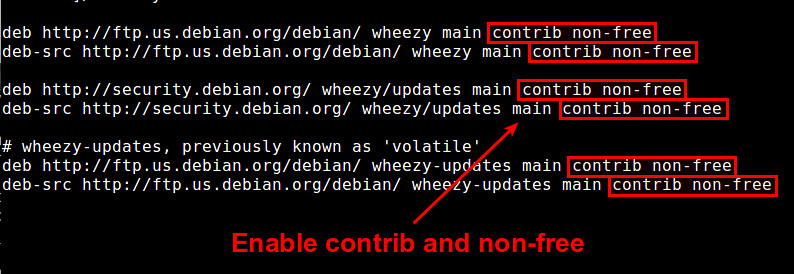

Debian是一个拥有[48,000][1]软件包的发行版. 这些软件包被分为三类: main, contrib 和 non-free, 主要是根据许可证要求, 参照[Debian开源软件指南][2] (DFSG)。

|

||||

|

||||

main软件仓库包括符合DFSG的开源软件。contrib也包括符合DFSG的开源软件,但是依赖闭源软件来编译或者执行。non-free包括不符合DFSG的、可再分发的闭源软件。main仓库被认为是Debian项目的一部分,但是contrib和non-free不是。后两者只是为了用户的方便而维护和提供。

|

||||

|

||||

如果你想一直能够在Debian上安装闭源软件包,你需要添加contrib和non-free软件仓库。这样做,用文本编辑器打开 /etc/apt/sources.list 添加"contrib non-free""到每个源。

|

||||

|

||||

下面是适用于 Debian Wheezy的 /etc/apt/sources.list 例子。

|

||||

|

||||

deb http://ftp.us.debian.org/debian/ wheezy main contrib non-free

|

||||

deb-src http://ftp.us.debian.org/debian/ wheezy main contrib non-free

|

||||

|

||||

deb http://security.debian.org/ wheezy/updates main contrib non-free

|

||||

deb-src http://security.debian.org/ wheezy/updates main contrib non-free

|

||||

|

||||

# wheezy-updates, 之前叫做 'volatile'

|

||||

deb http://ftp.us.debian.org/debian/ wheezy-updates main contrib non-free

|

||||

deb-src http://ftp.us.debian.org/debian/ wheezy-updates main contrib non-free

|

||||

|

||||

|

||||

|

||||

修改完源后, 运行下面命令去下载contrib和non-free软件仓库的文件索引。

|

||||

|

||||

$ sudo apt-get update

|

||||

|

||||

如果你用 aptitude, 运行下面命令。

|

||||

|

||||

$ sudo aptitude update

|

||||

|

||||

现在你在Debian上搜索和安装任何闭源软件包。

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/install-nonfree-packages-debian.html

|

||||

|

||||

译者:[mtunique](https://github.com/mtunique)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://packages.debian.org/stable/allpackages?format=txt.gz

|

||||

[2]:https://www.debian.org/social_contract.html#guidelines

|

||||

@ -1,44 +0,0 @@

|

||||

Linus Torvalds Launches Linux Kernel 3.19 RC1, One of the Biggest So Far

|

||||

================================================================================

|

||||

> new development cycle for Linux kernel has started

|

||||

|

||||

|

||||

|

||||

**The first Linux kernel Release Candidate has been made available in the 3.19 branch and it looks like it's one of the biggest ones so far. Linux Torvalds surprised everyone with an early launch, but it's easy to understand why.**

|

||||

|

||||

The Linux kernel development cycle has been refreshed with a new released, 3.19. Given the fact that the 3.18 branch reached stable status just a couple of weeks ago, today's release was not completely unexpected. The holidays are coming and many of the developers and maintainers will probably take a break. Usually, a new RC is launched on a weekly basis, but users might see a slight delay this time.

|

||||

|

||||

There is no mention of the regression problem that was identified in Linux kernel 3.18, but it's pretty certain that they are still working to fix it. On the other hand, Linux did say that this is a very large released, in fact it's one of the biggest ones made until now. It's likely that many devs wanted to push their patches before the holidays, so the next RC should be a smaller.

|

||||

|

||||

### Linux kernel 3.19 RC1 marks the start of a new cycle ###

|

||||

|

||||

The size of the releases has been increasing, along with the frequency. The development cycle for the kernel usually takes about 8 to 10 weeks and it seldom happens to be more than that, which brings a nice predictability for the project.

|

||||

|

||||

"That said, maybe there aren't any real stragglers - and judging by the size of rc1, there really can't have been much. Not only do I think there are more commits than there were in linux-next, this is one of the bigger rc1's (at least by commits) historically. We've had bigger ones (3.10 and 3.15 both had large merge windows leading up to them), but this was definitely not a small merge window."

|

||||

|

||||

"In the 'big picture', this looks like a fairly normal release. About two thirds driver updates, with about half of the rest being architecture updates (and no, the new nios2 patches are not at all dominant, it's about half ARM, with the new nios2 support being less than 10% of the arch updates by lines overall)," [reads][1] the announcement made by Linus Torvalds.

|

||||

|

||||

More details about this RC can be found on the official mailing list.

|

||||

|

||||

#### Download Linux kernel 3.19 RC1 source package: ####

|

||||

|

||||

- [tar.xz (3.18.1 Stable)][3]File size: 77.2 MB

|

||||

- [tar.xz (3.19 RC1 Unstable)][4]

|

||||

|

||||

It you want to test it, you will need to compile it yourself although it's advisable to not use a production machines.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Linus-Torvalds-Launches-Linux-kernel-3-19-RC1-One-of-the-Biggest-So-Far-468043.shtml

|

||||

|

||||

作者:[Silviu Stahie ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://lkml.iu.edu/hypermail/linux/kernel/1412.2/02480.html

|

||||

[2]:http://linux.softpedia.com/get/System/Operating-Systems/Kernels/Linux-Kernel-Development-8069.shtml

|

||||

[3]:https://www.kernel.org/pub/linux/kernel/v3.x/linux-3.18.1.tar.xz

|

||||

[4]:https://www.kernel.org/pub/linux/kernel/v3.x/testing/linux-3.19-rc1.tar.xz

|

||||

@ -1,79 +0,0 @@

|

||||

How To Use Steam Music Player on Ubuntu Desktop

|

||||

================================================================================

|

||||

|

||||

|

||||

**‘Music makes the people come together’ Madonna once sang. But can Steam’s new music player feature mix the bourgeoisie and the rebel as well?**

|

||||

|

||||

If you’ve been living under a rock, ears pressed tight to a granite roof, word of Steam Music may have passed you by. The feature isn’t entirely new. It’s been in testing in some form or another since earlier this year.

|

||||

|

||||

But in the latest stable update of the Steam client on Windows, Mac and Linux it is now available to all. Why does a gaming client need to add a music player, you ask? To let you play your favourite music while gaming, of course.

|

||||

|

||||

Don’t worry: playing your music over in-game music is not as bad as it sounds (har har) on paper. Steam reduces/cancels out the game soundtrack in favour of your tunes, but keeps sound effects high in the mix so you can hear the plings, boops and blams all the same.

|

||||

|

||||

### Using Steam Music Player ###

|

||||

|

||||

|

||||

|

||||

Music in Big Picture Mode

|

||||

|

||||

Steam Music Player is available to anyone running the latest version of the client. It’s a pretty simple addition: it lets you add, browse and play music from your computer.

|

||||

|

||||

The player element itself is accessible on the desktop and when playing in Steam’s (awesome) Big Picture mode. In both instances, controlling playback is made dead simple.

|

||||

|

||||

As the feature is **designed for playing music while gaming** it is not pitching itself as a rival for Rhythmbox or successor to Spotify. In fact, there’s no store to purchase music from and no integration with online services like Rdio, Grooveshark, etc. or the desktop. Nope, your keyboard media keys won’t work with the player in Linux.

|

||||

|

||||

Valve say they “*…plan to add more features so you can experience Steam music in new ways. We’re just getting started.*”

|

||||

|

||||

#### Steam Music Key Features: ####

|

||||

|

||||

- Plays MP3s only

|

||||

- Mixes with in-game soundtrack

|

||||

- Music controls available in game

|

||||

- Player can run on the desktop or in Big Picture mode

|

||||

- Playlist/queue based playback

|

||||

|

||||

**It does not integrate with the Ubuntu Sound Menu and does not currently support keyboard media keys.**

|

||||

|

||||

### Using Steam Music on Ubuntu ###

|

||||

|

||||

The first thing to do before you can play music is to add some. On Ubuntu, by default, Steam automatically adds two folders: the standard Music directory in Home, and its own Steam Music folder, where any downloadable soundtracks are stored.

|

||||

|

||||

Note: at present **Steam Music only plays MP3s**. If the bulk of your music is in a different file format (e.g., .aac, .m4a, etc.) it won’t be added and cannot be played.

|

||||

|

||||

To add an additional source or scan files in those already listed:

|

||||

|

||||

- Head to **View > Settings > Music**.

|

||||

- Click ‘**Add**‘ to add a folder in a different location to the two listed entries

|

||||

- Hit ‘**Start Scanning**’

|

||||

|

||||

|

||||

|

||||

This dialog is also where you can adjust other preferences, including a ‘scan at start’. If you routinely add new music and are prone to forgetting to manually initiate a scan, tick this one on. You can also choose whether to see notifications on track change, set the default volume levels, and adjust playback behaviour when opening an app or taking a voice chat.

|

||||

|

||||

Once your music sources have been successfully added and scanned you are all set to browse through your entries from the **Library > Music** section of the main client.

|

||||

|

||||

|

||||

|

||||

The Steam Music section groups music by album title by default. To browse by band name you need to click the ‘Albums’ header and then select ‘Artists’ from the drop down menu.

|

||||

|

||||

|

||||

|

||||

Steam Music works off of a ‘queue’ system. You can add music to the queue by double-clicking on a track in the browser or by right-clicking and selecting ‘Add to Queue’.

|

||||

|

||||

|

||||

|

||||

To **launch the desktop player** click the musical note emblem in the upper-right hand corner or through the **View > Music Player** menu.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/10/use-steam-music-player-linux

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

@ -0,0 +1,47 @@

|

||||

How to Download Music from Grooveshark with a Linux OS

|

||||

================================================================================

|

||||

> The solution is actually much simpler than you think

|

||||

|

||||

|

||||

|

||||

**Grooveshark is a great online platform for people who want to listen to music, and there are a number of ways to download music from there. Groovesquid is just one of the applications that let users get music from Grooveshark, and it's multiplatform.**

|

||||

|

||||

If there is a service that streams something online, then there is a way to download the stuff that you are just watching or listening. As it turns out, it's not that difficult and there are a ton of solutions, no matter the platform. For example, there are dozens of YouTube downloaders and it stands to reason that it's not all that difficult to get stuff from Grooveshark either.

|

||||

|

||||

Now, there is the problem of legality. Like many other applications out there, Groovesquid is not actually illegal. It's the user's fault if they do something illegal with an application. The same reasoning can be applied to apps like utorrent or Bittorrent. As long as you don't touch copyrighted material, there are no problems in using Groovesquid.

|

||||

|

||||

### Groovesquid is fast and efficient ###

|

||||

|

||||

The only problem that you could find with Groovesquid is the fact that it's based on Java and that's never a good sign. This is a good way to ensure that an application runs on all the platforms, but it's an issue when it comes to the interface. It's not great, but it doesn't really matter all that much for users, especially since the app is doing a great job.

|

||||

|

||||

There is one caveat though. Groovesquid is a free application, but in order to remain free, it has to display an ad on the right side of the menu. This shouldn't be a problem for most people, but it's a good idea to mention that right from the start.

|

||||

|

||||

From a usability point of view, the application is pretty straightforward. Users can download a single song by entering the link in the top field, but the purpose of that field can be changed by accessing the small drop-down menu to its left. From there, it's possible to change to Song, Popular, Albums, Playlist, and Artist. Some of the options provide access to things like the most popular song on Grooveshark and other options allow you to download an entire playlist, for example.

|

||||

|

||||

You can download Groovesquid 0.7.0

|

||||

|

||||

- [jar][1] File size: 3.8 MB

|

||||

- [tar.gz][2] File size: 549 KB

|

||||

|

||||

You will get a Jar file and all you have to do is to make it executable and let Java do the rest.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/How-to-Download-Music-from-Grooveshark-with-a-Linux-OS-468268.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:https://github.com/groovesquid/groovesquid/releases/download/v0.7.0/Groovesquid.jar

|

||||

[2]:https://github.com/groovesquid/groovesquid/archive/v0.7.0.tar.gz

|

||||

@ -1,157 +0,0 @@

|

||||

disylee占个坑~

|

||||

Docker: Present and Future

|

||||

================================================================================

|

||||

### Docker - the story so far ###

|

||||

|

||||

Docker is a toolset for Linux containers designed to ‘build, ship and run’ distributed applications. It was first released as an open source project by DotCloud in March 2013. The project quickly became popular, leading to DotCloud rebranded as Docker Inc (and ultimately [selling off their original PaaS business][1]). [Docker 1.0][2] was released in June 2014, and the monthly release cadence that led up to the June release has been sustained since.

|

||||

|

||||

The 1.0 release marked the point where Docker Inc considered the platform sufficiently mature to be used in production (with the company and partners providing paid for support options). The monthly release of point updates shows that the project is still evolving quickly, adding new features, and addressing issues as they are found. The project has however successfully decoupled ‘ship’ from ‘run’, so images sourced from any version of Docker can be used with any other version (with both forward and backward compatibility), something that provides a stable foundation for Docker use despite rapid change.

|

||||

|

||||

The growth of Docker into one of the most popular open source projects could be perceived as hype, but there is a great deal of substance. Docker has attracted support from many brand names across the industry, including Amazon, Canonical, CenturyLink, Google, IBM, Microsoft, New Relic, Pivotal, Red Hat and VMware. This is making it almost ubiquitously available wherever Linux can be found. In addition to the big names many startups are growing up around Docker, or changing direction to be better aligned with Docker. Those partnerships (large and small) are helping to drive rapid evolution of the core project and its surrounding ecosystem.

|

||||

|

||||

### A brief technical overview of Docker ###

|

||||

|

||||

Docker makes use of Linux kernel facilities such as [cGroups][3], namespaces and [SElinux][4] to provide isolation between containers. At first Docker was a front end for the [LXC][5] container management subsystem, but release 0.9 introduced [libcontainer][6], which is a native Go language library that provides the interface between user space and the kernel.

|

||||

|

||||

Containers sit on top of a union file system, such as [AUFS][7], which allows for the sharing of components such as operating system images and installed libraries across multiple containers. The layering approach in the filesystem is also exploited by the [Dockerfile][8] DevOps tool, which is able to cache operations that have already completed successfully. This can greatly speed up test cycles by taking out the wait time usually taken to install operating systems and application dependencies. Shared libraries between containers can also reduce RAM footprint.

|

||||

|

||||

A container is started from an image, which may be locally created, cached locally, or downloaded from a registry. Docker Inc operates the [Docker Hub public registry][9], which hosts official repositories for a variety of operating systems, middleware and databases. Organisations and individuals can host public repositories for images at Docker Hub, and there are also subscription services for hosting private repositories. Since an uploaded image could contain almost anything Docker Hub provides an automated build facility (that was previously called ‘trusted build’) where images are constructed from a Dockerfile that serves as a manifest for the contents of the image.

|

||||

|

||||

### Containers versus VMs ###

|

||||

|

||||

Containers are potentially much more efficient than VMs because they’re able to share a single kernel and share application libraries. This can lead to substantially smaller RAM footprints even when compared to virtualisation systems that can make use of RAM overcommitment. Storage footprints can also be reduced where deployed containers share underlying image layers. IBM’s Boden Russel has done [benchmarking][10] that illustrates these differences.

|

||||

|

||||

Containers also present a lower systems overhead than VMs, so the performance of an application inside a container will generally be the same or better versus the same application running within a VM. A team of IBM researchers have published a [performance comparison of virtual machines and Linux containers][11].

|

||||

|

||||

One area where containers are weaker than VMs is isolation. VMs can take advantage of ring -1 [hardware isolation][12] such as that provided by Intel’s VT-d and VT-x technologies. Such isolation prevents VMs from ‘breaking out’ and interfering with each other. Containers don’t yet have any form of hardware isolation, which makes them susceptible to exploits. A proof of concept attack named [Shocker][13] showed that Docker versions prior to 1.0 were vulnerable. Although Docker 1.0 fixed the particular issue exploited by Shocker, Docker CTO Solomon Hykes [stated][14], “When we feel comfortable saying that Docker out-of-the-box can safely contain untrusted uid0 programs, we will say so clearly.”. Hykes’s statement acknowledges that other exploits and associated risks remain, and that more work will need to be done before containers can become trustworthy.

|

||||

|

||||

For many use cases the choice of containers or VMs is a false dichotomy. Docker works well within a VM, which allows it to be used on existing virtual infrastructure, private clouds and public clouds. It’s also possible to run VMs inside containers, which is something that Google uses as part of its cloud platform. Given the widespread availability of infrastructure as a service (IaaS) that provides VMs on demand it’s reasonable to expect that containers and VMs will be used together for years to come. It’s also possible that container management and virtualisation technologies might be brought together to provide a best of both worlds approach; so a hardware trust anchored micro virtualisation implementation behind libcontainer could integrate with the Docker tool chain and ecosystem at the front end, but use a different back end that provides better isolation. Micro virtualisation (such as Bromium’s [vSentry][15] and VMware’s [Project Fargo][16]) is already used in desktop environments to provide hardware based isolation between applications, so similar approaches could be used along with libcontainer as an alternative to the container mechanisms in the Linux kernel.

|

||||

|

||||

### ‘Dockerizing’ applications ###

|

||||

|

||||

Pretty much any Linux application can run inside a Docker container. There are no limitations on choice of languages or frameworks. The only practical limitation is what a container is allowed to do from an operating system perspective. Even that bar can be lowered by running containers in privileged mode, which substantially reduces controls (and correspondingly increases risk of the containerised application being able to cause damage to the host operating system).

|

||||

|

||||

Containers are started from images, and images can be made from running containers. There are essentially two ways to get applications into containers - manually and Dockerfile..

|

||||

|

||||

#### Manual builds ####

|

||||

|

||||

A manual build starts by launching a container with a base operating system image. An interactive terminal can then be used to install applications and dependencies using the package manager offered by the chosen flavour of Linux. Zef Hemel provides a walk through of the process in his article ‘[Using Linux Containers to Support Portable Application Deployment][17]’. Once the application is installed the container can be pushed to a registry (such as Docker Hub) or exported into a tar file.

|

||||

|

||||

#### Dockerfile ####

|

||||

|

||||

Dockerfile is a system for scripting the construction of Docker containers. Each Dockerfile specifies the base image to start from and then a series of commands that are run in the container and/or files that are added to the container. The Dockerfile can also specify ports to be exposed, the working directory when a container is started and the default command on startup. Containers built with Dockerfiles can be pushed or exported just like manual builds. Dockerfiles can also be used in Docker Hub’s automated build system so that images are built from scratch in a system under the control of Docker Inc with the source of that image visible to anybody that might use it.

|

||||

|

||||

#### One process? ####

|

||||

|

||||

Whether images are built manually or with Dockerfile a key consideration is that only a single process is invoked when the container is launched. For a container serving a single purpose, such as running an application server, running a single process isn’t an issue (and some argue that containers should only have a single process). For situations where it’s desirable to have multiple processes running inside a container a [supervisor][18] process must be launched that can then spawn the other desired processes. There is no init system within containers, so anything that relies on systemd, upstart or similar won’t work without modification.

|

||||

|

||||

### Containers and microservices ###

|

||||

|

||||

A full description of the philosophy and benefits of using a microservices architecture is beyond the scope of this article (and well covered in the [InfoQ eMag: Microservices][19]). Containers are however a convenient way to bundle and deploy instances of microservices.

|

||||

|

||||

Whilst most practical examples of large scale microservices deployments to date have been on top of (large numbers of) VMs, containers offer the opportunity to deploy at a smaller scale. The ability for containers to have a shared RAM and disk footprint for operating systems, libraries common application code also means that deploying multiple versions of services side by side can be made very efficient.

|

||||

|

||||

### Connecting containers ###

|

||||

|

||||

Small applications will fit inside a single container, but in many cases an application will be spread across multiple containers. Docker’s success has spawned a flurry of new application compositing tools, orchestration tools and platform as a service (PaaS) implementations. Behind most of these efforts is a desire to simplify the process of constructing an application from a set of interconnected containers. Many tools also help with scaling, fault tolerance, performance management and version control of deployed assets.

|

||||

|

||||

#### Connectivity ####

|

||||

|

||||

Docker’s networking capabilities are fairly primitive. Services within containers can be made accessible to other containers on the same host, and Docker can also map ports onto the host operating system to make services available across a network. The officially sponsored approach to connectivity is [libchan][20], which is a library that provides Go like [channels][21] over the network. Until libchan finds its way into applications there’s room for third parties to provide complementary network services. For example, [Flocker][22] has taken a proxy based approach to make services portable across hosts (along with their underlying storage).

|

||||

|

||||

#### Compositing ####

|

||||

|

||||

Docker has native mechanisms for linking containers together where metadata about a dependency can be passed into the dependent container and consumed within as environment variables and hosts entries. Application compositing tools like [Fig][23] and [geard][24] express the dependency graph inside a single file so that multiple containers can be brought together into a coherent system. CenturyLink’s [Panamax][25] compositing tool takes a similar underlying approach to Fig and geard, but adds a web based user interface, and integrates directly with GitHub so that applications can be shared.

|

||||

|

||||

#### Orchestration ####

|

||||

|

||||

Orchestration systems like [Decking][26], New Relic’s [Centurion][27] and Google’s [Kubernetes][28] all aim to help with the deployment and life cycle management of containers. There are also numerous examples (such as [Mesosphere][29]) of [Apache Mesos][30] (and particularly its [Marathon][31] framework for long running applications) being used along with Docker. By providing an abstraction between the application needs (e.g. expressed as a requirement for CPU cores and memory) and underlying infrastructure, the orchestration tools provide decoupling that’s designed to simplify both application development and data centre operations. There is such a variety of orchestration systems because many have emerged from internal systems previously developed to manage large scale deployments of containers; for example Kubernetes is based on Google’s [Omega][32] system that’s used to manage containers across the Google estate.

|

||||

|

||||

Whilst there is some degree of functional overlap between the compositing tools and the orchestration tools there are also ways that they can complement each other. For example Fig might be used to describe how containers interact functionally whilst Kubernetes pods might be used to provide monitoring and scaling.

|

||||

|

||||

#### Platforms (as a Service) ####

|

||||

|

||||

A number of Docker native PaaS implementations such as [Deis][33] and [Flynn][34] have emerged to take advantage of the fact that Linux containers provide a great degree of developer flexibility (rather than being ‘opinionated’ about a given set of languages and frameworks). Other platforms such as CloudFoundry, OpenShift and Apcera Continuum have taken the route of integrating Docker based functionality into their existing systems, so that applications based on Docker images (or the Dockerfiles that make them) can be deployed and managed alongside of apps using previously supported languages and frameworks.

|

||||

|

||||

### All the clouds ###

|

||||

|

||||

Since Docker can run in any Linux VM with a reasonably up to date kernel it can run in pretty much every cloud offering IaaS. Many of the major cloud providers have announced additional support for Docker and its ecosystem.

|

||||

|

||||

Amazon have introduced Docker into their Elastic Beanstalk system (which is an orchestration service over underlying IaaS). Google have Docker enabled ‘managed VMs’, which provide a halfway house between the PaaS of App Engine and the IaaS of Compute Engine. Microsoft and IBM have both announced services based on Kubernetes so that multi container applications can be deployed and managed on their clouds.

|

||||

|

||||

To provide a consistent interface to the wide variety of back ends now available the Docker team have introduced [libswarm][35], which will integrate with a multitude of clouds and resource management systems. One of the stated aims of libswarm is to ‘avoid vendor lock-in by swapping any service out with another’. This is accomplished by presenting a consistent set of services (with associated APIs) that attach to implementation specific back ends. For example the Docker server service presents the Docker remote API to a local Docker command line tool so that containers can be managed on an array of service providers.

|

||||

|

||||

New service types based on Docker are still in their infancy. London based Orchard labs offered a Docker hosting service, but Docker Inc said that the service wouldn’t be a priority after acquiring Orchard. Docker Inc has also sold its previous DotCloud PaaS business to cloudControl. Services based on older container management systems such as [OpenVZ][36] are already commonplace, so to a certain extent Docker needs to prove its worth to hosting providers.

|

||||

|

||||

### Docker and the distros ###

|

||||

|

||||

Docker has already become a standard feature of major Linux distributions like Ubuntu, Red Hat Enterprise Linux (RHEL) and CentOS. Unfortunately the distributions move at a different pace to the Docker project, so the versions found in a distribution can be well behind the latest available. For example Ubuntu 14.04 was released with Docker 0.9.1, and that didn’t change on the point release upgrade to Ubuntu 14.04.1 (by which time Docker was at 1.1.2). There are also namespace issues in official repositories since Docker was also the name of a KDE system tray; so with Ubuntu 14.04 the package name and command line tool are both ‘docker.io’.

|

||||

|

||||

Things aren’t much different in the Enterprise Linux world. CentOS 7 comes with Docker 0.11.1, a development release that precedes Docker Inc’s announcement of production readiness with Docker 1.0. Linux distribution users that want the latest version for promised stability, performance and security will be better off following the [installation instructions][37] and using repositories hosted by Docker Inc rather than taking the version included in their distribution.

|

||||

|

||||

The arrival of Docker has spawned new Linux distributions such as [CoreOS][38] and Red Hat’s [Project Atomic][39] that are designed to be a minimal environment for running containers. These distributions come with newer kernels and Docker versions than the traditional distributions. They also have lower memory and disk footprints. The new distributions also come with new tools for managing large scale deployments such as [fleet][40] ‘a distributed init system’ and [etcd][41] for metadata management. There are also new mechanisms for updating the distribution itself so that the latest versions of the kernel and Docker can be used. This acknowledges that one of the effects of using Docker is that it pushes attention away from the distribution and its package management solution, making the Linux kernel (and Docker subsystem using it) more important.

|

||||

|

||||

New distributions might be the best way of running Docker, but traditional distributions and their package managers remain very important within containers. Docker Hub hosts official images for Debian, Ubuntu, and CentOS. There’s also a ‘semi-official’ repository for Fedora images. RHEL images aren’t available in Docker Hub, as they’re distributed directly from Red Hat. This means that the automated build mechanism on Docker Hub is only available to those using pure open source distributions (and willing to trust the provenance of the base images curated by the Docker Inc team).

|

||||

|

||||

Whilst Docker Hub integrates with source control systems such as GitHub and Bitbucket for automated builds the package managers used during the build process create a complex relationship between a build specification (in a Dockerfile) and the image resulting from a build. Non deterministic results from the build process isn’t specifically a Docker problem - it’s a result of how package managers work. A build done one day will get a given version, and a build done another time may get a later version, which is why package managers have upgrade facilities. The container abstraction (caring less about the contents of a container) along with container proliferation (because of lightweight resource utilisation) is however likely to make this a pain point that gets associated with Docker.

|

||||

|

||||

### The future of Docker ###

|

||||

|

||||

Docker Inc has set a clear path on the development of core capabilities (libcontainer), cross service management (libswarm) and messaging between containers (libchan). Meanwhile the company has already shown a willingness to consume its own ecosystem with the Orchard Labs acquisition. There is however more to Docker than Docker Inc, with contributions to the project coming from big names like Google, IBM and Red Hat. With a benevolent dictator in the shape of CTO Solomon Hykes at the helm there is a clear nexus of technical leadership for both the company and the project. Over its first 18 months the project has shown an ability to move fast by using its own output, and there are no signs of that abating.

|

||||

|

||||

Many investors are looking at the features matrix for VMware’s ESX/vSphere platform from a decade ago and figuring out where the gaps (and opportunities) lie between enterprise expectations driven by the popularity of VMs and the existing Docker ecosystem. Areas like networking, storage and fine grained version management (for the contents of containers) are presently underserved by the existing Docker ecosystem, and provide opportunities for both startups and incumbents.

|

||||

|

||||

Over time it’s likely that the distinction between VMs and containers (the ‘run’ part of Docker) will become less important, which will push attention to the ‘build’ and ‘ship’ aspects. The changes here will make the question of ‘what happens to Docker?’ much less important than ‘what happens to the IT industry as a result of Docker?’.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoq.com/articles/docker-future

|

||||

|

||||

作者:[Chris Swan][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoq.com/author/Chris-Swan

|

||||

[1]:http://blog.dotcloud.com/dotcloud-paas-joins-cloudcontrol

|

||||

[2]:http://www.infoq.com/news/2014/06/docker_1.0

|

||||

[3]:https://www.kernel.org/doc/Documentation/cgroups/cgroups.txt

|

||||

[4]:http://selinuxproject.org/page/Main_Page

|

||||

[5]:https://linuxcontainers.org/

|

||||

[6]:http://blog.docker.com/2014/03/docker-0-9-introducing-execution-drivers-and-libcontainer/

|

||||

[7]:http://aufs.sourceforge.net/aufs.html

|

||||

[8]:https://docs.docker.com/reference/builder/

|

||||

[9]:https://registry.hub.docker.com/

|

||||

[10]:http://bodenr.blogspot.co.uk/2014/05/kvm-and-docker-lxc-benchmarking-with.html?m=1

|

||||

[11]:http://domino.research.ibm.com/library/cyberdig.nsf/papers/0929052195DD819C85257D2300681E7B/$File/rc25482.pdf

|

||||

[12]:https://en.wikipedia.org/wiki/X86_virtualization#Hardware-assisted_virtualization

|

||||

[13]:http://stealth.openwall.net/xSports/shocker.c

|

||||

[14]:https://news.ycombinator.com/item?id=7910117

|

||||

[15]:http://www.bromium.com/products/vsentry.html

|

||||

[16]:http://cto.vmware.com/vmware-docker-better-together/

|

||||

[17]:http://www.infoq.com/articles/docker-containers

|

||||

[18]:http://docs.docker.com/articles/using_supervisord/

|

||||

[19]:http://www.infoq.com/minibooks/emag-microservices

|

||||

[20]:https://github.com/docker/libchan

|

||||

[21]:https://gobyexample.com/channels

|

||||

[22]:http://www.infoq.com/news/2014/08/clusterhq-launch-flocker

|

||||

[23]:http://www.fig.sh/

|

||||

[24]:http://openshift.github.io/geard/

|

||||

[25]:http://panamax.io/

|

||||

[26]:http://decking.io/

|

||||

[27]:https://github.com/newrelic/centurion

|

||||

[28]:https://github.com/GoogleCloudPlatform/kubernetes

|

||||

[29]:https://mesosphere.io/2013/09/26/docker-on-mesos/

|

||||

[30]:http://mesos.apache.org/

|

||||

[31]:https://github.com/mesosphere/marathon

|

||||

[32]:http://static.googleusercontent.com/media/research.google.com/en/us/pubs/archive/41684.pdf

|

||||

[33]:http://deis.io/

|

||||

[34]:https://flynn.io/

|

||||

[35]:https://github.com/docker/libswarm

|

||||

[36]:http://openvz.org/Main_Page

|

||||

[37]:https://docs.docker.com/installation/#installation

|

||||

[38]:https://coreos.com/

|

||||

[39]:http://www.projectatomic.io/

|

||||

[40]:https://github.com/coreos/fleet

|

||||

[41]:https://github.com/coreos/etcd

|

||||

@ -0,0 +1,100 @@

|

||||