mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

e1b781b024

@ -0,0 +1,217 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12667-1.html)

|

||||

[#]: subject: (Program hardware from the Linux command line)

|

||||

[#]: via: (https://opensource.com/article/20/9/hardware-command-line)

|

||||

[#]: author: (Alan Smithee https://opensource.com/users/alansmithee)

|

||||

|

||||

使用 RT-Thread 的 FinSH 对硬件进行编程

|

||||

======

|

||||

|

||||

> 由于物联网(IoT)的兴起,对硬件进行编程变得越来越普遍。RT-Thread 可以让你可以用 FinSH 从 Linux 命令行与设备进行沟通、

|

||||

|

||||

|

||||

|

||||

RT-Thread 是一个开源的[实时操作系统][2],用于对物联网(IoT)设备进行编程。FinSH 是 [RT-Thread][3] 的命令行组件,它提供了一套操作界面,使用户可以从命令行与设备进行沟通。它主要用于调试或查看系统信息。

|

||||

|

||||

通常情况下,开发调试使用硬件调试器和 `printf` 日志来显示。但在某些情况下,这两种方法并不是很有用,因为它是从运行的内容中抽象出来的,而且它们可能很难解析。不过 RT-Thread 是一个多线程系统,当你想知道一个正在运行的线程的状态,或者手动控制系统的当前状态时,这很有帮助。因为它是多线程的,所以你能够拥有一个交互式的 shell,你可以直接在设备上输入命令、调用函数来获取你需要的信息,或者控制程序的行为。如果你只习惯于 Linux 或 BSD 等现代操作系统,这在你看来可能很普通,但对于硬件黑客来说,这是极其奢侈的,远超将串行电缆直接连线到电路板上以获取一丝错误的做法。

|

||||

|

||||

FinSH 有两种模式。

|

||||

|

||||

* C 语言解释器模式,称为 c-style。

|

||||

* 传统的命令行模式,称为 msh(模块 shell)。

|

||||

|

||||

在 C 语言解释器模式下,FinSH 可以解析执行大部分 C 语言的表达式,并使用函数调用访问系统上的函数和全局变量。它还可以从命令行创建变量。

|

||||

|

||||

在 msh 模式下,FinSH 的操作与 Bash 等传统 shell 类似。

|

||||

|

||||

### GNU 命令标准

|

||||

|

||||

当我们在开发 FinSH 时,我们了解到,在编写命令行应用程序之前,你需要熟悉 GNU 命令行标准。这个标准实践的框架有助于给界面带入熟悉感,这有助于开发人员在使用时感到舒适和高效。

|

||||

|

||||

一个完整的 GNU 命令主要由四个部分组成。

|

||||

|

||||

1. 命令名(可执行文件):命令行程序的名称;

|

||||

2. 子命令:命令程序的子函数名称。

|

||||

3. 选项:子命令函数的配置选项。

|

||||

4. 参数:子命令函数配置选项的相应参数。

|

||||

|

||||

你可以在任何命令中看到这一点。以 Git 为例:

|

||||

|

||||

```

|

||||

git reset --hard HEAD~1

|

||||

```

|

||||

|

||||

这一点可以分解为:

|

||||

|

||||

![GNU command line standards][4]

|

||||

|

||||

可执行的命令是 `git`,子命令是 `reset`,使用的选项是 `--head`,参数是 `HEAD~1`。

|

||||

|

||||

再举个例子:

|

||||

|

||||

```

|

||||

systemctl enable --now firewalld

|

||||

```

|

||||

|

||||

可执行的命令是 `systemctl`,子命令是 `enable`,选项是 `--now`,参数是 `firewalld`。

|

||||

|

||||

想象一下,你想用 RT-Thread 编写一个符合 GNU 标准的命令行程序。FinSH 拥有你所需要的一切,并且会按照预期运行你的代码。更棒的是,你可以依靠这种合规性,让你可以自信地移植你最喜欢的 Linux 程序。

|

||||

|

||||

### 编写一个优雅的命令行程序

|

||||

|

||||

下面是一个 RT-Thread 运行命令的例子,RT-Thread 开发人员每天都在使用这个命令:

|

||||

|

||||

```

|

||||

usage: env.py package [-h] [--force-update] [--update] [--list] [--wizard]

|

||||

[--upgrade] [--printenv]

|

||||

|

||||

optional arguments:

|

||||

-h, --help show this help message and exit

|

||||

--force-update force update and clean packages, install or remove the

|

||||

packages by your settings in menuconfig

|

||||

--update update packages, install or remove the packages by your

|

||||

settings in menuconfig

|

||||

--list list target packages

|

||||

--wizard create a new package with wizard

|

||||

--upgrade upgrade local packages list and ENV scripts from git repo

|

||||

--printenv print environmental variables to check

|

||||

```

|

||||

|

||||

正如你所看到的那样,它看起来很熟悉,行为就像你可能已经在 Linux 或 BSD 上运行的大多数 POSIX 应用程序一样。当使用不正确或不充分的语法时,它会提供帮助,它支持长选项和短选项。这种通用的用户界面对于任何使用过 Unix 终端的人来说都是熟悉的。

|

||||

|

||||

### 选项种类

|

||||

|

||||

选项的种类很多,按长短可分为两大类。

|

||||

|

||||

1. 短选项:由一个连字符加一个字母组成,如 `pkgs -h` 中的 `-h` 选项。

|

||||

2. 长选项:由两个连字符加上单词或字母组成,例如,`scons- --target-mdk5` 中的 `--target` 选项。

|

||||

|

||||

你可以把这些选项分为三类,由它们是否有参数来决定。

|

||||

|

||||

1. 没有参数:该选项后面不能有参数。

|

||||

2. 参数必选:选项后面必须有参数。

|

||||

3. 参数可选:选项后可以有参数,但不是必需的。

|

||||

|

||||

正如你对大多数 Linux 命令的期望,FinSH 的选项解析非常灵活。它可以根据空格或等号作为定界符来区分一个选项和一个参数,或者仅仅通过提取选项本身并假设后面的内容是参数(换句话说,完全没有定界符)。

|

||||

|

||||

* `wavplay -v 50`

|

||||

* `wavplay -v50`

|

||||

* `wavplay --vol=50`

|

||||

|

||||

### 使用 optparse

|

||||

|

||||

如果你曾经写过命令行程序,你可能会知道,一般来说,你所选择的语言有一个叫做 optparse 的库或模块。它是提供给程序员的,所以作为命令的一部分输入的选项(比如 `-v` 或 `--verbose`)可以与命令的其他部分进行*解析*。这可以帮助你的代码从一个子命令或参数中获取一个选项。

|

||||

|

||||

当为 FinSH 编写一个命令时,`optparse` 包希望使用这种格式:

|

||||

|

||||

```

|

||||

MSH_CMD_EXPORT_ALIAS(pkgs, pkgs, this is test cmd.);

|

||||

```

|

||||

|

||||

你可以使用长形式或短形式,或者同时使用两种形式来实现选项。例如:

|

||||

|

||||

```

|

||||

static struct optparse_long long_opts[] =

|

||||

{

|

||||

{"help" , 'h', OPTPARSE_NONE}, // Long command: help, corresponding to short command h, without arguments.

|

||||

{"force-update", 0 , OPTPARSE_NONE}, // Long comman: force-update, without arguments

|

||||

{"update" , 0 , OPTPARSE_NONE},

|

||||

{"list" , 0 , OPTPARSE_NONE},

|

||||

{"wizard" , 0 , OPTPARSE_NONE},

|

||||

{"upgrade" , 0 , OPTPARSE_NONE},

|

||||

{"printenv" , 0 , OPTPARSE_NONE},

|

||||

{ NULL , 0 , OPTPARSE_NONE}

|

||||

};

|

||||

```

|

||||

|

||||

创建完选项后,写出每个选项及其参数的命令和说明:

|

||||

|

||||

```

|

||||

static void usage(void)

|

||||

{

|

||||

rt_kprintf("usage: env.py package [-h] [--force-update] [--update] [--list] [--wizard]\n");

|

||||

rt_kprintf(" [--upgrade] [--printenv]\n\n");

|

||||

rt_kprintf("optional arguments:\n");

|

||||

rt_kprintf(" -h, --help show this help message and exit\n");

|

||||

rt_kprintf(" --force-update force update and clean packages, install or remove the\n");

|

||||

rt_kprintf(" packages by your settings in menuconfig\n");

|

||||

rt_kprintf(" --update update packages, install or remove the packages by your\n");

|

||||

rt_kprintf(" settings in menuconfig\n");

|

||||

rt_kprintf(" --list list target packages\n");

|

||||

rt_kprintf(" --wizard create a new package with wizard\n");

|

||||

rt_kprintf(" --upgrade upgrade local packages list and ENV scripts from git repo\n");

|

||||

rt_kprintf(" --printenv print environmental variables to check\n");

|

||||

}

|

||||

```

|

||||

|

||||

下一步是解析。虽然你还没有实现它的功能,但解析后的代码框架是一样的:

|

||||

|

||||

```

|

||||

int pkgs(int argc, char **argv)

|

||||

{

|

||||

int ch;

|

||||

int option_index;

|

||||

struct optparse options;

|

||||

|

||||

if(argc == 1)

|

||||

{

|

||||

usage();

|

||||

return RT_EOK;

|

||||

}

|

||||

|

||||

optparse_init(&options, argv);

|

||||

while((ch = optparse_long(&options, long_opts, &option_index)) != -1)

|

||||

{

|

||||

ch = ch;

|

||||

|

||||

rt_kprintf("\n");

|

||||

rt_kprintf("optopt = %c\n", options.optopt);

|

||||

rt_kprintf("optarg = %s\n", options.optarg);

|

||||

rt_kprintf("optind = %d\n", options.optind);

|

||||

rt_kprintf("option_index = %d\n", option_index);

|

||||

}

|

||||

rt_kprintf("\n");

|

||||

|

||||

return RT_EOK;

|

||||

}

|

||||

```

|

||||

|

||||

这里是函数头文件:

|

||||

|

||||

```

|

||||

#include "optparse.h"

|

||||

#include "finsh.h"

|

||||

```

|

||||

|

||||

然后,编译并下载到设备上。

|

||||

|

||||

![Output][6]

|

||||

|

||||

### 硬件黑客

|

||||

|

||||

对硬件进行编程似乎很吓人,但随着物联网的发展,它变得越来越普遍。并不是所有的东西都可以或者应该在树莓派上运行,但在 RT-Thread,FinSH 可以让你保持熟悉的 Linux 感觉。

|

||||

|

||||

如果你对在裸机上编码感到好奇,不妨试试 RT-Thread。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/9/hardware-command-line

|

||||

|

||||

作者:[Alan Smithee][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/alansmithee

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/command_line_prompt.png?itok=wbGiJ_yg (Command line prompt)

|

||||

[2]: https://opensource.com/article/20/6/open-source-rtos

|

||||

[3]: https://github.com/RT-Thread/rt-thread

|

||||

[4]: https://opensource.com/sites/default/files/uploads/command-line-apps_2.png (GNU command line standards)

|

||||

[5]: https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[6]: https://opensource.com/sites/default/files/uploads/command-line-apps_3.png (Output)

|

||||

@ -1,34 +1,36 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12663-1.html)

|

||||

[#]: subject: (What's new with rdiff-backup?)

|

||||

[#]: via: (https://opensource.com/article/20/9/rdiff-backup-linux)

|

||||

[#]: author: (Patrik Dufresne https://opensource.com/users/patrik-dufresne)

|

||||

|

||||

rdiff-backup 有什么新功能?

|

||||

11 年后重新打造的 rdiff-backup 2.0 有什么新功能?

|

||||

======

|

||||

长期的 Linux 备份方案向 Python 3 的迁移为添加许多新功能提供了机会。

|

||||

![Hand putting a Linux file folder into a drawer][1]

|

||||

|

||||

> 这个老牌 Linux 备份方案迁移到了 Python 3 提供了添加许多新功能的机会。

|

||||

|

||||

|

||||

|

||||

2020 年 3 月,[rdiff-backup][2] 升级到了 2.0 版,这距离上一个主要版本已经过去了 11 年。2020 年初 Python 2 的废弃是这次更新的动力,但它为开发团队提供了整合其他功能和优势的机会。

|

||||

|

||||

大约二十年来,rdiff-backup 帮助 Linux 用户在本地或远程维护他们的数据的完整备份,而无需无谓地消耗资源。这是因为这个开源解决方案可以进行反向增量备份,只备份从上一次备份中改变的文件。

|

||||

大约二十年来,`rdiff-backup` 帮助 Linux 用户在本地或远程维护他们的数据的完整备份,而无需无谓地消耗资源。这是因为这个开源解决方案可以进行反向增量备份,只备份从上一次备份中改变的文件。

|

||||

|

||||

改版(或说,重生)得益于一个新的、自组织的开发团队(由来自 [IKUS Software][3] 的 Eric Zolf 和 Patrik Dufresne 领导,以及来自 [Seravo][4]的 Otto Kekäläinen 领导)的努力,为了所有 rdiff-backup 用户的利益,他们齐心协力。

|

||||

这次改版(或者说,重生)得益于一个新的、自组织的开发团队(由来自 [IKUS Software][3] 的 Eric Zolf 和 Patrik Dufresne,以及来自 [Seravo][4] 的 Otto Kekäläinen 共同领导)的努力,为了所有 `rdiff-backup` 用户的利益,他们齐心协力。

|

||||

|

||||

### rdiff-backup 的新功能

|

||||

|

||||

在 Eric 的带领下,随着向 Python 3 的迁移,项目被迁移到了一个新的、不受企业限制的[仓库][5],以欢迎贡献。团队还整合了多年来提交的所有补丁,包括稀疏文件支持和硬链接的修复。

|

||||

在 Eric 的带领下,随着向 Python 3 的迁移,项目被迁移到了一个新的、不受企业限制的[仓库][5],以欢迎贡献。团队还整合了多年来提交的所有补丁,包括对稀疏文件的支持和对硬链接的修复。

|

||||

|

||||

#### 用 Travis CI 实现自动化

|

||||

|

||||

另一个巨大的改进是增加了一个使用开源 [Travis CI][6] 的持续集成/持续交付 (CI/CD) 管道。这允许在各种环境下测试 rdiff-backup,从而确保变化不会影响方案的稳定性。CI/CD 管道包括集成所有主要平台的构建和二进制发布。

|

||||

另一个巨大的改进是增加了一个使用开源 [Travis CI][6] 的持续集成/持续交付(CI/CD)管道。这允许在各种环境下测试 `rdiff-backup`,从而确保变化不会影响方案的稳定性。CI/CD 管道包括集成所有主要平台的构建和二进制发布。

|

||||

|

||||

#### 使用 yum 和 apt 轻松安装

|

||||

|

||||

新的 rdiff-backup 解决方案可以运行在所有主流的 Linux 发行版上,包括 Fedora、Red Hat、Elementary、Debian 等。Frank 和 Otto 付出了艰辛的努力,提供了开放仓库以方便访问和安装。你可以使用你的软件包管理器安装 rdiff-backup,或者按照 GitHub 项目页面上的[分步说明][7]进行安装。

|

||||

新的 `rdiff-backup` 解决方案可以运行在所有主流的 Linux 发行版上,包括 Fedora、Red Hat、Elementary、Debian 等。Frank 和 Otto 付出了艰辛的努力,提供了开放的仓库以方便访问和安装。你可以使用你的软件包管理器安装 `rdiff-backup`,或者按照 GitHub 项目页面上的[分步说明][7]进行安装。

|

||||

|

||||

#### 新的主页

|

||||

|

||||

@ -36,7 +38,7 @@ rdiff-backup 有什么新功能?

|

||||

|

||||

### 如何使用 rdiff-backup

|

||||

|

||||

如果你是 rdiff-backup 的新手,你可能会对它的易用性感到惊讶。一个备份方案需要让你对备份和恢复过程感到舒适,而不是吓人。

|

||||

如果你是 `rdiff-backup` 的新手,你可能会对它的易用性感到惊讶。备份方案应该让你对备份和恢复过程感到舒适,而不是吓人。

|

||||

|

||||

#### 开始备份

|

||||

|

||||

@ -44,61 +46,55 @@ rdiff-backup 有什么新功能?

|

||||

|

||||

例如,要备份到名为 `my_backup_drive` 的本地驱动器,请输入:

|

||||

|

||||

|

||||

```

|

||||

`$ rdiff-backup /home/tux/ /run/media/tux/my_backup_drive/`

|

||||

$ rdiff-backup /home/tux/ /run/media/tux/my_backup_drive/

|

||||

```

|

||||

|

||||

要将数据备份到异地存储,请使用远程服务器的位置,并在 `::` 后面指向备份驱动器的挂载点:

|

||||

|

||||

|

||||

```

|

||||

`$ rdiff-backup /home/tux/ tux@example.com::/my_backup_drive/`

|

||||

$ rdiff-backup /home/tux/ tux@example.com::/my_backup_drive/

|

||||

```

|

||||

|

||||

你可能需要[设置 SSH 密钥][8]来使这个过程不费力。

|

||||

你可能需要[设置 SSH 密钥][8]来使这个过程更轻松。

|

||||

|

||||

#### 还原文件

|

||||

|

||||

做备份的原因是有时文件会丢失。为了使恢复尽可能简单,你甚至不需要 rdiff-backup 来恢复文件(虽然使用 `rdiff-backup` 命令提供了一些方便)。

|

||||

做备份的原因是有时文件会丢失。为了使恢复尽可能简单,你甚至不需要 `rdiff-backup` 来恢复文件(虽然使用 `rdiff-backup` 命令提供了一些方便)。

|

||||

|

||||

如果你需要从备份驱动器中获取一个文件,你可以使用 `cp` 将其从备份驱动器复制到本地系统,或者对于远程驱动器使用 `scp` 命令。

|

||||

|

||||

对于本地驱动器,使用:

|

||||

|

||||

|

||||

```

|

||||

`$ cp _run_media/tux/my_backup_drive/Documents/example.txt \ ~/Documents`

|

||||

$ cp _run_media/tux/my_backup_drive/Documents/example.txt ~/Documents

|

||||

```

|

||||

|

||||

或者用于远程驱动器:

|

||||

|

||||

|

||||

```

|

||||

`$ scp tux@example.com::/my_backup_drive/Documents/example.txt \ ~/Documents`

|

||||

$ scp tux@example.com::/my_backup_drive/Documents/example.txt ~/Documents

|

||||

```

|

||||

|

||||

然而,使用 `rdiff-backup` 命令提供了其他选项,包括 `--restore-as-of`。这允许你指定你要恢复的文件的哪个版本。

|

||||

|

||||

例如,假设你想恢复一个文件在四天前的版本:

|

||||

|

||||

|

||||

```

|

||||

`$ rdiff-backup --restore-as-of 4D \ /run/media/tux/foo.txt ~/foo_4D.txt`

|

||||

$ rdiff-backup --restore-as-of 4D /run/media/tux/foo.txt ~/foo_4D.txt

|

||||

```

|

||||

|

||||

你也可以用 `rdiff-backup` 来获取最新版本:

|

||||

|

||||

|

||||

```

|

||||

`$ rdiff-backup --restore-as-of now \ /run/media/tux/foo.txt ~/foo_4D.txt`

|

||||

$ rdiff-backup --restore-as-of now /run/media/tux/foo.txt ~/foo_4D.txt`

|

||||

```

|

||||

|

||||

就是这么简单。另外,rdiff-backup 还有很多其他选项,例如,你可以从列表中排除文件,从一个远程备份到另一个远程等等,这些你可以在[文档][9]中了解。

|

||||

就是这么简单。另外,`rdiff-backup` 还有很多其他选项,例如,你可以从列表中排除文件,从一个远程备份到另一个远程等等,这些你可以在[文档][9]中了解。

|

||||

|

||||

### 总结

|

||||

|

||||

我们的开发团队希望用户能够喜欢这个改版后的开源 rdiff-backup 方案,这是我们不断努力的结晶。我们也感谢我们的贡献者,他们真正展示了开源的力量。

|

||||

我们的开发团队希望用户能够喜欢这个改版后的开源 `rdiff-backup` 方案,这是我们不断努力的结晶。我们也感谢我们的贡献者,他们真正展示了开源的力量。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -107,7 +103,7 @@ via: https://opensource.com/article/20/9/rdiff-backup-linux

|

||||

作者:[Patrik Dufresne][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,37 +1,38 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12666-1.html)

|

||||

[#]: subject: (How to Fix “Repository is not valid yet” Error in Ubuntu Linux)

|

||||

[#]: via: (https://itsfoss.com/fix-repository-not-valid-yet-error-ubuntu/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

如何修复 Ubuntu Linux中 的 ”Repository is not valid yet“ 错误

|

||||

如何修复 Ubuntu Linux 中的 “Release file is not valid yet” 错误

|

||||

======

|

||||

|

||||

我最近[在我的树莓派上安装了 Ubuntu 服务器][1]。我[在 Ubuntu 终端连接上了 Wi-Fi][2],然后做了我在安装任何 Linux 系统后都会做的事情,那就是更新系统。

|

||||

|

||||

当我使用 ”sudo apt update“ 命令时,它给了一个对我而言特别的错误。它报出仓库的发布文件在某个时间段内无效。

|

||||

当我使用 `sudo apt update` 命令时,它给了一个对我而言特别的错误。它报出仓库的发布文件在某个时间段内无效。

|

||||

|

||||

**E: Release file for <http://ports.ubuntu.com/ubuntu-ports/dists/focal-security/InRelease> is not valid yet (invalid for another 159d 15h 20min 52s). Updates for this repository will not be applied.**

|

||||

> E: Release file for <http://ports.ubuntu.com/ubuntu-ports/dists/focal-security/InRelease> is not valid yet (invalid for another 159d 15h 20min 52s). Updates for this repository will not be applied.**

|

||||

|

||||

下面是完整输出:

|

||||

|

||||

```

|

||||

[email protected]:~$ sudo apt update

|

||||

Hit:1 http://ports.ubuntu.com/ubuntu-ports focal InRelease

|

||||

Get:2 http://ports.ubuntu.com/ubuntu-ports focal-updates InRelease [111 kB]

|

||||

Get:3 http://ports.ubuntu.com/ubuntu-ports focal-backports InRelease [98.3 kB]

|

||||

Get:4 http://ports.ubuntu.com/ubuntu-ports focal-security InRelease [107 kB]

|

||||

ubuntu@ubuntu:~$ sudo apt update

|

||||

Hit:1 http://ports.ubuntu.com/ubuntu-ports focal InRelease

|

||||

Get:2 http://ports.ubuntu.com/ubuntu-ports focal-updates InRelease [111 kB]

|

||||

Get:3 http://ports.ubuntu.com/ubuntu-ports focal-backports InRelease [98.3 kB]

|

||||

Get:4 http://ports.ubuntu.com/ubuntu-ports focal-security InRelease [107 kB]

|

||||

Reading package lists... Done

|

||||

E: Release file for http://ports.ubuntu.com/ubuntu-ports/dists/focal/InRelease is not valid yet (invalid for another 21d 23h 17min 25s). Updates for this repository will not be applied.

|

||||

E: Release file for http://ports.ubuntu.com/ubuntu-ports/dists/focal-updates/InRelease is not valid yet (invalid for another 159d 15h 21min 2s). Updates for this repository will not be applied.

|

||||

E: Release file for http://ports.ubuntu.com/ubuntu-ports/dists/focal-backports/InRelease is not valid yet (invalid for another 159d 15h 21min 32s). Updates for this repository will not be applied.

|

||||

E: Release file for http://ports.ubuntu.com/ubuntu-ports/dists/focal-security/InRelease is not valid yet (invalid for another 159d 15h 20min 52s). Updates for this repository will not be applied.

|

||||

|

||||

```

|

||||

|

||||

### 修复 Ubuntu 和其他 Linux 发行版中 ”release file is not valid yet“ 的错误。

|

||||

### 修复 Ubuntu 和其他 Linux 发行版中 “Release file is not valid yet” 的错误。

|

||||

|

||||

![][3]

|

||||

|

||||

@ -63,7 +64,7 @@ Architectures: amd64 arm64 armhf i386 ppc64el riscv64 s390x

|

||||

sudo timedatectl set-local-rtc 1

|

||||

```

|

||||

|

||||

timedatectl 命令可以让你在 Linux 上配置时间、日期和[更改时区][4]。

|

||||

`timedatectl` 命令可以让你在 Linux 上配置时间、日期和[更改时区][4]。

|

||||

|

||||

你应该不需要重新启动。它可以立即工作,你可以通过[更新你的 Ubuntu 系统][5]再次验证它。

|

||||

|

||||

@ -84,7 +85,7 @@ via: https://itsfoss.com/fix-repository-not-valid-yet-error-ubuntu/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,325 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12670-1.html)

|

||||

[#]: subject: (How to Create/Configure LVM in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/create-lvm-storage-logical-volume-manager-in-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

如何在 Linux 中创建/配置 LVM(逻辑卷管理)

|

||||

======

|

||||

|

||||

|

||||

|

||||

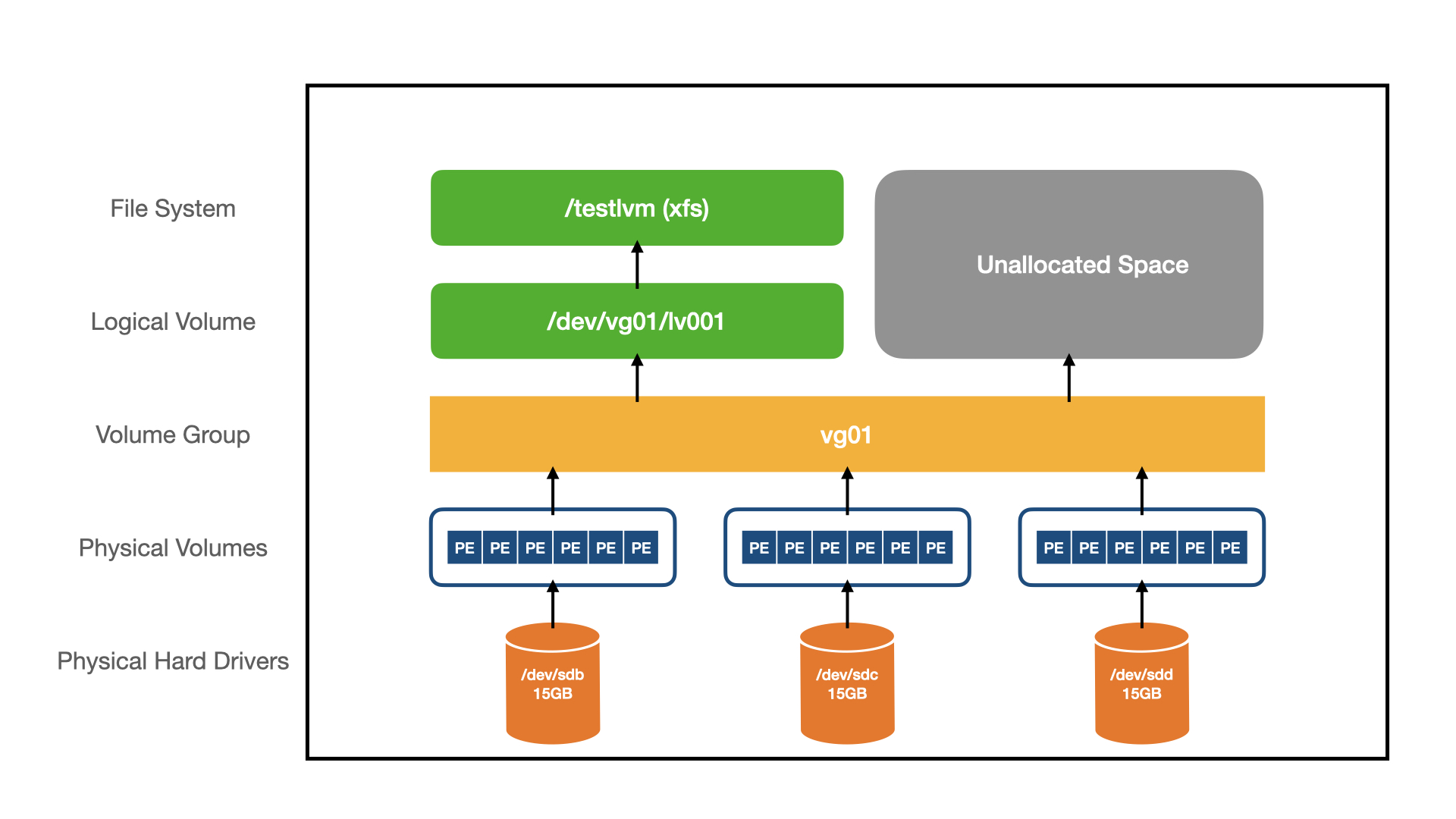

<ruby>逻辑卷管理<rt>Logical Volume Management</rt></ruby>(LVM)在 Linux 系统中扮演着重要的角色,它可以提高可用性、磁盘 I/O、性能和磁盘管理的能力。

|

||||

|

||||

LVM 是一种被广泛使用的技术,对于磁盘管理来说,它是非常灵活的。

|

||||

|

||||

它在物理磁盘和文件系统之间增加了一个额外的层,允许你创建一个逻辑卷而不是物理磁盘。

|

||||

|

||||

LVM 允许你在需要的时候轻松地调整、扩展和减少逻辑卷的大小。

|

||||

|

||||

|

||||

|

||||

### 如何创建 LVM 物理卷?

|

||||

|

||||

你可以使用任何磁盘、RAID 阵列、SAN 磁盘或分区作为 LVM <ruby>物理卷<rt>Physical Volume</rt></ruby>(PV)。

|

||||

|

||||

让我们想象一下,你已经添加了三个磁盘,它们是 `/dev/sdb`、`/dev/sdc` 和 `/dev/sdd`。

|

||||

|

||||

运行以下命令来[发现 Linux 中新添加的 LUN 或磁盘][2]:

|

||||

|

||||

```

|

||||

# ls /sys/class/scsi_host

|

||||

host0

|

||||

```

|

||||

|

||||

```

|

||||

# echo "- - -" > /sys/class/scsi_host/host0/scan

|

||||

```

|

||||

|

||||

```

|

||||

# fdisk -l

|

||||

```

|

||||

|

||||

**创建物理卷 (`pvcreate`) 的一般语法:**

|

||||

|

||||

```

|

||||

pvcreate [物理卷名]

|

||||

```

|

||||

|

||||

当在系统中检测到磁盘,使用 `pvcreate` 命令初始化 LVM PV:

|

||||

|

||||

```

|

||||

# pvcreate /dev/sdb /dev/sdc /dev/sdd

|

||||

Physical volume "/dev/sdb" successfully created

|

||||

Physical volume "/dev/sdc" successfully created

|

||||

Physical volume "/dev/sdd" successfully created

|

||||

```

|

||||

|

||||

**请注意:**

|

||||

|

||||

* 上面的命令将删除给定磁盘 `/dev/sdb`、`/dev/sdc` 和 `/dev/sdd` 上的所有数据。

|

||||

* 物理磁盘可以直接添加到 LVM PV 中,而不必是磁盘分区。

|

||||

|

||||

使用 `pvdisplay` 和 `pvs` 命令来显示你创建的 PV。`pvs` 命令显示的是摘要输出,`pvdisplay` 显示的是 PV 的详细输出:

|

||||

|

||||

```

|

||||

# pvs

|

||||

PV VG Fmt Attr PSize PFree

|

||||

/dev/sdb lvm2 a-- 15.00g 15.00g

|

||||

/dev/sdc lvm2 a-- 15.00g 15.00g

|

||||

/dev/sdd lvm2 a-- 15.00g 15.00g

|

||||

```

|

||||

|

||||

```

|

||||

# pvdisplay

|

||||

|

||||

"/dev/sdb" is a new physical volume of "15.00 GiB"

|

||||

--- NEW Physical volume ---

|

||||

PV Name /dev/sdb

|

||||

VG Name

|

||||

PV Size 15.00 GiB

|

||||

Allocatable NO

|

||||

PE Size 0

|

||||

Total PE 0

|

||||

Free PE 0

|

||||

Allocated PE 0

|

||||

PV UUID 69d9dd18-36be-4631-9ebb-78f05fe3217f

|

||||

|

||||

"/dev/sdc" is a new physical volume of "15.00 GiB"

|

||||

--- NEW Physical volume ---

|

||||

PV Name /dev/sdc

|

||||

VG Name

|

||||

PV Size 15.00 GiB

|

||||

Allocatable NO

|

||||

PE Size 0

|

||||

Total PE 0

|

||||

Free PE 0

|

||||

Allocated PE 0

|

||||

PV UUID a2092b92-af29-4760-8e68-7a201922573b

|

||||

|

||||

"/dev/sdd" is a new physical volume of "15.00 GiB"

|

||||

--- NEW Physical volume ---

|

||||

PV Name /dev/sdd

|

||||

VG Name

|

||||

PV Size 15.00 GiB

|

||||

Allocatable NO

|

||||

PE Size 0

|

||||

Total PE 0

|

||||

Free PE 0

|

||||

Allocated PE 0

|

||||

PV UUID d92fa769-e00f-4fd7-b6ed-ecf7224af7faS

|

||||

```

|

||||

|

||||

### 如何创建一个卷组

|

||||

|

||||

<ruby>卷组<rt>Volume Group</rt></ruby>(VG)是 LVM 结构中的另一层。基本上,卷组由你创建的 LVM 物理卷组成,你可以将物理卷添加到现有的卷组中,或者根据需要为物理卷创建新的卷组。

|

||||

|

||||

**创建卷组 (`vgcreate`) 的一般语法:**

|

||||

|

||||

```

|

||||

vgcreate [卷组名] [物理卷名]

|

||||

```

|

||||

|

||||

使用以下命令将一个新的物理卷添加到新的卷组中:

|

||||

|

||||

```

|

||||

# vgcreate vg01 /dev/sdb /dev/sdc /dev/sdd

|

||||

Volume group "vg01" successfully created

|

||||

```

|

||||

|

||||

**请注意:**默认情况下,它使用 4MB 的<ruby>物理范围<rt>Physical Extent</rt></ruby>(PE),但你可以根据你的需要改变它。

|

||||

|

||||

使用 `vgs` 和 `vgdisplay` 命令来显示你创建的 VG 的信息:

|

||||

|

||||

```

|

||||

# vgs vg01

|

||||

VG #PV #LV #SN Attr VSize VFree

|

||||

vg01 3 0 0 wz--n- 44.99g 44.99g

|

||||

```

|

||||

|

||||

```

|

||||

# vgdisplay vg01

|

||||

--- Volume group ---

|

||||

VG Name vg01

|

||||

System ID

|

||||

Format lvm2

|

||||

Metadata Areas 3

|

||||

Metadata Sequence No 1

|

||||

VG Access read/write

|

||||

VG Status resizable

|

||||

MAX LV 0

|

||||

Cur LV 0

|

||||

Open LV 0

|

||||

Max PV 0

|

||||

Cur PV 3

|

||||

Act PV 3

|

||||

VG Size 44.99 GiB

|

||||

PE Size 4.00 MiB

|

||||

Total PE 11511

|

||||

Alloc PE / Size 0 / 0

|

||||

Free PE / Size 11511 / 44.99 GiB

|

||||

VG UUID d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

```

|

||||

|

||||

### 如何扩展卷组

|

||||

|

||||

如果 VG 没有空间,请使用以下命令将新的物理卷添加到现有卷组中。

|

||||

|

||||

**卷组扩展 (`vgextend`)的一般语法:**

|

||||

|

||||

```

|

||||

vgextend [已有卷组名] [物理卷名]

|

||||

```

|

||||

|

||||

```

|

||||

# vgextend vg01 /dev/sde

|

||||

Volume group "vg01" successfully extended

|

||||

```

|

||||

|

||||

### 如何以 GB 为单位创建逻辑卷?

|

||||

|

||||

<ruby>逻辑卷<rt>Logical Volume</rt></ruby>(LV)是 LVM 结构中的顶层。逻辑卷是由卷组创建的块设备。它作为一个虚拟磁盘分区,可以使用 LVM 命令轻松管理。

|

||||

|

||||

你可以使用 `lvcreate` 命令创建一个新的逻辑卷。

|

||||

|

||||

**创建逻辑卷(`lvcreate`) 的一般语法:**

|

||||

|

||||

```

|

||||

lvcreate –n [逻辑卷名] –L [逻辑卷大小] [要创建的 LV 所在的卷组名称]

|

||||

```

|

||||

|

||||

运行下面的命令,创建一个大小为 10GB 的逻辑卷 `lv001`:

|

||||

|

||||

```

|

||||

# lvcreate -n lv001 -L 10G vg01

|

||||

Logical volume "lv001" created

|

||||

```

|

||||

|

||||

使用 `lvs` 和 `lvdisplay` 命令来显示你所创建的 LV 的信息:

|

||||

|

||||

```

|

||||

# lvs /dev/vg01/lvol01

|

||||

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

|

||||

lv001 vg01 mwi-a-m-- 10.00g lv001_mlog 100.00

|

||||

```

|

||||

|

||||

```

|

||||

# lvdisplay /dev/vg01/lv001

|

||||

--- Logical volume ---

|

||||

LV Path /dev/vg01/lv001

|

||||

LV Name lv001

|

||||

VG Name vg01

|

||||

LV UUID ca307aa4-0866-49b1-8184-004025789e63

|

||||

LV Write Access read/write

|

||||

LV Creation host, time localhost.localdomain, 2020-09-10 11:43:05 -0700

|

||||

LV Status available

|

||||

# open 0

|

||||

LV Size 10.00 GiB

|

||||

Current LE 2560

|

||||

Segments 1

|

||||

Allocation inherit

|

||||

Read ahead sectors auto

|

||||

- currently set to 256

|

||||

Block device 253:4

|

||||

```

|

||||

|

||||

### 如何以 PE 大小创建逻辑卷?

|

||||

|

||||

或者,你可以使用物理范围(PE)大小创建逻辑卷。

|

||||

|

||||

### 如何计算 PE 值?

|

||||

|

||||

很简单,例如,如果你有一个 10GB 的卷组,那么 PE 大小是多少?

|

||||

|

||||

默认情况下,它使用 4MB 的物理范围,但可以通过运行 `vgdisplay` 命令来检查正确的 PE 大小,因为这可以根据需求进行更改。

|

||||

|

||||

```

|

||||

10GB = 10240MB / 4MB (PE 大小) = 2560 PE

|

||||

```

|

||||

|

||||

**用 PE 大小创建逻辑卷 (`lvcreate`) 的一般语法:**

|

||||

|

||||

```

|

||||

lvcreate –n [逻辑卷名] –l [物理扩展 (PE) 大小] [要创建的 LV 所在的卷组名称]

|

||||

```

|

||||

|

||||

要使用 PE 大小创建 10GB 的逻辑卷,命令如下:

|

||||

|

||||

```

|

||||

# lvcreate -n lv001 -l 2560 vg01

|

||||

```

|

||||

|

||||

### 如何创建文件系统

|

||||

|

||||

在创建有效的文件系统之前,你不能使用逻辑卷。

|

||||

|

||||

**创建文件系统的一般语法:**

|

||||

|

||||

```

|

||||

mkfs –t [文件系统类型] /dev/[LV 所在的卷组名称]/[LV 名称]

|

||||

```

|

||||

|

||||

使用以下命令将逻辑卷 `lv001` 格式化为 ext4 文件系统:

|

||||

|

||||

```

|

||||

# mkfs -t ext4 /dev/vg01/lv001

|

||||

```

|

||||

|

||||

对于 xfs 文件系统:

|

||||

|

||||

```

|

||||

# mkfs -t xfs /dev/vg01/lv001

|

||||

```

|

||||

|

||||

### 挂载逻辑卷

|

||||

|

||||

最后,你需要挂载逻辑卷来使用它。确保在 `/etc/fstab` 中添加一个条目,以便系统启动时自动加载。

|

||||

|

||||

创建一个目录来挂载逻辑卷:

|

||||

|

||||

```

|

||||

# mkdir /lvmtest

|

||||

```

|

||||

|

||||

使用挂载命令[挂载逻辑卷][3]:

|

||||

|

||||

```

|

||||

# mount /dev/vg01/lv001 /lvmtest

|

||||

```

|

||||

|

||||

在 [/etc/fstab 文件][4]中添加新的逻辑卷详细信息,以便系统启动时自动挂载:

|

||||

|

||||

```

|

||||

# vi /etc/fstab

|

||||

/dev/vg01/lv001 /lvmtest xfs defaults 0 0

|

||||

```

|

||||

|

||||

使用 [df 命令][5]检查新挂载的卷:

|

||||

|

||||

```

|

||||

# df -h /lvmtest

|

||||

Filesystem Size Used Avail Use% Mounted on

|

||||

/dev/mapper/vg01-lv001 15360M 34M 15326M 4% /lvmtest

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/create-lvm-storage-logical-volume-manager-in-linux/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/wp-content/uploads/2020/09/create-lvm-storage-logical-volume-manager-in-linux-2.png

|

||||

[2]: https://www.2daygeek.com/scan-detect-luns-scsi-disks-on-redhat-centos-oracle-linux/

|

||||

[3]: https://www.2daygeek.com/mount-unmount-file-system-partition-in-linux/

|

||||

[4]: https://www.2daygeek.com/understanding-linux-etc-fstab-file/

|

||||

[5]: https://www.2daygeek.com/linux-check-disk-space-usage-df-command/

|

||||

@ -1,31 +1,30 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How to Extend/Increase LVM’s (Logical Volume Resize) in Linux)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12673-1.html)

|

||||

[#]: subject: (How to Extend/Increase LVM’s in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/extend-increase-resize-lvm-logical-volume-in-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

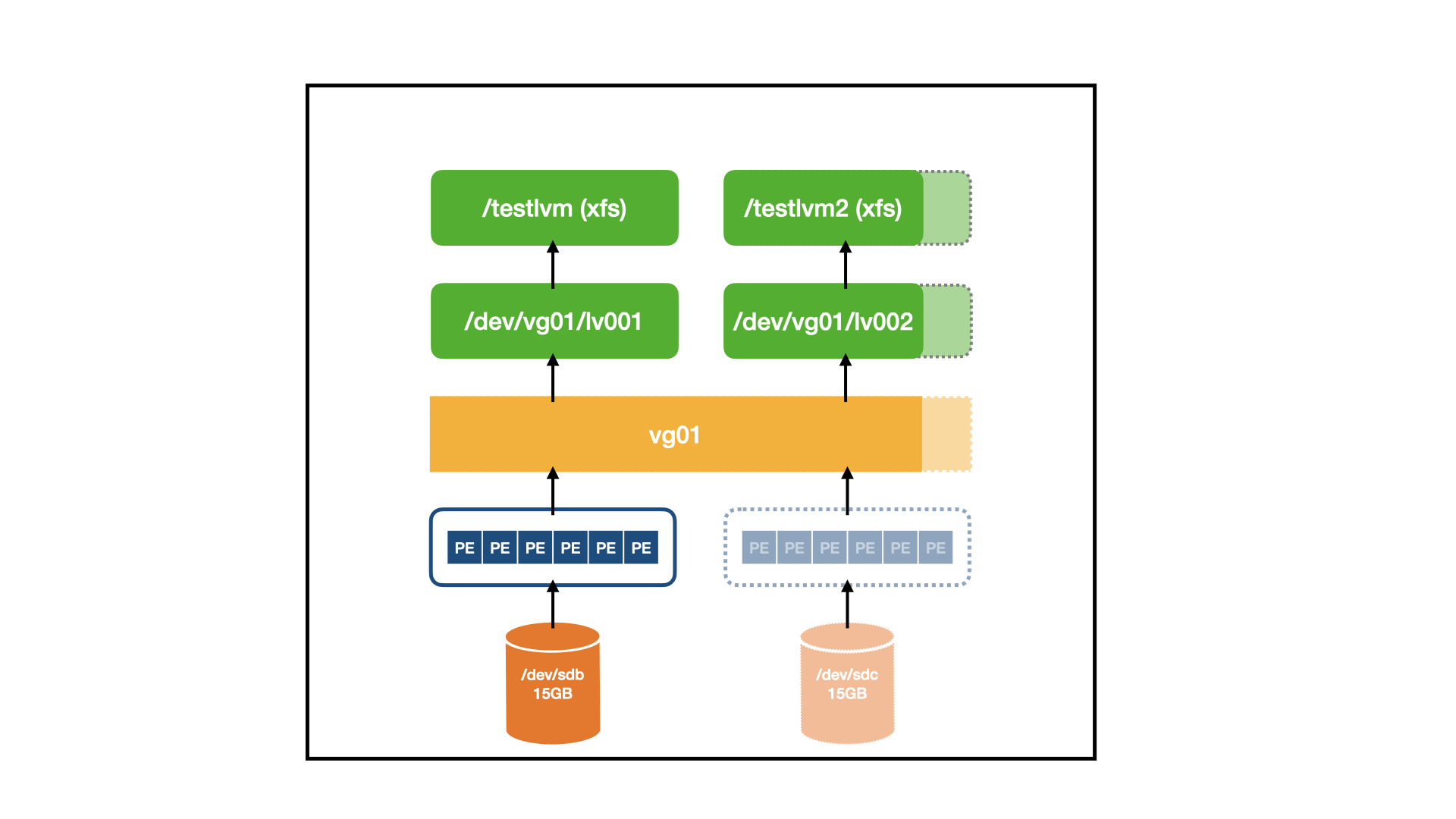

如何在 Linux 中扩展/增加 LVM 大小(逻辑卷调整)?

|

||||

如何在 Linux 中扩展/增加 LVM 大小(逻辑卷调整)

|

||||

======

|

||||

|

||||

|

||||

|

||||

扩展逻辑卷非常简单,只需要很少的步骤,而且不需要卸载某个逻辑卷就可以在线完成。

|

||||

|

||||

LVM 的主要目的是灵活的磁盘管理,当你需要的时候,可以很方便地调整、扩展和缩小逻辑卷的大小。

|

||||

|

||||

如果你是逻辑卷管理 (LVM) 新手,我建议你从我们之前的文章开始学习。

|

||||

如果你是逻辑卷管理(LVM) 新手,我建议你从我们之前的文章开始学习。

|

||||

|

||||

* **第一部分:[如何在 Linux 中创建/配 置LVM(逻辑卷管理)][1]**

|

||||

* **第一部分:[如何在 Linux 中创建/配置 LVM(逻辑卷管理)][1]**

|

||||

|

||||

|

||||

|

||||

![][2]

|

||||

|

||||

|

||||

扩展逻辑卷涉及到以下步骤:

|

||||

|

||||

|

||||

* 检查 LV 所在的卷组中是否有足够的未分配磁盘空间

|

||||

* 检查逻辑卷(LV)所在的卷组中是否有足够的未分配磁盘空间

|

||||

* 如果有,你可以使用这些空间来扩展逻辑卷

|

||||

* 如果没有,请向系统中添加新的磁盘或 LUN

|

||||

* 将物理磁盘转换为物理卷(PV)

|

||||

@ -34,13 +33,11 @@ LVM 的主要目的是灵活的磁盘管理,当你需要的时候,可以很

|

||||

* 扩大文件系统

|

||||

* 检查扩展的文件系统大小

|

||||

|

||||

|

||||

|

||||

### 如何创建 LVM 物理卷?

|

||||

|

||||

使用 pvcreate 命令创建 LVM 物理卷。

|

||||

使用 `pvcreate` 命令创建 LVM 物理卷。

|

||||

|

||||

当在操作系统中检测到磁盘,使用 pvcreate 命令初始化 LVM PV(物理卷)。

|

||||

当在操作系统中检测到磁盘,使用 `pvcreate` 命令初始化 LVM 物理卷:

|

||||

|

||||

```

|

||||

# pvcreate /dev/sdc

|

||||

@ -49,12 +46,10 @@ Physical volume "/dev/sdc" successfully created

|

||||

|

||||

**请注意:**

|

||||

|

||||

* 上面的命令将删除磁盘 /dev/sdc 上的所有数据。

|

||||

* 物理磁盘可以直接添加到 LVM PV 中,而不是磁盘分区。

|

||||

* 上面的命令将删除磁盘 `/dev/sdc` 上的所有数据。

|

||||

* 物理磁盘可以直接添加到 LVM 物理卷中,而不是磁盘分区。

|

||||

|

||||

|

||||

|

||||

使用 pvdisplay 命令来显示你所创建的 PV。

|

||||

使用 `pvdisplay` 命令来显示你所创建的物理卷:

|

||||

|

||||

```

|

||||

# pvdisplay /dev/sdc

|

||||

@ -74,14 +69,14 @@ PV UUID 69d9dd18-36be-4631-9ebb-78f05fe3217f

|

||||

|

||||

### 如何扩展卷组

|

||||

|

||||

使用以下命令在现有的卷组中添加一个新的物理卷。

|

||||

使用以下命令在现有的卷组(VG)中添加一个新的物理卷:

|

||||

|

||||

```

|

||||

# vgextend vg01 /dev/sdc

|

||||

Volume group "vg01" successfully extended

|

||||

```

|

||||

|

||||

使用 vgdisplay 命令来显示你所创建的 PV。

|

||||

使用 `vgdisplay` 命令来显示你所创建的物理卷:

|

||||

|

||||

```

|

||||

# vgdisplay vg01

|

||||

@ -111,13 +106,13 @@ VG UUID d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

|

||||

使用以下命令增加现有逻辑卷大小。

|

||||

|

||||

**逻辑卷扩展 (lvextend) 的常用语法。**

|

||||

**逻辑卷扩展(`lvextend`)的常用语法:**

|

||||

|

||||

```

|

||||

lvextend [要增加的额外空间] [现有逻辑卷名称]

|

||||

```

|

||||

|

||||

使用下面的命令将现有的逻辑卷增加 10GB。

|

||||

使用下面的命令将现有的逻辑卷增加 10GB:

|

||||

|

||||

```

|

||||

# lvextend -L +10G /dev/mapper/vg01-lv002

|

||||

@ -126,33 +121,33 @@ Size of logical volume vg01/lv002 changed from 5.00 GiB (1280 extents) to 15.00

|

||||

Logical volume var successfully resized

|

||||

```

|

||||

|

||||

使用 PE 大小来扩展逻辑卷。

|

||||

使用 PE 大小来扩展逻辑卷:

|

||||

|

||||

```

|

||||

# lvextend -l +2560 /dev/mapper/vg01-lv002

|

||||

```

|

||||

|

||||

要使用百分比 (%) 扩展逻辑卷,请使用以下命令。

|

||||

要使用百分比(%)扩展逻辑卷,请使用以下命令:

|

||||

|

||||

```

|

||||

# lvextend -l +40%FREE /dev/mapper/vg01-lv002

|

||||

```

|

||||

|

||||

现在,逻辑卷已经扩展,你需要调整文件系统的大小以扩展逻辑卷内的空间。

|

||||

现在,逻辑卷已经扩展,你需要调整文件系统的大小以扩展逻辑卷内的空间:

|

||||

|

||||

对于基于 ext3 和 ext4 的文件系统,运行以下命令。

|

||||

对于基于 ext3 和 ext4 的文件系统,运行以下命令:

|

||||

|

||||

```

|

||||

# resize2fs /dev/mapper/vg01-lv002

|

||||

```

|

||||

|

||||

对于xfs文件系统,使用以下命令。

|

||||

对于 xfs 文件系统,使用以下命令:

|

||||

|

||||

```

|

||||

# xfs_growfs /dev/mapper/vg01-lv002

|

||||

```

|

||||

|

||||

使用 **[df 命令][3]**查看文件系统大小。

|

||||

使用 [df 命令][3]查看文件系统大小:

|

||||

|

||||

```

|

||||

# df -h /lvmtest1

|

||||

@ -167,12 +162,12 @@ via: https://www.2daygeek.com/extend-increase-resize-lvm-logical-volume-in-linux

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/create-lvm-storage-logical-volume-manager-in-linux/

|

||||

[2]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[1]: https://linux.cn/article-12670-1.html

|

||||

[2]: https://www.2daygeek.com/wp-content/uploads/2020/09/extend-increase-resize-lvm-logical-volume-in-linux-3.png

|

||||

[3]: https://www.2daygeek.com/linux-check-disk-space-usage-df-command/

|

||||

@ -1,154 +1,158 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "lxbwolf"

|

||||

[#]: reviewer: " "

|

||||

[#]: publisher: " "

|

||||

[#]: url: " "

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-12671-1.html"

|

||||

[#]: subject: "10 Open Source Static Site Generators to Create Fast and Resource-Friendly Websites"

|

||||

[#]: via: "https://itsfoss.com/open-source-static-site-generators/"

|

||||

[#]: author: "Ankush Das https://itsfoss.com/author/ankush/"

|

||||

|

||||

10 个用来创建快速和资源友好网站的静态网站生成工具

|

||||

10 大静态网站生成工具

|

||||

======

|

||||

|

||||

_**摘要:在寻找部署静态网页的方法吗?这几个开源的静态网站生成工具可以帮你迅速部署界面优美、功能强大的静态网站,无需掌握复杂的 HTML 和 CSS 技能。**_

|

||||

|

||||

|

||||

> 在寻找部署静态网页的方法吗?这几个开源的静态网站生成工具可以帮你迅速部署界面优美、功能强大的静态网站,无需掌握复杂的 HTML 和 CSS 技能。

|

||||

|

||||

### 静态网站是什么?

|

||||

|

||||

技术上来讲,一个静态网站的网页不是由服务器动态生成的。HTML、CSS 和 JavaScript 文件就静静地躺在服务器的某个路径下,它们的内容与终端用户接收到时看到的是一样的。源码文件已经提前编译好了,源码在每次请求后都不会变化。

|

||||

技术上来讲,静态网站是指网页不是由服务器动态生成的。HTML、CSS 和 JavaScript 文件就静静地躺在服务器的某个路径下,它们的内容与终端用户接收到的版本是一样的。原始的源码文件已经提前编译好了,源码在每次请求后都不会变化。

|

||||

|

||||

It’s FOSS 是一个依赖多个数据库的动态网站,网页是在你的浏览器发出请求时即时生成和服务的。大部分网站是动态的,你与这些网站互动时,会有大量的内容在变化。

|

||||

Linux.CN 是一个依赖多个数据库的动态网站,当有浏览器的请求时,网页就会生成并提供服务。大部分网站是动态的,你与这些网站互动时,大量的内容会经常改变。

|

||||

|

||||

静态网站有一些好处,比如加载时间更短,请求的服务器资源更少,更安全(有争议?)。

|

||||

静态网站有一些好处,比如加载时间更短,请求的服务器资源更少、更安全(值得商榷)。

|

||||

|

||||

传统意义上,静态网站更适合于创建只有少量网页、内容变化不频繁的小网站。

|

||||

传统上,静态网站更适合于创建只有少量网页、内容变化不频繁的小网站。

|

||||

|

||||

然而,当静态网站生成工具出现后,静态网站的适用范围越来越大。你还可以使用这些工具搭建博客网站。

|

||||

然而,随着静态网站生成工具出现后,静态网站的适用范围越来越大。你还可以使用这些工具搭建博客网站。

|

||||

|

||||

我列出了几个开源的静态网站生成工具,这些工具可以帮你搭建界面优美的网站。

|

||||

我整理了几个开源的静态网站生成工具,这些工具可以帮你搭建界面优美的网站。

|

||||

|

||||

### 最好的开源静态网站生成工具

|

||||

|

||||

请注意,静态网站不会提供很复杂的功能。如果你需要复杂的功能,那么你可以参考适用于动态网站的[最好的开源 CMS][1]列表

|

||||

请注意,静态网站不会提供很复杂的功能。如果你需要复杂的功能,那么你可以参考适用于动态网站的[最佳开源 CMS][1]列表。

|

||||

|

||||

#### 1\. Jekyll

|

||||

#### 1、Jekyll

|

||||

|

||||

![][2]

|

||||

|

||||

Jekyll 是用 [Ruby][3] 写的最受欢迎的开源静态生成工具之一。实际上,Jekyll 是 [GitHub 页面][4] 的引擎,它可以让你免费用 GitHub 维护自己的网站。

|

||||

Jekyll 是用 [Ruby][3] 写的最受欢迎的开源静态生成工具之一。实际上,Jekyll 是 [GitHub 页面][4] 的引擎,它可以让你免费用 GitHub 托管网站。

|

||||

|

||||

你可以很轻松地跨平台配置 Jekyll,包括 Ubuntu。它利用 [Markdown][5]、[Liquid][5](模板语言)、HTML 和 CSS 来生成静态的网页文件。如果你要搭建一个没有广告或推广自己工具或服务的产品页的博客网站,它是个不错的选择。

|

||||

|

||||

它还支持从常见的 CMS(<ruby>内容管理系统<rt>Content management system</rt></ruby>)如 Ghost、WordPress、Drupal 7 迁移你的博客。你可以管理永久链接、类别、页面、文章,还可以自定义布局,这些功能都很强大。因此,即使你已经有了一个网站,如果你想转成静态网站,Jekyll 会是一个完美的解决方案。你可以参考[官方文档][6]或 [GitHub 页面][7]了解更多内容。

|

||||

|

||||

[Jekyll][8]

|

||||

- [Jekyll][8]

|

||||

|

||||

#### 2\. Hugo

|

||||

#### 2、Hugo

|

||||

|

||||

![][9]

|

||||

|

||||

Hugo 是另一个很受欢迎的用于搭建静态网站的开源框架。它是用 [Go 语言][10]写的。

|

||||

|

||||

它运行速度快,使用简单,可靠性高。如果你需要,它也可以提供更高级的主题。它还提供了能提高你效率的实用快捷键。无论是组合展示网站还是博客网站,Hogo 都有能力管理大量的内容类型。

|

||||

它运行速度快、使用简单、可靠性高。如果你需要,它也可以提供更高级的主题。它还提供了一些有用的快捷方式来帮助你轻松完成任务。无论是组合展示网站还是博客网站,Hogo 都有能力管理大量的内容类型。

|

||||

|

||||

如果你想使用 Hugo,你可以参照它的[官方文档][11]或它的 [GitHub 页面][12]来安装以及了解更多相关的使用方法。你还可以用 Hugo 在 GitHub 页面或 CDN(如果有需要)部署网站。

|

||||

如果你想使用 Hugo,你可以参照它的[官方文档][11]或它的 [GitHub 页面][12]来安装以及了解更多相关的使用方法。如果需要的话,你还可以将 Hugo 部署在 GitHub 页面或任何 CDN 上。

|

||||

|

||||

[Hugo][13]

|

||||

- [Hugo][13]

|

||||

|

||||

#### 3\. Hexo

|

||||

#### 3、Hexo

|

||||

|

||||

![][14]

|

||||

|

||||

Hexo 基于 [Node.js][15] 的一个有趣的开源框架。像其他的工具一样,你可以用它搭建相当快速的网站,不仅如此,它还提供了丰富的主题和插件。

|

||||

Hexo 是一个有趣的开源框架,基于 [Node.js][15]。像其他的工具一样,你可以用它搭建相当快速的网站,不仅如此,它还提供了丰富的主题和插件。

|

||||

|

||||

它还根据用户的每个需求提供了强大的 API 来扩展功能。如果你已经有一个网站,你可以用它的[迁移][16]扩展轻松完成迁移工作。

|

||||

|

||||

你可以参照[官方文档][17]或 [GitHub 页面][18] 来使用 Hexo。

|

||||

|

||||

[Hexo][19]

|

||||

- [Hexo][19]

|

||||

|

||||

#### 4\. Gatsby

|

||||

#### 4、Gatsby

|

||||

|

||||

![][20]

|

||||

|

||||

Gatsby 是一个不断发展的流行开源网站生成框架。它使用 [React.js][21] 来生成快速、界面优美的网站。

|

||||

Gatsby 是一个越来越流行的开源网站生成框架。它使用 [React.js][21] 来生成快速、界面优美的网站。

|

||||

|

||||

几年前在一个实验性的项目中,我曾经非常想尝试一下这个工具,它提供的成千上万的新插件和主题的能力让我印象深刻。与其他静态网站生成工具不同的是,你可以用 Gatsby 在不损失任何功能的前提下来生成静态网站。

|

||||

几年前在一个实验性的项目中,我曾经非常想尝试一下这个工具,它提供的成千上万的新插件和主题的能力让我印象深刻。与其他静态网站生成工具不同的是,你可以使用 Gatsby 生成一个网站,并在不损失任何功能的情况下获得静态网站的好处。

|

||||

|

||||

它提供了与很多流行的服务的整合功能。当然,你可以不使用它的复杂的功能,或选择一个流行的 CMS 与它配合使用,这也会很有趣。你可以查看他们的[官方文档][22]或它的 [GitHub 页面][23]了解更多内容。

|

||||

它提供了与很多流行的服务的整合功能。当然,你可以不使用它的复杂的功能,或将其与你选择的流行 CMS 配合使用,这也会很有趣。你可以查看他们的[官方文档][22]或它的 [GitHub 页面][23]了解更多内容。

|

||||

|

||||

[Gatsby][24]

|

||||

- [Gatsby][24]

|

||||

|

||||

#### 5\. VuePress

|

||||

#### 5、VuePress

|

||||

|

||||

![][25]

|

||||

|

||||

VuePress 是基于 [Vue.js][26] 的静态网站生成工具,同时也是开源的渐进式 JavaScript 框架。

|

||||

VuePress 是由 [Vue.js][26] 支持的静态网站生成工具,而 Vue.js 是一个开源的渐进式 JavaScript 框架。

|

||||

|

||||

如果你了解 HTML、CSS 和 JavaScript,那么你可以无压力地使用 VuePress。如果你想在搭建网站时抢先别人一步,那么你应该找几个有用的插件和主题。此外,看起来 Vue.js 更新地一直很活跃,很多开发者都在关注 Vue.js,这是一件好事。

|

||||

如果你了解 HTML、CSS 和 JavaScript,那么你可以无压力地使用 VuePress。你应该可以找到几个有用的插件和主题来为你的网站建设开个头。此外,看起来 Vue.js 的更新一直很活跃,很多开发者都在关注 Vue.js,这是一件好事。

|

||||

|

||||

你可以参照他们的[官方文档][27]和 [GitHub 页面][28]了解更多。

|

||||

|

||||

[VuePress][29]

|

||||

- [VuePress][29]

|

||||

|

||||

#### 6\. Nuxt.js

|

||||

#### 6、Nuxt.js

|

||||

|

||||

![][30]

|

||||

|

||||

Nuxt.js 使用 Vue.js 和 Node.js,但它致力于模块化,并且有能力依赖服务端而非客户端。不仅如此,它还志在通过描述详尽的错误和其他方面更详细的文档来为开发者提供直观的体验。

|

||||

Nuxt.js 使用了 Vue.js 和 Node.js,但它致力于模块化,并且有能力依赖服务端而非客户端。不仅如此,它的目标是为开发者提供直观的体验,并提供描述性错误,以及详细的文档等。

|

||||

|

||||

正如它声称的那样,在你用来搭建静态网站的所有工具中,Nuxt.js 在功能和灵活性两个方面都是佼佼者。他们还提供了一个 [Nuxt 线上沙盒][31]让你直接测试。

|

||||

正如它声称的那样,在你用来搭建静态网站的所有工具中,Nuxt.js 可以做到功能和灵活性两全其美。他们还提供了一个 [Nuxt 线上沙盒][31],让你不费吹灰之力就能直接测试它。

|

||||

|

||||

你可以查看它的 [GitHub 页面][32]和[官方网站][33]了解更多。

|

||||

|

||||

#### 7\. Docusaurus

|

||||

- [Nuxt.js][33]

|

||||

|

||||

#### 7、Docusaurus

|

||||

|

||||

![][34]

|

||||

|

||||

Docusaurus 是一个为搭建文档类网站量身定制的有趣的开源静态网站生成工具。它还是 [Facebook 开源计划][35]的一个项目。

|

||||

Docusaurus 是一个有趣的开源静态网站生成工具,为搭建文档类网站量身定制。它还是 [Facebook 开源计划][35]的一个项目。

|

||||

|

||||

Docusaurus 是用 React 构建的。你可以使用所有必要的功能,像文档版本管理、文档搜索,还有大部分已经预先配置好的翻译。如果你想为你的产品或服务搭建一个文档网站,那么可以试试 Docusaurus。

|

||||

Docusaurus 是用 React 构建的。你可以使用所有的基本功能,像文档版本管理、文档搜索和翻译大多是预先配置的。如果你想为你的产品或服务搭建一个文档网站,那么可以试试 Docusaurus。

|

||||

|

||||

你可以从它的 [GitHub 页面][36]和它的[官网][37]获取更多信息。

|

||||

|

||||

[Docusaurus][37]

|

||||

- [Docusaurus][37]

|

||||

|

||||

#### 8\. Eleventy

|

||||

#### 8、Eleventy

|

||||

|

||||

![][38]

|

||||

|

||||

Eleventy 自称是 Jekyll 的替代品,志在为创建更快的静态网站提供更简单的方式。

|

||||

Eleventy 自称是 Jekyll 的替代品,旨在以更简单的方法来制作更快的静态网站。

|

||||

|

||||

使用 Eleventy 看起来很简单,它也提供了能解决你的问题的文档。如果你想找一个简单的静态网站生成工具,Eleventy 似乎会是一个有趣的选择。

|

||||

它似乎很容易上手,而且它还提供了适当的文档来帮助你。如果你想找一个简单的静态网站生成工具,Eleventy 似乎会是一个有趣的选择。

|

||||

|

||||

你可以参照它的 [GitHub 页面][39]和[官网][40]来了解更多的细节。

|

||||

|

||||

[Eleventy][40]

|

||||

- [Eleventy][40]

|

||||

|

||||

#### 9\. Publii

|

||||

#### 9、Publii

|

||||

|

||||

![][41]

|

||||

|

||||

Publii 是一个令人印象深刻的开源 CMS,它能使生成一个静态网站变得很容易。它是用 [Electron][42] 和 Vue.js 构建的。如果有需要,你也可以把你的文章从 WorkPress 网站迁移过来。此外,它还提供了与 GitHub 页面、Netlify 及其它类似服务的一键同步功能。

|

||||

|

||||

利用 Publii 生成的静态网站,自带所见即所得编辑器。你可以从[官网][43]下载它,或者从它的 [GitHub 页面][44]了解更多信息。

|

||||

如果你利用 Publii 生成一个静态网站,你还可以得到一个所见即所得的编辑器。你可以从[官网][43]下载它,或者从它的 [GitHub 页面][44]了解更多信息。

|

||||

|

||||

[Publii][43]

|

||||

- [Publii][43]

|

||||

|

||||

#### 10\. Primo

|

||||

#### 10、Primo

|

||||

|

||||

![][45]

|

||||

|

||||

一个有趣的开源静态网站生成工具,目前开发工作仍很活跃。虽然与其他的静态生成工具相比,它还不是一个成熟的解决方案,有些功能还不完善,但它是一个独一无二的项目。

|

||||

一个有趣的开源静态网站生成工具,目前开发工作仍很活跃。虽然与其他的静态生成工具相比,它还不是一个成熟的解决方案,有些功能还不完善,但它是一个独特的项目。

|

||||

|

||||

Primo 志在使用可视化的构建器帮你构建和搭建网站,这样你就可以轻松编辑和部署到任意主机上。

|

||||

Primo 旨在使用可视化的构建器帮你构建和搭建网站,这样你就可以轻松编辑和部署到任意主机上。

|

||||

|

||||

你可以参照[官网][46]或查看它的 [GitHub 页面][47]了解更多信息。

|

||||

|

||||

[Primo][46]

|

||||

- [Primo][46]

|

||||

|

||||

### 结语

|

||||

|

||||

还有很多文章中没有列出的网站生成工具。然而,我已经尽力写出了能提供最快的加载速度、最好的安全性和令人印象最深刻的灵活性的最好的静态生成工具了。

|

||||

还有很多文章中没有列出的网站生成工具。然而,我试图提到最好的静态生成器,为您提供最快的加载时间,最好的安全性和令人印象深刻的灵活性。

|

||||

|

||||

列表中没有你最喜欢的工具?在下面的评论中告诉我。

|

||||

|

||||

@ -159,7 +163,7 @@ via: https://itsfoss.com/open-source-static-site-generators/

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lxbwolf](https://github.com/lxbwolf)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,201 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12674-1.html)

|

||||

[#]: subject: (Recovering deleted files on Linux with testdisk)

|

||||

[#]: via: (https://www.networkworld.com/article/3575524/recovering-deleted-files-on-linux-with-testdisk.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

用 testdisk 恢复 Linux 上已删除的文件

|

||||

======

|

||||

|

||||

> 这篇文章介绍了 testdisk,这是恢复最近删除的文件(以及用其他方式修复分区)的工具之一,非常方便。

|

||||

|

||||

|

||||

|

||||

当你在 Linux 系统上删除一个文件时,它不一定会永远消失,特别是当你最近才刚刚删除了它的时候。

|

||||

|

||||

除非你用 `shred` 等工具把它擦掉,否则数据仍然会放在你的磁盘上 —— 而恢复已删除文件的最佳工具之一 `testdisk` 可以帮助你拯救它。虽然 `testdisk` 具有广泛的功能,包括恢复丢失或损坏的分区和使不能启动磁盘可以重新启动,但它也经常被用来恢复被误删的文件。

|

||||

|

||||

在本篇文章中,我们就来看看如何使用 `testdisk` 恢复已删除的文件,以及该过程中的每一步是怎样的。由于这个过程需要不少的步骤,所以当你做了几次之后,你可能会觉得操作起来会更加得心应手。

|

||||

|

||||

### 安装 testdisk

|

||||

|

||||

可以使用 `apt install testdisk` 或 `yum install testdisk` 等命令安装 `testdisk`。有趣的是,它不仅是一个 Linux 工具,而且还适用于 MacOS、Solaris 和 Windows。

|

||||

|

||||

文档可在 [cgsecurity.org][1] 中找到。

|

||||

|

||||

### 恢复文件

|

||||

|

||||

首先,你必须以 `root` 身份登录,或者有 `sudo` 权限才能使用 `testdisk`。如果你没有 `sudo` 访问权限,你会在这个过程一开始就被踢出,而如果你选择创建了一个日志文件的话,最终会有这样的消息:

|

||||

|

||||

```

|

||||

TestDisk exited normally.

|

||||

jdoe is not in the sudoers file. This incident will be reported.

|

||||

```

|

||||

|

||||

当你用 `testdisk` 恢复被删除的文件时,你最终会将恢复的文件放在你启动该工具的目录下,而这些文件会属于 `root`。出于这个原因,我喜欢在 `/home/recovery` 这样的目录下启动。一旦文件被成功地还原和验证,就可以将它们移回它们的所属位置,并将它们的所有权也恢复。

|

||||

|

||||

在你可以写入的选定目录下开始:

|

||||

|

||||

```

|

||||

$ cd /home/recovery

|

||||

$ testdisk

|

||||

```

|

||||

|

||||

`testdisk` 提供的第一页信息描述了该工具并显示了一些选项。至少在刚开始,创建个日志文件是个好主意,因为它提供的信息可能会被证明是有用的。下面是如何做的:

|

||||

|

||||

```

|

||||

Use arrow keys to select, then press Enter key:

|

||||

>[ Create ] Create a new log file

|

||||

[ Append ] Append information to log file

|

||||

[ No Log ] Don’t record anything

|

||||

```

|

||||

|

||||

左边的 `>` 以及你看到的反转的字体和背景颜色指出了你按下回车键后将使用的选项。在这个例子中,我们选择了创建日志文件。

|

||||

|

||||

然后会提示你输入密码(除非你最近使用过 `sudo`)。

|

||||

|

||||

下一步是选择被删除文件所存储的磁盘分区(如果没有高亮显示的话)。根据需要使用上下箭头移动到它。然后点两次右箭头,当 “Proceed” 高亮显示时按回车键。

|

||||

|

||||

```

|

||||

Select a media (use Arrow keys, then press Enter):

|

||||

Disk /dev/sda - 120 GB / 111 GiB - SSD2SC120G1CS1754D117-551

|

||||

>Disk /dev/sdb - 500 GB / 465 GiB - SAMSUNG HE502HJ

|

||||

Disk /dev/loop0 - 13 MB / 13 MiB (RO)

|

||||

Disk /dev/loop1 - 101 MB / 96 MiB (RO)

|

||||

Disk /dev/loop10 - 148 MB / 141 MiB (RO)

|

||||

Disk /dev/loop11 - 36 MB / 35 MiB (RO)

|

||||

Disk /dev/loop12 - 52 MB / 49 MiB (RO)

|

||||

Disk /dev/loop13 - 78 MB / 75 MiB (RO)

|

||||

Disk /dev/loop14 - 173 MB / 165 MiB (RO)

|

||||

Disk /dev/loop15 - 169 MB / 161 MiB (RO)

|

||||

>[Previous] [ Next ] [Proceed ] [ Quit ]

|

||||

```

|

||||

|

||||

在这个例子中,被删除的文件在 `/dev/sdb` 的主目录下。

|

||||

|

||||

此时,`testdisk` 应该已经选择了合适的分区类型。

|

||||

|

||||

```

|

||||

Disk /dev/sdb - 500 GB / 465 GiB - SAMSUNG HE502HJ

|

||||

|

||||

Please select the partition table type, press Enter when done.

|

||||

[Intel ] Intel/PC partition

|

||||

>[EFI GPT] EFI GPT partition map (Mac i386, some x86_64...)

|

||||

[Humax ] Humax partition table

|

||||

[Mac ] Apple partition map (legacy)

|

||||

[None ] Non partitioned media

|

||||

[Sun ] Sun Solaris partition

|

||||

[XBox ] XBox partition

|

||||

[Return ] Return to disk selection

|

||||

```

|

||||

|

||||

在下一步中,按向下箭头指向 “[ Advanced ] Filesystem Utils”。

|

||||

|

||||

```

|

||||

[ Analyse ] Analyse current partition structure and search for lost partitions

|

||||

>[ Advanced ] Filesystem Utils

|

||||

[ Geometry ] Change disk geometry

|

||||

[ Options ] Modify options

|

||||

[ Quit ] Return to disk selection

|

||||

```

|

||||

|

||||

接下来,查看选定的分区。

|

||||

|

||||

```

|

||||

Partition Start End Size in sectors

|

||||

> 1 P Linux filesys. data 2048 910155775 910153728 [drive2]

|

||||

```

|

||||

|

||||

然后按右箭头选择底部的 “[ List ]”,按回车键。

|

||||

|

||||

```

|

||||

[ Type ] [Superblock] >[ List ] [Image Creation] [ Quit ]

|

||||

```

|

||||

|

||||

请注意,它看起来就像我们从根目录 `/` 开始,但实际上这是我们正在工作的文件系统的基点。在这个例子中,就是 `/home`。

|

||||

|

||||

```

|

||||

Directory / <== 开始点

|

||||

|

||||

>drwxr-xr-x 0 0 4096 23-Sep-2020 17:46 .

|

||||

drwxr-xr-x 0 0 4096 23-Sep-2020 17:46 ..

|

||||

drwx——— 0 0 16384 22-Sep-2020 11:30 lost+found

|

||||

drwxr-xr-x 1008 1008 4096 9-Jul-2019 14:10 dorothy

|

||||

drwxr-xr-x 1001 1001 4096 22-Sep-2020 12:12 nemo

|

||||

drwxr-xr-x 1005 1005 4096 19-Jan-2020 11:49 eel

|

||||

drwxrwxrwx 0 0 4096 25-Sep-2020 08:08 recovery

|

||||

...

|

||||

```

|

||||

|

||||

接下来,我们按箭头指向具体的主目录。

|

||||

|

||||

```

|

||||

drwxr-xr-x 1016 1016 4096 17-Feb-2020 16:40 gino

|

||||

>drwxr-xr-x 1000 1000 20480 25-Sep-2020 08:00 shs

|

||||

```

|

||||

|

||||

按回车键移动到该目录,然后根据需要向下箭头移动到子目录。注意,如果选错了,可以选择列表顶部附近的 `..` 返回。

|

||||

|

||||

如果找不到文件,可以按 `/`(就像在 `vi` 中开始搜索时一样),提示你输入文件名或其中的一部分。

|

||||

|

||||

```

|

||||

Directory /shs <== current location

|

||||

Previous

|

||||

...

|

||||

-rw-rw-r— 1000 1000 426 8-Apr-2019 19:09 2-min-topics

|

||||

>-rw-rw-r— 1000 1000 24667 8-Feb-2019 08:57 Up_on_the_Roof.pdf

|

||||

```

|

||||

|

||||

一旦你找到需要恢复的文件,按 `c` 选择它。

|

||||

|

||||

注意:你会在屏幕底部看到有用的说明:

|

||||

|

||||

```

|

||||

Use Left arrow to go back, Right to change directory, h to hide deleted files

|

||||

q to quit, : to select the current file, a to select all files

|

||||

C to copy the selected files, c to copy the current file <==

|

||||

```

|

||||

|

||||

这时,你就可以在起始目录内选择恢复该文件的位置了(参见前面的说明,在将文件移回原点之前,先在一个合适的地方进行检查)。在这种情况下,`/home/recovery` 目录没有子目录,所以这就是我们的恢复点。

|

||||

|

||||

注意:你会在屏幕底部看到有用的说明:

|

||||

|

||||

```

|

||||

Please select a destination where /shs/Up_on_the_Roof.pdf will be copied.

|

||||

Keys: Arrow keys to select another directory

|

||||

C when the destination is correct

|

||||

Q to quit

|

||||

Directory /home/recovery <== 恢复位置

|

||||

```

|

||||

|

||||

一旦你看到 “Copy done! 1 ok, 0 failed” 的绿色字样,你就会知道文件已经恢复了。

|

||||

|

||||

在这种情况下,文件被留在 `/home/recovery/shs` 下(起始目录,附加所选目录)。

|

||||

|

||||

在将文件移回原来的位置之前,你可能应该先验证恢复的文件看起来是否正确。确保你也恢复了原来的所有者和组,因为此时文件由 root 拥有。

|

||||

|

||||

**注意:** 对于文件恢复过程中的很多步骤,你可以使用退出(按 `q` 或“[ Quit ]”)来返回上一步。如果你愿意,可以选择退出选项一直回到该过程中的第一步,也可以选择按下 `^c` 立即退出。

|

||||

|

||||

#### 恢复训练

|

||||

|

||||

使用 `testdisk` 恢复文件相对来说没有痛苦,但有些复杂。在恐慌时间到来之前,最好先练习一下恢复文件,让自己有机会熟悉这个过程。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3575524/recovering-deleted-files-on-linux-with-testdisk.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.cgsecurity.org/testdisk.pdf

|

||||

[2]: https://www.facebook.com/NetworkWorld/

|

||||

[3]: https://www.linkedin.com/company/network-world

|

||||

@ -1,5 +1,5 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: translator: (rakino)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

|

||||

@ -0,0 +1,117 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (5 ways to conduct user research with an open source mindset)

|

||||

[#]: via: (https://opensource.com/article/20/9/open-source-user-research)

|

||||

[#]: author: (Alana Fialkoff https://opensource.com/users/alana)

|

||||

|

||||

5 ways to conduct user research with an open source mindset

|

||||

======

|

||||

See how this team elevates their user research experiences and results

|

||||

with an open source approach.

|

||||

![Mobile devices are a big part of our daily lives][1]

|

||||

|

||||

There are common beliefs about user experiences—the best ones are user-centered, iterative, and intuitive. When user experience (UX) research is conducted, user stories about these experiences are collected—but the research methods chosen inform user experiences, too.

|

||||

|

||||

So, what makes for an engaging research experience, and how can methods evolve alongside products to better connect with users?

|

||||

|

||||

[Red Hat's User Experience Design (UXD) research team][2] has the answer: a community-centered, open source mindset.

|

||||

|

||||

As a UX writer on Red Hat's UXD team, I create new design documentation, empower team voices, and share Red Hat's open source story. My passion lies in using content to connect and inspire others. On our [Twitter][3] and [Medium][4] channels, we share thought leadership about UX writing, research, development, and design, all to amplify and grow our open source community. This community is at the heart of what we do. So when I learned how the research team centers community throughout their user testing, I leaped at the chance to tell their story.

|

||||

|

||||

### Approaching research with an open source mindset

|

||||

|

||||

Red Hat's UXD team creates in the open, and this ideology applies to their research, too. Thinking the open source way involves adopting a community-first and community-driven frame of mind. New ideas can come from anywhere, and an open source mindset embraces these varied voices and perspectives.

|

||||

|

||||

Structuring research with an open source mindset means each research technique should be driven by two angles—we don't just want to learn about our users; we want to learn from them, too.

|

||||

|

||||

To satisfy both of these user-focused objectives, we make research decisions backed by other voices, not just our own. By sourcing input from beyond our team, we design research experiences that are truly tailored to the communities we serve.

|

||||

|

||||

Guiding questions help us streamline this process. Some questions the team's researchers use to develop their methods include:

|

||||

|

||||

* Does this method amplify user voices?

|

||||

* Are we facilitating open communication with our users?

|

||||

* Does this method build a meaningful connection with our user base?

|

||||

* What kind of experience does this technique build? Is it memorable? Engaging?

|

||||

|

||||

|

||||

|

||||

Notice a trend? Each question hinges on community.

|

||||

|

||||

### Building research experiences like user experiences

|

||||

|

||||

Researchers approach their UX research as a user experience, too. We want our research sessions to have the same qualities as our user interfaces:

|

||||

|

||||

* Memorable

|

||||

* Engaging

|

||||

* Intuitive

|

||||

* User-centered

|

||||

|

||||

|

||||

|

||||

Research offers in-depth engagement with our user base, so it's important to tailor our techniques to that community. When we conduct user research, we learn more about how our users engage with our products and use that knowledge to improve them. We can use that same process to shape future research sessions.

|

||||

|

||||

Open source, user-centered research—sounds great. But how can we actually achieve it?

|

||||

|

||||

Let's take a look at five collaborative techniques we use to design more immersive user research experiences the open source way.

|

||||

|

||||

### Evolve research methods to build engaging experiences and strong connections

|

||||

|

||||

Tailor research methods to the target audience, with a goal to create a connective experience. The tools at our disposal vary largely depending on our venue (in-person vs. virtual), so this approach lets us get creative.

|

||||

|

||||

* **Add dimension**: Are you conducting a survey? Consider appealing your users' senses offscreen. We've expanded our research to the third dimension using strings, LEGOs, and card diagrams to collect data in more tactile ways.

|

||||

* **Streamline**: Are your research methods efficient and time-conscious? There's only one way to find out. Follow up with users about their experience post-session. If they say a survey or form was cumbersome, consider condensing your longer questions into smaller, more digestible ones.

|

||||

* **Simplify**: Use what you know about your user base to customize your techniques. A busy community working in enterprise IT, for example, might only have time to fill out a brief form. Structure your methods so that they're navigable and intuitive for your specific audience.

|

||||

|

||||

|

||||

|

||||

### Guide research with research

|

||||

|

||||

Contextualize questions around user's needs. Explore their goals. Identify their cares, difficulties, and thoughts on product performance. Use these findings to design more meaningful research sessions, and check in often. As our products evolve with our users, our research methods do, too.

|

||||

|

||||

### Keep an open mind

|

||||

|

||||

Work with the community to disprove our own assumptions. Lean into the spirit of open source by engaging with others across the team, company, user base, and industry. Use these communities like a sounding board for ideas and welcome their feedback. Community-centered conversations take place in environments like:

|

||||

|

||||

* Team meetings and brainstorms

|

||||

* Company calls and research shares

|

||||

* Conferences and industry panels

|

||||

* Internal and external blogging platforms

|

||||

* Other thought leadership forums

|

||||

|

||||

|

||||

|

||||

This means speaking at monthly meetings, messaging across team channels, and presenting ideas at annual thought leadership events, where a multitude of voices meet from across the industry to share their experience and expertise.

|

||||

|

||||

### Communicate, communicate, communicate

|

||||

|

||||

With an open source mindset comes open communication. Spark meaningful conversations with users and keep those channels open beyond formal research sessions. Community-driven research techniques start with just that: community. Invest in a strong connection with users to invite deeper insights and facilitate more impactful sessions.

|

||||

|

||||

### Experiment with new research techniques and take a user-centered, UX approach

|

||||

|

||||

Prototype. Test. Iterate. Repeat. The methods will morph as the open source approach takes shape.

|

||||

|

||||

That's the magic of conducting research in the open: dynamic change, driven by a deeper connection to the community.

|

||||

|

||||

Learn more about how Red Hat's UXD team conducts research by checking out [DevConf.US][5], where individuals across the open source community come together to talk all things tech, open source, and UX.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/20/9/open-source-user-research

|

||||

|

||||

作者:[Alana Fialkoff][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/alana

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/mobile-demo-device-phone.png?itok=y9cHLI_F (Mobile devices are a big part of our daily lives)

|

||||