mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

commit

e196d7a1aa

@ -1,160 +0,0 @@

|

|||||||

How to Manage Software RAID’s in Linux with ‘Mdadm’ Tool – Part 9

|

|

||||||

================================================================================

|

|

||||||

Regardless of your previous experience with RAID arrays, and whether you followed all of the tutorials in [this RAID series][1] or not, managing software RAIDs in Linux is not a very complicated task once you have become acquainted with `mdadm --manage` command.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Manage Raid Devices with Mdadm in Linux – Part 9

|

|

||||||

|

|

||||||

In this tutorial we will review the functionality provided by this tool so that you can have it handy when you need it.

|

|

||||||

|

|

||||||

#### RAID Testing Scenario ####

|

|

||||||

|

|

||||||

As in the last article of this series, we will use for simplicity a RAID 1 (mirror) array which consists of two 8 GB disks (/dev/sdb and /dev/sdc) and an initial spare device (/dev/sdd) to illustrate, but the commands and concepts listed herein apply to other types of setups as well. That said, feel free to go ahead and add this page to your browser’s bookmarks, and let’s get started.

|

|

||||||

|

|

||||||

### Understanding mdadm Options and Usage ###

|

|

||||||

|

|

||||||

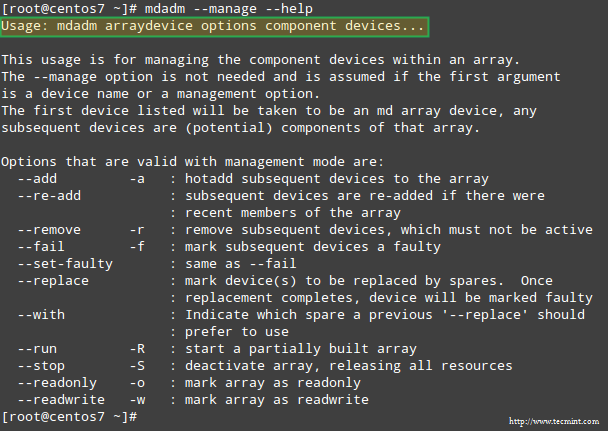

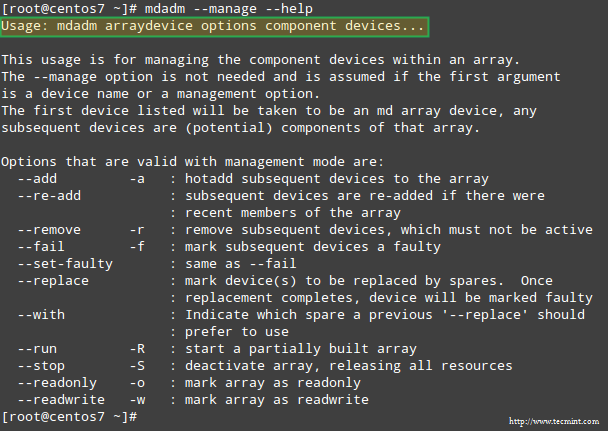

Fortunately, mdadm provides a `built-in --help` flag that provides explanations and documentation for each of the main options.

|

|

||||||

|

|

||||||

Thus, let’s start by typing:

|

|

||||||

|

|

||||||

# mdadm --manage --help

|

|

||||||

|

|

||||||

to see what are the tasks that `mdadm --manage` will allow us to perform and how:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Manage RAID with mdadm Tool

|

|

||||||

|

|

||||||

As we can see in the above image, managing a RAID array involves performing the following tasks at one time or another:

|

|

||||||

|

|

||||||

- (Re)Adding a device to the array.

|

|

||||||

- Mark a device as faulty.

|

|

||||||

- Removing a faulty device from the array.

|

|

||||||

- Replacing the faulty device with a spare one.

|

|

||||||

- Start an array that’s partially built.

|

|

||||||

- Stop an array.

|

|

||||||

- Mark an array as ro (read-only) or rw (read-write).

|

|

||||||

|

|

||||||

### Managing RAID Devices with mdadm Tool ###

|

|

||||||

|

|

||||||

Note that if you omit the `--manage` option, mdadm assumes management mode anyway. Keep this fact in mind to avoid running into trouble further down the road.

|

|

||||||

|

|

||||||

The highlighted text in the previous image shows the basic syntax to manage RAIDs:

|

|

||||||

|

|

||||||

# mdadm --manage RAID options devices

|

|

||||||

|

|

||||||

Let’s illustrate with a few examples.

|

|

||||||

|

|

||||||

#### Example 1: Add a device to the RAID array ####

|

|

||||||

|

|

||||||

You will typically add a new device when replacing a faulty one, or when you have a spare part that you want to have handy in case of a failure:

|

|

||||||

|

|

||||||

# mdadm --manage /dev/md0 --add /dev/sdd1

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Add Device to Raid Array

|

|

||||||

|

|

||||||

#### Example 2: Marking a RAID device as faulty and removing it from the array ####

|

|

||||||

|

|

||||||

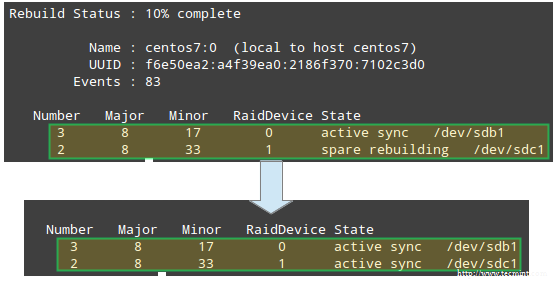

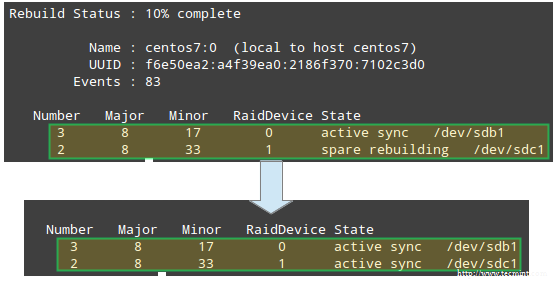

This is a mandatory step before logically removing the device from the array, and later physically pulling it out from the machine – in that order (if you miss one of these steps you may end up causing actual damage to the device):

|

|

||||||

|

|

||||||

# mdadm --manage /dev/md0 --fail /dev/sdb1

|

|

||||||

|

|

||||||

Note how the spare device added in the previous example is used to automatically replace the failed disk. Not only that, but the [recovery and rebuilding of raid data][2] start immediately as well:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Recover and Rebuild Raid Data

|

|

||||||

|

|

||||||

Once the device has been indicated as failed manually, it can be safely removed from the array:

|

|

||||||

|

|

||||||

# mdadm --manage /dev/md0 --remove /dev/sdb1

|

|

||||||

|

|

||||||

#### Example 3: Re-adding a device that was part of the array which had been removed previously ####

|

|

||||||

|

|

||||||

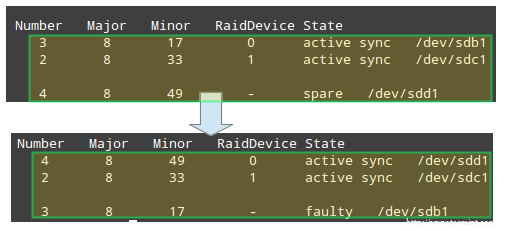

Up to this point, we have a working RAID 1 array that consists of 2 active devices: /dev/sdc1 and /dev/sdd1. If we attempt to re-add /dev/sdb1 to /dev/md0 right now:

|

|

||||||

|

|

||||||

# mdadm --manage /dev/md0 --re-add /dev/sdb1

|

|

||||||

|

|

||||||

we will run into an error:

|

|

||||||

|

|

||||||

mdadm: --re-add for /dev/sdb1 to /dev/md0 is not possible

|

|

||||||

because the array is already made up of the maximum possible number of drives. So we have 2 choices: a) add /dev/sdb1 as a spare, as shown in Example #1, or b) remove /dev/sdd1 from the array and then re-add /dev/sdb1.

|

|

||||||

|

|

||||||

We choose option b), and will start by stopping the array to later reassemble it:

|

|

||||||

|

|

||||||

# mdadm --stop /dev/md0

|

|

||||||

# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1

|

|

||||||

|

|

||||||

If the above command does not successfully add /dev/sdb1 back to the array, use the command from Example #1 to do it.

|

|

||||||

|

|

||||||

Although mdadm will initially detect the newly added device as a spare, it will start rebuilding the data and when it’s done doing so, it should recognize the device to be an active part of the RAID:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Raid Rebuild Status

|

|

||||||

|

|

||||||

#### Example 4: Replace a Raid device with a specific disk ####

|

|

||||||

|

|

||||||

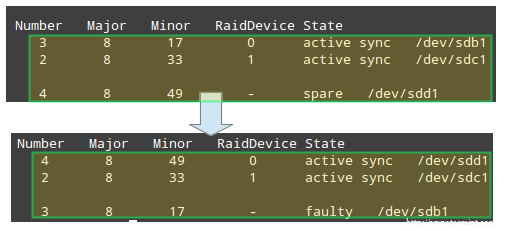

Replacing a disk in the array with a spare one is as easy as:

|

|

||||||

|

|

||||||

# mdadm --manage /dev/md0 --replace /dev/sdb1 --with /dev/sdd1

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Replace Raid Device

|

|

||||||

|

|

||||||

This results in the device following the `--with` switch being added to the RAID while the disk indicated through `--replace` being marked as faulty:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Check Raid Rebuild Status

|

|

||||||

|

|

||||||

#### Example 5: Marking an Raid array as ro or rw ####

|

|

||||||

|

|

||||||

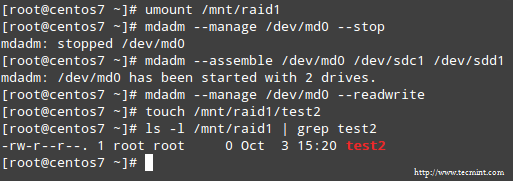

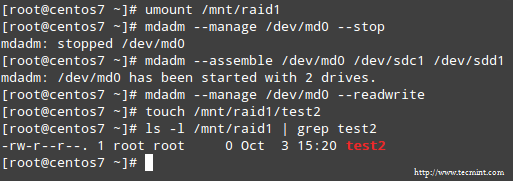

After creating the array, you must have created a filesystem on top of it and mounted it on a directory in order to use it. What you probably didn’t know then is that you can mark the RAID as ro, thus allowing only read operations to be performed on it, or rw, in order to write to the device as well.

|

|

||||||

|

|

||||||

To mark the device as ro, it needs to be unmounted first:

|

|

||||||

|

|

||||||

# umount /mnt/raid1

|

|

||||||

# mdadm --manage /dev/md0 --readonly

|

|

||||||

# mount /mnt/raid1

|

|

||||||

# touch /mnt/raid1/test1

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Set Permissions on Raid Array

|

|

||||||

|

|

||||||

To configure the array to allow write operations as well, use the `--readwrite` option. Note that you will need to unmount the device and stop it before setting the rw flag:

|

|

||||||

|

|

||||||

# umount /mnt/raid1

|

|

||||||

# mdadm --manage /dev/md0 --stop

|

|

||||||

# mdadm --assemble /dev/md0 /dev/sdc1 /dev/sdd1

|

|

||||||

# mdadm --manage /dev/md0 --readwrite

|

|

||||||

# touch /mnt/raid1/test2

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Allow Read Write Permission on Raid

|

|

||||||

|

|

||||||

### Summary ###

|

|

||||||

|

|

||||||

Throughout this series we have explained how to set up a variety of software RAID arrays that are used in enterprise environments. If you followed through the articles and the examples provided in these articles you are prepared to leverage the power of software RAIDs in Linux.

|

|

||||||

|

|

||||||

Should you happen to have questions or suggestions, feel free to contact us using the form below.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: http://www.tecmint.com/manage-software-raid-devices-in-linux-with-mdadm/

|

|

||||||

|

|

||||||

作者:[GABRIEL CÁNEPA][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:http://www.tecmint.com/author/gacanepa/

|

|

||||||

[1]:http://www.tecmint.com/understanding-raid-setup-in-linux/

|

|

||||||

[2]:http://www.tecmint.com/recover-data-and-rebuild-failed-software-raid/

|

|

||||||

@ -0,0 +1,161 @@

|

|||||||

|

|

||||||

|

在 Linux 中如何使用 ‘Mdadm’ 工具管理软件 RAID - 第9部分

|

||||||

|

================================================================================

|

||||||

|

|

||||||

|

无论你以前有没有使用 RAID 阵列的经验,以及是否完成了 [此 RAID 系列][1] 的所有教程,一旦你在 Linux 中熟悉了 `mdadm --manage` 命令的使用,管理软件 RAID 将不是很复杂的任务。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

在 Linux 中使用 mdadm 管理 RAID 设备 - 第9部分

|

||||||

|

|

||||||

|

在本教程中,我们会再介绍此工具提供的功能,这样当你需要它,就可以派上用场。

|

||||||

|

|

||||||

|

#### RAID 测试方案 ####

|

||||||

|

|

||||||

|

在本系列的最后一篇文章中,我们将使用一个简单的 RAID 1(镜像)阵列,它由两个 8GB 的磁盘(/dev/sdb 和 /dev/sdc)和一个备用设备(dev/sdd)来演示,但在此使用的方法也适用于其他类型的配置。也就是说,放心去用吧,把这个页面添加到浏览器的书签,然后让我们开始吧。

|

||||||

|

|

||||||

|

### 了解 mdadm 的选项和使用方法 ###

|

||||||

|

|

||||||

|

幸运的是,mdadm 有一个 `built-in --help` 参数来对每个主要的选项提供说明文档。

|

||||||

|

|

||||||

|

因此,让我们开始使用:

|

||||||

|

|

||||||

|

# mdadm --manage --help

|

||||||

|

|

||||||

|

`mdadm --manage` 将使我们看到能够执行哪些任务:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

使用 mdadm 工具来管理 RAID

|

||||||

|

|

||||||

|

正如我们在上面的图片看到,管理一个 RAID 阵列可以在任意时间执行以下任务:

|

||||||

|

|

||||||

|

- (重新)将设备添加到阵列中.

|

||||||

|

- 把设备标记为故障.

|

||||||

|

- 从阵列中删除故障设备.

|

||||||

|

- 使用备用设备更换故障设备.

|

||||||

|

- 先创建部分阵列.

|

||||||

|

- 停止阵列.

|

||||||

|

- 标记阵列为 ro(只读)或 rw(读写).

|

||||||

|

|

||||||

|

### 使用 mdadm 工具管理 RAID 设备 ###

|

||||||

|

|

||||||

|

需要注意的是,如果用户忽略 `--manage` 选项,mdadm 默认使用管理模式。请记住这其实一点,以避免出现最坏的情况。

|

||||||

|

|

||||||

|

上面图像中的高亮文本显示了管理 RAIDs 的基本的语法:

|

||||||

|

|

||||||

|

# mdadm --manage RAID options devices

|

||||||

|

|

||||||

|

让我们来演示几个例子.

|

||||||

|

|

||||||

|

#### 例1:为 RAID 阵列添加设备 ####

|

||||||

|

|

||||||

|

你通常会添加新设备来更换故障的设备,或者使用空闲的分区以便在出现故障时能及时替换:

|

||||||

|

|

||||||

|

# mdadm --manage /dev/md0 --add /dev/sdd1

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

添加设备到 Raid 阵列

|

||||||

|

|

||||||

|

#### 例2:把一个 RAID 设备标记为故障并从阵列中移除 ####

|

||||||

|

|

||||||

|

在从逻辑阵列中删除该设备前,这是强制性的一步,然后从机器中取出它 - 注意顺序(如果弄错了这些步骤,最终可能会造成实际设备的损害):

|

||||||

|

|

||||||

|

# mdadm --manage /dev/md0 --fail /dev/sdb1

|

||||||

|

|

||||||

|

请注意在前面的例子中,知道如何添加备用设备来自动更换出现故障的磁盘。在此之后,[恢复和重建 raid 数据][2] 就开始了:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

恢复和重建 raid 数据

|

||||||

|

|

||||||

|

一旦设备已被手动标记为故障,你就可以安全地从阵列中删除它:

|

||||||

|

|

||||||

|

# mdadm --manage /dev/md0 --remove /dev/sdb1

|

||||||

|

|

||||||

|

#### 例3:重新添加设备,这是阵列中已经移除的一部分 ####

|

||||||

|

|

||||||

|

到现在为止,我们有一个工作的 RAID 1 阵列,它包含了2个活动的设备:/dev/sdc1 和 /dev/sdd1。现在让我们试图重新添加 /dev/sdb1 到/dev/md0:

|

||||||

|

|

||||||

|

# mdadm --manage /dev/md0 --re-add /dev/sdb1

|

||||||

|

|

||||||

|

我们会碰到一个错误:

|

||||||

|

|

||||||

|

mdadm: --re-add 将 /dev/sdb1 添加到 /dev/md0 是不可能的,因为阵列中的磁盘已经达到了最大的数量。因此,我们有两个选择:a)将 /dev/sdb1 添加为备用的,如例1,或 b)从阵列中删除 /dev/sdd1 然后重新添加 /dev/sdb1。

|

||||||

|

|

||||||

|

我们选择选项 b),先停止阵列然后重新启动:

|

||||||

|

|

||||||

|

# mdadm --stop /dev/md0

|

||||||

|

# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1

|

||||||

|

|

||||||

|

如果上面的命令不能成功添加 /dev/sdb1 到阵列中,使用例1中的命令来完成。

|

||||||

|

|

||||||

|

mdadm 能检测到新添加的设备并将其作为备用设备,当添加完成后它会开始重建数据,它也被认为是 RAID 中的活动设备:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

重建 Raid 的状态

|

||||||

|

|

||||||

|

#### 例4:使用特定磁盘更换 RAID 设备 ####

|

||||||

|

|

||||||

|

在阵列中使用备用磁盘更换磁盘很简单:

|

||||||

|

|

||||||

|

# mdadm --manage /dev/md0 --replace /dev/sdb1 --with /dev/sdd1

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

更换 Raid 设备

|

||||||

|

|

||||||

|

当磁盘通过 `--replace` 被标记为故障时,这将导致设备通过 `--with` 被添加到 RAID 中:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

检查 Raid 重建状态

|

||||||

|

|

||||||

|

#### 例5:标记 RAID 阵列为 ro 或 rw ####

|

||||||

|

|

||||||

|

创建阵列后,你必须在它上面创建一个文件系统并将其挂载到一个目录下才能使用它。你可能不知道,RAID 也可以被设置为 ro,然后它就只读了,或者设置为 rw,然后就能进行写入设备以及读取操作。

|

||||||

|

|

||||||

|

为了标记该设备为 ro,首先需要将其卸载:

|

||||||

|

|

||||||

|

# umount /mnt/raid1

|

||||||

|

# mdadm --manage /dev/md0 --readonly

|

||||||

|

# mount /mnt/raid1

|

||||||

|

# touch /mnt/raid1/test1

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

在 RAID 阵列上设置权限

|

||||||

|

|

||||||

|

要配置阵列允许写入操作需要使用 `--readwrite` 选项。请注意,在设置 rw 标志前,你需要先卸载设备并停止它:

|

||||||

|

|

||||||

|

# umount /mnt/raid1

|

||||||

|

# mdadm --manage /dev/md0 --stop

|

||||||

|

# mdadm --assemble /dev/md0 /dev/sdc1 /dev/sdd1

|

||||||

|

# mdadm --manage /dev/md0 --readwrite

|

||||||

|

# touch /mnt/raid1/test2

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

配置 Raid 允许读写操作

|

||||||

|

|

||||||

|

### 总结 ###

|

||||||

|

|

||||||

|

在本系列中,我们已经解释了如何建立一个在企业环境中使用的软件RAID 阵列。如果你按照这些文章所提供的例子进行配置,在 Linux 中你会充分利用软件 RAID 的价值。

|

||||||

|

|

||||||

|

如果你碰巧任何问题或有建议,请随时使用下面的方式与我们联系。

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://www.tecmint.com/manage-software-raid-devices-in-linux-with-mdadm/

|

||||||

|

|

||||||

|

作者:[GABRIEL CÁNEPA][a]

|

||||||

|

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||||

|

校对:[校对者ID](https://github.com/校对者ID)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://www.tecmint.com/author/gacanepa/

|

||||||

|

[1]:http://www.tecmint.com/understanding-raid-setup-in-linux/

|

||||||

|

[2]:http://www.tecmint.com/recover-data-and-rebuild-failed-software-raid/

|

||||||

Loading…

Reference in New Issue

Block a user