mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-22 23:00:57 +08:00

commit

e18da6de6d

@ -0,0 +1,55 @@

|

||||

用 Apache Calcite 构建强大的实时流式应用

|

||||

==============

|

||||

|

||||

|

||||

|

||||

Calcite 是一个数据框架,它允许你创建自定义数据库功能,微软开发者 Atri Sharma 在 Apache 2016 年 11 月 14-16 日在西班牙塞维利亚举行的 Big Data Europe 中对此进行了讲演。

|

||||

|

||||

[Creative Commons Zero][2] Wikimedia Commons: Parent Géry

|

||||

|

||||

[Apache Calcite][7] 数据管理框架包含了典型的数据库管理系统的许多部分,但省略了如数据的存储和处理数据的算法等其他部分。 Microsoft 的 Azure Data Lake 的软件工程师 Atri Sharma 在西班牙塞维利亚的 [Apache:Big Data][6] 会议上的演讲中讨论了使用 [Apache Calcite][5] 的高级查询规划能力。我们与 Sharma 讨论了解有关 Calcite 的更多信息,以及现有程序如何利用其功能。

|

||||

|

||||

|

||||

|

||||

*Atri Sharma,微软 Azure Data Lake 的软件工程师,已经[授权使用][1]*

|

||||

|

||||

**Linux.com:你能提供一些关于 Apache Calcite 的背景吗? 它有什么作用?

|

||||

|

||||

Atri Sharma:Calcite 是一个框架,它是许多数据库内核的基础。Calcite 允许你构建自定义的数据库功能来使用 Calcite 所需的资源。例如,Hive 使用 Calcite 进行基于成本的查询优化、Drill 和 Kylin 使用 Calcite 进行 SQL 解析和优化、Apex 使用 Calcite 进行流式 SQL。

|

||||

|

||||

**Linux.com:有哪些是使得 Apache Calcite 与其他框架不同的特性?

|

||||

|

||||

Atri:Calcite 是独一无二的,它允许你建立自己的数据平台。 Calcite 不直接管理你的数据,而是允许你使用 Calcite 的库来定义你自己的组件。 例如,它允许使用 Calcite 中可用的 Planner 定义你的自定义查询优化器,而不是提供通用查询优化器。

|

||||

|

||||

**Linux.com:Apache Calcite 本身不会存储或处理数据。 它如何影响程序开发?

|

||||

|

||||

Atri:Calcite 是数据库内核中的依赖项。它针对的是希望扩展其功能,而无需从头开始编写大量功能的的数据管理平台。

|

||||

|

||||

** Linux.com:谁应该使用它? 你能举几个例子吗?**

|

||||

|

||||

Atri:任何旨在扩展其功能的数据管理平台都应使用 Calcite。 我们是你下一个高性能数据库的基础!

|

||||

|

||||

具体来说,我认为最大的例子是 Hive 使用 Calcite 用于查询优化、Flink 解析和流 SQL 处理。 Hive 和 Flink 是成熟的数据管理引擎,并将 Calcite 用于相当专业的用途。这是对 Calcite 应用进一步加强数据管理平台核心的一个好的案例研究。

|

||||

|

||||

**Linux.com:你有哪些期待的新功能?

|

||||

|

||||

Atri:流式 SQL 增强是令我非常兴奋的事情。这些功能令人兴奋,因为它们将使 Calcite 的用户能够更快地开发实时流式应用程序,并且这些程序的强大和功能将是多方面的。流式应用程序是新的事实,并且在流式 SQL 中具有查询优化的优点对于大部分人将是非常有用的。此外,关于暂存表的讨论还在进行,所以请继续关注!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/build-strong-real-time-streaming-apps-apache-calcite

|

||||

|

||||

作者:[AMBER ANKERHOLZ][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/aankerholz

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[3]:https://www.linux.com/files/images/atri-sharmajpg

|

||||

[4]:https://www.linux.com/files/images/calcitejpg

|

||||

[5]:https://calcite.apache.org/

|

||||

[6]:http://events.linuxfoundation.org/events/apache-big-data-europe

|

||||

[7]:https://calcite.apache.org/

|

||||

@ -6,16 +6,17 @@

|

||||

|

||||

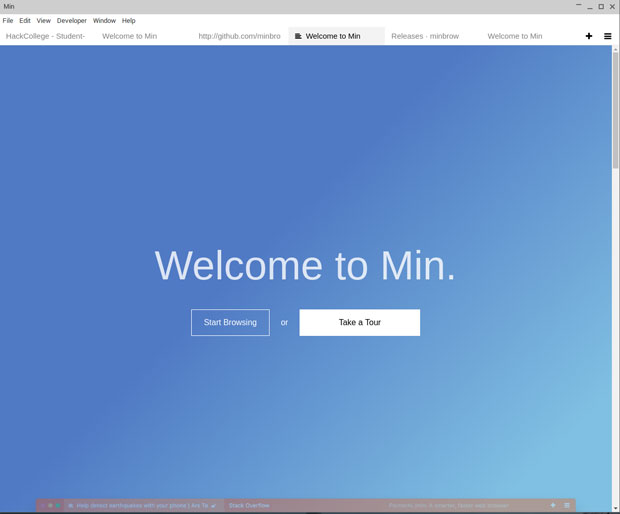

在软件设计中,“简单”并不意味着功能低级、有待改进。你如果喜欢花哨工具比较少的文本编辑器和笔记程序,那么在 Min 浏览器中会有同样舒适的感觉。

|

||||

|

||||

我经常在台式机和笔记本电脑上使用 Google Chrome、Chromium和 Firefox。我研究了它们的很多附加功能,所以我在长期的研究和工作中可以享用它们的特色服务。

|

||||

我经常在台式机和笔记本电脑上使用 Google Chrome、Chromium 和 Firefox。我研究了它们的很多附加功能,所以我在长期的研究和工作中可以享用它们的特色服务。

|

||||

|

||||

然而,有时我希望有个快速、整洁的替代品来上网。随着多个项目的进行,我需要很快打开一大批选项卡甚至是独立窗口的强大浏览器。

|

||||

然而,有时我希望有个快速、整洁的替代品来上网。随着多个项目的进行,我需要一个可以很快打开一大批选项卡甚至是独立窗口的强大浏览器。

|

||||

|

||||

我试过其他浏览器但很少能令我满意。替代品通常有一套独特的花哨的附件和功能,它们会让我开小差。

|

||||

|

||||

Min 浏览器就不这样。它是一个易于使用,并在 GitHub 开源的 web 浏览器,不会使我分心。

|

||||

|

||||

|

||||

Min 浏览器是精简的浏览器,提供了简单的功能以及快速的响应。只是不要指望马上上手。

|

||||

|

||||

*Min 浏览器是精简的浏览器,提供了简单的功能以及快速的响应。只是不要指望马上能上手。*

|

||||

|

||||

### 它做些什么

|

||||

|

||||

@ -25,7 +26,7 @@ Min 浏览器提供了 Debian Linux、Windows 和 Mac 机器的版本。它不

|

||||

|

||||

其中一个主要原因是其内置的广告拦截功能。开箱即用的 Min 浏览器不需要配置或寻找兼容的第三方应用程序来拦截广告。

|

||||

|

||||

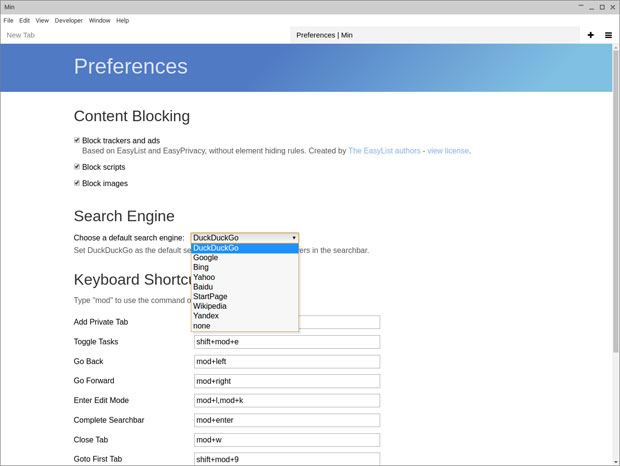

在 Edit/Preferences 中,你可以通过三个选项来设置阻止的内容。它很容易修改屏蔽策略来满足你的喜好。阻止跟踪器和广告选项使用 EasyList 和 EasyPrivacy。 如果没有其他原因,请保持此选项选中。

|

||||

在 Edit/Preferences 菜单中,你可以通过三个选项来设置阻止的内容。它很容易修改屏蔽策略来满足你的喜好。阻止跟踪器和广告选项使用 EasyList 和 EasyPrivacy。 如果没有其他原因,请保持此选项选中。

|

||||

|

||||

你还可以阻止脚本和图像。这样做可以最大限度地提高网站加载速度,并能有效防御恶意代码。

|

||||

|

||||

@ -37,12 +38,13 @@ Min 浏览器提供了 Debian Linux、Windows 和 Mac 机器的版本。它不

|

||||

|

||||

这种方法很节省时间,因为你不必先进入搜索引擎窗口。 还有一个好处是可以搜索你的书签。

|

||||

|

||||

在 Edit/Preferences 菜单中,选择默认的搜索引擎。该列表包括 DuckDuckGo、Google、Bing、Yahoo、Baidu、Wikipedia 和 Yandex。

|

||||

在 Edit/Preferences 菜单中,可以选择默认的搜索引擎。该列表包括 DuckDuckGo、Google、Bing、Yahoo、Baidu、Wikipedia 和 Yandex。

|

||||

|

||||

尝试将 DuckDuckGo 作为默认搜索引擎。 Min 默认使用这个引擎,但你也能更换。

|

||||

|

||||

|

||||

Min 浏览器的搜索功能是 URL 栏的一部分。Min 利用搜索引擎 DuckDuckGo 和维基百科的内容。你可以直接在 web 地址栏中输入要搜索的东西。

|

||||

|

||||

*Min 浏览器的搜索功能是 URL 栏的一部分。Min 会使用搜索引擎 DuckDuckGo 和维基百科的内容。你可以直接在 web 地址栏中输入要搜索的东西。*

|

||||

|

||||

搜索栏会非常快速地显示问题的答案。它会使用 DuckDuckGo 的信息,包括维基百科条目、计算器和其它的内容。

|

||||

|

||||

@ -54,10 +56,9 @@ Min 允许你使用模糊搜索快速跳转到任何网站。它能立即向你

|

||||

|

||||

我喜欢在当前标签旁边打开标签的方式。你不必设置此选项。它在默认情况下没有其他选择,但这也有道理。

|

||||

|

||||

[

|

||||

|

||||

][2]

|

||||

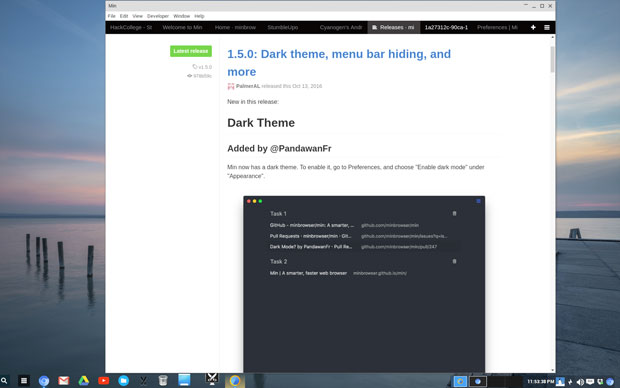

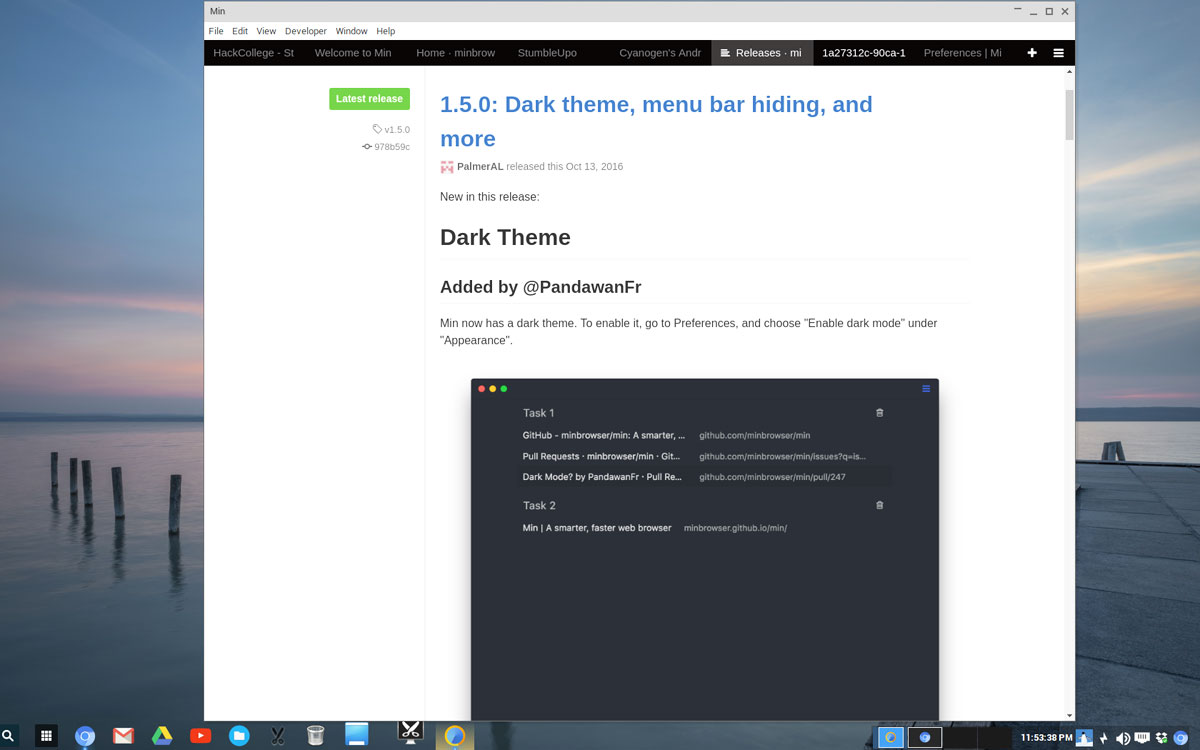

Min 的一个很酷的功能是将标签整理到任务栏中,这样你随时都可以搜索。(点击图片放大)

|

||||

|

||||

|

||||

*Min 的一个很酷的功能是将标签整理到任务栏中,这样你随时都可以搜索。*

|

||||

|

||||

不点击标签,过一会儿它就会消失。这使你可以专注于当前的任务,而不会分心。

|

||||

|

||||

@ -67,11 +68,11 @@ Min 不需要附加工具来控制多个标签。浏览器会显示标签列表

|

||||

|

||||

Min 在“视图”菜单中有一个可选的“聚焦模式”。启用后,除了你打开的选项卡外,它会隐藏其它所有选项卡。 你必须返回到菜单,关闭“聚焦模式”,才能打开新选项卡。

|

||||

|

||||

任务功能还可以帮助你保持专注。你可以在“文件(File)”菜单或使用 Ctrl+Shift+N 创建任务。如果要打开新选项卡,可以在“文件”菜单中选择该选项,或使用 Control+T。

|

||||

任务功能还可以帮助你保持专注。你可以在 File 菜单或使用 `Ctrl+Shift+N` 创建任务。如果要打开新选项卡,可以在 File 菜单中选择该选项,或使用 `Control+T`。

|

||||

|

||||

按照你的风格打开新任务。我喜欢按组来管理和显示标签,这组标签与工作项目或研究的某些部分相关。我可以在任何时间重新打开整个列表,从而轻松快速的方式找到我的浏览记录。

|

||||

|

||||

另一个好用的功能是可以在 tab 区域找到段落对齐按钮。单击它启用阅读模式。此模式会保存文章以供将来参考,并删除页面上的一切,以便你可以专注于阅读任务。

|

||||

另一个好用的功能是可以在选项卡区域找到段落对齐按钮。单击它启用阅读模式。此模式会保存文章以供将来参考,并删除页面上的一切,以便你可以专注于阅读任务。

|

||||

|

||||

### 并不完美

|

||||

|

||||

@ -91,7 +92,7 @@ Min 并不是一个功能完善、丰富的 web 浏览器。你在功能完善

|

||||

|

||||

我越使用 Min 浏览器,我越觉得它高效 - 但是当你第一次使用它时要小心。

|

||||

|

||||

Min 并不复杂,也不难操作 - 它只是有点古怪。你必须要玩弄一下才能明白它如何使用。

|

||||

Min 并不复杂,也不难操作 - 它只是有点古怪。你必须要体验一下才能明白它如何使用。

|

||||

|

||||

### 想要提建议么?

|

||||

|

||||

@ -105,11 +106,11 @@ Min 并不复杂,也不难操作 - 它只是有点古怪。你必须要玩弄一

|

||||

|

||||

作者简介:

|

||||

|

||||

Jack M. Germain 从苹果 II 和 PC 的早期起就一直在写关于计算机技术。他仍然有他原来的 IBM PC-Jr 和一些其他遗留的 DOS 和 Windows 盒子。他为 Linux 桌面的开源世界留下过共享软件。他运行几个版本的 Windows 和 Linux 操作系统,还通常不能决定是否用他的平板电脑、上网本或 Android 智能手机,还是用他的台式机或笔记本电脑。你可以在 Google+ 上与他联系。

|

||||

Jack M. Germain 从苹果 II 和 PC 的早期起就一直在写关于计算机技术。他仍然有他原来的 IBM PC-Jr 和一些其他遗留的 DOS 和 Windows 机器。他为 Linux 桌面的开源世界留下过共享软件。他运行几个版本的 Windows 和 Linux 操作系统,还通常不能决定是否用他的平板电脑、上网本或 Android 智能手机,还是用他的台式机或笔记本电脑。你可以在 Google+ 上与他联系。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxinsider.com/story/84212.html?rss=1

|

||||

via: http://www.linuxinsider.com/story/84212.html

|

||||

|

||||

作者:[Jack M. Germain][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

@ -1,33 +1,33 @@

|

||||

如何在 Vim 中使用标志和文本选择操作

|

||||

如何在 Vim 中进行文本选择操作和使用标志

|

||||

============================================================

|

||||

|

||||

基于图形界面的文本或源代码编辑器,提供了一些诸如文本选择的功能。我是想说,可能大多数人不觉得这是一个功能。不过像 Vim 这种基于命令行的编辑器就不是这样。当你仅使用键盘操作 Vim 的时候,就需要学习特定的命令来选择你想要的文本。在这个教程中,我们将详细讨论文本选择这一功能以及 Vim 中的标志功能。

|

||||

|

||||

在此之前需要说明的是,本教程中所提到的例子、命令和指令都是在 Ubuntu 16.04 的环境下测试的。Vim 的版本是 7.4。

|

||||

|

||||

# Vim 的文本选择功能

|

||||

### Vim 的文本选择功能

|

||||

|

||||

我们假设你已经具备了 Vim 编辑器的基本知识(如果没有,可以先阅读[这篇文章][2])。你应该知道,'d' 命令能够剪切/删除一行内容。如果你想要剪切 3 行的话,可以重复命令 3 次。不过,如果需要剪切 15 行呢?重复 ‘d’ 命令 15 次是个实用的解决方法吗?

|

||||

我们假设你已经具备了 Vim 编辑器的基本知识(如果没有,可以先阅读[这篇文章][2])。你应该知道,`d` 命令能够剪切/删除一行内容。如果你想要剪切 3 行的话,可以重复命令 3 次。不过,如果需要剪切 15 行呢?重复 `d` 命令 15 次是个实用的解决方法吗?

|

||||

|

||||

显然不是。这种情况下的最佳方法是,选中你想要剪切/删除的行,再运行 ‘d’ 命令。举个例子:

|

||||

显然不是。这种情况下的最佳方法是,选中你想要剪切/删除的行,再运行 `d` 命令。举个例子:

|

||||

|

||||

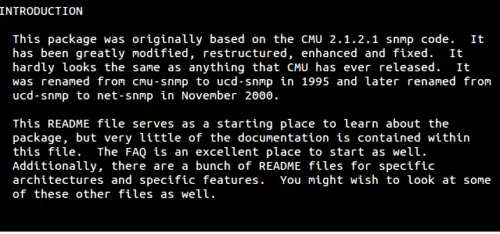

假如我想要剪切/删除下面截图中 INTRODUCTION 小节的第一段:

|

||||

|

||||

[][3]

|

||||

|

||||

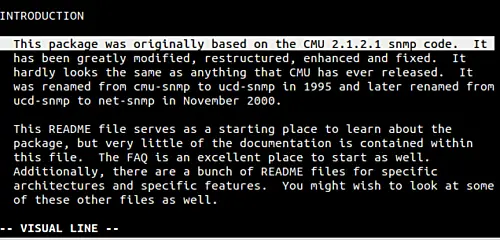

那么我的做法是:将光标放在第一行的开始,(确保退出了 Insert 模式)按下 'V'(Shift+v)命令。这时 Vim 会开启视图模式,并选中第一行。

|

||||

那么我的做法是:将光标放在第一行的开始,(确保退出了 Insert 模式)按下 `V`(即 `Shift+v`)命令。这时 Vim 会开启视图模式,并选中第一行。

|

||||

|

||||

[][4]

|

||||

|

||||

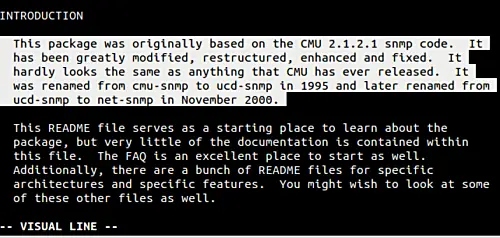

现在,我可以使用方向键'下',来选中整个段落。

|

||||

现在,我可以使用方向键“下”,来选中整个段落。

|

||||

|

||||

[][5]

|

||||

|

||||

这就是我们想要的,对吧!现在只需按 'd'键,就可以剪切/删除选中的段落了。当然,除了剪切/删除,你可以对选中的文本做任何操作。

|

||||

这就是我们想要的,对吧!现在只需按 `d` 键,就可以剪切/删除选中的段落了。当然,除了剪切/删除,你可以对选中的文本做任何操作。

|

||||

|

||||

这给我们带来了另一个重要的问题:当我们不需要删除整行的时候,该怎么做呢?也就是说,我们刚才讨论的解决方法,仅适用于想要对整行做操作的情况。那么如果我们只想删除段落的前三句话呢?

|

||||

|

||||

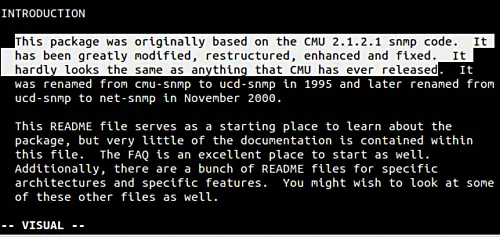

其实也有相应的命令 - 只需用 'v' 来代替 'V'(不包括单引号)即可。在下面的例子中,我使用 'v' 来选中段落的前三句话:

|

||||

其实也有相应的命令 - 只需用小写 `v` 来代替大写 `V` 即可。在下面的例子中,我使用 `v` 来选中段落的前三句话:

|

||||

|

||||

[][6]

|

||||

|

||||

@ -35,25 +35,25 @@

|

||||

|

||||

[][7]

|

||||

|

||||

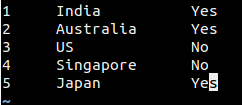

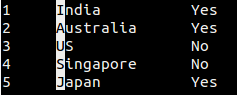

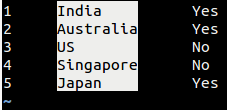

假设我们只需选择文本的第二列,即国家的名字。这种情况下,你可以将光标放在这一列的第一个字母上,按 Ctrl+v 一次。然后,按方向键'下',选中每个国家名字的第一个字母:

|

||||

假设我们只需选择文本的第二列,即国家的名字。这种情况下,你可以将光标放在这一列的第一个字母上,按 `Ctrl+v` 一次。然后,按方向键“下”,选中每个国家名字的第一个字母:

|

||||

|

||||

[][8]

|

||||

|

||||

然后按方向键'右',选中这一列。

|

||||

然后按方向键“右”,选中这一列。

|

||||

|

||||

[][9]

|

||||

|

||||

**小窍门**:如果你之前选中了某个文本块,现在想重新选中那个文本块,只需在命令模式下按 'gv' 即可。

|

||||

**小窍门**:如果你之前选中了某个文本块,现在想重新选中那个文本块,只需在命令模式下按 `gv` 即可。

|

||||

|

||||

# 使用标志

|

||||

### 使用标志

|

||||

|

||||

有时候,你在处理一个很大的文件(例如源代码文件或者一个 shell 脚本),可能想要切换到一个特定的位置,然后再回到刚才所在的行。如果这两行的位置不远,或者你并不常做这类操作,那么这不是什么问题。

|

||||

|

||||

但是,如果你需要频繁地在当前位置和一些较远的行之间切换,那么最好的方法就是使用标志。你只需标记当前的位置,然后就能够通过标志名,从文件的任意位置回到当前的位置。

|

||||

|

||||

在 Vim 中,我们使用 m 命令紧跟一个字母来标记一行(字母表示标志名,可用小写的 a-z)。例如 ma。然后你可以使用命令 'a (包括左单引号)回到标志为 a 的行。

|

||||

在 Vim 中,我们使用 `m` 命令紧跟一个字母来标记一行(字母表示标志名,可用小写的 `a` - `z`)。例如 `ma`。然后你可以使用命令 `'a` (包括左侧的单引号)回到标志为 `a` 的行。

|

||||

|

||||

**小窍门**:你可以使用单引号来跳转到标志行的第一个字符,或使用反引号来跳转到标志行的特定列。

|

||||

**小窍门**:你可以使用“单引号” `'` 来跳转到标志行的第一个字符,或使用“反引号” ` 来跳转到标志行的特定列。

|

||||

|

||||

Vim 的标志功能还有很多其他的用法。例如,你可以先标记一行,然后将光标移到其他行,运行下面的命令:

|

||||

|

||||

@ -65,43 +65,37 @@ d'[标志名]

|

||||

|

||||

在 Vim 官方文档中,有一个重要的内容:

|

||||

|

||||

```

|

||||

每个文件有一些由小写字母(a-z)定义的标志。此外,还存在一些由大写字母(A-Z)定义的全局标志,它们定义了一个特定文件的某个位置。例如,你可能在同时编辑十个文件,每个文件都可以有标志 a,但是只有一个文件能够有标志 A。

|

||||

```

|

||||

> 每个文件有一些由小写字母(`a`-`z`)定义的标志。此外,还存在一些由大写字母(`A`-`Z`)定义的全局标志,它们定义了一个特定文件的某个位置。例如,你可能在同时编辑十个文件,每个文件都可以有标志 `a`,但是只有一个文件能够有标志 `A`。

|

||||

|

||||

我们已经讨论了使用小写字母作为 Vim 标志的基本用法,以及它们的便捷之处。下面的这段摘录讲解的足够清晰:

|

||||

|

||||

```

|

||||

由于种种局限性,大写字母标志可能乍一看不如小写字母标志好用,但它可以用作一种快速的文件书签。例如,打开 .vimrc 文件,按下 mV,然后退出。下次再想要编辑 .vimrc 文件的时候,按下 'V 就能够打开它。

|

||||

```

|

||||

> 由于种种局限性,大写字母标志可能乍一看不如小写字母标志好用,但它可以用作一种快速的文件书签。例如,打开 `.vimrc` 文件,按下 `mV`,然后退出。下次再想要编辑 `.vimrc` 文件的时候,按下 `'V` 就能够打开它。

|

||||

|

||||

最后,我们使用 'delmarks' 命令来删除标志。例如:

|

||||

最后,我们使用 `delmarks` 命令来删除标志。例如:

|

||||

|

||||

```

|

||||

:delmarks a

|

||||

```

|

||||

|

||||

这一命令将从文件中删除一个标志。当然,你也可以删除标志行,这样标志将被自动删除。你可以在 [Vim 文档][11] 中找到关于标志的更多信息。

|

||||

这一命令将从文件中删除一个标志。当然,你也可以删除标志所在的行,这样标志将被自动删除。你可以在 [Vim 文档][11] 中找到关于标志的更多信息。

|

||||

|

||||

# 总结

|

||||

### 总结

|

||||

|

||||

当你开始使用 Vim 作为首选编辑器的时候,类似于这篇教程中提到的功能将会是非常有用的工具,能够节省大量的时间。你得承认,这里介绍的文本选择和标志功能几乎不怎么需要学习,所需要的只是一点练习。

|

||||

|

||||

你可以在 [HowtoForge][1] 上找到更多有关 Vim 的文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-use-markers-and-perform-text-selection-in-vim/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[Cathon](https://github.com/Cathon)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/how-to-use-markers-and-perform-text-selection-in-vim/

|

||||

[1]:https://www.howtoforge.com/tutorials/shell/

|

||||

[2]:https://www.howtoforge.com/vim-basics

|

||||

[2]:https://linux.cn/article-8143-1.html

|

||||

[3]:https://www.howtoforge.com/images/how-to-use-markers-and-perform-text-selection-in-vim/big/vim-select-example.png

|

||||

[4]:https://www.howtoforge.com/images/how-to-use-markers-and-perform-text-selection-in-vim/big/vim-select-initiated.png

|

||||

[5]:https://www.howtoforge.com/images/how-to-use-markers-and-perform-text-selection-in-vim/big/vim-select-working.png

|

||||

94

published/20170327 Using vi-mode in your shell.md

Normal file

94

published/20170327 Using vi-mode in your shell.md

Normal file

@ -0,0 +1,94 @@

|

||||

在 shell 中使用 vi 模式

|

||||

============================================================

|

||||

|

||||

> 介绍在命令行编辑中使用 vi 模式。

|

||||

|

||||

|

||||

|

||||

>图片提供: opensource.com

|

||||

|

||||

作为一名大型开源社区的参与者,更确切地说,作为 [Fedora 项目][2]的成员,我有机会与许多人会面并讨论各种有趣的技术主题。我最喜欢的主题是“命令行”或者说 [shell][3],因为了解人们如何熟练使用 shell 可以让你深入地了解他们的想法,他们喜欢什么样的工作流程,以及某种程度上是什么激发了他们的灵感。许多开发和运维人员在互联网上公开分享他们的“ dot 文件”(他们的 shell 配置文件的常见俚语),这将是一个有趣的协作机会,让每个人都能从对命令行有丰富经验的人中学习提示和技巧并分享快捷方式以及有效率的技巧。

|

||||

|

||||

今天我在这里会为你介绍 shell 中的 vi 模式。

|

||||

|

||||

在计算和操作系统的庞大生态系统中有[很多 shell][4]。然而,在 Linux 世界中,[bash][5] 已经成为事实上的标准,并在在撰写本文时,它是所有主要 Linux 发行版上的默认 shell。因此,它就是我所说的 shell。需要注意的是,bash 在其他类 UNIX 操作系统上也是一个相当受欢迎的选项,所以它可能跟你用的差别不大(对于 Windows 用户,可以用 [cygwin][6])。

|

||||

|

||||

在探索 shell 时,首先要做的是在其中输入命令并得到输出,如下所示:

|

||||

|

||||

```

|

||||

$ echo "Hello World!"

|

||||

Hello World!

|

||||

```

|

||||

|

||||

这是常见的练习,可能每个人都做过。没接触过的人和新手可能没有意识到 [bash][7] shell 的默认输入模式是 [Emacs][8] 模式,也就是说命令行中所用的行编辑功能都将使用 [Emacs 风格的“键盘快捷键”][9]。(行编辑功能实际上是由 [GNU Readline][10] 进行的。)

|

||||

|

||||

例如,如果你输入了 `echo "Hello Wrld!"`,并意识到你想要快速跳回一个单词(空格分隔)来修改打字错误,而无需按住左箭头键,那么你可以同时按下 `Alt+b`,光标会将向后跳到 `W`。

|

||||

|

||||

```

|

||||

$ echo "Hello Wrld!"

|

||||

^

|

||||

Cursor is here.

|

||||

```

|

||||

|

||||

这只是使用提供给 shell 用户的诸多 Emacs 快捷键组合之一完成的。还有其他更多东西,如复制文本、粘贴文本、删除文本以及使用快捷方式来编辑文本。使用复杂的快捷键组合并记住可能看起来很愚蠢,但是在使用较长的命令或从 shell 历史记录中调用一个命令并想再次编辑执行时,它们可能会非常强大。

|

||||

|

||||

尽管 Emacs 的键盘绑定都不错,如果你对 Emacs 编辑器熟悉或者发现它们很容易使用也不错,但是仍有一些人觉得 “vi 风格”的键盘绑定更舒服,因为他们经常使用 vi 编辑器(通常是 [vim][11] 或 [nvim][12])。bash shell(再说一次,通过 GNU Readline)可以为我们提供这个功能。要启用它,需要执行命令 `$ set -o vi`。

|

||||

|

||||

就像魔术一样,你现在处于 vi 模式了,现在可以使用 vi 风格的键绑定来轻松地进行编辑,以便复制文本、删除文本、并跳转到文本行中的不同位置。这与 Emacs 模式在功能方面没有太大的不同,但是它在你_如何_与 shell 进行交互执行操作上有一些差别,根据你的喜好这是一个强大的选择。

|

||||

|

||||

我们来看看先前的例子,但是在这种情况下一旦你在 shell 中进入 vi 模式,你就处于 INSERT 模式中,这意味着你可以和以前一样输入命令,现在点击 **Esc** 键,你将处于 NORMAL 模式,你可以自由浏览并进行文字修改。

|

||||

|

||||

看看先前的例子,如果你输入了 `echo "Hello Wrld!"`,并意识到你想跳回一个单词(再说一次,用空格分隔的单词)来修复那个打字错误,那么你可以点击 `Esc` 从 INSERT 模式变为 NORMAL 模式。然后,您可以输入 `B`(即 `Shift+b`),光标就能像以前那样回到前面了。(有关 vi 模式的更多信息,请参阅[这里][13]。):

|

||||

|

||||

```

|

||||

$ echo "Hello Wrld!"

|

||||

^

|

||||

Cursor is here.

|

||||

```

|

||||

|

||||

现在,对于 vi/vim/nvim 用户来说,你会惊喜地发现你可以一直使用相同的快捷键,而不仅仅是在编辑器中编写代码或文档的时候。如果你从未了解过这些,并且想要了解更多,那么我可能会建议你看看这个[交互式 vim 教程][14],看看 vi 风格的编辑是否有你所不知道的。

|

||||

|

||||

如果你喜欢在此风格下与 shell 交互,那么你可以在主目录中的 `~/.bashrc` 文件底部添加下面的行来持久设置它。

|

||||

|

||||

```

|

||||

set -o vi

|

||||

```

|

||||

|

||||

对于 emacs 模式的用户,希望这可以让你快速并愉快地看到 shell 的“另一面”。在结束之前,我认为每个人都应该使用任意一个让他们更有效率的编辑器和 shell 行编辑模式,如果你使用 vi 模式并且这篇文章给你展开了新的一页,那么恭喜你!现在就变得更有效率吧。

|

||||

|

||||

玩得愉快!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Adam Miller 是 Fedora 工程团队成员,专注于 Fedora 发布工程。他的工作包括下一代构建系统、自动化、RPM 包维护和基础架构部署。Adam 在山姆休斯顿州立大学完成了计算机科学学士学位与信息保障与安全科学硕士学位。他是一名红帽认证工程师(Cert#110-008-810),也是开源社区的活跃成员,并对 Fedora 项目(FAS 帐户名称:maxamillion)贡献有着悠久的历史。

|

||||

|

||||

|

||||

------------------------

|

||||

via: https://opensource.com/article/17/3/fun-vi-mode-your-shell

|

||||

|

||||

作者:[Adam Miller][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/maxamillion

|

||||

[1]:https://opensource.com/article/17/3/fun-vi-mode-your-shell?rate=5_eAB9UtByHOiZMysPcewU4Zz6hOrLwdcgIpu2Ub4vo

|

||||

[2]:https://getfedora.org/

|

||||

[3]:https://opensource.com/business/16/3/top-linux-shells

|

||||

[4]:https://opensource.com/business/16/3/top-linux-shells

|

||||

[5]:https://tiswww.case.edu/php/chet/bash/bashtop.html

|

||||

[6]:http://cygwin.org/

|

||||

[7]:https://tiswww.case.edu/php/chet/bash/bashtop.html

|

||||

[8]:https://www.gnu.org/software/emacs/

|

||||

[9]:https://en.wikipedia.org/wiki/GNU_Readline#Emacs_keyboard_shortcuts

|

||||

[10]:http://cnswww.cns.cwru.edu/php/chet/readline/rltop.html

|

||||

[11]:http://www.vim.org/

|

||||

[12]:https://neovim.io/

|

||||

[13]:https://en.wikibooks.org/wiki/Learning_the_vi_Editor/Vim/Modes

|

||||

[14]:http://www.openvim.com/tutorial.html

|

||||

[15]:https://opensource.com/user/10726/feed

|

||||

[16]:https://opensource.com/article/17/3/fun-vi-mode-your-shell#comments

|

||||

[17]:https://opensource.com/users/maxamillion

|

||||

155

sources/talk/20170320 Education of a Programmer.md

Normal file

155

sources/talk/20170320 Education of a Programmer.md

Normal file

@ -0,0 +1,155 @@

|

||||

Education of a Programmer

|

||||

============================================================

|

||||

|

||||

_When I left Microsoft in October 2016 after almost 21 years there and almost 35 years in the industry, I took some time to reflect on what I had learned over all those years. This is a lightly edited version of that post. Pardon the length!_

|

||||

|

||||

There are an amazing number of things you need to know to be a proficient programmer — details of languages, APIs, algorithms, data structures, systems and tools. These things change all the time — new languages and programming environments spring up and there always seems to be some hot new tool or language that “everyone” is using. It is important to stay current and proficient. A carpenter needs to know how to pick the right hammer and nail for the job and needs to be competent at driving the nail straight and true.

|

||||

|

||||

At the same time, I’ve found that there are some concepts and strategies that are applicable over a wide range of scenarios and across decades. We have seen multiple orders of magnitude change in the performance and capability of our underlying devices and yet certain ways of thinking about the design of systems still say relevant. These are more fundamental than any specific implementation. Understanding these recurring themes is hugely helpful in both the analysis and design of the complex systems we build.

|

||||

|

||||

Humility and Ego

|

||||

|

||||

This is not limited to programming, but in an area like computing which exhibits so much constant change, one needs a healthy balance of humility and ego. There is always more to learn and there is always someone who can help you learn it — if you are willing and open to that learning. One needs both the humility to recognize and acknowledge what you don’t know and the ego that gives you confidence to master a new area and apply what you already know. The biggest challenges I have seen are when someone works in a single deep area for a long time and “forgets” how good they are at learning new things. The best learning comes from actually getting hands dirty and building something, even if it is just a prototype or hack. The best programmers I know have had both a broad understanding of technology while at the same time have taken the time to go deep into some technology and become the expert. The deepest learning happens when you struggle with truly hard problems.

|

||||

|

||||

End to End Argument

|

||||

|

||||

Back in 1981, Jerry Saltzer, Dave Reed and Dave Clark were doing early work on the Internet and distributed systems and wrote up their [classic description][4] of the end to end argument. There is much misinformation out there on the Internet so it can be useful to go back and read the original paper. They were humble in not claiming invention — from their perspective this was a common engineering strategy that applies in many areas, not just in communications. They were simply writing it down and gathering examples. A minor paraphrasing is:

|

||||

|

||||

When implementing some function in a system, it can be implemented correctly and completely only with the knowledge and participation of the endpoints of the system. In some cases, a partial implementation in some internal component of the system may be important for performance reasons.

|

||||

|

||||

The SRC paper calls this an “argument”, although it has been elevated to a “principle” on Wikipedia and in other places. In fact, it is better to think of it as an argument — as they detail, one of the hardest problem for a system designer is to determine how to divide responsibilities between components of a system. This ends up being a discussion that involves weighing the pros and cons as you divide up functionality, isolate complexity and try to design a reliable, performant system that will be flexible to evolving requirements. There is no simple set of rules to follow.

|

||||

|

||||

Much of the discussion on the Internet focuses on communications systems, but the end-to-end argument applies in a much wider set of circumstances. One example in distributed systems is the idea of “eventual consistency”. An eventually consistent system can optimize and simplify by letting elements of the system get into a temporarily inconsistent state, knowing that there is a larger end-to-end process that can resolve these inconsistencies. I like the example of a scaled-out ordering system (e.g. as used by Amazon) that doesn’t require every request go through a central inventory control choke point. This lack of a central control point might allow two endpoints to sell the same last book copy, but the overall system needs some type of resolution system in any case, e.g. by notifying the customer that the book has been backordered. That last book might end up getting run over by a forklift in the warehouse before the order is fulfilled anyway. Once you realize an end-to-end resolution system is required and is in place, the internal design of the system can be optimized to take advantage of it.

|

||||

|

||||

In fact, it is this design flexibility in the service of either ongoing performance optimization or delivering other system features that makes this end-to-end approach so powerful. End-to-end thinking often allows internal performance flexibility which makes the overall system more robust and adaptable to changes in the characteristics of each of the components. This makes an end-to-end approach “anti-fragile” and resilient to change over time.

|

||||

|

||||

An implication of the end-to-end approach is that you want to be extremely careful about adding layers and functionality that eliminates overall performance flexibility. (Or other flexibility, but performance, especially latency, tends to be special.) If you expose the raw performance of the layers you are built on, end-to-end approaches can take advantage of that performance to optimize for their specific requirements. If you chew up that performance, even in the service of providing significant value-add functionality, you eliminate design flexibility.

|

||||

|

||||

The end-to-end argument intersects with organizational design when you have a system that is large and complex enough to assign whole teams to internal components. The natural tendency of those teams is to extend the functionality of those components, often in ways that start to eliminate design flexibility for applications trying to deliver end-to-end functionality built on top of them.

|

||||

|

||||

One of the challenges in applying the end-to-end approach is determining where the end is. “Little fleas have lesser fleas… and so on ad infinitum.”

|

||||

|

||||

Concentrating Complexity

|

||||

|

||||

Coding is an incredibly precise art, with each line of execution required for correct operation of the program. But this is misleading. Programs are not uniform in the overall complexity of their components or the complexity of how those components interact. The most robust programs isolate complexity in a way that lets significant parts of the system appear simple and straightforward and interact in simple ways with other components in the system. Complexity hiding can be isomorphic with other design approaches like information hiding and data abstraction but I find there is a different design sensibility if you really focus on identifying where the complexity lies and how you are isolating it.

|

||||

|

||||

The example I’ve returned to over and over again in my [writing][5] is the screen repaint algorithm that was used by early character video terminal editors like VI and EMACS. The early video terminals implemented control sequences for the core action of painting characters as well as additional display functions to optimize redisplay like scrolling the current lines up or down or inserting new lines or moving characters within a line. Each of those commands had different costs and those costs varied across different manufacturer’s devices. (See [TERMCAP][6] for links to code and a fuller history.) A full-screen application like a text editor wanted to update the screen as quickly as possible and therefore needed to optimize its use of these control sequences to transition the screen from one state to another.

|

||||

|

||||

These applications were designed so this underlying complexity was hidden. The parts of the system that modify the text buffer (where most innovation in functionality happens) completely ignore how these changes are converted into screen update commands. This is possible because the performance cost of computing the optimal set of updates for _any_ change in the content is swamped by the performance cost of actually executing the update commands on the terminal itself. It is a common pattern in systems design that performance analysis plays a key part in determining how and where to hide complexity. The screen update process can be asynchronous to the changes in the underlying text buffer and can be independent of the actual historical sequence of changes to the buffer. It is not important _how_ the buffer changed, but only _what_ changed. This combination of asynchronous coupling, elimination of the combinatorics of historical path dependence in the interaction between components and having a natural way for interactions to efficiently batch together are common characteristics used to hide coupling complexity.

|

||||

|

||||

Success in hiding complexity is determined not by the component doing the hiding but by the consumers of that component. This is one reason why it is often so critical for a component provider to actually be responsible for at least some piece of the end-to-end use of that component. They need to have clear optics into how the rest of the system interacts with their component and how (and whether) complexity leaks out. This often shows up as feedback like “this component is hard to use” — which typically means that it is not effectively hiding the internal complexity or did not pick a functional boundary that was amenable to hiding that complexity.

|

||||

|

||||

Layering and Componentization

|

||||

|

||||

It is the fundamental role of a system designer to determine how to break down a system into components and layers; to make decisions about what to build and what to pick up from elsewhere. Open Source may keep money from changing hands in this “build vs. buy” decision but the dynamics are the same. An important element in large scale engineering is understanding how these decisions will play out over time. Change fundamentally underlies everything we do as programmers, so these design choices are not only evaluated in the moment, but are evaluated in the years to come as the product continues to evolve.

|

||||

|

||||

Here are a few things about system decomposition that end up having a large element of time in them and therefore tend to take longer to learn and appreciate.

|

||||

|

||||

* Layers are leaky. Layers (or abstractions) are [fundamentally leaky][1]. These leaks have consequences immediately but also have consequences over time, in two ways. One consequence is that the characteristics of the layer leak through and permeate more of the system than you realize. These might be assumptions about specific performance characteristics or behavior ordering that is not an explicit part of the layer contract. This means that you generally are more _vulnerable_ to changes in the internal behavior of the component that you understood. A second consequence is it also means you are more _dependent_ on that internal behavior than is obvious, so if you consider changing that layer the consequences and challenges are probably larger than you thought.

|

||||

* Layers are too functional. It is almost a truism that a component you adopt will have more functionality than you actually require. In some cases, the decision to use it is based on leveraging that functionality for future uses. You adopt specifically because you want to “get on the train” and leverage the ongoing work that will go into that component. There are a few consequences of building on this highly functional layer. 1) The component will often make trade-offs that are biased by functionality that you do not actually require. 2) The component will embed complexity and constraints because of functionality you do not require and those constraints will impede future evolution of that component. 3) There will be more surface area to leak into your application. Some of that leakage will be due to true “leaky abstractions” and some will be explicit (but generally poorly controlled) increased dependence on the full capabilities of the component. Office is big enough that we found that for any layer we built on, we eventually fully explored its functionality in some part of the system. While that might appear to be positive (we are more completely leveraging the component), all uses are not equally valuable. So we end up having a massive cost to move from one layer to another based on this long-tail of often lower value and poorly recognized use cases. 4) The additional functionality creates complexity and opportunities for misuse. An XML validation API we used would optionally dynamically download the schema definition if it was specified as part of the XML tree. This was mistakenly turned on in our basic file parsing code which resulted in both a massive performance degradation as well as an (unintentional) distributed denial of service attack on a w3c.org web server. (These are colloquially known as “land mine” APIs.)

|

||||

* Layers get replaced. Requirements evolve, systems evolve, components are abandoned. You eventually need to replace that layer or component. This is true for external component dependencies as well as internal ones. This means that the issues above will end up becoming important.

|

||||

* Your build vs. buy decision will change. This is partly a corollary of above. This does not mean the decision to build or buy was wrong at the time. Often there was no appropriate component when you started and it only becomes available later. Or alternatively, you use a component but eventually find that it does not match your evolving requirements and your requirements are narrow enough, well-understood or so core to your value proposition that it makes sense to own it yourself. It does mean that you need to be just as concerned about leaky layers permeating more of the system for layers you build as well as for layers you adopt.

|

||||

* Layers get thick. As soon as you have defined a layer, it starts to accrete functionality. The layer is the natural throttle point to optimize for your usage patterns. The difficulty with a thick layer is that it tends to reduce your ability to leverage ongoing innovation in underlying layers. In some sense this is why OS companies hate thick layers built on top of their core evolving functionality — the pace at which innovation can be adopted is inherently slowed. One disciplined approach to avoid this is to disallow any additional state storage in an adaptor layer. Microsoft Foundation Classes took this general approach in building on top of Win32\. It is inevitably cheaper in the short term to just accrete functionality on to an existing layer (leading to all the eventual problems above) rather than refactoring and recomponentizing. A system designer who understands this looks for opportunities to break apart and simplify components rather than accrete more and more functionality within them.

|

||||

|

||||

Einsteinian Universe

|

||||

|

||||

I had been designing asynchronous distributed systems for decades but was struck by this quote from Pat Helland, a SQL architect, at an internal Microsoft talk. “We live in an Einsteinian universe — there is no such thing as simultaneity. “ When building distributed systems — and virtually everything we build is a distributed system — you cannot hide the distributed nature of the system. It’s just physics. This is one of the reasons I’ve always felt Remote Procedure Call, and especially “transparent” RPC that explicitly tries to hide the distributed nature of the interaction, is fundamentally wrong-headed. You need to embrace the distributed nature of the system since the implications almost always need to be plumbed completely through the system design and into the user experience.

|

||||

|

||||

Embracing the distributed nature of the system leads to a number of things:

|

||||

|

||||

* You think through the implications to the user experience from the start rather than trying to patch on error handling, cancellation and status reporting as an afterthought.

|

||||

* You use asynchronous techniques to couple components. Synchronous coupling is _impossible._ If something appears synchronous, it’s because some internal layer has tried to hide the asynchrony and in doing so has obscured (but definitely not hidden) a fundamental characteristic of the runtime behavior of the system.

|

||||

* You recognize and explicitly design for interacting state machines and that these states represent robust long-lived internal system states (rather than ad-hoc, ephemeral and undiscoverable state encoded by the value of variables in a deep call stack).

|

||||

* You recognize that failure is expected. The only guaranteed way to detect failure in a distributed system is to simply decide you have waited “too long”. This naturally means that [cancellation is first-class][2]. Some layer of the system (perhaps plumbed through to the user) will need to decide it has waited too long and cancel the interaction. Cancelling is only about reestablishing local state and reclaiming local resources — there is no way to reliably propagate that cancellation through the system. It can sometimes be useful to have a low-cost, unreliable way to attempt to propagate cancellation as a performance optimization.

|

||||

* You recognize that cancellation is not rollback since it is just reclaiming local resources and state. If rollback is necessary, it needs to be an end-to-end feature.

|

||||

* You accept that you can never really know the state of a distributed component. As soon as you discover the state, it may have changed. When you send an operation, it may be lost in transit, it might be processed but the response is lost, or it may take some significant amount of time to process so the remote state ultimately transitions at some arbitrary time in the future. This leads to approaches like idempotent operations and the ability to robustly and efficiently rediscover remote state rather than expecting that distributed components can reliably track state in parallel. The concept of “[eventual consistency][3]” succinctly captures many of these ideas.

|

||||

|

||||

I like to say you should “revel in the asynchrony”. Rather than trying to hide it, you accept it and design for it. When you see a technique like idempotency or immutability, you recognize them as ways of embracing the fundamental nature of the universe, not just one more design tool in your toolbox.

|

||||

|

||||

Performance

|

||||

|

||||

I am sure Don Knuth is horrified by how misunderstood his partial quote “Premature optimization is the root of all evil” has been. In fact, performance, and the incredible exponential improvements in performance that have continued for over 6 decades (or more than 10 decades depending on how willing you are to project these trends through discrete transistors, vacuum tubes and electromechanical relays), underlie all of the amazing innovation we have seen in our industry and all the change rippling through the economy as “software eats the world”.

|

||||

|

||||

A key thing to recognize about this exponential change is that while all components of the system are experiencing exponential change, these exponentials are divergent. So the rate of increase in capacity of a hard disk changes at a different rate from the capacity of memory or the speed of the CPU or the latency between memory and CPU. Even when trends are driven by the same underlying technology, exponentials diverge. [Latency improvements fundamentally trail bandwidth improvements][7]. Exponential change tends to look linear when you are close to it or over short periods but the effects over time can be overwhelming. This overwhelming change in the relationship between the performance of components of the system forces reevaluation of design decisions on a regular basis.

|

||||

|

||||

A consequence of this is that design decisions that made sense at one point no longer make sense after a few years. Or in some cases an approach that made sense two decades ago starts to look like a good trade-off again. Modern memory mapping has characteristics that look more like process swapping of the early time-sharing days than it does like demand paging. (This does sometimes result in old codgers like myself claiming that “that’s just the same approach we used back in ‘75” — ignoring the fact that it didn’t make sense for 40 years and now does again because some balance between two components — maybe flash and NAND rather than disk and core memory — has come to resemble a previous relationship).

|

||||

|

||||

Important transitions happen when these exponentials cross human constraints. So you move from a limit of two to the sixteenth characters (which a single user can type in a few hours) to two to the thirty-second (which is beyond what a single person can type). So you can capture a digital image with higher resolution than the human eye can perceive. Or you can store an entire music collection on a hard disk small enough to fit in your pocket. Or you can store a digitized video recording on a hard disk. And then later the ability to stream that recording in real time makes it possible to “record” it by storing it once centrally rather than repeatedly on thousands of local hard disks.

|

||||

|

||||

The things that stay as a fundamental constraint are three dimensions and the speed of light. We’re back to that Einsteinian universe. We will always have memory hierarchies — they are fundamental to the laws of physics. You will always have stable storage and IO, memory, computation and communications. The relative capacity, latency and bandwidth of these elements will change, but the system is always about how these elements fit together and the balance and tradeoffs between them. Jim Gray was the master of this analysis.

|

||||

|

||||

Another consequence of the fundamentals of 3D and the speed of light is that much of performance analysis is about three things: locality, locality, locality. Whether it is packing data on disk, managing processor cache hierarchies, or coalescing data into a communications packet, how data is packed together, the patterns for how you touch that data with locality over time and the patterns of how you transfer that data between components is fundamental to performance. Focusing on less code operating on less data with more locality over space and time is a good way to cut through the noise.

|

||||

|

||||

Jon Devaan used to say “design the data, not the code”. This also generally means when looking at the structure of a system, I’m less interested in seeing how the code interacts — I want to see how the data interacts and flows. If someone tries to explain a system by describing the code structure and does not understand the rate and volume of data flow, they do not understand the system.

|

||||

|

||||

A memory hierarchy also implies we will always have caches — even if some system layer is trying to hide it. Caches are fundamental but also dangerous. Caches are trying to leverage the runtime behavior of the code to change the pattern of interaction between different components in the system. They inherently need to model that behavior, even if that model is implicit in how they fill and invalidate the cache and test for a cache hit. If the model is poor _or becomes_ poor as the behavior changes, the cache will not operate as expected. A simple guideline is that caches _must_ be instrumented — their behavior will degrade over time because of changing behavior of the application and the changing nature and balance of the performance characteristics of the components you are modeling. Every long-time programmer has cache horror stories.

|

||||

|

||||

I was lucky that my early career was spent at BBN, one of the birthplaces of the Internet. It was very natural to think about communications between asynchronous components as the natural way systems connect. Flow control and queueing theory are fundamental to communications systems and more generally the way that any asynchronous system operates. Flow control is inherently resource management (managing the capacity of a channel) but resource management is the more fundamental concern. Flow control also is inherently an end-to-end responsibility, so thinking about asynchronous systems in an end-to-end way comes very naturally. The story of [buffer bloat][8]is well worth understanding in this context because it demonstrates how lack of understanding the dynamics of end-to-end behavior coupled with technology “improvements” (larger buffers in routers) resulted in very long-running problems in the overall network infrastructure.

|

||||

|

||||

The concept of “light speed” is one that I’ve found useful in analyzing any system. A light speed analysis doesn’t start with the current performance, it asks “what is the best theoretical performance I could achieve with this design?” What is the real information content being transferred and at what rate of change? What is the underlying latency and bandwidth between components? A light speed analysis forces a designer to have a deeper appreciation for whether their approach could ever achieve the performance goals or whether they need to rethink their basic approach. It also forces a deeper understanding of where performance is being consumed and whether this is inherent or potentially due to some misbehavior. From a constructive point of view, it forces a system designer to understand what are the true performance characteristics of their building blocks rather than focusing on the other functional characteristics.

|

||||

|

||||

I spent much of my career building graphical applications. A user sitting at one end of the system defines a key constant and constraint in any such system. The human visual and nervous system is not experiencing exponential change. The system is inherently constrained, which means a system designer can leverage ( _must_ leverage) those constraints, e.g. by virtualization (limiting how much of the underlying data model needs to be mapped into view data structures) or by limiting the rate of screen update to the perception limits of the human visual system.

|

||||

|

||||

The Nature of Complexity

|

||||

|

||||

I have struggled with complexity my entire career. Why do systems and apps get complex? Why doesn’t development within an application domain get easier over time as the infrastructure gets more powerful rather than getting harder and more constrained? In fact, one of our key approaches for managing complexity is to “walk away” and start fresh. Often new tools or languages force us to start from scratch which means that developers end up conflating the benefits of the tool with the benefits of the clean start. The clean start is what is fundamental. This is not to say that some new tool, platform or language might not be a great thing, but I can guarantee it will not solve the problem of complexity growth. The simplest way of controlling complexity growth is to build a smaller system with fewer developers.

|

||||

|

||||

Of course, in many cases “walking away” is not an alternative — the Office business is built on hugely valuable and complex assets. With OneNote, Office “walked away” from the complexity of Word in order to innovate along a different dimension. Sway is another example where Office decided that we needed to free ourselves from constraints in order to really leverage key environmental changes and the opportunity to take fundamentally different design approaches. With the Word, Excel and PowerPoint web apps, we decided that the linkage with our immensely valuable data formats was too fundamental to walk away from and that has served as a significant and ongoing constraint on development.

|

||||

|

||||

I was influenced by Fred Brook’s “[No Silver Bullet][9]” essay about accident and essence in software development. There is much irreducible complexity embedded in the essence of what the software is trying to model. I just recently re-read that essay and found it surprising on re-reading that two of the trends he imbued with the most power to impact future developer productivity were increasing emphasis on “buy” in the “build vs. buy” decision — foreshadowing the change that open-source and cloud infrastructure has had. The other trend was the move to more “organic” or “biological” incremental approaches over more purely constructivist approaches. A modern reader sees that as the shift to agile and continuous development processes. This in 1986!

|

||||

|

||||

I have been much taken with the work of Stuart Kauffman on the fundamental nature of complexity. Kauffman builds up from a simple model of Boolean networks (“[NK models][10]”) and then explores the application of this fundamentally mathematical construct to things like systems of interacting molecules, genetic networks, ecosystems, economic systems and (in a limited way) computer systems to understand the mathematical underpinning to emergent ordered behavior and its relationship to chaotic behavior. In a highly connected system, you inherently have a system of conflicting constraints that makes it (mathematically) hard to evolve that system forward (viewed as an optimization problem over a rugged landscape). A fundamental way of controlling this complexity is to batch the system into independent elements and limit the interconnections between elements (essentially reducing both “N” and “K” in the NK model). Of course this feels natural to a system designer applying techniques of complexity hiding, information hiding and data abstraction and using loose asynchronous coupling to limit interactions between components.

|

||||

|

||||

A challenge we always face is that many of the ways we want to evolve our systems cut across all dimensions. Real-time co-authoring has been a very concrete (and complex) recent example for the Office apps.

|

||||

|

||||

Complexity in our data models often equates with “power”. An inherent challenge in designing user experiences is that we need to map a limited set of gestures into a transition in the underlying data model state space. Increasing the dimensions of the state space inevitably creates ambiguity in the user gesture. This is “[just math][11]” which means that often times the most fundamental way to ensure that a system stays “easy to use” is to constrain the underlying data model.

|

||||

|

||||

Management

|

||||

|

||||

I started taking leadership roles in high school (student council president!) and always found it natural to take on larger responsibilities. At the same time, I was always proud that I continued to be a full-time programmer through every management stage. VP of development for Office finally pushed me over the edge and away from day-to-day programming. I’ve enjoyed returning to programming as I stepped away from that job over the last year — it is an incredibly creative and fulfilling activity (and maybe a little frustrating at times as you chase down that “last” bug).

|

||||

|

||||

Despite having been a “manager” for over a decade by the time I arrived at Microsoft, I really learned about management after my arrival in 1996\. Microsoft reinforced that “engineering leadership is technical leadership”. This aligned with my perspective and helped me both accept and grow into larger management responsibilities.

|

||||

|

||||

The thing that most resonated with me on my arrival was the fundamental culture of transparency in Office. The manager’s job was to design and use transparent processes to drive the project. Transparency is not simple, automatic, or a matter of good intentions — it needs to be designed into the system. The best transparency comes by being able to track progress as the granular output of individual engineers in their day-to-day activity (work items completed, bugs opened and fixed, scenarios complete). Beware subjective red/green/yellow, thumbs-up/thumbs-down dashboards!

|

||||

|

||||

I used to say my job was to design feedback loops. Transparent processes provide a way for every participant in the process — from individual engineer to manager to exec to use the data being tracked to drive the process and result and understand the role they are playing in the overall project goals. Ultimately transparency ends up being a great tool for empowerment — the manager can invest more and more local control in those closest to the problem because of confidence they have visibility to the progress being made. Coordination emerges naturally.

|

||||

|

||||

Key to this is that the goal has actually been properly framed (including key resource constraints like ship schedule). Decision-making that needs to constantly flow up and down the management chain usually reflects poor framing of goals and constraints by management.

|

||||

|

||||

I was at Beyond Software when I really internalized the importance of having a singular leader over a project. The engineering manager departed (later to hire me away for FrontPage) and all four of the leads were hesitant to step into the role — not least because we did not know how long we were going to stick around. We were all very technically sharp and got along well so we decided to work as peers to lead the project. It was a mess. The one obvious problem is that we had no strategy for allocating resources between the pre-existing groups — one of the top responsibilities of management! The deep accountability one feels when you know you are personally in charge was missing. We had no leader really accountable for unifying goals and defining constraints.

|

||||

|

||||

I have a visceral memory of the first time I fully appreciated the importance of _listening_ for a leader. I had just taken on the role of Group Development Manager for Word, OneNote, Publisher and Text Services. There was a significant controversy about how we were organizing the text services team and I went around to each of the key participants, heard what they had to say and then integrated and wrote up all I had heard. When I showed the write-up to one of the key participants, his reaction was “wow, you really heard what I had to say”! All of the largest issues I drove as a manager (e.g. cross-platform and the shift to continuous engineering) involved carefully listening to all the players. Listening is an active process that involves trying to understand the perspectives and then writing up what I learned and testing it to validate my understanding. When a key hard decision needed to happen, by the time the call was made everyone knew they had been heard and understood (whether they agreed with the decision or not).

|

||||

|

||||

It was the previous job, as FrontPage development manager, where I internalized the “operational dilemma” inherent in decision making with partial information. The longer you wait, the more information you will have to make a decision. But the longer you wait, the less flexibility you will have to actually implement it. At some point you just need to make a call.

|

||||

|

||||

Designing an organization involves a similar tension. You want to increase the resource domain so that a consistent prioritization framework can be applied across a larger set of resources. But the larger the resource domain, the harder it is to actually have all the information you need to make good decisions. An organizational design is about balancing these two factors. Software complicates this because characteristics of the software can cut across the design in an arbitrary dimensionality. Office has used [shared teams][12] to address both these issues (prioritization and resources) by having cross-cutting teams that can share work (add resources) with the teams they are building for.

|

||||

|

||||

One dirty little secret you learn as you move up the management ladder is that you and your new peers aren’t suddenly smarter because you now have more responsibility. This reinforces that the organization as a whole better be smarter than the leader at the top. Empowering every level to own their decisions within a consistent framing is the key approach to making this true. Listening and making yourself accountable to the organization for articulating and explaining the reasoning behind your decisions is another key strategy. Surprisingly, fear of making a dumb decision can be a useful motivator for ensuring you articulate your reasoning clearly and make sure you listen to all inputs.

|

||||

|

||||

Conclusion

|

||||

|

||||

At the end of my interview round for my first job out of college, the recruiter asked if I was more interested in working on “systems” or “apps”. I didn’t really understand the question. Hard, interesting problems arise at every level of the software stack and I’ve had fun plumbing all of them. Keep learning.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/education-of-a-programmer-aaecf2d35312

|

||||

|

||||

作者:[ Terry Crowley][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@terrycrowley

|

||||

[1]:https://medium.com/@terrycrowley/leaky-by-design-7b423142ece0#.x67udeg0a

|

||||

[2]:https://medium.com/@terrycrowley/how-to-think-about-cancellation-3516fc342ae#.3pfjc5b54

|

||||

[3]:http://queue.acm.org/detail.cfm?id=2462076

|

||||

[4]:http://web.mit.edu/Saltzer/www/publications/endtoend/endtoend.pdf

|

||||

[5]:https://medium.com/@terrycrowley/model-view-controller-and-loose-coupling-6370f76e9cde#.o4gnupqzq

|

||||

[6]:https://en.wikipedia.org/wiki/Termcap

|

||||

[7]:http://www.ll.mit.edu/HPEC/agendas/proc04/invited/patterson_keynote.pdf

|

||||

[8]:https://en.wikipedia.org/wiki/Bufferbloat

|

||||

[9]:http://worrydream.com/refs/Brooks-NoSilverBullet.pdf

|

||||

[10]:https://en.wikipedia.org/wiki/NK_model

|

||||

[11]:https://medium.com/@terrycrowley/the-math-of-easy-to-use-14645f819201#.untmk9eq7

|

||||

[12]:https://medium.com/@terrycrowley/breaking-conways-law-a0fdf8500413#.gqaqf1c5k

|

||||

@ -0,0 +1,53 @@

|

||||

Why do developers who could work anywhere flock to the world’s most expensive cities?

|

||||

============================================================

|

||||

|

||||

|

||||

Politicians and economists [lament][10] that certain alpha regions — SF, LA, NYC, Boston, Toronto, London, Paris — attract all the best jobs while becoming repellently expensive, reducing economic mobility and contributing to further bifurcation between haves and have-nots. But why don’t the best jobs move elsewhere?

|

||||

|

||||

Of course, many of them can’t. The average financier in NYC or London (until Brexit annihilates London’s banking industry, of course…) would be laughed out of the office, and not invited back, if they told their boss they wanted to henceforth work from Chiang Mai.

|

||||

|

||||

But this isn’t true of (much of) the software field. The average web/app developer might have such a request declined; but they would not be laughed at, or fired. The demand for good developers greatly outstrips supply, and in this era of Skype and Slack, there’s nothing about software development that requires meatspace interactions.

|

||||

|

||||

(This is even more true of writers, of course; I did in fact post this piece from Pohnpei. But writers don’t have anything like the leverage of software developers.)

|

||||

|

||||

Some people will tell you that remote teams are inherently less effective and productive than localized ones, or that “serendipitous collisions” are so important that every employee must be forced to the same physical location every day so that these collisions can be manufactured. These people are wrong, as long as the team in question is small — on the order of handfuls, dozens or scores, rather than hundreds or thousands — and flexible.

|

||||

|

||||

I should know: at [HappyFunCorp][11], we work extensively with remote teams, and actively recruit remote developers, and it works out fantastically well. A day in which I interact and collaborate with developers in Stockholm, São Paulo, Shanghai, Brooklyn and New Delhi, from my own home base in San Francisco, is not at all unusual.

|

||||

|

||||

At this point, whether it’s a good idea is almost irrelevant, though. Supply and demand is such that any sufficiently skilled developer could become a so-called digital nomad if they really wanted to. But many who could, do not. I recently spent some time in Reykjavik at a house Airbnb-ed for the month by an ever-shifting crew of temporary remote workers, keeping East Coast time to keep up with their jobs, while spending mornings and weekends exploring Iceland — but almost all of us then returned to live in the Bay Area.

|

||||

|

||||

Economically, of course, this is insane. Moving to and working from Southeast Asia would save us thousands of dollars a month in rent alone. So why do people who could live in Costa Rica on a San Francisco salary, or in Berlin while charging NYC rates, choose not to do so? Why are allegedly hardheaded engineers so financially irrational?

|

||||

|

||||

Of course there are social and cultural reasons. Chiang Mai is very nice, but doesn’t have the Met, or steampunk masquerade parties or 50 foodie restaurants within a 15-minute walk. Berlin is lovely, but doesn’t offer kite surfing, or Sierra hiking or California weather. Neither promises an effectively limitless population of people with whom you share values and a first language.

|

||||

|

||||

And yet I think there’s much more to it than this. I believe there’s a more fundamental economic divide opening than the one between haves and have-nots. I think we are witnessing a growing rift between the world’s Extremistan cities, in which truly extraordinary things can be achieved, and its Mediocristan towns, in which you can work and make money and be happy but never achieve greatness. (Labels stolen from the great Nassim Taleb.)

|

||||

|

||||

The arts have long had Extremistan cities. That’s why aspiring writers move to New York City, and even directors and actors who found international success are still drawn to L.A. like moths to a klieg light. Now it is true of tech, too. Even if you don’t even want to try to (help) build something extraordinary — and the startup myth is so powerful today that it’s a very rare engineer indeed who hasn’t at least dreamed about it — the prospect of being _where great things happen_ is intoxicatingly enticing.

|

||||

|

||||

But the interesting thing about this is that it could, in theory, change; because — as of quite recently — distributed, decentralized teams can, in fact, achieve extraordinary things. The cards are arguably stacked against them, because VCs tend to be quite myopic. But no law dictates that unicorns may only be born in California and a handful of other secondary territories; and it seems likely that, for better or worse, Extremistan is spreading. It would be pleasantly paradoxical if that expansion ultimately leads to _lower_ rents in the Mission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/

|

||||

|

||||

作者:[ Jon Evans ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://techcrunch.com/author/jon-evans/

|

||||

[1]:https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/#comments

|

||||

[2]:https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/#

|

||||

[3]:http://twitter.com/share?via=techcrunch&url=http://tcrn.ch/2owXJ0C&text=Why%20do%20developers%20who%20could%20work%20anywhere%20flock%20to%20the%20world%E2%80%99s%20most%20expensive%C2%A0cities%3F&hashtags=

|

||||

[4]:https://www.linkedin.com/shareArticle?mini=true&url=https%3A%2F%2Ftechcrunch.com%2F2017%2F04%2F02%2Fwhy-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities%2F&title=Why%20do%20developers%20who%20could%20work%20anywhere%20flock%20to%20the%20world%E2%80%99s%20most%20expensive%C2%A0cities%3F

|

||||

[5]:https://plus.google.com/share?url=https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/

|

||||

[6]:http://www.reddit.com/submit?url=https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/&title=Why%20do%20developers%20who%20could%20work%20anywhere%20flock%20to%20the%20world%E2%80%99s%20most%20expensive%C2%A0cities%3F

|

||||

[7]:http://www.stumbleupon.com/badge/?url=https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/

|

||||

[8]:mailto:?subject=Why%20do%20developers%20who%20could%20work%20anywhere%20flock%20to%20the%20world%E2%80%99s%20most%20expensive%C2%A0cities?&body=Article:%20https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/

|

||||

[9]:https://share.flipboard.com/bookmarklet/popout?v=2&title=Why%20do%20developers%20who%20could%20work%20anywhere%20flock%20to%20the%20world%E2%80%99s%20most%20expensive%C2%A0cities%3F&url=https://techcrunch.com/2017/04/02/why-do-developers-who-could-work-anywhere-flock-to-the-worlds-most-expensive-cities/

|

||||

[10]:https://mobile.twitter.com/Noahpinion/status/846054187288866

|

||||

[11]:http://happyfuncorp.com/

|

||||

[12]:https://twitter.com/rezendi

|

||||

[13]:https://techcrunch.com/author/jon-evans/

|

||||

[14]:https://techcrunch.com/2017/04/01/discussing-the-limits-of-artificial-intelligence/

|

||||

@ -1,142 +0,0 @@

|

||||

[Data-Oriented Hash Table][1]

|

||||

============================================================

|

||||

|

||||

In recent years, there’s been a lot of discussion and interest in “data-oriented design”—a programming style that emphasizes thinking about how your data is laid out in memory, how you access it and how many cache misses it’s going to incur. With memory reads taking orders of magnitude longer for cache misses than hits, the number of misses is often the key metric to optimize. It’s not just about performance-sensitive code—data structures designed without sufficient attention to memory effects may be a big contributor to the general slowness and bloatiness of software.

|

||||

|

||||

The central tenet of cache-efficient data structures is to keep things flat and linear. For example, under most circumstances, to store a sequence of items you should prefer a flat array over a linked list—every pointer you have to chase to find your data adds a likely cache miss, while flat arrays can be prefetched and enable the memory system to operate at peak efficiency.

|

||||

|

||||

This is pretty obvious if you know a little about how the memory hierarchy works—but it’s still a good idea to test things sometimes, even if they’re “obvious”! [Baptiste Wicht tested `std::vector` vs `std::list` vs `std::deque`][4] (the latter of which is commonly implemented as a chunked array, i.e. an array of arrays) a couple of years ago. The results are mostly in line with what you’d expect, but there are a few counterintuitive findings. For instance, inserting or removing values in the middle of the sequence—something lists are supposed to be good at—is actually faster with an array, if the elements are a POD type and no bigger than 64 bytes (i.e. one cache line) or so! It turns out to actually be faster to shift around the array elements on insertion/removal than to first traverse the list to find the right position and then patch a few pointers to insert/remove one element. That’s because of the many cache misses in the list traversal, compared to relatively few for the array shift. (For larger element sizes, non-POD types, or if you already have a pointer into the list, the list wins, as you’d expect.)

|

||||

|

||||

Thanks to data like Baptiste’s, we know a good deal about how memory layout affects sequence containers. But what about associative containers, i.e. hash tables? There have been some expert recommendations: [Chandler Carruth tells us to use open addressing with local probing][5] so that we don’t have to chase pointers, and [Mike Acton suggests segregating keys from values][6] in memory so that we get more keys per cache line, improving locality when we have to look at multiple keys. These ideas make good sense, but again, it’s a good idea to test things, and I couldn’t find any data. So I had to collect some of my own!

|

||||

|

||||

### [][7]The Tests

|

||||

|

||||

I tested four different quick-and-dirty hash table implementations, as well as `std::unordered_map`. All five used the same hash function, Bob Jenkins’ [SpookyHash][8] with 64-bit hash values. (I didn’t test different hash functions, as that wasn’t the point here; I’m also not looking at total memory consumption in my analysis.) The implementations are identified by short codes in the results tables:

|

||||

|

||||

* **UM**: `std::unordered_map`. In both VS2012 and libstdc++-v3 (used by both gcc and clang), UM is implemented as a linked list containing all the elements, and an array of buckets that store iterators into the list. In VS2012, it’s a doubly-linked list and each bucket stores both begin and end iterators; in libstdc++, it’s a singly-linked list and each bucket stores just a begin iterator. In both cases, the list nodes are individually allocated and freed. Max load factor is 1.

|

||||

* **Ch**: separate chaining—each bucket points to a singly-linked list of element nodes. The element nodes are stored in a flat array pool, to avoid allocating each node individually. Unused nodes are kept on a free list. Max load factor is 1.

|

||||

* **OL**: open addressing with linear probing—each bucket stores a 62-bit hash, a 2-bit state (empty, filled, or removed), key, and value. Max load factor is 2/3.

|

||||

* **DO1**: “data-oriented 1”—like OL, but the hashes and states are segregated from the keys and values, in two separate flat arrays.

|

||||

* **DO2**: “data-oriented 2”—like OL, but the hashes/states, keys, and values are segregated in three separate flat arrays.

|

||||

|

||||

All my implementations, as well as VS2012’s UM, use power-of-2 sizes by default, growing by 2x upon exceeding their max load factor. In libstdc++, UM uses prime-number sizes by default and grows to the next prime upon exceeding its max load factor. However, I don’t think these details are very important for performance. The prime-number thing is a hedge against poor hash functions that don’t have enough entropy in their lower bits, but we’re using a good hash function.

|

||||

|

||||

The OL, DO1 and DO2 implementations will collectively be referred to as OA (open addressing), since we’ll find later that their performance characteristics are often pretty similar.

|

||||

|

||||

For each of these implementations, I timed several different operations, at element counts from 100K to 1M and for payload sizes (i.e. total key+value size) from 8 to 4K bytes. For my purposes, keys and values were always POD types and keys were always 8 bytes (except for the 8-byte payload, in which key and value were 4 bytes each). I kept the keys to a consistent size because my purpose here was to test memory effects, not hash function performance. Each test was repeated 5 times and the minimum timing was taken.

|

||||

|

||||

The operations tested were:

|

||||

|

||||

* **Fill**: insert a randomly shuffled sequence of unique keys into the table.

|

||||

* **Presized fill**: like Fill, but first reserve enough memory for all the keys we’ll insert, to prevent rehashing and reallocing during the fill process.

|

||||

* **Lookup**: perform 100K lookups of random keys, all of which are in the table.

|

||||

* **Failed lookup**: perform 100K lookups of random keys, none of which are in the table.

|

||||

* **Remove**: remove a randomly chosen half of the elements from a table.

|

||||

* **Destruct**: destroy a table and free its memory.

|

||||

|

||||