mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

e078cde621

134

published/20171005 Reasons Kubernetes is cool.md

Normal file

134

published/20171005 Reasons Kubernetes is cool.md

Normal file

@ -0,0 +1,134 @@

|

||||

为什么 Kubernetes 很酷

|

||||

============================================================

|

||||

|

||||

在我刚开始学习 Kubernetes(大约是一年半以前吧?)时,我真的不明白为什么应该去关注它。

|

||||

|

||||

在我使用 Kubernetes 全职工作了三个多月后,我才逐渐明白了为什么我应该使用它。(我距离成为一个 Kubernetes 专家还很远!)希望这篇文章对你理解 Kubernetes 能做什么会有帮助!

|

||||

|

||||

我将尝试去解释我对 Kubernetes 感兴趣的一些原因,而不去使用 “<ruby>原生云<rt>cloud native</rt></ruby>”、“<ruby>编排系统<rt>orchestration</rt></ruby>”、“<ruby>容器<rt>container</rt></ruby>”,或者任何 Kubernetes 专用的术语 :)。我去解释的这些观点主要来自一位 Kubernetes 操作者/基础设施工程师,因为,我现在的工作就是去配置 Kubernetes 和让它工作的更好。

|

||||

|

||||

我不会去尝试解决一些如 “你应该在你的生产系统中使用 Kubernetes 吗?”这样的问题。那是非常复杂的问题。(不仅是因为“生产系统”根据你的用途而总是有不同的要求)

|

||||

|

||||

### Kubernetes 可以让你无需设置一台新的服务器即可在生产系统中运行代码

|

||||

|

||||

我首次被说教使用 Kubernetes 是与我的伙伴 Kamal 的下面的谈话:

|

||||

|

||||

大致是这样的:

|

||||

|

||||

* Kamal: 使用 Kubernetes 你可以通过一条命令就能设置一台新的服务器。

|

||||

* Julia: 我觉得不太可能吧。

|

||||

* Kamal: 像这样,你写一个配置文件,然后应用它,这时候,你就在生产系统中运行了一个 HTTP 服务。

|

||||

* Julia: 但是,现在我需要去创建一个新的 AWS 实例,明确地写一个 Puppet 清单,设置服务发现,配置负载均衡,配置我们的部署软件,并且确保 DNS 正常工作,如果没有什么问题的话,至少在 4 小时后才能投入使用。

|

||||

* Kamal: 是的,使用 Kubernetes 你不需要做那么多事情,你可以在 5 分钟内设置一台新的 HTTP 服务,并且它将自动运行。只要你的集群中有空闲的资源它就能正常工作!

|

||||

* Julia: 这儿一定是一个“坑”。

|

||||

|

||||

这里有一种陷阱,设置一个生产用 Kubernetes 集群(在我的经险中)确实并不容易。(查看 [Kubernetes 艰难之旅][3] 中去开始使用时有哪些复杂的东西)但是,我们现在并不深入讨论它。

|

||||

|

||||

因此,Kubernetes 第一个很酷的事情是,它可能使那些想在生产系统中部署新开发的软件的方式变得更容易。那是很酷的事,而且它真的是这样,因此,一旦你使用一个运作中的 Kubernetes 集群,你真的可以仅使用一个配置文件就在生产系统中设置一台 HTTP 服务(在 5 分钟内运行这个应用程序,设置一个负载均衡,给它一个 DNS 名字,等等)。看起来真的很有趣。

|

||||

|

||||

### 对于运行在生产系统中的代码,Kubernetes 可以提供更好的可见性和可管理性

|

||||

|

||||

在我看来,在理解 etcd 之前,你可能不会理解 Kubernetes 的。因此,让我们先讨论 etcd!

|

||||

|

||||

想像一下,如果现在我这样问你,“告诉我你运行在生产系统中的每个应用程序,它运行在哪台主机上?它是否状态很好?是否为它分配了一个 DNS 名字?”我并不知道这些,但是,我可能需要到很多不同的地方去查询来回答这些问题,并且,我需要花很长的时间才能搞定。我现在可以很确定地说不需要查询,仅一个 API 就可以搞定它们。

|

||||

|

||||

在 Kubernetes 中,你的集群的所有状态 – 运行中的应用程序 (“pod”)、节点、DNS 名字、 cron 任务、 等等 —— 都保存在一个单一的数据库中(etcd)。每个 Kubernetes 组件是无状态的,并且基本是通过下列方式工作的:

|

||||

|

||||

* 从 etcd 中读取状态(比如,“分配给节点 1 的 pod 列表”)

|

||||

* 产生变化(比如,“在节点 1 上运行 pod A”)

|

||||

* 更新 etcd 中的状态(比如,“设置 pod A 的状态为 ‘running’”)

|

||||

|

||||

这意味着,如果你想去回答诸如 “在那个可用区中有多少台运行着 nginx 的 pod?” 这样的问题时,你可以通过查询一个统一的 API(Kubernetes API)去回答它。并且,你可以在每个其它 Kubernetes 组件上运行那个 API 去进行同样的访问。

|

||||

|

||||

这也意味着,你可以很容易地去管理每个运行在 Kubernetes 中的任何东西。比如说,如果你想要:

|

||||

|

||||

* 部署实现一个复杂的定制的部署策略(部署一个东西,等待 2 分钟,部署 5 个以上,等待 3.7 分钟,等等)

|

||||

* 每当推送到 github 上一个分支,自动化 [启动一个新的 web 服务器][1]

|

||||

* 监视所有你的运行的应用程序,确保它们有一个合理的内存使用限制。

|

||||

|

||||

这些你只需要写一个程序与 Kubernetes API(“controller”)通讯就可以了。

|

||||

|

||||

另一个关于 Kubernetes API 的令人激动的事情是,你不会局限于 Kubernetes 所提供的现有功能!如果对于你要部署/创建/监视的软件有你自己的方案,那么,你可以使用 Kubernetes API 去写一些代码去达到你的目的!它可以让你做到你想做的任何事情。

|

||||

|

||||

### 即便每个 Kubernetes 组件都“挂了”,你的代码将仍然保持运行

|

||||

|

||||

关于 Kubernetes 我(在各种博客文章中 :))承诺的一件事情是,“如果 Kubernetes API 服务和其它组件‘挂了’也没事,你的代码将一直保持运行状态”。我认为理论上这听起来很酷,但是我不确定它是否真是这样的。

|

||||

|

||||

到目前为止,这似乎是真的!

|

||||

|

||||

我已经断开了一些正在运行的 etcd,发生了这些情况:

|

||||

|

||||

1. 所有的代码继续保持运行状态

|

||||

2. 不能做 _新的_ 事情(你不能部署新的代码或者生成变更,cron 作业将停止工作)

|

||||

3. 当它恢复时,集群将赶上这期间它错过的内容

|

||||

|

||||

这样做意味着如果 etcd 宕掉,并且你的应用程序的其中之一崩溃或者发生其它事情,在 etcd 恢复之前,它不能够恢复。

|

||||

|

||||

### Kubernetes 的设计对 bug 很有弹性

|

||||

|

||||

与任何软件一样,Kubernetes 也会有 bug。例如,到目前为止,我们的集群控制管理器有内存泄漏,并且,调度器经常崩溃。bug 当然不好,但是,我发现 Kubernetes 的设计可以帮助减轻它的许多核心组件中的错误的影响。

|

||||

|

||||

如果你重启动任何组件,将会发生:

|

||||

|

||||

* 从 etcd 中读取所有的与它相关的状态

|

||||

* 基于那些状态(调度 pod、回收完成的 pod、调度 cron 作业、按需部署等等),它会去做那些它认为必须要做的事情

|

||||

|

||||

因为,所有的组件并不会在内存中保持状态,你在任何时候都可以重启它们,这可以帮助你减轻各种 bug 的影响。

|

||||

|

||||

例如,如果在你的控制管理器中有内存泄露。因为,控制管理器是无状态的,你可以每小时定期去重启它,或者,在感觉到可能导致任何不一致的问题发生时重启它。又或者,在调度器中遇到了一个 bug,它有时忘记了某个 pod,从来不去调度它们。你可以每隔 10 分钟来重启调度器来缓减这种情况。(我们并不会这么做,而是去修复这个 bug,但是,你_可以这样做_ :))

|

||||

|

||||

因此,我觉得即使在它的核心组件中有 bug,我仍然可以信任 Kubernetes 的设计可以让我确保集群状态的一致性。并且,总在来说,随着时间的推移软件质量会提高。唯一你必须去操作的有状态的东西就是 etcd。

|

||||

|

||||

不用过多地讨论“状态”这个东西 —— 而我认为在 Kubernetes 中很酷的一件事情是,唯一需要去做备份/恢复计划的东西是 etcd (除非为你的 pod 使用了持久化存储的卷)。我认为这样可以使 Kubernetes 运维比你想的更容易一些。

|

||||

|

||||

### 在 Kubernetes 之上实现新的分布式系统是非常容易的

|

||||

|

||||

假设你想去实现一个分布式 cron 作业调度系统!从零开始做工作量非常大。但是,在 Kubernetes 里面实现一个分布式 cron 作业调度系统是非常容易的!(仍然没那么简单,毕竟它是一个分布式系统)

|

||||

|

||||

我第一次读到 Kubernetes 的 cron 作业控制器的代码时,我对它是如此的简单感到由衷高兴。去读读看,其主要的逻辑大约是 400 行的 Go 代码。去读它吧! => [cronjob_controller.go][4] <=

|

||||

|

||||

cron 作业控制器基本上做的是:

|

||||

|

||||

* 每 10 秒钟:

|

||||

* 列出所有已存在的 cron 作业

|

||||

* 检查是否有需要现在去运行的任务

|

||||

* 如果有,创建一个新的作业对象去调度,并通过其它的 Kubernetes 控制器实际运行它

|

||||

* 清理已完成的作业

|

||||

* 重复以上工作

|

||||

|

||||

Kubernetes 模型是很受限制的(它有定义在 etcd 中的资源模式,控制器读取这个资源并更新 etcd),我认为这种相关的固有的/受限制的模型,可以使它更容易地在 Kubernetes 框架中开发你自己的分布式系统。

|

||||

|

||||

Kamal 给我说的是 “Kubernetes 是一个写你自己的分布式系统的很好的平台” ,而不是“ Kubernetes 是一个你可以使用的分布式系统”,并且,我觉得它真的很有意思。他做了一个 [为你推送到 GitHub 的每个分支运行一个 HTTP 服务的系统][5] 的原型。这花了他一个周末的时间,大约 800 行 Go 代码,我认为它真不可思议!

|

||||

|

||||

### Kubernetes 可以使你做一些非常神奇的事情(但并不容易)

|

||||

|

||||

我一开始就说 “kubernetes 可以让你做一些很神奇的事情,你可以用一个配置文件来做这么多的基础设施,它太神奇了”。这是真的!

|

||||

|

||||

为什么说 “Kubernetes 并不容易”呢?是因为 Kubernetes 有很多部分,学习怎么去成功地运营一个高可用的 Kubernetes 集群要做很多的工作。就像我发现它给我了许多抽象的东西,我需要去理解这些抽象的东西才能调试问题和正确地配置它们。我喜欢学习新东西,因此,它并不会使我发狂或者生气,但是我认为了解这一点很重要 :)

|

||||

|

||||

对于 “我不能仅依靠抽象概念” 的一个具体的例子是,我努力学习了许多 [Linux 上网络是如何工作的][6],才让我对设置 Kubernetes 网络稍有信心,这比我以前学过的关于网络的知识要多很多。这种方式很有意思但是非常费时间。在以后的某个时间,我或许写更多的关于设置 Kubernetes 网络的困难/有趣的事情。

|

||||

|

||||

或者,为了成功设置我的 Kubernetes CA,我写了一篇 [2000 字的博客文章][7],述及了我不得不学习 Kubernetes 不同方式的 CA 的各种细节。

|

||||

|

||||

我觉得,像 GKE (Google 的 Kubernetes 产品) 这样的一些监管的 Kubernetes 的系统可能更简单,因为,他们为你做了许多的决定,但是,我没有尝试过它们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2017/10/05/reasons-kubernetes-is-cool/

|

||||

|

||||

作者:[Julia Evans][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/about

|

||||

[1]:https://github.com/kamalmarhubi/kubereview

|

||||

[2]:https://jvns.ca/categories/kubernetes

|

||||

[3]:https://github.com/kelseyhightower/kubernetes-the-hard-way

|

||||

[4]:https://github.com/kubernetes/kubernetes/blob/e4551d50e57c089aab6f67333412d3ca64bc09ae/pkg/controller/cronjob/cronjob_controller.go

|

||||

[5]:https://github.com/kamalmarhubi/kubereview

|

||||

[6]:https://jvns.ca/blog/2016/12/22/container-networking/

|

||||

[7]:https://jvns.ca/blog/2017/08/05/how-kubernetes-certificates-work/

|

||||

|

||||

|

||||

106

published/20180103 Creating an Offline YUM repository for LAN.md

Normal file

106

published/20180103 Creating an Offline YUM repository for LAN.md

Normal file

@ -0,0 +1,106 @@

|

||||

创建局域网内的离线 Yum 仓库

|

||||

======

|

||||

|

||||

在早先的教程中,我们讨论了[如何使用 ISO 镜像和在线 Yum 仓库的方式来创建自己的 Yum 仓库 ][1]。创建自己的 Yum 仓库是一个不错的想法,但若网络中只有 2-3 台 Linux 机器那就没啥必要了。不过若你的网络中有大量的 Linux 服务器,而且这些服务器还需要定时进行升级,或者你有大量服务器无法直接访问互联网,那么创建自己的 Yum 仓库就很有必要了。

|

||||

|

||||

当我们有大量的 Linux 服务器,而每个服务器都直接从互联网上升级系统时,数据消耗会很可观。为了节省数据量,我们可以创建个离线 Yum 源并将之分享到本地网络中。网络中的其他 Linux 机器就可以直接从本地 Yum 上获取系统更新,从而节省数据量,而且传输速度也会很好。

|

||||

|

||||

我们可以使用下面两种方法来分享 Yum 仓库:

|

||||

|

||||

* 使用 Web 服务器(Apache)

|

||||

* 使用 FTP 服务器(VSFTPD)

|

||||

|

||||

在开始讲解这两个方法之前,我们需要先根据[之前的教程][1]创建一个 Yum 仓库。

|

||||

|

||||

### 使用 Web 服务器

|

||||

|

||||

首先在 Yum 服务器上安装 Web 服务器(Apache),我们假设服务器 IP 是 `192.168.1.100`。我们已经在这台系统上配置好了 Yum 仓库,现在我们来使用 `yum` 命令安装 Apache Web 服务器,

|

||||

|

||||

```

|

||||

$ yum install httpd

|

||||

```

|

||||

|

||||

下一步,拷贝所有的 rpm 包到默认的 Apache 根目录下,即 `/var/www/html`,由于我们已经将包都拷贝到了 `/YUM` 下,我们也可以创建一个软连接来从 `/var/www/html` 指向 `/YUM`。

|

||||

|

||||

```

|

||||

$ ln -s /var/www/html/Centos /YUM

|

||||

```

|

||||

|

||||

重启 Web 服务器应用改变:

|

||||

|

||||

```

|

||||

$ systemctl restart httpd

|

||||

```

|

||||

|

||||

#### 配置客户端机器

|

||||

|

||||

服务端的配置就完成了,现在需要配置下客户端来从我们创建的离线 Yum 中获取升级包,这里假设客户端 IP 为 `192.168.1.101`。

|

||||

|

||||

在 `/etc/yum.repos.d` 目录中创建 `offline-yum.repo` 文件,输入如下信息,

|

||||

|

||||

```

|

||||

$ vi /etc/yum.repos.d/offline-yum.repo

|

||||

```

|

||||

|

||||

```

|

||||

name=Local YUM

|

||||

baseurl=http://192.168.1.100/CentOS/7

|

||||

gpgcheck=0

|

||||

enabled=1

|

||||

```

|

||||

|

||||

客户端也配置完了。试一下用 `yum` 来安装/升级软件包来确认仓库是正常工作的。

|

||||

|

||||

### 使用 FTP 服务器

|

||||

|

||||

在 FTP 上分享 Yum,首先需要安装所需要的软件包,即 vsftpd。

|

||||

|

||||

```

|

||||

$ yum install vsftpd

|

||||

```

|

||||

|

||||

vsftp 的默认根目录为 `/var/ftp/pub`,因此你可以拷贝 rpm 包到这个目录,或者为它创建一个软连接:

|

||||

|

||||

```

|

||||

$ ln -s /var/ftp/pub /YUM

|

||||

```

|

||||

|

||||

重启服务应用改变:

|

||||

|

||||

```

|

||||

$ systemctl restart vsftpd

|

||||

```

|

||||

|

||||

#### 配置客户端机器

|

||||

|

||||

像上面一样,在 `/etc/yum.repos.d` 中创建 `offline-yum.repo` 文件,并输入下面信息,

|

||||

|

||||

```

|

||||

$ vi /etc/yum.repos.d/offline-yum.repo

|

||||

```

|

||||

|

||||

```

|

||||

[Offline YUM]

|

||||

name=Local YUM

|

||||

baseurl=ftp://192.168.1.100/pub/CentOS/7

|

||||

gpgcheck=0

|

||||

enabled=1

|

||||

```

|

||||

|

||||

现在客户机可以通过 ftp 接收升级了。要配置 vsftpd 服务器为其他 Linux 系统分享文件,请[阅读这篇指南][2]。

|

||||

|

||||

这两种方法都很不错,你可以任意选择其中一种方法。有任何疑问或这想说的话,欢迎在下面留言框中留言。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/offline-yum-repository-for-lan/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:https://linux.cn/article-9296-1.html

|

||||

[2]:http://linuxtechlab.com/ftp-secure-installation-configuration/

|

||||

@ -0,0 +1,138 @@

|

||||

A history of low-level Linux container runtimes

|

||||

============================================================

|

||||

|

||||

### "Container runtime" is an overloaded term.

|

||||

|

||||

|

||||

|

||||

Image credits : Rikki Endsley. [CC BY-SA 4.0][12]

|

||||

|

||||

At Red Hat we like to say, "Containers are Linux—Linux is Containers." Here is what this means. Traditional containers are processes on a system that usually have the following three characteristics:

|

||||

|

||||

### 1\. Resource constraints

|

||||

|

||||

Linux Containers

|

||||

|

||||

* [What are Linux containers?][1]

|

||||

|

||||

* [What is Docker?][2]

|

||||

|

||||

* [What is Kubernetes?][3]

|

||||

|

||||

* [An introduction to container terminology][4]

|

||||

|

||||

When you run lots of containers on a system, you do not want to have any container monopolize the operating system, so we use resource constraints to control things like CPU, memory, network bandwidth, etc. The Linux kernel provides the cgroups feature, which can be configured to control the container process resources.

|

||||

|

||||

### 2\. Security constraints

|

||||

|

||||

Usually, you do not want your containers being able to attack each other or attack the host system. We take advantage of several features of the Linux kernel to set up security separation, such as SELinux, seccomp, capabilities, etc.

|

||||

|

||||

### 3\. Virtual separation

|

||||

|

||||

Container processes should not have a view of any processes outside the container. They should be on their own network. Container processes need to be able to bind to port 80 in different containers. Each container needs a different view of its image, needs its own root filesystem (rootfs). In Linux we use kernel namespaces to provide virtual separation.

|

||||

|

||||

Therefore, a process that runs in a cgroup, has security settings, and runs in namespaces can be called a container. Looking at PID 1, systemd, on a Red Hat Enterprise Linux 7 system, you see that systemd runs in a cgroup.

|

||||

|

||||

```

|

||||

# tail -1 /proc/1/cgroup

|

||||

1:name=systemd:/

|

||||

```

|

||||

|

||||

The `ps` command shows you that the system process has an SELinux label ...

|

||||

|

||||

```

|

||||

# ps -eZ | grep systemd

|

||||

system_u:system_r:init_t:s0 1 ? 00:00:48 systemd

|

||||

```

|

||||

|

||||

and capabilities.

|

||||

|

||||

```

|

||||

# grep Cap /proc/1/status

|

||||

...

|

||||

CapEff: 0000001fffffffff

|

||||

CapBnd: 0000001fffffffff

|

||||

CapBnd: 0000003fffffffff

|

||||

```

|

||||

|

||||

Finally, if you look at the `/proc/1/ns` subdir, you will see the namespace that systemd runs in.

|

||||

|

||||

```

|

||||

ls -l /proc/1/ns

|

||||

lrwxrwxrwx. 1 root root 0 Jan 11 11:46 mnt -> mnt:[4026531840]

|

||||

lrwxrwxrwx. 1 root root 0 Jan 11 11:46 net -> net:[4026532009]

|

||||

lrwxrwxrwx. 1 root root 0 Jan 11 11:46 pid -> pid:[4026531836]

|

||||

...

|

||||

```

|

||||

|

||||

If PID 1 (and really every other process on the system) has resource constraints, security settings, and namespaces, I argue that every process on the system is in a container.

|

||||

|

||||

Container runtime tools just modify these resource constraints, security settings, and namespaces. Then the Linux kernel executes the processes. After the container is launched, the container runtime can monitor PID 1 inside the container or the container's `stdin`/`stdout`—the container runtime manages the lifecycles of these processes.

|

||||

|

||||

### Container runtimes

|

||||

|

||||

You might say to yourself, well systemd sounds pretty similar to a container runtime. Well, after having several email discussions about why container runtimes do not use `systemd-nspawn` as a tool for launching containers, I decided it would be worth discussing container runtimes and giving some historical context.

|

||||

|

||||

[Docker][13] is often called a container runtime, but "container runtime" is an overloaded term. When folks talk about a "container runtime," they're really talking about higher-level tools like Docker, [CRI-O][14], and [RKT][15] that come with developer functionality. They are API driven. They include concepts like pulling the container image from the container registry, setting up the storage, and finally launching the container. Launching the container often involves running a specialized tool that configures the kernel to run the container, and these are also referred to as "container runtimes." I will refer to them as "low-level container runtimes." Daemons like Docker and CRI-O, as well as command-line tools like [Podman][16] and [Buildah][17], should probably be called "container managers" instead.

|

||||

|

||||

When Docker was originally written, it launched containers using the `lxc` toolset, which predates `systemd-nspawn`. Red Hat's original work with Docker was to try to integrate `[libvirt][6]` (`libvirt-lxc`) into Docker as an alternative to the `lxc` tools, which were not supported in RHEL. `libvirt-lxc` also did not use `systemd-nspawn`. At that time, the systemd team was saying that `systemd-nspawn` was only a tool for testing, not for production.

|

||||

|

||||

At the same time, the upstream Docker developers, including some members of my Red Hat team, decided they wanted a golang-native way to launch containers, rather than launching a separate application. Work began on libcontainer, as a native golang library for launching containers. Red Hat engineering decided that this was the best path forward and dropped `libvirt-lxc`.

|

||||

|

||||

Later, the [Open Container Initiative][18] (OCI) was formed, party because people wanted to be able to launch containers in additional ways. Traditional namespace-separated containers were popular, but people also had the desire for virtual machine-level isolation. Intel and [Hyper.sh][19] were working on KVM-separated containers, and Microsoft was working on Windows-based containers. The OCI wanted a standard specification defining what a container is, so the [OCI Runtime Specification][20] was born.

|

||||

|

||||

The OCI Runtime Specification defines a JSON file format that describes what binary should be run, how it should be contained, and the location of the rootfs of the container. Tools can generate this JSON file. Then other tools can read this JSON file and execute a container on the rootfs. The libcontainer parts of Docker were broken out and donated to the OCI. The upstream Docker engineers and our engineers helped create a new frontend tool to read the OCI Runtime Specification JSON file and interact with libcontainer to run the container. This tool, called `[runc][7]`, was also donated to the OCI. While `runc` can read the OCI JSON file, users are left to generate it themselves. `runc` has since become the most popular low-level container runtime. Almost all container-management tools support `runc`, including CRI-O, Docker, Buildah, Podman, and [Cloud Foundry Garden][21]. Since then, other tools have also implemented the OCI Runtime Spec to execute OCI-compliant containers.

|

||||

|

||||

Both [Clear Containers][22] and Hyper.sh's `runV` tools were created to use the OCI Runtime Specification to execute KVM-based containers, and they are combining their efforts in a new project called [Kata][23]. Last year, Oracle created a demonstration version of an OCI runtime tool called [RailCar][24], written in Rust. It's been two months since the GitHub project has been updated, so it's unclear if it is still in development. A couple of years ago, Vincent Batts worked on adding a tool, `[nspawn-oci][8]`, that interpreted an OCI Runtime Specification file and launched `systemd-nspawn`, but no one really picked up on it, and it was not a native implementation.

|

||||

|

||||

If someone wants to implement a native `systemd-nspawn --oci OCI-SPEC.json` and get it accepted by the systemd team for support, then CRI-O, Docker, and eventually Podman would be able to use it in addition to `runc `and Clear Container/runV ([Kata][25]). (No one on my team is working on this.)

|

||||

|

||||

The bottom line is, back three or four years, the upstream developers wanted to write a low-level golang tool for launching containers, and this tool ended up becoming `runc`. Those developers at the time had a C-based tool for doing this called `lxc` and moved away from it. I am pretty sure that at the time they made the decision to build libcontainer, they would not have been interested in `systemd-nspawn` or any other non-native (golang) way of running "namespace" separated containers.

|

||||

|

||||

|

||||

### About the author

|

||||

|

||||

[][26] Daniel J Walsh - Daniel Walsh has worked in the computer security field for almost 30 years. Dan joined Red Hat in August 2001\. Dan leads the RHEL Docker enablement team since August 2013, but has been working on container technology for several years. He has led the SELinux project, concentrating on the application space and policy development. Dan helped developed sVirt, Secure Virtualization. He also created the SELinux Sandbox, the Xguest user and the Secure Kiosk. Previously, Dan worked Netect/Bindview... [more about Daniel J Walsh][9][More about me][10]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/1/history-low-level-container-runtimes

|

||||

|

||||

作者:[Daniel J Walsh ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/rhatdan

|

||||

[1]:https://opensource.com/resources/what-are-linux-containers?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[2]:https://opensource.com/resources/what-docker?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[3]:https://opensource.com/resources/what-is-kubernetes?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[4]:https://developers.redhat.com/blog/2016/01/13/a-practical-introduction-to-docker-container-terminology/?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[5]:https://opensource.com/article/18/1/history-low-level-container-runtimes?rate=05T2m7ayQ7DRxtzQFjGcfBAlaTF5ffHN-EH1kEqSt9Q

|

||||

[6]:https://libvirt.org/

|

||||

[7]:https://github.com/opencontainers/runc

|

||||

[8]:https://github.com/vbatts/nspawn-oci

|

||||

[9]:https://opensource.com/users/rhatdan

|

||||

[10]:https://opensource.com/users/rhatdan

|

||||

[11]:https://opensource.com/user/16673/feed

|

||||

[12]:https://creativecommons.org/licenses/by-sa/4.0/

|

||||

[13]:https://github.com/docker

|

||||

[14]:https://github.com/kubernetes-incubator/cri-o

|

||||

[15]:https://github.com/rkt/rkt

|

||||

[16]:https://github.com/projectatomic/libpod/tree/master/cmd/podman

|

||||

[17]:https://github.com/projectatomic/buildah

|

||||

[18]:https://www.opencontainers.org/

|

||||

[19]:https://www.hyper.sh/

|

||||

[20]:https://github.com/opencontainers/runtime-spec

|

||||

[21]:https://github.com/cloudfoundry/garden

|

||||

[22]:https://clearlinux.org/containers

|

||||

[23]:https://clearlinux.org/containers

|

||||

[24]:https://github.com/oracle/railcar

|

||||

[25]:https://github.com/kata-containers

|

||||

[26]:https://opensource.com/users/rhatdan

|

||||

[27]:https://opensource.com/users/rhatdan

|

||||

[28]:https://opensource.com/users/rhatdan

|

||||

[29]:https://opensource.com/tags/containers

|

||||

[30]:https://opensource.com/tags/linux

|

||||

[31]:https://opensource.com/tags/containers-column

|

||||

@ -0,0 +1,94 @@

|

||||

6 pivotal moments in open source history

|

||||

============================================================

|

||||

|

||||

### Here's how open source developed from a printer jam solution at MIT to a major development model in the tech industry today.

|

||||

|

||||

|

||||

Image credits : [Alan Levine][4]. [CC0 1.0][5]

|

||||

|

||||

Open source has taken a prominent role in the IT industry today. It is everywhere from the smallest embedded systems to the biggest supercomputer, from the phone in your pocket to the software running the websites and infrastructure of the companies we engage with every day. Let's explore how we got here and discuss key moments from the past 40 years that have paved a path to the current day.

|

||||

|

||||

### 1\. RMS and the printer

|

||||

|

||||

In the late 1970s, [Richard M. Stallman (RMS)][6] was a staff programmer at MIT. His department, like those at many universities at the time, shared a PDP-10 computer and a single printer. One problem they encountered was that paper would regularly jam in the printer, causing a string of print jobs to pile up in a queue until someone fixed the jam. To get around this problem, the MIT staff came up with a nice social hack: They wrote code for the printer driver so that when it jammed, a message would be sent to everyone who was currently waiting for a print job: "The printer is jammed, please fix it." This way, it was never stuck for long.

|

||||

|

||||

In 1980, the lab accepted a donation of a brand-new laser printer. When Stallman asked for the source code for the printer driver, however, so he could reimplement the social hack to have the system notify users on a paper jam, he was told that this was proprietary information. He heard of a researcher in a different university who had the source code for a research project, and when the opportunity arose, he asked this colleague to share it—and was shocked when they refused. They had signed an NDA, which Stallman took as a betrayal of the hacker culture.

|

||||

|

||||

The late '70s and early '80s represented an era where software, which had traditionally been given away with the hardware in source code form, was seen to be valuable. Increasingly, MIT researchers were starting software companies, and selling licenses to the software was key to their business models. NDAs and proprietary software licenses became the norms, and the best programmers were hired from universities like MIT to work on private development projects where they could no longer share or collaborate.

|

||||

|

||||

As a reaction to this, Stallman resolved that he would create a complete operating system that would not deprive users of the freedom to understand how it worked, and would allow them to make changes if they wished. It was the birth of the free software movement.

|

||||

|

||||

### 2\. Creation of GNU and the advent of free software

|

||||

|

||||

By late 1983, Stallman was ready to announce his project and recruit supporters and helpers. In September 1983, [he announced the creation of the GNU project][7] (GNU stands for GNU's Not Unix—a recursive acronym). The goal of the project was to clone the Unix operating system to create a system that would give complete freedom to users.

|

||||

|

||||

In January 1984, he started working full-time on the project, first creating a compiler system (GCC) and various operating system utilities. Early in 1985, he published "[The GNU Manifesto][8]," which was a call to arms for programmers to join the effort, and launched the Free Software Foundation in order to accept donations to support the work. This document is the founding charter of the free software movement.

|

||||

|

||||

### 3\. The writing of the GPL

|

||||

|

||||

Until 1989, software written and released by the [Free Software Foundation][9] and RMS did not have a single license. Emacs was released under the Emacs license, GCC was released under the GCC license, and so on; however, after a company called Unipress forced Stallman to stop distributing copies of an Emacs implementation they had acquired from James Gosling (of Java fame), he felt that a license to secure user freedoms was important.

|

||||

|

||||

The first version of the GNU General Public License was released in 1989, and it encapsulated the values of copyleft (a play on words—what is the opposite of copyright?): You may use, copy, distribute, and modify the software covered by the license, but if you make changes, you must share the modified source code alongside the modified binaries. This simple requirement to share modified software, in combination with the advent of the internet in the 1990s, is what enabled the decentralized, collaborative development model of the free software movement to flourish.

|

||||

|

||||

### 4\. "The Cathedral and the Bazaar"

|

||||

|

||||

By the mid-1990s, Linux was starting to take off, and free software had become more mainstream—or perhaps "less fringe" would be more accurate. The Linux kernel was being developed in a way that was completely different to anything people had been seen before, and was very successful doing it. Out of the chaos of the kernel community came order, and a fast-moving project.

|

||||

|

||||

In 1997, Eric S. Raymond published the seminal essay, "[The Cathedral and the Bazaar][10]," comparing and contrasting the development methodologies and social structure of GCC and the Linux kernel and talking about his own experiences with a "bazaar" development model with the Fetchmail project. Many of the principles that Raymond describes in this essay will later become central to agile development and the DevOps movement—"release early, release often," refactoring of code, and treating users as co-developers are all fundamental to modern software development.

|

||||

|

||||

This essay has been credited with bringing free software to a broader audience, and with convincing executives at software companies at the time that releasing their software under a free software license was the right thing to do. Raymond went on to be instrumental in the coining of the term "open source" and the creation of the Open Source Institute.

|

||||

|

||||

"The Cathedral and the Bazaar" was credited as a key document in the 1998 release of the source code for the Netscape web browser Mozilla. At the time, this was the first major release of an existing, widely used piece of desktop software as free software, which brought it further into the public eye.

|

||||

|

||||

### 5\. Open source

|

||||

|

||||

As far back as 1985, the ambiguous nature of the word "free", used to describe software freedom, was identified as problematic by RMS himself. In the GNU Manifesto, he identified "give away" and "for free" as terms that confused zero price and user freedom. "Free as in freedom," "Speech not beer," and similar mantras were common when free software hit a mainstream audience in the late 1990s, but a number of prominent community figures argued that a term was needed that made the concept more accessible to the general public.

|

||||

|

||||

After Netscape released the source code for Mozilla in 1998 (see #4), a group of people, including Eric Raymond, Bruce Perens, Michael Tiemann, Jon "Maddog" Hall, and many of the leading lights of the free software world, gathered in Palo Alto to discuss an alternative term. The term "open source" was [coined by Christine Peterson][11] to describe free software, and the Open Source Institute was later founded by Bruce Perens and Eric Raymond. The fundamental difference with proprietary software, they argued, was the availability of the source code, and so this was what should be put forward first in the branding.

|

||||

|

||||

Later that year, at a summit organized by Tim O'Reilly, an extended group of some of the most influential people in the free software world at the time gathered to debate various new brands for free software. In the end, "open source" edged out "sourceware," and open source began to be adopted by many projects in the community.

|

||||

|

||||

There was some disagreement, however. Richard Stallman and the Free Software Foundation continued to champion the term "free software," because to them, the fundamental difference with proprietary software was user freedom, and the availability of source code was just a means to that end. Stallman argued that removing the focus on freedom would lead to a future where source code would be available, but the user of the software would not be able to avail of the freedom to modify the software. With the advent of web-deployed software-as-a-service and open source firmware embedded in devices, the battle continues to be waged today.

|

||||

|

||||

### 6\. Corporate investment in open source—VA Linux, Red Hat, IBM

|

||||

|

||||

In the late 1990s, a series of high-profile events led to a huge increase in the professionalization of free and open source software. Among these, the highest-profile events were the IPOs of VA Linux and Red Hat in 1999\. Both companies had massive gains in share price on their opening days as publicly traded companies, proving that open source was now going commercial and mainstream.

|

||||

|

||||

Also in 1999, IBM announced that they were supporting Linux by investing $1 billion in its development, making is less risky to traditional enterprise users. The following year, Sun Microsystems released the source code to its cross-platform office suite, StarOffice, and created the [OpenOffice.org][12] project.

|

||||

|

||||

The combined effect of massive Silicon Valley funding of open source projects, the attention of Wall Street for young companies built around open source software, and the market credibility that tech giants like IBM and Sun Microsystems brought had combined to create the massive adoption of open source, and the embrace of the open development model that helped it thrive have led to the dominance of Linux and open source in the tech industry today.

|

||||

|

||||

_Which pivotal moments would you add to the list? Let us know in the comments._

|

||||

|

||||

### About the author

|

||||

|

||||

[][13] Dave Neary - Dave Neary is a member of the Open Source and Standards team at Red Hat, helping make Open Source projects important to Red Hat be successful. Dave has been around the free and open source software world, wearing many different hats, since sending his first patch to the GIMP in 1999.[More about me][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/pivotal-moments-history-open-source

|

||||

|

||||

作者:[Dave Neary ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/dneary

|

||||

[1]:https://opensource.com/article/18/2/pivotal-moments-history-open-source?rate=gsG-JrjfROWACP7i9KUoqmH14JDff8-31C2IlNPPyu8

|

||||

[2]:https://opensource.com/users/dneary

|

||||

[3]:https://opensource.com/user/16681/feed

|

||||

[4]:https://www.flickr.com/photos/cogdog/6476689463/in/photolist-aSjJ8H-qHAvo4-54QttY-ofm5ZJ-9NnUjX-tFxS7Y-bPPjtH-hPYow-bCndCk-6NpFvF-5yQ1xv-7EWMXZ-48RAjB-5EzYo3-qAFAdk-9gGty4-a2BBgY-bJsTcF-pWXATc-6EBTmq-SkBnSJ-57QJco-ddn815-cqt5qG-ddmYSc-pkYxRz-awf3n2-Rvnoxa-iEMfeG-bVfq5-jXy74D-meCC1v-qx22rx-fMScsJ-ci1435-ie8P5-oUSXhp-xJSm9-bHgApk-mX7ggz-bpsxd7-8ukud7-aEDmBj-qWkytq-ofwhdM-b7zSeD-ddn5G7-ddn5gb-qCxnB2-S74vsk

|

||||

[5]:https://creativecommons.org/publicdomain/zero/1.0/

|

||||

[6]:https://en.wikipedia.org/wiki/Richard_Stallman

|

||||

[7]:https://groups.google.com/forum/#!original/net.unix-wizards/8twfRPM79u0/1xlglzrWrU0J

|

||||

[8]:https://www.gnu.org/gnu/manifesto.en.html

|

||||

[9]:https://www.fsf.org/

|

||||

[10]:https://en.wikipedia.org/wiki/The_Cathedral_and_the_Bazaar

|

||||

[11]:https://opensource.com/article/18/2/coining-term-open-source-software

|

||||

[12]:http://www.openoffice.org/

|

||||

[13]:https://opensource.com/users/dneary

|

||||

[14]:https://opensource.com/users/dneary

|

||||

[15]:https://opensource.com/users/dneary

|

||||

[16]:https://opensource.com/article/18/2/pivotal-moments-history-open-source#comments

|

||||

[17]:https://opensource.com/tags/licensing

|

||||

103

sources/talk/20180201 How I coined the term open source.md

Normal file

103

sources/talk/20180201 How I coined the term open source.md

Normal file

@ -0,0 +1,103 @@

|

||||

How I coined the term 'open source'

|

||||

============================================================

|

||||

|

||||

### Christine Peterson finally publishes her account of that fateful day, 20 years ago.

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

In a few days, on February 3, the 20th anniversary of the introduction of the term "[open source software][6]" is upon us. As open source software grows in popularity and powers some of the most robust and important innovations of our time, we reflect on its rise to prominence.

|

||||

|

||||

I am the originator of the term "open source software" and came up with it while executive director at Foresight Institute. Not a software developer like the rest, I thank Linux programmer Todd Anderson for supporting the term and proposing it to the group.

|

||||

|

||||

This is my account of how I came up with it, how it was proposed, and the subsequent reactions. Of course, there are a number of accounts of the coining of the term, for example by Eric Raymond and Richard Stallman, yet this is mine, written on January 2, 2006.

|

||||

|

||||

It has never been published, until today.

|

||||

|

||||

* * *

|

||||

|

||||

The introduction of the term "open source software" was a deliberate effort to make this field of endeavor more understandable to newcomers and to business, which was viewed as necessary to its spread to a broader community of users. The problem with the main earlier label, "free software," was not its political connotations, but that—to newcomers—its seeming focus on price is distracting. A term was needed that focuses on the key issue of source code and that does not immediately confuse those new to the concept. The first term that came along at the right time and fulfilled these requirements was rapidly adopted: open source.

|

||||

|

||||

This term had long been used in an "intelligence" (i.e., spying) context, but to my knowledge, use of the term with respect to software prior to 1998 has not been confirmed. The account below describes how the term [open source software][7] caught on and became the name of both an industry and a movement.

|

||||

|

||||

### Meetings on computer security

|

||||

|

||||

In late 1997, weekly meetings were being held at Foresight Institute to discuss computer security. Foresight is a nonprofit think tank focused on nanotechnology and artificial intelligence, and software security is regarded as central to the reliability and security of both. We had identified free software as a promising approach to improving software security and reliability and were looking for ways to promote it. Interest in free software was starting to grow outside the programming community, and it was increasingly clear that an opportunity was coming to change the world. However, just how to do this was unclear, and we were groping for strategies.

|

||||

|

||||

At these meetings, we discussed the need for a new term due to the confusion factor. The argument was as follows: those new to the term "free software" assume it is referring to the price. Oldtimers must then launch into an explanation, usually given as follows: "We mean free as in freedom, not free as in beer." At this point, a discussion on software has turned into one about the price of an alcoholic beverage. The problem was not that explaining the meaning is impossible—the problem was that the name for an important idea should not be so confusing to newcomers. A clearer term was needed. No political issues were raised regarding the free software term; the issue was its lack of clarity to those new to the concept.

|

||||

|

||||

### Releasing Netscape

|

||||

|

||||

On February 2, 1998, Eric Raymond arrived on a visit to work with Netscape on the plan to release the browser code under a free-software-style license. We held a meeting that night at Foresight's office in Los Altos to strategize and refine our message. In addition to Eric and me, active participants included Brian Behlendorf, Michael Tiemann, Todd Anderson, Mark S. Miller, and Ka-Ping Yee. But at that meeting, the field was still described as free software or, by Brian, "source code available" software.

|

||||

|

||||

While in town, Eric used Foresight as a base of operations. At one point during his visit, he was called to the phone to talk with a couple of Netscape legal and/or marketing staff. When he was finished, I asked to be put on the phone with them—one man and one woman, perhaps Mitchell Baker—so I could bring up the need for a new term. They agreed in principle immediately, but no specific term was agreed upon.

|

||||

|

||||

Between meetings that week, I was still focused on the need for a better name and came up with the term "open source software." While not ideal, it struck me as good enough. I ran it by at least four others: Eric Drexler, Mark Miller, and Todd Anderson liked it, while a friend in marketing and public relations felt the term "open" had been overused and abused and believed we could do better. He was right in theory; however, I didn't have a better idea, so I thought I would try to go ahead and introduce it. In hindsight, I should have simply proposed it to Eric Raymond, but I didn't know him well at the time, so I took an indirect strategy instead.

|

||||

|

||||

Todd had agreed strongly about the need for a new term and offered to assist in getting the term introduced. This was helpful because, as a non-programmer, my influence within the free software community was weak. My work in nanotechnology education at Foresight was a plus, but not enough for me to be taken very seriously on free software questions. As a Linux programmer, Todd would be listened to more closely.

|

||||

|

||||

### The key meeting

|

||||

|

||||

Later that week, on February 5, 1998, a group was assembled at VA Research to brainstorm on strategy. Attending—in addition to Eric Raymond, Todd, and me—were Larry Augustin, Sam Ockman, and attending by phone, Jon "maddog" Hall.

|

||||

|

||||

The primary topic was promotion strategy, especially which companies to approach. I said little, but was looking for an opportunity to introduce the proposed term. I felt that it wouldn't work for me to just blurt out, "All you technical people should start using my new term." Most of those attending didn't know me, and for all I knew, they might not even agree that a new term was greatly needed, or even somewhat desirable.

|

||||

|

||||

Fortunately, Todd was on the ball. Instead of making an assertion that the community should use this specific new term, he did something less directive—a smart thing to do with this community of strong-willed individuals. He simply used the term in a sentence on another topic—just dropped it into the conversation to see what happened. I went on alert, hoping for a response, but there was none at first. The discussion continued on the original topic. It seemed only he and I had noticed the usage.

|

||||

|

||||

Not so—memetic evolution was in action. A few minutes later, one of the others used the term, evidently without noticing, still discussing a topic other than terminology. Todd and I looked at each other out of the corners of our eyes to check: yes, we had both noticed what happened. I was excited—it might work! But I kept quiet: I still had low status in this group. Probably some were wondering why Eric had invited me at all.

|

||||

|

||||

Toward the end of the meeting, the [question of terminology][8] was brought up explicitly, probably by Todd or Eric. Maddog mentioned "freely distributable" as an earlier term, and "cooperatively developed" as a newer term. Eric listed "free software," "open source," and "sourceware" as the main options. Todd advocated the "open source" model, and Eric endorsed this. I didn't say much, letting Todd and Eric pull the (loose, informal) consensus together around the open source name. It was clear that to most of those at the meeting, the name change was not the most important thing discussed there; a relatively minor issue. Only about 10% of my notes from this meeting are on the terminology question.

|

||||

|

||||

But I was elated. These were some key leaders in the community, and they liked the new name, or at least didn't object. This was a very good sign. There was probably not much more I could do to help; Eric Raymond was far better positioned to spread the new meme, and he did. Bruce Perens signed on to the effort immediately, helping set up [Opensource.org][9] and playing a key role in spreading the new term.

|

||||

|

||||

For the name to succeed, it was necessary, or at least highly desirable, that Tim O'Reilly agree and actively use it in his many projects on behalf of the community. Also helpful would be use of the term in the upcoming official release of the Netscape Navigator code. By late February, both O'Reilly & Associates and Netscape had started to use the term.

|

||||

|

||||

### Getting the name out

|

||||

|

||||

After this, there was a period during which the term was promoted by Eric Raymond to the media, by Tim O'Reilly to business, and by both to the programming community. It seemed to spread very quickly.

|

||||

|

||||

On April 7, 1998, Tim O'Reilly held a meeting of key leaders in the field. Announced in advance as the first "[Freeware Summit][10]," by April 14 it was referred to as the first "[Open Source Summit][11]."

|

||||

|

||||

These months were extremely exciting for open source. Every week, it seemed, a new company announced plans to participate. Reading Slashdot became a necessity, even for those like me who were only peripherally involved. I strongly believe that the new term was helpful in enabling this rapid spread into business, which then enabled wider use by the public.

|

||||

|

||||

A quick Google search indicates that "open source" appears more often than "free software," but there still is substantial use of the free software term, which remains useful and should be included when communicating with audiences who prefer it.

|

||||

|

||||

### A happy twinge

|

||||

|

||||

When an [early account][12] of the terminology change written by Eric Raymond was posted on the Open Source Initiative website, I was listed as being at the VA brainstorming meeting, but not as the originator of the term. This was my own fault; I had neglected to tell Eric the details. My impulse was to let it pass and stay in the background, but Todd felt otherwise. He suggested to me that one day I would be glad to be known as the person who coined the name "open source software." He explained the situation to Eric, who promptly updated his site.

|

||||

|

||||

Coming up with a phrase is a small contribution, but I admit to being grateful to those who remember to credit me with it. Every time I hear it, which is very often now, it gives me a little happy twinge.

|

||||

|

||||

The big credit for persuading the community goes to Eric Raymond and Tim O'Reilly, who made it happen. Thanks to them for crediting me, and to Todd Anderson for his role throughout. The above is not a complete account of open source history; apologies to the many key players whose names do not appear. Those seeking a more complete account should refer to the links in this article and elsewhere on the net.

|

||||

|

||||

### About the author

|

||||

|

||||

[][13] Christine Peterson - Christine Peterson writes, lectures, and briefs the media on coming powerful technologies, especially nanotechnology, artificial intelligence, and longevity. She is Cofounder and Past President of Foresight Institute, the leading nanotech public interest group. Foresight educates the public, technical community, and policymakers on coming powerful technologies and how to guide their long-term impact. She serves on the Advisory Board of the [Machine Intelligence... ][2][more about Christine Peterson][3][More about me][4]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/coining-term-open-source-software

|

||||

|

||||

作者:[ Christine Peterson][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/christine-peterson

|

||||

[1]:https://opensource.com/article/18/2/coining-term-open-source-software?rate=HFz31Mwyy6f09l9uhm5T_OFJEmUuAwpI61FY-fSo3Gc

|

||||

[2]:http://intelligence.org/

|

||||

[3]:https://opensource.com/users/christine-peterson

|

||||

[4]:https://opensource.com/users/christine-peterson

|

||||

[5]:https://opensource.com/user/206091/feed

|

||||

[6]:https://opensource.com/resources/what-open-source

|

||||

[7]:https://opensource.org/osd

|

||||

[8]:https://wiki2.org/en/Alternative_terms_for_free_software

|

||||

[9]:https://opensource.org/

|

||||

[10]:http://www.oreilly.com/pub/pr/636

|

||||

[11]:http://www.oreilly.com/pub/pr/796

|

||||

[12]:https://ipfs.io/ipfs/QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco/wiki/Alternative_terms_for_free_software.html

|

||||

[13]:https://opensource.com/users/christine-peterson

|

||||

[14]:https://opensource.com/users/christine-peterson

|

||||

[15]:https://opensource.com/users/christine-peterson

|

||||

[16]:https://opensource.com/article/18/2/coining-term-open-source-software#comments

|

||||

@ -0,0 +1,75 @@

|

||||

Open source software: 20 years and counting

|

||||

============================================================

|

||||

|

||||

### On the 20th anniversary of the coining of the term "open source software," how did it rise to dominance and what's next?

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

Twenty years ago, in February 1998, the term "open source" was first applied to software. Soon afterwards, the Open Source Definition was created and the seeds that became the Open Source Initiative (OSI) were sown. As the OSD’s author [Bruce Perens relates][9],

|

||||

|

||||

> “Open source” is the proper name of a campaign to promote the pre-existing concept of free software to business, and to certify licenses to a rule set.

|

||||

|

||||

Twenty years later, that campaign has proven wildly successful, beyond the imagination of anyone involved at the time. Today open source software is literally everywhere. It is the foundation for the internet and the web. It powers the computers and mobile devices we all use, as well as the networks they connect to. Without it, cloud computing and the nascent Internet of Things would be impossible to scale and perhaps to create. It has enabled new ways of doing business to be tested and proven, allowing giant corporations like Google and Facebook to start from the top of a mountain others already climbed.

|

||||

|

||||

Like any human creation, it has a dark side as well. It has also unlocked dystopian possibilities for surveillance and the inevitably consequent authoritarian control. It has provided criminals with new ways to cheat their victims and unleashed the darkness of bullying delivered anonymously and at scale. It allows destructive fanatics to organize in secret without the inconvenience of meeting. All of these are shadows cast by useful capabilities, just as every human tool throughout history has been used both to feed and care and to harm and control. We need to help the upcoming generation strive for irreproachable innovation. As [Richard Feynman said][10],

|

||||

|

||||

> To every man is given the key to the gates of heaven. The same key opens the gates of hell.

|

||||

|

||||

As open source has matured, the way it is discussed and understood has also matured. The first decade was one of advocacy and controversy, while the second was marked by adoption and adaptation.

|

||||

|

||||

1. In the first decade, the key question concerned business models—“how can I contribute freely yet still be paid?”—while during the second, more people asked about governance—“how can I participate yet keep control/not be controlled?”

|

||||

|

||||

2. Open source projects of the first decade were predominantly replacements for off-the-shelf products; in the second decade, they were increasingly components of larger solutions.

|

||||

|

||||

3. Projects of the first decade were often run by informal groups of individuals; in the second decade, they were frequently run by charities created on a project-by-project basis.

|

||||

|

||||

4. Open source developers of the first decade were frequently devoted to a single project and often worked in their spare time. In the second decade, they were increasingly employed to work on a specific technology—professional specialists.

|

||||

|

||||

5. While open source was always intended as a way to promote software freedom, during the first decade, conflict arose with those preferring the term “free software.” In the second decade, this conflict was largely ignored as open source adoption accelerated.

|

||||

|

||||

So what will the third decade bring?

|

||||

|

||||

1. _The complexity business model_ —The predominant business model will involve monetizing the solution of the complexity arising from the integration of many open source parts, especially from deployment and scaling. Governance needs will reflect this.

|

||||

|

||||

2. _Open source mosaics_ —Open source projects will be predominantly families of component parts, together with being built into stacks of components. The resultant larger solutions will be a mosaic of open source parts.

|

||||

|

||||

3. _Families of projects_ —More and more projects will be hosted by consortia/trade associations like the Linux Foundation and OpenStack, and by general-purpose charities like Apache and the Software Freedom Conservancy.

|

||||

|

||||

4. _Professional generalists_ —Open source developers will increasingly be employed to integrate many technologies into complex solutions and will contribute to a range of projects.

|

||||

|

||||

5. _Software freedom redux_ —As new problems arise, software freedom (the application of the Four Freedoms to user and developer flexibility) will increasingly be applied to identify solutions that work for collaborative communities and independent deployers.

|

||||

|

||||

I’ll be expounding on all this in conference keynotes around the world during 2018\. Watch for [OSI’s 20th Anniversary World Tour][11]!

|

||||

|

||||

_This article was originally published on [Meshed Insights Ltd.][2] and is reprinted with permission. This article, as well as my work at OSI, is supported by [Patreon patrons][3]._

|

||||

|

||||

### About the author

|

||||

|

||||

[][12] Simon Phipps - Computer industry and open source veteran Simon Phipps started [Public Software][4], a European host for open source projects, and volunteers as President at OSI and a director at The Document Foundation. His posts are sponsored by [Patreon patrons][5] - become one if you'd like to see more! Over a 30+ year career he has been involved at a strategic level in some of the world’s leading... [more about Simon Phipps][6][More about me][7]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/open-source-20-years-and-counting

|

||||

|

||||

作者:[Simon Phipps ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/simonphipps

|

||||

[1]:https://opensource.com/article/18/2/open-source-20-years-and-counting?rate=TZxa8jxR6VBcYukor0FDsTH38HxUrr7Mt8QRcn0sC2I

|

||||

[2]:https://meshedinsights.com/2017/12/21/20-years-and-counting/

|

||||

[3]:https://patreon.com/webmink

|

||||

[4]:https://publicsoftware.eu/

|

||||

[5]:https://patreon.com/webmink

|

||||

[6]:https://opensource.com/users/simonphipps

|

||||

[7]:https://opensource.com/users/simonphipps

|

||||

[8]:https://opensource.com/user/12532/feed

|

||||

[9]:https://perens.com/2017/09/26/on-usage-of-the-phrase-open-source/

|

||||

[10]:https://www.brainpickings.org/2013/07/19/richard-feynman-science-morality-poem/

|

||||

[11]:https://opensource.org/node/905

|

||||

[12]:https://opensource.com/users/simonphipps

|

||||

[13]:https://opensource.com/users/simonphipps

|

||||

[14]:https://opensource.com/users/simonphipps

|

||||

@ -0,0 +1,102 @@

|

||||

How to Install Tripwire IDS (Intrusion Detection System) on Linux

|

||||

============================================================

|

||||

|

||||

|

||||

Tripwire is a popular Linux Intrusion Detection System (IDS) that runs on systems in order to detect if unauthorized filesystem changes occurred over time.

|

||||

|

||||

In CentOS and RHEL distributions, tripwire is not a part of official repositories. However, the tripwire package can be installed via [Epel repositories][1].

|

||||

|

||||

To begin, first install Epel repositories in CentOS and RHEL system, by issuing the below command.

|

||||

|

||||

```

|

||||

# yum install epel-release

|

||||

```

|

||||

|

||||

After you’ve installed Epel repositories, make sure you update the system with the following command.

|

||||

|

||||

```

|

||||

# yum update

|

||||

```

|

||||

|

||||

After the update process finishes, install Tripwire IDS software by executing the below command.

|

||||

|

||||

```

|

||||

# yum install tripwire

|

||||

```

|

||||

|

||||

Fortunately, tripwire is a part of Ubuntu and Debian default repositories and can be installed with following commands.

|

||||

|

||||

```

|

||||

$ sudo apt update

|

||||

$ sudo apt install tripwire

|

||||

```

|

||||

|

||||

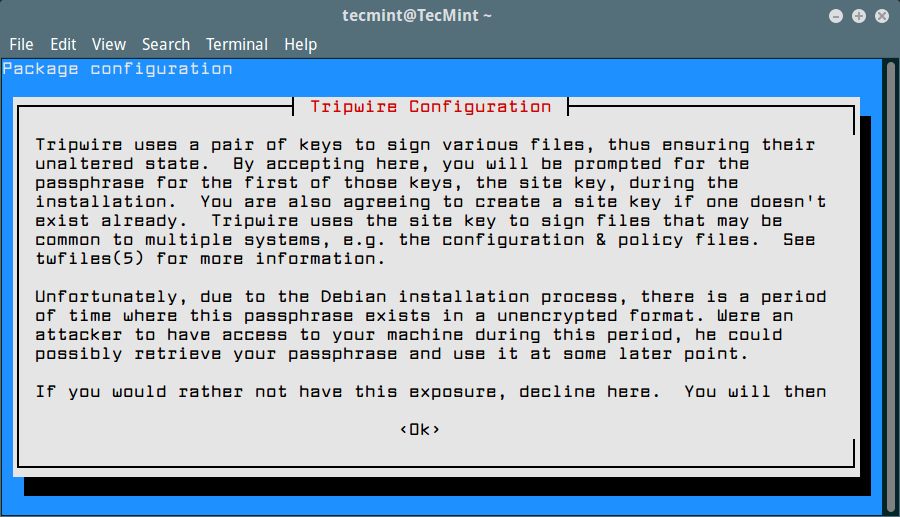

On Ubuntu and Debian, the tripwire installation will be asked to choose and confirm a site key and local key passphrase. These keys are used by tripwire to secure its configuration files.

|

||||

|

||||

[][2]

|

||||

|

||||

Create Tripwire Site and Local Key

|

||||

|

||||

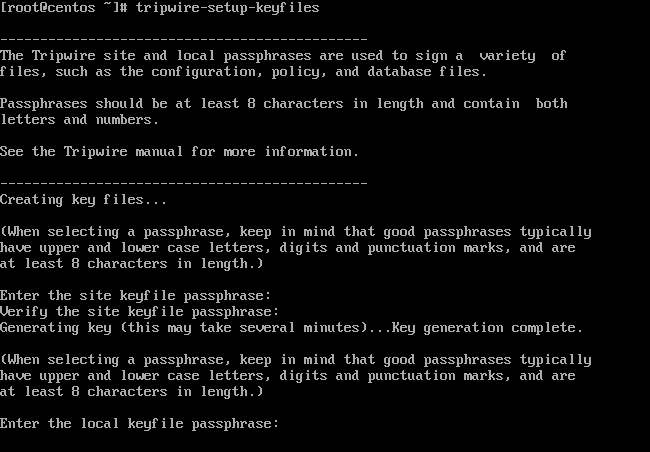

On CentOS and RHEL, you need to create tripwire keys with the below command and supply a passphrase for site key and local key.

|

||||

|

||||

```

|

||||

# tripwire-setup-keyfiles

|

||||

```

|

||||

[][3]

|

||||

|

||||

Create Tripwire Keys

|

||||

|

||||

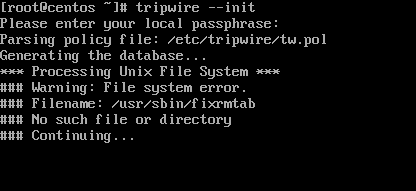

In order to validate your system, you need to initialize Tripwire database with the following command. Due to the fact that the database hasn’t been initialized yet, tripwire will display a lot of false-positive warnings.

|

||||

|

||||

```

|

||||

# tripwire --init

|

||||

```

|

||||

[][4]

|

||||

|

||||

Initialize Tripwire Database

|

||||

|

||||

Finally, generate a tripwire system report in order to check the configurations by issuing the below command. Use `--help` switch to list all tripwire check command options.

|

||||

|

||||

```

|

||||

# tripwire --check --help

|

||||

# tripwire --check

|

||||

```

|

||||

|

||||

After tripwire check command completes, review the report by opening the file with the extension `.twr` from /var/lib/tripwire/report/ directory with your favorite text editor command, but before that you need to convert to text file.

|

||||

|

||||

```

|

||||

# twprint --print-report --twrfile /var/lib/tripwire/report/tecmint-20170727-235255.twr > report.txt

|

||||

# vi report.txt

|

||||

```

|

||||

[][5]

|

||||

|

||||

Tripwire System Report

|

||||

|

||||

That’s It! you have successfully installed Tripwire on Linux server. I hope you can now easily configure your [Tripwire IDS][6].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

I'am a computer addicted guy, a fan of open source and linux based system software, have about 4 years experience with Linux distributions desktop, servers and bash scripting.

|

||||

|

||||

-------

|

||||

|

||||

via: https://www.tecmint.com/install-tripwire-ids-intrusion-detection-system-on-linux/

|

||||

|

||||

作者:[ Matei Cezar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/cezarmatei/

|

||||

[1]:https://www.tecmint.com/how-to-enable-epel-repository-for-rhel-centos-6-5/

|

||||

[2]:https://www.tecmint.com/wp-content/uploads/2018/01/Create-Site-and-Local-key.png

|

||||

[3]:https://www.tecmint.com/wp-content/uploads/2018/01/Create-Tripwire-Keys.png

|

||||

[4]:https://www.tecmint.com/wp-content/uploads/2018/01/Initialize-Tripwire-Database.png

|

||||

[5]:https://www.tecmint.com/wp-content/uploads/2018/01/Tripwire-System-Report.png

|

||||

[6]:https://www.tripwire.com/

|

||||

[7]:https://www.tecmint.com/author/cezarmatei/

|

||||

[8]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[9]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

File diff suppressed because it is too large

Load Diff

@ -0,0 +1,153 @@

|

||||

Building a Linux-based HPC system on the Raspberry Pi with Ansible

|

||||

============================================================

|

||||

|

||||

### Create a high-performance computing cluster with low-cost hardware and open source software.

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

In my [previous article for Opensource.com][14], I introduced the [OpenHPC][15] project, which aims to accelerate innovation in high-performance computing (HPC). This article goes a step further by using OpenHPC's capabilities to build a small HPC system. To call it an _HPC system_ might sound bigger than it is, so maybe it is better to say this is a system based on the [Cluster Building Recipes][16] published by the OpenHPC project.

|

||||

|

||||

The resulting cluster consists of two Raspberry Pi 3 systems acting as compute nodes and one virtual machine acting as the master node:

|

||||

|

||||

|

||||

|

||||

|

||||

My master node is running CentOS on x86_64 and my compute nodes are running a slightly modified CentOS on aarch64.

|

||||

|

||||

This is what the setup looks in real life:

|

||||

|

||||

|

||||

|

||||

|

||||

To set up my system like an HPC system, I followed some of the steps from OpenHPC's Cluster Building Recipes [install guide for CentOS 7.4/aarch64 + Warewulf + Slurm][17] (PDF). This recipe includes provisioning instructions using [Warewulf][18]; because I manually installed my three systems, I skipped the Warewulf parts and created an [Ansible playbook][19] for the steps I took.

|

||||

|

||||

|

||||

Once my cluster was set up by the [Ansible][26] playbooks, I could start to submit jobs to my resource manager. The resource manager, [Slurm][27] in my case, is the instance in the cluster that decides where and when my jobs are executed. One possibility to start a simple job on the cluster is:

|

||||

```

|

||||

[ohpc@centos01 ~]$ srun hostname

|

||||

calvin

|

||||

```

|

||||

|

||||

If I need more resources, I can tell Slurm that I want to run my command on eight CPUs:

|

||||

|

||||

```

|

||||

[ohpc@centos01 ~]$ srun -n 8 hostname

|

||||

hobbes

|

||||

hobbes

|

||||

hobbes

|

||||

hobbes

|

||||

calvin

|

||||

calvin

|

||||

calvin

|

||||

calvin

|

||||

```

|

||||

|

||||

In the first example, Slurm ran the specified command (`hostname`) on a single CPU, and in the second example Slurm ran the command on eight CPUs. One of my compute nodes is named `calvin` and the other is named `hobbes`; that can be seen in the output of the above commands. Each of the compute nodes is a Raspberry Pi 3 with four CPU cores.

|

||||

|

||||

Another way to submit jobs to my cluster is the command `sbatch`, which can be used to execute scripts with the output written to a file instead of my terminal.

|

||||

|

||||

```

|

||||

[ohpc@centos01 ~]$ cat script1.sh

|

||||

#!/bin/sh

|

||||

date

|

||||

hostname

|

||||

sleep 10

|

||||

date

|

||||

[ohpc@centos01 ~]$ sbatch script1.sh

|

||||

Submitted batch job 101

|

||||

```

|

||||

|

||||

This will create an output file called `slurm-101.out` with the following content:

|

||||

|

||||

```

|

||||

Mon 11 Dec 16:42:31 UTC 2017

|

||||

calvin

|

||||

Mon 11 Dec 16:42:41 UTC 2017

|

||||

```

|

||||

|

||||

To demonstrate the basic functionality of the resource manager, simple and serial command line tools are suitable—but a bit boring after doing all the work to set up an HPC-like system.

|

||||

|

||||

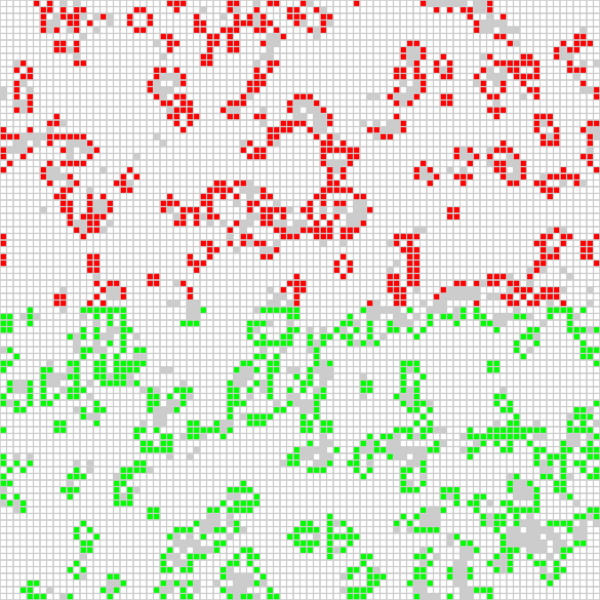

A more interesting application is running an [Open MPI][20] parallelized job on all available CPUs on the cluster. I'm using an application based on [Game of Life][21], which was used in a [video][22] called "Running Game of Life across multiple architectures with Red Hat Enterprise Linux." In addition to the previously used MPI-based Game of Life implementation, the version now running on my cluster colors the cells for each involved host differently. The following script starts the application interactively with a graphical output:

|

||||

|

||||

```

|

||||

$ cat life.mpi

|

||||

#!/bin/bash

|

||||

|

||||

module load gnu6 openmpi3

|

||||

|

||||

if [[ "$SLURM_PROCID" != "0" ]]; then

|

||||

exit

|

||||

fi

|

||||

|

||||

mpirun ./mpi_life -a -p -b

|

||||

```

|

||||

|

||||

I start the job with the following command, which tells Slurm to allocate eight CPUs for the job:

|

||||

|

||||

```

|

||||

$ srun -n 8 --x11 life.mpi

|

||||

```

|

||||

|

||||

For demonstration purposes, the job has a graphical interface that shows the current result of the calculation:

|

||||

|

||||

|

||||

|

||||

|

||||

The position of the red cells is calculated on one of the compute nodes, and the green cells are calculated on the other compute node. I can also tell the Game of Life program to color the cell for each used CPU (there are four per compute node) differently, which leads to the following output:

|

||||

|

||||

|

||||

|

||||

|

||||

Thanks to the installation recipes and the software packages provided by OpenHPC, I was able to set up two compute nodes and a master node in an HPC-type configuration. I can submit jobs to my resource manager, and I can use the software provided by OpenHPC to start MPI applications utilizing all my Raspberry Pis' CPUs.

|

||||

|

||||

* * *

|

||||

|

||||

_To learn more about using OpenHPC to build a Raspberry Pi cluster, please attend Adrian Reber's talks at [DevConf.cz 2018][10], January 26-28, in Brno, Czech Republic, and at the [CentOS Dojo 2018][11], on February 2, in Brussels._

|

||||

|

||||

### About the author

|

||||

|

||||

[][23] Adrian Reber - Adrian is a Senior Software Engineer at Red Hat and is migrating processes at least since 2010\. He started to migrate processes in a high performance computing environment and at some point he migrated so many processes that he got a PhD for that and since he joined Red Hat he started to migrate containers. Occasionally he still migrates single processes and is still interested in high performance computing topics.[More about me][12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||