mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

commit

deed12ab17

87

published/20171120 Adopting Kubernetes step by step.md

Normal file

87

published/20171120 Adopting Kubernetes step by step.md

Normal file

@ -0,0 +1,87 @@

|

||||

一步步采用 Kubernetes

|

||||

============================================================

|

||||

|

||||

### 为什么选择 Docker 和 Kubernetes 呢?

|

||||

|

||||

容器允许我们构建、发布和运行分布式应用。它们使应用程序摆脱了机器限制,可以让我们以一定的方式创建一个复杂的应用程序。

|

||||

|

||||

使用容器编写应用程序可以使开发、QA 更加接近生产环境(如果你努力这样做的话)。通过这样做,可以更快地发布修改,并且可以更快地测试整个系统。

|

||||

|

||||

[Docker][1] 这个容器式平台就是为此为生,可以使软件独立于云提供商。

|

||||

|

||||

但是,即使使用容器,移植应用程序到任何一个云提供商(或私有云)所需的工作量也是不可忽视的。应用程序通常需要自动伸缩组、持久远程磁盘、自动发现等。但是每个云提供商都有不同的机制。如果你想使用这些功能,很快你就会变的依赖于云提供商。

|

||||

|

||||

这正是 [Kubernetes][2] 登场的时候。它是一个容器<ruby>编排<rt>orchestration</rt></ruby>系统,它允许您以一定的标准管理、缩放和部署应用程序的不同部分,并且成为其中的重要工具。它的可移植抽象层兼容主要云的提供商(Google Cloud,Amazon Web Services 和 Microsoft Azure 都支持 Kubernetes)。

|

||||

|

||||

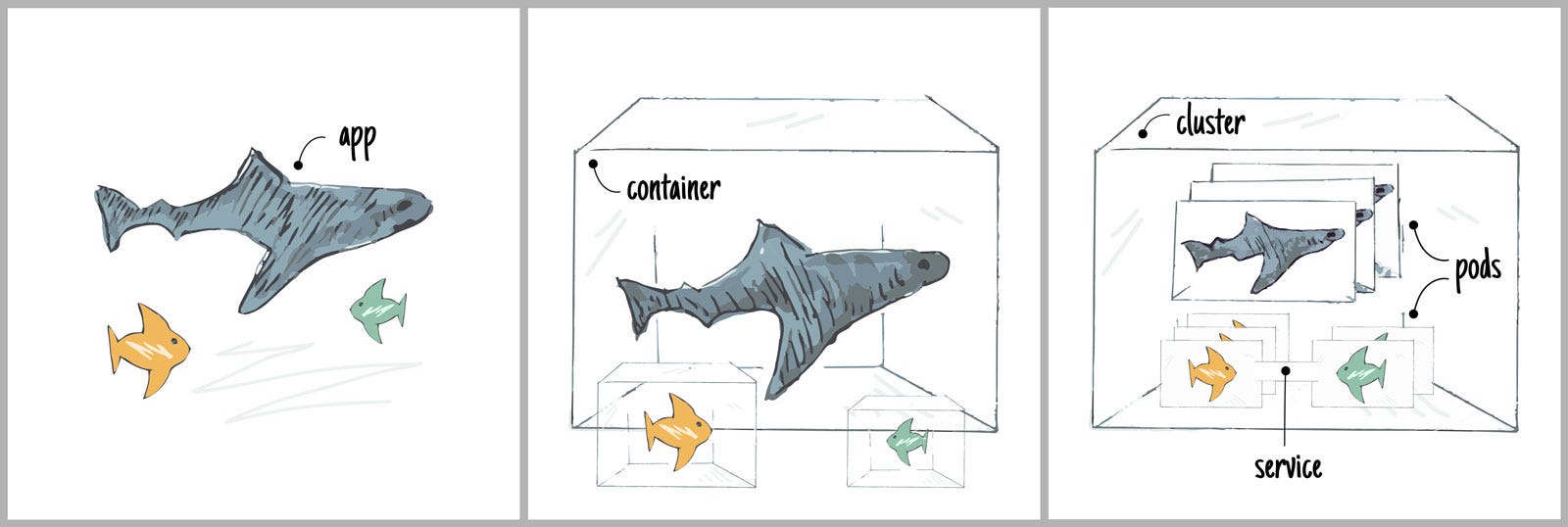

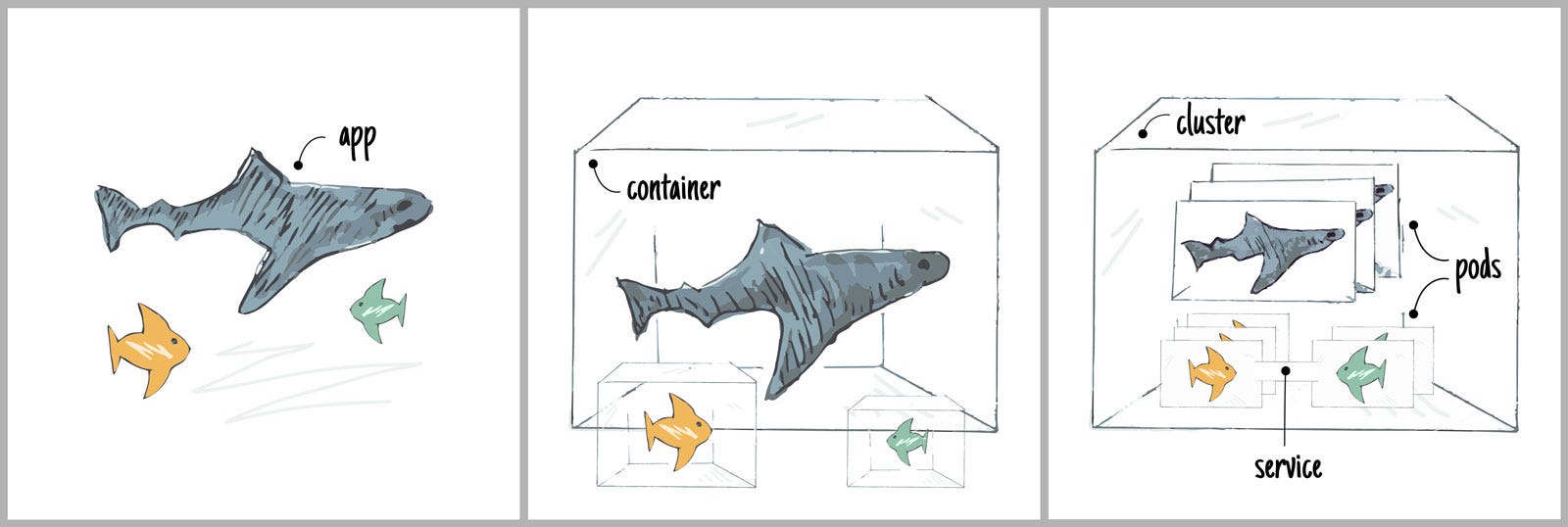

可以这样想象一下应用程序、容器和 Kubernetes。应用程序可以视为一条身边的鲨鱼,它存在于海洋中(在这个例子中,海洋就是您的机器)。海洋中可能还有其他一些宝贵的东西,但是你不希望你的鲨鱼与小丑鱼有什么关系。所以需要把你的鲨鱼(你的应用程序)移动到一个密封的水族馆中(容器)。这很不错,但不是特别的健壮。你的水族馆可能会被打破,或者你想建立一个通道连接到其他鱼类生活的另一个水族馆。也许你想要许多这样的水族馆,以防需要清洁或维护……这正是应用 Kubernetes 集群的作用。

|

||||

|

||||

|

||||

|

||||

*进化到 Kubernetes*

|

||||

|

||||

主流云提供商对 Kubernetes 提供了支持,从开发环境到生产环境,它使您和您的团队能够更容易地拥有几乎相同的环境。这是因为 Kubernetes 不依赖专有软件、服务或基础设施。

|

||||

|

||||

事实上,您可以在您的机器中使用与生产环境相同的部件启动应用程序,从而缩小了开发和生产环境之间的差距。这使得开发人员更了解应用程序是如何构建在一起的,尽管他们可能只负责应用程序的一部分。这也使得在开发流程中的应用程序更容易的快速完成测试。

|

||||

|

||||

### 如何使用 Kubernetes 工作?

|

||||

|

||||

随着更多的人采用 Kubernetes,新的问题出现了;应该如何针对基于集群环境进行开发?假设有 3 个环境,开发、质量保证和生产, 他们如何适应 Kubernetes?这些环境之间仍然存在着差异,无论是在开发周期(例如:在运行中的应用程序中我的代码的变化上花费时间)还是与数据相关的(例如:我不应该在我的质量保证环境中测试生产数据,因为它里面有敏感信息)。

|

||||

|

||||

那么,我是否应该总是在 Kubernetes 集群中编码、构建映像、重新部署服务,在我编写代码时重新创建部署和服务?或者,我是否不应该尽力让我的开发环境也成为一个 Kubernetes 集群(或一组集群)呢?还是,我应该以混合方式工作?

|

||||

|

||||

|

||||

|

||||

*用本地集群进行开发*

|

||||

|

||||

如果继续我们之前的比喻,上图两边的洞表示当使其保持在一个开发集群中的同时修改应用程序的一种方式。这通常通过[卷][4]来实现

|

||||

|

||||

### Kubernetes 系列

|

||||

|

||||

本 Kubernetes 系列资源是开源的,可以在这里找到: [https://github.com/red-gate/ks][5] 。

|

||||

|

||||

我们写这个系列作为以不同的方式构建软件的练习。我们试图约束自己在所有环境中都使用 Kubernetes,以便我们可以探索这些技术对数据和数据库的开发和管理造成影响。

|

||||

|

||||

这个系列从使用 Kubernetes 创建基本的 React 应用程序开始,并逐渐演变为能够覆盖我们更多开发需求的系列。最后,我们将覆盖所有应用程序的开发需求,并且理解在数据库生命周期中如何最好地迎合容器和集群。

|

||||

|

||||

以下是这个系列的前 5 部分:

|

||||

|

||||

1. ks1:使用 Kubernetes 构建一个 React 应用程序

|

||||

2. ks2:使用 minikube 检测 React 代码的更改

|

||||

3. ks3:添加一个提供 API 的 Python Web 服务器

|

||||

4. ks4:使 minikube 检测 Python 代码的更改

|

||||

5. ks5:创建一个测试环境

|

||||

|

||||

本系列的第二部分将添加一个数据库,并尝试找出最好的方式来开发我们的应用程序。

|

||||

|

||||

通过在各种环境中运行 Kubernetes,我们被迫在解决新问题的同时也尽量保持开发周期。我们不断尝试 Kubernetes,并越来越习惯它。通过这样做,开发团队都可以对生产环境负责,这并不困难,因为所有环境(从开发到生产)都以相同的方式进行管理。

|

||||

|

||||

### 下一步是什么?

|

||||

|

||||

我们将通过整合数据库和练习来继续这个系列,以找到使用 Kubernetes 获得数据库生命周期的最佳体验方法。

|

||||

|

||||

这个 Kubernetes 系列是由 Redgate 研发部门 Foundry 提供。我们正在努力使数据和容器的管理变得更加容易,所以如果您正在处理数据和容器,我们希望听到您的意见,请直接联系我们的开发团队。 [_foundry@red-gate.com_][6]

|

||||

|

||||

* * *

|

||||

|

||||

我们正在招聘。您是否有兴趣开发产品、创建[未来技术][7] 并采取类似创业的方法(没有风险)?看看我们的[软件工程师 - 未来技术][8]的角色吧,并阅读更多关于在 [英国剑桥][9]的 Redgate 工作的信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.com/ingeniouslysimple/adopting-kubernetes-step-by-step-f93093c13dfe

|

||||

|

||||

作者:[santiago arias][a]

|

||||

译者:[aiwhj](https://github.com/aiwhj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.com/@santiaago?source=post_header_lockup

|

||||

[1]:https://www.docker.com/what-docker

|

||||

[2]:https://kubernetes.io/

|

||||

[3]:https://www.google.co.uk/search?biw=723&bih=753&tbm=isch&sa=1&ei=p-YCWpbtN8atkwWc8ZyQAQ&q=nemo+fish&oq=nemo+fish&gs_l=psy-ab.3..0i67k1l2j0l2j0i67k1j0l5.5128.9271.0.9566.9.9.0.0.0.0.81.532.9.9.0....0...1.1.64.psy-ab..0.9.526...0i7i30k1j0i7i10i30k1j0i13k1j0i10k1.0.FbAf9xXxTEM

|

||||

[4]:https://kubernetes.io/docs/concepts/storage/volumes/

|

||||

[5]:https://github.com/red-gate/ks

|

||||

[6]:mailto:foundry@red-gate.com

|

||||

[7]:https://www.red-gate.com/foundry/

|

||||

[8]:https://www.red-gate.com/our-company/careers/current-opportunities/software-engineer-future-technologies

|

||||

[9]:https://www.red-gate.com/our-company/careers/living-in-cambridge

|

||||

295

sources/tech/20170216 25 Free Books To Learn Linux For Free.md

Normal file

295

sources/tech/20170216 25 Free Books To Learn Linux For Free.md

Normal file

@ -0,0 +1,295 @@

|

||||

25 Free Books To Learn Linux For Free

|

||||

======

|

||||

Brief: In this article, I'll share with you the best resource to **learn Linux for free**. This is a collection of websites, online video courses and free eBooks.

|

||||

|

||||

**How to learn Linux?**

|

||||

|

||||

This is perhaps the most commonly asked question in our Facebook group for Linux users.

|

||||

|

||||

The answer to this simple looking question 'how to learn Linux' is not at all simple.

|

||||

|

||||

Problem is that different people have different meanings of learning Linux.

|

||||

|

||||

* If someone has never used Linux, be it command line or desktop version, that person might be just wondering to know more about it.

|

||||

* If someone uses Windows as the desktop but have to use Linux command line at work, that person might be interested in learning Linux commands.

|

||||

* If someone has been using Linux for sometimes and is aware of the basics but he/she might want to go to the next level.

|

||||

* If someone is just interested in getting your way around a specific Linux distribution.

|

||||

* If someone is trying to improve or learn Bash scripting which is almost synonymous with Linux command line.

|

||||

* If someone is willing to make a career as a Linux SysAdmin or trying to improve his/her sysadmin skills.

|

||||

|

||||

|

||||

|

||||

You see, the answer to "how do I learn Linux" depends on what kind of Linux knowledge you are seeking. And for this purpose, I have collected a bunch of resources that you could use for learning Linux.

|

||||

|

||||

These free resources include eBooks, video courses, websites etc. And these are divided into sub-categories so that you can easily find what you are looking for when you seek to learn Linux.

|

||||

|

||||

Again, there is no **best way to learn Linux**. It totally up to you how you go about learning Linux, by online web portals, downloaded eBooks, video courses or something else.

|

||||

|

||||

Let's see how you can learn Linux.

|

||||

|

||||

**Disclaimer** : All the books listed here are legal to download. The sources mentioned here are the official sources, as per my knowledge. However, if you find it otherwise, please let me know so that I can take appropriate action.

|

||||

|

||||

![Best Free eBooks to learn Linux for Free][1]

|

||||

|

||||

## 1. Free materials to learn Linux for absolute beginners

|

||||

|

||||

So perhaps you have just heard of Linux from your friends or from a discussion online. You are intrigued about the hype around Linux and you are overwhelmed by the vast information available on the internet but just cannot figure out exactly where to look for to know more about Linux.

|

||||

|

||||

Worry not. Most of us, if not all, have been to your stage.

|

||||

|

||||

### Introduction to Linux by Linux Foundation [Video Course]

|

||||

|

||||

If you have no idea about what is Linux and you want to get started with it, I suggest you to go ahead with the free video course provided by the [Linux Foundation][2] on [edX][3]. Consider it an official course by the organization that 'maintains' Linux. And yes, it is endorsed by [Linus Torvalds][4], the father of Linux himself.

|

||||

|

||||

[Introduction To Linux][5]

|

||||

|

||||

### Linux Journey [Online Portal]

|

||||

|

||||

Not official and perhaps not very popular. But this little website is the perfect place for a no non-sense Linux learning for beginners.

|

||||

|

||||

The website is designed beautifully and is well organized based on the topics. It also has interactive quizzes that you can take after reading a section or chapter. My advice, bookmark this website:

|

||||

|

||||

[Linux Journey][6]

|

||||

|

||||

### Learn Linux in 5 Days [eBook]

|

||||

|

||||

This brilliant eBook is available for free exclusively to It's FOSS readers all thanks to [Linux Training Academy][7].

|

||||

|

||||

Written for absolute beginners in mind, this free Linux eBook gives you a quick overview of Linux, common Linux commands and other things that you need to learn to get started with Linux.

|

||||

|

||||

You can download the book from the page below:

|

||||

|

||||

[Learn Linux In 5 Days][8]

|

||||

|

||||

### The Ultimate Linux Newbie Guide [eBook]

|

||||

|

||||

This is a free to download eBook for Linux beginners. The eBook starts with explaining what is Linux and then go on to provide more practical usage of Linux as a desktop.

|

||||

|

||||

You can download the latest version of this eBook from the link below:

|

||||

|

||||

[The Ultimate Linux Newbie Guide][9]

|

||||

|

||||

## 2. Free Linux eBooks for Beginners to Advanced

|

||||

|

||||

This section lists out those Linux eBooks that are 'complete' in nature.

|

||||

|

||||

What I mean is that these are like academic textbooks that focus on each and every aspects of Linux, well most of it. You can read those as an absolute beginner or you can read those for deeper understanding as an intermediate Linux user. You can also use them for reference even if you are at expert level.

|

||||

|

||||

### Introduction to Linux [eBook]

|

||||

|

||||

Introduction to Linux is a free eBook from [The Linux Documentation Project][10] and it is one of the most popular free Linux books out there. Though I think some parts of this book needs to be updated, it is still a very good book to teach you about Linux, its file system, command line, networking and other related stuff.

|

||||

|

||||

[Introduction To Linux][11]

|

||||

|

||||

### Linux Fundamentals [eBook]

|

||||

|

||||

This free eBook by Paul Cobbaut teaches you about Linux history, installation and focuses on the basic Linux commands you should know. You can get the book from the link below:

|

||||

|

||||

[Linux Fundamentals][12]

|

||||

|

||||

### Advanced Linux Programming [eBook]

|

||||

|

||||

As the name suggests, this is for advanced users who are or want to develop software for Linux. It deals with sophisticated features such as multiprocessing, multi-threading, interprocess communication, and interaction with hardware devices.

|

||||

|

||||

Following the book will help you develop a faster, reliable and secure program that uses the full capability of a GNU/Linux system.

|

||||

|

||||

[Advanced Linux Programming][13]

|

||||

|

||||

### Linux From Scratch [eBook]

|

||||

|

||||

If you think you know enough about Linux and you are a pro, then why not create your own Linux distribution? Linux From Scratch (LFS) is a project that provides you with step-by-step instructions for building your own custom Linux system, entirely from source code.

|

||||

|

||||

Call it DIY Linux but this is a great way to put your Linux expertise to the next level.

|

||||

|

||||

There are various sub-parts of this project, you can check it out on its website and download the books from there.

|

||||

|

||||

[Linux From Scratch][14]

|

||||

|

||||

## 3. Free eBooks to learn Linux command line and Shell scripting

|

||||

|

||||

The real power of Linux lies in the command line and if you want to conquer Linux, you must learn Linux command line and Shell scripting.

|

||||

|

||||

In fact, if you have to work on Linux terminal on your job, having a good knowledge of Linux command line will actually help you in your tasks and perhaps help you in advancing your career as well (as you'll be more efficient).

|

||||

|

||||

In this section, we'll see various Linux commands free eBooks.

|

||||

|

||||

### GNU/Linux Command−Line Tools Summary [eBook]

|

||||

|

||||

This eBook from The Linux Documentation Project is a good place to begin with Linux command line and get acquainted with Shell scripting.

|

||||

|

||||

[GNU/Linux Command−Line Tools Summary][15]

|

||||

|

||||

### Bash Reference Manual from GNU [eBook]

|

||||

|

||||

This is a free eBook to download from [GNU][16]. As the name suggests, it deals with Bash Shell (if I can call that). This book has over 175 pages and it covers a number of topics around Linux command line in Bash.

|

||||

|

||||

You can get it from the link below:

|

||||

|

||||

[Bash Reference Manual][17]

|

||||

|

||||

### The Linux Command Line [eBook]

|

||||

|

||||

This 500+ pages of free eBook by William Shotts is the MUST HAVE for anyone who is serious about learning Linux command line.

|

||||

|

||||

Even if you think you know things about Linux, you'll be amazed at how much this book still teaches you.

|

||||

|

||||

It covers things from beginners to advanced level. I bet that you'll be a hell lot of better Linux user after reading this book. Download it and keep it with you always.

|

||||

|

||||

[The Linux Command Line][18]

|

||||

|

||||

### Bash Guide for Beginners [eBook]

|

||||

|

||||

If you just want to get started with Bash scripting, this could be a good companion for you. The Linux Documentation Project is behind this eBook again and it's the same author who wrote Introduction to Linux eBook (discussed earlier in this article).

|

||||

|

||||

[Bash Guide for Beginners][19]

|

||||

|

||||

### Advanced Bash-Scripting Guide [eBook]

|

||||

|

||||

If you think you already know basics of Bash scripting and you want to take your skills to the next level, this is what you need. This book has over 900+ pages of various advanced commands and their examples.

|

||||

|

||||

[Advanced Bash-Scripting Guide][20]

|

||||

|

||||

### The AWK Programming Language [eBook]

|

||||

|

||||

Not the prettiest book here but if you really need to go deeper with your scripts, this old-yet-gold book could be helpful.

|

||||

|

||||

[The AWK Programming Language][21]

|

||||

|

||||

### Linux 101 Hacks [eBook]

|

||||

|

||||

This 270 pages eBook from The Geek Stuff teaches you the essentials of Linux command lines with easy to follow practical examples. You can get the book from the link below:

|

||||

|

||||

[Linux 101 Hacks][22]

|

||||

|

||||

## 4. Distribution specific free learning material

|

||||

|

||||

This section deals with material that are dedicated to a certain Linux distribution. What we saw so far was the Linux in general, more focused on file systems, commands and other core stuff.

|

||||

|

||||

These books, on the other hand, can be termed as manual or getting started guide for various Linux distributions. So if you are using a certain Linux distribution or planning to use it, you can refer to these resources. And yes, these books are more desktop Linux focused.

|

||||

|

||||

I would also like to add that most Linux distributions have their own wiki or documentation section which are often pretty vast. You can always refer to them when you are online.

|

||||

|

||||

### Ubuntu Manual

|

||||

|

||||

Needless to say that this eBook is for Ubuntu users. It's an independent project that provides Ubuntu manual in the form of free eBook. It is updated for each version of Ubuntu.

|

||||

|

||||

The book is rightly called manual because it is basically a composition of step by step instruction and aimed at absolute beginners to Ubuntu. So, you get to know Unity desktop, how to go around it and find applications etc.

|

||||

|

||||

It's a must have if you never used Ubuntu Unity because it helps you to figure out how to use Ubuntu for your daily usage.

|

||||

|

||||

[Ubuntu Manual][23]

|

||||

|

||||

### For Linux Mint: Just Tell Me Damnit! [eBook]

|

||||

|

||||

A very basic eBook that focuses on Linux Mint. It shows you how to install Linux Mint in a virtual machine, how to find software, install updates and customize the Linux Mint desktop.

|

||||

|

||||

You can download the eBook from the link below:

|

||||

|

||||

[Just Tell Me Damnit!][24]

|

||||

|

||||

### Solus Linux Manual [eBook]

|

||||

|

||||

Caution! This used to be the official manual from Solus Linux but I cannot find its mentioned on Solus Project's website anymore. I don't know if it's outdated or not. But in any case, a little something about Solu Linux won't really hurt, will it?

|

||||

|

||||

[Solus Linux User Guide][25]

|

||||

|

||||

## 5. Free eBooks for SysAdmin

|

||||

|

||||

This section is dedicated to the SysAdmins, the superheroes for developers. I have listed a few free eBooks here for SysAdmin which will surely help anyone who is already a SysAdmin or aspirs to be one. I must add that you should also focus on essential Linux command lines as it will make your job easier.

|

||||

|

||||

### The Debian Administration's Handbook [eBook]

|

||||

|

||||

If you use Debian Linux for your servers, this is your bible. Book starts with Debian history, installation, package management etc and then moves on to cover topics like [LAMP][26], virtual machines, storage management and other core sysadmin stuff.

|

||||

|

||||

[The Debian Administration's Handbook][27]

|

||||

|

||||

### Advanced Linux System Administration [eBook]

|

||||

|

||||

This is an ideal book if you are preparing for [LPI certification][28]. The book deals straightway to the topics essential for sysadmins. So knowledge of Linux command line is a prerequisite in this case.

|

||||

|

||||

[Advanced Linux System Administration][29]

|

||||

|

||||

### Linux System Administration [eBook]

|

||||

|

||||

Another free eBook by Paul Cobbaut. The 370 pages long eBook covers networking, disk management, user management, kernel management, library management etc.

|

||||

|

||||

[Linux System Administration][30]

|

||||

|

||||

### Linux Servers [eBook]

|

||||

|

||||

One more eBook from Paul Cobbaut of [linux-training.be][31]. This book covers web servers, mysql, DHCP, DNS, Samba and other file servers.

|

||||

|

||||

[Linux Servers][32]

|

||||

|

||||

### Linux Networking [eBook]

|

||||

|

||||

Networking is the bread and butter of a SysAdmin, and this book by Paul Cobbaut (again) is a good reference material.

|

||||

|

||||

[Linux Networking][33]

|

||||

|

||||

### Linux Storage [eBook]

|

||||

|

||||

This book by Paul Cobbaut (yes, him again) explains disk management on Linux in detail and introduces a lot of other storage-related technologies.

|

||||

|

||||

[Linux Storage][34]

|

||||

|

||||

### Linux Security [eBook]

|

||||

|

||||

This is the last eBook by Paul Cobbaut in our list here. Security is one of the most important part of a sysadmin's job. This book focuses on file permissions, acls, SELinux, users and passwords etc.

|

||||

|

||||

[Linux Security][35]

|

||||

|

||||

## Your favorite Linux learning material?

|

||||

|

||||

I know that this is a good collection of free Linux eBooks. But this could always be made better.

|

||||

|

||||

If you have some other resources that could be helpful in learning Linux, do share with us. Please note to share only the legal downloads so that I can update this article with your suggestion(s) without any problem.

|

||||

|

||||

I hope you find this article helpful in learning Linux. Your feedback is welcome :)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/learn-linux-for-free/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/abhishek/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/02/free-ebooks-linux-800x450.png

|

||||

[2]:https://www.linuxfoundation.org/

|

||||

[3]:https://www.edx.org

|

||||

[4]:https://www.youtube.com/watch?v=eE-ovSOQK0Y

|

||||

[5]:https://www.edx.org/course/introduction-linux-linuxfoundationx-lfs101x-0

|

||||

[6]:https://linuxjourney.com/

|

||||

[7]:https://www.linuxtrainingacademy.com/

|

||||

[8]:https://courses.linuxtrainingacademy.com/itsfoss-ll5d/

|

||||

[9]:https://linuxnewbieguide.org/ulngebook/

|

||||

[10]:http://www.tldp.org/index.html

|

||||

[11]:http://tldp.org/LDP/intro-linux/intro-linux.pdf

|

||||

[12]:http://linux-training.be/linuxfun.pdf

|

||||

[13]:http://advancedlinuxprogramming.com/alp-folder/advanced-linux-programming.pdf

|

||||

[14]:http://www.linuxfromscratch.org/

|

||||

[15]:http://tldp.org/LDP/GNU-Linux-Tools-Summary/GNU-Linux-Tools-Summary.pdf

|

||||

[16]:https://www.gnu.org/home.en.html

|

||||

[17]:https://www.gnu.org/software/bash/manual/bash.pdf

|

||||

[18]:http://linuxcommand.org/tlcl.php

|

||||

[19]:http://www.tldp.org/LDP/Bash-Beginners-Guide/Bash-Beginners-Guide.pdf

|

||||

[20]:http://www.tldp.org/LDP/abs/abs-guide.pdf

|

||||

[21]:https://ia802309.us.archive.org/25/items/pdfy-MgN0H1joIoDVoIC7/The_AWK_Programming_Language.pdf

|

||||

[22]:http://www.thegeekstuff.com/linux-101-hacks-ebook/

|

||||

[23]:https://ubuntu-manual.org/

|

||||

[24]:http://downtoearthlinux.com/resources/just-tell-me-damnit/

|

||||

[25]:https://drive.google.com/file/d/0B5Ymf8oYXx-PWTVJR0pmM3daZUE/view

|

||||

[26]:https://en.wikipedia.org/wiki/LAMP_(software_bundle)

|

||||

[27]:https://debian-handbook.info/about-the-book/

|

||||

[28]:https://www.lpi.org/our-certifications/getting-started

|

||||

[29]:http://www.nongnu.org/lpi-manuals/manual/pdf/GNU-FDL-OO-LPI-201-0.1.pdf

|

||||

[30]:http://linux-training.be/linuxsys.pdf

|

||||

[31]:http://linux-training.be/

|

||||

[32]:http://linux-training.be/linuxsrv.pdf

|

||||

[33]:http://linux-training.be/linuxnet.pdf

|

||||

[34]:http://linux-training.be/linuxsto.pdf

|

||||

[35]:http://linux-training.be/linuxsec.pdf

|

||||

114

sources/tech/20170502 A beginner-s guide to Raspberry Pi 3.md

Normal file

114

sources/tech/20170502 A beginner-s guide to Raspberry Pi 3.md

Normal file

@ -0,0 +1,114 @@

|

||||

A beginner’s guide to Raspberry Pi 3

|

||||

======

|

||||

|

||||

This article is part of a weekly series where I'll create new projects using Raspberry Pi 3. The first article of the series focusses on getting you started and will cover the installation of Raspbian, with PIXEL desktop, setting up networking and some basics.

|

||||

|

||||

### What you need:

|

||||

|

||||

* A Raspberry Pi 3

|

||||

* A 5v 2mAh power supply with mini USB pin

|

||||

* Micro SD card with at least 8GB capacity

|

||||

* Wi-Fi or Ethernet cable

|

||||

* Heat sink

|

||||

* Keyboard and mouse

|

||||

* a PC monitor

|

||||

* A Mac or PC to prepare microSD card.

|

||||

|

||||

|

||||

|

||||

There are many Linux-based operating systems available for Raspberry Pi that you can install directly, but if you're new to the Pi, I suggest NOOBS, the official OS installer for Raspberry Pi that simplifies the process of installing an OS on the device.

|

||||

|

||||

Download NOOBS from [this link][1] on your system. It's a compressed .zip file. If you're on MacOS, just double click on it and MacOS will automatically uncompress the files. If you are on Windows, right-click on it, and select "extract here."

|

||||

|

||||

**[ Give yourself a technology career advantage with[InfoWorld's Deep Dive technology reports and Computerworld's career trends reports][2]. GET A 15% DISCOUNT through Jan. 15, 2017: Use code 8TIISZ4Z. ]**

|

||||

|

||||

If you're running desktop Linux, then how to unzip it really depends on the desktop environment you are running, as different DEs have different ways of doing the same thing. So the easiest way is to use the command line.

|

||||

|

||||

`$ unzip NOOBS.zip`

|

||||

|

||||

Irrespective of the operating system, open the unzipped file and check if the file structure looks like this:

|

||||

|

||||

![content][3] Swapnil Bhartiya

|

||||

|

||||

Now plug the Micro SD card to your PC and format it to the FAT32 file system. On MacOS, use the Disk Utility tool and format the Micro SD card:

|

||||

|

||||

![format][4] Swapnil Bhartiya

|

||||

|

||||

On Windows, just right click on the card and choose the formatting option. If you're on desktop Linux, different DEs use different tools, and covering all the DEs is beyond the scope of this story. I have written a tutorial [using the command line interface on Linux][5] to format an SD card with Fat32 file system.

|

||||

|

||||

Once you have the card formatted in the Fat32 partition, just copy the content of the downloaded NOOBS directory into the root directory of the device. If you are on MacOS or Linux, just rsync the content of NOOBS to the SD card. Open Terminal app in MacOS or Linux and run the rsync command in this format:

|

||||

|

||||

`rsync -avzP /path_of_NOOBS /path_of_sdcard`

|

||||

|

||||

Make sure to select the root directory of the sd card. In my case (on MacOS), it was:

|

||||

|

||||

`rsync -avzP /Users/swapnil/Downloads/NOOBS_v2_2_0/ /Volumes/U/`

|

||||

|

||||

Or you can copy and paste the content. Just make sure that all the files inside the NOOBS directory are copied into the root directory of the Micro SD Card and not inside any sub-directory.

|

||||

|

||||

Now plug the Micro SD Card into the Raspberry Pi 3, connect the monitor, the keyboard and power supply. If you do have wired network, I recommend using it as you will get faster download speed to download and install the base operating system. The device will boot into NOOBS that offers a couple of distributions to install. Choose Raspbian from the first option and follow the on-screen instructions.

|

||||

|

||||

![raspi config][6] Swapnil Bhartiya

|

||||

|

||||

Once the installation is complete, Pi will reboot, and you will be greeted with Raspbian. Now it's time to configure it and run system updates. In most cases, we use Raspberry Pi in headless mode and manage it remotely over the networking using SSH. Which means you don't have to plug in a monitor or keyboard to manage your Pi.

|

||||

|

||||

First of all, we need to configure the network if you are using Wi-Fi. Click on the network icon on the top panel, and select the network from the list and provide it with the password.

|

||||

|

||||

![wireless][7] Swapnil Bhartiya

|

||||

|

||||

Congrats, you are connected wirelessly. Before we proceed with the next step, we need to find the IP address of the device so we can manage it remotely.

|

||||

|

||||

Open Terminal and run this command:

|

||||

|

||||

`ifconfig`

|

||||

|

||||

Now, note down the IP address of the device in the wlan0 section. It should be listed as "inet addr."

|

||||

|

||||

Now it's time to enable SSH and configure the system. Open the terminal on Pi and open raspi-config tool.

|

||||

|

||||

`sudo raspi-config`

|

||||

|

||||

The default user and password for Raspberry Pi is "pi" and "raspberry" respectively. You'll need the password for the above command. The first option of Raspi Config tool is to change the default password, and I heavily recommend changing the password, especially if you want to use it over the network.

|

||||

|

||||

The second option is to change the hostname, which can be useful if you have more than one Pi on the network. A hostname makes it easier to identify each device on the network.

|

||||

|

||||

Then go to Interfacing Options and enable Camera, SSH, and VNC. If you're using the device for an application that involves multimedia, such as a home theater system or PC, then you may also want to change the audio output option. By default the output is set to HDMI, but if you're using external speakers, you need to change the set-up. Go to the Advanced Option tab of Raspi Config tool, and go to Audio. There choose 3.5mm as the default out.

|

||||

|

||||

[Tip: Use arrow keys to navigate and then Enter key to choose. ]

|

||||

|

||||

Once all these changes are applied, the Pi will reboot. You can unplug the monitor and keyboard from your Pi as we will be managing it over the network. Now open Terminal on your local machine. If you're on Windows, you can use Putty or read my article to install Ubuntu Bash on Windows 10.

|

||||

|

||||

Then ssh into your system:

|

||||

|

||||

`ssh pi@IP_ADDRESS_OF_Pi`

|

||||

|

||||

In my case it was:

|

||||

|

||||

`ssh pi@10.0.0.161`

|

||||

|

||||

Provide it with the password and Eureka!, you are logged into your Pi and can now manage the device from a remote machine. If you want to manage your Raspberry Pi over the Internet, read my article on [enabling RealVNC on your machine][8].

|

||||

|

||||

In the next follow-up article, I will talk about using Raspberry Pi to manage your 3D printer remotely.

|

||||

|

||||

**This article is published as part of the IDG Contributor Network.[Want to Join?][9]**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.infoworld.com/article/3176488/linux/a-beginner-s-guide-to-raspberry-pi-3.html

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.infoworld.com/author/Swapnil-Bhartiya/

|

||||

[1]:https://www.raspberrypi.org/downloads/noobs/

|

||||

[2]:http://idgenterprise.selz.com

|

||||

[3]:https://images.techhive.com/images/article/2017/03/content-100711633-large.jpg

|

||||

[4]:https://images.techhive.com/images/article/2017/03/format-100711635-large.jpg

|

||||

[5]:http://www.cio.com/article/3176034/linux/how-to-format-an-sd-card-in-linux.html

|

||||

[6]:https://images.techhive.com/images/article/2017/03/raspi-config-100711634-large.jpg

|

||||

[7]:https://images.techhive.com/images/article/2017/03/wireless-100711636-large.jpeg

|

||||

[8]:http://www.infoworld.com/article/3171682/internet-of-things/how-to-access-your-raspberry-pi-remotely-over-the-internet.html

|

||||

[9]:https://www.infoworld.com/contributor-network/signup.html

|

||||

81

sources/tech/20170924 Simulate System Loads.md

Normal file

81

sources/tech/20170924 Simulate System Loads.md

Normal file

@ -0,0 +1,81 @@

|

||||

translating by lujun9972

|

||||

Simulate System Loads

|

||||

======

|

||||

Sysadmins often need to discover how the performance of an application is affected when the system is under certain types of load. This means that an artificial load must be re-created. It is, of course, possible to install dedicated tools to do this but this option isn't always desirable or possible.

|

||||

|

||||

Every Linux distribution comes with all the tools needed to create load. They are not as configurable as dedicated tools but they will always be present and you already know how to use them.

|

||||

|

||||

### CPU

|

||||

|

||||

The following command will generate a CPU load by compressing a stream of random data and then sending it to `/dev/null`:

|

||||

```

|

||||

cat /dev/urandom | gzip -9 > /dev/null

|

||||

|

||||

```

|

||||

|

||||

If you require a greater load or have a multi-core system simply keep compressing and decompressing the data as many times as you need e.g.:

|

||||

```

|

||||

cat /dev/urandom | gzip -9 | gzip -d | gzip -9 | gzip -d > /dev/null

|

||||

|

||||

```

|

||||

|

||||

Use `CTRL+C` to end the process.

|

||||

|

||||

### RAM

|

||||

|

||||

The following process will reduce the amount of free RAM. It does this by creating a file system in RAM and then writing files to it. You can use up as much RAM as you need to by simply writing more files.

|

||||

|

||||

First, create a mount point then mount a `ramfs` filesystem there:

|

||||

```

|

||||

mkdir z

|

||||

mount -t ramfs ramfs z/

|

||||

|

||||

```

|

||||

|

||||

Then, use `dd` to create a file under that directory. Here a 128MB file is created:

|

||||

```

|

||||

dd if=/dev/zero of=z/file bs=1M count=128

|

||||

|

||||

```

|

||||

|

||||

The size of the file can be set by changing the following operands:

|

||||

|

||||

* **bs=** Block Size. This can be set to any number followed **B** for bytes, **K** for kilobytes, **M** for megabytes or **G** for gigabytes.

|

||||

* **count=** The number of blocks to write.

|

||||

|

||||

|

||||

|

||||

### Disk

|

||||

|

||||

We will create disk I/O by firstly creating a file, and then use a for loop to repeatedly copy it.

|

||||

|

||||

This command uses `dd` to generate a 1GB file of zeros:

|

||||

```

|

||||

dd if=/dev/zero of=loadfile bs=1M count=1024

|

||||

|

||||

```

|

||||

|

||||

The following command starts a for loop that runs 10 times. Each time it runs it will copy `loadfile` over `loadfile1`:

|

||||

```

|

||||

for i in {1..10}; do cp loadfile loadfile1; done

|

||||

|

||||

```

|

||||

|

||||

If you want it to run for a longer or shorter time change the second number in `{1..10}`.

|

||||

|

||||

If you prefer the process to run forever until you kill it with `CTRL+C` use the following command:

|

||||

```

|

||||

while true; do cp loadfile loadfile1; done

|

||||

|

||||

```

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://bash-prompt.net/guides/create-system-load/

|

||||

|

||||

作者:[Elliot Cooper][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://bash-prompt.net

|

||||

@ -0,0 +1,159 @@

|

||||

translating by lujun9972

|

||||

How To Easily Find Awesome Projects And Resources Hosted In GitHub

|

||||

======

|

||||

|

||||

|

||||

Everyday there are hundreds of new additions to the **GitHub** website. Since GitHub has thousands of stuffs, you would be exhausted when searching for a good project. Fortunately, a group of contributors have made a curated lists of awesome stuffs hosted in GitHub. These lists contains a lot of awesome stuffs grouped under different categories such as programming, database, editors, gaming, entertainment and many more. It makes our life much easier to find out any project, software, resource, library, books and all other stuffs hosted in GitHub. A fellow GitHub user went one step ahead and created a command-line utility called **" Awesome-finder"** to find awesome projects and resources on awesome series repositories. This utility helps us to browse through the curated list of awesome lists without leaving the Terminal, without using browser of course.

|

||||

|

||||

In this brief guide, I will show you how to easily browse through the curated list of awesome lists in Unix-like systems.

|

||||

|

||||

### Awesome-finder - Easily Find Awesome Projects And Resources Hosted In GitHub

|

||||

|

||||

#### Installing Awesome-finder

|

||||

|

||||

Awesome can be easily installed using **pip** , a package manager for installing programs developed using Python programming language.

|

||||

|

||||

On **Arch Linux** and its derivatives like **Antergos** , **Manjaro Linux** , you can install pip using command:

|

||||

```

|

||||

sudo pacman -S python-pip

|

||||

```

|

||||

|

||||

On **RHEL** , **CentOS** :

|

||||

```

|

||||

sudo yum install epel-release

|

||||

```

|

||||

```

|

||||

sudo yum install python-pip

|

||||

```

|

||||

|

||||

On **Fedora** :

|

||||

```

|

||||

sudo dnf install epel-release

|

||||

```

|

||||

```

|

||||

sudo dnf install python-pip

|

||||

```

|

||||

|

||||

On **Debian** , **Ubuntu** , **Linux Mint** :

|

||||

```

|

||||

sudo apt-get install python-pip

|

||||

```

|

||||

|

||||

On **SUSE** , **openSUSE** :

|

||||

```

|

||||

sudo zypper install python-pip

|

||||

```

|

||||

|

||||

Once PIP installed, run the following command to install 'Awesome-finder' utility.

|

||||

```

|

||||

sudo pip install awesome-finder

|

||||

```

|

||||

|

||||

#### Usage

|

||||

|

||||

Awesome-finder currently lists the stuffs from the following awesome topics (repositories, of course) from GitHub site:

|

||||

|

||||

* awesome

|

||||

* awesome-android

|

||||

* awesome-elixir

|

||||

* awesome-go

|

||||

* awesome-ios

|

||||

* awesome-java

|

||||

* awesome-javascript

|

||||

* awesome-php

|

||||

* awesome-python

|

||||

* awesome-ruby

|

||||

* awesome-rust

|

||||

* awesome-scala

|

||||

* awesome-swift

|

||||

|

||||

|

||||

|

||||

This list will be updated on regular basis.

|

||||

|

||||

For instance, to view the curated list from awesome-go repository, just type:

|

||||

```

|

||||

awesome go

|

||||

```

|

||||

|

||||

You will see all popular stuffs written using "Go", sorted by alphabetical order.

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

You can navigate through the list using **UP/DOWN** arrows. Once you found the stuff you looking for, choose it and hit **ENTER** key to open the link in your default web browser.

|

||||

|

||||

Similarly,

|

||||

|

||||

* "awesome android" command will search the **awesome-android** repository.

|

||||

* "awesome awesome" command will search the **awesome** repository.

|

||||

* "awesome elixir" command will search the **awesome-elixir**.

|

||||

* "awesome go" will search the **awesome-go**.

|

||||

* "awesome ios" will search the **awesome-ios**.

|

||||

* "awesome java" will search the **awesome-java**.

|

||||

* "awesome javascript" will search the **awesome-javascript**.

|

||||

* "awesome php" will search the **awesome-php**.

|

||||

* "awesome python" will search the **awesome-python**.

|

||||

* "awesome ruby" will search the **awesome-ruby**.

|

||||

* "awesome rust" will search the **awesome-rust**.

|

||||

* "awesome scala" will search the **awesome-scala**.

|

||||

* "awesome swift" will search the **awesome-swift**.

|

||||

|

||||

|

||||

|

||||

Also, it automatically displays the suggestions as you type in the prompt. For instance when I type "dj", it displays the stuffs related to Django.

|

||||

|

||||

[![][1]][3]

|

||||

|

||||

If you wanted to find the awesome things from latest awesome-<topic> (not use cache), use -f or -force flag:

|

||||

```

|

||||

awesome <topic> -f (--force)

|

||||

|

||||

```

|

||||

|

||||

**Example:**

|

||||

```

|

||||

awesome python -f

|

||||

```

|

||||

|

||||

Or,

|

||||

```

|

||||

awesome python --force

|

||||

```

|

||||

|

||||

The above command will display the curated list of stuffs from **awesome-python** GitHub repository.

|

||||

|

||||

Awesome, isn't it?

|

||||

|

||||

To exit from this utility, press **ESC** key. To display help, type:

|

||||

```

|

||||

awesome -h

|

||||

```

|

||||

|

||||

And, that's all for now. Hope this helps. If you find our guides useful, please share them on your social, professional networks, so everyone will benefit from them. Good good stuffs to come. Stay tuned!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_008-1.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_009.png ()

|

||||

[4]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=reddit (Click to share on Reddit)

|

||||

[5]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=twitter (Click to share on Twitter)

|

||||

[6]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=facebook (Click to share on Facebook)

|

||||

[7]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=google-plus-1 (Click to share on Google+)

|

||||

[8]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=linkedin (Click to share on LinkedIn)

|

||||

[9]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=pocket (Click to share on Pocket)

|

||||

[10]:whatsapp://send?text=How%20To%20Easily%20Find%20Awesome%20Projects%20And%20Resources%20Hosted%20In%20GitHub%20https%3A%2F%2Fwww.ostechnix.com%2Feasily-find-awesome-projects-resources-hosted-github%2F (Click to share on WhatsApp)

|

||||

[11]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=telegram (Click to share on Telegram)

|

||||

[12]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=email (Click to email this to a friend)

|

||||

[13]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/#print (Click to print)

|

||||

@ -0,0 +1,81 @@

|

||||

A 3-step process for making more transparent decisions

|

||||

======

|

||||

|

||||

|

||||

One of the most powerful ways to make your work as a leader more transparent is to take an existing process, open it up for feedback from your team, and then change the process to account for this feedback. The following exercise makes transparency more tangible, and it helps develop the "muscle memory" needed for continually evaluating and adjusting your work with transparency in mind.

|

||||

|

||||

I would argue that you can undertake this activity this with any process--even processes that might seem "off limits," like the promotion or salary adjustment processes. But if that's too big for a first bite, then you might consider beginning with a less sensitive process, such as the travel approval process or your system for searching for candidates to fill open positions on your team. (I've done this with our hiring process and promotion processes, for example.)

|

||||

|

||||

Opening up processes and making them more transparent builds your credibility and enhances trust with team members. It forces you to "walk the transparency walk" in ways that might challenge your assumptions or comfort level. Working this way does create additional work, particularly at the beginning of the process--but, ultimately, this works well for holding managers (like me) accountable to team members, and it creates more consistency.

|

||||

|

||||

### Phase 1: Pick a process

|

||||

|

||||

**Step 1.** Think of a common or routine process your team uses, but one that is not generally open for scrutiny. Some examples might include:

|

||||

|

||||

* Hiring: How are job descriptions created, interview teams selected, candidates screened and final hiring decisions made?

|

||||

* Planning: How are your team or organizational goals determined for the year or quarter?

|

||||

* Promotions: How do you select candidates for promotion, consider them, and decide who gets promoted?

|

||||

* Manager performance appraisals: Who receives the opportunity to provide feedback on manager performance, and how are they able to do it?

|

||||

* Travel: How is the travel budget apportioned, and how do you make decisions about whether to approval travel (or whether to nominate someone for travel)?

|

||||

|

||||

|

||||

|

||||

One of the above examples may resonate with you, or you may identify something else that you feel is more appropriate. Perhaps you've received questions about a particular process, or you find yourself explaining the rationale for a particular kind of decision frequently. Choose something that you are able to control or influence--and something you believe your constituents care about.

|

||||

|

||||

**Step 2.** Now answer the following questions about the process:

|

||||

|

||||

* Is the process currently documented in a place that all constituents know about and can access? If not, go ahead and create that documentation now (it doesn't have to be too detailed; just explain the different steps of the process and how it works). You may find that the process isn't clear or consistent enough to document. In that case, document it the way you think it should work in the ideal case.

|

||||

* Does the completed process documentation explain how decisions are made at various points? For example, in a travel approval process, does it explain how a decision to approve or deny a request is made?

|

||||

* What are the inputs of the process? For example, when determining departmental goals for the year, what data is used for key performance indicators? Whose feedback is sought and incorporated? Who has the opportunity to review or "sign off"?

|

||||

* What assumptions does this process make? For example, in promotion decisions, do you assume that all candidates for promotion will be put forward by their managers at the appropriate time?

|

||||

* What are the outputs of the process? For example, in assessing the performance of the managers, is the result shared with the manager being evaluated? Are any aspects of the review shared more broadly with the manager's direct reports (areas for improvement, for example)?

|

||||

|

||||

|

||||

|

||||

Avoid making judgements when answering the above questions. If the process doesn't clearly explain how a decision is made, that might be fine. The questions are simply an opportunity to assess the current state.

|

||||

|

||||

Next, revise the documentation of the process until you are satisfied that it adequately explains the process and anticipates the potential questions.

|

||||

|

||||

### Phase 2: Gather feedback

|

||||

|

||||

The next phase involves sharing the process with your constituents and asking for feedback. Sharing is easier said than done.

|

||||

|

||||

**Step 1.** Encourage people to provide feedback. Consider a variety of mechanisms for doing this:

|

||||

|

||||

* Post the process somewhere people can find it internally and note where they can make comments or provide feedback. A Google document works great with the ability to comment on specific text or suggest changes directly in the text.

|

||||

* Share the process document via email, inviting feedback

|

||||

* Mention the process document and ask for feedback during team meetings or one-on-one conversations

|

||||

* Give people a time window within which to provide feedback, and send periodic reminders during that window.

|

||||

|

||||

|

||||

|

||||

If you don't get much feedback, don't assume that silence is equal to endorsement. Try asking people directly if they have any idea why feedback is not coming in. Are people too busy? Is the process not as important to people as you thought? Have you effectively articulated what you're asking for?

|

||||

|

||||

**Step 2.** Iterate. As you get feedback about the process, engage the team in revising and iterating on the process. Incorporate ideas and suggestions for improvement, and ask for confirmation that the intended feedback has been applied. If you don't agree with a suggestion, be open to the discussion and ask yourself why you don't agree and what the merits are of one method versus another.

|

||||

|

||||

Setting a timebox for collecting feedback and iterating is helpful to move things forward. Once feedback has been collected and reviewed, discussed and applied, post the final process for the team to review.

|

||||

|

||||

### Phase 3: Implement

|

||||

|

||||

Implementing a process is often the hardest phase of the initiative. But if you've taken account of feedback when revising your process, people should already been anticipating it and will likely be more supportive. The documentation you have from the iterative process above is a great tool to keep you accountable on the implementation.

|

||||

|

||||

**Step 1.** Review requirements for implementation. Many processes that can benefit from increased transparency simply require doing things a little differently, but you do want to review whether you need any other support (tooling, for example).

|

||||

|

||||

**Step 2.** Set a timeline for implementation. Review the timeline with constituents so they know what to expect. If the new process requires a process change for others, be sure to provide enough time for people to adapt to the new behavior, and provide communication and reminders.

|

||||

|

||||

**Step 3.** Follow up. After using the process for 3-6 months, check in with your constituents to see how it's going. Is the new process more transparent? More effective? More predictable? Do you have any lessons learned that could be used to improve the process further?

|

||||

|

||||

### About The Author

|

||||

Sam Knuth;I Have The Privilege To Lead The Customer Content Services Team At Red Hat;Which Produces All Of The Documentation We Provide For Our Customers. Our Goal Is To Provide Customers With The Insights They Need To Be Successful With Open Source Technology In The Enterprise. Connect With Me On Twitter

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/17/9/exercise-in-transparent-decisions

|

||||

|

||||

作者:[a][Sam Knuth]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/samfw

|

||||

@ -0,0 +1,131 @@

|

||||

How to Use the ZFS Filesystem on Ubuntu Linux

|

||||

======

|

||||

There are a myriad of [filesystems available for Linux][1]. So why try a new one? They all work, right? They're not all the same, and some have some very distinct advantages, like ZFS.

|

||||

|

||||

### Why ZFS

|

||||

|

||||

ZFS is awesome. It's a truly modern filesystem with built-in capabilities that make sense for handling loads of data.

|

||||

|

||||

Now, if you're considering ZFS for your ultra-fast NVMe SSD, it might not be the best option. It's slower than others. That's okay, though. It was designed to store huge amounts of data and keep it safe.

|

||||

|

||||

ZFS eliminates the need to set up traditional RAID arrays. Instead, you can create ZFS pools, and even add drives to those pools at any time. ZFS pools behave almost exactly like RAID, but the functionality is built right into the filesystem.

|

||||

|

||||

ZFS also acts like a replacement for LVM, allowing you to partition and manage partitions on the fly without the need to handle things at a lower level and worry about the associated risks.

|

||||

|

||||

It's also a CoW filesystem. Without getting too technical, that means that ZFS protects your data from gradual corruption over time. ZFS creates checksums of files and lets you roll back those files to a previous working version.

|

||||

|

||||

### Installing ZFS

|

||||

|

||||

![Install ZFS on Ubuntu][2]

|

||||

|

||||

Installing ZFS on Ubuntu is very easy, though the process is slightly different for Ubuntu LTS and the latest releases.

|

||||

|

||||

**Ubuntu 16.04 LTS**

|

||||

```

|

||||

sudo apt install zfs

|

||||

```

|

||||

|

||||

**Ubuntu 17.04 and Later**

|

||||

```

|

||||

sudo apt install zfsutils

|

||||

```

|

||||

|

||||

After you have the utilities installed, you can create ZFS drives and partitions using the tools provided by ZFS.

|

||||

|

||||

### Creating Pools

|

||||

|

||||

![Create ZFS Pool][3]

|

||||

|

||||

Pools are the rough equivalent of RAID in ZFS. They are flexible and can easily be manipulated.

|

||||

|

||||

#### RAID0

|

||||

|

||||

RAID0 just pools your drives into what behaves like one giant drive. It can increase your drive speeds, but if one of your drives fails, you're probably going to be out of luck.

|

||||

|

||||

To achieve RAID0 with ZFS, just create a plain pool.

|

||||

```

|

||||

sudo zpool create your-pool /dev/sdc /dev/sdd

|

||||

```

|

||||

|

||||

#### RAID1/MIRROR

|

||||

|

||||

You can achieve RAID1 functionality with the `mirror` keyword in ZFS. Raid1 creates a 1-to-1 copy of your drive. This means that your data is constantly backed up. It also increases performance. Of course, you use half of your storage to the duplication.

|

||||

```

|

||||

sudo zpool create your-pool mirror /dev/sdc /dev/sdd

|

||||

```

|

||||

|

||||

#### RAID5/RAIDZ1

|

||||

|

||||

ZFS implements RAID5 functionality as RAIDZ1. RAID5 requires drives in multiples of three and allows you to keep 2/3 of your storage space by writing backup parity data to 1/3 of the drive space. If one drive fails, the array will remain online, but the failed drive should be replaced ASAP.

|

||||

```

|

||||

sudo zpool create your-pool raidz1 /dev/sdc /dev/sdd /dev/sde

|

||||

```

|

||||

|

||||

#### RAID6/RAIDZ2

|

||||

|

||||

RAID6 is almost exactly like RAID5, but it works in multiples of four instead of multiples of three. It doubles the parity data to allow up to two drives to fail without bringing the array down.

|

||||

```

|

||||

sudo zpool create your-pool raidz2 /dev/sdc /dev/sdd /dev/sde /dev/sdf

|

||||

```

|

||||

|

||||

#### RAID10/Striped Mirror

|

||||

|

||||

RAID10 aims to be the best of both worlds by providing both a speed increase and data redundancy with striping. You need drives in multiples of four and will only have access to half of the space. You can create a pool in RAID10 by creating two mirrors in the same pool command.

|

||||

```

|

||||

sudo zpool create your-pool mirror /dev/sdc /dev/sdd mirror /dev/sde /dev/sdf

|

||||

```

|

||||

|

||||

### Working With Pools

|

||||

|

||||

![ZFS pool Status][4]

|

||||

|

||||

There are also some management tools that you have to work with your pools once you've created them. First, check the status of your pools.

|

||||

```

|

||||

sudo zpool status

|

||||

```

|

||||

|

||||

#### Updates

|

||||

|

||||

When you update ZFS you'll need to update your pools, too. Your pools will notify you of any updates when you check their status. To update a pool, run the following command.

|

||||

```

|

||||

sudo zpool upgrade your-pool

|

||||

```

|

||||

|

||||

You can also upgrade them all.

|

||||

```

|

||||

sudo zpool upgrade -a

|

||||

```

|

||||

|

||||

#### Adding Drives

|

||||

|

||||

You can also add drives to your pools at any time. Tell `zpool` the name of the pool and the location of the drive, and it'll take care of everything.

|

||||

```

|

||||

sudo zpool add your-pool /dev/sdx

|

||||

```

|

||||

|

||||

### Other Thoughts

|

||||

|

||||

![ZFS in File Browser][5]

|

||||

|

||||

ZFS creates a directory in the root filesystem for your pools. You can browse to them by name using your GUI file manager or the CLI.

|

||||

|

||||

ZFS is awesomely powerful, and there are plenty of other things that you can do with it, too, but these are the basics. It is an excellent filesystem for working with loads of storage, even if it is just a RAID array of hard drives that you use for your files. ZFS works excellently with NAS systems, too.

|

||||

|

||||

Regardless of how stable and robust ZFS is, it's always best to back up your data when you implement something new on your hard drives.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/use-zfs-filesystem-ubuntu-linux/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/nickcongleton/

|

||||

[1]:https://www.maketecheasier.com/best-linux-filesystem-for-ssd/

|

||||

[2]:https://www.maketecheasier.com/assets/uploads/2017/09/zfs-install.jpg (Install ZFS on Ubuntu)

|

||||

[3]:https://www.maketecheasier.com/assets/uploads/2017/09/zfs-create-pool.jpg (Create ZFS Pool)

|

||||

[4]:https://www.maketecheasier.com/assets/uploads/2017/09/zfs-pool-status.jpg (ZFS pool Status)

|

||||

[5]:https://www.maketecheasier.com/assets/uploads/2017/09/zfs-pool-open.jpg (ZFS in File Browser)

|

||||

@ -0,0 +1,119 @@

|

||||

translating by lujun9972

|

||||

Connect To Wifi From The Linux Command Line

|

||||

======

|

||||

|

||||

### Objective

|

||||

|

||||

Configure WiFi using only command line utilities.

|

||||

|

||||

### Distributions

|

||||

|

||||

This will work on any major Linux distribution.

|

||||

|

||||

### Requirements

|

||||

|

||||

A working Linux install with root privileges and a compatible wireless network adapter.

|

||||

|

||||

### Difficulty

|

||||

|

||||

Easy

|

||||

|

||||

### Conventions

|

||||

|

||||

* **#** \- requires given command to be executed with root privileges either directly as a root user or by use of `sudo` command

|

||||

* **$** \- given command to be executed as a regular non-privileged user

|

||||

|

||||

|

||||

|

||||

### Introduction

|

||||

|

||||

Lots of people like graphical utilities for managing their computers, but plenty don't too. If you prefer command line utilities, managing WiFi can be a real pain. Well, it doesn't have to be.

|

||||

|

||||

wpa_supplicant can be used as a command line utility. You can actually set it up easily with a simple configuration file.

|

||||

|

||||

### Scan For Your Network

|

||||

|

||||

If you already know your network information, you can skip this step. If not, its a good way to figure out some info about the network you're connecting to.

|

||||

|

||||

wpa_supplicant comes with a tool called `wpa_cli` which provides a command line interface to manage your WiFi connections. You can actually use it to set up everything, but setting up a configuration file seems a bit easier.

|

||||

|

||||

Run `wpa_cli` with root privileges, then scan for networks.

|

||||

```

|

||||

|

||||

# wpa_cli

|

||||

> scan

|

||||

|

||||

```

|

||||

|

||||

The scan will take a couple of minutes, and show you the networks in your area. Notate the one you want to connect to. Type `quit` to exit.

|

||||

|

||||

### Generate a Block and Encrypt Your Password

|

||||

|

||||

There's an even more convenient utility that you can use to begin setting up your configuration file. It takes the name of your network and the password and creates a file with a configuration block for that network with the password encrypted, so it's not stored in plain text.

|

||||

```

|

||||

|

||||

# wpa_passphrase networkname password > /etc/wpa_supplicant/wpa_supplicant.conf

|

||||

|

||||

```

|

||||

|

||||

### Tailor Your Configuration

|

||||

|

||||

Now, you have a configuration file located at `/etc/wpa_supplicant/wpa_supplicant.conf`. It's not much, just the network block with your network name and password, but you can build it out from there.

|

||||

|

||||

Your file up in your favorite editor, and start by deleting the commented out password line. Then, add the following line to the top of the configuration.

|

||||

```

|

||||

ctrl_interface=DIR=/var/run/wpa_supplicant GROUP=wheel

|

||||

|

||||

```

|

||||

|

||||

It just lets users in the `wheel` group manage wpa_supplicant. It can be convenient.

|

||||

|

||||

Add the rest of this to the network block itself.

|

||||

|

||||

If you're connecting to a hidden network, you can add the following line to tell wpa_supplicant to scan it first.

|

||||

```

|

||||

scan_ssid=1

|

||||

|

||||

```

|

||||

|

||||

Next, set the protocol and key management settings. These settings correspond to WPA2.

|

||||

```

|

||||

proto=RSN

|

||||

key_mgmt=WPA-PSK

|

||||

|

||||

```

|

||||

|

||||

The group and pairwise settings tell wpa_supplicant if you're using CCMP, TKIP, or both. For best security, you should only be using CCMP.

|

||||

```

|

||||

group=CCMP

|

||||

pairwise=CCMP

|

||||

|

||||

```

|

||||

|

||||

Finally, set the priority of the network. Higher values will connect first.

|

||||

```

|

||||

priority=10

|

||||

|

||||

```

|

||||

|

||||

|

||||

![Complete WPA_Supplicant Settings][1]

|

||||

Save your configuration and restart wpa_supplicant for the changes to take effect.

|

||||

|

||||

### Closing Thoughts

|

||||

|

||||

Certainly, this method isn't the best for configuring wireless networks on-the-fly, but it works very well for the networks that you connect to on a regular basis.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/connect-to-wifi-from-the-linux-command-line

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org

|

||||

[1]:https://linuxconfig.org/images/wpa-cli-config.jpg

|

||||

@ -0,0 +1,100 @@

|

||||

Linux Gunzip Command Explained with Examples

|

||||

======

|

||||

|

||||

We have [already discussed][1] the **gzip** command in Linux. For starters, the tool is used to compress or expand files. To uncompress, the command offers a command line option **-d** , which can be used in the following way:

|

||||

|

||||

gzip -d [compressed-file-name]

|

||||

|

||||

However, there's an entirely different tool that you can use for uncompressing or expanding archives created by gzip. The tool in question is **gunzip**. In this article, we will discuss the gunzip command using some easy to understand examples. Please note that all examples/instructions mentioned in the tutorial have been tested on Ubuntu 16.04.

|

||||

|

||||

### Linux gunzip command

|