mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

decc148937

@ -1,67 +1,70 @@

|

||||

|

||||

Ubunto可以实现这功能吗?-回答4个新用户最常问的问题

|

||||

Ubuntu 有这功能吗?-回答4个新用户最常问的问题

|

||||

================================================================================

|

||||

|

||||

|

||||

**在谷歌输入‘Can Ubunt[u]’,一系列的自动建议会展现在你面前。这些建议都是根据最近搜索用户最频繁检索而形成的。

|

||||

|

||||

对于Linux老用户来说,他们都胸有成竹的回答这些问题。但是对于新用户或者那些还在探索类似Ubuntu是否是值得分配的人,他们不是十分清楚这些答案。这都是中肯,真实而且是基本的问题。

|

||||

对于Linux老用户来说,他们都胸有成竹的回答这些问题。但是对于新用户或者那些还在探索类似Ubuntu这样的发行版是否适合的人来说,他们不是十分清楚这些答案。这都是中肯,真实而且是基本的问题。

|

||||

|

||||

所以,在这片文章,我将会去回答4个最常会被搜索到的"Can Ubuntu...?"问题。

|

||||

|

||||

### Ubuntu可以取代Windows吗?###

|

||||

|

||||

|

||||

Windows 并不是每个人都喜欢 - 或者说是必须的。

|

||||

|

||||

是的。Ubutu(和其他Linux发行版)是可以安装到任何一台有能力运行微软系统的电脑。

|

||||

*Windows 并不是每个人都喜欢或都必须的*

|

||||

|

||||

无论你觉得 **应不应该** 取代它,不变的是,这取决于你自己的需求。

|

||||

是的。Ubuntu(和其他Linux发行版)是可以安装到任何一台有能力运行微软系统的电脑。

|

||||

|

||||

无论你觉得**应不应该**取代它,要不要替换只取决于你自己的需求。

|

||||

|

||||

例如,你在上大学,所需的软件都只是Windows而已。暂时而言,你是不需要完全更换你的系统。对于工作也是同样的道理。如果你工作所用到的软件只是微软Office, Adobe Creative Suite 或者是一个AutoCAD应用程序,不是很建议你更换系统,坚持你现在所用的软件就足够了。

|

||||

|

||||

但是对于那些用Ubuntu完全取代微软的我们,Ubuntu 提供一个安全的桌面工作环境。这个桌面工作环境可以运行与支持很广的硬件环境。基本上,每个东西都有软件的支持,从办公套件到网页浏览器,视频应用程序,音乐应用程序到游戏。

|

||||

但是对于那些用Ubuntu完全取代微软系统的我们,Ubuntu 提供了一个安全的桌面工作环境。这个桌面工作环境可以运行与支持很广的硬件环境。基本上,每个东西都有软件的支持,从办公套件到网页浏览器,视频应用程序,音乐应用程序到游戏。

|

||||

|

||||

### Ubuntu 可以运行 .exe文件吗?###

|

||||

|

||||

|

||||

你可以在Ubuntu运行一些Windows应用程序

|

||||

|

||||

是可以的,尽管这些程序不是一步安装到位,或者不能保证安装成功。这是因为这些软件版本本来就是在Windows下运行的。 这些程序本来就与其他桌面操作系统不兼容,包括Mac OS X 或者 Android (安卓系统)。

|

||||

*你可以在Ubuntu运行一些Windows应用程序*

|

||||

|

||||

那些专门为Ubuntu(和其他Linux发行版本)的软件安装包都是带有“.deb”的文件后缀名。它们的安装过程与安装 .exe 的程序是一样的 -双击安装包,然后根据屏幕提示完成安装。

|

||||

是可以的,尽管这些程序不是一步到位,或者不能保证运行成功。这是因为这些软件原本就是在Windows下运行的,本来就与其他桌面操作系统不兼容,包括Mac OS X 或者 Android (安卓系统)。

|

||||

|

||||

但是Linux是很多样化的。 使用一个名为"Wine"的兼容层,可以运行许多当下很流行的应用程序。 (Wine不是一个模拟器,但是简单来讲是一个速记本。)这些程序不会像在Windows下运行得那么顺畅,或者有着出色的用户界面。然而,它足以满足日常的工作要求。

|

||||

那些专门为Ubuntu(和其他 Debian 系列的 Linux 发行版本)的软件安装包都是带有“.deb”的文件后缀名。它们的安装过程与安装 .exe 的程序是一样的 -双击安装包,然后根据屏幕提示完成安装。 (LCTT 译注:RedHat 系统采用.rpm 文件,其它的也有各种不同的安装包格式,等等,作为初学者,你可以当成是各种压缩包格式来理解)

|

||||

|

||||

一些很出名的Windows软件是可以通过Wine来运行在Ubuntu操作系统上,这包括老版本的Photoshop和微软办公室软件。 有关兼容软件的列表 [参照Wine应用程序数据库][1].

|

||||

但是Linux是很多样化的。它使用一个名为"Wine"的兼容层,可以运行许多当下很流行的应用程序。 (Wine不是一个模拟器,但是简单来看可以当成一个快捷方式。)这些程序不会像在Windows下运行得那么顺畅,或者有着出色的用户界面。然而,它足以满足日常的工作要求。

|

||||

|

||||

一些很出名的Windows软件是可以通过Wine来运行在Ubuntu操作系统上,这包括老版本的Photoshop和微软办公室软件。 有关兼容软件的列表,[参照Wine应用程序数据库][1]。

|

||||

|

||||

### Ubuntu会有病毒吗?###

|

||||

|

||||

|

||||

它可能有错误,但是它并有病毒

|

||||

|

||||

*它可能有错误,但是它并有病毒*

|

||||

|

||||

理论上,它会有病毒。但是,实际上它没有。

|

||||

|

||||

Linux发行版本是建立在一个病毒,蠕虫,隐匿程序都很难被安装,运行或者造成很大影响的环境之下的。

|

||||

|

||||

例如,很多应用程序都是在没有特别管理权限要求,以普通用户权限运行的。病毒的访问系统关键部分的请求也是需要用户管理权限的。很多软件的提供都是从那些维护良好的而且集中的资源库,例如Ubuntu软件中心,而不是一些不知名的网站。 由于这样的管理使得安装一些受感染的软件的几率可以忽略不计。

|

||||

例如,很多应用程序都是在没有特别管理权限要求,以普通用户权限运行的。病毒要访问系统关键部分的请求也是需要用户管理权限的。很多软件的提供都是从那些维护良好的而且集中的资源库,例如Ubuntu软件中心,而不是一些不知名的网站。 由于这样的管理使得安装一些受感染的软件的几率可以忽略不计。

|

||||

|

||||

你应不应该在Ubuntu系统安装杀毒软件?这取决于你自己。为了自己的安心,或者如果你经常通过Wine来使用Windows软件,或者双系统,你可以安装ClamAV。它是一个免费的开源的病毒扫描应用程序。你可以在Ubuntu软件中心找到它。

|

||||

|

||||

你可以在Ubuntu维基百科了解更多关于病毒在Linux或者Ubuntu的信息。 [Ubuntu 维基百科][2].

|

||||

你可以在Ubuntu维基百科了解更多关于病毒在Linux或者Ubuntu的信息。 [Ubuntu 维基百科][2]。

|

||||

|

||||

### 在Ubuntu上可以玩游戏吗?###

|

||||

|

||||

|

||||

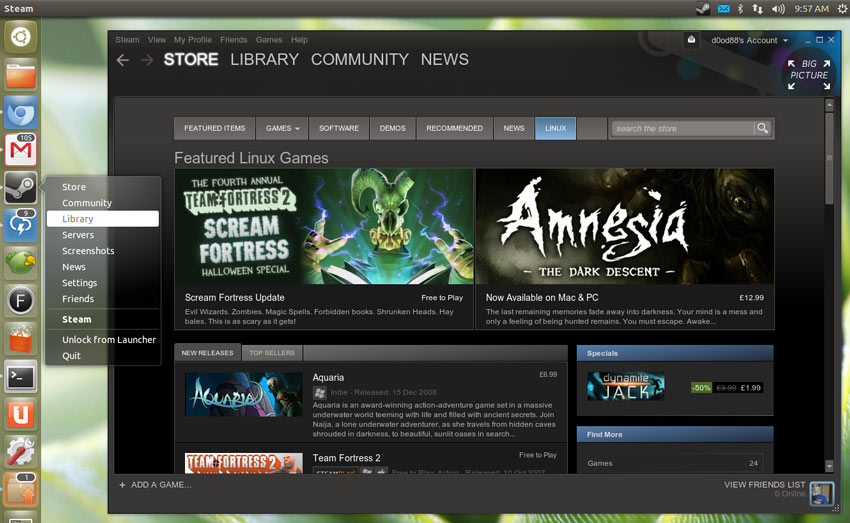

Steam有着上百个专门为Linux设计的高质量游戏。

|

||||

|

||||

当然可以!Ubuntu有着多样化的游戏,从传统简单的2D象棋,拼字游戏和扫雷游戏,到很现代化AAA级别的对显卡要求强的游戏。

|

||||

*Steam有着上百个专门为Linux设计的高质量游戏*

|

||||

|

||||

你首先去到 **Ubuntu 软件中心**。这里你会找到很多免费的,开源的和付钱的游戏,包括广受好评的独立制作游戏,像World of Goo 和Braid。当然也有其他传统游戏的提供,例如,Pychess(国际象棋),four-in-a-row和Scrabble clones(猜字拼字游戏)。

|

||||

当然可以!Ubuntu有着多样化的游戏,从传统简单的2D象棋,拼字游戏和扫雷游戏,到很现代化的AAA级别的要求显卡很强的游戏。

|

||||

|

||||

对于游戏狂热爱好者,你可以点击**Steam for Linux**. 在这里你可以找到各种这样最新最好玩的游戏。

|

||||

你首先可以去 **Ubuntu 软件中心**。这里你会找到很多免费的,开源的和收费的游戏,包括广受好评的独立制作游戏,像World of Goo 和Braid。当然也有其他传统游戏的提供,例如,Pychess(国际象棋),four-in-a-row(四子棋)和Scrabble clones(猜字拼字游戏)。

|

||||

|

||||

另外,记得留意这个网站 [Humble Bundle][3]。这些“只买你想要的”的套餐只会持续每个月里面的两周。作为游戏平台,它是Linux特别友好的支持者。因为每当一些新游戏出来的时候,它都保证可以在Linux下搜索到。

|

||||

对于游戏狂热爱好者,你可以安装**Steam for Linux**。在这里你可以找到各种这样最新最好玩的游戏。

|

||||

|

||||

另外,记得留意这个网站:[Humble Bundle][3]。每个月都会有两周的这种“只买你想要的”的套餐。作为游戏平台,它是对Linux特别友好的支持者。因为每当一些新游戏出来的时候,它都保证可以在Linux下搜索到。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -69,7 +72,7 @@ via: http://www.omgubuntu.co.uk/2014/08/ubuntu-can-play-games-replace-windows-qu

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[Shaohao Lin](https://github.com/shaohaolin)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

使用 GIT 备份 linux 上的网页

|

||||

使用 GIT 备份 linux 上的网页文件

|

||||

================================================================================

|

||||

|

||||

|

||||

BUP 并不单纯是 Git, 而是一款基于 Git 的软件. 一般情况下, 我使用 rsync 来备份我的文件, 而且迄今为止一直工作的很好. 唯一的不足就是无法把文件恢复到某个特定的时间点. 因此, 我开始寻找替代品, 结果发现了 BUP, 一款基于 git 的软件, 它将数据存储在一个仓库中, 并且有将数据恢复到特定时间点的选项.

|

||||

|

||||

要使用 BUP, 你先要初始化一个空的仓库, 然后备份所有文件. 当 BUP 完成一次备份是, 它会创建一个还原点, 你可以过后还原到这里. 它还会创建所有文件的索引, 包括文件的属性和验校和. 当要进行下一个备份是, BUP 会对比文件的属性和验校和, 只保存发生变化的数据. 这样可以节省很多空间.

|

||||

要使用 BUP, 你先要初始化一个空的仓库, 然后备份所有文件. 当 BUP 完成一次备份是, 它会创建一个还原点, 你可以过后还原到这里. 它还会创建所有文件的索引, 包括文件的属性和验校和. 当要进行下一个备份时, BUP 会对比文件的属性和验校和, 只保存发生变化的数据. 这样可以节省很多空间.

|

||||

|

||||

### 安装 BUP (在 Centos 6 & 7 上测试通过) ###

|

||||

|

||||

@ -20,7 +20,8 @@ BUP 并不单纯是 Git, 而是一款基于 Git 的软件. 一般情况下, 我

|

||||

[techarena51@vps ~]$ make test

|

||||

[techarena51@vps ~]$ sudo make install

|

||||

|

||||

对于 debian/ubuntu 用户, 你可以使用 "apt-get build-dep bup". 要获得更多的信心, 可以查看 https://github.com/bup/bup

|

||||

对于 debian/ubuntu 用户, 你可以使用 "apt-get build-dep bup". 要获得更多的信息, 可以查看 https://github.com/bup/bup

|

||||

|

||||

在 CentOS 7 上, 当你运行 "make test" 时可能会出错, 但你可以继续运行 "make install".

|

||||

|

||||

第一步时初始化一个空的仓库, 就像 git 一样.

|

||||

@ -49,7 +50,7 @@ BUP 并不单纯是 Git, 而是一款基于 Git 的软件. 一般情况下, 我

|

||||

|

||||

"BUP save" 会把所有内容分块, 然后把它们作为对象储存. "-n" 选项指定备份名.

|

||||

|

||||

你可以查看一系列备份和已备份文件.

|

||||

你可以查看备份列表和已备份文件.

|

||||

|

||||

[techarena51@vps ~]$ bup ls

|

||||

local-etc techarena51 test

|

||||

@ -88,13 +89,13 @@ BUP 并不单纯是 Git, 而是一款基于 Git 的软件. 一般情况下, 我

|

||||

|

||||

唯一的缺点是你不能把文件恢复到另一个服务器, 你必须通过 SCP 或者 rsync 手动复制文件.

|

||||

|

||||

通过集成的 web 服务器查看备份

|

||||

通过集成的 web 服务器查看备份.

|

||||

|

||||

bup web

|

||||

#specific port

|

||||

bup web :8181

|

||||

|

||||

你可以使用 shell 脚本来运行 bup, 并建立一个每日运行的定时任务

|

||||

你可以使用 shell 脚本来运行 bup, 并建立一个每日运行的定时任务.

|

||||

|

||||

#!/bin/bash

|

||||

|

||||

@ -103,7 +104,7 @@ BUP 并不单纯是 Git, 而是一款基于 Git 的软件. 一般情况下, 我

|

||||

|

||||

BUP 并不完美, 但它的确能够很好地完成任务. 我当然非常愿意看到这个项目的进一步开发, 希望以后能够增加远程恢复的功能.

|

||||

|

||||

你也许喜欢阅读 使用[inotify-tools][1], 一篇关于实时文件同步的文章.

|

||||

你也许喜欢阅读这篇——使用[inotify-tools][1]实时文件同步.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -111,7 +112,7 @@ via: http://techarena51.com/index.php/using-git-backup-website-files-on-linux/

|

||||

|

||||

作者:[Leo G][a]

|

||||

译者:[wangjiezhe](https://github.com/wangjiezhe)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,43 +1,43 @@

|

||||

Linux日历程序California 0.2 发布了

|

||||

================================================================================

|

||||

**随着[上月的Geary和Shotwell的更新][1],非盈利软件套装Yobra又回来了,这次带来的是新的[California][2]日历程序的发布。**

|

||||

**随着[上月的Geary和Shotwell的更新][1],非盈利软件套装Yobra又回来了,同时带来了是新的[California][2]日历程序。**

|

||||

|

||||

一个合格的桌面日历是工作井井有条(和想要井井有条)的必备工具。[广受欢迎Chrome Web Store上的Sunrise应用][3]的发布意味着选择并不像以前那么少了。California又为这个撑腰了。

|

||||

一个合格的桌面日历是工作井井有条(以及想要井井有条)的必备工具。[Chrome Web Store上广受欢迎的Sunrise应用][3]的发布让我们的选择比以前更丰富了,而California又为之增添了新的生力军!

|

||||

|

||||

Yorba的Jim Nelson在Yorba博客上写道:“发生了很多变化“,接着写道:“初次发布比我想的加入了更多的特性。”

|

||||

Yorba的Jim Nelson在Yorba博客上写道:“发生了很多变化“,接着写道:“...很高兴的告诉大家,初次发布比我预想的加入了更多的特性。”

|

||||

|

||||

|

||||

|

||||

California 0.2在GNOME上看上去棒极了。

|

||||

*California 0.2在GNOME上看上去棒极了。*

|

||||

|

||||

最突出的是添加了“自然语言”解析器。这使得添加事件更容易。相反,你可以直接输入“**在下午2点就Nachos会见Sam”接着California就会自动把它安排下接下来的星期一的下午两点,而不必你手动输入位的信息(日期,时间等等)。

|

||||

最突出变化的是添加了“自然语言”解析器。这使得添加事件更容易。你可以直接输入“**在下午2点就Nachos会见Sam**”接着California就会自动把它安排下接下来的星期一的下午两点,而不必你手动输入位的信息(日期,时间等等)。(LCTT 译注:显然你只能输入英文才行)

|

||||

|

||||

|

||||

|

||||

|

||||

当我们在5月份回顾开发版本时这个特性也能工作了,甚至修复了一个问题:重复事件。

|

||||

这个功能和我们我们在5月份评估开发版本时一样好用,甚至还修复了一个bug:事件重复。

|

||||

|

||||

要创建一个重复时间(比如:“每个星期四搜索自己的名字”),你需要在日期前包含文字“every”(每个)。要确保地点也在内(比如:中午12点和Samba De Amigo在Boston Tea Party喝咖啡)。条目中需要有“at”或者“@”。

|

||||

|

||||

至于详细信息,我们可以见[GNOME Wiki上的快速添加页面][4]

|

||||

至于详细信息,我们可以见[GNOME Wiki上的快速添加页面][4]:

|

||||

|

||||

其他的改变包括:

|

||||

|

||||

- 通过‘月’和‘周’查看事件

|

||||

-以‘月’和‘周’视图查看事件

|

||||

-添加/删除 Google,CalDAV和web(.ics)日历

|

||||

- 改进数据服务器整合

|

||||

-添加/编辑/啥是拿出远程事件(包括重复事件)

|

||||

-自然语言计划

|

||||

-F1在线帮助快捷键

|

||||

- 新的动画和弹出窗口

|

||||

-改进数据服务器整合

|

||||

-添加/编辑/删除远程事件(包括重复事件)

|

||||

-用自然语言安排计划

|

||||

-按下F1获取在线帮助

|

||||

-新的动画和弹出窗口

|

||||

|

||||

### 在Ubuntu 14.10上安装 California 0.2 ###

|

||||

|

||||

由于是GNOME 3的程序,可以说这下面程序看起来和感受上更好。

|

||||

作为一个GNOME 3的程序,它在 Gnome 3下运行的外观和体验会更好。

|

||||

|

||||

Yorba没有忽略Ubuntu用户。他们已经努力(也可以说是耐心地)地解决导致Ubuntu需要同时安装GTK+和GNOME的主题问题。结果就是在Ubuntu上程序可能看上去有点错位,但是同样工作的很好。

|

||||

不过,Yorba也没有忽略Ubuntu用户。他们已经努力(也可以说是耐心地)地解决导致Ubuntu需要同时安装GTK+和GNOME的主题问题。结果就是在Ubuntu上程序可能看上去有点错位,但是同样工作的很好。

|

||||

|

||||

California 0.2在[Yorba稳定版软件PPA][5]中可以下载,且只针对Ubuntu 14.10。

|

||||

California 0.2在[Yorba稳定版软件PPA][5]中可以下载,只用于Ubuntu 14.10。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -45,7 +45,7 @@ via: http://www.omgubuntu.co.uk/2014/10/california-calendar-natural-language-par

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -14,13 +14,13 @@ Torvalds以他典型的[放任式][1]的口吻在Linux内核邮件列表中解

|

||||

|

||||

### Linux 3.17有哪些新的? ###

|

||||

|

||||

最为一个新的发布,Linux 3.17 加入了最新的改进,硬件支持,修复等等。范围从有迷惑性的 - 比如:[memfd 和 文件密封补丁][2] - 到大多数人感兴趣的,比如最新硬件的支持。

|

||||

最新版本的 Linux 3.17 加入了最新的改进,硬件支持,修复等等。范围从不明觉厉的 - 比如:[memfd 和 文件密封补丁][2] - 到大多数人感兴趣的,比如最新硬件的支持。

|

||||

|

||||

下面是这次发布的一些亮点的列表,但她们并不详尽。

|

||||

下面是这次发布的一些亮点的列表,但它们并不详尽:

|

||||

|

||||

- Microsoft Xbox One 控制器支持 (没有震动)

|

||||

- Microsoft Xbox One 控制器支持 (没有震动反馈)

|

||||

- 额外的Sony SIXAXIS支持改进

|

||||

- 东芝 “Active Protection Sensor” 支持

|

||||

- 东芝 “主动防护感应器” 支持

|

||||

- 新的包括Rockchip RK3288和AllWinner A23 SoC的ARM芯片支持

|

||||

- 安全计算设备上的“跨线程过滤设置”

|

||||

- 基于Broadcom BCM7XXX板卡的支持(用在不同的机顶盒上)

|

||||

@ -32,9 +32,9 @@ Torvalds以他典型的[放任式][1]的口吻在Linux内核邮件列表中解

|

||||

|

||||

虽然被列为稳定版,但是目前对于大多数人而言只有很少的功能需要我们“现在去安装”。

|

||||

|

||||

但是如果你很耐心- **更重要的是**-有足够的技能去处理从中导致的问题,你可以通过在由Canonical维护的主线内核存档中安装一系列合适的包来在你的Ubuntu 14.10中安装Linux 3.17

|

||||

但是如果你很耐心——**更重要的是**——有足够的技能去处理从中导致的问题,你可以通过在由Canonical维护的主线内核存档中找到一系列合适的包安装在你的Ubuntu 14.10中,升级到Linux 3.17。

|

||||

|

||||

**除非你知道你正在做什么,不要尝试从下面的链接中安装任何东西。**

|

||||

**警告:除非你知道你正在做什么,不要尝试从下面的链接中安装任何东西。**

|

||||

|

||||

- [访问Ubuntu内核主线存档][3]

|

||||

|

||||

@ -44,7 +44,7 @@ via: http://www.omgubuntu.co.uk/2014/10/linux-kernel-3-17-whats-new-improved

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,219 @@

|

||||

Compact Text Editors Great for Remote Editing and Much More

|

||||

================================================================================

|

||||

A text editor is software used for editing plain text files. This type of software has many different uses including modifying configuration files, writing programming language source code, jotting down thoughts, or even making a grocery list. Given that editors can be used for such a diverse range of activities, it is worth spending the time finding an editor that best suites your preferences.

|

||||

|

||||

Whatever the level of sophistication of the editor, they typically have a common set of functionality, such as searching/replacing text, formatting text, importing files, as well as moving text within the file.

|

||||

|

||||

All of these text editors are console based applications which make them ideal for editing files on remote machines. Textadept also provides a graphical user interface, but remains fast and minimalist.

|

||||

|

||||

Console based applications are also light on system resources (very useful on low spec machines), can be faster and more efficient than their graphical counterparts, they do not stop working when X needs to be restarted, and are great for scripting purposes.

|

||||

|

||||

I have selected my favorite open source text editors that are frugal on system resources.

|

||||

|

||||

----------

|

||||

|

||||

### Textadept ###

|

||||

|

||||

|

||||

|

||||

Textadept is a fast, minimalist, and extensible cross-platform open source text editor for programmers. This open source application is written in a mixture of C and Lua and has been optimized for speed and minimalism over the years.

|

||||

|

||||

Textadept is an ideal editor for programmers who want endless extensibility options without sacrificing speed or succumbing to code bloat and featuritis.

|

||||

|

||||

There is also a version available for the terminal, which only depends on ncurses; great for editing on remote machines.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

|

||||

- Lightweight

|

||||

- Minimal design maximizes screen real estate

|

||||

- Self-contained executable – no installation necessary

|

||||

- Entirely keyboard driven

|

||||

- Unlimited split views (GUI version) split the editor window as many times as you like either horizontally or vertically. Please note that Textadept is not a tabbed editor

|

||||

- Support for over 80 programming languages

|

||||

- Powerful snippets and key commands

|

||||

- Code autocompletion and API lookup

|

||||

- Unparalleled extensibility

|

||||

- Bookmarks

|

||||

- Find and Replace

|

||||

- Find in Files

|

||||

- Buffer-based word completion

|

||||

- Adeptsense autocomplete symbols for programming languages and display API documentation

|

||||

- Themes: light, dark, and term

|

||||

- Uses lexers to assign names to buffer elements like comments, strings, and keywords

|

||||

- Sessions

|

||||

- Snapopen

|

||||

- Available modules include support for Java, Python, Ruby and recent file lists

|

||||

- Conforms with the Gnome HIG Human Interface Guidelines

|

||||

- Modules include support for Java, Python, Ruby and recent file lists

|

||||

- Support for editing Lua code. Syntax autocomplete and LuaDoc is available for many Textadept objects as well as Lua’s standard libraries

|

||||

|

||||

- Website: [foicica.com/textadept][1]

|

||||

- Developer: Mitchell and contributors

|

||||

- License: MIT License

|

||||

- Version Number: 7.7

|

||||

|

||||

----------

|

||||

|

||||

### Vim ###

|

||||

|

||||

|

||||

|

||||

Vim is an advanced text editor that seeks to provide the power of the editor 'Vi', with a more complete feature set.

|

||||

|

||||

This editor is very useful for editing programs and other plain ASCII files. All commands are given with normal keyboard characters, so those who can type with ten fingers can work very fast. Additionally, function keys can be defined by the user, and the mouse can be used.

|

||||

|

||||

Vim is often called a "programmer's editor," and is so useful for programming that many consider it to be an entire Integrated Development Environment. However, this application is not only intended for programmers. Vim is highly regarded for all kinds of text editing, from composing email to editing configuration files.

|

||||

|

||||

Vim's interface is based on commands given in a text user interface. Although its graphical user interface, gVim, adds menus and toolbars for commonly used commands, the software's entire functionality is still reliant on its command line mode.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

|

||||

- 3 modes:

|

||||

- - Command mode

|

||||

- - Insert mode

|

||||

- - Command line mode

|

||||

- Unlimited undo

|

||||

- Multiple windows and buffers

|

||||

- Flexible insert mode

|

||||

- Syntax highlighting highlight portions of the buffer in different colors or styles, based on the type of file being edited

|

||||

- Interactive commands

|

||||

- - Marking a line

|

||||

- - vi line buffers

|

||||

- - Shift a block of code

|

||||

- Block operators

|

||||

- Command line history

|

||||

- Extended regular expressions

|

||||

- Edit compressed/archive files (gzip, bzip2, zip, tar)

|

||||

- Filename completion

|

||||

- Block operations

|

||||

- Jump tags

|

||||

- Folding text

|

||||

- Indenting

|

||||

- ctags and cscope intergration

|

||||

- 100% vi compatibility mode

|

||||

- Plugins to add/extend functionality

|

||||

- Macros

|

||||

- vimscript, Vim's internal scripting language

|

||||

- Unicode support

|

||||

- Multi-language support

|

||||

- Integrated On-line help

|

||||

|

||||

- Website: [www.vim.org][2]

|

||||

- Developer: Bram Moolenaar

|

||||

- License: GNU GPL compatible (charityware)

|

||||

- Version Number: 7.4

|

||||

|

||||

----------

|

||||

|

||||

### ne ###

|

||||

|

||||

|

||||

|

||||

ne is a full screen open source text editor. It is intended to be an easier to learn alternative to vi, yet still portable across POSIX-compliant operating systems.

|

||||

|

||||

ne is easy to use for the beginner, but powerful and fully configurable for the wizard, and most sparing in its resource usage.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

|

||||

- Three user interfaces: control keystrokes, command line, and menus; keystrokes and menus are completely configurable

|

||||

- Syntax highlighting

|

||||

- Full support for UTF-8 files, including multiple-column characters

|

||||

- The number of documents and clips, the dimensions of the display, and the file/line lengths are limited only by the integer size of the machine

|

||||

- Simple scripting language where scripts can be generated via an idiotproof record/play method

|

||||

- Unlimited undo/redo capability (can be disabled with a command)

|

||||

- Automatic preferences system based on the extension of the file name being edited

|

||||

- Automatic completion of prefixes using words in your documents as dictionary

|

||||

- File requester with completion features for easy file retrieval;

|

||||

- Extended regular expression search and replace à la emacs and vi

|

||||

- A very compact memory model easily load and modify very large files

|

||||

- Editing of binary files

|

||||

|

||||

- Website: [ne.di.unimi.it][3]

|

||||

- Developer: Sebastiano Vigna (original developer). Additional features added by Todd M. Lewis

|

||||

- License: GNU GPL v3

|

||||

- Version Number: 2.5

|

||||

|

||||

----------

|

||||

|

||||

### Zile ###

|

||||

|

||||

|

||||

|

||||

Zile Is Lossy Emacs (Zile) is a small Emacs clone. Zile is a customizable, self-documenting real-time display editor. Zile was written to be as similar as possible to Emacs; every Emacs user should feel comfortable with Zile.

|

||||

|

||||

Zile is distinguished by a very small RAM memory footprint, of approximately 130kB, and quick editing sessions. It is 8-bit clean, allowing it to be used on any sort of file.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

- Small but fast and powerful

|

||||

- Multi buffer editing with multi level undo

|

||||

- Multi window

|

||||

- Killing, yanking and registers

|

||||

- Minibuffer completion

|

||||

- Auto fill (word wrap)

|

||||

- Registers

|

||||

- Looks like Emacs. Key sequences, function and variable names are identical with Emacs's

|

||||

- Killing

|

||||

- Yanking

|

||||

- Auto line ending detection

|

||||

|

||||

- Website: [www.gnu.org/software/zile][4]

|

||||

- Developer: Reuben Thomas, Sandro Sigala, David A. Capello

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 2.4.11

|

||||

|

||||

----------

|

||||

|

||||

### nano ###

|

||||

|

||||

|

||||

|

||||

nano is a curses-based text editor. It is a clone of Pico, the editor of the Pine email client.

|

||||

|

||||

The nano project was started in 1999 due to licensing issues with the Pine suite (Pine was not distributed under a free software license), and also because Pico lacked some essential features.

|

||||

|

||||

nano aims to emulate the functionality and easy-to-use interface of Pico, while offering additional functionality, but without the tight mailer integration of the Pine/Pico package.

|

||||

|

||||

nano, like Pico, is keyboard-oriented, controlled with control keys.

|

||||

|

||||

#### Features include: ####

|

||||

|

||||

- Interactive search and replace

|

||||

- Color syntax highlighting

|

||||

- Go to line and column number

|

||||

- Auto-indentation

|

||||

- Feature toggles

|

||||

- UTF-8 support

|

||||

- Mixed file format auto-conversion

|

||||

- Verbatim input mode

|

||||

- Multiple file buffers

|

||||

- Smooth scrolling

|

||||

- Bracket matching

|

||||

- Customizable quoting string

|

||||

- Backup files

|

||||

- Internationalization support

|

||||

- Filename tab completion

|

||||

|

||||

- Website: [nano-editor.org][5]

|

||||

- Developer: Chris Allegretta, David Lawrence, Jordi Mallach, Adam Rogoyski, Robert Siemborski, Rocco Corsi, David Benbennick, Mike Frysinger

|

||||

- License: GNU GPL v3

|

||||

- Version Number: 2.2.6

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/20141011073917230/TextEditors.html

|

||||

|

||||

作者:Frazer Kline

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://foicica.com/textadept/

|

||||

[2]:http://www.vim.org/

|

||||

[3]:http://ne.di.unimi.it/

|

||||

[4]:http://www.gnu.org/software/zile/

|

||||

[5]:http://nano-editor.org/

|

||||

55

sources/share/20141013 UbuTricks 14.10.08.md

Normal file

55

sources/share/20141013 UbuTricks 14.10.08.md

Normal file

@ -0,0 +1,55 @@

|

||||

UbuTricks 14.10.08

|

||||

================================================================================

|

||||

> An Ubuntu utility that allows you to install the latest versions of popular apps and games

|

||||

|

||||

UbuTricks is a freely distributed script written in Bash and designed from the ground up to help you install the latest version of the most acclaimed games and graphical applications on your Ubuntu Linux operating system, as well as on various other Ubuntu derivatives.

|

||||

|

||||

|

||||

|

||||

### What apps can I install with UbuTricks? ###

|

||||

|

||||

Currently, the latest versions of the Calibre, Fotoxx, Geary, GIMP, Google Earth, HexChat, jAlbum, Kdenlive, LibreOffice, PCManFM, Qmmp, QuiteRSS, QupZilla, Shutter, SMPlayer, Ubuntu Tweak, Wine and XBMC (Kodi), PlayOnLinux, Red Notebook, NeonView, Sunflower, Pale Moon, QupZilla Next, FrostWire and RSSOwl applications can be installed with UbuTricks.

|

||||

|

||||

### What games can I install with UbuTricks? ###

|

||||

|

||||

In addition, the latest versions of the 0 A.D., Battle for Wesnoth, Transmageddon, Unvanquished and VCMI (Heroes III Engine) games can be installed with the UbuTricks program. Users can also install the latest version of the Cinnamon and LXQt desktop environments.

|

||||

|

||||

### Getting started with UbuTricks ###

|

||||

|

||||

The program is distributed as a .sh file (shell script) that can be run from the command-line using the “sh ubutricks.sh” command (without quotes) or make it executable and double-click it from your Home folder or desktop. All you have to do is to select and app or game and click the OK button to install it.

|

||||

|

||||

### How does it work? ###

|

||||

|

||||

When accessed for the first time, the program will display a welcome screen from the get-to, notifying users about how it actually works. There are three methods to install an app or game, via PPA, DEB file or source tarball. Please note that apps and games will be automatically downloaded and installed.

|

||||

|

||||

### What distributions are supported? ###

|

||||

|

||||

Several versions of the Ubuntu Linux operating systems are supported, but if not specified, it will default to the current stable version, Ubuntu 14.04 LTS (Trusty Tahr). At the moment, the program will not work if you don’t have the gksu package installed on your Ubuntu box. It is based on Zenity, which should be installed too.

|

||||

|

||||

|

||||

|

||||

- last updated on:October 9th, 2014, 11:29 GMT

|

||||

- price:FREE!

|

||||

- developed by:Dan Craciun

|

||||

- homepage:[www.tuxarena.com][1]

|

||||

- license type:[GPL (GNU General Public License)][3]

|

||||

- category:ROOT \ Desktop Environment \ Tools

|

||||

|

||||

### Download for UbuTricks: ###

|

||||

|

||||

- [ubutricks.sh][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linux.softpedia.com/get/Desktop-Environment/Tools/UbuTricks-103626.shtml

|

||||

|

||||

作者:[Marius Nestor][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.softpedia.com/editors/browse/marius-nestor

|

||||

[1]:http://www.tuxarena.com/apps/ubutricks/

|

||||

[2]:http://www.tuxarena.com/intro/files/ubutricks.sh

|

||||

[3]:http://www.gnu.org/licenses/gpl-2.0.html

|

||||

@ -0,0 +1,60 @@

|

||||

What is good reference management software on Linux

|

||||

================================================================================

|

||||

Have you ever written a paper so long that you thought you would never see the end of it? If so, you know that the worst part is not dedicating hours on it, but rather that once you are done, you still have to order and format your references into a structured convention-following bibliography. Hopefully for you, Linux has the solution: bibliography/reference management tools. Using the power of BibTex, these programs can help you import your citation sources, and spit out a structured bibliography. Here is a non-exhaustive list of open-source reference management software on Linux.

|

||||

|

||||

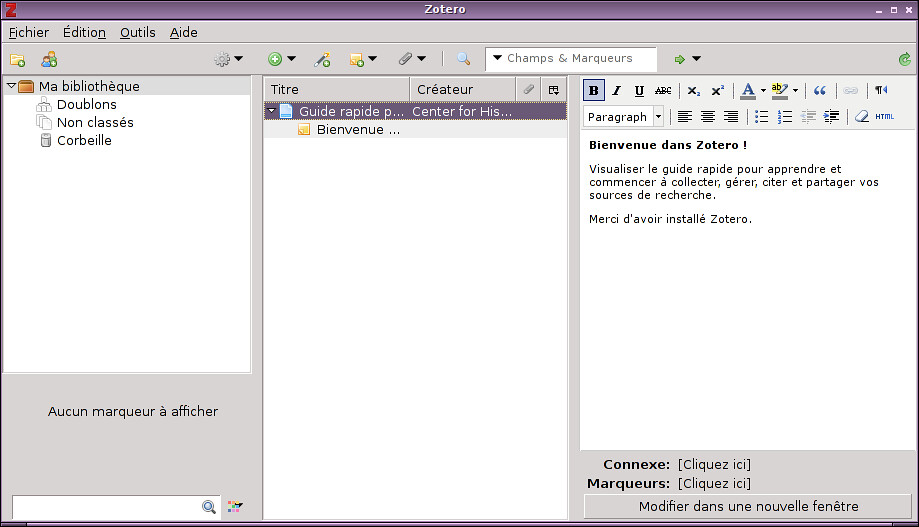

### 1. Zotero ###

|

||||

|

||||

|

||||

|

||||

Surely the most famous tool for collecting references, [Zotero][1] is known for being a browser extension. However, there also exists a convenient Linux stand alone program. Among its biggest advantages, Zotero is easy to use, and can be coupled with LibreOffice or other text editors to manage the bibliography of documents. I personally appreciate the interface and the plugin manager. However, Zotero is quickly limited if you have a lot of needs about your bibliography.

|

||||

|

||||

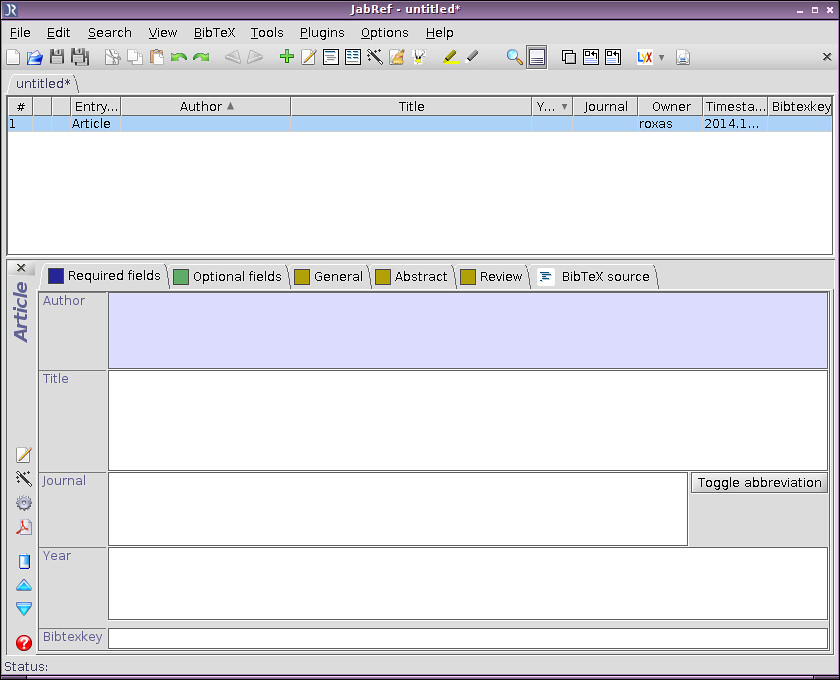

### 2. JabRef ###

|

||||

|

||||

|

||||

|

||||

[JabRef][2] is one of the most advanced tools out there for citation management. You can import from a plethora of format, lookup entries from external databases (like Google Scholar), and export straight to your favorite editor. JabRef integrates your environment nicely, and can even support plugins. And as a final touch, JabRef can connect to your own SQL database. The only downside to all of this is of course the learning curve.

|

||||

|

||||

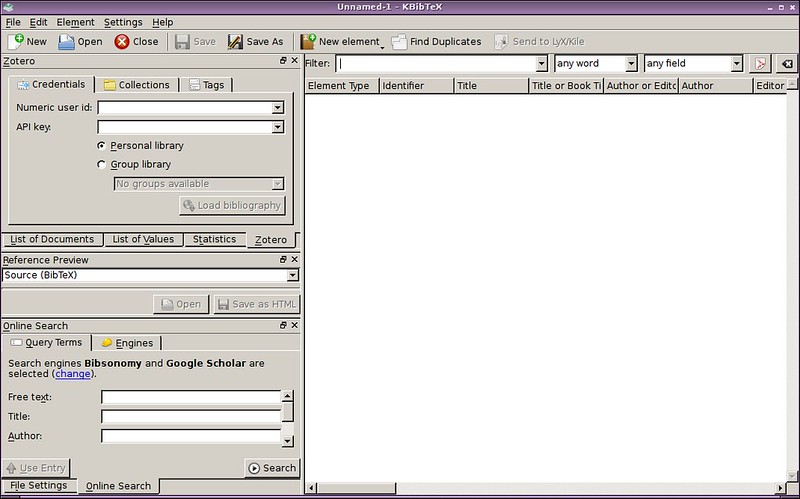

### 3. KBibTex ###

|

||||

|

||||

|

||||

|

||||

For KDE adepts, the desktop environment has its own dedicated bibliography manager called [KBibTex][3]. And as you might expect from a program of this caliber, the promised quality is delivered. The software is highly customizable, from the shortcuts to the behavior and appearance. It is easy to find duplicates, to preview the results, and to export directly to a LaTeX editor. But the best feature in my opinion is the integration of Bibsonomy, Google Scholar, and even your Zotero account. The only downside is that the interface seems a bit cluttered at first. Hopefully spending enough time in the settings should fix that.

|

||||

|

||||

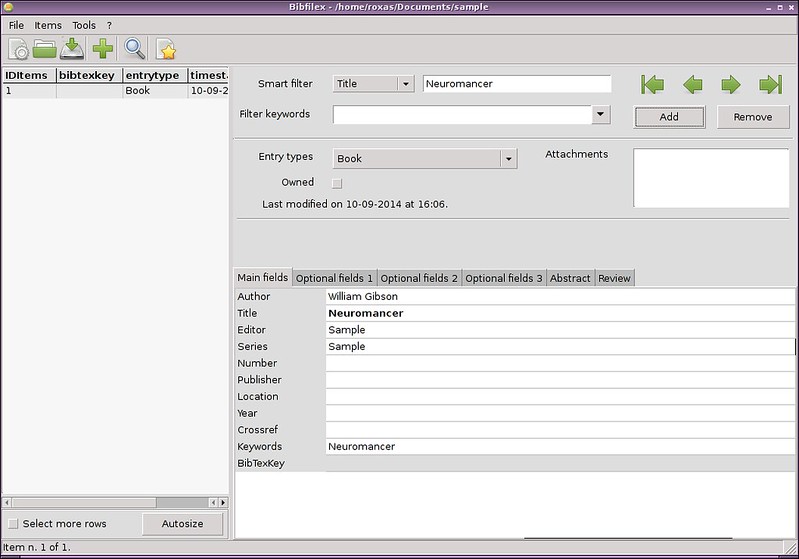

### 4. Bibfilex ###

|

||||

|

||||

|

||||

|

||||

Capable of running in both Gtk and Qt environment, [Bibfilex][4] is a user friendly bibliography management tool based on Biblatex. Less advanced than JabRef or KBibTex, it is fast and lightweight. Definitely a smart choice for making a bibliography quickly without thinking too much. The interface is slick and reflects just the necessary functions. I give it extra credits for the complete manual that you can get from the official [download page][5]

|

||||

|

||||

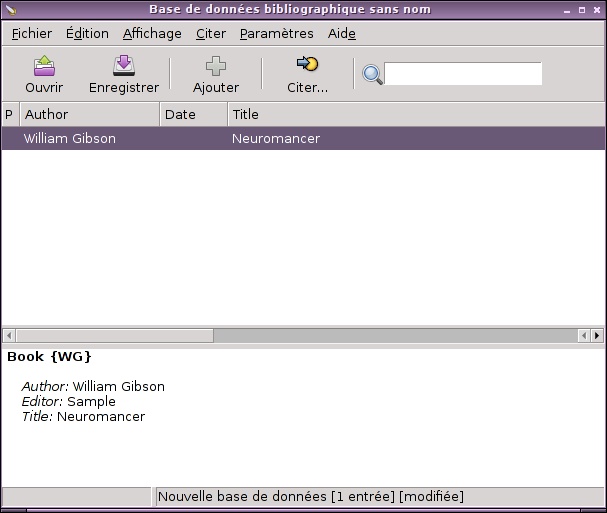

5. Pybliographer

|

||||

|

||||

|

||||

|

||||

As indicated by its name, [Pybliographer][6] is a non-graphical tool for bibliography management written in Python. I personally like to use Pybliographic as the graphical front-end. The interface is extremely clear and minimalist. If you just have a few references to export and don't really have time to learn how to use an extensive piece of software, Pybliographer is the place to go. A bit like Bibfilex, the intent is on user-friendliness and quick use.

|

||||

|

||||

### 6. Referencer ###

|

||||

|

||||

|

||||

|

||||

Probably my biggest surprise when doing this list, [Referencer][7] is really appealing to the eye. Capable of integrating itself perfectly with Gnome, it can find and import your documents, look up their reference on the web, and export to LyX, while being sexy and really well designed. The few shortcuts and plugins are a good bonus along with the library style interface.

|

||||

|

||||

To conclude, thanks to these tools, you will not have to worry about long papers anymore, or at least not about the reference section. What did we miss? Is there a bibliography management tool that you prefer? Let us know in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/reference-management-software-linux.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/adrien

|

||||

[1]:https://www.zotero.org/

|

||||

[2]:http://jabref.sourceforge.net/

|

||||

[3]:http://home.gna.org/kbibtex/

|

||||

[4]:https://sites.google.com/site/bibfilex/

|

||||

[5]:https://sites.google.com/site/bibfilex/download

|

||||

[6]:http://pybliographer.org/

|

||||

[7]:https://launchpad.net/referencer

|

||||

@ -1,89 +0,0 @@

|

||||

[felixonmars translating...]

|

||||

|

||||

10 Open Source Cloning Software For Linux Users

|

||||

================================================================================

|

||||

> These cloning software take all disk data, convert them into a single .img file and you can copy it to another hard drive.

|

||||

|

||||

|

||||

|

||||

Disk cloning means copying data from a hard disk to another one and you can do this by simple copy & paste. But you cannot copy the hidden files and folders and not the in-use files too. That's when you need a cloning software which can also help you in saving a back-up image from your files and folders. The cloning software takes all disk data, convert them into a single .img file and you can copy it to another hard drive. Here we give you the best 10 Open Source Cloning software:

|

||||

|

||||

### 1. [Clonezilla][1]: ###

|

||||

|

||||

Clonezilla is a Live CD based on Ubuntu and Debian. It clones all your hard drive data and take a backup just like Norton Ghost on Windows but in a more effective way. Clonezilla support many filesystems like ext2, ext3, ext4, btrfs, xfs and others. It also supports BIOS, UEFI, MPR and GPT partitions.

|

||||

|

||||

|

||||

|

||||

### 2. [Redo Backup][2]: ###

|

||||

|

||||

Redo Bakcup is another Live CD tool which clones your drivers easily. It is free and Open Source Live System which has its licence under GPL 3. Its main features include easy GUI boots from CD, no installation, restoration of Linux and Windows systems, access to files with out any log-in, recovery of deleted files and more.

|

||||

|

||||

|

||||

|

||||

### 3. [Mondo Rescue][3]: ###

|

||||

|

||||

Mondo doesn't work like other software. It doesn’t convert your hard drivers into an .img file. It converts them into an .iso image and with Mondo you can also create a custom Live CD using “mindi” which is a special tool developed by Mondo Rescue to clone your data from the Live CD. It supports most Linux distributions, FreeBSD, and it is licensed under GPL.

|

||||

|

||||

|

||||

|

||||

### 4. [Partimage][4]: ###

|

||||

|

||||

This is an open-source software backup, which works under Linux system, by default. It's also available to install from the package manager for most Linux distributions and if you don’t have a Linux system then you can use “SystemRescueCd”. It is a Live CD which includes Partimage by default to do the cloning process that you want. Partimage is very fast in cloning hard drivers.

|

||||

|

||||

|

||||

|

||||

### 5. [FSArchiver][5]: ###

|

||||

|

||||

FSArchiver is a follow-up to Partimage, and it is again a good tool to clone hard disks. It supports cloning Ext4 partitions and NTFS partitions, basic file attributes like owner, permissions, extended attributes like those used by SELinux, basic file system attributes for all Linux file systems and so on.

|

||||

|

||||

### 6. [Partclone][6]: ###

|

||||

|

||||

Partclone is a free tool which clones and restores partitions. Written in C it first appeared in 2007 and it supports many filesystems like ext2, ext3, ext4, xfs, nfs, reiserfs, reiser4, hfs+, btrfs. It is very simple to use and it's licensed under GPL.

|

||||

|

||||

### 7. [doClone][7]: ###

|

||||

|

||||

doClone is a free software project which is developed to clone Linux system partitions easily. It's written in C++ and it supports up to 12 different filesystems. It can preform Grub bootloader restoration and can also transform the clone image to another computer via LAN. It also provides support to live cloning which means you will eb able to clone from the system even if it's running.

|

||||

|

||||

|

||||

|

||||

### 8. [Macrium Reflect Free Edition][8]: ###

|

||||

|

||||

Macrium Reflect Free Edition is claimed to be one of the fastest disk cloning utilities which supports only Windows file systems. It is a fairly straightforward user interface. This software does disk imaging and disk cloning and also allows you to access images from the file manager. It allows you to create a Linux rescue CD and it is compatible with Windows Vista and 7.

|

||||

|

||||

|

||||

|

||||

### 9. [DriveImage XML][9]: ###

|

||||

|

||||

DriveImage XML uses Microsoft VSS for creation of images, quite reliably. With this software you can create "hot" images from a disk, which is still running. XML files store images, which means you can access them from any supporting third-party software. DriveImage XML also allows restoring an image to a machine without any reboot. This software is also compatible with Windows XP, Windows Server 2003, Vista, and 7.

|

||||

|

||||

|

||||

|

||||

### 10. [Paragon Backup & Recovery Free][10]: ###

|

||||

|

||||

Paragon Backup & Recovery Free does a great job when it comes to managing scheduled imaging. This is a free software but it's for personal use only.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.efytimes.com/e1/fullnews.asp?edid=148039

|

||||

|

||||

作者:Sanchari Banerjee

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://clonezilla.org/

|

||||

[2]:http://redobackup.org/

|

||||

[3]:http://www.mondorescue.org/

|

||||

[4]:http://www.partimage.org/Main_Page

|

||||

[5]:http://www.fsarchiver.org/Main_Page

|

||||

[6]:http://www.partclone.org/

|

||||

[7]:http://doclone.nongnu.org/

|

||||

[8]:http://www.macrium.com/reflectfree.aspx

|

||||

[9]:http://www.runtime.org/driveimage-xml.htm

|

||||

[10]:http://www.paragon-software.com/home/br-free/

|

||||

@ -1,66 +0,0 @@

|

||||

zpl1025

|

||||

What Linux Users Should Know About Open Hardware

|

||||

================================================================================

|

||||

> What Linux users don't know about manufacturing open hardware can lead them to disappointment.

|

||||

|

||||

Business and free software have been intertwined for years, but the two often misunderstand one another. That's not surprising -- what is just a business to one is way of life for the other. But the misunderstanding can be painful, which is why debunking it is a worth the effort.

|

||||

|

||||

An increasingly common case in point: the growing attempts at open hardware, whether from Canonical, Jolla, MakePlayLive, or any of half a dozen others. Whether pundit or end-user, the average free software user reacts with exaggerated enthusiasm when a new piece of hardware is announced, then retreats into disillusionment as delay follows delay, often ending in the cancellation of the entire product.

|

||||

|

||||

It's a cycle that does no one any good, and often breeds distrust – and all because the average Linux user has no idea what's happening behind the news.

|

||||

|

||||

My own experience with bringing products to market is long behind me. However, nothing I have heard suggests that anything has changed. Bringing open hardware or any other product to market remains not just a brutal business, but one heavily stacked against newcomers.

|

||||

|

||||

### Searching for Partners ###

|

||||

|

||||

Both the manufacturing and distribution of digital products is controlled by a relatively small number of companies, whose time can sometimes be booked months in advance. Profit margins can be tight, so like movie studios that buy the rights to an ancient sit-com, the manufacturers usually hope to clone the success of the latest hot product. As Aaron Seigo told me when talking about his efforts to develop the Vivaldi tablet, the manufacturers would much rather prefer someone else take the risk of doing anything new.

|

||||

|

||||

Not only that, but they would prefer to deal with someone with an existing sales record who is likely to bring repeat business.

|

||||

|

||||

Besides, the average newcomer is looking at a product run of a few thousand units. A chip manufacturer would much rather deal with Apple or Samsung, whose order is more likely in the hundreds of thousands.

|

||||

|

||||

Faced with this situation, the makers of open hardware are likely to find themselves cascading down into the list of manufacturers until they can find a second or third tier manufacturer that is willing to take a chance on a small run of something new.

|

||||

|

||||

They might be reduced to buying off-the-shelf components and assembling units themselves, as Seigo tried with Vivaldi. Alternatively, they might do as Canonical did, and find established partners that encourage the industry to take a gamble. Even if they succeed, they have usually taken months longer than they expected in their initial naivety.

|

||||

|

||||

### Staggering to Market ###

|

||||

|

||||

However, finding a manufacturer is only the first obstacle. As Raspberry Pi found out, even if the open hardware producers want only free software in their product, the manufacturers will probably insist that firmware or drivers stay proprietary in the name of protecting trade secrets.

|

||||

|

||||

This situation is guaranteed to set off criticism from potential users, but the open hardware producers have no choice except to compromise their vision. Looking for another manufacturer is not a solution, partly because to do so means more delays, but largely because completely free-licensed hardware does not exist. The industry giants like Samsung have no interest in free hardware, and, being new, the open hardware producers have no clout to demand any.

|

||||

|

||||

Besides, even if free hardware was available, manufacturers could probably not guarantee that it would be used in the next production run. The producers might easily find themselves re-fighting the same battle every time they needed more units.

|

||||

|

||||

As if all this is not enough, at this point the open hardware producer has probably spent 6-12 months haggling. The chances are, the industry standards have shifted, and they may have to start from the beginning again by upgrading specs.

|

||||

|

||||

### A Short and Brutal Shelf Life ###

|

||||

|

||||

Despite these obstacles, hardware with some degree of openness does sometimes get released. But remember the challenges of finding a manufacturer? They have to be repeated all over again with the distributors -- and not just once, but region by region.

|

||||

|

||||

Typically, the distributors are just as conservative as the manufacturers, and just as cautious about dealing with newcomers and new ideas. Even if they agree to add a product to their catalog, the distributors can easily decide not to encourage their representatives to promote it, which means that in a few months they have effectively removed it from the shelves.

|

||||

|

||||

Of course, online sales are a possibility. But meanwhile, the hardware has to be stored somewhere, adding to the cost. Production runs on demand are expensive even in the unlikely event that they are available, and even unassembled units need storage.

|

||||

|

||||

### Weighing the Odds ###

|

||||

|

||||

I have been generalizing wildly here, but anyone who has ever been involved in producing anything will recognize what I am describing as the norm. And just to make matters worse, open hardware producers typically discover the situation as they are going through it. Inevitably, they make mistakes, which adds still more delays.

|

||||

|

||||

But the point is, if you have any sense of the process at all, your knowledge is going to change how you react to news of another attempt at hardware. The process means that, unless a company has been in serious stealth mode, an announcement that a product will be out in six months will rapidly prove to be an outdate guestimate. 12-18 months is more likely, and the obstacles I describe may mean that the product will never actually be released.

|

||||

|

||||

For example, as I write, people are waiting for the emergence of the first Steam Machines, the Linux-based gaming consoles. They are convinced that the Steam Machines will utterly transform both Linux and gaming.

|

||||

|

||||

As a market category, Steam Machines may do better than other new products, because those who are developing them at least have experience developing software products. However, none of the dozen or so Steam Machines in development have produced more than a prototype after almost a year, and none are likely to be available for buying until halfway through 2015. Given the realities of hardware manufacturing, we will be lucky if half of them see daylight. In fact, a release of 2-4 might be more realistic.

|

||||

|

||||

I make that prediction with next to no knowledge of any of the individual efforts. But, having some sense of how hardware manufacturing works, I suspect that it is likely to be closer to what happens next year than all the predictions of a new Golden Age for Linux and gaming. I would be entirely happy being wrong, but the fact remains: what is surprising is not that so many Linux-associated hardware products fail, but that any succeed even briefly.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/what-linux-users-should-know-about-open-hardware-1.html

|

||||

|

||||

作者:[Bruce Byfield][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Bruce-Byfield-6030.html

|

||||

@ -1,201 +0,0 @@

|

||||

诗诗来翻译!disylee

|

||||

How to configure a network printer and scanner on Ubuntu desktop

|

||||

================================================================================

|

||||

In a [previous article][1](注:这篇文章在2014年8月12号的原文里做过,不知道翻译了没有,如果翻译发布了,发布此文章的时候可改成翻译后的链接), we discussed how to install several kinds of printers (and also a network scanner) in a Linux server. Today we will deal with the other end of the line: how to access the network printer/scanner devices from a desktop client.

|

||||

|

||||

### Network Environment ###

|

||||

|

||||

For this setup, our server's (Debian Wheezy 7.2) IP address is 192.168.0.10, and our client's (Ubuntu 12.04) IP address is 192.168.0.105. Note that both boxes are on the same network (192.168.0.0/24). If we want to allow printing from other networks, we need to modify the following section in the cupsd.conf file on the sever:

|

||||

|

||||

<Location />

|

||||

Order allow,deny

|

||||

Allow localhost

|

||||

Allow from XXX.YYY.ZZZ.*

|

||||

</Location>

|

||||

|

||||

(in the above example, we grant access to the printer from localhost and from any system whose IPv4 address starts with XXX.YYY.ZZZ)

|

||||

|

||||

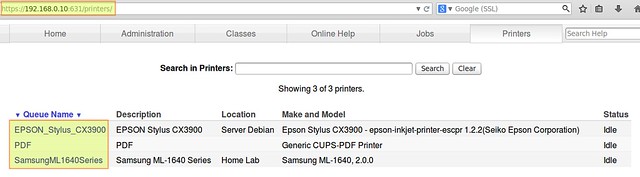

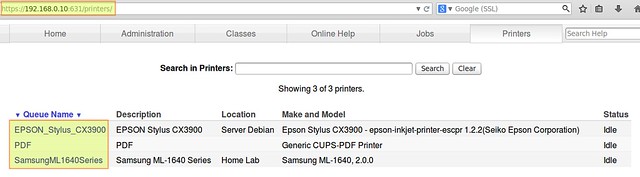

To verify which printers are available on our server, we can either use lpstat command on the server, or browse to the https://192.168.0.10:631/printers page.

|

||||

|

||||

root@debian:~# lpstat -a

|

||||

|

||||

----------

|

||||

|

||||

EPSON_Stylus_CX3900 accepting requests since Mon 18 Aug 2014 10:49:33 AM WARST

|

||||

PDF accepting requests since Mon 06 May 2013 04:46:11 PM WARST

|

||||

SamsungML1640Series accepting requests since Wed 13 Aug 2014 10:13:47 PM WARST

|

||||

|

||||

|

||||

|

||||

### Installing Network Printers in Ubuntu Desktop ###

|

||||

|

||||

In our Ubuntu 12.04 client, we will open the "Printing" menu (Dash -> Printing). Note that in other distributions the name may differ a little (such as "Printers" or "Print & Fax", for example):

|

||||

|

||||

|

||||

|

||||

No printers have been added to our Ubuntu client yet:

|

||||

|

||||

|

||||

|

||||

Here are the steps to install a network printer on Ubuntu desktop client.

|

||||

|

||||

**1)** The "Add" button will fire up the "New Printer" menu. We will choose "Network printer" -> "Find Network Printer" and enter the IP address of our server, then click "Find":

|

||||

|

||||

|

||||

|

||||

**2)** At the bottom we will see the names of the available printers. Let's choose the Samsung printer and press "Forward":

|

||||

|

||||

|

||||

|

||||

**3)** We will be asked to fill in some information about our printer. When we're done, we'll click on "Apply":

|

||||

|

||||

|

||||

|

||||

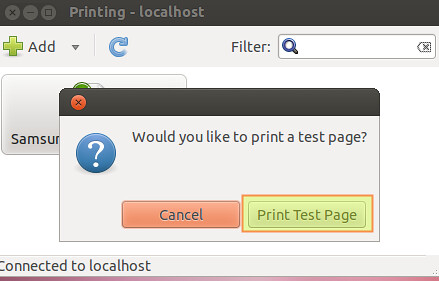

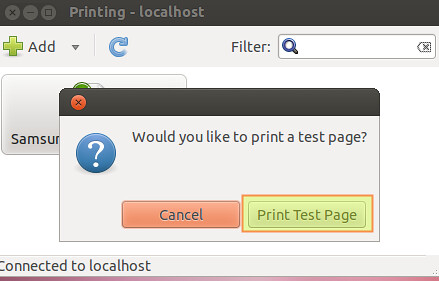

**4)** We will then be asked whether we want to print a test page. Let’s click on "Print test page":

|

||||

|

||||

|

||||

|

||||

The print job was created with local id 2:

|

||||

|

||||

|

||||

|

||||

5) Using our server's CUPS web interface, we can observe that the print job has been submitted successfully (Printers -> SamsungML1640Series -> Show completed jobs):

|

||||

|

||||

|

||||

|

||||

We can also display this same information by running the following command on the printer server:

|

||||

|

||||

root@debian:~# cat /var/log/cups/page_log | grep -i samsung

|

||||

|

||||

----------

|

||||

|

||||

SamsungML1640Series root 27 [13/Aug/2014:22:15:34 -0300] 1 1 - localhost Test Page - -

|

||||

SamsungML1640Series gacanepa 28 [18/Aug/2014:11:28:50 -0300] 1 1 - 192.168.0.105 Test Page - -

|

||||

SamsungML1640Series gacanepa 29 [18/Aug/2014:11:45:57 -0300] 1 1 - 192.168.0.105 Test Page - -

|

||||

|

||||

The page_log log file shows every page that has been printed, along with the user who sent the print job, the date & time, and the client's IPv4 address.

|

||||

|

||||

To install the Epson inkjet and PDF printers, we need to repeat steps 1 through 5, and choose the right print queue each time. For example, in the image below we are selecting the PDF printer:

|

||||

|

||||

|

||||

|

||||

However, please note that according to the [CUPS-PDF documentation][2], by default:

|

||||

|

||||

> PDF files will be placed in subdirectories named after the owner of the print job. In case the owner cannot be identified (i.e. does not exist on the server) the output is placed in the directory for anonymous operation (if not disabled in cups-pdf.conf - defaults to /var/spool/cups-pdf/ANONYMOUS/).

|

||||

|

||||

These default directories can be modified by changing the value of the **Out** and **AnonDirName** variables in the /etc/cups/cups-pdf.conf file. Here, ${HOME} is expanded to the user's home directory:

|

||||

|

||||

Out ${HOME}/PDF

|

||||

AnonDirName /var/spool/cups-pdf/ANONYMOUS

|

||||

|

||||

### Network Printing Examples ###

|

||||

|

||||

#### Example #1 ####

|

||||

|

||||

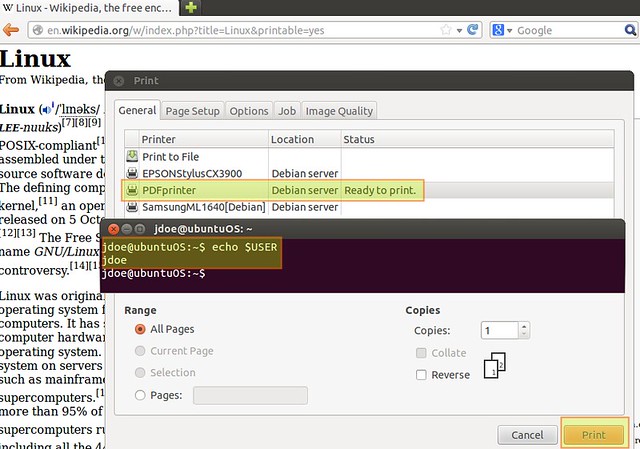

Printing from Ubuntu 12.04, logged on locally as gacanepa (an account with the same name exists on the printer server).

|

||||

|

||||

|

||||

|

||||

After printing to the PDF printer, let's check the contents of the /home/gacanepa/PDF directory on the printer server:

|

||||

|

||||

root@debian:~# ls -l /home/gacanepa/PDF

|

||||

|

||||

----------

|

||||

|

||||

total 368

|

||||

-rw------- 1 gacanepa gacanepa 279176 Aug 18 13:49 Test_Page.pdf

|

||||

-rw------- 1 gacanepa gacanepa 7994 Aug 18 13:50 Untitled1.pdf

|

||||

-rw------- 1 gacanepa gacanepa 74911 Aug 18 14:36 Welcome_to_Conference_-_Thomas_S__Monson.pdf

|

||||

|

||||

The PDF files are created with permissions set to 600 (-rw-------), which means that only the owner (gacanepa in this case) can have access to them. We can change this behavior by editing the value of the **UserUMask** variable in the /etc/cups/cups-pdf.conf file. For example, a umask of 0033 will cause the PDF printer to create files with all permissions for the owner, but read-only privileges to all others.

|

||||

|

||||

root@debian:~# grep -i UserUMask /etc/cups/cups-pdf.conf

|

||||

|

||||

----------

|

||||

|

||||

### Key: UserUMask

|

||||

UserUMask 0033

|

||||

|

||||

For those unfamiliar with umask (aka user file-creation mode mask), it acts as a set of permissions that can be used to control the default file permissions that are set for new files when they are created. Given a certain umask, the final file permissions are calculated by performing a bitwise boolean AND operation between the file base permissions (0666) and the unary bitwise complement of the umask. Thus, for a umask set to 0033, the default permissions for new files will be NOT (0033) AND 0666 = 644 (read / write / execute privileges for the owner, read-only for all others.

|

||||

|

||||

### Example #2 ###

|

||||

|

||||

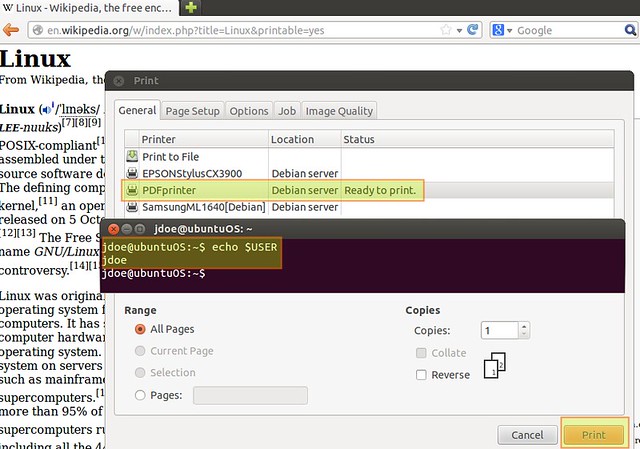

Printing from Ubuntu 12.04, logged on locally as jdoe (an account with the same name doesn't exist on the server).

|

||||

|

||||

|

||||

|

||||

root@debian:~# ls -l /var/spool/cups-pdf/ANONYMOUS

|

||||

|

||||

----------

|

||||

|

||||

total 5428

|

||||

-rw-rw-rw- 1 nobody nogroup 5543070 Aug 18 15:57 Linux_-_Wikipedia__the_free_encyclopedia.pdf

|

||||

|

||||

The PDF files are created with permissions set to 666 (-rw-rw-rw-), which means that everyone has access to them. We can change this behavior by editing the value of the **AnonUMask** variable in the /etc/cups/cups-pdf.conf file.

|

||||

|

||||

At this point, you may be wondering about this: Why bother to install a network PDF printer when most (if not all) current Linux desktop distributions come with a built-in "Print to file" utility that allows users to create PDF files on-the-fly?

|

||||

|

||||

There are a couple of benefits of using a network PDF printer:

|

||||

|

||||

- A network printer (of whatever kind) lets you print directly from the command line without having to open the file first.

|

||||

- In a network with other operating system installed on the clients, a PDF network printer spares the system administrator from having to install a PDF creator utility on each individual machine (and also the danger of allowing end-users to install such tools).

|

||||

- The network PDF printer allows to print directly to a network share with configurable permissions, as we have seen.

|

||||

|

||||

### Installing a Network Scanner in Ubuntu Desktop ###

|

||||

|

||||

Here are the steps to installing and accessing a network scanner from Ubuntu desktop client. It is assumed that the network scanner server is already up and running as described [here][3].

|

||||

|

||||

**1)** Let us first check whether there is a scanner available on our Ubuntu client host. Without any prior setup, you will see the message saying that "No scanners were identified."

|

||||

|

||||

$ scanimage -L

|

||||

|

||||

|

||||

|

||||

**2)** Now we need to enable saned daemon which comes pre-installed on Ubuntu desktop. To enable it, we need to edit the /etc/default/saned file, and set the RUN variable to yes:

|

||||

|

||||

$ sudo vim /etc/default/saned

|

||||

|

||||

----------

|

||||

|

||||

# Set to yes to start saned

|

||||

RUN=yes

|

||||

|

||||

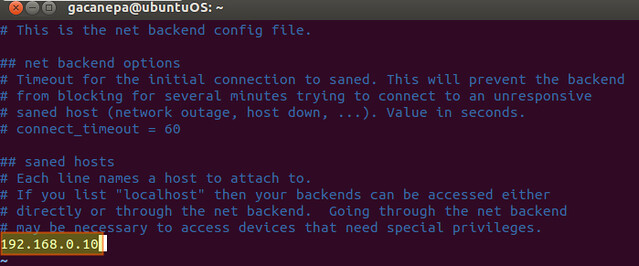

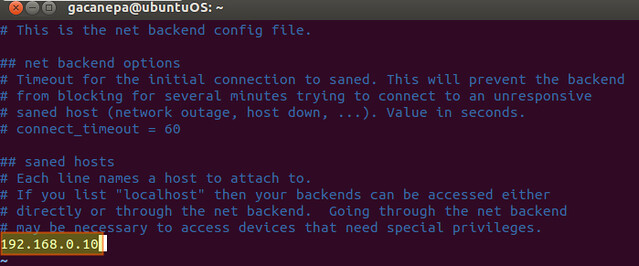

**3)** Let's edit the /etc/sane.d/net.conf file, and add the IP address of the server where the scanner is installed:

|

||||

|

||||

|

||||

|

||||

**4)** Restart saned:

|

||||

|

||||

$ sudo service saned restart

|

||||

|

||||

**5)** Let's see if the scanner is available now:

|

||||

|

||||

|

||||

|

||||

Now we can open "Simple Scan" (or other scanning utility) and start scanning documents. We can rotate, crop, and save the resulting image:

|

||||

|

||||

|

||||

|

||||

### Summary ###

|

||||

|

||||

Having one or more network printers and scanner is a nice convenience in any office or home network, and offers several advantages at the same time. To name a few:

|

||||

|

||||

- Multiple users (connecting from different platforms / places) are able to send print jobs to the printer's queue.

|

||||

- Cost and maintenance savings can be achieved due to hardware sharing.

|

||||

|

||||

I hope this article helps you make use of those advantages.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/2014/08/configure-network-printer-scanner-ubuntu-desktop.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/gabriel

|

||||

[1]:http://xmodulo.com/2014/08/usb-network-printer-and-scanner-server-debian.html

|

||||

[2]:http://www.cups-pdf.de/documentation.shtml

|

||||

[3]:http://xmodulo.com/2014/08/usb-network-printer-and-scanner-server-debian.html#scanner

|

||||

@ -1,138 +0,0 @@

|

||||

johnhoow translating...

|

||||

# Practical Lessons in Peer Code Review #

|

||||

|

||||

Millions of years ago, apes descended from the trees, evolved opposable thumbs and—eventually—turned into human beings.

|

||||

|

||||

We see mandatory code reviews in a similar light: something that separates human from beast on the rolling grasslands of the software

|

||||

development savanna.

|

||||

|

||||

Nonetheless, I sometimes hear comments like these from our team members:

|

||||

|

||||

"Code reviews on this project are a waste of time."

|

||||

"I don't have time to do code reviews."

|

||||

"My release is delayed because my dastardly colleague hasn't done my review yet."

|

||||

"Can you believe my colleague wants me to change something in my code? Please explain to them that the delicate balance of the universe will

|

||||

be disrupted if my pristine, elegant code is altered in any way."

|

||||

|

||||

### Why do we do code reviews? ###

|

||||

|

||||

Let us remember, first of all, why we do code reviews. One of the most important goals of any professional software developer is to

|

||||

continually improve the quality of their work. Even if your team is packed with talented programmers, you aren't going to distinguish

|

||||

yourselves from a capable freelancer unless you work as a team. Code reviews are one of the most important ways to achieve this. In

|

||||

particular, they:

|

||||

|

||||

provide a second pair of eyes to find defects and better ways of doing something.

|

||||

ensure that at least one other person is familiar with your code.

|

||||

help train new staff by exposing them to the code of more experienced developers.

|

||||

promote knowledge sharing by exposing both the reviewer and reviewee to the good ideas and practices of the other.

|

||||

encourage developers to be more thorough in their work since they know it will be reviewed by one of their colleagues.

|

||||

|

||||

### Doing thorough reviews ###

|

||||

|

||||

However, these goals cannot be achieved unless appropriate time and care are devoted to reviews. Just scrolling through a patch, making sure

|

||||

that the indentation is correct and that all the variables use lower camel case, does not constitute a thorough code review. It is

|

||||

instructive to consider pair programming, which is a fairly popular practice and adds an overhead of 100% to all development time, as the

|

||||

baseline for code review effort. You can spend a lot of time on code reviews and still use much less overall engineer time than pair

|

||||

programming.

|

||||

|

||||

My feeling is that something around 25% of the original development time should be spent on code reviews. For example, if a developer takes

|

||||

two days to implement a story, the reviewer should spend roughly four hours reviewing it.

|

||||

|

||||

Of course, it isn't primarily important how much time you spend on a review as long as the review is done correctly. Specifically, you must

|

||||

understand the code you are reviewing. This doesn't just mean that you know the syntax of the language it is written in. It means that you

|

||||

must understand how the code fits into the larger context of the application, component or library it is part of. If you don't grasp all the

|

||||

implications of every line of code, then your reviews are not going to be very valuable. This is why good reviews cannot be done quickly: it

|

||||

takes time to investigate the various code paths that can trigger a given function, to ensure that third-party APIs are used correctly

|

||||

(including any edge cases) and so forth.

|

||||

|

||||

In addition to looking for defects or other problems in the code you are reviewing, you should ensure that:

|

||||

|

||||

All necessary tests are included.

|

||||

Appropriate design documentation has been written.

|

||||

Even developers who are good about writing tests and documentation don't always remember to update them when they change their code. A

|

||||

gentle nudge from the code reviewer when appropriate is vital to ensure that they don't go stale over time.

|

||||

|

||||

### Preventing code review overload ###

|

||||

|

||||

If your team does mandatory code reviews, there is the danger that your code review backlog will build up to the point where it is

|

||||

unmanageable. If you don't do any reviews for two weeks, you can easily have several days of reviews to catch up on. This means that your

|

||||

own development work will take a large and unexpected hit when you finally decide to deal with them. It also makes it a lot harder to do

|

||||

good reviews since proper code reviews require intense and sustained mental effort. It is difficult to keep this up for days on end.

|

||||

|

||||

For this reason, developers should strive to empty their review backlog every day. One approach is to tackle reviews first thing in the

|

||||

morning. By doing all outstanding reviews before you start your own development work, you can keep the review situation from getting out of

|

||||

hand. Some might prefer to do reviews before or after the midday break or at the end of the day. Whenever you do them, by considering code

|

||||

reviews as part of your regular daily work and not a distraction, you avoid:

|

||||

|

||||

Not having time to deal with your review backlog.

|

||||

Delaying a release because your reviews aren't done yet.

|

||||

Posting reviews that are no longer relevant since the code has changed so much in the meantime.

|

||||

Doing poor reviews since you have to rush through them at the last minute.

|

||||

|

||||

### Writing reviewable code ###

|

||||

|

||||

The reviewer is not always the one responsible for out-of-control review backlogs. If my colleague spends a week adding code willy-nilly

|

||||

across a large project then the patch they post is going to be really hard to review. There will be too much to get through in one session.

|

||||

It will be difficult to understand the purpose and underlying architecture of the code.

|

||||

|

||||

This is one of many reasons why it is important to split your work into manageable units. We use scrum methodology so the appropriate unit

|

||||

for us is the story. By making an effort to organize our work by story and submit reviews that pertain only to the specific story we are

|

||||

working on, we write code that is much easier to review. Your team may use another methodology but the principle is the same.

|

||||

|

||||

There are other prerequisites to writing reviewable code. If there are tricky architectural decisions to be made, it makes sense to meet

|

||||

with the reviewer beforehand to discuss them. This will make it much easier for the reviewer to understand your code, since they will know

|

||||