, so we put it into the `css`method of response object we have (line `46`). After that, we just need to get the URL of the blog post. It is easily achieved by `'./a/@href'` XPath string, which takes the `href` attribute of tag found as direct child of our

.

+

+### Finding traffic data

+

+The next task is estimating the number of views per day each of the blogs receives. There are [various options][45] to get such data, both free and paid. After quick googling I decided to stick to this simple and free to use website [www.statshow.com][46]. The Spider for this website should take as an input blog URLs we’ve obtained in the previous step, go through them and add traffic information. Spider initialization looks like this:

+

+```

+class TrafficSpider(scrapy.Spider):

+ name = 'traffic'

+ allowed_domains = ['www.statshow.com']

+

+ def __init__(self, blogs_data):

+ super(TrafficSpider, self).__init__()

+ self.blogs_data = blogs_data

+```

+

+[view raw][12][traffic.py][13] hosted with

+

+ by [GitHub][14]

+

+`blogs_data` is expected to be list of dictionaries in the form: `{"rank": 70, "url": "www.stat.washington.edu", "query": "Python"}`.

+

+Request building function looks like this:

+

+```

+ def start_requests(self):

+ url_template = urllib.parse.urlunparse(

+ ['http', self.allowed_domains[0], '/www/{path}', '', '', ''])

+ for blog in self.blogs_data:

+ url = url_template.format(path=blog['url'])

+ request = SplashRequest(url, endpoint='render.html',

+ args={'wait': 0.5}, meta={'blog': blog})

+ yield request

+```

+

+[view raw][15][traffic.py][16] hosted with

+

+ by [GitHub][17]

+

+It’s quite simple, we just add `/www/web-site-url/` string to the `'www.statshow.com'` url.

+

+Now let’s see how does the parser look:

+

+```

+ def parse(self, response):

+ site_data = response.xpath('//div[@id="box_1"]/span/text()').extract()

+ views_data = list(filter(lambda r: '$' not in r, site_data))

+ if views_data:

+ blog_data = response.meta.get('blog')

+ traffic_data = {

+ 'daily_page_views': int(views_data[0].translate({ord(','): None})),

+ 'daily_visitors': int(views_data[1].translate({ord(','): None}))

+ }

+ blog_data.update(traffic_data)

+ yield blog_data

+```

+

+[view raw][18][traffic.py][19] hosted with

+

+ by [GitHub][20]

+

+Similarly to the blog parsing routine, we just make our way through the sample return page of the StatShow and track down the elements containing daily page views and daily visitors. Both of these parameters identify website popularity, so we’ll just pick page views for our analysis.

+

+### Part II: Analysis

+

+The next part is analyzing all the data we got after scraping. We then visualize the prepared data sets with the lib called [Bokeh][47]. I don’t give the runner/visualization code here but it can be found in the [GitHub repo][48] in addition to everything else you see in this post.

+

+The initial result set has few outlying items representing websites with HUGE amount of traffic (such as google.com, linkedin.com, Oracle.com etc.). They obviously shouldn’t be considered. Even if some of those have blogs, they aren’t language specific. That’s why we filter the outliers based on the approach suggested in [this StackOverflow answer][36].

+

+### Language popularity comparison

+

+At first, let’s just make a head-to-head comparison of all the languages we have and see which one has most daily views among the top 100 blogs.

+

+Here’s the function that can take care of such a task:

+

+

+```

+def get_languages_popularity(data):

+ query_sorted_data = sorted(data, key=itemgetter('query'))

+ result = {'languages': [], 'views': []}

+ popularity = []

+ for k, group in groupby(query_sorted_data, key=itemgetter('query')):

+ group = list(group)

+ daily_page_views = map(lambda r: int(r['daily_page_views']), group)

+ total_page_views = sum(daily_page_views)

+ popularity.append((group[0]['query'], total_page_views))

+ sorted_popularity = sorted(popularity, key=itemgetter(1), reverse=True)

+ languages, views = zip(*sorted_popularity)

+ result['languages'] = languages

+ result['views'] = views

+ return result

+

+```

+

+[view raw][21][analysis.py][22] hosted with

+

+ by [GitHub][23]

+

+Here we first group our data by languages (‘query’ key in the dict) and then use python’s `groupby`wonderful function borrowed from SQL to generate groups of items from our data list, each representing some programming language. Afterwards, we calculate total page views for each language on line `14` and then add tuples of the form `('Language', rank)` in the `popularity`list. After the loop, we sort the popularity data based on the total views and unpack these tuples in 2 separate lists and return those in the `result` variable.

+

+There was some huge deviation in the initial dataset. I checked what was going on and realized that if I make query “C” in the [blogsearchengine.org][37], I get lots of irrelevant links, containing “C” letter somewhere. So, I had to exclude C from the analysis. It almost doesn’t happen with “R” in contrast as well as other C-like names: “C++”, “C#”.

+

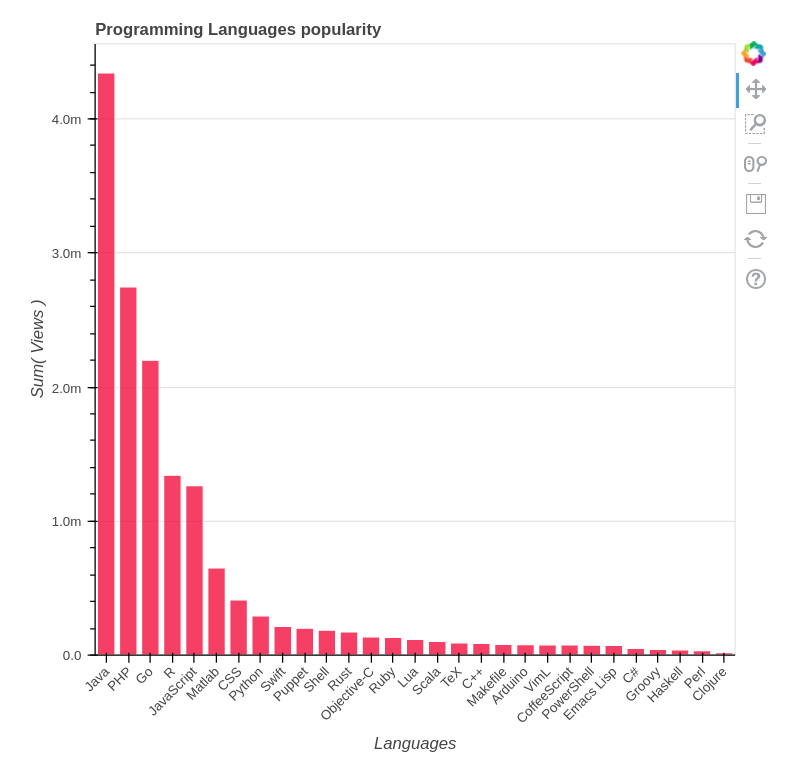

+So, if we remove C from the consideration and look at other languages, we can see the following picture:

+

+

+

+Evaluation. Java made it with over 4 million views daily, PHP and Go have over 2 million, R and JavaScript close up the “million scorers” list.

+

+### Daily Page Views vs Google Ranking

+

+Let’s now take a look at the connection between the number of daily views and Google ranking of blogs. Logically, less popular blogs should be further in ranking, It’s not so easy though, as other factors influence ranking as well, for example, if the article in the less popular blog is more recent, it’ll likely pop up first.

+

+The data preparation is performed in the following fashion:

+

+```

+def get_languages_popularity(data):

+ query_sorted_data = sorted(data, key=itemgetter('query'))

+ result = {'languages': [], 'views': []}

+ popularity = []

+ for k, group in groupby(query_sorted_data, key=itemgetter('query')):

+ group = list(group)

+ daily_page_views = map(lambda r: int(r['daily_page_views']), group)

+ total_page_views = sum(daily_page_views)

+ popularity.append((group[0]['query'], total_page_views))

+ sorted_popularity = sorted(popularity, key=itemgetter(1), reverse=True)

+ languages, views = zip(*sorted_popularity)

+ result['languages'] = languages

+ result['views'] = views

+ return result

+```

+

+[view raw][24][analysis.py][25] hosted with

+

+ by [GitHub][26]

+

+The function accepts scraped data and list of languages to consider. We sort the data in the same way we did for languages popularity. Afterwards, in a similar language grouping loop, we build `(rank, views_number)` tuples (with 1-based ranks) that are being converted to 2 separate lists. This pair of lists is then written to the resulting dictionary.

+

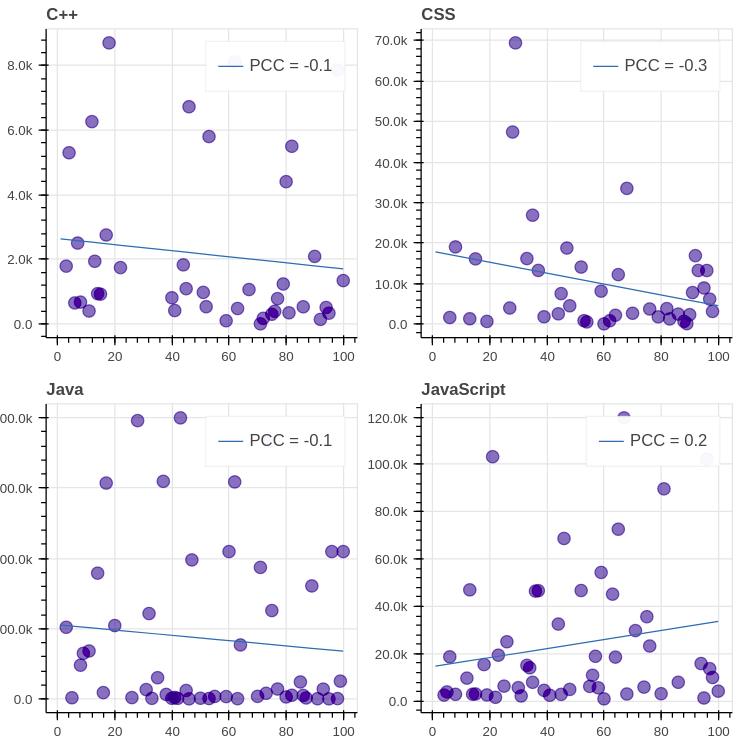

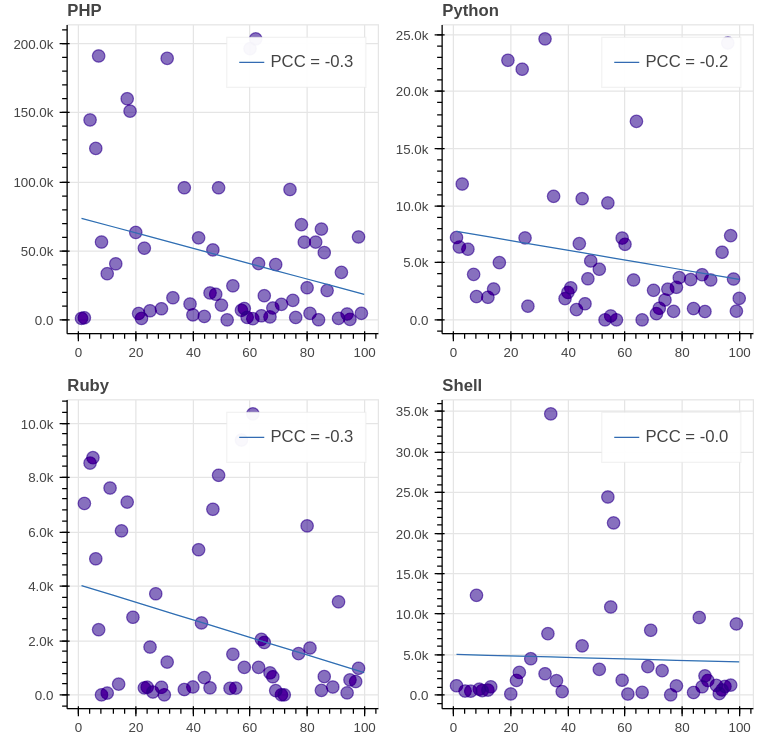

+The results for the top 8 GitHub languages (except C) are the following:

+

+

+

+

+

+Evaluation. We see that the [PCC (Pearson correlation coefficient)][49] of all graphs is far from 1/-1, which signifies lack of correlation between the daily views and the ranking. It’s important to note though that in most of the graphs (7 out of 8) the correlation is negative, which means that decrease in ranking leads to decrease in views indeed.

+

+### Conclusion

+

+So, according to our analysis, Java is by far most popular programming language, followed by PHP, Go, R and JavaScript. Neither of top 8 languages has a strong correlation between daily views and ranking in Google, so you can definitely get high in search results even if you’re just starting your blogging path. What exactly is required for that top hit a topic for another discussion though.

+

+These results are quite biased and can’t be taken into consideration without additional analysis. At first, it would be a good idea to collect more traffic feeds for an extended period of time and then analyze the mean (median?) values of daily views and rankings. Maybe I’ll return to it sometime in the future.

+

+### References

+

+1. Scraping:

+

+1. [blog.scrapinghub.com: Handling Javascript In Scrapy With Splash][27]

+

+2. [BlogSearchEngine.org][28]

+

+3. [twingly.com: Twingly Real-Time Blog Search][29]

+

+4. [searchblogspot.com: finding blogs on blogspot platform][30]

+

+3. Traffic estimation:

+

+1. [labnol.org: Find Out How Much Traffic a Website Gets][31]

+

+2. [quora.com: What are the best free tools that estimate visitor traffic…][32]

+

+3. [StatShow.com: The Stats Maker][33]

+

+--------------------------------------------------------------------------------

+

+via: https://www.databrawl.com/2017/10/08/blog-analysis/

+

+作者:[Serge Mosin ][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.databrawl.com/author/svmosingmail-com/

+[1]:https://bokeh.pydata.org/

+[2]:https://bokeh.pydata.org/

+[3]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/blogs.py

+[4]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-blogs-py

+[5]:https://github.com/

+[6]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/blogs.py

+[7]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-blogs-py

+[8]:https://github.com/

+[9]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/blogs.py

+[10]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-blogs-py

+[11]:https://github.com/

+[12]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/traffic.py

+[13]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-traffic-py

+[14]:https://github.com/

+[15]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/traffic.py

+[16]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-traffic-py

+[17]:https://github.com/

+[18]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/traffic.py

+[19]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-traffic-py

+[20]:https://github.com/

+[21]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/analysis.py

+[22]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-analysis-py

+[23]:https://github.com/

+[24]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee/raw/4ebb94aa41e9ab25fc79af26b49272b2eff47e00/analysis.py

+[25]:https://gist.github.com/Greyvend/f730ccd5dc1e7eacc4f27b0c9da86eee#file-analysis-py

+[26]:https://github.com/

+[27]:https://blog.scrapinghub.com/2015/03/02/handling-javascript-in-scrapy-with-splash/

+[28]:http://www.blogsearchengine.org/

+[29]:https://www.twingly.com/

+[30]:http://www.searchblogspot.com/

+[31]:https://www.labnol.org/internet/find-website-traffic-hits/8008/

+[32]:https://www.quora.com/What-are-the-best-free-tools-that-estimate-visitor-traffic-for-a-given-page-on-a-particular-website-that-you-do-not-own-or-operate-3rd-party-sites

+[33]:http://www.statshow.com/

+[34]:https://docs.scrapy.org/en/latest/intro/tutorial.html

+[35]:https://blog.scrapinghub.com/2015/03/02/handling-javascript-in-scrapy-with-splash/

+[36]:https://stackoverflow.com/a/16562028/1573766

+[37]:http://blogsearchengine.org/

+[38]:https://github.com/Databrawl/blog_analysis

+[39]:https://scrapy.org/

+[40]:https://github.com/scrapinghub/splash

+[41]:https://en.wikipedia.org/wiki/Google_Custom_Search

+[42]:http://www.blogsearchengine.org/

+[43]:http://www.blogsearchengine.org/

+[44]:https://doc.scrapy.org/en/latest/topics/shell.html

+[45]:https://www.labnol.org/internet/find-website-traffic-hits/8008/

+[46]:http://www.statshow.com/

+[47]:https://bokeh.pydata.org/en/latest/

+[48]:https://github.com/Databrawl/blog_analysis

+[49]:https://en.wikipedia.org/wiki/Pearson_correlation_coefficient

+[50]:https://www.databrawl.com/author/svmosingmail-com/

+[51]:https://www.databrawl.com/2017/10/08/

diff --git a/sources/tech/20171009 Building an Open Standard for Distributed Messaging Introducing OpenMessaging.md b/sources/tech/20171009 Building an Open Standard for Distributed Messaging Introducing OpenMessaging.md

new file mode 100644

index 0000000000..13cb4bfcbb

--- /dev/null

+++ b/sources/tech/20171009 Building an Open Standard for Distributed Messaging Introducing OpenMessaging.md

@@ -0,0 +1,52 @@

+Building an Open Standard for Distributed Messaging: Introducing OpenMessaging

+============================================================

+

+

+Through a collaborative effort from enterprises and communities invested in cloud, big data, and standard APIs, I’m excited to welcome the OpenMessaging project to The Linux Foundation. The OpenMessaging community’s goal is to create a globally adopted, vendor-neutral, and open standard for distributed messaging that can be deployed in cloud, on-premise, and hybrid use cases.

+

+Alibaba, Yahoo!, Didi, and Streamlio are the founding project contributors. The Linux Foundation has worked with the initial project community to establish a governance model and structure for the long-term benefit of the ecosystem working on a messaging API standard.

+

+As more companies and developers move toward cloud native applications, challenges are developing at scale with messaging and streaming applications. These include interoperability issues between platforms, lack of compatibility between wire-level protocols and a lack of standard benchmarking across systems.

+

+In particular, when data transfers across different messaging and streaming platforms, compatibility problems arise, meaning additional work and maintenance cost. Existing solutions lack standardized guidelines for load balance, fault tolerance, administration, security, and streaming features. Current systems don’t satisfy the needs of modern cloud-oriented messaging and streaming applications. This can lead to redundant work for developers and makes it difficult or impossible to meet cutting-edge business demands around IoT, edge computing, smart cities, and more.

+

+Contributors to OpenMessaging are looking to improve distributed messaging by:

+

+* Creating a global, cloud-oriented, vendor-neutral industry standard for distributed messaging

+

+* Facilitating a standard benchmark for testing applications

+

+* Enabling platform independence

+

+* Targeting cloud data streaming and messaging requirements with scalability, flexibility, isolation, and security built in

+

+* Fostering a growing community of contributing developers

+

+You can learn more about the new project and how to participate here: [http://openmessaging.cloud][1]

+

+These are some of the organizations supporting OpenMessaging:

+

+“We have focused on the messaging and streaming field for years, during which we explored Corba notification, JMS and other standards to try to solve our stickiest business requirements. After evaluating the available alternatives, Alibaba chose to create a new cloud-oriented messaging standard, OpenMessaging, which is a vendor-neutral and language-independent and provides industrial guidelines for areas like finance, e-commerce, IoT, and big data. Moreover, it aims to develop messaging and streaming applications across heterogeneous systems and platforms. We hope it can be open, simple, scalable, and interoperable. In addition, we want to build an ecosystem according to this standard, such as benchmark, computation, and various connectors. We would like to have new contributions and hope everyone can work together to push the OpenMessaging standard forward.” _— Von Gosling, senior architect at Alibaba, co-creator of Apache RocketMQ, and original initiator of OpenMessaging_

+

+“As the sophistication and scale of applications’ messaging needs continue to grow, lack of a standard interface has created complexity and inflexibility barriers for developers and organizations. Streamlio is excited to work with other leaders to launch the OpenMessaging standards initiative in order to give customers easy access to high-performance, low-latency messaging solutions like Apache Pulsar that offer the durability, consistency, and availability that organizations require.” _— Matteo Merli, software engineer at Streamlio, co-creator of Apache Pulsar, and member of Apache BookKeeper PMC_

+

+“Oath–a Verizon subsidiary of leading media and tech brands including Yahoo and AOL– supports open, collaborative initiatives and is glad to join the OpenMessaging project.” _— _ _Joe Francis, director, Core Platforms_

+

+“In Didi, we have defined a private set of producer API and consumer API to hide differences among open source MQs such as Apache Kafka, Apache RocketMQ, etc. as well as to provide additional customized features. We are planning to release these to the open source community. So far, we have accumulated a lot of experience on MQs and API unification, and are willing to work in OpenMessaging to construct a common standard of APIs together with others. We sincerely believe that a unified and widely accepted API standard can benefit MQ technology and applications that rely on it.” _— Neil Qi, architect at Didi_

+

+“There are many different open source messaging solutions, including Apache ActiveMQ, Apache RocketMQ, Apache Pulsar, and Apache Kafka. The lack of an industry-wide, scalable messaging standard makes evaluating a suitable solution difficult. We are excited to support the joint effort from multiple open source projects working together to define a scalable, open messaging specification. Apache BookKeeper has been successfully deployed in production at Yahoo (via Apache Pulsar) and Twitter (via Apache DistributedLog) as their durable, high-performance, low-latency storage foundation for their enterprise-grade messaging systems. We are excited to join the OpenMessaging effort to help other projects address common problems like low-latency durability, consistency and availability in messaging solutions.” _— Sijie Guo, co-founder of Streamlio, PMC chair of Apache BookKeeper, and co-creator of Apache DistributedLog_

+

+--------------------------------------------------------------------------------

+

+via: https://www.linuxfoundation.org/blog/building-open-standard-distributed-messaging-introducing-openmessaging/

+

+作者:[Mike Dolan][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.linuxfoundation.org/author/mdolan/

+[1]:http://openmessaging.cloud/

+[2]:https://www.linuxfoundation.org/author/mdolan/

+[3]:https://www.linuxfoundation.org/category/blog/

diff --git a/sources/tech/20171009 Considering Pythons Target Audience.md b/sources/tech/20171009 Considering Pythons Target Audience.md

new file mode 100644

index 0000000000..8ca5c86be7

--- /dev/null

+++ b/sources/tech/20171009 Considering Pythons Target Audience.md

@@ -0,0 +1,283 @@

+[Considering Python's Target Audience][40]

+============================================================

+

+Who is Python being designed for?

+

+* [Use cases for Python's reference interpreter][8]

+

+* [Which audience does CPython primarily serve?][9]

+

+* [Why is this relevant to anything?][10]

+

+* [Where does PyPI fit into the picture?][11]

+

+* [Why are some APIs changed when adding them to the standard library?][12]

+

+* [Why are some APIs added in provisional form?][13]

+

+* [Why are only some standard library APIs upgraded?][14]

+

+* [Will any parts of the standard library ever be independently versioned?][15]

+

+* [Why do these considerations matter?][16]

+

+Several years ago, I [highlighted][38] "CPython moves both too fast and too slowly" as one of the more common causes of conflict both within the python-dev mailing list, as well as between the active CPython core developers and folks that decide that participating in that process wouldn't be an effective use of their personal time and energy.

+

+I still consider that to be the case, but it's also a point I've spent a lot of time reflecting on in the intervening years, as I wrote that original article while I was still working for Boeing Defence Australia. The following month, I left Boeing for Red Hat Asia-Pacific, and started gaining a redistributor level perspective on [open source supply chain management][39] in large enterprises.

+

+### [Use cases for Python's reference interpreter][17]

+

+While it's a gross oversimplification, I tend to break down CPython's use cases as follows (note that these categories aren't fully distinct, they're just aimed at focusing my thinking on different factors influencing the rollout of new software features and versions):

+

+* Education: educator's main interest is in teaching ways of modelling and manipulating the world computationally, _not_ writing or maintaining production software). Examples:

+ * Australia's [Digital Curriculum][1]

+

+ * Lorena A. Barba's [AeroPython][2]

+

+* Personal automation & hobby projects: software where the main, and often only, user is the individual that wrote it. Examples:

+ * my Digital Blasphemy [image download notebook][3]

+

+ * Paul Fenwick's (Inter)National [Rick Astley Hotline][4]

+

+* Organisational process automation: software where the main, and often only, user is the organisation it was originally written to benefit. Examples:

+ * CPython's [core workflow tools][5]

+

+ * Development, build & release management tooling for Linux distros

+

+* Set-and-forget infrastructure: software where, for sometimes debatable reasons, in-life upgrades to the software itself are nigh impossible, but upgrades to the underlying platform may be feasible. Examples:

+ * most self-managed corporate and institutional infrastructure (where properly funded sustaining engineering plans are disturbingly rare)

+

+ * grant funded software (where maintenance typically ends when the initial grant runs out)

+

+ * software with strict certification requirements (where recertification is too expensive for routine updates to be economically viable unless absolutely essential)

+

+ * Embedded software systems without auto-upgrade capabilities

+

+* Continuously upgraded infrastructure: software with a robust sustaining engineering model, where dependency and platform upgrades are considered routine, and no more concerning than any other code change. Examples:

+ * Facebook's Python service infrastructure

+

+ * Rolling release Linux distributions

+

+ * most public PaaS and serverless environments (Heroku, OpenShift, AWS Lambda, Google Cloud Functions, Azure Cloud Functions, etc)

+

+* Intermittently upgraded standard operating environments: environments that do carry out routine upgrades to their core components, but those upgrades occur on a cycle measured in years, rather than weeks or months. Examples:

+ * [VFX Platform][6]

+

+ * LTS Linux distributions

+

+ * CPython and the Python standard library

+

+ * Infrastructure management & orchestration tools (e.g. OpenStack, Ansible)

+

+ * Hardware control systems

+

+* Ephemeral software: software that tends to be used once and then discarded or ignored, rather than being subsequently upgraded in place. Examples:

+ * Ad hoc automation scripts

+

+ * Single-player games with a defined "end" (once you've finished them, even if you forget to uninstall them, you probably won't reinstall them on a new device)

+

+ * Single-player games with little or no persistent state (if you uninstall and reinstall them, it doesn't change much about your play experience)

+

+ * Event-specific applications (the application was tied to a specific physical event, and once the event is over, that app doesn't matter any more)

+

+* Regular use applications: software that tends to be regularly upgraded after deployment. Examples:

+ * Business management software

+

+ * Personal & professional productivity applications (e.g. Blender)

+

+ * Developer tools & services (e.g. Mercurial, Buildbot, Roundup)

+

+ * Multi-player games, and other games with significant persistent state, but no real defined "end"

+

+ * Embedded software systems with auto-upgrade capabilities

+

+* Shared abstraction layers: software components that are designed to make it possible to work effectively in a particular problem domain even if you don't personally grasp all the intricacies of that domain yet. Examples:

+ * most runtime libraries and frameworks fall into this category (e.g. Django, Flask, Pyramid, SQL Alchemy, NumPy, SciPy, requests)

+

+ * many testing and type inference tools also fit here (e.g. pytest, Hypothesis, vcrpy, behave, mypy)

+

+ * plugins for other applications (e.g. Blender plugins, OpenStack hardware adapters)

+

+ * the standard library itself represents the baseline "world according to Python" (and that's an [incredibly complex][7] world view)

+

+### [Which audience does CPython primarily serve?][18]

+

+Ultimately, the main audiences that CPython and the standard library specifically serve are those that, for whatever reason, aren't adequately served by the combination of a more limited standard library and the installation of explicitly declared third party dependencies from PyPI.

+

+To oversimplify the above review of different usage and deployment models even further, it's possible to summarise the single largest split in Python's user base as the one between those that are using Python as a _scripting language_ for some environment of interest, and those that are using it as an _application development language_ , where the eventual artifact that will be distributed is something other than the script that they're working on.

+

+Typical developer behaviours when using Python as a scripting language include:

+

+* the main working unit consists of a single Python file (or Jupyter notebook!), rather than a directory of Python and metadata files

+

+* there's no separate build step of any kind - the script is distributed _as_ a script, similar to the way standalone shell scripts are distributed

+

+* there's no separate install step (other than downloading the file to an appropriate location), as it is expected that the required runtime environment will be preconfigured on the destination system

+

+* no explicit dependencies stated, except perhaps a minimum Python version, or else a statement of the expected execution environment. If dependencies outside the standard library are needed, they're expected to be provided by the environment being scripted (whether that's an operating system, a data analysis platform, or an application that embeds a Python runtime)

+

+* no separate test suite, with the main test of correctness being "Did the script do what you wanted it to do with the input that you gave it?"

+

+* if testing prior to live execution is needed, it will be in the form of a "dry run" or "preview" mode that conveys to the user what the software _would_ do if run that way

+

+* if static code analysis tools are used at all, it's via integration into the user's software development environment, rather than being set up separately for each individual script

+

+By contrast, typical developer behaviours when using Python as an application development language include:

+

+* the main working unit consists of a directory of Python and metadata files, rather than a single Python file

+

+* these is a separate build step to prepare the application for publication, even if it's just bundling the files together into a Python sdist, wheel or zipapp archive

+

+* whether there's a separate install step to prepare the application for use will depend on how the application is packaged, and what the supported target environments are

+

+* external dependencies are expressed in a metadata file, either directly in the project directory (e.g. `pyproject.toml`, `requirements.txt`, `Pipfile`), or as part of the generated publication archive (e.g. `setup.py`, `flit.ini`)

+

+* a separate test suite exists, either as unit tests for the Python API, integration tests for the functional interfaces, or a combination of the two

+

+* usage of static analysis tools is configured at the project level as part of its testing regime, rather than being dependent on

+

+As a result of that split, the main purpose that CPython and the standard library end up serving is to define the redistributor independent baseline of assumed functionality for educational and ad hoc Python scripting environments 3-5 years after the corresponding CPython feature release.

+

+For ad hoc scripting use cases, that 3-5 year latency stems from a combination of delays in redistributors making new releases available to their users, and users of those redistributed versions taking time to revise their standard operating environments.

+

+In the case of educational environments, educators need that kind of time to review the new features and decide whether or not to incorporate them into the courses they offer their students.

+

+### [Why is this relevant to anything?][19]

+

+This post was largely inspired by the Twitter discussion following on from [this comment of mine][20] citing the Provisional API status defined in [PEP 411][21] as an example of an open source project issuing a de facto invitation to users to participate more actively in the design & development process as co-creators, rather than only passively consuming already final designs.

+

+The responses included several expressions of frustration regarding the difficulty of supporting provisional APIs in higher level libraries, without those libraries making the provisional status transitive, and hence limiting support for any related features to only the latest version of the provisional API, and not any of the earlier iterations.

+

+My [main reaction][22] was to suggest that open source publishers should impose whatever support limitations they need to impose to make their ongoing maintenance efforts personally sustainable. That means that if supporting older iterations of provisional APIs is a pain, then they should only be supported if the project developers themselves need that, or if somebody is paying them for the inconvenience. This is similar to my view on whether or not volunteer-driven projects should support older commercial LTS Python releases for free when it's a hassle for them to do: I [don't think they should][23], as I expect most such demands to be stemming from poorly managed institutional inertia, rather than from genuine need (and if the need _is_ genuine, then it should instead be possible to find some means of paying to have it addressed).

+

+However, my [second reaction][24], was to realise that even though I've touched on this topic over the years (e.g. in the original 2011 article linked above, as well as in Python 3 Q & A answers [here][25], [here][26], and [here][27], and to a lesser degree in last year's article on the [Python Packaging Ecosystem][28]), I've never really attempted to directly explain the impact it has on the standard library design process.

+

+And without that background, some aspects of the design process, such as the introduction of provisional APIs, or the introduction of inspired-by-but-not-the-same-as, seem completely nonsensical, as they appear to be an attempt to standardise APIs without actually standardising them.

+

+### [Where does PyPI fit into the picture?][29]

+

+The first hurdle that _any_ proposal sent to python-ideas or python-dev has to clear is answering the question "Why isn't a module on PyPI good enough?". The vast majority of proposals fail at this step, but there are several common themes for getting past it:

+

+* rather than downloading a suitable third party library, novices may be prone to copying & pasting bad advice from the internet at large (e.g. this is why the `secrets` library now exists: to make it less likely people will use the `random` module, which is intended for games and statistical simulations, for security-sensitive purposes)

+

+* the module is intended to provide a reference implementation and to enable interoperability between otherwise competing implementations, rather than necessarily being all things to all people (e.g. `asyncio`, `wsgiref`, `unittest``, and `logging` all fall into this category)

+

+* the module is intended for use in other parts of the standard library (e.g. `enum` falls into this category, as does `unittest`)

+

+* the module is designed to support a syntactic addition to the language (e.g. the `contextlib`, `asyncio` and `typing` modules fall into this category)

+

+* the module is just plain useful for ad hoc scripting purposes (e.g. `pathlib`, and `ipaddress` fall into this category)

+

+* the module is useful in an educational context (e.g. the `statistics` module allows for interactive exploration of statistic concepts, even if you wouldn't necessarily want to use it for full-fledged statistical analysis)

+

+Passing this initial "Is PyPI obviously good enough?" check isn't enough to ensure that a module will be accepted for inclusion into the standard library, but it's enough to shift the question to become "Would including the proposed library result in a net improvement to the typical introductory Python software developer experience over the next few years?"

+

+The introduction of `ensurepip` and `venv` modules into the standard library also makes it clear to redistributors that we expect Python level packaging and installation tools to be supported in addition to any platform specific distribution mechanisms.

+

+### [Why are some APIs changed when adding them to the standard library?][30]

+

+While existing third party modules are sometimes adopted wholesale into the standard library, in other cases, what actually gets added is a redesigned and reimplemented API that draws on the user experience of the existing API, but drops or revises some details based on the additional design considerations and privileges that go with being part of the language's reference implementation.

+

+For example, unlike its popular third party predecessor, `path.py`, ``pathlib` does _not_ define string subclasses, but instead independent types. Solving the resulting interoperability challenges led to the definition of the filesystem path protocol, allowing a wider range of objects to be used with interfaces that work with filesystem paths.

+

+The API design for the `ipaddress` module was adjusted to explicitly separate host interface definitions (IP addresses associated with particular IP networks) from the definitions of addresses and networks in order to serve as a better tool for teaching IP addressing concepts, whereas the original `ipaddr` module is less strict in the way it uses networking terminology.

+

+In other cases, standard library modules are constructed as a synthesis of multiple existing approaches, and may also rely on syntactic features that didn't exist when the APIs for pre-existing libraries were defined. Both of these considerations apply for the `asyncio` and `typing` modules, while the latter consideration applies for the `dataclasses` API being considered in PEP 557 (which can be summarised as "like attrs, but using variable annotations for field declarations").

+

+The working theory for these kinds of changes is that the existing libraries aren't going away, and their maintainers often aren't all that interested in putitng up with the constraints associated with standard library maintenance (in particular, the relatively slow release cadence). In such cases, it's fairly common for the documentation of the standard library version to feature a "See Also" link pointing to the original module, especially if the third party version offers additional features and flexibility that were omitted from the standard library module.

+

+### [Why are some APIs added in provisional form?][31]

+

+While CPython does maintain an API deprecation policy, we generally prefer not to use it without a compelling justification (this is especially the case while other projects are attempting to maintain compatibility with Python 2.7).

+

+However, when adding new APIs that are inspired by existing third party ones without being exact copies of them, there's a higher than usual risk that some of the design decisions may turn out to be problematic in practice.

+

+When we consider the risk of such changes to be higher than usual, we'll mark the related APIs as provisional, indicating that conservative end users may want to avoid relying on them at all, and that developers of shared abstraction layers may want to consider imposing stricter than usual constraints on which versions of the provisional API they're prepared to support.

+

+### [Why are only some standard library APIs upgraded?][32]

+

+The short answer here is that the main APIs that get upgraded are those where:

+

+* there isn't likely to be a lot of external churn driving additional updates

+

+* there are clear benefits for either ad hoc scripting use cases or else in encouraging future interoperability between multiple third party solutions

+

+* a credible proposal is submitted by folks interested in doing the work

+

+If the limitations of an existing module are mainly noticeable when using the module for application development purposes (e.g. `datetime`), if redistributors already tend to make an improved alternative third party option readily available (e.g. `requests`), or if there's a genuine conflict between the release cadence of the standard library and the needs of the package in question (e.g. `certifi`), then the incentives to propose a change to the standard library version tend to be significantly reduced.

+

+This is essentially the inverse to the question about PyPI above: since PyPI usually _is_ a sufficiently good distribution mechanism for application developer experience enhancements, it makes sense for such enhancements to be distributed that way, allowing redistributors and platform providers to make their own decisions about what they want to include as part of their default offering.

+

+Changing CPython and the standard library only comes into play when there is perceived value in changing the capabilities that can be assumed to be present by default in 3-5 years time.

+

+### [Will any parts of the standard library ever be independently versioned?][33]

+

+Yes, it's likely the bundling model used for `ensurepip` (where CPython releases bundle a recent version of `pip` without actually making it part of the standard library) may be applied to other modules in the future.

+

+The most probable first candidate for that treatment would be the `distutils` build system, as switching to such a model would allow the build system to be more readily kept consistent across multiple releases.

+

+Other potential candidates for this kind of treatment would be the Tcl/Tk graphics bindings, and the IDLE editor, which are already unbundled and turned into an optional addon installations by a number of redistributors.

+

+### [Why do these considerations matter?][34]

+

+By the very nature of things, the folks that tend to be most actively involved in open source development are those folks working on open source applications and shared abstraction layers.

+

+The folks writing ad hoc scripts or designing educational exercises for their students often won't even think of themselves as software developers - they're teachers, system administrators, data analysts, quants, epidemiologists, physicists, biologists, business analysts, market researchers, animators, graphical designers, etc.

+

+When all we have to worry about for a language is the application developer experience, then we can make a lot of simplifying assumptions around what people know, the kinds of tools they're using, the kinds of development processes they're following, and the ways they're going to be building and deploying their software.

+

+Things get significantly more complicated when an application runtime _also_ enjoys broad popularity as a scripting engine. Doing either job well is already difficult, and balancing the needs of both audiences as part of a single project leads to frequent incomprehension and disbelief on both sides.

+

+This post isn't intended to claim that we never make incorrect decisions as part of the CPython development process - it's merely pointing out that the most reasonable reaction to seemingly nonsensical feature additions to the Python standard library is going to be "I'm not part of the intended target audience for that addition" rather than "I have no interest in that, so it must be a useless and pointless addition of no value to anyone, added purely to annoy me".

+

+--------------------------------------------------------------------------------

+

+via: http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html

+

+作者:[Nick Coghlan ][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:http://www.curiousefficiency.org/pages/about.html

+[1]:https://aca.edu.au/#home-unpack

+[2]:https://github.com/barbagroup/AeroPython

+[3]:https://nbviewer.jupyter.org/urls/bitbucket.org/ncoghlan/misc/raw/default/notebooks/Digital%20Blasphemy.ipynb

+[4]:https://github.com/pjf/rickastley

+[5]:https://github.com/python/core-workflow

+[6]:http://www.vfxplatform.com/

+[7]:http://www.curiousefficiency.org/posts/2015/10/languages-to-improve-your-python.html#broadening-our-horizons

+[8]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#use-cases-for-python-s-reference-interpreter

+[9]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#which-audience-does-cpython-primarily-serve

+[10]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#why-is-this-relevant-to-anything

+[11]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#where-does-pypi-fit-into-the-picture

+[12]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#why-are-some-apis-changed-when-adding-them-to-the-standard-library

+[13]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#why-are-some-apis-added-in-provisional-form

+[14]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#why-are-only-some-standard-library-apis-upgraded

+[15]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#will-any-parts-of-the-standard-library-ever-be-independently-versioned

+[16]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#why-do-these-considerations-matter

+[17]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id1

+[18]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id2

+[19]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id3

+[20]:https://twitter.com/ncoghlan_dev/status/916994106819088384

+[21]:https://www.python.org/dev/peps/pep-0411/

+[22]:https://twitter.com/ncoghlan_dev/status/917092464355241984

+[23]:http://www.curiousefficiency.org/posts/2015/04/stop-supporting-python26.html

+[24]:https://twitter.com/ncoghlan_dev/status/917088410162012160

+[25]:http://python-notes.curiousefficiency.org/en/latest/python3/questions_and_answers.html#wouldn-t-a-python-2-8-release-help-ease-the-transition

+[26]:http://python-notes.curiousefficiency.org/en/latest/python3/questions_and_answers.html#doesn-t-this-make-python-look-like-an-immature-and-unstable-platform

+[27]:http://python-notes.curiousefficiency.org/en/latest/python3/questions_and_answers.html#what-about-insert-other-shiny-new-feature-here

+[28]:http://www.curiousefficiency.org/posts/2016/09/python-packaging-ecosystem.html

+[29]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id4

+[30]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id5

+[31]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id6

+[32]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id7

+[33]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id8

+[34]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#id9

+[35]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#

+[36]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#disqus_thread

+[37]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.rst

+[38]:http://www.curiousefficiency.org/posts/2011/04/musings-on-culture-of-python-dev.html

+[39]:http://community.redhat.com/blog/2015/02/the-quid-pro-quo-of-open-infrastructure/

+[40]:http://www.curiousefficiency.org/posts/2017/10/considering-pythons-target-audience.html#

diff --git a/sources/tech/20171009 CyberShaolin Teaching the Next Generation of Cybersecurity Experts.md b/sources/tech/20171009 CyberShaolin Teaching the Next Generation of Cybersecurity Experts.md

new file mode 100644

index 0000000000..23704fa46d

--- /dev/null

+++ b/sources/tech/20171009 CyberShaolin Teaching the Next Generation of Cybersecurity Experts.md

@@ -0,0 +1,57 @@

+CyberShaolin: Teaching the Next Generation of Cybersecurity Experts

+============================================================

+

+

+

+Reuben Paul, co-founder of CyberShaolin, will speak at Open Source Summit in Prague, highlighting the importance of cybersecurity awareness for kids.

+

+Reuben Paul is not the only kid who plays video games, but his fascination with games and computers set him on a unique journey of curiosity that led to an early interest in cybersecurity education and advocacy and the creation of CyberShaolin, an organization that helps children understand the threat of cyberattacks. Paul, who is now 11 years old, will present a keynote talk at [Open Source Summit in Prague][2], sharing his experiences and highlighting insecurities in toys, devices, and other technologies in daily use.

+

+

+

+Reuben Paul, co-founder of CyberShaolin

+

+We interviewed Paul to hear the story of his journey and to discuss CyberShaolin and its mission to educate, equip, and empower kids (and their parents) with knowledge of cybersecurity dangers and defenses. Linux.com: When did your fascination with computers start? Reuben Paul: My fascination with computers started with video games. I like mobile phone games as well as console video games. When I was about 5 years old (I think), I was playing the “Asphalt” racing game by Gameloft on my phone. It was a simple but fun game. I had to touch on the right side of the phone to go fast and touch the left side of the phone to slow down. I asked my dad, “How does the game know where I touch?”

+

+He researched and found out that the phone screen was an xy coordinate system and so he told me that if the x value was greater than half the width of the phone screen, then it was a touch on the right side. Otherwise, it was a touch on the left side. To help me better understand how this worked, he gave me the equation to graph a straight line, which was y = mx + b and asked, “Can you find the y value for each x value?” After about 30 minutes, I calculated the y value for each of the x values he gave me.

+

+“When my dad realized that I was able to learn some fundamental logics of programming, he introduced me to Scratch and I wrote my first game — called “Big Fish eats Small Fish” — using the x and y values of the mouse pointer in the game. Then I just kept falling in love with computers.Paul, who is now 11 years old, will present a keynote talk at [Open Source Summit in Prague][1], sharing his experiences and highlighting insecurities in toys, devices, and other technologies in daily use.

+

+Linux.com: What got you interested in cybersecurity? Paul: My dad, Mano Paul, used to train his business clients on cybersecurity. Whenever he worked from his home office, I would listen to his phone conversations. By the time I was 6 years old, I knew about things like the Internet, firewalls, and the cloud. When my dad realized I had the interest and the potential for learning, he started teaching me security topics like social engineering techniques, cloning websites, man-in-the-middle attack techniques, hacking mobile apps, and more. The first time I got a meterpreter shell from a test target machine, I felt like Peter Parker who had just discovered his Spiderman abilities.

+

+Linux.com: How and why did you start CyberShaolin? Paul: When I was 8 years old, I gave my first talk on “InfoSec from the mouth of babes (or an 8 year old)” in DerbyCon. It was in September of 2014\. After that conference, I received several invitations and before the end of 2014, I had keynoted at three other conferences.

+

+So, when kids started hearing me speak at these different conferences, they started writing to me and asking me to teach them. I told my parents that I wanted to teach other kids, and they asked me how. I said, “Maybe I can make some videos and publish them on channels like YouTube.” They asked me if I wanted to charge for my videos, and I said “No.” I want my videos to be free and accessible to any child anywhere in the world. This is how CyberShaolin was created.

+

+Linux.com: What’s the goal of CyberShaolin? Paul: CyberShaolin is the non-profit organization that my parents helped me found. Its mission is to educate, equip, and empower kids (and their parents) with knowledge of cybersecurity dangers and defenses, using videos and other training material that I develop in my spare time from school, along with kung fu, gymnastics, swimming, inline hockey, piano, and drums. I have published about a dozen videos so far on the www.CyberShaolin.org website and plan to develop more. I would also like to make games and comics to support security learning.

+

+CyberShaolin comes from two words: Cyber and Shaolin. The word cyber is of course from technology. Shaolin comes from the kung fu martial art form in which my dad and are I are both second degree black belt holders. In kung fu, we have belts to show our progress of knowledge, and you can think of CyberShaolin like digital kung fu where kids can become Cyber Black Belts, after learning and taking tests on our website.

+

+Linux.com: How important do you think is it for children to understand cybersecurity? Paul: We are living in a time when technology and devices are not only in our homes but also in our schools and pretty much any place you go. The world is also getting very connected with the Internet of Things, which can easily become the Internet of Threats. Children are one of the main users of these technologies and devices. Unfortunately, these devices and apps on these devices are not very secure and can cause serious problems to children and families. For example, I recently (in May 2017) demonstrated how I could hack into a smart toy teddy bear and turn it into a remote spying device. Children are also the next generation. If they are not aware and trained in cybersecurity, then the future (our future) will not be very good.

+

+Linux.com: How does the project help children? Paul:As I mentioned before, CyberShaolin’s mission is to educate, equip, and empower kids (and their parents) with knowledge of cybersecurity dangers and defenses.

+

+As kids are educated about cybersecurity dangers like cyber bullying, man-in-the-middle, phishing, privacy, online threats, mobile threats, etc., they will be equipped with knowledge and skills, which will empower them to make cyber-wise decisions and stay safe and secure in cyberspace. And, just as I would never use my kung fu skills to harm someone, I expect all CyberShaolin graduates to use their cyber kung fu skills to create a secure future, for the good of humanity.

+

+--------------------------------------------------------------------------------

+作者简介:

+

+Swapnil Bhartiya is a journalist and writer who has been covering Linux and Open Source for more than 10 years.

+

+-------------------------

+

+via: https://www.linuxfoundation.org/blog/cybershaolin-teaching-next-generation-cybersecurity-experts/

+

+作者:[Swapnil Bhartiya][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.linuxfoundation.org/author/sbhartiya/

+[1]:http://events.linuxfoundation.org/events/open-source-summit-europe

+[2]:http://events.linuxfoundation.org/events/open-source-summit-europe

+[3]:https://www.linuxfoundation.org/author/sbhartiya/

+[4]:https://www.linuxfoundation.org/category/blog/

+[5]:https://www.linuxfoundation.org/category/campaigns/events-campaigns/

+[6]:https://www.linuxfoundation.org/category/blog/qa/

diff --git a/sources/tech/20171009 Examining network connections on Linux systems.md b/sources/tech/20171009 Examining network connections on Linux systems.md

new file mode 100644

index 0000000000..299aef18e2

--- /dev/null

+++ b/sources/tech/20171009 Examining network connections on Linux systems.md

@@ -0,0 +1,217 @@

+Examining network connections on Linux systems

+============================================================

+

+### Linux systems provide a lot of useful commands for reviewing network configuration and connections. Here's a look at a few, including ifquery, ifup, ifdown and ifconfig.

+

+

+There are a lot of commands available on Linux for looking at network settings and connections. In today's post, we're going to run through some very handy commands and see how they work.

+

+### ifquery command

+

+One very useful command is the **ifquery** command. This command should give you a quick list of network interfaces. However, you might only see something like this —showing only the loopback interface:

+

+```

+$ ifquery --list

+lo

+```

+

+If this is the case, your **/etc/network/interfaces** file doesn't include information on network interfaces except for the loopback interface. You can add lines like the last two in the example below — assuming DHCP is used to assign addresses — if you'd like it to be more useful.

+

+```

+# interfaces(5) file used by ifup(8) and ifdown(8)

+auto lo

+iface lo inet loopback

+auto eth0

+iface eth0 inet dhcp

+```

+

+### ifup and ifdown commands

+

+The related **ifup** and **ifdown** commands can be used to bring network connections up and shut them down as needed provided this file has the required descriptive data. Just keep in mind that "if" means "interface" in these commands just as it does in the **ifconfig** command, not "if" as in "if I only had a brain".

+

+

+

+### ifconfig command

+

+The **ifconfig** command, on the other hand, doesn't read the /etc/network/interfaces file at all and still provides quite a bit of useful information on network interfaces -- configuration data along with packet counts that tell you how busy each interface has been. The ifconfig command can also be used to shut down and restart network interfaces (e.g., ifconfig eth0 down).

+

+```

+$ ifconfig eth0

+eth0 Link encap:Ethernet HWaddr 00:1e:4f:c8:43:fc

+ inet addr:192.168.0.6 Bcast:192.168.0.255 Mask:255.255.255.0

+ inet6 addr: fe80::b44b:bdb6:2527:6ae9/64 Scope:Link

+ UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

+ RX packets:60474 errors:0 dropped:0 overruns:0 frame:0

+ TX packets:33463 errors:0 dropped:0 overruns:0 carrier:0

+ collisions:0 txqueuelen:1000

+ RX bytes:43922053 (43.9 MB) TX bytes:4000460 (4.0 MB)

+ Interrupt:21 Memory:fe9e0000-fea00000

+```

+

+The RX and TX packet counts in this output are extremely low. In addition, no errors or packet collisions have been reported. The **uptime** command will likely confirm that this system has only recently been rebooted.

+

+The broadcast (Bcast) and network mask (Mask) addresses shown above indicate that the system is operating on a Class C equivalent network (the default) so local addresses will range from 192.168.0.1 to 192.168.0.254.

+

+### netstat command

+

+The **netstat** command provides information on routing and network connections. The **netstat -rn** command displays the system's routing table.

+

+

+

+```

+$ netstat -rn

+Kernel IP routing table

+Destination Gateway Genmask Flags MSS Window irtt Iface

+0.0.0.0 192.168.0.1 0.0.0.0 UG 0 0 0 eth0

+169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

+192.168.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

+```

+

+That **169.254.0.0** entry in the above output is only necessary if you are using or planning to use link-local communications. You can comment out the related lines in the **/etc/network/if-up.d/avahi-autoipd** file like this if this is not the case:

+

+```

+$ tail -12 /etc/network/if-up.d/avahi-autoipd

+#if [ -x /bin/ip ]; then

+# # route already present?

+# ip route show | grep -q '^169.254.0.0/16[[:space:]]' && exit 0

+#

+# /bin/ip route add 169.254.0.0/16 dev $IFACE metric 1000 scope link

+#elif [ -x /sbin/route ]; then

+# # route already present?

+# /sbin/route -n | egrep -q "^169.254.0.0[[:space:]]" && exit 0

+#

+# /sbin/route add -net 169.254.0.0 netmask 255.255.0.0 dev $IFACE metric 1000

+#fi

+```

+

+### netstat -a command

+

+The **netstat -a** command will display **_all_** network connections. To limit this to listening and established connections (generally much more useful), use the **netstat -at** command instead.

+

+```

+$ netstat -at

+Active Internet connections (servers and established)

+Proto Recv-Q Send-Q Local Address Foreign Address State

+tcp 0 0 *:ssh *:* LISTEN

+tcp 0 0 localhost:ipp *:* LISTEN

+tcp 0 0 localhost:smtp *:* LISTEN

+tcp 0 256 192.168.0.6:ssh 192.168.0.32:53550 ESTABLISHED

+tcp6 0 0 [::]:http [::]:* LISTEN

+tcp6 0 0 [::]:ssh [::]:* LISTEN

+tcp6 0 0 ip6-localhost:ipp [::]:* LISTEN

+tcp6 0 0 ip6-localhost:smtp [::]:* LISTEN

+```

+

+### netstat -rn command

+

+The **netstat -rn** command displays the system's routing table. The 192.168.0.1 address is the local gateway (Flags=UG).

+

+```

+$ netstat -rn

+Kernel IP routing table

+Destination Gateway Genmask Flags MSS Window irtt Iface

+0.0.0.0 192.168.0.1 0.0.0.0 UG 0 0 0 eth0

+192.168.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

+```

+

+### host command

+

+The **host** command works a lot like **nslookup** by looking up the remote system's IP address, but also provides the system's mail handler.

+

+```

+$ host world.std.com

+world.std.com has address 192.74.137.5

+world.std.com mail is handled by 10 smtp.theworld.com.

+```

+

+### nslookup command

+

+The **nslookup** also provides information on the system (in this case, the local system) that is providing DNS lookup services.

+

+```

+$ nslookup world.std.com

+Server: 127.0.1.1

+Address: 127.0.1.1#53

+

+Non-authoritative answer:

+Name: world.std.com

+Address: 192.74.137.5

+```

+

+### dig command

+

+The **dig** command provides quitea lot of information on connecting to a remote system -- including the name server we are communicating with and how long the query takes to respond and is often used for troubleshooting.

+

+```

+$ dig world.std.com

+

+; <<>> DiG 9.10.3-P4-Ubuntu <<>> world.std.com

+;; global options: +cmd

+;; Got answer:

+;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 28679

+;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

+

+;; OPT PSEUDOSECTION:

+; EDNS: version: 0, flags:; udp: 512

+;; QUESTION SECTION:

+;world.std.com. IN A

+

+;; ANSWER SECTION:

+world.std.com. 78146 IN A 192.74.137.5

+

+;; Query time: 37 msec

+;; SERVER: 127.0.1.1#53(127.0.1.1)

+;; WHEN: Mon Oct 09 13:26:46 EDT 2017

+;; MSG SIZE rcvd: 58

+```

+

+### nmap command

+

+The **nmap** command is most frequently used to probe remote systems, but can also be used to report on the services being offered by the local system. In the output below, we can see that ssh is available for logins, that smtp is servicing email, that a web site is active, and that an ipp print service is running.

+

+```

+$ nmap localhost

+

+Starting Nmap 7.01 ( https://nmap.org ) at 2017-10-09 15:01 EDT

+Nmap scan report for localhost (127.0.0.1)

+Host is up (0.00016s latency).

+Not shown: 996 closed ports

+PORT STATE SERVICE

+22/tcp open ssh

+25/tcp open smtp

+80/tcp open http

+631/tcp open ipp

+

+Nmap done: 1 IP address (1 host up) scanned in 0.09 seconds

+```

+

+Linux systems provide a lot of useful commands for reviewing their network configuration and connections. If you run out of commands to explore, keep in mind that **apropos network** might point you toward even more.

+

+--------------------------------------------------------------------------------

+

+via: https://www.networkworld.com/article/3230519/linux/examining-network-connections-on-linux-systems.html

+

+作者:[Sandra Henry-Stocker][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.networkworld.com/author/Sandra-Henry_Stocker/

+[1]:https://www.networkworld.com/article/3221393/linux/review-considering-oracle-linux-is-a-no-brainer-if-you-re-an-oracle-shop.html

+[2]:https://www.networkworld.com/article/3221393/linux/review-considering-oracle-linux-is-a-no-brainer-if-you-re-an-oracle-shop.html#tk.nww_nsdr_ndxprmomod

+[3]:https://www.networkworld.com/article/3221423/linux/review-suse-linux-enterprise-server-12-sp2-scales-well-supports-3rd-party-virtualization.html

+[4]:https://www.networkworld.com/article/3221423/linux/review-suse-linux-enterprise-server-12-sp2-scales-well-supports-3rd-party-virtualization.html#tk.nww_nsdr_ndxprmomod

+[5]:https://www.networkworld.com/article/3221476/linux/review-free-linux-fedora-server-offers-upgrades-as-they-become-available-no-wait.html

+[6]:https://www.networkworld.com/article/3221476/linux/review-free-linux-fedora-server-offers-upgrades-as-they-become-available-no-wait.html#tk.nww_nsdr_ndxprmomod

+[7]:https://www.networkworld.com/article/3227929/linux/making-good-use-of-the-files-in-proc.html

+[8]:https://www.networkworld.com/article/3221415/linux/linux-commands-for-managing-partitioning-troubleshooting.html

+[9]:https://www.networkworld.com/article/2225768/cisco-subnet/dual-protocol-routing-with-raspberry-pi.html

+[10]:https://www.networkworld.com/video/51206/solo-drone-has-linux-smarts-gopro-mount

+[11]:https://www.networkworld.com/insider

+[12]:https://www.networkworld.com/article/3227929/linux/making-good-use-of-the-files-in-proc.html

+[13]:https://www.networkworld.com/article/3221415/linux/linux-commands-for-managing-partitioning-troubleshooting.html

+[14]:https://www.networkworld.com/video/51206/solo-drone-has-linux-smarts-gopro-mount

+[15]:https://www.networkworld.com/video/51206/solo-drone-has-linux-smarts-gopro-mount

+[16]:https://www.flickr.com/photos/cogdog/4317096083/in/photolist-7zufg6-8JS2ym-bmDGsu-cnYW2C-mnrvP-a1s6VU-4ThA5-33B4ME-7GHEod-ERKLhX-5iPi6m-dTZAW6-UC6wyi-dRCJAZ-dq4wxW-peQyWU-8AGfjw-8wGAqs-4oLjd2-4T6pXM-dQua38-UKngxR-5kQwHN-ejjXMo-q4YvvL-7AUF3h-39ya27-7HiWfp-TosWda-6L3BZn-uST4Hi-TkRW8U-H7zBu-oDkNvU-6T2pZg-dQEbs9-39hxfS-5pBhQL-eR6iKT-7dgDwk-W15qVn-nVQHN3-mdRj8-75tqVh-RajJsC-7gympc-7dwxjt-9EadYN-p1qH1G-6rZhh6

+[17]:https://creativecommons.org/licenses/by/2.0/legalcode

diff --git a/sources/tech/20171010 Changes in Password Best Practices.md b/sources/tech/20171010 Changes in Password Best Practices.md

new file mode 100644

index 0000000000..8b1c611171

--- /dev/null

+++ b/sources/tech/20171010 Changes in Password Best Practices.md

@@ -0,0 +1,35 @@

+translating----geekpi

+

+### Changes in Password Best Practices

+

+NIST recently published its four-volume [_SP800-63b Digital Identity Guidelines_][3] . Among other things, it makes three important suggestions when it comes to passwords:

+

+1. Stop it with the annoying password complexity rules. They make passwords harder to remember. They increase errors because artificially complex passwords are harder to type in. And they [don't help][1] that much. It's better to allow people to use pass phrases.

+

+2. Stop it with password expiration. That was an [old idea for an old way][2] we used computers. Today, don't make people change their passwords unless there's indication of compromise.

+

+3. Let people use password managers. This is how we deal with all the passwords we need.

+

+These password rules were failed attempts to [fix the user][4]. Better we fix the security systems.

+

+--------------------------------------------------------------------------------

+

+作者简介:

+

+I've been writing about security issues on my blog since 2004, and in my monthly newsletter since 1998. I write books, articles, and academic papers. Currently, I'm the Chief Technology Officer of IBM Resilient, a fellow at Harvard's Berkman Center, and a board member of EFF.

+

+-----------------

+

+via: https://www.schneier.com/blog/archives/2017/10/changes_in_pass.html

+

+作者:[Bruce Schneier][a]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:https://www.schneier.com/blog/about/

+[1]:https://www.wsj.com/articles/the-man-who-wrote-those-password-rules-has-a-new-tip-n3v-r-m1-d-1502124118

+[2]:https://securingthehuman.sans.org/blog/2017/03/23/time-for-password-expiration-to-die

+[3]:http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-63b.pdf

+[4]:http://ieeexplore.ieee.org/document/7676198/?reload=true

diff --git a/sources/tech/20171010 In Device We Trust Measure Twice Compute Once with Xen Linux TPM 2.0 and TXT.md b/sources/tech/20171010 In Device We Trust Measure Twice Compute Once with Xen Linux TPM 2.0 and TXT.md

new file mode 100644

index 0000000000..20c14074c6

--- /dev/null

+++ b/sources/tech/20171010 In Device We Trust Measure Twice Compute Once with Xen Linux TPM 2.0 and TXT.md

@@ -0,0 +1,94 @@

+In Device We Trust: Measure Twice, Compute Once with Xen, Linux, TPM 2.0 and TXT

+============================================================

+

+

+

+Xen virtualization enables innovative applications to be economically integrated with measured, interoperable software components on general-purpose hardware.[Creative Commons Zero][1]Pixabay

+

+Is it a small tablet or large phone? Is it a phone or broadcast sensor? Is it a server or virtual desktop cluster? Is x86 emulating ARM, or vice-versa? Is Linux inspiring Windows, or the other way around? Is it microcode or hardware? Is it firmware or software? Is it microkernel or hypervisor? Is it a security or quality update? _Is anything in my device the same as yesterday? When we observe our evolving devices and their remote services, what can we question and measure?_

+

+### General Purpose vs. Special Purpose Ecosystems

+

+The general-purpose computer now lives in a menagerie of special-purpose devices and information appliances. Yet software and hardware components _within_ devices are increasingly flexible, blurring category boundaries. With hardware virtualization on x86 and ARM platforms, the ecosystems of multiple operating systems can coexist on a single device. Can a modular and extensible multi-vendor architecture compete with the profitability of vertically integrated products from a single vendor?

+

+Operating systems evolved alongside applications for lucrative markets. PC desktops were driven by business productivity and media creation. Web browsers abstracted OS differences, as software revenue shifted to e-commerce, services, and advertising. Mobile devices added sensors, radios and hardware decoders for content and communication. Apple, now the most profitable computer company, vertically integrates software and services with sensors and hardware. Other companies monetize data, increasing demand for memory and storage optimization.

+

+Some markets require security or safety certifications: automotive, aviation, marine, cross domain, industrial control, finance, energy, medical, and embedded devices. As software "eats the world," how can we [modernize][5]vertical markets without the economies of scale seen in enterprise and consumer markets? One answer comes from device architectures based on hardware virtualization, Xen, [disaggregation][6], OpenEmbedded Linux and measured launch. [OpenXT][7] derivatives use this extensible, open-source base to enforce policy for specialized applications on general-purpose hardware, while reusing interoperable components.

+

+[OpenEmbedded][8] Linux supports a range of x86 and ARM devices, while Xen isolates operating systems and [unikernels][9]. Applications and drivers from multiple ecosystems can run concurrently, expanding technical and licensing options. Special-purpose software can be securely composed with general-purpose software in isolated VMs, anchored by a hardware-assisted root of trust defined by customer and OEM policies. This architecture allows specialist software vendors to share platform and hardware support costs, while supporting emerging and legacy software ecosystems that have different rates of change.

+

+### On the Shoulders of Hardware, Firmware and Software Developers

+

+###

+

+ _System Architecture, from NIST SP800-193 (Draft), Platform Firmware Resiliency_

+

+By the time a user-facing software application begins executing on a powered-on hardware device, an array of firmware and software is already running on the platform. Special-purpose applications’ security and safety assertions are dependent on platform firmware and the developers of a computing device’s “root of trust.”

+

+If we consider the cosmological “[Turtles All The Way Down][2]” question for a computing device, the root of trust is the lowest-level combination of hardware, firmware and software that is initially trusted to perform critical security functions and persist state. Hardware components used in roots of trust include the TCG's Trusted Platform Module ([TPM][10]), ARM’s [TrustZone][11]-enabled Trusted Execution Environment ([TEE][12]), Apple’s [Secure Enclave][13] co-processor ([SEP][14]), and Intel's Management Engine ([ME][15]) in x86 CPUs. [TPM 2.0][16]was approved as an ISO standard in 2015 and is widely available in 2017 devices.

+

+TPMs enable key authentication, integrity measurement and remote attestation. TPM key generation uses a hardware random number generator, with private keys that never leave the chip. TPM integrity measurement functions ensure that sensitive data like private keys are only used by trusted code. When software is provisioned, its cryptographic hash is used to extend a chain of hashes in TPM Platform Configuration Registers (PCRs). When the device boots, sensitive data is only unsealed if measurements of running software can recreate the PCR hash chain that was present at the time of sealing. PCRs record the aggregate result of extending hashes, while the TPM Event Log records the hash chain.

+

+Measurements are calculated by hardware, firmware and software external to the TPM. There are Static (SRTM) and Dynamic (DRTM) Roots of Trust for Measurement. SRTM begins at device boot when the BIOS boot block measures BIOS before execution. The BIOS then execute, extending configuration and option ROM measurements into static PCRs 0-7\. TPM-aware boot loaders like TrustedGrub can extend a measurement chain from BIOS up to the [Linux kernel][17]. These software identity measurements enable relying parties to make trusted decisions within [specific workflows][18].

+

+DRTM enables "late launch" of a trusted environment from an untrusted one at an arbitrary time, using Intel's Trusted Execution Technology ([TXT][19]) or AMD's Secure Virtual Machine ([SVM][20]). With Intel TXT, the CPU instruction SENTER resets CPUs to a known state, clears dynamic PCRs 17-22 and validates the Intel SINIT ACM binary to measure Intel’s tboot MLE, which can then measure Xen, Linux or other components. In 2008, Carnegie Mellon's [Flicker][21] used late launch to minimize the Trusted Computing Base (TCB) for isolated execution of sensitive code on AMD devices, during the interval between suspend/resume of untrusted Linux.

+

+If DRTM enables launch of a trusted Xen or Linux environment without reboot, is SRTM still needed? Yes, because [attacks][22] are possible via privileged System Management Mode (SMM) firmware, UEFI Boot/Runtime Services, Intel ME firmware, or Intel Active Management Technology (AMT) firmware. Measurements for these components can be extended into static PCRs, to ensure they have not been modified since provisioning. In 2015, Intel released documentation and reference code for an SMI Transfer Monitor ([STM][23]), which can isolate SMM firmware on VT-capable systems. As of September 2017, an OEM-supported STM is not yet available to improve the security of Intel TXT.

+

+Can customers secure devices while retaining control over firmware? UEFI Secure Boot requires a signed boot loader, but customers can define root certificates. Intel [Boot Guard][24] provides OEMs with validation of the BIOS boot block. _Verified Boot_ requires a signed boot block and the OEM's root certificate is fused into the CPU to restrict firmware. _Measured Boot_ extends the boot block hash into a TPM PCR, where it can be used for measured launch of customer-selected firmware. Sadly, no OEM has yet shipped devices which implement ONLY the Measured Boot option of Boot Guard.

+

+### Measured Launch with Xen on General Purpose Devices

+

+[OpenXT 7.0][25] has entered release candidate status, with support for Kaby Lake devices, TPM 2.0, OE [meta-measured][3], and [forward seal][26] (upgrade with pre-computed PCRs).

+

+[OpenXT 6.0][27] on a Dell T20 Haswell Xeon microserver, after adding a SATA controller, low-power AMD GPU and dual-port Broadcom NIC, can be configured with measured launch of Windows 7 GPU p/t, FreeNAS 9.3 SATA p/t, pfSense 2.3.4, Debian Wheezy, OpenBSD 6.0, and three NICs, one per passthrough driver VM.

+