mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-31 23:30:11 +08:00

Merge pull request #2 from LCTT/master

This commit is contained in:

commit

dd830d5a0a

95

published/20160511 LEDE and OpenWrt.md

Normal file

95

published/20160511 LEDE and OpenWrt.md

Normal file

@ -0,0 +1,95 @@

|

||||

LEDE 和 OpenWrt 分裂之争

|

||||

===================

|

||||

|

||||

对于家用 WiFi 路由器和接入点来说,[OpenWrt][1] 项目可能是最广为人知的 Linux 发行版;在 12 年以前,它产自现在有名的 Linksys WRT54G 路由器的源代码。(2016 年)五月初,当一群 OpenWrt 核心开发者 [宣布][2] 他们将开始着手 OpenWrt 的一个副产品 (或者,可能算一个分支)叫 [Linux 嵌入开发环境][3] (LEDE)时,OpenWrt 用户社区陷入一片巨大的混乱中。为什么产生分裂对公众来说并不明朗,而且 LEDE 宣言惊到了一些其他 OpenWrt 开发者也暗示这团队的内部矛盾。

|

||||

|

||||

LEDE 宣言被 Jo-Philipp Wich 于五月三日发往所有 OpenWrt 开发者列表和新 LEDE 开发者列表。它将 LEDE 描述为“OpenWrt 社区的一次重启” 和 “OpenWrt 项目的一个副产品” ,希望产生一个 “注重透明性、合作和权利分散”的 Linux 嵌入式开发社区。

|

||||

|

||||

给出的重启的原因是 OpenWrt 遭受着长期以来存在且不能从内部解决的问题 —— 换句话说,关于内部处理方式和政策。例如,宣言称,开发者的数目在不断减少,却没有接纳新开发者的方式(而且貌似没有授权委托访问给新开发者的方法)。宣言说到,项目的基础设施不可靠(例如,去年服务器挂掉在这个项目中也引发了相当多的矛盾),但是内部不合和单点错误阻止了修复它。内部和从这个项目到外面世界也存在着“交流、透明度和合作”的普遍缺失。最后,一些技术缺陷被引述:不充分的测试、缺乏常规维护,以及窘迫的稳固性与文档。

|

||||

|

||||

该宣言继续描述 LEDE 重启将怎样解决这些问题。所有交流频道都会打开供公众使用,决策将在项目范围内的投票决出,合并政策将放宽等等。更详细的说明可以在 LEDE 站点的[规则][4]页找到。其他细节中,它说贡献者将只有一个阶级(也就是,没有“核心开发者”这样拥有额外权利的群体),简单的少数服从多数投票作出决定,并且任何被这个项目管理的基础设施必须有三个以上管理员账户。在 LEDE 邮件列表, Hauke Mehrtens [补充][5]到,该项目将会努力把补丁投递到上游项目 —— 这是过去 OpenWrt 被批判的一点,尤其是对 Linux 内核。

|

||||

|

||||

除了 Wich,这个宣言被 OpenWrt 贡献者 John Crispin、 Daniel Golle、 Felix Fietkau、 Mehrtens、 Matthias Schiffer 和 Steven Barth 共同签署,并以给其他有兴趣参与的人访问 LEDE 站点的邀请作为了宣言结尾。

|

||||

|

||||

### 回应和问题

|

||||

|

||||

有人可能会猜想 LEDE 组织者预期他们的宣言会有或积极或消极的反响。毕竟,细读宣言中批判 OpenWrt 项目暗示了 LEDE 阵营发现有一些 OpenWrt 项目成员难以共事(例如,“单点错误” 或 “内部不和”阻止了基础设施的修复)。

|

||||

|

||||

并且,确实,有很多消极回应。OpenWrt 创立者之一 Mike Baker [回应][6] 了一些警告,反驳所有 LEDE 宣言中的结论并称“像‘重启’这样的词语都是含糊不清的,且具有误导性的,而且 LEDE 项目未能揭晓其真实本质。”与此同时,有人关闭了那些在 LEDE 宣言上署名的开发者的 @openwrt.org 邮件入口;当 Fietkau [提出反对][7], Baker [回复][8]账户“暂时停用”是因为“还不确定 LEDE 能不能代表 OpenWrt。” 另一个 OpenWrt 核心成员 Imre Kaloz [写][9]到,他们现在所抱怨的 OpenWrt 的“大多数[破]事就是 LEDE 团队弄出来的”。

|

||||

|

||||

但是大多数 OpenWrt 列表的回应对该宣言表示困惑。邮件列表成员不明确 LEDE 团队是否将对 OpenWrt [继续贡献][10],或导致了这次分裂的架构和内部问题的[确切本质][11]是什么。 Baker 的第一反应是对宣言中引述的那些问题缺乏公开讨论表示难过:“我们意识到当前的 OpenWrt 项目遭受着许多的问题,”但“我们希望有机会去讨论并尝试着解决”它们。 Baker 作出结论:

|

||||

|

||||

> 我们想强调,我们确实希望能够公开的讨论,并解决掉手头事情。我们的目标是与所有能够且希望对 OpenWrt 作出贡献的参与者共事,包括 LEDE 团队。

|

||||

|

||||

除了有关新项目的初心的问题之外,一些邮件列表订阅者提出了 LEDE 是否与 OpenWrt 有相同的使用场景定位,给新项目取一个听起来更一般的名字的疑惑。此外,许多人,像 Roman Yeryomin,对为什么这些问题需要 LEDE 团队的离开(来解决)[表示了疑惑][12],特别是,与此同时,LEDE 团队由大部分活跃核心 OpenWrt 开发者构成。一些列表订阅者,像 Michael Richardson,甚至不清楚[谁还会继续开发][13] OpenWrt。

|

||||

|

||||

### 澄清

|

||||

|

||||

LEDE 团队尝试着深入阐释他们的境况。在 Fietkau 给 Baker 的回复中,他说在 OpenWrt 内部关于有目的地改变的讨论会很快变得“有毒,”因此导致没有进展。而且:

|

||||

|

||||

> 这些讨论的要点在于那些掌握着基础设施关键部分的人精力有限却拒绝他人的加入和帮助,甚至是面对无法及时解决的重要问题时也是这样。

|

||||

|

||||

> 这种像单点错误一样的事已经持续了很多年了,没有任何有意义的进展来解决它。

|

||||

|

||||

Wich 和 Fietkau 都没有明显指出具体的人,虽然在列表的其他人可能会想到这个基础设施和 OpenWrt 的内部决策问题要归咎于某些人。 Daniel Dickinson [陈述][14]到:

|

||||

|

||||

> 我的印象是 Kaloz (至少) 以基础设施为胁来保持控制,并且根本性的问题是 OpenWrt 是*不*民主的,而且忽视那些真正在 OpenWrt 工作的人想要的是什么,无视他们的愿望,因为他/他们把控着要害。

|

||||

|

||||

另一方面, Luka Perkov [指出][15] 很多 OpemWrt 开发者想从 Subversion 转移到 Git,但 Fietkau 却阻止这种变化。

|

||||

|

||||

看起来是 OpenWrt 的管理结构并非如预期般发挥作用,其结果导致个人冲突爆发,而且由于没有完好定义的流程,某些人能够简单的忽视或阻止提议的变化。明显,这不是一个能长期持续的模式。

|

||||

|

||||

五月六日,Crispin 在一个新的帖子中[写给][16] OpenWrt 列表,尝试着重构 LEDE 项目宣言。他说,这并不是意味着“敌对或分裂”行为,只是与结构失衡的 OpenWrt 做个清晰的划分并以新的方式开始。问题在于“不要归咎于一次单独的事件、一个人或者一次口水战”,他说,“我们想与过去自己造成的错误和多次作出的错误管理决定分开”。 Crispin 也承认宣言没有把握好,说 LEDE 团队 “弄糟了发起纲领。”

|

||||

|

||||

Crispin 的邮件似乎没能使 Kaloz 满意,她[坚持认为][17] Crispin(作为发行经理)和 Fietkau(作为领头开发者)可以轻易地在 OpenWrt 内部作出想要的改变。但是讨论的下文后来变得沉寂;之后 LEDE 或者 OpenWrt 哪边会发生什么还有待观察。

|

||||

|

||||

### 目的

|

||||

|

||||

对于那些想要探究 LEDE 所认为有问题的事情的更多细节的 OpenWrt 成员来说,有更多的信息来源可以为这个问题提供线索。在公众宣言之前,LEDE 组织花了几周谈论他们的计划,会议的 IRC 日志现已[发布][18]。特别有趣的是,三月三十日的[会议][19]包含了这个项目目标的细节讨论。

|

||||

|

||||

其中包括一些针对 OpenWrt 的基础设施的抱怨,像项目的 Trac 工单追踪器的缺点。它充斥着不完整的漏洞报告和“我也是”的评论,Wich 说,结果几乎没有贡献者使用它。此外,他们也在 Github 上追踪 bug,人们对这件事感到困惑,这使得工单应该在哪里讨论不明了。

|

||||

|

||||

这些 IRC 讨论也定下了开发流程本身。LEDE 团队想作出些改变,以使用会合并到主干的阶段开发分支为开端,与 OpenWrt 所使用的“直接提交到主干”方式不同。该项目也将提供基于时间的发行版,并通过只发行已被成功测试的二进制模块来鼓励用户测试,由社区而不是核心开发者在实际的硬件上进行测试。

|

||||

|

||||

最后,这些 IRC 讨论也确定了 LEDE 团队的目的不是用它的宣言吓唬 OpenWrt。Crispin 提到 LEDE 首先是“半公开的”并渐渐做得更公开。 Wich 解释说他希望 LEDE 是“中立的、专业的,并打开大门欢迎 OpenWrt 以便将来的合并”。不幸的是,前期发起工作并不是做得很好。

|

||||

|

||||

在一封邮件中, Fietkau 补充到 OpenWrt 核心开发者确实在任务中遇到瓶颈,像补丁复审和基础设施维护这些事情让他们完成不了其他工作,比如配置下载镜像和改良构建系统。在 LEDE 宣言之后短短几天内,他说,团队成功解决了镜像和构建系统任务,而这些已被搁置多年。

|

||||

|

||||

> 我们在 LEDE 所做的事情很多是基于转移到 Github 的去中心化软件包开发经验,并放弃了软件包应如何被维护的许多控制。这样最终有效减少了我们的工作量,而且我们有了很多更活跃的开发者。

|

||||

|

||||

> 我们真的希望为核心开发做一些类似的事,但是基于我们想作出更大改变的经验,我们觉得在 OpenWrt 项目内做不到。

|

||||

|

||||

修复基础设施也将收获其他好处,他说,就比如改进了用于管理签署发布版本的密码的系统。团队正在考虑在某些情况下非上游补丁的规则,像需要补丁的描述和为什么没有发送到上游的解释。他也提到很多留下的 OpenWrt 开发者表示有兴趣加入 LEDE,相关当事人正试图弄清楚他们是否会重新合并该项目。

|

||||

|

||||

有人希望 LEDE 更为扁平的管理模式和更为透明的分工会在困扰 OpenWrt 的方面取得成功。解决最初的宣言中被诟病的沟通方面的问题会是最大的障碍。如果那个过程处理得好,那么,未来 LEDE 和 OpenWrt 可能能够求同存异并协作。否则,之后两个团队可能一起被迫发展到比以前拥有更少资源的方向,这也许不是开发者或用户想看到的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://lwn.net/Articles/686767/

|

||||

|

||||

作者:[Nathan Willis][a]

|

||||

译者:[XYenChi](https://github.com/XYenChi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://lwn.net/Articles/686767/

|

||||

[1]:https://openwrt.org/

|

||||

[2]:https://lwn.net/Articles/686180/

|

||||

[3]:https://www.lede-project.org/

|

||||

[4]:https://www.lede-project.org/rules.html

|

||||

[5]:http://lists.infradead.org/pipermail/lede-dev/2016-May/000080.html

|

||||

[6]:https://lwn.net/Articles/686988/

|

||||

[7]:https://lwn.net/Articles/686989/

|

||||

[8]:https://lwn.net/Articles/686990/

|

||||

[9]:https://lwn.net/Articles/686991/

|

||||

[10]:https://lwn.net/Articles/686995/

|

||||

[11]:https://lwn.net/Articles/686996/

|

||||

[12]:https://lwn.net/Articles/686992/

|

||||

[13]:https://lwn.net/Articles/686993/

|

||||

[14]:https://lwn.net/Articles/686998/

|

||||

[15]:https://lwn.net/Articles/687001/

|

||||

[16]:https://lwn.net/Articles/687003/

|

||||

[17]:https://lwn.net/Articles/687004/

|

||||

[18]:http://meetings.lede-project.org/lede-adm/2016/?C=M;O=A

|

||||

[19]:http://meetings.lede-project.org/lede-adm/2016/lede-adm.2016-03-30-11.05.log.html

|

||||

@ -0,0 +1,86 @@

|

||||

促使项目团队作出改变的五步计划

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

目的是任何团队组建的首要之事。如果一个人足以实现那个目的,那么就没有必要组成团队。而且如果没有重要目标,你根本不需要一个团队。但只要任务需要的专业知识比一个人所拥有的更多,我们就会遇到集体参与的问题——如果处理不当,会使你脱离正轨。

|

||||

|

||||

想象一群人困在洞穴中。没有一个人具备如何出去的全部知识,所以每个人要协作,心路常开,在想要做的事情上尽力配合。当(且仅当)组建了适当的工作团队之后,才能为实现团队的共同目标创造出合适的环境。

|

||||

|

||||

但确实有人觉得待在洞穴中很舒适而且只想待在那里。在组织里,领导者们如何掌控那些实际上抵触改善、待在洞穴中觉得舒适的人?同时该如何找到拥有共同目标但是不在自己组织的人?

|

||||

|

||||

我从事指导国际销售培训,刚开始甚至很少有人认为我的工作有价值。所以,我想出一套使他们信服的战术。那个战术非常成功以至于我决定深入研究它并与各位[分享][2]。

|

||||

|

||||

### 获得支持

|

||||

|

||||

为了建立公司强大的企业文化,有人会反对改变,并且从幕后打压任何改变的提议。他们希望每个人都待在那个舒适的洞穴里。例如,当我第一次接触到海外销售培训,我受到了一些关键人物的严厉阻挠。他们迫使其他人相信某个东京人做不了销售培训——只要基本的产品培训就行了。

|

||||

|

||||

尽管我最终解决了这个问题,但我那时候真的不知道该怎么办。所以,我开始研究顾问们在改变公司里抗拒改变的人的想法这个问题上该如何给出建议。从学者 [Laurence Haughton][3] 的研究中,我发现一般对于改变的提议,组织中 83% 的人最开始不会支持你。大约 17% _会_从一开始就支持你,但是只要看到一个实验案例成功之后,他们觉得这个主意安全可行了,60% 的人会支持你。最后,有部分人会反对任何改变,无论它有多棒。

|

||||

|

||||

我研究的步骤:

|

||||

|

||||

* 从试验项目开始

|

||||

* 开导洞穴人

|

||||

* 快速跟进

|

||||

* 开导洞穴首领

|

||||

* 全局展开

|

||||

|

||||

### 1、 从试验项目开始

|

||||

|

||||

找到高价值且成功率较高的项目——而不是大的、成本高的、周期长的、全局的行动。然后,找到能看到项目价值、理解它的价值并能为之奋斗的关键人物。这些人不应该只是“老好人”或者“朋友”;他们必须相信项目的目标而且拥有推进项目的能力或经验。不要急于求成。只要足够支持你研究并保持进度即可。

|

||||

|

||||

个人而言,我在新加坡的一个小型车辆代理商那里举办了自己的第一场销售研讨会。虽然并不是特别成功,但足以让人们开始讨论销售训练会达到怎样的效果。那时候的我困在洞穴里(那是一份我不想做的工作)。这个试验销售训练是我走出困境的蓝图。

|

||||

|

||||

### 2、 开导洞穴人

|

||||

|

||||

洞穴(CAVE)实际上是我从 Laurence Haughton 那里听来的缩略词。它代表着 Citizens Against Virtually Everything。(LCTT 译注,此处一语双关前文提及的洞穴。)

|

||||

|

||||

你得辨别这些人,因为他们会暗地里阻挠项目的进展,特别是早期脆弱的时候。他们容易黑化:总是消极。他们频繁使用“但是”、“如果”和“为什么”,只是想推脱你。他们询问轻易不可得的细节信息。他们花费过多的时间在问题上,而不是寻找解决方案。他们认为每个失败都是一个趋势。他们总是对人而不是对事。他们作出反对建议的陈述却又不能简单确认。

|

||||

|

||||

避开洞穴人;不要让他们太早加入项目的讨论。他们固守成见,因为他们看不到改变所具有的价值。他们安居于洞穴,所以试着让他们去做些其他事。你应该找出我上面提到那 17% 的人群中的关键人物,那些想要改变的人,并且跟他们开一个非常隐秘的准备会。

|

||||

|

||||

我在五十铃汽车(股东之一是通用汽车公司)的时候,销售训练项目开始于一个销往世界上其他小国家的合资分销商,主要是非洲、南亚、拉丁美洲和中东。我的个人团队由通用汽车公司雪佛兰的人、五十铃产品经理和分公司的销售计划员工组成。隔绝其他任何人于这个圈子之外。

|

||||

|

||||

### 3、 快速跟进

|

||||

|

||||

洞穴人总是慢吞吞的,那么你就迅速行动起来。如果你在他们参与之前就有了小成就的经历,他们对你团队产生消极影响的能力将大大减弱——你要在他们提出之前就解决他们必然反对的问题。再一次,选择一个成功率高的试验项目,很快能出结果的。然后宣传成功,就像广告上的加粗标题。

|

||||

|

||||

当我在新加坡研讨会上所言开始流传时,其他地区开始意识到销售训练的好处。仅在新加坡研讨会之后,我就被派到马来西亚开展了四次以上。

|

||||

|

||||

### 4、 开导洞穴首领

|

||||

|

||||

只要你取得了第一个小项目的成功,就针对能影响洞穴首领的关键人物推荐项目。让团队继续该项目以告诉关键人物成功的经历。一线人员甚至顾客也能提供有力的证明。 洞穴管理者往往只着眼于销量和收益,那么就宣扬项目在降低开支、减少浪费和增加销量方面的价值。

|

||||

|

||||

自新加坡的第一次研讨会及之后,我向直接掌握了五十铃销售渠道的前线销售部门员工和通用汽车真正想看到进展的人极力宣传他们的成功。当他们接受了之后,他们会向上级提出培训请求并让其看到分公司销量的提升。

|

||||

|

||||

### 5、 全局展开

|

||||

|

||||

一旦一把手站在了自己这边,立马向整个组织宣告成功的试验项目。讨论项目的扩展。

|

||||

|

||||

用上面的方法,在 21 年的职业生涯中,我在世界各地超过 60 个国家举办了研讨会。我确实走出了洞穴——并且真的看到了广阔的世界。

|

||||

|

||||

(题图:opensource.com)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Ron McFarland - Ron McFarland 已在日本工作 40 年,从事国际销售、销售管理和在世界范围内扩展销售业务 30 载有余。他曾去过或就职于 80 多个国家。在过去的 14 年里, Ron 为总部位于东京的日本硬件切割厂在美国和欧洲各地建立分销商。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/17/1/escape-the-cave

|

||||

|

||||

作者:[Ron McFarland][a]

|

||||

译者:[XYenChi](https://github.com/XYenChi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ron-mcfarland

|

||||

[1]:https://opensource.com/open-organization/17/1/escape-the-cave?rate=dBJIKVJy720uFj0PCfa1JXDZKkMwozxV8TB2qJnoghM

|

||||

[2]:http://www.slideshare.net/RonMcFarland1/creating-change-58994683

|

||||

[3]:http://www.laurencehaughton.com/

|

||||

[4]:https://opensource.com/user/68021/feed

|

||||

[5]:https://opensource.com/open-organization/17/1/escape-the-cave#comments

|

||||

[6]:https://opensource.com/users/ron-mcfarland

|

||||

@ -0,0 +1,66 @@

|

||||

为什么 DevOps 如我们所知道的那样,是安全的终结

|

||||

==========

|

||||

|

||||

|

||||

|

||||

安全难以推行。在企业管理者迫使开发团队尽快发布程序的大环境下,很难说服他们花费有限的时间来修补安全漏洞。但是鉴于所有网络攻击中有 84% 发生在应用层,作为一个组织是无法承担其开发团队不包括安全性带来的后果。

|

||||

|

||||

DevOps 的崛起为许多安全负责人带来了困境。Sonatype 的前 CTO [Josh Corman][2] 说:“这是对安全的威胁,但这也是让安全变得更好的机会。” Corman 是一个坚定的[将安全和 DevOps 实践整合起来创建 “坚固的 DevOps”][3]的倡导者。_Business Insights_ 与 Corman 谈论了安全和 DevOps 共同的价值,以及这些共同价值如何帮助组织更少地受到中断和攻击的影响。

|

||||

|

||||

### 安全和 DevOps 实践如何互惠互利?

|

||||

|

||||

**Josh Corman:** 一个主要的例子是 DevOps 团队对所有可测量的东西进行检测的倾向。安全性一直在寻找更多的情报和遥测。你可以获取许多 DevOps 团队正在测量的信息,并将这些信息输入到你的日志管理或 SIEM (安全信息和事件管理系统)。

|

||||

|

||||

一个 OODA 循环(<ruby>观察<rt>observe</rt></ruby>、<ruby>定向<rt>orient</rt></ruby>、<ruby>决定<rt>decide</rt></ruby>、<ruby>行为<rt>act</rt></ruby>)的前提是有足够普遍的眼睛和耳朵,以注意到窃窃私语和回声。DevOps 为你提供无处不在的仪器。

|

||||

|

||||

### 他们有分享其他文化观点吗?

|

||||

|

||||

**JC:** “严肃对待你的代码”是一个共同的价值观。例如,由 Netflix 编写的软件工具 Chaos Monkey 是 DevOps 团队的分水岭。它是为了测试亚马逊网络服务的弹性和可恢复性而创建的,Chaos Monkey 使得 Netflix 团队更加强大,更容易为中断做好准备。

|

||||

|

||||

所以现在有个想法是我们的系统需要测试,因此,James Wickett 和我及其他人决定做一个邪恶的、带有攻击性的 Chaos Monkey,这就是 GAUNTLT 项目的来由。它基本上是一堆安全测试, 可以在 DevOps 周期和 DevOps 工具链中使用。它也有非常适合 DevOps 的API。

|

||||

|

||||

### 企业安全和 DevOps 价值在哪里相交?

|

||||

|

||||

**JC:** 这两个团队都认为复杂性是一切事情的敌人。例如,[安全人员和 Rugged DevOps 人员][4]实际上可以说:“看,我们在我们的项目中使用了 11 个日志框架 - 也许我们不需要那么多,也许攻击面和复杂性可能会让我们受到伤害或者损害产品的质量或可用性。”

|

||||

|

||||

复杂性往往是许多事情的敌人。通常情况下,你不会很难说服 DevOps 团队在架构层面使用更好的建筑材料:使用最新的、最不易受攻击的版本,并使用较少的组件。

|

||||

|

||||

### “更好的建筑材料”是什么意思?

|

||||

|

||||

**JC:** 我是世界上最大的开源仓库的保管人,所以我能看到他们在使用哪些版本,里面有哪些漏洞,何时他们没有修复漏洞,以及等了多久。例如,某些日志记录框架从不会修复任何错误。其中一些会在 90 天内修复了大部分的安全漏洞。人们越来越多地遭到攻击,因为他们使用了一个毫无安全的框架。

|

||||

|

||||

除此之外,即使你不知道日志框架的质量,拥有 11 个不同的框架会变得非常笨重、出现 bug,还有额外的工作和复杂性。你暴露在漏洞中的风险是非常大的。你想把时间花在修复大量的缺陷上,还是在制造下一个大的破坏性的事情上?

|

||||

|

||||

[Rugged DevOps 的关键是软件供应链管理][5],其中包含三个原则:使用更少和更好的供应商、使用这些供应商的最高质量的部分、并跟踪这些部分,以便在发生错误时,你可以有一个及时和敏捷的响应。

|

||||

|

||||

### 所以变更管理也很重要。

|

||||

|

||||

**JC:** 是的,这是另一个共同的价值。我发现,当一家公司想要执行诸如异常检测或净流量分析等安全测试时,他们需要知道“正常”的样子。让人们失误的许多基本事情与仓库和补丁管理有关。

|

||||

|

||||

我在 _Verizon 数据泄露调查报告_中看到,追踪去年被成功利用的漏洞后,其中 97% 归结为 10 个 CVE(常见漏洞和风险),而这 10 个已经被修复了十多年。所以,我们羞于谈论高级间谍活动。我们没有做基本的补丁工作。现在,我不是说如果你修复这 10 个CVE,那么你就没有被利用,而是这占据了人们实际失误的最大份额。

|

||||

|

||||

[DevOps 自动化工具][6]的好处是它们已经成为一个意外的变更管理数据库。其真实反应了谁在哪里什么时候做了变更。这是一个巨大的胜利,因为我们经常对安全性有最大影响的因素无法控制。你承受了 CIO 和 CTO 做出的选择的后果。随着 IT 通过自动化变得更加严格和可重复,你可以减少人为错误的机会,并且哪里发生了变化更加可追溯。

|

||||

|

||||

### 你认为什么是最重要的共同价值?

|

||||

|

||||

**JC:** DevOps 涉及到过程和工具链,但我认为定义这种属性的是文化,特别是同感。 DevOps 有用是因为开发人员和运维团队能够更好地了解彼此,并做出更明智的决策。不是在解决孤岛中的问题,而是为了活动流程和目标解决。如果你向 DevOps 的团队展示安全如何能使他们变得更好,那么作为回馈他们往往会问:“那么,我们是否有任何选择让你的生活更轻松?”因为他们通常不知道他们做的 X、Y 或 Z 的选择使它无法包含安全性。

|

||||

|

||||

对于安全团队,驱动价值的方法之一是在寻求帮助之前变得更有所帮助,在我们告诉 DevOps 团队要做什么之前提供定性和定量的价值。你必须获得 DevOps 团队的信任,并获得发挥的权利,然后才能得到回报。它通常比你想象的快很多。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://techbeacon.com/why-devops-end-security-we-know-it

|

||||

|

||||

作者:[Mike Barton][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://twitter.com/intent/follow?original_referer=https%3A%2F%2Ftechbeacon.com%2Fwhy-devops-end-security-we-know-it%3Fimm_mid%3D0ee8c5%26cmp%3Dem-webops-na-na-newsltr_20170310&ref_src=twsrc%5Etfw®ion=follow_link&screen_name=mikebarton&tw_p=followbutton

|

||||

[1]:https://techbeacon.com/resources/application-security-devops-true-state?utm_source=tb&utm_medium=article&utm_campaign=inline-cta

|

||||

[2]:https://twitter.com/joshcorman

|

||||

[3]:https://techbeacon.com/want-rugged-devops-team-your-release-security-engineers

|

||||

[4]:https://techbeacon.com/rugged-devops-rsa-6-takeaways-security-ops-pros

|

||||

[5]:https://techbeacon.com/josh-corman-security-devops-how-shared-team-values-can-reduce-threats

|

||||

[6]:https://techbeacon.com/devops-automation-best-practices-how-much-too-much

|

||||

@ -1,21 +1,23 @@

|

||||

OpenGL 与 Go 教程第三节:实现游戏

|

||||

OpenGL 与 Go 教程(三)实现游戏

|

||||

============================================================

|

||||

|

||||

[第一节: Hello, OpenGL][8] | [第二节: 绘制游戏面板][9] | [第三节:实现游戏功能][10]

|

||||

- [第一节: Hello, OpenGL][8]

|

||||

- [第二节: 绘制游戏面板][9]

|

||||

- [第三节:实现游戏功能][10]

|

||||

|

||||

该教程的完整源代码可以从 [GitHub][11] 上获得。

|

||||

该教程的完整源代码可以从 [GitHub][11] 上找到。

|

||||

|

||||

欢迎回到《OpenGL 与 Go 教程》!如果你还没有看过 [第一节][12] 和 [第二节][13],那就要回过头去看一看。

|

||||

|

||||

到目前为止,你应该懂得如何创建网格系统以及创建代表方格中每一个单元的格子阵列。现在可以开始把网格当作游戏面板实现《Conway's Game of Life》。

|

||||

到目前为止,你应该懂得如何创建网格系统以及创建代表方格中每一个单元的格子阵列。现在可以开始把网格当作游戏面板实现<ruby>康威生命游戏<rt>Conway's Game of Life</rt></ruby>。

|

||||

|

||||

开始吧!

|

||||

|

||||

### 实现 《Conway’s Game》

|

||||

### 实现康威生命游戏

|

||||

|

||||

《Conway's Game》的其中一个要点是所有 cell 必须同时基于当前 cell 在面板中的状态确定下一个 cell 的状态。也就是说如果 Cell (X=3,Y=4)在计算过程中状态发生了改变,那么邻近的 cell (X=4,Y=4)必须基于(X=3,T=4)的状态决定自己的状态变化,而不是基于自己现在的状态。简单的讲,这意味着我们必须遍历 cell ,确定下一个 cell 的状态,在绘制之前,不改变他们的当前状态,然后在下一次循环中我们将新状态应用到游戏里,依此循环往复。

|

||||

康威生命游戏的其中一个要点是所有<ruby>细胞<rt>cell</rt></ruby>必须同时基于当前细胞在面板中的状态确定下一个细胞的状态。也就是说如果细胞 `(X=3,Y=4)` 在计算过程中状态发生了改变,那么邻近的细胞 `(X=4,Y=4)` 必须基于 `(X=3,Y=4)` 的状态决定自己的状态变化,而不是基于自己现在的状态。简单的讲,这意味着我们必须遍历细胞,确定下一个细胞的状态,而在绘制之前不改变他们的当前状态,然后在下一次循环中我们将新状态应用到游戏里,依此循环往复。

|

||||

|

||||

为了完成这个功能,我们需要在 cell 结构体中添加两个布尔型变量:

|

||||

为了完成这个功能,我们需要在 `cell` 结构体中添加两个布尔型变量:

|

||||

|

||||

```

|

||||

type cell struct {

|

||||

@ -29,6 +31,8 @@ type cell struct {

|

||||

}

|

||||

```

|

||||

|

||||

这里我们添加了 `alive` 和 `aliveNext`,前一个是细胞当前的专题,后一个是经过计算后下一回合的状态。

|

||||

|

||||

现在添加两个函数,我们会用它们来确定 cell 的状态:

|

||||

|

||||

```

|

||||

@ -39,22 +43,22 @@ func (c *cell) checkState(cells [][]*cell) {

|

||||

|

||||

liveCount := c.liveNeighbors(cells)

|

||||

if c.alive {

|

||||

// 1\. 当任何一个存活的 cell 的附近少于 2 个存活的 cell 时,该 cell 将会消亡,就像人口过少所导致的结果一样

|

||||

// 1. 当任何一个存活的 cell 的附近少于 2 个存活的 cell 时,该 cell 将会消亡,就像人口过少所导致的结果一样

|

||||

if liveCount < 2 {

|

||||

c.aliveNext = false

|

||||

}

|

||||

|

||||

// 2\. 当任何一个存活的 cell 的附近有 2 至 3 个存活的 cell 时,该 cell 在下一代中仍然存活。

|

||||

// 2. 当任何一个存活的 cell 的附近有 2 至 3 个存活的 cell 时,该 cell 在下一代中仍然存活。

|

||||

if liveCount == 2 || liveCount == 3 {

|

||||

c.aliveNext = true

|

||||

}

|

||||

|

||||

// 3\. 当任何一个存活的 cell 的附近多于 3 个存活的 cell 时,该 cell 将会消亡,就像人口过多所导致的结果一样

|

||||

// 3. 当任何一个存活的 cell 的附近多于 3 个存活的 cell 时,该 cell 将会消亡,就像人口过多所导致的结果一样

|

||||

if liveCount > 3 {

|

||||

c.aliveNext = false

|

||||

}

|

||||

} else {

|

||||

// 4\. 任何一个消亡的 cell 附近刚好有 3 个存活的 cell,该 cell 会变为存活的状态,就像重生一样。

|

||||

// 4. 任何一个消亡的 cell 附近刚好有 3 个存活的 cell,该 cell 会变为存活的状态,就像重生一样。

|

||||

if liveCount == 3 {

|

||||

c.aliveNext = true

|

||||

}

|

||||

@ -95,9 +99,11 @@ func (c *cell) liveNeighbors(cells [][]*cell) int {

|

||||

}

|

||||

```

|

||||

|

||||

更加值得注意的是 liveNeighbors 函数里在返回地方,我们返回的是当前处于存活状态的 cell 的邻居个数。我们定义了一个叫做 add 的内嵌函数,它会对 X 和 Y 坐标做一些重复性的验证。它所做的事情是检查我们传递的数字是否超出了范围——比如说,如果 cell(X=0,Y=5)想要验证它左边的 cell,它就得验证面板另一边的 cell(X=9,Y=5),Y 轴与之类似。

|

||||

在 `checkState` 中我们设置当前状态(`alive`) 等于我们最近迭代结果(`aliveNext`)。接下来我们计数邻居数量,并根据游戏的规则来决定 `aliveNext` 状态。该规则是比较清晰的,而且我们在上面的代码当中也有说明,所以这里不再赘述。

|

||||

|

||||

在 add 内嵌函数后面,我们给当前 cell 附近的八个 cell 分别调用 add 函数,示意如下:

|

||||

更加值得注意的是 `liveNeighbors` 函数里,我们返回的是当前处于存活(`alive`)状态的细胞的邻居个数。我们定义了一个叫做 `add` 的内嵌函数,它会对 `X` 和 `Y` 坐标做一些重复性的验证。它所做的事情是检查我们传递的数字是否超出了范围——比如说,如果细胞 `(X=0,Y=5)` 想要验证它左边的细胞,它就得验证面板另一边的细胞 `(X=9,Y=5)`,Y 轴与之类似。

|

||||

|

||||

在 `add` 内嵌函数后面,我们给当前细胞附近的八个细胞分别调用 `add` 函数,示意如下:

|

||||

|

||||

```

|

||||

[

|

||||

@ -109,9 +115,9 @@ func (c *cell) liveNeighbors(cells [][]*cell) int {

|

||||

]

|

||||

```

|

||||

|

||||

在该示意中,每一个叫做 N 的 cell 是与 C 相邻的 cell。

|

||||

在该示意中,每一个叫做 N 的细胞是 C 的邻居。

|

||||

|

||||

现在是我们的主函数,在我们执行循环核心游戏的地方,调用每个 cell 的 checkState 函数进行绘制:

|

||||

现在是我们的 `main` 函数,这里我们执行核心游戏循环,调用每个细胞的 `checkState` 函数进行绘制:

|

||||

|

||||

```

|

||||

func main() {

|

||||

@ -129,6 +135,8 @@ func main() {

|

||||

}

|

||||

```

|

||||

|

||||

现在我们的游戏逻辑全都设置好了,我们需要修改细胞绘制函数来跳过绘制不存活的细胞:

|

||||

|

||||

```

|

||||

func (c *cell) draw() {

|

||||

if !c.alive {

|

||||

@ -140,7 +148,10 @@ func (c *cell) draw() {

|

||||

}

|

||||

```

|

||||

|

||||

现在完善这个函数。回到 makeCells 函数,我们用 0.0 到 1.0 之间的一个随机数来设置游戏的初始状态。我们会定义一个大小为 0.15 的常量阈值,也就是说每个 cell 都有 15% 的几率处于存活状态。

|

||||

如果我们现在运行这个游戏,你将看到一个纯黑的屏幕,而不是我们辛苦工作后应该看到生命模拟。为什么呢?其实这正是模拟在工作。因为我们没有活着的细胞,所以就一个都不会绘制出来。

|

||||

|

||||

|

||||

现在完善这个函数。回到 `makeCells` 函数,我们用 `0.0` 到 `1.0` 之间的一个随机数来设置游戏的初始状态。我们会定义一个大小为 `0.15` 的常量阈值,也就是说每个细胞都有 15% 的几率处于存活状态。

|

||||

|

||||

```

|

||||

import (

|

||||

@ -174,11 +185,13 @@ func makeCells() [][]*cell {

|

||||

}

|

||||

```

|

||||

|

||||

接下来在循环中,在用 newCell 函数创造一个新的 cell 时,我们根据随机数的大小设置它的存活状态,随机数在 0.0 到 1.0 之间,如果比阈值(0.15)小,就是存活状态。再次强调,这意味着每个 cell 在开始时都有 15% 的几率是存活的。你可以修改数值大小,增加或者减少当前游戏中存活的 cell。我们还把 aliveNext 设成 alive 状态,否则在第一次迭代之后我们会发现一大片 cell 消亡了,这是因为 aliveNext 将永远是 false。

|

||||

我们首先增加两个引入:随机(`math/rand`)和时间(`time`),并定义我们的常量阈值。然后在 `makeCells` 中我们使用当前时间作为随机种子,给每个游戏一个独特的起始状态。你也可也指定一个特定的种子值,来始终得到一个相同的游戏,这在你想重放某个有趣的模拟时很有用。

|

||||

|

||||

现在接着往下看,运行它,你很有可能看到 cell 们一闪而过,但你却无法理解这是为什么。原因可能在于你的电脑太快了,在你能够看清楚之前就运行了(甚至完成了)模拟过程。

|

||||

接下来在循环中,在用 `newCell` 函数创造一个新的细胞时,我们根据随机浮点数的大小设置它的存活状态,随机数在 `0.0` 到 `1.0` 之间,如果比阈值(`0.15`)小,就是存活状态。再次强调,这意味着每个细胞在开始时都有 15% 的几率是存活的。你可以修改数值大小,增加或者减少当前游戏中存活的细胞。我们还把 `aliveNext` 设成 `alive` 状态,否则在第一次迭代之后我们会发现一大片细胞消亡了,这是因为 `aliveNext` 将永远是 `false`。

|

||||

|

||||

降低游戏速度,在主循环中引入一个 frames-per-second 限制:

|

||||

现在继续运行它,你很有可能看到细胞们一闪而过,但你却无法理解这是为什么。原因可能在于你的电脑太快了,在你能够看清楚之前就运行了(甚至完成了)模拟过程。

|

||||

|

||||

让我们降低游戏速度,在主循环中引入一个帧率(FPS)限制:

|

||||

|

||||

```

|

||||

const (

|

||||

@ -223,7 +236,7 @@ const (

|

||||

)

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

试着修改常量,看看它们是怎么影响模拟过程的 —— 这是你用 Go 语言写的第一个 OpenGL 程序,很酷吧?

|

||||

|

||||

@ -231,20 +244,18 @@ const (

|

||||

|

||||

这是《OpenGL 与 Go 教程》的最后一节,但是这不意味着到此而止。这里有些新的挑战,能够增进你对 OpenGL (以及 Go)的理解。

|

||||

|

||||

1. 给每个 cell 一种不同的颜色。

|

||||

2. 让用户能够通过命令行参数指定格子尺寸,帧率,种子和阈值。在 GitHub 上的 [github.com/KyleBanks/conways-gol][4] 里你可以看到一个已经实现的程序。

|

||||

1. 给每个细胞一种不同的颜色。

|

||||

2. 让用户能够通过命令行参数指定格子尺寸、帧率、种子和阈值。在 GitHub 上的 [github.com/KyleBanks/conways-gol][4] 里你可以看到一个已经实现的程序。

|

||||

3. 把格子的形状变成其它更有意思的,比如六边形。

|

||||

4. 用颜色表示 cell 的状态 —— 比如,在第一帧把存活状态的格子设成绿色,如果它们存活了超过三帧的时间,就变成黄色。

|

||||

5. 如果模拟过程结束了,就自动关闭窗口,也就是说所有 cell 都消亡了,或者是最后两帧里没有格子的状态有改变。

|

||||

4. 用颜色表示细胞的状态 —— 比如,在第一帧把存活状态的格子设成绿色,如果它们存活了超过三帧的时间,就变成黄色。

|

||||

5. 如果模拟过程结束了,就自动关闭窗口,也就是说所有细胞都消亡了,或者是最后两帧里没有格子的状态有改变。

|

||||

6. 将着色器源代码放到单独的文件中,而不是把它们用字符串的形式放在 Go 的源代码中。

|

||||

|

||||

### 总结

|

||||

|

||||

希望这篇教程对想要入门 OpenGL (或者是 Go)的人有所帮助!这很有趣,因此我也希望理解学习它也很有趣。

|

||||

|

||||

正如我所说的,OpenGL 可能是非常恐怖的,但只要你开始着手了就不会太差。你只用制定一个个可达成的小目标,然后享受每一次成功,因为尽管 OpenGL 不会总像它看上去的那么难,但也肯定有些难懂的东西。我发现,当遇到一个难于用 go-gl 方式理解的 OpenGL 问题时,你总是可以参考一下在网上更流行的当作教程的 C 语言代码,这很有用。通常 C 语言和 Go 语言的唯一区别是在 Go 中,gl 的前缀是 gl. 而不是 GL_。这极大地增加了你的绘制知识!

|

||||

|

||||

[第一节: Hello, OpenGL][14] | [第二节: 绘制游戏面板][15] | [第三节:实现游戏功能][16]

|

||||

正如我所说的,OpenGL 可能是非常恐怖的,但只要你开始着手了就不会太差。你只用制定一个个可达成的小目标,然后享受每一次成功,因为尽管 OpenGL 不会总像它看上去的那么难,但也肯定有些难懂的东西。我发现,当遇到一个难于理解用 go-gl 生成的代码的 OpenGL 问题时,你总是可以参考一下在网上更流行的当作教程的 C 语言代码,这很有用。通常 C 语言和 Go 语言的唯一区别是在 Go 中,gl 函数的前缀是 `gl.` 而不是 `gl`,常量的前缀是 `gl` 而不是 `GL_`。这可以极大地增加了你的绘制知识!

|

||||

|

||||

该教程的完整源代码可从 [GitHub][17] 上获得。

|

||||

|

||||

@ -419,22 +430,22 @@ func (c *cell) checkState(cells [][]*cell) {

|

||||

|

||||

liveCount := c.liveNeighbors(cells)

|

||||

if c.alive {

|

||||

// 1\. 当任何一个存活的 cell 的附近少于 2 个存活的 cell 时,该 cell 将会消亡,就像人口过少所导致的结果一样

|

||||

// 1. 当任何一个存活的 cell 的附近少于 2 个存活的 cell 时,该 cell 将会消亡,就像人口过少所导致的结果一样

|

||||

if liveCount < 2 {

|

||||

c.aliveNext = false

|

||||

}

|

||||

|

||||

// 2\. 当任何一个存活的 cell 的附近有 2 至 3 个存活的 cell 时,该 cell 在下一代中仍然存活。

|

||||

// 2. 当任何一个存活的 cell 的附近有 2 至 3 个存活的 cell 时,该 cell 在下一代中仍然存活。

|

||||

if liveCount == 2 || liveCount == 3 {

|

||||

c.aliveNext = true

|

||||

}

|

||||

|

||||

// 3\. 当任何一个存活的 cell 的附近多于 3 个存活的 cell 时,该 cell 将会消亡,就像人口过多所导致的结果一样

|

||||

// 3. 当任何一个存活的 cell 的附近多于 3 个存活的 cell 时,该 cell 将会消亡,就像人口过多所导致的结果一样

|

||||

if liveCount > 3 {

|

||||

c.aliveNext = false

|

||||

}

|

||||

} else {

|

||||

// 4\. 任何一个消亡的 cell 附近刚好有 3 个存活的 cell,该 cell 会变为存活的状态,就像重生一样。

|

||||

// 4. 任何一个消亡的 cell 附近刚好有 3 个存活的 cell,该 cell 会变为存活的状态,就像重生一样。

|

||||

if liveCount == 3 {

|

||||

c.aliveNext = true

|

||||

}

|

||||

@ -570,9 +581,9 @@ func compileShader(source string, shaderType uint32) (uint32, error) {

|

||||

|

||||

via: https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-3-implementing-the-game

|

||||

|

||||

作者:[kylewbanks ][a]

|

||||

作者:[kylewbanks][a]

|

||||

译者:[GitFuture](https://github.com/GitFuture)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -584,14 +595,14 @@ via: https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-3-implementing

|

||||

[5]:https://kylewbanks.com/category/golang

|

||||

[6]:https://kylewbanks.com/category/opengl

|

||||

[7]:https://twitter.com/kylewbanks

|

||||

[8]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-1-hello-opengl

|

||||

[9]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-2-drawing-the-game-board

|

||||

[8]:https://linux.cn/article-8933-1.html

|

||||

[9]:https://linux.cn/article-8937-1.html

|

||||

[10]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-3-implementing-the-game

|

||||

[11]:https://github.com/KyleBanks/conways-gol

|

||||

[12]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-1-hello-opengl

|

||||

[12]:https://linux.cn/article-8933-1.html

|

||||

[13]:https://kylewbanks.com/blog/[Part%202:%20Drawing%20the%20Game%20Board](/blog/tutorial-opengl-with-golang-part-2-drawing-the-game-board)

|

||||

[14]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-1-hello-opengl

|

||||

[15]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-2-drawing-the-game-board

|

||||

[14]:https://linux.cn/article-8933-1.html

|

||||

[15]:https://linux.cn/article-8937-1.html

|

||||

[16]:https://kylewbanks.com/blog/tutorial-opengl-with-golang-part-3-implementing-the-game

|

||||

[17]:https://github.com/KyleBanks/conways-gol

|

||||

[18]:https://twitter.com/kylewbanks

|

||||

@ -0,0 +1,211 @@

|

||||

介绍 Flashback,一个互联网模拟工具

|

||||

============================================================

|

||||

|

||||

> Flashback 用于测试目的来模拟 HTTP 和 HTTPS 资源,如 Web 服务和 REST API。

|

||||

|

||||

|

||||

|

||||

在 LinkedIn,我们经常开发需要与第三方网站交互的 Web 应用程序。我们还采用自动测试,以确保我们的软件在发布到生产环境之前的质量。然而,测试只是在它可靠时才有用。

|

||||

|

||||

考虑到这一点,有外部依赖关系的测试是有很大的问题的,例如在第三方网站上。这些外部网站可能会没有通知地发生改变、遭受停机,或者由于互联网的不可靠性暂时无法访问。

|

||||

|

||||

如果我们的一个测试依赖于能够与第三方网站通信,那么任何故障的原因都很难确定。失败可能是因为 LinkedIn 的内部变更、第三方网站的维护人员进行的外部变更,或网络基础设施的问题。你可以想像,与第三方网站的交互可能会有很多失败的原因,因此你可能想要知道,我将如何处理这个问题?

|

||||

|

||||

好消息是有许多互联网模拟工具可以帮助。其中一个是 [Betamax][4]。它通过拦截 Web 应用程序发起的 HTTP 连接,之后进行重放的方式来工作。对于测试,Betamax 可以用以前记录的响应替换 HTTP 上的任何交互,它可以非常可靠地提供这个服务。

|

||||

|

||||

最初,我们选择在 LinkedIn 的自动化测试中使用 Betamax。它工作得很好,但我们遇到了一些问题:

|

||||

|

||||

* 出于安全考虑,我们的测试环境没有接入互联网。然而,与大多数代理一样,Betamax 需要 Internet 连接才能正常运行。

|

||||

* 我们有许多需要使用身份验证协议的情况,例如 OAuth 和 OpenId。其中一些协议需要通过 HTTP 进行复杂的交互。为了模拟它们,我们需要一个复杂的模型来捕获和重放请求。

|

||||

|

||||

为了应对这些挑战,我们决定基于 Betamax 的思路,构建我们自己的互联网模拟工具,名为 Flashback。我们也很自豪地宣布 Flashback 现在是开源的。

|

||||

|

||||

### 什么是 Flashback?

|

||||

|

||||

Flashback 用于测试目的来模拟 HTTP 和 HTTPS 资源,如 Web 服务和 [REST][5] API。它记录 HTTP/HTTPS 请求并重放以前记录的 HTTP 事务 - 我们称之为“<ruby>场景<rt>scene</rt></ruby>”,这样就不需要连接到 Internet 才能完成测试。

|

||||

|

||||

Flashback 也可以根据请求的部分匹配重放场景。它使用的是“匹配规则”。匹配规则将传入请求与先前记录的请求相关联,然后将其用于生成响应。例如,以下代码片段实现了一个基本匹配规则,其中测试方法“匹配”[此 URL][6]的传入请求。

|

||||

|

||||

HTTP 请求通常包含 URL、方法、标头和正文。Flashback 允许为这些组件的任意组合定义匹配规则。Flashback 还允许用户向 URL 查询参数,标头和正文添加白名单或黑名单标签。

|

||||

|

||||

例如,在 OAuth 授权流程中,请求查询参数可能如下所示:

|

||||

|

||||

```

|

||||

oauth_consumer_key="jskdjfljsdklfjlsjdfs",

|

||||

oauth_nonce="ajskldfjalksjdflkajsdlfjasldfja;lsdkj",

|

||||

oauth_signature="asdfjaklsdjflasjdflkajsdklf",

|

||||

oauth_signature_method="HMAC-SHA1",

|

||||

oauth_timestamp="1318622958",

|

||||

oauth_token="asdjfkasjdlfajsdklfjalsdjfalksdjflajsdlfa",

|

||||

oauth_version="1.0"

|

||||

```

|

||||

|

||||

这些值许多将随着每个请求而改变,因为 OAuth 要求客户端每次为 `oauth_nonce` 生成一个新值。在我们的测试中,我们需要验证 `oauth_consumer_key`、`oauth_signature_method` 和 `oauth_version` 的值,同时确保 `oauth_nonce`、`oauth_signature`、`oauth_timestamp` 和 `oauth_token` 存在于请求中。Flashback 使我们有能力创建我们自己的匹配规则来实现这一目标。此功能允许我们测试随时间变化的数据、签名、令牌等的请求,而客户端没有任何更改。

|

||||

|

||||

这种灵活的匹配和在不连接互联网的情况下运行的功能是 Flashback 与其他模拟解决方案不同的特性。其他一些显著特点包括:

|

||||

|

||||

* Flashback 是一种跨平台和跨语言解决方案,能够测试 JVM(Java虚拟机)和非 JVM(C++、Python 等)应用程序。

|

||||

* Flashback 可以随时生成 SSL/TLS 证书,以模拟 HTTPS 请求的安全通道。

|

||||

|

||||

### 如何记录 HTTP 事务

|

||||

|

||||

使用 Flashback 记录 HTTP 事务以便稍后重放是一个比较简单的过程。在我们深入了解流程之前,我们首先列出一些术语:

|

||||

|

||||

* `Scene` :场景存储以前记录的 HTTP 事务 (以 JSON 格式),它可以在以后重放。例如,这里是一个[Flashback 场景][1]示例。

|

||||

* `Root Path` :根路径是包含 Flashback 场景数据的目录的文件路径。

|

||||

* `Scene Name` :场景名称是给定场景的名称。

|

||||

* `Scene Mode` :场景模式是使用场景的模式, 即“录制”或“重放”。

|

||||

* `Match Rule` :匹配规则确定传入的客户端请求是否与给定场景的内容匹配的规则。

|

||||

* `Flashback Proxy` :Flashback 代理是一个 HTTP 代理,共有录制和重放两种操作模式。

|

||||

* `Host` 和 `Port` :代理主机和端口。

|

||||

|

||||

为了录制场景,你必须向目的地址发出真实的外部请求,然后 HTTPS 请求和响应将使用你指定的匹配规则存储在场景中。在录制时,Flashback 的行为与典型的 MITM(中间人)代理完全相同 - 只有在重放模式下,连接流和数据流仅限于客户端和代理之间。

|

||||

|

||||

要实际看下 Flashback,让我们创建一个场景,通过执行以下操作捕获与 example.org 的交互:

|

||||

|

||||

1、 取回 Flashback 的源码:

|

||||

|

||||

```

|

||||

git clone https://github.com/linkedin/flashback.git

|

||||

```

|

||||

|

||||

2、 启动 Flashback 管理服务器:

|

||||

|

||||

```

|

||||

./startAdminServer.sh -port 1234

|

||||

```

|

||||

|

||||

3、 注意上面的 Flashback 将在本地端口 5555 上启动录制模式。匹配规则需要完全匹配(匹配 HTTP 正文、标题和 URL)。场景将存储在 `/tmp/test1` 下。

|

||||

|

||||

4、 Flashback 现在可以记录了,所以用它来代理对 example.org 的请求:

|

||||

|

||||

```

|

||||

curl http://www.example.org -x localhost:5555 -X GET

|

||||

```

|

||||

|

||||

5、 Flashback 可以(可选)在一个记录中记录多个请求。要完成录制,[关闭 Flashback][8]。

|

||||

|

||||

6、 要验证已记录的内容,我们可以在输出目录(`/tmp/test1`)中查看场景的内容。它应该[包含以下内容][9]。

|

||||

|

||||

[在 Java 代码中使用 Flashback][10]也很容易。

|

||||

|

||||

### 如何重放 HTTP 事务

|

||||

|

||||

要重放先前存储的场景,请使用与录制时使用的相同的基本设置。唯一的区别是[将“场景模式”设置为上述步骤 3 中的“播放”][11]。

|

||||

|

||||

验证响应来自场景而不是外部源的一种方法,是在你执行步骤 1 到 6 时临时禁用 Internet 连接。另一种方法是修改场景文件,看看响应是否与文件中的相同。

|

||||

|

||||

这是 [Java 中的一个例子][12]。

|

||||

|

||||

### 如何记录并重播 HTTPS 事务

|

||||

|

||||

使用 Flashback 记录并重放 HTTPS 事务的过程非常类似于 HTTP 事务的过程。但是,需要特别注意用于 HTTPS SSL 组件的安全证书。为了使 Flashback 作为 MITM 代理,必须创建证书颁发机构(CA)证书。在客户端和 Flashback 之间创建安全通道时将使用此证书,并允许 Flashback 检查其代理的 HTTPS 请求中的数据。然后将此证书存储为受信任的源,以便客户端在进行调用时能够对 Flashback 进行身份验证。有关如何创建证书的说明,有很多[类似这样][13]的资源是非常有帮助的。大多数公司都有自己的管理和获取证书的内部策略 - 请务必用你们自己的方法。

|

||||

|

||||

这里值得一提的是,Flashback 仅用于测试目的。你可以随时随地将 Flashback 与你的服务集成在一起,但需要注意的是,Flashback 的记录功能将需要存储所有的数据,然后在重放模式下使用它。我们建议你特别注意确保不会无意中记录或存储敏感成员数据。任何可能违反贵公司数据保护或隐私政策的行为都是你的责任。

|

||||

|

||||

一旦涉及安全证书,HTTP 和 HTTPS 之间在记录设置方面的唯一区别是添加了一些其他参数。

|

||||

|

||||

* `RootCertificateInputStream`: 表示 CA 证书文件路径或流。

|

||||

* `RootCertificatePassphrase`: 为 CA 证书创建的密码。

|

||||

* `CertificateAuthority`: CA 证书的属性

|

||||

|

||||

[查看 Flashback 中用于记录 HTTPS 事务的代码][14],它包括上述条目。

|

||||

|

||||

用 Flashback 重放 HTTPS 事务的过程与录制相同。唯一的区别是场景模式设置为“播放”。这在[此代码][15]中演示。

|

||||

|

||||

### 支持动态修改

|

||||

|

||||

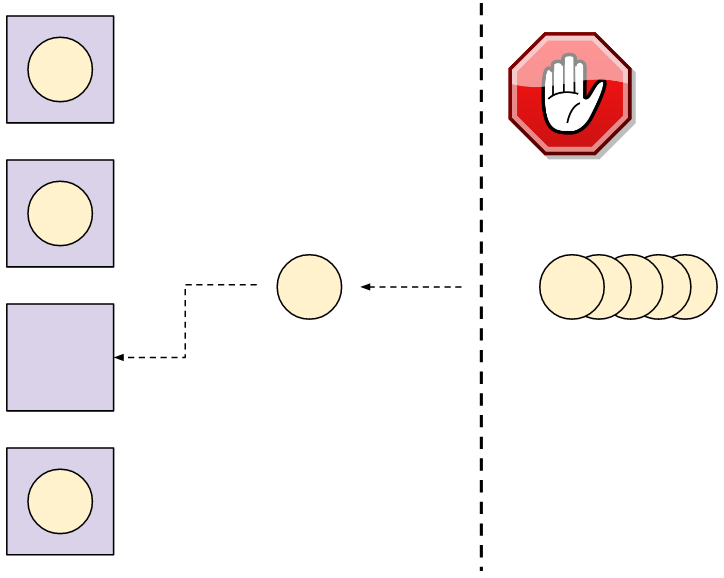

为了测试灵活性,Flashback 允许你动态地更改场景和匹配规则。动态更改场景允许使用不同的响应(如 `success`、`time_out`、`rate_limit` 等)测试相同的请求。[场景更改][16]仅适用于我们已经 POST 更新外部资源的场景。以下图为例。

|

||||

|

||||

|

||||

|

||||

能够动态[更改匹配规则][17]可以使我们测试复杂的场景。例如,我们有一个使用情况,要求我们测试 Twitter 的公共和私有资源的 HTTP 调用。对于公共资源,HTTP 请求是不变的,所以我们可以使用 “MatchAll” 规则。然而,对于私人资源,我们需要使用 OAuth 消费者密码和 OAuth 访问令牌来签名请求。这些请求包含大量具有不可预测值的参数,因此静态 MatchAll 规则将无法正常工作。

|

||||

|

||||

### 使用案例

|

||||

|

||||

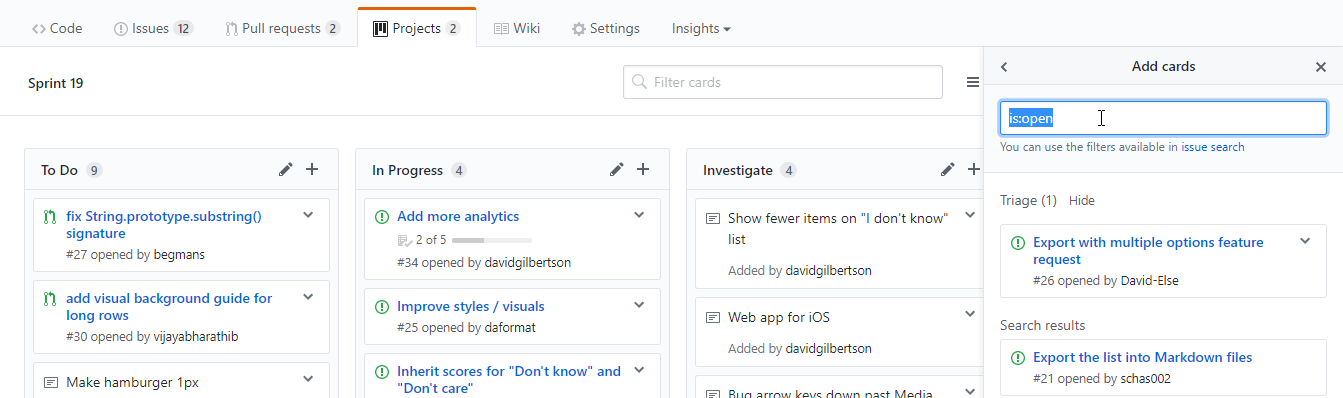

在 LinkedIn,Flashback 主要用于在集成测试中模拟不同的互联网提供商,如下图所示。第一张图展示了 LinkedIn 生产数据中心内的一个内部服务,通过代理层,与互联网提供商(如 Google)进行交互。我们想在测试环境中测试这个内部服务。

|

||||

|

||||

|

||||

|

||||

第二和第三张图表展示了我们如何在不同的环境中录制和重放场景。记录发生在我们的开发环境中,用户在代理启动的同一端口上启动 Flashback。从内部服务到提供商的所有外部请求将通过 Flashback 而不是我们的代理层。在必要场景得到记录后,我们可以将其部署到我们的测试环境中。

|

||||

|

||||

|

||||

|

||||

在测试环境(隔离并且没有 Internet 访问)中,Flashback 在与开发环境相同的端口上启动。所有 HTTP 请求仍然来自内部服务,但响应将来自 Flashback 而不是 Internet 提供商。

|

||||

|

||||

|

||||

|

||||

### 未来方向

|

||||

|

||||

我们希望将来可以支持非 HTTP 协议(如 FTP 或 JDBC),甚至可以让用户使用 MITM 代理框架来自行注入自己的定制协议。我们将继续改进 Flashback 设置 API,使其更容易支持非 Java 语言。

|

||||

|

||||

### 现在为一个开源项目

|

||||

|

||||

我们很幸运能够在 GTAC 2015 上发布 Flashback。在展会上,有几名观众询问是否将 Flashback 作为开源项目发布,以便他们可以将其用于自己的测试工作。

|

||||

|

||||

### Google TechTalks:GATC 2015 - 模拟互联网

|

||||

|

||||

<iframe allowfullscreen="" frameborder="0" height="315" src="https://www.youtube.com/embed/6gPNrujpmn0?origin=https://opensource.com&enablejsapi=1" width="560" id="6gPNrujpmn0" data-sdi="true"></iframe>

|

||||

|

||||

我们很高兴地宣布,Flashback 现在以 BSD 两句版许可证开源。要开始使用,请访问 [Flashback GitHub 仓库][18]。

|

||||

|

||||

_该文原始发表在[LinkedIn 工程博客上][2]。获得转载许可_

|

||||

|

||||

### 致谢

|

||||

|

||||

Flashback 由 [Shangshang Feng][19]、[Yabin Kang][20] 和 [Dan Vinegrad][21] 创建,并受到 [Betamax][22] 启发。特别感谢 [Hwansoo Lee][23]、[Eran Leshem][24]、[Kunal Kandekar][25]、[Keith Dsouza][26] 和 [Kang Wang][27] 帮助审阅代码。同样感谢我们的管理层 - [Byron Ma][28]、[Yaz Shimizu][29]、[Yuliya Averbukh][30]、[Christopher Hazlett][31] 和 [Brandon Duncan][32] - 感谢他们在开发和开源 Flashback 中的支持。

|

||||

|

||||

|

||||

(题图:Opensource.com)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Shangshang Feng - Shangshang 是 LinkedIn 纽约市办公室的高级软件工程师。在 LinkedIn 他从事了三年半的网关平台工作。在加入 LinkedIn 之前,他曾在 Thomson Reuters 和 ViewTrade 证券的基础设施团队工作。

|

||||

|

||||

---------

|

||||

|

||||

via: https://opensource.com/article/17/4/flashback-internet-mocking-tool

|

||||

|

||||

作者:[Shangshang Feng][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/shangshangfeng

|

||||

[1]:https://gist.github.com/anonymous/17d226050d8a9b79746a78eda9292382

|

||||

[2]:https://engineering.linkedin.com/blog/2017/03/flashback-mocking-tool

|

||||

[3]:https://opensource.com/article/17/4/flashback-internet-mocking-tool?rate=Jwt7-vq6jP9kS7gOT6f6vgwVlZupbyzWsVXX41ikmGk

|

||||

[4]:https://github.com/betamaxteam/betamax

|

||||

[5]:https://en.wikipedia.org/wiki/Representational_state_transfer

|

||||

[6]:https://gist.github.com/anonymous/91637854364287b38897c0970aad7451

|

||||

[7]:https://gist.github.com/anonymous/2f5271191edca93cd2e03ce34d1c2b62

|

||||

[8]:https://gist.github.com/anonymous/f899ebe7c4246904bc764b4e1b93c783

|

||||

[9]:https://gist.github.com/sf1152/c91d6d62518fe62cc87157c9ce0e60cf

|

||||

[10]:https://gist.github.com/anonymous/fdd972f1dfc7363f4f683a825879ce19

|

||||

[11]:https://gist.github.com/anonymous/ae1c519a974c3bc7de2a925254b6550e

|

||||

[12]:https://gist.github.com/anonymous/edcc1d60847d51b159c8fd8a8d0a5f8b

|

||||

[13]:https://jamielinux.com/docs/openssl-certificate-authority/introduction.html

|

||||

[14]:https://gist.github.com/anonymous/091d13179377c765f63d7bf4275acc11

|

||||

[15]:https://gist.github.com/anonymous/ec6a0fd07aab63b7369bf8fde69c1f16

|

||||

[16]:https://gist.github.com/anonymous/1f1660280acb41277fbe2c257bab2217

|

||||

[17]:https://gist.github.com/anonymous/0683c43f31bd916b76aff348ff87f51b

|

||||

[18]:https://github.com/linkedin/flashback

|

||||

[19]:https://www.linkedin.com/in/shangshangfeng

|

||||

[20]:https://www.linkedin.com/in/benykang

|

||||

[21]:https://www.linkedin.com/in/danvinegrad/

|

||||

[22]:https://github.com/betamaxteam/betamax

|

||||

[23]:https://www.linkedin.com/in/hwansoo/

|

||||

[24]:https://www.linkedin.com/in/eranl/

|

||||

[25]:https://www.linkedin.com/in/kunalkandekar/

|

||||

[26]:https://www.linkedin.com/in/dsouzakeith/

|

||||

[27]:https://www.linkedin.com/in/kang-wang-44960b4/

|

||||

[28]:https://www.linkedin.com/in/byronma/

|

||||

[29]:https://www.linkedin.com/in/yazshimizu/

|

||||

[30]:https://www.linkedin.com/in/yuliya-averbukh-818a41/

|

||||

[31]:https://www.linkedin.com/in/chazlett/

|

||||

[32]:https://www.linkedin.com/in/dudcat/

|

||||

[33]:https://opensource.com/user/125361/feed

|

||||

[34]:https://opensource.com/users/shangshangfeng

|

||||

@ -1,21 +1,13 @@

|

||||

当你只想将事情搞定时,为什么开放式工作这么难?

|

||||

============================================================

|

||||

|

||||

### 学习使用开放式决策框架来写一本书

|

||||

> 学习使用开放式决策框架来写一本书

|

||||

|

||||

|

||||

>图片来源 : opensource.com

|

||||

|

||||

|

||||

GSD(get stuff done 的缩写,即搞定)指导着我的工作方式。数年来,我将各种方法论融入我日常工作的习惯中,包括精益方法的反馈循环,和敏捷开发的迭代优化,以此来更好地 GSD(如果把 GSD 当作动词的话)。这意味着我必须非常有效地利用我的时间:列出清晰,各自独立的目标;标记已完成的项目;用迭代的方式地持续推进项目进度。但是当我们默认使用开放的时仍然能够 GSD 吗?又或者 GSD 的方法完全行不通呢?大多数人都认为这会导致糟糕的状况,但我发现事实并不一定这样。

|

||||

GSD(get stuff done 的缩写,即搞定)指导着我的工作方式。数年来,我将各种方法论融入我日常工作的习惯中,包括精益方法的反馈循环,和敏捷开发的迭代优化,以此来更好地 GSD(如果把 GSD 当作动词的话)。这意味着我必须非常有效地利用我的时间:列出清晰、各自独立的目标;标记已完成的项目;用迭代的方式地持续推进项目进度。但是当我们以开放为基础时仍然能够 GSD 吗?又或者 GSD 的方法完全行不通呢?大多数人都认为这会导致糟糕的状况,但我发现事实并不一定这样。

|

||||

|

||||

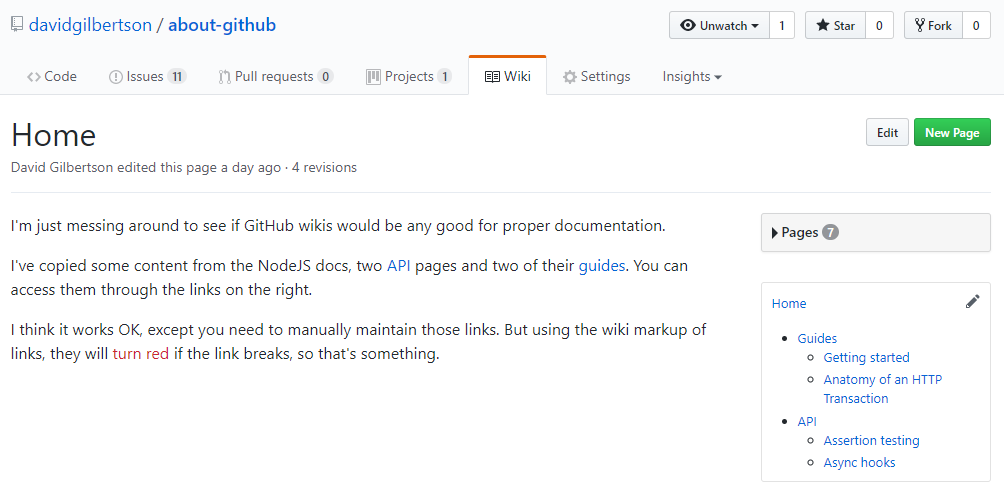

在开放的环境中工作,遵循[开放式决策框架][6]中的指导,会让项目起步变慢。但是在最近的一个项目中,我们作出了一个决定,一个从开始就正确的决定:以开放的方式工作,并与我们的社群一起合作。

|

||||

|

||||

关于开放式组织的资料

|

||||

|

||||

* [下载《开放式组织 IT 文化变革指南》][1]

|

||||

* [下载《开放式组织领袖手册》][2]

|

||||

* [什么是开放式组织][3]

|

||||

* [什么是开放决策][4]

|

||||

在开放的环境中工作,遵循<ruby>[开放式决策框架][6]<rt>Open Decision Framework</rt></ruby>中的指导,会让项目起步变慢。但是在最近的一个项目中,我们作出了一个决定,一个从开始就正确的决定:以开放的方式工作,并与我们的社群一起合作。

|

||||

|

||||

这是我们能做的最好的决定。

|

||||

|

||||

@ -23,13 +15,13 @@ GSD(get stuff done 的缩写,即搞定)指导着我的工作方式。数

|

||||

|

||||

### 建立社区

|

||||

|

||||

2014 年 10 月,我接手了一个新的项目:当时红帽的 CEO Jim Whitehurst 即将推出一本新书《开放式组织》,我要根据书中提出的概念,建立一个社区。“太棒了,这听起来是一个挑战,我加入了!”我这样想。但不久,[冒牌者综合征][7]便出现了,我又开始想:“我们究竟要做什么呢?怎样才算成功呢?”

|

||||

2014 年 10 月,我接手了一个新的项目:当时红帽的 CEO Jim Whitehurst 即将推出一本新书<ruby>《开放式组织》<rt>The Open Organization</rt></ruby>,我要根据书中提出的概念,建立一个社区。“太棒了,这听起来是一个挑战,我加入了!”我这样想。但不久,[冒牌者综合征][7]便出现了,我又开始想:“我们究竟要做什么呢?怎样才算成功呢?”

|

||||

|

||||

让我剧透一下,在这本书的结尾处,Jim 鼓励读者访问 Opensource.com,继续探讨 21 世纪的开放和管理。所以,在 2015 年 5 月,我们的团队在网站上建立了一个新的板块来讨论这些想法。我们计划讲一些故事,就像我们在 Opensource.com 上常做的那样,只不过这次围绕着书中的观点与概念。之后,我们每周都发布新的文章,在 Twitter 上举办了一个在线的读书俱乐部,还将《开放式组织》打造成了系列书籍。

|

||||

|

||||

我们内部独自完成了该系列书籍的前三期,每隔六个月发布一期。每完成一期,我们就向社区发布。然后我们继续完成下一期的工作,如此循环下去。

|

||||

|

||||

这种工作方式,让我们看到了很大的成功。近 3000 人订阅了[该系列的新书][9],《开放式组织领袖手册》。我们用 6 个月的周期来完成这个项目,这样新书的发行日正好是前书的两周年纪念日。

|

||||

这种工作方式,让我们看到了很大的成功。近 3000 人订阅了[该系列的新书][9]:《开放式组织领袖手册》。我们用 6 个月的周期来完成这个项目,这样新书的发行日正好是前书的两周年纪念日。

|

||||

|

||||

在这样的背景下,我们完成这本书的方式是简单直接的:针对开放工作这个主题,我们收集了最好的故事,并将它们组织起来形成文章,招募作者填补一些内容上的空白,使用开源工具调整字体样式,与设计师一起完成封面,最终发布这本书。这样的工作方式使得我们能按照自己的时间线(GSD)全速前进。到[第三本书][10]时,我们的工作流已经基本完善了。

|

||||

|

||||

@ -39,59 +31,55 @@ GSD(get stuff done 的缩写,即搞定)指导着我的工作方式。数

|

||||

|

||||

开放式决策框架列出了组成开放决策制定过程的 4 个阶段。下面是我们在每个阶段中的工作情况(以及开放是如何帮助完成工作的)。

|

||||

|

||||

### 1\. 构思

|

||||

#### 1、 构思

|

||||

|

||||

我们首先写了一份草稿,罗列了对项目设想的愿景。我们需要拿出东西来和潜在的“顾客”分享(在这个例子中,“顾客”指潜在的利益相关者和作者)。然后我们约了一些领域专家面谈,这些专家能够给我们直接的诚实的意见。这些专家表现出的热情与他们提供的指导验证了我们的想法,同时提出了反馈意见使我们能继续向前。如果我们没有得到这些验证,我们会退回到我们最初的想法,再决定从哪里重新开始。

|

||||

|

||||

### 2\. 计划与研究

|

||||

#### 2、 计划与研究

|

||||

|

||||

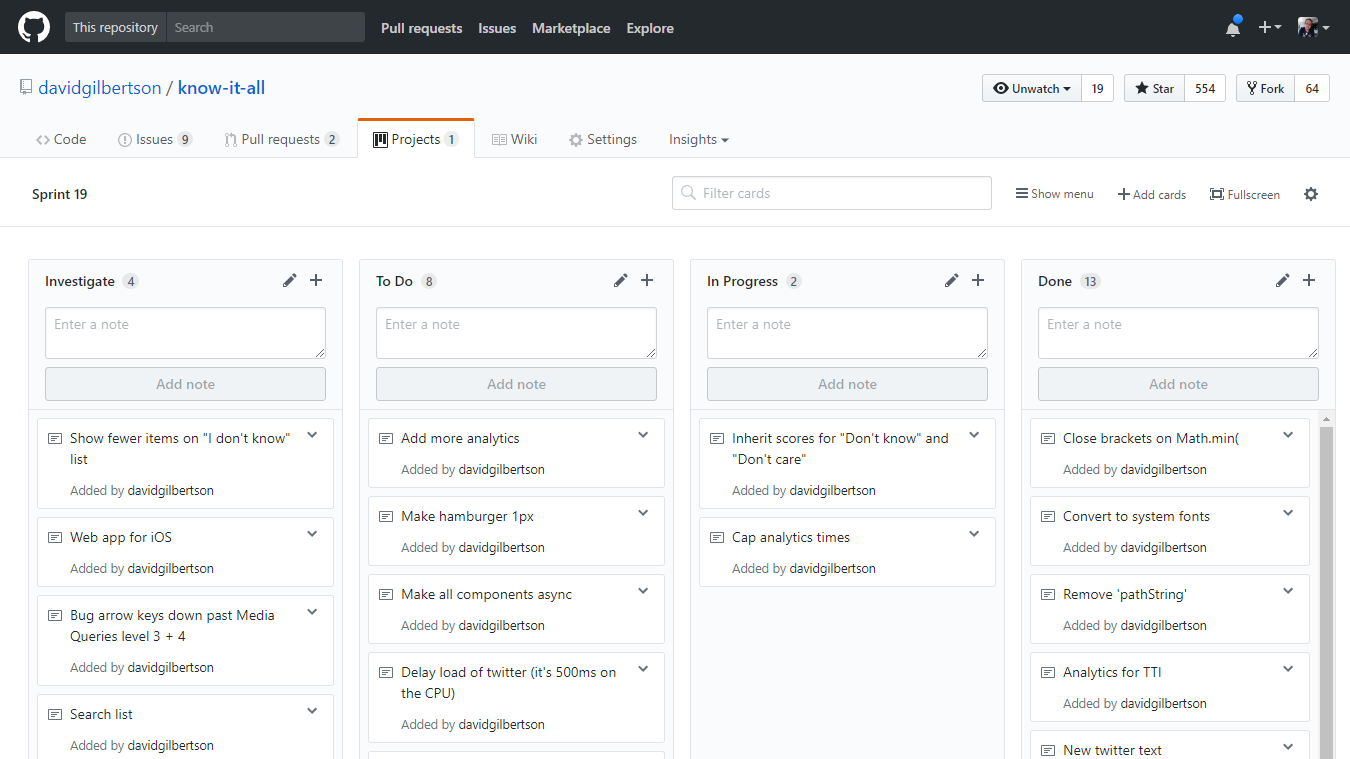

经过几次面谈,我们准备在 [Opensource.com 上公布这个项目][11]。同时,我们在 [Github 上也公布了这个项目][12], 提供了项目描述,预计的时间线,并阐明了我们所受的约束。这次公布得到了很好的效果,我们最初计划的目录中欠缺了一些内容,在项目公布之后的 72 小时内就被补充完整了。另外(也是更重要的),读者针对一些章节,提出了本不在我们计划中的想法,但是读者觉得这些想法能够补充我们最初设想的版本。

|

||||

经过几次面谈,我们准备在 [Opensource.com 上公布这个项目][11]。同时,我们在 [Github 上也启动了这个项目][12],提供了项目描述、预计的时间线,并阐明了我们所受的约束。这次公布得到了很好的效果,我们最初计划的目录中欠缺了一些内容,在项目公布之后的 72 小时内就被补充完整了。另外(也是更重要的),读者针对一些章节,提出了本不在我们计划中的想法,但是读者觉得这些想法能够补充我们最初设想的版本。

|

||||

|

||||

我们体会到了 [Linus 法则][16]: "With more eyes, all _typos_ are shallow."

|

||||

回顾过去,我觉得在项目的第一和第二个阶段,开放项目并不会影响我们搞定项目的能力。事实上,这样工作有一个很大的好处:发现并填补内容的空缺。我们不只是填补了空缺,我们是迅速地填补了空缺,并且还是用我们自己从未考虑过的点子。这并不一定要求我们做更多的工作,只是改变了我们的工作方式。我们动用有限的人脉,邀请别人来写作,再组织收到的内容,设置上下文,将人们导向正确的方向。

|

||||

|

||||

回顾过去,我觉得在项目的第一和第二个阶段,开放项目并不会影响我们搞定项目的能力。事实上,这样工作有一个很大的好处:发现并填补内容的空缺。我们不只是填补了空缺,我们是迅速地就填补了空缺,并且还是用我们自己从未考虑过的点子。这并不一定要求我们做更多的工作,只是改变了我们的工作方式。我们动用有限的人脉,邀请别人来写作,再组织收到的内容,设置上下文,将人们导向正确的方向。

|

||||

|

||||

### 3\. 设计,开发和测试

|

||||

#### 3、 设计,开发和测试

|

||||

|

||||

项目的这个阶段完全围绕项目管理,管理一些像猫一样特立独行的人,并处理项目的预期。我们有明确的截止时间,我们提前沟通,频繁沟通。我们还使用了一个战略:列出了贡献者和利益相关者,在项目的整个过程中向他们告知项目的进度,尤其是我们在 Github 上标出的里程碑。

|

||||

|

||||

最后,我们的书需要一个名字。我们收集了许多反馈,指出书名应该是什么,更重要的反馈指出了书名不应该是什么。我们通过 [Github 上的 issue][13] 收集反馈意见,并公开表示我们的团队将作最后的决定。当我们准备宣布最后的书名时,我的同事 Bryan Behrenshausen 做了很好的工作,[分享了我们作出决定的过程][14]。人们似乎对此感到高兴——即使他们不同意我们最后的书名。

|

||||

最后,我们的书需要一个名字。我们收集了许多反馈,指出书名应该是什么,更重要的是反馈指出了书名不应该是什么。我们通过 [Github 上的工单][13]收集反馈意见,并公开表示我们的团队将作最后的决定。当我们准备宣布最后的书名时,我的同事 Bryan Behrenshausen 做了很好的工作,[分享了我们作出决定的过程][14]。人们似乎对此感到高兴——即使他们不同意我们最后的书名。

|

||||

|

||||

书的“测试”阶段需要大量的[校对][15]。社区成员真的参与到回答这个“求助”贴中来。我们在 GitHub issue 上收到了大约 80 条意见,汇报校对工作的进度(更不用说通过电子邮件和其他反馈渠道获得的许多额外的反馈)。

|

||||

书的“测试”阶段需要大量的[校对][15]。社区成员真的参与到回答这个“求助”贴中来。我们在 GitHub 工单上收到了大约 80 条意见,汇报校对工作的进度(更不用说通过电子邮件和其他反馈渠道获得的许多额外的反馈)。

|

||||

|

||||

关于搞定任务:在这个阶段,我们亲身体会了 [Linus 法则][16]:"With more eyes, all _typos_ are shallow." 如果我们像前三本书一样自己独立完成,那么整个校对的负担就会落在我们的肩上(就像这些书一样)!相反,社区成员慷慨地帮我们承担了校对的重担,我们的工作从自己校对(尽管我们仍然做了很多工作)转向管理所有的 change requests。对我们团队来说,这是一个受大家欢迎的改变;对社区来说,这是一个参与的机会。如果我们自己做的话,我们肯定能更快地完成校对,但是在开放的情况下,我们在截止日期之前发现了更多的错误,这一点毋庸置疑。

|

||||

关于搞定任务:在这个阶段,我们亲身体会了 [Linus 法则][16]:“<ruby>众目之下,_笔误_无所遁形。<rt>With more eyes, all _typos_ are shallow.</rt></ruby>” 如果我们像前三本书一样自己独立完成,那么整个校对的负担就会落在我们的肩上(就像这些书一样)!相反,社区成员慷慨地帮我们承担了校对的重担,我们的工作从自己校对(尽管我们仍然做了很多工作)转向管理所有的 change requests。对我们团队来说,这是一个受大家欢迎的改变;对社区来说,这是一个参与的机会。如果我们自己做的话,我们肯定能更快地完成校对,但是在开放的情况下,我们在截止日期之前发现了更多的错误,这一点毋庸置疑。

|

||||

|

||||

### 4\. Launch

|

||||

|

||||

### 4\. 发布

|

||||

#### 4、 发布

|

||||

|

||||

好了,我们现在推出了这本书的最终版本。(或者只是第一版?)

|

||||

|

||||

遵循开放决策框架是《IT 文化变革指南》成功的关键。

|

||||

|

||||

我们把发布分为两个阶段。首先,根据我们的公开的项目时间表,在最终日期之前的几天,我们安静地推出了这本书,以便让我们的社区贡献者帮助我们测试[下载表格][17]。第二阶段也就是现在,这本书的[通用版][18]的正式公布。当然,我们在发布后的仍然接受反馈,开源方式也正是如此。

|

||||

|

||||

### 成就解锁

|

||||

|

||||

遵循开放式决策框架是《IT 文化变革指南》成功的关键。通过与客户和利益相关者的合作,分享我们的制约因素,工作透明化,我们甚至超出了自己对图书项目的期望。

|

||||

遵循开放式决策框架是<ruby>《IT 文化变革指南》<rt>Guide to IT Culture Change</rt></ruby>成功的关键。通过与客户和利益相关者的合作,分享我们的制约因素,工作透明化,我们甚至超出了自己对图书项目的期望。

|

||||

|

||||

我对整个项目中的合作,反馈和活动感到非常满意。虽然有一段时间内没有像我想要的那样快速完成任务,这让我有一种焦虑感,但我很快就意识到,开放这个过程实际上让我们能完成更多的事情。基于上面我的概述这一点显而易见。

|

||||

|

||||

所以也许我应该重新考虑我的 GSD 心态,并将其扩展到 GMD:Get **more** done,搞定**更多**工作,并且就这个例子说,取得更好的结果。

|

||||

所以也许我应该重新考虑我的 GSD 心态,并将其扩展到 GMD:Get **More** Done,搞定**更多**工作,并且就这个例子说,取得更好的结果。

|

||||

|

||||

(题图:opensource.com)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Jason Hibbets - Jason Hibbets 是 Red Hat 企业营销中的高级社区传播者,也是 Opensource.com 的社区经理。 他自2003年以来一直在 Red Hat,并且是开源城市基金会的创立者。之前的职位包括高级营销专员,项目经理,Red Hat 知识库维护人员和支持工程师。

|

||||

Jason Hibbets - Jason Hibbets 是 Red Hat 企业营销中的高级社区传播者,也是 Opensource.com 的社区经理。 他自2003 年以来一直在 Red Hat,并且是开源城市基金会的创立者。之前的职位包括高级营销专员、项目经理、Red Hat 知识库维护人员和支持工程师。

|

||||

|

||||

-----------

|

||||

|

||||

via: https://opensource.com/open-organization/17/6/working-open-and-gsd

|

||||

|

||||

作者:[Jason Hibbets ][a]

|

||||

作者:[Jason Hibbets][a]

|

||||

译者:[explosic4](https://github.com/explosic4)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

311

published/20170617 What all you need to know about HTML5.md

Normal file

311

published/20170617 What all you need to know about HTML5.md

Normal file

@ -0,0 +1,311 @@

|

||||

关于 HTML5 你需要了解的基础知识

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> HTML5 是第五个且是当前的 HTML 版本,它是用于在万维网上构建和呈现内容的标记语言。本文将帮助读者了解它。

|

||||

|

||||

HTML5 通过 W3C 和<ruby>Web 超文本应用技术工作组<rt>Web Hypertext Application Technology Working Group</rt></ruby>之间的合作发展起来。它是一个更高版本的 HTML,它的许多新元素可以使你的页面更加语义化和动态。它是为所有人提供更好的 Web 体验而开发的。HTML5 提供了很多的功能,使 Web 更加动态和交互。

|

||||

|

||||

HTML5 的新功能是:

|

||||

|

||||

* 新标签,如 `<header>` 和 `<section>`

|

||||

* 用于 2D 绘图的 `<canvas>` 元素

|

||||

* 本地存储

|

||||

* 新的表单控件,如日历、日期和时间

|

||||

* 新媒体功能

|

||||

* 地理位置

|

||||

|

||||

HTML5 还不是正式标准(LCTT 译注:HTML5 已于 2014 年成为“推荐标准”),因此,并不是所有的浏览器都支持它或其中一些功能。开发 HTML5 背后最重要的原因之一是防止用户下载并安装像 Silverlight 和 Flash 这样的多个插件。

|

||||

|

||||

**新标签和元素**

|

||||

|

||||

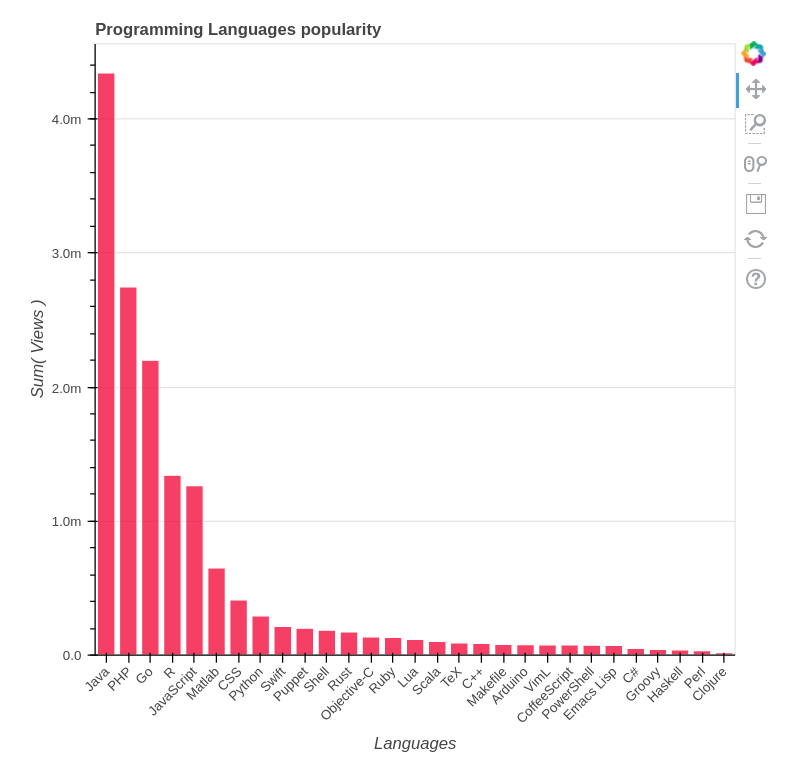

- **语义化元素:** 图 1 展示了一些有用的语义化元素。

|

||||

- **表单元素:** HTML5 中的表单元素如图 2 所示。

|

||||

- **图形元素:** HTML5 中的图形元素如图 3 所示。

|

||||

- **媒体元素:** HTML5 中的新媒体元素如图 4 所示。

|

||||

|

||||

|

||||

[][3]

|

||||

|

||||

*图 1:语义化元素*

|

||||

|

||||

[][4]

|

||||

|

||||

*图 2:表单元素*

|

||||

|

||||

[][5]

|

||||

|

||||

*图 3:图形元素*

|

||||

|

||||

[][6]

|

||||

|

||||

*图 4:媒体元素*

|

||||

|

||||

### HTML5 的高级功能

|

||||

|

||||

#### 地理位置

|

||||

|

||||

这是一个 HTML5 API,用于获取网站用户的地理位置,用户必须首先允许网站获取他或她的位置。这通常通过按钮和/或浏览器弹出窗口来实现。所有最新版本的 Chrome、Firefox、IE、Safari 和 Opera 都可以使用 HTML5 的地理位置功能。

|

||||

|

||||

地理位置的一些用途是:

|

||||

|

||||

* 公共交通网站

|

||||

* 出租车及其他运输网站

|

||||

* 电子商务网站计算运费

|

||||

* 旅行社网站

|

||||

* 房地产网站

|

||||

* 在附近播放的电影的电影院网站

|

||||

* 在线游戏

|

||||

* 网站首页提供本地标题和天气

|

||||

* 工作职位可以自动计算通勤时间

|

||||

|

||||

**工作原理:** 地理位置通过扫描位置信息的常见源进行工作,其中包括以下:

|

||||

|

||||

* 全球定位系统(GPS)是最准确的

|

||||

* 网络信号 - IP地址、RFID、Wi-Fi 和蓝牙 MAC地址

|

||||

* GSM/CDMA 蜂窝 ID

|

||||

* 用户输入

|

||||

|

||||

该 API 提供了非常方便的函数来检测浏览器中的地理位置支持:

|

||||

|

||||

```

|

||||

if (navigator.geolocation) {

|

||||

// do stuff

|

||||

}

|

||||

```

|

||||

`getCurrentPosition` API 是使用地理位置的主要方法。它检索用户设备的当前地理位置。该位置被描述为一组地理坐标以及航向和速度。位置信息作为位置对象返回。

|

||||

|

||||

语法是:

|

||||

|

||||

```

|

||||

getCurrentPosition(showLocation, ErrorHandler, options);

|

||||

```

|

||||

|

||||

* `showLocation`:定义了检索位置信息的回调方法。

|

||||

* `ErrorHandler`(可选):定义了在处理异步调用时发生错误时调用的回调方法。

|

||||

* `options` (可选): 定义了一组用于检索位置信息的选项。

|

||||

|

||||

我们可以通过两种方式向用户提供位置信息:测地和民用。

|

||||

|

||||

1. 描述位置的测地方式直接指向纬度和经度。

|

||||

2. 位置信息的民用表示法是人类可读的且容易理解。

|

||||

|

||||

如下表 1 所示,每个属性/参数都具有测地和民用表示。

|

||||

|

||||

[][7]

|

||||

|

||||

图 5 包含了一个位置对象返回的属性集。

|

||||

|

||||

[][8]

|

||||

|

||||

*图5:位置对象属性*

|

||||

|

||||

#### 网络存储

|

||||

|

||||

在 HTML 中,为了在本机存储用户数据,我们需要使用 JavaScript cookie。为了避免这种情况,HTML5 已经引入了 Web 存储,网站利用它在本机上存储用户数据。

|

||||

|

||||

与 Cookie 相比,Web 存储的优点是:

|

||||

|

||||

* 更安全

|

||||

* 更快

|

||||

* 存储更多的数据

|

||||

* 存储的数据不会随每个服务器请求一起发送。只有在被要求时才包括在内。这是 HTML5 Web 存储超过 Cookie 的一大优势。

|

||||

|

||||

有两种类型的 Web 存储对象:

|

||||

|

||||

1. 本地 - 存储没有到期日期的数据。

|

||||

2. 会话 - 仅存储一个会话的数据。

|

||||

|

||||

**如何工作:** `localStorage` 和 `sessionStorage` 对象创建一个 `key=value` 对。比如: `key="Name"`,` value="Palak"`。

|

||||

|

||||

这些存储为字符串,但如果需要,可以使用 JavaScript 函数(如 `parseInt()` 和 `parseFloat()`)进行转换。

|

||||

|

||||

下面给出了使用 Web 存储对象的语法:

|

||||

|

||||

- 存储一个值:

|

||||

- `localStorage.setItem("key1", "value1");`

|

||||

- `localStorage["key1"] = "value1";`

|

||||

- 得到一个值:

|

||||

- `alert(localStorage.getItem("key1"));`

|

||||

- `alert(localStorage["key1"]);`

|

||||

- 删除一个值:

|

||||

-`removeItem("key1");`

|

||||

- 删除所有值:

|

||||

- `localStorage.clear();`

|

||||

|

||||

#### 应用缓存(AppCache)

|

||||

|

||||

使用 HTML5 AppCache,我们可以使 Web 应用程序在没有 Internet 连接的情况下脱机工作。除 IE 之外,所有浏览器都可以使用 AppCache(截止至此时)。

|

||||

|

||||

应用缓存的优点是:

|

||||

|

||||

* 网页浏览可以脱机

|

||||

* 页面加载速度更快

|

||||

* 服务器负载更小

|

||||

|

||||

`cache manifest` 是一个简单的文本文件,其中列出了浏览器应缓存的资源以进行脱机访问。 `manifest` 属性可以包含在文档的 HTML 标签中,如下所示:

|

||||

|

||||

```

|

||||

<html manifest="test.appcache">

|

||||

...

|

||||

</html>

|

||||

```

|

||||

|

||||

它应该在你要缓存的所有页面上。

|

||||

|

||||

缓存的应用程序页面将一直保留,除非:

|

||||

|

||||

1. 用户清除它们

|

||||

2. `manifest` 被修改

|

||||

3. 缓存更新

|

||||

|

||||

#### 视频

|

||||

|

||||

在 HTML5 发布之前,没有统一的标准来显示网页上的视频。大多数视频都是通过 Flash 等不同的插件显示的。但 HTML5 规定了使用 video 元素在网页上显示视频的标准方式。

|

||||

|

||||

目前,video 元素支持三种视频格式,如表 2 所示。

|

||||

|

||||

[][9]

|

||||

|

||||

下面的例子展示了 video 元素的使用:

|

||||

|

||||

```

|

||||

<! DOCTYPE HTML>

|

||||

<html>

|

||||

<body>

|

||||

|

||||

<video src=" vdeo.ogg" width="320" height="240" controls="controls">

|

||||

|

||||

This browser does not support the video element.

|

||||

|

||||

</video>

|

||||

|

||||

</body>

|

||||

</html>

|

||||

```

|

||||

|

||||

例子使用了 Ogg 文件,并且可以在 Firefox、Opera 和 Chrome 中使用。要使视频在 Safari 和未来版本的 Chrome 中工作,我们必须添加一个 MPEG4 和 WebM 文件。

|

||||

|

||||

`video` 元素允许多个 `source` 元素。`source` 元素可以链接到不同的视频文件。浏览器将使用第一个识别的格式,如下所示:

|

||||

|

||||

```

|

||||

<video width="320" height="240" controls="controls">

|

||||

<source src="vdeo.ogg" type="video/ogg" />

|

||||

<source src=" vdeo.mp4" type="video/mp4" />

|

||||

<source src=" vdeo.webm" type="video/webm" />

|

||||

This browser does not support the video element.

|

||||

</video>

|

||||

```

|

||||

|

||||

[][10]

|

||||

|

||||

*图6:Canvas 的输出*

|

||||

|

||||

#### 音频

|

||||

|

||||

对于音频,情况类似于视频。在 HTML5 发布之前,在网页上播放音频没有统一的标准。大多数音频也通过 Flash 等不同的插件播放。但 HTML5 规定了通过使用音频元素在网页上播放音频的标准方式。音频元素用于播放声音文件和音频流。

|

||||

|

||||

目前,HTML5 `audio` 元素支持三种音频格式,如表 3 所示。

|

||||

|

||||

[][11]

|

||||

|

||||

`audio` 元素的使用如下所示:

|

||||

|

||||

```

|

||||

<! DOCTYPE HTML>

|

||||

<html>

|

||||

<body>

|

||||

|

||||

<audio src=" song.ogg" controls="controls">

|

||||

|

||||

This browser does not support the audio element.

|

||||

|

||||

</video>

|

||||

|

||||

</body>

|

||||

</html>

|

||||

```

|

||||

|

||||

此例使用 Ogg 文件,并且可以在 Firefox、Opera 和 Chrome 中使用。要在 Safari 和 Chrome 的未来版本中使 audio 工作,我们必须添加一个 MP3 和 Wav 文件。

|

||||

|

||||

`audio` 元素允许多个 `source` 元素,它可以链接到不同的音频文件。浏览器将使用第一个识别的格式,如下所示:

|

||||

|

||||

```

|

||||

<audio controls="controls">

|

||||

<source src="song.ogg" type="audio/ogg" />

|

||||

<source src="song.mp3" type="audio/mpeg" />

|

||||

|

||||

This browser does not support the audio element.

|

||||

|

||||

</audio>

|

||||

```

|

||||

|

||||

#### 画布(Canvas)

|

||||

|

||||

要在网页上创建图形,HTML5 使用 画布 API。我们可以用它绘制任何东西,并且它使用 JavaScript。它通过避免从网络下载图像而提高网站性能。使用画布,我们可以绘制形状和线条、弧线和文本、渐变和图案。此外,画布可以让我们操作图像中甚至视频中的像素。你可以将 `canvas` 元素添加到 HTML 页面,如下所示:

|

||||

|

||||

```

|

||||

<canvas id="myCanvas" width="200" height="100"></canvas>

|

||||

```

|

||||

|

||||

画布元素不具有绘制元素的功能。我们可以通过使用 JavaScript 来实现绘制。所有绘画应在 JavaScript 中。

|

||||

|

||||

```

|

||||

<script type="text/javascript">

|

||||

var c=document.getElementById("myCanvas");

|

||||

var cxt=c.getContext("2d");

|

||||

cxt.fillStyle="blue";

|

||||

cxt.storkeStyle = "red";

|

||||

cxt.fillRect(10,10,100,100);

|

||||

cxt.storkeRect(10,10,100,100);

|

||||

</script>

|

||||

```

|

||||

|

||||

以上脚本的输出如图 6 所示。

|

||||

|

||||

你可以绘制许多对象,如弧、圆、线/垂直梯度等。

|

||||

|

||||

### HTML5 工具

|

||||

|

||||

为了有效操作,所有熟练的或业余的 Web 开发人员/设计人员都应该使用 HTML5 工具,当需要设置工作流/网站或执行重复任务时,这些工具非常有帮助。它们提高了网页设计的可用性。

|

||||

|

||||

以下是一些帮助创建很棒的网站的必要工具。

|

||||

|

||||

- **HTML5 Maker:** 用来在 HTML、JavaScript 和 CSS 的帮助下与网站内容交互。非常容易使用。它还允许我们开发幻灯片、滑块、HTML5 动画等。

|

||||

- **Liveweave:** 用来测试代码。它减少了保存代码并将其加载到屏幕上所花费的时间。在编辑器中粘贴代码即可得到结果。它非常易于使用,并为一些代码提供自动完成功能,这使得开发和测试更快更容易。

|

||||

- **Font dragr:** 在浏览器中预览定制的 Web 字体。它会直接载入该字体,以便你可以知道看起来是否正确。也提供了拖放界面,允许你拖动字形、Web 开放字体和矢量图形来马上测试。

|

||||

- **HTML5 Please:** 可以让我们找到与 HTML5 相关的任何内容。如果你想知道如何使用任何一个功能,你可以在 HTML Please 中搜索。它提供了支持的浏览器和设备的有用资源的列表,语法,以及如何使用元素的一般建议等。

|

||||

- **Modernizr:** 这是一个开源工具,用于给访问者浏览器提供最佳体验。使用此工具,你可以检测访问者的浏览器是否支持 HTML5 功能,并加载相应的脚本。

|

||||

- **Adobe Edge Animate:** 这是必须处理交互式 HTML 动画的 HTML5 开发人员的有用工具。它用于数字出版、网络和广告领域。此工具允许用户创建无瑕疵的动画,可以跨多个设备运行。

|

||||

- **Video.js:** 这是一款基于 JavaScript 的 HTML5 视频播放器。如果要将视频添加到你的网站,你应该使用此工具。它使视频看起来不错,并且是网站的一部分。

|

||||

- **The W3 Validator:** W3 验证工具测试 HTML、XHTML、SMIL、MathML 等中的网站标记的有效性。要测试任何网站的标记有效性,你必须选择文档类型为 HTML5 并输入你网页的 URL。这样做之后,你的代码将被检查,并将提供所有错误和警告。

|

||||

- **HTML5 Reset:** 此工具允许开发人员在 HTML5 中重写旧网站的代码。你可以使用这些工具为你网站的访问者提供一个良好的网络体验。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

Palak Shah

|

||||

|

||||

作者是高级软件工程师。她喜欢探索新技术,学习创新概念。她也喜欢哲学。你可以通过 palak311@gmail.com 联系她。

|

||||

|

||||

--------------------

|

||||

via: http://opensourceforu.com/2017/06/introduction-to-html5/

|

||||

|

||||

作者:[Palak Shah][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://opensourceforu.com/author/palak-shah/

|

||||

[1]:http://opensourceforu.com/2017/06/introduction-to-html5/#disqus_thread

|

||||

[2]:http://opensourceforu.com/author/palak-shah/

|

||||

[3]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure-1-7.jpg

|

||||

[4]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure-2-5.jpg

|

||||

[5]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure-3-2.jpg

|

||||

[6]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure-4-2.jpg

|

||||

[7]:http://opensourceforu.com/wp-content/uploads/2017/05/table-1.jpg

|

||||

[8]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure5-1.jpg

|

||||

[9]:http://opensourceforu.com/wp-content/uploads/2017/05/table-2.jpg

|

||||

[10]:http://opensourceforu.com/wp-content/uploads/2017/05/Figure6-1.jpg

|

||||

[11]:http://opensourceforu.com/wp-content/uploads/2017/05/table-3.jpg

|

||||

80

published/20170707 The changing face of the hybrid cloud.md

Normal file

80

published/20170707 The changing face of the hybrid cloud.md

Normal file

@ -0,0 +1,80 @@

|

||||

混合云的变化

|

||||

============================================================

|

||||

|

||||

> 围绕云计算的概念和术语仍然很新,但是也在不断的改进。

|

||||

|

||||

|

||||

|

||||

|

||||

不管怎么看,云计算也只有十多年的发展时间。一些我们习以为常的云计算的概念和术语仍然很新。美国国家标准与技术研究所(NIST)文档显示,一些已经被熟悉的术语定义在 2011 年才被发布,例如基础设施即服务(IaaS),而在此之前它就以草案的形式广泛流传。

|

||||

|

||||

在该文档中其它定义中,有一个叫做<ruby>混合云<rt>hybrid cloud</rt></ruby>。让我们回溯一下该术语在这段期间的变化是很有启发性的。云基础设施已经超越了相对简单的分类。此外,它还强调了开源软件的使用者所熟悉的优先级,例如灵活性、可移植性、选择性,已经被运用到了混合云上。

|

||||

|

||||

NIST 对混合云最初的定义主要集中于<ruby>云爆发<rt>cloud bursting</rt></ruby>,你能使用内部的基础设施去处理一个基本的计算负荷,但是如果你的负荷量暴涨,可以将多出来的转为使用公有云。与之密切联系的是加强私有云与公有云之间 API 的兼容性,甚至是创造一个现货市场来提供最便宜的容量。

|

||||

|

||||

Nick Carr 在 [The Big Switch][10] 一书中提出一个概念,云是一种计算单元,其与输电网类似。这个故事不错,但是即使在早期,[这种类比的局限性也变得很明显][11]。计算不是以电流方式呈现的一种物品。需要关注的是,公有云提供商以及 OpenStack 一类的开源云软件激增的新功能,可见许多用户并不仅仅是寻找最便宜的通用计算能力。

|

||||

|

||||

云爆发的概念基本上忽略了计算是与数据相联系的现实,你不可能只移动洪水般突如其来的数据而不承担巨大的带宽费用,以及不用为转移需要花费的时间而操作。Dave McCrory 发明了 “<ruby>数据引力<rt>data gravity</rt></ruby>”一词去描述这个限制。

|

||||

|

||||

那么既然混合云有如此负面的情况,为什么我们现在还要再讨论混合云?

|

||||

|

||||

正如我说的,混合云的最初的构想是在云爆发的背景下诞生的。云爆发强调的是快速甚至是即时的将工作环境从一个云转移到另一个云上;然而,混合云也意味着应用和数据的移植性。确实,如之前 [2011 年我在 CNET 的文章][12]中写到:“我认为过度关注于全自动的工作转换给我们自己造成了困扰,我们真正应该关心的是,如果供应商不能满意我们的需求或者尝试将我们锁定在其平台上时,我们是否有将数据从一个地方到另一个地方的迁移能力。”

|

||||

|

||||

从那以后,探索云之间的移植性有了进一步的进展。

|

||||

|

||||

Linux 是云移植性的关键,因为它能运行在各种地方,无论是从裸机到内部虚拟基础设施,还是从私有云到公有云。Linux 提供了一个完整、可靠的平台,其具有稳定的 API 接口,且可以依靠这些接口编写程序。

|

||||

|

||||

被广泛采纳的容器进一步加强了 Linux 提供应用在云之间移植的能力。通过提供一个包含了应用的基础配置环境的镜像,应用在开发、测试和最终运行环境之间移动时容器提供了可移植性和兼容性。

|

||||

|

||||

Linux 容器被应用到要求可移植性、可配置性以及独立性的许多方面上。不管是预置的云,还是公有云,以及混合云都是如此。

|

||||

|

||||

容器使用的是基于镜像的部署模式,这让在不同环境中分享一个应用或者具有全部基础环境的服务集变得容易了。

|

||||

|

||||

在 OCI 支持下开发的规范定义了容器镜像的内容及其所依赖、环境、参数和一些镜像正确运行所必须的要求。在标准化的作用下,OCI 为许多其它工具提供了一个机会,它们现在可以依靠稳定的运行环境和镜像规范了。

|

||||

|

||||

同时,通过 Gluster 和 Ceph 这类的开源技术,分布式存储能提供数据在云上的可移植性。 物理约束限制了如何快速简单地把数据从一个地方移动到另一个地方;然而,随着组织部署和使用不同类型的基础架构,他们越来越渴望一个不受物理、虚拟和云资源限制的开放的软件定义储存平台。

|

||||

|

||||

尤其是在数据存储需求飞速增长的情况下,由于预测分析,物联网和实时监控的趋势。[2016 年的一项研究表明][13],98% 的 IT 决策者认为一个更敏捷的存储解决方案对他们的组织是有利的。在同一个研究中,他们列举出不恰当的存储基础设施是最令他们组织受挫的事情之一。

|

||||

|

||||

混合云表现出的是提供在不同计算能力和资源之间合适的移植性和兼容性。其不仅仅是将私有云和公有云同时运用在一个应用中。它是一套多种类型的服务,其中的一部分可能是你们 IT 部门建立和操作的,而另一部分可能来源于外部。

|

||||

|

||||

它们可能是软件即服务(SaaS)应用的混合,例如邮件和客户关系管理(CRM)。被 Kubernetes 这类开源软件协调在一起的容器平台越来越受新开发应用的欢迎。你的组织可能正在运用某一家大型云服务提供商来做一些事情。同时你也能在私有云或更加传统的内部基础设施上操作一些你自己的基础设施。

|

||||

|

||||

这就是现在混合云的现状,它能被归纳为两个选择,选择最合适的基础设施和服务,以及选择把应用和数据从一个地方移动到另一个你想的地方。

|

||||

|

||||

**相关阅读: [多重云和混合云有什么不同?][6]**

|

||||

|

||||

(题图 : [Flickr 使用者: theaucitron][9] (CC BY-SA 2.0))

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Gordon Haff 是红帽云的布道者,常受到业内和客户的高度赞赏,帮助红帽云组合方案的发展。他是《Computing Next: How the Cloud Opens the Future》的作者,除此之外他还有许多出版物。在红帽之前,Gordon 写了大量的研究简报,经常被纽约时报等出版物在 IT 类话题上引用,在产品和市场策略上给予客户建议。他职业生涯的早期,在 Data General 他负责将各种不同的计算机系统,从微型计算机到大型的 UNIX 服务器,引入市场。他有麻省理工学院和达特茅斯学院的工程学位,还是康奈尔大学约翰逊商学院的工商管理学硕士。

|

||||

|

||||

-----

|

||||

|

||||

via: https://opensource.com/article/17/7/hybrid-cloud

|

||||

|

||||

作者:[Gordon Haff (Red Hat)][a]

|

||||

译者:[ZH1122](https://github.com/ZH1122)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ghaff

|

||||

[1]:https://opensource.com/resources/cloud?src=cloud_resource_menu1

|

||||

[2]:https://opensource.com/resources/what-is-openstack?src=cloud_resource_menu2

|

||||

[3]:https://opensource.com/resources/what-is-kubernetes?src=cloud_resource_menu3

|

||||

[4]:https://opensource.com/16/12/yearbook-why-operating-system-matters?src=cloud_resource_menu4

|

||||

[5]:https://opensource.com/business/16/10/interview-andy-cathrow-anchore?src=cloud_resource_menu5

|

||||

[6]:https://enterprisersproject.com/article/2017/7/multi-cloud-vs-hybrid-cloud-whats-difference

|

||||

[7]:https://opensource.com/article/17/7/hybrid-cloud?rate=ztmV2D_utD03cID1u41Al08w0XFm6rXXwCJdTwqI4iw

|

||||

[8]:https://opensource.com/user/21220/feed

|

||||

[9]:https://www.flickr.com/photos/theaucitron/5810163712/in/photolist-5p9nh3-6EkSKG-6EgGEF-9hYBcr-abCSpq-9zbjDz-4PVqwm-9RqBfq-abA2T4-4nXfwv-9RQkdN-dmjSdA-84o2ER-abA2Wp-ehyhPC-7oFYrc-4nvqBz-csMQXb-nRegFf-ntS23C-nXRyaB-6Xw3Mq-cRMaCq-b6wkkP-7u8sVQ-yqcg-6fTmk7-bzm3vU-6Xw3vL-6EkzCQ-d3W8PG-5MoveP-oMWsyY-jtMME6-XEMwS-2SeRXT-d2hjzJ-p2ZZVZ-7oFYoX-84r6Mo-cCizvm-gnnsg5-77YfPx-iDjqK-8gszbW-6MUZEZ-dhtwtk-gmpTob-6TBJ8p-mWQaAC/

|

||||

[10]:http://www.nicholascarr.com/?page_id=21

|

||||

[11]:https://www.cnet.com/news/there-is-no-big-switch-for-cloud-computing/

|

||||

[12]:https://www.cnet.com/news/cloudbursting-or-just-portable-clouds/

|

||||

[13]:https://www.redhat.com/en/technologies/storage/vansonbourne

|

||||

[14]:https://opensource.com/users/ghaff

|

||||

[15]:https://opensource.com/users/ghaff

|

||||

@ -1,22 +1,17 @@

|

||||

translating by sugarfillet

|

||||

Functional testing Gtk+ applications in C

|

||||

============================================================

|

||||

用 C 语言对 Gtk+ 应用进行功能测试

|

||||

========

|

||||

|

||||

### Learn how to test your application's function with this simple tutorial.

|

||||

> 这个简单教程教你如何测试你应用的功能

|

||||

|

||||

|

||||

|

||||

|

||||

Image by :

|

||||

自动化测试用来保证你程序的质量以及让它以预想的运行。单元测试只是检测你算法的某一部分,而并不注重各组件间的适应性。这就是为什么会有功能测试,它有时也称为集成测试。

|

||||

|

||||

opensource.com

|

||||

功能测试简单地与你的用户界面进行交互,无论它是网站还是桌面应用。为了展示功能测试如何工作,我们以测试一个 Gtk+ 应用为例。为了简单起见,这个教程里,我们使用 Gtk+ 2.0 教程的示例。

|

||||

|

||||

Automated tests are required to ensure your program's quality and that it works as expected. Unit tests examine only certain parts of your algorithm, but don't look at how each component fits together. That's where functional testing, sometimes referred as integration testing, comes in.

|

||||

### 基础设置

|

||||

|

||||

A functional test basically interacts with your user interface, whether through a website or a desktop application. To show you how that works, let's look at how to test a Gtk+ application. For simplicity, in this tutorial let's use the [Tictactoe][6] example from the Gtk+ 2.0 tutorial.

|

||||

|

||||

### Basic setup

|

||||

|

||||

For every functional test, you usually define some global variables, such as "user interaction delay" or "timeout until a failure is indicated" (i.e., when an event doesn't occur until the specified time and the application is doomed).

|

||||

对于每一个功能测试,你通常需要定义一些全局变量,比如 “用户交互时延” 或者 “失败的超时时间”(也就是说,如果在指定的时间内一个事件没有发生,程序就要中断)。

|

||||

|

||||

```

|

||||

#define TTT_FUNCTIONAL_TEST_UTIL_IDLE_CONDITION(f) ((TttFunctionalTestUtilIdleCondition)(f))

|

||||

@ -29,7 +24,7 @@ struct timespec ttt_functional_test_util_default_timeout = {

|

||||

};

|

||||

```

|

||||

|

||||

Now we can implement our dead-time functions. Here, we'll use the **usleep** function in order to get the desired delay.

|

||||

现在我们可以实现我们自己的超时函数。这里,为了能够得到期望的延迟,我们采用 `usleep` 函数。

|

||||

|

||||

```

|

||||

void

|

||||

@ -45,7 +40,7 @@ ttt_functional_test_util_reaction_time_long()

|

||||

}

|

||||

```

|

||||

|

||||