mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

commit

dd1ced936a

@ -0,0 +1,88 @@

|

||||

那些奇特的 Linux 发行版本

|

||||

================================================================================

|

||||

从大多数消费者所关注的诸如 Ubuntu,Fedora,Mint 或 elementary OS 到更加复杂、轻量级和企业级的诸如 Slackware,Arch Linux 或 RHEL,这些发行版本我都已经见识过了。除了这些,难道没有其他别的了吗?其实 Linux 的生态系统是非常多样化的,对每个人来说,总有一款适合你。下面就让我们讨论一些稀奇古怪的小众 Linux 发行版本吧,它们代表着开源平台真正的多样性。

|

||||

|

||||

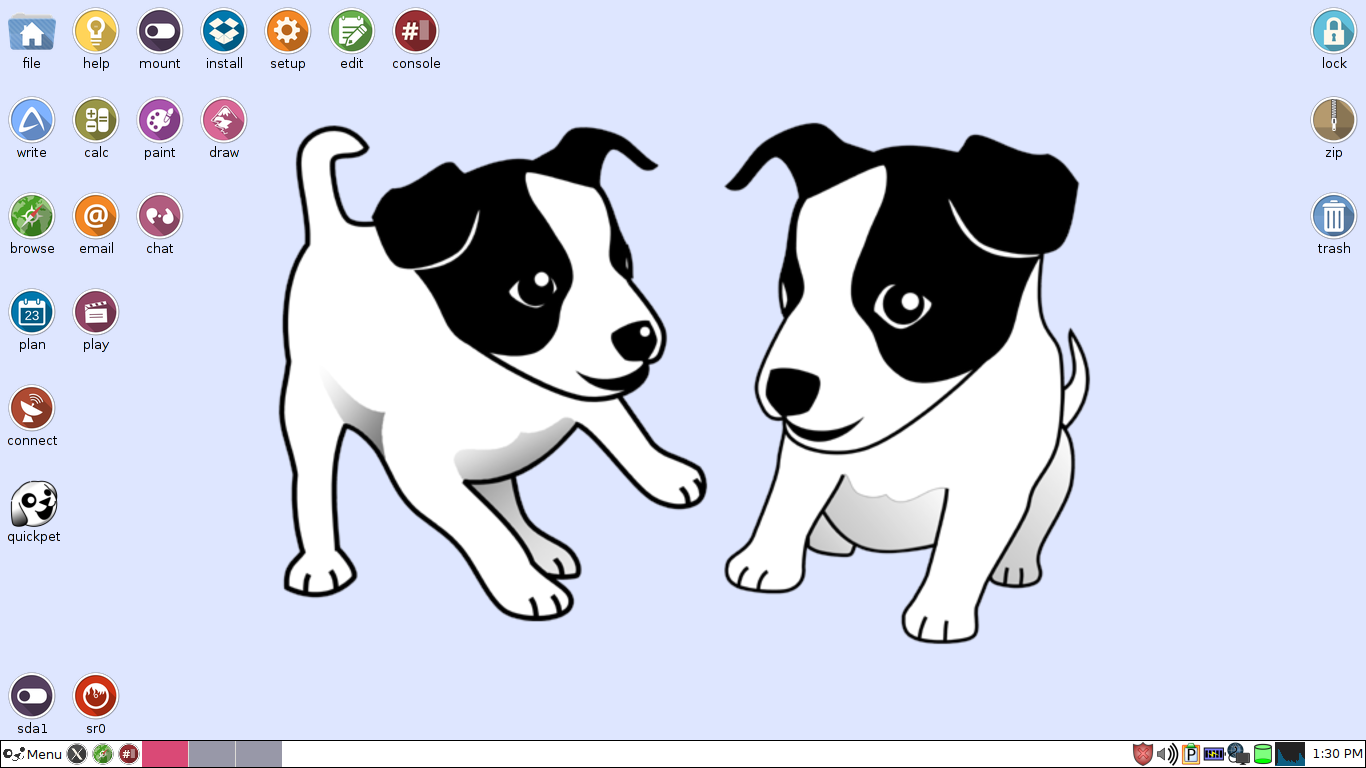

### Puppy Linux

|

||||

|

||||

|

||||

|

||||

它是一个仅有一个普通 DVD 光盘容量十分之一大小的操作系统,这就是 Puppy Linux。整个操作系统仅有 100MB 大小!并且它还可以从内存中运行,这使得它运行极快,即便是在老式的 PC 机上。 在操作系统启动后,你甚至可以移除启动介质!还有什么比这个更好的吗? 系统所需的资源极小,大多数的硬件都会被自动检测到,并且它预装了能够满足你基本需求的软件。[在这里体验 Puppy Linux 吧][1].

|

||||

|

||||

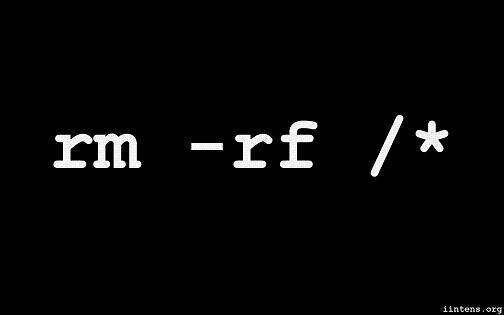

### Suicide Linux(自杀 Linux)

|

||||

|

||||

|

||||

|

||||

这个名字吓到你了吗?我想应该是。 ‘任何时候 -注意是任何时候-一旦你远程输入不正确的命令,解释器都会创造性地将它重定向为 `rm -rf /` 命令,然后擦除你的硬盘’。它就是这么简单。我真的很想知道谁自信到将[Suicide Linux][2] 安装到生产机上。 **警告:千万不要在生产机上尝试这个!** 假如你感兴趣的话,现在可以通过一个简洁的[DEB 包][3]来获取到它。

|

||||

|

||||

### PapyrOS

|

||||

|

||||

|

||||

|

||||

它的 “奇怪”是好的方面。PapyrOS 正尝试着将 Android 的 material design 设计语言引入到新的 Linux 发行版本上。尽管这个项目还处于早期阶段,看起来它已经很有前景。该项目的网页上说该系统已经完成了 80%,随后人们可以期待它的第一个 Alpha 发行版本。在该项目被宣告提出时,我们做了 [PapyrOS][4] 的小幅报道,从它的外观上看,它甚至可能会引领潮流。假如你感兴趣的话,可在 [Google+][5] 上关注该项目并可通过 [BountySource][6] 来贡献出你的力量。

|

||||

|

||||

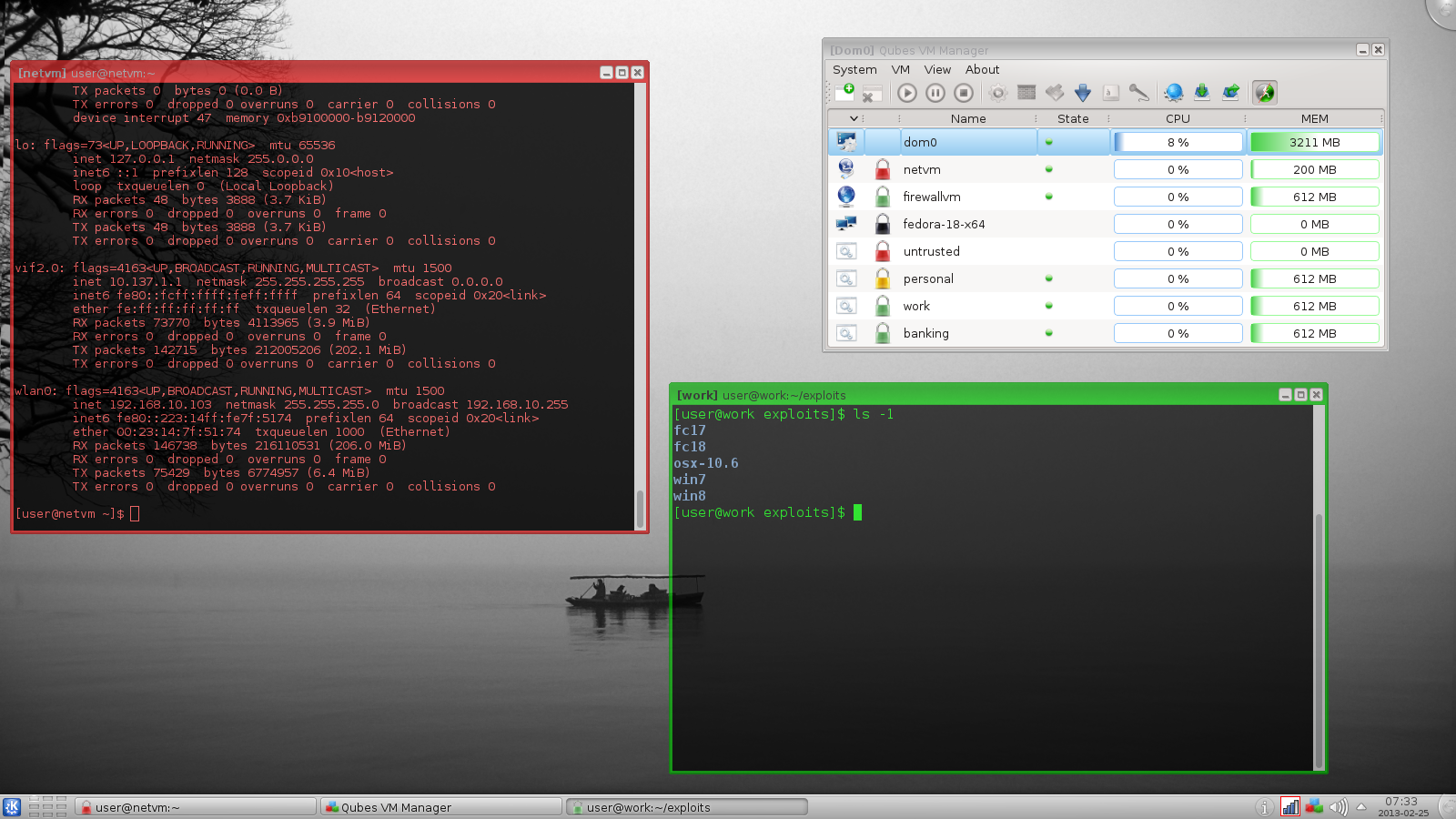

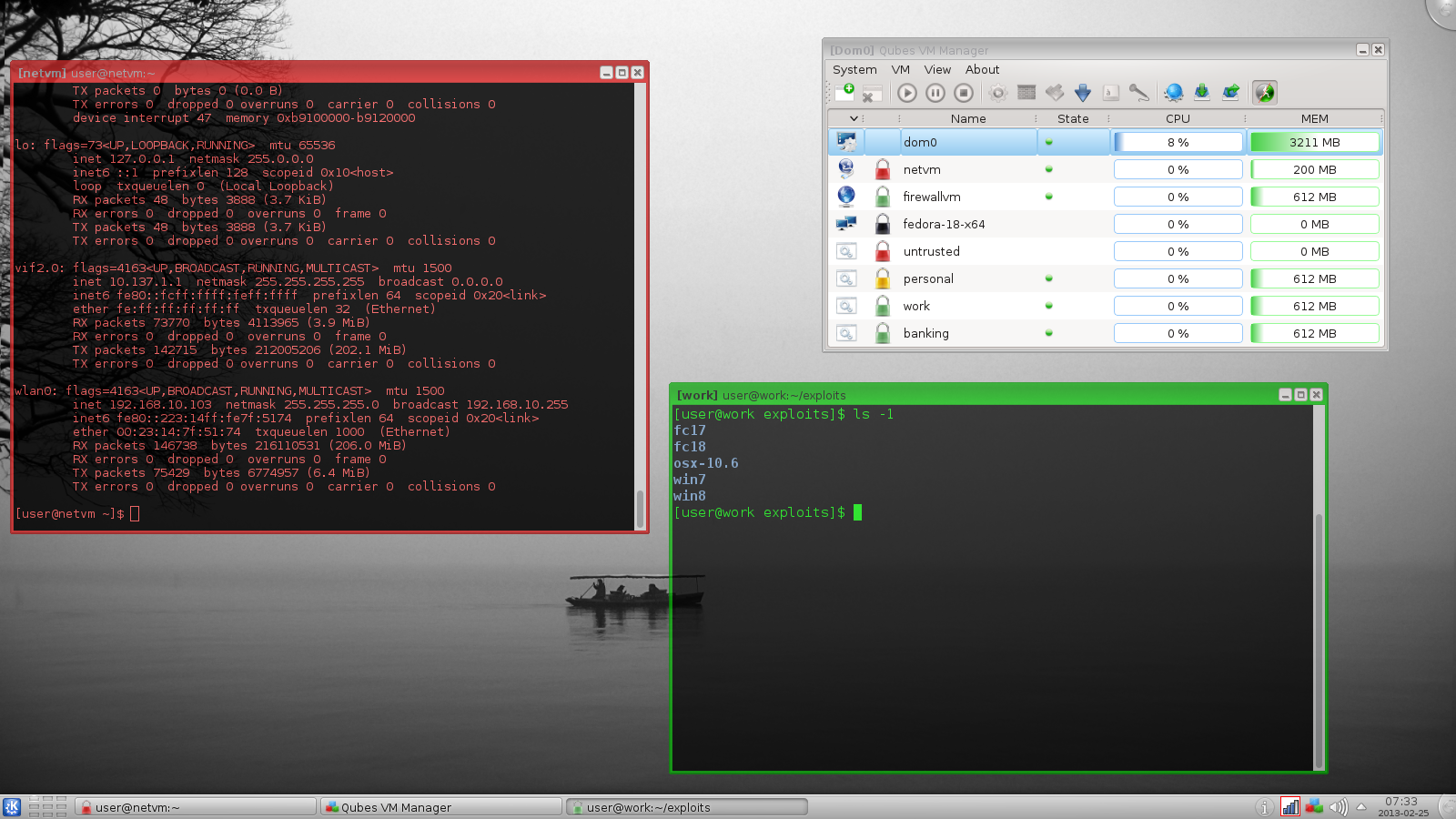

### Qubes OS

|

||||

|

||||

|

||||

|

||||

Qubes 是一个开源的操作系统,其设计通过使用[安全分级(Security by Compartmentalization)][14]的方法,来提供强安全性。其前提假设是不存在完美的没有 bug 的桌面环境。并通过实现一个‘安全隔离(Security by Isolation)’ 的方法,[Qubes Linux][7]试图去解决这些问题。Qubes 基于 Xen、X 视窗系统和 Linux,并可运行大多数的 Linux 应用,支持大多数的 Linux 驱动。Qubes 入选了 Access Innovation Prize 2014 for Endpoint Security Solution 决赛名单。

|

||||

|

||||

### Ubuntu Satanic Edition

|

||||

|

||||

|

||||

|

||||

Ubuntu SE 是一个基于 Ubuntu 的发行版本。通过一个含有主题、壁纸甚至来源于某些天才新晋艺术家的重金属音乐的综合软件包,“它同时带来了最好的自由软件和免费的金属音乐” 。尽管这个项目看起来不再积极开发了, Ubuntu Satanic Edition 甚至在其名字上都显得奇异。 [Ubuntu SE (Slightly NSFW)][8]。

|

||||

|

||||

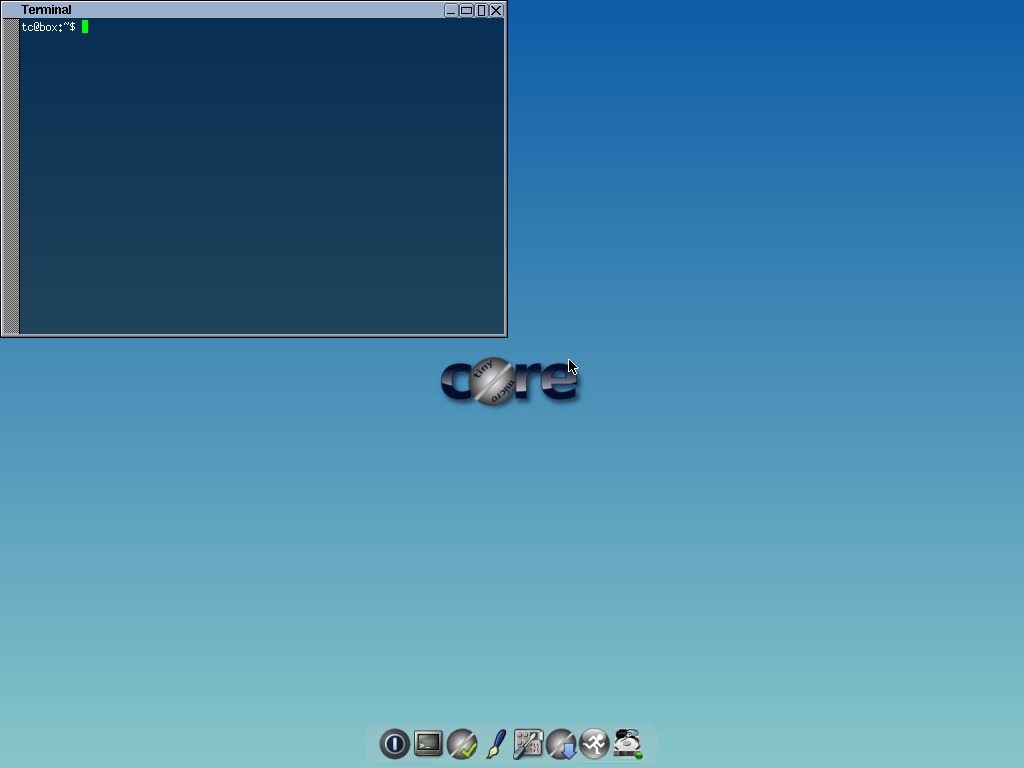

### Tiny Core Linux

|

||||

|

||||

|

||||

|

||||

Puppy Linux 还不够小?试试这个吧。 Tiny Core Linux 是一个 12MB 大小的图形化 Linux 桌面!是的,你没有看错。一个主要的补充说明:它不是一个完整的桌面,也并不完全支持所有的硬件。它只含有能够启动进入一个非常小巧的 X 桌面,支持有线网络连接的核心部件。它甚至还有一个名为 Micro Core Linux 的没有 GUI 的版本,仅有 9MB 大小。[Tiny Core Linux][9]。

|

||||

|

||||

### NixOS

|

||||

|

||||

|

||||

|

||||

它是一个资深用户所关注的 Linux 发行版本,有着独特的打包和配置管理方式。在其他的发行版本中,诸如升级的操作可能是非常危险的。升级一个软件包可能会引起其他包无法使用,而升级整个系统感觉还不如重新安装一个。在那些你不能安全地测试由一个配置的改变所带来的结果的更改之上,它们通常没有“重来”这个选项。在 NixOS 中,整个系统由 Nix 包管理器按照一个纯功能性的构建语言的描述来构建。这意味着构建一个新的配置并不会重写先前的配置。大多数其他的特色功能也遵循着这个模式。Nix 相互隔离地存储所有的软件包。有关 NixOS 的更多内容请看[这里][10]。

|

||||

|

||||

### GoboLinux

|

||||

|

||||

|

||||

|

||||

这是另一个非常奇特的 Linux 发行版本。它与其他系统如此不同的原因是它有着独特的重新整理的文件系统。它有着自己独特的子目录树,其中存储着所有的文件和程序。GoboLinux 没有专门的包数据库,因为其文件系统就是它的数据库。在某些方面,这类重整有些类似于 OS X 上所看到的功能。

|

||||

|

||||

### Hannah Montana Linux

|

||||

|

||||

|

||||

|

||||

它是一个基于 Kubuntu 的 Linux 发行版本,它有着汉娜·蒙塔娜( Hannah Montana) 主题的开机启动界面、KDM(KDE Display Manager)、图标集、ksplash、plasma、颜色主题和壁纸(I'm so sorry)。[这是它的链接][12]。这个项目现在不再活跃了。

|

||||

|

||||

### RLSD Linux

|

||||

|

||||

它是一个极其精简、小巧、轻量和安全可靠的,基于 Linux 文本的操作系统。开发者称 “它是一个独特的发行版本,提供一系列的控制台应用和自带的安全特性,对黑客或许有吸引力。” [RLSD Linux][13].

|

||||

|

||||

我们还错过了某些更加奇特的发行版本吗?请让我们知晓吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.techdrivein.com/2015/08/the-strangest-most-unique-linux-distros.html

|

||||

|

||||

作者:Manuel Jose

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://puppylinux.org/main/Overview%20and%20Getting%20Started.htm

|

||||

[2]:http://qntm.org/suicide

|

||||

[3]:http://sourceforge.net/projects/suicide-linux/files/

|

||||

[4]:http://www.techdrivein.com/2015/02/papyros-material-design-linux-coming-soon.html

|

||||

[5]:https://plus.google.com/communities/109966288908859324845/stream/3262a3d3-0797-4344-bbe0-56c3adaacb69

|

||||

[6]:https://www.bountysource.com/teams/papyros

|

||||

[7]:https://www.qubes-os.org/

|

||||

[8]:http://ubuntusatanic.org/

|

||||

[9]:http://tinycorelinux.net/

|

||||

[10]:https://nixos.org/

|

||||

[11]:http://www.gobolinux.org/

|

||||

[12]:http://hannahmontana.sourceforge.net/

|

||||

[13]:http://rlsd2.dimakrasner.com/

|

||||

[14]:https://en.wikipedia.org/wiki/Compartmentalization_(information_security)

|

||||

@ -0,0 +1,53 @@

|

||||

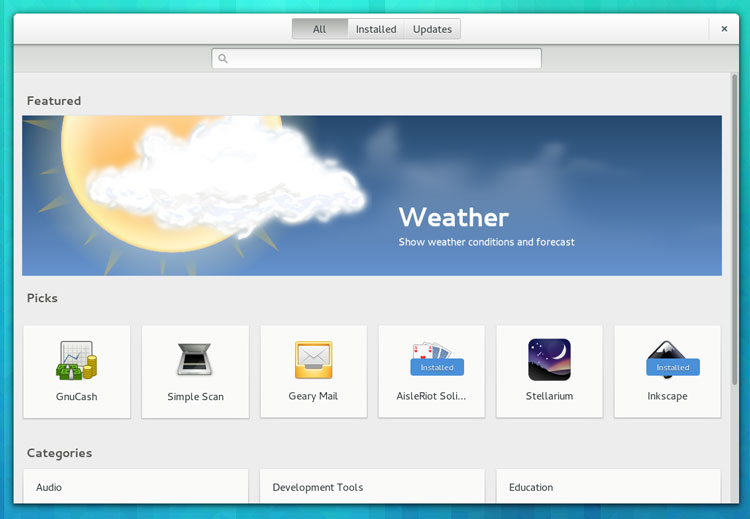

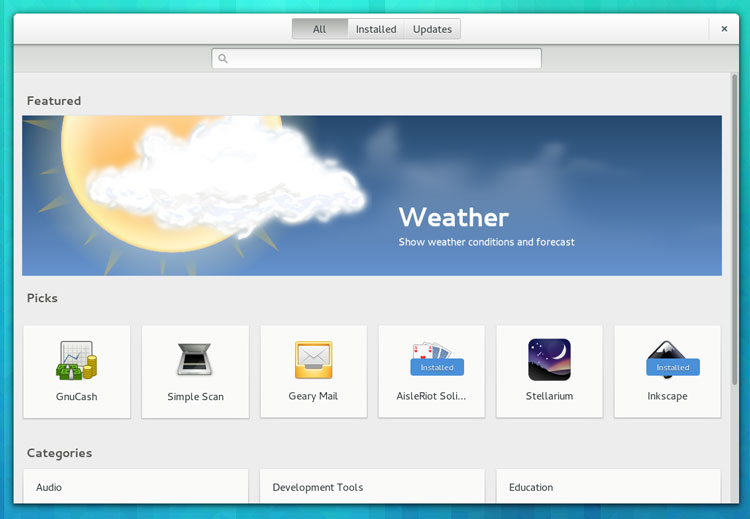

Ubuntu 软件中心将在 16.04 LTS 中被替换

|

||||

================================================================================

|

||||

|

||||

|

||||

*Ubuntu 软件中心将在 Ubuntu 16.04 LTS 中被替换。*

|

||||

|

||||

Ubuntu Xenial Xerus 桌面用户将会发现,这个熟悉的(并有些繁琐的)Ubuntu 软件中心将不再可用。

|

||||

|

||||

按照目前的计划,GNOME 的 [软件应用(Software application)][1] 将作为基于 Unity 7 的桌面的默认包管理工具。

|

||||

|

||||

|

||||

|

||||

*GNOME 软件应用*

|

||||

|

||||

作为这次变化的一个结果是,会新开发插件来支持软件中心的评级、评论和应用程序付费的功能。

|

||||

|

||||

该决定是在伦敦的 Canonical 总部最近举行的一次桌面峰会中通过的。

|

||||

|

||||

“相对于 Ubuntu 软件中心,我们认为我们在 GNOME 软件中心(sic)添加 Snaps 支持上能做的更好。所以,现在看起来我们将使用 GNOME 软件中心来取代 [Ubuntu 软件中心]”,Ubuntu 桌面经理 Will Cooke 在 Ubuntu 在线峰会解释说。

|

||||

|

||||

GNOME 3.18 架构与也将出现在 Ubuntu 16.04 中,其中一些应用程序将更新到 GNOME 3.20 , ‘这么做也是有道理的’,Will Cooke 补充说。

|

||||

|

||||

我们最近在 Twitter 上做了一项民意调查,询问如何在 Ubuntu 上安装软件。结果表明,只有少数人怀念现在的软件中心...

|

||||

|

||||

你使用什么方式在 Ubuntu 上安装软件?

|

||||

|

||||

- 软件中心

|

||||

- 终端

|

||||

|

||||

### 在 Ubuntu 16.04 其他应用程序也将会减少 ###

|

||||

|

||||

Ubuntu 软件中心并不是唯一一个在 Xenial Xerus 中被丢弃的。

|

||||

|

||||

光盘刻录工具 Brasero 和即时通讯工具 **Empathy** 也将从默认镜像中删除。

|

||||

|

||||

虽然这些应用程序还在不断的开发,但随着笔记本减少了光驱以及基于移动网络的聊天服务,它们看起来越来越过时了。

|

||||

|

||||

如果你还在使用它们请不要惊慌:Brasero 和 Empathy 将 **仍然可以通过存档在 Ubuntu 上安装**。

|

||||

|

||||

也并不全是丢弃和替换,默认还包括了一个新的桌面应用程序:GNOME 日历。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/11/the-ubuntu-software-centre-is-being-replace-in-16-04-lts

|

||||

|

||||

作者:[Sam Tran][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/111008502832304483939?rel=author

|

||||

[1]:https://wiki.gnome.org/Apps/Software

|

||||

112

published/LetsEncrypt.md

Normal file

112

published/LetsEncrypt.md

Normal file

@ -0,0 +1,112 @@

|

||||

# SSL/TLS 加密新纪元 - Let's Encrypt

|

||||

|

||||

根据 Let's Encrypt 官方博客消息,Let's Encrypt 服务将在下周(11 月 16 日)正式对外开放。

|

||||

|

||||

Let's Encrypt 项目是由互联网安全研究小组(ISRG,Internet Security Research Group)主导并开发的一个新型数字证书认证机构(CA,Certificate Authority)。该项目旨在开发一个自由且开放的自动化 CA 套件,并向公众提供相关的证书免费签发服务以降低安全通讯的财务、技术和教育成本。在过去的一年中,互联网安全研究小组拟定了 [ACME 协议草案][1],并首次实现了使用该协议的应用套件:服务端 [Boulder][2] 和客户端 [letsencrypt][3]。

|

||||

|

||||

至于为什么 Let's Encrypt 让我们如此激动,以及 HTTPS 协议如何保护我们的通讯请参考[浅谈 HTTPS 和 SSL/TLS 协议的背景与基础][4]。

|

||||

|

||||

## ACME 协议

|

||||

|

||||

Let's Encrypt 的诞生离不开 ACME(Automated Certificate Management Environment,自动证书管理环境)协议的拟定。

|

||||

|

||||

说到 ACME 协议,我们不得不提一下传统 CA 的认证方式。Let's Encrypt 服务所签发的证书为域名认证证书(DV,Domain-validated Certificate),签发这类证书需要域名所有者完成以下至少一种挑战(Challenge)以证明自己对域名的所有权:

|

||||

|

||||

* 验证申请人对域名的 Whois 信息中邮箱的控制权;

|

||||

* 验证申请人对域名的常见管理员邮箱(如以 `admin@`、`postmaster@` 开头的邮箱等)的控制权;

|

||||

* 在 DNS 的 TXT 记录中发布一条 CA 提供的字符串;

|

||||

* 在包含域名的网址中特定路径发布一条 CA 提供的字符串。

|

||||

|

||||

不难发现,其中最容易实现自动化的一种操作必然为最后一条,ACME 协议中的 [Simple HTTP][5] 认证即是用一种类似的方法对从未签发过任何证书的域名进行认证。该协议要求在访问 `http://域名/.well-known/acme-challenge/指定字符串` 时返回特定的字符串。

|

||||

|

||||

然而实现该协议的客户端 [letsencrypt][3] 做了更多——它不仅可以通过 ACME 协议配合服务端 [Boulder][2] 的域名进行独立(standalone)的认证工作,同时还可以自动配置常见的服务器软件(目前支持 Nginx 和 Apache)以完成认证。

|

||||

|

||||

## Let's Encrypt 免费证书签发服务

|

||||

|

||||

对于大多数网站管理员来讲,想要对自己的 Web 服务器进行加密需要一笔不小的支出进行证书签发并且难以配置。根据早些年 SSL Labs 公布的 [2010 年互联网 SSL 调查报告(PDF)][6] 指出超过半数的 Web 服务器没能正确使用 Web 服务器证书,主要的问题有证书不被浏览器信任、证书和域名不匹配、证书过期、证书信任链没有正确配置、使用已知有缺陷的协议和算法等。而且证书过期后的续签和泄漏后的吊销仍需进行繁琐的人工操作。

|

||||

|

||||

幸运的是 Let's Encrypt 免费证书签发服务在经历了漫长的开发和测试之后终于来临,在 Let's Encrypt 官方 CA 被广泛信任之前,IdenTrust 的根证书对 Let's Encrypt 的二级 CA 进行了交叉签名使得大部分浏览器已经信任 Let's Encrypt 签发的证书。

|

||||

|

||||

## 使用 letsencrypt

|

||||

|

||||

由于当前 Let's Encrypt 官方的证书签发服务还未公开,你只能尝试开发版本。这个版本会签发一个 CA 标识为 `happy hacker fake CA` 的测试证书,注意这个证书不受信任。

|

||||

|

||||

要获取开发版本请直接 `$ git clone https://github.com/letsencrypt/letsencrypt`。

|

||||

|

||||

以下的[使用方法][7]摘自 Let's Encrypt 官方网站。

|

||||

|

||||

### 签发证书

|

||||

|

||||

`letsencrypt` 工具可以协助你处理证书请求和验证工作。

|

||||

|

||||

#### 自动配置 Web 服务器

|

||||

|

||||

下面的操作将会自动帮你将新证书配置到 Nginx 和 Apache 中。

|

||||

|

||||

```

|

||||

$ letsencrypt run

|

||||

```

|

||||

|

||||

#### 独立签发证书

|

||||

|

||||

下面的操作将会将新证书置于当前目录下。

|

||||

|

||||

```

|

||||

$ letsencrypt -d example.com auth

|

||||

```

|

||||

|

||||

### 续签证书

|

||||

|

||||

默认情况下 `letsencrypt` 工具将协助你跟踪当前证书的有效期限并在需要时自动帮你续签。如果需要手动续签,执行下面的操作。

|

||||

|

||||

```

|

||||

$ letsencrypt renew --cert-path example-cert.pem

|

||||

```

|

||||

|

||||

### 吊销证书

|

||||

|

||||

列出当前托管的证书菜单以吊销。

|

||||

|

||||

```

|

||||

$ letsencrypt revoke

|

||||

```

|

||||

|

||||

你也可以吊销某一个证书或者属于某个私钥的所有证书。

|

||||

|

||||

```

|

||||

$ letsencrypt revoke --cert-path example-cert.pem

|

||||

```

|

||||

|

||||

```

|

||||

$ letsencrypt revoke --key-path example-key.pem

|

||||

```

|

||||

|

||||

## Docker 化 letsencrypt

|

||||

|

||||

如果你不想让 letsencrypt 自动配置你的 Web 服务器的话,使用 Docker 跑一份独立的版本将是一个不错的选择。你所要做的只是在装有 Docker 的系统中执行:

|

||||

|

||||

```

|

||||

$ sudo docker run -it --rm -p 443:443 -p 80:80 --name letsencrypt \

|

||||

-v "/etc/letsencrypt:/etc/letsencrypt" \

|

||||

-v "/var/lib/letsencrypt:/var/lib/letsencrypt" \

|

||||

quay.io/letsencrypt/letsencrypt:latest auth

|

||||

```

|

||||

|

||||

你就可以快速的为自己的 Web 服务器签发一个免费而且受信任的 DV 证书啦!

|

||||

|

||||

## Let's Encrypt 的注意事项

|

||||

|

||||

* Let's Encrypt 当前发行的 DV 证书仅能验证域名的所有权,并不能验证其所有者身份;

|

||||

* Let's Encrypt 不像其他 CA 那样对安全事故有保险赔付;

|

||||

* Let's Encrypt 目前不提共 Wildcard 证书;

|

||||

* Let's Encrypt 的有效时间仅为 90 天,逾期需要续签(可自动续签)。

|

||||

|

||||

对于 Let's Encrypt 的介绍就到这里,让我们一起目睹这场互联网的安全革命吧。

|

||||

|

||||

[1]: https://github.com/letsencrypt/acme-spec

|

||||

[2]: https://github.com/letsencrypt/boulder

|

||||

[3]: https://github.com/letsencrypt/letsencrypt

|

||||

[4]: https://linux.cn/article-5175-1.html

|

||||

[5]: https://letsencrypt.github.io/acme-spec/#simple-http

|

||||

[6]: https://community.qualys.com/servlet/JiveServlet/download/38-1636/Qualys_SSL_Labs-State_of_SSL_2010-v1.6.pdf

|

||||

[7]: https://letsencrypt.org/howitworks/

|

||||

@ -1,57 +0,0 @@

|

||||

translation by strugglingyouth

|

||||

Ubuntu Software Centre To Be Replaced in 16.04 LTS

|

||||

================================================================================

|

||||

|

||||

|

||||

The USC Will Be Replaced

|

||||

|

||||

**The Ubuntu Software Centre is to be replaced in Ubuntu 16.04 LTS.**

|

||||

|

||||

Users of the Xenial Xerus desktop will find that the familiar (and somewhat cumbersome) Ubuntu Software Centre is no longer available.

|

||||

|

||||

GNOME’s [Software application][1] will – according to current plans – take its place as the default and package management utility on the Unity 7-based desktop.

|

||||

|

||||

|

||||

|

||||

GNOME Software

|

||||

|

||||

New plugins will be created to support the Software Centre’s ratings, reviews and paid app features as a result of the switch.

|

||||

|

||||

The decisions were taken at a recent desktop Sprint held at Canonical HQ in London.

|

||||

|

||||

“We are more confident in our ability to add support for Snaps to GNOME Software Centre (sic) than we are to Ubuntu Software Centre. And so, right now, it looks like we will be replacing [the USC] with GNOME Software Centre”, explains Ubuntu desktop manager Will Cooke at the Ubuntu Online Summit.

|

||||

|

||||

GNOME 3.18 stack will also be included in Ubuntu 16.04, with select app updates to GNOME 3.20 apps taken ‘as and when it makes sense’, adds Will Cooke.

|

||||

|

||||

We recently ran a poll on Twitter asking how you install software on Ubuntu. The results suggest that few of you will mourn the passing of the incumbent Software Centre…

|

||||

|

||||

注:投票项目

|

||||

Which of these do you use to install software on #Ubuntu?

|

||||

|

||||

- Software Centre

|

||||

- Terminal

|

||||

|

||||

### Other Apps Being Dropped in Ubuntu 16.04 ###

|

||||

|

||||

The Ubuntu Software Centre is not the only app set to be given the heave-ho in Xenial Xerus.

|

||||

|

||||

Disc burning utility Brasero and instant messaging app **Empathy** are also to be removed from the default install image.

|

||||

|

||||

Neither app is considered to be under active development, and with the march of laptops lacking optical drives and web and mobile-based chat services, they may also be seen as increasingly obsolete.

|

||||

|

||||

If you do have use for them don’t panic: both Brasero and Empathy will **still be available to install on Ubuntu from the archives**.

|

||||

|

||||

It’s not all removals and replacements as one new desktop app is set be included by default: GNOME Calendar.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/11/the-ubuntu-software-centre-is-being-replace-in-16-04-lts

|

||||

|

||||

作者:[Sam Tran][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/111008502832304483939?rel=author

|

||||

[1]:https://wiki.gnome.org/Apps/Software

|

||||

@ -1,125 +0,0 @@

|

||||

Open Source Alternatives to LastPass

|

||||

================================================================================

|

||||

LastPass is a cross-platform password management program. For Linux, it is available as a plugin for Firefox, Chrome, and Opera. LastPass Sesame is available for Ubuntu/Debian and Fedora. There is also a version of LastPass compatible with Firefox Portable for installing on a USB key. And with LastPass Pocket for Ubuntu/Debian, Fedora and openSUSE, there's good coverage. While LastPass is a highly rated service, it is proprietary software. And LastPass has recently been absorbed by LogMeIn. If you're looking for an open source alternative, this article is for you.

|

||||

|

||||

We all face information overload. Whether you conduct business online, read for your job, or just read for pleasure, the internet is a vast source of information. Retaining that information on a long-term basis can be difficult. However, it is essential to recall certain items of information immediately. Passwords are one such example.

|

||||

|

||||

As a computer user, you face the dilemma of choosing the same password or a unique password for each service or web site you use. Matters are complicated because some sites place restrictions on the selection of the password. For example, a site may insist on a minimum number of characters, capital letters, numerals, and other characters which make choosing the same password for each site to be impossible. More importantly, there are good security reasons not to duplicate passwords. This inevitably means that individuals will simply have too many passwords to remember. One solution is to keep the passwords in written form. However, this is also highly insecure.

|

||||

|

||||

Instead of trying to remember an endless array of passwords, a popular solution is to use password manager software. In fact, this type of software is an essential tool for the active internet user. It makes it easy to retrieve, manage and secure all of your passwords. Most passwords are encrypted, either by the program or the filesystem. Consequently, the user only has to remember a single password. Password managers encourage users to choose unique, non-intuitive strong passwords for each service.

|

||||

|

||||

To provide an insight into the quality of software available for Linux, I introduce 4 excellent open source alternatives to LastPass.

|

||||

|

||||

### KeePassX ###

|

||||

|

||||

|

||||

|

||||

KeePassX is a multi-platform port of KeePass, an open source and cross-platform password manager. This utility helps you to manage your passwords in a secure way. You can put all your passwords in one database, which is locked with one master key or a key-disk. This lets users only need to remember one single master password or insert the key-disk to unlock the whole database.

|

||||

|

||||

The databases are encrypted using the algorithms AES (alias Rijndael) or Twofish using a 256 bit key.

|

||||

|

||||

Features include:

|

||||

|

||||

- Extensive management- title for each entry for better identification:

|

||||

- Determine different expiration dates

|

||||

- Insertion of attachments

|

||||

- User-defined symbols for groups and entries

|

||||

- Fast entry duplication

|

||||

- Sorting entries in groups

|

||||

- Search function: in specific groups or in the complete database

|

||||

- Auto-Type, a feature that allows you to e.g. log in to a web page by pressing a single key combination. KeePassX does the rest of the typing for you. Auto-Type reads the title of currently active window on your screen and matches it to the configured database entries

|

||||

- Database security with access to the KeePassX database being granted either with a password, a key-file (e.g. a CD or a memory-stick) or both

|

||||

- Automatic generation of secure passwords

|

||||

- Precaution features, quality indicator for chosen passwords hiding all passwords behind asterisks

|

||||

- Encryption- either the Advanced Encryption Standard (AES) or the Twofish algorithm are used, with encryption of the database in 256 bit sized increments

|

||||

- Import and export of entries. Import from PwManager (*.pwm) and KWallet (*.xml) files, Export as textfile (*.txt)

|

||||

|

||||

- Website: [www.keepassx.org][1]

|

||||

- Developer: KeePassX Team

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 0.4.3

|

||||

|

||||

### Encryptr ###

|

||||

|

||||

|

||||

|

||||

Encryptr is an open source zero-knowledge cloud-based password manager / e-wallet powered by Crypton. Crypton is a JavaScript library that allows developers to write web applications where the server knows nothing of the contents a user is storing.

|

||||

|

||||

Encryptr stores your sensitive data like passwords, credit card data, PINs, or access codes, in the cloud. However, because it was built on the zero-knowledge Crypton framework, Encryptr ensures that only the user has the ability to access or read the confidential information.

|

||||

|

||||

Being cross-platform, it allows users to securely access their confidential data from a single account from the cloud, no matter where they are.

|

||||

|

||||

Features include:

|

||||

|

||||

- Very secure Zero-Knowledge Crypton Framework only ever encrypts or decrypts your data locally on your device

|

||||

- Simple to use

|

||||

- Cloud based

|

||||

- Stores three types of data it stores passwords, credit card numbers and general key/value pairs

|

||||

- Optional "Notes" field to all entries

|

||||

- Filtering / searching the entry list

|

||||

- Local encrypted caching of entries to speed up load time

|

||||

|

||||

- Website: [encryptr.org][2]

|

||||

- Developer: Tommy Williams

|

||||

- License: GNU GPL v3

|

||||

- Version Number: 1.2.0

|

||||

|

||||

### RatticDB ###

|

||||

|

||||

|

||||

|

||||

RatticDB is an open source Django based password management service.

|

||||

|

||||

RatticDB is built to be 'Password Lifecycle Management' and not simply a 'Password Storage Engine'. RatticDB aims to help you keep track of what passwords need to be changed and when. It does not include application level encryption.

|

||||

|

||||

Features include:

|

||||

|

||||

- Simple ACL scheme

|

||||

- Change Queue feature that allows users to see when they need to update passwords for the applications they use

|

||||

- Ansible configurations

|

||||

-

|

||||

- Website: [rattic.org][3]

|

||||

- Developer: Daniel Hall

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 1.3.1

|

||||

|

||||

### Seahorse ###

|

||||

|

||||

|

||||

|

||||

Seahorse is a Gnome front end for GnuPG - the Gnu Privacy Guard program. Its goal is to provide an easy to use Key Management Tool, along with an easy to use interface for encryption operations.

|

||||

|

||||

It is a tool for secure communications and data storage. Data encryption and digital signature creation can easily be performed through a GUI and Key Management operations can easily be carried out through an intuitive interface.

|

||||

|

||||

Additionally, Seahorse includes a Gedit plugin, can handle files using Nautilus, an applet for managing stuff put in the clipboard and an agent for storing private passphrases, as well as a GnuPG and OpenSSH key manager.

|

||||

|

||||

Features include:

|

||||

|

||||

- Encrypt/decrypt/sign files and text

|

||||

- Manage your keys and keyring

|

||||

- Synchronize your keys and your keyring with key servers

|

||||

- Sign keys and publish

|

||||

- Cache your passphrase so you don't have to keep typing it

|

||||

- Backup your keys and keyring

|

||||

- Add an image in any GDK supported format as a OpenGPG photo ID

|

||||

- Create SSH keys, configure them, cache them

|

||||

- Internationalization support

|

||||

|

||||

- Website: [www.gnome.org/projects/seahorse][4]

|

||||

- Developer: Jacob Perkins, Jose Carlos, Garcia Sogo, Jean Schurger, Stef Walter, Adam Schreiber

|

||||

- License: GNU GPL v2

|

||||

- Version Number: 3.18.0

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxlinks.com/article/20151108125950773/LastPassAlternatives.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.keepassx.org/

|

||||

[2]:https://encryptr.org/

|

||||

[3]:http://rattic.org/

|

||||

[4]:http://www.gnome.org/projects/seahorse/

|

||||

@ -1,102 +0,0 @@

|

||||

zpl1025 translating

|

||||

The Brief History Of Aix, HP-UX, Solaris, BSD, And LINUX

|

||||

================================================================================

|

||||

|

||||

|

||||

Always remember that when doors close on you, other doors open. [Ken Thompson][1] and [Dennis Richie][2] are a great example for such saying. They were two of the best information technology specialists in the **20th** century as they created the **UNIX** system which is considered one the most influential and inspirational software that ever written.

|

||||

|

||||

### The UNIX systems beginning at Bell Labs ###

|

||||

|

||||

**UNIX** which was originally called **UNICS** (**UN**iplexed **I**nformation and **C**omputing **S**ervice) has a great family and was never born by itself. The grandfather of UNIX was **CTSS** (**C**ompatible **T**ime **S**haring **S**ystem) and the father was the **Multics** (**MULT**iplexed **I**nformation and **C**omputing **S**ervice) project which supports interactive timesharing for mainframe computers by huge communities of users.

|

||||

|

||||

UNIX was born at **Bell Labs** in **1969** by **Ken Thompson** and later **Dennis Richie**. These two great researchers and scientists worked on a collaborative project with **General Electric** and the **Massachusetts Institute of Technology** to create an interactive timesharing system called the Multics.

|

||||

|

||||

Multics was created to combine timesharing with other technological advances, allowing the users to phone the computer from remote terminals, then edit documents, read e-mail, run calculations, and so on.

|

||||

|

||||

Over the next five years, AT&T corporate invested millions of dollars in the Multics project. They purchased mainframe computer called GE-645 and they dedicated to the effort of the top researchers at Bell Labs such as Ken Thompson, Stuart Feldman, Dennis Ritchie, M. Douglas McIlroy, Joseph F. Ossanna, and Robert Morris. The project was too ambitious, but it fell troublingly behind the schedule. And at the end, AT&T leaders decided to leave the project.

|

||||

|

||||

Bell Labs managers decided to stop any further work on operating systems which made many researchers frustrated and upset. But thanks to Thompson, Richie, and some researchers who ignored their bosses’ instructions and continued working with love on their labs, UNIX was created as one the greatest operating systems of all times.

|

||||

|

||||

UNIX started its life on a PDP-7 minicomputer which was a testing machine for Thompson’s ideas about the operating systems design and a platform for Thompsons and Richie’s game simulation that was called Space and Travel.

|

||||

|

||||

> “What we wanted to preserve was not just a good environment in which to do programming, but a system around which a fellowship could form. We knew from experience that the essence of communal computing, as supplied by remote-access, time-shared machines, is not just to type programs into a terminal instead of a keypunch, but to encourage close communication”. Dennis Richie Said.

|

||||

|

||||

UNIX was so close to be the first system under which the programmer could directly sit down at a machine and start composing programs on the fly, explore possibilities and also test while composing. All through UNIX lifetime, it has had a growing more capabilities pattern by attracting skilled volunteer effort from different programmers impatient with the other operating systems limitations.

|

||||

|

||||

UNIX has received its first funding for a PDP-11/20 in 1970, the UNIX operating system was then officially named and could run on the PDP-11/20. The first real job from UNIX was in 1971, it was to support word processing for the patent department at Bell Labs.

|

||||

|

||||

### The C revolution on UNIX systems ###

|

||||

|

||||

Dennis Richie invented a higher level programming language called “**C**” in **1972**, later he decided with Ken Thompson to rewrite the UNIX in “C” to give the system more portability options. They wrote and debugged almost 100,000 code lines that year. The migration to the “C” language resulted in highly portable software that require only a relatively small machine-dependent code to be then replaced when porting UNIX to another computing platform.

|

||||

|

||||

The UNIX was first formally presented to the outside world in 1973 on Operating Systems Principles, where Dennis Ritchie and Ken Thompson delivered a paper, then AT&T released Version 5 of the UNIX system and licensed it to the educational institutions, and then in 1975 they licensed Version 6 of UNIX to companies for the first time with a cost **$20.000**. The most widely used version of UNIX was Version 7 in 1980 where anybody could purchase a license but it was very restrictive terms in this license. The license included the source code, the machine dependents kernel which was written in PDP-11 assembly language. At all, versions of UNIX systems were determined by its user manuals editions.

|

||||

|

||||

### The AIX System ###

|

||||

|

||||

In **1983**, **Microsoft** had a plan to make a **Xenix** MS-DOS’s multiuser successor, and they created Xenix-based Altos 586 with **512 KB** RAM and **10 MB** hard drive by this year with cost $8,000. By 1984, 100,000 UNIX installations around the world for the System V Release 2. In 1986, 4.3BSD was released that included internet name server and the **AIX system** was announced by **IBM** with Installation base over 250,000. AIX is based on Unix System V, this system has BSD roots and is a hybrid of both.

|

||||

|

||||

AIX was the first operating system that introduced a **journaled file system (JFS)** and an integrated Logical Volume Manager (LVM). IBM ported AIX to its RS/6000 platform by 1989. The Version 5L was a breakthrough release that was introduced in 2001 to provide Linux affinity and logical partitioning with the Power4 servers.

|

||||

|

||||

AIX introduced virtualization by 2004 in AIX 5.3 with Advanced Power Virtualization (APV) which offered Symmetric multi-threading, micro-partitioning, and shared processor pools.

|

||||

|

||||

In 2007, IBM started to enhance its virtualization product, by coinciding with the AIX 6.1 release and the architecture of Power6. They also rebranded Advanced Power Virtualization to PowerVM.

|

||||

|

||||

The enhancements included form of workload partitioning that was called WPARs, that are similar to Solaris zones/Containers, but with much better functionality.

|

||||

|

||||

### The HP-UX System ###

|

||||

|

||||

The **Hewlett-Packard’s UNIX (HP-UX)** was based originally on System V release 3. The system initially ran exclusively on the PA-RISC HP 9000 platform. The Version 1 of HP-UX was released in 1984.

|

||||

|

||||

The Version 9, introduced SAM, its character-based graphical user interface (GUI), from which one can administrate the system. The Version 10, was introduced in 1995, and brought some changes in the layout of the system file and directory structure, which made it similar to AT&T SVR4.

|

||||

|

||||

The Version 11 was introduced in 1997. It was HP’s first release to support 64-bit addressing. But in 2000, this release was rebranded to 11i, as HP introduced operating environments and bundled groups of layered applications for specific Information Technology purposes.

|

||||

|

||||

In 2001, The Version 11.20 was introduced with support for Itanium systems. The HP-UX was the first UNIX that used ACLs (Access Control Lists) for file permissions and it was also one of the first that introduced built-in support for Logical Volume Manager.

|

||||

|

||||

Nowadays, HP-UX uses Veritas as primary file system due to partnership between Veritas and HP.

|

||||

|

||||

The HP-UX is up to release 11iv3, update 4.

|

||||

|

||||

### The Solaris System ###

|

||||

|

||||

The Sun’s UNIX version, **Solaris**, was the successor of **SunOS**, which was founded in 1992. SunOS was originally based on the BSD (Berkeley Software Distribution) flavor of UNIX but SunOS versions 5.0 and later were based on Unix System V Release 4 which was rebranded as Solaris.

|

||||

|

||||

SunOS version 1.0 was introduced with support for Sun-1 and Sun-2 systems in 1983. Version 2.0 was introduced later in 1985. In 1987, Sun and AT&T announced that they would collaborate on a project to merge System V and BSD into only one release, based on SVR4.

|

||||

|

||||

The Solaris 2.4 was first Sparc/x86 release by Sun. The last release of the SunOS was version 4.1.4 announced in November 1994. The Solaris 7 was the first 64-bit Ultra Sparc release and it added native support for file system metadata logging.

|

||||

|

||||

Solaris 9 was introduced in 2002, with support for Linux capabilities and Solaris Volume Manager. Then, Solaris 10 was introduced in 2005, and has number of innovations, such as support for its Solaris Containers, new ZFS file system, and Logical Domains.

|

||||

|

||||

The Solaris system is presently up to version 10 as the latest update was released in 2008.

|

||||

|

||||

### Linux ###

|

||||

|

||||

By 1991 there were growing requirements for a free commercial alternative. Therefore **Linus Torvalds** set out to create new free operating system kernel that eventually became **Linux**. Linux started with a small number of “C” files and under a license which prohibited commercial distribution. Linux is a UNIX-like system and is different than UNIX.

|

||||

|

||||

Version 3.18 was introduced in 2015 under a GNU Public License. IBM said that more than 18 million lines of code are Open Source and available to developers.

|

||||

|

||||

The GNU Public License becomes the most widely available free software license which you can find nowadays. In accordance with the Open Source principles, this license permits individuals and organizations the freedom to distribute, run, share by copying, study, and also modify the code of the software.

|

||||

|

||||

### UNIX vs. Linux: Technical Overview ###

|

||||

|

||||

- Linux can encourage more diversity, and Linux developers come from wider range of backgrounds with different experiences and opinions.

|

||||

- Linux can run on wider range of platforms and also types of architecture than UNIX.

|

||||

- Developers of UNIX commercial editions have a specific target platform and audience in mind for their operating system.

|

||||

- **Linux is more secure than UNIX** as it is less affected by virus threats or malware attacks. Linux has had about 60-100 viruses to date, but at the same time none of them are currently spreading. On the other hand, UNIX has had 85-120 viruses but some of them are still spreading.

|

||||

- With commands of UNIX, tools and elements are rarely changed, and even some interfaces and command lines arguments still remain in later versions of UNIX.

|

||||

- Some Linux development projects get funded on a voluntary basis such as Debian. The other projects maintain a community version of commercial Linux distributions such as SUSE with openSUSE and Red Hat with Fedora.

|

||||

- Traditional UNIX is about scale up, but on the other hand Linux is about scale out.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/brief-history-aix-hp-ux-solaris-bsd-linux/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/pirat9/

|

||||

[1]:http://www.unixmen.com/ken-thompson-unix-systems-father/

|

||||

[2]:http://www.unixmen.com/dennis-m-ritchie-father-c-programming-language/

|

||||

@ -1,38 +0,0 @@

|

||||

Nautilus File Search Is About To Get A Big Power Up

|

||||

================================================================================

|

||||

|

||||

|

||||

**Finding stray files and folders in Nautilus is about to get a whole lot easier. **

|

||||

|

||||

A new **search filter** for the default [GNOME file manager][1] is in development. It makes heavy use of GNOME’s spiffy pop-over menus in an effort to offer a simpler way to narrow in on search results and find exactly what you’re after.

|

||||

|

||||

Developer Georges Stavracas is working on the new UI and [describes][2] the new editor as “cleaner, saner and more intuitive”.

|

||||

|

||||

Based on a video he’s [uploaded to YouTube][3] demoing the new approach – which he hasn’t made available for embedding – he’s not wrong.

|

||||

|

||||

> “Nautilus has very complex but powerful internals, which allows us to do many things. And indeed, there is code for the many options in there. So, why did it used to look so poorly implemented/broken?”, he writes on his blog.

|

||||

|

||||

The question is part rhetorical; the new search filter interface surfaces many of these ‘powerful internals’ to yhe user. Searches can be filtered ad **hoc** based on content type, name or by date range.

|

||||

|

||||

Changing anything in an app like Nautilus is likely to upset some users, so as helpful and straightforward as the new UI seems it could come in for some heat.

|

||||

|

||||

Not that worry of discontent seems to hamper progress (though the outcry at the [removal of ‘type ahead’ search][4] in 2014 still rings loud in many ears, no doubt). GNOME 3.18, [released last month][5], introduced a new file progress dialog to Nautilus and better integration for remote shares, including Google Drive.

|

||||

|

||||

Stavracas’ search filter are not yet merged in to Files’ trunk, but the reworked search UI is tentatively targeted for inclusion in GNOME 3.20, due spring next year.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2015/10/new-nautilus-search-filter-ui

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:https://wiki.gnome.org/Apps/Nautilus

|

||||

[2]:http://feaneron.com/2015/10/12/the-new-search-for-gnome-files-aka-nautilus/

|

||||

[3]:https://www.youtube.com/watch?v=X2sPRXDzmUw

|

||||

[4]:http://www.omgubuntu.co.uk/2014/01/ubuntu-14-04-nautilus-type-ahead-patch

|

||||

[5]:http://www.omgubuntu.co.uk/2015/09/gnome-3-18-release-new-features

|

||||

@ -1,114 +0,0 @@

|

||||

translating by Ezio

|

||||

|

||||

|

||||

How to Setup DockerUI - a Web Interface for Docker

|

||||

================================================================================

|

||||

Docker is getting more popularity day by day. The idea of running a complete Operating System inside a container rather than running inside a virtual machine is an awesome technology. Docker has made lives of millions of system administrators and developers pretty easy for getting their work done in no time. It is an open source technology that provides an open platform to pack, ship, share and run any application as a lightweight container without caring on which operating system we are running on the host. It has no boundaries of Language support, Frameworks or packaging system and can be run anywhere, anytime from a small home computers to high-end servers. Running docker containers and managing them may come a bit difficult and time consuming, so there is a web based application named DockerUI which is make managing and running container pretty simple. DockerUI is highly beneficial to people who are not much aware of linux command lines and want to run containerized applications. DockerUI is an open source web based application best known for its beautiful design and ease simple interface for running and managing docker containers.

|

||||

|

||||

Here are some easy steps on how we can setup Docker Engine with DockerUI in our linux machine.

|

||||

|

||||

### 1. Installing Docker Engine ###

|

||||

|

||||

First of all, we'll gonna install docker engine in our linux machine. Thanks to its developers, docker is very easy to install in any major linux distribution. To install docker engine, we'll need to run the following command with respect to which distribution we are running.

|

||||

|

||||

#### On Ubuntu/Fedora/CentOS/RHEL/Debian ####

|

||||

|

||||

Docker maintainers have written an awesome script that can be used to install docker engine in Ubuntu 15.04/14.10/14.04, CentOS 6.x/7, Fedora 22, RHEL 7 and Debian 8.x distributions of linux. This script recognizes the distribution of linux installed in our machine, then adds the required repository to the filesystem, updates the local repository index and finally installs docker engine and required dependencies from it. To install docker engine using that script, we'll need to run the following command under root or sudo mode.

|

||||

|

||||

# curl -sSL https://get.docker.com/ | sh

|

||||

|

||||

#### On OpenSuse/SUSE Linux Enterprise ####

|

||||

|

||||

To install docker engine in the machine running OpenSuse 13.1/13.2 or SUSE Linux Enterprise Server 12, we'll simply need to execute the zypper command. We'll gonna install docker using zypper command as the latest docker engine is available on the official repository. To do so, we'll run the following command under root/sudo mode.

|

||||

|

||||

# zypper in docker

|

||||

|

||||

#### On ArchLinux ####

|

||||

|

||||

Docker is available in the official repository of Archlinux as well as in the AUR packages maintained by the community. So, we have two options to install docker in archlinux. To install docker using the official arch repository, we'll need to run the following pacman command.

|

||||

|

||||

# pacman -S docker

|

||||

|

||||

But if we want to install docker from the Archlinux User Repository ie AUR, then we'll need to execute the following command.

|

||||

|

||||

# yaourt -S docker-git

|

||||

|

||||

### 2. Starting Docker Daemon ###

|

||||

|

||||

After docker is installed, we'll now gonna start our docker daemon so that we can run docker containers and manage them. We'll run the following command to make sure that docker daemon is installed and to start the docker daemon.

|

||||

|

||||

#### On SysVinit ####

|

||||

|

||||

# service docker start

|

||||

|

||||

#### On Systemd ####

|

||||

|

||||

# systemctl start docker

|

||||

|

||||

### 3. Installing DockerUI ###

|

||||

|

||||

Installing DockerUI is pretty easy than installing docker engine. We just need to pull the dockerui from the Docker Registry Hub and run it inside a container. To do so, we'll simply need to run the following command.

|

||||

|

||||

# docker run -d -p 9000:9000 --privileged -v /var/run/docker.sock:/var/run/docker.sock dockerui/dockerui

|

||||

|

||||

|

||||

|

||||

Here, in the above command, as the default port of the dockerui web application server 9000, we'll simply map the default port of it with -p flag. With -v flag, we specify the docker socket. The --privileged flag is required for hosts using SELinux.

|

||||

|

||||

After executing the above command, we'll now check if the dockerui container is running or not by running the following command.

|

||||

|

||||

# docker ps

|

||||

|

||||

|

||||

|

||||

### 4. Pulling an Image ###

|

||||

|

||||

Currently, we cannot pull an image directly from DockerUI so, we'll need to pull a docker image from the linux console/terminal. To do so, we'll need to run the following command.

|

||||

|

||||

# docker pull ubuntu

|

||||

|

||||

|

||||

|

||||

The above command will pull an image tagged as ubuntu from the official [Docker Hub][1]. Similarly, we can pull more images that we require and are available in the hub.

|

||||

|

||||

### 4. Managing with DockerUI ###

|

||||

|

||||

After we have started the dockerui container, we'll now have fun with it to start, pause, stop, remove and perform many possible activities featured by dockerui with docker containers and images. First of all, we'll need to open the web application using our web browser. To do so, we'll need to point our browser to http://ip-address:9000 or http://mydomain.com:9000 according to the configuration of our system. By default, there is no login authentication needed for the user access but we can configure our web server for adding authentication. To start a container, first we'll need to have images of the required application we want to run a container with.

|

||||

|

||||

#### Create a Container ####

|

||||

|

||||

To create a container, we'll need to go to the section named Images then, we'll need to click on the image id which we want to create a container of. After clicking on the required image id, we'll need to click on Create button then we'll be asked to enter the required properties for our container. And after everything is set and done. We'll need to click on Create button to finally create a container.

|

||||

|

||||

|

||||

|

||||

#### Stop a Container ####

|

||||

|

||||

To stop a container, we'll need to move towards the Containers page and then select the required container we want to stop. Now, we'll want to click on Stop option which we can see under Actions drop-down menu.

|

||||

|

||||

|

||||

|

||||

#### Pause and Resume ####

|

||||

|

||||

To pause a container, we simply select the required container we want to pause by keeping a check mark on the container and then click the Pause option under Actions . This is will pause the running container and then, we can simply resume the container by selecting Unpause option from the Actions drop down menu.

|

||||

|

||||

#### Kill and Remove ####

|

||||

|

||||

Like we had performed the above tasks, its pretty easy to kill and remove a container or an image. We just need to check/select the required container or image and then select the Kill or Remove button from the application according to our need.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

DockerUI is a beautiful utilization of Docker Remote API to develop an awesome web interface for managing docker containers. The developers have designed and developed this application in pure HTML and JS language. It is currently incomplete and is under heavy development so we don't recommend it for the use in production currently. It makes users pretty easy to manage their containers and images with simple clicks without needing to execute lines of commands to do small jobs. If we want to contribute DockerUI, we can simply visit its [Github Repository][2]. If you have any questions, suggestions, feedback please write them in the comment box below so that we can improve or update our contents. Thank you !

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/setup-dockerui-web-interface-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[oska874](https://github.com/oska874)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:https://hub.docker.com/

|

||||

[2]:https://github.com/crosbymichael/dockerui/

|

||||

@ -1,513 +0,0 @@

|

||||

struggling 翻译中...

|

||||

9 Tips for Improving WordPress Performance

|

||||

================================================================================

|

||||

WordPress is the single largest platform for website creation and web application delivery worldwide. About [a quarter][1] of all sites are now built on open-source WordPress software, including sites for eBay, Mozilla, RackSpace, TechCrunch, CNN, MTV, the New York Times, the Wall Street Journal.

|

||||

|

||||

WordPress.com, the most popular site for user-created blogs, also runs on WordPress open source software. [NGINX powers WordPress.com][2]. Among WordPress customers, many sites start on WordPress.com and then move to hosted WordPress open-source software; more and more of these sites use NGINX software as well.

|

||||

|

||||

WordPress’ appeal is its simplicity, both for end users and for implementation. However, the architecture of a WordPress site presents problems when usage ramps upward – and several steps, including caching and combining WordPress and NGINX, can solve these problems.

|

||||

|

||||

In this blog post, we provide nine performance tips to help overcome typical WordPress performance challenges:

|

||||

|

||||

- [Cache static resources][3]

|

||||

- [Cache dynamic files][4]

|

||||

- [Move to NGINX][5]

|

||||

- [Add permalink support to NGINX][6]

|

||||

- [Configure NGINX for FastCGI][7]

|

||||

- [Configure NGINX for W3_Total_Cache][8]

|

||||

- [Configure NGINX for WP-Super-Cache][9]

|

||||

- [Add security precautions to your NGINX configuration][10]

|

||||

- [Configure NGINX to support WordPress Multisite][11]

|

||||

|

||||

### WordPress Performance on LAMP Sites ###

|

||||

|

||||

Most WordPress sites are run on a traditional LAMP software stack: the Linux OS, Apache web server software, MySQL database software – often on a separate database server – and the PHP programming language. Each of these is a very well-known, widely used, open source tool. Most people in the WordPress world “speak” LAMP, so it’s easy to get help and support.

|

||||

|

||||

When a user visits a WordPress site, a browser running the Linux/Apache combination creates six to eight connections per user. As the user moves around the site, PHP assembles each page on the fly, grabbing resources from the MySQL database to answer requests.

|

||||

|

||||

LAMP stacks work well for anywhere from a few to, perhaps, hundreds of simultaneous users. However, sudden increases in traffic are common online and – usually – a good thing.

|

||||

|

||||

But when a LAMP-stack site gets busy, with the number of simultaneous users climbing into the many hundreds or thousands, it can develop serious bottlenecks. Two main causes of bottlenecks are:

|

||||

|

||||

1. The Apache web server – Apache consumes substantial resources for each and every connection. If Apache accepts too many simultaneous connections, memory can be exhausted and performance slows because data has to be paged back and forth to disk. If connections are limited to protect response time, new connections have to wait, which also leads to a poor user experience.

|

||||

1. The PHP/MySQL interaction – Together, an application server running PHP and a MySQL database server can serve a maximum number of requests per second. When the number of requests exceeds the maximum, users have to wait. Exceeding the maximum by a relatively small amount can cause a large slowdown in responsiveness for all users. Exceeding it by two or more times can cause significant performance problems.

|

||||

|

||||

The performance bottlenecks in a LAMP site are particularly resistant to the usual instinctive response, which is to upgrade to more powerful hardware – more CPUs, more disk space, and so on. Incremental increases in hardware performance can’t keep up with the exponential increases in demand for system resources that Apache and the PHP/MySQL combination experience when they get overloaded.

|

||||

|

||||

The leading alternative to a LAMP stack is a LEMP stack – Linux, NGINX, MySQL, and PHP. (In the LEMP acronym, the E stands for the sound at the start of “engine-x.”) We describe a LEMP stack in [Tip 3][12].

|

||||

|

||||

### Tip 1. Cache Static Resources ###

|

||||

|

||||

Static resources are unchanging files such as CSS files, JavaScript files, and image files. These files often make up half or more of the data on a web page. The remainder of the page is dynamically generated content like comments in a forum, a performance dashboard, or personalized content (think Amazon.com product recommendations).

|

||||

|

||||

Caching static resources has two big benefits:

|

||||

|

||||

- Faster delivery to the user – The user gets the static file from their browser cache or a caching server closer to them on the Internet. These are sometimes big files, so reducing latency for them helps a lot.

|

||||

- Reduced load on the application server – Every file that’s retrieved from a cache is one less request the web server has to process. The more you cache, the more you avoid thrashing because resources have run out.

|

||||

|

||||

To support browser caching, set the correct HTTP headers for static files. Look into the HTTP Cache-Control header, specifically the max-age setting, the Expires header, and Entity tags. You can find a good introduction [here][13].

|

||||

|

||||

When local caching is enabled and a user requests a previously accessed file, the browser first checks whether the file is in the cache. If so, it asks the web server if the file has changed. If the file hasn’t changed, the web server can respond immediately with code 304 (Not Modified) meaning that the file is unchanged, instead of returning code 200 OK and then retrieving and delivering the changed file.

|

||||

|

||||

To support caching beyond the browser, consider the Tips below, and consider a content delivery network (CDN).CDNs are a popular and powerful tool for caching, but we don’t describe them in detail here. Consider a CDN after you implement the other techniques mentioned here. Also, CDNs may be less useful as you transition your site from HTTP/1.x to the new HTTP/2 standard; investigate and test as needed to find the right answer for your site.

|

||||

|

||||

If you move to NGINX Plus or the open source NGINX software as part of your software stack, as suggested in [Tip 3][14], then configure NGINX to cache static resources. Use the following configuration, replacing www.example.com with the URL of your web server.

|

||||

|

||||

server {

|

||||

# substitute your web server's URL for www.example.com

|

||||

server_name www.example.com;

|

||||

root /var/www/example.com/htdocs;

|

||||

index index.php;

|

||||

|

||||

access_log /var/log/nginx/example.com.access.log;

|

||||

error_log /var/log/nginx/example.com.error.log;

|

||||

|

||||

location / {

|

||||

try_files $uri $uri/ /index.php?$args;

|

||||

}

|

||||

|

||||

location ~ \.php$ {

|

||||

try_files $uri =404;

|

||||

include fastcgi_params;

|

||||

# substitute the socket, or address and port, of your WordPress server

|

||||

fastcgi_pass unix:/var/run/php5-fpm.sock;

|

||||

#fastcgi_pass 127.0.0.1:9000;

|

||||

}

|

||||

|

||||

location ~* .(ogg|ogv|svg|svgz|eot|otf|woff|mp4|ttf|css|rss|atom|js|jpg|jpeg|gif|png|ico|zip|tgz|gz|rar|bz2|doc|xls|exe|ppt|tar|mid|midi|wav|bmp|rtf)$ {

|

||||

expires max;

|

||||

log_not_found off;

|

||||

access_log off;

|

||||

}

|

||||

}

|

||||

|

||||

### Tip 2. Cache Dynamic Files ###

|

||||

|

||||

WordPress generates web pages dynamically, meaning that it generates a given web page every time it is requested (even if the result is the same as the time before). This means that users always get the freshest content.

|

||||

|

||||

Think of a user visiting a blog post that has comments enabled at the bottom of the post. You want the user to see all comments – even a comment that just came in a moment ago. Dynamic content makes this happen.

|

||||

|

||||

But now let’s say that the blog post is getting ten or twenty requests per second. The application server might start to thrash under the pressure of trying to regenerate the page so often, causing big delays. The goal of delivering the latest content to new visitors becomes relevant only in theory, because they’re have to wait so long to get the page in the first place.

|

||||

|

||||

To prevent page delivery from slowing down due to increasing load, cache the dynamic file. This makes the file less dynamic, but makes the whole system more responsive.

|

||||

|

||||

To enable caching in WordPress, use one of several popular plug-ins – described below. A WordPress caching plug-in asks for a fresh page, then caches it for a brief period of time – perhaps just a few seconds. So, if the site is getting several requests a second, most users get their copy of the page from the cache. This helps the retrieval time for all users:

|

||||

|

||||

- Most users get a cached copy of the page. The application server does no work at all.

|

||||

- Users who do get a fresh copy get it fast. The application server only has to generate a fresh page every so often. When the server does generate a fresh page (for the first user to come along after the cached page expires), it does this much faster because it’s not overloaded with requests.

|

||||

|

||||

You can cache dynamic files for WordPress running on a LAMP stack or on a [LEMP stack][15] (described in [Tip 3][16]). There are several caching plug-ins you can use with WordPress. Here are the most popular caching plug-ins and caching techniques, listed from the simplest to the most powerful:

|

||||

|

||||

- [Hyper-Cache][17] and [Quick-Cache][18] – These two plug-ins create a single PHP file for each WordPress page or post. This supports some dynamic functionality while bypassing much WordPress core processing and the database connection, creating a faster user experience. They don’t bypass all PHP processing, so they don’t give the same performance boost as the following options. They also don’t require changes to the NGINX configuration.

|

||||

- [WP Super Cache][19] – The most popular caching plug-in for WordPress. It has many settings, which are presented through an easy-to-use interface, shown below. We show a sample NGINX configuration in [Tip 7][20].

|

||||

- [W3 Total Cache][21] – This is the second most popular cache plug-in for WordPress. It has even more option settings than WP Super Cache, making it a powerful but somewhat complex option. For a sample NGINX configuration, see [Tip 6][22].

|

||||

- [FastCGI][23] – CGI stands for Common Gateway Interface, a language-neutral way to request and receive files on the Internet. FastCGI is not a plug-in but a way to interact with a cache. FastCGI can be used in Apache as well as in NGINX, where it’s the most popular dynamic caching approach; we describe how to configure NGINX to use it in [Tip 5][24].

|

||||

|

||||

The documentation for these plug-ins and techniques explains how to configure them in a typical LAMP stack. Configuration options include database and object caching; minification for HTML, CSS, and JavaScript files; and integration options for popular CDNs. For NGINX configuration, see the Tips referenced in the list.

|

||||

|

||||

**Note**: Caches do not work for users who are logged into WordPress, because their view of WordPress pages is personalized. (For most sites, only a small minority of users are likely to be logged in.) Also, most caches do not show a cached page to users who have recently left a comment, as that user will want to see their comment appear when they refresh the page. To cache the non-personalized content of a page, you can use a technique called [fragment caching][25], if it’s important to overall performance.

|

||||

|

||||

### Tip 3. Move to NGINX ###

|

||||

|

||||

As mentioned above, Apache can cause performance problems when the number of simultaneous users rises above a certain point – perhaps hundreds of simultaneous users. Apache allocates substantial resources to each connection, and therefore tends to run out of memory. Apache can be configured to limit connections to avoid exhausting memory, but that means, when the limit is exceeded, new connection requests have to wait.

|

||||

|

||||

In addition, Apache loads another copy of the mod_php module into memory for every connection, even if it’s only serving static files (images, CSS, JavaScript, etc.). This consumes even more resources for each connection and limits the capacity of the server further.

|

||||

|

||||

To start solving these problems, move from a LAMP stack to a LEMP stack – replace Apache with (e)NGINX. NGINX handles many thousands of simultaneous connections in a fixed memory footprint, so you don’t have to experience thrashing, nor limit simultaneous connections to a small number.

|

||||

|

||||

NGINX also deals with static files better, with built-in, easily tuned [caching][26] controls. The load on the application server is reduced, and your site can serve far more traffic with a faster, more enjoyable experience for your users.

|

||||

|

||||

You can use NGINX on all the web servers in your deployment, or you can put an NGINX server “in front” of Apache as a reverse proxy – the NGINX server receives client requests, serves static files, and sends PHP requests to Apache, which processes them.

|

||||

|

||||

For dynamically generated pages – the core use case for WordPress experience – choose a caching tool, as described in [Tip 2][27]. In the Tips below, you can find NGINX configuration suggestions for FastCGI, W3_Total_Cache, and WP-Super-Cache. (Hyper-Cache and Quick-Cache don’t require changes to NGINX configuration.)

|

||||

|

||||

**Tip.** Caches are typically saved to disk, but you can use [tmpfs][28] to store the cache in memory and increase performance.

|

||||

|

||||

Setting up NGINX for WordPress is easy. Just follow these four steps, which are described in further detail in the indicated Tips:

|

||||

|

||||

1. Add permalink support – Add permalink support to NGINX. This eliminates dependence on the **.htaccess** configuration file, which is Apache-specific. See [Tip 4][29].

|

||||

1. Configure for caching – Choose a caching tool and implement it. Choices include FastCGI cache, W3 Total Cache, WP Super Cache, Hyper Cache, and Quick Cache. See Tips [5][30], [6][31], and [7][32].

|

||||

1. Implement security precautions – Adopt best practices for WordPress security on NGINX. See [Tip 8][33].

|

||||

1. Configure WordPress Multisite – If you use WordPress Multisite, configure NGINX for a subdirectory, subdomain, or multiple-domain architecture. See [Tip 9][34].

|

||||

|

||||

### Tip 4. Add Permalink Support to NGINX ###

|

||||

|

||||

Many WordPress sites depend on **.htaccess** files, which are required for several WordPress features, including permalink support, plug-ins, and file caching. NGINX does not support **.htaccess** files. Fortunately, you can use NGINX’s simple, yet comprehensive, configuration language to achieve most of the same functionality.

|

||||

|

||||

You can enable [Permalinks][35] in WordPress with NGINX by including the following location block in your main [server][36] block. (This location block is also included in other code samples below.)

|

||||

|

||||

The **try_files** directive tells NGINX to check whether the requested URL exists as a file ( **$uri**) or directory (**$uri/**) in the document root, **/var/www/example.com/htdocs**. If not, NGINX does a redirect to **/index.php**, passing the query string arguments as parameters.

|

||||

|

||||

server {

|

||||

server_name example.com www.example.com;

|

||||

root /var/www/example.com/htdocs;

|

||||

index index.php;

|

||||

|

||||

access_log /var/log/nginx/example.com.access.log;

|

||||

error_log /var/log/nginx/example.com.error.log;

|

||||

|

||||

location / {

|

||||

try_files $uri $uri/ /index.php?$args;

|

||||

}

|

||||

}

|

||||

|

||||

### Tip 5. Configure NGINX for FastCGI ###

|

||||

|

||||

NGINX can cache responses from FastCGI applications like PHP. This method offers the best performance.

|

||||

|

||||

For NGINX open source, compile in the third-party module [ngx_cache_purge][37], which provides cache purging capability, and use the configuration code below. NGINX Plus includes its own implementation of this code.

|

||||

|

||||

When using FastCGI, we recommend you install the [Nginx Helper plug-in][38] and use a configuration such as the one below, especially the use of **fastcgi_cache_key** and the location block including **fastcgi_cache_purge**. The plug-in automatically purges your cache when a page or a post is published or modified, a new comment is published, or the cache is manually purged from the WordPress Admin Dashboard.

|

||||

|

||||

The Nginx Helper plug-in can also add a short HTML snippet to the bottom of your pages, confirming the cache is working and displaying some statistics. (You can also confirm the cache is functioning properly using the [$upstream_cache_status][39] variable.)

|

||||

|

||||

fastcgi_cache_path /var/run/nginx-cache levels=1:2

|

||||

keys_zone=WORDPRESS:100m inactive=60m;

|

||||

fastcgi_cache_key "$scheme$request_method$host$request_uri";

|

||||

|

||||

server {

|

||||

server_name example.com www.example.com;

|

||||

root /var/www/example.com/htdocs;

|

||||

index index.php;

|

||||

|

||||

access_log /var/log/nginx/example.com.access.log;

|

||||

error_log /var/log/nginx/example.com.error.log;

|

||||

|

||||

set $skip_cache 0;

|

||||

|

||||

# POST requests and urls with a query string should always go to PHP

|

||||

if ($request_method = POST) {

|

||||

set $skip_cache 1;

|

||||

}

|

||||

|

||||

if ($query_string != "") {

|

||||

set $skip_cache 1;

|

||||

}

|

||||

|

||||

# Don't cache uris containing the following segments

|

||||

if ($request_uri ~* "/wp-admin/|/xmlrpc.php|wp-.*.php|/feed/|index.php

|

||||

|sitemap(_index)?.xml") {

|

||||

set $skip_cache 1;

|

||||

}

|

||||

|

||||

# Don't use the cache for logged in users or recent commenters

|

||||

if ($http_cookie ~* "comment_author|wordpress_[a-f0-9]+|wp-postpass

|

||||

|wordpress_no_cache|wordpress_logged_in") {

|

||||

set $skip_cache 1;

|

||||

}

|

||||

|

||||

location / {

|

||||

try_files $uri $uri/ /index.php?$args;

|

||||

}

|

||||

|

||||

location ~ \.php$ {

|

||||

try_files $uri /index.php;

|

||||

include fastcgi_params;

|

||||

fastcgi_pass unix:/var/run/php5-fpm.sock;

|

||||

fastcgi_cache_bypass $skip_cache;

|

||||

fastcgi_no_cache $skip_cache;

|

||||

fastcgi_cache WORDPRESS;

|

||||

fastcgi_cache_valid 60m;

|

||||

}

|

||||

|

||||

location ~ /purge(/.*) {

|

||||

fastcgi_cache_purge WORDPRESS "$scheme$request_method$host$1";

|

||||

}

|

||||

|

||||

location ~* ^.+\.(ogg|ogv|svg|svgz|eot|otf|woff|mp4|ttf|css|rss|atom|js|jpg|jpeg|gif|png

|

||||

|ico|zip|tgz|gz|rar|bz2|doc|xls|exe|ppt|tar|mid|midi|wav|bmp|rtf)$ {

|

||||

|

||||

access_log off;

|

||||

log_not_found off;

|

||||

expires max;

|

||||

}

|

||||

|

||||

location = /robots.txt {

|

||||

access_log off;

|

||||

log_not_found off;

|

||||

}

|

||||

|