mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-28 23:20:10 +08:00

commit

dcad6d3941

@ -1,35 +1,43 @@

|

||||

Linux / Unix / Mac OS X 中的 30 个方便的 Bash shell 别名

|

||||

30 个方便的 Bash shell 别名

|

||||

======

|

||||

bash 别名不是把别的,只不过是指向命令的快捷方式而已。`alias` 命令允许用户只输入一个单词就运行任意一个命令或一组命令(包括命令选项和文件名)。执行 `alias` 命令会显示一个所有已定义别名的列表。你可以在 [~/.bashrc][1] 文件中自定义别名。使用别名可以在命令行中减少输入的时间,使工作更流畅,同时增加生产率。

|

||||

|

||||

bash <ruby>别名<rt>alias</rt></ruby>只不过是指向命令的快捷方式而已。`alias` 命令允许用户只输入一个单词就运行任意一个命令或一组命令(包括命令选项和文件名)。执行 `alias` 命令会显示一个所有已定义别名的列表。你可以在 [~/.bashrc][1] 文件中自定义别名。使用别名可以在命令行中减少输入的时间,使工作更流畅,同时增加生产率。

|

||||

|

||||

本文通过 30 个 bash shell 别名的实际案例演示了如何创建和使用别名。

|

||||

|

||||

![30 Useful Bash Shell Aliase For Linux/Unix Users][2]

|

||||

|

||||

## bash alias 的那些事

|

||||

### bash alias 的那些事

|

||||

|

||||

bash shell 中的 alias 命令的语法是这样的:

|

||||

|

||||

### 如何列出 bash 别名

|

||||

```

|

||||

alias [alias-name[=string]...]

|

||||

```

|

||||

|

||||

#### 如何列出 bash 别名

|

||||

|

||||

输入下面的 [alias 命令][3]:

|

||||

|

||||

输入下面的 [alias 命令 ][3]:

|

||||

```

|

||||

alias

|

||||

```

|

||||

|

||||

结果为:

|

||||

|

||||

```

|

||||

alias ..='cd ..'

|

||||

alias amazonbackup='s3backup'

|

||||

alias apt-get='sudo apt-get'

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

默认 alias 命令会列出当前用户定义好的别名。

|

||||

`alias` 命令默认会列出当前用户定义好的别名。

|

||||

|

||||

### 如何定义或者说创建一个 bash shell 别名

|

||||

#### 如何定义或者创建一个 bash shell 别名

|

||||

|

||||

使用下面语法 [创建别名][4]:

|

||||

|

||||

使用下面语法 [创建别名 ][4]:

|

||||

```

|

||||

alias name =value

|

||||

alias name = 'command'

|

||||

@ -38,19 +46,22 @@ alias name = '/path/to/script'

|

||||

alias name = '/path/to/script.pl arg1'

|

||||

```

|

||||

|

||||

举个例子,输入下面命令并回车就会为常用的 `clear`( 清除屏幕)命令创建一个别名 **c**:

|

||||

举个例子,输入下面命令并回车就会为常用的 `clear`(清除屏幕)命令创建一个别名 `c`:

|

||||

|

||||

```

|

||||

alias c = 'clear'

|

||||

```

|

||||

|

||||

然后输入字母 `c` 而不是 `clear` 后回车就会清除屏幕了:

|

||||

|

||||

```

|

||||

c

|

||||

```

|

||||

|

||||

### 如何临时性地禁用 bash 别名

|

||||

#### 如何临时性地禁用 bash 别名

|

||||

|

||||

下面语法可以[临时性地禁用别名][5]:

|

||||

|

||||

下面语法可以[临时性地禁用别名 ][5]:

|

||||

```

|

||||

## path/to/full/command

|

||||

/usr/bin/clear

|

||||

@ -60,37 +71,43 @@ c

|

||||

command ls

|

||||

```

|

||||

|

||||

### 如何删除 bash 别名

|

||||

#### 如何删除 bash 别名

|

||||

|

||||

使用 [unalias 命令来删除别名][6]。其语法为:

|

||||

|

||||

使用 [unalias 命令来删除别名 ][6]。其语法为:

|

||||

```

|

||||

unalias aliasname

|

||||

unalias foo

|

||||

```

|

||||

|

||||

例如,删除我们之前创建的别名 `c`:

|

||||

|

||||

```

|

||||

unalias c

|

||||

```

|

||||

|

||||

你还需要用文本编辑器删掉 [~/.bashrc 文件 ][1] 中的别名定义(参见下一部分内容)。

|

||||

你还需要用文本编辑器删掉 [~/.bashrc 文件][1] 中的别名定义(参见下一部分内容)。

|

||||

|

||||

### 如何让 bash shell 别名永久生效

|

||||

#### 如何让 bash shell 别名永久生效

|

||||

|

||||

别名 `c` 在当前登录会话中依然有效。但当你登出或重启系统后,别名 `c` 就没有了。为了防止出现这个问题,将别名定义写入 [~/.bashrc file][1] 中,输入:

|

||||

|

||||

```

|

||||

vi ~/.bashrc

|

||||

```

|

||||

|

||||

输入下行内容让别名 `c` 对当前用户永久有效:

|

||||

|

||||

```

|

||||

alias c = 'clear'

|

||||

```

|

||||

|

||||

保存并关闭文件就行了。系统级的别名(也就是对所有用户都生效的别名) 可以放在 `/etc/bashrc` 文件中。请注意,alias 命令内建于各种 shell 中,包括 ksh,tcsh/csh,ash,bash 以及其他 shell。

|

||||

保存并关闭文件就行了。系统级的别名(也就是对所有用户都生效的别名)可以放在 `/etc/bashrc` 文件中。请注意,`alias` 命令内建于各种 shell 中,包括 ksh,tcsh/csh,ash,bash 以及其他 shell。

|

||||

|

||||

### 关于特权权限判断

|

||||

#### 关于特权权限判断

|

||||

|

||||

可以将下面代码加入 `~/.bashrc`:

|

||||

|

||||

```

|

||||

# if user is not root, pass all commands via sudo #

|

||||

if [ $UID -ne 0 ]; then

|

||||

@ -99,9 +116,10 @@ if [ $UID -ne 0 ]; then

|

||||

fi

|

||||

```

|

||||

|

||||

### 定义与操作系统类型相关的别名

|

||||

#### 定义与操作系统类型相关的别名

|

||||

|

||||

可以将下面代码加入 `~/.bashrc` [使用 case 语句][7]:

|

||||

|

||||

可以将下面代码加入 `~/.bashrc` [使用 case 语句 ][7]:

|

||||

```

|

||||

### Get os name via uname ###

|

||||

_myos="$(uname)"

|

||||

@ -115,13 +133,14 @@ case $_myos in

|

||||

esac

|

||||

```

|

||||

|

||||

## 30 个 bash shell 别名的案例

|

||||

### 30 个 bash shell 别名的案例

|

||||

|

||||

你可以定义各种类型的别名来节省时间并提高生产率。

|

||||

|

||||

### #1:控制 ls 命令的输出

|

||||

#### #1:控制 ls 命令的输出

|

||||

|

||||

[ls 命令列出目录中的内容][8] 而你可以对输出进行着色:

|

||||

|

||||

[ls 命令列出目录中的内容 ][8] 而你可以对输出进行着色:

|

||||

```

|

||||

## Colorize the ls output ##

|

||||

alias ls = 'ls --color=auto'

|

||||

@ -133,7 +152,8 @@ alias ll = 'ls -la'

|

||||

alias l.= 'ls -d . .. .git .gitignore .gitmodules .travis.yml --color=auto'

|

||||

```

|

||||

|

||||

### #2:控制 cd 命令的行为

|

||||

#### #2:控制 cd 命令的行为

|

||||

|

||||

```

|

||||

## get rid of command not found ##

|

||||

alias cd..= 'cd ..'

|

||||

@ -147,9 +167,10 @@ alias .4= 'cd ../../../../'

|

||||

alias .5= 'cd ../../../../..'

|

||||

```

|

||||

|

||||

### #3:控制 grep 命令的输出

|

||||

#### #3:控制 grep 命令的输出

|

||||

|

||||

[grep 命令是一个用于在纯文本文件中搜索匹配正则表达式的行的命令行工具][9]:

|

||||

|

||||

[grep 命令是一个用于在纯文本文件中搜索匹配正则表达式的行的命令行工具 ][9]:

|

||||

```

|

||||

## Colorize the grep command output for ease of use (good for log files)##

|

||||

alias grep = 'grep --color=auto'

|

||||

@ -157,44 +178,51 @@ alias egrep = 'egrep --color=auto'

|

||||

alias fgrep = 'fgrep --color=auto'

|

||||

```

|

||||

|

||||

### #4:让计算器默认开启 math 库

|

||||

#### #4:让计算器默认开启 math 库

|

||||

|

||||

```

|

||||

alias bc = 'bc -l'

|

||||

```

|

||||

|

||||

### #4:生成 sha1 数字签名

|

||||

#### #4:生成 sha1 数字签名

|

||||

|

||||

```

|

||||

alias sha1 = 'openssl sha1'

|

||||

```

|

||||

|

||||

### #5:自动创建父目录

|

||||

#### #5:自动创建父目录

|

||||

|

||||

[mkdir 命令][10] 用于创建目录:

|

||||

|

||||

[mkdir 命令 ][10] 用于创建目录:

|

||||

```

|

||||

alias mkdir = 'mkdir -pv'

|

||||

```

|

||||

|

||||

### #6:为 diff 输出着色

|

||||

#### #6:为 diff 输出着色

|

||||

|

||||

你可以[使用 diff 来一行行第比较文件][11] 而一个名为 `colordiff` 的工具可以为 diff 输出着色:

|

||||

|

||||

你可以[使用 diff 来一行行第比较文件 ][11] 而一个名为 colordiff 的工具可以为 diff 输出着色:

|

||||

```

|

||||

# install colordiff package :)

|

||||

alias diff = 'colordiff'

|

||||

```

|

||||

|

||||

### #7:让 mount 命令的输出更漂亮,更方便人类阅读

|

||||

#### #7:让 mount 命令的输出更漂亮,更方便人类阅读

|

||||

|

||||

```

|

||||

alias mount = 'mount |column -t'

|

||||

```

|

||||

|

||||

### #8:简化命令以节省时间

|

||||

#### #8:简化命令以节省时间

|

||||

|

||||

```

|

||||

# handy short cuts #

|

||||

alias h = 'history'

|

||||

alias j = 'jobs -l'

|

||||

```

|

||||

|

||||

### #9:创建一系列新命令

|

||||

#### #9:创建一系列新命令

|

||||

|

||||

```

|

||||

alias path = 'echo -e ${PATH//:/\\n}'

|

||||

alias now = 'date +"%T"'

|

||||

@ -202,7 +230,8 @@ alias nowtime =now

|

||||

alias nowdate = 'date +"%d-%m-%Y"'

|

||||

```

|

||||

|

||||

### #10:设置 vim 为默认编辑器

|

||||

#### #10:设置 vim 为默认编辑器

|

||||

|

||||

```

|

||||

alias vi = vim

|

||||

alias svi = 'sudo vi'

|

||||

@ -210,7 +239,8 @@ alias vis = 'vim "+set si"'

|

||||

alias edit = 'vim'

|

||||

```

|

||||

|

||||

### #11:控制网络工具 ping 的输出

|

||||

#### #11:控制网络工具 ping 的输出

|

||||

|

||||

```

|

||||

# Stop after sending count ECHO_REQUEST packets #

|

||||

alias ping = 'ping -c 5'

|

||||

@ -219,16 +249,18 @@ alias ping = 'ping -c 5'

|

||||

alias fastping = 'ping -c 100 -s.2'

|

||||

```

|

||||

|

||||

### #12:显示打开的端口

|

||||

#### #12:显示打开的端口

|

||||

|

||||

使用 [netstat 命令][12] 可以快速列出服务区中所有的 TCP/UDP 端口:

|

||||

|

||||

使用 [netstat 命令 ][12] 可以快速列出服务区中所有的 TCP/UDP 端口:

|

||||

```

|

||||

alias ports = 'netstat -tulanp'

|

||||

```

|

||||

|

||||

### #13:唤醒休眠额服务器

|

||||

#### #13:唤醒休眠的服务器

|

||||

|

||||

[Wake-on-LAN (WOL) 是一个以太网标准][13],可以通过网络消息来开启服务器。你可以使用下面别名来[快速激活 nas 设备][14] 以及服务器:

|

||||

|

||||

[Wake-on-LAN (WOL) 是一个以太网标准 ][13],可以通过网络消息来开启服务器。你可以使用下面别名来[快速激活 nas 设备 ][14] 以及服务器:

|

||||

```

|

||||

## replace mac with your actual server mac address #

|

||||

alias wakeupnas01 = '/usr/bin/wakeonlan 00:11:32:11:15:FC'

|

||||

@ -236,9 +268,10 @@ alias wakeupnas02 = '/usr/bin/wakeonlan 00:11:32:11:15:FD'

|

||||

alias wakeupnas03 = '/usr/bin/wakeonlan 00:11:32:11:15:FE'

|

||||

```

|

||||

|

||||

### #14:控制防火墙 (iptables) 的输出

|

||||

#### #14:控制防火墙 (iptables) 的输出

|

||||

|

||||

[Netfilter 是一款 Linux 操作系统上的主机防火墙][15]。它是 Linux 发行版中的一部分,且默认情况下是激活状态。[这里列出了大多数 Liux 新手防护入侵者最常用的 iptables 方法][16]。

|

||||

|

||||

[Netfilter 是一款 Linux 操作系统上的主机防火墙 ][15]。它是 Linux 发行版中的一部分,且默认情况下是激活状态。[这里列出了大多数 Liux 新手防护入侵者最常用的 iptables 方法 ][16]。

|

||||

```

|

||||

## shortcut for iptables and pass it via sudo#

|

||||

alias ipt = 'sudo /sbin/iptables'

|

||||

@ -251,7 +284,8 @@ alias iptlistfw = 'sudo /sbin/iptables -L FORWARD -n -v --line-numbers'

|

||||

alias firewall =iptlist

|

||||

```

|

||||

|

||||

### #15:使用 curl 调试 web 服务器 /cdn 上的问题

|

||||

#### #15:使用 curl 调试 web 服务器 / CDN 上的问题

|

||||

|

||||

```

|

||||

# get web server headers #

|

||||

alias header = 'curl -I'

|

||||

@ -260,7 +294,8 @@ alias header = 'curl -I'

|

||||

alias headerc = 'curl -I --compress'

|

||||

```

|

||||

|

||||

### #16:增加安全性

|

||||

#### #16:增加安全性

|

||||

|

||||

```

|

||||

# do not delete / or prompt if deleting more than 3 files at a time #

|

||||

alias rm = 'rm -I --preserve-root'

|

||||

@ -276,9 +311,10 @@ alias chmod = 'chmod --preserve-root'

|

||||

alias chgrp = 'chgrp --preserve-root'

|

||||

```

|

||||

|

||||

### #17:更新 Debian Linux 服务器

|

||||

#### #17:更新 Debian Linux 服务器

|

||||

|

||||

[apt-get 命令][17] 用于通过因特网安装软件包 (ftp 或 http)。你也可以一次性升级所有软件包:

|

||||

|

||||

[apt-get 命令 ][17] 用于通过因特网安装软件包 (ftp 或 http)。你也可以一次性升级所有软件包:

|

||||

```

|

||||

# distro specific - Debian / Ubuntu and friends #

|

||||

# install with apt-get

|

||||

@ -289,25 +325,27 @@ alias updatey = "sudo apt-get --yes"

|

||||

alias update = 'sudo apt-get update && sudo apt-get upgrade'

|

||||

```

|

||||

|

||||

### #18:更新 RHEL / CentOS / Fedora Linux 服务器

|

||||

#### #18:更新 RHEL / CentOS / Fedora Linux 服务器

|

||||

|

||||

[yum 命令][18] 是 RHEL / CentOS / Fedora Linux 以及其他基于这些发行版的 Linux 上的软件包管理工具:

|

||||

|

||||

[yum 命令 ][18] 是 RHEL / CentOS / Fedora Linux 以及其他基于这些发行版的 Linux 上的软件包管理工具:

|

||||

```

|

||||

## distrp specifc RHEL/CentOS ##

|

||||

alias update = 'yum update'

|

||||

alias updatey = 'yum -y update'

|

||||

```

|

||||

|

||||

### #19:优化 sudo 和 su 命令

|

||||

#### #19:优化 sudo 和 su 命令

|

||||

|

||||

```

|

||||

# become root #

|

||||

alias root = 'sudo -i'

|

||||

alias su = 'sudo -i'

|

||||

```

|

||||

|

||||

### #20:使用 sudo 执行 halt/reboot 命令

|

||||

#### #20:使用 sudo 执行 halt/reboot 命令

|

||||

|

||||

[shutdown 命令 ][19] 会让 Linux / Unix 系统关机:

|

||||

[shutdown 命令][19] 会让 Linux / Unix 系统关机:

|

||||

```

|

||||

# reboot / halt / poweroff

|

||||

alias reboot = 'sudo /sbin/reboot'

|

||||

@ -316,7 +354,8 @@ alias halt = 'sudo /sbin/halt'

|

||||

alias shutdown = 'sudo /sbin/shutdown'

|

||||

```

|

||||

|

||||

### #21:控制 web 服务器

|

||||

#### #21:控制 web 服务器

|

||||

|

||||

```

|

||||

# also pass it via sudo so whoever is admin can reload it without calling you #

|

||||

alias nginxreload = 'sudo /usr/local/nginx/sbin/nginx -s reload'

|

||||

@ -327,7 +366,8 @@ alias httpdreload = 'sudo /usr/sbin/apachectl -k graceful'

|

||||

alias httpdtest = 'sudo /usr/sbin/apachectl -t && /usr/sbin/apachectl -t -D DUMP_VHOSTS'

|

||||

```

|

||||

|

||||

### #22:与备份相关的别名

|

||||

#### #22:与备份相关的别名

|

||||

|

||||

```

|

||||

# if cron fails or if you want backup on demand just run these commands #

|

||||

# again pass it via sudo so whoever is in admin group can start the job #

|

||||

@ -342,7 +382,8 @@ alias rsnapshotmonthly = 'sudo /home/scripts/admin/scripts/backup/wrapper.rsnaps

|

||||

alias amazonbackup =s3backup

|

||||

```

|

||||

|

||||

### #23:桌面应用相关的别名 - 按需播放的 avi/mp3 文件

|

||||

#### #23:桌面应用相关的别名 - 按需播放的 avi/mp3 文件

|

||||

|

||||

```

|

||||

## play video files in a current directory ##

|

||||

# cd ~/Download/movie-name

|

||||

@ -364,10 +405,10 @@ alias nplaymp3 = 'for i in /nas/multimedia/mp3/*.mp3; do mplayer "$i"; done'

|

||||

alias music = 'mplayer --shuffle *'

|

||||

```

|

||||

|

||||

#### #24:设置系统管理相关命令的默认网卡

|

||||

|

||||

### #24:设置系统管理相关命令的默认网卡

|

||||

[vnstat 一款基于终端的网络流量检测器][20]。[dnstop 是一款分析 DNS 流量的终端工具][21]。[tcptrack 和 iftop 命令显示][22] TCP/UDP 连接方面的信息,它监控网卡并显示其消耗的带宽。

|

||||

|

||||

[vnstat 一款基于终端的网络流量检测器 ][20]。[dnstop 是一款分析 DNS 流量的终端工具 ][21]。[tcptrack 和 iftop 命令显示 ][22] TCP/UDP 连接方面的信息,它监控网卡并显示其消耗的带宽。

|

||||

```

|

||||

## All of our servers eth1 is connected to the Internets via vlan / router etc ##

|

||||

alias dnstop = 'dnstop -l 5 eth1'

|

||||

@ -381,7 +422,8 @@ alias ethtool = 'ethtool eth1'

|

||||

alias iwconfig = 'iwconfig wlan0'

|

||||

```

|

||||

|

||||

### #25:快速获取系统内存,cpu 使用,和 gpu 内存相关信息

|

||||

#### #25:快速获取系统内存,cpu 使用,和 gpu 内存相关信息

|

||||

|

||||

```

|

||||

## pass options to free ##

|

||||

alias meminfo = 'free -m -l -t'

|

||||

@ -404,9 +446,10 @@ alias cpuinfo = 'lscpu'

|

||||

alias gpumeminfo = 'grep -i --color memory /var/log/Xorg.0.log'

|

||||

```

|

||||

|

||||

### #26:控制家用路由器

|

||||

#### #26:控制家用路由器

|

||||

|

||||

`curl` 命令可以用来 [重启 Linksys 路由器][23]。

|

||||

|

||||

curl 命令可以用来 [重启 Linksys 路由器 ][23]。

|

||||

```

|

||||

# Reboot my home Linksys WAG160N / WAG54 / WAG320 / WAG120N Router / Gateway from *nix.

|

||||

alias rebootlinksys = "curl -u 'admin:my-super-password' 'http://192.168.1.2/setup.cgi?todo=reboot'"

|

||||

@ -415,15 +458,17 @@ alias rebootlinksys = "curl -u 'admin:my-super-password' 'http://192.168.1.2/set

|

||||

alias reboottomato = "ssh admin@192.168.1.1 /sbin/reboot"

|

||||

```

|

||||

|

||||

### #27 wget 默认断点续传

|

||||

#### #27 wget 默认断点续传

|

||||

|

||||

[GNU wget 是一款用来从 web 下载文件的自由软件][25]。它支持 HTTP,HTTPS,以及 FTP 协议,而且它也支持断点续传:

|

||||

|

||||

[GNU Wget 是一款用来从 web 下载文件的自由软件 ][25]。它支持 HTTP,HTTPS,以及 FTP 协议,而且它页支持断点续传:

|

||||

```

|

||||

## this one saved by butt so many times ##

|

||||

alias wget = 'wget -c'

|

||||

```

|

||||

|

||||

### #28 使用不同浏览器来测试网站

|

||||

#### #28 使用不同浏览器来测试网站

|

||||

|

||||

```

|

||||

## this one saved by butt so many times ##

|

||||

alias ff4 = '/opt/firefox4/firefox'

|

||||

@ -438,9 +483,10 @@ alias ff =ff13

|

||||

alias browser =chrome

|

||||

```

|

||||

|

||||

### #29:关于 ssh 别名的注意事项

|

||||

#### #29:关于 ssh 别名的注意事项

|

||||

|

||||

不要创建 ssh 别名,代之以 `~/.ssh/config` 这个 OpenSSH SSH 客户端配置文件。它的选项更加丰富。下面是一个例子:

|

||||

|

||||

```

|

||||

Host server10

|

||||

Hostname 1.2.3.4

|

||||

@ -451,12 +497,13 @@ Host server10

|

||||

TCPKeepAlive yes

|

||||

```

|

||||

|

||||

然后你就可以使用下面语句连接 peer1 了:

|

||||

然后你就可以使用下面语句连接 server10 了:

|

||||

|

||||

```

|

||||

$ ssh server10

|

||||

```

|

||||

|

||||

### #30:现在该分享你的别名了

|

||||

#### #30:现在该分享你的别名了

|

||||

|

||||

```

|

||||

## set some other defaults ##

|

||||

@ -486,27 +533,26 @@ alias cdnmdel = '/home/scripts/admin/cdn/purge_cdn_cache --profile akamai --stdi

|

||||

alias amzcdnmdel = '/home/scripts/admin/cdn/purge_cdn_cache --profile amazon --stdin'

|

||||

```

|

||||

|

||||

## 结论

|

||||

### 总结

|

||||

|

||||

本文总结了 *nix bash 别名的多种用法:

|

||||

|

||||

1。为命令设置默认的参数(例如通过 `alias ethtool='ethtool eth0'` 设置 ethtool 命令的默认参数为 eth0)。

|

||||

2。修正错误的拼写(通过 `alias cd。.='cd .。'`让 `cd。.` 变成 `cd .。`)。

|

||||

3。缩减输入。

|

||||

4。设置系统中多版本命令的默认路径(例如 GNU/grep 位于 /usr/local/bin/grep 中而 Unix grep 位于 /bin/grep 中。若想默认使用 GNU grep 则设置别名 `grep='/usr/local/bin/grep'` )。

|

||||

5。通过默认开启命令(例如 rm,mv 等其他命令)的交互参数来增加 Unix 的安全性。

|

||||

6。为老旧的操作系统(比如 MS-DOS 或者其他类似 Unix 的操作系统)创建命令以增加兼容性(比如 `alias del=rm` )。

|

||||

1. 为命令设置默认的参数(例如通过 `alias ethtool='ethtool eth0'` 设置 ethtool 命令的默认参数为 eth0)。

|

||||

2. 修正错误的拼写(通过 `alias cd..='cd ..'`让 `cd..` 变成 `cd ..`)。

|

||||

3. 缩减输入。

|

||||

4. 设置系统中多版本命令的默认路径(例如 GNU/grep 位于 `/usr/local/bin/grep` 中而 Unix grep 位于 `/bin/grep` 中。若想默认使用 GNU grep 则设置别名 `grep='/usr/local/bin/grep'` )。

|

||||

5. 通过默认开启命令(例如 `rm`,`mv` 等其他命令)的交互参数来增加 Unix 的安全性。

|

||||

6. 为老旧的操作系统(比如 MS-DOS 或者其他类似 Unix 的操作系统)创建命令以增加兼容性(比如 `alias del=rm`)。

|

||||

|

||||

我已经分享了多年来为了减少重复输入命令而使用的别名。若你知道或使用的哪些 bash/ksh/csh 别名能够减少输入,请在留言框中分享。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/bash-aliases-mac-centos-linux-unix.html

|

||||

|

||||

作者:[nixCraft][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,103 +1,115 @@

|

||||

一个树莓派 3 的新手指南

|

||||

树莓派 3 的新手指南

|

||||

======

|

||||

> 这个教程将帮助你入门<ruby>树莓派 3<rt>Raspberry Pi 3</rt></ruby>。

|

||||

|

||||

|

||||

|

||||

这篇文章是我的使用树莓派 3 创建新项目的每周系列文章的一部分。该系列的第一篇文章专注于入门,它主要讲使用 PIXEL 桌面去安装树莓派、设置网络以及其它的基本组件。

|

||||

这篇文章是我的使用树莓派 3 创建新项目的每周系列文章的一部分。该系列的这个第一篇文章专注于入门,它主要讲安装 Raspbian 和 PIXEL 桌面,以及设置网络和其它的基本组件。

|

||||

|

||||

### 你需要:

|

||||

|

||||

* 一台树莓派 3

|

||||

* 一个 5v 2mAh 带 USB 接口的电源适配器

|

||||

* 至少 8GB 容量的 Micro SD 卡

|

||||

* Wi-Fi 或者以太网线

|

||||

* 散热片

|

||||

* 键盘和鼠标

|

||||

* 一台 PC 显示器

|

||||

* 一台用于准备 microSD 卡的 Mac 或者 PC

|

||||

* 一台树莓派 3

|

||||

* 一个 5v 2mAh 带 USB 接口的电源适配器

|

||||

* 至少 8GB 容量的 Micro SD 卡

|

||||

* Wi-Fi 或者以太网线

|

||||

* 散热片

|

||||

* 键盘和鼠标

|

||||

* 一台 PC 显示器

|

||||

* 一台用于准备 microSD 卡的 Mac 或者 PC

|

||||

|

||||

|

||||

|

||||

现在市面上有很多基于 Linux 操作系统的树莓派,这种树莓派你可以直接安装它,但是,如果你是第一次接触树莓派,我推荐使用 NOOBS,它是树莓派官方的操作系统安装器,它安装操作系统到设备的过程非常简单。

|

||||

现在有很多基于 Linux 操作系统可用于树莓派,你可以直接安装它,但是,如果你是第一次接触树莓派,我推荐使用 NOOBS,它是树莓派官方的操作系统安装器,它安装操作系统到该设备的过程非常简单。

|

||||

|

||||

在你的电脑上从 [这个链接][1] 下载 NOOBS。它是一个 zip 压缩文件。如果你使用的是 MacOS,可以直接双击它,MacOS 会自动解压这个文件。如果你使用的是 Windows,右键单击它,选择“解压到这里”。

|

||||

|

||||

如果你运行的是 Linux,如何去解压 zip 文件取决于你的桌面环境,因为,不同的桌面环境下解压文件的方法不一样,但是,使用命令行可以很容易地完成解压工作。

|

||||

如果你运行的是 Linux 桌面,如何去解压 zip 文件取决于你的桌面环境,因为,不同的桌面环境下解压文件的方法不一样,但是,使用命令行可以很容易地完成解压工作。

|

||||

|

||||

`$ unzip NOOBS.zip`

|

||||

```

|

||||

$ unzip NOOBS.zip

|

||||

```

|

||||

|

||||

不管它是什么操作系统,打开解压后的文件,你看到的应该是如下图所示的样子:

|

||||

|

||||

![content][3] Swapnil Bhartiya

|

||||

![content][3]

|

||||

|

||||

现在,在你的 PC 上插入 Micro SD 卡,将它格式化成 FAT32 格式的文件系统。在 MacOS 上,使用磁盘实用工具去格式化 Micro SD 卡:

|

||||

|

||||

![format][4] Swapnil Bhartiya

|

||||

![format][4]

|

||||

|

||||

在 Windows 上,只需要右键单击这个卡,然后选择“格式化”选项。如果是在 Linux 上,不同的桌面环境使用不同的工具,就不一一去讲解了。在这里我写了一个教程,[在 Linux 上使用命令行接口][5] 去格式化 SD 卡为 Fat32 文件系统。

|

||||

在 Windows 上,只需要右键单击这个卡,然后选择“格式化”选项。如果是在 Linux 上,不同的桌面环境使用不同的工具,就不一一去讲解了。在这里我写了一个教程,[在 Linux 上使用命令行界面][5] 去格式化 SD 卡为 Fat32 文件系统。

|

||||

|

||||

在你拥有了 FAT32 格式的文件系统后,就可以去拷贝下载的 NOOBS 目录的内容到这个卡的根目录下。如果你使用的是 MacOS 或者 Linux,可以使用 rsync 将 NOOBS 的内容传到 SD 卡的根目录中。在 MacOS 或者 Linux 中打开终端应用,然后运行如下的 rsync 命令:

|

||||

在你的卡格式成了 FAT32 格式的文件系统后,就可以去拷贝下载的 NOOBS 目录的内容到这个卡的根目录下。如果你使用的是 MacOS 或者 Linux,可以使用 `rsync` 将 NOOBS 的内容传到 SD 卡的根目录中。在 MacOS 或者 Linux 中打开终端应用,然后运行如下的 rsync 命令:

|

||||

|

||||

`rsync -avzP /path_of_NOOBS /path_of_sdcard`

|

||||

```

|

||||

rsync -avzP /path_of_NOOBS /path_of_sdcard

|

||||

```

|

||||

|

||||

一定要确保选择了 SD 卡的根目录,在我的案例中(在 MacOS 上),它是:

|

||||

|

||||

`rsync -avzP /Users/swapnil/Downloads/NOOBS_v2_2_0/ /Volumes/U/`

|

||||

```

|

||||

rsync -avzP /Users/swapnil/Downloads/NOOBS_v2_2_0/ /Volumes/U/

|

||||

```

|

||||

|

||||

或者你也可以拷贝粘贴 NOOBS 目录中的内容。一定要确保将 NOOBS 目录中的内容全部拷贝到 Micro SD 卡的根目录下,千万不能放到任何的子目录中。

|

||||

|

||||

现在可以插入这张 Micro SD 卡到树莓派 3 中,连接好显示器、键盘鼠标和电源适配器。如果你拥有有线网络,我建议你使用它,因为有线网络下载和安装操作系统更快。树莓派将引导到 NOOBS,它将提供一个供你去选择安装的分发版列表。从第一个选项中选择树莓派,紧接着会出现如下图的画面。

|

||||

现在可以插入这张 MicroSD 卡到树莓派 3 中,连接好显示器、键盘鼠标和电源适配器。如果你拥有有线网络,我建议你使用它,因为有线网络下载和安装操作系统更快。树莓派将引导到 NOOBS,它将提供一个供你去选择安装的分发版列表。从第一个选项中选择 Raspbian,紧接着会出现如下图的画面。

|

||||

|

||||

![raspi config][6] Swapnil Bhartiya

|

||||

![raspi config][6]

|

||||

|

||||

在你安装完成后,树莓派将重新启动,你将会看到一个欢迎使用树莓派的画面。现在可以去配置它,并且去运行系统更新。大多数情况下,我们都是在没有外设的情况下使用树莓派的,都是使用 SSH 基于网络远程去管理它。这意味着你不需要为了管理树莓派而去为它接上鼠标键盘和显示器。

|

||||

在你安装完成后,树莓派将重新启动,你将会看到一个欢迎使用树莓派的画面。现在可以去配置它,并且去运行系统更新。大多数情况下,我们都是在没有外设的情况下使用树莓派的,都是使用 SSH 基于网络远程去管理它。这意味着你不需要为了管理树莓派而去为它接上鼠标、键盘和显示器。

|

||||

|

||||

开始使用它的第一步是,配置网络(假如你使用的是 Wi-Fi)。点击顶部面板上的网络图标,然后在出现的网络列表中,选择你要配置的网络并为它输入正确的密码。

|

||||

|

||||

![wireless][7] Swapnil Bhartiya

|

||||

![wireless][7]

|

||||

|

||||

恭喜您,无线网络的连接配置完成了。在进入下一步的配置之前,你需要找到你的网络为树莓派分配的 IP 地址,因为远程管理会用到它。

|

||||

|

||||

打开一个终端,运行如下的命令:

|

||||

|

||||

`ifconfig`

|

||||

```

|

||||

ifconfig

|

||||

```

|

||||

|

||||

现在,记下这个设备的 wlan0 部分的 IP 地址。它一般显示为 “inet addr”

|

||||

现在,记下这个设备的 `wlan0` 部分的 IP 地址。它一般显示为 “inet addr”。

|

||||

|

||||

现在,可以去启用 SSH 了,在树莓派上打开一个终端,然后打开 raspi-config 工具。

|

||||

现在,可以去启用 SSH 了,在树莓派上打开一个终端,然后打开 `raspi-config` 工具。

|

||||

|

||||

`sudo raspi-config`

|

||||

```

|

||||

sudo raspi-config

|

||||

```

|

||||

|

||||

树莓派的默认用户名和密码分别是 “pi” 和 “raspberry”。在上面的命令中你会被要求输入密码。树莓派配置工具的第一个选项是去修改默认密码,我强烈推荐你修改默认密码,尤其是你基于网络去使用它的时候。

|

||||

|

||||

第二个选项是去修改主机名,如果在你的网络中有多个树莓派时,主机名用于区分它们。一个有意义的主机名可以很容易在网络上识别每个设备。

|

||||

|

||||

然后进入到接口选项,去启用摄像头、SSH、以及 VNC。如果你在树莓派上使用了一个涉及到多媒体的应用程序,比如,家庭影院系统或者 PC,你也可以去改变音频输出选项。缺省情况下,它的默认输出到 HDMI 接口,但是,如果你使用外部音响,你需要去改变音频输出设置。转到树莓派配置工具的高级配置选项,选择音频,然后选择 3.5mm 作为默认输出。

|

||||

然后进入到接口选项,去启用摄像头、SSH、以及 VNC。如果你在树莓派上使用了一个涉及到多媒体的应用程序,比如,家庭影院系统或者 PC,你也可以去改变音频输出选项。缺省情况下,它的默认输出到 HDMI 接口,但是,如果你使用外部音响,你需要去改变音频输出设置。转到树莓派配置工具的高级配置选项,选择音频,然后选择 “3.5mm” 作为默认输出。

|

||||

|

||||

[小提示:使用箭头键去导航,使用回车键去选择]

|

||||

|

||||

一旦所有的改变被应用, 树莓派将要求重新启动。你可以从树莓派上拔出显示器、鼠标键盘,以后可以通过网络来管理它。现在可以在你的本地电脑上打开终端。如果你使用的是 Windows,你可以使用 Putty 或者去读我的文章 - 怎么在 Windows 10 上安装 Ubuntu Bash。

|

||||

一旦应用了所有的改变, 树莓派将要求重新启动。你可以从树莓派上拔出显示器、鼠标键盘,以后可以通过网络来管理它。现在可以在你的本地电脑上打开终端。如果你使用的是 Windows,你可以使用 Putty 或者去读我的文章 - 怎么在 Windows 10 上安装 Ubuntu Bash。

|

||||

|

||||

在你的本地电脑上输入如下的 SSH 命令:

|

||||

|

||||

`ssh pi@IP_ADDRESS_OF_Pi`

|

||||

```

|

||||

ssh pi@IP_ADDRESS_OF_Pi

|

||||

```

|

||||

|

||||

在我的电脑上,这个命令是这样的:

|

||||

|

||||

`ssh pi@10.0.0.161`

|

||||

```

|

||||

ssh pi@10.0.0.161

|

||||

```

|

||||

|

||||

输入它的密码,你登入到树莓派了!现在你可以从一台远程电脑上去管理你的树莓派。如果你希望通过因特网去管理树莓派,可以去阅读我的文章 - [如何在你的计算机上启用 RealVNC][8]。

|

||||

|

||||

在该系列的下一篇文章中,我将讲解使用你的树莓派去远程管理你的 3D 打印机。

|

||||

|

||||

**这篇文章是作为 IDG 投稿网络的一部分发表的。[想加入吗?][9]**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.infoworld.com/article/3176488/linux/a-beginner-s-guide-to-raspberry-pi-3.html

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,113 @@

|

||||

使用 fdisk 和 fallocate 命令创建交换分区

|

||||

======

|

||||

|

||||

交换分区在物理内存(RAM)被填满时用来保持内存中的内容。当 RAM 被耗尽,Linux 会将内存中不活动的页移动到交换空间中,从而空出内存给系统使用。虽然如此,但交换空间不应被认为是物理内存的替代品。

|

||||

|

||||

大多数情况下,建议交换内存的大小为物理内存的 1 到 2 倍。也就是说如果你有 8GB 内存, 那么交换空间大小应该介于8-16 GB。

|

||||

|

||||

若系统中没有配置交换分区,当内存耗尽后,系统可能会杀掉正在运行中的进程/应用,从而导致系统崩溃。在本文中,我们将学会如何为 Linux 系统添加交换分区,我们有两个办法:

|

||||

|

||||

- 使用 fdisk 命令

|

||||

- 使用 fallocate 命令

|

||||

|

||||

### 第一个方法(使用 fdisk 命令)

|

||||

|

||||

通常,系统的第一块硬盘会被命名为 `/dev/sda`,而其中的分区会命名为 `/dev/sda1` 、 `/dev/sda2`。 本文我们使用的是一块有两个主分区的硬盘,两个分区分别为 `/dev/sda1`、 `/dev/sda2`,而我们使用 `/dev/sda3` 来做交换分区。

|

||||

|

||||

首先创建一个新分区,

|

||||

|

||||

```

|

||||

$ fdisk /dev/sda

|

||||

```

|

||||

|

||||

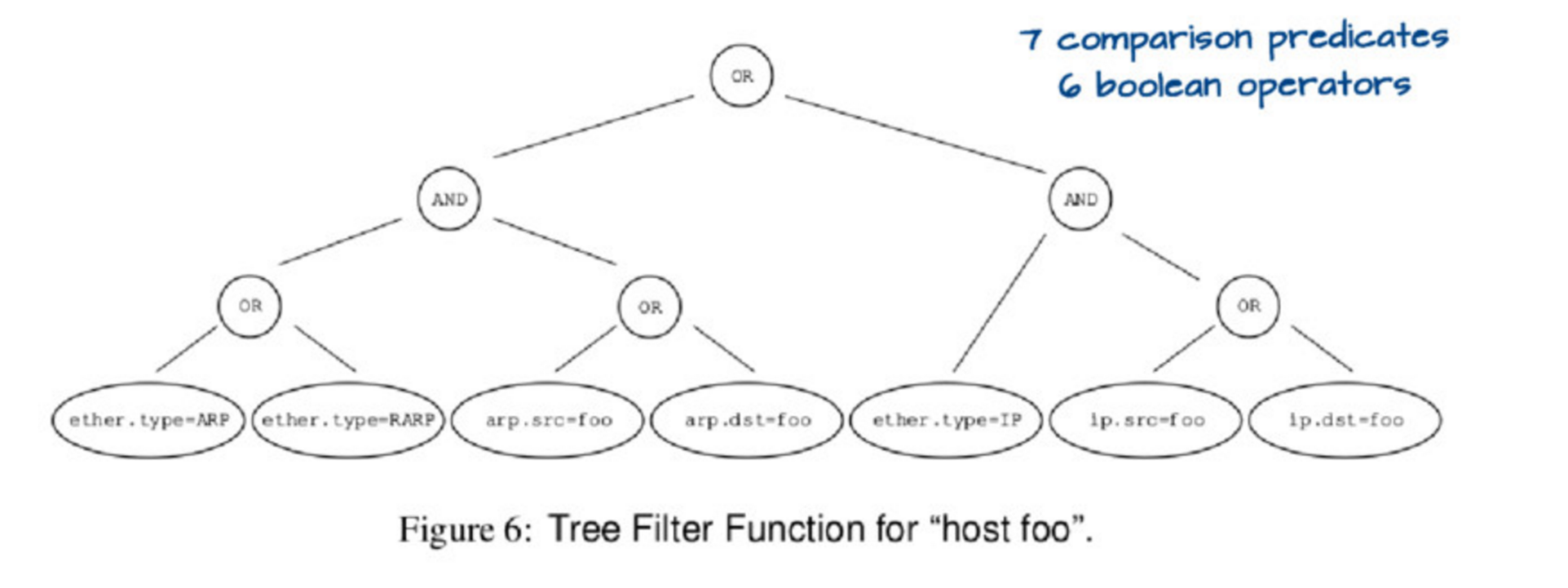

按 `n` 来创建新分区。系统会询问你从哪个柱面开始,直接按回车键使用默认值即可。然后系统询问你到哪个柱面结束, 这里我们输入交换分区的大小(比如 1000MB)。这里我们输入 `+1000M`。

|

||||

|

||||

![swap][2]

|

||||

|

||||

现在我们创建了一个大小为 1000MB 的磁盘了。但是我们并没有设置该分区的类型,我们按下 `t` 然后回车,来设置分区类型。

|

||||

|

||||

现在我们要输入分区编号,这里我们输入 `3`,然后输入磁盘分类号,交换分区的分区类型为 `82` (要显示所有可用的分区类型,按下 `l` ) ,然后再按下 `w` 保存磁盘分区表。

|

||||

|

||||

![swap][4]

|

||||

|

||||

再下一步使用 `mkswap` 命令来格式化交换分区:

|

||||

|

||||

```

|

||||

$ mkswap /dev/sda3

|

||||

```

|

||||

|

||||

然后激活新建的交换分区:

|

||||

|

||||

```

|

||||

$ swapon /dev/sda3

|

||||

```

|

||||

|

||||

然而我们的交换分区在重启后并不会自动挂载。要做到永久挂载,我们需要添加内容到 `/etc/fstab` 文件中。打开 `/etc/fstab` 文件并输入下面行:

|

||||

|

||||

```

|

||||

$ vi /etc/fstab

|

||||

|

||||

/dev/sda3 swap swap default 0 0

|

||||

```

|

||||

|

||||

保存并关闭文件。现在每次重启后都能使用我们的交换分区了。

|

||||

|

||||

### 第二种方法(使用 fallocate 命令)

|

||||

|

||||

我推荐用这种方法因为这个是最简单、最快速的创建交换空间的方法了。`fallocate` 是最被低估和使用最少的命令之一了。 `fallocate` 命令用于为文件预分配块/大小。

|

||||

|

||||

使用 `fallocate` 创建交换空间,我们首先在 `/` 目录下创建一个名为 `swap_space` 的文件。然后分配 2GB 到 `swap_space` 文件:

|

||||

|

||||

```

|

||||

$ fallocate -l 2G /swap_space

|

||||

```

|

||||

|

||||

我们运行下面命令来验证文件大小:

|

||||

|

||||

```

|

||||

$ ls -lh /swap_space

|

||||

```

|

||||

|

||||

然后更改文件权限,让 `/swap_space` 更安全:

|

||||

|

||||

```

|

||||

$ chmod 600 /swap_space

|

||||

```

|

||||

|

||||

这样只有 root 可以读写该文件了。我们再来格式化交换分区(LCTT 译注:虽然这个 `swap_space` 是个文件,但是我们把它当成是分区来挂载):

|

||||

|

||||

```

|

||||

$ mkswap /swap_space

|

||||

```

|

||||

|

||||

然后启用交换空间:

|

||||

|

||||

```

|

||||

$ swapon -s

|

||||

```

|

||||

|

||||

每次重启后都要重新挂载磁盘分区。因此为了使之持久化,就像上面一样,我们编辑 `/etc/fstab` 并输入下面行:

|

||||

|

||||

```

|

||||

/swap_space swap swap sw 0 0

|

||||

```

|

||||

|

||||

保存并退出文件。现在我们的交换分区会一直被挂载了。我们重启后可以在终端运行 `free -m` 来检查交换分区是否生效。

|

||||

|

||||

我们的教程至此就结束了,希望本文足够容易理解和学习,如果有任何疑问欢迎提出。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxtechlab.com/create-swap-using-fdisk-fallocate/

|

||||

|

||||

作者:[Shusain][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxtechlab.com/author/shsuain/

|

||||

[1]:https://i1.wp.com/linuxtechlab.com/wp-content/plugins/a3-lazy-load/assets/images/lazy_placeholder.gif?resize=668%2C211

|

||||

[2]:https://i0.wp.com/linuxtechlab.com/wp-content/uploads/2017/02/fidsk.jpg?resize=668%2C211

|

||||

[3]:https://i1.wp.com/linuxtechlab.com/wp-content/plugins/a3-lazy-load/assets/images/lazy_placeholder.gif?resize=620%2C157

|

||||

[4]:https://i0.wp.com/linuxtechlab.com/wp-content/uploads/2017/02/fidsk-swap-select.jpg?resize=620%2C157

|

||||

@ -1,23 +1,26 @@

|

||||

在 RHEL/CentOS 系统上使用 YUM History 命令回滚升级操作

|

||||

在 RHEL/CentOS 系统上使用 YUM history 命令回滚升级操作

|

||||

======

|

||||

|

||||

为服务器打补丁是 Linux 系统管理员的一项重要任务,为的是让系统更加稳定,性能更加优化。厂商经常会发布一些安全/高危的补丁包,相关软件需要升级以防范潜在的安全风险。

|

||||

|

||||

Yum (Yellowdog Update Modified) 是 CentOS 和 RedHat 系统上用的 RPM 包管理工具,Yum history 命令允许系统管理员将系统回滚到上一个状态,但由于某些限制,回滚不是在所有情况下都能成功,有时 yum 命令可能什么都不做,有时可能会删掉一些其他的包。

|

||||

Yum (Yellowdog Update Modified) 是 CentOS 和 RedHat 系统上用的 RPM 包管理工具,`yum history` 命令允许系统管理员将系统回滚到上一个状态,但由于某些限制,回滚不是在所有情况下都能成功,有时 `yum` 命令可能什么都不做,有时可能会删掉一些其他的包。

|

||||

|

||||

我建议你在升级之前还是要做一个完整的系统备份,而 yum history 并不能用来替代系统备份的。系统备份能让你将系统还原到任意时候的节点状态。

|

||||

我建议你在升级之前还是要做一个完整的系统备份,而 `yum history` 并不能用来替代系统备份的。系统备份能让你将系统还原到任意时候的节点状态。

|

||||

|

||||

**推荐阅读:**

|

||||

**(#)** [在 RHEL/CentOS 系统上使用 YUM 命令管理软件包 ][1]

|

||||

**(#)** [在 Fedora 系统上使用 DNF (YUM 的一个分支) 命令管理软件包 ][2]

|

||||

**(#)** [如何让 History 命令显示日期和时间 ][3]

|

||||

|

||||

某些情况下,安装的应用程序在升级了补丁之后不能正常工作或者出现一些错误(可能是由于库不兼容或者软件包升级导致的),那该怎么办呢?

|

||||

- [在 RHEL/CentOS 系统上使用 YUM 命令管理软件包][1]

|

||||

- [在 Fedora 系统上使用 DNF (YUM 的一个分支)命令管理软件包 ][2]

|

||||

- [如何让 history 命令显示日期和时间][3]

|

||||

|

||||

某些情况下,安装的应用程序在升级了补丁之后不能正常工作或者出现一些错误(可能是由于库不兼容或者软件包升级导致的),那该怎么办呢?

|

||||

|

||||

与应用开发团队沟通,并找出导致库和软件包的问题所在,然后使用 `yum history` 命令进行回滚。

|

||||

|

||||

与应用开发团队沟通,并找出导致库和软件包的问题所在,然后使用 yum history 命令进行回滚。

|

||||

**注意:**

|

||||

|

||||

* 它不支持回滚 selinux,selinux-policy-*,kernel,glibc (以及依赖 glibc 的包,比如 gcc)。

|

||||

* 不建议将系统降级到更低的版本(比如 CentOS 6.9 降到 CentOS 6.8),这回导致系统处于不稳定的状态

|

||||

* 它不支持回滚 selinux,selinux-policy-*,kernel,glibc (以及依赖 glibc 的包,比如 gcc)。

|

||||

* 不建议将系统降级到更低的版本(比如 CentOS 6.9 降到 CentOS 6.8),这会导致系统处于不稳定的状态

|

||||

|

||||

让我们先来看看系统上有哪些包可以升级,然后挑选出一些包来做实验。

|

||||

|

||||

@ -66,10 +69,10 @@ Upgrade 4 Package(s)

|

||||

|

||||

Total download size: 5.5 M

|

||||

Is this ok [y/N]: n

|

||||

|

||||

```

|

||||

|

||||

你会发现 `git` 包可以被升级,那我们就用它来实验吧。运行下面命令获得软件包的版本信息(当前安装的版本和可以升级的版本)。

|

||||

你会发现 `git` 包可以被升级,那我们就用它来实验吧。运行下面命令获得软件包的版本信息(当前安装的版本和可以升级的版本)。

|

||||

|

||||

```

|

||||

# yum list git

|

||||

Loaded plugins: fastestmirror, security

|

||||

@ -80,10 +83,10 @@ Installed Packages

|

||||

git.x86_64 1.7.1-8.el6 @base

|

||||

Available Packages

|

||||

git.x86_64 1.7.1-9.el6_9 updates

|

||||

|

||||

```

|

||||

|

||||

运行下面命令来将 `git` 从 `1.7.1-8` 升级到 `1.7.1-9`。

|

||||

|

||||

```

|

||||

# yum update git

|

||||

Loaded plugins: fastestmirror, presto

|

||||

@ -147,27 +150,29 @@ Dependency Updated:

|

||||

perl-Git.noarch 0:1.7.1-9.el6_9

|

||||

|

||||

Complete!

|

||||

|

||||

```

|

||||

|

||||

验证升级后的 `git` 版本.

|

||||

|

||||

```

|

||||

# yum list git

|

||||

Installed Packages

|

||||

git.x86_64 1.7.1-9.el6_9 @updates

|

||||

|

||||

or

|

||||

或

|

||||

# rpm -q git

|

||||

git-1.7.1-9.el6_9.x86_64

|

||||

|

||||

```

|

||||

|

||||

现在我们成功升级这个软件包,可以对它进行回滚了. 步骤如下.

|

||||

现在我们成功升级这个软件包,可以对它进行回滚了。步骤如下。

|

||||

|

||||

### 使用 YUM history 命令回滚升级操作

|

||||

|

||||

首先,使用下面命令获取 yum 操作的 id。下面的输出很清晰地列出了所有需要的信息,例如操作 id、谁做的这个操作(用户名)、操作日期和时间、操作的动作(安装还是升级)、操作影响的包数量。

|

||||

|

||||

首先,使用下面命令获取yum操作id. 下面的输出很清晰地列出了所有需要的信息,例如操作 id, 谁做的这个操作(用户名), 操作日期和时间, 操作的动作(安装还是升级), 操作影响的包数量.

|

||||

```

|

||||

# yum history

|

||||

or

|

||||

或

|

||||

# yum history list all

|

||||

Loaded plugins: fastestmirror, presto

|

||||

ID | Login user | Date and time | Action(s) | Altered

|

||||

@ -185,10 +190,10 @@ ID | Login user | Date and time | Action(s) | Altered

|

||||

3 | root | 2016-10-18 12:53 | Install | 1

|

||||

2 | root | 2016-09-30 10:28 | E, I, U | 31 EE

|

||||

1 | root | 2016-07-26 11:40 | E, I, U | 160 EE

|

||||

|

||||

```

|

||||

|

||||

上面命令现实有两个包受到了影响,因为 git 还升级了它的依赖包 **perl-Git**. 运行下面命令来查看关于操作的详细信息.

|

||||

上面命令显示有两个包受到了影响,因为 `git` 还升级了它的依赖包 `perl-Git`。 运行下面命令来查看关于操作的详细信息。

|

||||

|

||||

```

|

||||

# yum history info 13

|

||||

Loaded plugins: fastestmirror, presto

|

||||

@ -214,7 +219,8 @@ history info

|

||||

|

||||

```

|

||||

|

||||

运行下面命令来回滚 `git` 包到上一个版本.

|

||||

运行下面命令来回滚 `git` 包到上一个版本。

|

||||

|

||||

```

|

||||

# yum history undo 13

|

||||

Loaded plugins: fastestmirror, presto

|

||||

@ -279,21 +285,21 @@ Installed:

|

||||

git.x86_64 0:1.7.1-8.el6 perl-Git.noarch 0:1.7.1-8.el6

|

||||

|

||||

Complete!

|

||||

|

||||

```

|

||||

|

||||

回滚后, 使用下面命令来检查降级包的版本.

|

||||

回滚后,使用下面命令来检查降级包的版本。

|

||||

|

||||

```

|

||||

# yum list git

|

||||

or

|

||||

或

|

||||

# rpm -q git

|

||||

git-1.7.1-8.el6.x86_64

|

||||

|

||||

```

|

||||

|

||||

### 使用YUM downgrade 命令回滚升级

|

||||

|

||||

此外,我们也可以使用 YUM downgrade 命令回滚升级.

|

||||

此外,我们也可以使用 YUM `downgrade` 命令回滚升级。

|

||||

|

||||

```

|

||||

# yum downgrade git-1.7.1-8.el6 perl-Git-1.7.1-8.el6

|

||||

Loaded plugins: search-disabled-repos, security, ulninfo

|

||||

@ -346,14 +352,14 @@ Installed:

|

||||

git.x86_64 0:1.7.1-8.el6 perl-Git.noarch 0:1.7.1-8.el6

|

||||

|

||||

Complete!

|

||||

|

||||

```

|

||||

|

||||

**注意 :** 你也需要降级依赖包, 否则它会删掉当前版本的依赖包而不是对依赖包做降级,因为downgrade命令无法处理依赖关系.

|

||||

注意: 你也需要降级依赖包,否则它会删掉当前版本的依赖包而不是对依赖包做降级,因为 `downgrade` 命令无法处理依赖关系。

|

||||

|

||||

### 至于 Fedora 用户

|

||||

|

||||

命令是一样的,只需要将包管理器名称从YUM改成DNF就行了.

|

||||

命令是一样的,只需要将包管理器名称从 `yum` 改成 `dnf` 就行了。

|

||||

|

||||

```

|

||||

# dnf list git

|

||||

# dnf history

|

||||

@ -361,7 +367,6 @@ Complete!

|

||||

# dnf history undo

|

||||

# dnf list git

|

||||

# dnf downgrade git-1.7.1-8.el6 perl-Git-1.7.1-8.el6

|

||||

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -370,7 +375,7 @@ via: https://www.2daygeek.com/rollback-fallback-updates-downgrade-packages-cento

|

||||

|

||||

作者:[2daygeek][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

如何在 Linux 上让一段时间不活动的用户自动登出

|

||||

======

|

||||

|

||||

|

||||

|

||||

|

||||

让我们想象这么一个场景。你有一台服务器经常被网络中各系统的很多个用户访问。有可能出现某些用户忘记登出会话让会话保持会话处于连接状态。我们都知道留下一个处于连接状态的用户会话是一件多么危险的事情。有些用户可能会借此故意做一些损坏系统的事情。而你,作为一名系统管理员,会去每个系统上都检查一遍用户是否有登出吗?其实这完全没必要的。而且若网络中有成百上千台机器,这也太耗时了。不过,你可以让用户在本机或 SSH 会话上超过一定时间不活跃的情况下自动登出。本教程就将教你如何在类 Unix 系统上实现这一点。一点都不难。跟我做。

|

||||

|

||||

@ -11,32 +11,40 @@

|

||||

|

||||

#### 方法 1:

|

||||

|

||||

编辑 **~/.bashrc** 或 **~/.bash_profile** 文件:

|

||||

编辑 `~/.bashrc` 或 `~/.bash_profile` 文件:

|

||||

|

||||

```

|

||||

$ vi ~/.bashrc

|

||||

```

|

||||

|

||||

或,

|

||||

|

||||

```

|

||||

$ vi ~/.bash_profile

|

||||

```

|

||||

|

||||

将下面行加入其中。

|

||||

将下面行加入其中:

|

||||

|

||||

```

|

||||

TMOUT=100

|

||||

```

|

||||

|

||||

这回让用户在停止动作 100 秒后自动登出。你可以根据需要定义这个值。保存并关闭文件。

|

||||

这会让用户在停止动作 100 秒后自动登出。你可以根据需要定义这个值。保存并关闭文件。

|

||||

|

||||

运行下面命令让更改生效:

|

||||

|

||||

```

|

||||

$ source ~/.bashrc

|

||||

```

|

||||

|

||||

或,

|

||||

|

||||

```

|

||||

$ source ~/.bash_profile

|

||||

```

|

||||

|

||||

现在让会话闲置 100 秒。100 秒不活动后,你会看到下面这段信息,并且用户会自动退出会话。

|

||||

|

||||

```

|

||||

timed out waiting for input: auto-logout

|

||||

Connection to 192.168.43.2 closed.

|

||||

@ -44,13 +52,16 @@ Connection to 192.168.43.2 closed.

|

||||

|

||||

该设置可以轻易地被用户所修改。因为,`~/.bashrc` 文件被用户自己所拥有。

|

||||

|

||||

要修改或者删除超时设置,只需要删掉上面添加的行然后执行 "source ~/.bashrc" 命令让修改生效。

|

||||

要修改或者删除超时设置,只需要删掉上面添加的行然后执行 `source ~/.bashrc` 命令让修改生效。

|

||||

|

||||

此外,用户也可以运行下面命令来禁止超时:

|

||||

|

||||

此啊玩 i,用户也可以运行下面命令来禁止超时:

|

||||

```

|

||||

$ export TMOUT=0

|

||||

```

|

||||

|

||||

或,

|

||||

|

||||

```

|

||||

$ unset TMOUT

|

||||

```

|

||||

@ -59,14 +70,16 @@ $ unset TMOUT

|

||||

|

||||

#### 方法 2:

|

||||

|

||||

以 root 用户登陆

|

||||

以 root 用户登录。

|

||||

|

||||

创建一个名为 `autologout.sh` 的新文件。

|

||||

|

||||

```

|

||||

# vi /etc/profile.d/autologout.sh

|

||||

```

|

||||

|

||||

加入下面内容:

|

||||

|

||||

```

|

||||

TMOUT=100

|

||||

readonly TMOUT

|

||||

@ -76,55 +89,58 @@ export TMOUT

|

||||

保存并退出该文件。

|

||||

|

||||

为它添加可执行权限:

|

||||

|

||||

```

|

||||

# chmod +x /etc/profile.d/autologout.sh

|

||||

```

|

||||

|

||||

现在,登出或者重启系统。非活动用户就会在 100 秒后自动登出了。普通用户即使想保留会话连接但也无法修改该配置了。他们会在 100 秒后强制退出。

|

||||

|

||||

这两种方法对本地会话和远程会话都适用(即本地登陆的用户和远程系统上通过 SSH 登陆的用户)。下面让我们来看看如何实现只自动登出非活动的 SSH 会话,而不自动登出本地会话。

|

||||

这两种方法对本地会话和远程会话都适用(即本地登录的用户和远程系统上通过 SSH 登录的用户)。下面让我们来看看如何实现只自动登出非活动的 SSH 会话,而不自动登出本地会话。

|

||||

|

||||

#### 方法 3:

|

||||

|

||||

这种方法,我们智慧让 SSH 会话用户在一段时间不活动后自动登出。

|

||||

这种方法,我们只会让 SSH 会话用户在一段时间不活动后自动登出。

|

||||

|

||||

编辑 `/etc/ssh/sshd_config` 文件:

|

||||

|

||||

```

|

||||

$ sudo vi /etc/ssh/sshd_config

|

||||

```

|

||||

|

||||

添加/修改下面行:

|

||||

|

||||

```

|

||||

ClientAliveInterval 100

|

||||

ClientAliveCountMax 0

|

||||

```

|

||||

|

||||

保存并退出该文件。重启 sshd 服务让改动生效。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart sshd

|

||||

```

|

||||

|

||||

现在,在远程系统通过 ssh 登陆该系统。100 秒后,ssh 会话就会自动关闭了,你也会看到下面消息:

|

||||

现在,在远程系统通过 ssh 登录该系统。100 秒后,ssh 会话就会自动关闭了,你也会看到下面消息:

|

||||

|

||||

```

|

||||

$ Connection to 192.168.43.2 closed by remote host.

|

||||

Connection to 192.168.43.2 closed.

|

||||

```

|

||||

|

||||

现在,任何人从远程系统通过 SSH 登陆本系统,都会在 100 秒不活动后自动登出了。

|

||||

现在,任何人从远程系统通过 SSH 登录本系统,都会在 100 秒不活动后自动登出了。

|

||||

|

||||

希望本文能对你有所帮助。我马上还会写另一篇实用指南。如果你觉得我们的指南有用,请在您的社交网络上分享,支持 OSTechNix!

|

||||

希望本文能对你有所帮助。我马上还会写另一篇实用指南。如果你觉得我们的指南有用,请在您的社交网络上分享,支持 我们!

|

||||

|

||||

祝您好运!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/auto-logout-inactive-users-period-time-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,13 +1,13 @@

|

||||

Linux fmt 命令 - 用法与案例

|

||||

Linux 的 fmt 命令用法与案例

|

||||

======

|

||||

|

||||

有时你会发现需要格式化某个文本文件中的内容。比如,该文本文件每行一个单词,而人物是把所有的单词都放在同一行。当然,你可以手工来做,但没人喜欢手工做这么耗时的工作。而且,这只是一个例子 - 事实上的任务可能千奇百怪。

|

||||

有时你会发现需要格式化某个文本文件中的内容。比如,该文本文件每行一个单词,而任务是把所有的单词都放在同一行。当然,你可以手工来做,但没人喜欢手工做这么耗时的工作。而且,这只是一个例子 - 事实上的任务可能千奇百怪。

|

||||

|

||||

好在,有一个命令可以满足至少一部分的文本格式化的需求。这个工具就是 `fmt`。本教程将会讨论 `fmt` 的基本用法以及它提供的一些主要功能。文中所有的命令和指令都在 Ubuntu 16.04LTS 下经过了测试。

|

||||

|

||||

### Linux fmt 命令

|

||||

|

||||

fmt 命令是一个简单的文本格式化工具,任何人都能在命令行下运行它。它的基本语法为:

|

||||

`fmt` 命令是一个简单的文本格式化工具,任何人都能在命令行下运行它。它的基本语法为:

|

||||

|

||||

```

|

||||

fmt [-WIDTH] [OPTION]... [FILE]...

|

||||

@ -15,15 +15,13 @@ fmt [-WIDTH] [OPTION]... [FILE]...

|

||||

|

||||

它的 man 页是这么说的:

|

||||

|

||||

```

|

||||

重新格式化文件FILE(s)中的每一个段落,将结果写到标准输出. 选项 -WIDTH 是 --width=DIGITS 形式的缩写

|

||||

```

|

||||

> 重新格式化文件中的每一个段落,将结果写到标准输出。选项 `-WIDTH` 是 `--width=DIGITS` 形式的缩写。

|

||||

|

||||

下面这些问答方式的例子应该能让你对 fmt 的用法有很好的了解。

|

||||

下面这些问答方式的例子应该能让你对 `fmt` 的用法有很好的了解。

|

||||

|

||||

### Q1。如何使用 fmt 来将文本内容格式成同一行?

|

||||

### Q1、如何使用 fmt 来将文本内容格式成同一行?

|

||||

|

||||

使用 `fmt` 命令的基本格式(省略任何选项)就能做到这一点。你只需要将文件名作为参数传递给它。

|

||||

使用 `fmt` 命令的基本形式(省略任何选项)就能做到这一点。你只需要将文件名作为参数传递给它。

|

||||

|

||||

```

|

||||

fmt [file-name]

|

||||

@ -33,9 +31,9 @@ fmt [file-name]

|

||||

|

||||

[![format contents of file in single line][1]][2]

|

||||

|

||||

你可以看到文件中多行内容都被格式化成同一行了。请注意,这并不会修改原文件(也就是 file1)。

|

||||

你可以看到文件中多行内容都被格式化成同一行了。请注意,这并不会修改原文件(file1)。

|

||||

|

||||

### Q2。如何修改最大行宽?

|

||||

### Q2、如何修改最大行宽?

|

||||

|

||||

默认情况下,`fmt` 命令产生的输出中的最大行宽为 75。然而,如果你想的话,可以用 `-w` 选项进行修改,它接受一个表示新行宽的数字作为参数值。

|

||||

|

||||

@ -47,7 +45,7 @@ fmt -w [n] [file-name]

|

||||

|

||||

[![change maximum line width][3]][4]

|

||||

|

||||

### Q3。如何让 fmt 突出显示第一行?

|

||||

### Q3、如何让 fmt 突出显示第一行?

|

||||

|

||||

这是通过让第一行的缩进与众不同来实现的,你可以使用 `-t` 选项来实现。

|

||||

|

||||

@ -57,7 +55,7 @@ fmt -t [file-name]

|

||||

|

||||

[![make fmt highlight the first line][5]][6]

|

||||

|

||||

### Q4。如何使用 fmt 拆分长行?

|

||||

### Q4、如何使用 fmt 拆分长行?

|

||||

|

||||

fmt 命令也能用来对长行进行拆分,你可以使用 `-s` 选项来应用该功能。

|

||||

|

||||

@ -69,9 +67,9 @@ fmt -s [file-name]

|

||||

|

||||

[![make fmt split long lines][7]][8]

|

||||

|

||||

### Q5。如何在单词与单词之间,行与行之间用空格分开?

|

||||

### Q5、如何在单词与单词之间,句子之间用空格分开?

|

||||

|

||||

fmt 命令提供了一个 `-u` 选项,这会在单词与单词之间用单个空格分开,行与行之间用两个空格分开。你可以这样用:

|

||||

fmt 命令提供了一个 `-u` 选项,这会在单词与单词之间用单个空格分开,句子之间用两个空格分开。你可以这样用:

|

||||

|

||||

```

|

||||

fmt -u [file-name]

|

||||

@ -81,7 +79,7 @@ fmt -u [file-name]

|

||||

|

||||

### 总结

|

||||

|

||||

没错,fmt 提供的功能不多,但不代表它的应用就不广泛。因为你永远不知道什么时候会用到它。在本教程中,我们已经讲解了 `fmt` 提供的主要选项。若想了解更多细节,请查看该工具的 [man 页 ][9]。

|

||||

没错,`fmt` 提供的功能不多,但不代表它的应用就不广泛。因为你永远不知道什么时候会用到它。在本教程中,我们已经讲解了 `fmt` 提供的主要选项。若想了解更多细节,请查看该工具的 [man 页][9]。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -90,7 +88,7 @@ via: https://www.howtoforge.com/linux-fmt-command/

|

||||

|

||||

作者:[Himanshu Arora][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

76

published/20170919 What Are Bitcoins.md

Normal file

76

published/20170919 What Are Bitcoins.md

Normal file

@ -0,0 +1,76 @@

|

||||

比特币是什么?

|

||||

======

|

||||

|

||||

|

||||

|

||||

<ruby>[比特币][1]<rt>Bitcoin</rt></ruby> 是一种数字货币或者说是电子现金,依靠点对点技术来完成交易。 由于使用点对点技术作为主要网络,比特币提供了一个类似于<ruby>管制经济<rt>managed economy</rt></ruby>的社区。 这就是说,比特币消除了货币管理的集中式管理方式,促进了货币的社区管理。 大部分比特币数字现金的挖掘和管理软件也是开源的。

|

||||

|

||||

第一个比特币软件是由<ruby>中本聪<rt>Satoshi Nakamoto</rt></ruby>开发的,基于开源的密码协议。 比特币最小单位被称为<ruby>聪<rt>Satoshi</rt></ruby>,它基本上是一个比特币的百万分之一(0.00000001 BTC)。

|

||||

|

||||

人们不能低估比特币在数字经济中消除的界限。 例如,比特币消除了由中央机构对货币进行的管理控制,并将控制和管理提供给整个社区。 此外,比特币基于开放源代码密码协议的事实使其成为一个开放的领域,其中存在价值波动、通货紧缩和通货膨胀等严格的活动。 当许多互联网用户正在意识到他们在网上完成交易的隐私性时,比特币正在变得比以往更受欢迎。 但是,对于那些了解暗网及其工作原理的人们,可以确认有些人早就开始使用它了。

|

||||

|

||||

不利的一面是,比特币在匿名支付方面也非常安全,可能会对安全或个人健康构成威胁。 例如,暗网市场是进口药物甚至武器的主要供应商和零售商。 在暗网中使用比特币有助于这种犯罪活动。 尽管如此,如果使用得当,比特币有许多的好处,可以消除一些由于集中的货币代理管理导致的经济上的谬误。 另外,比特币允许在世界任何地方交换现金。 比特币的使用也可以减少货币假冒、印刷或贬值。 同时,依托对等网络作为骨干网络,促进交易记录的分布式权限,交易会更加安全。

|

||||

|

||||

比特币的其他优点包括:

|

||||

|

||||

- 在网上商业世界里,比特币促进资金安全和完全控制。这是因为买家受到保护,以免商家可能想要为较低成本的服务额外收取钱财。买家也可以选择在交易后不分享个人信息。此外,由于隐藏了个人信息,也就保护了身份不被盗窃。

|

||||

- 对于主要的常见货币灾难,比如如丢失、冻结或损坏,比特币是一种替代品。但是,始终都建议对比特币进行备份并使用密码加密。

|

||||

- 使用比特币进行网上购物和付款时,收取的费用少或者不收取。这就提高了使用时的可承受性。

|

||||

- 与其他电子货币不同,商家也面临较少的欺诈风险,因为比特币交易是无法逆转的。即使在高犯罪率和高欺诈的时刻,比特币也是有用的,因为在公开的公共总账(区块链)上难以对付某个人。

|

||||

- 比特币货币也很难被操纵,因为它是开源的,密码协议是非常安全的。

|

||||

- 交易也可以随时随地进行验证和批准。这是数字货币提供的灵活性水准。

|

||||

|

||||

还可以阅读 - [Bitkey:专用于比特币交易的 Linux 发行版][2]

|

||||

|

||||

### 如何挖掘比特币和完成必要的比特币管理任务的应用程序

|

||||

|

||||

在数字货币中,比特币挖矿和管理需要额外的软件。有许多开源的比特币管理软件,便于进行支付,接收付款,加密和备份比特币,还有很多的比特币挖掘软件。有些网站,比如:通过查看广告赚取免费比特币的 [Freebitcoin][4],MoonBitcoin 是另一个可以免费注册并获得比特币的网站。但是,如果有空闲时间和相当多的人脉圈参与,会很方便。有很多提供比特币挖矿的网站,可以轻松注册然后开始挖矿。其中一个主要秘诀就是尽可能引入更多的人构建成一个大型的网络。

|

||||

|

||||

与比特币一起使用时需要的应用程序包括比特币钱包,使得人们可以安全的持有比特币。这就像使用实物钱包来保存硬通货币一样,而这里是以数字形式存在的。钱包可以在这里下载 —— [比特币-钱包][6]。其他类似的应用包括:与比特币钱包类似的[区块链][7]。

|

||||

|

||||

下面的屏幕截图分别显示了 Freebitco 和 MoonBitco 这两个挖矿网站。

|

||||

|

||||

[][8]

|

||||

|

||||

[][9]

|

||||

|

||||

获得比特币的方式多种多样。其中一些包括比特币挖矿机的使用,比特币在交易市场的购买以及免费的比特币在线采矿。比特币可以在 [MtGox][10](LCTT 译注:本文比较陈旧,此交易所已经倒闭),[bitNZ][11],[Bitstamp][12],[BTC-E][13],[VertEx][14] 等等这些网站买到,这些网站都提供了开源开源应用程序。这些应用包括:Bitminter、[5OMiner][15],[BFG Miner][16] 等等。这些应用程序使用一些图形卡和处理器功能来生成比特币。在个人电脑上开采比特币的效率在很大程度上取决于显卡的类型和采矿设备的处理器。(LCTT 译注:目前个人挖矿已经几乎毫无意义了)此外,还有很多安全的在线存储用于备份比特币。这些网站免费提供比特币存储服务。比特币管理网站的例子包括:[xapo][17] , [BlockChain][18] 等。在这些网站上注册需要有效的电子邮件和电话号码进行验证。 Xapo 通过电话应用程序提供额外的安全性,无论何时进行新的登录都需要做请求验证。

|

||||

|

||||

### 比特币的缺点

|

||||

|

||||

使用比特币数字货币所带来的众多优势不容忽视。 但是,由于比特币还处于起步阶段,因此遇到了几个阻力点。 例如,大多数人没有完全意识到比特币数字货币及其工作方式。 缺乏意识可以通过教育和意识的创造来缓解。 比特币用户也面临波动,因为比特币的需求量高于可用的货币数量。 但是,考虑到更长的时间,很多人开始使用比特币的时候,波动性会降低。

|

||||

|

||||

### 改进点

|

||||

|

||||

基于[比特币技术][19]的起步,仍然有变化的余地使其更安全更可靠。 考虑到更长的时间,比特币货币将会发展到足以提供作为普通货币的灵活性。 为了让比特币成功,除了给出有关比特币如何工作及其好处的信息之外,还需要更多人了解比特币。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxandubuntu.com/home/things-you-need-to-know-about-bitcoins

|

||||

|

||||

作者:[LINUXANDUBUNTU][a]

|

||||

译者:[Flowsnow](https://github.com/Flowsnow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxandubuntu.com/

|

||||

[1]:http://www.linuxandubuntu.com/home/bitkey-a-linux-distribution-dedicated-for-conducting-bitcoin-transactions

|

||||

[2]:http://www.linuxandubuntu.com/home/bitkey-a-linux-distribution-dedicated-for-conducting-bitcoin-transactions

|

||||

[3]:http://www.linuxandubuntu.com/home/things-you-need-to-know-about-bitcoins

|

||||

[4]:https://freebitco.in/?r=2167375

|

||||

[5]:http://moonbit.co.in/?ref=c637809a5051

|

||||

[6]:https://bitcoin.org/en/choose-your-wallet

|

||||

[7]:https://blockchain.info/wallet/

|

||||

[8]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/freebitco-bitcoin-mining-site_orig.jpg

|

||||

[9]:http://www.linuxandubuntu.com/uploads/2/1/1/5/21152474/moonbitcoin-bitcoin-mining-site_orig.png

|

||||

[10]:http://mtgox.com/

|

||||

[11]:https://en.bitcoin.it/wiki/BitNZ

|

||||

[12]:https://www.bitstamp.net/

|

||||

[13]:https://btc-e.com/

|

||||

[14]:https://www.vertexinc.com/

|

||||

[15]:https://www.downloadcloud.com/bitcoin-miner-software.html

|

||||

[16]:https://github.com/luke-jr/bfgminer

|

||||

[17]:https://xapo.com/

|

||||

[18]:https://www.blockchain.com/

|

||||

[19]:https://en.wikipedia.org/wiki/Bitcoin

|

||||

@ -1,14 +1,14 @@

|

||||

在 Ubuntu 16.04 上安装并使用 YouTube-DL

|

||||

======

|

||||

|

||||

Youtube-dl 是一个免费而开源的命令行视频下载工具,可以用来从 Youtube 等类似的网站上下载视频,目前它支持的网站除了 Youtube 还有 Facebook,Dailymotion,Google Video,Yahoo 等等。它构架于 pygtk 之上,需要 Python 的支持来运行。它支持很多操作系统,包括 Windows,Mac 以及 Unix。Youtube-dl 还有断点续传,下载整个频道或者整个播放清单中的视频,添加自定义的标题,代理,等等其他功能。

|

||||

Youtube-dl 是一个自由开源的命令行视频下载工具,可以用来从 Youtube 等类似的网站上下载视频,目前它支持的网站除了 Youtube 还有 Facebook、Dailymotion、Google Video、Yahoo 等等。它构架于 pygtk 之上,需要 Python 的支持来运行。它支持很多操作系统,包括 Windows、Mac 以及 Unix。Youtube-dl 还有断点续传、下载整个频道或者整个播放清单中的视频、添加自定义的标题、代理等等其他功能。

|

||||

|

||||

本文中,我们将来学习如何在 Ubuntu16.04 上安装并使用 Youtube-dl 和 Youtube-dlg。我们还会学习如何以不同质量,不同格式来下载 Youtube 中的视频。

|

||||

本文中,我们将来学习如何在 Ubuntu 16.04 上安装并使用 Youtube-dl 和 Youtube-dlg。我们还会学习如何以不同质量,不同格式来下载 Youtube 中的视频。

|

||||

|

||||

### 前置需求

|

||||

|

||||

* 一台运行 Ubuntu 16.04 的服务器。

|

||||

* 非 root 用户但拥有 sudo 特权。

|

||||

* 一台运行 Ubuntu 16.04 的服务器。

|

||||

* 非 root 用户但拥有 sudo 特权。

|

||||

|

||||

让我们首先用下面命令升级系统到最新版:

|

||||

|

||||

@ -21,37 +21,37 @@ sudo apt-get upgrade -y

|

||||

|

||||

### 安装 Youtube-dl

|

||||

|

||||

默认情况下,Youtube-dl 并不在 Ubuntu-16.04 仓库中。你需要从官网上来下载它。使用 curl 命令可以进行下载:

|

||||

默认情况下,Youtube-dl 并不在 Ubuntu-16.04 仓库中。你需要从官网上来下载它。使用 `curl` 命令可以进行下载:

|

||||

|

||||

首先,使用下面命令安装 curl:

|

||||

首先,使用下面命令安装 `curl`:

|

||||

|

||||

```

|

||||

sudo apt-get install curl -y

|

||||

```

|

||||

|

||||

然后,下载 youtube-dl 的二进制包:

|

||||

然后,下载 `youtube-dl` 的二进制包:

|

||||

|

||||

```

|

||||

curl -L https://yt-dl.org/latest/youtube-dl -o /usr/bin/youtube-dl

|

||||

```

|

||||

|

||||

接着,用下面命令更改 youtube-dl 二进制包的权限:

|

||||

接着,用下面命令更改 `youtube-dl` 二进制包的权限:

|

||||

|

||||

```

|

||||

sudo chmod 755 /usr/bin/youtube-dl

|

||||

```

|

||||

|

||||

youtube-dl 有算是安装好了,现在可以进行下一步了。

|

||||

`youtube-dl` 算是安装好了,现在可以进行下一步了。

|

||||

|

||||

### 使用 Youtube-dl

|

||||

|

||||

运行下面命令会列出 youtube-dl 的所有可选项:

|

||||

运行下面命令会列出 `youtube-dl` 的所有可选项:

|

||||

|

||||

```

|

||||

youtube-dl --h

|

||||

```

|

||||

|

||||

Youtube-dl 支持多种视频格式,像 Mp4,WebM,3gp,以及 FLV 都支持。你可以使用下面命令列出指定视频所支持的所有格式:

|

||||

`youtube-dl` 支持多种视频格式,像 Mp4,WebM,3gp,以及 FLV 都支持。你可以使用下面命令列出指定视频所支持的所有格式:

|

||||

|

||||

```

|

||||

youtube-dl -F https://www.youtube.com/watch?v=j_JgXJ-apXs

|

||||

@ -94,6 +94,7 @@ youtube-dl -f 18 https://www.youtube.com/watch?v=j_JgXJ-apXs

|

||||

```

|

||||

|

||||

该命令会下载 640x360 分辨率的 mp4 格式的视频:

|

||||

|

||||

```

|

||||

[youtube] j_JgXJ-apXs: Downloading webpage

|

||||

[youtube] j_JgXJ-apXs: Downloading video info webpage

|

||||

@ -101,7 +102,6 @@ youtube-dl -f 18 https://www.youtube.com/watch?v=j_JgXJ-apXs

|

||||

[youtube] j_JgXJ-apXs: Downloading MPD manifest

|

||||

[download] Destination: B.A. PASS 2 Trailer no 2 _ Filmybox-j_JgXJ-apXs.mp4

|

||||

[download] 100% of 6.90MiB in 00:47

|

||||

|

||||

```

|

||||

|

||||

如果你想以 mp3 音频的格式下载 Youtube 视频,也可以做到:

|

||||

@ -122,7 +122,7 @@ youtube-dl -citw https://www.youtube.com/channel/UCatfiM69M9ZnNhOzy0jZ41A

|

||||

youtube-dl --proxy http://proxy-ip:port https://www.youtube.com/watch?v=j_JgXJ-apXs

|

||||

```

|

||||

|

||||

若想一条命令下载多个 Youtube 视频,那么首先把所有要下载的 Youtube 视频 URL 存在一个文件中(假设这个文件叫 youtube-list.txt),然后运行下面命令:

|

||||

若想一条命令下载多个 Youtube 视频,那么首先把所有要下载的 Youtube 视频 URL 存在一个文件中(假设这个文件叫 `youtube-list.txt`),然后运行下面命令:

|

||||

|

||||

```

|

||||

youtube-dl -a youtube-list.txt

|

||||

@ -130,7 +130,7 @@ youtube-dl -a youtube-list.txt

|

||||

|

||||

### 安装 Youtube-dl GUI

|

||||

|

||||

若你想要图形化的界面,那么 youtube-dlg 是你最好的选择。youtube-dlg 是一款由 wxPython 所写的免费而开源的 youtube-dl 界面。

|

||||

若你想要图形化的界面,那么 `youtube-dlg` 是你最好的选择。`youtube-dlg` 是一款由 wxPython 所写的免费而开源的 `youtube-dl` 界面。

|

||||

|

||||

该工具默认也不在 Ubuntu 16.04 仓库中。因此你需要为它添加 PPA。

|

||||

|

||||

@ -138,14 +138,14 @@ youtube-dl -a youtube-list.txt

|

||||

sudo add-apt-repository ppa:nilarimogard/webupd8

|

||||

```

|

||||

|

||||

下一步,更新软件包仓库并安装 youtube-dlg:

|

||||

下一步,更新软件包仓库并安装 `youtube-dlg`:

|

||||

|

||||

```

|

||||

sudo apt-get update -y

|

||||

sudo apt-get install youtube-dlg -y

|

||||

```

|

||||

|

||||

安装好 Youtube-dl 后,就能在 `Unity Dash` 中启动它了:

|

||||

安装好 Youtube-dl 后,就能在 Unity Dash 中启动它了:

|

||||

|

||||

[![][2]][3]

|

||||

|

||||

@ -157,14 +157,13 @@ sudo apt-get install youtube-dlg -y

|

||||

|

||||

恭喜你!你已经成功地在 Ubuntu 16.04 服务器上安装好了 youtube-dl 和 youtube-dlg。你可以很方便地从 Youtube 及任何 youtube-dl 支持的网站上以任何格式和任何大小下载视频了。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/install-and-use-youtube-dl-on-ubuntu-1604/

|

||||

|

||||

作者:[Hitesh Jethva][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

144

published/20171119 10 Best LaTeX Editors For Linux.md

Normal file

144

published/20171119 10 Best LaTeX Editors For Linux.md

Normal file

@ -0,0 +1,144 @@

|

||||

10 款 Linux 平台上最好的 LaTeX 编辑器

|

||||

======

|

||||

|

||||

**简介:一旦你克服了 LaTeX 的学习曲线,就没有什么比 LaTeX 更棒了。下面介绍的是针对 Linux 和其他平台的最好的 LaTeX 编辑器。**

|

||||

|

||||

### LaTeX 是什么?

|

||||

|

||||

[LaTeX][1] 是一个文档制作系统。与纯文本编辑器不同,在 LaTeX 编辑器中你不能只写纯文本,为了组织文档的内容,你还必须使用一些 LaTeX 命令。

|

||||

|

||||

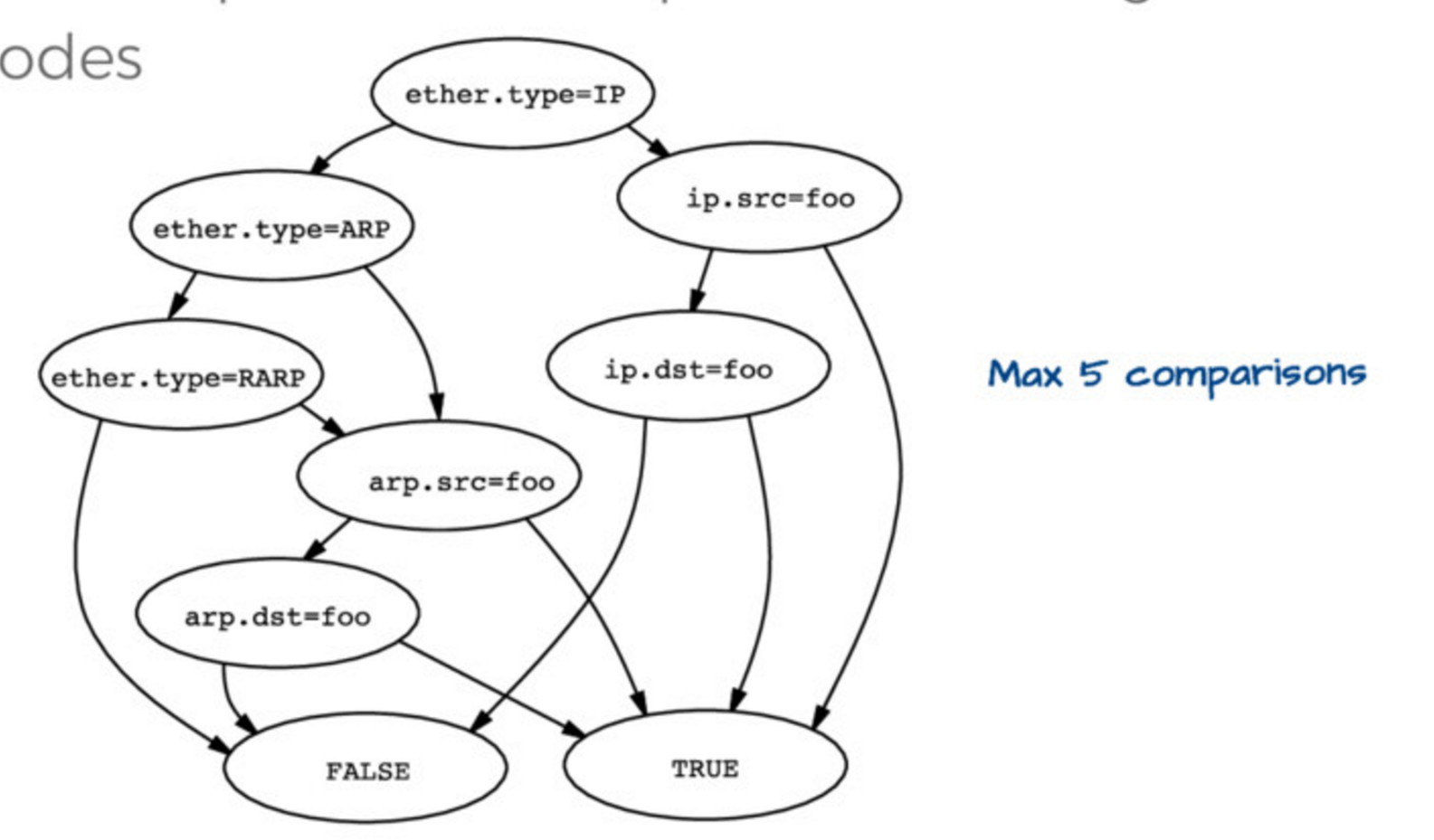

![LaTeX 示例][3]

|

||||

|

||||

LaTeX 编辑器一般用在出于学术目的的科学研究文档或书籍的出版,最重要的是,当你需要处理包含众多复杂数学符号的文档时,它能够为你带来方便。当然,使用 LaTeX 编辑器是很有趣的,但它也并非总是很有用,除非你对所要编写的文档有一些特别的需求。

|

||||

|

||||

### 为什么你应当使用 LaTeX?

|

||||

|

||||

好吧,正如我前面所提到的那样,使用 LaTeX 编辑器便意味着你有着特定的需求。为了捣腾 LaTeX 编辑器,并不需要你有一颗极客的头脑。但对于那些使用一般文本编辑器的用户来说,它并不是一个很有效率的解决方法。

|

||||

|

||||

假如你正在寻找一款工具来精心制作一篇文档,同时你对花费时间在格式化文本上没有任何兴趣,那么 LaTeX 编辑器或许正是你所寻找的那款工具。在 LaTeX 编辑器中,你只需要指定文档的类型,它便会相应地为你设置好文档的字体种类和大小尺寸。正是基于这个原因,难怪它会被认为是 [给作家的最好开源工具][4] 之一。

|

||||

|

||||

但请务必注意: LaTeX 编辑器并不是自动化的工具,你必须首先学会一些 LaTeX 命令来让它能够精确地处理文本的格式。

|

||||

|

||||

### 针对 Linux 平台的 10 款最好 LaTeX 编辑器

|

||||

|

||||

事先说明一下,以下列表并没有一个明确的先后顺序,序号为 3 的编辑器并不一定比序号为 7 的编辑器优秀。

|

||||

|

||||

#### 1、 LyX

|

||||

|

||||

![][5]

|

||||

|

||||

[LyX][6] 是一个开源的 LaTeX 编辑器,即是说它是网络上可获取到的最好的文档处理引擎之一。LyX 帮助你集中于你的文章,并忘记对单词的格式化,而这些正是每个 LaTeX 编辑器应当做的。LyX 能够让你根据文档的不同,管理不同的文档内容。一旦安装了它,你就可以控制文档中的很多东西了,例如页边距、页眉、页脚、空白、缩进、表格等等。

|

||||

|

||||

假如你正忙着精心撰写科学类文档、研究论文或类似的文档,你将会很高兴能够体验到 LyX 的公式编辑器,这也是其特色之一。 LyX 还包括一系列的教程来入门,使得入门没有那么多的麻烦。

|

||||

|

||||

#### 2、 Texmaker

|

||||

|

||||

![][7]

|

||||

|

||||

[Texmaker][8] 被认为是 GNOME 桌面环境下最好的 LaTeX 编辑器之一。它呈现出一个非常好的用户界面,带来了极好的用户体验。它也被称之为最实用的 LaTeX 编辑器之一。假如你经常进行 PDF 的转换,你将发现 TeXmaker 相比其他编辑器更加快速。在你书写的同时,你也可以预览你的文档最终将是什么样子的。同时,你也可以观察到可以很容易地找到所需要的符号。

|

||||

|

||||

Texmaker 也提供一个扩展的快捷键支持。你有什么理由不试着使用它呢?

|

||||

|

||||

#### 3、 TeXstudio

|

||||

|

||||

![][9]

|

||||

|

||||

假如你想要一个这样的 LaTeX 编辑器:它既能为你提供相当不错的自定义功能,又带有一个易用的界面,那么 [TeXstudio][10] 便是一个完美的选择。它的 UI 确实很简单,但是不粗糙。 TeXstudio 带有语法高亮,自带一个集成的阅读器,可以让你检查参考文献,同时还带有一些其他的辅助工具。

|

||||

|

||||

它同时还支持某些酷炫的功能,例如自动补全,链接覆盖,书签,多游标等等,这使得书写 LaTeX 文档变得比以前更加简单。

|

||||

|

||||

TeXstudio 的维护很活跃,对于新手或者高级写作者来说,这使得它成为一个引人注目的选择。

|

||||

|

||||

#### 4、 Gummi

|

||||

|

||||

![][11]

|

||||

|

||||

[Gummi][12] 是一个非常简单的 LaTeX 编辑器,它基于 GTK+ 工具箱。当然,在这个编辑器中你找不到许多华丽的选项,但如果你只想能够立刻着手写作, 那么 Gummi 便是我们给你的推荐。它支持将文档输出为 PDF 格式,支持语法高亮,并帮助你进行某些基础的错误检查。尽管在 GitHub 上它已经不再被活跃地维护,但它仍然工作地很好。

|

||||

|

||||

#### 5、 TeXpen

|

||||

|

||||

![][13]

|

||||

|

||||

[TeXpen][14] 是另一个简洁的 LaTeX 编辑器。它为你提供了自动补全功能。但其用户界面或许不会让你感到印象深刻。假如你对用户界面不在意,又想要一个超级容易的 LaTeX 编辑器,那么 TeXpen 将满足你的需求。同时 TeXpen 还能为你校正或提高在文档中使用的英语语法和表达式。

|

||||

|

||||

#### 6、 ShareLaTeX

|

||||

|

||||

![][15]

|

||||

|

||||

[ShareLaTeX][16] 是一款在线 LaTeX 编辑器。假如你想与某人或某组朋友一同协作进行文档的书写,那么这便是你所需要的。

|

||||

|

||||

它提供一个免费方案和几种付费方案。甚至来自哈佛大学和牛津大学的学生也都使用它来进行个人的项目。其免费方案还允许你添加一位协作者。

|

||||

|

||||

其付费方案允许你与 GitHub 和 Dropbox 进行同步,并且能够记录完整的文档修改历史。你可以为你的每个方案选择多个协作者。对于学生,它还提供单独的计费方案。

|

||||

|

||||

#### 7、 Overleaf

|

||||

|

||||

![][17]

|

||||

|

||||

[Overleaf][18] 是另一款在线的 LaTeX 编辑器。它与 ShareLaTeX 类似,它为专家和学生提供了不同的计费方案。它也提供了一个免费方案,使用它你可以与 GitHub 同步,检查你的修订历史,或添加多个合作者。

|

||||

|

||||

在每个项目中,它对文件的数目有所限制。所以在大多数情况下如果你对 LaTeX 文件非常熟悉,这并不会为你带来不便。

|

||||

|

||||

#### 8、 Authorea

|

||||

|

||||

![][19]

|

||||

|

||||

[Authorea][20] 是一个美妙的在线 LaTeX 编辑器。当然,如果考虑到价格,它可能不是最好的一款。对于免费方案,它有 100 MB 的数据上传限制和每次只能创建一个私有文档。而付费方案则提供更多的额外好处,但如果考虑到价格,它可能不是最便宜的。你应该选择 Authorea 的唯一原因应该是因为其用户界面。假如你喜爱使用一款提供令人印象深刻的用户界面的工具,那就不要错过它。

|

||||

|

||||

#### 9、 Papeeria

|

||||

|

||||

![][21]

|

||||

|

||||

[Papeeria][22] 是在网络上你能够找到的最为便宜的 LaTeX 在线编辑器,如果考虑到它和其他的编辑器一样可信赖的话。假如你想免费地使用它,则你不能使用它开展私有项目。但是,如果你更偏爱公共项目,它允许你创建不限数目的项目,添加不限数目的协作者。它的特色功能是有一个非常简便的画图构造器,并且在无需额外费用的情况下使用 Git 同步。假如你偏爱付费方案,它赋予你创建 10 个私有项目的能力。

|

||||

|

||||

#### 10、 Kile

|

||||

|

||||

![Kile LaTeX 编辑器][23]

|

||||

|

||||

位于我们最好 LaTeX 编辑器清单的最后一位是 [Kile][24] 编辑器。有些朋友对 Kile 推崇备至,很大程度上是因为其提供某些特色功能。

|

||||

|

||||

Kile 不仅仅是一款编辑器,它还是一款类似 Eclipse 的 IDE 工具,提供了针对文档和项目的一整套环境。除了快速编译和预览功能,你还可以使用诸如命令的自动补全 、插入引用,按照章节来组织文档等功能。你真的应该使用 Kile 来见识其潜力。

|

||||

|

||||

Kile 在 Linux 和 Windows 平台下都可获取到。

|

||||

|

||||

### 总结

|

||||

|

||||

所以上面便是我们推荐的 LaTeX 编辑器,你可以在 Ubuntu 或其他 Linux 发行版本中使用它们。

|

||||

|

||||

当然,我们可能还遗漏了某些可以在 Linux 上使用并且有趣的 LaTeX 编辑器。如若你正好知道它们,请在下面的评论中让我们知晓。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/LaTeX-editors-linux/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/ankush/

|

||||

[1]:https://www.LaTeX-project.org/

|

||||

[3]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/11/latex-sample-example.jpeg

|

||||

[4]:https://itsfoss.com/open-source-tools-writers/

|

||||

[5]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/10/lyx_latex_editor.jpg

|

||||

[6]:https://www.LyX.org/

|