mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-19 00:30:12 +08:00

commit

dc1b4cdff5

@ -8,45 +8,44 @@ Copyright (C) 2015 greenbytes GmbH

|

||||

|

||||

### 源码 ###

|

||||

|

||||

你可以从[这里][1]得到 Apache 发行版。Apache 2.4.17 及其更高版本都支持 HTTP/2。我不会再重复介绍如何构建服务器的指令。在很多地方有很好的指南,例如[这里][2]。

|

||||

你可以从[这里][1]得到 Apache 版本。Apache 2.4.17 及其更高版本都支持 HTTP/2。我不会再重复介绍如何构建该服务器的指令。在很多地方有很好的指南,例如[这里][2]。

|

||||

|

||||

(有任何试验的链接?在 Twitter 上告诉我吧 @icing)

|

||||

(有任何这个试验性软件包的相关链接?在 Twitter 上告诉我吧 @icing)

|

||||

|

||||

#### 编译支持 HTTP/2 ####

|

||||

#### 编译支持 HTTP/2 ####

|

||||

|

||||

在你编译发行版之前,你要进行一些**配置**。这里有成千上万的选项。和 HTTP/2 相关的是:

|

||||

在你编译版本之前,你要进行一些**配置**。这里有成千上万的选项。和 HTTP/2 相关的是:

|

||||

|

||||

- **--enable-http2**

|

||||

|

||||

启用在 Apache 服务器内部实现协议的 ‘http2’ 模块。

|

||||

启用在 Apache 服务器内部实现该协议的 ‘http2’ 模块。

|

||||

|

||||

- **--with-nghttp2=<dir>**

|

||||

- **--with-nghttp2=\<dir>**

|

||||

|

||||

指定 http2 模块需要的 libnghttp2 模块的非默认位置。如果 nghttp2 是在默认的位置,配置过程会自动采用。

|

||||

|

||||

- **--enable-nghttp2-staticlib-deps**

|

||||

|

||||

很少用到的选项,你可能用来静态链接 nghttp2 库到服务器。在大部分平台上,只有在找不到共享 nghttp2 库时才有效。

|

||||

很少用到的选项,你可能想将 nghttp2 库静态链接到服务器里。在大部分平台上,只有在找不到共享 nghttp2 库时才有用。

|

||||

|

||||

如果你想自己编译 nghttp2,你可以到 [nghttp2.org][3] 查看文档。最新的 Fedora 以及其它发行版已经附带了这个库。

|

||||

如果你想自己编译 nghttp2,你可以到 [nghttp2.org][3] 查看文档。最新的 Fedora 以及其它版本已经附带了这个库。

|

||||

|

||||

#### TLS 支持 ####

|

||||

|

||||

大部分人想在浏览器上使用 HTTP/2, 而浏览器只在 TLS 连接(**https:// 开头的 url)时支持它。你需要一些我下面介绍的配置。但首先你需要的是支持 ALPN 扩展的 TLS 库。

|

||||

大部分人想在浏览器上使用 HTTP/2, 而浏览器只在使用 TLS 连接(**https:// 开头的 url)时才支持 HTTP/2。你需要一些我下面介绍的配置。但首先你需要的是支持 ALPN 扩展的 TLS 库。

|

||||

|

||||

ALPN 用来协商(negotiate)服务器和客户端之间的协议。如果你服务器上 TLS 库还没有实现 ALPN,客户端只能通过 HTTP/1.1 通信。那么,可以和 Apache 链接并支持它的是什么库呢?

|

||||

|

||||

ALPN 用来屏蔽服务器和客户端之间的协议。如果你服务器上 TLS 库还没有实现 ALPN,客户端只能通过 HTTP/1.1 通信。那么,和 Apache 连接的到底是什么?又是什么支持它呢?

|

||||

- **OpenSSL 1.0.2** 及其以后。

|

||||

- ??? (别的我也不知道了)

|

||||

|

||||

- **OpenSSL 1.0.2** 即将到来。

|

||||

- ???

|

||||

|

||||

如果你的 OpenSSL 库是 Linux 发行版自带的,这里使用的版本号可能和官方 OpenSSL 发行版的不同。如果不确定的话检查一下你的 Linux 发行版吧。

|

||||

如果你的 OpenSSL 库是 Linux 版本自带的,这里使用的版本号可能和官方 OpenSSL 版本的不同。如果不确定的话检查一下你的 Linux 版本吧。

|

||||

|

||||

### 配置 ###

|

||||

|

||||

另一个给服务器的好建议是为 http2 模块设置合适的日志等级。添加下面的配置:

|

||||

|

||||

# 某个地方有这样一行

|

||||

# 放在某个地方的这样一行

|

||||

LoadModule http2_module modules/mod_http2.so

|

||||

|

||||

<IfModule http2_module>

|

||||

@ -62,38 +61,37 @@ ALPN 用来屏蔽服务器和客户端之间的协议。如果你服务器上 TL

|

||||

|

||||

那么,假设你已经编译部署好了服务器, TLS 库也是最新的,你启动了你的服务器,打开了浏览器。。。你怎么知道它在工作呢?

|

||||

|

||||

如果除此之外你没有添加其它到服务器配置,很可能它没有工作。

|

||||

如果除此之外你没有添加其它的服务器配置,很可能它没有工作。

|

||||

|

||||

你需要告诉服务器在哪里使用协议。默认情况下,你的服务器并没有启动 HTTP/2 协议。因为这是安全路由,你可能要有一套部署了才能继续。

|

||||

你需要告诉服务器在哪里使用该协议。默认情况下,你的服务器并没有启动 HTTP/2 协议。因为这样比较安全,也许才能让你已有的部署可以继续工作。

|

||||

|

||||

你用 **Protocols** 命令启用 HTTP/2 协议:

|

||||

你可以用新的 **Protocols** 指令启用 HTTP/2 协议:

|

||||

|

||||

# for a https server

|

||||

# 对于 https 服务器

|

||||

Protocols h2 http/1.1

|

||||

...

|

||||

|

||||

# for a http server

|

||||

# 对于 http 服务器

|

||||

Protocols h2c http/1.1

|

||||

|

||||

你可以给一般服务器或者指定的 **vhosts** 添加这个配置。

|

||||

你可以给整个服务器或者指定的 **vhosts** 添加这个配置。

|

||||

|

||||

#### SSL 参数 ####

|

||||

|

||||

对于 TLS (SSL),HTTP/2 有一些特殊的要求。阅读 [https:// 连接][4]了解更详细的信息。

|

||||

对于 TLS (SSL),HTTP/2 有一些特殊的要求。阅读下面的“ https:// 连接”一节了解更详细的信息。

|

||||

|

||||

### http:// 连接 (h2c) ###

|

||||

|

||||

尽管现在还没有浏览器支持 HTTP/2 协议, http:// 这样的 url 也能正常工作, 因为有 mod_h[ttp]2 的支持。启用它你只需要做的一件事是在 **httpd.conf** 配置 Protocols :

|

||||

尽管现在还没有浏览器支持,但是 HTTP/2 协议也工作在 http:// 这样的 url 上, 而且 mod_h[ttp]2 也支持。启用它你唯一所要做的是在 Protocols 配置中启用它:

|

||||

|

||||

# for a http server

|

||||

# 对于 http 服务器

|

||||

Protocols h2c http/1.1

|

||||

|

||||

|

||||

这里有一些支持 **h2c** 的客户端(和客户端库)。我会在下面介绍:

|

||||

|

||||

#### curl ####

|

||||

|

||||

Daniel Stenberg 维护的网络资源命令行客户端 curl 当然支持。如果你的系统上有 curl,有一个简单的方法检查它是否支持 http/2:

|

||||

Daniel Stenberg 维护的用于访问网络资源的命令行客户端 curl 当然支持。如果你的系统上有 curl,有一个简单的方法检查它是否支持 http/2:

|

||||

|

||||

sh> curl -V

|

||||

curl 7.43.0 (x86_64-apple-darwin15.0) libcurl/7.43.0 SecureTransport zlib/1.2.5

|

||||

@ -126,11 +124,11 @@ Daniel Stenberg 维护的网络资源命令行客户端 curl 当然支持。如

|

||||

|

||||

恭喜,如果看到了有 **...101 Switching...** 的行就表示它正在工作!

|

||||

|

||||

有一些情况不会发生到 HTTP/2 的 Upgrade 。如果你的第一个请求没有内容,例如你上传一个文件,就不会触发 Upgrade。[h2c 限制][5]部分有详细的解释。

|

||||

有一些情况不会发生 HTTP/2 的升级切换(Upgrade)。如果你的第一个请求有内容数据(body),例如你上传一个文件时,就不会触发升级切换。[h2c 限制][5]部分有详细的解释。

|

||||

|

||||

#### nghttp ####

|

||||

|

||||

nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客户端,你可以简单地通过获取资源验证你的安装:

|

||||

nghttp2 可以一同编译它自己的客户端和服务器。如果你的系统中有该客户端,你可以简单地通过获取一个资源来验证你的安装:

|

||||

|

||||

sh> nghttp -uv http://<yourserver>/

|

||||

[ 0.001] Connected

|

||||

@ -151,7 +149,7 @@ nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客

|

||||

|

||||

这和我们上面 **curl** 例子中看到的 Upgrade 输出很相似。

|

||||

|

||||

在命令行参数中隐藏着一种可以使用 **h2c**:的参数:**-u**。这会指示 **nghttp** 进行 HTTP/1 Upgrade 过程。但如果我们不使用呢?

|

||||

有另外一种在命令行参数中不用 **-u** 参数而使用 **h2c** 的方法。这个参数会指示 **nghttp** 进行 HTTP/1 升级切换过程。但如果我们不使用呢?

|

||||

|

||||

sh> nghttp -v http://<yourserver>/

|

||||

[ 0.002] Connected

|

||||

@ -166,36 +164,33 @@ nghttp2 有能一起编译的客户端和服务器。如果你的系统中有客

|

||||

:scheme: http

|

||||

...

|

||||

|

||||

连接马上显示出了 HTTP/2!这就是协议中所谓的直接模式,当客户端发送一些特殊的 24 字节到服务器时就会发生:

|

||||

连接马上使用了 HTTP/2!这就是协议中所谓的直接(direct)模式,当客户端发送一些特殊的 24 字节到服务器时就会发生:

|

||||

|

||||

0x505249202a20485454502f322e300d0a0d0a534d0d0a0d0a

|

||||

or in ASCII: PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n

|

||||

|

||||

用 ASCII 表示是:

|

||||

|

||||

PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n

|

||||

|

||||

支持 **h2c** 的服务器在一个新的连接中看到这些信息就会马上切换到 HTTP/2。HTTP/1.1 服务器则认为是一个可笑的请求,响应并关闭连接。

|

||||

|

||||

因此 **直接** 模式只适合于那些确定服务器支持 HTTP/2 的客户端。例如,前一个 Upgrade 过程是成功的。

|

||||

因此,**直接**模式只适合于那些确定服务器支持 HTTP/2 的客户端。例如,当前一个升级切换过程成功了的时候。

|

||||

|

||||

**直接** 模式的魅力是零开销,它支持所有请求,即使没有 body 部分(查看[h2c 限制][6])。任何支持 h2c 协议的服务器默认启用了直接模式。如果你想停用它,可以添加下面的配置指令到你的服务器:

|

||||

**直接**模式的魅力是零开销,它支持所有请求,即使带有请求数据部分(查看[h2c 限制][6])。

|

||||

|

||||

注:下面这行打删除线

|

||||

|

||||

H2Direct off

|

||||

|

||||

注:下面这行打删除线

|

||||

|

||||

对于 2.4.17 发行版,默认明文连接时启用 **H2Direct** 。但是有一些模块和这不兼容。因此,在下一发行版中,默认会设置为**off**,如果你希望你的服务器支持它,你需要设置它为:

|

||||

对于 2.4.17 版本,明文连接时默认启用 **H2Direct** 。但是有一些模块和这不兼容。因此,在下一版本中,默认会设置为**off**,如果你希望你的服务器支持它,你需要设置它为:

|

||||

|

||||

H2Direct on

|

||||

|

||||

### https:// 连接 (h2) ###

|

||||

|

||||

一旦你的 mod_h[ttp]2 支持 h2c 连接,就是时候一同启用 **h2**,因为现在的浏览器支持它和 **https:** 一同使用。

|

||||

当你的 mod_h[ttp]2 可以支持 h2c 连接时,那就可以一同启用 **h2** 兄弟了,现在的浏览器仅支持它和 **https:** 一同使用。

|

||||

|

||||

HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已经提到了 ALNP 扩展。另外的一个要求是不会使用特定[黑名单][7]中的密码。

|

||||

HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已经提到了 ALNP 扩展。另外的一个要求是不能使用特定[黑名单][7]中的加密算法。

|

||||

|

||||

尽管现在版本的 **mod_h[ttp]2** 不增强这些密码(以后可能会),大部分客户端会这么做。如果你用不切当的密码在浏览器中打开 **h2** 服务器,你会看到模糊警告**INADEQUATE_SECURITY**,浏览器会拒接连接。

|

||||

尽管现在版本的 **mod_h[ttp]2** 不增强这些算法(以后可能会),但大部分客户端会这么做。如果让你的浏览器使用不恰当的算法打开 **h2** 服务器,你会看到不明确的警告**INADEQUATE_SECURITY**,浏览器会拒接连接。

|

||||

|

||||

一个可接受的 Apache SSL 配置类似:

|

||||

一个可行的 Apache SSL 配置类似:

|

||||

|

||||

SSLCipherSuite ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!3DES:!MD5:!PSK

|

||||

SSLProtocol All -SSLv2 -SSLv3

|

||||

@ -203,11 +198,11 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

|

||||

(是的,这确实很长。)

|

||||

|

||||

这里还有一些应该调整的 SSL 配置参数,但不是必须:**SSLSessionCache**, **SSLUseStapling** 等,其它地方也有介绍这些。例如 Ilya Grigorik 写的一篇博客 [高性能浏览器网络][8]。

|

||||

这里还有一些应该调整,但不是必须调整的 SSL 配置参数:**SSLSessionCache**, **SSLUseStapling** 等,其它地方也有介绍这些。例如 Ilya Grigorik 写的一篇超赞的博客: [高性能浏览器网络][8]。

|

||||

|

||||

#### curl ####

|

||||

|

||||

再次回到 shell 并使用 curl(查看 [curl h2c 章节][9] 了解要求)你也可以通过 curl 用简单的命令检测你的服务器:

|

||||

再次回到 shell 使用 curl(查看上面的“curl h2c”章节了解要求),你也可以通过 curl 用简单的命令检测你的服务器:

|

||||

|

||||

sh> curl -v --http2 https://<yourserver>/

|

||||

...

|

||||

@ -220,9 +215,9 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

|

||||

恭喜你,能正常工作啦!如果还不能,可能原因是:

|

||||

|

||||

- 你的 curl 不支持 HTTP/2。查看[检测][10]。

|

||||

- 你的 curl 不支持 HTTP/2。查看上面的“检测 curl”一节。

|

||||

- 你的 openssl 版本太低不支持 ALPN。

|

||||

- 不能验证你的证书,或者不接受你的密码配置。尝试添加命令行选项 -k 停用 curl 中的检查。如果那能工作,还要重新配置你的 SSL 和证书。

|

||||

- 不能验证你的证书,或者不接受你的算法配置。尝试添加命令行选项 -k 停用 curl 中的这些检查。如果可以工作,就重新配置你的 SSL 和证书。

|

||||

|

||||

#### nghttp ####

|

||||

|

||||

@ -246,11 +241,11 @@ HTTP/2 标准对 https:(TLS)连接增加了一些额外的要求。上面已

|

||||

The negotiated protocol: http/1.1

|

||||

[ERROR] HTTP/2 protocol was not selected. (nghttp2 expects h2)

|

||||

|

||||

这表示 ALPN 能正常工作,但并没有用 h2 协议。你需要像上面介绍的那样在服务器上选中那个协议。如果一开始在 vhost 部分选中不能正常工作,试着在通用部分选中它。

|

||||

这表示 ALPN 能正常工作,但并没有用 h2 协议。你需要像上面介绍的那样检查你服务器上的 Protocols 配置。如果一开始在 vhost 部分设置不能正常工作,试着在通用部分设置它。

|

||||

|

||||

#### Firefox ####

|

||||

|

||||

Update: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox HTTP/2 指示插件][12]。你可以看到有多少地方用到了 h2(提示:Apache Lounge 用 h2 已经有一段时间了。。。)

|

||||

更新: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox 上有个 HTTP/2 指示插件][12]。你可以看到有多少地方用到了 h2(提示:Apache Lounge 用 h2 已经有一段时间了。。。)

|

||||

|

||||

你可以在 Firefox 浏览器中打开开发者工具,在那里的网络标签页查看 HTTP/2 连接。当你打开了 HTTP/2 并重新刷新 html 页面时,你会看到类似下面的东西:

|

||||

|

||||

@ -260,9 +255,9 @@ Update: [Apache Lounge][11] 的 Steffen Land 告诉我 [Firefox HTTP/2 指示

|

||||

|

||||

#### Google Chrome ####

|

||||

|

||||

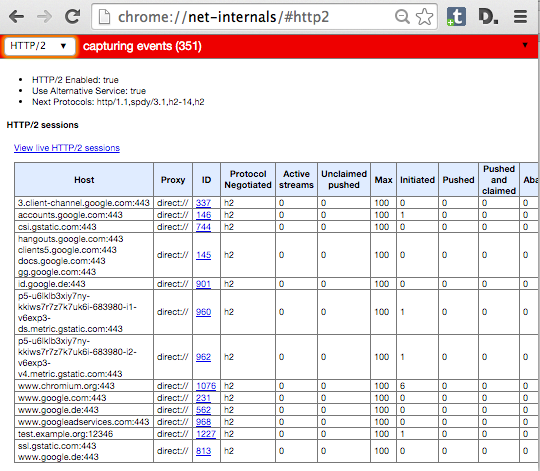

在 Google Chrome 中,你在开发者工具中看不到 HTTP/2 指示器。相反,Chrome 用特殊的地址 **chrome://net-internals/#http2** 给出了相关信息。

|

||||

在 Google Chrome 中,你在开发者工具中看不到 HTTP/2 指示器。相反,Chrome 用特殊的地址 **chrome://net-internals/#http2** 给出了相关信息。(LCTT 译注:Chrome 已经有一个 “HTTP/2 and SPDY indicator” 可以很好的在地址栏识别 HTTP/2 连接)

|

||||

|

||||

如果你在服务器中打开了一个页面并在 Chrome 那个页面查看,你可以看到类似下面这样:

|

||||

如果你打开了一个服务器的页面,可以在 Chrome 中查看那个 net-internals 页面,你可以看到类似下面这样:

|

||||

|

||||

|

||||

|

||||

@ -276,21 +271,21 @@ Windows 10 中 Internet Explorer 的继任者 Edge 也支持 HTTP/2。你也可

|

||||

|

||||

#### Safari ####

|

||||

|

||||

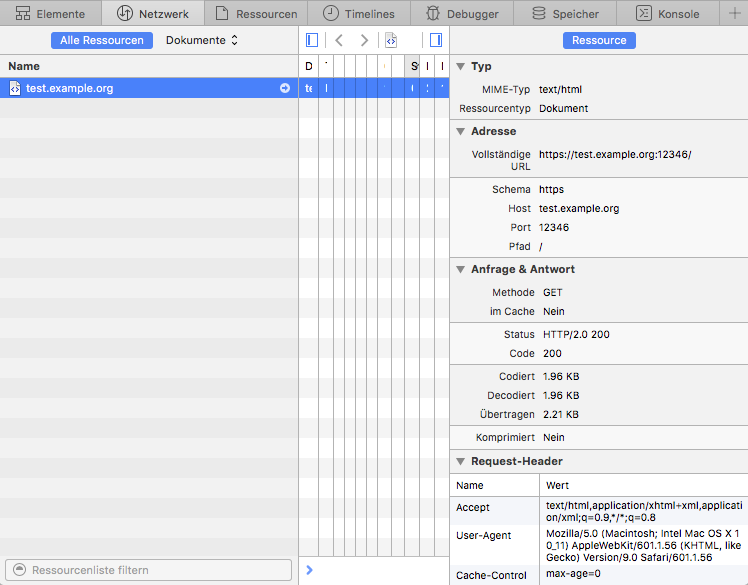

在 Apple 的 Safari 中,打开开发者工具,那里有个网络标签页。重新加载你的服务器页面并在开发者工具中选择显示了加载的行。如果你启用了在右边显示详细试图,看 **状态** 部分。那里显示了 **HTTP/2.0 200**,类似:

|

||||

在 Apple 的 Safari 中,打开开发者工具,那里有个网络标签页。重新加载你的服务器上的页面,并在开发者工具中选择显示了加载的那行。如果你启用了在右边显示详细视图,看 **Status** 部分。那里显示了 **HTTP/2.0 200**,像这样:

|

||||

|

||||

|

||||

|

||||

#### 重新协商 ####

|

||||

|

||||

https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会发生变化。在 Apache httpd 中,你可以通过目录中的配置文件修改 TLS 参数。如果一个要获取特定位置资源的请求到来,配置的 TLS 参数会和当前的 TLS 参数进行对比。如果它们不相同,就会触发重新协商。

|

||||

https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会发生变化。在 Apache httpd 中,你可以在 directory 配置中改变 TLS 参数。如果进来一个获取特定位置资源的请求,配置的 TLS 参数会和当前的 TLS 参数进行对比。如果它们不相同,就会触发重新协商。

|

||||

|

||||

这种最常见的情形是密码变化和客户端验证。你可以要求客户访问特定位置时需要通过验证,或者对于特定资源,你可以使用更安全的, CPU 敏感的密码。

|

||||

这种最常见的情形是算法变化和客户端证书。你可以要求客户访问特定位置时需要通过验证,或者对于特定资源,你可以使用更安全的、对 CPU 压力更大的算法。

|

||||

|

||||

不管你的想法有多么好,HTTP/2 中都**不可以**发生重新协商。如果有 100 多个请求到同一个地方,什么时候哪个会发生重新协商呢?

|

||||

但不管你的想法有多么好,HTTP/2 中都**不可以**发生重新协商。在同一个连接上会有 100 多个请求,那么重新协商该什么时候做呢?

|

||||

|

||||

对于这种配置,现有的 **mod_h[ttp]2** 还不能保证你的安全。如果你有一个站点使用了 TLS 重新协商,别在上面启用 h2!

|

||||

对于这种配置,现有的 **mod_h[ttp]2** 还没有办法。如果你有一个站点使用了 TLS 重新协商,别在上面启用 h2!

|

||||

|

||||

当然,我们会在后面的发行版中解决这个问题然后你就可以安全地启用了。

|

||||

当然,我们会在后面的版本中解决这个问题,然后你就可以安全地启用了。

|

||||

|

||||

### 限制 ###

|

||||

|

||||

@ -298,45 +293,45 @@ https: 连接重新协商是指正在运行的连接中特定的 TLS 参数会

|

||||

|

||||

实现除 HTTP 之外协议的模块可能和 **mod_http2** 不兼容。这在其它协议要求服务器首先发送数据时无疑会发生。

|

||||

|

||||

**NNTP** 就是这种协议的一个例子。如果你在服务器中配置了 **mod_nntp_like_ssl**,甚至都不要加载 mod_http2。等待下一个发行版。

|

||||

**NNTP** 就是这种协议的一个例子。如果你在服务器中配置了 **mod\_nntp\_like\_ssl**,那么就不要加载 mod_http2。等待下一个版本。

|

||||

|

||||

#### h2c 限制 ####

|

||||

|

||||

**h2c** 的实现还有一些限制,你应该注意:

|

||||

|

||||

#### 在虚拟主机中拒绝 h2c ####

|

||||

##### 在虚拟主机中拒绝 h2c #####

|

||||

|

||||

你不能对指定的虚拟主机拒绝 **h2c 直连**。连接建立而没有看到请求时会触发**直连**,这使得不可能预先知道 Apache 需要查找哪个虚拟主机。

|

||||

|

||||

#### 升级请求体 ####

|

||||

##### 有请求数据时的升级切换 #####

|

||||

|

||||

对于有 body 部分的请求,**h2c** 升级不能正常工作。那些是 PUT 和 POST 请求(用于提交和上传)。如果你写了一个客户端,你可能会用一个简单的 GET 去处理请求或者用选项 * 去触发升级。

|

||||

对于有数据的请求,**h2c** 升级切换不能正常工作。那些是 PUT 和 POST 请求(用于提交和上传)。如果你写了一个客户端,你可能会用一个简单的 GET 或者 OPTIONS * 来处理那些请求以触发升级切换。

|

||||

|

||||

原因从技术层面来看显而易见,但如果你想知道:升级过程中,连接处于半疯状态。请求按照 HTTP/1.1 的格式,而响应使用 HTTP/2。如果请求有一个 body 部分,服务器在发送响应之前需要读取整个 body。因为响应可能需要从客户端处得到应答用于流控制。但如果仍在发送 HTTP/1.1 请求,客户端就还不能处理 HTTP/2 连接。

|

||||

原因从技术层面来看显而易见,但如果你想知道:在升级切换过程中,连接处于半疯状态。请求按照 HTTP/1.1 的格式,而响应使用 HTTP/2 帧。如果请求有一个数据部分,服务器在发送响应之前需要读取整个数据。因为响应可能需要从客户端处得到应答用于流控制及其它东西。但如果仍在发送 HTTP/1.1 请求,客户端就仍然不能以 HTTP/2 连接。

|

||||

|

||||

为了使行为可预测,几个服务器实现商决定不要在任何请求体中进行升级,即使 body 很小。

|

||||

为了使行为可预测,几个服务器在实现上决定不在任何带有请求数据的请求中进行升级切换,即使请求数据很小。

|

||||

|

||||

#### 升级 302s ####

|

||||

##### 302 时的升级切换 #####

|

||||

|

||||

有重定向发生时当前 h2c 升级也不能工作。看起来 mod_http2 之前的重写有可能发生。这当然不会导致断路,但你测试这样的站点也许会让你迷惑。

|

||||

有重定向发生时,当前的 h2c 升级切换也不能工作。看起来 mod_http2 之前的重写有可能发生。这当然不会导致断路,但你测试这样的站点也许会让你迷惑。

|

||||

|

||||

#### h2 限制 ####

|

||||

|

||||

这里有一些你应该意识到的 h2 实现限制:

|

||||

|

||||

#### 连接重用 ####

|

||||

##### 连接重用 #####

|

||||

|

||||

HTTP/2 协议允许在特定条件下重用 TLS 连接:如果你有带通配符的证书或者多个 AltSubject 名称,浏览器可能会重用现有的连接。例如:

|

||||

|

||||

你有一个 **a.example.org** 的证书,它还有另外一个名称 **b.example.org**。你在浏览器中打开 url **https://a.example.org/**,用另一个标签页加载 **https://b.example.org/**。

|

||||

你有一个 **a.example.org** 的证书,它还有另外一个名称 **b.example.org**。你在浏览器中打开 URL **https://a.example.org/**,用另一个标签页加载 **https://b.example.org/**。

|

||||

|

||||

在重新打开一个新的连接之前,浏览器看到它有一个到 **a.example.org** 的连接并且证书对于 **b.example.org** 也可用。因此,它在第一个连接上面向第二个标签页发送请求。

|

||||

在重新打开一个新的连接之前,浏览器看到它有一个到 **a.example.org** 的连接并且证书对于 **b.example.org** 也可用。因此,它在第一个连接上面发送第二个标签页的请求。

|

||||

|

||||

这种连接重用是刻意设计的,它使得致力于 HTTP/1 切分效率的站点能够不需要太多变化就能利用 HTTP/2。

|

||||

这种连接重用是刻意设计的,它使得使用了 HTTP/1 切分(sharding)来提高效率的站点能够不需要太多变化就能利用 HTTP/2。

|

||||

|

||||

Apache **mod_h[ttp]2** 还没有完全实现这点。如果 **a.example.org** 和 **b.example.org** 是不同的虚拟主机, Apache 不会允许这样的连接重用,并会告知浏览器状态码**421 错误请求**。浏览器会意识到它需要重新打开一个到 **b.example.org** 的连接。这仍然能工作,只是会降低一些效率。

|

||||

Apache **mod_h[ttp]2** 还没有完全实现这点。如果 **a.example.org** 和 **b.example.org** 是不同的虚拟主机, Apache 不会允许这样的连接重用,并会告知浏览器状态码 **421 Misdirected Request**。浏览器会意识到它需要重新打开一个到 **b.example.org** 的连接。这仍然能工作,只是会降低一些效率。

|

||||

|

||||

我们期望下一次的发布中能有切当的检查。

|

||||

我们期望下一次的发布中能有合适的检查。

|

||||

|

||||

Münster, 12.10.2015,

|

||||

|

||||

@ -355,7 +350,7 @@ via: https://icing.github.io/mod_h2/howto.html

|

||||

|

||||

作者:[icing][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

translating wi-cuckoo

|

||||

A Repository with 44 Years of Unix Evolution

|

||||

================================================================================

|

||||

### Abstract ###

|

||||

@ -199,4 +200,4 @@ via: http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.htm

|

||||

[50]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAI

|

||||

[51]:http://ftp.netbsd.org/pub/NetBSD/NetBSD-current/src/share/misc/bsd-family-tree

|

||||

[52]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAJ

|

||||

[53]:https://github.com/dspinellis/unix-history-make

|

||||

[53]:https://github.com/dspinellis/unix-history-make

|

||||

|

||||

@ -0,0 +1,450 @@

|

||||

Getting started with Docker by Dockerizing this Blog

|

||||

======================

|

||||

>This article covers the basic concepts of Docker and how to Dockerize an application by creating a custom Dockerfile

|

||||

>Written by Benjamin Cane on 2015-12-01 10:00:00

|

||||

|

||||

Docker is an interesting technology that over the past 2 years has gone from an idea, to being used by organizations all over the world to deploy applications. In today's article I am going to cover how to get started with Docker by "Dockerizing" an existing application. The application in question is actually this very blog!

|

||||

|

||||

## What is Docker

|

||||

|

||||

Before we dive into learning the basics of Docker let's first understand what Docker is and why it is so popular. Docker, is an operating system container management tool that allows you to easily manage and deploy applications by making it easy to package them within operating system containers.

|

||||

|

||||

### Containers vs. Virtual Machines

|

||||

|

||||

Containers may not be as familiar as virtual machines but they are another method to provide **Operating System Virtualization**. However, they differ quite a bit from standard virtual machines.

|

||||

|

||||

Standard virtual machines generally include a full Operating System, OS Packages and eventually an Application or two. This is made possible by a Hypervisor which provides hardware virtualization to the virtual machine. This allows for a single server to run many standalone operating systems as virtual guests.

|

||||

|

||||

Containers are similar to virtual machines in that they allow a single server to run multiple operating environments, these environments however, are not full operating systems. Containers generally only include the necessary OS Packages and Applications. They do not generally contain a full operating system or hardware virtualization. This also means that containers have a smaller overhead than traditional virtual machines.

|

||||

|

||||

Containers and Virtual Machines are often seen as conflicting technology, however, this is often a misunderstanding. Virtual Machines are a way to take a physical server and provide a fully functional operating environment that shares those physical resources with other virtual machines. A Container is generally used to isolate a running process within a single host to ensure that the isolated processes cannot interact with other processes within that same system. In fact containers are closer to **BSD Jails** and `chroot`'ed processes than full virtual machines.

|

||||

|

||||

### What Docker provides on top of containers

|

||||

|

||||

Docker itself is not a container runtime environment; in fact Docker is actually container technology agnostic with efforts planned for Docker to support [Solaris Zones](https://blog.docker.com/2015/08/docker-oracle-solaris-zones/) and [BSD Jails](https://wiki.freebsd.org/Docker). What Docker provides is a method of managing, packaging, and deploying containers. While these types of functions may exist to some degree for virtual machines they traditionally have not existed for most container solutions and the ones that existed, were not as easy to use or fully featured as Docker.

|

||||

|

||||

Now that we know what Docker is, let's start learning how Docker works by first installing Docker and deploying a public pre-built container.

|

||||

|

||||

## Starting with Installation

|

||||

As Docker is not installed by default step 1 will be to install the Docker package; since our example system is running Ubuntu 14.0.4 we will do this using the Apt package manager.

|

||||

|

||||

```

|

||||

# apt-get install docker.io

|

||||

Reading package lists... Done

|

||||

Building dependency tree

|

||||

Reading state information... Done

|

||||

The following extra packages will be installed:

|

||||

aufs-tools cgroup-lite git git-man liberror-perl

|

||||

Suggested packages:

|

||||

btrfs-tools debootstrap lxc rinse git-daemon-run git-daemon-sysvinit git-doc

|

||||

git-el git-email git-gui gitk gitweb git-arch git-bzr git-cvs git-mediawiki

|

||||

git-svn

|

||||

The following NEW packages will be installed:

|

||||

aufs-tools cgroup-lite docker.io git git-man liberror-perl

|

||||

0 upgraded, 6 newly installed, 0 to remove and 0 not upgraded.

|

||||

Need to get 7,553 kB of archives.

|

||||

After this operation, 46.6 MB of additional disk space will be used.

|

||||

Do you want to continue? [Y/n] y

|

||||

```

|

||||

|

||||

To check if any containers are running we can execute the `docker` command using the `ps` option.

|

||||

|

||||

```

|

||||

# docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

```

|

||||

|

||||

The `ps` function of the `docker` command works similar to the Linux `ps `command. It will show available Docker containers and their current status. Since we have not started any Docker containers yet, the command shows no running containers.

|

||||

|

||||

## Deploying a pre-built nginx Docker container

|

||||

One of my favorite features of Docker is the ability to deploy a pre-built container in the same way you would deploy a package with `yum` or `apt-get`. To explain this better let's deploy a pre-built container running the nginx web server. We can do this by executing the `docker` command again, however, this time with the `run` option.

|

||||

|

||||

```

|

||||

# docker run -d nginx

|

||||

Unable to find image 'nginx' locally

|

||||

Pulling repository nginx

|

||||

5c82215b03d1: Download complete

|

||||

e2a4fb18da48: Download complete

|

||||

58016a5acc80: Download complete

|

||||

657abfa43d82: Download complete

|

||||

dcb2fe003d16: Download complete

|

||||

c79a417d7c6f: Download complete

|

||||

abb90243122c: Download complete

|

||||

d6137c9e2964: Download complete

|

||||

85e566ddc7ef: Download complete

|

||||

69f100eb42b5: Download complete

|

||||

cd720b803060: Download complete

|

||||

7cc81e9a118a: Download complete

|

||||

```

|

||||

|

||||

The `run` function of the `docker` command tells Docker to find a specified Docker image and start a container running that image. By default, Docker containers run in the foreground, meaning when you execute `docker run` your shell will be bound to the container's console and the process running within the container. In order to launch this Docker container in the background I included the `-d` (**detach**) flag.

|

||||

|

||||

By executing `docker ps` again we can see the nginx container running.

|

||||

|

||||

```

|

||||

# docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

f6d31ab01fc9 nginx:latest nginx -g 'daemon off 4 seconds ago Up 3 seconds 443/tcp, 80/tcp desperate_lalande

|

||||

```

|

||||

|

||||

In the above output we can see the running container `desperate_lalande` and that this container has been built from the `nginx:latest image`.

|

||||

|

||||

### Docker Images

|

||||

Images are one of Docker's key features and is similar to a virtual machine image. Like virtual machine images, a Docker image is a container that has been saved and packaged. Docker however, doesn't just stop with the ability to create images. Docker also includes the ability to distribute those images via Docker repositories which are a similar concept to package repositories. This is what gives Docker the ability to deploy an image like you would deploy a package with `yum`. To get a better understanding of how this works let's look back at the output of the `docker run` execution.

|

||||

|

||||

```

|

||||

# docker run -d nginx

|

||||

Unable to find image 'nginx' locally

|

||||

```

|

||||

|

||||

The first message we see is that `docker` could not find an image named nginx locally. The reason we see this message is that when we executed `docker run` we told Docker to startup a container, a container based on an image named **nginx**. Since Docker is starting a container based on a specified image it needs to first find that image. Before checking any remote repository Docker first checks locally to see if there is a local image with the specified name.

|

||||

|

||||

Since this system is brand new there is no Docker image with the name nginx, which means Docker will need to download it from a Docker repository.

|

||||

|

||||

```

|

||||

Pulling repository nginx

|

||||

5c82215b03d1: Download complete

|

||||

e2a4fb18da48: Download complete

|

||||

58016a5acc80: Download complete

|

||||

657abfa43d82: Download complete

|

||||

dcb2fe003d16: Download complete

|

||||

c79a417d7c6f: Download complete

|

||||

abb90243122c: Download complete

|

||||

d6137c9e2964: Download complete

|

||||

85e566ddc7ef: Download complete

|

||||

69f100eb42b5: Download complete

|

||||

cd720b803060: Download complete

|

||||

7cc81e9a118a: Download complete

|

||||

```

|

||||

|

||||

This is exactly what the second part of the output is showing us. By default, Docker uses the [Docker Hub](https://hub.docker.com/) repository, which is a repository service that Docker (the company) runs.

|

||||

|

||||

Like GitHub, Docker Hub is free for public repositories but requires a subscription for private repositories. It is possible however, to deploy your own Docker repository, in fact it is as easy as `docker run registry`. For this article we will not be deploying a custom registry service.

|

||||

|

||||

### Stopping and Removing the Container

|

||||

Before moving on to building a custom Docker container let's first clean up our Docker environment. We will do this by stopping the container from earlier and removing it.

|

||||

|

||||

To start a container we executed `docker` with the `run` option, in order to stop this same container we simply need to execute the `docker` with the `kill` option specifying the container name.

|

||||

|

||||

```

|

||||

# docker kill desperate_lalande

|

||||

desperate_lalande

|

||||

```

|

||||

|

||||

If we execute `docker ps` again we will see that the container is no longer running.

|

||||

|

||||

```

|

||||

# docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

```

|

||||

|

||||

However, at this point we have only stopped the container; while it may no longer be running it still exists. By default, `docker ps` will only show running containers, if we add the `-a` (all) flag it will show all containers running or not.

|

||||

|

||||

```

|

||||

# docker ps -a

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

f6d31ab01fc9 5c82215b03d1 nginx -g 'daemon off 4 weeks ago Exited (-1) About a minute ago desperate_lalande

|

||||

```

|

||||

|

||||

In order to fully remove the container we can use the `docker` command with the `rm` option.

|

||||

|

||||

```

|

||||

# docker rm desperate_lalande

|

||||

desperate_lalande

|

||||

```

|

||||

|

||||

While this container has been removed; we still have a **nginx** image available. If we were to re-run `docker run -d nginx` again the container would be started without having to fetch the nginx image again. This is because Docker already has a saved copy on our local system.

|

||||

|

||||

To see a full list of local images we can simply run the `docker` command with the `images` option.

|

||||

|

||||

```

|

||||

# docker images

|

||||

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

|

||||

nginx latest 9fab4090484a 5 days ago 132.8 MB

|

||||

```

|

||||

|

||||

## Building our own custom image

|

||||

At this point we have used a few basic Docker commands to start, stop and remove a common pre-built image. In order to "Dockerize" this blog however, we are going to have to build our own Docker image and that means creating a **Dockerfile**.

|

||||

|

||||

With most virtual machine environments if you wish to create an image of a machine you need to first create a new virtual machine, install the OS, install the application and then finally convert it to a template or image. With Docker however, these steps are automated via a Dockerfile. A Dockerfile is a way of providing build instructions to Docker for the creation of a custom image. In this section we are going to build a custom Dockerfile that can be used to deploy this blog.

|

||||

|

||||

### Understanding the Application

|

||||

Before we can jump into creating a Dockerfile we first need to understand what is required to deploy this blog.

|

||||

|

||||

The blog itself is actually static HTML pages generated by a custom static site generator that I wrote named; **hamerkop**. The generator is very simple and more about getting the job done for this blog specifically. All the code and source files for this blog are available via a public [GitHub](https://github.com/madflojo/blog) repository. In order to deploy this blog we simply need to grab the contents of the GitHub repository, install **Python** along with some **Python** modules and execute the `hamerkop` application. To serve the generated content we will use **nginx**; which means we will also need **nginx** to be installed.

|

||||

|

||||

So far this should be a pretty simple Dockerfile, but it will show us quite a bit of the [Dockerfile Syntax](https://docs.docker.com/v1.8/reference/builder/). To get started we can clone the GitHub repository and creating a Dockerfile with our favorite editor; `vi` in my case.

|

||||

|

||||

```

|

||||

# git clone https://github.com/madflojo/blog.git

|

||||

Cloning into 'blog'...

|

||||

remote: Counting objects: 622, done.

|

||||

remote: Total 622 (delta 0), reused 0 (delta 0), pack-reused 622

|

||||

Receiving objects: 100% (622/622), 14.80 MiB | 1.06 MiB/s, done.

|

||||

Resolving deltas: 100% (242/242), done.

|

||||

Checking connectivity... done.

|

||||

# cd blog/

|

||||

# vi Dockerfile

|

||||

```

|

||||

|

||||

### FROM - Inheriting a Docker image

|

||||

The first instruction of a Dockerfile is the `FROM` instruction. This is used to specify an existing Docker image to use as our base image. This basically provides us with a way to inherit another Docker image. In this case we will be starting with the same **nginx** image we were using before, if we wanted to start with a blank slate we could use the **Ubuntu** Docker image by specifying `ubuntu:latest`.

|

||||

|

||||

```

|

||||

## Dockerfile that generates an instance of http://bencane.com

|

||||

|

||||

FROM nginx:latest

|

||||

MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

```

|

||||

|

||||

In addition to the `FROM` instruction, I also included a `MAINTAINER` instruction which is used to show the Author of the Dockerfile.

|

||||

|

||||

As Docker supports using `#` as a comment marker, I will be using this syntax quite a bit to explain the sections of this Dockerfile.

|

||||

|

||||

### Running a test build

|

||||

Since we inherited the **nginx** Docker image our current Dockerfile also inherited all the instructions within the [Dockerfile](https://github.com/nginxinc/docker-nginx/blob/08eeb0e3f0a5ee40cbc2bc01f0004c2aa5b78c15/Dockerfile) used to build that **nginx** image. What this means is even at this point we are able to build a Docker image from this Dockerfile and run a container from that image. The resulting image will essentially be the same as the **nginx** image but we will run through a build of this Dockerfile now and a few more times as we go to help explain the Docker build process.

|

||||

|

||||

In order to start the build from a Dockerfile we can simply execute the `docker` command with the **build** option.

|

||||

|

||||

```

|

||||

# docker build -t blog /root/blog

|

||||

Sending build context to Docker daemon 23.6 MB

|

||||

Sending build context to Docker daemon

|

||||

Step 0 : FROM nginx:latest

|

||||

---> 9fab4090484a

|

||||

Step 1 : MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

---> Running in c97f36450343

|

||||

---> 60a44f78d194

|

||||

Removing intermediate container c97f36450343

|

||||

Successfully built 60a44f78d194

|

||||

```

|

||||

|

||||

In the above example I used the `-t` (**tag**) flag to "tag" the image as "blog". This essentially allows us to name the image, without specifying a tag the image would only be callable via an **Image ID** that Docker assigns. In this case the **Image ID** is `60a44f78d194` which we can see from the `docker` command's build success message.

|

||||

|

||||

In addition to the `-t` flag, I also specified the directory `/root/blog`. This directory is the "build directory", which is the directory that contains the Dockerfile and any other files necessary to build this container.

|

||||

|

||||

Now that we have run through a successful build, let's start customizing this image.

|

||||

|

||||

### Using RUN to execute apt-get

|

||||

The static site generator used to generate the HTML pages is written in **Python** and because of this the first custom task we should perform within this `Dockerfile` is to install Python. To install the Python package we will use the Apt package manager. This means we will need to specify within the Dockerfile that `apt-get update` and `apt-get install python-dev` are executed; we can do this with the `RUN` instruction.

|

||||

|

||||

```

|

||||

## Dockerfile that generates an instance of http://bencane.com

|

||||

|

||||

FROM nginx:latest

|

||||

MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

|

||||

## Install python and pip

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y python-dev python-pip

|

||||

```

|

||||

|

||||

In the above we are simply using the `RUN` instruction to tell Docker that when it builds this image it will need to execute the specified `apt-get` commands. The interesting part of this is that these commands are only executed within the context of this container. What this means is even though `python-dev` and `python-pip` are being installed within the container, they are not being installed for the host itself. Or to put it simplier, within the container the `pip` command will execute, outside the container, the `pip` command does not exist.

|

||||

|

||||

It is also important to note that the Docker build process does not accept user input during the build. This means that any commands being executed by the `RUN` instruction must complete without user input. This adds a bit of complexity to the build process as many applications require user input during installation. For our example, none of the commands executed by `RUN` require user input.

|

||||

|

||||

### Installing Python modules

|

||||

With **Python** installed we now need to install some Python modules. To do this outside of Docker, we would generally use the `pip` command and reference a file within the blog's Git repository named `requirements.txt`. In an earlier step we used the `git` command to "clone" the blog's GitHub repository to the `/root/blog` directory; this directory also happens to be the directory that we have created the `Dockerfile`. This is important as it means the contents of the Git repository are accessible to Docker during the build process.

|

||||

|

||||

When executing a build, Docker will set the context of the build to the specified "build directory". This means that any files within that directory and below can be used during the build process, files outside of that directory (outside of the build context), are inaccessible.

|

||||

|

||||

In order to install the required Python modules we will need to copy the `requirements.txt` file from the build directory into the container. We can do this using the `COPY` instruction within the `Dockerfile`.

|

||||

|

||||

```

|

||||

## Dockerfile that generates an instance of http://bencane.com

|

||||

|

||||

FROM nginx:latest

|

||||

MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

|

||||

## Install python and pip

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y python-dev python-pip

|

||||

|

||||

## Create a directory for required files

|

||||

RUN mkdir -p /build/

|

||||

|

||||

## Add requirements file and run pip

|

||||

COPY requirements.txt /build/

|

||||

RUN pip install -r /build/requirements.txt

|

||||

```

|

||||

|

||||

Within the `Dockerfile` we added 3 instructions. The first instruction uses `RUN` to create a `/build/` directory within the container. This directory will be used to copy any application files needed to generate the static HTML pages. The second instruction is the `COPY` instruction which copies the `requirements.txt` file from the "build directory" (`/root/blog`) into the `/build` directory within the container. The third is using the `RUN` instruction to execute the `pip` command; installing all the modules specified within the `requirements.txt` file.

|

||||

|

||||

`COPY` is an important instruction to understand when building custom images. Without specifically copying the file within the Dockerfile this Docker image would not contain the requirements.txt file. With Docker containers everything is isolated, unless specifically executed within a Dockerfile a container is not likely to include required dependencies.

|

||||

|

||||

### Re-running a build

|

||||

Now that we have a few customization tasks for Docker to perform let's try another build of the blog image again.

|

||||

|

||||

```

|

||||

# docker build -t blog /root/blog

|

||||

Sending build context to Docker daemon 19.52 MB

|

||||

Sending build context to Docker daemon

|

||||

Step 0 : FROM nginx:latest

|

||||

---> 9fab4090484a

|

||||

Step 1 : MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

---> Using cache

|

||||

---> 8e0f1899d1eb

|

||||

Step 2 : RUN apt-get update

|

||||

---> Using cache

|

||||

---> 78b36ef1a1a2

|

||||

Step 3 : RUN apt-get install -y python-dev python-pip

|

||||

---> Using cache

|

||||

---> ef4f9382658a

|

||||

Step 4 : RUN mkdir -p /build/

|

||||

---> Running in bde05cf1e8fe

|

||||

---> f4b66e09fa61

|

||||

Removing intermediate container bde05cf1e8fe

|

||||

Step 5 : COPY requirements.txt /build/

|

||||

---> cef11c3fb97c

|

||||

Removing intermediate container 9aa8ff43f4b0

|

||||

Step 6 : RUN pip install -r /build/requirements.txt

|

||||

---> Running in c50b15ddd8b1

|

||||

Downloading/unpacking jinja2 (from -r /build/requirements.txt (line 1))

|

||||

Downloading/unpacking PyYaml (from -r /build/requirements.txt (line 2))

|

||||

<truncated to reduce noise>

|

||||

Successfully installed jinja2 PyYaml mistune markdown MarkupSafe

|

||||

Cleaning up...

|

||||

---> abab55c20962

|

||||

Removing intermediate container c50b15ddd8b1

|

||||

Successfully built abab55c20962

|

||||

```

|

||||

|

||||

From the above build output we can see the build was successful, but we can also see another interesting message;` ---> Using cache`. What this message is telling us is that Docker was able to use its build cache during the build of this image.

|

||||

|

||||

#### Docker build cache

|

||||

|

||||

When Docker is building an image, it doesn't just build a single image; it actually builds multiple images throughout the build processes. In fact we can see from the above output that after each "Step" Docker is creating a new image.

|

||||

|

||||

```

|

||||

Step 5 : COPY requirements.txt /build/

|

||||

---> cef11c3fb97c

|

||||

```

|

||||

|

||||

The last line from the above snippet is actually Docker informing us of the creating of a new image, it does this by printing the **Image ID**; `cef11c3fb97c`. The useful thing about this approach is that Docker is able to use these images as cache during subsequent builds of the **blog** image. This is useful because it allows Docker to speed up the build process for new builds of the same container. If we look at the example above we can actually see that rather than installing the `python-dev` and `python-pip` packages again, Docker was able to use a cached image. However, since Docker was unable to find a build that executed the `mkdir` command, each subsequent step was executed.

|

||||

|

||||

The Docker build cache is a bit of a gift and a curse; the reason for this is that the decision to use cache or to rerun the instruction is made within a very narrow scope. For example, if there was a change to the `requirements.txt` file Docker would detect this change during the build and start fresh from that point forward. It does this because it can view the contents of the `requirements.txt` file. The execution of the `apt-get` commands however, are another story. If the **Apt** repository that provides the Python packages were to contain a newer version of the python-pip package; Docker would not be able to detect the change and would simply use the build cache. This means that an older package may be installed. While this may not be a major issue for the `python-pip` package it could be a problem if the installation was caching a package with a known vulnerability.

|

||||

|

||||

For this reason it is useful to periodically rebuild the image without using Docker's cache. To do this you can simply specify `--no-cache=True` when executing a Docker build.

|

||||

|

||||

## Deploying the rest of the blog

|

||||

With the Python packages and modules installed this leaves us at the point of copying the required application files and running the `hamerkop` application. To do this we will simply use more `COPY` and `RUN` instructions.

|

||||

|

||||

```

|

||||

## Dockerfile that generates an instance of http://bencane.com

|

||||

|

||||

FROM nginx:latest

|

||||

MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

|

||||

## Install python and pip

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y python-dev python-pip

|

||||

|

||||

## Create a directory for required files

|

||||

RUN mkdir -p /build/

|

||||

|

||||

## Add requirements file and run pip

|

||||

COPY requirements.txt /build/

|

||||

RUN pip install -r /build/requirements.txt

|

||||

|

||||

## Add blog code nd required files

|

||||

COPY static /build/static

|

||||

COPY templates /build/templates

|

||||

COPY hamerkop /build/

|

||||

COPY config.yml /build/

|

||||

COPY articles /build/articles

|

||||

|

||||

## Run Generator

|

||||

RUN /build/hamerkop -c /build/config.yml

|

||||

```

|

||||

|

||||

Now that we have the rest of the build instructions, let's run through another build and verify that the image builds successfully.

|

||||

|

||||

```

|

||||

# docker build -t blog /root/blog/

|

||||

Sending build context to Docker daemon 19.52 MB

|

||||

Sending build context to Docker daemon

|

||||

Step 0 : FROM nginx:latest

|

||||

---> 9fab4090484a

|

||||

Step 1 : MAINTAINER Benjamin Cane <ben@bencane.com>

|

||||

---> Using cache

|

||||

---> 8e0f1899d1eb

|

||||

Step 2 : RUN apt-get update

|

||||

---> Using cache

|

||||

---> 78b36ef1a1a2

|

||||

Step 3 : RUN apt-get install -y python-dev python-pip

|

||||

---> Using cache

|

||||

---> ef4f9382658a

|

||||

Step 4 : RUN mkdir -p /build/

|

||||

---> Using cache

|

||||

---> f4b66e09fa61

|

||||

Step 5 : COPY requirements.txt /build/

|

||||

---> Using cache

|

||||

---> cef11c3fb97c

|

||||

Step 6 : RUN pip install -r /build/requirements.txt

|

||||

---> Using cache

|

||||

---> abab55c20962

|

||||

Step 7 : COPY static /build/static

|

||||

---> 15cb91531038

|

||||

Removing intermediate container d478b42b7906

|

||||

Step 8 : COPY templates /build/templates

|

||||

---> ecded5d1a52e

|

||||

Removing intermediate container ac2390607e9f

|

||||

Step 9 : COPY hamerkop /build/

|

||||

---> 59efd1ca1771

|

||||

Removing intermediate container b5fbf7e817b7

|

||||

Step 10 : COPY config.yml /build/

|

||||

---> bfa3db6c05b7

|

||||

Removing intermediate container 1aebef300933

|

||||

Step 11 : COPY articles /build/articles

|

||||

---> 6b61cc9dde27

|

||||

Removing intermediate container be78d0eb1213

|

||||

Step 12 : RUN /build/hamerkop -c /build/config.yml

|

||||

---> Running in fbc0b5e574c5

|

||||

Successfully created file /usr/share/nginx/html//2011/06/25/checking-the-number-of-lwp-threads-in-linux

|

||||

Successfully created file /usr/share/nginx/html//2011/06/checking-the-number-of-lwp-threads-in-linux

|

||||

<truncated to reduce noise>

|

||||

Successfully created file /usr/share/nginx/html//archive.html

|

||||

Successfully created file /usr/share/nginx/html//sitemap.xml

|

||||

---> 3b25263113e1

|

||||

Removing intermediate container fbc0b5e574c5

|

||||

Successfully built 3b25263113e1

|

||||

```

|

||||

|

||||

### Running a custom container

|

||||

With a successful build we can now start our custom container by running the `docker` command with the `run` option, similar to how we started the nginx container earlier.

|

||||

|

||||

```

|

||||

# docker run -d -p 80:80 --name=blog blog

|

||||

5f6c7a2217dcdc0da8af05225c4d1294e3e6bb28a41ea898a1c63fb821989ba1

|

||||

```

|

||||

|

||||

Once again the `-d` (**detach**) flag was used to tell Docker to run the container in the background. However, there are also two new flags. The first new flag is `--name`, which is used to give the container a user specified name. In the earlier example we did not specify a name and because of that Docker randomly generated one. The second new flag is `-p`, this flag allows users to map a port from the host machine to a port within the container.

|

||||

|

||||

The base **nginx** image we used exposes port 80 for the HTTP service. By default, ports bound within a Docker container are not bound on the host system as a whole. In order for external systems to access ports exposed within a container the ports must be mapped from a host port to a container port using the `-p` flag. The command above maps port 80 from the host, to port 80 within the container. If we wished to map port 8080 from the host, to port 80 within the container we could do so by specifying the ports in the following syntax `-p 8080:80`.

|

||||

|

||||

From the above command it appears that our container was started successfully, we can verify this by executing `docker ps`.

|

||||

|

||||

```

|

||||

# docker ps

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

d264c7ef92bd blog:latest nginx -g 'daemon off 3 seconds ago Up 3 seconds 443/tcp, 0.0.0.0:80->80/tcp blog

|

||||

```

|

||||

|

||||

## Wrapping up

|

||||

|

||||

At this point we now have a running custom Docker container. While we touched on a few Dockerfile instructions within this article we have yet to discuss all the instructions. For a full list of Dockerfile instructions you can checkout [Docker's reference page](https://docs.docker.com/v1.8/reference/builder/), which explains the instructions very well.

|

||||

|

||||

Another good resource is their [Dockerfile Best Practices page](https://docs.docker.com/engine/articles/dockerfile_best-practices/) which contains quite a few best practices for building custom Dockerfiles. Some of these tips are very useful such as strategically ordering the commands within the Dockerfile. In the above examples our Dockerfile has the `COPY` instruction for the `articles` directory as the last `COPY` instruction. The reason for this is that the `articles` directory will change quite often. It's best to put instructions that will change oftenat the lowest point possible within the Dockerfile to optimize steps that can be cached.

|

||||

|

||||

In this article we covered how to start a pre-built container and how to build, then deploy a custom container. While there is quite a bit to learn about Docker this article should give you a good idea on how to get started. Of course, as always if you think there is anything that should be added drop it in the comments below.

|

||||

|

||||

--------------------------------------

|

||||

via:http://bencane.com/2015/12/01/getting-started-with-docker-by-dockerizing-this-blog/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+bencane%2FSAUo+%28Benjamin+Cane%29

|

||||

|

||||

作者:Benjamin Cane

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,60 +1,60 @@

|

||||

translation by strugglingyouth

|

||||

Install Wetty on Centos/RHEL 6.X

|

||||

|

||||

在 Centos/RHEL 6.X 上安装 Wetty

|

||||

================================================================================

|

||||

|

||||

|

||||

What is Wetty?

|

||||

Wetty 是什么?

|

||||

|

||||

As a system administrator, you probably connect to remote servers using a program such as GNOME Terminal (or the like) if you’re on a Linux desktop, or a SSH client such as Putty if you have a Windows machine, while you perform other tasks like browsing the web or checking your email.

|

||||

作为系统管理员,如果你是在 Linux 桌面下,你可能会使用一个软件来连接远程服务器,像 GNOME 终端(或类似的),如果你是在 Windows 下,你可能会使用像 Putty 这样的 SSH 客户端来连接,并同时可以在浏览器中查收邮件等做其他事情。

|

||||

|

||||

### Step 1: Install epel repo ###

|

||||

### 第1步: 安装 epel 源 ###

|

||||

|

||||

# wget http://download.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpm

|

||||

# rpm -ivh epel-release-6-8.noarch.rpm

|

||||

|

||||

### Step 2: Install dependencies ###

|

||||

### 第2步:安装依赖 ###

|

||||

|

||||

# yum install epel-release git nodejs npm -y

|

||||

|

||||

### Step 3: After installing these dependencies, clone the GitHub repository ###

|

||||

### 第3步:在安装完依赖后,克隆 GitHub 仓库 ###

|

||||

|

||||

# git clone https://github.com/krishnasrinivas/wetty

|

||||

|

||||

### Step 4: Run Wetty ###

|

||||

### 第4步:运行 Wetty ###

|

||||

|

||||

# cd wetty

|

||||

# npm install

|

||||

|

||||

### Step 5: Starting Wetty and Access Linux Terminal from Web Browser ###

|

||||

### 第5步:从 Web 浏览器启动 Wetty 并访问 Linux 终端 ###

|

||||

|

||||

# node app.js -p 8080

|

||||

|

||||

### Step 6: Wetty through HTTPS ###

|

||||

### 第6步:为 Wetty 安装 HTTPS 证书 ###

|

||||

|

||||

# openssl req -x509 -newkey rsa:2048 -keyout key.pem -out cert.pem -days 365 -nodes (complete this)

|

||||

|

||||

### Step 7: launch Wetty via HTTPS ###

|

||||

### Step 7: 通过 HTTPS 来使用 Wetty ###

|

||||

|

||||

# nohup node app.js --sslkey key.pem --sslcert cert.pem -p 8080 &

|

||||

|

||||

### Step 8: Add an user for wetty ###

|

||||

### Step 8: 为 wetty 添加一个用户 ###

|

||||

|

||||

# useradd <username>

|

||||

# Passwd <username>

|

||||

|

||||

### Step 9: Access wetty ###

|

||||

### 第9步:访问 wetty ###

|

||||

|

||||

http://Your_IP-Address:8080

|

||||

give the credential have created before for wetty and access

|

||||

|

||||

Enjoy

|

||||

到此结束!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/install-wetty-centosrhel-6-x/

|

||||

|

||||

作者:[Debojyoti Das][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

Loading…

Reference in New Issue

Block a user