mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

Merge remote-tracking branch 'upstream/master'

This commit is contained in:

commit

dbbaa6bfd6

published

20180503 How the four components of a distributed tracing system work together.md20180511 MidnightBSD Could Be Your Gateway to FreeBSD.md

202001

20171018 How to create an e-book chapter template in LibreOffice Writer.md20190405 File sharing with Git.md20190406 Run a server with Git.md20190619 Getting started with OpenSSL- Cryptography basics.md20190724 How to make an old computer useful again.md20190924 An advanced look at Python interfaces using zope.interface.md20190930 How the Linux screen tool can save your tasks - and your sanity - if SSH is interrupted.md20191015 How GNOME uses Git.md20191016 Open source interior design with Sweet Home 3D.md20191017 Intro to the Linux useradd command.md20191108 My Linux story- Learning Linux in the 90s.md20191113 How to cohost GitHub and GitLab with Ansible.md20191121 Simulate gravity in your Python game.md20191129 How to write a Python web API with Django.md20191130 7 maker gifts for kids and teens.md20191205 Add jumping to your Python platformer game.md20191208 What-s your favorite terminal emulator.md20191210 Lessons learned from programming in Go.md20191211 Enable your Python game player to run forward and backward.md20191214 Make VLC More Awesome With These Simple Tips.md20191215 How to Add Border Around Text in GIMP.md20191217 App Highlight- Open Source Disk Partitioning Tool GParted.md20191219 Kubernetes namespaces for beginners.md20191220 4 ways to volunteer this holiday season.md20191220 Why Vim fans love the Herbstluftwm Linux window manager.md20191221 Pop-_OS vs Ubuntu- Which One is Better.md20191224 Chill out with the Linux Equinox Desktop Environment.md20191226 Darktable 3 Released With GUI Rework and New Features.md20191227 10 resources to boost your Git skills.md20191227 Explained- Why Your Distribution Still Using an ‘Outdated- Linux Kernel.md20191229 The best resources for agile software development.md20191230 10 articles to enhance your security aptitude.md20191230 Fixing -VLC is Unable to Open the MRL- Error -Quick Tip.md20191231 10 Ansible resources to accelerate your automation skills.md20191231 12 programming resources for coders of all levels.md20200101 5 predictions for Kubernetes in 2020.md20200101 9 cheat sheets and guides to enhance your tech skills.md20200101 Signal- A Secure, Open Source Messaging App.md20200102 Put some loot in your Python platformer game.md20200103 GNOME has a Secret- Screen Recorder. Here-s How to Use it.md20200103 Introducing the guide to inter-process communication in Linux.md20200103 My Raspberry Pi retrospective- 6 projects and more.md20200105 PaperWM- tiled window management for GNOME.md20200106 How to write a Python web API with Pyramid and Cornice.md20200107 Generating numeric sequences with the Linux seq command.md20200107 How piwheels will save Raspberry Pi users time in 2020.md20200108 How to setup multiple monitors in sway.md20200109 Huawei-s Linux Distribution openEuler is Available Now.md20200110 Bash Script to Send eMail With a List of User Accounts Expiring in -X- Days.md20200111 Sync files across multiple devices with Syncthing.md20200112 Use Stow for configuration management of multiple machines.md20200113 Keep your email in sync with OfflineIMAP.md20200113 setV- A Bash function to maintain Python virtual environments.md20200114 Organize your email with Notmuch.md20200115 6 handy Bash scripts for Git.md20200115 Organize and sync your calendar with khal and vdirsyncer.md20200115 Root User in Ubuntu- Important Things You Should Know.md20200115 Why everyone is talking about WebAssembly.md20200116 3 open source tools to manage your contacts.md20200117 C vs. Rust- Which to choose for programming hardware abstractions.md20200117 Get started with this open source to-do list manager.md20200117 Locking and unlocking accounts on Linux systems.md20200119 What-s your favorite Linux terminal trick.md20200122 Setting up passwordless Linux logins using public-private keys.md20200123 Wine 5.0 is Released- Here-s How to Install it.md

20200102 Data streaming and functional programming in Java.md20200103 Add scorekeeping to your Python game.md20200109 My favorite Bash hacks.md20200118 Keep a journal of your activities with this Python program.md20200119 How to Set or Change Timezone in Ubuntu Linux -Beginner-s Tip.md20200119 One open source chat tool to rule them all.md20200120 Use this Twitter client for Linux to tweet from the terminal.md20200129 4 cool new projects to try in COPR for January 2020.md20200129 Showing memory usage in Linux by process and user.md20200131 Intro to the Linux command line.md20200202 4 Key Changes to Look Out for in Linux Kernel 5.6.mdsources

README.md

news

20200117 Fedora CoreOS out of preview.md20200130 NSA cloud advice, Facebook open source year in review, and more industry trends.md20200205 The Y2038 problem in the Linux kernel, 25 years of Java, and other industry news.mdREADME.md

talk

20170717 The Ultimate Guide to JavaScript Fatigue- Realities of our industry.md20170911 What every software engineer should know about search.md20171030 Why I love technical debt.md20171107 How to Monetize an Open Source Project.md20171114 Why pair writing helps improve documentation.md20171115 Why and How to Set an Open Source Strategy.md20171116 Why is collaboration so difficult.md20171221 Changing how we use Slack solved our transparency and silo problems.md20180109 How Mycroft used WordPress and GitHub to improve its documentation.md20180111 The open organization and inner sourcing movements can share knowledge.md20180112 in which the cost of structured data is reduced.md20180124 Security Chaos Engineering- A new paradigm for cybersecurity.md20180131 How to write a really great resume that actually gets you hired.md20180206 UQDS- A software-development process that puts quality first.md20180207 Why Mainframes Aren-t Going Away Any Time Soon.md20180209 Arch Anywhere Is Dead, Long Live Anarchy Linux.md20180209 How writing can change your career for the better, even if you don-t identify as a writer.md

@ -0,0 +1,149 @@

|

||||

分布式跟踪系统的四大功能模块如何协同工作

|

||||

======

|

||||

|

||||

> 了解分布式跟踪中的主要体系结构决策,以及各部分如何组合在一起。

|

||||

|

||||

|

||||

|

||||

早在十年前,认真研究过分布式跟踪基本上只有学者和一小部分大型互联网公司中的人。对于任何采用微服务的组织来说,它如今成为一种筹码。其理由是确立的:微服务通常会发生让人意想不到的错误,而分布式跟踪则是描述和诊断那些错误的最好方法。

|

||||

|

||||

也就是说,一旦你准备将分布式跟踪集成到你自己的应用程序中,你将很快意识到对于不同的人来说“<ruby>分布式跟踪<rt>Distributed Tracing</rt></ruby>”一词意味着不同的事物。此外,跟踪生态系统里挤满了具有相似内容的重叠项目。本文介绍了分布式跟踪系统中四个(可能)独立的功能模块,并描述了它们间将如何协同工作。

|

||||

|

||||

### 分布式跟踪:一种思维模型

|

||||

|

||||

大多数用于跟踪的思维模型来源于 [Google 的 Dapper 论文][1]。[OpenTracing][2] 使用相似的术语,因此,我们从该项目借用了以下术语:

|

||||

|

||||

![Tracing][3]

|

||||

|

||||

* <ruby>跟踪<rt>Trace</rt></ruby>:事物在分布式系统运行的过程描述。

|

||||

* <ruby>跨度<rt>Span</rt></ruby>:一种命名的定时操作,表示工作流的一部分。跨度可接受键值对标签以及附加到特定跨度实例的细粒度的、带有时间戳的结构化日志。

|

||||

* <ruby>跨度上下文<rt>Span context</rt></ruby>:携带分布式事务的跟踪信息,包括当它通过网络或消息总线将服务传递给服务时。跨度上下文包含跟踪标识符、跨度标识符以及跟踪系统所需传播到下游服务的任何其他数据。

|

||||

|

||||

如果你想要深入研究这种思维模式的细节,请仔细参照 [OpenTracing 技术规范][1]。

|

||||

|

||||

### 四大功能模块

|

||||

|

||||

从应用层分布式跟踪系统的观点来看,现代软件系统架构如下图所示:

|

||||

|

||||

![Tracing][5]

|

||||

|

||||

现代软件系统的组件可分为三类:

|

||||

|

||||

* **应用程序和业务逻辑**:你的代码。

|

||||

* **广泛共享库**:他人的代码

|

||||

* **广泛共享服务**:他人的基础架构

|

||||

|

||||

这三类组件有着不同的需求,驱动着监控应用程序的分布式跟踪系统的设计。最终的设计得到了四个重要的部分:

|

||||

|

||||

* <ruby>跟踪检测 API<rt>A tracing instrumentation API</rt></ruby>:修饰应用程序代码

|

||||

* <ruby>线路协议<rt>Wire protocol</rt></ruby>:在 RPC 请求中与应用程序数据一同发送的规定

|

||||

* <ruby>数据协议<rt>Data protocol</rt></ruby>:将异步信息(带外)发送到你的分析系统的规定

|

||||

* <ruby>分析系统<rt>Analysis system</rt></ruby>:用于处理跟踪数据的数据库和交互式用户界面

|

||||

|

||||

为了更深入的解释这个概念,我们将深入研究驱动该设计的细节。如果你只需要我的一些建议,请跳转至下方的四大解决方案。

|

||||

|

||||

### 需求,细节和解释

|

||||

|

||||

应用程序代码、共享库以及共享式服务在操作上有显著的差别,这种差别严重影响了对其进行检测的请求操作。

|

||||

|

||||

#### 检测应用程序代码和业务逻辑

|

||||

|

||||

在任何特定的微服务中,由微服务开发者编写的大部分代码是应用程序或者商业逻辑。这部分代码规定了特定区域的操作。通常,它包含任何特殊、独一无二的逻辑判断,这些逻辑判断首先证明了创建新型微服务的合理性。基本上按照定义,**该代码通常不会在多个服务中共享或者以其他方式出现。**

|

||||

|

||||

也即是说你仍需了解它,这也意味着需要以某种方式对它进行检测。一些监控和跟踪分析系统使用<ruby>黑盒代理<rt>black-box agents</rt></ruby>自动检测代码,另一些系统更想使用显式的白盒检测工具。对于后者,抽象跟踪 API 提供了许多对于微服务的应用程序代码来说更为实用的优势:

|

||||

|

||||

* 抽象 API 允许你在不重新编写检测代码的条件下换新的监视工具。你可能想要变更云服务提供商、供应商和监测技术,而一大堆不可移植的检测代码将会为该过程增加有意义的开销和麻烦。

|

||||

* 事实证明,除了生产监控之外,该工具还有其他有趣的用途。现有的项目使用相同的跟踪工具来驱动测试工具、分布式调试器、“混沌工程”故障注入器和其他元应用程序。

|

||||

* 但更重要的是,若将应用程序组件提取到共享库中要怎么办呢?由上述内容可得到结论:

|

||||

|

||||

#### 检测共享库

|

||||

|

||||

在大多数应用程序中出现的实用程序代码(处理网络请求、数据库调用、磁盘写操作、线程、并发管理等)通常情况下是通用的,而非特别应用于某个特定应用程序。这些代码会被打包成库和框架,而后就可以被装载到许多的微服务上并且被部署到多种不同的环境中。

|

||||

|

||||

其真正的不同是:对于共享代码,其他人则成为了使用者。大多数用户有不同的依赖关系和操作风格。如果尝试去使用该共享代码,你将会注意到几个常见的问题:

|

||||

|

||||

* 你需要一个 API 来编写检测。然而,你的库并不知道你正在使用哪个分析系统。会有多种选择,并且运行在相同应用下的所有库无法做出不兼容的选择。

|

||||

* 由于这些包封装了所有网络处理代码,因此从请求报头注入和提取跨度上下文的任务往往指向 RPC 库。然而,共享库必须了解到每个应用程序正在使用哪种跟踪协议。

|

||||

* 最后,你不想强制用户使用相互冲突的依赖项。大多数用户有不同的依赖关系和操作风格。即使他们使用 gRPC,绑定的 gRPC 版本是否相同?因此任何你的库附带用于跟踪的监控 API 必定是免于依赖的。

|

||||

|

||||

**因此,一个(a)没有依赖关系、(b)与线路协议无关、(c)使用流行的供应商和分析系统的抽象 API 应该是对检测共享库代码的要求。**

|

||||

|

||||

#### 检测共享式服务

|

||||

|

||||

最后,有时整个服务(或微服务集合体)的通用性足以使许多独立的应用程序使用它们。这种共享式服务通常由第三方托管和管理,例如缓存服务器、消息队列以及数据库。

|

||||

|

||||

从应用程序开发者的角度来看,理解共享式服务本质上是黑盒子是极其重要的。它不可能将你的应用程序监控注入到共享式服务。恰恰相反,托管服务通常会运行它自己的监控方案。

|

||||

|

||||

### 四个方面的解决方案

|

||||

|

||||

因此,抽象的跟踪应用程序接口将会帮助库发出数据并且注入/抽取跨度上下文。标准的线路协议将会帮助黑盒服务相互连接,而标准的数据格式将会帮助分离的分析系统合并其中的数据。让我们来看一下部分有希望解决这些问题的方案。

|

||||

|

||||

#### 跟踪 API:OpenTracing 项目

|

||||

|

||||

如你所见,我们需要一个跟踪 API 来检测应用程序代码。为了将这种工具扩展到大多数进行跨度上下文注入和提取的共享库中,则必须以某种关键方式对 API 进行抽象。

|

||||

|

||||

[OpenTracing][2] 项目主要针对解决库开发者的问题,OpenTracing 是一个与供应商无关的跟踪 API,它没有依赖关系,并且迅速得到了许多监控系统的支持。这意味着,如果库附带了内置的本地 OpenTracing 工具,当监控系统在应用程序启动连接时,跟踪将会自动启动。

|

||||

|

||||

就个人而言,作为一个已经编写、发布和操作开源软件十多年的人,在 OpenTracing 项目上工作并最终解决这个观察性的难题令我十分满意。

|

||||

|

||||

除了 API 之外,OpenTracing 项目还维护了一个不断增长的工具列表,其中一些可以在[这里][6]找到。如果你想参与进来,无论是通过提供一个检测插件,对你自己的 OSS 库进行本地测试,或者仅仅只想问个问题,都可以通过 [Gitter][7] 向我们打招呼。

|

||||

|

||||

#### 线路协议: HTTP 报头 trace-context

|

||||

|

||||

为了监控系统能进行互操作,以及减轻从一个监控系统切换为另外一个时带来的迁移问题,需要标准的线路协议来传播跨度上下文。

|

||||

|

||||

[w3c 分布式跟踪上下文社区小组][8]在努力制定此标准。目前的重点是制定一系列标准的 HTTP 报头。该规范的最新草案可以在[此处][9]找到。如果你对此小组有任何的疑问,[邮件列表][10]和[Gitter 聊天室][11]是很好的解惑地点。

|

||||

|

||||

(LCTT 译注:本文原文发表于 2018 年 5 月,可能现在社区已有不同进展)

|

||||

|

||||

#### 数据协议 (还未出现!!)

|

||||

|

||||

对于黑盒服务,在无法安装跟踪程序或无法与程序进行交互的情况下,需要使用数据协议从系统中导出数据。

|

||||

|

||||

目前这种数据格式和协议的开发工作尚处在初级阶段,并且大多在 w3c 分布式跟踪上下文工作组的上下文中进行工作。需要特别关注的是在标准数据模式中定义更高级别的概念,例如 RPC 调用、数据库语句等。这将允许跟踪系统对可用数据类型做出假设。OpenTracing 项目也通过定义一套[标准标签集][12]来解决这一事务。该计划是为了使这两项努力结果相互配合。

|

||||

|

||||

注意当前有一个中间地带。对于由应用程序开发者操作但不想编译或以其他方式执行代码修改的“网络设备”,动态链接可以帮助避免这种情况。主要的例子就是服务网格和代理,就像 Envoy 或者 NGINX。针对这种情况,可将兼容 OpenTracing 的跟踪器编译为共享对象,然后在运行时动态链接到可执行文件中。目前 [C++ OpenTracing API][13] 提供了该选项。而 JAVA 的 OpenTracing [跟踪器解析][14]也在开发中。

|

||||

|

||||

这些解决方案适用于支持动态链接,并由应用程序开发者部署的的服务。但从长远来看,标准的数据协议可以更广泛地解决该问题。

|

||||

|

||||

#### 分析系统:从跟踪数据中提取有见解的服务

|

||||

|

||||

最后不得不提的是,现在有足够多的跟踪监视解决方案。可以在[此处][15]找到已知与 OpenTracing 兼容的监控系统列表,但除此之外仍有更多的选择。我更鼓励你研究你的解决方案,同时希望你在比较解决方案时发现本文提供的框架能派上用场。除了根据监控系统的操作特性对其进行评级外(更不用提你是否喜欢 UI 和其功能),确保你考虑到了上述三个重要方面、它们对你的相对重要性以及你感兴趣的跟踪系统如何为它们提供解决方案。

|

||||

|

||||

### 结论

|

||||

|

||||

最后,每个部分的重要性在很大程度上取决于你是谁以及正在建立什么样的系统。举个例子,开源库的作者对 OpenTracing API 非常感兴趣,而服务开发者对 trace-context 规范更感兴趣。当有人说一部分比另一部分重要时,他们的意思通常是“一部分对我来说比另一部分重要”。

|

||||

|

||||

然而,事实是:分布式跟踪已经成为监控现代系统所必不可少的事物。在为这些系统进行构建模块时,“尽可能解耦”的老方法仍然适用。在构建像分布式监控系统一样的跨系统的系统时,干净地解耦组件是维持灵活性和前向兼容性地最佳方式。

|

||||

|

||||

感谢你的阅读!现在当你准备好在你自己的应用程序中实现跟踪服务时,你已有一份指南来了解他们正在谈论哪部分部分以及它们之间如何相互协作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/distributed-tracing

|

||||

|

||||

作者:[Ted Young][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[chenmu-kk](https://github.com/chenmu-kk)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/tedsuo

|

||||

[1]:https://research.google.com/pubs/pub36356.html

|

||||

[2]:http://opentracing.io/

|

||||

[3]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/uploads/tracing1_0.png?itok=dvDTX0JJ (Tracing)

|

||||

[4]:https://github.com/opentracing/specification/blob/master/specification.md

|

||||

[5]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/uploads/tracing2_0.png?itok=yokjNLZk (Tracing)

|

||||

[6]:https://github.com/opentracing-contrib/

|

||||

[7]:https://gitter.im/opentracing/public

|

||||

[8]:https://www.w3.org/community/trace-context/

|

||||

[9]:https://w3c.github.io/distributed-tracing/report-trace-context.html

|

||||

[10]:http://lists.w3.org/Archives/Public/public-trace-context/

|

||||

[11]:https://gitter.im/TraceContext/Lobby

|

||||

[12]:https://github.com/opentracing/specification/blob/master/semantic_conventions.md

|

||||

[13]:https://github.com/opentracing/opentracing-cpp

|

||||

[14]:https://github.com/opentracing-contrib/java-tracerresolver

|

||||

[15]:http://opentracing.io/documentation/pages/supported-tracers

|

||||

[16]:https://events.linuxfoundation.org/kubecon-eu-2018/

|

||||

[17]:https://events.linuxfoundation.org/events/kubecon-cloudnativecon-north-america-2018/

|

||||

@ -0,0 +1,159 @@

|

||||

MidnightBSD:或许是你通往 FreeBSD 的大门

|

||||

======

|

||||

|

||||

|

||||

|

||||

[FreeBSD][1] 是一个开源操作系统,衍生自著名的 <ruby>[伯克利软件套件][2]<rt>Berkeley Software Distribution</rt></ruby>(BSD)。FreeBSD 的第一个版本发布于 1993 年,并且仍然在继续发展。2007 年左右,Lucas Holt 想要利用 OpenStep(现在是 Cocoa)的 Objective-C 框架、widget 工具包和应用程序开发工具的 [GnuStep][3] 实现,来创建一个 FreeBSD 的分支。为此,他开始开发 MidnightBSD 桌面发行版。

|

||||

|

||||

MidnightBSD(以 Lucas 的猫 Midnight 命名)仍然在积极地(尽管缓慢)开发。从 2017 年 8 月开始,可以获得最新的稳定发布版本(0.8.6)(LCTT 译注:截止至本译文发布时,当前是 2019/10/31 发布的 1.2 版)。尽管 BSD 发行版不是你所说的用户友好型发行版,但上手安装是熟悉如何处理 文本(ncurses)安装过程以及通过命令行完成安装的好方法。

|

||||

|

||||

这样,你最终会得到一个非常可靠的 FreeBSD 分支的桌面发行版。这需要花费一点精力,但是如果你是一名正在寻找扩展你的技能的 Linux 用户……这是一个很好的起点。

|

||||

|

||||

我将带你走过安装 MidnightBSD 的流程,如何添加一个图形桌面环境,然后如何安装应用程序。

|

||||

|

||||

### 安装

|

||||

|

||||

正如我所提到的,这是一个文本(ncurses)安装过程,因此在这里找不到可以用鼠标点击的地方。相反,你将使用你键盘的 `Tab` 键和箭头键。在你下载[最新的发布版本][4]后,将它刻录到一个 CD/DVD 或 USB 驱动器,并启动你的机器(或者在 [VirtualBox][5] 中创建一个虚拟机)。安装程序将打开并给你三个选项(图 1)。使用你的键盘的箭头键选择 “Install”,并敲击回车键。

|

||||

|

||||

![MidnightBSD installer][6]

|

||||

|

||||

*图 1: 启动 MidnightBSD 安装程序。*

|

||||

|

||||

在这里要经历相当多的屏幕。其中很多屏幕是一目了然的:

|

||||

|

||||

1. 设置非默认键盘映射(是/否)

|

||||

2. 设置主机名称

|

||||

3. 添加可选系统组件(文档、游戏、32 位兼容性、系统源码代码)

|

||||

4. 对硬盘分区

|

||||

5. 管理员密码

|

||||

6. 配置网络接口

|

||||

7. 选择地区(时区)

|

||||

8. 启用服务(例如 ssh)

|

||||

9. 添加用户(图 2)

|

||||

|

||||

![Adding a user][7]

|

||||

|

||||

*图 2: 向系统添加一个用户。*

|

||||

|

||||

在你向系统添加用户后,你将被进入到一个窗口中(图 3),在这里,你可以处理任何你可能忘记配置或你想重新配置的东西。如果你不需要作出任何更改,选择 “Exit”,然后你的配置就会被应用。

|

||||

|

||||

![Applying your configurations][8]

|

||||

|

||||

*图 3: 应用你的配置。*

|

||||

|

||||

在接下来的窗口中,当出现提示时,选择 “No”,接下来系统将重启。在 MidnightBSD 重启后,你已经为下一阶段的安装做好了准备。

|

||||

|

||||

### 后安装阶段

|

||||

|

||||

当你最新安装的 MidnightBSD 启动时,你将发现你自己处于命令提示符当中。此刻,还没有图形界面。要安装应用程序,MidnightBSD 依赖于 `mport` 工具。比如说你想安装 Xfce 桌面环境。为此,登录到 MidnightBSD 中,并发出下面的命令:

|

||||

|

||||

```

|

||||

sudo mport index

|

||||

sudo mport install xorg

|

||||

```

|

||||

|

||||

你现在已经安装好 Xorg 窗口服务器了,它允许你安装桌面环境。使用命令来安装 Xfce :

|

||||

|

||||

```

|

||||

sudo mport install xfce

|

||||

```

|

||||

|

||||

现在 Xfce 已经安装好。不过,我们必须让它同命令 `startx` 一起启用。为此,让我们先安装 nano 编辑器。发出命令:

|

||||

|

||||

```

|

||||

sudo mport install nano

|

||||

```

|

||||

|

||||

随着 nano 安装好,发出命令:

|

||||

|

||||

```

|

||||

nano ~/.xinitrc

|

||||

```

|

||||

|

||||

这个文件仅包含一行内容:

|

||||

|

||||

```

|

||||

exec startxfce4

|

||||

```

|

||||

|

||||

保存并关闭这个文件。如果你现在发出命令 `startx`, Xfce 桌面环境将会启动。你应该会感到有点熟悉了吧(图 4)。

|

||||

|

||||

![ Xfce][9]

|

||||

|

||||

*图 4: Xfce 桌面界面已准备好服务。*

|

||||

|

||||

因为你不会总是想必须发出命令 `startx`,你希望启用登录守护进程。然而,它却没有安装。要安装这个子系统,发出命令:

|

||||

|

||||

```

|

||||

sudo mport install mlogind

|

||||

```

|

||||

|

||||

当完成安装后,通过在 `/etc/rc.conf` 文件中添加一个项目来在启动时启用 mlogind。在 `rc.conf` 文件的底部,添加以下内容:

|

||||

|

||||

```

|

||||

mlogind_enable=”YES”

|

||||

```

|

||||

|

||||

保存并关闭该文件。现在,当你启动(或重启)机器时,你应该会看到图形登录屏幕。在写这篇文章的时候,在登录后我最后得到一个空白屏幕和讨厌的 X 光标。不幸的是,目前似乎并没有这个问题的解决方法。所以,要访问你的桌面环境,你必须使用 `startx` 命令。

|

||||

|

||||

### 安装应用

|

||||

|

||||

默认情况下,你找不到很多能可用的应用程序。如果你尝试使用 `mport` 安装应用程序,你很快就会感到沮丧,因为只能找到很少的应用程序。为解决这个问题,我们需要使用 `svnlite` 命令来查看检出的可用 mport 软件列表。回到终端窗口,并发出命令:

|

||||

|

||||

```

|

||||

svnlite co http://svn.midnightbsd.org/svn/mports/trunk mports

|

||||

```

|

||||

|

||||

在你完成这些后,你应该看到一个命名为 `~/mports` 的新目录。使用命令 `cd ~/.mports` 更改到这个目录。发出 `ls` 命令,然后你应该看到许多的类别(图 5)。

|

||||

|

||||

![applications][10]

|

||||

|

||||

*图 5: mport 现在可用的应用程序类别。*

|

||||

|

||||

你想安装 Firefox 吗?如果你查看 `www` 目录,你将看到一个 `linux-firefox` 列表。发出命令:

|

||||

|

||||

```

|

||||

sudo mport install linux-firefox

|

||||

```

|

||||

|

||||

现在你应该会在 Xfce 桌面菜单中看到一个 Firefox 项。翻找所有的类别,并使用 `mport` 命令来安装你需要的所有软件。

|

||||

|

||||

### 一个悲哀的警告

|

||||

|

||||

一个悲哀的小警告是,`mport` (通过 `svnlite`)仅能找到的一个办公套件的版本是 OpenOffice 3 。那是非常过时的。尽管在 `~/mports/editors` 目录中能找到 Abiword ,但是它看起来不能安装。甚至在安装 OpenOffice 3 后,它会输出一个执行格式错误。换句话说,你不能使用 MidnightBSD 在办公生产效率方面做很多的事情。但是,嘿嘿,如果你周围正好有一个旧的 Palm Pilot,你可以安装 pilot-link。换句话说,可用的软件不足以构成一个极其有用的桌面发行版……至少对普通用户不是。但是,如果你想在 MidnightBSD 上开发,你将找到很多可用的工具可以安装(查看 `~/mports/devel` 目录)。你甚至可以使用命令安装 Drupal :

|

||||

|

||||

```

|

||||

sudo mport install drupal7

|

||||

```

|

||||

|

||||

当然,在此之后,你将需要创建一个数据库(MySQL 已经安装)、安装 Apache(`sudo mport install apache24`),并配置必要的 Apache 配置。

|

||||

|

||||

显然地,已安装的和可以安装的是一个应用程序、系统和服务的大杂烩。但是随着足够多的工作,你最终可以得到一个能够服务于特殊目的的发行版。

|

||||

|

||||

### 享受 \*BSD 优良

|

||||

|

||||

这就是如何使 MidnightBSD 启动,并使其运行某种有用的桌面发行版的方法。它不像很多其它的 Linux 发行版一样快速简便,但是如果你想要一个促使你思考的发行版,这可能正是你正在寻找的。尽管大多数竞争对手都准备了很多可以安装的应用软件,但 MidnightBSD 无疑是一个 Linux 爱好者或管理员应该尝试的有趣挑战。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2018/5/midnightbsd-could-be-your-gateway-freebsd

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[robsean](https://github.com/robsean)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://www.freebsd.org/

|

||||

[2]:https://en.wikipedia.org/wiki/Berkeley_Software_Distribution

|

||||

[3]:https://en.wikipedia.org/wiki/GNUstep

|

||||

[4]:http://www.midnightbsd.org/download/

|

||||

[5]:https://www.virtualbox.org/

|

||||

[6]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_1.jpg (MidnightBSD installer)

|

||||

[7]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_2.jpg (Adding a user)

|

||||

[8]:https://lcom.static.linuxfound.org/sites/lcom/files/mightnight_3.jpg (Applying your configurations)

|

||||

[9]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_4.jpg (Xfce)

|

||||

[10]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_5.jpg (applications)

|

||||

[11]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -0,0 +1,333 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11810-1.html)

|

||||

[#]: subject: (Getting started with OpenSSL: Cryptography basics)

|

||||

[#]: via: (https://opensource.com/article/19/6/cryptography-basics-openssl-part-1)

|

||||

[#]: author: (Marty Kalin https://opensource.com/users/mkalindepauledu/users/akritiko/users/clhermansen)

|

||||

|

||||

OpenSSL 入门:密码学基础知识

|

||||

======

|

||||

|

||||

> 想要入门密码学的基础知识,尤其是有关 OpenSSL 的入门知识吗?继续阅读。

|

||||

|

||||

|

||||

|

||||

本文是使用 [OpenSSL][2] 的密码学基础知识的两篇文章中的第一篇,OpenSSL 是在 Linux 和其他系统上流行的生产级库和工具包。(要安装 OpenSSL 的最新版本,请参阅[这里][3]。)OpenSSL 实用程序可在命令行使用,程序也可以调用 OpenSSL 库中的函数。本文的示例程序使用的是 C 语言,即 OpenSSL 库的源语言。

|

||||

|

||||

本系列的两篇文章涵盖了加密哈希、数字签名、加密和解密以及数字证书。你可以从[我的网站][4]的 ZIP 文件中找到这些代码和命令行示例。

|

||||

|

||||

让我们首先回顾一下 OpenSSL 名称中的 SSL。

|

||||

|

||||

### OpenSSL 简史

|

||||

|

||||

<ruby>[安全套接字层][5]<rt>Secure Socket Layer</rt></ruby>(SSL)是 Netscape 在 1995 年发布的一种加密协议。该协议层可以位于 HTTP 之上,从而为 HTTPS 提供了 S:<ruby>安全<rt>secure</rt></ruby>。SSL 协议提供了各种安全服务,其中包括两项在 HTTPS 中至关重要的服务:

|

||||

|

||||

* <ruby>对等身份验证<rt>Peer authentication</rt></ruby>(也称为相互质询):连接的每一边都对另一边的身份进行身份验证。如果 Alice 和 Bob 要通过 SSL 交换消息,则每个人首先验证彼此的身份。

|

||||

* <ruby>机密性<rt>Confidentiality</rt></ruby>:发送者在通过通道发送消息之前先对其进行加密。然后,接收者解密每个接收到的消息。此过程可保护网络对话。即使窃听者 Eve 截获了从 Alice 到 Bob 的加密消息(即*中间人*攻击),Eve 会发现他无法在计算上解密此消息。

|

||||

|

||||

反过来,这两个关键 SSL 服务与其他不太受关注的服务相关联。例如,SSL 支持消息完整性,从而确保接收到的消息与发送的消息相同。此功能是通过哈希函数实现的,哈希函数也随 OpenSSL 工具箱一起提供。

|

||||

|

||||

SSL 有多个版本(例如 SSLv2 和 SSLv3),并且在 1999 年出现了一个基于 SSLv3 的类似协议<ruby>传输层安全性<rt>Transport Layer Security</rt></ruby>(TLS)。TLSv1 和 SSLv3 相似,但不足以相互配合工作。不过,通常将 SSL/TLS 称为同一协议。例如,即使正在使用的是 TLS(而非 SSL),OpenSSL 函数也经常在名称中包含 SSL。此外,调用 OpenSSL 命令行实用程序以 `openssl` 开始。

|

||||

|

||||

除了 man 页面之外,OpenSSL 的文档是零零散散的,鉴于 OpenSSL 工具包很大,这些页面很难以查找使用。命令行和代码示例可以将主要主题集中起来。让我们从一个熟悉的示例开始(使用 HTTPS 访问网站),然后使用该示例来选出我们感兴趣的加密部分进行讲述。

|

||||

|

||||

### 一个 HTTPS 客户端

|

||||

|

||||

此处显示的 `client` 程序通过 HTTPS 连接到 Google:

|

||||

|

||||

```

|

||||

/* compilation: gcc -o client client.c -lssl -lcrypto */

|

||||

#include <stdio.h>

|

||||

#include <stdlib.h>

|

||||

#include <openssl/bio.h> /* BasicInput/Output streams */

|

||||

#include <openssl/err.h> /* errors */

|

||||

#include <openssl/ssl.h> /* core library */

|

||||

#define BuffSize 1024

|

||||

|

||||

void report_and_exit(const char* msg) {

|

||||

perror(msg);

|

||||

ERR_print_errors_fp(stderr);

|

||||

exit(-1);

|

||||

}

|

||||

|

||||

void init_ssl() {

|

||||

SSL_load_error_strings();

|

||||

SSL_library_init();

|

||||

}

|

||||

|

||||

void cleanup(SSL_CTX* ctx, BIO* bio) {

|

||||

SSL_CTX_free(ctx);

|

||||

BIO_free_all(bio);

|

||||

}

|

||||

|

||||

void secure_connect(const char* hostname) {

|

||||

char name[BuffSize];

|

||||

char request[BuffSize];

|

||||

char response[BuffSize];

|

||||

|

||||

const SSL_METHOD* method = TLSv1_2_client_method();

|

||||

if (NULL == method) report_and_exit("TLSv1_2_client_method...");

|

||||

|

||||

SSL_CTX* ctx = SSL_CTX_new(method);

|

||||

if (NULL == ctx) report_and_exit("SSL_CTX_new...");

|

||||

|

||||

BIO* bio = BIO_new_ssl_connect(ctx);

|

||||

if (NULL == bio) report_and_exit("BIO_new_ssl_connect...");

|

||||

|

||||

SSL* ssl = NULL;

|

||||

|

||||

/* 链路 bio 通道,SSL 会话和服务器端点 */

|

||||

|

||||

sprintf(name, "%s:%s", hostname, "https");

|

||||

BIO_get_ssl(bio, &ssl); /* 会话 */

|

||||

SSL_set_mode(ssl, SSL_MODE_AUTO_RETRY); /* 鲁棒性 */

|

||||

BIO_set_conn_hostname(bio, name); /* 准备连接 */

|

||||

|

||||

/* 尝试连接 */

|

||||

if (BIO_do_connect(bio) <= 0) {

|

||||

cleanup(ctx, bio);

|

||||

report_and_exit("BIO_do_connect...");

|

||||

}

|

||||

|

||||

/* 验证信任库,检查证书 */

|

||||

if (!SSL_CTX_load_verify_locations(ctx,

|

||||

"/etc/ssl/certs/ca-certificates.crt", /* 信任库 */

|

||||

"/etc/ssl/certs/")) /* 其它信任库 */

|

||||

report_and_exit("SSL_CTX_load_verify_locations...");

|

||||

|

||||

long verify_flag = SSL_get_verify_result(ssl);

|

||||

if (verify_flag != X509_V_OK)

|

||||

fprintf(stderr,

|

||||

"##### Certificate verification error (%i) but continuing...\n",

|

||||

(int) verify_flag);

|

||||

|

||||

/* 获取主页作为示例数据 */

|

||||

sprintf(request,

|

||||

"GET / HTTP/1.1\x0D\x0AHost: %s\x0D\x0A\x43onnection: Close\x0D\x0A\x0D\x0A",

|

||||

hostname);

|

||||

BIO_puts(bio, request);

|

||||

|

||||

/* 从服务器读取 HTTP 响应并打印到输出 */

|

||||

while (1) {

|

||||

memset(response, '\0', sizeof(response));

|

||||

int n = BIO_read(bio, response, BuffSize);

|

||||

if (n <= 0) break; /* 0 代表流结束,< 0 代表有错误 */

|

||||

puts(response);

|

||||

}

|

||||

|

||||

cleanup(ctx, bio);

|

||||

}

|

||||

|

||||

int main() {

|

||||

init_ssl();

|

||||

|

||||

const char* hostname = "www.google.com:443";

|

||||

fprintf(stderr, "Trying an HTTPS connection to %s...\n", hostname);

|

||||

secure_connect(hostname);

|

||||

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

可以从命令行编译和执行该程序(请注意 `-lssl` 和 `-lcrypto` 中的小写字母 `L`):

|

||||

|

||||

```

|

||||

gcc -o client client.c -lssl -lcrypto

|

||||

```

|

||||

|

||||

该程序尝试打开与网站 [www.google.com][13] 的安全连接。在与 Google Web 服务器的 TLS 握手过程中,`client` 程序会收到一个或多个数字证书,该程序会尝试对其进行验证(但在我的系统上失败了)。尽管如此,`client` 程序仍继续通过安全通道获取 Google 主页。该程序取决于前面提到的安全工件,尽管在上述代码中只着重突出了数字证书。但其它工件仍在幕后发挥作用,稍后将对它们进行详细说明。

|

||||

|

||||

通常,打开 HTTP(非安全)通道的 C 或 C++ 的客户端程序将使用诸如*文件描述符*或*网络套接字*之类的结构,它们是两个进程(例如,这个 `client` 程序和 Google Web 服务器)之间连接的端点。另一方面,文件描述符是一个非负整数值,用于在程序中标识该程序打开的任何文件类的结构。这样的程序还将使用一种结构来指定有关 Web 服务器地址的详细信息。

|

||||

|

||||

这些相对较低级别的结构不会出现在客户端程序中,因为 OpenSSL 库会将套接字基础设施和地址规范等封装在更高层面的安全结构中。其结果是一个简单的 API。下面首先看一下 `client` 程序示例中的安全性详细信息。

|

||||

|

||||

* 该程序首先加载相关的 OpenSSL 库,我的函数 `init_ssl` 中对 OpenSSL 进行了两次调用:

|

||||

|

||||

```

|

||||

SSL_load_error_strings();

|

||||

SSL_library_init();

|

||||

```

|

||||

* 下一个初始化步骤尝试获取安全*上下文*,这是建立和维护通往 Web 服务器的安全通道所需的信息框架。如对 OpenSSL 库函数的调用所示,在示例中使用了 TLS 1.2:

|

||||

|

||||

```

|

||||

const SSL_METHOD* method = TLSv1_2_client_method(); /* TLS 1.2 */

|

||||

```

|

||||

|

||||

如果调用成功,则将 `method` 指针被传递给库函数,该函数创建类型为 `SSL_CTX` 的上下文:

|

||||

|

||||

```

|

||||

SSL_CTX* ctx = SSL_CTX_new(method);

|

||||

```

|

||||

|

||||

`client` 程序会检查每个关键的库调用的错误,如果其中一个调用失败,则程序终止。

|

||||

* 现在还有另外两个 OpenSSL 工件也在发挥作用:SSL 类型的安全会话,从头到尾管理安全连接;以及类型为 BIO(<ruby>基本输入/输出<rt>Basic Input/Output</rt></ruby>)的安全流,用于与 Web 服务器进行通信。BIO 流是通过以下调用生成的:

|

||||

|

||||

```

|

||||

BIO* bio = BIO_new_ssl_connect(ctx);

|

||||

```

|

||||

|

||||

请注意,这个最重要的上下文是其参数。`BIO` 类型是 C 语言中 `FILE` 类型的 OpenSSL 封装器。此封装器可保护 `client` 程序与 Google 的网络服务器之间的输入和输出流的安全。

|

||||

* 有了 `SSL_CTX` 和 `BIO`,然后程序在 SSL 会话中将它们组合在一起。三个库调用可以完成工作:

|

||||

|

||||

```

|

||||

BIO_get_ssl(bio, &ssl); /* 会话 */

|

||||

SSL_set_mode(ssl, SSL_MODE_AUTO_RETRY); /* 鲁棒性 */

|

||||

BIO_set_conn_hostname(bio, name); /* 准备连接 */

|

||||

```

|

||||

|

||||

安全连接本身是通过以下调用建立的:

|

||||

|

||||

```

|

||||

BIO_do_connect(bio);

|

||||

```

|

||||

|

||||

如果最后一个调用不成功,则 `client` 程序终止;否则,该连接已准备就绪,可以支持 `client` 程序与 Google Web 服务器之间的机密对话。

|

||||

|

||||

在与 Web 服务器握手期间,`client` 程序会接收一个或多个数字证书,以认证服务器的身份。但是,`client` 程序不会发送自己的证书,这意味着这个身份验证是单向的。(Web 服务器通常配置为**不**需要客户端证书)尽管对 Web 服务器证书的验证失败,但 `client` 程序仍通过了连接到 Web 服务器的安全通道继续获取 Google 主页。

|

||||

|

||||

为什么验证 Google 证书的尝试会失败?典型的 OpenSSL 安装目录为 `/etc/ssl/certs`,其中包含 `ca-certificates.crt` 文件。该目录和文件包含着 OpenSSL 自带的数字证书,以此构成<ruby>信任库<rt>truststore</rt></ruby>。可以根据需要更新信任库,尤其是可以包括新信任的证书,并删除不再受信任的证书。

|

||||

|

||||

`client` 程序从 Google Web 服务器收到了三个证书,但是我的计算机上的 OpenSSL 信任库并不包含完全匹配的证书。如目前所写,`client` 程序不会通过例如验证 Google 证书上的数字签名(一个用来证明该证书的签名)来解决此问题。如果该签名是受信任的,则包含该签名的证书也应受信任。尽管如此,`client` 程序仍继续获取页面,然后打印出 Google 的主页。下一节将更详细地介绍这些。

|

||||

|

||||

### 客户端程序中隐藏的安全性

|

||||

|

||||

让我们从客户端示例中可见的安全工件(数字证书)开始,然后考虑其他安全工件如何与之相关。数字证书的主要格式标准是 X509,生产级的证书由诸如 [Verisign][14] 的<ruby>证书颁发机构<rt>Certificate Authority</rt></ruby>(CA)颁发。

|

||||

|

||||

数字证书中包含各种信息(例如,激活日期和失效日期以及所有者的域名),也包括发行者的身份和*数字签名*(这是加密过的*加密哈希*值)。证书还具有未加密的哈希值,用作其标识*指纹*。

|

||||

|

||||

哈希值来自将任意数量的二进制位映射到固定长度的摘要。这些位代表什么(会计报告、小说或数字电影)无关紧要。例如,<ruby>消息摘要版本 5<rt>Message Digest version 5</rt></ruby>(MD5)哈希算法将任意长度的输入位映射到 128 位哈希值,而 SHA1(<ruby>安全哈希算法版本 1<rt>Secure Hash Algorithm version 1</rt></ruby>)算法将输入位映射到 160 位哈希值。不同的输入位会导致不同的(实际上在统计学上是唯一的)哈希值。下一篇文章将会进行更详细的介绍,并着重介绍什么使哈希函数具有加密功能。

|

||||

|

||||

数字证书的类型有所不同(例如根证书、中间证书和最终实体证书),并形成了反映这些证书类型的层次结构。顾名思义,*根*证书位于层次结构的顶部,其下的证书继承了根证书所具有的信任。OpenSSL 库和大多数现代编程语言都具有 X509 数据类型以及处理此类证书的函数。来自 Google 的证书具有 X509 格式,`client` 程序会检查该证书是否为 `X509_V_OK`。

|

||||

|

||||

X509 证书基于<ruby>公共密钥基础结构<rt>public-key infrastructure</rt></ruby>(PKI),其中包括的算法(RSA 是占主导地位的算法)用于生成*密钥对*:公共密钥及其配对的私有密钥。公钥是一种身份:[Amazon][15] 的公钥对其进行标识,而我的公钥对我进行标识。私钥应由其所有者负责保密。

|

||||

|

||||

成对出现的密钥具有标准用途。可以使用公钥对消息进行加密,然后可以使用同一个密钥对中的私钥对消息进行解密。私钥也可以用于对文档或其他电子工件(例如程序或电子邮件)进行签名,然后可以使用该对密钥中的公钥来验证签名。以下两个示例补充了一些细节。

|

||||

|

||||

在第一个示例中,Alice 将她的公钥分发给全世界,包括 Bob。然后,Bob 用 Alice 的公钥加密邮件,然后将加密的邮件发送给 Alice。用 Alice 的公钥加密的邮件将可以用她的私钥解密(假设是她自己的私钥),如下所示:

|

||||

|

||||

```

|

||||

+------------------+ encrypted msg +-------------------+

|

||||

Bob's msg--->|Alice's public key|--------------->|Alice's private key|---> Bob's msg

|

||||

+------------------+ +-------------------+

|

||||

```

|

||||

|

||||

理论上可以在没有 Alice 的私钥的情况下解密消息,但在实际情况中,如果使用像 RSA 这样的加密密钥对系统,则在计算上做不到。

|

||||

|

||||

现在,第二个示例,请对文档签名以证明其真实性。签名算法使用密钥对中的私钥来处理要签名的文档的加密哈希:

|

||||

|

||||

```

|

||||

+-------------------+

|

||||

Hash of document--->|Alice's private key|--->Alice's digital signature of the document

|

||||

+-------------------+

|

||||

```

|

||||

|

||||

假设 Alice 以数字方式签署了发送给 Bob 的合同。然后,Bob 可以使用 Alice 密钥对中的公钥来验证签名:

|

||||

|

||||

```

|

||||

+------------------+

|

||||

Alice's digital signature of the document--->|Alice's public key|--->verified or not

|

||||

+------------------+

|

||||

```

|

||||

|

||||

假若没有 Alice 的私钥,就无法轻松伪造 Alice 的签名:因此,Alice 有必要保密她的私钥。

|

||||

|

||||

在 `client` 程序中,除了数字证书以外,这些安全性都没有明确展示。下一篇文章使用使用 OpenSSL 实用程序和库函数的示例填充更多详细的信息。

|

||||

|

||||

### 命令行的 OpenSSL

|

||||

|

||||

同时,让我们看一下 OpenSSL 命令行实用程序:特别是在 TLS 握手期间检查来自 Web 服务器的证书的实用程序。调用 OpenSSL 实用程序可以使用 `openssl` 命令,然后添加参数和标志的组合以指定所需的操作。

|

||||

|

||||

看看以下命令:

|

||||

|

||||

```

|

||||

openssl list-cipher-algorithms

|

||||

```

|

||||

|

||||

该输出是组成<ruby>加密算法套件<rt>cipher suite<rt></ruby>的相关算法的列表。下面是列表的开头,加了澄清首字母缩写词的注释:

|

||||

|

||||

```

|

||||

AES-128-CBC ## Advanced Encryption Standard, Cipher Block Chaining

|

||||

AES-128-CBC-HMAC-SHA1 ## Hash-based Message Authentication Code with SHA1 hashes

|

||||

AES-128-CBC-HMAC-SHA256 ## ditto, but SHA256 rather than SHA1

|

||||

...

|

||||

```

|

||||

|

||||

下一条命令使用参数 `s_client` 将打开到 [www.google.com][13] 的安全连接,并在屏幕上显示有关此连接的所有信息:

|

||||

|

||||

```

|

||||

openssl s_client -connect www.google.com:443 -showcerts

|

||||

```

|

||||

|

||||

端口号 443 是 Web 服务器用于接收 HTTPS(而不是 HTTP 连接)的标准端口号。(对于 HTTP,标准端口为 80)Web 地址 www.google.com:443 也出现在 `client` 程序的代码中。如果尝试连接成功,则将显示来自 Google 的三个数字证书以及有关安全会话、正在使用的加密算法套件以及相关项目的信息。例如,这是开头的部分输出,它声明*证书链*即将到来。证书的编码为 base64:

|

||||

|

||||

|

||||

```

|

||||

Certificate chain

|

||||

0 s:/C=US/ST=California/L=Mountain View/O=Google LLC/CN=www.google.com

|

||||

i:/C=US/O=Google Trust Services/CN=Google Internet Authority G3

|

||||

-----BEGIN CERTIFICATE-----

|

||||

MIIEijCCA3KgAwIBAgIQdCea9tmy/T6rK/dDD1isujANBgkqhkiG9w0BAQsFADBU

|

||||

MQswCQYDVQQGEwJVUzEeMBwGA1UEChMVR29vZ2xlIFRydXN0IFNlcnZpY2VzMSUw

|

||||

...

|

||||

```

|

||||

|

||||

诸如 Google 之类的主要网站通常会发送多个证书进行身份验证。

|

||||

|

||||

输出以有关 TLS 会话的摘要信息结尾,包括加密算法套件的详细信息:

|

||||

|

||||

```

|

||||

SSL-Session:

|

||||

Protocol : TLSv1.2

|

||||

Cipher : ECDHE-RSA-AES128-GCM-SHA256

|

||||

Session-ID: A2BBF0E4991E6BBBC318774EEE37CFCB23095CC7640FFC752448D07C7F438573

|

||||

...

|

||||

```

|

||||

|

||||

`client` 程序中使用了协议 TLS 1.2,`Session-ID` 唯一地标识了 `openssl` 实用程序和 Google Web 服务器之间的连接。`Cipher` 条目可以按以下方式进行解析:

|

||||

|

||||

* `ECDHE`(<ruby>椭圆曲线 Diffie-Hellman(临时)<rt>Elliptic Curve Diffie Hellman Ephemeral</rt></ruby>)是一种用于管理 TLS 握手的高效的有效算法。尤其是,ECDHE 通过确保连接双方(例如,`client` 程序和 Google Web 服务器)使用相同的加密/解密密钥(称为*会话密钥*)来解决“密钥分发问题”。后续文章会深入探讨该细节。

|

||||

* `RSA`(Rivest Shamir Adleman)是主要的公共密钥密码系统,并以 1970 年代末首次描述了该系统的三位学者的名字命名。这个正在使用的密钥对是使用 RSA 算法生成的。

|

||||

* `AES128`(<ruby>高级加密标准<rt>Advanced Encryption Standard</rt></ruby>)是一种<ruby>块式加密算法<rt>block cipher</rt></ruby>,用于加密和解密<ruby>位块<rt>blocks of bits</rt></ruby>。(另一种算法是<ruby>流式加密算法<rt>stream cipher</rt></ruby>,它一次加密和解密一个位。)这个加密算法是对称加密算法,因为使用同一个密钥进行加密和解密,这首先引起了密钥分发问题。AES 支持 128(此处使用)、192 和 256 位的密钥大小:密钥越大,安全性越好。

|

||||

|

||||

通常,像 AES 这样的对称加密系统的密钥大小要小于像 RSA 这样的非对称(基于密钥对)系统的密钥大小。例如,1024 位 RSA 密钥相对较小,而 256 位密钥则当前是 AES 最大的密钥。

|

||||

* `GCM`(<ruby>伽罗瓦计数器模式<rt>Galois Counter Mode</rt></ruby>)处理在安全对话期间重复应用的加密算法(在这种情况下为 AES128)。AES128 块的大小仅为 128 位,安全对话很可能包含从一侧到另一侧的多个 AES128 块。GCM 非常有效,通常与 AES128 搭配使用。

|

||||

* `SHA256`(<ruby>256 位安全哈希算法<rt>Secure Hash Algorithm 256 bits</rt></ruby>)是我们正在使用的加密哈希算法。生成的哈希值的大小为 256 位,尽管使用 SHA 甚至可以更大。

|

||||

|

||||

加密算法套件正在不断发展中。例如,不久前,Google 使用 RC4 流加密算法(RSA 的 Ron Rivest 后来开发的 Ron's Cipher 版本 4)。 RC4 现在有已知的漏洞,这大概部分导致了 Google 转换为 AES128。

|

||||

|

||||

### 总结

|

||||

|

||||

我们通过安全的 C Web 客户端和各种命令行示例对 OpenSSL 做了首次了解,使一些需要进一步阐明的主题脱颖而出。[下一篇文章会详细介绍][17],从加密散列开始,到对数字证书如何应对密钥分发挑战为结束的更全面讨论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/6/cryptography-basics-openssl-part-1

|

||||

|

||||

作者:[Marty Kalin][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mkalindepauledu/users/akritiko/users/clhermansen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/BUSINESS_3reasons.png?itok=k6F3-BqA (A lock on the side of a building)

|

||||

[2]: https://www.openssl.org/

|

||||

[3]: https://www.howtoforge.com/tutorial/how-to-install-openssl-from-source-on-linux/

|

||||

[4]: http://condor.depaul.edu/mkalin

|

||||

[5]: https://en.wikipedia.org/wiki/Transport_Layer_Security

|

||||

[6]: https://en.wikipedia.org/wiki/Netscape

|

||||

[7]: http://www.opengroup.org/onlinepubs/009695399/functions/perror.html

|

||||

[8]: http://www.opengroup.org/onlinepubs/009695399/functions/exit.html

|

||||

[9]: http://www.opengroup.org/onlinepubs/009695399/functions/sprintf.html

|

||||

[10]: http://www.opengroup.org/onlinepubs/009695399/functions/fprintf.html

|

||||

[11]: http://www.opengroup.org/onlinepubs/009695399/functions/memset.html

|

||||

[12]: http://www.opengroup.org/onlinepubs/009695399/functions/puts.html

|

||||

[13]: http://www.google.com

|

||||

[14]: https://www.verisign.com

|

||||

[15]: https://www.amazon.com

|

||||

[16]: http://www.google.com:443

|

||||

[17]: https://opensource.com/article/19/6/cryptography-basics-openssl-part-2

|

||||

@ -0,0 +1,107 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11822-1.html)

|

||||

[#]: subject: (How the Linux screen tool can save your tasks – and your sanity – if SSH is interrupted)

|

||||

[#]: via: (https://www.networkworld.com/article/3441777/how-the-linux-screen-tool-can-save-your-tasks-and-your-sanity-if-ssh-is-interrupted.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

如果 SSH 被中断,Linux screen 工具如何拯救你的任务以及理智

|

||||

======

|

||||

|

||||

> 当你需要确保长时间运行的任务不会在 SSH 会话中断时被杀死时,Linux screen 命令可以成为救生员。以下是使用方法。

|

||||

|

||||

|

||||

|

||||

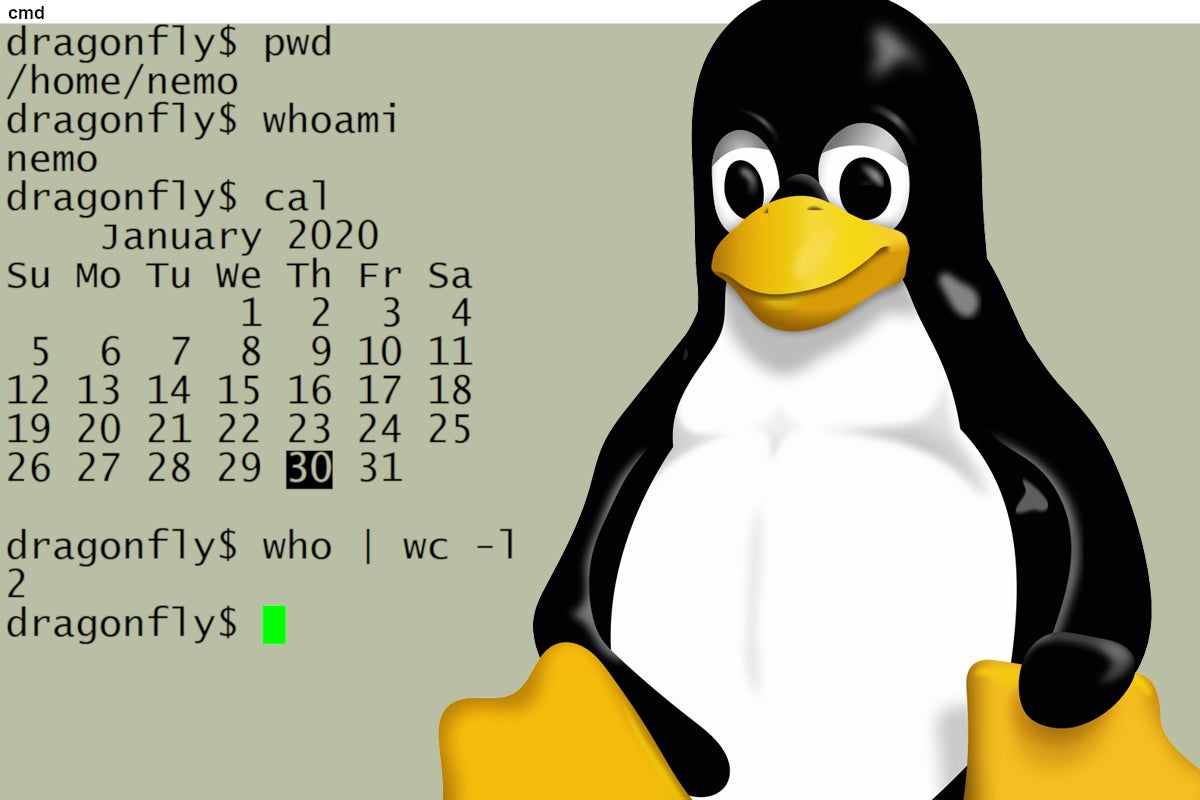

如果因 SSH 会话断开而不得不重启一个耗时的进程,那么你可能会很高兴了解一个有趣的工具,可以用来避免此问题:`screen` 工具。

|

||||

|

||||

`screen` 是一个终端多路复用器,它使你可以在单个 SSH 会话中运行多个终端会话,并随时从它们之中脱离或重新接驳。做到这一点的过程非常简单,仅涉及少数命令。

|

||||

|

||||

要启动 `screen` 会话,只需在 SSH 会话中键入 `screen`。 然后,你可以开始启动需要长时间运行的进程,并在适当的时候键入 `Ctrl + A Ctrl + D` 从会话中脱离,然后键入 `screen -r` 重新接驳。

|

||||

|

||||

如果你要运行多个 `screen` 会话,更好的选择是为每个会话指定一个有意义的名称,以帮助你记住正在处理的任务。使用这种方法,你可以在启动每个会话时使用如下命令命名:

|

||||

|

||||

```

|

||||

$ screen -S slow-build

|

||||

```

|

||||

|

||||

一旦运行了多个会话,要重新接驳到一个会话,需要从列表中选择它。在以下命令中,我们列出了当前正在运行的会话,然后再重新接驳其中一个。请注意,一开始这两个会话都被标记为已脱离。

|

||||

|

||||

```

|

||||

$ screen -ls

|

||||

There are screens on:

|

||||

6617.check-backups (09/26/2019 04:35:30 PM) (Detached)

|

||||

1946.slow-build (09/26/2019 02:51:50 PM) (Detached)

|

||||

2 Sockets in /run/screen/S-shs

|

||||

```

|

||||

|

||||

然后,重新接驳到该会话要求你提供分配给会话的名称。例如:

|

||||

|

||||

```

|

||||

$ screen -r slow-build

|

||||

```

|

||||

|

||||

在脱离的会话中,保持运行状态的进程会继续进行处理,而你可以执行其他工作。如果你使用这些 `screen` 会话之一来查询 `screen` 会话情况,可以看到当前重新接驳的会话再次显示为 `Attached`。

|

||||

|

||||

```

|

||||

$ screen -ls

|

||||

There are screens on:

|

||||

6617.check-backups (09/26/2019 04:35:30 PM) (Attached)

|

||||

1946.slow-build (09/26/2019 02:51:50 PM) (Detached)

|

||||

2 Sockets in /run/screen/S-shs.

|

||||

```

|

||||

|

||||

你可以使用 `-version` 选项查询正在运行的 `screen` 版本。

|

||||

|

||||

```

|

||||

$ screen -version

|

||||

Screen version 4.06.02 (GNU) 23-Oct-17

|

||||

```

|

||||

|

||||

### 安装 screen

|

||||

|

||||

如果 `which screen` 未在屏幕上提供信息,则可能你的系统上未安装该工具。

|

||||

|

||||

```

|

||||

$ which screen

|

||||

/usr/bin/screen

|

||||

```

|

||||

|

||||

如果你需要安装它,则以下命令之一可能适合你的系统:

|

||||

|

||||

```

|

||||

sudo apt install screen

|

||||

sudo yum install screen

|

||||

```

|

||||

|

||||

当你需要运行耗时的进程时,如果你的 SSH 会话由于某种原因断开连接,则可能会中断这个耗时的进程,那么 `screen` 工具就会派上用场。而且,如你所见,它非常易于使用和管理。

|

||||

|

||||

以下是上面使用的命令的摘要:

|

||||

|

||||

```

|

||||

screen -S <process description> 开始会话

|

||||

Ctrl+A Ctrl+D 从会话中脱离

|

||||

screen -ls 列出会话

|

||||

screen -r <process description> 重新接驳会话

|

||||

```

|

||||

|

||||

尽管还有更多关于 `screen` 的知识,包括可以在 `screen` 会话之间进行操作的其他方式,但这已经足够帮助你开始使用这个便捷的工具了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3441777/how-the-linux-screen-tool-can-save-your-tasks-and-your-sanity-if-ssh-is-interrupted.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.youtube.com/playlist?list=PL7D2RMSmRO9J8OTpjFECi8DJiTQdd4hua

|

||||

[2]: https://www.networkworld.com/article/3440100/take-the-intelligent-route-with-consumption-based-storage.html?utm_source=IDG&utm_medium=promotions&utm_campaign=HPE20773&utm_content=sidebar ( Take the Intelligent Route with Consumption-Based Storage)

|

||||

[3]: https://www.facebook.com/NetworkWorld/

|

||||

[4]: https://www.linkedin.com/company/network-world

|

||||

61

published/202001/20191015 How GNOME uses Git.md

Normal file

61

published/202001/20191015 How GNOME uses Git.md

Normal file

@ -0,0 +1,61 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11806-1.html)

|

||||

[#]: subject: (How GNOME uses Git)

|

||||

[#]: via: (https://opensource.com/article/19/10/how-gnome-uses-git)

|

||||

[#]: author: (Molly de Blanc https://opensource.com/users/mollydb)

|

||||

|

||||

一个非技术人员对 GNOME 项目使用 GitLab 的感受

|

||||

======

|

||||

|

||||

> 将 GNOME 项目集中在 GitLab 上的决定为整个社区(不只是开发人员)带来了好处。

|

||||

|

||||

![red panda][1]

|

||||

|

||||

“您的 GitLab 是什么?”这是我在 [GNOME 基金会][2]工作的第一天被问到的第一个问题之一,该基金会是支持 GNOME 项目(包括[桌面环境][3]、[GTK][4] 和 [GStreamer][5])的非盈利组织。此人问的是我在 [GNOME 的 GitLab 实例][6]上的用户名。我在 GNOME 期间,经常有人要求我提供我的 GitLab。

|

||||

|

||||

我们使用 GitLab 进行几乎所有操作。通常情况下,我会收到一些<ruby>提案<rt>issue</rt></ruby>和参考错误报告,有时还需要修改文件。我不是以开发人员或系统管理员的身份进行此操作的。我参与了“参与度、包容性和多样性(I&D)”团队。我为 GNOME 朋友们撰写新闻通讯,并采访该项目的贡献者。我为 GNOME 活动提供赞助。我不写代码,但我每天都使用 GitLab。

|

||||

|

||||

在过去的二十年中,GNOME 项目的管理采用了各种方式。该项目的不同部分使用不同的系统来跟踪代码更改、协作以及作为项目和社交空间共享信息。但是,该项目决定,它需要更加地一体化,这从构思到完成大约花费了一年的时间。

|

||||

|

||||

GNOME 希望切换到单个工具供整个社区使用的原因很多。外部项目与 GNOME 息息相关,并为它们提供更简单的与资源交互的方式对于项目至关重要,无论是支持社区还是发展生态系统。我们还希望更好地跟踪 GNOME 的指标,即贡献者的数量、贡献的类型和数量以及项目不同部分的开发进度。

|

||||

|

||||

当需要选择一种协作工具时,我们考虑了我们需要的东西。最重要的要求之一是它必须由 GNOME 社区托管。由第三方托管并不是一种选择,因此像 GitHub 和 Atlassian 这样的服务就不在考虑之中。而且,当然了,它必须是自由软件。很快,唯一真正的竞争者出现了,它就是 GitLab。我们希望确保进行贡献很容易。GitLab 具有诸如单点登录的功能,该功能允许人们使用 GitHub、Google、GitLab.com 和 GNOME 帐户登录。

|

||||

|

||||

我们认为 GitLab 是一条出路,我们开始从许多工具迁移到单个工具。GNOME 董事会成员 [Carlos Soriano][7] 领导这项改变。在 GitLab 和 GNOME 社区的大力支持下,我们于 2018 年 5 月完成了该过程。

|

||||

|

||||

人们非常希望迁移到 GitLab 有助于社区的发展,并使贡献更加容易。由于 GNOME 以前使用了许多不同的工具,包括 Bugzilla 和 CGit,因此很难定量地评估这次切换对贡献量的影响。但是,我们可以更清楚地跟踪一些统计数据,例如在 2018 年 6 月至 2018 年 11 月之间关闭了近 10,000 个提案,合并了 7,085 个合并请求。人们感到社区在发展壮大,越来越受欢迎,而且贡献实际上也更加容易。

|

||||

|

||||

人们因不同的原因而开始使用自由软件,重要的是,可以通过为需要软件的人提供更好的资源和更多的支持来公平竞争。Git 作为一种工具已被广泛使用,并且越来越多的人使用这些技能来参与到自由软件当中。自托管的 GitLab 提供了将 Git 的熟悉度与 GitLab 提供的功能丰富、用户友好的环境相结合的绝佳机会。

|

||||

|

||||

切换到 GitLab 已经一年多了,变化确实很明显。持续集成(CI)为开发带来了巨大的好处,并且已经完全集成到 GNOME 的几乎每个部分当中。不进行代码开发的团队也转而使用 GitLab 生态系统进行工作。无论是使用问题跟踪来管理分配的任务,还是使用版本控制来共享和管理资产,就连“参与度、包容性和多样性(I&D)”这样的团队都已经使用了 GitLab。

|

||||

|

||||

一个社区,即使是一个正在开发的自由软件,也很难适应新技术或新工具。在类似 GNOME 的情况下,这尤其困难,该项目[最近已经 22 岁了] [8]。像 GNOME 这样经过了 20 多年建设的项目,太多的人和组织使用了太多的部件,但迁移工作之所以能实现,这要归功于 GNOME 社区的辛勤工作和 GitLab 的慷慨帮助。

|

||||

|

||||

在为使用 Git 进行版本控制的项目工作时,我发现很方便。这是一个令人感觉舒适和熟悉的系统,是一个在工作场所和爱好项目之间保持一致的工具。作为 GNOME 社区的新成员,能够参与并使用 GitLab 真是太好了。作为社区建设者,看到这样结果是令人鼓舞的:越来越多的相关项目加入并进入生态系统;新的贡献者和社区成员对该项目做出了首次贡献;以及增强了衡量我们正在做的工作以了解其成功和成功的能力。

|

||||

|

||||

如此多的做着完全不同的事情(例如他们正在从事的不同工作以及所使用的不同技能)的团队同意汇集在一个工具上(尤其是被认为是跨开源的标准工具),这一点很棒。作为 GNOME 的贡献者,我真的非常感谢我们使用了 GitLab。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/10/how-gnome-uses-git

|

||||

|

||||

作者:[Molly de Blanc][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mollydb

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/redpanda_firefox_pet_animal.jpg?itok=aSpKsyna (red panda)

|

||||

[2]: https://www.gnome.org/foundation/

|

||||

[3]: https://gnome.org/

|

||||

[4]: https://www.gtk.org/

|

||||

[5]: https://gstreamer.freedesktop.org/

|

||||

[6]: https://gitlab.gnome.org/

|

||||

[7]: https://twitter.com/csoriano1618?lang=en

|

||||

[8]: https://opensource.com/article/19/8/poll-favorite-gnome-version

|

||||

@ -0,0 +1,62 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (alim0x)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11831-1.html)

|

||||

[#]: subject: (My Linux story: Learning Linux in the 90s)

|

||||

[#]: via: (https://opensource.com/article/19/11/learning-linux-90s)

|

||||

[#]: author: (Mike Harris https://opensource.com/users/mharris)

|

||||

|

||||

我的 Linux 故事:在 90 年代学习 Linux

|

||||

======

|

||||

|

||||

> 这是一个关于我如何在 WiFi 时代之前学习 Linux 的故事,那时的发行版还以 CD 的形式出现。

|

||||

|

||||

|

||||

|

||||

大部分人可能不记得 1996 年时计算产业或日常生活世界的样子。但我很清楚地记得那一年。我那时候是堪萨斯中部一所高中的二年级学生,那是我的自由与开源软件(FOSS)旅程的开端。

|

||||

|

||||

我从这里开始进步。我在 1996 年之前就开始对计算机感兴趣。我在我家的第一台 Apple ][e 上启蒙成长,然后多年之后是 IBM Personal System/2。(是的,在这过程中有一些代际的跨越。)IBM PS/2 有一个非常激动人心的特性:一个 1200 波特的 Hayes 调制解调器。

|

||||

|

||||

我不记得是怎样了,但在那不久之前,我得到了一个本地 [BBS][2] 的电话号码。一旦我拨号进去,我可以得到本地的一些其他 BBS 的列表,我的网络探险就此开始了。

|

||||

|

||||

在 1995 年,[足够幸运][3]的人拥有了家庭互联网连接,每月可以使用不到 30 分钟。那时的互联网不像我们现代的服务那样,通过卫星、光纤、有线电视同轴电缆或任何版本的铜线提供。大多数家庭通过一个调制解调器拨号,它连接到他们的电话线上。(这时离移动电话无处不在的时代还早得很,大多数人只有一部家庭电话。)尽管这还要取决你所在的位置,但我不认为那时有很多独立的互联网服务提供商(ISP),所以大多数人从仅有的几家大公司获得服务,包括 America Online,CompuServe 以及 Prodigy。

|

||||

|

||||

你能获取到的服务速率非常低,甚至在拨号上网革命性地达到了顶峰的 56K,你也只能期望得到最高 3.5Kbps 的速率。如果你想要尝试 Linux,下载一个 200MB 到 800MB 的 ISO 镜像或(更加切合实际的)一套软盘镜像要贡献出时间、决心,以及减少电话的使用。

|

||||

|

||||

我走了一条简单一点的路:在 1996 年,我从一家主要的 Linux 发行商订购了一套 “tri-Linux” CD 集。这些光盘提供了三个发行版,我的这套包含了 Debian 1.1(Debian 的第一个稳定版本)、Red Hat Linux 3.0.3 以及 Slackware 3.1(代号 Slackware '96)。据我回忆,这些光盘是从一家叫做 [Linux Systems Labs][4] 的在线商店购买的。这家在线商店如今已经不存在了,但在 90 年代和 00 年代早期,这样的发行商很常见。这些是多光盘 Linux 套件。这是 1998 年的一套光盘,你可以了解到他们都包含了什么:

|

||||

|

||||

![A tri-linux CD set][5]

|

||||

|

||||

![A tri-linux CD set][6]

|

||||

|

||||

在 1996 年夏天一个命中注定般的日子,那时我住在堪萨斯一个新的并且相对较为乡村的城市,我做出了安装并使用 Linux 的第一次尝试。在 1996 年的整个夏天,我尝试了那套三张 Linux CD 套件里的全部三个发行版。他们都在我母亲的老 Pentium 75MHz 电脑上完美运行。

|

||||

|

||||

我最终选择了 [Slackware][7] 3.1 作为我的首选发行版,相比其它发行版可能更多的是因为它的终端的外观,这是决定选择一个发行版前需要考虑的重要因素。

|

||||

|

||||

我将系统设置完毕并运行了起来。我连接到一家 “不太知名的” ISP(一家这个区域的本地服务商),通过我家的第二条电话线拨号(为了满足我的所有互联网使用而订购)。那就像在天堂一样。我有一台完美运行的双系统(Microsoft Windows 95 和 Slackware 3.1)电脑。我依然拨号进入我所知道和喜爱的 BBS,游玩在线 BBS 游戏,比如 Trade Wars、Usurper 以及 Legend of the Red Dragon。

|

||||

|

||||

我能够记得在 EFNet(IRC)上 #Linux 频道上渡过的日子,帮助其他用户,回答他们的 Linux 问题以及和版主们互动。

|

||||

|

||||

在我第一次在家尝试使用 Linux 系统的 20 多年后,已经是我进入作为 Red Hat 顾问的第五年,我仍然在使用 Linux(现在是 Fedora)作为我的日常系统,并且依然在 IRC 上帮助想要使用 Linux 的人们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/11/learning-linux-90s

|

||||

|

||||

作者:[Mike Harris][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mharris

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/bus-cloud.png?itok=vz0PIDDS (Sky with clouds and grass)

|

||||

[2]: https://en.wikipedia.org/wiki/Bulletin_board_system

|

||||

[3]: https://en.wikipedia.org/wiki/Global_Internet_usage#Internet_users

|

||||

[4]: https://web.archive.org/web/19961221003003/http://lsl.com/

|

||||

[5]: https://opensource.com/sites/default/files/20191026_142009.jpg (A tri-linux CD set)

|

||||

[6]: https://opensource.com/sites/default/files/20191026_142020.jpg (A tri-linux CD set)

|

||||

[7]: http://slackware.com

|

||||

@ -0,0 +1,73 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11814-1.html)

|

||||

[#]: subject: (What's your favorite terminal emulator?)

|

||||

[#]: via: (https://opensource.com/article/19/12/favorite-terminal-emulator)

|

||||

[#]: author: (Opensource.com https://opensource.com/users/admin)

|

||||

|

||||

你最喜欢的终端模拟器是什么?

|

||||

======

|

||||

|

||||

> 我们让社区讲述他们在终端仿真器方面的经验。以下是我们收到的一些回复。

|

||||

|

||||

|

||||

|

||||

终端仿真器的偏好可以说明一个人的工作流程。无鼠标操作能力是否必须具备?你想要标签页还是窗口?对于终端仿真器你还有什么选择的原因?是否有酷的因素?欢迎参加调查或给我们留下评论,告诉我们你最喜欢的终端模拟器。你尝试过多少种终端仿真器呢?

|

||||

|

||||

我们让社区讲述他们在终端仿真器方面的经验。以下是我们收到的一些回复。

|

||||

|

||||

“我最喜欢的终端仿真器是用 Powerline 定制的 Tilix。我喜欢它支持在一个窗口中打开多个终端。” —Dan Arel

|

||||

|

||||

“[urxvt][2]。它可以通过文件简单配置,轻巧,并且在大多数程序包管理器存储库中都很容易找到。” —Brian Tomlinson

|

||||

|

||||

“即使我不再使用 GNOME,gnome-terminal 仍然是我的首选。:)” —Justin W. Flory

|

||||

|

||||

“现在 FC31 上的 Terminator。我刚刚开始使用它,我喜欢它的分屏功能,对我来说感觉很轻巧。我正在研究它的插件。” —Marc Maxwell

|

||||

|

||||

“不久前,我切换到了 Tilix,它完成了我需要终端执行的所有工作。:) 多个窗格、通知,很精简,用来运行我的 tmux 会话很棒。” —Kevin Fenzi

|

||||

|

||||

“alacritty。它针对速度进行了优化,是用 Rust 实现的,并且具有很多常规功能,但是老实说,我只关心一个功能:可配置的字形间距,使我可以进一步压缩字体。” —Alexander Sosedkin

|

||||

|

||||

“我是个老古板:KDE Konsole。如果是远程会话,请使用 tmux。” —Marcin Juszkiewicz

|

||||

|

||||

“在 macOS 上用 iTerm2。是的,它是开源的。:-) 在 Linux 上是 Terminator。” —Patrick Mullins

|

||||

|

||||

“我现在已经使用 alacritty 一两年了,但是最近我在全屏模式下使用 cool-retro-term,因为我必须运行一个输出内容有很多的脚本,而它看起来很酷,让我感觉很酷。这对我很重要。” —Nick Childers

|

||||

|

||||

“我喜欢 Tilix,部分是因为它擅长免打扰(我通常全屏运行它,里面是 tmux),而且还提供自定义热链接支持:在我的终端中,像 ‘rhbz#1234’ 之类的文本是将我带到 Bugzilla 的热链接。类似的还有 LaunchPad 提案,OpenStack 的 Gerrit 更改 ID 等。” —Lars Kellogg-Stedman

|

||||

|

||||

“Eterm,在使用 Vintage 配置文件的 cool-retro-term 中,演示效果也最好。” —Ivan Horvath

|

||||

|

||||

“Tilix +1。这是 GNOME 用户最好的选择,我是这么觉得的!” —Eric Rich

|

||||

|

||||

“urxvt。快速、小型、可配置、可通过 Perl 插件扩展,这使其可以无鼠标操作。” —Roman Dobosz

|

||||

|

||||

“Konsole 是最好的,也是 KDE 项目中我唯一使用的应用程序。所有搜索结果都高亮显示是一个杀手级功能,据我所知没有任何其它 Linux 终端有这个功能(如果能证明我错了,那我也很高兴)。最适合搜索编译错误和输出日志。” —Jan Horak

|

||||

|

||||

“我过去经常使用 Terminator。现在我在 Tilix 中克隆了它的主题(深色主题),而感受一样好。它可以在选项卡之间轻松移动。就是这样。” —Alberto Fanjul Alonso

|

||||

|

||||

“我开始使用的是 Terminator,自从差不多过去这三年,我已经完全切换到 Tilix。” —Mike Harris

|

||||

|

||||

“我使用下拉式终端 X。这是 GNOME 3 的一个非常简单的扩展,使我始终可以通过一个按键(对于我来说是`F12`)拉出一个终端。它还支持制表符,这正是我所需要的。 ” —Germán Pulido

|

||||

|

||||

“xfce4-terminal:支持 Wayland、缩放、无边框、无标题栏、无滚动条 —— 这就是我在 tmux 之外全部想要的终端仿真器的功能。我希望我的终端仿真器可以尽可能多地使用屏幕空间,我通常在 tmux 窗格中并排放着编辑器(Vim)和 repl。” —Martin Kourim

|

||||

|

||||

“别问,问就是 Fish ! ;-)” —Eric Schabell

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/favorite-terminal-emulator

|

||||

|

||||

作者:[Opensource.com][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/admin

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/osdc_terminals_0.png?itok=XwIRERsn (Terminal window with green text)

|

||||

[2]: https://opensource.com/article/19/10/why-use-rxvt-terminal

|

||||

@ -0,0 +1,467 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (robsean)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11819-1.html)

|

||||

[#]: subject: (Enable your Python game player to run forward and backward)

|

||||

[#]: via: (https://opensource.com/article/19/12/python-platformer-game-run)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

使你的 Python 游戏玩家能够向前和向后跑

|

||||

======

|

||||

> 使用 Pygame 模块来使你的 Python 平台开启侧滚效果,来让你的玩家自由奔跑。

|

||||

|

||||

|

||||

|

||||

这是仍在进行中的关于使用 Pygame 模块来在 Python 3 中在创建电脑游戏的第九部分。先前的文章是:

|

||||

|

||||

* [通过构建一个简单的掷骰子游戏去学习怎么用 Python 编程][2]

|

||||

* [使用 Python 和 Pygame 模块构建一个游戏框架][3]

|

||||

* [如何在你的 Python 游戏中添加一个玩家][4]

|

||||

* [用 Pygame 使你的游戏角色移动起来][5]

|

||||

* [如何向你的 Python 游戏中添加一个敌人][6]

|

||||

* [在 Pygame 游戏中放置平台][12]

|

||||

* [在你的 Python 游戏中模拟引力][7]

|

||||

* [为你的 Python 平台类游戏添加跳跃功能][8]

|

||||

|

||||

在这一系列关于使用 [Pygame][10] 模块来在 [Python 3][9] 中创建电脑游戏的先前文章中,你已经设计了你的关卡设计布局,但是你的关卡的一些部分可能已近超出你的屏幕的可视区域。在平台类游戏中,这个问题的普遍解决方案是,像术语“<ruby>侧滚<rt>side-scroller</rt></ruby>”表明的一样,滚动。

|

||||

|

||||

滚动的关键是当玩家精灵接近屏的幕边缘时,使在玩家精灵周围的平台移动。这样给予一种错觉,屏幕是一个在游戏世界中穿梭追拍的"摄像机"。

|

||||

|

||||

这个滚动技巧需要两个在屏幕边缘的绝对区域,在绝对区域内的点处,在世界滚动期间,你的化身静止不动。

|

||||

|

||||

### 在侧滚动条中放置卷轴

|

||||

|

||||

如果你希望你的玩家能够后退,你需要一个触发点来向前和向后。这两个点仅仅是两个变量。设置它们各个距各个屏幕边缘大约 100 或 200 像素。在你的设置部分中创建变量。在下面的代码中,前两行用于上下文说明,所以仅需要添加这行后的代码:

|

||||

|

||||

```

|

||||

player_list.add(player)

|

||||

steps = 10

|

||||

forwardX = 600

|

||||

backwardX = 230

|

||||

```

|

||||

|

||||

在主循环中,查看你的玩家精灵是否在 `forwardx` 或 `backwardx` 滚动点处。如果是这样,向左或向右移动使用的平台,取决于世界是向前或向后移动。在下面的代码中,代码的最后三行仅供你参考:

|

||||

|

||||

```

|

||||

# scroll the world forward

|

||||

if player.rect.x >= forwardx:

|

||||

scroll = player.rect.x - forwardx

|

||||

player.rect.x = forwardx

|

||||

for p in plat_list:

|

||||

p.rect.x -= scroll

|

||||

|

||||

# scroll the world backward

|

||||

if player.rect.x <= backwardx:

|

||||

scroll = backwardx - player.rect.x

|

||||

player.rect.x = backwardx

|

||||

for p in plat_list:

|

||||

p.rect.x += scroll

|

||||

|

||||

## scrolling code above

|

||||

world.blit(backdrop, backdropbox)

|

||||

player.gravity() # check gravity

|

||||

player.update()

|

||||

```

|

||||

|

||||

启动你的游戏,并尝试它。

|

||||

|

||||

![Scrolling the world in Pygame][11]

|

||||

|

||||

滚动像预期的一样工作,但是你可能注意到一个发生的小问题,当你滚动你的玩家和非玩家精灵周围的世界时:敌人精灵不随同世界滚动。除非你要你的敌人精灵要无休止地追逐你的玩家,你需要修改敌人代码,以便当你的玩家快速撤退时,敌人被留在后面。

|

||||

|

||||

### 敌人卷轴

|

||||

|

||||

在你的主循环中,你必须对卷轴平台为你的敌人的位置的应用相同的规则。因为你的游戏世界将(很可能)有不止一个敌人在其中,该规则应该被应用于你的敌人列表,而不是一个单独的敌人精灵。这是分组类似元素到列表中的优点之一。

|

||||

|

||||

前两行用于上下文注释,所以只需添加这两行后面的代码到你的主循环中:

|

||||

|

||||

```

|

||||

# scroll the world forward

|

||||

if player.rect.x >= forwardx:

|

||||

scroll = player.rect.x - forwardx

|

||||

player.rect.x = forwardx

|

||||

for p in plat_list:

|

||||

p.rect.x -= scroll

|

||||

for e in enemy_list:

|

||||

e.rect.x -= scroll

|

||||

```

|

||||

|

||||

来滚向另一个方向:

|

||||

|

||||

```

|

||||

# scroll the world backward

|

||||

if player.rect.x <= backwardx:

|

||||

scroll = backwardx - player.rect.x

|

||||

player.rect.x = backwardx

|

||||

for p in plat_list:

|

||||

p.rect.x += scroll

|

||||

for e in enemy_list:

|

||||

e.rect.x += scroll

|

||||

```

|

||||

|

||||

再次启动游戏,看看发生什么。

|

||||

|

||||

这里是到目前为止你已经为这个 Python 平台所写所有的代码:

|

||||

|

||||

```

|

||||

#!/usr/bin/env python3

|

||||

# draw a world

|

||||

# add a player and player control

|

||||

# add player movement

|

||||

# add enemy and basic collision

|

||||

# add platform

|

||||

# add gravity

|

||||

# add jumping

|

||||

# add scrolling

|

||||

|

||||

# GNU All-Permissive License

|

||||

# Copying and distribution of this file, with or without modification,

|

||||

# are permitted in any medium without royalty provided the copyright

|

||||

# notice and this notice are preserved. This file is offered as-is,

|

||||

# without any warranty.

|

||||

|

||||

import pygame

|

||||

import sys

|

||||

import os

|

||||

|

||||

'''

|

||||

Objects

|

||||

'''

|

||||

|

||||

class Platform(pygame.sprite.Sprite):

|

||||

# x location, y location, img width, img height, img file

|

||||

def __init__(self,xloc,yloc,imgw,imgh,img):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.image = pygame.image.load(os.path.join('images',img)).convert()

|

||||

self.image.convert_alpha()

|

||||

self.rect = self.image.get_rect()

|

||||

self.rect.y = yloc

|

||||

self.rect.x = xloc

|

||||

|

||||

class Player(pygame.sprite.Sprite):

|

||||

'''

|

||||

Spawn a player

|

||||

'''

|

||||

def __init__(self):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.movex = 0

|

||||

self.movey = 0

|

||||

self.frame = 0

|

||||

self.health = 10

|

||||

self.collide_delta = 0

|

||||

self.jump_delta = 6

|

||||

self.score = 1

|

||||

self.images = []

|

||||

for i in range(1,9):

|

||||

img = pygame.image.load(os.path.join('images','hero' + str(i) + '.png')).convert()

|

||||

img.convert_alpha()

|

||||

img.set_colorkey(ALPHA)

|

||||

self.images.append(img)

|

||||

self.image = self.images[0]

|

||||

self.rect = self.image.get_rect()

|

||||

|

||||

def jump(self,platform_list):

|

||||

self.jump_delta = 0

|

||||

|

||||

def gravity(self):

|

||||

self.movey += 3.2 # how fast player falls

|

||||

|

||||

if self.rect.y > worldy and self.movey >= 0:

|

||||

self.movey = 0

|

||||

self.rect.y = worldy-ty

|

||||

|

||||

def control(self,x,y):

|

||||

'''

|

||||

control player movement

|

||||

'''

|

||||

self.movex += x

|

||||

self.movey += y

|

||||

|

||||

def update(self):

|

||||

'''

|

||||

Update sprite position

|

||||

'''

|

||||

|

||||

self.rect.x = self.rect.x + self.movex

|

||||

self.rect.y = self.rect.y + self.movey

|

||||

|

||||

# moving left

|

||||

if self.movex < 0:

|

||||

self.frame += 1

|

||||

if self.frame > ani*3:

|

||||

self.frame = 0

|

||||

self.image = self.images[self.frame//ani]

|

||||

|

||||

# moving right

|

||||

if self.movex > 0:

|

||||

self.frame += 1

|

||||

if self.frame > ani*3:

|

||||

self.frame = 0

|

||||

self.image = self.images[(self.frame//ani)+4]

|

||||

|

||||

# collisions

|

||||

enemy_hit_list = pygame.sprite.spritecollide(self, enemy_list, False)

|

||||

for enemy in enemy_hit_list:

|

||||

self.health -= 1

|

||||

#print(self.health)

|

||||

|

||||

plat_hit_list = pygame.sprite.spritecollide(self, plat_list, False)

|

||||

for p in plat_hit_list:

|

||||

self.collide_delta = 0 # stop jumping

|

||||

self.movey = 0

|

||||

if self.rect.y > p.rect.y:

|

||||

self.rect.y = p.rect.y+ty

|

||||

else:

|

||||

self.rect.y = p.rect.y-ty

|

||||

|

||||

ground_hit_list = pygame.sprite.spritecollide(self, ground_list, False)

|

||||

for g in ground_hit_list:

|

||||

self.movey = 0

|

||||

self.rect.y = worldy-ty-ty

|

||||

self.collide_delta = 0 # stop jumping

|

||||

if self.rect.y > g.rect.y:

|

||||

self.health -=1

|

||||

print(self.health)

|

||||

|

||||

if self.collide_delta < 6 and self.jump_delta < 6:

|

||||

self.jump_delta = 6*2

|

||||

self.movey -= 33 # how high to jump

|

||||

self.collide_delta += 6

|

||||

self.jump_delta += 6

|

||||

|

||||

class Enemy(pygame.sprite.Sprite):

|

||||

'''

|

||||

Spawn an enemy

|

||||

'''

|

||||

def __init__(self,x,y,img):

|

||||

pygame.sprite.Sprite.__init__(self)

|

||||

self.image = pygame.image.load(os.path.join('images',img))

|

||||

self.movey = 0

|

||||

#self.image.convert_alpha()

|

||||

#self.image.set_colorkey(ALPHA)

|

||||

self.rect = self.image.get_rect()

|

||||

self.rect.x = x

|

||||

self.rect.y = y

|

||||

self.counter = 0

|

||||

|

||||

|

||||

def move(self):

|

||||

'''

|

||||

enemy movement

|

||||

'''

|

||||

distance = 80

|

||||

speed = 8

|

||||

|

||||

self.movey += 3.2

|

||||

|

||||

if self.counter >= 0 and self.counter <= distance:

|

||||

self.rect.x += speed

|

||||

elif self.counter >= distance and self.counter <= distance*2:

|

||||

self.rect.x -= speed

|

||||

else:

|

||||

self.counter = 0

|

||||

|

||||

self.counter += 1

|

||||

|

||||

if not self.rect.y >= worldy-ty-ty:

|

||||

self.rect.y += self.movey

|

||||

|

||||

plat_hit_list = pygame.sprite.spritecollide(self, plat_list, False)

|

||||

for p in plat_hit_list:

|

||||

self.movey = 0

|

||||

if self.rect.y > p.rect.y:

|

||||

self.rect.y = p.rect.y+ty

|

||||

else:

|

||||

self.rect.y = p.rect.y-ty

|

||||

|

||||

ground_hit_list = pygame.sprite.spritecollide(self, ground_list, False)

|

||||

for g in ground_hit_list:

|

||||

self.rect.y = worldy-ty-ty

|

||||

|

||||

|

||||

class Level():

|

||||

def bad(lvl,eloc):

|

||||

if lvl == 1:

|

||||

enemy = Enemy(eloc[0],eloc[1],'yeti.png') # spawn enemy

|

||||

enemy_list = pygame.sprite.Group() # create enemy group

|

||||

enemy_list.add(enemy) # add enemy to group

|

||||

|

||||

if lvl == 2:

|

||||

print("Level " + str(lvl) )

|

||||

|

||||

return enemy_list

|

||||

|

||||

def loot(lvl,lloc):

|

||||

print(lvl)

|

||||

|

||||

def ground(lvl,gloc,tx,ty):

|

||||

ground_list = pygame.sprite.Group()

|

||||

i=0

|

||||

if lvl == 1: