mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

db0bb54799

@ -1,42 +1,44 @@

|

||||

使用 Ledger 记录(财务)情况

|

||||

======

|

||||

|

||||

自 2005 年搬到加拿大以来,我使用 [Ledger CLI][1] 来跟踪我的财务状况。我喜欢纯文本的方式,它支持虚拟信封意味着我可以同时将我的银行帐户余额和我的虚拟分配到不同的目录下。以下是我们如何使用这些虚拟信封分别管理我们的财务状况。

|

||||

|

||||

每个月,我都有一个条目将我生活开支分配到不同的目录中,包括家庭开支的分配。W- 不要求太多, 所以我要谨慎地处理这两者之间的差别和我自己的生活费用。我们处理它的方式是我支付固定金额,这是贷记我支付的杂货。由于我们的杂货总额通常低于我预算的家庭开支,因此任何差异都会留在标签上。我过去常常给他写支票,但最近我只是支付偶尔额外的大笔费用。

|

||||

|

||||

这是个示例信封分配:

|

||||

|

||||

```

|

||||

2014.10.01 * Budget

|

||||

[Envelopes:Living]

|

||||

[Envelopes:Household] $500

|

||||

;; More lines go here

|

||||

|

||||

```

|

||||

|

||||

这是设置的信封规则之一。它鼓励我正确地分类支出。所有支出都从我的 “Play” 信封中取出。

|

||||

|

||||

```

|

||||

= /^Expenses/

|

||||

(Envelopes:Play) -1.0

|

||||

|

||||

```

|

||||

|

||||

这个为家庭支出报销 “Play” 信封,将金额从 “Household” 信封转移到 “Play” 信封。

|

||||

|

||||

```

|

||||

= /^Expenses:House$/

|

||||

(Envelopes:Play) 1.0

|

||||

(Envelopes:Household) -1.0

|

||||

|

||||

```

|

||||

|

||||

我有一套定期的支出来模拟我的预算中的家庭开支。例如,这是 10 月份的。

|

||||

|

||||

```

|

||||

2014.10.1 * House

|

||||

Expenses:House

|

||||

Assets:Household $-500

|

||||

|

||||

```

|

||||

|

||||

这是杂货交易的形式:

|

||||

|

||||

```

|

||||

2014.09.28 * No Frills

|

||||

Assets:Household:Groceries $70.45

|

||||

@ -61,7 +63,7 @@ via: http://sachachua.com/blog/2014/11/keeping-financial-score-ledger/

|

||||

作者:[Sacha Chua][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,17 @@

|

||||

Mesos 和 Kubernetes:不是竞争者

|

||||

======

|

||||

|

||||

> 人们经常用 x 相对于 y 这样的术语来考虑问题,但是它并不是一个技术对另一个技术的问题。Ben Hindman 在这里解释了 Mesos 是如何对另外一种技术进行补充的。

|

||||

|

||||

|

||||

|

||||

Mesos 的起源可以追溯到 2009 年,当时,Ben Hindman 还是加州大学伯克利分校研究并行编程的博士生。他们在 128 核的芯片上做大规模的并行计算,并尝试去解决多个问题,比如怎么让软件和库在这些芯片上运行更高效。他与同学们讨论能否借鉴并行处理和多线程的思想,并将它们应用到集群管理上。

|

||||

Mesos 的起源可以追溯到 2009 年,当时,Ben Hindman 还是加州大学伯克利分校研究并行编程的博士生。他们在 128 核的芯片上做大规模的并行计算,以尝试去解决多个问题,比如怎么让软件和库在这些芯片上运行更高效。他与同学们讨论能否借鉴并行处理和多线程的思想,并将它们应用到集群管理上。

|

||||

|

||||

Hindman 说 "最初,我们专注于大数据” 。那时,大数据非常热门,并且 Hadoop 是其中一个热门技术。“我们发现,人们在集群上运行像 Hadoop 这样的程序与运行多线程应用和并行应用很相似。Hindman 说。

|

||||

Hindman 说 “最初,我们专注于大数据” 。那时,大数据非常热门,而 Hadoop 就是其中的一个热门技术。“我们发现,人们在集群上运行像 Hadoop 这样的程序与运行多线程应用及并行应用很相似。”Hindman 说。

|

||||

|

||||

但是,它们的效率并不高,因此,他们开始去思考,如何通过集群管理和资源管理让它们运行的更好。”我们查看了那个时间很多的不同技术“ Hindman 回忆道。

|

||||

但是,它们的效率并不高,因此,他们开始去思考,如何通过集群管理和资源管理让它们运行的更好。“我们查看了那个时期很多的各种技术” Hindman 回忆道。

|

||||

|

||||

然而,Hindman 和他的同事们,决定去采用一种全新的方法。”我们决定去对资源管理创建一个低级的抽象,然后在此之上运行调度服务和做其它的事情。“ Hindman 说,“基本上,这就是 Mesos 的本质 —— 将资源管理部分从调度部分中分离出来。”

|

||||

然后,Hindman 和他的同事们决定去采用一种全新的方法。“我们决定对资源管理创建一个低级的抽象,然后在此之上运行调度服务和做其它的事情。” Hindman 说,“基本上,这就是 Mesos 的本质 —— 将资源管理部分从调度部分中分离出来。”

|

||||

|

||||

他成功了,并且 Mesos 从那时开始强大了起来。

|

||||

|

||||

@ -17,21 +19,21 @@ Hindman 说 "最初,我们专注于大数据” 。那时,大数据非常热

|

||||

|

||||

这个项目发起于 2009 年。在 2010 年时,团队决定将这个项目捐献给 Apache 软件基金会(ASF)。它在 Apache 孵化,并于 2013 年成为顶级项目(TLP)。

|

||||

|

||||

为什么 Mesos 社区选择 Apache 软件基金会有很多的原因,比如,Apache 许可证,以及他们已经拥有了一个充满活力的此类项目的许多其它社区。

|

||||

为什么 Mesos 社区选择 Apache 软件基金会有很多的原因,比如,Apache 许可证,以及基金会已经拥有了一个充满活力的其它此类项目的社区。

|

||||

|

||||

与影响力也有关系。许多在 Mesos 上工作的人,也参与了 Apache,并且许多人也致力于像 Hadoop 这样的项目。同时,来自 Mesos 社区的许多人也致力于其它大数据项目,比如 Spark。这种交叉工作使得这三个项目 —— Hadoop、Mesos、以及 Spark —— 成为 ASF 的项目。

|

||||

与影响力也有关系。许多在 Mesos 上工作的人也参与了 Apache,并且许多人也致力于像 Hadoop 这样的项目。同时,来自 Mesos 社区的许多人也致力于其它大数据项目,比如 Spark。这种交叉工作使得这三个项目 —— Hadoop、Mesos,以及 Spark —— 成为 ASF 的项目。

|

||||

|

||||

与商业也有关系。许多公司对 Mesos 很感兴趣,并且开发者希望它能由一个中立的机构来维护它,而不是让它成为一个私有项目。

|

||||

|

||||

### 谁在用 Mesos?

|

||||

|

||||

更好的问题应该是,谁不在用 Mesos?从 Apple 到 Netflix 每个都在用 Mesos。但是,Mesos 也面临任何技术在早期所面对的挑战。”最初,我要说服人们,这是一个很有趣的新技术。它叫做“容器”,因为它不需要使用虚拟机“ Hindman 说。

|

||||

更好的问题应该是,谁不在用 Mesos?从 Apple 到 Netflix 每个都在用 Mesos。但是,Mesos 也面临任何技术在早期所面对的挑战。“最初,我要说服人们,这是一个很有趣的新技术。它叫做‘容器’,因为它不需要使用虚拟机” Hindman 说。

|

||||

|

||||

从那以后,这个行业发生了许多变化,现在,只要与别人聊到基础设施,必然是从”容器“开始的 —— 感谢 Docker 所做出的工作。今天再也不需要说服工作了,而在 Mesos 出现的早期,前面提到的像 Apple、Netflix、以及 PayPal 这样的公司。他们已经知道了容器化替代虚拟机给他们带来的技术优势。”这些公司在容器化成为一种现象之前,已经明白了容器化的价值所在“, Hindman 说。

|

||||

从那以后,这个行业发生了许多变化,现在,只要与别人聊到基础设施,必然是从”容器“开始的 —— 感谢 Docker 所做出的工作。今天再也不需要做说服工作了,而在 Mesos 出现的早期,前面提到的像 Apple、Netflix,以及 PayPal 这样的公司。他们已经知道了容器替代虚拟机给他们带来的技术优势。“这些公司在容器成为一种现象之前,已经明白了容器的价值所在”, Hindman 说。

|

||||

|

||||

可以在这些公司中看到,他们有大量的容器而不是虚拟机。他们所做的全部工作只是去管理和运行这些容器,并且他们欣然接受了 Mesos。在 Mesos 早期就使用它的公司有 Apple、Netflix、PayPal、Yelp、OpenTable、和 Groupon。

|

||||

可以在这些公司中看到,他们有大量的容器而不是虚拟机。他们所做的全部工作只是去管理和运行这些容器,并且他们欣然接受了 Mesos。在 Mesos 早期就使用它的公司有 Apple、Netflix、PayPal、Yelp、OpenTable 和 Groupon。

|

||||

|

||||

“大多数组织使用 Mesos 来运行任意需要的服务” Hindman 说,“但也有些公司用它做一些非常有趣的事情,比如,数据处理、数据流、分析负载和应用程序。“

|

||||

“大多数组织使用 Mesos 来运行各种服务” Hindman 说,“但也有些公司用它做一些非常有趣的事情,比如,数据处理、数据流、分析任务和应用程序。“

|

||||

|

||||

这些公司采用 Mesos 的其中一个原因是,资源管理层之间有一个明晰的界线。当公司运营容器的时候,Mesos 为他们提供了很好的灵活性。

|

||||

|

||||

@ -43,11 +45,11 @@ Hindman 说 "最初,我们专注于大数据” 。那时,大数据非常热

|

||||

|

||||

人们经常用 x 相对于 y 这样的术语来考虑问题,但是它并不是一个技术对另一个技术的问题。大多数的技术在一些领域总是重叠的,并且它们可以是互补的。“我不喜欢将所有的这些东西都看做是竞争者。我认为它们中的一些与另一个在工作中是互补的,” Hindman 说。

|

||||

|

||||

“事实上,名字 Mesos 表示它处于 ‘中间’;它是一种中间的 OS,” Hindman 说,“我们有一个容器调度器的概念,它能够运行在像 Mesos 这样的东西之上。当 Kubernetes 刚出现的时候,我们实际上在 Mesos 的生态系统中接受它的,并将它看做是运行在 Mesos 之上、DC/OS 之中的另一种方式的容器。”

|

||||

“事实上,名字 Mesos 表示它处于 ‘中间’;它是一种中间的操作系统”, Hindman 说,“我们有一个容器调度器的概念,它能够运行在像 Mesos 这样的东西之上。当 Kubernetes 刚出现的时候,我们实际上在 Mesos 的生态系统中接受了它,并将它看做是在 Mesos 上的 DC/OS 中运行容器的另一种方式。”

|

||||

|

||||

Mesos 也复活了一个名为 [Marathon][1](一个用于 Mesos 和 DC/OS 的容器编排器)的项目,它在 Mesos 生态系统中是做的最好的容器编排器。但是,Marathon 确实无法与 Kubernetes 相比较。“Kubernetes 比 Marathon 做的更多,因此,你不能将它们简单地相互交换,” Hindman 说,“与此同时,我们在 Mesos 中做了许多 Kubernetes 中没有的东西。因此,这些技术之间是互补的。”

|

||||

Mesos 也复活了一个名为 [Marathon][1](一个用于 Mesos 和 DC/OS 的容器编排器)的项目,它成为了 Mesos 生态系统中最重要的成员。但是,Marathon 确实无法与 Kubernetes 相比较。“Kubernetes 比 Marathon 做的更多,因此,你不能将它们简单地相互交换,” Hindman 说,“与此同时,我们在 Mesos 中做了许多 Kubernetes 中没有的东西。因此,这些技术之间是互补的。”

|

||||

|

||||

不要将这些技术视为相互之间是敌对的关系,它们应该被看做是对行业有益的技术。它们不是技术上的重复;它们是多样化的。据 Hindman 说,“对于开源领域的终端用户来说,这可能会让他们很困惑,因为他们很难去知道哪个技术适用于哪种负载,但这是被称为开源的这种东西最令人讨厌的本质所在。“

|

||||

不要将这些技术视为相互之间是敌对的关系,它们应该被看做是对行业有益的技术。它们不是技术上的重复;它们是多样化的。据 Hindman 说,“对于开源领域的终端用户来说,这可能会让他们很困惑,因为他们很难去知道哪个技术适用于哪种任务,但这是这个被称之为开源的本质所在。“

|

||||

|

||||

这只是意味着有更多的选择,并且每个都是赢家。

|

||||

|

||||

@ -58,7 +60,7 @@ via: https://www.linux.com/blog/2018/6/mesos-and-kubernetes-its-not-competition

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,33 +1,35 @@

|

||||

使用 Open edX 托管课程入门

|

||||

使用 Open edX 托管课程

|

||||

======

|

||||

|

||||

> Open edX 为各种规模和类型的组织提供了一个强大而多功能的开源课程管理的解决方案。要不要了解一下。

|

||||

|

||||

|

||||

|

||||

[Open edX 平台][2] 是一个免费和开源的课程管理系统,它是 [全世界][3] 都在使用的大规模网络公开课(MOOCs)以及小型课程和培训模块的托管平台。在 Open edX 的 [第七个主要发行版][1] 中,到现在为止,它已经提供了超过 8,000 个原创课程和 5000 万个课程注册数。你可以使用你自己的本地设备或者任何行业领先的云基础设施服务提供商来安装这个平台,但是,随着项目的[服务提供商][4]名单越来越长,来自它们中的软件即服务(SaaS)的可用模型也越来越多了。

|

||||

[Open edX 平台][2] 是一个自由开源的课程管理系统,它是 [全世界][3] 都在使用的大规模网络公开课(MOOC)以及小型课程和培训模块的托管平台。在 Open edX 的 [第七个主要发行版][1] 中,到现在为止,它已经提供了超过 8,000 个原创课程和 5000 万个课程注册数。你可以使用你自己的本地设备或者任何行业领先的云基础设施服务提供商来安装这个平台,而且,随着项目的[服务提供商][4]名单越来越长,来自它们中的软件即服务(SaaS)的可用模型也越来越多了。

|

||||

|

||||

Open edX 平台被来自世界各地的顶尖教育机构、私人公司、公共机构、非政府组织、非营利机构、以及教育技术初创企业广泛地使用,并且项目服务提供商的全球社区持续让越来越小的组织可以访问这个平台。如果你打算向广大的读者设计和提供教育内容,你应该考虑去使用 Open edX 平台。

|

||||

Open edX 平台被来自世界各地的顶尖教育机构、私人公司、公共机构、非政府组织、非营利机构,以及教育技术初创企业广泛地使用,并且该项目的服务提供商全球社区不断地让甚至更小的组织也可以访问这个平台。如果你打算向广大的读者设计和提供教育内容,你应该考虑去使用 Open edX 平台。

|

||||

|

||||

### 安装

|

||||

|

||||

安装这个软件有多种方式,这可能是不一个不受欢迎的惊喜,至少刚开始是这样。但是不管你是以何种方式 [安装 Open edX][5],最终你都得到的是有相同功能的应用程序。默认安装包含一个为在线学习者提供的、全功能的学习管理系统(LMS),和一个全功能的课程管理工作室(CMS),CMS 可以让你的讲师团队用它来编写原创课程内容。你可以把 CMS 当做是课程内容设计和管理的 “[Wordpress][6]”,把 LMS 当做是课程销售、分发、和消费的 “[Magento][7]”。

|

||||

安装这个软件有多种方式,这可能有点让你难以选择,至少刚开始是这样。但是不管你是以何种方式 [安装 Open edX][5],最终你都得到的是有相同功能的应用程序。默认安装包含一个为在线学习者提供的、全功能的学习管理系统(LMS),和一个全功能的课程管理工作室(CMS),CMS 可以让你的讲师团队用它来编写原创课程内容。你可以把 CMS 当做是课程内容设计和管理的 “[Wordpress][6]”,把 LMS 当做是课程销售、分发、和消费的 “[Magento][7]”。

|

||||

|

||||

Open edX 是设备无关的和完全响应式的应用软件,并且不用花费很多的努力就可发布一个原生的 iOS 和 Android apps,它可以无缝地集成到你的实例后端。Open edX 平台的代码库、原生移动应用、以及安装脚本都发布在 [GitHub][8] 上。

|

||||

Open edX 是设备无关的、完全响应式的应用软件,并且不用花费很多的努力就可发布一个原生的 iOS 和 Android 应用,它可以无缝地集成到你的实例后端。Open edX 平台的代码库、原生移动应用、以及安装脚本都发布在 [GitHub][8] 上。

|

||||

|

||||

#### 有何期望

|

||||

|

||||

Open edX 平台的 [GitHub 仓库][9] 包含适用于各种类型组织的、性能很好的、产品级的代码。来自数百个机构的数千名程序员定期为 edX 仓库做贡献,并且这个平台是一个名副其实的,研究如何去构建和管理一个复杂的企业级应用的好案例。因此,尽管你可能会遇到大量的类似如何将平台迁移到生产环境中的问题,但是你不应该对 Open edX 平台代码库本身的质量和健状性担忧。

|

||||

Open edX 平台的 [GitHub 仓库][9] 包含适用于各种类型的组织的、性能很好的、产品级的代码。来自数百个机构的数千名程序员经常为 edX 仓库做贡献,并且这个平台是一个名副其实的、研究如何去构建和管理一个复杂的企业级应用的好案例。因此,尽管你可能会遇到大量的类似“如何将平台迁移到生产环境中”的问题,但是你无需对 Open edX 平台代码库本身的质量和健状性担忧。

|

||||

|

||||

通过少量的培训,你的讲师就可以去设计很好的在线课程。但是请记住,Open edX 是通过它的 [XBlock][10] 组件架构可扩展的,因此,通过他们和你的努力,你的讲师将有可能将好的课程变成精品课程。

|

||||

通过少量的培训,你的讲师就可以去设计不错的在线课程。但是请记住,Open edX 是通过它的 [XBlock][10] 组件架构进行扩展的,因此,通过他们和你的努力,你的讲师将有可能将不错的课程变成精品课程。

|

||||

|

||||

这个平台在单服务器环境下也运行的很好,并且它是高度模块化的,几乎可以进行无限地水平扩展。它也是主题化的和本地化的,平台的功能和外观可以根据你的需要进行几乎无限制地调整。平台在你的设备上可以按需安装并可靠地运行。

|

||||

|

||||

#### 一些封装要求

|

||||

#### 需要一些封装

|

||||

|

||||

请记住,有大量的 edX 软件模块是不包含在默认安装中的,并且这些模块提供的经常都是组织所需要的功能。比如,分析模块、电商模块、以及课程的通知/公告模块都是不包含在默认安装中的,并且这些单独的模块都是值得安装的。另外,在数据备份/恢复和系统管理方面要完全依赖你自己去处理。幸运的是,有关这方面的内容,社区有越来越多的文档和如何去做的文章。你可以通过 Google 和 Bing 去搜索,以帮助你在生产环境中安装它们。

|

||||

请记住,有大量的 edX 软件模块是不包含在默认安装中的,并且这些模块提供的经常都是各种组织所需要的功能。比如,分析模块、电商模块,以及课程的通知/公告模块都是不包含在默认安装中的,并且这些单独的模块都是值得安装的。另外,在数据备份/恢复和系统管理方面要完全依赖你自己去处理。幸运的是,有关这方面的内容,社区有越来越多的文档和如何去做的文章。你可以通过 Google 和 Bing 去搜索,以帮助你在生产环境中安装它们。

|

||||

|

||||

虽然有很多文档良好的程序,但是根据你的技能水平,配置 [oAuth][11] 和 [SSL/TLS][12],以及使用平台的 [REST API][13] 可能对你是一个挑战。另外,一些组织要求将 MySQL 和/或 MongoDB 数据库在中心化环境中管理,如果你正好是这种情况,你还需要将这些服务从默认平台安装中分离出来。edX 设计团队已经尽可能地为你做了简化,但是由于它是一个非常重大的更改,因此可能需要一些时间去实现。

|

||||

|

||||

如果你面临资源和/或技术上的困难 —— 不要气馁,Open edX 社区 SaaS 提供商,像 [appsembler][14] 和 [eduNEXT][15],提供了引人入胜的替代方案去进行 DIY 安装,尤其是如果你只适应窗口方式操作。

|

||||

如果你面临资源和/或技术上的困难 —— 不要气馁,Open edX 社区 SaaS 提供商,像 [appsembler][14] 和 [eduNEXT][15],提供了引人入胜的替代方案去进行 DIY 安装,尤其是如果你只想简单购买就行。

|

||||

|

||||

### 技术栈

|

||||

|

||||

@ -35,7 +37,7 @@ Open edX 平台的 [GitHub 仓库][9] 包含适用于各种类型组织的、性

|

||||

|

||||

![edx-architecture.png][24]

|

||||

|

||||

Open edX 技术栈(CC BY,来自 edX)

|

||||

*Open edX 技术栈(CC BY,来自 edX)*

|

||||

|

||||

将这些组件安装并配置好本身就是一件非常不容易的事情,但是以这样的一种方式将所有的组件去打包,并适合于任意规模和复杂性的组织,并且能够按他们的需要进行任意调整搭配而无需在代码上做重大改动,看起来似乎是不可能的事情 —— 它就是这种情况,直到你看到主要的平台配置参数安排和命名是多少的巧妙和直观。请注意,平台的组织结构有一个学习曲线,但是,你所学习的一切都是值的去学习的,不仅是对这个项目,对一般意义上的大型 IT 项目都是如此。

|

||||

|

||||

@ -43,7 +45,7 @@ Open edX 技术栈(CC BY,来自 edX)

|

||||

|

||||

### 采用

|

||||

|

||||

edX 项目能够迅速得到世界范围内的采纳,很大程度上取决于软件的运行情况。这一点也不奇怪,这个项目成功地吸引了大量才华卓越的人参与其中,他们作为程序员、项目顾问、翻译者、技术作者、以及博客作者参与了项目的贡献。一年一次的 [Open edX 会议][27]、[官方的 edX Google Group][28]、以及 [Open edX 服务提供商名单][4] 是了解这个多样化的、不断成长的生态系统的非常好的起点。我作为相对而言的新人,我发现参与和直接从事这个项目的各个方面是非常容易的。

|

||||

edX 项目能够迅速得到世界范围内的采纳,很大程度上取决于该软件的运行情况。这一点也不奇怪,这个项目成功地吸引了大量才华卓越的人参与其中,他们作为程序员、项目顾问、翻译者、技术作者、以及博客作者参与了项目的贡献。一年一次的 [Open edX 会议][27]、[官方的 edX Google Group][28]、以及 [Open edX 服务提供商名单][4] 是了解这个多样化的、不断成长的生态系统的非常好的起点。我作为相对而言的新人,我发现参与和直接从事这个项目的各个方面是非常容易的。

|

||||

|

||||

祝你学习之旅一切顺利,并且当你构思你的项目时,你可以随时联系我。

|

||||

|

||||

@ -54,7 +56,7 @@ via: https://opensource.com/article/18/6/getting-started-open-edx

|

||||

作者:[Lawrence Mc Daniel][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,200 @@

|

||||

iWant – The Decentralized Peer To Peer File Sharing Commandline Application

|

||||

======

|

||||

|

||||

|

||||

|

||||

A while ago, we have written a guide about two file sharing utilities named [**transfer.sh**][1], a free web service that allows you to share files over Internet easily and quickly, and [**PSiTransfer**][2], a simple open source self-hosted file sharing solution. Today, we will see yet another file sharing utility called **“iWant”**. It is a free and open source CLI-based decentralized peer to peer file sharing application.

|

||||

|

||||

What’s makes it different from other file sharing applications? You might wonder. Here are some prominent features of iWant.

|

||||

|

||||

* It’s commandline application. You don’t need any memory consuming GUI utilities. You need only the Terminal.

|

||||

* It is decentralized. That means your data will not be stored in any central location. So, there is no central point of failure.

|

||||

* iWant allows you to pause the download and you can resume it later when you want. You don’t need to download it from beginning, it just resumes the downloads from where you left off.

|

||||

* Any changes made in the files in the shared directory (such as deletion, addition, modification) will be reflected instantly in the network.

|

||||

* Just like torrents, iWant downloads the files from multiple peers. If any seeder left the group or failed to respond, it will continue the download from another seeder.

|

||||

* It is cross-platform, so, you can use it in GNU/Linux, MS Windows, and Mac OS X.

|

||||

|

||||

|

||||

|

||||

### iWant – A CLI-based Decentralized Peer To Peer File Sharing Solution

|

||||

|

||||

#### Install iWant

|

||||

|

||||

iWant can be easily installed using PIP package manager. Make sure you have pip installed in your Linux distribution. if it is not installed yet, refer the following guide.

|

||||

|

||||

[How To Manage Python Packages Using Pip](https://www.ostechnix.com/manage-python-packages-using-pip/)

|

||||

|

||||

After installing PIP, make sure you have installed the the following dependencies:

|

||||

|

||||

* libffi-dev

|

||||

* libssl-dev

|

||||

|

||||

|

||||

|

||||

Say for example, on Ubuntu, you can install these dependencies using command:

|

||||

```

|

||||

$ sudo apt-get install libffi-dev libssl-dev

|

||||

|

||||

```

|

||||

|

||||

Once all dependencies installed, install iWant using the following command:

|

||||

```

|

||||

$ sudo pip install iwant

|

||||

|

||||

```

|

||||

|

||||

We have now iWant in our system. Let us go ahead and see how to use it to transfer files over network.

|

||||

|

||||

#### Usage

|

||||

|

||||

First, start iWant server using command:

|

||||

```

|

||||

$ iwanto start

|

||||

|

||||

```

|

||||

|

||||

At the first time, iWant will ask the Shared and Download folder’s location. Enter the actual location of both folders. Then, choose which interface you want to use:

|

||||

|

||||

Sample output would be:

|

||||

```

|

||||

Shared/Download folder details looks empty..

|

||||

Note: Shared and Download folder cannot be the same

|

||||

SHARED FOLDER(absolute path):/home/sk/myshare

|

||||

DOWNLOAD FOLDER(absolute path):/home/sk/mydownloads

|

||||

Network interface available

|

||||

1. lo => 127.0.0.1

|

||||

2. enp0s3 => 192.168.43.2

|

||||

Enter index of the interface:2

|

||||

now scanning /home/sk/myshare

|

||||

[Adding] /home/sk/myshare 0.0

|

||||

Updating Leader 56f6d5e8-654e-11e7-93c8-08002712f8c1

|

||||

[Adding] /home/sk/myshare 0.0

|

||||

connecting to 192.168.43.2:1235 for hashdump

|

||||

|

||||

```

|

||||

|

||||

If you see an output something like above, you can start using iWant right away.

|

||||

|

||||

Similarly, start iWant service on all systems in the network, assign valid Shared and Downloads folder’s location, and select the network interface card.

|

||||

|

||||

The iWant service will keep running in the current Terminal window until you press **CTRL+C** to quit it. You need to open a new tab or new Terminal window to use iWant.

|

||||

|

||||

iWant usage is very simple. It has few commands as listed below.

|

||||

|

||||

* **iwanto start** – Starts iWant server.

|

||||

* **iwanto search <name>** – Search for files.

|

||||

* **iwanto download <hash>** – Download a file.

|

||||

* **iwanto share <path>** – Change the Shared folder’s location.

|

||||

* **iwanto download to <destination>** – Change the Download folder’s location.

|

||||

* **iwanto view config** – View Shared and Download folders.

|

||||

* **iwanto –version** – Displays the iWant version.

|

||||

* **iwanto -h** – Displays the help section.

|

||||

|

||||

|

||||

|

||||

Allow me to show you some examples.

|

||||

|

||||

**Search files**

|

||||

|

||||

To search for a file, run:

|

||||

```

|

||||

$ iwanto search <filename>

|

||||

|

||||

```

|

||||

|

||||

Please note that you don’t need to specify the accurate name.

|

||||

|

||||

Example:

|

||||

```

|

||||

$ iwanto search command

|

||||

|

||||

```

|

||||

|

||||

The above command will search for any files that contains the string “command”.

|

||||

|

||||

Sample output from my Ubuntu system:

|

||||

```

|

||||

Filename Size Checksum

|

||||

------------------------------------------- ------- --------------------------------

|

||||

/home/sk/myshare/THE LINUX COMMAND LINE.pdf 3.85757 efded6cc6f34a3d107c67c2300459911

|

||||

|

||||

```

|

||||

|

||||

**Download files**

|

||||

|

||||

You can download the files from any system on your network. To download a file, just mention the hash (checksum) of the file as shown below. You can get hash value of a share using “iwanto search” command.

|

||||

```

|

||||

$ iwanto download efded6cc6f34a3d107c67c2300459911

|

||||

|

||||

```

|

||||

|

||||

The file will be saved in your Download location (/home/sk/mydownloads/ in my case).

|

||||

```

|

||||

Filename: /home/sk/mydownloads/THE LINUX COMMAND LINE.pdf

|

||||

Size: 3.857569 MB

|

||||

|

||||

```

|

||||

|

||||

**View configuration**

|

||||

|

||||

To view the configuration i.e the Shared and Download folders, run:

|

||||

```

|

||||

$ iwanto view config

|

||||

|

||||

```

|

||||

|

||||

Sample output:

|

||||

```

|

||||

Shared folder:/home/sk/myshare

|

||||

Download folder:/home/sk/mydownloads

|

||||

|

||||

```

|

||||

|

||||

**Change Shared and Download folder’s location**

|

||||

|

||||

You can change the Shared folder and Download folder location to some other path like below.

|

||||

```

|

||||

$ iwanto share /home/sk/ostechnix

|

||||

|

||||

```

|

||||

|

||||

Now, the Shared location has been changed to /home/sk/ostechnix location.

|

||||

|

||||

Also, you can change the Downloads location using command:

|

||||

```

|

||||

$ iwanto download to /home/sk/Downloads

|

||||

|

||||

```

|

||||

|

||||

To view the changes made, run the config command:

|

||||

```

|

||||

$ iwanto view config

|

||||

|

||||

```

|

||||

|

||||

**Stop iWant**

|

||||

|

||||

Once you done with iWant, you can quit it by pressing **CTRL+C**.

|

||||

|

||||

If it is not working by any chance, it might be due to Firewall or your router doesn’t support multicast. You can view all logs in** ~/.iwant/.iwant.log** file. For more details, refer the project’s GitHub page provided at the end.

|

||||

|

||||

And, that’s all. Hope this tool helps. I will be here again with another interesting guide. Till then, stay tuned with OSTechNix!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/iwant-decentralized-peer-peer-file-sharing-commandline-application/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.ostechnix.com/easy-fast-way-share-files-internet-command-line/

|

||||

[2]:https://www.ostechnix.com/psitransfer-simple-open-source-self-hosted-file-sharing-solution/

|

||||

@ -1,270 +0,0 @@

|

||||

BriFuture is translating

|

||||

|

||||

You don't know Bash: An introduction to Bash arrays

|

||||

======

|

||||

|

||||

|

||||

|

||||

Although software engineers regularly use the command line for many aspects of development, arrays are likely one of the more obscure features of the command line (although not as obscure as the regex operator `=~`). But obscurity and questionable syntax aside, [Bash][1] arrays can be very powerful.

|

||||

|

||||

### Wait, but why?

|

||||

|

||||

Writing about Bash is challenging because it's remarkably easy for an article to devolve into a manual that focuses on syntax oddities. Rest assured, however, the intent of this article is to avoid having you RTFM.

|

||||

|

||||

#### A real (actually useful) example

|

||||

|

||||

To that end, let's consider a real-world scenario and how Bash can help: You are leading a new effort at your company to evaluate and optimize the runtime of your internal data pipeline. As a first step, you want to do a parameter sweep to evaluate how well the pipeline makes use of threads. For the sake of simplicity, we'll treat the pipeline as a compiled C++ black box where the only parameter we can tweak is the number of threads reserved for data processing: `./pipeline --threads 4`.

|

||||

|

||||

### The basics

|

||||

|

||||

`--threads` parameter that we want to test:

|

||||

```

|

||||

allThreads=(1 2 4 8 16 32 64 128)

|

||||

|

||||

```

|

||||

|

||||

The first thing we'll do is define an array containing the values of theparameter that we want to test:

|

||||

|

||||

In this example, all the elements are numbers, but it need not be the case—arrays in Bash can contain both numbers and strings, e.g., `myArray=(1 2 "three" 4 "five")` is a valid expression. And just as with any other Bash variable, make sure to leave no spaces around the equal sign. Otherwise, Bash will treat the variable name as a program to execute, and the `=` as its first parameter!

|

||||

|

||||

Now that we've initialized the array, let's retrieve a few of its elements. You'll notice that simply doing `echo $allThreads` will output only the first element.

|

||||

|

||||

To understand why that is, let's take a step back and revisit how we usually output variables in Bash. Consider the following scenario:

|

||||

```

|

||||

type="article"

|

||||

|

||||

echo "Found 42 $type"

|

||||

|

||||

```

|

||||

|

||||

Say the variable `$type` is given to us as a singular noun and we want to add an `s` at the end of our sentence. We can't simply add an `s` to `$type` since that would turn it into a different variable, `$types`. And although we could utilize code contortions such as `echo "Found 42 "$type"s"`, the best way to solve this problem is to use curly braces: `echo "Found 42 ${type}s"`, which allows us to tell Bash where the name of a variable starts and ends (interestingly, this is the same syntax used in JavaScript/ES6 to inject variables and expressions in [template literals][2]).

|

||||

|

||||

So as it turns out, although Bash variables don't generally require curly brackets, they are required for arrays. In turn, this allows us to specify the index to access, e.g., `echo ${allThreads[1]}` returns the second element of the array. Not including brackets, e.g.,`echo $allThreads[1]`, leads Bash to treat `[1]` as a string and output it as such.

|

||||

|

||||

Yes, Bash arrays have odd syntax, but at least they are zero-indexed, unlike some other languages (I'm looking at you, `R`).

|

||||

|

||||

### Looping through arrays

|

||||

|

||||

Although in the examples above we used integer indices in our arrays, let's consider two occasions when that won't be the case: First, if we wanted the `$i`-th element of the array, where `$i` is a variable containing the index of interest, we can retrieve that element using: `echo ${allThreads[$i]}`. Second, to output all the elements of an array, we replace the numeric index with the `@` symbol (you can think of `@` as standing for `all`): `echo ${allThreads[@]}`.

|

||||

|

||||

#### Looping through array elements

|

||||

|

||||

With that in mind, let's loop through `$allThreads` and launch the pipeline for each value of `--threads`:

|

||||

```

|

||||

for t in ${allThreads[@]}; do

|

||||

|

||||

./pipeline --threads $t

|

||||

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

#### Looping through array indices

|

||||

|

||||

Next, let's consider a slightly different approach. Rather than looping over array elements, we can loop over array indices:

|

||||

```

|

||||

for i in ${!allThreads[@]}; do

|

||||

|

||||

./pipeline --threads ${allThreads[$i]}

|

||||

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

Let's break that down: As we saw above, `${allThreads[@]}` represents all the elements in our array. Adding an exclamation mark to make it `${!allThreads[@]}` will return the list of all array indices (in our case 0 to 7). In other words, the `for` loop is looping through all indices `$i` and reading the `$i`-th element from `$allThreads` to set the value of the `--threads` parameter.

|

||||

|

||||

This is much harsher on the eyes, so you may be wondering why I bother introducing it in the first place. That's because there are times where you need to know both the index and the value within a loop, e.g., if you want to ignore the first element of an array, using indices saves you from creating an additional variable that you then increment inside the loop.

|

||||

|

||||

### Populating arrays

|

||||

|

||||

So far, we've been able to launch the pipeline for each `--threads` of interest. Now, let's assume the output to our pipeline is the runtime in seconds. We would like to capture that output at each iteration and save it in another array so we can do various manipulations with it at the end.

|

||||

|

||||

#### Some useful syntax

|

||||

|

||||

But before diving into the code, we need to introduce some more syntax. First, we need to be able to retrieve the output of a Bash command. To do so, use the following syntax: `output=$( ./my_script.sh )`, which will store the output of our commands into the variable `$output`.

|

||||

|

||||

The second bit of syntax we need is how to append the value we just retrieved to an array. The syntax to do that will look familiar:

|

||||

```

|

||||

myArray+=( "newElement1" "newElement2" )

|

||||

|

||||

```

|

||||

|

||||

#### The parameter sweep

|

||||

|

||||

Putting everything together, here is our script for launching our parameter sweep:

|

||||

```

|

||||

allThreads=(1 2 4 8 16 32 64 128)

|

||||

|

||||

allRuntimes=()

|

||||

|

||||

for t in ${allThreads[@]}; do

|

||||

|

||||

runtime=$(./pipeline --threads $t)

|

||||

|

||||

allRuntimes+=( $runtime )

|

||||

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

And voilà!

|

||||

|

||||

### What else you got?

|

||||

|

||||

In this article, we covered the scenario of using arrays for parameter sweeps. But I promise there are more reasons to use Bash arrays—here are two more examples.

|

||||

|

||||

#### Log alerting

|

||||

|

||||

In this scenario, your app is divided into modules, each with its own log file. We can write a cron job script to email the right person when there are signs of trouble in certain modules:``

|

||||

```

|

||||

# List of logs and who should be notified of issues

|

||||

|

||||

logPaths=("api.log" "auth.log" "jenkins.log" "data.log")

|

||||

|

||||

logEmails=("jay@email" "emma@email" "jon@email" "sophia@email")

|

||||

|

||||

|

||||

|

||||

# Look for signs of trouble in each log

|

||||

|

||||

for i in ${!logPaths[@]};

|

||||

|

||||

do

|

||||

|

||||

log=${logPaths[$i]}

|

||||

|

||||

stakeholder=${logEmails[$i]}

|

||||

|

||||

numErrors=$( tail -n 100 "$log" | grep "ERROR" | wc -l )

|

||||

|

||||

|

||||

|

||||

# Warn stakeholders if recently saw > 5 errors

|

||||

|

||||

if [[ "$numErrors" -gt 5 ]];

|

||||

|

||||

then

|

||||

|

||||

emailRecipient="$stakeholder"

|

||||

|

||||

emailSubject="WARNING: ${log} showing unusual levels of errors"

|

||||

|

||||

emailBody="${numErrors} errors found in log ${log}"

|

||||

|

||||

echo "$emailBody" | mailx -s "$emailSubject" "$emailRecipient"

|

||||

|

||||

fi

|

||||

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

#### API queries

|

||||

|

||||

Say you want to generate some analytics about which users comment the most on your Medium posts. Since we don't have direct database access, SQL is out of the question, but we can use APIs!

|

||||

|

||||

To avoid getting into a long discussion about API authentication and tokens, we'll instead use [JSONPlaceholder][3], a public-facing API testing service, as our endpoint. Once we query each post and retrieve the emails of everyone who commented, we can append those emails to our results array:

|

||||

```

|

||||

endpoint="https://jsonplaceholder.typicode.com/comments"

|

||||

|

||||

allEmails=()

|

||||

|

||||

|

||||

|

||||

# Query first 10 posts

|

||||

|

||||

for postId in {1..10};

|

||||

|

||||

do

|

||||

|

||||

# Make API call to fetch emails of this posts's commenters

|

||||

|

||||

response=$(curl "${endpoint}?postId=${postId}")

|

||||

|

||||

|

||||

|

||||

# Use jq to parse the JSON response into an array

|

||||

|

||||

allEmails+=( $( jq '.[].email' <<< "$response" ) )

|

||||

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

Note here that I'm using the [`jq` tool][4] to parse JSON from the command line. The syntax of `jq` is beyond the scope of this article, but I highly recommend you look into it.

|

||||

|

||||

As you might imagine, there are countless other scenarios in which using Bash arrays can help, and I hope the examples outlined in this article have given you some food for thought. If you have other examples to share from your own work, please leave a comment below.

|

||||

|

||||

### But wait, there's more!

|

||||

|

||||

Since we covered quite a bit of array syntax in this article, here's a summary of what we covered, along with some more advanced tricks we did not cover:

|

||||

|

||||

Syntax Result `arr=()` Create an empty array `arr=(1 2 3)` Initialize array `${arr[2]}` Retrieve third element `${arr[@]}` Retrieve all elements `${!arr[@]}` Retrieve array indices `${#arr[@]}` Calculate array size `arr[0]=3` Overwrite 1st element `arr+=(4)` Append value(s) `str=$(ls)` Save `ls` output as a string `arr=( $(ls) )` Save `ls` output as an array of files `${arr[@]:s:n}` Retrieve elements at indices `n` to `s+n`

|

||||

|

||||

### One last thought

|

||||

|

||||

As we've discovered, Bash arrays sure have strange syntax, but I hope this article convinced you that they are extremely powerful. Once you get the hang of the syntax, you'll find yourself using Bash arrays quite often.

|

||||

|

||||

#### Bash or Python?

|

||||

|

||||

Which begs the question: When should you use Bash arrays instead of other scripting languages such as Python?

|

||||

|

||||

To me, it all boils down to dependencies—if you can solve the problem at hand using only calls to command-line tools, you might as well use Bash. But for times when your script is part of a larger Python project, you might as well use Python.

|

||||

|

||||

For example, we could have turned to Python to implement the parameter sweep, but we would have ended up just writing a wrapper around Bash:

|

||||

```

|

||||

import subprocess

|

||||

|

||||

|

||||

|

||||

all_threads = [1, 2, 4, 8, 16, 32, 64, 128]

|

||||

|

||||

all_runtimes = []

|

||||

|

||||

|

||||

|

||||

# Launch pipeline on each number of threads

|

||||

|

||||

for t in all_threads:

|

||||

|

||||

cmd = './pipeline --threads {}'.format(t)

|

||||

|

||||

|

||||

|

||||

# Use the subprocess module to fetch the return output

|

||||

|

||||

p = subprocess.Popen(cmd, stdout=subprocess.PIPE, shell=True)

|

||||

|

||||

output = p.communicate()[0]

|

||||

|

||||

all_runtimes.append(output)

|

||||

|

||||

```

|

||||

|

||||

Since there's no getting around the command line in this example, using Bash directly is preferable.

|

||||

|

||||

#### Time for a shameless plug

|

||||

|

||||

If you enjoyed this article, there's more where that came from! [Register here to attend OSCON][5], where I'll be presenting the live-coding workshop [You Don't Know Bash][6] on July 17, 2018. No slides, no clickers—just you and me typing away at the command line, exploring the wondrous world of Bash.

|

||||

|

||||

This article originally appeared on [Medium][7] and is republished with permission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/you-dont-know-bash-intro-bash-arrays

|

||||

|

||||

作者:[Robert Aboukhalil][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/robertaboukhalil

|

||||

[1]:https://opensource.com/article/17/7/bash-prompt-tips-and-tricks

|

||||

[2]:https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Template_literals

|

||||

[3]:https://github.com/typicode/jsonplaceholder

|

||||

[4]:https://stedolan.github.io/jq/

|

||||

[5]:https://conferences.oreilly.com/oscon/oscon-or

|

||||

[6]:https://conferences.oreilly.com/oscon/oscon-or/public/schedule/detail/67166

|

||||

[7]:https://medium.com/@robaboukhalil/the-weird-wondrous-world-of-bash-arrays-a86e5adf2c69

|

||||

@ -0,0 +1,59 @@

|

||||

What is the Difference Between the macOS and Linux Kernels

|

||||

======

|

||||

Some people might think that there are similarities between the macOS and the Linux kernel because they can handle similar commands and similar software. Some people even think that Apple’s macOS is based on Linux. The truth is that both kernels have very different histories and features. Today, we will take a look at the difference between macOS and Linux kernels.

|

||||

|

||||

![macOS vs Linux][1]

|

||||

|

||||

### History of macOS Kernel

|

||||

|

||||

We will start with the history of the macOS kernel. In 1985, Steve Jobs left Apple due to a falling out with CEO John Sculley and the Apple board of directors. He then founded a new computer company named [NeXT][2]. Jobs wanted to get a new computer (with a new operating system) to market quickly. To save time, the NeXT team used the [Mach kernel][3] from Carnegie Mellon and parts of the BSD code base to created the [NeXTSTEP operating system][4].

|

||||

|

||||

NeXT never became a financial success, due in part to Jobs’ habit of spending money like he was still at Apple. Meanwhile, Apple had tried unsuccessfully on several occasions to update their operating system, even going so far as to partner with IBM. In 1997, Apple purchased NeXT for $429 million. As part of the deal, Steve Jobs returned to Apple and NeXTSTEP became the foundation of macOS and iOS.

|

||||

|

||||

### History of Linux Kernel

|

||||

|

||||

Unlike the macOS kernel, Linux was not created as part of a commercial endeavor. Instead, it was [created in 1991 by Finnish computer science student Linus Torvalds][5]. Originally, the kernel was written to the specifications of Linus’ computer because he wanted to take advantage of its new 80386 processor. Linus posted the code for his new kernel to [the Usenet in August of 1991][6]. Soon, he was receiving code and feature suggestions from all over the world. The following year Orest Zborowski ported the X Window System to Linux, giving it the ability to support a graphical user interface.

|

||||

|

||||

Over the last 27 years, Linux has slowly grown and gained features. It’s no longer a student’s small-time project. Now it runs most of the [world’s][7] [computing devices][8] and the [world’s supercomputers][9]. Not too shabby.

|

||||

|

||||

### Features of the macOS Kernel

|

||||

|

||||

The macOS kernel is officially known as XNU. The [acronym][10] stands for “XNU is Not Unix.” According to [Apple’s Github page][10], XNU is “a hybrid kernel combining the Mach kernel developed at Carnegie Mellon University with components from FreeBSD and C++ API for writing drivers”. The BSD subsystem part of the code is [“typically implemented as user-space servers in microkernel systems”][11]. The Mach part is responsible for low-level work, such as multitasking, protected memory, virtual memory management, kernel debugging support, and console I/O.

|

||||

|

||||

### Features of Linux Kernel

|

||||

|

||||

While the macOS kernel combines the feature of a microkernel ([Mach][12])) and a monolithic kernel ([BSD][13]), Linux is solely a monolithic kernel. A [monolithic kernel][14] is responsible for managing the CPU, memory, inter-process communication, device drivers, file system, and system server calls.

|

||||

|

||||

### Difference between Mac and Linux kernel in one line

|

||||

|

||||

The macOS kernel (XNU) has been around longer than Linux and was based on a combination of two even older code bases. On the other hand, Linux is newer, written from scratch, and is used on many more devices.

|

||||

|

||||

If you found this article interesting, please take a minute to share it on social media, Hacker News or [Reddit][15].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/mac-linux-difference/

|

||||

|

||||

作者:[John Paul][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/john/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/07/macos-vs-linux-kernels.jpeg

|

||||

[2]:https://en.wikipedia.org/wiki/NeXT

|

||||

[3]:https://en.wikipedia.org/wiki/Mach_(kernel)

|

||||

[4]:https://en.wikipedia.org/wiki/NeXTSTEP

|

||||

[5]:https://www.cs.cmu.edu/%7Eawb/linux.history.html

|

||||

[6]:https://groups.google.com/forum/#!original/comp.os.minix/dlNtH7RRrGA/SwRavCzVE7gJ

|

||||

[7]:https://www.zdnet.com/article/sorry-windows-android-is-now-the-most-popular-end-user-operating-system/

|

||||

[8]:https://www.linuxinsider.com/story/31855.html

|

||||

[9]:https://itsfoss.com/linux-supercomputers-2017/

|

||||

[10]:https://github.com/apple/darwin-xnu

|

||||

[11]:http://osxbook.com/book/bonus/ancient/whatismacosx/arch_xnu.html

|

||||

[12]:https://en.wikipedia.org/wiki/Mach_(kernel

|

||||

[13]:https://en.wikipedia.org/wiki/FreeBSD

|

||||

[14]:https://www.howtogeek.com/howto/31632/what-is-the-linux-kernel-and-what-does-it-do/

|

||||

[15]:http://reddit.com/r/linuxusersgroup

|

||||

@ -0,0 +1,54 @@

|

||||

5 Firefox extensions to protect your privacy

|

||||

======

|

||||

|

||||

|

||||

|

||||

In the wake of the Cambridge Analytica story, I took a hard look at how far I had let Facebook penetrate my online presence. As I'm generally concerned about single points of failure (or compromise), I am not one to use social logins. I use a password manager and create unique logins for every site (and you should, too).

|

||||

|

||||

What I was most perturbed about was the pervasive intrusion Facebook was having on my digital life. I uninstalled the Facebook mobile app almost immediately after diving into the Cambridge Analytica story. I also [disconnected all apps, games, and websites][1] from Facebook. Yes, this will change your experience on Facebook, but it will also protect your privacy. As a veteran with friends spread out across the globe, maintaining the social connectivity of Facebook is important to me.

|

||||

|

||||

I went about the task of scrutinizing other services as well. I checked Google, Twitter, GitHub, and more for any unused connected applications. But I know that's not enough. I need my browser to be proactive in preventing behavior that violates my privacy. I began the task of figuring out how best to do that. Sure, I can lock down a browser, but I need to make the sites and tools I use work while trying to keep them from leaking data.

|

||||

|

||||

Following are five tools that will protect your privacy while using your browser. The first three extensions are available for Firefox and Chrome, while the latter two are only available for Firefox.

|

||||

|

||||

### Privacy Badger

|

||||

|

||||

[Privacy Badger][2] has been my go-to extension for quite some time. Do other content or ad blockers do a better job? Maybe. The problem with a lot of content blockers is that they are "pay for play." Meaning they have "partners" that get whitelisted for a fee. That is the antithesis of why content blockers exist. Privacy Badger is made by the Electronic Frontier Foundation (EFF), a nonprofit entity with a donation-based business model. Privacy Badger promises to learn from your browsing habits and requires minimal tuning. For example, I have only had to whitelist a handful of sites. Privacy Badger also allows granular controls of exactly which trackers are enabled on what sites. It's my #1, must-install extension, no matter the browser.

|

||||

|

||||

### DuckDuckGo Privacy Essentials

|

||||

|

||||

The search engine DuckDuckGo has typically been privacy-conscious. [DuckDuckGo Privacy Essentials][3] works across major mobile devices and browsers. It's unique in the sense that it grades sites based on the settings you give them. For example, Facebook gets a D, even with Privacy Protection enabled. Meanwhile, [chrisshort.net][4] gets a B with Privacy Protection enabled and a C with it disabled. If you're not keen on EFF or Privacy Badger for whatever reason, I would recommend DuckDuckGo Privacy Essentials (choose one, not both, as they essentially do the same thing).

|

||||

|

||||

### HTTPS Everywhere

|

||||

|

||||

[HTTPS Everywhere][5] is another extension from the EFF. According to HTTPS Everywhere, "Many sites on the web offer some limited support for encryption over HTTPS, but make it difficult to use. For instance, they may default to unencrypted HTTP or fill encrypted pages with links that go back to the unencrypted site. The HTTPS Everywhere extension fixes these problems by using clever technology to rewrite requests to these sites to HTTPS." While a lot of sites and browsers are getting better about implementing HTTPS, there are a lot of sites that still need help. HTTPS Everywhere will try its best to make sure your traffic is encrypted.

|

||||

|

||||

### NoScript Security Suite

|

||||

|

||||

[NoScript Security Suite][6] is not for the faint of heart. While the Firefox-only extension "allows JavaScript, Java, Flash, and other plugins to be executed only by trusted websites of your choice," it doesn't do a great job at figuring out what your choices are. But, make no mistake, a surefire way to prevent leaking data is not executing code that could leak it. NoScript enables that via its "whitelist-based preemptive script blocking." This means you will need to build the whitelist as you go for sites not already on it. Note that NoScript is only available for Firefox.

|

||||

|

||||

### Facebook Container

|

||||

|

||||

[Facebook Container][7] makes Firefox the only browser where I will use Facebook. "Facebook Container works by isolating your Facebook identity into a separate container that makes it harder for Facebook to track your visits to other websites with third-party cookies." This means Facebook cannot snoop on activity happening elsewhere in your browser. Suddenly those creepy ads will stop appearing so frequently (assuming you uninstalled the Facebook app from your mobile devices). Using Facebook in an isolated space will prevent any additional collection of data. Remember, you've given Facebook data already, and Facebook Container can't prevent that data from being shared.

|

||||

|

||||

These are my go-to extensions for browser privacy. What are yours? Please share them in the comments.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/firefox-extensions-protect-privacy

|

||||

|

||||

作者:[Chris Short][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/chrisshort

|

||||

[1]:https://www.facebook.com/help/211829542181913

|

||||

[2]:https://www.eff.org/privacybadger

|

||||

[3]:https://duckduckgo.com/app

|

||||

[4]:https://chrisshort.net

|

||||

[5]:https://www.eff.org/https-everywhere

|

||||

[6]:https://noscript.net/

|

||||

[7]:https://addons.mozilla.org/en-US/firefox/addon/facebook-container/

|

||||

@ -0,0 +1,200 @@

|

||||

A sysadmin's guide to network management

|

||||

======

|

||||

|

||||

|

||||

|

||||

If you're a sysadmin, your daily tasks include managing servers and the data center's network. The following Linux utilities and commands—from basic to advanced—will help make network management easier.

|

||||

|

||||

In several of these commands, you'll see `<fqdn>`, which stands for "fully qualified domain name." When you see this, substitute your website URL or your server (e.g., `server-name.company.com`), as the case may be.

|

||||

|

||||

### Ping

|

||||

|

||||

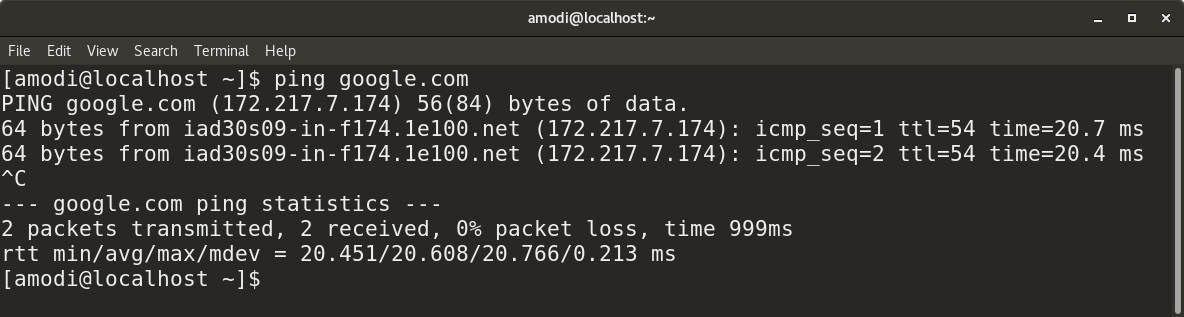

As the name suggests, `ping` is used to check the end-to-end connectivity from your system to the one you are trying to connect to. It uses [ICMP][1] echo packets that travel back to your system when a ping is successful. It's also a good first step to check system/network connectivity. You can use the `ping` command with IPv4 and IPv6 addresses. (Read my article "[How to find your IP address in Linux][2]" to learn more about IP addresses.)

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* IPv4: `ping <ip address>/<fqdn>`

|

||||

* IPv6: `ping6 <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

You can also use `ping` to resolve names of websites to their corresponding IP address, as shown below:

|

||||

|

||||

|

||||

|

||||

### Traceroute

|

||||

|

||||

`ping` checks end-to-end connectivity, the `traceroute` utility tells you all the router IPs on the path you travel to reach the end system, website, or server. `traceroute` is usually is the second step after `ping` for network connection debugging.

|

||||

|

||||

This is a nice utility for tracing the full network path from your system to another. Wherechecks end-to-end connectivity, theutility tells you all the router IPs on the path you travel to reach the end system, website, or server.is usually is the second step afterfor network connection debugging.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `traceroute <ip address>/<fqdn>`

|

||||

|

||||

|

||||

|

||||

### Telnet

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `telnet <ip address>/<fqdn>` is used to [telnet][3] into any server.

|

||||

|

||||

|

||||

|

||||

### Netstat

|

||||

|

||||

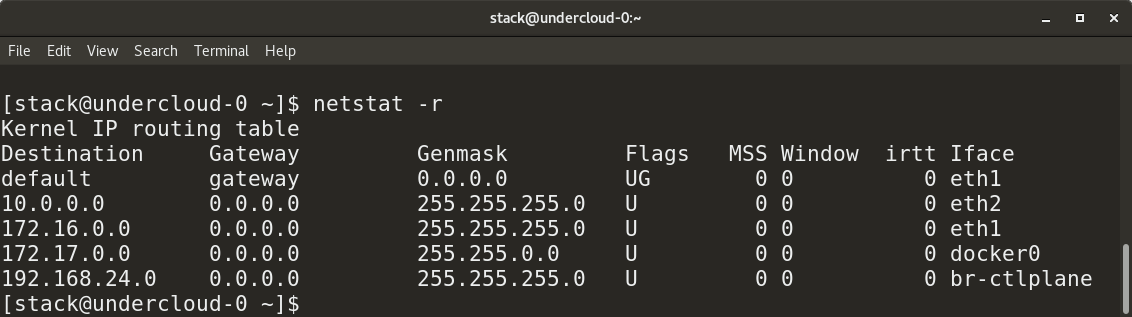

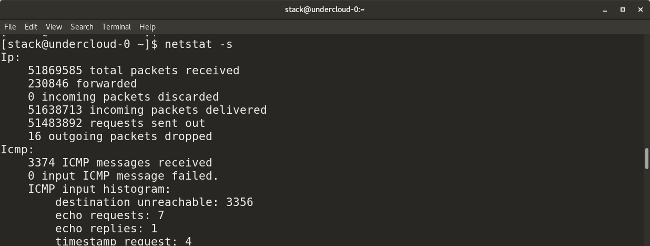

The network statistics (`netstat`) utility is used to troubleshoot network-connection problems and to check interface/port statistics, routing tables, protocol stats, etc. It's any sysadmin's must-have tool.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `netstat -l` shows the list of all the ports that are in listening mode.

|

||||

* `netstat -a` shows all ports; to specify only TCP, use `-at` (for UDP use `-au`).

|

||||

* `netstat -r` provides a routing table.

|

||||

|

||||

|

||||

|

||||

* `netstat -s` provides a summary of statistics for each protocol.

|

||||

|

||||

|

||||

|

||||

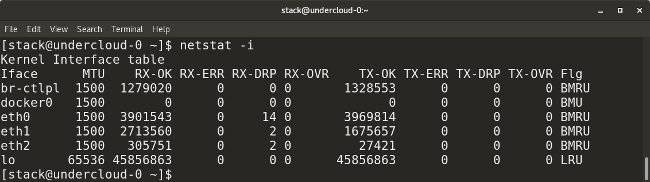

* `netstat -i` displays transmission/receive (TX/RX) packet statistics for each interface.

|

||||

|

||||

|

||||

|

||||

### Nmcli

|

||||

|

||||

`nmcli` is a good utility for managing network connections, configurations, etc. It can be used to control Network Manager and modify any device's network configuration details.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

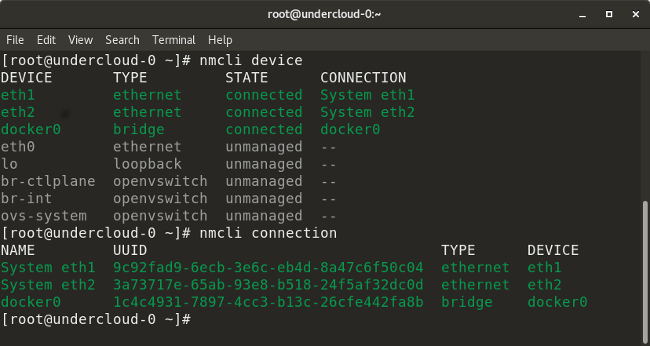

* `nmcli device` lists all devices on the system.

|

||||

|

||||

* `nmcli device show <interface>` shows network-related details of the specified interface.

|

||||

|

||||

* `nmcli connection` checks a device's connection.

|

||||

|

||||

* `nmcli connection down <interface>` shuts down the specified interface.

|

||||

|

||||

* `nmcli connection up <interface>` starts the specified interface.

|

||||

|

||||

* `nmcli con add type vlan con-name <connection-name> dev <interface> id <vlan-number> ipv4 <ip/cidr> gw4 <gateway-ip>` adds a virtual LAN (VLAN) interface with the specified VLAN number, IP address, and gateway to a particular interface.

|

||||

|

||||

|

||||

|

||||

|

||||

### Routing

|

||||

|

||||

There are many commands you can use to check and configure routing. Here are some useful ones:

|

||||

|

||||

**Syntax:**

|

||||

|

||||

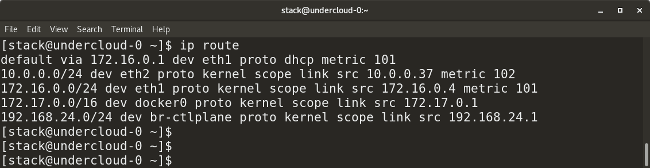

* `ip route` shows all the current routes configured for the respective interfaces.

|

||||

|

||||

|

||||

|

||||

* `route add default gw <gateway-ip>` adds a default gateway to the routing table.

|

||||

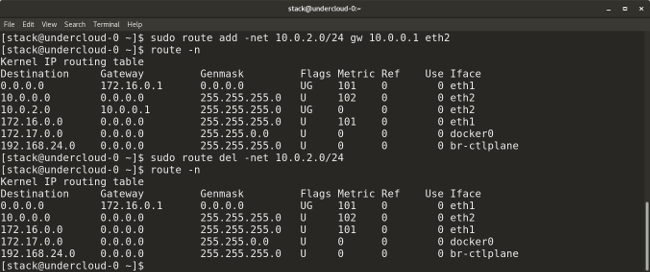

* `route add -net <network ip/cidr> gw <gateway ip> <interface>` adds a new network route to the routing table. There are many other routing parameters, such as adding a default route, default gateway, etc.

|

||||

* `route del -net <network ip/cidr>` deletes a particular route entry from the routing table.

|

||||

|

||||

|

||||

|

||||

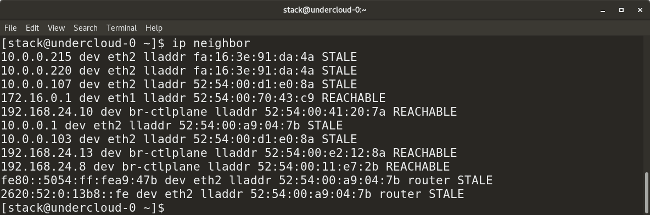

* `ip neighbor` shows the current neighbor table and can be used to add, change, or delete new neighbors.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

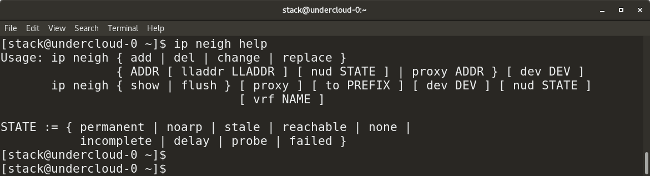

* `arp` (which stands for address resolution protocol) is similar to `ip neighbor`. `arp` maps a system's IP address to its corresponding MAC (media access control) address.

|

||||

|

||||

|

||||

|

||||

### Tcpdump and Wireshark

|

||||

|

||||

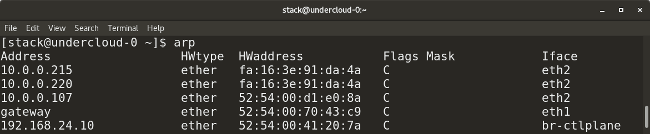

Linux provides many packet-capturing tools like `tcpdump`, `wireshark`, `tshark`, etc. They are used to capture network traffic in packets that are transmitted/received and hence are very useful for a sysadmin to debug any packet losses or related issues. For command-line enthusiasts, `tcpdump` is a great tool, and for GUI users, `wireshark` is a great utility to capture and analyze packets. `tcpdump` is a built-in Linux utility to capture network traffic. It can be used to capture/show traffic on specific ports, protocols, etc.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `tcpdump -i <interface-name>` shows live packets from the specified interface. Packets can be saved in a file by adding the `-w` flag and the name of the output file to the command, for example: `tcpdump -w <output-file.> -i <interface-name>`.

|

||||

|

||||

|

||||

|

||||

* `tcpdump -i <interface> src <source-ip>` captures packets from a particular source IP.

|

||||

* `tcpdump -i <interface> dst <destination-ip>` captures packets from a particular destination IP.

|

||||

* `tcpdump -i <interface> port <port-number>` captures traffic for a specific port number like 53, 80, 8080, etc.

|

||||

* `tcpdump -i <interface> <protocol>` captures traffic for a particular protocol, like TCP, UDP, etc.

|

||||

|

||||

|

||||

|

||||

### Iptables

|

||||

|

||||

`iptables` is a firewall-like packet-filtering utility that can allow or block certain traffic. The scope of this utility is very wide; here are some of its most common uses.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `iptables -L` lists all existing `iptables` rules.

|

||||

* `iptables -F` deletes all existing rules.

|

||||

|

||||

|

||||

|

||||

The following commands allow traffic from the specified port number to the specified interface:

|

||||

|

||||

* `iptables -A INPUT -i <interface> -p tcp –dport <port-number> -m state –state NEW,ESTABLISHED -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o <interface> -p tcp -sport <port-number> -m state – state ESTABLISHED -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

The following commands allow loopback access to the system:

|

||||

|

||||

* `iptables -A INPUT -i lo -j ACCEPT`

|

||||

* `iptables -A OUTPUT -o lo -j ACCEPT`

|

||||

|

||||

|

||||

|

||||

### Nslookup

|

||||

|

||||

The `nslookup` tool is used to obtain IP address mapping of a website or domain. It can also be used to obtain information on your DNS server, such as all DNS records on a website (see the example below). A similar tool to `nslookup` is the `dig` (Domain Information Groper) utility.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `nslookup <website-name.com>` shows the IP address of your DNS server in the Server field, and, below that, gives the IP address of the website you are trying to reach.

|

||||

* `nslookup -type=any <website-name.com>` shows all the available records for the specified website/domain.

|

||||

|

||||

|

||||

|

||||

### Network/interface debugging

|

||||

|

||||

Here is a summary of the necessary commands and files used to troubleshoot interface connectivity or related network issues.

|

||||

|

||||

**Syntax:**

|

||||

|

||||

* `ss` is a utility for dumping socket statistics.

|

||||

* `nmap <ip-address>`, which stands for Network Mapper, scans network ports, discovers hosts, detects MAC addresses, and much more.

|

||||

* `ip addr/ifconfig -a` provides IP addresses and related info on all the interfaces of a system.

|

||||

* `ssh -vvv user@<ip/domain>` enables you to SSH to another server with the specified IP/domain and username. The `-vvv` flag provides "triple-verbose" details of the processes going on while SSH'ing to the server.

|

||||

* `ethtool -S <interface>` checks the statistics for a particular interface.

|

||||

* `ifup <interface>` starts up the specified interface.

|

||||

* `ifdown <interface>` shuts down the specified interface.

|

||||

* `systemctl restart network` restarts a network service for the system.

|

||||

* `/etc/sysconfig/network-scripts/<interface-name>` is an interface configuration file used to set IP, network, gateway, etc. for the specified interface. DHCP mode can be set here.

|

||||

* `/etc/hosts` this file contains custom host/domain to IP mappings.

|

||||

* `/etc/resolv.conf` specifies the DNS nameserver IP of the system.

|

||||

* `/etc/ntp.conf` specifies the NTP server domain.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/sysadmin-guide-networking-commands

|

||||

|

||||

作者:[Archit Modi][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/architmodi

|

||||

[1]:https://en.wikipedia.org/wiki/Internet_Control_Message_Protocol

|

||||

[2]:https://opensource.com/article/18/5/how-find-ip-address-linux

|

||||

[3]:https://en.wikipedia.org/wiki/Telnet

|

||||

@ -0,0 +1,101 @@

|

||||

Anbox: How To Install Google Play Store And Enable ARM (libhoudini) Support, The Easy Way

|

||||

======

|

||||

**[Anbox][1], or Android in a Box, is a free and open source tool that allows running Android applications on Linux.** It works by running the Android runtime environment in an LXC container, recreating the directory structure of Android as a mountable loop image, while using the native Linux kernel to execute applications.

|

||||

|

||||

Its key features are security, performance, integration and convergence (scales across different form factors), according to its website.

|

||||

|

||||

**Using Anbox, each Android application or game is launched in a separate window, just like system applications** , and they behave more or less like regular windows, showing up in the launcher, can be tiled, etc.

|

||||

|

||||

By default, Anbox doesn't ship with the Google Play Store or support for ARM applications. To install applications you must download each app APK and install it manually using adb. Also, installing ARM applications or games doesn't work by default with Anbox - trying to install ARM apps results in the following error being displayed:

|

||||

```

|

||||

Failed to install PACKAGE.NAME.apk: Failure [INSTALL_FAILED_NO_MATCHING_ABIS: Failed to extract native libraries, res=-113]

|

||||

|

||||

```

|

||||

|

||||

You can set up both Google Play Store and support for ARM applications (through libhoudini) manually for Android in a Box, but it's a quite complicated process. **To make it easier to install Google Play Store and Google Play Services on Anbox, and get it to support ARM applications and games (using libhoudini), the folks at[geeks-r-us.de][2] (linked article is in German) have created a [script][3] that automates these tasks.**

|

||||

|

||||

Before using this, I'd like to make it clear that not all Android applications and games work in Anbox, even after integrating libhoudini for ARM support. Some Android applications and games may not show up in the Google Play Store at all, while others may be available for installation but will not work. Also, some features may not be available in some applications.

|

||||

|

||||

### Install Google Play Store and enable ARM applications / games support on Anbox (Android in a Box)

|

||||

|

||||

These instructions will obviously not work if Anbox is not already installed on your Linux desktop. If you haven't already, install Anbox by following the installation instructions found

|

||||

|

||||

`anbox.appmgr`

|

||||

|

||||

at least once after installing Anbox and before using this script, to avoid running into issues.

|

||||

|

||||

1\. Install the required dependencies (`wget` , `lzip` , `unzip` and `squashfs-tools`).

|

||||

|

||||

In Debian, Ubuntu or Linux Mint, use this command to install the required dependencies:

|

||||

```

|

||||

sudo apt install wget lzip unzip squashfs-tools

|

||||

|

||||

```

|

||||

|

||||

2\. Download and run the script that automatically downloads and installs Google Play Store (and Google Play Services) and libhoudini (for ARM apps / games support) on your Android in a Box installation.

|

||||

|

||||

**Warning: never run a script you didn't write without knowing what it does. Before running this script, check out its [code][4]. **

|

||||

|

||||

To download the script, make it executable and run it on your Linux desktop, use these commands in a terminal:

|

||||

```

|

||||

wget https://raw.githubusercontent.com/geeks-r-us/anbox-playstore-installer/master/install-playstore.sh

|

||||

chmod +x install-playstore.sh

|

||||

sudo ./install-playstore.sh

|

||||

|

||||

```

|

||||

|

||||

3\. To get Google Play Store to work in Anbox, you need to enable all the permissions for both Google Play Store and Google Play Services

|

||||

|

||||

To do this, run Anbox:

|

||||

```

|

||||

anbox.appmgr

|

||||

|

||||

```

|

||||

|

||||

Then go to `Settings > Apps > Google Play Services > Permissions` and enable all available permissions. Do the same for Google Play Store!

|

||||

|

||||

You should now be able to login using a Google account into Google Play Store.

|

||||

|

||||

Without enabling all permissions for Google Play Store and Google Play Services, you may encounter an issue when trying to login to your Google account, with the following error message: " _Couldn't sign in. There was a problem communicating with Google servers. Try again later_ ", as you can see in this screenshot:

|

||||

|

||||

After logging in, you can disable some of the Google Play Store / Google Play Services permissions.

|

||||

|

||||

**If you're encountering some connectivity issues when logging in to your Google account on Anbox,** make sure the `anbox-bride.sh` is running:

|

||||

|

||||

* to start it:

|

||||

|

||||

|

||||

```

|

||||

sudo /snap/anbox/current/bin/anbox-bridge.sh start

|

||||

|

||||

```

|

||||

|

||||

* to restart it:

|

||||

|

||||

|

||||

```

|

||||

sudo /snap/anbox/current/bin/anbox-bridge.sh restart

|

||||

|

||||

```

|

||||

|

||||

You may also need to install the dnsmasq package if you continue to have connectivity issues with Anbox, according to

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxuprising.com/2018/07/anbox-how-to-install-google-play-store.html

|

||||

|

||||

作者:[Logix][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/118280394805678839070

|

||||

[1]:https://anbox.io/

|

||||

[2]:https://geeks-r-us.de/2017/08/26/android-apps-auf-dem-linux-desktop/

|

||||

[3]:https://github.com/geeks-r-us/anbox-playstore-installer/

|

||||

[4]:https://github.com/geeks-r-us/anbox-playstore-installer/blob/master/install-playstore.sh

|

||||

[5]:https://docs.anbox.io/userguide/install.html

|

||||

[6]:https://github.com/anbox/anbox/issues/118#issuecomment-295270113

|

||||

@ -0,0 +1,68 @@

|

||||

Boost your typing with emoji in Fedora 28 Workstation

|

||||

======

|

||||

|

||||

|

||||

|

||||