mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge branch 'master' of https://github.com/runningwater/TranslateProject

This commit is contained in:

commit

da09e5286c

published

20140611 Little Known Apache Mesos Project Helps Mesosphere Raise $10M From Andreessen Horowitz.md20140624 Super Pi Brothers.md20140701 Get OpenVPN up and running, enjoy your privacy.md20140702 Wine 1.7.21 (Development Version) Released--Install in RedHat and Debian Based Systems.md20140709 How to set up two-factor authentication for SSH login on Linux.md20140711 How to Install Lightweight Budgie Desktop in Ubuntu 14.04.md20140711 How to simulate key press and mouse movement in Linux.md20140714 Linux slabtop command--Display Kernel Slab Cache Information.md20140714 Test read or write speed of usb and ssd drives with dd command on Linux.md20140718 Linux Kernel Testing and Debugging 4.md20140718 Linux Kernel Testing and Debugging 5.md20140718 Linux Kernel Testing and Debugging 6.md20140722 Budgie Desktop 5.1 Is a Superb New Desktop Environment for Conservative Users.md20140723 How to configure chroot SFTP in Linux.md20140729 Linux FAQs with Answers--How to check which fonts are used in a PDF document.md20140731 Top 10 Free Linux Games.md20140807 Linux FAQs with Answers--How to fix 'fatal error--jsoncpp or json or json.h--No such file or directory'.md20140811 StuntRally 2.4 Is the Most Advanced Free Racing Game on Linux.md20140818 How To Schedule A Shutdown In Ubuntu 14.04 [Quick Tip].md

sources

news

20140811 StuntRally 2.4 Is the Most Advanced Free Racing Game on Linux.md20140826 Munich Council--LiMux Demise Has Been Greatly Exaggerated.md20140828 GIMP 2.8.12 Released--Here' s How to Install it on Ubuntu.md20140829 Red Hat Shake-up, Desktop Users, and Outta Time.mdMunich Council- LiMux Demise Has Been Greatly Exaggerated.md

talk

20140610 How does the cloud affect the everyday linux user.md20140819 KDE Plasma 5--For those Linux users undecided on the kernel' s future.md20140826 Linus Torvalds Promotes Linux for Desktops, Embedded Computing.md20140826 Linus Torvalds Started a Revolution on August 25 1991 Happy Birthday Linux.md20140828 Interesting facts about Linux.md20140829 Linux Doesn't Need to Own the Desktop.md

tech

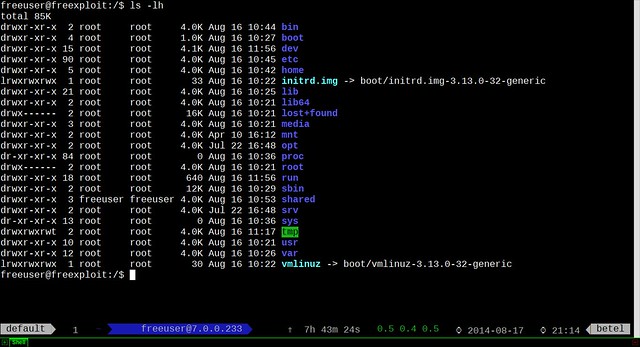

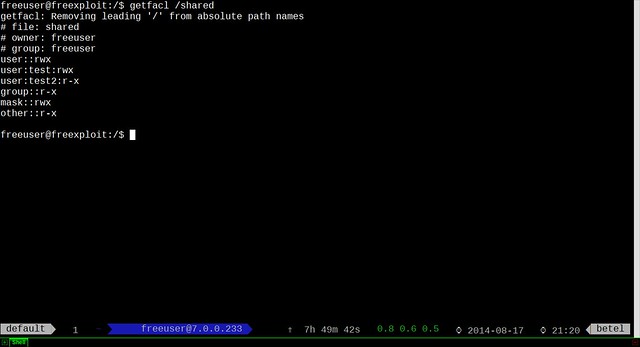

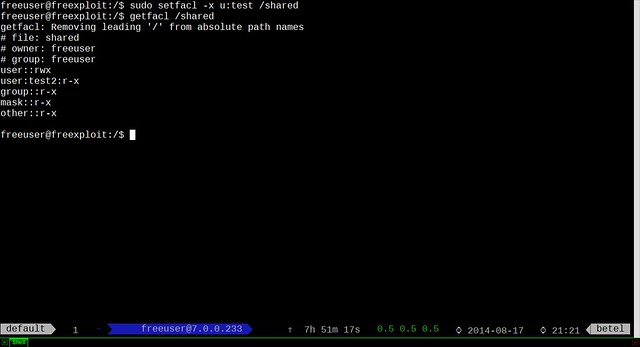

20140722 How to manage DigitalOcean VPS droplets from the command line on Linux.md20140808 How to install Puppet server and client on CentOS and RHEL.md20140811 Disable or Password Protect Single User Mode or RHEL ro CentOS ro 5.x ro 6.x.md20140813 How to remove file metadata on Linux.md20140818 How to configure Access Control Lists (ACLs) on Linux.md20140819 How to Encrypt Email in Linux.md20140825 Linux FAQs with Answers--How to enable Nux Dextop repository on CentOS or RHEL.md20140826 20 Postfix Interview Questions and Answers.md20140826 How to configure SNMPv3 on ubuntu 14.04 server.md20140826 Linux Tutorial--Install Ansible Configuration Management And IT Automation Tool.md20140828 How to create a site-to-site IPsec VPN tunnel using Openswan in Linux.md20140828 Setup Thin Provisioning Volumes in Logical Volume Management (LVM)--Part IV.md20140829 6 Interesting Funny Commands of Linux (Fun in Terminal) – Part II.md20140829 Fun in Linux Terminal--Play with Word and Character Counts.md

translated

talk

20140611 Little Known Apache Mesos Project Helps Mesosphere Raise $10M From Andreessen Horowitz.md20140825 China Will Change The Way All Software Is Bought And Sold.md20140826 Linus Torvalds is my hero, says 13 year old Zachary DuPont.md

tech

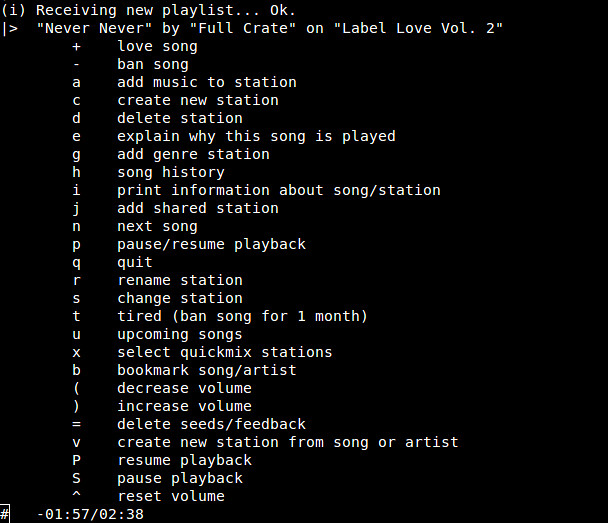

20140716 Linux FAQs with Answers--How to define PATH environment variable for sudo commands.md20140722 How to manage DigitalOcean VPS droplets from the command line on Linux.md20140808 How to install Puppet server and client on CentOS and RHEL.md20140811 Disable or Password Protect Single User Mode or RHEL ro CentOS ro 5.x ro 6.x.md20140813 How to remove file metadata on Linux.md20140815 How to manage a WiFi connection from the command line.md20140818 How to configure Access Control Lists (ACLs) on Linux.md20140819 Linux Systemd--Start or Stop or Restart Services in RHEL or CentOS 7.md20140825 Linux FAQs with Answers--How to fix 'failed to run aclocal--No such file or directory'.md20140825 Linux FAQs with Answers--How to install Shutter on CentOS.md20140825 Linux FAQs with Answers--How to show a MAC learning table of Linux bridge.md20140825 Linux Terminal--speedtest_cli checks your real bandwidth speed.mdHow to listen to Internet radio from the command line on Linux.md

@ -0,0 +1,50 @@

|

||||

鲜为人知的 Apache Mesos 项目帮助 Mesosphere 公司得到了千万美元投资

|

||||

================================================================================

|

||||

|

||||

|

||||

[Mesosphere][1],一家试图围绕鲜为人知的 Apache Mesos 项目开展商业活动的公司,刚刚从 Andreessen Horowitz 那里获得了 1000 万美元投资。以下是为什么这个项目能够吸引如此巨款的原因。

|

||||

|

||||

事实上 Mesos 这款自动扩放软件已经出现了五年了。据 Mesosphere 的CEO及联合创始人 Florian Leibert 所述,Mesos 已经在 Twitter 内已经管理了超过 50,000 个以上的CPU。此外, EBay, AirBnB, Netflix 还有 HubSpot 也是这款软件的使用者。

|

||||

|

||||

当那些互联网巨头发现 Mesos 的时候,这项技术却并不为大多数企业所知。但它确实可以满足一些公司在他们内部的数据中心上应用公共云的一些技术的需求。

|

||||

|

||||

Mesos 管理集群机器,根据需要自动扩放应用。它在每台机器上只依赖很少的软件,它由一个主调度程序协调。据 Leibert 所说,其CPU 占用为 0 并且几乎不消耗任何内存。在其工作的每台机器上的该软件会向调度程序报告关于虚拟机或者服务器的容量信息,接着调度程序向目标机器分派任务。

|

||||

|

||||

“如果一项任务终断并且没有返回任何结果,主调度程序知道如何重新调度它和它所用的资源在哪里。” Mesosphere 的资深副总裁 Matt Trifiro 说。

|

||||

|

||||

Mesos 能自动扩放一系列的任务,包括 Hadoop 数据库,Ruby on Rails 节点,以及 Cassandra 。

|

||||

|

||||

使用 Mesos 使得 Hubspot 削减了一半的 AWS(Amazon Web Services) 的费用支出,Liebert 说道。这是因为 Mesos 能够在目标机器之间有效地分配作业量的原因。

|

||||

|

||||

然而,Mesos 更有可能应用到那些试图真正地在内部创建一个类 AWS 环境的企业,一位来自 451 Research 的分析员 Jay Lyman 说。AWS 提供一些[自动扩放工具][3],但大多数公司对于在公共云基础设施上运行所有东西还是感到不安。与此同时,他们并不想着反对他们的开发者采用 AWS 那样的公共云中可用的优异性能。他们希望他们的私有云能集成这些可用的优点。

|

||||

|

||||

“如你所见,类似 AWS 风格的界面风格,与监控、命令、操控以及稳定性相融合,” Liebert 继续说道。

|

||||

|

||||

Mesos 既可以在一个私有云上也可以在 AWS 上运行,向企业提供最有效率地使用其内部云的方法,并在需要扩放时自动切换到 AWS 去。

|

||||

|

||||

但是,从另外的方面说 Mesos 也是有一些缺点的。它[并不能运行][4]任何 Windows 操作系统或者比较古老的应用比如说 SAP 软件。

|

||||

|

||||

不过,Lyman 说,“假如一个团队拥有长时期使用云的经历,他们大概早就对 Linux 操作系统情有独钟了。”

|

||||

|

||||

在将来,Mesosphere 能够支持 Windows 操作系统是很有可能的。最初,像 Puppet 和 Chef 这样的技术也只支持 Linux 操作系统,Lyman 表示。“这只是早期 Mesosphere 的特性。现在它还是不太成熟,” 他又说道。

|

||||

|

||||

Mesosphere 正瞄向大部分使用现代编程技术构建了越来越多的运行于 Linux 的应用的企业,以及 Twitter 和 Netflix 这种在初创时还没有 Mesos 类似技术的第一代 Web 2.0 公司。“这是早期两类最常见的客户概况,” Trifiro 说。

|

||||

|

||||

年终之前,Mesosphere 希望发布包含文档的商业产品,通过技术支持与颁发许可证来获得营收。Mesosphere 已开发一款名为 Marathon 的大规模扩放编制工具,并且支持 Docker 集成。它现在免费提供打包好的 Mesos 发行版,希望以此占有未来的市场。

|

||||

|

||||

Mesosphere 同时也正在为少数早期的顾客工作。它帮助 HubSpot 实施有关 Mesos 的搭建。

|

||||

|

||||

Mesosphere 在这个领域并不唯一。Rightscale,Scalr 以及现在归 Dell 所有的 Enstratius,全都提供了一些各种版本的扩放或云管理技术。Mesosphere 强调说,Mesos 及其公司自己开发的技术在单独机器中创建服务器集群方面的表现远胜于市场上的其他同类软件。来自 Andreessen 的新投资一定会帮助 Meos 获得更大的动力。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thenewstack.io/little-known-apache-mesos-project-helps-mesosphere-raise-10m-from-andreessen/

|

||||

|

||||

译者:[SteveArcher](https://github.com/SteveArcher) 校对:[ wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://mesosphere.io/

|

||||

[2]:http://mesos.apache.org/

|

||||

[3]:http://aws.amazon.com/autoscaling/

|

||||

[4]:http://mesosphere.io/learn/

|

||||

@ -1,23 +1,27 @@

|

||||

超级树莓派兄弟

|

||||

================================================================================

|

||||

我已经不象以前那样玩那么多游戏了。虽然之前我当然花费了生命里的无数时间在任天堂,SNES,或是之后在我电脑上的第一人称射击游戏(只在Linux下,谢谢),如今,我更愿意把空余时间花在我累积起来的许多其他非游戏爱好上。但是最近,我发现自己又抹掉了Wii手柄上的灰尘,这样就可以玩一玩我重新购买的NES和SNES游戏了。不过问题是,这些游戏需要用到一些特别的控制器,而且我已经有一个修改过的SNES控制器可以通过USB连接。这已经有足够的理由让我去寻找一个更合适的方案。当然,我也可以简单地接上三个甚至四个手柄,然后在客厅里面堆满游戏。但是我已经习惯于把我的CD和DVD都提取成文件,然后在中心媒体服务器上挑选着听或是看。所以如果每次我想换游戏的时候,不用起身去翻游戏卡带,那就完美了。当然,这意味着得使用模拟器。尽管之前我在一个改动过的Xbox上成功过,不过可惜它已经不在我手上了。然后我觉得一定有什么人已经在树莓派上实现过这种平台,结果是肯定的,在简单地搜索和一些命令之后,我在一个剩下的树莓派上搭起来一个完美的怀旧游戏中心。

|

||||

我已经不象以前那样玩那么多游戏了。虽然之前我当然花费了生命里的无数时间在任天堂,SNES,或是之后在我电脑上的第一人称射击游戏(只在Linux下,谢谢),如今,我更愿意把空余时间花在我累积起来的许多其他非游戏爱好上。

|

||||

|

||||

但是最近,我发现自己又抹掉了Wii手柄上的灰尘,这样就可以玩一玩我重新购买的NES和SNES游戏了。不过问题是,这些游戏需要用到一些特别的控制器,而且我已经有一个修改过的SNES控制器可以通过USB连接。这已经有足够的理由让我去寻找一个更合适的方案。

|

||||

|

||||

当然,我也可以简单地接上三个甚至四个手柄,然后在客厅里面堆满游戏。但是我已经习惯于把我的CD和DVD都提取成文件,然后在中心媒体服务器上挑选着听或是看。所以如果每次我想换游戏的时候,不用起身去翻游戏卡带,那就完美了。

|

||||

|

||||

当然,这意味着得使用模拟器。尽管之前我在一个改动过的Xbox上成功过,不过可惜它已经不在我手上了。然后我觉得一定有什么人已经在树莓派上实现过这种平台,结果是肯定的,在简单地搜索和一些命令之后,我在一个剩下的树莓派上搭起来一个完美的怀旧游戏中心。

|

||||

|

||||

树莓派项目的一个优点是,有大量的用户在使用相同的硬件。对我来说,这意味着我不用完整地参考别人的指引再根据自己的需求做出必要的改动,而只需要简单地完全按照别人的指导做就行了。在我这件事情上,我找到了RetroPie项目,它把你安装时需要用到的所有命令都包到了一个单一的大脚本中。在执行完后,你就完整地安装并配置好了RetroArch,它集成了所有的主流模拟器以及一个统一的配置方式,再加上一个在树莓派上开机启动的EmulationStation图形界面,通过它可以只用手柄就能方便地定位到你想玩的游戏。

|

||||

|

||||

### 安装RetroPie ###

|

||||

|

||||

在安装RetroPie之前,你可能需要确认一下你的Raspbian版本(树莓派默认的Linux发行版,这也是这个项目假设你在用的)是不是最新的,包括有没有新的固件。这只需要几个通用的`apt`命令。虽然,在这一步里你当然可以接个键盘到树莓派上,不过我觉得用`ssh`登录到树莓派上更方便。之后直接复制和粘贴下面的命令:

|

||||

在安装RetroPie之前,你可能需要确认一下你的Raspbian版本(树莓派默认的Linux发行版,这也是这个项目假设你在用的)是不是最新的,包括有没有新的固件。这只需要几个通用的`apt`命令。虽然,在这一步里你可以接个键盘到树莓派上,不过我觉得用`ssh`登录到树莓派上更方便。之后直接复制和粘贴下面的命令:

|

||||

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get -y upgrade

|

||||

|

||||

现在树莓派已经更新到最新了,再确认一下是否安装了git和dialog,然后可以通过git来下载RetroPie:

|

||||

|

||||

|

||||

$ sudo apt-get -y install git dialog

|

||||

$ cd

|

||||

$ git clone --depth=0

|

||||

↪git://github.com/petrockblog/RetroPie-Setup.git

|

||||

$ git clone --depth=0 git://github.com/petrockblog/RetroPie-Setup.git

|

||||

|

||||

执行完上边的命令后会创建一个RetroPie-Setup目录,里面有主要的安装脚本。之后你只需要进去这个目录,并运行安装脚本:

|

||||

|

||||

@ -29,9 +33,9 @@

|

||||

|

||||

|

||||

|

||||

#### 图1. RetroPie安装菜单 ####

|

||||

*图1. RetroPie安装菜单*

|

||||

|

||||

在vanilla Raspbian固件版本中,这一步会需要很长时间,因为有大量不同的包需要下载和安装。在安装完成之后,返回在RetroPie安装主界面中,在主菜单里选择SETUP,在之后的二级菜单里,你可以调整设置,例如是否开机启动EmulationStation(推荐打开)以及是否允许欢迎界面。在我这里,我两个都允许了,因为我希望这个设备是一个独立的模拟游戏机。不过你需要了解的是,如果你确实允许了EmulationStation开机自动启动,你仍然可以ssh登录到机器上然后执行原始的RetroPie安装配置脚本来改变这个设置。

|

||||

在vanilla Raspbian固件版本中,这一步会需要很长时间,因为有大量不同的包需要下载和安装。在安装完成之后,返回在RetroPie安装主界面中,在主菜单里选择SETUP,在之后的二级菜单里,你可以调整设置,例如是否开机启动EmulationStation(推荐打开)以及是否允许欢迎界面。在我这里,我两个都允许了,因为我希望这个设备是一个独立的模拟游戏机。不过你需要了解的是,即便你打开了EmulationStation开机自动启动,你仍然可以ssh登录到机器上然后执行原始的RetroPie安装配置脚本来改变这个设置。

|

||||

|

||||

### 添加ROM ###

|

||||

|

||||

@ -46,11 +50,11 @@

|

||||

|

||||

### EmulationStation ###

|

||||

|

||||

重启完之后,当看到EmulationStation界面时应该会很高兴,之后它会提示你设定控制杆,游戏手柄,或键盘按键,这样就可以控制EmulationStation菜单了。不过注意一下,这并不会影响手柄在游戏里的按键定义,只是用于控制EmulationStation菜单的。在设定完手柄后,你应该可以按下向右或向左方向键来切换不同的模拟器菜单了。在我这里,我将会在游戏里用到手柄上的所有按钮,所以我特别将另一个键盘上的键映射到菜单功能,这样在我玩完一个游戏后,不用重启树莓派就可以退出来。

|

||||

重启完之后,你会很高兴地看到EmulationStation界面,之后它会提示你设定控制杆,游戏手柄,或键盘按键,这样就可以控制EmulationStation菜单了。不过注意一下,这并不会影响手柄在游戏里的按键定义,只是用于控制EmulationStation菜单的。在设定完手柄后,你应该可以按下向右或向左方向键来切换不同的模拟器菜单了。在我这里,我将会在游戏里用到手柄上的所有按钮,所以我特别将另一个键盘上的键映射到菜单功能,这样在我玩完一个游戏后,不用重启树莓派就可以退出来。

|

||||

|

||||

EmulationStation只会显示已经侦测到ROM的模拟器,所以,如果你还没有拷贝ROM的话,得先做这件事情,然后可能还得重启一下才会有效果。而且,默认情况下,你的手柄没有为任何游戏做配置,但是,如果你在EmulationStation里一直按向右键足够多次以后,会弹出输入设定界面,你可以在里面映射手柄按键。有一个亮点是,当你设定好按键后,它会相应地应用到其他模拟器中。

|

||||

|

||||

就是这些了。在这之后,你可以浏览你收藏的各种游戏,然后按下绑定到确定的那个按键开始游戏。一开始我还担心树莓派可能不够强劲来玩我的游戏,但是直到现在,我试过地所有游戏都可以完美地运行。

|

||||

就是这些了。在这之后,你可以浏览你收藏的各种游戏,然后按下绑定到“确定”的那个按键开始游戏。一开始我还担心树莓派可能不够强劲来玩我的游戏,但是直到现在,我试过地所有游戏都可以完美地运行。

|

||||

|

||||

### 资源 ###

|

||||

|

||||

@ -62,7 +66,7 @@ RetroPie安装文档:[https://github.com/petrockblog/RetroPie-Setup][2]

|

||||

|

||||

via: http://www.linuxjournal.com/content/super-pi-brothers

|

||||

|

||||

译者:[zpl1025](https://github.com/zpl1025) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[zpl1025](https://github.com/zpl1025) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,43 +1,42 @@

|

||||

2q1w2007翻译中

|

||||

搭建并运行OpenVPN,享受你的隐私生活

|

||||

十步搭建 OpenVPN,享受你的隐私生活

|

||||

================================================================================

|

||||

|

||||

|

||||

> 我们支持保护隐私,不为我们有自己的秘密需要保护,只是我们认为保护隐私应该成为一项基本人权。所以我们坚信无论谁在什么时候行使这项权利,都应该不受拘束的获取必须的工具和服务。OpenVPN就是这样一种服务并且有多种工具(客户端) 来让我们利用并享受这种服务。

|

||||

> 我们支持保护隐私,不为我们有自己的秘密需要保护,只是我们认为保护隐私应该成为一项基本人权。所以我们坚信无论谁在什么时候行使这项权利,都应该不受拘束的获取必须的工具和服务。OpenVPN就是这样一种服务并且有多种工具(客户端) 来让我们利用并享受这种服务。

|

||||

|

||||

通过与一个[OpenVPN][1]服务器建立连接,我们基本上在我们的设备和远端运行OpenVPN的主机之间建立了一个安全的通信通道。尽管在两个端点之间的通信可能被截获,但是信息是经过高强度加密的所以实际上它对于攻击者没什么用。OpenVPN除了扮演加密通信通道的调解人,我们也可以通过设置使服务器扮演因特网网管的角色。通过这种方式,我们可以连接任何不安全的Wifi,然后迅速的链接到远程的OpenVPN服务器并在不需要考虑偷窥的人或者无聊的管理员的情况下运行需要上网的程序。(注意:OpenVPN服务器旁还是需要信任的管理员的。)

|

||||

通过与一个[OpenVPN][1]服务器建立连接,我们基本上在我们的设备和远端运行OpenVPN的主机之间建立了一个安全的通信通道。尽管在两个端点之间的通信可能被截获,但是信息是经过高强度加密的所以实际上它对于攻击者没什么用。OpenVPN除了扮演加密通信通道的调解人,我们也可以通过设置使服务器扮演互联网网关的角色。通过这种方式,我们可以**连接任何不安全的Wifi**,然后迅速的链接到远程的OpenVPN服务器,然后在不需要考虑偷窥的人或者无聊的管理员的情况下运行需要上网的程序。(注意:OpenVPN服务器旁还是需要信任的管理员的。)

|

||||

|

||||

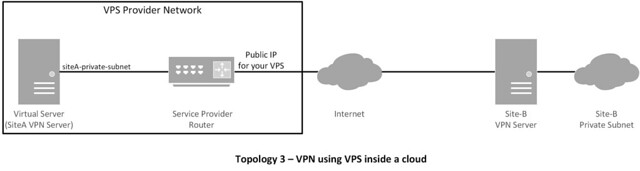

这篇文章将一步一步的教会你如何在[Ubuntu Server 14.04 LTS][2]上安装OpenVPN。OpenVPN所在的主机可能是云上的一台VPS,一台在我们家里某台电脑上运行的虚拟机,或者是一个老到你都快忘了的设备。

|

||||

这篇文章将一步一步的教会你如何在[Ubuntu Server 14.04 LTS][2]上安装OpenVPN。OpenVPN所在的主机可能是云上的一台VPS,一台在我们家里某台电脑上运行的虚拟机,或者是一个老到你都快忘了的设备。

|

||||

|

||||

### 第一步 -- 准备系统 ###

|

||||

### 第一步 准备系统 ###

|

||||

|

||||

我们需要Ubuntu Server主机的一个命令行终端,比如通过SSH从远程访问它。首先需要更新它的本地仓库数据:

|

||||

我们需要Ubuntu Server主机的一个命令行终端,比如通过SSH从远程访问它。首先需要更新它的本地仓库数据:

|

||||

|

||||

sub0@delta:~$ sudo apt-get update

|

||||

|

||||

进行操作系统和已安装的包的升级,输入:

|

||||

进行操作系统和已安装的包的升级,输入:

|

||||

|

||||

sub0@delta:~$ sudo apt-get dist-upgrade

|

||||

|

||||

如果升级了新内核,那就需要重启。当更新完成后,就该安装OpenVPN了:

|

||||

如果升级了新内核,那就需要重启。当更新完成后,就该安装OpenVPN了:

|

||||

|

||||

sub0@delta:~$ sudo apt-get -y install openvpn easy-rsa dnsmasq

|

||||

|

||||

注意,我们用apt-get安装了三个包:

|

||||

注意,我们用apt-get安装了三个包:

|

||||

|

||||

- openvpn提供了OpenVPN的核心

|

||||

- easy-rsa包含了一些有用的密钥管理脚本

|

||||

- dnsmasq是当我们的OpenVPN所在的主机将扮演客户端的路由器时会用到的域名服务器

|

||||

|

||||

### 第二步 -- 生成证书和私钥 ###

|

||||

### 第二步 生成证书和私钥 ###

|

||||

|

||||

这是安装OpenVPN中最重要和最关键的一步,目的是建立公钥基础设施(PKI)。包括如下内容:

|

||||

这是安装OpenVPN中最重要和最关键的一步,目的是建立公钥基础设施(PKI)。包括如下内容:

|

||||

|

||||

- 为OpenVPN服务器创建一个证书(公钥)和一个私钥

|

||||

- 为每个OpenVPN客户端创建证书和私钥

|

||||

- 建立一个证书颁发机构(CA)并创建证书和私钥。这个私钥用来给OpenVPN服务器和客户端的证书签名

|

||||

|

||||

从最后一个做起,我们先建立一个目录:

|

||||

从最后一个做起,我们先建立一个目录:

|

||||

|

||||

sub0@delta:~$ sudo mkdir /etc/openvpn/easy-rsa

|

||||

|

||||

@ -45,11 +44,11 @@

|

||||

|

||||

sub0@delta:~$ sudo cp -r /usr/share/easy-rsa/* /etc/openvpn/easy-rsa

|

||||

|

||||

在我们创建CA的私钥之前,我们先编辑/etc/openvpn/easy-rsa/vars(我们喜欢用nano,不过这只是我们的喜好,你爱用什么用什么):

|

||||

在我们创建CA的私钥之前,我们先编辑/etc/openvpn/easy-rsa/vars(我们喜欢用nano编辑器,不过这只是我们的喜好,你爱用什么用什么):

|

||||

|

||||

sub0@delta:~$ sudo nano /etc/openvpn/easy-rsa/vars

|

||||

|

||||

在文件的尾部,我们设置主证书和密钥的信息:

|

||||

在文件的尾部,我们设置主证书和密钥的信息:

|

||||

|

||||

export KEY_COUNTRY="GR"

|

||||

export KEY_PROVINCE="Central Macedonia"

|

||||

@ -61,9 +60,9 @@

|

||||

export KEY_OU="Parabing"

|

||||

export KEY_ALTNAMES="VPNsRUS"

|

||||

|

||||

你可以根据自己的情况设置不同的值。特别注意最后KEY_ALTNAMES这一行,尽管这不是原本vars文件中有的但是我们还是把它加到文件的尾部,不然build-ca脚本会运行失败。

|

||||

你可以根据自己的情况设置不同的值。特别注意最后KEY_ALTNAMES这一行,尽管这不是原本vars文件中有的但是我们还是把它加到文件的尾部,不然build-ca脚本会运行失败。

|

||||

|

||||

保存更改,我们得按[CTRL+O]然后按[Enter]。想退出nano,请按[CTRL+X]。现在,我们要获得root访问权限,继续生成主证书和私钥(LCTT译注:请注意命令行账户发生了改变):

|

||||

保存更改,我们得按[CTRL+O]然后按[Enter]。想退出nano,请按[CTRL+X]。现在,我们要获得root访问权限,继续生成主证书和私钥(LCTT译注:请注意命令行账户发生了改变):

|

||||

|

||||

sub0@delta:~$ sudo su

|

||||

root@delta:/home/sub0# cd /etc/openvpn/easy-rsa

|

||||

@ -93,11 +92,11 @@

|

||||

Email Address [nobody@parabing.com]:

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

在我们的实例中,所有问题的答案都选择了默认的。在运行了build-ca脚本后,我们就获得了主证书文件(keys/ca.crt)和对应的私钥(keys/ca.key)。私钥必须不计代价的保密。

|

||||

在我们的例子中,所有问题的答案都选择了默认的。在运行了build-ca脚本后,我们就获得了主证书文件(keys/ca.crt)和对应的私钥(keys/ca.key)。私钥必须不计代价的保密。

|

||||

|

||||

### 第三步 -- 生成OpenVPN服务器的证书和私钥 ###

|

||||

### 第三步 生成OpenVPN服务器的证书和私钥 ###

|

||||

|

||||

在我们为OpenVPN服务器生成证书和密钥之前,我们得给他起个名。我决定把它叫"delta",然后运行build-key-server脚本来获取证书和密钥:

|

||||

在我们为OpenVPN服务器生成证书和密钥之前,我们得给他起个名。我决定把它叫"delta",然后运行build-key-server脚本来获取证书和密钥:

|

||||

|

||||

root@delta:/etc/openvpn/easy-rsa# sh build-key-server delta

|

||||

Generating a 1024 bit RSA private key

|

||||

@ -145,11 +144,11 @@

|

||||

Data Base Updated

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

当脚本成功运行完的时候,我们就得到了服务器的证书(keys/delta.crt)和私钥(keys/delta.key)。注意服务器证书被CA的私钥签名了。

|

||||

当脚本成功运行完的时候,我们就得到了服务器的证书(keys/delta.crt)和私钥(keys/delta.key)。注意服务器证书被CA的私钥签名了。

|

||||

|

||||

### 第四步 -- 生成Diffie-Hellman参数 ###

|

||||

### 第四步 生成Diffie-Hellman参数 ###

|

||||

|

||||

幸亏有了Diffie-Hellman参数,我们才能在不安全的通信通道里安全的交换密钥。为了生成它我们需要键入:

|

||||

幸亏有了Diffie-Hellman参数,我们才能在不安全的通信通道里安全的交换密钥。为了生成它我们需要键入:

|

||||

|

||||

root@delta:/etc/openvpn/easy-rsa# sh build-dh

|

||||

Generating DH parameters, 2048 bit long safe prime, generator 2

|

||||

@ -162,7 +161,7 @@

|

||||

.......................................++*++*++*

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

证书,私钥和包含Diffie-Hellman参数的文件已生成,它们都储存在/etc/openvpn/easy-rsa/keys,所以我们到现在为止已经有如下五个文件了:

|

||||

证书,私钥和包含Diffie-Hellman参数的文件已生成,它们都储存在/etc/openvpn/easy-rsa/keys,所以我们到现在为止已经有如下五个文件了:

|

||||

|

||||

1. **ca.crt** – 证书颁发机构(CA)的证书

|

||||

2. **ca.key** – CA的私钥

|

||||

@ -177,9 +176,9 @@

|

||||

root@delta:/etc/openvpn/easy-rsa/keys# cd ..

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

### 第五步 -- 为OpenVPN客户端生成证书和私钥 ###

|

||||

### 第五步 为OpenVPN客户端生成证书和私钥 ###

|

||||

|

||||

试想我们的笔记本要连接OpenVPN服务器。为了实现这个很常见的情况,我们首先需要为客户端(比如:我们的笔记本)生成证书和私钥,在/etc/openvpn/easy-rsa有一个脚本帮我们完成这项工作:

|

||||

试想我们的笔记本要连接OpenVPN服务器。为了实现这个很常见的情况,我们首先需要为客户端(比如:我们的笔记本)生成证书和私钥,在/etc/openvpn/easy-rsa有一个脚本帮我们完成这项工作:

|

||||

|

||||

root@delta:/etc/openvpn/easy-rsa# source vars

|

||||

NOTE: If you run ./clean-all, I will be doing a rm -rf on /etc/openvpn/easy-rsa/keys

|

||||

@ -229,7 +228,7 @@

|

||||

Data Base Updated

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

我们为密钥选取的名字是"laptop",当build-key脚本运行完之后,我们就得到了在keys/laptop.crt的证书和在keys/laptop.key的私钥。有了这两个文件和CA的证书,我们得把这三个文件拷贝到用户有(比如用户sub0)权访问的地方。比如我们可以在用户的home目录中新建一个目录并把三个文件拷贝过去:

|

||||

我们为密钥选取的名字是"laptop",当build-key脚本运行完之后,我们就得到了在keys/laptop.crt的证书和在keys/laptop.key的私钥。有了这两个文件和CA的证书,我们得把这三个文件拷贝到用户有(比如用户sub0)权访问的地方。比如我们可以在用户的home目录中新建一个目录并把三个文件拷贝过去:

|

||||

|

||||

root@delta:/etc/openvpn/easy-rsa# mkdir /home/sub0/ovpn-client

|

||||

root@delta:/etc/openvpn/easy-rsa# cd keys

|

||||

@ -238,11 +237,11 @@

|

||||

root@delta:/etc/openvpn/easy-rsa/keys# cd ..

|

||||

root@delta:/etc/openvpn/easy-rsa#

|

||||

|

||||

ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们可以给多个客户端分发这三个文件。当然了,等我们需要一个不一样的证书-私钥对的时候只要再次运行build-key脚本即可。

|

||||

ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们可以给多个客户端分发这三个文件。当然了,等我们需要一个不一样的证书-私钥对的时候只要再次运行build-key脚本即可。

|

||||

|

||||

### 第六步 -- OpenVPN服务器设置 ###

|

||||

|

||||

等会我们的OpenVPN服务器就要启动并运行了。但是开始的时候,我们需要更改一些设置。在/usr/share/doc/openvpn/examples/sample-config-files中有一个示例配置文件,它很适合我们的教程,这个文件叫server.conf.gz:

|

||||

等会我们的OpenVPN服务器就要启动并运行了。但是开始的时候,我们需要更改一些设置。在/usr/share/doc/openvpn/examples/sample-config-files中有一个示例配置文件,它很适合我们的教程,这个文件叫server.conf.gz:

|

||||

|

||||

root@delta:/etc/openvpn/easy-rsa# cd /etc/openvpn

|

||||

root@delta:/etc/openvpn# cp /usr/share/doc/openvpn/examples/sample-config-files/server.conf.gz .

|

||||

@ -250,18 +249,18 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

root@delta:/etc/openvpn# mv server.conf delta.conf

|

||||

root@delta:/etc/openvpn#

|

||||

|

||||

如你所见,我们把server.conf.gz拷贝到/etc/openvpn,解压并重命名到delta.conf。你可以按个人喜好给OpenVPN服务器配置文件取名字,但是它必须有".conf"扩展名。我们现在用nano打开配置文件:

|

||||

如你所见,我们把server.conf.gz拷贝到/etc/openvpn,解压并重命名到delta.conf。你可以按个人喜好给OpenVPN服务器配置文件取名字,但是它必须有".conf"扩展名。我们现在用nano打开配置文件:

|

||||

|

||||

root@delta:/etc/openvpn# nano delta.conf

|

||||

|

||||

下面是我们应该做出的更改。

|

||||

|

||||

- 首先,定位到这一行

|

||||

- 首先,定位到这一行

|

||||

|

||||

cert server.crt

|

||||

key server.key

|

||||

|

||||

确认OpenVPN服务器证书和私钥的位置和名称,在我们的例子中,这两行要改成

|

||||

确认OpenVPN服务器证书和私钥的位置和名称,在我们的例子中,这两行要改成

|

||||

|

||||

cert delta.crt

|

||||

key delta.key

|

||||

@ -274,18 +273,18 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

|

||||

dh dh2048.pem

|

||||

|

||||

- 在配置文件的末尾,我们添加下面这两行:

|

||||

- 在配置文件的末尾,我们添加下面这两行:

|

||||

|

||||

push "redirect-gateway def1"

|

||||

push "dhcp-option DNS 10.8.0.1"

|

||||

|

||||

最后这两行指示客户端用OpenVPN作为默认的网关,并用10.8.0.1作为DNS服务器。注意10.8.0.1是OpenVPN启动时自动创建的隧道接口的IP。如果客户用别的域名解析服务,那么我们就得提防不安全的DNS服务器。为了避免这种泄露,我们建议所有OpenVPN客户端使用10.8.0.1作为DNS服务器。

|

||||

最后这两行指示客户端用OpenVPN作为默认的网关,并用10.8.0.1作为DNS服务器。注意10.8.0.1是OpenVPN启动时自动创建的隧道接口的IP。如果客户用别的域名解析服务,那么我们就得提防不安全的DNS服务器。为了避免这种泄露,我们建议所有OpenVPN客户端使用10.8.0.1作为DNS服务器。

|

||||

|

||||

我们以这种方式来开始运行OpenVPN服务器:

|

||||

|

||||

root@delta:/etc/openvpn# service openvpn start

|

||||

|

||||

默认的,OpenVPN服务器监听1194/UDP端口。一种查看的方法是使用netstat工具:

|

||||

默认的,OpenVPN服务器监听1194/UDP端口。一种查看的方法是使用netstat工具:

|

||||

|

||||

root@delta:/etc/openvpn# netstat -anup

|

||||

Active Internet connections (servers and established)

|

||||

@ -297,11 +296,11 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

udp6 0 0 :::60622 :::* 555/dhclient

|

||||

udp6 0 0 :::53 :::* 2756/dnsmasq

|

||||

|

||||

看起来一切运行的不错,但是我们还没设置DNS服务器呢。

|

||||

看起来一切运行的不错,但是我们还没设置DNS服务器呢。

|

||||

|

||||

### 第七步 -- 为OpenVPN客户端搭建DNS ###

|

||||

### 第七步 为OpenVPN客户端搭建DNS ###

|

||||

|

||||

这就是为什么我们要安装dnsmasq,打开它的配置文件。

|

||||

这就是为什么我们要安装dnsmasq的原因,打开它的配置文件。

|

||||

|

||||

root@delta:/etc/openvpn# nano /etc/dnsmasq.conf

|

||||

|

||||

@ -321,13 +320,13 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

|

||||

bind-interfaces

|

||||

|

||||

为了让dnsmasq应用这些更改,我们重启它:

|

||||

为了让dnsmasq应用这些更改,我们重启它:

|

||||

|

||||

root@delta:/etc/openvpn# service dnsmasq restart

|

||||

* Restarting DNS forwarder and DHCP server dnsmasq [ OK ]

|

||||

root@delta:/etc/openvpn#

|

||||

|

||||

现在,dnamasq在本地回环(lo)和隧道(tun0)接口监听DNS请求。netstat的输出看起来是这个样子的:

|

||||

现在,dnamasq在本地回环(lo)和隧道(tun0)接口监听DNS请求。netstat的输出看起来是这个样子的:

|

||||

|

||||

root@delta:/etc/openvpn# netstat -anup

|

||||

Active Internet connections (servers and established)

|

||||

@ -339,13 +338,13 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

udp 0 0 0.0.0.0:68 0.0.0.0:* 638/dhclient

|

||||

udp6 0 0 :::39148 :::* 638/dhclient

|

||||

|

||||

### 第八步 -- 路由功能 ###

|

||||

### 第八步 路由功能 ###

|

||||

|

||||

我们希望在一些"盒子"或虚拟机上运行的OpneVPN有路由的功能,这意味着要开启IP转发.为了打开它,我们用root账户键入:

|

||||

我们希望在一些机器或虚拟机上运行的OpneVPN有路由的功能,这意味着要开启IP转发。为了打开它,我们用root账户键入:

|

||||

|

||||

root@delta:/etc/openvpn# echo "1" > /proc/sys/net/ipv4/ip_forward

|

||||

|

||||

为了让这个设置重启也好用,我们编辑 /etc/sysctl.conf:

|

||||

为了让这个设置重启也好用,我们编辑 /etc/sysctl.conf:

|

||||

|

||||

root@delta:/etc/openvpn# nano /etc/sysctl.conf

|

||||

|

||||

@ -365,7 +364,7 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

root@delta:/etc/openvpn# iptables -t nat -A POSTROUTING -s 10.8.0.0/24 -o eth0 -j MASQUERADE

|

||||

root@delta:/etc/openvpn#

|

||||

|

||||

当然了,我们希望每次Ubuntu启动的时候,这些规则都好用。所以我们得把它们加到/etc/rc.local里:

|

||||

当然了,我们希望每次Ubuntu启动的时候,这些规则都好用。所以我们得把它们加到/etc/rc.local里:

|

||||

|

||||

#!/bin/sh -e

|

||||

#

|

||||

@ -393,11 +392,11 @@ ovpn-client文件夹必须安全的拷贝到我们的笔记本电脑上。我们

|

||||

|

||||

service dnsmasq restart

|

||||

|

||||

> 这非常重要:在系统启动时,dnsmasq会尝试在OpenVPN之前启动。但是OpenVPN启动之前是没有隧道(tun0)接口的,所以dnsmasq自然就挂了。过了一阵,当/etc/rc.local读到隧道(tun0)接口出现时,它会在这时重启dnsmasq然后就一切如你所愿了。

|

||||

> 这非常重要:在系统启动时,dnsmasq会尝试在OpenVPN之前启动。但是OpenVPN启动之前是没有隧道(tun0)接口的,所以dnsmasq自然就挂了。过了一阵,当/etc/rc.local读到隧道(tun0)接口出现时,它会在这时重启dnsmasq然后就一切如你所愿了。

|

||||

|

||||

### 第九步 -- 客户端设置 ###

|

||||

### 第九步 客户端设置 ###

|

||||

|

||||

在第五步,我们在用户的home目录里我们建立了ovpn-client文件夹(在我们的例子里是/home/sub0)。在哪里有CA的证书和客户端证书和私钥。现在只缺客户端配置文件了,在/usr/share/doc/openvpn/examples/sample-config-files有一个示例配置文件:

|

||||

在第五步,我们在用户的home目录里我们建立了ovpn-client文件夹(在我们的例子里是/home/sub0)。在哪里有CA的证书和客户端证书和私钥。现在只缺客户端配置文件了,在/usr/share/doc/openvpn/examples/sample-config-files有一个示例配置文件:

|

||||

|

||||

root@delta:/etc/openvpn# exit

|

||||

exit

|

||||

@ -405,19 +404,19 @@ service dnsmasq restart

|

||||

sub0@delta:~/ovpn-client$ cp /usr/share/doc/openvpn/examples/sample-config-files/client.conf .

|

||||

sub0@delta:~/ovpn-client$

|

||||

|

||||

我们编需要辑client.conf,定位到这一行:

|

||||

我们需要编辑client.conf,定位到这一行:

|

||||

|

||||

remote my-server-1 1194

|

||||

|

||||

"my-server-1"是一个占位符,现在我们要把它换成我们自己服务器的公网域名或IP。如果我们已经给服务器分配域名了,那只要把它填到my-server-1的位置。如果没有域名,那么得获取公网IP。如何获取呢?一种方式是键入下列命令:

|

||||

"my-server-1"是一个占位符,现在我们要把它换成我们自己服务器的公网域名或IP。如果我们已经给服务器分配域名了,那只要把它填到my-server-1的位置。如果没有域名,那么得获取公网IP。如何获取呢?一种方式是键入下列命令:

|

||||

|

||||

sub0@delta:~/ovpn-client$ curl ipecho.net/plain ; echo

|

||||

|

||||

(如果不是一个数字的IP地址, 或是发生错误,那就等会再试。)所以我们现在知道我们的服务器公网IP了,但是它是动态的还是静态的呢?当我们把服务器架设在家或者办公室的时候,极有可能就是动态IP。如果是动态IP的话,可以用免费的动态域名服务(DDNS),比如[No-IP](http://www.noip.com)的服务。如果使用No-IP,假设我们选择了免费的域名dnsalias.net,那么这一行应该像这样填写:

|

||||

(如果不是一个数字的IP地址, 或是发生错误,那就等会再试。)所以我们现在知道我们的服务器公网IP了,但是它是动态的还是静态的呢?当我们把服务器架设在家或者办公室的时候,极有可能就是动态IP。如果是动态IP的话,可以用免费的动态域名服务(DDNS),比如[No-IP](http://www.noip.com)的服务。如果使用No-IP,假设我们选择了免费的域名dnsalias.net,那么这一行应该像这样填写:

|

||||

|

||||

remote ovpn.dnsalias.net 1194

|

||||

|

||||

"ovpn"是我们给服务器起的主机名。如果我们的服务器在云上,那么它可能有一个静态IP。如果有静态IP的话,那么这一行应该是这样的:

|

||||

"ovpn"是我们给服务器起的主机名。如果我们的服务器在云上,那么它可能有一个静态IP。如果有静态IP的话,那么这一行应该是这样的:

|

||||

|

||||

remote 1.2.3.4 1194

|

||||

|

||||

@ -426,56 +425,56 @@ service dnsmasq restart

|

||||

cert client.crt

|

||||

key client.key

|

||||

|

||||

在我们的例子里,客户端的证书和密钥的名字分别是laptop.crt和laptop.key所以我们的client.conf要包含下面这两行:

|

||||

在我们的例子里,客户端的证书和密钥的名字分别是laptop.crt和laptop.key所以我们的client.conf要包含下面这两行:

|

||||

|

||||

cert laptop.crt

|

||||

key laptop.key

|

||||

|

||||

在确认保存client.conf的修改之后,我们需要安全的把整个ovpn-client文件夹传输到客户端。一种方式是使用scp命令(安全拷贝或在SSH上拷贝)。另一种方式由优秀而免费的软件FileZilla提供,使用在SSH上运行的FTP(SFTP)。

|

||||

在确认保存client.conf的修改之后,我们需要安全的把整个ovpn-client文件夹传输到客户端。一种方式是使用scp命令(安全拷贝或在SSH上拷贝)。另一种方式由优秀而免费的软件FileZilla提供,使用在SSH上运行的FTP(SFTP)。

|

||||

|

||||

### 第十步 -- 连接并测试 ###

|

||||

### 第十步 连接并测试 ###

|

||||

|

||||

|

||||

|

||||

|

||||

所以我们到底怎么样才能链接到远程的OpenVPN服务器的呢?它完全取决于我们手中现有的设备类型,当然也取决于所运行的操作系统。我们将在四种不同类别的操作系统上运行,Linux, Windows, OS X和iOS/Android。注意,无论在什么设备和系统上,我们都得在OpenVPN服务器的本地网络外才能连接成功。此外,如果在服务器前有防火墙,我们需要增加一条这样的规则:

|

||||

所以我们到底怎么样才能链接到远程的OpenVPN服务器的呢?它完全取决于我们手中现有的设备类型,当然也取决于所运行的操作系统。我们将在四种不同类别的操作系统上运行,Linux, Windows, OS X和iOS/Android。注意,无论在什么设备和系统上,我们都得在OpenVPN服务器的本地网络外才能连接成功。此外,如果在服务器前有防火墙,我们需要增加一条这样的规则:

|

||||

|

||||

*把所有从1194/UDP端口收到的包转发到服务器公网接口的1194/UDP端口。*

|

||||

|

||||

这是一个简单的防火墙规则。事不宜迟,让我们与我们难以置信的OpenVPN服务器建立第一个连接吧。

|

||||

这是一个简单的防火墙规则。事不宜迟,让我们与我们难以置信的OpenVPN服务器建立第一个连接吧。

|

||||

|

||||

**Linux**: 我们只需安装openvpn包。一种连接远程OpenVPN服务器的方式是新建一个终端,切换到ovpn-client文件夹并以root身份或使用sudo来键入下列命令:

|

||||

**Linux**: 我们只需安装openvpn包。一种连接远程OpenVPN服务器的方式是新建一个终端,切换到ovpn-client文件夹并以root身份或使用sudo来键入下列命令:

|

||||

|

||||

/usr/sbin/openvpn --config client.conf

|

||||

|

||||

任何时候,如果我们需要终止OpenVPN,按[CTRL+C]就行了。

|

||||

任何时候如果我们需要终止OpenVPN,按[CTRL+C]就行了。

|

||||

|

||||

**Windows**: 有一个免费的OpenVPN客户端软件叫做[OpenVPN Desktop Client][3]。 配置文件client.conf需要重命名成client.ovpn,这就是我们需要提供给OpenVPN Desktop Client的文件。程序会读取client.ovpn并给OpenVPN服务器生成一个新的连接配置。

|

||||

**Windows**: 有一个免费的OpenVPN客户端软件叫做[OpenVPN Desktop Client][3]。 配置文件client.conf需要重命名成client.ovpn,这就是我们需要提供给OpenVPN Desktop Client的文件。程序会读取client.ovpn并给OpenVPN服务器生成一个新的连接配置。

|

||||

|

||||

|

||||

|

||||

**OS X**: [tunnelblick][4]是一款可以连接OpenVPN的免费开源OS X软件。[Viscosity][5]也可以但它是商业软件,不过我们喜欢。Viscosity会读取client.conf并给OpenVPN服务器生成一个新的连接配置。

|

||||

**OS X**: [tunnelblick][4]是一款可以连接OpenVPN的免费开源OS X软件。[Viscosity][5]也可以但它是商业软件,不过我们喜欢。Viscosity会读取client.conf并给OpenVPN服务器生成一个新的连接配置。

|

||||

|

||||

**iOS/Android**: OpenVPN connect是绝佳的选择。它是免费的且可以从[App Store][6]和[Google Play store][7]获得

|

||||

|

||||

不管是什么平台,有时我们想检验我们是否真的使用OpenVPN连接了。一种检验方法是完成下面这简单的4步:

|

||||

不管是什么平台,有时我们想检验我们是否真的使用OpenVPN连接了。一种检验方法是完成下面这简单的4步:

|

||||

|

||||

在连接到OpenVPN服务器前我们需要…

|

||||

|

||||

- 打开[whatip.com][8]记录我们的公网IP

|

||||

- 打开[dnsleaktest.com][10],运行标准测试(standard test),记录我们的域名解析服务器

|

||||

- 打开[dnsleaktest.com][10],运行标准测试(standard test),记录我们的域名解析服务器

|

||||

|

||||

|

||||

|

||||

在连接到OpenVPN服务器后重复这两部。如果我们获取到两个不同的公网IP,这意味着我们的网络出口已在远端OpenVPN服务器那。此外,如果获取了两个不同的域名解析服务器,那么就不存在DNS泄露的问题了。

|

||||

在连接到OpenVPN服务器后重复这两部。如果我们获取到两个不同的公网IP,这意味着我们的网络出口已在远端OpenVPN服务器那。此外,如果获取了两个不同的域名解析服务器,那么就不存在DNS泄露的问题了。

|

||||

|

||||

### 感言 ###

|

||||

|

||||

我用三个不同的OpenVPN服务器,都是定制的。 一个运行在希腊Thessaloniki的家庭办公室的pfSense路由。当我不在办公室时,我用这个服务器安全的连接到局域网。剩下的两个服务器在两个不同的VPS上,一个在冰岛雷克雅未克,另一个在美国纽泽西州。当我在外且需要任意用一个WiFi热点的时候,我不必考虑安全问题:我只需简单的连接到雷克雅未克的服务器然后正常上网。有时我想看看限制用户地理位置在美国的服务。在这种不太常见的情况下,新泽西的服务器就派上用场了,当我连接时,我就获得了美国的一个公网IP,这样就可以访问有地理位置限制的服务了。值得注意的是,一些服务会把一些知名的VPN公司的IP列入黑名单。这是在你选的VPS提供商建立自己的OpenVPN*十分重要*的一个优点:这不大可能被列入黑名单.

|

||||

我用了三个不同的OpenVPN服务器,都是定制的。 一个运行在希腊Thessaloniki的家庭办公室的pfSense路由。当我不在办公室时,我用这个服务器安全的连接到局域网。剩下的两个服务器在两个不同的VPS上,一个在冰岛雷克雅未克,另一个在美国纽泽西州。当我在外面且需要任意用一个WiFi热点的时候,我不必考虑安全问题:我只需简单的连接到雷克雅未克的服务器然后正常上网。有时我想看看那些限制用户地理位置在美国的服务。在这种不太常见的情况下,新泽西的服务器就派上用场了,当我连接时,我就获得了美国的一个公网IP,这样就可以访问有地理位置限制的服务了。值得注意的是,一些服务会把一些知名的VPN公司的IP列入黑名单。这是在你选的VPS提供商建立自己的OpenVPN*十分重要*的一个优点:这不大可能被列入黑名单。

|

||||

|

||||

无论你的物理位置在哪, OpenVPN确保客户端和服务器之间的数据流是高度加密的。没有OpenVPN的数据则是另一种情况。 取决于不同的应用层协议,它可能仍然是加密的,但它也可能是未加密的。所以除非你对OpenVPN服务器和它的本地网络有绝对的控制权,你不能完全相信另一端的管理员。这种精神是显而易见的:如果你真的在乎你自己的隐私,那么你需要注意你的行为可能在破坏它。

|

||||

无论你的物理位置在哪, OpenVPN确保客户端和服务器之间的数据流是高度加密的。没有OpenVPN的数据则是另一种情况。 取决于不同的应用层协议,它可能仍然是加密的,但它也可能是未加密的。所以除非你对OpenVPN服务器和它的本地网络有绝对的控制权,你不能完全相信另一端的管理员。这种精神是显而易见的:如果你真的在乎你自己的隐私,那么你需要注意你的行为可能在破坏它。

|

||||

|

||||

一个例子是我们有希望点对点传输。我们有一个在云上配置好的OpenVPN服务器。当需要任意用一个WiFi热点的时候,你没有丝毫的担心,因为你连在OpenVPN服务器上。然后你打开你最喜欢的电子邮件客户端从一个依然使用SMTP的老服务器收信。你猜会发生什么?你的用户名和密码以未加密的纯文本格式离开OpenVPN服务器。与此同时一个在你OpenVPN服务器附近的带宽管理员很容易就嗅探出你的证书并记录到他们越来越长的名叫"random happy people.txt"的列表。

|

||||

一个例子是我们希望点对点传输。我们有一个在云上配置好的OpenVPN服务器。当需要任意用一个WiFi热点的时候,你没有丝毫的担心,因为你连在OpenVPN服务器上。然后你打开你最喜欢的电子邮件客户端从一个依然使用SMTP的老服务器收信。你猜会发生什么?你的用户名和密码以未加密的纯文本格式离开OpenVPN服务器。与此同时一个在你OpenVPN服务器附近的鬼鬼祟祟的管理员很容易就嗅探出你的证书并记录到他们越来越长的名叫"random happy people.txt"的列表。

|

||||

|

||||

所以你该做什么?很简单。你应该继续使用OpenVPN服务器,但不要使用应用了旧的或不安全的协议的应用程序。

|

||||

|

||||

@ -485,7 +484,7 @@ service dnsmasq restart

|

||||

|

||||

via: http://parabing.com/2014/06/openvpn-on-ubuntu/

|

||||

|

||||

译者:[2q1w2007](https://github.com/2q1w2007) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[2q1w2007](https://github.com/2q1w2007) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,45 +1,27 @@

|

||||

2q1w2007翻译中

|

||||

Wine 1.7.21 (开发者版本) 发布 – 在基于RedHat或Debian的系统上安装

|

||||

在基于RedHat或Debian的系统上安装 Wine 1.7

|

||||

================================================================================

|

||||

Wine,Linux上最流行也是最有力的软件, 用来不出问题的在Linux平台上运行Windows程序和游戏。

|

||||

Wine,Linux上最流行也是最有力的软件, 可以顺利地在Linux平台上运行Windows程序和游戏。

|

||||

|

||||

|

||||

|

||||

|

||||

在Linux上安装Wine(开发者版本)

|

||||

这篇文章教你怎么在像CentOS, Fedora, Ubuntu, Linux Mint一样基于**Red Hat**和**Debian**的系统上安装最新的**Wine 1.7**。

|

||||

|

||||

**WineHQ** 团队, 最近发布了一个新的开发者版本**Wine 1.7.21**,带来了许多新特性和几个修正。

|

||||

### 在Linux安装 Wine 1.7 ###

|

||||

|

||||

Wine的团队, 坚持每周更新开发者版本并带来了许多新特性和修正。 每个新版本都带来对新程序和游戏的支持,这让Wine成为最流行的也是想在Linux平台运行基于Windows的软件的用户的必备工具.

|

||||

|

||||

根据changelog, 该版本有如下关键的新特性:

|

||||

|

||||

- C runtime中添加临界区的支持.

|

||||

- Unicode 升级到 Unicode 7.

|

||||

- 新增交错 PNG 编码支持.

|

||||

- Packager 库初始化 stub

|

||||

- 修改了几个bug.

|

||||

|

||||

更多深度的改变信息在 [changelog][1] .

|

||||

|

||||

这篇文章教你怎么在像CentOS, Fedora, Ubuntu, Linux Mint一样基于**Red Hat**和**Debian**的系统上安装最新的**Wine 1.7.21**。

|

||||

|

||||

### 在Linux安装 Wine 1.7.21 开发者版本 ###

|

||||

|

||||

不幸的, 在基于**Red Hat**的系统上没有官方的 Wine 仓库,所以唯一的安装方式是从源码编译。你需要安装一些依赖的包比如gcc, flex, bison, libX11-devel freetype-devel 和 Development Tools,这些包用来从源码编译Wine。我们可以用**YUM**命令安装他们。

|

||||

不幸的, 在基于**Red Hat**的系统上没有官方的 Wine 仓库,所以唯一的安装方式是从源码编译。你需要安装一些依赖的包比如gcc, flex, bison, libX11-devel freetype-devel 和 Development Tools,这些包用来从源码编译Wine。我们可以用**yum**命令安装他们。

|

||||

|

||||

#### 在 RedHat, Fedora 和 CentOS 上 ####

|

||||

|

||||

# yum -y groupinstall 'Development Tools'

|

||||

# yum -y install flex bison libX11-devel freetype-devel

|

||||

|

||||

接下来,下载最新的开发者版本(即**1.7.21**)并用下面的命令提取出来。

|

||||

接下来,下载最新的开发版本(如**1.7.21**)并用下面的命令解压。

|

||||

|

||||

$ cd /tmp

|

||||

$ wget http://citylan.dl.sourceforge.net/project/wine/Source/wine-1.7.21.tar.bz2

|

||||

$ tar -xvf wine-1.7.21.tar.bz2 -C /tmp/

|

||||

|

||||

现在,是时候以普通用户身份编译并搭建Wine的安装程序了。(**注意**: 根据机器性能和网络速度的不同,安装过程需要 **15-20** 分钟,安装过程中会要求输入 **root** 密码。)

|

||||

|

||||

现在,要以普通用户身份编译并搭建Wine的安装程序。(**注意**: 根据机器性能和网络速度的不同,安装过程需要 **15-20** 分钟,安装过程中会要求输入 **root** 密码。)

|

||||

|

||||

**32位系统上**

|

||||

|

||||

@ -61,15 +43,13 @@ Wine的团队, 坚持每周更新开发者版本并带来了许多新特性和

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install wine 1.7 winetricks

|

||||

|

||||

**注意**: 写这篇文章的时候版本是 **1.7.20** 新版本还没上传到仓库,但当新版本可用时上面的命令将安装 **1.7.21** 。

|

||||

|

||||

一旦装完了,你可以以如下方式运行基于Windows的软件和游戏。

|

||||

|

||||

$ wine notepad

|

||||

$ wine notepad.exe

|

||||

$ wine c:\\windows\\notepad.exe

|

||||

|

||||

**注意**: 请记住,这是个开发者版本,不要用在生产环境。 建议此版本只用在测试用途

|

||||

**注意**: 请记住,如果是开发版本不要用在生产环境。 建议只用在测试用途

|

||||

|

||||

如果你想安装最近的稳定版Wine, 请看下面的文章, 在文章里介绍了在几乎所以Linux系统中安装Wine的方法

|

||||

|

||||

@ -80,21 +60,11 @@ Wine的团队, 坚持每周更新开发者版本并带来了许多新特性和

|

||||

|

||||

- [WineHQ Homepage][4]

|

||||

|

||||

----------

|

||||

|

||||

|

||||

|

||||

#### Ravi Saive ####

|

||||

|

||||

Owner at [TecMint.com][5]

|

||||

|

||||

Simple Word a Computer Geek and Linux Guru who loves to share tricks and tips on Internet. Most Of My Servers runs on Open Source Platform called Linux.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/install-wine-in-linux/

|

||||

|

||||

译者:[2q1w2007](https://github.com/2q1w2007) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[2q1w2007](https://github.com/2q1w2007) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,37 +1,111 @@

|

||||

如何为Linux系统中的SSH添加双重认证

|

||||

================================================================================

|

||||

近来很多知名企业都出现了密码泄露,业内对多重认证的呼声也越来越高。在这种多重认证的系统中,用户需要通过两种不同的认证程序:提供他们知道的信息(如 用户名/密码),再借助其他工具提供用户所不知道的信息(如 用手机生成的一次性密码)。这种组合方式常叫做双因子认证或者两阶段验证。

|

||||

|

||||

为了鼓励广泛采用双因子认证的方式,Google公司发布了[Google Authenticator][1],一款开源的,可基于开放规则(如 HMAP/基于时间)生成一次性密码的软件。这是一款跨平台软件,可运行在Linux, [Android][2], [iOS][3]。Google公司同时也支持插件式鉴别模块PAM(pluggable authentication module),使其能和其他也适用PAM进行验证的工具(如OpenSSH)协同工作。

|

||||

在本教程中,我们将叙述集成OpenSSH和Google提供的认证器实现**如何为SSH服务设置双因子认证**。我将使用一款[Android][4]设备来生成一次性密码,本教程中需要两样兵器:(1)一台运行着OpenSSH服务的Linux终端,(2)一台安卓设备。

|

||||

|

||||

在本教程中,我们将叙述集成OpenSSH和Google提供的认证器实现**如何为SSH服务设置双因子认证**。我将使用一款[Android][4]设备来生成一次性密码,本教程中需要两样武器:(1)一台运行着OpenSSH服务的Linux终端,(2)一台安卓设备。

|

||||

|

||||

### 在Linux系统中安装Google Authenticator ###

|

||||

|

||||

第一步需要在运行着OpenSSH服务的Linux主机上安装Google认证器。按照[安装指南] [5]的步骤安装Google认证器及其PAM模块。

|

||||

第一步需要在运行着OpenSSH服务的Linux主机上安装Google认证器。按照如下步骤安装Google认证器及其PAM模块。

|

||||

|

||||

#### 用安装包安装 Google Authenticator ####

|

||||

|

||||

如果你不想自己构建 Google Authenticator,在几个 Linux 发行版上有已经编译好的安装包。安装包里面包含 Google Authenticator 二进制程序和 PAM 模块。

|

||||

|

||||

在 Ubuntu 上安装 Google Authenticator:

|

||||

|

||||

$ sudo apt-get install libpam-google-authenticator

|

||||

|

||||

在 Fedora 上安装 Google Authenticator:

|

||||

|

||||

$ sudo yum install google-authenticator

|

||||

|

||||

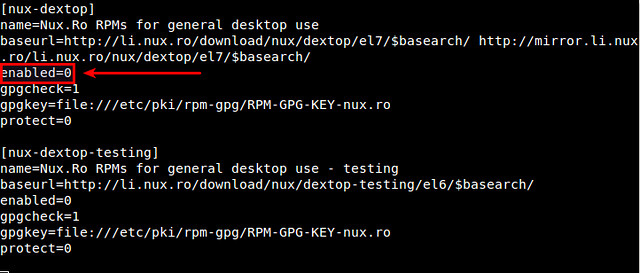

在 CentOS 上安装 Google Authenticator ,需要首先启用 EPEL 软件库,然后运行如下命令:

|

||||

|

||||

$ sudo yum install google-authenticator

|

||||

|

||||

如果不想使用已经编译好的安装包,或者你的 Linux 发行版不在此列,可以自行编译:

|

||||

|

||||

#### 在 Linux 上 Google Authenticator ####

|

||||

|

||||

首先,安装构建 Google Authenticator 所需的软件包。

|

||||

|

||||

在 Debian、 Ubuntu 或 Linux Mint 上:

|

||||

|

||||

$ sudo apt-get install wget make gcc libpam0g-dev

|

||||

|

||||

在 CentOS、 Fedora 或 RHEL上:

|

||||

|

||||

$ sudo yum install wget make gcc pam-devel

|

||||

|

||||

然后下载 Google Authenticator 的源代码,并按如下命令编译。

|

||||

|

||||

$ wget https://google-authenticator.googlecode.com/files/libpam-google-authenticator-1.0-source.tar.bz2

|

||||

$ tar xvfvj libpam-google-authenticator-1.0-source.tar.bz2

|

||||

$ cd libpam-google-authenticator-1.0

|

||||

$ make

|

||||

|

||||

如果构建成功,你会在目录中看到 pam_google_authenticator.so 和 google-authenticator 两个文件。

|

||||

|

||||

最后,将 Google Authenticator 安装到合适位置

|

||||

|

||||

$ sudo make install

|

||||

|

||||

|

||||

#### 排错 ####

|

||||

|

||||

当编译 Google Authenticator 时出现如下错误:

|

||||

|

||||

fatal error: security/pam_appl.h: No such file or directory

|

||||

|

||||

要修复这个问题,请安装如下依赖包。

|

||||

|

||||

在 Debian、 Ubuntu 或 Linux Mint 上:

|

||||

|

||||

$ sudo apt-get install libpam0g-dev

|

||||

|

||||

在 CentOS、 Fedora 或 RHEL 上:

|

||||

|

||||

$ sudo yum install pam-devel

|

||||

|

||||

|

||||

当Google认证器安装好后,你需要在Linux主机上创建验证密钥,并且在安卓设备上注册,注意这项配置操作是一次性的。我们将详细叙述如何完成这些操作:

|

||||

|

||||

### 生成验证密钥 ###

|

||||

|

||||

在Linux主机上运行Google认证器

|

||||

|

||||

$ google-authenticator

|

||||

|

||||

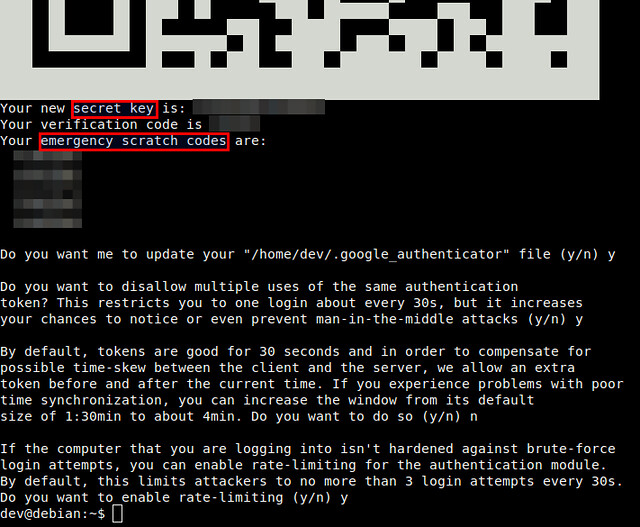

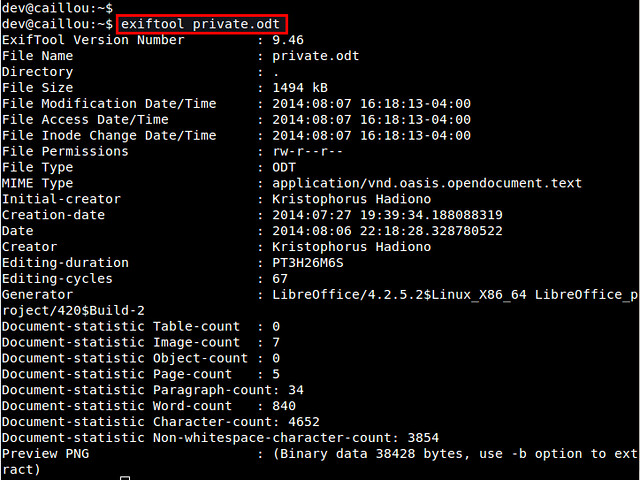

你将看到一个QR码,它使用图形保存了我们数字形态的密钥。一会我们要用到它在安卓设备上完成配置。

|

||||

你将看到一个QR码,它使用如下图形表示我们数字形态的密钥。一会我们要用到它在安卓设备上完成配置。

|

||||

|

||||

|

||||

|

||||

|

||||

Google认证器会问一些问题,如果你不确定,就回答"Yes"。这个应急备用验证码(图中 emergency scratch codes)可以在你丢失被绑定的安卓设备的情况下恢复访问,并且设备也不再生成一次性密码。所以最好将应急备用验证码妥善保存。

|

||||

Google认证器会问一些问题,如果你不确定,就回答"Yes"。这个应急备用验证码(图中 emergency scratch codes)可以在你由于丢失了绑定的安卓设备的情况下(所以不能得到生成的一次性密码)恢复访问。最好将应急备用验证码妥善保存。

|

||||

|

||||

### 在安卓设备上运行Google认证器 ###

|

||||

|

||||

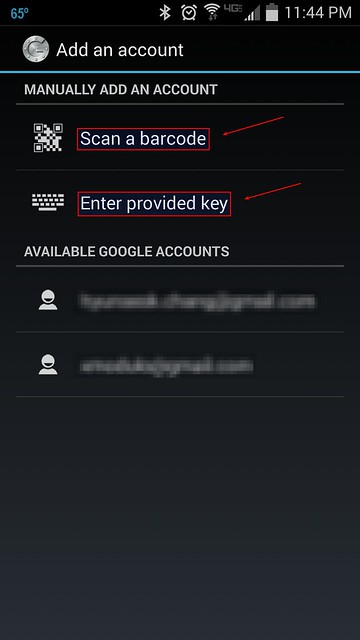

我们需要在安卓设备上安装[Google Authenticator app][6]才能完成双因子认证,到Google Play下载并安装一个。在安卓设备上运行Google认证器,找到下图所示中的配置菜单。

|

||||

|

||||

|

||||

|

||||

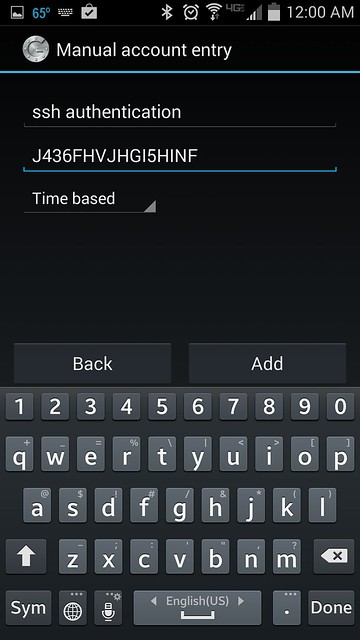

你可以选择"Scan a barcode" 或者"Enter provided key"选项。"Scan a barcode"允许你扫描QR码来完成密钥的输入,在此可能需要先安装扫描软件[Barcode Scanner app][7]。如果选择"Enter provided key"选项,你可以使用键盘输入验证密钥,如下图所示:

|

||||

|

||||

|

||||

|

||||

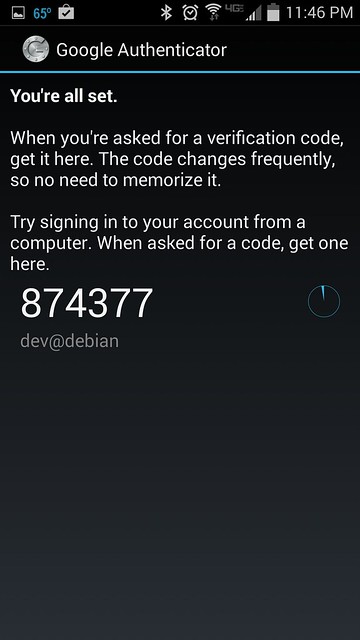

无论采用上述两种选项的任何方式,一旦成功,你将看到注册成功提示和一次性密码,如下图所示:

|

||||

|

||||

|

||||

|

||||

### 为SSH服务器用Google认证器 ###

|

||||

|

||||

最终我们需要修改两个文件来完成集成Google认证器和OpenSSH服务这临门一脚。

|

||||

|

||||

首先,修改PAM配置文件,命令和需添加的内容如下:

|

||||

|

||||

$ sudo vi /etc/pam.d/sshd

|

||||

|

||||

----------

|

||||

@ -39,6 +113,7 @@ Google认证器会问一些问题,如果你不确定,就回答"Yes"。这个

|

||||

auth required pam_google_authenticator.so

|

||||

|

||||

然后打开SSH配置文件,找到参数ChallengeResponseAuthentication,并启用它。

|

||||

|

||||

$ sudo vi /etc/ssh/sshd_config

|

||||

|

||||

----------

|

||||

@ -47,35 +122,40 @@ ChallengeResponseAuthentication yes

|

||||

|

||||

|

||||

最后,重启SSH服务。

|

||||

在 Ubuntu, Debian, Linux Mint:

|

||||

|

||||

在 Ubuntu、 Debian 和 Linux Mint 上:

|

||||

|

||||

$ sudo service ssh restart

|

||||

|

||||

在Fedora:

|

||||

在Fedora (或 CentOS/RHEL 7)上:

|

||||

|

||||

$ sudo systemctl restart sshd

|

||||

|

||||

在CentOS 或 RHEL:

|

||||

在CentOS 6.x或 RHEL 6.x上:

|

||||

|

||||

$ sudo service sshd restart

|

||||

|

||||

### 验证双因子认证 ###

|

||||

|

||||

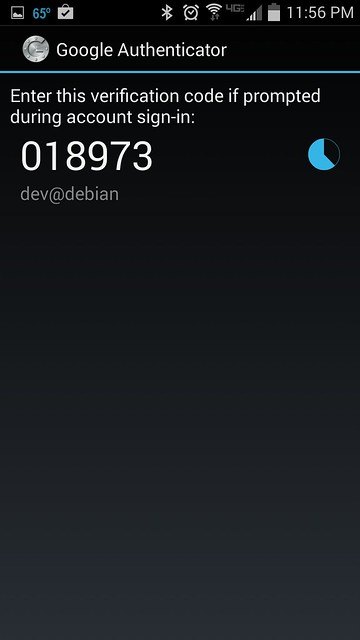

在绑定的安卓设备上运行Google认证器,获得一个一次性验证码,该验证码30秒内有效,一旦过期,将重新生成一个新的验证码。

|

||||

|

||||

|

||||

|

||||

现在和往常一样,使用SSH登录终端

|

||||

|

||||

$ ssh user@ssh_server

|

||||

|

||||

当提示你输入验证码的时候,输入我们刚获得的验证码。验证成功后,再输入SSH的登录密码。

|

||||

|

||||

|

||||

|

||||

双因子认证通过在用户密码前新增一层来有效的保护我们脆弱的用户密码。你可以使用Google认证器来保护我们其他的密码,如Google账户, WordPress.com, Dropbox.com, Outlook.com等等。是否使用这项技术,取决于我们自己,但采用双因子认证已经是行业的大趋势了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/2014/07/two-factor-authentication-ssh-login-linux.html

|

||||

|

||||

译者:[nd0104](https://github.com/nd0104) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[nd0104](https://github.com/nd0104) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,17 @@

|

||||

怎样在ubuntu 14.04上安装轻量级的Budgie桌面

|

||||

================================================================================

|

||||

**如果你在推特上关注了我们,你可能看见了我们最近分享的一张截图,和这张截图一起的还有对它所展示的桌面进行命名的邀请。 **

|

||||

|

||||

你猜对了吗? 答案就是budgie —— 一种为基于openSUSE 的linux发行版Evolve OS所设计,但不仅仅只能用于 Evolve OS的简易桌面环境。

|

||||

**如果你在推特上关注了我们,你可能看见了我们最近分享的一张截图,和这张截图一起的还有对它所展示的桌面进行命名的邀请。**

|

||||

|

||||

你猜对了吗? 答案就是[Budgie][1] —— 一种为基于openSUSE 的linux发行版Evolve OS所设计、但不仅仅只能用于 Evolve OS的简易桌面环境。

|

||||

|

||||

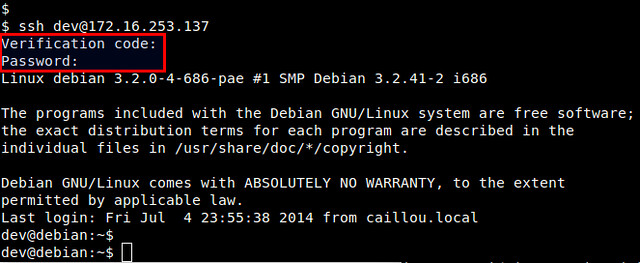

|

||||

|

||||

我们第一次描写Budgie是在三月份,当时我们被它的整洁,小巧的美感,灵活的架构,还有重复使用在当今大多数发行版中所使用的GNOME 3.10 成熟技术中的公共部分和标堆栈的决定所折服。

|

||||

我们第一次提到Budgie是在三月份,当时我们被它的整洁、小巧的美感、灵活的架构,还有再次使用在当今大多数发行版中所使用的GNOME 3.10 成熟技术中的公共部分和标堆栈的决定所折服。

|

||||

|

||||

我对此项目的领导者LKey Doherty所作出的开发选择非常佩服。无可否认另起炉灶有它的优点,但决定从上游的项目获取帮助将可以整个项目进展得更快,无论是在发展方面(更轻的技术负担)还是在用户可使用方面(更容易在其它发行版上运行)。

|

||||

我对此项目的领导者Ikey Doherty所作出的开发选择非常佩服。无可否认另起炉灶有它的优点,但决定从上游的项目获取帮助将可以整个项目进展得更快,无论是在发展方面(更轻的技术负担)还是在用户可使用方面(更容易在其它发行版上运行)。

|

||||

|

||||

政治因素选择除外,这款桌面以干净,小巧向谷歌Chrome OS的Ash桌面表示敬意。如果你不介意有些许粗糙的边缘,那它值得你玩玩。那么怎样在Ubuntu安装Budgie呢?

|

||||

除了政治因素选择以外,这款桌面干净、小巧,向谷歌Chrome OS的Ash桌面致敬。如果你不介意有些许粗糙的边缘,那它值得你玩玩。那么怎样在Ubuntu安装Budgie呢?

|

||||

|

||||

###非官方的PPA是不正式的 ###

|

||||

|

||||

@ -18,37 +19,38 @@

|

||||

|

||||

但如果你很懒,想不费周折就在Ubuntu 14.04 LTS(或者一个基于它的发行版)运行Budgie,那么你可以通过比较容易的途径来实现。

|

||||

|

||||

添加一个非官方的Unofficial PPA,刷新你的软件源然后进行安装。几分钟后在这个家庭中你将有一位名叫Bob的新叔叔,并且有一个新的桌面可以玩耍。

|

||||

只要添加一个**非官方的PPA**,刷新你的软件源然后进行安装。几分钟后在这个家庭中你将有一位[名叫Bob][2]的新叔叔,并且有一个新的桌面可以玩耍。

|

||||

|

||||

###添加Budgie PPA ###

|

||||

|

||||

将以下命令复制进一个打开的终端窗口,在提示过后输入你的密码(如果需要的话)

|

||||

将以下命令复制进一个打开的终端窗口,在提示过后输入你的密码(如果需要的话):

|

||||

|

||||

sudo add-apt-repository ppa:sukso96100/budgie-desktop

|

||||

sudo apt-get update && sudo apt-get install budgie-desktop

|

||||

|

||||

### 登入Budgie会话 ###

|

||||

|

||||

安装完成后你就可以在Unity欢迎界面的会话选择器中选择Budgie会话了。(别忘了以后要把选择项改回到稳定的桌面环境)

|

||||

安装完成后你就可以在Unity欢迎界面的会话选择器中选择“Budgie”了。(别忘了以后要把选择项改回到稳定的桌面环境)

|

||||

|

||||

### 注意 ###

|

||||

|

||||

**budgie是不稳定,不完善的,它在Ubuntu上也没有被正式支持。它正在积极开发中,缺少一些特性,包括(但不仅仅只有这些):不支持网络管理,没有音量控制小程序(键盘工作良好),没有通知系统,无法将应用程序“固定”在任务栏。**

|

||||

**budgie是不稳定、不完善的,并且它在Ubuntu上也没有被正式支持。**它正在积极开发中,功能还仍然有些缺失,包括但不仅限于:不支持网络管理,没有音量控制小程序(键盘按键工作良好),没有通知系统,无法将应用程序“固定”在任务栏。

|

||||

|

||||

它对UBUNTU的叠加滚动条和一些GTK主题的支持也不是很好,而且在使用upstart的发行版(例如ubuntu,即使它正在改变之中)中会话管理器(例如,注销,重启等等)将无法工作。

|

||||

它对UBUNTU的叠加滚动条、一些GTK主题的支持也不是很好,而且在使用upstart的发行版(例如ubuntu,[即使它正在改变之中][3])中会话管理器(例如,注销,重启等等)将无法工作。

|

||||

|

||||

一个应变方法是:禁用叠加滚动条,设置一个默认主题,通过在终端中使用以下命名来退出会话。

|

||||

一个应变方法是:禁用叠加滚动条,设置一个默认主题,通过在终端中使用以下命名来退出会话:

|

||||

|

||||

gnome-session-quit

|

||||

|

||||

想着脑海中所有的这些警告,我建议想使用稳定的,可靠的系统的人现在暂时不要使用它。

|

||||

脑海中有了上述这些警告后,我得建议那些自己的理智倾向于使用稳定、可靠的系统的人现在暂时不要使用它。

|

||||

|

||||

**而剩下那些狂热的业余爱好者们呢?好吧,请在下面留言告诉我们你觉得它如何。我给Bob让路。**

|

||||

|

||||

而作为狂热一族的业余体验呢?请在下面评论,让我们了解你的想法。我将退出而让Bob来接手。

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/07/install-budgie-evolve-os-desktop-ubuntu-14-04

|

||||

|

||||

译者:[Love-xuan](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[Love-xuan](https://github.com/Love-xuan) 校对:[reinoir](https://github.com/reinoi)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,8 @@

|

||||

在Linux中模拟击键和鼠标移动

|

||||

================================================================================

|

||||

<pre><code>

|

||||

你是否曾经拥有一个梦

|

||||

——你的计算机

|

||||

——你的计算机`

|

||||

可以自动为你干活?

|

||||

或许,并非因为

|

||||

你刚看了终结者。

|

||||

@ -27,9 +28,10 @@

|

||||

xdotool可以通过读取文本文件

|

||||

模拟击键的旋律

|

||||

以及鼠标的曼舞

|

||||

|

||||

</code></pre>

|

||||

|

||||

### 让Xdotool在Linux定居 ###

|

||||

|

||||

对于Ubuntu,Debian或者Linux Mint,你能够只做:

|

||||

|

||||

$ sudo apt-get install xdotool

|

||||

@ -64,7 +66,7 @@

|

||||

|

||||

$ xdotool type ''

|

||||

|

||||

这些对于基本的击键而言已经足够了。但是,xdotool的众多长处之一,就是它可以获取特定窗口的焦点。它可以获取右边的窗口,然后在里面输入,同时阻止所有你记录的按键,让那些动作随风而逝吧。要获得该功能,一个简单的命令可以搞定:

|

||||

这些对于基本的击键而言已经足够了。但是,xdotool的众多长处之一,就是它可以获取特定窗口的焦点。它可以获取右边的窗口,然后在里面输入,所有你记录下的按键都不会人间蒸发,而是老老实实的如你所愿的出现在那里。要获得该功能,一个简单的命令可以搞定:

|

||||

|

||||

$ xdotool search --name [name of the window] key [keys to press]

|

||||

|

||||

@ -80,7 +82,7 @@

|

||||

|

||||

这会让鼠标移动到(x,y),然后点击鼠标左键。“1”代表鼠标左键,“2”则是滚轮,“3”则是右键。

|

||||

|

||||

最后,一旦你这些命令根植于你脑海,你也许想要实际转储于文件来编辑并试着玩玩。鉴于此,就会有超过一个语句以上的内容了。你可以写的就是一个bash脚本了:

|

||||

最后,一旦你这些命令根植于你脑海,你也许想要实际转储于文件来编辑并试着玩玩。鉴于此,就会有超过一个语句以上的内容了。你需要的就是写一个bash脚本了:

|

||||

|

||||

#!/bin/bash

|

||||

|

||||

@ -96,7 +98,7 @@

|

||||

|

||||

### 意外收获 ###

|

||||

|

||||

作为本文的一个意外收获,这里是xdotool的一个具体实例。你可能听说过,也可能没听说过Bing,微软的搜索引擎。在后面的实例中,你从没听过Bing奖励吧:一个程序,可以让你用Bing积分兑取亚马逊的礼物卡和其它的一些礼物卡。要赚取这些积分,你可以每天在Bing上搜索累计达30次,每次搜索你都会获得0.5个积分。换句话说,你必须把Bing设为默认搜索引擎,并每天使用它。

|

||||

作为本文的一个意外收获,这里是xdotool的一个具体实例。你可能听说过,也可能没听说过Bing —— 微软的搜索引擎。在后面的实例中,你会看到你可能从没听过Bing奖励:一个程序,可以让你用Bing积分兑取亚马逊的礼物卡和其它的一些礼物卡。要赚取这些积分,你可以每天在Bing上搜索累计达30次,每次搜索你都会获得0.5个积分。换句话说,你必须把Bing设为默认搜索引擎,并每天使用它。

|

||||

|

||||

或者,你可以使用xdotool脚本,在这个脚本中,会自动聚焦到Firefox(你可以用你喜欢的浏览器来取代它),并使用fortune命令生成一些随机单词来实施搜索。大约30秒之内,你的日常搜索任务就完成了。

|

||||

|

||||

@ -115,7 +117,7 @@

|

||||

done

|

||||

|

||||

|

||||

下面来个小结吧:我真的很喜欢xdotool,即便它完整功能超越了本文涵盖的范围。这对于脚本和任务自动化而言,确实是种平易的方式。负面的问题是,它可能不是最有效率的一个。但我要再说一遍,它忠于职守了,而且学习起来也不是那么麻烦。

|

||||

下面来个小结吧:我真的很喜欢xdotool,即便它的完整功能超越了本文涵盖的范围。这对于脚本和任务自动化而言,确实是种平易的方式。负面的问题是,它可能不是最有效率的一个。但我要再说一遍,它忠于职守了,而且学习起来也不是那么麻烦。

|

||||

|

||||

你对xdotool怎么看呢?你是否更喜欢另外一个自动化工具,而不是它呢?为什么呢?请在评论中告诉我们吧。

|

||||

|

||||

@ -123,7 +125,7 @@

|

||||

|

||||

via: http://xmodulo.com/2014/07/simulate-key-press-mouse-movement-linux.html

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[GOLinux](https://github.com/GOLinux) 校对:[ wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

Linux slabtop命令——显示内核片缓存信息

|

||||

================================================================================

|

||||

|

||||

Linux内核需要为临时对象如任务或者设备结构和节点分配内存,缓存分配器管理着这些对象类型的缓存。现代Linux内核部署了该缓存分配器以持有缓存,称之为片。不同类型的片缓存由片分配器维护。本文集中讨论slabtop命令,该命令显示了实时内核片缓存信息。

|

||||

Linux内核需要为临时对象如任务或者设备结构和节点分配内存,缓存分配器管理着这些类型对象的缓存。现代Linux内核部署了该缓存分配器以持有缓存,称之为片。不同类型的片缓存由片分配器维护。本文集中讨论slabtop命令,该命令显示了实时内核片缓存信息。

|

||||

|

||||

### 1. 命令用法: ###

|

||||

|

||||

@ -12,7 +12,7 @@ Linux内核需要为临时对象如任务或者设备结构和节点分配内存

|

||||

你可以在前面设置“sudo”来运行该命令,默认输出见下图:

|

||||

|

||||

|

||||

|

||||

|

||||

要退出slabtop,只需敲‘q’,就像在top命令中那样。

|

||||

|

||||

### 2. Slabtop选项: ###

|

||||

@ -31,7 +31,7 @@ Linux内核需要为临时对象如任务或者设备结构和节点分配内存

|

||||

|

||||

#### 2.3 输出一次: ####

|

||||

|

||||

-o或--once选项不会刷新输出,它仅仅将一次输出结果丢给STDOUT,然后退出。

|

||||

-o或--once选项不会刷新输出,它仅仅将一次输出结果丢给STDOUT,然后退出。

|

||||

|

||||

|

||||

|

||||

@ -2,17 +2,17 @@ Linux系统中使用 DD 命令测试 USB 和 SSD 硬盘的读写速度

|

||||

================================================================================

|

||||

### 磁盘驱动器速度 ###

|

||||

|

||||

磁盘驱动器的速度是以在一个单位时间内能读写数据量的多少来衡量的。DD 命令是一个简单的命令行工具,它可用对磁盘进行任意数据块的读取和写入,同时可以度量读取写入的速度。

|

||||

磁盘驱动器的速度是以一个单位时间内读写数据量的多少来衡量的。DD 命令是一个简单的命令行工具,它可用对磁盘进行任意数据块的读取和写入,同时可以度量读取写入的速度。

|

||||

|

||||

在这篇文章中,我们将会使用 DD 命令来测试 USB 和 SSD 磁盘的读取和写入速度。

|

||||

|

||||

数据传输速度不但取决于驱动盘本身,而且还与连接的接口有关。比如, USB 2.0 端口的最大传输速度是 35 兆字节/秒,所以如果您把一个支持高速传输的 USB 3.0 驱动盘插入 USB 2.0 端口的话,它实际的传输速度将是 2.0 端口的上下限。

|

||||

数据传输速度不但取决于驱动盘本身,而且还与连接的接口有关。比如, USB 2.0 端口的最大传输速度是 35 兆字节/秒,所以如果您把一个支持高速传输的 USB 3.0 驱动盘插入 USB 2.0 端口的话,它实际的传输速度将是 2.0 端口的下限。

|

||||

|

||||

这对于 SSD 也是一样的。 SSD 连接的 SATA 端口有不同的类型。平均是 375 兆字节/秒的 SATA 2.0 端口理论上最大传输速度是 3 Gbit/秒,而 SATA 3.0 是这个速度的两倍。

|

||||

|

||||

### 测试方法 ###

|

||||

|

||||

挂载上驱动盘,终端上进入此盘目录下。然后使用 DD 命令,首先写入固定大小块的一个文件,接着读取这个文件。

|

||||

挂载上驱动盘,从终端进入此盘目录下。然后使用 DD 命令,首先写入固定大小块的一个文件,接着读取这个文件。

|

||||

|

||||

DD 命令通用语法格式如下:

|

||||

|

||||

@ -22,11 +22,11 @@ DD 命令通用语法格式如下:

|

||||

|

||||

### 固态硬盘 ###

|

||||

|

||||

我们使用的是一块“三星 Evo 120G” 的固态硬盘。它性价比很高,很适合刚开始用固态硬盘的用户,也是我的第一块固态硬盘,并且在市场上表现的也非常不错。

|

||||

我们使用的是一块“三星 Evo 120G” 的固态硬盘。它性价比很高,很适合刚开始用固态硬盘的用户,也是我的第一块固态硬盘,并且也是市场上效果最好的固态硬盘之一。

|

||||

|

||||

这次实验中,我们把硬盘接在 SATA 2.0 端口上。

|

||||

|

||||

#### 写入速度 ####

|

||||

|

||||

#### 写入速度 ####

|

||||

|

||||

首先让我们写入固态硬盘

|

||||

|

||||

@ -35,7 +35,7 @@ DD 命令通用语法格式如下:

|

||||

1024+0 records out

|

||||

1073741824 bytes (1.1 GB) copied, 4.82364 s, 223 MB/s

|

||||

|

||||

的大小实际上是相当大的。你可以尝试用更小的尺寸如 64 K甚至是 4K 的。

|

||||

的大小实际上是相当大的。你可以尝试用更小的尺寸如 64K 甚至是 4K 的。

|

||||

|

||||

#### 读取速度 ####

|

||||

|

||||

@ -52,7 +52,7 @@ DD 命令通用语法格式如下:

|

||||

165118+0 records out

|

||||

676323328 bytes (676 MB) copied, 3.0114 s, 225 MB/s

|

||||

|

||||

在 Arch Linux 的维基页上有一整页的关于从同的厂商,如英特尔、三星、Sandisk 等提供的各类固态硬盘的读/写速度的信息。点击如下的 url 可得到得到想着信息。

|

||||

在 Arch Linux 的维基页上有一整页的关于不同的厂商,如英特尔、三星、Sandisk 等提供的各类固态硬盘 读/写速度的信息。点击如下的 url 可以查看相关信息。

|

||||

|

||||

[https://wiki.archlinux.org/index.php/SSD_Benchmarking][1]

|

||||

|

||||

@ -60,7 +60,7 @@ DD 命令通用语法格式如下:

|

||||

|

||||

此次实验我们会测量普通的 USB/随身笔的读写速度。驱动盘都是接入标准的 USB 2.0 端口的。首先用的是一个 4GB 大小的 sony USB 驱动盘,随后用的是一个 16GB 大小的 strontium 驱动盘。

|

||||

|

||||

首先把驱动盘插入端口,并挂载上,使其可读的。然后从命令行下面进入挂载的文件目录下。

|

||||

首先把驱动盘插入端口,并挂载上,使其可读。然后从命令行下面进入挂载的文件目录下。

|

||||

|

||||

#### Sony 4GB - 写入 ####

|

||||

|

||||

@ -75,11 +75,11 @@ DD 命令通用语法格式如下:

|

||||

|

||||

#### Sony 4GB - 读取 ####

|

||||

|

||||

把相同的文件读取回来,测试速度。首先运行如下命令清除内存缓存

|

||||

把相同的文件读取回来,测试速度。首先运行如下命令清除内存缓存。

|

||||

|

||||

$ sudo sh -c "sync && echo 3 > /proc/sys/vm/drop_caches"

|

||||

|

||||

现在就可以使用 DD 命令来读取文件了

|

||||

现在就可以使用 DD 命令来读取文件了。

|

||||

|

||||

# dd if=./largefile of=/dev/null bs=8k

|

||||

8000+0 records in

|

||||

@ -92,7 +92,7 @@ DD 命令通用语法格式如下:

|

||||

|

||||

上面实验中, USB 驱动盘插入USB 2.0 端口,读取的速度达到了 24.7兆字节/秒,这是很不错的读速度。但写入速度就不敢恭维了。

|

||||

|

||||

下面让我们用 Strontium 的 16GB 的驱动盘来做相同的实验。虽然 Strontium 的 USB 驱动盘很稳定,但它也是一款很便宜的品牌。

|

||||

下面让我们用 16GB 的 Strontium 驱动盘来做相同的实验。虽然 Strontium 的 USB 驱动盘很稳定,但它也是一款很便宜的品牌。

|

||||

|

||||

#### Strontium 16gb 盘写入速度 ####

|

||||

|

||||

@ -136,4 +136,4 @@ via: http://www.binarytides.com/linux-test-drive-speed/

|

||||

[1]:http://wiki.archlinux.org/index.php/SSD_Benchmarking

|

||||

[2]:http://en.wikipedia.org/wiki/USB

|

||||

[e]:m00n.silv3r@gmail.com

|

||||

[g]:http://plus.google.com/117145272367995638274/posts

|

||||

[g]:http://plus.google.com/117145272367995638274/posts

|

||||

@ -1,4 +1,4 @@

|

||||

Linux 内核测试和调试 - 4

|

||||

Linux 内核测试和调试(4)

|

||||

================================================================================

|

||||

### 自动测试工具 ###

|

||||

|

||||

@ -38,11 +38,11 @@ Linux 内核本身包含很多调试功能,比如 kmemcheck 和 kmemleak。

|

||||

|

||||

#### kmemcheck ####

|

||||

|

||||

> kmemcheck 是一个动态检查工具,可以检测出一些未被初始化的内存(LCTT:内核态使用这些内存可能会造成系统崩溃)并发出警告。它的功能与 Valgrind 类似,只是 Valgrind 运行在用户态,而 kmemchecke 运行在内核态。编译内核时加上 CONFIG_KMEMCHECK 选项打开 kmemcheck 调试功能。你可以阅读 Documentation/kmemcheck.txt 来学习如何配置使用这个功能,以及如何看懂调试结果。

|

||||

> kmemcheck 是一个动态检查工具,可以检测出一些未被初始化的内存(LCTT:内核态使用这些内存可能会造成系统崩溃)并发出警告。它的功能与 Valgrind 类似,只是 Valgrind 运行在用户态,而 kmemchecke 运行在内核态。编译内核时加上 `CONFIG_KMEMCHECK` 选项打开 kmemcheck 调试功能。你可以阅读 Documentation/kmemcheck.txt 来学习如何配置使用这个功能,以及如何看懂调试结果。

|

||||

|

||||

#### kmemleak ####

|

||||

|

||||

> kmemleak 通过类似于垃圾收集器的功能来检测内核是否有内存泄漏问题。而 kmemleak 与垃圾收集器的不同之处在于前者不会释放孤儿目标(LCTT:不会再被使用的、应该被释放而没被释放的内存区域),而是将它们打印到 /sys/kernel/debug/kmemleak 文件中。用户态的 Valgrind 也有一个类似的功能,使用 --leak-check 选项可以检测并报错内存泄漏问题,但并不释放这个孤儿内存。编译内核时使用 CONFIG_DEBUG_KMEMLEAK 选项打开 kmemcleak 调试功能。阅读 Documentation/kmemleak.txt 来学习怎么使用这个工具并读懂调试结果。

|

||||

> kmemleak 通过类似于垃圾收集器的功能来检测内核是否有内存泄漏问题。而 kmemleak 与垃圾收集器的不同之处在于前者不会释放孤儿目标(LCTT:不会再被使用的、应该被释放而没被释放的内存区域),而是将它们打印到 /sys/kernel/debug/kmemleak 文件中。用户态的 Valgrind 也有一个类似的功能,使用 --leak-check 选项可以检测并报错内存泄漏问题,但并不释放这个孤儿内存。编译内核时使用 `CONFIG_DEBUG_KMEMLEAK` 选项打开 kmemcleak 调试功能。阅读 Documentation/kmemleak.txt 来学习怎么使用这个工具并读懂调试结果。

|

||||

|

||||

### 内核调试接口 ###

|

||||

|

||||

@ -54,13 +54,13 @@ Linux 内核通过配置选项、调试用的 API、接口和框架来支持动

|

||||

|

||||

### 调试的 API ###

|

||||

|

||||

调试 API 的一个很好的例子是 DMA-debug,用来调试驱动是否错误使用了 DMA 提供的 API。它会跟踪每个设备的映射关系,检测程序有没有试图为一些根本不存在的映射执行“取消映射”操作,检测代码建立 DMA 映射后可能产生的“映射丢失”的错误。内核配置选项 CONFIG_HAVE_DMA_APT_DEBUG 和 CONFIG_DMA_API_DEBUG 可以为内核提供这个功能。其中,CONFIG_DMA_API_DEBUG 选项启用后,内核调用 DMA 的 API 的同时也会调用 Debug-dma 接口。举例来说,当一个驱动调用 dma_map_page() 函数来映射一个 DMA 缓存时,dma_map_page() 会调用debug_dma_map_page() 函数来跟踪这个缓存,直到驱动调用 dma_unmap_page() 来取消映射。详细内容请参考[使用 DMA 调试 API 检测潜在的数据污染和内存泄漏问题][3]。

|

||||

调试 API 的一个很好的例子是 DMA-debug,用来调试驱动是否错误使用了 DMA 提供的 API。它会跟踪每个设备的映射关系,检测程序有没有试图为一些根本不存在的映射执行“取消映射”操作,检测代码建立 DMA 映射后可能产生的“映射丢失”的错误。内核配置选项 `CONFIG_HAVE_DMA_APT_DEBUG` 和 `CONFIG_DMA_API_DEBUG` 可以为内核提供这个功能。其中,`CONFIG_DMA_API_DEBUG` 选项启用后,内核调用 DMA 的 API 的同时也会调用 Debug-dma 接口。举例来说,当一个驱动调用 `dma_map_page()` 函数来映射一个 DMA 缓存时,`dma_map_page()` 会调用`debug_dma_map_page()` 函数来跟踪这个缓存,直到驱动调用 `dma_unmap_page()` 来取消映射。详细内容请参考[使用 DMA 调试 API 检测潜在的数据污染和内存泄漏问题][3]。

|

||||

|

||||

### 动态调试 ###

|

||||

|

||||

动态调试功能就是你可以决定在程序运行过程中是否要 pr_debug(), dev_dbg(), print_hex_dump_debug(), print_hex_dump_bytes() 这些函数正常运行起来。什么意思?当程序运行过程中出现错误时,你可以指定程序打印有针对性的、详细的调试信息。这功能牛逼极了,我们不再需要为了添加调试代码定位一个问题,而重新编译安装内核。你可以指定 CONDIF_DYNAMIC_DEBUG 选项打开动态调试功能,然后通过 /sys/kernel/debug/dynamic_debug/control 接口指定要打印哪些调试日志。下面分别列出代码级别和模块级别打印日志的操作方法:

|

||||

动态调试功能就是你可以决定在程序运行过程中是否要 `pr_debug()`, `dev_dbg()`, `print_hex_dump_debug()`, `print_hex_dump_bytes()` 这些函数正常运行起来。什么意思?当程序运行过程中出现错误时,你可以指定程序打印有针对性的、详细的调试信息。这功能牛逼极了,我们不再需要为了添加调试代码定位一个问题,而重新编译安装内核。你可以指定 `CONDIF_DYNAMIC_DEBUG` 选项打开动态调试功能,然后通过 `/sys/kernel/debug/dynamic_debug/control` 接口指定要打印哪些调试日志。下面分别列出代码级别和模块级别打印日志的操作方法:

|

||||

|

||||

让 kernel/power/suspend.c 源码第340行的 pr_debug() 函数打印日志:

|

||||

让 kernel/power/suspend.c 源码第340行的 `pr_debug()` 函数打印日志:

|

||||

|

||||

echo 'file suspend.c line 340 +p' > /sys/kernel/debug/dynamic_debug/control

|

||||

|

||||

@ -72,7 +72,7 @@ Linux 内核通过配置选项、调试用的 API、接口和框架来支持动

|

||||

|

||||

> 编辑 /etc/modprobe.d/modname.conf 文件(没有这个文件就创建一个),添加 dyndbg='plmft' 选项。然而对于哪些通过 initramfs 加载的驱动来说,这个配置基本无效(LCTT:免费奉送点比较高级的知识哈。系统启动时,需要先让 initramfs 挂载一个虚拟的文件系统,然后再挂载启动盘上的真实文件系统。这个虚拟文件系统里面的文件是 initramfs 自己提供的,也就是说你在真实的文件系统下面配置了 /etc/modprobe.d/modname.conf 这个文件,initramfs 是压根不去理会的。站在内核驱动的角度看:如果内核驱动在 initramfs 过程中被加载到内核,这个驱动读取到的 /etc/modprobe.d/modname.conf 是 initramfs 提供的,而不是你编辑的那个。所以会有上述“写了配置文件后重启依然无效”的结论)。对于这种刁民,呃,刁驱动,我们需要修改 grub 配置文件,在 kernel 那一行添加 module.dyndbg='plmft' 参数,这样你的驱动就可以开机启动动态调试功能了。

|

||||

|

||||

想打印更详细的调试信息,可以使用 dynamic_debug.verbose=1 选项。参考 Documentation/dynamic-debug-howto.txt 文件获取更多信息。

|

||||

想打印更详细的调试信息,可以使用 `dynamic_debug.verbose=1` 选项。参考 Documentation/dynamic-debug-howto.txt 文件获取更多信息。

|

||||

|

||||

### 设置追踪点 ###

|

||||

|

||||

@ -135,7 +135,7 @@ Linux 内核通过配置选项、调试用的 API、接口和框架来支持动

|

||||

|

||||

via:http://www.linuxjournal.com/content/linux-kernel-testing-and-debugging?page=0,3

|

||||

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux 内核测试和调试 - 5

|

||||

Linux 内核测试和调试(5)

|

||||

================================================================================

|

||||

### 仿真环境下进行 Linux 电源管理子系统测试 ###

|

||||

|

||||

@ -85,6 +85,6 @@ git bisect 是一个非常有用非常强大的工具,用于将 git 上的一

|

||||

|

||||

via: http://www.linuxjournal.com/content/linux-kernel-testing-and-debugging?page=0,4

|

||||

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,4 +1,4 @@

|

||||

Linux 内核的测试和调试 - 6

|

||||

Linux 内核的测试和调试(6)

|

||||

================================================================================

|

||||

### Linux 内核补丁测试 ###

|

||||

|

||||

@ -12,7 +12,7 @@ Linux 内核的测试和调试 - 6

|

||||

|

||||

如果你对你的补丁测试结果感到很满意,你就可以提交补丁了。请确保提交 commit 的信息要描述得非常清楚。要让内核维护者和其他开发者看懂补丁所修改的内容,这一点非常重要。生成补丁后,执行 scripts/checkpatch.pl 脚本,找到 checkpatch 是产生的错误或警告(如果有的话),修复它们。重新生成补丁,直到补丁通过这个脚本的测试。重新测试这个补丁。将本补丁用于其他的内核源码上,保证不会有冲突产生。

|

||||

|

||||

现在你做好提交补丁的准备了。先运行 scriptst/get_maintainer.pl 来确认你应该把补丁发给哪个内核维护者。注意不要以附件形式发送补丁,而是以纯文本形式粘贴在邮件里面。确保你的邮件客户端可以发送纯文本信息,你可以试试给自己发送一份补丁邮件来测试你的邮件客户端的功能。收到自己的邮件后,运行 checkpatch 命令并给自己的内核源码打上你的补丁。如果这两部都能通过,你就可以给 Linux 邮箱列表发送补丁了。使用 git send-email 命令是提交补丁最安全的方式,可以避免你的邮箱的兼容性问题。你的 .gitconfig 文件里面需要配置好有效的 smtp 服务器,详细操作参考 git 的帮助文档。

|

||||

现在你做好提交补丁的准备了。先运行 `scriptst/get_maintainer.pl` 来确认你应该把补丁发给哪个内核维护者。注意不要以附件形式发送补丁,而是以纯文本形式粘贴在邮件里面。确保你的邮件客户端可以发送纯文本信息,你可以试试给自己发送一份补丁邮件来测试你的邮件客户端的功能。收到自己的邮件后,运行 checkpatch 命令并给自己的内核源码打上你的补丁。如果这两部都能通过,你就可以给 Linux 邮箱列表发送补丁了。使用 git send-email 命令是提交补丁最安全的方式,可以避免你的邮箱的兼容性问题。你的 .gitconfig 文件里面需要配置好有效的 smtp 服务器,详细操作参考 git 的帮助文档。

|

||||

|

||||

更多提交补丁的规矩,请参考下面的资料:

|

||||

|

||||

@ -111,7 +111,7 @@ Shuah Khan 是三星公司开源组的高级 Linux 内核开发工程师。

|

||||

|

||||

via: http://www.linuxjournal.com/content/linux-kernel-testing-and-debugging?page=0,5

|

||||

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[bazz2](https://github.com/bazz2) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,14 +1,14 @@

|

||||

Budgie桌面5.1 对保守的用户来说是一个极好的新桌面环境

|

||||

Budgie桌面5.1 :给保守用户的超好桌面环境

|

||||

================================================================================

|

||||

|

||||

|

||||

**Evolve OS的开发者Ikey Doherty,制作了这款叫做Budgie Desktop的新桌面环境并且发布了它的新版本。**

|

||||

|

||||

Evolve OS还没有发布,但开发者正积极地进行关于它的工作。系统中并没有采用一个现有的桌面环境,他决定最好还是制作一个自己的。这个桌面基于GNOME并用了不少GNOME的包,但它看起来却截然不同。事实上,它使用了与MATE和Cinnamon桌面相同的模式,尽管Budgie似乎更有现代感更优美一些。

|

||||

Evolve OS还没有发布,但开发者正积极地进行关于它的工作。系统中并没有采用任何现有的桌面环境,他们决定最好还是制作一个自己的。这个桌面基于GNOME并用了不少GNOME的包,但它看起来却截然不同。事实上,它使用了与MATE和Cinnamon桌面相同的模式,尽管Budgie似乎更有现代感更优美一些。

|

||||

|

||||

有趣的是,桌面这个关键的技术部分却先于其将要服务的操作系统发布了,但是潜在的用户完全不必惊讶。由此也出现了可用于Ubuntu 14.04 LTS 和Ubuntu 14.10的[PPA][1],但这不是官方的。Arch Linux用户也将还会在AUR库中发现新的桌面环境。

|

||||

有趣的是,桌面这个关键的技术部分却先于其将要服务的操作系统发布了,但是对此感兴趣的用户完全不必惊讶。由此也出现了可用于Ubuntu 14.04 LTS 和Ubuntu 14.10的[PPA][1],但这不是官方的。Arch Linux用户也将还会在AUR库中发现新的桌面环境。

|

||||

|

||||

“从V4版本以来,几乎所有的改变都与面板有关。它已被使用Vala语言重写,这降低了维护开销也大大降低了新贡献者进入的门槛。所以,如果你使用OBS的话,当你于(希望是)今天在OBS上获取到更新,或者对Evolve OS用户,你已经安装了更新,你将只能感觉到很小的视觉差异。我的想法是不去改变外观,而是重写代码来使它更好些。”

|

||||

“从V4版本以来,几乎所有的改变都与面板有关。它已被使用Vala语言重写,这降低了维护开销也大大降低了新贡献者进入的门槛。所以,如果你使用OBS的话,当你(希望是)今天在OBS上获取到更新,或者对Evolve OS用户,你已经安装了更新,你将只能感觉到很小的视觉差异。我的想法是不去改变外观,而是重写代码来使它更好些。”

|

||||

|

||||

“将它重写成Vala语言的程序付出了很多努力,但马上就会见到成效。将来桌面整个都将会用Vala重写,成为‘第二次写’——第二次我们会做的更好”Ikey Doherty在发布[公告][2]中这样说。

|

||||

|

||||

@ -30,7 +30,7 @@ via: http://news.softpedia.com/news/Budgie-Desktop-5-1-Is-a-Superb-New-Desktop-E

|

||||

|

||||

原文作者:[Silviu Stahie][a]

|

||||

|

||||

译者:[linuhap](https://github.com/linuhap) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[linuhap](https://github.com/linuhap) 校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

在Linux中为SFTP配置chroot环境

|

||||

在 Linux 中为非 SSH 用户配置 SFTP 环境

|

||||

================================================================================

|

||||

在**某些环境**中,系统管理员想要允许极少数用户传输文件到Linux盒子中,而非ssh。要实现这一目的,我们可以使用**SFTP**,并为其构建chroot环境。

|

||||

在**某些环境**中,系统管理员想要允许极少数用户在可以传输文件到Linux机器中,但是不允许使用 SSH。要实现这一目的,我们可以使用**SFTP**,并为其构建chroot环境。

|

||||

|

||||

### SFTP & chroot背景: ###

|

||||

|

||||

**SFTP**是值**SSH文件传输协议(SSH File Transfer protocol)或安全文件传输协议(Secure File Transfer Protocol)**,它提供了任何可信数据流下的文件访问、文件传输以及文件管理功能。当我们为SFTP配置chroot环境后,只有被许可的用户可以访问,并被限制到它们的**家目录**中,或者我们可以这么说:被许可的用户将处于牢笼环境中,在此环境中它们甚至不能切换它们的目录。

|

||||

**SFTP**是指**SSH文件传输协议(SSH File Transfer protocol)或安全文件传输协议(Secure File Transfer Protocol)**,它提供了可信数据流下的文件访问、文件传输以及文件管理功能。当我们为SFTP配置chroot环境后,只有被许可的用户可以访问,并被限制到他们的**家目录**中,换言之:被许可的用户将处于牢笼环境中,在此环境中它们甚至不能切换它们的目录。

|

||||

|

||||

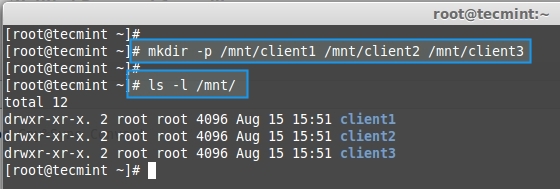

在本文中,我们将配置**RHEL 6.X** & **CentOS 6.X中的SFTP Chroot环境**。我们开启一个用户帐号‘**Jack**’,该用户将被允许在Linux盒子上传输文件,但没有ssh访问权限。

|

||||

在本文中,我们将配置**RHEL 6.X** 和 **CentOS 6.X中的SFTP Chroot环境**。我们开启一个用户帐号‘**Jack**’,该用户将被允许在Linux机器上传输文件,但没有ssh访问权限。

|

||||

|

||||

### 步骤:1 创建组 ###

|

||||

|

||||

@ -14,7 +14,7 @@

|

||||

|

||||

### 步骤:2 分配附属组(sftp_users)给用户 ###

|

||||

|

||||

如果用户在系统上不存在,使用以下命令创建:

|

||||

如果用户在系统上不存在,使用以下命令创建( LCTT 译注:这里给用户指定了一个不能登录的 shell,以防止通过 ssh 登录):

|

||||

|

||||

[root@localhost ~]# useradd -G sftp_users -s /sbin/nologin jack

|

||||

[root@localhost ~]# passwd jack

|

||||

@ -23,7 +23,7 @@

|

||||

|

||||

[root@localhost ~]# usermod –G sftp_users -s /sbin/nologin jack

|

||||

|

||||

**注意**:如果你想要修改用户的**默认家目录**,那么在useradd和usermod命令中使用‘**-d**’选项,并设置**合适的权限**。

|

||||

**注意**:如果你想要修改用户的**默认家目录**,那么可以在useradd和usermod命令中使用‘**-d**’选项,并设置**合适的权限**。

|

||||

|

||||

### 步骤:3 现在编辑配置文件 “/etc/ssh/sshd_config” ###

|

||||

|

||||

@ -33,7 +33,7 @@

|

||||

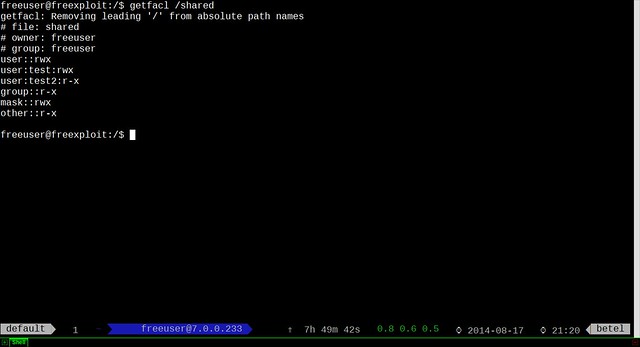

Subsystem sftp internal-sftp

|

||||

|

||||