mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge remote-tracking branch 'LCTT/master' into 20190811-How-to-measure-the-health-of-an-open-source-community

This commit is contained in:

commit

d8d9ff3fe0

@ -0,0 +1,69 @@

|

||||

使用 MacSVG 创建 SVG 动画

|

||||

======

|

||||

|

||||

> 开源 SVG:墙上的魔法字。

|

||||

|

||||

|

||||

|

||||

新巴比伦的摄政王[伯沙撒][1]没有注意到他在盛宴期间神奇地[书写在墙上的文字][2]。但是,如果他在公元前 539 年有一台笔记本电脑和良好的互联网连接,他可能会通过在浏览器上阅读 SVG 来避开那些讨厌的波斯人。

|

||||

|

||||

出现在网页上的动画文本和对象是建立用户兴趣和参与度的好方法。有几种方法可以实现这一点,例如视频嵌入、动画 GIF 或幻灯片 —— 但你也可以使用[可缩放矢量图形(SVG)][3]。

|

||||

|

||||

SVG 图像与 JPG 不同,因为它可以缩放而不会丢失其分辨率。矢量图像是由点而不是像素创建的,所以无论它放大到多大,它都不会失去分辨率或像素化。充分利用可缩放的静态图像的一个例子是网站的徽标。

|

||||

|

||||

### 动起来,动起来

|

||||

|

||||

你可以使用多种绘图程序创建 SVG 图像,包括开源的 [Inkscape][4] 和 Adobe Illustrator。让你的图像“能动起来”需要更多的努力。幸运的是,有一些开源解决方案甚至可以引起伯沙撒的注意。

|

||||

|

||||

[MacSVG][5] 是一款可以让你的图像动起来的工具。你可以在 [GitHub][6] 上找到源代码。

|

||||

|

||||

根据其[官网][5]说,MacSVG 由阿肯色州康威的 Douglas Ward 开发,是一个“用于设计 HTML5 SVG 艺术和动画的开源 Mac OS 应用程序”。

|

||||

|

||||

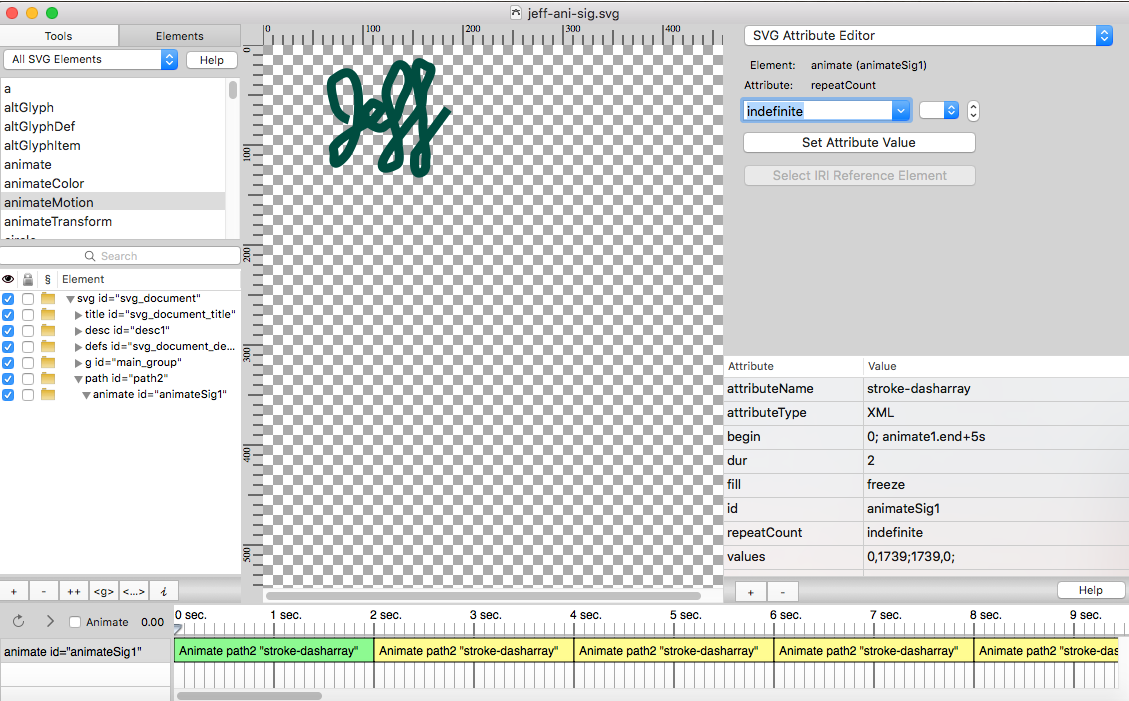

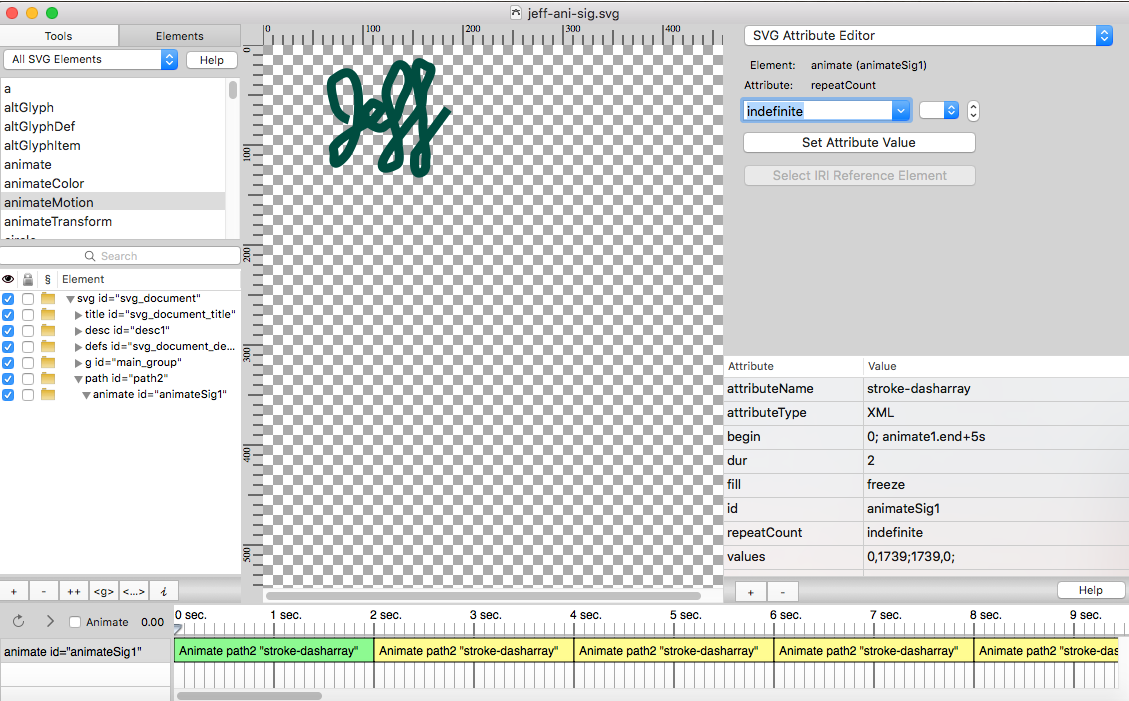

我想使用 MacSVG 来创建一个动画签名。我承认我发现这个过程有点令人困惑,并且在我第一次尝试创建一个实际的动画 SVG 图像时失败了。

|

||||

|

||||

|

||||

|

||||

重要的是首先要了解要展示的书法内容实际写的是什么。

|

||||

|

||||

动画文字背后的属性是 [stroke-dasharray][7]。将该术语分成三个单词有助于解释正在发生的事情:“stroke” 是指用笔(无论是物理的笔还是数字化笔)制作的线条或笔画。“dash” 意味着将笔划分解为一系列折线。“array” 意味着将整个东西生成为数组。这是一个简单的概述,但它可以帮助我理解应该发生什么以及为什么。

|

||||

|

||||

使用 MacSVG,你可以导入图形(.PNG)并使用钢笔工具描绘书写路径。我使用了草书来表示我的名字。然后,只需应用该属性来让书法动画起来、增加和减少笔划的粗细、改变其颜色等等。完成后,动画的书法将导出为 .SVG 文件,并可以在网络上使用。除书写外,MacSVG 还可用于许多不同类型的 SVG 动画。

|

||||

|

||||

### 在 WordPress 中书写

|

||||

|

||||

我准备在我的 [WordPress][8] 网站上传和分享我的 SVG 示例,但我发现 WordPress 不允许进行 SVG 媒体导入。幸运的是,我找到了一个方便的插件:Benbodhi 的 [SVG 支持][9]插件允许快速、轻松地导入我的 SVG,就像我将 JPG 导入媒体库一样。我能够在世界各地向巴比伦人展示我[写在墙上的魔法字][10]。

|

||||

|

||||

我在 [Brackets][11] 中开源了 SVG 的源代码,结果如下:

|

||||

|

||||

```

|

||||

<?xml version="1.0" encoding="utf-8" standalone="yes"?>

|

||||

<!DOCTYPE svg PUBLIC "-//W3C//DTD SVG 1.1//EN" "http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd">

|

||||

<svg xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:dc="http://purl.org/dc/elements/1.1/" xmlns:cc="http://web.resource.org/cc/" xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:sodipodi="http://sodipodi.sourceforge.net/DTD/sodipodi-0.dtd" xmlns:inkscape="http://www.inkscape.org/namespaces/inkscape" height="360px" style="zoom: 1;" cursor="default" id="svg_document" width="480px" baseProfile="full" version="1.1" preserveAspectRatio="xMidYMid meet" viewBox="0 0 480 360"><title id="svg_document_title">Path animation with stroke-dasharray</title><desc id="desc1">This example demonstrates the use of a path element, an animate element, and the stroke-dasharray attribute to simulate drawing.</desc><defs id="svg_document_defs"></defs><g id="main_group"></g><path stroke="#004d40" id="path2" stroke-width="9px" d="M86,75 C86,75 75,72 72,61 C69,50 66,37 71,34 C76,31 86,21 92,35 C98,49 95,73 94,82 C93,91 87,105 83,110 C79,115 70,124 71,113 C72,102 67,105 75,97 C83,89 111,74 111,74 C111,74 119,64 119,63 C119,62 110,57 109,58 C108,59 102,65 102,66 C102,67 101,75 107,79 C113,83 118,85 122,81 C126,77 133,78 136,64 C139,50 147,45 146,33 C145,21 136,15 132,24 C128,33 123,40 123,49 C123,58 135,87 135,96 C135,105 139,117 133,120 C127,123 116,127 120,116 C124,105 144,82 144,81 C144,80 158,66 159,58 C160,50 159,48 161,43 C163,38 172,23 166,22 C160,21 155,12 153,23 C151,34 161,68 160,78 C159,88 164,108 163,113 C162,118 165,126 157,128 C149,130 152,109 152,109 C152,109 185,64 185,64 " fill="none" transform=""><animate values="0,1739;1739,0;" attributeType="XML" begin="0; animate1.end+5s" id="animateSig1" repeatCount="indefinite" attributeName="stroke-dasharray" fill="freeze" dur="2"></animate></path></svg>

|

||||

```

|

||||

|

||||

你会使用 MacSVG 做什么?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/10/macsvg-open-source-tool-animation

|

||||

|

||||

作者:[Jeff Macharyas][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/rikki-endsley

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/Belshazzar

|

||||

[2]: https://en.wikipedia.org/wiki/Belshazzar%27s_feast

|

||||

[3]: https://en.wikipedia.org/wiki/Scalable_Vector_Graphics

|

||||

[4]: https://inkscape.org/

|

||||

[5]: https://macsvg.org/

|

||||

[6]: https://github.com/dsward2/macSVG

|

||||

[7]: https://gist.github.com/mbostock/5649592

|

||||

[8]: https://macharyas.com/

|

||||

[9]: https://wordpress.org/plugins/svg-support/

|

||||

[10]: https://macharyas.com/index.php/2018/10/14/open-source-svg/

|

||||

[11]: http://brackets.io/

|

||||

167

published/20181220 Getting started with Prometheus.md

Normal file

167

published/20181220 Getting started with Prometheus.md

Normal file

@ -0,0 +1,167 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11234-1.html)

|

||||

[#]: subject: (Getting started with Prometheus)

|

||||

[#]: via: (https://opensource.com/article/18/12/introduction-prometheus)

|

||||

[#]: author: (Michael Zamot https://opensource.com/users/mzamot)

|

||||

|

||||

Prometheus 入门

|

||||

======

|

||||

|

||||

> 学习安装 Prometheus 监控和警报系统并编写它的查询。

|

||||

|

||||

|

||||

|

||||

[Prometheus][1] 是一个开源的监控和警报系统,它直接从目标主机上运行的代理程序中抓取指标,并将收集的样本集中存储在其服务器上。也可以使用像 `collectd_exporter` 这样的插件推送指标,尽管这不是 Promethius 的默认行为,但在主机位于防火墙后面或位于安全策略禁止打开端口的某些环境中它可能很有用。

|

||||

|

||||

Prometheus 是[云原生计算基金会(CNCF)][2]的一个项目。它使用<ruby>联合模型<rt>federation model</rt></ruby>进行扩展,该模型使得一个 Prometheus 服务器能够抓取另一个 Prometheus 服务器的数据。这允许创建分层拓扑,其中中央系统或更高级别的 Prometheus 服务器可以抓取已从下级实例收集的聚合数据。

|

||||

|

||||

除 Prometheus 服务器外,其最常见的组件是[警报管理器][3]及其输出器。

|

||||

|

||||

警报规则可以在 Prometheus 中创建,并配置为向警报管理器发送自定义警报。然后,警报管理器处理和管理这些警报,包括通过电子邮件或第三方服务(如 [PagerDuty][4])等不同机制发送通知。

|

||||

|

||||

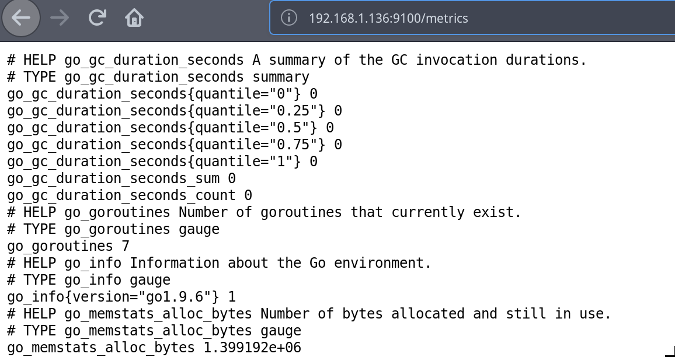

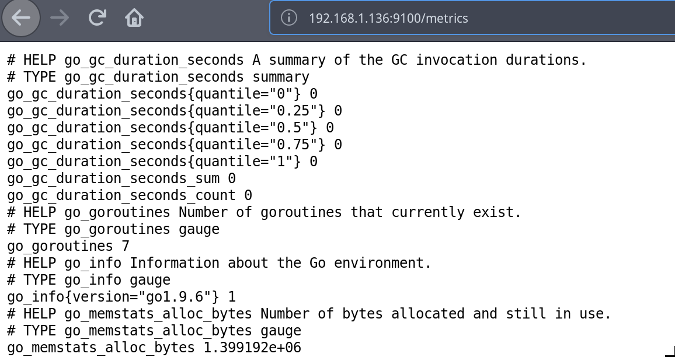

Prometheus 的输出器可以是库、进程、设备或任何其他能将 Prometheus 抓取的指标公开出去的东西。 这些指标可在端点 `/metrics` 中获得,它允许 Prometheus 无需代理直接抓取它们。本文中的教程使用 `node_exporter` 来公开目标主机的硬件和操作系统指标。输出器的输出是明文的、高度可读的,这是 Prometheus 的优势之一。

|

||||

|

||||

此外,你可以将 Prometheus 作为后端,配置 [Grafana][5] 来提供数据可视化和仪表板功能。

|

||||

|

||||

### 理解 Prometheus 的配置文件

|

||||

|

||||

抓取 `/metrics` 的间隔秒数控制了时间序列数据库的粒度。这在配置文件中定义为 `scrape_interval` 参数,默认情况下设置为 60 秒。

|

||||

|

||||

在 `scrape_configs` 部分中为每个抓取作业设置了目标。每个作业都有自己的名称和一组标签,可以帮助你过滤、分类并更轻松地识别目标。一项作业可以有很多目标。

|

||||

|

||||

### 安装 Prometheus

|

||||

|

||||

在本教程中,为简单起见,我们将使用 Docker 安装 Prometheus 服务器和 `node_exporter`。Docker 应该已经在你的系统上正确安装和配置。对于更深入、自动化的方法,我推荐 Steve Ovens 的文章《[如何使用 Ansible 与 Prometheus 建立系统监控][6]》。

|

||||

|

||||

在开始之前,在工作目录中创建 Prometheus 配置文件 `prometheus.yml`,如下所示:

|

||||

|

||||

```

|

||||

global:

|

||||

scrape_interval: 15s

|

||||

evaluation_interval: 15s

|

||||

|

||||

scrape_configs:

|

||||

- job_name: 'prometheus'

|

||||

|

||||

static_configs:

|

||||

- targets: ['localhost:9090']

|

||||

|

||||

- job_name: 'webservers'

|

||||

|

||||

static_configs:

|

||||

- targets: ['<node exporter node IP>:9100']

|

||||

```

|

||||

|

||||

通过运行以下命令用 Docker 启动 Prometheus:

|

||||

|

||||

```

|

||||

$ sudo docker run -d -p 9090:9090 -v

|

||||

/path/to/prometheus.yml:/etc/prometheus/prometheus.yml

|

||||

prom/prometheus

|

||||

```

|

||||

|

||||

默认情况下,Prometheus 服务器将使用端口 9090。如果此端口已在使用,你可以通过在上一个命令的后面添加参数 `--web.listen-address="<IP of machine>:<port>"` 来更改它。

|

||||

|

||||

在要监视的计算机中,使用以下命令下载并运行 `node_exporter` 容器:

|

||||

|

||||

```

|

||||

$ sudo docker run -d -v "/proc:/host/proc" -v "/sys:/host/sys" -v

|

||||

"/:/rootfs" --net="host" prom/node-exporter --path.procfs

|

||||

/host/proc --path.sysfs /host/sys --collector.filesystem.ignored-

|

||||

mount-points "^/(sys|proc|dev|host|etc)($|/)"

|

||||

```

|

||||

|

||||

出于本文练习的目的,你可以在同一台机器上安装 `node_exporter` 和 Prometheus。请注意,生产环境中在 Docker 下运行 `node_exporter` 是不明智的 —— 这仅用于测试目的。

|

||||

|

||||

要验证 `node_exporter` 是否正在运行,请打开浏览器并导航到 `http://<IP of Node exporter host>:9100/metrics`,这将显示收集到的所有指标;也即是 Prometheus 将要抓取的相同指标。

|

||||

|

||||

|

||||

|

||||

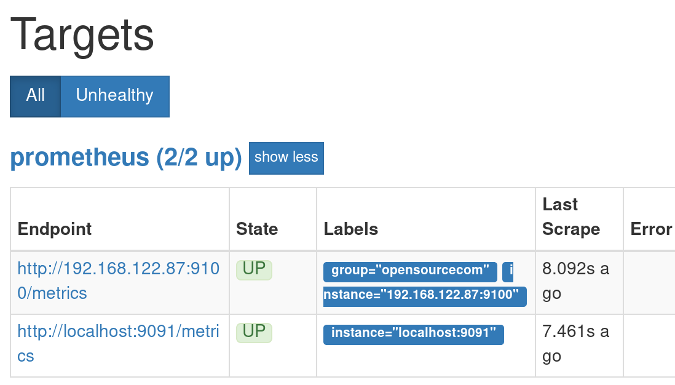

要确认 Prometheus 服务器安装成功,打开浏览器并导航至:<http://localhost:9090>。

|

||||

|

||||

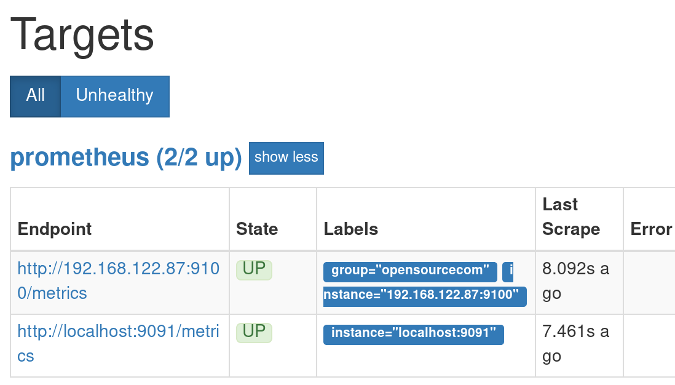

你应该看到了 Prometheus 的界面。单击“Status”,然后单击“Targets”。在 “Status” 下,你应该看到你的机器被列为 “UP”。

|

||||

|

||||

|

||||

|

||||

### 使用 Prometheus 查询

|

||||

|

||||

现在是时候熟悉一下 [PromQL][7](Prometheus 的查询语法)及其图形化 Web 界面了。转到 Prometheus 服务器上的 `http://localhost:9090/graph`。你将看到一个查询编辑器和两个选项卡:“Graph” 和 “Console”。

|

||||

|

||||

Prometheus 将所有数据存储为时间序列,使用指标名称标识每个数据。例如,指标 `node_filesystem_avail_bytes` 显示可用的文件系统空间。指标的名称可以在表达式框中使用,以选择具有此名称的所有时间序列并生成即时向量。如果需要,可以使用选择器和标签(一组键值对)过滤这些时间序列,例如:

|

||||

|

||||

```

|

||||

node_filesystem_avail_bytes{fstype="ext4"}

|

||||

```

|

||||

|

||||

过滤时,你可以匹配“完全相等”(`=`)、“不等于”(`!=`),“正则匹配”(`=~`)和“正则排除匹配”(`!~`)。以下示例说明了这一点:

|

||||

|

||||

要过滤 `node_filesystem_avail_bytes` 以显示 ext4 和 XFS 文件系统:

|

||||

|

||||

```

|

||||

node_filesystem_avail_bytes{fstype=~"ext4|xfs"}

|

||||

```

|

||||

|

||||

要排除匹配:

|

||||

|

||||

```

|

||||

node_filesystem_avail_bytes{fstype!="xfs"}

|

||||

```

|

||||

|

||||

你还可以使用方括号得到从当前时间往回的一系列样本。你可以使用 `s` 表示秒,`m` 表示分钟,`h` 表示小时,`d` 表示天,`w` 表示周,而 `y` 表示年。使用时间范围时,返回的向量将是范围向量。

|

||||

|

||||

例如,以下命令生成从五分钟前到现在的样本:

|

||||

|

||||

```

|

||||

node_memory_MemAvailable_bytes[5m]

|

||||

```

|

||||

|

||||

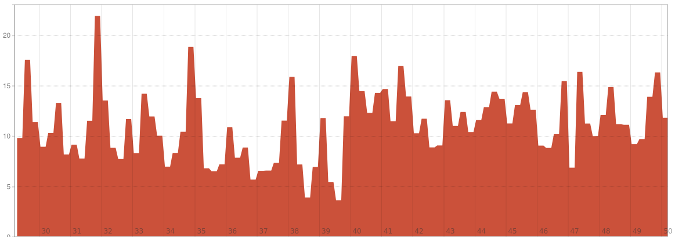

Prometheus 还包括了高级查询的功能,例如:

|

||||

|

||||

```

|

||||

100 * (1 - avg by(instance)(irate(node_cpu_seconds_total{job='webservers',mode='idle'}[5m])))

|

||||

```

|

||||

|

||||

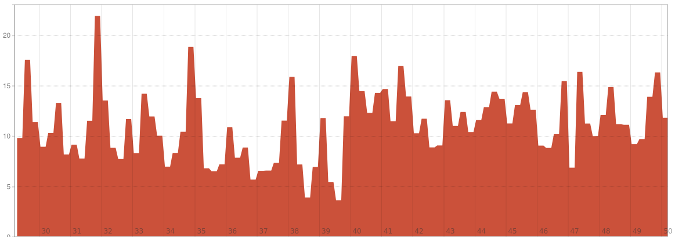

请注意标签如何用于过滤作业和模式。指标 `node_cpu_seconds_total` 返回一个计数器,`irate()`函数根据范围间隔的最后两个数据点计算每秒的变化率(意味着该范围可以小于五分钟)。要计算 CPU 总体使用率,可以使用 `node_cpu_seconds_total` 指标的空闲(`idle`)模式。处理器的空闲比例与繁忙比例相反,因此从 1 中减去 `irate` 值。要使其为百分比,请将其乘以 100。

|

||||

|

||||

|

||||

|

||||

### 了解更多

|

||||

|

||||

Prometheus 是一个功能强大、可扩展、轻量级、易于使用和部署的监视工具,对于每个系统管理员和开发人员来说都是必不可少的。出于这些原因和其他原因,许多公司正在将 Prometheus 作为其基础设施的一部分。

|

||||

|

||||

要了解有关 Prometheus 及其功能的更多信息,我建议使用以下资源:

|

||||

|

||||

+ 关于 [PromQL][8]

|

||||

+ 什么是 [node_exporters 集合][9]

|

||||

+ [Prometheus 函数][10]

|

||||

+ [4 个开源监控工具] [11]

|

||||

+ [现已推出:DevOps 监控工具的开源指南] [12]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/12/introduction-prometheus

|

||||

|

||||

作者:[Michael Zamot][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mzamot

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://prometheus.io/

|

||||

[2]: https://www.cncf.io/

|

||||

[3]: https://prometheus.io/docs/alerting/alertmanager/

|

||||

[4]: https://en.wikipedia.org/wiki/PagerDuty

|

||||

[5]: https://grafana.com/

|

||||

[6]: https://opensource.com/article/18/3/how-use-ansible-set-system-monitoring-prometheus

|

||||

[7]: https://prometheus.io/docs/prometheus/latest/querying/basics/

|

||||

[8]: https://prometheus.io/docs/prometheus/latest/querying/basics/

|

||||

[9]: https://github.com/prometheus/node_exporter#collectors

|

||||

[10]: https://prometheus.io/docs/prometheus/latest/querying/functions/

|

||||

[11]: https://opensource.com/article/18/8/open-source-monitoring-tools

|

||||

[12]: https://opensource.com/article/18/8/now-available-open-source-guide-devops-monitoring-tools

|

||||

150

published/20190318 Let-s try dwm - dynamic window manager.md

Normal file

150

published/20190318 Let-s try dwm - dynamic window manager.md

Normal file

@ -0,0 +1,150 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11235-1.html)

|

||||

[#]: subject: (Let’s try dwm — dynamic window manager)

|

||||

[#]: via: (https://fedoramagazine.org/lets-try-dwm-dynamic-window-manger/)

|

||||

[#]: author: (Adam Šamalík https://fedoramagazine.org/author/asamalik/)

|

||||

|

||||

试试动态窗口管理器 dwm 吧

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

如果你崇尚效率和极简主义,并且正在为你的 Linux 桌面寻找新的窗口管理器,那么你应该尝试一下<ruby>动态窗口管理器<rt>dynamic window manager</rt></ruby> dwm。以不到 2000 标准行的代码写就的 dwm,是一个速度极快而功能强大,且可高度定制的窗口管理器。

|

||||

|

||||

你可以在平铺、单片和浮动布局之间动态选择,使用标签将窗口组织到多个工作区,并使用键盘快捷键快速导航。本文将帮助你开始使用 dwm。

|

||||

|

||||

### 安装

|

||||

|

||||

要在 Fedora 上安装 dwm,运行:

|

||||

|

||||

```

|

||||

$ sudo dnf install dwm dwm-user

|

||||

```

|

||||

|

||||

`dwm` 包会安装窗口管理器本身,`dwm-user` 包显著简化了配置,本文稍后将对此进行说明。

|

||||

|

||||

此外,为了能够在需要时锁定屏幕,我们还将安装 `slock`,这是一个简单的 X 显示锁屏。

|

||||

|

||||

```

|

||||

$ sudo dnf install slock

|

||||

```

|

||||

|

||||

当然,你可以根据你的个人喜好使用其它的锁屏。

|

||||

|

||||

### 快速入门

|

||||

|

||||

要启动 dwm,在登录屏选择 “dwm-user” 选项。

|

||||

|

||||

![][2]

|

||||

|

||||

登录后,你将看到一个非常简单的桌面。事实上,顶部唯一的一个面板列出了代表工作空间的 9 个标签和一个代表窗户布局的 `[]=` 符号。

|

||||

|

||||

#### 启动应用

|

||||

|

||||

在查看布局之前,首先启动一些应用程序,以便你可以随时使用布局。可以通过按 `Alt+p` 并键入应用程序的名称,然后回车来启动应用程序。还有一个快捷键 `Alt+Shift+Enter` 用于打开终端。

|

||||

|

||||

现在有一些应用程序正在运行了,请查看布局。

|

||||

|

||||

#### 布局

|

||||

|

||||

默认情况下有三种布局:平铺布局,单片布局和浮动布局。

|

||||

|

||||

平铺布局由条形图上的 `[]=` 表示,它将窗口组织为两个主要区域:左侧为主区域,右侧为堆叠区。你可以按 `Alt+t` 激活平铺布局。

|

||||

|

||||

![][3]

|

||||

|

||||

平铺布局背后的想法是,主窗口放在主区域中,同时仍然可以看到堆叠区中的其他窗口。你可以根据需要在它们之间快速切换。

|

||||

|

||||

要在两个区域之间交换窗口,请将鼠标悬停在堆叠区中的一个窗口上,然后按 `Alt+Enter` 将其与主区域中的窗口交换。

|

||||

|

||||

![][4]

|

||||

|

||||

单片布局由顶部栏上的 `[N]` 表示,可以使你的主窗口占据整个屏幕。你可以按 `Alt+m` 切换到它。

|

||||

|

||||

最后,浮动布局可让你自由移动和调整窗口大小。它的快捷方式是 `Alt+f`,顶栏上的符号是 `><>`。

|

||||

|

||||

#### 工作区和标签

|

||||

|

||||

每个窗口都分配了一个顶部栏中列出的标签(1-9)。要查看特定标签,请使用鼠标单击其编号或按 `Alt+1..9`。你甚至可以使用鼠标右键单击其编号,一次查看多个标签。

|

||||

|

||||

通过使用鼠标突出显示后,并按 `Alt+Shift+1..9`,窗口可以在不同标签之间移动。

|

||||

|

||||

### 配置

|

||||

|

||||

为了使 dwm 尽可能简约,它不使用典型的配置文件。而是你需要修改代表配置的 C 语言头文件,并重新编译它。但是不要担心,在 Fedora 中你只需要简单地编辑主目录中的一个文件,而其他一切都会在后台发生,这要归功于 Fedora 的维护者提供的 `dwm-user` 包。

|

||||

|

||||

首先,你需要使用类似于以下的命令将文件复制到主目录中:

|

||||

|

||||

```

|

||||

$ mkdir ~/.dwm

|

||||

$ cp /usr/src/dwm-VERSION-RELEASE/config.def.h ~/.dwm/config.h

|

||||

```

|

||||

|

||||

你可以通过运行 `man dwm-start` 来获取确切的路径。

|

||||

|

||||

其次,只需编辑 `~/.dwm/config.h` 文件。例如,让我们配置一个新的快捷方式:通过按 `Alt+Shift+L` 来锁定屏幕。

|

||||

|

||||

考虑到我们已经安装了本文前面提到的 `slock` 包,我们需要在文件中添加以下两行以使其工作:

|

||||

|

||||

在 `/* commands */` 注释下,添加:

|

||||

|

||||

```

|

||||

static const char *slockcmd[] = { "slock", NULL };

|

||||

```

|

||||

|

||||

添加下列行到 `static Key keys[]` 中:

|

||||

|

||||

```

|

||||

{ MODKEY|ShiftMask, XK_l, spawn, {.v = slockcmd } },

|

||||

```

|

||||

|

||||

最终,它应该看起来如下:

|

||||

|

||||

```

|

||||

...

|

||||

/* commands */

|

||||

static char dmenumon[2] = "0"; /* component of dmenucmd, manipulated in spawn() */

|

||||

static const char *dmenucmd[] = { "dmenu_run", "-m", dmenumon, "-fn", dmenufont, "-nb", normbgcolor, "-nf", normfgcolor, "-sb", selbgcolor, "-sf", selfgcolor, NULL };

|

||||

static const char *termcmd[] = { "st", NULL };

|

||||

static const char *slockcmd[] = { "slock", NULL };

|

||||

|

||||

static Key keys[] = {

|

||||

/* modifier key function argument */

|

||||

{ MODKEY|ShiftMask, XK_l, spawn, {.v = slockcmd } },

|

||||

{ MODKEY, XK_p, spawn, {.v = dmenucmd } },

|

||||

{ MODKEY|ShiftMask, XK_Return, spawn, {.v = termcmd } },

|

||||

...

|

||||

```

|

||||

|

||||

保存文件。

|

||||

|

||||

最后,按 `Alt+Shift+q` 注销,然后重新登录。`dwm-user` 包提供的脚本将识别你已更改主目录中的`config.h` 文件,并会在登录时重新编译 dwm。因为 dwm 非常小,它快到你甚至都不会注意到它重新编译了。

|

||||

|

||||

你现在可以尝试按 `Alt+Shift+L` 锁定屏幕,然后输入密码并按回车键再次登录。

|

||||

|

||||

### 总结

|

||||

|

||||

如果你崇尚极简主义并想要一个非常快速而功能强大的窗口管理器,dwm 可能正是你一直在寻找的。但是,它可能不适合初学者,你可能需要做许多其他配置才能按照你的喜好进行配置。

|

||||

|

||||

要了解有关 dwm 的更多信息,请参阅该项目的主页: <https://dwm.suckless.org/>。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/lets-try-dwm-dynamic-window-manger/

|

||||

|

||||

作者:[Adam Šamalík][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/asamalik/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://fedoramagazine.org/wp-content/uploads/2019/03/dwm-magazine-image-816x345.png

|

||||

[2]: https://fedoramagazine.org/wp-content/uploads/2019/03/choosing-dwm-1024x469.png

|

||||

[3]: https://fedoramagazine.org/wp-content/uploads/2019/03/dwm-desktop-1024x593.png

|

||||

[4]: https://fedoramagazine.org/wp-content/uploads/2019/03/Screenshot-2019-03-15-at-11.12.32-1024x592.png

|

||||

@ -1,38 +1,38 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11243-1.html)

|

||||

[#]: subject: (How To Check Linux Package Version Before Installing It)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-check-linux-package-version-before-installing-it/)

|

||||

[#]: author: (sk https://www.ostechnix.com/author/sk/)

|

||||

|

||||

How To Check Linux Package Version Before Installing It

|

||||

如何在安装之前检查 Linux 软件包的版本?

|

||||

======

|

||||

|

||||

![Check Linux Package Version][1]

|

||||

|

||||

Most of you will know how to [**find the version of an installed package**][2] in Linux. But, what would you do to find the packages’ version which are not installed in the first place? No problem! This guide describes how to check Linux package version before installing it in Debian and its derivatives like Ubuntu. This small tip might be helpful for those wondering what version they would get before installing a package.

|

||||

大多数人都知道如何在 Linux 中[查找已安装软件包的版本][2],但是,你会如何查找那些还没有安装的软件包的版本呢?很简单!本文将介绍在 Debian 及其衍生品(如 Ubuntu)中,如何在软件包安装之前检查它的版本。对于那些想在安装之前知道软件包版本的人来说,这个小技巧可能会有所帮助。

|

||||

|

||||

### Check Linux Package Version Before Installing It

|

||||

### 在安装之前检查 Linux 软件包版本

|

||||

|

||||

There are many ways to find a package’s version even if it is not installed already in DEB-based systems. Here I have given a few methods.

|

||||

在基于 DEB 的系统中,即使软件包还没有安装,也有很多方法可以查看他的版本。接下来,我将一一介绍。

|

||||

|

||||

##### Method 1 – Using Apt

|

||||

#### 方法 1 – 使用 Apt

|

||||

|

||||

The quick and dirty way to check a package version, simply run:

|

||||

检查软件包的版本的懒人方法:

|

||||

|

||||

```

|

||||

$ apt show <package-name>

|

||||

```

|

||||

|

||||

**Example:**

|

||||

**示例:**

|

||||

|

||||

```

|

||||

$ apt show vim

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

**示例输出:**

|

||||

|

||||

```

|

||||

Package: vim

|

||||

@ -67,23 +67,21 @@ Description: Vi IMproved - enhanced vi editor

|

||||

N: There is 1 additional record. Please use the '-a' switch to see it

|

||||

```

|

||||

|

||||

As you can see in the above output, “apt show” command displays, many important details of the package such as,

|

||||

正如你在上面的输出中看到的,`apt show` 命令显示了软件包许多重要的细节,例如:

|

||||

|

||||

1. package name,

|

||||

2. version,

|

||||

3. origin (from where the vim comes from),

|

||||

4. maintainer,

|

||||

5. home page of the package,

|

||||

6. dependencies,

|

||||

7. download size,

|

||||

8. description,

|

||||

9. and many.

|

||||

1. 包名称,

|

||||

2. 版本,

|

||||

3. 来源(vim 来自哪里),

|

||||

4. 维护者,

|

||||

5. 包的主页,

|

||||

6. 依赖,

|

||||

7. 下载大小,

|

||||

8. 简介,

|

||||

9. 其他。

|

||||

|

||||

因此,Ubuntu 仓库中可用的 Vim 版本是 **8.0.1453**。如果我把它安装到我的 Ubuntu 系统上,就会得到这个版本。

|

||||

|

||||

|

||||

So, the available version of Vim package in the Ubuntu repositories is **8.0.1453**. This is the version I get if I install it on my Ubuntu system.

|

||||

|

||||

Alternatively, use **“apt policy”** command if you prefer short output:

|

||||

或者,如果你不想看那么多的内容,那么可以使用 `apt policy` 这个命令:

|

||||

|

||||

```

|

||||

$ apt policy vim

|

||||

@ -98,7 +96,7 @@ vim:

|

||||

500 http://archive.ubuntu.com/ubuntu bionic/main amd64 Packages

|

||||

```

|

||||

|

||||

Or even shorter:

|

||||

甚至更短:

|

||||

|

||||

```

|

||||

$ apt list vim

|

||||

@ -107,17 +105,17 @@ vim/bionic-updates,bionic-security 2:8.0.1453-1ubuntu1.1 amd64

|

||||

N: There is 1 additional version. Please use the '-a' switch to see it

|

||||

```

|

||||

|

||||

**Apt** is the default package manager in recent Ubuntu versions. So, this command is just enough to find the detailed information of a package. It doesn’t matter whether given package is installed or not. This command will simply list the given package’s version along with all other details.

|

||||

`apt` 是 Ubuntu 最新版本的默认包管理器。因此,这个命令足以找到一个软件包的详细信息,给定的软件包是否安装并不重要。这个命令将简单地列出给定包的版本以及其他详细信息。

|

||||

|

||||

##### Method 2 – Using Apt-get

|

||||

#### 方法 2 – 使用 Apt-get

|

||||

|

||||

To find a package version without installing it, we can use **apt-get** command with **-s** option.

|

||||

要查看软件包的版本而不安装它,我们可以使用 `apt-get` 命令和 `-s` 选项。

|

||||

|

||||

```

|

||||

$ apt-get -s install vim

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

**示例输出:**

|

||||

|

||||

```

|

||||

NOTE: This is only a simulation!

|

||||

@ -136,19 +134,19 @@ Inst vim (2:8.0.1453-1ubuntu1.1 Ubuntu:18.04/bionic-updates, Ubuntu:18.04/bionic

|

||||

Conf vim (2:8.0.1453-1ubuntu1.1 Ubuntu:18.04/bionic-updates, Ubuntu:18.04/bionic-security [amd64])

|

||||

```

|

||||

|

||||

Here, -s option indicates **simulation**. As you can see in the output, It performs no action. Instead, It simply performs a simulation to let you know what is going to happen when you install the Vim package.

|

||||

这里,`-s` 选项代表 **模拟**。正如你在输出中看到的,它不执行任何操作。相反,它只是模拟执行,好让你知道在安装 Vim 时会发生什么。

|

||||

|

||||

You can substitute “install” option with “upgrade” option to see what will happen when you upgrade a package.

|

||||

你可以将 `install` 选项替换为 `upgrade`,以查看升级包时会发生什么。

|

||||

|

||||

```

|

||||

$ apt-get -s upgrade vim

|

||||

```

|

||||

|

||||

##### Method 3 – Using Aptitude

|

||||

#### 方法 3 – 使用 Aptitude

|

||||

|

||||

**Aptitude** is an ncurses and commandline-based front-end to APT package manger in Debian and its derivatives.

|

||||

在 Debian 及其衍生品中,`aptitude` 是一个基于 ncurses(LCTT 译注:ncurses 是终端基于文本的字符处理的库)和命令行的前端 APT 包管理器。

|

||||

|

||||

To find the package version with Aptitude, simply run:

|

||||

使用 aptitude 来查看软件包的版本,只需运行:

|

||||

|

||||

```

|

||||

$ aptitude versions vim

|

||||

@ -156,7 +154,7 @@ p 2:8.0.1453-1ubuntu1

|

||||

p 2:8.0.1453-1ubuntu1.1 bionic-security,bionic-updates 500

|

||||

```

|

||||

|

||||

You can also use simulation option ( **-s** ) to see what would happen if you install or upgrade package.

|

||||

你还可以使用模拟选项(`-s`)来查看安装或升级包时会发生什么。

|

||||

|

||||

```

|

||||

$ aptitude -V -s install vim

|

||||

@ -167,33 +165,29 @@ Need to get 1,152 kB of archives. After unpacking 2,852 kB will be used.

|

||||

Would download/install/remove packages.

|

||||

```

|

||||

|

||||

Here, **-V** flag is used to display detailed information of the package version.

|

||||

|

||||

Similarly, just substitute “install” with “upgrade” option to see what would happen if you upgrade a package.

|

||||

这里,`-V` 标志用于显示软件包的详细信息。

|

||||

|

||||

```

|

||||

$ aptitude -V -s upgrade vim

|

||||

```

|

||||

|

||||

Another way to find the non-installed package’s version using Aptitude command is:

|

||||

类似的,只需将 `install` 替换为 `upgrade` 选项,即可查看升级包会发生什么。

|

||||

|

||||

```

|

||||

$ aptitude search vim -F "%c %p %d %V"

|

||||

```

|

||||

|

||||

Here,

|

||||

这里,

|

||||

|

||||

* **-F** is used to specify which format should be used to display the output,

|

||||

* **%c** – status of the given package (installed or not installed),

|

||||

* **%p** – name of the package,

|

||||

* **%d** – description of the package,

|

||||

* **%V** – version of the package.

|

||||

* `-F` 用于指定应使用哪种格式来显示输出,

|

||||

* `%c` – 包的状态(已安装或未安装),

|

||||

* `%p` – 包的名称,

|

||||

* `%d` – 包的简介,

|

||||

* `%V` – 包的版本。

|

||||

|

||||

当你不知道完整的软件包名称时,这非常有用。这个命令将列出包含给定字符串(即 vim)的所有软件包。

|

||||

|

||||

|

||||

This is helpful when you don’t know the full package name. This command will list all packages that contains the given string (i.e vim).

|

||||

|

||||

Here is the sample output of the above command:

|

||||

以下是上述命令的示例输出:

|

||||

|

||||

```

|

||||

[...]

|

||||

@ -207,17 +201,17 @@ p vim-voom Vim two-pane out

|

||||

p vim-youcompleteme fast, as-you-type, fuzzy-search code completion engine for Vim 0+20161219+git

|

||||

```

|

||||

|

||||

##### Method 4 – Using Apt-cache

|

||||

#### 方法 4 – 使用 Apt-cache

|

||||

|

||||

**Apt-cache** command is used to query APT cache in Debian-based systems. It is useful for performing many operations on APT’s package cache. One fine example is we can [**list installed applications from a certain repository/ppa**][3].

|

||||

`apt-cache` 命令用于查询基于 Debian 的系统中的 APT 缓存。对于要在 APT 的包缓存上执行很多操作时,它很有用。一个很好的例子是我们可以从[某个仓库或 ppa 中列出已安装的应用程序][3]。

|

||||

|

||||

Not just installed applications, we can also find the version of a package even if it is not installed. For instance, the following command will find the version of Vim package:

|

||||

不仅是已安装的应用程序,我们还可以找到软件包的版本,即使它没有被安装。例如,以下命令将找到 Vim 的版本:

|

||||

|

||||

```

|

||||

$ apt-cache policy vim

|

||||

```

|

||||

|

||||

Sample output:

|

||||

示例输出:

|

||||

|

||||

```

|

||||

vim:

|

||||

@ -231,19 +225,19 @@ vim:

|

||||

500 http://archive.ubuntu.com/ubuntu bionic/main amd64 Packages

|

||||

```

|

||||

|

||||

As you can see in the above output, Vim is not installed. If you wanted to install it, you would get version **8.0.1453**. It also displays from which repository the vim package is coming from.

|

||||

正如你在上面的输出中所看到的,Vim 并没有安装。如果你想安装它,你会知道它的版本是 **8.0.1453**。它还显示 vim 包来自哪个仓库。

|

||||

|

||||

##### Method 5 – Using Apt-show-versions

|

||||

#### 方法 5 – 使用 Apt-show-versions

|

||||

|

||||

**Apt-show-versions** command is used to list installed and available package versions in Debian and Debian-based systems. It also displays the list of all upgradeable packages. It is quite handy if you have a mixed stable/testing environment. For instance, if you have enabled both stable and testing repositories, you can easily find the list of applications from testing and also you can upgrade all packages in testing.

|

||||

在 Debian 和基于 Debian 的系统中,`apt-show-versions` 命令用于列出已安装和可用软件包的版本。它还显示所有可升级软件包的列表。如果你有一个混合的稳定或测试环境,这是非常方便的。例如,如果你同时启用了稳定和测试仓库,那么你可以轻松地从测试库找到应用程序列表,还可以升级测试库中的所有软件包。

|

||||

|

||||

Apt-show-versions is not installed by default. You need to install it using command:

|

||||

默认情况下系统没有安装 `apt-show-versions`,你需要使用以下命令来安装它:

|

||||

|

||||

```

|

||||

$ sudo apt-get install apt-show-versions

|

||||

```

|

||||

|

||||

Once installed, run the following command to find the version of a package,for example Vim:

|

||||

安装后,运行以下命令查找软件包的版本,例如 Vim:

|

||||

|

||||

```

|

||||

$ apt-show-versions -a vim

|

||||

@ -253,15 +247,15 @@ vim:amd64 2:8.0.1453-1ubuntu1.1 bionic-updates archive.ubuntu.com

|

||||

vim:amd64 not installed

|

||||

```

|

||||

|

||||

Here, **-a** switch prints all available versions of the given package.

|

||||

这里,`-a` 选项打印给定软件包的所有可用版本。

|

||||

|

||||

If the given package is already installed, you need not to use **-a** option. In that case, simply run:

|

||||

如果已经安装了给定的软件包,那么就不需要使用 `-a` 选项。在这种情况下,只需运行:

|

||||

|

||||

```

|

||||

$ apt-show-versions vim

|

||||

```

|

||||

|

||||

And, that’s all. If you know any other methods, please share them in the comment section below. I will check and update this guide.

|

||||

差不多完了。如果你还了解其他方法,在下面的评论中分享,我将检查并更新本指南。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -269,8 +263,8 @@ via: https://www.ostechnix.com/how-to-check-linux-package-version-before-install

|

||||

|

||||

作者:[sk][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -69,17 +69,17 @@ package org.opensource.demo.singleton;

|

||||

|

||||

public class OpensourceSingleton {

|

||||

|

||||

private static OpensourceSingleton uniqueInstance;

|

||||

private static OpensourceSingleton uniqueInstance;

|

||||

|

||||

private OpensourceSingleton() {

|

||||

}

|

||||

private OpensourceSingleton() {

|

||||

}

|

||||

|

||||

public static OpensourceSingleton getInstance() {

|

||||

if (uniqueInstance == null) {

|

||||

uniqueInstance = new OpensourceSingleton();

|

||||

}

|

||||

return uniqueInstance;

|

||||

}

|

||||

public static OpensourceSingleton getInstance() {

|

||||

if (uniqueInstance == null) {

|

||||

uniqueInstance = new OpensourceSingleton();

|

||||

}

|

||||

return uniqueInstance;

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

@ -102,20 +102,20 @@ package org.opensource.demo.singleton;

|

||||

|

||||

public class ImprovedOpensourceSingleton {

|

||||

|

||||

private volatile static ImprovedOpensourceSingleton uniqueInstance;

|

||||

private volatile static ImprovedOpensourceSingleton uniqueInstance;

|

||||

|

||||

private ImprovedOpensourceSingleton() {}

|

||||

private ImprovedOpensourceSingleton() {}

|

||||

|

||||

public static ImprovedOpensourceSingleton getInstance() {

|

||||

if (uniqueInstance == null) {

|

||||

synchronized (ImprovedOpensourceSingleton.class) {

|

||||

if (uniqueInstance == null) {

|

||||

uniqueInstance = new ImprovedOpensourceSingleton();

|

||||

}

|

||||

}

|

||||

}

|

||||

return uniqueInstance;

|

||||

}

|

||||

public static ImprovedOpensourceSingleton getInstance() {

|

||||

if (uniqueInstance == null) {

|

||||

synchronized (ImprovedOpensourceSingleton.class) {

|

||||

if (uniqueInstance == null) {

|

||||

uniqueInstance = new ImprovedOpensourceSingleton();

|

||||

}

|

||||

}

|

||||

}

|

||||

return uniqueInstance;

|

||||

}

|

||||

|

||||

}

|

||||

```

|

||||

@ -141,20 +141,20 @@ package org.opensource.demo.factory;

|

||||

|

||||

public class OpensourceFactory {

|

||||

|

||||

public OpensourceJVMServers getServerByVendor([String][18] name) {

|

||||

if(name.equals("Apache")) {

|

||||

return new Tomcat();

|

||||

}

|

||||

else if(name.equals("Eclipse")) {

|

||||

return new Jetty();

|

||||

}

|

||||

else if (name.equals("RedHat")) {

|

||||

return new WildFly();

|

||||

}

|

||||

else {

|

||||

return null;

|

||||

}

|

||||

}

|

||||

public OpensourceJVMServers getServerByVendor(String name) {

|

||||

if(name.equals("Apache")) {

|

||||

return new Tomcat();

|

||||

}

|

||||

else if(name.equals("Eclipse")) {

|

||||

return new Jetty();

|

||||

}

|

||||

else if (name.equals("RedHat")) {

|

||||

return new WildFly();

|

||||

}

|

||||

else {

|

||||

return null;

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

@ -164,9 +164,9 @@ public class OpensourceFactory {

|

||||

package org.opensource.demo.factory;

|

||||

|

||||

public interface OpensourceJVMServers {

|

||||

public void startServer();

|

||||

public void stopServer();

|

||||

public [String][18] getName();

|

||||

public void startServer();

|

||||

public void stopServer();

|

||||

public String getName();

|

||||

}

|

||||

```

|

||||

|

||||

@ -176,17 +176,17 @@ public interface OpensourceJVMServers {

|

||||

package org.opensource.demo.factory;

|

||||

|

||||

public class WildFly implements OpensourceJVMServers {

|

||||

public void startServer() {

|

||||

[System][19].out.println("Starting WildFly Server...");

|

||||

}

|

||||

public void startServer() {

|

||||

System.out.println("Starting WildFly Server...");

|

||||

}

|

||||

|

||||

public void stopServer() {

|

||||

[System][19].out.println("Shutting Down WildFly Server...");

|

||||

}

|

||||

public void stopServer() {

|

||||

System.out.println("Shutting Down WildFly Server...");

|

||||

}

|

||||

|

||||

public [String][18] getName() {

|

||||

return "WildFly";

|

||||

}

|

||||

public String getName() {

|

||||

return "WildFly";

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

@ -209,9 +209,9 @@ package org.opensource.demo.observer;

|

||||

|

||||

public interface Topic {

|

||||

|

||||

public void addObserver([Observer][22] observer);

|

||||

public void deleteObserver([Observer][22] observer);

|

||||

public void notifyObservers();

|

||||

public void addObserver(Observer observer);

|

||||

public void deleteObserver(Observer observer);

|

||||

public void notifyObservers();

|

||||

}

|

||||

```

|

||||

|

||||

@ -226,39 +226,39 @@ import java.util.List;

|

||||

import java.util.ArrayList;

|

||||

|

||||

public class Conference implements Topic {

|

||||

private List<Observer> listObservers;

|

||||

private int totalAttendees;

|

||||

private int totalSpeakers;

|

||||

private [String][18] nameEvent;

|

||||

private List<Observer> listObservers;

|

||||

private int totalAttendees;

|

||||

private int totalSpeakers;

|

||||

private String nameEvent;

|

||||

|

||||

public Conference() {

|

||||

listObservers = new ArrayList<Observer>();

|

||||

}

|

||||

public Conference() {

|

||||

listObservers = new ArrayList<Observer>();

|

||||

}

|

||||

|

||||

public void addObserver([Observer][22] observer) {

|

||||

listObservers.add(observer);

|

||||

}

|

||||

public void addObserver(Observer observer) {

|

||||

listObservers.add(observer);

|

||||

}

|

||||

|

||||

public void deleteObserver([Observer][22] observer) {

|

||||

int i = listObservers.indexOf(observer);

|

||||

if (i >= 0) {

|

||||

listObservers.remove(i);

|

||||

}

|

||||

}

|

||||

public void deleteObserver(Observer observer) {

|

||||

int i = listObservers.indexOf(observer);

|

||||

if (i >= 0) {

|

||||

listObservers.remove(i);

|

||||

}

|

||||

}

|

||||

|

||||

public void notifyObservers() {

|

||||

for (int i=0, nObservers = listObservers.size(); i < nObservers; ++ i) {

|

||||

[Observer][22] observer = listObservers.get(i);

|

||||

observer.update(totalAttendees,totalSpeakers,nameEvent);

|

||||

}

|

||||

}

|

||||

public void notifyObservers() {

|

||||

for (int i=0, nObservers = listObservers.size(); i < nObservers; ++ i) {

|

||||

Observer observer = listObservers.get(i);

|

||||

observer.update(totalAttendees,totalSpeakers,nameEvent);

|

||||

}

|

||||

}

|

||||

|

||||

public void setConferenceDetails(int totalAttendees, int totalSpeakers, [String][18] nameEvent) {

|

||||

this.totalAttendees = totalAttendees;

|

||||

this.totalSpeakers = totalSpeakers;

|

||||

this.nameEvent = nameEvent;

|

||||

notifyObservers();

|

||||

}

|

||||

public void setConferenceDetails(int totalAttendees, int totalSpeakers, String nameEvent) {

|

||||

this.totalAttendees = totalAttendees;

|

||||

this.totalSpeakers = totalSpeakers;

|

||||

this.nameEvent = nameEvent;

|

||||

notifyObservers();

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

@ -269,8 +269,8 @@ public class Conference implements Topic {

|

||||

```

|

||||

package org.opensource.demo.observer;

|

||||

|

||||

public interface [Observer][22] {

|

||||

public void update(int totalAttendees, int totalSpeakers, [String][18] nameEvent);

|

||||

public interface Observer {

|

||||

public void update(int totalAttendees, int totalSpeakers, String nameEvent);

|

||||

}

|

||||

```

|

||||

|

||||

@ -281,27 +281,27 @@ public interface [Observer][22] {

|

||||

```

|

||||

package org.opensource.demo.observer;

|

||||

|

||||

public class MonitorConferenceAttendees implements [Observer][22] {

|

||||

private int totalAttendees;

|

||||

private int totalSpeakers;

|

||||

private [String][18] nameEvent;

|

||||

private Topic topic;

|

||||

public class MonitorConferenceAttendees implements Observer {

|

||||

private int totalAttendees;

|

||||

private int totalSpeakers;

|

||||

private String nameEvent;

|

||||

private Topic topic;

|

||||

|

||||

public MonitorConferenceAttendees(Topic topic) {

|

||||

this.topic = topic;

|

||||

topic.addObserver(this);

|

||||

}

|

||||

public MonitorConferenceAttendees(Topic topic) {

|

||||

this.topic = topic;

|

||||

topic.addObserver(this);

|

||||

}

|

||||

|

||||

public void update(int totalAttendees, int totalSpeakers, [String][18] nameEvent) {

|

||||

this.totalAttendees = totalAttendees;

|

||||

this.totalSpeakers = totalSpeakers;

|

||||

this.nameEvent = nameEvent;

|

||||

printConferenceInfo();

|

||||

}

|

||||

public void update(int totalAttendees, int totalSpeakers, String nameEvent) {

|

||||

this.totalAttendees = totalAttendees;

|

||||

this.totalSpeakers = totalSpeakers;

|

||||

this.nameEvent = nameEvent;

|

||||

printConferenceInfo();

|

||||

}

|

||||

|

||||

public void printConferenceInfo() {

|

||||

[System][19].out.println(this.nameEvent + " has " + totalSpeakers + " speakers and " + totalAttendees + " attendees");

|

||||

}

|

||||

public void printConferenceInfo() {

|

||||

System.out.println(this.nameEvent + " has " + totalSpeakers + " speakers and " + totalAttendees + " attendees");

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (Scvoet)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: translator: (scvoet)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11232-1.html)

|

||||

[#]: subject: (How To Add ‘New Document’ Option In Right Click Context Menu In Ubuntu 18.04)

|

||||

[#]: via: ((https://www.ostechnix.com/how-to-add-new-document-option-in-right-click-context-menu-in-ubuntu-18-04/)

|

||||

[#]: author: (sk https://www.ostechnix.com/author/sk/)

|

||||

@ -12,25 +12,25 @@

|

||||

|

||||

![Add 'New Document' Option In Right Click Context Menu In Ubuntu 18.04 GNOME desktop][1]

|

||||

|

||||

前几天,我在各种在线资源站点上收集关于 [**Linux 包管理**][2] 的参考资料。在我想创建一个用于保存笔记的文件,我突然发现我的 Ubuntu 18.04 LTS 桌面并没有“创建文件”的按钮了,它好像离奇失踪了。在谷歌一下后,我发现原来“新建文档”按钮不再被集成在 Ubuntu GNOME 版本中了。庆幸的是,我找到了一个在 Ubuntu 18.04 LTS 桌面的右键单击菜单中添加“新建文档”按钮的简易解决方案。

|

||||

前几天,我在各种在线资源站点上收集关于 [Linux 包管理器][2] 的参考资料。在我想创建一个用于保存笔记的文件,我突然发现我的 Ubuntu 18.04 LTS 桌面并没有“新建文件”的按钮了,它好像离奇失踪了。在谷歌一下后,我发现原来“新建文档”按钮不再被集成在 Ubuntu GNOME 版本中了。庆幸的是,我找到了一个在 Ubuntu 18.04 LTS 桌面的右键单击菜单中添加“新建文档”按钮的简易解决方案。

|

||||

|

||||

就像你在下方截图中看到的一样,Nautilus 文件管理器的右键单击菜单中并没有“新建文件”按钮。

|

||||

|

||||

![][3]

|

||||

|

||||

Ubuntu 18.04 移除了右键点击菜单中的“新建文件”的选项。

|

||||

*Ubuntu 18.04 移除了右键点击菜单中的“新建文件”的选项。*

|

||||

|

||||

如果你想添加此一按钮,请按照以下步骤进行操作。

|

||||

如果你想添加此按钮,请按照以下步骤进行操作。

|

||||

|

||||

### 在 Ubuntu 的右键单击菜单中添加“新建文件”按钮

|

||||

|

||||

首先,你需要确保您的系统中有 **~/Templates** 文件夹。如果没有的话,可以按照下面的命令进行创建。

|

||||

首先,你需要确保你的系统中有 `~/Templates` 文件夹。如果没有的话,可以按照下面的命令进行创建。

|

||||

|

||||

```

|

||||

$ mkdir ~/Templates

|

||||

```

|

||||

|

||||

然后打开终端应用并使用 cd 命令进入 **~/Templates** 文件夹:

|

||||

然后打开终端应用并使用 `cd` 命令进入 `~/Templates` 文件夹:

|

||||

|

||||

```

|

||||

$ cd ~/Templates

|

||||

@ -50,11 +50,11 @@ $ touch "Empty Document"

|

||||

|

||||

![][4]

|

||||

|

||||

新开一个 Nautilus 文件管理器,然后检查一下右键单击菜单中是否成功添加了“新建文档”按钮。

|

||||

新打开一个 Nautilus 文件管理器,然后检查一下右键单击菜单中是否成功添加了“新建文档”按钮。

|

||||

|

||||

![][5]

|

||||

|

||||

在 Ubuntu 18.04 的右键单击菜单中添加“新建文件”按钮

|

||||

*在 Ubuntu 18.04 的右键单击菜单中添加“新建文件”按钮*

|

||||

|

||||

如上图所示,我们重新启用了“新建文件”的按钮。

|

||||

|

||||

@ -73,7 +73,7 @@ $ touch New\ PyScript.py

|

||||

|

||||

在“新建文件”子菜单中给不同的文件类型添加按钮

|

||||

|

||||

注意,所有文件都应该创建在 **~/Templates** 文件夹下。

|

||||

注意,所有文件都应该创建在 `~/Templates` 文件夹下。

|

||||

|

||||

现在,打开 Nautilus 并检查“新建文件” 菜单中是否有相应的新建文件按钮。

|

||||

|

||||

@ -93,14 +93,13 @@ via: https://www.ostechnix.com/how-to-add-new-document-option-in-right-click-con

|

||||

|

||||

作者:[sk][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID][c]

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[scvoet](https://github.com/scvoet)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux 中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[b]: https://github.com/lujun9972

|

||||

[c]: https://github.com/scvoet

|

||||

[1]: https://www.ostechnix.com/wp-content/uploads/2019/07/Add-New-Document-Option-In-Right-Click-Context-Menu-1-720x340.png

|

||||

[2]: https://www.ostechnix.com/linux-package-managers-compared-appimage-vs-snap-vs-flatpak/

|

||||

[3]: https://www.ostechnix.com/wp-content/uploads/2019/07/new-document-option-missing.png

|

||||

@ -0,0 +1,113 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11238-1.html)

|

||||

[#]: subject: (Find Out How Long Does it Take To Boot Your Linux System)

|

||||

[#]: via: (https://itsfoss.com/check-boot-time-linux/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

你的 Linux 系统开机时间已经击败了 99% 的电脑

|

||||

======

|

||||

|

||||

当你打开系统电源时,你会等待制造商的徽标出现,屏幕上可能会显示一些消息(以非安全模式启动),然后是 [Grub][1] 屏幕、操作系统加载屏幕以及最后的登录屏。

|

||||

|

||||

你检查过这花费了多长时间么?也许没有。除非你真的需要知道,否则你不会在意开机时间。

|

||||

|

||||

但是如果你很想知道你的 Linux 系统需要很长时间才能启动完成呢?使用秒表是一种方法,但在 Linux 中,你有一种更好、更轻松地了解系统启动时间的方法。

|

||||

|

||||

### 在 Linux 中使用 systemd-analyze 检查启动时间

|

||||

|

||||

|

||||

|

||||

无论你是否喜欢,[systemd][3] 运行在大多数流行的 Linux 发行版中。systemd 有许多管理 Linux 系统的工具。其中一个就是 `systemd-analyze`。

|

||||

|

||||

`systemd-analyze` 命令为你提供最近一次启动时运行的服务数量以及消耗时间的详细信息。

|

||||

|

||||

如果在终端中运行以下命令:

|

||||

|

||||

```

|

||||

systemd-analyze

|

||||

```

|

||||

|

||||

你将获得总启动时间以及固件、引导加载程序、内核和用户空间所消耗的时间:

|

||||

|

||||

```

|

||||

Startup finished in 7.275s (firmware) + 13.136s (loader) + 2.803s (kernel) + 12.488s (userspace) = 35.704s

|

||||

|

||||

graphical.target reached after 12.408s in userspace

|

||||

```

|

||||

|

||||

正如你在上面的输出中所看到的,我的系统花了大约 35 秒才进入可以输入密码的页面。我正在使用戴尔 XPS Ubuntu。它使用 SSD 存储,尽管如此,它还需要很长时间才能启动。

|

||||

|

||||

不是那么令人印象深刻,是吗?为什么不共享你们系统的启动时间?我们来比较吧。

|

||||

|

||||

你可以使用以下命令将启动时间进一步细分为每个单元:

|

||||

|

||||

```

|

||||

systemd-analyze blame

|

||||

```

|

||||

|

||||

这将生成大量输出,所有服务按所用时间的降序列出。

|

||||

|

||||

```

|

||||

7.347s plymouth-quit-wait.service

|

||||

6.198s NetworkManager-wait-online.service

|

||||

3.602s plymouth-start.service

|

||||

3.271s plymouth-read-write.service

|

||||

2.120s apparmor.service

|

||||

1.503s [email protected]

|

||||

1.213s motd-news.service

|

||||

908ms snapd.service

|

||||

861ms keyboard-setup.service

|

||||

739ms fwupd.service

|

||||

702ms bolt.service

|

||||

672ms dev-nvme0n1p3.device

|

||||

608ms [email protected]:intel_backlight.service

|

||||

539ms snap-core-7270.mount

|

||||

504ms snap-midori-451.mount

|

||||

463ms snap-screencloud-1.mount

|

||||

446ms snapd.seeded.service

|

||||

440ms snap-gtk\x2dcommon\x2dthemes-1313.mount

|

||||

420ms snap-core18-1066.mount

|

||||

416ms snap-scrcpy-133.mount

|

||||

412ms snap-gnome\x2dcharacters-296.mount

|

||||

```

|

||||

|

||||

#### 额外提示:改善启动时间

|

||||

|

||||

如果查看此输出,你可以看到网络管理器和 [plymouth][4] 都消耗了大量的启动时间。

|

||||

|

||||

Plymouth 负责你在 Ubuntu 和其他发行版中在登录页面出现之前的引导页面。网络管理器负责互联网连接,可以关闭它来加快启动时间。不要担心,在你登录后,你可以正常使用 wifi。

|

||||

|

||||

```

|

||||

sudo systemctl disable NetworkManager-wait-online.service

|

||||

```

|

||||

|

||||

如果要还原更改,可以使用以下命令:

|

||||

|

||||

```

|

||||

sudo systemctl enable NetworkManager-wait-online.service

|

||||

```

|

||||

|

||||

请不要在不知道用途的情况下自行禁用各种服务。这可能会产生危险的后果。

|

||||

|

||||

现在你知道了如何检查 Linux 系统的启动时间,为什么不在评论栏分享你的系统的启动时间?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/check-boot-time-linux/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.gnu.org/software/grub/

|

||||

[2]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/08/linux-boot-time.jpg?resize=800%2C450&ssl=1

|

||||

[3]: https://en.wikipedia.org/wiki/Systemd

|

||||

[4]: https://wiki.archlinux.org/index.php/Plymouth

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11236-1.html)

|

||||

[#]: subject: (How to Get Linux Kernel 5.0 in Ubuntu 18.04 LTS)

|

||||

[#]: via: (https://itsfoss.com/ubuntu-hwe-kernel/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

@ -10,31 +10,31 @@

|

||||

如何在 Ubuntu 18.04 LTS 中获取 Linux 5.0 内核

|

||||

======

|

||||

|

||||

_ **最近发布的 Ubuntu 18.04.3 包括 Linux 5.0 内核中的几个新功能和改进,但默认情况下没有安装。本教程演示了如何在 Ubuntu 18.04 LTS 中获取 Linux 5 内核。** _

|

||||

> 最近发布的 Ubuntu 18.04.3 包括 Linux 5.0 内核中的几个新功能和改进,但默认情况下没有安装。本教程演示了如何在 Ubuntu 18.04 LTS 中获取 Linux 5 内核。

|

||||

|

||||

[Subscribe to It’s FOSS YouTube Channel for More Videos][1]

|

||||

|

||||

|

||||

[Ubuntu 18.04 的第三个“点发布版”在这里][2],它带来了新的稳定版本的 GNOME 组件、livepatch 桌面集成和内核 5.0。

|

||||

[Ubuntu 18.04 的第三个“点发布版”已经发布][2],它带来了新的稳定版本的 GNOME 组件、livepatch 桌面集成和内核 5.0。

|

||||

|

||||

可是等等!什么是“点发布版”(point release)?让我先解释一下。

|

||||

可是等等!什么是“<ruby>小数点版本<rt>point release</rt></ruby>”?让我先解释一下。

|

||||

|

||||

### Ubuntu LTS 点发布版

|

||||

### Ubuntu LTS 小数点版本

|

||||

|

||||

Ubuntu 18.04 于 2018 年 4 月发布,由于它是一个长期支持 (LTS) 版本,它将一直支持到 2023 年。从那时起,已经有许多 bug 修复,安全更新和软件升级。如果你今天下载 Ubuntu 18.04,你需要在[在安装 Ubuntu 后首先安装这些更新][3]。

|

||||

Ubuntu 18.04 于 2018 年 4 月发布,由于它是一个长期支持 (LTS) 版本,它将一直支持到 2023 年。从那时起,已经有许多 bug 修复、安全更新和软件升级。如果你今天下载 Ubuntu 18.04,你需要在[在安装 Ubuntu 后首先安装这些更新][3]。

|

||||

|

||||

当然,这不是一种理想情况。这就是 Ubuntu 提供这些“点发布版”的原因。点发布版包含所有功能和安全更新以及自 LTS 版本首次发布以来添加的 bug 修复。如果你今天下载 Ubuntu,你会得到 Ubuntu 18.04.3 而不是 Ubuntu 18.04。这节省了在新安装的 Ubuntu 系统上下载和安装数百个更新的麻烦。

|

||||

当然,这不是一种理想情况。这就是 Ubuntu 提供这些“小数点版本”的原因。点发布版包含所有功能和安全更新以及自 LTS 版本首次发布以来添加的 bug 修复。如果你今天下载 Ubuntu,你会得到 Ubuntu 18.04.3 而不是 Ubuntu 18.04。这节省了在新安装的 Ubuntu 系统上下载和安装数百个更新的麻烦。

|

||||

|

||||

好了!现在你知道“点发布版”的概念了。你如何升级到这些点发布版?答案很简单。只需要像平时一样[更新你的 Ubuntu 系统][4],这样你将在最新的点发布版上了。

|

||||

好了!现在你知道“小数点版本”的概念了。你如何升级到这些小数点版本?答案很简单。只需要像平时一样[更新你的 Ubuntu 系统][4],这样你将在最新的小数点版本上了。

|

||||

|

||||

你可以[查看 Ubuntu 版本][5]来了解正在使用的版本。我检查了一下,因为我用的是 Ubuntu 18.04.3,我以为我的内核会是 5。当我[查看 Linux 内核版本][6]时,它仍然是基本内核 4.15。

|

||||

|

||||

![Ubuntu Version And Linux Kernel Version Check][7]

|

||||

|

||||

这是为什么?如果 Ubuntu 18.04.3 有 Linux 5.0 内核,为什么它仍然使用 Linux 4.15 内核?这是因为你必须通过选择 LTS 支持栈(通常称为 HWE)手动请求在 Ubuntu LTS 中安装新内核。

|

||||

这是为什么?如果 Ubuntu 18.04.3 有 Linux 5.0 内核,为什么它仍然使用 Linux 4.15 内核?这是因为你必须通过选择 LTS <ruby>支持栈<rt>Enablement Stack</rt></ruby>(通常称为 HWE)手动请求在 Ubuntu LTS 中安装新内核。

|

||||

|

||||

### 使用 HWE 在Ubuntu 18.04 中获取 Linux 5.0 内核

|

||||

|

||||

默认情况下,Ubuntu LTS 将保持在最初发布的 Linux 内核上。 [硬件支持栈][9](HWE)为现有的 Ubuntu LTS 版本提供了更新的内核和 xorg 支持。

|

||||

默认情况下,Ubuntu LTS 将保持在最初发布的 Linux 内核上。<ruby>[硬件支持栈][9]<rt>hardware enablement stack</rt></ruby>(HWE)为现有的 Ubuntu LTS 版本提供了更新的内核和 xorg 支持。

|

||||

|

||||

最近发生了一些变化。如果你下载了 Ubuntu 18.04.2 或更新的桌面版本,那么就会为你启用 HWE,默认情况下你将获得新内核以及常规更新。

|

||||

|

||||

@ -54,7 +54,7 @@ sudo apt-get install --install-recommends linux-generic-hwe-18.04

|

||||

|

||||

完成 HWE 内核的安装后,重启系统。现在你应该拥有更新的 Linux 内核了。

|

||||

|

||||

**你在 Ubuntu 18.04 中获取 5.0 内核了么?**

|

||||

### 你在 Ubuntu 18.04 中获取 5.0 内核了么?

|

||||

|

||||

请注意,下载并安装了 Ubuntu 18.04.2 的用户已经启用了 HWE。所以这些用户将能轻松获取 5.0 内核。

|

||||

|

||||

@ -69,7 +69,7 @@ via: https://itsfoss.com/ubuntu-hwe-kernel/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,81 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11241-1.html)

|

||||

[#]: subject: (GNOME and KDE team up on the Linux desktop, docs for Nvidia GPUs open up, a powerful new way to scan for firmware vulnerabilities, and more news)

|

||||

[#]: via: (https://opensource.com/article/19/8/news-august-17)

|

||||

[#]: author: (Scott Nesbitt https://opensource.com/users/scottnesbitt)

|

||||

|

||||

开源新闻综述:GNOME 和 KDE 达成合作、Nvidia 开源 GPU 文档

|

||||

======

|

||||

|

||||

> 不要错过两周以来最大的开源头条新闻。

|

||||

|

||||

![Weekly news roundup with TV][1]

|

||||

|

||||

在本期开源新闻综述中,我们将介绍两种新的强大数据可视化工具、Nvidia 开源其 GPU 文档、激动人心的新工具、确保自动驾驶汽车的固件安全等等!

|

||||

|

||||

### GNOME 和 KDE 在 Linux 桌面上达成合作伙伴

|

||||

|

||||

Linux 在桌面计算机上一直处于分裂状态。在最近的一篇[公告][2]中称,“两个主要的 Linux 桌面竞争对手,[GNOME 基金会][3] 和 [KDE][4] 已经同意合作。”

|

||||

|

||||

这两个组织将成为今年 11 月在巴塞罗那举办的 [Linux App Summit(LAS)2019][5] 的赞助商。这一举措在某种程度上似乎是对桌面计算不再是争夺支配地位的最佳场所的回应。无论是什么原因,Linux 桌面的粉丝们都有新的理由希望未来出现一个标准化的 GUI 环境。

|

||||

|

||||

### 新的开源数据可视化工具

|

||||

|

||||

这个世界上很少有不是由数据驱动的。除非数据以人们可以互动的形式出现,否则它并不是很好使用。最近开源的两个数据可视化项目正在尝试使数据更有用。

|

||||

|

||||

第一个工具名为 **Neuroglancer**,由 [Google 的研究团队][6]创建。它“使神经科医生能够在交互式可视化中建立大脑神经通路的 3D 模型。”Neuroglancer 通过使用神经网络追踪大脑中的神经元路径并构建完整的可视化来实现这一点。科学家已经使用了 Neuroglancer(你可以[从 GitHub 取得][7])通过扫描果蝇的大脑来建立一个交互式地图。

|

||||

|

||||

第二个工具来自一个不太能想到的的来源:澳大利亚信号理事会。这是该国家类似 NSA 的机构,它“开源了[内部数据可视化和分析工具][8]之一。”这个被称为 **[Constellation][9]** 的工具可以“识别复杂数据集中的趋势和模式,并且能够扩展到‘数十亿输入’。”该机构总干事迈克•伯吉斯表示,他希望“这一工具将有助于产生有利于所有澳大利亚人的科学和其他方面的突破。”鉴于它是开源的,它可以使整个世界受益。

|

||||

|

||||

### Nvidia 开始发布 GPU 文档

|

||||

|

||||

多年来,图形处理单元(GPU)制造商 Nvidia 并没有做出什么让开源项目轻松开发其产品的驱动程序的努力。现在,该公司通过[发布 GPU 硬件文档][10]向这些项目迈出了一大步。

|

||||

|

||||

该公司根据 MIT 许可证发布的文档[可在 GitHub 上获取][11]。它涵盖了几个关键领域,如设备初始化、内存时钟/调整和电源状态。据硬件新闻网站 Phoronix 称,开发了 Nvidia GPU 的开源驱动程序的 Nouveau 项目将是率先使用该文档来推动其开发工作的项目之一。

|

||||

|

||||

### 用于保护固件的新工具

|

||||

|

||||

似乎每周都有的消息称,移动设备或连接互联网的小设备中出现新漏洞。通常,这些漏洞存在于控制设备的固件中。自动驾驶汽车服务 Cruise [发布了一个开源工具][12],用于在这些漏洞成为问题之前捕获这些漏洞。

|

||||

|

||||

该工具被称为 [FwAnalzyer][13]。它检查固件代码中是否存在许多潜在问题,包括“识别潜在危险的可执行文件”,并查明“任何错误遗留的调试代码”。Cruise 的工程师 Collin Mulliner 曾帮助开发该工具,他说通过在代码上运行 FwAnalyzer,固件开发人员“现在能够检测并防止各种安全问题。”

|

||||

|

||||

### 其它新闻

|

||||

|

||||

* [为什么洛杉矶决定将未来寄予开源][14]

|

||||

* [麻省理工学院出版社发布了关于开源出版软件的综合报告][15]

|

||||

* [华为推出鸿蒙操作系统,不会放弃 Android 智能手机][16]

|

||||

|

||||

*一如既往地感谢 Opensource.com 的工作人员和主持人本周的帮助。*

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/news-august-17

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/weekly_news_roundup_tv.png?itok=B6PM4S1i (Weekly news roundup with TV)

|

||||

[2]: https://www.zdnet.com/article/gnome-and-kde-work-together-on-the-linux-desktop/

|

||||

[3]: https://www.gnome.org/

|

||||

[4]: https://kde.org/

|

||||

[5]: https://linuxappsummit.org/

|

||||

[6]: https://www.cbronline.com/news/brain-mapping-google-ai

|

||||

[7]: https://github.com/google/neuroglancer

|

||||

[8]: https://www.computerworld.com.au/article/665286/australian-signals-directorate-open-sources-data-analysis-tool/

|

||||

[9]: https://www.constellation-app.com/

|

||||

[10]: https://www.phoronix.com/scan.php?page=news_item&px=NVIDIA-Open-GPU-Docs

|

||||

[11]: https://github.com/nvidia/open-gpu-doc

|

||||

[12]: https://arstechnica.com/information-technology/2019/08/self-driving-car-service-open-sources-new-tool-for-securing-firmware/

|

||||

[13]: https://github.com/cruise-automation/fwanalyzer

|

||||

[14]: https://www.techrepublic.com/article/why-la-decided-to-open-source-its-future/

|

||||

[15]: https://news.mit.edu/2019/mit-press-report-open-source-publishing-software-0808

|

||||

[16]: https://www.itnews.com.au/news/huawei-unveils-harmony-operating-system-wont-ditch-android-for-smartphones-529432

|

||||

@ -0,0 +1,105 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (LiVES Video Editor 3.0 is Here With Significant Improvements)

|

||||

[#]: via: (https://itsfoss.com/lives-video-editor/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

LiVES Video Editor 3.0 is Here With Significant Improvements

|

||||

======

|

||||

|

||||

We recently covered a list of [best open source video editors][1]. LiVES is one of those open source video editors, available for free.

|

||||

|

||||

Even though a lot of users are still waiting for the release on Windows, a major update just popped up for LiVES Video Editor (i.e v3.0.1 as the latest package) on Linux. The new upgrade includes some new features and improvements.

|

||||

|

||||

In this article, I’ll cover the key improvements in the new version and I’ll also mention the steps to install it on your Linux system.

|

||||

|

||||

### LiVES Video Editor 3.0: New Changes

|

||||

|

||||

![Lives Video Editor Loading in Zorin OS][2]

|

||||

|

||||

Overall, with this major update – LiVES Video Editor aims to have a smoother playback, prevent unwanted crashes, optimized video recording, and making the online video downloader more useful.

|

||||

|

||||

The list of changes are:

|

||||

|

||||

* Render silence to end of video if necessary during rendering.

|

||||

* Improvements to openGL playback plugin, including much smoother playback.

|

||||

* Re-enable Advanced options for the openGL playback plugin.

|

||||

* Allow “Enough” in VJ / Pre-decode all frames

|

||||

* Refactor code for timebase calculations during playback (better a/v synch).

|

||||

* Overhaul external audio and audio recording to improve accuracy and use fewer CPU cycles.

|

||||

* Auto switch to internal audio when entering multitack mode.

|

||||

* Show correct effects state (on / off) when reshowing effect mapper window.

|

||||

* Eliminate some race conditions between the audio and video threads.

|

||||

* Improvements to online video downloader, clip size and format can now be selected, added an update option.

|

||||

* Implemented reference counting for realtime effect instances.

|

||||

* Extensively rewrote the main interface, cleaning up the code and making many visual improvements.

|

||||

* Optimized recording when video generators are running.

|

||||

* Improvements to the projectM filter wrapper, including SDL2 support.

|

||||

* Added an option to invert the Z-order in multitrack compositor (rear layers can now overlay front ones).

|

||||

* Added support for musl libc

|

||||

* Updated translations for Ukranian

|

||||

|

||||

|

||||

|

||||

While some of the points listed can just go over your head if you are not an advanced video editor. But, in a nutshell, all of these things make ‘LiVES Video Editor’ a better open source video editing software.

|

||||

|

||||

[][3]

|

||||

|

||||

Suggested read VidCutter Lets You Easily Trim And Merge Videos In Linux

|

||||

|

||||

### Installing LiVES Video Editor on Linux

|

||||

|

||||

LiVES is normally available in the repository of all major Linux distributions. However, you may not find the latest version on your software center yet. So, if you want to install it that way – you’ll have to wait.

|

||||

|

||||

If you want to install it manually, you can get the RPM packages for Fedora/Open SUSE from its download page. The source is also available for Linux distros.

|

||||

|

||||

[Download LiVES Video Editor][4]

|

||||

|

||||

For Ubuntu (or Ubuntu-based distros), you can add the [unofficial PPA][5] maintained by [Ubuntuhandbook][6]. Here’s how to do it:

|

||||

|

||||

**1.** Launch the terminal and enter the following command:

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:ubuntuhandbook1/lives

|

||||

```

|

||||

|

||||