mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

d8d4deed04

@ -1,6 +1,6 @@

|

||||

10个实用的关于linux中Squid代理服务器的面试问答

|

||||

10个关于linux中Squid代理服务器的实用面试问答

|

||||

================================================================================

|

||||

不仅是系统管理员和网络管理员时不时会听到“代理服务器”这个词,我们也经常听到。代理服务器已经是一种企业的文化,而且那是需要时间来积累的。它现在也在一些小型的学校或者大型跨国公司的自助餐厅里得到了实现。Squid(也可做代理服务)就是这样一个应用程序,它既可以被作为代理服务器,同时也是在其同类工具中比较被广泛使用的一种。

|

||||

不仅是系统管理员和网络管理员时不时会听到“代理服务器”这个词,我们也经常听到。代理服务器已经成为一种企业常态,而且经常会接触到它。它现在也出现在一些小型的学校或者大型跨国公司的自助餐厅里。Squid(常被视作代理服务的代名词)就是这样一个应用程序,它不但可以被作为代理服务器,其同时也是在该类工具中比较被广泛使用的一种。

|

||||

|

||||

本文旨在提高你在遇到关于代理服务器面试点时的一些基本应对能力。

|

||||

|

||||

@ -10,12 +10,13 @@

|

||||

|

||||

### 1. 什么是代理服务器?代理服务器在计算机网络中有什么用途? ###

|

||||

|

||||

> **回答** : 代理服务器是指那些作为客户端和资源提供商或服务器之间的中间件的物理机或者应用程序。客户端从代理服务器中寻找文件、页面或者是数据而且代理服务器能处理客户端与服务器之间所有复杂事务从而满足客户端的生成的需求。

|

||||

代理服务器是WWW(万维网)的支柱,它们其中大部分都是Web代理。一台代理服务器能处理客户端与服务器之间的复杂通信事务。此外,它在网络上提供的是匿名信息那就意味着你的身份和浏览痕迹都是安全的。代理可以去配置允许哪些网站的客户能看到,哪些网站被屏蔽了。

|

||||

> **回答** : 代理服务器是指那些作为客户端和资源提供商或服务器之间的中间件的物理机或者应用程序。客户端从代理服务器中寻找文件、页面或者是数据,而且代理服务器能处理客户端与服务器之间所有复杂事务,从而满足客户端的生成的需求。

|

||||

|

||||

代理服务器是WWW(万维网)的支柱,它们其中大部分都是Web代理。一台代理服务器能处理客户端与服务器之间的复杂通信事务。此外,它在网络上提供的是匿名信息(LCTT 译注:指浏览者的 IP、浏览器信息等被隐藏),这就意味着你的身份和浏览痕迹都是安全的。代理可以去配置允许哪些网站的客户能看到,哪些网站被屏蔽了。

|

||||

|

||||

### 2. Squid是什么? ###

|

||||

|

||||

> **回答** : Squid是一个在GNU/GPL协议下发布的即可作为代理服务器同时也可作为Web缓存守护进程的应用软件。Squid主要是支持像HTTP和FTP那样的协议但是对其它的协议比如HTTPS,SSL,TLS等同样也能支持。其特点是Web缓存守护进程通过从经常上访问的网站里缓存Web和DNS从而让上网速度更快。Squid支持所有的主流平台,包括Linux,UNIX,微软公司的Windows和苹果公司的Mac。

|

||||

> **回答** : Squid是一个在GNU/GPL协议下发布的既可作为代理服务器,同时也可作为Web缓存守护进程的应用软件。Squid主要是支持像HTTP和FTP那样的协议,但是对其它的协议比如HTTPS,SSL,TLS等同样也能支持。其特点是Web缓存守护进程通过从经常上访问的网站里缓存Web和DNS数据,从而让上网速度更快。Squid支持所有的主流平台,包括Linux,UNIX,微软公司的Windows和苹果公司的Mac。

|

||||

|

||||

### 3. Squid的默认端口是什么?怎么去修改它的操作端口? ###

|

||||

|

||||

@ -66,17 +67,17 @@ f. 保存配置文件并退出,重启Squid服务让其生效。

|

||||

|

||||

# service squid restart

|

||||

|

||||

### 5. 在Squid中什么是媒体范围限制和部分下载? ###

|

||||

### 5. 在Squid中什么是媒体范围限制(Media Range Limitation)和部分下载? ###

|

||||

|

||||

> **回答** : 媒体范围限制是Squid的一种特殊的功能,它只从服务器中获取所需要的数据而不是整个文件。这个功能很好的实现了用户在各种视频流媒体网站如YouTube和Metacafe看视频时,可以点击视频中的进度条来选择进度,因此整个视频不用全部都加载,除了一些需要的部分。

|

||||

|

||||

Squid部分下载功能的特点是很好地实现了在Windows更新时下载的文件能以一个个小数据包的形式暂停。正因为它的这个特点,正在下载文件的Windows机器能不用担心数据会丢失,从而进行恢复下载。Squid让媒体范围限制和部分下载功能只在存储一个完整文件的复件之后实现。此外,当用户指向另一个页面时,Squid要以某种方式进行特殊地配置,部分下载下来的文件才会不被删除且留有缓存。

|

||||

Squid部分下载功能的特点是很好地实现了类似在Windows更新时能以一个个小数据包的形式下载,并可以暂停,正因为它的这个特点,正在下载文件的Windows机器可以重新继续下载,而不用担心数据会丢失。Squid的媒体范围限制和部分下载功能只有在存储了一个完整文件的副本之后才行。此外,当用户访问另一个页面时,除非Squid进行了特定的配置,部分下载下来的文件会被删除且不留在缓存中。

|

||||

|

||||

### 6. 什么是Squid的反向代理? ###

|

||||

|

||||

> **回答** : 反向代理是Squid的一个特点,这个功能被用来加快最终用户的上网速度。缩写为 ‘RS’ 的原服务器包含了所有资源,而代理服务器则叫 ‘PS’ 。客户端寻找RS所提供的数据,第一次指定的数据和它的复件会经过多次配置从RS上存储在PS上。这样的话每次从PS上请求的数据就等于就是从原服务器上获取的。这样就会减轻网络拥堵,减少CPU使用率,降低网络资源的利用率从而缓解原来实际服务器的负载压力。但是RS统计不了总流量的数据因为PS分担了部分原服务器的任务。‘X-Forwarded-For HTTP’ 就能记录下通过HTTP代理或负载均衡方式连接到RS的客户端最原始的IP地址。

|

||||

> **回答** : 反向代理是Squid的一个功能,这个功能被用来加快最终用户的上网速度。下面用缩写 ‘RS’ 的表示包含了资源的原服务器,而代理服务器则称作 ‘PS’ 。初次访问时,它会从RS得到其提供的数据,并将其副本按照配置好的时间存储在PS上。这样的话每次从PS上请求的数据就相当于就是从原服务器上获取的。这样就会减轻网络拥堵,减少CPU使用率,降低网络资源的利用率,从而缓解原来实际服务器的负载压力。但是RS统计不了总流量的数据,因为PS分担了部分原服务器的任务。‘X-Forwarded-For HTTP’ 信息能用于记录下通过HTTP代理或负载均衡方式连接到RS的客户端最原始的IP地址。

|

||||

|

||||

严格意义上来说,用单个Squid服务器同时作为正向代理服务器和反向代理服务器是可行的。

|

||||

从技术上说,用单个Squid服务器同时作为正向代理服务器和反向代理服务器是可行的。

|

||||

|

||||

### 7. 由于Squid能作为一个Web缓存守护进程,那缓存可以删除吗?怎么删除? ###

|

||||

|

||||

@ -91,7 +92,7 @@ b. 创建交换分区目录。

|

||||

|

||||

# squid -z

|

||||

|

||||

### 8. 你身边有一台客户机,而你正在工作,如果想要限制儿童的访问时间段,你会怎么去设置那个场景? ###

|

||||

### 8. 你有一台工作中的机器可以访问代理服务器,如果想要限制你的孩子的访问时间,你会怎么去设置那个场景? ###

|

||||

|

||||

把允许访问的时间设置成晚上4点到7点三个小时,跨度为星期一到星期五。

|

||||

|

||||

@ -114,9 +115,9 @@ c. 重启Squid服务。

|

||||

|

||||

### 10. Squid的缓存会存储到哪里? ###

|

||||

|

||||

> **回答** : Squid存储的缓存是位于 ‘/var/spool/squid’ 的特殊目录下。

|

||||

> **回答** : Squid存储的缓存是位于 ‘/var/spool/squid’ 的特定目录下。

|

||||

|

||||

以上就是全部内容了,很快我还会带着其它有趣的内容回到这里,届时还请继续关注Tecmint。别忘了告诉我们你的反馈和评论。

|

||||

以上就是全部内容了,很快我还会带着其它有趣的内容回到这里。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -124,7 +125,7 @@ via: http://www.tecmint.com/squid-interview-questions/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -8,12 +8,13 @@

|

||||

|

||||

### 添加窗口按钮 ###

|

||||

|

||||

处于一些未知的原因,GNOME的开发者们决定对标准的窗口按钮(关闭,最小化,最大化)不屑一顾,而支持只有单个关闭按钮的窗口了。我缺少了最大化按钮(虽然你可以简单地拖动窗口到屏幕顶部来将它最大化),然而也可以通过在标题栏右击选择最小化或者最大化来进行最小化/最大化操作。这种变化仅仅增加了操作步骤,因此缺少最小化按钮实在搞得人云里雾里。所幸的是,有个简单的修复工具可以解决这个问题,下面说说怎样做吧:

|

||||

出于一些未知的原因,GNOME的开发者们决定对标准的窗口按钮(关闭,最小化,最大化)不屑一顾,而支持只有单个关闭按钮的窗口了。我缺少了最大化按钮(虽然你可以简单地拖动窗口到屏幕顶部来将它最大化),而且也可以通过在标题栏右击选择最小化或者最大化来进行最小化/最大化操作。这种变化仅仅增加了操作步骤,因此缺少最小化按钮实在搞得人云里雾里。所幸的是,有个简单的修复工具可以解决这个问题,下面说说怎样做吧:

|

||||

|

||||

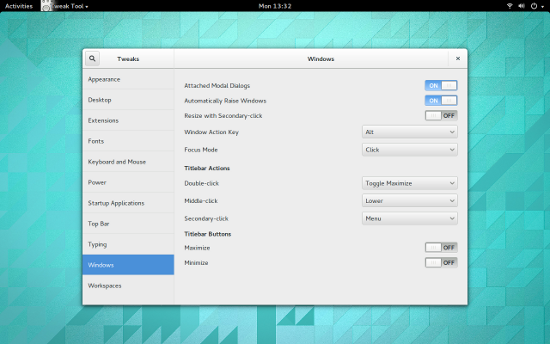

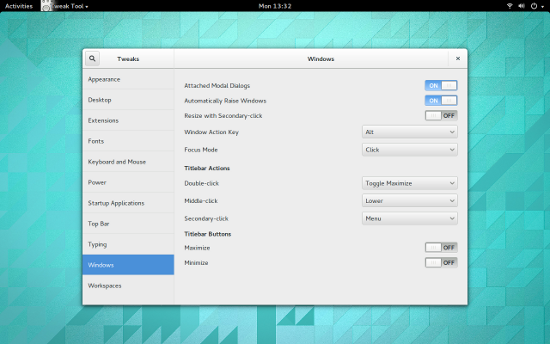

默认情况下,你应该安装了GNOME优化工具。通过该工具,你可以打开最大化或最小化按钮(图1)。

|

||||

默认情况下,你应该安装了GNOME优化工具(GNOME Tweak Tool)。通过该工具,你可以打开最大化或最小化按钮(图1)。

|

||||

|

||||

|

||||

Figure 1: 添加回最小化按钮到GNOME 3窗口

|

||||

<center>

|

||||

|

||||

*图 1: 添加回最小化按钮到GNOME 3窗口*</center>

|

||||

|

||||

添加完后,你就可以看到最小化按钮了,它在关闭按钮的左边,等着为你服务呢。你的窗口现在管理起来更方便了。

|

||||

|

||||

@ -27,36 +28,39 @@ Figure 1: 添加回最小化按钮到GNOME 3窗口

|

||||

|

||||

### 添加扩展 ###

|

||||

|

||||

GNOME 3的最佳特性之一,就是shell扩展,这些扩展为GNOME带来了全部种类的有用的特性。关于shell扩展,没必要从包管理器去安装。你可以访问[GNOME Shell扩展][2]站点,搜索你想要添加的扩展,点击扩展列表,点击打开按钮,然后扩展就安装完成了;或者你也可以从GNOME优化工具中添加它们(你在网站上会找到更多可用的扩展)。

|

||||

GNOME 3的最佳特性之一,就是shell扩展,这些扩展为GNOME带来了各种类别的有用特性。关于shell扩展,没必要从包管理器去安装。你可以访问[GNOME Shell扩展][2]站点,搜索你想要添加的扩展,点击扩展列表,点击打开按钮,然后扩展就安装完成了;或者你也可以从GNOME优化工具中添加它们(你在网站上会找到更多可用的扩展)。

|

||||

|

||||

注:你可能需要在浏览器中允许扩展安装。如果出现这样的情况,你会在第一次访问GNOME Shell扩展站点时见到警告信息。当出现提示时,只要点击允许即可。

|

||||

|

||||

令人印象更为深刻的(而又得心应手的扩展)之一,就是[Dash to Dock][3]。

|

||||

令人印象更为深刻的(而又得心应手的)扩展之一,就是[Dash to Dock][3]。

|

||||

|

||||

该扩展将Dash移出应用程序概览,并将它转变为相当标准的停靠栏(图2)。

|

||||

|

||||

|

||||

Figure 2: Dash to Dock添加一个停靠栏到GNOME 3.

|

||||

<center>

|

||||

|

||||

*图 2: Dash to Dock添加一个停靠栏到GNOME 3*</center>

|

||||

|

||||

当你添加应用程序到Dash后,他们也将被添加到Dash to Dock。你也可以通过点击Dock底部的6点图标访问应用程序概览。

|

||||

|

||||

还有大量其它扩展聚焦于讲GNOME 3打造成一个更为高效的桌面,在这些更好的扩展中,包括以下这些:

|

||||

还有大量其它扩展致力于将GNOME 3打造成一个更为高效的桌面,在这些不错的扩展中,包括以下这些:

|

||||

|

||||

- [最近项目][4]: 添加一个最近使用项目的下拉菜单到面板。

|

||||

- [搜索Firefox书签提供者][5]: 从概览搜索(并启动)书签。

|

||||

- [Firefox书签搜索][5]: 从概览搜索(并启动)书签。

|

||||

- [跳转列表][6]: 添加一个跳转列表弹出菜单到Dash图标(该扩展可以让你快速打开和程序关联的新文档,甚至更多)

|

||||

- [待办列表][7]: 添加一个下拉列表到面板,它允许你添加项目到该列表。

|

||||

- [网页搜索对话框][8]: 允许你通过敲击Ctrl+空格来快速搜索网页并输入一个文本字符串(结果在新的浏览器标签页中显示)。

|

||||

- [网页搜索框][8]: 允许你通过敲击Ctrl+空格来快速搜索网页并输入一个文本字符串(结果在新的浏览器标签页中显示)。

|

||||

|

||||

### 添加一个完整停靠栏 ###

|

||||

|

||||

如果Dash to dock对于而言功能还是太有限(你想要通知区域,甚至更多),那么向你推荐我最喜爱的停靠栏之一[Cairo Dock][9](图3)。

|

||||

如果Dash to dock对于你而言功能还是太有限(你想要“通知区域”,甚至更多),那么向你推荐我最喜爱的停靠栏之一[Cairo Dock][9](图3)。

|

||||

|

||||

|

||||

Figure 3: Cairo Dock待命

|

||||

<center>

|

||||

|

||||

在Cairo Dock添加到GNOME 3后,你的体验将成倍地增长。从你的发行版的包管理器中安装这个优秀的停靠栏吧。

|

||||

*图 3: Cairo Dock待命*</center>

|

||||

|

||||

不必将GNOME 3看作是一个效率不高的,用户不友好的桌面。只要稍作调整,GNOME 3可以成为和其它可用的桌面一样强大而用户友好的桌面。

|

||||

在将Cairo Dock添加到GNOME 3后,你的体验将成倍地增长。从你的发行版的包管理器中安装这个优秀的停靠栏吧。

|

||||

|

||||

不要将GNOME 3看作是一个效率不高的,用户不友好的桌面。只要稍作调整,GNOME 3可以成为和其它可用的桌面一样强大而用户友好的桌面。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -64,7 +68,7 @@ via: http://www.linux.com/learn/tutorials/781916-easy-steps-to-make-gnome-3-more

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[ wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

什么时候Linux才能完美

|

||||

什么时候Linux才能完美?

|

||||

================================================================================

|

||||

前几天我的同事兼损友,Ken Starks,在FOSS Force上发表了[一篇文章][1],关于他最喜欢发牢骚的内容:Linux系统中那些不能正常工作的事情。这次他抱怨的是在Mint里使用KDE时碰到的字体问题。这对于Ken来说也不是什么新鲜事了。过去他写了一些文章,关于各种Linux发行版中的缺陷从来都没有被认真修复过。他的观点是,这些在一次又一次的发布中从没有被修复过的“小问题”,对于Linux桌面系统在赢得大众方面的失败需要负主要责任。

|

||||

|

||||

@ -14,21 +14,21 @@

|

||||

|

||||

### 也不全是这样子的 ###

|

||||

|

||||

早在2002年的时候,我第一次安装使用GNU/Linux,像大多数美国人那样,我搞不定拨号连接,在我呆的这个小地方当时宽带还没普及。我在当地Best Buy商店里花了差不多70美元买了用热缩膜包装的Mandrake 9.0的Powerpack版,当时那里同时在卖Mandrake和Red Hat,现在仍然还在经营桌面业务。

|

||||

早在2002年的时候,我第一次安装使用GNU/Linux,像大多数美国人那样,我搞不定拨号连接,在我呆的这个小地方当时宽带还没普及。我在当地Best Buy商店里花了差不多70美元买了用热缩膜包装的Mandrake 9.0的Powerpack版,当时那里同时在卖Mandrake和Red Hat,现在仍然还在经营桌面PC业务。

|

||||

|

||||

在那个恐龙时代,Mandrake被认为是易用的Linux发行版中做的最好的。它安装简单,还有人说比Windows还简单,它自带的分区工具更是让划分磁盘像切苹果馅饼一样简单。不过实际上,Linux老手们经常公开嘲笑Mandrake,暗示易用的Linux不是真的Linux。

|

||||

|

||||

但是我很喜欢它,感觉来到了一个全新的世界。再也不用担心Windows的蓝屏死机和几乎每天一死了。不幸的是,之前在Windows下“能用”的很多外围设备也随之而去。

|

||||

|

||||

安装完Mandrake之后我要做的第一件事就是,把我的小白盒拿给[Dragonware Computers][2]的Michelle,把便宜的winmodem换成硬件调制解调器。就算,一个硬件猫意味着计算机响应更快,但是计算机商店却在40英里外的地方,并不是很方便,而且费用我也有点压力。

|

||||

安装完Mandrake之后我要做的第一件事就是,把我的小白盒拿给[Dragonware Computers][2]的Michelle,把便宜的winmodem换成硬件调制解调器。就算是一个硬件猫意味着计算机响应更快,但是计算机商店却在40英里外的地方,并不是很方便,而且费用对我也有点压力。

|

||||

|

||||

但是我不介意。我对Microsoft并不感冒--而且使用一个“不同”的操作系统让我感觉自己就像一个计算机天才。

|

||||

|

||||

打印机也是个麻烦,但是这个问题对于Mandrake还好,不像其他大多数发行版还需要命令行里的操作才能解决。Mandrake提供了一个华丽的图形界面来设置打印机-如果你正好幸运的有一台能在Linux下工作的打印机的话。很多,不是大多数,都不行。

|

||||

打印机也是个麻烦,但是这个问题对于Mandrake还好,不像其他大多数发行版还需要命令行里的操作才能解决。Mandrake提供了一个华丽的图形界面来设置打印机-如果你正好幸运的有一台能在Linux下工作的打印机的话。很多打印机——就算不是大多数——都不行。

|

||||

|

||||

我的还在保修期的Lexmark,在Windows下比其他打印机多出很多华而不实的小功能,厂商并不支持Linux版本,但是我找到一个多少能用的开源逆向工程驱动。它能在Mozilla浏览器里正常打印网页,但是在Star Office软件里打印的话会是用很小的字体塞到页面的右上角里。打印机还会发出很大的机械响声,让我想起了汽车变速箱在报废时发出的噪音。

|

||||

|

||||

Star Office问题的变通方案是把所有文字都保存到文本文件,然后在文本编辑器里打印。而对于那个听上去像是打印机处于自解体模式的噪音?我的方法是尽量不要打印。

|

||||

Star Office问题的变通方案是把所有文字都保存到文本文件,然后在文本编辑器里打印。而对于那个听上去像是打印机处于天魔解体模式的噪音?我的方法是尽量不要打印。

|

||||

|

||||

### 更多的其他问题-对我来说太多了都快忘了 ###

|

||||

|

||||

@ -36,12 +36,13 @@ Star Office问题的变通方案是把所有文字都保存到文本文件,然

|

||||

|

||||

好吧,我还有个并口扫描仪,在我转移到Linux之前两个星期买的,之后它就基本是块砖了,因为没有Linux下的驱动。

|

||||

|

||||

我的观点是在那个年代里这些都不重要。我们大多数人都习惯了修改配置文件之类的事情,即便是运行微软产品的“IBM兼容”计算机。就像那个年代的大多数用户,我刚学开始接触使用命令行的DOS机器,在它上面打印机需要针对每个程序单独设置,而且写写简单的autoexec.bat是必须的技能。

|

||||

我的观点是在那个年代里这些都不重要。我们大多数人都习惯了修改配置文件之类的事情,即便是运行微软产品的“IBM兼容”计算机。就像那个年代的大多数用户,我刚学开始接触使用命令行的DOS机器,在它上面打印机需要针对每个程序单独设置,而且写写简单的autoexec.bat是必备的技能。

|

||||

|

||||

|

||||

Linux就像1966年的“山羊”

|

||||

<center></center>

|

||||

|

||||

能够摆弄操作系统内部的配置是能够拥有一台计算机的一个简单部分。我们大多数使用计算机的人要么是极客或是希望成为极客。我们为这种能够调整计算机按我们想要的方式运行的能力而感到骄傲。我们就是那个年代里高科技版本的好男孩,他们会在周六下午在树荫下改装他们肌肉车上的排气管,通风管,化油器之类的。

|

||||

<center>Linux就像1966年的“山羊”</center>

|

||||

|

||||

那时,能够摆弄操作系统内部的配置是能够拥有一台计算机的一个简单部分。我们大多数使用计算机的人要么是极客或是希望成为极客。我们为这种能够调整计算机按我们想要的方式运行的能力而感到骄傲。我们就是那个年代里高科技版本的好男孩,他们会在周六下午在树荫下改装他们肌肉车上的排气管,通风管,化油器之类的。

|

||||

|

||||

### 不过现在大家不是这样使用计算机的 ###

|

||||

|

||||

@ -59,7 +60,7 @@ via: http://fossforce.com/2014/08/when-linux-was-perfect-enough/

|

||||

|

||||

作者:Christine Hall

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,20 +1,17 @@

|

||||

使用Clonezilla对硬盘进行镜像和克隆

|

||||

================================================================================

|

||||

|

||||

|

||||

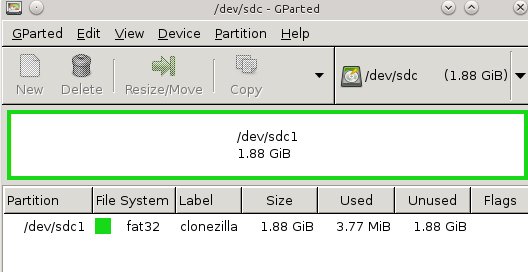

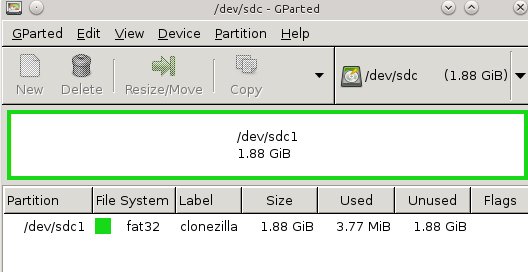

图1: 在USB存储棒上为Clonezilla创建分区

|

||||

Clonezilla是一个用于Linux,Free-Net-OpenBSD,Mac OS X,Windows以及Minix的分区和磁盘克隆程序。它支持所有主要的文件系统,包括EXT,NTFS,FAT,XFS,JFS和Btrfs,LVM2,以及VMWare的企业集群文件系统VMFS3和VMFS5。Clonezilla支持32位和64位系统,同时支持旧版BIOS和UEFI BIOS,并且同时支持MBR和GPT分区表。它是一个用于完整备份Windows系统和所有安装于上的应用软件的好工具,而我喜欢用它来为Linux测试系统做备份,以便我可以在其上做疯狂的实验搞坏后,可以快速恢复它们。

|

||||

|

||||

Clonezilla是一个用于Linux,Free-Net-,OpenBSD,Mac OS X,Windows以及Minix的分区和磁盘克隆程序。它支持所有主要的文件系统,包括EXT,NTFS,FAT,XFS,JFS和Btrfs,LVM2,以及VMWare的企业集群文件系统VMFS3和VMFS5。Clonezilla支持32位和64位系统,同时支持旧版BIOS和UEFI BIOS,并且同时支持MBR和GPT分区表。它是一个用于完整备份Windows系统和所有安装于上的应用软件的好工具,而我喜欢用它来为Linux测试系统做备份,以便我可以在其上做疯狂的实验搞坏后,可以快速恢复它们。

|

||||

Clonezilla也可以使用dd命令来备份不支持的文件系统,该命令可以复制块而非文件,因而不必在意文件系统。简单点说,就是Clonezilla可以复制任何东西。(关于块的快速说明:磁盘扇区是磁盘上最小的可编址存储单元,而块是由单个或者多个扇区组成的逻辑数据结构。)

|

||||

|

||||

Clonezilla也可以使用dd命令来备份不支持的文件系统,该命令可以复制块而非文件,因而不必弄明白文件系统。因此,简单点说,就是Clonezilla可以复制任何东西。(关于块的快速说明:磁盘扇区是磁盘上最小的可编址存储单元,而块是由单个或者多个扇区组成的逻辑数据结构。)

|

||||

|

||||

Clonezilla分为两个版本:Clonezilla Live和Clonezilla Server Edition(SE)。Clonezilla Live对于将单个计算机克隆岛本地存储设备或者网络共享来说是一流的。而Clonezilla SE则适合更大的部署,用于一次性快速多点克隆整个网络中的PC。Clonezilla SE是一个神奇的软件,我们将在今后讨论。今天,我们将创建一个Clonezilla Live USB存储棒,克隆某个系统,然后恢复它。

|

||||

Clonezilla分为两个版本:Clonezilla Live和Clonezilla Server Edition(SE)。Clonezilla Live对于将单个计算机克隆到本地存储设备或者网络共享来说是一流的。而Clonezilla SE则适合更大的部署,用于一次性快速多点克隆整个网络中的PC。Clonezilla SE是一个神奇的软件,我们将在今后讨论。今天,我们将创建一个Clonezilla Live USB存储棒,克隆某个系统,然后恢复它。

|

||||

|

||||

### Clonezilla和Tuxboot ###

|

||||

|

||||

当你访问下载页时,你会看到[稳定版和可选稳定发行版][1]。也有测试版本,如果你有兴趣帮助改善Clonezilla,那么我推荐你使用此版本。稳定版基于Debian,不含有非自由软件。可选稳定版基于Ubuntu,包含有一些非自由固件,并支持UEFI安全启动。

|

||||

|

||||

在你[下载Clonezilla][2]后,请安装[Tuxboot][3]来复制Clonezilla到USB存储棒。Tuxboot是一个Unetbootin的修改版,它支持Clonezilla;你不能使用Unetbootin,因为它无法工作。安装Tuxboot有点让人头痛,然而Ubuntu用户通过个人包归档压缩包(PPA)方便地安装:

|

||||

在你[下载Clonezilla][2]后,请安装[Tuxboot][3]来复制Clonezilla到USB存储棒。Tuxboot是一个Unetbootin的修改版,它支持Clonezilla;你不能使用Unetbootin,因为它无法配合工作。安装Tuxboot有点让人头痛,然而Ubuntu用户通过个人包归档包(PPA)方便地安装:

|

||||

|

||||

$ sudo apt-add-repository ppa:thomas.tsai/ubuntu-tuxboot

|

||||

$ sudo apt-get update

|

||||

@ -22,18 +19,24 @@ Clonezilla分为两个版本:Clonezilla Live和Clonezilla Server Edition(SE

|

||||

|

||||

如果你没有运行Ubuntu,并且你的发行版不包含打包好的Tuxboot版本,那么请[下载源代码tarball][4],并遵循README.txt文件中的说明来编译并安装。

|

||||

|

||||

安装完Tuxboot后,就可以使用它来创建你精巧的可直接启动的Clonezilla USB存储棒了。首先,创建一个最小200MB的FAT 32分区;图1(上面)展示了使用GParted来进行分区。我喜欢使用标签,比如“Clonezilla”,这会让我知道它是个什么东西。该例子中展示了将一个2GB的存储棒格式化成一个单个分区。

|

||||

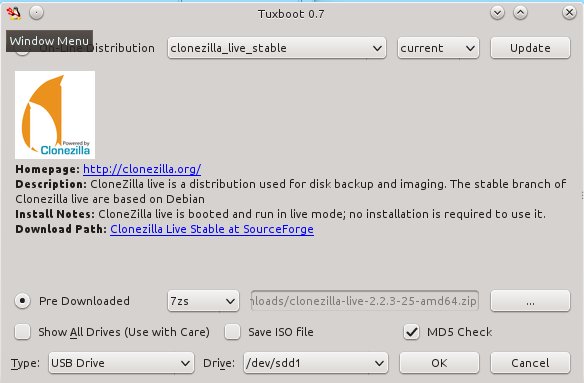

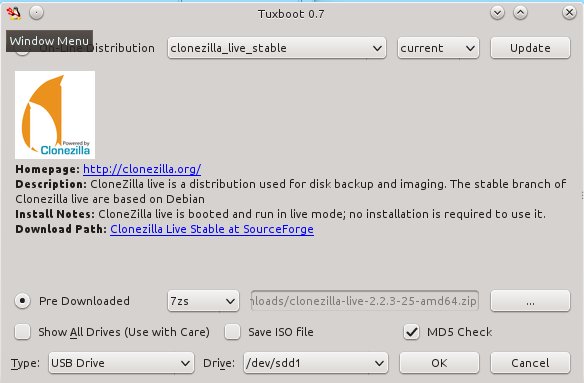

Then fire up Tuxboot (figure 2). Check "Pre-downloaded" and click the button with the ellipsis to select your Clonezilla file. It should find your USB stick automatically, and you should check the partition number to make sure it found the right one. In my example that is /dev/sdd1. Click OK, and when it's finished click Exit. It asks you if you want to reboot now, but don't worry because it won't. Now you have a nice portable Clonezilla USB stick you can use almost anywhere.

|

||||

然后,启动Tuxboot(图2)。选中“预下载的(Pre-downloaded)”然后点击带省略号的按钮来选择Clonezilla文件。它会自动发现你的USB存储棒,而你需要选中分区号来确保它找到的是正确的那个,我的例子中是/dev/sdd1。点击确定,然后当它完成后点击退出。它会问你是否要重启动,请不要担心,因为它不会的。现在你有一个精巧的便携式Clonezilla USB存储棒了,你可以随时随地使用它了。

|

||||

<center></center>

|

||||

|

||||

|

||||

图2: 启动Tuxboot

|

||||

<center>*图1: 在USB存储棒上为Clonezilla创建分区*</center>

|

||||

|

||||

|

||||

安装完Tuxboot后,就可以使用它来创建你精巧的可直接启动的Clonezilla USB存储棒了。首先,创建一个最小200MB的FAT 32分区;图1(上图)展示了使用GParted来进行分区。我喜欢使用类似“Clonezilla”这样的标签,这会让我知道它是个什么东西。该例子中展示了将一个2GB的存储棒格式化成一个单个分区。

|

||||

|

||||

然后,启动Tuxboot(图2)。选中“预下载的(Pre-downloaded)”然后点击带省略号的按钮来选择Clonezilla文件。它会自动发现你的USB存储棒,而你需要选中分区号来确保它找到的是正确的那个,我的例子中是/dev/sdd1。点击确定,然后当它完成后点击退出。它会问你是否要重启动,不要担心,现在不用重启。现在你有一个精巧的便携式Clonezilla USB存储棒了,你可以随时随地使用它了。

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*图2: 启动Tuxboot*</center>

|

||||

|

||||

### 创建磁盘镜像 ###

|

||||

|

||||

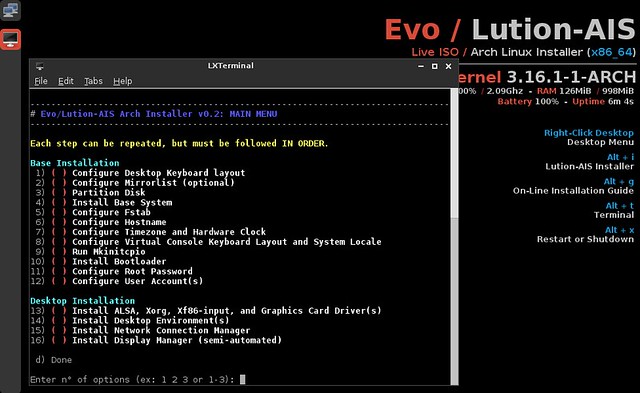

在你想要备份的计算机上启动Clonezilla USB存储棒,第一个映入你眼帘的是常规的启动菜单。启动到默认条目。你会被问及使用何种语言和键盘,而当你到达启动Clonezilla菜单时,请选择启动Clonezilla。在下一级菜单中选择设备镜像,然后进入下一屏。

|

||||

|

||||

这一屏有点让人摸不着头脑,里头有什么local_dev,ssh_server,samba_server,以及nfs_server之类的选项。这里就是要你选择将备份的镜像拷贝到哪里,目标分区或者驱动器必须和你要拷贝的卷要一样大,甚至更大。如果你选择local_dev,那么你需要一个足够大的本地分区来存储你的镜像。附加USB硬盘驱动器是一个不错的,快速而又简单的选项。如果你选择任何服务器选项,你需要有线连接到服务器,并提供IP地址并登录上去。我将使用一个本地分区,这就是说要选择local_dev。

|

||||

这一屏有点让人摸不着头脑,里头有什么local_dev,ssh_server,samba_server,以及nfs_server之类的选项。这里就是要你选择将备份的镜像拷贝到哪里,目标分区或者驱动器必须和你要拷贝的卷要一样大,甚至更大。如果你选择local_dev,那么你需要一个足够大的本地分区来存储你的镜像。附加的USB硬盘驱动器是一个不错的,快速而又简单的选项。如果你选择任何服务器选项,你需要能连接到服务器,并提供IP地址并登录上去。我将使用一个本地分区,这就是说要选择local_dev。

|

||||

|

||||

当你选择local_dev时,Clonezilla会扫描所有连接到本地的存储折本,包括硬盘和USB存储设备。然后,它会列出所有分区。选择你想要存储镜像的分区,然后它会问你使用哪个目录并列出目录。选择你所需要的目录,然后进入下一屏,它会显示所有的挂载以及已使用/可用的空间。按回车进入下一屏,请选择初学者还是专家模式。我选择初学者模式。

|

||||

|

||||

@ -41,12 +44,13 @@ Then fire up Tuxboot (figure 2). Check "Pre-downloaded" and click the button wit

|

||||

|

||||

下一屏中,它会问你新建镜像的名称。在接受默认名称,或者输入你自己的名称后,进入下一屏。Clonezilla会扫描你所有的分区并创建一个检查列表,你可以从中选择你想要拷贝的。选择完后,在下一屏中会让你选择是否进行文件系统检查并修复。我才没这耐心,所以直接跳过了。

|

||||

|

||||

下一屏中,会问你是否想要Clonezilla检查你新创建的镜像,以确保它是可恢复的。选是吧,确保万无一失。接下来,它会给你一个命令行提示,如果你想用命令行而非GUI,那么你必须再次按回车。你需要再次确认,并输入y来确认制作拷贝。

|

||||

下一屏中,会问你是否想要Clonezilla检查你新创建的镜像,以确保它是可恢复的。选“是”吧,确保万无一失。接下来,它会给你一个命令行提示,如果你想用命令行而非GUI,那么你必须再次按回车。你需要再次确认,并输入y来确认制作拷贝。

|

||||

|

||||

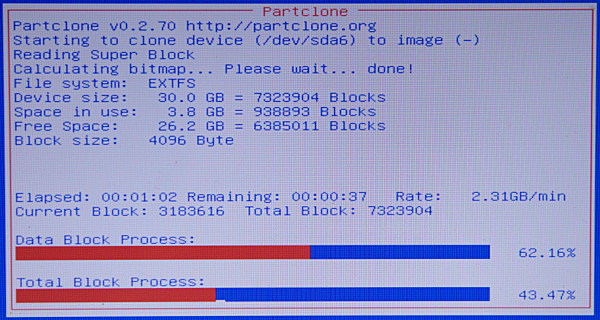

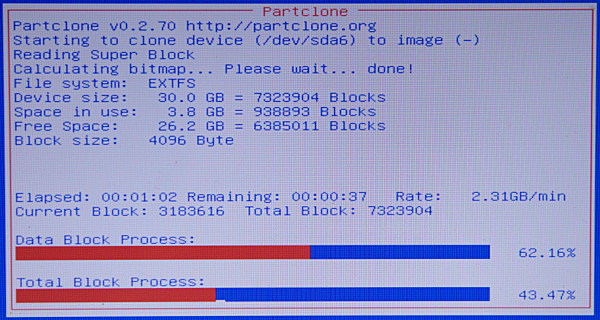

在Clonezilla创建新镜像的时候,你可以好好欣赏一下这个友好的红、白、蓝三色的进度屏(图3)。

|

||||

|

||||

|

||||

图3: 守候创建新镜像

|

||||

<center></center>

|

||||

|

||||

<center>*图3: 守候创建新镜像*</center>

|

||||

|

||||

全部完成后,按回车然后选择重启,记得拔下你的Clonezilla USB存储棒。正常启动计算机,然后去看看你新创建的Clonezilla镜像吧。你应该看到像下面这样的东西:

|

||||

|

||||

@ -81,7 +85,7 @@ via: http://www.linux.com/learn/tutorials/783416-how-to-image-and-clone-hard-dri

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,155 @@

|

||||

15个关于Linux的‘cd’命令的练习例子

|

||||

===========================

|

||||

|

||||

在Linux中,**‘cd‘(改变目录)**命令,是对新手和系统管理员来说,最重要最常用的命令。对管理无图形界面的服务器的管理员,‘**cd**‘是进入目录,检查日志,执行程序/应用软件/脚本和其余每个任务的唯一方法。对新手来说,是他们必须自己动手学习的最初始命令

|

||||

|

||||

|

||||

|

||||

*Linux中15个cd命令举例*

|

||||

|

||||

所以,请用心学习,我们在这会带给你**15**个基础的‘**cd**‘命令,它们富有技巧和捷径,学会使用这些了解到的技巧,会大大减少你在终端上花费的努力和时间

|

||||

|

||||

### 课程细节 ###

|

||||

|

||||

- 命令名称:cd

|

||||

- 代表:切换目录

|

||||

- 使用平台:所有Linux发行版本

|

||||

- 执行方式:命令行

|

||||

- 权限:访问自己的目录或者其余指定目录

|

||||

- 级别:基础/初学者

|

||||

|

||||

1. 从当前目录切换到/usr/local

|

||||

|

||||

avi@tecmint:~$ cd /usr/local

|

||||

avi@tecmint:/usr/local$

|

||||

|

||||

2. 使用绝对路径,从当前目录切换到/usr/local/lib

|

||||

|

||||

avi@tecmint:/usr/local$ cd /usr/local/lib

|

||||

avi@tecmint:/usr/local/lib$

|

||||

|

||||

3. 使用相对路径,从当前路径切换到/usr/local/lib

|

||||

|

||||

avi@tecmint:/usr/local$ cd lib

|

||||

avi@tecmint:/usr/local/lib$

|

||||

|

||||

4. **(a)**切换当前目录到上级目录

|

||||

|

||||

avi@tecmint:/usr/local/lib$ cd -

|

||||

/usr/local

|

||||

avi@tecmint:/usr/local$

|

||||

|

||||

**(b)**切换当前目录到上级目录

|

||||

|

||||

avi@tecmint:/usr/local/lib$ cd ..

|

||||

avi@tecmint:/usr/local$

|

||||

|

||||

5. 显示我们最后一个离开的工作目录(使用‘-’选项)

|

||||

|

||||

avi@tecmint:/usr/local$ cd --

|

||||

/home/avi

|

||||

|

||||

6. 从当前目录向上级返回两层

|

||||

|

||||

avi@tecmint:/usr/local$ cd ../../

|

||||

avi@tecmint:/$

|

||||

|

||||

7. 从任何目录返回到用户home目录

|

||||

|

||||

avi@tecmint:/usr/local$ cd ~

|

||||

avi@tecmint:~$

|

||||

|

||||

或

|

||||

|

||||

avi@tecmint:/usr/local$ cd

|

||||

avi@tecmint:~$

|

||||

|

||||

8. 切换工作目录到当前工作目录(LCTT:这有什么意义嘛?!)

|

||||

|

||||

avi@tecmint:~/Downloads$ cd .

|

||||

avi@tecmint:~/Downloads$

|

||||

|

||||

或

|

||||

|

||||

avi@tecmint:~/Downloads$ cd ./

|

||||

avi@tecmint:~/Downloads$

|

||||

|

||||

9. 你当前目录是“/usr/local/lib/python3.4/dist-packages”,现在要切换到“/home/avi/Desktop/”,要求:一行命令,通过向上一直切换直到‘/’,然后使用绝对路径

|

||||

|

||||

avi@tecmint:/usr/local/lib/python3.4/dist-packages$ cd ../../../../../home/avi/Desktop/

|

||||

avi@tecmint:~/Desktop$

|

||||

|

||||

10. 从当前工作目录切换到/var/www/html,要求:不要将命令打完整,使用TAB

|

||||

|

||||

avi@tecmint:/var/www$ cd /v<TAB>/w<TAB>/h<TAB>

|

||||

avi@tecmint:/var/www/html$

|

||||

|

||||

11. 从当前目录切换到/etc/v__ _,啊呀,你竟然忘了目录的名字,但是你又不想用TAB

|

||||

|

||||

avi@tecmint:~$ cd /etc/v*

|

||||

avi@tecmint:/etc/vbox$

|

||||

|

||||

**请注意:**如果只有一个目录以‘**v**‘开头,这将会移动到‘**vbox**‘。如果有很多目录以‘**v**‘开头,而且命令行中没有提供更多的标准,这将会移动到第一个以‘**v**‘开头的目录(按照他们在标准字典里字母存在的顺序)

|

||||

|

||||

12. 你想切换到用户‘**av**‘(不确定是avi还是avt)目录,不用**TAB**

|

||||

|

||||

avi@tecmint:/etc$ cd /home/av?

|

||||

avi@tecmint:~$

|

||||

|

||||

13. Linux下的pushed和poped

|

||||

|

||||

Pushed和poped是Linux bash命令,也是其他几个能够保存当前工作目录位置至内存,并且从内存读取目录作为当前目录的脚本,这些脚本也可以切换目录

|

||||

|

||||

avi@tecmint:~$ pushd /var/www/html

|

||||

/var/www/html ~

|

||||

avi@tecmint:/var/www/html$

|

||||

|

||||

上面的命令保存当前目录到内存,然后切换到要求的目录。一旦poped被执行,它会从内存取出保存的目录位置,作为当前目录

|

||||

|

||||

avi@tecmint:/var/www/html$ popd

|

||||

~

|

||||

avi@tecmint:~$

|

||||

|

||||

14. 切换到名字带有空格的目录

|

||||

|

||||

avi@tecmint:~$ cd test\ tecmint/

|

||||

avi@tecmint:~/test tecmint$

|

||||

|

||||

或

|

||||

|

||||

avi@tecmint:~$ cd 'test tecmint'

|

||||

avi@tecmint:~/test tecmint$

|

||||

|

||||

或

|

||||

|

||||

avi@tecmint:~$ cd "test tecmint"/

|

||||

avi@tecmint:~/test tecmint$

|

||||

|

||||

15. 从当前目录切换到下载目录,然后列出它所包含的内容(使用一行命令)

|

||||

|

||||

avi@tecmint:/usr$ cd ~/Downloads && ls

|

||||

...

|

||||

.

|

||||

service_locator_in.xls

|

||||

sources.list

|

||||

teamviewer_linux_x64.deb

|

||||

tor-browser-linux64-3.6.3_en-US.tar.xz

|

||||

.

|

||||

...

|

||||

|

||||

我们尝试使用最少的词句和一如既往的友好,来让你了解Linux的工作和执行

|

||||

|

||||

这就是所有内容。我很快会带着另一个有趣的主题回来的。

|

||||

|

||||

---

|

||||

|

||||

via: http://www.tecmint.com/cd-command-in-linux/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[su-kaiyao](https://github.com/su-kaiyao)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux FAQ -- 如何在CentOS或者RHEL上启用Nux Dextop仓库

|

||||

Linux有问必答:如何在CentOS或者RHEL上启用Nux Dextop仓库

|

||||

================================================================================

|

||||

> **问题**: 我想要安装一个在Nux Dextop仓库的RPM包。我该如何在CentOS或者RHEL上设置Nux Dextop仓库?

|

||||

|

||||

@ -6,7 +6,7 @@ Linux FAQ -- 如何在CentOS或者RHEL上启用Nux Dextop仓库

|

||||

|

||||

要在CentOS或者RHEL上启用Nux Dextop,遵循下面的步骤。

|

||||

|

||||

首先,要理解Nux Dextop被设计与EPEL仓库共存。因此,你需要使用Nux Dexyop仓库前先[启用 EPEL][2]。

|

||||

首先,要知道Nux Dextop被设计与EPEL仓库共存。因此,你需要在使用Nux Dexyop仓库前先[启用 EPEL][2]。

|

||||

|

||||

启用EPEL后,用下面的命令安装Nux Dextop仓库。

|

||||

|

||||

@ -26,13 +26,13 @@ Linux FAQ -- 如何在CentOS或者RHEL上启用Nux Dextop仓库

|

||||

|

||||

### 对于 Repoforge/RPMforge 用户 ###

|

||||

|

||||

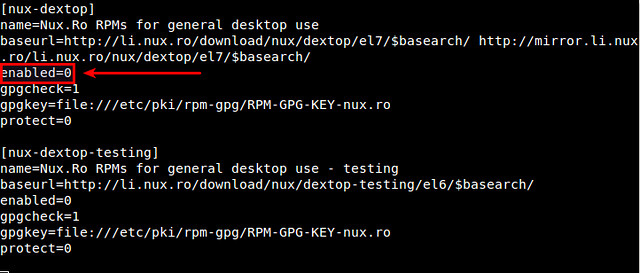

据作者所说,Nux Dextop目前所知会与其他第三方库比如Repoforge和ATrpms相冲突。因此,如果你启用了除了EPEL的其他第三方库,强烈建议你将Nux Dextop仓库设置成“default off”(默认关闭)状态。就是用文本编辑器打开/etc/yum.repos.d/nux-dextop.repo,并且在nux-desktop下面将"enabled=1" 改成 "enabled=0"。

|

||||

据作者所说,目前已知Nux Dextop会与其他第三方库比如Repoforge和ATrpms相冲突。因此,如果你启用了除了EPEL的其他第三方库,强烈建议你将Nux Dextop仓库设置成“default off”(默认关闭)状态。就是用文本编辑器打开/etc/yum.repos.d/nux-dextop.repo,并且在nux-desktop下面将"enabled=1" 改成 "enabled=0"。

|

||||

|

||||

$ sudo vi /etc/yum.repos.d/nux-dextop.repo

|

||||

$ sudo vi /etc/yum.repos.d/nux-dextop.repo

|

||||

|

||||

|

||||

|

||||

当你无论何时从Nux Dextop仓库安装包时,显式地用下面的命令启用仓库。

|

||||

无论何时当你从Nux Dextop仓库安装包时,显式地用下面的命令启用仓库。

|

||||

|

||||

$ sudo yum --enablerepo=nux-dextop install <package-name>

|

||||

|

||||

@ -41,7 +41,7 @@ $ sudo vi /etc/yum.repos.d/nux-dextop.repo

|

||||

via: http://ask.xmodulo.com/enable-nux-dextop-repository-centos-rhel.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[ wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

Linux有问必答——如何修复“运行aclocal失败:没有该文件或目录”

|

||||

Linux有问必答:如何修复“运行aclocal失败:没有该文件或目录”

|

||||

================================================================================

|

||||

> **问题**:我试着在Linux上构建一个程序,该程序的开发版本是使用“autogen.sh”脚本进行的。当我运行它来创建配置脚本时,却发生了下面的错误:

|

||||

>

|

||||

@ -24,7 +24,7 @@ Linux有问必答——如何修复“运行aclocal失败:没有该文件或

|

||||

via: http://ask.xmodulo.com/fix-failed-to-run-aclocal.html

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,5 +1,7 @@

|

||||

Email生日快乐

|

||||

他发明了 Email ?

|

||||

================================================================================

|

||||

[编者按:本文所述的 Email 发明人的观点存在很大的争议,请读者留意,以我的观点来看,其更应该被称作为某个 Email 应用系统的发明人,其所发明的一些功能和特性,至今沿用。——wxy]

|

||||

|

||||

**一个印度裔美国人用他天才的头脑发明了电子邮件,而从此以后我们没有哪一天可以离开电子邮件。**

|

||||

|

||||

|

||||

@ -18,6 +20,6 @@ via: http://www.efytimes.com/e1/fullnews.asp?edid=147170

|

||||

|

||||

作者:Sanchari Banerjee

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,6 +1,7 @@

|

||||

在Ubuntu14.04上安装UberWriterMarkdown编辑器

|

||||

================================================================================

|

||||

下面将展示如何通过官方的PPA源在Ubuntu14.04上安装UberWriter编辑器

|

||||

这是一篇快速教程指导我们如何通过官方的PPA源在Ubuntu14.04上安装UberWriter编辑器。

|

||||

|

||||

[UberWriter][1]是一款Ubuntu下的Markdown编辑器,它简洁的界面能让我们更致力于编辑文字。UberWriter利用了[pandoc][3](一个格式转换器)。但由于UberWriter的UI是基于GTK3的,因此不能完全兼容Unity桌面系统。以下是对UberWriter功能的列举:

|

||||

|

||||

- 简洁的界面

|

||||

@ -13,7 +14,7 @@

|

||||

|

||||

### 在Ubuntu14.04上安装UberWriter ###

|

||||

|

||||

UberWriter可以在[Ubuntu软件中心][4]中找到但是安装需要支付$5。如果你真的喜欢这款编辑器并想为开发者提供一些资金支持的话,我很建议你购买它。

|

||||

UberWriter可以在[Ubuntu软件中心][4]中找到但是安装需要支付5刀。如果你真的喜欢这款编辑器并想为开发者提供一些资金支持的话,我很建议你购买它。

|

||||

|

||||

除此之外,UberWriter也能通过官方的PPA源来免费安装。通过如下命令:

|

||||

|

||||

@ -29,16 +30,15 @@ UberWriter可以在[Ubuntu软件中心][4]中找到但是安装需要支付$5。

|

||||

|

||||

|

||||

|

||||

当想要导出到PDF的时候会提示先安装texlive。

|

||||

我尝试导出到PDF的时候被提示安装texlive。

|

||||

|

||||

|

||||

|

||||

虽然导出到HTML和ODT格式是好的。

|

||||

|

||||

在Linux下还有一些其他的markdown编辑器。[Remarkable][5]是一款能够实时预览的编辑器,但UberWriter不能。如果你在寻找文本编辑器的话,你以可以试试[Texmaker LaTeX editor][6]。

|

||||

|

||||

系统这次展示能够帮你在Ubuntu14.04上成功安装UberWriter。我猜想UberWriter在Ubuntu12.04,Linux Mint 17,Elementary OS和其他在Ubuntu的基础上的Linux发行版上也能成功安装。

|

||||

在Linux下还有一些其他的markdown编辑器。[Remarkable][5]是一款能够实时预览的编辑器,UberWriter却不能,不过总的来说它是一款很不错的应用。如果你在寻找文本编辑器的话,你可以试试[Texmaker LaTeX editor][6]。

|

||||

|

||||

系统这个教程能够帮你在Ubuntu14.04上成功安装UberWriter。我猜想UberWriter在Ubuntu12.04,Linux Mint 17,Elementary OS和其他在Ubuntu的基础上的Linux发行版上也能成功安装。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -46,7 +46,7 @@ via: http://itsfoss.com/install-uberwriter-markdown-editor-ubuntu-1404/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[John](https://github.com/johnhoow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,12 +1,12 @@

|

||||

QuiteRSS: Linux桌面的RSS阅读器

|

||||

================================================================================

|

||||

[QuiteRSS][1]是一个自由[开源][2]的RSS/Atome阅读器。它可以在Windows、Linux和Mac上运行。它用C++/QT编写,所以它有许多的特点。

|

||||

[QuiteRSS][1]是一个免费的[开源][2]RSS/Atome阅读器。它可以在Windows、Linux和Mac上运行。它用C++/QT编写。它有许多的特色功能。

|

||||

|

||||

QuiteRSS的界面让我想起Lotus Notes mail,会有很多RSS信息排列在大小合适的方块上,你可以通过标签分组。需要查找东西时,只需在下面板上打开RSS信息。

|

||||

QuiteRSS的界面让我想起Lotus Notes mail,会有很多RSS信息排列在右侧面板上,你可以通过标签分组。点击一个 RSS 条目时,会在下方的面板里面显示该信息。

|

||||

|

||||

|

||||

|

||||

除了上述功能,它还有一个广告屏蔽器,一个报纸输出视图,通过URL特性导入RSS等众多功能。你可以在[这里][3]查找到完整的功能列表。

|

||||

除了上述功能,它还有一个广告屏蔽器,一个报纸视图,通过URL导入RSS源等众多功能。你可以在[这里][3]查找到完整的功能列表。

|

||||

|

||||

### 在 Ubuntu 和 Linux Mint 上安装 QuiteRSS ###

|

||||

|

||||

@ -20,19 +20,19 @@ QuiteRSS在Ubuntu 14.04 和 Linux Mint 17中可用。你可以通过以下命令

|

||||

sudo apt-get update

|

||||

sudo apt-get install quiterss

|

||||

|

||||

上面的命令在所有基于Ubuntu的发行版都支持,比如Linux Mint, Elementary OS, Linux Lite, Pinguy OS等等。对于其他Linux发行版和平台上,你可以从 [下载页][5]获得源码来安装。

|

||||

上面的命令支持所有基于Ubuntu的发行版,比如Linux Mint, Elementary OS, Linux Lite, Pinguy OS等等。对于其他Linux发行版和平台上,你可以从 [下载页][5]获得源码来安装。

|

||||

|

||||

### 卸载 QuiteRSS ###

|

||||

|

||||

用下方命令卸载 QuiteRSS:

|

||||

用下列命令卸载 QuiteRSS:

|

||||

|

||||

sudo apt-get remove quiterss

|

||||

|

||||

如果你使用了PPA,你还需要从源列表中把仓库删除:

|

||||

如果你使用了PPA,你还也应该从源列表中把仓库删除:

|

||||

|

||||

sudo add-apt-repository --remove ppa:quiterss/quiterss

|

||||

|

||||

QuiteRSS是一个不错的开源RSS阅读器,尽管我更喜欢[Feedly][6]。尽管现在 Feedly 还没有Linux桌面程序,但是你依然可以在网页浏览器中使用。希望你会觉得QuiteRSS值得在桌面Linux一试。

|

||||

QuiteRSS是一个不错的开源RSS阅读器,尽管我更喜欢[Feedly][6]。不过现在 Feedly 还没有Linux桌面程序,但是你依然可以在网页浏览器中使用。希望你会觉得QuiteRSS值得在桌面Linux一试。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

Linux有问必答——如何查找并移除Ubuntu上陈旧的PPA仓库

|

||||

================================================================================

|

||||

> **问题**:我试着通过运行apt-get update命令来再次同步包索引文件,但是却出现了“404 无法找到”的错误,看起来似乎是我不能从先前添加的第三方PPA仓库中获取最新的索引。我怎样才能清楚这些破损而且陈旧的PPA仓库呢?

|

||||

> **问题**:我试着通过运行apt-get update命令来再次同步包索引文件,但是却出现了“404 无法找到”的错误,看起来似乎是我不能从先前添加的第三方PPA仓库中获取最新的索引。我怎样才能清除这些破损而且陈旧的PPA仓库呢?

|

||||

|

||||

Err http://ppa.launchpad.net trusty/main amd64 Packages

|

||||

404 Not Found

|

||||

@ -12,7 +12,7 @@ Linux有问必答——如何查找并移除Ubuntu上陈旧的PPA仓库

|

||||

|

||||

E: Some index files failed to download. They have been ignored, or old ones used instead.

|

||||

|

||||

但你试着更新APT包索引时,“404 无法找到”错误总是会在版本更新之后发生。就是说,在你升级你的Ubuntu发行版后,你在旧的版本上添加的一些第三方PPA仓库就不再受新版本的支持。在此种情况下,你可以像下面这样来**鉴别并清除那些破损的PPA仓库**。

|

||||

当你试着更新APT包索引时,“404 无法找到”错误总是会在版本更新之后发生。就是说,在你升级你的Ubuntu发行版后,你在旧的版本上添加的一些第三方PPA仓库就不再受新版本的支持。在此种情况下,你可以像下面这样来**鉴别并清除那些破损的PPA仓库**。

|

||||

|

||||

首先,找出那些引起“404 无法找到”错误的PPA。

|

||||

|

||||

@ -22,7 +22,7 @@ Linux有问必答——如何查找并移除Ubuntu上陈旧的PPA仓库

|

||||

|

||||

在本例中,Ubuntu Trusty不再支持的PPA仓库是“ppa:finalterm/daily”。

|

||||

|

||||

去吧,去[移除PPA仓库][1]。

|

||||

去[移除PPA仓库][1]吧。

|

||||

|

||||

$ sudo add-apt-repository --remove ppa:finalterm/daily

|

||||

|

||||

@ -30,14 +30,14 @@ Linux有问必答——如何查找并移除Ubuntu上陈旧的PPA仓库

|

||||

|

||||

|

||||

|

||||

在移除所有过时PPA仓库后,重新运行“apt-get update”命令来检查它们是否都被移除。

|

||||

在移除所有过时的PPA仓库后,重新运行“apt-get update”命令来检查它们是否都被成功移除。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/find-remove-obsolete-ppa-repositories-ubuntu.html

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,26 @@

|

||||

2q1w2007翻译中

|

||||

世界上最小的发行版之一Tiny Core有了新的更新

|

||||

世界上最小的发行版之一Tiny Core有了更新

|

||||

================================================================================

|

||||

|

||||

|

||||

Tiny Core

|

||||

|

||||

**Robert Shingledecker 刚刚发布了最新可用的最终版本的Tiny Core 5.4,这也使它成为世界上最小的发行版之一**

|

||||

**Robert Shingledecker 宣布了最终版本的Tiny Core 5.4 Linux操作系统已经可以即刻下载,这也使它成为世界上最小的发行版之一。**

|

||||

|

||||

发行版的名字说明了一切,但是开发者依然集成了一些有意思的包和一个轻量的桌面。这次最新的迭代只有一个候选版本,而且它将会是有史以来最安静的版本。

|

||||

发行版的名字说明了一切,但是开发者依然集成了一些有意思的包和一个轻量的桌面来与它相匹配。这次最新的迭代只有一个候选版本,而且它也是迄今为止最安静的版本之一。

|

||||

|

||||

官网上的开发者说"Tiny Core是一个简单的例子来示范核心项目可以提供什么。,它提供了一个12MB的FLTK/FLWM桌面。用户对提供的程序和外加的硬件有完整的控制权。你可以把它用在桌面、笔记本或者服务器上,这可以由用户在从在线库中安装附加程序时选择,或者用提供的工具编译大多数你需要的。"

|

||||

官网上的开发者说"Tiny Core是一个简单的范例来说明核心项目可以提供什么。它提供了一个12MB的FLTK/FLWM桌面。用户对提供的程序和外加的硬件有完整的控制权。你可以把它用在桌面、笔记本或者服务器上,这可以由用户从在线库中安装附加程序时选择,或者用提供的工具编译大多数你需要的。"

|

||||

|

||||

根据更新日志,NFS的入口被添加,'Done'将在新的一行里显示,udev也升级到174来修复竞态条件问题。

|

||||

|

||||

关于修改和升级的完整内容可以在官方的[声明][1]里找到。

|

||||

|

||||

你可以下载Tiny Core Linux 5.4.

|

||||

你可以点击以下链接下载Tiny Core Linux 5.4.

|

||||

|

||||

- [Tiny Core Linux 5.4 (ISO)][2][iso] [14 MB]

|

||||

- [Tiny Core Plus 5.4 (ISO)][3][iso] [72 MB]

|

||||

- [Core 5.4 (ISO)][4][iso] [8.90 MB]

|

||||

|

||||

这些分发都有Live,你可以在安装之前试用。

|

||||

这些发行版都有Live,你可以在安装之前试用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -29,7 +28,7 @@ via: http://news.softpedia.com/news/One-of-the-Smallest-Distros-in-the-World-Tin

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[2q1w2007](https://github.com/2q1w2007)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -37,4 +36,4 @@ via: http://news.softpedia.com/news/One-of-the-Smallest-Distros-in-the-World-Tin

|

||||

[1]:http://forum.tinycorelinux.net/index.php/topic,17487.0.html

|

||||

[2]:http://distro.ibiblio.org/pub/linux/distributions/tinycorelinux/5.x/x86/release/TinyCore-5.4.iso

|

||||

[3]:http://repo.tinycorelinux.net/5.x/x86/release/CorePlus-5.4.iso

|

||||

[4]:http://distro.ibiblio.org/tinycorelinux/5.x/x86/release/Core-current.iso

|

||||

[4]:http://distro.ibiblio.org/tinycorelinux/5.x/x86/release/Core-current.iso

|

||||

@ -0,0 +1,41 @@

|

||||

GNOME控制中心3.14 RC1修复了大量潜在崩溃问题

|

||||

================================================================================

|

||||

|

||||

|

||||

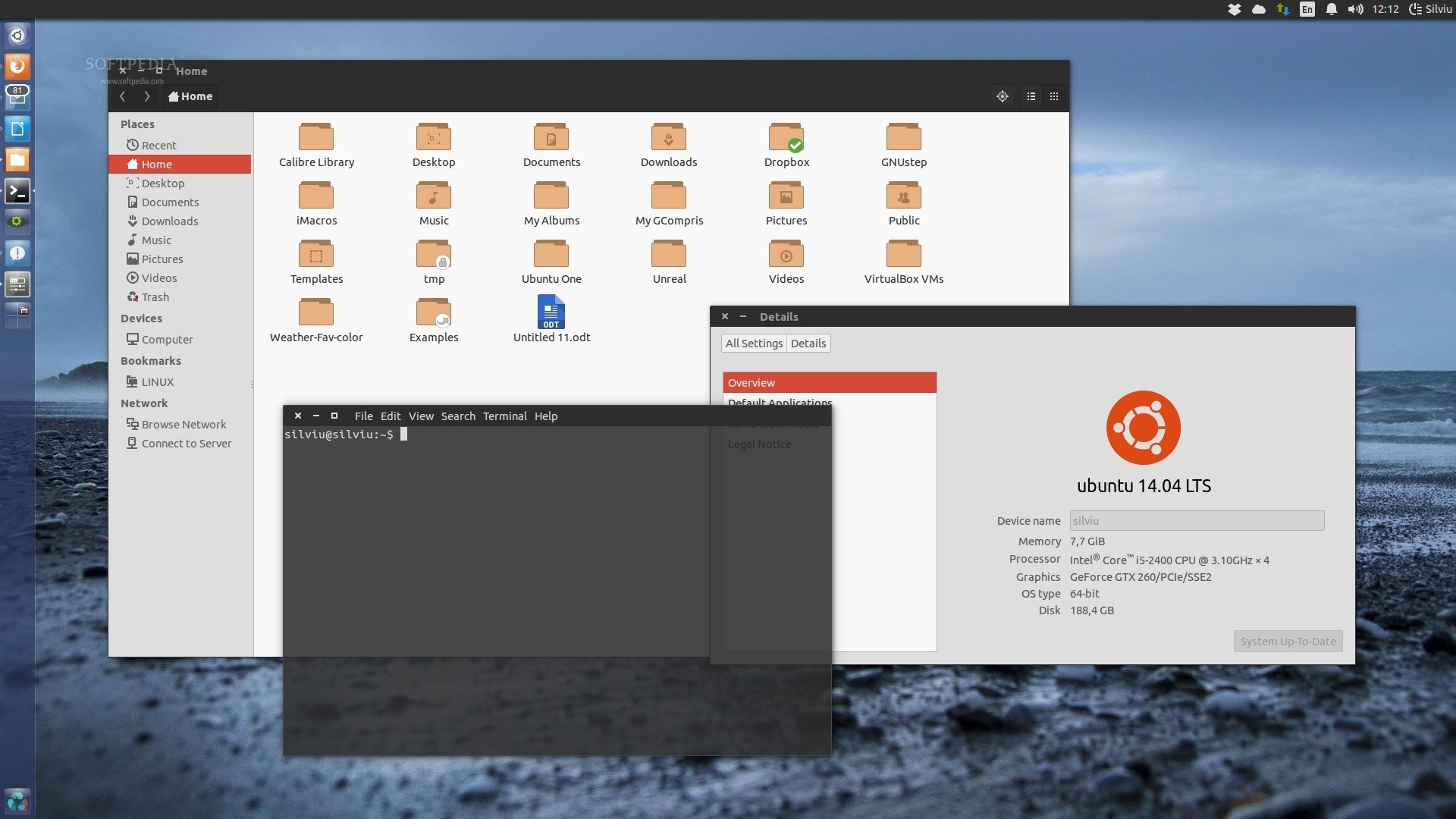

Arch Linux下的GNOME控制中心

|

||||

|

||||

**GNOME控制中心,可以在GNOME中更改你的桌面各个方面设置的主界面,已经升级至3.14 RC1,伴随而来的是大量来自GNOME stack的包。**

|

||||

|

||||

GNOME控制中心是在GNOME生态系统中十分重要的软件之一,尽管不是所有的用户意识到了它的存在。GNOME控制中心是管理由GNOME驱动的操作系统中所有设置的部分,就像你从截图里看到的那样。

|

||||

|

||||

GNOME控制中心不是很经常被宣传,它实际上是GNOME stack中为数不多的源代码包和安装后的应用名称不同的软件包。源代码包的名字为GNOME控制中心,但用户经常看到的应用名称是“设置”或“系统设置”,取决于开发者的选择。

|

||||

|

||||

### GNOME控制中心 3.14 RC1 带来哪些新东西 ###

|

||||

|

||||

通过更新日志可以得知,升级了libgd以修复GdNotification主题,切换视图时背景选择对话框不再重新调整大小,选择对话框由三个不同视图组合而成,修复Flickr支持中的一个内存泄漏,在“日期和时间”中不再使用硬编码的字体大小,修复改换窗口管理器(或重启)时引起的崩溃,更改无线网络启用时可能引起的崩溃也已被修复,以及纠正了更多可能的WWAN潜在崩溃因素。

|

||||

|

||||

此外,现在热点仅在设备活动时运行,所有虚拟桥接现在是隐藏的,不再显示VPN连接的底层设备,默认不显示空文件夹列表,解决了几个UI填充问题,输入焦点现在重新回到了账户对话框,将年份设置为0时导致的崩溃已修复,“Wi-Fi热点”属性居中,修复了打开启用热点时弹出警告的问题,以及现在打开热点失败时将弹出错误信息。

|

||||

|

||||

完整的变动,更新以及bug修复,参见官方[更新日志][1]。

|

||||

|

||||

你可以下载GNOME控制中心 3.14 RC1:

|

||||

|

||||

- [tar.xz (3.12.1 稳定版)][2][sources] [6.50 MB]

|

||||

- [tar.xz (3.14 RC1 开发版)][3][sources] [6.60 MB]

|

||||

|

||||

这里提供的仅仅是源代码包,你必须自己编译以测试GNOME控制中心。除非你真的知道自己在做什么,否则你应该等到完整的GNOME stack在源中可用时再使用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/GNOME-Control-Center-3-14-RC1-Correct-Lots-of-Potential-Crashes-458986.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://ftp.acc.umu.se/pub/GNOME/sources/gnome-control-center/3.13/gnome-control-center-3.13.92.news

|

||||

[2]:http://ftp.acc.umu.se/pub/GNOME/sources/gnome-control-center/3.12/gnome-control-center-3.12.1.tar.xz

|

||||

[3]:http://ftp.acc.umu.se/pub/GNOME/sources/gnome-control-center/3.13/gnome-control-center-3.13.92.tar.xz

|

||||

@ -0,0 +1,36 @@

|

||||

欧洲现在很流行拥抱开源

|

||||

================================================================================

|

||||

|

||||

|

||||

看来拥抱[开源][1]最近在欧洲的国家很流行。上个月我们我只听说[都灵成为意大利首个官方接受开源产品的城市][2]。另一个意大利西北部城市,[乌迪内][3],已经宣布他们正在抛弃微软Office转而迁移到[OpenOffice][4]。

|

||||

|

||||

乌迪内有100,000的人口并且行政部门有大约900台电脑,它们都运行着微软Windows以及它的默认产品套装。根据[预算文档][5],迁移将在大约12月份时进行,从80台新电脑开始。接着将会是旧电脑迁移到OpenOffice。

|

||||

|

||||

迁移估计会节省一笔授权费用,不然将会每台电脑花费大约400欧元,总计360,000欧元。但是节约成本并不是迁移的唯一目的,获得常规的软件升级也是其中一个因素。

|

||||

|

||||

当然从微软的Office到OpenOfifice不会太顺利。不过,全市的培训计划是先让少数员工使用安装了OpenOffice的电脑。

|

||||

|

||||

如我先前说明的,这似乎在欧洲是一个趋势。在今年早些时候在[西班牙的加那利群岛][7]之后[法国城市图卢兹也使用了LibreOffice中从而节省了100万欧元][6]。相邻的法国城市[日内瓦也有开源方面的迹象][8]。在世界的另一边,政府机构[泰米尔纳德邦][9]和印度喀拉拉邦省也抛弃了微软而使用开源软件。

|

||||

|

||||

伴随着经济的萧条,我觉得Windows XP的死亡一直是开源的福音。无论是什么原因,我很高兴看到这份名单越来越大。你看呢?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/udine-open-source/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/Abhishek/

|

||||

[1]:http://itsfoss.com/category/open-source-software/

|

||||

[2]:http://linux.cn/article-3602-1.html

|

||||

[3]:http://en.wikipedia.org/wiki/Udine

|

||||

[4]:https://www.openoffice.org/

|

||||

[5]:http://www.comune.udine.it/opencms/opencms/release/ComuneUdine/comune/Rendicontazione/PEG/PEG_2014/index.html?lang=it&style=1&expfolder=???+NavText+???

|

||||

[6]:http://linux.cn/article-3575-1.html

|

||||

[7]:http://itsfoss.com/canary-islands-saves-700000-euro-open-source/

|

||||

[8]:http://itsfoss.com/170-primary-public-schools-geneva-switch-ubuntu/

|

||||

[9]:http://linux.cn/article-2744-1.html

|

||||

@ -4,25 +4,17 @@

|

||||

|

||||

|

||||

|

||||

**和其他项目一样,Canonical也在开发Unity桌面环境与Mir显示服务。开发团队刚刚发布了一个小的更新来据此让我们知道发生了些什么**

|

||||

**和其他项目一样,Canonical也在开发Unity桌面环境与Mir显示服务。开发团队刚刚发布了一个小的更新,据此我们可以知道都有些什么进展**

|

||||

|

||||

Ubuntu开发者可能刚刚集中精力在一些重要的发布上,就像接下来的Ubuntu 14.10(Utopic Unicorn) 或者是新的面向移动设备的Ubuntu Touch,但是他们同样也涉及想Mir以及Unity 8这样的项目。

|

||||

Ubuntu开发者可能刚刚集中精力在一些重要的发布上,就像接下来的Ubuntu 14.10(Utopic Unicorn) 或者是新的面向移动设备的Ubuntu Touch,但是他们同样也涉及像Mir以及Unity 8这样的项目。

|

||||

|

||||

目前这代Ubuntu系统使用的是Unity 7桌面环境,但是新一代已经酝酿了很长一段时间。与新的显示服务一起,已经在Ubuntu的移动版中了,但最终也要将它带到桌面上。

|

||||

目前这代Ubuntu系统使用的是Unity 7桌面环境,但是新一代已经酝酿了很长一段时间。它与新的显示服务一起,已经在Ubuntu的移动版中了,但最终也要将它带到桌面上。

|

||||

|

||||

这两个项目的领导Kevin Gunn经常发布一些来自开发者的进度信息以及这周以来的一些改变,虽然这些都很粗略。

|

||||

|

||||

根据 [开发团队][1]的消息, 一些关于触摸/高难度的问题已经修正了,几个翻译问题也已经修复了,一些Dash UI相关的问题已经修复了,目前 团队在开发Mir 0.8,Mir 0.7.2已经推广了,同时一些高优先级的bug也在进行中。

|

||||

根据 [开发团队][1]的消息, 一些关于触摸/触发角的问题已经修正了,也修复了几个翻译问题,一些Dash UI相关的问题已经修复了,目前 团队在开发Mir 0.8,Mir 0.7.2将被升级,同时一些高优先级的bug处理也在进行中。

|

||||

|

||||

你可以下载 Ubuntu Next

|

||||

|

||||

- [Ubuntu 14.10 Daily Build (ISO) 64-bit][2]

|

||||

- [Ubuntu 14.10 Daily Build (ISO) 32-bit][3]

|

||||

- [Ubuntu 14.10 Daily Build (ISO) 64-bit Mac][4]

|

||||

- [Ubuntu Desktop Next 14.10 Daily Build (ISO) 64-bit][5]

|

||||

- [Ubuntu Desktop Next 14.10 Daily Build (ISO) 32-bit][6]

|

||||

|

||||

这个的特性是新的Unity 8以及Mir,但是还不完全。直到有一个明确的方向之前,它还会持续一会。

|

||||

你可以[下载 Ubuntu Next][7]来体验新的Unity 8以及Mir的特性,但是还不够稳定。要等到成熟还需要一些时间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -30,7 +22,7 @@ via: http://news.softpedia.com/news/Mir-and-Unity-8-Update-Arrive-from-Ubuntu-De

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,48 @@

|

||||

Netflix支持 Ubuntu 上原生回放

|

||||

================================================================================

|

||||

|

||||

|

||||

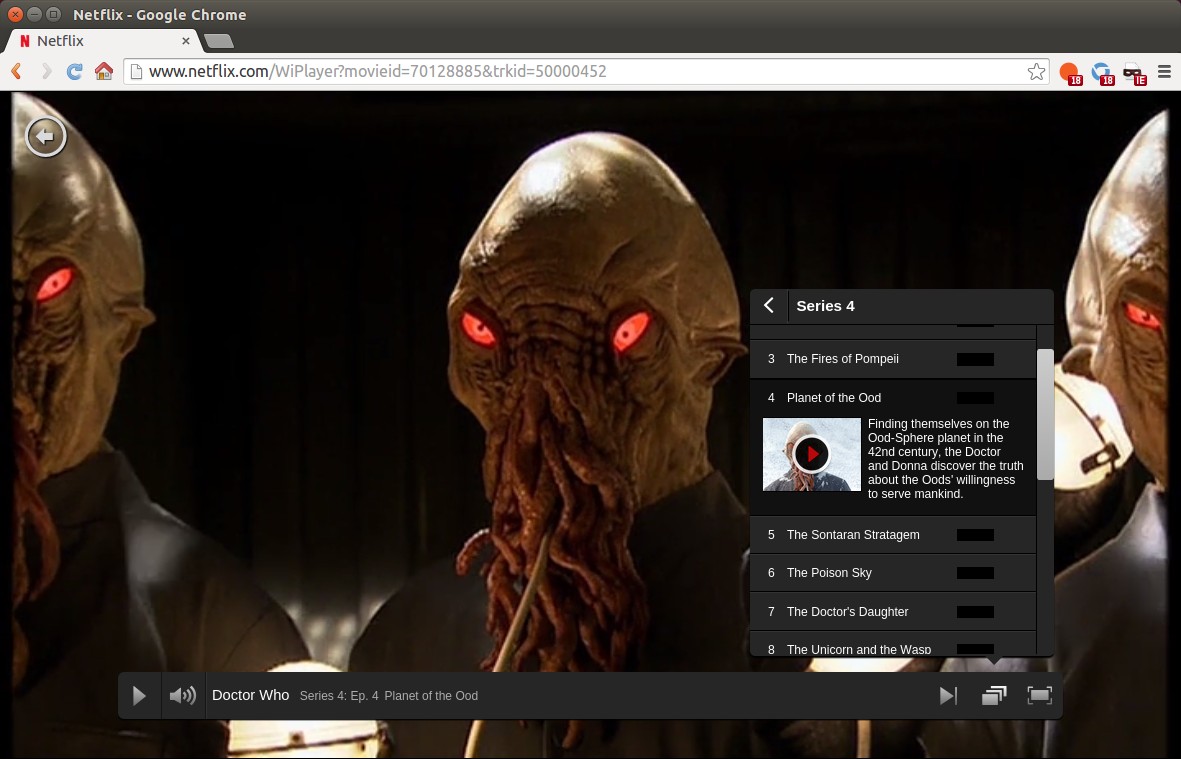

**我们[上个月说的Netflix 的原生Linux支持很接近了][1],现在终于有了,我们只需几个简单的步骤就可以在Ubuntu桌面上启用HTML 5视频流了。

|

||||

|

||||

现在Netflix更近一步提供了支持。它希望给Ubuntu带来真正的开箱即用的Netflix回放。现在只需要更新**网络安全(Network Security Services,NSS)**服务库就行。

|

||||

|

||||

### 原生Netflix? Neato. ###

|

||||

|

||||

在一封发给Ubuntu开发者邮件列表的[邮件中][2],Netflix的Paul Adolph解释了现在的情况:

|

||||

|

||||

> “如果NSS的版本是3.16.2或者更高的话,Netflix可以在Ubuntu 14.04的稳定版Chrome中播放。如果版本超过了14.04,Netflix会作出一些调整,以避免用户必须对浏览器的 User-Agent 参数进行一些修改才能播放。”

|

||||

|

||||

[LCTT 译注:此处原文是“14.02”,疑是笔误,应该是指Ubuntu 14.04。]

|

||||

|

||||

很快要发布的Ubuntu 14.10提供了更新的[NSS v3.17][3], 而目前大多数用户使用的版本 Ubuntu 14.04 LTS 提供的是 v3.15.x。

|

||||

|

||||

NSS是一系列支持多种安全功能的客户端和服务端应用的库,包括SSL,TLS,PKCS和其他安全标准。为了让Ubuntu LTS用户可以尽快用上原生的HTML5 Netflix, Paul 问道:

|

||||

|

||||

>”让一个新的NSS版本进入更新流的过程是什么?或者有人可以给我提供正确的联系方式么?“

|

||||

|

||||

Netflix今年早期时在Windows 8.1和OSX Yosemite上提供了HTML5视频回放,而不需要任何额外的下载或者插件。现在可以通过[加密媒体扩展][4]特性来使用。

|

||||

|

||||

虽然我们等待这讨论取得进展(并且希望可以完全解决),但是你仍可以在Ubuntu上[下面的指导来][5]修改HTML5 Netflix。

|

||||

|

||||

更新:9/19

|

||||

|

||||

本文发表后,Canonical 已经确认所需版本的NSS 库会按计划在下个“安全更新”中更新,预计 Ubuntu 14.04 LTS 将在两周内得到更新。

|

||||

|

||||

这个新闻让 Netflix 的Paul Adolph 很高兴,作为回应,他说当软件包更新后,他将“去掉 Chrome 中回放 Netflix HTML5 视频时的User-Agent 过滤,不再需要修改UA 了”。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/09/netflix-linux-html5-nss-change-request

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www.omgubuntu.co.uk/2014/08/netflix-linux-html5-support-plugins

|

||||

[2]:https://lists.ubuntu.com/archives/ubuntu-devel-discuss/2014-September/015048.html

|

||||

[3]:https://developer.mozilla.org/en-US/docs/Mozilla/Projects/NSS/NSS_3.17_release_notes

|

||||

[4]:http://en.wikipedia.org/wiki/Encrypted_Media_Extensions

|

||||

[5]:http://www.omgubuntu.co.uk/2014/08/netflix-linux-html5-support-plugins

|

||||

103

published/20140922 Ten Blogs Every Ubuntu User Must Follow.md

Normal file

103

published/20140922 Ten Blogs Every Ubuntu User Must Follow.md

Normal file

@ -0,0 +1,103 @@

|

||||

10个 Ubuntu 用户一定要知道的博客

|

||||

================================================================================

|

||||

|

||||

|

||||

**想要了解更多关于 ubuntu 的资讯,我们应该追哪些网站呢?**

|

||||

|

||||

这是初学者经常会问的一个问题,在这里,我会告诉你们10个我最喜欢的博客,这些博客可以帮助我们解决问题,能让我们及时了解所有 Ubuntu 版本的更新消息。不,我谈论的不是通常的 Linux 和 shell 脚本一类的东东。我是在说一个流畅的 Linux 桌面系统和一个普通的用户所要的关于 Ubuntu 的经验。

|

||||

|

||||

这些网站帮助你解决你正遇到的问题,提醒你关注各种应用和提供给你来自 Ubuntu 世界的最新消息。这个网站可以让你对 Ubuntu 更了解,所以,下面列出的是10个我最喜欢的博客,它们包括了 Ubuntu 的方方面面。

|

||||

|

||||

###10个Ubutun用户一定要知道的博客###

|

||||

|

||||

从我开始在 itsfoss 网站上写作开始,我特意把它排除在外,没有列入名单。我也并没有把[Planet Ubuntu][1]列入名单,因为它不适合初学者。废话不多说,让我们一起来看下**最好的乌邦图(ubuntu)博客**(排名不分先后):

|

||||

|

||||

### [OMG! Ubuntu!][2] ###

|

||||

|

||||

这是一个只针对 ubuntu 爱好者的网站。无论多小,只要是和乌邦图有关系的,OMG!Ubuntu 都会收入站内!博客主要包括新闻和应用。你也可以再这里找到一些关于 Ubuntu 的教程,但不是很多。

|

||||

|

||||

这个博客会让你知道 Ubuntu 世界发生的各种事情。

|

||||

|

||||

### [Web Upd8][3] ###

|

||||

|

||||

Web Upd8 是我最喜欢的博客。除了涵盖新闻,它有很多容易理解的教程。Web Upd8 还维护了几个PPAs。博主[Andrei][4]有时会在评论里回答你的问题,这对你来说也会是很有帮助的。

|

||||

|

||||

这是一个你可以了解新闻资讯,学习教程的网站。

|

||||

|

||||

### [Noobs Lab][5] ###

|

||||

|

||||

和Web Upd8一样,Noobs Lab上也有很多教程,新闻,并且它可能是PPA里最大的主题和图标集。

|

||||

|

||||

如果你是个新手,去Noobs Lab看看吧。

|

||||

|

||||

### [Linux Scoop][6] ###

|

||||

|

||||

大多数的博客都是“文字博客”。你通过看说明和截图来学习教程。而 Linux Scoop 上有很多录像来帮助初学者来学习,完全是一个视频博客。

|

||||

|

||||

比起阅读来,如果你更喜欢视频,Linux Scoop应该是最适合你的。

|

||||

|

||||

### [Ubuntu Geek][7] ###

|

||||

|

||||

这是一个相对比较老的博客。覆盖面很广,并且有很多快速安装的教程和说明。虽然,有时我发现其中的一些教程文章缺乏深度,当然这也许只是我个人的观点。

|

||||

|

||||

想要快速小贴士,去Ubuntu Geek。

|

||||

|

||||

### [Tech Drive-in][8] ###

|

||||

|

||||

这个网站的更新频率好像没有以前那么快了,可能是 Manuel 在忙于他的工作,但是仍然给我们提供了很多的东西。新闻,教程,应用评论是这个博客的亮点。

|

||||

|

||||

博客经常被收入到[Ubuntu的新闻邀请邮件中][9],Tech Drive-in肯定是一个很值得你去学习的网站。

|

||||

|

||||

### [UbuntuHandbook][10] ###

|

||||

|

||||

快速小贴士,新闻和教程是UbuntuHandbook的USP。[Ji m][11]最近也在参与维护一些PPAS。我必须很认真的说,这个博客的页面其实可以做得更好看点,纯属个人观点。

|

||||

|

||||

UbuntuHandbook 真的很方便。

|

||||

|

||||

### [Unixmen][12] ###

|

||||

|

||||

这个网站是由很多人一起维护的,而且并不仅仅局限于Ubuntu,它也覆盖了很多的其他的Linux发行版。它有自己的论坛来帮助用户。

|

||||

|

||||

紧跟着 Unixmen 的步伐。。

|

||||

|

||||

### [The Mukt][13] ###

|

||||

|

||||

The Mukt是Muktware新的代表。Muktware是一个逐渐消亡的Linux组织,并以Mukt重生。Muktware是一个很严谨的Linux开源的博客,The Mukt涉及很多广泛的主题,包括,科技新闻,极客新闻,有时还有娱乐新闻(听起来是否有一种混搭风的感觉?)The Mukt也包括很多你感兴趣的Ubuntu新闻。

|

||||

|

||||

The Mukt 不仅仅是一个博客,它是一种文化潮流。

|

||||

|

||||

### [LinuxG][14] ###

|

||||

|

||||

LinuxG是一个你可以找到所有关于“怎样安装”类型文章的站点。几乎所有的文章都开始于一句话“你好,Linux geeksters,正如你所知道的……”,博客可以在不同的主题上做得更好。我经常发现有些是文章缺乏深度,并且是急急忙忙写出来的,但是它仍然是一个关注应用最新版本的好地方。

|

||||

|

||||

这是个快速浏览新的应用和它们最新的版本好地方。

|

||||

|

||||

### 你还有什么好的站点吗? ###

|

||||

|

||||

这些就是我平时经常浏览的 Ubuntu 博客。我知道还有很多我不知道的站点,可能会比我列出来的这些更好。所以,欢迎把你最喜爱的 Ubuntu 博客写在下面评论区。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/ten-blogs-every-ubuntu-user-must-follow/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/Abhishek/

|

||||

[1]:http://planet.ubuntu.com/

|

||||

[2]:http://www.omgubuntu.co.uk/

|

||||

[3]:http://www.webupd8.org/

|

||||

[4]:https://plus.google.com/+AlinAndrei

|

||||

[5]:http://www.noobslab.com/

|

||||

[6]:http://linuxscoop.com/

|

||||

[7]:http://www.ubuntugeek.com/

|

||||

[8]:http://www.techdrivein.com/

|

||||

[9]:https://lists.ubuntu.com/mailman/listinfo/ubuntu-news

|

||||

[10]:http://ubuntuhandbook.org/

|

||||

[11]:https://plus.google.com/u/0/+JimUbuntuHandbook

|

||||

[12]:http://www.unixmen.com/

|

||||

[13]:http://www.themukt.com/

|

||||

[14]:http://linuxg.net/

|

||||

@ -0,0 +1,42 @@

|

||||

Debian 8 "Jessie" 将把GNOME作为默认桌面环境

|

||||

================================================================================

|

||||

> Debian的GNOME团队已经取得了实质进展

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*GNOME 3.14桌面*</center>

|

||||

|

||||

**Debian项目开发者花了很长一段时间来决定将Xfce,GNOME或一些其他桌面环境中的哪个作为默认环境,不过目前看起来像是GNOME赢了。**

|

||||

|

||||

[我们两天前提到了][1],GNOME 3.14的软件包被上传到 Debian Testing(Debian 8 “Jessie”)的软件仓库中,这是一个令人惊喜的事情。通常情况下,GNOME的维护者对任何类型的软件包都不会这么快地决定添加,更别说桌面环境。

|

||||

|

||||

事实证明,关于即将到来的Debian 8的发行版中所用的默认桌面的争论已经尘埃落定,尽管这个词可能有点过于武断。无论什么情况下,总是有些开发者想要Xfce,另外一些则是喜欢 GNOME,看起来 MATE 也是不少人的备选。

|

||||

|

||||

### 最有可能的是,GNOME将Debian 8“Jessie” 的默认桌面环境###

|

||||

|

||||

我们之所以说“最有可能”是因为协议尚未达成一致,但它看起来GNOME已经遥遥领先了。Debian的维护者和开发者乔伊·赫斯解释了为什么会这样。

|

||||

|

||||

“根据从 https://wiki.debian.org/DebianDesktop/Requalification/Jessie 初步结果看,一些所需数据尚不可用,但在这一点上,我百分之八十地确定GNOME已经领先了。特别是,由于“辅助功能”和某些“systemd”整合的进度。在辅助功能方面:Gnome和Mate都领先了一大截。其他一些桌面的辅助功能改善了在Debian上的支持,部分原因是这一过程推动的,但仍需要上游大力支持。“

|

||||

|

||||

“Systemd /etc 整合方面:Xfce,Mate等尽力追赶在这一领域正在发生的变化,当技术团队停止了修改之后,希望有时间能在冻结期间解决这些问题。所以这并不是完全否决这些桌面,但要从目前的状态看,GNOME是未来的选择,“乔伊·赫斯[补充说][2]。

|

||||

|

||||

开发者在邮件中表示,在Debian的GNOME团队对他们所维护的项目[充满了激情][3],而Debian的Xfce的团队是决定默认桌面的实际阻碍。

|

||||

|

||||

无论如何,Debian 8“Jessie”没有一个具体发布时间,并没有迹象显示何时可能会被发布。在另一方面,GNOME 3.14已经发布了(也许你已经看到新闻了),它将很快应对好进行Debian的测试。

|

||||

|

||||

我们也应该感谢Jordi Mallach,在Debian中的GNOME包的维护者之一,他为我们指引了正确的讯息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Have-GNOME-as-the-Default-Desktop-459665.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[fbigun](https://github.com/fbigun)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://news.softpedia.com/news/Debian-8-quot-Jessie-quot-to-Get-GNOME-3-14-459470.shtml

|

||||

[2]:http://anonscm.debian.org/cgit/tasksel/tasksel.git/commit/?id=dce99f5f8d84e4c885e6beb4cc1bb5bb1d9ee6d7

|

||||

[3]:http://news.softpedia.com/news/Debian-Maintainer-Says-that-Xfce-on-Debian-Will-Not-Meet-Quality-Standards-GNOME-Is-Needed-454962.shtml

|

||||

@ -0,0 +1,29 @@

|

||||

Red Hat Enterprise Linux 5产品线终结

|

||||

================================================================================

|

||||

2007年3月,红帽公司首次宣布它的[Red Hat Enterprise Linux 5][1](RHEL)平台。虽然如今看来很普通,RHEL 5特别显著的一点是它是红帽公司第一个强调虚拟化的主要发行版本,而这点是如今现代发行版所广泛接受的特性。

|

||||

|

||||

最初的计划是为RHEL 5提供七年的寿命,但在2012年该计划改变了,红帽为RHEL 5[扩展][2]至10年的标准支持。

|

||||

|

||||

刚刚过去的这个星期,Red Hat发布的RHEL 5.11是RHEL 5.X系列的最后的、次要里程碑版本。红帽现在进入了将持续三年的名为“production 3”的支持周期。在这阶段将没有新的功能被添加到平台中,并且红帽公司将只提供有重大影响的安全修复程序和紧急优先级的bug修复。

|

||||

|

||||

平台事业部副总裁兼总经理Jim Totton在红帽公司在一份声明中说:“红帽公司致力于建立一个长期,稳定的产品生命周期,这将给那些依赖Red Hat Enterprise Linux为他们的关键应用服务的企业客户提供关键的益处。虽然RHEL 5.11是RHEL 5平台的最终次要版本,但它提供了安全性和可靠性方面的增强功能,以保持该平台接下来几年的活力。”

|

||||

|

||||

新的增强功能包括安全性和稳定性更新,包括改进了红帽帮助用户调试系统的方式。

|

||||

|

||||

还有一些新的存储的驱动程序,以支持新的存储适配器和改进在VMware ESXi上运行RHEL的支持。

|

||||

|

||||

在安全方面的巨大改进是OpenSCAP更新到版本1.0.8。红帽在2011年五月的[RHEL5.7的里程碑更新][3]中第一次支持了OpenSCAP。 OpenSCAP是安全内容自动化协议(SCAP)框架的开源实现,用于创建一个标准化方法来维护安全系统。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxplanet.com/news/end-of-the-line-for-red-hat-enterprise-linux-5.html

|

||||

|

||||

作者:Sean Michael Kerner

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.internetnews.com/ent-news/article.php/3665641

|

||||

[2]:http://www.serverwatch.com/server-news/red-hat-extends-linux-support.html

|

||||

[3]:http://www.internetnews.com/skerner/2011/05/red-hat-enterprise-linux-57-ad.html

|

||||

@ -0,0 +1,39 @@

|

||||

KDE Plasma 5的第二个bug修复版本发布,带来了很多的改变

|

||||

================================================================================

|

||||

> 新的Plasma 5发布了,带来了新的外观

|

||||

|

||||

<center></center>

|

||||

|

||||

<center>*KDE Plasma 5*</center>

|

||||

|

||||

### Plasma 5的第二个bug修复版本发布,已可下载###

|

||||

|

||||

KDE Plasma 5的bug修复版本不断来到,它新的桌面体验将会是KDE的生态系统的一个组成部分。

|

||||

|

||||

[公告][1]称:“plasma-5.0.2这个版本,新增了一个月以来来自KDE的贡献者新的翻译和修订。Bug修复通常是很小但是很重要,如修正未翻译的文字,使用正确的图标和修正KDELibs 4软件的文件重复现象。它还增加了一个月以来辛勤的翻译成果,使其支持其他更多的语言”

|

||||

|

||||

这个桌面还没有在任何Linux发行版中默认安装,这将持续一段时间,直到我们测试完成。

|

||||

|

||||

开发者还解释说,更新的软件包可以在Kubuntu Plasma 5的开发版本中进行审查。

|

||||

|

||||

如果你个人需要它们,你也可以下载源码包。

|

||||

|

||||

- [KDE Plasma Packages][2]

|

||||

- [KDE Plasma Sources][3]

|

||||

|

||||

如果你决定去编译它,你必须需要知道 KDE Plasma 5.0.2是一组复杂的软件,可能你需要解决不少问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Second-Bugfix-Release-for-KDE-Plasma-5-Arrives-with-Lots-of-Changes-459688.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://kde.org/announcements/plasma-5.0.2.php

|

||||

[2]:https://community.kde.org/Plasma/Packages

|

||||

[3]:http://kde.org/info/plasma-5.0.2.php

|

||||

@ -1,37 +0,0 @@

|

||||

Microsoft Lobby Denies the State of Chile Access to Free Software

|

||||

================================================================================

|

||||

|

||||

Fuerza Chile

|

||||

|

||||

Fresh on the heels of the entire Munich and Linux debacle, another story involving Microsoft and free software has popped up across the world, in Chile. A prolific magazine from the South American country says that the powerful Microsoft lobby managed to turn around a law that would allow the authorities to use free software.

|

||||

|

||||

The story broke out from a magazine called El Sábado de El Mercurio, which explains in great detail how the Microsoft lobby works and how it can overturn a law that may harm its financial interests.

|

||||

|

||||

An independent member of the Chilean Parliament, Vlado Mirosevic, pushed a bill that would allow the state to consider free software when the authorities needed to purchase or renew licenses. The state of Chile pays $2.7 billion (€2 billion) on licenses from various companies, including Microsoft.

|

||||

|

||||

According to [ubuntizando.com][1], Microsoft representatives met with Vlado Mirosevic shortly after he announced his intentions, but the bill passed the vote, with 64 votes in favor, 12 abstentions, and one vote against it. That one vote was cast by Daniel Farcas, a member of a Chilean party.

|

||||

|

||||

A while later, the same member of the Parliament, Daniel Farcas, proposed another bill that actually nullified the effects of the previous one that had just been adopted. To make things even more interesting, some of the people who voted in favor of the first law also voted in favor of the second one.

|

||||

|

||||

The new bill is even more egregious, because it aggressively pushes for the adoption of proprietary software. Companies that choose to use proprietary software will receive certain tax breaks, which makes it very hard for free software to get adopted.

|

||||

|

||||

Microsoft has been in the news in the last few days because the [German city of Munich that adopted Linux][2] and dropped Windows system from its administration was considering, supposedly, returning to proprietary software.

|

||||

|

||||

This new situation in Chile give us a sample of the kind of pull a company like Microsoft has and it shows us just how fragile laws really are. This is not the first time a company tries to bend the laws in a country to maximize the profits, but the advent of free software and the clear financial advantages that it offers are really making a dent.

|

||||

|

||||

Five years ago, few people or governments would have considered adopting free software, but the quality of that software has risen dramatically and it has become a real competition [for the likes of Microsoft][3].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Microsoft-Lobby-Denies-the-State-of-Chile-Access-to-Free-Software-455598.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://www.ubuntizando.com/2014/08/20/microsoft-chile-y-el-poder-del-lobby/

|

||||

[2]:http://news.softpedia.com/news/Munich-Disappointed-with-Linux-Plans-to-Switch-Back-to-Windows-455405.shtml

|

||||

[3]:http://news.softpedia.com/news/Munich-Switching-to-Windows-from-Linux-Is-Proof-that-Microsoft-Is-Still-an-Evil-Company-455510.shtml

|

||||

@ -1,40 +0,0 @@

|

||||

Transport Tycoon Deluxe Remake OpenTTD 1.4.2 Is an Almost Perfect Sim

|

||||

================================================================================

|

||||

|

||||

Transport Tycoon

|

||||

|

||||

**OpenTTD 1.4.2, an open source simulation game based on the popular Microprose title Transport Tycoon written by Chris Sawyer, has been officially released.**

|

||||

|

||||

Transport Tycoon is a very old game that was originally launched back in 1995, but it made such a huge impact on the gaming community that, even almost 20 years later, it still has a powerful fan base.

|

||||

|

||||

In fact, Transport Tycoon Deluxe had such an impact on the gaming industry that it managed to spawn an entire generation of similar games and it has yet to be surpassed by any new title, even though many have tried.

|

||||

|

||||

Despite the aging graphics, the developers of OpenTTD have tried to provide new challenges for the fans of the original games. To put things into perspective, the original game is already two decades old. That means that someone who was 20 years old back then is now in his forties and he is the main audience for OpenTTD.

|

||||

|

||||

"OpenTTD is modelled after the original Transport Tycoon game by Chris Sawyer and enhances the game experience dramatically. Many features were inspired by TTDPatch while others are original," reads the official announcement.

|

||||

|

||||

OpenTTD features bigger maps (up to 64 times in size), stable multiplayer mode for up to 255 players in 15 companies, a dedicated server mode and an in-game console for administration, IPv6 and IPv4 support for all communication of the client and server, new pathfinding algorithms that makes vehicles go where you want them to, different configurable models for acceleration of vehicles, and much more.

|

||||

|

||||

According to the changelog, awk is now used instead of trying to convince cpp to preprocess nfo files, CMD_CLEAR_ORDER_BACKUP is no longer suppressed by pause modes, the Wrong breakdown sound is no longer played for ships, integer overflow in the acceleration code is no longer causing either too low acceleration or too high acceleration, incorrectly saved order backups are now discarded when clients join, and the game no longer crashes when trying to show an error about vehicle in a NewGRF and the NewGRF was not loaded at all.

|

||||

|

||||

Also, the Slovak language no longer uses space as group separator in numbers, the parameter bound checks are now tighter on GSCargoMonitor functions, the days in dates are not represented by ordinal numbers in all languages, and the incorrect usage of string commands in the base language has been fixed.

|

||||

|

||||

Check out the [changelog][1] for a complete list of updates and fixes.

|

||||

|

||||

Download OpenTTD 1.4.2:

|

||||

|

||||

- [http://www.openttd.org/en/download-stable][2]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Transport-Tycoon-Deluxe-Remake-OpenTTD-1-4-2-Is-an-Almost-Perfect-Sim-455715.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://ftp.snt.utwente.nl/pub/games/openttd/binaries/releases/1.4.2/changelog.txt

|

||||

[2]:http://www.openttd.org/en/download-stable

|

||||

@ -1,41 +0,0 @@

|

||||

[sailing]

|

||||

Munich Council: LiMux Demise Has Been Greatly Exaggerated

|

||||

================================================================================

|

||||

|

||||

LiMux – Munich City Council’s Official OS

|

||||

|

||||

A Munich city council spokesman has attempted to clarify the reasons behind its [plan to re-examine the role of open-source][1] software in local government IT systems.

|

||||

|

||||

The response comes after numerous German media outlets revealed that the city’s incoming mayor has asked for a report into the use of LiMux, the open-source Linux distribution used by more than 80% of municipalities.

|

||||

|

||||

Reports quoted an unnamed city official, who claimed employees were ‘suffering’ from having to use open-source software. Others called it an ‘expensive failure’, with the deputy mayor, Josef Schmid, saying the move was ‘driven by ideology’, not financial prudence.

|

||||

|

||||

With Munich often viewed as the poster child for large Linux migrations, news of the potential renege quickly went viral. Now council spokesman Stefan Hauf has attempted to bring clarity to the situation.

|

||||

|

||||

### ‘Plans for the future’ ###

|

||||

|

||||

Hauf confirms that the city’s new mayor has requested a review of the city’s IT systems, including its choice of operating systems. But the report is not, as implied in earlier reports, solely tasked with deciding whether to return to using Microsoft Windows.

|

||||

|

||||

**“It’s about the organisation, the costs, performance and the usability and satisfaction of the users,”** [Techrepublic][2] quote him as saying.

|

||||

|

||||

**“[It's about gathering the] facts so we can decide and make a proposal for the city council how to proceed in future.”**

|

||||

|

||||

Hauf also confirms that council staff have, and do, complain about LiMux, but that the majority of issues stem from compatibility issues in OpenOffice, something a potential switch to LibreOffice could solve.

|

||||

|

||||