mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

d6eb33ee6b

@ -1,37 +1,39 @@

|

||||

四个开源的Android邮件客户端

|

||||

四个开源的 Android 邮件客户端

|

||||

======

|

||||

Email 现在还没有绝迹,而且现在大部分邮件都来自于移动设备。

|

||||

|

||||

> Email 现在还没有绝迹,而且现在大部分邮件都来自于移动设备。

|

||||

|

||||

|

||||

|

||||

现在一些年轻人正将邮件称之为“老年人的交流方式”,然而事实却是邮件绝对还没有消亡。虽然[协作工具][1],社交媒体,和短信很常用,但是它们还没做好取代邮件这种必要的商业(和社交)通信工具。

|

||||

现在一些年轻人正将邮件称之为“老年人的交流方式”,然而事实却是邮件绝对还没有消亡。虽然[协作工具][1]、社交媒体,和短信很常用,但是它们还没做好取代邮件这种必要的商业(和社交)通信工具的准备。

|

||||

|

||||

考虑到邮件还没有消失,并且(很多研究表明)人们都是在移动设备上阅读邮件,拥有一个好的移动邮件客户端就变得很关键。如果你是一个想使用开源的邮件客户端的 Android 用户,事情就变得有点棘手了。

|

||||

|

||||

我们提供了四个开源的 Andorid 邮件客户端供选择。其中两个可以通过 Andorid 官方应用商店 [Google Play][2] 下载。你也可以在 [Fossdroid][3] 或者 [F-Droid][4] 这些开源 Android 应用库中找到他们。(下方有每个应用的具体下载方式。)

|

||||

|

||||

### K-9 Mail

|

||||

|

||||

[K-9 Mail][5] 拥有几乎和 Android 一样长的历史——它起源于 Android 1.0 邮件客户端的一个补丁。它支持 IMAP 和 WebDAV、多用户、附件、emojis 和其他经典的邮件客户端功能。它的[用户文档][6]提供了关于安装、启动、安全、阅读和发送邮件等等的帮助。

|

||||

[K-9 Mail][5] 拥有几乎和 Android 一样长的历史——它起源于 Android 1.0 邮件客户端的一个补丁。它支持 IMAP 和 WebDAV、多用户、附件、emoji 和其它经典的邮件客户端功能。它的[用户文档][6]提供了关于安装、启动、安全、阅读和发送邮件等等的帮助。

|

||||

|

||||

K-9 基于 [Apache 2.0][7] 协议开源,[源码][8]可以从 GitHub 上获得. 应用可以从 [Google Play][9]、[Amazon][10] 和 [F-Droid][11] 上下载。

|

||||

|

||||

### p≡p

|

||||

|

||||

正如它的全称,”Pretty Easy Privacy”说的那样,[p≡p][12] 主要关注于隐私和安全通信。它提供自动的、端到端的邮件和附件加密(但要求你的收件人也要能够加密邮件——否则,p≡p会警告你的邮件将不加密发出)。

|

||||

正如它的全称,”Pretty Easy Privacy”说的那样,[p≡p][12] 主要关注于隐私和安全通信。它提供自动的、端到端的邮件和附件加密(但要求你的收件人也要能够加密邮件——否则,p≡p 会警告你的邮件将不加密发出)。

|

||||

|

||||

你可以从 GitLab 获得[源码][13](基于 [GPLv3][14] 协议),并且可以从应用的官网上找到相应的[文档][15]。应用可以在 [Fossdroid][16] 上免费下载或者在 [Google Play][17] 上支付一点儿象征性的费用下载。

|

||||

|

||||

### InboxPager

|

||||

|

||||

[InboxPager][18] 允许你通过 SSL/TLS 协议收发邮件信息,这也表明如果你的邮件提供商(比如 Gmail )没有默认开启这个功能的话,你可能要做一些设置。(幸运的是, InboxPager 提供了 Gmail的[设置教程][19]。)它同时也支持通过 OpenKeychain 应用进行 OpenPGP 机密。

|

||||

[InboxPager][18] 允许你通过 SSL/TLS 协议收发邮件信息,这也表明如果你的邮件提供商(比如 Gmail )没有默认开启这个功能的话,你可能要做一些设置。(幸运的是, InboxPager 提供了 Gmail 的[设置教程][19]。)它同时也支持通过 OpenKeychain 应用进行 OpenPGP 加密。

|

||||

|

||||

InboxPager 基于 [GPLv3][20] 协议,其源码可从 GitHub 获得,并且应用可以从 [F-Droid][21] 下载。

|

||||

|

||||

### FairEmail

|

||||

|

||||

[FairEmail][22] 是一个极简的邮件客户端,它的功能集中于读写信息,没有任何多余的可能拖慢客户端的功能。它支持多个帐号和用户,消息线程,加密等等。

|

||||

[FairEmail][22] 是一个极简的邮件客户端,它的功能集中于读写信息,没有任何多余的可能拖慢客户端的功能。它支持多个帐号和用户、消息线索、加密等等。

|

||||

|

||||

它基于 [GPLv3][23] 协议开源,[源码][24]可以从GitHub上获得。你可以在 [Fossdroid][25] 上下载 FairEamil; 对 Google Play 版本感兴趣的人可以从 [testing the software][26] 获得应用。

|

||||

它基于 [GPLv3][23] 协议开源,[源码][24]可以从 GitHub 上获得。你可以在 [Fossdroid][25] 上下载 FairEamil;对 Google Play 版本感兴趣的人可以从 [testing the software][26] 获得应用。

|

||||

|

||||

肯定还有更多的开源 Android 客户端(或者上述软件的加强版本)——活跃的开发者们可以关注一下。如果你知道还有哪些优秀的应用,可以在评论里和我们分享。

|

||||

|

||||

@ -42,7 +44,7 @@ via: https://opensource.com/article/18/10/open-source-android-email-clients

|

||||

作者:[Opensource.com][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zianglei][c]

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

275

sources/tech/20180612 Systemd Services- Reacting to Change.md

Normal file

275

sources/tech/20180612 Systemd Services- Reacting to Change.md

Normal file

@ -0,0 +1,275 @@

|

||||

Systemd Services: Reacting to Change

|

||||

======

|

||||

|

||||

|

||||

|

||||

[I have one of these Compute Sticks][1] (Figure 1) and use it as an all-purpose server. It is inconspicuous and silent and, as it is built around an x86 architecture, I don't have problems getting it to work with drivers for my printer, and that’s what it does most days: it interfaces with the shared printer and scanner in my living room.

|

||||

|

||||

![ComputeStick][3]

|

||||

|

||||

An Intel ComputeStick. Euro coin for size.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

Most of the time it is idle, especially when we are out, so I thought it would be good idea to use it as a surveillance system. The device doesn't come with its own camera, and it wouldn't need to be spying all the time. I also didn't want to have to start the image capturing by hand because this would mean having to log into the Stick using SSH and fire up the process by writing commands in the shell before rushing out the door.

|

||||

|

||||

So I thought that the thing to do would be to grab a USB webcam and have the surveillance system fire up automatically just by plugging it in. Bonus points if the surveillance system fired up also after the Stick rebooted, and it found that the camera was connected.

|

||||

|

||||

In prior installments, we saw that [systemd services can be started or stopped by hand][5] or [when certain conditions are met][6]. Those conditions are not limited to when the OS reaches a certain state in the boot up or powerdown sequence but can also be when you plug in new hardware or when things change in the filesystem. You do that by combining a Udev rule with a systemd service.

|

||||

|

||||

### Hotplugging with Udev

|

||||

|

||||

Udev rules live in the _/etc/udev/rules_ directory and are usually a single line containing _conditions_ and _assignments_ that lead to an _action_.

|

||||

|

||||

That was a bit cryptic. Let's try again:

|

||||

|

||||

Typically, in a Udev rule, you tell systemd what to look for when a device is connected. For example, you may want to check if the make and model of a device you just plugged in correspond to the make and model of the device you are telling Udev to wait for. Those are the _conditions_ mentioned earlier.

|

||||

|

||||

Then you may want to change some stuff so you can use the device easily later. An example of that would be to change the read and write permissions to a device: if you plug in a USB printer, you're going to want users to be able to read information from the printer (the user's printing app would want to know the model, make, and whether it is ready to receive print jobs or not) and write to it, that is, send stuff to print. Changing the read and write permissions for a device is done using one of the _assignments_ you read about earlier.

|

||||

|

||||

Finally, you will probably want the system to do something when the conditions mentioned above are met, like start a backup application to copy important files when a certain external hard disk drive is plugged in. That is an example of an _action_ mentioned above.

|

||||

|

||||

With that in mind, ponder this:

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0", ATTRS{idProduct}=="e207",

|

||||

SYMLINK+="mywebcam", TAG+="systemd", MODE="0666", ENV{SYSTEMD_WANTS}="webcam.service"

|

||||

```

|

||||

|

||||

The first part of the rule,

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0",

|

||||

ATTRS{idProduct}=="e207" [etc... ]

|

||||

```

|

||||

|

||||

shows the conditions that the device has to meet before doing any of the other stuff you want the system to do. The device has to be added (`ACTION=="add"`) to the machine, it has to be integrated into the `video4linux` subsystem. To make sure the rule is applied only when the correct device is plugged in, you have to make sure Udev correctly identifies the manufacturer (`ATTRS{idVendor}=="03f0"`) and a model (`ATTRS{idProduct}=="e207"`) of the device.

|

||||

|

||||

In this case, we're talking about this device (Figure 2):

|

||||

|

||||

![webcam][8]

|

||||

|

||||

The HP webcam used in this experiment.

|

||||

|

||||

[Used with permission][4]

|

||||

|

||||

Notice how you use `==` to indicate that these are a logical operation. You would read the above snippet of the rule like this:

|

||||

|

||||

```

|

||||

if the device is added and the device controlled by the video4linux subsystem

|

||||

and the manufacturer of the device is 03f0 and the model is e207, then...

|

||||

```

|

||||

|

||||

But where do you get all this information? Where do you find the action that triggers the event, the manufacturer, model, and so on? You will probably have to use several sources. The `IdVendor` and `idProduct` you can get by plugging the webcam into your machine and running `lsusb`:

|

||||

|

||||

```

|

||||

lsusb

|

||||

Bus 002 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

|

||||

Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

|

||||

Bus 003 Device 003: ID 03f0:e207 Hewlett-Packard

|

||||

Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

Bus 001 Device 003: ID 04f2:b1bb Chicony Electronics Co., Ltd

|

||||

Bus 001 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

|

||||

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

|

||||

```

|

||||

|

||||

The webcam I’m using is made by HP, and you can only see one HP device in the list above. The `ID` gives you the manufacturer and the model numbers separated by a colon (`:`). If you have more than one device by the same manufacturer and not sure which is which, unplug the webcam, run `lsusb` again and check what's missing.

|

||||

|

||||

OR...

|

||||

|

||||

Unplug the webcam, wait a few seconds, run the command `udevadmin monitor --environment` and then plug the webcam back in again. When you do that with the HP webcam, you get:

|

||||

|

||||

```

|

||||

udevadmin monitor --environment

|

||||

UDEV [35776.495221] add /devices/pci0000:00/0000:00:1c.3/0000:04:00.0

|

||||

/usb3/3-1/3-1:1.0/input/input21/event11 (input)

|

||||

.MM_USBIFNUM=00

|

||||

ACTION=add

|

||||

BACKSPACE=guess

|

||||

DEVLINKS=/dev/input/by-path/pci-0000:04:00.0-usb-0:1:1.0-event

|

||||

/dev/input/by-id/usb-Hewlett_Packard_HP_Webcam_HD_2300-event-if00

|

||||

DEVNAME=/dev/input/event11

|

||||

DEVPATH=/devices/pci0000:00/0000:00:1c.3/0000:04:00.0/

|

||||

usb3/3-1/3-1:1.0/input/input21/event11

|

||||

ID_BUS=usb

|

||||

ID_INPUT=1

|

||||

ID_INPUT_KEY=1

|

||||

ID_MODEL=HP_Webcam_HD_2300

|

||||

ID_MODEL_ENC=HP\x20Webcam\x20HD\x202300

|

||||

ID_MODEL_ID=e207

|

||||

ID_PATH=pci-0000:04:00.0-usb-0:1:1.0

|

||||

ID_PATH_TAG=pci-0000_04_00_0-usb-0_1_1_0

|

||||

ID_REVISION=1020

|

||||

ID_SERIAL=Hewlett_Packard_HP_Webcam_HD_2300

|

||||

ID_TYPE=video

|

||||

ID_USB_DRIVER=uvcvideo

|

||||

ID_USB_INTERFACES=:0e0100:0e0200:010100:010200:030000:

|

||||

ID_USB_INTERFACE_NUM=00

|

||||

ID_VENDOR=Hewlett_Packard

|

||||

ID_VENDOR_ENC=Hewlett\x20Packard

|

||||

ID_VENDOR_ID=03f0

|

||||

LIBINPUT_DEVICE_GROUP=3/3f0/e207:usb-0000:04:00.0-1/button

|

||||

MAJOR=13

|

||||

MINOR=75

|

||||

SEQNUM=3162

|

||||

SUBSYSTEM=input

|

||||

USEC_INITIALIZED=35776495065

|

||||

XKBLAYOUT=es

|

||||

XKBMODEL=pc105

|

||||

XKBOPTIONS=

|

||||

XKBVARIANT=

|

||||

```

|

||||

|

||||

That may look like a lot to process, but, check this out: the `ACTION` field early in the list tells you what event just happened, i.e., that a device got added to the system. You can also see the name of the device spelled out on several of the lines, so you can be pretty sure that it is the device you are looking for. The output also shows the manufacturer's ID number (`ID_VENDOR_ID=03f0`) and the model number (`ID_VENDOR_ID=03f0`).

|

||||

|

||||

This gives you three of the four values the condition part of the rule needs. You may be tempted to think that it a gives you the fourth, too, because there is also a line that says:

|

||||

|

||||

```

|

||||

SUBSYSTEM=input

|

||||

```

|

||||

|

||||

Be careful! Although it is true that a USB webcam is a device that provides input (as does a keyboard and a mouse), it is also belongs to the _usb_ subsystem, and several others. This means that your webcam gets added to several subsystems and looks like several devices. If you pick the wrong subsystem, your rule may not work as you want it to, or, indeed, at all.

|

||||

|

||||

So, the third thing you have to check is all the subsystems the webcam has got added to and pick the correct one. To do that, unplug your webcam again and run:

|

||||

|

||||

```

|

||||

ls /dev/video*

|

||||

```

|

||||

|

||||

This will show you all the video devices connected to the machine. If you are using a laptop, most come with a built-in webcam and it will probably show up as `/dev/video0`. Plug your webcam back in and run `ls /dev/video*` again.

|

||||

|

||||

Now you should see one more video device (probably `/dev/video1`).

|

||||

|

||||

Now you can find out all the subsystems it belongs to by running `udevadm info -a /dev/video1`:

|

||||

|

||||

```

|

||||

udevadm info -a /dev/video1

|

||||

|

||||

Udevadm info starts with the device specified by the devpath and then

|

||||

walks up the chain of parent devices. It prints for every device

|

||||

found, all possible attributes in the udev rules key format.

|

||||

A rule to match, can be composed by the attributes of the device

|

||||

and the attributes from one single parent device.

|

||||

|

||||

looking at device '/devices/pci0000:00/0000:00:1c.3/0000:04:00.0

|

||||

/usb3/3-1/3-1:1.0/video4linux/video1':

|

||||

KERNEL=="video1"

|

||||

SUBSYSTEM=="video4linux"

|

||||

DRIVER==""

|

||||

ATTR{dev_debug}=="0"

|

||||

ATTR{index}=="0"

|

||||

ATTR{name}=="HP Webcam HD 2300: HP Webcam HD"

|

||||

|

||||

[etc...]

|

||||

```

|

||||

|

||||

The output goes on for quite a while, but what you're interested is right at the beginning: `SUBSYSTEM=="video4linux"`. This is a line you can literally copy and paste right into your rule. The rest of the output (not shown for brevity) gives you a couple more nuggets, like the manufacturer and mode IDs, again in a format you can copy and paste into your rule.

|

||||

|

||||

Now you have a way of identifying the device and what event should trigger the action univocally, it is time to tinker with the device.

|

||||

|

||||

The next section in the rule, `SYMLINK+="mywebcam", TAG+="systemd", MODE="0666"` tells Udev to do three things: First, you want to create symbolic link from the device to (e.g. _/dev/video1_ ) to _/dev/mywebcam_. This is because you cannot predict what the system is going to call the device by default. When you have an in-built webcam and you hotplug a new one, the in-built webcam will usually be _/dev/video0_ while the external one will become _/dev/video1_. However, if you boot your computer with the external USB webcam plugged in, that could be reversed and the internal webcam can become _/dev/video1_ and the external one _/dev/video0_. What this is telling you is that, although your image-capturing script (which you will see later on) always needs to point to the external webcam device, you can't rely on it being _/dev/video0_ or _/dev/video1_. To solve this problem, you tell Udev to create a symbolic link which will never change in the moment the device is added to the _video4linux_ subsystem and you will make your script point to that.

|

||||

|

||||

The second thing you do is add `"systemd"` to the list of Udev tags associated with this rule. This tells Udev that the action that the rule will trigger will be managed by systemd, that is, it will be some sort of systemd service.

|

||||

|

||||

Notice how in both cases you use `+=` operator. This adds the value to a list, which means you can add more than one value to `SYMLINK` and `TAG`.

|

||||

|

||||

The `MODE` values, on the other hand, can only contain one value (hence you use the simple `=` assignment operator). What `MODE` does is tell Udev who can read from or write to the device. If you are familiar with `chmod` (and, if you are reading this, you should be), you will also be familiar of [how you can express permissions using numbers][9]. That is what this is: `0666` means " _give read and write privileges to the device to everybody_ ".

|

||||

|

||||

At last, `ENV{SYSTEMD_WANTS}="webcam.service"` tells Udev what systemd service to run.

|

||||

|

||||

Save this rule into file called _90-webcam.rules_ (or something like that) in _/etc/udev/rules.d_ and you can load it either by rebooting your machine, or by running:

|

||||

|

||||

```

|

||||

sudo udevadm control --reload-rules && udevadm trigger

|

||||

```

|

||||

|

||||

## Service at Last

|

||||

|

||||

The service the Udev rule triggers is ridiculously simple:

|

||||

|

||||

```

|

||||

# webcam.service

|

||||

|

||||

[Service]

|

||||

Type=simple

|

||||

ExecStart=/home/[user name]/bin/checkimage.sh

|

||||

```

|

||||

|

||||

Basically, it just runs the _checkimage.sh_ script stored in your personal _bin/_ and pushes it the background. [This is something you saw how to do in prior installments][5]. It may seem something little, but just because it is called by a Udev rule, you have just created a special kind of systemd unit called a _device_ unit. Congratulations.

|

||||

|

||||

As for the _checkimage.sh_ script _webcam.service_ calls, there are several ways of grabbing an image from a webcam and comparing it to a prior one to check for changes (which is what _checkimage.sh_ does), but this is how I did it:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

# This is the checkimage.sh script

|

||||

|

||||

mplayer -vo png -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=

|

||||

/dev/mywebcam &>/dev/null

|

||||

mv 00000001.png /home/[user name]/monitor/monitor.png

|

||||

|

||||

while true

|

||||

do

|

||||

mplayer -vo png -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=/dev/mywebcam &>/dev/null

|

||||

mv 00000001.png /home/[user name]/monitor/temp.png

|

||||

|

||||

imagediff=`compare -metric mae /home/[user name]/monitor/monitor.png /home/[user name]

|

||||

/monitor/temp.png /home/[user name]/monitor/diff.png 2>&1 > /dev/null | cut -f 1 -d " "`

|

||||

if [ `echo "$imagediff > 700.0" | bc` -eq 1 ]

|

||||

then

|

||||

mv /home/[user name]/monitor/temp.png /home/[user name]/monitor/monitor.png

|

||||

fi

|

||||

|

||||

sleep 0.5

|

||||

done

|

||||

```

|

||||

|

||||

Start by using [MPlayer][10] to grab a frame ( _00000001.png_ ) from the webcam. Notice how we point `mplayer` to the `mywebcam` symbolic link we created in our Udev rule, instead of to `video0` or `video1`. Then you transfer the image to the _monitor/_ directory in your home directory. Then run an infinite loop that does the same thing again and again, but also uses [Image Magick's _compare_ tool][11] to see if there any differences between the last image captured and the one that is already in the _monitor/_ directory.

|

||||

|

||||

If the images are different, it means something has moved within the webcam's frame. The script overwrites the original image with the new image and continues comparing waiting for some more movement.

|

||||

|

||||

### Plugged

|

||||

|

||||

With all the bits and pieces in place, when you plug your webcam in, your Udev rule will be triggered and will start the _webcam.service_. The _webcam.service_ will execute _checkimage.sh_ in the background, and _checkimage.sh_ will start taking pictures every half a second. You will know because your webcam's LED will start flashing indicating every time it takes a snap.

|

||||

|

||||

As always, if something goes wrong, run

|

||||

|

||||

```

|

||||

systemctl status webcam.service

|

||||

```

|

||||

|

||||

to check what your service and script are up to.

|

||||

|

||||

### Coming up

|

||||

|

||||

You may be wondering: Why overwrite the original image? Surely you would want to see what's going on if the system detects any movement, right? You would be right, but as you will see in the next installment, leaving things as they are and processing the images using yet another type of systemd unit makes things nice, clean and easy.

|

||||

|

||||

Just wait and see.

|

||||

|

||||

Learn more about Linux through the free ["Introduction to Linux" ][12]course from The Linux Foundation and edX.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/intro-to-linux/2018/6/systemd-services-reacting-change

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.intel.com/content/www/us/en/products/boards-kits/compute-stick/stk1a32sc.html

|

||||

[2]: https://www.linux.com/files/images/fig01png

|

||||

[3]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/fig01.png?itok=cfEHN5f1 (ComputeStick)

|

||||

[4]: https://www.linux.com/licenses/category/used-permission

|

||||

[5]: https://www.linux.com/blog/learn/intro-to-linux/2018/5/writing-systemd-services-fun-and-profit

|

||||

[6]: https://www.linux.com/blog/learn/2018/5/systemd-services-beyond-starting-and-stopping

|

||||

[7]: https://www.linux.com/files/images/fig02png

|

||||

[8]: https://www.linux.com/sites/lcom/files/styles/floated_images/public/fig02.png?itok=esFv4BdM (webcam)

|

||||

[9]: https://chmod-calculator.com/

|

||||

[10]: https://mplayerhq.hu/design7/news.html

|

||||

[11]: https://www.imagemagick.org/script/compare.php

|

||||

[12]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -0,0 +1,152 @@

|

||||

Systemd Services: Monitoring Files and Directories

|

||||

======

|

||||

|

||||

|

||||

|

||||

So far in this systemd multi-part tutorial, we’ve covered [how to start and stop a service by hand][1], [how to start a service when booting your OS and have it stop on power down][2], and [how to boot a service when a certain device is detected][3]. This installment does something different yet again and covers how to create a unit that starts a service when something changes in the filesystem. For the practical example, you'll see how you can use one of these units to extend the [surveillance system we talked about last time][4].

|

||||

|

||||

### Where we left off

|

||||

|

||||

[Last time we saw how the surveillance system took pictures, but it did nothing with them][3]. In fact, it even overwrote the last picture it took when it detected movement so as not to fill the storage of the device.

|

||||

|

||||

Does that mean the system is useless? Not by a long shot. Because, you see, systemd offers yet another type of units, _paths_ , that can help you out. _Path_ units allow you to trigger a service when an event happens in the filesystem, say, when a file gets deleted or a directory accessed. And, overwriting an image is exactly the kind of event we are talking about here.

|

||||

|

||||

### Anatomy of a Path Unit

|

||||

|

||||

A systemd path unit takes the extension _.path_ , and it monitors a file or directory. A _.path_ unit calls another unit (usually a _.service_ unit with the same name) when something happens to the monitored file or directory. For example, if you have a _picchanged.path_ unit to monitor the snapshot from your webcam, you will also have a _picchanged.service_ that will execute a script when the snapshot is overwritten.

|

||||

|

||||

Path units contain a new section, `[Path]`, with few more directives. First, you have the what-to-watch-for directives:

|

||||

|

||||

* **`PathExists=`** monitors whether the file or directory exists. If it does, the associated unit gets triggered. `PathExistsGlob=` works in a similar fashion, but lets you use globbing, like when you use `ls *.jpg` to search for all the JPEG images in a directory. This lets you check, for example, whether a file with a certain extension exists.

|

||||

* **`PathChanged=`** watches a file or directory and activates the configured unit whenever it changes. It is not activated on every write to the watched file but only when a monitored file open for for writing is changed and then closed. The associated unit is executed when the file is closed.

|

||||

* **`PathModified=`** , on the other hand, does activate the unit when anything is changed in the file you are monitoring, even before you close the file.

|

||||

* **`DirectoryNotEmpty=`** does what it says on the box, that is, it activates the associated unit if the monitored directory contains files or subdirectories.

|

||||

|

||||

|

||||

|

||||

Then, we have `Unit=` that tells the _.path_ which _.service_ unit to activate, in case you want to give it a different name to that of your _.path_ unit; `MakeDirectory=` can be `true` or `false` (or `0` or `1`, or `yes` or `no`) and creates the directory you want to monitor before monitoring starts. Obviously, using `MakeDirectory=` in combination with `PathExists=` does not make sense. However, `MakeDirectory=` can be used in combination with `DirectoryMode=`, which you use to set the the mode (permissions) of the new directory. If you don't use `DirectoryMode=`, the default permissions for the new directory are `0755`.

|

||||

|

||||

### Building _picchanged.path_

|

||||

|

||||

All these directives are very useful, but you will be just looking for changes made to one single file, so your _.path_ unit is very simple:

|

||||

|

||||

```

|

||||

#picchanged.path

|

||||

[Unit]

|

||||

Wants= webcam.service

|

||||

|

||||

[Path]

|

||||

PathChanged= /home/[user name]/monitor/monitor.jpg

|

||||

```

|

||||

|

||||

In the `Unit=` section the line that says

|

||||

|

||||

```

|

||||

Wants= webcam.service

|

||||

```

|

||||

|

||||

The `Wants=` directive is the preferred way of starting up a unit the current unit needs to work properly. [`webcam.service` is the name you gave the surveillance service that you saw in the previous article][3] and is the service that actually controls the webcam and makes it take a snap every half second. This means it’s _picchanged.path_ that is going to start up _webcam.service_ now, and not the [Udev rule you saw in the prior article][3]. You will use the Udev rule to start _picchanged.path_ instead.

|

||||

|

||||

To summarize: the Udev rule pulls in your new _picchanged.path_ unit, which, in turn pulls in the _webcam.service_ as a requirement for everything to work perfectly.

|

||||

|

||||

The "thing" that _picchanged.path_ monitors is the _monitor.jpg_ file in the _monitor/_ directory in your home directory. As you saw last time, _webcam.service_ called a script, _checkimage.sh_ , took a picture at the beginning of its execution and stored it in _monitor/temp.jpg_. _checkimage.sh_ then took another pic, _temp.jpg_ , and compared it with _monitor.jpg_. If it found significant differences (like when somebody walks into frame) the script overwrote _monitor.jpg_ with the _temp.jpg_. That is when _picchanged.path_ fires.

|

||||

|

||||

As you haven't included a `Unit=` directive in your _.path_ , the unit systemd expects a matching _picchanged.service_ unit which it will trigger when _/home/[ _user name_ ]/monitor/monitor.jpg_ gets modified:

|

||||

|

||||

```

|

||||

#picchanged.service

|

||||

[Service]

|

||||

Type= simple

|

||||

ExecStart= /home/[user name]/bin/picmonitor.sh

|

||||

```

|

||||

|

||||

For the time being, let’s make _picmonitor.sh_ save a time-stamped copy of _monitor.jpg_ every time changes get detected:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

# This is the pcmonitor.sh script

|

||||

|

||||

cp /home/[user name]/monitor/monitor.jpg /home/[user name]/monitor/"`date`.jpg"

|

||||

```

|

||||

|

||||

### Udev Changes

|

||||

|

||||

You have to change the custom Udev rule you wrote in [the previous installment][3] so everything works. Edit _/etc/udev/rules.d/01-webcam.rules_ so instead of looking like this:

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0",

|

||||

ATTRS{idProduct}=="e207", SYMLINK+="mywebcam", TAG+="systemd",

|

||||

MODE="0666", ENV{SYSTEMD_WANTS}="webcam.service"

|

||||

```

|

||||

|

||||

It looks like this:

|

||||

|

||||

```

|

||||

ACTION=="add", SUBSYSTEM=="video4linux", ATTRS{idVendor}=="03f0",

|

||||

ATTRS{idProduct}=="e207", SYMLINK+="mywebcam", TAG+="systemd",

|

||||

MODE="0666", ENV{SYSTEMD_WANTS}="picchanged.path"

|

||||

```

|

||||

|

||||

The new rule, instead of calling _webcam.service_ , now calls _picchanged.path_ when your webcam gets detected. (Note that you will have to change the `idVendor` and `IdProduct` to those of your own webcam -- you saw how to find these out previously).

|

||||

|

||||

For the record, I also changed _checkimage.sh_ from using PNG to JPEG images. I did this because I found some dependency problems with PNG images when working with _mplayer_ on some versions of Debian. _checkimage.sh_ now looks like this:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

mplayer -vo jpeg -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=

|

||||

/dev/mywebcam &>/dev/null

|

||||

mv 00000001.jpg /home/paul/monitor/monitor.jpg

|

||||

|

||||

while true

|

||||

do

|

||||

mplayer -vo jpeg -frames 1 tv:// -tv driver=v4l2:width=640:height=480:device=

|

||||

/dev/mywebcam &>/dev/null

|

||||

mv 00000001.jpg /home/paul/monitor/temp.jpg

|

||||

|

||||

imagediff=`compare -metric mae /home/paul/monitor/monitor.jpg

|

||||

/home/paul/monitor/temp.jpg /home/paul/monitor/diff.png 2>&1 >

|

||||

/dev/null | cut -f 1 -d " "`

|

||||

|

||||

if [ `echo "$imagediff > 700.0" | bc` -eq 1 ]

|

||||

then

|

||||

mv /home/paul/monitor/temp.jpg /home/paul/monitor/monitor.jpg

|

||||

fi

|

||||

|

||||

sleep 0.5

|

||||

done

|

||||

```

|

||||

|

||||

### Firing up

|

||||

|

||||

This is a multi-unit service that, when all its bits and pieces are in place, you don't have to worry much about: you plug in the designated webcam (or boot the machine with the webcam already connected), _picchanged.path_ gets started thanks to the Udev rule and takes over, bringing up the _webcam.service_ and starting to check on the snaps. There is nothing else you need to do.

|

||||

|

||||

### Conclusion

|

||||

|

||||

Having the process split into two doesn't only help explain how path units work, but it’s also very useful for debugging. One service does not "touch" the other in any way, which means that you could, for example, improve the "motion detection" part, and it would be very easy to roll back if things didn't work as expected.

|

||||

|

||||

Admittedly, the example is a bit goofy, as there are definitely [better ways of monitoring movement using a webcam][5]. But remember: the main aim of these articles is to help you learn how systemd units work within a context.

|

||||

|

||||

Next time, we'll finish up with systemd units by looking at some of the other types of units available and show how to improve your home-monitoring system further by setting up service that sends images to another machine.

|

||||

|

||||

Learn more about Linux through the free ["Introduction to Linux" ][6]course from The Linux Foundation and edX.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/intro-to-linux/2018/6/systemd-services-monitoring-files-and-directories

|

||||

|

||||

作者:[Paul Brown][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/bro66

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linux.com/blog/learn/intro-to-linux/2018/5/writing-systemd-services-fun-and-profit

|

||||

[2]: https://www.linux.com/blog/learn/2018/5/systemd-services-beyond-starting-and-stopping

|

||||

[3]: https://www.linux.com/blog/intro-to-linux/2018/6/systemd-services-reacting-change

|

||||

[4]: https://www.linux.com/blog/learn/intro-to-linux/2018/6/systemd-services-monitoring-files-and-directories

|

||||

[5]: https://www.linux.com/learn/how-operate-linux-spycams-motion

|

||||

[6]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,170 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

How To Quickly Serve Files And Folders Over HTTP In Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

Today, I came across a whole bunch of methods to serve a single file or entire directory with other systems in your local area network via a web browser. I tested all of them in my Ubuntu test machine, and everything worked just fine as described below. If you ever wondered how to easily and quickly serve files and folders over HTTP in Unix-like operating systems, one of the following methods will definitely help.

|

||||

|

||||

### Serve Files And Folders Over HTTP In Linux

|

||||

|

||||

**Disclaimer:** All the methods given here are meant to be used within a secure local area network. Since these methods doesn’t have any security mechanism, it is **not recommended to use them in production**. You have been warned!

|

||||

|

||||

#### Method 1 – Using simpleHTTPserver (Python)

|

||||

|

||||

We already have written a brief guide to setup a simple http server to share files and directories instantly in the following link. If you have a system with Python installed, this method is quite handy.

|

||||

|

||||

#### Method 2 – Using Quickserve (Python)

|

||||

|

||||

This method is specifically for Arch Linux and its variants. Check the following link for more details.

|

||||

|

||||

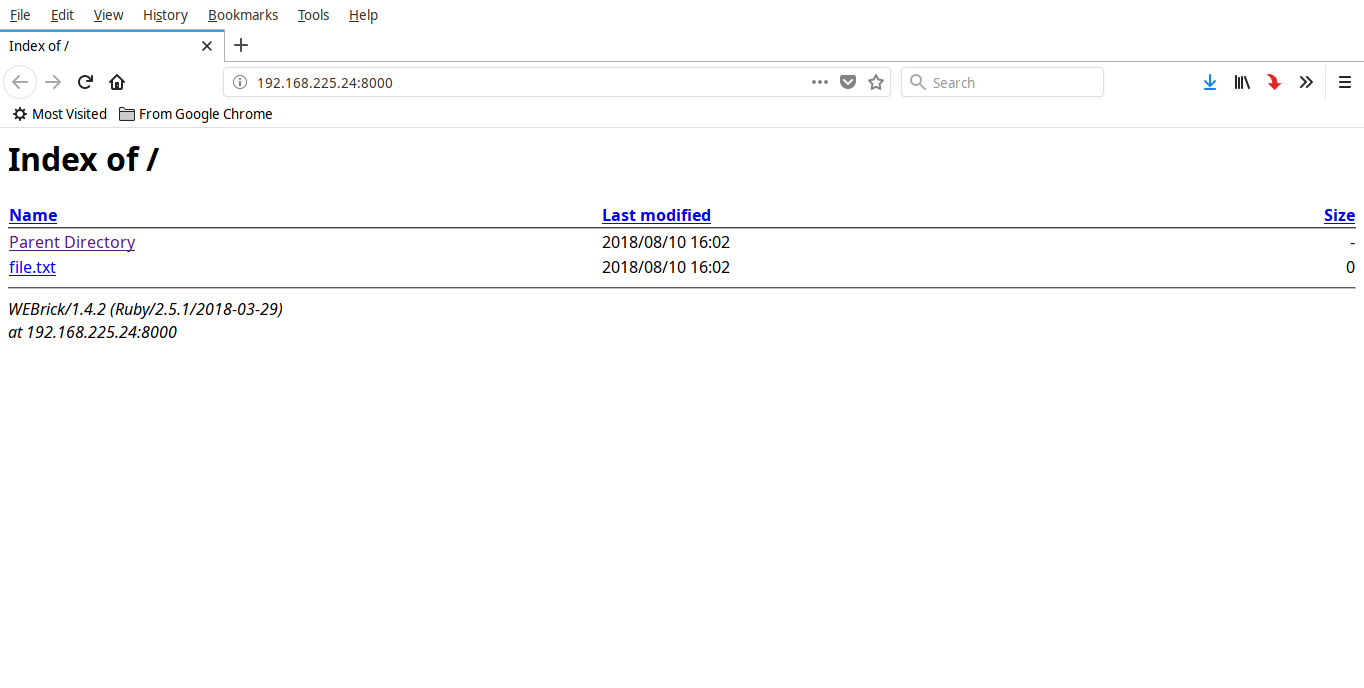

#### Method 3 – Using Ruby**

|

||||

|

||||

In this method, we use Ruby to serve files and folders over HTTP in Unix-like systems. Install Ruby and Rails as described in the following link.

|

||||

|

||||

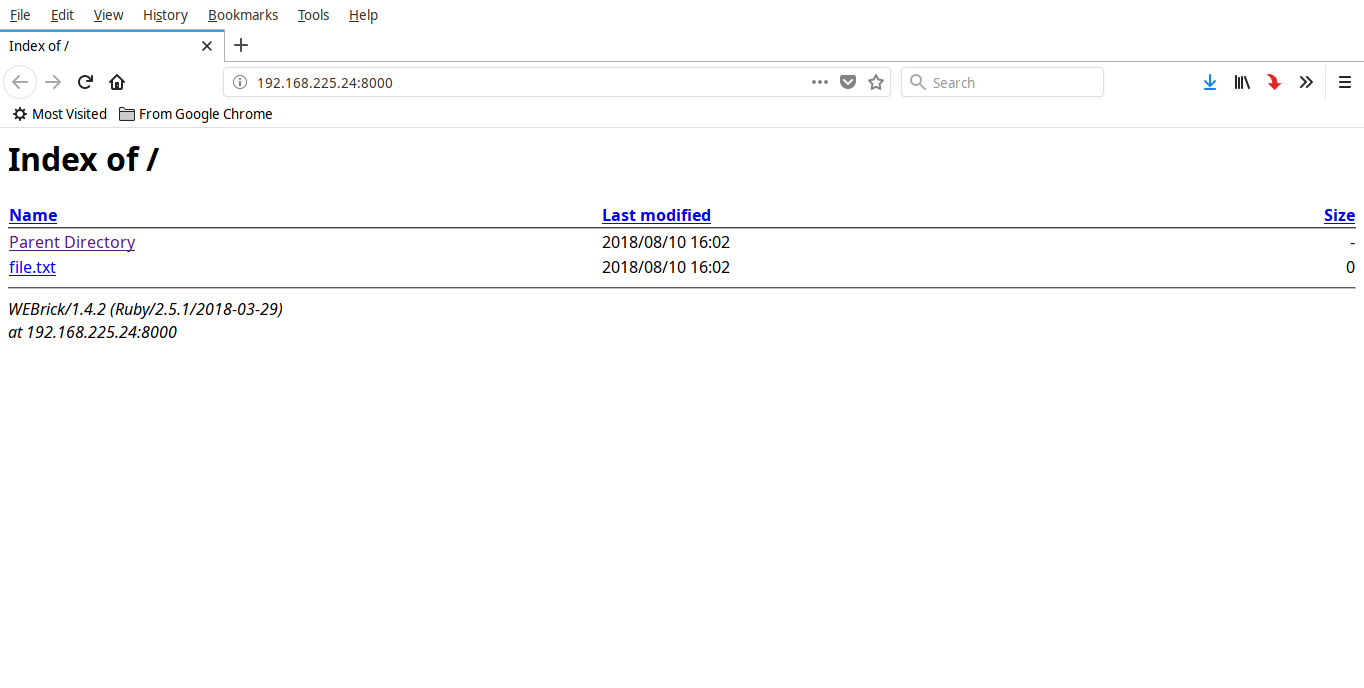

Once Ruby installed, go to the directory, for example ostechnix, that you want to share over the network:

|

||||

```

|

||||

$ cd ostechnix

|

||||

|

||||

```

|

||||

|

||||

And, run the following command:

|

||||

```

|

||||

$ ruby -run -ehttpd . -p8000

|

||||

[2018-08-10 16:02:55] INFO WEBrick 1.4.2

|

||||

[2018-08-10 16:02:55] INFO ruby 2.5.1 (2018-03-29) [x86_64-linux]

|

||||

[2018-08-10 16:02:55] INFO WEBrick::HTTPServer#start: pid=5859 port=8000

|

||||

|

||||

```

|

||||

|

||||

Make sure the port 8000 is opened in your router or firewall . If the port has already been used by some other services use different port.

|

||||

|

||||

You can now access the contents of this folder from any remote system using URL – **http:// <IP-address>:8000/**.

|

||||

|

||||

|

||||

|

||||

To stop sharing press **CTRL+C**.

|

||||

|

||||

#### Method 4 – Using Http-server (NodeJS)

|

||||

|

||||

[**Http-server**][1] is a simple, production ready command line http-server written in NodeJS. It requires zero configuration and can be used to instantly share files and directories via web browser.

|

||||

|

||||

Install NodeJS as described below.

|

||||

|

||||

Once NodeJS installed, run the following command to install http-server.

|

||||

```

|

||||

$ npm install -g http-server

|

||||

|

||||

```

|

||||

|

||||

Now, go to any directory and share its contents over HTTP as shown below.

|

||||

```

|

||||

$ cd ostechnix

|

||||

|

||||

$ http-server -p 8000

|

||||

Starting up http-server, serving ./

|

||||

Available on:

|

||||

http://127.0.0.1:8000

|

||||

http://192.168.225.24:8000

|

||||

http://192.168.225.20:8000

|

||||

Hit CTRL-C to stop the server

|

||||

|

||||

```

|

||||

|

||||

Now, you can access the contents of this directory from local or remote systems in the network using URL – **http:// <ip-address>:8000**.

|

||||

|

||||

|

||||

|

||||

To stop sharing, press **CTRL+C**.

|

||||

|

||||

#### Method 5 – Using Miniserve (Rust)

|

||||

|

||||

[**Miniserve**][2] is yet another command line utility that allows you to quickly serve files over HTTP. It is very fast, easy-to-use, and cross-platform utility written in **Rust** programming language. Unlike the above utilities/methods, it provides authentication support, so you can setup username and password to the shares.

|

||||

|

||||

Install Rust in your Linux system as described in the following link.

|

||||

|

||||

After installing Rust, run the following command to install miniserve:

|

||||

```

|

||||

$ cargo install miniserve

|

||||

|

||||

```

|

||||

|

||||

Alternatively, you can download the binaries from [**the releases page**][3] and make it executable.

|

||||

```

|

||||

$ chmod +x miniserve-linux

|

||||

|

||||

```

|

||||

|

||||

And, then you can run it using command (assuming miniserve binary file is downloaded in the current working directory):

|

||||

```

|

||||

$ ./miniserve-linux <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

**Usage**

|

||||

|

||||

To serve a directory:

|

||||

```

|

||||

$ miniserve <path-to-directory>

|

||||

|

||||

```

|

||||

|

||||

**Example:**

|

||||

```

|

||||

$ miniserve /home/sk/ostechnix/

|

||||

miniserve v0.2.0

|

||||

Serving path /home/sk/ostechnix at http://[::]:8080, http://localhost:8080

|

||||

Quit by pressing CTRL-C

|

||||

|

||||

```

|

||||

|

||||

Now, you can access the share from local system itself using URL – **<http://localhost:8080>** and/or from remote system with URL – **http:// <ip-address>:8080**.

|

||||

|

||||

To serve a single file:

|

||||

```

|

||||

$ miniserve <path-to-file>

|

||||

|

||||

```

|

||||

|

||||

**Example:**

|

||||

```

|

||||

$ miniserve ostechnix/file.txt

|

||||

|

||||

```

|

||||

|

||||

Serve file/folder with username and password:

|

||||

```

|

||||

$ miniserve --auth joe:123 <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

Bind to multiple interfaces:

|

||||

```

|

||||

$ miniserve -i 192.168.225.1 -i 10.10.0.1 -i ::1 -- <path-to-share>

|

||||

|

||||

```

|

||||

|

||||

As you can see, I have given only 5 methods. But, there are few more methods given in the link attached at the end of this guide. Go and test them as well. Also, bookmark and revisit it from time to time to check if there are any new additions to the list in future.

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-quickly-serve-files-and-folders-over-http-in-linux/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:https://www.npmjs.com/package/http-server

|

||||

[2]:https://github.com/svenstaro/miniserve

|

||||

[3]:https://github.com/svenstaro/miniserve/releases

|

||||

@ -1,3 +1,5 @@

|

||||

Translating by jlztan

|

||||

|

||||

Convert files at the command line with Pandoc

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

### translating by way-ww

|

||||

|

||||

4 Must-Have Tools for Monitoring Linux

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

zianglei translating

|

||||

Chrony – An Alternative NTP Client And Server For Unix-like Systems

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,368 @@

|

||||

How Do We Find Out The Installed Packages Came From Which Repository?

|

||||

======

|

||||

Sometimes you might want to know the installed packages came from which repository. This will helps you to troubleshoot when you are facing the package conflict issue.

|

||||

|

||||

Because [third party vendor repositories][1] are holding the latest version of package and sometime it will causes the issue when you are trying to install any packages due to incompatibility.

|

||||

|

||||

Everything is possible in Linux because you can able to install a packages on your system even though when the package is not available on your distribution.

|

||||

|

||||

Also, you can able to install a package with latest version when your distribution don’t have it. How?

|

||||

|

||||

That’s why third party repositories are came in the picture. They are allowing users to install all the available packages from their repositories.

|

||||

|

||||

Almost all the distributions are allowing third party repositories. Some of the distribution officially suggesting few of third party repositories which are not replacing the base packages badly like CentOS officially suggesting us to install [EPEL repository][2].

|

||||

|

||||

[List of Major repositories][1] and it’s details are below.

|

||||

|

||||

* **`CentOS:`** [EPEL][2], [ELRepo][3], etc is [CentOS Community Approved Repositories][4].

|

||||

* **`Fedora:`** [RPMfusion repo][5] is commonly used by most of the [Fedora][6] users.

|

||||

* **`ArchLinux:`** ArchLinux community repository contains packages that have been adopted by Trusted Users from the Arch User Repository.

|

||||

* **`openSUSE:`** [Packman repo][7] offers various additional packages for openSUSE, especially but not limited to multimedia related applications and libraries that are on the openSUSE Build Service application blacklist. It’s the largest external repository of openSUSE packages.

|

||||

* **`Ubuntu:`** Personal Package Archives (PPAs) are a kind of repository. Developers create them in order to distribute their software. You can find this information on the PPA’s Launchpad page. Also, you can enable Cananical partners repositories.

|

||||

|

||||

|

||||

|

||||

### What Is Repository?

|

||||

|

||||

A software repository is a central place which stores the software packages for the particular application.

|

||||

|

||||

All the Linux distributions are maintaining their own repositories and they allow users to retrieve and install packages on their machine.

|

||||

|

||||

Each vendor offered a unique package management tool to manage their repositories such as search, install, update, upgrade, remove, etc.

|

||||

|

||||

Most of the Linux distributions comes as freeware except RHEL and SUSE. To access their repositories you need to buy a subscriptions.

|

||||

|

||||

### Why do we need to enable third party repositories?

|

||||

|

||||

In Linux, installing a package from source is not advisable as this might cause so many issues while upgrading the package or system that’s why we are advised to install a package from repo instead of source.

|

||||

|

||||

### How Do We Find Out The Installed Packages Came From Which Repository on RHEL/CentOS Systems?

|

||||

|

||||

This can be done in multiple ways. Here we will be giving you all the possible options and you can choose which one is best for you.

|

||||

|

||||

### Method-1: Using Yum Command

|

||||

|

||||

RHEL & CentOS systems are using RPM packages hence we can use the [Yum Package Manager][8] to get this information.

|

||||

|

||||

YUM stands for Yellowdog Updater, Modified is an open-source command-line front-end package-management utility for RPM based systems such as Red Hat Enterprise Linux (RHEL) and CentOS.

|

||||

|

||||

Yum is the primary tool for getting, installing, deleting, querying, and managing RPM packages from distribution repositories, as well as other third-party repositories.

|

||||

|

||||

```

|

||||

# yum info apachetop

|

||||

Loaded plugins: fastestmirror

|

||||

Loading mirror speeds from cached hostfile

|

||||

* epel: epel.mirror.constant.com

|

||||

Installed Packages

|

||||

Name : apachetop

|

||||

Arch : x86_64

|

||||

Version : 0.15.6

|

||||

Release : 1.el7

|

||||

Size : 65 k

|

||||

Repo : installed

|

||||

From repo : epel

|

||||

Summary : A top-like display of Apache logs

|

||||

URL : https://github.com/tessus/apachetop

|

||||

License : BSD

|

||||

Description : ApacheTop watches a logfile generated by Apache (in standard common or

|

||||

: combined logformat, although it doesn't (yet) make use of any of the extra

|

||||

: fields in combined) and generates human-parsable output in realtime.

|

||||

```

|

||||

|

||||

The **`apachetop`** package is coming from **`epel repo`**.

|

||||

|

||||

### Method-2: Using Yumdb Command

|

||||

|

||||

Yumdb info provides information similar to yum info but additionally it provides package checksum data, type, user info (who installed the package). Since yum 3.2.26 yum has started storing additional information outside of the rpmdatabase (where user indicates it was installed by the user, and dep means it was brought in as a dependency).

|

||||

|

||||

```

|

||||

# yumdb info lighttpd

|

||||

Loaded plugins: fastestmirror

|

||||

lighttpd-1.4.50-1.el7.x86_64

|

||||

checksum_data = a24d18102ed40148cfcc965310a516050ed437d728eeeefb23709486783a4d37

|

||||

checksum_type = sha256

|

||||

command_line = --enablerepo=epel install lighttpd apachetop aria2 atop axel

|

||||

from_repo = epel

|

||||

from_repo_revision = 1540756729

|

||||

from_repo_timestamp = 1540757483

|

||||

installed_by = 0

|

||||

origin_url = https://epel.mirror.constant.com/7/x86_64/Packages/l/lighttpd-1.4.50-1.el7.x86_64.rpm

|

||||

reason = user

|

||||

releasever = 7

|

||||

var_contentdir = centos

|

||||

var_infra = stock

|

||||

var_uuid = ce328b07-9c0a-4765-b2ad-59d96a257dc8

|

||||

```

|

||||

|

||||

The **`lighttpd`** package is coming from **`epel repo`**.

|

||||

|

||||

### Method-3: Using RPM Command

|

||||

|

||||

[RPM command][9] stands for Red Hat Package Manager is a powerful, command line Package Management utility for Red Hat based system such as (RHEL, CentOS, Fedora, openSUSE & Mageia) distributions.

|

||||

|

||||

The utility allow you to install, upgrade, remove, query & verify the software on your Linux system/server. RPM files comes with .rpm extension. RPM package built with required libraries and dependency which will not conflicts other packages were installed on your system.

|

||||

|

||||

```

|

||||

# rpm -qi apachetop

|

||||

Name : apachetop

|

||||

Version : 0.15.6

|

||||

Release : 1.el7

|

||||

Architecture: x86_64

|

||||

Install Date: Mon 29 Oct 2018 06:47:49 AM EDT

|

||||

Group : Applications/Internet

|

||||

Size : 67020

|

||||

License : BSD

|

||||

Signature : RSA/SHA256, Mon 22 Jun 2015 09:30:26 AM EDT, Key ID 6a2faea2352c64e5

|

||||

Source RPM : apachetop-0.15.6-1.el7.src.rpm

|

||||

Build Date : Sat 20 Jun 2015 09:02:37 PM EDT

|

||||

Build Host : buildvm-22.phx2.fedoraproject.org

|

||||

Relocations : (not relocatable)

|

||||

Packager : Fedora Project

|

||||

Vendor : Fedora Project

|

||||

URL : https://github.com/tessus/apachetop

|

||||

Summary : A top-like display of Apache logs

|

||||

Description :

|

||||

ApacheTop watches a logfile generated by Apache (in standard common or

|

||||

combined logformat, although it doesn't (yet) make use of any of the extra

|

||||

fields in combined) and generates human-parsable output in realtime.

|

||||

```

|

||||

|

||||

The **`apachetop`** package is coming from **`epel repo`**.

|

||||

|

||||

### Method-4: Using Repoquery Command

|

||||

|

||||

repoquery is a program for querying information from YUM repositories similarly to rpm queries.

|

||||

|

||||

```

|

||||

# repoquery -i httpd

|

||||

|

||||

Name : httpd

|

||||

Version : 2.4.6

|

||||

Release : 80.el7.centos.1

|

||||

Architecture: x86_64

|

||||

Size : 9817285

|

||||

Packager : CentOS BuildSystem

|

||||

Group : System Environment/Daemons

|

||||

URL : http://httpd.apache.org/

|

||||

Repository : updates

|

||||

Summary : Apache HTTP Server

|

||||

Source : httpd-2.4.6-80.el7.centos.1.src.rpm

|

||||

Description :

|

||||

The Apache HTTP Server is a powerful, efficient, and extensible

|

||||

web server.

|

||||

```

|

||||

|

||||

The **`httpd`** package is coming from **`CentOS updates repo`**.

|

||||

|

||||

### How Do We Find Out The Installed Packages Came From Which Repository on Fedora System?

|

||||

|

||||

DNF stands for Dandified yum. We can tell DNF, the next generation of yum package manager (Fork of Yum) using hawkey/libsolv library for back-end. Aleš Kozumplík started working on DNF since Fedora 18 and its implemented/launched in Fedora 22 finally.

|

||||

|

||||

[Dnf command][10] is used to install, update, search & remove packages on Fedora 22 and later system. It automatically resolve dependencies and make it smooth package installation without any trouble.

|

||||

|

||||

```

|

||||

$ dnf info tilix

|

||||

Last metadata expiration check: 27 days, 10:00:23 ago on Wed 04 Oct 2017 06:43:27 AM IST.

|

||||

Installed Packages

|

||||

Name : tilix

|

||||

Version : 1.6.4

|

||||

Release : 1.fc26

|

||||

Arch : x86_64

|

||||

Size : 3.6 M

|

||||

Source : tilix-1.6.4-1.fc26.src.rpm

|

||||

Repo : @System

|

||||

From repo : updates

|

||||

Summary : Tiling terminal emulator

|

||||

URL : https://github.com/gnunn1/tilix

|

||||

License : MPLv2.0 and GPLv3+ and CC-BY-SA

|

||||

Description : Tilix is a tiling terminal emulator with the following features:

|

||||

:

|

||||

: - Layout terminals in any fashion by splitting them horizontally or vertically

|

||||

: - Terminals can be re-arranged using drag and drop both within and between

|

||||

: windows

|

||||

: - Terminals can be detached into a new window via drag and drop

|

||||

: - Input can be synchronized between terminals so commands typed in one

|

||||

: terminal are replicated to the others

|

||||

: - The grouping of terminals can be saved and loaded from disk

|

||||

: - Terminals support custom titles

|

||||

: - Color schemes are stored in files and custom color schemes can be created by

|

||||

: simply creating a new file

|

||||

: - Transparent background

|

||||

: - Supports notifications when processes are completed out of view

|

||||

:

|

||||

: The application was written using GTK 3 and an effort was made to conform to

|

||||

: GNOME Human Interface Guidelines (HIG).

|

||||

```

|

||||

|

||||

The **`tilix`** package is coming from **`Fedora updates repo`**.

|

||||

|

||||

### How Do We Find Out The Installed Packages Came From Which Repository on openSUSE System?

|

||||

|

||||

Zypper is a command line package manager which makes use of libzypp. [Zypper command][11] provides functions like repository access, dependency solving, package installation, etc.

|

||||

|

||||

```

|

||||

$ zypper info nano

|

||||

|

||||

Loading repository data...

|

||||

Reading installed packages...

|

||||

|

||||

|

||||

Information for package nano:

|

||||

-----------------------------

|

||||

Repository : Main Repository (OSS)

|

||||

Name : nano

|

||||

Version : 2.4.2-5.3

|

||||

Arch : x86_64

|

||||

Vendor : openSUSE

|

||||

Installed Size : 1017.8 KiB

|

||||

Installed : No

|

||||

Status : not installed

|

||||

Source package : nano-2.4.2-5.3.src

|

||||

Summary : Pico editor clone with enhancements

|

||||

Description :

|

||||

GNU nano is a small and friendly text editor. It aims to emulate

|

||||

the Pico text editor while also offering a few enhancements.

|

||||

```

|

||||

|

||||

The **`nano`** package is coming from **`openSUSE Main repo (OSS)`**.

|

||||

|

||||

### How Do We Find Out The Installed Packages Came From Which Repository on ArchLinux System?

|

||||

|

||||

[Pacman command][12] stands for package manager utility. pacman is a simple command-line utility to install, build, remove and manage Arch Linux packages. Pacman uses libalpm (Arch Linux Package Management (ALPM) library) as a back-end to perform all the actions.

|

||||

|

||||

```

|

||||

# pacman -Ss chromium

|

||||

extra/chromium 48.0.2564.116-1

|

||||

The open-source project behind Google Chrome, an attempt at creating a safer, faster, and more stable browser

|

||||

extra/qt5-webengine 5.5.1-9 (qt qt5)

|

||||

Provides support for web applications using the Chromium browser project

|

||||

community/chromium-bsu 0.9.15.1-2

|

||||

A fast paced top scrolling shooter

|

||||

community/chromium-chromevox latest-1

|

||||

Causes the Chromium web browser to automatically install and update the ChromeVox screen reader extention. Note: This

|

||||

package does not contain the extension code.

|

||||

community/fcitx-mozc 2.17.2313.102-1

|

||||

Fcitx Module of A Japanese Input Method for Chromium OS, Windows, Mac and Linux (the Open Source Edition of Google Japanese

|

||||

Input)

|

||||

```

|

||||

|

||||

The **`chromium`** package is coming from **`ArchLinux extra repo`**.

|

||||

|

||||

Alternatively, we can use the following option to get the detailed information about the package.

|

||||

|

||||

```

|

||||

# pacman -Si chromium

|

||||

Repository : extra

|

||||

Name : chromium

|

||||

Version : 48.0.2564.116-1

|

||||

Description : The open-source project behind Google Chrome, an attempt at creating a safer, faster, and more stable browser

|

||||

Architecture : x86_64

|

||||

URL : http://www.chromium.org/

|

||||

Licenses : BSD

|

||||

Groups : None

|

||||

Provides : None

|

||||

Depends On : gtk2 nss alsa-lib xdg-utils bzip2 libevent libxss icu libexif libgcrypt ttf-font systemd dbus

|

||||

flac snappy speech-dispatcher pciutils libpulse harfbuzz libsecret libvpx perl perl-file-basedir

|

||||

desktop-file-utils hicolor-icon-theme

|

||||

Optional Deps : kdebase-kdialog: needed for file dialogs in KDE

|

||||

gnome-keyring: for storing passwords in GNOME keyring

|

||||

kwallet: for storing passwords in KWallet

|

||||

Conflicts With : None

|

||||

Replaces : None

|

||||

Download Size : 44.42 MiB

|

||||

Installed Size : 172.44 MiB

|

||||

Packager : Evangelos Foutras

|

||||

Build Date : Fri 19 Feb 2016 04:17:12 AM IST

|

||||

Validated By : MD5 Sum SHA-256 Sum Signature

|

||||

```

|

||||

|

||||

The **`chromium`** package is coming from **`ArchLinux extra repo`**.

|

||||

|

||||

### How Do We Find Out The Installed Packages Came From Which Repository on Debian Based Systems?

|

||||

|

||||

It can be done in two ways on Debian based systems such as Ubuntu, LinuxMint, etc.,

|

||||

|

||||

### Method-1: Using apt-cache Command

|

||||

|

||||

The [apt-cache command][13] can display much of the information stored in APT’s internal database. This information is a sort of cache since it is gathered from the different sources listed in the sources.list file. This happens during the apt update operation.

|

||||

|

||||

```

|

||||

$ apt-cache policy python3

|

||||

python3:

|

||||

Installed: 3.6.3-0ubuntu2

|

||||

Candidate: 3.6.3-0ubuntu3

|

||||

Version table:

|

||||

3.6.3-0ubuntu3 500

|

||||

500 http://in.archive.ubuntu.com/ubuntu artful-updates/main amd64 Packages

|

||||

* 3.6.3-0ubuntu2 500

|

||||

500 http://in.archive.ubuntu.com/ubuntu artful/main amd64 Packages

|

||||

100 /var/lib/dpkg/status

|

||||

```

|

||||

|

||||

The **`python3`** package is coming from **`Ubuntu updates repo`**.

|

||||

|

||||

### Method-2: Using apt Command

|

||||

|

||||

[APT command][14] stands for Advanced Packaging Tool (APT) which is replacement for apt-get, like how DNF came to picture instead of YUM. It’s feature rich command-line tools with included all the futures in one command (APT) such as apt-cache, apt-search, dpkg, apt-cdrom, apt-config, apt-key, etc..,. and several other unique features. For example we can easily install .dpkg packages through APT but we can’t do through Apt-Get similar more features are included into APT command. APT-GET replaced by APT Due to lock of futures missing in apt-get which was not solved.

|

||||

|

||||

```

|

||||

$ apt -a show notepadqq

|

||||

Package: notepadqq

|

||||

Version: 1.3.2-1~artful1

|

||||

Priority: optional

|

||||

Section: editors

|

||||

Maintainer: Daniele Di Sarli

|

||||

Installed-Size: 1,352 kB

|

||||

Depends: notepadqq-common (= 1.3.2-1~artful1), coreutils (>= 8.20), libqt5svg5 (>= 5.2.1), libc6 (>= 2.14), libgcc1 (>= 1:3.0), libqt5core5a (>= 5.9.0~beta), libqt5gui5 (>= 5.7.0), libqt5network5 (>= 5.2.1), libqt5printsupport5 (>= 5.2.1), libqt5webkit5 (>= 5.6.0~rc), libqt5widgets5 (>= 5.2.1), libstdc++6 (>= 5.2)

|

||||

Download-Size: 356 kB

|

||||

APT-Sources: http://ppa.launchpad.net/notepadqq-team/notepadqq/ubuntu artful/main amd64 Packages

|

||||

Description: Notepad++-like editor for Linux

|

||||

Text editor with support for multiple programming

|

||||

languages, multiple encodings and plugin support.

|

||||

|

||||

Package: notepadqq

|

||||

Version: 1.2.0-1~artful1

|

||||

Status: install ok installed

|

||||

Priority: optional

|

||||

Section: editors

|

||||

Maintainer: Daniele Di Sarli

|

||||

Installed-Size: 1,352 kB

|

||||

Depends: notepadqq-common (= 1.2.0-1~artful1), coreutils (>= 8.20), libqt5svg5 (>= 5.2.1), libc6 (>= 2.14), libgcc1 (>= 1:3.0), libqt5core5a (>= 5.9.0~beta), libqt5gui5 (>= 5.7.0), libqt5network5 (>= 5.2.1), libqt5printsupport5 (>= 5.2.1), libqt5webkit5 (>= 5.6.0~rc), libqt5widgets5 (>= 5.2.1), libstdc++6 (>= 5.2)

|

||||

Homepage: http://notepadqq.altervista.org

|

||||

Download-Size: unknown

|

||||

APT-Manual-Installed: yes

|

||||

APT-Sources: /var/lib/dpkg/status

|

||||

Description: Notepad++-like editor for Linux

|

||||

Text editor with support for multiple programming

|

||||

languages, multiple encodings and plugin support.

|

||||

```

|

||||

|

||||

The **`notepadqq`** package is coming from **`Launchpad PPA`**.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/how-do-we-find-out-the-installed-packages-came-from-which-repository/

|

||||

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/prakash/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/category/repository/

|

||||

[2]: https://www.2daygeek.com/install-enable-epel-repository-on-rhel-centos-scientific-linux-oracle-linux/

|

||||

[3]: https://www.2daygeek.com/install-enable-elrepo-on-rhel-centos-scientific-linux/

|

||||

[4]: https://www.2daygeek.com/additional-yum-repositories-for-centos-rhel-fedora-systems/

|

||||

[5]: https://www.2daygeek.com/install-enable-rpm-fusion-repository-on-centos-fedora-rhel/

|

||||

[6]: https://fedoraproject.org/wiki/Third_party_repositories

|

||||

[7]: https://www.2daygeek.com/install-enable-packman-repository-on-opensuse-leap/

|

||||

[8]: https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/

|

||||

[9]: https://www.2daygeek.com/rpm-command-examples/

|

||||

[10]: https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/

|

||||

[11]: https://www.2daygeek.com/zypper-command-examples-manage-packages-opensuse-system/

|

||||

[12]: https://www.2daygeek.com/pacman-command-examples-manage-packages-arch-linux-system/

|

||||

[13]: https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[14]: https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

8 creepy commands that haunt the terminal | Opensource.com

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,407 @@

|

||||

Getting started with a local OKD cluster on Linux

|

||||

======

|

||||

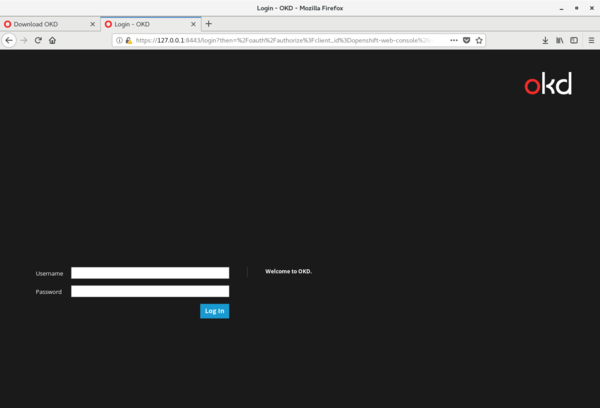

Try out OKD, the community edition of the OpenShift container platform, with this tutorial.

|

||||

|

||||

|

||||

OKD is the open source upstream community edition of Red Hat's OpenShift container platform. OKD is a container management and orchestration platform based on [Docker][1] and [Kubernetes][2].

|

||||

|

||||

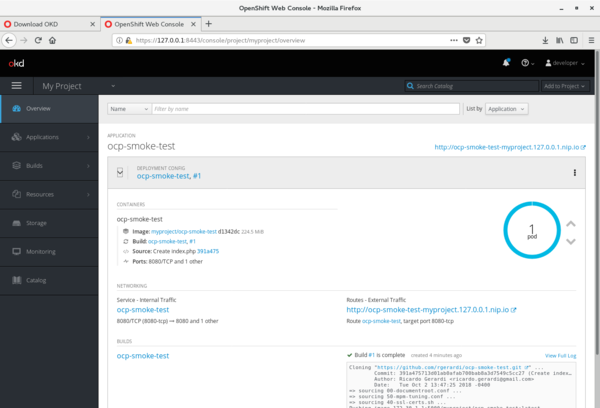

OKD is a complete solution to manage, deploy, and operate containerized applications that (in addition to the features provided by Kubernetes) includes an easy-to-use web interface, automated build tools, routing capabilities, and monitoring and logging aggregation features.

|

||||

|

||||

OKD provides several deployment options aimed at different requirements with single or multiple master nodes, high-availability capabilities, logging, monitoring, and more. You can create OKD clusters as small or as large as you need.

|

||||

|

||||

In addition to these deployment options, OKD provides a way to create a local, all-in-one cluster on your own machine using the oc command-line tool. This is a great option if you want to try OKD locally without committing the resources to create a larger multi-node cluster, or if you want to have a local cluster on your machine as part of your workflow or development process. In this case, you can create and deploy the applications locally using the same APIs and interfaces required to deploy the application on a larger scale. This process ensures a seamless integration that prevents issues with applications that work in the developer's environment but not in production.

|

||||

|

||||

This tutorial will show you how to create an OKD cluster using **oc cluster up** in a Linux box.

|

||||

|

||||

### 1\. Install Docker

|

||||

|

||||

The **oc cluster up** command creates a local OKD cluster on your machine using Docker containers. In order to use this command, you need Docker installed on your machine. For OKD version 3.9 and later, Docker 1.13 is the minimum recommended version. If Docker is not installed on your system, install it by using your distribution package manager. For example, on CentOS or RHEL, install Docker with this command:

|

||||

|