mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

commit

d66dad95a5

@ -0,0 +1,159 @@

|

|||||||

|

MidnightBSD:或许是你通往 FreeBSD 的大门

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

[FreeBSD][1] 是一个开源操作系统,衍生自著名的 <ruby>[伯克利软件套件][2]<rt>Berkeley Software Distribution</rt></ruby>(BSD)。FreeBSD 的第一个版本发布于 1993 年,并且仍然在继续发展。2007 年左右,Lucas Holt 想要利用 OpenStep(现在是 Cocoa)的 Objective-C 框架、widget 工具包和应用程序开发工具的 [GnuStep][3] 实现,来创建一个 FreeBSD 的分支。为此,他开始开发 MidnightBSD 桌面发行版。

|

||||||

|

|

||||||

|

MidnightBSD(以 Lucas 的猫 Midnight 命名)仍然在积极地(尽管缓慢)开发。从 2017 年 8 月开始,可以获得最新的稳定发布版本(0.8.6)(LCTT 译注:截止至本译文发布时,当前是 2019/10/31 发布的 1.2 版)。尽管 BSD 发行版不是你所说的用户友好型发行版,但上手安装是熟悉如何处理 文本(ncurses)安装过程以及通过命令行完成安装的好方法。

|

||||||

|

|

||||||

|

这样,你最终会得到一个非常可靠的 FreeBSD 分支的桌面发行版。这需要花费一点精力,但是如果你是一名正在寻找扩展你的技能的 Linux 用户……这是一个很好的起点。

|

||||||

|

|

||||||

|

我将带你走过安装 MidnightBSD 的流程,如何添加一个图形桌面环境,然后如何安装应用程序。

|

||||||

|

|

||||||

|

### 安装

|

||||||

|

|

||||||

|

正如我所提到的,这是一个文本(ncurses)安装过程,因此在这里找不到可以用鼠标点击的地方。相反,你将使用你键盘的 `Tab` 键和箭头键。在你下载[最新的发布版本][4]后,将它刻录到一个 CD/DVD 或 USB 驱动器,并启动你的机器(或者在 [VirtualBox][5] 中创建一个虚拟机)。安装程序将打开并给你三个选项(图 1)。使用你的键盘的箭头键选择 “Install”,并敲击回车键。

|

||||||

|

|

||||||

|

![MidnightBSD installer][6]

|

||||||

|

|

||||||

|

*图 1: 启动 MidnightBSD 安装程序。*

|

||||||

|

|

||||||

|

在这里要经历相当多的屏幕。其中很多屏幕是一目了然的:

|

||||||

|

|

||||||

|

1. 设置非默认键盘映射(是/否)

|

||||||

|

2. 设置主机名称

|

||||||

|

3. 添加可选系统组件(文档、游戏、32 位兼容性、系统源码代码)

|

||||||

|

4. 对硬盘分区

|

||||||

|

5. 管理员密码

|

||||||

|

6. 配置网络接口

|

||||||

|

7. 选择地区(时区)

|

||||||

|

8. 启用服务(例如 ssh)

|

||||||

|

9. 添加用户(图 2)

|

||||||

|

|

||||||

|

![Adding a user][7]

|

||||||

|

|

||||||

|

*图 2: 向系统添加一个用户。*

|

||||||

|

|

||||||

|

在你向系统添加用户后,你将被进入到一个窗口中(图 3),在这里,你可以处理任何你可能忘记配置或你想重新配置的东西。如果你不需要作出任何更改,选择 “Exit”,然后你的配置就会被应用。

|

||||||

|

|

||||||

|

![Applying your configurations][8]

|

||||||

|

|

||||||

|

*图 3: 应用你的配置。*

|

||||||

|

|

||||||

|

在接下来的窗口中,当出现提示时,选择 “No”,接下来系统将重启。在 MidnightBSD 重启后,你已经为下一阶段的安装做好了准备。

|

||||||

|

|

||||||

|

### 后安装阶段

|

||||||

|

|

||||||

|

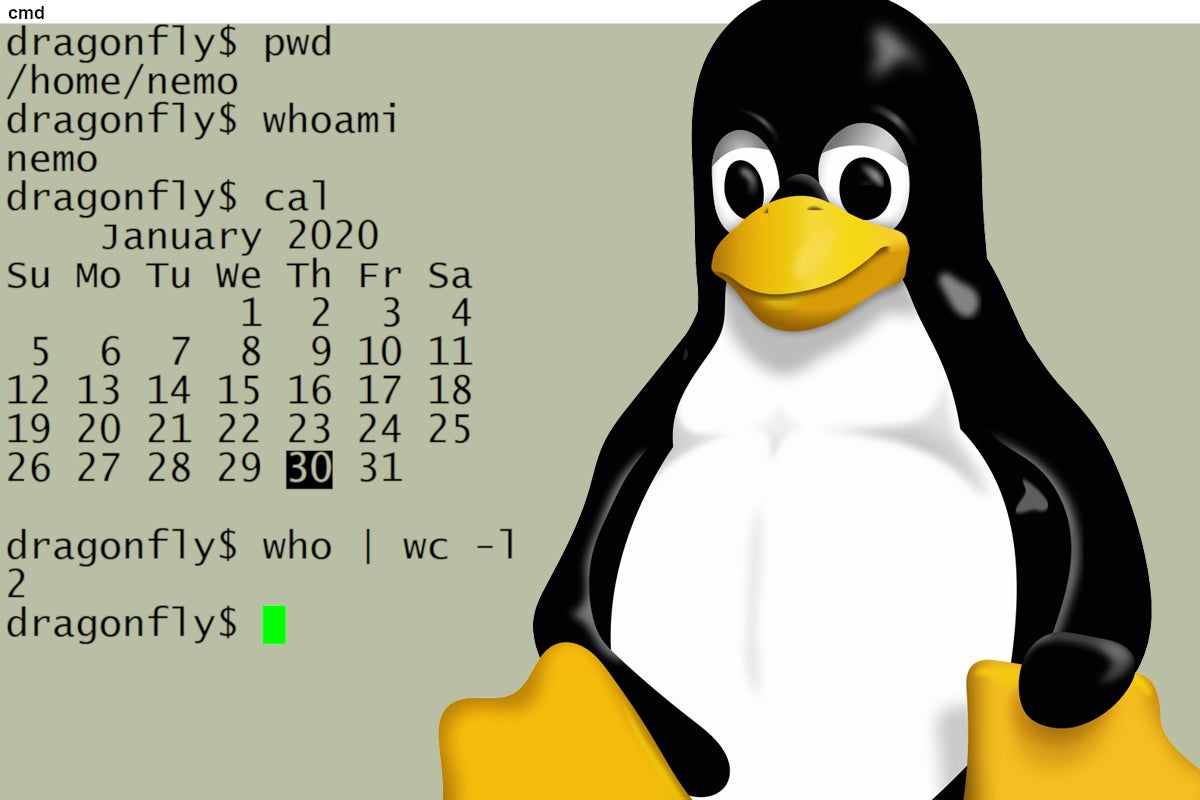

当你最新安装的 MidnightBSD 启动时,你将发现你自己处于命令提示符当中。此刻,还没有图形界面。要安装应用程序,MidnightBSD 依赖于 `mport` 工具。比如说你想安装 Xfce 桌面环境。为此,登录到 MidnightBSD 中,并发出下面的命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport index

|

||||||

|

sudo mport install xorg

|

||||||

|

```

|

||||||

|

|

||||||

|

你现在已经安装好 Xorg 窗口服务器了,它允许你安装桌面环境。使用命令来安装 Xfce :

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport install xfce

|

||||||

|

```

|

||||||

|

|

||||||

|

现在 Xfce 已经安装好。不过,我们必须让它同命令 `startx` 一起启用。为此,让我们先安装 nano 编辑器。发出命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport install nano

|

||||||

|

```

|

||||||

|

|

||||||

|

随着 nano 安装好,发出命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

nano ~/.xinitrc

|

||||||

|

```

|

||||||

|

|

||||||

|

这个文件仅包含一行内容:

|

||||||

|

|

||||||

|

```

|

||||||

|

exec startxfce4

|

||||||

|

```

|

||||||

|

|

||||||

|

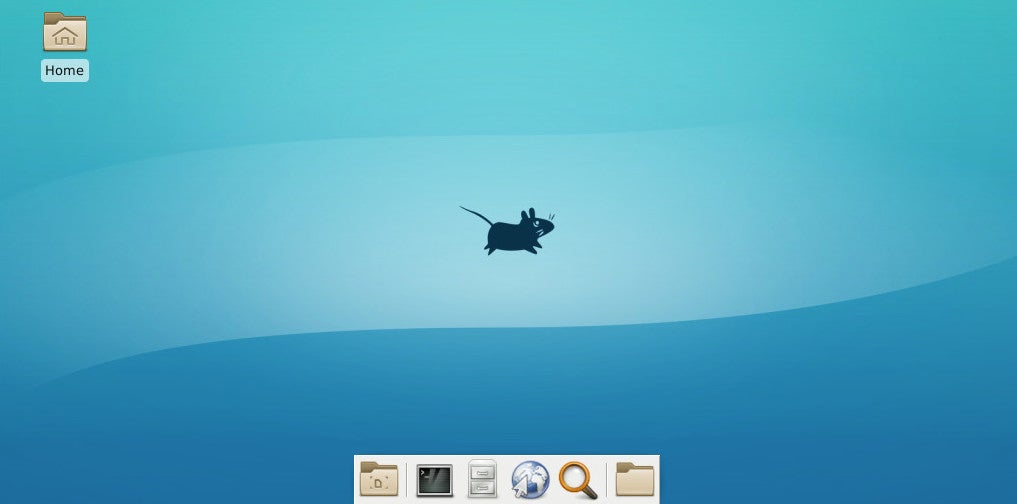

保存并关闭这个文件。如果你现在发出命令 `startx`, Xfce 桌面环境将会启动。你应该会感到有点熟悉了吧(图 4)。

|

||||||

|

|

||||||

|

![ Xfce][9]

|

||||||

|

|

||||||

|

*图 4: Xfce 桌面界面已准备好服务。*

|

||||||

|

|

||||||

|

因为你不会总是想必须发出命令 `startx`,你希望启用登录守护进程。然而,它却没有安装。要安装这个子系统,发出命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport install mlogind

|

||||||

|

```

|

||||||

|

|

||||||

|

当完成安装后,通过在 `/etc/rc.conf` 文件中添加一个项目来在启动时启用 mlogind。在 `rc.conf` 文件的底部,添加以下内容:

|

||||||

|

|

||||||

|

```

|

||||||

|

mlogind_enable=”YES”

|

||||||

|

```

|

||||||

|

|

||||||

|

保存并关闭该文件。现在,当你启动(或重启)机器时,你应该会看到图形登录屏幕。在写这篇文章的时候,在登录后我最后得到一个空白屏幕和讨厌的 X 光标。不幸的是,目前似乎并没有这个问题的解决方法。所以,要访问你的桌面环境,你必须使用 `startx` 命令。

|

||||||

|

|

||||||

|

### 安装应用

|

||||||

|

|

||||||

|

默认情况下,你找不到很多能可用的应用程序。如果你尝试使用 `mport` 安装应用程序,你很快就会感到沮丧,因为只能找到很少的应用程序。为解决这个问题,我们需要使用 `svnlite` 命令来查看检出的可用 mport 软件列表。回到终端窗口,并发出命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

svnlite co http://svn.midnightbsd.org/svn/mports/trunk mports

|

||||||

|

```

|

||||||

|

|

||||||

|

在你完成这些后,你应该看到一个命名为 `~/mports` 的新目录。使用命令 `cd ~/.mports` 更改到这个目录。发出 `ls` 命令,然后你应该看到许多的类别(图 5)。

|

||||||

|

|

||||||

|

![applications][10]

|

||||||

|

|

||||||

|

*图 5: mport 现在可用的应用程序类别。*

|

||||||

|

|

||||||

|

你想安装 Firefox 吗?如果你查看 `www` 目录,你将看到一个 `linux-firefox` 列表。发出命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport install linux-firefox

|

||||||

|

```

|

||||||

|

|

||||||

|

现在你应该会在 Xfce 桌面菜单中看到一个 Firefox 项。翻找所有的类别,并使用 `mport` 命令来安装你需要的所有软件。

|

||||||

|

|

||||||

|

### 一个悲哀的警告

|

||||||

|

|

||||||

|

一个悲哀的小警告是,`mport` (通过 `svnlite`)仅能找到的一个办公套件的版本是 OpenOffice 3 。那是非常过时的。尽管在 `~/mports/editors` 目录中能找到 Abiword ,但是它看起来不能安装。甚至在安装 OpenOffice 3 后,它会输出一个执行格式错误。换句话说,你不能使用 MidnightBSD 在办公生产效率方面做很多的事情。但是,嘿嘿,如果你周围正好有一个旧的 Palm Pilot,你可以安装 pilot-link。换句话说,可用的软件不足以构成一个极其有用的桌面发行版……至少对普通用户不是。但是,如果你想在 MidnightBSD 上开发,你将找到很多可用的工具可以安装(查看 `~/mports/devel` 目录)。你甚至可以使用命令安装 Drupal :

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo mport install drupal7

|

||||||

|

```

|

||||||

|

|

||||||

|

当然,在此之后,你将需要创建一个数据库(MySQL 已经安装)、安装 Apache(`sudo mport install apache24`),并配置必要的 Apache 配置。

|

||||||

|

|

||||||

|

显然地,已安装的和可以安装的是一个应用程序、系统和服务的大杂烩。但是随着足够多的工作,你最终可以得到一个能够服务于特殊目的的发行版。

|

||||||

|

|

||||||

|

### 享受 \*BSD 优良

|

||||||

|

|

||||||

|

这就是如何使 MidnightBSD 启动,并使其运行某种有用的桌面发行版的方法。它不像很多其它的 Linux 发行版一样快速简便,但是如果你想要一个促使你思考的发行版,这可能正是你正在寻找的。尽管大多数竞争对手都准备了很多可以安装的应用软件,但 MidnightBSD 无疑是一个 Linux 爱好者或管理员应该尝试的有趣挑战。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.linux.com/learn/intro-to-linux/2018/5/midnightbsd-could-be-your-gateway-freebsd

|

||||||

|

|

||||||

|

作者:[Jack Wallen][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[robsean](https://github.com/robsean)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://www.linux.com/users/jlwallen

|

||||||

|

[1]:https://www.freebsd.org/

|

||||||

|

[2]:https://en.wikipedia.org/wiki/Berkeley_Software_Distribution

|

||||||

|

[3]:https://en.wikipedia.org/wiki/GNUstep

|

||||||

|

[4]:http://www.midnightbsd.org/download/

|

||||||

|

[5]:https://www.virtualbox.org/

|

||||||

|

[6]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_1.jpg (MidnightBSD installer)

|

||||||

|

[7]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_2.jpg (Adding a user)

|

||||||

|

[8]:https://lcom.static.linuxfound.org/sites/lcom/files/mightnight_3.jpg (Applying your configurations)

|

||||||

|

[9]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_4.jpg (Xfce)

|

||||||

|

[10]:https://lcom.static.linuxfound.org/sites/lcom/files/midnight_5.jpg (applications)

|

||||||

|

[11]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||||

236

published/20190407 Manage multimedia files with Git.md

Normal file

236

published/20190407 Manage multimedia files with Git.md

Normal file

@ -0,0 +1,236 @@

|

|||||||

|

[#]: collector: (lujun9972)

|

||||||

|

[#]: translator: (svtter)

|

||||||

|

[#]: reviewer: (wxy)

|

||||||

|

[#]: publisher: (wxy)

|

||||||

|

[#]: url: (https://linux.cn/article-11889-1.html)

|

||||||

|

[#]: subject: (Manage multimedia files with Git)

|

||||||

|

[#]: via: (https://opensource.com/article/19/4/manage-multimedia-files-git)

|

||||||

|

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||||

|

|

||||||

|

|

||||||

|

通过 Git 来管理多媒体文件

|

||||||

|

======

|

||||||

|

|

||||||

|

> 在我们有关 Git 鲜为人知的用法系列的最后一篇文章中,了解如何使用 Git 跟踪项目中的大型多媒体文件。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Git 是专用于源代码版本控制的工具。因此,Git 很少被用于非纯文本的项目以及行业。然而,异步工作流的优点是十分诱人的,尤其是在一些日益增长的行业中,这种类型的行业把重要的计算和重要的艺术创作结合起来,这包括网页设计、视觉效果、视频游戏、出版、货币设计(是的,这是一个真实的行业)、教育……等等。还有许多行业属于这个类型。

|

||||||

|

|

||||||

|

在这个 Git 系列文章中,我们分享了六种鲜为人知的 Git 使用方法。在最后一篇文章中,我们将介绍将 Git 的优点带到管理多媒体文件的软件。

|

||||||

|

|

||||||

|

### Git 管理多媒体文件的问题

|

||||||

|

|

||||||

|

众所周知,Git 用于处理非文本文件不是很好,但是这并不妨碍我们进行尝试。下面是一个使用 Git 来复制照片文件的例子:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ du -hs

|

||||||

|

108K .

|

||||||

|

$ cp ~/photos/dandelion.tif .

|

||||||

|

$ git add dandelion.tif

|

||||||

|

$ git commit -m 'added a photo'

|

||||||

|

[master (root-commit) fa6caa7] two photos

|

||||||

|

1 file changed, 0 insertions(+), 0 deletions(-)

|

||||||

|

create mode 100644 dandelion.tif

|

||||||

|

$ du -hs

|

||||||

|

1.8M .

|

||||||

|

```

|

||||||

|

|

||||||

|

目前为止没有什么异常。增加一个 1.8MB 的照片到一个目录下,使得目录变成了 1.8 MB 的大小。所以下一步,我们尝试删除文件。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git rm dandelion.tif

|

||||||

|

$ git commit -m 'deleted a photo'

|

||||||

|

$ du -hs

|

||||||

|

828K .

|

||||||

|

```

|

||||||

|

|

||||||

|

在这里我们可以看到有些问题:删除一个已经被提交的文件,还是会使得存储库的大小扩大到原来的 8 倍(从 108K 到 828K)。我们可以测试多次来得到一个更好的平均值,但是这个简单的演示与我的经验一致。提交非文本文件,在一开始花费空间比较少,但是一个工程活跃地时间越长,人们可能对静态内容修改的会更多,更多的零碎文件会被加和到一起。当一个 Git 存储库变的越来越大,主要的成本往往是速度。拉取和推送的时间,从最初抿一口咖啡的时间到你觉得你可能断网了。

|

||||||

|

|

||||||

|

静态内容导致 Git 存储库的体积不断扩大的原因是什么呢?那些通过文本的构成的文件,允许 Git 只拉取那些修改的部分。光栅图以及音乐文件对 Git 文件而言与文本不同,你可以查看一下 .png 和 .wav 文件中的二进制数据。所以,Git 只不过是获取了全部的数据,并且创建了一个新的副本,哪怕是一张图仅仅修改了一个像素。

|

||||||

|

|

||||||

|

### Git-portal

|

||||||

|

|

||||||

|

在实践中,许多多媒体项目不需要或者不想追踪媒体的历史记录。相对于文本或者代码的部分,项目的媒体部分一般有一个不同的生命周期。媒体资源一般按一个方向产生:一张图片从铅笔草稿开始,以数字绘画的形式抵达它的目的地。然后,尽管文本能够回滚到早起的版本,但是艺术制品只会一直向前发展。工程中的媒体很少被绑定到一个特定的版本。例外情况通常是反映数据集的图形,通常是可以用基于文本的格式(如 SVG)完成的表、图形或图表。

|

||||||

|

|

||||||

|

所以,在许多同时包含文本(无论是叙事散文还是代码)和媒体的工程中,Git 是一个用于文件管理的,可接受的解决方案,只要有一个在版本控制循环之外的游乐场来给艺术家游玩就行。

|

||||||

|

|

||||||

|

![Graphic showing relationship between art assets and Git][2]

|

||||||

|

|

||||||

|

一个启用这个特性的简单方法是 [Git-portal][3],这是一个通过带有 Git 钩子的 Bash 脚本,它可将静态文件从文件夹中移出 Git 的范围,并通过符号链接来取代它们。Git 提交链接文件(有时候称作别名或快捷方式),这种符号链接文件比较小,所以所有的提交都是文本文件和那些代表媒体文件的链接。因为替身文件是符号链接,所以工程还会像预期的运行,因为本地机器会处理他们,转换成“真实的”副本。当用符号链接替换出文件时,Git-portal 维护了项目的结构,因此,如果你认为 Git-portal 不适合你的项目,或者你需要构建项目的一个没有符号链接的版本(比如用于分发),则可以轻松地逆转该过程。

|

||||||

|

|

||||||

|

Git-portal 也允许通过 `rsync` 来远程同步静态资源,所以用户可以设置一个远程存储位置,来做为一个中心的授权源。

|

||||||

|

|

||||||

|

Git-portal 对于多媒体的工程是一个理想的解决方案。类似的多媒体工程包括视频游戏、桌面游戏、需要进行大型 3D 模型渲染和纹理的虚拟现实工程、[带图][4]以及 .odt 输出的书籍、协作型的[博客站点][5]、音乐项目,等等。艺术家在应用程序中以图层(在图形世界中)和曲目(在音乐世界中)的形式执行版本控制并不少见——因此,Git 不会向多媒体项目文件本身添加任何内容。Git 的功能可用于艺术项目的其他部分(例如散文和叙述、项目管理、字幕文件、致谢、营销副本、文档等),而结构化远程备份的功能则由艺术家使用。

|

||||||

|

|

||||||

|

#### 安装 Git-portal

|

||||||

|

|

||||||

|

Git-portal 的 RPM 安装包位于 <https://klaatu.fedorapeople.org/git-portal>,可用于下载和安装。

|

||||||

|

|

||||||

|

此外,用户可以从 Git-portal 的 Gitlab 主页手动安装。这仅仅是一个 Bash 脚本以及一些 Git 钩子(也是 Bash 脚本),但是需要一个快速的构建过程来让它知道安装的位置。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git clone https://gitlab.com/slackermedia/git-portal.git git-portal.clone

|

||||||

|

$ cd git-portal.clone

|

||||||

|

$ ./configure

|

||||||

|

$ make

|

||||||

|

$ sudo make install

|

||||||

|

```

|

||||||

|

|

||||||

|

#### 使用 Git-portal

|

||||||

|

|

||||||

|

Git-portal 与 Git 一起使用。这意味着,如同 Git 的所有大型文件扩展一样,都需要记住一些额外的步骤。但是,你仅仅需要在处理你的媒体资源的时候使用 Git-portal,所以很容易记住,除非你把大文件都当做文本文件来进行处理(对于 Git 用户很少见)。使用 Git-portal 必须做的一个安装步骤是:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ mkdir bigproject.git

|

||||||

|

$ cd !$

|

||||||

|

$ git init

|

||||||

|

$ git-portal init

|

||||||

|

```

|

||||||

|

|

||||||

|

Git-portal 的 `init` 函数在 Git 存储库中创建了一个 `_portal` 文件夹并且添加到 `.gitignore` 文件中。

|

||||||

|

|

||||||

|

在平日里使用 Git-portal 和 Git 协同十分平滑。一个较好的例子是基于 MIDI 的音乐项目:音乐工作站产生的项目文件是基于文本的,但是 MIDI 文件是二进制数据:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ ls -1

|

||||||

|

_portal

|

||||||

|

song.1.qtr

|

||||||

|

song.qtr

|

||||||

|

song-Track_1-1.mid

|

||||||

|

song-Track_1-3.mid

|

||||||

|

song-Track_2-1.mid

|

||||||

|

$ git add song*qtr

|

||||||

|

$ git-portal song-Track*mid

|

||||||

|

$ git add song-Track*mid

|

||||||

|

```

|

||||||

|

|

||||||

|

如果你查看一下 `_portal` 文件夹,你会发现那里有最初的 MIDI 文件。这些文件在原本的位置被替换成了指向 `_portal` 的链接文件,使得音乐工作站像预期一样运行。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ ls -lG

|

||||||

|

[...] _portal/

|

||||||

|

[...] song.1.qtr

|

||||||

|

[...] song.qtr

|

||||||

|

[...] song-Track_1-1.mid -> _portal/song-Track_1-1.mid*

|

||||||

|

[...] song-Track_1-3.mid -> _portal/song-Track_1-3.mid*

|

||||||

|

[...] song-Track_2-1.mid -> _portal/song-Track_2-1.mid*

|

||||||

|

```

|

||||||

|

|

||||||

|

与 Git 相同,你也可以添加一个目录下的文件。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ cp -r ~/synth-presets/yoshimi .

|

||||||

|

$ git-portal add yoshimi

|

||||||

|

Directories cannot go through the portal. Sending files instead.

|

||||||

|

$ ls -lG _portal/yoshimi

|

||||||

|

[...] yoshimi.stat -> ../_portal/yoshimi/yoshimi.stat*

|

||||||

|

```

|

||||||

|

|

||||||

|

删除功能也像预期一样工作,但是当从 `_portal` 中删除一些东西时,你应该使用 `git-portal rm` 而不是 `git rm`。使用 Git-portal 可以确保文件从 `_portal` 中删除:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ ls

|

||||||

|

_portal/ song.qtr song-Track_1-3.mid@ yoshimi/

|

||||||

|

song.1.qtr song-Track_1-1.mid@ song-Track_2-1.mid@

|

||||||

|

$ git-portal rm song-Track_1-3.mid

|

||||||

|

rm 'song-Track_1-3.mid'

|

||||||

|

$ ls _portal/

|

||||||

|

song-Track_1-1.mid* song-Track_2-1.mid* yoshimi/

|

||||||

|

```

|

||||||

|

|

||||||

|

如果你忘记使用 Git-portal,那么你需要手动删除 `_portal` 下的文件:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git-portal rm song-Track_1-1.mid

|

||||||

|

rm 'song-Track_1-1.mid'

|

||||||

|

$ ls _portal/

|

||||||

|

song-Track_1-1.mid* song-Track_2-1.mid* yoshimi/

|

||||||

|

$ trash _portal/song-Track_1-1.mid

|

||||||

|

```

|

||||||

|

|

||||||

|

Git-portal 其它的唯一功能,是列出当前所有的链接并且找到里面可能已经损坏的符号链接。有时这种情况会因为项目文件夹中的文件被移动而发生:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ mkdir foo

|

||||||

|

$ mv yoshimi foo

|

||||||

|

$ git-portal status

|

||||||

|

bigproject.git/song-Track_2-1.mid: symbolic link to _portal/song-Track_2-1.mid

|

||||||

|

bigproject.git/foo/yoshimi/yoshimi.stat: broken symbolic link to ../_portal/yoshimi/yoshimi.stat

|

||||||

|

```

|

||||||

|

|

||||||

|

如果你使用 Git-portal 用于私人项目并且维护自己的备份,以上就是技术方面所有你需要知道关于 Git-portal 的事情了。如果你想要添加一个协作者或者你希望 Git-portal 来像 Git 的方式来管理备份,你可以创建一个远程位置。

|

||||||

|

|

||||||

|

#### 增加 Git-portal 远程位置

|

||||||

|

|

||||||

|

为 Git-portal 增加一个远程位置是通过 Git 已有的远程功能来实现的。Git-portal 实现了 Git 钩子(隐藏在存储库 `.git` 文件夹中的脚本),来寻找你的远程位置上是否存在以 `_portal` 开头的文件夹。如果它找到一个,它会尝试使用 `rsync` 来与远程位置同步文件。Git-portal 在用户进行 Git 推送以及 Git 合并的时候(或者在进行 Git 拉取的时候,实际上是进行一次获取和自动合并),都会执行此操作。

|

||||||

|

|

||||||

|

如果你仅克隆了 Git 存储库,那么你可能永远不会自己添加一个远程位置。这是一个标准的 Git 过程:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git remote add origin git@gitdawg.com:seth/bigproject.git

|

||||||

|

$ git remote -v

|

||||||

|

origin git@gitdawg.com:seth/bigproject.git (fetch)

|

||||||

|

origin git@gitdawg.com:seth/bigproject.git (push)

|

||||||

|

```

|

||||||

|

|

||||||

|

对你的主要 Git 存储库来说,`origin` 这个名字是一个流行的惯例,将其用于 Git 数据是有意义的。然而,你的 Git-portal 数据是分开存储的,所以你必须创建第二个远程位置来让 Git-portal 了解向哪里推送和从哪里拉取。取决于你的 Git 主机,你可能需要一个单独的服务器,因为空间有限的 Git 主机不太可能接受 GB 级的媒体资产。或者,可能你的服务器仅允许你访问你的 Git 存储库而不允许访问外部的存储文件夹:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git remote add _portal seth@example.com:/home/seth/git/bigproject_portal

|

||||||

|

$ git remote -v

|

||||||

|

origin git@gitdawg.com:seth/bigproject.git (fetch)

|

||||||

|

origin git@gitdawg.com:seth/bigproject.git (push)

|

||||||

|

_portal seth@example.com:/home/seth/git/bigproject_portal (fetch)

|

||||||

|

_portal seth@example.com:/home/seth/git/bigproject_portal (push)

|

||||||

|

```

|

||||||

|

|

||||||

|

你可能不想为所有用户提供服务器上的个人帐户,也不必这样做。为了提供对托管资源库大文件资产的服务器的访问权限,你可以运行一个 Git 前端,比如 [Gitolite][8] 或者你可以使用 `rrsync` (受限的 rsync)。

|

||||||

|

|

||||||

|

现在你可以推送你的 Git 数据到你的远程 Git 存储库,并将你的 Git-portal 数据到你的远程的门户:

|

||||||

|

|

||||||

|

```

|

||||||

|

$ git push origin HEAD

|

||||||

|

master destination detected

|

||||||

|

Syncing _portal content...

|

||||||

|

sending incremental file list

|

||||||

|

sent 9,305 bytes received 18 bytes 1,695.09 bytes/sec

|

||||||

|

total size is 60,358,015 speedup is 6,474.10

|

||||||

|

Syncing _portal content to example.com:/home/seth/git/bigproject_portal

|

||||||

|

```

|

||||||

|

|

||||||

|

如果你已经安装了 Git-portal,并且配置了 `_portal` 的远程位置,你的 `_portal` 文件夹将会被同步,并且从服务器获取新的内容,以及在每一次推送的时候发送新的内容。尽管你不需要进行 Git 提交或者推送来和服务器同步(用户可以使用直接使用 `rsync`),但是我发现对于艺术性内容的改变,提交是有用的。这将会把艺术家及其数字资产集成到工作流的其余部分中,并提供有关项目进度和速度的有用元数据。

|

||||||

|

|

||||||

|

### 其他选择

|

||||||

|

|

||||||

|

如果 Git-portal 对你而言太过简单,还有一些用于 Git 管理大型文件的其他选择。[Git 大文件存储][9](LFS)是一个名为 git-media 的停工项目的分支,这个分支由 GitHub 维护和支持。它需要特殊的命令(例如 `git lfs track` 来保护大型文件不被 Git 追踪)并且需要用户维护一个 `.gitattributes` 文件来更新哪些存储库中的文件被 LFS 追踪。对于大文件而言,它**仅**支持 HTTP 和 HTTPS 远程主机。所以你必须配置 LFS 服务器,才能使得用户可以通过 HTTP 而不是 SSH 或 `rsync` 来进行鉴权。

|

||||||

|

|

||||||

|

另一个相对 LFS 更灵活的选择是 [git-annex][10]。你可以在我的文章 [管理 Git 中大二进制 blob][11] 中了解更多(忽略其中 git-media 这个已经废弃项目的章节,因为其灵活性没有被它的继任者 Git LFS 延续下来)。Git-annex 是一个灵活且优雅的解决方案。它拥有一个细腻的系统来用于添加、删除、移动存储库中的大型文件。因为它灵活且强大,有很多新的命令和规则需要进行学习,所以建议看一下它的[文档][12]。

|

||||||

|

|

||||||

|

然而,如果你的需求很简单,你可能更加喜欢整合已有技术来进行简单且明显任务的解决方案,则 Git-portal 可能是对于工作而言比较合适的工具。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/19/4/manage-multimedia-files-git

|

||||||

|

|

||||||

|

作者:[Seth Kenlon][a]

|

||||||

|

选题:[lujun9972][b]

|

||||||

|

译者:[svtter](https://github.com/svtter)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]: https://opensource.com/users/seth

|

||||||

|

[b]: https://github.com/lujun9972

|

||||||

|

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/video_editing_folder_music_wave_play.png?itok=-J9rs-My (video editing dashboard)

|

||||||

|

[2]: https://opensource.com/sites/default/files/uploads/git-velocity.jpg (Graphic showing relationship between art assets and Git)

|

||||||

|

[3]: http://gitlab.com/slackermedia/git-portal.git

|

||||||

|

[4]: https://www.apress.com/gp/book/9781484241691

|

||||||

|

[5]: http://mixedsignals.ml

|

||||||

|

[6]: mailto:git@gitdawg.com

|

||||||

|

[7]: mailto:seth@example.com

|

||||||

|

[8]: https://opensource.com/article/19/4/file-sharing-git

|

||||||

|

[9]: https://git-lfs.github.com/

|

||||||

|

[10]: https://git-annex.branchable.com/

|

||||||

|

[11]: https://opensource.com/life/16/8/how-manage-binary-blobs-git-part-7

|

||||||

|

[12]: https://git-annex.branchable.com/walkthrough/

|

||||||

@ -0,0 +1,208 @@

|

|||||||

|

[#]: collector: (lujun9972)

|

||||||

|

[#]: translator: (robsean)

|

||||||

|

[#]: reviewer: (wxy)

|

||||||

|

[#]: publisher: (wxy)

|

||||||

|

[#]: url: (https://linux.cn/article-11881-1.html)

|

||||||

|

[#]: subject: (How to Go About Linux Boot Time Optimisation)

|

||||||

|

[#]: via: (https://opensourceforu.com/2019/10/how-to-go-about-linux-boot-time-optimisation/)

|

||||||

|

[#]: author: (B Thangaraju https://opensourceforu.com/author/b-thangaraju/)

|

||||||

|

|

||||||

|

如何进行 Linux 启动时间优化

|

||||||

|

======

|

||||||

|

|

||||||

|

![][2]

|

||||||

|

|

||||||

|

> 快速启动嵌入式设备或电信设备,对于时间要求紧迫的应用程序是至关重要的,并且在改善用户体验方面也起着非常重要的作用。这个文章给予一些关于如何增强任意设备的启动时间的重要技巧。

|

||||||

|

|

||||||

|

快速启动或快速重启在各种情况下起着至关重要的作用。为了保持所有服务的高可用性和更好的性能,嵌入式设备的快速启动至关重要。设想有一台运行着没有启用快速启动的 Linux 操作系统的电信设备,所有依赖于这个特殊嵌入式设备的系统、服务和用户可能会受到影响。这些设备维持其服务的高可用性是非常重要的,为此,快速启动和重启起着至关重要的作用。

|

||||||

|

|

||||||

|

一台电信设备的一次小故障或关机,即使只是几秒钟,都可能会对无数互联网上的用户造成破坏。因此,对于很多对时间要求严格的设备和电信设备来说,在它们的设备中加入快速启动的功能以帮助它们快速恢复工作是非常重要的。让我们从图 1 中理解 Linux 启动过程。

|

||||||

|

|

||||||

|

![图 1:启动过程][3]

|

||||||

|

|

||||||

|

### 监视工具和启动过程

|

||||||

|

|

||||||

|

在对机器做出更改之前,用户应注意许多因素。其中包括计算机的当前启动速度,以及占用资源并增加启动时间的服务、进程或应用程序。

|

||||||

|

|

||||||

|

#### 启动图

|

||||||

|

|

||||||

|

为监视启动速度和在启动期间启动的各种服务,用户可以使用下面的命令来安装:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo apt-get install pybootchartgui

|

||||||

|

```

|

||||||

|

|

||||||

|

你每次启动时,启动图会在日志中保存一个 png 文件,使用户能够查看该 png 文件来理解系统的启动过程和服务。为此,使用下面的命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

cd /var/log/bootchart

|

||||||

|

```

|

||||||

|

|

||||||

|

用户可能需要一个应用程序来查看 png 文件。Feh 是一个面向控制台用户的 X11 图像查看器。不像大多数其它的图像查看器,它没有一个精致的图形用户界面,但它只用来显示图片。Feh 可以用于查看 png 文件。你可以使用下面的命令来安装它:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo apt-get install feh

|

||||||

|

```

|

||||||

|

|

||||||

|

你可以使用 `feh xxxx.png` 来查看 png 文件。

|

||||||

|

|

||||||

|

|

||||||

|

![图 2:启动图][4]

|

||||||

|

|

||||||

|

图 2 显示了一个正在查看的引导图 png 文件。

|

||||||

|

|

||||||

|

#### systemd-analyze

|

||||||

|

|

||||||

|

但是,对于 Ubuntu 15.10 以后的版本不再需要引导图。为获取关于启动速度的简短信息,使用下面的命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

systemd-analyze

|

||||||

|

```

|

||||||

|

|

||||||

|

![图 3:systemd-analyze 的输出][5]

|

||||||

|

|

||||||

|

图表 3 显示命令 `systemd-analyze` 的输出。

|

||||||

|

|

||||||

|

命令 `systemd-analyze blame` 用于根据初始化所用的时间打印所有正在运行的单元的列表。这个信息是非常有用的,可用于优化启动时间。`systemd-analyze blame` 不会显示服务类型为简单(`Type=simple`)的服务,因为 systemd 认为这些服务应是立即启动的;因此,无法测量初始化的延迟。

|

||||||

|

|

||||||

|

![图 4:systemd-analyze blame 的输出][6]

|

||||||

|

|

||||||

|

图 4 显示 `systemd-analyze blame` 的输出。

|

||||||

|

|

||||||

|

下面的命令打印时间关键的服务单元的树形链条:

|

||||||

|

|

||||||

|

```

|

||||||

|

command systemd-analyze critical-chain

|

||||||

|

```

|

||||||

|

|

||||||

|

图 5 显示命令 `systemd-analyze critical-chain` 的输出。

|

||||||

|

|

||||||

|

![图 5:systemd-analyze critical-chain 的输出][7]

|

||||||

|

|

||||||

|

### 减少启动时间的步骤

|

||||||

|

|

||||||

|

下面显示的是一些可以减少启动时间的各种步骤。

|

||||||

|

|

||||||

|

#### BUM(启动管理器)

|

||||||

|

|

||||||

|

BUM 是一个运行级配置编辑器,允许在系统启动或重启时配置初始化服务。它显示了可以在启动时启动的每个服务的列表。用户可以打开和关闭各个服务。BUM 有一个非常清晰的图形用户界面,并且非常容易使用。

|

||||||

|

|

||||||

|

在 Ubuntu 14.04 中,BUM 可以使用下面的命令安装:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo apt-get install bum

|

||||||

|

```

|

||||||

|

|

||||||

|

为在 15.10 以后的版本中安装它,从链接 http://apt.ubuntu.com/p/bum 下载软件包。

|

||||||

|

|

||||||

|

以基本的服务开始,禁用扫描仪和打印机相关的服务。如果你没有使用蓝牙和其它不想要的设备和服务,你也可以禁用它们中一些。我强烈建议你在禁用相关的服务前学习服务的基础知识,因为这可能会影响计算机或操作系统。图 6 显示 BUM 的图形用户界面。

|

||||||

|

|

||||||

|

![图 6:BUM][8]

|

||||||

|

|

||||||

|

#### 编辑 rc 文件

|

||||||

|

|

||||||

|

要编辑 rc 文件,你需要转到 rc 目录。这可以使用下面的命令来做到:

|

||||||

|

|

||||||

|

```

|

||||||

|

cd /etc/init.d

|

||||||

|

```

|

||||||

|

|

||||||

|

然而,访问 `init.d` 需要 root 用户权限,该目录基本上包含的是开始/停止脚本,这些脚本用于在系统运行时或启动期间控制(开始、停止、重新加载、启动启动)守护进程。

|

||||||

|

|

||||||

|

在 `init.d` 目录中的 `rc` 文件被称为<ruby>运行控制<rt>run control</rt></ruby>脚本。在启动期间,`init` 执行 `rc` 脚本并发挥它的作用。为改善启动速度,我们可以更改 `rc` 文件。使用任意的文件编辑器打开 `rc` 文件(当你在 `init.d` 目录中时)。

|

||||||

|

|

||||||

|

例如,通过输入 `vim rc` ,你可以更改 `CONCURRENCY=none` 为 `CONCURRENCY=shell`。后者允许某些启动脚本同时执行,而不是依序执行。

|

||||||

|

|

||||||

|

在最新版本的内核中,该值应该被更改为 `CONCURRENCY=makefile`。

|

||||||

|

|

||||||

|

图 7 和图 8 显示编辑 `rc` 文件前后的启动时间比较。可以注意到启动速度有所提高。在编辑 `rc` 文件前的启动时间是 50.98 秒,然而在对 `rc` 文件进行更改后的启动时间是 23.85 秒。

|

||||||

|

|

||||||

|

但是,上面提及的更改方法在 Ubuntu 15.10 以后的操作系统上不工作,因为使用最新内核的操作系统使用 systemd 文件,而不再是 `init.d` 文件。

|

||||||

|

|

||||||

|

![图 7:对 rc 文件进行更改之前的启动速度][9]

|

||||||

|

|

||||||

|

![图 8:对 rc 文件进行更改之后的启动速度][10]

|

||||||

|

|

||||||

|

#### E4rat

|

||||||

|

|

||||||

|

E4rat 代表 e4 <ruby>减少访问时间<rt>reduced access time</rt></ruby>(仅在 ext4 文件系统的情况下)。它是由 Andreas Rid 和 Gundolf Kiefer 开发的一个项目。E4rat 是一个通过碎片整理来帮助快速启动的应用程序。它还会加速应用程序的启动。E4rat 使用物理文件的重新分配来消除寻道时间和旋转延迟,因而达到较高的磁盘传输速度。

|

||||||

|

|

||||||

|

E4rat 可以 .deb 软件包形式获得,你可以从它的官方网站 http://e4rat.sourceforge.net/ 下载。

|

||||||

|

|

||||||

|

Ubuntu 默认安装的 ureadahead 软件包与 e4rat 冲突。因此必须使用下面的命令安装这几个软件包:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo dpkg purge ureadahead ubuntu-minimal

|

||||||

|

```

|

||||||

|

|

||||||

|

现在使用下面的命令来安装 e4rat 的依赖关系:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo apt-get install libblkid1 e2fslibs

|

||||||

|

```

|

||||||

|

|

||||||

|

打开下载的 .deb 文件,并安装它。现在需要恰当地收集启动数据来使 e4rat 工作。

|

||||||

|

|

||||||

|

遵循下面所给的步骤来使 e4rat 正确地运行并提高启动速度。

|

||||||

|

|

||||||

|

* 在启动期间访问 Grub 菜单。这可以在系统启动时通过按住 `shift` 按键来完成。

|

||||||

|

* 选择通常用于启动的选项(内核版本),并按 `e`。

|

||||||

|

* 查找以 `linux /boot/vmlinuz` 开头的行,并在该行的末尾添加下面的代码(在句子的最后一个字母后按空格键):`init=/sbin/e4rat-collect or try - quiet splash vt.handsoff =7 init=/sbin/e4rat-collect

|

||||||

|

`。

|

||||||

|

* 现在,按 `Ctrl+x` 来继续启动。这可以让 e4rat 在启动后收集数据。在这台机器上工作,并在接下来的两分钟时间内打开并关闭应用程序。

|

||||||

|

* 通过转到 e4rat 文件夹,并使用下面的命令来访问日志文件:`cd /var/log/e4rat`。

|

||||||

|

* 如果你没有找到任何日志文件,重复上面的过程。一旦日志文件就绪,再次访问 Grub 菜单,并对你的选项按 `e`。

|

||||||

|

* 在你之前已经编辑过的同一行的末尾输入 `single`。这可以让你访问命令行。如果出现其它菜单,选择恢复正常启动(Resume normal boot)。如果你不知为何不能进入命令提示符,按 `Ctrl+Alt+F1` 组合键。

|

||||||

|

* 在你看到登录提示后,输入你的登录信息。

|

||||||

|

* 现在输入下面的命令:`sudo e4rat-realloc /var/lib/e4rat/startup.log`。此过程需要一段时间,具体取决于机器的磁盘速度。

|

||||||

|

* 现在使用下面的命令来重启你的机器:`sudo shutdown -r now`。

|

||||||

|

* 现在,我们需要配置 Grub 来在每次启动时运行 e4rat。

|

||||||

|

* 使用任意的编辑器访问 grub 文件。例如,`gksu gedit /etc/default/grub`。

|

||||||

|

* 查找以 `GRUB CMDLINE LINUX DEFAULT=` 开头的一行,并在引号之间和任何选项之前添加下面的行:`init=/sbin/e4rat-preload 18`。

|

||||||

|

* 它应该看起来像这样:`GRUB CMDLINE LINUX DEFAULT = init=/sbin/e4rat- preload quiet splash`。

|

||||||

|

* 保存并关闭 Grub 菜单,并使用 `sudo update-grub` 更新 Grub 。

|

||||||

|

* 重启系统,你将发现启动速度有明显变化。

|

||||||

|

|

||||||

|

图 9 和图 10 显示在安装 e4rat 前后的启动时间之间的差异。可注意到启动速度的提高。在使用 e4rat 前启动所用时间是 22.32 秒,然而在使用 e4rat 后启动所用时间是 9.065 秒。

|

||||||

|

|

||||||

|

![图 9:使用 e4rat 之前的启动速度][11]

|

||||||

|

|

||||||

|

![图 10:使用 e4rat 之后的启动速度][12]

|

||||||

|

|

||||||

|

### 一些易做的调整

|

||||||

|

|

||||||

|

使用很小的调整也可以达到良好的启动速度,下面列出其中两个。

|

||||||

|

|

||||||

|

#### SSD

|

||||||

|

|

||||||

|

使用固态设备而不是普通的硬盘或者其它的存储设备将肯定会改善启动速度。SSD 也有助于加快文件传输和运行应用程序方面的速度。

|

||||||

|

|

||||||

|

#### 禁用图形用户界面

|

||||||

|

|

||||||

|

图形用户界面、桌面图形和窗口动画占用大量的资源。禁用图形用户界面是获得良好的启动速度的另一个好方法。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensourceforu.com/2019/10/how-to-go-about-linux-boot-time-optimisation/

|

||||||

|

|

||||||

|

作者:[B Thangaraju][a]

|

||||||

|

选题:[lujun9972][b]

|

||||||

|

译者:[robsean](https://github.com/robsean)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]: https://opensourceforu.com/author/b-thangaraju/

|

||||||

|

[b]: https://github.com/lujun9972

|

||||||

|

[1]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Screenshot-from-2019-10-07-13-16-32.png?&ssl=1 (Screenshot from 2019-10-07 13-16-32)

|

||||||

|

[2]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Screenshot-from-2019-10-07-13-16-32.png?fit=700%2C499&ssl=1

|

||||||

|

[3]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-1.png?ssl=1

|

||||||

|

[4]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-2.png?ssl=1

|

||||||

|

[5]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-3.png?ssl=1

|

||||||

|

[6]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-4.png?ssl=1

|

||||||

|

[7]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-5.png?ssl=1

|

||||||

|

[8]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-6.png?ssl=1

|

||||||

|

[9]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-7.png?ssl=1

|

||||||

|

[10]: https://i1.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-8.png?ssl=1

|

||||||

|

[11]: https://i2.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-9.png?ssl=1

|

||||||

|

[12]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/fig-10.png?ssl=1

|

||||||

@ -1,49 +1,45 @@

|

|||||||

[#]: collector: (lujun9972)

|

[#]: collector: (lujun9972)

|

||||||

[#]: translator: (mengxinayan)

|

[#]: translator: (mengxinayan)

|

||||||

[#]: reviewer: ( )

|

[#]: reviewer: (wxy)

|

||||||

[#]: publisher: ( )

|

[#]: publisher: (wxy)

|

||||||

[#]: url: ( )

|

[#]: url: (https://linux.cn/article-11870-1.html)

|

||||||

[#]: subject: (8 Commands to Check Memory Usage on Linux)

|

[#]: subject: (8 Commands to Check Memory Usage on Linux)

|

||||||

[#]: via: (https://www.2daygeek.com/linux-commands-check-memory-usage/)

|

[#]: via: (https://www.2daygeek.com/linux-commands-check-memory-usage/)

|

||||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||||

|

|

||||||

检查 Linux 中内存使用情况的8条命令

|

检查 Linux 中内存使用情况的 8 条命令

|

||||||

======

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Linux 并不像 Windows,你经常不会有图形界面可供使用,特别是在服务器环境中。

|

Linux 并不像 Windows,你经常不会有图形界面可供使用,特别是在服务器环境中。

|

||||||

|

|

||||||

作为一名 Linux 管理员,知道如何获取当前可用的和已经使用的资源情况,比如内存、CPU、磁盘等,是相当重要的。

|

作为一名 Linux 管理员,知道如何获取当前可用的和已经使用的资源情况,比如内存、CPU、磁盘等,是相当重要的。如果某一应用在你的系统上占用了太多的资源,导致你的系统无法达到最优状态,那么你需要找到并修正它。

|

||||||

|

|

||||||

如果某一应用在你的系统上占用了太多的资源,导致你的系统无法达到最优状态,那么你需要找到并修正它。

|

如果你想找到消耗内存前十名的进程,你需要去阅读这篇文章:[如何在 Linux 中找出内存消耗最大的进程][1]。

|

||||||

|

|

||||||

如果你想找到消耗内存前十名的进程,你需要去阅读这篇文章: **[在 Linux 系统中找到消耗内存最多的 10 个进程][1]** 。

|

在 Linux 中,命令能做任何事,所以使用相关命令吧。在这篇教程中,我们将会给你展示 8 个有用的命令来即查看在 Linux 系统中内存的使用情况,包括 RAM 和交换分区。

|

||||||

|

|

||||||

在 Linux 中,命令能做任何事,所以使用相关命令吧。

|

创建交换分区在 Linux 系统中是非常重要的,如果你想了解如何创建,可以去阅读这篇文章:[在 Linux 系统上创建交换分区][2]。

|

||||||

|

|

||||||

在这篇教程中,我们将会给你展示 8 个有用的命令来即查看在 Linux 系统中内存的使用情况,包括 RAM 和交换分区。

|

|

||||||

|

|

||||||

创建交换分区在 Linux 系统中是非常重要的,如果你想了解如何创建,可以去阅读这篇文章: **[在 Linux 系统上创建交换分区][2]** 。

|

|

||||||

|

|

||||||

下面的命令可以帮助你以不同的方式查看 Linux 内存使用情况。

|

下面的命令可以帮助你以不同的方式查看 Linux 内存使用情况。

|

||||||

|

|

||||||

* free 命令

|

* `free` 命令

|

||||||

* /proc/meminfo 文件

|

* `/proc/meminfo` 文件

|

||||||

* vmstat 命令

|

* `vmstat` 命令

|

||||||

* ps_mem 命令

|

* `ps_mem` 命令

|

||||||

* smem 命令

|

* `smem` 命令

|

||||||

* top 命令

|

* `top` 命令

|

||||||

* htop 命令

|

* `htop` 命令

|

||||||

* glances 命令

|

* `glances` 命令

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 1)如何使用 free 命令查看 Linux 内存使用情况

|

### 1)如何使用 free 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[Free 命令][3]** 是被 Linux 管理员广泛使用地命令。但是它提供的信息比 “/proc/meminfo” 文件少。

|

[free 命令][3] 是被 Linux 管理员广泛使用的主要命令。但是它提供的信息比 `/proc/meminfo` 文件少。

|

||||||

|

|

||||||

Free 命令会分别展示物理内存和交换分区内存中已使用的和未使用的数量,以及内核使用的缓冲区和缓存。

|

`free` 命令会分别展示物理内存和交换分区内存中已使用的和未使用的数量,以及内核使用的缓冲区和缓存。

|

||||||

|

|

||||||

这些信息都是从 “/proc/meminfo” 文件中获取的。

|

这些信息都是从 `/proc/meminfo` 文件中获取的。

|

||||||

|

|

||||||

```

|

```

|

||||||

# free -m

|

# free -m

|

||||||

@ -52,24 +48,18 @@ Mem: 15867 9199 1702 3315 4965 3039

|

|||||||

Swap: 17454 666 16788

|

Swap: 17454 666 16788

|

||||||

```

|

```

|

||||||

|

|

||||||

* **total:** 总的内存量

|

* `total`:总的内存量

|

||||||

* **used:** 当前正在被运行中的进程使用的内存量 (used = total – free – buff/cache)

|

* `used`:被当前运行中的进程使用的内存量(`used` = `total` – `free` – `buff/cache`)

|

||||||

* **free:** 未被使用的内存量 (free = total – used – buff/cache)

|

* `free`: 未被使用的内存量(`free` = `total` – `used` – `buff/cache`)

|

||||||

* **shared:** 在两个或多个进程之间共享的内存量 (多进程)

|

* `shared`: 在两个或多个进程之间共享的内存量

|

||||||

* **buffers:** 内核用于记录进程队列请求的内存量

|

* `buffers`: 内存中保留用于内核记录进程队列请求的内存量

|

||||||

* **cache:** 在 RAM 中最近使用的文件中的页缓冲大小

|

* `cache`: 在 RAM 中存储最近使用过的文件的页缓冲大小

|

||||||

* **buff/cache:** 缓冲区和缓存总的使用内存量

|

* `buff/cache`: 缓冲区和缓存总的使用内存量

|

||||||

* **available:** 启动新应用不含交换分区的可用内存量

|

* `available`: 可用于启动新应用的可用内存量(不含交换分区)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### 2) 如何使用 /proc/meminfo 文件查看 Linux 内存使用情况

|

### 2) 如何使用 /proc/meminfo 文件查看 Linux 内存使用情况

|

||||||

|

|

||||||

“/proc/meminfo” 文件是一个包含了多种内存使用的实时信息的虚拟文件。

|

`/proc/meminfo` 文件是一个包含了多种内存使用的实时信息的虚拟文件。它展示内存状态单位使用的是 kB,其中大部分属性都难以理解。然而它也包含了内存使用情况的有用信息。

|

||||||

|

|

||||||

它展示内存状态单位使用的是 kB,其中大部分属性都难以理解。

|

|

||||||

|

|

||||||

然而它也包含了内存使用情况的有用信息。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

# cat /proc/meminfo

|

# cat /proc/meminfo

|

||||||

@ -126,12 +116,9 @@ DirectMap1G: 2097152 kB

|

|||||||

|

|

||||||

### 3) 如何使用 vmstat 命令查看 Linux 内存使用情况

|

### 3) 如何使用 vmstat 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[vmstat 命令][4]** 是另一个报告虚拟内存统计信息的有用工具。

|

[vmstat 命令][4] 是另一个报告虚拟内存统计信息的有用工具。

|

||||||

|

|

||||||

vmstat 报告的信息包括:进程、内存、页面映射、块 I/O、陷阱、磁盘和 cpu 功能信息。

|

`vmstat` 报告的信息包括:进程、内存、页面映射、块 I/O、陷阱、磁盘和 CPU 特性信息。`vmstat` 不需要特殊的权限,并且它可以帮助诊断系统瓶颈。

|

||||||

|

|

||||||

|

|

||||||

vmstat 不需要特殊的权限,并且它可以帮助诊断系统瓶颈。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

# vmstat

|

# vmstat

|

||||||

@ -143,54 +130,31 @@ procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

|

|||||||

|

|

||||||

如果你想详细了解每一项的含义,阅读下面的描述。

|

如果你想详细了解每一项的含义,阅读下面的描述。

|

||||||

|

|

||||||

**Procs**

|

* `procs`:进程

|

||||||

|

* `r`: 可以运行的进程数目(正在运行或等待运行)

|

||||||

* **r:** 可以运行的进程数目(正在运行或等待运行)

|

* `b`: 处于不可中断睡眠中的进程数目

|

||||||

* **b:** 不间断睡眠中的进程数目

|

* `memory`:内存

|

||||||

|

* `swpd`: 使用的虚拟内存数量

|

||||||

|

* `free`: 空闲的内存数量

|

||||||

|

* `buff`: 用作缓冲区内存的数量

|

||||||

**Memory**

|

* `cache`: 用作缓存内存的数量

|

||||||

|

* `inact`: 不活动的内存数量(使用 `-a` 选项)

|

||||||

* **swpd:** 使用的虚拟内存数量

|

* `active`: 活动的内存数量(使用 `-a` 选项)

|

||||||

* **free:** 空闲的内存数量

|

* `Swap`:交换分区

|

||||||

* **buff:** 用作缓冲区内存的数量

|

* `si`: 每秒从磁盘交换的内存数量

|

||||||

* **cache:** 用作缓存内存的数量

|

* `so`: 每秒交换到磁盘的内存数量

|

||||||

* **inact:** 不活动的内存数量(-a 选项)

|

* `IO`:输入输出

|

||||||

* **active:** 活动的内存数量(-a 选项)

|

* `bi`: 从一个块设备中收到的块(块/秒)

|

||||||

|

* `bo`: 发送到一个块设备的块(块/秒)

|

||||||

|

* `System`:系统

|

||||||

|

* `in`: 每秒的中断次数,包括时钟。

|

||||||

**Swap**

|

* `cs`: 每秒的上下文切换次数。

|

||||||

|

* `CPU`:下面这些是在总的 CPU 时间占的百分比

|

||||||

* **si:** 从磁盘交换的内存数量 (/s).

|

* `us`: 花费在非内核代码上的时间占比(包括用户时间,调度时间)

|

||||||

* **so:** 交换到磁盘的内存数量 (/s).

|

* `sy`: 花费在内核代码上的时间占比 (系统时间)

|

||||||

|

* `id`: 花费在闲置的时间占比。在 Linux 2.5.41 之前,包括 I/O 等待时间

|

||||||

|

* `wa`: 花费在 I/O 等待上的时间占比。在 Linux 2.5.41 之前,包括在空闲时间中

|

||||||

|

* `st`: 被虚拟机偷走的时间占比。在 Linux 2.6.11 之前,这部分称为 unknown

|

||||||

**IO**

|

|

||||||

|

|

||||||

* **bi:** 从一个块设备中收到的块 (blocks/s).

|

|

||||||

* **bo:** 发送到一个块设备的块 (blocks/s).

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**System**

|

|

||||||

|

|

||||||

* **in:** 每秒的中断此数,包括时钟。

|

|

||||||

* **cs:** 每秒的上下文切换次数。

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**CPU : 下面这些是在总的 CPU 时间占的百分比 **

|

|

||||||

|

|

||||||

* **us:** 花费在非内核上的时间占比(包括用户时间,调度)

|

|

||||||

* **sy:** 花费在内核上的时间占比 (系统时间)

|

|

||||||

* **id:** 花费在闲置的时间占比。在 Linux 2.5.41 之前,包括 I/O 等待时间

|

|

||||||

* **wa:** 花费在 I/O 等待上的时间占比。在 Linux 2.5.41 之前,包括空闲时间

|

|

||||||

* **st:** 被虚拟机偷走的时间占比。在 Linux 2.6.11 之前,这部分称为 unknow

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

运行下面的命令查看详细的信息。

|

运行下面的命令查看详细的信息。

|

||||||

|

|

||||||

@ -226,9 +190,7 @@ procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

|

|||||||

```

|

```

|

||||||

### 4) 如何使用 ps_mem 命令查看 Linux 内存使用情况

|

### 4) 如何使用 ps_mem 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[ps_mem][5]** 是一个简单的 Python 脚本用来查看当前内存使用情况。

|

[ps_mem][5] 是一个用来查看当前内存使用情况的简单的 Python 脚本。该工具可以确定每个程序使用了多少内存(不是每个进程)。

|

||||||

|

|

||||||

该工具可以确定每个程序使用了多少内存(不是每个进程)。

|

|

||||||

|

|

||||||

该工具采用如下的方法计算每个程序使用内存:总的使用 = 程序进程私有的内存 + 程序进程共享的内存。

|

该工具采用如下的方法计算每个程序使用内存:总的使用 = 程序进程私有的内存 + 程序进程共享的内存。

|

||||||

|

|

||||||

@ -287,13 +249,11 @@ procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

|

|||||||

|

|

||||||

### 5)如何使用 smem 命令查看 Linux 内存使用情况

|

### 5)如何使用 smem 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[smem][6]** 是一个可以为 Linux 系统提供多种内存使用情况报告的工具。不同于现有的工具,smem 可以报告比例集大小(PSS)、唯一集大小(USS)和居住集大小(RSS)

|

[smem][6] 是一个可以为 Linux 系统提供多种内存使用情况报告的工具。不同于现有的工具,`smem` 可以报告<ruby>比例集大小<rt>Proportional Set Size</rt></ruby>(PSS)、<ruby>唯一集大小<rt>Unique Set Size</rt></ruby>(USS)和<ruby>驻留集大小<rt>Resident Set Size</rt></ruby>(RSS)。

|

||||||

|

|

||||||

比例集(PSS):库和应用在虚拟内存系统中的使用量。

|

- 比例集大小(PSS):库和应用在虚拟内存系统中的使用量。

|

||||||

|

- 唯一集大小(USS):其报告的是非共享内存。

|

||||||

唯一集大小(USS):其报告的是非共享内存。

|

- 驻留集大小(RSS):物理内存(通常多进程共享)使用情况,其通常高于内存使用量。

|

||||||

|

|

||||||

居住集大小(RSS):物理内存(通常多进程共享)使用情况,其通常高于内存使用量。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

# smem -tk

|

# smem -tk

|

||||||

@ -338,11 +298,9 @@ procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

|

|||||||

|

|

||||||

### 6) 如何使用 top 命令查看 Linux 内存使用情况

|

### 6) 如何使用 top 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[top 命令][7]** 是一个 Linux 系统的管理员最常使用的用于查看进程的资源使用情况的命令。

|

[top 命令][7] 是一个 Linux 系统的管理员最常使用的用于查看进程的资源使用情况的命令。

|

||||||

|

|

||||||

该命令会展示了系统总的内存量,当前内存使用量,空闲内存量和缓冲区使用的内存总量。

|

该命令会展示了系统总的内存量、当前内存使用量、空闲内存量和缓冲区使用的内存总量。此外,该命令还会展示总的交换空间内存量、当前交换空间的内存使用量、空闲的交换空间内存量和缓存使用的内存总量。

|

||||||

|

|

||||||

此外,该命令还会展示总的交换空间内存量,当前交换空间的内存使用量,空闲的交换空间内存量和缓存使用的内存总量。

|

|

||||||

|

|

||||||

```

|

```

|

||||||

# top -b | head -10

|

# top -b | head -10

|

||||||

@ -370,25 +328,23 @@ KiB Swap: 17873388 total, 17873388 free, 0 used. 9179772 avail Mem

|

|||||||

|

|

||||||

### 7) 如何使用 htop 命令查看 Linux 内存使用情况

|

### 7) 如何使用 htop 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[htop 命令][8]** 是一个可交互的 Linux/Unix 系统进程查看器。它是一个文本模式应用,且使用它需要 Hisham 开发的 ncurses库。

|

[htop 命令][8] 是一个可交互的 Linux/Unix 系统进程查看器。它是一个文本模式应用,且使用它需要 Hisham 开发的 ncurses 库。

|

||||||

|

|

||||||

该名令的设计目的使用来代替 top 命令。

|

该名令的设计目的使用来代替 `top` 命令。该命令与 `top` 命令很相似,但是其允许你可以垂直地或者水平地的滚动以便可以查看系统中所有的进程情况。

|

||||||

|

|

||||||

该命令与 top 命令很相似,但是其允许你可以垂直地或者水平地的滚动以便可以查看系统中所有的进程情况。

|

`htop` 命令拥有不同的颜色,这个额外的优点当你在追踪系统性能情况时十分有用。

|

||||||

|

|

||||||

htop 命令拥有不同的颜色,这个额外的优点当你在追踪系统性能情况时十分有用。

|

|

||||||

|

|

||||||

此外,你可以自由地执行与进程相关的任务,比如杀死进程或者改变进程的优先级而不需要其进程号(PID)。

|

此外,你可以自由地执行与进程相关的任务,比如杀死进程或者改变进程的优先级而不需要其进程号(PID)。

|

||||||

|

|

||||||

[![][9]][10]

|

![][10]

|

||||||

|

|

||||||

### 8)如何使用 glances 命令查看 Linux 内存使用情况

|

### 8)如何使用 glances 命令查看 Linux 内存使用情况

|

||||||

|

|

||||||

**[Glances][11]** 是一个 Python 编写的跨平台的系统监视工具。

|

[Glances][11] 是一个 Python 编写的跨平台的系统监视工具。

|

||||||

|

|

||||||

你可以在一个其中查看所有信息,比如:CPU使用情况,内存使用情况,正在运行的进程,网络接口,磁盘 I/O,RAID,传感器,文件系统信息,Docker,系统信息,运行时间等等。

|

你可以在一个地方查看所有信息,比如:CPU 使用情况、内存使用情况、正在运行的进程、网络接口、磁盘 I/O、RAID、传感器、文件系统信息、Docker、系统信息、运行时间等等。

|

||||||

|

|

||||||

![][9]

|

![][12]

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

@ -397,20 +353,21 @@ via: https://www.2daygeek.com/linux-commands-check-memory-usage/

|

|||||||

作者:[Magesh Maruthamuthu][a]

|

作者:[Magesh Maruthamuthu][a]

|

||||||

选题:[lujun9972][b]

|

选题:[lujun9972][b]

|

||||||

译者:[萌新阿岩](https://github.com/mengxinayan)

|

译者:[萌新阿岩](https://github.com/mengxinayan)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

[a]: https://www.2daygeek.com/author/magesh/

|

[a]: https://www.2daygeek.com/author/magesh/

|

||||||

[b]: https://github.com/lujun9972

|

[b]: https://github.com/lujun9972

|

||||||

[1]: https://www.2daygeek.com/how-to-find-high-cpu-consumption-processes-in-linux/

|

[1]: https://linux.cn/article-11542-1.html

|

||||||

[2]: https://www.2daygeek.com/add-extend-increase-swap-space-memory-file-partition-linux/

|

[2]: https://linux.cn/article-9579-1.html

|

||||||

[3]: https://www.2daygeek.com/free-command-to-check-memory-usage-statistics-in-linux/

|

[3]: https://linux.cn/article-8314-1.html

|

||||||

[4]: https://www.2daygeek.com/linux-vmstat-command-examples-tool-report-virtual-memory-statistics/

|

[4]: https://linux.cn/article-8157-1.html

|

||||||

[5]: https://www.2daygeek.com/ps_mem-report-core-memory-usage-accurately-in-linux/

|

[5]: https://linux.cn/article-8639-1.html

|

||||||

[6]: https://www.2daygeek.com/smem-linux-memory-usage-statistics-reporting-tool/

|

[6]: https://linux.cn/article-7681-1.html

|

||||||

[7]: https://www.2daygeek.com/linux-top-command-linux-system-performance-monitoring-tool/

|

[7]: https://www.2daygeek.com/linux-top-command-linux-system-performance-monitoring-tool/

|

||||||

[8]: https://www.2daygeek.com/linux-htop-command-linux-system-performance-resource-monitoring-tool/

|

[8]: https://www.2daygeek.com/linux-htop-command-linux-system-performance-resource-monitoring-tool/

|

||||||

[9]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

[9]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||||

[10]: https://www.2daygeek.com/wp-content/uploads/2019/12/linux-commands-check-memory-usage-2.jpg

|

[10]: https://www.2daygeek.com/wp-content/uploads/2019/12/linux-commands-check-memory-usage-2.jpg

|

||||||

[11]: https://www.2daygeek.com/linux-glances-advanced-real-time-linux-system-performance-monitoring-tool/

|

[11]: https://www.2daygeek.com/linux-glances-advanced-real-time-linux-system-performance-monitoring-tool/

|

||||||

|

[12]: https://www.2daygeek.com/wp-content/uploads/2019/12/linux-commands-check-memory-usage-3.jpg

|

||||||

@ -0,0 +1,57 @@

|

|||||||

|

[#]: collector: (lujun9972)

|

||||||

|

[#]: translator: (Morisun029)

|

||||||

|

[#]: reviewer: (wxy)

|

||||||

|

[#]: publisher: (wxy)

|

||||||

|

[#]: url: (https://linux.cn/article-11875-1.html)

|

||||||

|

[#]: subject: (Top CI/CD resources to set you up for success)

|

||||||

|

[#]: via: (https://opensource.com/article/19/12/cicd-resources)

|

||||||

|

[#]: author: (Jessica Cherry https://opensource.com/users/jrepka)

|

||||||

|

|

||||||

|

顶级 CI / CD 资源,助你成功

|

||||||

|

======

|

||||||

|

|

||||||

|

> 随着企业期望实现无缝、灵活和可扩展的部署,持续集成和持续部署成为 2019 年的关键主题。

|

||||||

|

|

||||||

|

![Plumbing tubes in many directions][1]

|

||||||

|

|

||||||

|

对于 CI/CD 和 DevOps 来说,2019 年是非常棒的一年。Opensource.com 的作者分享了他们专注于无缝、灵活和可扩展部署时是如何朝着敏捷和 scrum 方向发展的。以下是我们 2019 年发布的 CI/CD 文章中的一些重要文章。

|

||||||

|

|

||||||

|

### 学习和提高你的 CI/CD 技能

|

||||||

|

|

||||||

|

我们最喜欢的一些文章集中在 CI/CD 的实操经验上,并涵盖了许多方面。通常以 [Jenkins][2] 管道开始,Bryant Son 的文章《[用 Jenkins 构建 CI/CD 管道][3]》将为你提供足够的经验,以开始构建你的第一个管道。Daniel Oh 在《[用 DevOps 管道进行自动验收测试][4]》一文中,提供了有关验收测试的重要信息,包括可用于自行测试的各种 CI/CD 应用程序。我写的《[安全扫描 DevOps 管道][5]》非常简短,其中简要介绍了如何使用 Jenkins 平台在管道中设置安全性。

|

||||||

|

|

||||||

|

### 交付工作流程

|

||||||

|

|

||||||

|

正如 Jithin Emmanuel 在《[Screwdriver:一个用于持续交付的可扩展构建平台][6]》中分享的,在学习如何使用和提高你的 CI/CD 技能方面,工作流程很重要,特别是当涉及到管道时。Emily Burns 在《[为什么 Spinnaker 对 CI/CD 很重要][7]》中解释了灵活地使用 CI/CD 工作流程准确构建所需内容的原因。Willy-Peter Schaub 还盛赞了为所有产品创建统一管道的想法,以便《[在一个 CI/CD 管道中一致地构建每个产品][8]》。这些文章将让你很好地了解在团队成员加入工作流程后会发生什么情况。

|

||||||

|

|

||||||

|

### CI/CD 如何影响企业

|

||||||

|

|

||||||

|

2019 年也是认识到 CI/CD 的业务影响以及它是如何影响日常运营的一年。Agnieszka Gancarczyk 分享了 Red Hat 《[小型 Scrum vs. 大型 Scrum][9]》的调查结果, 包括受访者对 Scrum、敏捷运动及对团队的影响的不同看法。Will Kelly 的《[持续部署如何影响整个组织][10]》,也提及了开放式沟通的重要性。Daniel Oh 也在《[DevOps 团队必备的 3 种指标仪表板][11]》中强调了指标和可观测性的重要性。最后是 Ann Marie Fred 的精彩文章《[不在生产环境中测试?要在生产环境中测试!][12]》详细说明了在验收测试前在生产环境中测试的重要性。

|

||||||

|

|

||||||

|

感谢许多贡献者在 2019 年与 Opensource 的读者分享他们的见解,我期望在 2020 年里从他们那里了解更多有关 CI/CD 发展的信息。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/19/12/cicd-resources

|

||||||

|

|

||||||

|

作者:[Jessica Cherry][a]

|

||||||

|

选题:[lujun9972][b]

|

||||||

|

译者:[Morisun029](https://github.com/Morisun029)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]: https://opensource.com/users/jrepka

|

||||||

|

[b]: https://github.com/lujun9972

|

||||||

|

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/plumbing_pipes_tutorial_how_behind_scenes.png?itok=F2Z8OJV1 (Plumbing tubes in many directions)

|

||||||

|

[2]: https://jenkins.io/

|

||||||

|

[3]: https://linux.cn/article-11546-1.html

|

||||||

|

[4]: https://opensource.com/article/19/4/devops-pipeline-acceptance-testing

|

||||||

|

[5]: https://opensource.com/article/19/7/security-scanning-your-devops-pipeline

|

||||||

|

[6]: https://opensource.com/article/19/3/screwdriver-cicd

|

||||||

|

[7]: https://opensource.com/article/19/8/why-spinnaker-matters-cicd

|

||||||

|

[8]: https://opensource.com/article/19/7/cicd-pipeline-rule-them-all

|

||||||

|

[9]: https://opensource.com/article/19/3/small-scale-scrum-vs-large-scale-scrum

|

||||||

|

[10]: https://opensource.com/article/19/7/organizational-impact-continuous-deployment

|

||||||

|

[11]: https://linux.cn/article-11183-1.html

|

||||||

|

[12]: https://opensource.com/article/19/5/dont-test-production

|

||||||

@ -1,22 +1,24 @@

|

|||||||

[#]: collector: (lujun9972)

|

[#]: collector: (lujun9972)

|

||||||

[#]: translator: (laingke)

|

[#]: translator: (laingke)

|

||||||

[#]: reviewer: ( )

|

[#]: reviewer: (wxy)

|

||||||

[#]: publisher: ( )

|

[#]: publisher: (wxy)

|

||||||

[#]: url: ( )

|

[#]: url: (https://linux.cn/article-11857-1.html)

|

||||||

[#]: subject: (Data streaming and functional programming in Java)

|

[#]: subject: (Data streaming and functional programming in Java)

|

||||||

[#]: via: (https://opensource.com/article/20/1/javastream)

|

[#]: via: (https://opensource.com/article/20/1/javastream)

|

||||||

[#]: author: (Marty Kalin https://opensource.com/users/mkalindepauledu)

|

[#]: author: (Marty Kalin https://opensource.com/users/mkalindepauledu)

|

||||||

|

|

||||||

Java 中的数据流和函数式编程

|

Java 中的数据流和函数式编程

|

||||||

======

|

======

|

||||||

学习如何使用 Java 8 中的流 API 和函数式编程结构。

|

|

||||||

![computer screen ][1]

|

|

||||||

|

|

||||||

当 Java SE 8(又名核心 Java 8)在 2014 年被推出时,它引入了一些从根本上影响 IT 编程的更改。这些更改中有两个紧密相连的部分:流API和功能编程构造。本文使用代码示例,从基础到高级特性,介绍每个部分并说明它们之间的相互作用。

|

> 学习如何使用 Java 8 中的流 API 和函数式编程结构。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

当 Java SE 8(又名核心 Java 8)在 2014 年被推出时,它引入了一些更改,从根本上影响了用它进行的编程。这些更改中有两个紧密相连的部分:流 API 和函数式编程构造。本文使用代码示例,从基础到高级特性,介绍每个部分并说明它们之间的相互作用。

|

||||||

|

|

||||||

### 基础特性

|

### 基础特性

|

||||||

|

|

||||||

流 API 是在数据序列中迭代元素的简洁而高级的方法。包 `java.util.stream` 和 `java.util.function` 包含用于流 API 和相关函数式编程构造的新库。当然,一个代码示例胜过千言万语。

|

流 API 是在数据序列中迭代元素的简洁而高级的方法。包 `java.util.stream` 和 `java.util.function` 包含了用于流 API 和相关函数式编程构造的新库。当然,代码示例胜过千言万语。

|

||||||

|

|

||||||

下面的代码段用大约 2,000 个随机整数值填充了一个 `List`:

|

下面的代码段用大约 2,000 个随机整数值填充了一个 `List`:

|

||||||

|

|

||||||

@ -26,7 +28,9 @@ List<Integer> list = new ArrayList<Integer>(); // 空 list

|

|||||||

for (int i = 0; i < 2048; i++) list.add(rand.nextInt()); // 填充它

|

for (int i = 0; i < 2048; i++) list.add(rand.nextInt()); // 填充它

|

||||||

```

|

```

|

||||||

|

|

||||||

另一个 `for` 循环可用于遍历填充 list,以将偶数值收集到另一个 list 中。流 API 提供了一种更简洁的方法来执行此操作:

|

另外用一个 `for` 循环可用于遍历填充列表,以将偶数值收集到另一个列表中。

|

||||||

|

|

||||||

|

流 API 提供了一种更简洁的方法来执行此操作:

|

||||||

|

|

||||||

```

|

```

|

||||||

List <Integer> evens = list

|

List <Integer> evens = list

|

||||||

@ -37,43 +41,42 @@ List <Integer> evens = list

|

|||||||

|

|

||||||

这个例子有三个来自流 API 的函数:

|

这个例子有三个来自流 API 的函数:

|

||||||

|

|

||||||

> `stream` 函数可以将**集合**转换为流,而流是一个每次可访问一个值的传送带。流化是惰性的(因此也是高效的),因为值是根据需要产生的,而不是一次性产生的。

|

- `stream` 函数可以将**集合**转换为流,而流是一个每次可访问一个值的传送带。流化是惰性的(因此也是高效的),因为值是根据需要产生的,而不是一次性产生的。

|

||||||

|

- `filter` 函数确定哪些流的值(如果有的话)通过了处理管道中的下一个阶段,即 `collect` 阶段。`filter` 函数是 <ruby>高阶的<rt>higher-order</rt></ruby>,因为它的参数是一个函数 —— 在这个例子中是一个 lambda 表达式,它是一个未命名的函数,并且是 Java 新的函数式编程结构的核心。

|

||||||

> `filter` 函数确定哪些流的值(如果有的话)通过了处理管道中的下一个阶段,即 `collect` 阶段。`filter` 函数是 _<ruby>高阶的<rt>higher-order</rt></ruby>_,因为它的参数是一个函数 —— 在这个例子中是一个 lambda 表达式,它是一个未命名的函数,并且是 Java 新的函数式编程结构的核心。

|

|

||||||

|

|

||||||

lambda 语法与传统的 Java 完全不同:

|

lambda 语法与传统的 Java 完全不同:

|

||||||

|

|

||||||

```

|

```

|

||||||

`n -> (n & 0x1) == 0`

|

n -> (n & 0x1) == 0

|

||||||

```

|

```

|

||||||

|

|

||||||

箭头(一个减号后面紧跟着一个大于号)将左边的参数列表与右边的函数体分隔开。参数 `n` 虽未明确输入,但可以显式输入。在任何情况下,编译器都会计算出 `n` 是 `Integer`。如果有多个参数,这些参数将被括在括号中,并用逗号分隔。

|

箭头(一个减号后面紧跟着一个大于号)将左边的参数列表与右边的函数体分隔开。参数 `n` 虽未明确类型,但也可以明确。在任何情况下,编译器都会发现 `n` 是个 `Integer`。如果有多个参数,这些参数将被括在括号中,并用逗号分隔。

|

||||||

|

|

||||||

在本例中,函数体检查一个整数的最低顺序(最右)位是否为零,以表示为偶数。过滤器应返回一个布尔值。尽管可以,但函数的主体中没有显式的 `return`。如果主体没有显式的 `return`,则主体的最后一个表达式是返回值。在这个例子中,主体按照 lambda 编程的思想编写,由一个简单的布尔表达式 `(n & 0x1) == 0` 组成。

|

在本例中,函数体检查一个整数的最低位(最右)是否为零,这用来表示偶数。过滤器应返回一个布尔值。尽管可以,但该函数的主体中没有显式的 `return`。如果主体没有显式的 `return`,则主体的最后一个表达式即是返回值。在这个例子中,主体按照 lambda 编程的思想编写,由一个简单的布尔表达式 `(n & 0x1) == 0` 组成。

|

||||||

|

|

||||||

> `collect` 函数将偶数值收集到引用为 `evens` 的 list 中。如下例所示,`collect` 函数是线程安全的,因此,即使在多个线程之间共享了过滤操作,该函数也可以正常工作。

|

- `collect` 函数将偶数值收集到引用为 `evens` 的列表中。如下例所示,`collect` 函数是线程安全的,因此,即使在多个线程之间共享了过滤操作,该函数也可以正常工作。

|

||||||

|

|

||||||

### 方便的功能和轻松实现多线程

|

### 方便的功能和轻松实现多线程

|

||||||

|

|

||||||

在生产环境中,数据流的源可能是文件或网络连接。为了学习流 API, Java 提供了诸如 `IntStream` 这样的类型,它可以用各种类型的元素生成流。这里有一个 `IntStream` 的例子:

|

在生产环境中,数据流的源可能是文件或网络连接。为了学习流 API, Java 提供了诸如 `IntStream` 这样的类型,它可以用各种类型的元素生成流。这里有一个 `IntStream` 的例子:

|

||||||

|

|

||||||

```

|

```

|

||||||

IntStream // 整型流

|

IntStream // 整型流

|

||||||

.range(1, 2048) // 生成此范围内的整型流

|

.range(1, 2048) // 生成此范围内的整型流

|

||||||

.parallel() // 为多个线程分区数据

|

.parallel() // 为多个线程分区数据

|

||||||

.filter(i -> ((i & 0x1) > 0)) // 奇偶校验 - 只允许奇数通过

|

.filter(i -> ((i & 0x1) > 0)) // 奇偶校验 - 只允许奇数通过

|

||||||

.forEach(System.out::println); // 打印每个值

|

.forEach(System.out::println); // 打印每个值

|

||||||

```

|

```

|

||||||

|

|

||||||

`IntStream` 类型包括一个 `range` 函数,该函数在指定的范围内生成一个整数值流,在本例中,以 1 为增量,从 1 递增到 2,048。`parallel` 函数自动工作划分到多个线程中,在各个线程中进行过滤和打印。(线程数通常与主机系统上的 CPU 数量匹配。)函数 `forEach` 参数是一个_方法引用_,在本例中是对封装在 `System.out` 中的 `println` 方法的引用,方法输出类型为 `PrintStream`。方法和构造器引用的语法将在稍后讨论。

|

`IntStream` 类型包括一个 `range` 函数,该函数在指定的范围内生成一个整数值流,在本例中,以 1 为增量,从 1 递增到 2048。`parallel` 函数自动划分该工作到多个线程中,在各个线程中进行过滤和打印。(线程数通常与主机系统上的 CPU 数量匹配。)函数 `forEach` 参数是一个*方法引用*,在本例中是对封装在 `System.out` 中的 `println` 方法的引用,方法输出类型为 `PrintStream`。方法和构造器引用的语法将在稍后讨论。

|

||||||

|

|

||||||

由于具有多线程,因此整数值整体上以任意顺序打印,但在给定线程中按顺序打印。例如,如果线程 T1 打印 409 和 411,那么 T1 将按照顺序 409-411 打印,但是其它某个线程可能会预先打印 2,045。`parallel` 调用后面的线程是并发执行的,因此它们的输出顺序是不确定的。

|

由于具有多线程,因此整数值整体上以任意顺序打印,但在给定线程中是按顺序打印的。例如,如果线程 T1 打印 409 和 411,那么 T1 将按照顺序 409-411 打印,但是其它某个线程可能会预先打印 2045。`parallel` 调用后面的线程是并发执行的,因此它们的输出顺序是不确定的。

|

||||||

|

|

||||||

### map/reduce 模式

|

### map/reduce 模式

|

||||||

|

|

||||||

_map/reduce_ 模式在处理大型数据集方面已变得很流行。一个 map/reduce 宏操作由两个微操作构成。首先,将数据分散(_mapped_)到各个工作程序中,然后将单独的结果收集在一起 —— 也可能收集统计起来成为一个值,即 _reduction_。Reduction 可以采用不同的形式,如以下示例所示。

|

*map/reduce* 模式在处理大型数据集方面变得很流行。一个 map/reduce 宏操作由两个微操作构成。首先,将数据分散(<ruby>映射<rt>mapped</rt></ruby>)到各个工作程序中,然后将单独的结果收集在一起 —— 也可能收集统计起来成为一个值,即<ruby>归约<rt>reduction</rt></ruby>。归约可以采用不同的形式,如以下示例所示。

|

||||||

|

|

||||||

下面 `Number` 类的实例用 **EVEN** 或 **ODD** 表示有奇偶校验的整数值:

|

下面 `Number` 类的实例用 `EVEN` 或 `ODD` 表示有奇偶校验的整数值:

|

||||||

|

|

||||||

```

|

```

|

||||||

public class Number {

|

public class Number {

|

||||||

@ -92,7 +95,7 @@ public class Number {

|

|||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

下面的代码演示了带有 `Number` 流进行 map/reduce 的情形,从而表明流 API 不仅可以处理 `int` 和 `float` 等基本类型,还可以处理程序员自定义的类类型。

|

下面的代码演示了用 `Number` 流进行 map/reduce 的情形,从而表明流 API 不仅可以处理 `int` 和 `float` 等基本类型,还可以处理程序员自定义的类类型。

|

||||||

|

|

||||||

在下面的代码段中,使用了 `parallelStream` 而不是 `stream` 函数对随机整数值列表进行流化处理。与前面介绍的 `parallel` 函数一样,`parallelStream` 变体也可以自动执行多线程。

|

在下面的代码段中,使用了 `parallelStream` 而不是 `stream` 函数对随机整数值列表进行流化处理。与前面介绍的 `parallel` 函数一样,`parallelStream` 变体也可以自动执行多线程。

|

||||||

|

|

||||||

@ -115,14 +118,14 @@ System.out.println("The sum of the randomly generated values is: " + sum4All);

|

|||||||

方法引用 `Number::getValue` 可以用下面的 lambda 表达式替换。参数 `n` 是流中的 `Number` 实例中的之一:

|

方法引用 `Number::getValue` 可以用下面的 lambda 表达式替换。参数 `n` 是流中的 `Number` 实例中的之一:

|

||||||

|

|

||||||

```

|

```

|

||||||

`mapToInt(n -> n.getValue())`

|

mapToInt(n -> n.getValue())

|

||||||

```

|

```

|

||||||

|

|

||||||

通常,lambdas 和方法引用是可互换的:如果像 `mapToInt` 这样的高阶函数可以采用一种形式作为参数,那么这个函数也可以采用另一种形式。这两个函数式编程结构具有相同的目的 —— 对作为参数传入的数据执行一些自定义操作。在两者之间进行选择通常是为了方便。例如,lambda 可以在没有封装类的情况下编写,而方法则不能。。我的习惯是使用 lambda,除非已经有了适当的封装方法。

|

通常,lambda 表达式和方法引用是可互换的:如果像 `mapToInt` 这样的高阶函数可以采用一种形式作为参数,那么这个函数也可以采用另一种形式。这两个函数式编程结构具有相同的目的 —— 对作为参数传入的数据执行一些自定义操作。在两者之间进行选择通常是为了方便。例如,lambda 可以在没有封装类的情况下编写,而方法则不能。我的习惯是使用 lambda,除非已经有了适当的封装方法。

|

||||||

|

|

||||||

当前示例末尾的 `sum` 函数通过结合来自 `parallelStream` 线程的部分和,以线程安全的方式进行归约。但是,程序员有责任确保在 `parallelStream` 调用引发的多线程过程中,程序员自己的函数调用(在本例中为 `getValue`)是线程安全的。

|

当前示例末尾的 `sum` 函数通过结合来自 `parallelStream` 线程的部分和,以线程安全的方式进行归约。但是,程序员有责任确保在 `parallelStream` 调用引发的多线程过程中,程序员自己的函数调用(在本例中为 `getValue`)是线程安全的。

|

||||||

|

|

||||||

最后一点值得强调。lambda 语法鼓励编写 _<ruby>纯函数<rt>pure function</rt></ruby>_,即函数的返回值仅取决于传入的参数(如果有);纯函数没有副作用,例如更新类中的 `static` 字段。因此,纯函数是线程安全的,并且如果传递给高阶函数的函数参数(例如 `filter` 和 `map` )是纯函数,则流 API 效果最佳。

|

最后一点值得强调。lambda 语法鼓励编写<ruby>纯函数<rt>pure function</rt></ruby>,即函数的返回值仅取决于传入的参数(如果有);纯函数没有副作用,例如更新一个类中的 `static` 字段。因此,纯函数是线程安全的,并且如果传递给高阶函数的函数参数(例如 `filter` 和 `map` )是纯函数,则流 API 效果最佳。

|

||||||

|

|

||||||

对于更细粒度的控制,有另一个流 API 函数,名为 `reduce`,可用于对 `Number` 流中的值求和:

|

对于更细粒度的控制,有另一个流 API 函数,名为 `reduce`,可用于对 `Number` 流中的值求和:

|

||||||

|

|

||||||

@ -135,11 +138,10 @@ Integer sum4AllHarder = listOfNums

|

|||||||

|

|

||||||

此版本的 `reduce` 函数带有两个参数,第二个参数是一个函数:

|

此版本的 `reduce` 函数带有两个参数,第二个参数是一个函数:

|

||||||

|

|

||||||

> 第一个参数(在这种情况下为零)是 _特征_ 值,该值用作求和操作的初始值,并且在求和过程中流结束时用作默认值。

|

- 第一个参数(在这种情况下为零)是*特征*值,该值用作求和操作的初始值,并且在求和过程中流结束时用作默认值。

|

||||||

|

- 第二个参数是*累加器*,在本例中,这个 lambda 表达式有两个参数:第一个参数(`sofar`)是正在运行的和,第二个参数(`next`)是来自流的下一个值。运行的和以及下一个值相加,然后更新累加器。请记住,由于开始时调用了 `parallelStream`,因此 `map` 和 `reduce` 函数现在都在多线程上下文中执行。

|

||||||

|

|

||||||

> 第二个参数是 _累加器_,在本例中,这个 lambda 表达式有两个参数:第一个参数(`sofar`)是正在运行的和,第二个参数(`next`)是来自流的下一个值。运行总和以及下一个值相加,然后更新累加器。请记住,由于开始时调用了 `parallelStream`,因此 `map` 和 `reduce` 函数现在都在多线程上下文中执行。

|

在到目前为止的示例中,流值被收集,然后被规约,但是,通常情况下,流 API 中的 `Collectors` 可以累积值,而不需要将它们规约到单个值。正如下一个代码段所示,收集活动可以生成任意丰富的数据结构。该示例使用与前面示例相同的 `listOfNums`:

|

||||||

|

|

||||||

在到目前为止的示例中,流值被收集,然后被归并,但是,通常情况下,流 API 中的 `Collectors` 可以累积值,而不需要将它们减少到单个值。正如下一个代码段所示,收集活动可以生成任意丰富的数据结构。该示例使用与前面示例相同的 `listOfNums`:

|

|

||||||

|

|

||||||

```

|

```

|

||||||

Map<Number.Parity, List<Number>> numMap = listOfNums

|

Map<Number.Parity, List<Number>> numMap = listOfNums

|

||||||

@ -150,7 +152,7 @@ List<Number> evens = numMap.get(Number.Parity.EVEN);

|

|||||||

List<Number> odds = numMap.get(Number.Parity.ODD);

|

List<Number> odds = numMap.get(Number.Parity.ODD);

|

||||||

```

|

```

|

||||||

|

|

||||||

第一行中的 `numMap` 指的是一个 `Map`,它的键是一个 `Number` 奇偶校验位(**ODD** 或 **EVEN**),其值是一个具有指定奇偶校验位值的 `Number` 实例的`List`。同样,通过 `parallelStream` 调用进行多线程处理,然后 `collect` 调用(以线程安全的方式)将部分结果组装到 `numMap` 引用的 `Map` 中。然后,在 `numMap` 上调用 `get` 方法两次,一次获取 `evens`,第二次获取 `odds`。

|

第一行中的 `numMap` 指的是一个 `Map`,它的键是一个 `Number` 奇偶校验位(`ODD` 或 `EVEN`),其值是一个具有指定奇偶校验位值的 `Number` 实例的 `List`。同样,通过 `parallelStream` 调用进行多线程处理,然后 `collect` 调用(以线程安全的方式)将部分结果组装到 `numMap` 引用的 `Map` 中。然后,在 `numMap` 上调用 `get` 方法两次,一次获取 `evens`,第二次获取 `odds`。

|

||||||

|

|

||||||

实用函数 `dumpList` 再次使用来自流 API 的高阶 `forEach` 函数:

|

实用函数 `dumpList` 再次使用来自流 API 的高阶 `forEach` 函数:

|

||||||

|

|

||||||

@ -184,7 +186,7 @@ Value: 41 (parity: odd)

|

|||||||

|

|

||||||

### 用于代码简化的函数式结构

|

### 用于代码简化的函数式结构

|

||||||

|

|

||||||